Topic 1 Parallel Architecture Lingo Common Used Terms

![Types of [Parallel] Computers • Flynn’s Taxonomy (1972) – Classification due on how instructions Types of [Parallel] Computers • Flynn’s Taxonomy (1972) – Classification due on how instructions](https://slidetodoc.com/presentation_image_h/097cc429e3c67e0684db72281b7f1134/image-15.jpg)

![A Generic Computer The MIMD Example Small Size Interconnect Network Bus and [one level] A Generic Computer The MIMD Example Small Size Interconnect Network Bus and [one level]](https://slidetodoc.com/presentation_image_h/097cc429e3c67e0684db72281b7f1134/image-79.jpg)

![Network Architecture Topics • • • Topology [Mesh Interconnect] Routing [Dimensional Order Routing] Flow Network Architecture Topics • • • Topology [Mesh Interconnect] Routing [Dimensional Order Routing] Flow](https://slidetodoc.com/presentation_image_h/097cc429e3c67e0684db72281b7f1134/image-85.jpg)

- Slides: 112

Topic 1 Parallel Architecture Lingo & Common Used Terms Complexity is just the lack of knowledge 9/6/2006 eleg 652 -010 -06 F 1

Reading List • Slides: Topic 1 x • Culler. Singh’s: Chapter 1, Chapter 2 • Other assigned readings from homework and classes 9/6/2006 eleg 652 -010 -06 F 2

Parallel Computer Lingo Massive Parallel Processor Computer Amdahl’s Law (cache coherent) Non Uniform Memory Space Flynn’s Taxonomy Dance Hall Memory Systems Parallel Programming Models Parallelism and Concurrency Distributed Memory Machines and Beowulfs Symmetric Multi Processor Computer Super Scalar Computers Vector Computers and Processing Class A and B applications (Memory) Consistency Very Long Instruction Word Computers (Memory) Coherency 9/6/2006 eleg 652 -010 -06 F 3

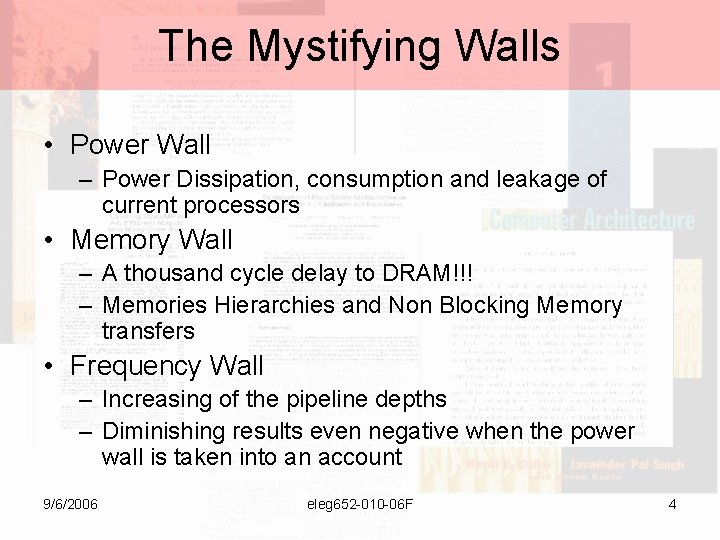

The Mystifying Walls • Power Wall – Power Dissipation, consumption and leakage of current processors • Memory Wall – A thousand cycle delay to DRAM!!! – Memories Hierarchies and Non Blocking Memory transfers • Frequency Wall – Increasing of the pipeline depths – Diminishing results even negative when the power wall is taken into an account 9/6/2006 eleg 652 -010 -06 F 4

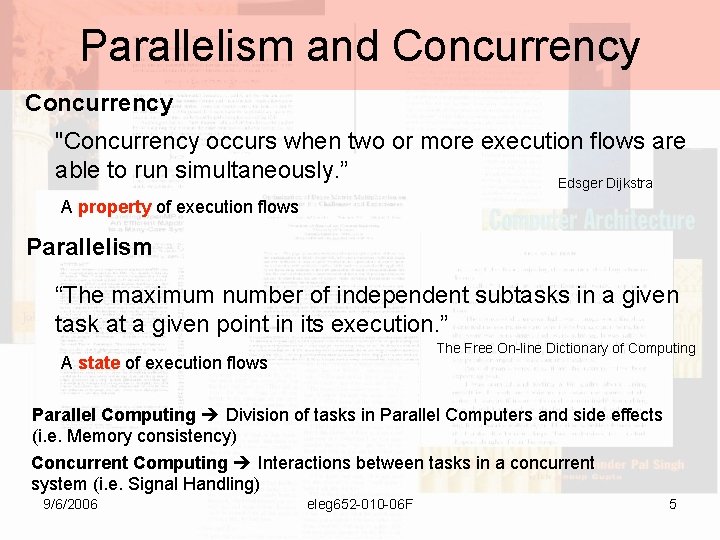

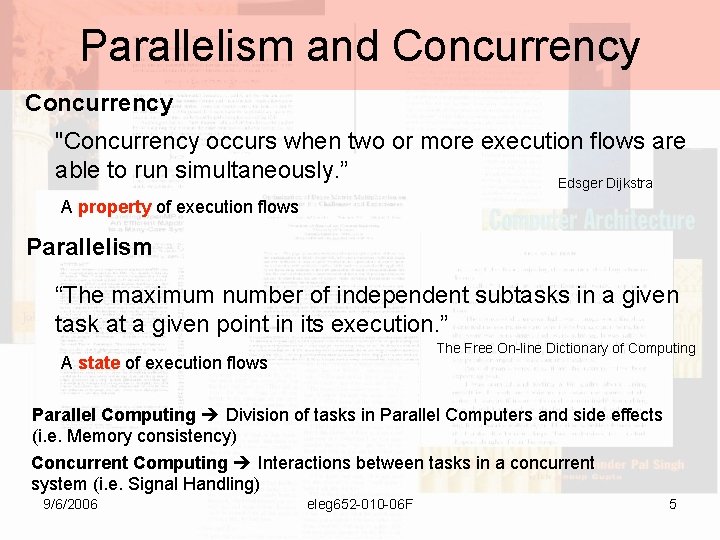

Parallelism and Concurrency "Concurrency occurs when two or more execution flows are able to run simultaneously. ” Edsger Dijkstra A property of execution flows Parallelism “The maximum number of independent subtasks in a given task at a given point in its execution. ” The Free On-line Dictionary of Computing A state of execution flows Parallel Computing Division of tasks in Parallel Computers and side effects (i. e. Memory consistency) Concurrent Computing Interactions between tasks in a concurrent system (i. e. Signal Handling) 9/6/2006 eleg 652 -010 -06 F 5

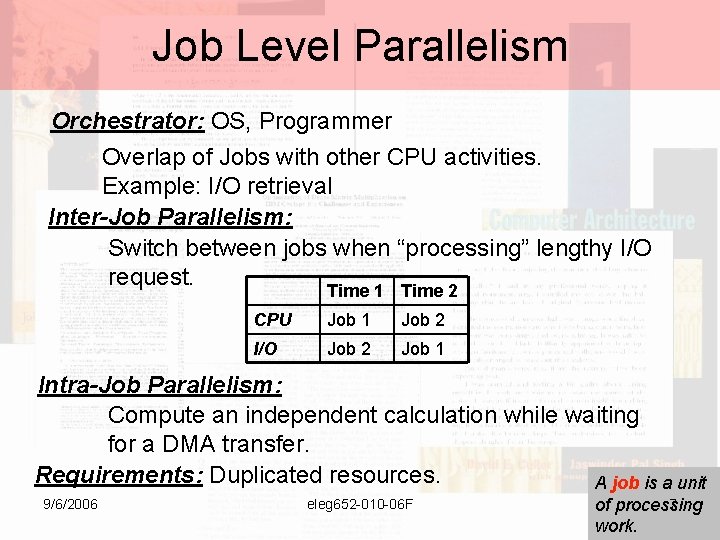

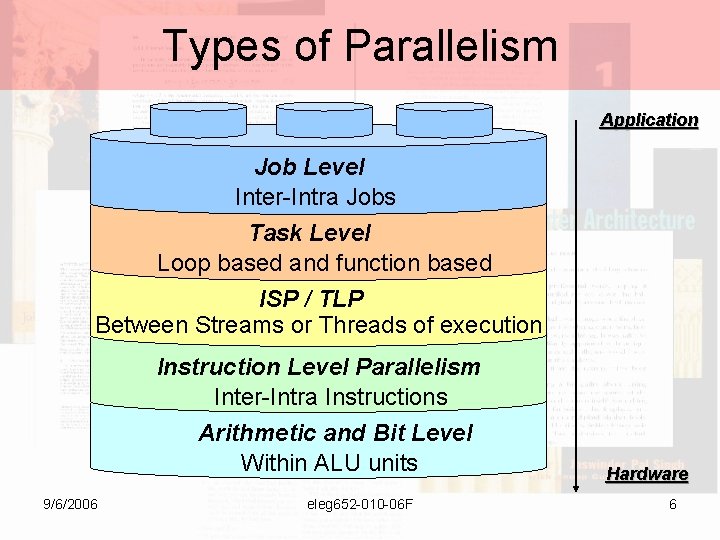

Types of Parallelism Application Job Level Inter-Intra Jobs Task Level Loop based and function based ISP / TLP Between Streams or Threads of execution Instruction Level Parallelism Inter-Intra Instructions Arithmetic and Bit Level Within ALU units 9/6/2006 eleg 652 -010 -06 F Hardware 6

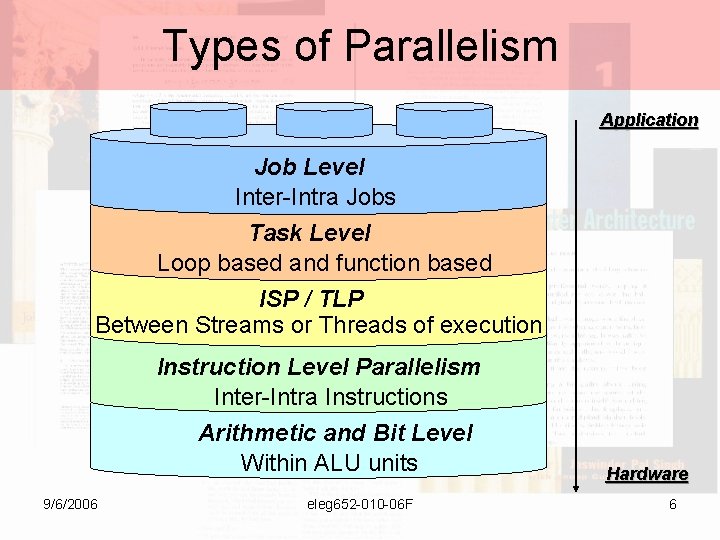

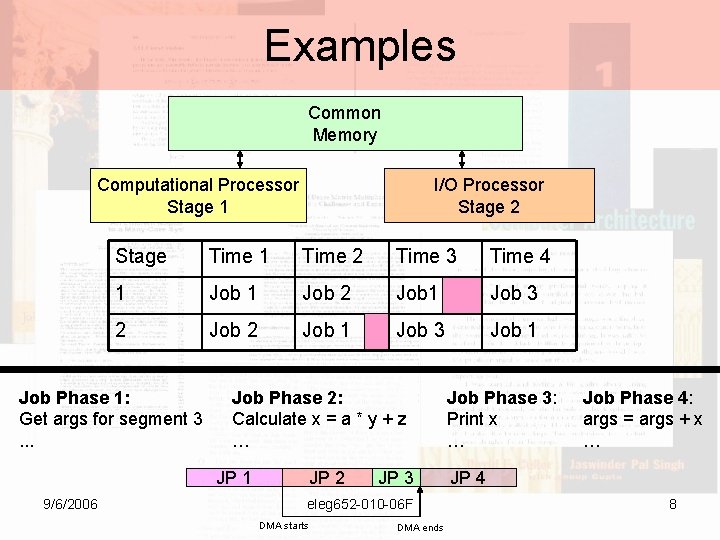

Job Level Parallelism Orchestrator: OS, Programmer Overlap of Jobs with other CPU activities. Example: I/O retrieval Inter-Job Parallelism: Switch between jobs when “processing” lengthy I/O request. Time 1 Time 2 CPU Job 1 Job 2 I/O Job 2 Job 1 Intra-Job Parallelism: Compute an independent calculation while waiting for a DMA transfer. Requirements: Duplicated resources. A job is a unit 9/6/2006 eleg 652 -010 -06 F 7 of processing work.

Examples Common Memory Computational Processor Stage 1 I/O Processor Stage 2 Stage Time 1 Time 2 Time 3 Time 4 1 Job 2 Job 1 Job 3 Job 1 Job Phase 1: Get args for segment 3. . . Job Phase 2: Calculate x = a * y + z … JP 1 9/6/2006 JP 2 JP 3 eleg 652 -010 -06 F DMA starts DMA ends Job Phase 3: Print x … Job Phase 4: args = args + x … JP 4 8

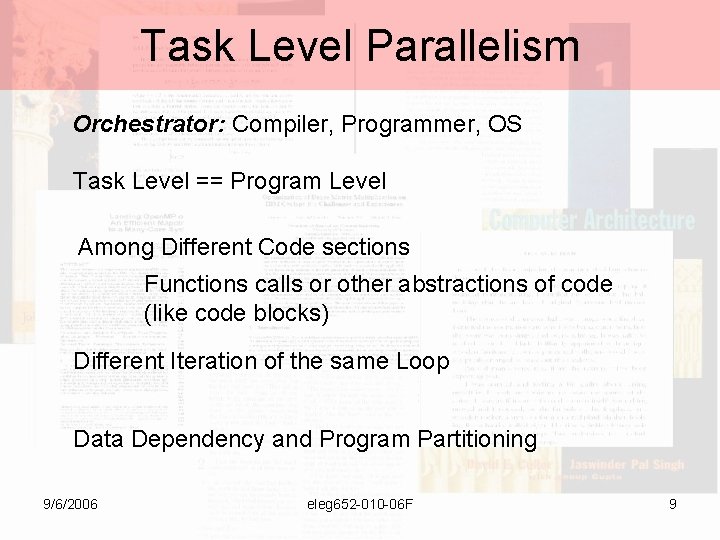

Task Level Parallelism Orchestrator: Compiler, Programmer, OS Task Level == Program Level Among Different Code sections Functions calls or other abstractions of code (like code blocks) Different Iteration of the same Loop Data Dependency and Program Partitioning 9/6/2006 eleg 652 -010 -06 F 9

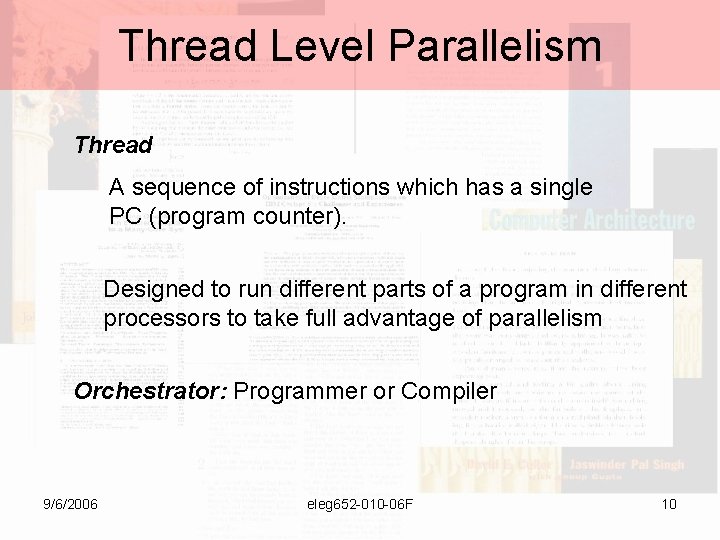

Thread Level Parallelism Thread A sequence of instructions which has a single PC (program counter). Designed to run different parts of a program in different processors to take full advantage of parallelism Orchestrator: Programmer or Compiler 9/6/2006 eleg 652 -010 -06 F 10

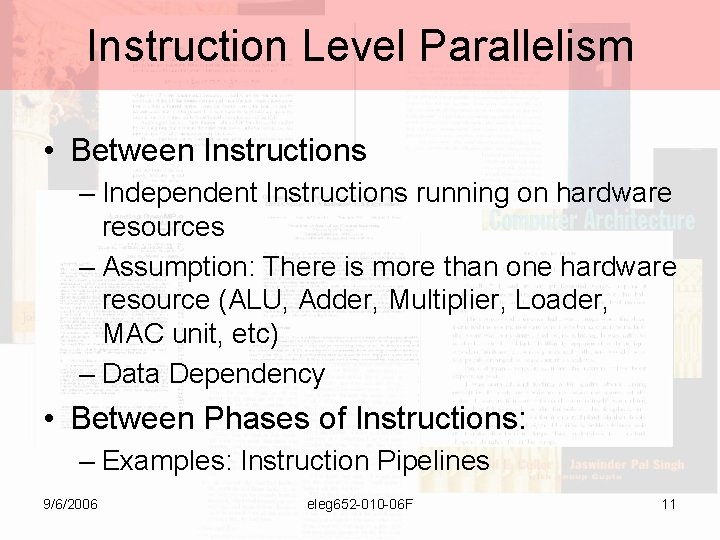

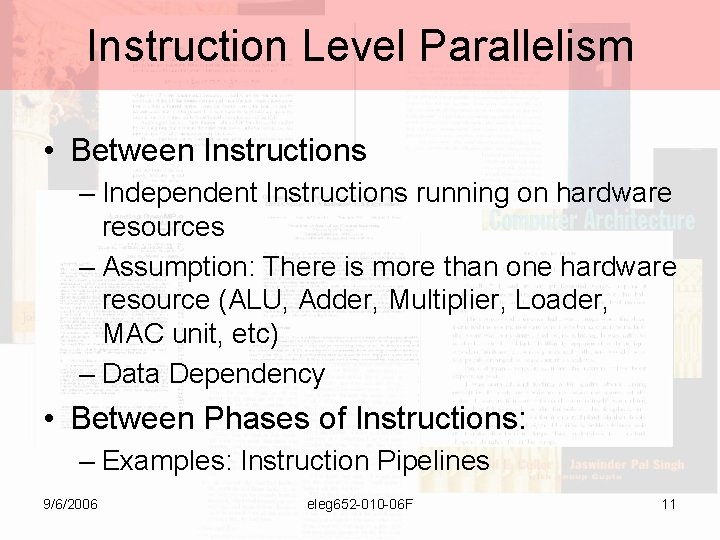

Instruction Level Parallelism • Between Instructions – Independent Instructions running on hardware resources – Assumption: There is more than one hardware resource (ALU, Adder, Multiplier, Loader, MAC unit, etc) – Data Dependency • Between Phases of Instructions: – Examples: Instruction Pipelines 9/6/2006 eleg 652 -010 -06 F 11

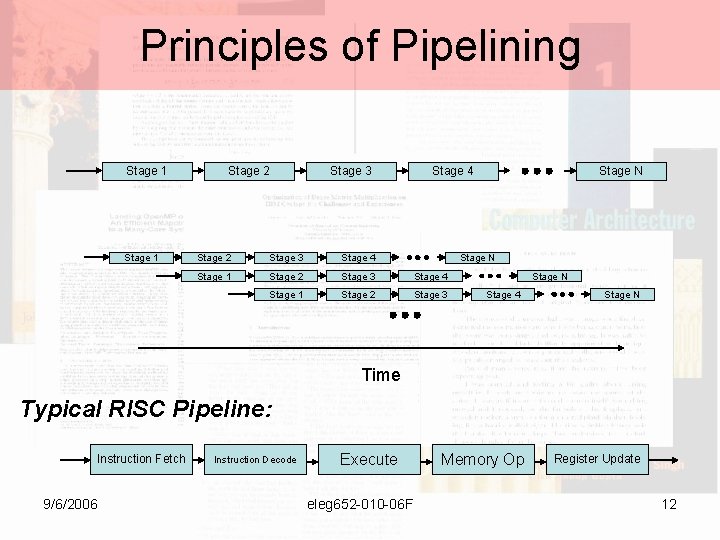

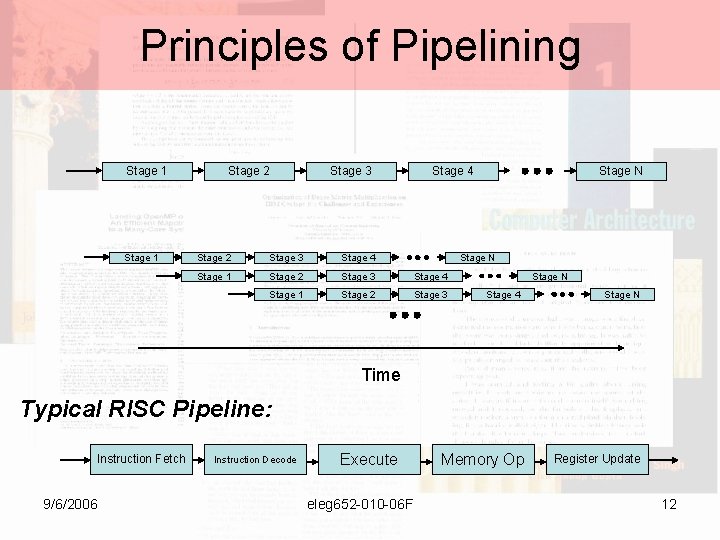

Principles of Pipelining Stage 1 Stage 2 Stage 3 Stage 4 Stage 1 Stage 2 Stage 3 Stage N Stage 4 Stage N Time Typical RISC Pipeline: Instruction Fetch 9/6/2006 Instruction Decode Execute eleg 652 -010 -06 F Memory Op Register Update 12

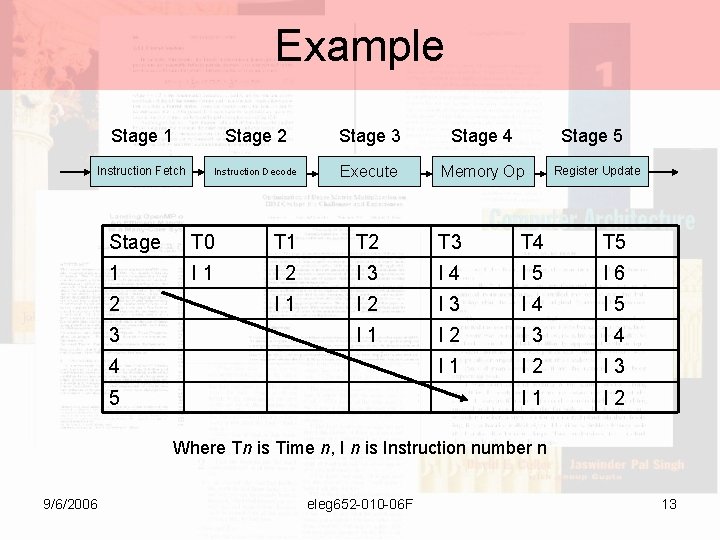

Example Stage 1 Stage 2 Stage 3 Stage 4 Stage 5 Instruction Fetch Instruction Decode Execute Memory Op Register Update Stage T 0 T 1 T 2 T 3 T 4 T 5 1 I 2 I 3 I 4 I 5 I 6 I 1 I 2 I 3 I 4 I 5 I 1 I 2 I 3 I 4 I 1 I 2 I 3 I 1 I 2 2 3 4 5 Where Tn is Time n, I n is Instruction number n 9/6/2006 eleg 652 -010 -06 F 13

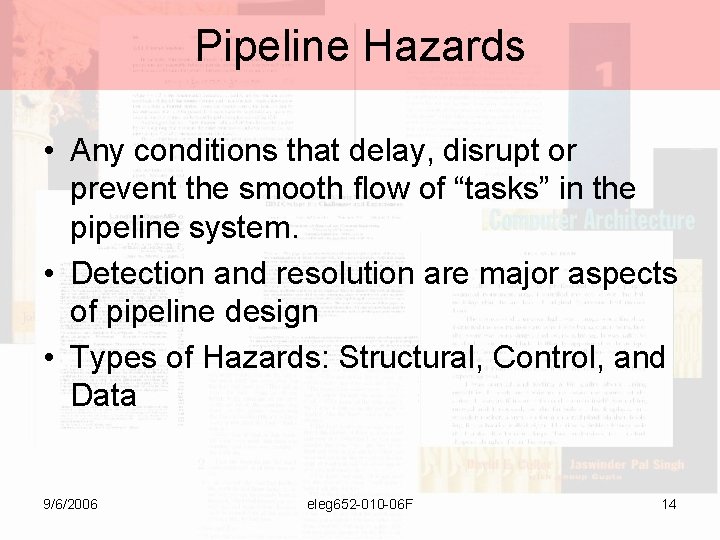

Pipeline Hazards • Any conditions that delay, disrupt or prevent the smooth flow of “tasks” in the pipeline system. • Detection and resolution are major aspects of pipeline design • Types of Hazards: Structural, Control, and Data 9/6/2006 eleg 652 -010 -06 F 14

![Types of Parallel Computers Flynns Taxonomy 1972 Classification due on how instructions Types of [Parallel] Computers • Flynn’s Taxonomy (1972) – Classification due on how instructions](https://slidetodoc.com/presentation_image_h/097cc429e3c67e0684db72281b7f1134/image-15.jpg)

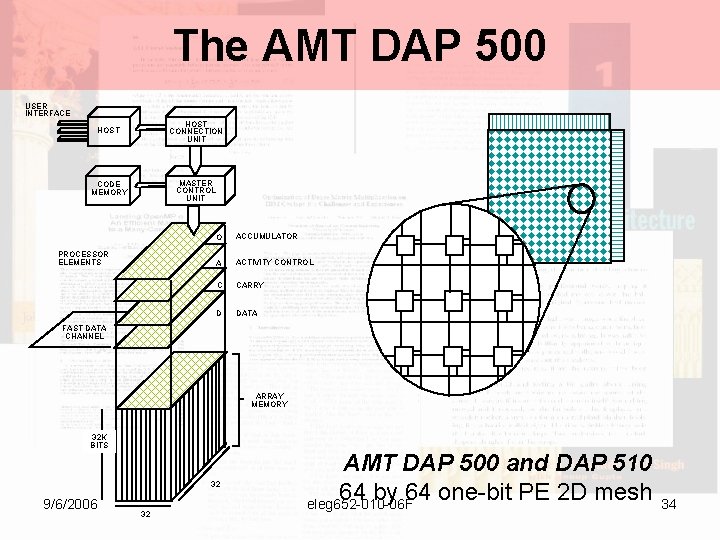

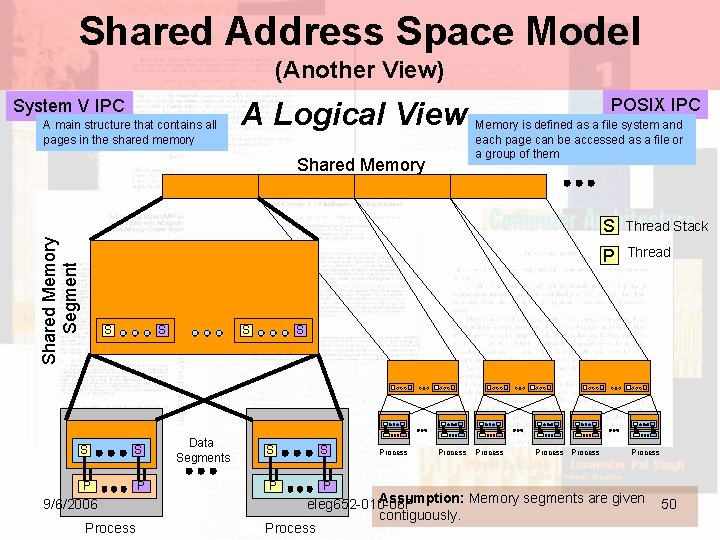

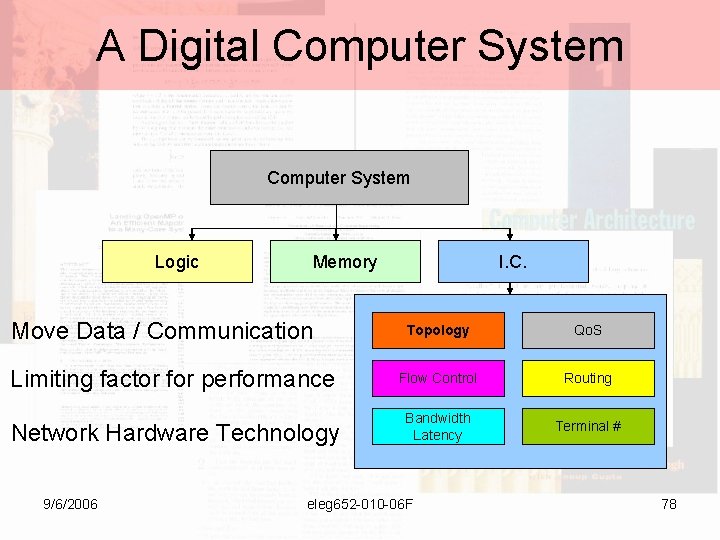

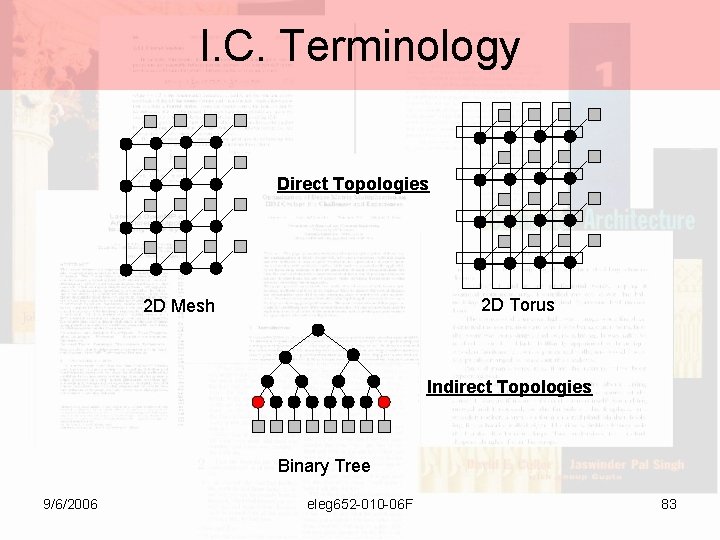

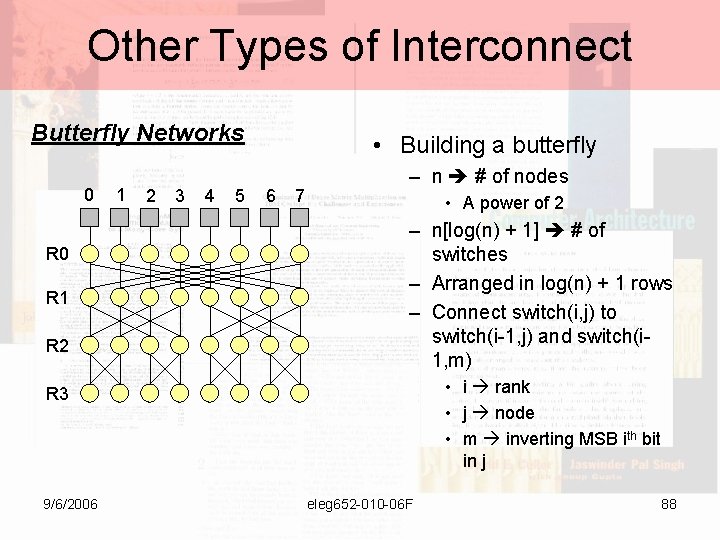

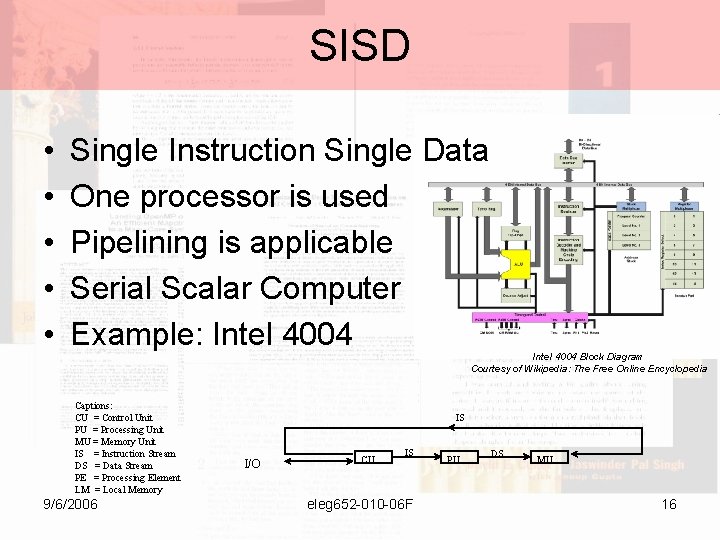

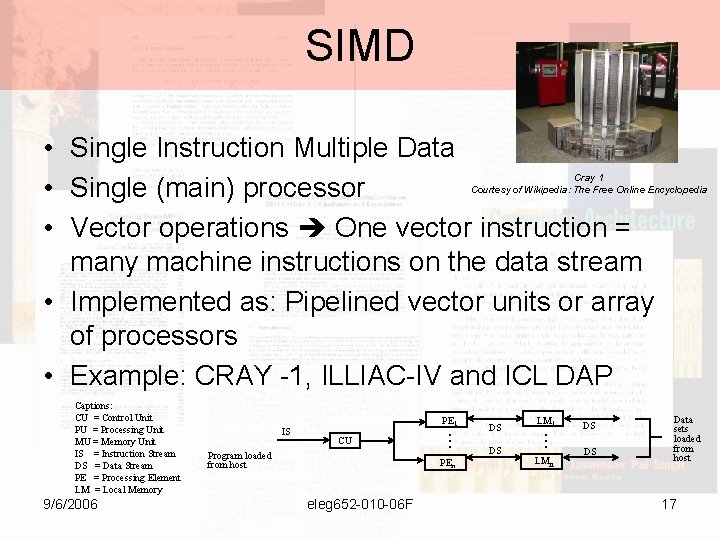

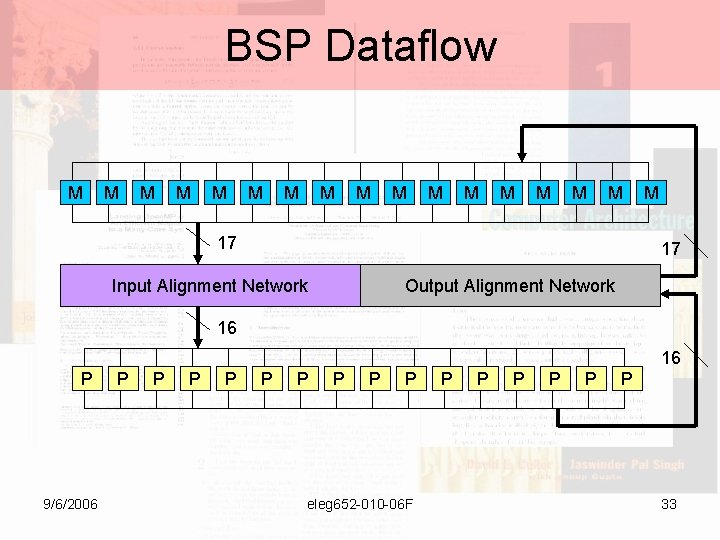

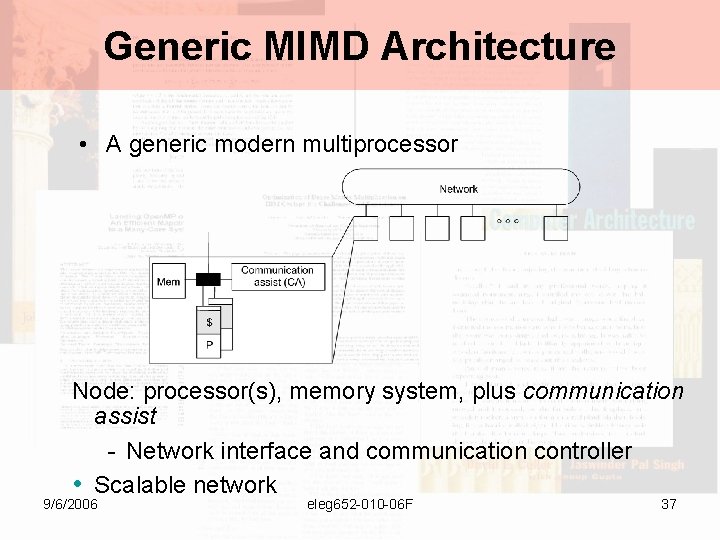

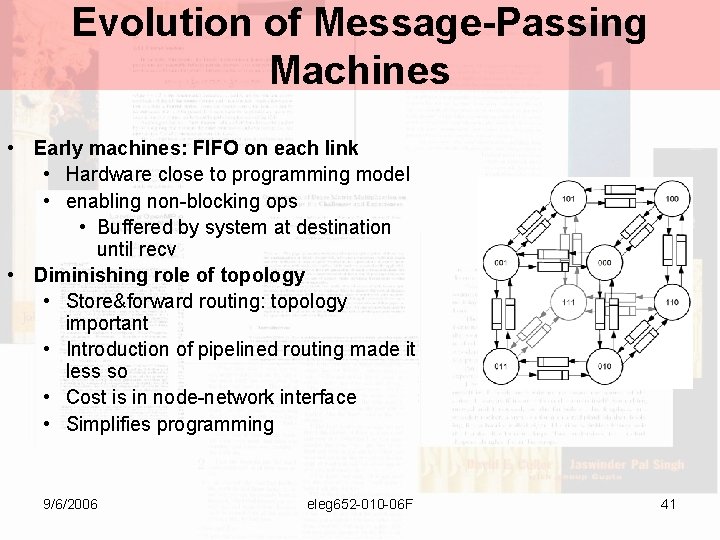

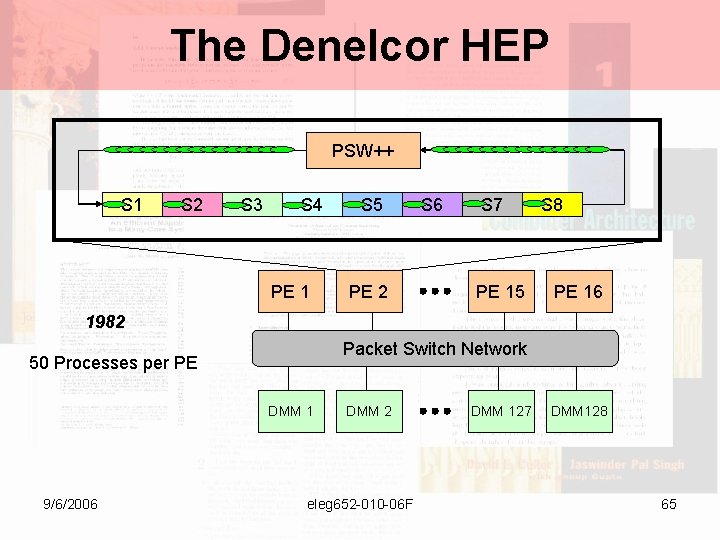

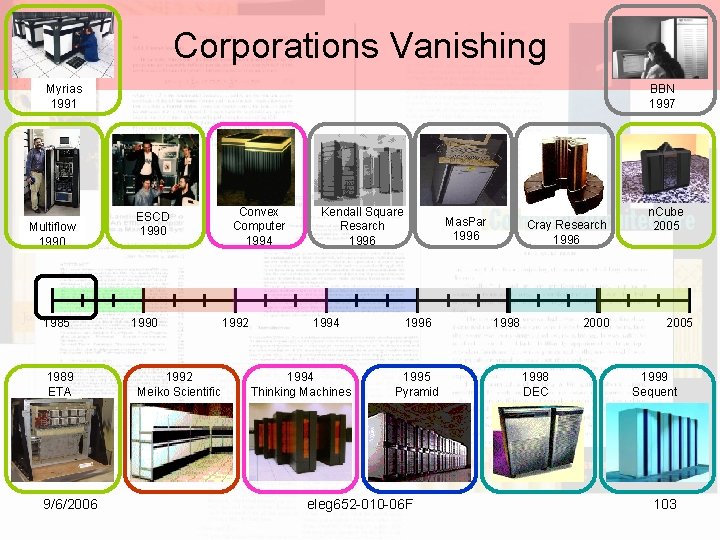

Types of [Parallel] Computers • Flynn’s Taxonomy (1972) – Classification due on how instructions (streams) operate on data (streams) – A stream is a collection of items (instructions or data) being executed or operated by the processor • Types of Computers: SISD, SIMD, MISD and MIMD 9/6/2006 eleg 652 -010 -06 F 15

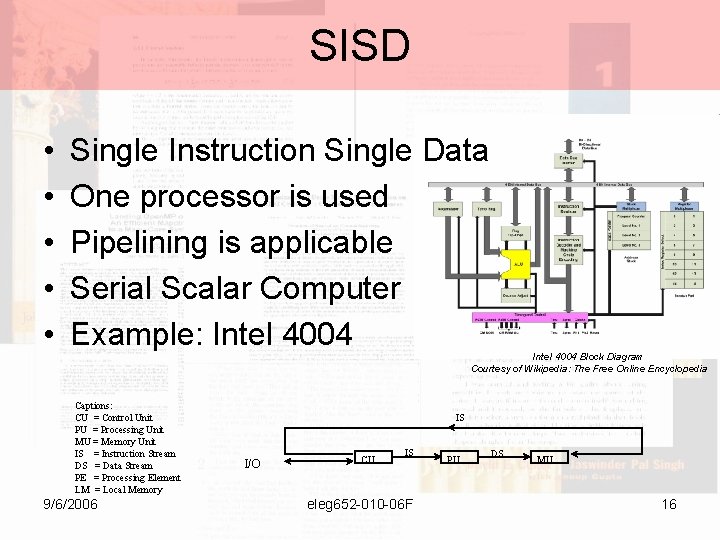

SISD • • • Single Instruction Single Data One processor is used Pipelining is applicable Serial Scalar Computer Example: Intel 4004 Block Diagram Courtesy of Wikipedia: The Free Online Encyclopedia Captions: CU = Control Unit PU = Processing Unit MU = Memory Unit IS = Instruction Stream DS = Data Stream PE = Processing Element LM = Local Memory 9/6/2006 IS I/O CU IS eleg 652 -010 -06 F PU DS MU 16

SIMD • Single Instruction Multiple Data • Single (main) processor • Vector operations One vector instruction = many machine instructions on the data stream • Implemented as: Pipelined vector units or array of processors • Example: CRAY -1, ILLIAC-IV and ICL DAP Cray 1 Courtesy of Wikipedia: The Free Online Encyclopedia 9/6/2006 PE 1 CU Program loaded from host PEn eleg 652 -010 -06 F DS DS LM 1 . . . IS . . . Captions: CU = Control Unit PU = Processing Unit MU = Memory Unit IS = Instruction Stream DS = Data Stream PE = Processing Element LM = Local Memory LMn DS DS Data sets loaded from host 17

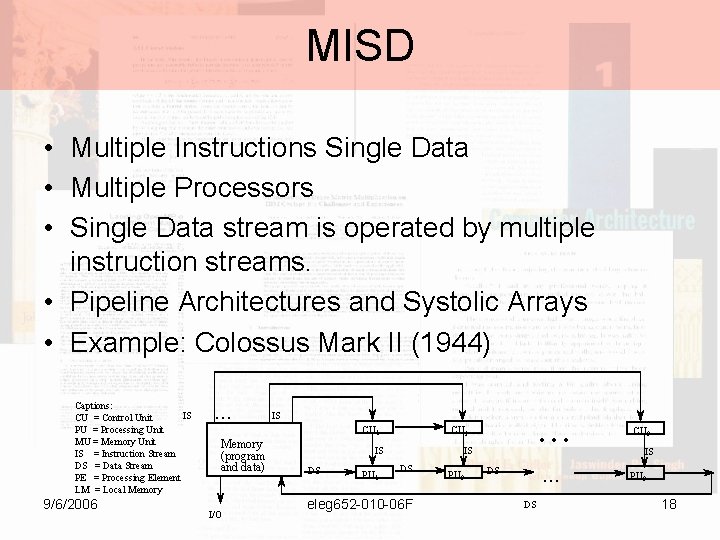

MISD • Multiple Instructions Single Data • Multiple Processors • Single Data stream is operated by multiple instruction streams. • Pipeline Architectures and Systolic Arrays • Example: Colossus Mark II (1944) Captions: IS CU = Control Unit PU = Processing Unit MU = Memory Unit IS = Instruction Stream DS = Data Stream PE = Processing Element LM = Local Memory 9/6/2006 . . . Memory (program and data) I/O . . . IS CU 1 CU 2 IS DS PU 1 IS DS eleg 652 -010 -06 F PU 2 . . . DS DS CU 2 IS PU 2 18

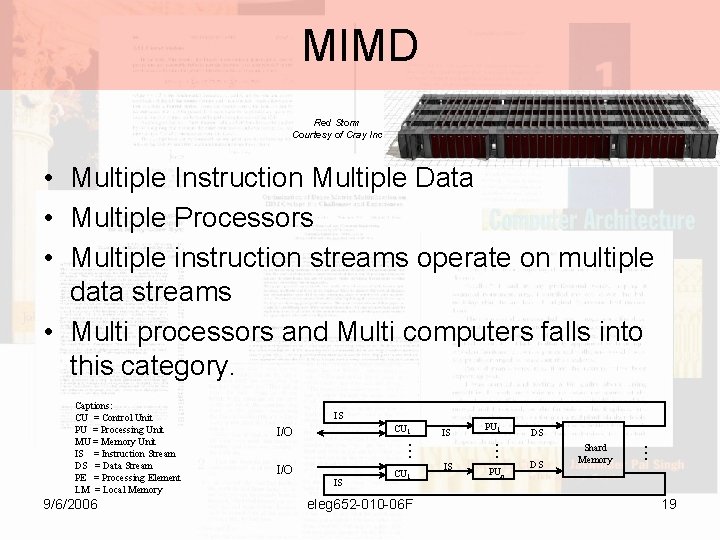

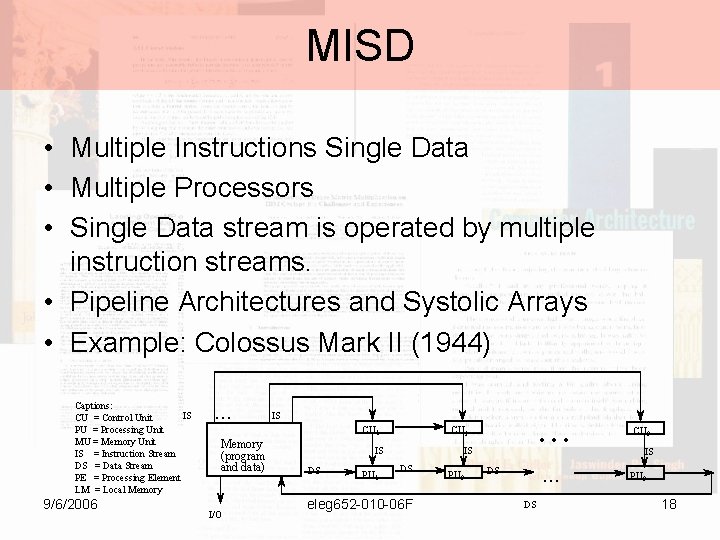

MIMD Red Storm Courtesy of Cray Inc • Multiple Instruction Multiple Data • Multiple Processors • Multiple instruction streams operate on multiple data streams • Multi processors and Multi computers falls into this category. 9/6/2006 IS I/O IS CU 1 eleg 652 -010 -06 F PU 1 . . . CU 1 I/O IS PUn DS DS Shard Memory . . . IS . . . Captions: CU = Control Unit PU = Processing Unit MU = Memory Unit IS = Instruction Stream DS = Data Stream PE = Processing Element LM = Local Memory 19

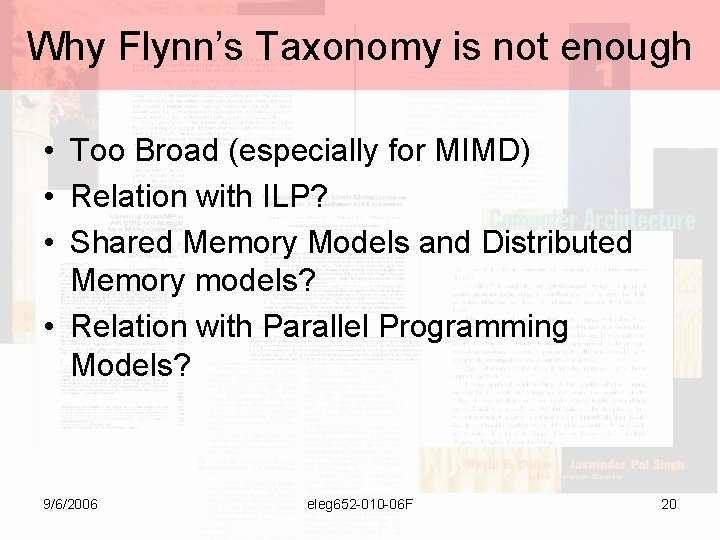

Why Flynn’s Taxonomy is not enough • Too Broad (especially for MIMD) • Relation with ILP? • Shared Memory Models and Distributed Memory models? • Relation with Parallel Programming Models? 9/6/2006 eleg 652 -010 -06 F 20

Another Classification ILP Architectures • Multiple Instruction Issuing plus Deep Pipelining • Superscalar – Dynamic instruction dispatching – More than one instruction per cycle – Intel Pentium 4 • Very Long Instruction Word – Static instruction arrangement – More than one instruction per cycle – Texas Instruments C 68 DSP processors • Super pipelined – MIPS 4000/8000 – DEC Alpha • Hybrid 9/6/2006 eleg 652 -010 -06 F 21

More Classifications Structural Composition • Vector Computer / Processor Array – Serial Processor connected to a series of synchronized processing elements (PE) • Multiprocessors – Multiple CPU computer with shared memory – Centralized Multiprocessor • A group of processors sharing a bus and the same physical memory • Uniform Memory Access (UMA) • Symmetric Multi Processors (SMP) 9/6/2006 eleg 652 -010 -06 F 22

More Classifications Structural Composition – Distributed Multiprocessors • Memory is distributed across several processors • Memory forms a single logical memory space • Non-uniform memory access multiprocessor (NUMA) • Multicomputers – Disjointed local address spaces for each processor – Asymmetrical Multi computers • Consists of a front end (user interaction and I/O devices) and a back end (parallel tasks) 9/6/2006 eleg 652 -010 -06 F 23

More Classifications Structural Composition – Symmetrical Multi Computers • All components (computers) has identical functionality • Clusters and Networks of workstations • Massive Parallel Processing Machines (MPP) – Any combination of the above classes in which the number of (computational) components are in order of hundreds or even thousands – Highly Coupled with fast interconnect networks – All [Current] Supercomputers enter this range 9/6/2006 eleg 652 -010 -06 F 24

The Software Dilemma • OS and application software depends on programming model • Programming Model A contract between Software and Hardware • Many Programming models due to many types of Architectures. • Dilemma: Many directions, how to unified them? 9/6/2006 eleg 652 -010 -06 F 25

Topic 1 a: In Depth Examples SIMD Computers of Yesteryears 9/6/2006 eleg 652 -010 -06 F 26

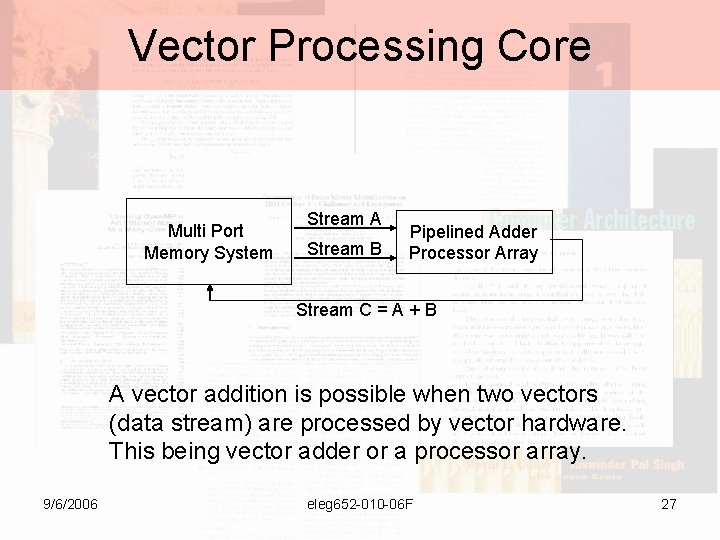

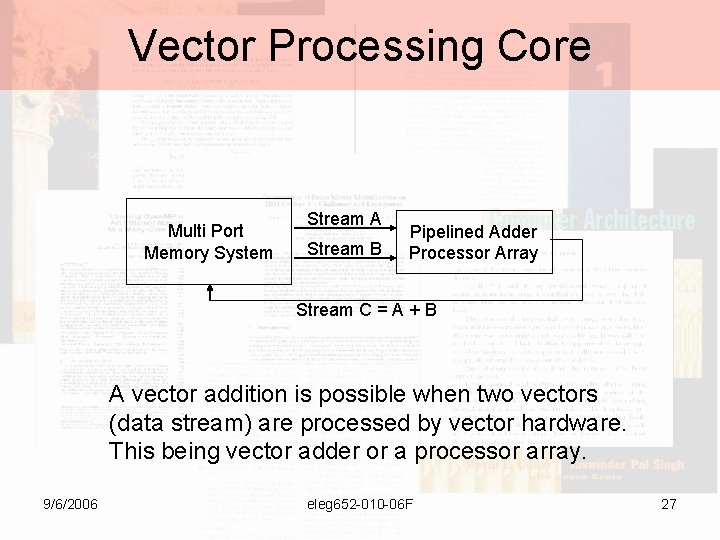

Vector Processing Core Multi Port Memory System Stream A Stream B Pipelined Adder Processor Array Stream C = A + B A vector addition is possible when two vectors (data stream) are processed by vector hardware. This being vector adder or a processor array. 9/6/2006 eleg 652 -010 -06 F 27

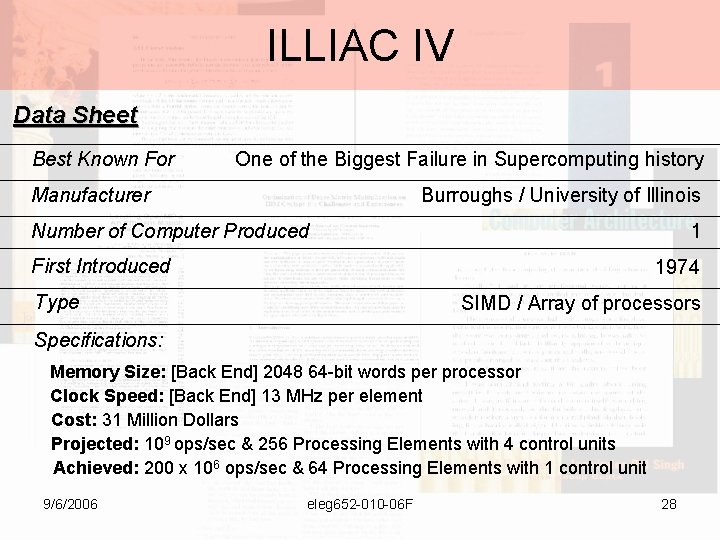

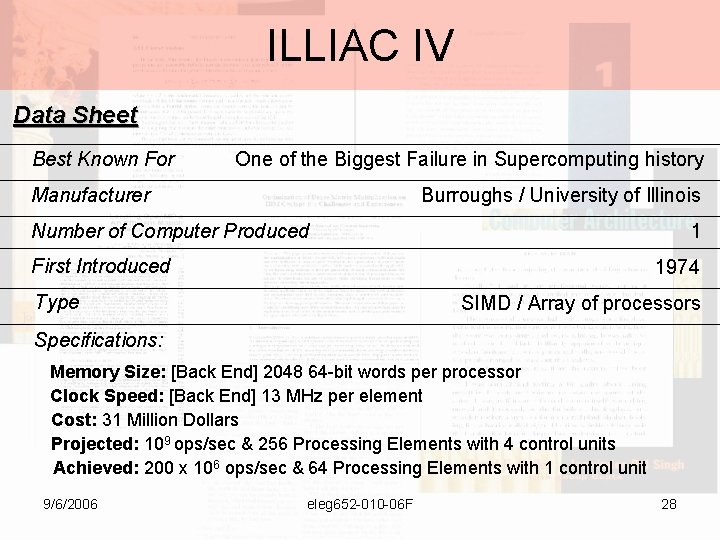

ILLIAC IV Data Sheet Best Known For One of the Biggest Failure in Supercomputing history Manufacturer Burroughs / University of Illinois Number of Computer Produced 1 First Introduced 1974 Type SIMD / Array of processors Specifications: Memory Size: [Back End] 2048 64 -bit words per processor Clock Speed: [Back End] 13 MHz per element Cost: 31 Million Dollars Projected: 109 ops/sec & 256 Processing Elements with 4 control units Achieved: 200 x 106 ops/sec & 64 Processing Elements with 1 control unit 9/6/2006 eleg 652 -010 -06 F 28

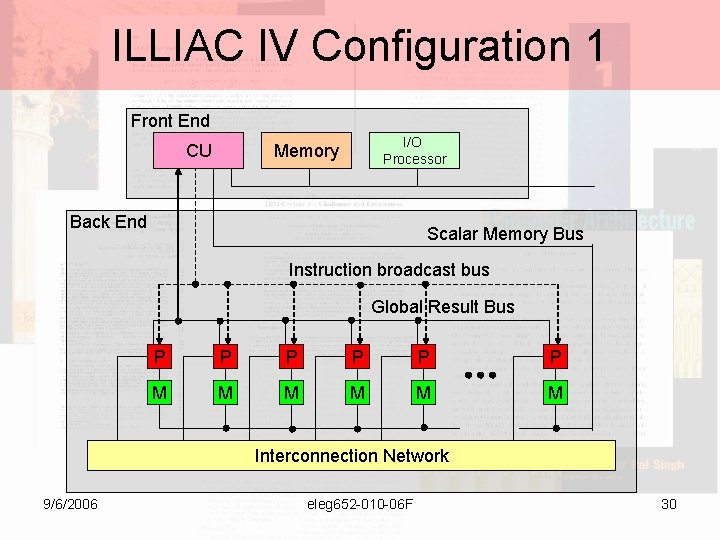

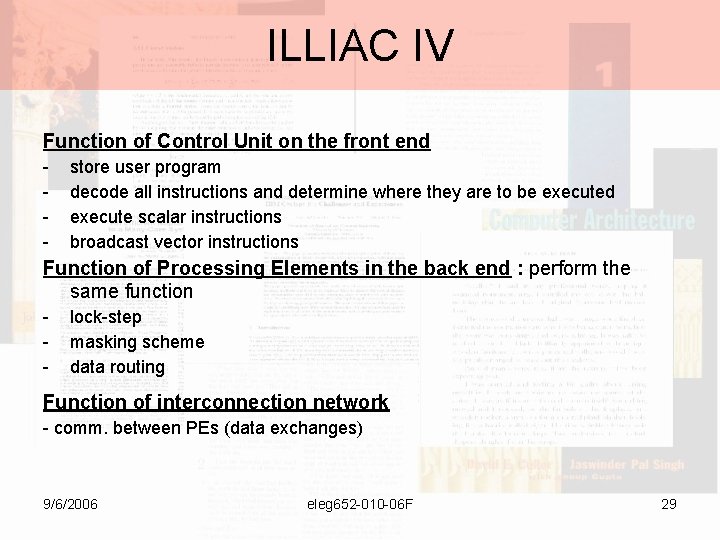

ILLIAC IV Function of Control Unit on the front end - store user program decode all instructions and determine where they are to be executed execute scalar instructions broadcast vector instructions Function of Processing Elements in the back end : perform the same function - lock-step masking scheme data routing Function of interconnection network - comm. between PEs (data exchanges) 9/6/2006 eleg 652 -010 -06 F 29

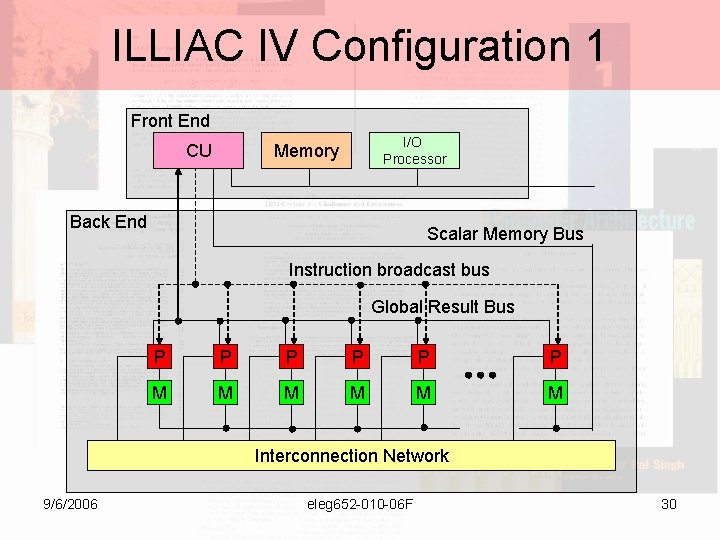

ILLIAC IV Configuration 1 Front End CU I/O Processor Memory Back End Scalar Memory Bus Instruction broadcast bus Global Result Bus P P P M M M Interconnection Network 9/6/2006 eleg 652 -010 -06 F 30

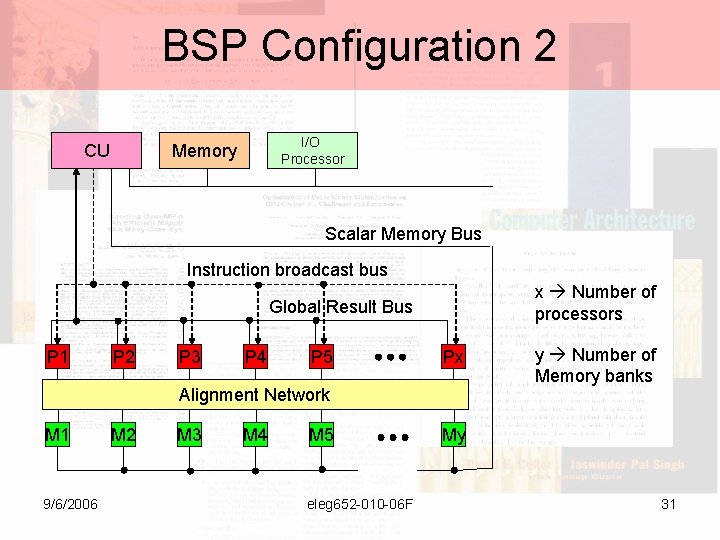

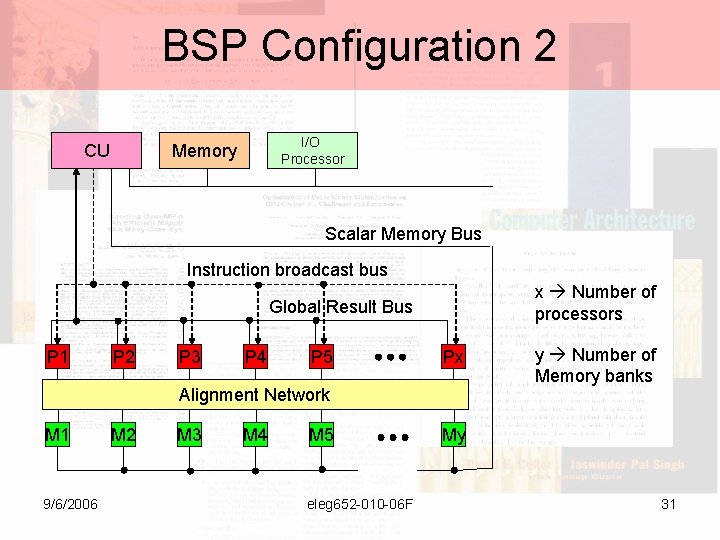

BSP Configuration 2 CU I/O Processor Memory Scalar Memory Bus Instruction broadcast bus x Number of processors Global Result Bus P 1 P 2 P 3 P 4 P 5 Px Alignment Network M 1 9/6/2006 M 2 M 3 M 4 M 5 eleg 652 -010 -06 F y Number of Memory banks My 31

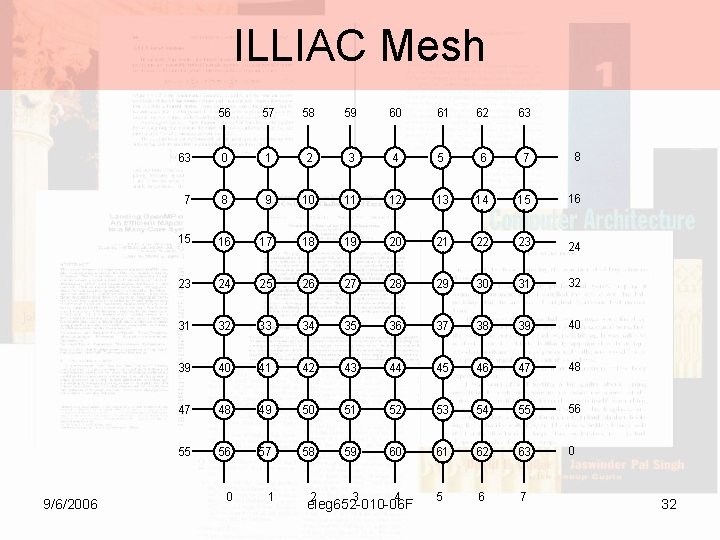

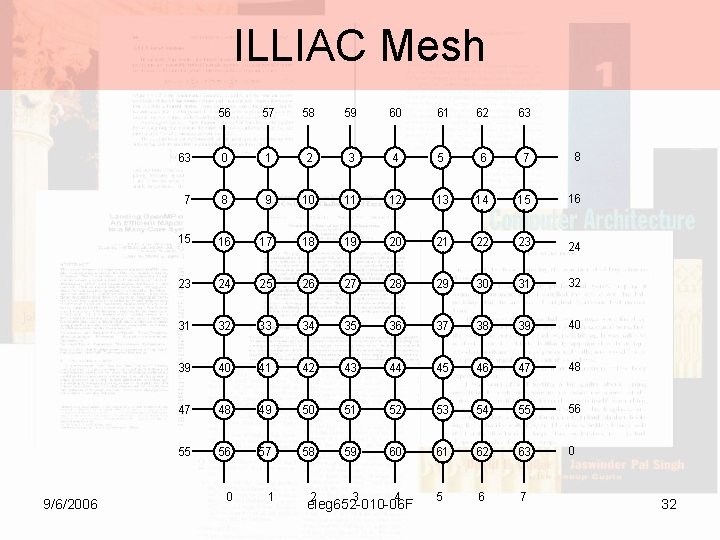

ILLIAC Mesh 9/6/2006 56 57 58 59 60 61 62 63 63 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 0 0 1 2 3 4 5 6 7 eleg 652 -010 -06 F 32

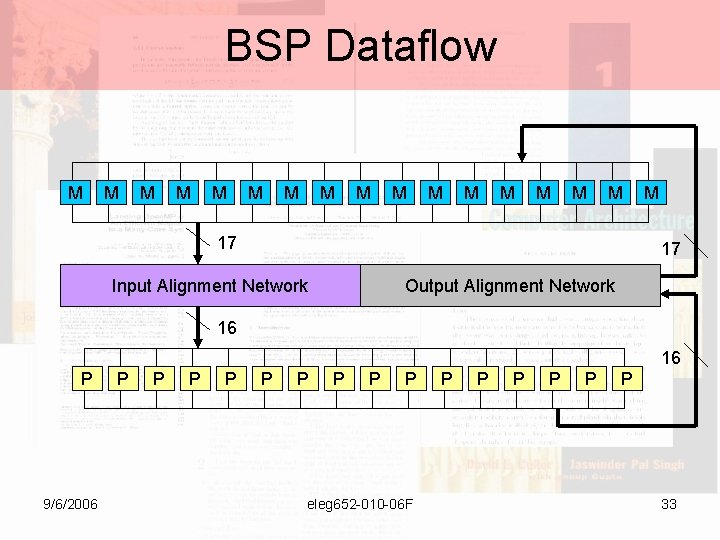

BSP Dataflow M M M M 17 M 17 Input Alignment Network Output Alignment Network 16 16 P 9/6/2006 P P P P P eleg 652 -010 -06 F P P P 33

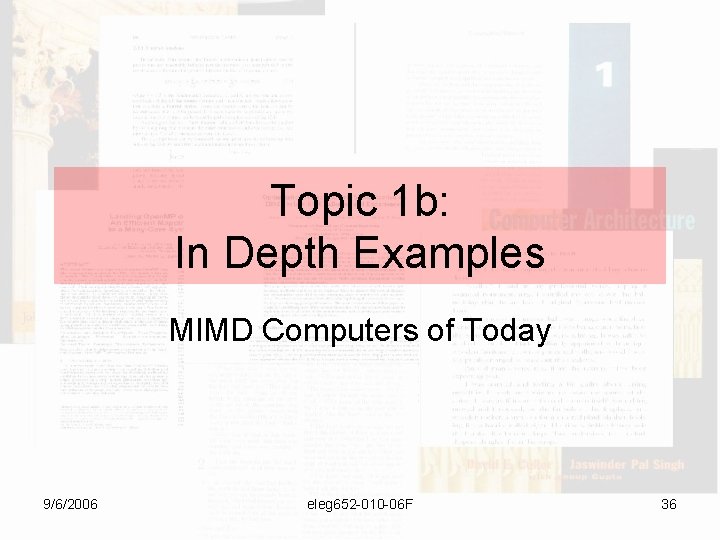

The AMT DAP 500 USER INTERFACE HOST CONNECTION UNIT CODE MEMORY MASTER CONTROL UNIT PROCESSOR ELEMENTS O ACCUMULATOR A ACTIVITY CONTROL C CARRY D DATA FAST DATA CHANNEL ARRAY MEMORY 32 K BITS 32 9/6/2006 32 AMT DAP 500 and DAP 510 64 by 64 one-bit PE 2 D mesh eleg 652 -010 -06 F 34

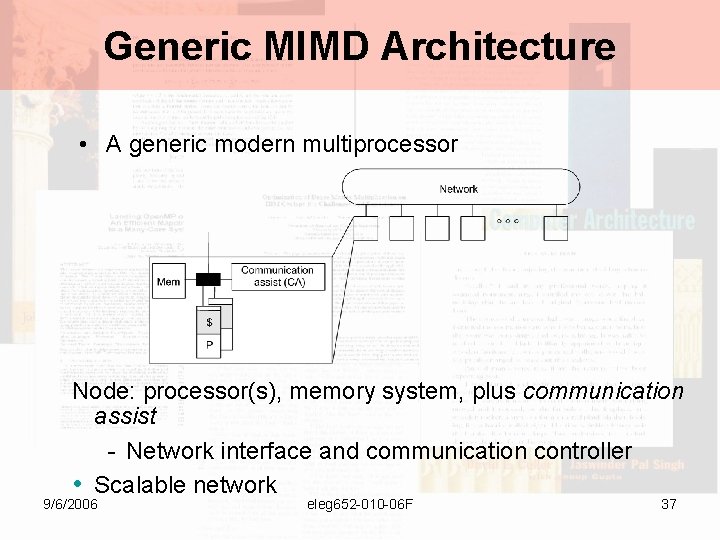

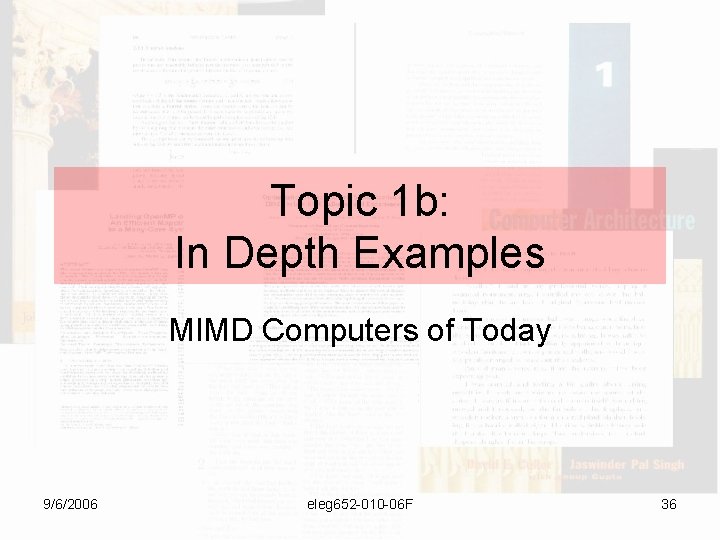

SIMD across the Years CDC Cyber 205 ETA 10 (Levine, 1982) CDC 7600 (ETA, Inc. 1989) Cray Y-MP (CDC, 1970) (Cray Research, 1989) Cray/MPP (Cray Research, 1993) Cray 1 (Russell, 1978) Fujitsu, NEC, Hitachi Models (a) Multivector track DAP 610 (AMT, Inc. 1987) Goodyear MPP (Batcher, 1980) CM 2 (TMC, 1990) Illiac IV (Barnes et al, 1968) Mas. Par MP 1 BSP (Kuck and Stokes, 1982) (Nickolls, 1990) IBM GF/11 9/6/2006 (b)eleg 652 -010 -06 F SIMD track (Beetem et al, 1985) 35

Topic 1 b: In Depth Examples MIMD Computers of Today 9/6/2006 eleg 652 -010 -06 F 36

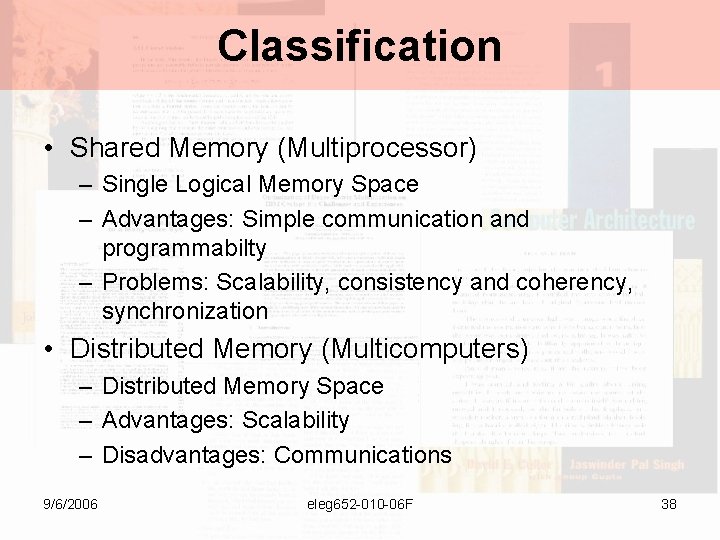

Generic MIMD Architecture • A generic modern multiprocessor Node: processor(s), memory system, plus communication assist - Network interface and communication controller • Scalable network 9/6/2006 eleg 652 -010 -06 F 37

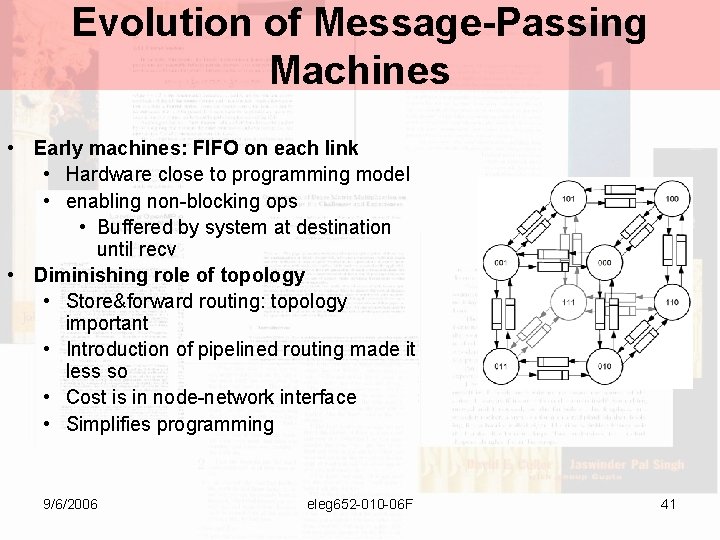

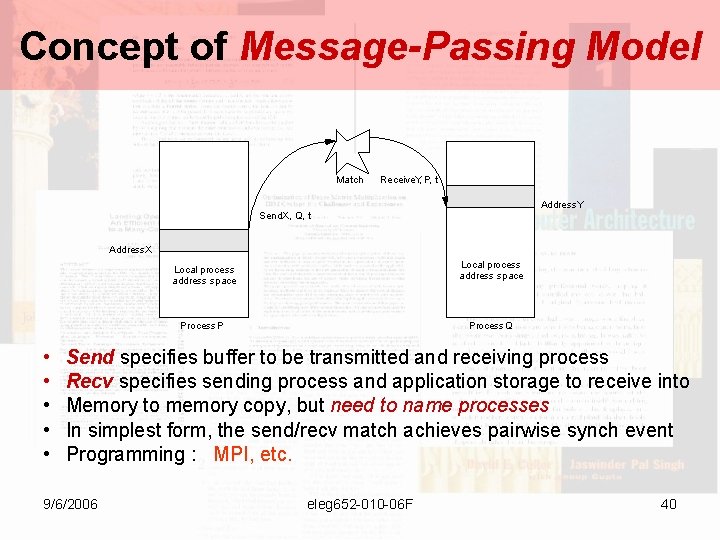

Classification • Shared Memory (Multiprocessor) – Single Logical Memory Space – Advantages: Simple communication and programmabilty – Problems: Scalability, consistency and coherency, synchronization • Distributed Memory (Multicomputers) – Distributed Memory Space – Advantages: Scalability – Disadvantages: Communications 9/6/2006 eleg 652 -010 -06 F 38

Distributed Memory MIMD Machines (Multicomputers, MPPs, clusters, etc. ) • Message passing programming models • Interconnect networks • Generations/history: 9/6/2006 1983 -87: COSMIC CUBE i. PSC/I, II software routing 1988 -92: mesh-connected (hardware routing) Intel paragon 1993 -99: CM-5, IBM-SP 1996 - : clusters eleg 652 -010 -06 F 39

Concept of Message-Passing Model Match Receive. Y, P, t Address. Y Send. X, Q, t Address. X • • • Local process address space Process P Process Q Send specifies buffer to be transmitted and receiving process Recv specifies sending process and application storage to receive into Memory to memory copy, but need to name processes In simplest form, the send/recv match achieves pairwise synch event Programming : MPI, etc. 9/6/2006 eleg 652 -010 -06 F 40

Evolution of Message-Passing Machines • Early machines: FIFO on each link • Hardware close to programming model • enabling non-blocking ops • Buffered by system at destination until recv • Diminishing role of topology • Store&forward routing: topology important • Introduction of pipelined routing made it less so • Cost is in node-network interface • Simplifies programming 9/6/2006 eleg 652 -010 -06 F 41

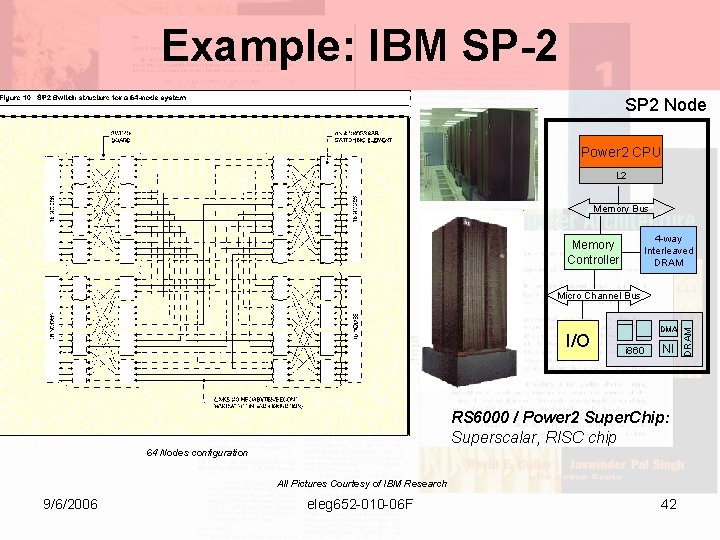

Example: IBM SP-2 SP 2 Node Power 2 CPU L 2 Memory Bus 4 -way Interleaved DRAM Memory Controller I/O DMA i 860 NI RS 6000 / Power 2 Super. Chip: Superscalar, RISC chip 64 Nodes configuration All Pictures Courtesy of IBM Research 9/6/2006 eleg 652 -010 -06 F 42 DRAM Micro Channel Bus

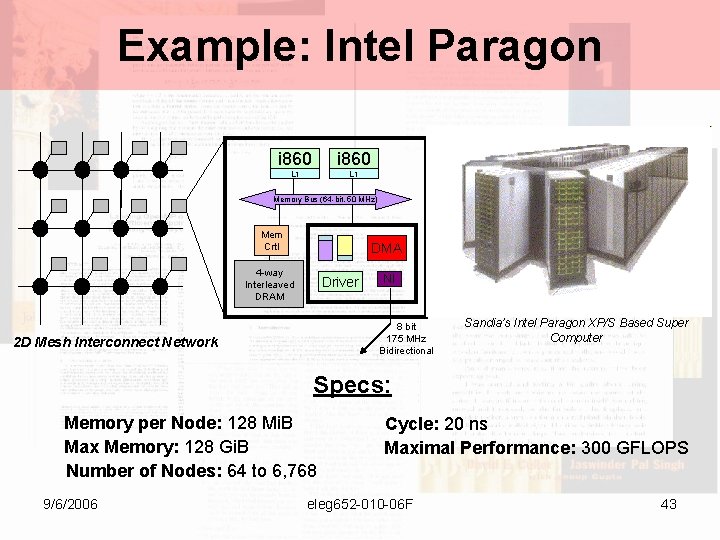

Example: Intel Paragon i 860 L 1 Memory Bus (64 -bit, 50 MHz) Mem Crtl DMA 4 -way Interleaved DRAM Driver NI 8 bit 175 MHz Bidirectional 2 D Mesh Interconnect Network Sandia’s Intel Paragon XP/S Based Super Computer Specs: Memory per Node: 128 Mi. B Max Memory: 128 Gi. B Number of Nodes: 64 to 6, 768 9/6/2006 Cycle: 20 ns Maximal Performance: 300 GFLOPS eleg 652 -010 -06 F 43

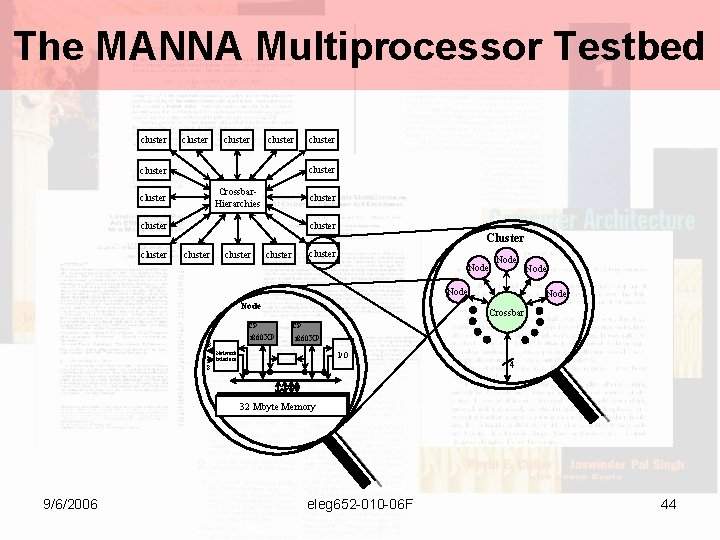

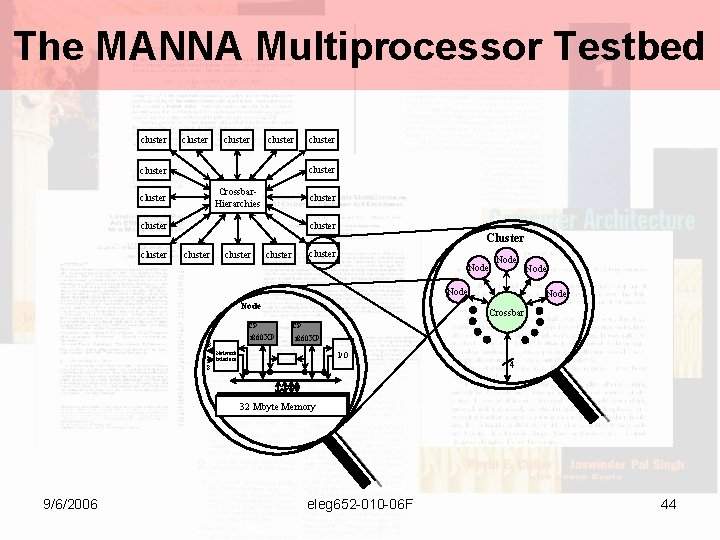

The MANNA Multiprocessor Testbed cluster cluster Crossbar. Hierarchies cluster Cluster cluster cluster Node CP i 860 XP 8 Node Crossbar CP i 860 XP Network Interface I/O 8 4 32 Mbyte Memory 9/6/2006 eleg 652 -010 -06 F 44

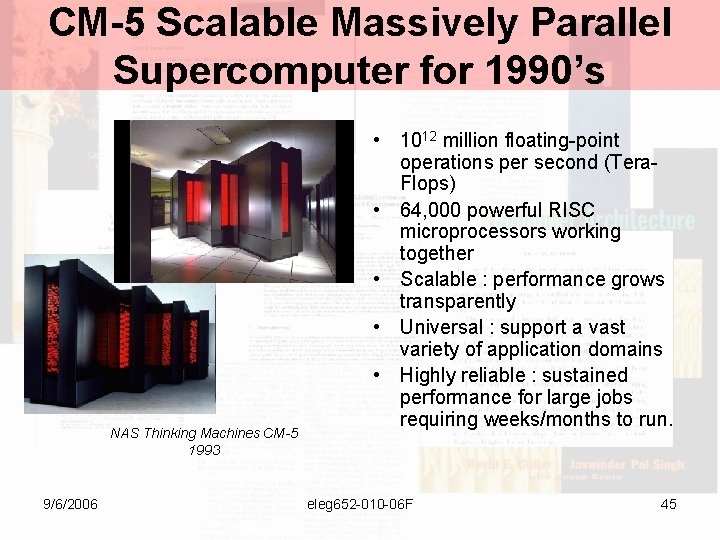

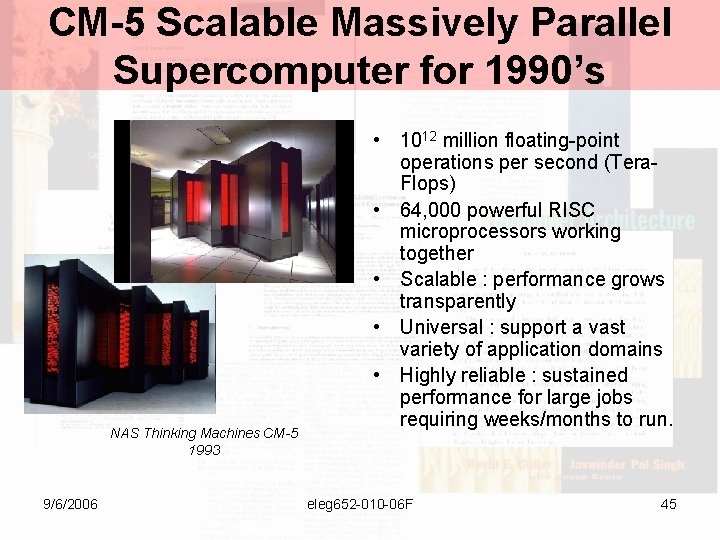

CM-5 Scalable Massively Parallel Supercomputer for 1990’s NAS Thinking Machines CM-5 1993 9/6/2006 • 1012 million floating-point operations per second (Tera. Flops) • 64, 000 powerful RISC microprocessors working together • Scalable : performance grows transparently • Universal : support a vast variety of application domains • Highly reliable : sustained performance for large jobs requiring weeks/months to run. eleg 652 -010 -06 F 45

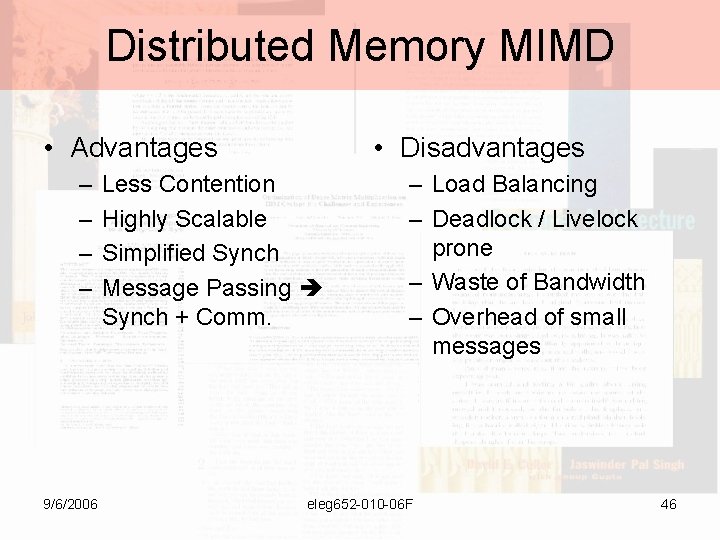

Distributed Memory MIMD • Advantages – – 9/6/2006 • Disadvantages Less Contention Highly Scalable Simplified Synch Message Passing Synch + Comm. – Load Balancing – Deadlock / Livelock prone – Waste of Bandwidth – Overhead of small messages eleg 652 -010 -06 F 46

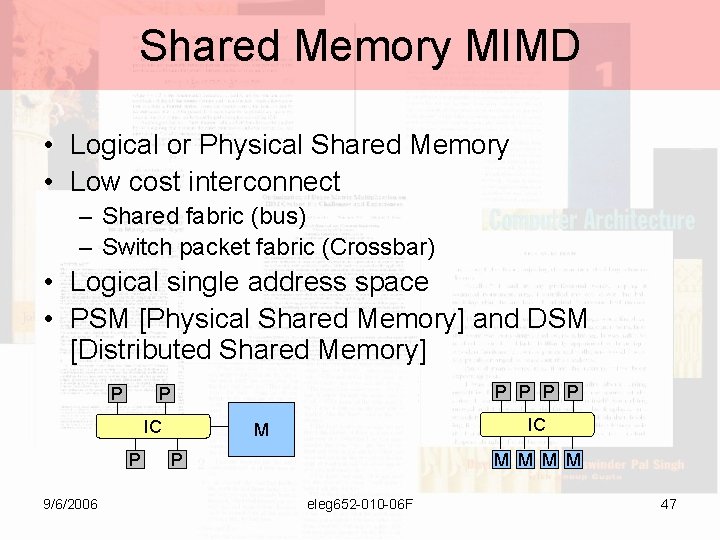

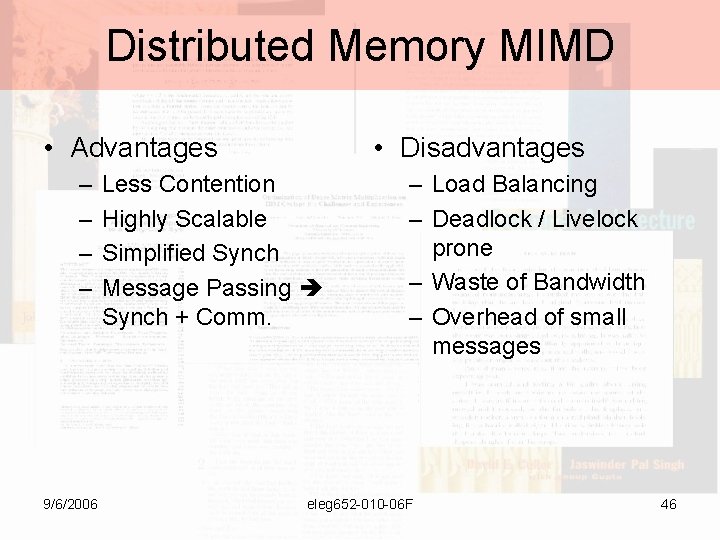

Shared Memory MIMD • Logical or Physical Shared Memory • Low cost interconnect – Shared fabric (bus) – Switch packet fabric (Crossbar) • Logical single address space • PSM [Physical Shared Memory] and DSM [Distributed Shared Memory] P P P IC P 9/6/2006 IC M P M M eleg 652 -010 -06 F 47

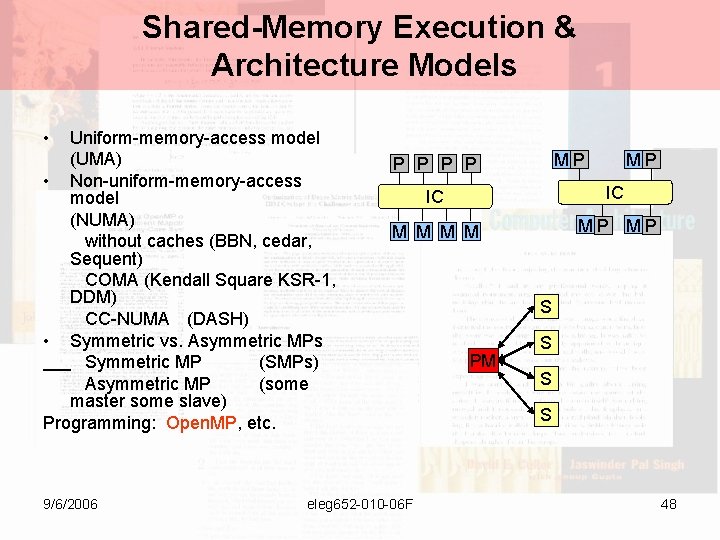

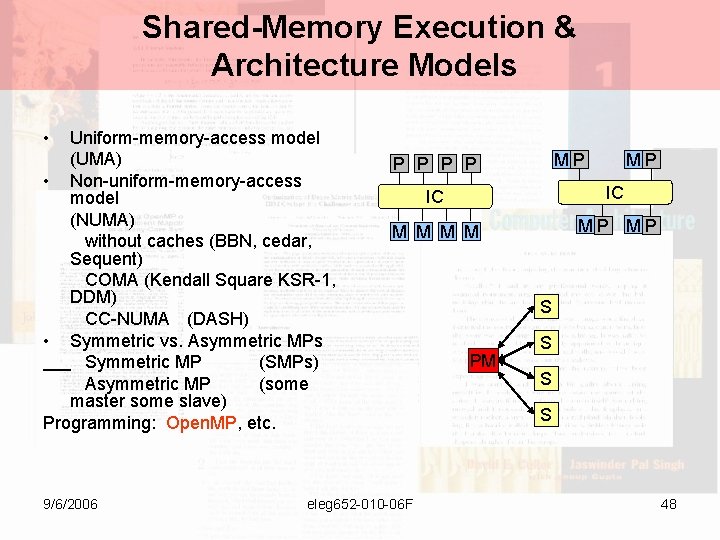

Shared-Memory Execution & Architecture Models • Uniform-memory-access model (UMA) • Non-uniform-memory-access model (NUMA) without caches (BBN, cedar, Sequent) COMA (Kendall Square KSR-1, DDM) CC-NUMA (DASH) • Symmetric vs. Asymmetric MPs Symmetric MP (SMPs) Asymmetric MP (some master some slave) Programming: Open. MP, etc. 9/6/2006 MP P P MP IC IC M M MP MP eleg 652 -010 -06 F S PM S S S 48

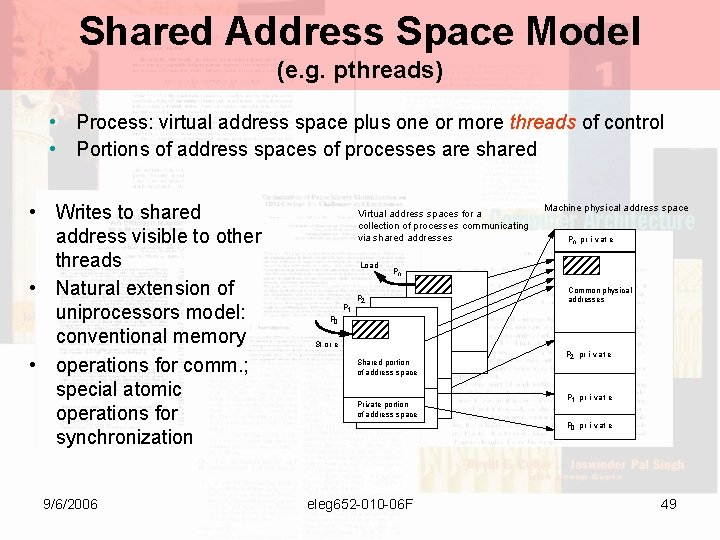

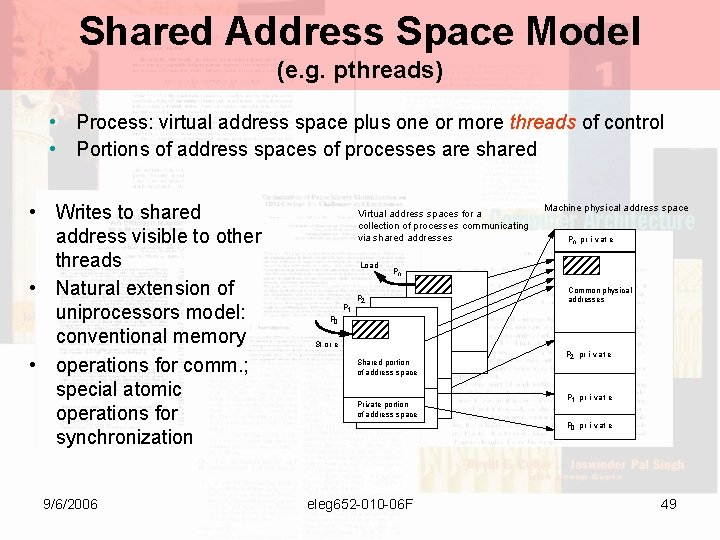

Shared Address Space Model (e. g. pthreads) • Process: virtual address space plus one or more threads of control • Portions of address spaces of processes are shared • Writes to shared address visible to other threads • Natural extension of uniprocessors model: conventional memory • operations for comm. ; special atomic operations for synchronization 9/6/2006 Virtual address spaces for a collection of processes communicating via shared addresses Load P 1 Machine physical address space Pn pr i v at e Pn P 2 Common physical addresses P 0 St or e Shared portion of address space Private portion of address space P 2 pr i vat e P 1 pr i vat e P 0 pr i vat e eleg 652 -010 -06 F 49

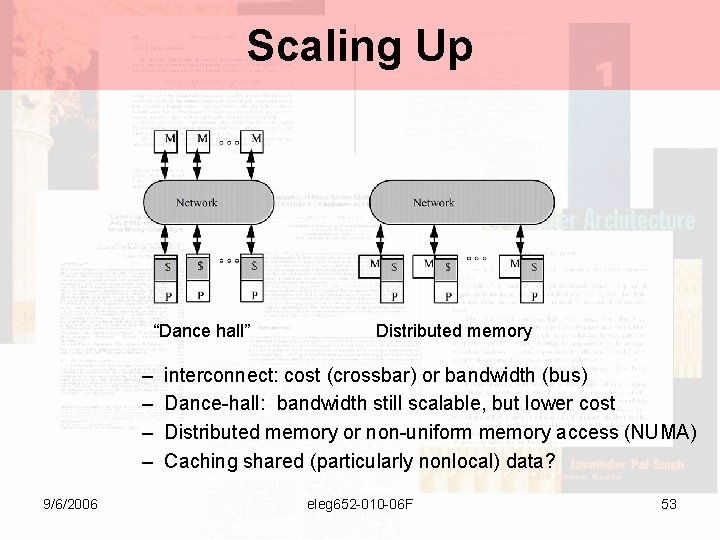

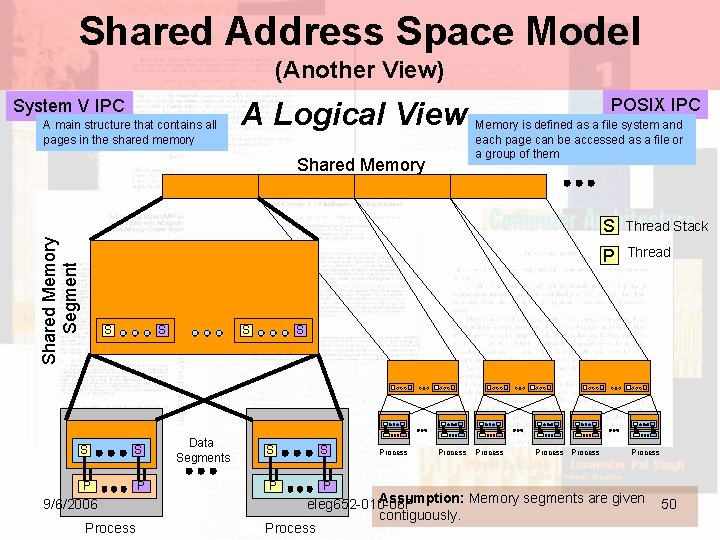

Shared Address Space Model (Another View) System V IPC A main structure that contains all pages in the shared memory A Logical View Shared Memory Segment Shared Memory S S P P 9/6/2006 Process S Data Segments POSIX IPC Memory is defined as a file system and each page can be accessed as a file or a group of them S Thread Stack P Thread S S S P P Process Process Assumption: Memory segments are given eleg 652 -010 -06 F contiguously. Process 50

Shared Address Space Architectures • Any processor can directly reference any memory location (comm. Implicit) • Convenient: • Location transparency • Similar programming model to time-sharing on uniprocessors • Popularly known as shared memory machines or model • Ambiguous: memory may be physically distributed among processors 9/6/2006 eleg 652 -010 -06 F 51

Shared-Memory Parallel Computers (late 90’s –early 2000’s) • SMPs (Intel-Quad, SUN SMPs) • Supercomputers • Cray T 3 E • Convex 2000 • SGI Origin/Onyx • Tera Computers 9/6/2006 eleg 652 -010 -06 F 52

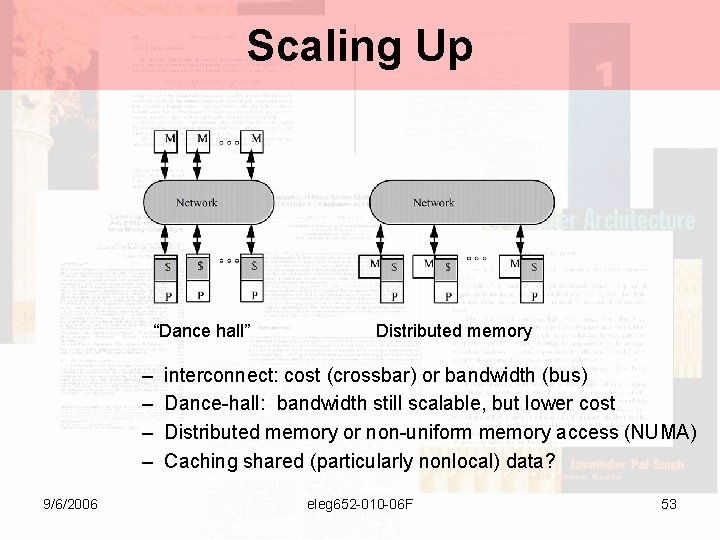

Scaling Up “Dance hall” – – 9/6/2006 Distributed memory interconnect: cost (crossbar) or bandwidth (bus) Dance-hall: bandwidth still scalable, but lower cost Distributed memory or non-uniform memory access (NUMA) Caching shared (particularly nonlocal) data? eleg 652 -010 -06 F 53

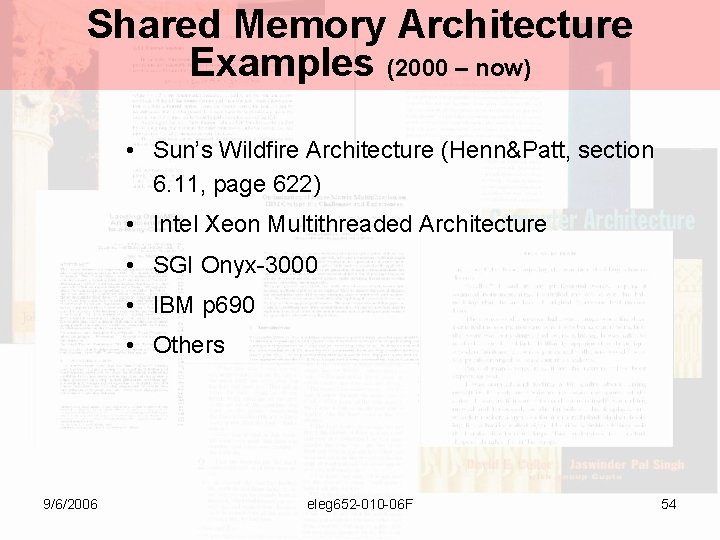

Shared Memory Architecture Examples (2000 – now) • Sun’s Wildfire Architecture (Henn&Patt, section 6. 11, page 622) • Intel Xeon Multithreaded Architecture • SGI Onyx-3000 • IBM p 690 • Others 9/6/2006 eleg 652 -010 -06 F 54

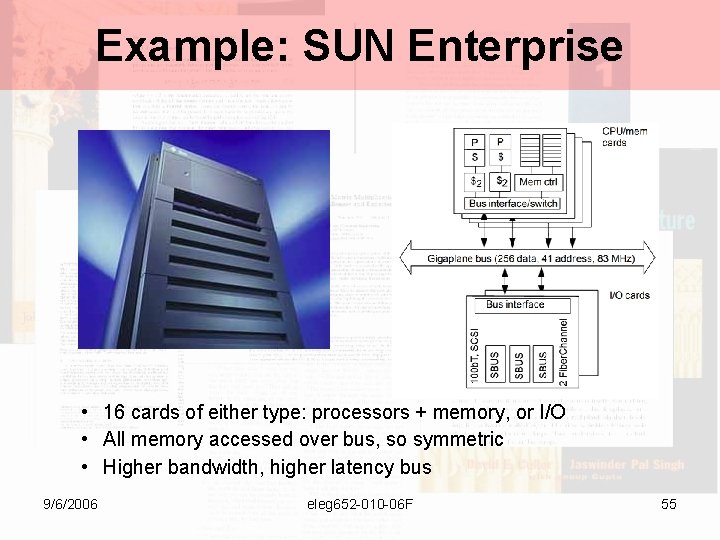

Example: SUN Enterprise • 16 cards of either type: processors + memory, or I/O • All memory accessed over bus, so symmetric • Higher bandwidth, higher latency bus 9/6/2006 eleg 652 -010 -06 F 55

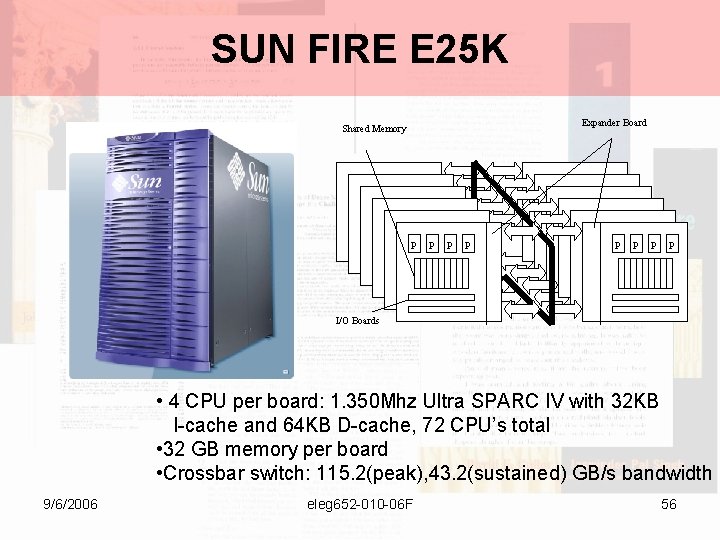

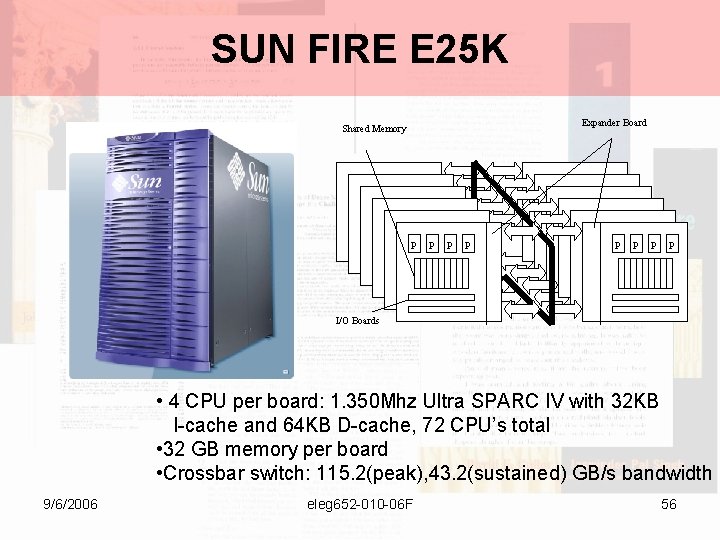

SUN FIRE E 25 K Expander Board Shared Memory p p p p I/O Boards • 4 CPU per board: 1. 350 Mhz Ultra SPARC IV with 32 KB I-cache and 64 KB D-cache, 72 CPU’s total • 32 GB memory per board • Crossbar switch: 115. 2(peak), 43. 2(sustained) GB/s bandwidth 9/6/2006 eleg 652 -010 -06 F 56

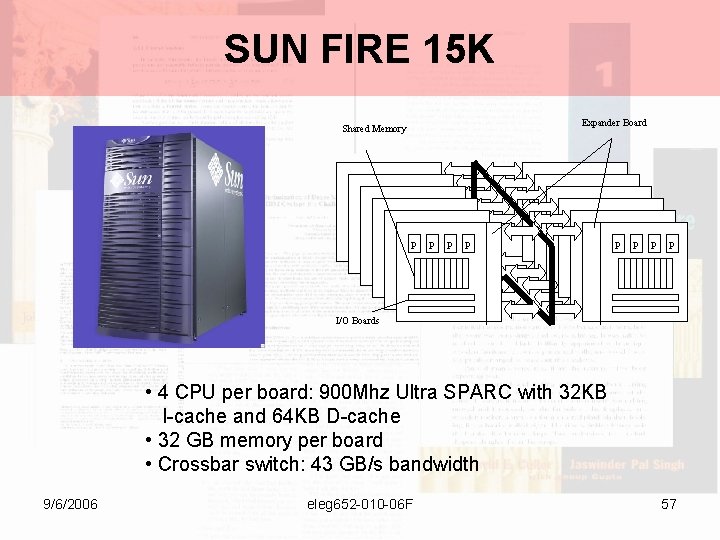

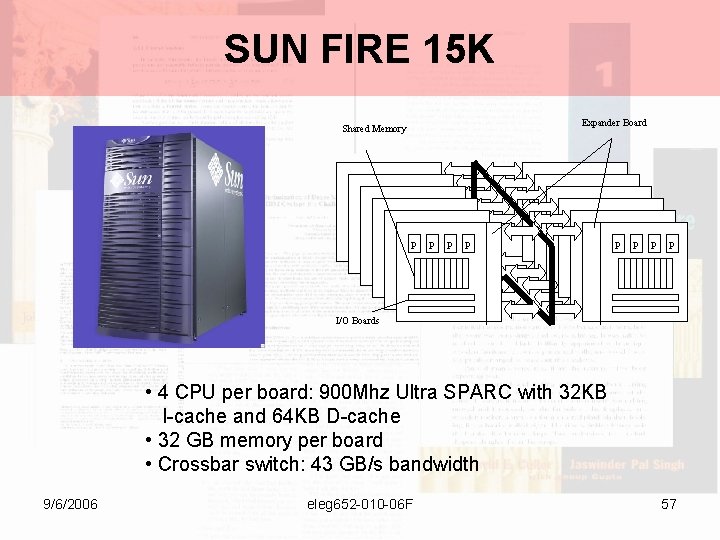

SUN FIRE 15 K Expander Board Shared Memory p p p p I/O Boards • 4 CPU per board: 900 Mhz Ultra SPARC with 32 KB I-cache and 64 KB D-cache • 32 GB memory per board • Crossbar switch: 43 GB/s bandwidth 9/6/2006 eleg 652 -010 -06 F 57

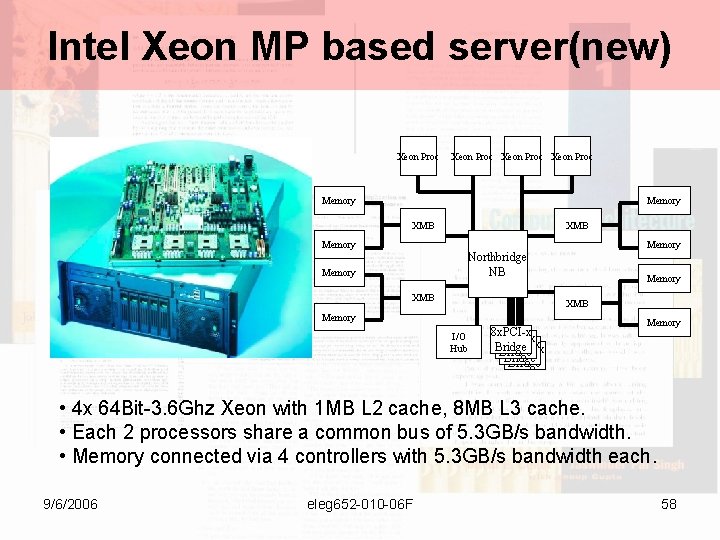

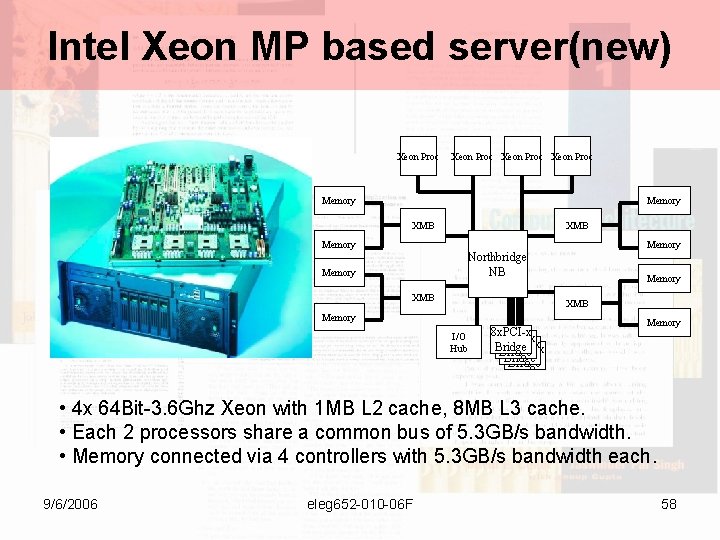

Intel Xeon MP based server(new) Xeon Proc Memory XMB Memory Northbridge NB Memory XMB Memory I/O Hub 8 x. PCI-x Bridge Memory • 4 x 64 Bit-3. 6 Ghz Xeon with 1 MB L 2 cache, 8 MB L 3 cache. • Each 2 processors share a common bus of 5. 3 GB/s bandwidth. • Memory connected via 4 controllers with 5. 3 GB/s bandwidth each. 9/6/2006 eleg 652 -010 -06 F 58

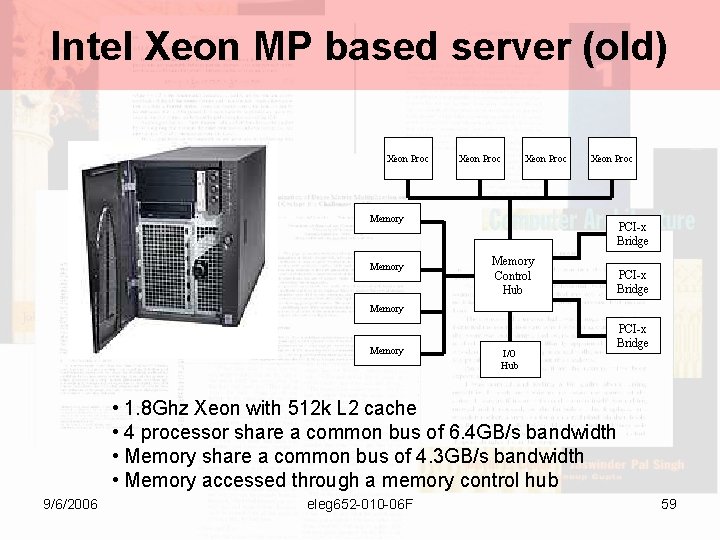

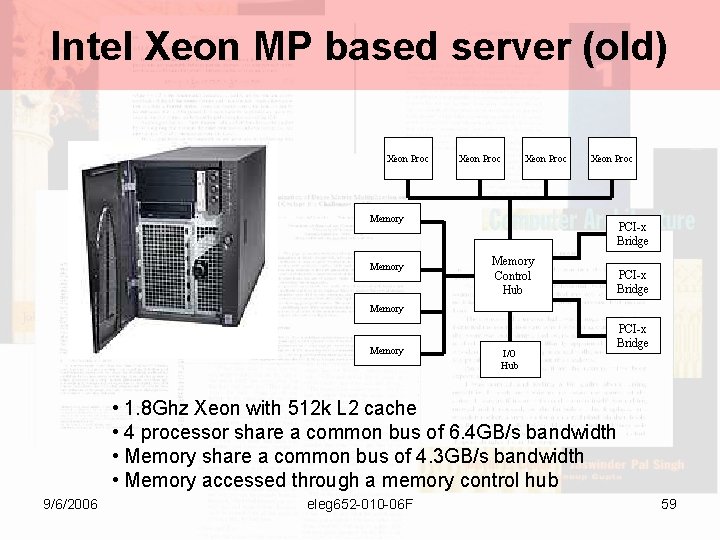

Intel Xeon MP based server (old) Xeon Proc Memory Xeon Proc PCI-x Bridge Memory Control Hub PCI-x Bridge Memory I/O Hub PCI-x Bridge • 1. 8 Ghz Xeon with 512 k L 2 cache • 4 processor share a common bus of 6. 4 GB/s bandwidth • Memory share a common bus of 4. 3 GB/s bandwidth • Memory accessed through a memory control hub 9/6/2006 eleg 652 -010 -06 F 59

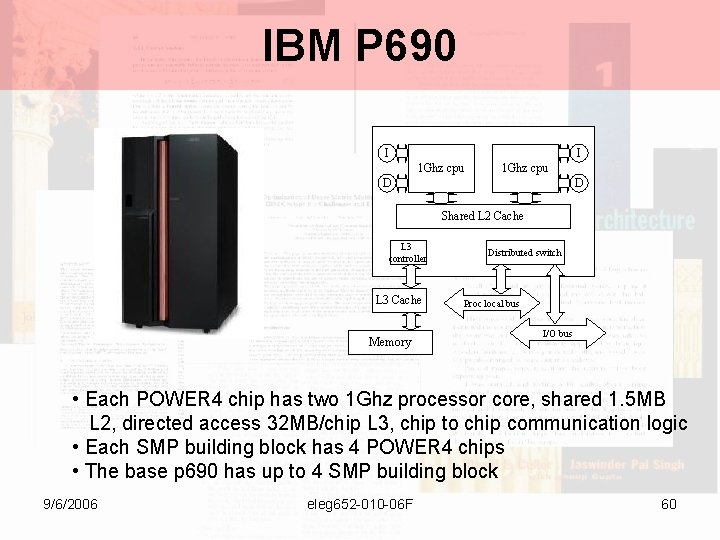

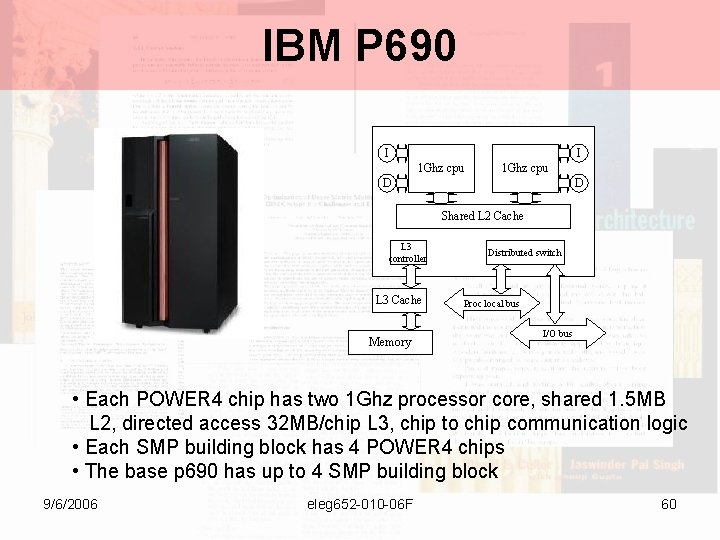

IBM P 690 I I 1 Ghz cpu D D Shared L 2 Cache L 3 controller L 3 Cache Memory Distributed switch Proc local bus I/O bus • Each POWER 4 chip has two 1 Ghz processor core, shared 1. 5 MB L 2, directed access 32 MB/chip L 3, chip to chip communication logic • Each SMP building block has 4 POWER 4 chips • The base p 690 has up to 4 SMP building block 9/6/2006 eleg 652 -010 -06 F 60

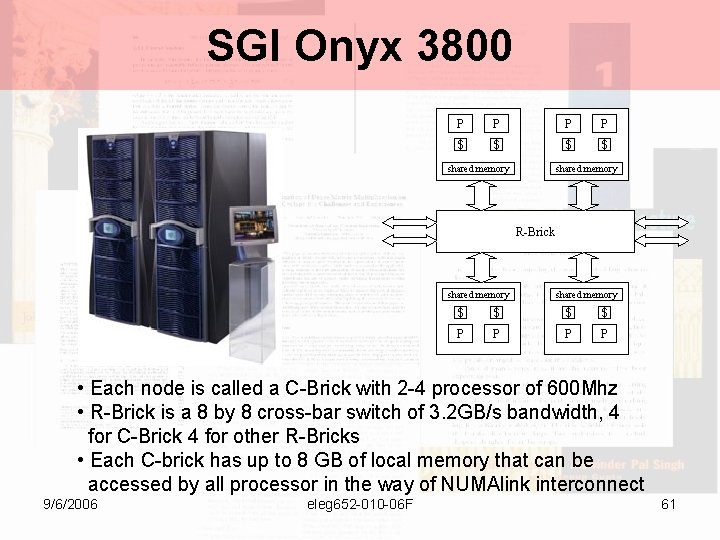

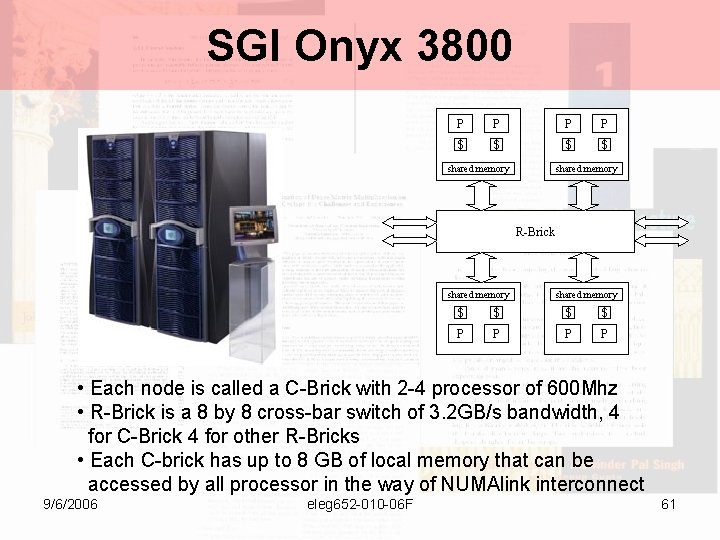

SGI Onyx 3800 P P $ $ shared memory R-Brick shared memory $ $ P P • Each node is called a C-Brick with 2 -4 processor of 600 Mhz • R-Brick is a 8 by 8 cross-bar switch of 3. 2 GB/s bandwidth, 4 for C-Brick 4 for other R-Bricks • Each C-brick has up to 8 GB of local memory that can be accessed by all processor in the way of NUMAlink interconnect 9/6/2006 eleg 652 -010 -06 F 61

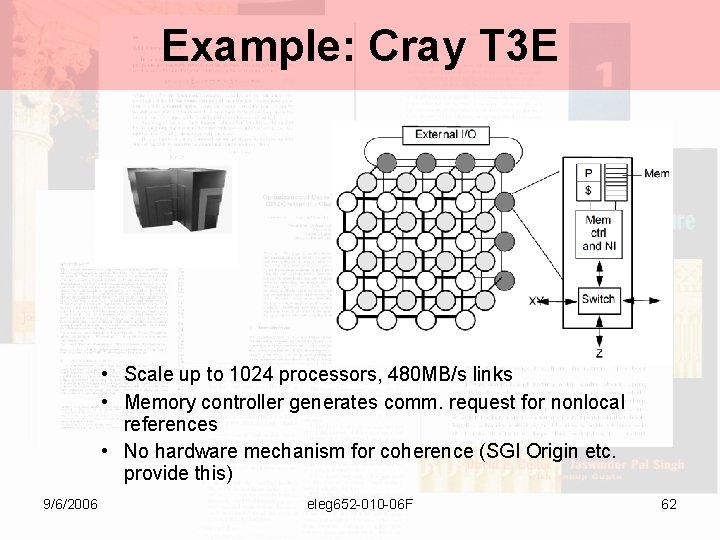

Example: Cray T 3 E • Scale up to 1024 processors, 480 MB/s links • Memory controller generates comm. request for nonlocal references • No hardware mechanism for coherence (SGI Origin etc. provide this) 9/6/2006 eleg 652 -010 -06 F 62

Multithreaded Execution and Architecture Models • Dataflow models • Dependent on the data for execution • Control-flow based models • Dependent on the logic of the application for the use of resources • Hybrid models • Concepts of tokens associated with both data and logic 9/6/2006 eleg 652 -010 -06 F 63

Multithreaded Execution and Architectue Models • The “time sharing” one instruction processing unit in a pipelined fashion by all instruction streams • Use by Denelcor Heterogeneous Processor Supercomputer • Used in Hyper Threading technology (Intel’s HT) and Symmetric Multi Threaded (IBM’s SMT and general) • Key: Logical or physical replicated and independent hardware resources (i. e. C. U. , register files, etc) 9/6/2006 eleg 652 -010 -06 F 64

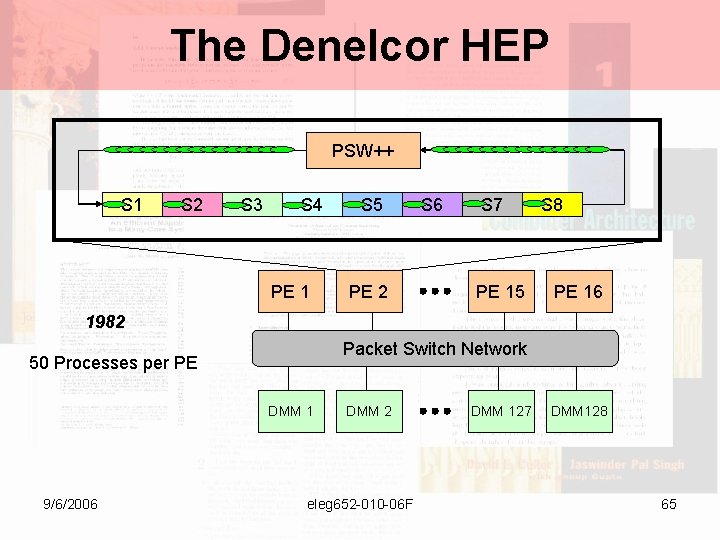

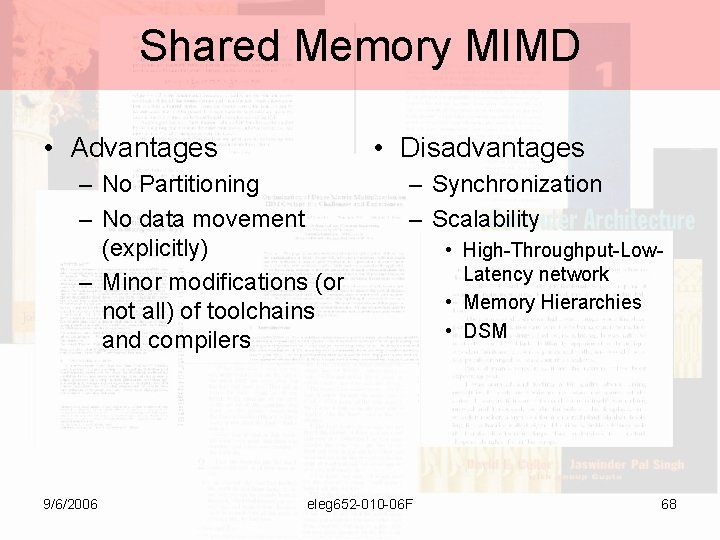

The Denelcor HEP PSW++ S 1 S 2 S 3 S 4 PE 1 S 5 PE 2 S 6 S 7 PE 15 S 8 PE 16 1982 Packet Switch Network 50 Processes per PE DMM 1 9/6/2006 DMM 2 eleg 652 -010 -06 F DMM 127 DMM 128 65

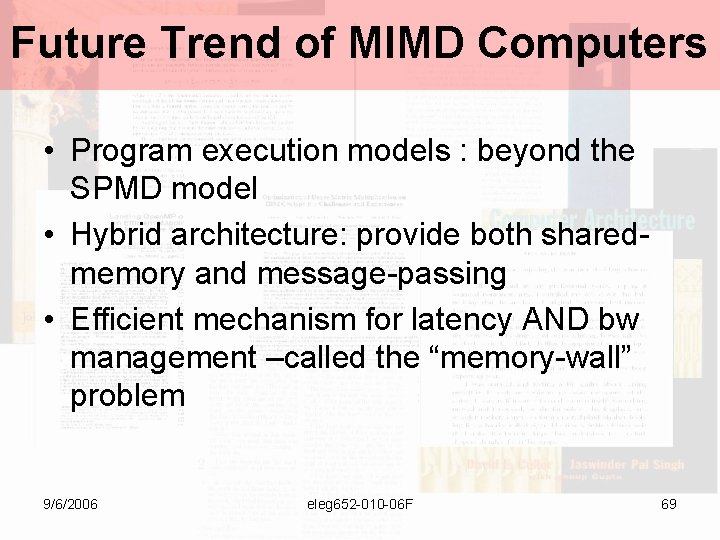

Denelcor HEP • Many inst. streams single P-unit • 16 PEM + 128 DMM : 64 bit/DMM • Packet-switching network = • I-stream creation is under program control • 50 I-streams • Programmability : SISAL, Fortran 9/6/2006 eleg 652 -010 -06 F 66

Tera MTA (1990) • A shared memory LIW multiprocessor • 128 fine threads have 32 registers each • to tolerate FU, synchronization and memory latency. • Explicit-dependence look ahead increases singlethread concurrency. • Synchronization uses full/empty bits. 9/6/2006 eleg 652 -010 -06 F 67

Shared Memory MIMD • Advantages • Disadvantages – No Partitioning – No data movement (explicitly) – Minor modifications (or not all) of toolchains and compilers 9/6/2006 – Synchronization – Scalability eleg 652 -010 -06 F • High-Throughput-Low. Latency network • Memory Hierarchies • DSM 68

Future Trend of MIMD Computers • Program execution models : beyond the SPMD model • Hybrid architecture: provide both sharedmemory and message-passing • Efficient mechanism for latency AND bw management –called the “memory-wall” problem 9/6/2006 eleg 652 -010 -06 F 69

Side Note 1 SPMD Model • Single Program Multiple Data Streams • A piece of Code is run by multiple processors which operates on different data streams • Used for Shared Memory Machines as a programming model • Used by Open. MP, etc. 9/6/2006 eleg 652 -010 -06 F 70

Recent High-End MIMD Parallel Architecture Projects • ASCI Projects (USA) • ASCI Blue • ASCI Red • ASCI Blue Mountains • HTMT Project (USA) • The Earth Simulator (Japan) • IBM BG/L Architecture • HPCS architectures (USA) • IBM Cyclops-64 Architecture • Japan and others 9/6/2006 eleg 652 -010 -06 F 71

Topic 1 c: Amdahl’s Law The Reason to Study Parallelism 9/6/2006 eleg 652 -010 -06 F 72

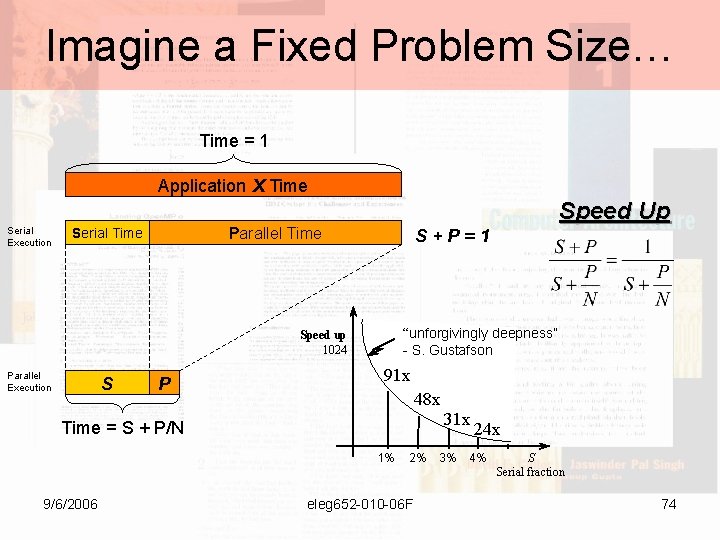

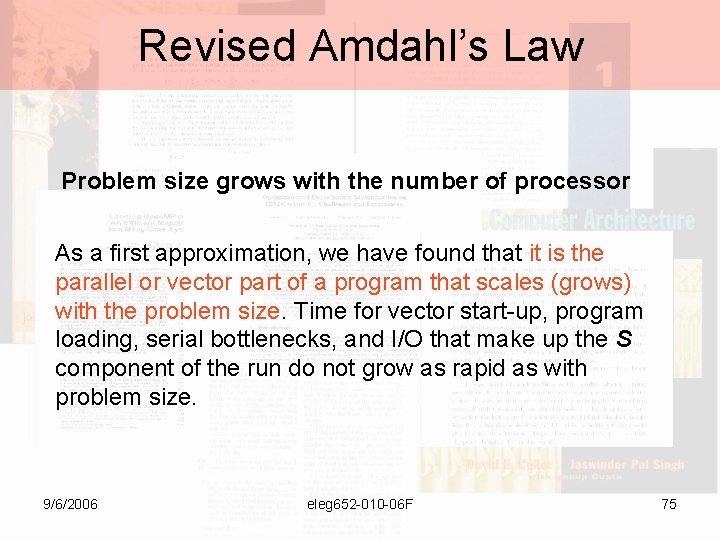

Amdahl’s Law “When the fraction of serial work in a given problem is small, say s, the maximum speedup obtainable (from an even infinite number of processors) is only 1/s. ” By Amdahl, G. : “Validity of the single processor approach to achieve large-scale computer capabilities” AFIPS Conf. Proceedings 30, 1967, pp 483 -485 9/6/2006 eleg 652 -010 -06 F 73

Imagine a Fixed Problem Size… Time = 1 Application X Time Serial Execution Speed Up Parallel Time Serial Time S+P=1 “unforgivingly deepness” - S. Gustafson Speed up 1024 Parallel Execution S P 91 x 48 x Time = S + P/N 1% 9/6/2006 2% eleg 652 -010 -06 F 31 x 3% 24 x 4% S Serial fraction 74

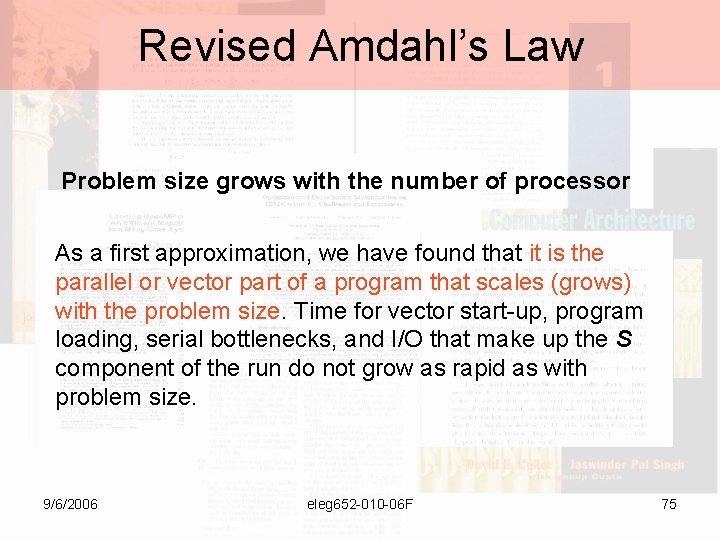

Revised Amdahl’s Law Problem size grows with the number of processor As a first approximation, we have found that it is the parallel or vector part of a program that scales (grows) with the problem size. Time for vector start-up, program loading, serial bottlenecks, and I/O that make up the S component of the run do not grow as rapid as with problem size. 9/6/2006 eleg 652 -010 -06 F 75

Imagine a Growing Problem Size… Time = S + N * P Application X Time Serial Execution Parallel Time Serial Time Speed Up Parallel Execution S P S+P=1 Time = 1 9/6/2006 eleg 652 -010 -06 F 76

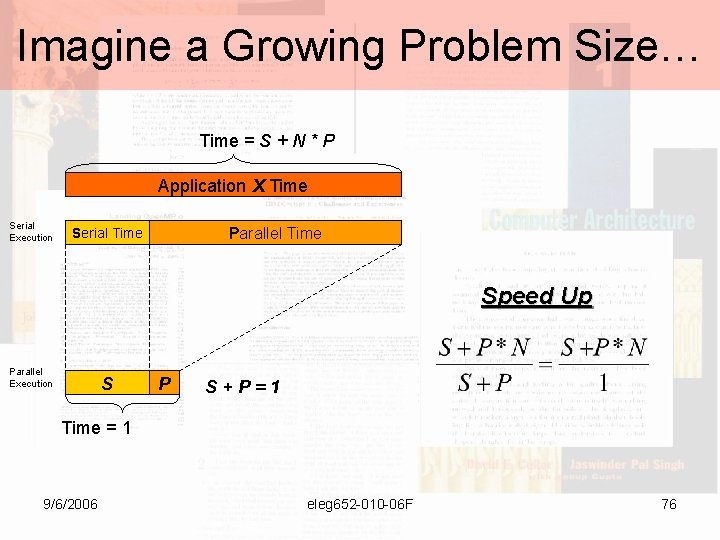

Topic 1 d Interconnection Networks How do you talk to others? 9/6/2006 eleg 652 -010 -06 F 77

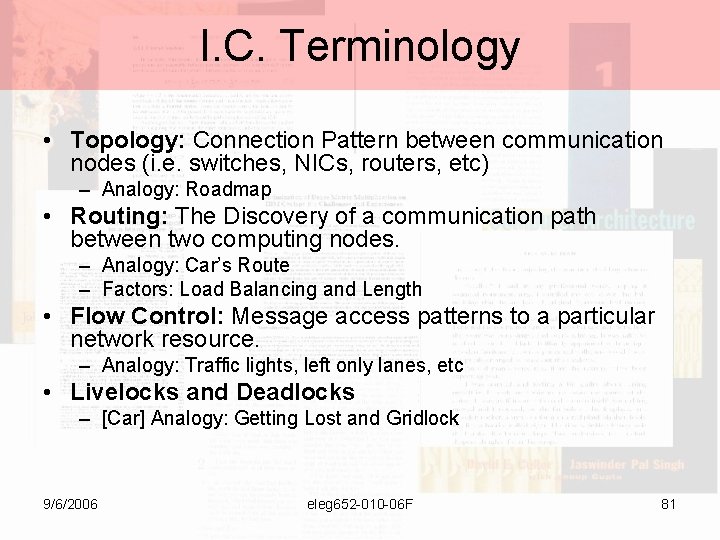

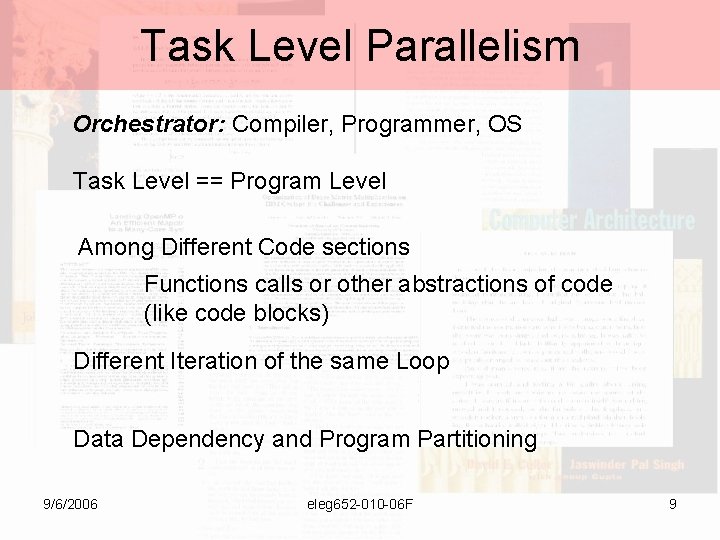

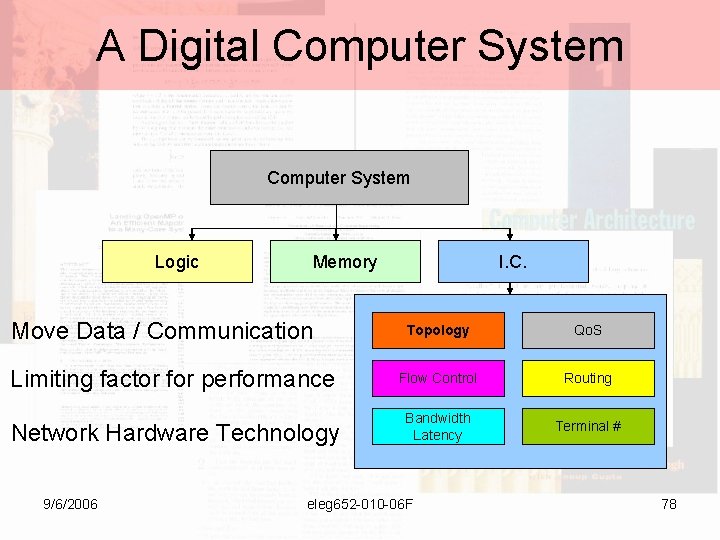

A Digital Computer System Logic Memory Move Data / Communication I. C. Topology Qo. S Limiting factor for performance Flow Control Routing Network Hardware Technology Bandwidth Latency Terminal # 9/6/2006 eleg 652 -010 -06 F 78

![A Generic Computer The MIMD Example Small Size Interconnect Network Bus and one level A Generic Computer The MIMD Example Small Size Interconnect Network Bus and [one level]](https://slidetodoc.com/presentation_image_h/097cc429e3c67e0684db72281b7f1134/image-79.jpg)

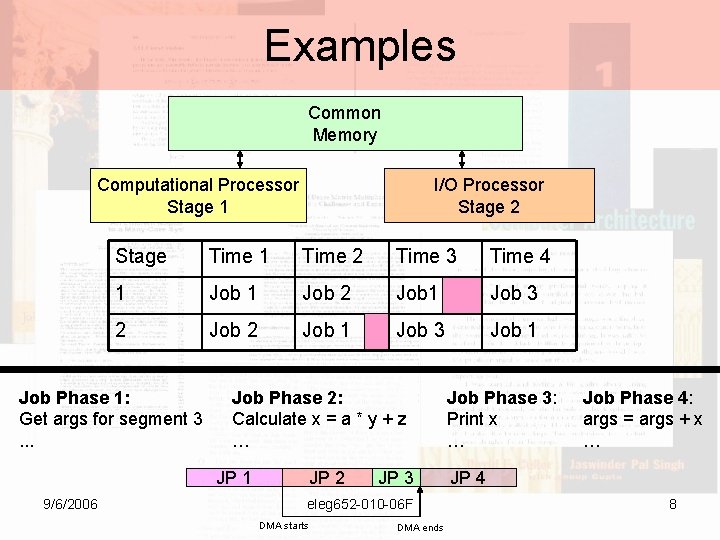

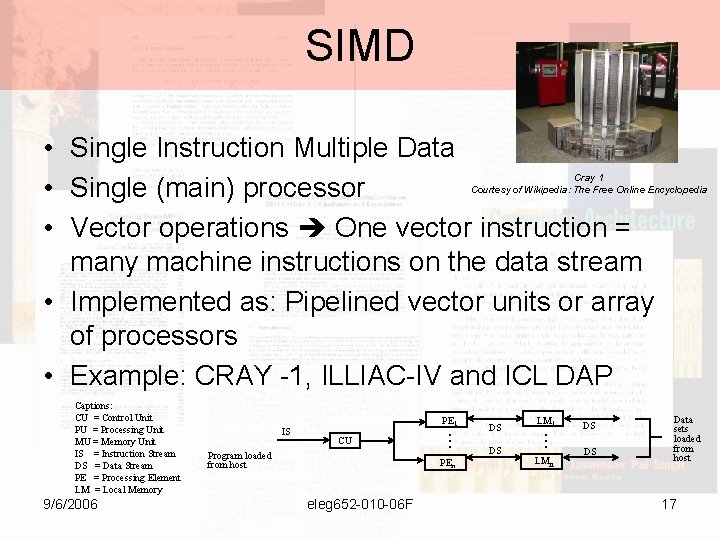

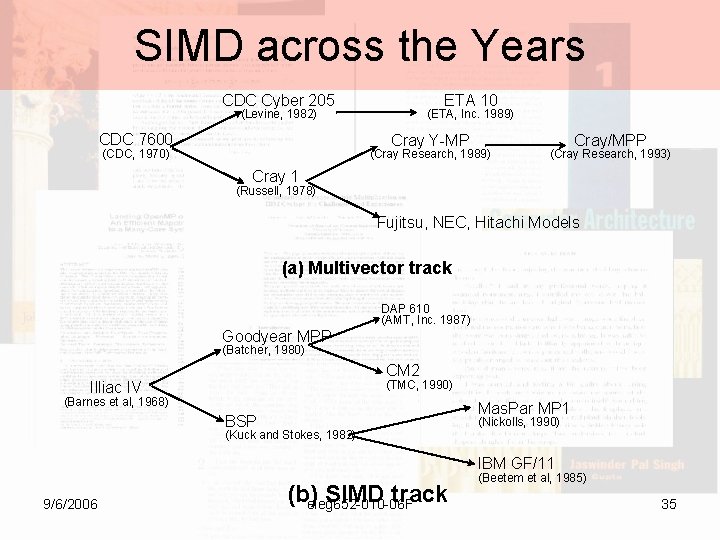

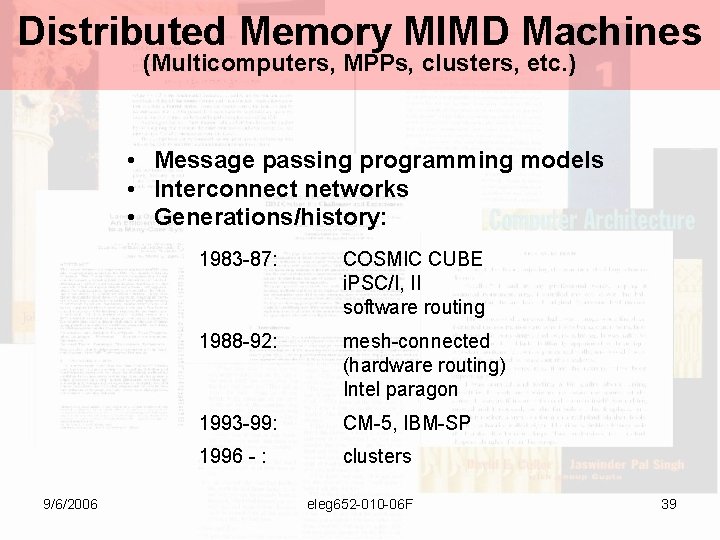

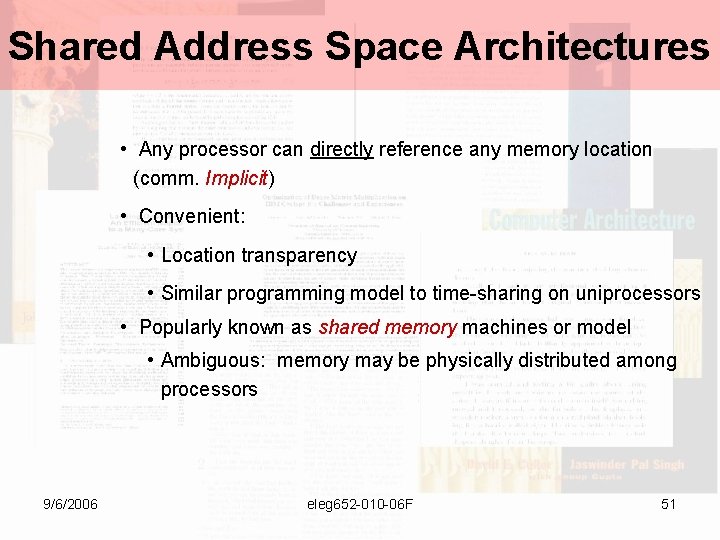

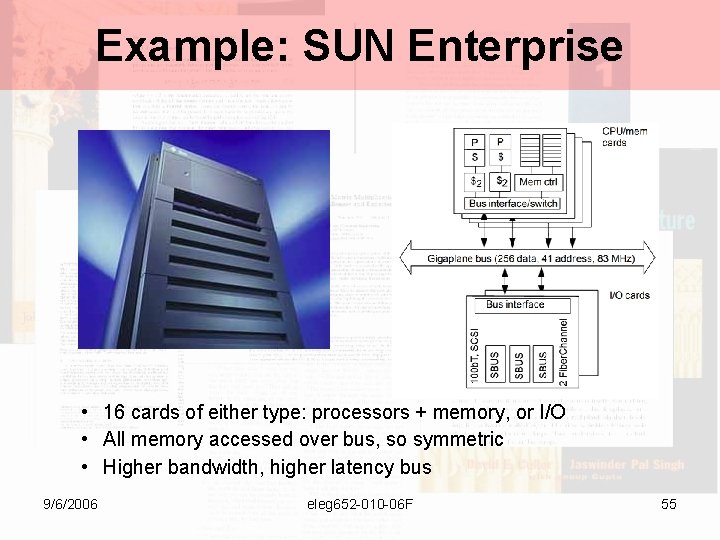

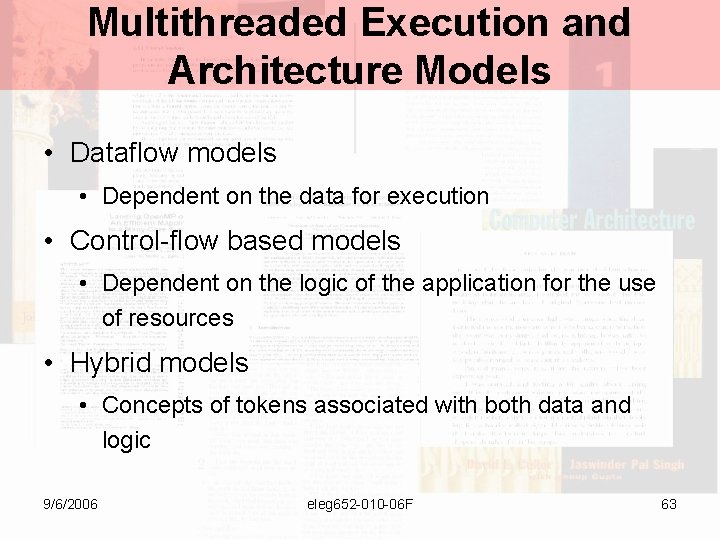

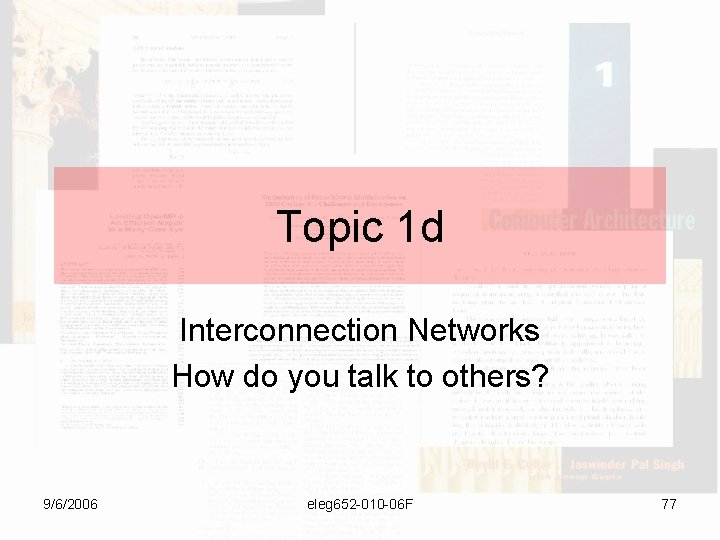

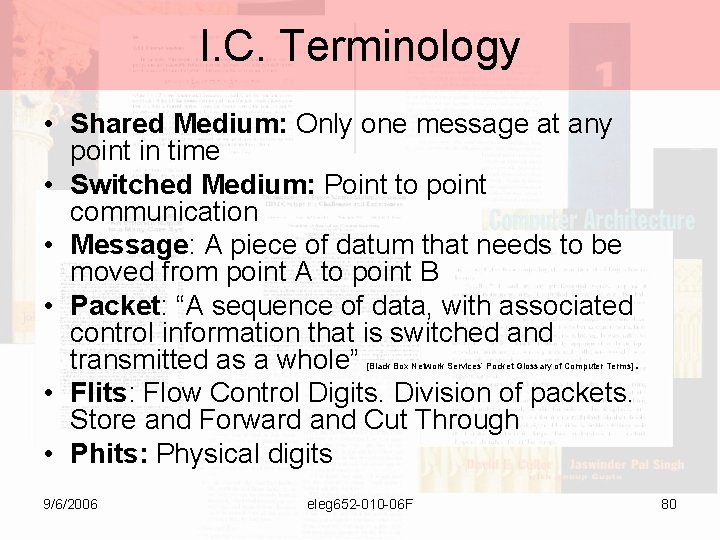

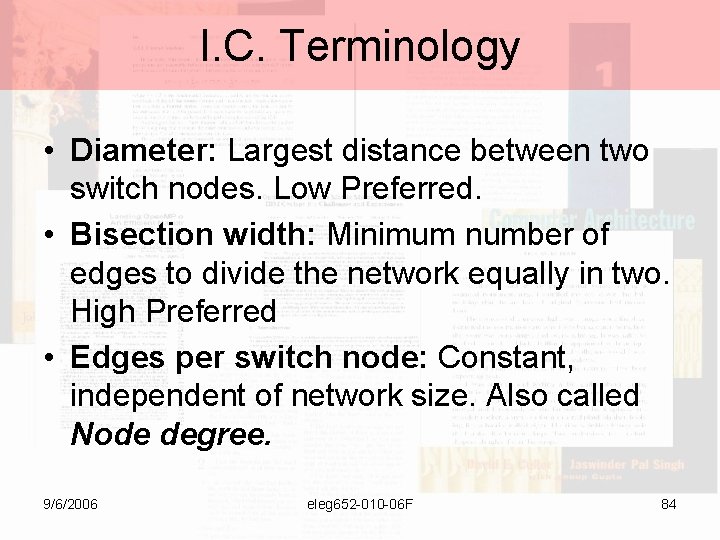

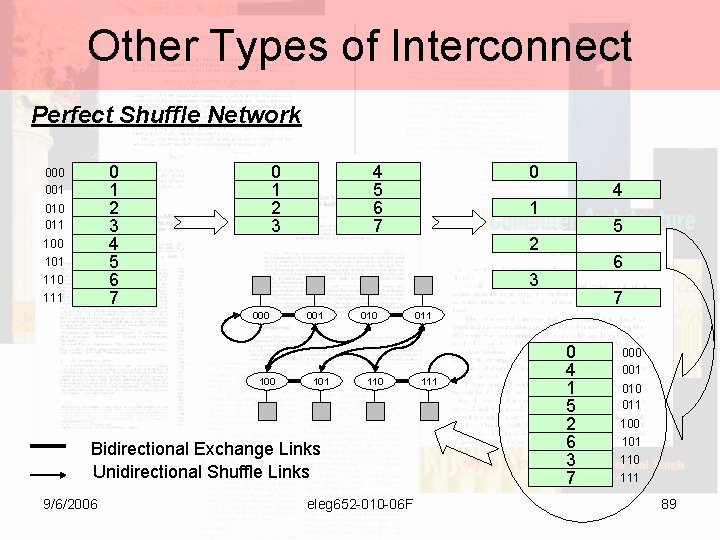

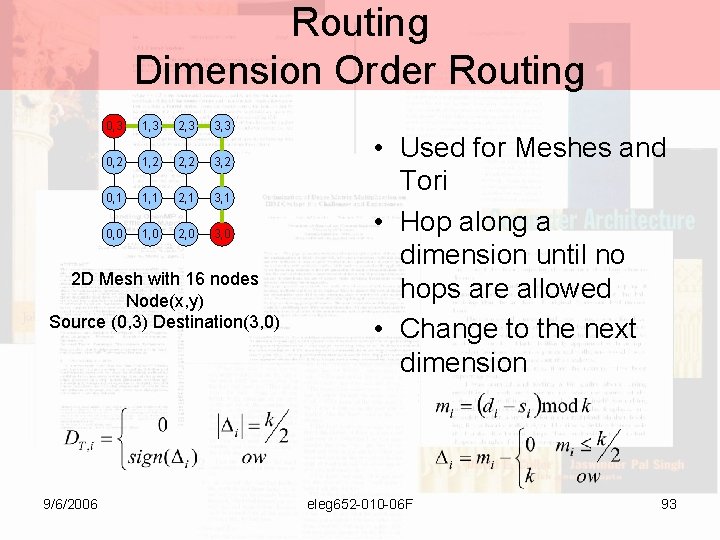

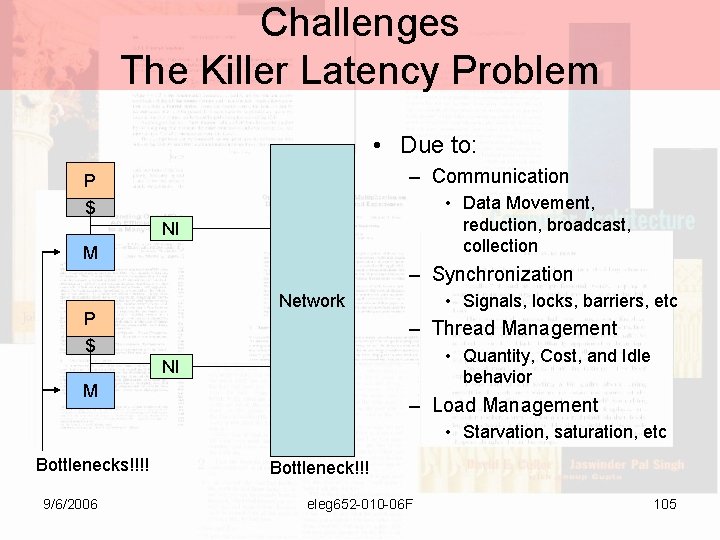

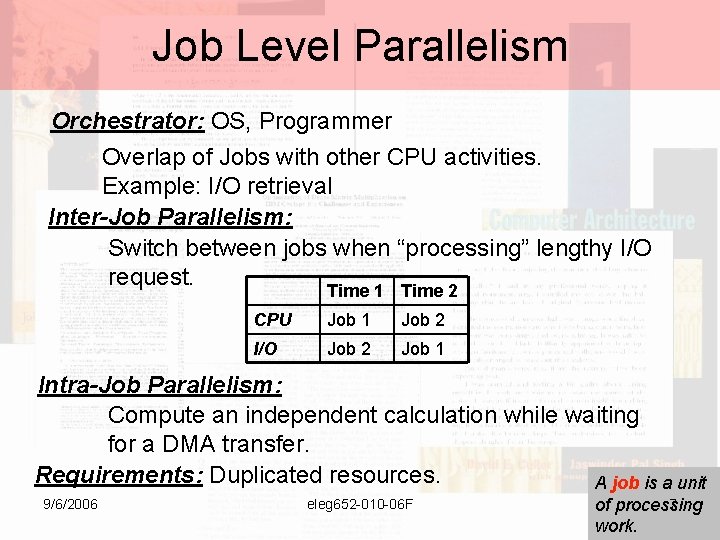

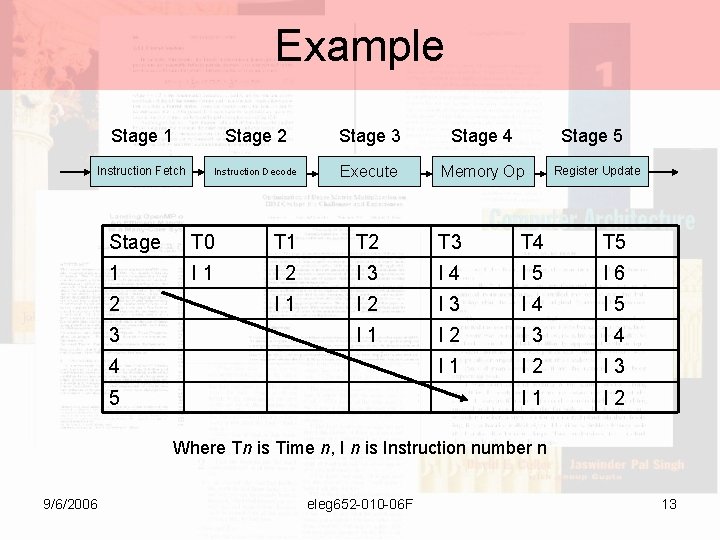

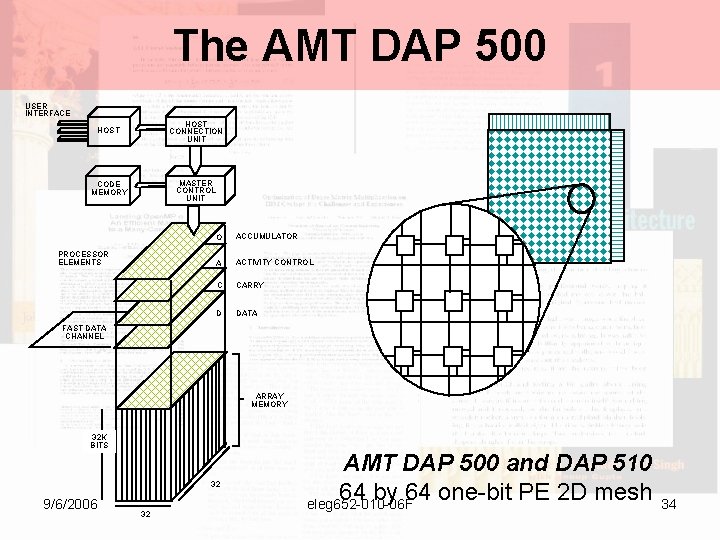

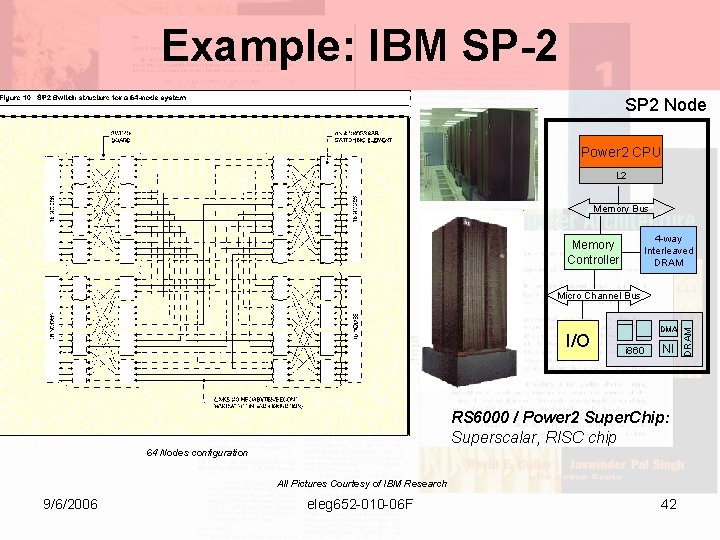

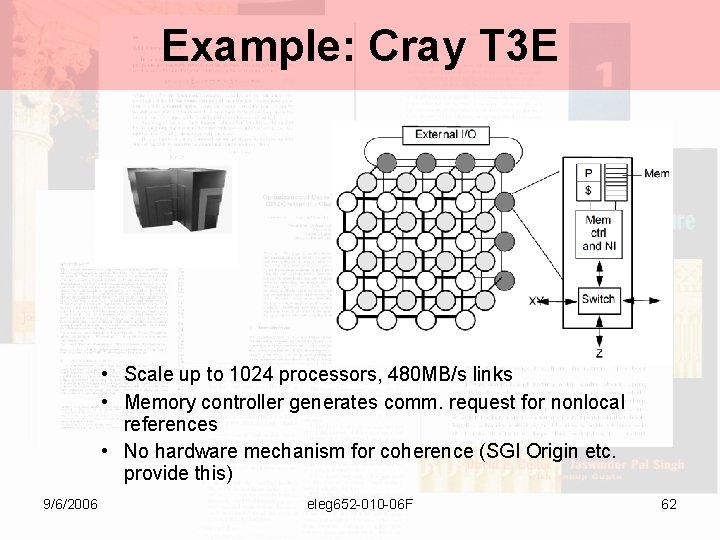

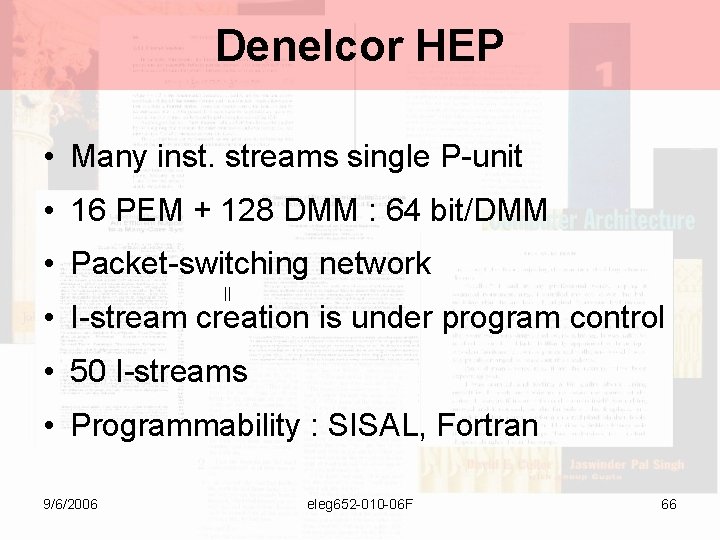

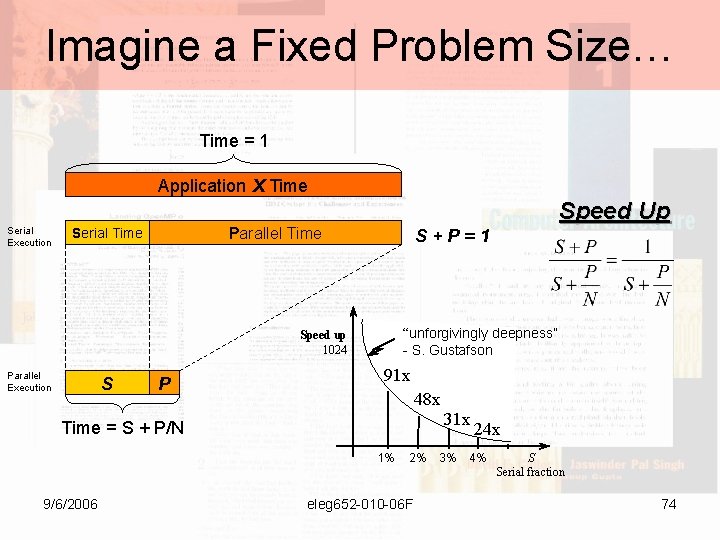

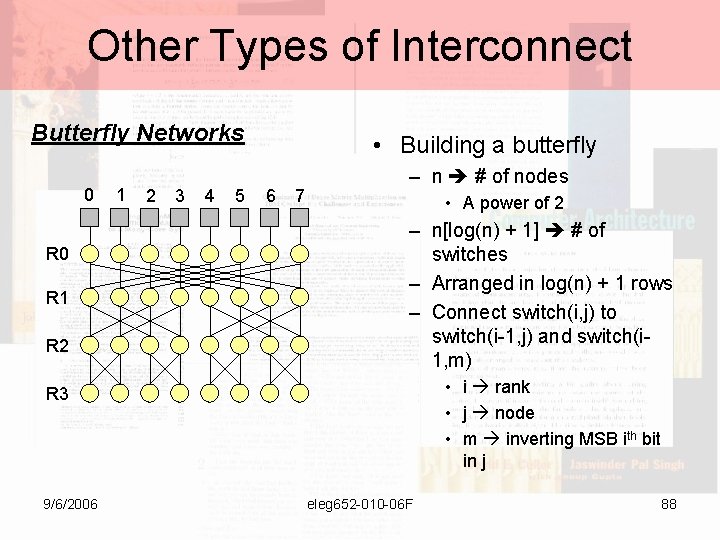

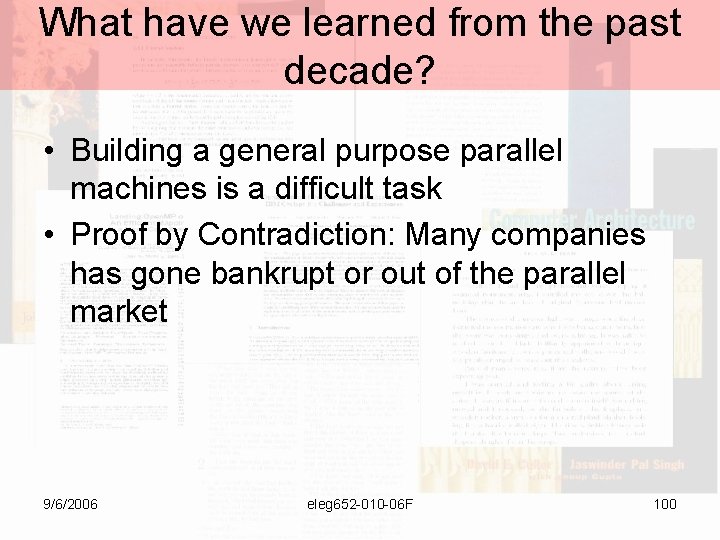

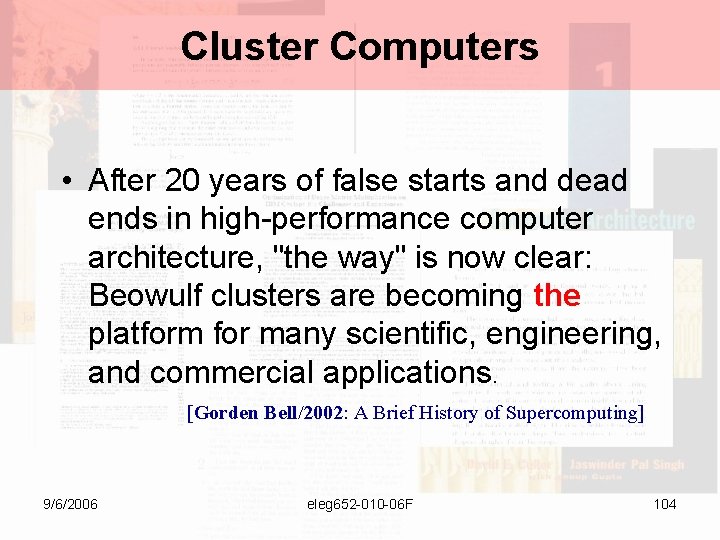

A Generic Computer The MIMD Example Small Size Interconnect Network Bus and [one level] Crossbars IC Memory Communication Assist IC $ $ P P Full Featured Interconnect Networks. Packet Switching Fabrics Key: Scalable Network Objectives: Make efficient use of scarce comm. Resources – providing high bandwidth, low-latency communication between nodes with a minimum cost and energy. 9/6/2006 eleg 652 -010 -06 F 79

I. C. Terminology • Shared Medium: Only one message at any point in time • Switched Medium: Point to point communication • Message: A piece of datum that needs to be moved from point A to point B • Packet: “A sequence of data, with associated control information that is switched and transmitted as a whole”. • Flits: Flow Control Digits. Division of packets. Store and Forward and Cut Through • Phits: Physical digits [Black Box Network Services’ Pocket Glossary of Computer Terms] 9/6/2006 eleg 652 -010 -06 F 80

I. C. Terminology • Topology: Connection Pattern between communication nodes (i. e. switches, NICs, routers, etc) – Analogy: Roadmap • Routing: The Discovery of a communication path between two computing nodes. – Analogy: Car’s Route – Factors: Load Balancing and Length • Flow Control: Message access patterns to a particular network resource. – Analogy: Traffic lights, left only lanes, etc • Livelocks and Deadlocks – [Car] Analogy: Getting Lost and Gridlock 9/6/2006 eleg 652 -010 -06 F 81

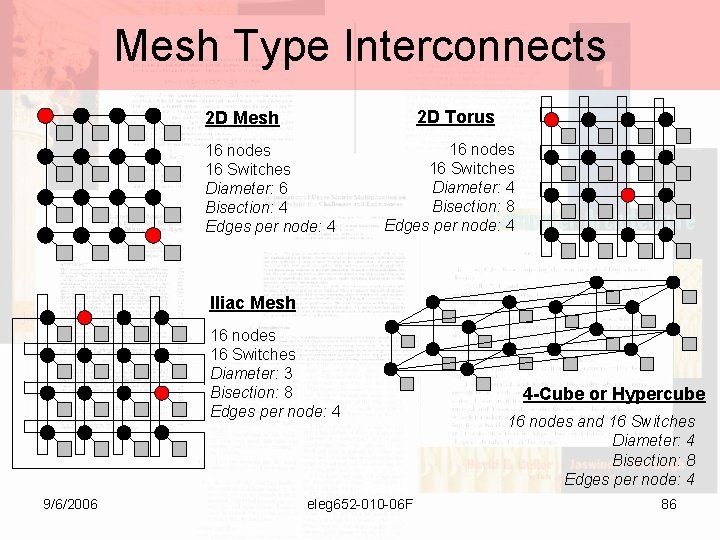

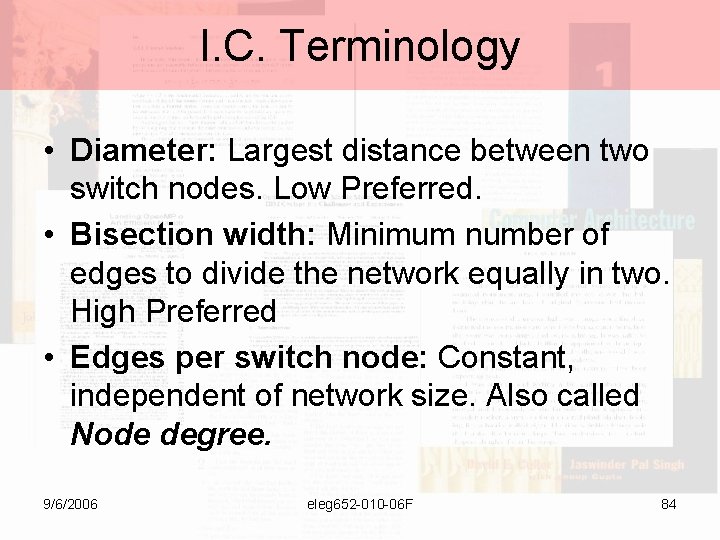

I. C. Terminology • Direct Topology: The ratio between the switch nodes and the processor nodes are 1: 1 • Indirect Topology: The ratio between the switch nodes and the processor elements is more than 1: 1 • Self Throttling Network: A network in which congestion is reduced because the actors “detect” such congestion and begin to slow down the number of requests 9/6/2006 eleg 652 -010 -06 F 82

I. C. Terminology Direct Topologies 2 D Torus 2 D Mesh Indirect Topologies Binary Tree 9/6/2006 eleg 652 -010 -06 F 83

I. C. Terminology • Diameter: Largest distance between two switch nodes. Low Preferred. • Bisection width: Minimum number of edges to divide the network equally in two. High Preferred • Edges per switch node: Constant, independent of network size. Also called Node degree. 9/6/2006 eleg 652 -010 -06 F 84

![Network Architecture Topics Topology Mesh Interconnect Routing Dimensional Order Routing Flow Network Architecture Topics • • • Topology [Mesh Interconnect] Routing [Dimensional Order Routing] Flow](https://slidetodoc.com/presentation_image_h/097cc429e3c67e0684db72281b7f1134/image-85.jpg)

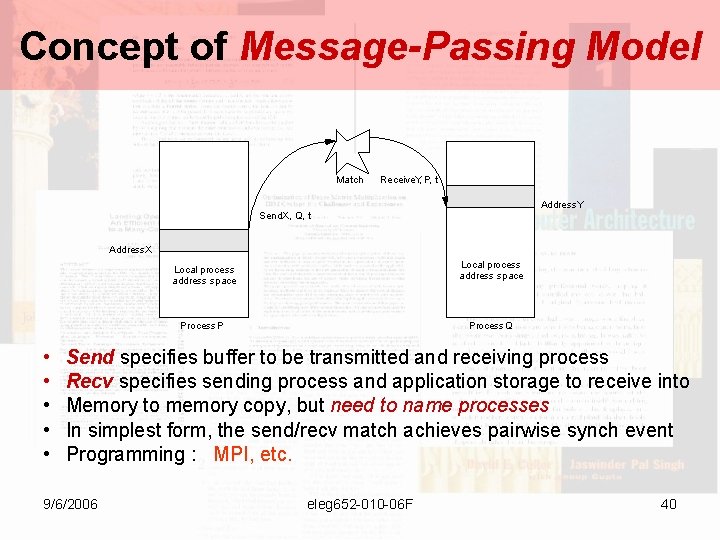

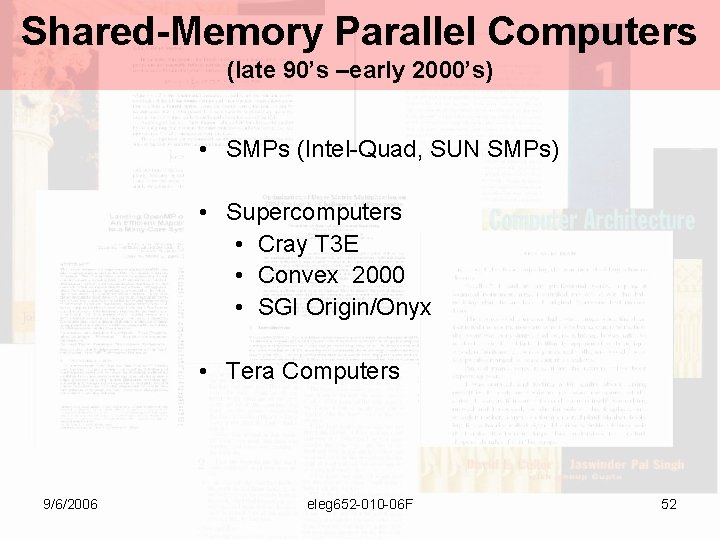

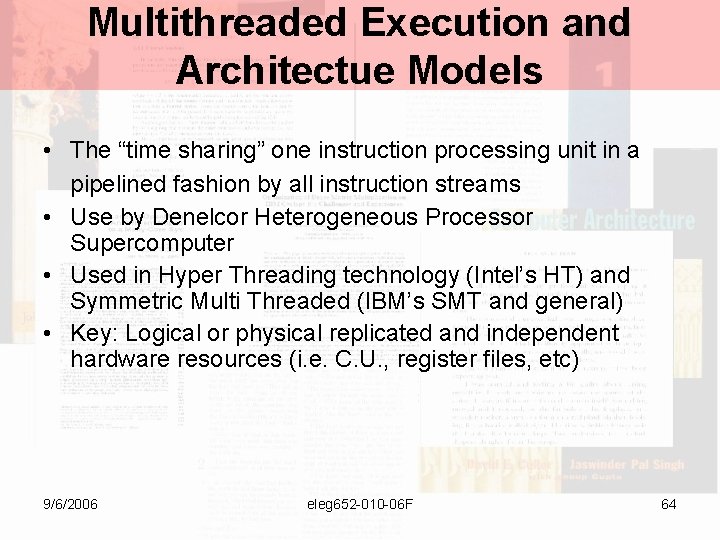

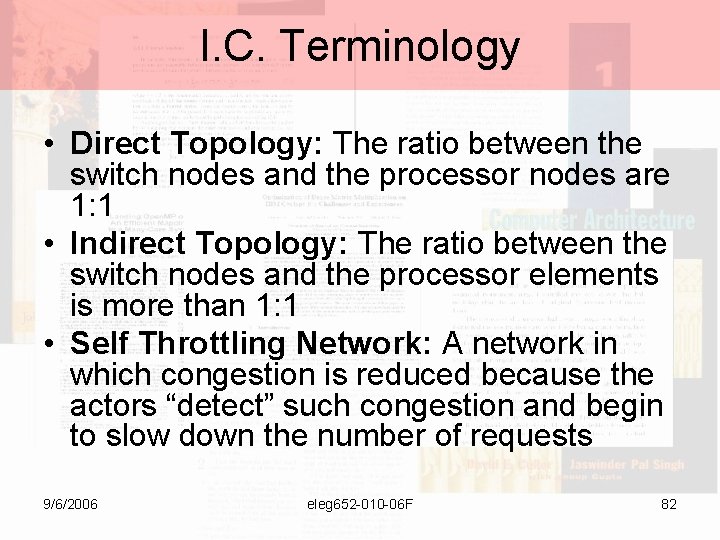

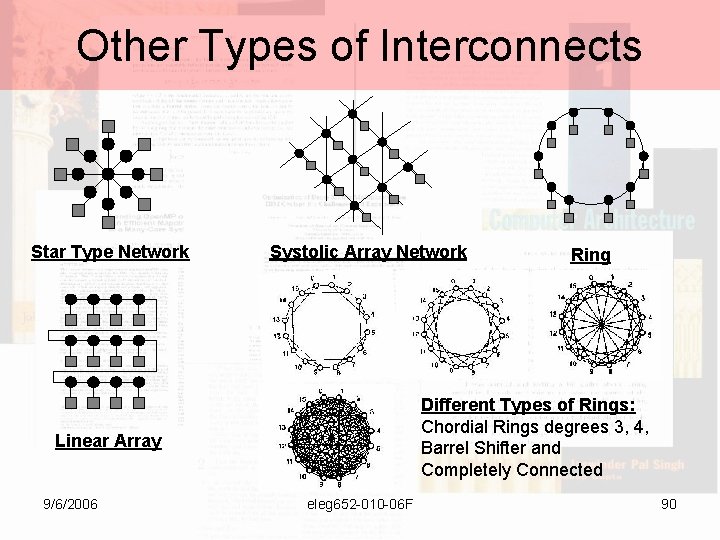

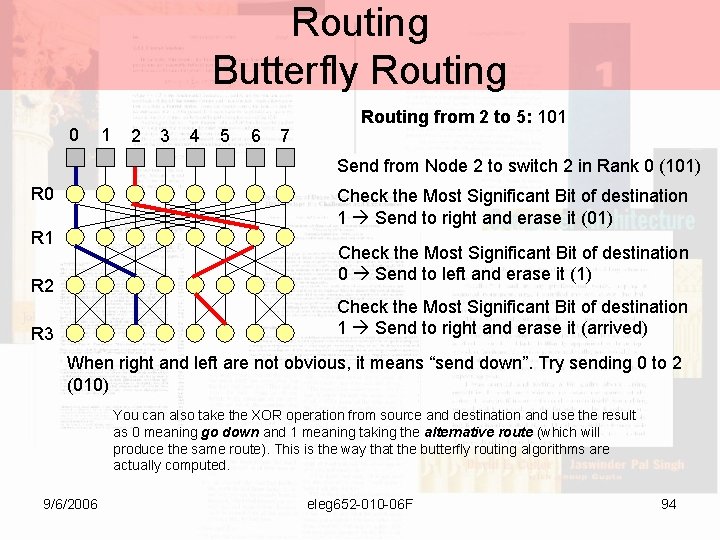

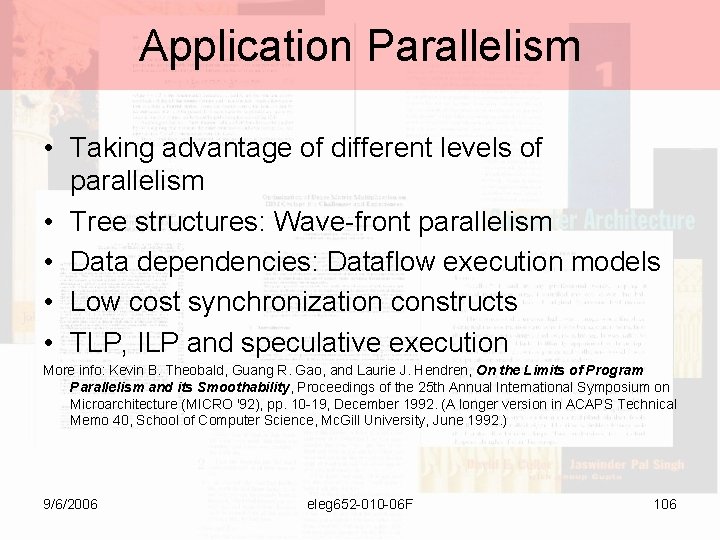

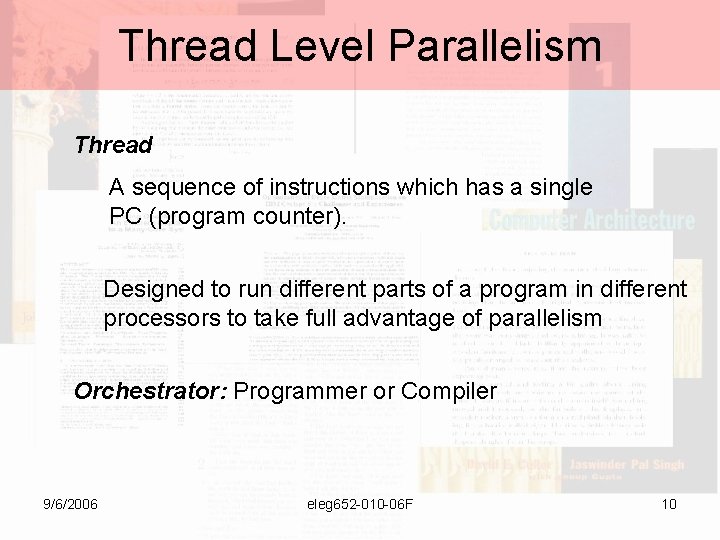

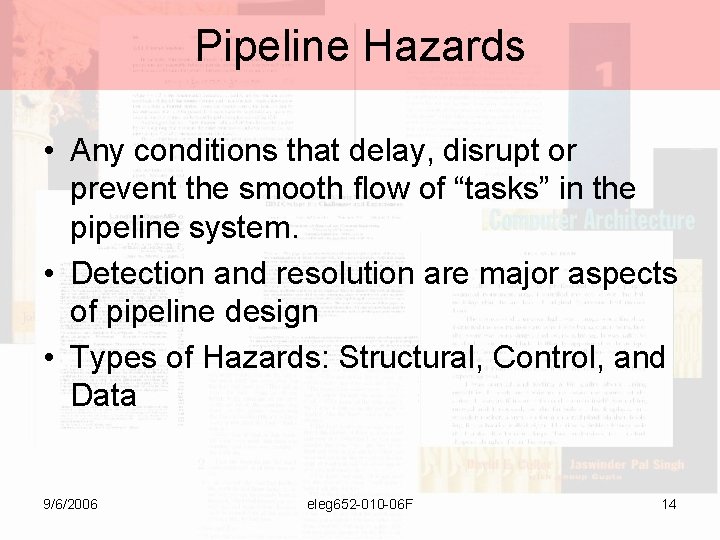

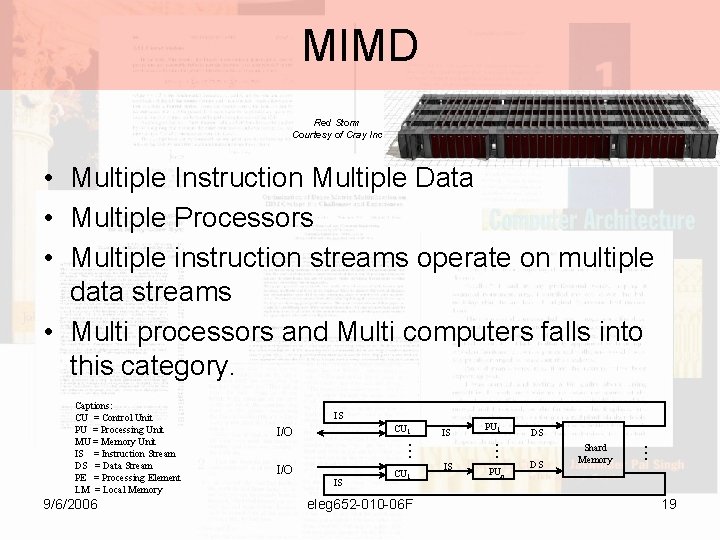

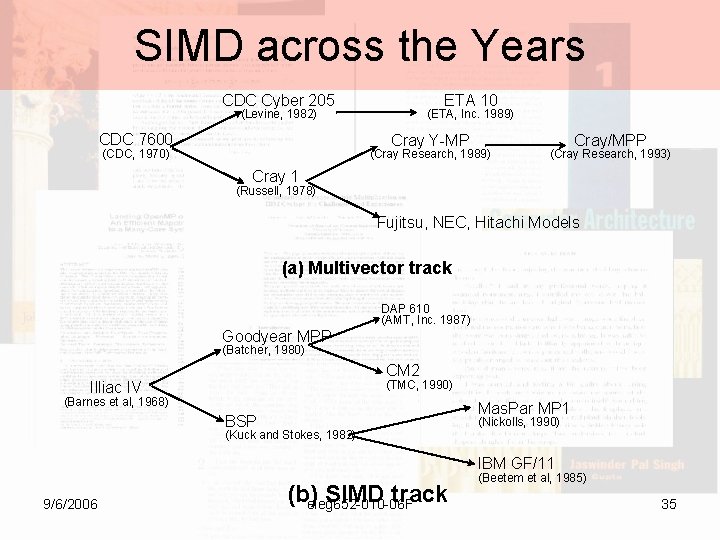

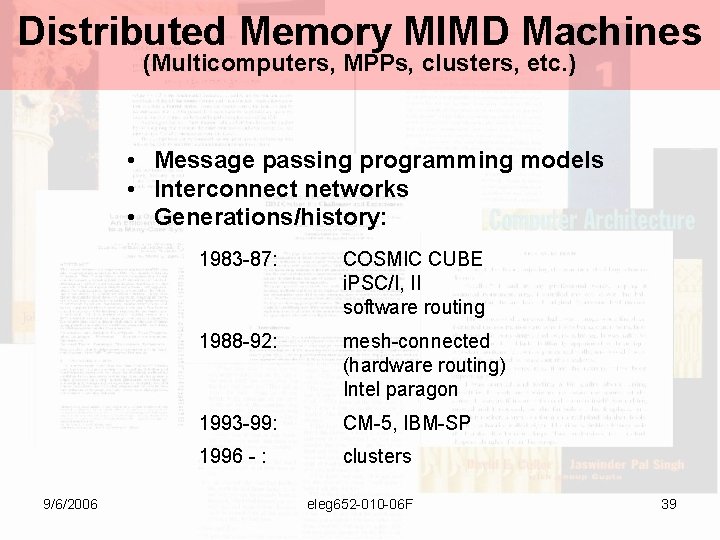

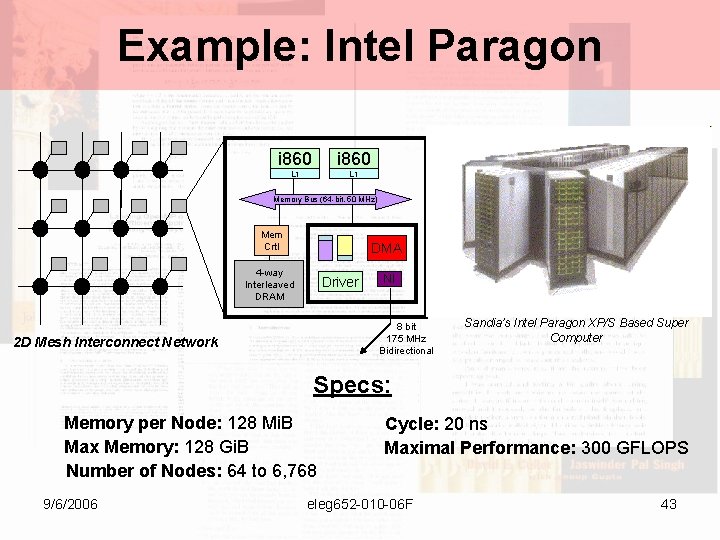

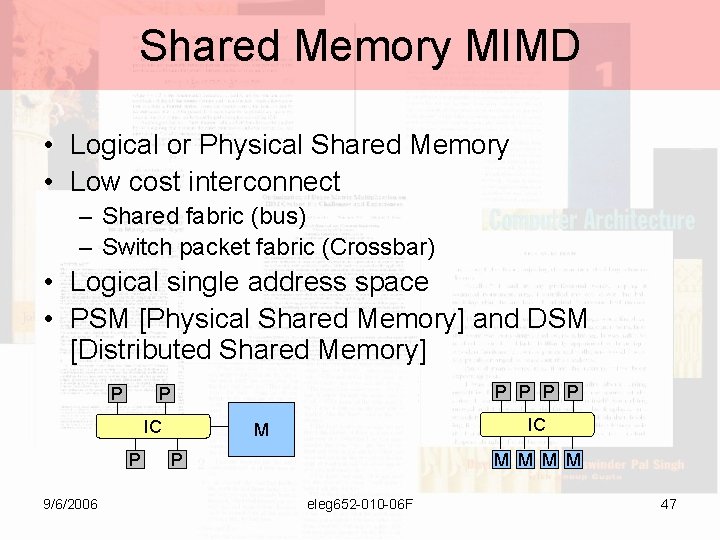

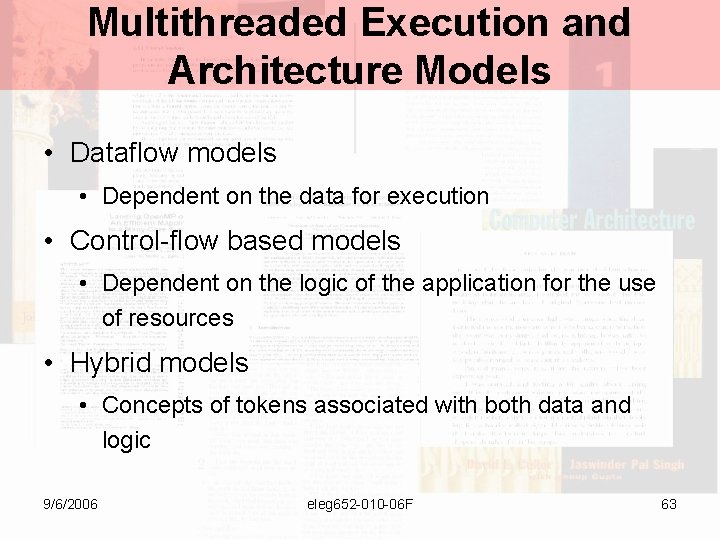

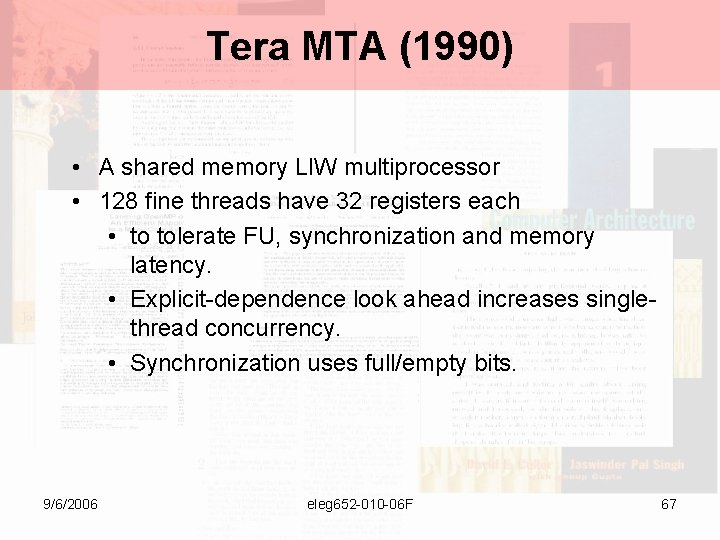

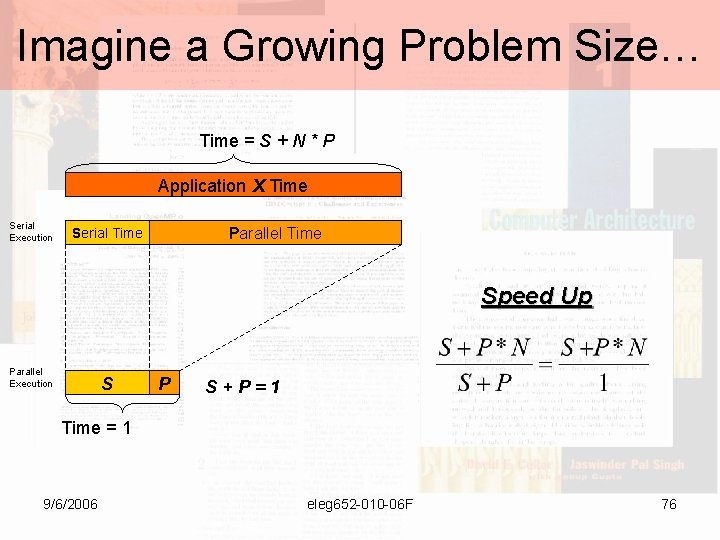

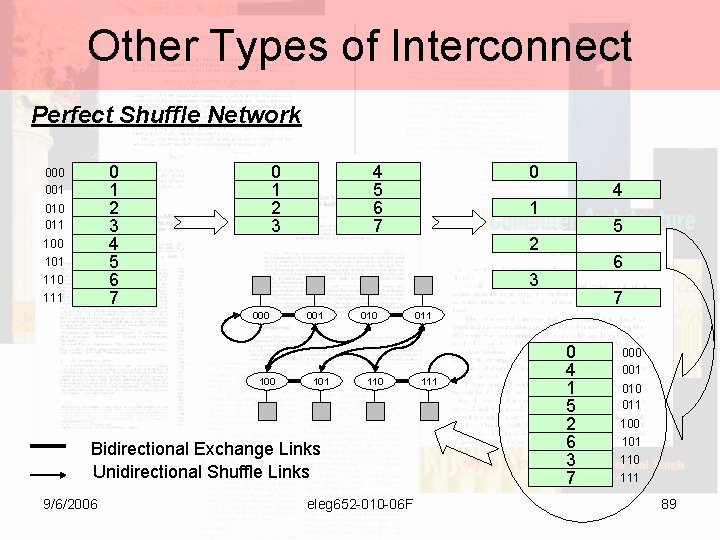

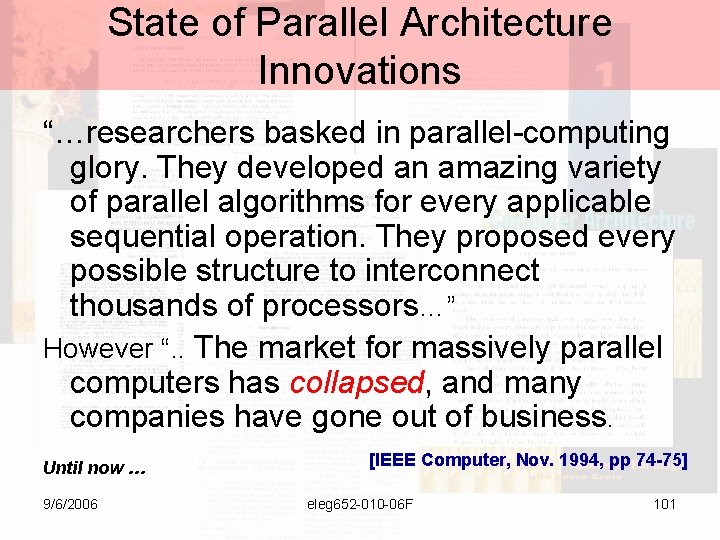

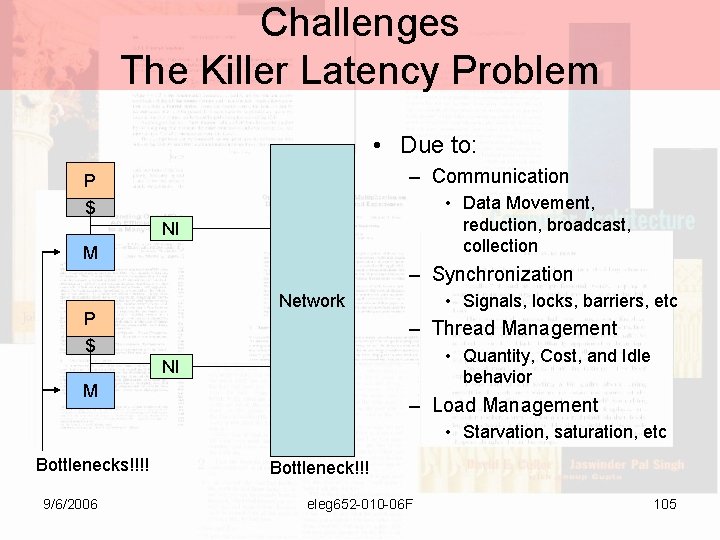

Network Architecture Topics • • • Topology [Mesh Interconnect] Routing [Dimensional Order Routing] Flow Control [Flits’ Store and Forward] Router Design [Locking Buffer] Performance Analysis [Dally and Towle, 2003] 9/6/2006 eleg 652 -010 -06 F 85

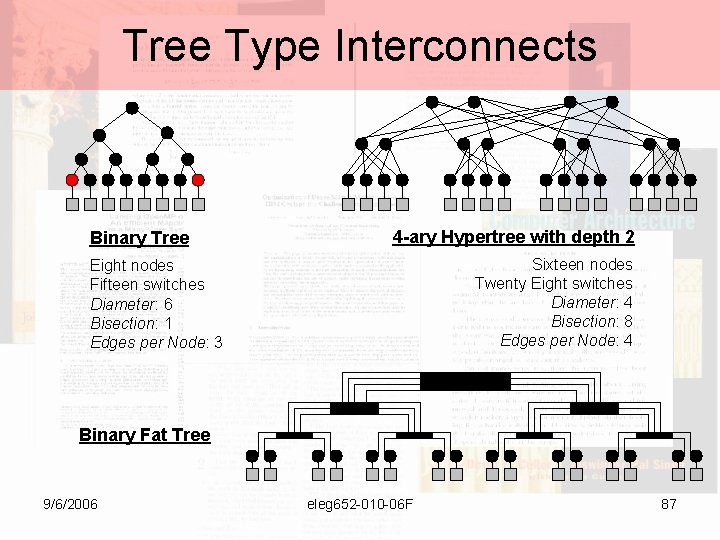

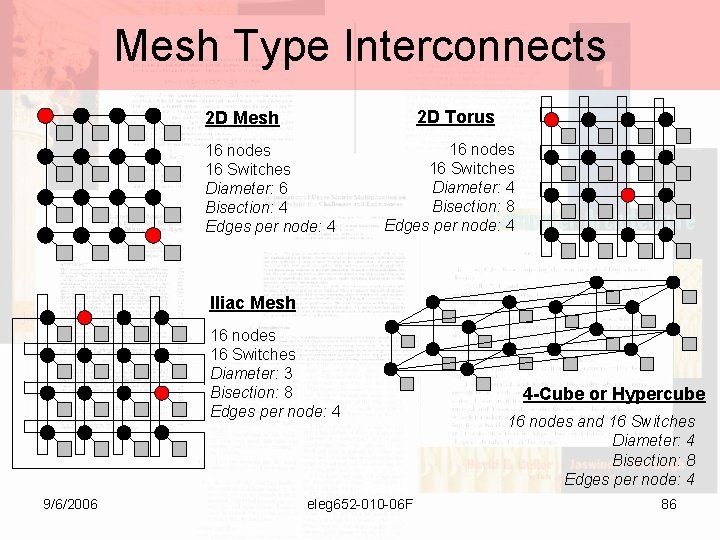

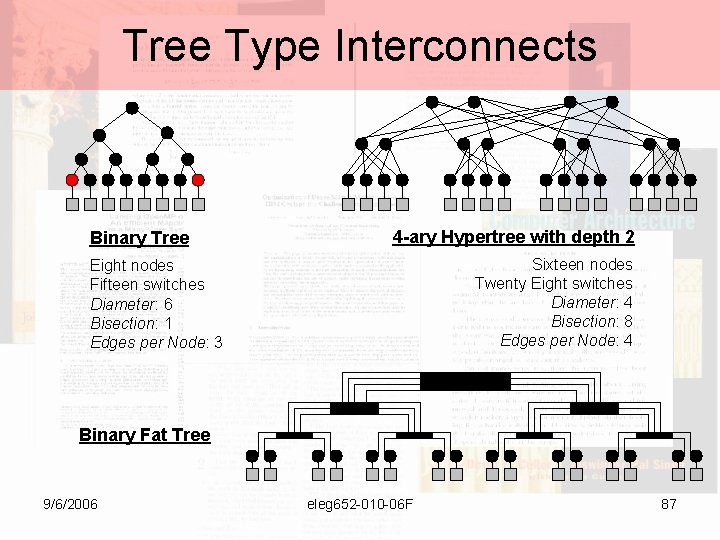

Mesh Type Interconnects 2 D Torus 2 D Mesh 16 nodes 16 Switches Diameter: 6 Bisection: 4 Edges per node: 4 16 nodes 16 Switches Diameter: 4 Bisection: 8 Edges per node: 4 Iliac Mesh 16 nodes 16 Switches Diameter: 3 Bisection: 8 Edges per node: 4 9/6/2006 eleg 652 -010 -06 F 4 -Cube or Hypercube 16 nodes and 16 Switches Diameter: 4 Bisection: 8 Edges per node: 4 86

Tree Type Interconnects Binary Tree 4 -ary Hypertree with depth 2 Sixteen nodes Twenty Eight switches Diameter: 4 Bisection: 8 Edges per Node: 4 Eight nodes Fifteen switches Diameter: 6 Bisection: 1 Edges per Node: 3 Binary Fat Tree 9/6/2006 eleg 652 -010 -06 F 87

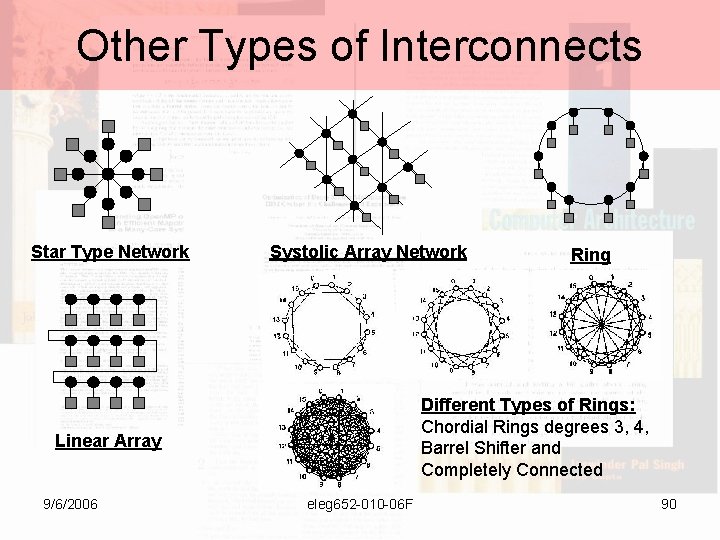

Other Types of Interconnect Butterfly Networks 0 R 1 R 2 1 2 3 4 5 • Building a butterfly 6 7 – n # of nodes • A power of 2 – n[log(n) + 1] # of switches – Arranged in log(n) + 1 rows – Connect switch(i, j) to switch(i-1, j) and switch(i 1, m) • i rank • j node • m inverting MSB ith bit in j R 3 9/6/2006 eleg 652 -010 -06 F 88

Other Types of Interconnect Perfect Shuffle Network 0 1 2 3 4 5 6 7 000 001 010 011 100 101 110 111 0 1 2 3 4 5 6 7 4 1 5 2 6 3 000 100 001 101 010 110 Bidirectional Exchange Links Unidirectional Shuffle Links 9/6/2006 0 eleg 652 -010 -06 F 7 011 111 0 4 1 5 2 6 3 7 000 001 010 011 100 101 110 111 89

Other Types of Interconnects Star Type Network Systolic Array Network Different Types of Rings: Chordial Rings degrees 3, 4, Barrel Shifter and Completely Connected Linear Array 9/6/2006 Ring eleg 652 -010 -06 F 90

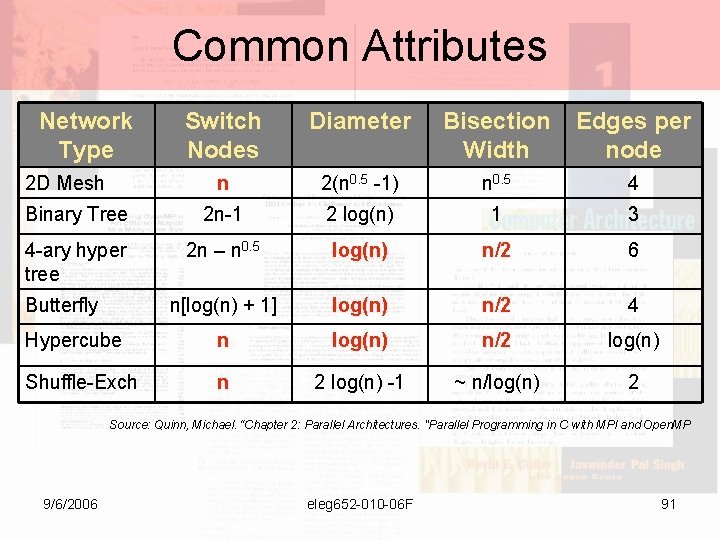

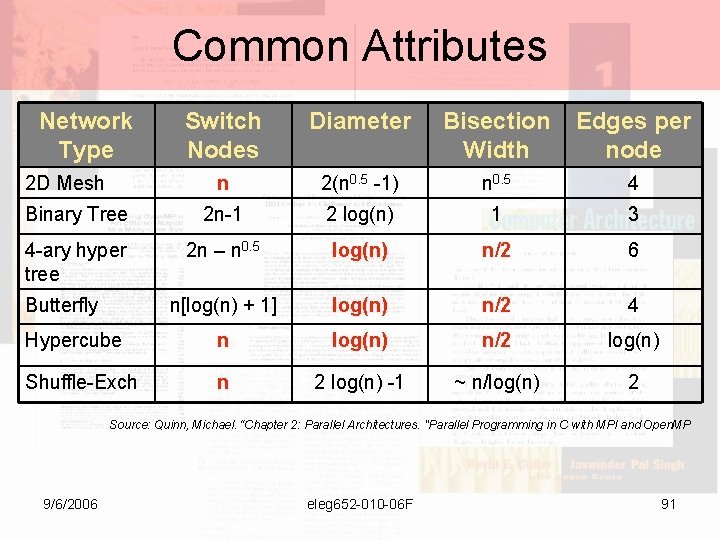

Common Attributes Network Type Switch Nodes Diameter Bisection Width Edges per node n 2(n 0. 5 -1) n 0. 5 4 Binary Tree 2 n-1 2 log(n) 1 3 4 -ary hyper tree 2 n – n 0. 5 log(n) n/2 6 n[log(n) + 1] log(n) n/2 4 Hypercube n log(n) n/2 log(n) Shuffle-Exch n 2 log(n) -1 ~ n/log(n) 2 2 D Mesh Butterfly Source: Quinn, Michael. “Chapter 2: Parallel Architectures. "Parallel Programming in C with MPI and Open. MP 9/6/2006 eleg 652 -010 -06 F 91

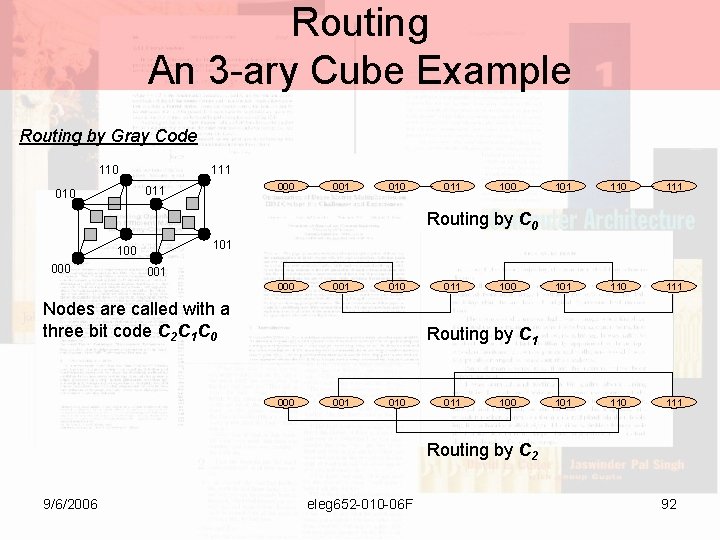

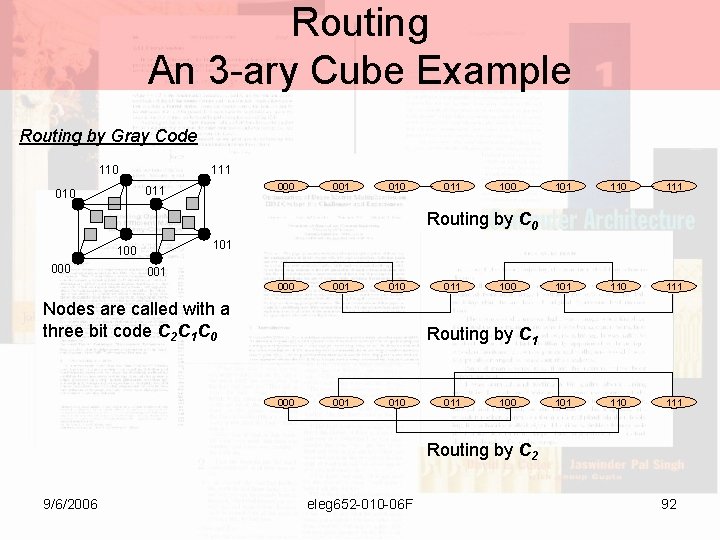

Routing An 3 -ary Cube Example Routing by Gray Code 110 111 000 011 010 001 010 011 100 101 110 111 Routing by C 0 101 100 001 000 001 010 Nodes are called with a three bit code C 2 C 1 C 0 011 100 Routing by C 1 000 001 010 011 100 Routing by C 2 9/6/2006 eleg 652 -010 -06 F 92

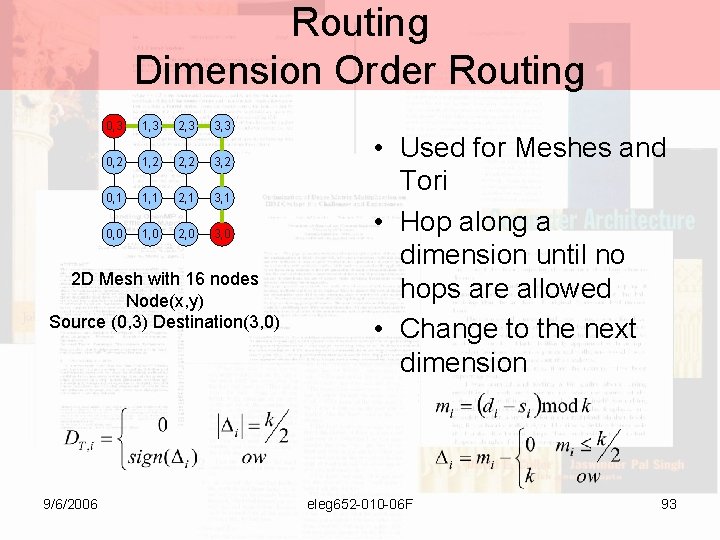

Routing Dimension Order Routing 0, 3 1, 3 2, 3 3, 3 0, 2 1, 2 2, 2 3, 2 0, 1 1, 1 2, 1 3, 1 0, 0 1, 0 2, 0 3, 0 2 D Mesh with 16 nodes Node(x, y) Source (0, 3) Destination(3, 0) 9/6/2006 • Used for Meshes and Tori • Hop along a dimension until no hops are allowed • Change to the next dimension eleg 652 -010 -06 F 93

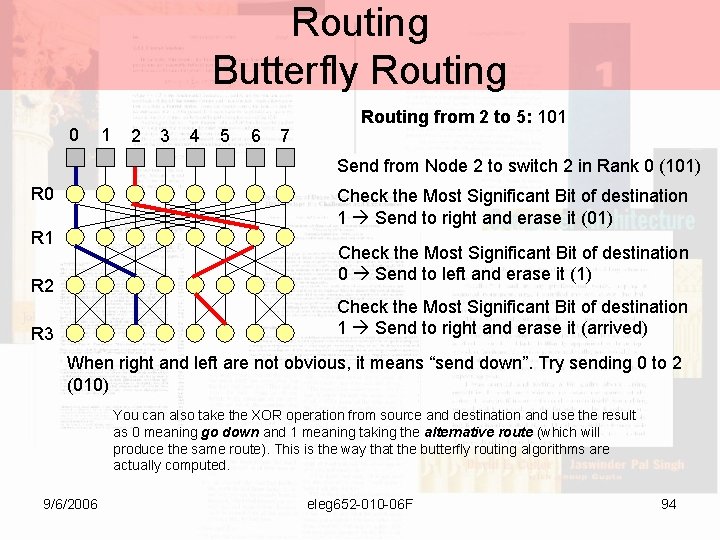

Routing Butterfly Routing 0 1 2 3 4 5 6 7 Routing from 2 to 5: 101 Send from Node 2 to switch 2 in Rank 0 (101) R 0 Check the Most Significant Bit of destination 1 Send to right and erase it (01) R 1 Check the Most Significant Bit of destination 0 Send to left and erase it (1) R 2 Check the Most Significant Bit of destination 1 Send to right and erase it (arrived) R 3 When right and left are not obvious, it means “send down”. Try sending 0 to 2 (010) You can also take the XOR operation from source and destination and use the result as 0 meaning go down and 1 meaning taking the alternative route (which will produce the same route). This is the way that the butterfly routing algorithms are actually computed. 9/6/2006 eleg 652 -010 -06 F 94

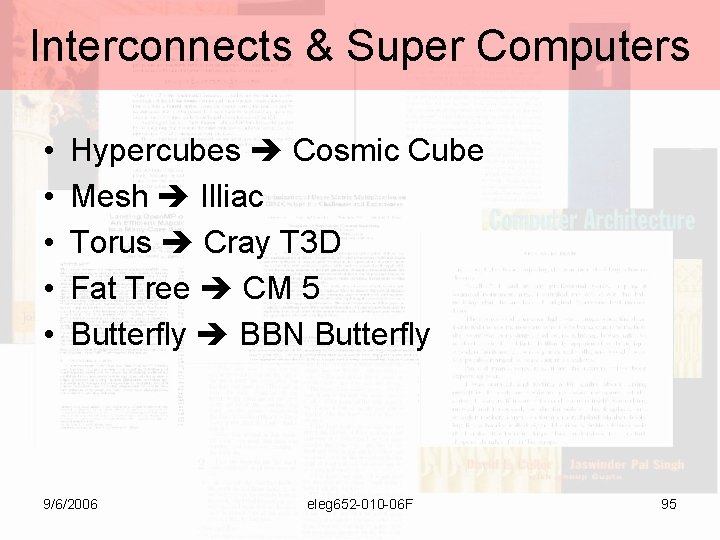

Interconnects & Super Computers • • • Hypercubes Cosmic Cube Mesh Illiac Torus Cray T 3 D Fat Tree CM 5 Butterfly BBN Butterfly 9/6/2006 eleg 652 -010 -06 F 95

Topic 1 e High Performance Parallel Systems 9/6/2006 eleg 652 -010 -06 F 96

Class A and Class B Applications • Class A • Class B – Applications that are highly parallelizable – Low in communication – Regular – Logic flow depends little on the input data – Examples: Matrix Multiply, dot product, image processing (i. e. JPEG decompressing and compressing), etc 9/6/2006 – Applications in which parallelism is hidden or non existent – High Communication / Synchronization needs – Logic flows depends greatly on input data (while loops, conditional structures) – Examples: Sorting methods, graph and tree searching eleg 652 -010 -06 F 97

Science Grand Challenges • Quantum Chemistry, Statistical Mechanics and relativistic physics • Cosmology and astrophysics • Computational Fluid dynamic and turbulence • Material Design and Superconductivity • Biology, Pharmacology, genome sequencing, genetic engineering, protein folding, enzyme activity, and cell modeling • Medicine and modeling of human organs and bones • Global weather and environmental modeling 9/6/2006 eleg 652 -010 -06 F 98

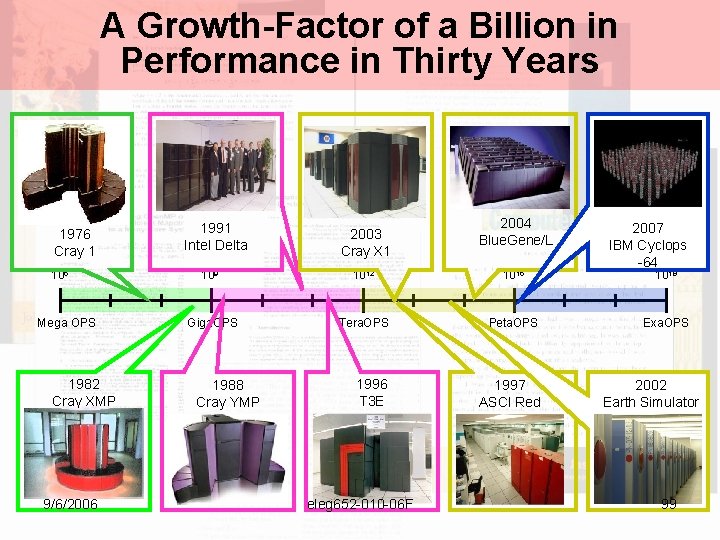

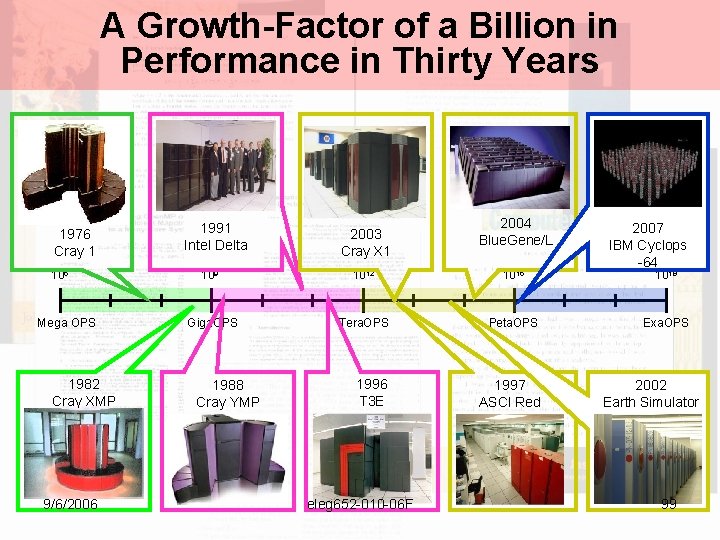

A Growth-Factor of a Billion in Performance in Thirty Years 1976 Cray 1 106 Mega OPS 1982 Cray XMP 9/6/2006 1991 Intel Delta 2003 Cray X 1 2004 Blue. Gene/L 109 1012 1015 Giga. OPS Tera. OPS Peta. OPS 1996 T 3 E 1997 ASCI Red 1988 Cray YMP eleg 652 -010 -06 F 2007 IBM Cyclops -64 1018 Exa. OPS 2002 Earth Simulator 99

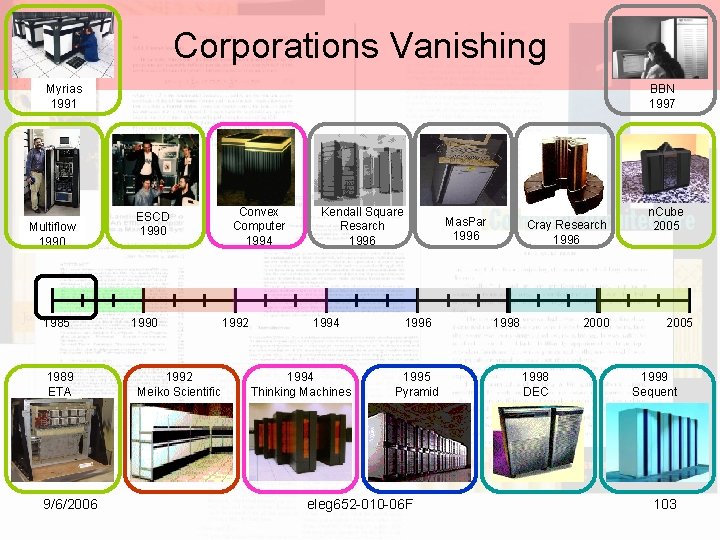

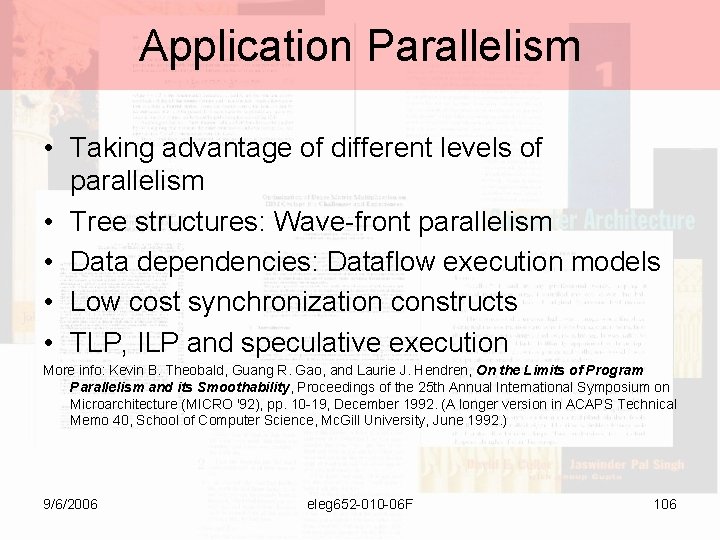

What have we learned from the past decade? • Building a general purpose parallel machines is a difficult task • Proof by Contradiction: Many companies has gone bankrupt or out of the parallel market 9/6/2006 eleg 652 -010 -06 F 100

State of Parallel Architecture Innovations “…researchers basked in parallel-computing glory. They developed an amazing variety of parallel algorithms for every applicable sequential operation. They proposed every possible structure to interconnect thousands of processors…” However “. . The market for massively parallel computers has collapsed, and many companies have gone out of business. Until now … 9/6/2006 [IEEE Computer, Nov. 1994, pp 74 -75] eleg 652 -010 -06 F 101

State of Parallel Architecture Innovations “. . The term 'proprietary architecture' has become pejorative. For computer designers, the revolution is over and only 'fine tuning' remains…” [“End of Architecture”, Burton Smith 1990 s] Reasons for this: lack of parallel-processing software 9/6/2006 eleg 652 -010 -06 F 102

Corporations Vanishing Myrias 1991 Multiflow 1990 1985 1989 ETA 9/6/2006 BBN 1997 ESCD 1990 1992 Meiko Scientific Convex Computer 1994 1992 Kendall Square Resarch 1996 1994 Thinking Machines Mas. Par 1996 1995 Pyramid eleg 652 -010 -06 F Cray Research 1996 1998 2000 1998 DEC n. Cube 2005 1999 Sequent 103

Cluster Computers • After 20 years of false starts and dead ends in high-performance computer architecture, "the way" is now clear: Beowulf clusters are becoming the platform for many scientific, engineering, and commercial applications. [Gorden Bell/2002: A Brief History of Supercomputing] 9/6/2006 eleg 652 -010 -06 F 104

Challenges The Killer Latency Problem • Due to: – Communication P $ • Data Movement, reduction, broadcast, collection NI M – Synchronization • Signals, locks, barriers, etc Network P $ – Thread Management • Quantity, Cost, and Idle behavior NI M – Load Management • Starvation, saturation, etc Bottlenecks!!!! 9/6/2006 Bottleneck!!! eleg 652 -010 -06 F 105

Application Parallelism • Taking advantage of different levels of parallelism • Tree structures: Wave-front parallelism • Data dependencies: Dataflow execution models • Low cost synchronization constructs • TLP, ILP and speculative execution More info: Kevin B. Theobald, Guang R. Gao, and Laurie J. Hendren, On the Limits of Program Parallelism and its Smoothability, Proceedings of the 25 th Annual International Symposium on Microarchitecture (MICRO '92), pp. 10 -19, December 1992. (A longer version in ACAPS Technical Memo 40, School of Computer Science, Mc. Gill University, June 1992. ) 9/6/2006 eleg 652 -010 -06 F 106

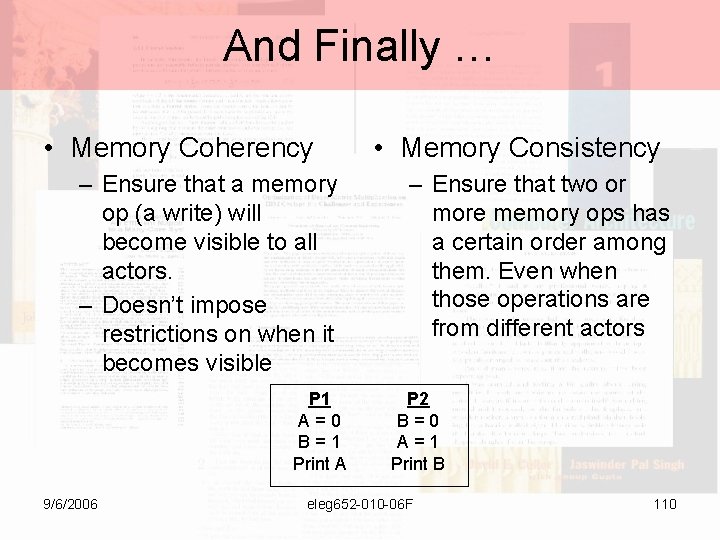

There is an “old network saying”: • Bandwidth problems can be cured with money. Latency problems are harder because the speed of light is fixed - you can not bribe God. - David Clark, MIT 9/6/2006 eleg 652 -010 -06 F 107

Proposed Solutions • Low round trip latency on small messages is very important to many Class B applications • Minimize Synchronization and Communication latency: PIM, hardware lock free data structures, … • Fully utilize available bandwidth to hide latency: Helper / Loader threads, double buffering. 9/6/2006 eleg 652 -010 -06 F 108

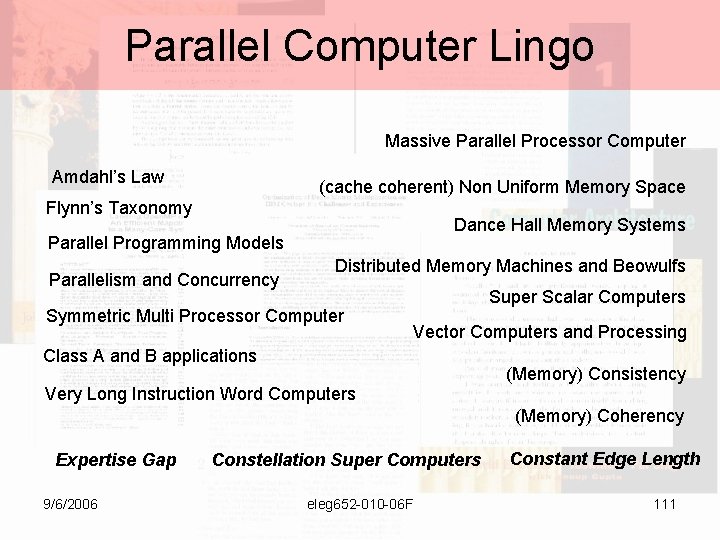

The “Killer Latency” Problem It makes the “performance debugging” of parallel programs very hard! Performance “best performance” “reasonable performance” A 1 A 2 An 9/6/2006 eleg 652 -010 -06 F Effort + expert Knowledge 109

And Finally … • Memory Coherency – Ensure that a memory op (a write) will become visible to all actors. – Doesn’t impose restrictions on when it becomes visible P 1 A=0 B=1 Print A 9/6/2006 • Memory Consistency – Ensure that two or more memory ops has a certain order among them. Even when those operations are from different actors P 2 B=0 A=1 Print B eleg 652 -010 -06 F 110

Parallel Computer Lingo Massive Parallel Processor Computer Amdahl’s Law (cache coherent) Non Uniform Memory Space Flynn’s Taxonomy Dance Hall Memory Systems Parallel Programming Models Parallelism and Concurrency Distributed Memory Machines and Beowulfs Symmetric Multi Processor Computer Super Scalar Computers Vector Computers and Processing Class A and B applications (Memory) Consistency Very Long Instruction Word Computers (Memory) Coherency Expertise Gap 9/6/2006 Constellation Super Computers eleg 652 -010 -06 F Constant Edge Length 111

Bibliography • Quinn, Michael. Parallel Programming in C with MPI and Open. MP. Mc. Graw Hill. ISBN 0070282256 -2 • Dally, William. Towles, Brian. Principles and Practices of Interconnection Networks. Morgan Kaufmann. ISBN 0 -12 -200751 -4 • Black Box Network Services’ Pocket Glossary of Computer Terms • Intel Introduction to Multiprocessors: http: //www. intel. com/cd/ids/developer/asmona/eng/238663. htm? prn=y 9/6/2006 eleg 652 -010 -06 F 112