Summarization and Personal Information Management Carolyn Penstein Ros

- Slides: 46

Summarization and Personal Information Management Carolyn Penstein Rosé Language Technologies Institute/ Human-Computer Interaction Institute

Announcements Questions? n Plan for Today n ¨ Finish n Ku, Liang, and Chen, 2006 ¨ Chat n analysis Zhou & Hovey, 2005 ¨ n Blog Analysis Student presentation by Justin Weisz Time permitting: Supplementary discussion about chat analysis ¨ Arguello & Rosé, 2005

Food for thought!! n I propose that we redesign the way discussions appear on the Internet. Current discussion interfaces are terrible anyways. ¨ And we can design them in ways that favor summarization or promote brevity. ¨ Can we get users to write in newsroom, inverted pyramid style with all of the important info at the top? ¨ Getting users to do work that algorithms can't do is currently in vogue. Even better if they can do some work that will assist algorithms …

More food! n While relying on "the masses" to provide free information seems to be the popular web 2. 0 thing -- it is only really viable on large scales. While slashdot moderation can give good feedback about which posts are informative, the same methods wouldn't work to summarize, for instance, a class discussion board with 20 members.

Ku, Liang, and Chen, 2006

Opinion Based Summarization n The high level view is that what they’re trying to do is determine what opinion is represented by a post ¨ Where by opinion they mean whether it is positive or negative ¨ Then they are trying to identify what sentences are responsible for expressing that positive or negative view n A summary is an extract from a blog post that expresses an opinion in a concise way

Data English data came from a TREC blog analysis competition n They annotated positive and negative polarity at the word, sentence, and document level n ¨ They present the reliability of their coding, but don’t talk in great detail about their definitions of polarity that guided their judgments ¨ 3 different coders worked on the annotation n They did the same with Chinese data

Algorithm n n First identify which words express an opinion Use opinion words to identify whether a sentence expresses an opinion (and whether that opinion is positive or negative) Once you have the subset of sentences that express that opinion, you can make a decision about what opinion the post is expressing The intuition is that what makes opinion classification hard is that a lot of comments are made in a post that are not part of the expression of the opinion, and this text acts as a distractor

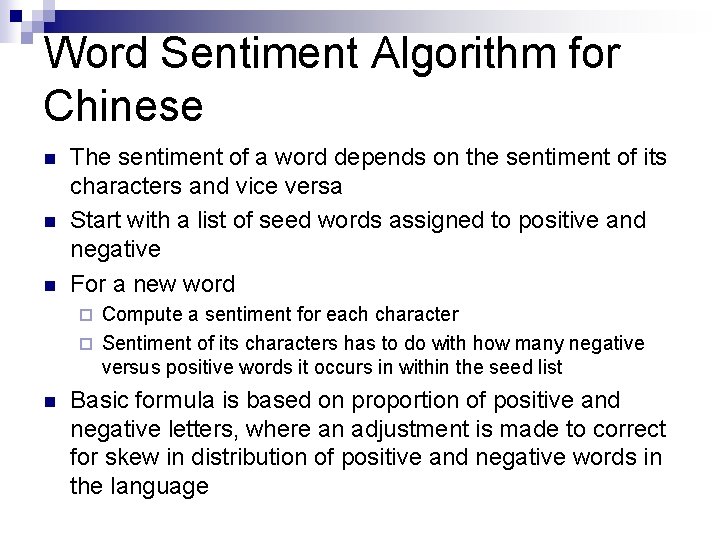

Word Sentiment Algorithm for Chinese n n n The sentiment of a word depends on the sentiment of its characters and vice versa Start with a list of seed words assigned to positive and negative For a new word Compute a sentiment for each character ¨ Sentiment of its characters has to do with how many negative versus positive words it occurs in within the seed list ¨ n Basic formula is based on proportion of positive and negative letters, where an adjustment is made to correct for skew in distribution of positive and negative words in the language

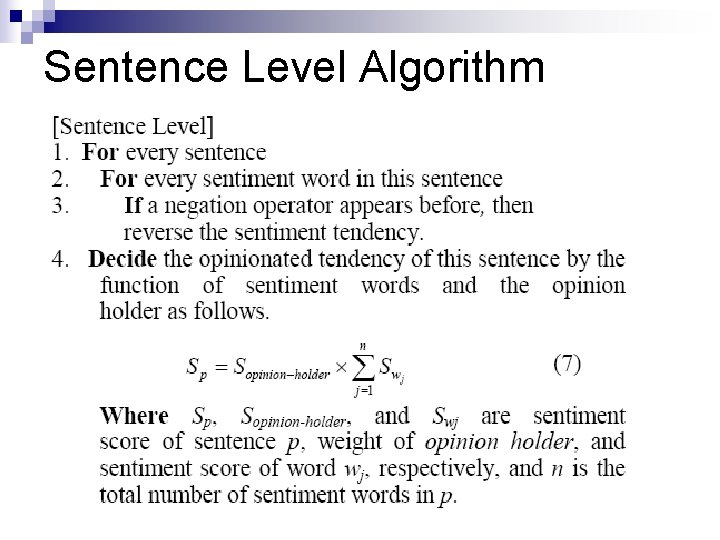

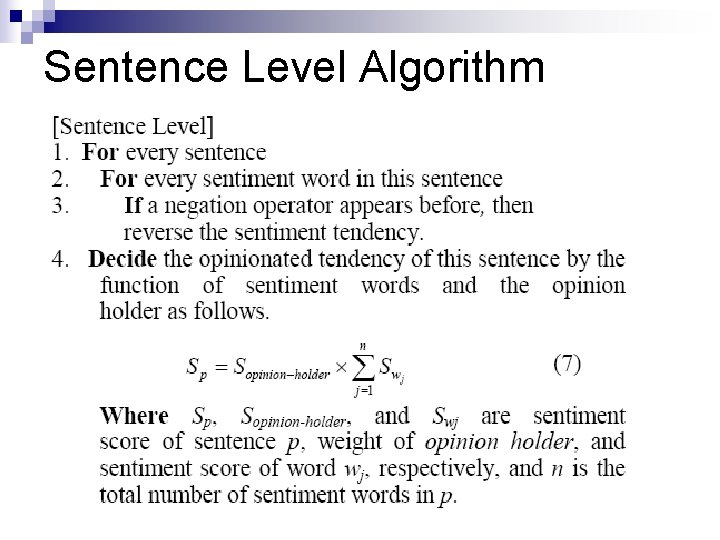

Sentence Level Algorithm

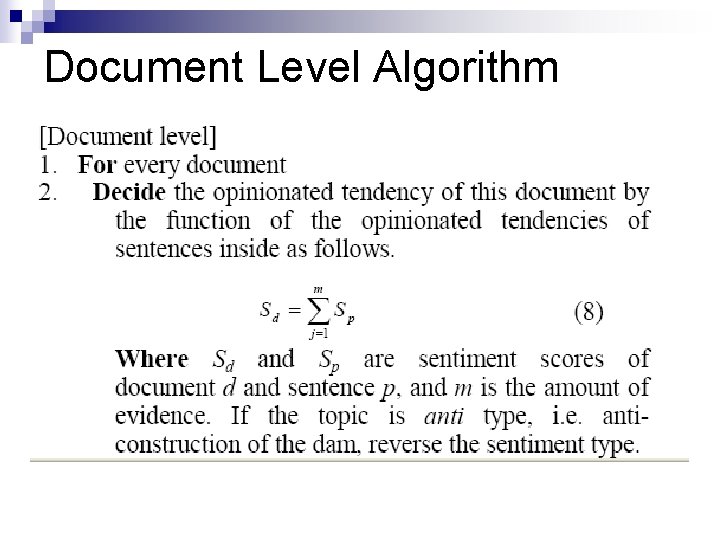

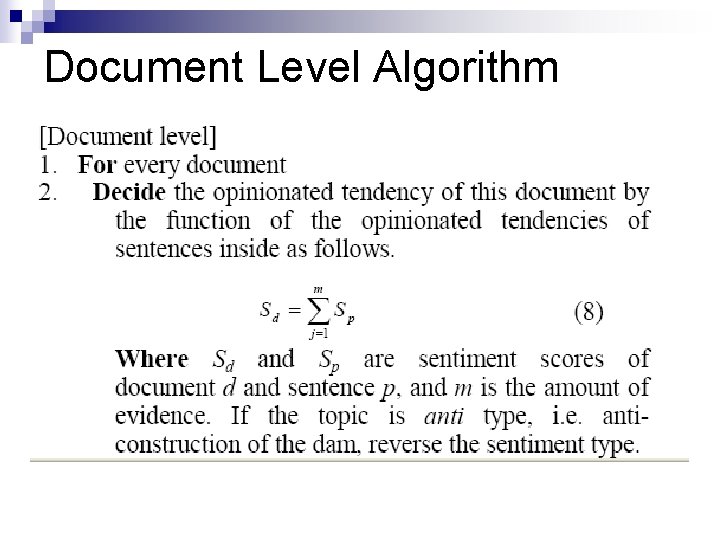

Document Level Algorithm

Identifying Opinion Words Their approach does not require crossvalidation n SVM and C 5 tested using 2 fold cross validation (A and B were the two folds) n Precision is higher for their approach n ¨ Statistical significance?

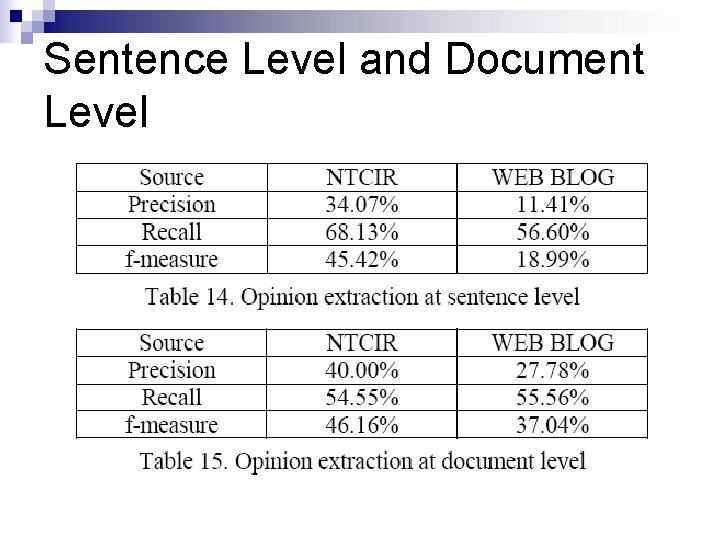

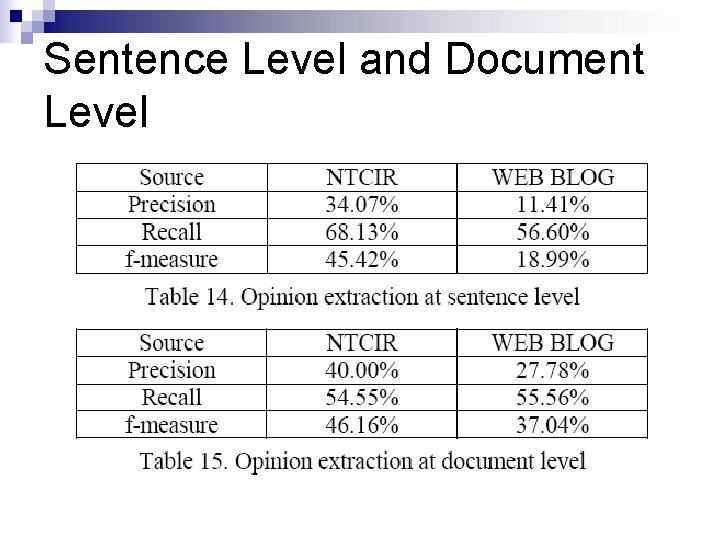

Sentence Level and Document Level

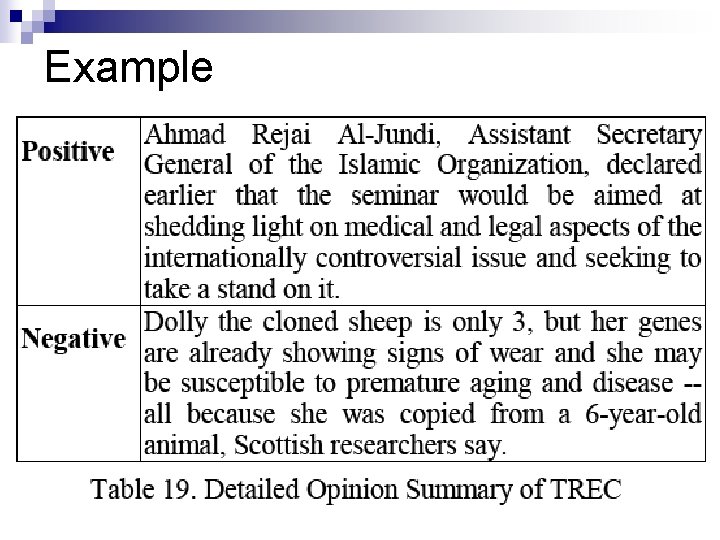

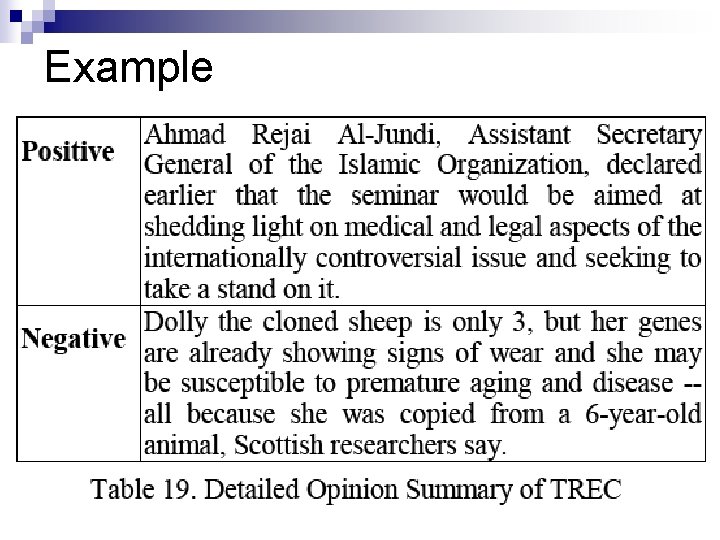

Now let’s see what you think… They give examples of their summaries but no real quantitative evaluation of their quality n Look at the summaries and see whether you think they are really expressing a positive or negative opinion n ¨ Why or why not?

Example

Discussion If you didn’t think the summaries were expressing a positive or negative opinion, what do you think they were expressing instead? n What is the difference between an opinion and a sentiment? n What would you have done differently? n

VOTE! n In class final versus take home final n Public poster session versus private poster session

Zhou & Hovey, 2005 Justin Weisz Student Presentation

Evaluation Issues! Variety of student comments related to the evaluation n We will discuss those next week when we start the evaluation segment n

Important Ideas Topic segmentation n Topic clustering n Both are hard in chat! n Their corpus was atypical for chat: n ¨ Very long contirbutions ¨ Email mixed with chat n Let’s take a moment to think about chat more broadly…

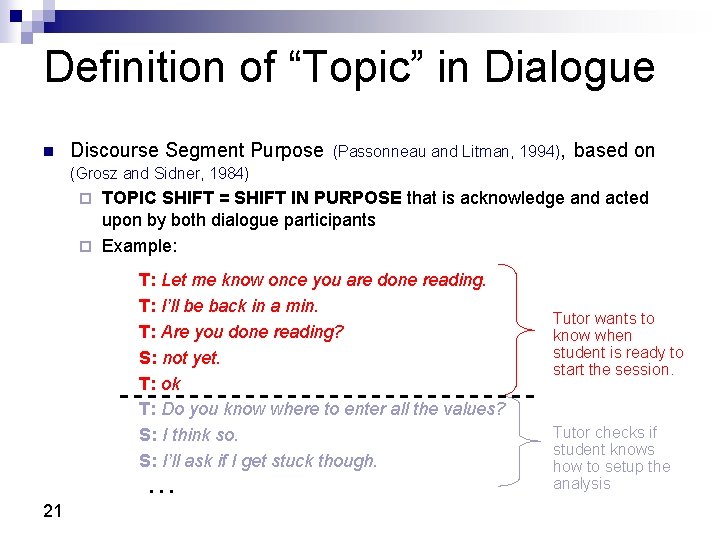

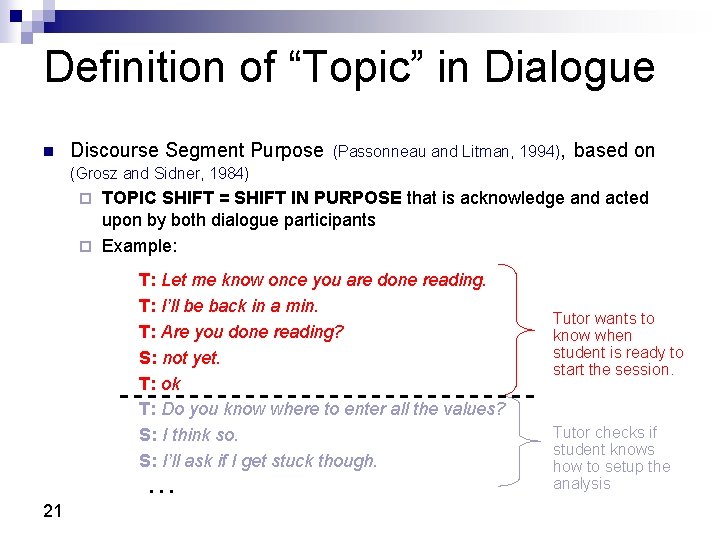

Definition of “Topic” in Dialogue n Discourse Segment Purpose (Passonneau and Litman, 1994), based on (Grosz and Sidner, 1984) TOPIC SHIFT = SHIFT IN PURPOSE that is acknowledge and acted upon by both dialogue participants ¨ Example: ¨ T: Let me know once you are done reading. T: I’ll be back in a min. T: Are you done reading? S: not yet. T: ok T: Do you know where to enter all the values? S: I think so. S: I’ll ask if I get stuck though. . 21 Tutor wants to know when student is ready to start the session. Tutor checks if student knows how to setup the analysis

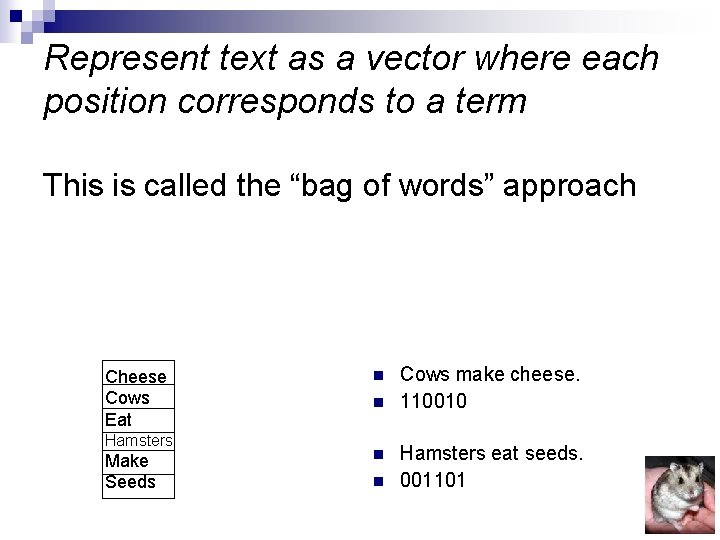

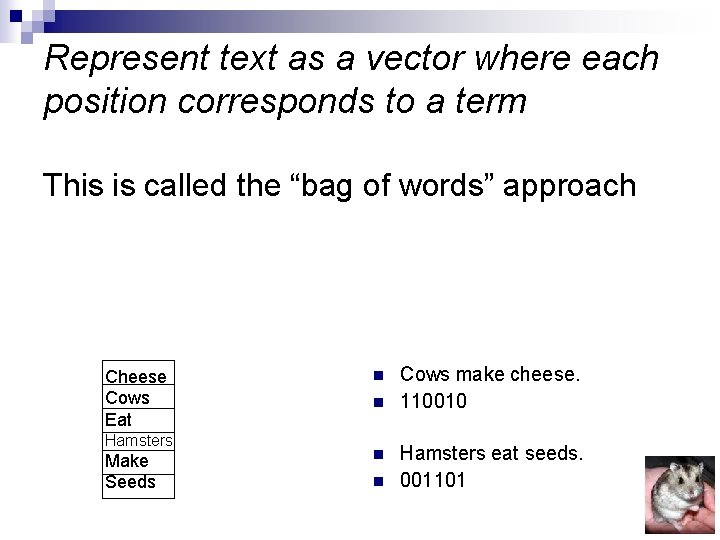

Represent text as a vector where each position corresponds to a term This is called the “bag of words” approach Cheese Cows Eat Hamsters Make Seeds n n Cows make cheese. 110010 Hamsters eat seeds. 001101

Student Questions I don't know what Maximum Entropy is. n However, I don’t see how I can distinguish segment clusters (corresponding to subtopic) in terms of their degree of importance. n I vaguely understood the use of SVM was basically to classify the sentences as belonging in the summary or not. n ¨I don't understand why SVM doesn't require "feature selection". Doesn't all machine learning require features?

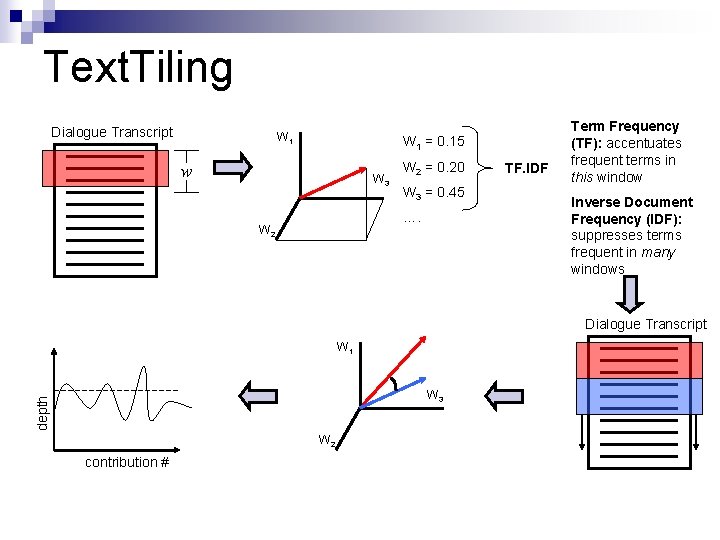

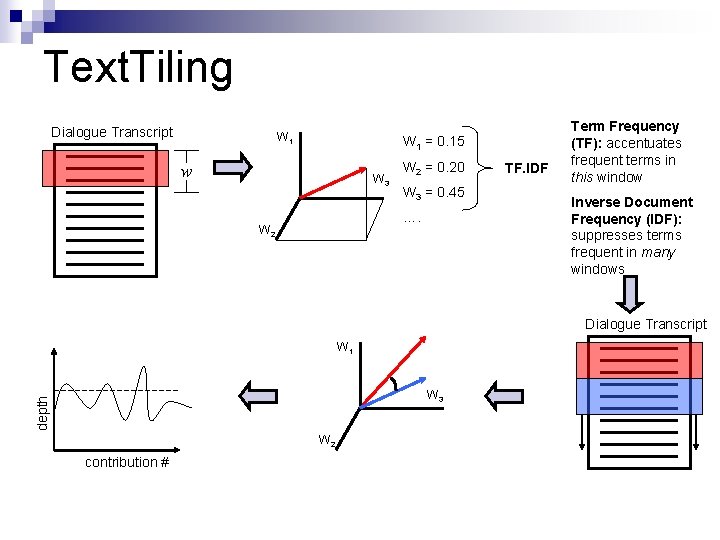

Text. Tiling Dialogue Transcript W 1 = 0. 15 w W 3 W 2 = 0. 20 W 3 = 0. 45 …. W 2 TF. IDF Term Frequency (TF): accentuates frequent terms in this window Inverse Document Frequency (IDF): suppresses terms frequent in many windows Dialogue Transcript W 1 depth W 3 W 2 contribution #

Student Comment n I think they should have reported how well Text. Tiling performed on chat/email data. Originally it has been designed for expository text.

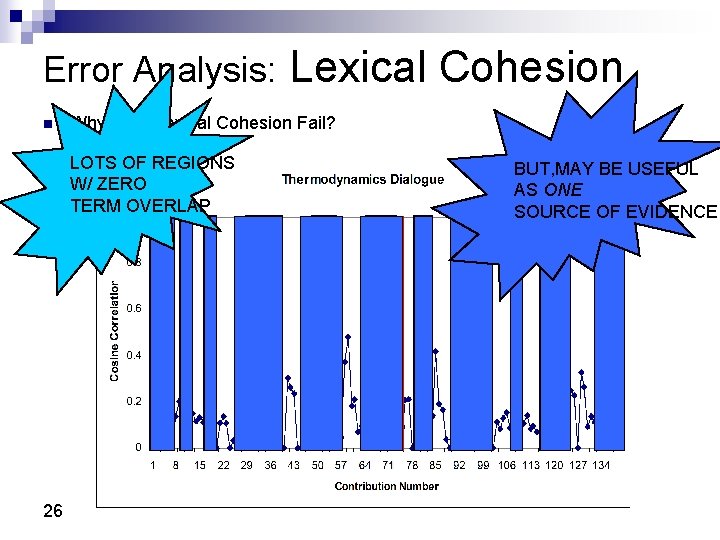

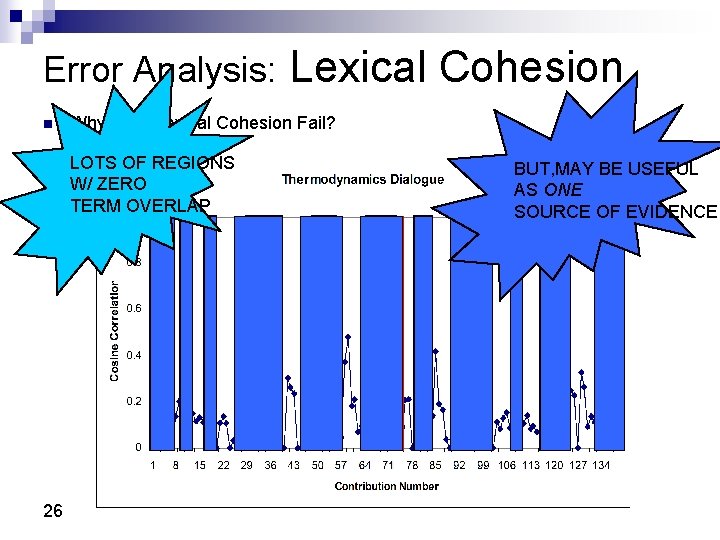

Error Analysis: n Why does Lexical Cohesion Fail? LOTS OF REGIONS W/ ZERO TERM OVERLAP 26 Lexical Cohesion BUT, MAY BE USEFUL AS ONE SOURCE OF EVIDENCE

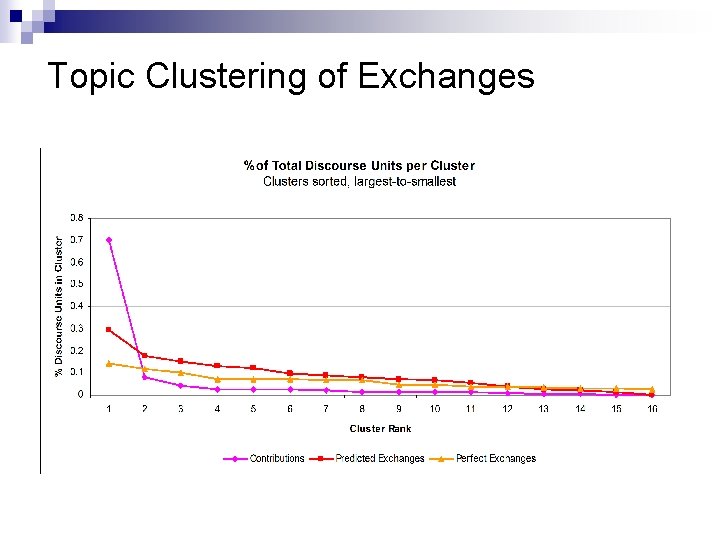

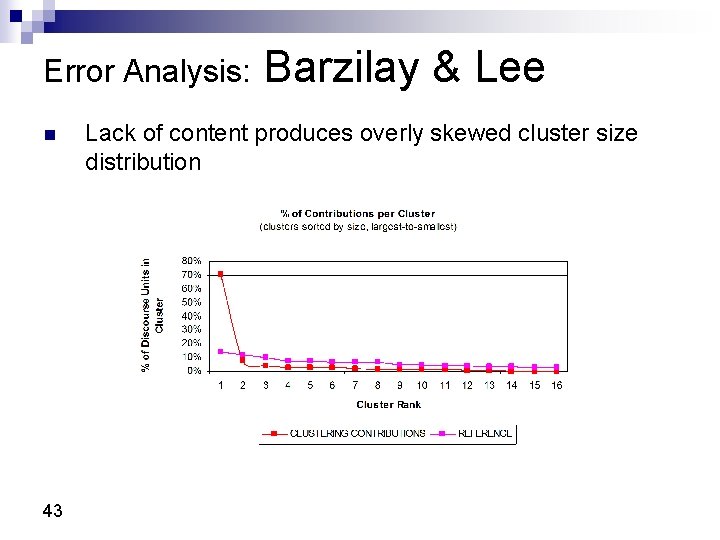

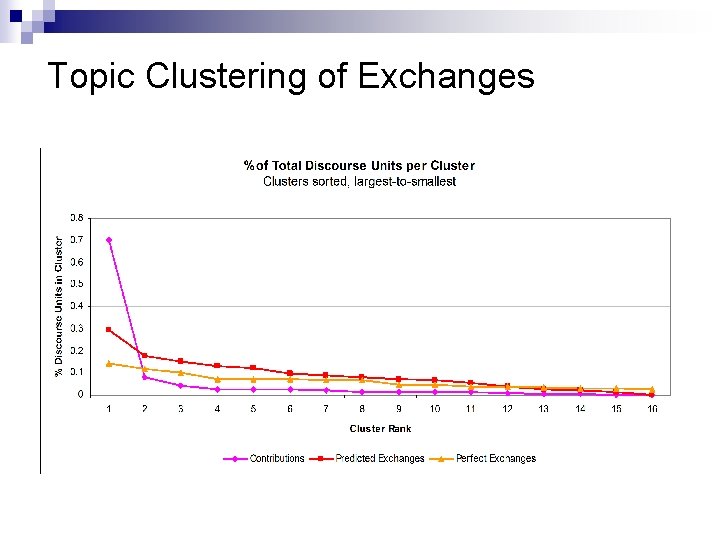

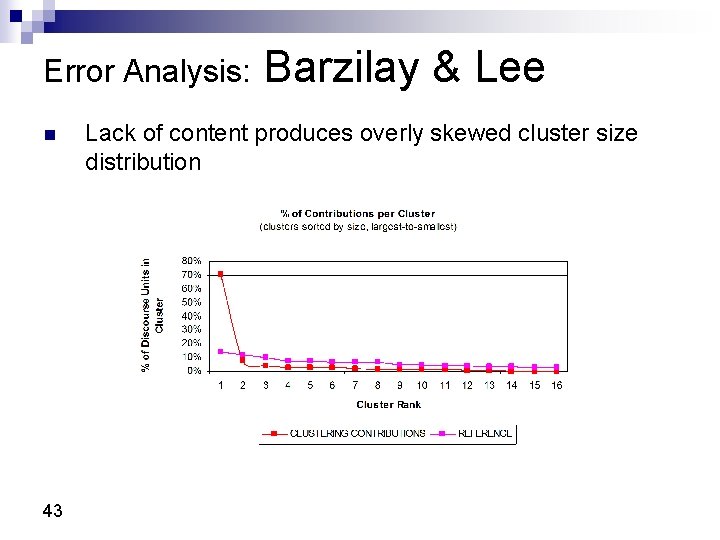

Clustering Contributions in Chat n Lack of content produces overly skewed cluster size distribution

Dialogue Exchanges A more coarse-grained discourse unit n Initiation, Response, Feedback (IRF) Structure (Stubbs, 1983; Sinclair and Coulthard, 1975) n [initiation] [response] [feedback] [initiation] T: Hi T: Are you connected? S: Ya. T: Are you done reading? S: Yah T: ok T: Your objective today is to learn as much as you can about Rankin Cycles

Topic Clustering of Exchanges

Related Student Quote n Taking discourse structure into account ¨I feel it is a good strategy for the task. Beyond what they did I would like to reconstruct the relationship between sub-topics. This will increase the summary quality and capture what happened in the discussion

Insert Justin’s Presentation Here

Museli: A Multi-Source Evidence Integration Approach to Topic Segmentation of Spontaneous Dialogue Jaime Arguello Carolyn Rosé Language Technologies Institute Carnegie Mellon University

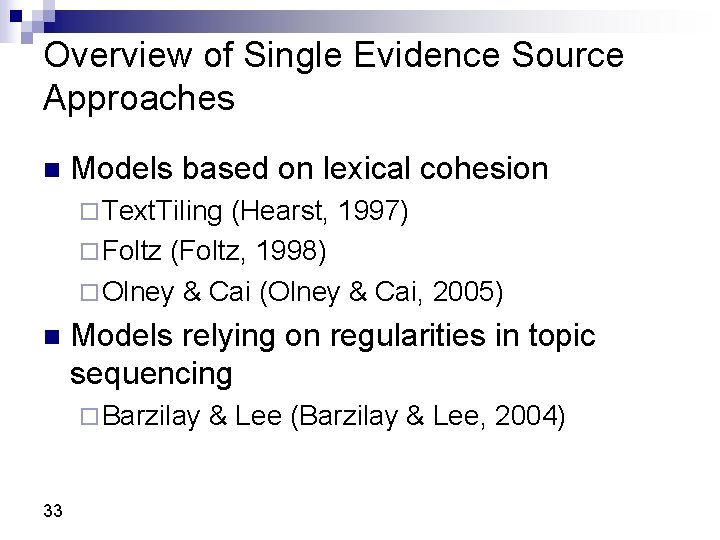

Overview of Single Evidence Source Approaches n Models based on lexical cohesion ¨ Text. Tiling (Hearst, 1997) ¨ Foltz (Foltz, 1998) ¨ Olney & Cai (Olney & Cai, 2005) n Models relying on regularities in topic sequencing ¨ Barzilay 33 & Lee (Barzilay & Lee, 2004)

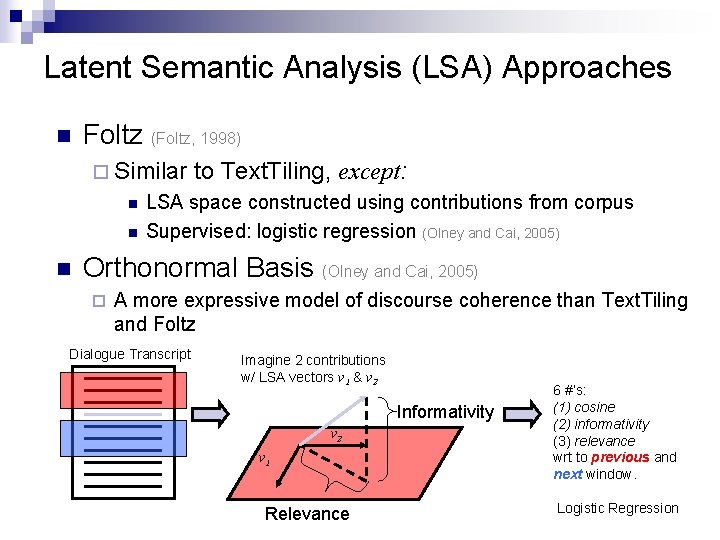

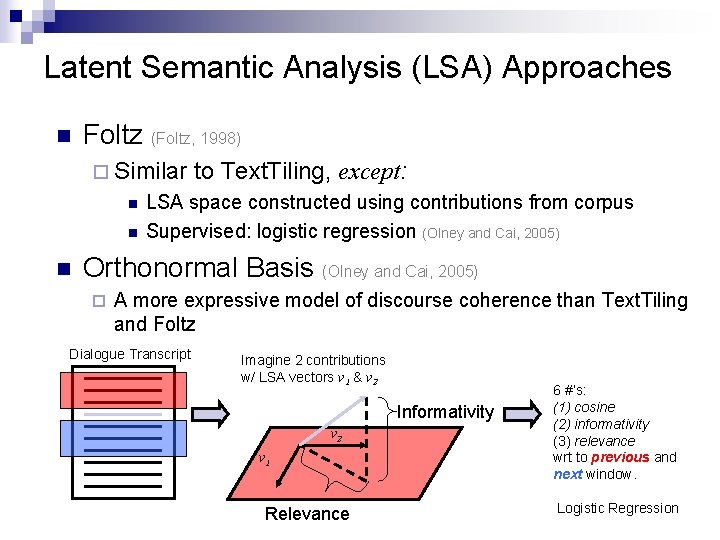

Latent Semantic Analysis (LSA) Approaches n Foltz (Foltz, 1998) ¨ Similar n n n to Text. Tiling, except: LSA space constructed using contributions from corpus Supervised: logistic regression (Olney and Cai, 2005) Orthonormal Basis (Olney and Cai, 2005) ¨ A more expressive model of discourse coherence than Text. Tiling and Foltz Dialogue Transcript Imagine 2 contributions w/ LSA vectors v 1 & v 2 Informativity v 2 v 1 Relevance 6 #’s: (1) cosine (2) informativity (3) relevance wrt to previous and next window. Logistic Regression

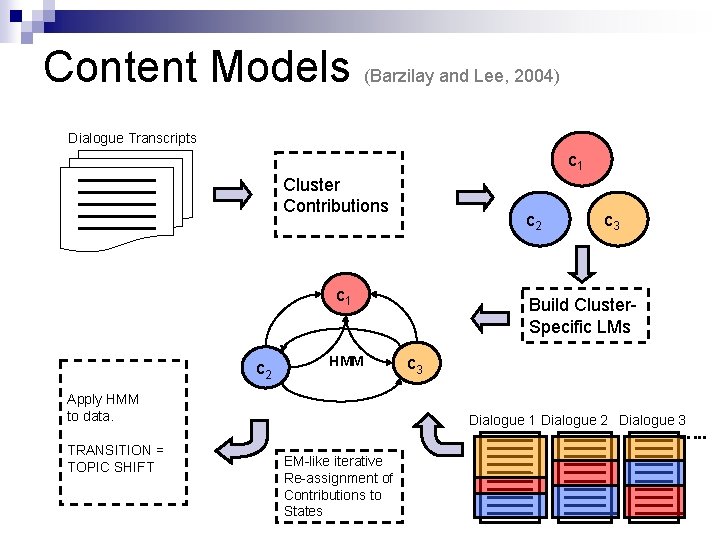

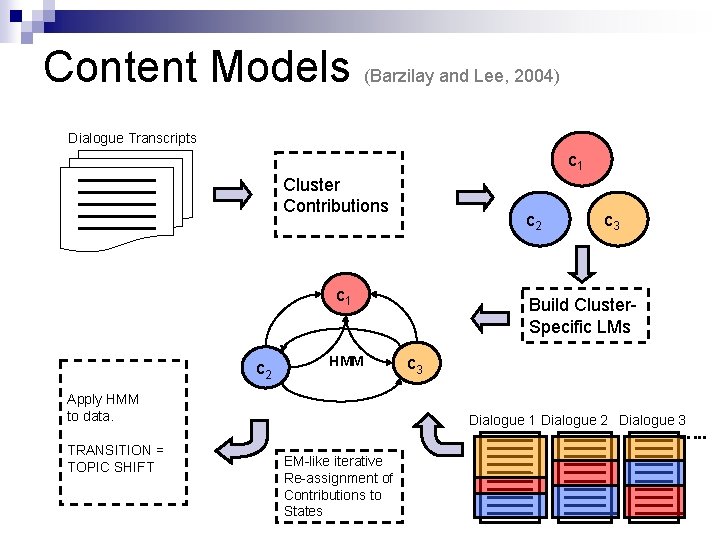

Content Models (Barzilay and Lee, 2004) Dialogue Transcripts c 1 Cluster Contributions c 2 c 1 c 2 HMM Apply HMM to data. TRANSITION = TOPIC SHIFT c 3 Build Cluster. Specific LMs c 3 Dialogue 1 Dialogue 2 Dialogue 3 …. . EM-like iterative Re-assignment of Contributions to States

MUSELI n n Integrates multiple sources of evidence of topic shift Features: ¨ Lexical Cohesion (via cosine correlation) ¨ Time lag between contributions ¨ Unigrams (previous and current contribution) ¨ Bigrams (previous and current cont. ) ¨ POS Bigrams (previous and current cont. ) ¨ Contribution Length ¨ Previous/Current Speaker ¨ Contribution of Content Words 36

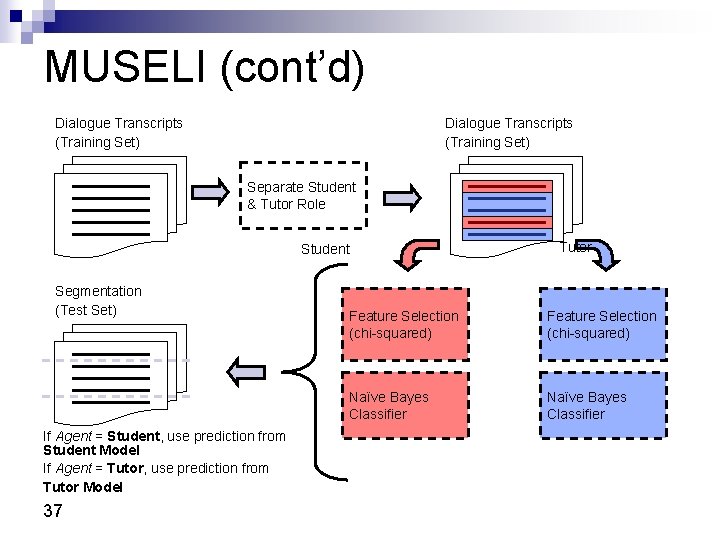

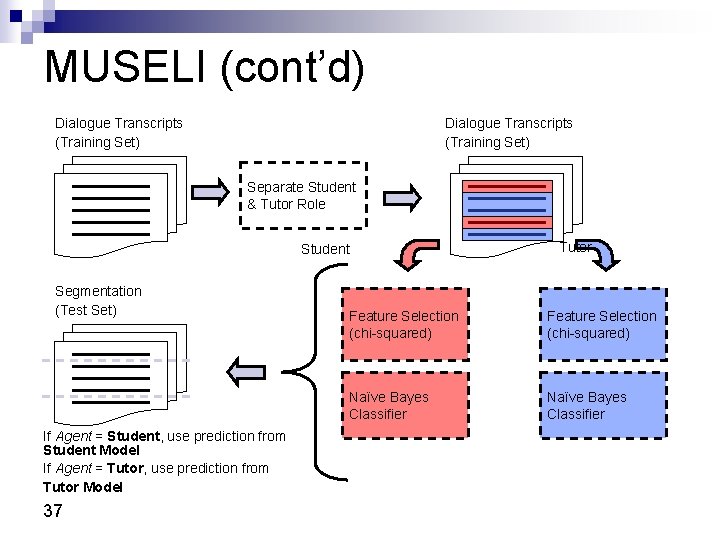

MUSELI (cont’d) Dialogue Transcripts (Training Set) Separate Student & Tutor Role Student Segmentation (Test Set) If Agent = Student, use prediction from Student Model If Agent = Tutor, use prediction from Tutor Model 37 Tutor Feature Selection (chi-squared) Naïve Bayes Classifier

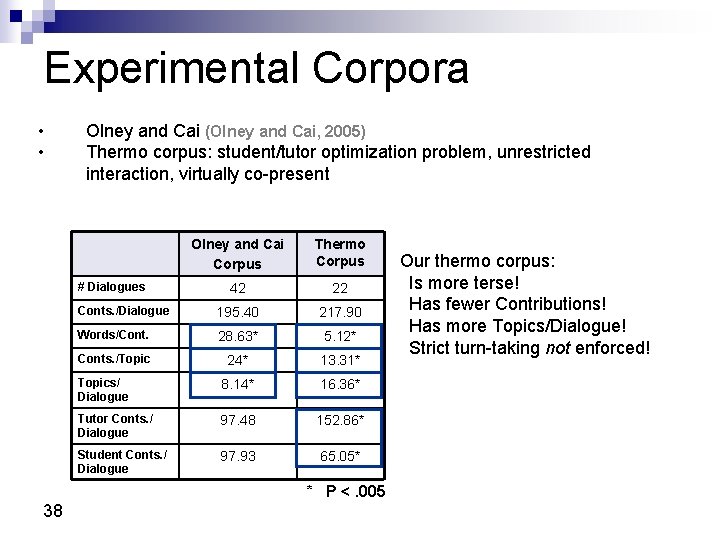

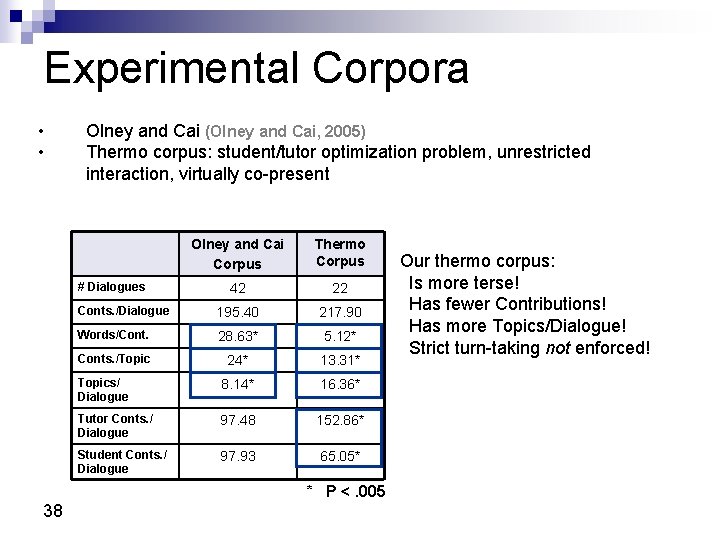

Experimental Corpora • • Olney and Cai (Olney and Cai, 2005) Thermo corpus: student/tutor optimization problem, unrestricted interaction, virtually co-present Olney and Cai Corpus Thermo Corpus 42 22 Conts. /Dialogue 195. 40 217. 90 Words/Cont. 28. 63* 5. 12* Conts. /Topic 24* 13. 31* Topics/ Dialogue 8. 14* 16. 36* Tutor Conts. / Dialogue 97. 48 152. 86* Student Conts. / Dialogue 97. 93 65. 05* # Dialogues * P <. 005 38 Our thermo corpus: Is more terse! Has fewer Contributions! Has more Topics/Dialogue! Strict turn-taking not enforced!

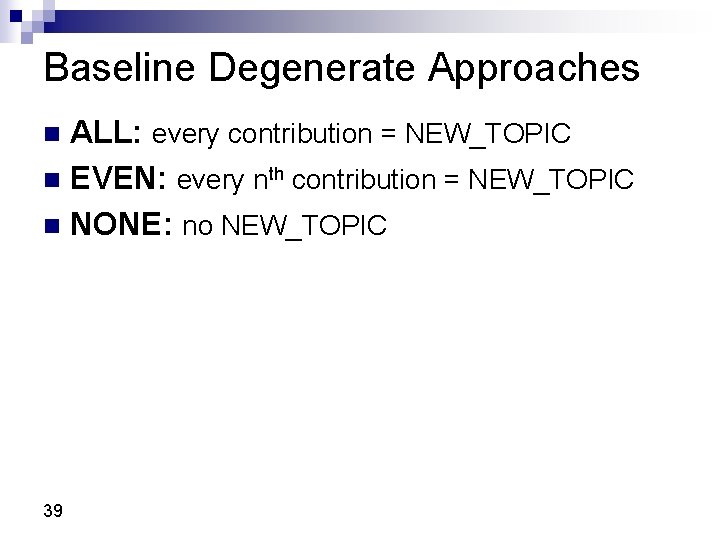

Baseline Degenerate Approaches ALL: every contribution = NEW_TOPIC n EVEN: every nth contribution = NEW_TOPIC n NONE: no NEW_TOPIC n 39

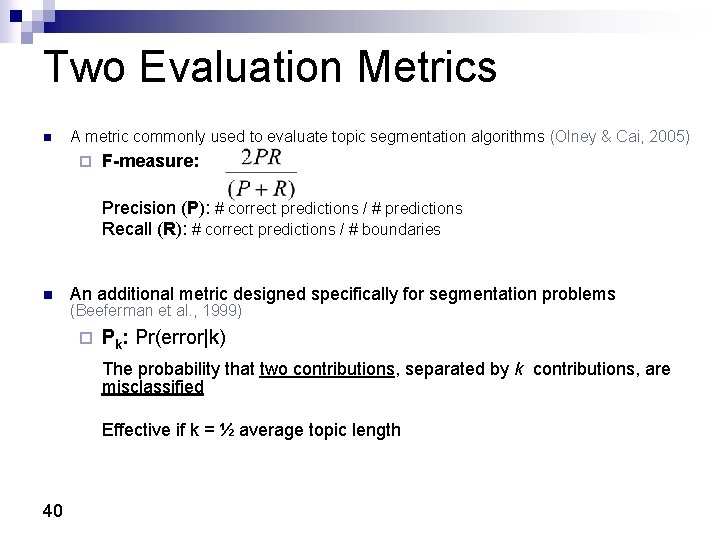

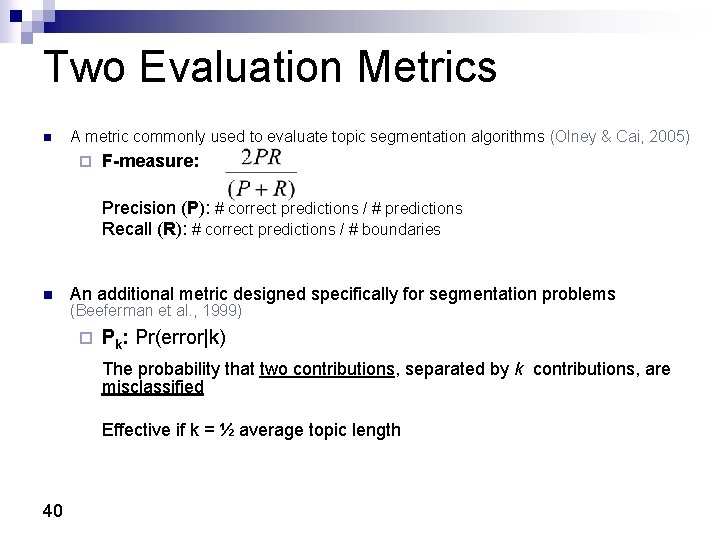

Two Evaluation Metrics n A metric commonly used to evaluate topic segmentation algorithms (Olney & Cai, 2005) ¨ F-measure: Precision (P): # correct predictions / # predictions Recall (R): # correct predictions / # boundaries n An additional metric designed specifically for segmentation problems (Beeferman et al. , 1999) ¨ Pk: Pr(error|k) The probability that two contributions, separated by k contributions, are misclassified Effective if k = ½ average topic length 40

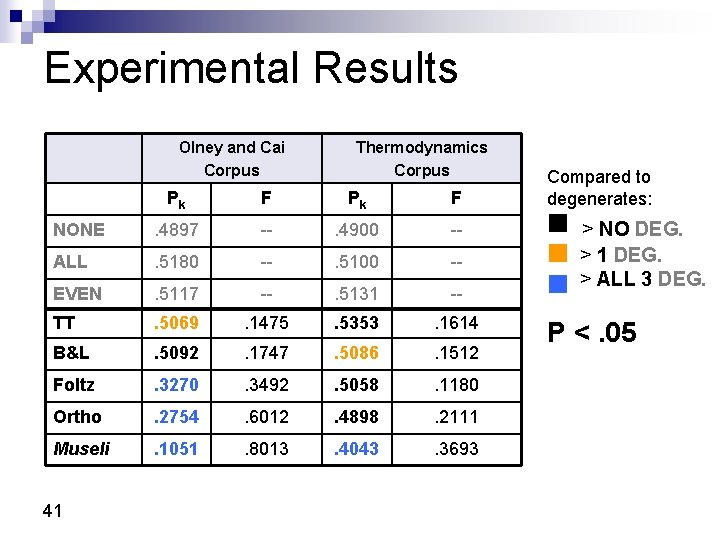

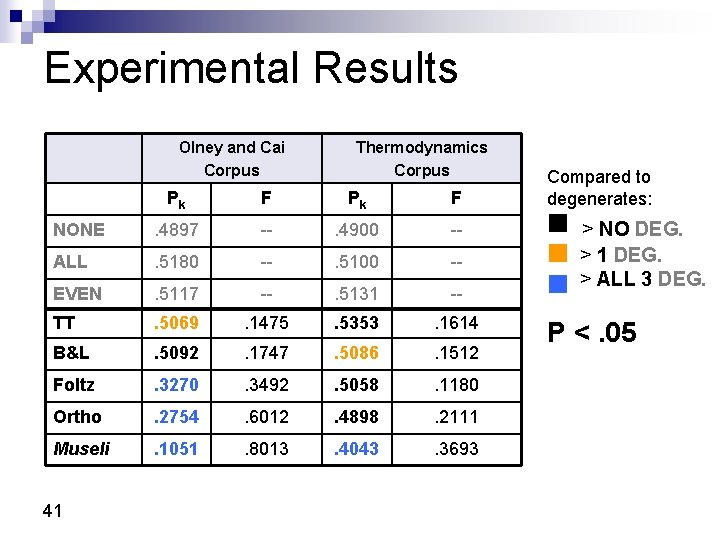

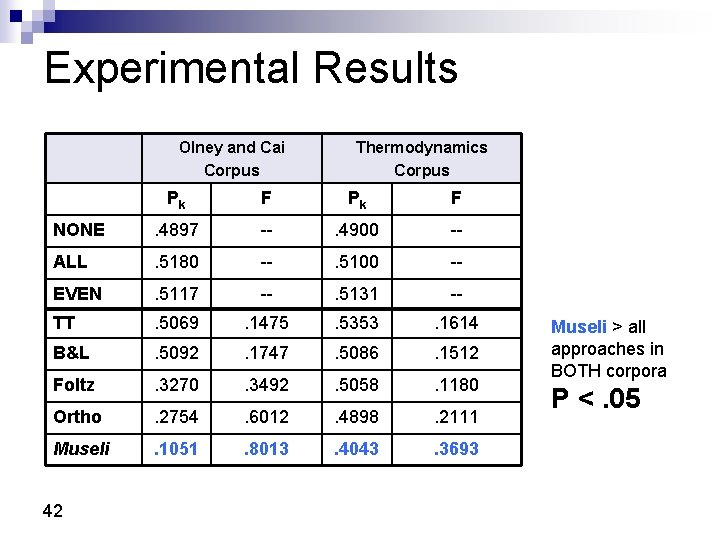

Experimental Results Olney and Cai Corpus Thermodynamics Corpus Pk F NONE . 4897 -- . 4900 -- ALL . 5180 -- . 5100 -- EVEN . 5117 -- . 5131 -- TT . 5069 . 1475 . 5353 . 1614 B&L . 5092 . 1747 . 5086 . 1512 Foltz . 3270 . 3492 . 5058 . 1180 Ortho . 2754 . 6012 . 4898 . 2111 Museli . 1051 . 8013 . 4043 . 3693 41 Compared to degenerates: > NO DEG. > 1 DEG. > ALL 3 DEG. P <. 05

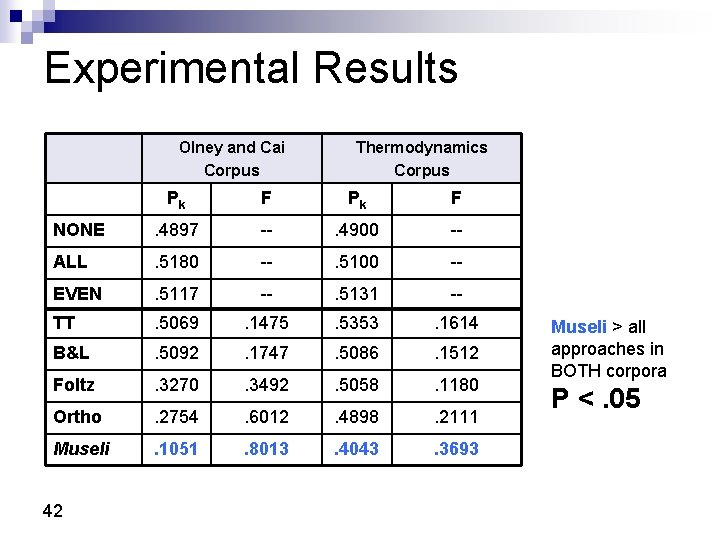

Experimental Results Olney and Cai Corpus Thermodynamics Corpus Pk F NONE . 4897 -- . 4900 -- ALL . 5180 -- . 5100 -- EVEN . 5117 -- . 5131 -- TT . 5069 . 1475 . 5353 . 1614 B&L . 5092 . 1747 . 5086 . 1512 Foltz . 3270 . 3492 . 5058 . 1180 Ortho . 2754 . 6012 . 4898 . 2111 Museli . 1051 . 8013 . 4043 . 3693 42 Museli > all approaches in BOTH corpora P <. 05

Error Analysis: n 43 Barzilay & Lee Lack of content produces overly skewed cluster size distribution

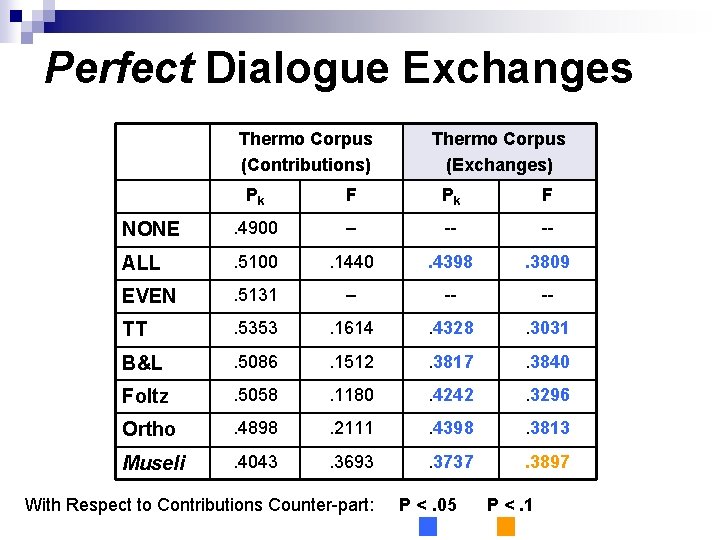

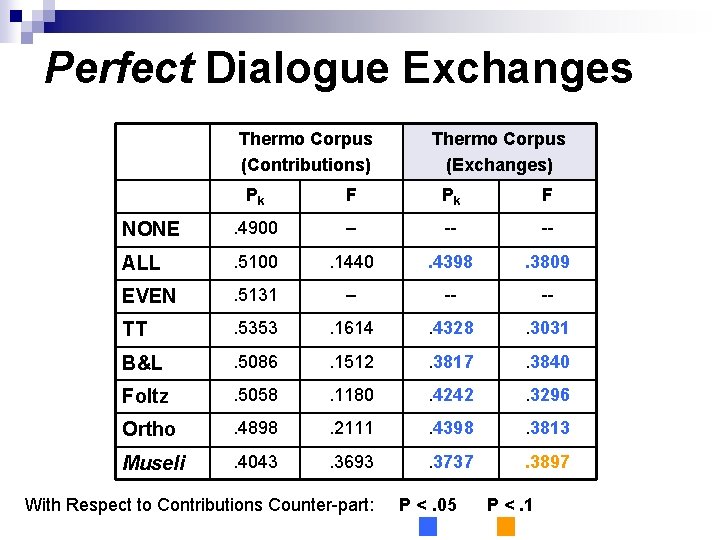

Perfect Dialogue Exchanges Thermo Corpus (Contributions) Thermo Corpus (Exchanges) Pk F NONE . 4900 -- -- -- ALL . 5100 . 1440 . 4398 . 3809 EVEN . 5131 -- -- -- TT . 5353 . 1614 . 4328 . 3031 B&L . 5086 . 1512 . 3817 . 3840 Foltz . 5058 . 1180 . 4242 . 3296 Ortho . 4898 . 2111 . 4398 . 3813 Museli . 4043 . 3693 . 3737 . 3897 With Respect to Contributions Counter-part: P <. 05 P <. 1

Predicted Dialogue Exchanges n Museli approach for predicting exchange boundaries ¨ F-measure: . 5295 ¨ Pk: n . 3358 B&L with predicted exchanges: ¨ F-measure: . 3291 ¨ Pk: . 4154 ¨ An improvement over B&L with contribution, P <. 05

Questions?

Force and newtons law review

Force and newtons law review Entity summarization

Entity summarization Text summarization vietnamese

Text summarization vietnamese Medical summarization outsourcing

Medical summarization outsourcing Text summarization vietnamese

Text summarization vietnamese Text summarization vietnamese

Text summarization vietnamese Abstractive summarization

Abstractive summarization Carolyn mendiola

Carolyn mendiola Synchronicity market timing

Synchronicity market timing How to tell wild

How to tell wild Carolyn sourek

Carolyn sourek Carolyn talbot

Carolyn talbot Carolyn johnston md

Carolyn johnston md Carolyn shread

Carolyn shread Carolyn graham jazz chants

Carolyn graham jazz chants Carolyn hanesworth

Carolyn hanesworth Stury

Stury Carolyn marano

Carolyn marano Kaylene crum

Kaylene crum Carolyn maull

Carolyn maull Yvonne has 10 tulip bulbs in a bag

Yvonne has 10 tulip bulbs in a bag Carolyn ells

Carolyn ells Carolyn hotchkiss

Carolyn hotchkiss Carolyn laorno

Carolyn laorno Carolyn washburn

Carolyn washburn Carolyn cherry

Carolyn cherry Carolyn knoepfler

Carolyn knoepfler Carolyn saxby facts

Carolyn saxby facts Beggs and brill pressure drop calculation

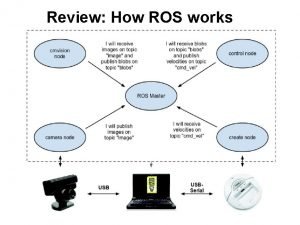

Beggs and brill pressure drop calculation Ros lidar mapping

Ros lidar mapping Catkin build ros

Catkin build ros Universal robot ros driver

Universal robot ros driver Ros business consulting

Ros business consulting Ros smith

Ros smith Haemotypsis

Haemotypsis Ros crash course

Ros crash course Ros cheat sheet melodic

Ros cheat sheet melodic Polimeri adalah

Polimeri adalah Ros navigation stack

Ros navigation stack Ros tf listener

Ros tf listener Ros lecture

Ros lecture Pityriasis ros

Pityriasis ros Ros adn

Ros adn Patient chief complaint

Patient chief complaint Włoski fizyk pionier radiotechniki

Włoski fizyk pionier radiotechniki Ricardo ros

Ricardo ros Later adulthood physical development

Later adulthood physical development