The True Sample Complexity of Active Learning Steve

![A More Subtle Example: Intervals Suppose D is uniform on [0, 1] 0 - A More Subtle Example: Intervals Suppose D is uniform on [0, 1] 0 -](https://slidetodoc.com/presentation_image_h2/3ab3bfe38d67da5c0e450f71f19bcdf6/image-10.jpg)

![A More Subtle Example: Intervals Suppose D is uniform on [0, 1] } } A More Subtle Example: Intervals Suppose D is uniform on [0, 1] } }](https://slidetodoc.com/presentation_image_h2/3ab3bfe38d67da5c0e450f71f19bcdf6/image-11.jpg)

![Active Learning Always Helps! [HLW 94] passive algorithm has O(1/ ) sample complexity. Steve Active Learning Always Helps! [HLW 94] passive algorithm has O(1/ ) sample complexity. Steve](https://slidetodoc.com/presentation_image_h2/3ab3bfe38d67da5c0e450f71f19bcdf6/image-14.jpg)

- Slides: 31

The True Sample Complexity of Active Learning Steve Hanneke Machine Learning Department Carnegie Mellon University shanneke@cs. cmu. edu Joint work with Maria-Florina Balcan Computer Science Department Carnegie Mellon University ninamf@cs. cmu. edu and Jennifer Wortman Computer and Information Science University of Pennsylvania wortmanj@seas. upenn. edu

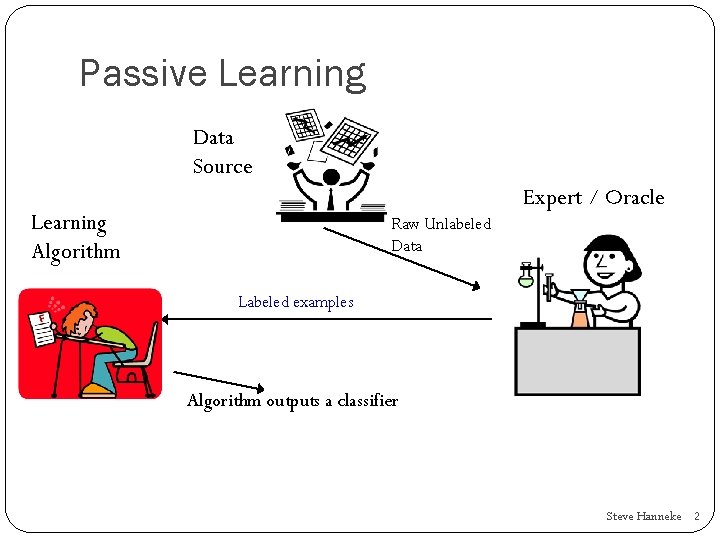

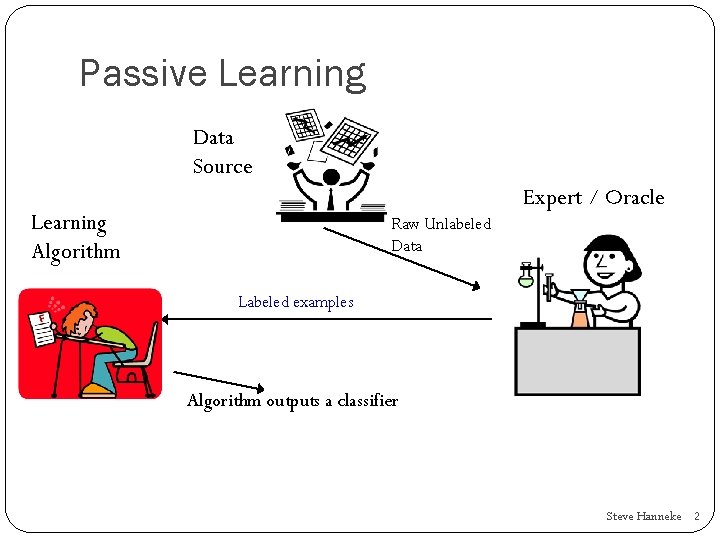

Passive Learning Data Source Expert / Oracle Learning Algorithm Raw Unlabeled Data Labeled examples Algorithm outputs a classifier Steve Hanneke 2

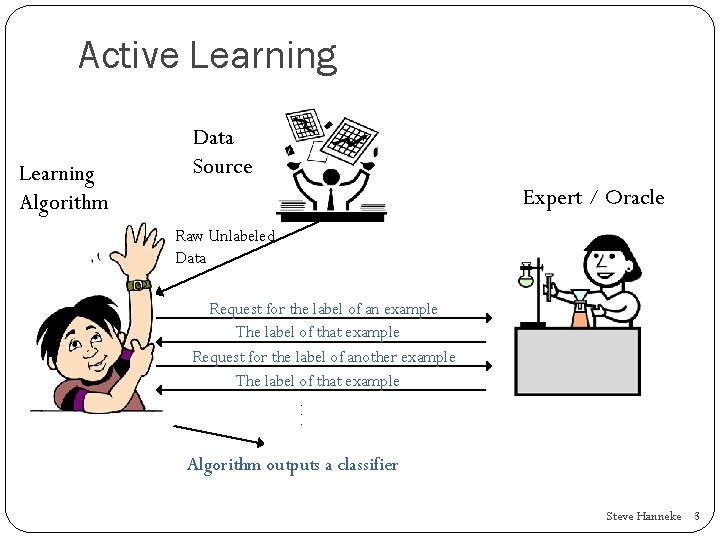

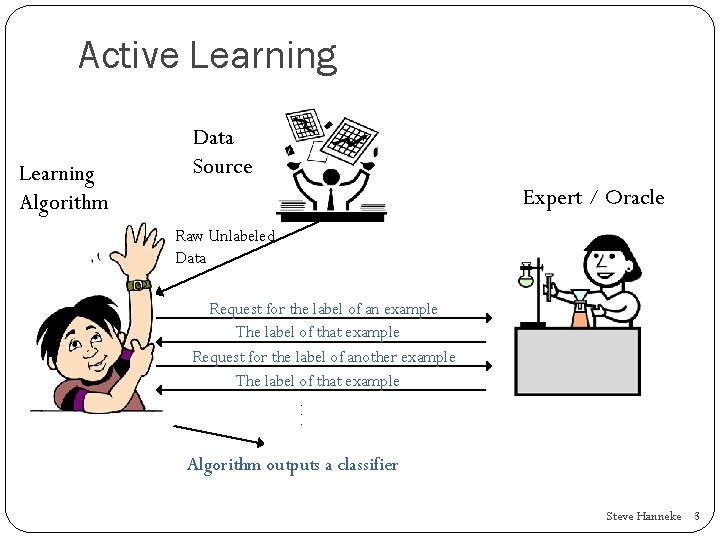

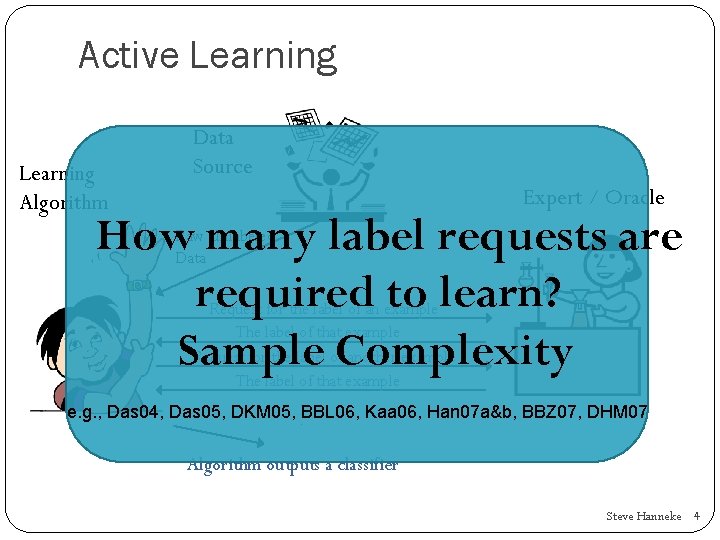

Active Learning Algorithm Data Source Expert / Oracle Raw Unlabeled Data Request for the label of an example The label of that example Request for the label of another example The label of that example. . . Algorithm outputs a classifier Steve Hanneke 3

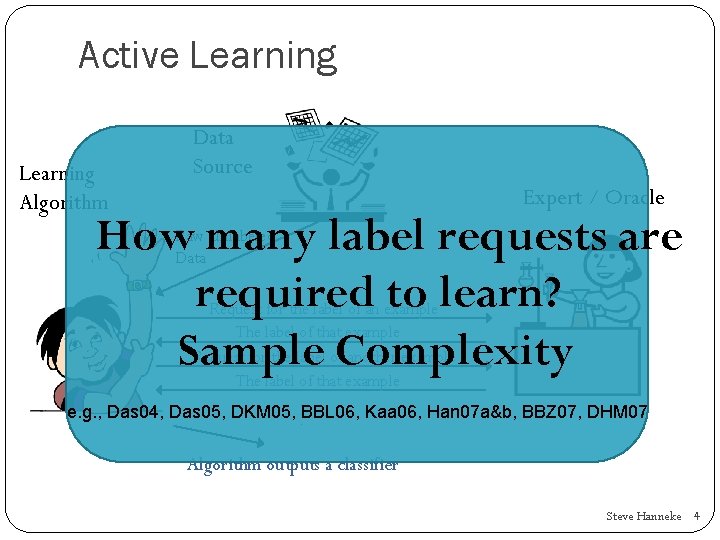

Active Learning Algorithm Data Source Expert / Oracle How many label requests are required to learn? Sample Complexity Raw Unlabeled Data Request for the label of an example The label of that example Request for the label of another example The label of that example. . . e. g. , Das 04, Das 05, DKM 05, BBL 06, Kaa 06, Han 07 a&b, BBZ 07, DHM 07 Algorithm outputs a classifier Steve Hanneke 4

Active Learning Sometimes Helps An Example: 1 -dimensional threshold functions. - + Steve Hanneke 5

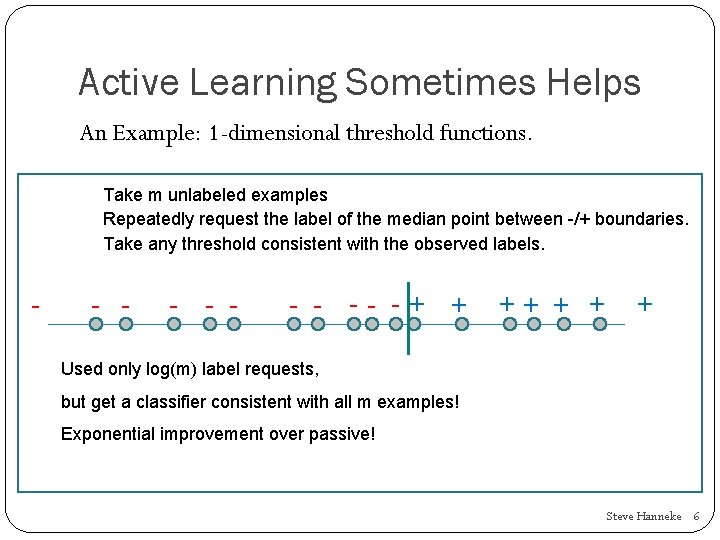

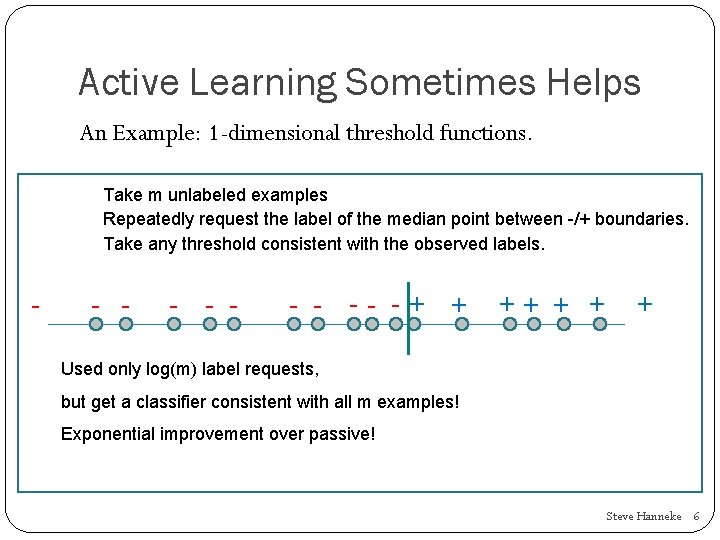

Active Learning Sometimes Helps An Example: 1 -dimensional threshold functions. Take m unlabeled examples Repeatedly request the label of the median point between -/+ boundaries. Take any threshold consistent with the observed labels. - - - - -- -+ + + + Used only log(m) label requests, but get a classifier consistent with all m examples! Exponential improvement over passive! Steve Hanneke 6

Outline Formal Model An Illustrative Example Exciting New Results Conclusions & Open Problems Steve Hanneke 7

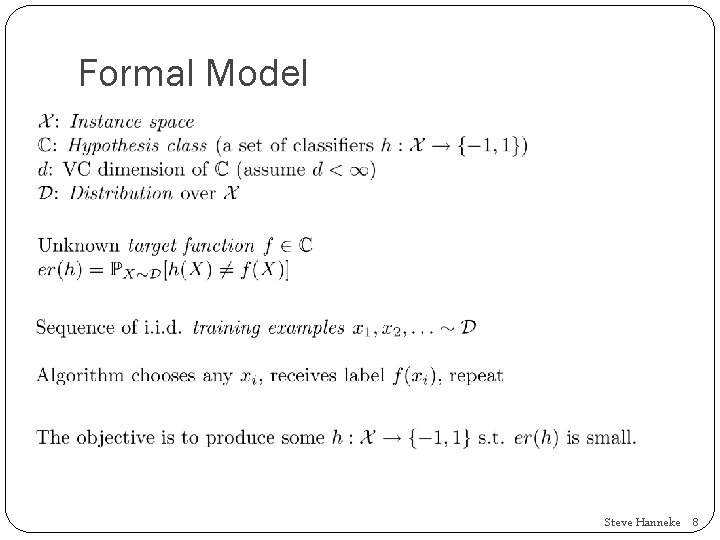

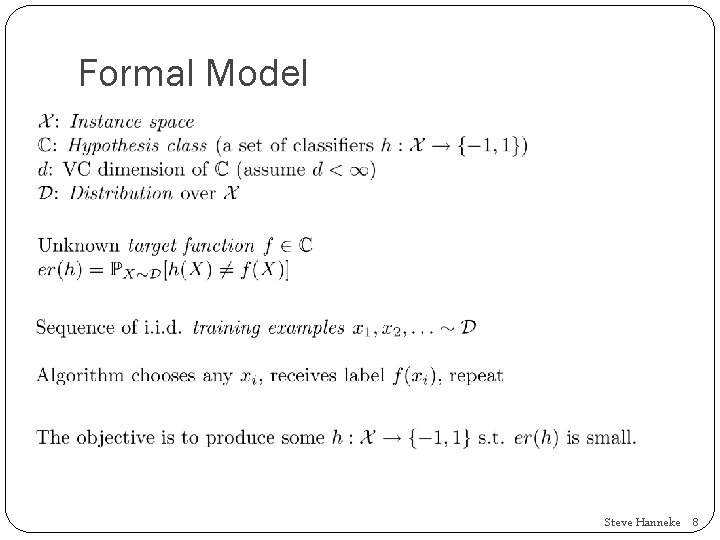

Formal Model Steve Hanneke 8

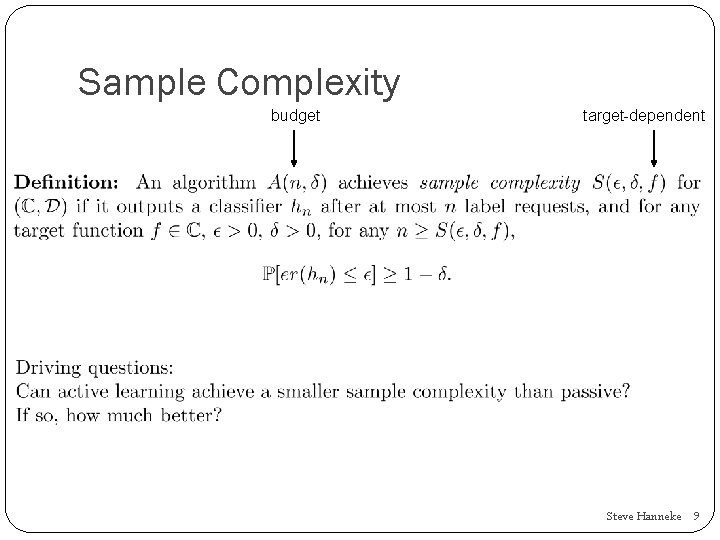

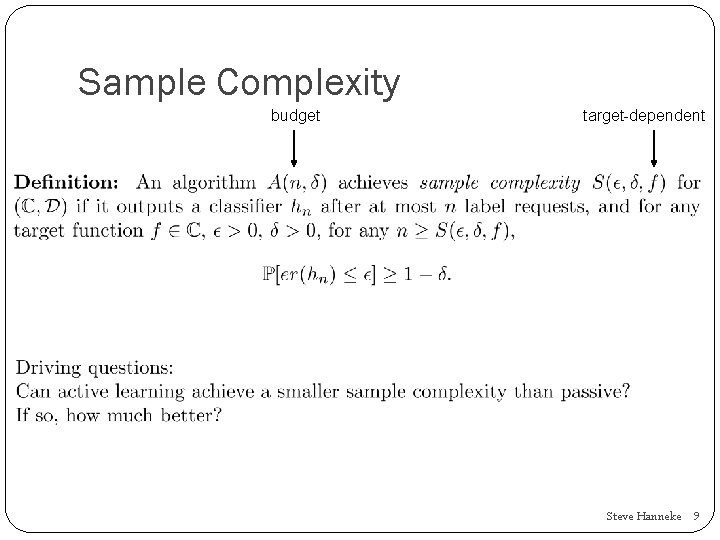

Sample Complexity budget target-dependent Steve Hanneke 9

![A More Subtle Example Intervals Suppose D is uniform on 0 1 0 A More Subtle Example: Intervals Suppose D is uniform on [0, 1] 0 -](https://slidetodoc.com/presentation_image_h2/3ab3bfe38d67da5c0e450f71f19bcdf6/image-10.jpg)

A More Subtle Example: Intervals Suppose D is uniform on [0, 1] 0 - + - 1 Steve Hanneke 10

![A More Subtle Example Intervals Suppose D is uniform on 0 1 A More Subtle Example: Intervals Suppose D is uniform on [0, 1] } }](https://slidetodoc.com/presentation_image_h2/3ab3bfe38d67da5c0e450f71f19bcdf6/image-11.jpg)

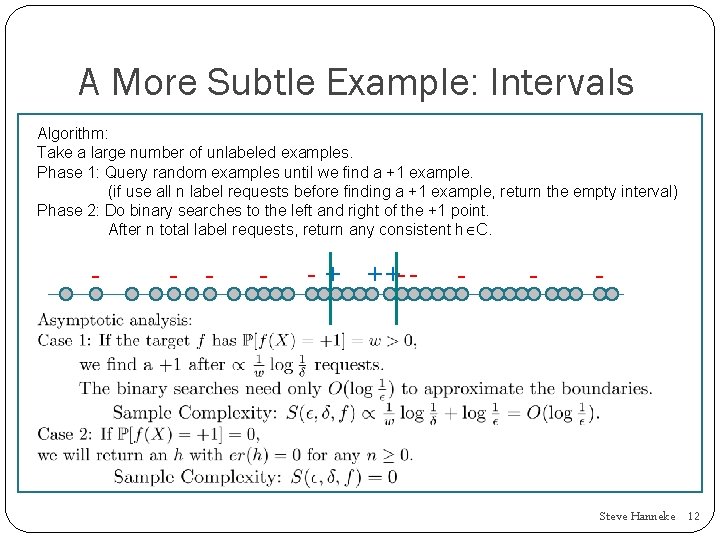

A More Subtle Example: Intervals Suppose D is uniform on [0, 1] } } } } } } } } 2 2 2 2 2 2 2 2 0 1 Suppose the target labels everything “-1” How many labels are needed to verify that a given h has er(h) < ? Need (1/ ) label requests to guarantee the target isn’t one of these (1/ ) sample complexity? Steve Hanneke 11

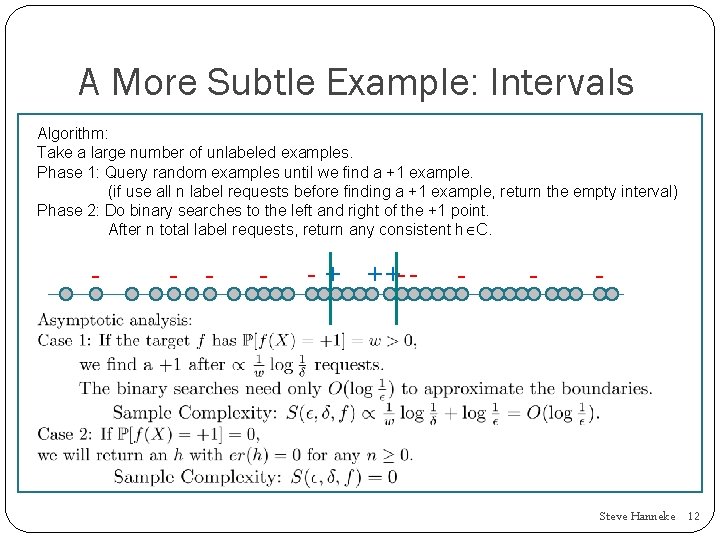

A More Subtle Example: Intervals Algorithm: Take a large number of unlabeled examples. Phase 1: Query random examples until we find a +1 example. (if use all n label requests before finding a +1 example, return the empty interval) Phase 2: Do binary searches to the left and right of the +1 point. After n total label requests, return any consistent h C. - - - + + +- - - Steve Hanneke 12

Can Active Learning Always Help? Steve Hanneke 13

![Active Learning Always Helps HLW 94 passive algorithm has O1 sample complexity Steve Active Learning Always Helps! [HLW 94] passive algorithm has O(1/ ) sample complexity. Steve](https://slidetodoc.com/presentation_image_h2/3ab3bfe38d67da5c0e450f71f19bcdf6/image-14.jpg)

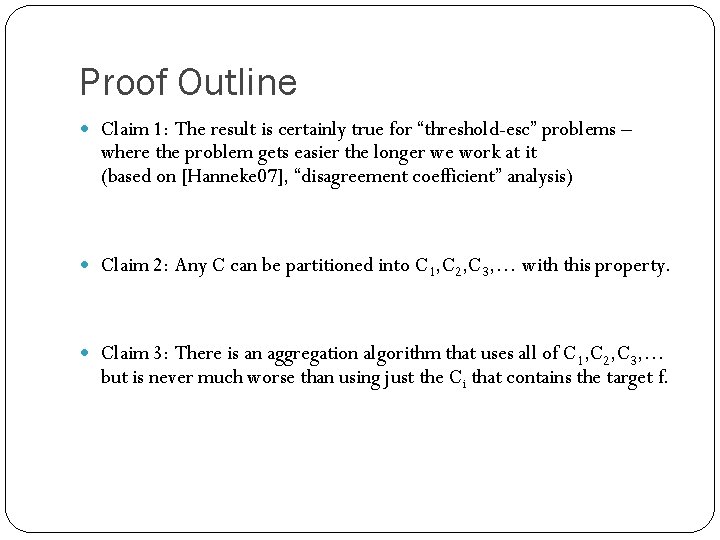

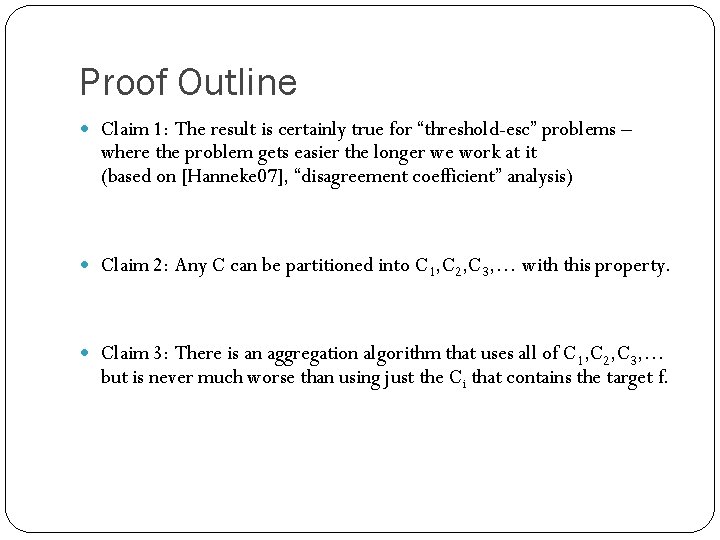

Active Learning Always Helps! [HLW 94] passive algorithm has O(1/ ) sample complexity. Steve Hanneke 14

Proof Outline Claim 1: The result is certainly true for “threshold-esc” problems – where the problem gets easier the longer we work at it (based on [Hanneke 07], “disagreement coefficient” analysis) Claim 2: Any C can be partitioned into C 1, C 2, C 3, … with this property. Claim 3: There is an aggregation algorithm that uses all of C 1, C 2, C 3, … but is never much worse than using just the Ci that contains the target f.

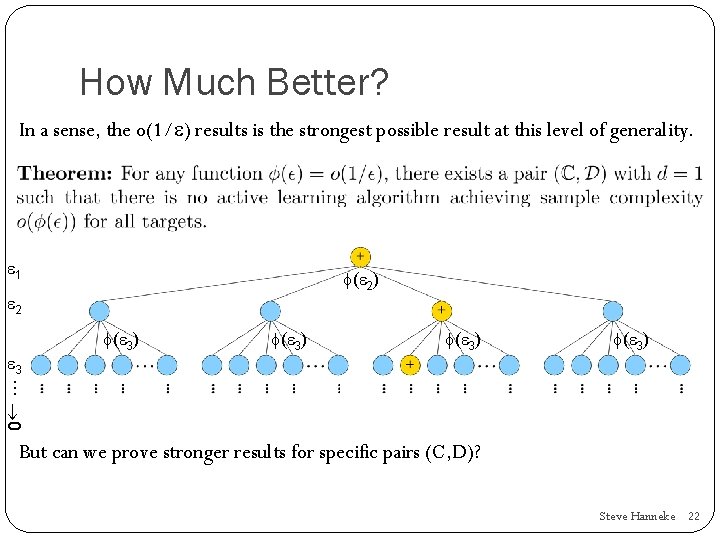

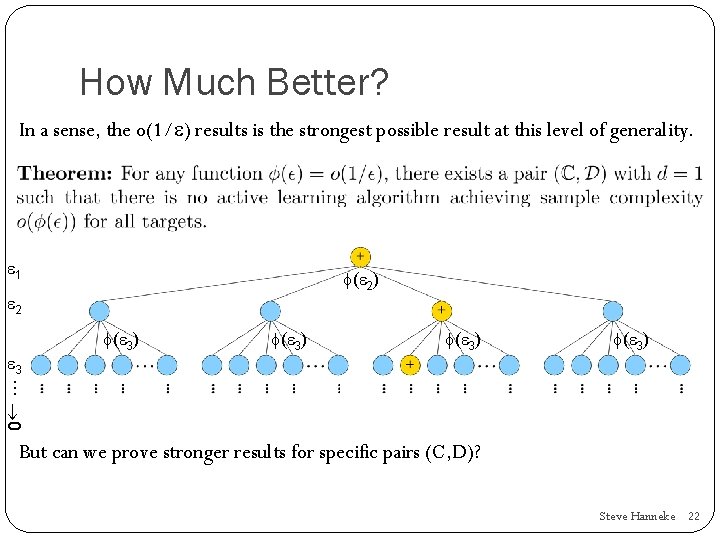

How Much Better? In a sense, the o(1/ ) results is the strongest possible result at this level of generality. But can we prove stronger results for specific pairs (C, D)? Steve Hanneke 16

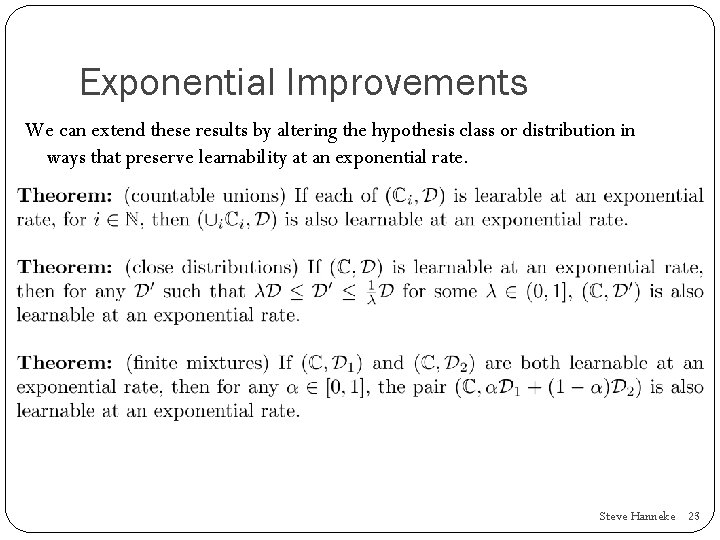

Exponential Improvements It is often possible to achieve polylogarithmic sample complexity for all targets. For example: linear separators, under uniform distributions on an r-sphere Axis-aligned rectangles, under uniform distributions on [0, 1]r Decision trees with axis-aligned splits, under uniform distributions on [0, 1] r Finite unions of intervals on the real line (arbitrary distributions) Can also preserve polylog sample complexities under some transformations: Unions, “close” distributions, mixtures of distributions Steve Hanneke 17

Conclusions Active learning can always achieve a strictly superior asymptotic sample complexity compared to passive learning. For most natural learning problems, the improvements are exponential. Often the amount of improvement is not detectable from observable quantities (e. g. , for intervals). Steve Hanneke 18

Open Problems Generalizing to agnostic learning General conditions for learnability at an exponential rate Reversing the order of quantifiers regarding D in the model Steve Hanneke 19

Thank You Special thanks to Yishay Mansour for suggesting this perspective, and to Eyal Even-Dar, Michael Kearns, Larry Wasserman & Eric Xing for discussions and feedback. Steve Hanneke 20

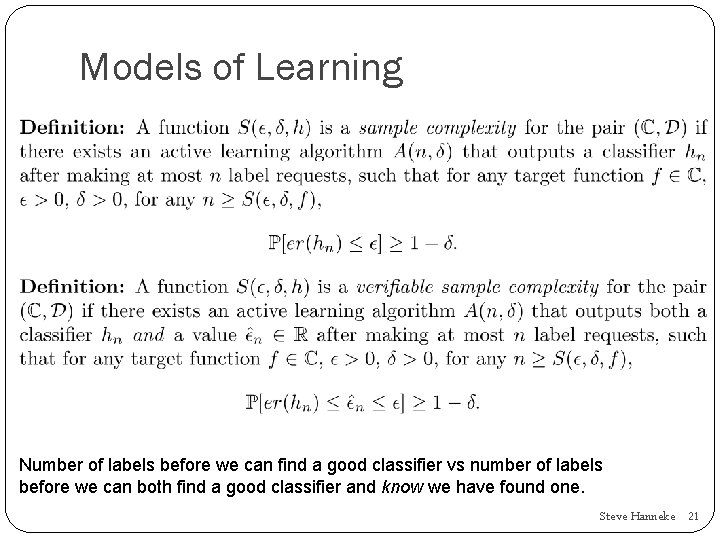

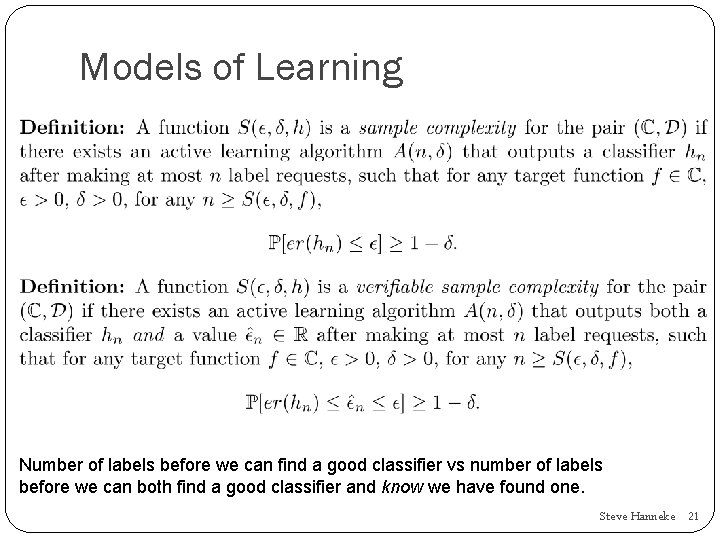

Models of Learning Number of labels before we can find a good classifier vs number of labels before we can both find a good classifier and know we have found one. Steve Hanneke 21

How Much Better? In a sense, the o(1/ ) results is the strongest possible result at this level of generality. 1 ( 2) 2 3 ( 3) … 0 But can we prove stronger results for specific pairs (C, D)? Steve Hanneke 22

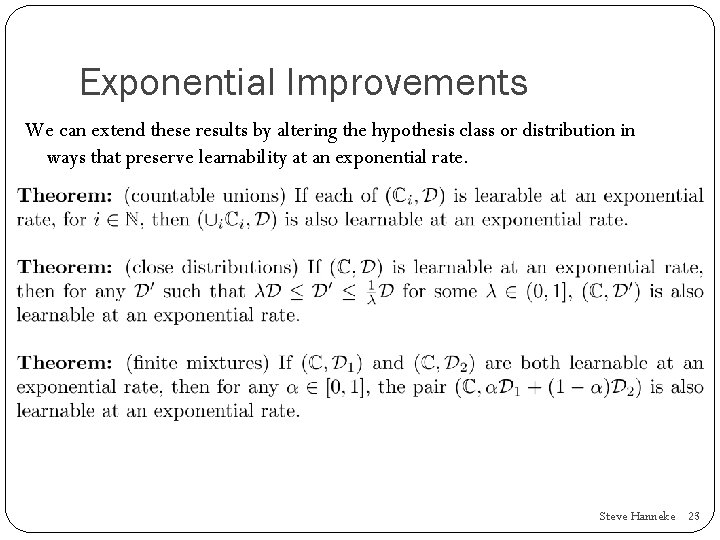

Exponential Improvements We can extend these results by altering the hypothesis class or distribution in ways that preserve learnability at an exponential rate. Steve Hanneke 23

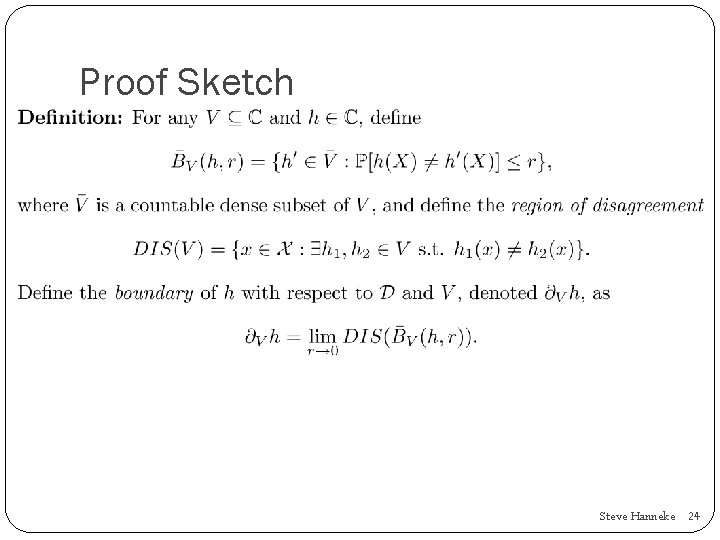

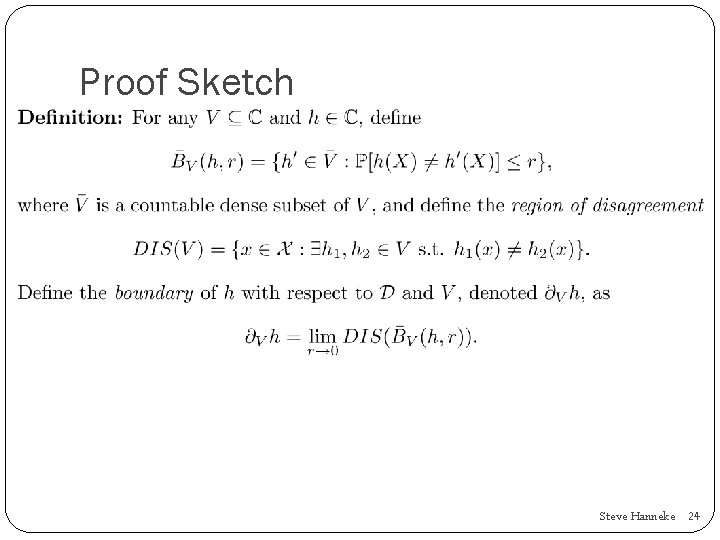

Proof Sketch Steve Hanneke 24

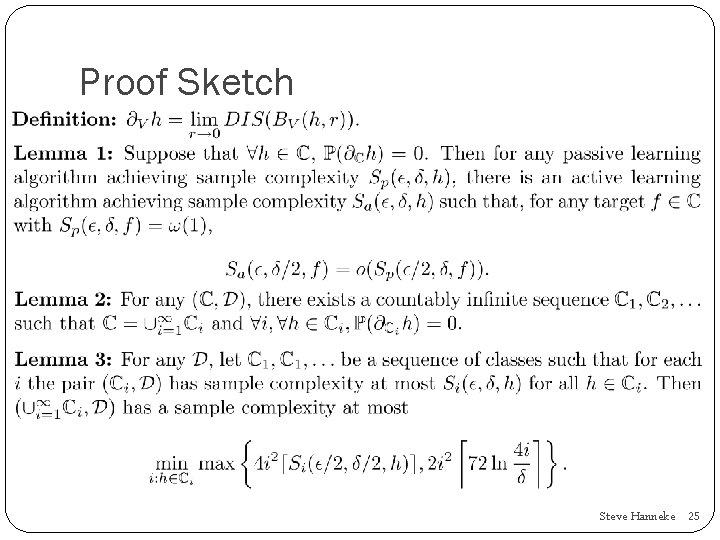

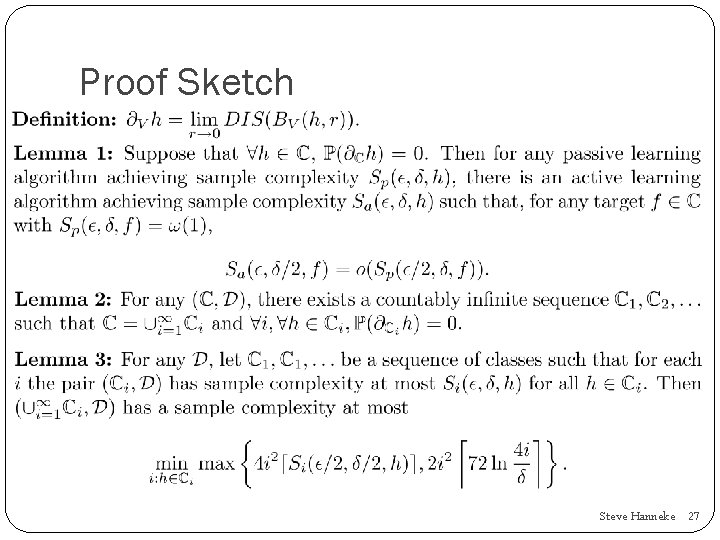

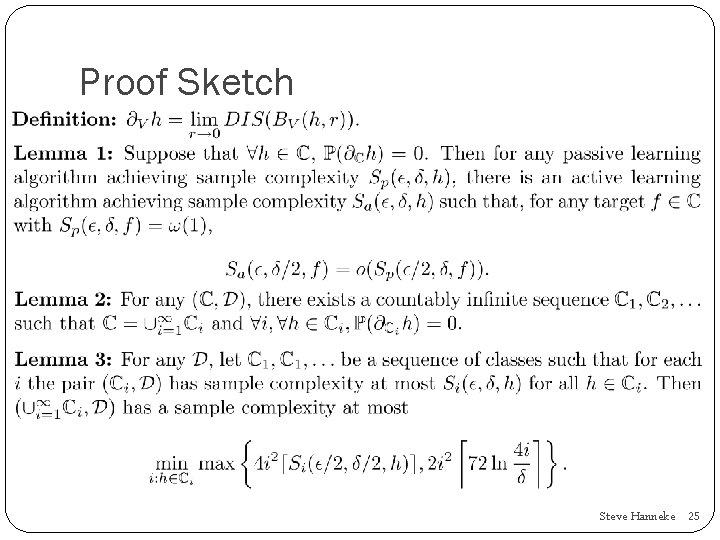

Proof Sketch Steve Hanneke 25

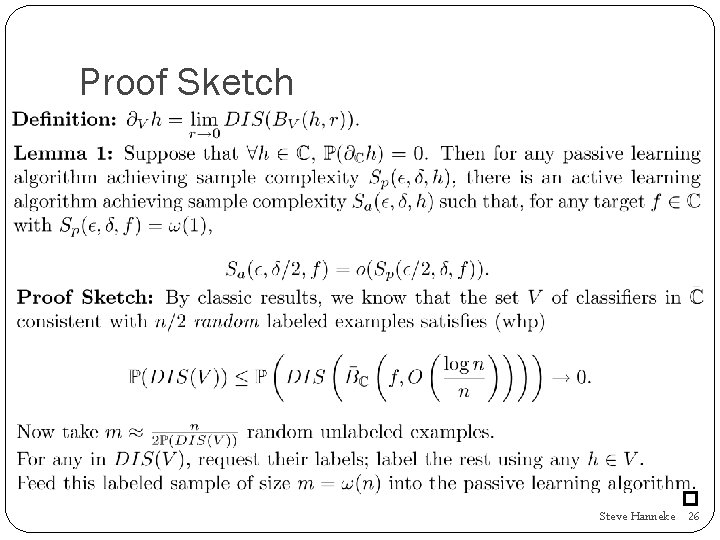

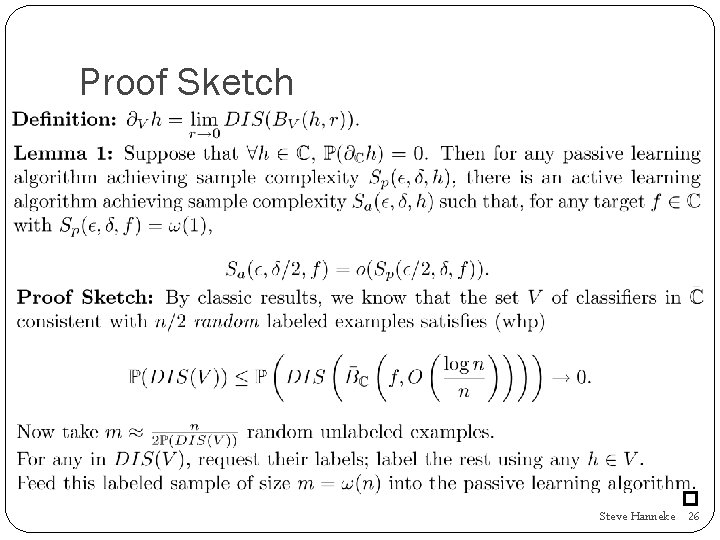

Proof Sketch Steve Hanneke 26

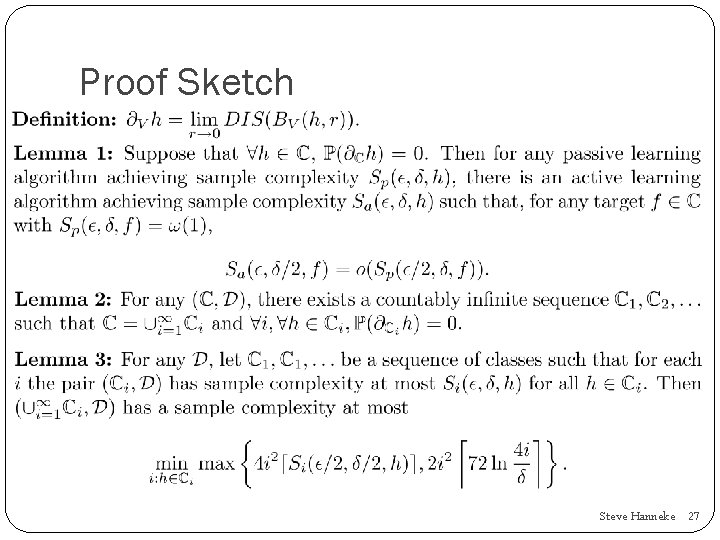

Proof Sketch Steve Hanneke 27

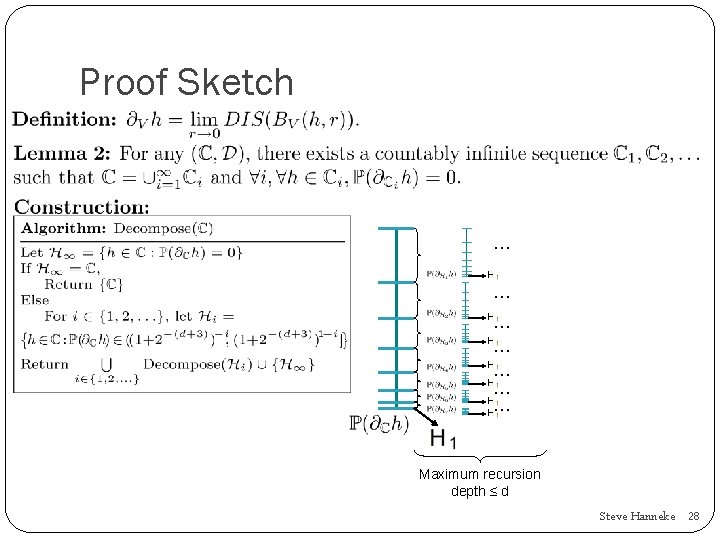

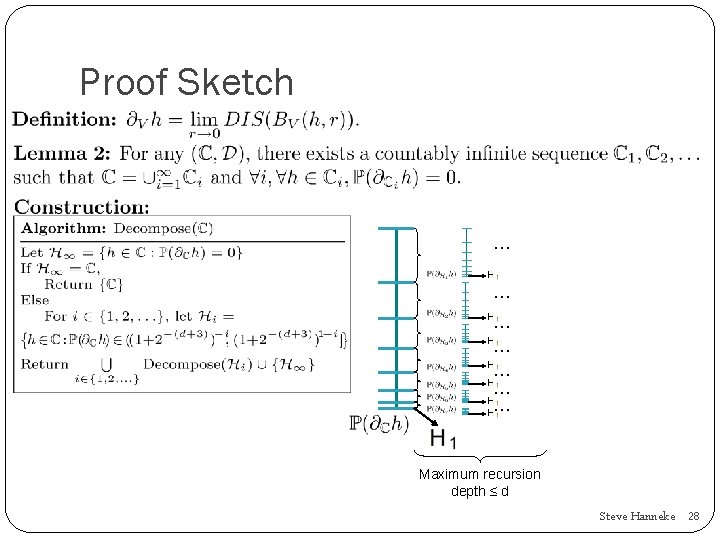

Proof Sketch … … … … Maximum recursion depth ≤ d Steve Hanneke 28

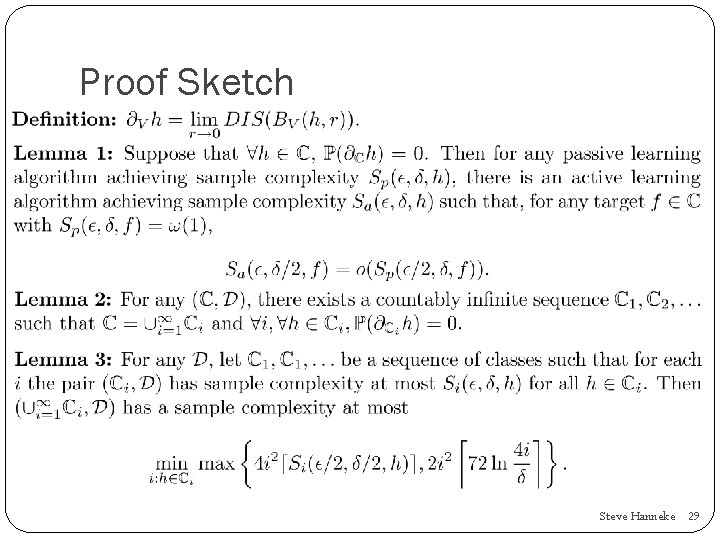

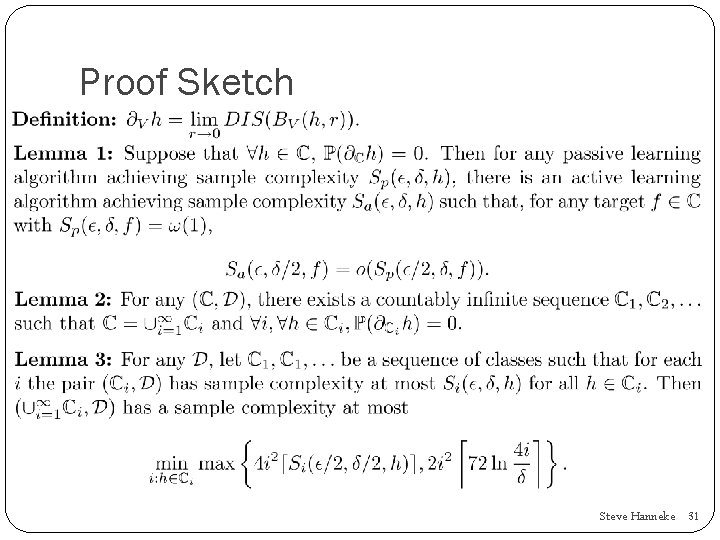

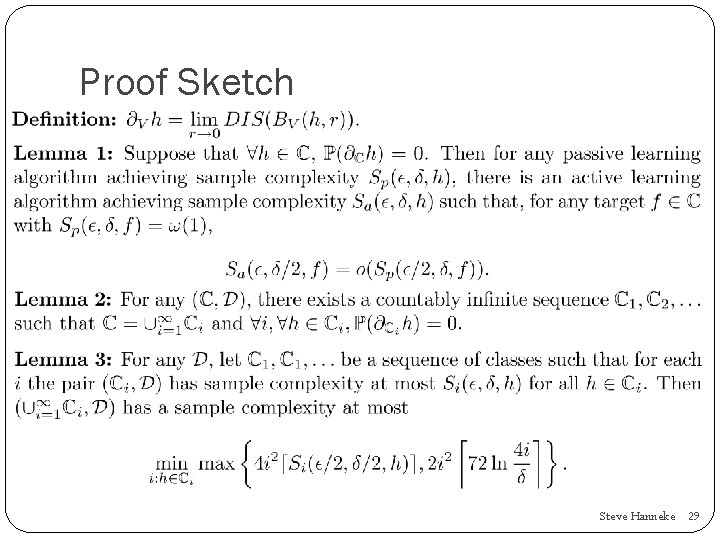

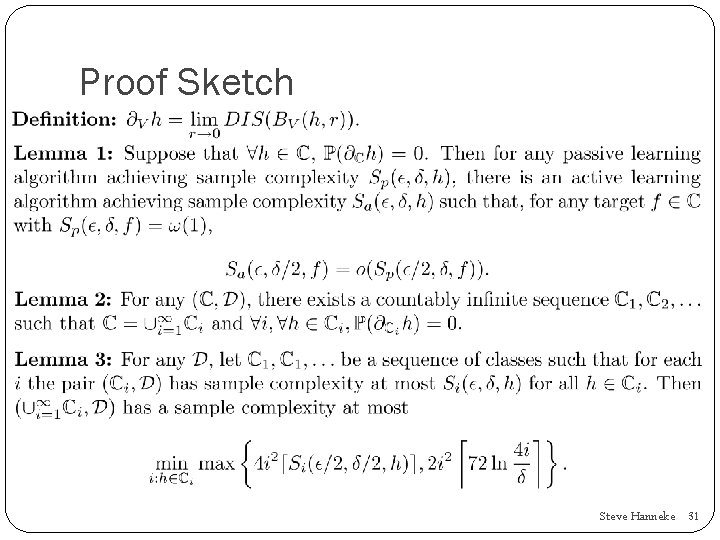

Proof Sketch Steve Hanneke 29

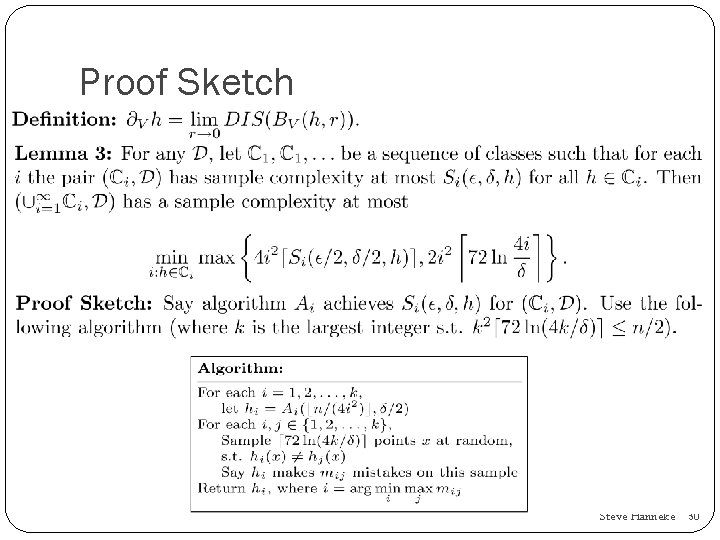

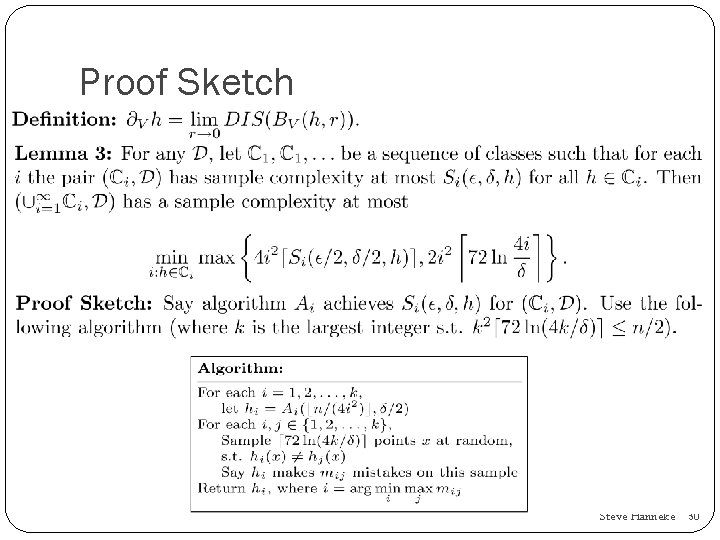

Proof Sketch Steve Hanneke 30

Proof Sketch Steve Hanneke 31