Active Learning for Reward Estimation in Inverse Reinforcement

![The Selection Criterion n Distribution P[r | D] induces a distribution on Use MC The Selection Criterion n Distribution P[r | D] induces a distribution on Use MC](https://slidetodoc.com/presentation_image_h/790d260bfb6bf93887f79c338fb3742f/image-14.jpg)

- Slides: 22

Active Learning for Reward Estimation in Inverse Reinforcement Learning M. Lopes (ISR) Francisco Melo (INESC-ID) L. Montesano (ISR)

Learning from Demonstration n Natural/intuitive n Does not require expert knowledge of the system n Does not require tuning of parameters n . . . 2

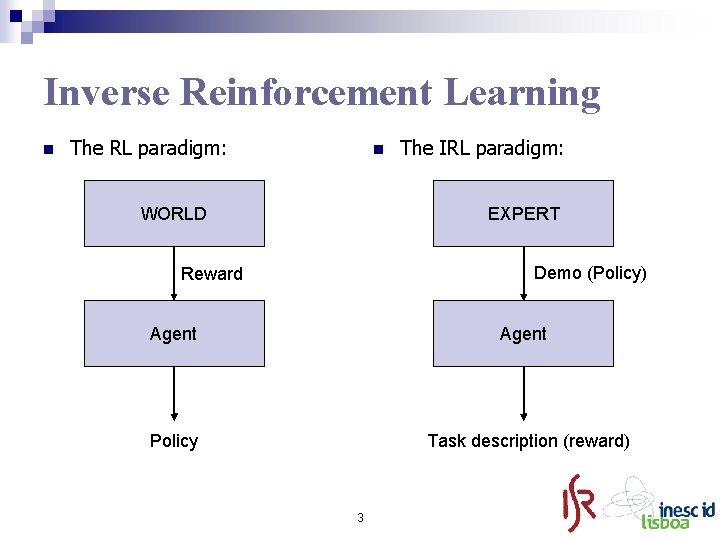

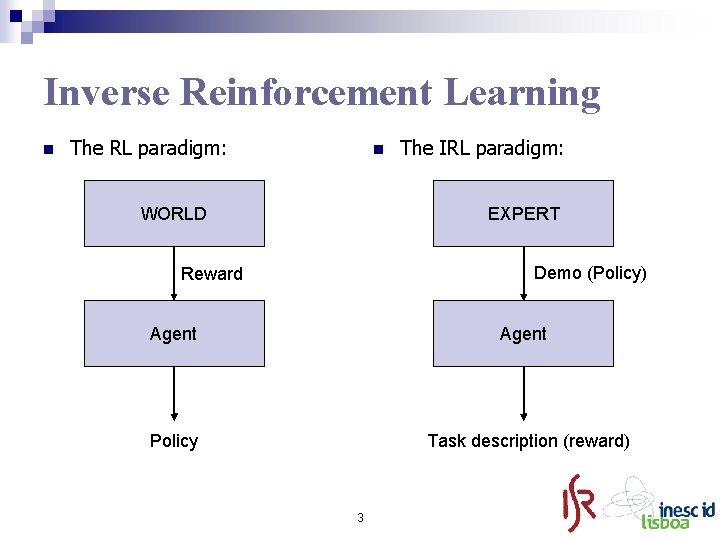

Inverse Reinforcement Learning n The RL paradigm: n WORLD The IRL paradigm: EXPERT Demo (Policy) Reward Agent Policy Task description (reward) 3

However. . . n n IRL is an ill-defined problem: ¨ One reward multiple policies ¨ One policy multiple rewards Complete demonstrations often impractical By actively querying the demonstrator, . . . n The agent gains the ability to choose “best” situations to be demonstrated n Less extensive demonstrations are required 4

Outline n Motivation n Background n Active IRL n Results n Conclusions 5

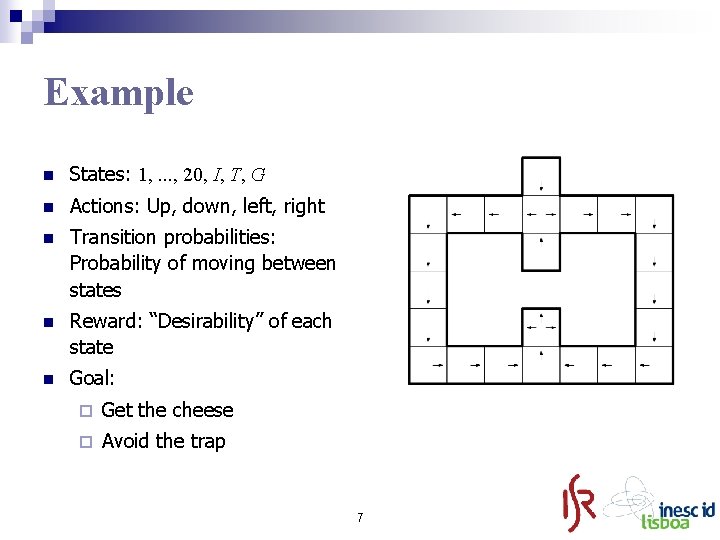

Markov Decision Processes A Markov decision process is a tuple (X, A, P, r, γ) n Set of possible states of the world: X = {1, . . . , |X|} n Set of possible actions of the agent: A = {1, . . . , |A|} n State evolves according to probabilities P[Xt + 1 = y | Xt = x, At = a] = Pa(x, y) n Reward r defines the task of the agent 6

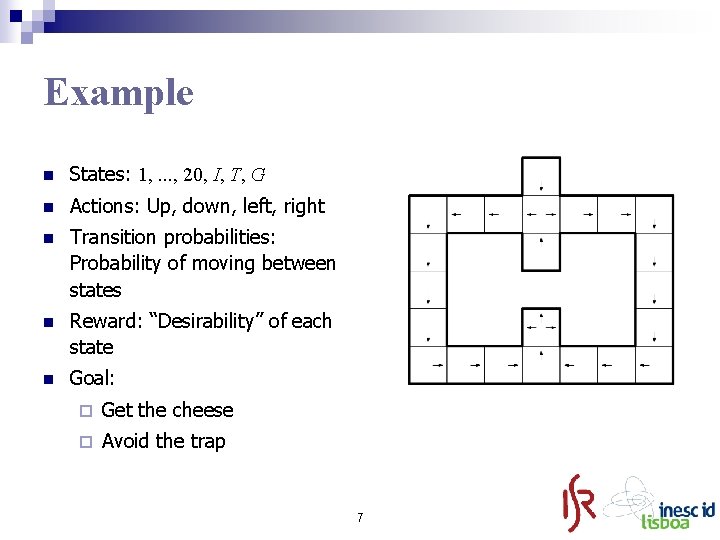

Example n States: 1, . . . , 20, I, T, G n Actions: Up, down, left, right n Transition probabilities: Probability of moving between states n Reward: “Desirability” of each state n Goal: ¨ Get the cheese ¨ Avoid the trap 7

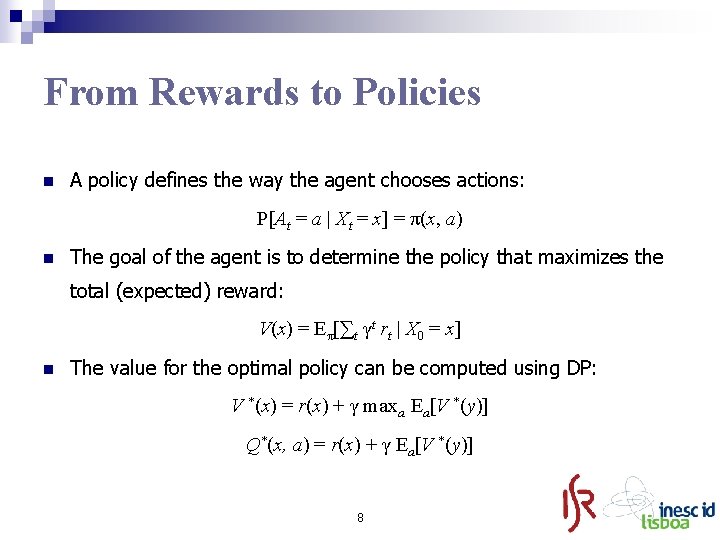

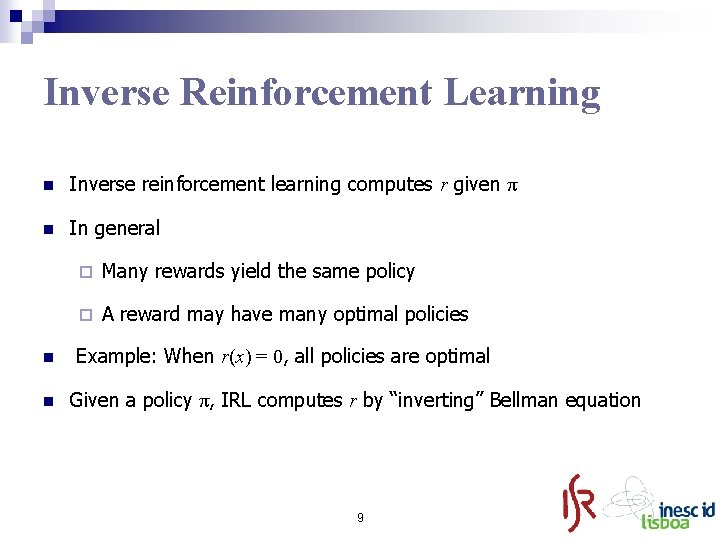

From Rewards to Policies n A policy defines the way the agent chooses actions: P[At = a | Xt = x] = π(x, a) n The goal of the agent is to determine the policy that maximizes the total (expected) reward: V(x) = Eπ[∑t γt rt | X 0 = x] n The value for the optimal policy can be computed using DP: V *(x) = r(x) + γ maxa Ea[V *(y)] Q*(x, a) = r(x) + γ Ea[V *(y)] 8

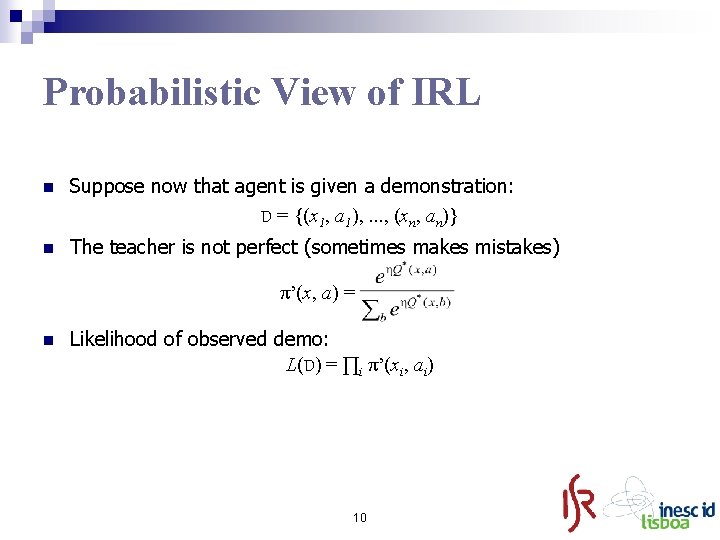

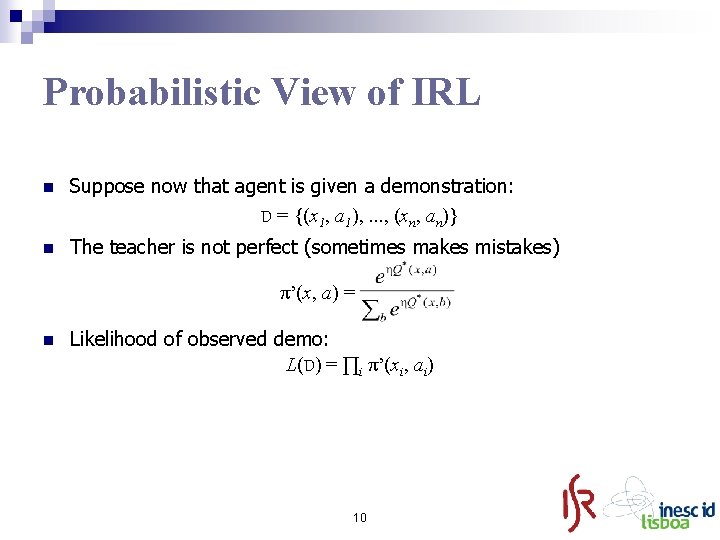

Inverse Reinforcement Learning n Inverse reinforcement learning computes r given π n In general n n ¨ Many rewards yield the same policy ¨ A reward may have many optimal policies Example: When r(x) = 0, all policies are optimal Given a policy π, IRL computes r by “inverting” Bellman equation 9

Probabilistic View of IRL n Suppose now that agent is given a demonstration: D = {(x 1, a 1), . . . , (xn, an)} n The teacher is not perfect (sometimes makes mistakes) π’(x, a) = e n Q(x, a) n Likelihood of observed demo: L(D) = ∏i π’(xi, ai) 10

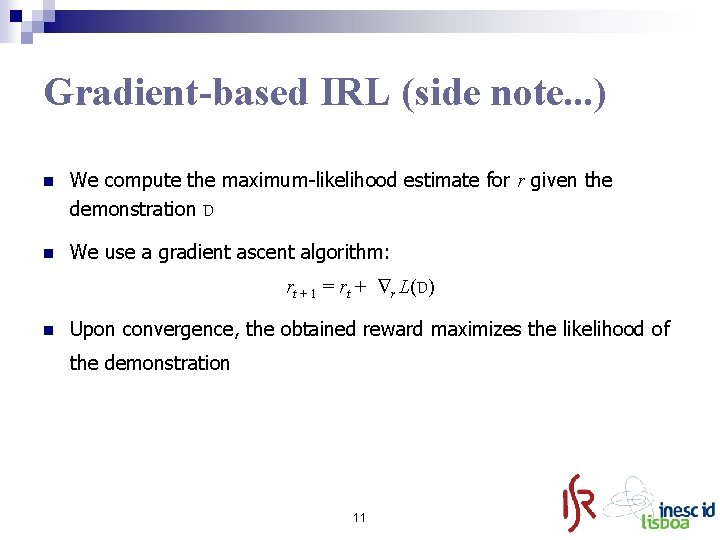

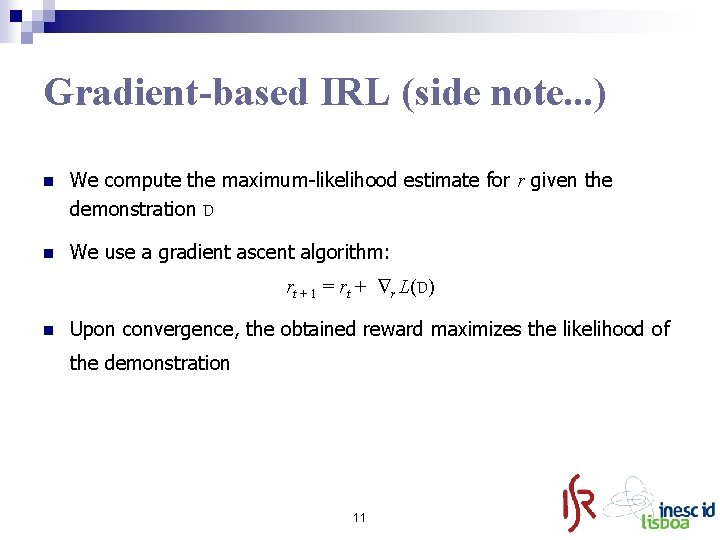

Gradient-based IRL (side note. . . ) n We compute the maximum-likelihood estimate for r given the demonstration D n We use a gradient ascent algorithm: rt + 1 = rt + r L(D) n Upon convergence, the obtained reward maximizes the likelihood of the demonstration 11

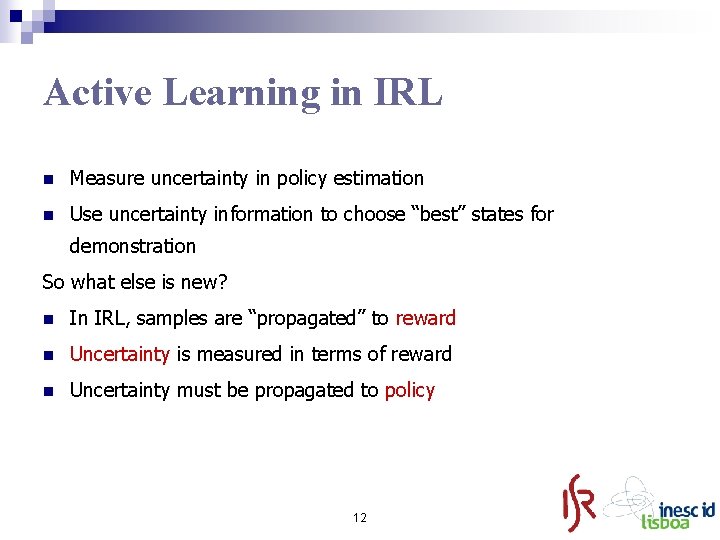

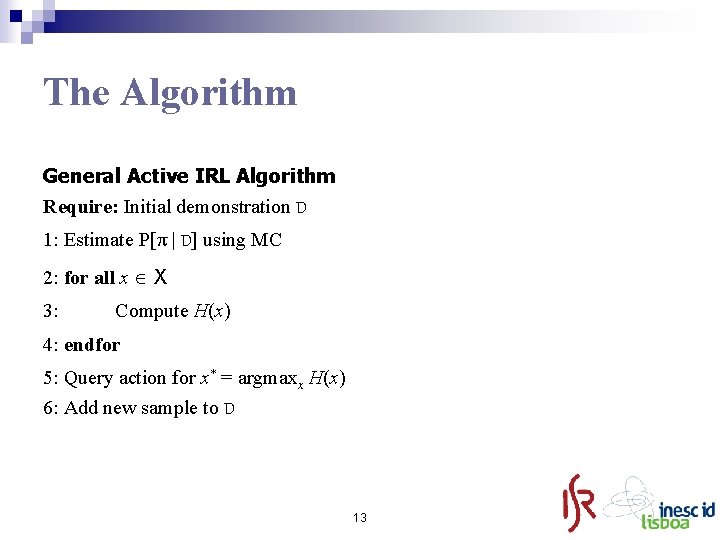

Active Learning in IRL n Measure uncertainty in policy estimation n Use uncertainty information to choose “best” states for demonstration So what else is new? n In IRL, samples are “propagated” to reward n Uncertainty is measured in terms of reward n Uncertainty must be propagated to policy 12

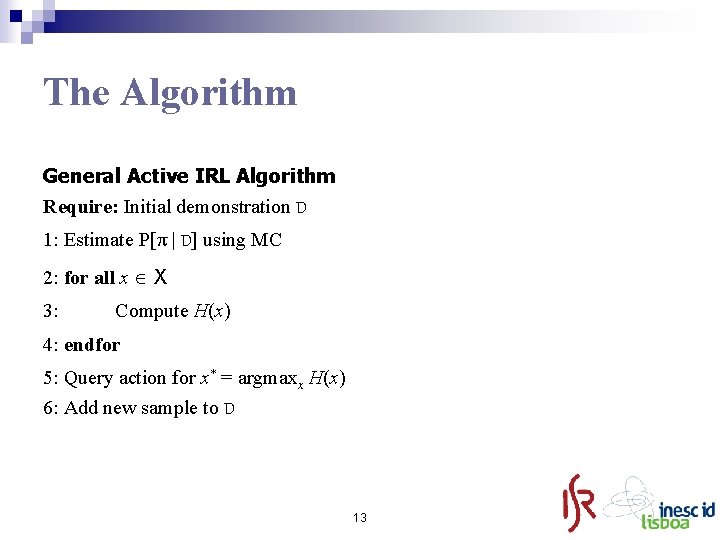

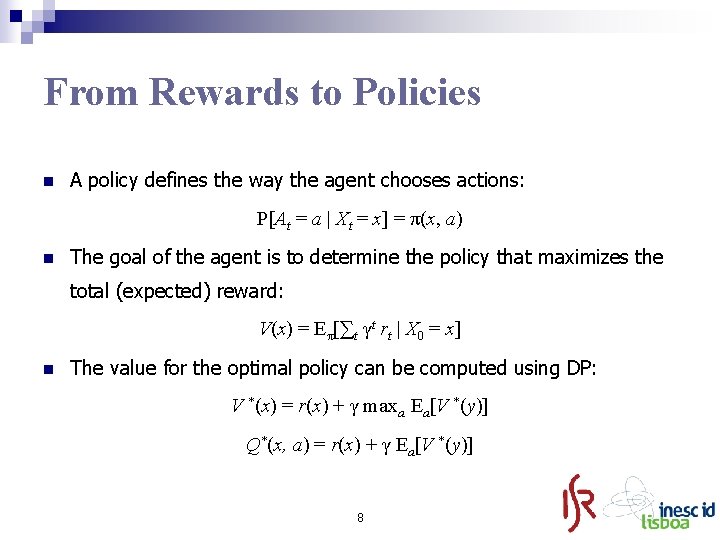

The Algorithm General Active IRL Algorithm Require: Initial demonstration D 1: Estimate P[π | D] using MC 2: for all x X 3: Compute H(x) 4: endfor 5: Query action for x* = argmaxx H(x) 6: Add new sample to D 13

![The Selection Criterion n Distribution Pr D induces a distribution on Use MC The Selection Criterion n Distribution P[r | D] induces a distribution on Use MC](https://slidetodoc.com/presentation_image_h/790d260bfb6bf93887f79c338fb3742f/image-14.jpg)

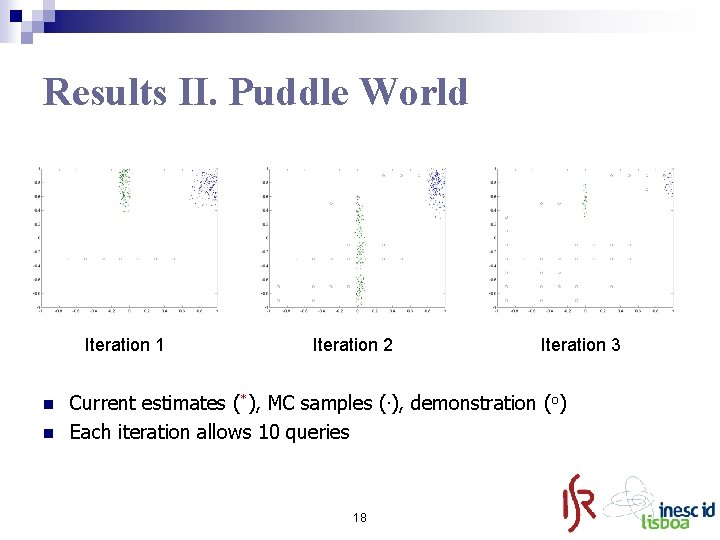

The Selection Criterion n Distribution P[r | D] induces a distribution on Use MC to approximate P[r | D] For each (x, a), P[r | D] induces a distribution on π(x, a): μxa(p) = P[π(x, a) = p | D] Compute entropy H(μxa) a 1 a 2 a 3 a 4. . . a. N n Compute per state average entropy: H(x) = 1/|A| ∑a H(μxa) 14

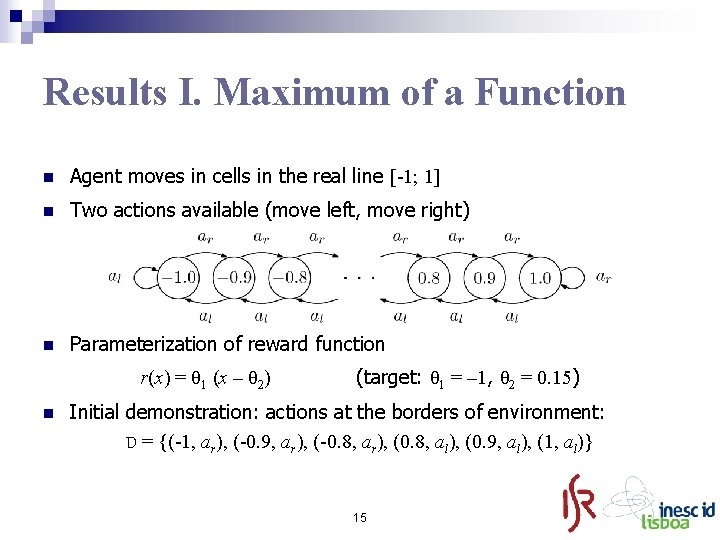

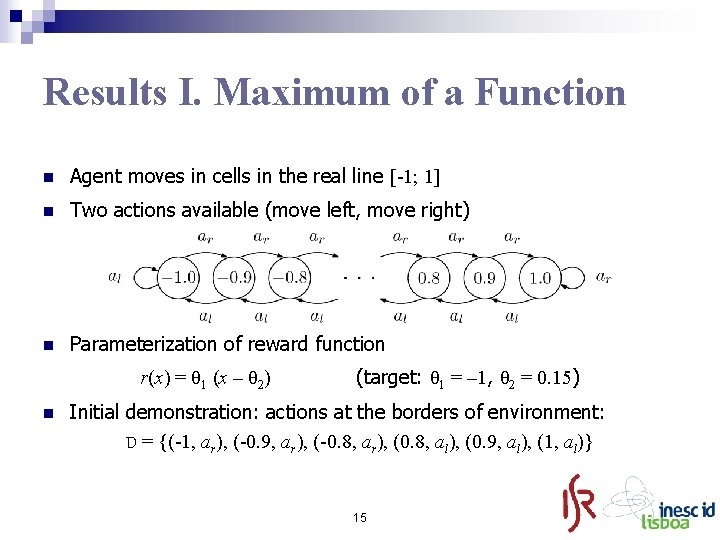

Results I. Maximum of a Function n Agent moves in cells in the real line [-1; 1] n Two actions available (move left, move right) n Parameterization of reward function r(x) = θ 1 (x – θ 2) n (target: θ 1 = – 1, θ 2 = 0. 15) Initial demonstration: actions at the borders of environment: D = {(-1, ar), (-0. 9, ar), (-0. 8, ar), (0. 8, al), (0. 9, al), (1, al)} 15

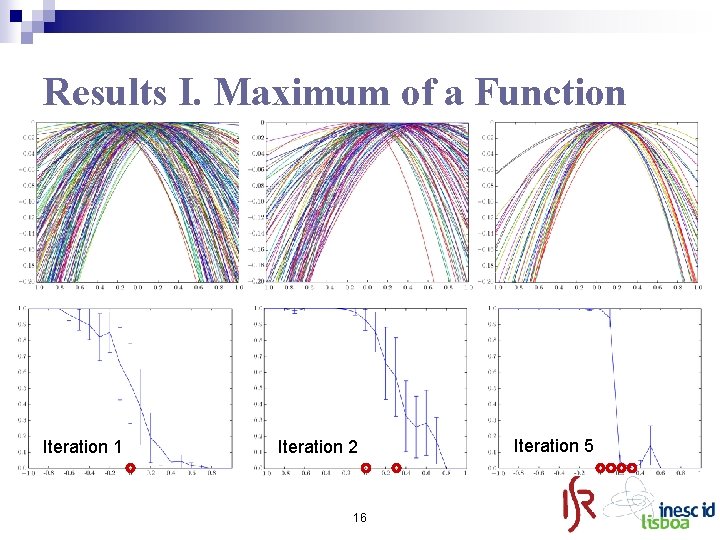

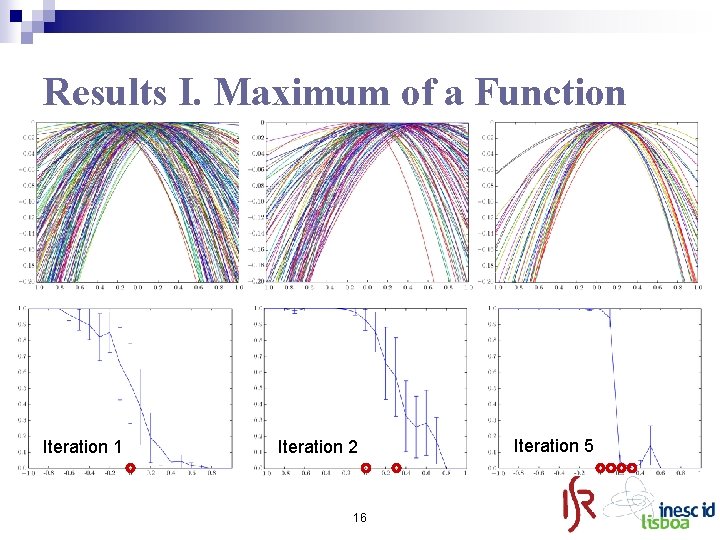

Results I. Maximum of a Function Iteration 1 Iteration 2 16 Iteration 5

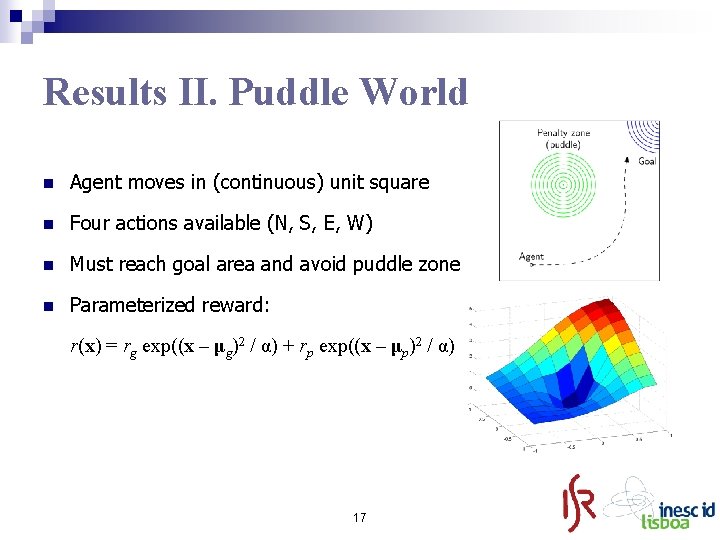

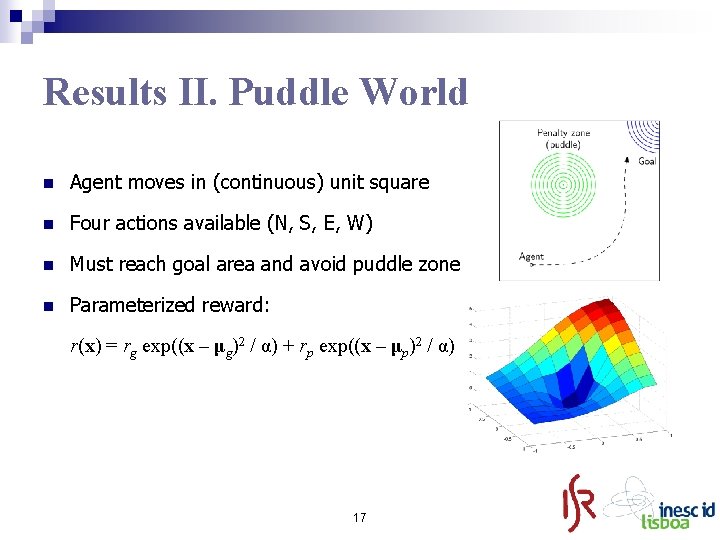

Results II. Puddle World n Agent moves in (continuous) unit square n Four actions available (N, S, E, W) n Must reach goal area and avoid puddle zone n Parameterized reward: r(x) = rg exp((x – μg)2 / α) + rp exp((x – μp)2 / α) 17

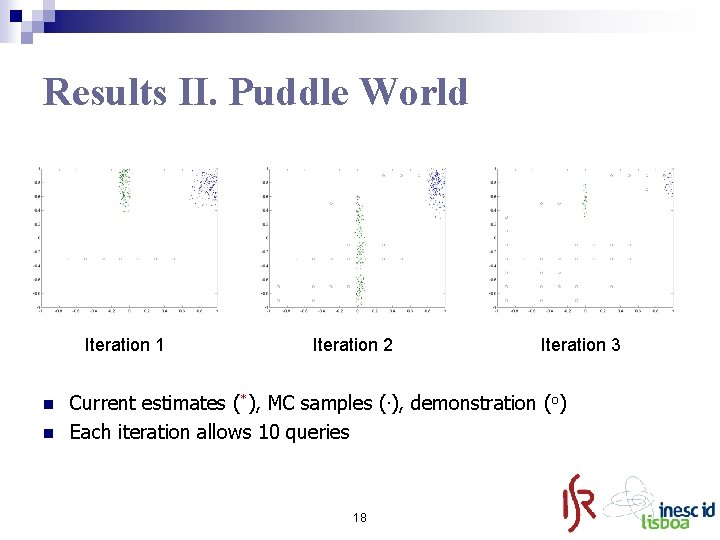

Results II. Puddle World Iteration 1 n n Iteration 2 Iteration 3 Current estimates (*), MC samples (. ), demonstration (o) Each iteration allows 10 queries 18

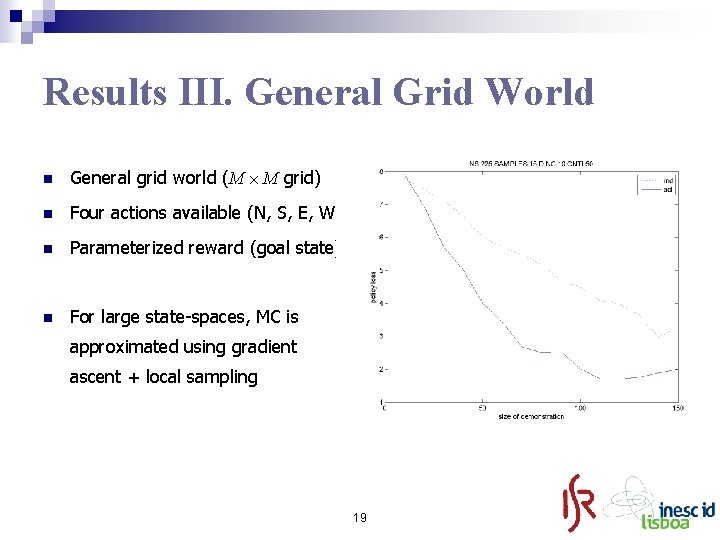

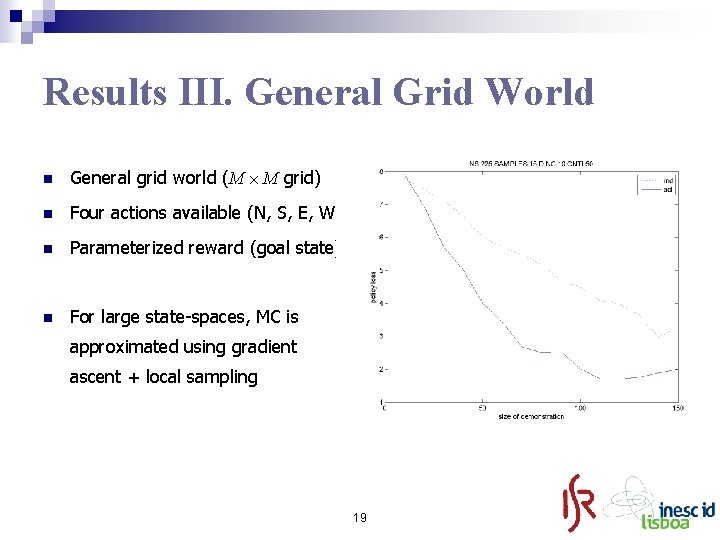

Results III. General Grid World n General grid world (M M grid) n Four actions available (N, S, E, W) n Parameterized reward (goal state) n For large state-spaces, MC is approximated using gradient ascent + local sampling 19

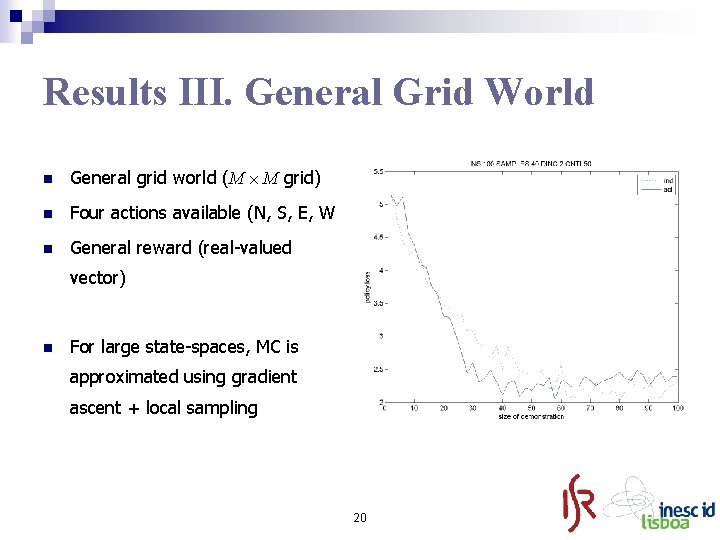

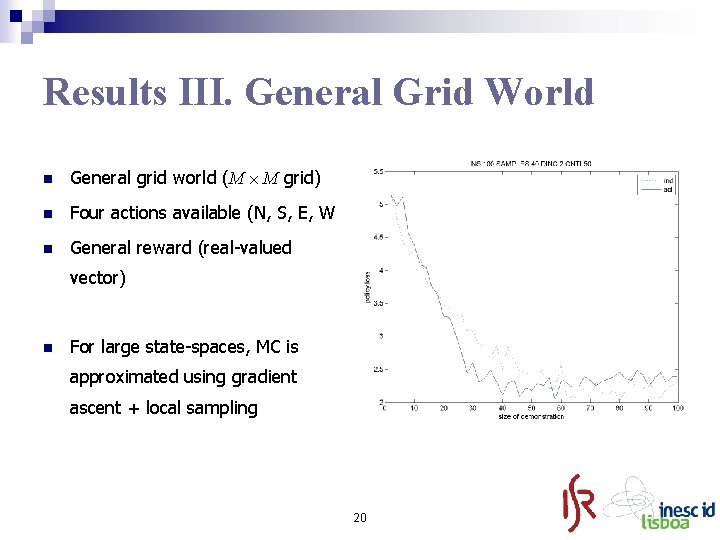

Results III. General Grid World n General grid world (M M grid) n Four actions available (N, S, E, W) n General reward (real-valued vector) n For large state-spaces, MC is approximated using gradient ascent + local sampling 20

Conclusions n Experimental results show active sampling in IRL can help decrease number of demonstrated samples Active sampling in IRL translates reward uncertainty into policy uncertainty Prior knowledge (about reward parameterization) impacts usefulness of active IRL Experimental results indicate that active is not worse than random n We’re currently studying theoretical properties of Active IRL n n n 21

Thank you. 22