SESSION CODE WSV 302 R 2 Wenming Ye

- Slides: 77

SESSION CODE: WSV 302 R 2 Wen-ming Ye Technical Evangelist (HPC) Microsoft Corporation 2

Session Objectives: Key Takeaways Yes, a USB key takeaway for staying

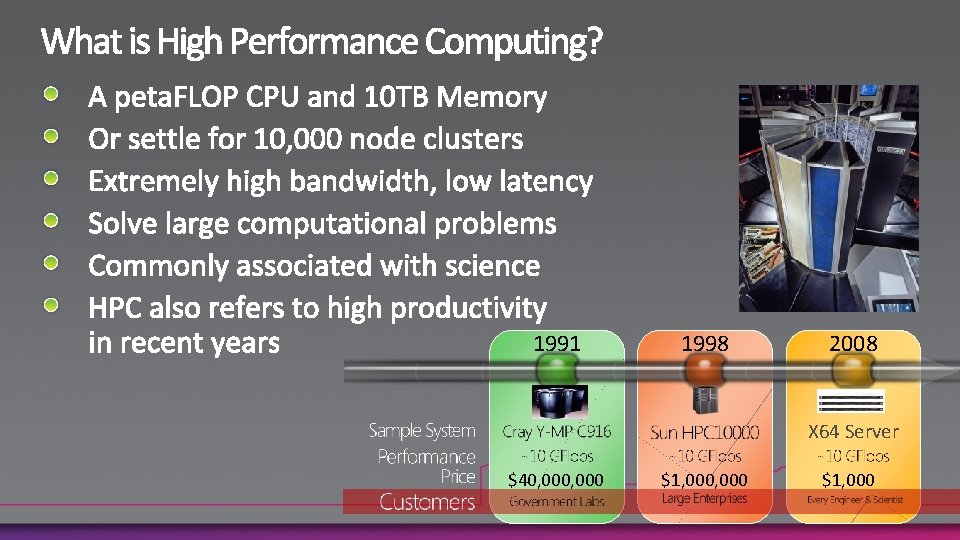

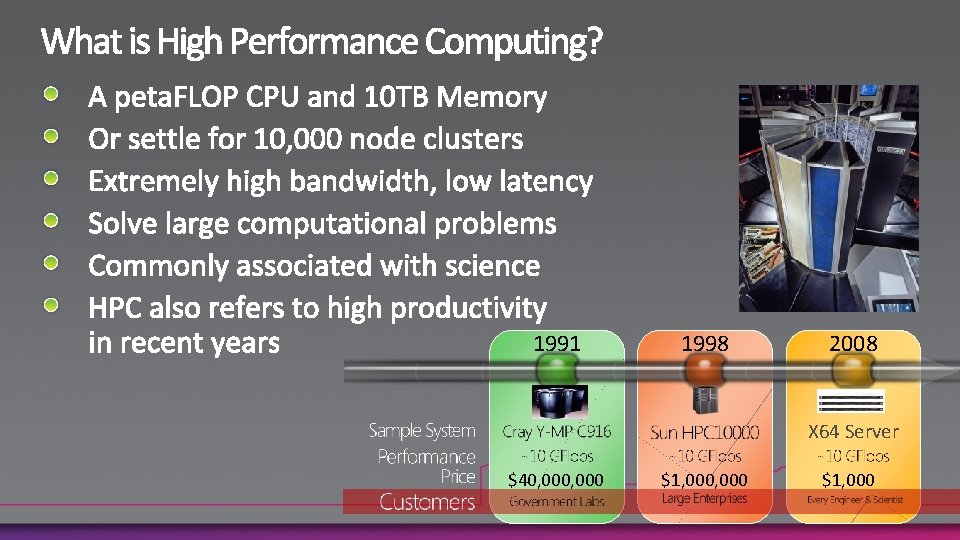

1991 1998 2008 X 64 Server $40, 000 $1, 000

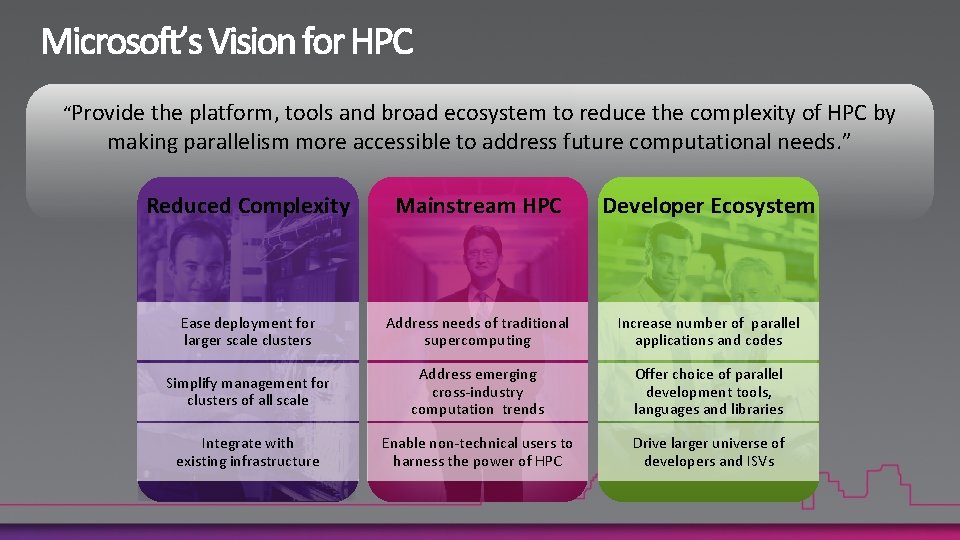

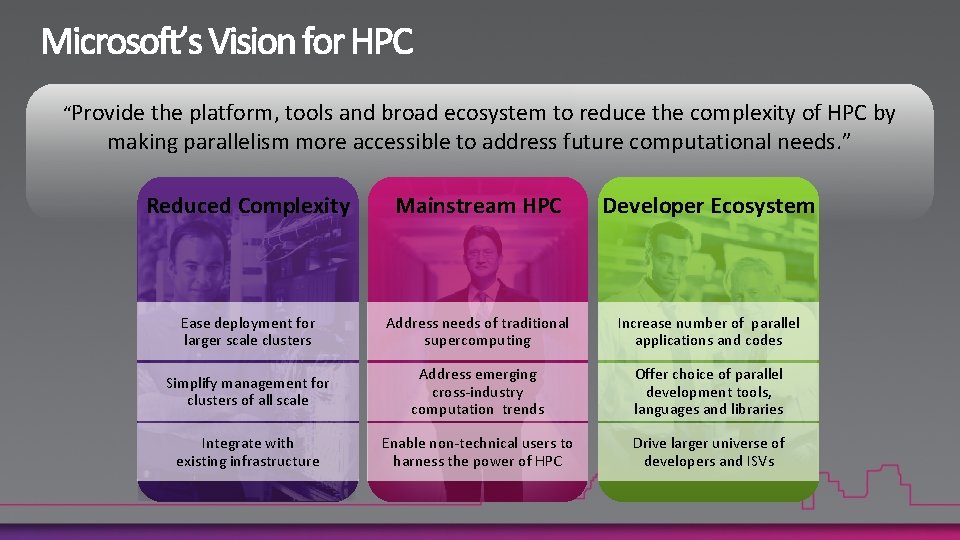

“Provide the platform, tools and broad ecosystem to reduce the complexity of HPC by making parallelism more accessible to address future computational needs. ” Reduced Complexity Mainstream HPC Developer Ecosystem Ease deployment for larger scale clusters Address needs of traditional supercomputing Increase number of parallel applications and codes Simplify management for clusters of all scale Address emerging cross-industry computation trends Offer choice of parallel development tools, languages and libraries Integrate with existing infrastructure Enable non-technical users to harness the power of HPC Drive larger universe of developers and ISVs

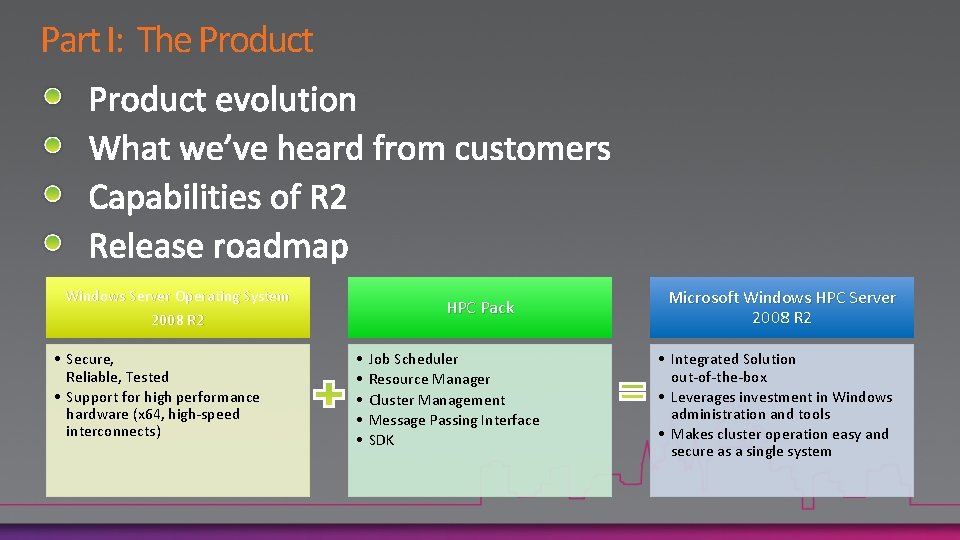

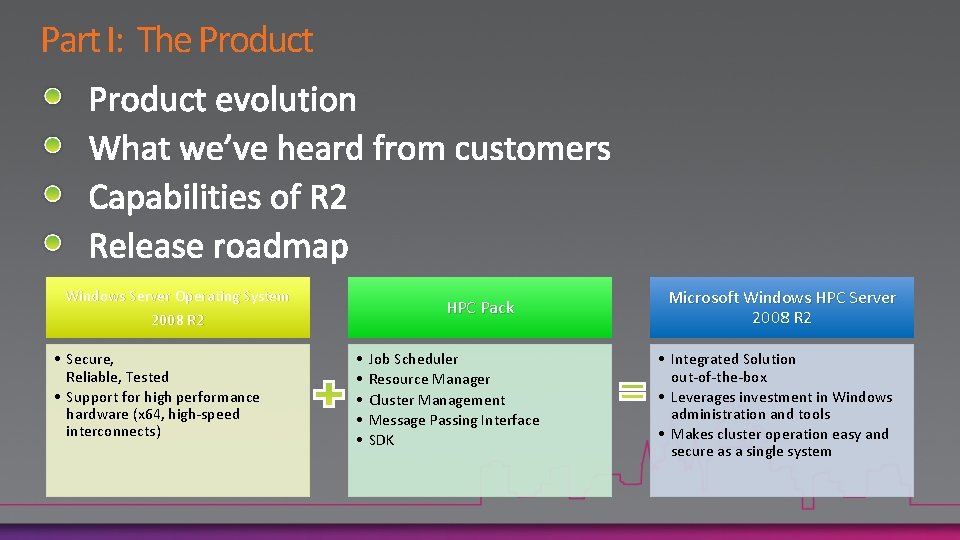

Part I: The Product Windows Server Operating System 2008 R 2 • Secure, Reliable, Tested • Support for high performance hardware (x 64, high-speed interconnects) HPC Pack • • • Job Scheduler Resource Manager Cluster Management Message Passing Interface SDK Microsoft Windows HPC Server 2008 R 2 • Integrated Solution out-of-the-box • Leverages investment in Windows administration and tools • Makes cluster operation easy and secure as a single system

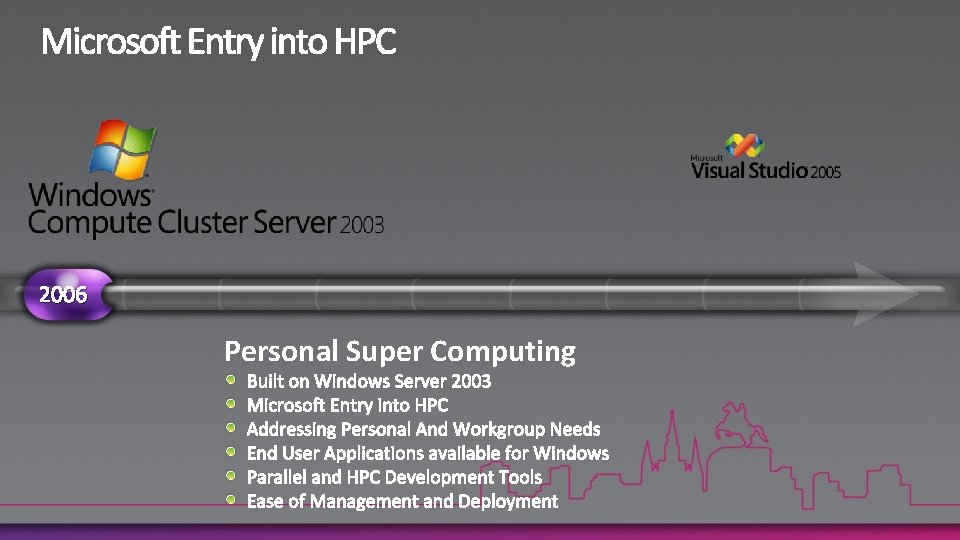

Product Evolution 7 http: //www. flickr. com/photos/drachenspinne/212500261/

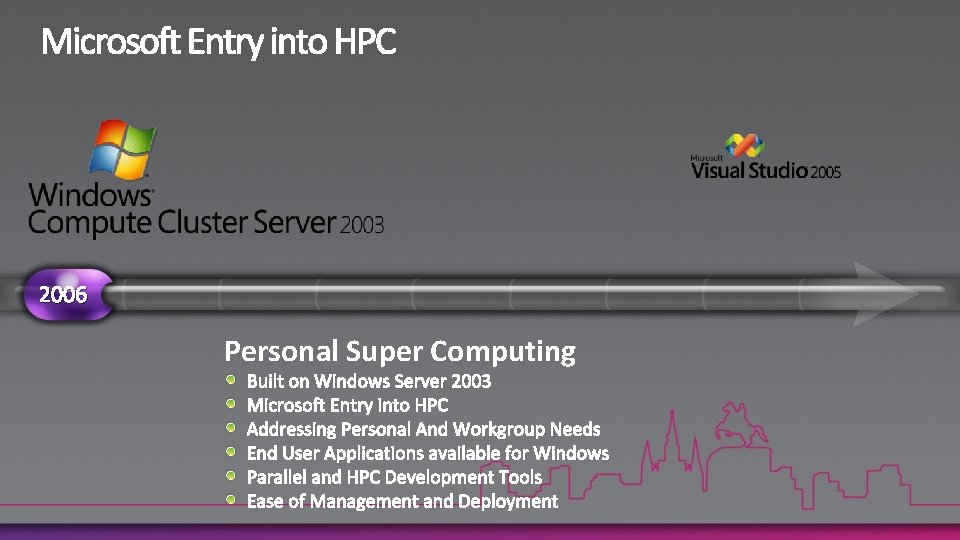

2006 Personal Super Computing

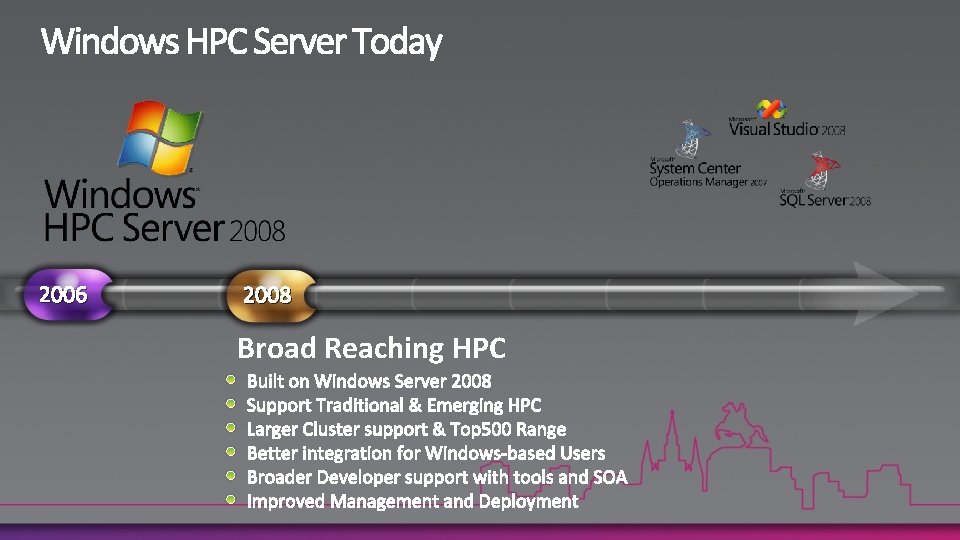

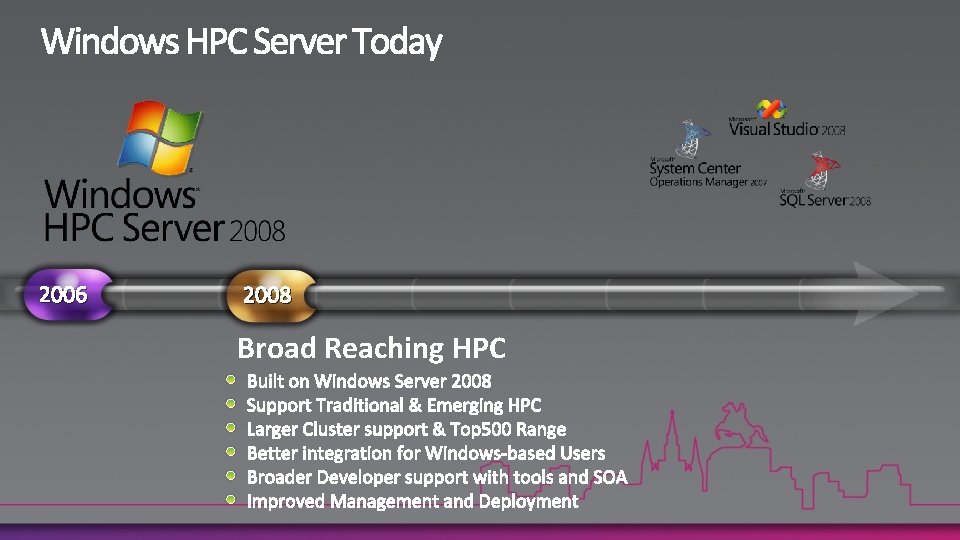

2006 2008 Broad Reaching HPC

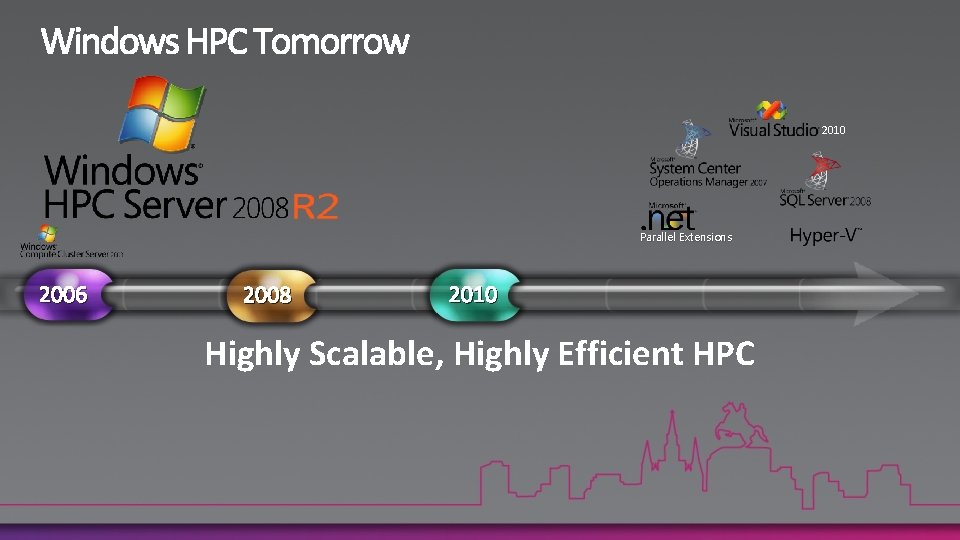

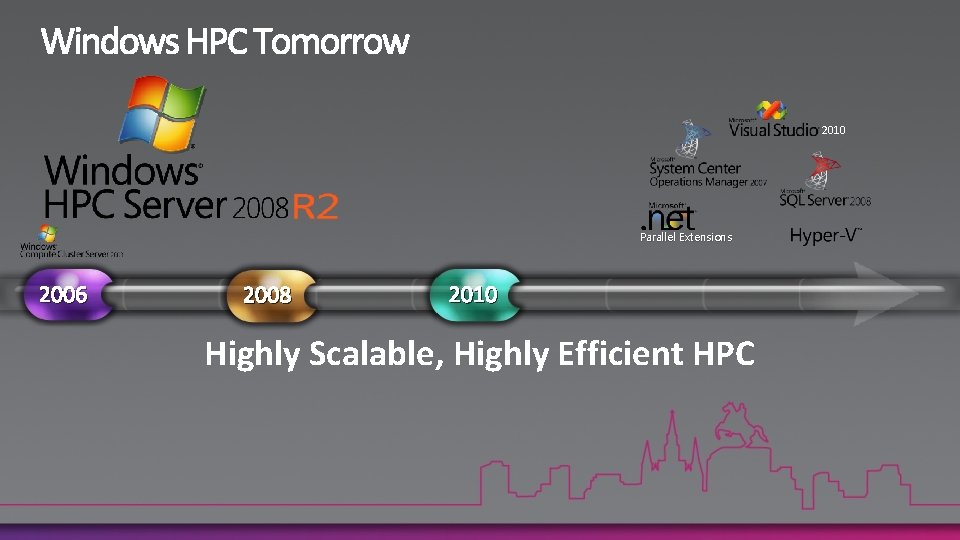

2010 Parallel Extensions 2006 2008 2010 Highly Scalable, Highly Efficient HPC

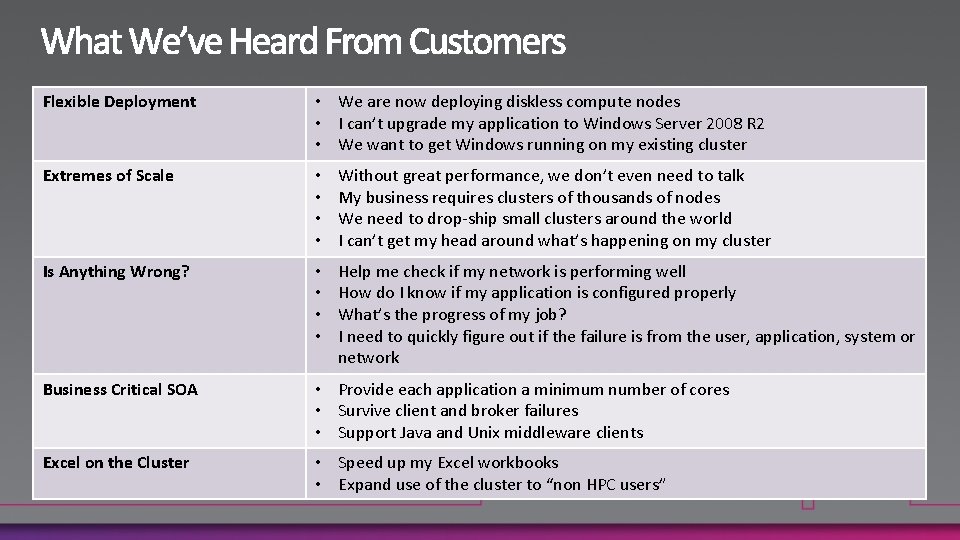

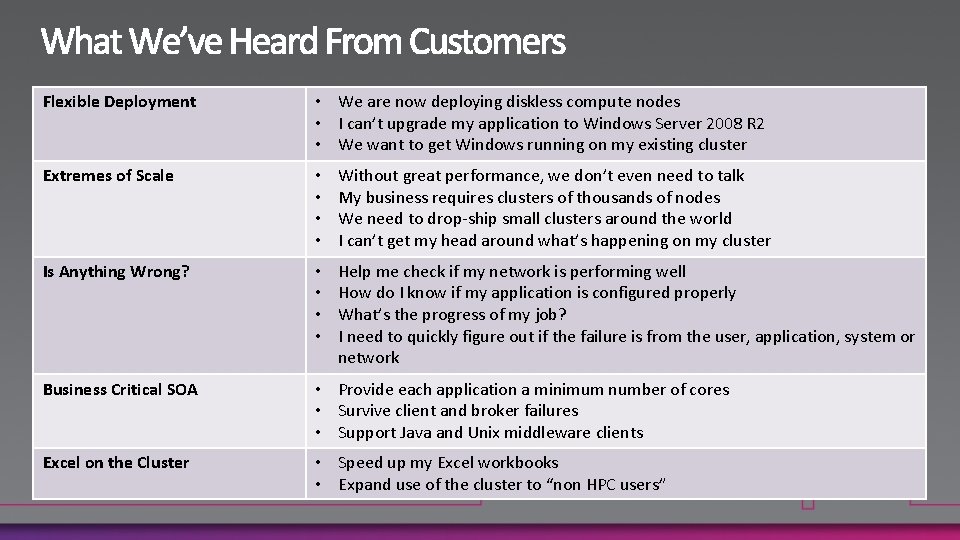

Flexible Deployment • We are now deploying diskless compute nodes • I can’t upgrade my application to Windows Server 2008 R 2 • We want to get Windows running on my existing cluster Extremes of Scale • • Without great performance, we don’t even need to talk My business requires clusters of thousands of nodes We need to drop-ship small clusters around the world I can’t get my head around what’s happening on my cluster Is Anything Wrong? • • Help me check if my network is performing well How do I know if my application is configured properly What’s the progress of my job? I need to quickly figure out if the failure is from the user, application, system or network Business Critical SOA • Provide each application a minimum number of cores • Survive client and broker failures • Support Java and Unix middleware clients Excel on the Cluster • Speed up my Excel workbooks • Expand use of the cluster to “non HPC users”

Flexible Deployment 12 http: //www. flickr. com/photos/carowallis 1/620967948/

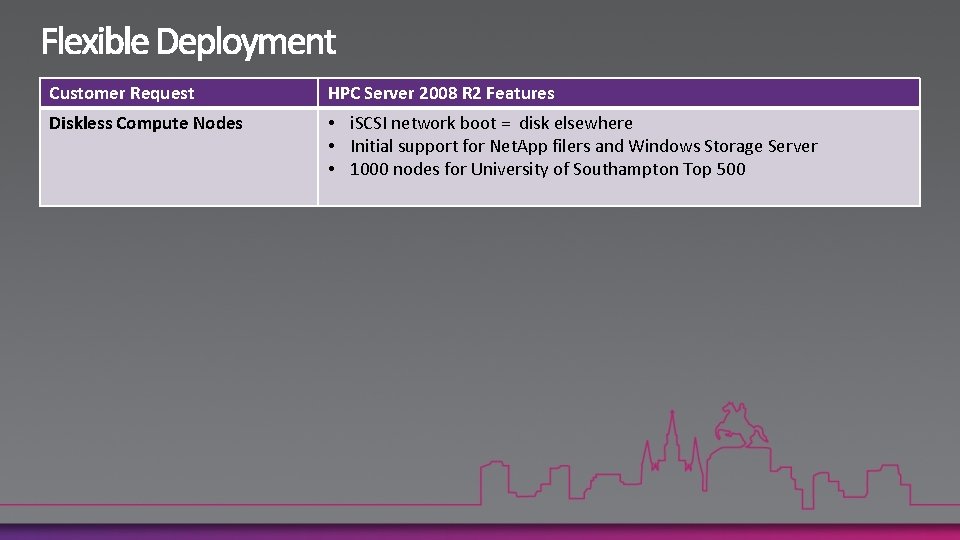

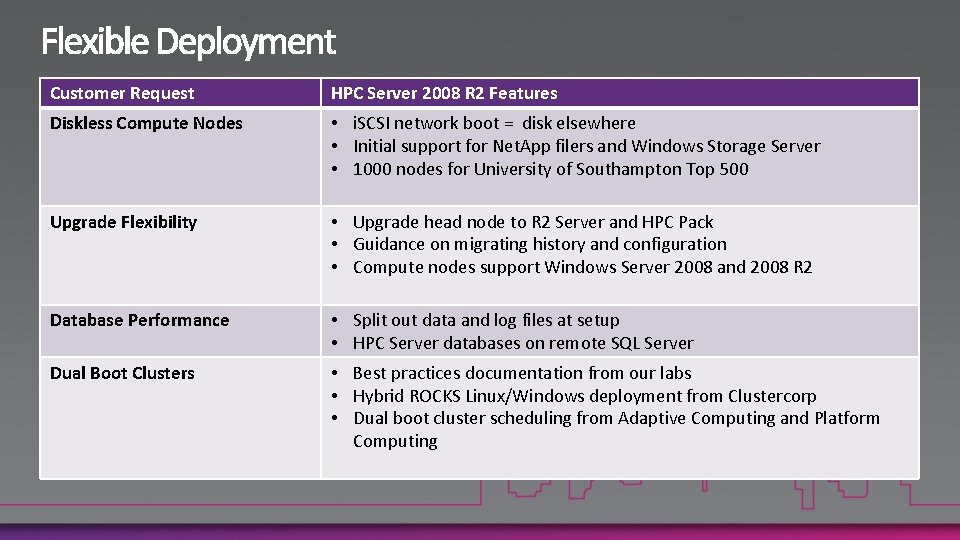

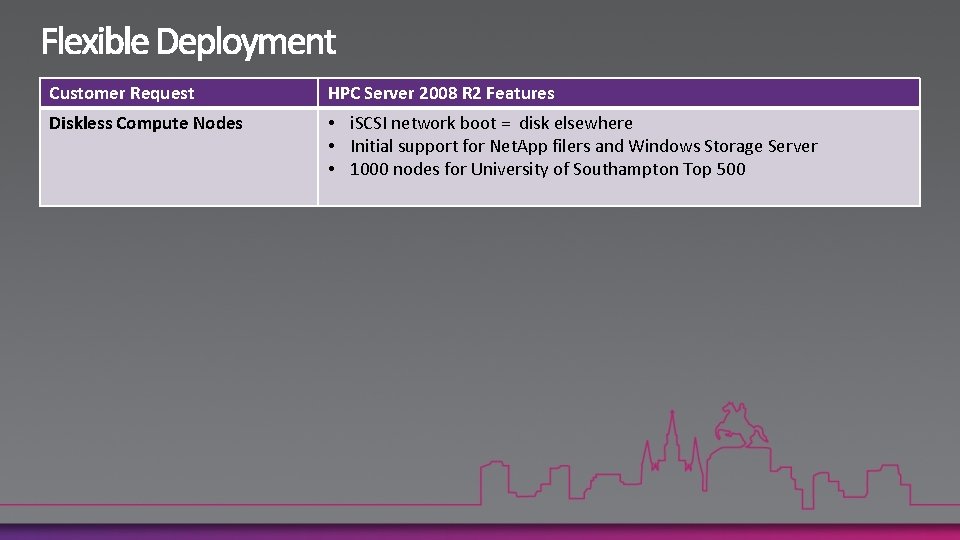

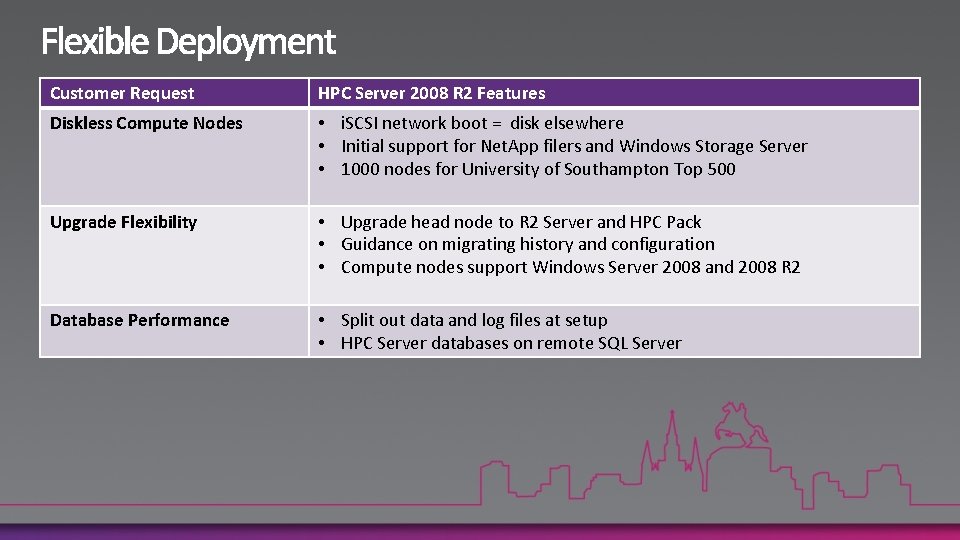

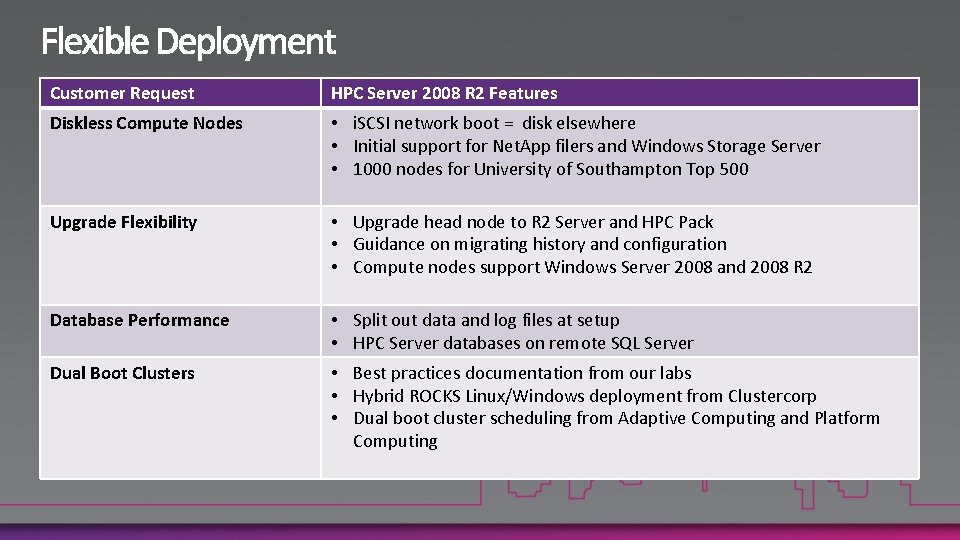

Customer Request HPC Server 2008 R 2 Features Diskless Compute Nodes • i. SCSI network boot = disk elsewhere • Initial support for Net. App filers and Windows Storage Server • 1000 nodes for University of Southampton Top 500

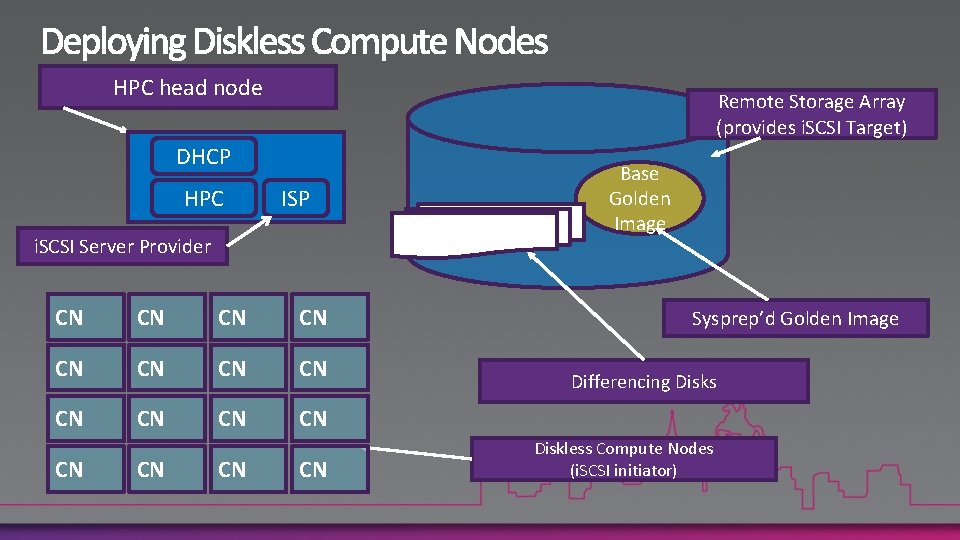

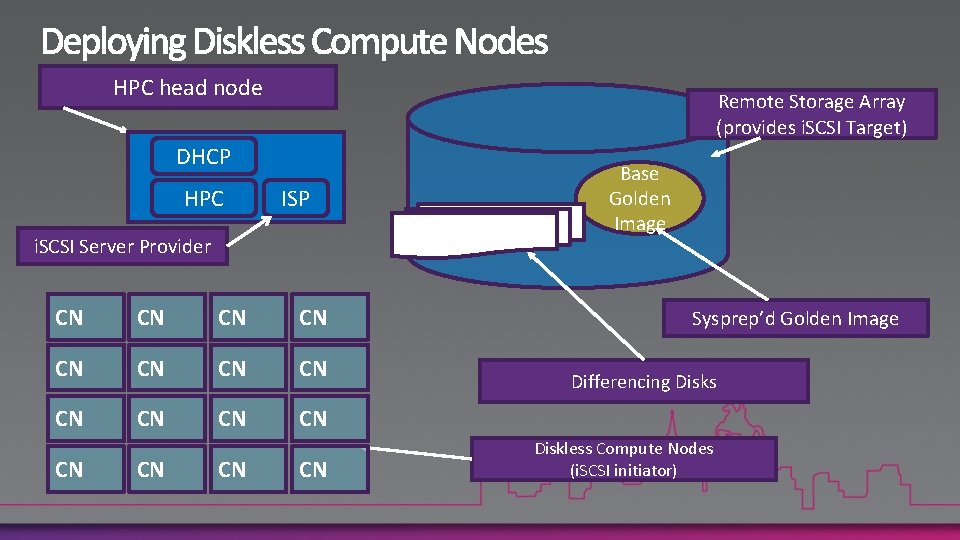

HPC head node Remote Storage Array (provides i. SCSI Target) DHCP HPC ISP i. SCSI Server Provider CN CN CN CN Base Golden Image Sysprep’d Golden Image Differencing Disks Diskless Compute Nodes (i. SCSI initiator)

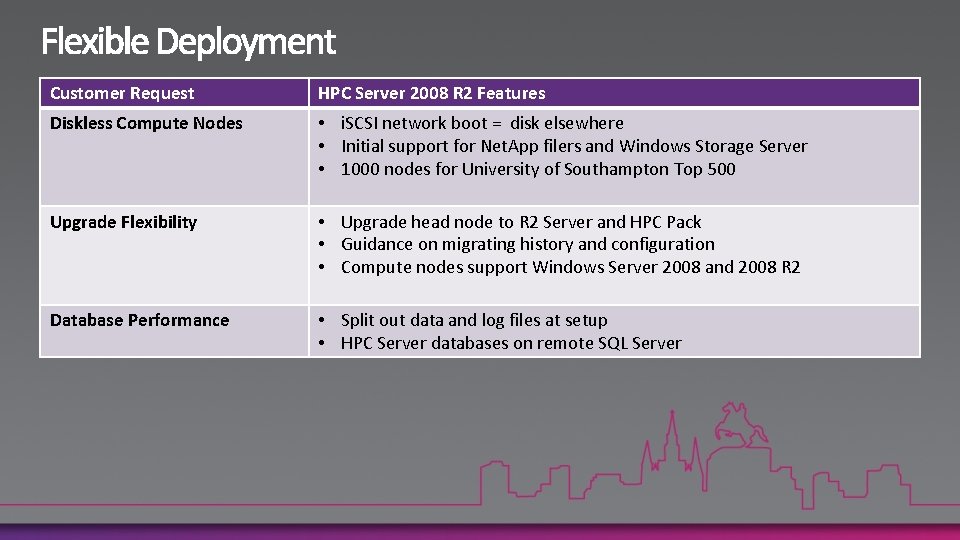

Customer Request HPC Server 2008 R 2 Features Diskless Compute Nodes • i. SCSI network boot = disk elsewhere • Initial support for Net. App filers and Windows Storage Server • 1000 nodes for University of Southampton Top 500 Upgrade Flexibility • Upgrade head node to R 2 Server and HPC Pack • Guidance on migrating history and configuration • Compute nodes support Windows Server 2008 and 2008 R 2 Database Performance • Split out data and log files at setup • HPC Server databases on remote SQL Server

Customer Request HPC Server 2008 R 2 Features Diskless Compute Nodes • i. SCSI network boot = disk elsewhere • Initial support for Net. App filers and Windows Storage Server • 1000 nodes for University of Southampton Top 500 Upgrade Flexibility • Upgrade head node to R 2 Server and HPC Pack • Guidance on migrating history and configuration • Compute nodes support Windows Server 2008 and 2008 R 2 Database Performance • Split out data and log files at setup • HPC Server databases on remote SQL Server Dual Boot Clusters • Best practices documentation from our labs • Hybrid ROCKS Linux/Windows deployment from Clustercorp • Dual boot cluster scheduling from Adaptive Computing and Platform Computing

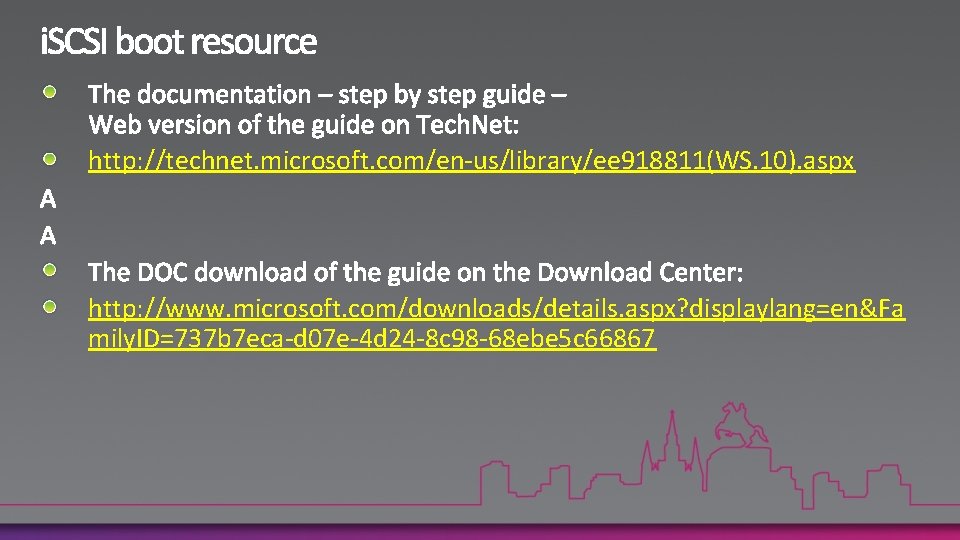

http: //technet. microsoft. com/en-us/library/ee 918811(WS. 10). aspx http: //www. microsoft. com/downloads/details. aspx? displaylang=en&Fa mily. ID=737 b 7 eca-d 07 e-4 d 24 -8 c 98 -68 ebe 5 c 66867

Extremes of Scale http: //www. flickr. com/photos/chrisdag/3706446455/in/set-72157600938800182/

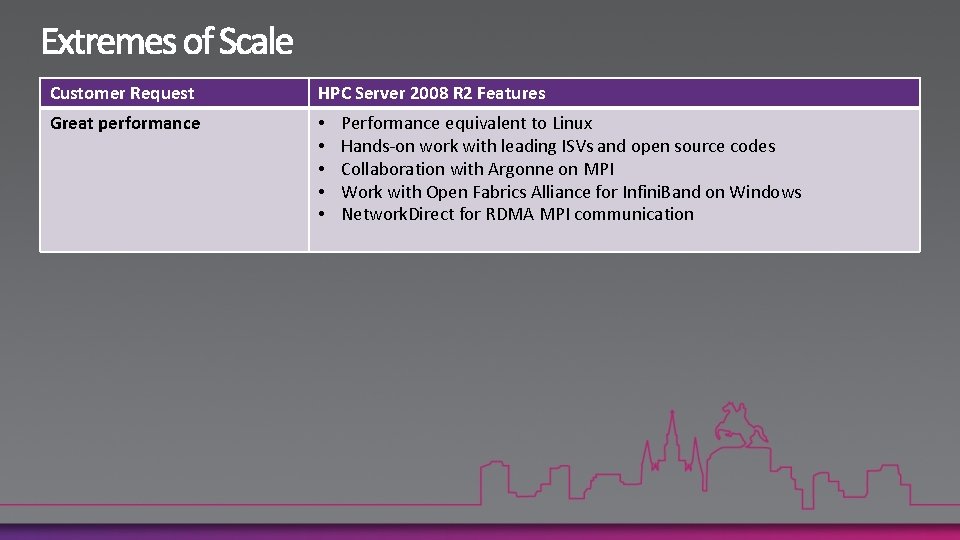

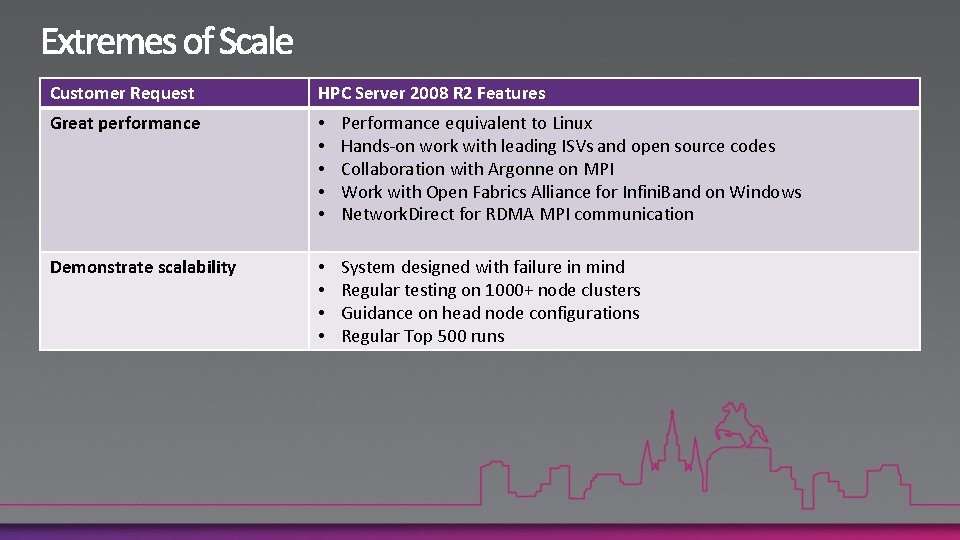

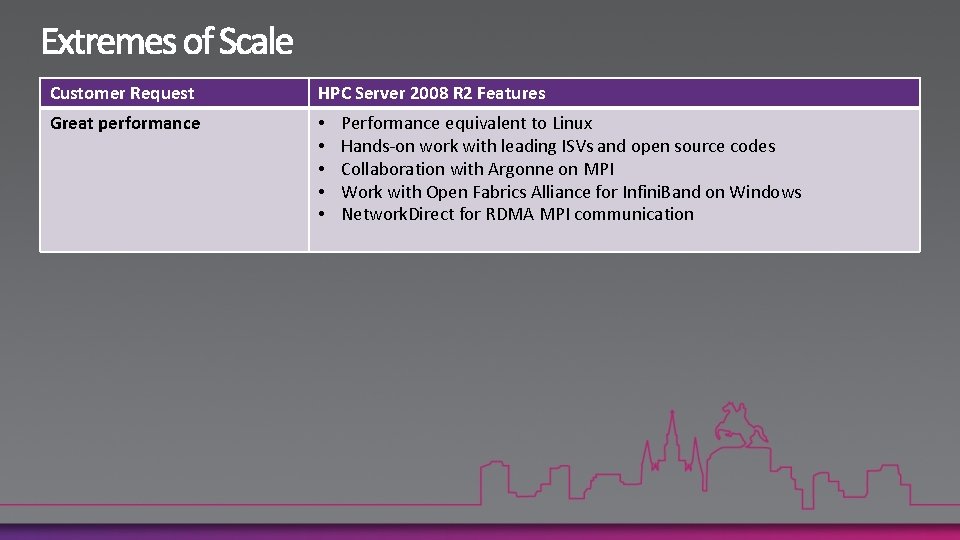

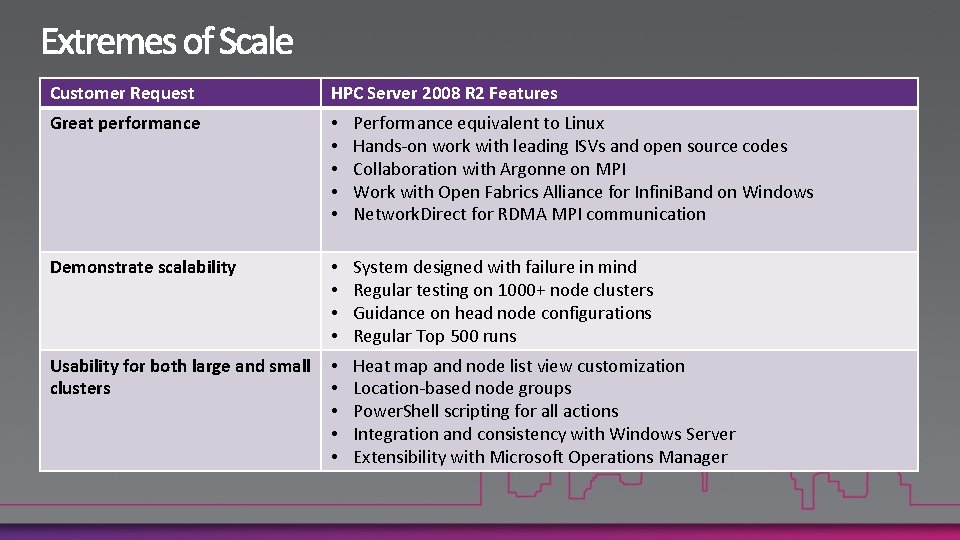

Customer Request HPC Server 2008 R 2 Features Great performance • • • Performance equivalent to Linux Hands-on work with leading ISVs and open source codes Collaboration with Argonne on MPI Work with Open Fabrics Alliance for Infini. Band on Windows Network. Direct for RDMA MPI communication

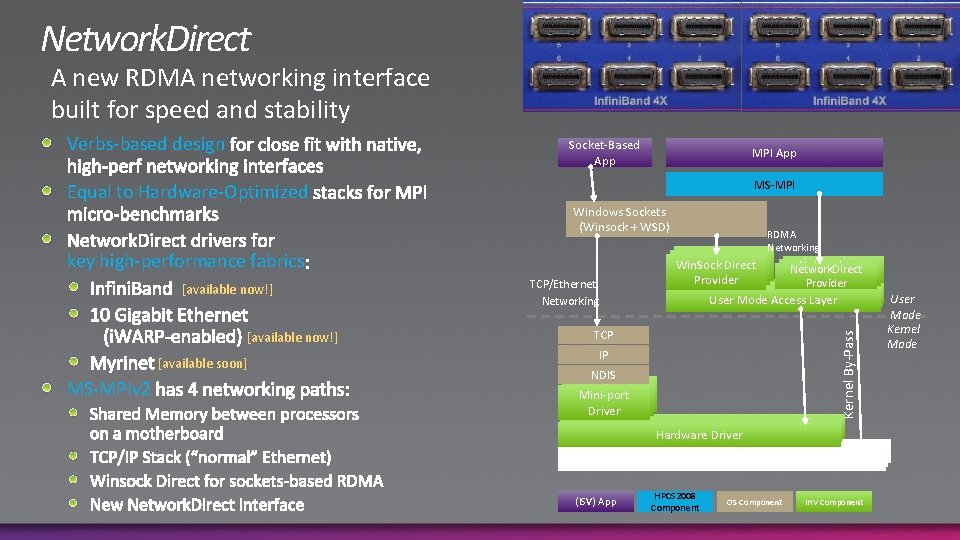

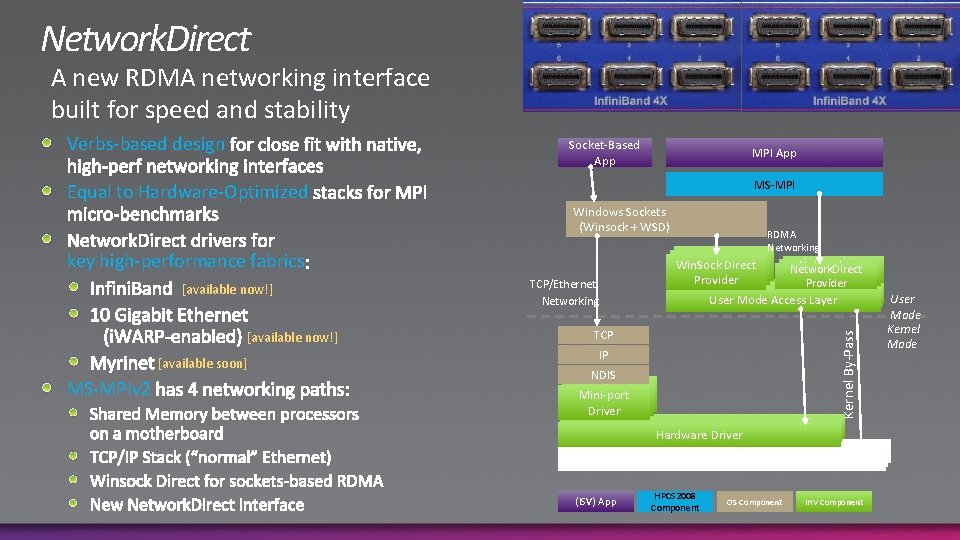

Network. Direct A new RDMA networking interface built for speed and stability Socket-Based App MPI App MS-MPI Equal to Hardware-Optimized Windows Sockets (Winsock + WSD) key high-performance fabrics [available now!] [available soon] MS-MPIv 2 TCP/Ethernet Networking RDMA Networking Win. Sock Direct Networking Network. Direct Hardware Provider Networking Hardware User Mode Access Layer TCP IP NDIS Networking Mini-port Hardware Driver (ISV) App Networking Hardware Driver Networking Hardware HPCS 2008 Component OS Component Kernel By-Pass Verbs-based design IHV Component User Mode Kernel Mode

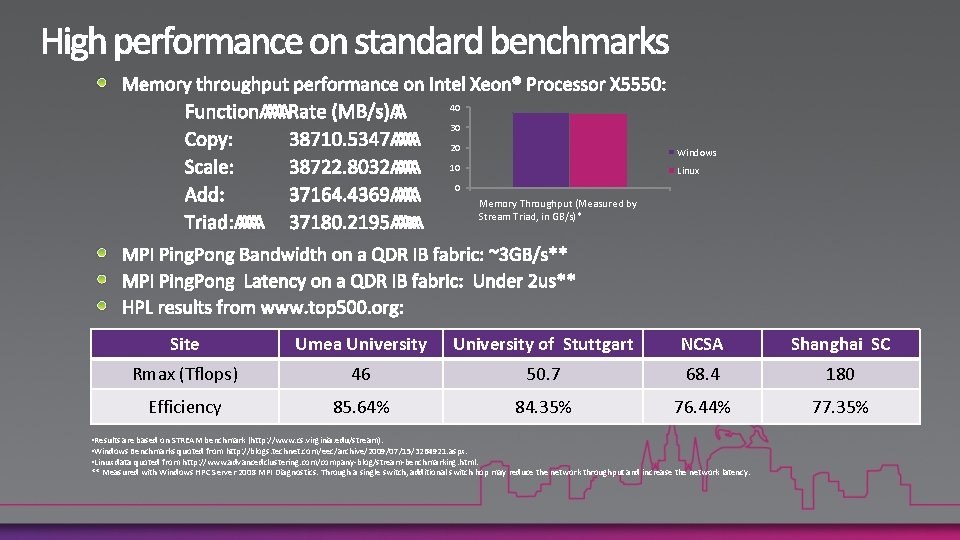

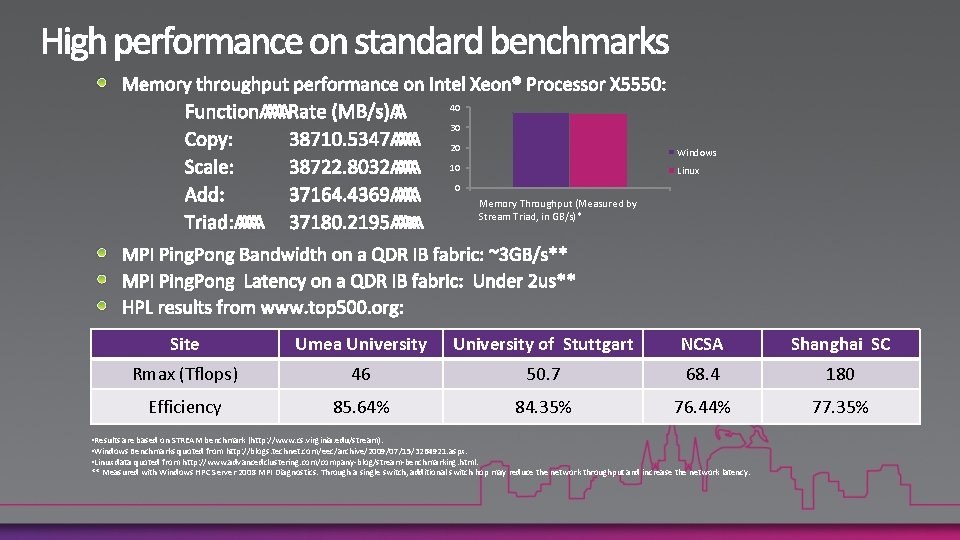

40 30 20 Windows 10 Linux 0 Memory Throughput (Measured by Stream Triad, in GB/s)* Site Umea University of Stuttgart NCSA Shanghai SC Rmax (Tflops) 46 50. 7 68. 4 180 Efficiency 85. 64% 84. 35% 76. 44% 77. 35% • Results are based on STREAM benchmark (http: //www. cs. virginia. edu/stream). • Windows Benchmarks quoted from http: //blogs. technet. com/eec/archive/2009/07/15/3264921. aspx. • Linux data quoted from http: //www. advancedclustering. com/company-blog/stream-benchmarking. html. ** Measured with Windows HPC Server 2008 MPI Diagnostics. Through a single switch, additional switch hop may reduce the network throughput and increase the network latency.

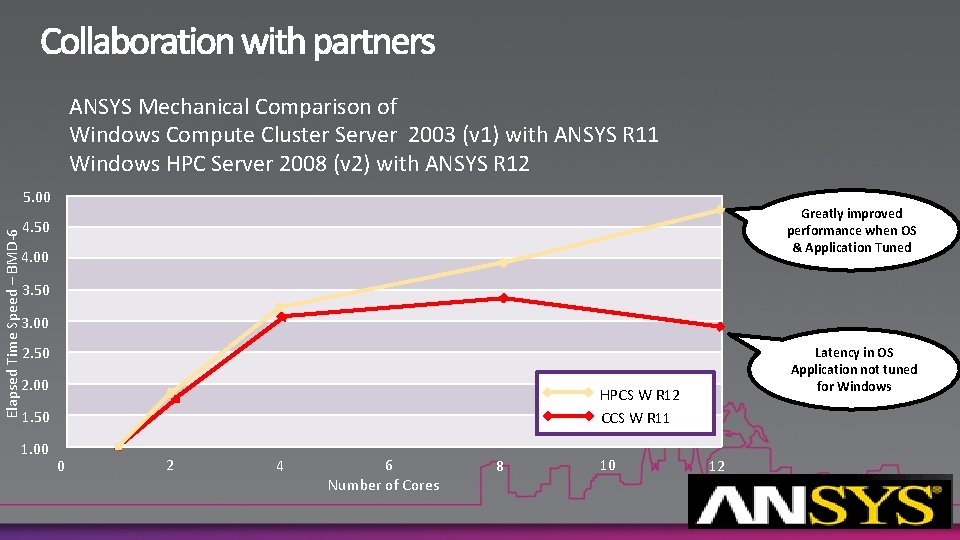

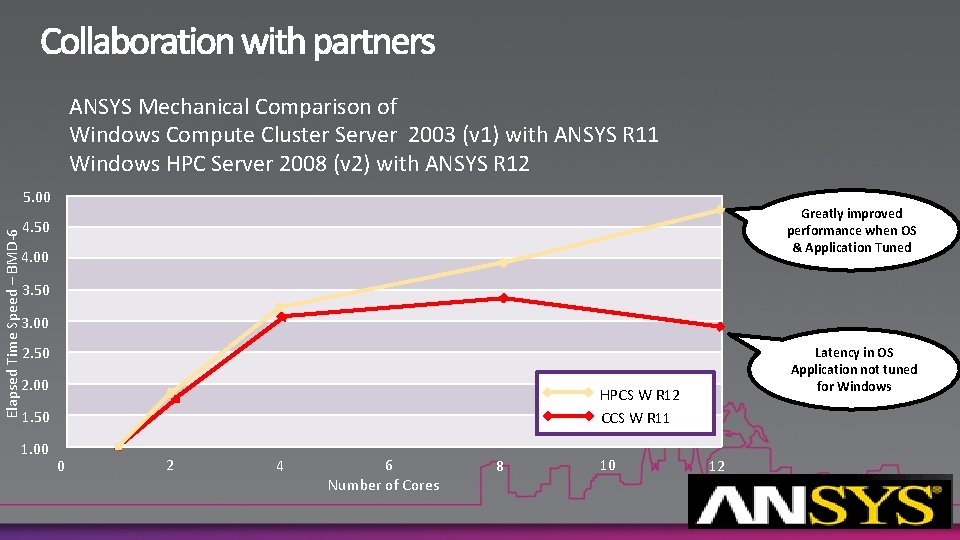

ANSYS Mechanical Comparison of Windows Compute Cluster Server 2003 (v 1) with ANSYS R 11 Windows HPC Server 2008 (v 2) with ANSYS R 12 Elapsed Time Speed – BMD-6 5. 00 Greatly improved performance when OS & Application Tuned 4. 50 4. 00 3. 50 3. 00 2. 50 2. 00 HPCS W R 12 CCS W R 11 1. 50 1. 00 Latency in OS Application not tuned for Windows 0 2 4 6 Number of Cores 8 10 12

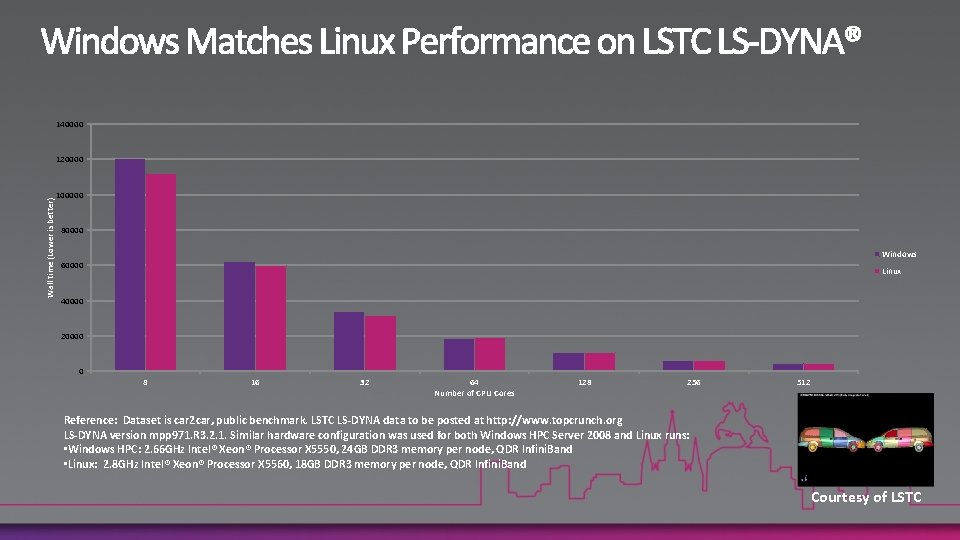

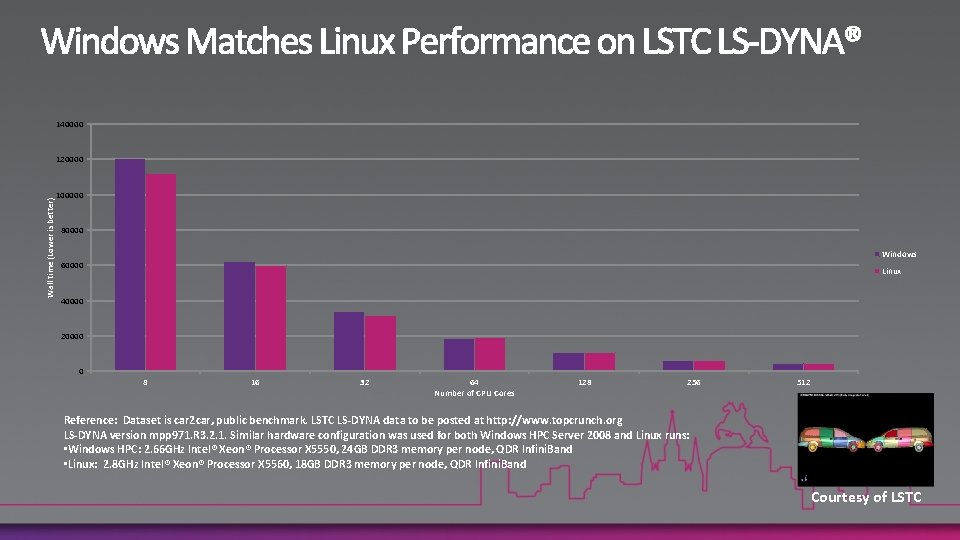

140000 Wall time (Lower is better) 120000 100000 80000 Windows 60000 Linux 40000 20000 0 8 16 32 64 Number of CPU Cores 128 256 512 Reference: Dataset is car 2 car, public benchmark. LSTC LS-DYNA data to be posted at http: //www. topcrunch. org LS-DYNA version mpp 971. R 3. 2. 1. Similar hardware configuration was used for both Windows HPC Server 2008 and Linux runs: • Windows HPC: 2. 66 GHz Intel® Xeon® Processor X 5550, 24 GB DDR 3 memory per node, QDR Infini. Band • Linux: 2. 8 GHz Intel® Xeon® Processor X 5560, 18 GB DDR 3 memory per node, QDR Infini. Band Courtesy of LSTC

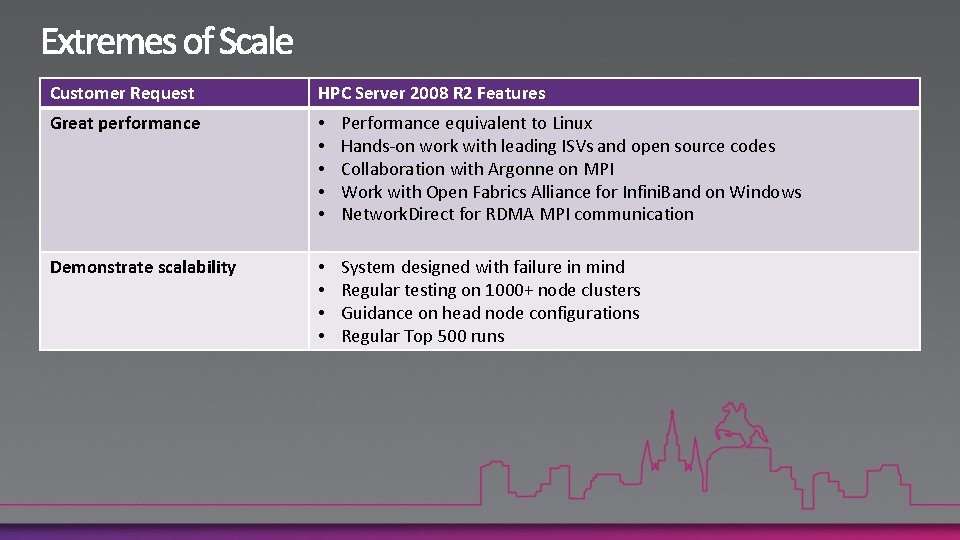

Customer Request HPC Server 2008 R 2 Features Great performance • • • Performance equivalent to Linux Hands-on work with leading ISVs and open source codes Collaboration with Argonne on MPI Work with Open Fabrics Alliance for Infini. Band on Windows Network. Direct for RDMA MPI communication Demonstrate scalability • • System designed with failure in mind Regular testing on 1000+ node clusters Guidance on head node configurations Regular Top 500 runs

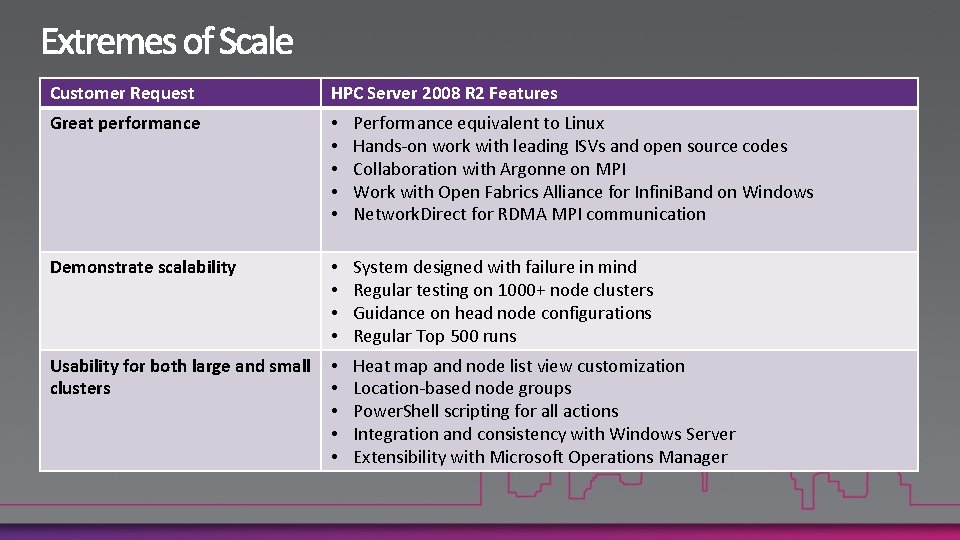

Customer Request HPC Server 2008 R 2 Features Great performance • • • Performance equivalent to Linux Hands-on work with leading ISVs and open source codes Collaboration with Argonne on MPI Work with Open Fabrics Alliance for Infini. Band on Windows Network. Direct for RDMA MPI communication Demonstrate scalability • • System designed with failure in mind Regular testing on 1000+ node clusters Guidance on head node configurations Regular Top 500 runs Usability for both large and small clusters • • • Heat map and node list view customization Location-based node groups Power. Shell scripting for all actions Integration and consistency with Windows Server Extensibility with Microsoft Operations Manager

Is Anything Wrong? http: //www. flickr. com/photos/mandyxclear/3461234232

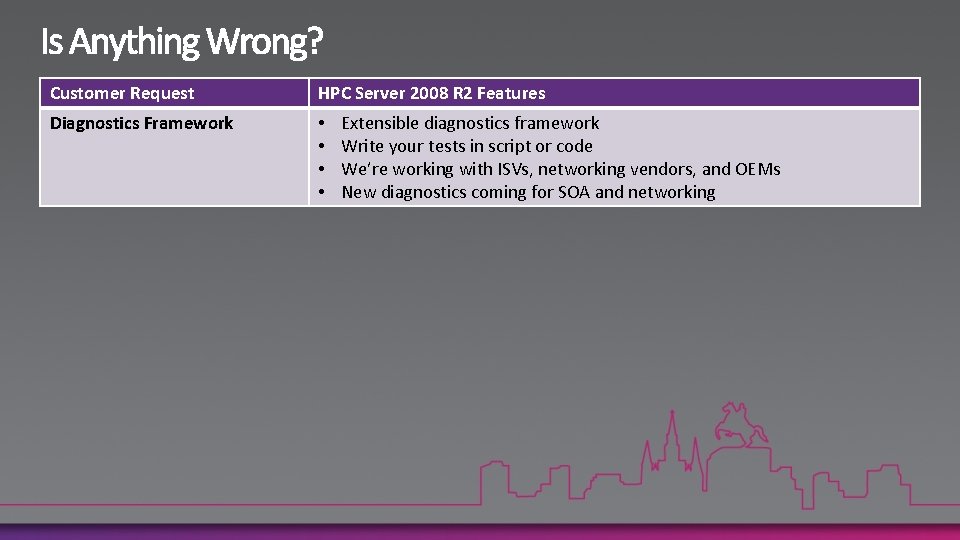

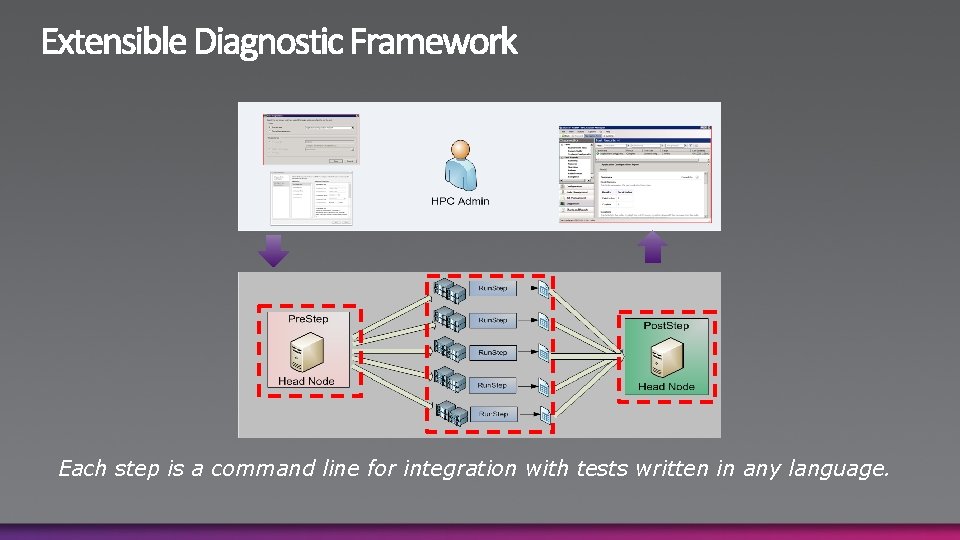

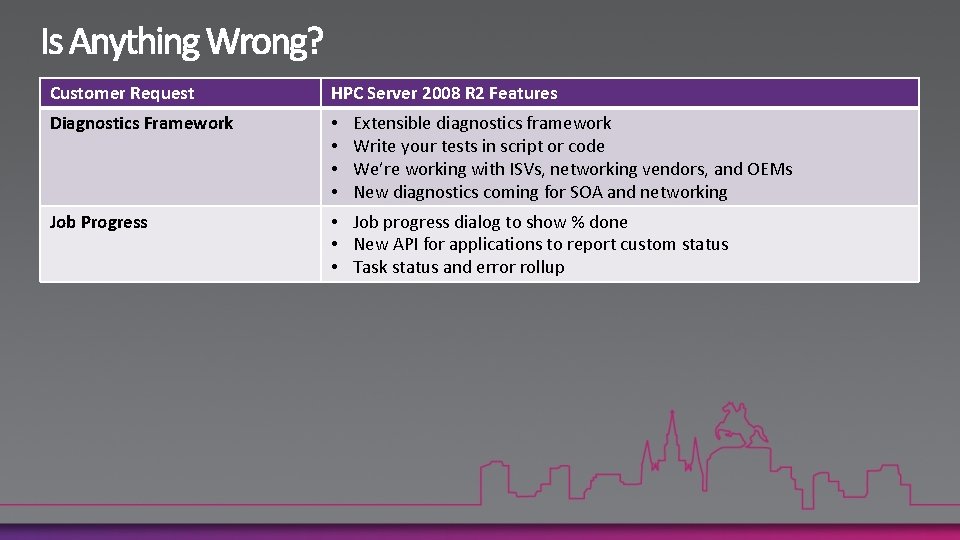

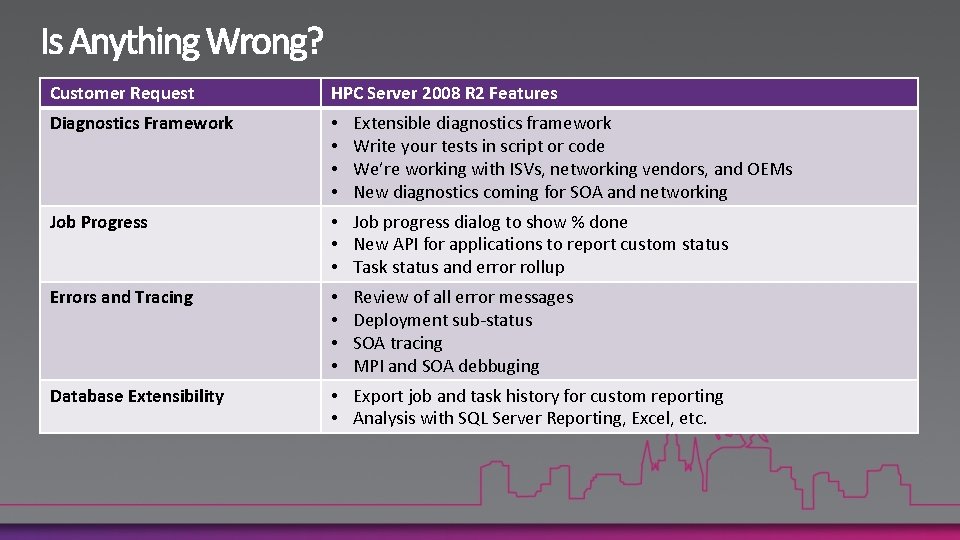

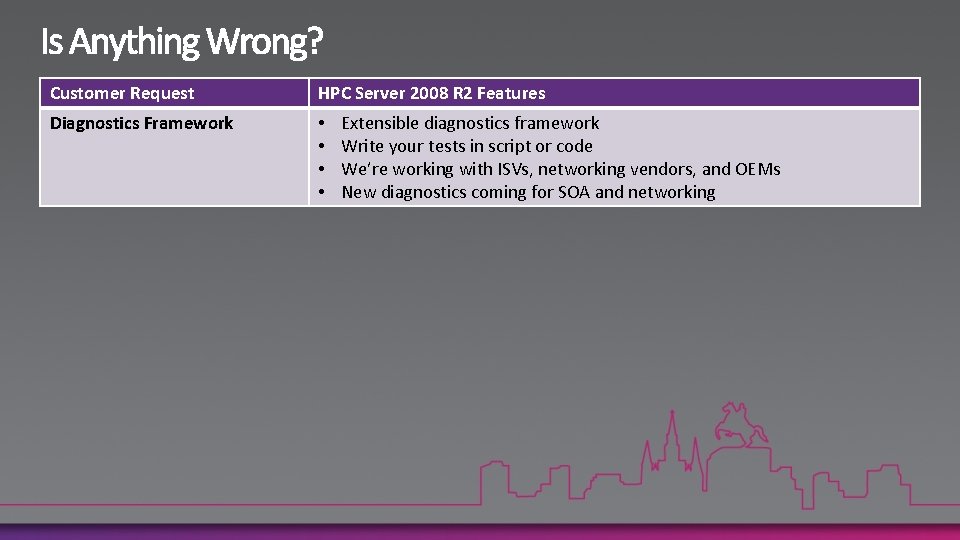

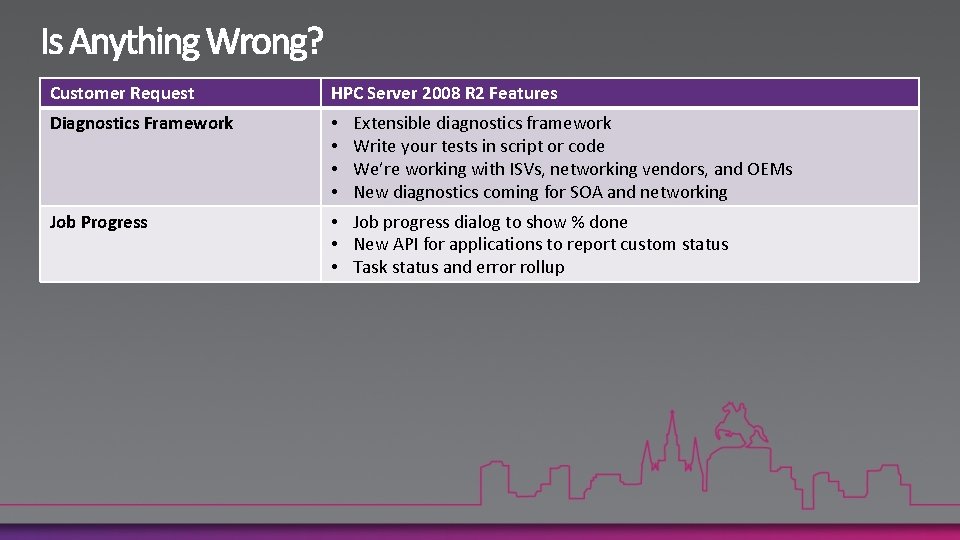

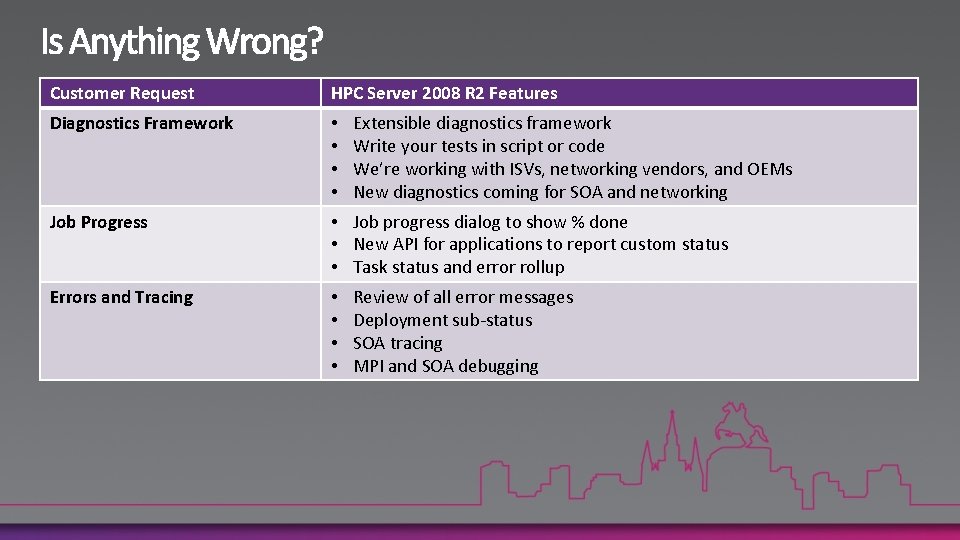

Customer Request HPC Server 2008 R 2 Features Diagnostics Framework • • Extensible diagnostics framework Write your tests in script or code We’re working with ISVs, networking vendors, and OEMs New diagnostics coming for SOA and networking

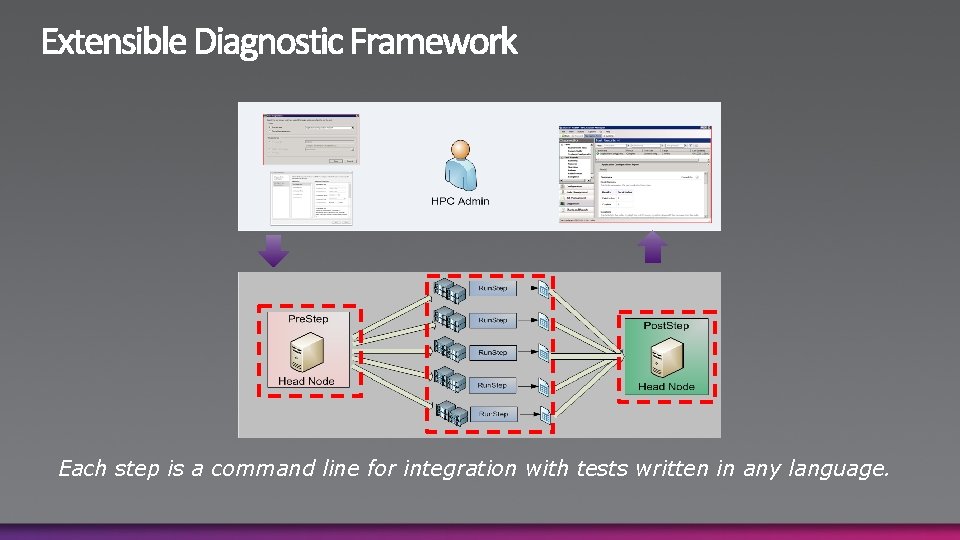

Each step is a command line for integration with tests written in any language.

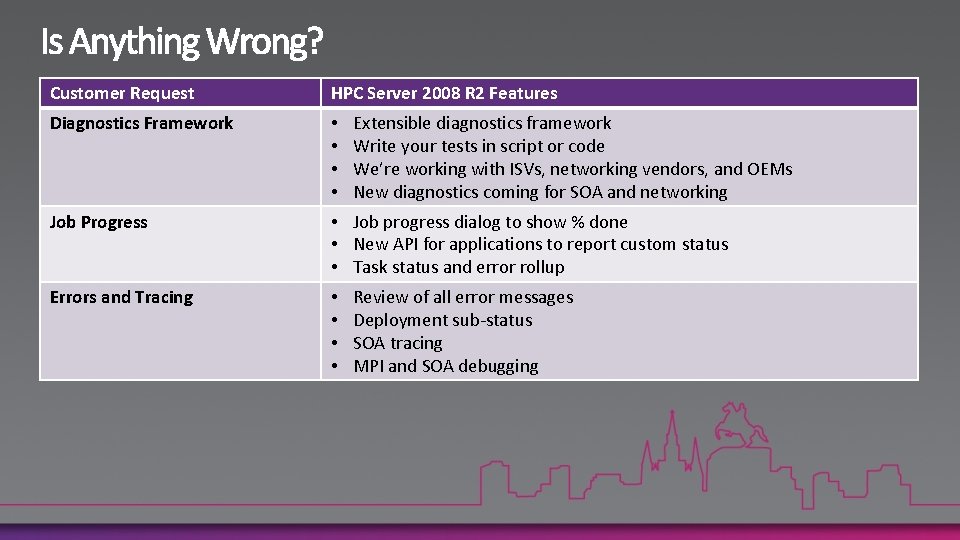

Customer Request HPC Server 2008 R 2 Features Diagnostics Framework • • Job Progress • Job progress dialog to show % done • New API for applications to report custom status • Task status and error rollup Extensible diagnostics framework Write your tests in script or code We’re working with ISVs, networking vendors, and OEMs New diagnostics coming for SOA and networking

Customer Request HPC Server 2008 R 2 Features Diagnostics Framework • • Job Progress • Job progress dialog to show % done • New API for applications to report custom status • Task status and error rollup Errors and Tracing • • Extensible diagnostics framework Write your tests in script or code We’re working with ISVs, networking vendors, and OEMs New diagnostics coming for SOA and networking Review of all error messages Deployment sub-status SOA tracing MPI and SOA debugging

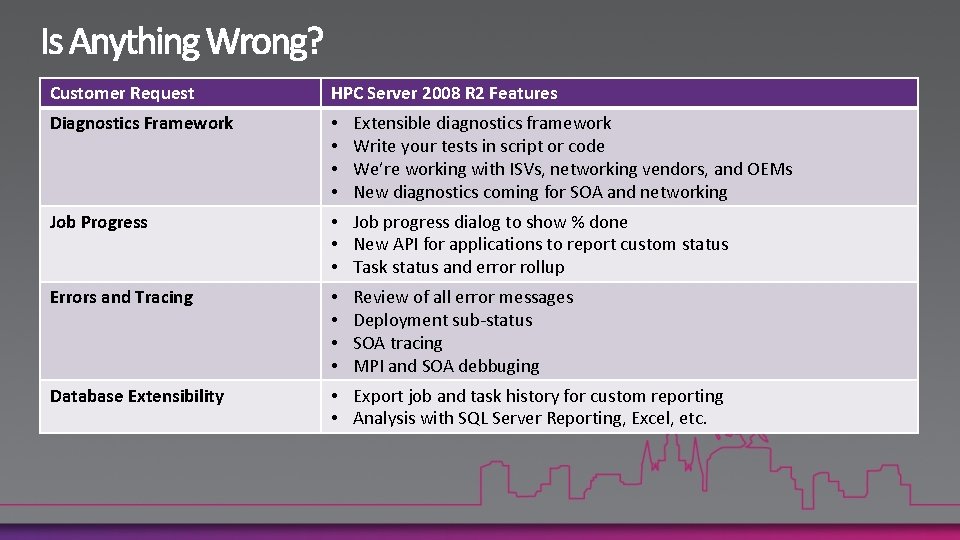

Customer Request HPC Server 2008 R 2 Features Diagnostics Framework • • Job Progress • Job progress dialog to show % done • New API for applications to report custom status • Task status and error rollup Errors and Tracing • • Database Extensibility • Export job and task history for custom reporting • Analysis with SQL Server Reporting, Excel, etc. Extensible diagnostics framework Write your tests in script or code We’re working with ISVs, networking vendors, and OEMs New diagnostics coming for SOA and networking Review of all error messages Deployment sub-status SOA tracing MPI and SOA debbuging

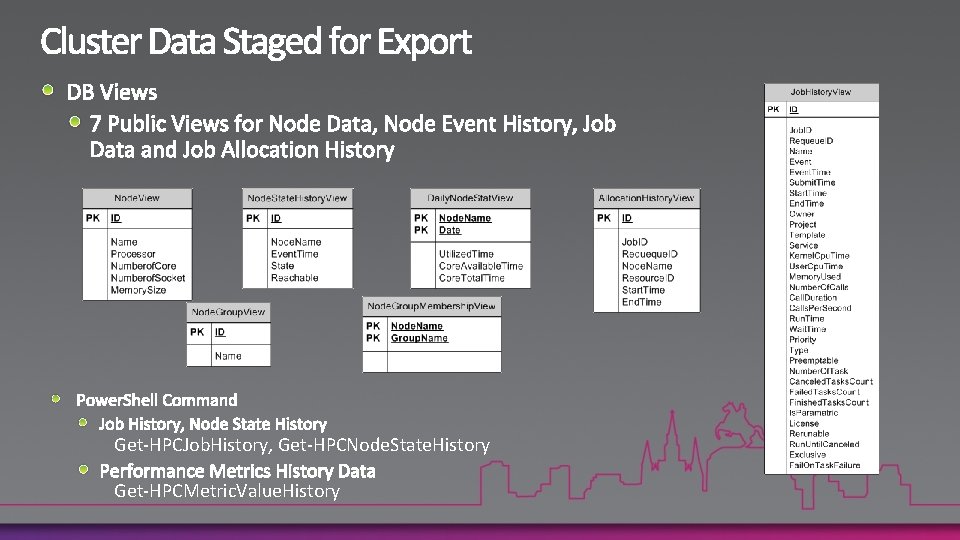

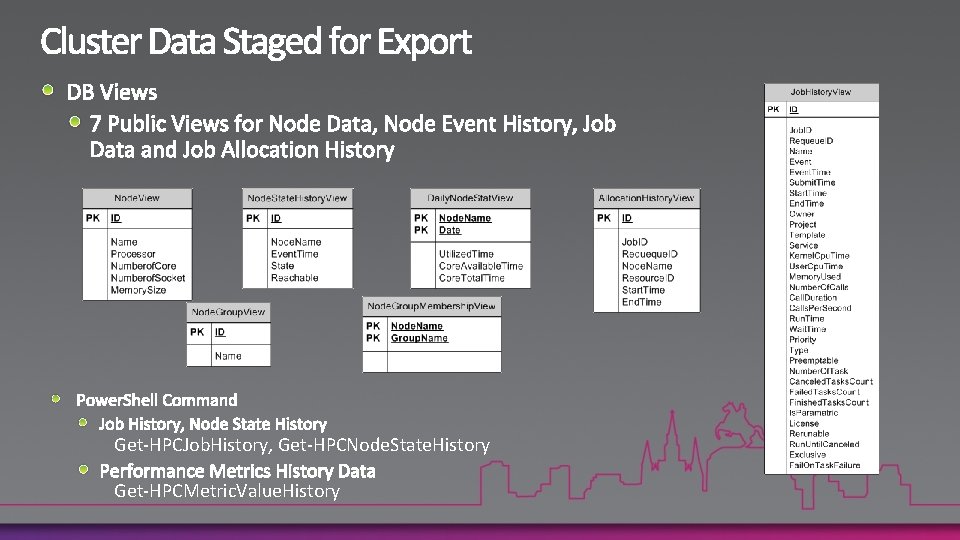

Get-HPCJob. History, Get-HPCNode. State. History Get-HPCMetric. Value. History

Business Critical SOA 40 http: //www. flickr. com/photos/richardsummers/269503660/

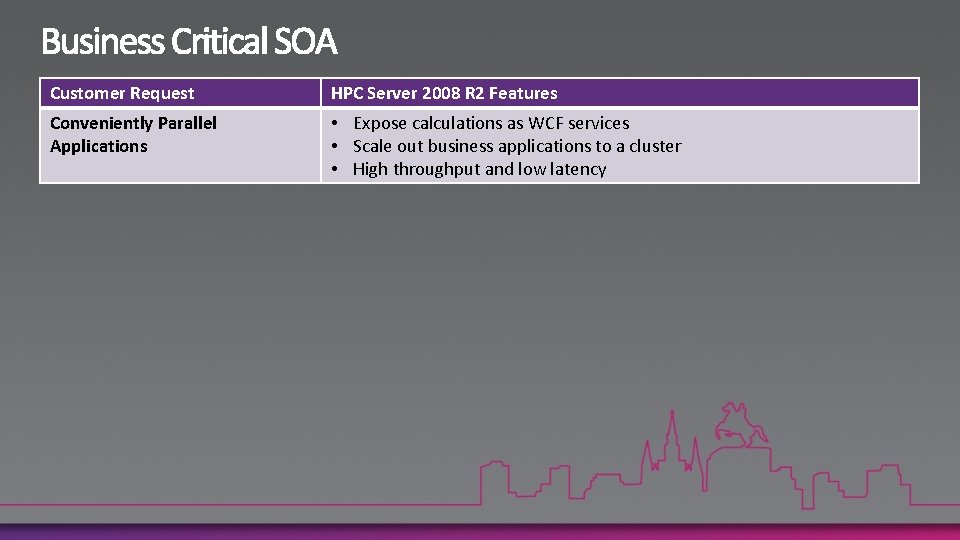

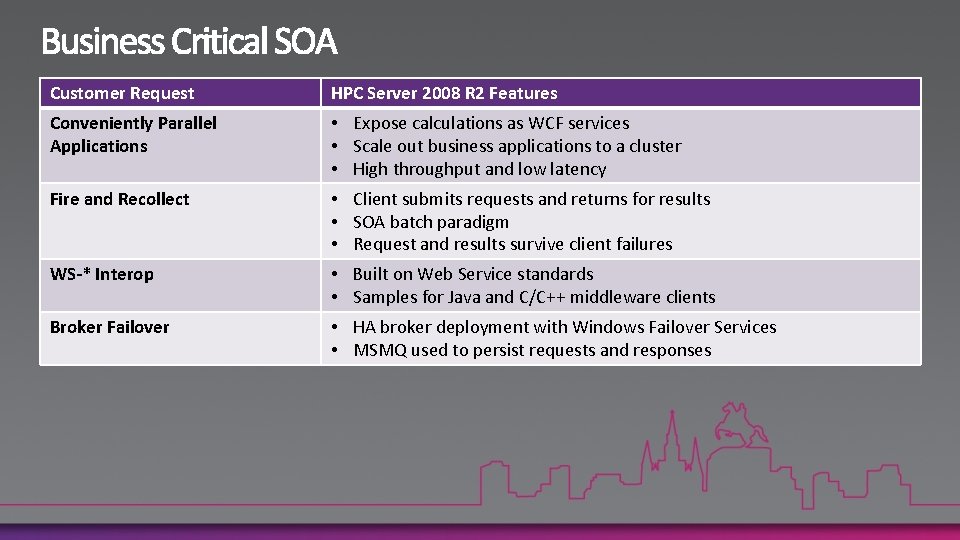

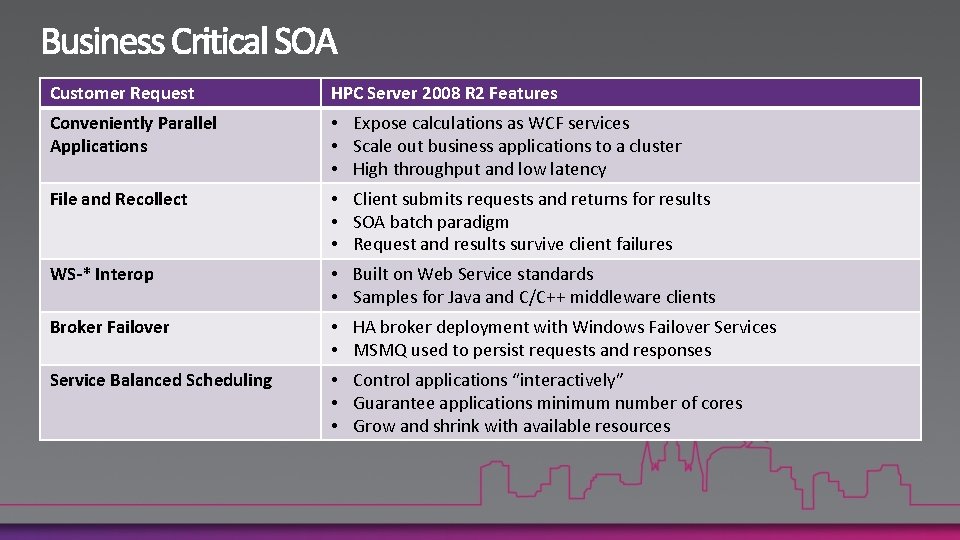

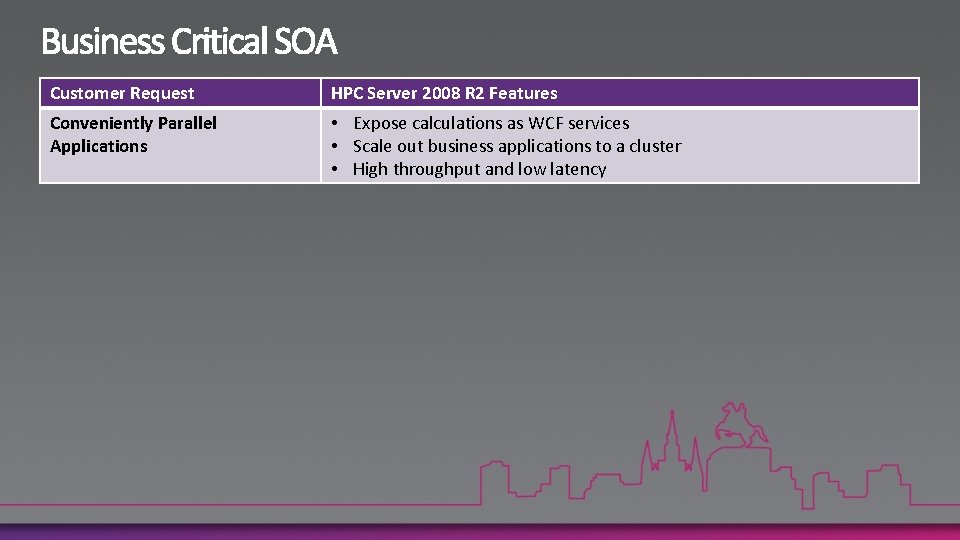

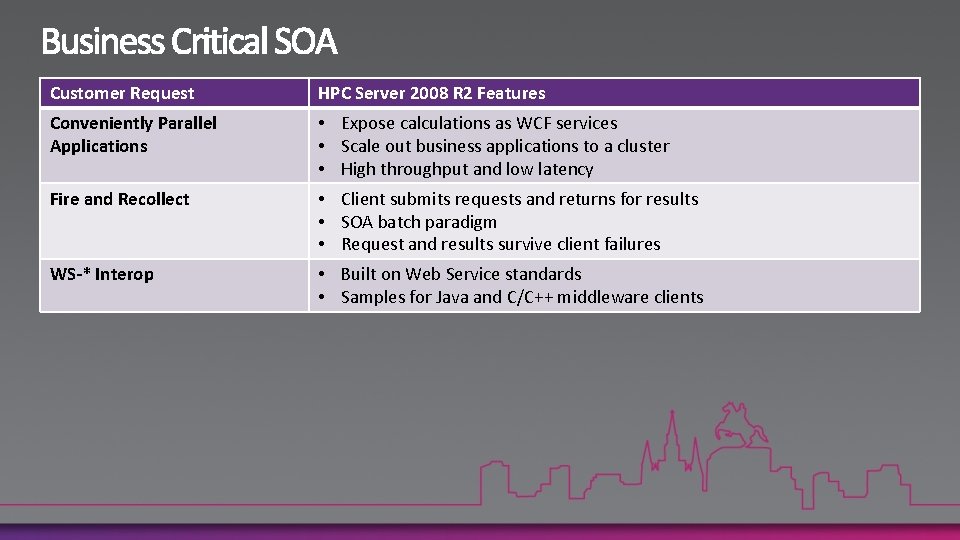

Customer Request HPC Server 2008 R 2 Features Conveniently Parallel Applications • Expose calculations as WCF services • Scale out business applications to a cluster • High throughput and low latency

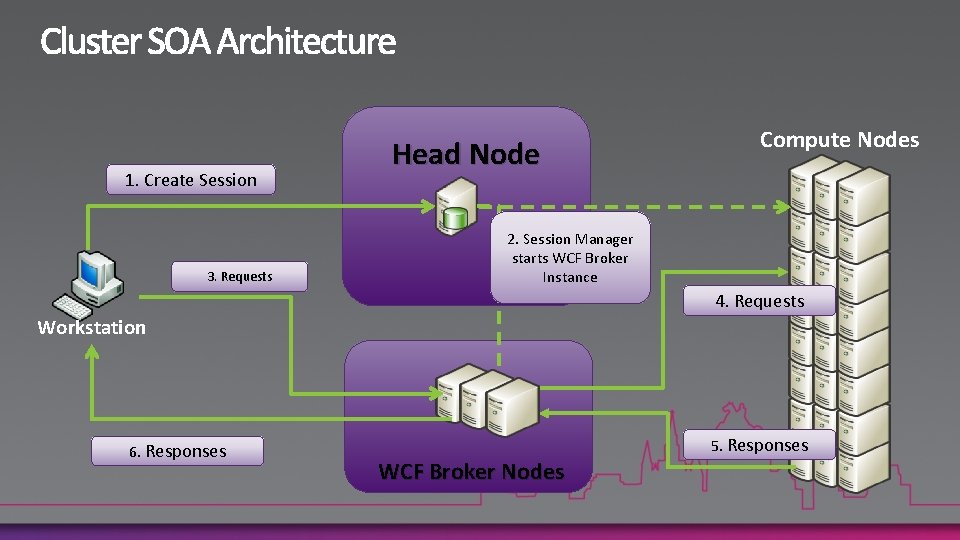

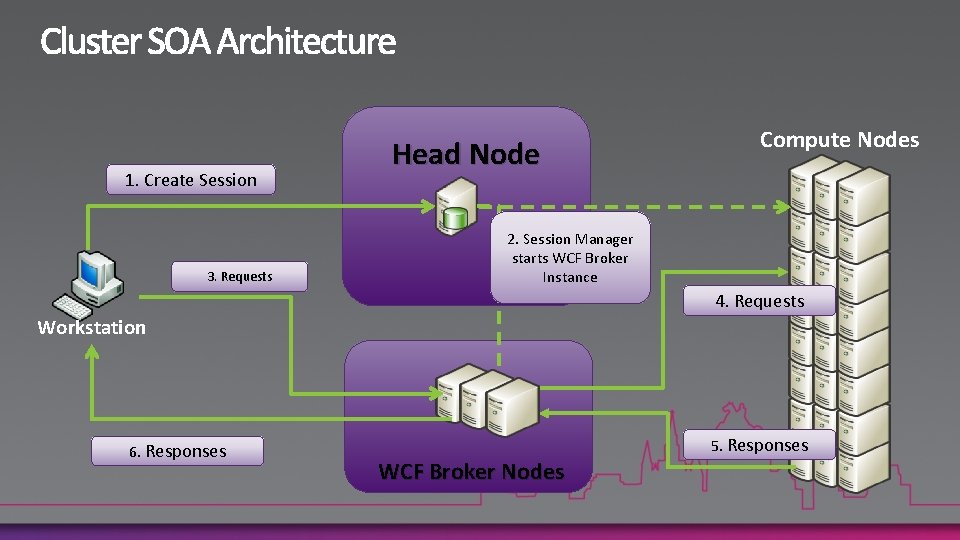

1. Create Session 3. Requests Head Node Compute Nodes 2. Session Manager starts WCF Broker Instance 4. Requests Workstation 6. Responses WCF Broker Nodes 5. Responses

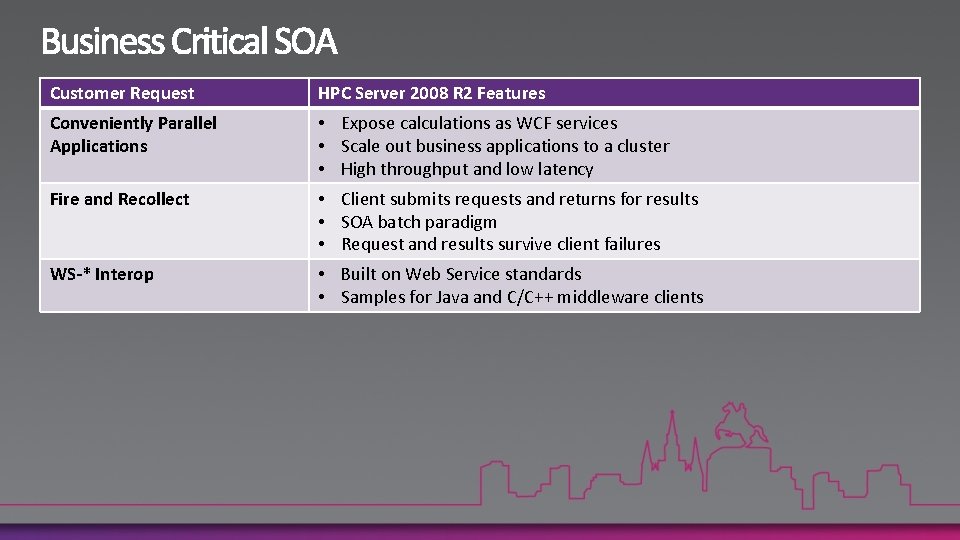

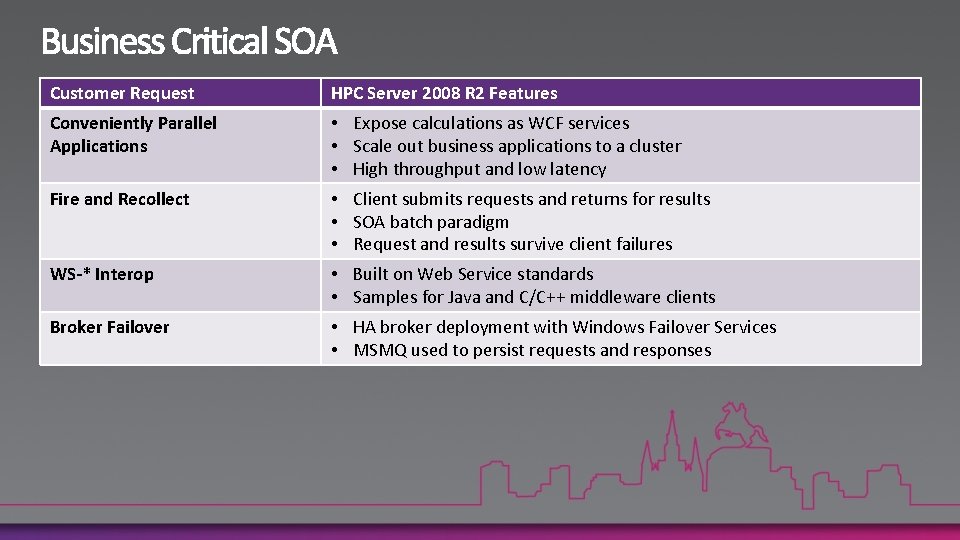

Customer Request HPC Server 2008 R 2 Features Conveniently Parallel Applications • Expose calculations as WCF services • Scale out business applications to a cluster • High throughput and low latency Fire and Recollect • Client submits requests and returns for results • SOA batch paradigm • Request and results survive client failures WS-* Interop • Built on Web Service standards • Samples for Java and C/C++ middleware clients

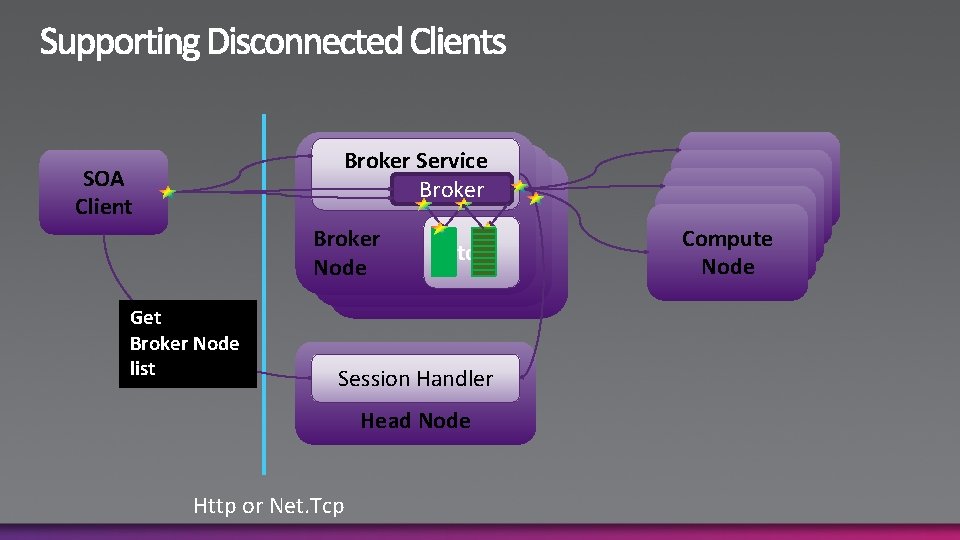

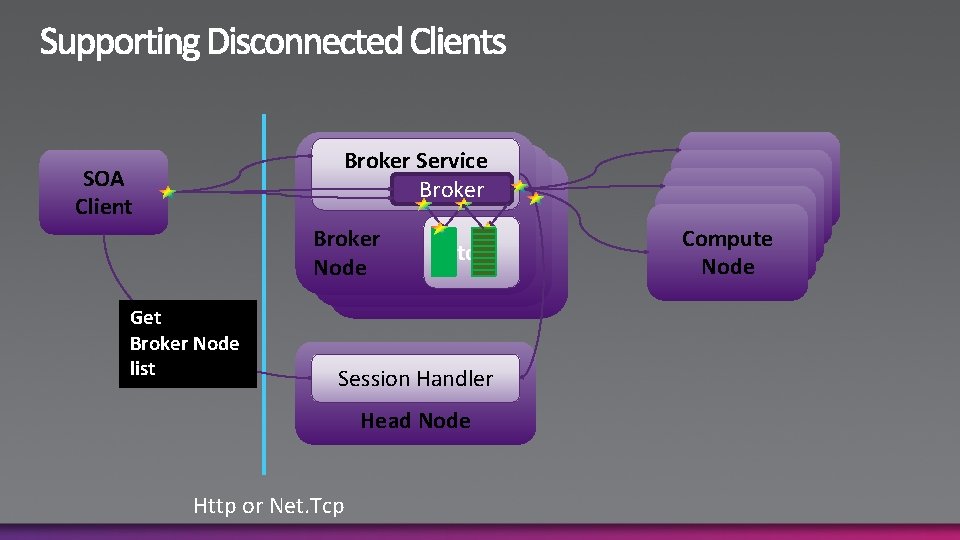

Broker Service Broker SOA Client Broker Node Get Broker Node list Store Session Handler Head Node Http or Net. Tcp Compute Node

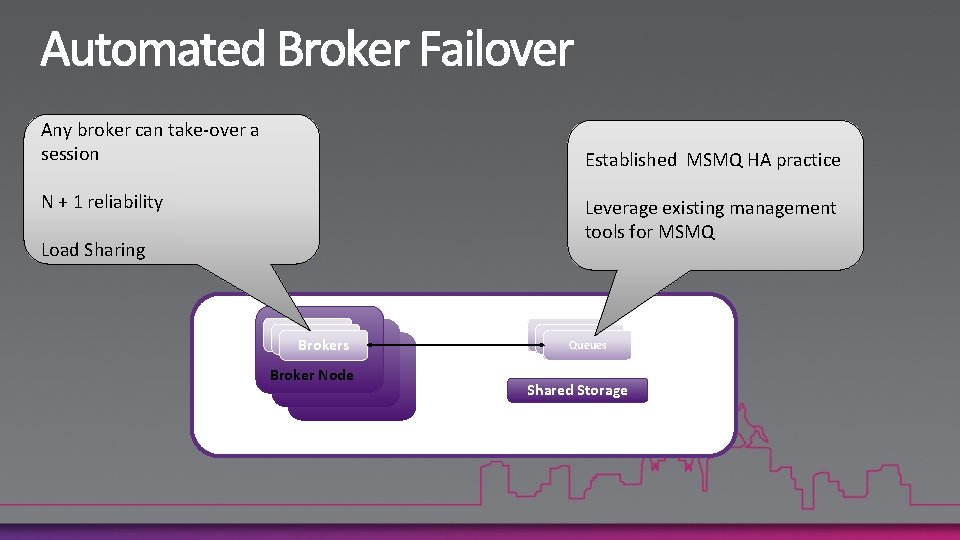

Customer Request HPC Server 2008 R 2 Features Conveniently Parallel Applications • Expose calculations as WCF services • Scale out business applications to a cluster • High throughput and low latency Fire and Recollect • Client submits requests and returns for results • SOA batch paradigm • Request and results survive client failures WS-* Interop • Built on Web Service standards • Samples for Java and C/C++ middleware clients Broker Failover • HA broker deployment with Windows Failover Services • MSMQ used to persist requests and responses

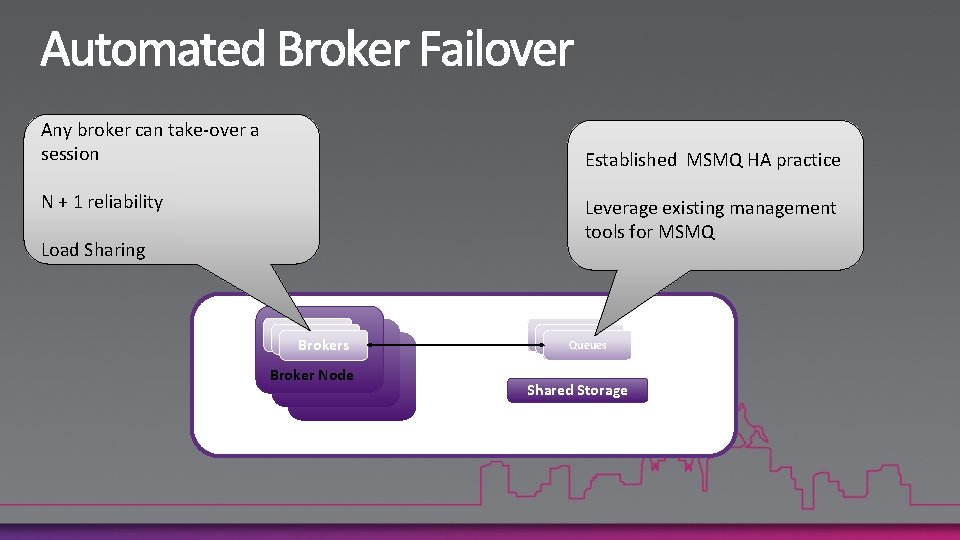

Any broker can take-over a session Established MSMQ HA practice N + 1 reliability Leverage existing management tools for MSMQ Load Sharing Brokers Broker Node Stateless Broker NodesMSCS Storage Queues Shared Storage MSCS Cluster

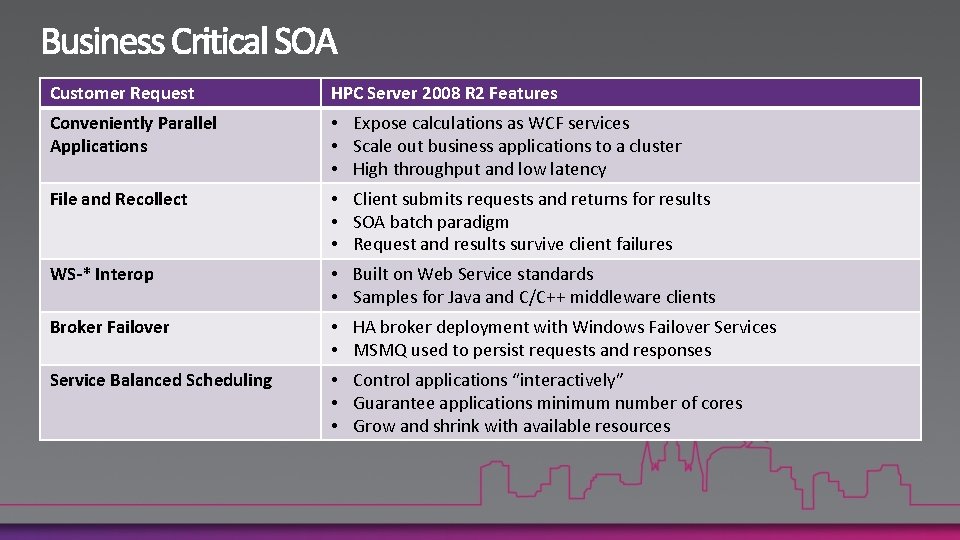

Customer Request HPC Server 2008 R 2 Features Conveniently Parallel Applications • Expose calculations as WCF services • Scale out business applications to a cluster • High throughput and low latency File and Recollect • Client submits requests and returns for results • SOA batch paradigm • Request and results survive client failures WS-* Interop • Built on Web Service standards • Samples for Java and C/C++ middleware clients Broker Failover • HA broker deployment with Windows Failover Services • MSMQ used to persist requests and responses Service Balanced Scheduling • Control applications “interactively” • Guarantee applications minimum number of cores • Grow and shrink with available resources

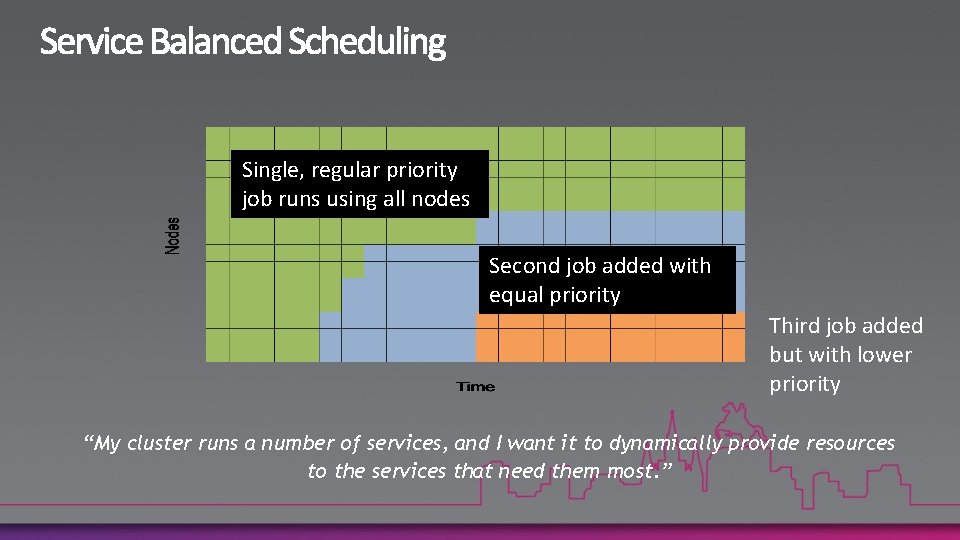

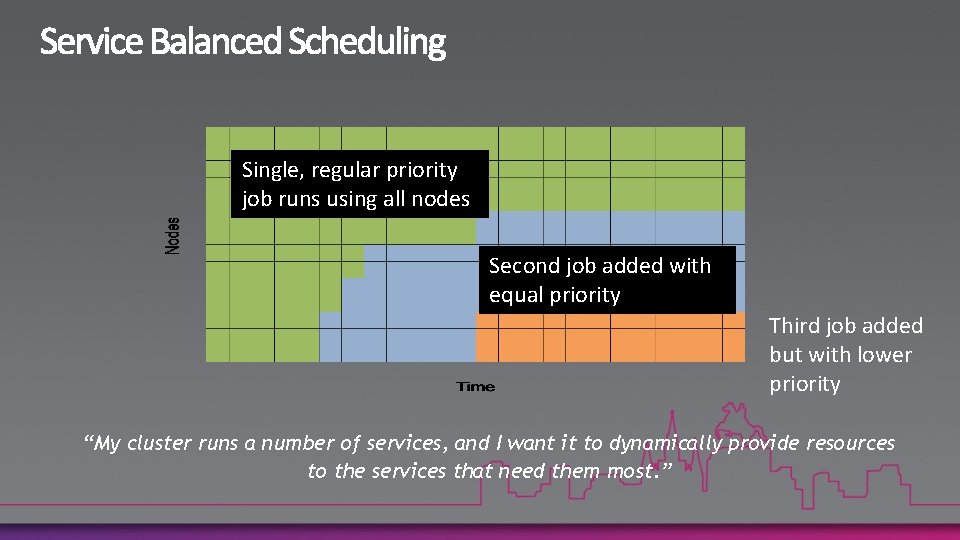

Single, regular priority job runs using all nodes Second job added with equal priority Third job added but with lower priority “My cluster runs a number of services, and I want it to dynamically provide resources to the services that need them most. ”

Excel on the Cluster 50 http: //www. flickr. com/photos/mvjaf/2209185558/

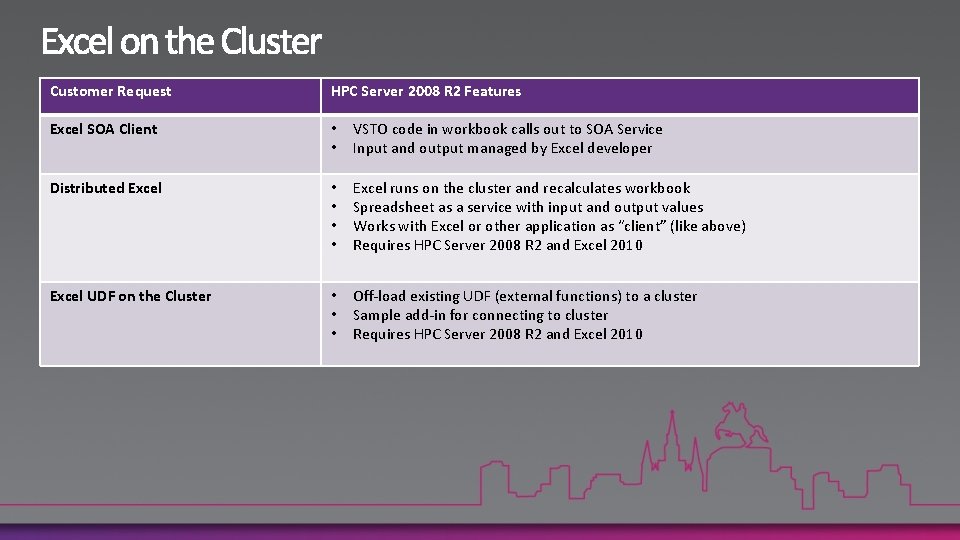

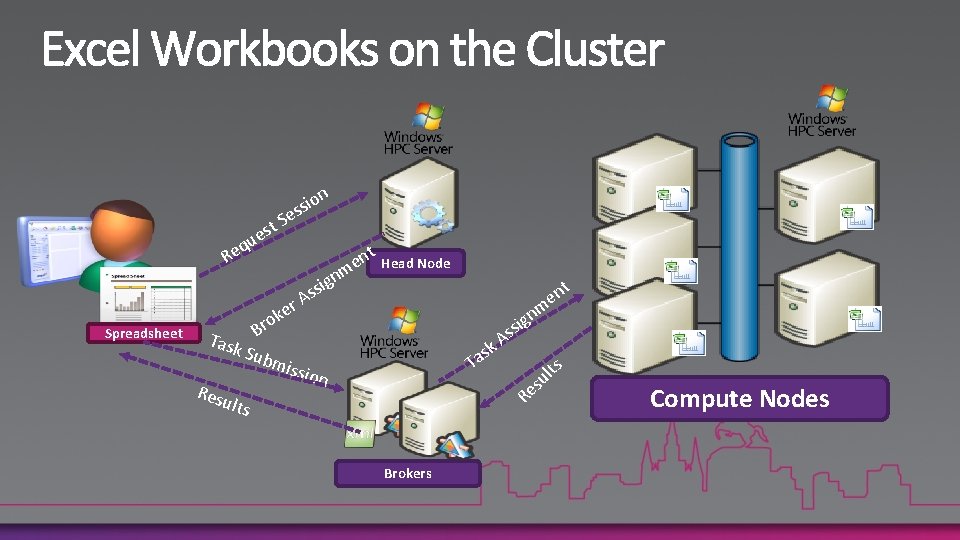

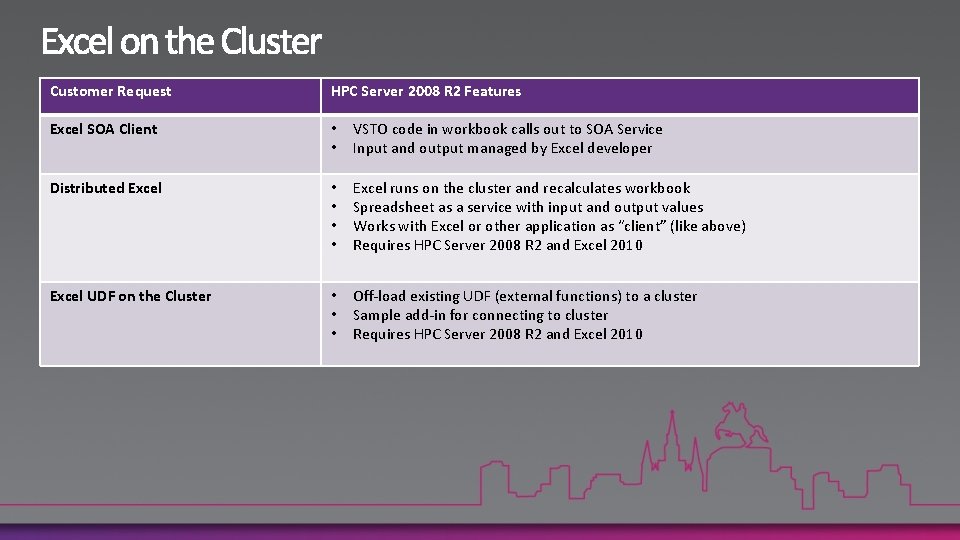

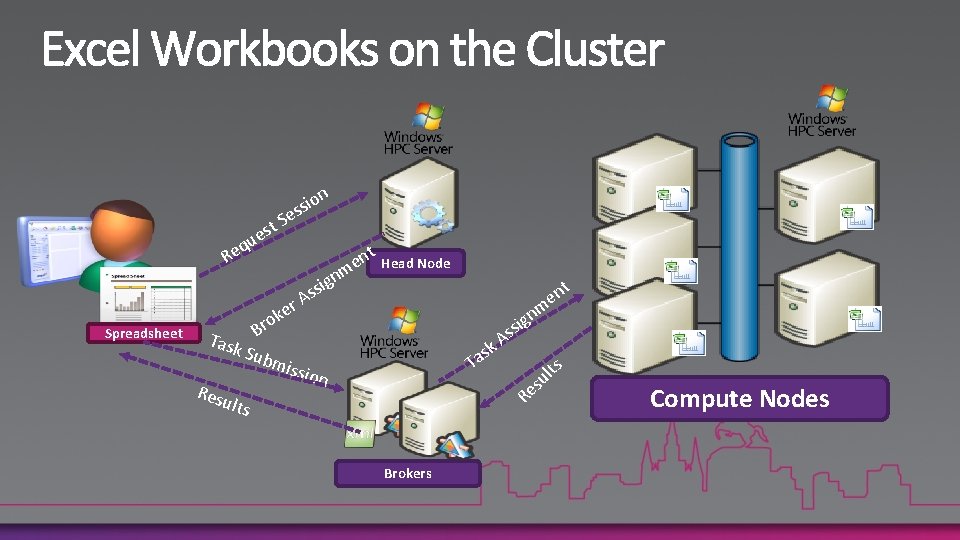

Customer Request HPC Server 2008 R 2 Features Excel SOA Client • • VSTO code in workbook calls out to SOA Service Input and output managed by Excel developer Distributed Excel • • Excel runs on the cluster and recalculates workbook Spreadsheet as a service with input and output values Works with Excel or other application as “client” (like above) Requires HPC Server 2008 R 2 and Excel 2010 Excel UDF on the Cluster • • • Off-load existing UDF (external functions) to a cluster Sample add-in for connecting to cluster Requires HPC Server 2008 R 2 and Excel 2010

n q Re u est io s s e S nt Head Node e m r. A e Spreadsheet rok gn i s s Task B Sub mi m k as T Resu ssio n lts Brokers t en gn i s s A s e R ts l u Compute Nodes

Full Throttle Ahead 54 54 http: //www. flickr. com/photos/30046478@N 08/3562725745/

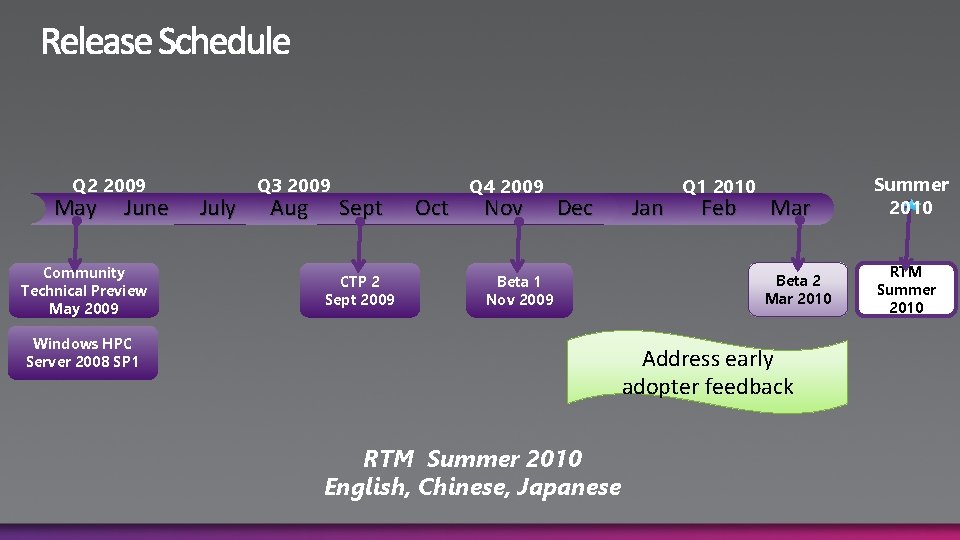

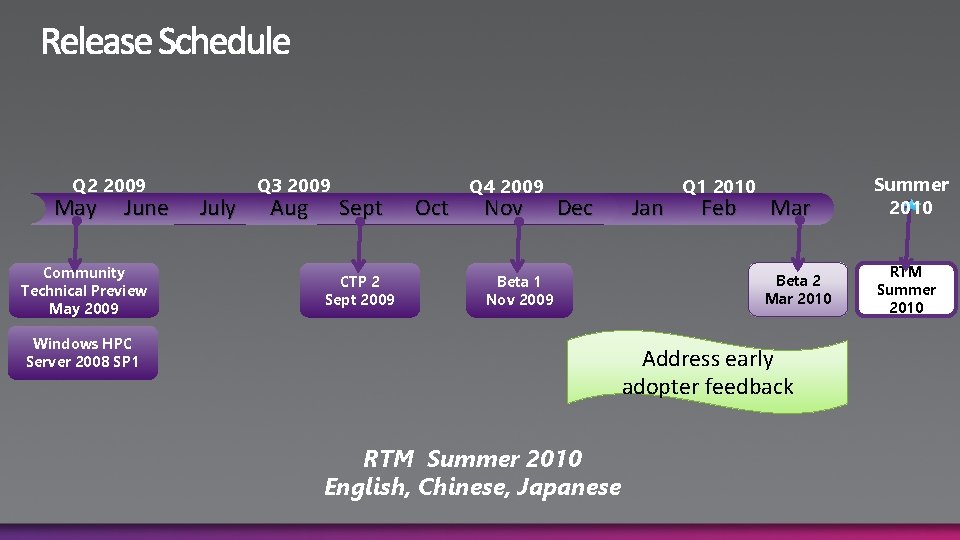

Q 2 2009 May June Community Technical Preview May 2009 July Q 3 2009 Aug Sept CTP 2 Sept 2009 Oct Q 4 2009 Nov Dec Beta 1 Nov 2009 Windows HPC Server 2008 SP 1 Jan Q 1 2010 Feb Mar Beta 2 Mar 2010 Address early adopter feedback RTM Summer 2010 English, Chinese, Japanese Summer 2010 RTM Summer 2010

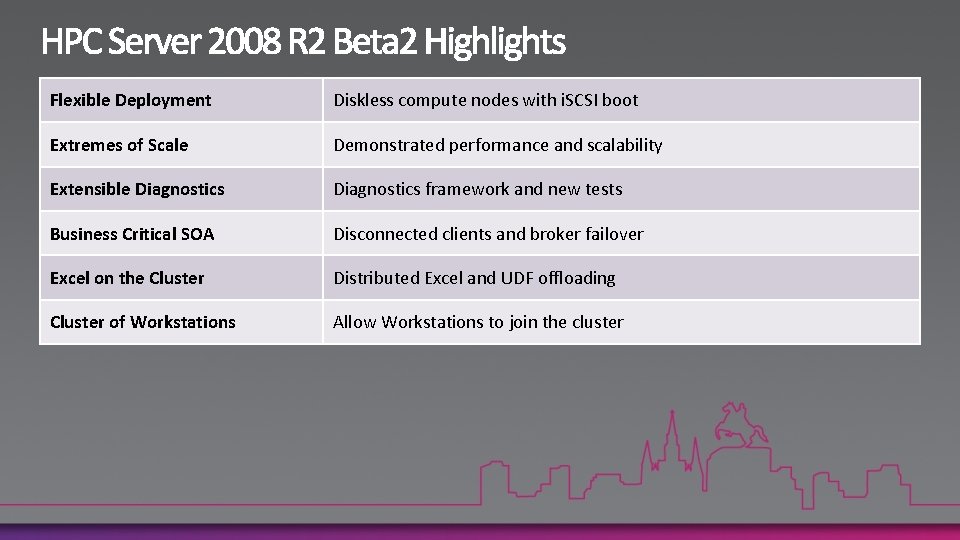

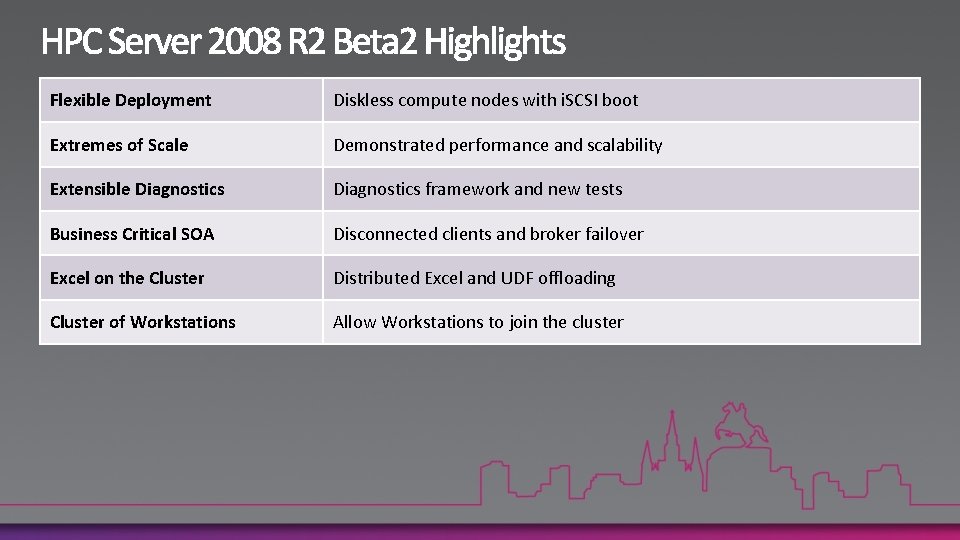

Flexible Deployment Diskless compute nodes with i. SCSI boot Extremes of Scale Demonstrated performance and scalability Extensible Diagnostics framework and new tests Business Critical SOA Disconnected clients and broker failover Excel on the Cluster Distributed Excel and UDF offloading Cluster of Workstations Allow Workstations to join the cluster

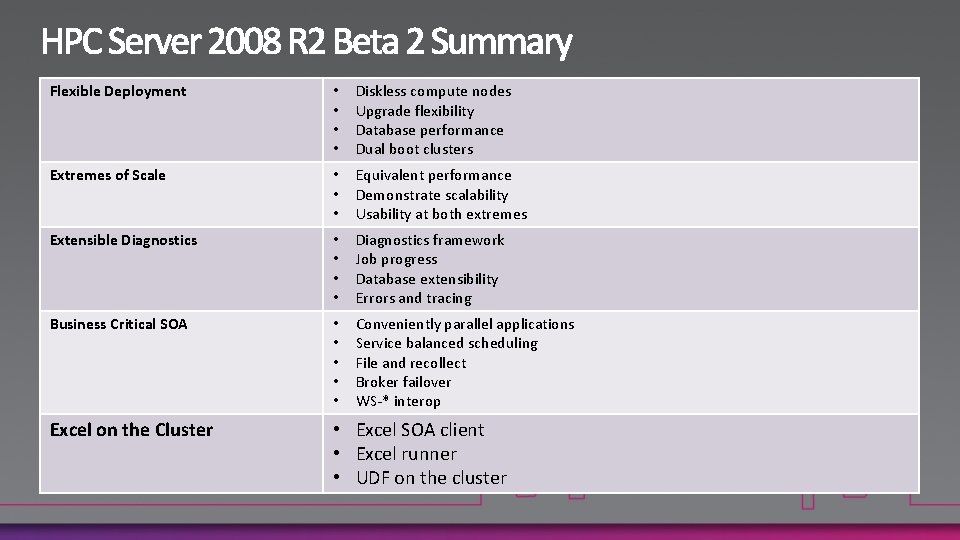

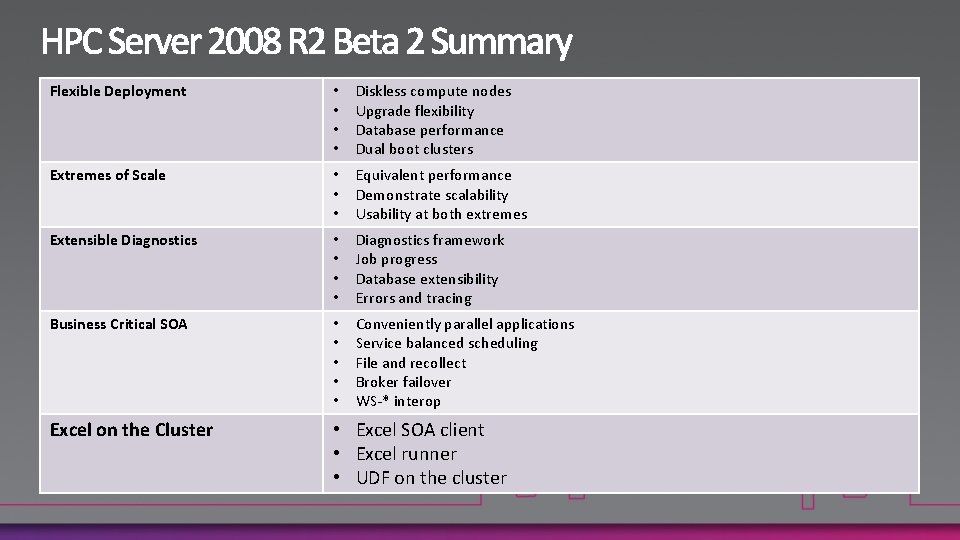

Flexible Deployment • • Diskless compute nodes Upgrade flexibility Database performance Dual boot clusters Extremes of Scale • • • Equivalent performance Demonstrate scalability Usability at both extremes Extensible Diagnostics • • Diagnostics framework Job progress Database extensibility Errors and tracing Business Critical SOA • • • Conveniently parallel applications Service balanced scheduling File and recollect Broker failover WS-* interop Excel on the Cluster • Excel SOA client • Excel runner • UDF on the cluster

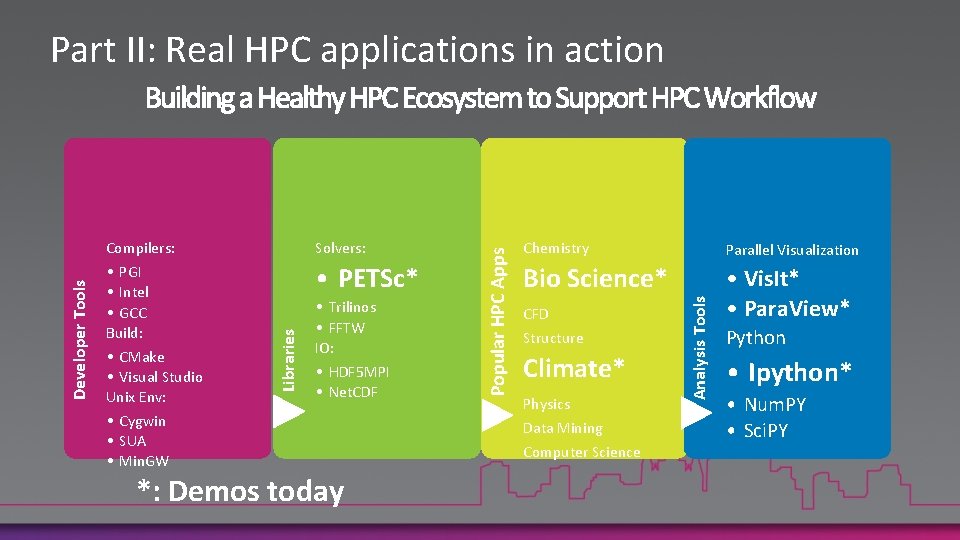

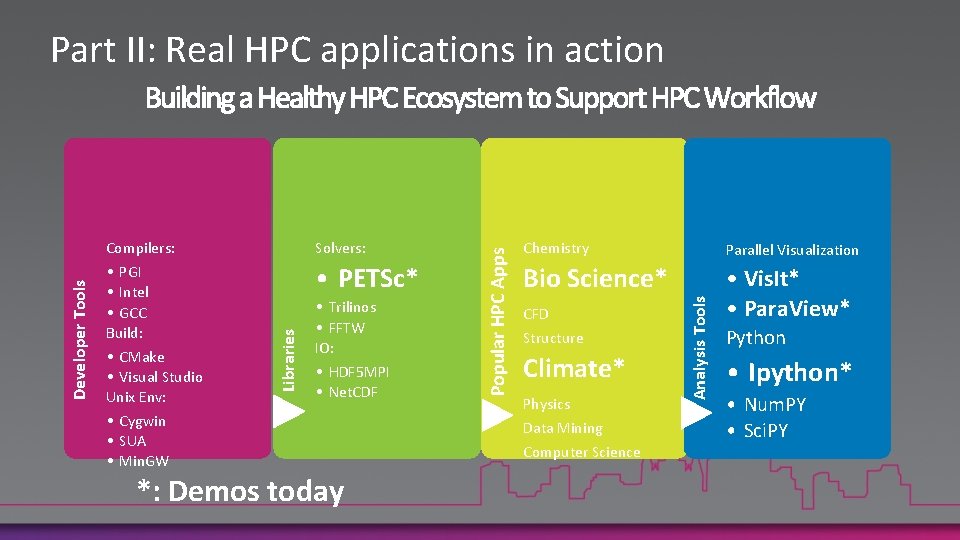

• PETSc* • Trilinos • FFTW IO: • HDF 5 MPI • Net. CDF *: Demos today Chemistry Parallel Visualization Bio Science* • Vis. It* • Para. View* CFD Structure Climate* Physics Data Mining Computer Science Analysis Tools Solvers: Popular HPC Apps Compilers: • PGI • Intel • GCC Build: • CMake • Visual Studio Unix Env: • Cygwin • SUA • Min. GW Libraries Developer Tools Part II: Real HPC applications in action Python • Ipython* • Num. PY • Sci. PY

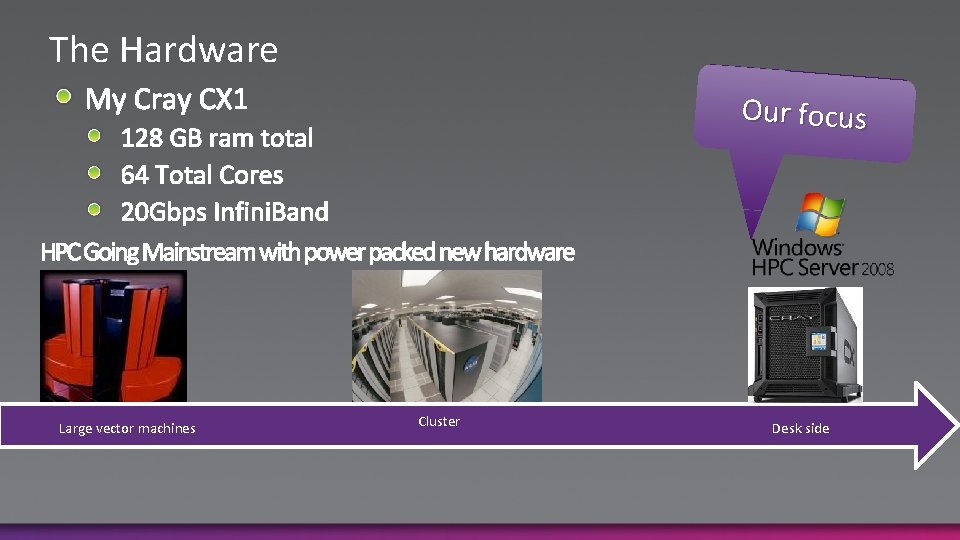

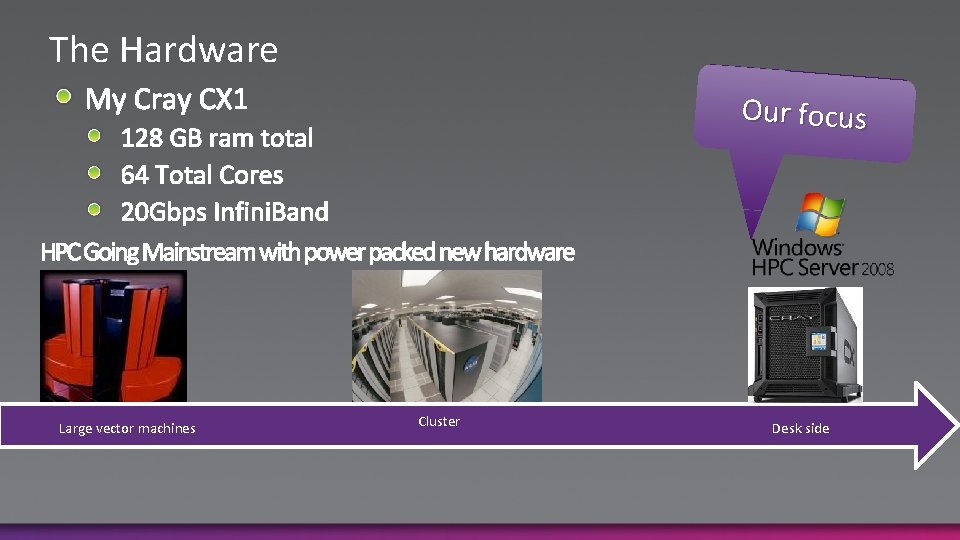

The Hardware Our focus Large vector machines Cluster Desk side

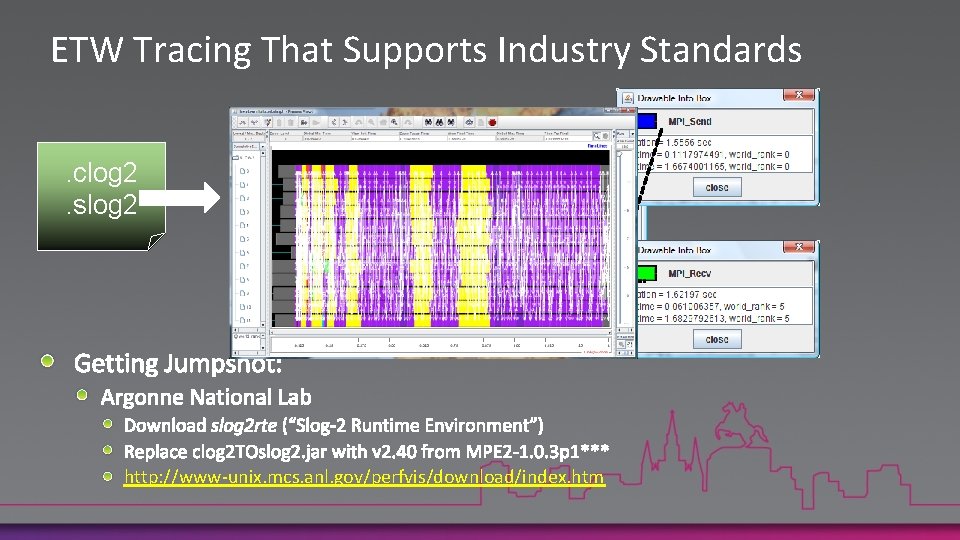

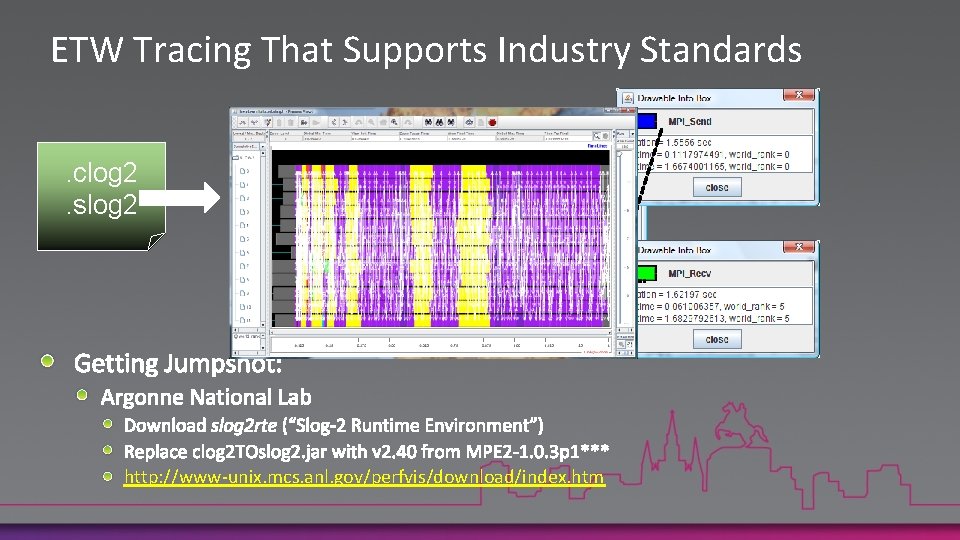

ETW Tracing That Supports Industry Standards. clog 2. slog 2 http: //www-unix. mcs. anl. gov/perfvis/download/index. htm

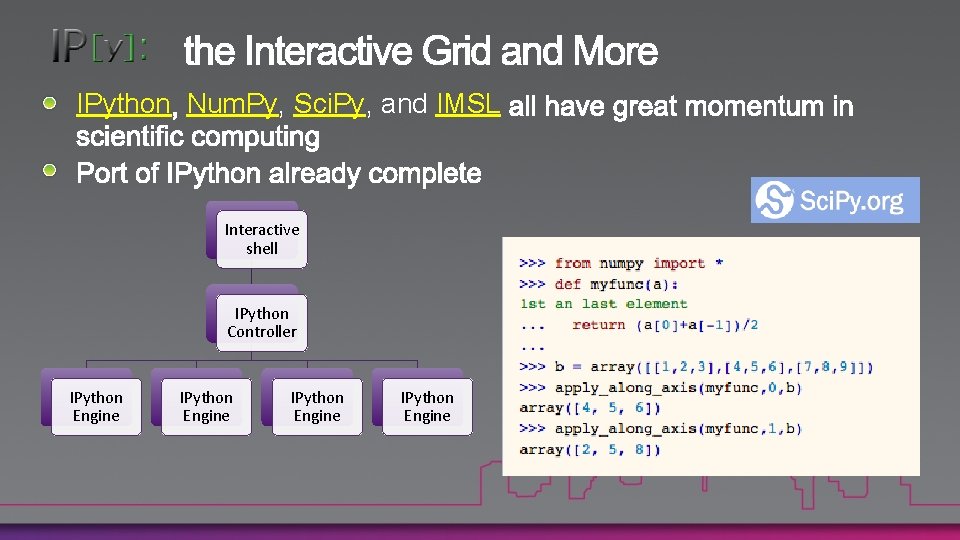

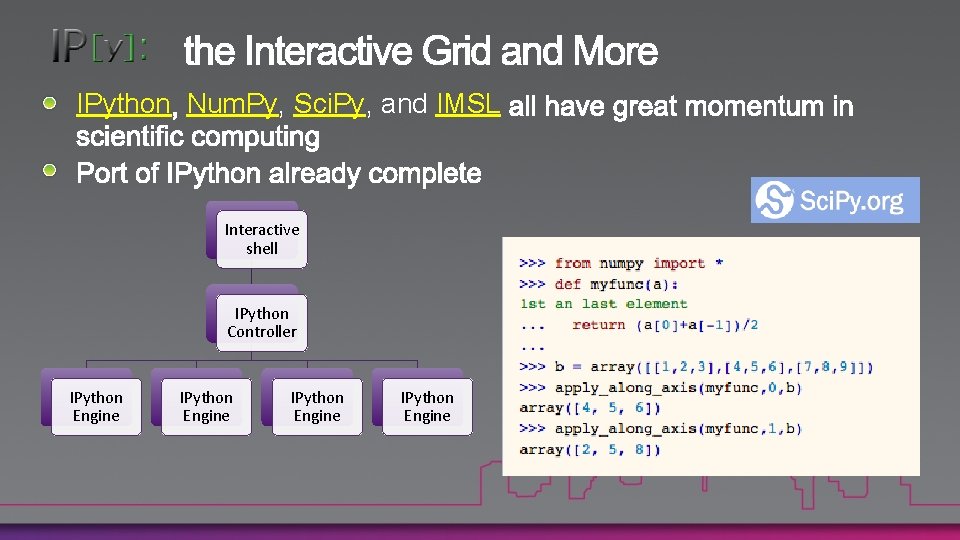

IPython Num. Py, Sci. Py, and IMSL Interactive shell IPython Controller IPython Engine

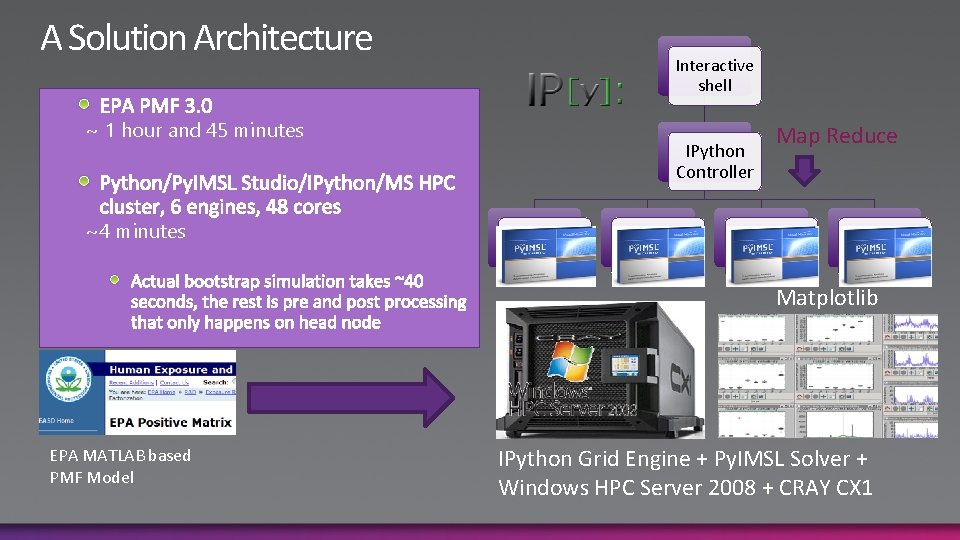

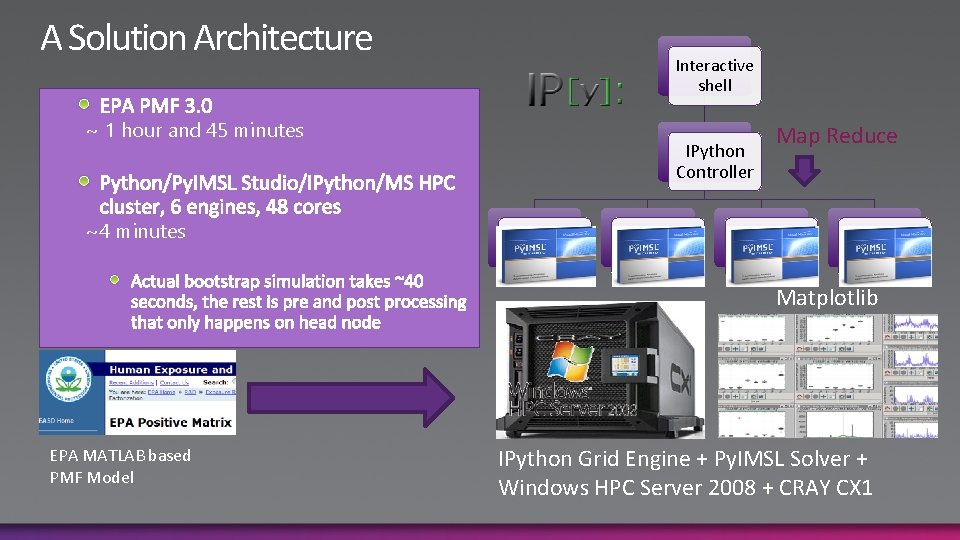

A Solution Architecture Interactive shell ~ 1 hour and 45 minutes ~4 minutes IPython Controller IPython Engine Map Reduce IPython Engine Matplotlib EPA MATLAB based PMF Model IPython Grid Engine + Py. IMSL Solver + Windows HPC Server 2008 + CRAY CX 1

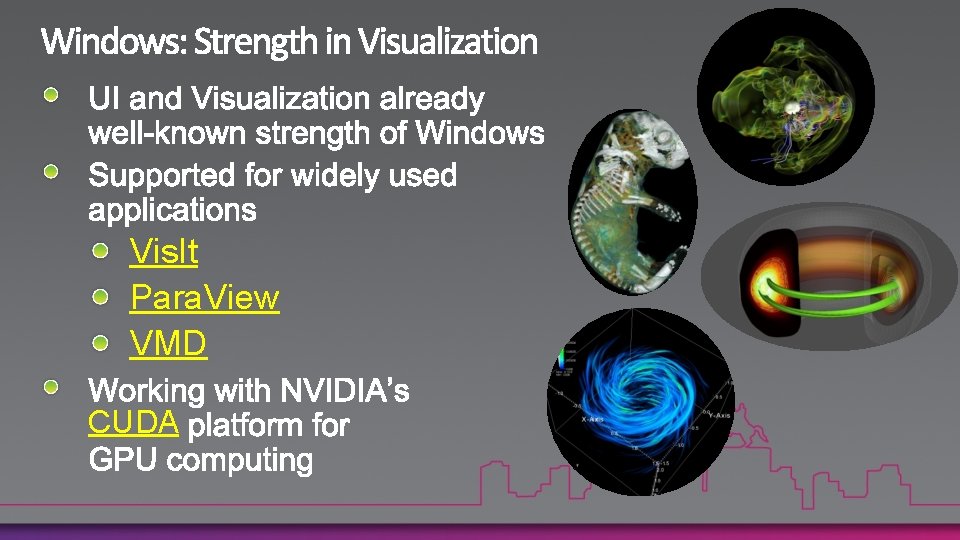

Vis. It Para. View VMD CUDA

Developer Benefits of Windows HPC Easier binary distribution Better tools, first class developer support More potential new users and developers

http: //www. microsoft. com/hpc http: //blogs. msdn. com/hpctrekker http: //channel 9. msdn. com/shows/The+HPC+Show http: //www. kitware. com/ http: //technet. microsoft. com/en-us/hpc/default. aspx

www. microsoft. com/teched www. microsoft. com/learning http: //microsoft. com/technet http: //microsoft. com/msdn

Sign up for Tech·Ed 2011 and save $500 starting June 8 – June 31 st http: //northamerica. msteched. com/registration You can also register at the North America 2011 kiosk located at registration Join us in Atlanta next year

THANKS!