PrivacyPreserving Classification Kamalika Chaudhuri Claire Monteleoni Anand Sarwate

![Differential Privacy t D 1 h[A(D 1) = t] D 2 h[A(D 2) = Differential Privacy t D 1 h[A(D 1) = t] D 2 h[A(D 2) =](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-14.jpg)

![Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2. Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2.](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-30.jpg)

![Previous Work Algorithm Data Running Time [BLR 08], [KL+08] d 2/® 3² Exp(d) Recipe Previous Work Algorithm Data Running Time [BLR 08], [KL+08] d 2/® 3² Exp(d) Recipe](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-33.jpg)

![Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2. Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2.](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-35.jpg)

![Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2. Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2.](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-40.jpg)

![Accuracy: Proof Sketch Theorem: [CM 08, SCM 09] #Samples needed for error ® is Accuracy: Proof Sketch Theorem: [CM 08, SCM 09] #Samples needed for error ® is](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-42.jpg)

![Accuracy Guarantees Theorem: [CM 08, SCM 09] #Samples needed for error ® is 1/° Accuracy Guarantees Theorem: [CM 08, SCM 09] #Samples needed for error ® is 1/°](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-46.jpg)

![Error Privacy-Accuracy Tradeoff Our Algorithm [DMNS 06] Chance Normal. SVM Privacy Level ² Smaller Error Privacy-Accuracy Tradeoff Our Algorithm [DMNS 06] Chance Normal. SVM Privacy Level ² Smaller](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-49.jpg)

![Privacy-Accuracy Tradeoff Error Our Algorithm [DMNS 06] Normal. SVM Privacy Level ² Smaller ², Privacy-Accuracy Tradeoff Error Our Algorithm [DMNS 06] Normal. SVM Privacy Level ² Smaller ²,](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-51.jpg)

- Slides: 56

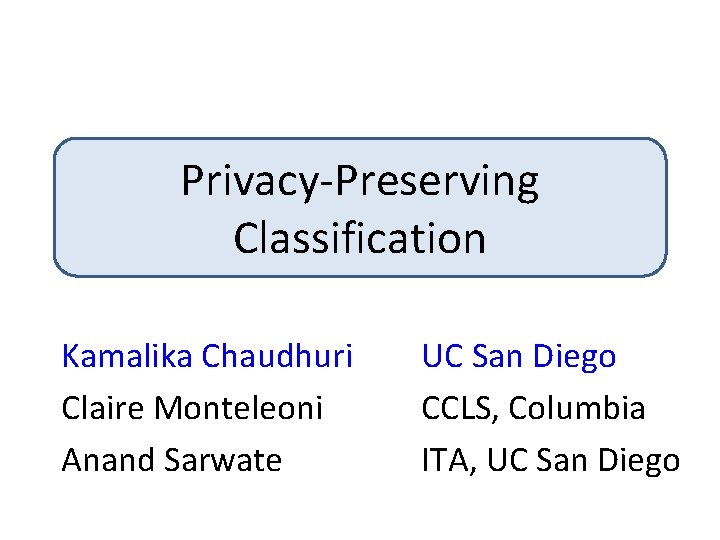

Privacy-Preserving Classification Kamalika Chaudhuri Claire Monteleoni Anand Sarwate UC San Diego CCLS, Columbia ITA, UC San Diego

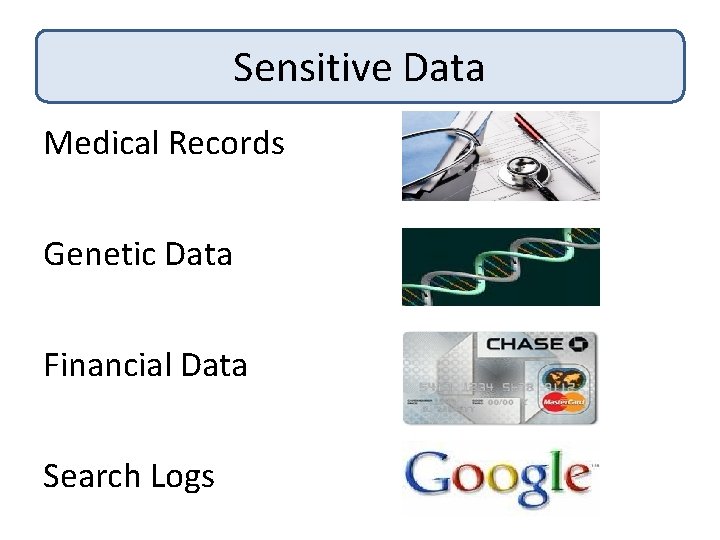

Sensitive Data Medical Records Genetic Data Financial Data Search Logs

How to learn from sensitive data While preserving privacy?

A Learning Problem: Flu Test Predicts flu or not, based on symptoms Trained on sensitive patient data

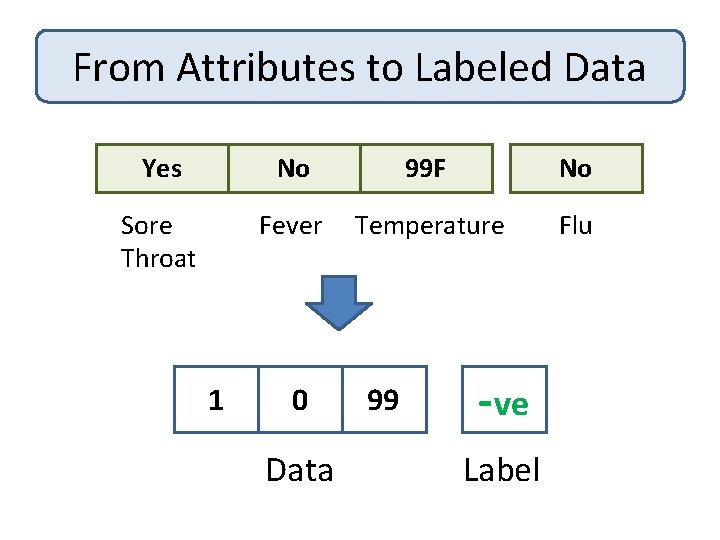

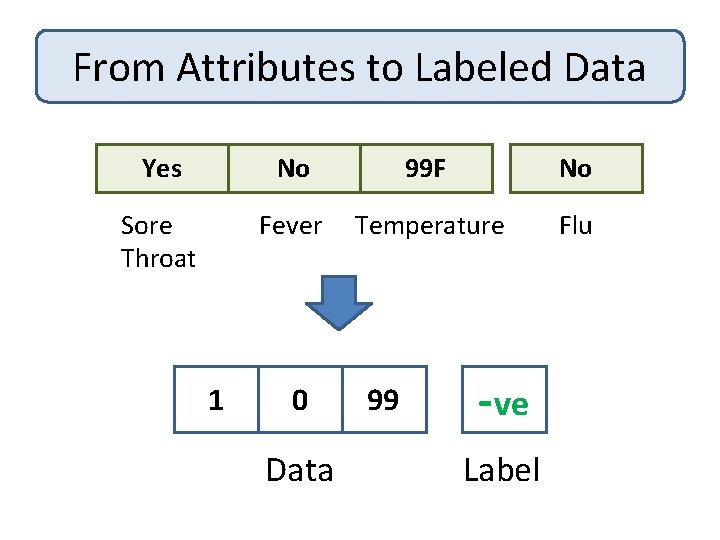

From Attributes to Labeled Data Yes No 99 F No Sore Throat Fever Temperature Flu 1 0 Data 99 -ve Label

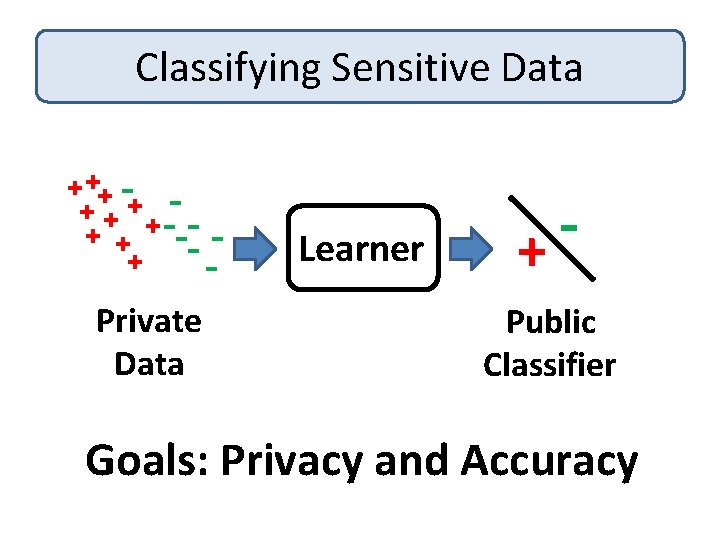

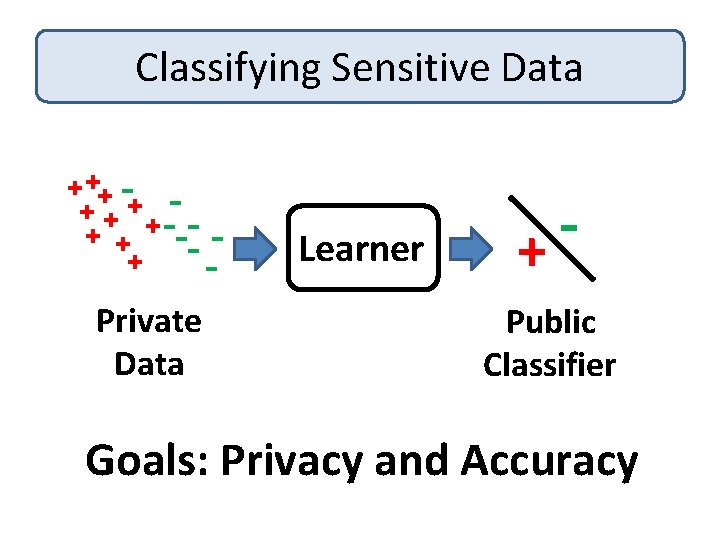

Classifying Sensitive Data + ++ + + --- + -- Private Data Learner + Public Classifier Goals: Privacy and Accuracy

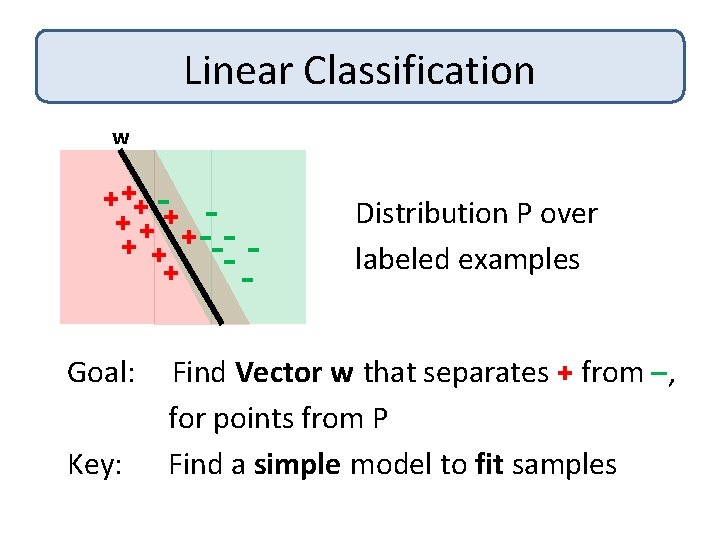

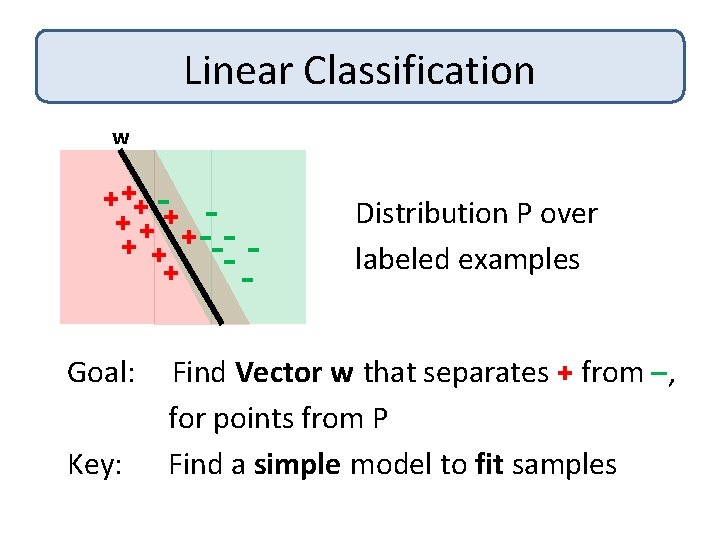

Linear Classification w + ++ + + --- + -Goal: Key: Distribution P over labeled examples Find Vector w that separates + from –, for points from P Find a simple model to fit samples

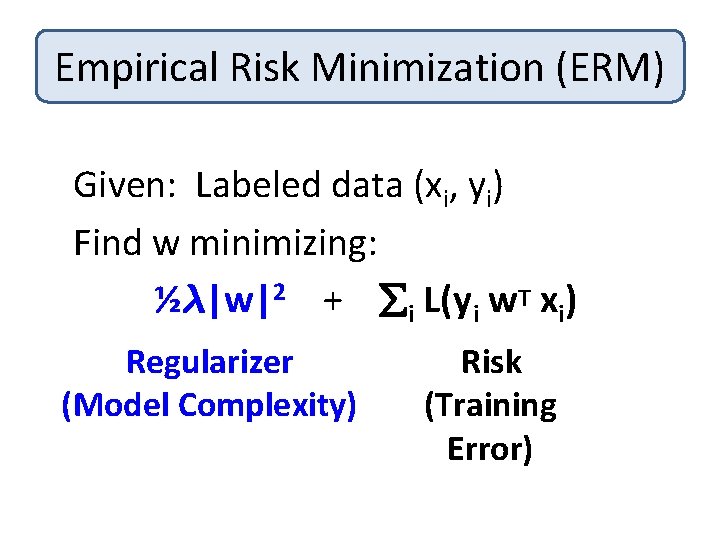

Empirical Risk Minimization (ERM) Given: Labeled data (xi, yi) Find w minimizing: ½¸|w|2 + i L(y i w. T xi) Regularizer (Model Complexity) Risk (Training Error)

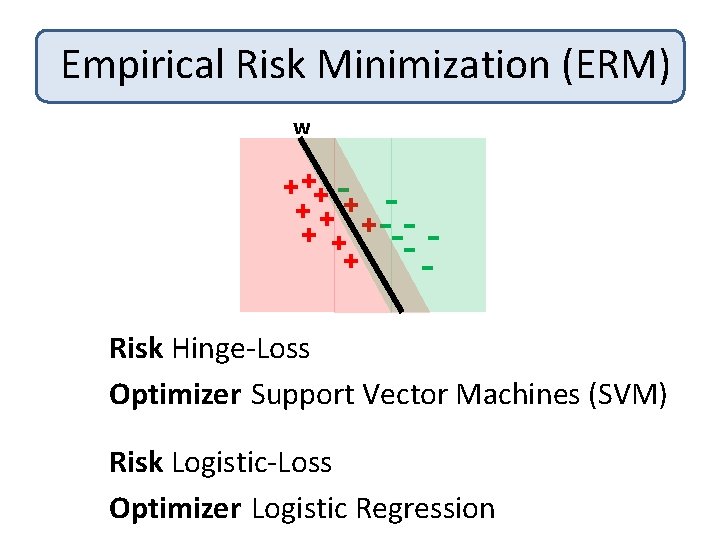

Empirical Risk Minimization (ERM) w + ++ + + --- + -Risk Hinge-Loss Given: Labeled data (x 1, y 1), …, (xn, yn) Optimizer Support Machines (SVM) Find: Vector w that. Vector minimizes: 2 + L(y w. T x ) ½¸|w| i i Risk Logistic-Loss i Optimizer Logistic Regression Regularizer Risk

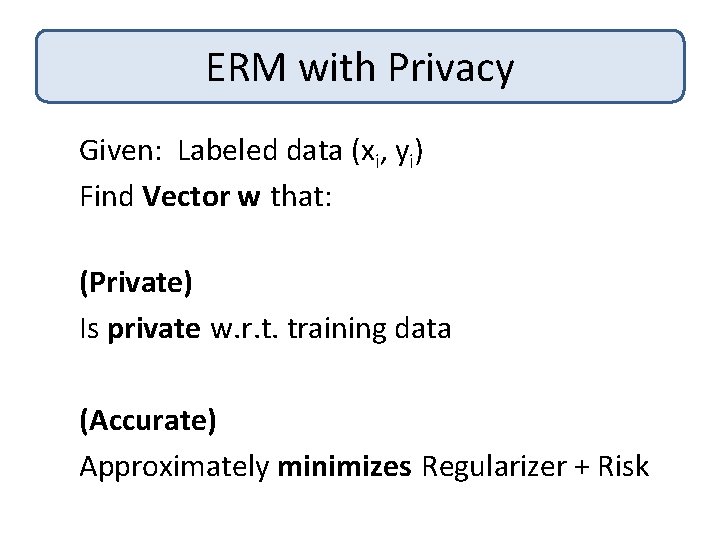

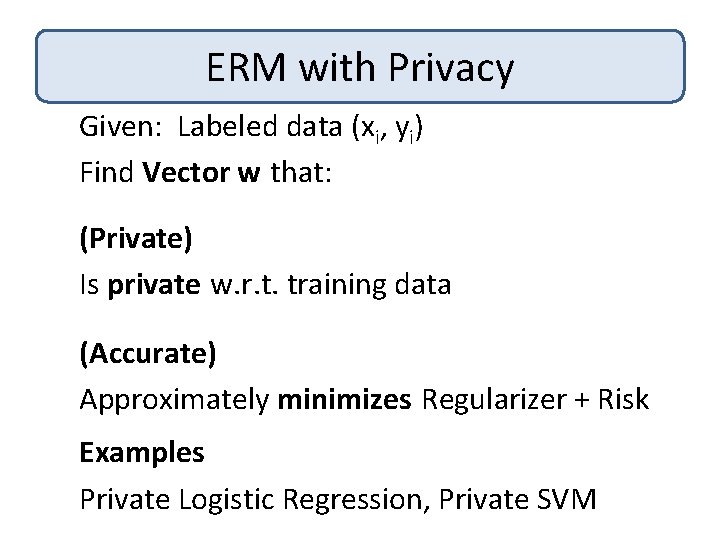

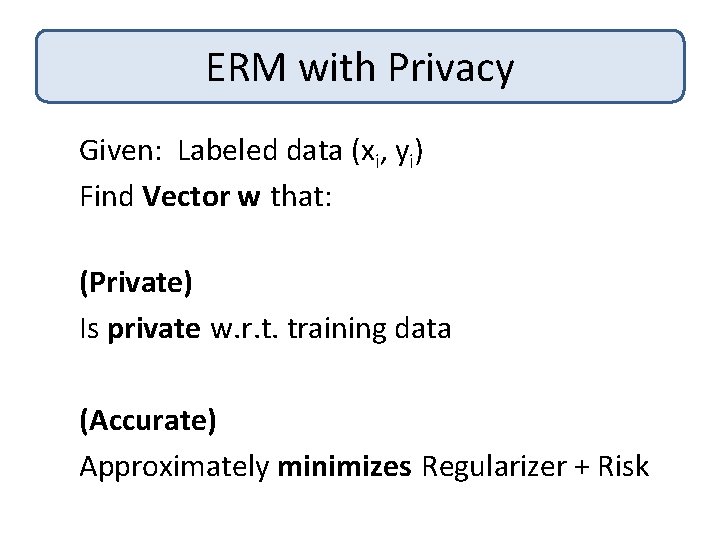

ERM with Privacy Given: Labeled data (xi, yi) Find Vector w that: (Private) Is private w. r. t. training data (Accurate) Approximately minimizes Regularizer + Risk

Talk Outline 1. 2. Privacy-preserving Classification How to define Privacy?

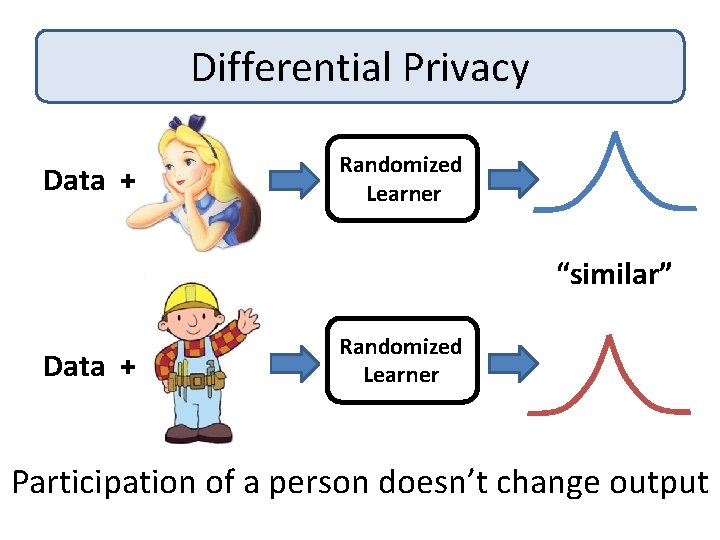

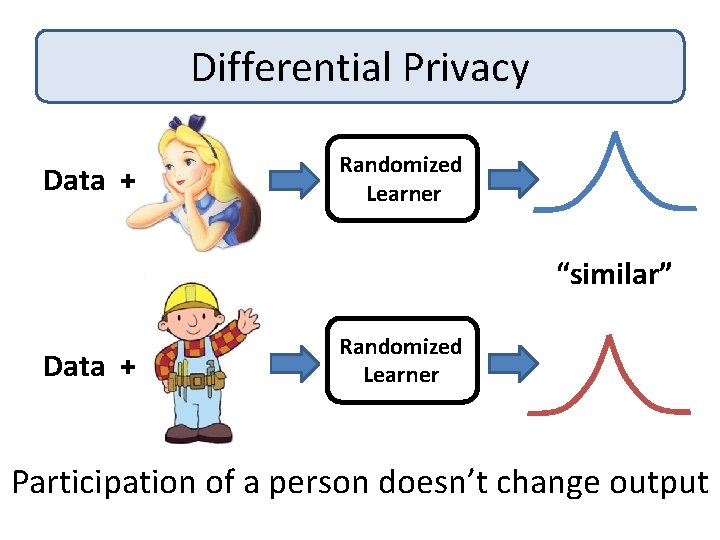

Differential Privacy Data + Randomized Learner “similar” Data + Randomized Learner Participation of a person doesn’t change output

Differential Privacy: Attacker’s View Classifier Prior + Knowledge Trained on Conclusion on Data & Classifier Trained on Prior + Knowledge Data & = Conclusion on

![Differential Privacy t D 1 hAD 1 t D 2 hAD 2 Differential Privacy t D 1 h[A(D 1) = t] D 2 h[A(D 2) =](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-14.jpg)

Differential Privacy t D 1 h[A(D 1) = t] D 2 h[A(D 2) = t] For all D 1, D 2 that differ by one person’s value: If A = ²-private randomized algorithm, h=density, 8 t, h[A(D 1) = t] ≤ (1 + ²) h[A(D 2) = t]

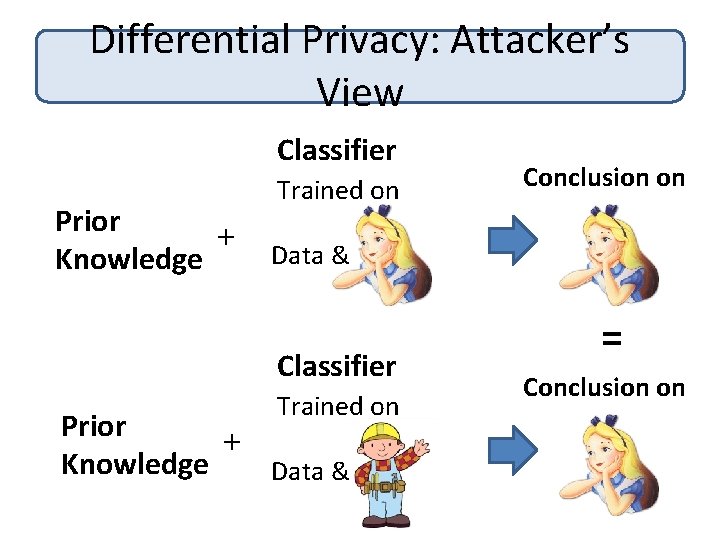

Differential Privacy: Facts 1. Provably strong notion of privacy Adversary knows all values in D except one Cannot gain confidence on last value from A(D) 2. Good private approximations for many functions E. g. mean, histograms, contingency tables, . . .

Talk Outline 1. 2. 3. Privacy-preserving Classification Differential Privacy ERM with Privacy

ERM with Privacy Given: Labeled data (xi, yi) Find Vector w that: (Private) Is private w. r. t. training data (Accurate) Approximately minimizes Regularizer + Risk Examples Private Logistic Regression, Private SVM

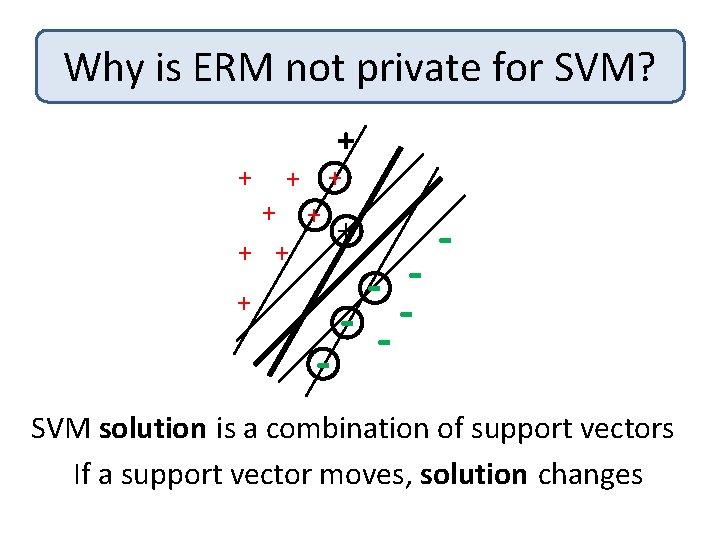

Why is ERM not private for SVM? + + + + + - -- - SVM solution is a combination of support vectors If a support vector moves, solution changes

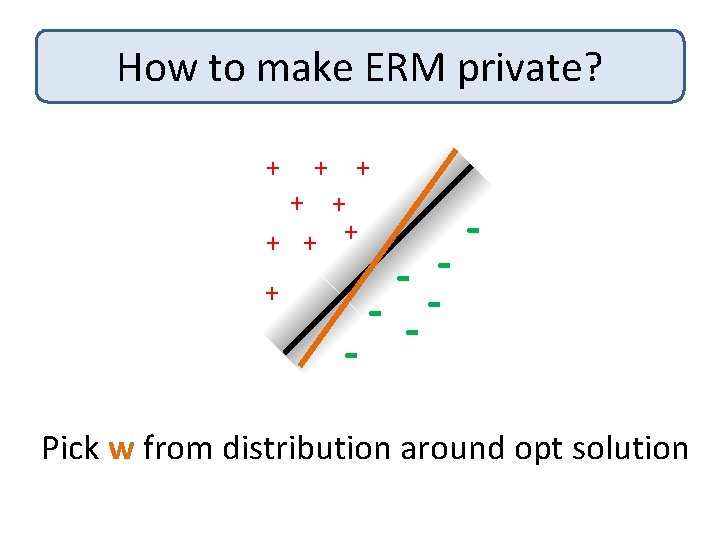

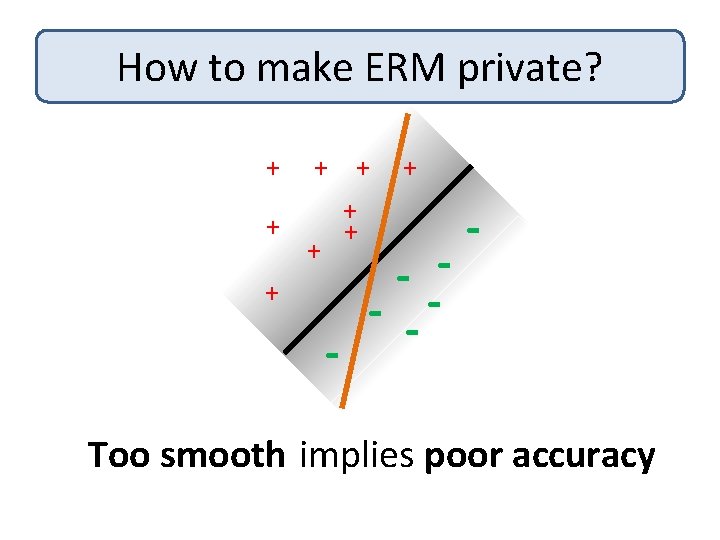

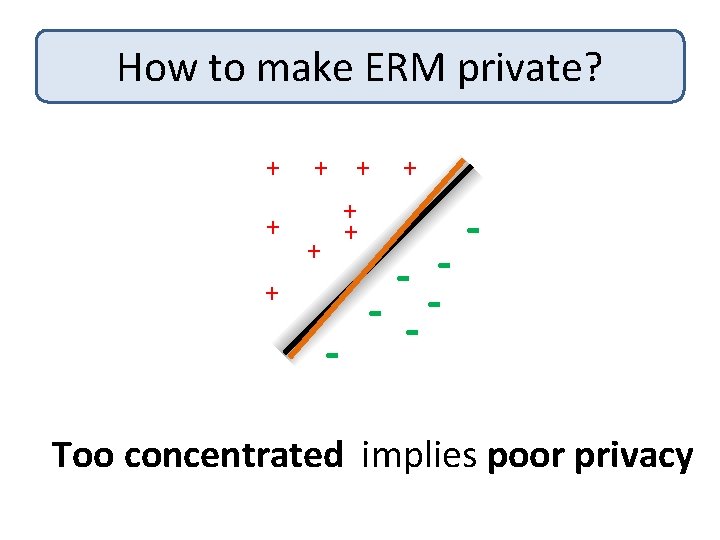

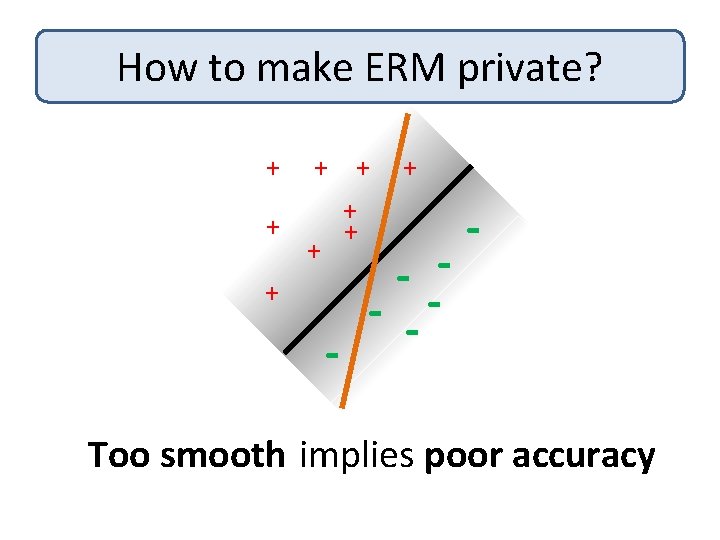

How to make ERM private? + + + + + - - -- - Pick w from distribution around opt solution

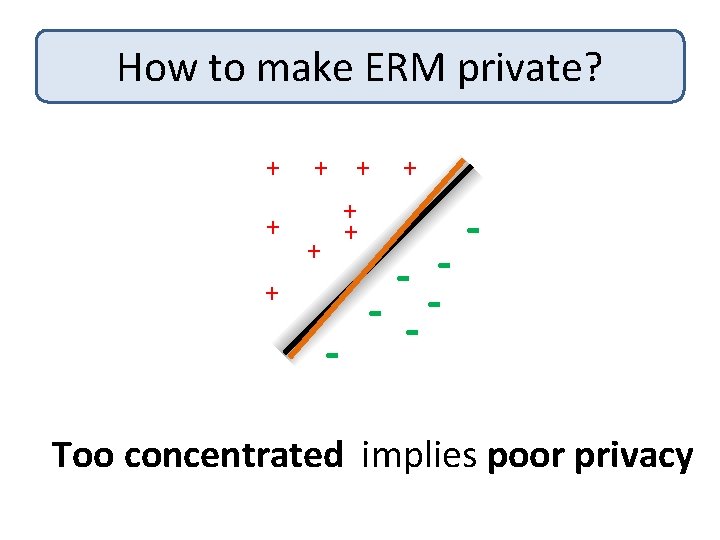

How to make ERM private? + + + + + - - -- - Too concentrated implies poor privacy

How to make ERM private? + + + + + - - -- - Too smooth implies poor accuracy

Pick distribution that gives privacy and accuracy

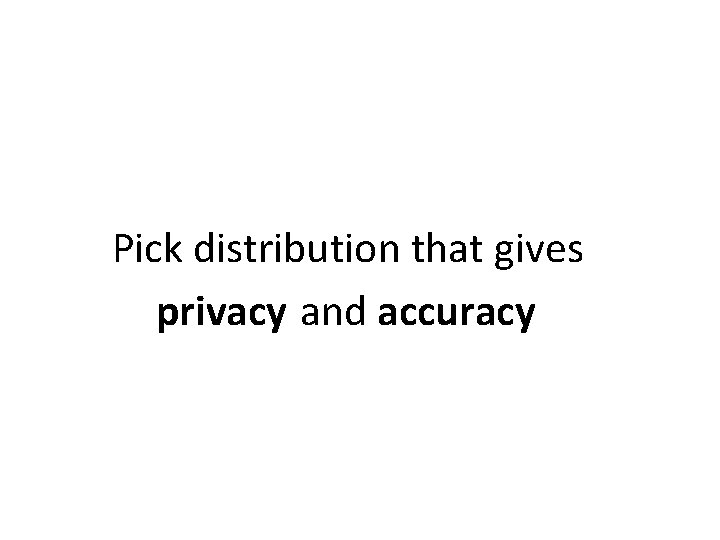

Talk Outline 1. 2. 3. 4. Privacy-preserving Classification Differential Privacy ERM with Privacy Algorithm

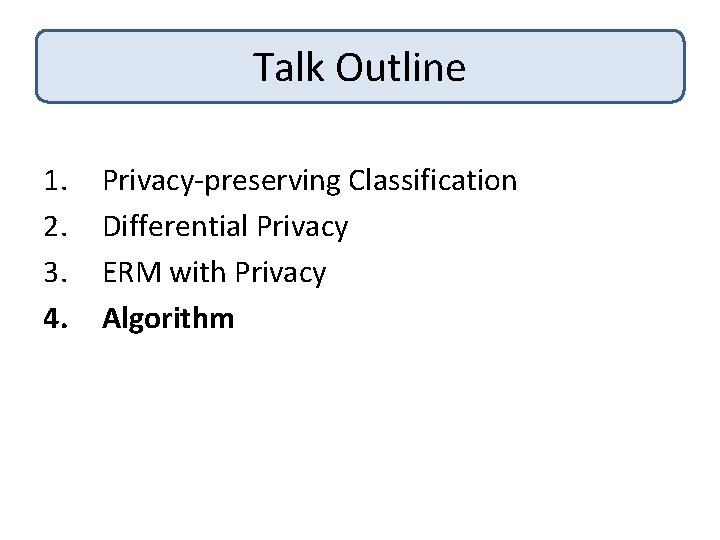

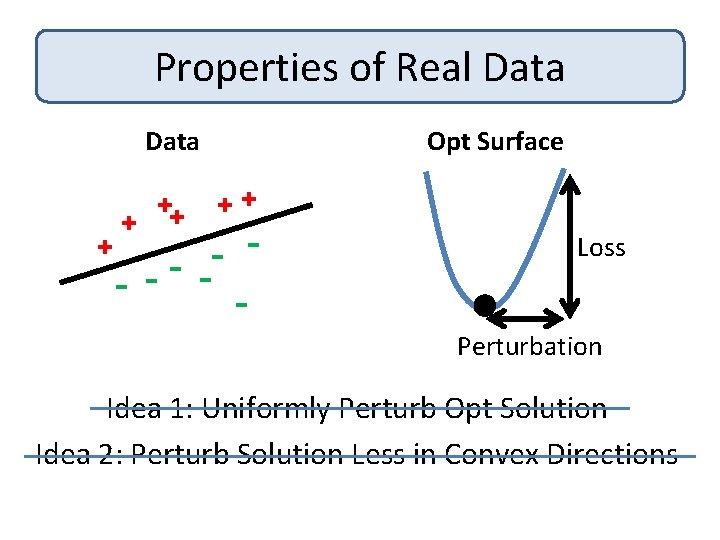

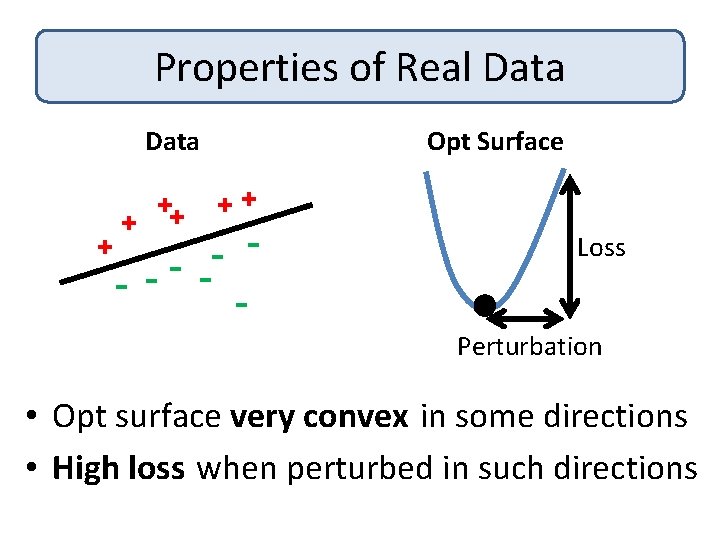

Properties of Real Data + ++ + Opt Surface ++ - Loss Perturbation • Opt surface very convex in some directions • High loss when perturbed in such directions

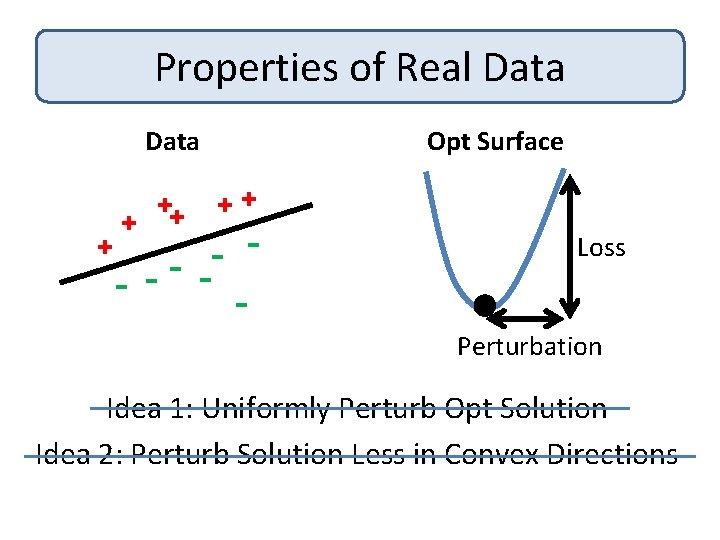

Properties of Real Data + ++ + Opt Surface ++ - Loss Perturbation Idea 1: Uniformly Perturb Opt Solution Idea 2: Perturb Solution Less in Convex Directions

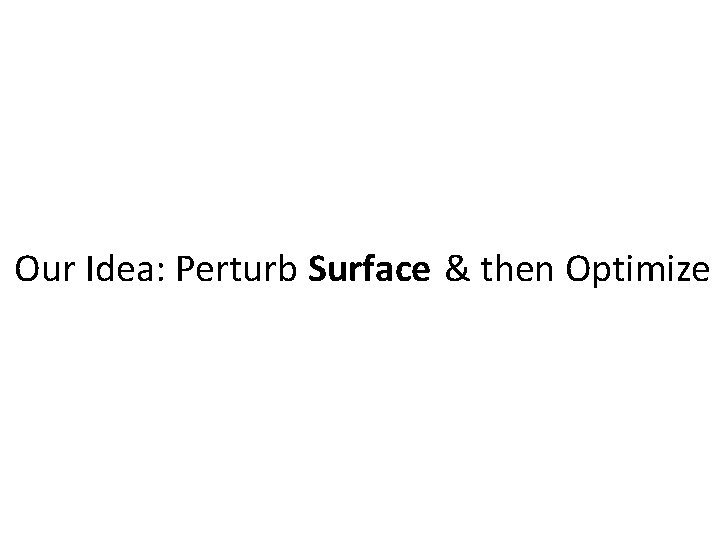

Our Idea: Perturb Surface & then Optimize

Algorithm Given: Labeled data (xi, yi) Find w minimizing: ½¸|w|2 + i L(y i w. T xi) + (1/n)b. Tw Regularizer Risk Perturbation (Model Complexity) (Training Error) (Privacy)

Algorithm: Perturbation b drawn from: Magnitude: |b| » ¡(d, 1/²) Direction : uniform

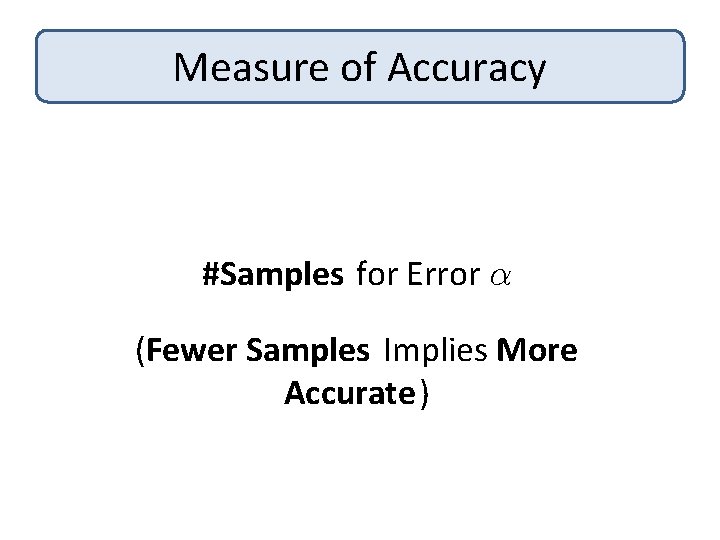

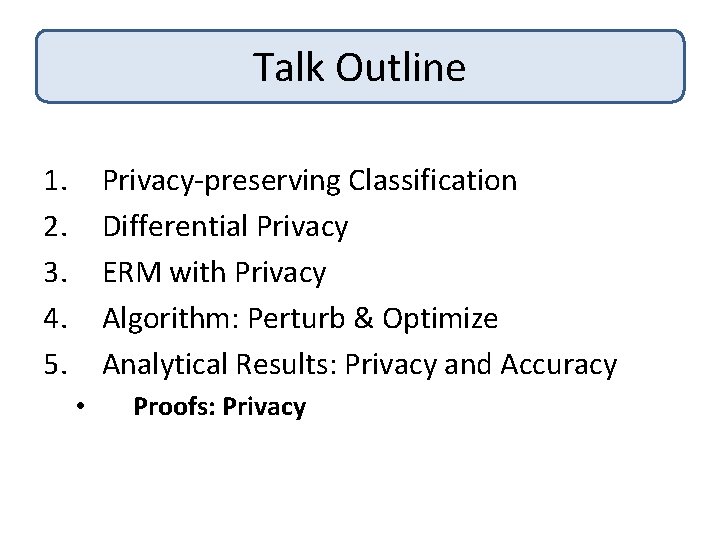

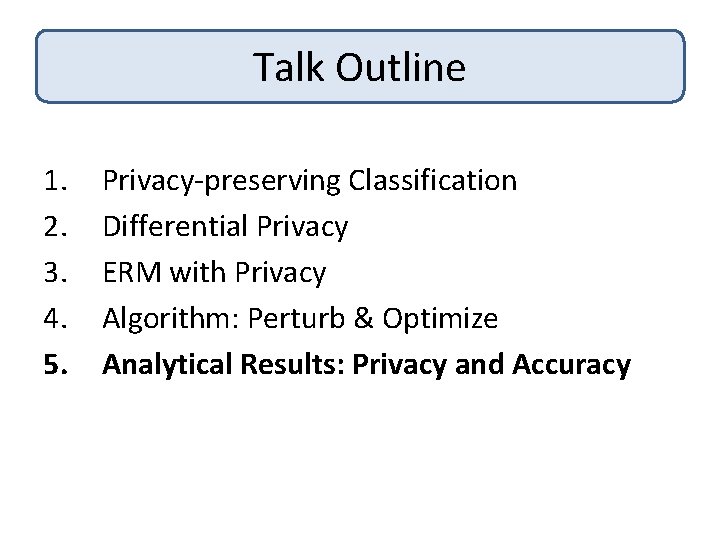

Talk Outline 1. 2. 3. 4. 5. Privacy-preserving Classification Differential Privacy ERM with Privacy Algorithm: Perturb & Optimize Analytical Results: Privacy and Accuracy

![Privacy Guarantees Theorem CM 08 SCM 09 If 1 L is convex differentiable 2 Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2.](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-30.jpg)

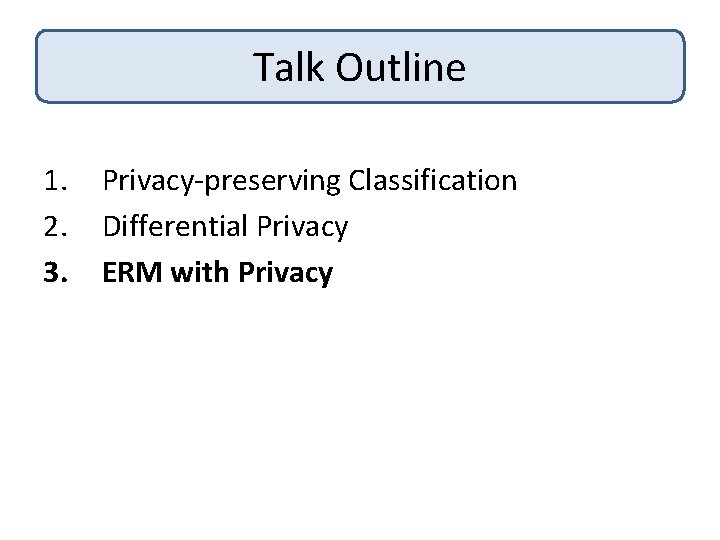

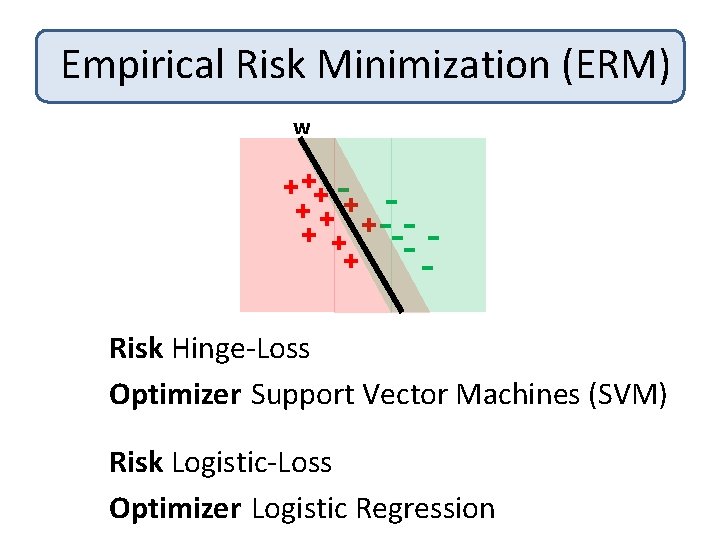

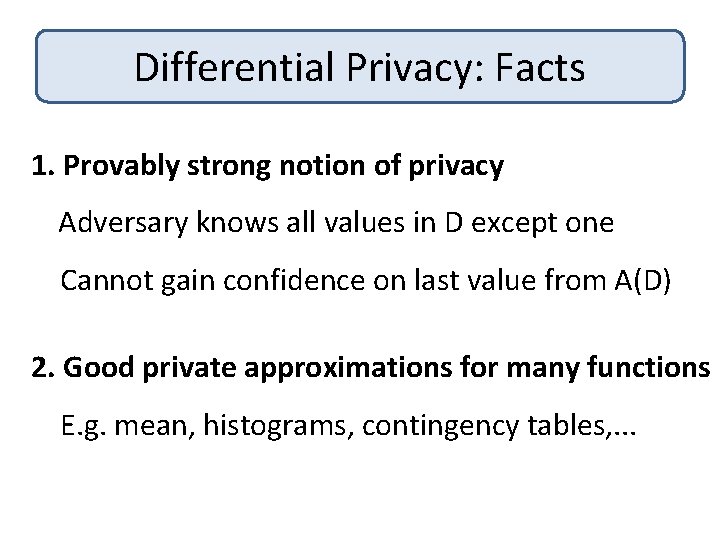

Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2. For any w, any D 1, D 2 differing in one value, |r. L(D 1, w) – r. L(D 2, w)| · 1/n then, our algorithm is ²-differentially-private L = Logistic loss Private Logistic Regression L = Huber loss Private SVM (Hinge Loss is Non-differentiable)

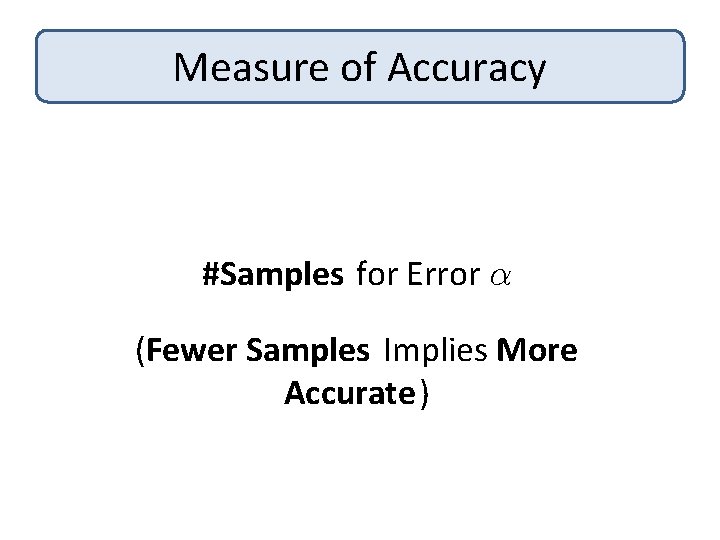

Measure of Accuracy #Samples for Error ® (Fewer Samples Implies More Accurate)

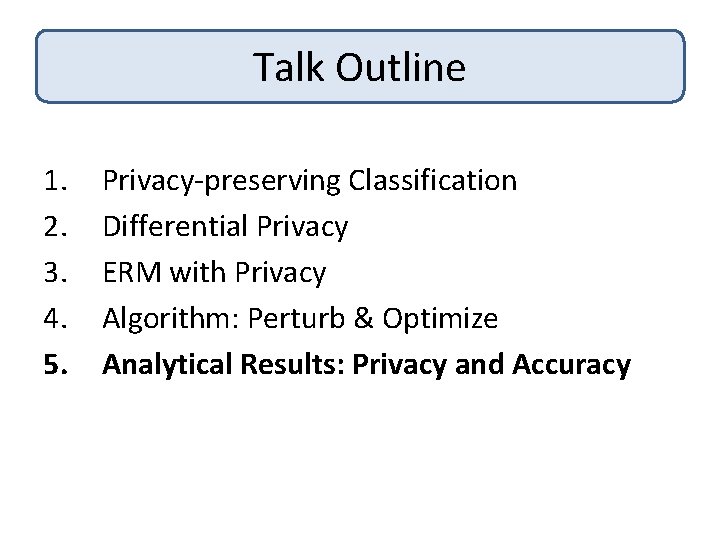

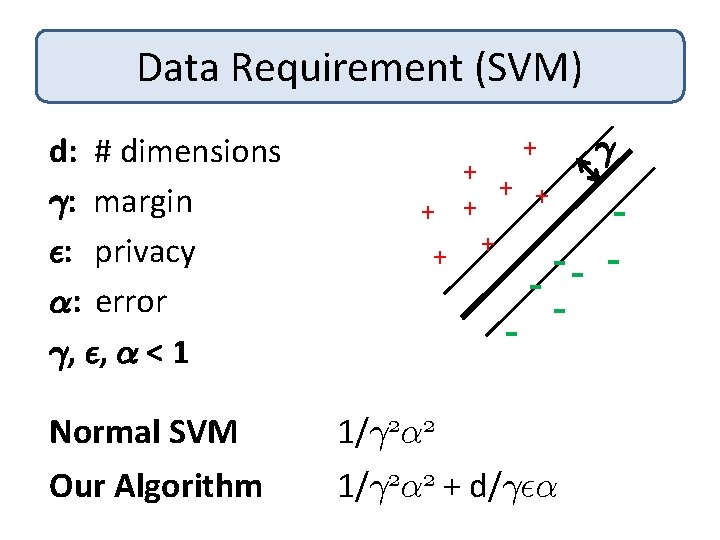

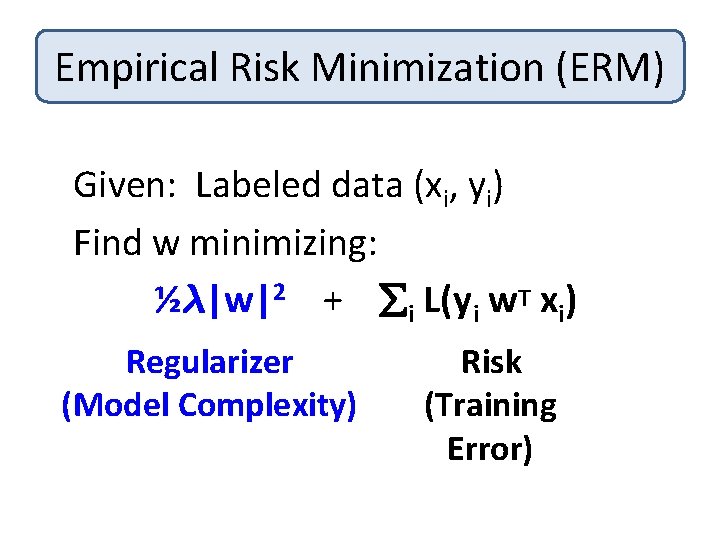

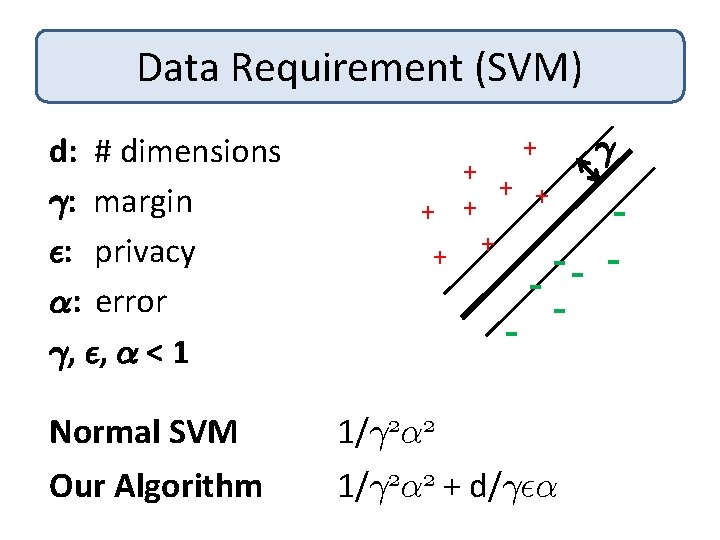

Data Requirement (SVM) d: # dimensions °: margin ²: privacy ®: error °, ², ® < 1 Normal SVM Our Algorithm + + + + - - ° - 1/° 2® 2 + d/°²®

![Previous Work Algorithm Data Running Time BLR 08 KL08 d 2 3² Expd Recipe Previous Work Algorithm Data Running Time [BLR 08], [KL+08] d 2/® 3² Exp(d) Recipe](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-33.jpg)

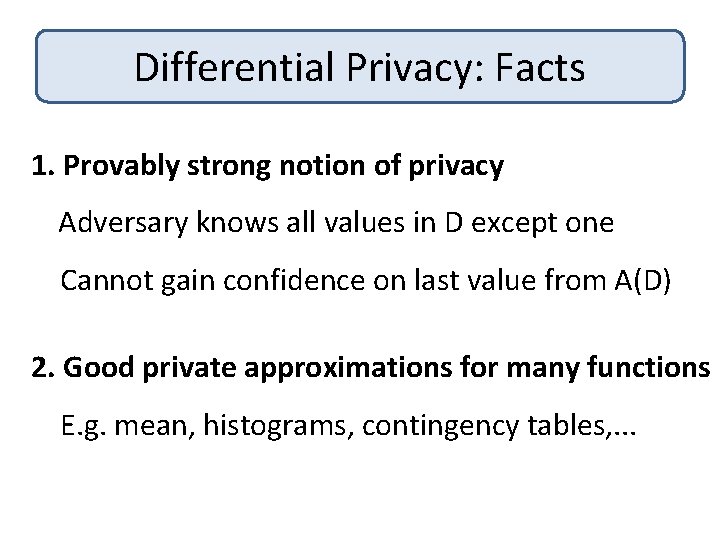

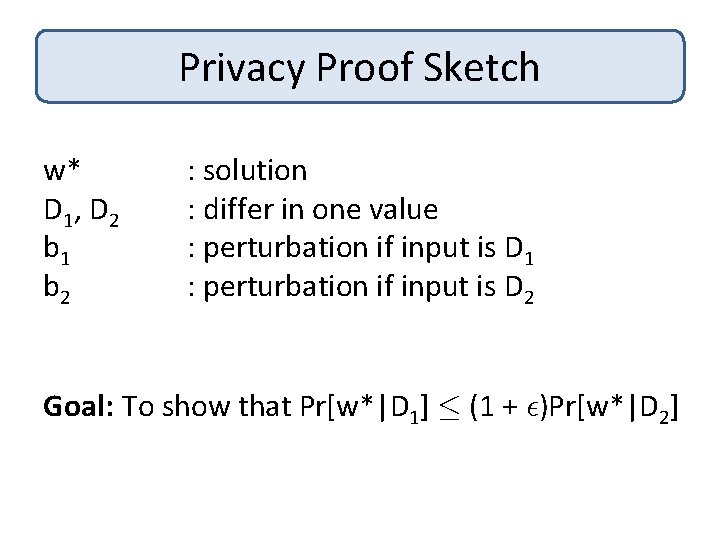

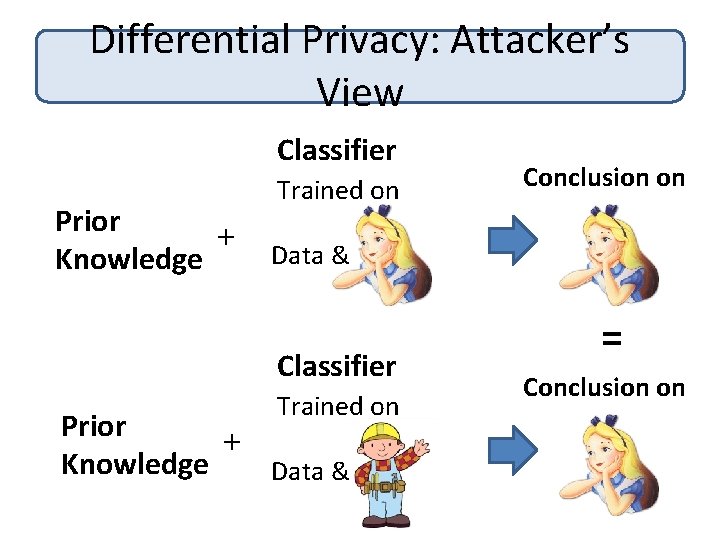

Previous Work Algorithm Data Running Time [BLR 08], [KL+08] d 2/® 3² Exp(d) Recipe of [DMNS 06] d/° 2²® 1. 5 Efficient [CM 08], [SCM 09] d/°²® Efficient

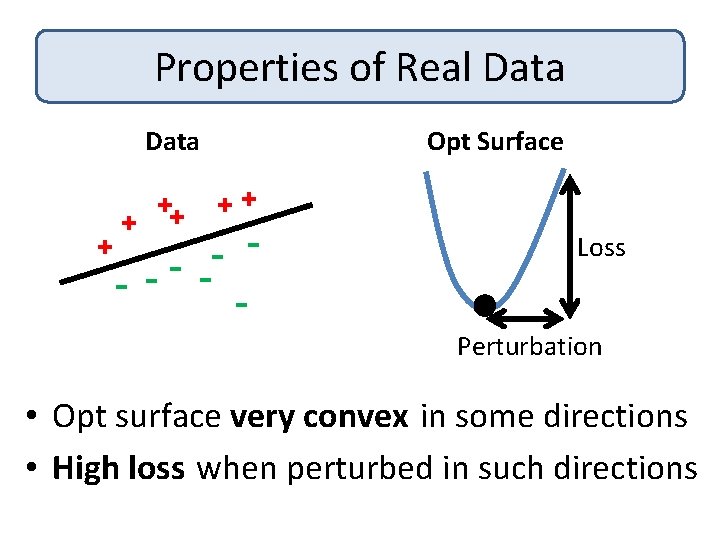

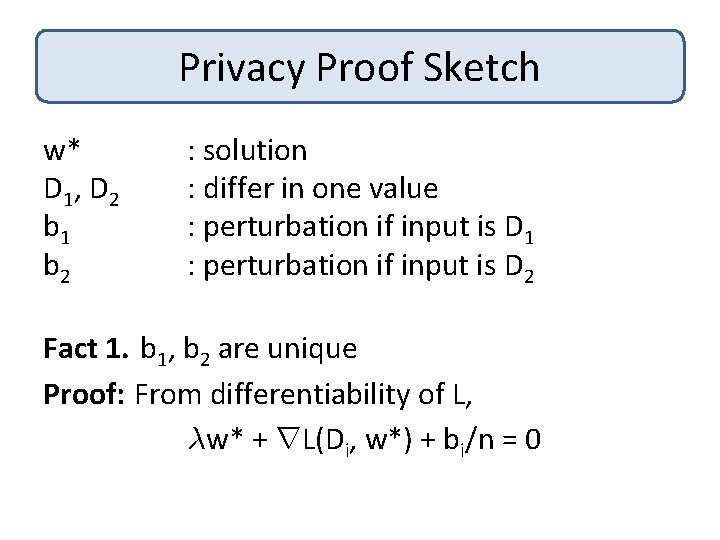

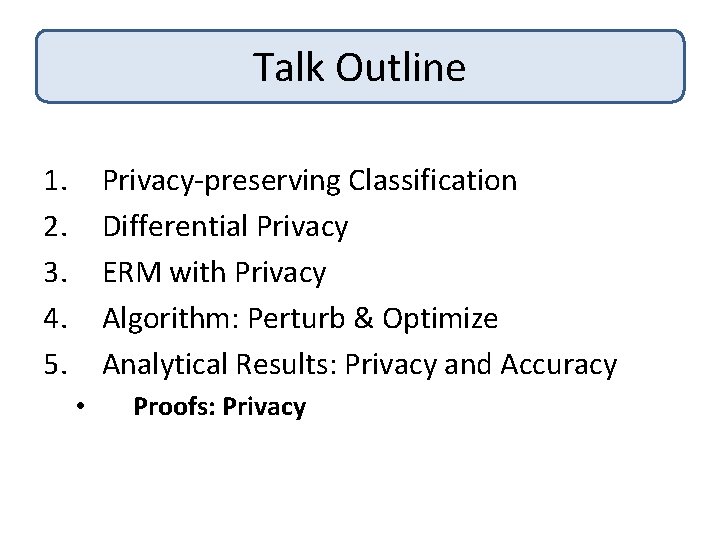

Talk Outline 1. 2. 3. 4. 5. Privacy-preserving Classification Differential Privacy ERM with Privacy Algorithm: Perturb & Optimize Analytical Results: Privacy and Accuracy • Proofs: Privacy

![Privacy Guarantees Theorem CM 08 SCM 09 If 1 L is convex differentiable 2 Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2.](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-35.jpg)

Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2. For any w, any D 1, D 2 differing in one value, |r. L(D 1, w) – r. L(D 2, w)| · 1/n then, our algorithm is ²-differentially-private

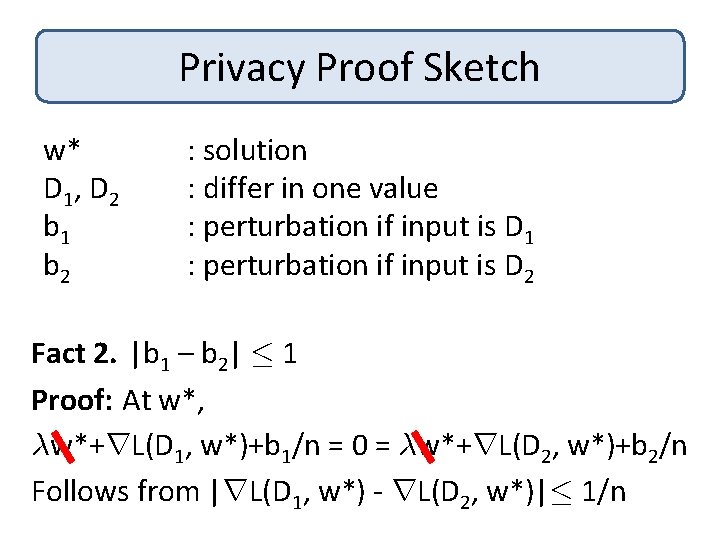

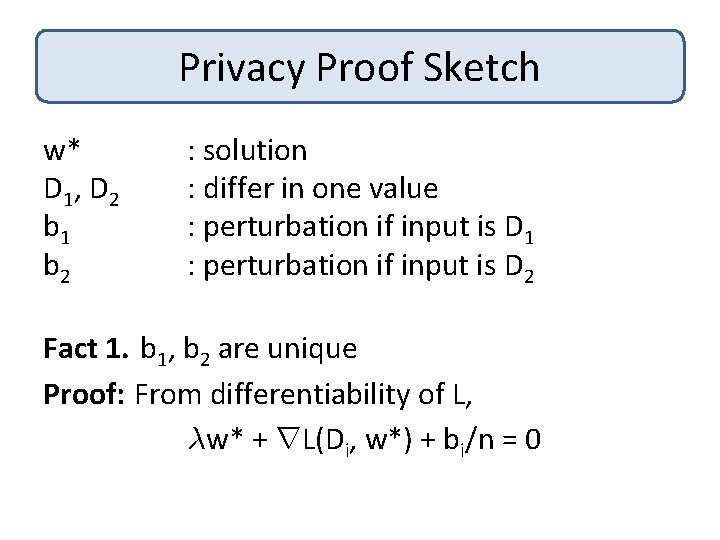

Privacy Proof Sketch w* D 1 , D 2 b 1 b 2 : solution : differ in one value : perturbation if input is D 1 : perturbation if input is D 2 Goal: To show that Pr[w*|D 1] · (1 + ²)Pr[w*|D 2]

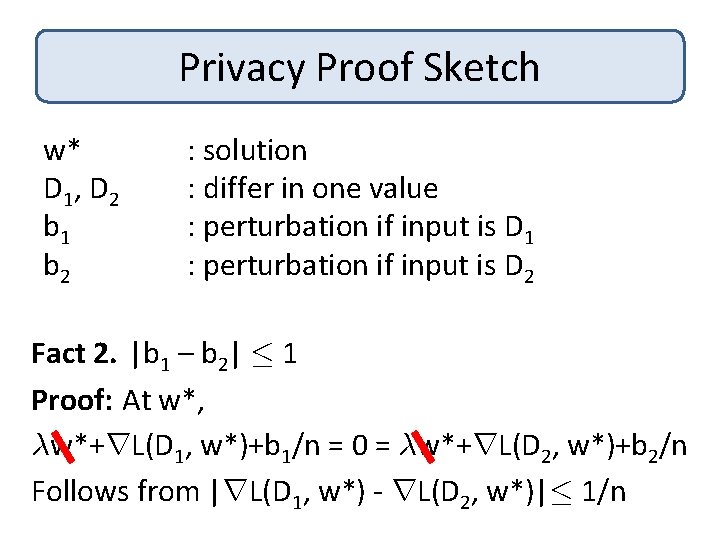

Privacy Proof Sketch w* D 1 , D 2 b 1 b 2 : solution : differ in one value : perturbation if input is D 1 : perturbation if input is D 2 Fact 1. b 1, b 2 are unique Proof: From differentiability of L, ¸w* + r. L(Di, w*) + bi/n = 0

Privacy Proof Sketch w* D 1 , D 2 b 1 b 2 : solution : differ in one value : perturbation if input is D 1 : perturbation if input is D 2 Fact 2. |b 1 – b 2| · 1 Proof: At w*, ¸w*+r. L(D 1, w*)+b 1/n = 0 = ¸w*+r. L(D 2, w*)+b 2/n Follows from |r. L(D 1, w*) - r. L(D 2, w*)|· 1/n

Privacy Proof Sketch w* D 1 , D 2 b 1 b 2 : solution : differ in one value : perturbation if input is D 1 : perturbation if input is D 2 Fact 1. b 1, b 2 are unique Fact 2. |b 1 – b 2| · 1 2 & property of ¡, Pr[b 1] · (1 + ²) Pr[b 2] 1 & uniqueness of w*, Pr[w*|D 1] · (1 +²)Pr[w*|D 2]

![Privacy Guarantees Theorem CM 08 SCM 09 If 1 L is convex differentiable 2 Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2.](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-40.jpg)

Privacy Guarantees Theorem: [CM 08, SCM 09] If 1. L is convex, differentiable 2. For any w, any D 1, D 2 differing in one value, |r. L(D 1, w) – r. L(D 2, w)| · 1/n then, our algorithm is ²-differentially-private

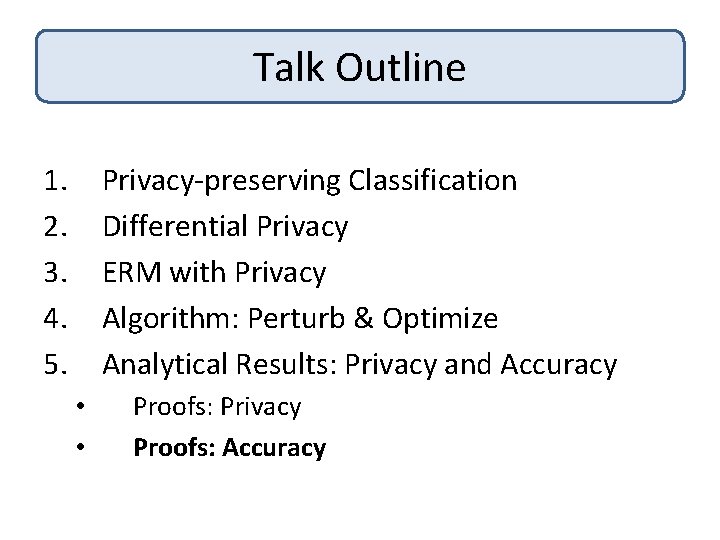

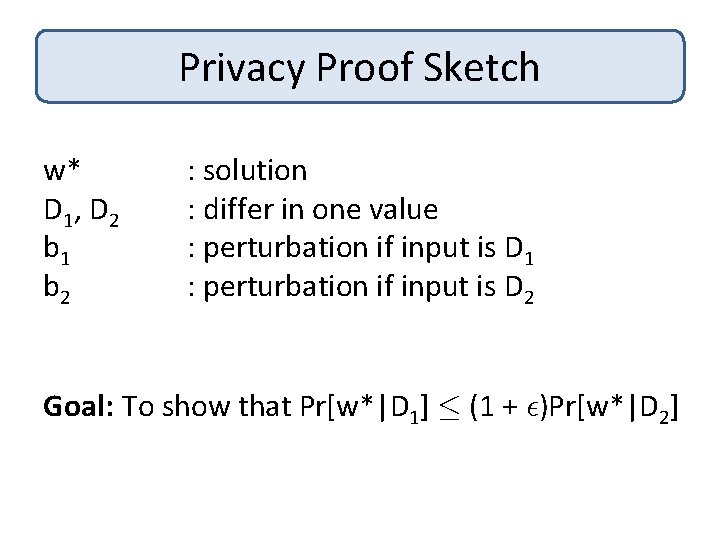

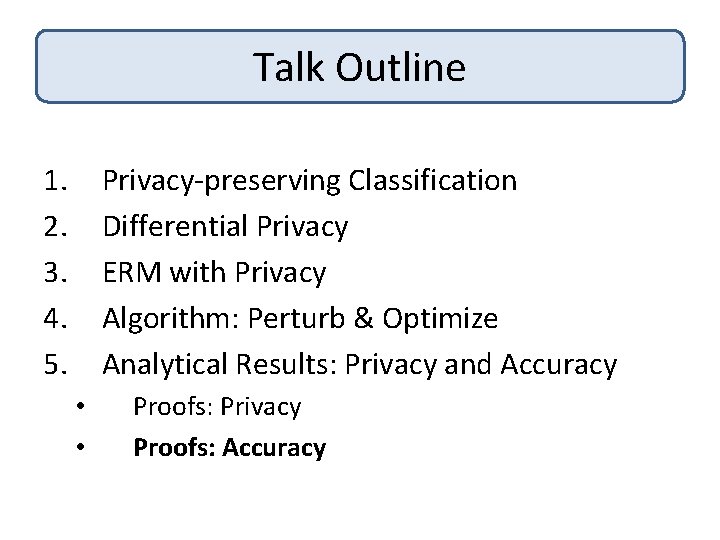

Talk Outline 1. 2. 3. 4. 5. Privacy-preserving Classification Differential Privacy ERM with Privacy Algorithm: Perturb & Optimize Analytical Results: Privacy and Accuracy • • Proofs: Privacy Proofs: Accuracy

![Accuracy Proof Sketch Theorem CM 08 SCM 09 Samples needed for error is Accuracy: Proof Sketch Theorem: [CM 08, SCM 09] #Samples needed for error ® is](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-42.jpg)

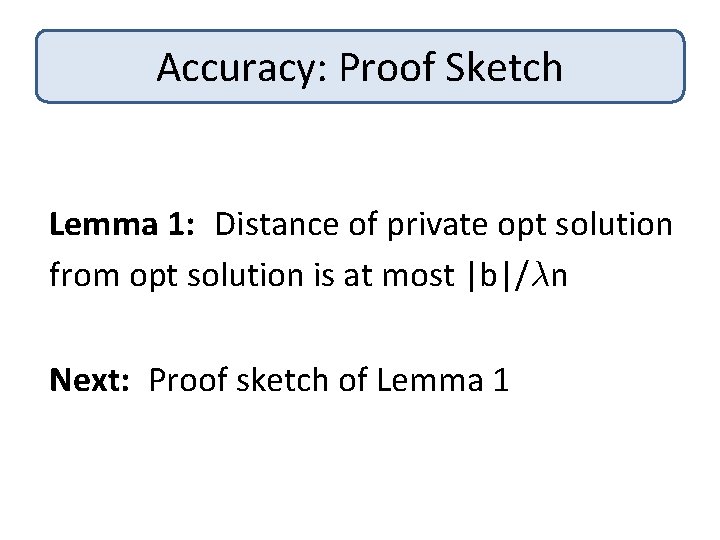

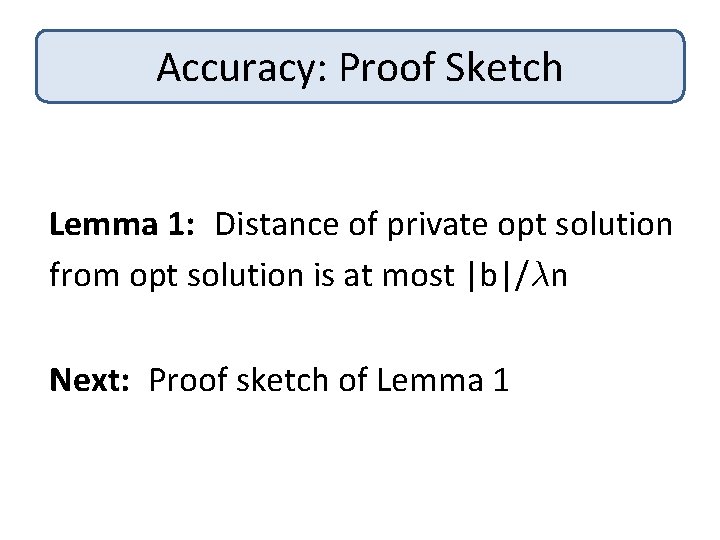

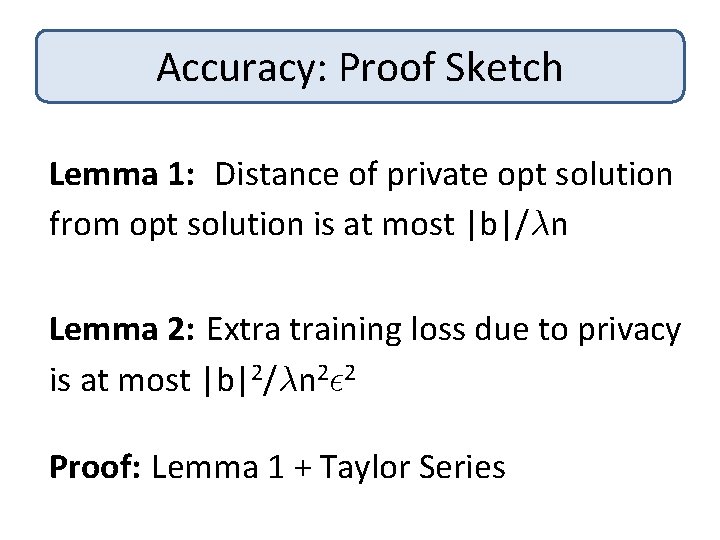

Accuracy: Proof Sketch Theorem: [CM 08, SCM 09] #Samples needed for error ® is 1/° 2® 2 + d/°²® Lemma 1: Distance of private opt solution from opt solution is at most |b|/¸n Lemma 2: Extra training loss due to privacy is at most|b|2/¸n 2² 2

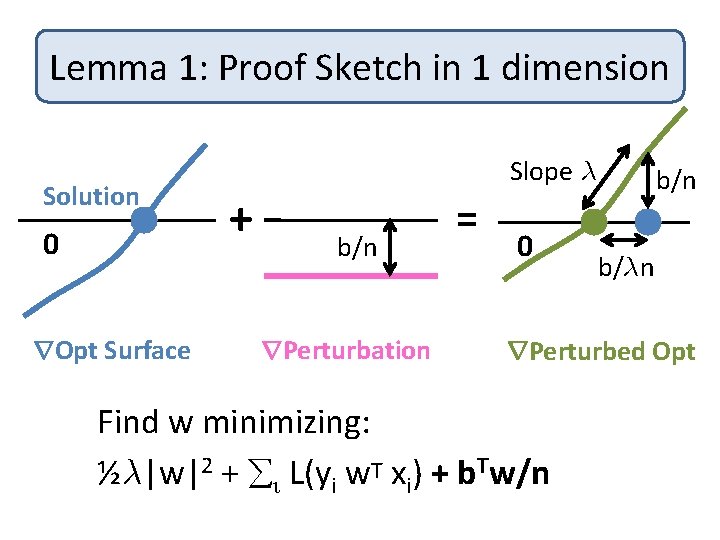

Accuracy: Proof Sketch Lemma 1: Distance of private opt solution from opt solution is at most |b|/¸n Next: Proof sketch of Lemma 1

Lemma 1: Proof Sketch in 1 dimension Solution 0 r. Opt Surface Slope ¸ + b/n r. Perturbation = 0 b/n b/¸n r. Perturbed Opt Find w minimizing: ½¸|w|2 + i L(yi w. T xi) + b. Tw/n

Accuracy: Proof Sketch Lemma 1: Distance of private opt solution from opt solution is at most |b|/¸n Lemma 2: Extra training loss due to privacy is at most |b|2/¸n 2² 2 Proof: Lemma 1 + Taylor Series

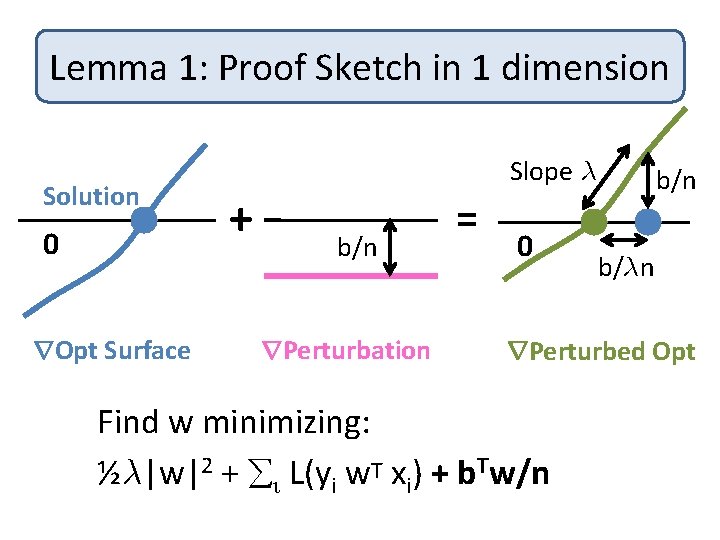

![Accuracy Guarantees Theorem CM 08 SCM 09 Samples needed for error is 1 Accuracy Guarantees Theorem: [CM 08, SCM 09] #Samples needed for error ® is 1/°](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-46.jpg)

Accuracy Guarantees Theorem: [CM 08, SCM 09] #Samples needed for error ® is 1/° 2® 2 + d/°²® Proof: Lemma 2 and techniques of [SSS 08]

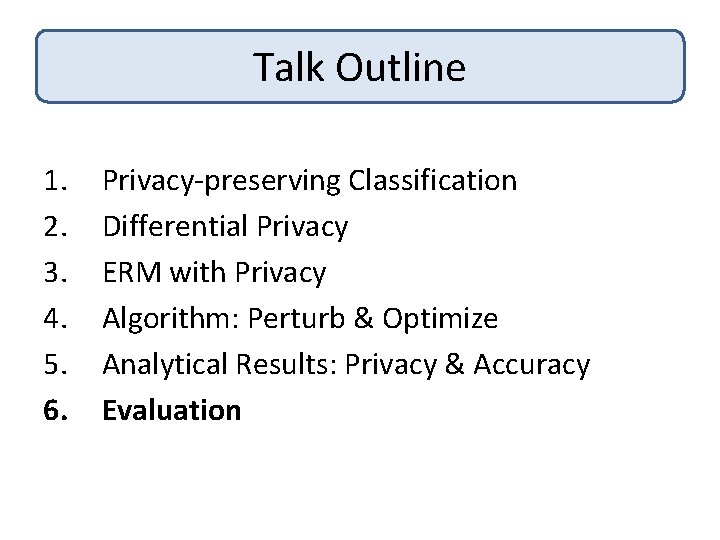

Talk Outline 1. 2. 3. 4. 5. 6. Privacy-preserving Classification Differential Privacy ERM with Privacy Algorithm: Perturb & Optimize Analytical Results: Privacy & Accuracy Evaluation

Experiments UCI Adult: Census/Income Data • Demographic data of size 47 K • 105 dimensions (after preprocessing) Task: Predict if income is above/below 50 K

![Error PrivacyAccuracy Tradeoff Our Algorithm DMNS 06 Chance Normal SVM Privacy Level ² Smaller Error Privacy-Accuracy Tradeoff Our Algorithm [DMNS 06] Chance Normal. SVM Privacy Level ² Smaller](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-49.jpg)

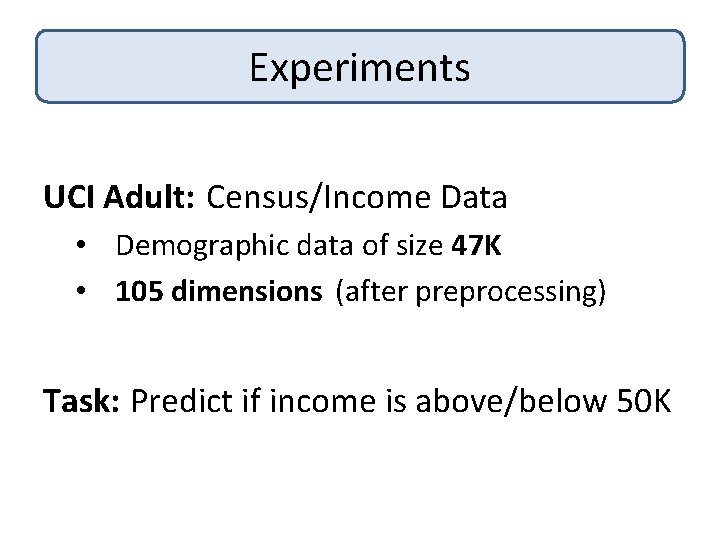

Error Privacy-Accuracy Tradeoff Our Algorithm [DMNS 06] Chance Normal. SVM Privacy Level ² Smaller ², More privacy

Experiments KDDCup 99: Intrusion Detection Data • 50 K network connections • 119 dimensions (after preprocessing) Task: Predict if connection is malicious or not

![PrivacyAccuracy Tradeoff Error Our Algorithm DMNS 06 Normal SVM Privacy Level ² Smaller ² Privacy-Accuracy Tradeoff Error Our Algorithm [DMNS 06] Normal. SVM Privacy Level ² Smaller ²,](https://slidetodoc.com/presentation_image_h2/0ff0247e7f9dafbced8a61869b4b863f/image-51.jpg)

Privacy-Accuracy Tradeoff Error Our Algorithm [DMNS 06] Normal. SVM Privacy Level ² Smaller ², More privacy

Talk Outline 1. 2. 3. 4. 5. 6. Privacy-preserving Classification Differential Privacy ERM with Privacy Algorithm: Perturb & Optimize Analytical Results: Privacy & Accuracy Evaluation: On Adult & KDDCup datasets

Future Work 1. Can we reduce the price of privacy ? Find a linear classification algorithm: • ²-private • Computationally efficient • Requires fewer samples 2. Can we lower-bound the sample requirement for differentially private classification ?

References • Privacy-preserving Logistic Regression, K. Chaudhuri, C. Monteleoni, NIPS 2008 • Differentially-Private Support Vector Machines, A. Sarwate, K. Chaudhuri, C. Monteleoni, In Submission, Available from Arxiv

Questions?

Claire monteleoni

Claire monteleoni Mainak chaudhuri iitk

Mainak chaudhuri iitk Sumanta chaudhuri

Sumanta chaudhuri Swarat chaudhuri

Swarat chaudhuri Surajit chaudhuri

Surajit chaudhuri Manas kumar chaudhuri

Manas kumar chaudhuri Manas kumar chaudhuri

Manas kumar chaudhuri Swarat chaudhuri

Swarat chaudhuri Swarat chaudhuri

Swarat chaudhuri Salient features of operation blackboard

Salient features of operation blackboard Surajit chaudhuri

Surajit chaudhuri Mainak chaudhuri

Mainak chaudhuri Intra.rathi.com

Intra.rathi.com Anand avati

Anand avati Anand bhatt md

Anand bhatt md Sikh wedding shabads

Sikh wedding shabads Electronic manufacturing services

Electronic manufacturing services Kanwaljeet anand

Kanwaljeet anand Starbucks anand

Starbucks anand Anand desai dsk

Anand desai dsk Dr rishi anand

Dr rishi anand Anand deshpande house

Anand deshpande house Shreya anand caltech

Shreya anand caltech Dr anand rao

Dr anand rao Anand natarajan dtu

Anand natarajan dtu Anand rangarajan

Anand rangarajan Anand kalra

Anand kalra Father of indian english

Father of indian english Anand shandilya

Anand shandilya Dr anand annamalai

Dr anand annamalai Anand sivasubramaniam

Anand sivasubramaniam Anand gujarat

Anand gujarat Anand gujarat

Anand gujarat Anand rangarajan

Anand rangarajan Imti trichy

Imti trichy Sowmya anand

Sowmya anand Solar logos theosophy

Solar logos theosophy Anand milk union limited

Anand milk union limited Claire pooley

Claire pooley Claire andre and manuel velasquez

Claire andre and manuel velasquez University of north carolina hematology oncology fellowship

University of north carolina hematology oncology fellowship Dr claire beimesch

Dr claire beimesch Unidad educativa claire bucaram de aivas

Unidad educativa claire bucaram de aivas Foster claire keegan

Foster claire keegan Mz nz

Mz nz Marc bremer

Marc bremer Claire nowlan

Claire nowlan Claire mallinson

Claire mallinson Steinbeck's letter to claire luce

Steinbeck's letter to claire luce Dr claire kelly

Dr claire kelly Andra prems

Andra prems Facteur cheval autisme

Facteur cheval autisme Claire tougui

Claire tougui Claire fietst naar haar oma in breda

Claire fietst naar haar oma in breda Claire nowlan

Claire nowlan Claire solomon

Claire solomon Claire scodellaro

Claire scodellaro