Static Analysis part 2 Claire Le Goues 2015

![Empirical results • Nortel study [Zheng et al. 2006] – 3 C/C++ projects – Empirical results • Nortel study [Zheng et al. 2006] – 3 C/C++ projects –](https://slidetodoc.com/presentation_image/37c841b0a5ed315367060cefad2e6a94/image-49.jpg)

![More empirical results • Info. Sys study [Chaturvedi 2005] – 5 projects – Average More empirical results • Info. Sys study [Chaturvedi 2005] – 5 projects – Average](https://slidetodoc.com/presentation_image/37c841b0a5ed315367060cefad2e6a94/image-50.jpg)

- Slides: 63

Static Analysis, part 2 Claire Le Goues 2015 (c) C. Le Goues 1

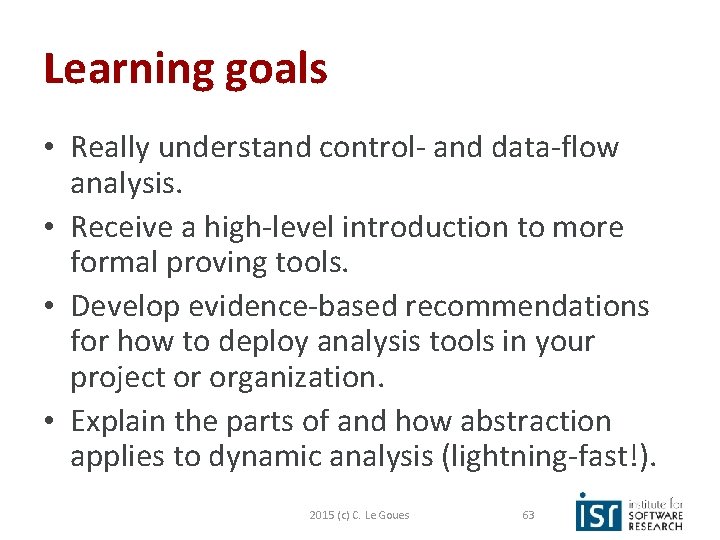

Learning goals • Really understand control- and data-flow analysis. • Receive a high-level introduction to more formal proving tools. • Develop evidence-based recommendations for how to deploy analysis tools in your project or organization. • Explain how abstraction applies to dynamic analysis (lightning-fast!). 2015 (c) C. Le Goues 2

Two fundamental concepts • Abstraction – Elide details of a specific implementation. – Capture semantically relevant details; ignore the rest. • Programs as data – Programs are just trees/graphs! – …and CS has lots of ways to analyze trees/graphs 2015 (c) C. Le Goues 3

What is Static Analysis? Don’t track everything! (That’s normal interpretation) Systematic examination of an abstraction of program state space Ensure everything is checked in the same way 2015 (c) C. Le Goues 4

Compare to testing, inspection • Why might it be hard to test/inspect for: – Array bounds errors? – Forgetting to re-enable interrupts? – Race conditions? 2015 (c) C. Le Goues 5

Compare to testing, inspection • Array Bounds, Interrupts – Testing • Errors typically on uncommon paths or uncommon input • Difficult to exercise these paths – Inspection • Non-local and thus easy to miss – Array allocation vs. index expression – Disable interrupts vs. return statement • Finding Race Conditions – Testing • Cannot force all interleavings – Inspection • Too many interleavings to consider • Check rules like “lock protects x” instead – But checking is non-local and thus easy to miss a case 2015 (c) C. Le Goues 6

Defects Static Analysis can Catch • Defects that result from inconsistently following simple, mechanical design rules. Security: Buffer overruns, improperly validated input. Memory safety: Null dereference, uninitialized data. Resource leaks: Memory, OS resources. API Protocols: Device drivers; real time libraries; GUI frameworks. – Exceptions: Arithmetic/library/user-defined – Encapsulation: Accessing internal data, calling private functions. – Data races: Two threads access the same data without synchronization – – Key: check compliance to simple, mechanical design rules 2015 (c) C. Le Goues 7

The Bad News: Rice's Theorem "Any nontrivial property about the language recognized by a Turing machine is undecidable. “ Henry Gordon Rice, 1953 Every static analysis is necessarily incomplete or unsound or undecidable (or multiple of these) 2015 (c) C. Le Goues 8

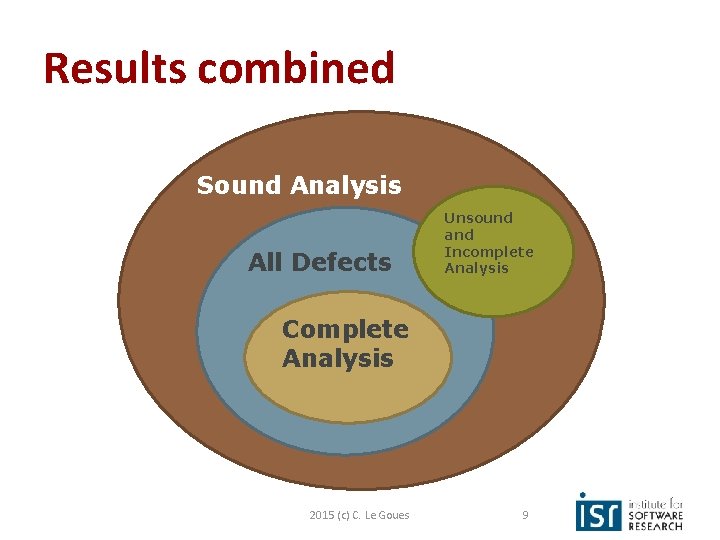

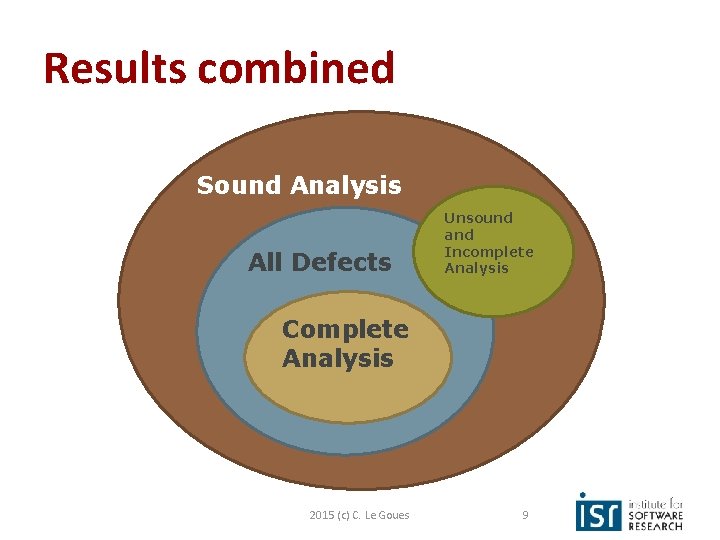

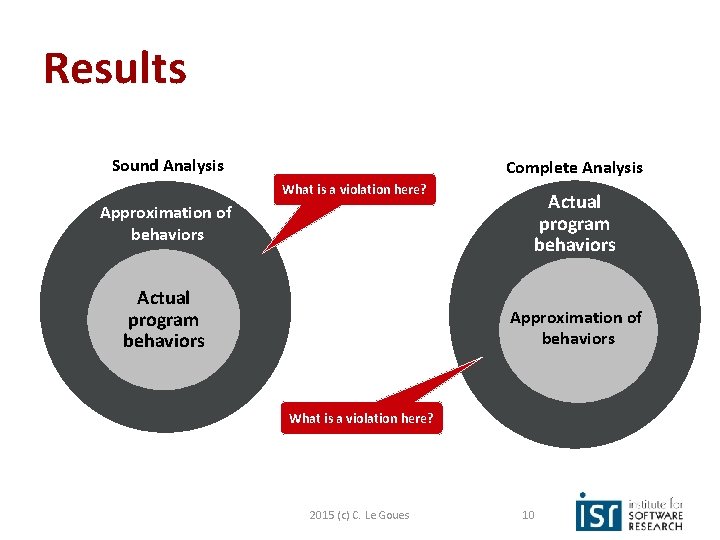

Results combined Sound Analysis All Defects Unsound and Incomplete Analysis Complete Analysis 2015 (c) C. Le Goues 9

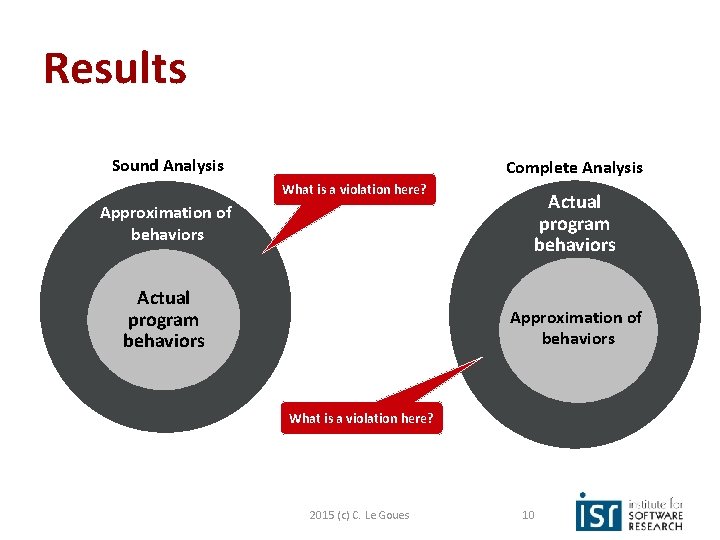

Results Sound Analysis Complete Analysis What is a violation here? Approximation of behaviors Actual program behaviors Approximation of behaviors What is a violation here? 2015 (c) C. Le Goues 10

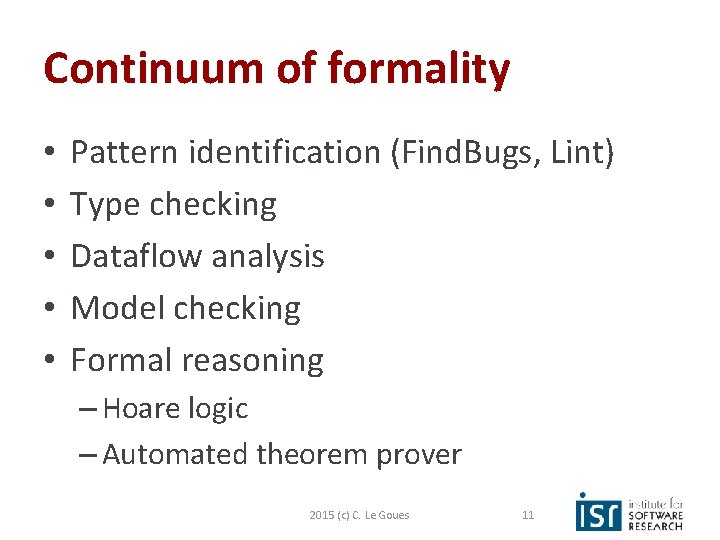

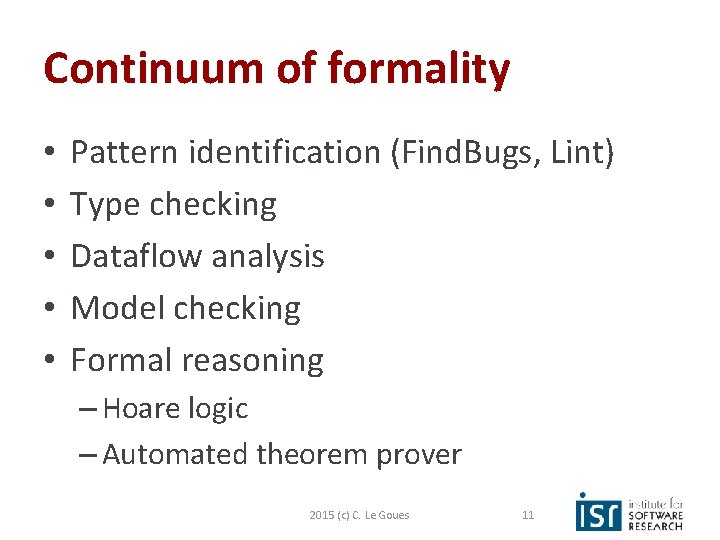

Continuum of formality • • • Pattern identification (Find. Bugs, Lint) Type checking Dataflow analysis Model checking Formal reasoning – Hoare logic – Automated theorem prover 2015 (c) C. Le Goues 11

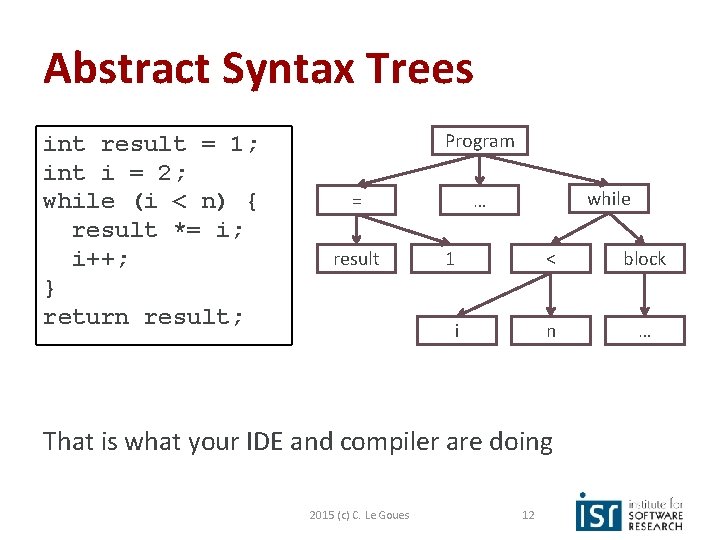

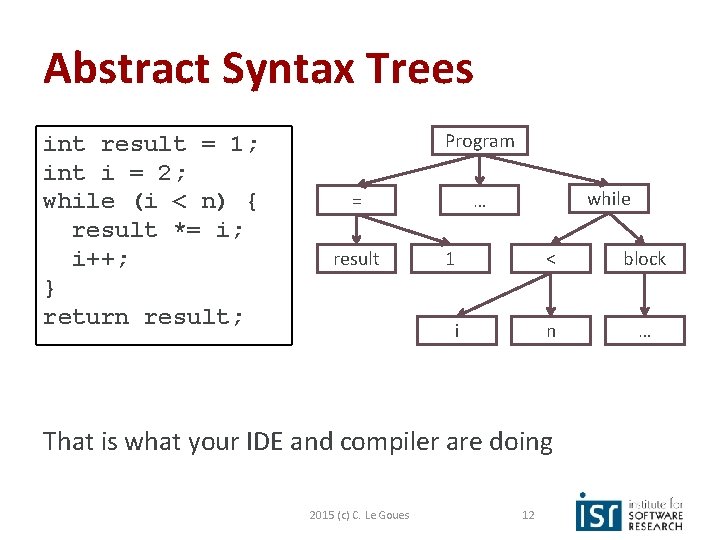

Abstract Syntax Trees int result = 1; int i = 2; while (i < n) { result *= i; i++; } return result; Program = result while … 1 < block i n … That is what your IDE and compiler are doing 2015 (c) C. Le Goues 12

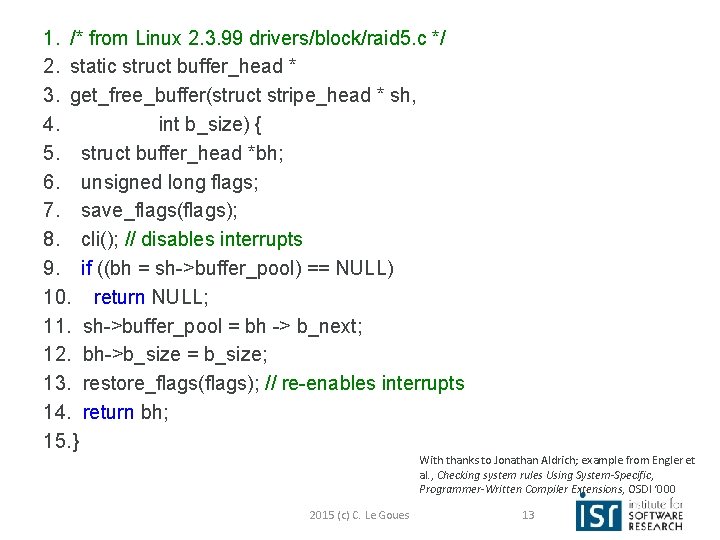

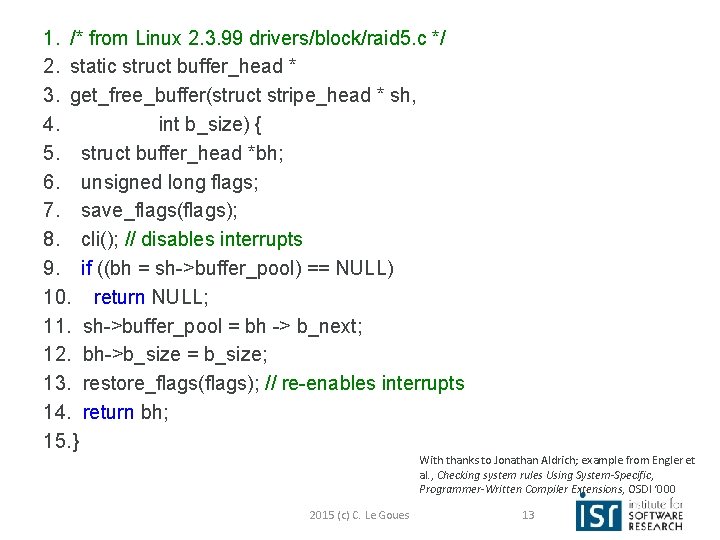

1. /* from Linux 2. 3. 99 drivers/block/raid 5. c */ 2. static struct buffer_head * 3. get_free_buffer(struct stripe_head * sh, 4. int b_size) { 5. struct buffer_head *bh; 6. unsigned long flags; 7. save_flags(flags); 8. cli(); // disables interrupts 9. if ((bh = sh->buffer_pool) == NULL) 10. return NULL; 11. sh->buffer_pool = bh -> b_next; 12. bh->b_size = b_size; 13. restore_flags(flags); // re-enables interrupts 14. return bh; 15. } With thanks to Jonathan Aldrich; example from Engler et al. , Checking system rules Using System-Specific, Programmer-Written Compiler Extensions, OSDI ‘ 000 2015 (c) C. Le Goues 13

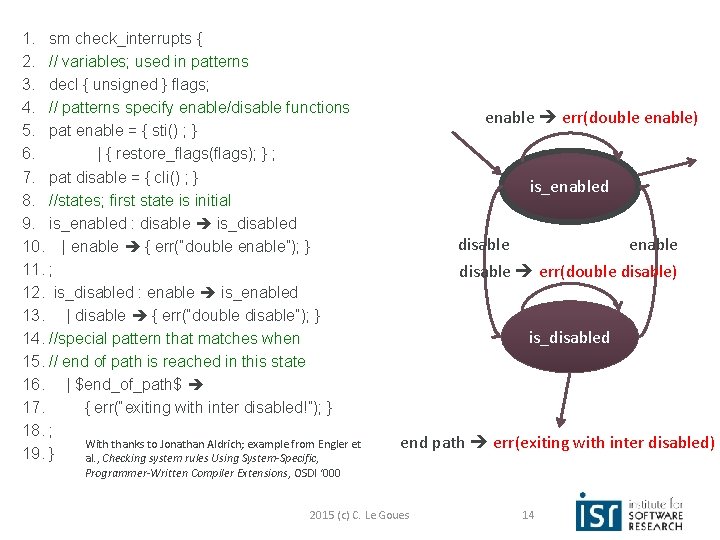

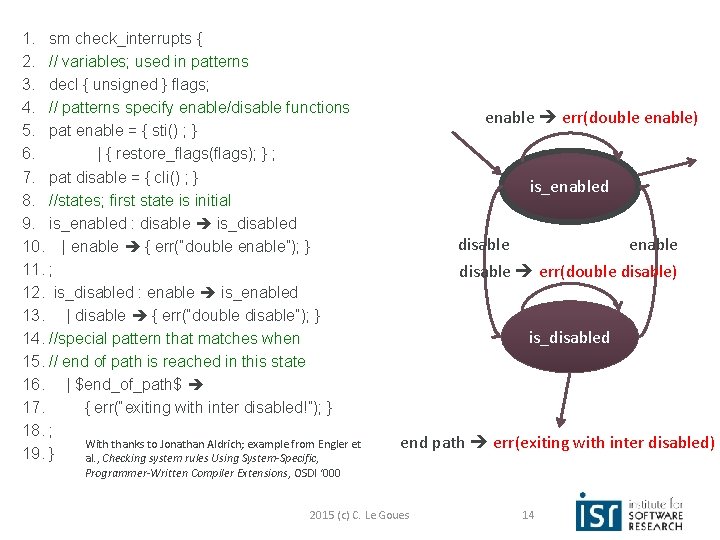

1. sm check_interrupts { 2. // variables; used in patterns 3. decl { unsigned } flags; 4. // patterns specify enable/disable functions 5. pat enable = { sti() ; } 6. | { restore_flags(flags); } ; 7. pat disable = { cli() ; } 8. //states; first state is initial 9. is_enabled : disable is_disabled 10. | enable { err(“double enable”); } 11. ; 12. is_disabled : enable is_enabled 13. | disable { err(“double disable”); } 14. //special pattern that matches when 15. // end of path is reached in this state 16. | $end_of_path$ 17. { err(“exiting with inter disabled!”); } 18. ; With thanks to Jonathan Aldrich; example from Engler et 19. } al. , Checking system rules Using System-Specific, enable err(double enable) is_enabled disable enable disable err(double disable) is_disabled end path err(exiting with inter disabled) Programmer-Written Compiler Extensions, OSDI ‘ 000 2015 (c) C. Le Goues 14

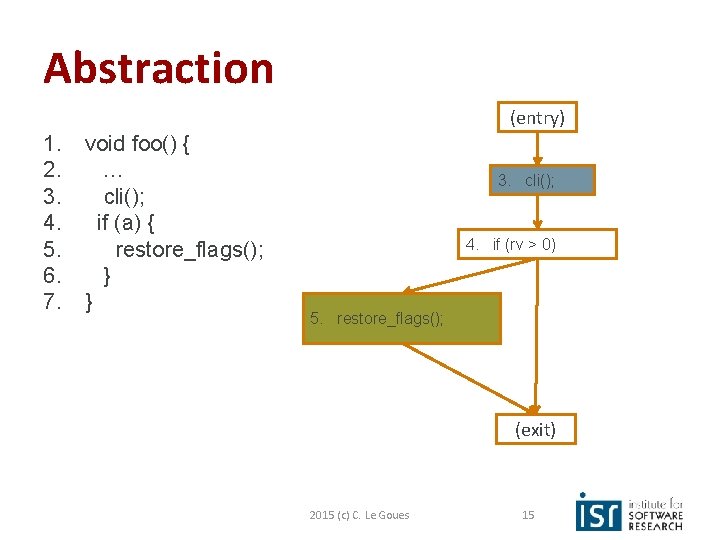

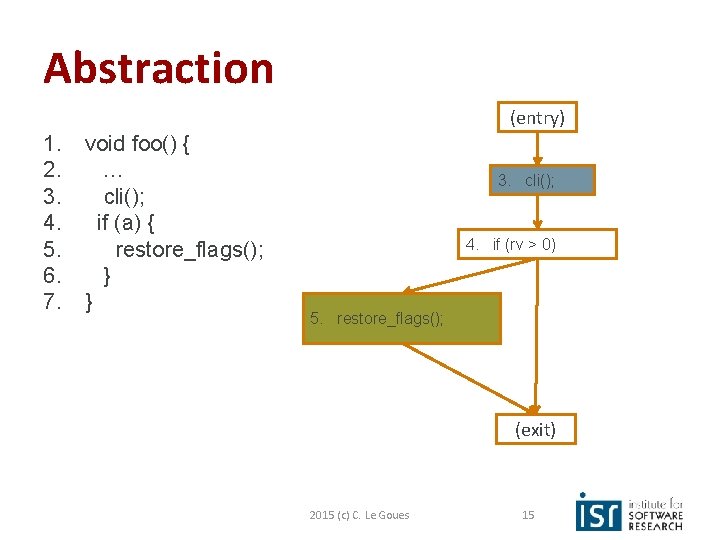

Abstraction (entry) 1. 2. 3. 4. 5. 6. 7. void foo() { … cli(); if (a) { restore_flags(); } } 3. cli(); 4. if (rv > 0) 5. restore_flags(); (exit) 2015 (c) C. Le Goues 15

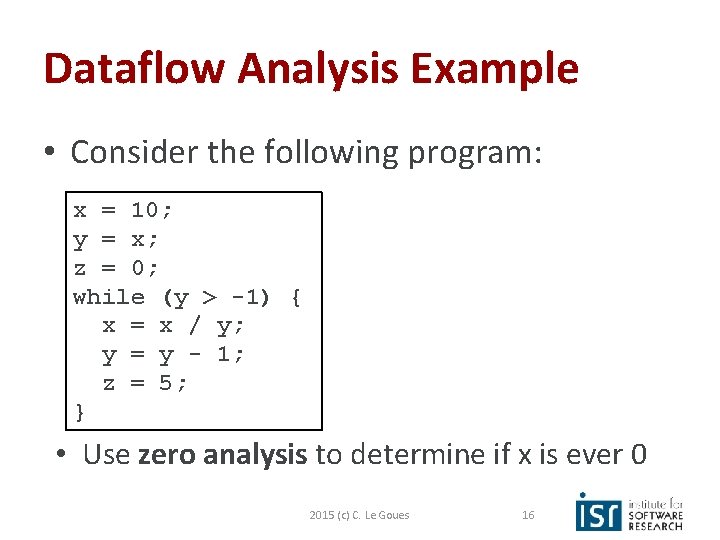

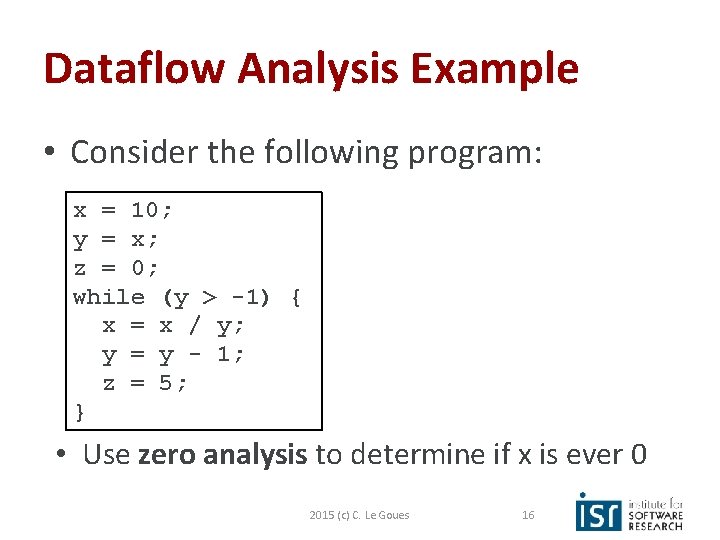

Dataflow Analysis Example • Consider the following program: x = 10; y = x; z = 0; while (y > -1) { x = x / y; y = y - 1; z = 5; } • Use zero analysis to determine if x is ever 0 2015 (c) C. Le Goues 16

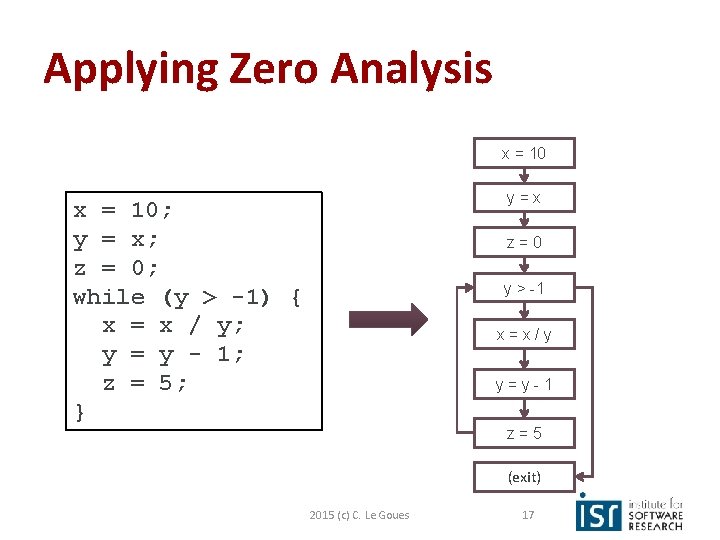

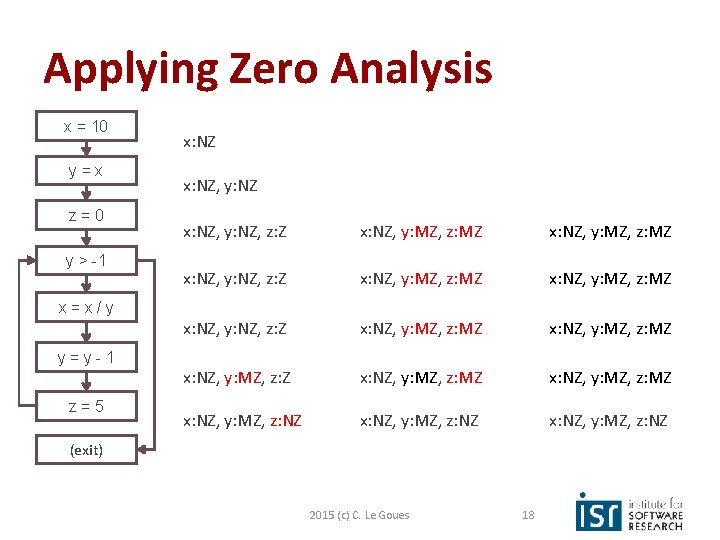

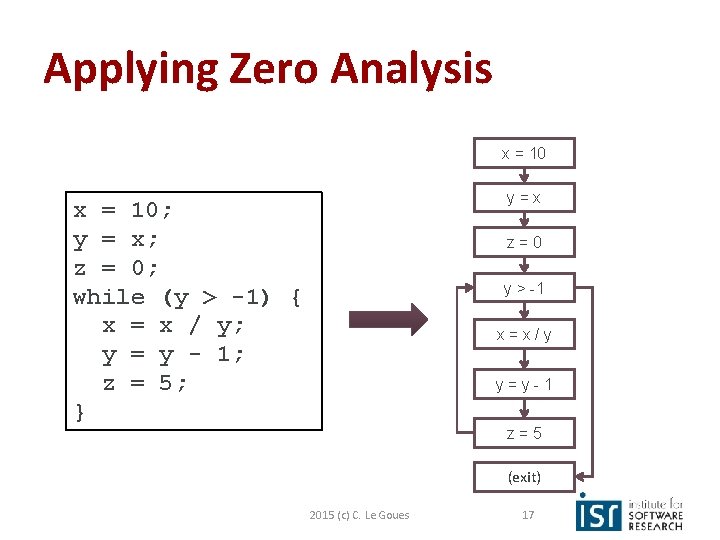

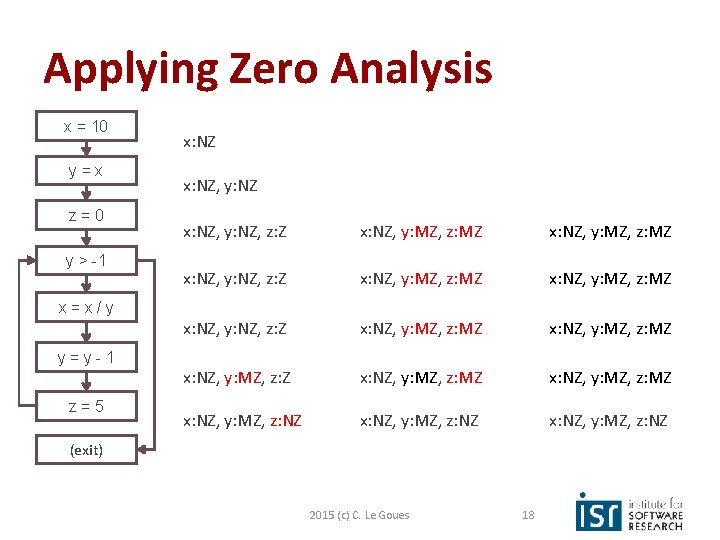

Applying Zero Analysis x = 10 y=x x = 10; y = x; z = 0; while (y > -1) { x = x / y; y = y - 1; z = 5; } z=0 y > -1 x=x/y y=y-1 z=5 (exit) 2015 (c) C. Le Goues 17

Applying Zero Analysis x = 10 y=x z=0 y > -1 x: NZ, y: NZ, z: Z x: NZ, y: MZ, z: MZ x: NZ, y: NZ, z: Z x: NZ, y: MZ, z: MZ x: NZ, y: MZ, z: NZ x=x/y y=y-1 z=5 (exit) 2015 (c) C. Le Goues 18

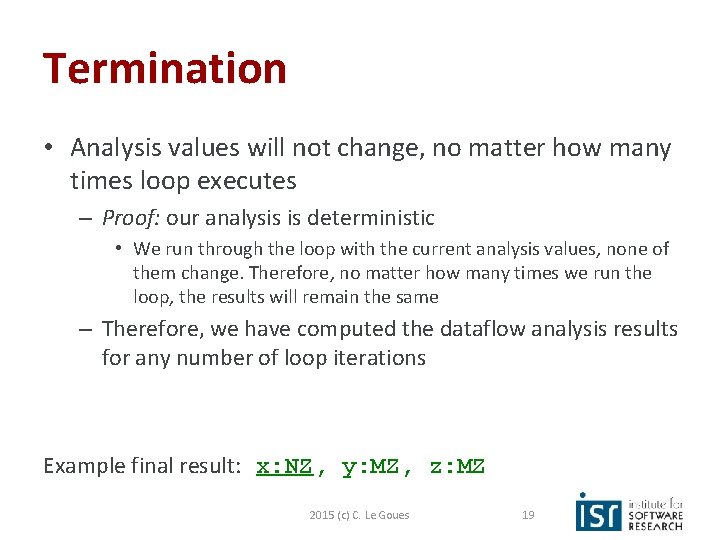

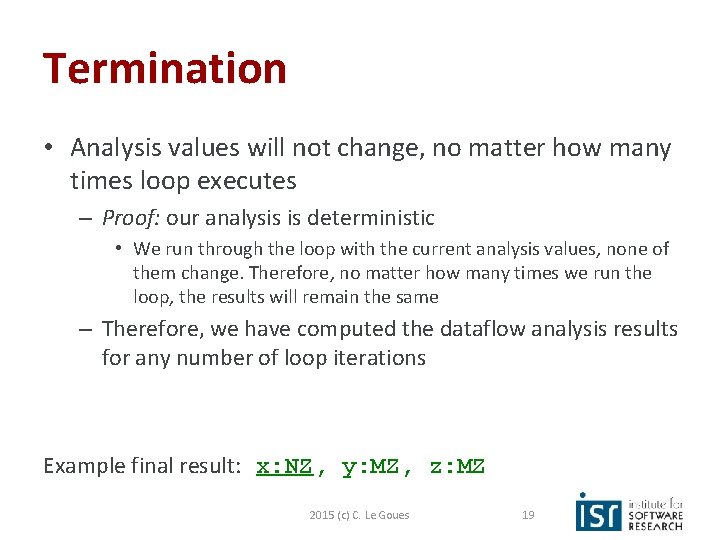

Termination • Analysis values will not change, no matter how many times loop executes – Proof: our analysis is deterministic • We run through the loop with the current analysis values, none of them change. Therefore, no matter how many times we run the loop, the results will remain the same – Therefore, we have computed the dataflow analysis results for any number of loop iterations Example final result: x: NZ, y: MZ, z: MZ 2015 (c) C. Le Goues 19

Abstraction at Work • Number of possible states gigantic – n 32 bit variables results in 232*n states • 2(32*3) = 296 – With loops, states can change indefinitely • Zero Analysis narrows the state space – Zero or not zero – 2(2*3) = 26 – When this limited space is explored, then we are done • Extrapolate over all loop iterations 2015 (c) C. Le Goues 20

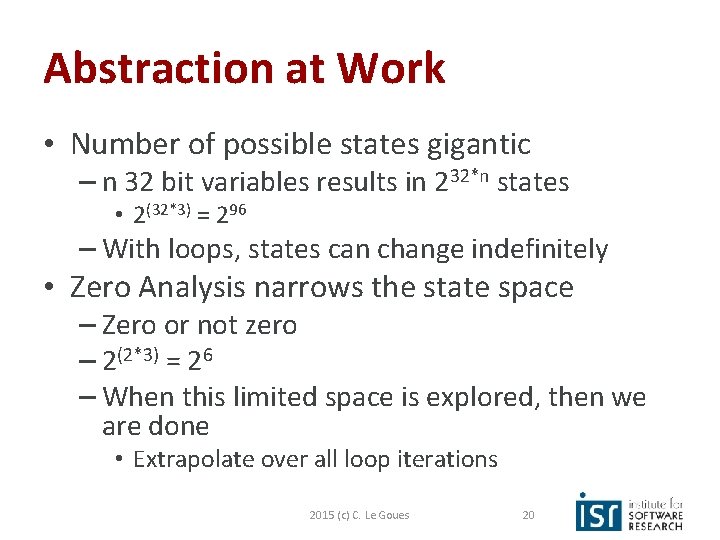

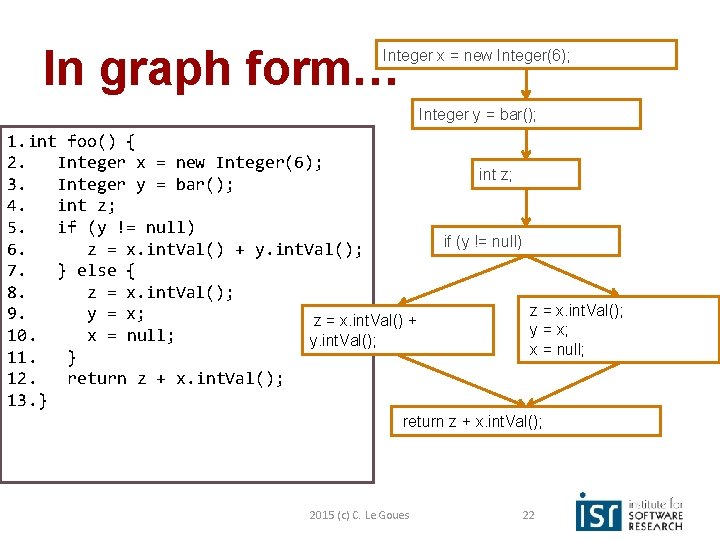

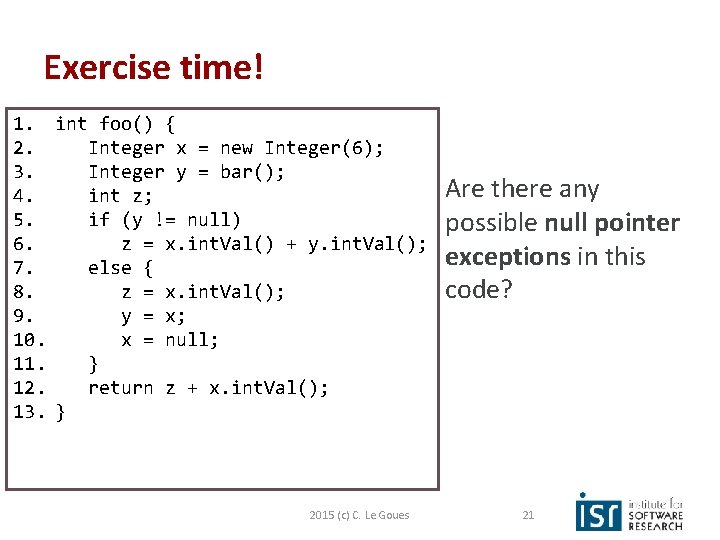

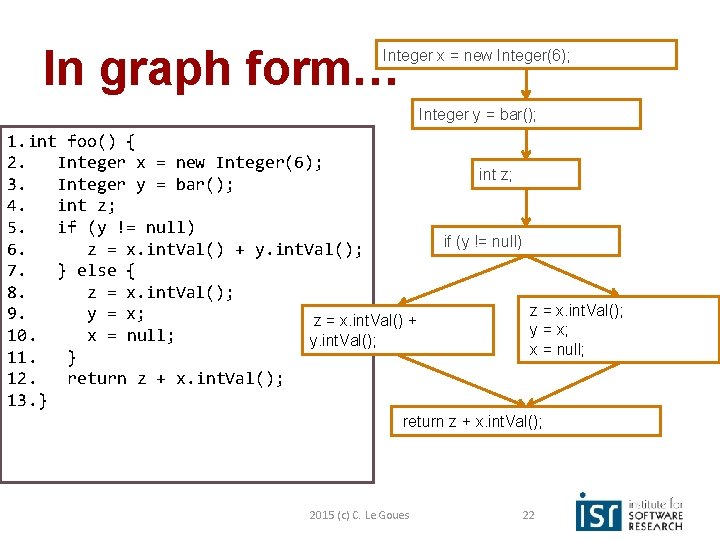

Exercise time! 1. int foo() { 2. Integer x = new Integer(6); 3. Integer y = bar(); 4. int z; 5. if (y != null) 6. z = x. int. Val() + y. int. Val(); 7. else { 8. z = x. int. Val(); 9. y = x; 10. x = null; 11. } 12. return z + x. int. Val(); 13. } 2015 (c) C. Le Goues Are there any possible null pointer exceptions in this code? 21

In graph form… Integer x = new Integer(6); Integer y = bar(); 1. int foo() { 2. Integer x = new Integer(6); 3. Integer y = bar(); 4. int z; 5. if (y != null) 6. z = x. int. Val() + y. int. Val(); 7. } else { 8. z = x. int. Val(); 9. y = x; z = x. int. Val() + 10. x = null; y. int. Val(); 11. } 12. return z + x. int. Val(); 13. } int z; if (y != null) z = x. int. Val(); y = x; x = null; return z + x. int. Val(); 2015 (c) C. Le Goues 22

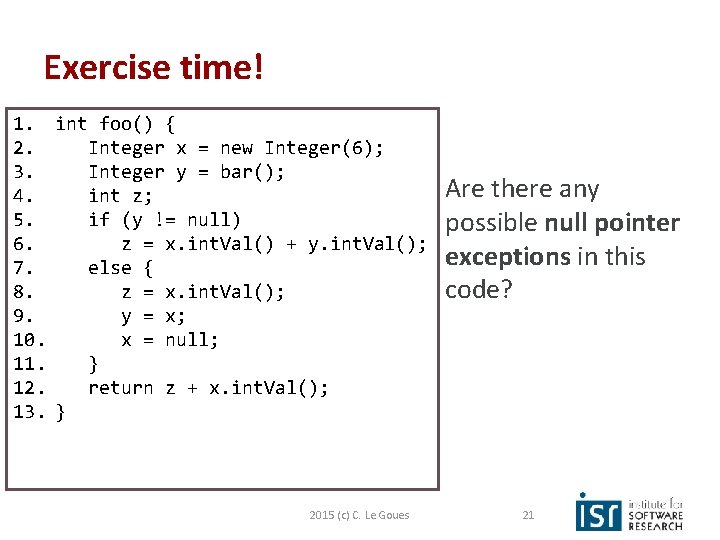

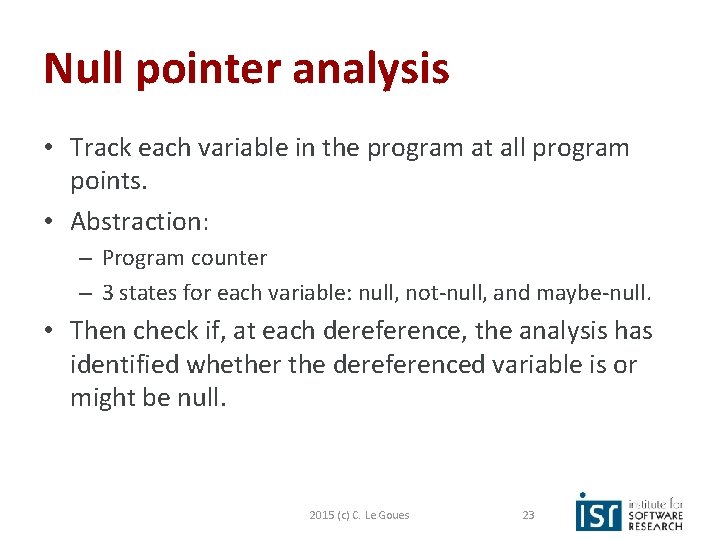

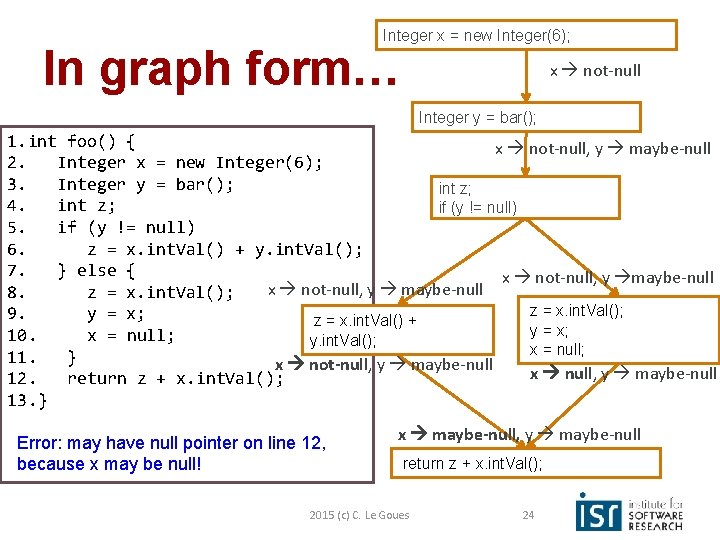

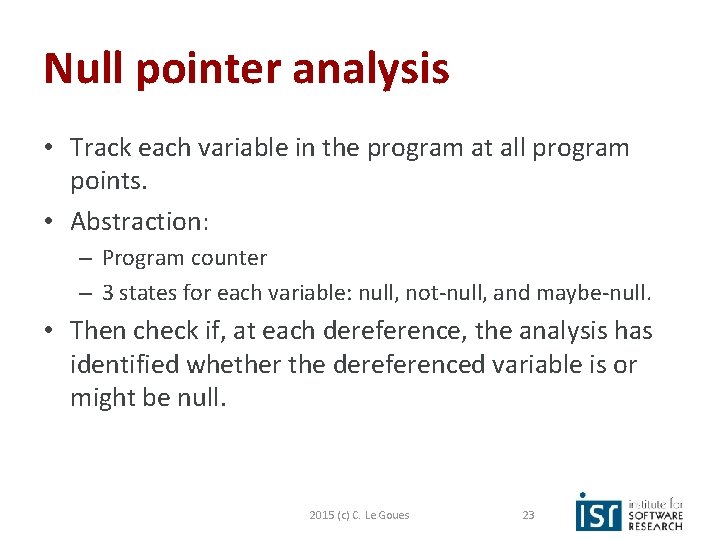

Null pointer analysis • Track each variable in the program at all program points. • Abstraction: – Program counter – 3 states for each variable: null, not-null, and maybe-null. • Then check if, at each dereference, the analysis has identified whether the dereferenced variable is or might be null. 2015 (c) C. Le Goues 23

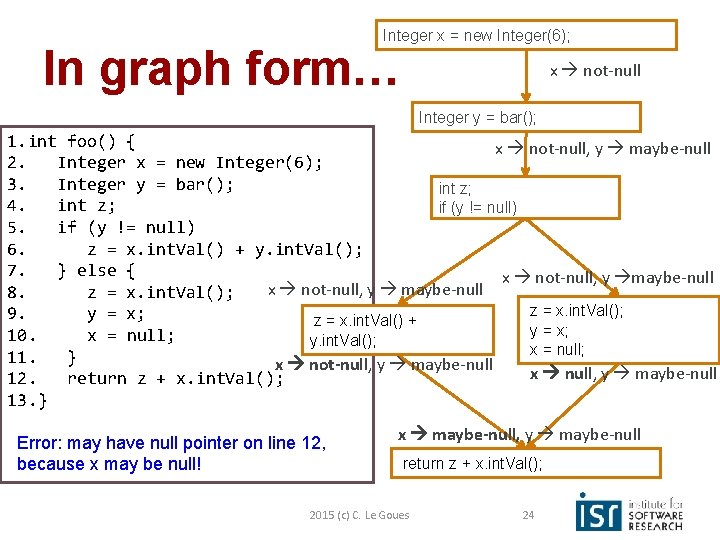

Integer x = new Integer(6); In graph form… x not-null Integer y = bar(); 1. int foo() { x not-null, y maybe-null 2. Integer x = new Integer(6); 3. Integer y = bar(); int z; 4. int z; if (y != null) 5. if (y != null) 6. z = x. int. Val() + y. int. Val(); 7. } else { x not-null, y maybe-null x not-null, y maybe-null 8. z = x. int. Val(); 9. y = x; z = x. int. Val() + y = x; 10. x = null; y. int. Val(); x = null; 11. } x not-null, y maybe-null x null, y maybe-null 12. return z + x. int. Val(); 13. } Error: may have null pointer on line 12, because x may be null! x maybe-null, y maybe-null return z + x. int. Val(); 2015 (c) C. Le Goues 24

Continuum of formality • • • Pattern identification (Find. Bugs, Lint) Type checking Dataflow analysis Model checking Formal reasoning – Hoare logic – Automated theorem prover 2015 (c) C. Le Goues 25

Model Checking • Build model of a program and exhaustively evaluate that model against a specification – Check properties hold • Produce counter examples • Common form of model checking uses temporal logic formula to describe properties • Especially good for finding concurrency issues • Subject of Thursday’s lecture! 2015 (c) C. Le Goues 26

Tools: SPIN Model Checker • Simple Promela INterpreter • Well established and freely available model checker – Model processes – Verify linear temporal logic properties active proctype Hello() { printf(“Hello Worldn”); } • We’ll spend considerable time with SPIN later http: //spinroot. com/ 2015 (c) C. Le Goues 27

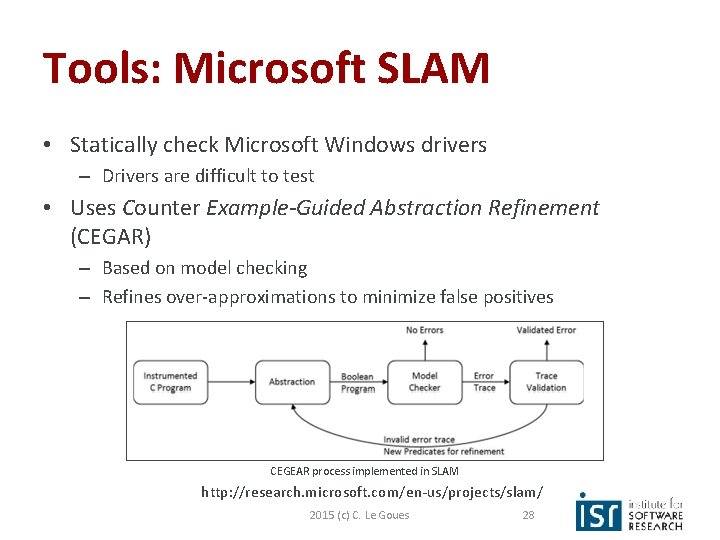

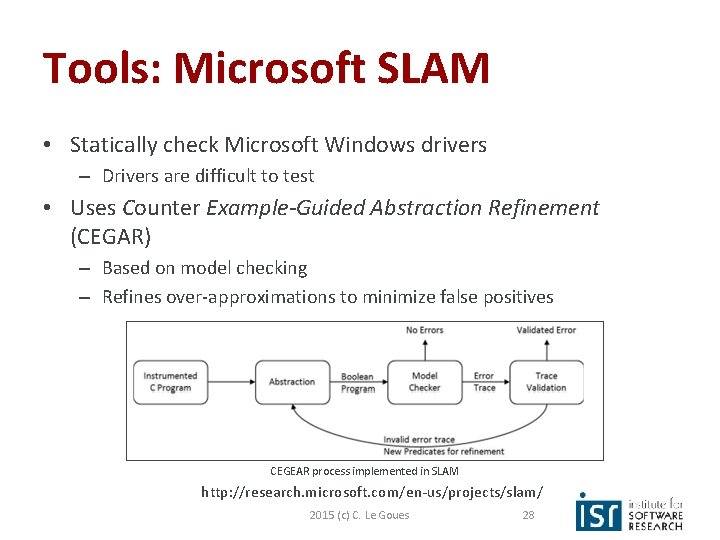

Tools: Microsoft SLAM • Statically check Microsoft Windows drivers – Drivers are difficult to test • Uses Counter Example-Guided Abstraction Refinement (CEGAR) – Based on model checking – Refines over-approximations to minimize false positives CEGEAR process implemented in SLAM http: //research. microsoft. com/en-us/projects/slam/ 2015 (c) C. Le Goues 28

Formal Reasoning: Hoare Logic • Set of tools for reasoning about the correctness of a computer program – Uses pre and post conditions • The Hoare triple: {P} S {Q} – P and Q are predicates – S is the program – If we start in a state where P is true and execute S, then S will terminate in a state where Q is true 2015 (c) C. Le Goues 29

Hoare Triples • {x=y} x : = x + 3 { x = y + 3 } • {x > -1} x : = x * 2 + 3 { x > 1} • { true} x : = 5 { x=5} 2015 (c) C. Le Goues 30

Hoare Logic • Hoare logic defines inference rules for program constructs – Assignment – Conditionals • { P } if x > 0 then y : = z else y : = -z { y > 5 } – Loops • {P} while B do S {Q} • Using these rules, we can reason about program correctness if we can come up with pre- and postconditions. • Common technology in safety-critical systems programming, such as code written in SPARK ADA, avionics systems, etc. 2015 (c) C. Le Goues 31

Tools: ESC/Java • Extended Static Checking for Java – Uses the Simplify theorem prover to evaluate each routine in a program – Embedded assertions/annotations • Checker readable comments • Based on the Java Modeling Language (JML) /*@ requires i > 0 */ public void div(int i, int j) { return j/i; } C. Flanagan, K. R. M. Leino, M. Lillibridge, G. Nelson, J. B. Saxe and R. Stata. Extended static checking for Java 2015 (c) C. Le Goues 32

Annotation Benefits • Annotations express design intent – How you intended to achieve a particular quality attribute • e. g. never writing more than N elements to this array • As you add more annotations, you find more errors – Some annotations already built in to Java libraries 2015 (c) C. Le Goues 33

Upshot: analysis as approximation • Analysis must approximate in practice – False positives: may report errors where there are really none – False negatives: may not report errors that really exist – All analysis tools have either false negatives or false positives • Approximation strategy – Find a pattern P for correct code • which is feasible to check (analysis terminates quickly), • covers most correct code in practice (low false positives), • which implies no errors (no false negatives) • Analysis can be pretty good in practice – Many tools have low false positive/negative rates – A sound tool has no false negatives • Never misses an error in a category that it checks 2015 (c) C. Le Goues 34

Pseudo-summary: analysis is attribute-Specific • Analysis is specific to: – A quality attribute (e. g. , race condition, buffer overflow, use after free) – A pattern for verifying that attribute (e. g. , protect each shared piece of data with a lock, Presburger arithmetic decision procedure for array indexes, only one variable points to each memory location) • Analysis is inappropriate for some attributes. • For every technique, think about: what’s being abstracted, what data is being lost, where the error can come from! • And maybe: how we can make it better? 2015 (c) C. Le Goues 35

Why do Static Analysis 2015 (c) C. Le Goues 36

Quality assurance at Microsoft • Original process: manual code inspection – Effective when system and team are small – Too many paths to consider as system grew • Early 1990 s: add massive system and unit testing – Tests took weeks to run • Diversity of platforms and configurations • Sheer volume of tests – Inefficient detection of common patterns, security holes • Non-local, intermittent, uncommon path bugs Was treading water in Windows Vista development • Early 2000 s: add static analysis 2015 (c) C. Le Goues 37

Impact at Microsoft • Thousands of bugs caught monthly • Significant observed quality improvements – e. g. buffer overruns latent in codebases • Widespread developer acceptance – Check-in gates – Writing specifications 2015 (c) C. Le Goues 38

Ebay: Prior Evaluations • Individual teams tried tools – On snapshots – No tool customization – Overall negative results – Developers were not impressed: many minor issues (2 checkers reported half the issues, all irrelevant for Ebay) • Would this change when integrated into process? i. e. incremental checking • Which bugs to look at? Jaspan, Ciera, I. Chen, and Anoop Sharma. "Understanding the value of program analysis tools. " Companion to the 22 nd ACM SIGPLAN conference on Object-oriented programming systems and applications companion. ACM, 2007. 2015 (c) C. Le Goues 39

Ebay: Goals • Find defects earlier in the lifecycle – Allow quality engineers to focus on different issues • Find defects that are difficult to find through other QA techniques – security, performance, concurrency • As early as feasible: Run on developer machines and in nightly builds • No resources to build own tool – But few people for dedicated team (customization, policies, creating project-specific analyses etc) possible • Continuous evaluation 2015 (c) C. Le Goues 40

Ebay: Customization • Customization dropped false positives from 50% to 10% • Separate checkers evaluated separately – By number of issues – By severity as judged by developers; iteratively with several groups • Some low-priority checkers (e. g. , dead store to local) was assigned high priority – performance impact important for Ebay 2015 (c) C. Le Goues 41

Ebay: Enforcement policy • High priority: All these issues must be fixed (e. g. null pointer exceptions) – Potentially very costly given the huge existing code base • Medium priority: May not be added to the code base. Old issues won't be fixed unless refactored anyway (e. g. , high cyclomatic complexity) • Low priority: At most X issues may be added between releases (usually stylistic) • Tossed: Turned off entirely 2015 (c) C. Le Goues 42

Ebay: Cost estimation • Free tool • 2 developers full time for customization and extension • A typical tester at ebay finds 10 bugs/week, 10% high priority • Sample bugs found with Findbugs for a comparison 2015 (c) C. Le Goues 43

Aside: Cost/benefit analysis • Cost/Benefit tradeoff – Benefit: How valuable is the bug? • How much does it cost if not found? • How expensive to find using testing/inspection? – Cost: How much did the analysis cost? • Effort spent running analysis, interpreting results – includes false positives • Effort spent finding remaining bugs (for unsound analysis) • Rule of thumb – For critical bugs that testing/inspection can’t find, a sound analysis is worth it, as long as false positive rate is acceptable. – For other bugs, maximize engineer productivity 2015 (c) C. Le Goues 44

Ebay: Combining tools • Program analysis coverage – Performance – High importance – Security – High – Global quality – High – Local quality – medium – API/framework compliance – medium – Concurrency – low – Style and readability – low • Select appropriate tools and detectors 2015 (c) C. Le Goues 45

Ebay: Enforcement • Enforcement at dev/QA handoff: • Developers run Find. Bugs on desktop • QA runs Find. Bugs on receipt of code, posts results, require high-priority fixes. 2015 (c) C. Le Goues 46

Ebay: Continuous evaluation • Gather data on detected bugs and false positives • Present to developers, make case for tool 2015 (c) C. Le Goues 47

Incremental introduction • Begin with early adopters in small team • Use these as champions in organization • Support team: answer questions, help with tool. 2015 (c) C. Le Goues 48

![Empirical results Nortel study Zheng et al 2006 3 CC projects Empirical results • Nortel study [Zheng et al. 2006] – 3 C/C++ projects –](https://slidetodoc.com/presentation_image/37c841b0a5ed315367060cefad2e6a94/image-49.jpg)

Empirical results • Nortel study [Zheng et al. 2006] – 3 C/C++ projects – 3 million LOC total – Early generation static analysis tools • Conclusions – Cost per fault of static analysis 61 -72% compared to inspections – Effectively finds assignment, checking faults – Can be used to find potential security vulnerabilities 2015 (c) C. Le Goues 49

![More empirical results Info Sys study Chaturvedi 2005 5 projects Average More empirical results • Info. Sys study [Chaturvedi 2005] – 5 projects – Average](https://slidetodoc.com/presentation_image/37c841b0a5ed315367060cefad2e6a94/image-50.jpg)

More empirical results • Info. Sys study [Chaturvedi 2005] – 5 projects – Average 700 function points each – Compare inspection with and without static analysis • Conclusions – Higher productivity – Fewer defects 2015 (c) C. Le Goues 50

Question time! HOW IS TEST SUITE COVERAGE COMPUTED? 2015 (c) C. Le Goues 51

Dynamic analysis • Learn about a program’s properties by executing it. • How can we learn about properties that are more interesting than “did this test pass”? (e. g. , memory use). • Short answer: examine program state throughout execution by gathering additional information. 2015 (c) C. Le Goues 52

Common dynamic analysis • • • Coverage Performance Memory usage Security properties Concurrency errors Invariant detection 2015 (c) C. Le Goues 53

Collecting execution info • Instrument at compile time – e. g. , Aspects, logging • Run on a specialized VM, like valgrind • Instrument or monitor at runtime – Also requires a special VM – E. g. , Visual. VM (hooks into JVM using debug symbols to profile/monitor) 2015 (c) C. Le Goues 54

Quick note • Some of those require a static preprocessing step, such as inserting logging statements! • It’s still a dynamic analysis, because you run the program, collect the info, and learn from that information. 2015 (c) C. Le Goues 55

Parts of a dynamic analysis. 1. Property of interest. 2. Information related to property of interest. 3. Mechanism for collecting that information from a program execution. 4. Test input data. 5. Mechanism for learning about the property of interest from the information you collected. 2015 (c) C. Le Goues 56

Abstraction • How is abstraction relevant to static analysis, again? • Dynamic analysis also requires abstraction. • You’re still focusing on a particular program property or type of information. • Abstracting parts of a trace of exeuction rather than the entire state space. 2015 (c) C. Le Goues 57

Challenges: Very input dependent • Good if you have alots of tests! (system tests are also best) • Are those tests indicative of common behavior? – Is that what you want? • Can also use logs from live sessions (sometimes). • Or specific inputs that replicate specific defect scenarios (like memory leaks). 2015 (c) C. Le Goues 58

Challenges: Heisenbuggy behavior • Instrumentation and monitoring can change the behavior of a program. – E. g. , slowdown, overhead • Important question 1: can/should you deploy it live? Or just for debugging something specific? • Important question 2: will the monitoring meaningfully change the program behavior with respect ot the property you care about? 2015 (c) C. Le Goues 59

Too much data • Logging events in large and/or longrunning programs (even for just one property!) can result in HUGE amounts of data. • How do you process it? – Common strategy: sampling. 2015 (c) C. Le Goues 60

Benefits over static analysis • Precise data for a specific run. • No false positives or negatives on a given run. • No confusion about which path was taken; it’s clear where an error happened. • Can (often) use on live code. – Very common for security and data gathering. 2015 (c) C. Le Goues 61

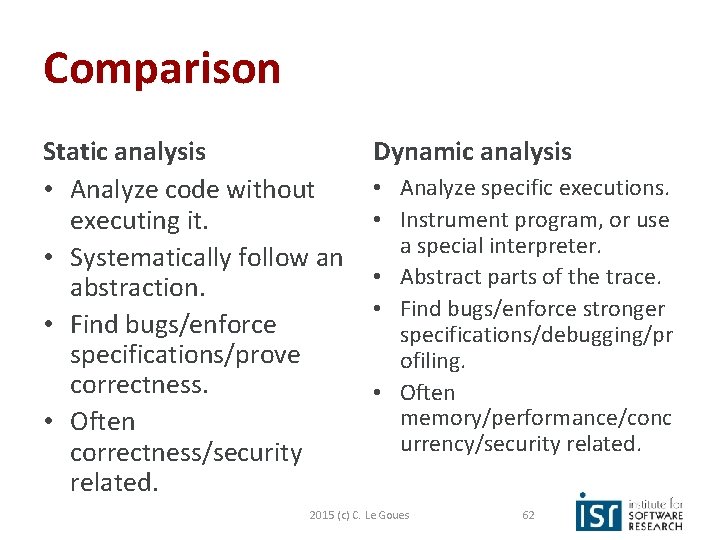

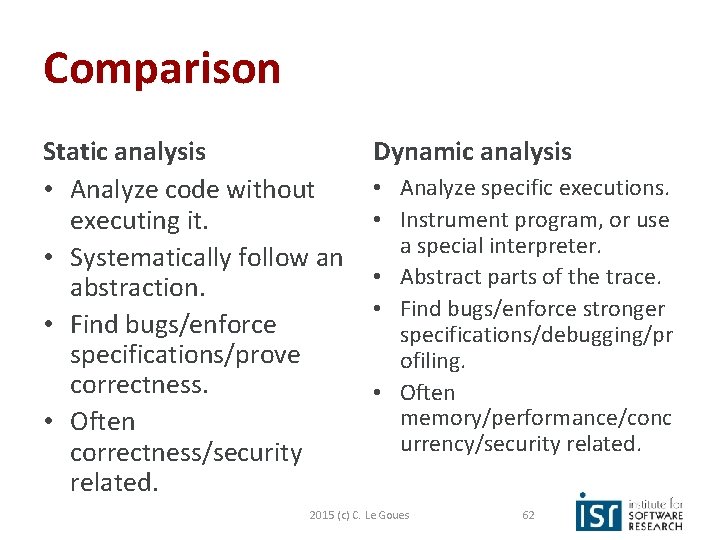

Comparison Static analysis • Analyze code without executing it. • Systematically follow an abstraction. • Find bugs/enforce specifications/prove correctness. • Often correctness/security related. Dynamic analysis • Analyze specific executions. • Instrument program, or use a special interpreter. • Abstract parts of the trace. • Find bugs/enforce stronger specifications/debugging/pr ofiling. • Often memory/performance/conc urrency/security related. 2015 (c) C. Le Goues 62

Learning goals • Really understand control- and data-flow analysis. • Receive a high-level introduction to more formal proving tools. • Develop evidence-based recommendations for how to deploy analysis tools in your project or organization. • Explain the parts of and how abstraction applies to dynamic analysis (lightning-fast!). 2015 (c) C. Le Goues 63