Parsing with PCFG Ling 571 Fei Xia Week

![CYK algorithm (another way) • For every rule A w_i, add it to Cell[i][i] CYK algorithm (another way) • For every rule A w_i, add it to Cell[i][i]](https://slidetodoc.com/presentation_image_h/22746cb413dcfabe65543c86b25af603/image-9.jpg)

![Parse “book that flight”: C 1[begin][end] end=3 end=2 end=1 VP V NP (m=1) ---- Parse “book that flight”: C 1[begin][end] end=3 end=2 end=1 VP V NP (m=1) ----](https://slidetodoc.com/presentation_image_h/22746cb413dcfabe65543c86b25af603/image-11.jpg)

![Parse “book that flight”: C 2[begin][span] span=3 VP V NP (m=1) span=2 ---span=1 N Parse “book that flight”: C 2[begin][span] span=3 VP V NP (m=1) span=2 ---span=1 N](https://slidetodoc.com/presentation_image_h/22746cb413dcfabe65543c86b25af603/image-12.jpg)

- Slides: 59

Parsing with PCFG Ling 571 Fei Xia Week 3: 10/11 -10/13/05

Outline • Misc • CYK algorithm • Converting CFG into CNF • PCFG • Lexicalized PCFG

Misc • Quiz 1: 15 pts, due 10/13 • Hw 2: 10 pts, due 10/13, ling 580 i_au 05@u, ling 580 e_au 05@u • Treehouse weekly meeting: – Time: every Wed 2: 30 -3: 30 pm, tomorrow is the 1 st meeting – Location: EE 1 025 (Campus map 12 -N, South of MGH) – Mailing list: cl-announce@u • Others: – – Pongo policies Machines: LLC, Parrington, Treehouse Linux commands: ssh, sftp, … Catalyst tools: ESubmit, EPost, …

CYK algorithm

Parsing algorithms • • • Top-down Bottom-up Top-down with bottom-up filtering Earley algorithm CYK algorithm. .

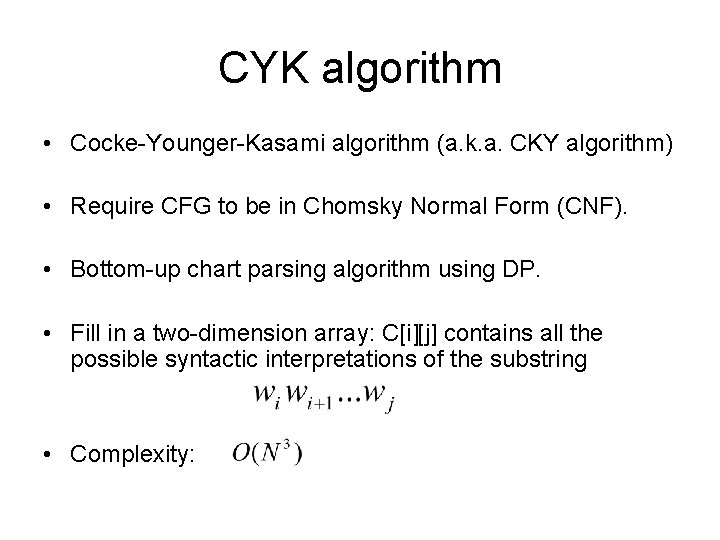

CYK algorithm • Cocke-Younger-Kasami algorithm (a. k. a. CKY algorithm) • Require CFG to be in Chomsky Normal Form (CNF). • Bottom-up chart parsing algorithm using DP. • Fill in a two-dimension array: C[i][j] contains all the possible syntactic interpretations of the substring • Complexity:

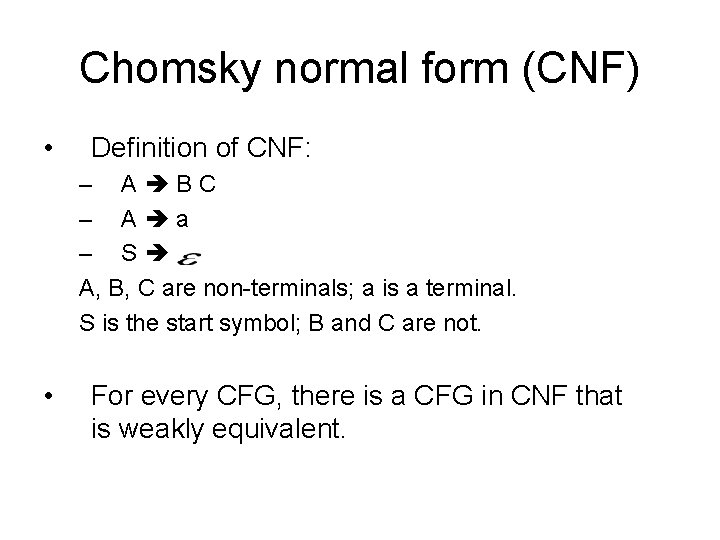

Chomsky normal form (CNF) • Definition of CNF: – A BC – A a – S A, B, C are non-terminals; a is a terminal. S is the start symbol; B and C are not. • For every CFG, there is a CFG in CNF that is weakly equivalent.

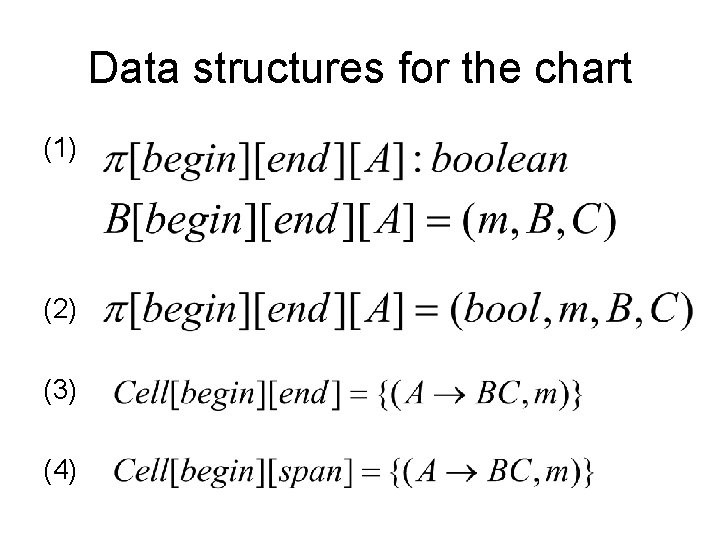

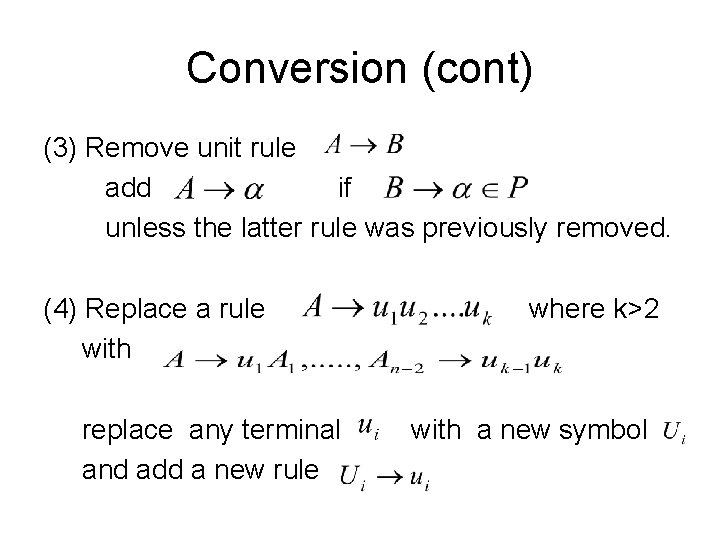

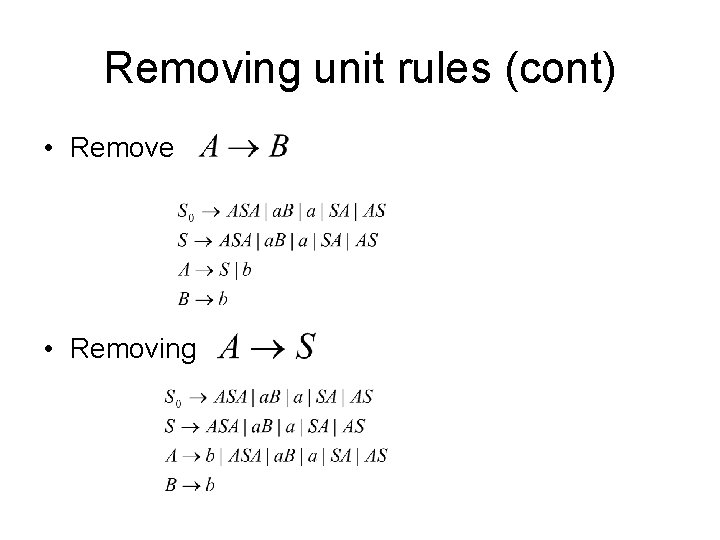

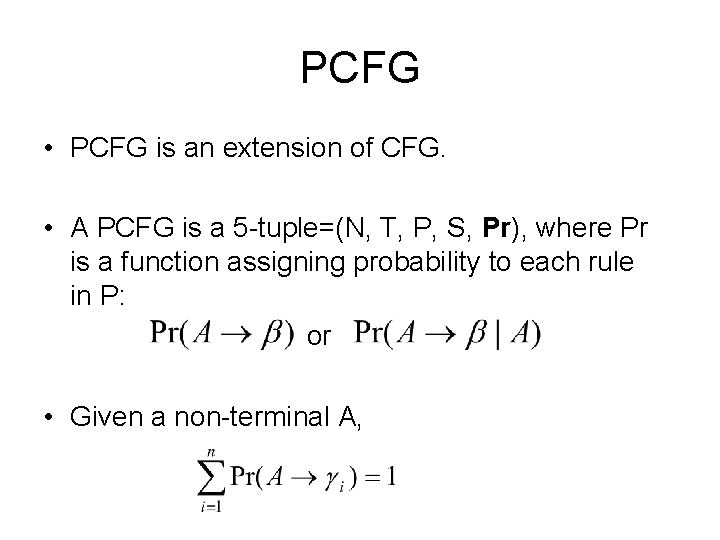

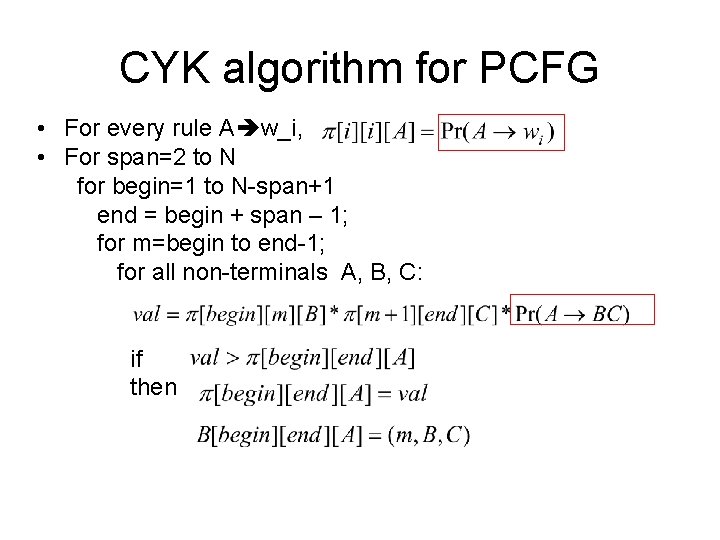

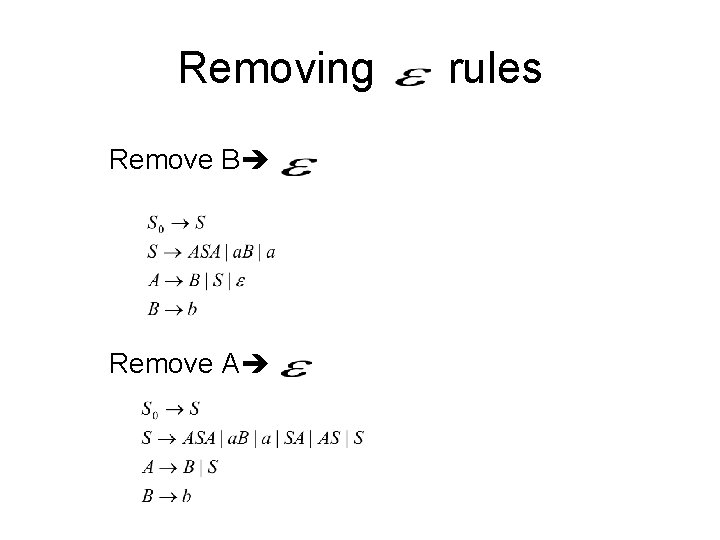

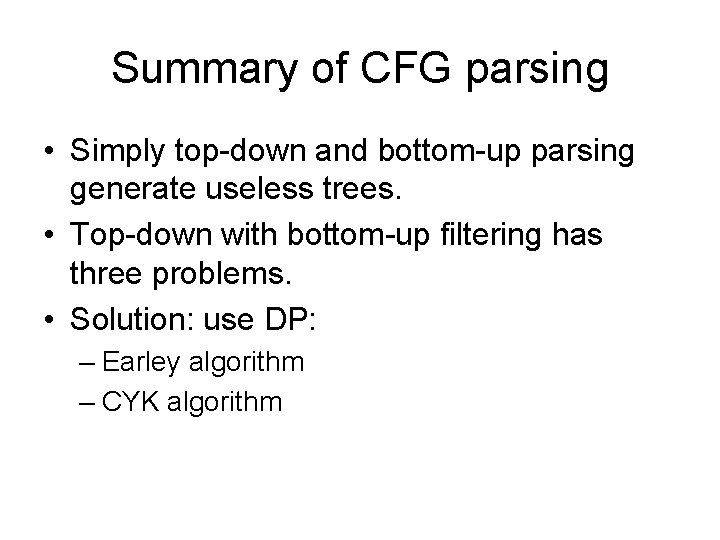

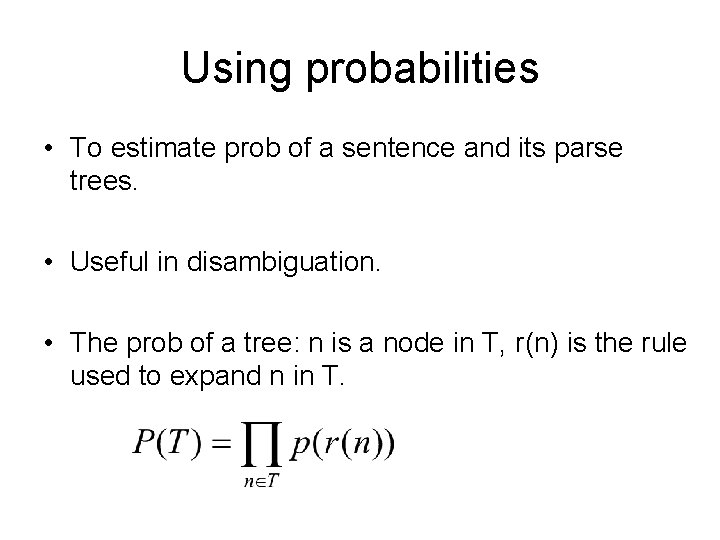

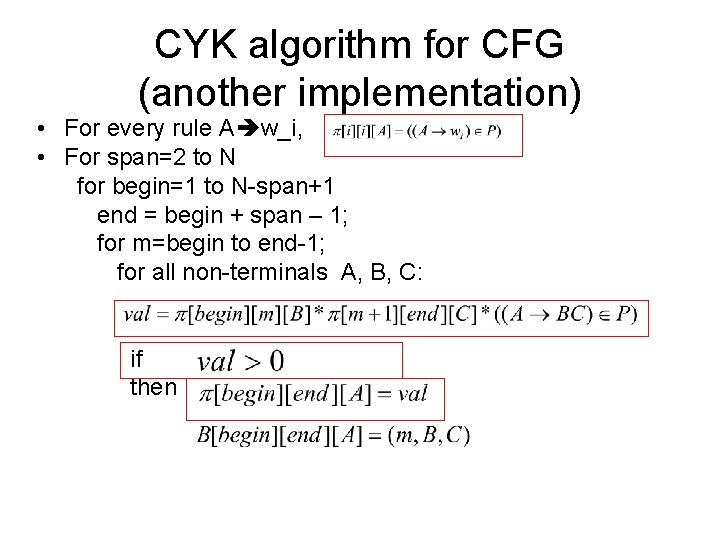

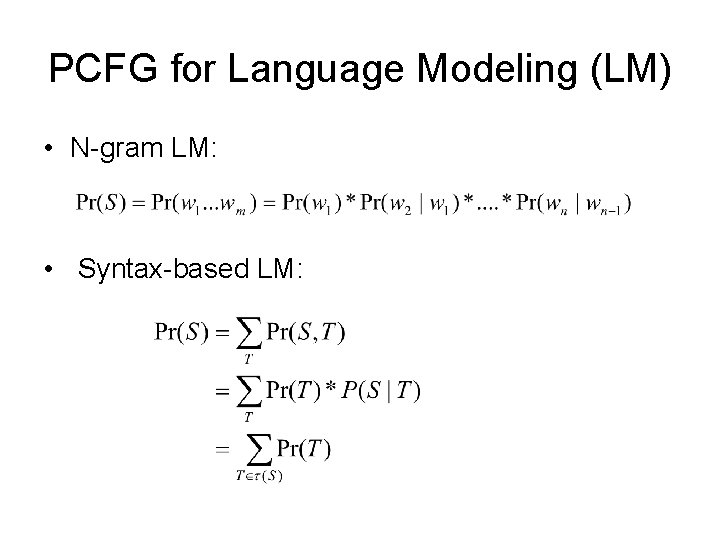

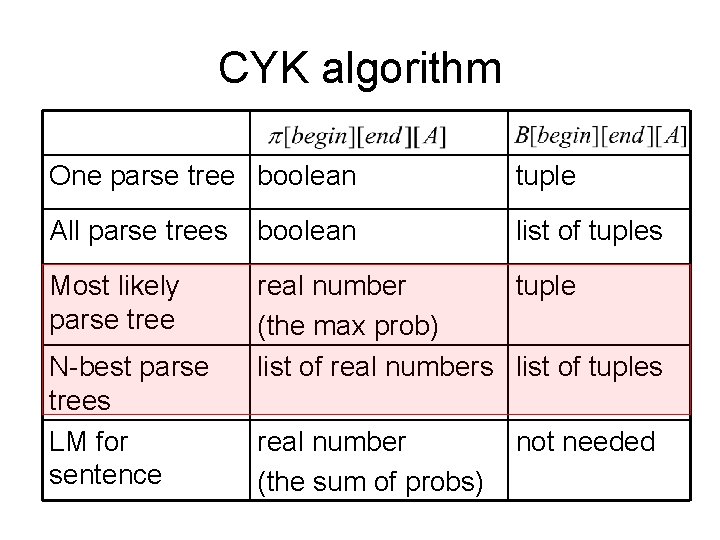

CYK algorithm • For every rule A w_i, • For span=2 to N for begin=1 to N-span+1 end = begin + span – 1; for m=begin to end-1; for all non-terminals A, B, C: If then

![CYK algorithm another way For every rule A wi add it to Cellii CYK algorithm (another way) • For every rule A w_i, add it to Cell[i][i]](https://slidetodoc.com/presentation_image_h/22746cb413dcfabe65543c86b25af603/image-9.jpg)

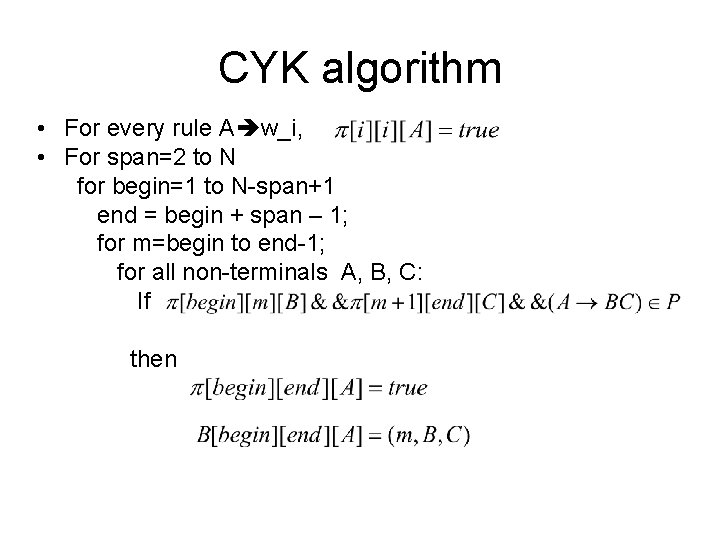

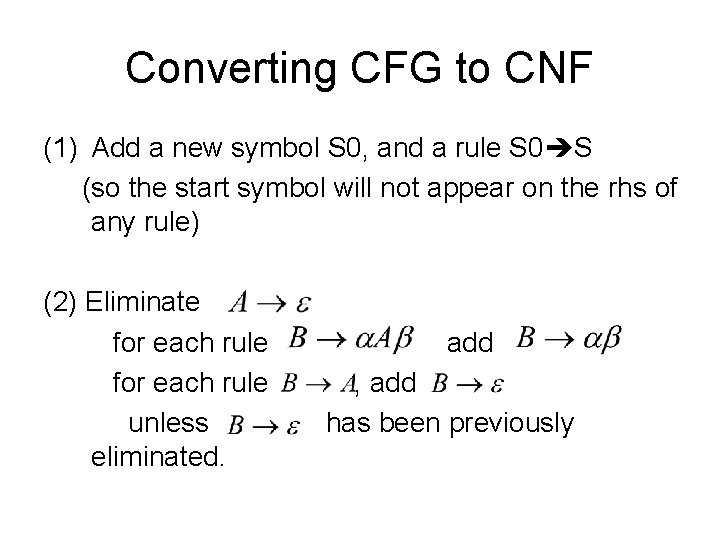

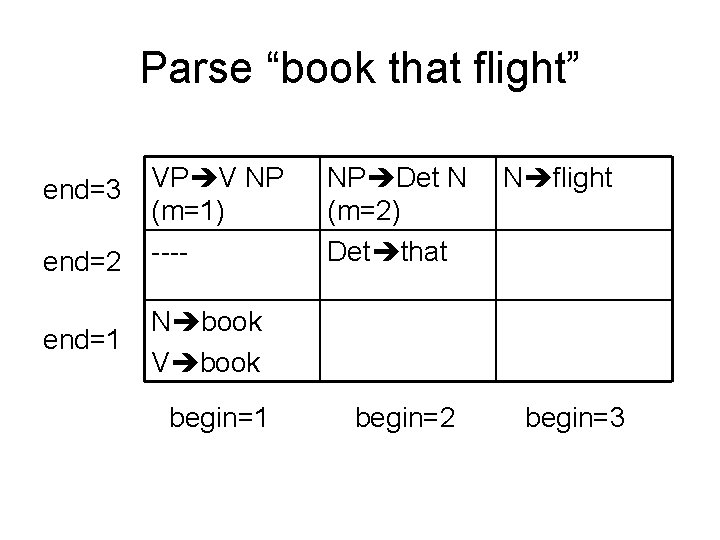

CYK algorithm (another way) • For every rule A w_i, add it to Cell[i][i] • For span=2 to N for begin=1 to N-span+1 end = begin + span – 1; for m=begin to end-1; for all non-terminals A, B, C: If Cell[begin][m] contains B. . . and Cell[m+1][end] contains C … and A BC is a rule in the grammar then add A BC to Cell[begin][end] and remember m

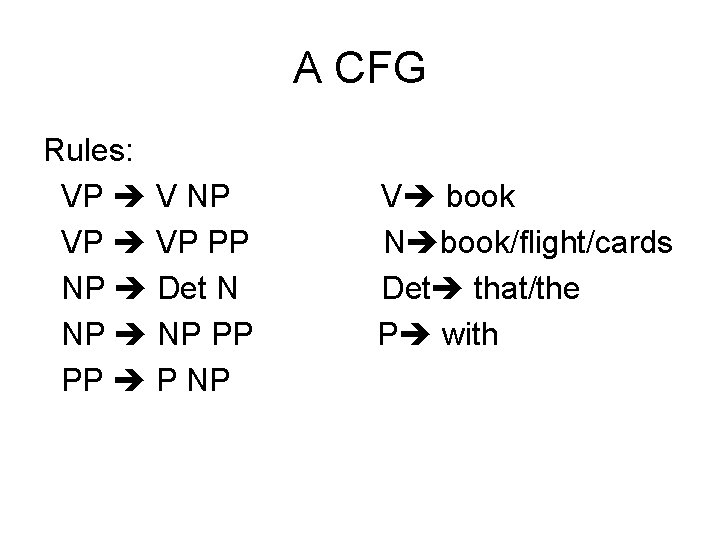

An example Rules: VP V NP VP PP NP Det N NP PP PP P NP V book N book/flight/cards Det that/the P with

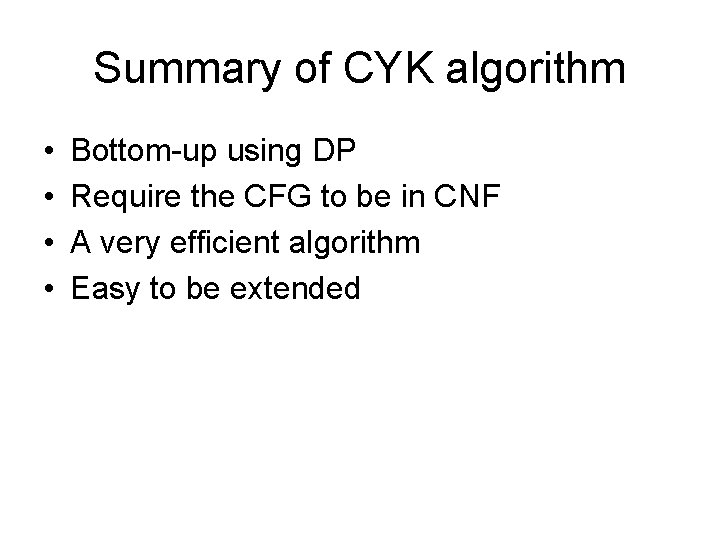

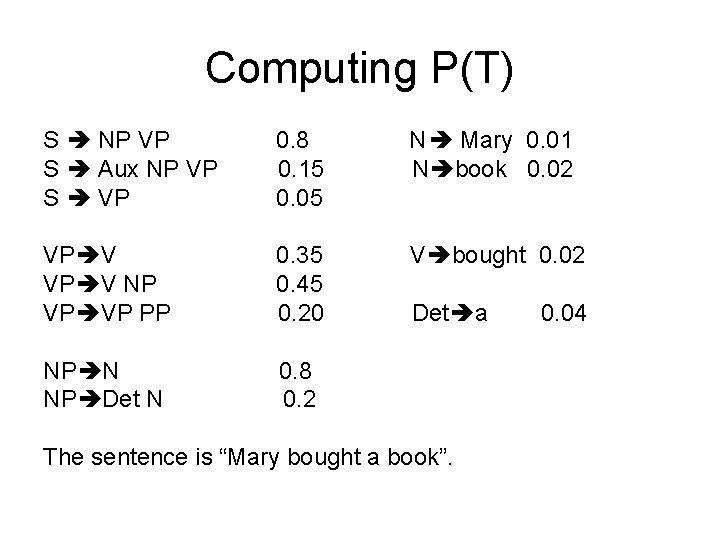

![Parse book that flight C 1beginend end3 end2 end1 VP V NP m1 Parse “book that flight”: C 1[begin][end] end=3 end=2 end=1 VP V NP (m=1) ----](https://slidetodoc.com/presentation_image_h/22746cb413dcfabe65543c86b25af603/image-11.jpg)

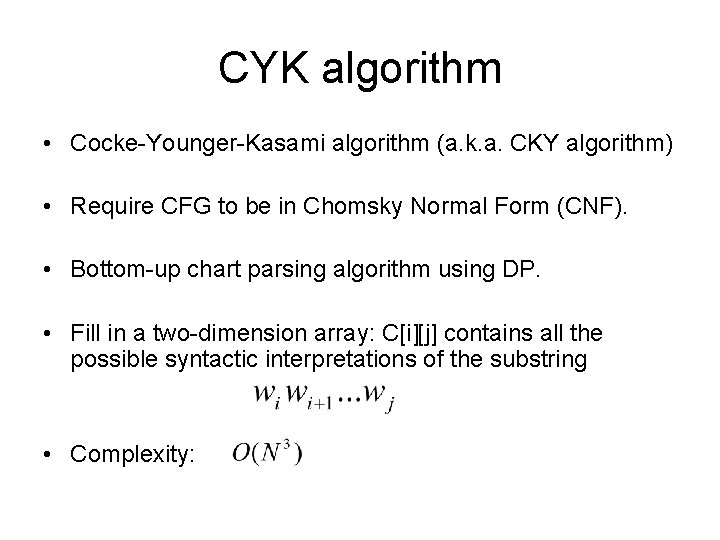

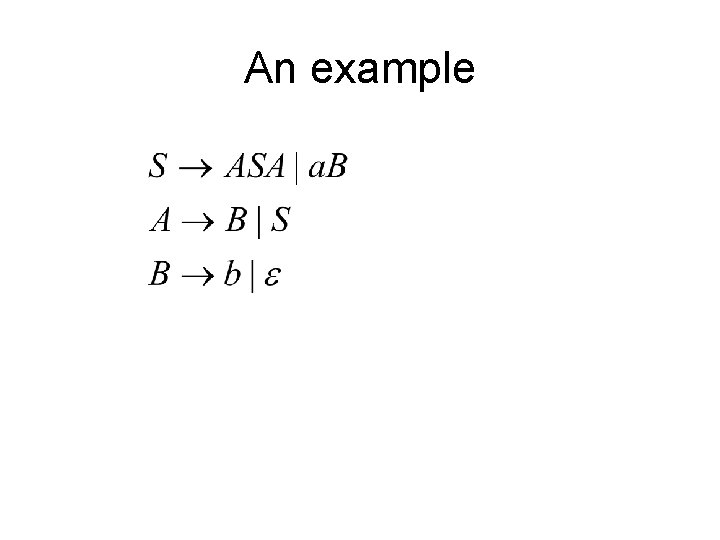

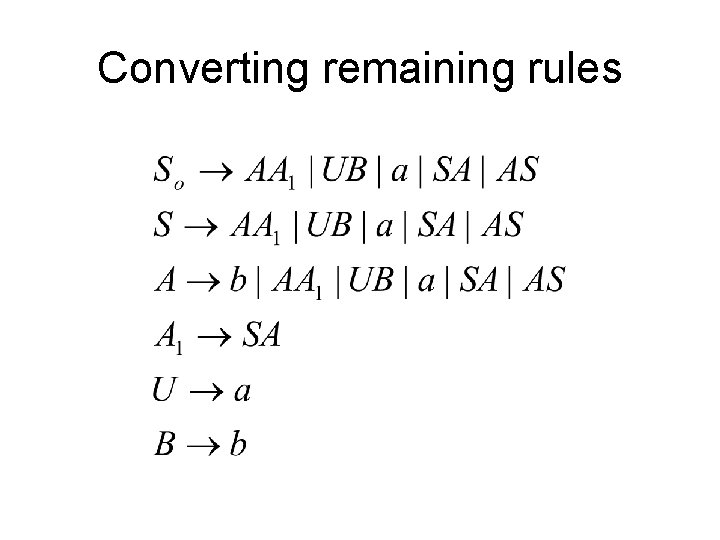

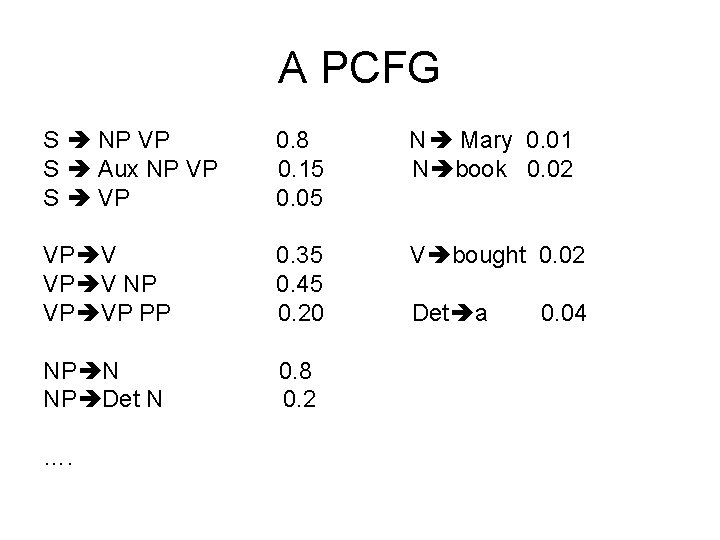

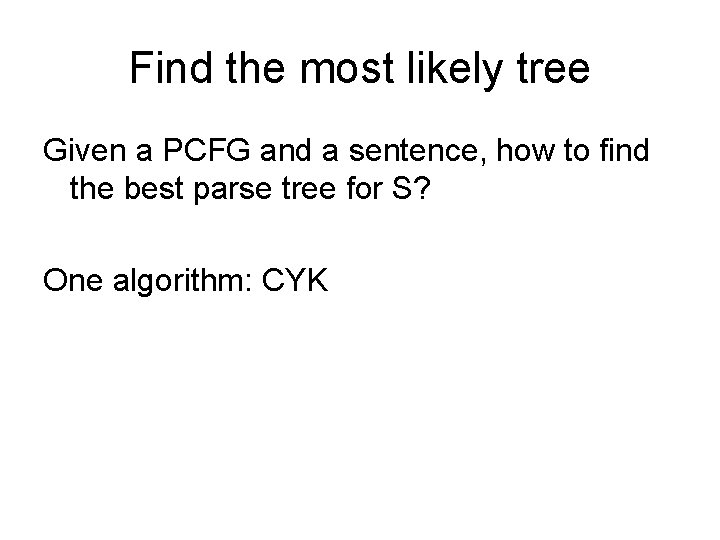

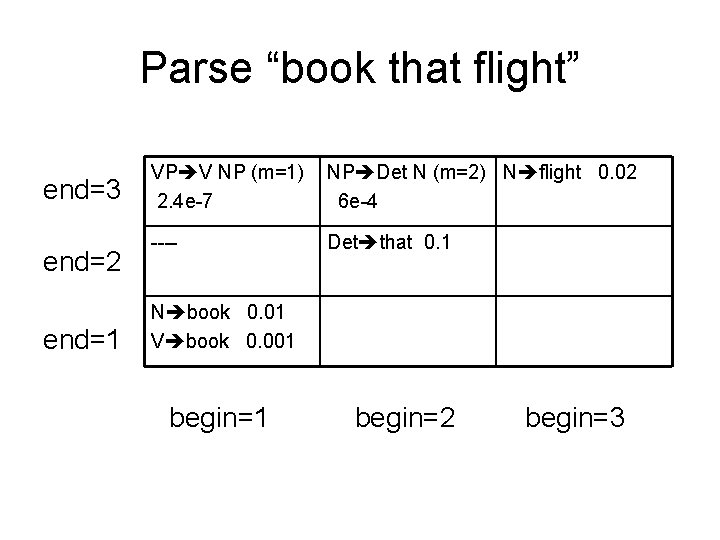

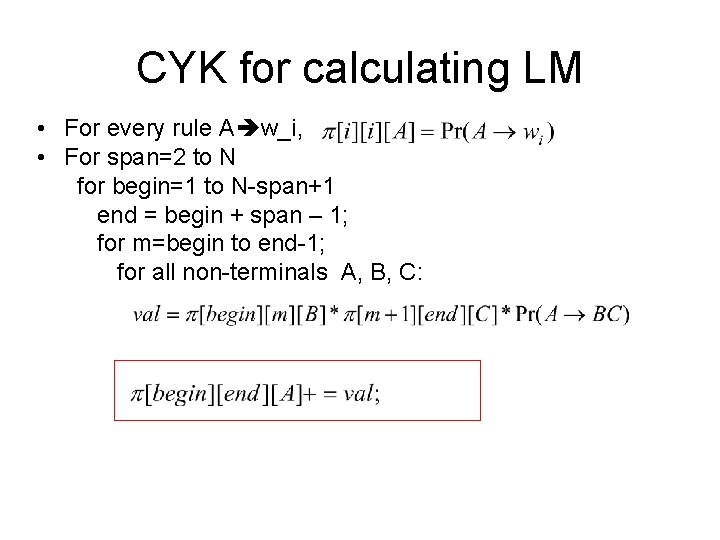

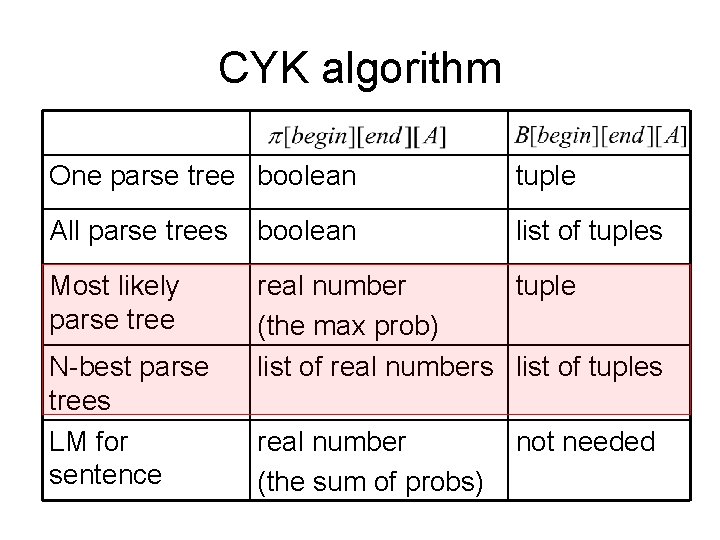

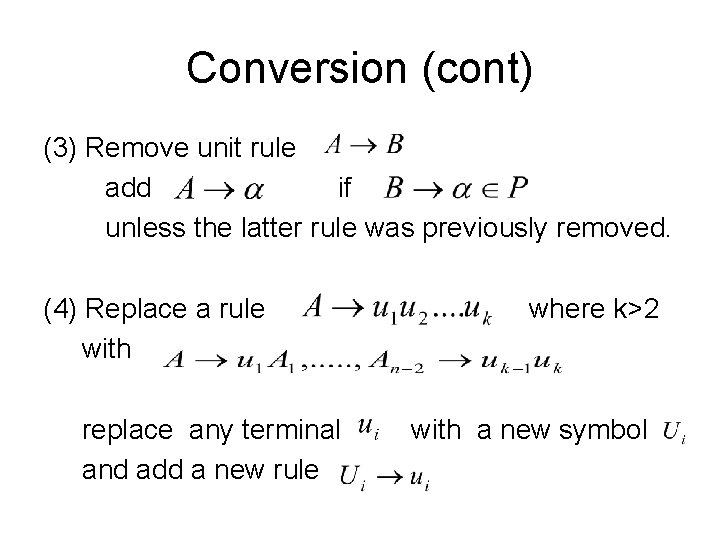

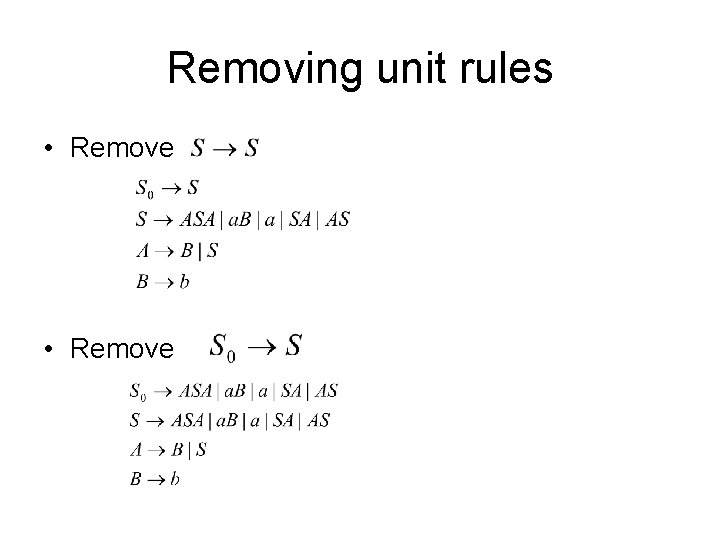

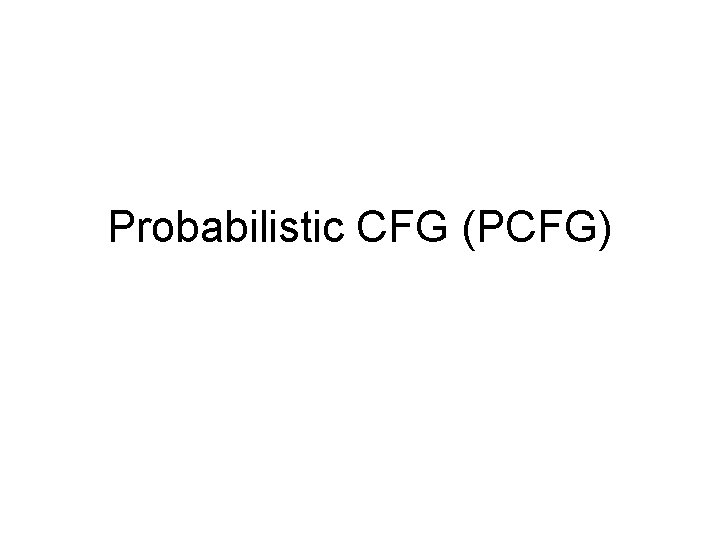

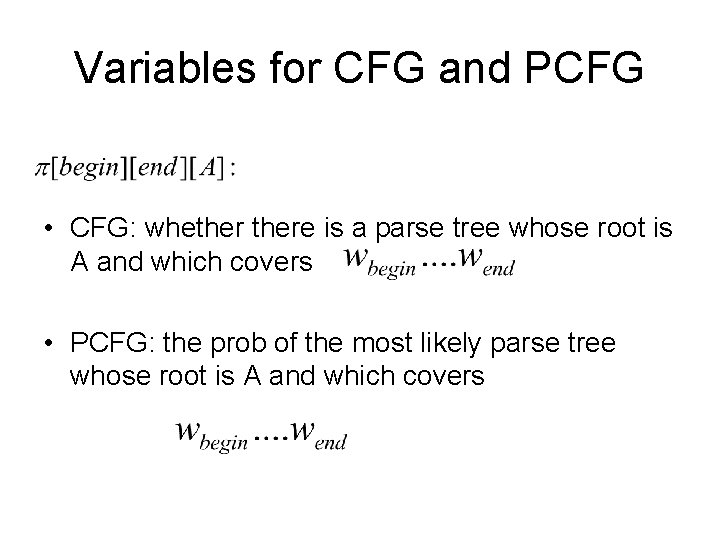

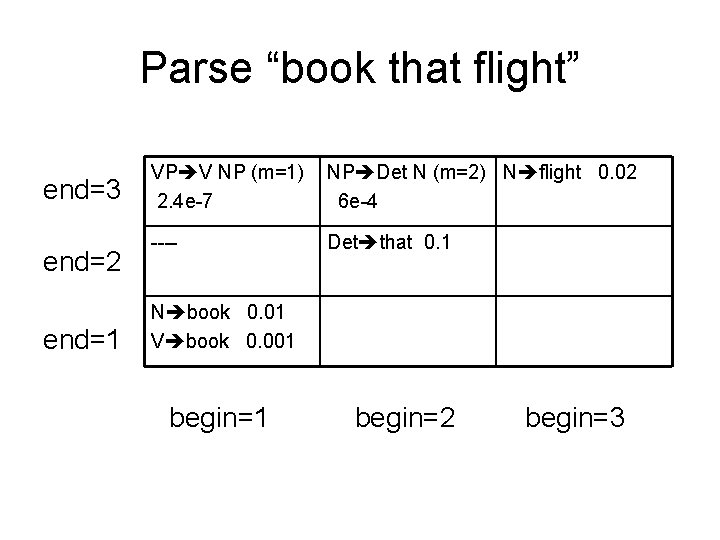

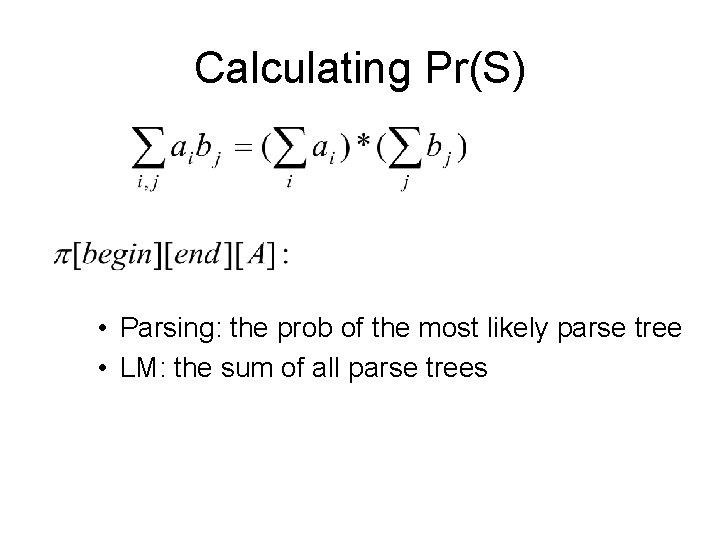

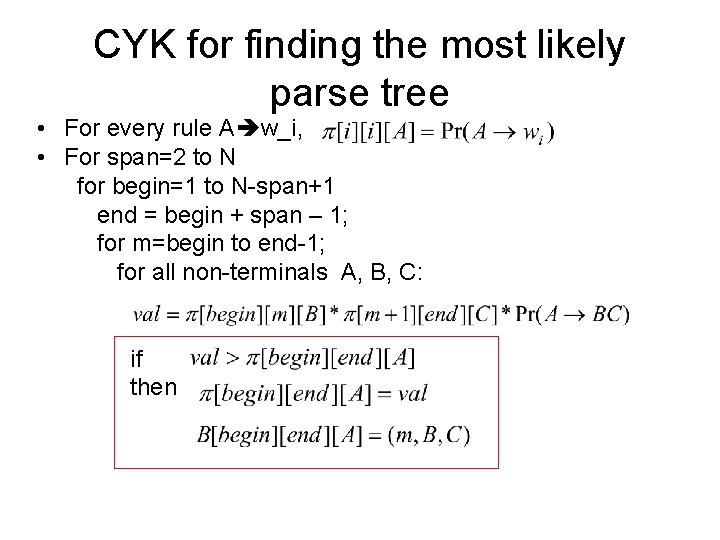

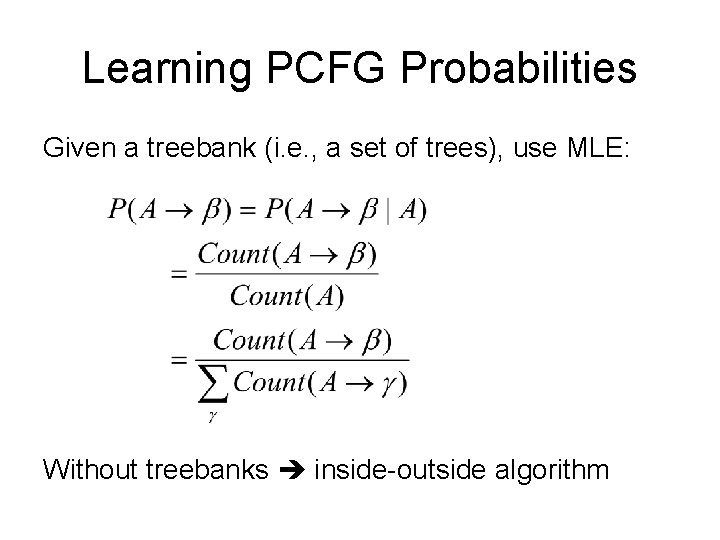

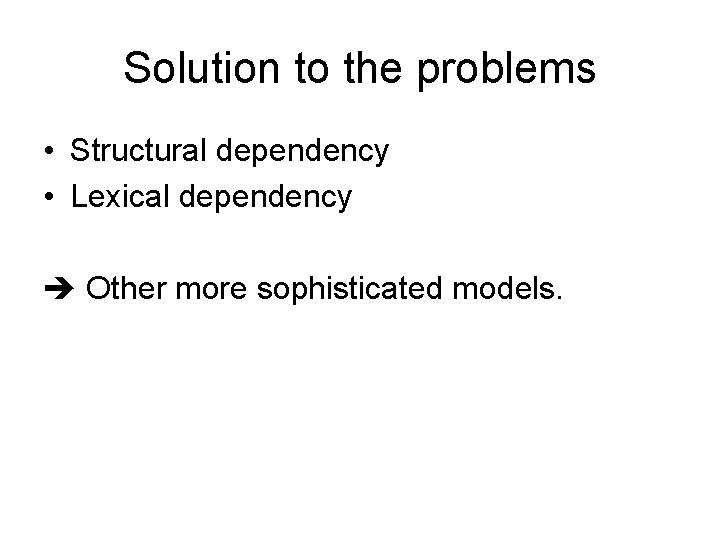

Parse “book that flight”: C 1[begin][end] end=3 end=2 end=1 VP V NP (m=1) ---- NP Det N (m=2) Det that N flight N book V book begin=1 begin=2 begin=3

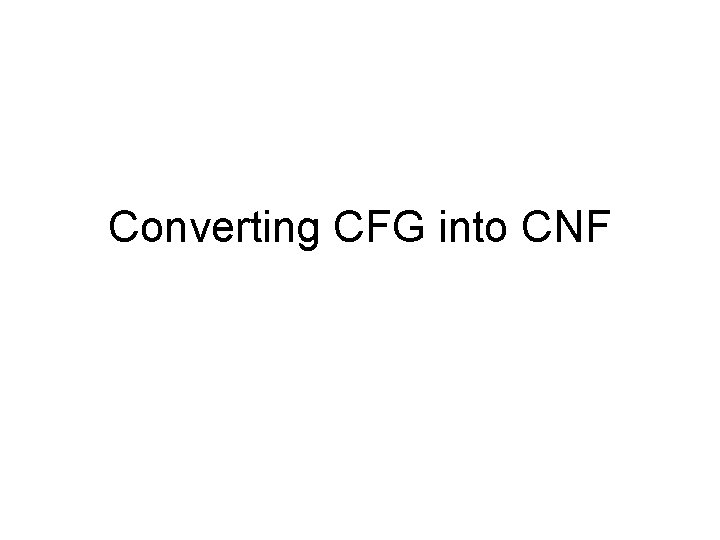

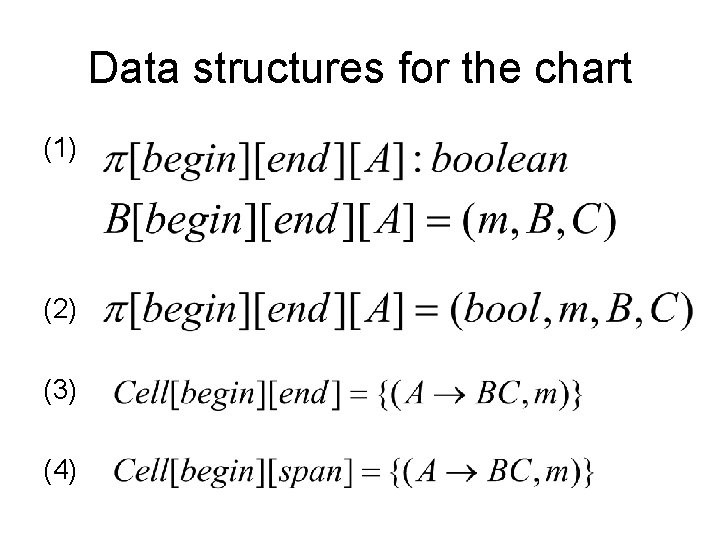

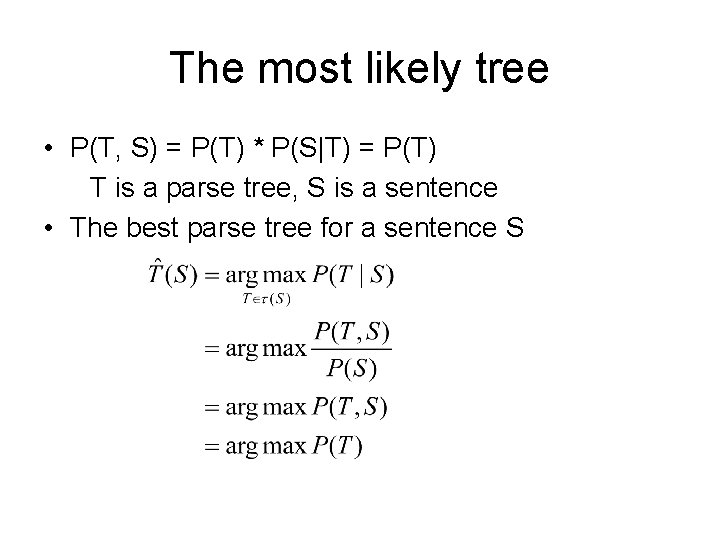

![Parse book that flight C 2beginspan span3 VP V NP m1 span2 span1 N Parse “book that flight”: C 2[begin][span] span=3 VP V NP (m=1) span=2 ---span=1 N](https://slidetodoc.com/presentation_image_h/22746cb413dcfabe65543c86b25af603/image-12.jpg)

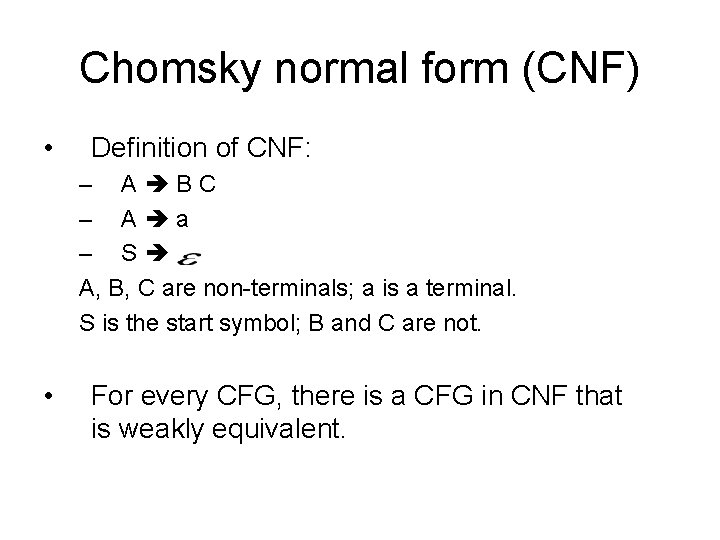

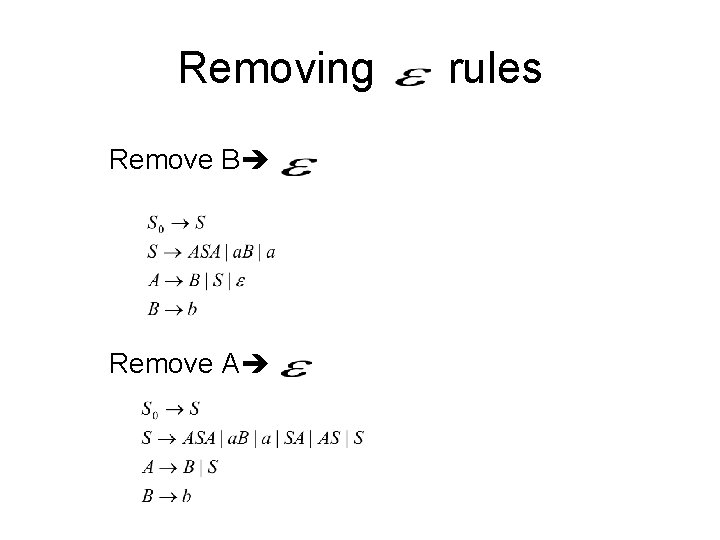

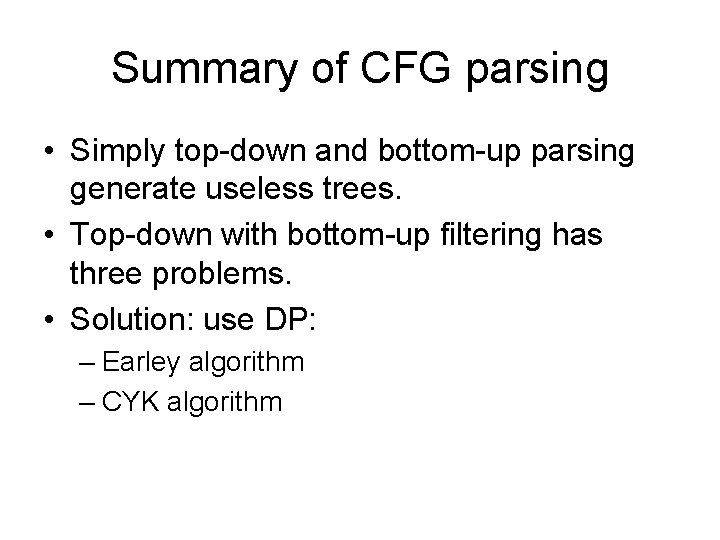

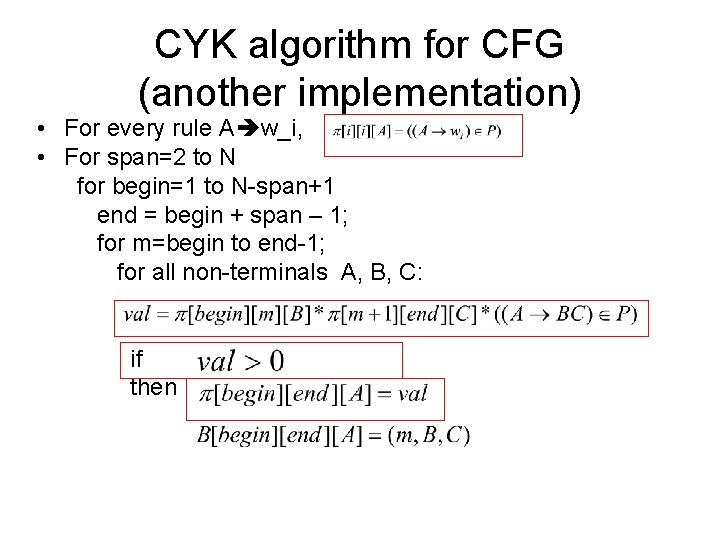

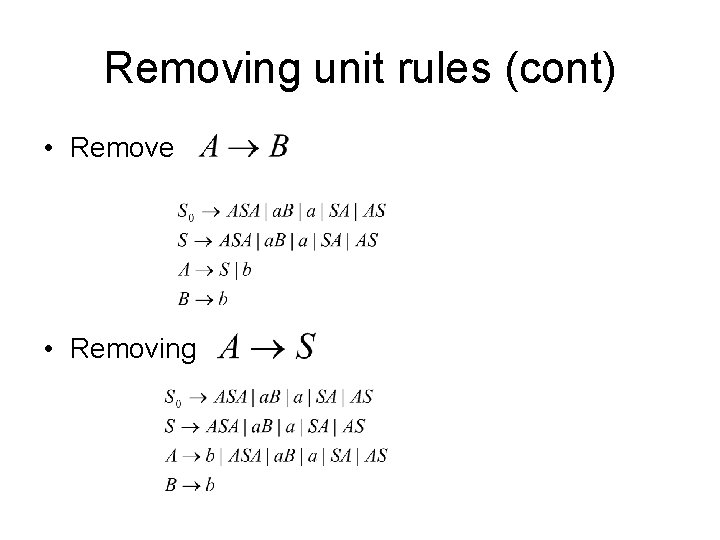

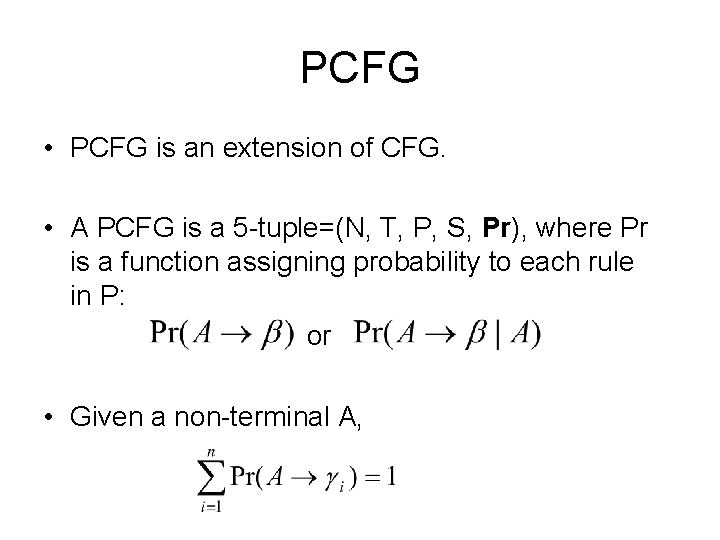

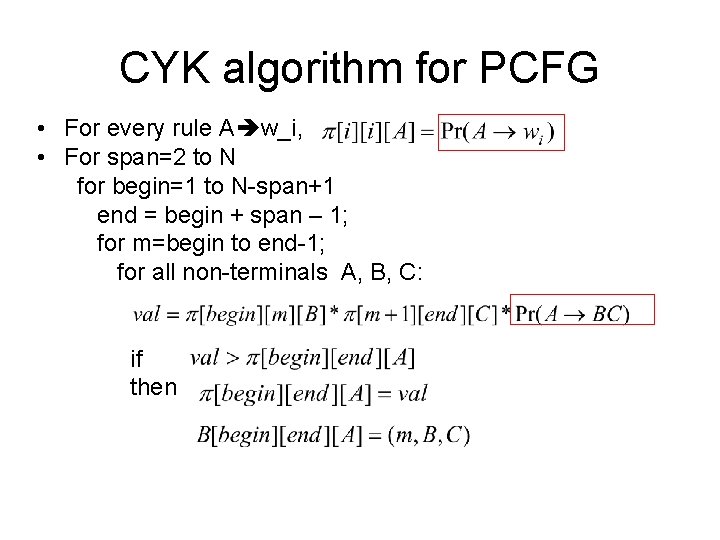

Parse “book that flight”: C 2[begin][span] span=3 VP V NP (m=1) span=2 ---span=1 N book V book begin=1 NP Det N (m=2) Det that begin=2 N flight begin=3

Data structures for the chart (1) (2) (3) (4)

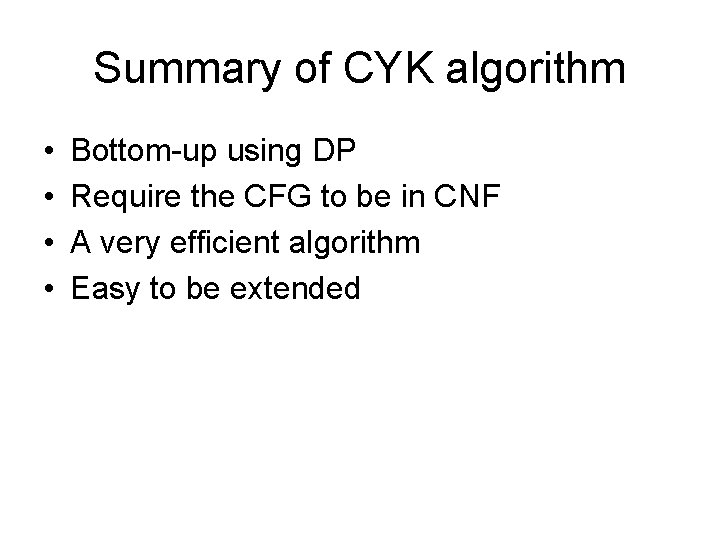

Summary of CYK algorithm • • Bottom-up using DP Require the CFG to be in CNF A very efficient algorithm Easy to be extended

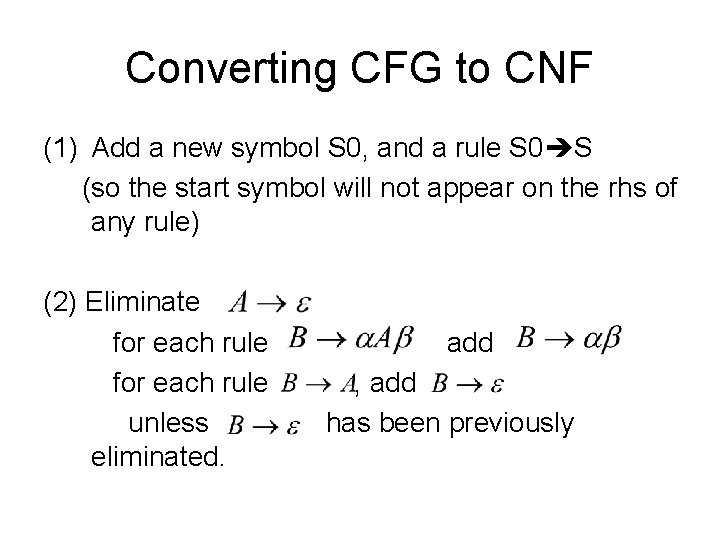

Converting CFG into CNF

Chomsky normal form (CNF) • Definition of CNF: – A B C, – A a, – S Where A, B, C are non-terminals, a is a terminal, S is the start symbol, and B, C are not start symbols. • For every CFG, there is a CFG in CNF that is weakly equivalent.

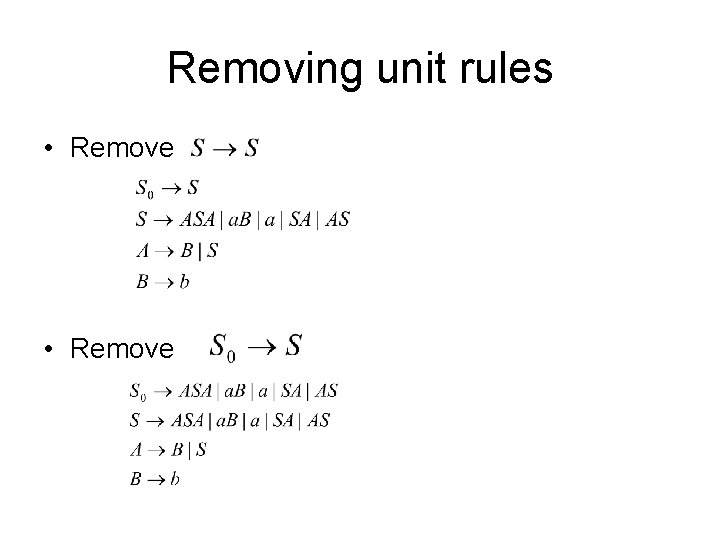

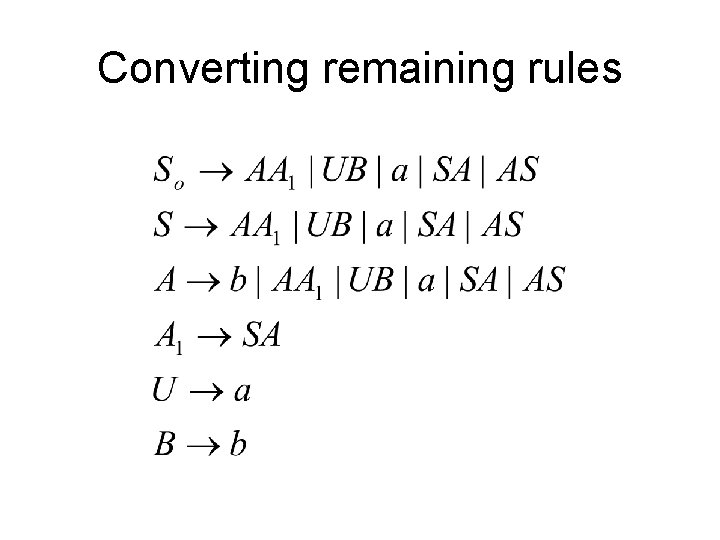

Converting CFG to CNF (1) Add a new symbol S 0, and a rule S 0 S (so the start symbol will not appear on the rhs of any rule) (2) Eliminate for each rule unless eliminated. add , add has been previously

Conversion (cont) (3) Remove unit rule add if unless the latter rule was previously removed. (4) Replace a rule with replace any terminal and add a new rule where k>2 with a new symbol

An example

Adding

Removing Remove B Remove A rules

Removing unit rules • Remove

Removing unit rules (cont) • Remove • Removing

Converting remaining rules

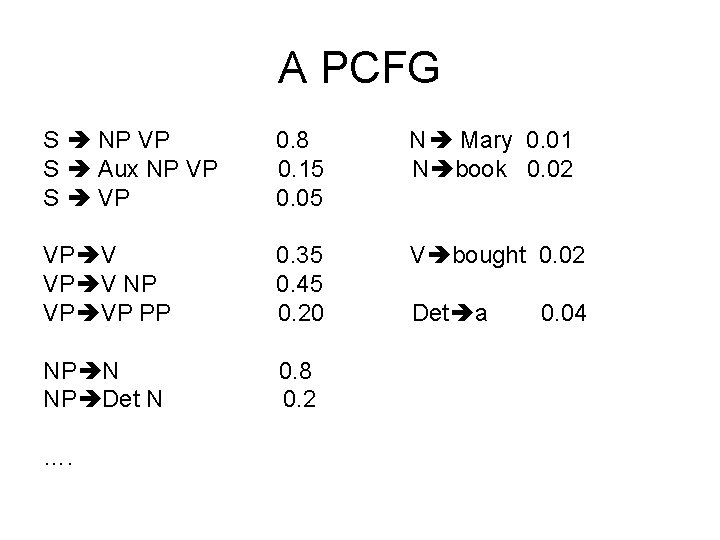

Summary of CFG parsing • Simply top-down and bottom-up parsing generate useless trees. • Top-down with bottom-up filtering has three problems. • Solution: use DP: – Earley algorithm – CYK algorithm

Probabilistic CFG (PCFG)

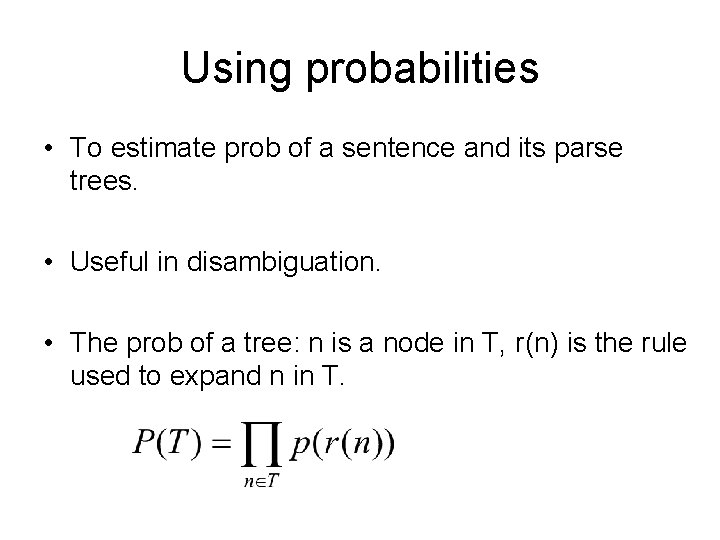

PCFG • PCFG is an extension of CFG. • A PCFG is a 5 -tuple=(N, T, P, S, Pr), where Pr is a function assigning probability to each rule in P: or • Given a non-terminal A,

A PCFG S NP VP S Aux NP VP S VP 0. 8 0. 15 0. 05 N Mary 0. 01 N book 0. 02 VP V NP VP VP PP 0. 35 0. 45 0. 20 V bought 0. 02 NP N NP Det N 0. 8 0. 2 …. Det a 0. 04

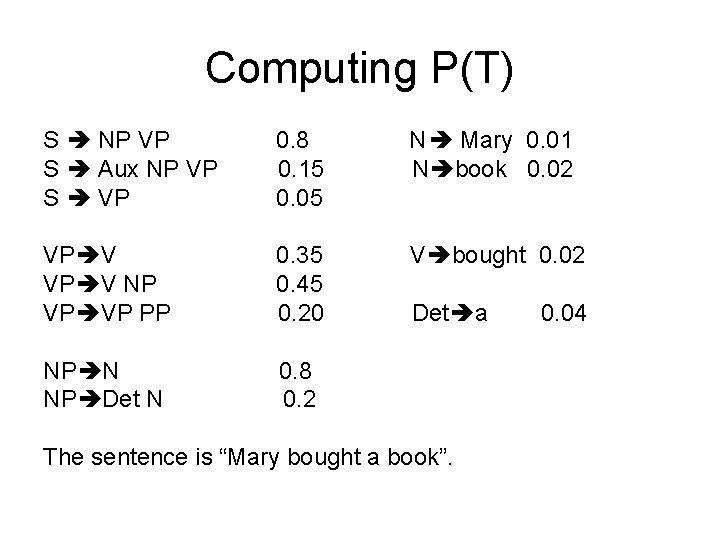

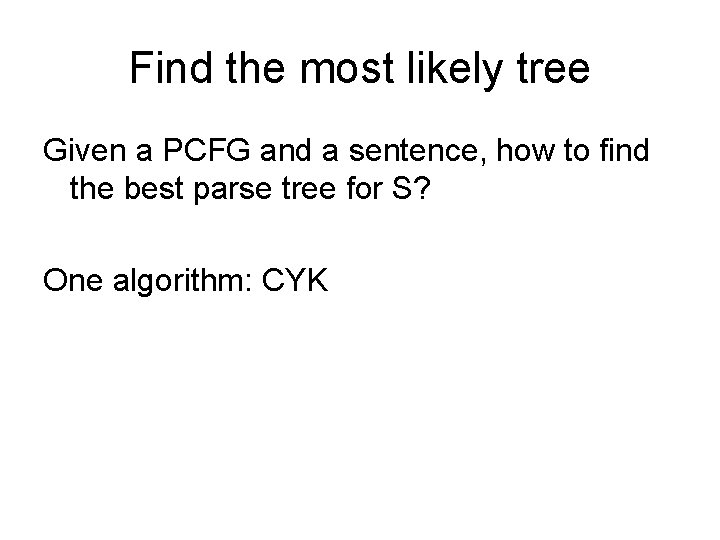

Using probabilities • To estimate prob of a sentence and its parse trees. • Useful in disambiguation. • The prob of a tree: n is a node in T, r(n) is the rule used to expand n in T.

Computing P(T) S NP VP S Aux NP VP S VP 0. 8 0. 15 0. 05 N Mary 0. 01 N book 0. 02 VP V NP VP VP PP 0. 35 0. 45 0. 20 V bought 0. 02 NP N NP Det N 0. 8 0. 2 Det a The sentence is “Mary bought a book”. 0. 04

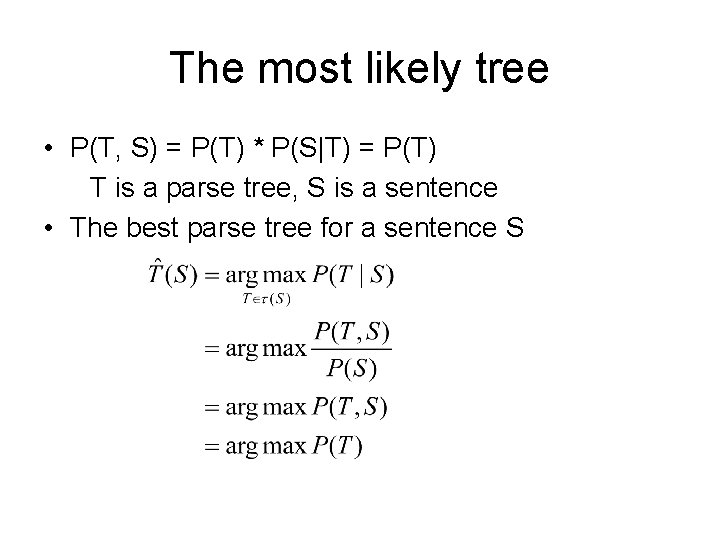

The most likely tree • P(T, S) = P(T) * P(S|T) = P(T) T is a parse tree, S is a sentence • The best parse tree for a sentence S

Find the most likely tree Given a PCFG and a sentence, how to find the best parse tree for S? One algorithm: CYK

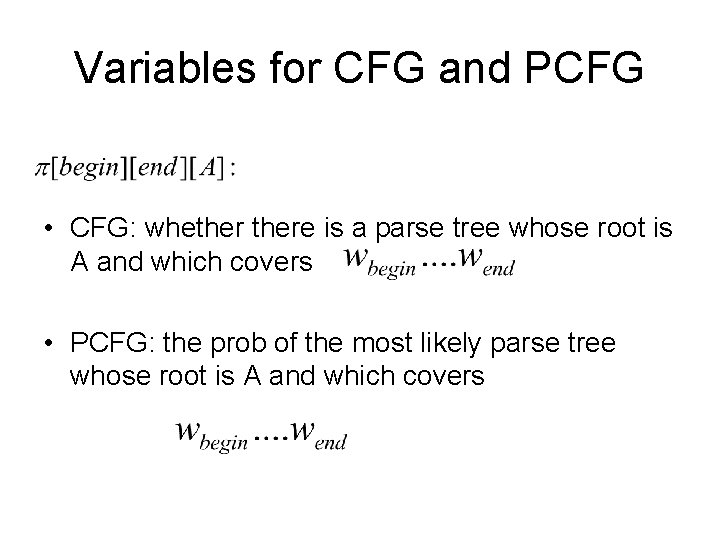

CYK algorithm for CFG • For every rule A w_i, • For span=2 to N for begin=1 to N-span+1 end = begin + span – 1; for m=begin to end-1; for all non-terminals A, B, C: If then

CYK algorithm for CFG (another implementation) • For every rule A w_i, • For span=2 to N for begin=1 to N-span+1 end = begin + span – 1; for m=begin to end-1; for all non-terminals A, B, C: if then

Variables for CFG and PCFG • CFG: whethere is a parse tree whose root is A and which covers • PCFG: the prob of the most likely parse tree whose root is A and which covers

CYK algorithm for PCFG • For every rule A w_i, • For span=2 to N for begin=1 to N-span+1 end = begin + span – 1; for m=begin to end-1; for all non-terminals A, B, C: if then

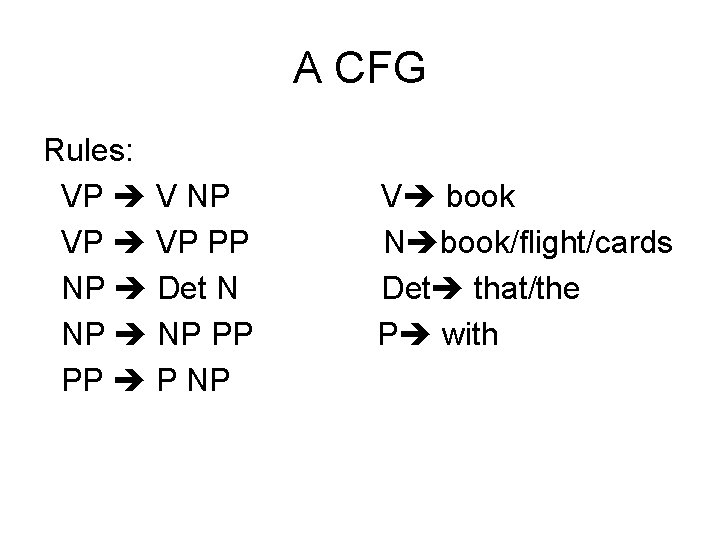

A CFG Rules: VP V NP VP PP NP Det N NP PP PP P NP V book N book/flight/cards Det that/the P with

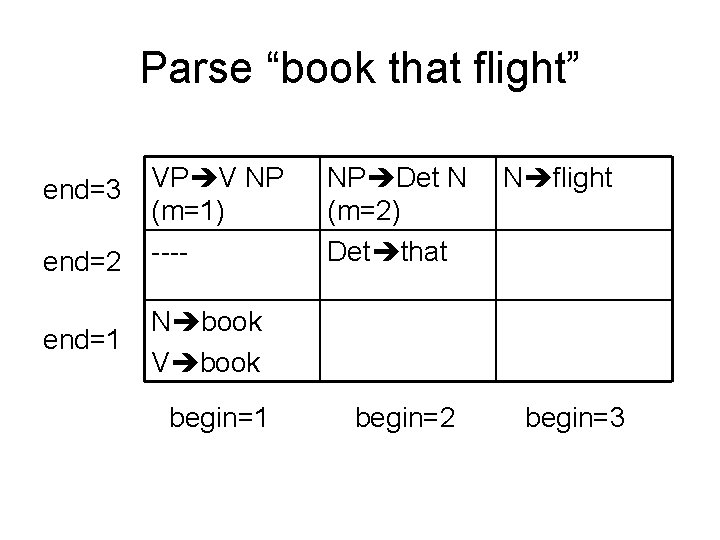

Parse “book that flight” end=3 end=2 end=1 VP V NP (m=1) ---- NP Det N (m=2) Det that N flight N book V book begin=1 begin=2 begin=3

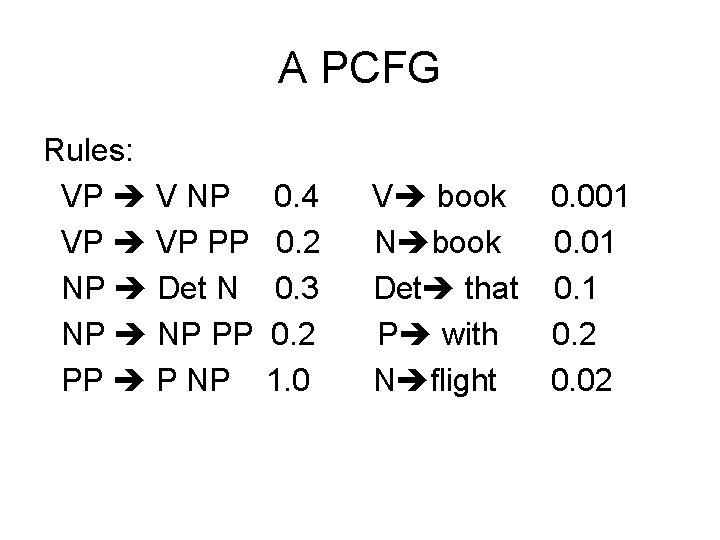

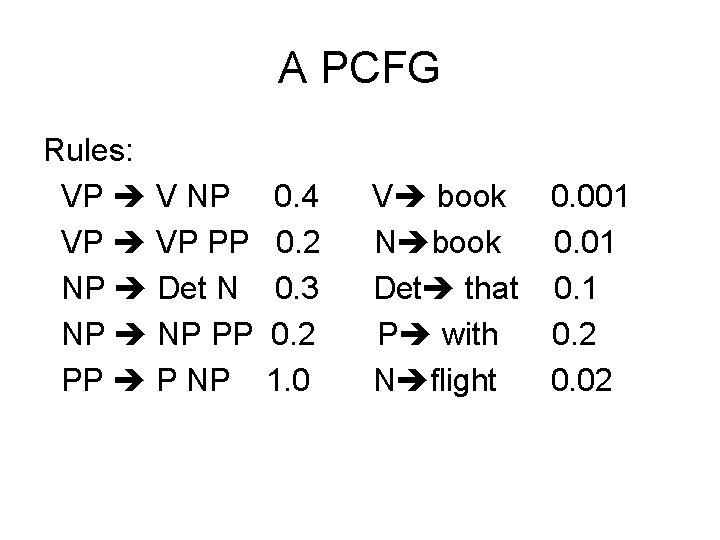

A PCFG Rules: VP V NP VP PP NP Det N NP PP PP P NP 0. 4 0. 2 0. 3 0. 2 1. 0 V book 0. 001 N book 0. 01 Det that 0. 1 P with 0. 2 N flight 0. 02

Parse “book that flight” end=3 end=2 end=1 VP V NP (m=1) 2. 4 e-7 NP Det N (m=2) N flight 0. 02 6 e-4 ---- Det that 0. 1 N book 0. 01 V book 0. 001 begin=2 begin=3

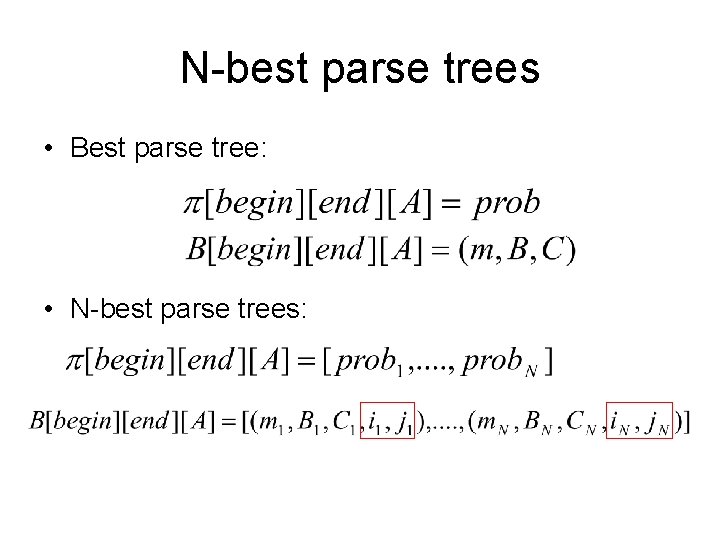

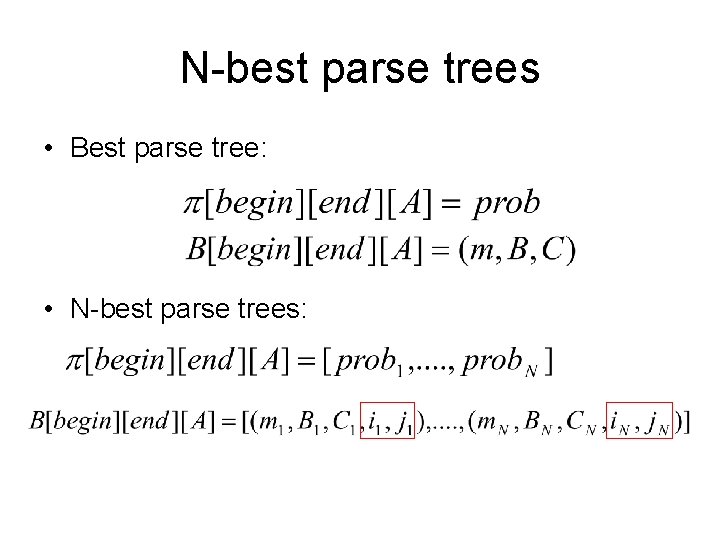

N-best parse trees • Best parse tree: • N-best parse trees:

CYK algorithm for N-best • • For every rule A w_i, For span=2 to N for begin=1 to N-span+1 end = begin + span – 1; for m=begin to end-1; for all non-terminals A, B, C: for each if val > one of probs in then remove the last element in and insert val to the array remove the last element in B[begin][end][A] and insert (m, B, C, i, j) to B[begin][end][A].

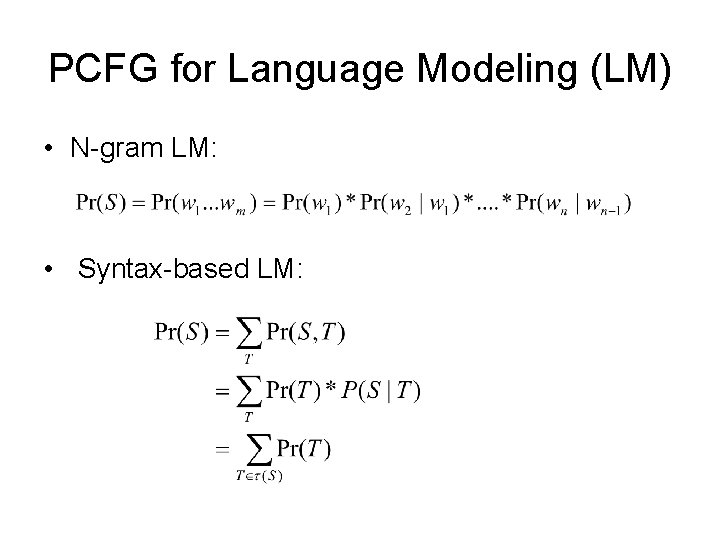

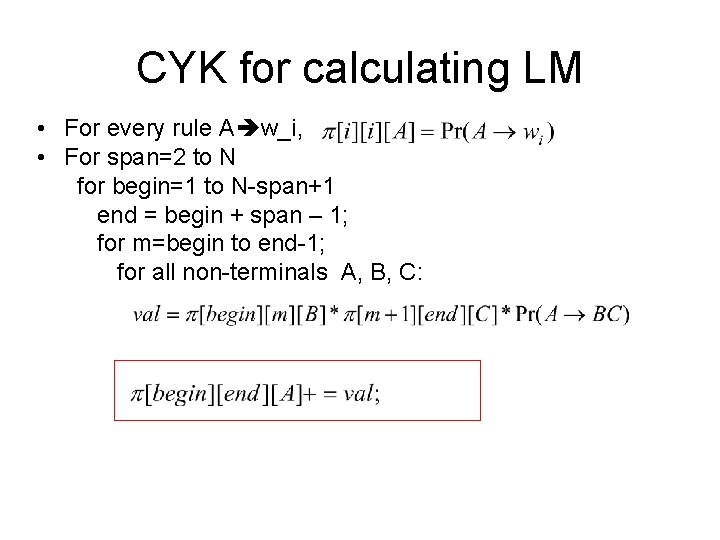

PCFG for Language Modeling (LM) • N-gram LM: • Syntax-based LM:

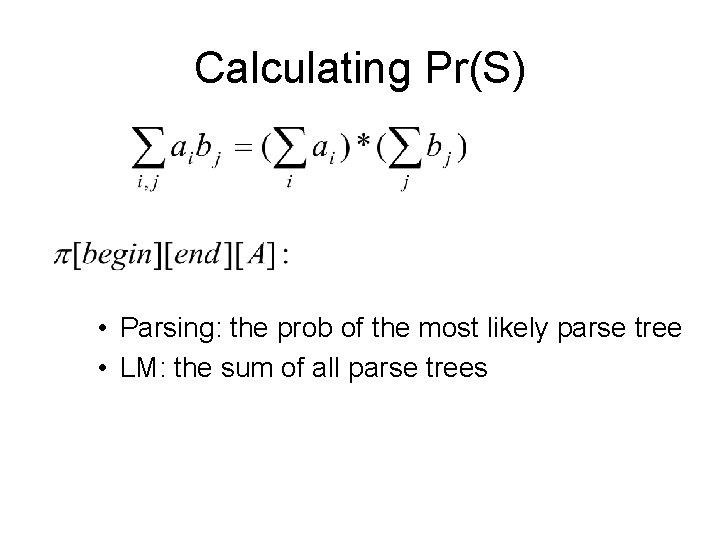

Calculating Pr(S) • Parsing: the prob of the most likely parse tree • LM: the sum of all parse trees

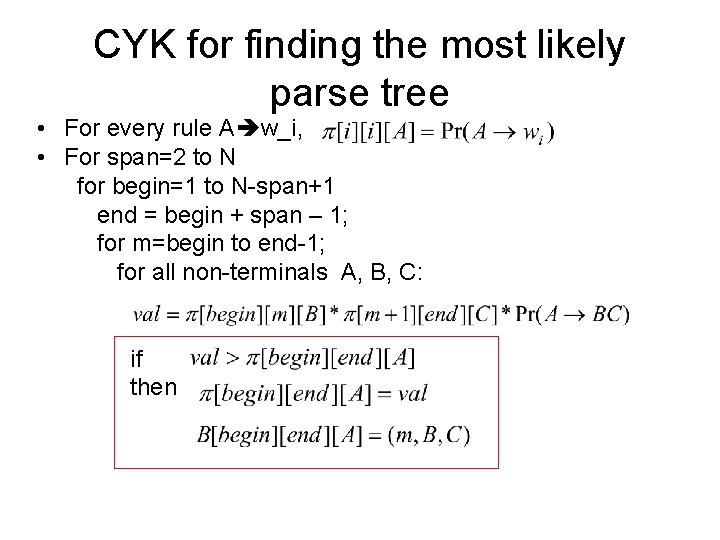

CYK for finding the most likely parse tree • For every rule A w_i, • For span=2 to N for begin=1 to N-span+1 end = begin + span – 1; for m=begin to end-1; for all non-terminals A, B, C: if then

CYK for calculating LM • For every rule A w_i, • For span=2 to N for begin=1 to N-span+1 end = begin + span – 1; for m=begin to end-1; for all non-terminals A, B, C:

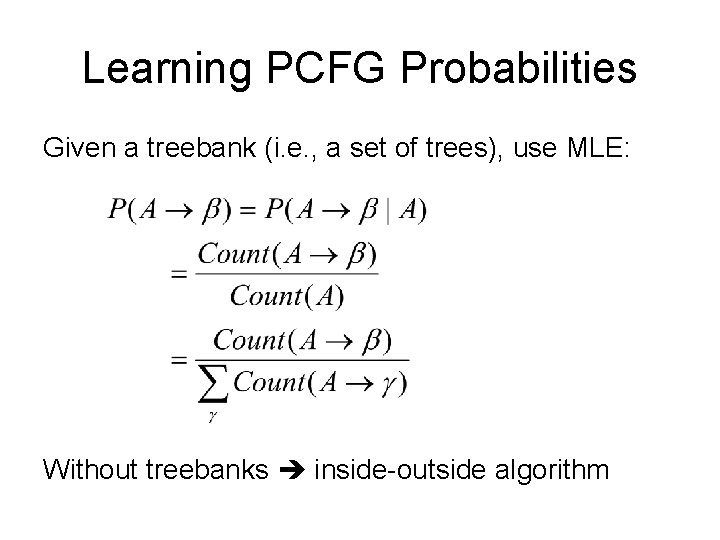

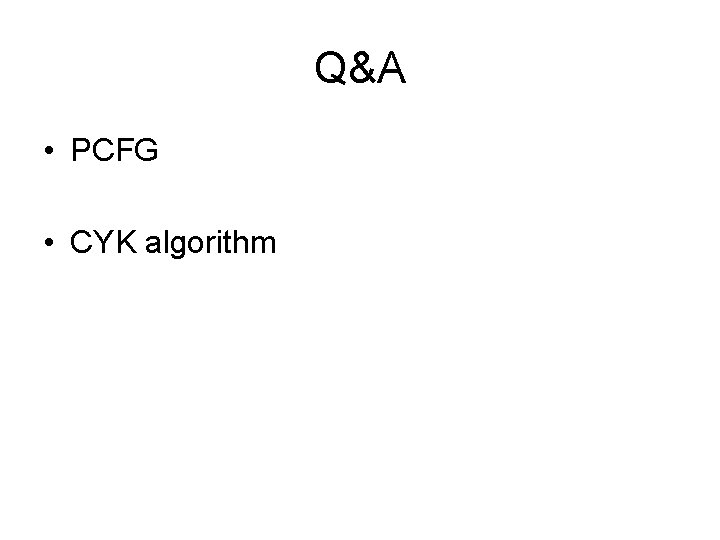

CYK algorithm One parse tree boolean tuple All parse trees boolean list of tuples Most likely parse tree real number tuple (the max prob) list of real numbers list of tuples N-best parse trees LM for sentence real number (the sum of probs) not needed

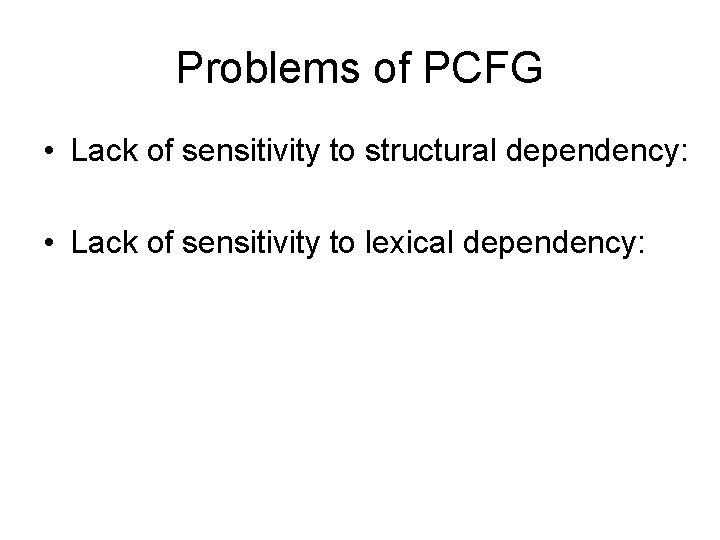

Learning PCFG Probabilities Given a treebank (i. e. , a set of trees), use MLE: Without treebanks inside-outside algorithm

Q&A • PCFG • CYK algorithm

Problems of PCFG • Lack of sensitivity to structural dependency: • Lack of sensitivity to lexical dependency:

Structural Dependency • Each PCFG rule is assumed to be independent of other rules. • Observation: sometimes the choice of how a node expands is dependent on the location of the node in the parse tree. – NP Pron depends on whether the NP was a subject or an object

Lexical Dependency Given P(NP NP PP) > P(VP VP PP) should a PP always be attached to an NP? Verbs such as “send” Preps such as “of”, “into”

Solution to the problems • Structural dependency • Lexical dependency Other more sophisticated models.

Lexicalized PCFG

Head and head child • Each syntactic constituent is associated with a lexical head. • Each context-free rule has a head child: – – – VP V NP NP Det N VP VP PP NP NP PP VP to VP VP aux VP

Head propagation • Lexical head propagates from head child to its parent. • An example: “Mary bought a book in the store. ”

Lexicalized PCFG • Lexicalized rules: – VP (bought) V(bought) NP 0. 01 – VP V NP | 0. 01 | 0 | bought – VP (bought) V (bought) NP (book) 1. 5 e-7 – VP V NP | 1. 5 e-7 | 0 | bought book

Finding head in a parse tree • Head propagation table: simple rules to find head child • An example: – (VP left V/VP/Aux) – (PP left P) – (NP right N)

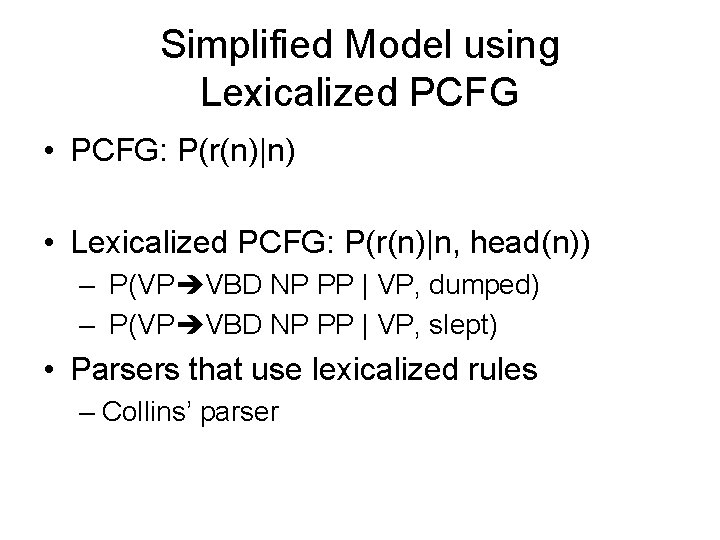

Simplified Model using Lexicalized PCFG • PCFG: P(r(n)|n) • Lexicalized PCFG: P(r(n)|n, head(n)) – P(VP VBD NP PP | VP, dumped) – P(VP VBD NP PP | VP, slept) • Parsers that use lexicalized rules – Collins’ parser

Fei fei li

Fei fei li Fei fei li

Fei fei li Week by week plans for documenting children's development

Week by week plans for documenting children's development Cs 571 gmu

Cs 571 gmu Wap 571

Wap 571 571 ad

571 ad Voice communication army

Voice communication army Münchener verein 571+575

Münchener verein 571+575 Bus 571

Bus 571 21 nisan 571

21 nisan 571 571 r

571 r 571

571 Asu cse 571

Asu cse 571 Chữ số 5 trong số 20 571 có giá trị là

Chữ số 5 trong số 20 571 có giá trị là Svitlana vyetrenko

Svitlana vyetrenko Tengbo li

Tengbo li Xia bellringer

Xia bellringer Xuhua xia

Xuhua xia Xuhua xia rate my prof

Xuhua xia rate my prof Qfrost

Qfrost Longest chinese dynasty

Longest chinese dynasty Social choice voucher

Social choice voucher Exces de cerere

Exces de cerere Achievements of xia dynasty

Achievements of xia dynasty Xia (hsia) dynasty

Xia (hsia) dynasty Dr xia wang

Dr xia wang Perfume xia xiang

Perfume xia xiang Laura iordache

Laura iordache Xia red

Xia red Albert xia

Albert xia Lirong xia rpi

Lirong xia rpi Xia shang zhou qin han

Xia shang zhou qin han Guoxing xia

Guoxing xia Indicates devotion courage bravery

Indicates devotion courage bravery Xỉa cá mè đè cá chép

Xỉa cá mè đè cá chép Bps uottawa

Bps uottawa Yuni xia

Yuni xia Tian shang tian xia wei wo du zun

Tian shang tian xia wei wo du zun Guoxing xia

Guoxing xia Qiangfei xia

Qiangfei xia Amy xia amgen

Amy xia amgen Xia red

Xia red Mga dinastiya ng sinaunang tsina

Mga dinastiya ng sinaunang tsina Seth ebner

Seth ebner Guoxing xia

Guoxing xia Fei protocal

Fei protocal Fei duas barras sendo uma de ferro e outra de aluminio

Fei duas barras sendo uma de ferro e outra de aluminio Coco

Coco Kkui

Kkui Assinale a alternativa incorreta sobre a prosa naturalista

Assinale a alternativa incorreta sobre a prosa naturalista Fei kontakt

Fei kontakt Fei dressage tests

Fei dressage tests Hippoevent

Hippoevent Rang dong restaurant

Rang dong restaurant Fei

Fei Explosion w fei

Explosion w fei Moodle stuba

Moodle stuba Dr ng li ling

Dr ng li ling Good short term goals

Good short term goals Huo lingyu

Huo lingyu