Semisupervised learning and selftraining LING 572 Fei Xia

- Slides: 36

Semi-supervised learning and self-training LING 572 Fei Xia 02/14/06

Outline • Overview of Semi-supervised learning (SSL) • Self-training (a. k. a. Bootstrapping)

Additional Reference • Xiaojin Zhu (2006): Semi-supervised learning literature survey. • Olivier Chapelle et al. (2005): Semisupervised Learning. The MIT Press.

Overview of SSL

What is SSL? • Labeled data: – Ex: POS tagging: tagged sentences – Creating labeled data is difficult, expensive, and/or time-consuming. • Unlabeled data: – Ex: POS tagging: untagged sentences. – Obtaining unlabeled data is easier. • Goal: use both labeled and unlabeled data to improve the performance

• Learning – Supervised (labeled data only) – Semi-supervised (both labeled and unlabeled data) – Unsupervised (unlabeled data only) • Problems: – – Classification Regression Clustering … Focus on semi-supervised classification problem

A brief history of SSL • The idea of self-training appeared in the 1960 s. • SSL took off in the 1970 s. • The interest for SSL increased in the 1990 s, mostly due to applications in NLP.

Does SSL work? • Yes, under certain conditions. – Problem itself: the knowledge on p(x) carry information that is useful in the inference of p(y | x). – Algorithm: the modeling assumption fits well with the problem structure. • SSL will be most useful when there are far more unlabeled data than labeled data. • SSL could degrade the performance when mistakes reinforce themselves.

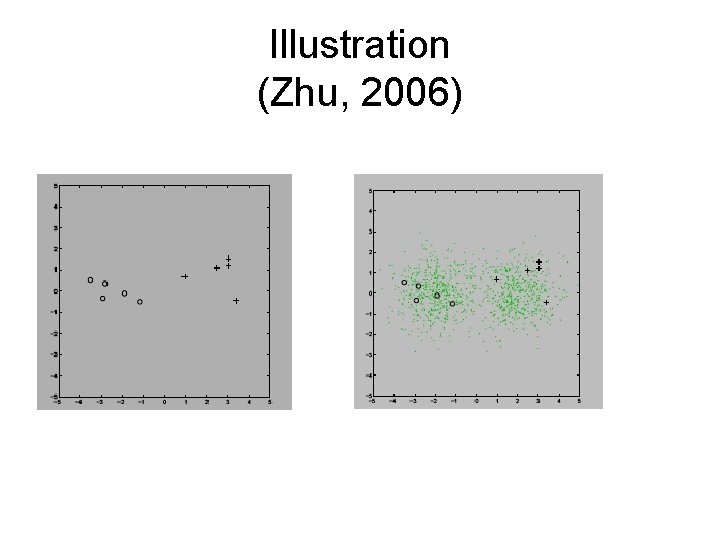

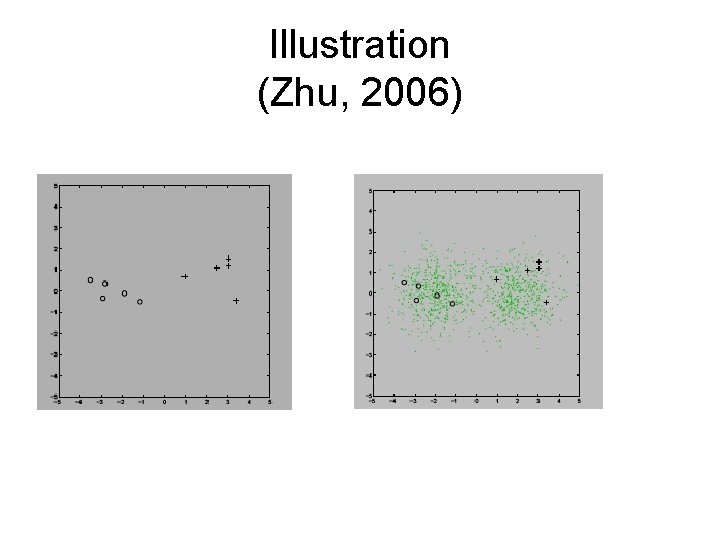

Illustration (Zhu, 2006)

Illustration (cont)

Assumptions • Smoothness (continuity) assumption: if two points x 1 and x 2 in a high-density region are close, then so should be the corresponding outputs y 1 and y 2. • Cluster assumption: If points are in the same cluster, they are likely to be of the same class. Low density separation: the decision boundary should lie in a low density region. • ….

SSL algorithms • Self-training • Co-training • Generative models: – Ex: EM with generative mixture models • Low Density Separations: – Ex: Transductive SVM • Graph-based models

Which SSL method should we use? • It depends. • Semi-supervised methods make strong model assumptions. Choose the ones whose assumptions fit the problem structure.

Semi-supervised and active learning • They address the same issue: labeled data are hard to get. • Semi-supervised: choose the unlabeled data to be added to the labeled data. • Active learning: choose the unlabeled data to be annotated.

Self-training

Basics of self-training • Probably the earliest SSL idea. • Also called self-teaching or bootstrapping. • Appeared in the 1960 s and 1970 s. • First well-known NLP paper: (Yarowsky, 1995)

Self-training algorithm • Let L be the set of labeled data, U be the set of unlabeled data. • Repeat – – – Train a classifier h with training data L Classify data in U with h Find a subset U’ of U with the most confident scores. L + U’ L U – U’ U

Case study: (Yarowsky, 1995)

Setting • Task: WSD – Ex: plant: living / factory • “Unsupervised”: just need a few seed collocations for each sense. • Learner: Decision list

Assumption #1: One sense per collocation • Nearby words provide strong and consistent clues to the sense of a target word. • The effect varies depending on the type of collocation – It is strongest for immediately adjacent collocations. • Assumption #1 Use collocations in the decision rules.

Assumption #2: One sense per discourse • The sense of a target word is highly consistent within any given document. • The assumption holds most of the time (99. 8% in their experiment) • Assumption #2 filter and augment the addition of unlabeled data.

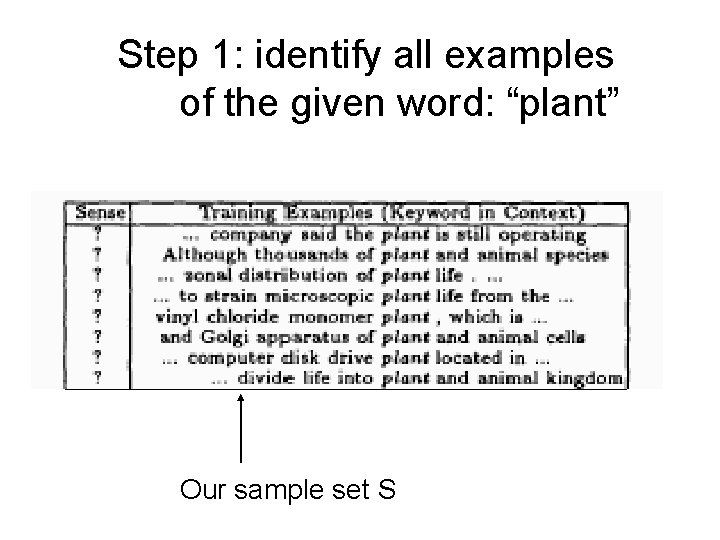

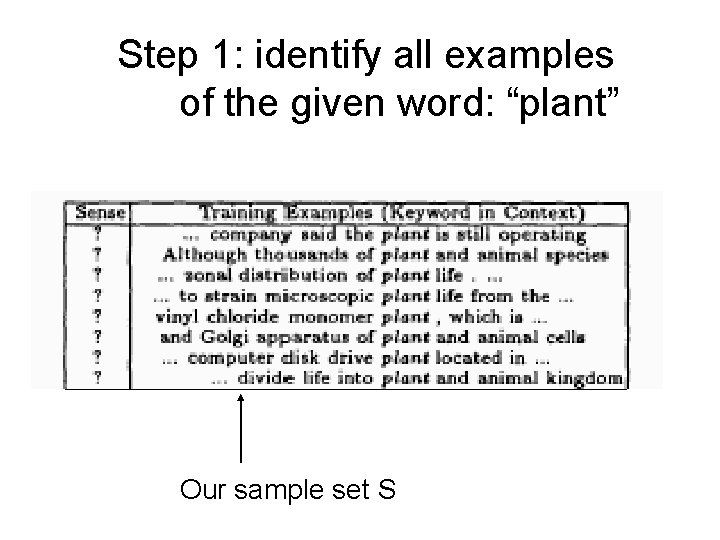

Step 1: identify all examples of the given word: “plant” Our sample set S

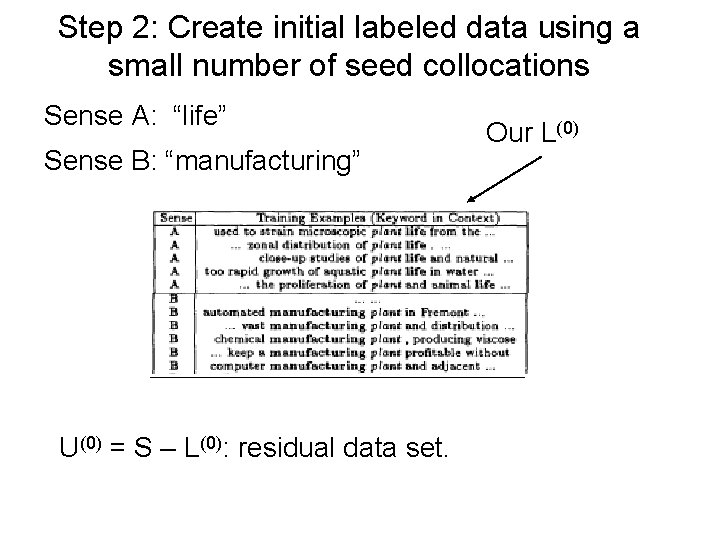

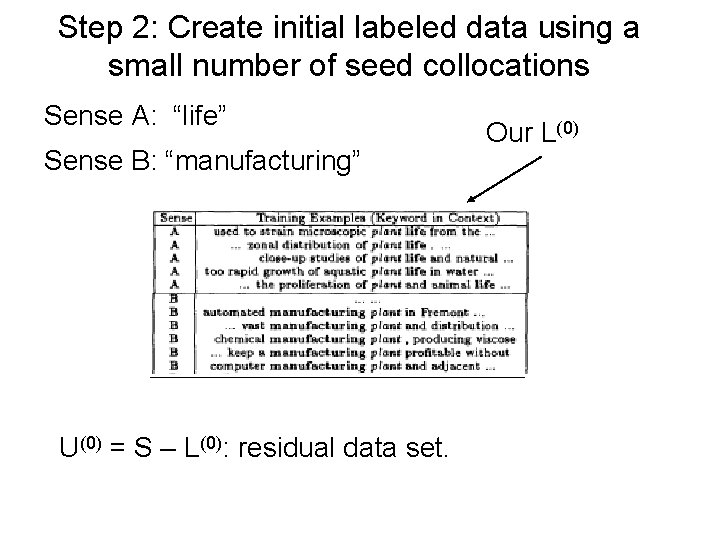

Step 2: Create initial labeled data using a small number of seed collocations Sense A: “life” Sense B: “manufacturing” U(0) = S – L(0): residual data set. Our L(0)

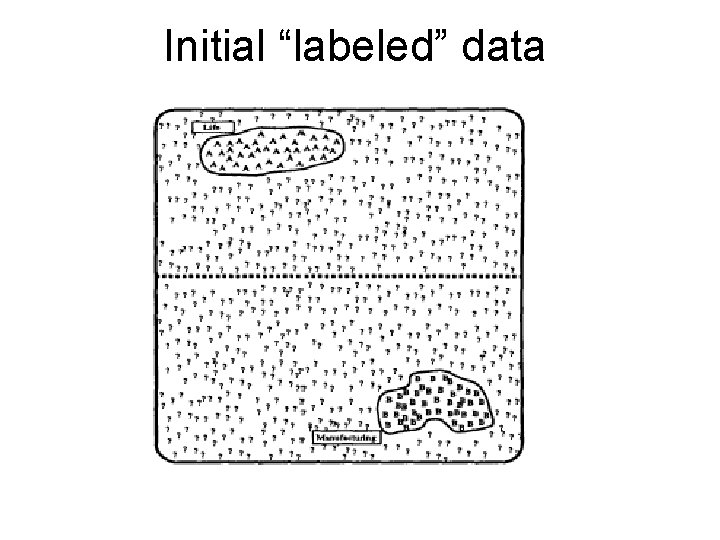

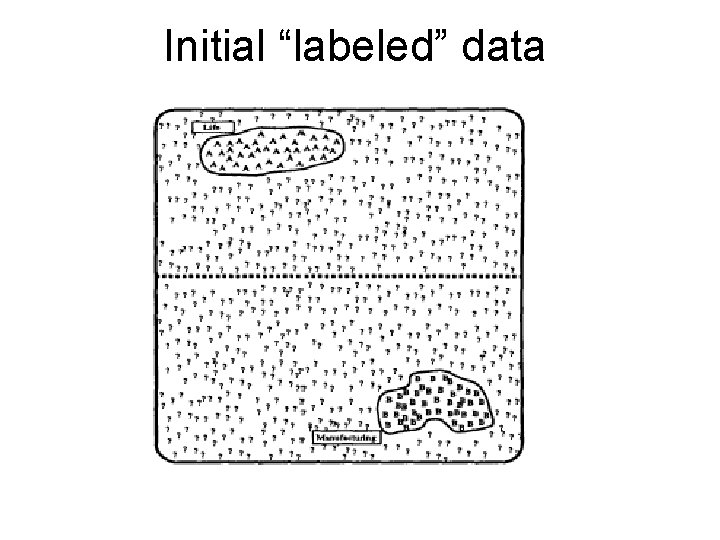

Initial “labeled” data

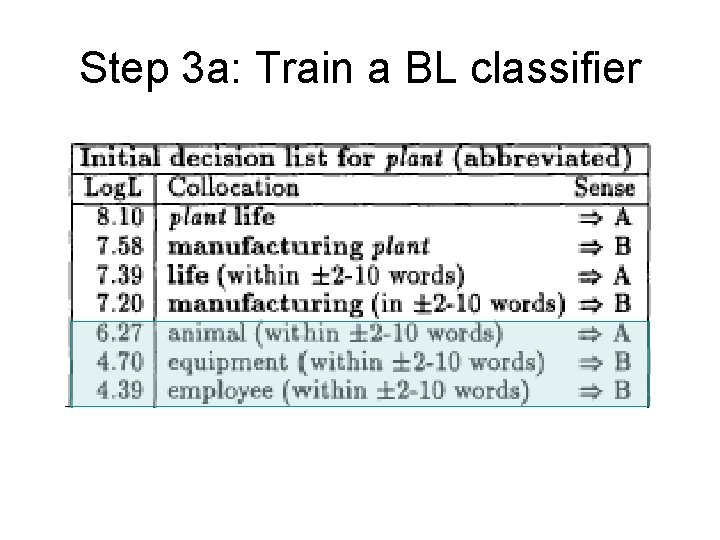

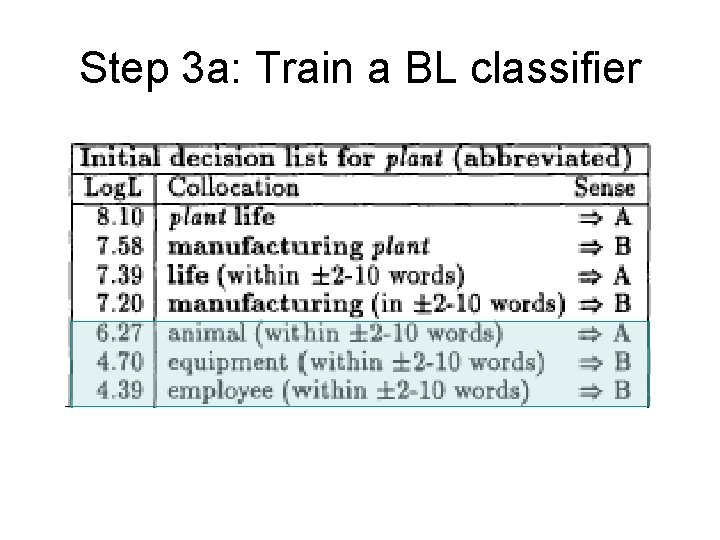

Step 3 a: Train a BL classifier

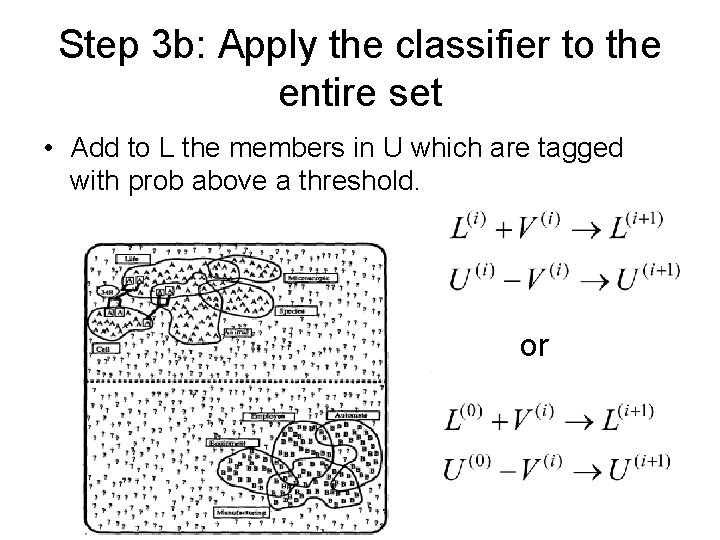

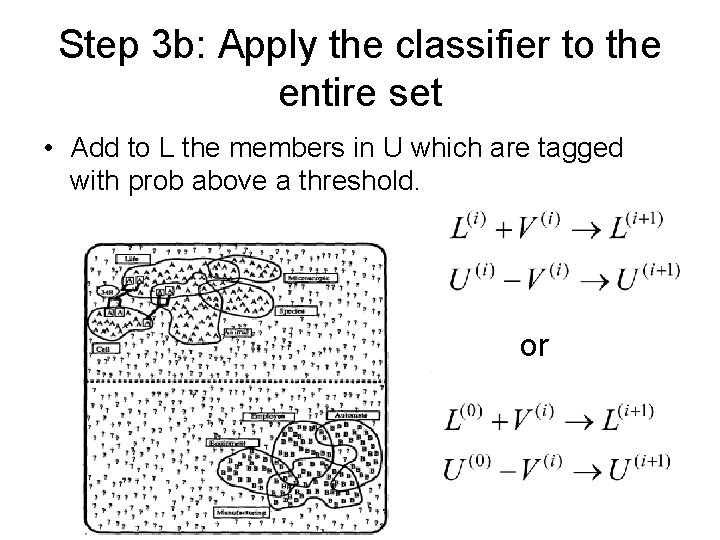

Step 3 b: Apply the classifier to the entire set • Add to L the members in U which are tagged with prob above a threshold. or

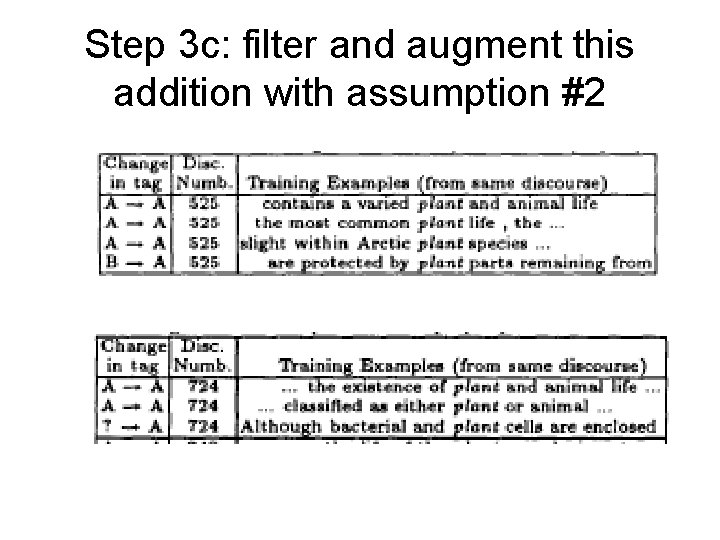

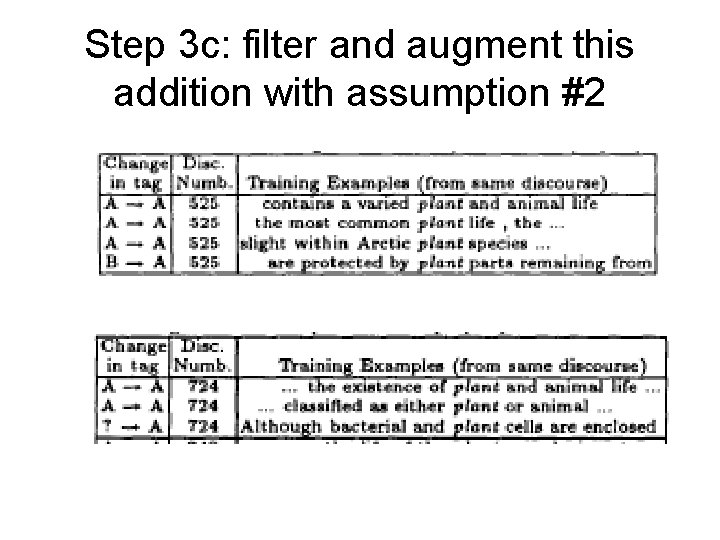

Step 3 c: filter and augment this addition with assumption #2

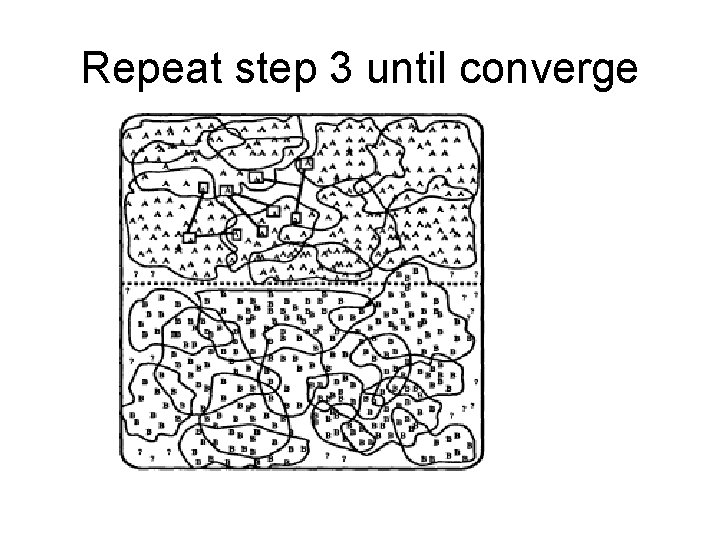

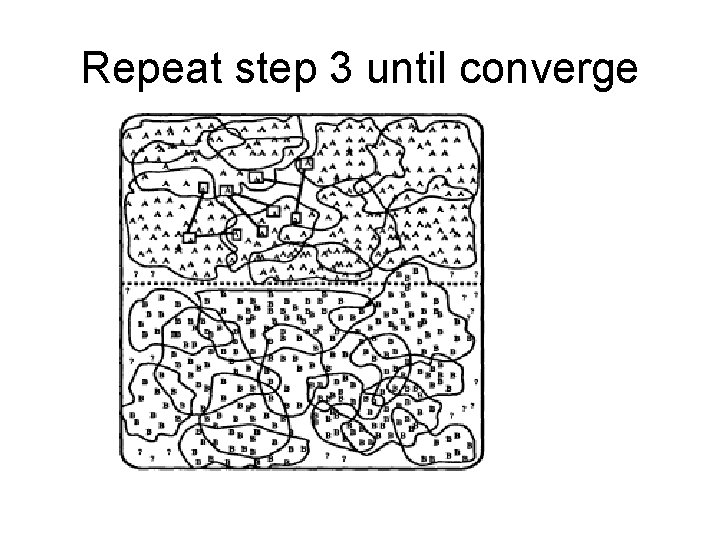

Repeat step 3 until converge

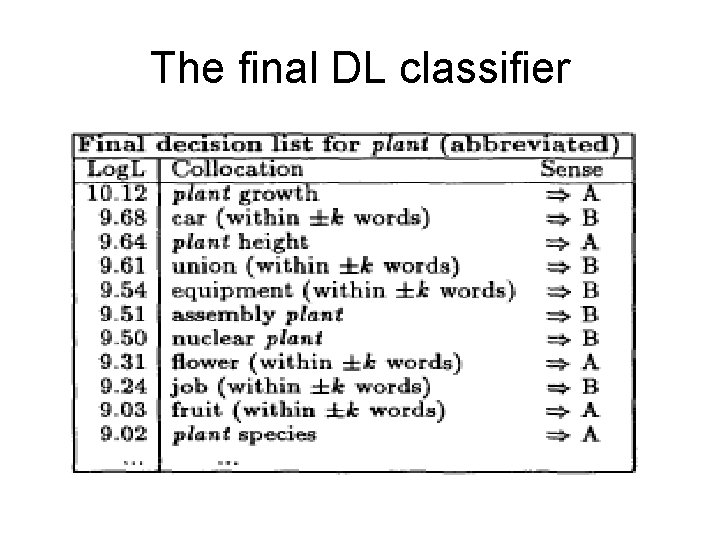

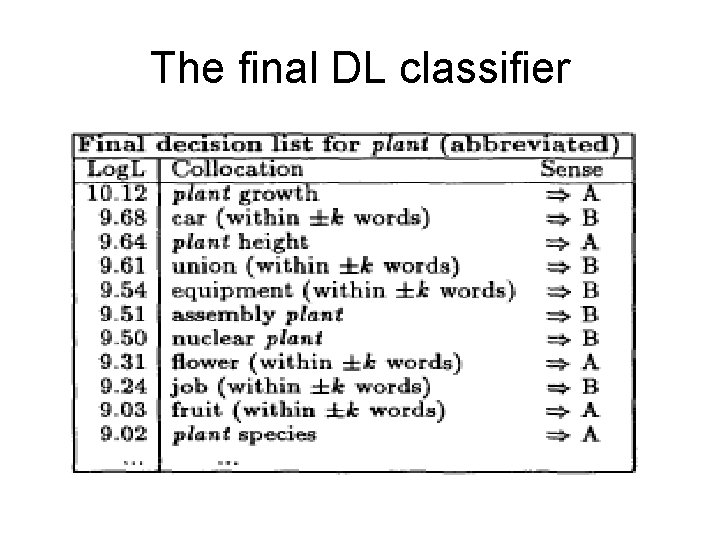

The final DL classifier

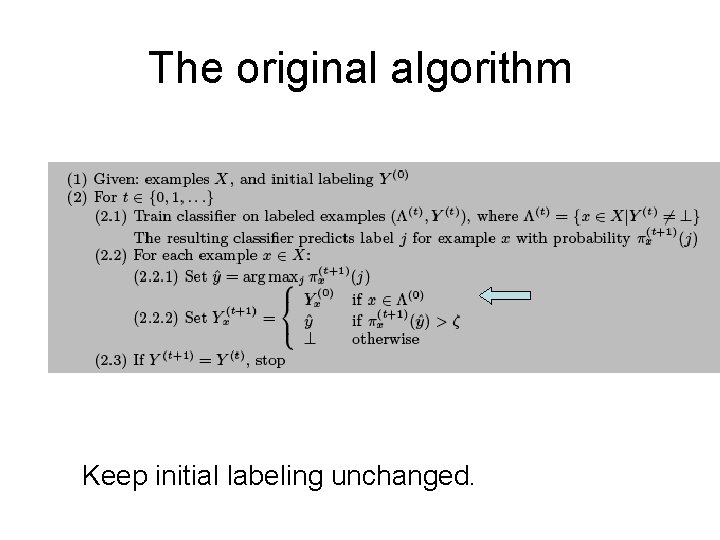

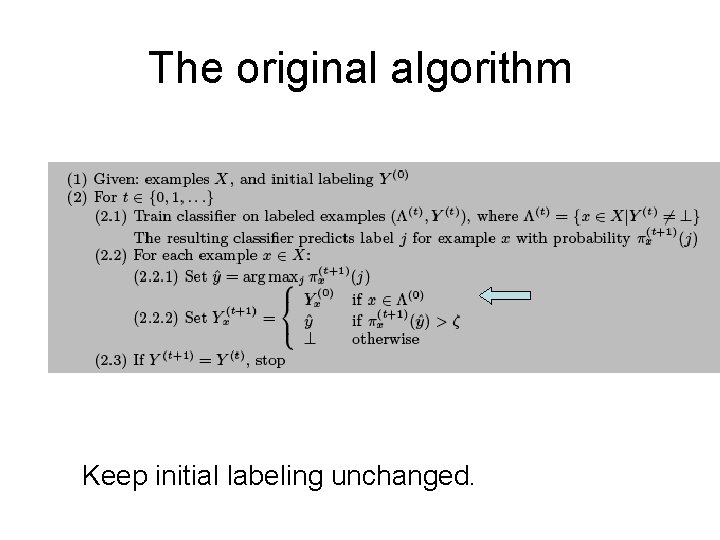

The original algorithm Keep initial labeling unchanged.

Options for obtaining the seeds • Use words in dictionary definitions • Use a single defining collocate for each sense: – Ex: “bird” and “machine” for the word “crane” • Label salient corpus collocates: – Collect frequent collocates – Manually check the collocates Getting the seeds is not hard.

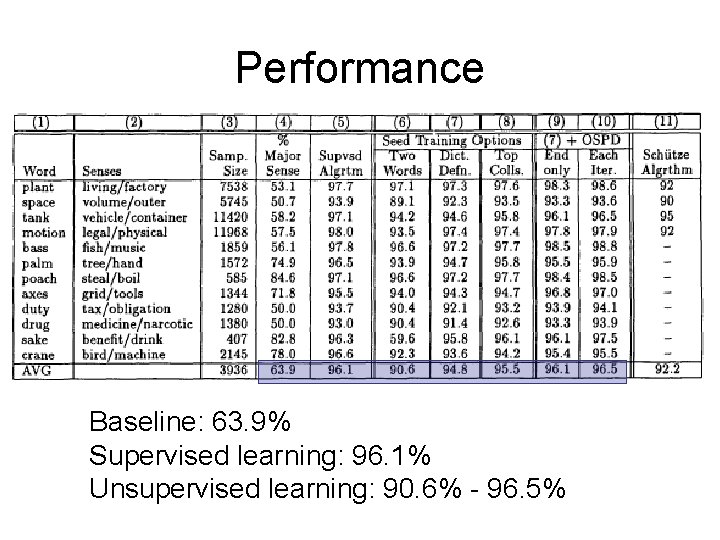

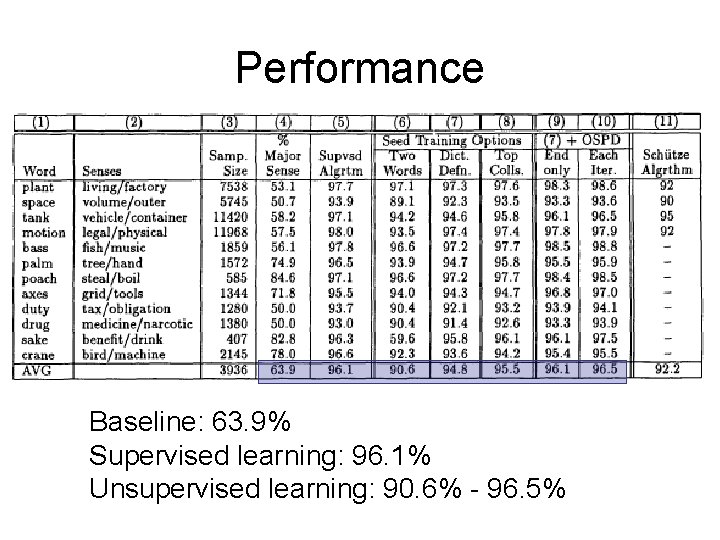

Performance Baseline: 63. 9% Supervised learning: 96. 1% Unsupervised learning: 90. 6% - 96. 5%

Why does it work? • (Steven Abney, 2004): “Understanding the Yarowsky Algorithm”

Summary of self-training • The algorithm is straightforward and intuitive. • It produces outstanding results. • Added unlabeled data pollute the original labeled data:

Additional slides

Papers on self-training • Yarowsky (1995): WSD • Riloff et al. (2003): identify subjective nouns • Maeireizo et al. (2004): classify dialogues as “emotional” or “non-emotional”.