Parsing with CFG Ling 571 Fei Xia Week

![A state contains: – A single dotted grammar rule: – [i, j]: • i: A state contains: – A single dotted grammar rule: – [i, j]: • i:](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-37.jpg)

![Parsing procedure From left to right, for each entry chart[i]: apply one of three Parsing procedure From left to right, for each entry chart[i]: apply one of three](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-39.jpg)

![Chart [0], word[0]=book S 0: S 1: S 2: S 3: S 4: S Chart [0], word[0]=book S 0: S 1: S 2: S 3: S 4: S](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-45.jpg)

![Chart[1], word[1]=that S 8: N book. S 9: V book. S 10: NP N. Chart[1], word[1]=that S 8: N book. S 9: V book. S 10: NP N.](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-46.jpg)

![Chart[2] word[2]=flight S 20: Det that. S 21: NP Det. N [1, 2] S Chart[2] word[2]=flight S 20: Det that. S 21: NP Det. N [1, 2] S](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-47.jpg)

![Chart[3] S 22: N flight. [2, 3] S 23: NP Det N. [1, 3] Chart[3] S 22: N flight. [2, 3] S 23: NP Det N. [1, 3]](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-48.jpg)

![Retrieving parse trees Start from chart[3], look for start S. [0, 3] S 26 Retrieving parse trees Start from chart[3], look for start S. [0, 3] S 26](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-49.jpg)

- Slides: 50

Parsing with CFG Ling 571 Fei Xia Week 2: 10/4 -10/6/05

Outline • Parsing • Grammar and language • Parsing algorithms for CFG: – Top-down – Bottom-up – Top-down with bottom-up filter – Earley algorithm – CYK algorithm (will cover in Week 3)

Parsing

What is parsing? A sentence parse tree (s) Two kinds of parse trees: • Phrase structure • Dependency structure Ex: book that flight

Good parsers • Accuracy: handle ambiguity well – Precision, recall, F-measure – Percent of sentences correctly parsed • Robustness: handle “ungrammatical” sentences or sentences out of domain • Resources needed: treebanks, grammars • Efficiency: the speed • Richness: trace, functional tags, etc.

Types of parsers What kind of parse trees? • Phrase-structure parsers • Dependency parsers Use statistics? • Statistical parsers • Rule-based parsers

Types of parsers (cont) Use grammars? • Grammar-based parsers: CFG, HPSG, … • Parsers that do not use grammars explicitly: Ratnaparki’s parser (1997) Require treebanks? • Supervised parsers • Unsupervised parsers

Our focus • Parsers: – – Phrase-structure Mainly statistical Grammar-based: mainly CFG Supervised • Where grammars come from: – Built by hand – Extracted from treebanks – Induced from text

Grammar and language

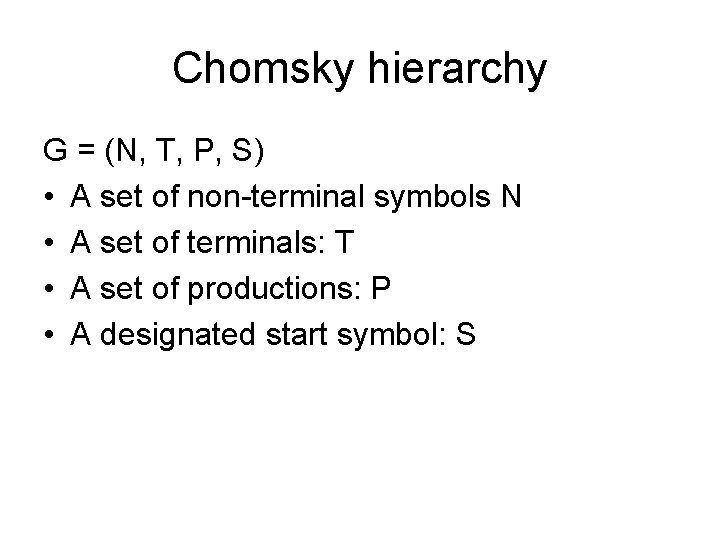

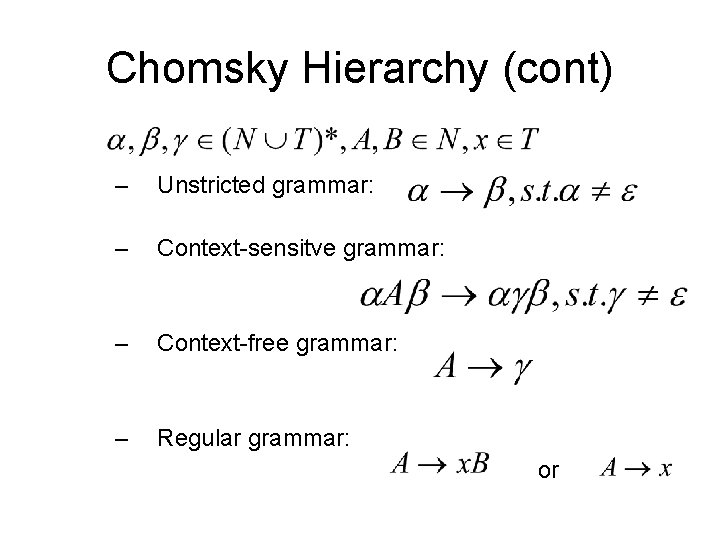

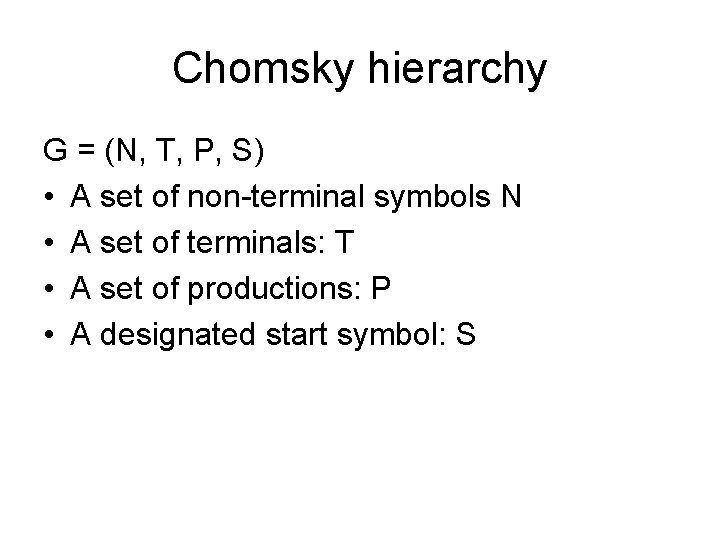

Chomsky hierarchy G = (N, T, P, S) • A set of non-terminal symbols N • A set of terminals: T • A set of productions: P • A designated start symbol: S

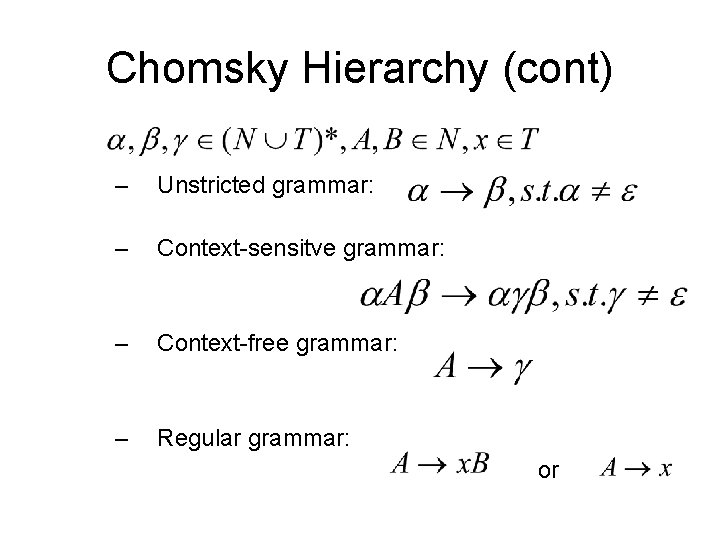

Chomsky Hierarchy (cont) – Unstricted grammar: – Context-sensitve grammar: – Context-free grammar: – Regular grammar: or

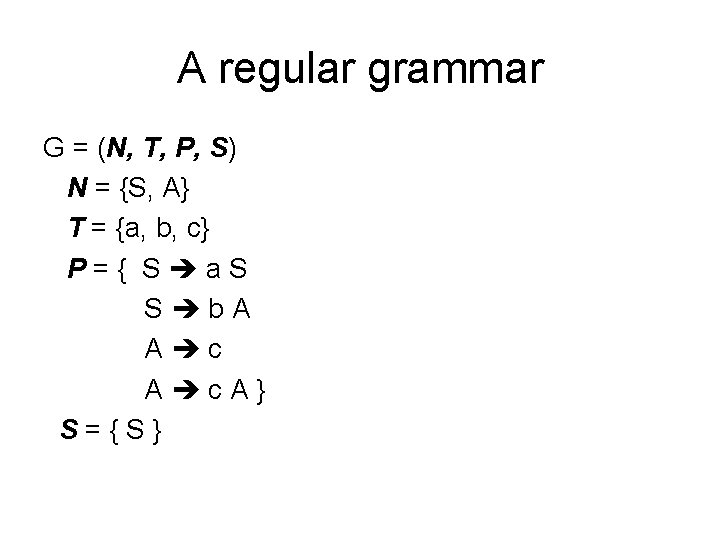

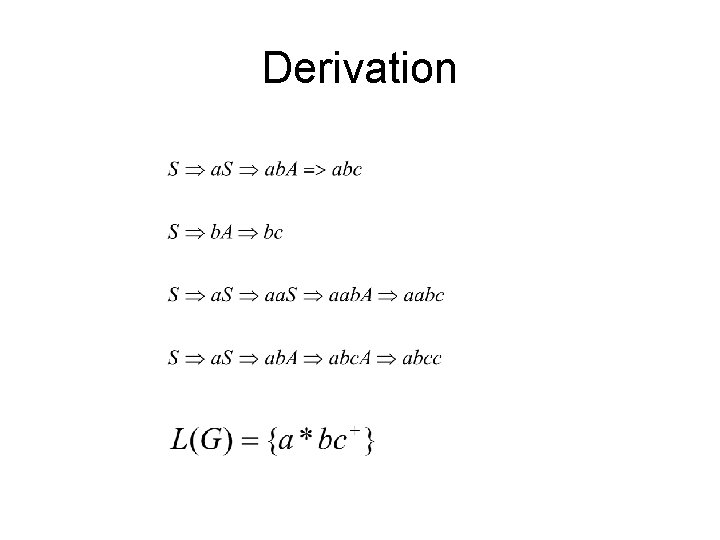

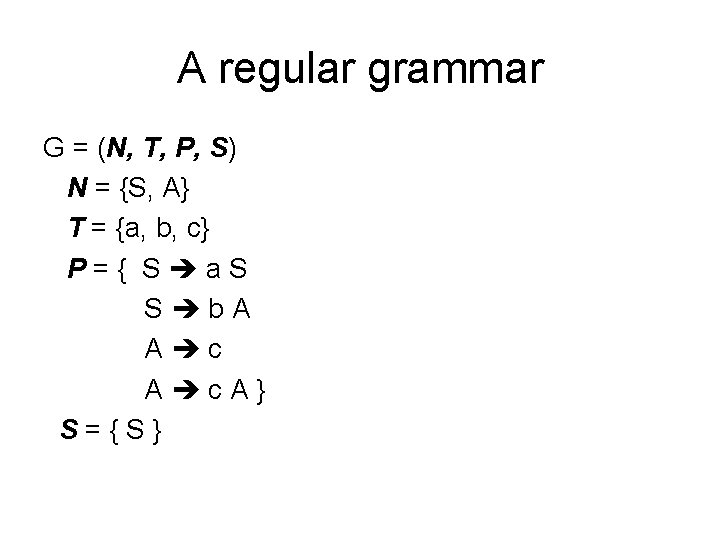

A regular grammar G = (N, T, P, S) N = {S, A} T = {a, b, c} P={ S a. S S b. A A c. A} S={S}

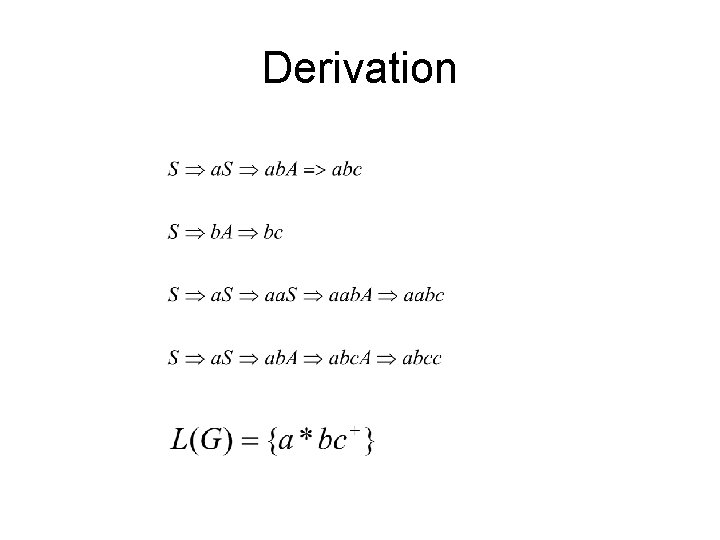

Derivation

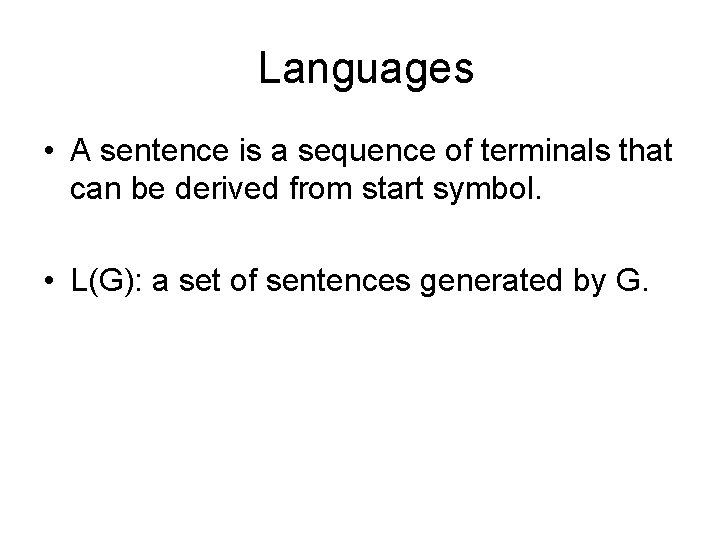

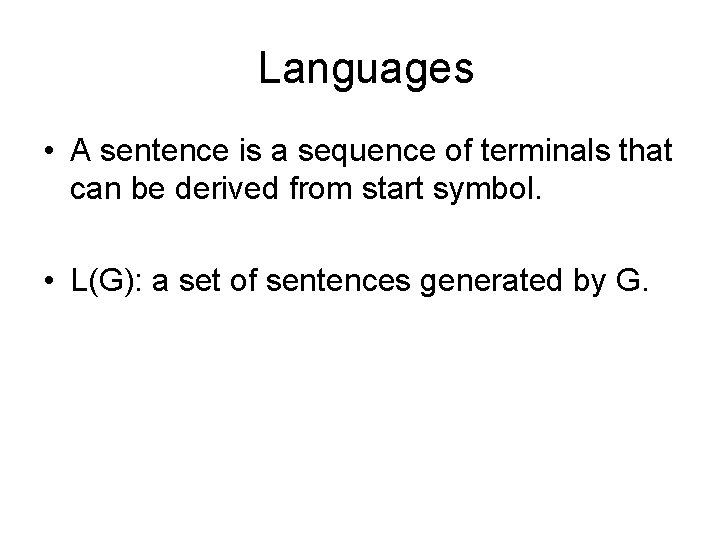

Languages • A sentence is a sequence of terminals that can be derived from start symbol. • L(G): a set of sentences generated by G.

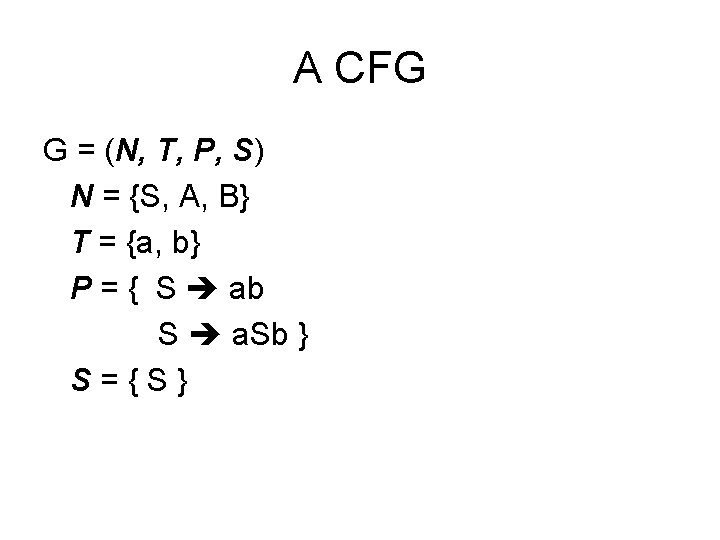

A CFG G = (N, T, P, S) N = {S, A, B} T = {a, b} P = { S ab S a. Sb } S={S}

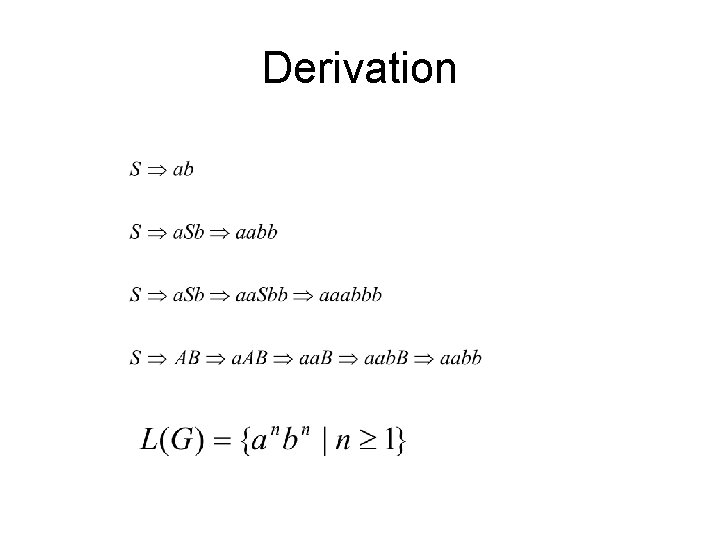

Derivation

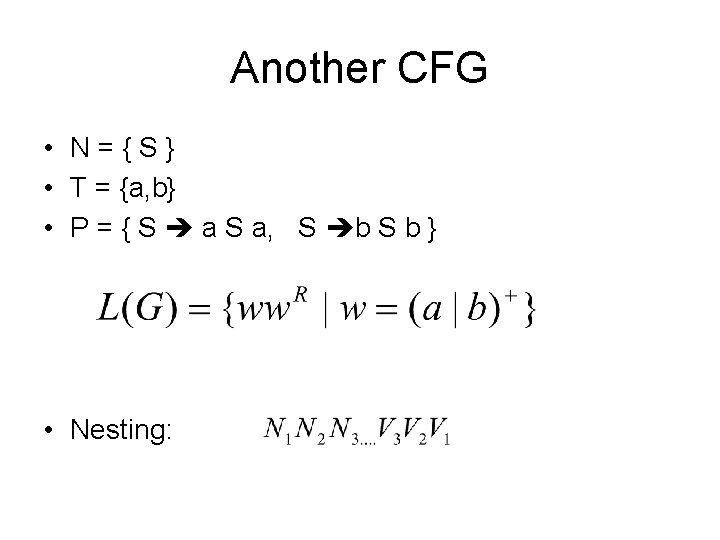

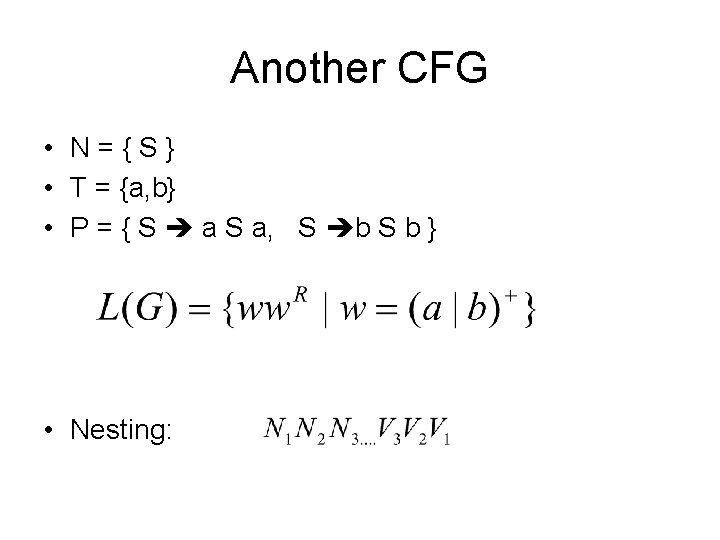

Another CFG • N={S} • T = {a, b} • P = { S a S a, S b } • Nesting:

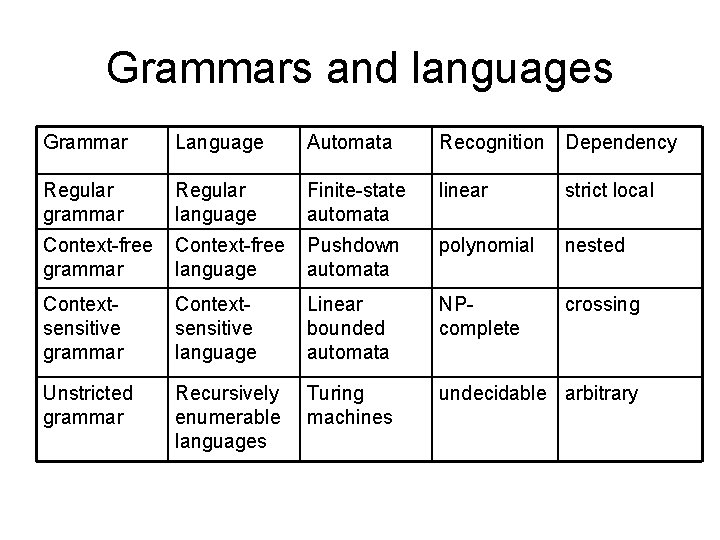

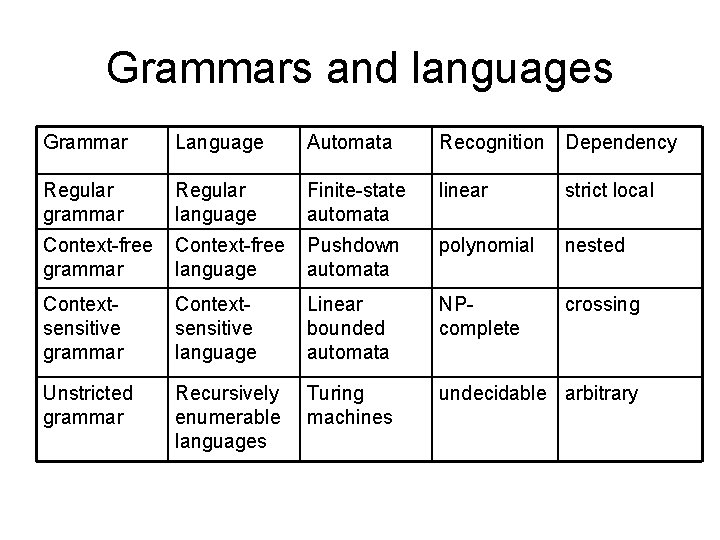

Grammars and languages Grammar Language Automata Recognition Dependency Regular grammar Regular language Finite-state automata linear strict local Context-free grammar Context-free language Pushdown automata polynomial nested Contextsensitive grammar Contextsensitive language Linear bounded automata NPcomplete crossing Unstricted grammar Recursively enumerable languages Turing machines undecidable arbitrary

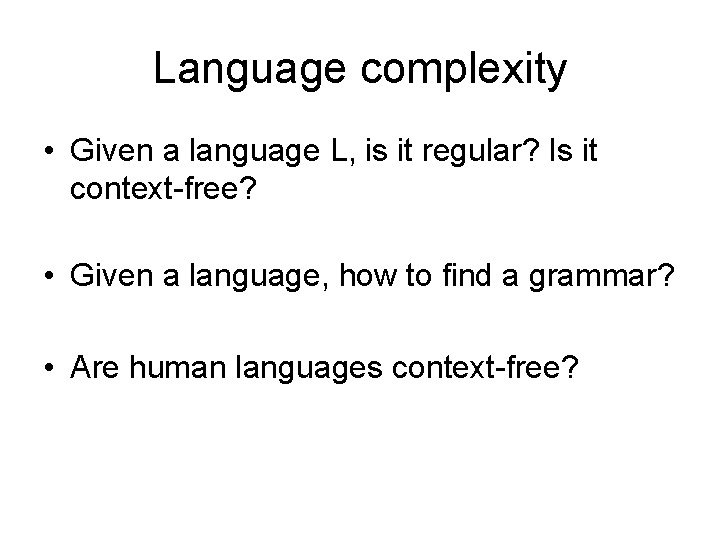

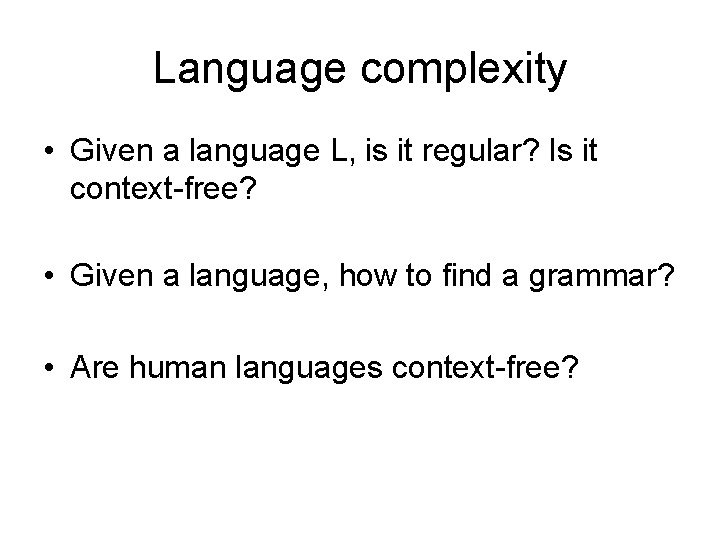

Language complexity • Given a language L, is it regular? Is it context-free? • Given a language, how to find a grammar? • Are human languages context-free?

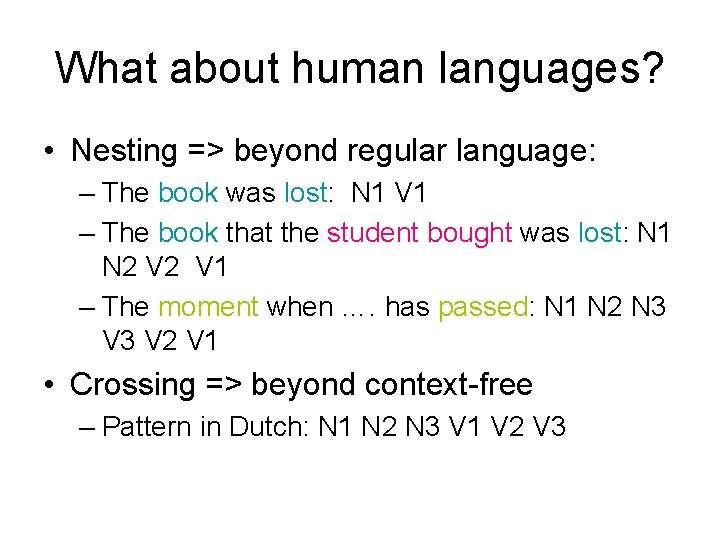

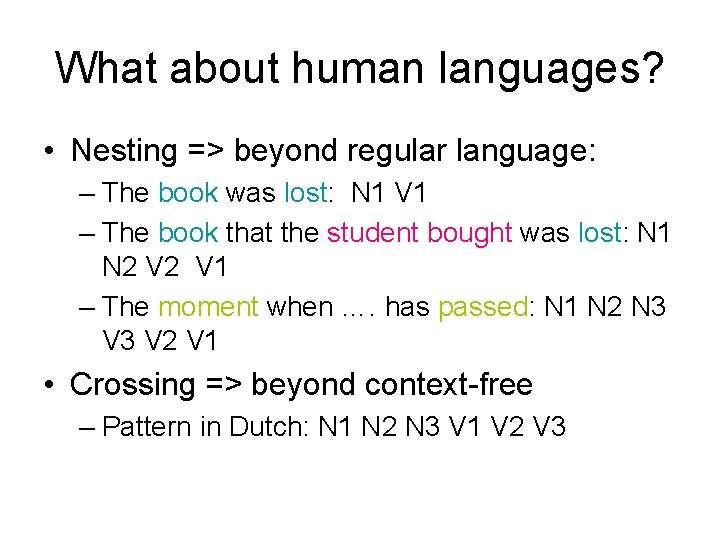

What about human languages? • Nesting => beyond regular language: – The book was lost: N 1 V 1 – The book that the student bought was lost: N 1 N 2 V 1 – The moment when …. has passed: N 1 N 2 N 3 V 2 V 1 • Crossing => beyond context-free – Pattern in Dutch: N 1 N 2 N 3 V 1 V 2 V 3

Summary of Chomsky Hierarchy • • There are four types of grammars Each type has its own generative power Human language is not context-free But in order to process human languages, we often use CFG as an approximation.

Other grammar formalisms • Phrase structure based: – CFG-based grammars: HPSG, LFG – Tree grammars: TAG, D-grammar • Dependency based: – Dependency grammars

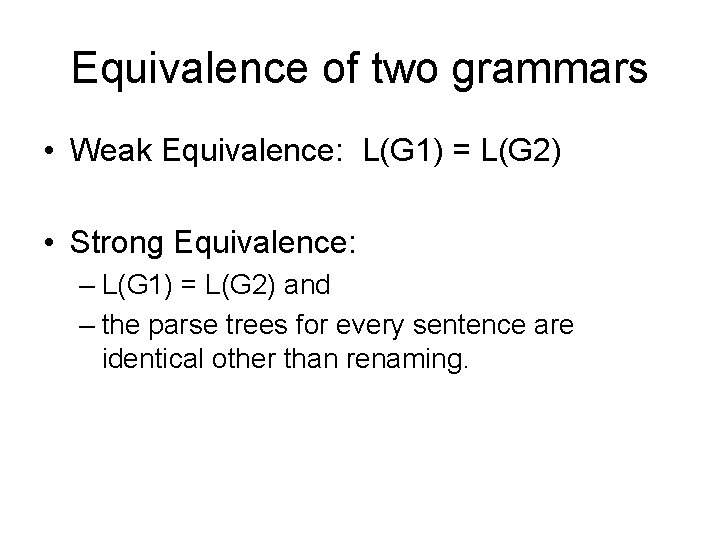

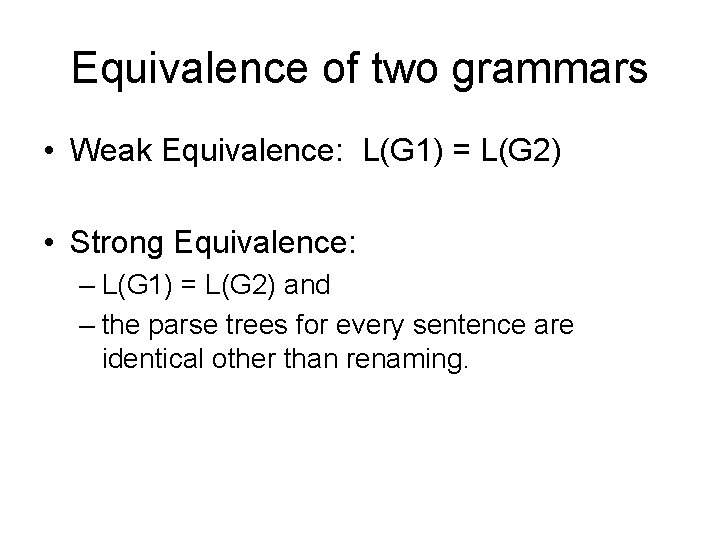

Equivalence of two grammars • Weak Equivalence: L(G 1) = L(G 2) • Strong Equivalence: – L(G 1) = L(G 2) and – the parse trees for every sentence are identical other than renaming.

Context-free grammar

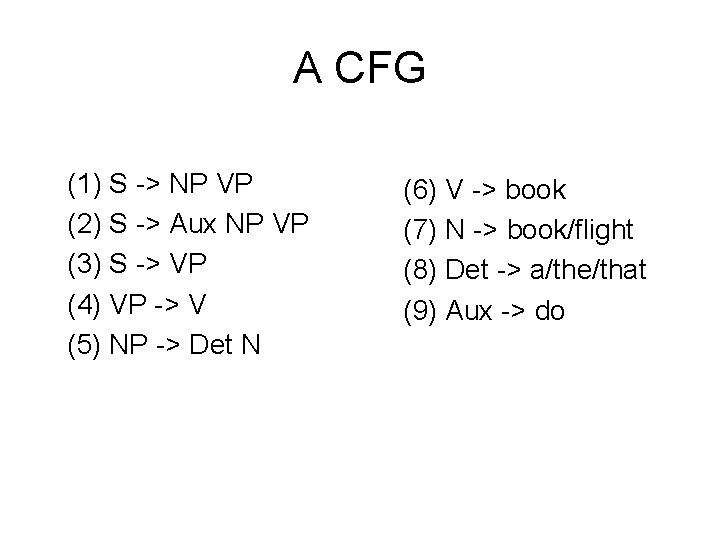

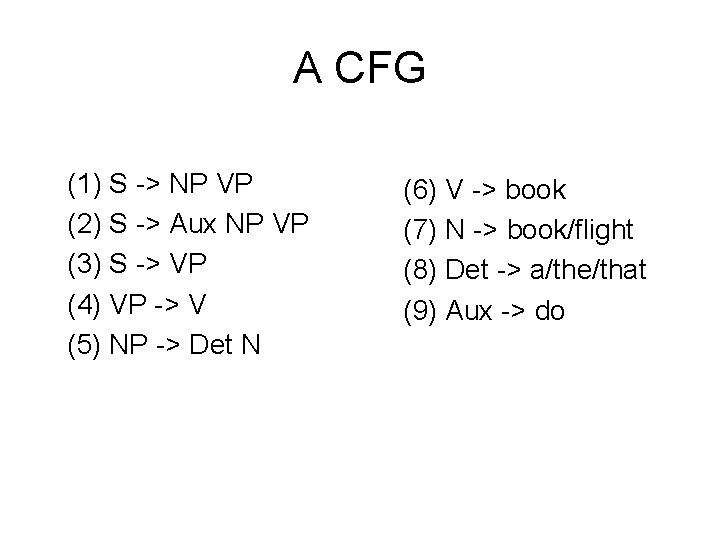

A CFG (1) S -> NP VP (2) S -> Aux NP VP (3) S -> VP (4) VP -> V (5) NP -> Det N (6) V -> book (7) N -> book/flight (8) Det -> a/the/that (9) Aux -> do

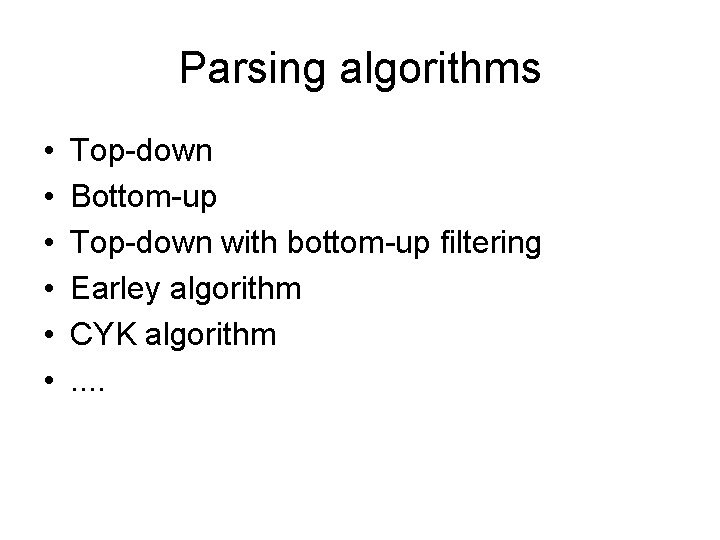

Parsing algorithms • • • Top-down Bottom-up Top-down with bottom-up filtering Earley algorithm CYK algorithm. .

Top-down parsing • Start from the start symbol, and apply rules • Top-down, depth-first, left-to-right parsing • Never explore trees that do not result in S => goal-directed search • Waste time on trees that do not match input sentence.

An example • Book that flight

Bottom-up parsing • Use the input to guide => data-driven search • Find rules whose right-hand sides match the current nodes. • Waste time on trees that don’t result in S.

The example (cont) • Book that flight

Top-down parsing with bottom-up look-ahead filtering • Both top-down and bottom-up generate too many useless trees. • Combine the two to reduce over-generation • B is a left-corner of A if • Left-corner table provides more efficient look-ahead – Pre-compute all POS that can serve as the leftmost POS in the derivations of each non-terminal category

The example • Book that flight

Remaining problems • Left-recursion: NP -> NP PP • Ambiguity • Repeated parsing of subtrees

Dynamic programming (DP) • DP: – Dividable: The optimal solution of a subproblem is part of the optimal solution of the whole problem. – Memorization: Solve small problems only once and remember the answers. • Example: T(n) = T(n-1) + T(n-2)

Parsing with DP • Three well-known CFG parsing algorithms: – Earley algorithm (1970) – Cocke-Younger-Kasami (CYK) (1960) – Graham-Harrison-Ruzzo (GHR) (1980)

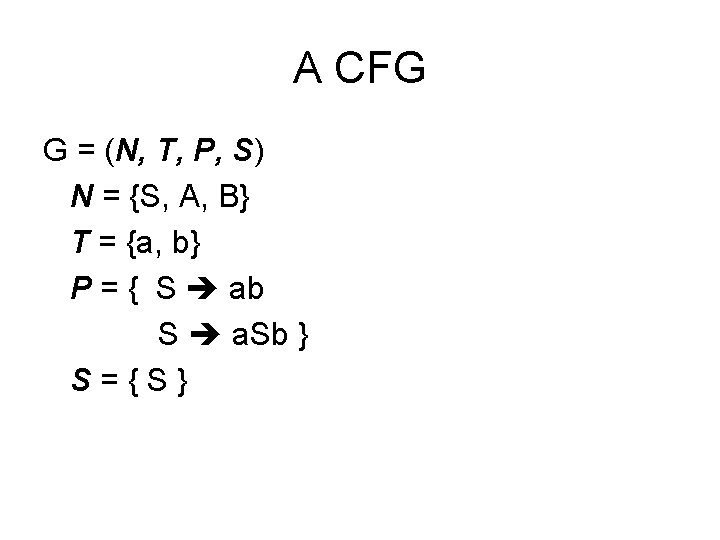

Earley algorithm • Use DP to do top-down search • A single left-to-right pass that fills out an array (called a chart) that has N+1 entries. • An entry is a list of states: it represents all partial trees generated so far.

![A state contains A single dotted grammar rule i j i A state contains: – A single dotted grammar rule: – [i, j]: • i:](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-37.jpg)

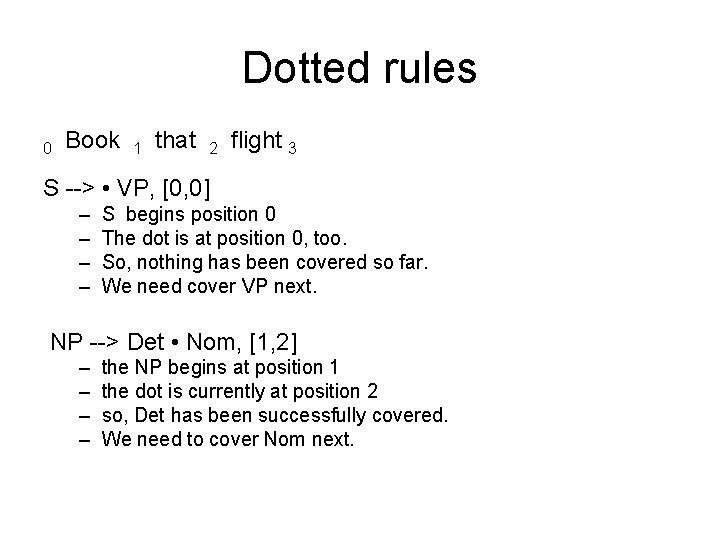

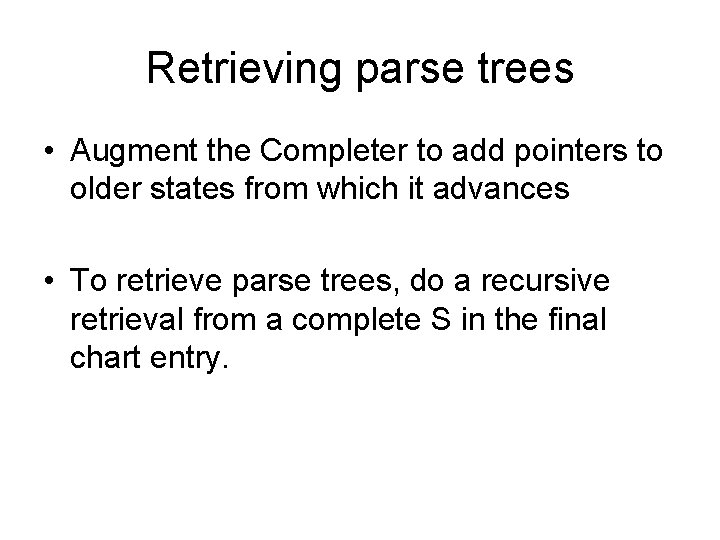

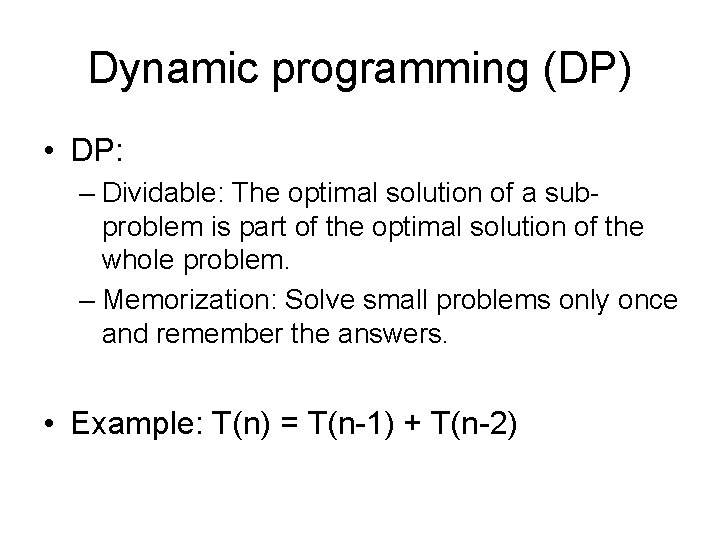

A state contains: – A single dotted grammar rule: – [i, j]: • i: where the state begins w. r. t. the input • j: the position of dot w. r. t. the input In order to retrieve parse trees, we need to keep a list of pointers, which point to older states.

Dotted rules 0 Book 1 that 2 flight 3 S --> • VP, [0, 0] – – S begins position 0 The dot is at position 0, too. So, nothing has been covered so far. We need cover VP next. NP --> Det • Nom, [1, 2] – – the NP begins at position 1 the dot is currently at position 2 so, Det has been successfully covered. We need to cover Nom next.

![Parsing procedure From left to right for each entry charti apply one of three Parsing procedure From left to right, for each entry chart[i]: apply one of three](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-39.jpg)

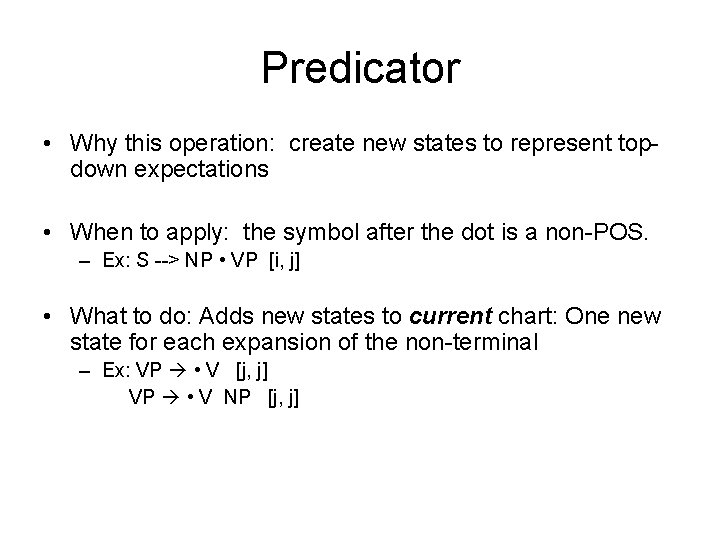

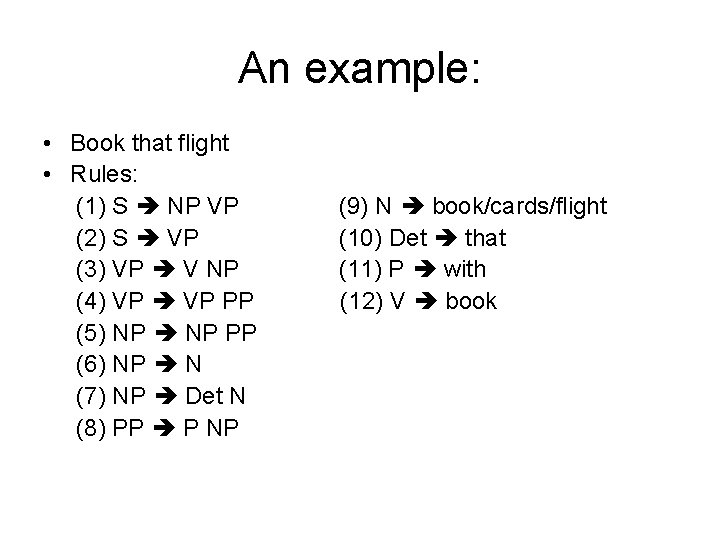

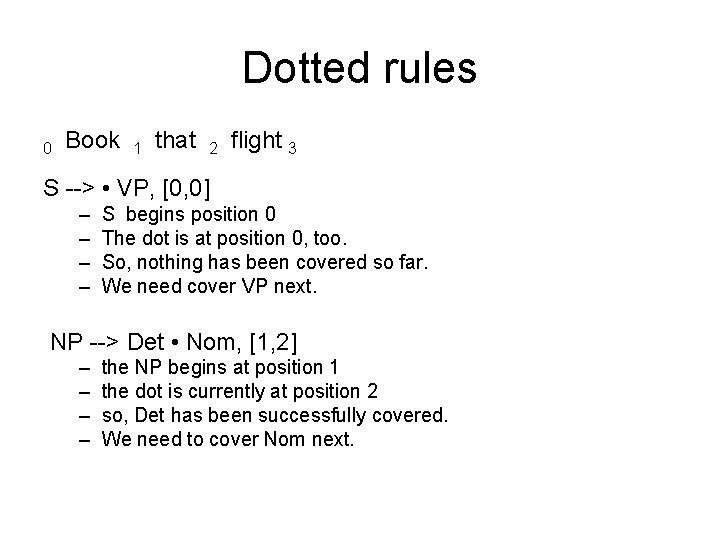

Parsing procedure From left to right, for each entry chart[i]: apply one of three operators to each state: • predictor: predict the expansion • scanner: match input word with the POS after the dot. • completer: advance previous created states.

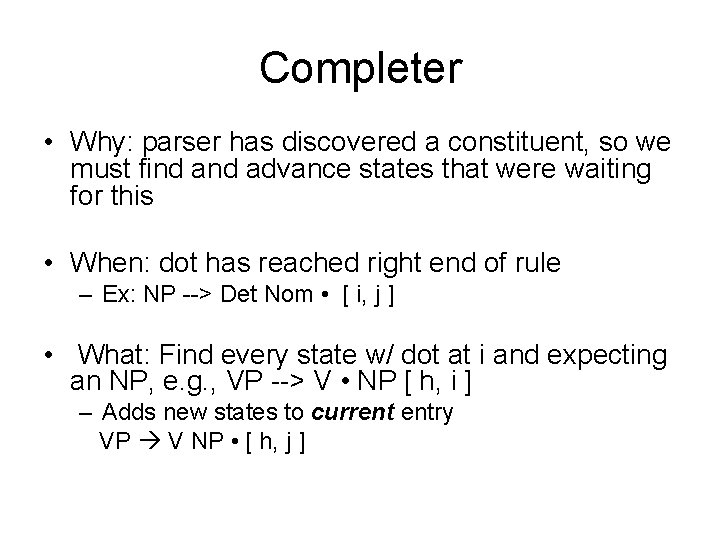

Predicator • Why this operation: create new states to represent topdown expectations • When to apply: the symbol after the dot is a non-POS. – Ex: S --> NP • VP [i, j] • What to do: Adds new states to current chart: One new state for each expansion of the non-terminal – Ex: VP • V [j, j] VP • V NP [j, j]

Scanner • Why: match the input word with the POS in a rule • When: the symbol after the dot is a POS – Ex: VP --> • V NP [ i, j ], word[ j ] = “book” • What: if matches, adds state to next entry – Ex: V book • [ j, j+1 ]

Completer • Why: parser has discovered a constituent, so we must find advance states that were waiting for this • When: dot has reached right end of rule – Ex: NP --> Det Nom • [ i, j ] • What: Find every state w/ dot at i and expecting an NP, e. g. , VP --> V • NP [ h, i ] – Adds new states to current entry VP V NP • [ h, j ]

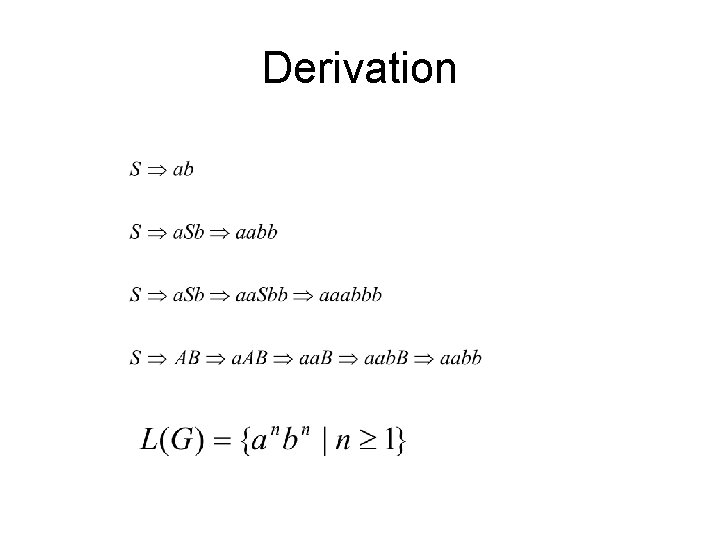

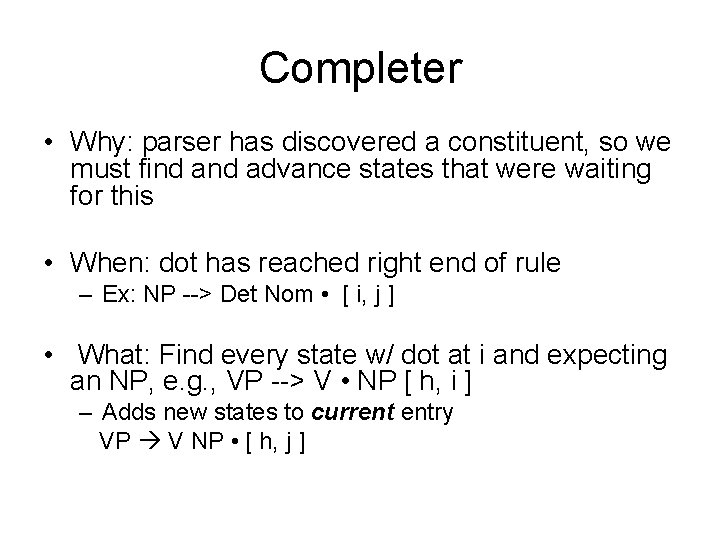

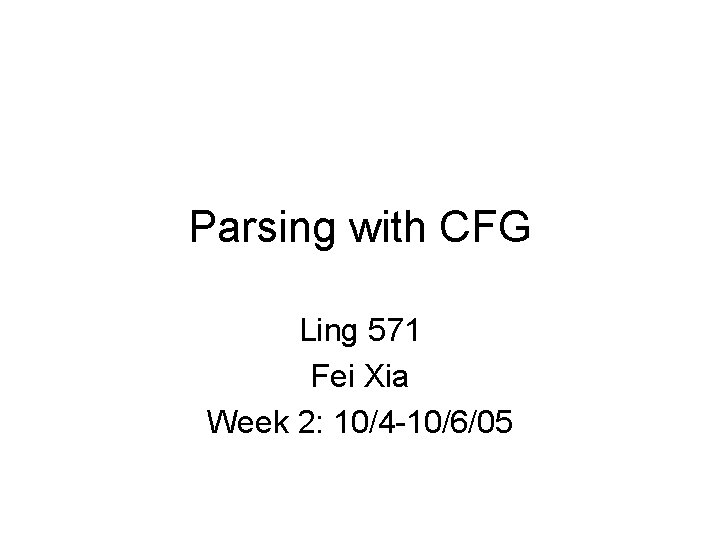

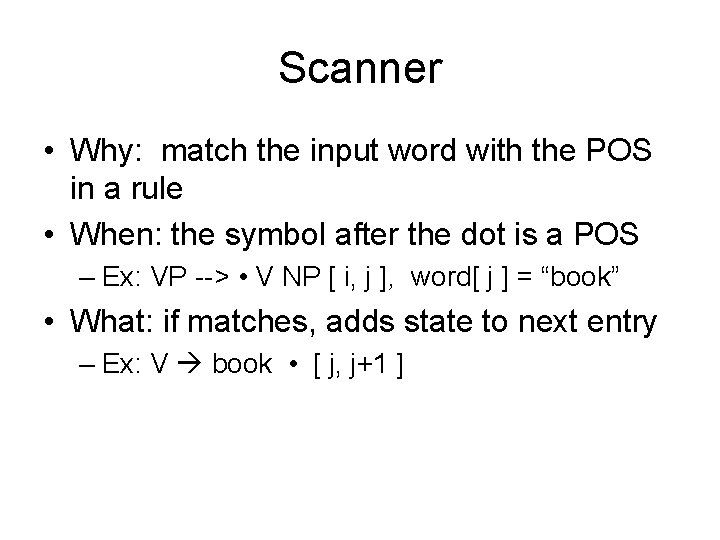

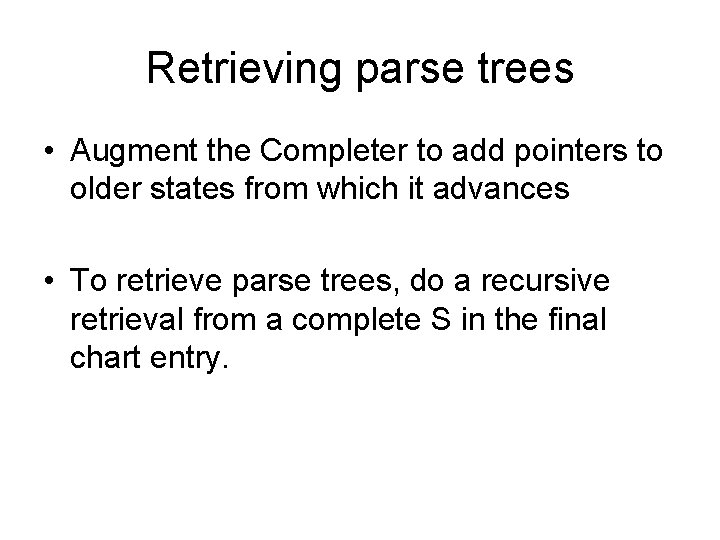

Retrieving parse trees • Augment the Completer to add pointers to older states from which it advances • To retrieve parse trees, do a recursive retrieval from a complete S in the final chart entry.

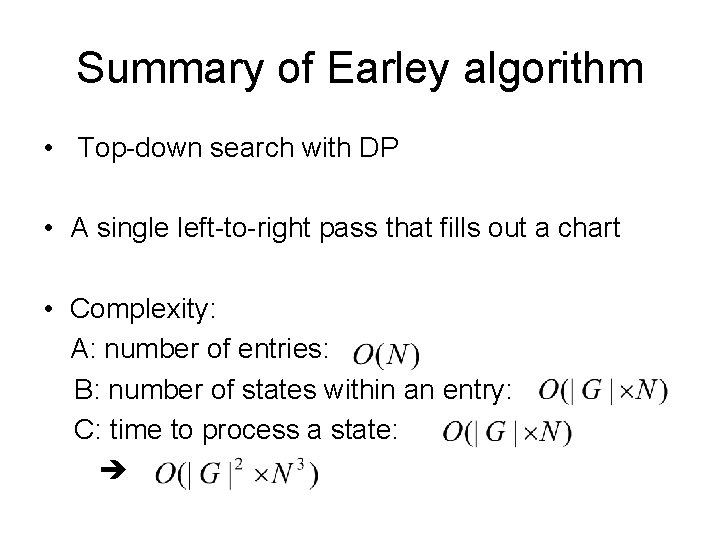

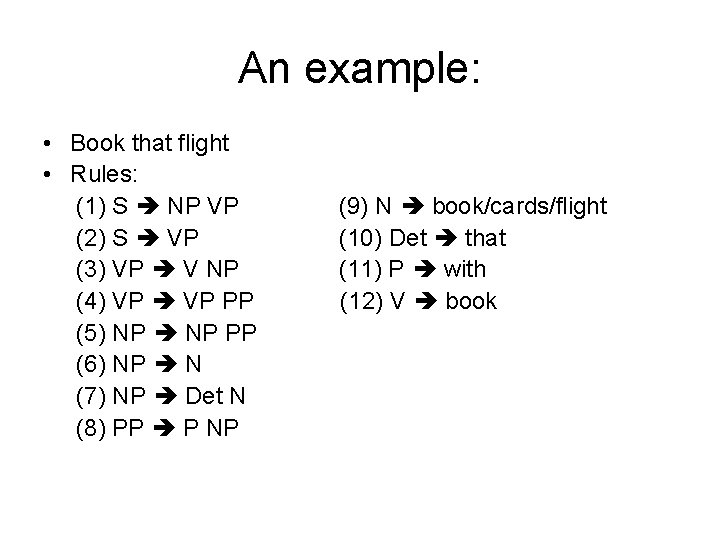

An example: • Book that flight • Rules: (1) S NP VP (2) S VP (3) VP V NP (4) VP PP (5) NP PP (6) NP N (7) NP Det N (8) PP P NP (9) N book/cards/flight (10) Det that (11) P with (12) V book

![Chart 0 word0book S 0 S 1 S 2 S 3 S 4 S Chart [0], word[0]=book S 0: S 1: S 2: S 3: S 4: S](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-45.jpg)

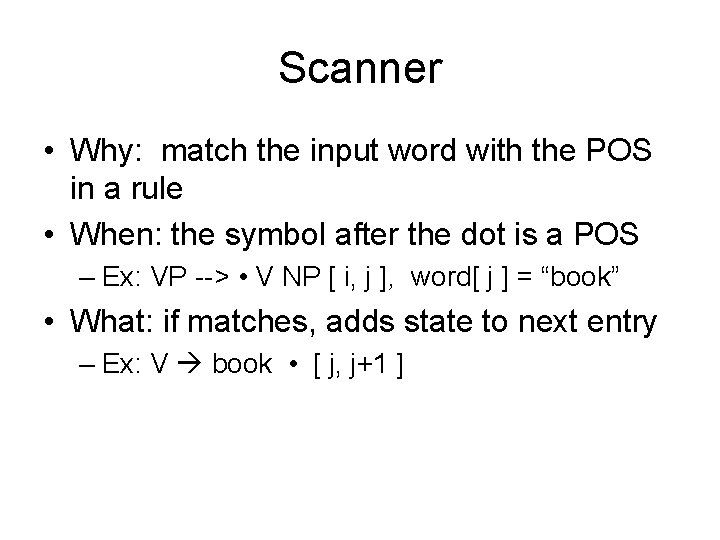

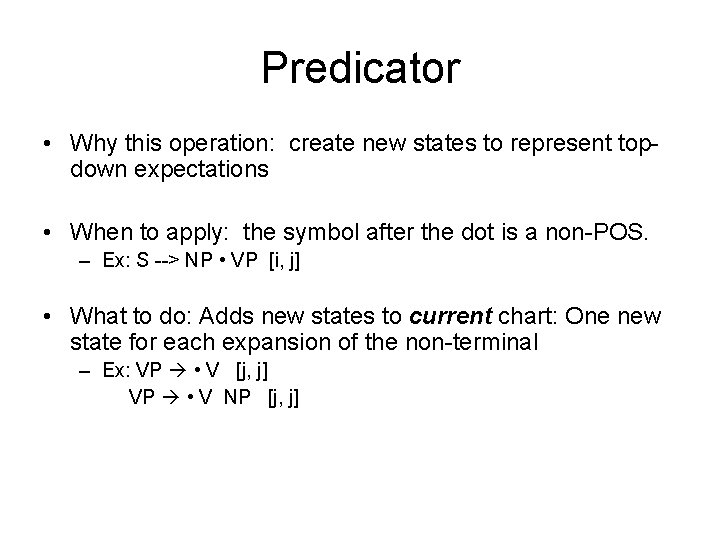

Chart [0], word[0]=book S 0: S 1: S 2: S 3: S 4: S 5: S 6: S 7: Start . S [0, 0] S. NP VP [0, 0] S . VP [0, 0] NP. NP PP [0, 0] NP. Det N [0, 0] NP. N [0, 0] VP . V NP [0, 0] VP PP [0, 0] init S 0 S 1 S 1 S 2 pred pred

![Chart1 word1that S 8 N book S 9 V book S 10 NP N Chart[1], word[1]=that S 8: N book. S 9: V book. S 10: NP N.](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-46.jpg)

Chart[1], word[1]=that S 8: N book. S 9: V book. S 10: NP N. S 11: VP V. NP S 12: S NP. VP S 13: NP NP. PP S 14: NP. NP PP S 15: NP. Det N S 16: NP. N S 17: VP. V NP S 18: VP. VP PP S 19: PP. P NP [0, 1] [0, 1] [1, 1] [1, 1] S 5 scan S 6 scan S 8 comp [S 8] S 9 comp [S 9] S 10 comp [S 10] S 11 pred S 12 pred S 13 pred

![Chart2 word2flight S 20 Det that S 21 NP Det N 1 2 S Chart[2] word[2]=flight S 20: Det that. S 21: NP Det. N [1, 2] S](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-47.jpg)

Chart[2] word[2]=flight S 20: Det that. S 21: NP Det. N [1, 2] S 15 scan [1, 2] S 20 comp [S 20]

![Chart3 S 22 N flight 2 3 S 23 NP Det N 1 3 Chart[3] S 22: N flight. [2, 3] S 23: NP Det N. [1, 3]](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-48.jpg)

Chart[3] S 22: N flight. [2, 3] S 23: NP Det N. [1, 3] S 24: VP V NP. [0, 3] S 25: NP NP. PP [1, 3] S 26: S VP. [0, 3] S 27: VP VP. PP [0, 3] S 28: PP. P NP [3, 3] S 29: start S. [0, 3] S 21 scan S 22 comp [S 20, S 22] S 23 comp [S 9, S 23] S 23 comp [S 23] S 24 comp [S 24] S 25 pred S 26 comp [S 26]

![Retrieving parse trees Start from chart3 look for start S 0 3 S 26 Retrieving parse trees Start from chart[3], look for start S. [0, 3] S 26](https://slidetodoc.com/presentation_image/3c85123f915fabd747e065b19a1c3eef/image-49.jpg)

Retrieving parse trees Start from chart[3], look for start S. [0, 3] S 26 S 24 S 9, S 23 S 20, S 22

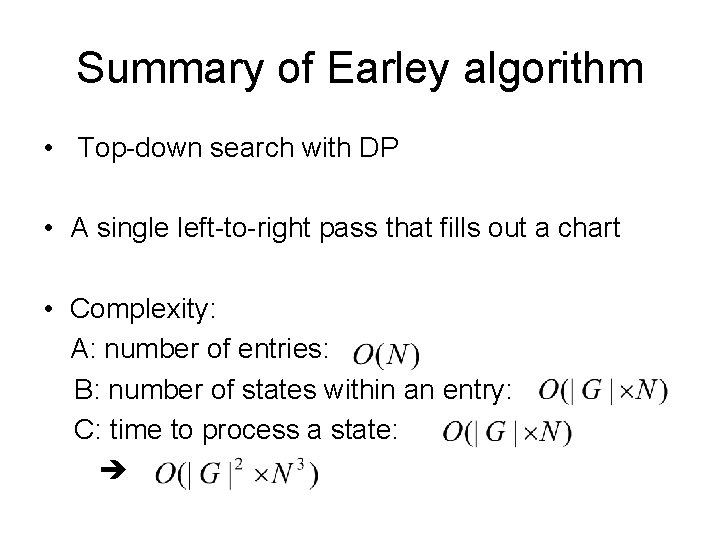

Summary of Earley algorithm • Top-down search with DP • A single left-to-right pass that fills out a chart • Complexity: A: number of entries: B: number of states within an entry: C: time to process a state:

Fei fei li

Fei fei li Cs 7643 deep learning

Cs 7643 deep learning Week by week plans for documenting children's development

Week by week plans for documenting children's development Cs 571 gmu

Cs 571 gmu Wap 361

Wap 361 571 ad

571 ad Perform voice communications 113-com-1022 powerpoint

Perform voice communications 113-com-1022 powerpoint Münchener verein 571+575

Münchener verein 571+575 Bus 571

Bus 571 21 nisan 571

21 nisan 571 571 r

571 r 571

571 Cse 571 artificial intelligence

Cse 571 artificial intelligence Chữ số 5 trong số 20 571 có giá trị là

Chữ số 5 trong số 20 571 có giá trị là Lingxiao xia

Lingxiao xia Jennifer xia

Jennifer xia Xia bellringer

Xia bellringer Xuhua xia

Xuhua xia Molecular clock hypothesis

Molecular clock hypothesis Derek xia

Derek xia Xia dynasty government

Xia dynasty government Lirong xia

Lirong xia Exces de cerere

Exces de cerere Contributions of the shang dynasty

Contributions of the shang dynasty Dinastiyang hsia

Dinastiyang hsia Dr xia wang

Dr xia wang Perfume xia xiang

Perfume xia xiang A xia

A xia Xia red

Xia red Albert xia

Albert xia Lirong xia rpi

Lirong xia rpi Han sui tang song

Han sui tang song Guoxing xia

Guoxing xia It indicates ambition cool headedness and fierceness

It indicates ambition cool headedness and fierceness Kñp

Kñp Bps4104

Bps4104 Yuni xia

Yuni xia Pregiera padre nostro

Pregiera padre nostro Guoxing xia

Guoxing xia Qiangfei xia

Qiangfei xia Amy xia amgen

Amy xia amgen Xia red

Xia red Dinastiyang sung

Dinastiyang sung Patrick xia

Patrick xia Guoxing xia

Guoxing xia Mina fei-ting chen

Mina fei-ting chen Shutterstock

Shutterstock Coco

Coco Kkui

Kkui Assinale a alternativa incorreta sobre a prosa naturalista

Assinale a alternativa incorreta sobre a prosa naturalista Fei kontakt

Fei kontakt