Decision tree LING 572 Fei Xia 11006 Outline

![An example S=[9+, 5 -] E=0. 940 Humidity High Normal [3+, 4 -] E=0. An example S=[9+, 5 -] E=0. 940 Humidity High Normal [3+, 4 -] E=0.](https://slidetodoc.com/presentation_image/1c2d87943bb066e77931322c888b0248/image-16.jpg)

- Slides: 42

Decision tree LING 572 Fei Xia 1/10/06

Outline • Basic concepts • Main issues • Advanced topics

Basic concepts

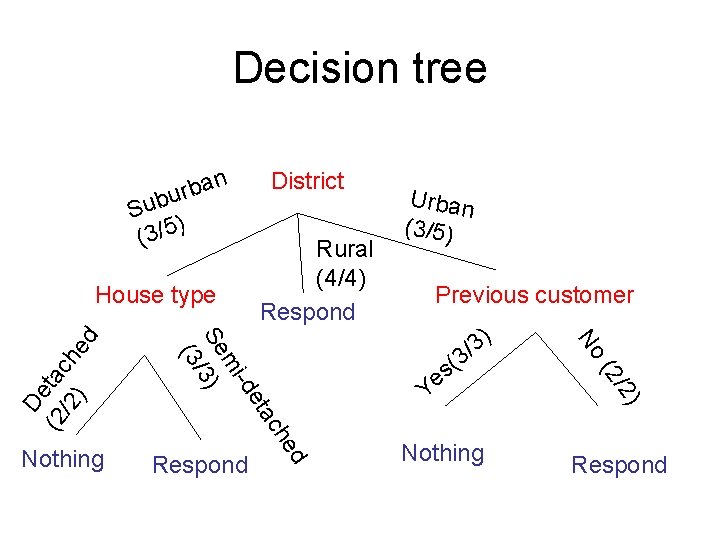

A classification problem District House type Income Suburban Detached Suburban Rural Urban … Outcome High Previous Customer No Semidetached High Yes Respond Semidetached Detached Low No Respond Low Yes Nothing

Classification and estimation problems • Given – a finite set of (input) attributes: features • Ex: District, House type, Income, Previous customer – a target attribute: the goal • Ex: Outcome: {Nothing, Respond} – training data: a set of classified examples in attribute-value representation • Ex: the previous table • Predict the value of the goal given the values of input attributes – The goal is a discrete variable classification problem – The goal is a continuous variable estimation problem

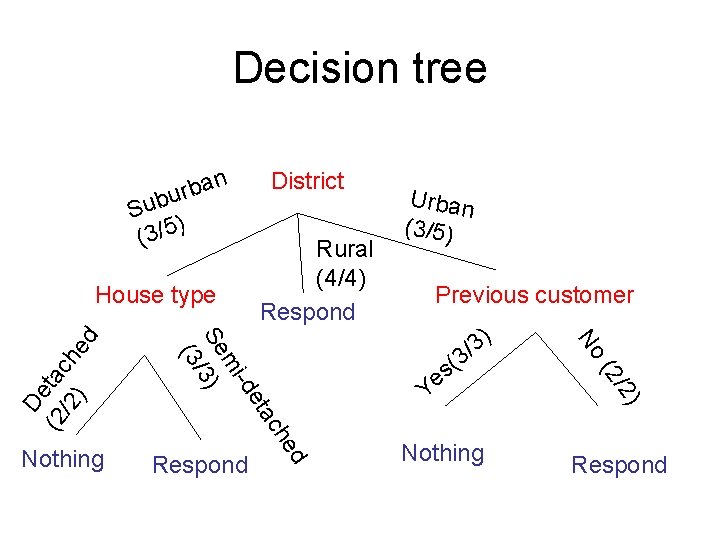

Decision tree n a b r u Sub ) 5 / 3 ( Urban (3/5) Previous customer ) /2 (2 3 ( s Ye No ) 3 / tac d Respond he Nothing Rural (4/4) Respond e -d mi Se /3) (3 De (2 tac /2 h ) ed House type District Nothing Respond

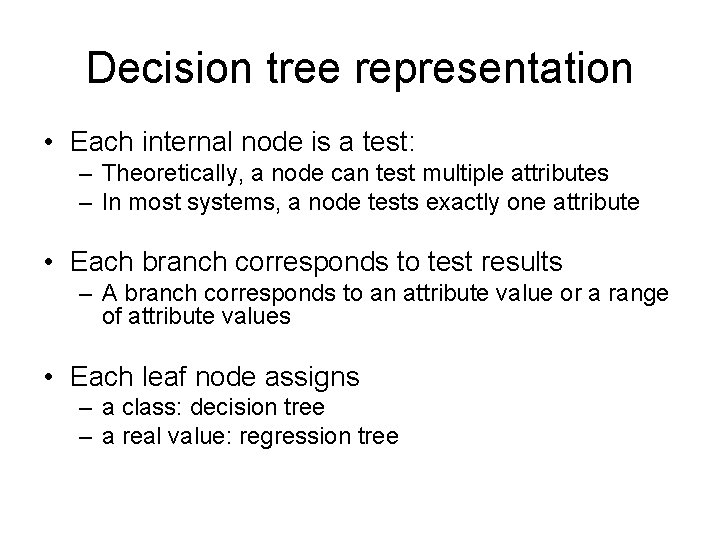

Decision tree representation • Each internal node is a test: – Theoretically, a node can test multiple attributes – In most systems, a node tests exactly one attribute • Each branch corresponds to test results – A branch corresponds to an attribute value or a range of attribute values • Each leaf node assigns – a class: decision tree – a real value: regression tree

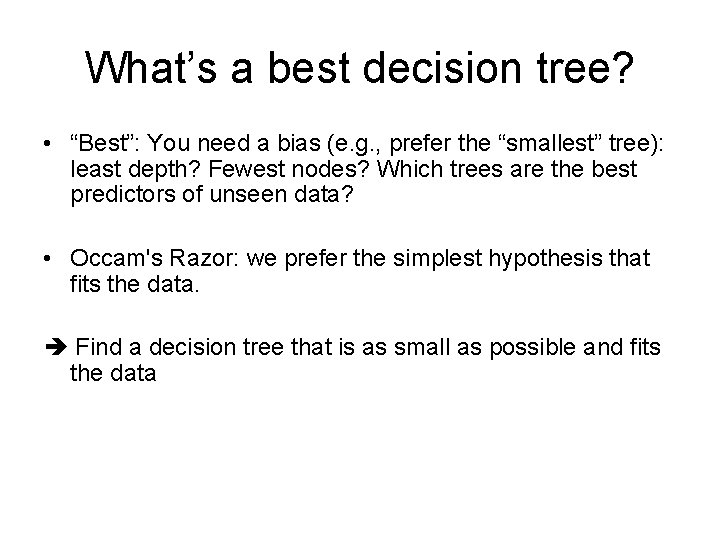

What’s a best decision tree? • “Best”: You need a bias (e. g. , prefer the “smallest” tree): least depth? Fewest nodes? Which trees are the best predictors of unseen data? • Occam's Razor: we prefer the simplest hypothesis that fits the data. Find a decision tree that is as small as possible and fits the data

Finding a smallest decision tree • A decision tree can represent any discrete function of the inputs: y=f(x 1, x 2, …, xn) • The space of decision trees is too big for systemic search for a smallest decision tree. • Solution: greedy algorithm

Basic algorithm: top-down induction • Find the “best” decision attribute, A, and assign A as decision attribute for node • For each value of A, create new branch, and divide up training examples • Repeat the process until the gain is small enough

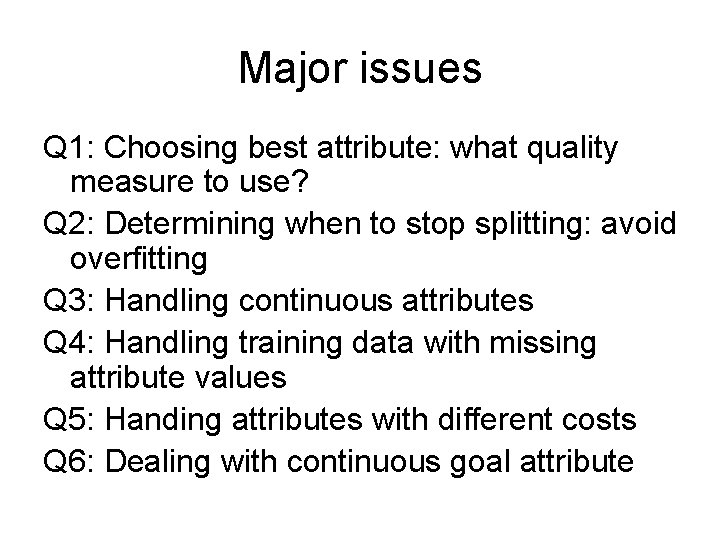

Major issues

Major issues Q 1: Choosing best attribute: what quality measure to use? Q 2: Determining when to stop splitting: avoid overfitting Q 3: Handling continuous attributes Q 4: Handling training data with missing attribute values Q 5: Handing attributes with different costs Q 6: Dealing with continuous goal attribute

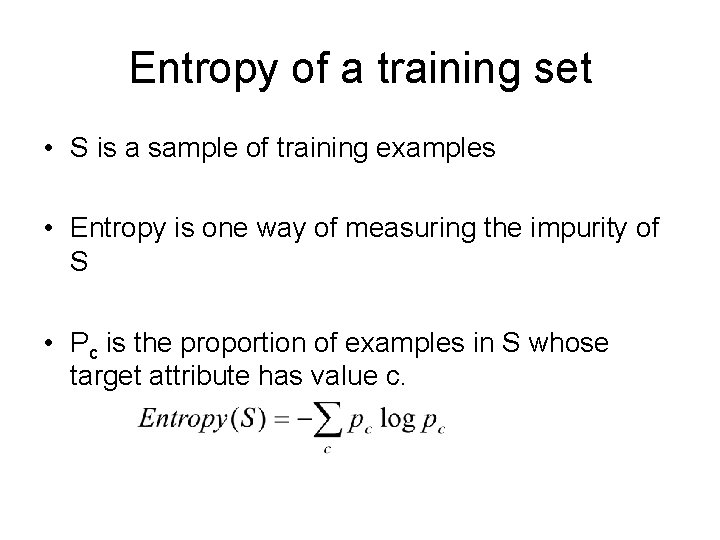

Q 1: What quality measure • Information gain • Gain Ratio • …

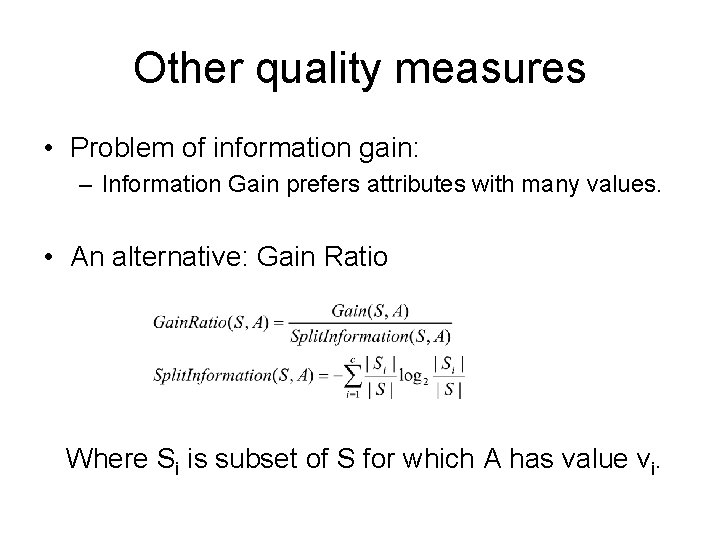

Entropy of a training set • S is a sample of training examples • Entropy is one way of measuring the impurity of S • Pc is the proportion of examples in S whose target attribute has value c.

Information Gain • Gain(S, A)=expected reduction in entropy due to sorting on A. • Choose the A with the max information gain. (a. k. a. choose the A with the min average entropy)

![An example S9 5 E0 940 Humidity High Normal 3 4 E0 An example S=[9+, 5 -] E=0. 940 Humidity High Normal [3+, 4 -] E=0.](https://slidetodoc.com/presentation_image/1c2d87943bb066e77931322c888b0248/image-16.jpg)

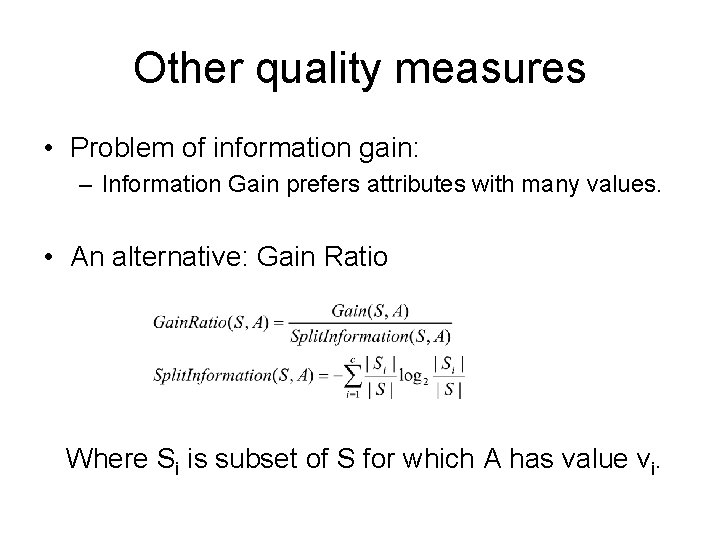

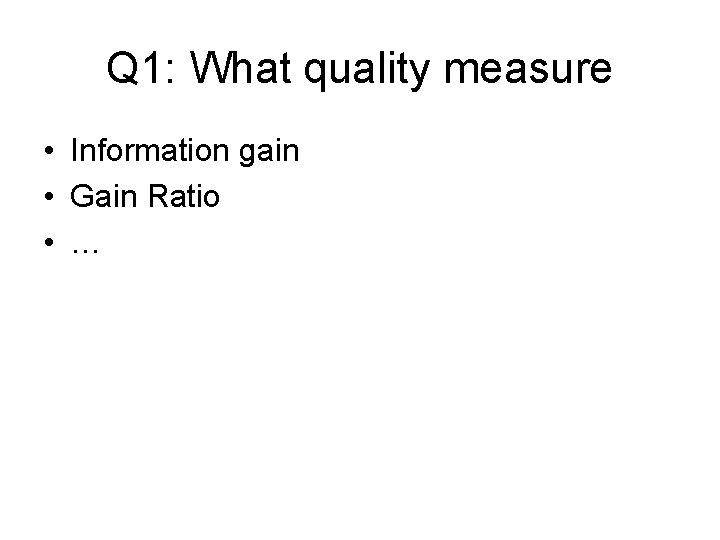

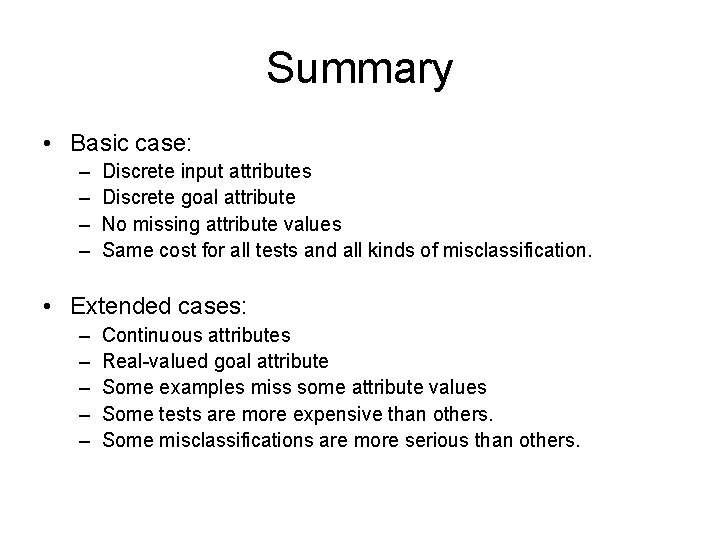

An example S=[9+, 5 -] E=0. 940 Humidity High Normal [3+, 4 -] E=0. 985 [6+, 1 -] E=0. 592 Info. Gain(S, Humidity) =0. 940 -(7/14)*0. 985 -(7/14)0. 592 =0. 151 S=[9+, 5 -] E=0. 940 Wind Weak Strong [6+, 2 -] E=0. 811 [3+, 3 -] E=1. 00 Info. Gain(S, Wind) =0. 940 -(8/14)*0. 811 -(6/14)*1. 0 =0. 048

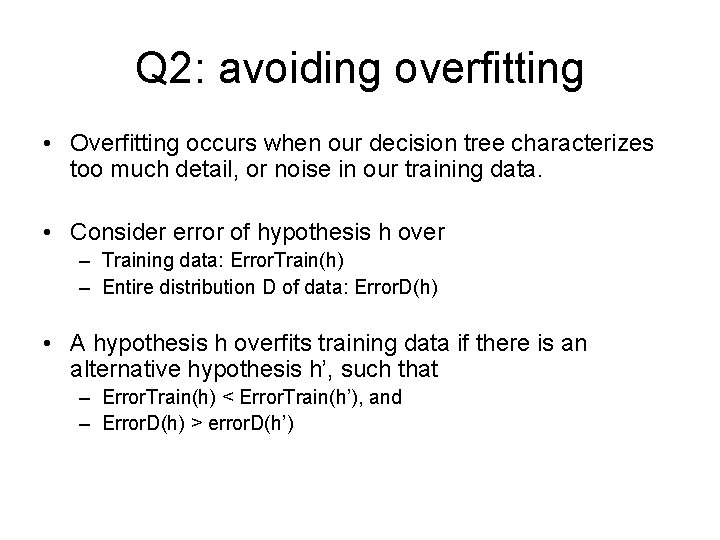

Other quality measures • Problem of information gain: – Information Gain prefers attributes with many values. • An alternative: Gain Ratio Where Si is subset of S for which A has value vi.

Q 2: avoiding overfitting • Overfitting occurs when our decision tree characterizes too much detail, or noise in our training data. • Consider error of hypothesis h over – Training data: Error. Train(h) – Entire distribution D of data: Error. D(h) • A hypothesis h overfits training data if there is an alternative hypothesis h’, such that – Error. Train(h) < Error. Train(h’), and – Error. D(h) > error. D(h’)

How to avoiding Overfitting • Stop growing the tree earlier – Ex: Info. Gain < threshold – Ex: Size of examples in a node < threshold –… • Grow full tree, then post-prune In practice, the latter works better than the former.

Post-pruning • Split data into training and validation set • Do until further pruning is harmful: – Evaluate impact on validation set of pruning each possible node (plus those below it) – Greedily remove the ones that don’t improve the performance on validation set Produces a smaller tree with best performance measure

Performance measure • Accuracy: – on validation data – K-fold cross validation • Misclassification cost: Sometimes more accuracy is desired for some classes than others. • MDL: size(tree) + errors(tree)

Rule post-pruning • Convert tree to equivalent set of rules • Prune each rule independently of others • Sort final rules into desired sequence for use • Perhaps most frequently used method (e. g. , C 4. 5)

Q 3: handling numeric attributes • Continuous attribute discrete attribute • Example – Original attribute: Temperature = 82. 5 – New attribute: (temperature > 72. 3) = t, f Question: how to choose thresholds?

Choosing thresholds for a continuous attribute • Sort the examples according to the continuous attribute. • Identify adjacent examples that differ in their target classification a set of candidate thresholds • Choose the candidate with the highest information gain.

Q 4: Unknown attribute values • Assume an attribute can take the value “blank”. • Assign most common value of A among training data at node n which have the same target class. • Assign prob pi to each possible value vi of A – Assign a fraction (pi) of example to each descendant in tree – This method is used in C 4. 5.

Q 5: Attributes with cost • Consider medical diagnosis (e. g. , blood test) has a cost • Question: how to learn a consistent tree with low expected cost? • One approach: replace gain by – Tan and Schlimmer (1990)

Q 6: Dealing with continuous goal attribute Regression tree • A variant of decision trees • Estimation problem: approximate real-valued functions: e. g. , the crime rate • A leaf node is marked with a real value or a linear function: e. g. , the mean of the target values of the examples at the node. • Measure of impurity: e. g. , variance, standard deviation, …

Summary of Major issues Q 1: Choosing best attribute: different quality measure. Q 2: Determining when to stop splitting: stop earlier or post-pruning Q 3: Handling continuous attributes: find the breakpoints

Summary of major issues (cont) Q 4: Handling training data with missing attribute values: blank value, most common value, or fractional count Q 5: Handing attributes with different costs: use a quality measure that includes the cost factors. Q 6: Dealing with continuous goal attribute: various ways of building regression trees.

Common algorithms • ID 3 • C 4. 5 • CART

ID 3 • Proposed by Quinlan (so is C 4. 5) • Can handle basic cases: discrete attributes, no missing information, etc. • Information gain as quality measure

C 4. 5 • An extension of ID 3: – Several quality measures – Incomplete information (missing attribute values) – Numerical (continuous) attributes – Pruning of decision trees – Rule derivation – Random mood and batch mood

CART • • CART (classification and regression tree) Proposed by Breiman et. al. (1984) Constant numerical values in leaves Variance as measure of impurity

Strengths of decision tree methods • Ability to generate understandable rules • Ease of calculation at classification time • Ability to handle both continuous and categorical variables • Ability to clearly indicate best attributes

The weaknesses of decision tree methods • Greedy algorithm: no global optimization • Error-prone with too many classes: numbers of training examples become smaller quickly in a tree with many levels/branches. • Expensive to train: sorting, combination of attributes, calculating quality measures, etc. • Trouble with non-rectangular regions: the rectangular classification boxes that may not correspond well with the actual distribution of records in the decision space.

Advanced topics

Combining multiple models • The inherent instability of top-down decision tree induction: different training datasets from a given problem domain will produce quite different trees. • Techniques: – Bagging – Boosting

Bagging • Introduced by Breiman • It first creates multiple decision trees based on different training sets. • Then, it compares each tree and incorporates the best features of each. • This addresses some of the problems inherent in regular ID 3.

Boosting • Introduced by Freund and Schapire • It examines the trees that incorrectly classify an instance and assign them a weight. • These weights are used to eliminate hypotheses or refocus the algorithm on the hypotheses that are performing well.

Summary • Basic case: – – Discrete input attributes Discrete goal attribute No missing attribute values Same cost for all tests and all kinds of misclassification. • Extended cases: – – – Continuous attributes Real-valued goal attribute Some examples miss some attribute values Some tests are more expensive than others. Some misclassifications are more serious than others.

Summary (cont) • Basic algorithm: – greedy algorithm – top-down induction – Bias for small trees • Major issues:

Uncovered issues • Incremental decision tree induction? • How can a decision relate to other decisions? what's the order of making the decisions? (e. g. , POS tagging) • What's the difference between decision tree and decision list?