Dialogue Ling 571 Fei Xia Week 8 111505

![An example [assert] [info-req, Ack] [assert, Answer] [info-req, ack] [assert, Answer] C: I need An example [assert] [info-req, Ack] [assert, Answer] [info-req, ack] [assert, Answer] C: I need](https://slidetodoc.com/presentation_image_h2/e42804897c8248448bf38d3e829fb328/image-18.jpg)

- Slides: 42

Dialogue Ling 571 Fei Xia Week 8: 11/15/05

Outline • Properties of dialogues • Dialogue acts • Dialogue manager

Properties of dialogue • Turn-taking: • Grounding: • Implicature

Turn-taking • Overlapped speech: less than 5%. • Little silence between turns either: The speaker begin motor planning before the previous speaker finishes. • Turn-taking rule: – If the current speaker selects A, A must speak. – If the current speaker does not select the next speaker, any speaker can speak. – If no one else takes the next turn, the current speaker may take the next turn.

Turn-taking (cont) • Adjacency pair: two-part structures – Question / Answer – Greeting / Greeting – Complement / downplayer – Request / Grant • Significant silence: follows 1 st part of an adjacency pair.

Turn and utterance • A single utterance may span several turns: – A: I am leaving tomorrow – B: really? – A: right after my class. • A turn may contain several utterances – A: Do you think it will work? Will John get mad at me? What if Mary does not like it? … – B: Slow down.

Grounding • Speaker and Hearer must establish common ground. • Examples: – Acknowledgement: yeah, that’s great – Demonstration: rephrase or collaboratively completing A’s utterance – Request of repair: Huh? What? – ….

Conversational Implicature • Example: – A: What day in May did you want to travel? – B: I need to be there for a meeting that is from 12 th to 15 th. • Speakers expect hearers to draw certain inferences. • (Grice, 1975) What enables hearers to draw these inferences is that conversation is guided by a set of maxims.

Grice’s four maxims • Maxim of Quantity: Be exactly as informative as is required: – Make your contribution as informative as is required (for the current purposes of the exchange). – Don’t make your contribution more informative than is required. – Ex: There are three flights today.

Grice’s maxims (cont) • Maxim of Quality: try to make your contribution one that is true: – Do not say what you believe to be false. – Do not say that for which you lack adequate evidence • Maxim of Relevance: be relevant. • Maxim of Manner: be perspicuous: – – Avoid obscurity of expression Avoid ambiguity Be brief Be orderly

Grice’s maxims (cont) • • Maxim of Quantity Maxim of Quality Maxim of Relevance Maxim of Manner • Do people actually follow the maxims?

Properties of dialogue • Turn-taking: – – Overlapping speech Little silence Turn-taking rules Utterance segmentation • Grounding: acknowledgement, request of repair • Implicature: four axioms • Case study: Instant messaging

Instant messaging • Turn-taking: – More overlapping speech – Longer silence – Turn-taking rules – More unsynchronized exchange – One more level: utterance, turn, message • Grounding is more important. • Implicature: four axioms • Discourse structure: a tree?

Outline • Properties of dialogues • Dialogue acts • Dialogue manager

Dialogue acts • Austin (1962): three kinds of acts – Locutionary act: the act of uttering a string of words – Illocutionary act (a. k. a. speech act): the act that the speaker performs in the utterance – Perlocutionary act: production of effects by means of the utterance. • Searle (1975): five classes of speech acts

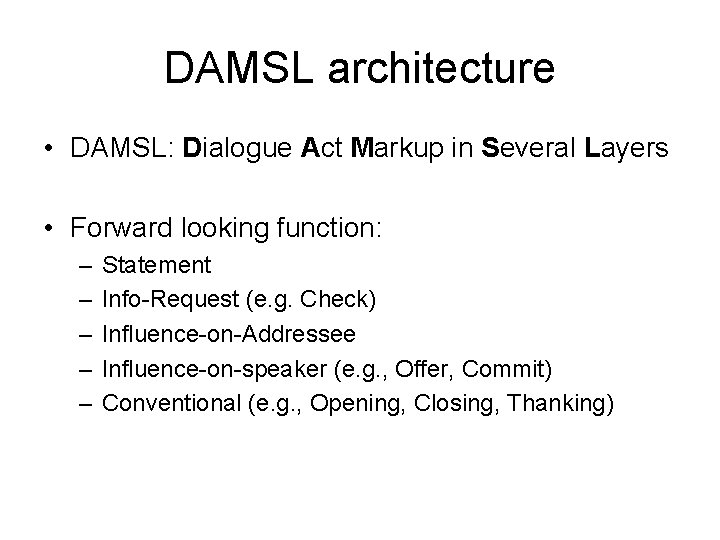

DAMSL architecture • DAMSL: Dialogue Act Markup in Several Layers • Forward looking function: – – – Statement Info-Request (e. g. Check) Influence-on-Addressee Influence-on-speaker (e. g. , Offer, Commit) Conventional (e. g. , Opening, Closing, Thanking)

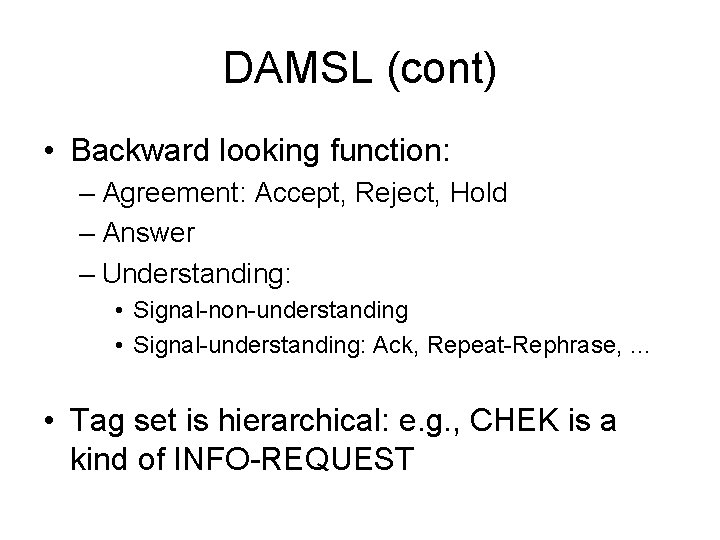

DAMSL (cont) • Backward looking function: – Agreement: Accept, Reject, Hold – Answer – Understanding: • Signal-non-understanding • Signal-understanding: Ack, Repeat-Rephrase, … • Tag set is hierarchical: e. g. , CHEK is a kind of INFO-REQUEST

![An example assert inforeq Ack assert Answer inforeq ack assert Answer C I need An example [assert] [info-req, Ack] [assert, Answer] [info-req, ack] [assert, Answer] C: I need](https://slidetodoc.com/presentation_image_h2/e42804897c8248448bf38d3e829fb328/image-18.jpg)

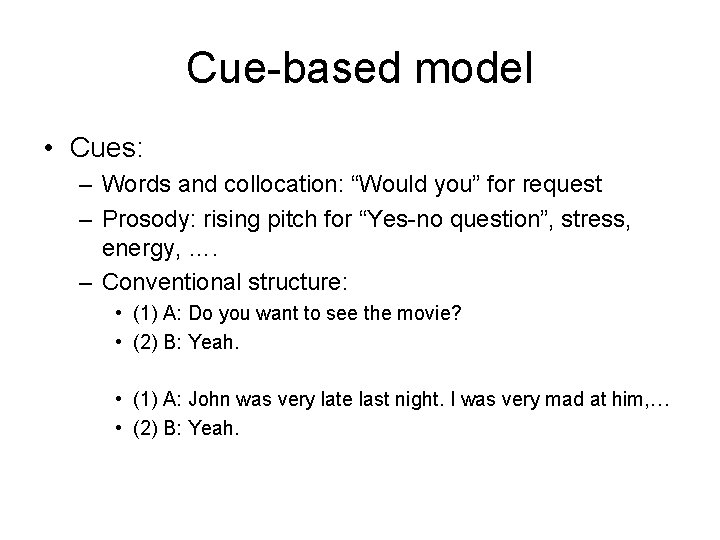

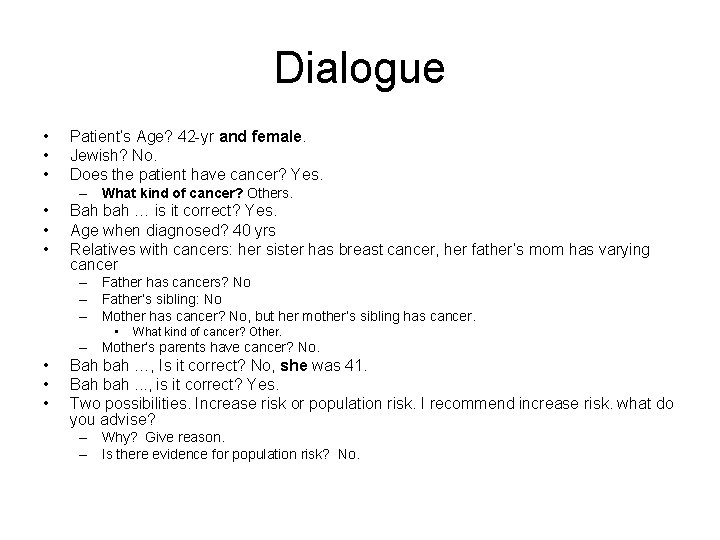

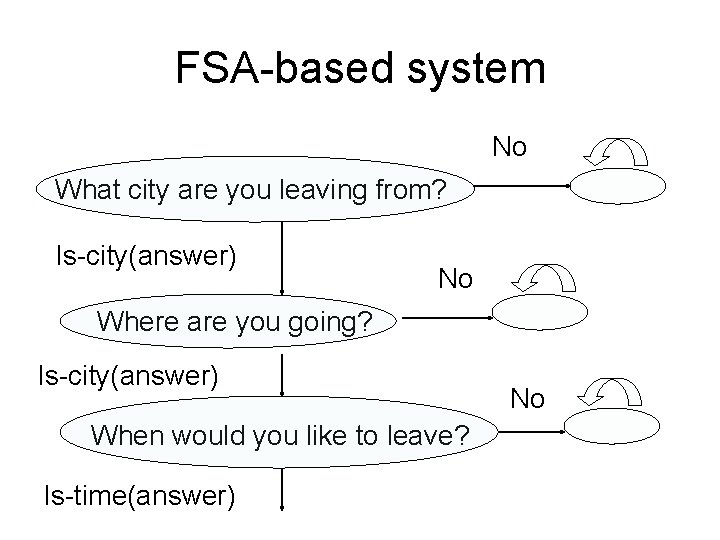

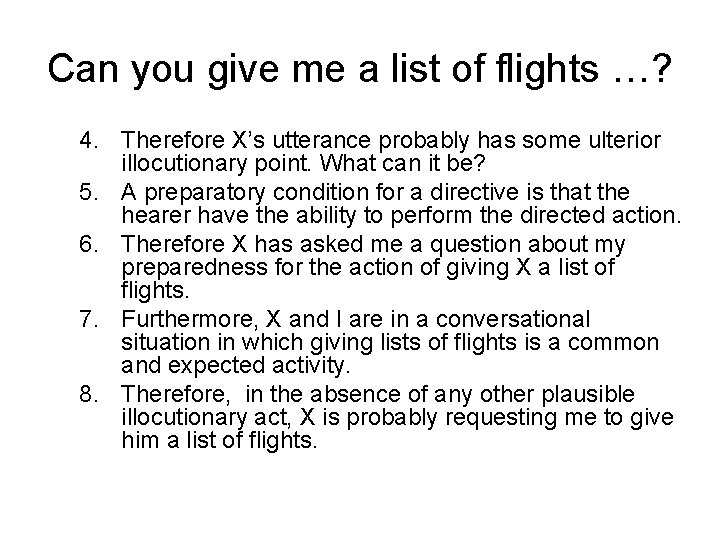

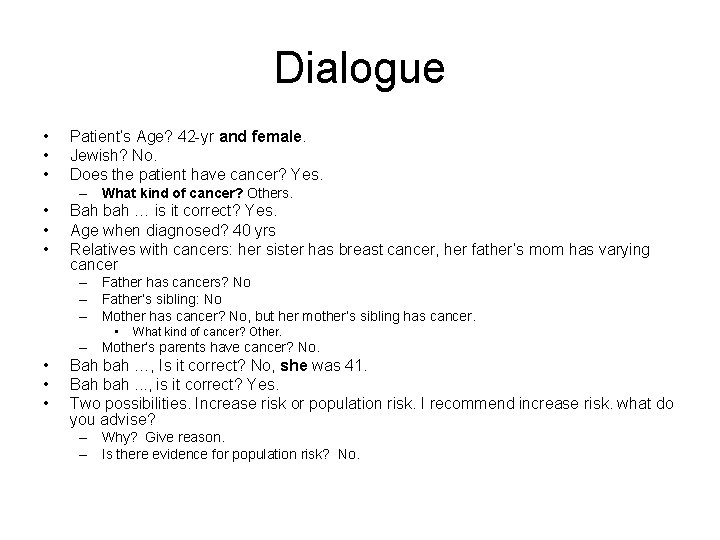

An example [assert] [info-req, Ack] [assert, Answer] [info-req, ack] [assert, Answer] C: I need to travel in May. A: And, what day in May do you want to travel? C: I need to be there for a meeting on 15 th. A: And you are flying into what city? C: Seattle.

How to identify these acts? • Examples: – Can you give me a list of flights from A to B? – It’s hot in here. • Two models: – Inference model: symbolic approach – Cue model: statistical approach

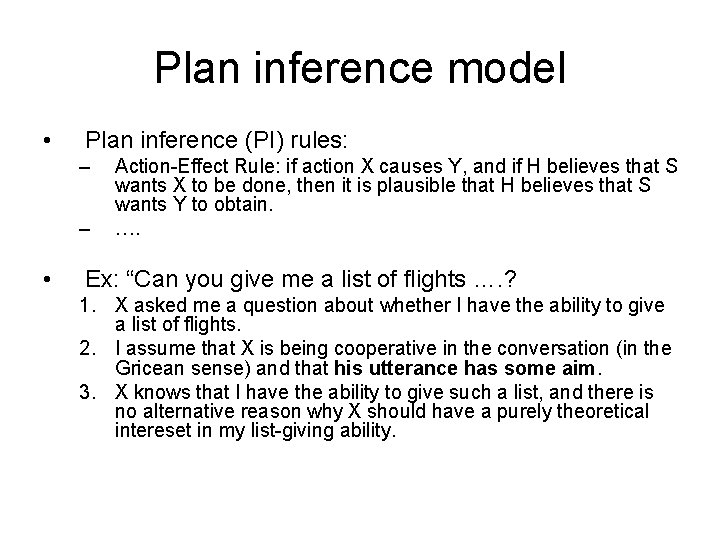

Plan inference model • Plan inference (PI) rules: – – • Action-Effect Rule: if action X causes Y, and if H believes that S wants X to be done, then it is plausible that H believes that S wants Y to obtain. …. Ex: “Can you give me a list of flights …. ? 1. X asked me a question about whether I have the ability to give a list of flights. 2. I assume that X is being cooperative in the conversation (in the Gricean sense) and that his utterance has some aim. 3. X knows that I have the ability to give such a list, and there is no alternative reason why X should have a purely theoretical intereset in my list-giving ability.

Can you give me a list of flights …? 4. Therefore X’s utterance probably has some ulterior illocutionary point. What can it be? 5. A preparatory condition for a directive is that the hearer have the ability to perform the directed action. 6. Therefore X has asked me a question about my preparedness for the action of giving X a list of flights. 7. Furthermore, X and I are in a conversational situation in which giving lists of flights is a common and expected activity. 8. Therefore, in the absence of any other plausible illocutionary act, X is probably requesting me to give him a list of flights.

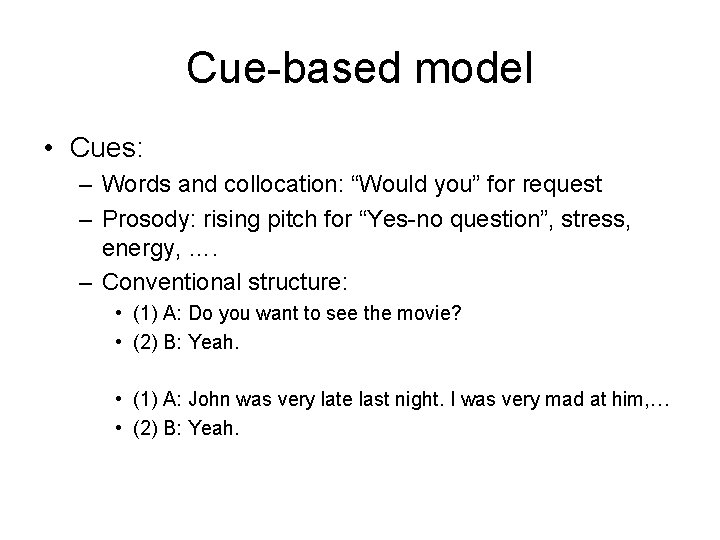

Cue-based model • Cues: – Words and collocation: “Would you” for request – Prosody: rising pitch for “Yes-no question”, stress, energy, …. – Conventional structure: • (1) A: Do you want to see the movie? • (2) B: Yeah. • (1) A: John was very late last night. I was very mad at him, … • (2) B: Yeah.

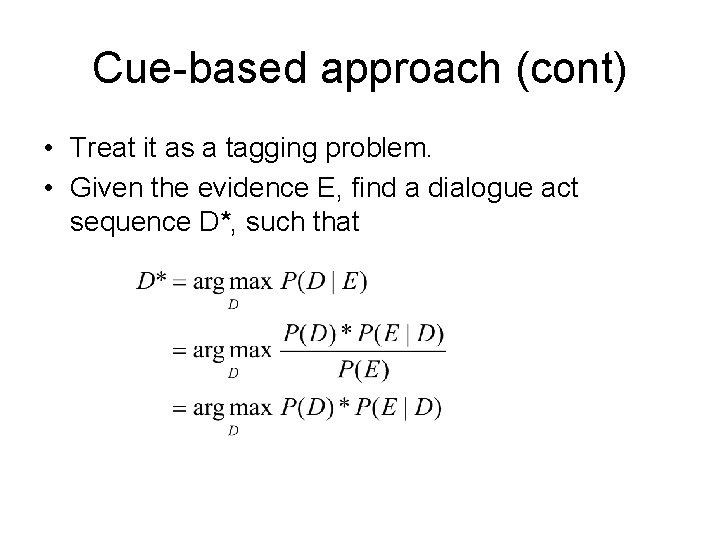

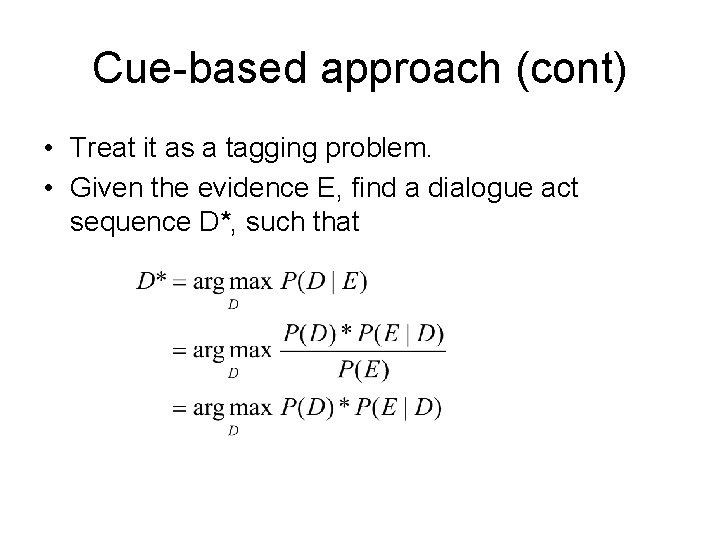

Cue-based approach (cont) • Treat it as a tagging problem. • Given the evidence E, find a dialogue act sequence D*, such that

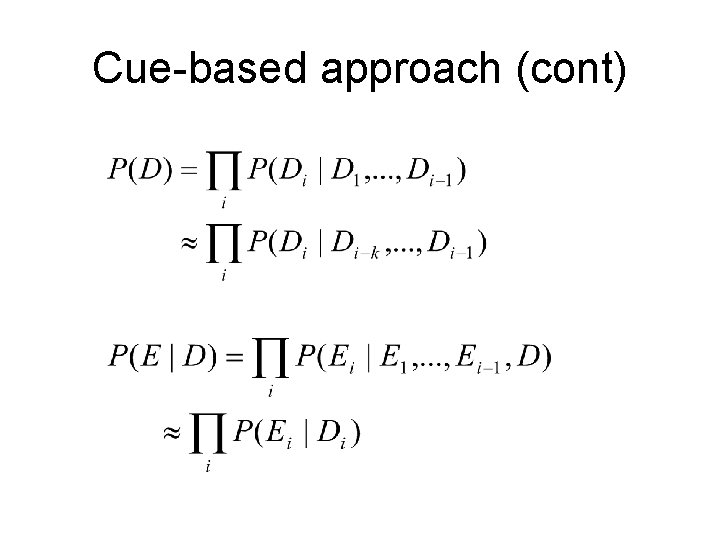

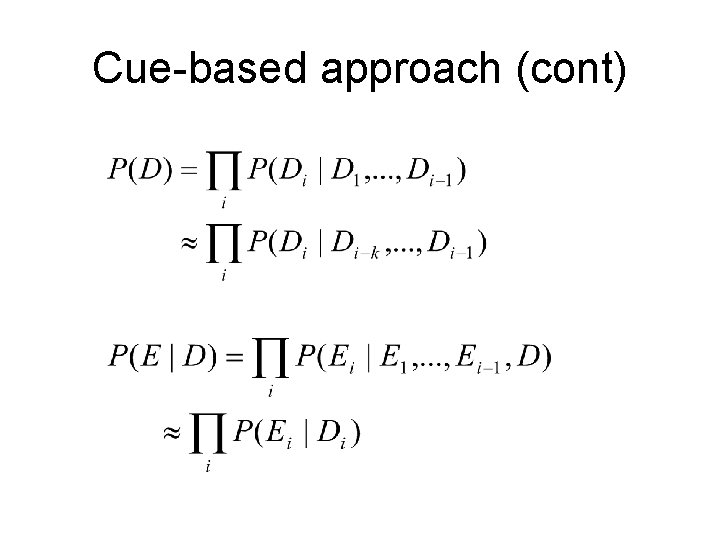

Cue-based approach (cont)

Outline • Properties of dialogues • Dialogue acts • Dialogue manager

Dialogue Manager

Where are dialogue systems used? • • Banks, Utility companies, … Airline travel information systems Restaurant guides Telephone interfaces to emails or calendars • Financial services: buy/sell stocks • Technology: Apple, Dell, Microsoft, …

What does a dialogue system do? • “Understand” human’s utterance: – Reference resolution – Dialogue act – Human’s intention • Decide what to do: the intended dialogue act • Produce the utterance

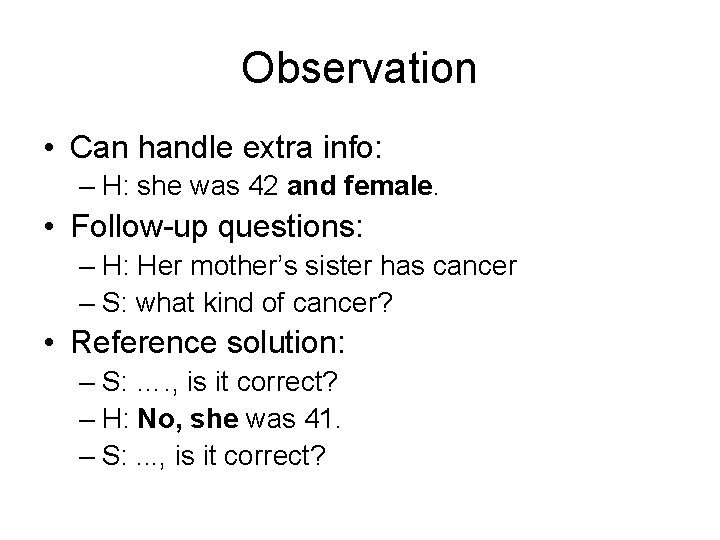

A dialogue system • Homey: Home monitoring through intelligent dialog system • By Advanced Computation Lab (ACL), part of Cancer Research UK, Europe's largest independent cancer research organization. • Demo: local-demo

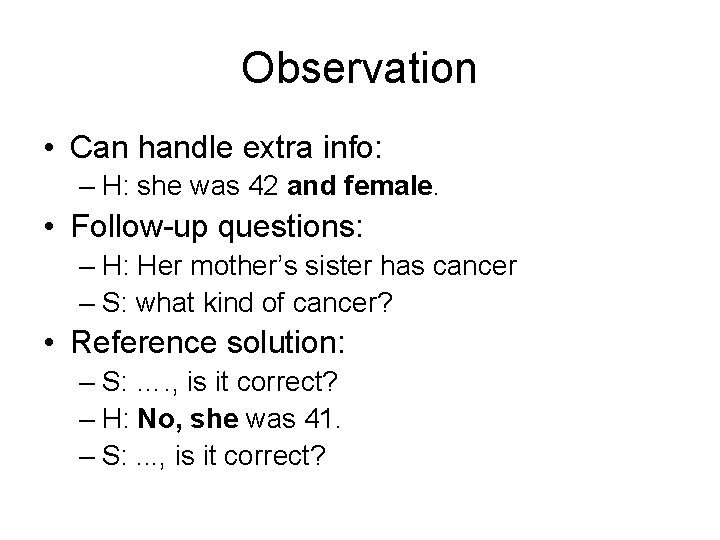

Dialogue • • • Patient’s Age? 42 -yr and female. Jewish? No. Does the patient have cancer? Yes. – What kind of cancer? Others. • • • Bah bah … is it correct? Yes. Age when diagnosed? 40 yrs Relatives with cancers: her sister has breast cancer, her father’s mom has varying cancer – Father has cancers? No – Father’s sibling: No – Mother has cancer? No, but her mother’s sibling has cancer. • What kind of cancer? Other. – Mother’s parents have cancer? No. • • • Bah bah …, Is it correct? No, she was 41. Bah bah. . . , is it correct? Yes. Two possibilities. Increase risk or population risk. I recommend increase risk. what do you advise? – Why? Give reason. – Is there evidence for population risk? No.

Observation • Can handle extra info: – H: she was 42 and female. • Follow-up questions: – H: Her mother’s sister has cancer – S: what kind of cancer? • Reference solution: – S: …. , is it correct? – H: No, she was 41. – S: . . . , is it correct?

Observation (cont) • Explicit batch confirmation and correction afterwards • Mixed initiative: – S: I recommend …. . What do you advise? – H: Why do you recommend that? – S: Because …. • Inference: – 42 under 45 – father’s mother one of father’s parents

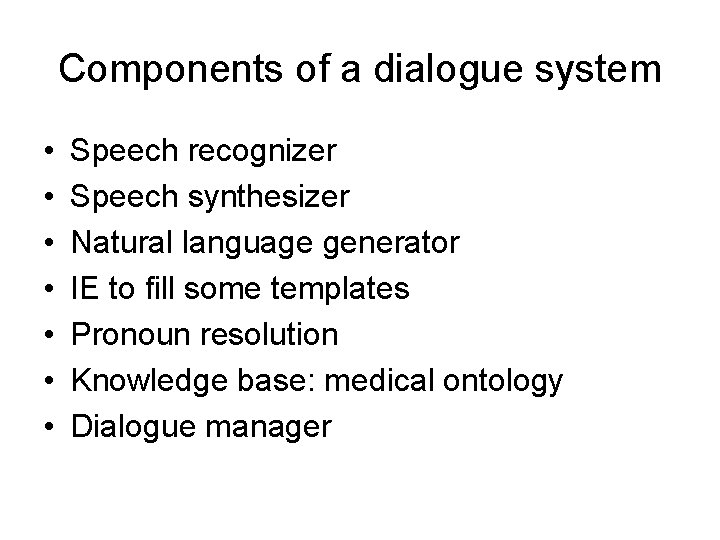

Components of a dialogue system • • Speech recognizer Speech synthesizer Natural language generator IE to fill some templates Pronoun resolution Knowledge base: medical ontology Dialogue manager

Dialog management • Goal: determine how to respond to user utterance: – – – Answer user question Solicit information Confirm/Clarify user utterances Notify invalid answer Suggest alternatives • Interface between user/language processing components and system knowledge base

Initiative strategies • System-initiative: system always has control • User-initiative: user always has control • Mixed-initiative: control switches between user and system.

Confirmation strategies • Human: I want to go to Seattle • Explicit: System: did you say you want to go to Seattle? • Implicit: System: what time did you want to leave Seattle?

An example • Human: I want to go to Pittsburgh in May. • Dialogue manager: – Turn holder: system – Intended speech acts: Req-info, Ack, Clarify – Discourse goal: get-travel-info, create-travelplan • System: And, what day in May did you want to travel?

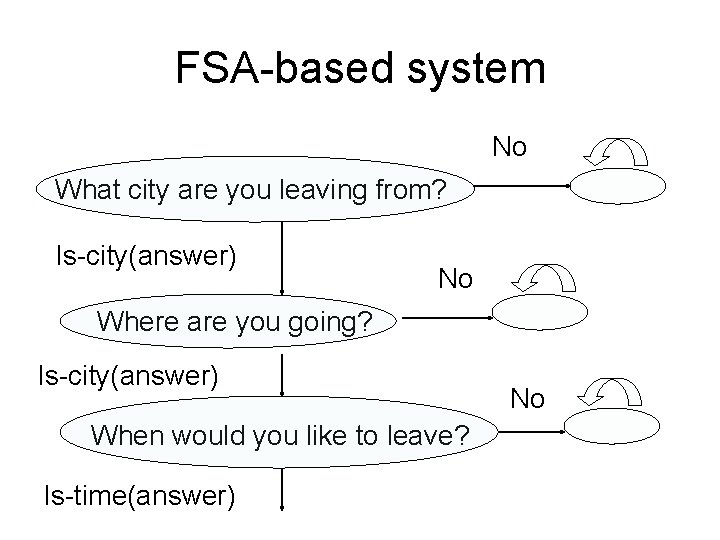

FSA-based system No What city are you leaving from? Is-city(answer) No Where are you going? Is-city(answer) When would you like to leave? Is-time(answer) No

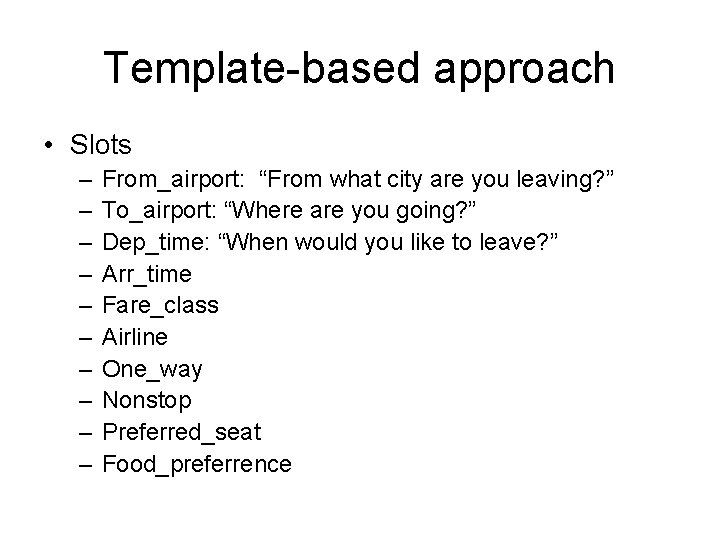

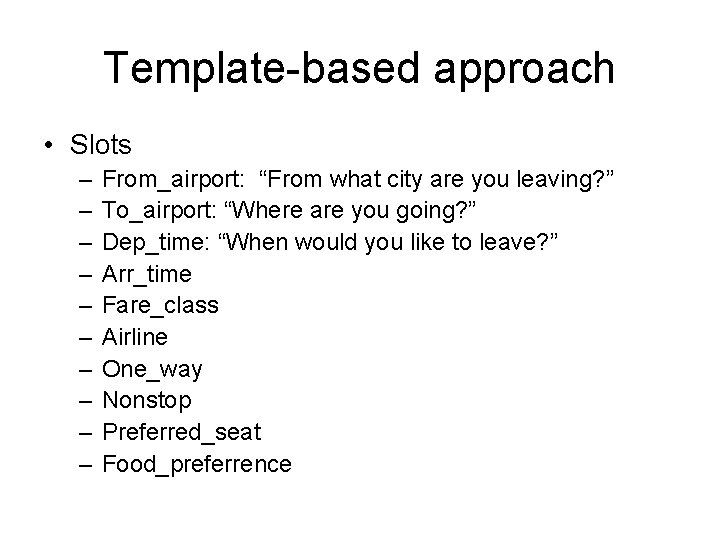

Template-based approach • Slots – – – – – From_airport: “From what city are you leaving? ” To_airport: “Where are you going? ” Dep_time: “When would you like to leave? ” Arr_time Fare_class Airline One_way Nonstop Preferred_seat Food_preferrence

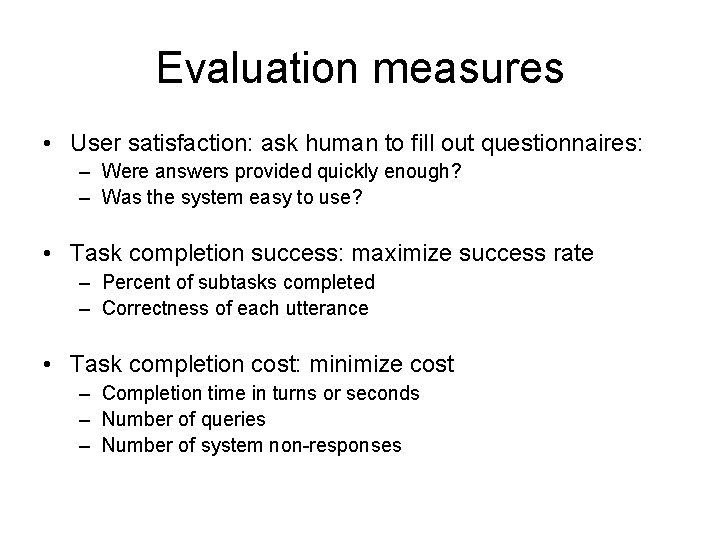

Evaluation measures • User satisfaction: ask human to fill out questionnaires: – Were answers provided quickly enough? – Was the system easy to use? • Task completion success: maximize success rate – Percent of subtasks completed – Correctness of each utterance • Task completion cost: minimize cost – Completion time in turns or seconds – Number of queries – Number of system non-responses

How good are automated systems? • An article on the Seattle Times (11/6/05): “How to outsmart automated phone systems? ” • Shortcuts and tips: – Go to the website or use “live-chat”. – Sometimes it is faster to not respond when prompted by an automated phone system, or pretend you’re calling from a rotary-dial phone.

Summary of Dialogue • Properties of dialogues: – Turn-taking – Grounding – Implicatures • Dialogue acts – Types of dialogue acts – Identifying dialogue acts: inference-based, cue -based • Dialogue manager

Cs 4803

Cs 4803 Fei fei li

Fei fei li Expression of congratulating

Expression of congratulating Week 7 dialogue

Week 7 dialogue Week by week plans for documenting children's development

Week by week plans for documenting children's development Smbse cisco training

Smbse cisco training 571 ad

571 ad Perform voice communications

Perform voice communications Münchener verein 571+575

Münchener verein 571+575 Bus 571

Bus 571 20 nisan 571 hangi gün

20 nisan 571 hangi gün 571 r

571 r Cs 571 gmu

Cs 571 gmu 571

571 Asu cse 571

Asu cse 571 Chữ số 5 trong số 20 571 có giá trị là

Chữ số 5 trong số 20 571 có giá trị là Lirong xia rpi

Lirong xia rpi Xia shang zhou qin han

Xia shang zhou qin han Laura iordache

Laura iordache Guoxing xia

Guoxing xia It indicates fierceness, ambition and cool-headedness

It indicates fierceness, ambition and cool-headedness Kñp

Kñp Yuni xia

Yuni xia Pregiera padre nostro

Pregiera padre nostro Guoxing xia

Guoxing xia Amy xia amgen

Amy xia amgen Xuhua xia

Xuhua xia Red ning contra

Red ning contra Dinastiyang xia o hsia

Dinastiyang xia o hsia Qiangfei xia

Qiangfei xia Patrick xia

Patrick xia Guoxing xia

Guoxing xia Svitlana vyetrenko

Svitlana vyetrenko Jennifer xia

Jennifer xia Xuhua xia

Xuhua xia Qfrost

Qfrost Molecular clock hypothesis

Molecular clock hypothesis Xia dynasty government

Xia dynasty government Monsoons

Monsoons Lirong xia

Lirong xia Exces de cerere

Exces de cerere Contributions of the shang dynasty

Contributions of the shang dynasty Dr xia wang

Dr xia wang