Network Modeling for Psychological and Attitudinal Data 11012018

- Slides: 73

Network Modeling for Psychological (and Attitudinal) Data 11/01/2018 – 12/01/2018 Oldenburg Adela Isvoranu & Pia Tio http: //www. adelaisvoranu. com/Oldenburg 2018

Workshop Overview • Thursday January 11 • Morning • • • Afternoon • • Introduction & Theoretical Foundation of Network Analysis Drawing networks in R (part of practical) Network Estimation (Markov Random Fields: Gaussian Graphical Model & Ising Model) Friday January 12 • Morning • • Stability, Replicability & Challenges of Network Modeling Afternoon • Advanced Methods (Network Comparison Test, Mixed Graphical Models, Latent Variable Network Modeling)

Morning Session Overview Markov Random Fields II: stability, replicability, challenges • Part 1. Centrality • Part 2. Stability • Edge Weights Stability • Centrality Stability • Part 3. Replicability • Part 4. Challenges Practical Session

Morning Session Overview Markov Random Fields II: stability, replicability, challenges • Part 1. Centrality • Part 2. Stability • Edge Weights Stability • Centrality Stability • Part 3. Replicability • Part 4. Challenges Practical Session

5 Centrality – Refresher! • Node strength: how strongly a node is directly connected • • Closeness: how strongly a node is indirectly connected • • A central railway station is one located in the center of the country, close to all destinations Betweenness: how well one node connects other nodes • • A central railway station is one with many railways running through it A central railway station is an important transit station Often similar results, but not necessarily

6 Centrality – Refresher! • Plot all three centrality indices for our GGM via the qgraph centrality. Plot function centrality. Plot() • This provides standardized centrality estimates (mean=0, SD=1)

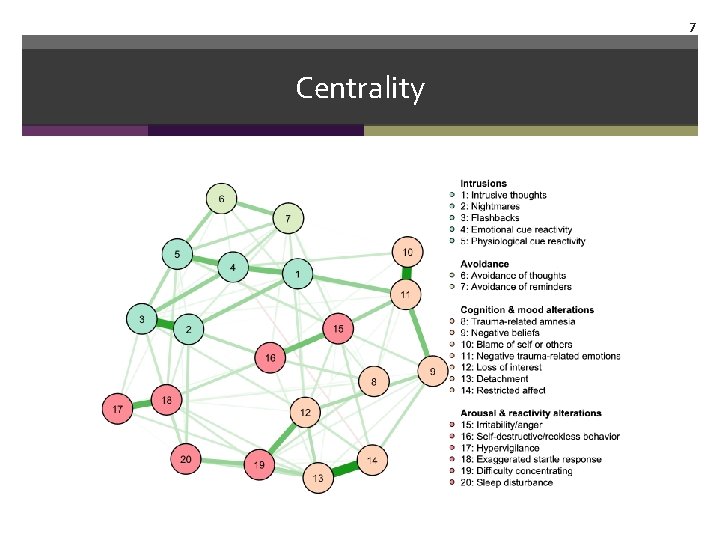

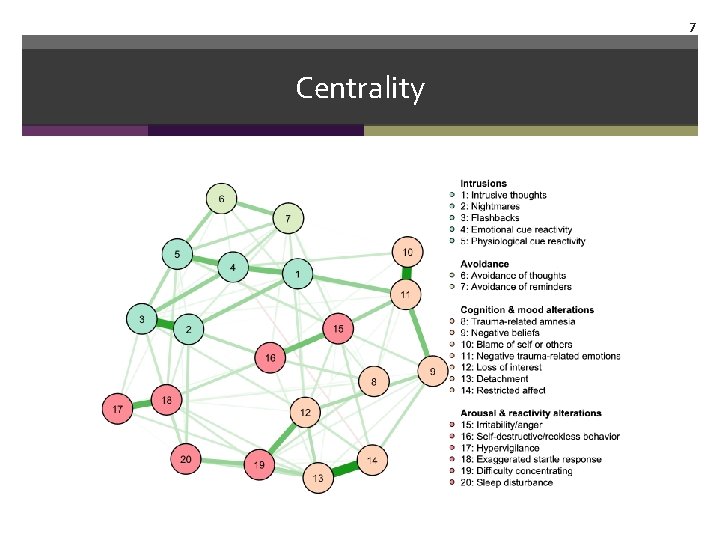

7 Centrality

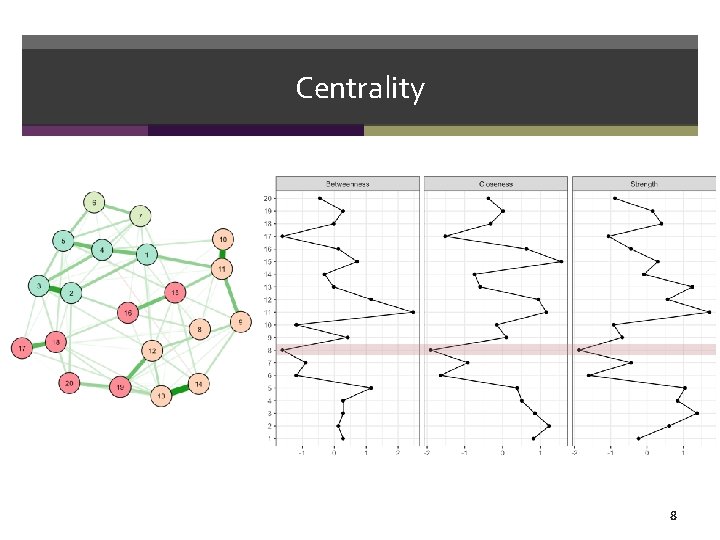

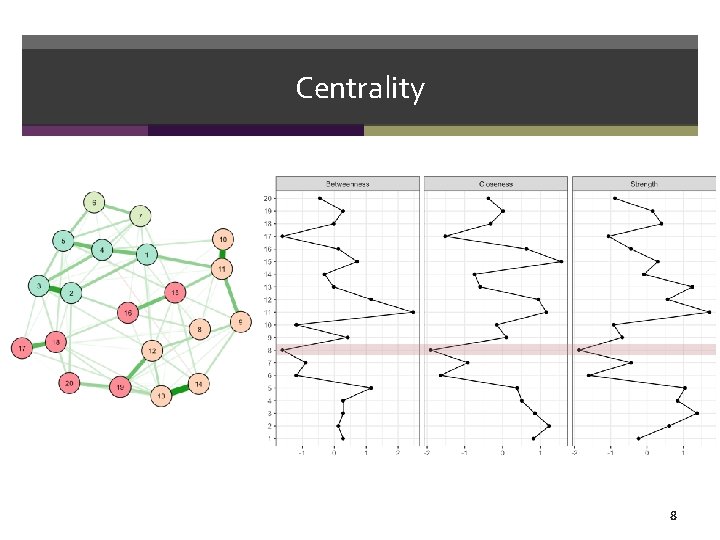

Centrality 8

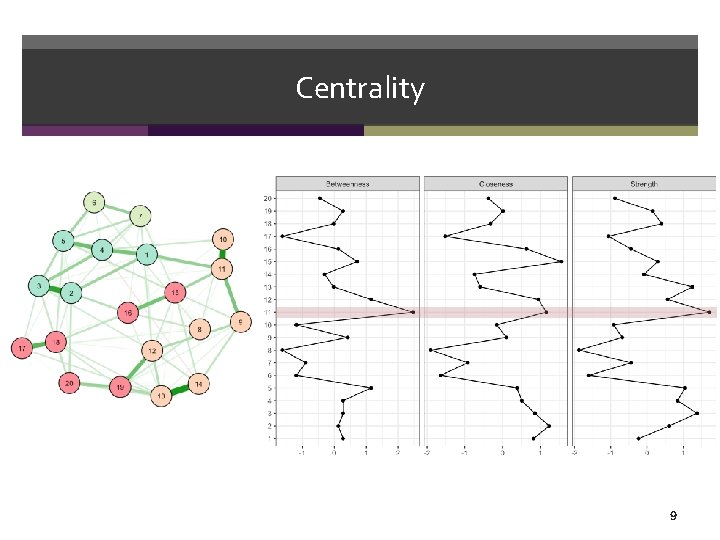

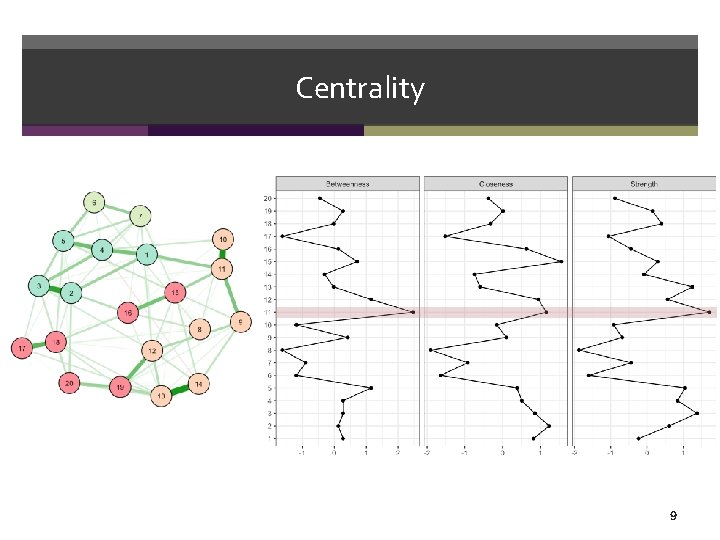

Centrality 9

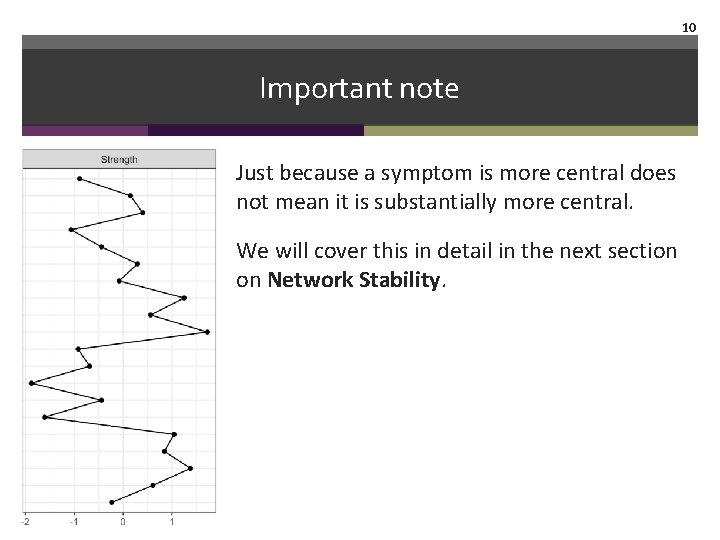

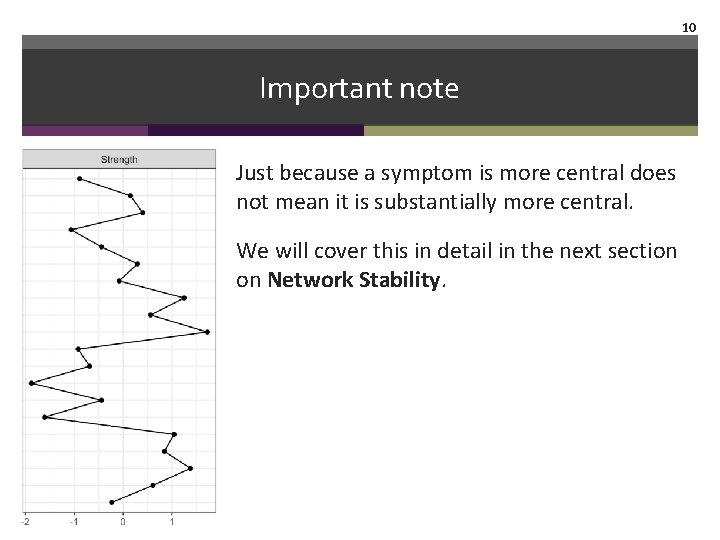

10 Important note Just because a symptom is more central does not mean it is substantially more central. We will cover this in detail in the next section on Network Stability.

Morning Session Overview Markov Random Fields II: stability, replicability, challenges • Part 1. Centrality • Part 2. Stability • Edge Weights Stability • Centrality Stability • Part 3. Replicability • Part 4. Challenges

12 Stability & power • Are men taller than women? Measuring 10 men and 10 women will result in a lot of uncertainty, while measuring 1000 will increase the accuracy of your parameter estimate by decreasing the confidence interval. • We would call this result accurate, and if it were part of a statistical model, we would call the model “stable”.

13 Network stability Two most common stability / accuracy questions in network psychometrics: 1. Stability of edge weights 2. Stability of centrality indices

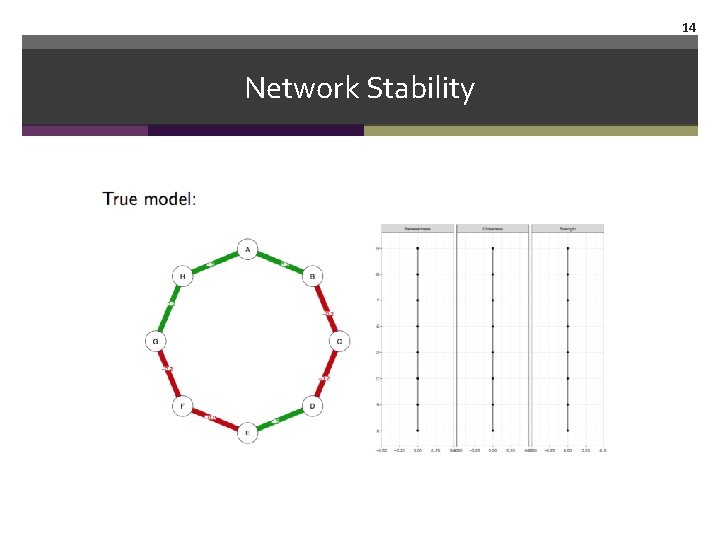

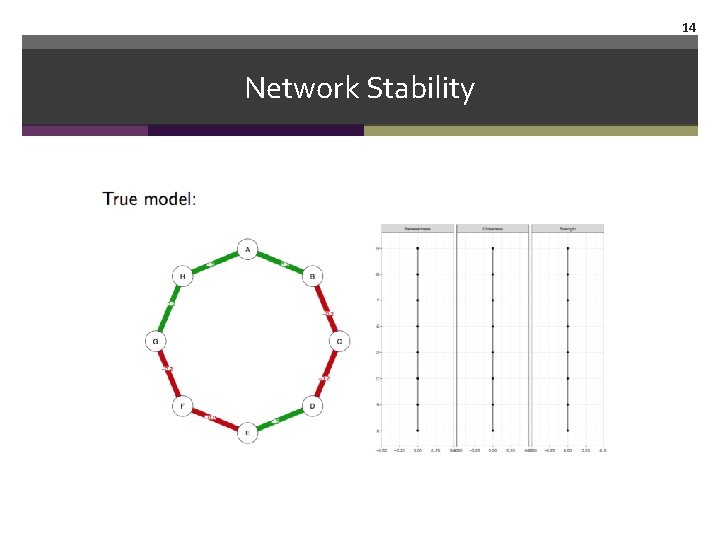

14 Network Stability

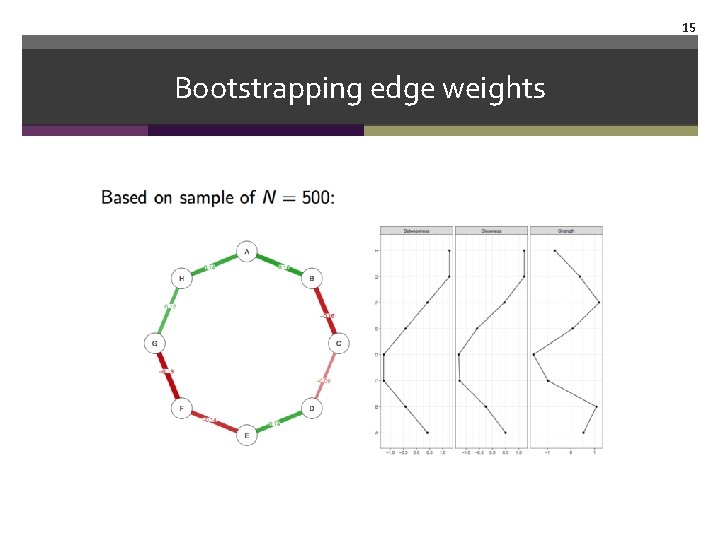

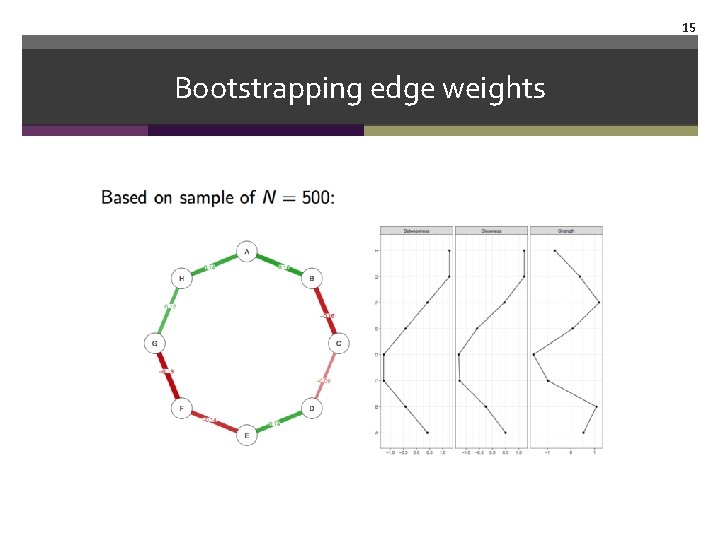

15 Bootstrapping edge weights

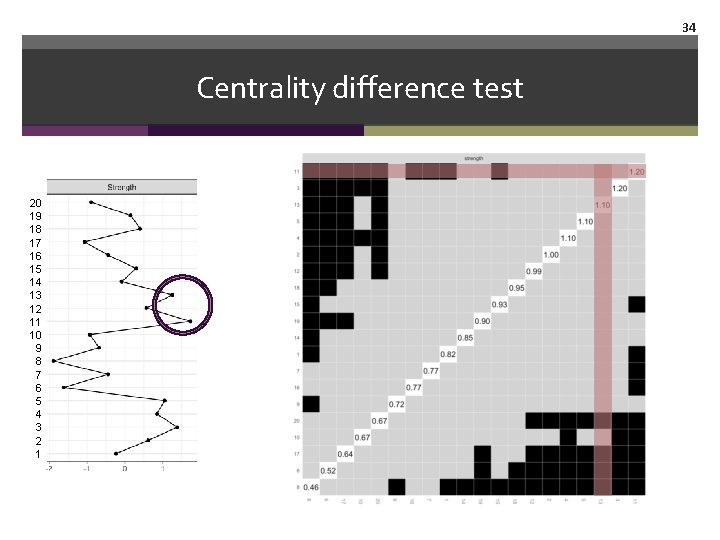

16 Network stability 1. Is edge 13— 14 meaningfully larger than edge 14— 9? 2. Is node 11 substantially more central than node 13? 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1

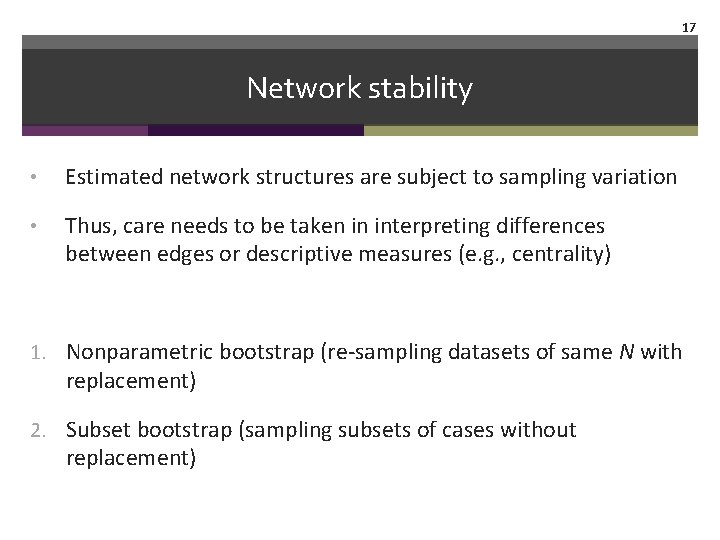

17 Network stability • Estimated network structures are subject to sampling variation • Thus, care needs to be taken in interpreting differences between edges or descriptive measures (e. g. , centrality) 1. Nonparametric bootstrap (re-sampling datasets of same N with replacement) 2. Subset bootstrap (sampling subsets of cases without replacement)

R-package bootnet

Morning Session Overview Markov Random Fields II: stability, replicability, challenges • Part 1. Centrality • Part 2. Stability • Edge Weights Stability • Centrality Stability • Part 3. Replicability • Part 4. Challenges

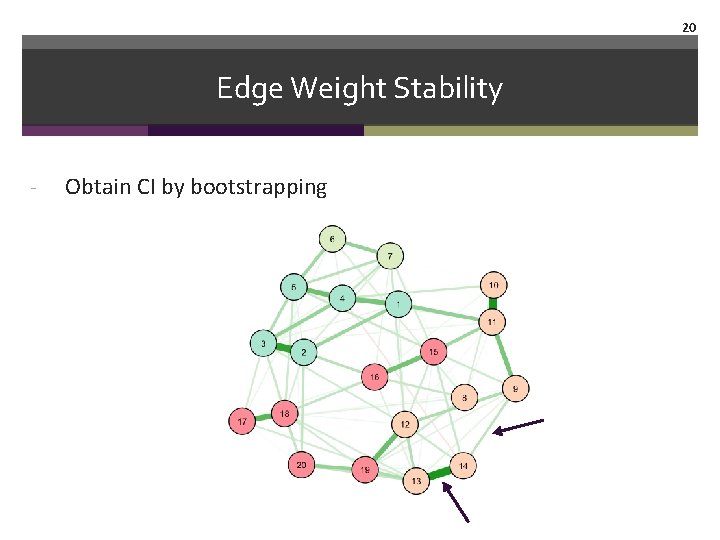

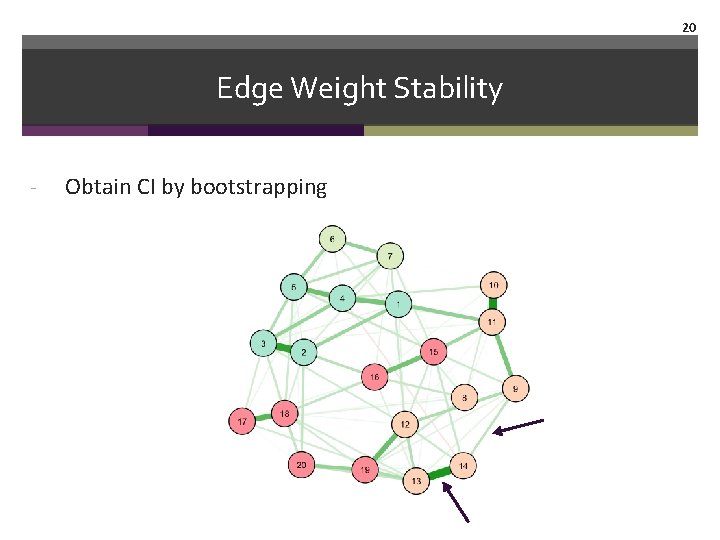

20 Edge Weight Stability - Obtain CI by bootstrapping

21 Non-parametric bootstrap • The non-parametric bootstrap is a well-known data-driven approach to investigate sampling variation 1. Compute some statistic from your data (e. g. , edge-weight) 2. Generate a new dataset by sampling cases from your original data with replacement 3. Use these new datasets to estimate a range of the statistic 4. Use these ranges to draw confidence intervals • The bootstrap samples can also be used to test for differences between parameters

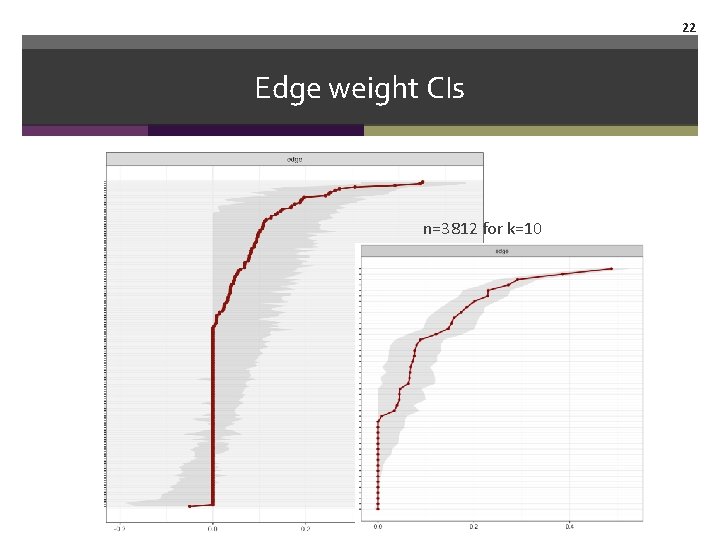

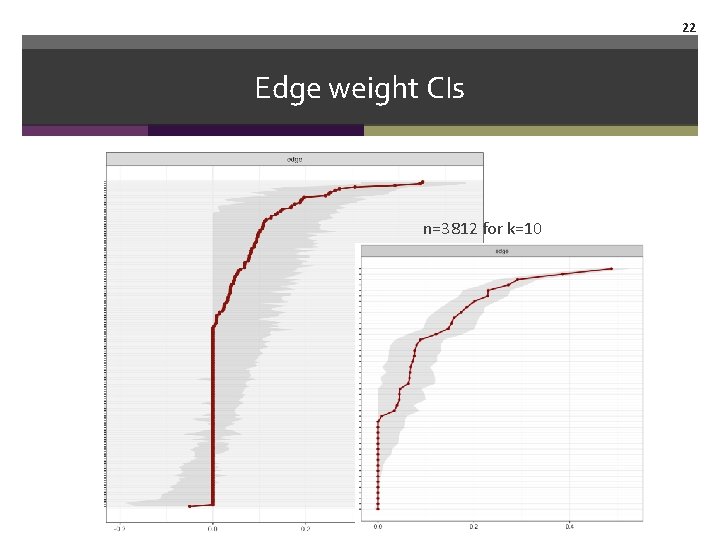

22 Edge weight CIs n=3812 for k=10

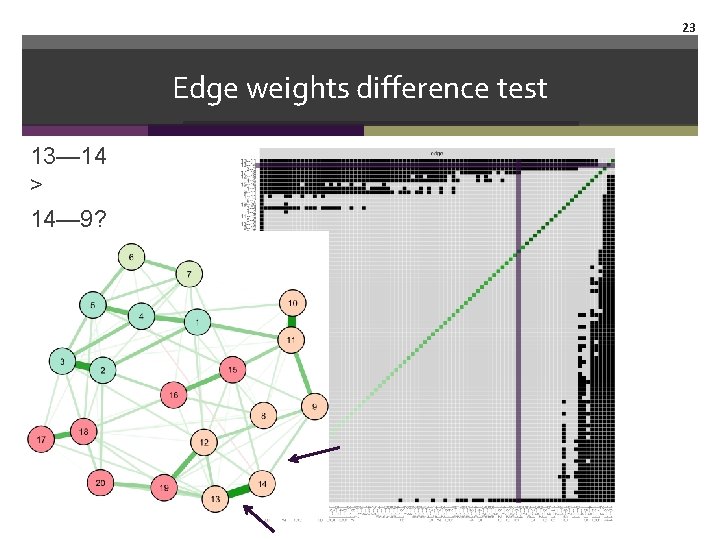

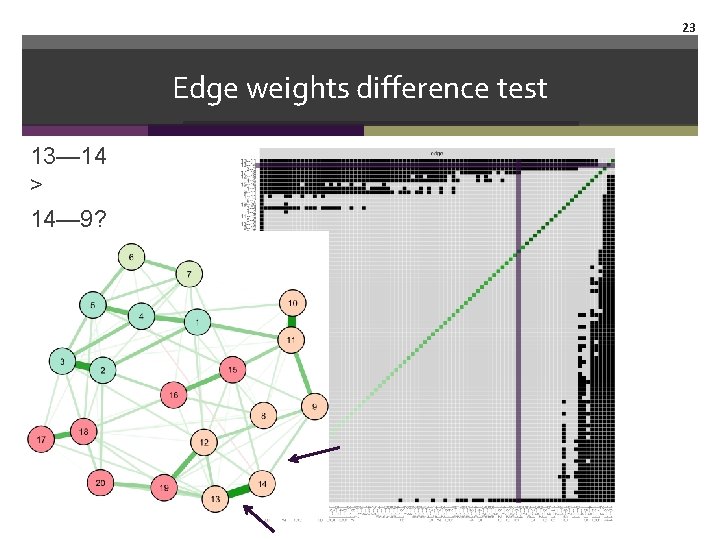

23 Edge weights difference test 13— 14 > 14— 9?

Morning Session Overview Markov Random Fields II: stability, replicability, challenges • Part 1. Centrality • Part 2. Stability • Edge Weights Stability • Centrality Stability • Part 3. Replicability • Part 4. Challenges

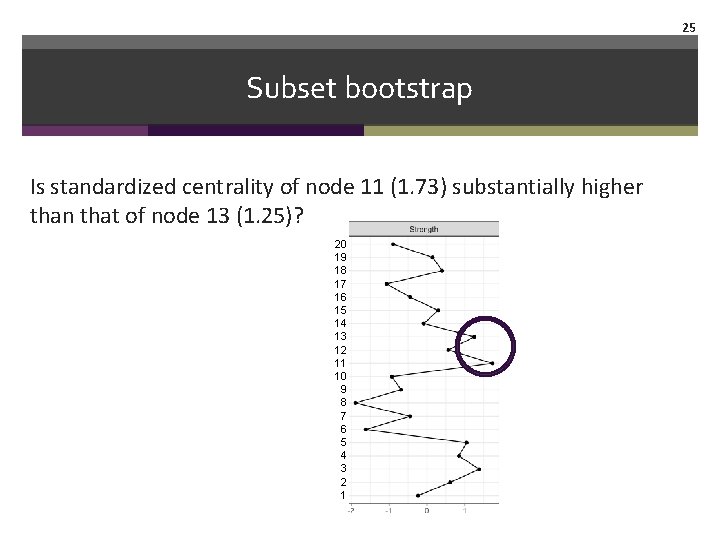

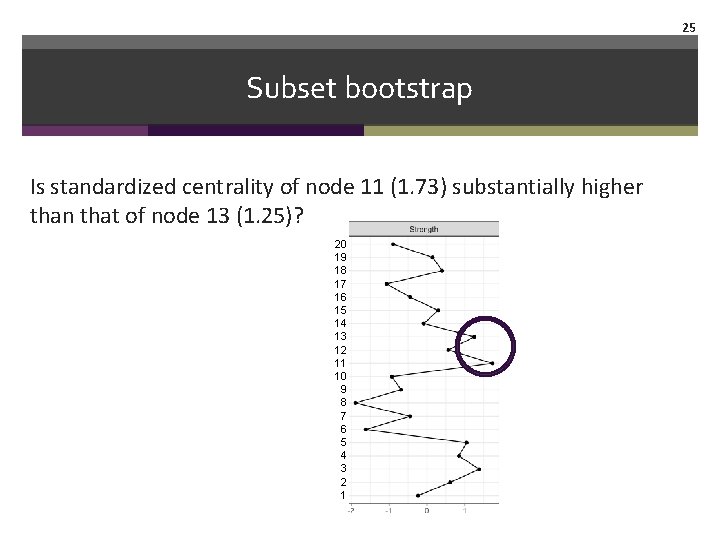

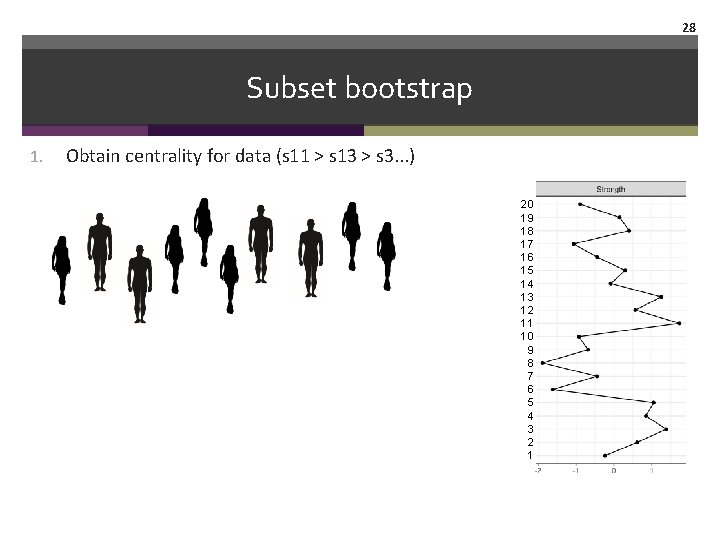

25 Subset bootstrap Is standardized centrality of node 11 (1. 73) substantially higher than that of node 13 (1. 25)? 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1

26 Subset bootstrap • Unfortunately, bootstrapping CIs around centrality estimates is not possible • Solution: trick from Costenbader, E. , & Valente, T. W. (2003). DOI: 10. 1016/S 0378 -8733(03)00012 -1

27 Subset bootstrap • Case-dropping bootstrap: • Drop x% of the cases (people) at random • Compute a network and derive centrality indices • Correlate obtained centrality indices with the original centrality indices • Ideally, we would want centrality to remain comparable to the original network even after dropping many cases from the dataset!

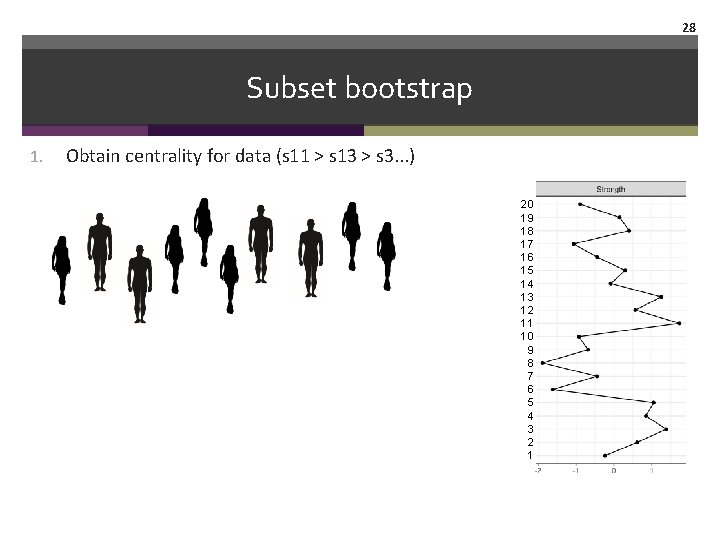

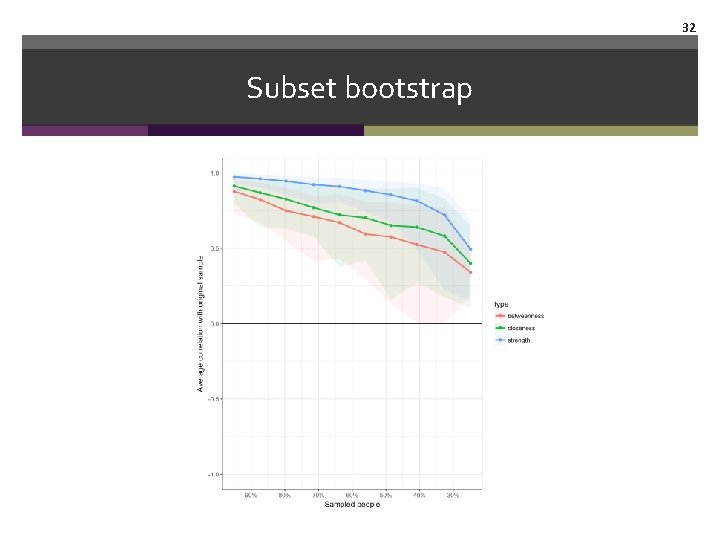

28 Subset bootstrap 1. Obtain centrality for data (s 11 > s 13 > s 3. . . ) 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1

29 Subset bootstrap 1. Obtain centrality for data (s 11 > s 13 > s 3. . . ) 2. Subset data by dropping 10% of the people 3. Obtain centrality for -10% subset (s 13 > s 11. . . ) 4. Subset data by dropping 20% of the people 5. Obtain centrality for -20% subset (s 16 > s 13 > s 3. . . ) 6. .

30 Subset bootstrap 1. Obtain centrality for data (s 11 > s 13 > s 3. . . ) 2. Subset data by dropping 10% of the people 3. Obtain centrality for -10% subset (s 13 > s 11. . . ) 4. Subset data by dropping 20% of the people 5. Obtain centrality for -20% subset (s 16 > s 13 > s 3. . . ) 6. .

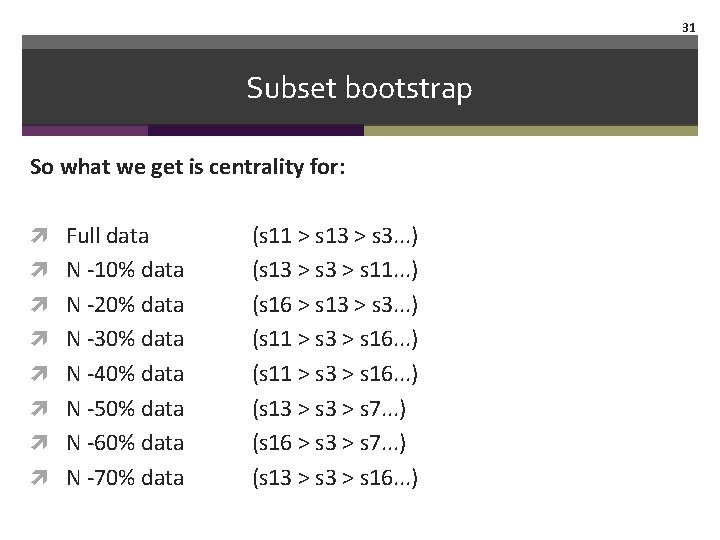

31 Subset bootstrap So what we get is centrality for: Full data N -10% data N -20% data N -30% data N -40% data N -50% data N -60% data N -70% data (s 11 > s 13 > s 3. . . ) (s 13 > s 11. . . ) (s 16 > s 13 > s 3. . . ) (s 11 > s 3 > s 16. . . ) (s 13 > s 7. . . ) (s 16 > s 3 > s 7. . . ) (s 13 > s 16. . . )

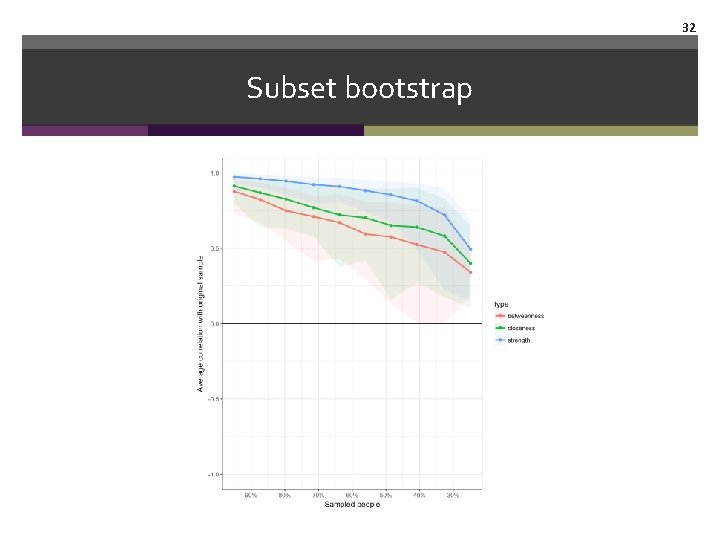

32 Subset bootstrap

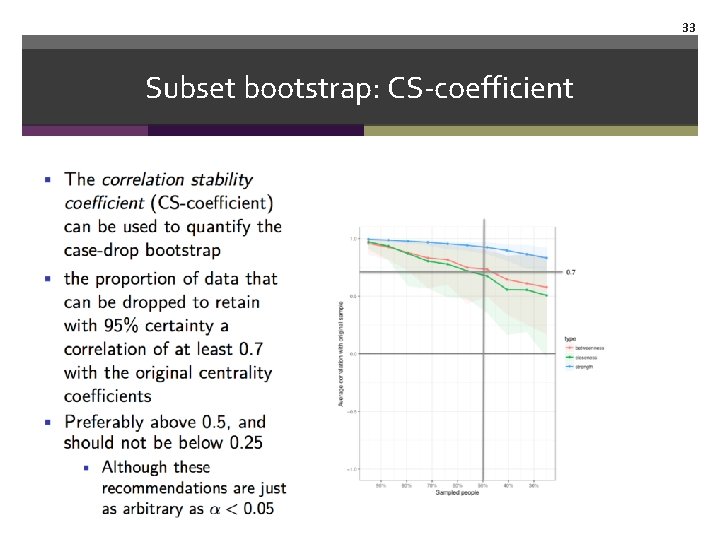

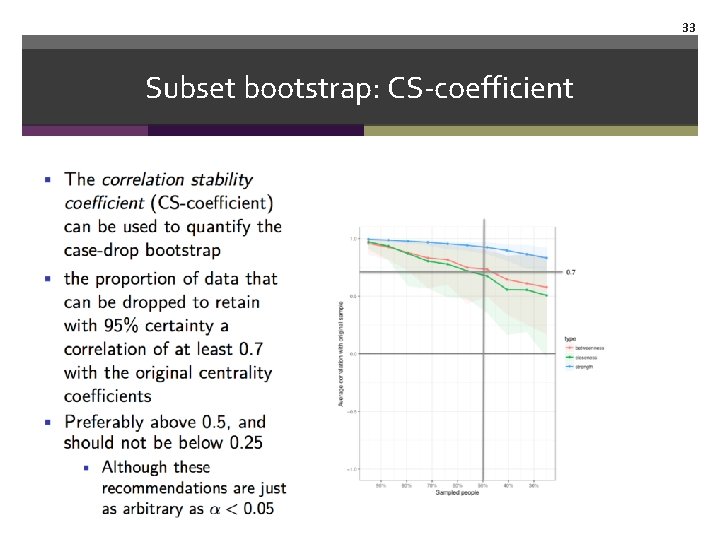

33 Subset bootstrap: CS-coefficient

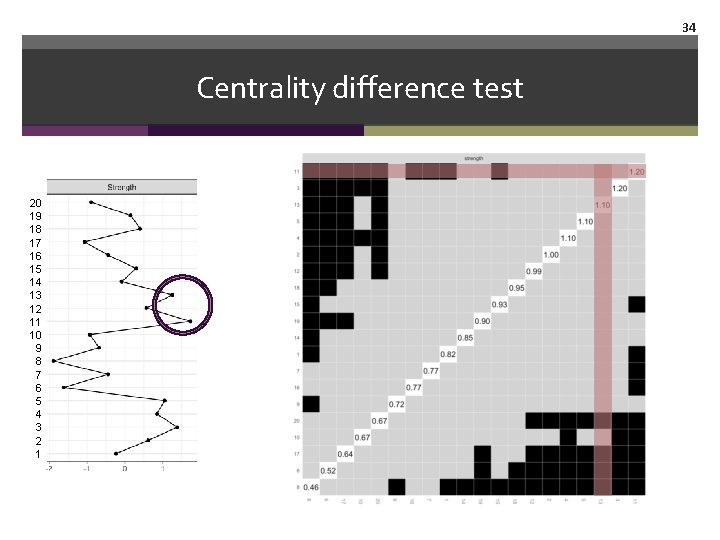

34 Centrality difference test 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1

35 Standardized vs unstandardized • centrality() gives unstandardized results; to standardize, use e. g. scale(centrality(n 1)$In. Degree) • centrality. Plot() automatically uses standardized results • bootnet() graphs give unstandardized results

36 Take home message • For most statistical parameters or test statistics, it is very useful to understand how precisely they are estimated • Different ways to do that, one way is to bootstrap confidence intervals around the point estimates • Investigating the stability of network parameters like edge weights will help us to understand the stability of network models • Crucial to understand replicability

Morning Session Overview Markov Random Fields II: stability, replicability, challenges • Part 1. Centrality • Part 2. Stability • Edge Weights Stability • Centrality Stability • Part 3. Replicability • Part 4. Challenges

39

40

Bootnet • We know that network parameters can be estimated accurately in large samples. • We do not know if networks replicate in different samples (model stability is necessary but not sufficient for replicability)

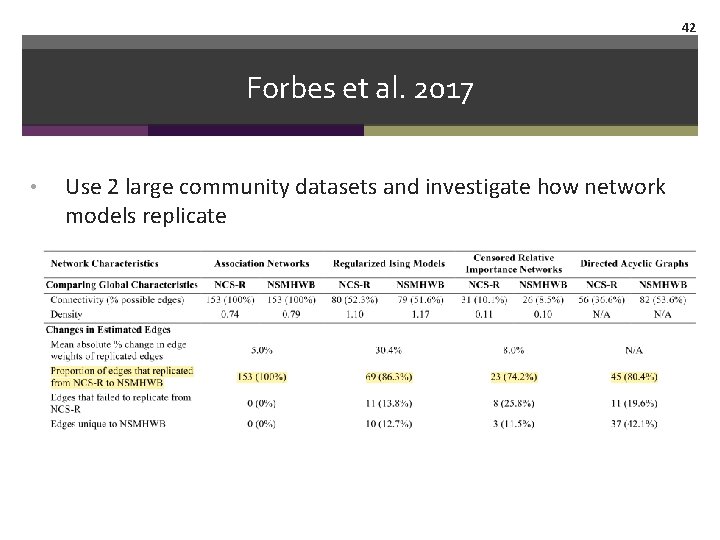

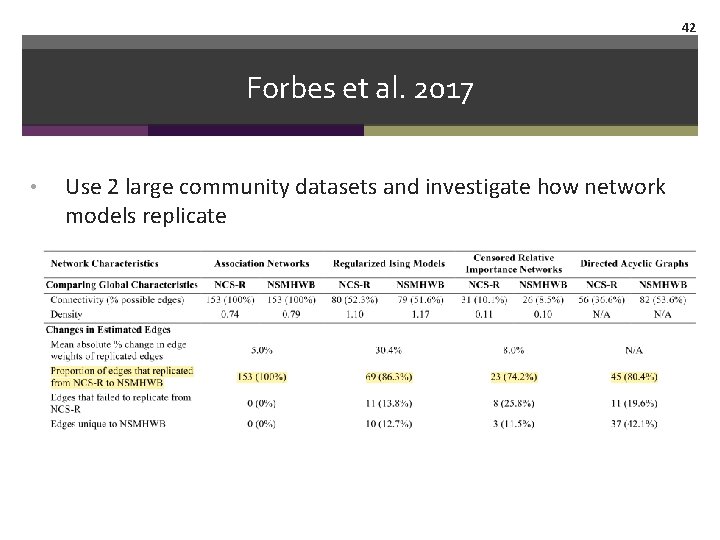

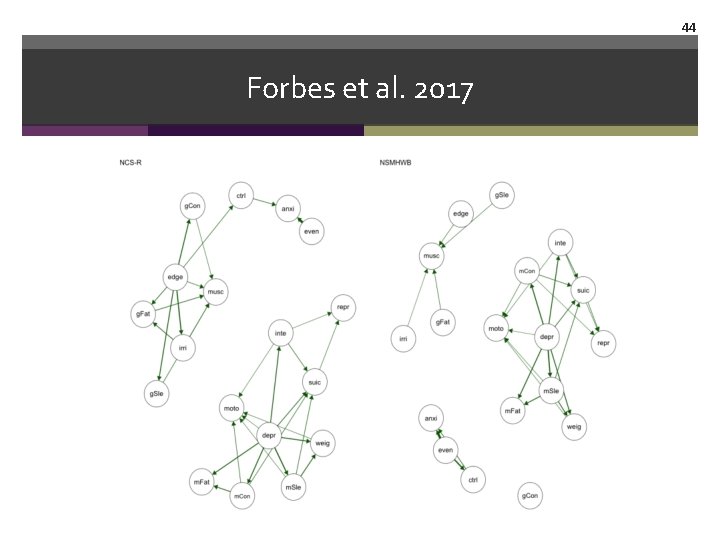

42 Forbes et al. 2017 • Use 2 large community datasets and investigate how network models replicate

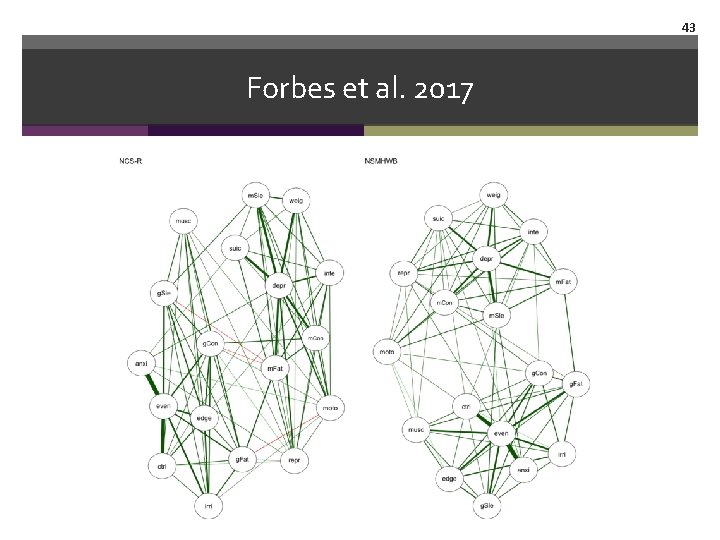

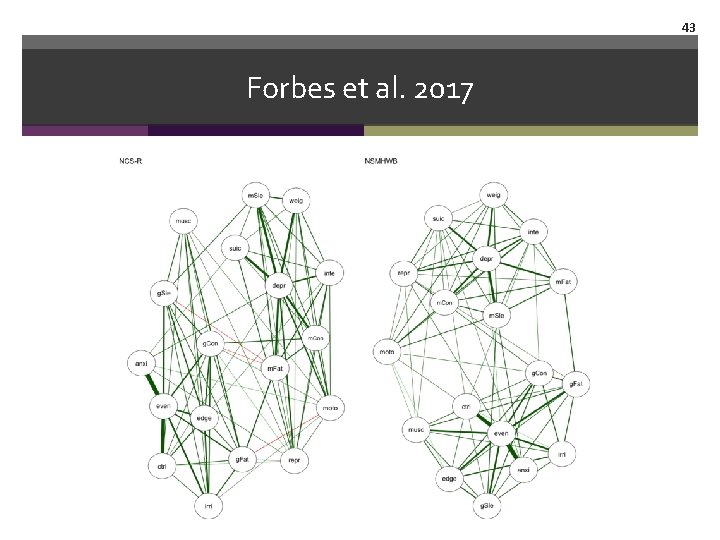

43 Forbes et al. 2017

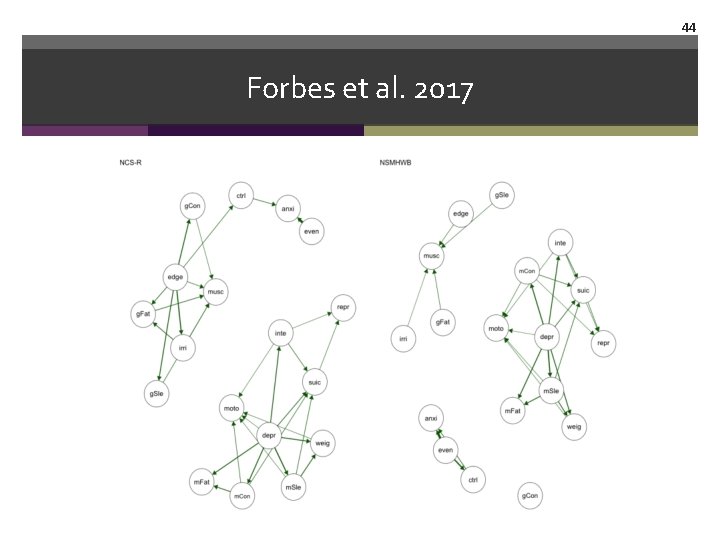

44 Forbes et al. 2017

45 Forbes et al. 2017 • “psychopathology networks have limited replicability” and “limited utility” • “popular network analysis methods produce unreliable results” • “novel results originating from psychopathology networks should be held to higher standards of evidence before they are ready for dissemination or implementation in the field”

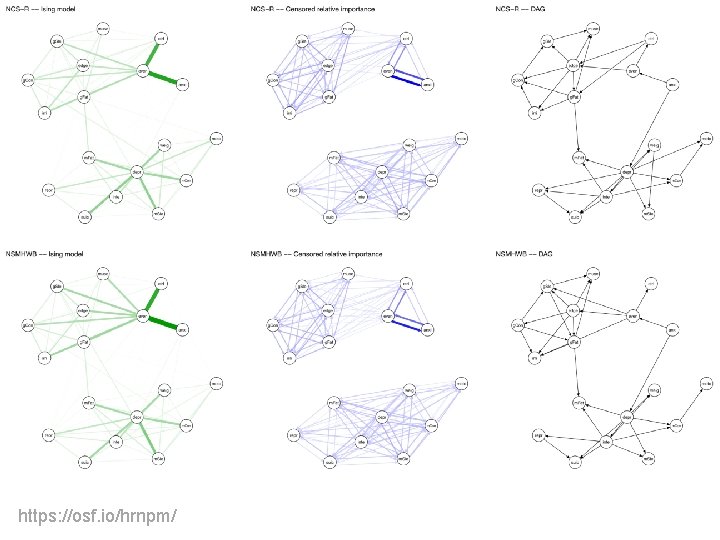

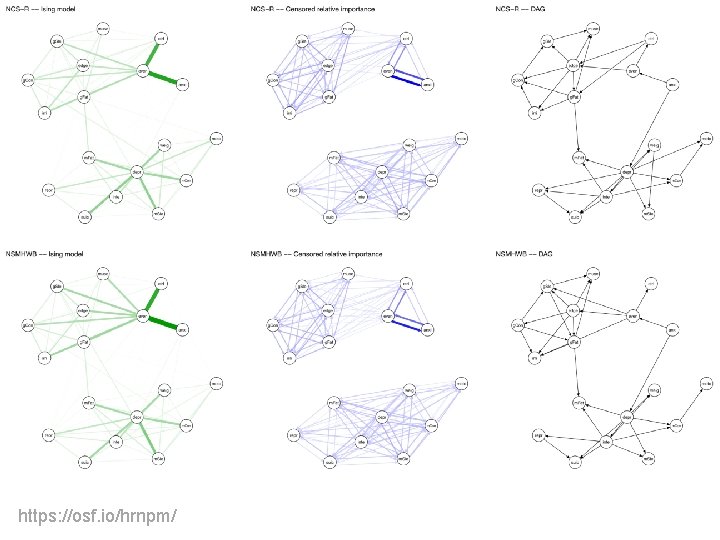

46 Borsboom et al. reanalysis 2017 1. Networks across the two datasets replicate very well in Forbes et al. , and even better (around 0. 95) if you correct some of the mistakes they made (e. g. computation errors; used metrics to assess replicability that also make factor models replicate terribly; ignore the issue of skip questions that biases replicability; misunderstand shared variance). 2. Network. Comparison. Test revealed no statistical differences between global network structure and individual edges 3. Concluding that “regression analysis is flawed” because you obtain 2 different regression coefficients in 2 different datasets would be pretty stunning. https: //osf. io/hrnpm/

47 Borsboom et al. reanalysis 2017 https: //osf. io/hrnpm/

48 Borsboom et al. reanalysis 2017

2016: Replicability of networks No single published paper investigated replicability in 7+ years of network literature.

50 Replicability of PTSD networks 1. >10 papers published 2015 -2017, but networks fitted to only 1 dataset each • Replicability and generalizability unknown 2. Networks fitted to primarily small datasets • Potential power issues 3. Networks fitted on community or subclinical data • Network structure of clinical data unknown (arguably the one we are interested in)

51 2017: Replicability of PTSD networks 1. >10 papers published 2015 -2017, but networks fitted to only 1 dataset each -> 4 datasets Replicability and generalizability unknown 2. Networks fitted to primarily small datasets Potential power issues 3. Networks fitted on community or subclinical data Network structure of clinical data unknown (arguably the one we are interested in)

52 2017: Replicability of PTSD networks 1. >10 papers published 2015 -2017, but networks fitted to only 1 dataset each -> 4 datasets Replicability and generalizability unknown 2. Networks fitted to primarily small datasets -> total N=2, 782 Potential power issues 3. Networks fitted on community or subclinical data Network structure of clinical data unknown (arguably the one we are interested in)

53 2017: Replicability of PTSD networks 1. >10 papers published 2015 -2017, but networks fitted to only 1 dataset each -> 4 datasets Replicability and generalizability unknown 2. Networks fitted to primarily small datasets -> total N=2, 782 Potential power issues 3. Networks fitted on community or subclinical data Network structure of clinical data unknown (arguably the one we are interested in) -> Traumatized patients receiving treatment

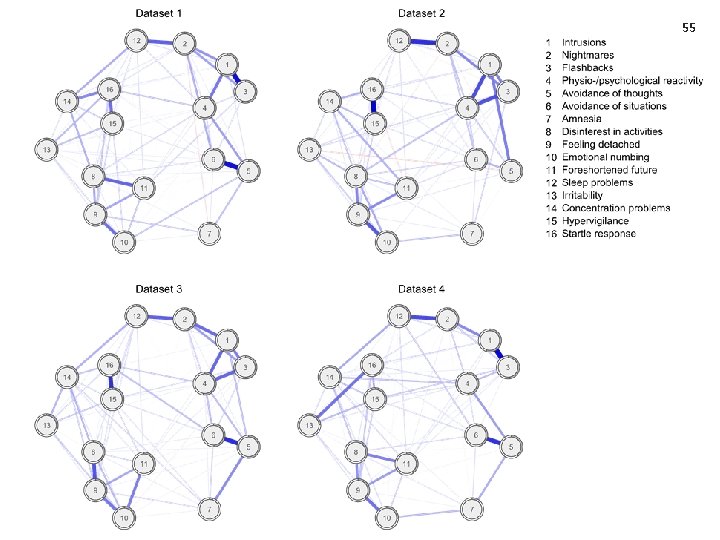

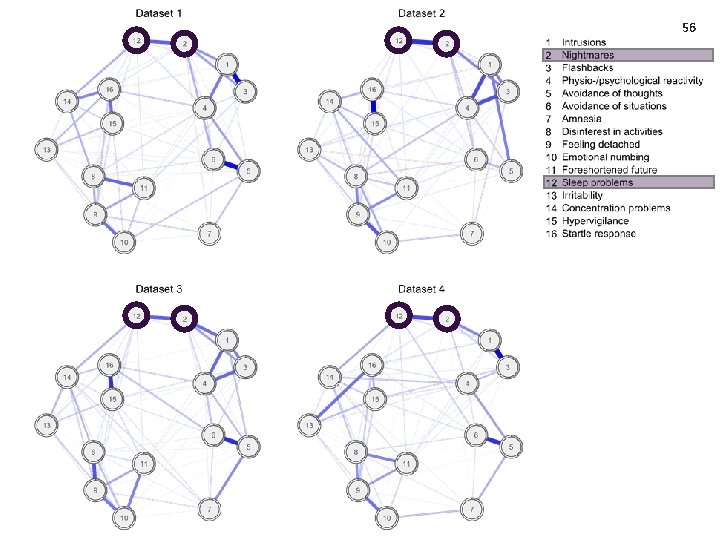

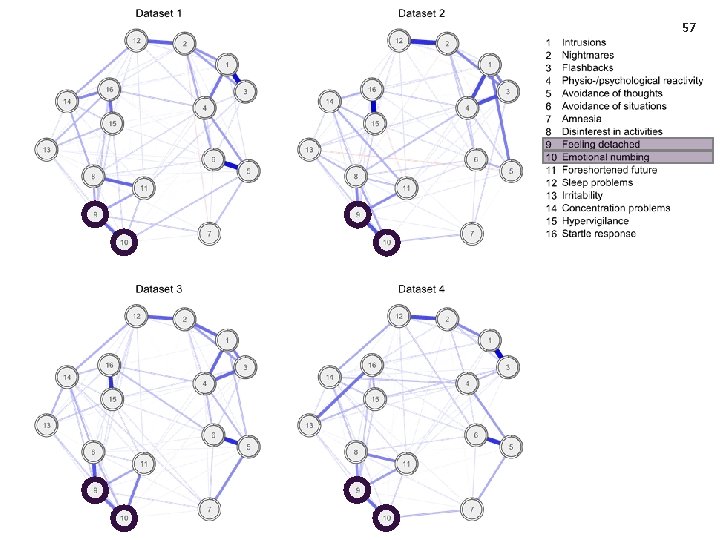

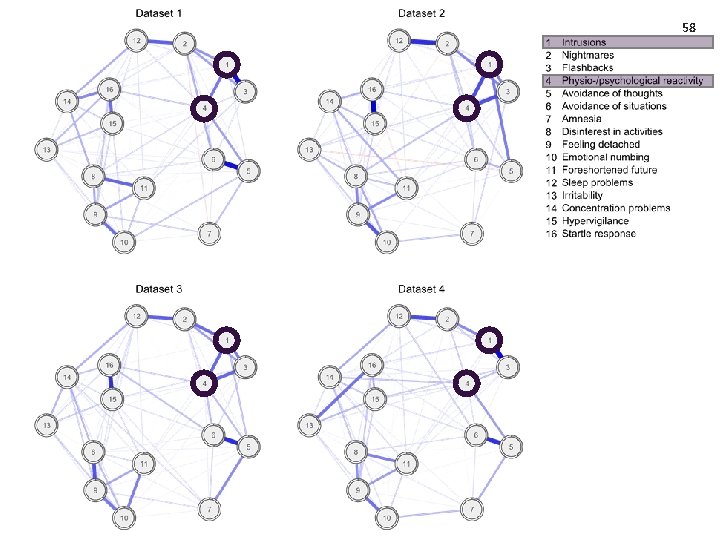

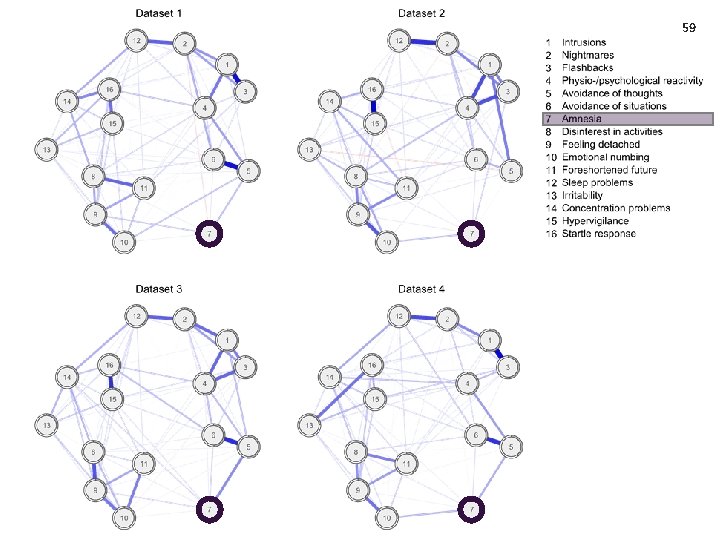

54 Networks

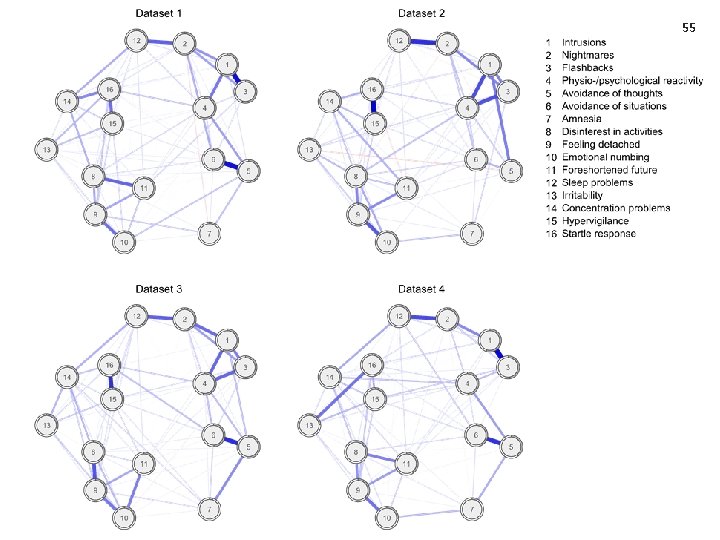

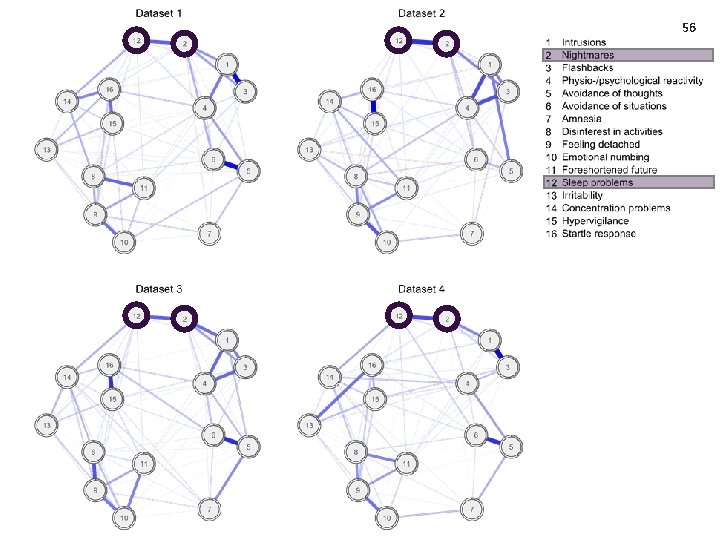

55

56

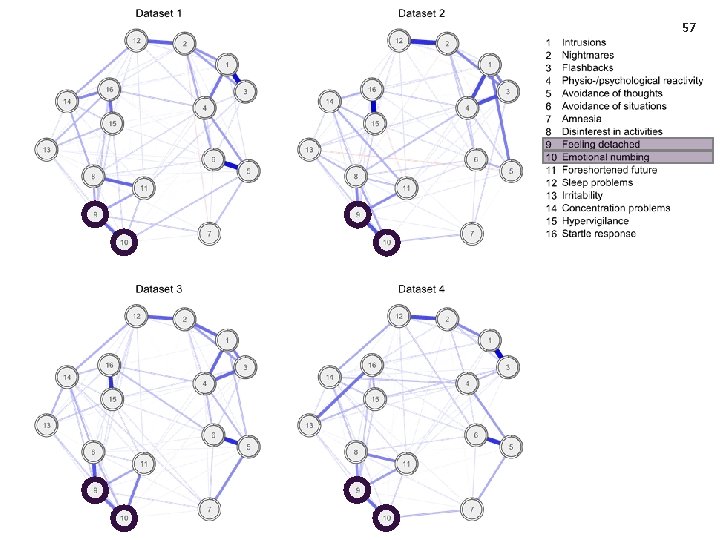

57

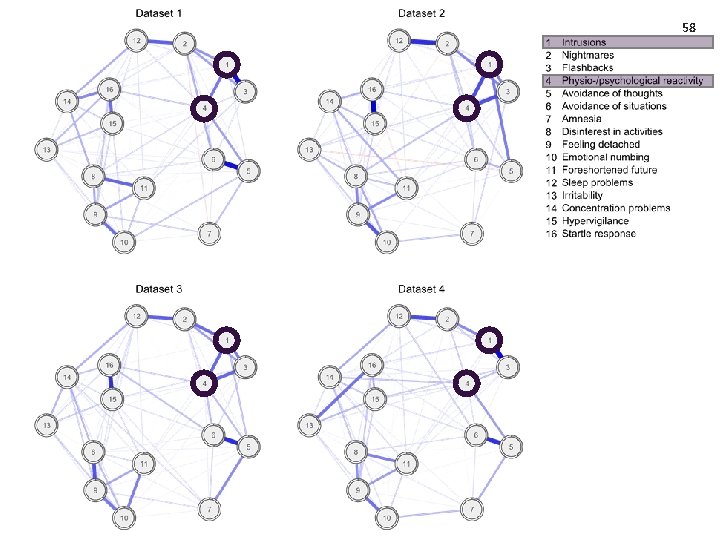

58

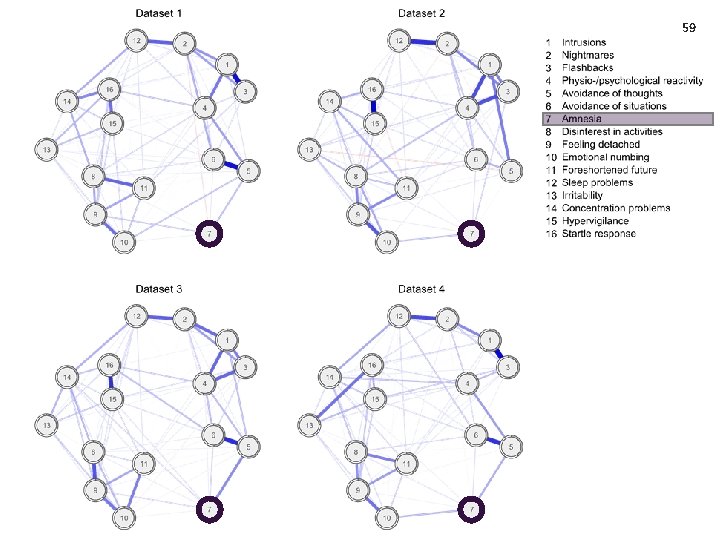

59

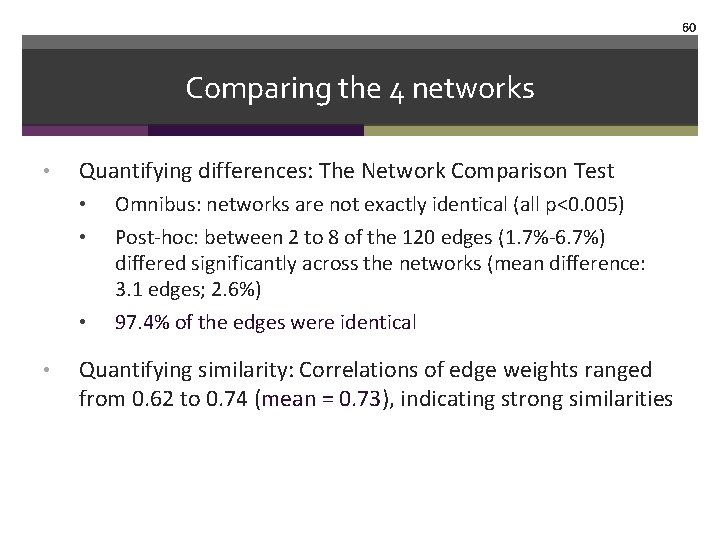

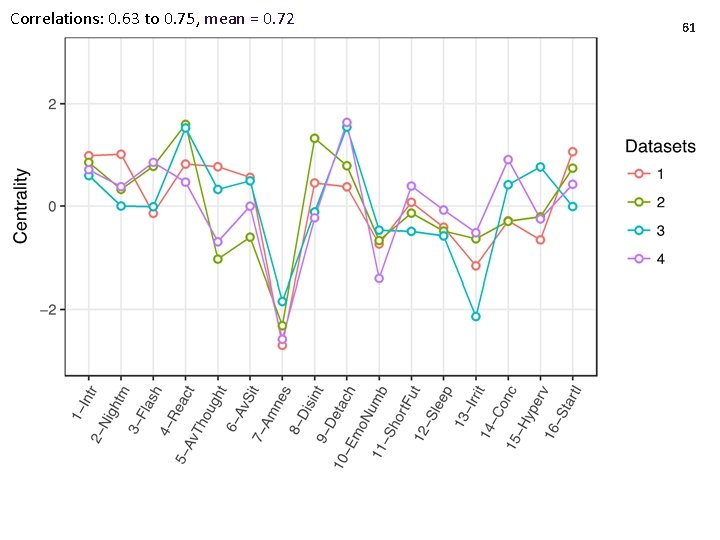

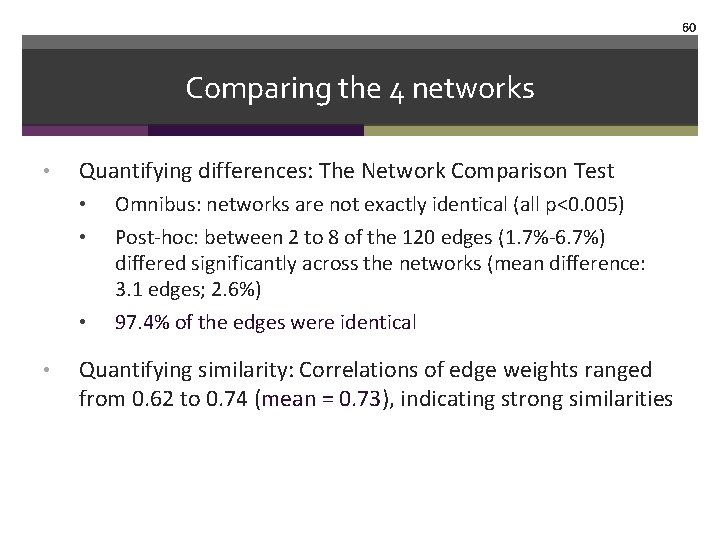

60 Comparing the 4 networks • Quantifying differences: The Network Comparison Test • • Omnibus: networks are not exactly identical (all p<0. 005) Post-hoc: between 2 to 8 of the 120 edges (1. 7%-6. 7%) differed significantly across the networks (mean difference: 3. 1 edges; 2. 6%) 97. 4% of the edges were identical Quantifying similarity: Correlations of edge weights ranged from 0. 62 to 0. 74 (mean = 0. 73), indicating strong similarities

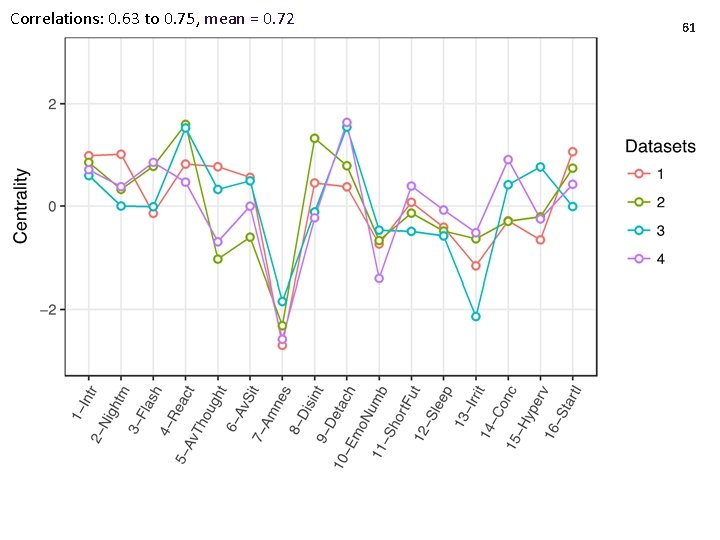

Correlations: 0. 63 to 0. 75, mean = 0. 72 61

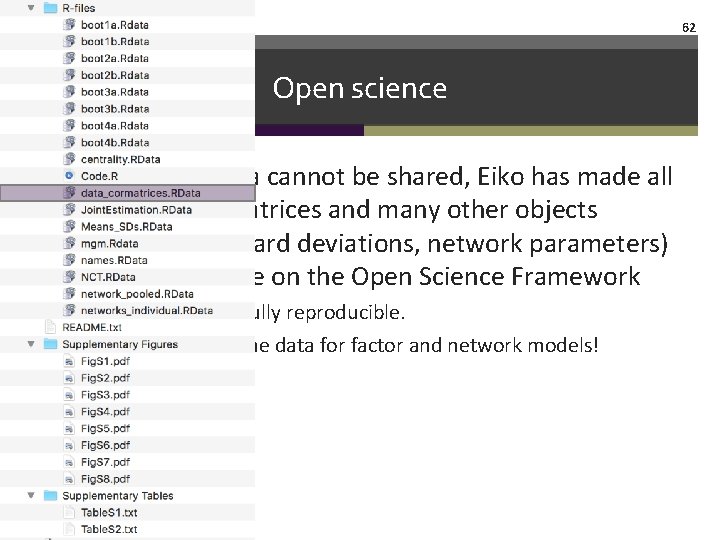

62 Open science • While the data cannot be shared, Eiko has made all correlation matrices and many other objects (means, standard deviations, network parameters) freely available on the Open Science Framework • • The paper is fully reproducible. You can use the data for factor and network models!

63 https: //osf. io/2 t 7 qp/

Morning Session Overview Markov Random Fields II: stability, replicability, challenges • Part 1. Centrality • Part 2. Stability • Edge Weights Stability • Centrality Stability • Part 3. Replicability • Part 4. Challenges

65 Challenges https: //osf. io/vqsa 4/

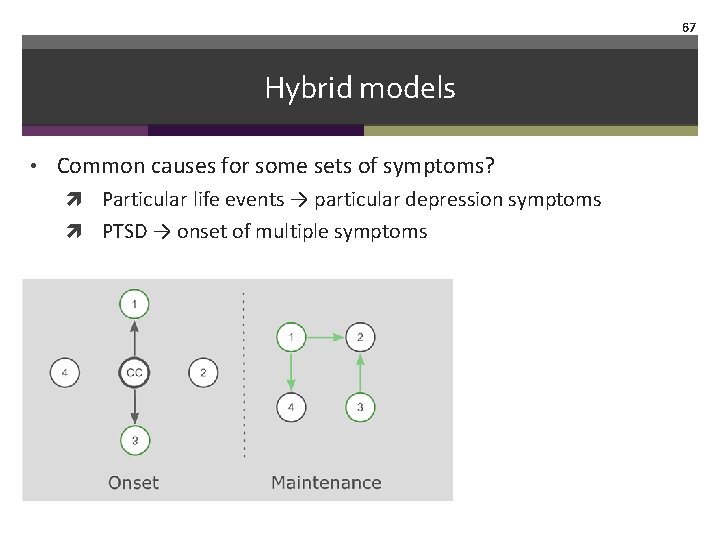

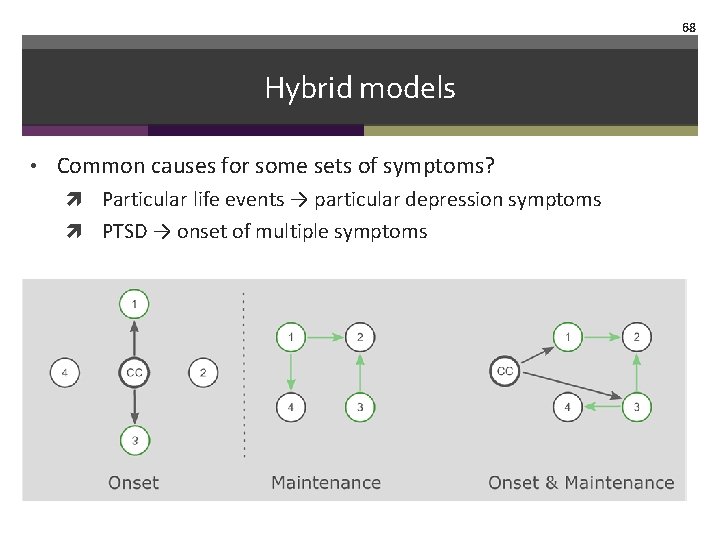

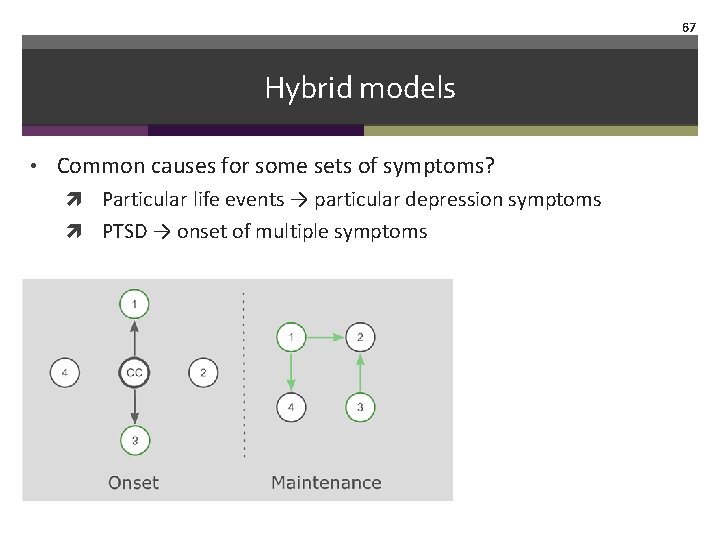

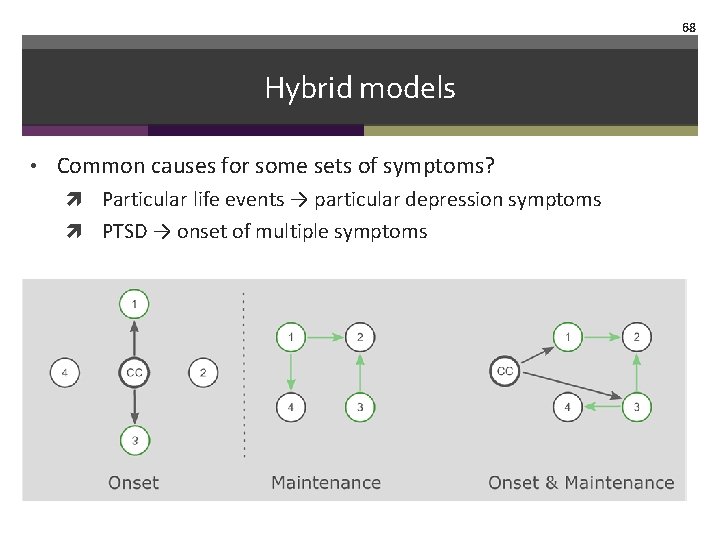

66 Hybrid models • Common causes for some sets of symptoms? Particular life events → particular depression symptoms PTSD → onset of multiple symptoms

67 Hybrid models • Common causes for some sets of symptoms? Particular life events → particular depression symptoms PTSD → onset of multiple symptoms

68 Hybrid models • Common causes for some sets of symptoms? Particular life events → particular depression symptoms PTSD → onset of multiple symptoms

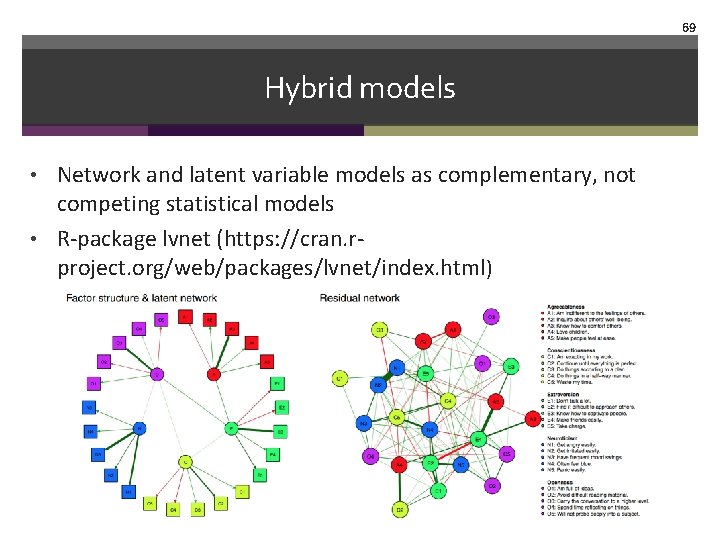

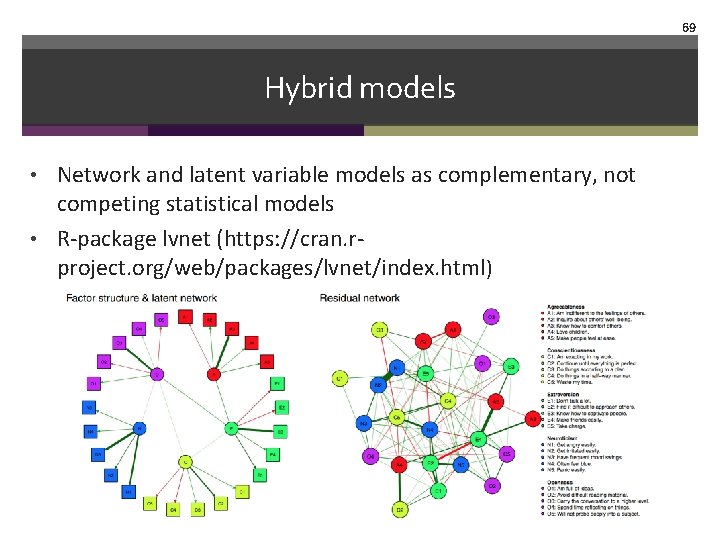

69 Hybrid models • Network and latent variable models as complementary, not competing statistical models • R-package lvnet (https: //cran. rproject. org/web/packages/lvnet/index. html)

70 What variables to include • Some questionnaire seem to measure the same variable multiple times CES-D: ‘sad mood’, ‘depressed mood’, ‘feeling blue’ DOI 10. 17605/OSF. IO/BNEKP

71 2 hot topics • “Causality” and anything related to it; it’s helpful to start reading up on Granger causality and move on from there. • Between subjects (cross-sectional) vs within subjects (timeseries)

72 Random topics • Missing data

73 Practical Time! http: //www. adelaisvoranu. com/Oldenburg 2018