Generative Adversarial Network GAN MING HSIEN LAI 20170605

![Calculate JSD Unstable (Original cost function) What Overlap can be “neglected” means? Ref[3] Ex Calculate JSD Unstable (Original cost function) What Overlap can be “neglected” means? Ref[3] Ex](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-18.jpg)

![Mode collapse (Alternative cost function) Ref[1]_fig 24 Ref[1]_fig 23 Mode collapse (Alternative cost function) Ref[1]_fig 24 Ref[1]_fig 23](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-20.jpg)

![f-GAN Ref[10]_Tabel 1 f-GAN Ref[10]_Tabel 1](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-27.jpg)

![Conditional GAN Ref[4]: fig 1 Conditional GAN Ref[4]: fig 1](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-29.jpg)

![Conditional GAN Ex: Condition on 1 -of-k vector Ref[4]_fig 2 Conditional GAN Ex: Condition on 1 -of-k vector Ref[4]_fig 2](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-30.jpg)

![LAPGAN Ref[6]_fig 1 LAPGAN Ref[6]_fig 1](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-32.jpg)

![LAPGAN Ref[5]_fig 4 LAPGAN Ref[5]_fig 4](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-34.jpg)

![LAPGAN Ref[5]_fig 5 LAPGAN Ref[5]_fig 5](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-35.jpg)

![DCGAN Ref[2] fig 2, fig 7 DCGAN Ref[2] fig 2, fig 7](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-38.jpg)

![WGAN W-GAN Ref[7]_fig 5, 6, 7 DCGAN-Generator W-GAN DCGAN-Generator Eliminate BN Same filter number WGAN W-GAN Ref[7]_fig 5, 6, 7 DCGAN-Generator W-GAN DCGAN-Generator Eliminate BN Same filter number](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-47.jpg)

![WGAN Wasserstein distance JSD Ref[7]_fig 3, 4 WGAN Wasserstein distance JSD Ref[7]_fig 3, 4](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-48.jpg)

![Stack. GAN Ref[9] fig 3 Stack. GAN Ref[9] fig 3](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-53.jpg)

![Stack. GAN Ref[9] fig 4 Stack. GAN Ref[9] fig 4](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-54.jpg)

![Stack. GAN Ref[9] fig 5 Stack. GAN Ref[9] fig 5](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-55.jpg)

![Reference [1] NIPS 2016 Tutorial: Generative Adversarial Networks Ian Goodfellow [2] Radford, A. , Reference [1] NIPS 2016 Tutorial: Generative Adversarial Networks Ian Goodfellow [2] Radford, A. ,](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-57.jpg)

- Slides: 58

Generative Adversarial Network (GAN) MING- HSIEN, LAI 2017/06/05

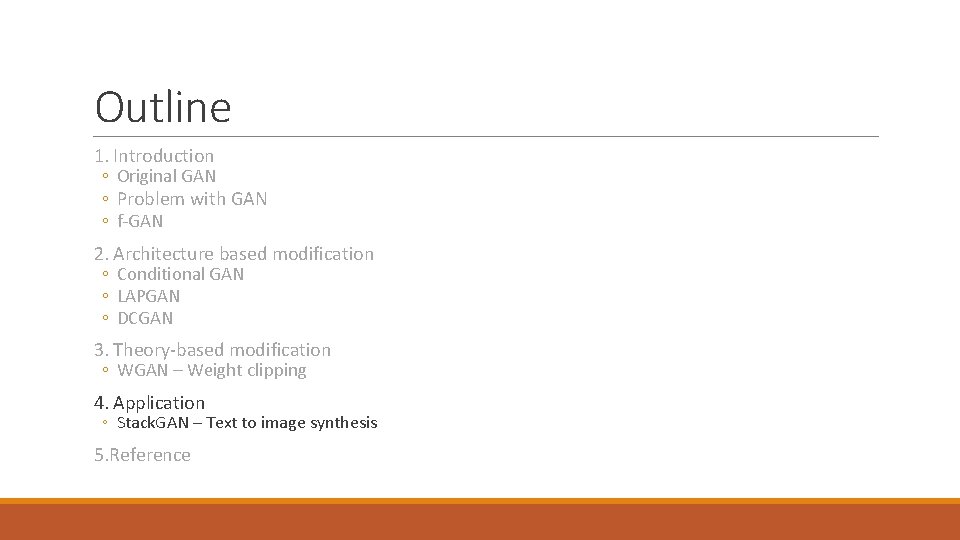

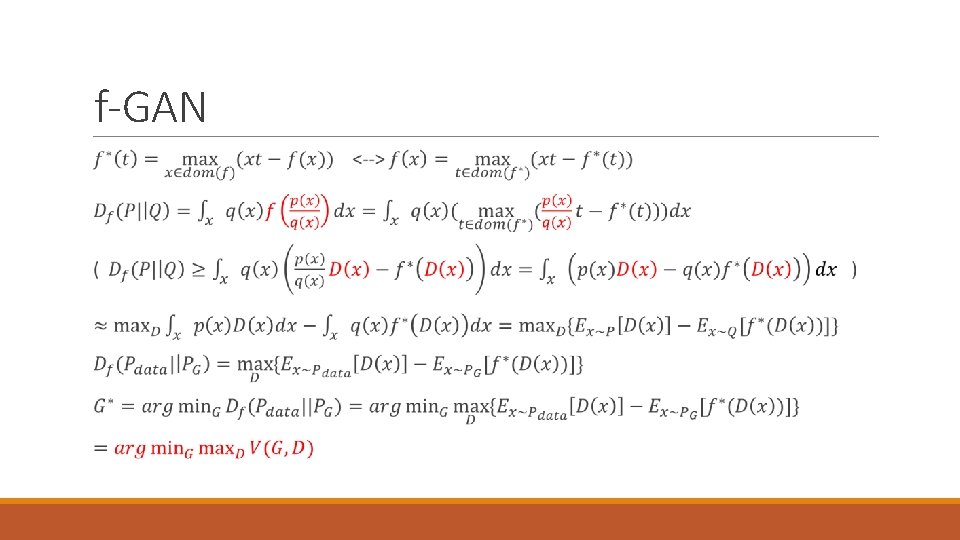

Outline 1. Introduction ◦ Original GAN ◦ Problem with GAN ◦ f-GAN 2. Architecture-based modification ◦ Conditional GAN ◦ LAPGAN ◦ DCGAN 3. Theory-based modification ◦ WGAN – Weight clipping 4. Application ◦ Stack. GAN – Text to image synthesis 5. Reference

Outline 1. Introduction ◦ Original GAN ◦ Problem with GAN ◦ f-GAN 2. Architecture based modification ◦ Conditional GAN ◦ LAPGAN ◦ DCGAN 3. Theory-based modification ◦ WGAN – Weight clipping 4. Application ◦ Stack. GAN – Text to image synthesis 5. Reference

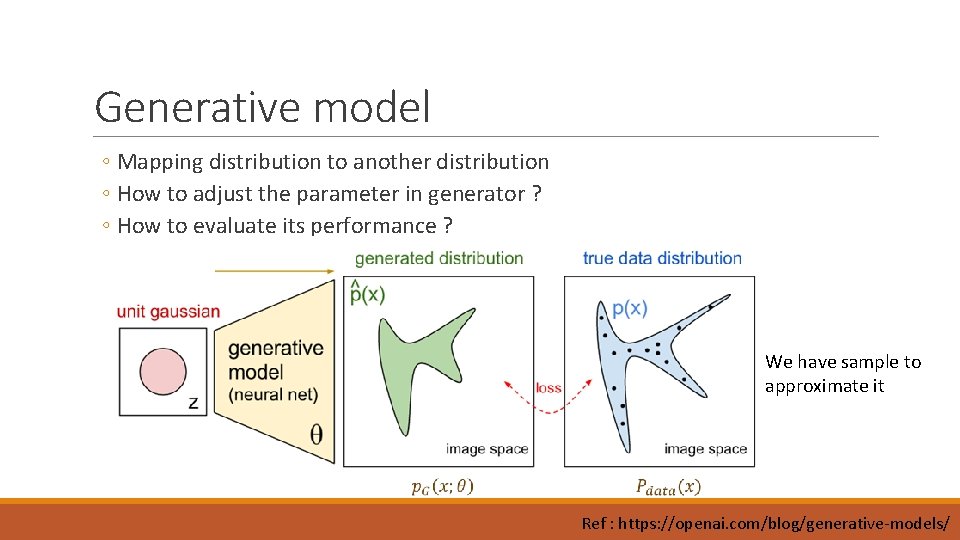

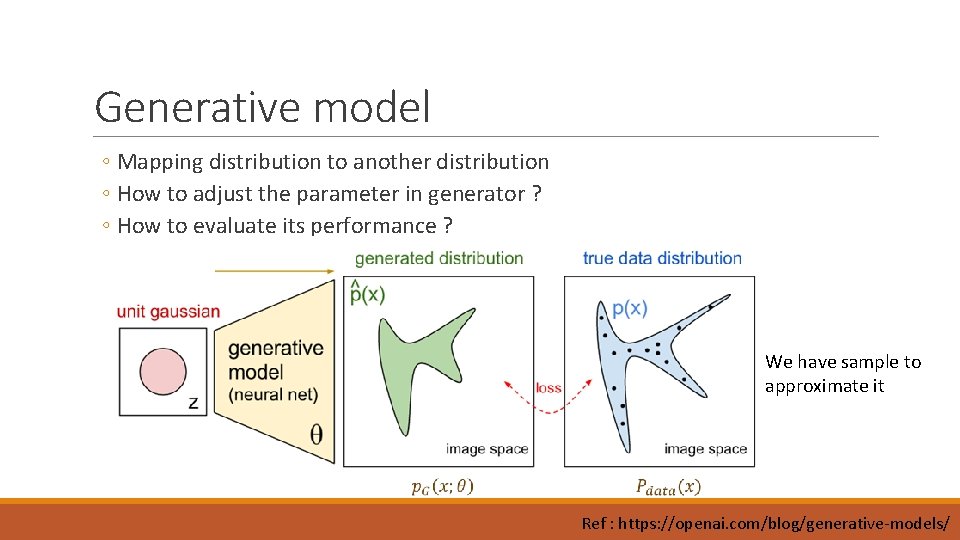

Generative model ◦ Mapping distribution to another distribution ◦ How to adjust the parameter in generator ? ◦ How to evaluate its performance ? We have sample to approximate it Ref : https: //openai. com/blog/generative-models/

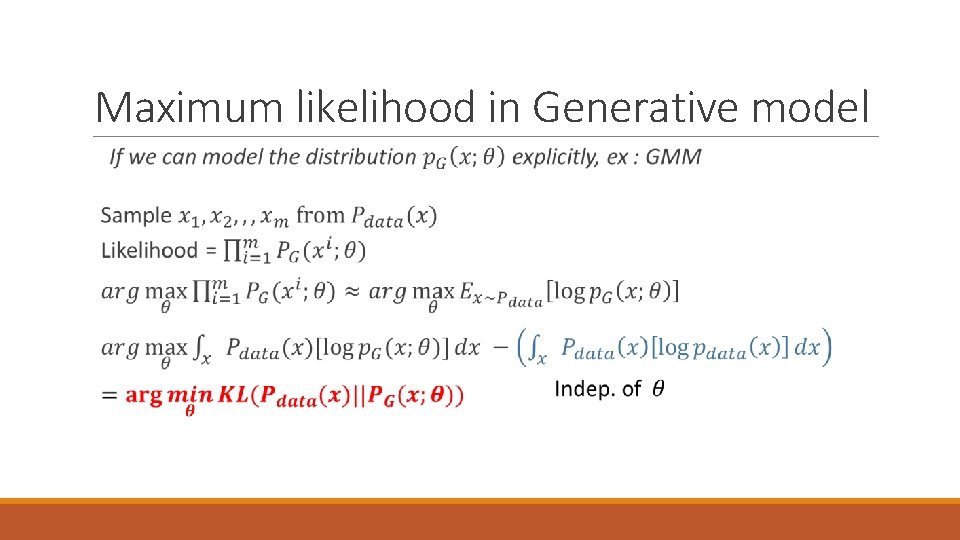

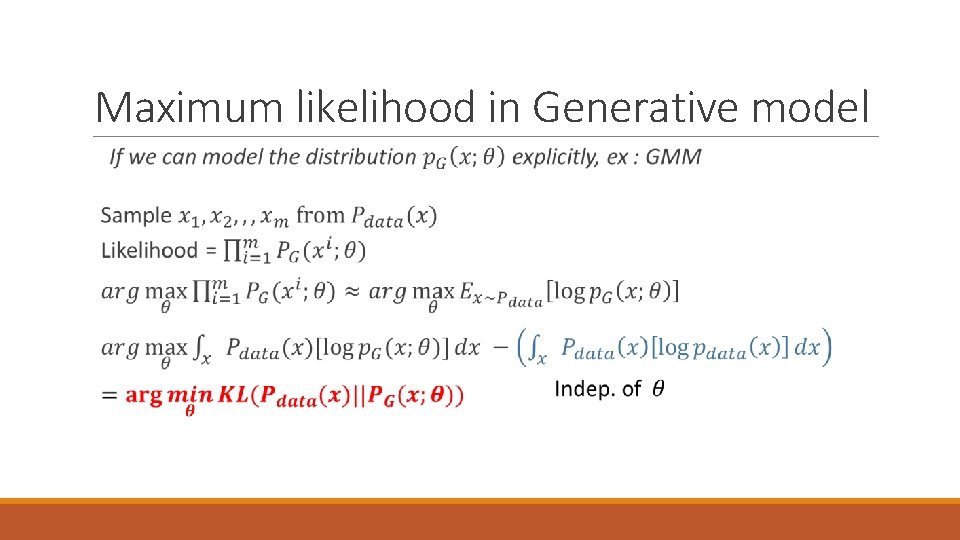

Maximum likelihood in Generative model

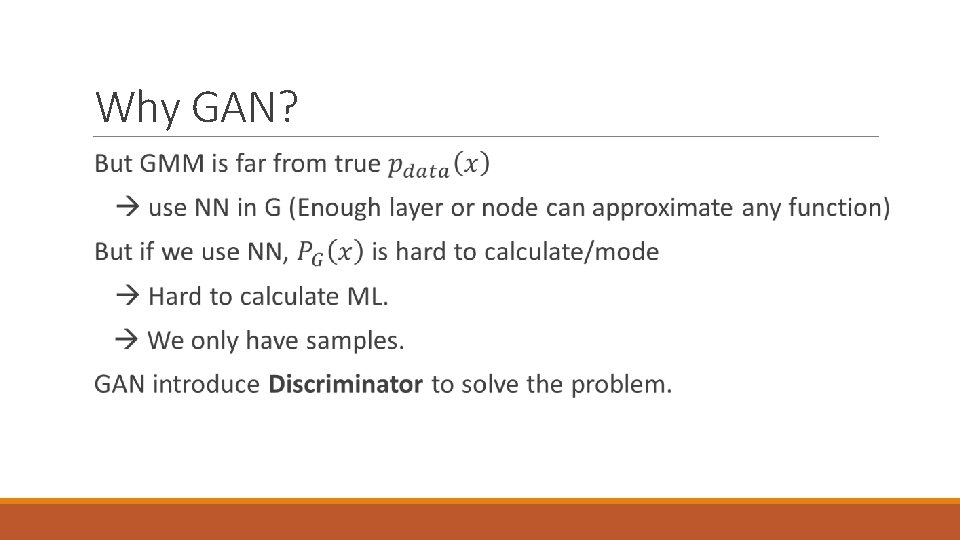

Why GAN?

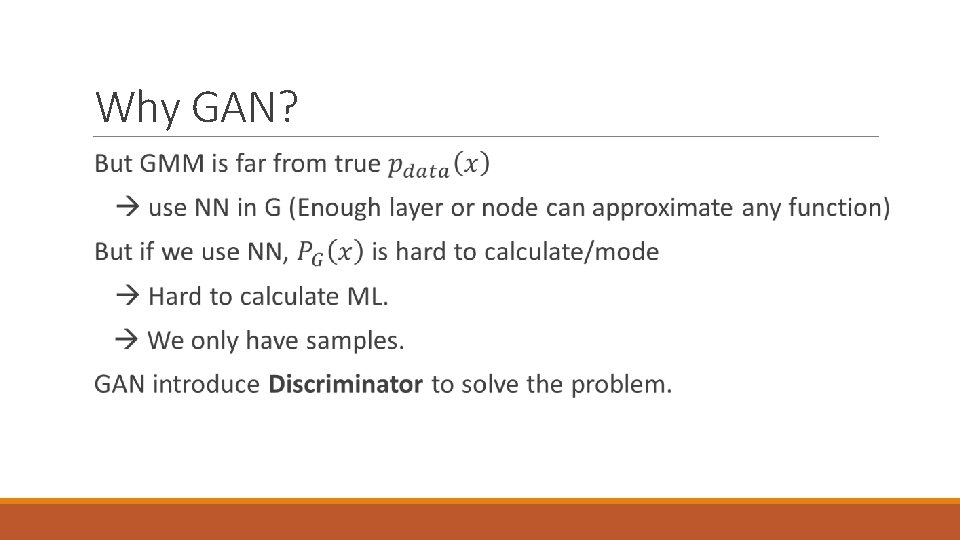

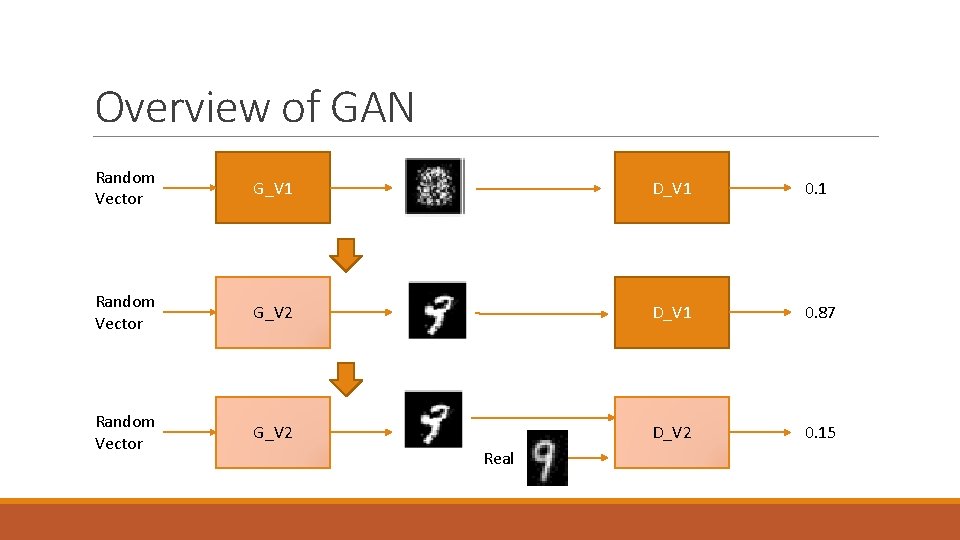

Overview of GAN Random Vector G_V 1 D_V 1 0. 1 Random Vector G_V 2 D_V 1 0. 87 Random Vector G_V 2 D_V 2 0. 15 Real

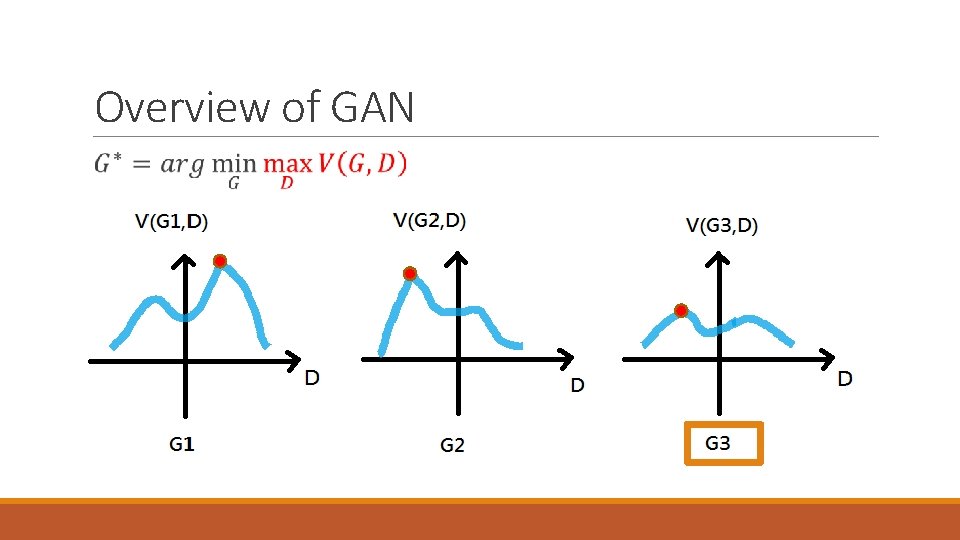

Overview of GAN

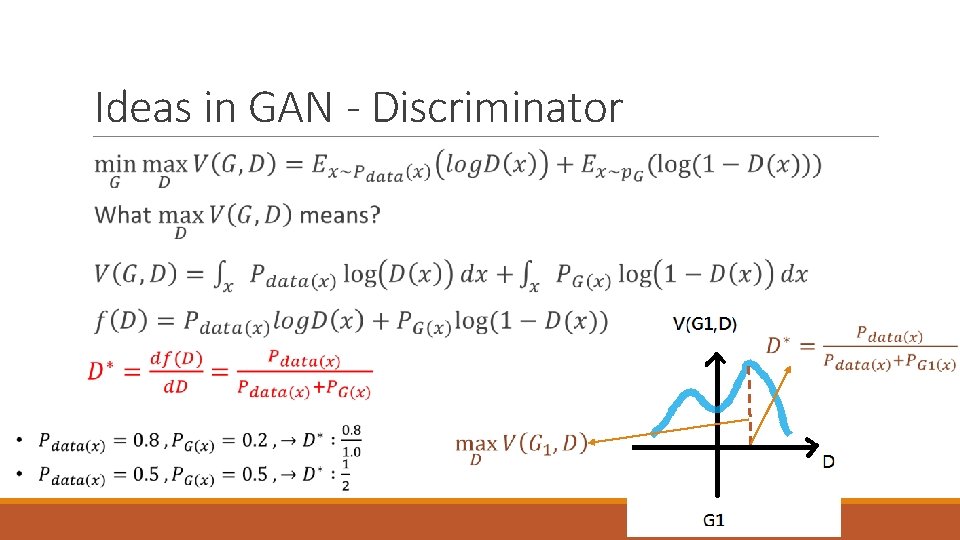

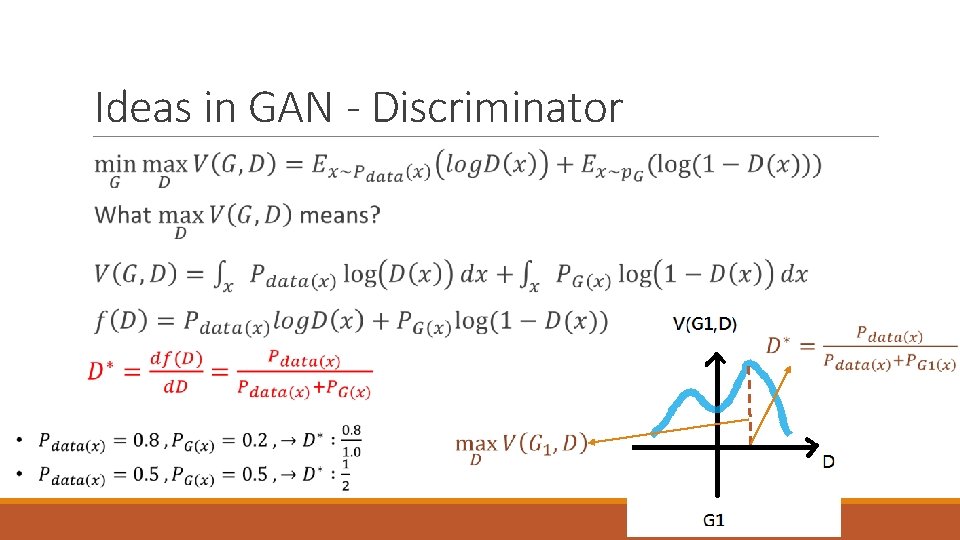

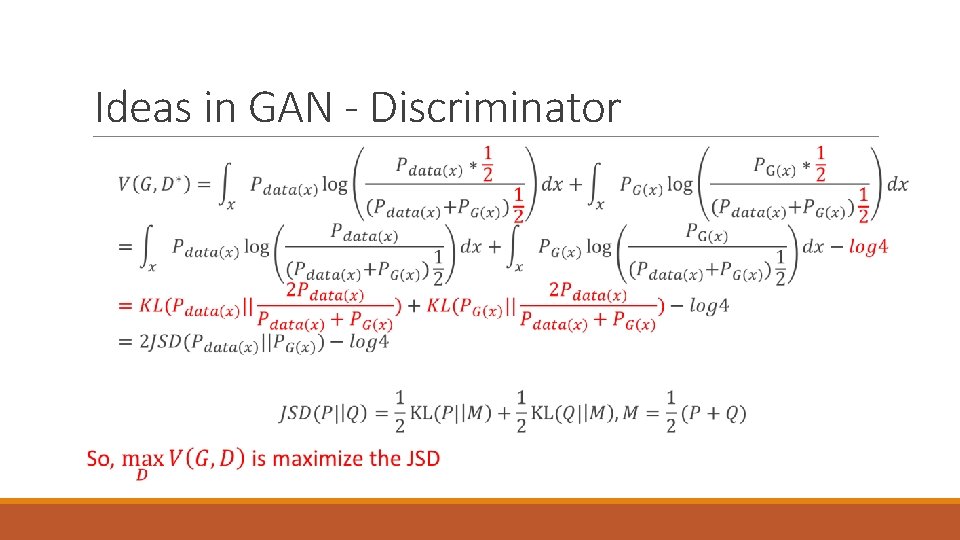

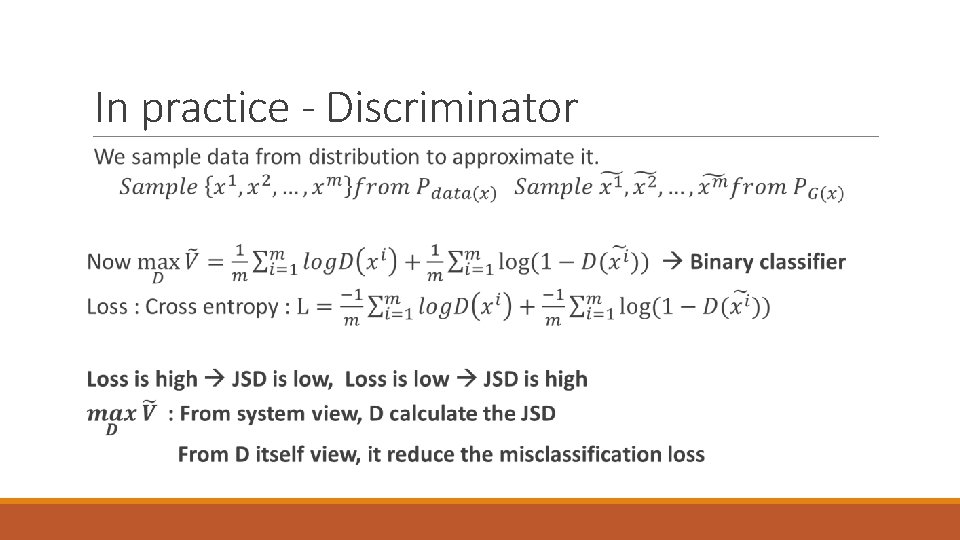

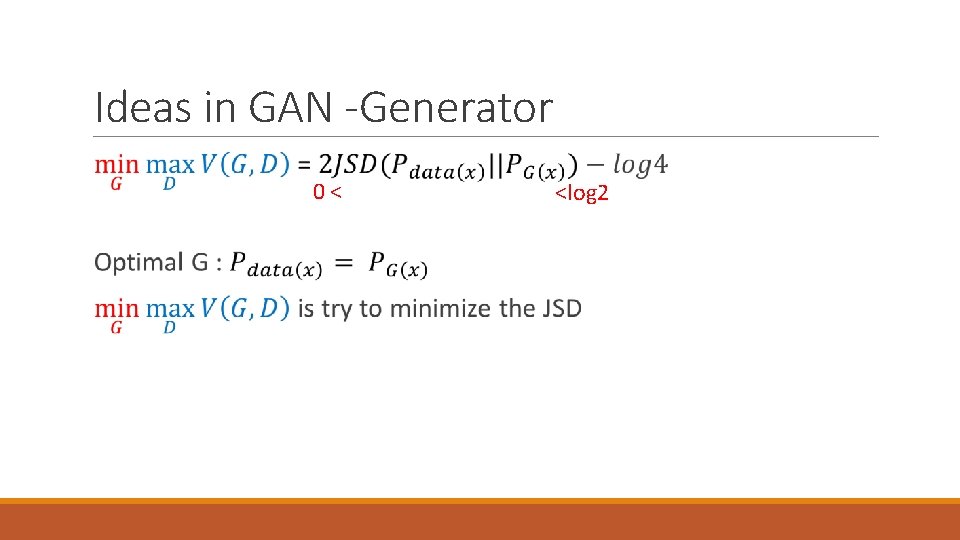

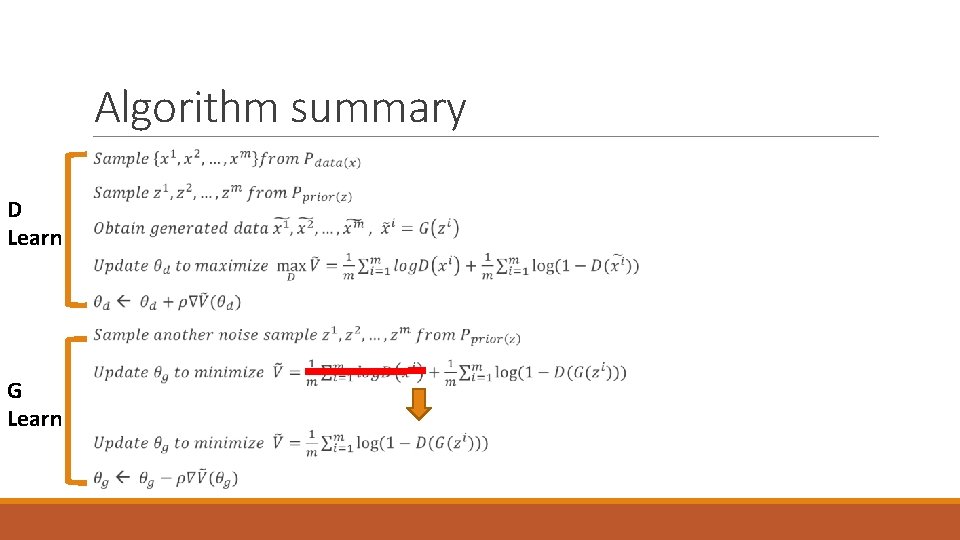

Ideas in GAN - Discriminator

Ideas in GAN - Discriminator

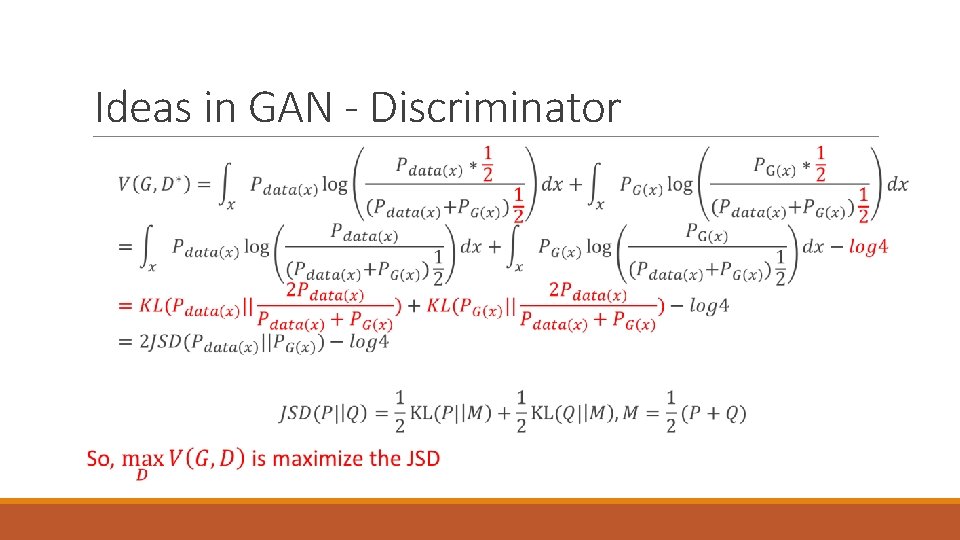

In practice - Discriminator

Ideas in GAN -Generator 0< <log 2

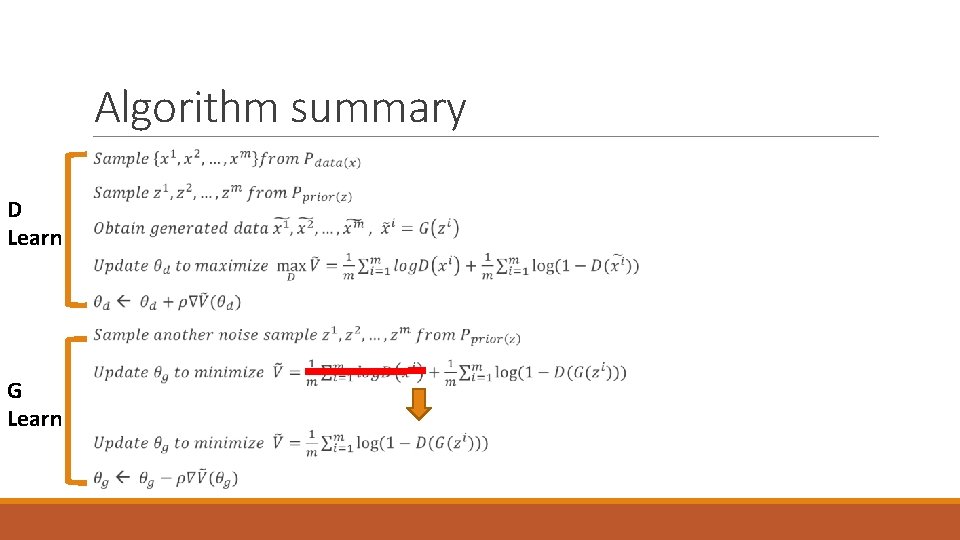

Algorithm summary D Learn G Learn

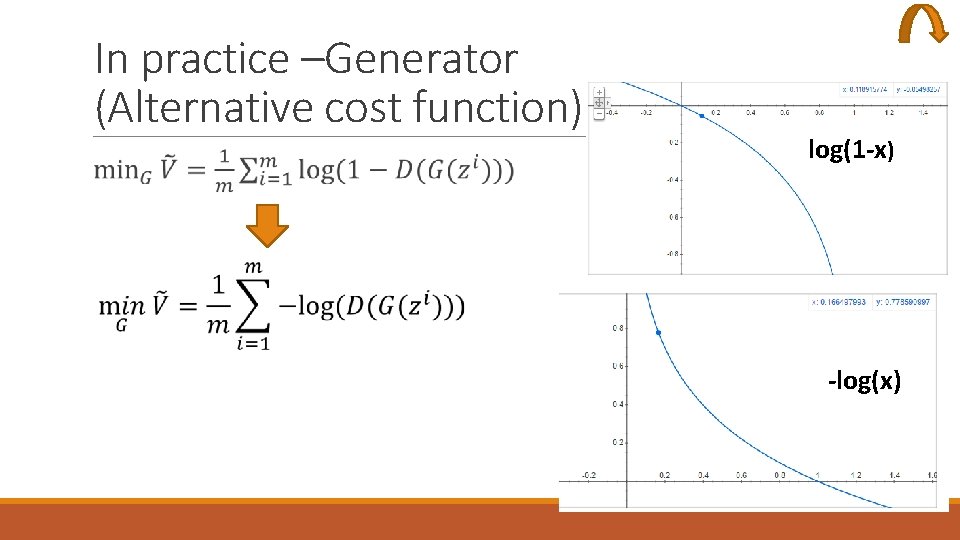

In practice –Generator (Alternative cost function) log(1 -x) -log(x)

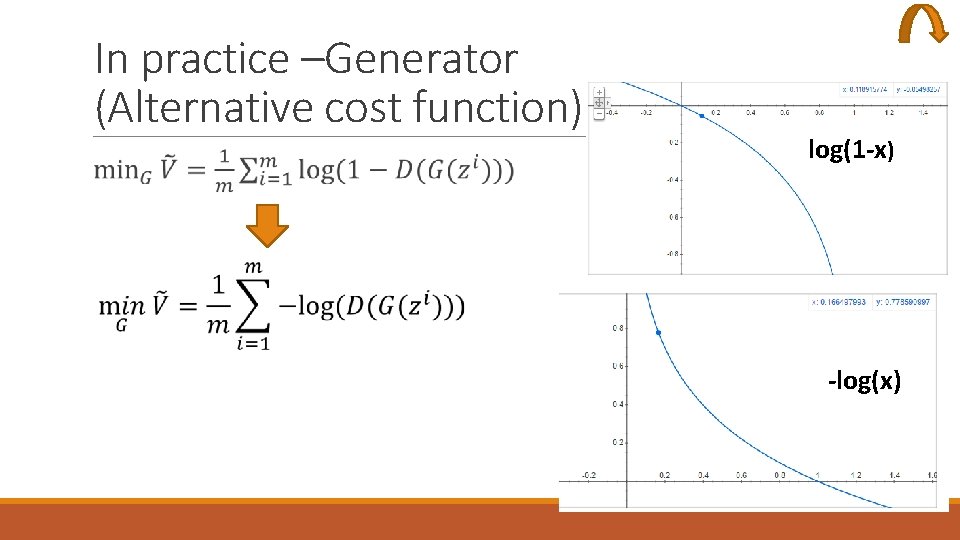

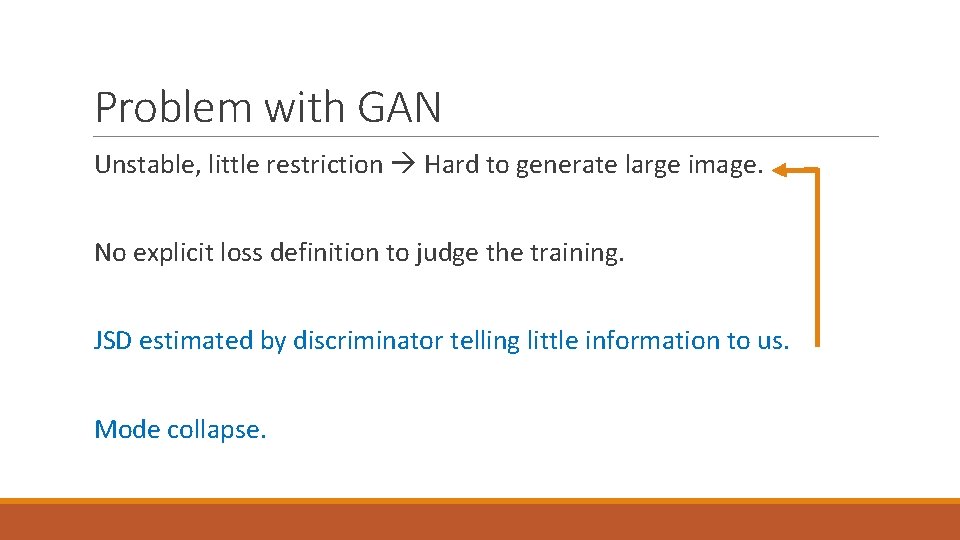

Problem with GAN Unstable, little restriction Hard to generate large image. No explicit loss definition to judge the training. JSD estimated by discriminator telling little information to us. Mode collapse.

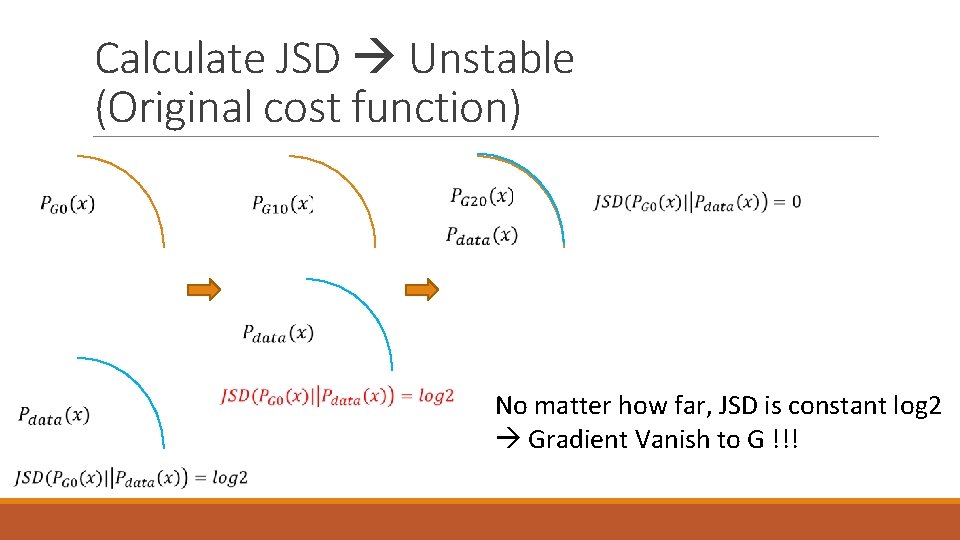

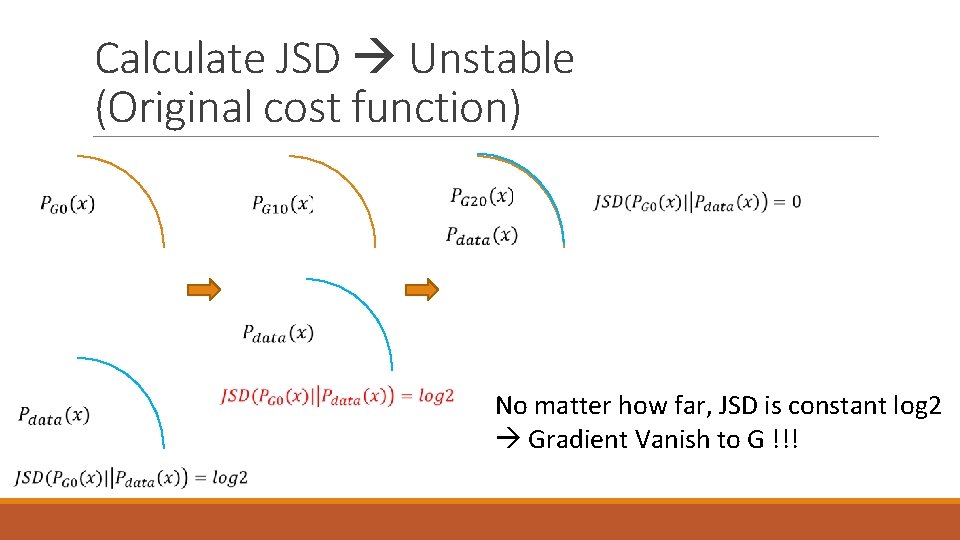

Calculate JSD Unstable (Original cost function)

Calculate JSD Unstable (Original cost function) No matter how far, JSD is constant log 2 Gradient Vanish to G !!!

![Calculate JSD Unstable Original cost function What Overlap can be neglected means Ref3 Ex Calculate JSD Unstable (Original cost function) What Overlap can be “neglected” means? Ref[3] Ex](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-18.jpg)

Calculate JSD Unstable (Original cost function) What Overlap can be “neglected” means? Ref[3] Ex : dim(z) : 100, dim(x) : 900 (30*30 image) G(z) is 100 -dim manifold in 900 dimensions cannot fulfill the 900 -dim space If has overlap can be neglected. (ex: 2 D space, two curve has intersection point, but “point” is 1 D, its (length)measure is zero)

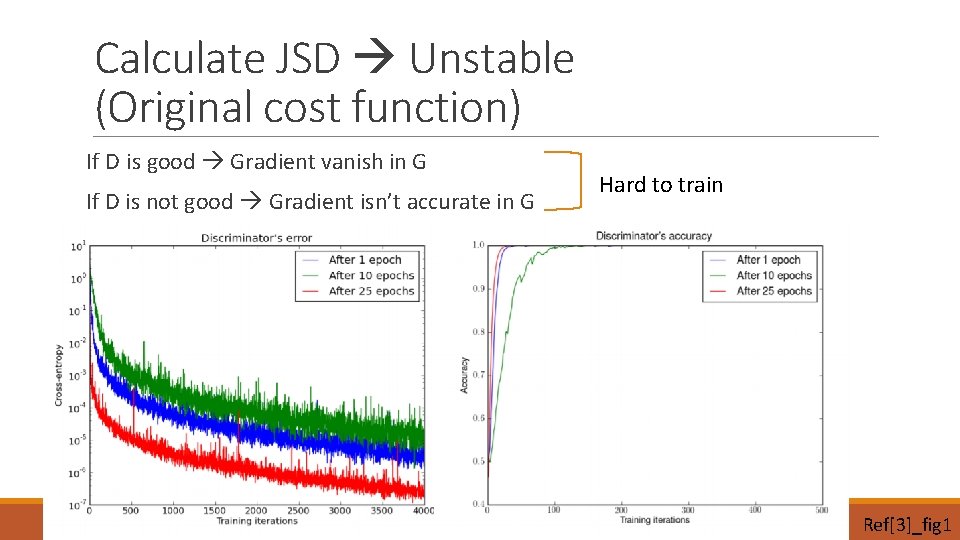

Calculate JSD Unstable (Original cost function) If D is good Gradient vanish in G If D is not good Gradient isn’t accurate in G Hard to train Ref[3]_fig 1

![Mode collapse Alternative cost function Ref1fig 24 Ref1fig 23 Mode collapse (Alternative cost function) Ref[1]_fig 24 Ref[1]_fig 23](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-20.jpg)

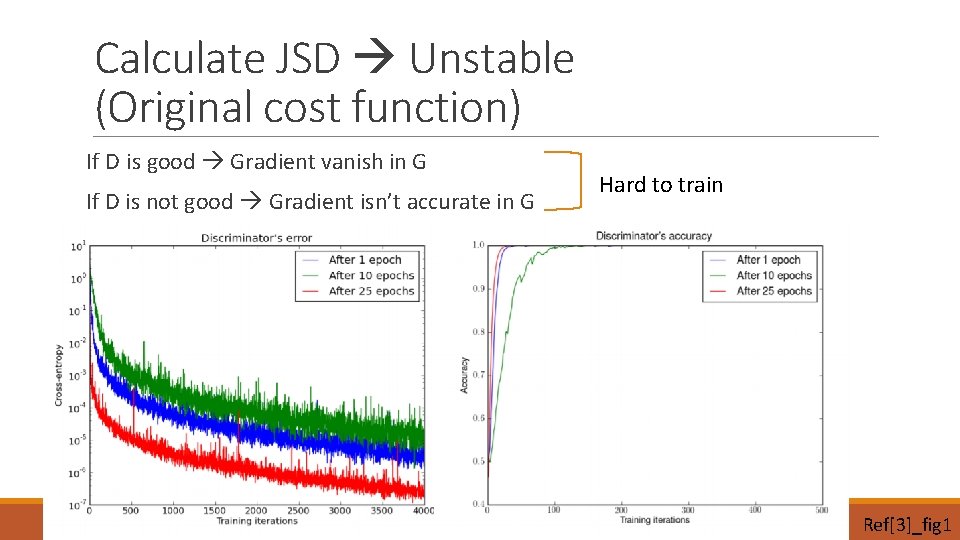

Mode collapse (Alternative cost function) Ref[1]_fig 24 Ref[1]_fig 23

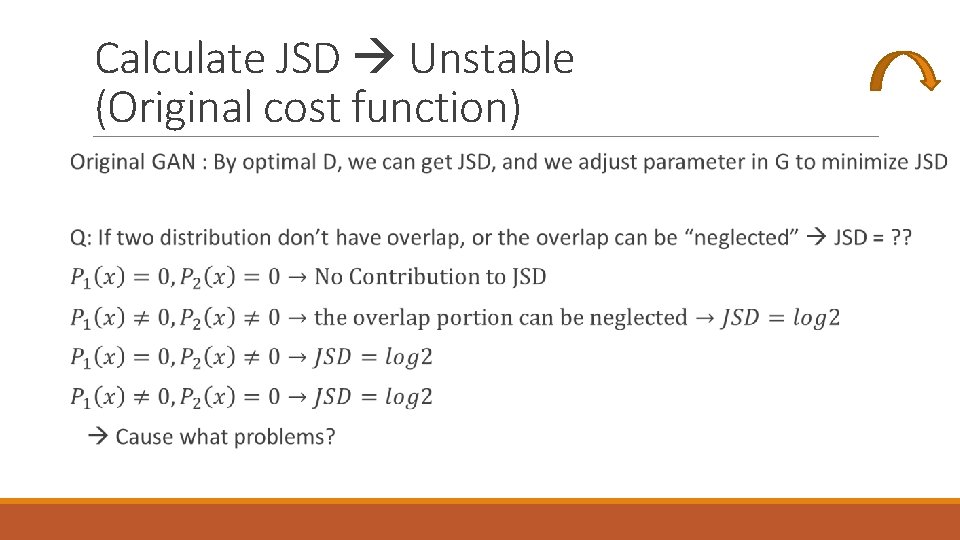

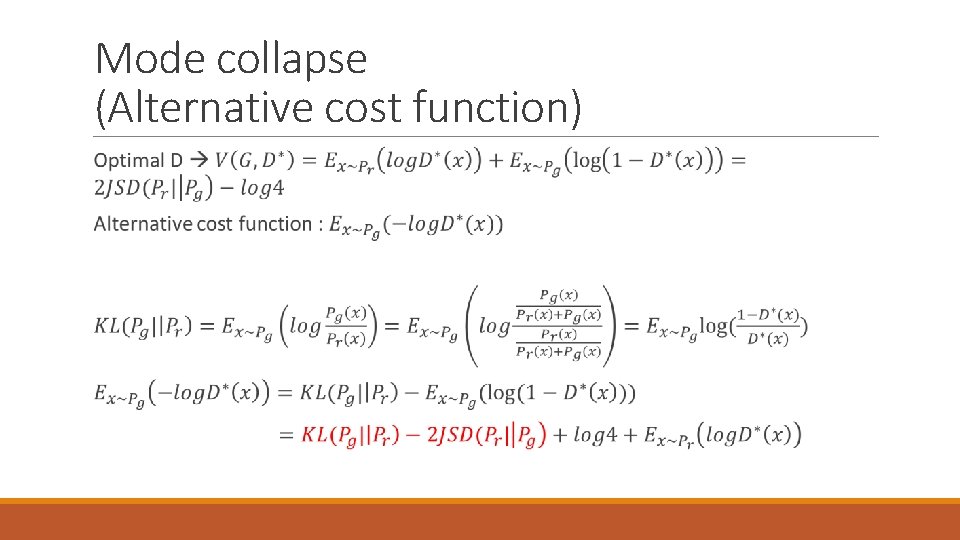

Mode collapse (Alternative cost function)

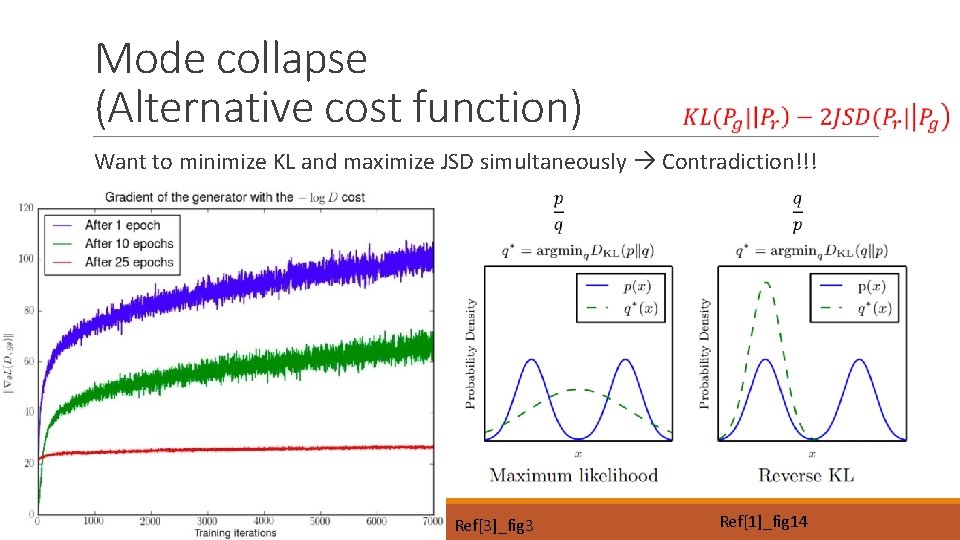

Mode collapse (Alternative cost function) Want to minimize KL and maximize JSD simultaneously Contradiction!!! Ref[3]_fig 3 Ref[1]_fig 14

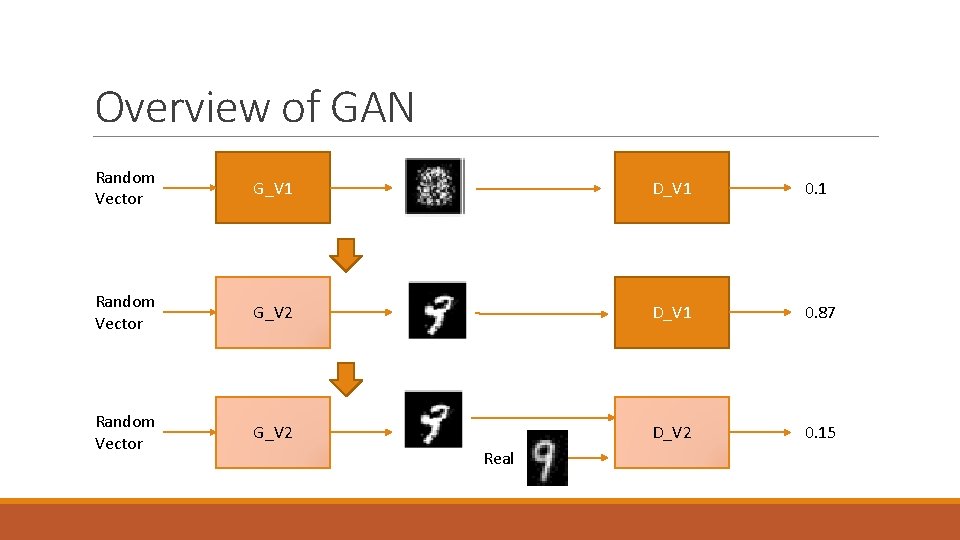

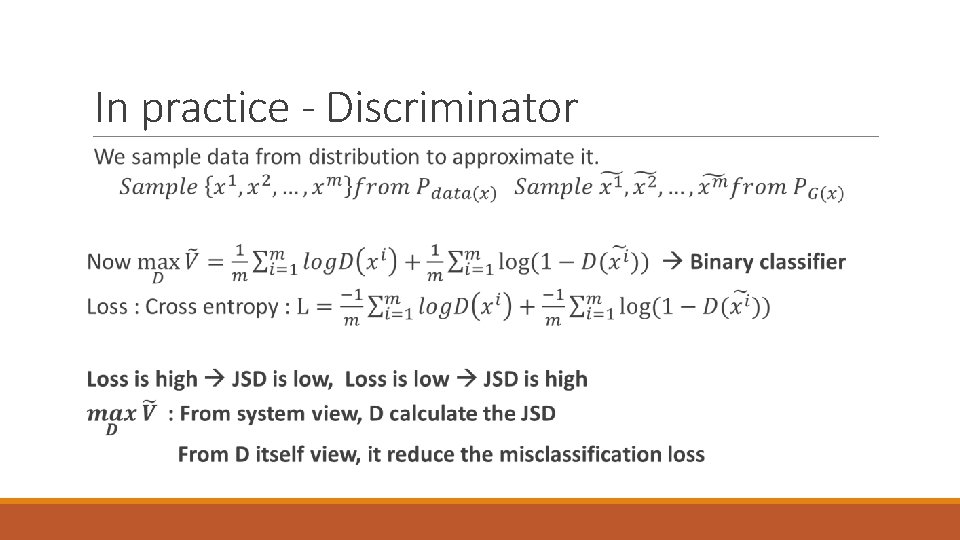

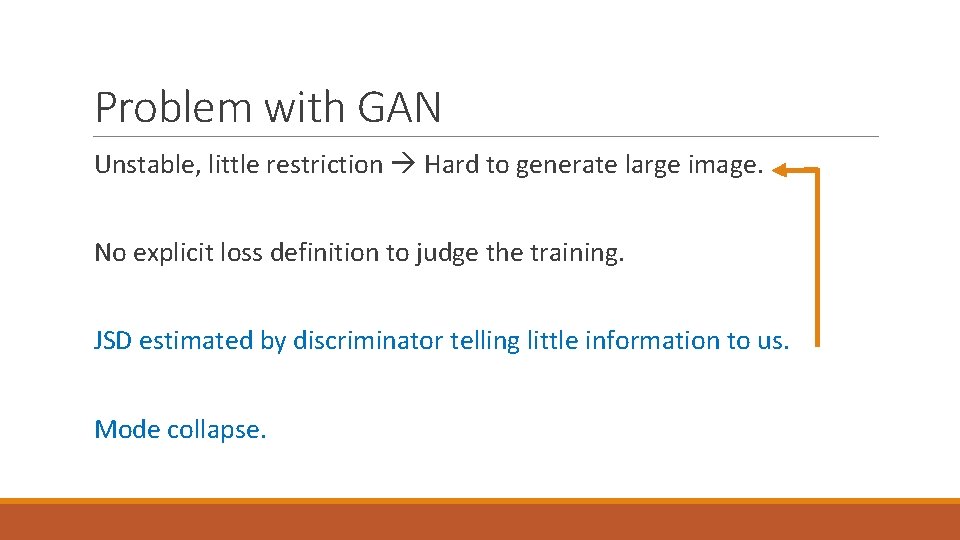

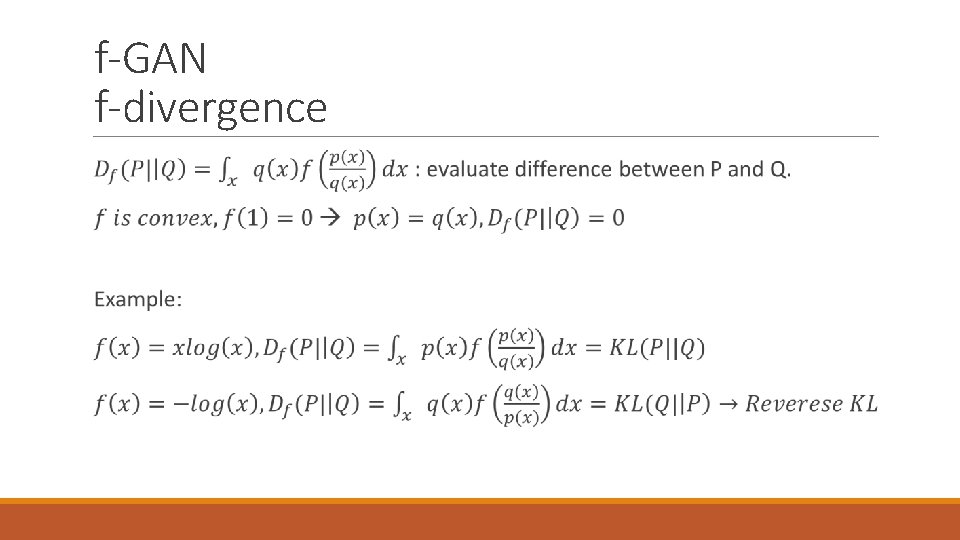

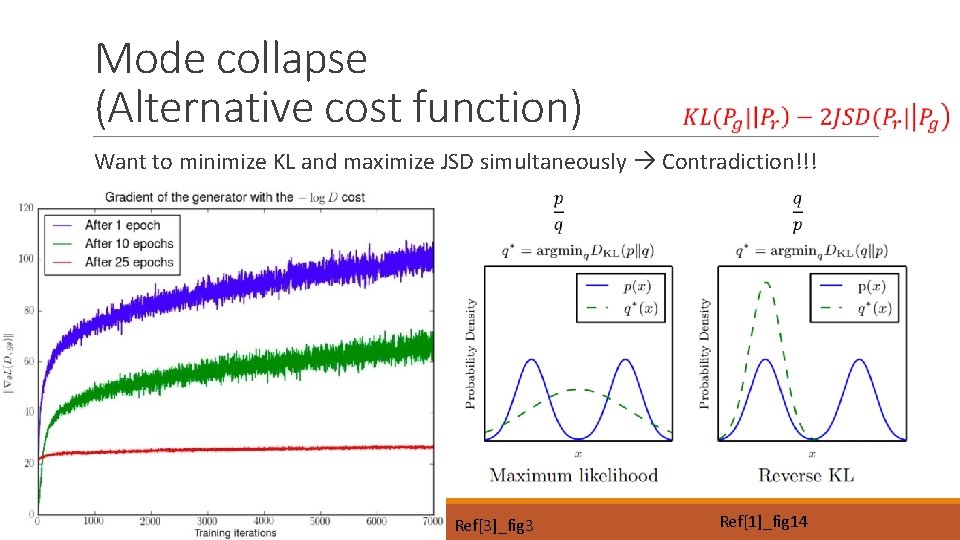

f-GAN f-divergence

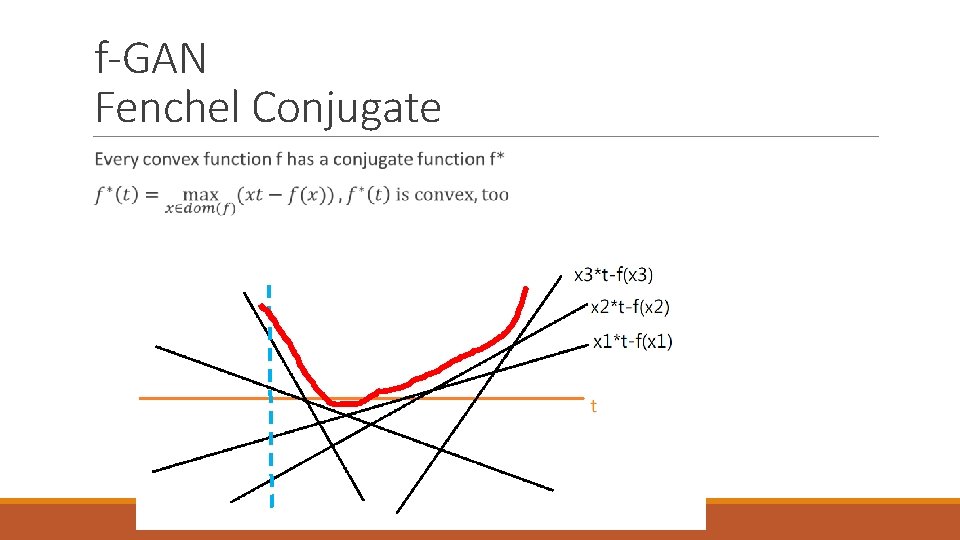

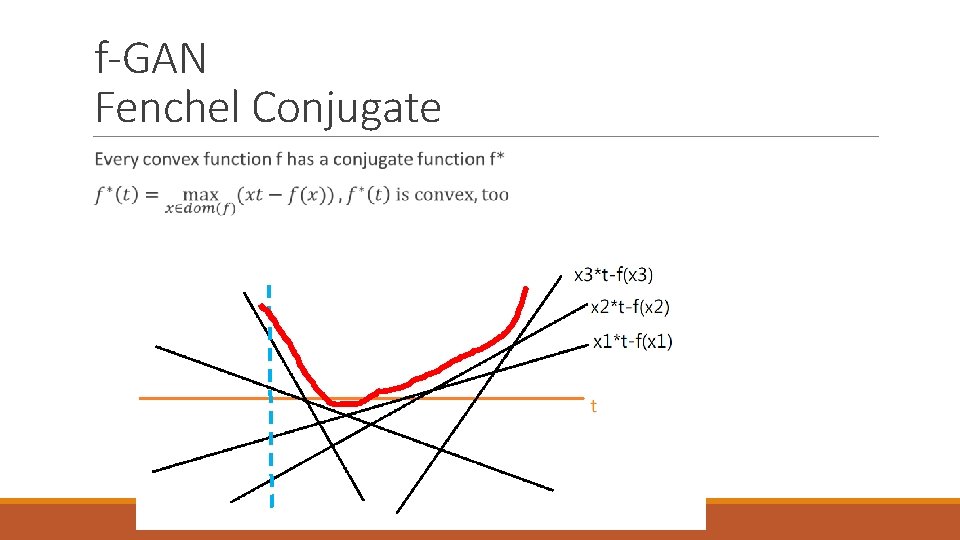

f-GAN Fenchel Conjugate

f-GAN Fenchel Conjugate

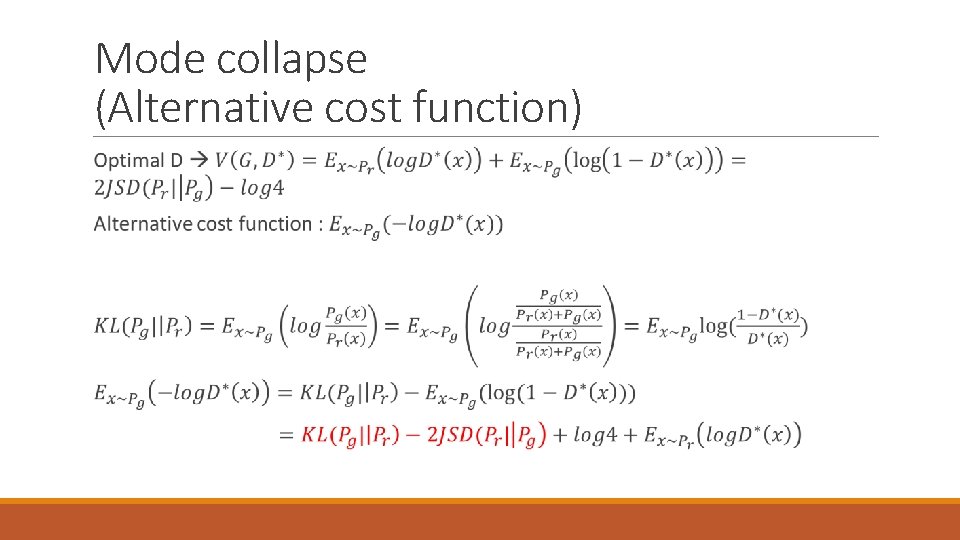

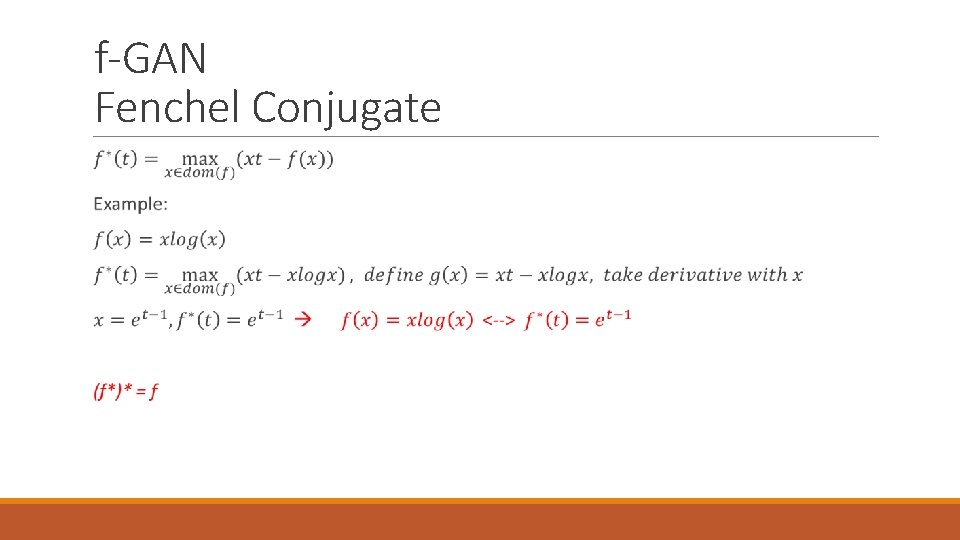

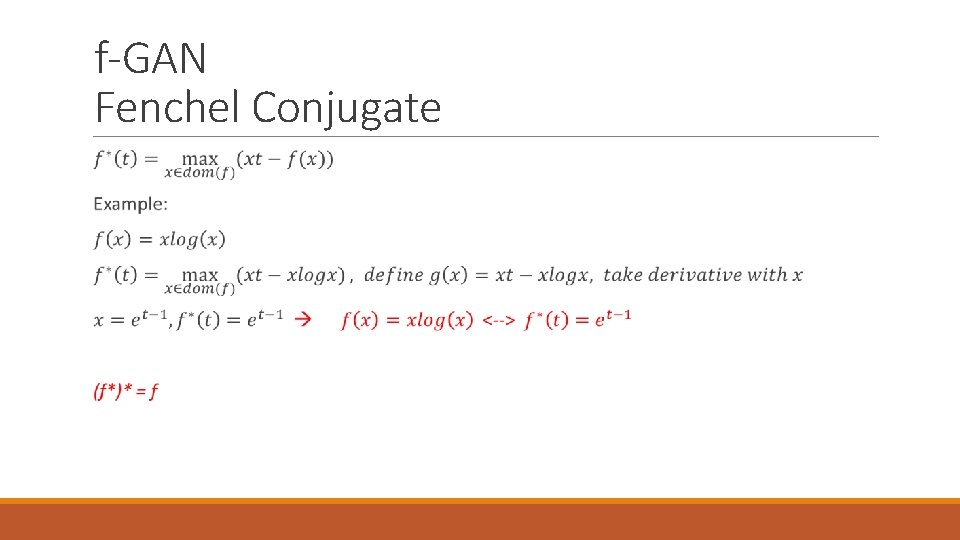

f-GAN

![fGAN Ref10Tabel 1 f-GAN Ref[10]_Tabel 1](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-27.jpg)

f-GAN Ref[10]_Tabel 1

Outline 1. Introduction ◦ Original GAN ◦ Problem with GAN ◦ f-GAN 2. Architecture based modification ◦ Conditional GAN ◦ LAPGAN ◦ DCGAN 3. Theory-based modification ◦ WGAN – Weight clipping 4. Application ◦ Stack. GAN – Text to image synthesis 5. Reference

![Conditional GAN Ref4 fig 1 Conditional GAN Ref[4]: fig 1](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-29.jpg)

Conditional GAN Ref[4]: fig 1

![Conditional GAN Ex Condition on 1 ofk vector Ref4fig 2 Conditional GAN Ex: Condition on 1 -of-k vector Ref[4]_fig 2](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-30.jpg)

Conditional GAN Ex: Condition on 1 -of-k vector Ref[4]_fig 2

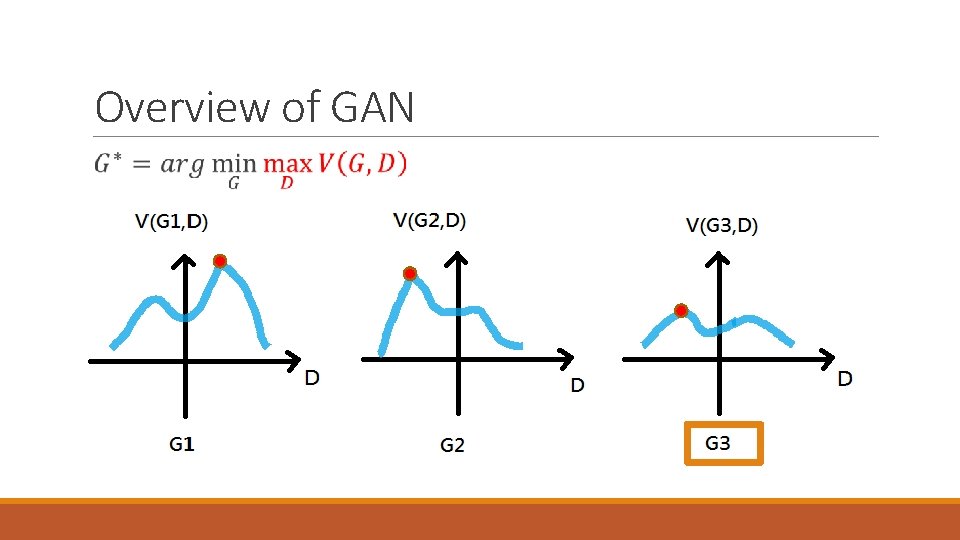

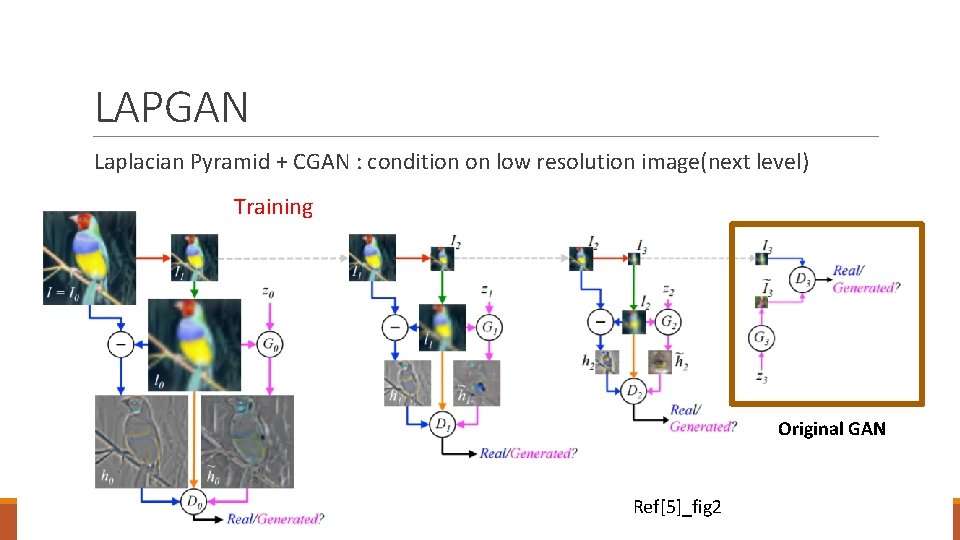

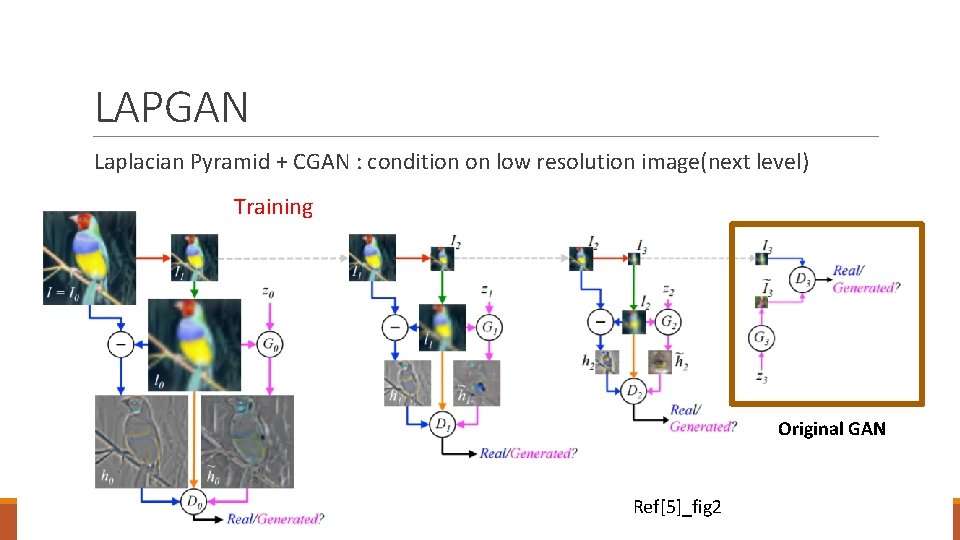

LAPGAN Laplacian Pyramid + CGAN : condition on low resolution image(next level) Training Original GAN Ref[5]_fig 2

![LAPGAN Ref6fig 1 LAPGAN Ref[6]_fig 1](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-32.jpg)

LAPGAN Ref[6]_fig 1

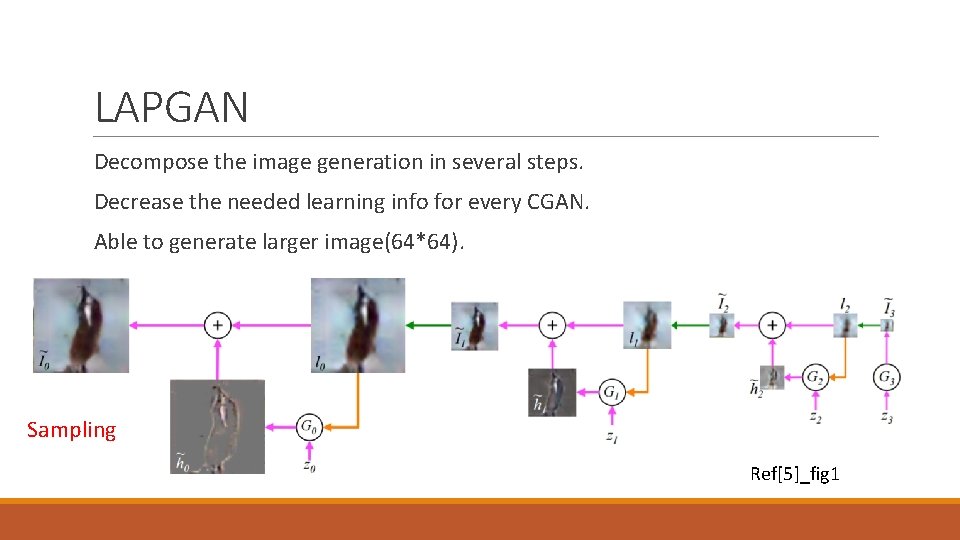

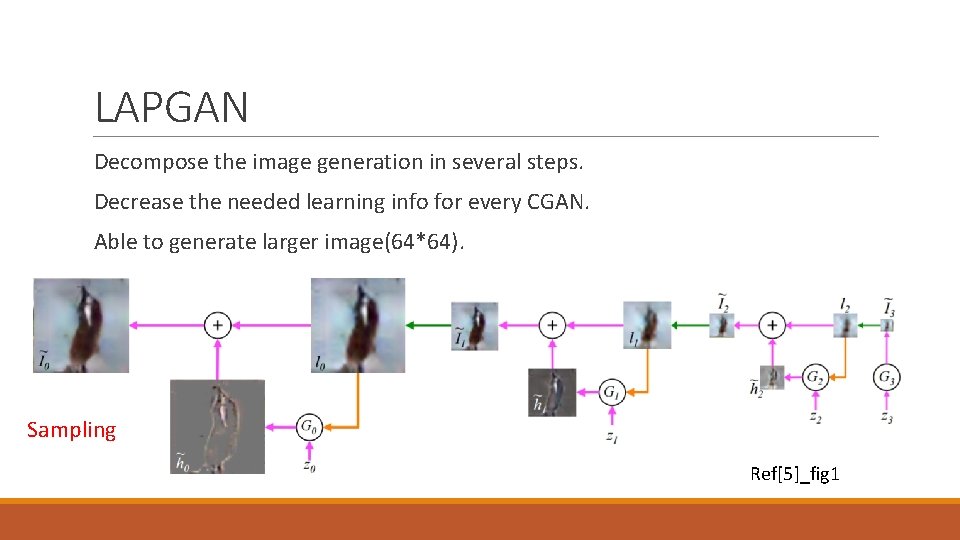

LAPGAN Decompose the image generation in several steps. Decrease the needed learning info for every CGAN. Able to generate larger image(64*64). Sampling Ref[5]_fig 1

![LAPGAN Ref5fig 4 LAPGAN Ref[5]_fig 4](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-34.jpg)

LAPGAN Ref[5]_fig 4

![LAPGAN Ref5fig 5 LAPGAN Ref[5]_fig 5](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-35.jpg)

LAPGAN Ref[5]_fig 5

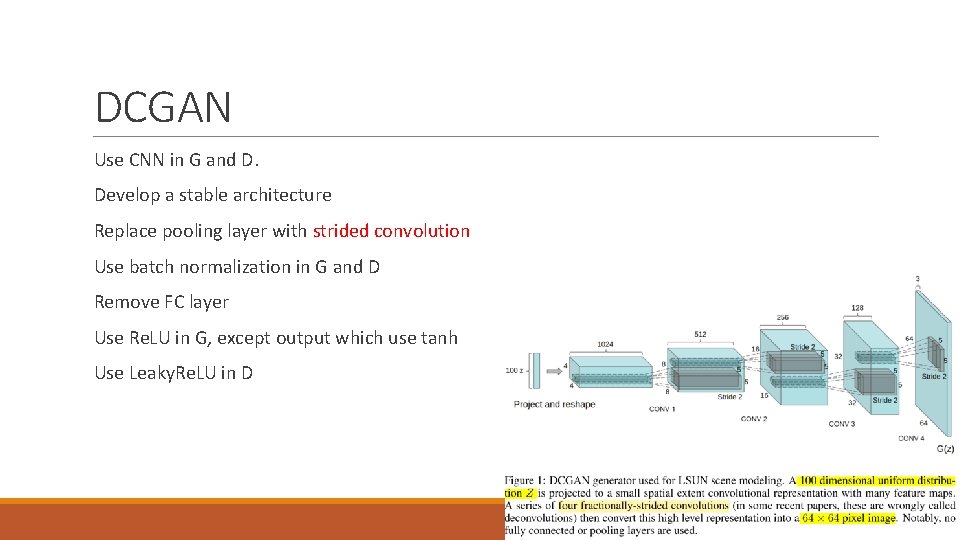

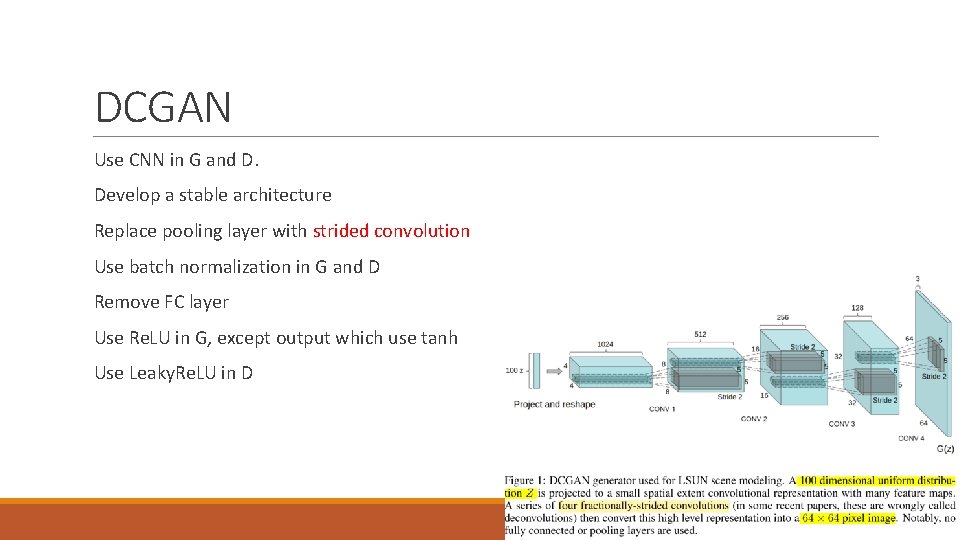

DCGAN Use CNN in G and D. Develop a stable architecture Replace pooling layer with strided convolution Use batch normalization in G and D Remove FC layer Use Re. LU in G, except output which use tanh Use Leaky. Re. LU in D

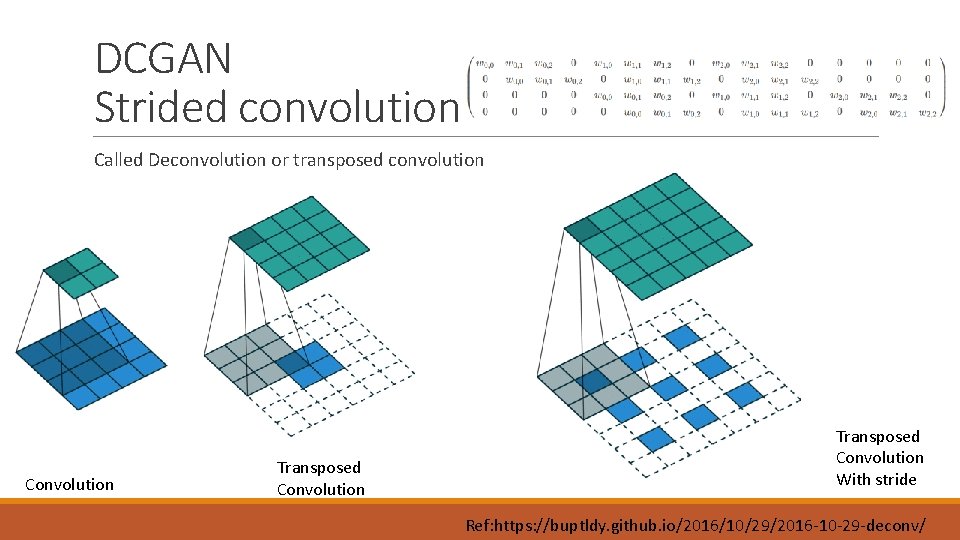

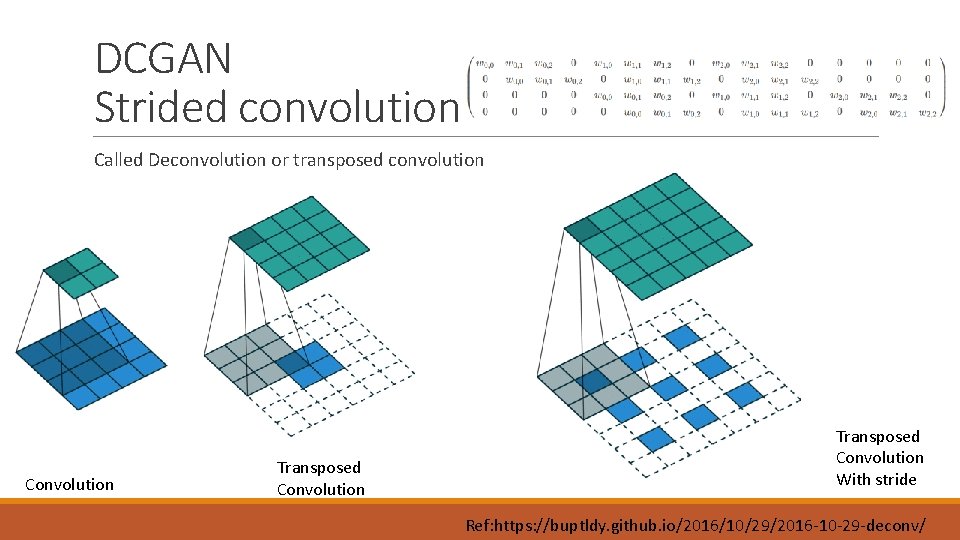

DCGAN Strided convolution Called Deconvolution or transposed convolution Convolution Transposed Convolution With stride Ref: https: //buptldy. github. io/2016/10/29/2016 -10 -29 -deconv/

![DCGAN Ref2 fig 2 fig 7 DCGAN Ref[2] fig 2, fig 7](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-38.jpg)

DCGAN Ref[2] fig 2, fig 7

Outline 1. Introduction ◦ Original GAN ◦ Problem with GAN ◦ f-GAN 2. Architecture based modification ◦ Conditional GAN ◦ LAPGAN ◦ DCGAN 3. Theory-based modification ◦ WGAN – Weight clipping 4. Application ◦ Stack. GAN – Text to image synthesis 5. Reference

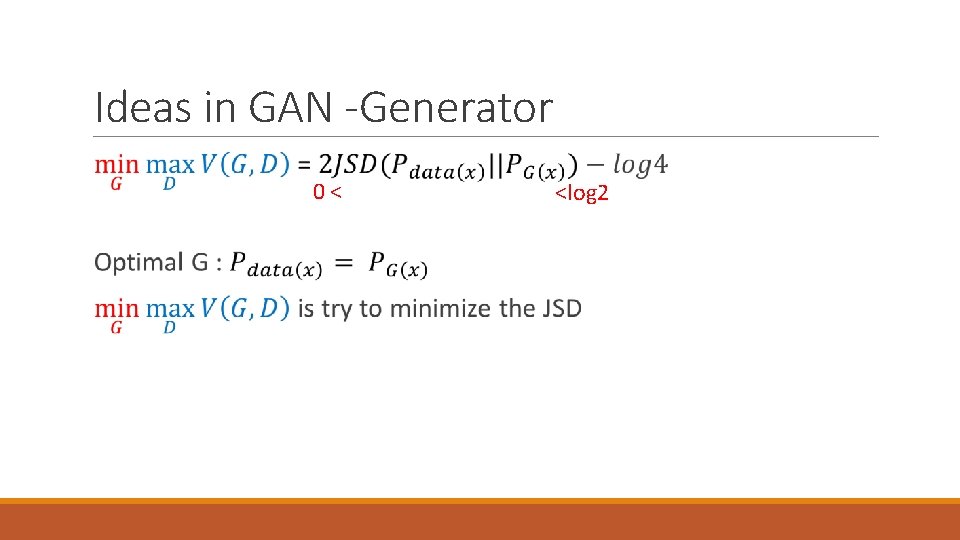

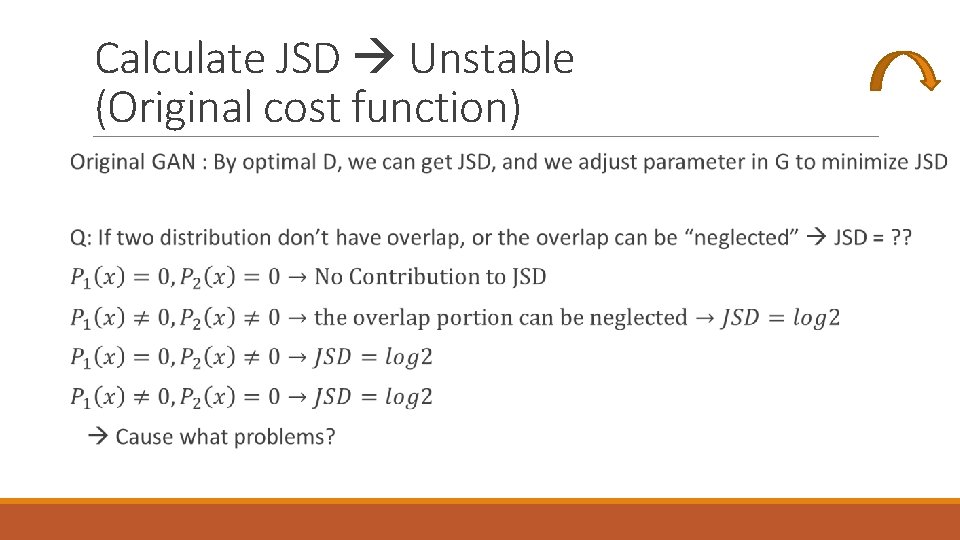

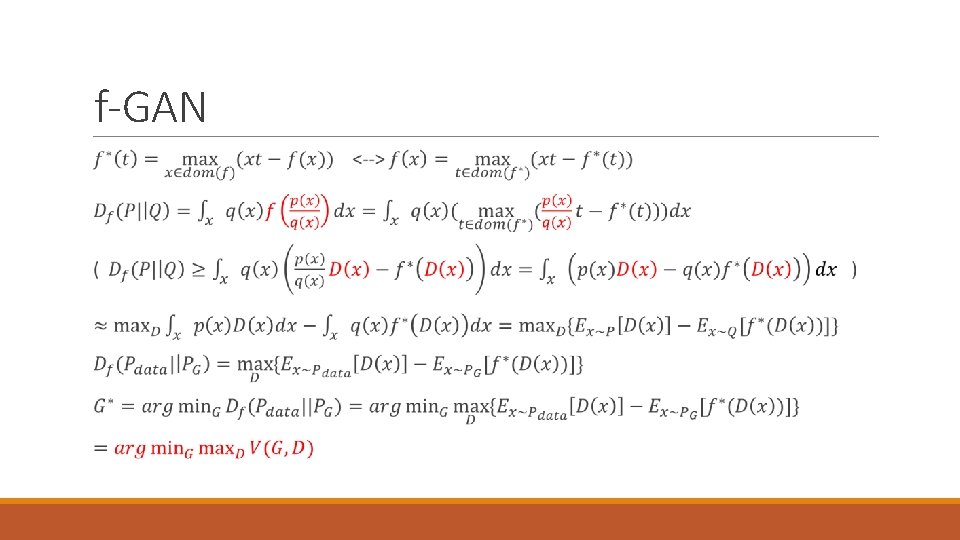

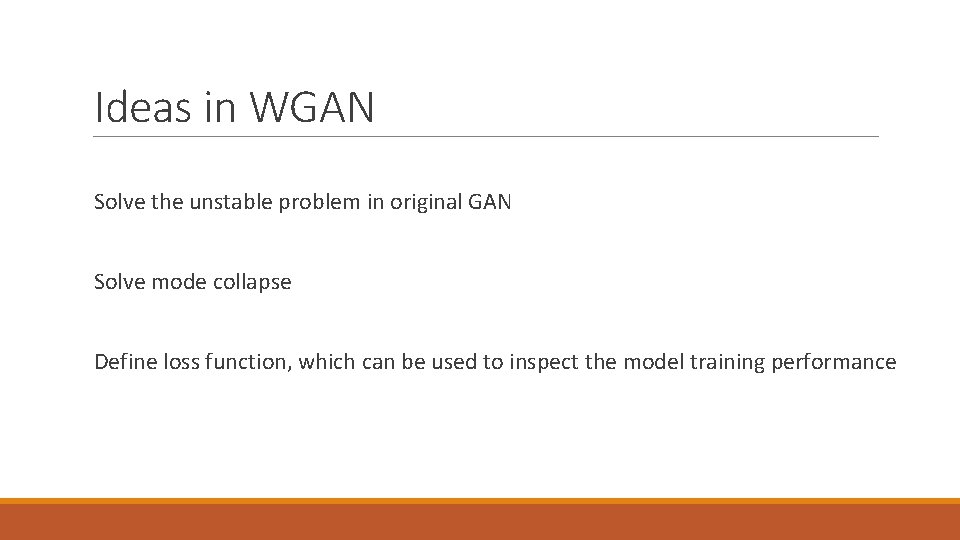

Ideas in WGAN Solve the unstable problem in original GAN Solve mode collapse Define loss function, which can be used to inspect the model training performance

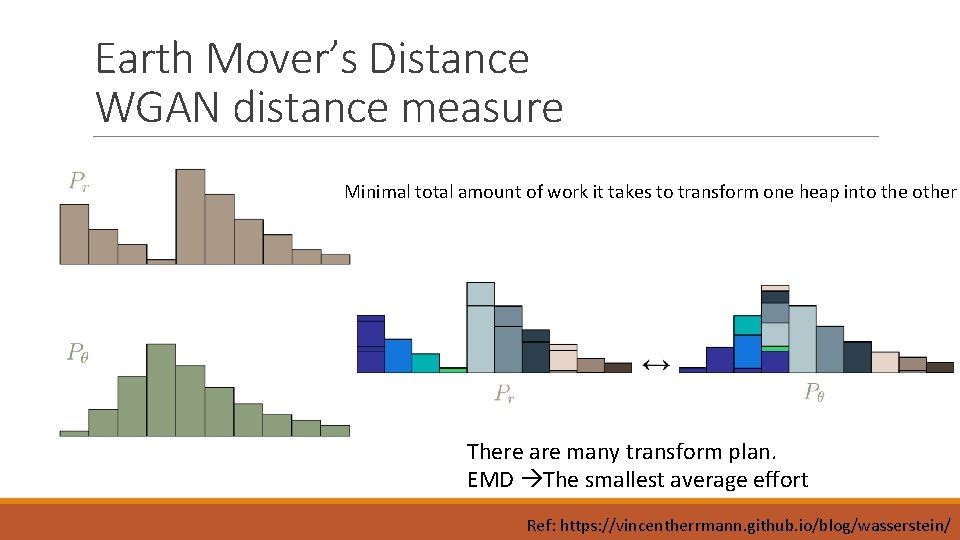

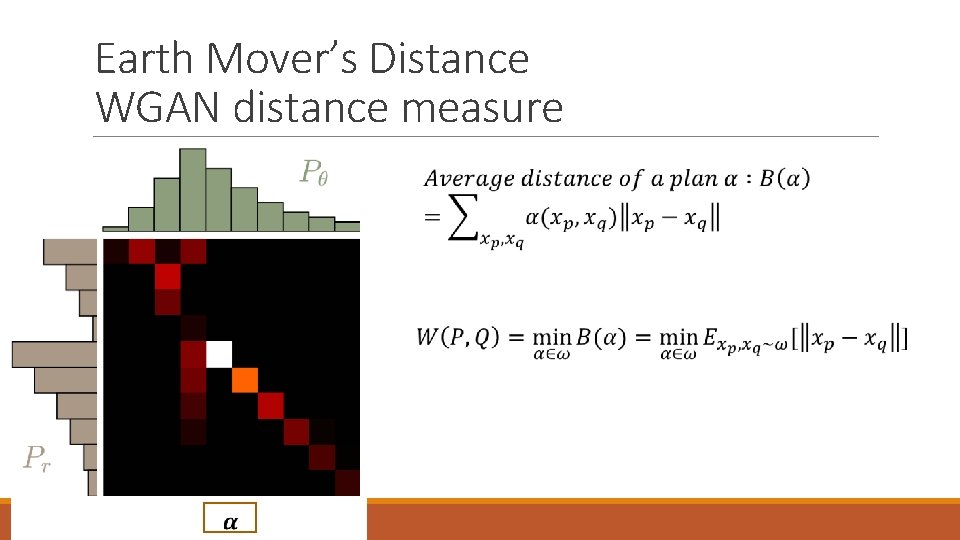

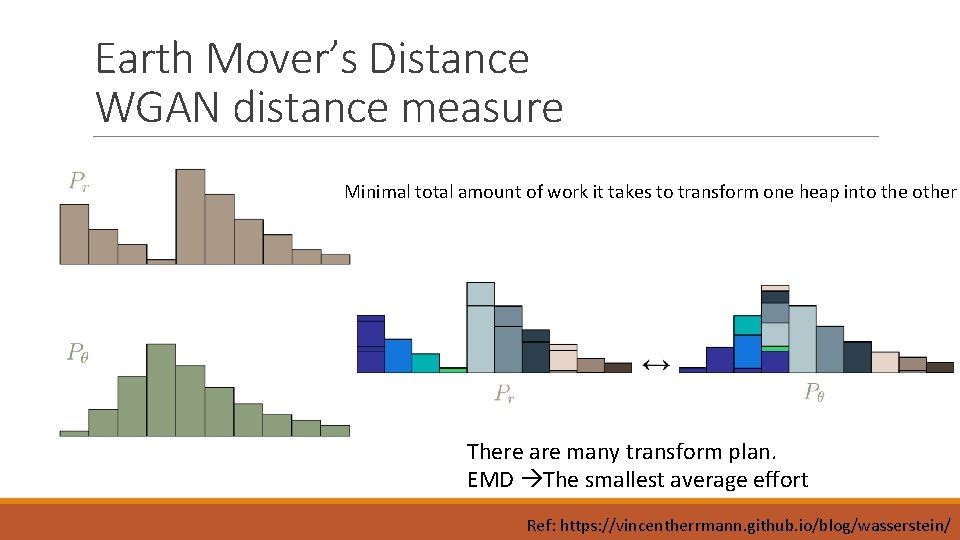

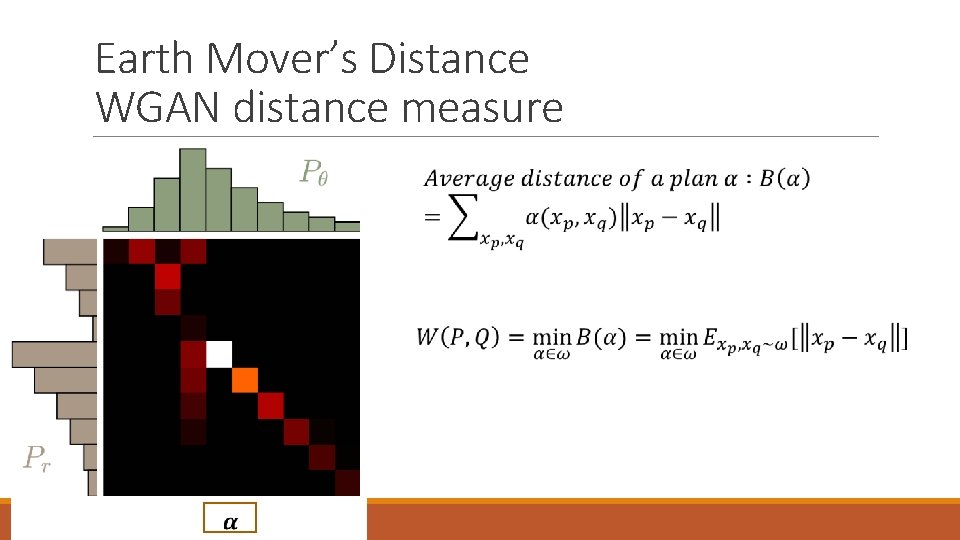

Earth Mover’s Distance WGAN distance measure Minimal total amount of work it takes to transform one heap into the other There are many transform plan. EMD The smallest average effort Ref: https: //vincentherrmann. github. io/blog/wasserstein/

Earth Mover’s Distance WGAN distance measure a

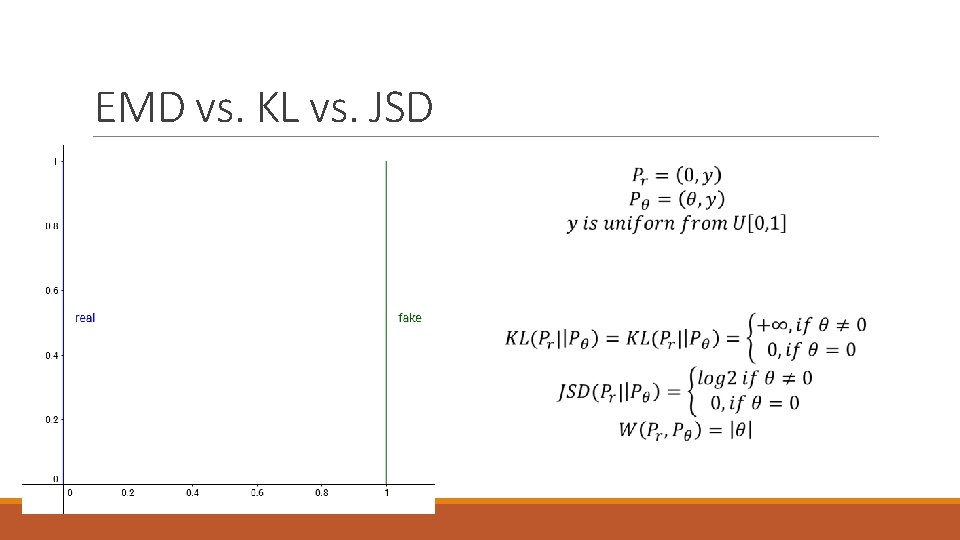

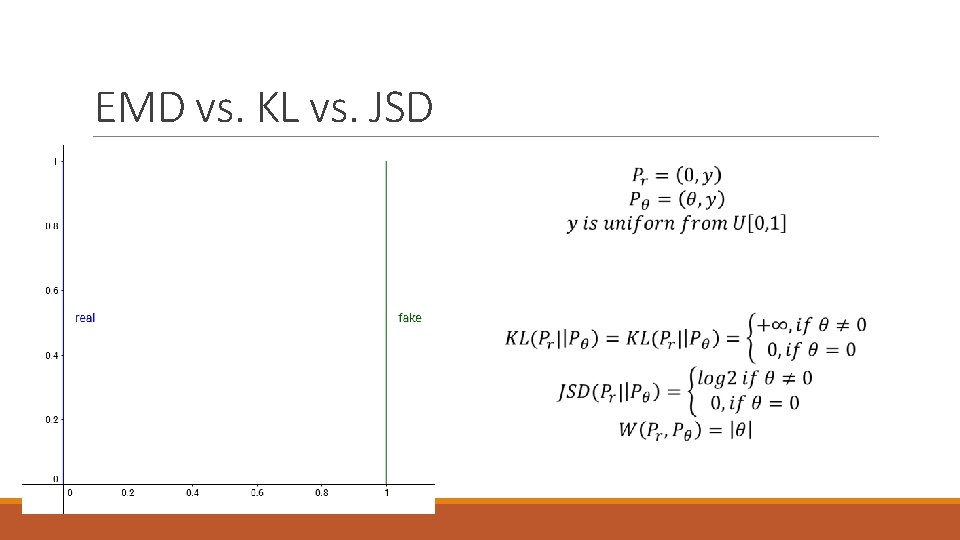

EMD vs. KL vs. JSD

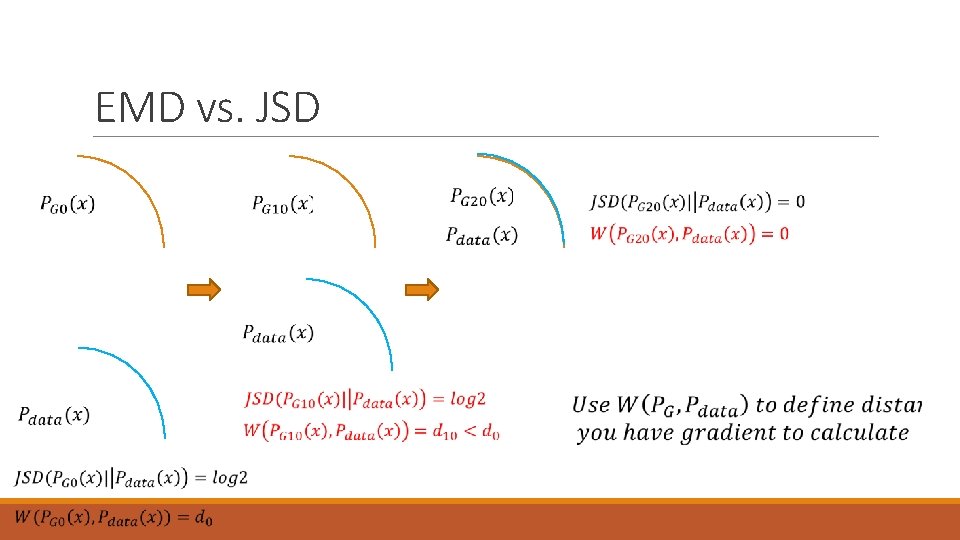

EMD vs. JSD

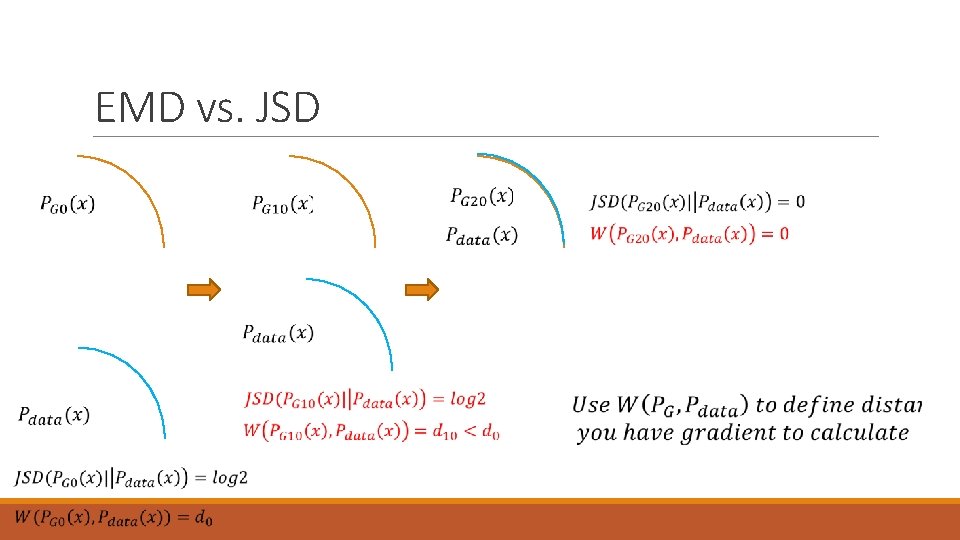

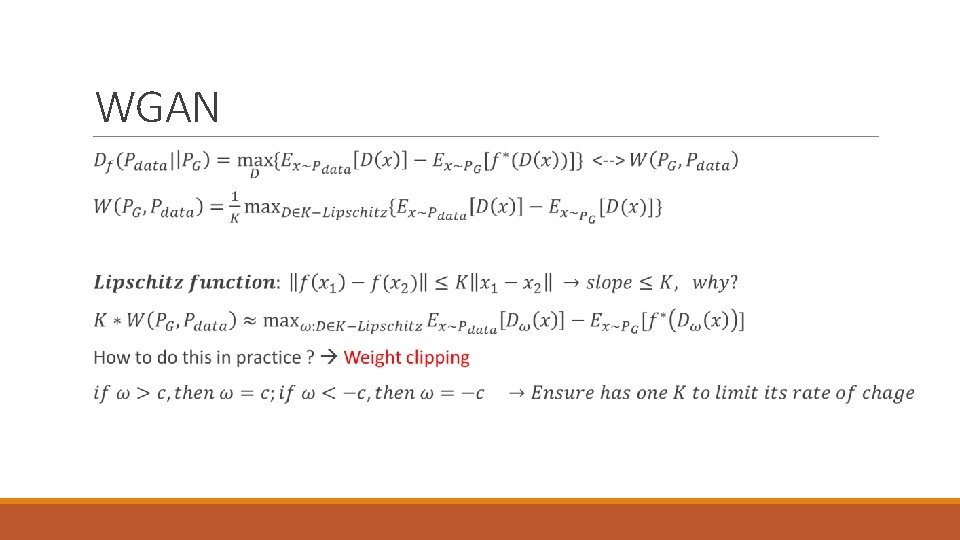

WGAN

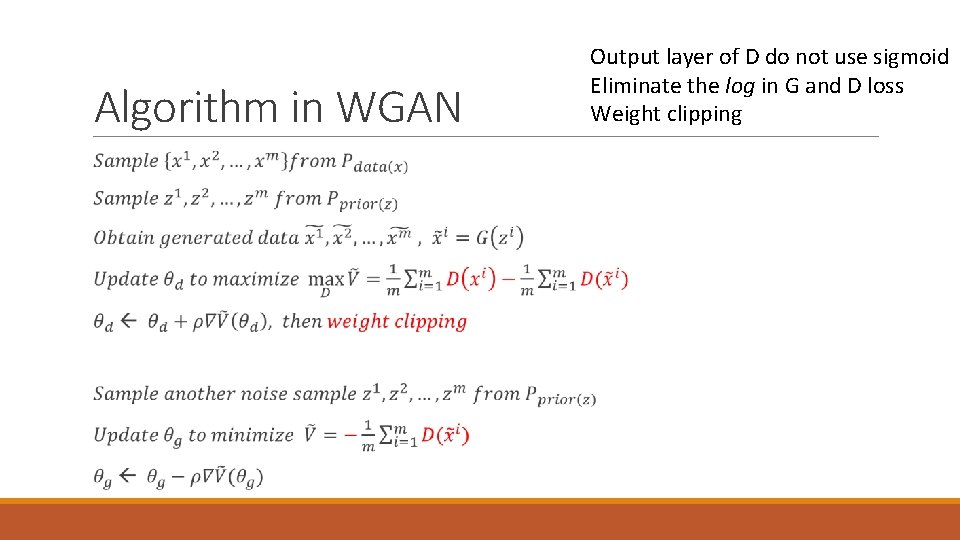

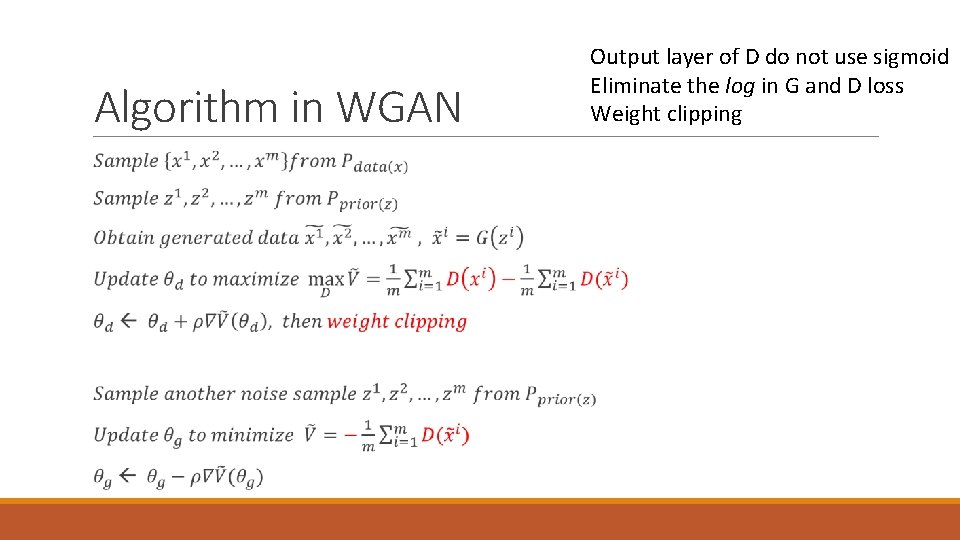

Algorithm in WGAN Output layer of D do not use sigmoid Eliminate the log in G and D loss Weight clipping

![WGAN WGAN Ref7fig 5 6 7 DCGANGenerator WGAN DCGANGenerator Eliminate BN Same filter number WGAN W-GAN Ref[7]_fig 5, 6, 7 DCGAN-Generator W-GAN DCGAN-Generator Eliminate BN Same filter number](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-47.jpg)

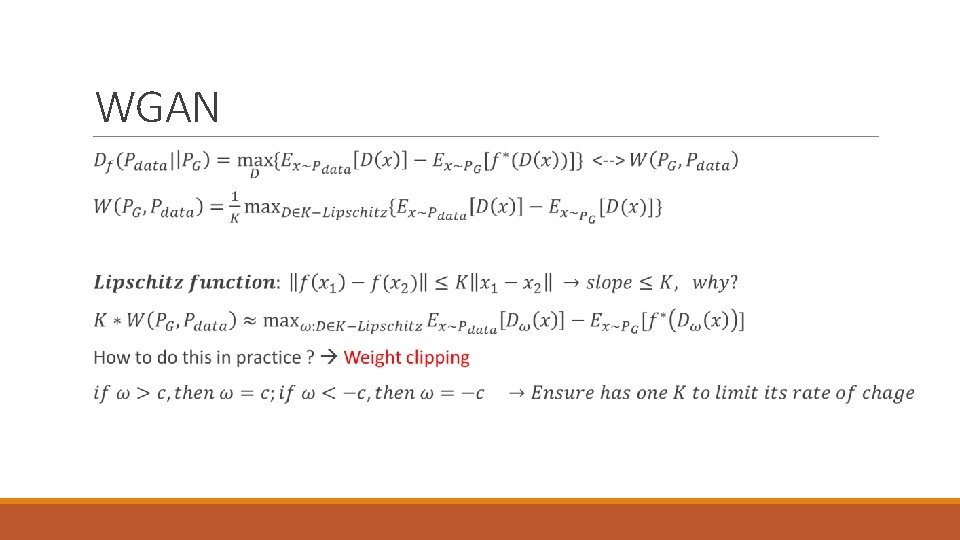

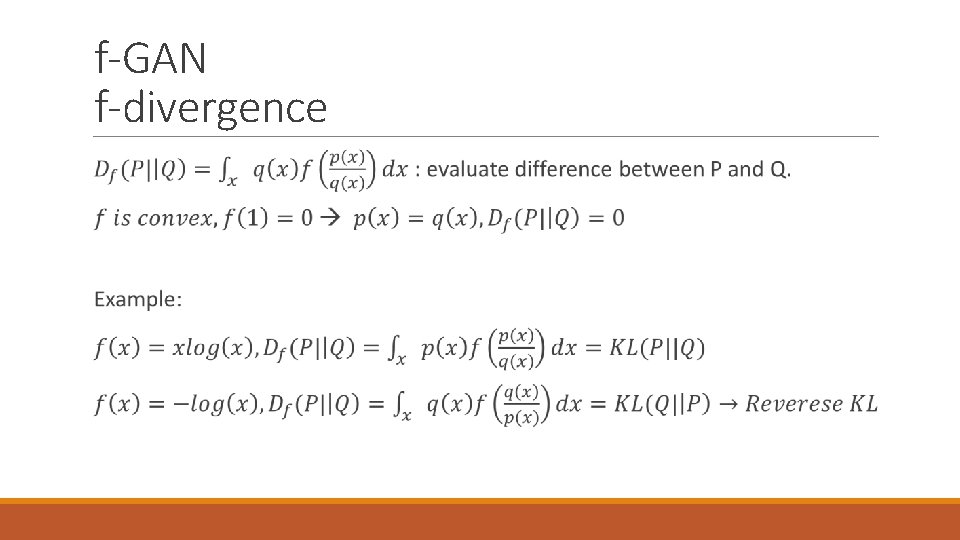

WGAN W-GAN Ref[7]_fig 5, 6, 7 DCGAN-Generator W-GAN DCGAN-Generator Eliminate BN Same filter number W-GAN MLP-Generator GAN Mode collapse

![WGAN Wasserstein distance JSD Ref7fig 3 4 WGAN Wasserstein distance JSD Ref[7]_fig 3, 4](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-48.jpg)

WGAN Wasserstein distance JSD Ref[7]_fig 3, 4

Outline 1. Introduction ◦ Original GAN ◦ Problem with GAN ◦ f-GAN 2. Architecture based modification ◦ Conditional GAN ◦ LAPGAN ◦ DCGAN 3. Theory-based modification ◦ WGAN – Weight clipping 4. Application ◦ Stack. GAN – Text to image synthesis 5. Reference

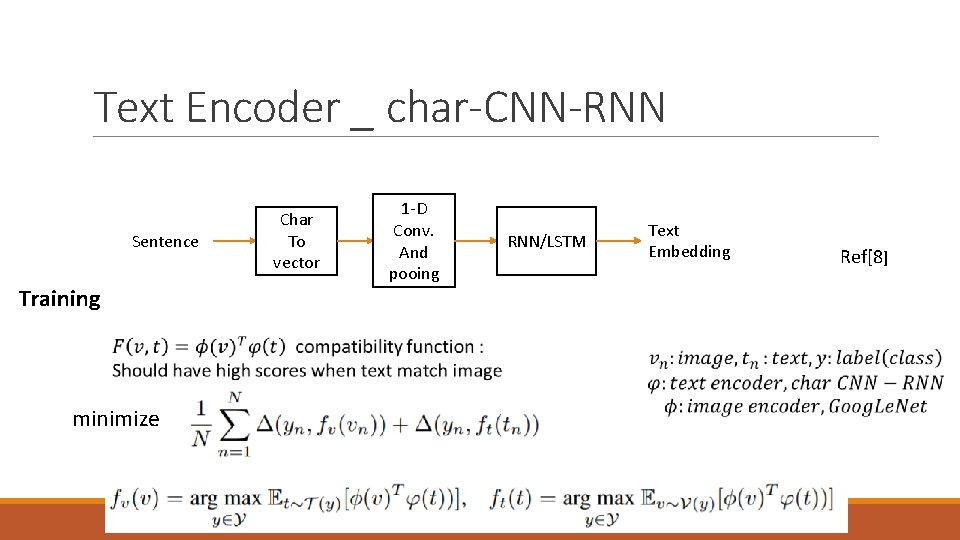

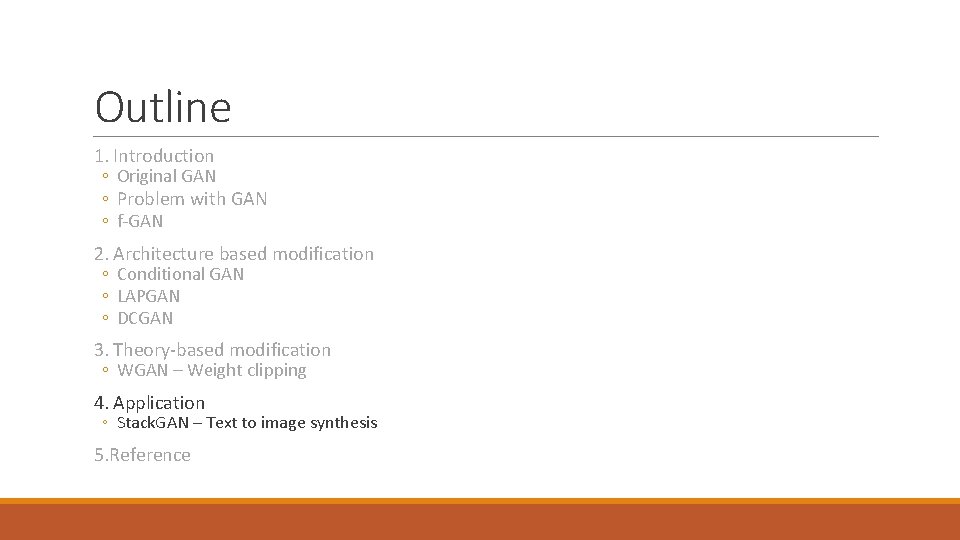

Text Encoder _ char-CNN-RNN Sentence Training minimize Char To vector 1 -D Conv. And pooing RNN/LSTM Text Embedding Ref[8]

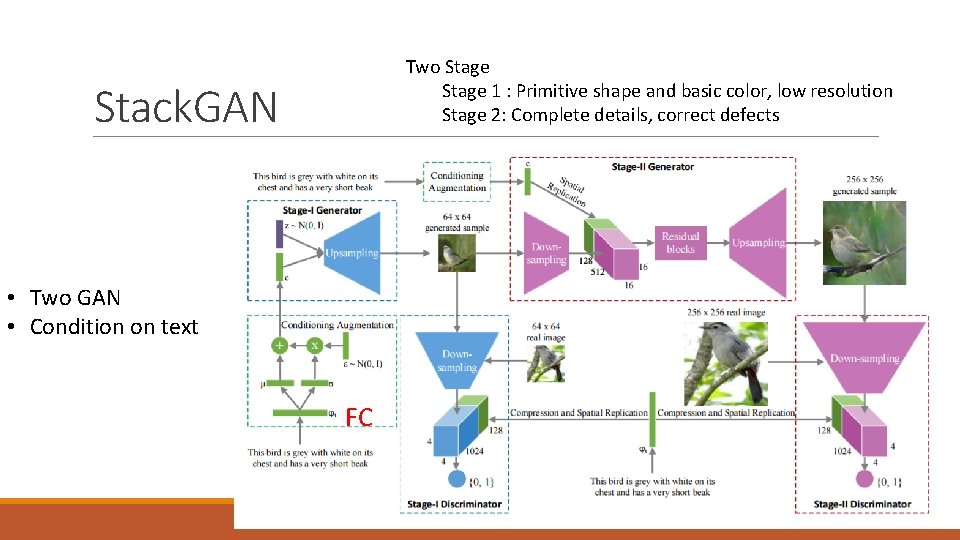

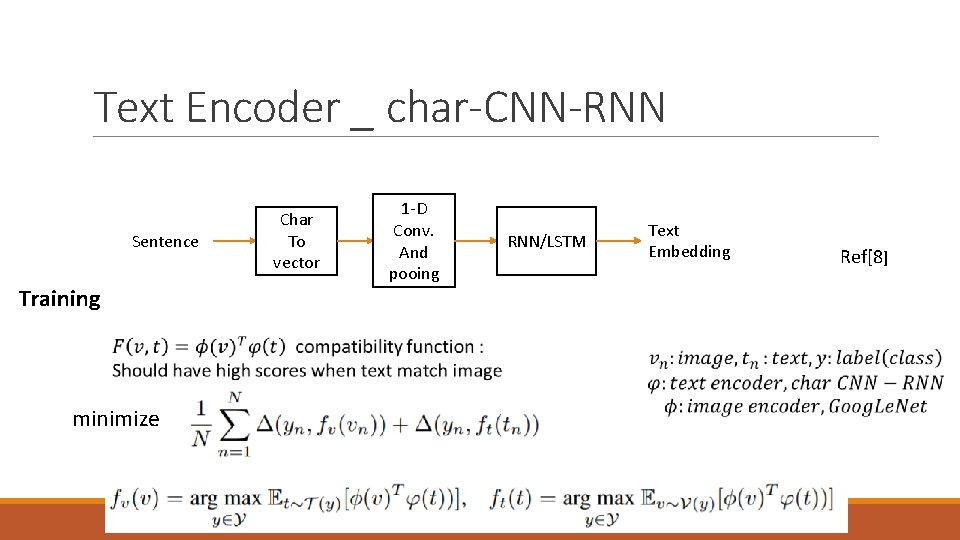

Two Stage 1 : Primitive shape and basic color, low resolution Stage 2: Complete details, correct defects Stack. GAN • Two GAN • Condition on text FC

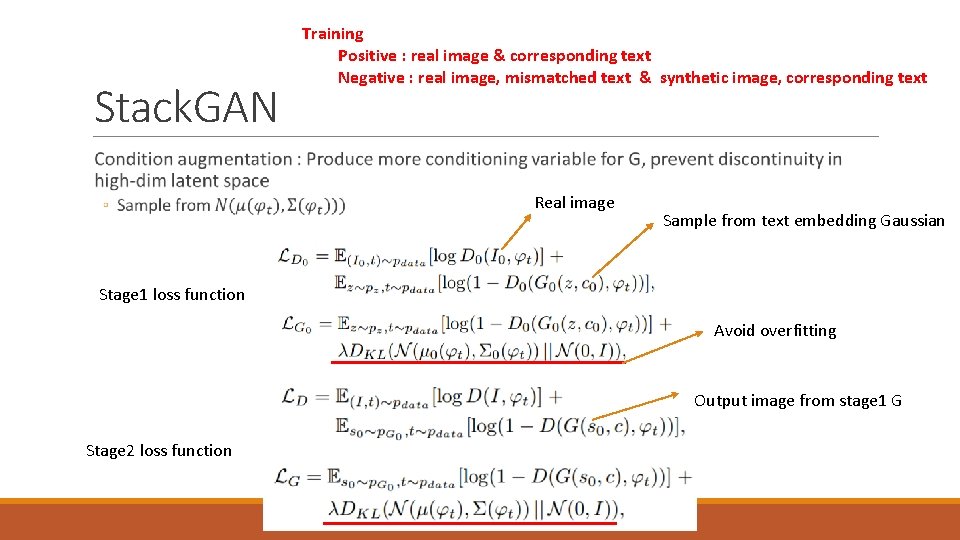

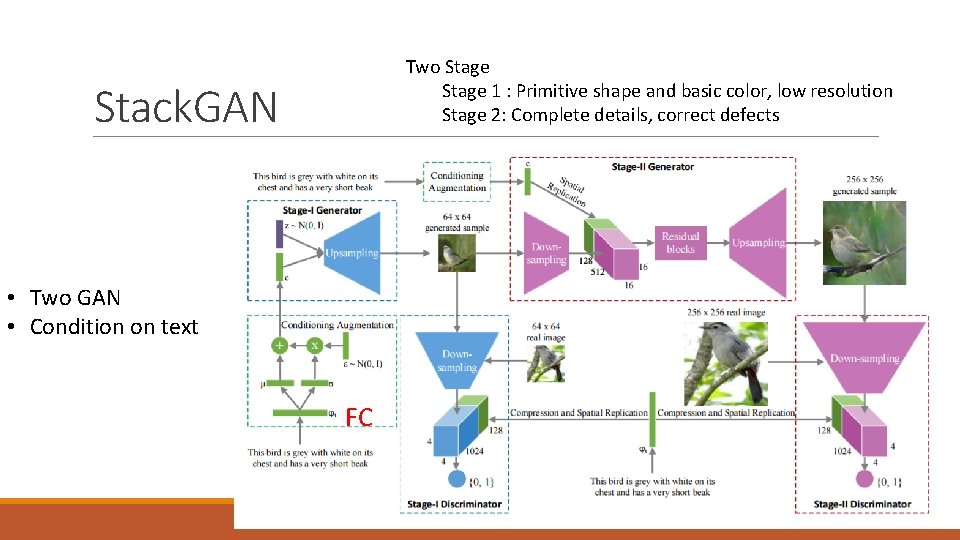

Stack. GAN Training Positive : real image & corresponding text Negative : real image, mismatched text & synthetic image, corresponding text Real image Sample from text embedding Gaussian Stage 1 loss function Avoid overfitting Output image from stage 1 G Stage 2 loss function

![Stack GAN Ref9 fig 3 Stack. GAN Ref[9] fig 3](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-53.jpg)

Stack. GAN Ref[9] fig 3

![Stack GAN Ref9 fig 4 Stack. GAN Ref[9] fig 4](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-54.jpg)

Stack. GAN Ref[9] fig 4

![Stack GAN Ref9 fig 5 Stack. GAN Ref[9] fig 5](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-55.jpg)

Stack. GAN Ref[9] fig 5

Outline 1. Introduction ◦ Original GAN ◦ Problem with GAN ◦ f-GAN 2. Architecture based modification ◦ Conditional GAN ◦ LAPGAN ◦ DCGAN 3. Theory-based modification ◦ WGAN – Weight clipping 4. Application ◦ Stack. GAN – Text to image synthesis 5. Reference

![Reference 1 NIPS 2016 Tutorial Generative Adversarial Networks Ian Goodfellow 2 Radford A Reference [1] NIPS 2016 Tutorial: Generative Adversarial Networks Ian Goodfellow [2] Radford, A. ,](https://slidetodoc.com/presentation_image_h2/680ea0aa5f864ff2a7ccbf92dfa1e323/image-57.jpg)

Reference [1] NIPS 2016 Tutorial: Generative Adversarial Networks Ian Goodfellow [2] Radford, A. , Metz, L. , and Chintala, S. (2015). Unsupervised representation learning with deep convolutional generative adversarial networks. ar. Xiv preprint ar. Xiv: 1511. 06434. [3]Martin Arjovsky and L´eon Bottou. Towards principled methods for training generative adversarial networks. In International Conference on Learning Representations, 2017. Under review. [4] M. Mirza and S. Osindero. Conditional generative adversarial nets. Co. RR, abs/1411. 1784, 2014. [5] E. Denton, S. Chintala, A. Szlam, and R. Fergus. Deep generative image models using a laplacian pyramid of adversarial networks [6] E. Denton, S. Chintala, A. Szlam, and R. Fergus. Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks Supplementary Material [7] Arjovsky, Chintala, Bottou: Wasserstein GAN [8] Scott Reed, Zeynep Akata, Bernt Schiele, Honglak Lee. Learning Deep Representations of Fine-grained Visual Descriptions [9] Han Zhang, Tao Xu, Hongsheng Li, Shaoting Zhang, Xiaolei Huang, Xiaogang Wang, Dimitris Metaxas. Stack. GAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks [10] Sebastian Nowozin, Botond Cseke, Ryota Tomioka, f-GAN: Training Generative Neural Samplers using Variational Divergence Minimization

Reference https: //www. youtube. com/watch? v=0 CKeq. Xl 5 IY 0&t=6180 s https: //www. youtube. com/watch? v=KSN 4 QYg. Atao 台大 李宏毅教授 上課影片 https: //zhuanlan. zhihu. com/p/25071913 -令人拍案叫绝的Wasserstein GAN http: //www. alexirpan. com/2017/02/22/wasserstein-gan. html