Attacks Which Do Not Kill Training Make Adversarial

![Background – PGD Attack[1] • Fix the number of iteration. [1] Towards deep learning Background – PGD Attack[1] • Fix the number of iteration. [1] Towards deep learning](https://slidetodoc.com/presentation_image_h/ee6cfda1f33d5d7c0ae834e057055e5e/image-2.jpg)

![Background – Adversarial Training (Madry[1]) • Easy to understand: Put adversarial examples into training Background – Adversarial Training (Madry[1]) • Easy to understand: Put adversarial examples into training](https://slidetodoc.com/presentation_image_h/ee6cfda1f33d5d7c0ae834e057055e5e/image-3.jpg)

![Experiments Madry: Standard Adversarial Training CAT[2]: Curriculum Adversarial Training DAT[3]: Dynamic Adversarial Training [2] Experiments Madry: Standard Adversarial Training CAT[2]: Curriculum Adversarial Training DAT[3]: Dynamic Adversarial Training [2]](https://slidetodoc.com/presentation_image_h/ee6cfda1f33d5d7c0ae834e057055e5e/image-11.jpg)

![Experiments Madry: Standard Adversarial Training CAT[2]: Curriculum Adversarial Training DAT[3]: Dynamic Adversarial Training [2] Experiments Madry: Standard Adversarial Training CAT[2]: Curriculum Adversarial Training DAT[3]: Dynamic Adversarial Training [2]](https://slidetodoc.com/presentation_image_h/ee6cfda1f33d5d7c0ae834e057055e5e/image-12.jpg)

- Slides: 13

Attacks Which Do Not Kill Training Make Adversarial Learning Stronger ICML 2020

![Background PGD Attack1 Fix the number of iteration 1 Towards deep learning Background – PGD Attack[1] • Fix the number of iteration. [1] Towards deep learning](https://slidetodoc.com/presentation_image_h/ee6cfda1f33d5d7c0ae834e057055e5e/image-2.jpg)

Background – PGD Attack[1] • Fix the number of iteration. [1] Towards deep learning models resistant to adversarial attacks. ICLR 2018

![Background Adversarial Training Madry1 Easy to understand Put adversarial examples into training Background – Adversarial Training (Madry[1]) • Easy to understand: Put adversarial examples into training](https://slidetodoc.com/presentation_image_h/ee6cfda1f33d5d7c0ae834e057055e5e/image-3.jpg)

Background – Adversarial Training (Madry[1]) • Easy to understand: Put adversarial examples into training set and train models. • Math language [1] Towards deep learning models resistant to adversarial attacks. ICLR 2018

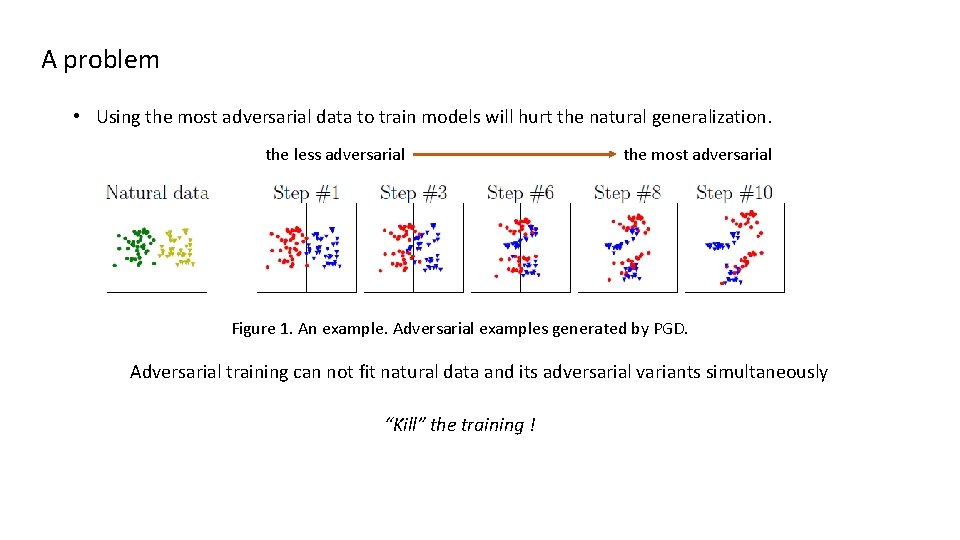

A problem • Using the most adversarial data to train models will hurt the natural generalization. the less adversarial the most adversarial Figure 1. An example. Adversarial examples generated by PGD. Adversarial training can not fit natural data and its adversarial variants simultaneously “Kill” the training !

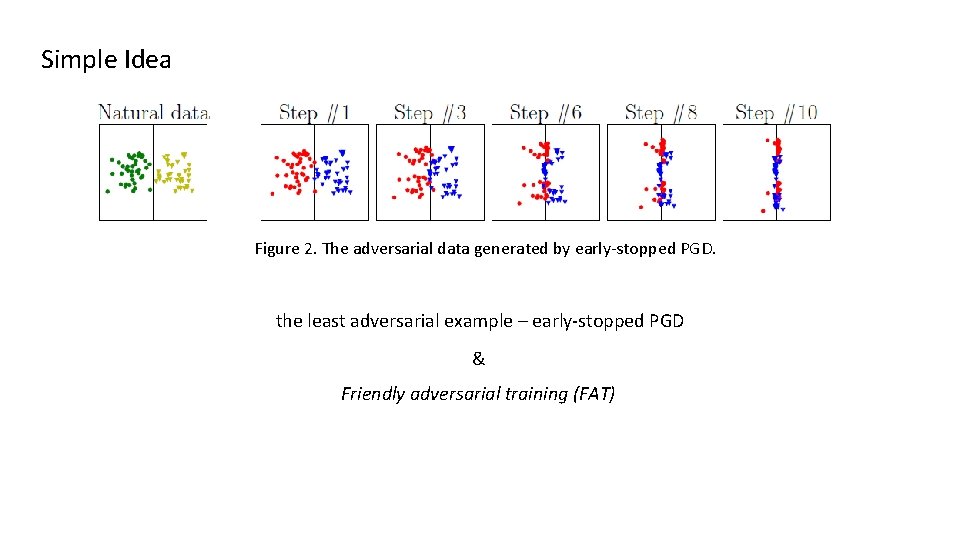

Simple Idea How to solve this problem? Stop the attack earlier! Stop the attack at the step when you want! Figure 2. The adversarial data generated by early-stopped PGD.

Simple Idea Figure 2. The adversarial data generated by early-stopped PGD. the least adversarial example – early-stopped PGD & Friendly adversarial training (FAT)

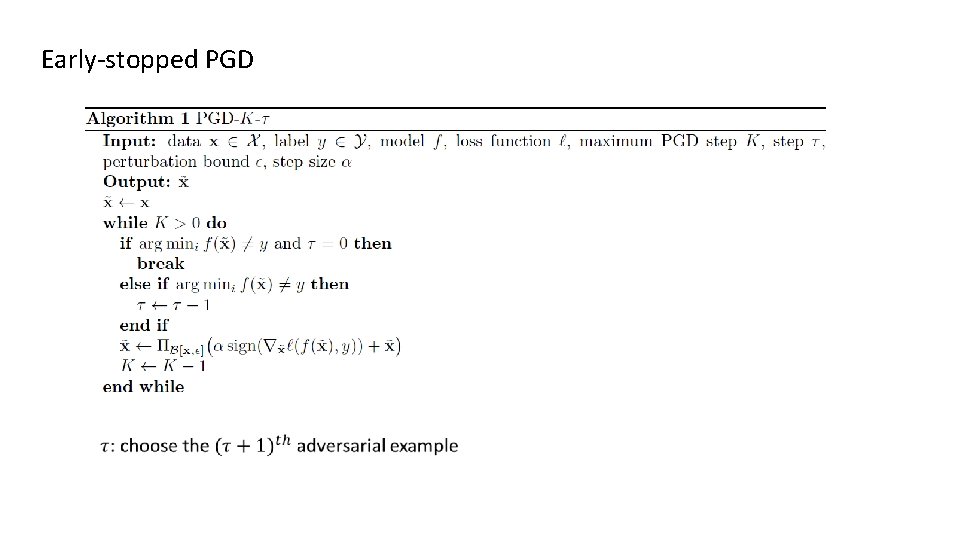

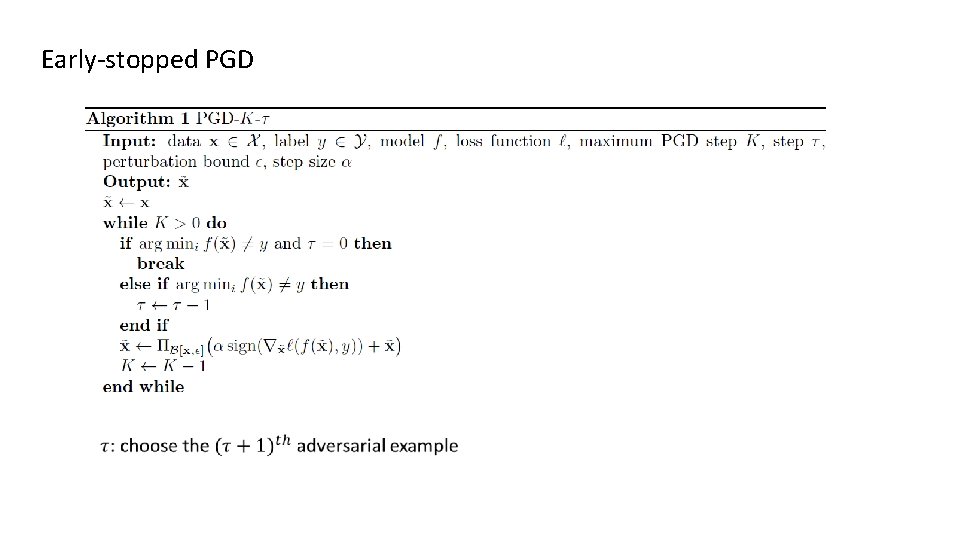

Early-stopped PGD

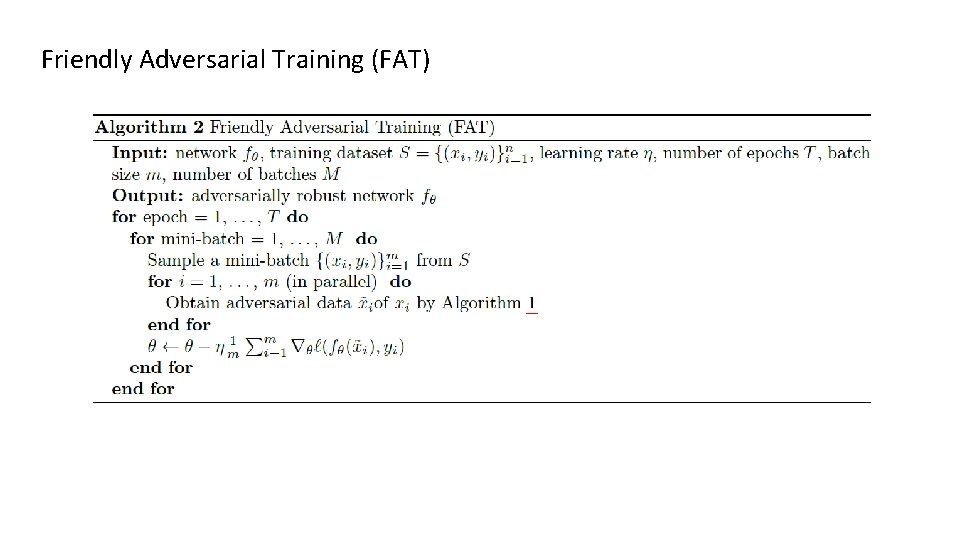

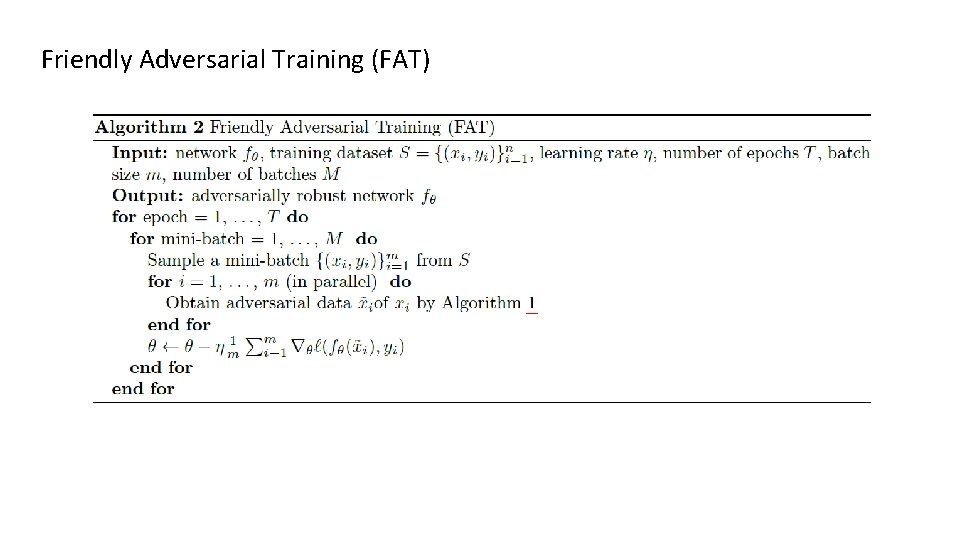

Friendly Adversarial Training (FAT)

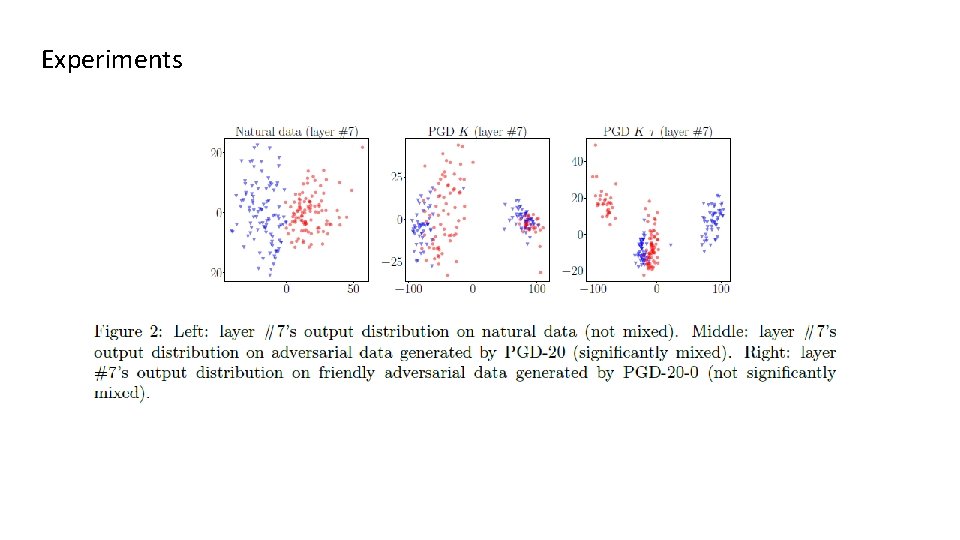

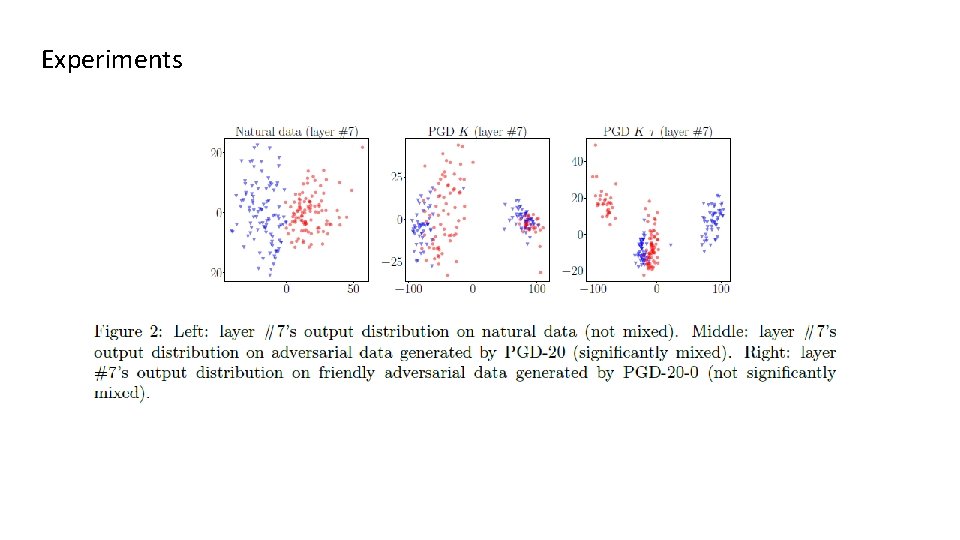

Experiments

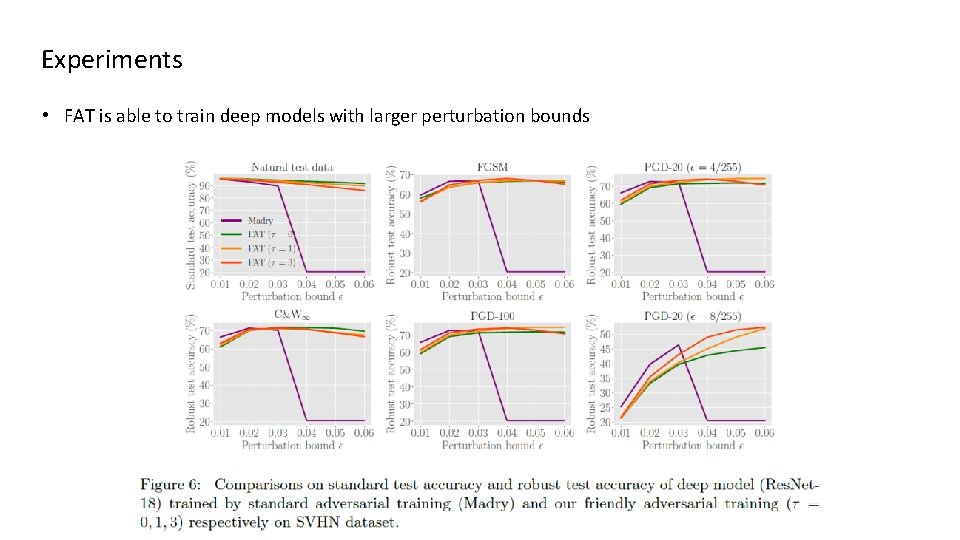

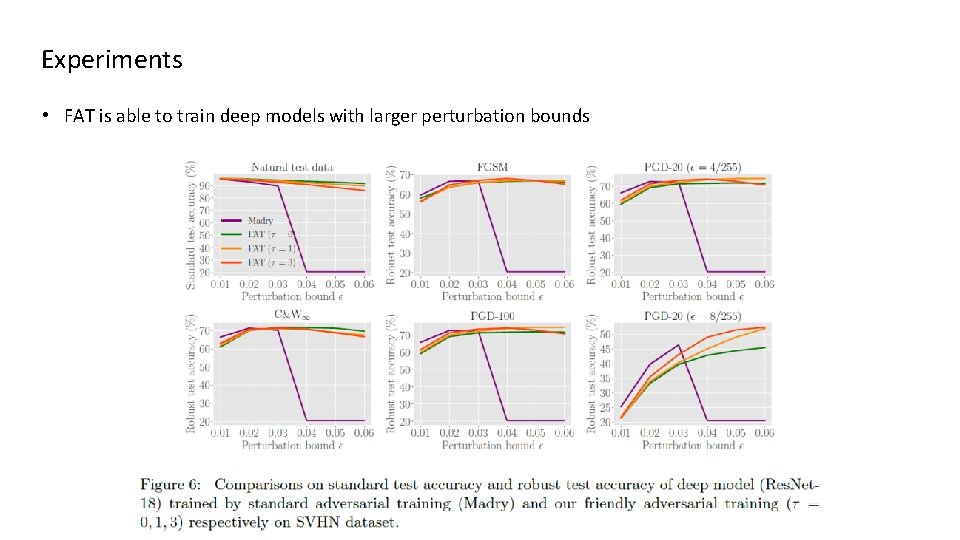

Experiments • FAT is able to train deep models with larger perturbation bounds

![Experiments Madry Standard Adversarial Training CAT2 Curriculum Adversarial Training DAT3 Dynamic Adversarial Training 2 Experiments Madry: Standard Adversarial Training CAT[2]: Curriculum Adversarial Training DAT[3]: Dynamic Adversarial Training [2]](https://slidetodoc.com/presentation_image_h/ee6cfda1f33d5d7c0ae834e057055e5e/image-11.jpg)

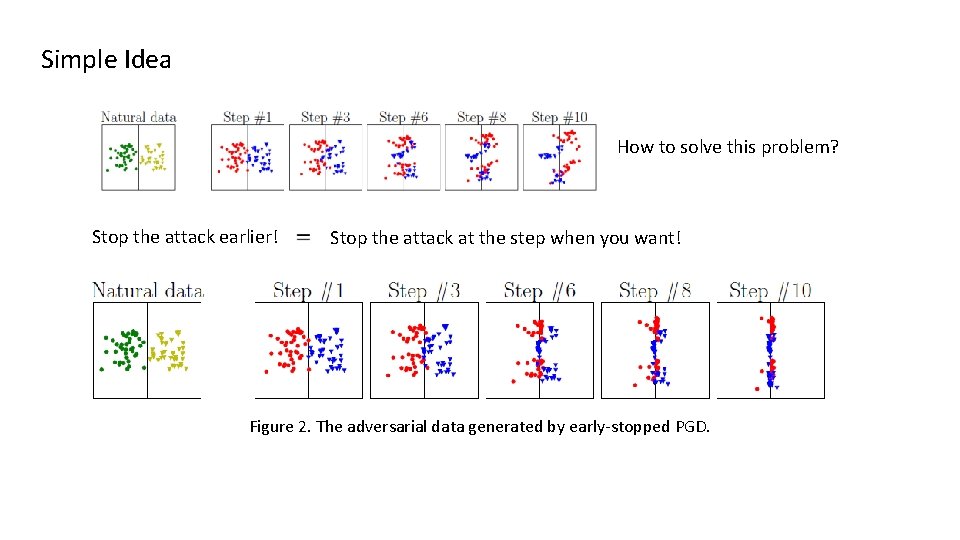

Experiments Madry: Standard Adversarial Training CAT[2]: Curriculum Adversarial Training DAT[3]: Dynamic Adversarial Training [2] Curriculum Adversarial Training. IJCAI 2018. [3] Dynamic Adversarial Training. ICML 2018. CAT & DAT • The model is first trained with a weak attack. • Once the model can achieve a high accuracy against attacks at some strength, the attack strength is increased.

![Experiments Madry Standard Adversarial Training CAT2 Curriculum Adversarial Training DAT3 Dynamic Adversarial Training 2 Experiments Madry: Standard Adversarial Training CAT[2]: Curriculum Adversarial Training DAT[3]: Dynamic Adversarial Training [2]](https://slidetodoc.com/presentation_image_h/ee6cfda1f33d5d7c0ae834e057055e5e/image-12.jpg)

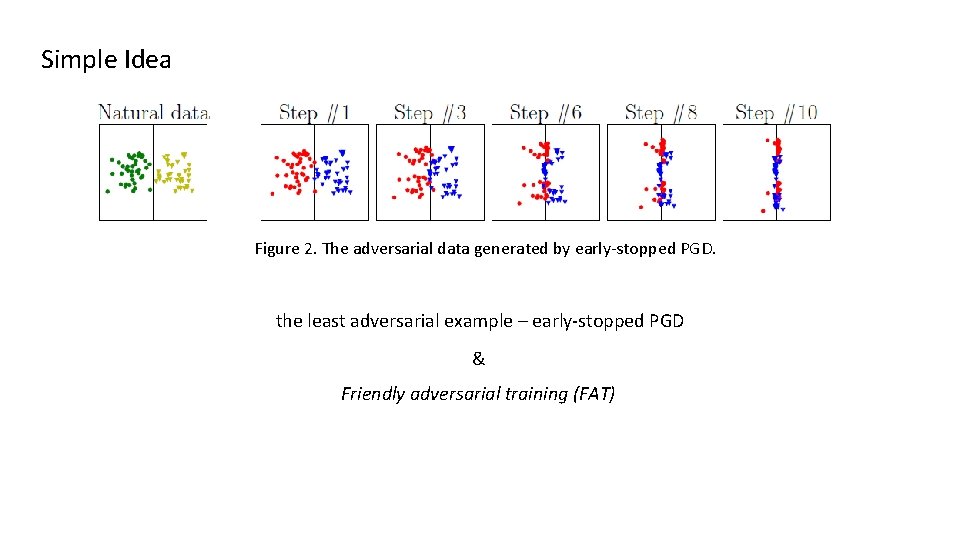

Experiments Madry: Standard Adversarial Training CAT[2]: Curriculum Adversarial Training DAT[3]: Dynamic Adversarial Training [2] Curriculum Adversarial Training. IJCAI 2018. [3] Dynamic Adversarial Training. ICML 2018. The hardness measure • CAT: the perturbation step K of PGD • DAT: a proposed measure FOSC

Conclusion • FAT is computationally efficient, and it helps overcome the problem of cross-over mixture. Comments + Simple idea and nice performance. - How about using adversarial examples generated by other early-stopped attack methods?