SWE 681 ISA 681 Secure Software Design Programming

- Slides: 59

SWE 681 / ISA 681 Secure Software Design & Programming Lecture 10: Miscellaneous Dr. David A. Wheeler 2020 -01 -03

Outline • Artificial Intelligence / Machine Learning & security • Science of Security • Malicious tools / trusting trust attack – Countering using diverse double-compiling (DDC) • Are you in control? (e. g. , baseband processors) • Vulnerability disclosure Some portions © Institute for Defense Analyses (the OSS and open proofs sections), used by permission. This material is not intended to endorse particular suppliers or products. 2

Artificial Intelligence (AI) & Machine Learning (ML) • Artificial intelligence: “when a machine mimics “cognitive” functions that humans associate with other human minds, such as learning and problem solving. ” • Machine learning: “a subset of artificial intelligence in the field of computer science that often uses statistical techniques to give computers the ability to “learn” (i. e. , progressively improve performance on a specific task) with data, without being explicitly programmed. ” – May be supervised (presented inputs & desired outputs such as labels) or unsupervised (“find structures in input”) – Often used for classification problems (“what is this? ”); other applications include clustering (“what are like each other? ”) & dimension reduction (“simplify original input”) Sources: https: //en. wikipedia. org/w/index. php? title=Artificial_intelligence&oldid=854123634 & https: //en. wikipedia. org/w/index. php? title=Machine_learning&oldid=853415971 3

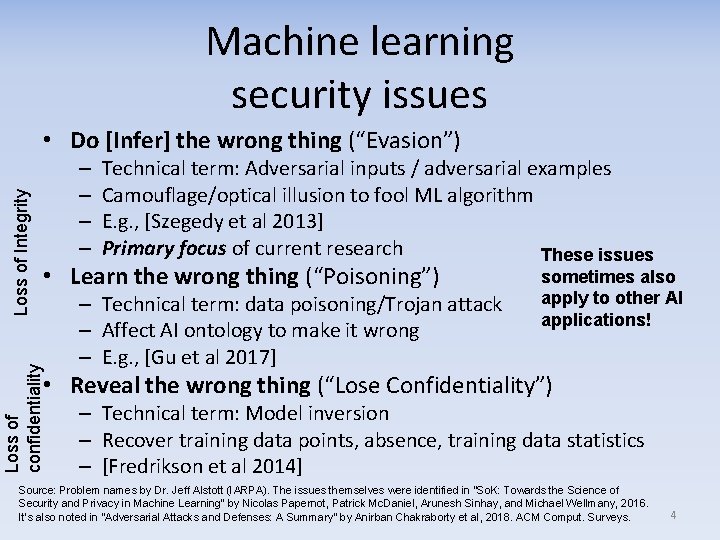

Machine learning security issues – – Technical term: Adversarial inputs / adversarial examples Camouflage/optical illusion to fool ML algorithm E. g. , [Szegedy et al 2013] Primary focus of current research These issues • Learn the wrong thing (“Poisoning”) Loss of confidentiality Loss of Integrity • Do [Infer] the wrong thing (“Evasion”) – Technical term: data poisoning/Trojan attack – Affect AI ontology to make it wrong – E. g. , [Gu et al 2017] sometimes also apply to other AI applications! • Reveal the wrong thing (“Lose Confidentiality”) – Technical term: Model inversion – Recover training data points, absence, training data statistics – [Fredrikson et al 2014] Source: Problem names by Dr. Jeff Alstott (IARPA). The issues themselves were identified in “So. K: Towards the Science of Security and Privacy in Machine Learning” by Nicolas Papernot, Patrick Mc. Daniel, Arunesh Sinhay, and Michael Wellmany, 2016. It’s also noted in “Adversarial Attacks and Defenses: A Summary” by Anirban Chakraborty et al, 2018. ACM Comput. Surveys. 4

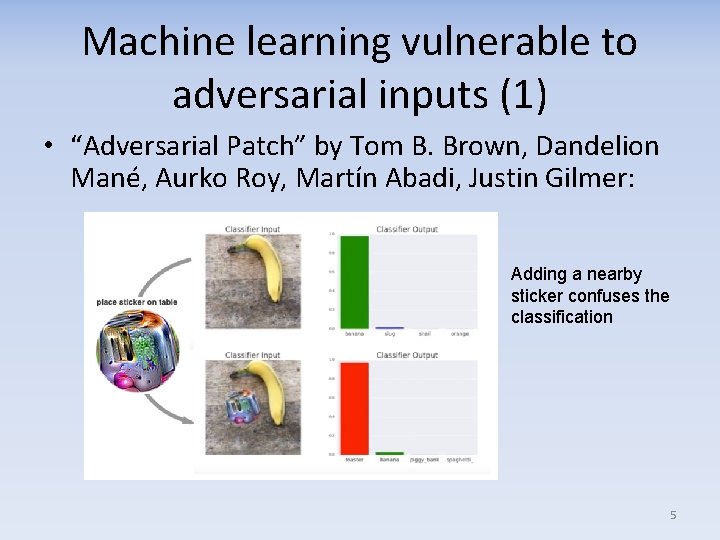

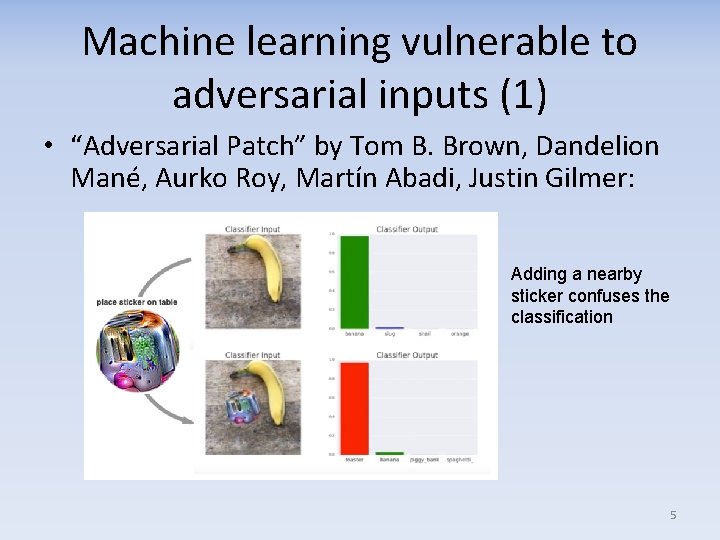

Machine learning vulnerable to adversarial inputs (1) • “Adversarial Patch” by Tom B. Brown, Dandelion Mané, Aurko Roy, Martín Abadi, Justin Gilmer: Adding a nearby sticker confuses the classification 5

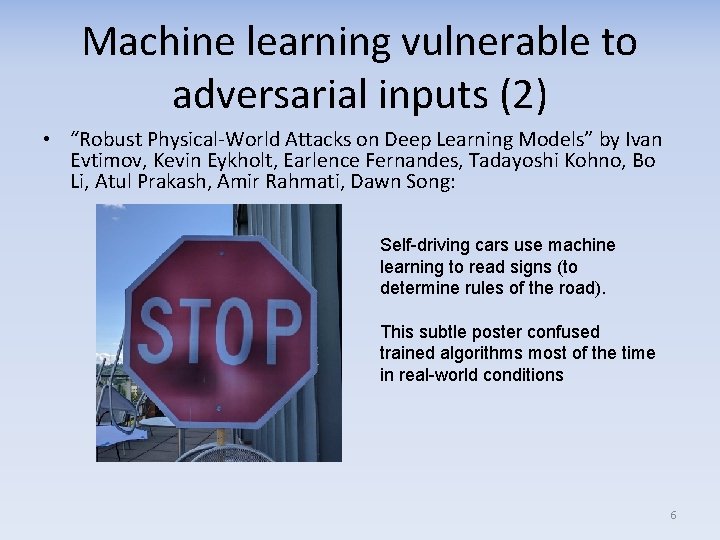

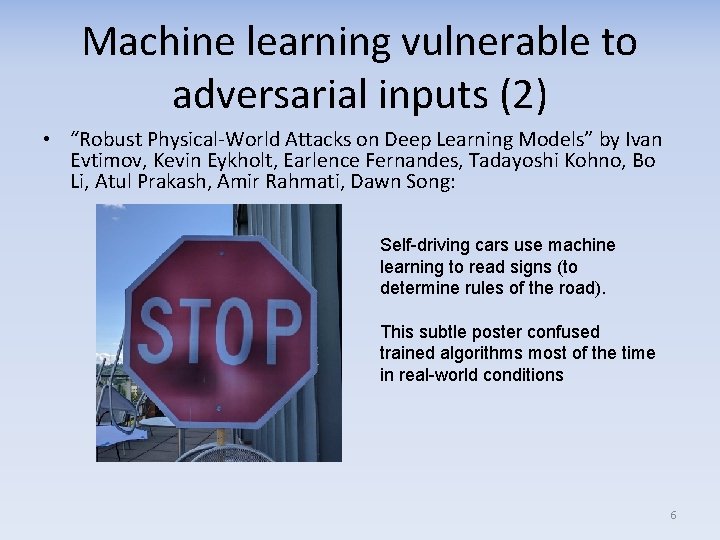

Machine learning vulnerable to adversarial inputs (2) • “Robust Physical-World Attacks on Deep Learning Models” by Ivan Evtimov, Kevin Eykholt, Earlence Fernandes, Tadayoshi Kohno, Bo Li, Atul Prakash, Amir Rahmati, Dawn Song: Self-driving cars use machine learning to read signs (to determine rules of the road). This subtle poster confused trained algorithms most of the time in real-world conditions 6

Machine learning vulnerable to adversarial inputs (3) • Tencent’s Keen Security Lab was able to cause Tesla autopilot to transition one lane to left (wrong lane) without warning in 2019 – With just 3 stickers & no cue to driver – When no other lines were present for car to follow – Also able to fool car that a line wasn’t present when it was • That was much more obvious because Tesla’s neural network was more robust when lines are present • That robustness simplified fooling the system when no lines were present – Not necessarily “into oncoming traffic” (not proven) but worrisome – Need to use multiple input sources (car in front, oncoming, …) – Tencent is Chinese multinational developing its self-driving cars • Provides incentive to investigate its competitors Source: “Three Small Stickers in Intersection Cause Tesla Autopilot to Swerve Into Wrong Lane: Security researchers from Tencent have demonstrated a way to use physical attacks to spoof Tesla's autopilot” by Evan Ackerman, 1 Apr 2019, IEEE Spectrum, https: //spectrum. ieee. org/cars-that-think/transportation/self-driving/three-smallstickers-on-road-can-steer-tesla-autopilot-into-oncoming-lane When using machine learning, must consider & counter adversaries if they can exist in the environment 7

Countering attacks • Countering adversarial inputs (evasion) is an area of significant active research – As of 2018, over 100 papers/year • Countering other attacks has less attention – But work ongoing – To counter poisoning during training, think about using trustworthy training data, sanitize vs. malicious & biased data, apply “reject on negative impact” • We don’t have good techniques yet for countering attacks – I’ve tried to summarize a little – “On Evaluating Adversarial Robustness…” by Carlini et al discusses how to evaluate approaches (many proposals don’t consider serious attack) – Keep watching for improvements in this area! 8

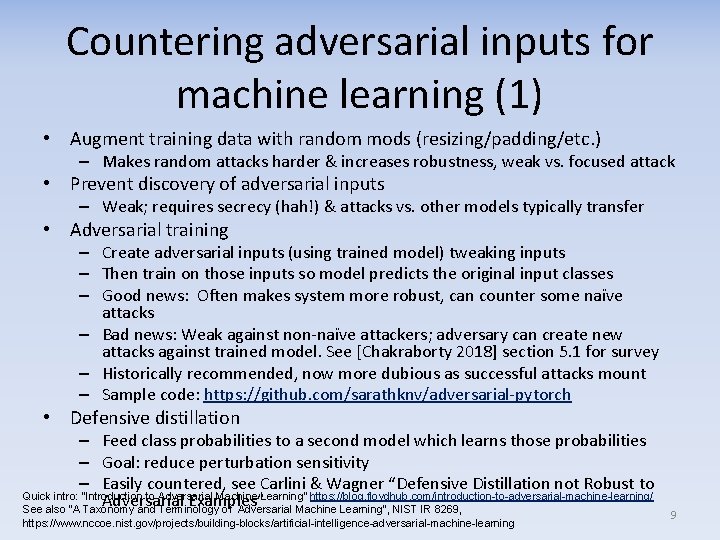

Countering adversarial inputs for machine learning (1) • Augment training data with random mods (resizing/padding/etc. ) – Makes random attacks harder & increases robustness, weak vs. focused attack • Prevent discovery of adversarial inputs – Weak; requires secrecy (hah!) & attacks vs. other models typically transfer • Adversarial training – Create adversarial inputs (using trained model) tweaking inputs – Then train on those inputs so model predicts the original input classes – Good news: Often makes system more robust, can counter some naïve attacks – Bad news: Weak against non-naïve attackers; adversary can create new attacks against trained model. See [Chakraborty 2018] section 5. 1 for survey – Historically recommended, now more dubious as successful attacks mount – Sample code: https: //github. com/sarathknv/adversarial-pytorch • Defensive distillation – Feed class probabilities to a second model which learns those probabilities – Goal: reduce perturbation sensitivity – Easily countered, see Carlini & Wagner “Defensive Distillation not Robust to Quick intro: “Introduction to Adversarial Machine Learning” https: //blog. floydhub. com/introduction-to-adversarial-machine-learning/ Adversarial Examples” See also “A Taxonomy and Terminology of Adversarial Machine Learning”, NIST IR 8269, https: //www. nccoe. nist. gov/projects/building-blocks/artificial-intelligence-adversarial-machine-learning 9

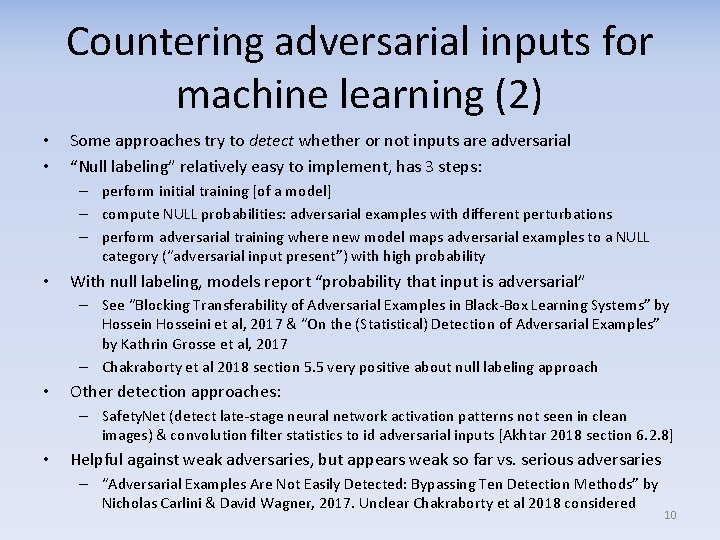

Countering adversarial inputs for machine learning (2) • • Some approaches try to detect whether or not inputs are adversarial “Null labeling” relatively easy to implement, has 3 steps: – perform initial training [of a model] – compute NULL probabilities: adversarial examples with different perturbations – perform adversarial training where new model maps adversarial examples to a NULL category (“adversarial input present”) with high probability • With null labeling, models report “probability that input is adversarial” – See “Blocking Transferability of Adversarial Examples in Black-Box Learning Systems” by Hosseini et al, 2017 & “On the (Statistical) Detection of Adversarial Examples” by Kathrin Grosse et al, 2017 – Chakraborty et al 2018 section 5. 5 very positive about null labeling approach • Other detection approaches: – Safety. Net (detect late-stage neural network activation patterns not seen in clean images) & convolution filter statistics to id adversarial inputs [Akhtar 2018 section 6. 2. 8] • Helpful against weak adversaries, but appears weak so far vs. serious adversaries – “Adversarial Examples Are Not Easily Detected: Bypassing Ten Detection Methods” by Nicholas Carlini & David Wagner, 2017. Unclear Chakraborty et al 2018 considered 10

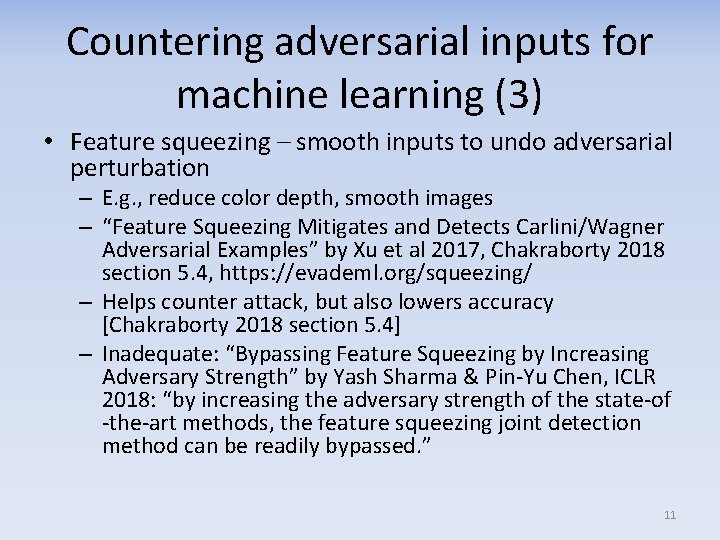

Countering adversarial inputs for machine learning (3) • Feature squeezing – smooth inputs to undo adversarial perturbation – E. g. , reduce color depth, smooth images – “Feature Squeezing Mitigates and Detects Carlini/Wagner Adversarial Examples” by Xu et al 2017, Chakraborty 2018 section 5. 4, https: //evademl. org/squeezing/ – Helps counter attack, but also lowers accuracy [Chakraborty 2018 section 5. 4] – Inadequate: “Bypassing Feature Squeezing by Increasing Adversary Strength” by Yash Sharma & Pin-Yu Chen, ICLR 2018: “by increasing the adversary strength of the state-of -the-art methods, the feature squeezing joint detection method can be readily bypassed. ” 11

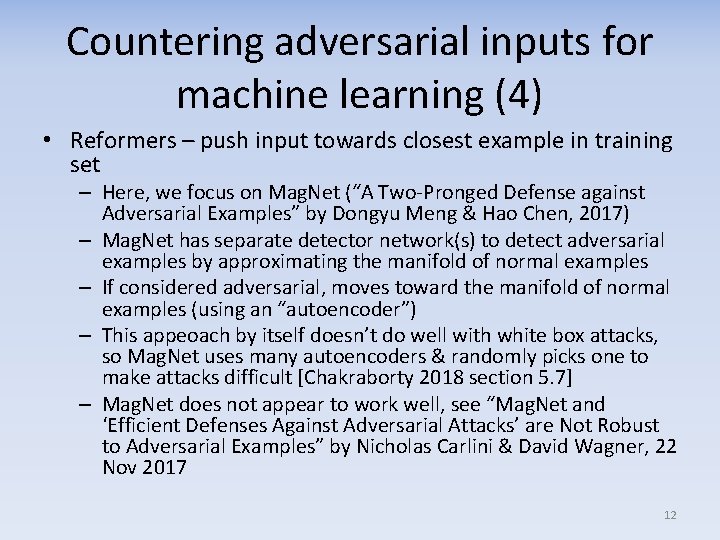

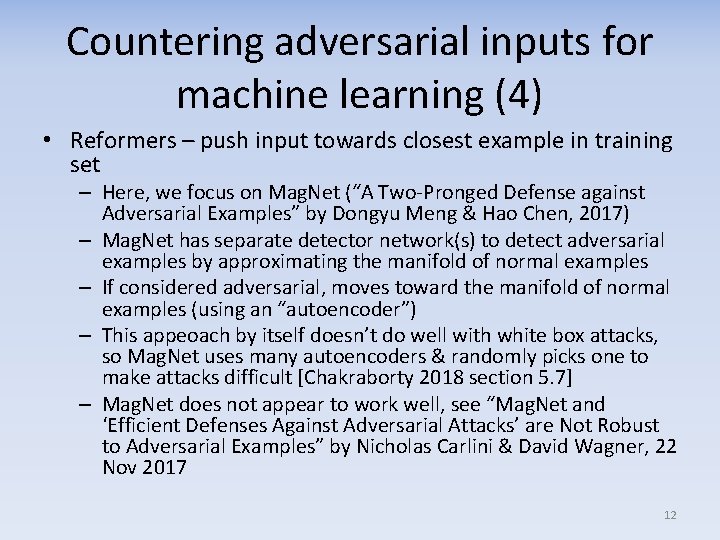

Countering adversarial inputs for machine learning (4) • Reformers – push input towards closest example in training set – Here, we focus on Mag. Net (“A Two-Pronged Defense against Adversarial Examples” by Dongyu Meng & Hao Chen, 2017) – Mag. Net has separate detector network(s) to detect adversarial examples by approximating the manifold of normal examples – If considered adversarial, moves toward the manifold of normal examples (using an “autoencoder”) – This appeoach by itself doesn’t do well with white box attacks, so Mag. Net uses many autoencoders & randomly picks one to make attacks difficult [Chakraborty 2018 section 5. 7] – Mag. Net does not appear to work well, see “Mag. Net and ‘Efficient Defenses Against Adversarial Attacks’ are Not Robust to Adversarial Examples” by Nicholas Carlini & David Wagner, 22 Nov 2017 12

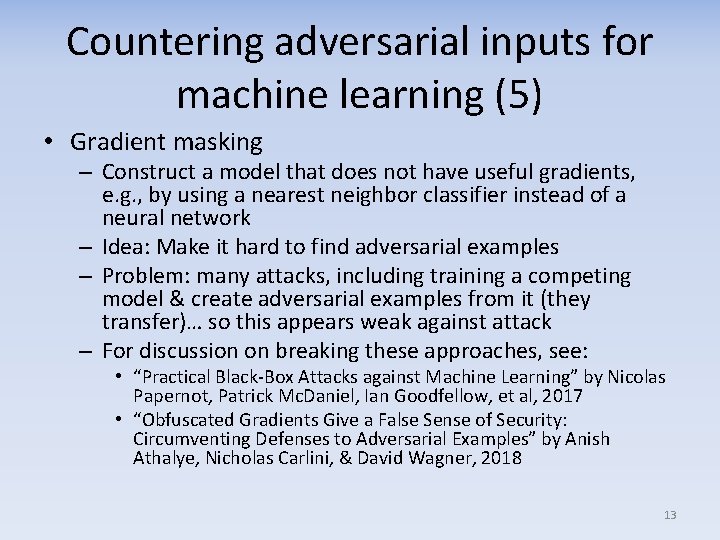

Countering adversarial inputs for machine learning (5) • Gradient masking – Construct a model that does not have useful gradients, e. g. , by using a nearest neighbor classifier instead of a neural network – Idea: Make it hard to find adversarial examples – Problem: many attacks, including training a competing model & create adversarial examples from it (they transfer)… so this appears weak against attack – For discussion on breaking these approaches, see: • “Practical Black-Box Attacks against Machine Learning” by Nicolas Papernot, Patrick Mc. Daniel, Ian Goodfellow, et al, 2017 • “Obfuscated Gradients Give a False Sense of Security: Circumventing Defenses to Adversarial Examples” by Anish Athalye, Nicholas Carlini, & David Wagner, 2018 13

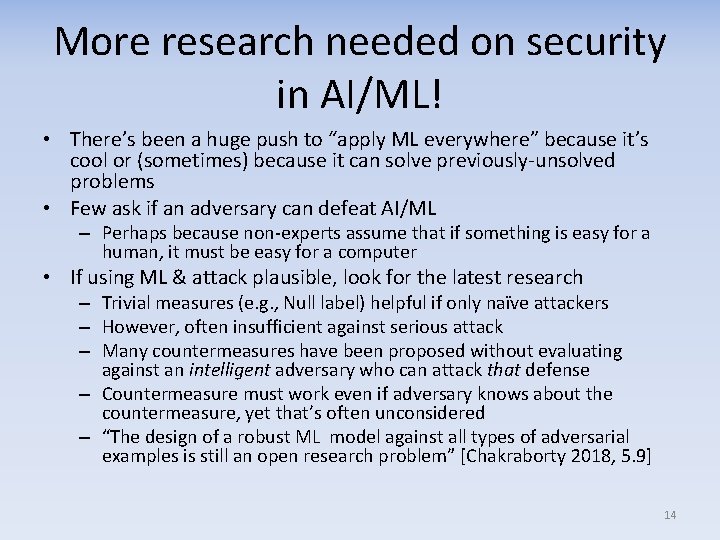

More research needed on security in AI/ML! • There’s been a huge push to “apply ML everywhere” because it’s cool or (sometimes) because it can solve previously-unsolved problems • Few ask if an adversary can defeat AI/ML – Perhaps because non-experts assume that if something is easy for a human, it must be easy for a computer • If using ML & attack plausible, look for the latest research – Trivial measures (e. g. , Null label) helpful if only naïve attackers – However, often insufficient against serious attack – Many countermeasures have been proposed without evaluating against an intelligent adversary who can attack that defense – Countermeasure must work even if adversary knows about the countermeasure, yet that’s often unconsidered – “The design of a robust ML model against all types of adversarial examples is still an open research problem” [Chakraborty 2018, 5. 9] 14

Some useful sources on countering attacks on AI/ML systems • • • • N. Akhtar and A. Mian, "Threat of adversarial attacks on deep learning in computer vision: A survey, " IEEE Access, vol. 6, pp. 14410 -14430, 2018. B. Biggio and F. Roli, "Wild patterns: Ten years after the rise of adversarial machine learning, " Pattern Recognition, vol. 84, pp. 317 -331, 2018. A. Chakraborty, M. Alam, V. Dey, A. Chattopadhyay and D. Mukhopadhyay, "Adversarial Attacks and Defences: A Survey, " 2018. Q. Liu, P. Li, W. Zhao, W. Cai, S. Yu and V. C. M. Leung, "A survey on security threats and defensive techniques of machine learning: A data driven view, " IEEE access, vol. 6, pp. 12103 -12117, 2018. N. Papernot, P. Mc. Daniel, A. Sinha and M. P. Wellman, "So. K: Security and privacy in machine learning, " in 2018 IEEE European Symposium on Security and Privacy (Euro. S&P), 2018. Ian Goodfellow, Patrick Mc. Daniel, and Nicolas Papernot, “Making Machine Learning Robust against Adversarial Inputs”, Communications of the ACM, July 2018, Vol 61, No. 7. “Adversarial Risk and the Dangers of Evaluating Against Weak Attacks”, Jonathan Uesato, Brendan O’Donoghue, Aaron van den Oord , Pushmeet Kohli, 2018 “A Taxonomy and Terminology of Adversarial Machine Learning”, NIST IR 8269, https: //csrc. nist. gov/publications/detail/nistir/8269/draft and https: //www. nccoe. nist. gov/projects/building-blocks/artificial -intelligence-adversarial-machine-learning Xiaoyong Yuan , Pan He, Qile Zhu, and Xiaolin Li, “Adversarial Examples: Attacks and Defenses for Deep Learning”, IEEE Transactions on Neural Networks and Learning Systems, Vol. 30, No. 9, September 2019 “Feature Squeezing Mitigates and Detects Carlini/Wagner Adversarial Examples” by Weilin Xu, David Evans, Yanjun Qi, 2017 (Quick intro) “Introduction to Adversarial Machine Learning” https: //blog. floydhub. com/introduction-to-adversarialmachine-learning/ Nicholas Carlini, Anish Athlye, Nicolas Papernot, et al. , “On Evaluating Adversarial Robustness”, 20 Feb 2019 (discusses how defenses should be evaluated & common mistakes in doing so) “Evade. ML” (Machine Learning & Security Research groups at UVA): https: //evademl. org/ “Solving the challenge of securing AI and machine learning systems” by Valecia Maclin (also points to other papers, esp. “Failure Modes in ML”) - https: //blogs. microsoft. com/on-the-issues/2019/12/06/ai-machine-learning-security/ “Security and Privacy of Machine Learning” course, https: //secml. github. io/ 15

Science of Security 16

Situation • Lots is unknown in security – Modern interest in “Science of Security” – E. g. , “Hot Topics in the Science of Security” (Ho. TSOS) conference • Confusion over the terms engineering, science, & math 17

Software development is a kind of engineering • Don’t confuse these (my definitions): – Mathematics: Rigorous deduction of conclusions from assumptions (not necessarily real world) – Science: Induction of general principles, rigorously tested via experiments and observations – Engineering: Developing systems that solve human problems within constraints and using available knowledge [and in practice, handling constraints requires trade-offs] • Developing software to solve human problems is fundamentally engineering • Engineering depends on knowledge (including science, mathematics, rules of thumb, etc. ) Source: “If It Works, It’s Legacy: Analysis of Legacy Code (Keynote for Analysis of Legacy Code Session, Sound Static Analysis for Security Conference)” by David A. Wheeler, June 2018 18

Science of Security paper by Herley & Oorschot • Next slides summarize Harley & Oorschot: – “So. K: Science, Security, and the Elusive Goal of Security as a Scientific Pursuit”, Cormac Herley & P. C. van Oorschot, 2017 IEEE Symposium on Security and Privacy (SP), May 2017, https: //www. microsoft. com/en-us/research/wpcontent/uploads/2017/03/science. And. Security. So. K. pdf • Important paper on “Science of Security” 19

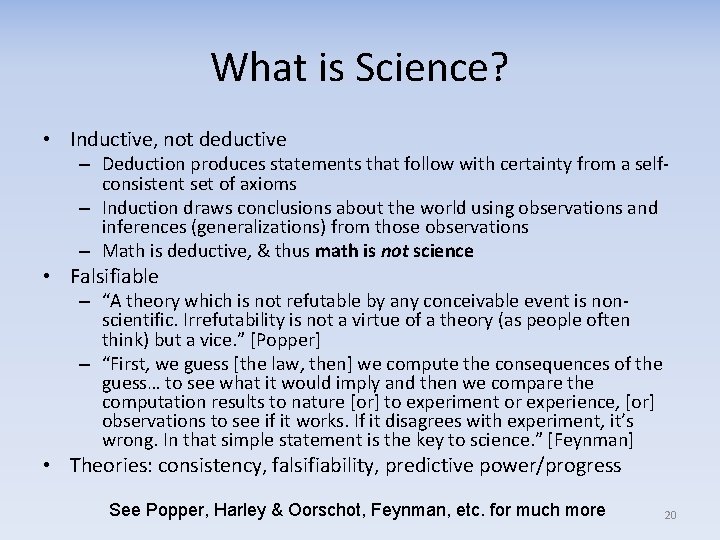

What is Science? • Inductive, not deductive – Deduction produces statements that follow with certainty from a selfconsistent set of axioms – Induction draws conclusions about the world using observations and inferences (generalizations) from those observations – Math is deductive, & thus math is not science • Falsifiable – “A theory which is not refutable by any conceivable event is nonscientific. Irrefutability is not a virtue of a theory (as people often think) but a vice. ” [Popper] – “First, we guess [the law, then] we compute the consequences of the guess… to see what it would imply and then we compare the computation results to nature [or] to experiment or experience, [or] observations to see if it works. If it disagrees with experiment, it’s wrong. In that simple statement is the key to science. ” [Feynman] • Theories: consistency, falsifiability, predictive power/progress See Popper, Harley & Oorschot, Feynman, etc. for much more 20

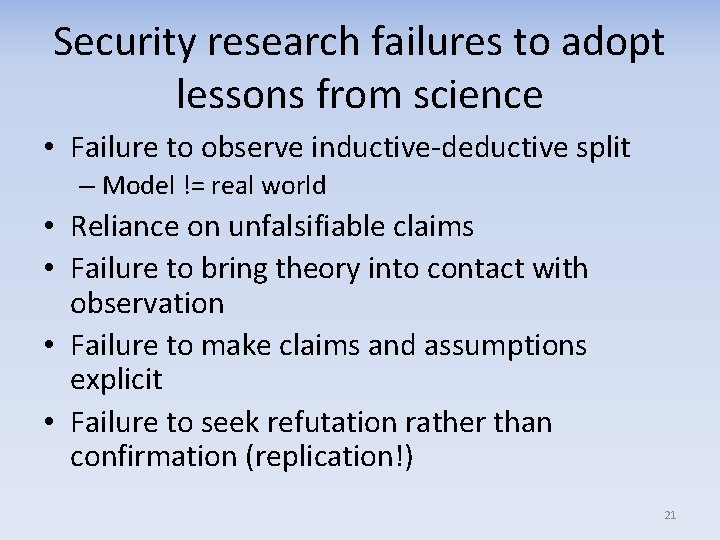

Security research failures to adopt lessons from science • Failure to observe inductive-deductive split – Model != real world • Reliance on unfalsifiable claims • Failure to bring theory into contact with observation • Failure to make claims and assumptions explicit • Failure to seek refutation rather than confirmation (replication!) 21

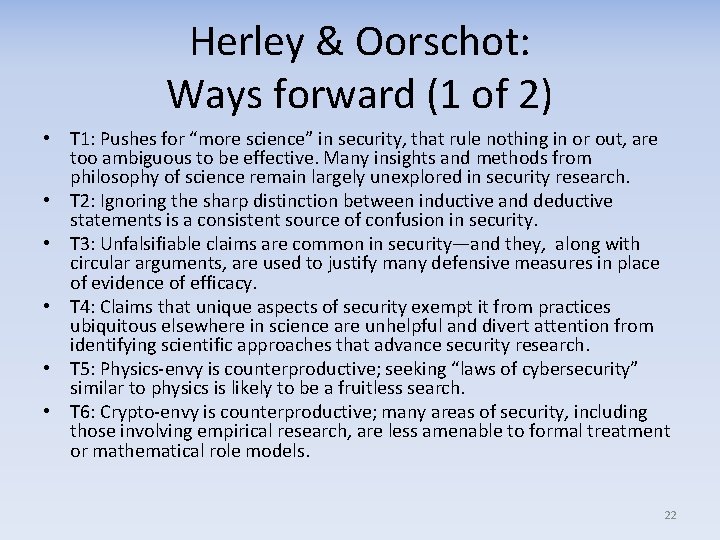

Herley & Oorschot: Ways forward (1 of 2) • T 1: Pushes for “more science” in security, that rule nothing in or out, are too ambiguous to be effective. Many insights and methods from philosophy of science remain largely unexplored in security research. • T 2: Ignoring the sharp distinction between inductive and deductive statements is a consistent source of confusion in security. • T 3: Unfalsifiable claims are common in security—and they, along with circular arguments, are used to justify many defensive measures in place of evidence of efficacy. • T 4: Claims that unique aspects of security exempt it from practices ubiquitous elsewhere in science are unhelpful and divert attention from identifying scientific approaches that advance security research. • T 5: Physics-envy is counterproductive; seeking “laws of cybersecurity” similar to physics is likely to be a fruitless search. • T 6: Crypto-envy is counterproductive; many areas of security, including those involving empirical research, are less amenable to formal treatment or mathematical role models. 22

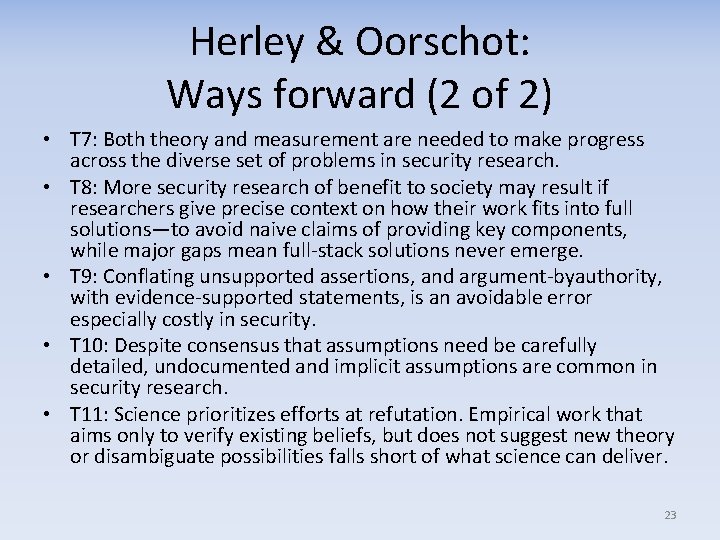

Herley & Oorschot: Ways forward (2 of 2) • T 7: Both theory and measurement are needed to make progress across the diverse set of problems in security research. • T 8: More security research of benefit to society may result if researchers give precise context on how their work fits into full solutions—to avoid naive claims of providing key components, while major gaps mean full-stack solutions never emerge. • T 9: Conflating unsupported assertions, and argument-byauthority, with evidence-supported statements, is an avoidable error especially costly in security. • T 10: Despite consensus that assumptions need be carefully detailed, undocumented and implicit assumptions are common in security research. • T 11: Science prioritizes efforts at refutation. Empirical work that aims only to verify existing beliefs, but does not suggest new theory or disambiguate possibilities falls short of what science can deliver. 23

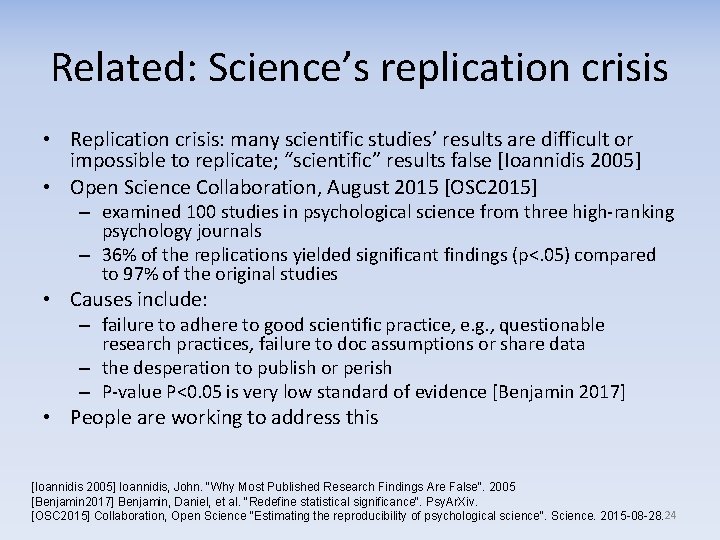

Related: Science’s replication crisis • Replication crisis: many scientific studies’ results are difficult or impossible to replicate; “scientific” results false [Ioannidis 2005] • Open Science Collaboration, August 2015 [OSC 2015] – examined 100 studies in psychological science from three high-ranking psychology journals – 36% of the replications yielded significant findings (p<. 05) compared to 97% of the original studies • Causes include: – failure to adhere to good scientific practice, e. g. , questionable research practices, failure to doc assumptions or share data – the desperation to publish or perish – P-value P<0. 05 is very low standard of evidence [Benjamin 2017] • People are working to address this [Ioannidis 2005] Ioannidis, John. "Why Most Published Research Findings Are False". 2005 [Benjamin 2017] Benjamin, Daniel, et al. "Redefine statistical significance". Psy. Ar. Xiv. [OSC 2015] Collaboration, Open Science "Estimating the reproducibility of psychological science". Science. 2015 -08 -28. 24

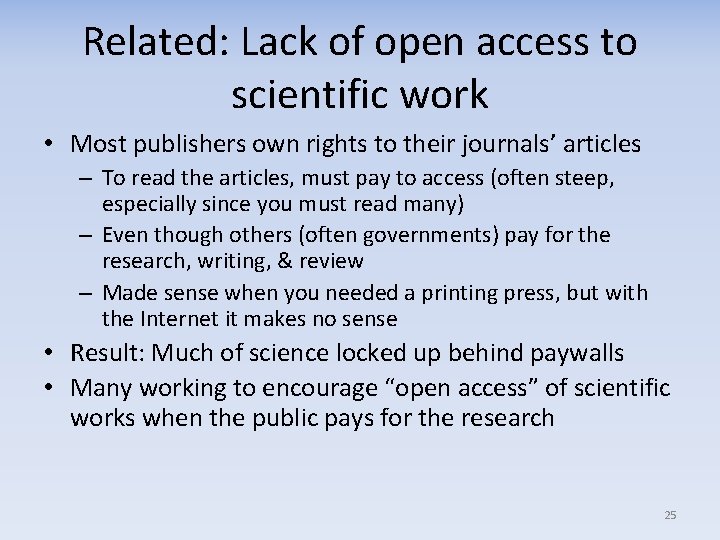

Related: Lack of open access to scientific work • Most publishers own rights to their journals’ articles – To read the articles, must pay to access (often steep, especially since you must read many) – Even though others (often governments) pay for the research, writing, & review – Made sense when you needed a printing press, but with the Internet it makes no sense • Result: Much of science locked up behind paywalls • Many working to encourage “open access” of scientific works when the public pays for the research 25

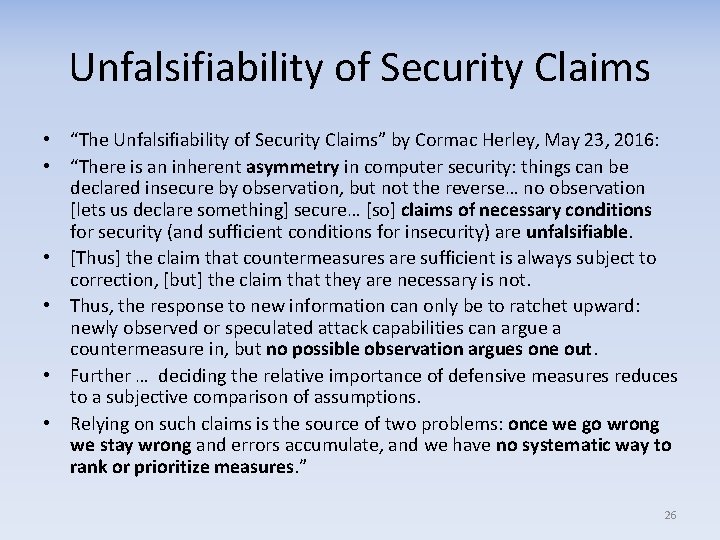

Unfalsifiability of Security Claims • “The Unfalsifiability of Security Claims” by Cormac Herley, May 23, 2016: • “There is an inherent asymmetry in computer security: things can be declared insecure by observation, but not the reverse… no observation [lets us declare something] secure… [so] claims of necessary conditions for security (and sufficient conditions for insecurity) are unfalsifiable. • [Thus] the claim that countermeasures are sufficient is always subject to correction, [but] the claim that they are necessary is not. • Thus, the response to new information can only be to ratchet upward: newly observed or speculated attack capabilities can argue a countermeasure in, but no possible observation argues one out. • Further … deciding the relative importance of defensive measures reduces to a subjective comparison of assumptions. • Relying on such claims is the source of two problems: once we go wrong we stay wrong and errors accumulate, and we have no systematic way to rank or prioritize measures. ” 26

Countering trust through diverse double-compiling (DDC) 27

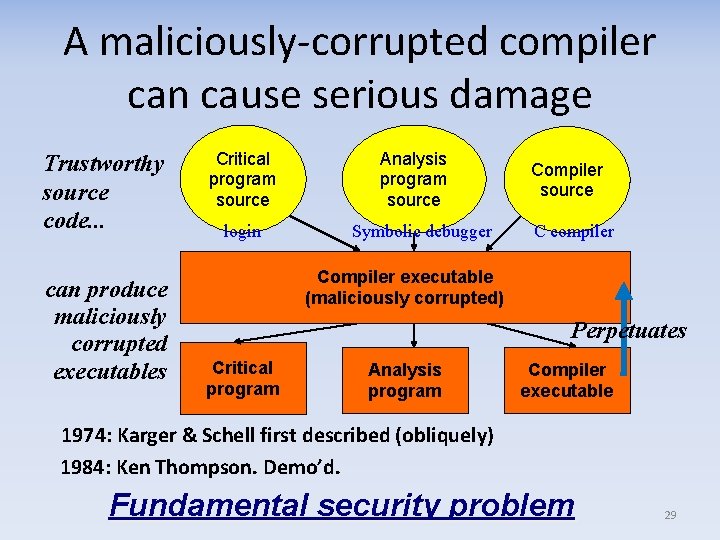

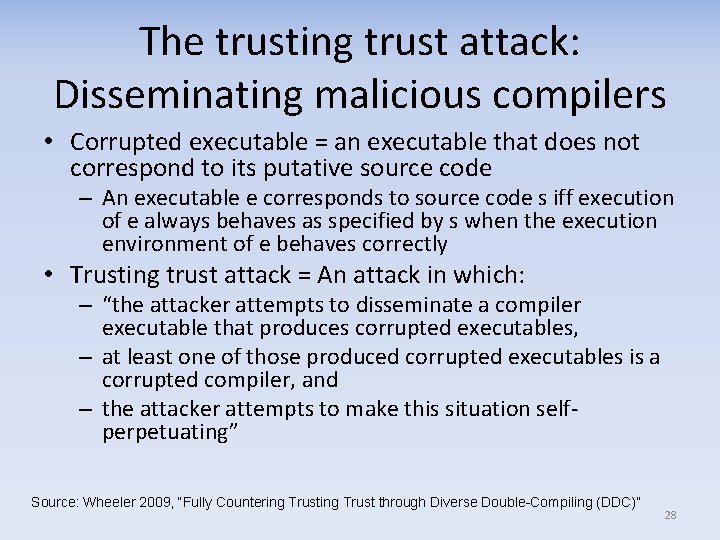

The trusting trust attack: Disseminating malicious compilers • Corrupted executable = an executable that does not correspond to its putative source code – An executable e corresponds to source code s iff execution of e always behaves as specified by s when the execution environment of e behaves correctly • Trusting trust attack = An attack in which: – “the attacker attempts to disseminate a compiler executable that produces corrupted executables, – at least one of those produced corrupted executables is a corrupted compiler, and – the attacker attempts to make this situation selfperpetuating” Source: Wheeler 2009, “Fully Countering Trust through Diverse Double-Compiling (DDC)” 28

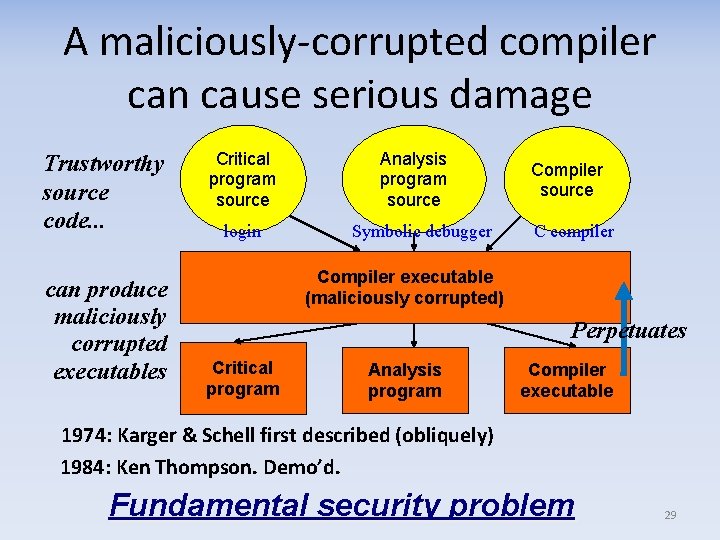

A maliciously-corrupted compiler can cause serious damage Trustworthy source code. . . can produce maliciously corrupted executables Critical program source login Analysis program source Symbolic debugger Compiler source C compiler Compiler executable (maliciously corrupted) Perpetuates Critical program Analysis program Compiler executable 1974: Karger & Schell first described (obliquely) 1984: Ken Thompson. Demo’d. Fundamental security problem 29

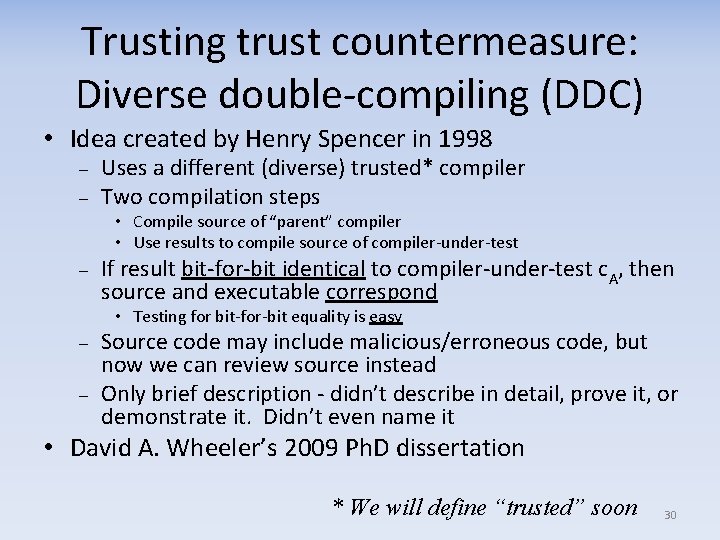

Trusting trust countermeasure: Diverse double-compiling (DDC) • Idea created by Henry Spencer in 1998 – – Uses a different (diverse) trusted* compiler Two compilation steps • Compile source of “parent” compiler • Use results to compile source of compiler-under-test – If result bit-for-bit identical to compiler-under-test c. A, then source and executable correspond • Testing for bit-for-bit equality is easy – – Source code may include malicious/erroneous code, but now we can review source instead Only brief description - didn’t describe in detail, prove it, or demonstrate it. Didn’t even name it • David A. Wheeler’s 2009 Ph. D dissertation * We will define “trusted” soon 30

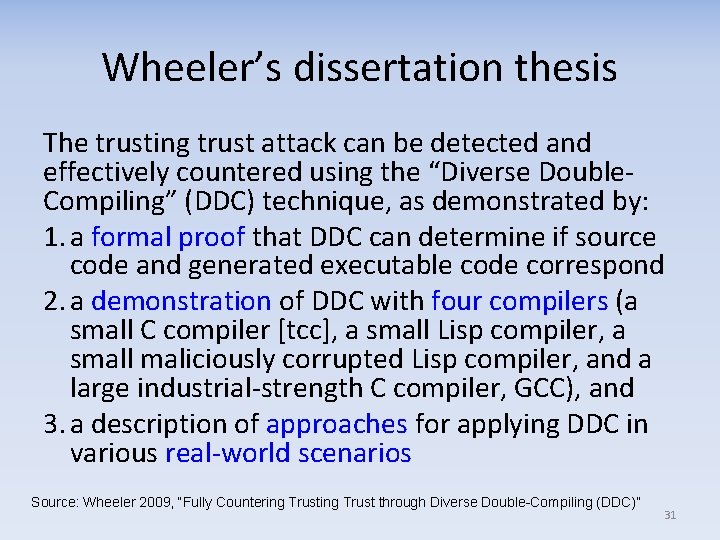

Wheeler’s dissertation thesis The trusting trust attack can be detected and effectively countered using the “Diverse Double. Compiling” (DDC) technique, as demonstrated by: 1. a formal proof that DDC can determine if source code and generated executable code correspond 2. a demonstration of DDC with four compilers (a small C compiler [tcc], a small Lisp compiler, a small maliciously corrupted Lisp compiler, and a large industrial-strength C compiler, GCC), and 3. a description of approaches for applying DDC in various real-world scenarios Source: Wheeler 2009, “Fully Countering Trust through Diverse Double-Compiling (DDC)” 31

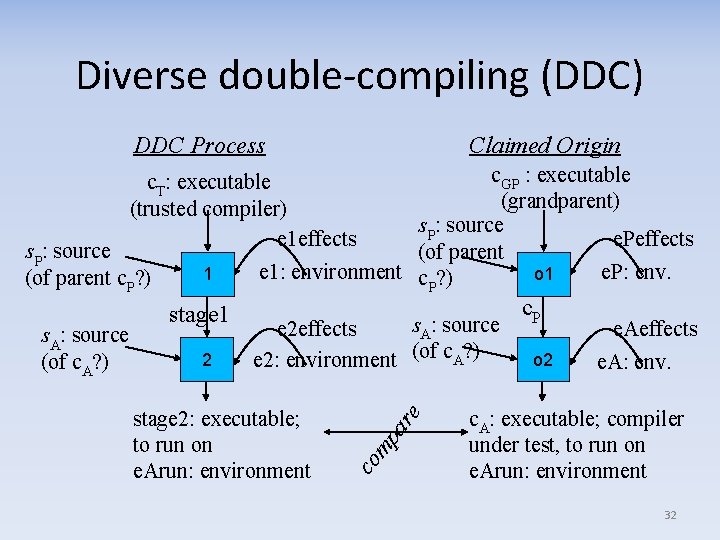

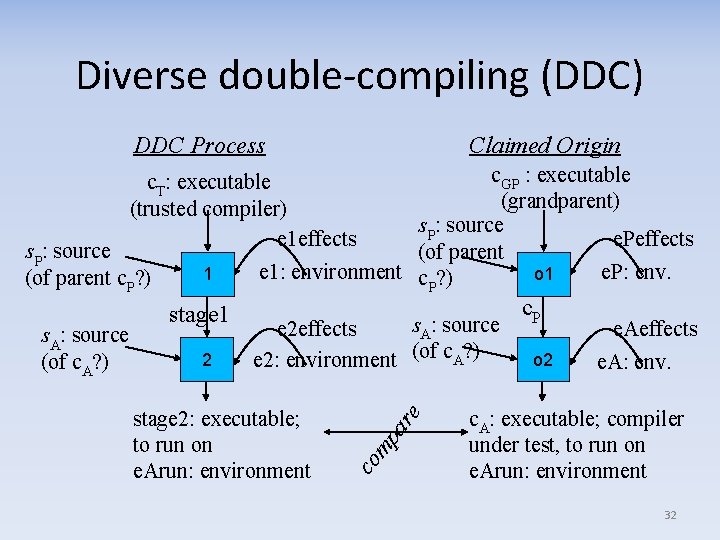

Diverse double-compiling (DDC) DDC Process Claimed Origin c. GP : executable (grandparent) s. P: source e. Peffects (of parent e 1: environment c ? ) e. P: env. o 1 P c. T: executable (trusted compiler) e 1 effects stage 1 2 s. A: source e 2 effects e 2: environment (of c. A? ) stage 2: executable; to run on e. Arun: environment ar e s. A: source (of c. A? ) 1 co mp s. P: source (of parent c. P? ) c. P o 2 e. Aeffects e. A: env. c. A: executable; compiler under test, to run on e. Arun: environment 32

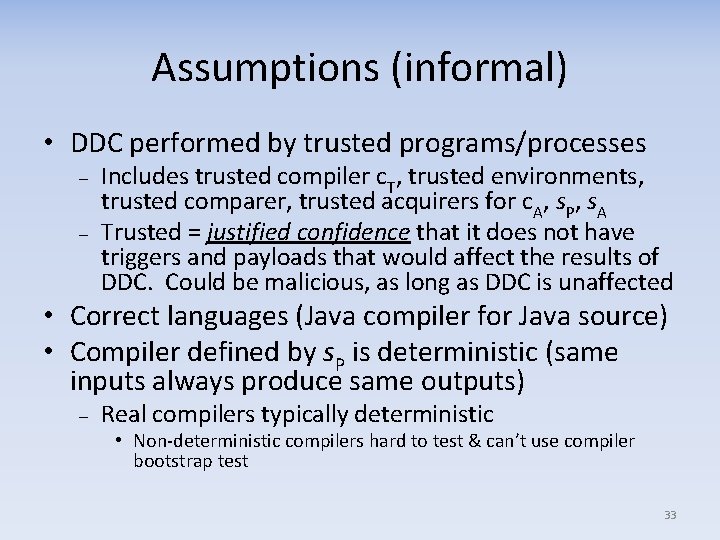

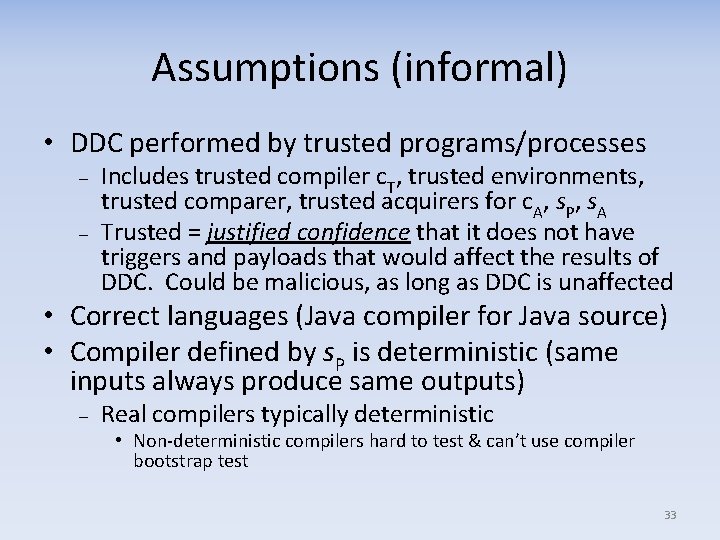

Assumptions (informal) • DDC performed by trusted programs/processes – – Includes trusted compiler c. T, trusted environments, trusted comparer, trusted acquirers for c. A, s. P, s. A Trusted = justified confidence that it does not have triggers and payloads that would affect the results of DDC. Could be malicious, as long as DDC is unaffected • Correct languages (Java compiler for Java source) • Compiler defined by s. P is deterministic (same inputs always produce same outputs) – Real compilers typically deterministic • Non-deterministic compilers hard to test & can’t use compiler bootstrap test 33

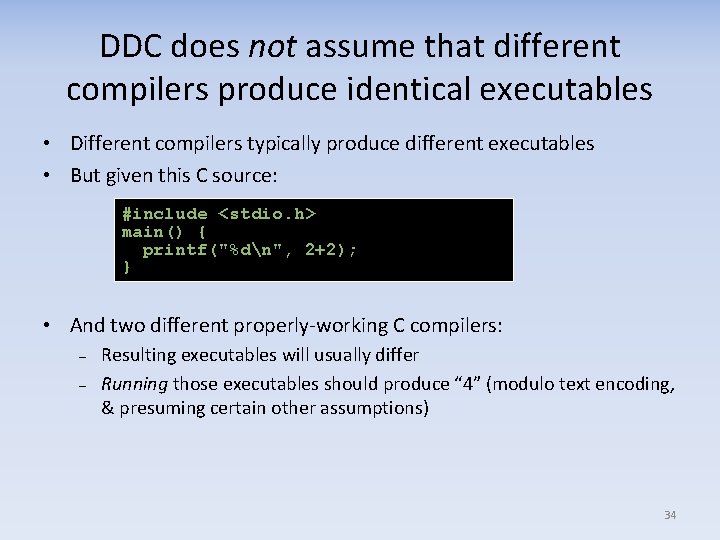

DDC does not assume that different compilers produce identical executables • Different compilers typically produce different executables • But given this C source: #include <stdio. h> main() { printf("%dn", 2+2); } • And two different properly-working C compilers: – – Resulting executables will usually differ Running those executables should produce “ 4” (modulo text encoding, & presuming certain other assumptions) 34

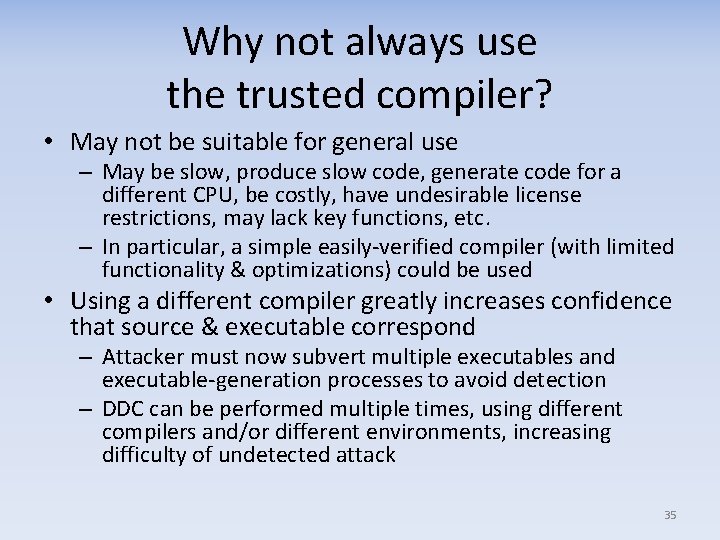

Why not always use the trusted compiler? • May not be suitable for general use – May be slow, produce slow code, generate code for a different CPU, be costly, have undesirable license restrictions, may lack key functions, etc. – In particular, a simple easily-verified compiler (with limited functionality & optimizations) could be used • Using a different compiler greatly increases confidence that source & executable correspond – Attacker must now subvert multiple executables and executable-generation processes to avoid detection – DDC can be performed multiple times, using different compilers and/or different environments, increasing difficulty of undetected attack 35

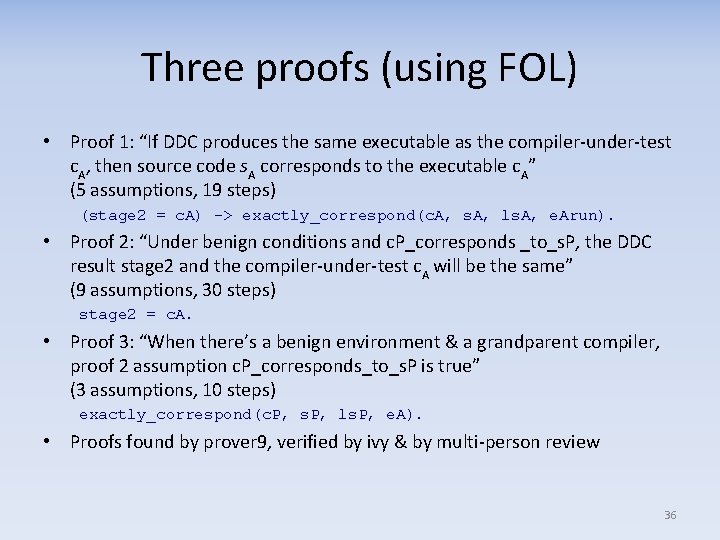

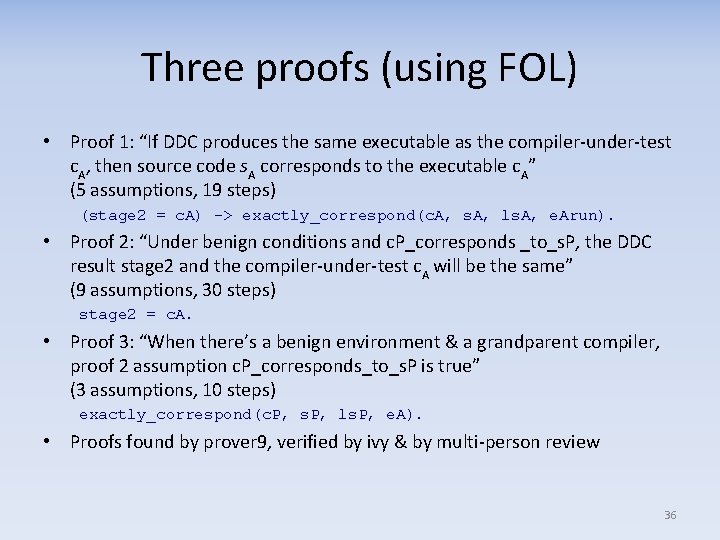

Three proofs (using FOL) • Proof 1: “If DDC produces the same executable as the compiler-under-test c. A, then source code s. A corresponds to the executable c. A” (5 assumptions, 19 steps) (stage 2 = c. A) -> exactly_correspond(c. A, s. A, ls. A, e. Arun). • Proof 2: “Under benign conditions and c. P_corresponds _to_s. P, the DDC result stage 2 and the compiler-under-test c. A will be the same” (9 assumptions, 30 steps) stage 2 = c. A. • Proof 3: “When there’s a benign environment & a grandparent compiler, proof 2 assumption c. P_corresponds_to_s. P is true” (3 assumptions, 10 steps) exactly_correspond(c. P, s. P, ls. P, e. A). • Proofs found by prover 9, verified by ivy & by multi-person review 36

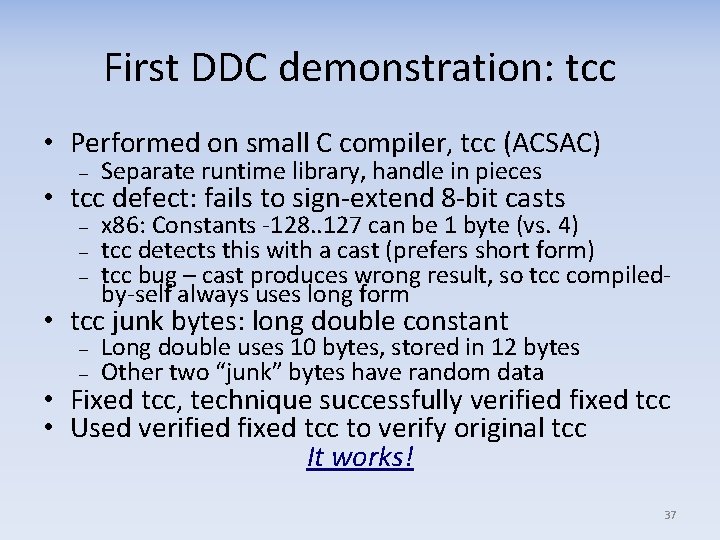

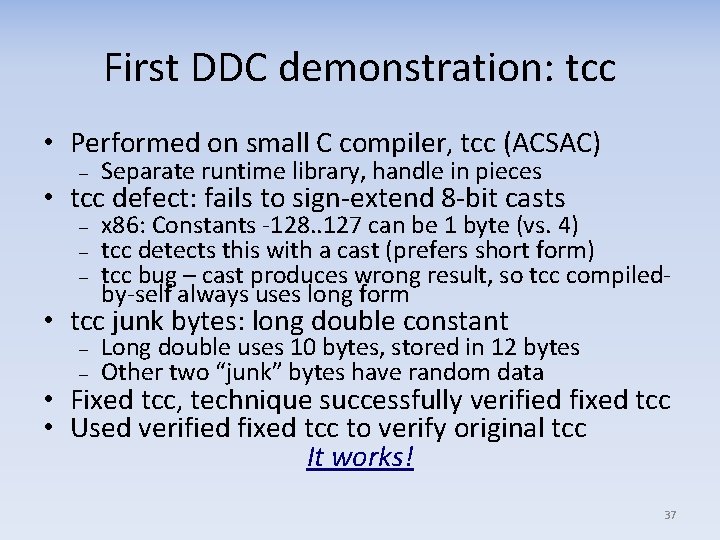

First DDC demonstration: tcc • Performed on small C compiler, tcc (ACSAC) – Separate runtime library, handle in pieces – – – x 86: Constants -128. . 127 can be 1 byte (vs. 4) tcc detects this with a cast (prefers short form) tcc bug – cast produces wrong result, so tcc compiledby-self always uses long form • tcc defect: fails to sign-extend 8 -bit casts • tcc junk bytes: long double constant – – Long double uses 10 bytes, stored in 12 bytes Other two “junk” bytes have random data • Fixed tcc, technique successfully verified fixed tcc • Used verified fixed tcc to verify original tcc It works! 37

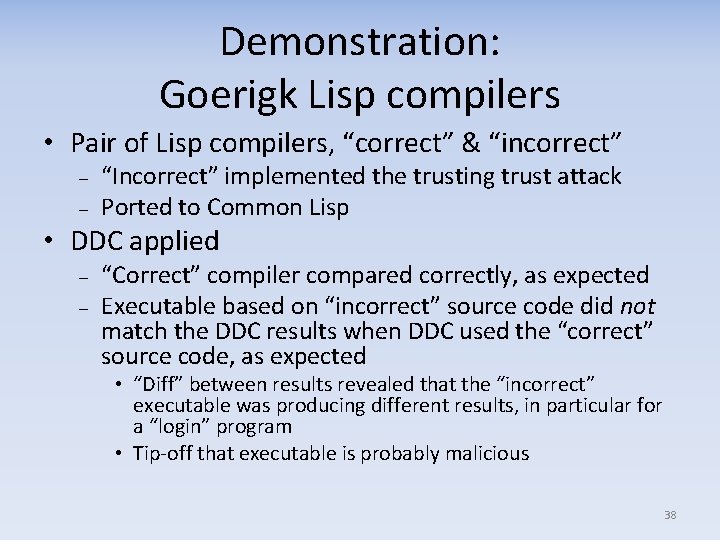

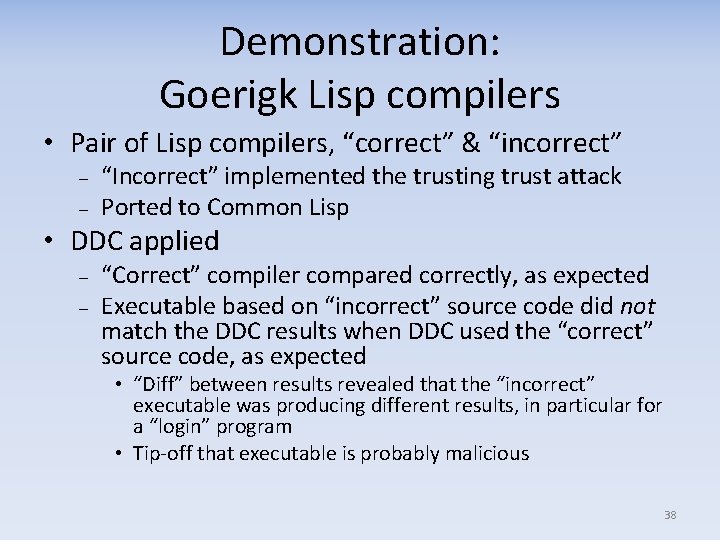

Demonstration: Goerigk Lisp compilers • Pair of Lisp compilers, “correct” & “incorrect” – – “Incorrect” implemented the trusting trust attack Ported to Common Lisp • DDC applied – – “Correct” compiler compared correctly, as expected Executable based on “incorrect” source code did not match the DDC results when DDC used the “correct” source code, as expected • “Diff” between results revealed that the “incorrect” executable was producing different results, in particular for a “login” program • Tip-off that executable is probably malicious 38

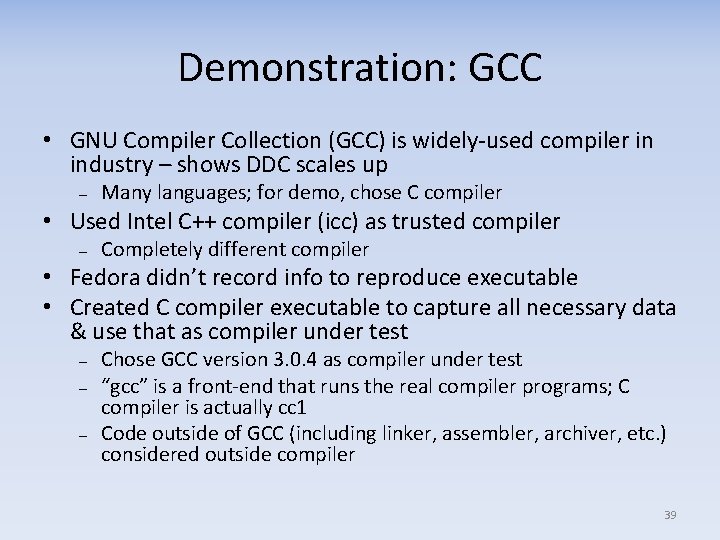

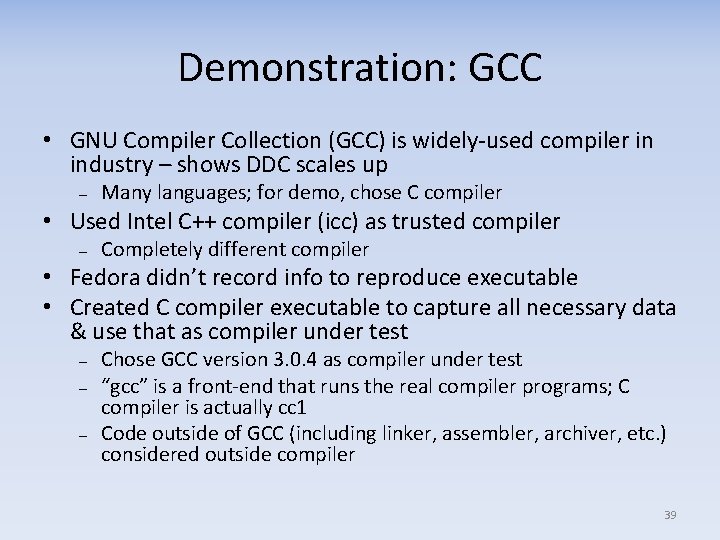

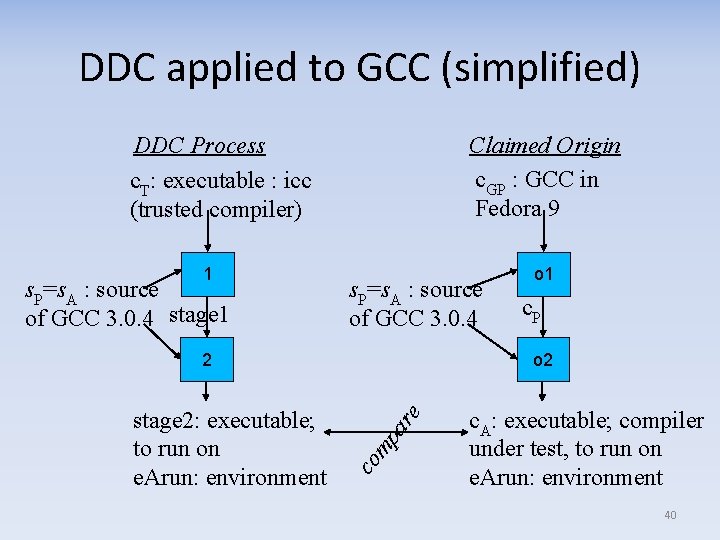

Demonstration: GCC • GNU Compiler Collection (GCC) is widely-used compiler in industry – shows DDC scales up – Many languages; for demo, chose C compiler • Used Intel C++ compiler (icc) as trusted compiler – Completely different compiler • Fedora didn’t record info to reproduce executable • Created C compiler executable to capture all necessary data & use that as compiler under test – – – Chose GCC version 3. 0. 4 as compiler under test “gcc” is a front-end that runs the real compiler programs; C compiler is actually cc 1 Code outside of GCC (including linker, assembler, archiver, etc. ) considered outside compiler 39

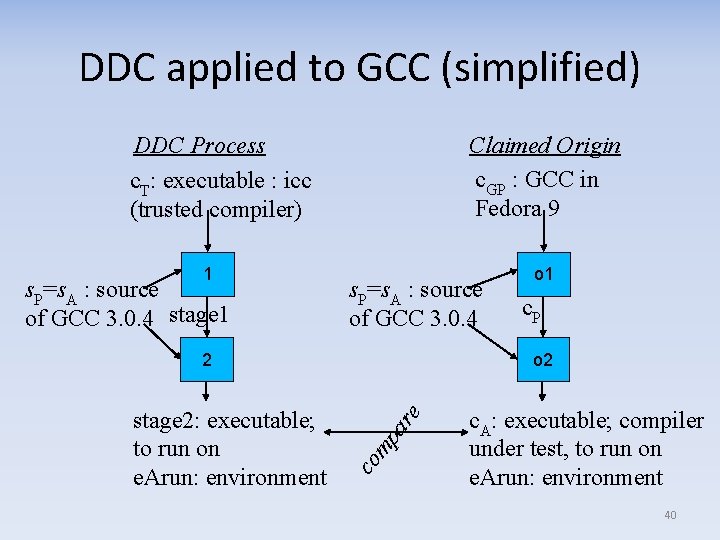

DDC applied to GCC (simplified) DDC Process c. T: executable : icc (trusted compiler) 1 s. P=s. A : source of GCC 3. 0. 4 stage 1 Claimed Origin c. GP : GCC in Fedora 9 s. P=s. A : source of GCC 3. 0. 4 co mp stage 2: executable; to run on e. Arun: environment c. P o 2 ar e 2 o 1 c. A: executable; compiler under test, to run on e. Arun: environment 40

DDC applied to GCC (continued) • Challenges: – – – “Master result” pathname embedded in executable (so made sure it was the same) Tool semantic change (“tail +16 c”) GCC did not fully rebuild when using its build process (libiberty library not rebuilt) • This took time to trace back & determine cause • Once corrected, DDC produced bit-for-bit equal results as expected 41

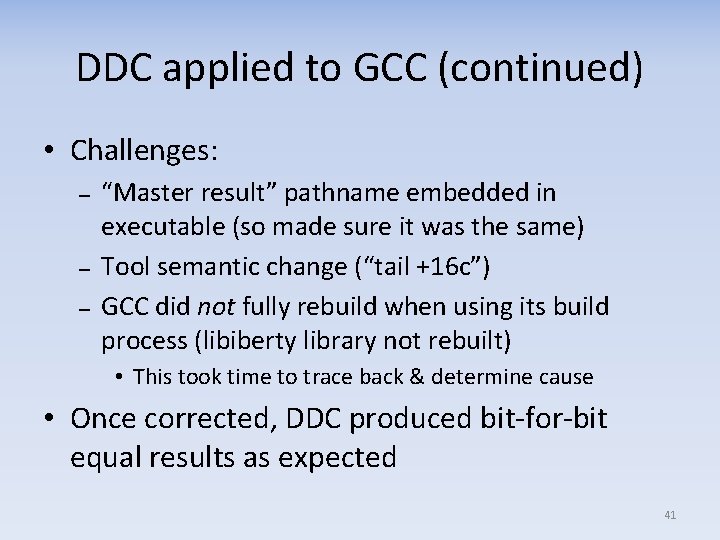

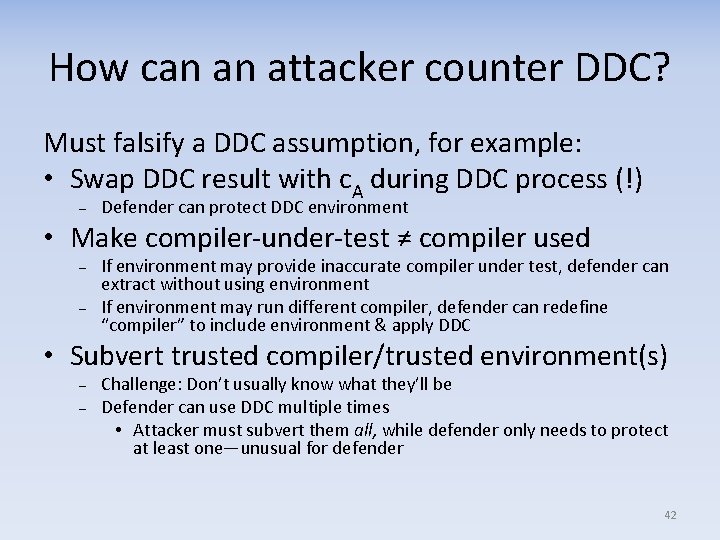

How can an attacker counter DDC? Must falsify a DDC assumption, for example: • Swap DDC result with c. A during DDC process (!) – Defender can protect DDC environment • Make compiler-under-test ≠ compiler used – – If environment may provide inaccurate compiler under test, defender can extract without using environment If environment may run different compiler, defender can redefine “compiler” to include environment & apply DDC • Subvert trusted compiler/trusted environment(s) – – Challenge: Don’t usually know what they’ll be Defender can use DDC multiple times • Attacker must subvert them all, while defender only needs to protect at least one—unusual for defender 42

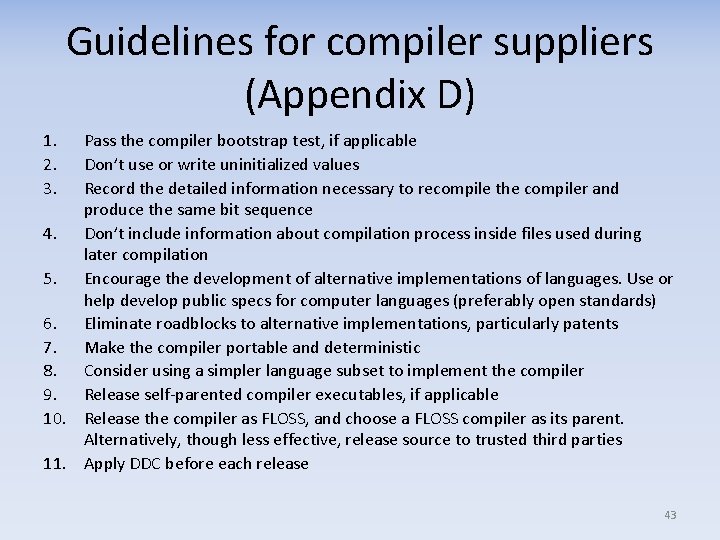

Guidelines for compiler suppliers (Appendix D) 1. 2. 3. Pass the compiler bootstrap test, if applicable Don’t use or write uninitialized values Record the detailed information necessary to recompile the compiler and produce the same bit sequence 4. Don’t include information about compilation process inside files used during later compilation 5. Encourage the development of alternative implementations of languages. Use or help develop public specs for computer languages (preferably open standards) 6. Eliminate roadblocks to alternative implementations, particularly patents 7. Make the compiler portable and deterministic 8. Consider using a simpler language subset to implement the compiler 9. Release self-parented compiler executables, if applicable 10. Release the compiler as FLOSS, and choose a FLOSS compiler as its parent. Alternatively, though less effective, release source to trusted third parties 11. Apply DDC before each release 43

Are you in control? • More generally, your applications are controlled by the OS, CPU, peripherals… – Can you trust them? • What controls the OS, CPU, peripherals…? – Can you trust them? • Worrying trend: Users often cannot control their own computers – Computer users often controlled by others instead 44

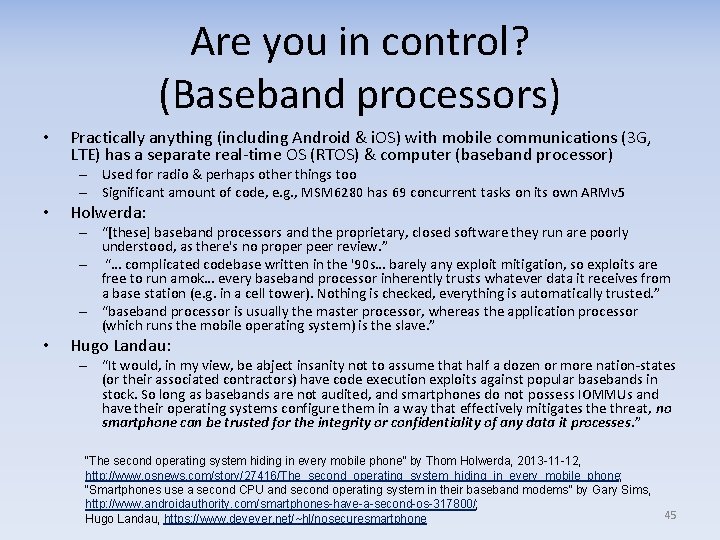

Are you in control? (Baseband processors) • Practically anything (including Android & i. OS) with mobile communications (3 G, LTE) has a separate real-time OS (RTOS) & computer (baseband processor) – Used for radio & perhaps other things too – Significant amount of code, e. g. , MSM 6280 has 69 concurrent tasks on its own ARMv 5 • Holwerda: – “[these] baseband processors and the proprietary, closed software they run are poorly understood, as there's no proper peer review. ” – “… complicated codebase written in the '90 s… barely any exploit mitigation, so exploits are free to run amok… every baseband processor inherently trusts whatever data it receives from a base station (e. g. in a cell tower). Nothing is checked, everything is automatically trusted. ” – “baseband processor is usually the master processor, whereas the application processor (which runs the mobile operating system) is the slave. ” • Hugo Landau: – “It would, in my view, be abject insanity not to assume that half a dozen or more nation-states (or their associated contractors) have code execution exploits against popular basebands in stock. So long as basebands are not audited, and smartphones do not possess IOMMUs and have their operating systems configure them in a way that effectively mitigates the threat, no smartphone can be trusted for the integrity or confidentiality of any data it processes. ” “The second operating system hiding in every mobile phone” by Thom Holwerda, 2013 -11 -12, http: //www. osnews. com/story/27416/The_second_operating_system_hiding_in_every_mobile_phone; “Smartphones use a second CPU and second operating system in their baseband modems” by Gary Sims, http: //www. androidauthority. com/smartphones-have-a-second-os-317800/; Hugo Landau, https: //www. devever. net/~hl/nosecuresmartphone 45

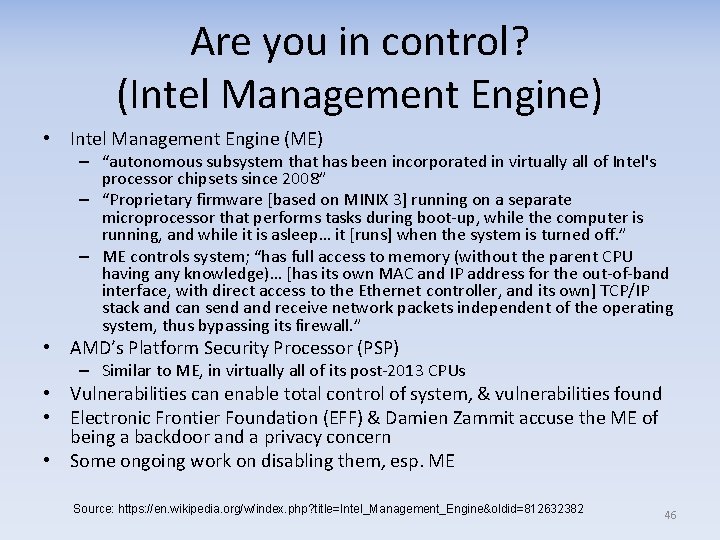

Are you in control? (Intel Management Engine) • Intel Management Engine (ME) – “autonomous subsystem that has been incorporated in virtually all of Intel's processor chipsets since 2008” – “Proprietary firmware [based on MINIX 3] running on a separate microprocessor that performs tasks during boot-up, while the computer is running, and while it is asleep… it [runs] when the system is turned off. ” – ME controls system; “has full access to memory (without the parent CPU having any knowledge)… [has its own MAC and IP address for the out-of-band interface, with direct access to the Ethernet controller, and its own] TCP/IP stack and can send and receive network packets independent of the operating system, thus bypassing its firewall. ” • AMD’s Platform Security Processor (PSP) – Similar to ME, in virtually all of its post-2013 CPUs • Vulnerabilities can enable total control of system, & vulnerabilities found • Electronic Frontier Foundation (EFF) & Damien Zammit accuse the ME of being a backdoor and a privacy concern • Some ongoing work on disabling them, esp. ME Source: https: //en. wikipedia. org/w/index. php? title=Intel_Management_Engine&oldid=812632382 46

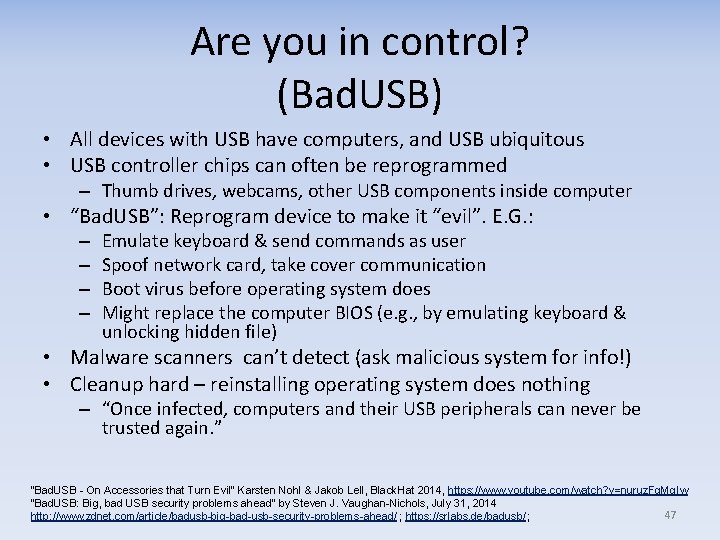

Are you in control? (Bad. USB) • All devices with USB have computers, and USB ubiquitous • USB controller chips can often be reprogrammed – Thumb drives, webcams, other USB components inside computer • “Bad. USB”: Reprogram device to make it “evil”. E. G. : – – Emulate keyboard & send commands as user Spoof network card, take cover communication Boot virus before operating system does Might replace the computer BIOS (e. g. , by emulating keyboard & unlocking hidden file) • Malware scanners can’t detect (ask malicious system for info!) • Cleanup hard – reinstalling operating system does nothing – “Once infected, computers and their USB peripherals can never be trusted again. ” “Bad. USB - On Accessories that Turn Evil” Karsten Nohl & Jakob Lell, Black. Hat 2014, https: //www. youtube. com/watch? v=nuruz. Fq. Mg. Iw “Bad. USB: Big, bad USB security problems ahead” by Steven J. Vaughan-Nichols, July 31, 2014 47 http: //www. zdnet. com/article/badusb-big-bad-usb-security-problems-ahead/ ; https: //srlabs. de/badusb/ ;

Vulnerability Disclosure 48

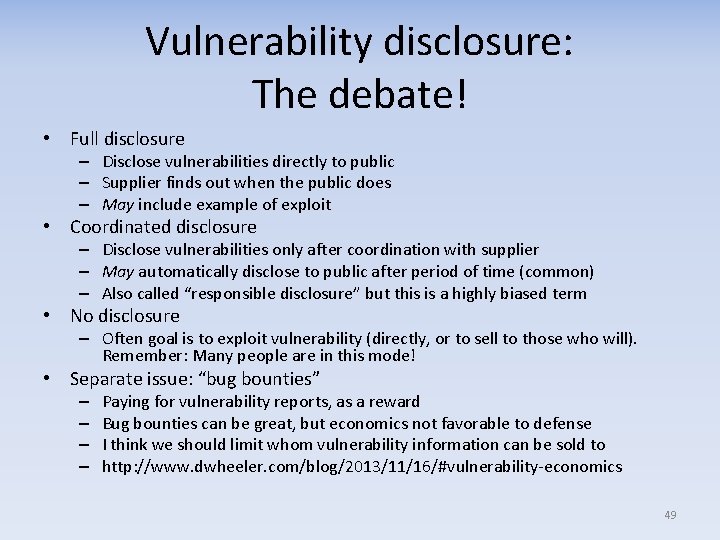

Vulnerability disclosure: The debate! • Full disclosure – Disclose vulnerabilities directly to public – Supplier finds out when the public does – May include example of exploit • Coordinated disclosure – Disclose vulnerabilities only after coordination with supplier – May automatically disclose to public after period of time (common) – Also called “responsible disclosure” but this is a highly biased term • No disclosure – Often goal is to exploit vulnerability (directly, or to sell to those who will). Remember: Many people are in this mode! • Separate issue: “bug bounties” – – Paying for vulnerability reports, as a reward Bug bounties can be great, but economics not favorable to defense I think we should limit whom vulnerability information can be sold to http: //www. dwheeler. com/blog/2013/11/16/#vulnerability-economics 49

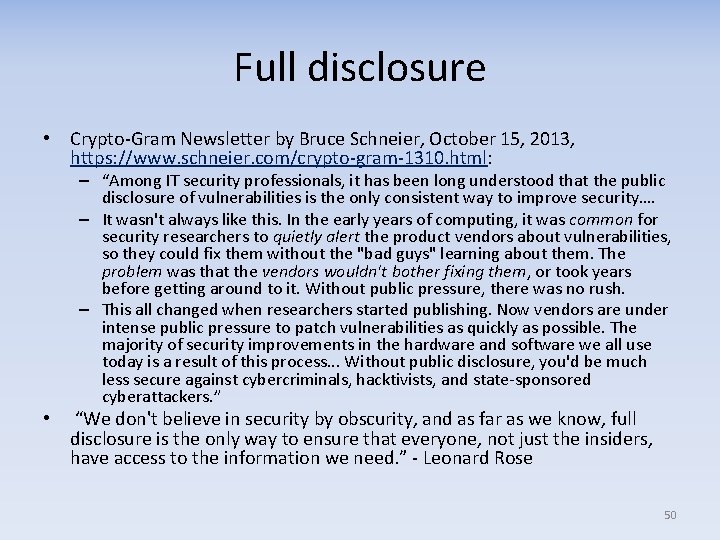

Full disclosure • Crypto-Gram Newsletter by Bruce Schneier, October 15, 2013, https: //www. schneier. com/crypto-gram-1310. html: – “Among IT security professionals, it has been long understood that the public disclosure of vulnerabilities is the only consistent way to improve security…. – It wasn't always like this. In the early years of computing, it was common for security researchers to quietly alert the product vendors about vulnerabilities, so they could fix them without the "bad guys" learning about them. The problem was that the vendors wouldn't bother fixing them, or took years before getting around to it. Without public pressure, there was no rush. – This all changed when researchers started publishing. Now vendors are under intense public pressure to patch vulnerabilities as quickly as possible. The majority of security improvements in the hardware and software we all use today is a result of this process. . . Without public disclosure, you'd be much less secure against cybercriminals, hacktivists, and state-sponsored cyberattackers. ” • “We don't believe in security by obscurity, and as far as we know, full disclosure is the only way to ensure that everyone, not just the insiders, have access to the information we need. ” - Leonard Rose 50

Coordinated disclosure • Originally called “responsible disclosure” – “It’s Time to End Information Anarchy” by Scott Culp, October 2001 – Term still used, but it is extremely biased (“framing”) – Microsoft now calls it “coordinated disclosure” – I recommend that term instead • Notion: Tell supplier first, disclose after supplier releases a fix – That way, attackers who don’t know about it can’t exploit information • Microsoft has a coordinated disclosure policy with no time limit as long as the supplier keeps yakking – http: //www. theregister. co. uk/Print/2011/04/19/microsoft_vulnerabili ty_disclosure_policy/ • But many suppliers never fix non-public vulnerabilities & just stall – Incorrectly assumes attackers are not already exploiting vulnerability (when they are, nondisclosure puts public at greater risk) 51

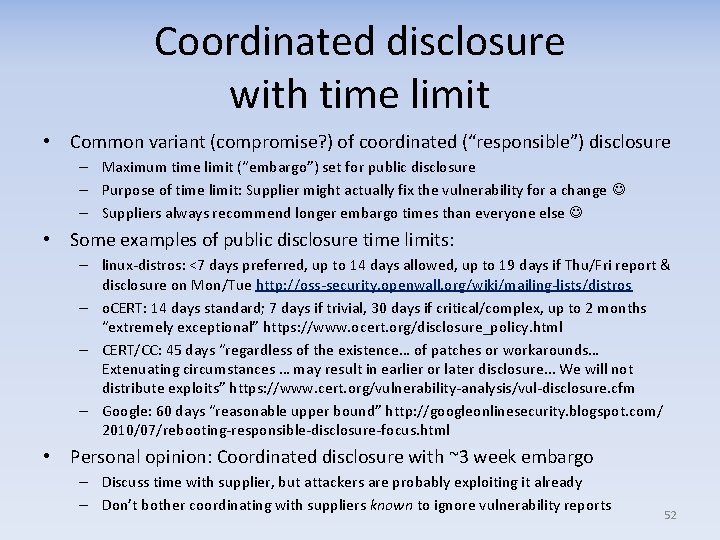

Coordinated disclosure with time limit • Common variant (compromise? ) of coordinated (“responsible”) disclosure – Maximum time limit (“embargo”) set for public disclosure – Purpose of time limit: Supplier might actually fix the vulnerability for a change – Suppliers always recommend longer embargo times than everyone else • Some examples of public disclosure time limits: – linux-distros: <7 days preferred, up to 14 days allowed, up to 19 days if Thu/Fri report & disclosure on Mon/Tue http: //oss-security. openwall. org/wiki/mailing-lists/distros – o. CERT: 14 days standard; 7 days if trivial, 30 days if critical/complex, up to 2 months “extremely exceptional” https: //www. ocert. org/disclosure_policy. html – CERT/CC: 45 days “regardless of the existence… of patches or workarounds… Extenuating circumstances … may result in earlier or later disclosure. . . We will not distribute exploits” https: //www. cert. org/vulnerability-analysis/vul-disclosure. cfm – Google: 60 days “reasonable upper bound” http: //googleonlinesecurity. blogspot. com/ 2010/07/rebooting-responsible-disclosure-focus. html • Personal opinion: Coordinated disclosure with ~3 week embargo – Discuss time with supplier, but attackers are probably exploiting it already – Don’t bother coordinating with suppliers known to ignore vulnerability reports 52

Disclosure: More information • “Full Disclosure” by Bruce Schneier (November 15, 2001) https: //www. schneier. com/crypto-gram-0111. html#1 • “Full Disclosure and Why Vendors Hate it” by Jonathan Zdziarski (May 1, 2008) http: //www. zdziarski. com/blog/? p=47 • “Software Vulnerabilities: Full-, Responsible-, and Non. Disclosure” by Andrew Cencini, Kevin Yu, Tony Chan • “How long should security embargos be? ”, Jake Edge, https: //lwn. net/Articles/479936/ • “ Vulnerability disclosure publications and discussion tracking” https: //www. ee. oulu. fi/ research/ouspg/Disclosure_tracking 53

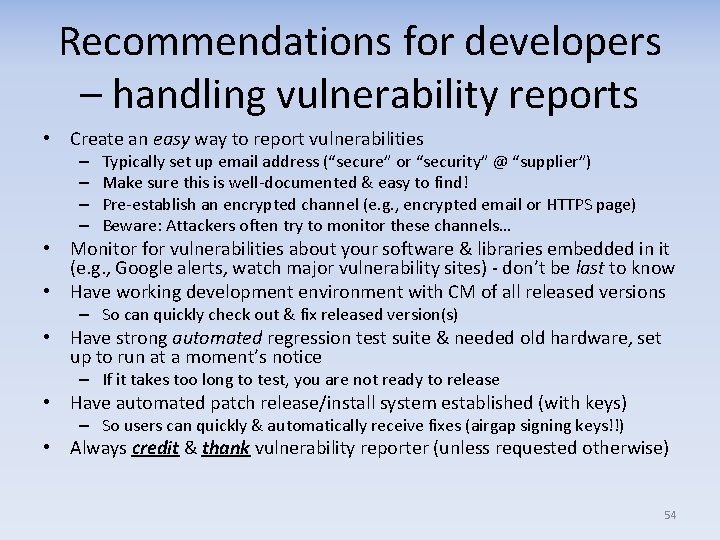

Recommendations for developers – handling vulnerability reports • Create an easy way to report vulnerabilities – – Typically set up email address (“secure” or “security” @ “supplier”) Make sure this is well-documented & easy to find! Pre-establish an encrypted channel (e. g. , encrypted email or HTTPS page) Beware: Attackers often try to monitor these channels… • Monitor for vulnerabilities about your software & libraries embedded in it (e. g. , Google alerts, watch major vulnerability sites) - don’t be last to know • Have working development environment with CM of all released versions – So can quickly check out & fix released version(s) • Have strong automated regression test suite & needed old hardware, set up to run at a moment’s notice – If it takes too long to test, you are not ready to release • Have automated patch release/install system established (with keys) – So users can quickly & automatically receive fixes (airgap signing keys!!) • Always credit & thank vulnerability reporter (unless requested otherwise) 54

We’ve covered… • Formal methods – General approaches & specific tools available – How to applying them varies by purpose • Open proofs • Malicious tools / trusting trust attack – Countering using diverse double-compiling (DDC) • Vulnerability disclosure 55

56

Backups 57

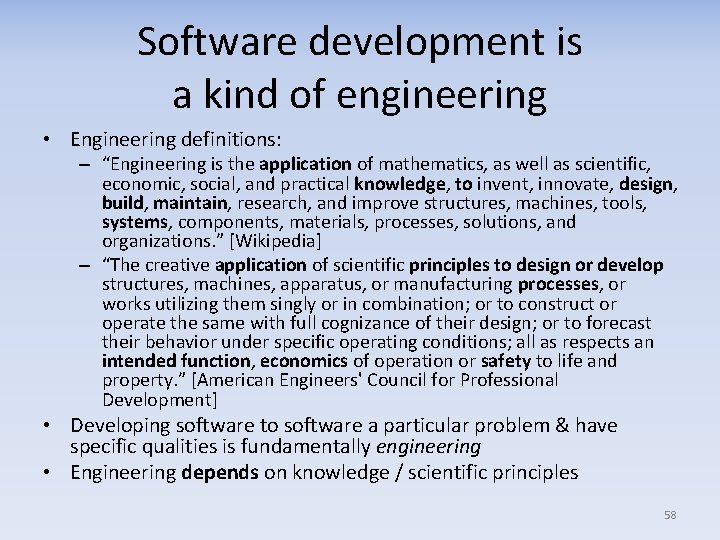

Software development is a kind of engineering • Engineering definitions: – “Engineering is the application of mathematics, as well as scientific, economic, social, and practical knowledge, to invent, innovate, design, build, maintain, research, and improve structures, machines, tools, systems, components, materials, processes, solutions, and organizations. ” [Wikipedia] – “The creative application of scientific principles to design or develop structures, machines, apparatus, or manufacturing processes, or works utilizing them singly or in combination; or to construct or operate the same with full cognizance of their design; or to forecast their behavior under specific operating conditions; all as respects an intended function, economics of operation or safety to life and property. ” [American Engineers' Council for Professional Development] • Developing software to software a particular problem & have specific qualities is fundamentally engineering • Engineering depends on knowledge / scientific principles 58

Released under CC BY-SA 3. 0 • This presentation is released under the Creative Commons Attribution. Share. Alike 3. 0 Unported (CC BY-SA 3. 0) license • You are free: – to Share — to copy, distribute and transmit the work – to Remix — to adapt the work – to make commercial use of the work • Under the following conditions: – Attribution — You must attribute the work in the manner specified by the author or licensor (but not in any way that suggests that they endorse you or your use of the work) – Share Alike — If you alter, transform, or build upon this work, you may distribute the resulting work only under the same or similar license to this one • These conditions can be waived by permission from the copyright holder – dwheeler at dwheeler dot com • Details at: http: //creativecommons. org/licenses/by-sa/3. 0/ • Attribute as “David A. Wheeler and the Institute for Defense Analyses” 59