SVSGAN Singing Voice Separation via Generative Adversarial Network

- Slides: 14

SVSGAN : Singing Voice Separation via Generative Adversarial Network Presenter : Zhe-Cheng Fan

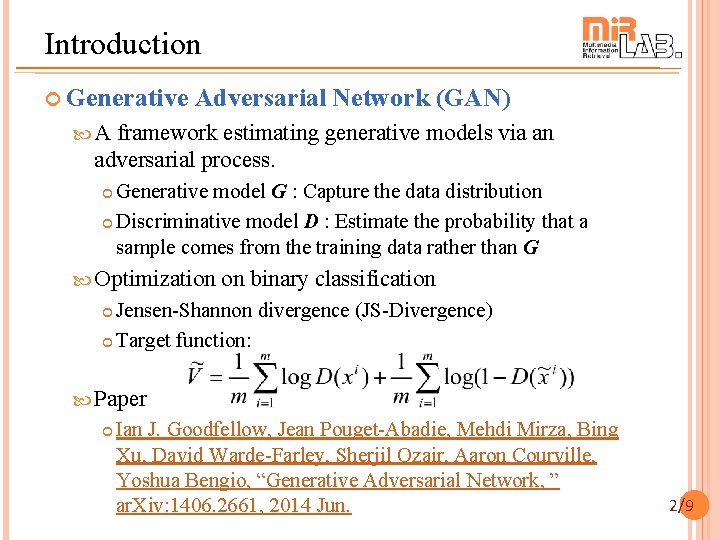

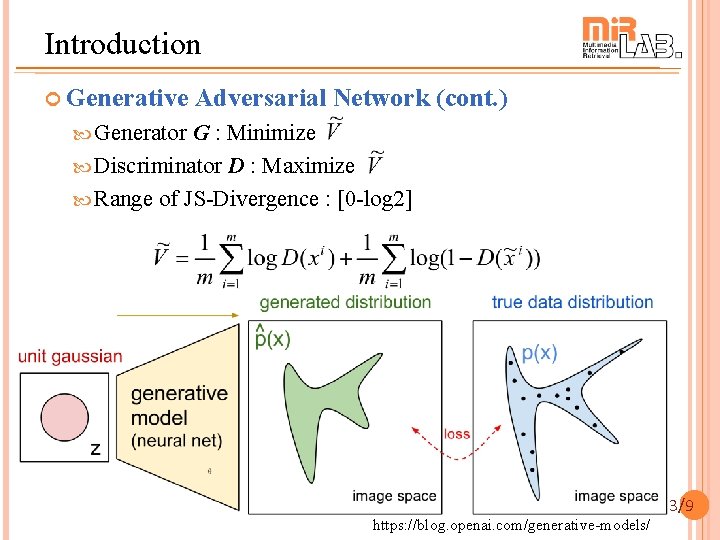

Introduction Generative Adversarial Network (GAN) A framework estimating generative models via an adversarial process. Generative model G : Capture the data distribution Discriminative model D : Estimate the probability that a sample comes from the training data rather than G Optimization on binary classification Jensen-Shannon divergence (JS-Divergence) Target function: Paper Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio, “Generative Adversarial Network, ” ar. Xiv: 1406. 2661, 2014 Jun. 2/9

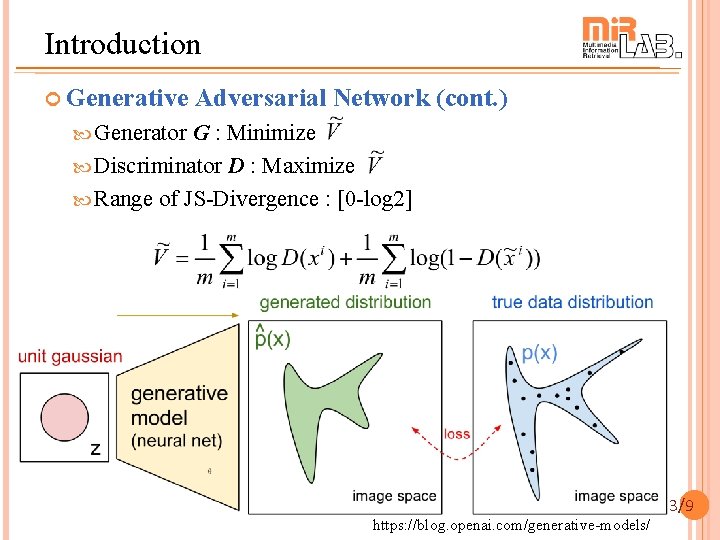

Introduction Generative Adversarial Network (cont. ) Generator G : Minimize Discriminator D : Maximize Range of JS-Divergence : [0 -log 2] https: //blog. openai. com/generative-models/ 3/9

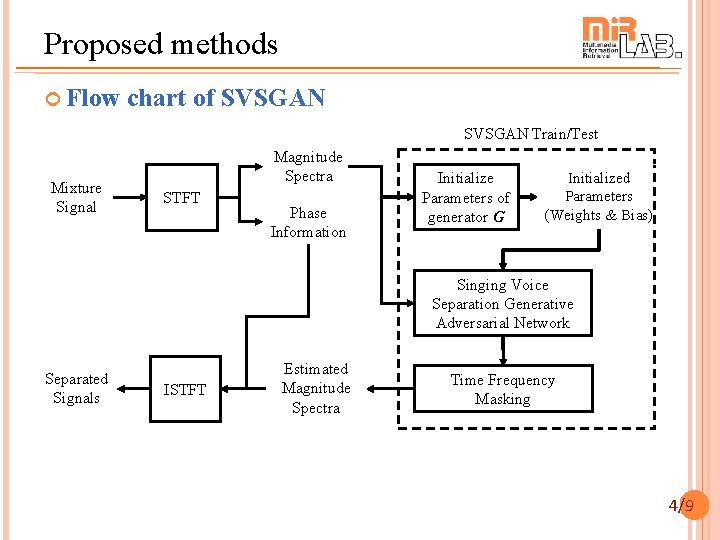

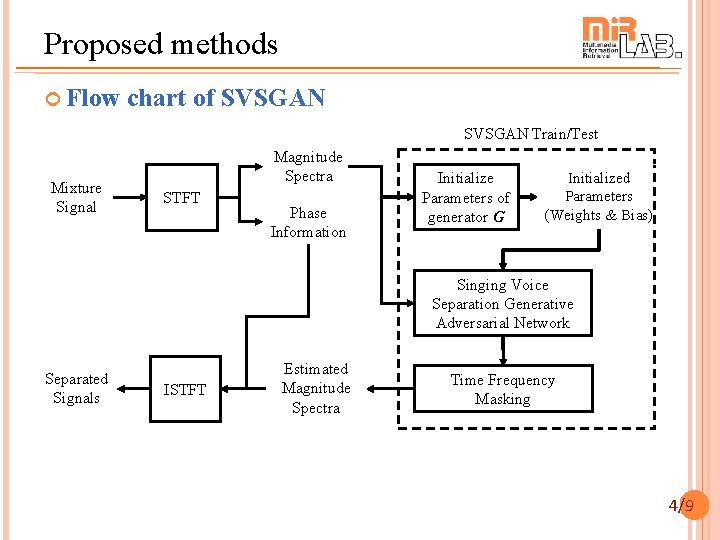

Proposed methods Flow chart of SVSGAN Train/Test Mixture Signal Magnitude Spectra STFT Phase Information Initialize Parameters of generator G Initialized Parameters (Weights & Bias) Singing Voice Separation Generative Adversarial Network Separated Signals ISTFT Estimated Magnitude Spectra Time Frequency Masking 4/9

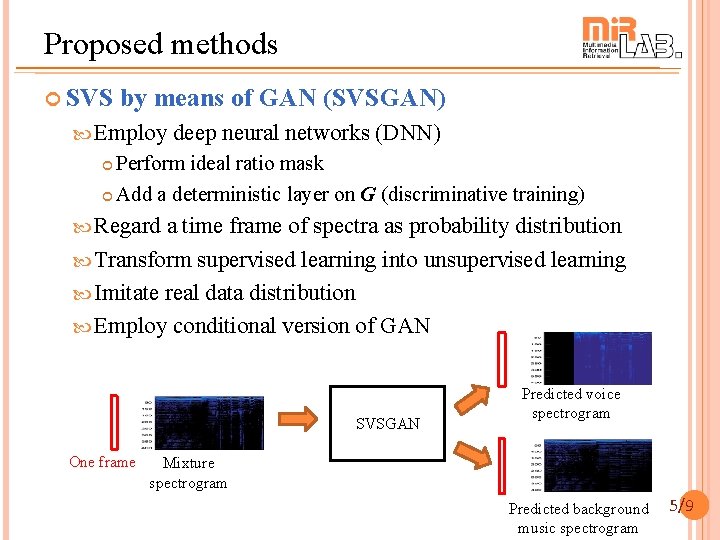

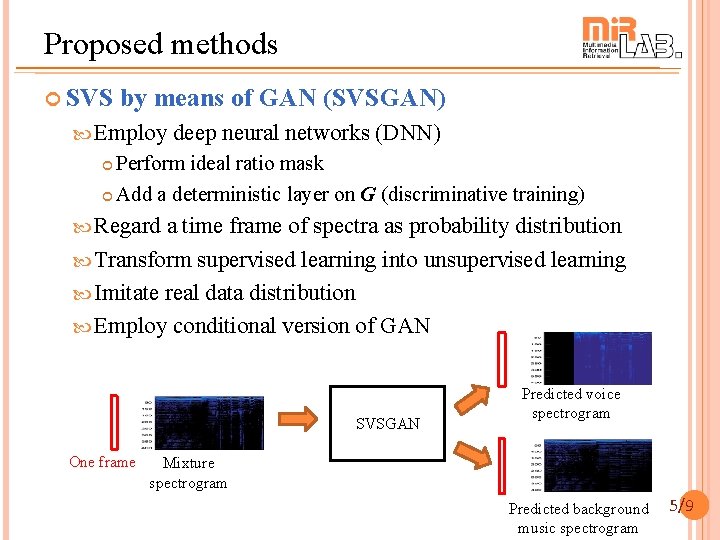

Proposed methods SVS by means of GAN (SVSGAN) Employ deep neural networks (DNN) Perform ideal ratio mask Add a deterministic layer on G (discriminative training) Regard a time frame of spectra as probability distribution Transform supervised learning into unsupervised learning Imitate real data distribution Employ conditional version of GAN SVSGAN One frame Predicted voice spectrogram Mixture spectrogram Predicted background music spectrogram 5/9

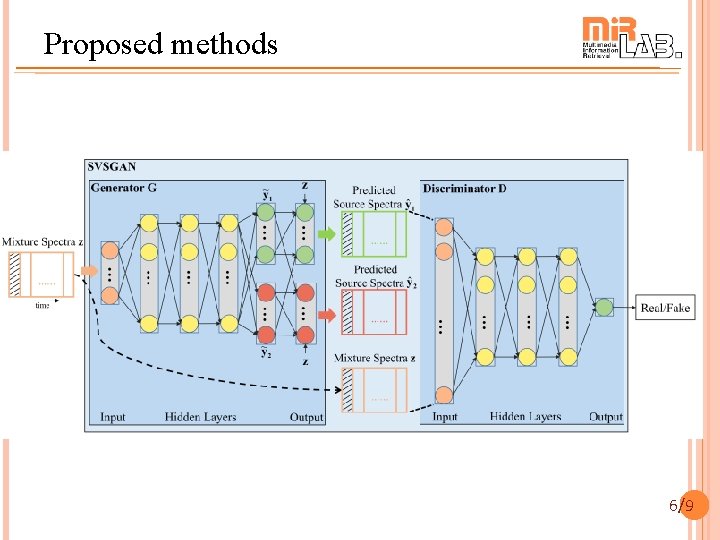

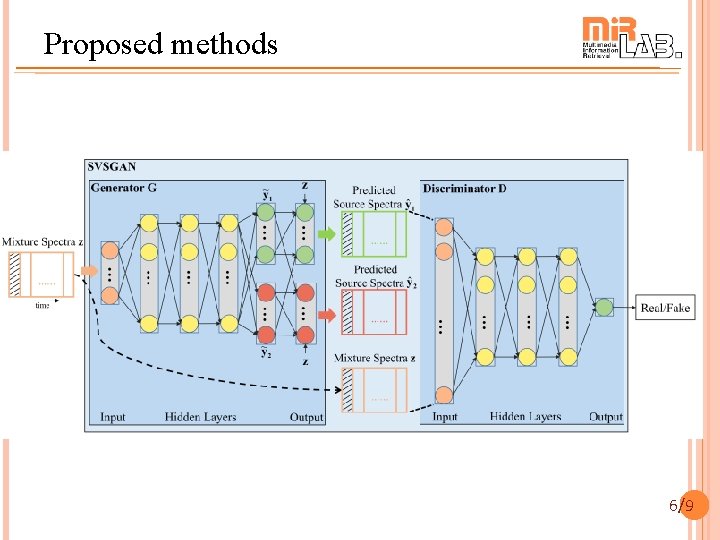

Proposed methods 6/9

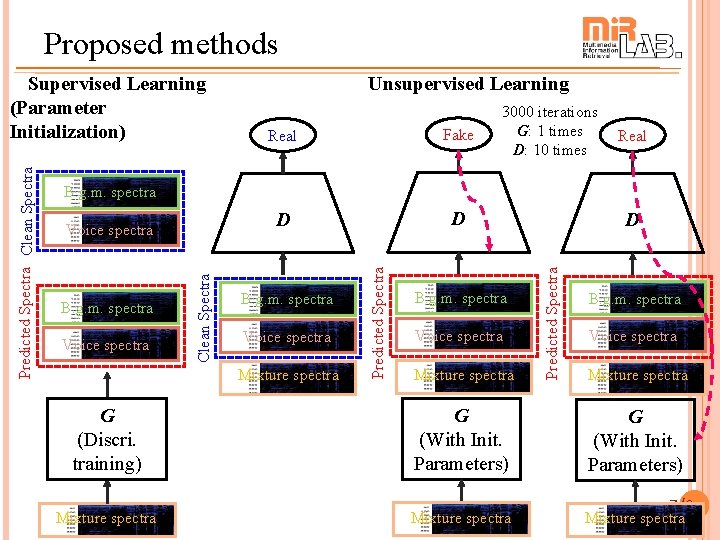

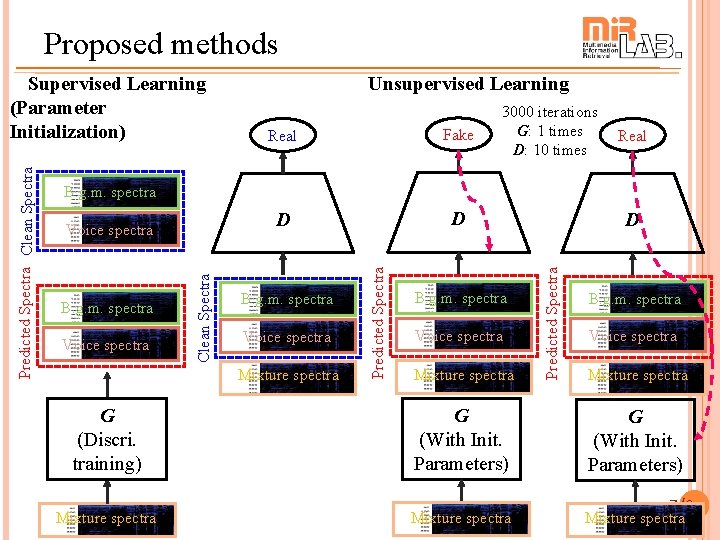

Proposed methods Unsupervised Learning Real Fake D D 3000 iterations G: 1 times D: 10 times Real B. g. m. spectra Voice spectra Mixture spectra D Predicted Spectra Voice spectra Predicted Spectra B. g. m. spectra Clean Spectra Predicted Spectra Clean Spectra Supervised Learning (Parameter Initialization) B. g. m. spectra Voice spectra Mixture spectra G (Discri. training) G (With Init. Parameters) Mixture spectra 7/9

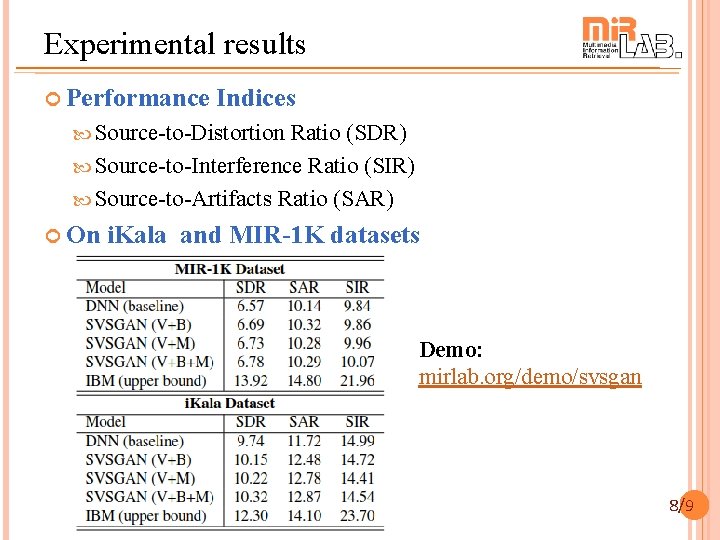

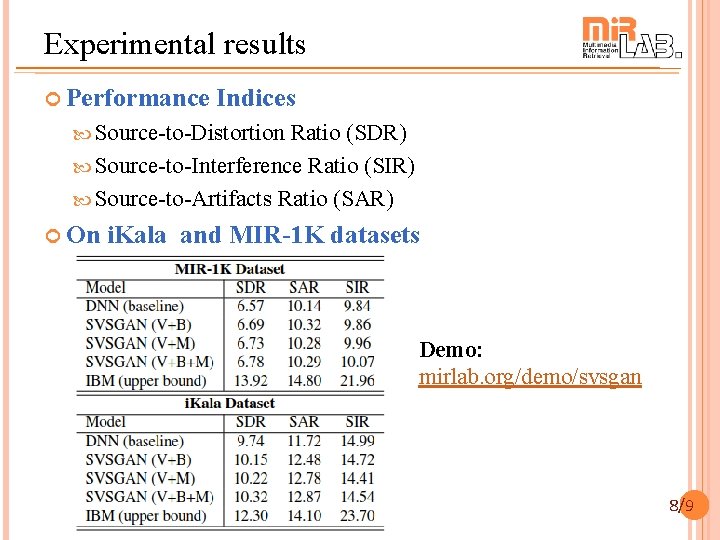

Experimental results Performance Indices Source-to-Distortion Ratio (SDR) Source-to-Interference Ratio (SIR) Source-to-Artifacts Ratio (SAR) On i. Kala and MIR-1 K datasets Demo: mirlab. org/demo/svsgan 8/9

Thanks 9/9

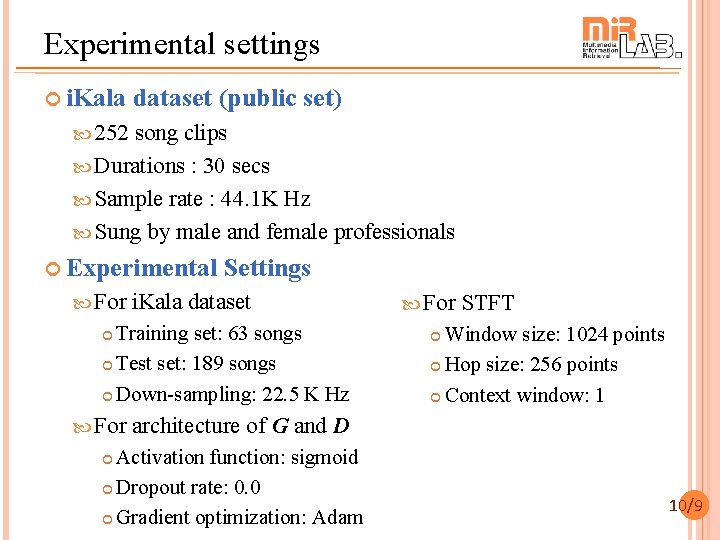

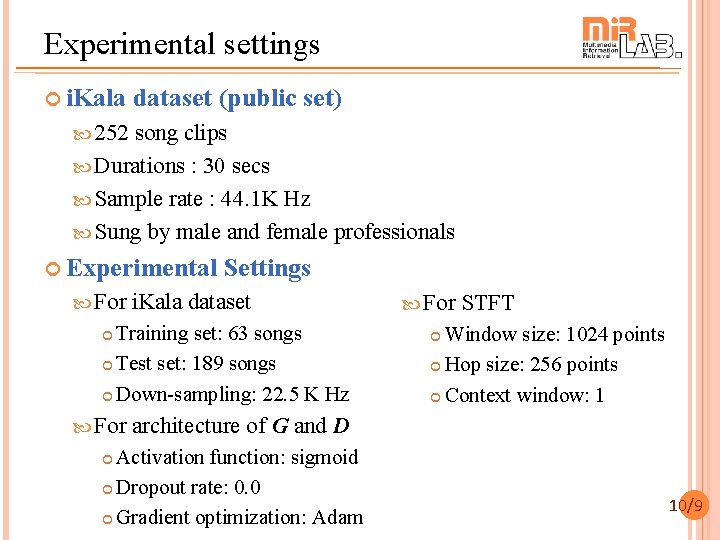

Experimental settings i. Kala dataset (public set) 252 song clips Durations : 30 secs Sample rate : 44. 1 K Hz Sung by male and female professionals Experimental Settings For i. Kala dataset Training set: 63 songs Test set: 189 songs Down-sampling: 22. 5 K Hz For STFT Window size: 1024 points Hop size: 256 points Context window: 1 architecture of G and D Activation function: sigmoid Dropout rate: 0. 0 Gradient optimization: Adam 10/9

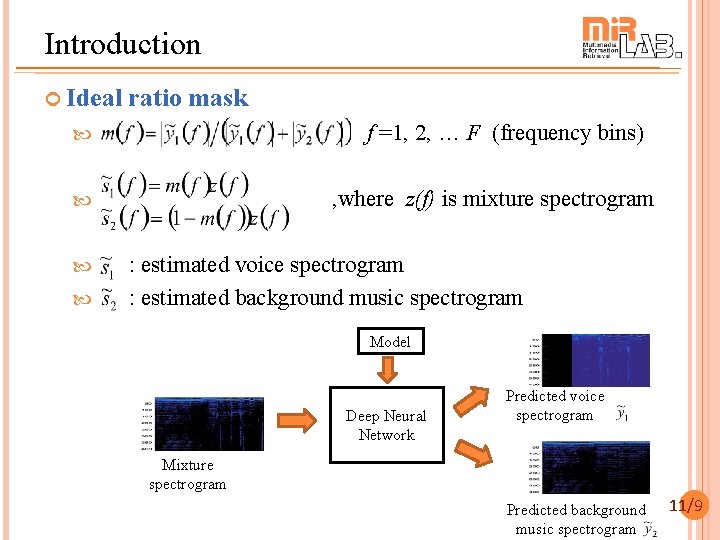

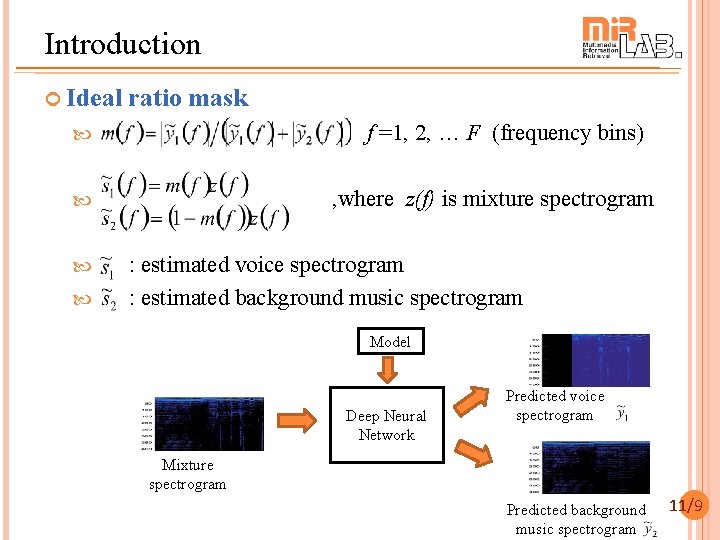

Introduction Ideal ratio mask f =1, 2, … F (frequency bins) , where z(f) is mixture spectrogram : estimated voice spectrogram : estimated background music spectrogram Model Deep Neural Network Predicted voice spectrogram Mixture spectrogram Predicted background music spectrogram 11/9

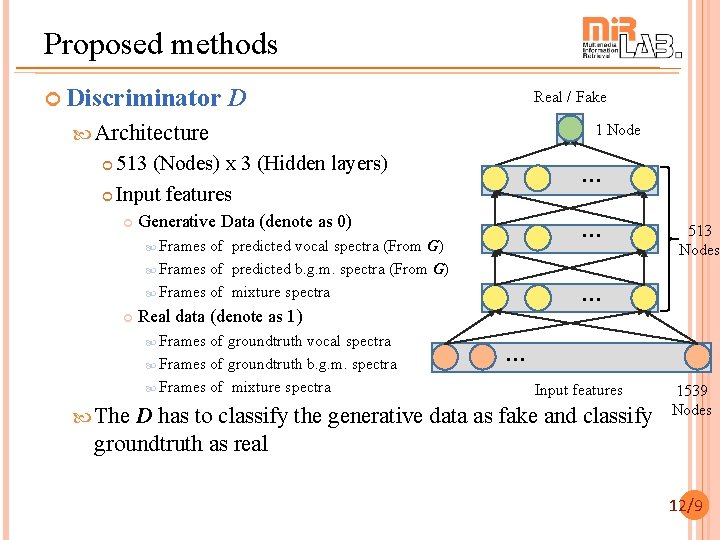

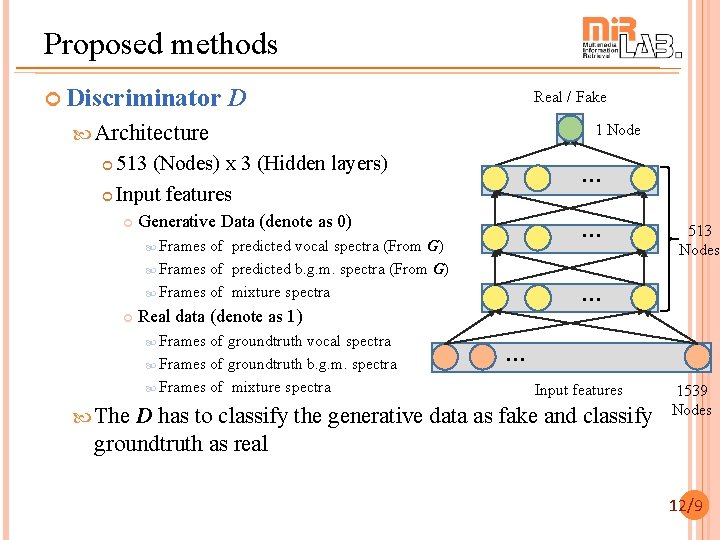

Proposed methods Discriminator D Real / Fake Architecture 1 Node 513 (Nodes) x 3 (Hidden layers) Input features … Generative Data (denote as 0) … Frames of predicted vocal spectra (From G) Frames of predicted b. g. m. spectra (From G) Frames of mixture spectra … Real data (denote as 1) Frames of groundtruth vocal spectra Frames of groundtruth b. g. m. spectra Frames of mixture spectra The 513 Nodes … Input features D has to classify the generative data as fake and classify groundtruth as real 1539 Nodes 12/9

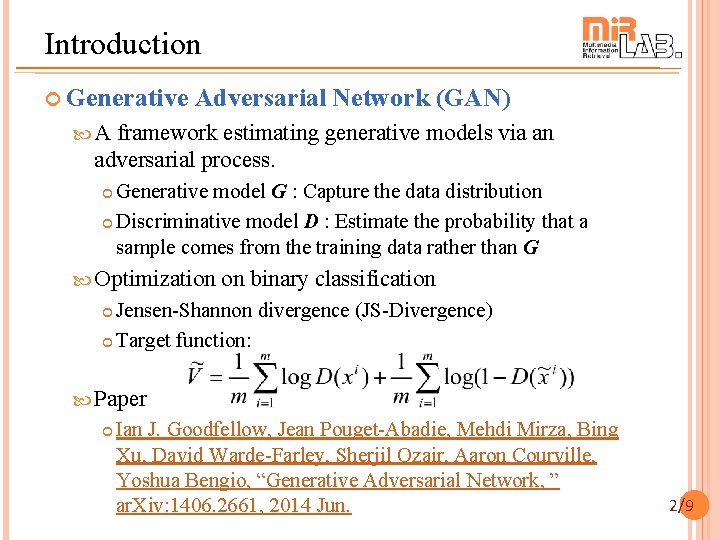

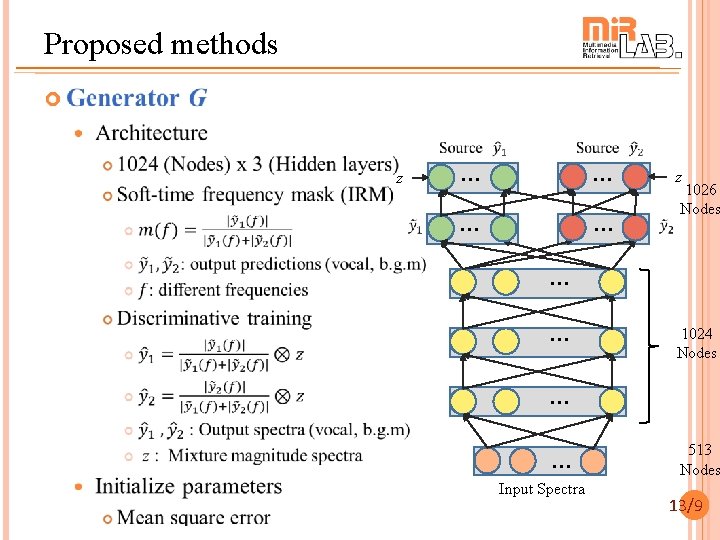

Proposed methods z … … z 1026 Nodes … … 1024 Nodes … … Input Spectra 513 Nodes 13/9

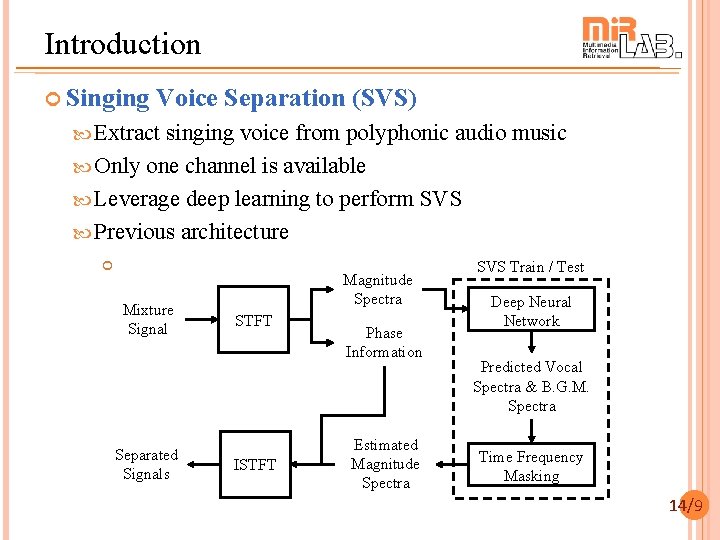

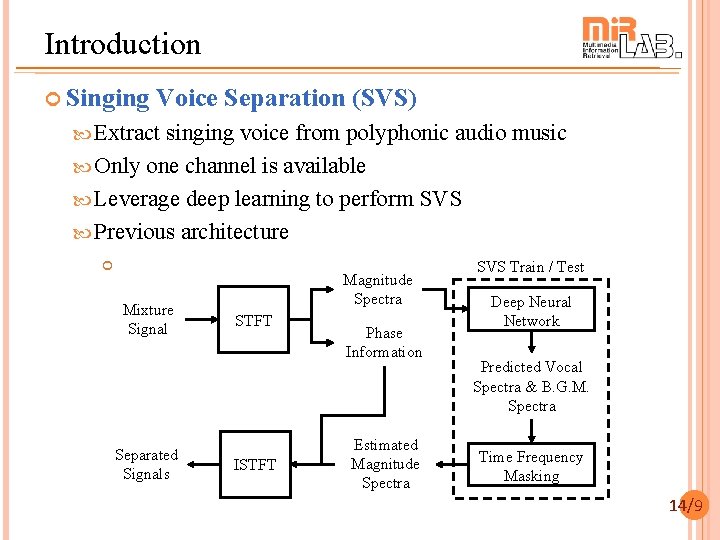

Introduction Singing Voice Separation (SVS) Extract singing voice from polyphonic audio music Only one channel is available Leverage deep learning to perform SVS Previous architecture Mixture Signal Separated Signals Magnitude Spectra STFT ISTFT Phase Information Estimated Magnitude Spectra SVS Train / Test Deep Neural Network Predicted Vocal Spectra & B. G. M. Spectra Time Frequency Masking 14/9