GENERATIVE ADVERSARIAL NETWORKS IN TMVA ANUSHREE RANKAWAT GENERATIVE

- Slides: 17

GENERATIVE ADVERSARIAL NETWORKS IN TMVA ANUSHREE RANKAWAT

GENERATIVE ADVERSARIAL NETWORKS IN TMVA • Current Status of TMVA GAN • Results obtained while training TMVA GAN • Future Work

CURRENT STATUS OF TMVA GAN MODULE • Input, Batch and Network Layouts parsed for both generator and discriminator networks. • Designed Method. GAN module to fit the TMVA framework. • GAN framework developed entirely for TMVA. • MNIST data modified for Method. GAN module. • Method. GAN tested and running on MNIST data. • Unit Tests developed and passing for Method. GAN. • Integration tests developed and working for Method. GAN.

PARSING LAYOUTS FOR GENERATIVE AND DISCRIMINATIVE LAYOUTS • Layout="CONV|12|2|2|1|1|TANH, MAXPOOL|6|6|1|1, RESHAPE|1|1|9408|FLAT, DENS E|512|TANH …. ## CONV|12|2|2|1|1|TANH, MAXPOOL|6|6|1|1, RESHAPE|1|1|9408|FLAT, DENSE|512| TANH. . ” • Generative and Discriminative Model Layouts are separated by ‘##’ • We can separate the two layouts if it seems more intuitive to do so.

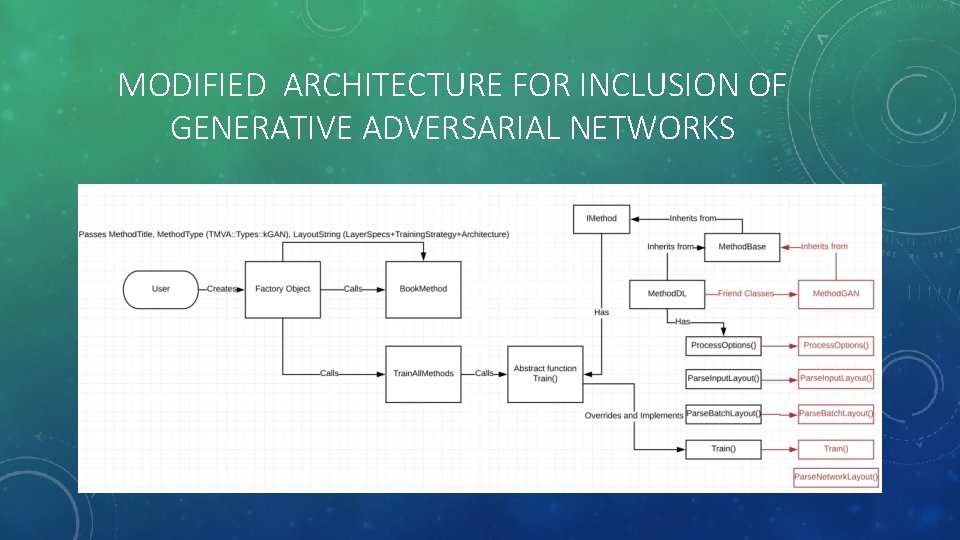

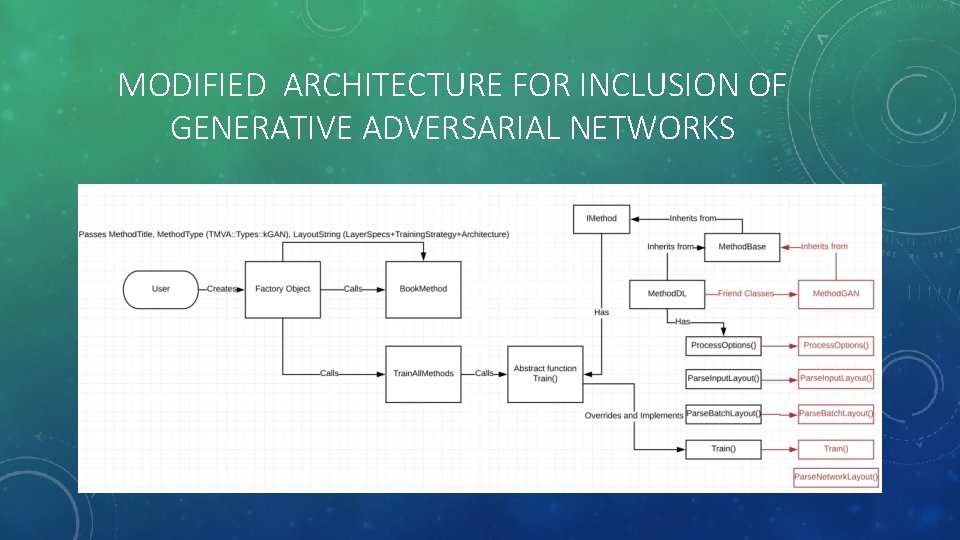

MODIFIED ARCHITECTURE FOR INCLUSION OF GENERATIVE ADVERSARIAL NETWORKS

ISSUES HANDLED WHILE IMPLEMENTING METHODGAN • MNIST Data Creation related issues. • Combined network (layers of both generator and discriminator network) related issues. • Issues related to getting predicted data from generator once noisy data is fed in. • Issues related with creating Tensor. Data. Loader for data obtained from generator to be fed into discriminator for predicting whether it is real or fake. • Working around the supervised learning framework to create an unsupervised learning based framework.

MNIST DATA CREATION RELATED ISSUES • Creating a ROOT based input file for image data is not the most natural way to work with images. • MNIST ROOT file with storing an array for an entire image doesn’t work for Method. GAN. • Arrays can’t be loaded as a single variable in Data. Loader. Accessing each pixel value is difficult. • SOLUTION: MNIST ROOT file with each pixel being a branch in the ROOT tree. Added a variable in Data. Loader for accessing each pixel. • As certain pixels were zero throughout all the images in the entire dataset, it was causing issues while creating histograms. • SOLUTION: Addition of small random noise to a pixel if it has a value of zero.

COMBINED NETWORK RELATED ISSUES • Generator needs to be trained on the loss given by the discriminator on the generated data. • SOLUTION: Created a network with generator layers followed by discriminator layers. • Training of the discriminator layers was turned off in this combined network. • The loss was backpropagated and the generator layers were trained accordingly. • While training the discriminator network again, the training was turned on. • Added a Layer. Type variable in General. Layer which lists the type of layer which was added (e. g. , CONV, MAXPOOL, DENSE). This helps in adding the layers of generator and discriminator network to a new combined network.

ISSUES RELATED TO GETTING PREDICTED DATA FROM GENERATOR ONCE NOISY DATA IS FED IN • Given noisy matrices as an input to the generator network, the predicted data needs to be formatted as a Tensor. Data. Loader to be used again as an input to the discriminator. • This is needed for training the discriminator on fake data. • Argument type mismatch observed in the type expected by Tensor. Data. Loader and the Prediction function. • SOLUTION Generator network calls the prediction function on a tensor with the type Matrix_t which is required by the Prediction function. • This tensor created is then copied into the desired type needed for Tensor. Data. Loader (TMatrix. T<Double_t>)

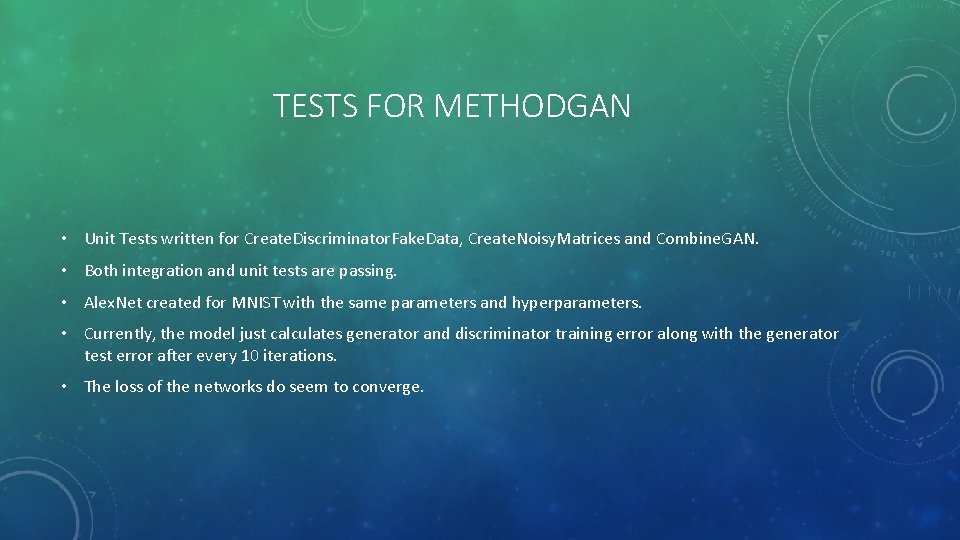

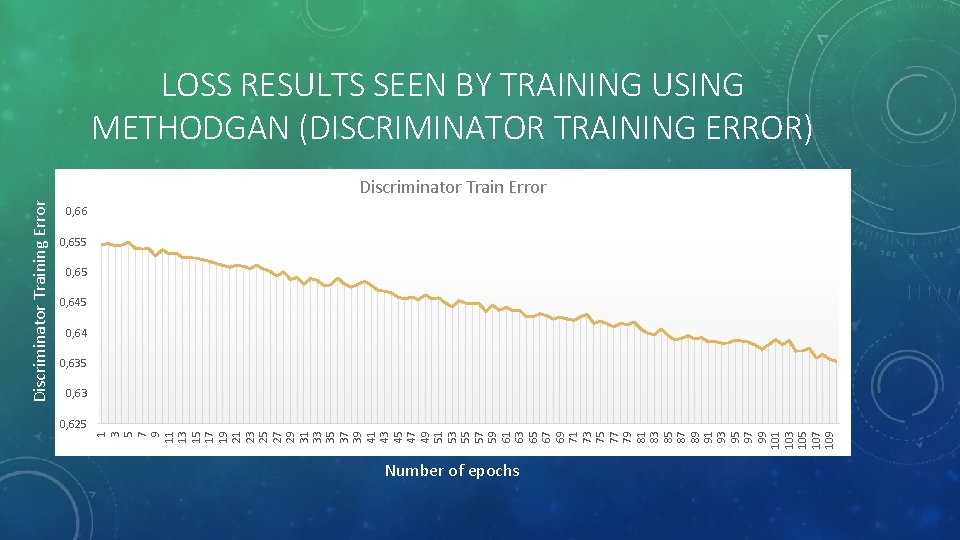

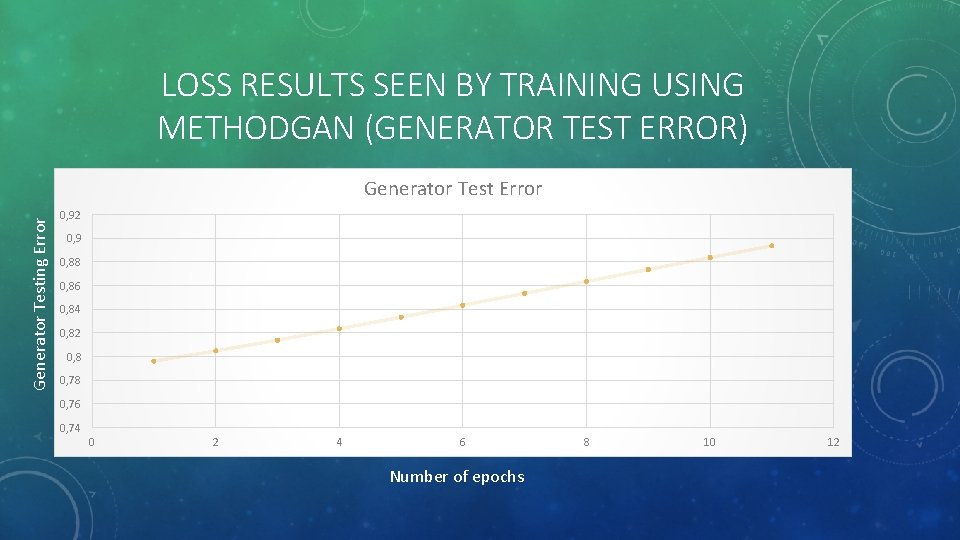

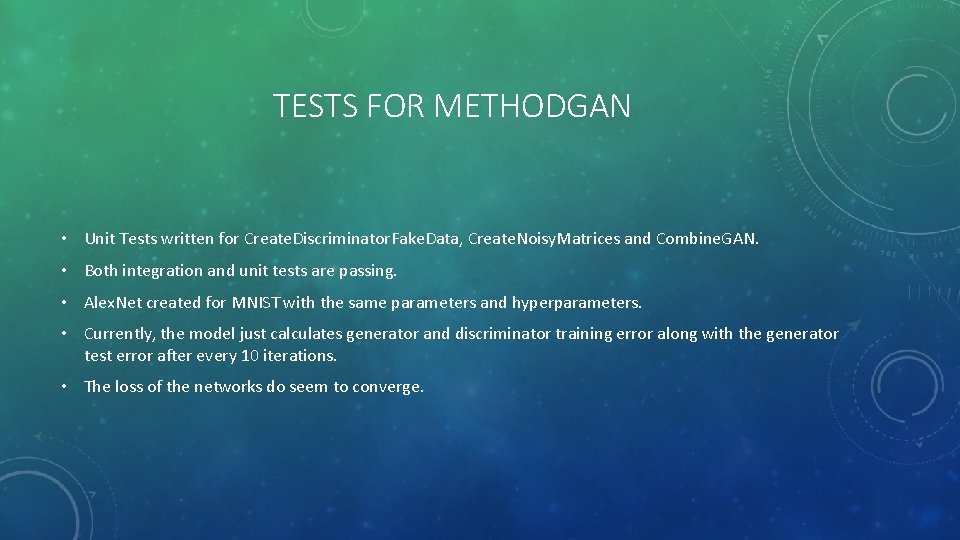

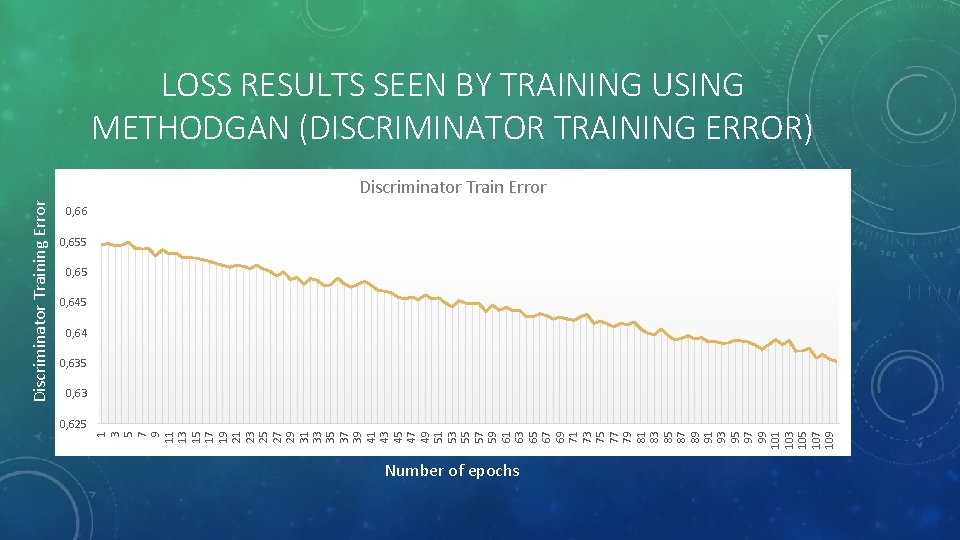

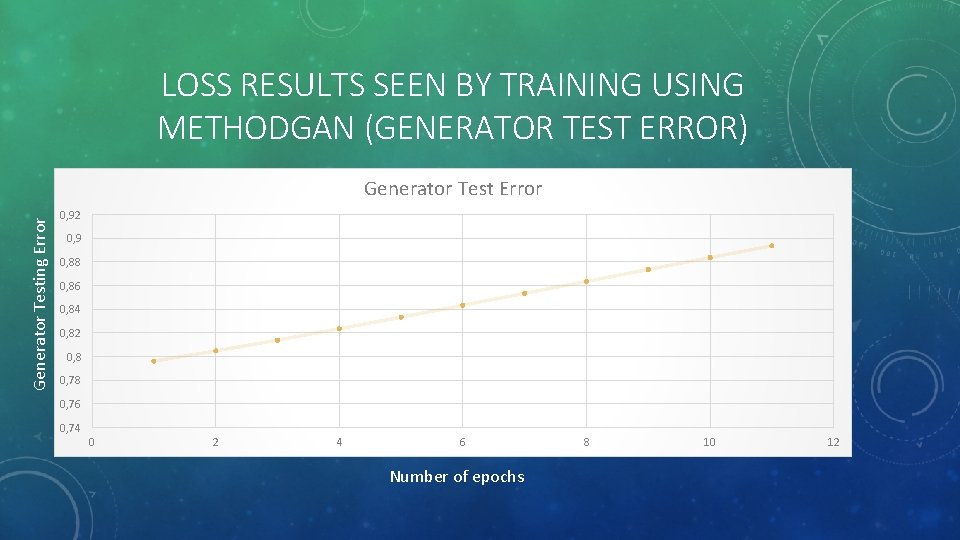

TESTS FOR METHODGAN • Unit Tests written for Create. Discriminator. Fake. Data, Create. Noisy. Matrices and Combine. GAN. • Both integration and unit tests are passing. • Alex. Net created for MNIST with the same parameters and hyperparameters. • Currently, the model just calculates generator and discriminator training error along with the generator test error after every 10 iterations. • The loss of the networks do seem to converge.

1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 35 37 39 41 43 45 47 49 51 53 55 57 59 61 63 65 67 69 71 73 75 77 79 81 83 85 87 89 91 93 95 97 99 101 103 105 107 109 Generator Training Error LOSS RESULTS SEEN BY TRAINING USING METHODGAN (GENERATOR TRAINING ERROR) Generator Train Error 0, 8 0, 7 0, 6 0, 5 0, 4 0, 3 0, 2 0, 1 0 Number of epochs

LOSS RESULTS SEEN BY TRAINING USING METHODGAN (DISCRIMINATOR TRAINING ERROR) 0, 66 0, 655 0, 645 0, 64 0, 635 0, 63 0, 625 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 35 37 39 41 43 45 47 49 51 53 55 57 59 61 63 65 67 69 71 73 75 77 79 81 83 85 87 89 91 93 95 97 99 101 103 105 107 109 Discriminator Training Error Discriminator Train Error Number of epochs

LOSS RESULTS SEEN BY TRAINING USING METHODGAN (GENERATOR TEST ERROR) Generator Testing Error Generator Test Error 0, 92 0, 9 0, 88 0, 86 0, 84 0, 82 0, 8 0, 76 0, 74 0 2 4 6 Number of epochs 8 10 12

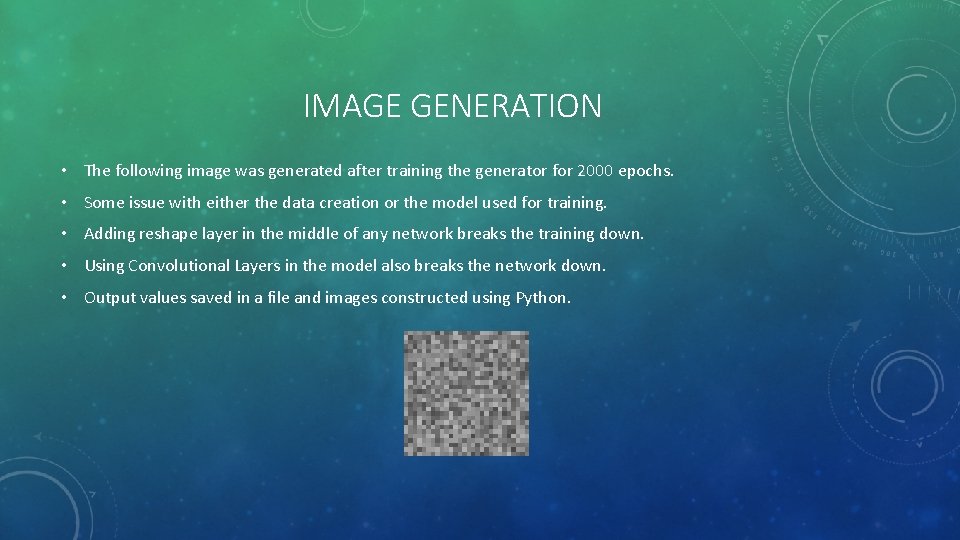

IMAGE GENERATION • The following image was generated after training the generator for 2000 epochs. • Some issue with either the data creation or the model used for training. • Adding reshape layer in the middle of any network breaks the training down. • Using Convolutional Layers in the model also breaks the network down. • Output values saved in a file and images constructed using Python.

CURRENT WORK GOING ON • Preparing the code for a Pull Request as the final submission. • Refactoring Train function as the code is too long to be comprehensible for future developers. • Seperating GAN from the TMVAClassification tutorial and create a separate file for a tutorial concerning Method. GAN. • Adding comments which would make the usage of source code simpler. • Working on the blog summarizing all the aspects of the design process along with the implementation details. • Working on pinpointing the glitch in the training/module which gives random noise as the generated image instead of an image resembling the real data.

FUTURE WORK • Glitch in the training as images created are not resembling real data. • Adding reshape layer in the middle of any network breaks the training down. • Using Convolutional Layers breaks the model. Currently using Dense Layers as a substitute till the issue is pinpointed and resolved. • Benchmarking Method. GAN against other standard implementations of Generative Adversarial Networks. • Design a framework for Unsupervised Learning by creating an Unsupervised Data. Loader and enabling unsupervised form of data in Factory Method. • Use GANs for HEP problems (Calo. GAN for generating EM calorimeter showers, for example. )

THANK YOU!

Anushree rankawat

Anushree rankawat Spectral normalization

Spectral normalization Generative adversarial networks

Generative adversarial networks Oren freifeld

Oren freifeld Quantum generative adversarial learning

Quantum generative adversarial learning Generative adversarial network

Generative adversarial network Anushree rauta

Anushree rauta Tmva tutorial

Tmva tutorial Tmva courses

Tmva courses Tmva user guide

Tmva user guide Basestore iptv

Basestore iptv Virtual circuit switching example

Virtual circuit switching example What is adversary system

What is adversary system The limitations of deep learning in adversarial settings

The limitations of deep learning in adversarial settings Adversarial system law definition

Adversarial system law definition Voice conversion

Voice conversion Friendly adversarial training

Friendly adversarial training Adversarial patch

Adversarial patch