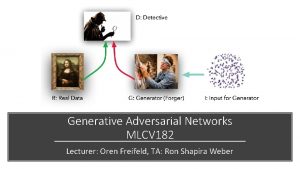

Introduction of Generative Adversarial Network GAN Hungyi Lee

![[Mario Lucic, et al. ar. Xiv, 2017] FID[Martin Heusel, et al. , NIPS, 2017]: [Mario Lucic, et al. ar. Xiv, 2017] FID[Martin Heusel, et al. , NIPS, 2017]:](https://slidetodoc.com/presentation_image_h/1dc530723a0cb1cc4551881fd2cbb453/image-61.jpg)

![[Goodfellow, et al. , NIPS, 2014] The maximum objective value is related to JS [Goodfellow, et al. , NIPS, 2014] The maximum objective value is related to JS](https://slidetodoc.com/presentation_image_h/1dc530723a0cb1cc4551881fd2cbb453/image-68.jpg)

- Slides: 69

Introduction of Generative Adversarial Network (GAN) 李宏毅 Hung-yi Lee

Generative Adversarial Network (GAN) • How to pronounce “GAN”? Google 小姐

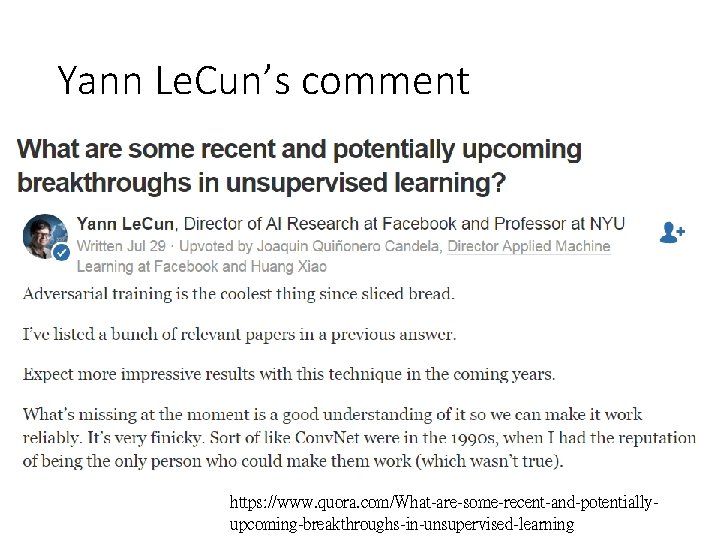

Yann Le. Cun’s comment https: //www. quora. com/What-are-some-recent-and-potentiallyupcoming-breakthroughs-in-unsupervised-learning

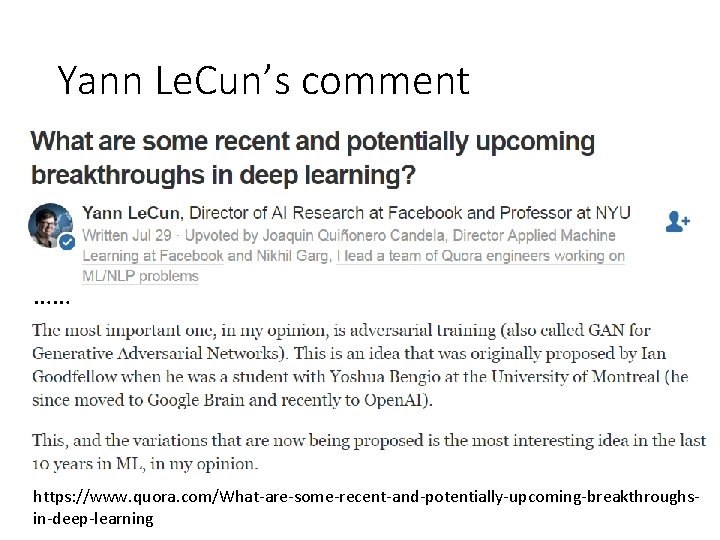

Yann Le. Cun’s comment …… https: //www. quora. com/What-are-some-recent-and-potentially-upcoming-breakthroughsin-deep-learning

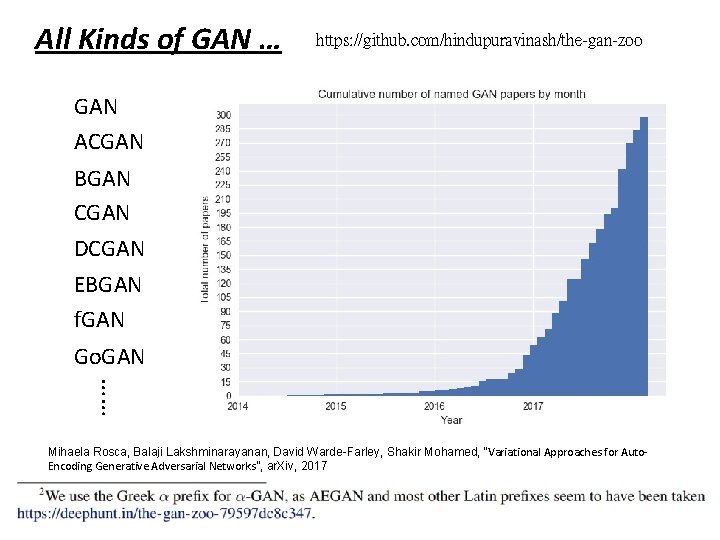

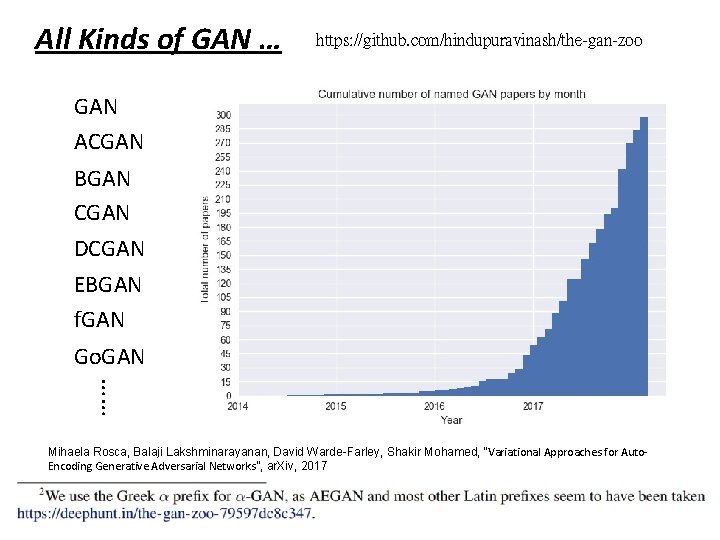

All Kinds of GAN … https: //github. com/hindupuravinash/the-gan-zoo GAN ACGAN BGAN CGAN DCGAN EBGAN f. GAN Go. GAN …… Mihaela Rosca, Balaji Lakshminarayanan, David Warde-Farley, Shakir Mohamed, “Variational Approaches for Auto. Encoding Generative Adversarial Networks”, ar. Xiv, 2017

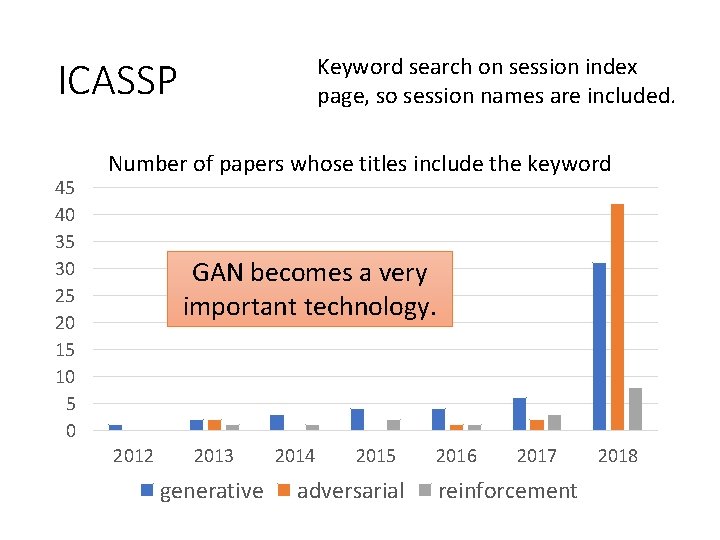

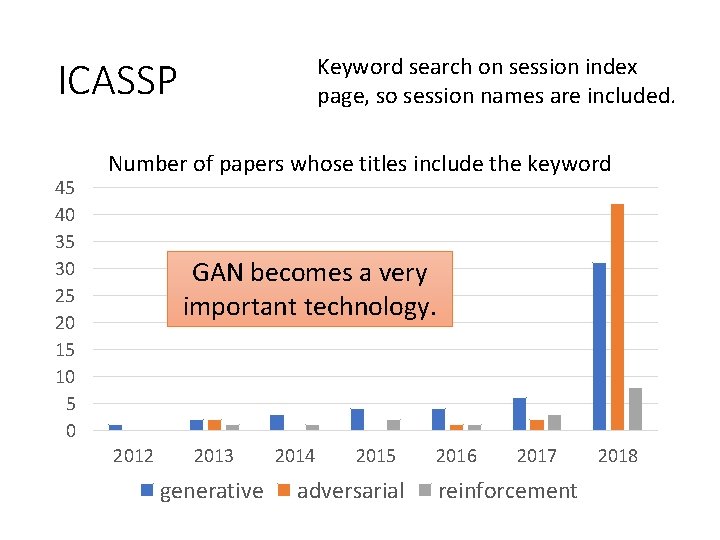

Keyword search on session index page, so session names are included. ICASSP 45 40 35 30 25 20 15 10 5 0 Number of papers whose titles include the keyword GAN becomes a very important technology. 2012 2013 generative 2014 2015 adversarial 2016 2017 reinforcement 2018

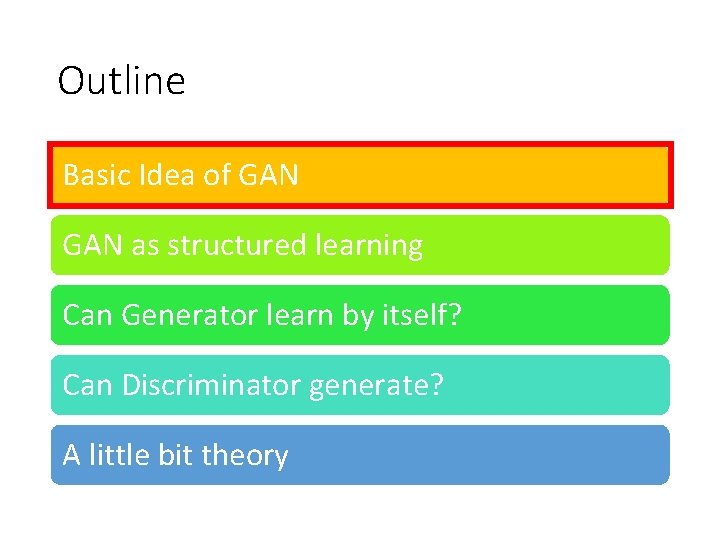

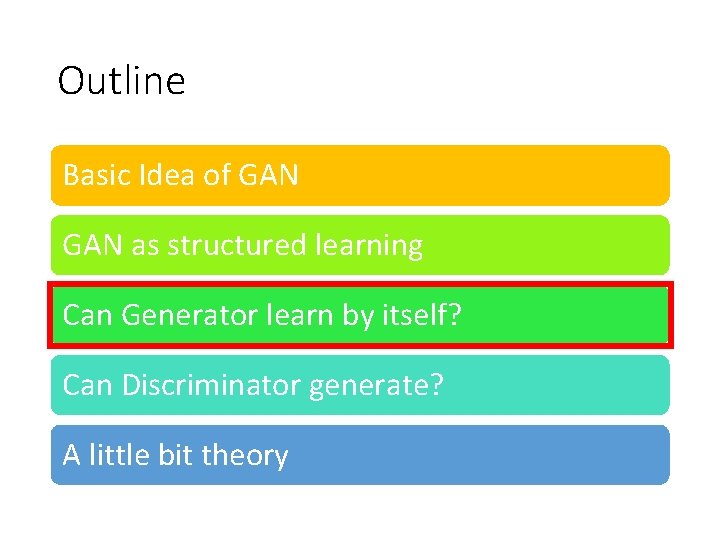

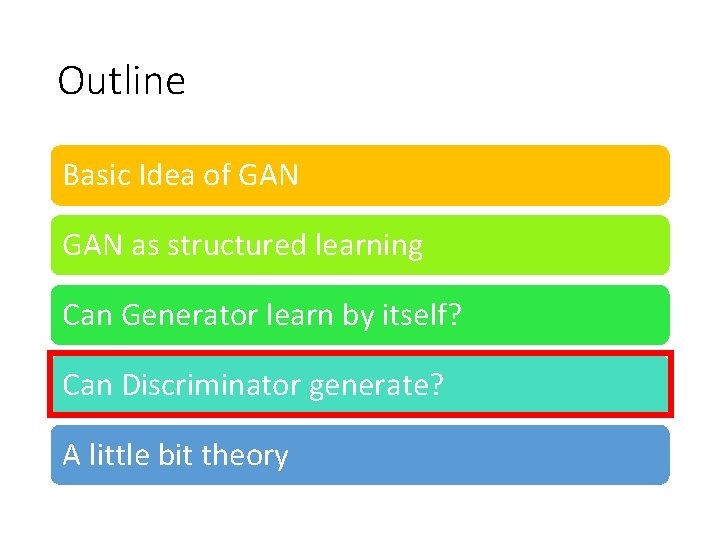

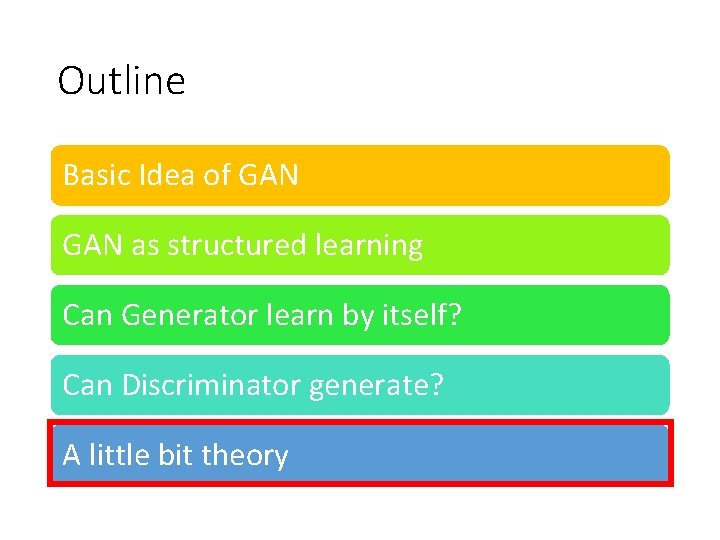

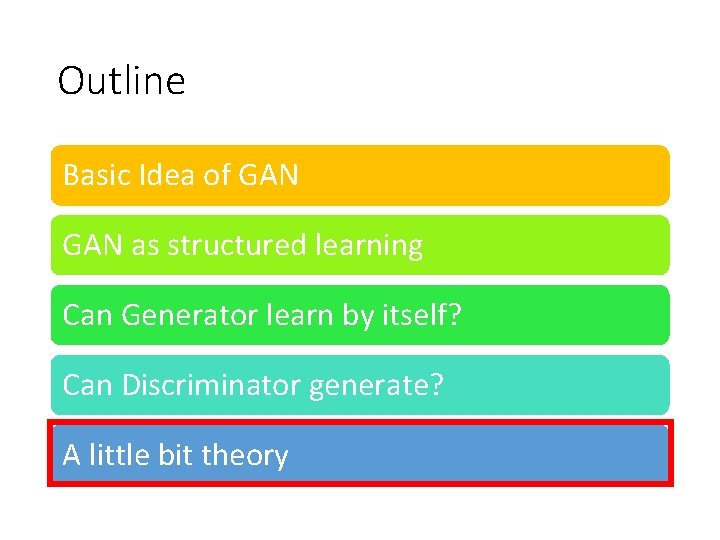

Outline Basic Idea of GAN as structured learning Can Generator learn by itself? Can Discriminator generate? A little bit theory

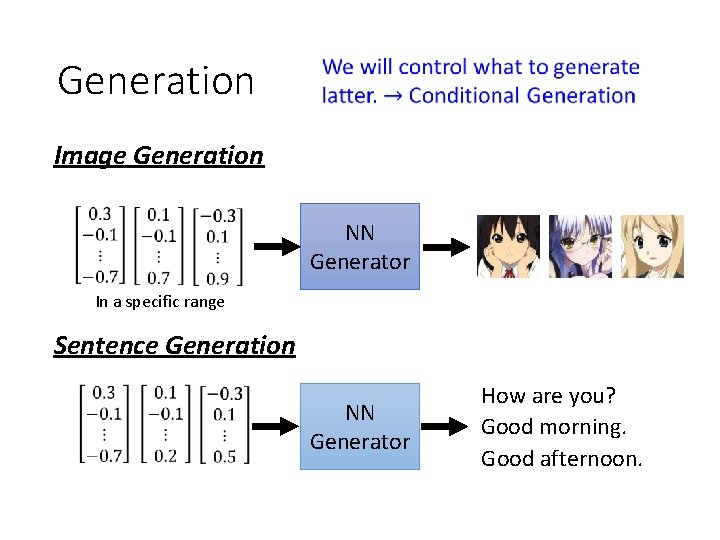

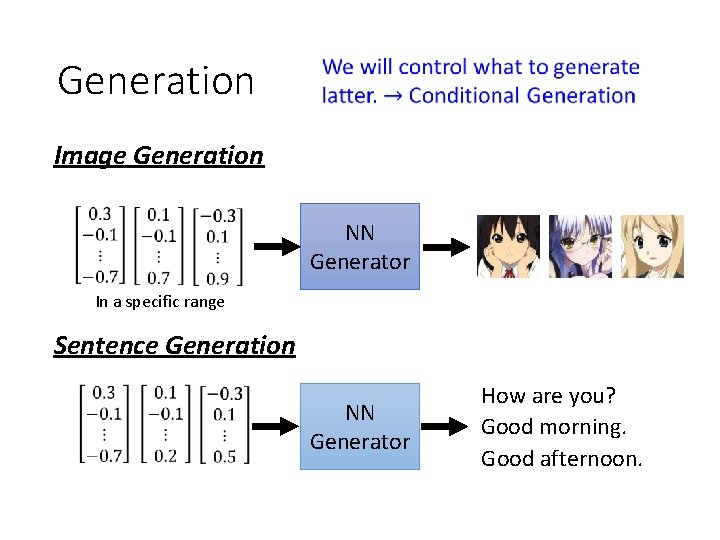

Generation Image Generation NN Generator In a specific range Sentence Generation NN Generator How are you? Good morning. Good afternoon.

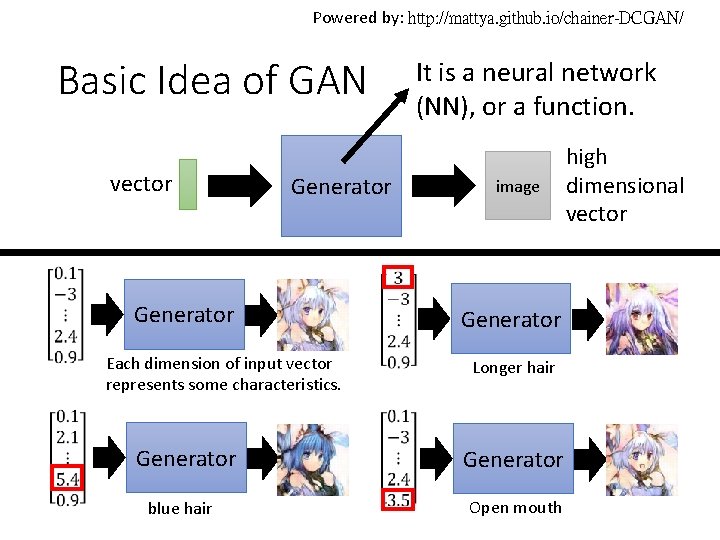

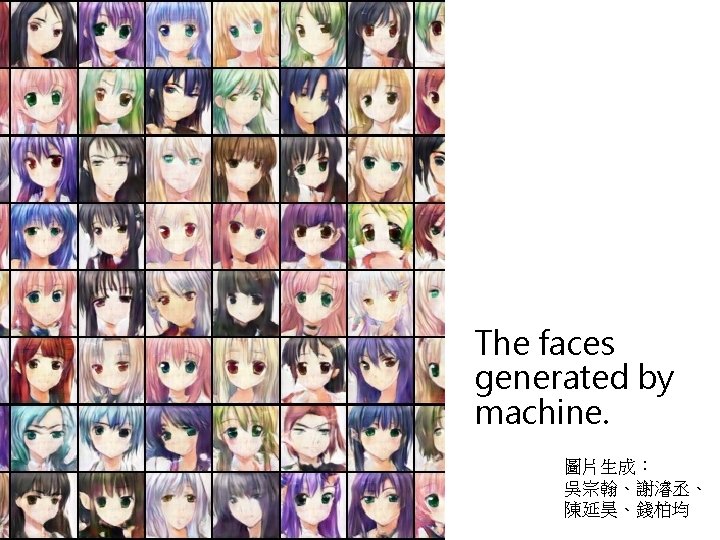

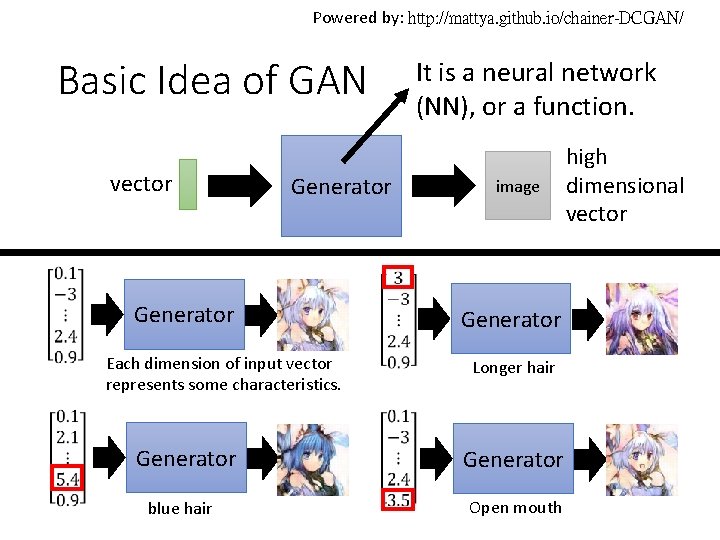

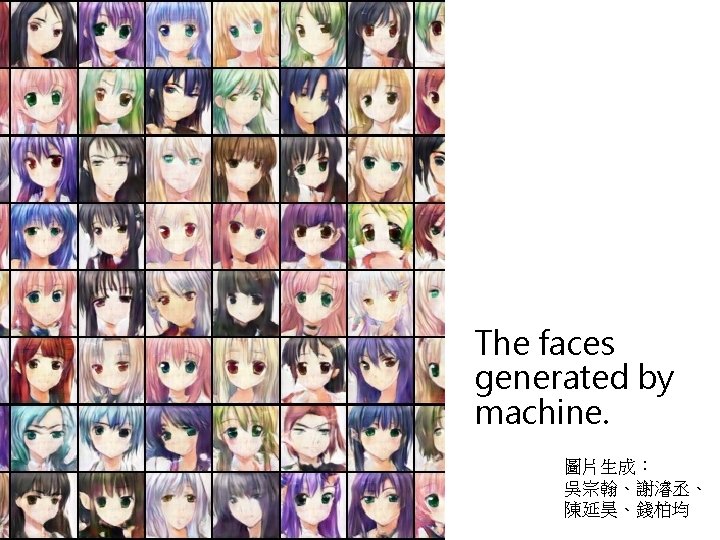

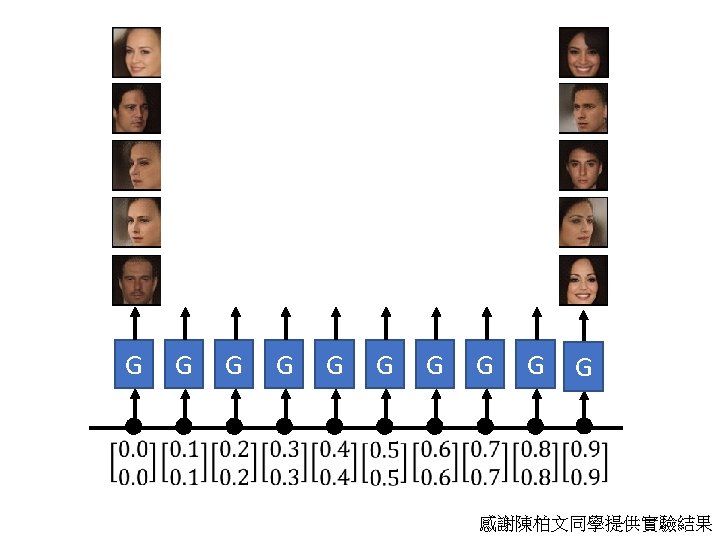

Powered by: http: //mattya. github. io/chainer-DCGAN/ Basic Idea of GAN vector Generator Each dimension of input vector represents some characteristics. Generator blue hair It is a neural network (NN), or a function. image Generator Longer hair Generator Open mouth high dimensional vector

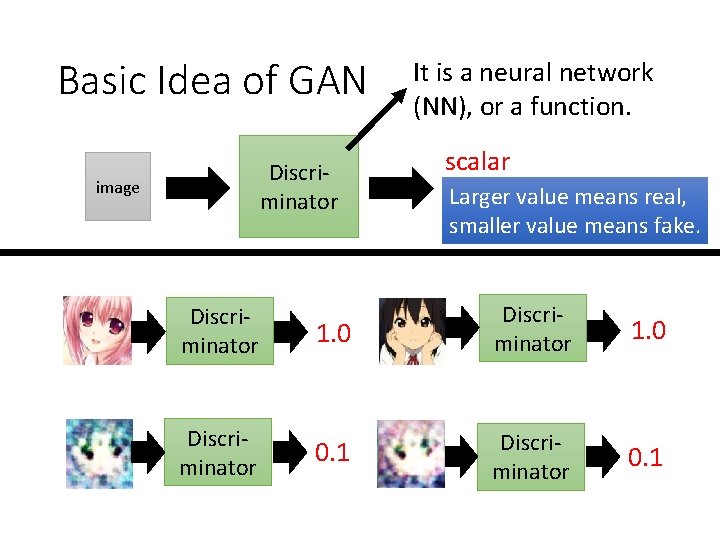

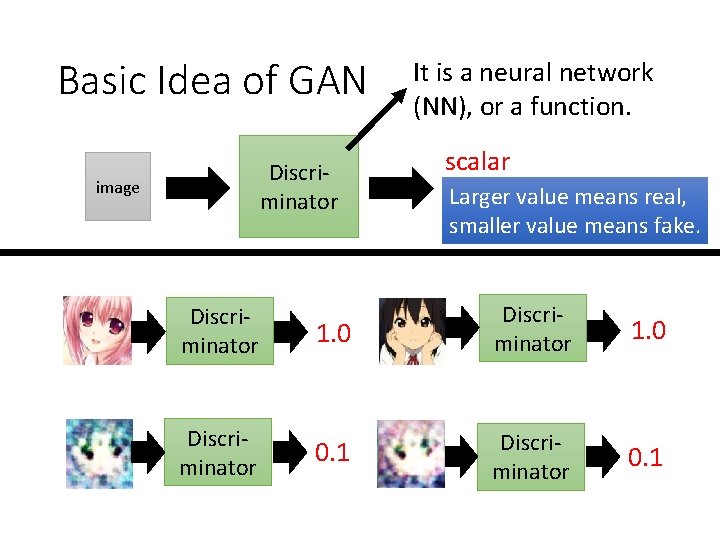

Basic Idea of GAN Discriminator image Discriminator It is a neural network (NN), or a function. scalar Larger value means real, smaller value means fake. 1. 0 Discriminator 1. 0 0. 1 Discriminator 0. 1

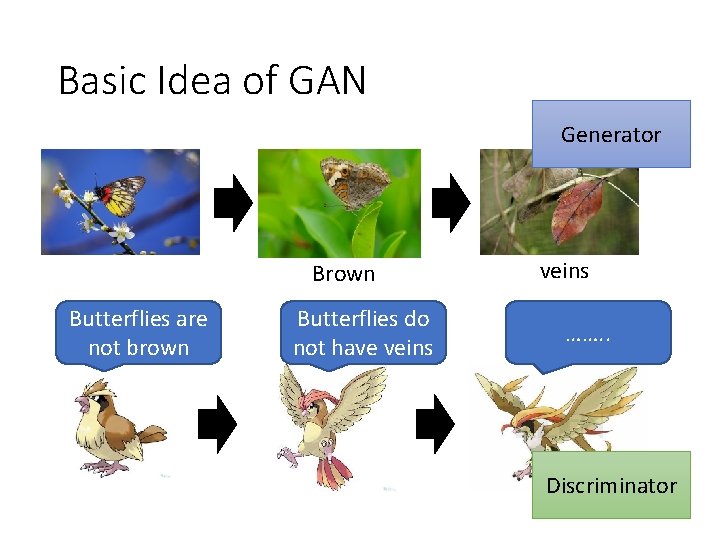

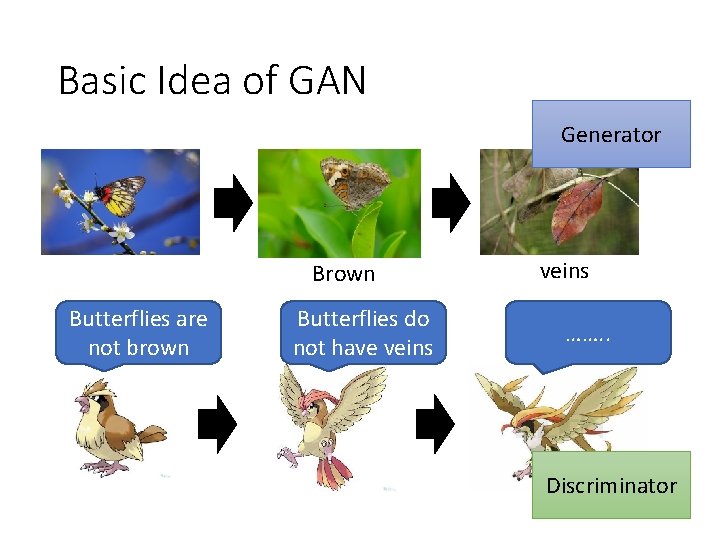

Basic Idea of GAN Generator Brown Butterflies are not brown Butterflies do not have veins ……. . Discriminator

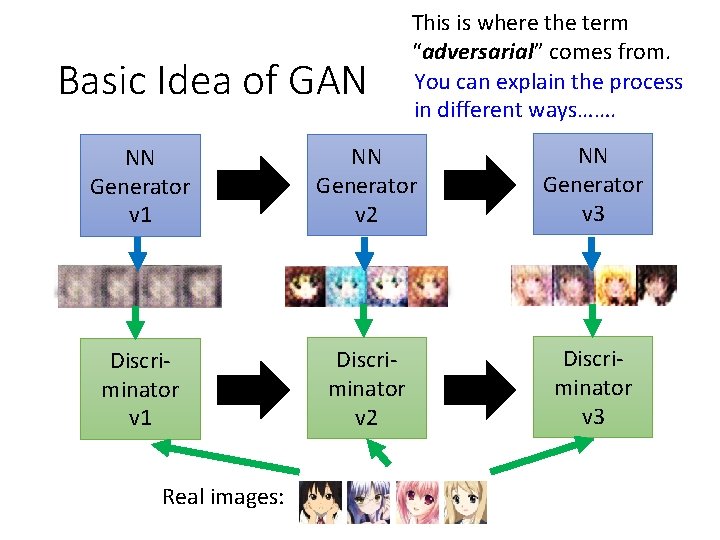

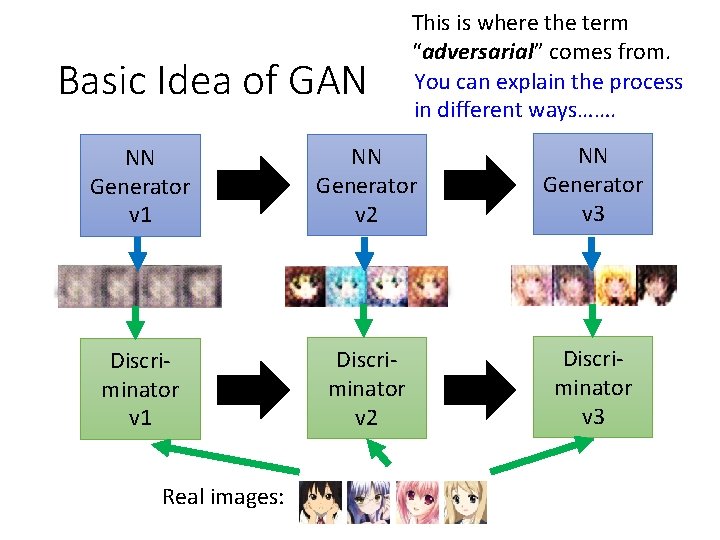

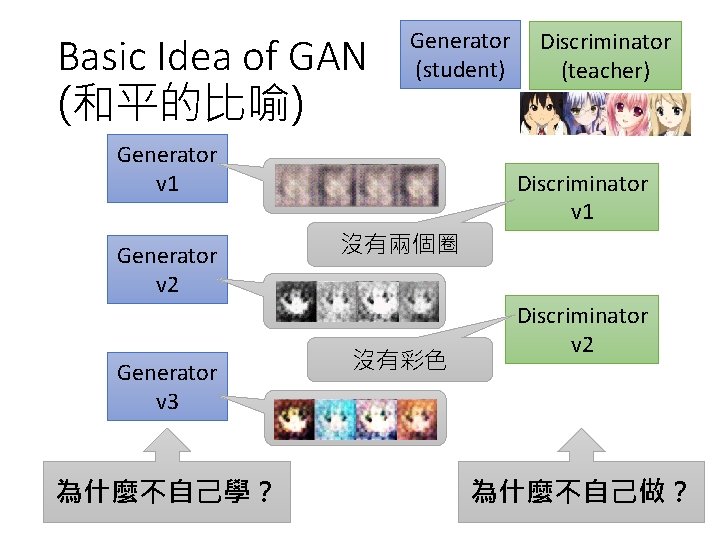

Basic Idea of GAN This is where the term “adversarial” comes from. You can explain the process in different ways……. NN Generator v 1 NN Generator v 2 NN Generator v 3 Discriminator v 1 Discriminator v 2 Discriminator v 3 Real images:

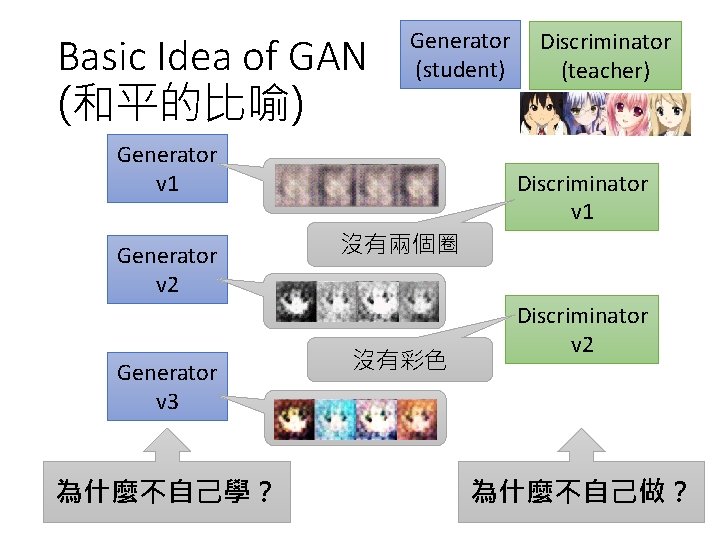

Basic Idea of GAN (和平的比喻) Generator (student) Generator v 1 Generator v 2 Generator v 3 為什麼不自己學? Discriminator (teacher) Discriminator v 1 沒有兩個圈 沒有彩色 Discriminator v 2 為什麼不自己做?

Generator v. s. Discriminator • 寫作敵人,唸做朋友

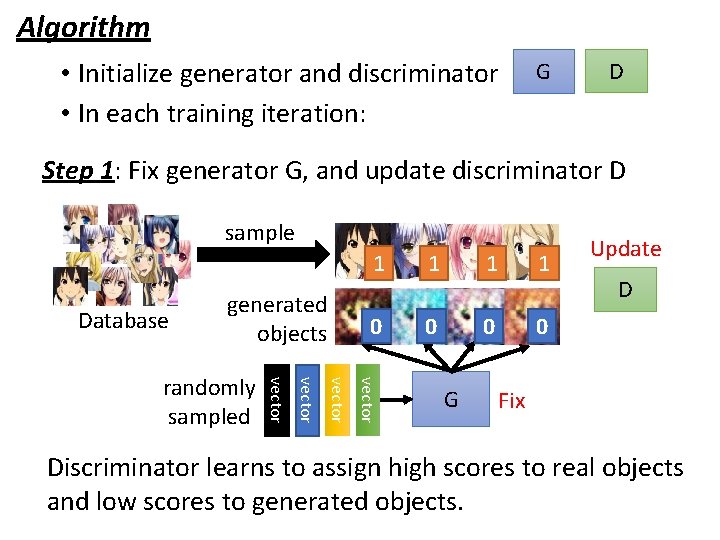

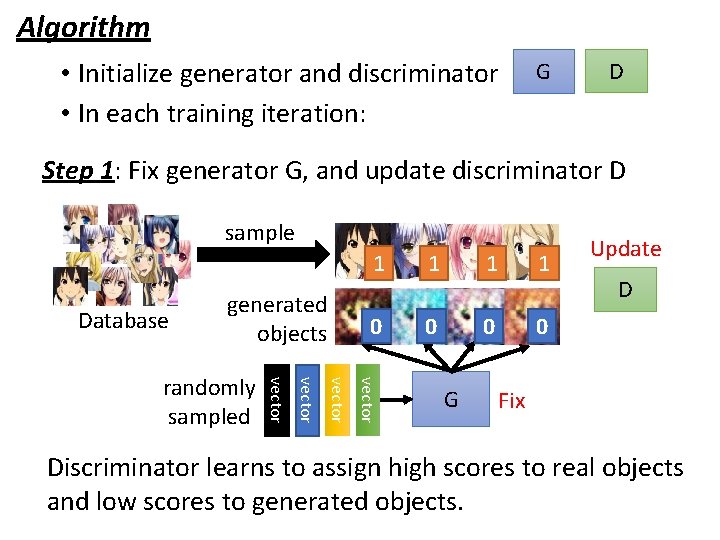

Algorithm • Initialize generator and discriminator • In each training iteration: G D Step 1: Fix generator G, and update discriminator D sample Database generated objects 1 1 1 0 0 vector randomly sampled 1 G Update D Fix Discriminator learns to assign high scores to real objects and low scores to generated objects.

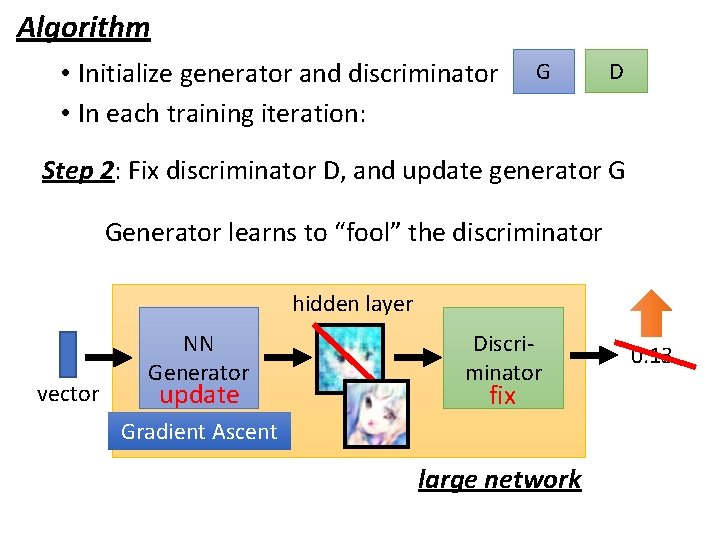

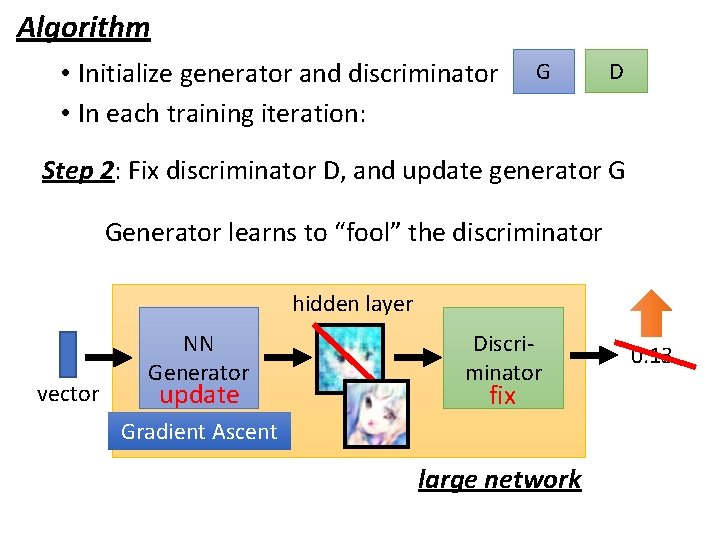

Algorithm • Initialize generator and discriminator • In each training iteration: G D Step 2: Fix discriminator D, and update generator G Generator learns to “fool” the discriminator hidden layer vector NN Generator update Discriminator fix Gradient Ascent large network 0. 13

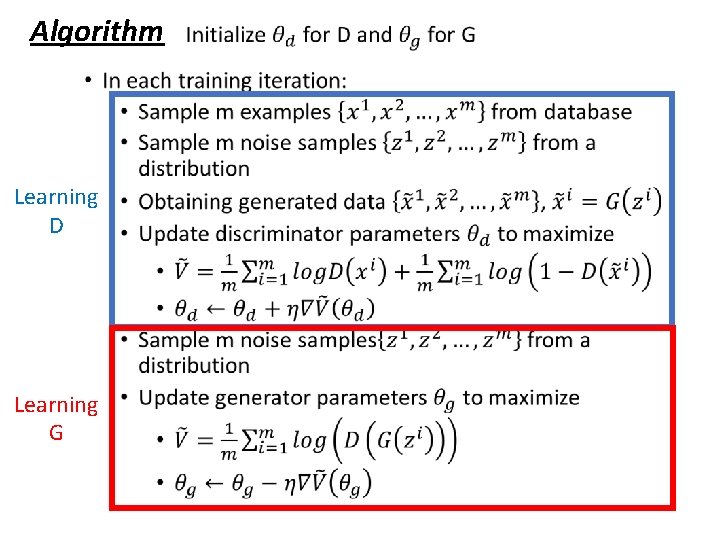

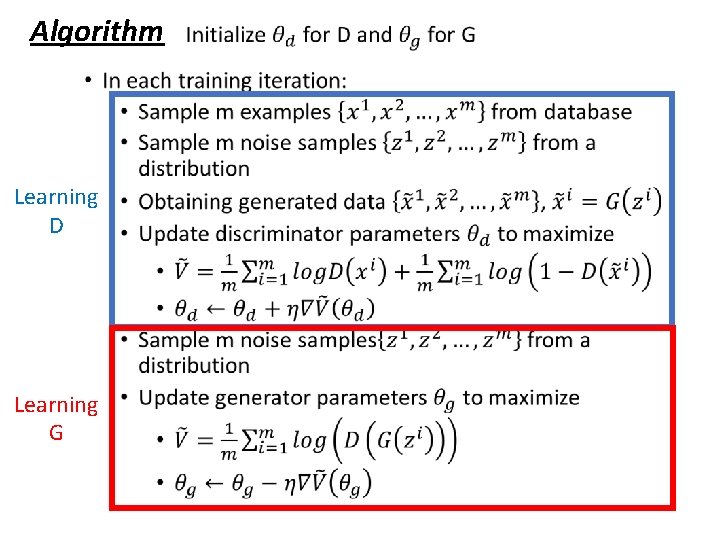

Algorithm • Learning D Learning G

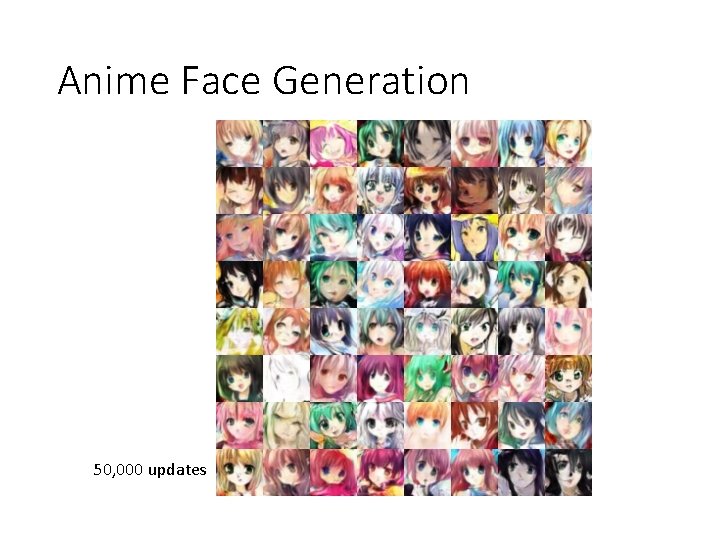

Anime Face Generation 100 updates Source of training data: https: //zhuanlan. zhihu. com/p/24767059

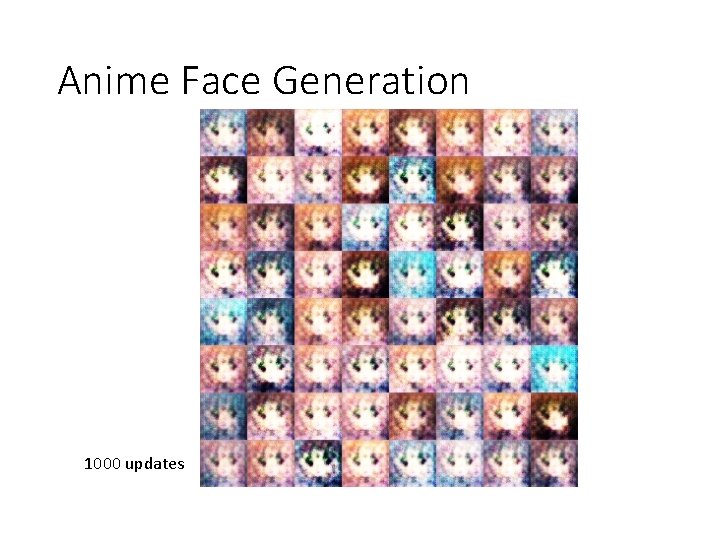

Anime Face Generation 1000 updates

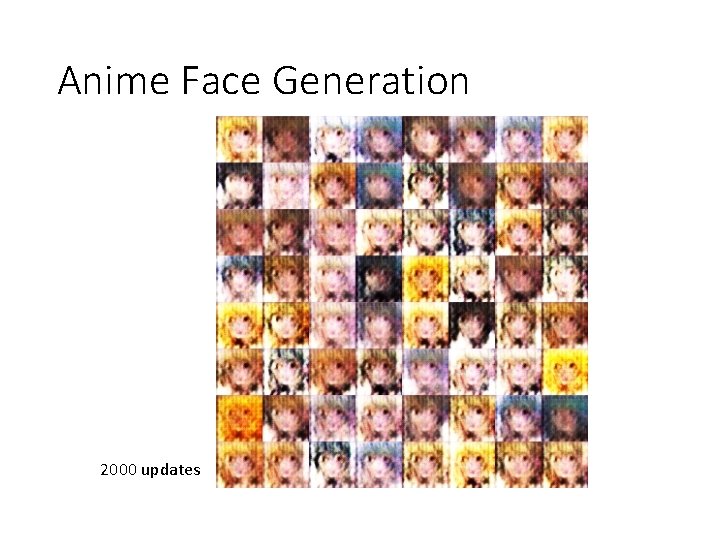

Anime Face Generation 2000 updates

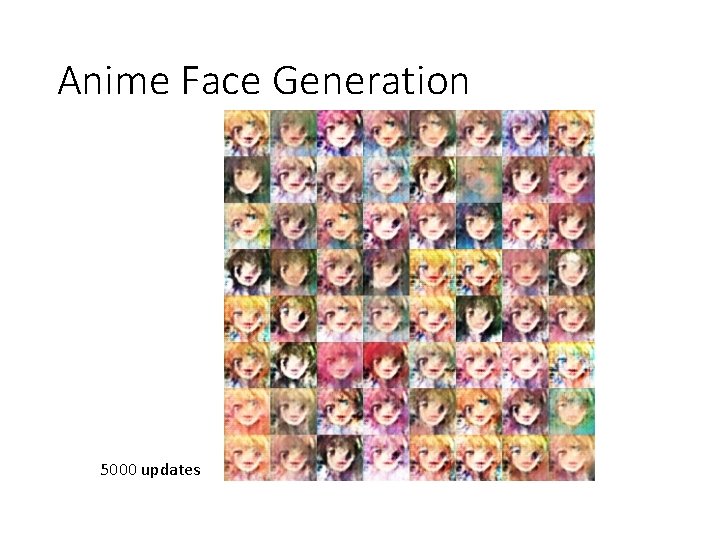

Anime Face Generation 5000 updates

Anime Face Generation 10, 000 updates

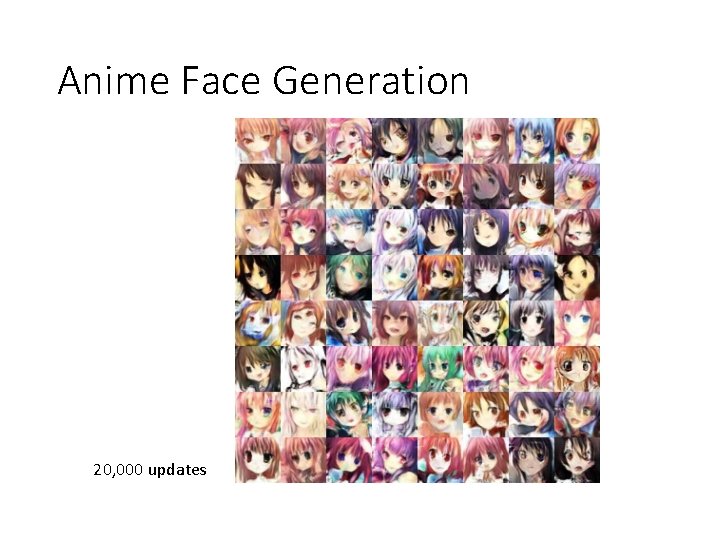

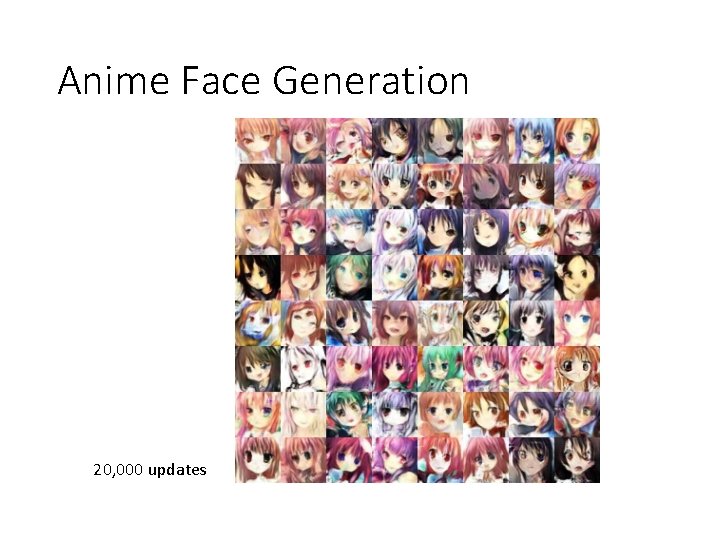

Anime Face Generation 20, 000 updates

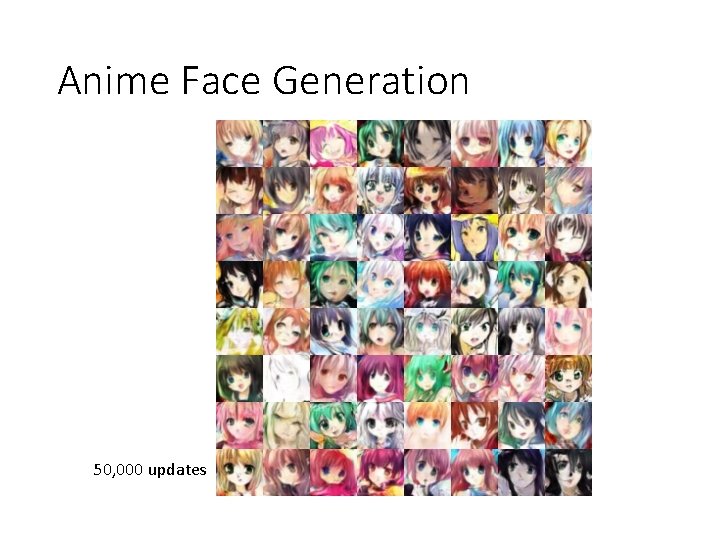

Anime Face Generation 50, 000 updates

Outline Basic Idea of GAN as structured learning Can Generator learn by itself? Can Discriminator generate? A little bit theory

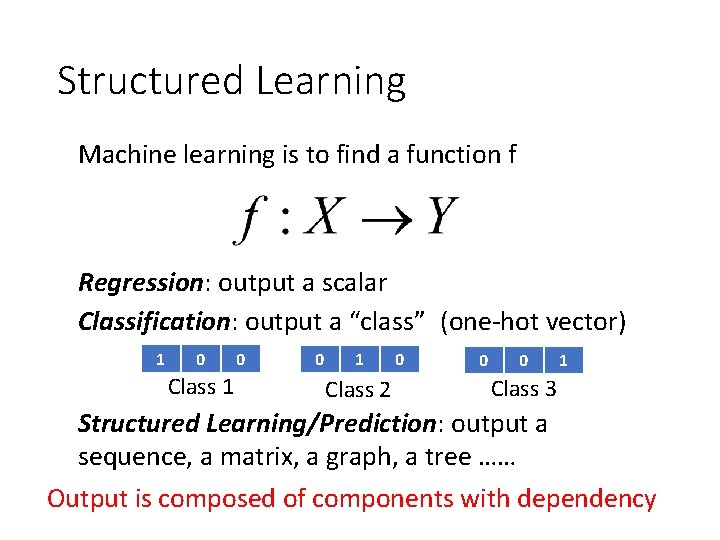

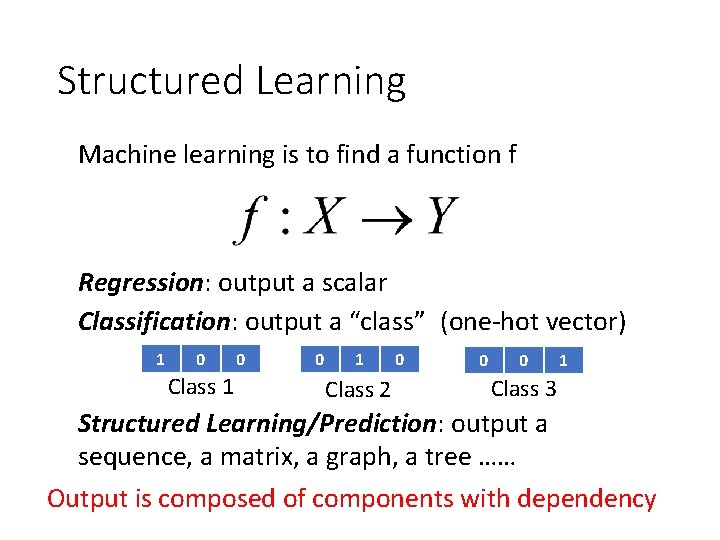

Structured Learning Machine learning is to find a function f Regression: output a scalar Classification: output a “class” (one-hot vector) 1 0 Class 1 0 0 1 Class 2 0 0 0 1 Class 3 Structured Learning/Prediction: output a sequence, a matrix, a graph, a tree …… Output is composed of components with dependency

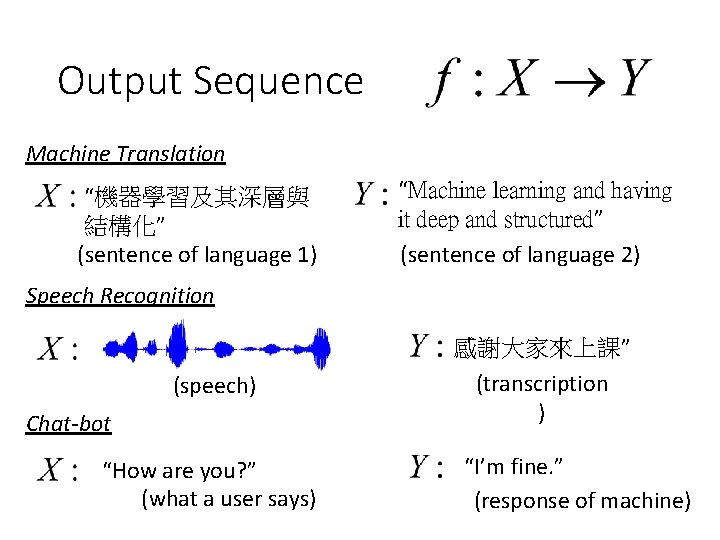

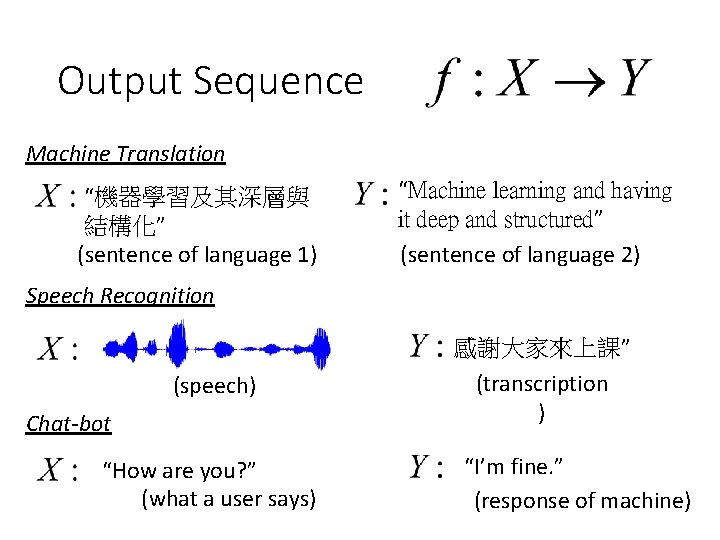

Output Sequence Machine Translation “機器學習及其深層與 結構化” (sentence of language 1) “Machine learning and having it deep and structured” (sentence of language 2) Speech Recognition 感謝大家來上課” (speech) Chat-bot “How are you? ” (what a user says) (transcription ) “I’m fine. ” (response of machine)

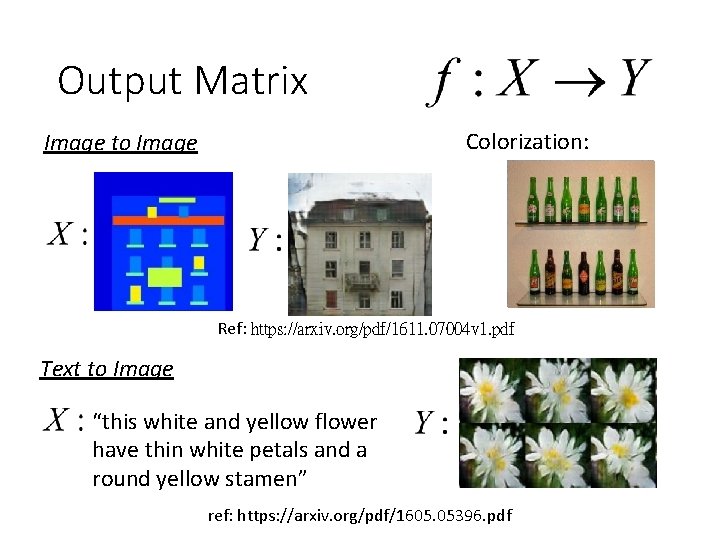

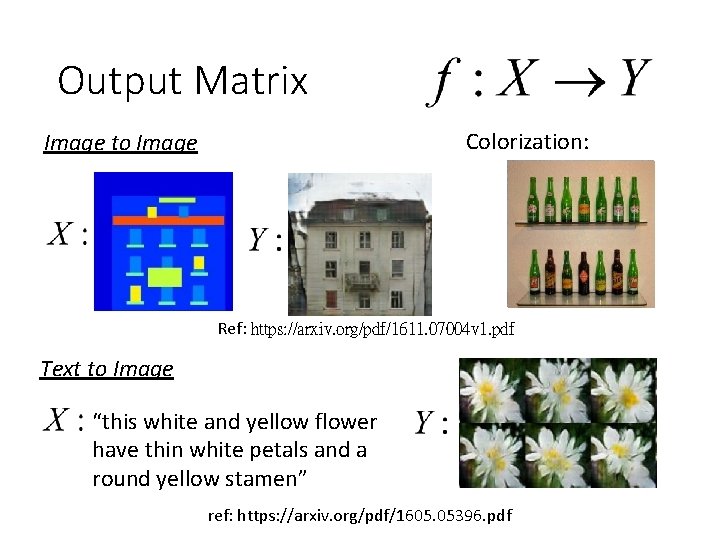

Output Matrix Colorization: Image to Image Ref: https: //arxiv. org/pdf/1611. 07004 v 1. pdf Text to Image “this white and yellow flower have thin white petals and a round yellow stamen” ref: https: //arxiv. org/pdf/1605. 05396. pdf

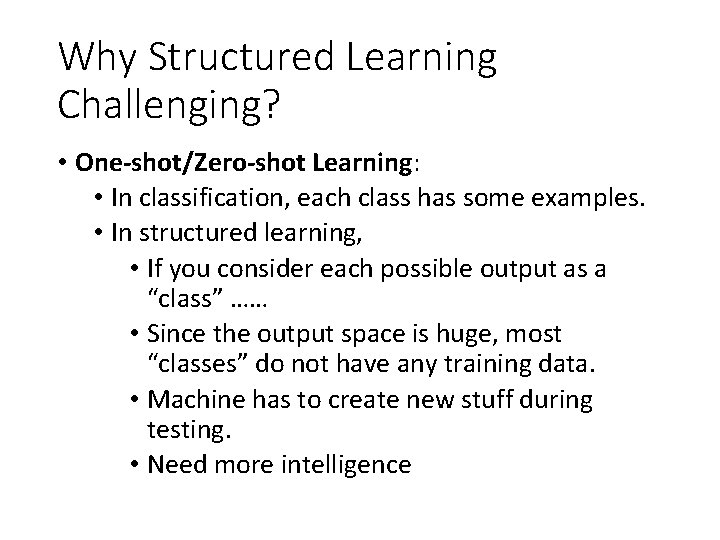

Why Structured Learning Challenging? • One-shot/Zero-shot Learning: • In classification, each class has some examples. • In structured learning, • If you consider each possible output as a “class” …… • Since the output space is huge, most “classes” do not have any training data. • Machine has to create new stuff during testing. • Need more intelligence

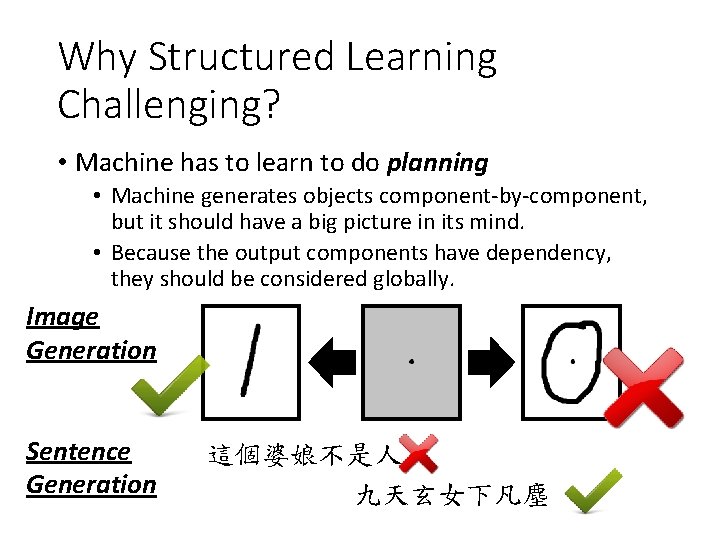

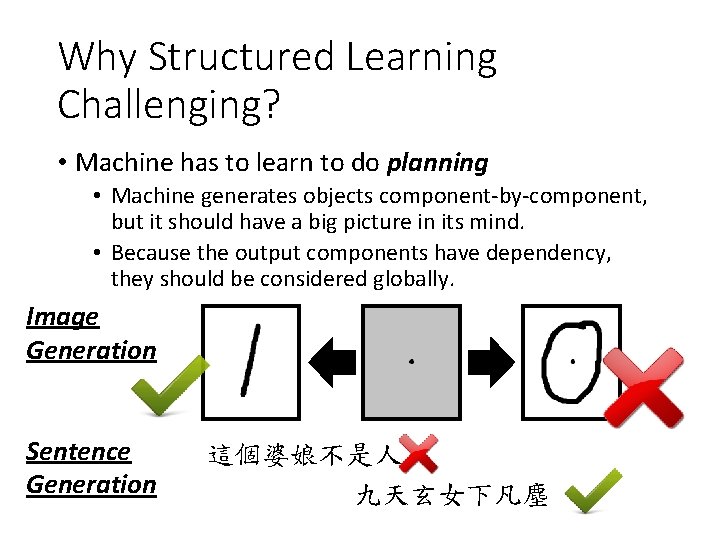

Why Structured Learning Challenging? • Machine has to learn to do planning • Machine generates objects component-by-component, but it should have a big picture in its mind. • Because the output components have dependency, they should be considered globally. Image Generation Sentence Generation 這個婆娘不是人 九天玄女下凡塵

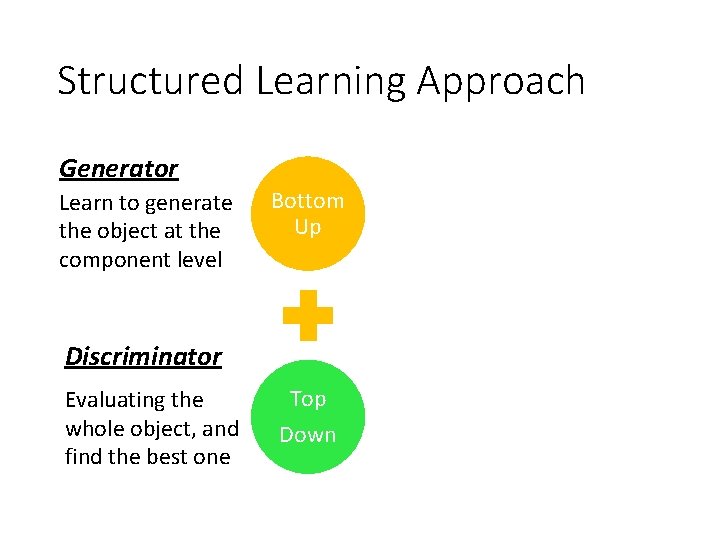

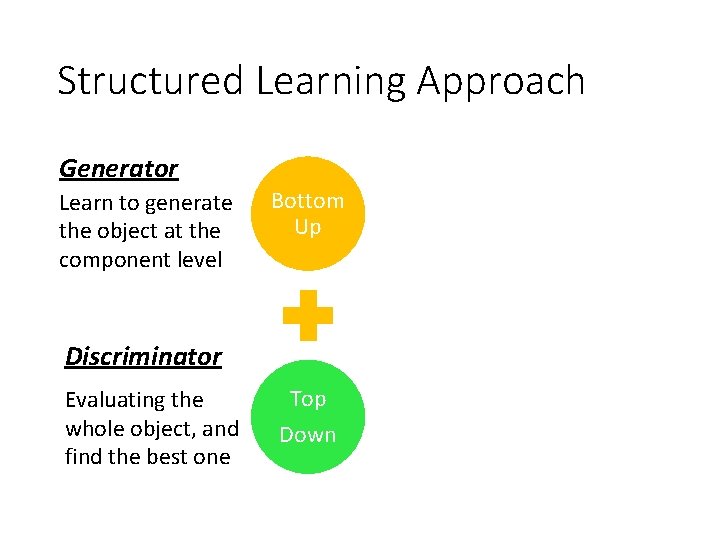

Structured Learning Approach Generator Learn to generate the object at the component level Bottom Up Generative Adversarial Network (GAN) Discriminator Evaluating the whole object, and find the best one Top Down

Outline Basic Idea of GAN as structured learning Can Generator learn by itself? Can Discriminator generate? A little bit theory

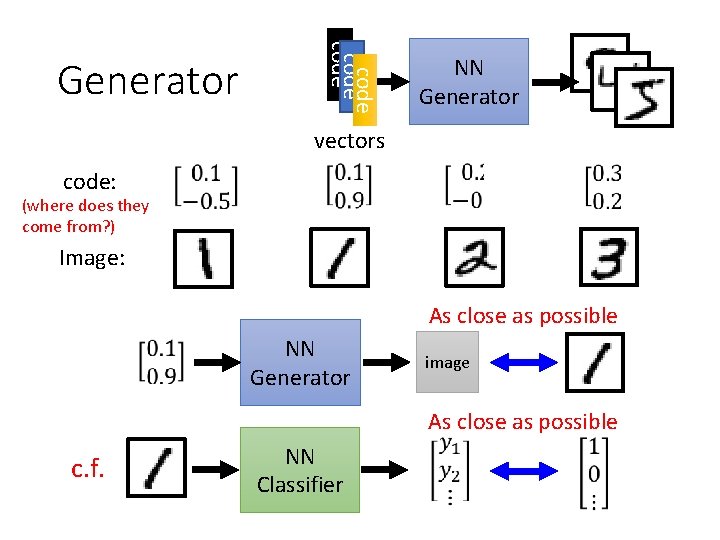

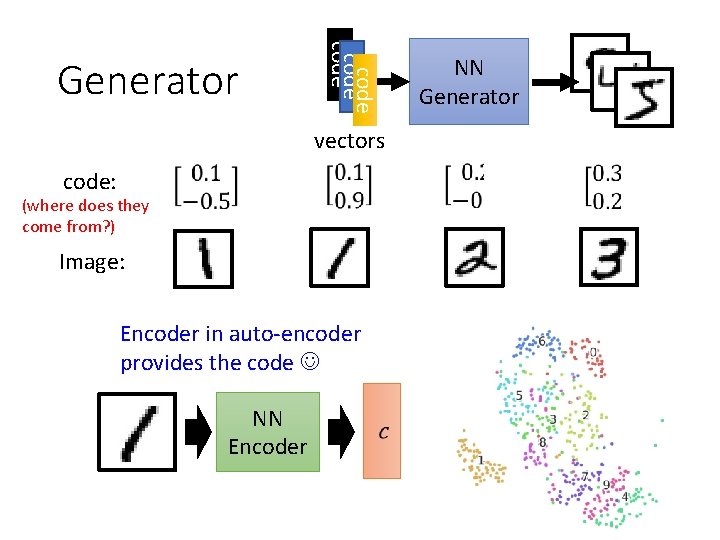

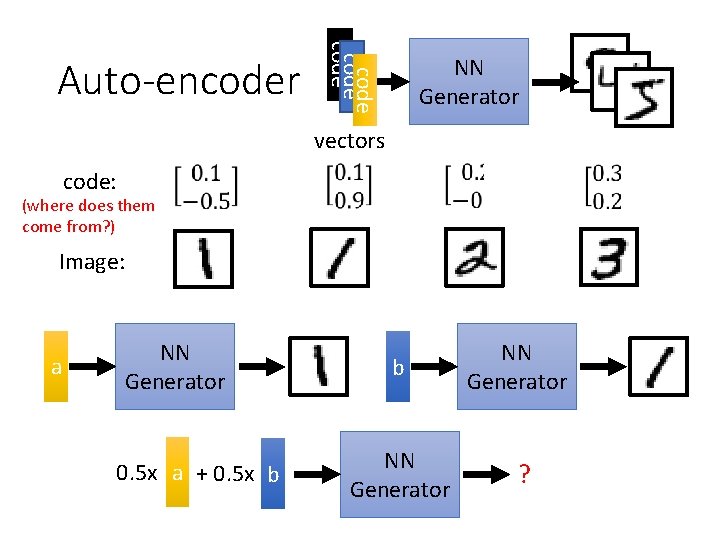

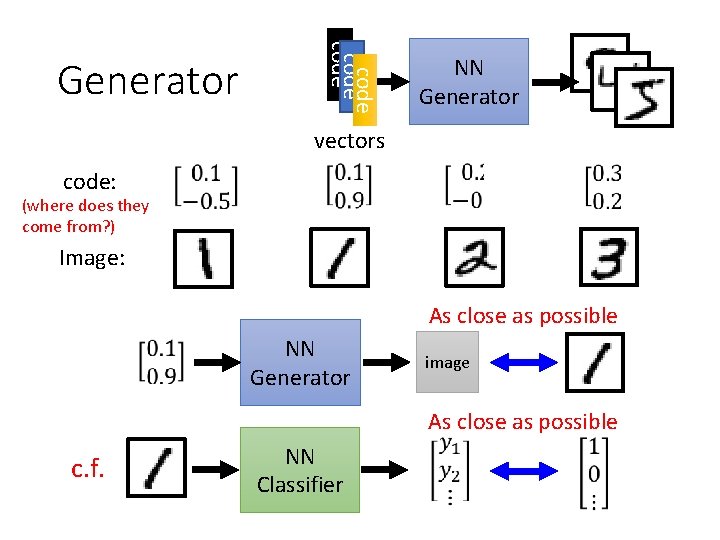

code Generator NN Generator vectors code: (where does they come from? ) Image: As close as possible NN Generator image As close as possible c. f. NN Classifier

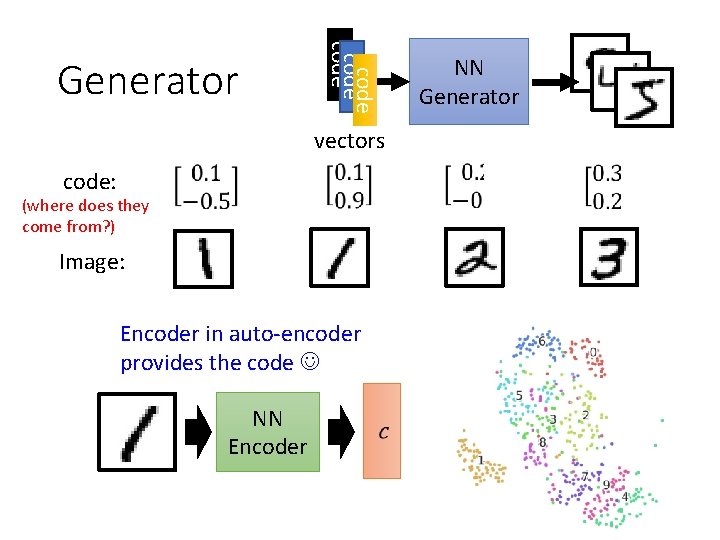

code Generator vectors code: (where does they come from? ) Image: Encoder in auto-encoder provides the code NN Encoder NN Generator

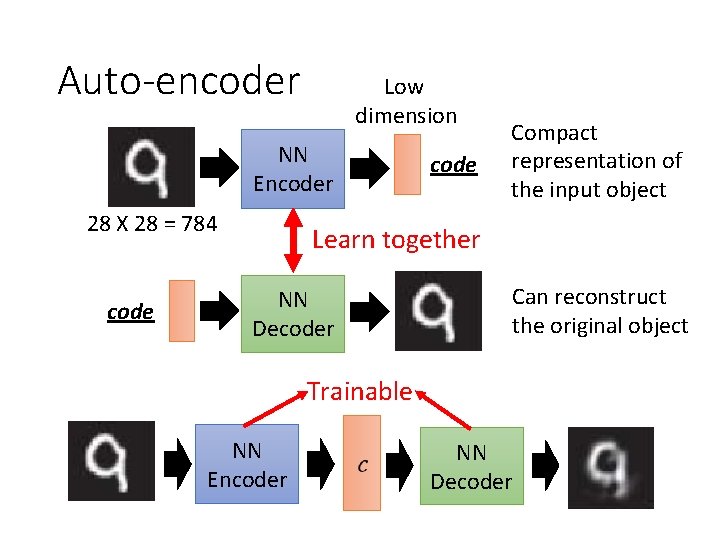

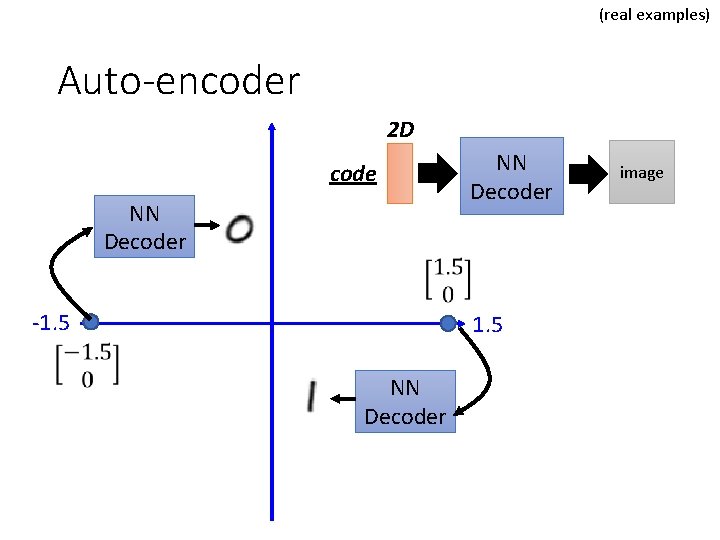

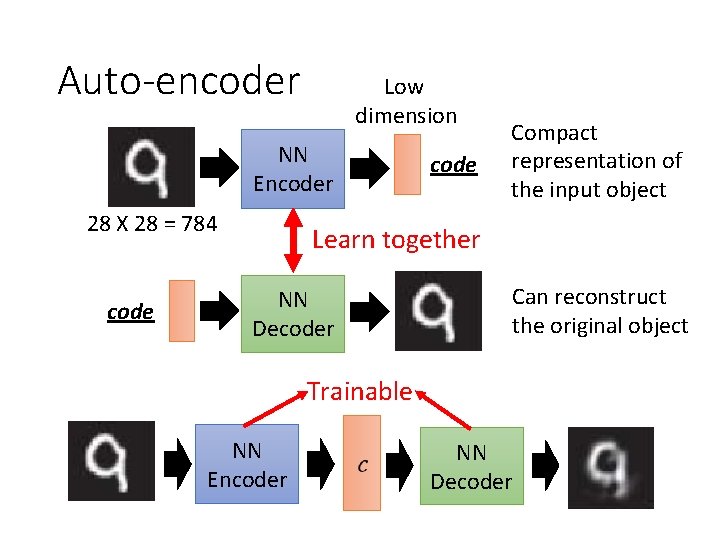

Auto-encoder Low dimension NN Encoder 28 X 28 = 784 code Compact representation of the input object Learn together NN Decoder Can reconstruct the original object Trainable NN Encoder NN Decoder

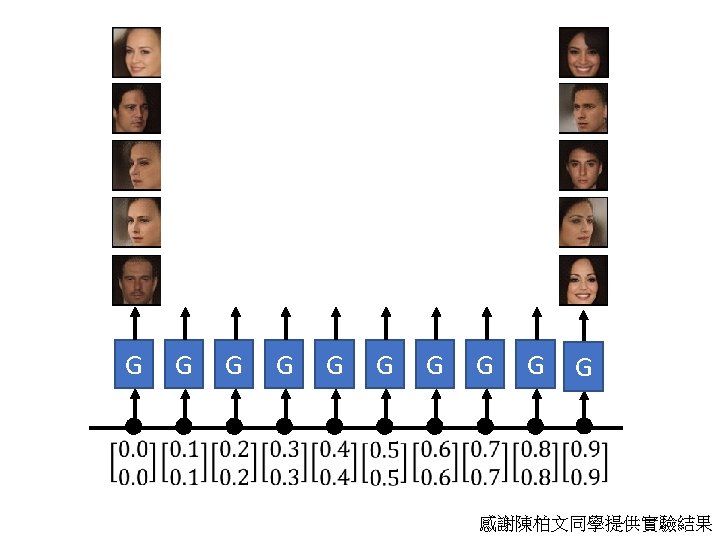

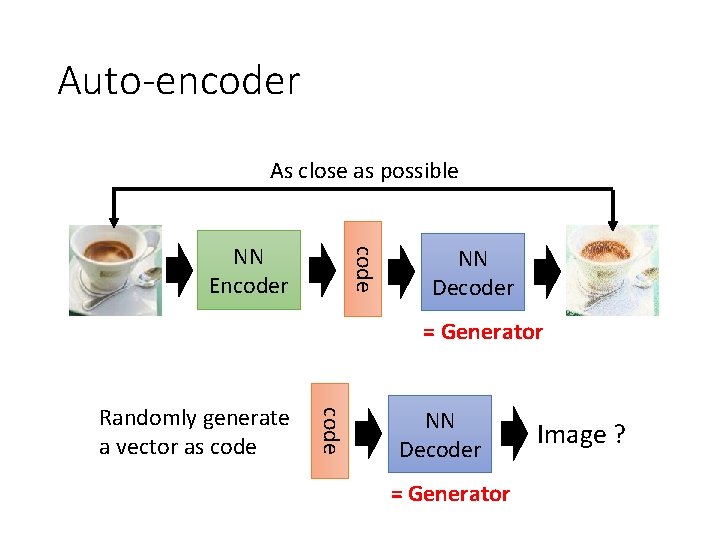

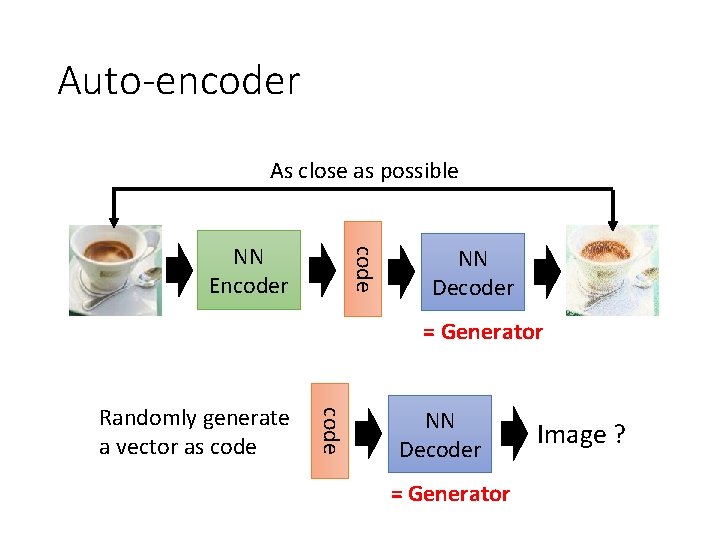

Auto-encoder As close as possible code NN Encoder NN Decoder = Generator code Randomly generate a vector as code NN Decoder = Generator Image ?

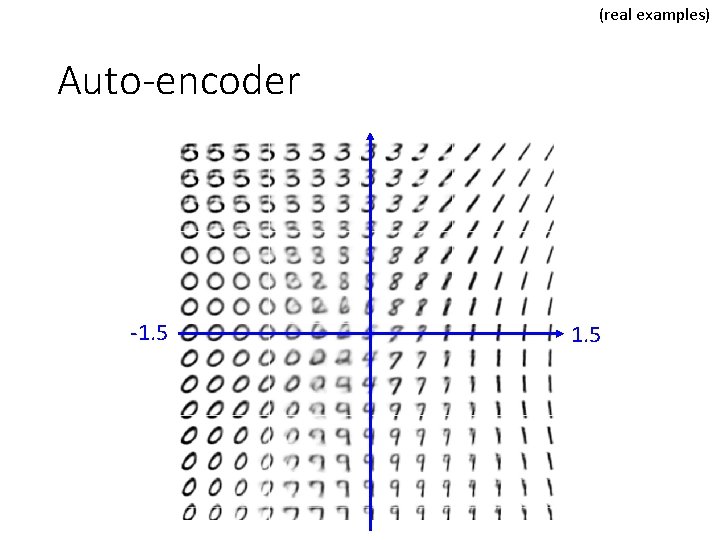

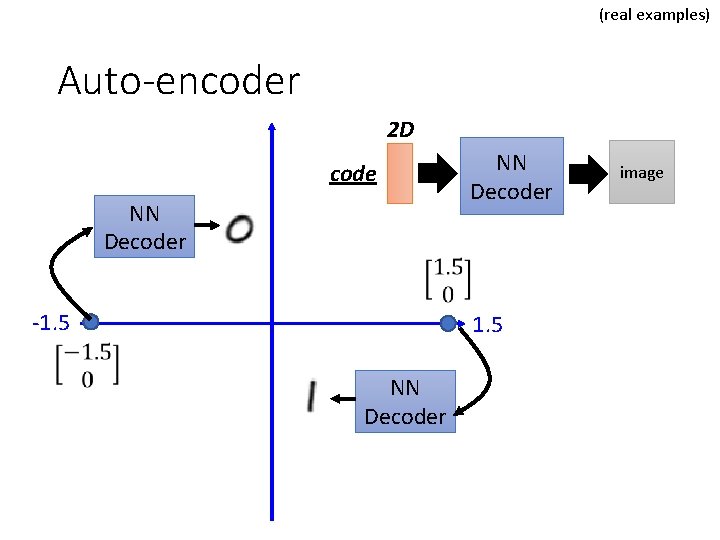

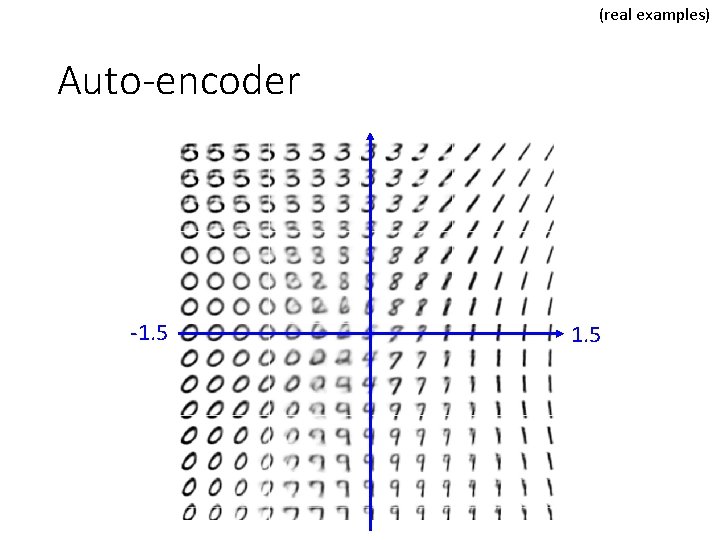

(real examples) Auto-encoder 2 D code NN Decoder -1. 5 NN Decoder image

(real examples) Auto-encoder -1. 5

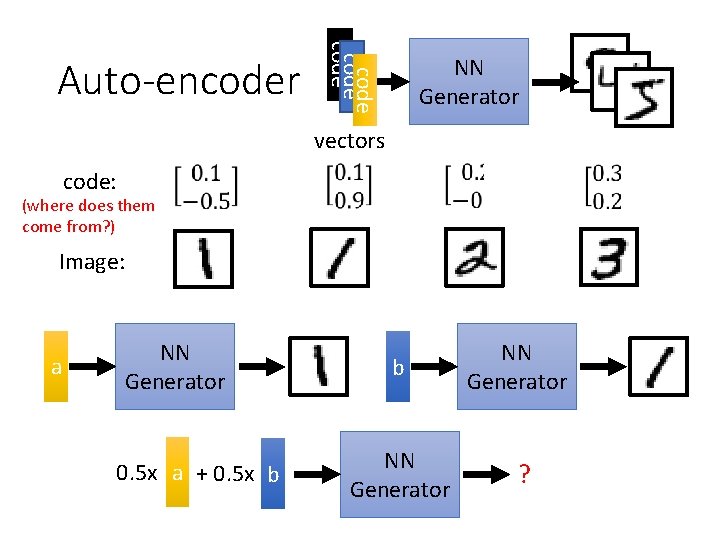

code Auto-encoder NN Generator vectors code: (where does them come from? ) Image: a NN Generator 0. 5 x a + 0. 5 x b b NN Generator ?

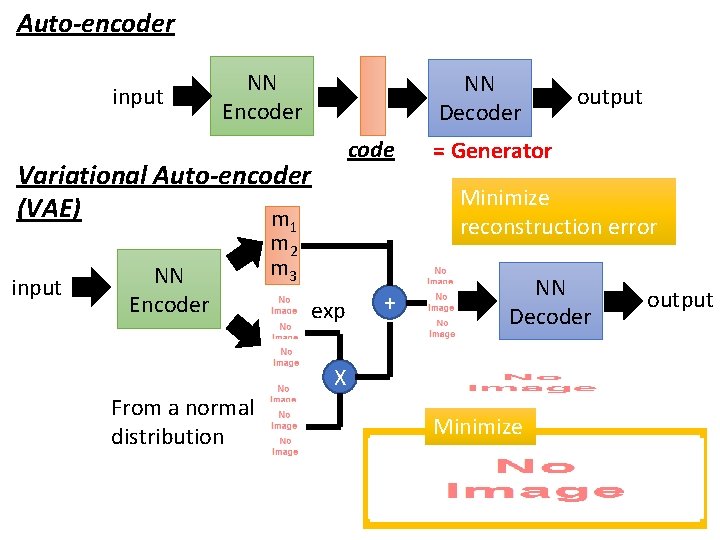

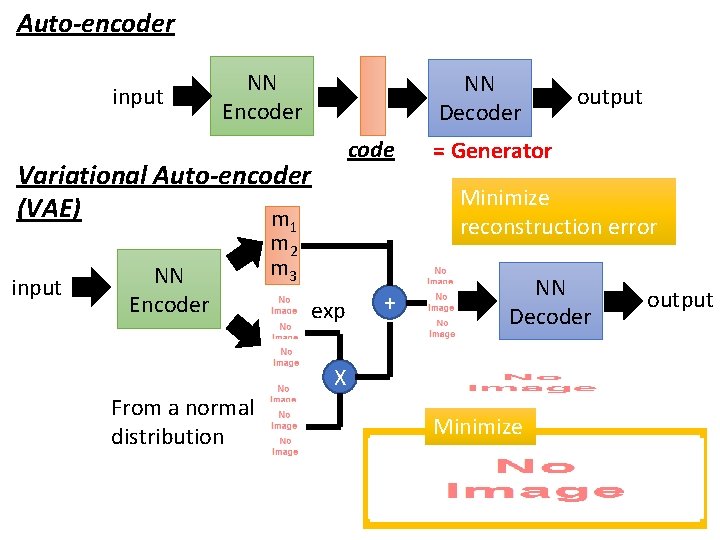

Auto-encoder input NN Encoder NN Decoder code Variational Auto-encoder (VAE) m NN Encoder = Generator Minimize reconstruction error 1 input m 2 m 3 exp + NN Decoder X From a normal distribution output Minimize output

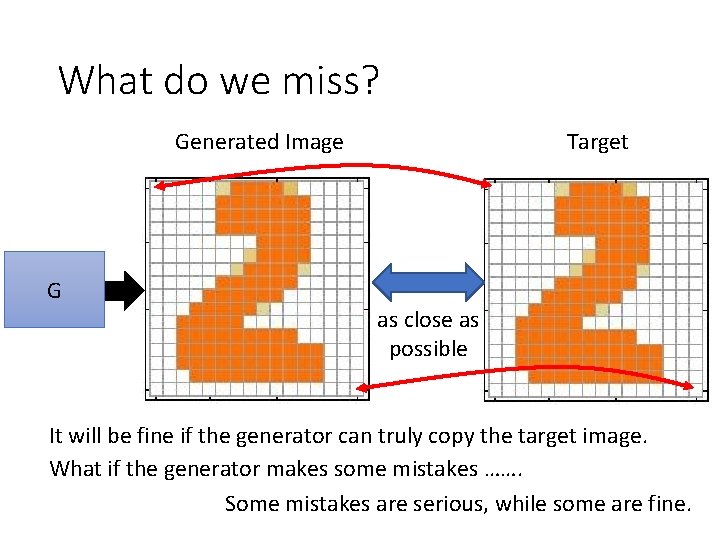

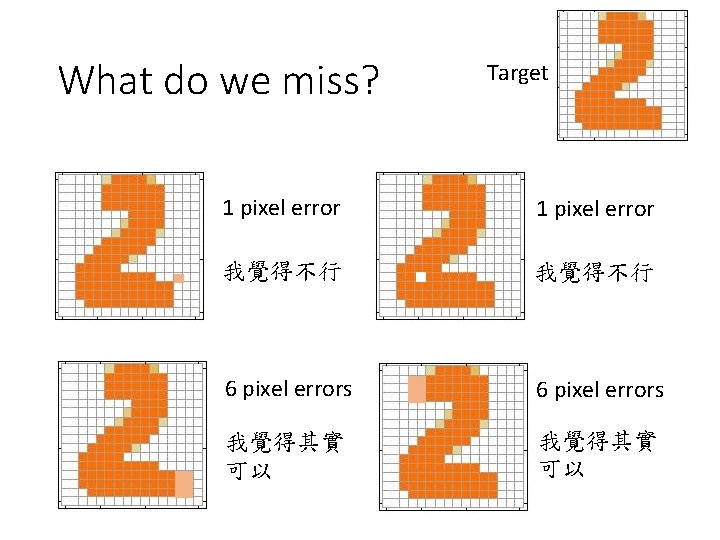

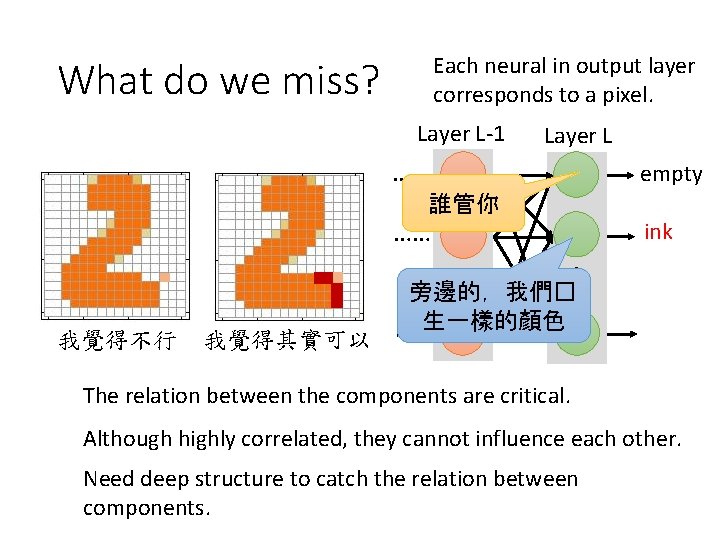

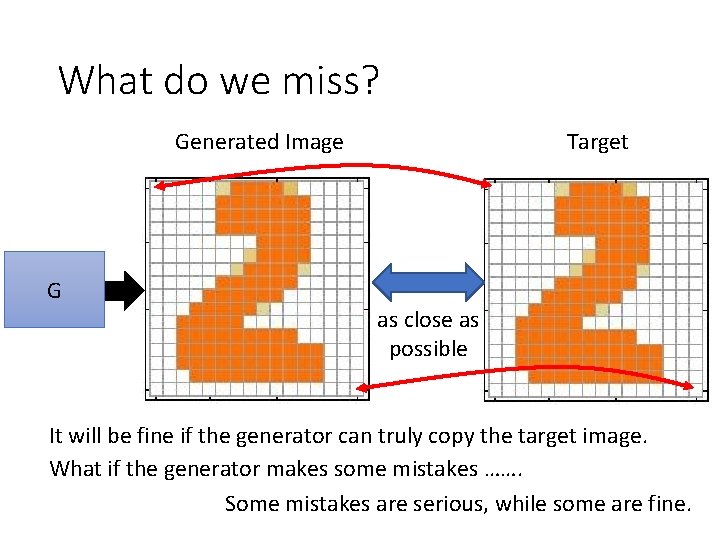

What do we miss? Generated Image G Target as close as possible It will be fine if the generator can truly copy the target image. What if the generator makes some mistakes ……. Some mistakes are serious, while some are fine.

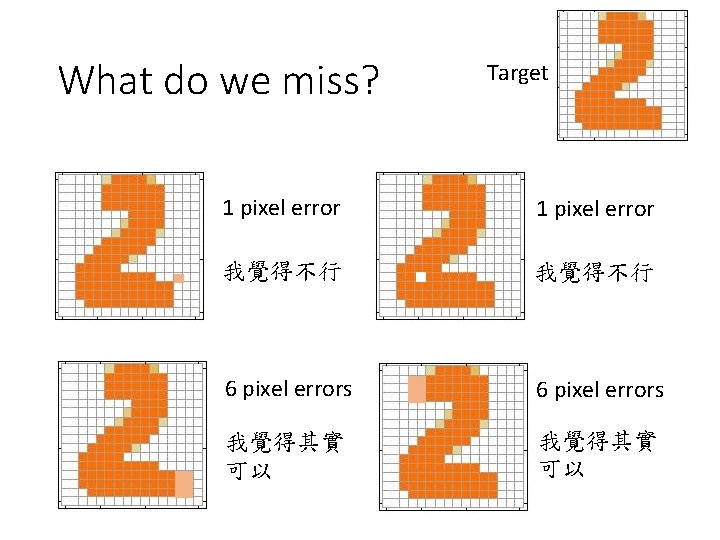

What do we miss? Target 1 pixel error 我覺得不行 6 pixel errors 我覺得其實 可以

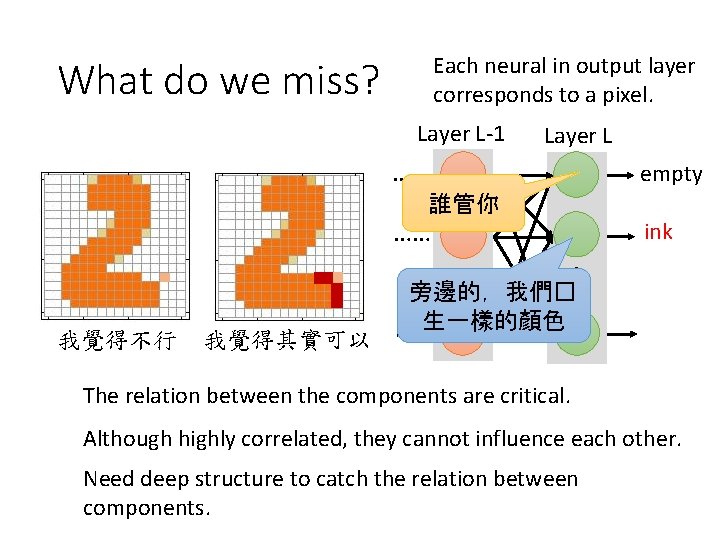

Each neural in output layer corresponds to a pixel. What do we miss? Layer L-1 Layer L …… empty 誰管你 …… ink 我覺得其實可以 …… …… 我覺得不行 旁邊的,我們� ……生一樣的顏色 The relation between the components are critical. Although highly correlated, they cannot influence each other. Need deep structure to catch the relation between components.

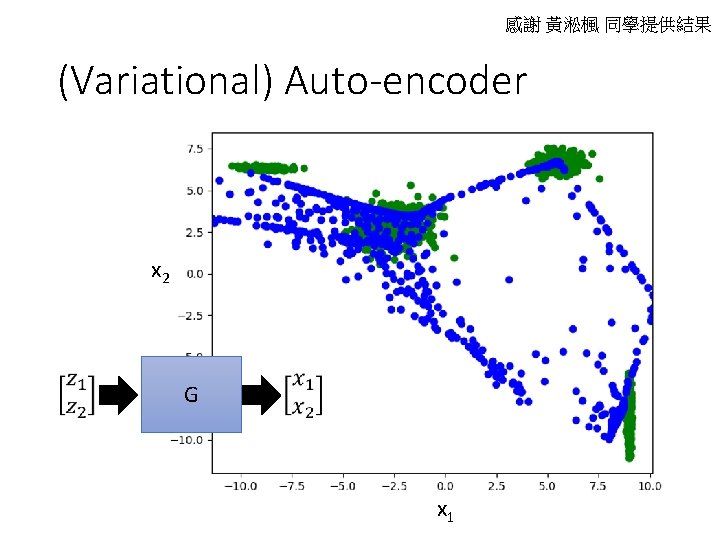

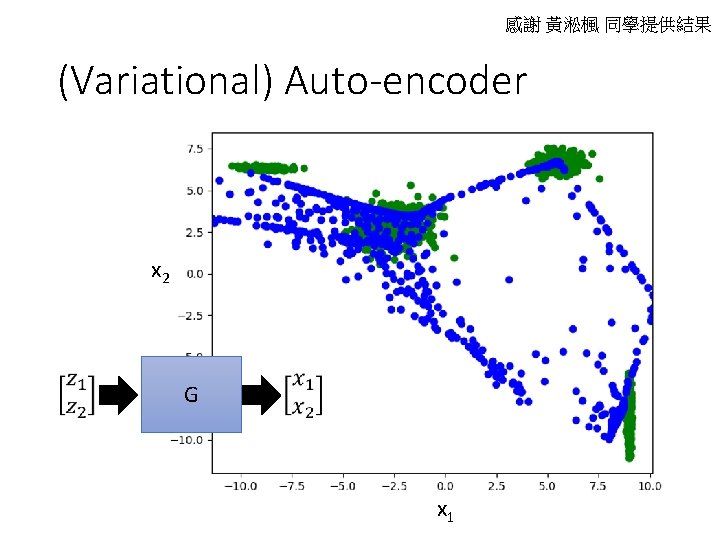

感謝 黃淞楓 同學提供結果 (Variational) Auto-encoder x 2 G x 1

Outline Basic Idea of GAN as structured learning Can Generator learn by itself? Can Discriminator generate? A little bit theory

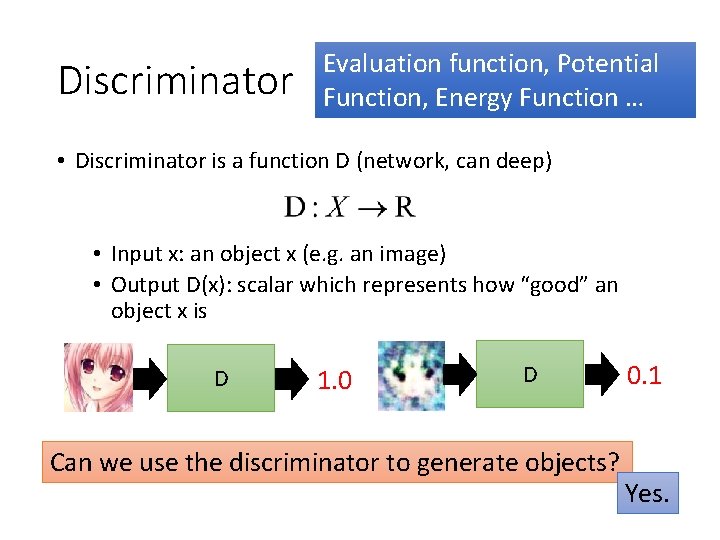

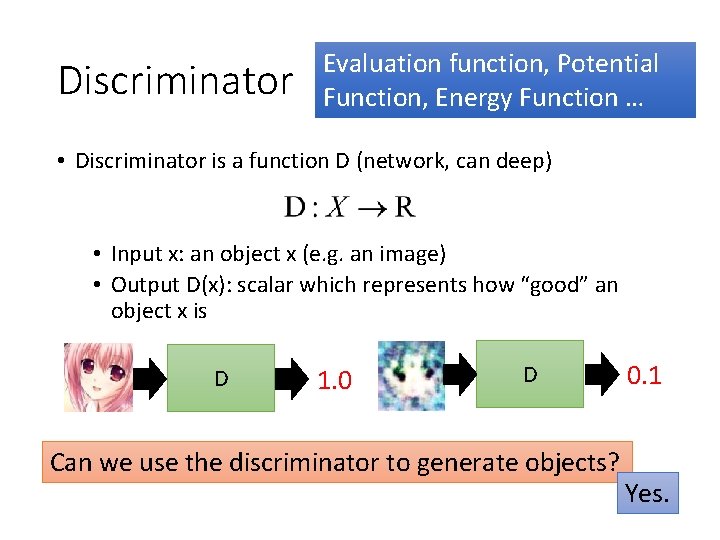

Discriminator Evaluation function, Potential Function, Energy Function … • Discriminator is a function D (network, can deep) • Input x: an object x (e. g. an image) • Output D(x): scalar which represents how “good” an object x is D 1. 0 D Can we use the discriminator to generate objects? 0. 1 Yes.

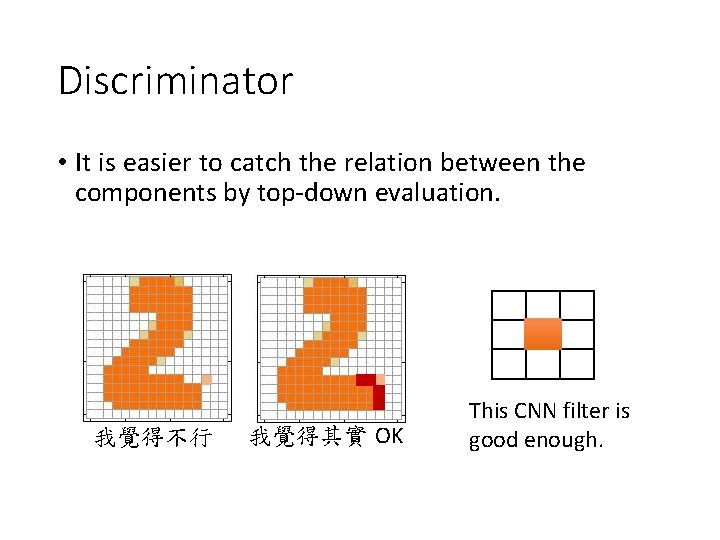

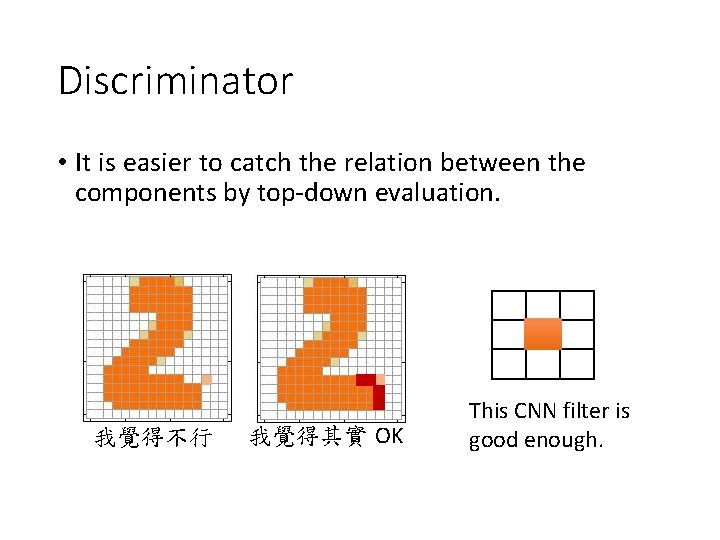

Discriminator • It is easier to catch the relation between the components by top-down evaluation. 我覺得不行 我覺得其實 OK This CNN filter is good enough.

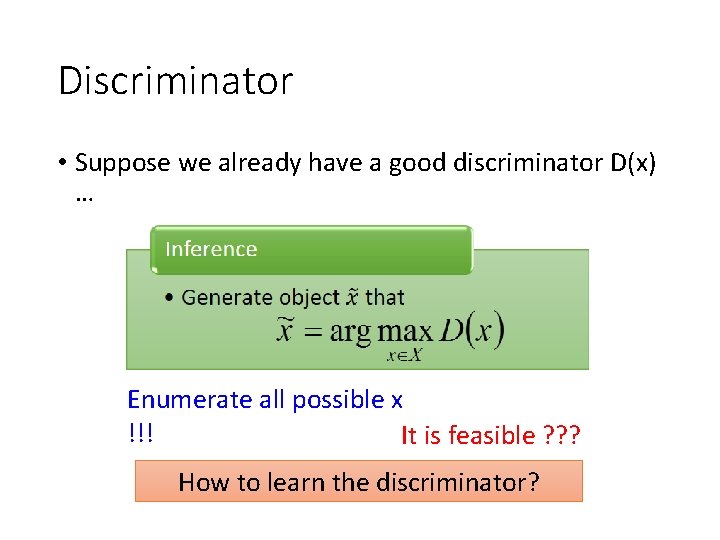

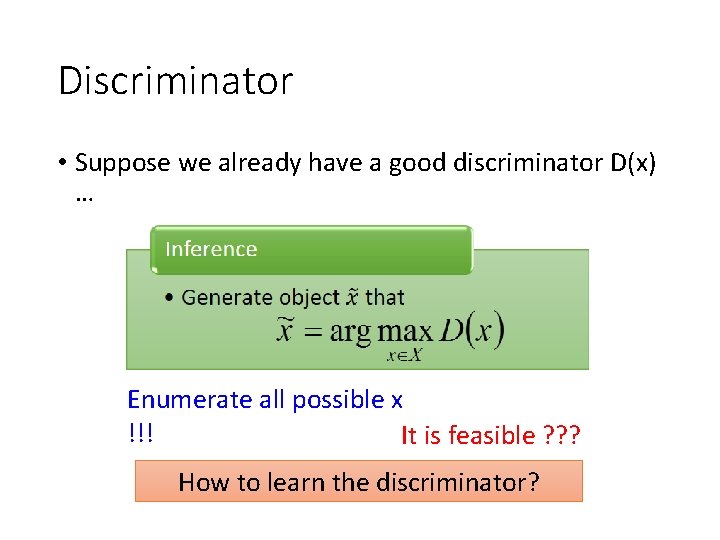

Discriminator • Suppose we already have a good discriminator D(x) … Enumerate all possible x !!! It is feasible ? ? ? How to learn the discriminator?

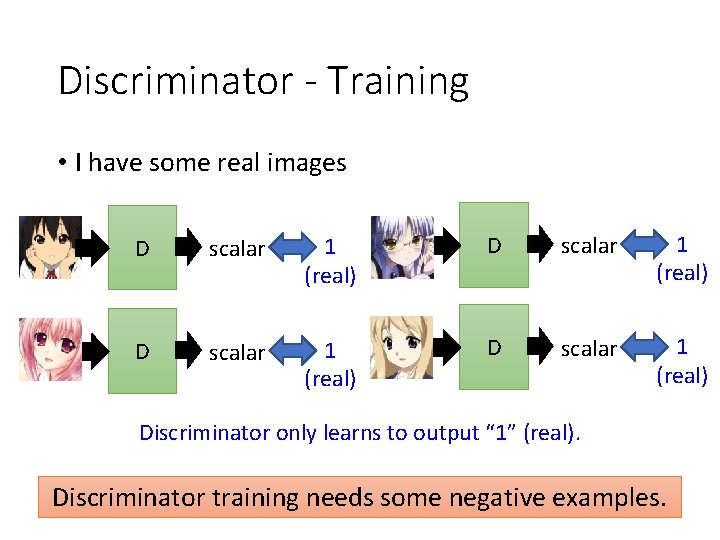

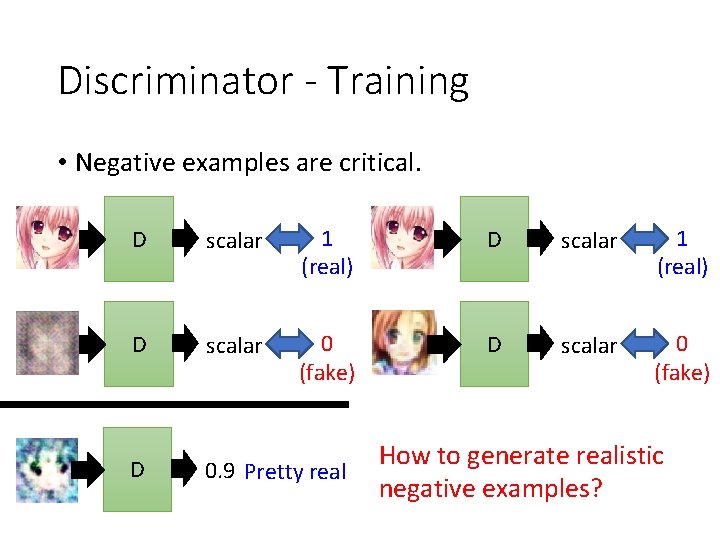

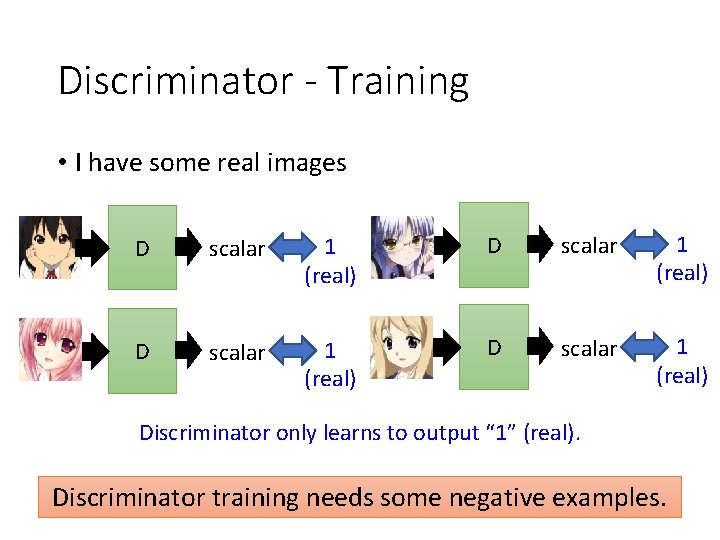

Discriminator - Training • I have some real images D scalar 1 (real) Discriminator only learns to output “ 1” (real). Discriminator training needs some negative examples.

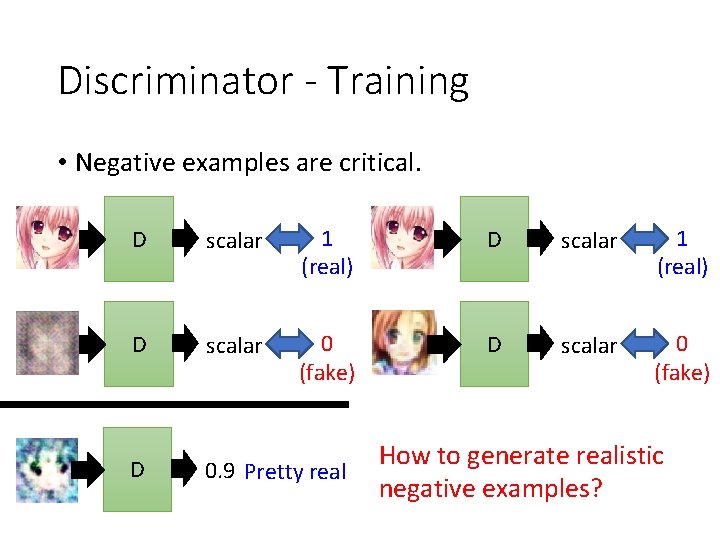

Discriminator - Training • Negative examples are critical. D scalar 1 (real) D scalar 0 (fake) D 0. 9 Pretty real How to generate realistic negative examples?

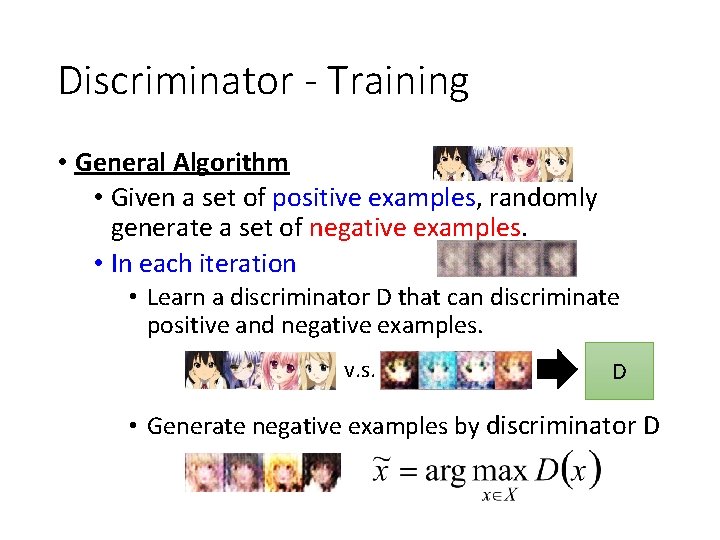

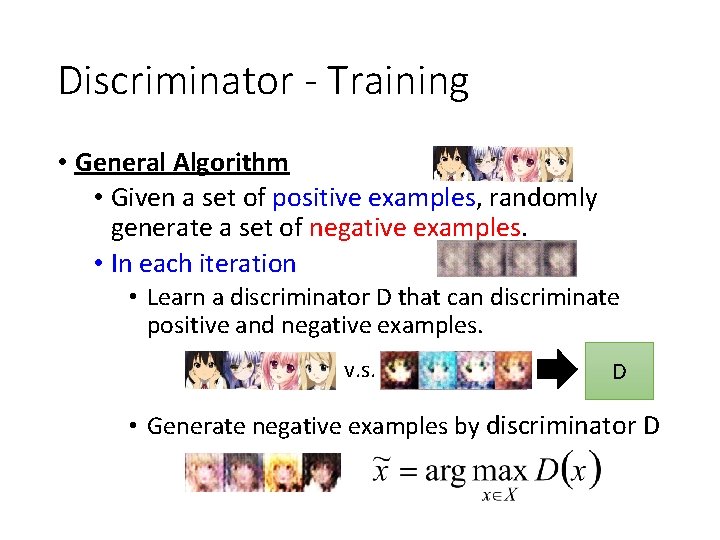

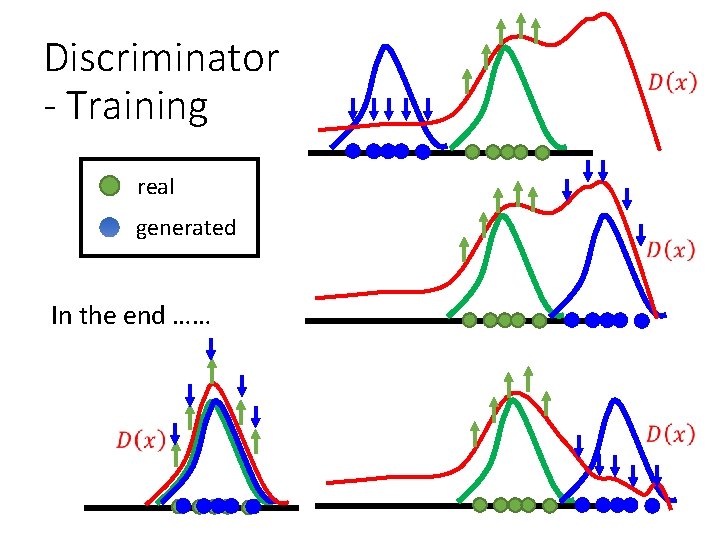

Discriminator - Training • General Algorithm • Given a set of positive examples, randomly generate a set of negative examples. • In each iteration • Learn a discriminator D that can discriminate positive and negative examples. v. s. D • Generate negative examples by discriminator D

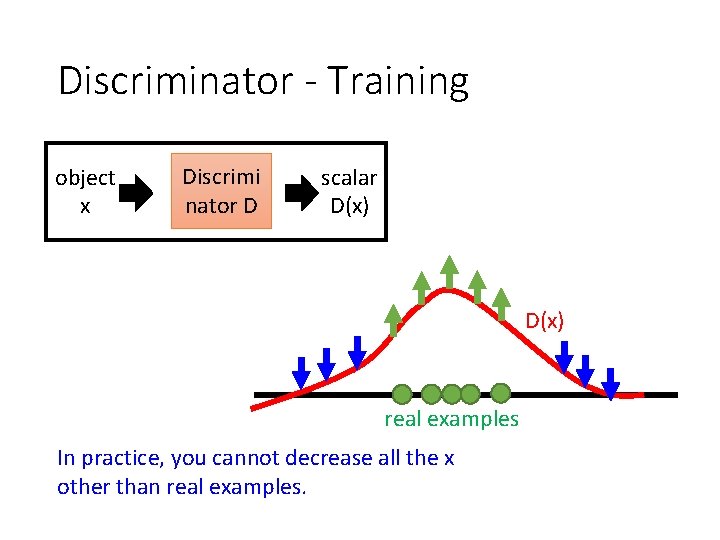

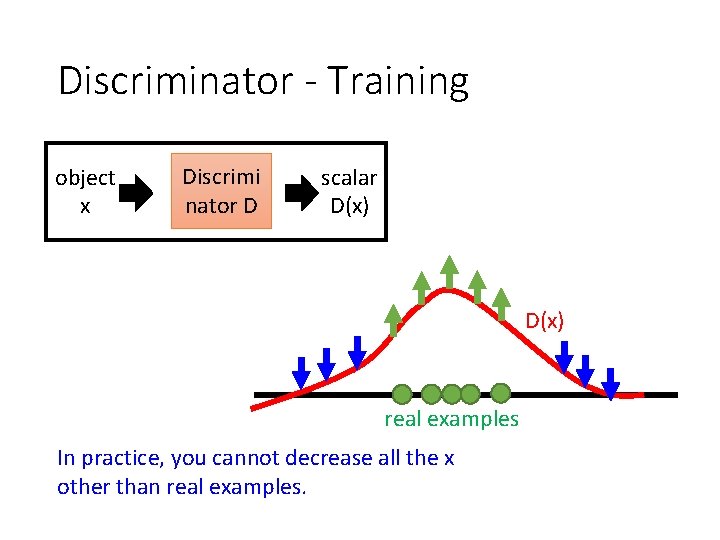

Discriminator - Training object x Discrimi nator D scalar D(x) real examples In practice, you cannot decrease all the x other than real examples.

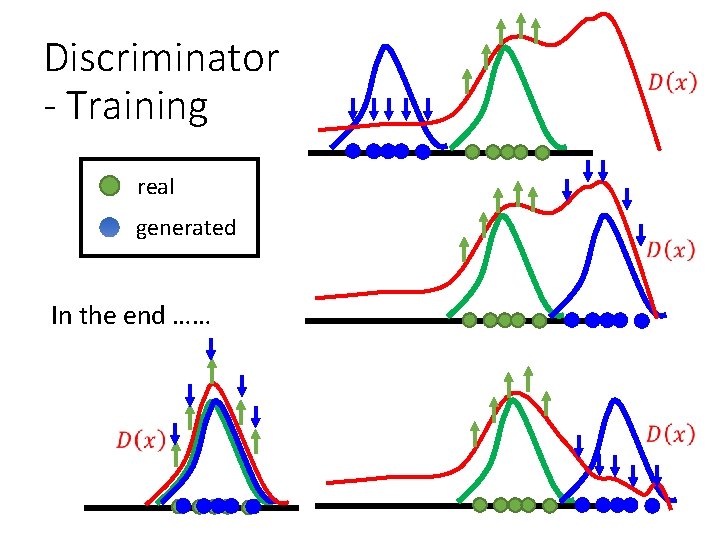

Discriminator - Training real generated In the end ……

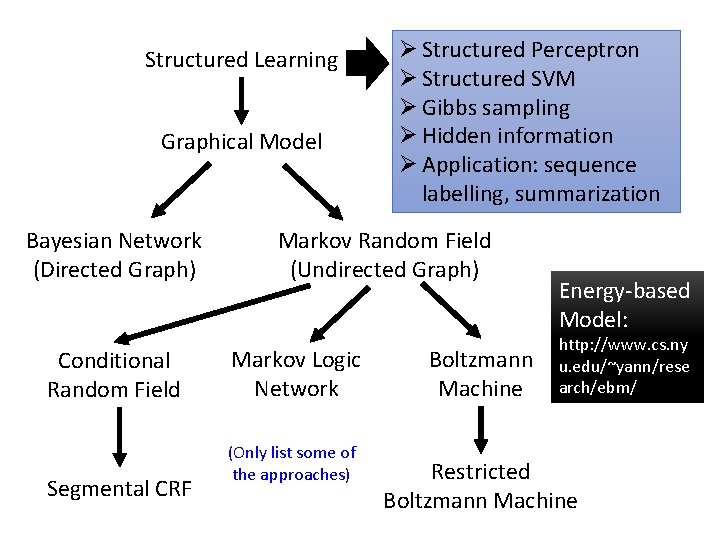

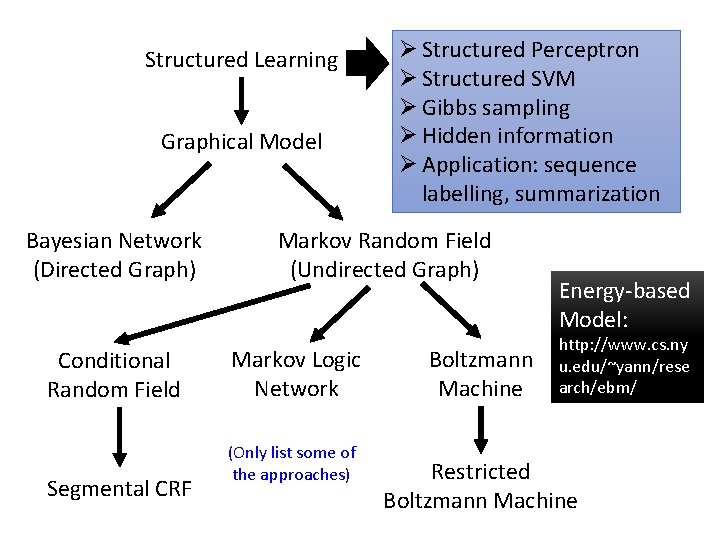

Structured Learning Graphical Model Bayesian Network (Directed Graph) Conditional Random Field Segmental CRF Ø Structured Perceptron Ø Structured SVM Ø Gibbs sampling Ø Hidden information Ø Application: sequence labelling, summarization Markov Random Field (Undirected Graph) Markov Logic Network (Only list some of the approaches) Boltzmann Machine Energy-based Model: http: //www. cs. ny u. edu/~yann/rese arch/ebm/ Restricted Boltzmann Machine

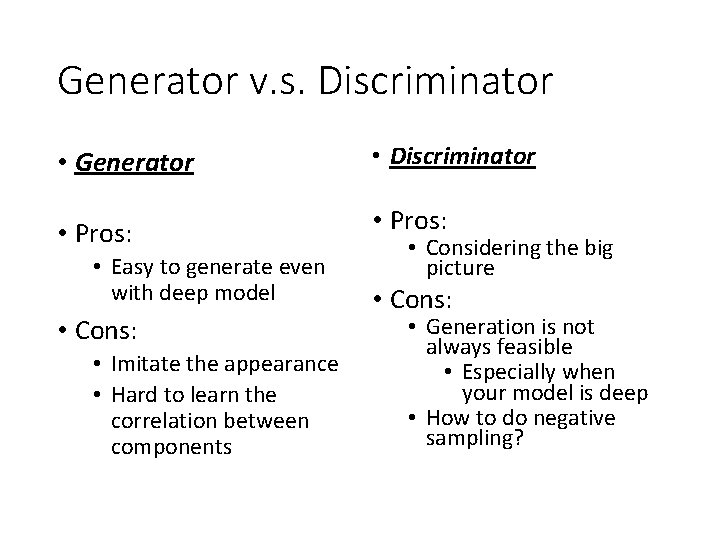

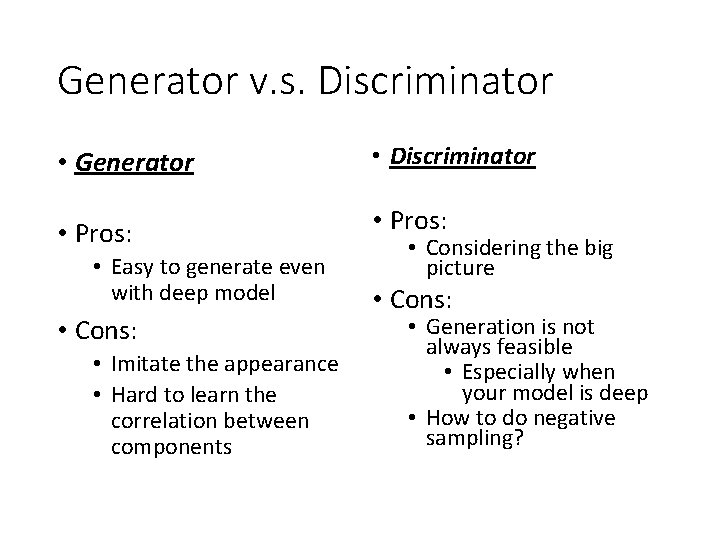

Generator v. s. Discriminator • Generator • Discriminator • Pros: • Easy to generate even with deep model • Cons: • Imitate the appearance • Hard to learn the correlation between components • Considering the big picture • Cons: • Generation is not always feasible • Especially when your model is deep • How to do negative sampling?

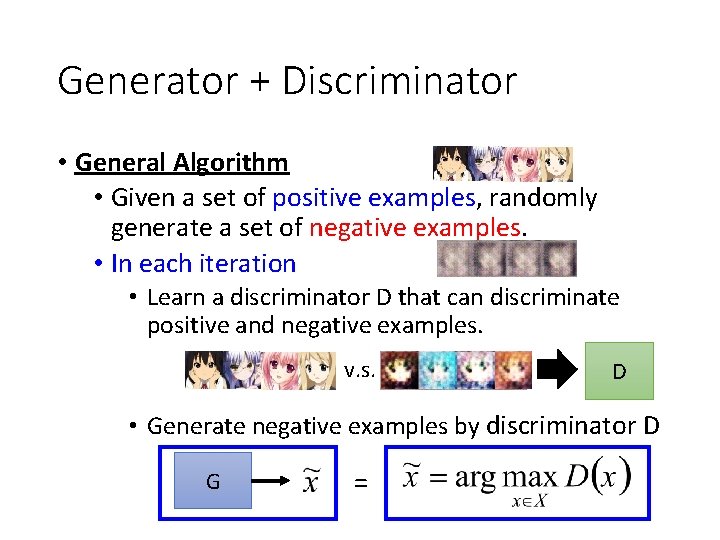

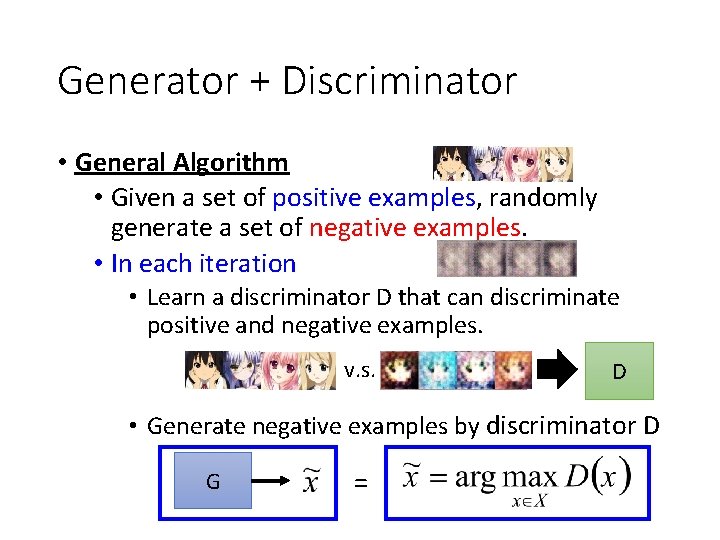

Generator + Discriminator • General Algorithm • Given a set of positive examples, randomly generate a set of negative examples. • In each iteration • Learn a discriminator D that can discriminate positive and negative examples. v. s. D • Generate negative examples by discriminator D G =

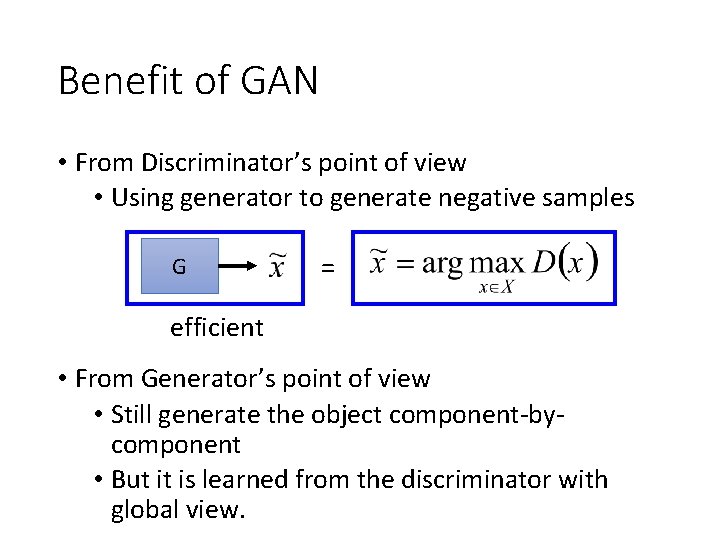

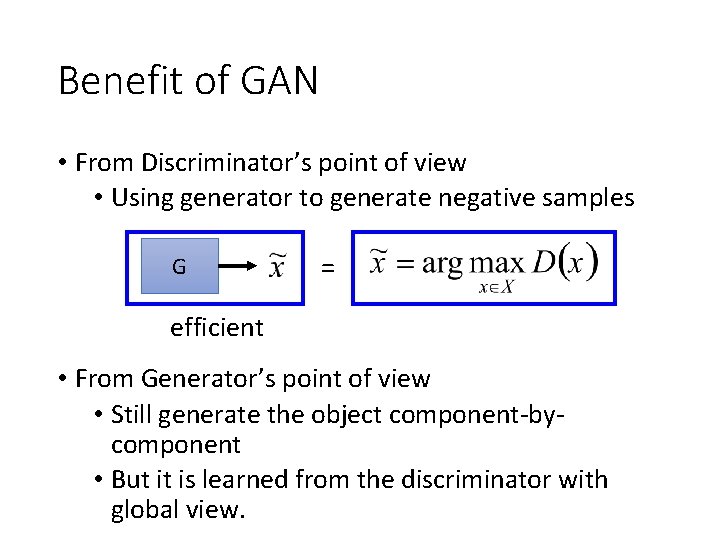

Benefit of GAN • From Discriminator’s point of view • Using generator to generate negative samples G = efficient • From Generator’s point of view • Still generate the object component-bycomponent • But it is learned from the discriminator with global view.

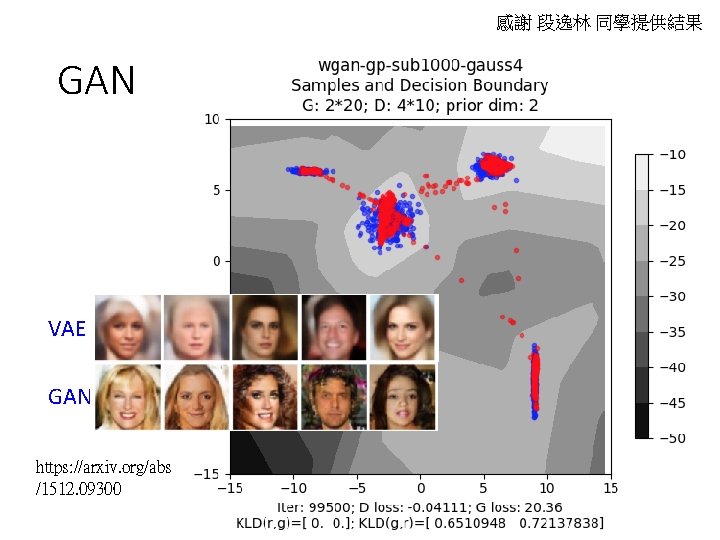

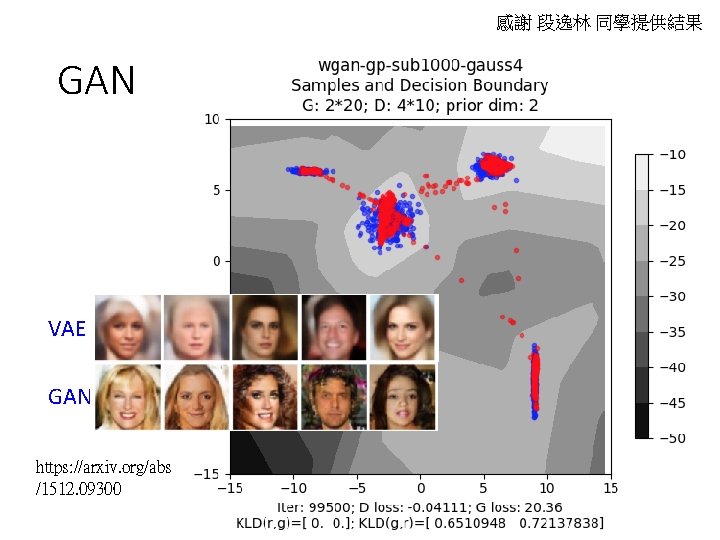

感謝 段逸林 同學提供結果 GAN VAE GAN https: //arxiv. org/abs /1512. 09300

![Mario Lucic et al ar Xiv 2017 FIDMartin Heusel et al NIPS 2017 [Mario Lucic, et al. ar. Xiv, 2017] FID[Martin Heusel, et al. , NIPS, 2017]:](https://slidetodoc.com/presentation_image_h/1dc530723a0cb1cc4551881fd2cbb453/image-61.jpg)

[Mario Lucic, et al. ar. Xiv, 2017] FID[Martin Heusel, et al. , NIPS, 2017]: Smaller is better

Next Time • Preview • https: //youtu. be/0 CKeq. Xl 5 IY 0 • https: //youtu. be/KSN 4 QYg. Atao

Outline Basic Idea of GAN as structured learning Can Generator learn by itself? Can Discriminator generate? A little bit theory

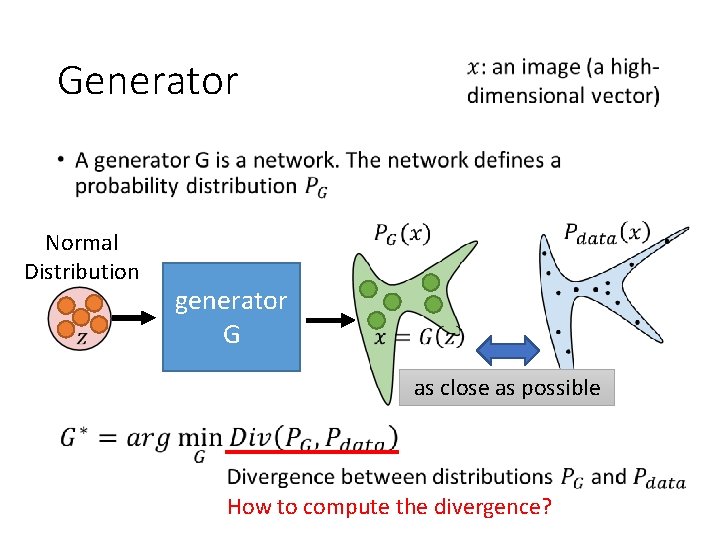

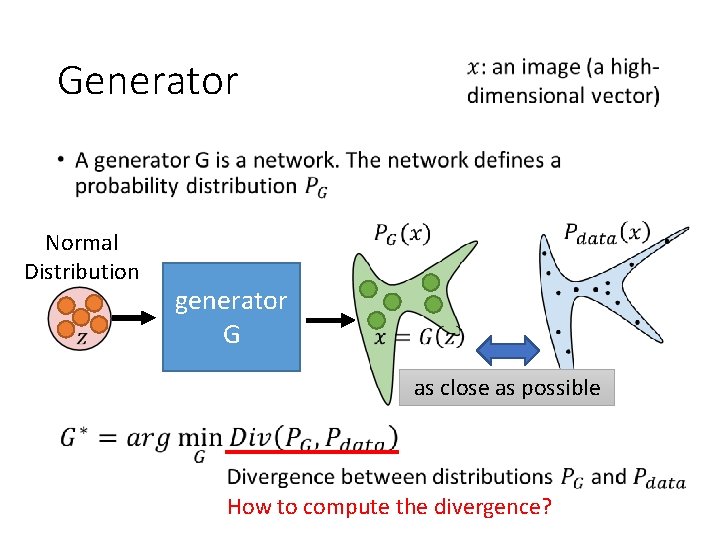

Generator • Normal Distribution generator G as close as possible How to compute the divergence?

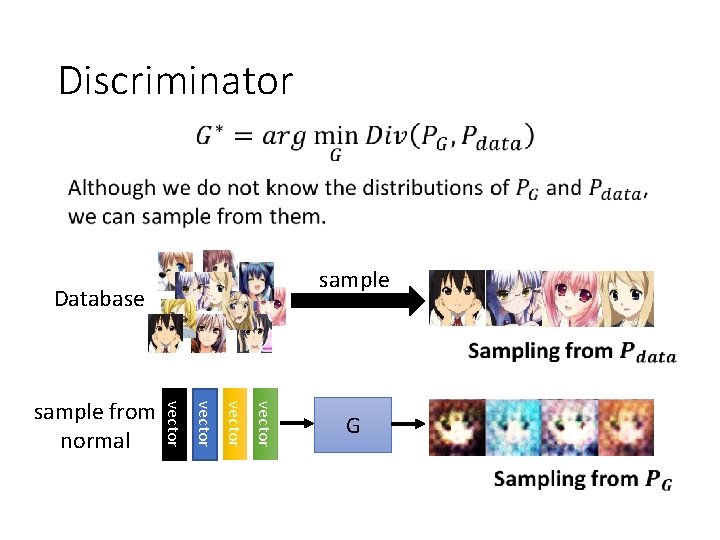

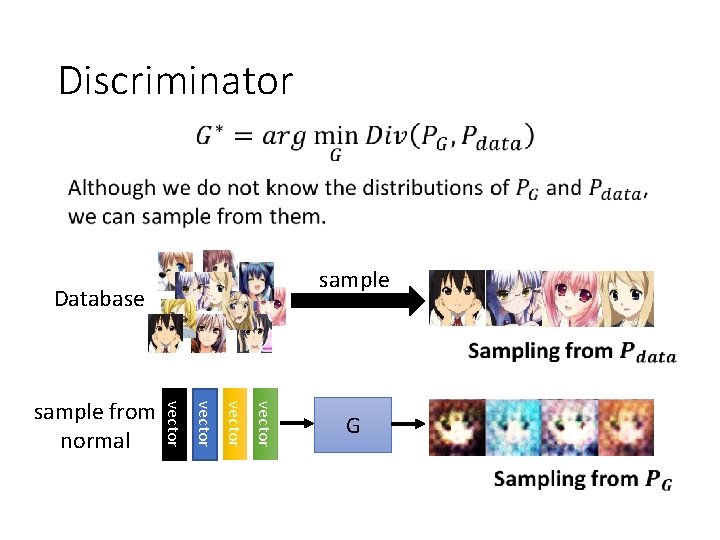

Discriminator sample Database vector sample from normal G

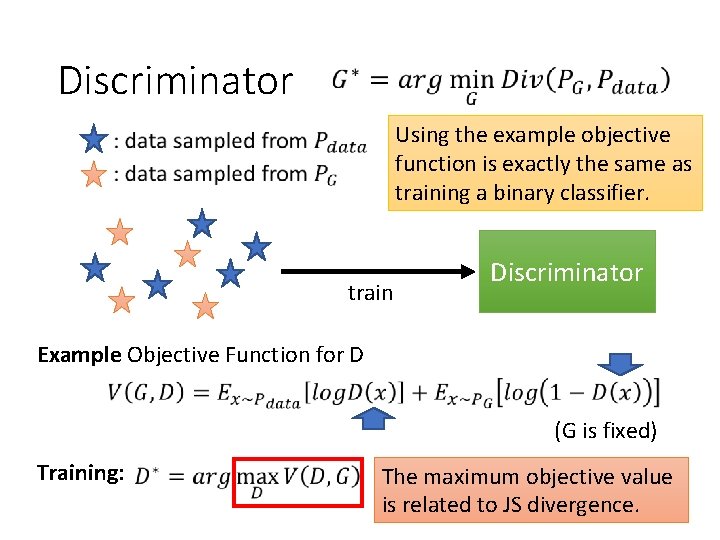

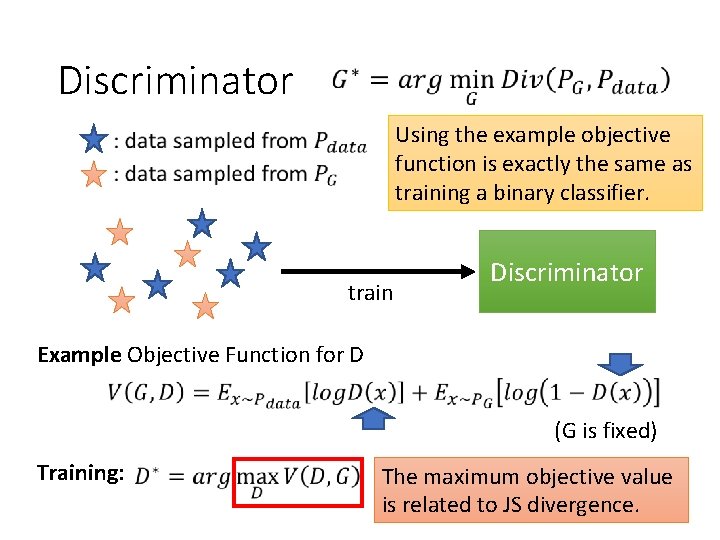

Discriminator Using the example objective function is exactly the same as training a binary classifier. train Discriminator Example Objective Function for D (G is fixed) Training: The maximum objective value is related to JS divergence.

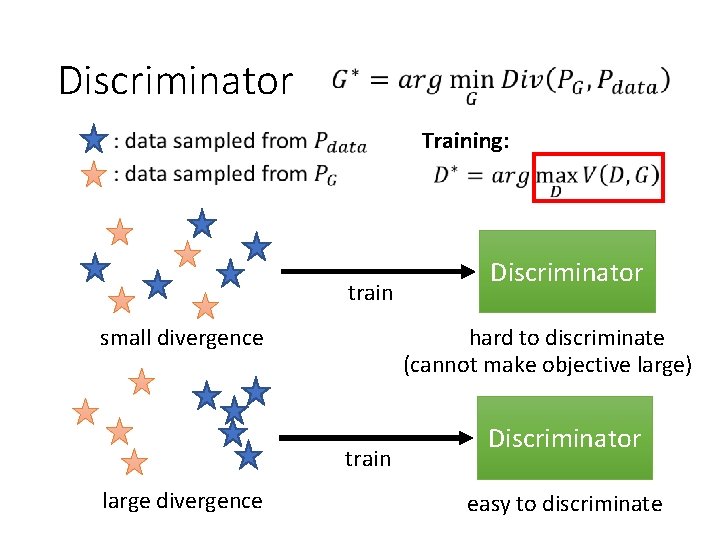

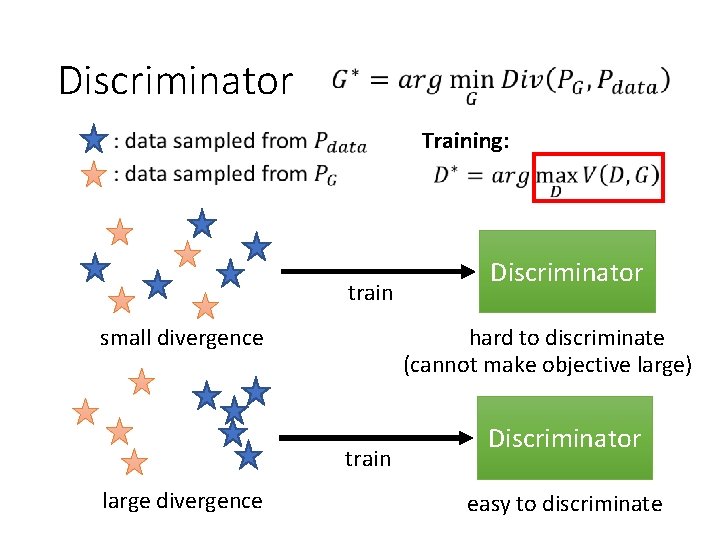

Discriminator Training: train small divergence hard to discriminate (cannot make objective large) train large divergence Discriminator easy to discriminate

![Goodfellow et al NIPS 2014 The maximum objective value is related to JS [Goodfellow, et al. , NIPS, 2014] The maximum objective value is related to JS](https://slidetodoc.com/presentation_image_h/1dc530723a0cb1cc4551881fd2cbb453/image-68.jpg)

[Goodfellow, et al. , NIPS, 2014] The maximum objective value is related to JS divergence. • Initialize generator and discriminator • In each training iteration: Step 1: Fix generator G, and update discriminator D Step 2: Fix discriminator D, and update generator G

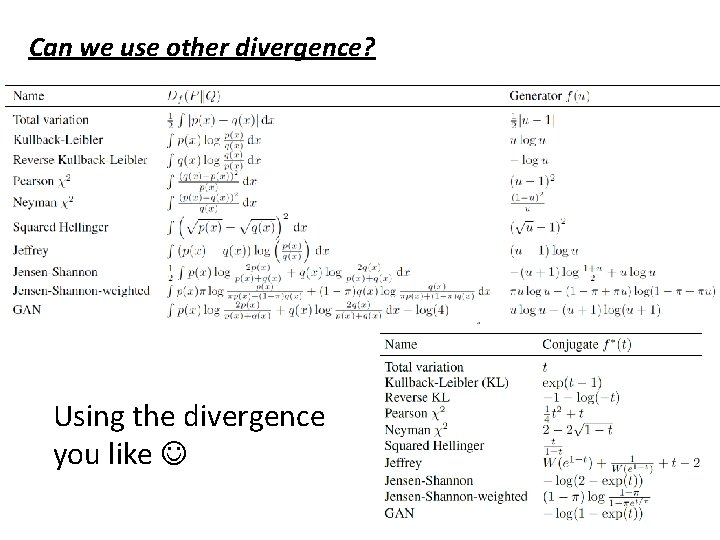

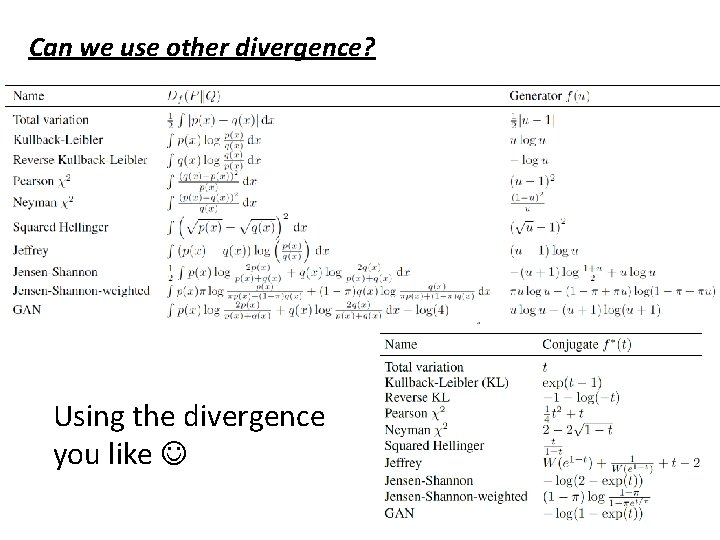

Can we use other divergence? Using the divergence you like

Generative adversarial network

Generative adversarial network Vai vai komati

Vai vai komati Quantum generative adversarial learning

Quantum generative adversarial learning Spectral normalization gan

Spectral normalization gan Conditional generator

Conditional generator Melody randford

Melody randford Lee hung yi

Lee hung yi Hung yi lee

Hung yi lee Hung-yi lee

Hung-yi lee Hungyi lee

Hungyi lee Hungyi lee

Hungyi lee Gan chatbot

Gan chatbot Gans

Gans D

D Networs

Networs Gau gan

Gau gan Ebgan

Ebgan Dr gan dunnington

Dr gan dunnington Gan partnership

Gan partnership Dos moi pa sto, kai tan gan kinaso

Dos moi pa sto, kai tan gan kinaso Lay gan

Lay gan Gan

Gan Muscularis mucosae

Muscularis mucosae Deformable style transfer

Deformable style transfer Gan

Gan Gan

Gan Gân duỗi các ngón tay

Gân duỗi các ngón tay Causal gan

Causal gan Gan

Gan Kantorovich-rubinstein duality

Kantorovich-rubinstein duality Vcsel driver

Vcsel driver Gan de actividades

Gan de actividades áp xe gan slide

áp xe gan slide Gan optimist

Gan optimist Adversarial stakeholders

Adversarial stakeholders Adversarial trial system

Adversarial trial system What is an adversary system

What is an adversary system Sigir 2018

Sigir 2018 Friendly adversarial training

Friendly adversarial training Neur ips

Neur ips Adversarial interview

Adversarial interview Adversarial system law definition

Adversarial system law definition Adversarial search problems uses

Adversarial search problems uses Adversarial examples

Adversarial examples Adversarial multi-task learning for text classification

Adversarial multi-task learning for text classification Adversarial training

Adversarial training Adversarial training

Adversarial training Certified defenses against adversarial examples

Certified defenses against adversarial examples The limitations of deep learning in adversarial settings

The limitations of deep learning in adversarial settings Adversarial patch

Adversarial patch Vbmapp

Vbmapp Structuralism grammar

Structuralism grammar Phrase structure grammar ppt

Phrase structure grammar ppt Kritisk realisme definisjon

Kritisk realisme definisjon Generative recursion

Generative recursion Deep and surface structure examples

Deep and surface structure examples Bentley generative components

Bentley generative components Dilan gorur

Dilan gorur Hudson safety culture ladder

Hudson safety culture ladder Generative lymphoid organs

Generative lymphoid organs Generative thinking boards

Generative thinking boards Generative vs discriminative models

Generative vs discriminative models Structural linguistic and behavioral psychology

Structural linguistic and behavioral psychology Taxonomy of generative models

Taxonomy of generative models Lucas theis

Lucas theis Generative meditation

Generative meditation Generative type computer aided process planning

Generative type computer aided process planning Generative design grasshopper

Generative design grasshopper Generative grammar examples

Generative grammar examples Nlp generative model

Nlp generative model