FIL Eventrelated f MRI Rik Henson With thanks

![FIL Nonlinear Model input u(t) [u(t)] response y(t) Stimulus function kernels (h) estimate Volterra FIL Nonlinear Model input u(t) [u(t)] response y(t) Stimulus function kernels (h) estimate Volterra](https://slidetodoc.com/presentation_image_h/9e931a255aa9c665481266bbffd90456/image-48.jpg)

- Slides: 77

FIL Event-related f. MRI Rik Henson With thanks to: Karl Friston, Oliver Josephs

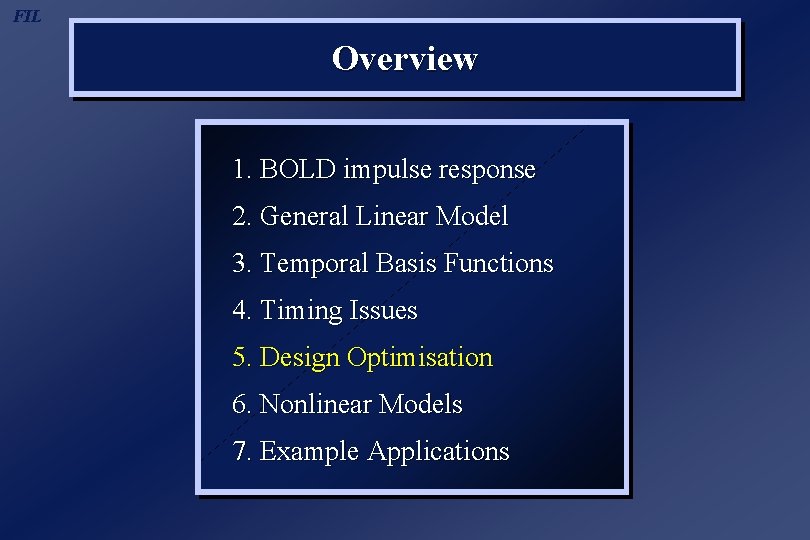

FIL Overview 1. BOLD impulse response 2. General Linear Model 3. Temporal Basis Functions 4. Timing Issues 5. Design Optimisation 6. Nonlinear Models 7. Example Applications

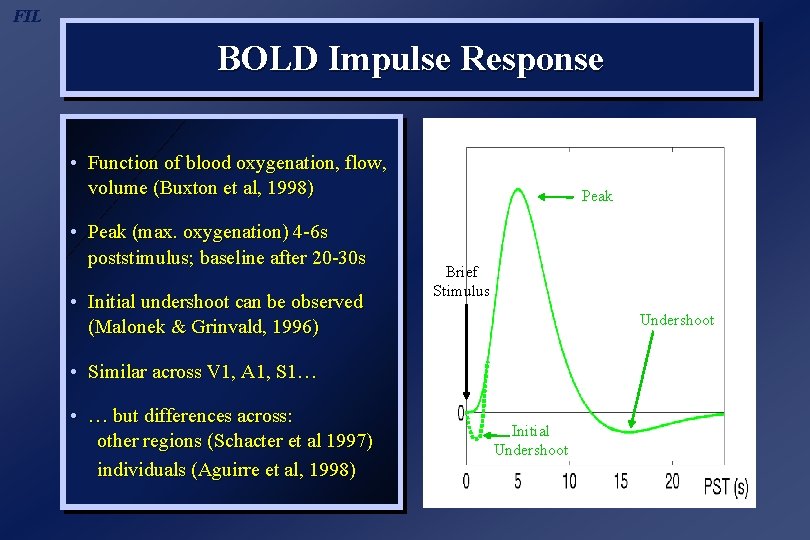

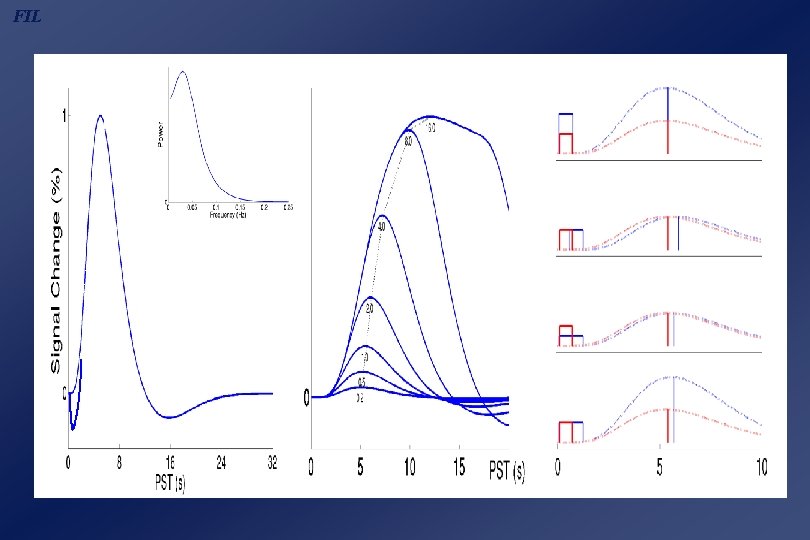

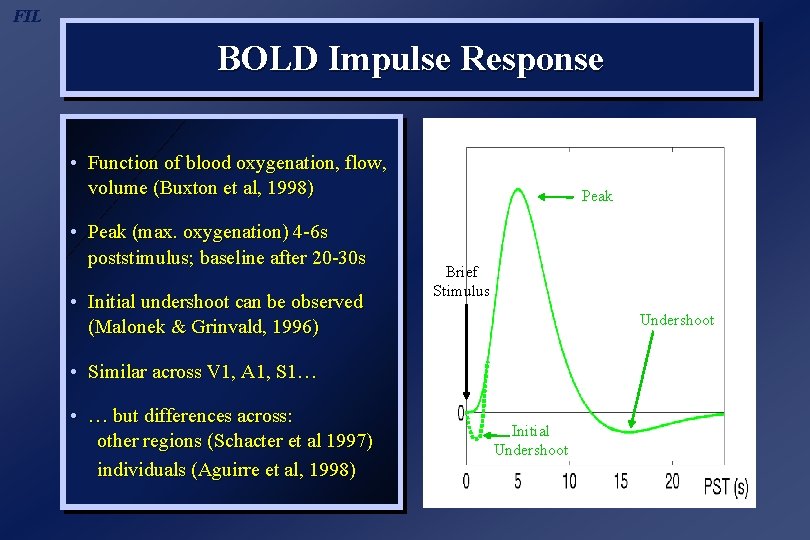

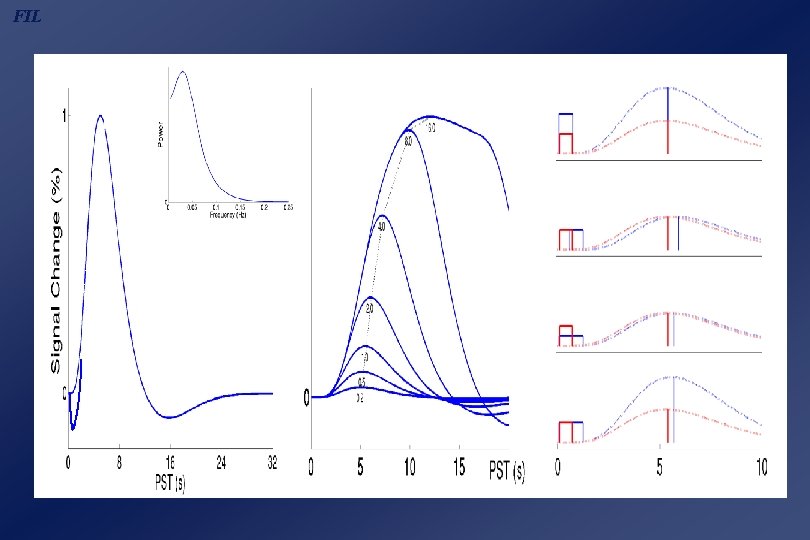

FIL BOLD Impulse Response • Function of blood oxygenation, flow, volume (Buxton et al, 1998) • Peak (max. oxygenation) 4 -6 s poststimulus; baseline after 20 -30 s • Initial undershoot can be observed (Malonek & Grinvald, 1996) Peak Brief Stimulus Undershoot • Similar across V 1, A 1, S 1… • … but differences across: other regions (Schacter et al 1997) individuals (Aguirre et al, 1998) Initial Undershoot

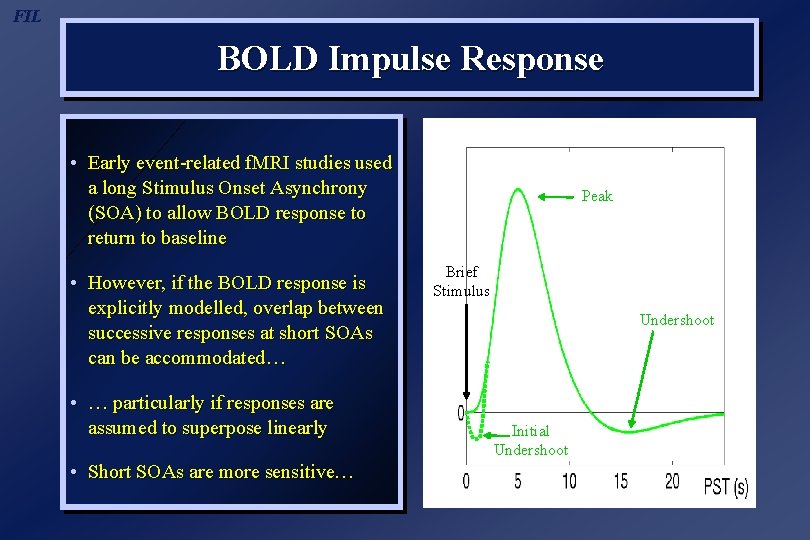

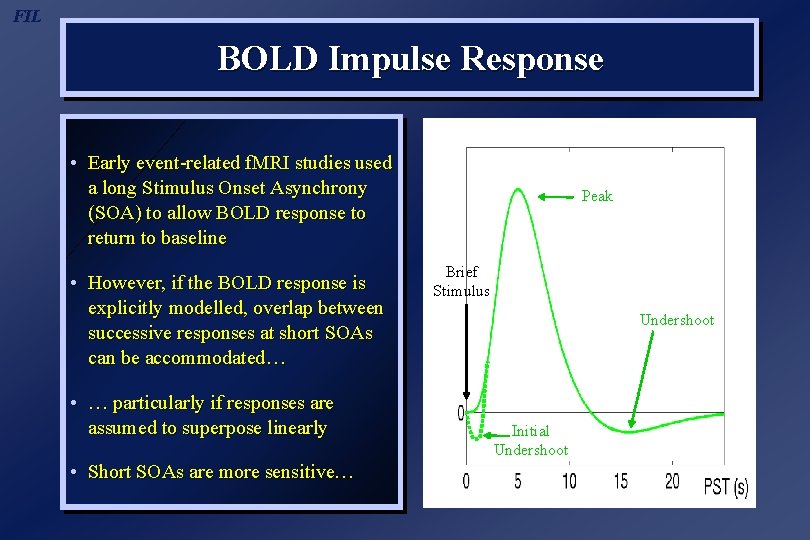

FIL BOLD Impulse Response • Early event-related f. MRI studies used a long Stimulus Onset Asynchrony (SOA) to allow BOLD response to return to baseline • However, if the BOLD response is explicitly modelled, overlap between successive responses at short SOAs can be accommodated… • … particularly if responses are assumed to superpose linearly • Short SOAs are more sensitive… Peak Brief Stimulus Undershoot Initial Undershoot

FIL Overview 1. BOLD impulse response 2. General Linear Model 3. Temporal Basis Functions 4. Timing Issues 5. Design Optimisation 6. Nonlinear Models 7. Example Applications

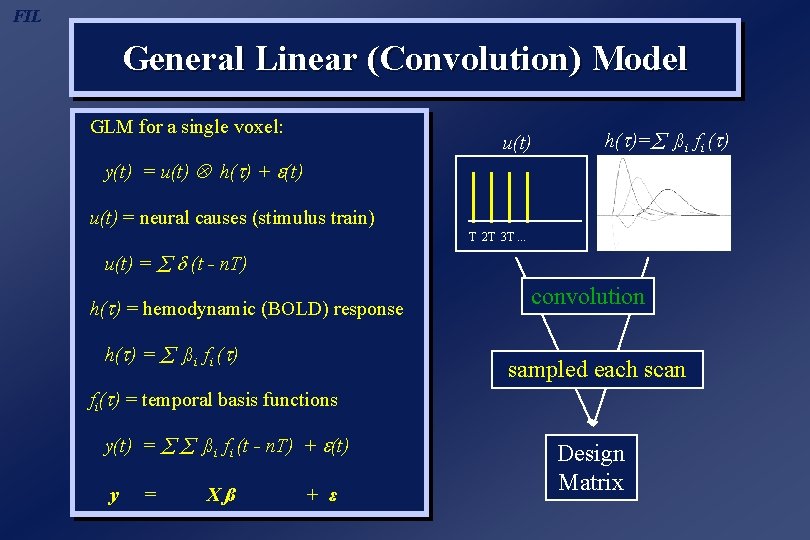

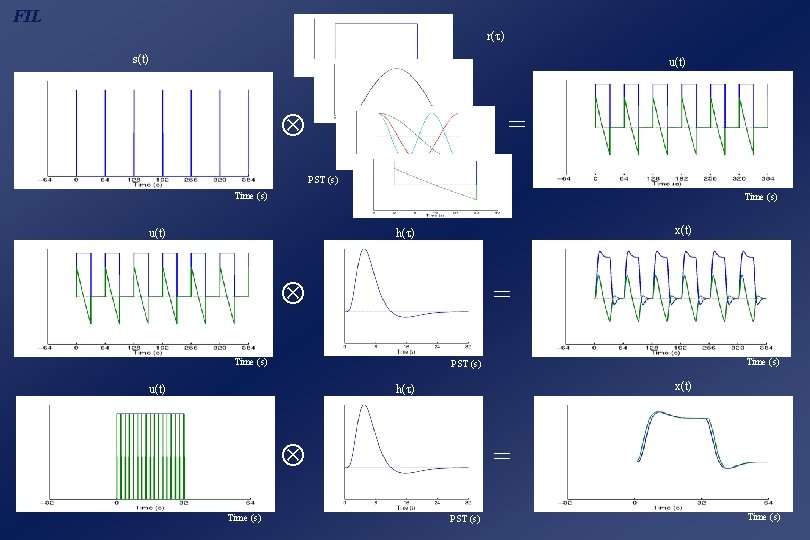

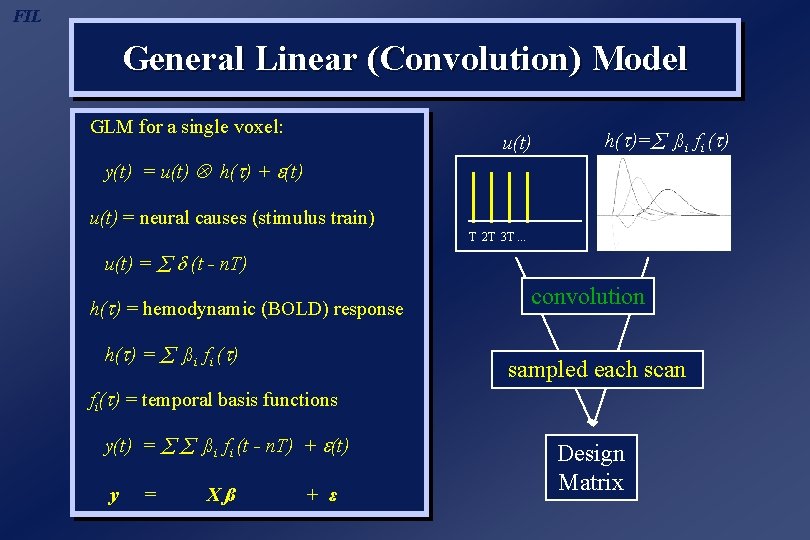

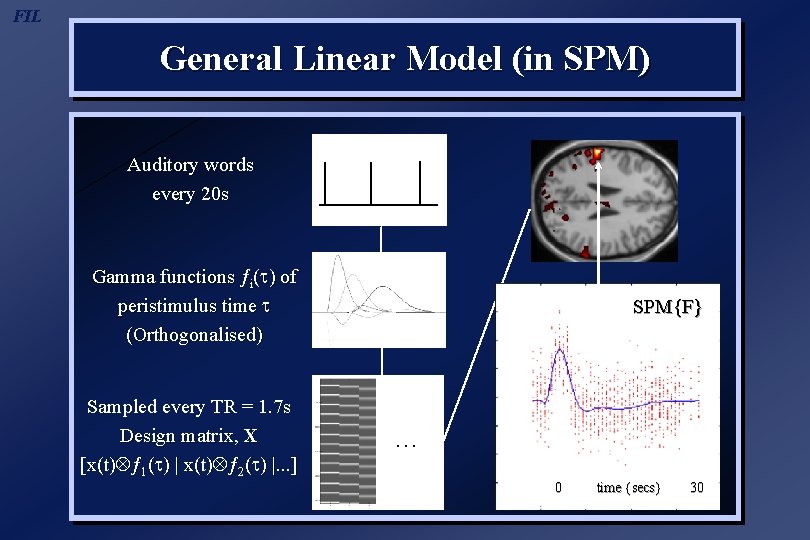

FIL General Linear (Convolution) Model GLM for a single voxel: u(t) h(t)= ßi fi (t) y(t) = u(t) h(t) + (t) u(t) = neural causes (stimulus train) T 2 T 3 T. . . u(t) = (t - n. T) h(t) = hemodynamic (BOLD) response h(t) = ßi fi (t) convolution sampled each scan fi(t) = temporal basis functions y(t) = ßi fi (t - n. T) + (t) y = Xß + ε Design Matrix

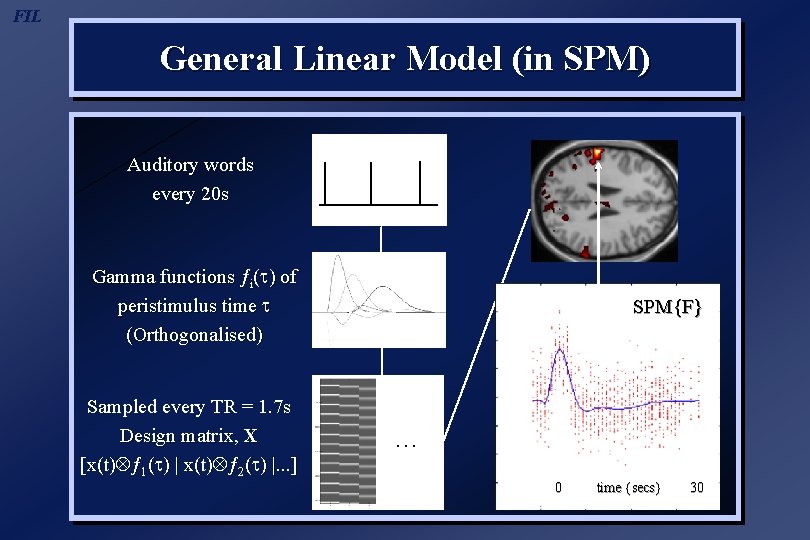

FIL General Linear Model (in SPM) Auditory words every 20 s Gamma functions ƒi( ) of peristimulus time (Orthogonalised) Sampled every TR = 1. 7 s Design matrix, X [x(t) ƒ 1( ) | x(t) ƒ 2( ) |. . . ] SPM{F} … 0 time {secs} 30

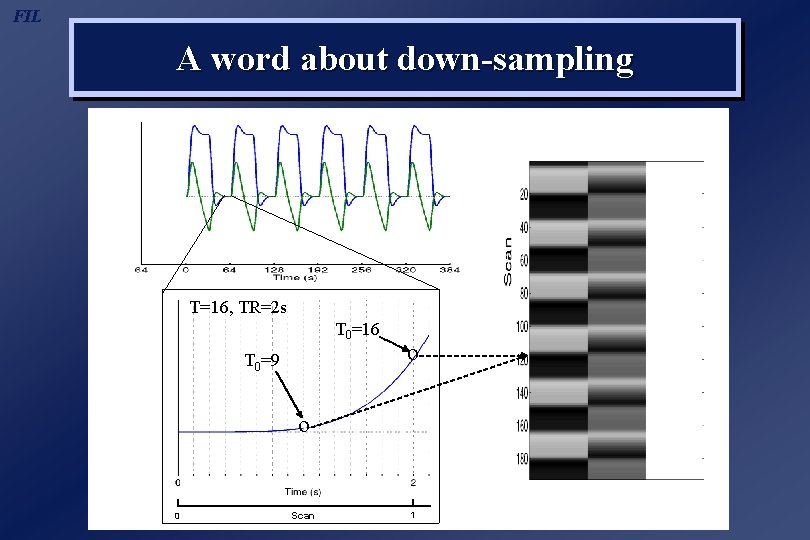

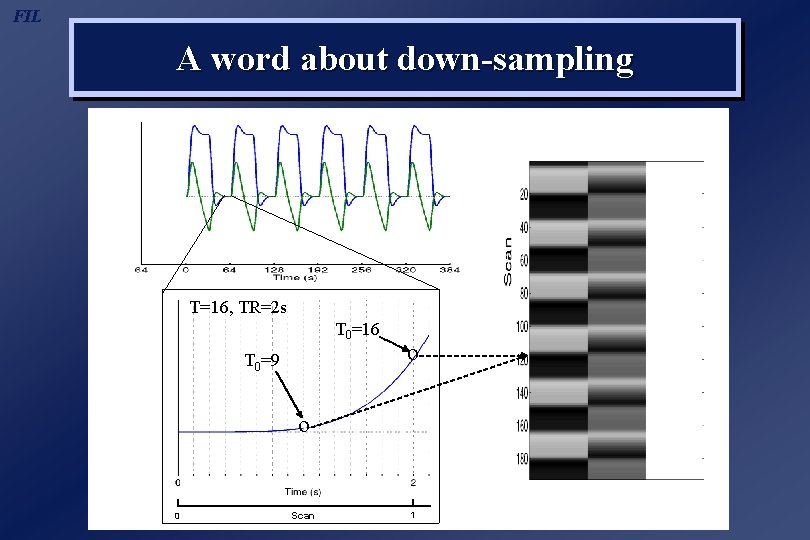

FIL A word about down-sampling T=16, TR=2 s T 0=16 T 0=9 o o 0 Scan 1 x 2 x 3

FIL Overview 1. BOLD impulse response 2. General Linear Model 3. Temporal Basis Functions 4. Timing Issues 5. Design Optimisation 6. Nonlinear Models 7. Example Applications

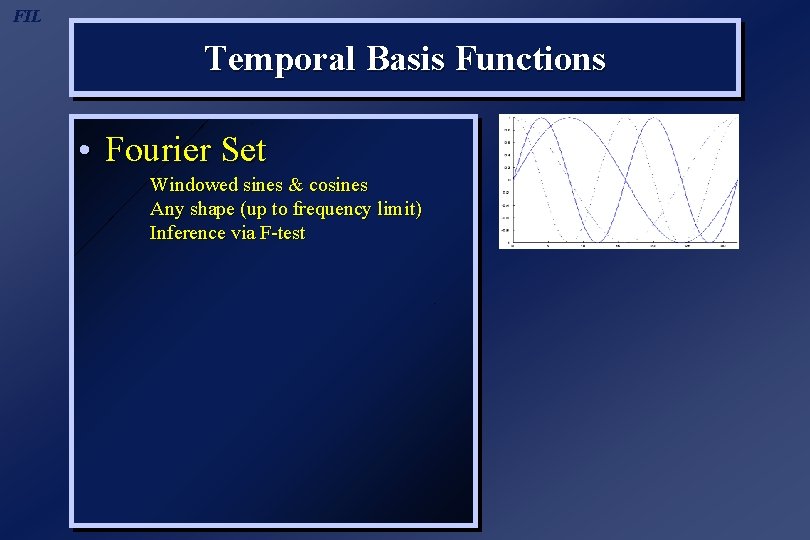

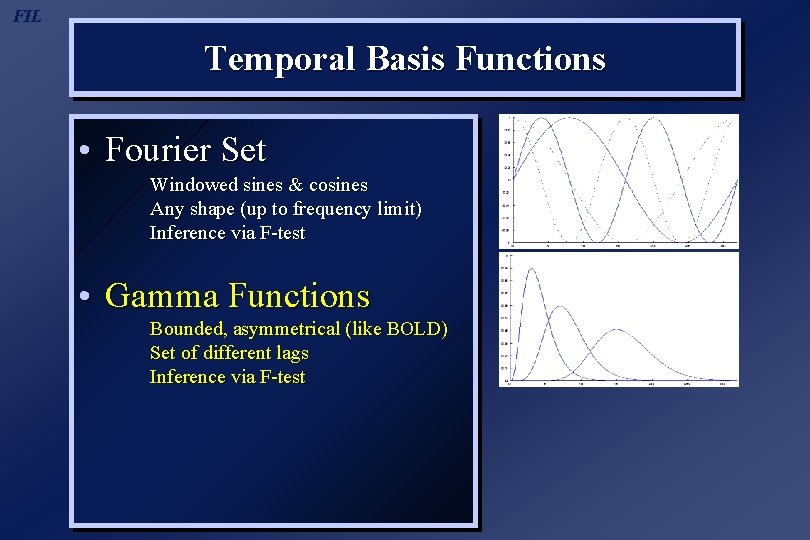

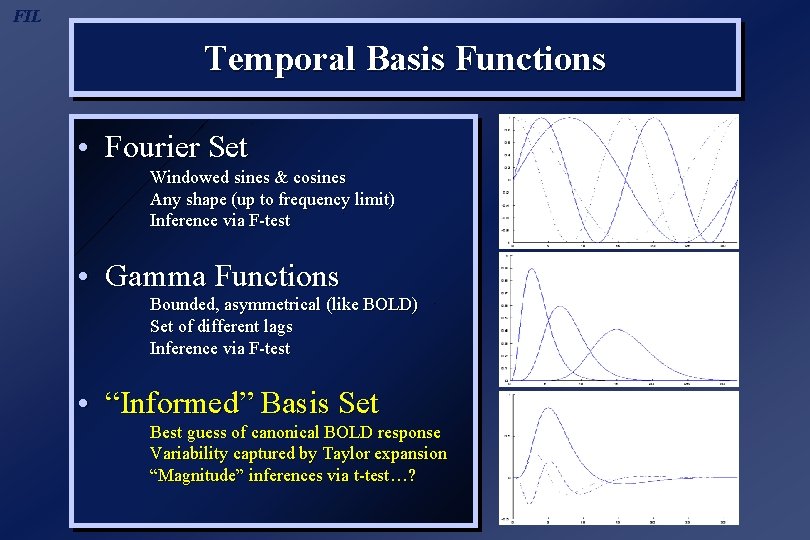

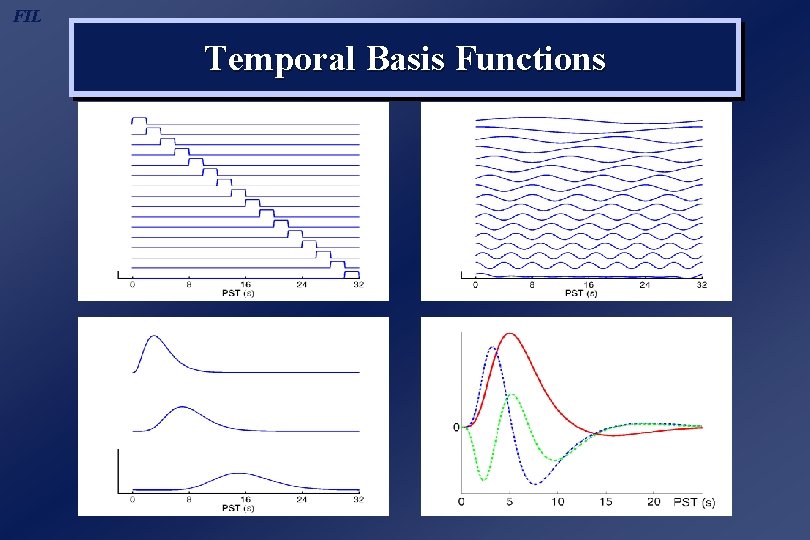

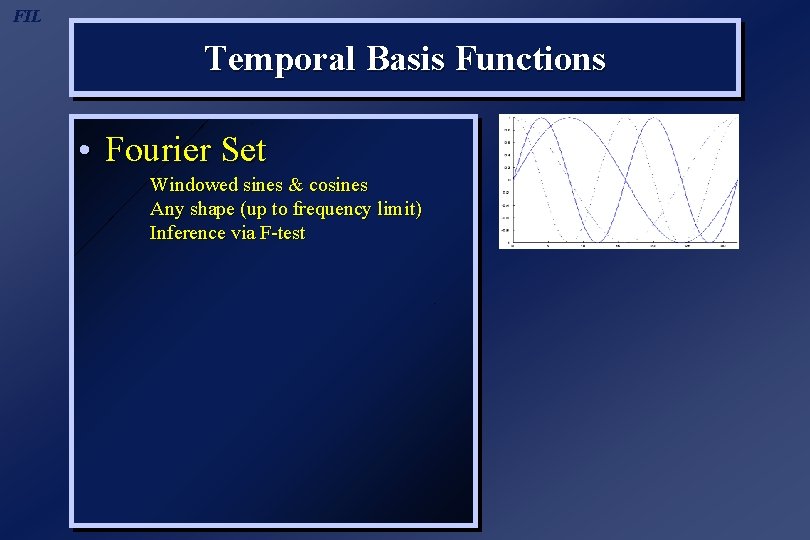

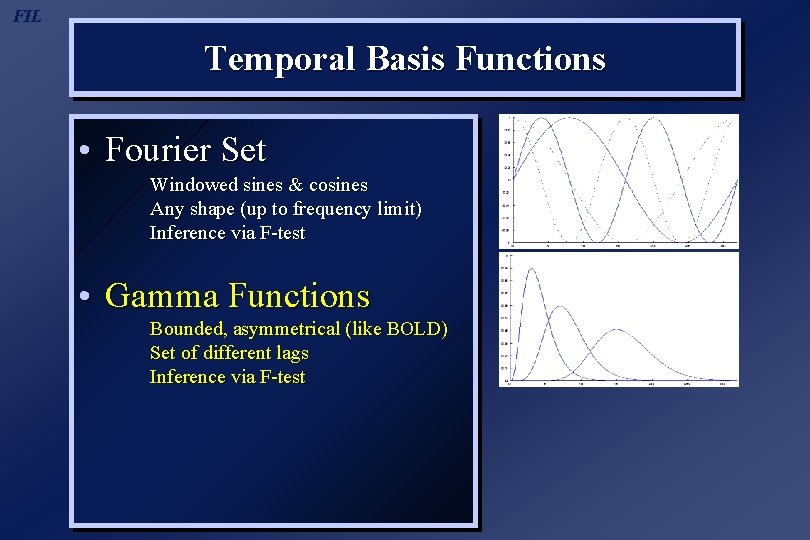

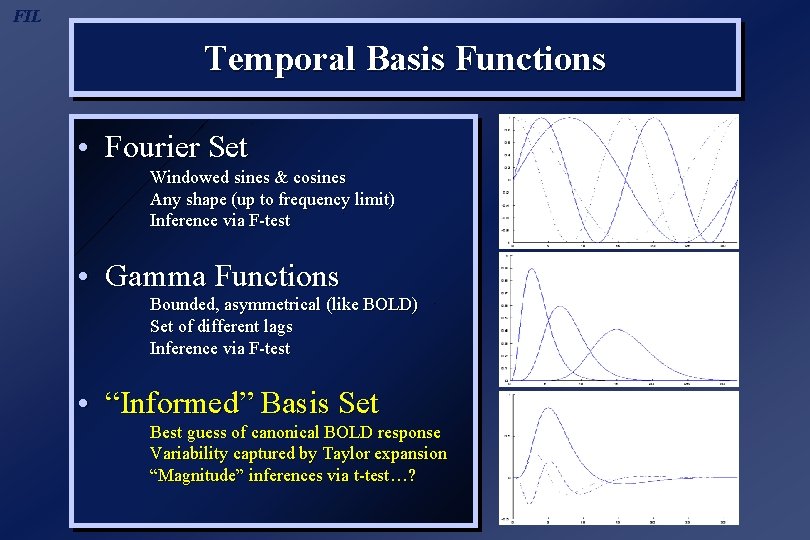

FIL Temporal Basis Functions • Fourier Set Windowed sines & cosines Any shape (up to frequency limit) Inference via F-test

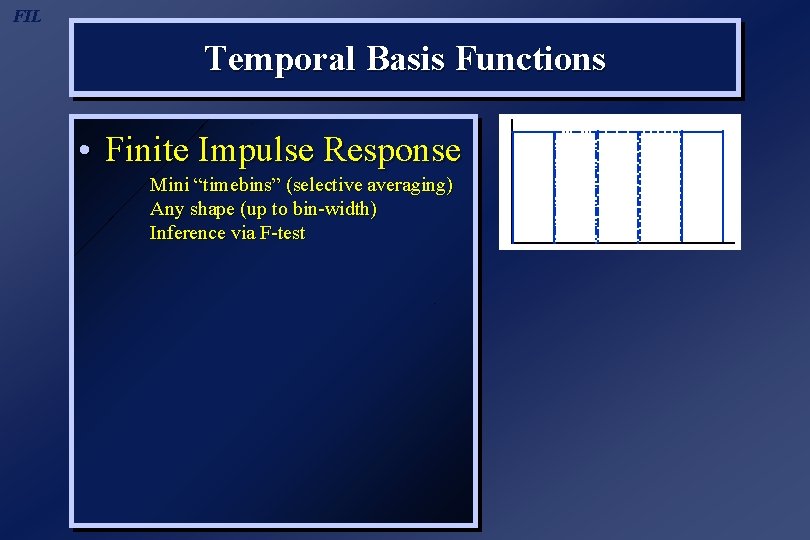

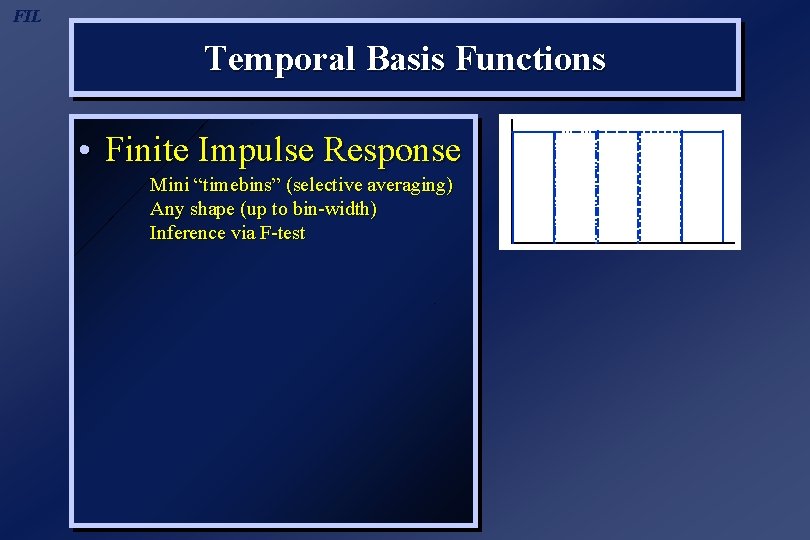

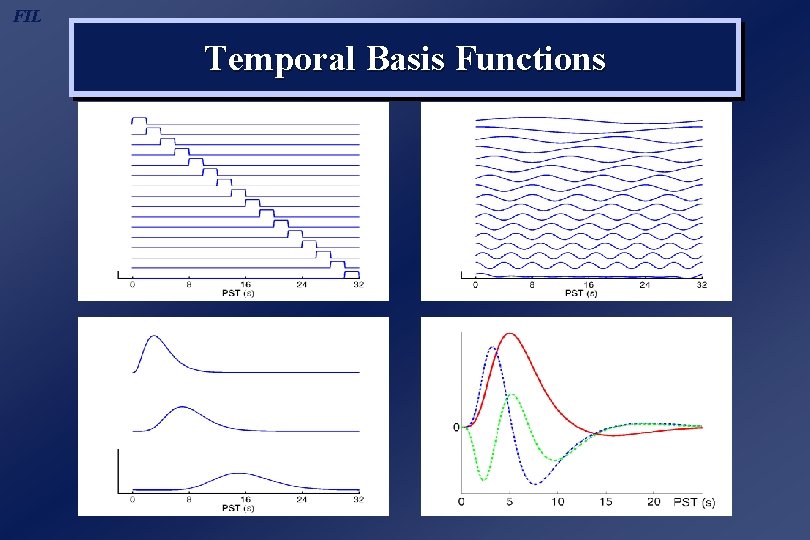

FIL Temporal Basis Functions • Finite Impulse Response Mini “timebins” (selective averaging) Any shape (up to bin-width) Inference via F-test

FIL Temporal Basis Functions • Fourier Set Windowed sines & cosines Any shape (up to frequency limit) Inference via F-test • Gamma Functions Bounded, asymmetrical (like BOLD) Set of different lags Inference via F-test

FIL Temporal Basis Functions • Fourier Set Windowed sines & cosines Any shape (up to frequency limit) Inference via F-test • Gamma Functions Bounded, asymmetrical (like BOLD) Set of different lags Inference via F-test • “Informed” Basis Set Best guess of canonical BOLD response Variability captured by Taylor expansion “Magnitude” inferences via t-test…?

FIL Temporal Basis Functions

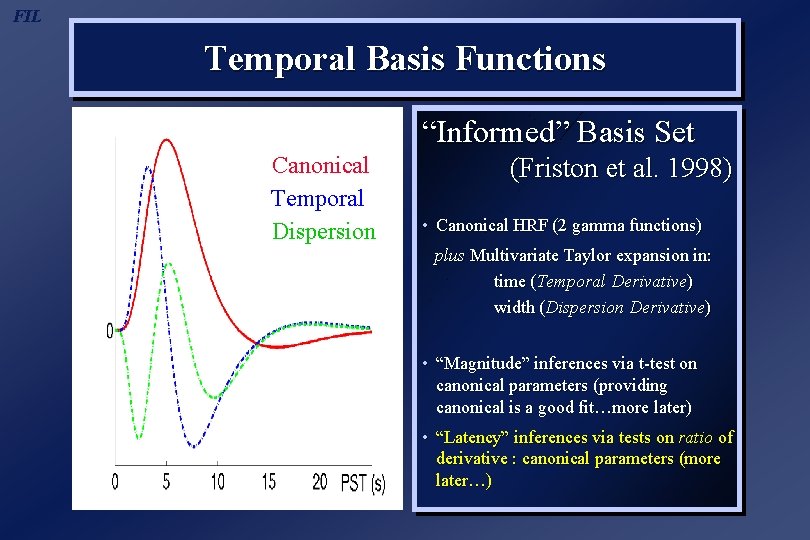

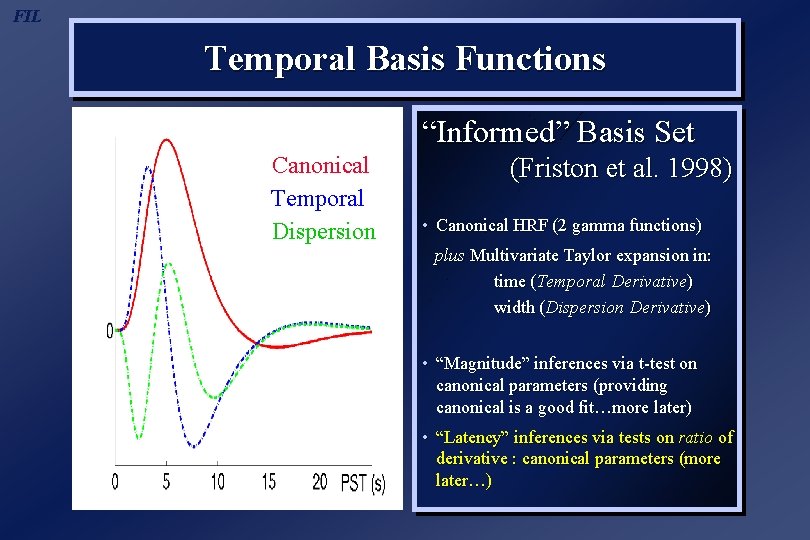

FIL Temporal Basis Functions “Informed” Basis Set Canonical Temporal Dispersion (Friston et al. 1998) • Canonical HRF (2 gamma functions) plus Multivariate Taylor expansion in: time (Temporal Derivative) width (Dispersion Derivative) • “Magnitude” inferences via t-test on canonical parameters (providing canonical is a good fit…more later) • “Latency” inferences via tests on ratio of derivative : canonical parameters (more later…)

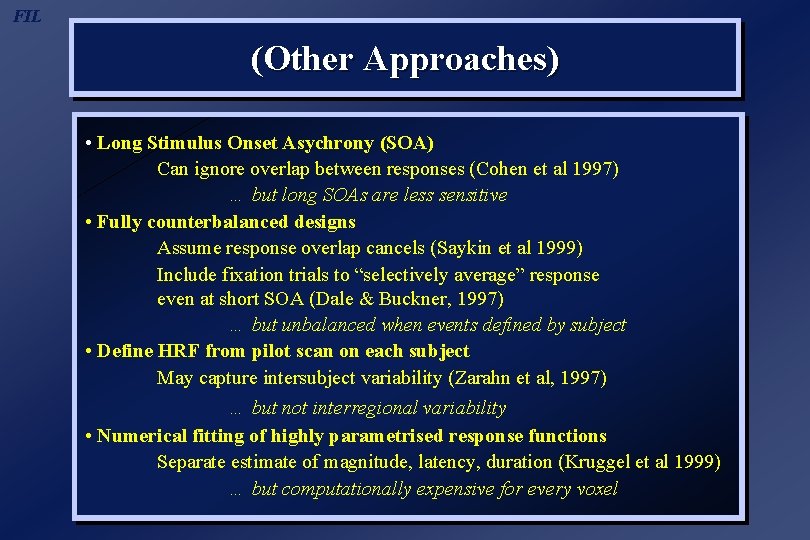

FIL (Other Approaches) • Long Stimulus Onset Asychrony (SOA) Can ignore overlap between responses (Cohen et al 1997) … but long SOAs are less sensitive • Fully counterbalanced designs Assume response overlap cancels (Saykin et al 1999) Include fixation trials to “selectively average” response even at short SOA (Dale & Buckner, 1997) … but unbalanced when events defined by subject • Define HRF from pilot scan on each subject May capture intersubject variability (Zarahn et al, 1997) … but not interregional variability • Numerical fitting of highly parametrised response functions Separate estimate of magnitude, latency, duration (Kruggel et al 1999) … but computationally expensive for every voxel

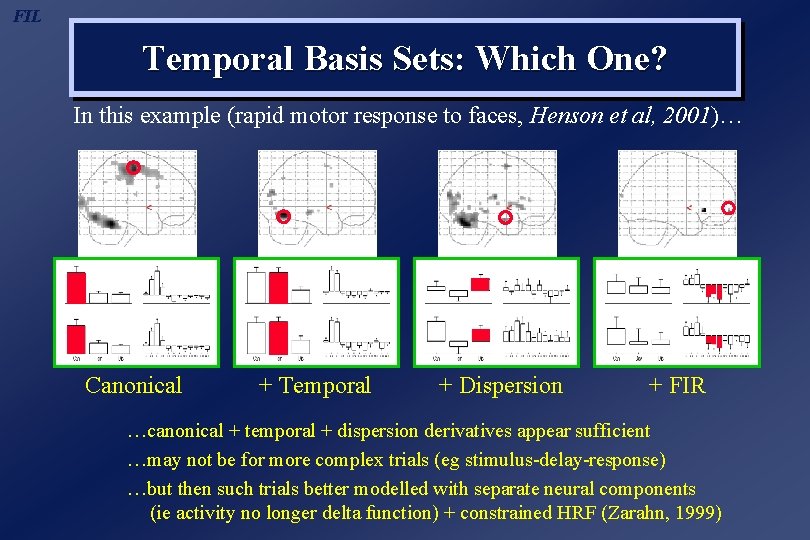

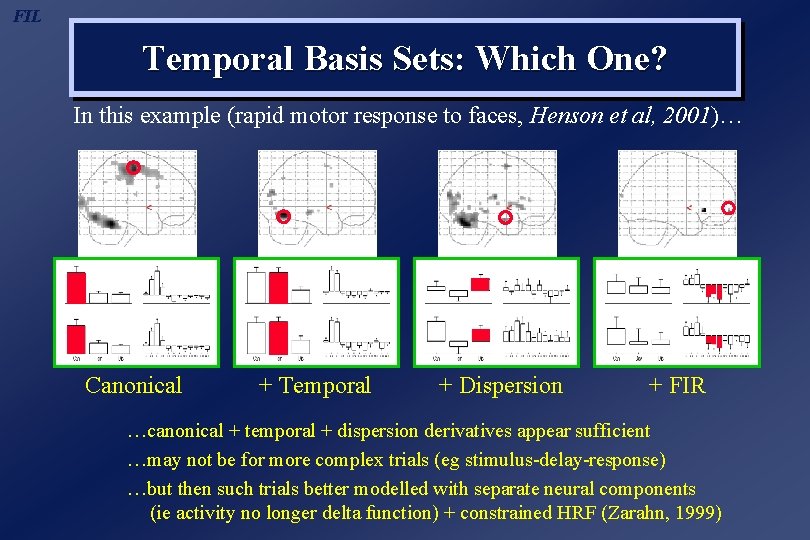

FIL Temporal Basis Sets: Which One? In this example (rapid motor response to faces, Henson et al, 2001)… Canonical + Temporal + Dispersion + FIR …canonical + temporal + dispersion derivatives appear sufficient …may not be for more complex trials (eg stimulus-delay-response) …but then such trials better modelled with separate neural components (ie activity no longer delta function) + constrained HRF (Zarahn, 1999)

FIL Overview 1. BOLD impulse response 2. General Linear Model 3. Temporal Basis Functions 4. Timing Issues 5. Design Optimisation 6. Nonlinear Models 7. Example Applications

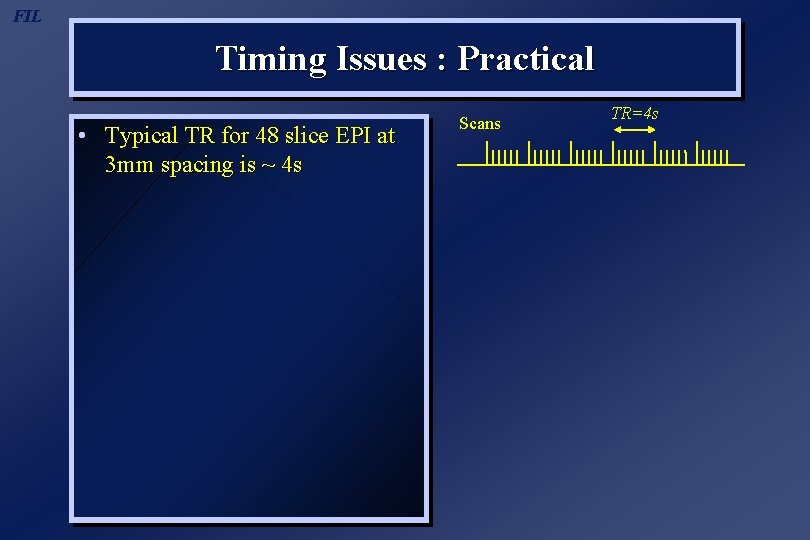

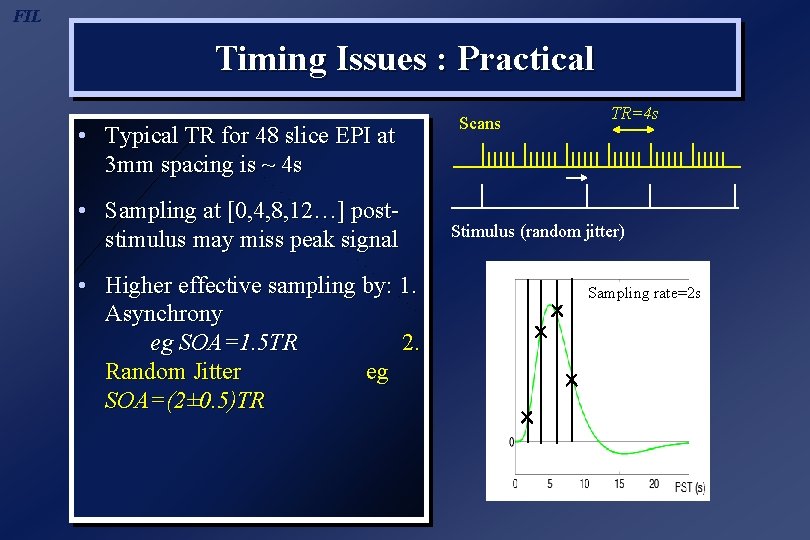

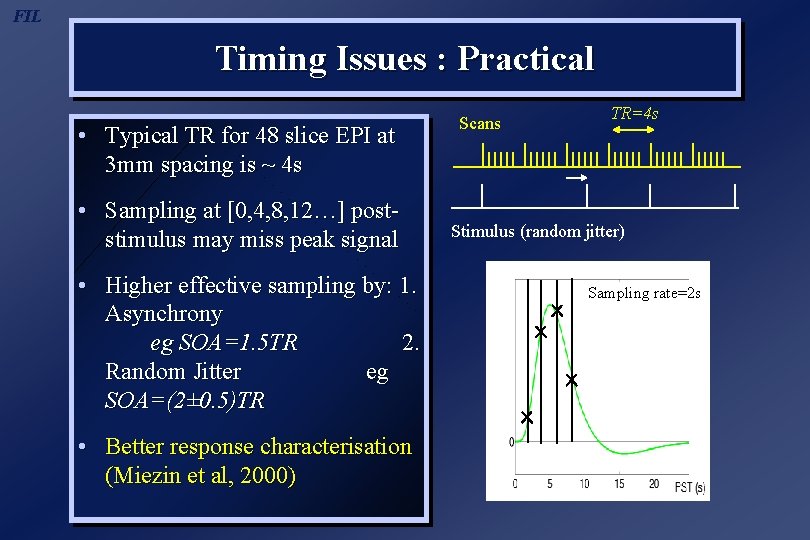

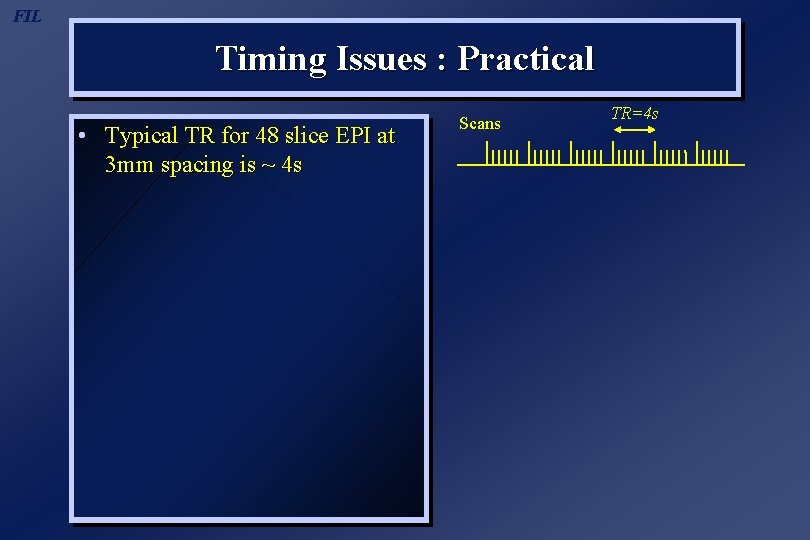

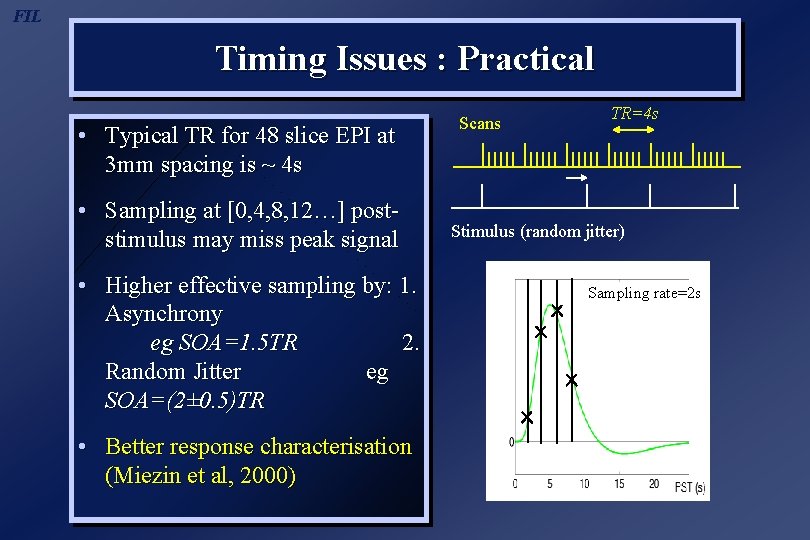

FIL Timing Issues : Practical • Typical TR for 48 slice EPI at 3 mm spacing is ~ 4 s Scans TR=4 s

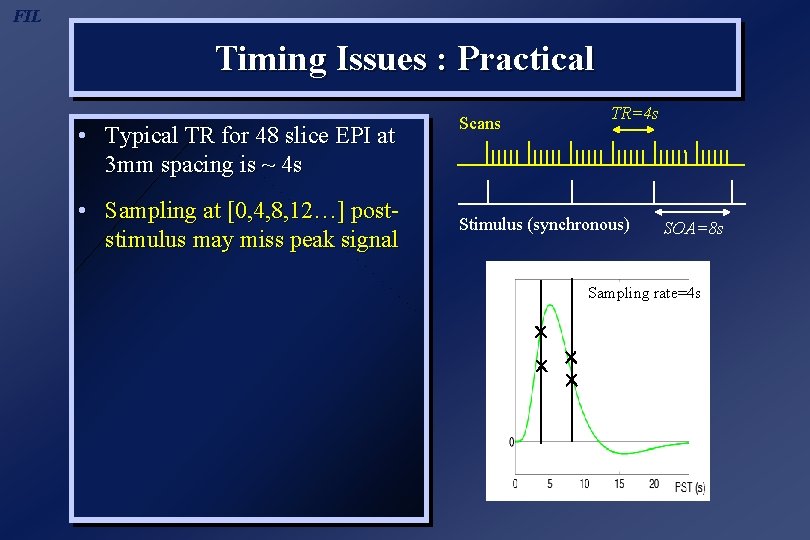

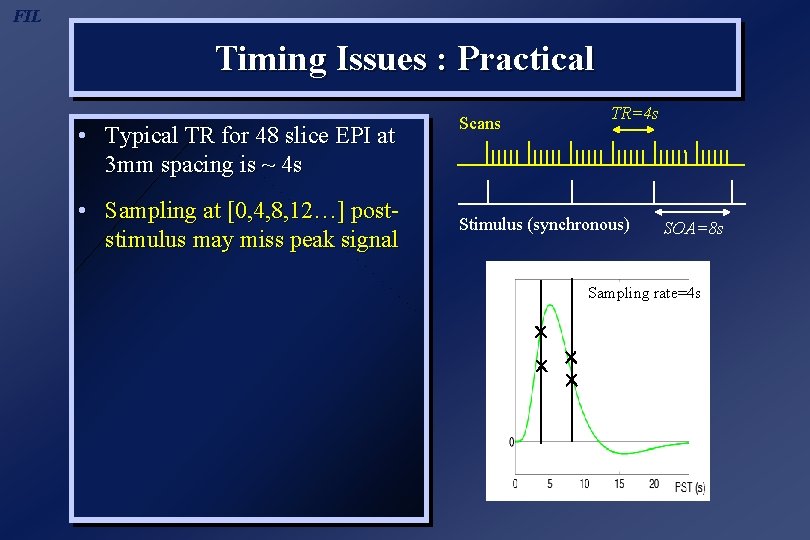

FIL Timing Issues : Practical • Typical TR for 48 slice EPI at 3 mm spacing is ~ 4 s • Sampling at [0, 4, 8, 12…] poststimulus may miss peak signal Scans TR=4 s Stimulus (synchronous) SOA=8 s Sampling rate=4 s

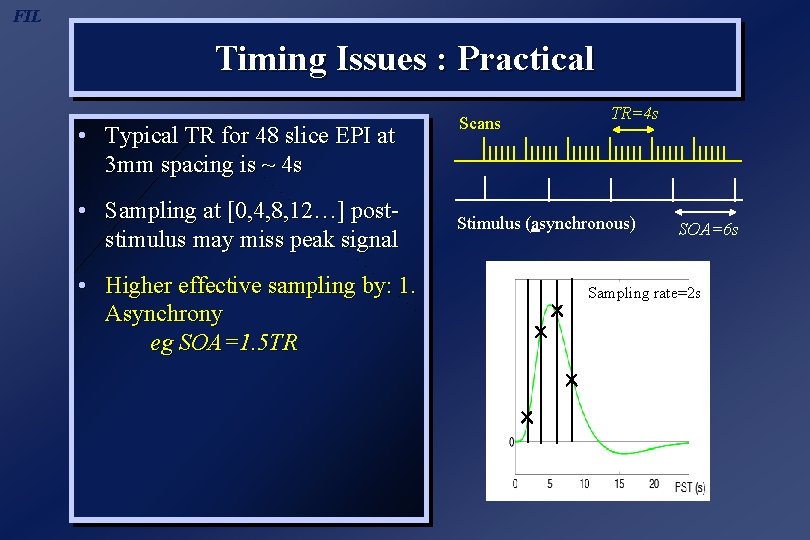

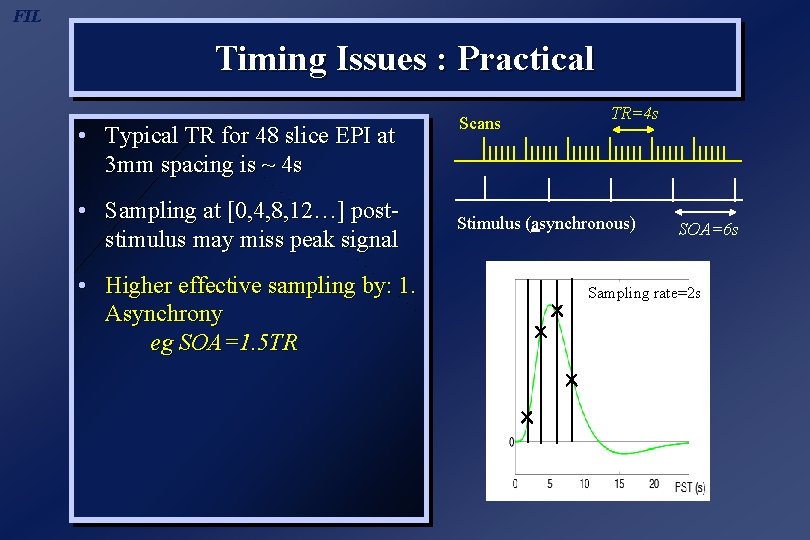

FIL Timing Issues : Practical • Typical TR for 48 slice EPI at 3 mm spacing is ~ 4 s • Sampling at [0, 4, 8, 12…] poststimulus may miss peak signal • Higher effective sampling by: 1. Asynchrony eg SOA=1. 5 TR Scans TR=4 s Stimulus (asynchronous) SOA=6 s Sampling rate=2 s

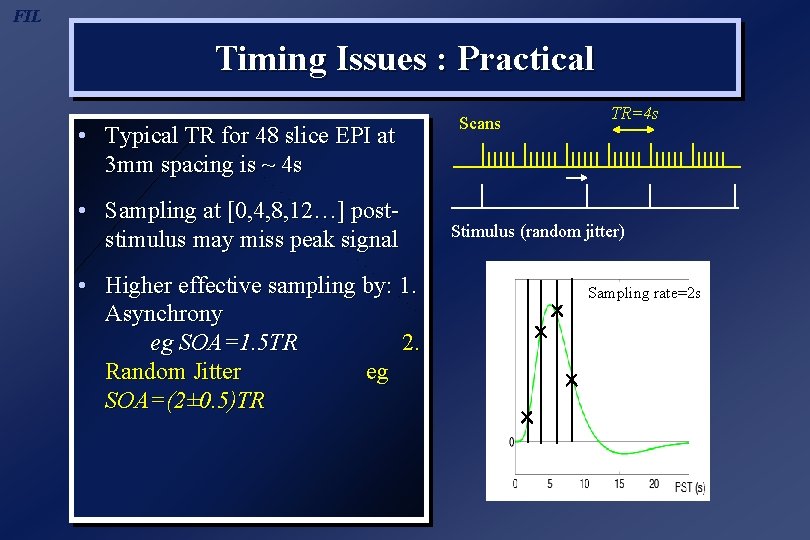

FIL Timing Issues : Practical • Typical TR for 48 slice EPI at 3 mm spacing is ~ 4 s • Sampling at [0, 4, 8, 12…] poststimulus may miss peak signal • Higher effective sampling by: 1. Asynchrony eg SOA=1. 5 TR 2. Random Jitter eg SOA=(2± 0. 5)TR Scans TR=4 s Stimulus (random jitter) Sampling rate=2 s

FIL Timing Issues : Practical • Typical TR for 48 slice EPI at 3 mm spacing is ~ 4 s • Sampling at [0, 4, 8, 12…] poststimulus may miss peak signal • Higher effective sampling by: 1. Asynchrony eg SOA=1. 5 TR 2. Random Jitter eg SOA=(2± 0. 5)TR • Better response characterisation (Miezin et al, 2000) Scans TR=4 s Stimulus (random jitter) Sampling rate=2 s

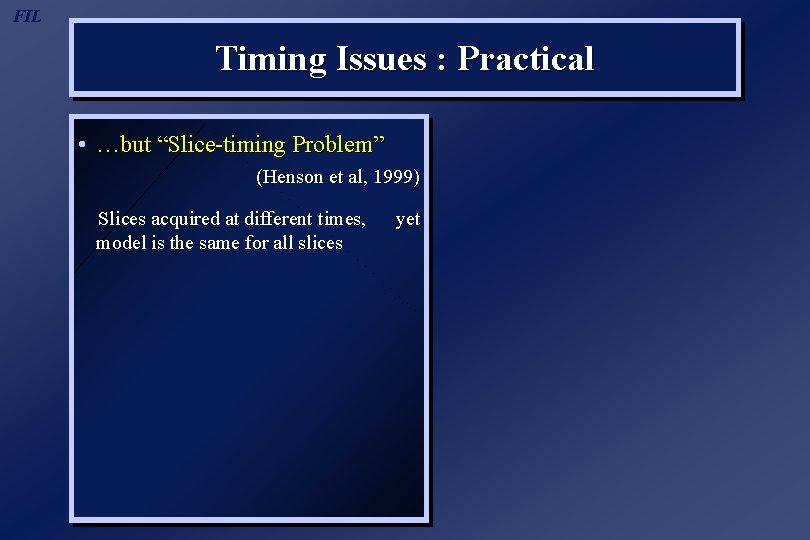

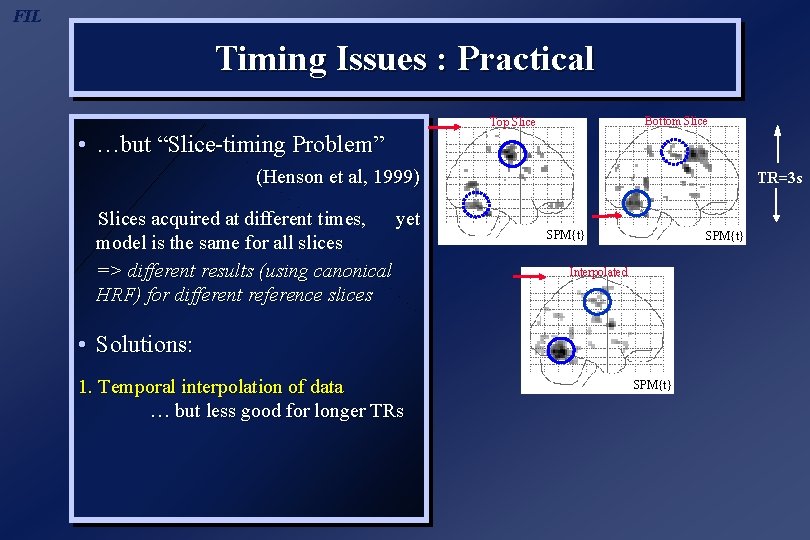

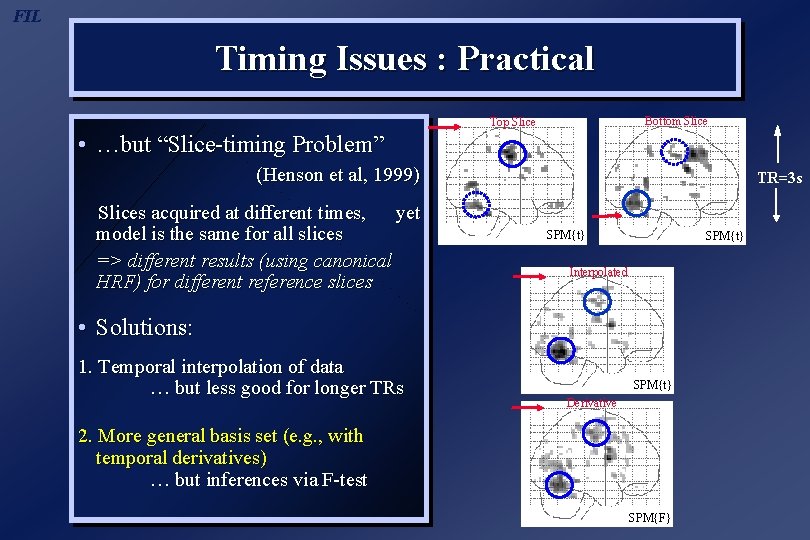

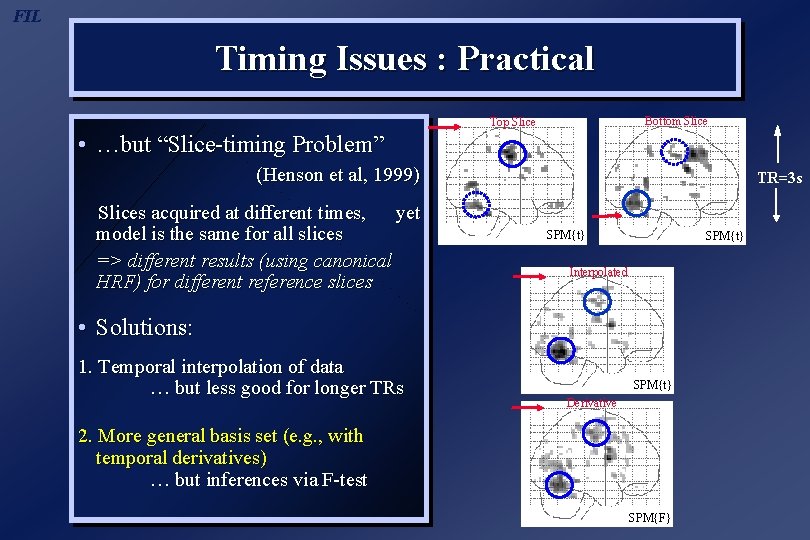

FIL Timing Issues : Practical • …but “Slice-timing Problem” (Henson et al, 1999) Slices acquired at different times, model is the same for all slices yet

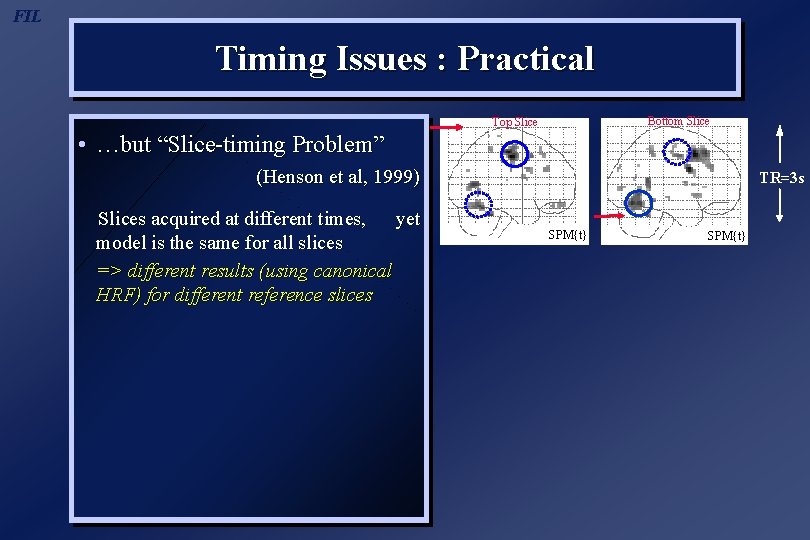

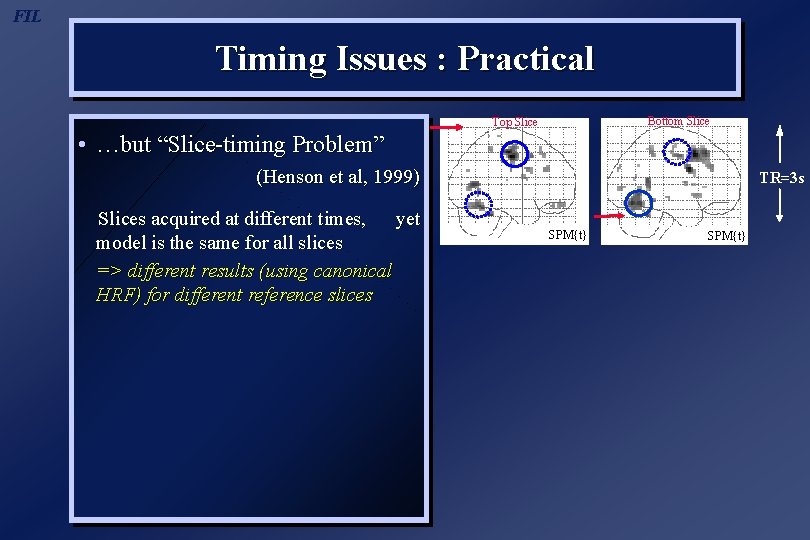

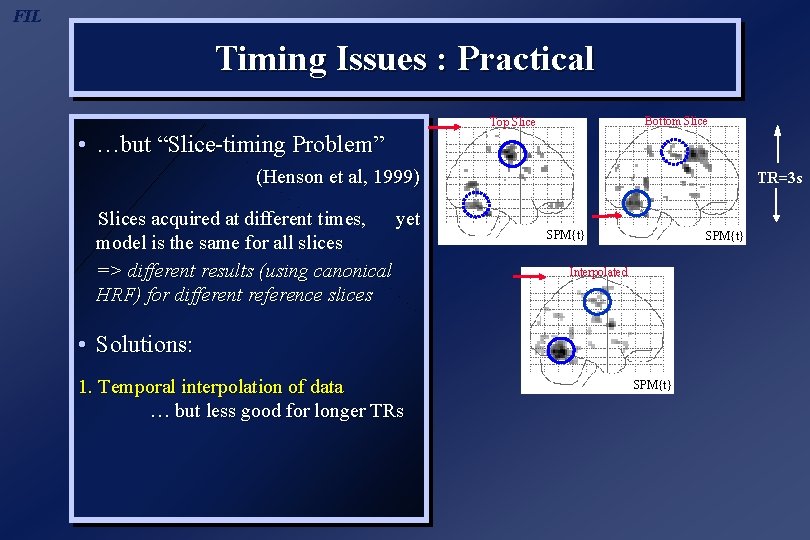

FIL Timing Issues : Practical Bottom Slice Top Slice • …but “Slice-timing Problem” (Henson et al, 1999) Slices acquired at different times, yet model is the same for all slices => different results (using canonical HRF) for different reference slices TR=3 s SPM{t}

FIL Timing Issues : Practical Bottom Slice Top Slice • …but “Slice-timing Problem” (Henson et al, 1999) Slices acquired at different times, yet model is the same for all slices => different results (using canonical HRF) for different reference slices TR=3 s SPM{t} Interpolated • Solutions: 1. Temporal interpolation of data … but less good for longer TRs SPM{t}

FIL Timing Issues : Practical Bottom Slice Top Slice • …but “Slice-timing Problem” (Henson et al, 1999) Slices acquired at different times, yet model is the same for all slices => different results (using canonical HRF) for different reference slices TR=3 s SPM{t} Interpolated • Solutions: 1. Temporal interpolation of data … but less good for longer TRs SPM{t} Derivative 2. More general basis set (e. g. , with temporal derivatives) … but inferences via F-test SPM{F}

FIL Overview 1. BOLD impulse response 2. General Linear Model 3. Temporal Basis Functions 4. Timing Issues 5. Design Optimisation 6. Nonlinear Models 7. Example Applications

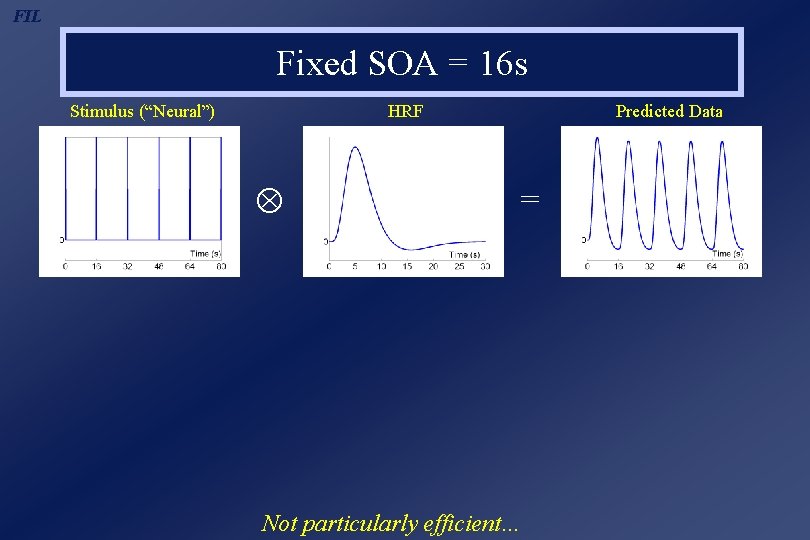

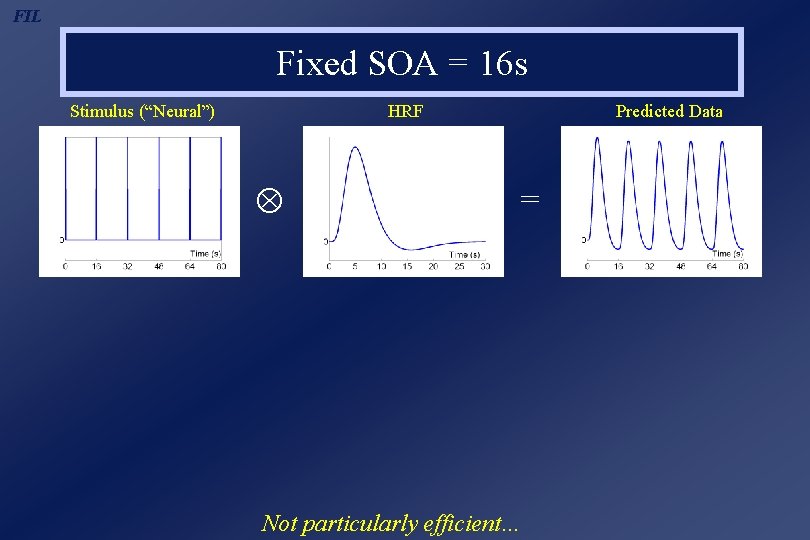

FIL Fixed SOA = 16 s Stimulus (“Neural”) HRF Predicted Data = Not particularly efficient…

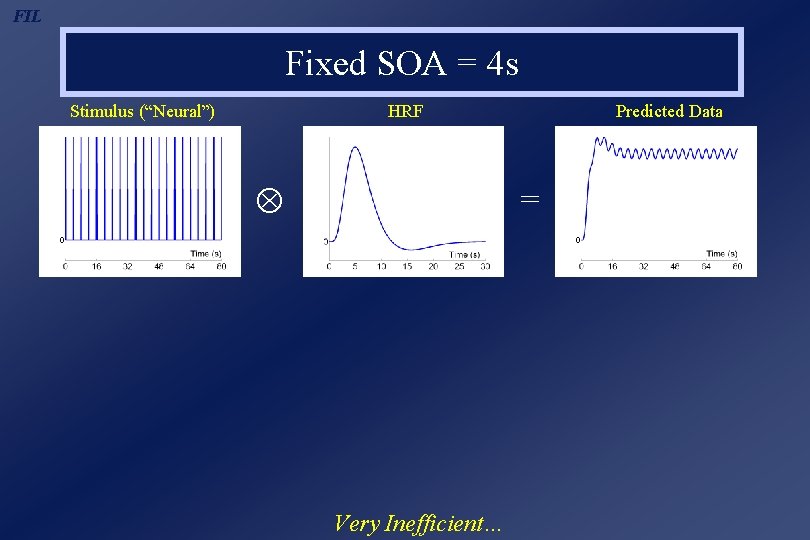

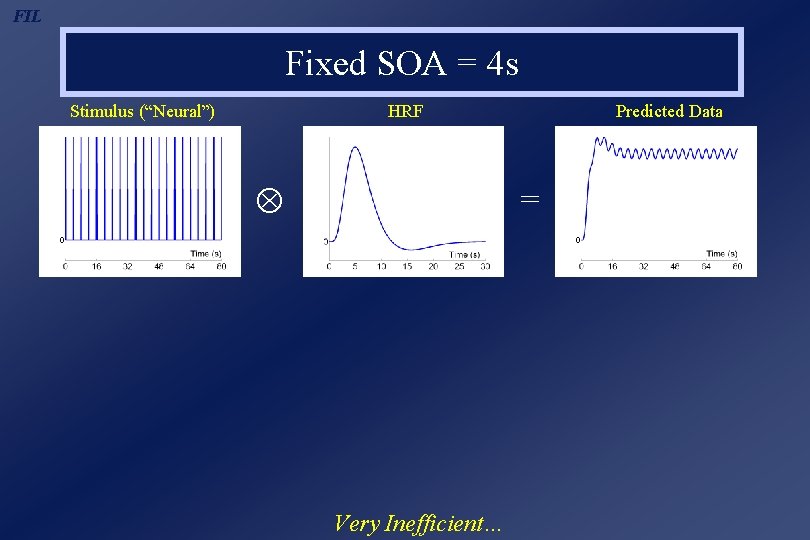

FIL Fixed SOA = 4 s Stimulus (“Neural”) HRF Predicted Data = Very Inefficient…

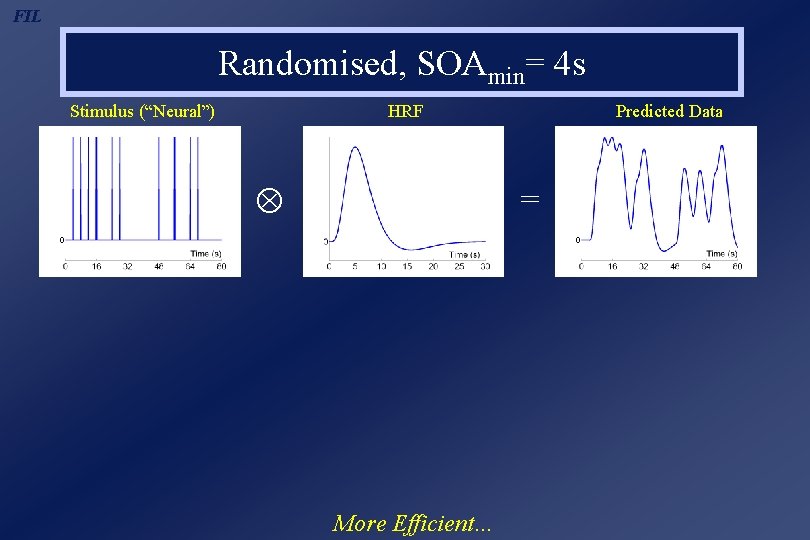

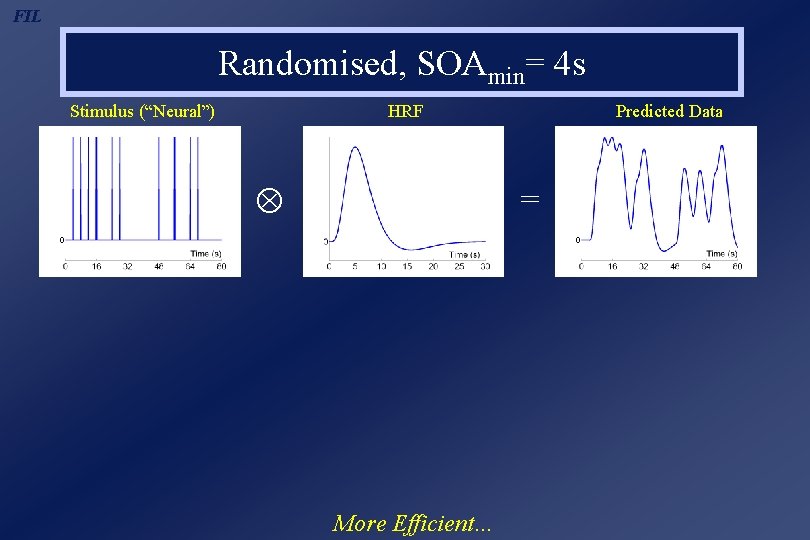

FIL Randomised, SOAmin= 4 s Stimulus (“Neural”) HRF Predicted Data = More Efficient…

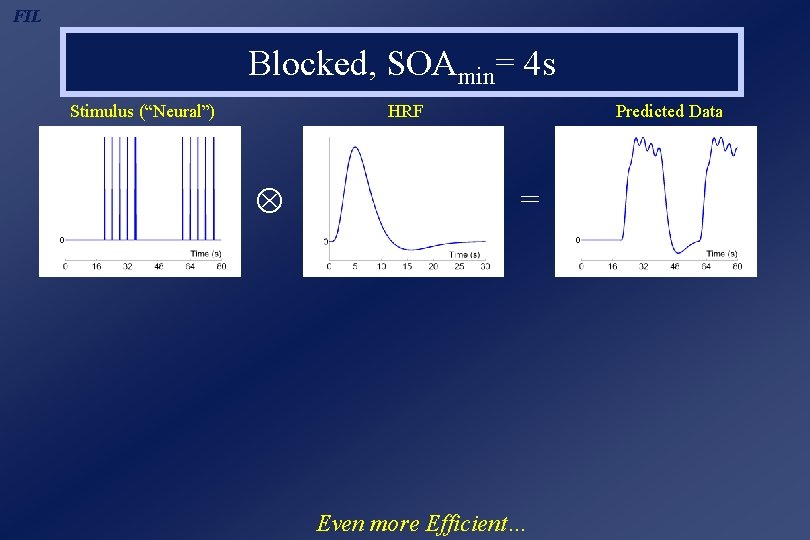

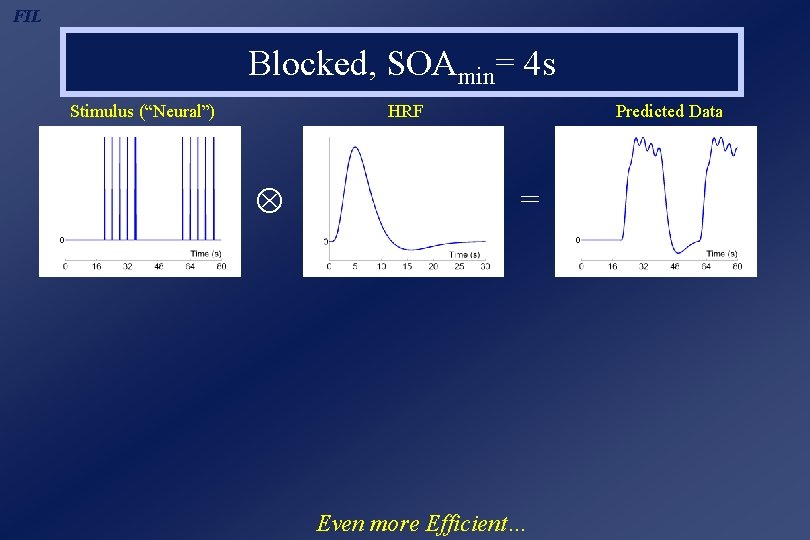

FIL Blocked, SOAmin= 4 s Stimulus (“Neural”) HRF Predicted Data = Even more Efficient…

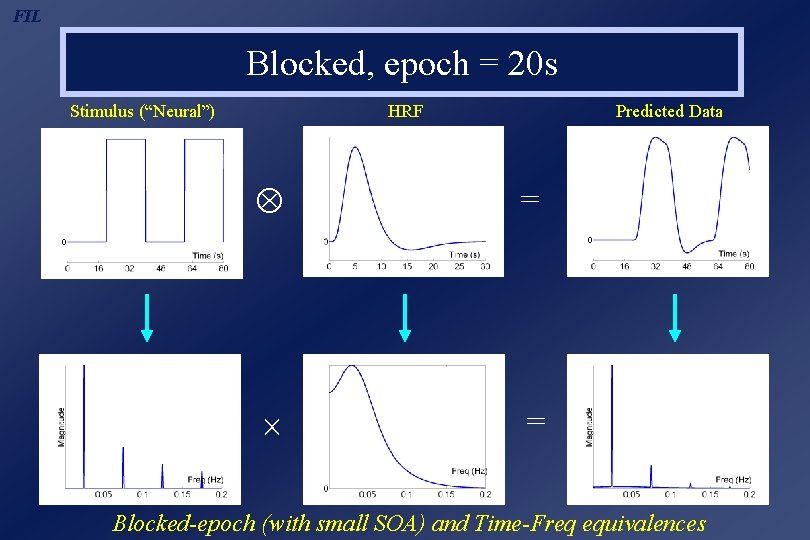

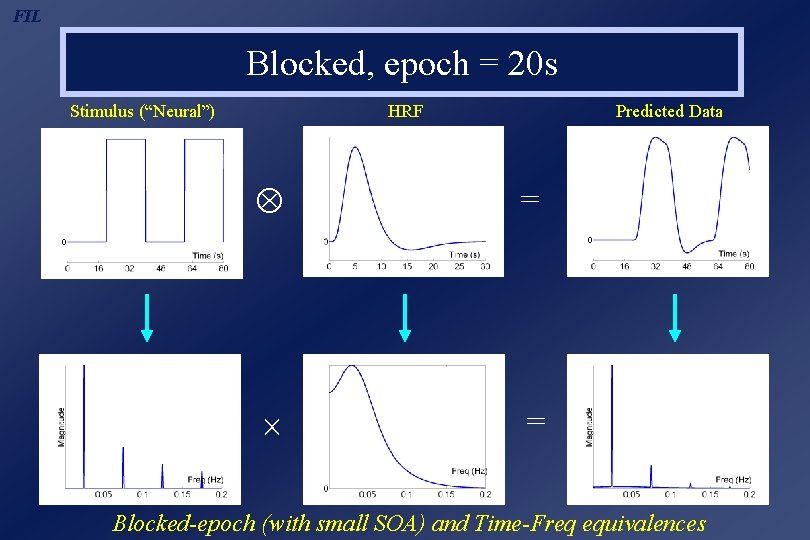

FIL Blocked, epoch = 20 s Stimulus (“Neural”) HRF Predicted Data = = Blocked-epoch (with small SOA) and Time-Freq equivalences

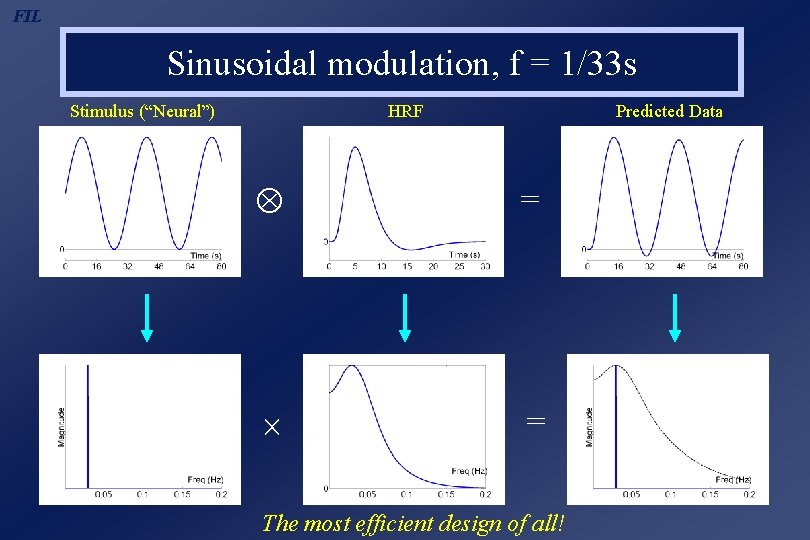

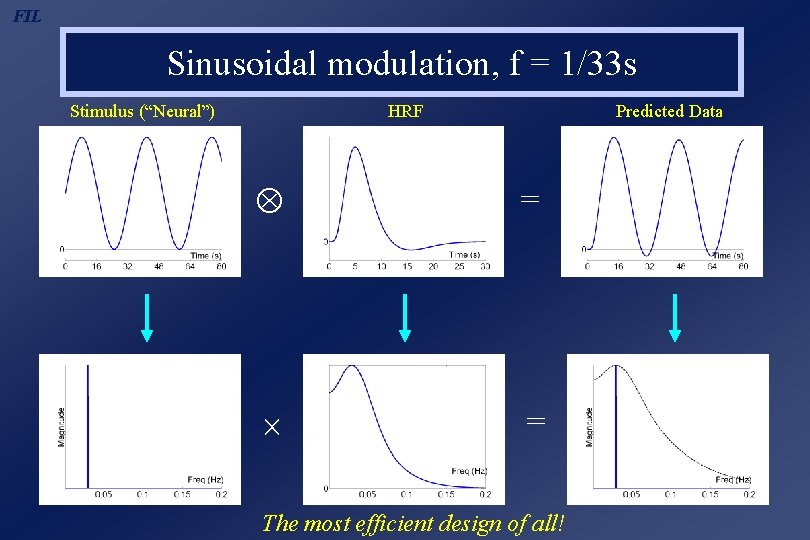

FIL Sinusoidal modulation, f = 1/33 s Stimulus (“Neural”) HRF Predicted Data = = The most efficient design of all!

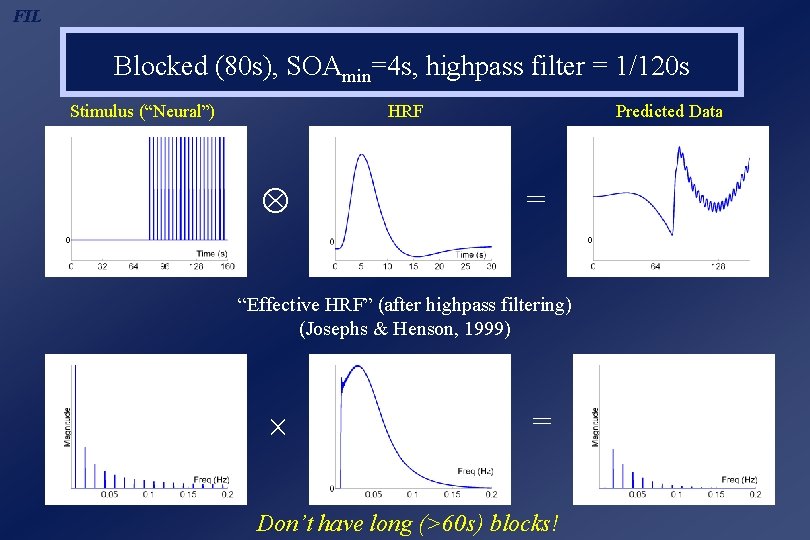

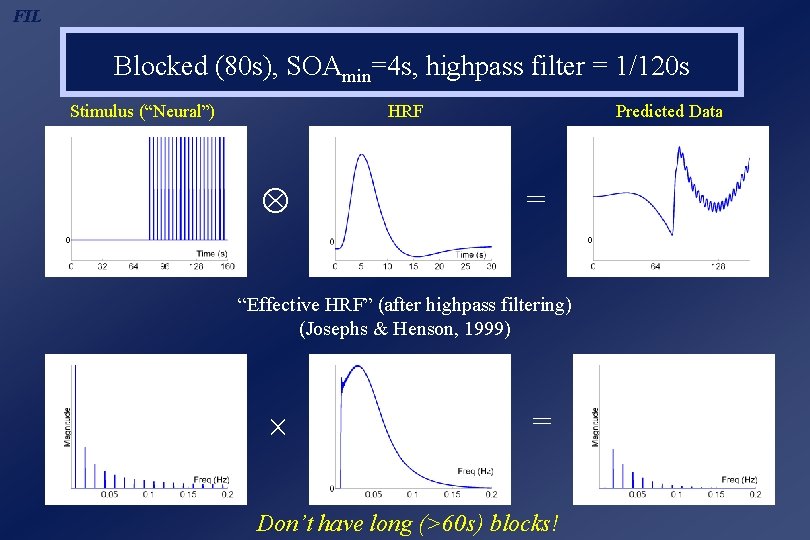

FIL Blocked (80 s), SOAmin=4 s, highpass filter = 1/120 s Stimulus (“Neural”) HRF Predicted Data = “Effective HRF” (after highpass filtering) (Josephs & Henson, 1999) = Don’t have long (>60 s) blocks!

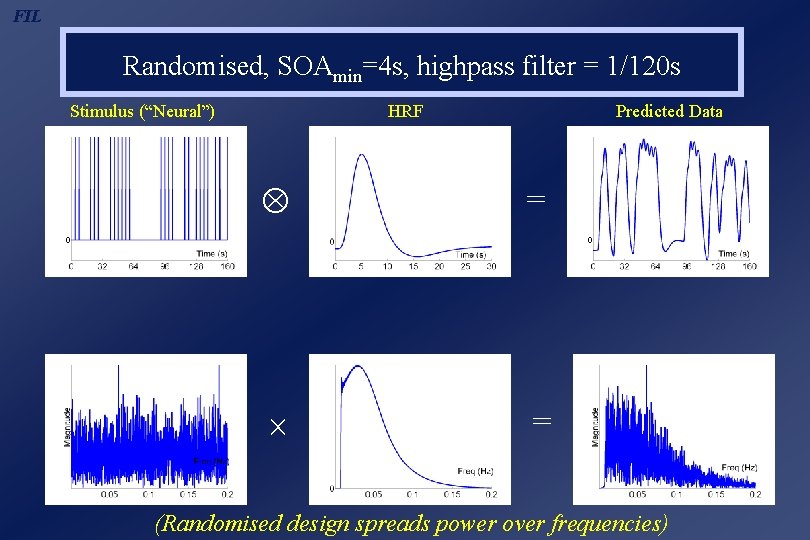

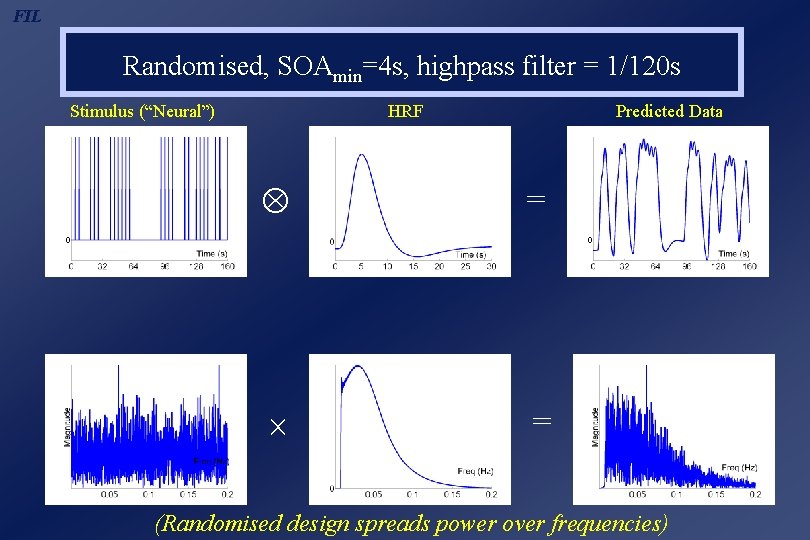

FIL Randomised, SOAmin=4 s, highpass filter = 1/120 s Stimulus (“Neural”) HRF Predicted Data = = (Randomised design spreads power over frequencies)

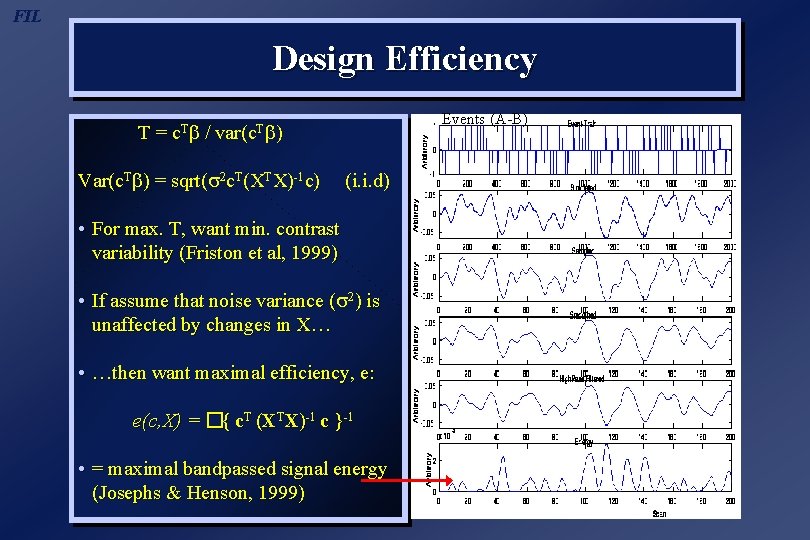

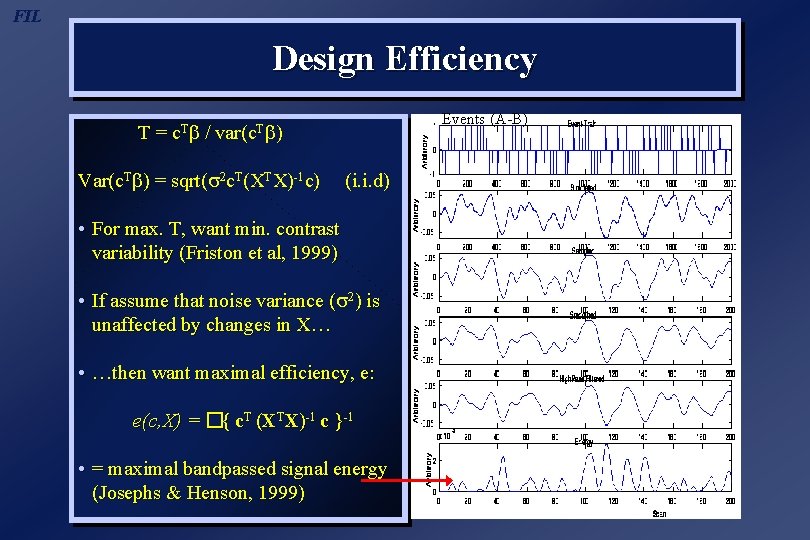

FIL Design Efficiency T= c. T b / Events (A-B) var(c. Tb) Var(c. Tb) = sqrt( 2 c. T(XTX)-1 c) (i. i. d) • For max. T, want min. contrast variability (Friston et al, 1999) • If assume that noise variance ( ( 2) is unaffected by changes in X… • …then want maximal efficiency, e: e(c, X) = �{ c. T (XTX)-1 c }-1 • = maximal bandpassed signal energy (Josephs & Henson, 1999)

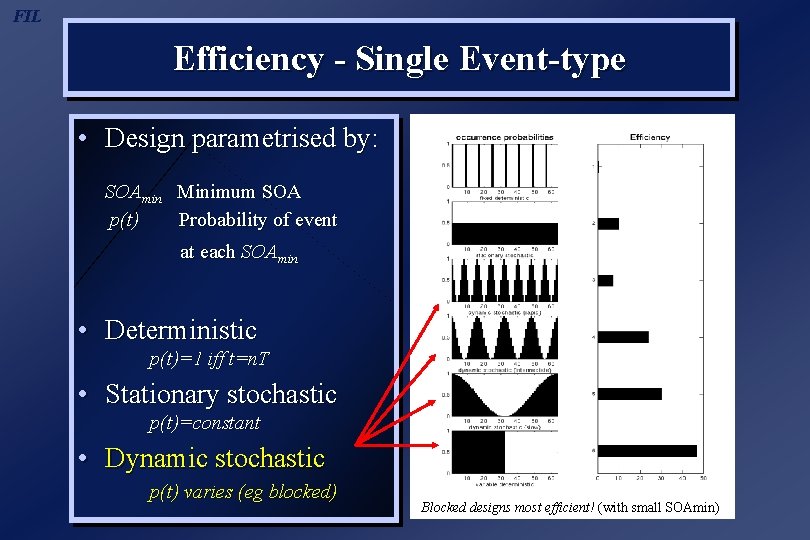

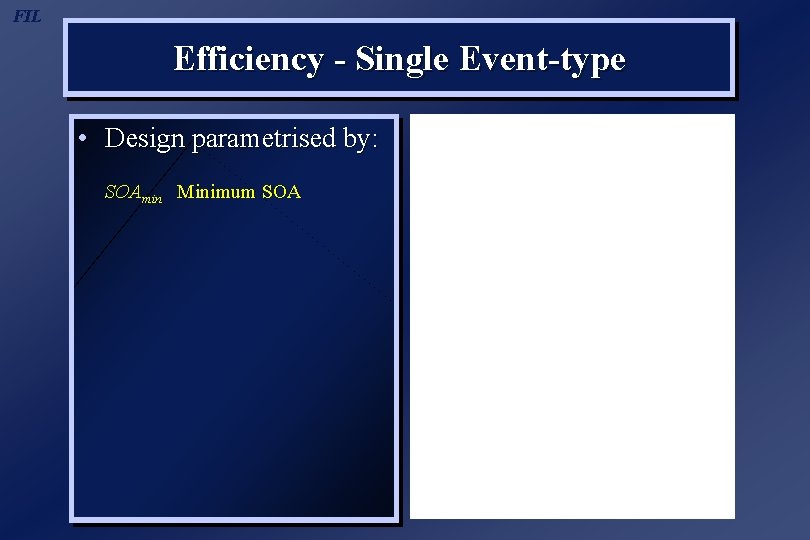

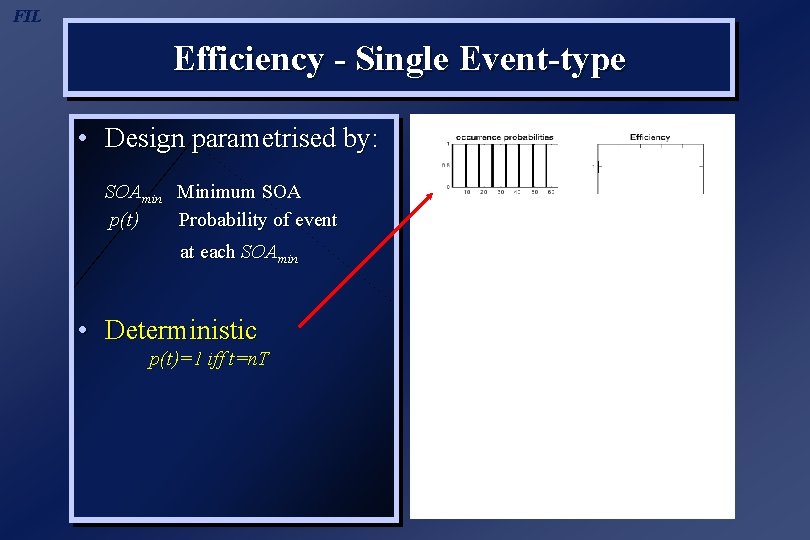

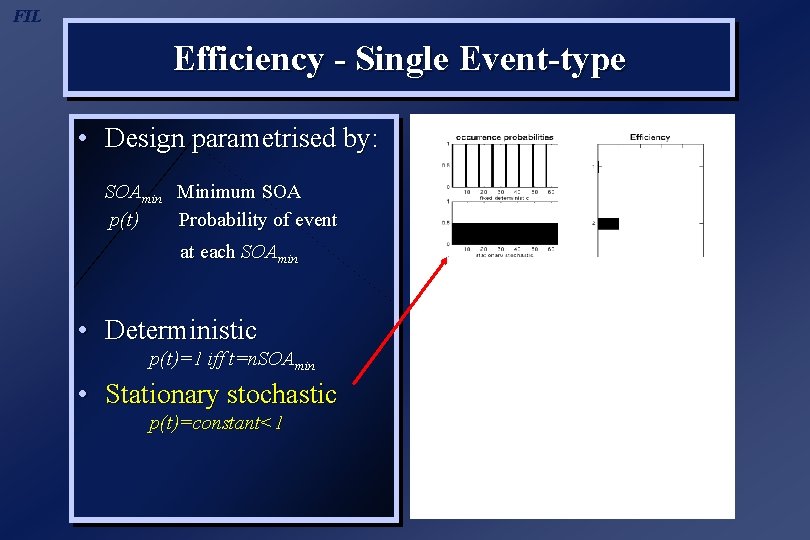

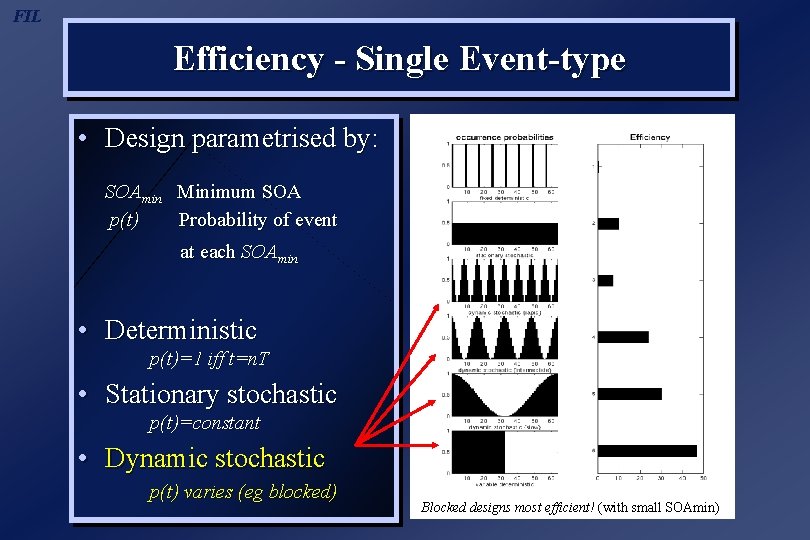

FIL Efficiency - Single Event-type • Design parametrised by: SOAmin Minimum SOA

FIL Efficiency - Single Event-type • Design parametrised by: SOAmin Minimum SOA p(t) Probability of event at each SOAmin

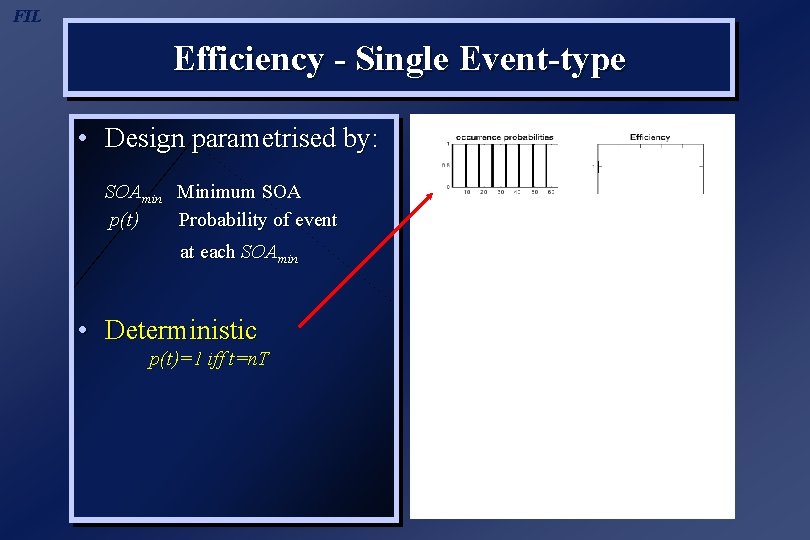

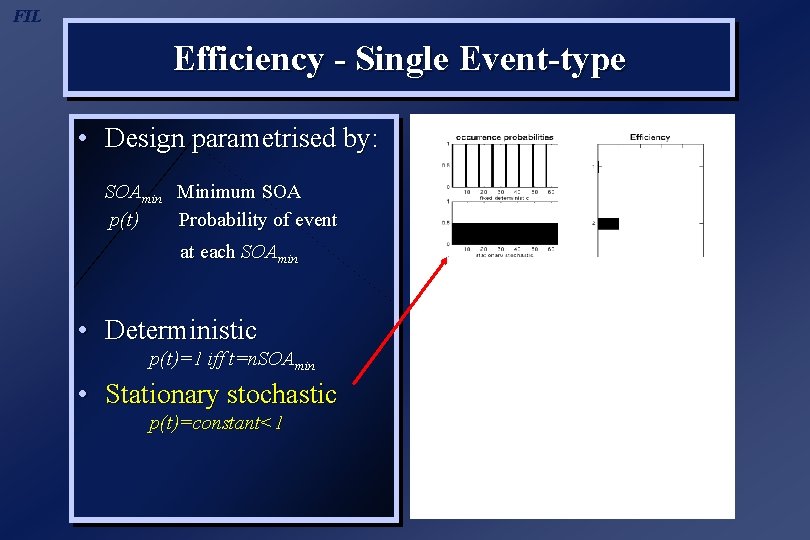

FIL Efficiency - Single Event-type • Design parametrised by: SOAmin Minimum SOA p(t) Probability of event at each SOAmin • Deterministic p(t)=1 iff t=n. T

FIL Efficiency - Single Event-type • Design parametrised by: SOAmin Minimum SOA p(t) Probability of event at each SOAmin • Deterministic p(t)=1 iff t=n. SOAmin • Stationary stochastic p(t)=constant<1

FIL Efficiency - Single Event-type • Design parametrised by: SOAmin Minimum SOA p(t) Probability of event at each SOAmin • Deterministic p(t)=1 iff t=n. T • Stationary stochastic p(t)=constant • Dynamic stochastic p(t) varies (eg blocked) Blocked designs most efficient! (with small SOAmin)

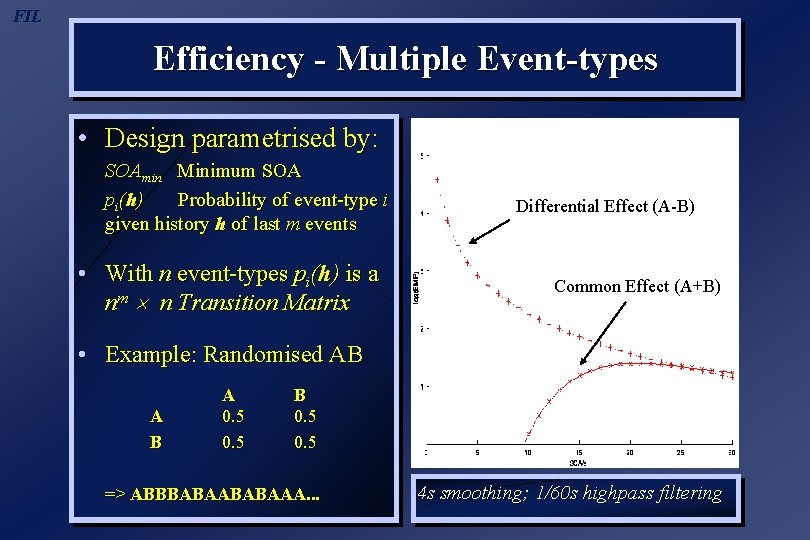

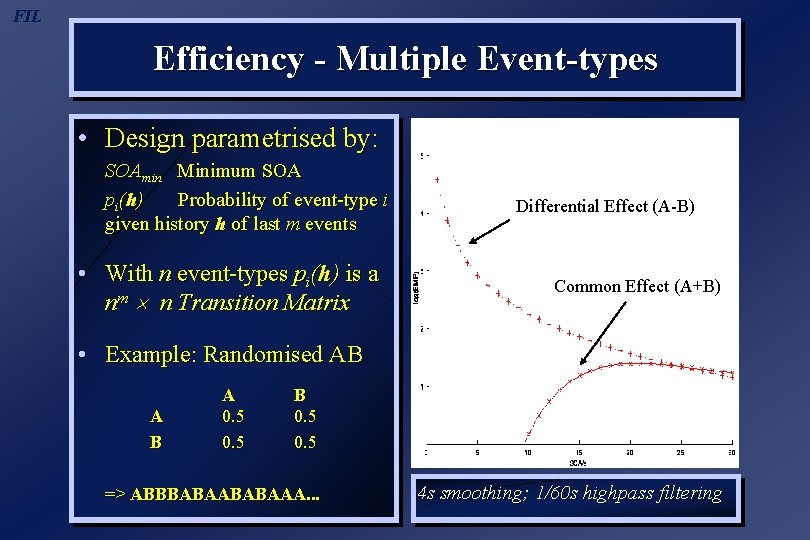

FIL Efficiency - Multiple Event-types • Design parametrised by: SOAmin Minimum SOA pi(h) Probability of event-type i given history h of last m events • With n event-types pi(h) is a nm n Transition Matrix Differential Effect (A-B) Common Effect (A+B) • Example: Randomised AB A 0. 5 B 0. 5 => ABBBABAAA. . . 4 s smoothing; 1/60 s highpass filtering

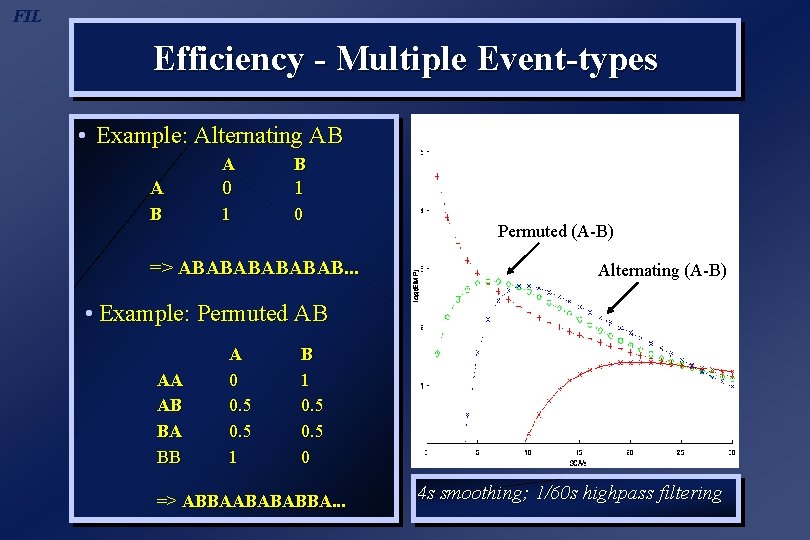

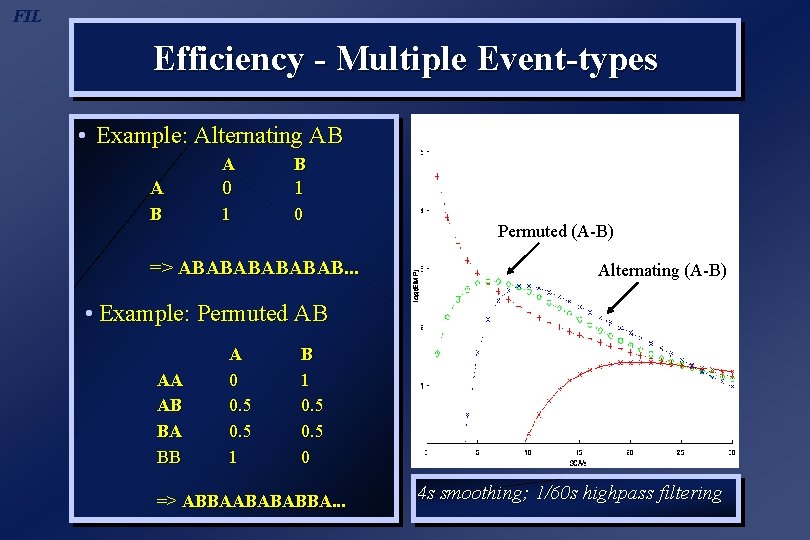

FIL Efficiency - Multiple Event-types • Example: Alternating AB A 0 1 B 1 0 => ABABAB. . . Permuted (A-B) Alternating (A-B) • Example: Permuted AB AA AB BA BB A 0 0. 5 1 B 1 0. 5 0 => ABBAABABABBA. . . 4 s smoothing; 1/60 s highpass filtering

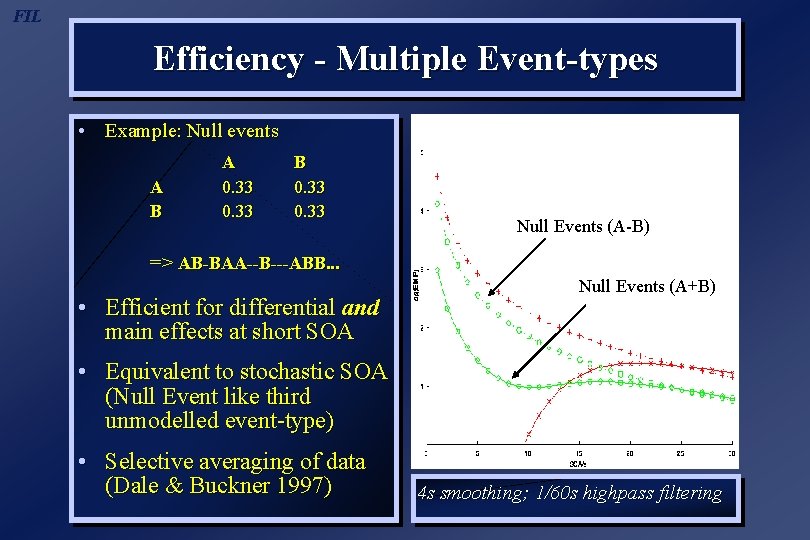

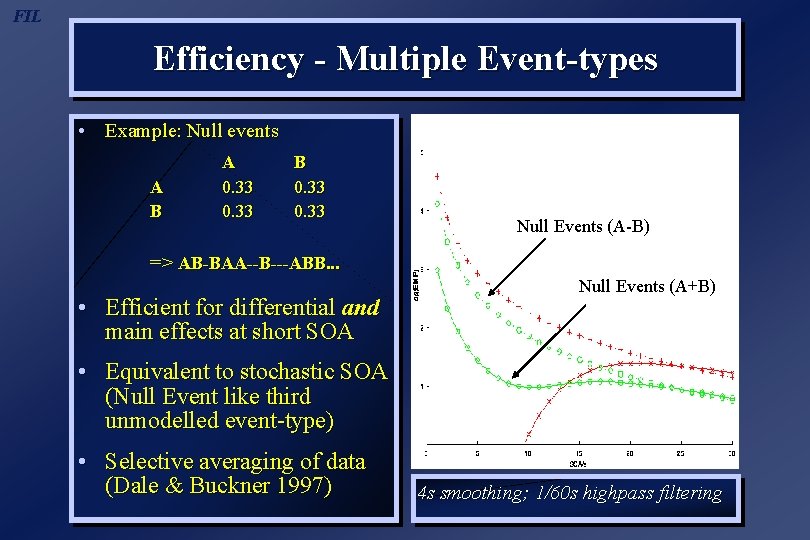

FIL Efficiency - Multiple Event-types • Example: Null events A B A 0. 33 B 0. 33 Null Events (A-B) => AB-BAA--B---ABB. . . • Efficient for differential and main effects at short SOA Null Events (A+B) • Equivalent to stochastic SOA (Null Event like third unmodelled event-type) • Selective averaging of data (Dale & Buckner 1997) 4 s smoothing; 1/60 s highpass filtering

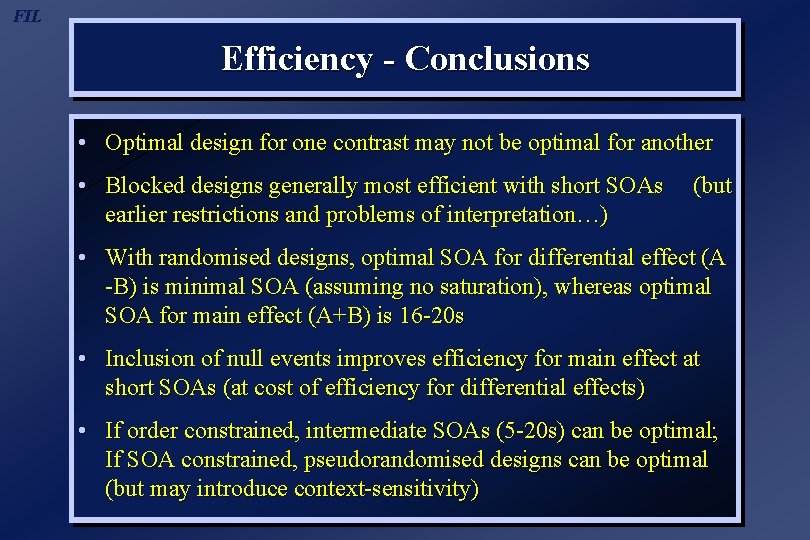

FIL Efficiency - Conclusions • Optimal design for one contrast may not be optimal for another • Blocked designs generally most efficient with short SOAs earlier restrictions and problems of interpretation…) (but • With randomised designs, optimal SOA for differential effect (A -B) is minimal SOA (assuming no saturation), whereas optimal SOA for main effect (A+B) is 16 -20 s • Inclusion of null events improves efficiency for main effect at short SOAs (at cost of efficiency for differential effects) • If order constrained, intermediate SOAs (5 -20 s) can be optimal; If SOA constrained, pseudorandomised designs can be optimal (but may introduce context-sensitivity)

FIL Overview 1. BOLD impulse response 2. General Linear Model 3. Temporal Basis Functions 4. Timing Issues 5. Design Optimisation 6. Nonlinear Models 7. Example Applications

![FIL Nonlinear Model input ut ut response yt Stimulus function kernels h estimate Volterra FIL Nonlinear Model input u(t) [u(t)] response y(t) Stimulus function kernels (h) estimate Volterra](https://slidetodoc.com/presentation_image_h/9e931a255aa9c665481266bbffd90456/image-48.jpg)

FIL Nonlinear Model input u(t) [u(t)] response y(t) Stimulus function kernels (h) estimate Volterra series - a general nonlinear input-output model = 1[u(t)] + 2[u(t)] +. . + n[u(t)] +. . y(t) n[u(t)] = . . hn(t 1, . . . , tn)u(t - t 1). . u(t - tn)dt 1. . dtn

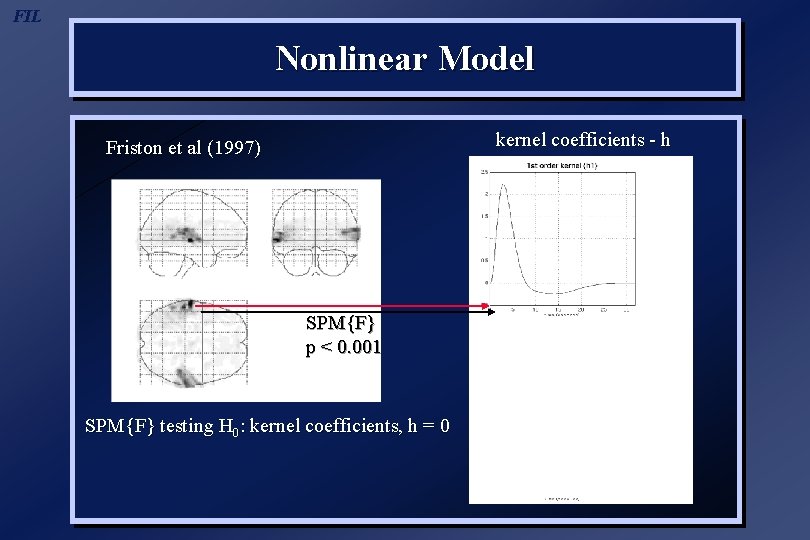

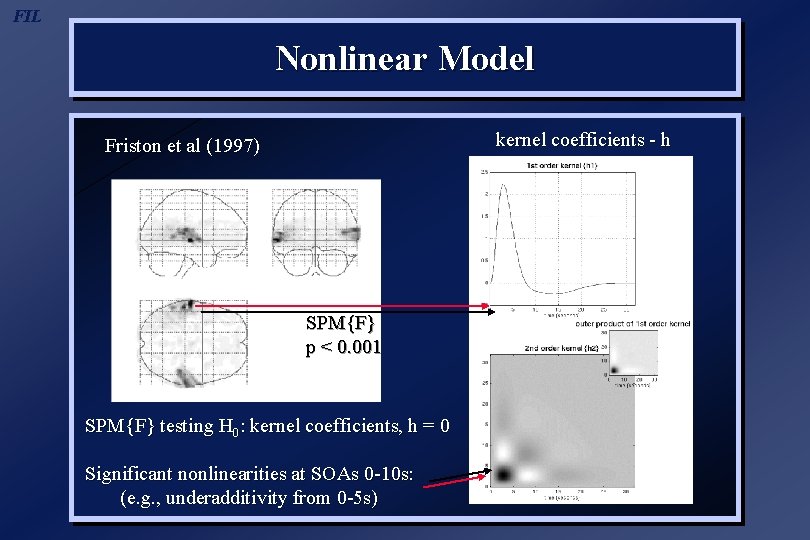

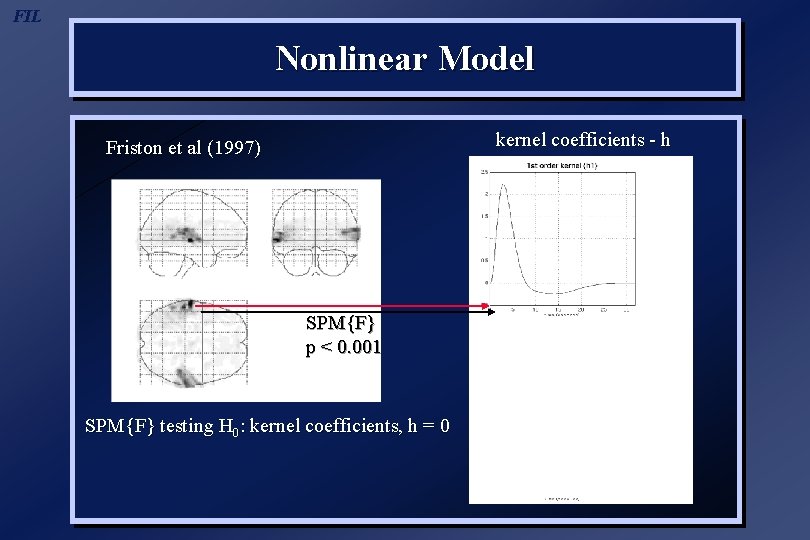

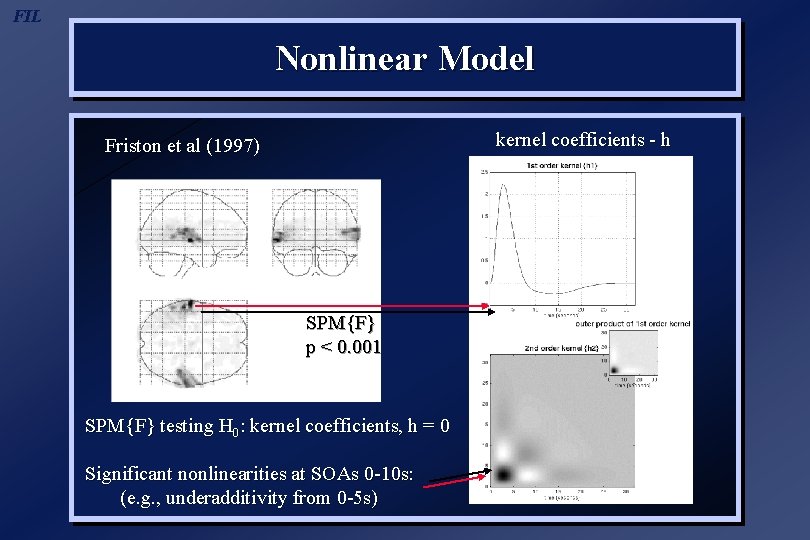

FIL Nonlinear Model kernel coefficients - h Friston et al (1997) SPM{F} p < 0. 001 SPM{F} testing H 0: kernel coefficients, h = 0

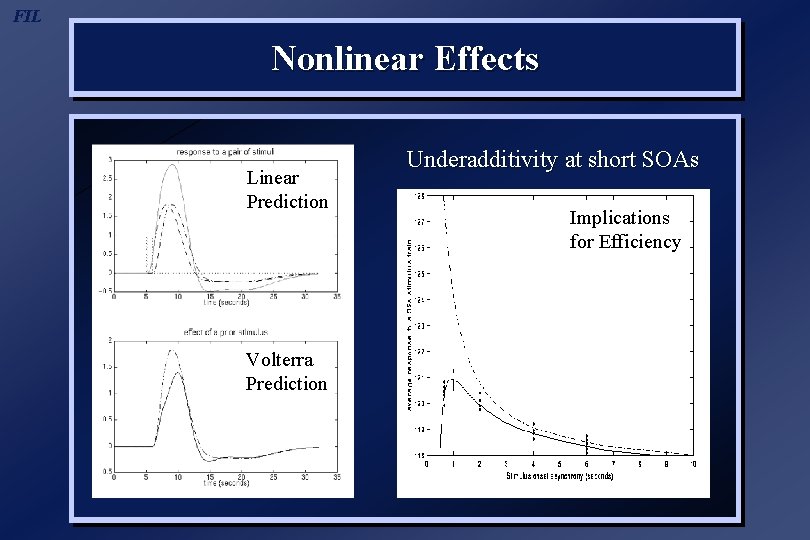

FIL Nonlinear Model kernel coefficients - h Friston et al (1997) SPM{F} p < 0. 001 SPM{F} testing H 0: kernel coefficients, h = 0 Significant nonlinearities at SOAs 0 -10 s: (e. g. , underadditivity from 0 -5 s)

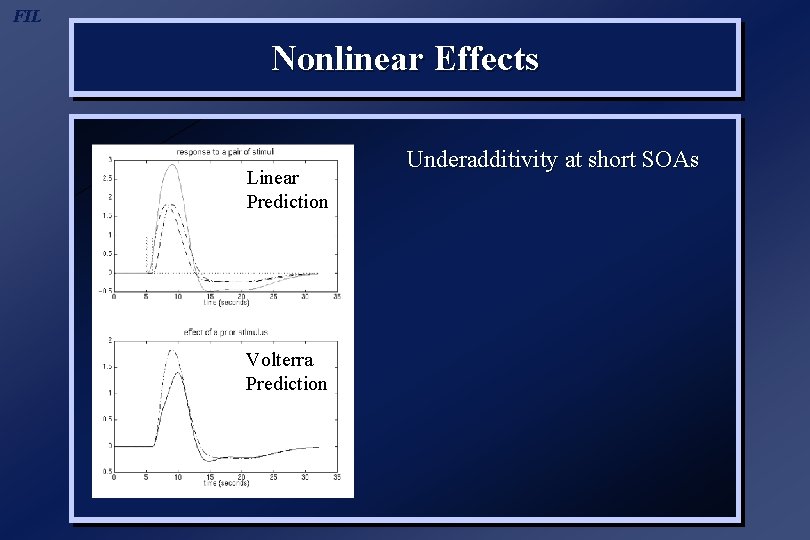

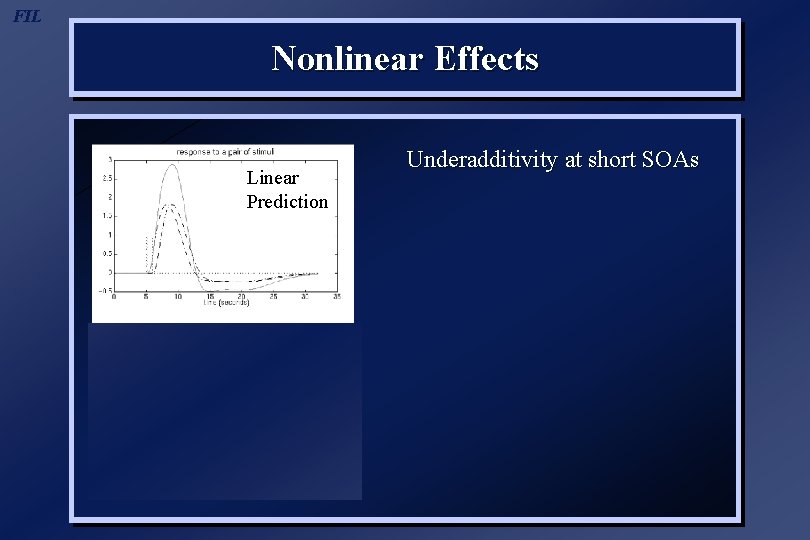

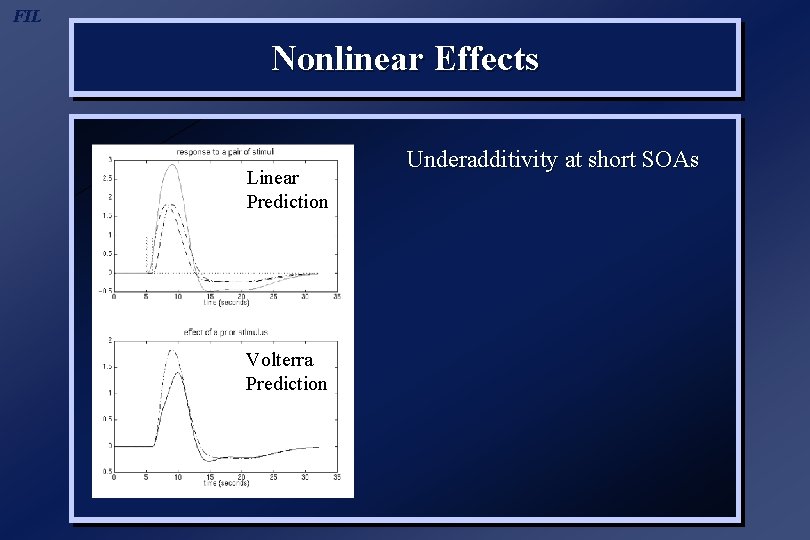

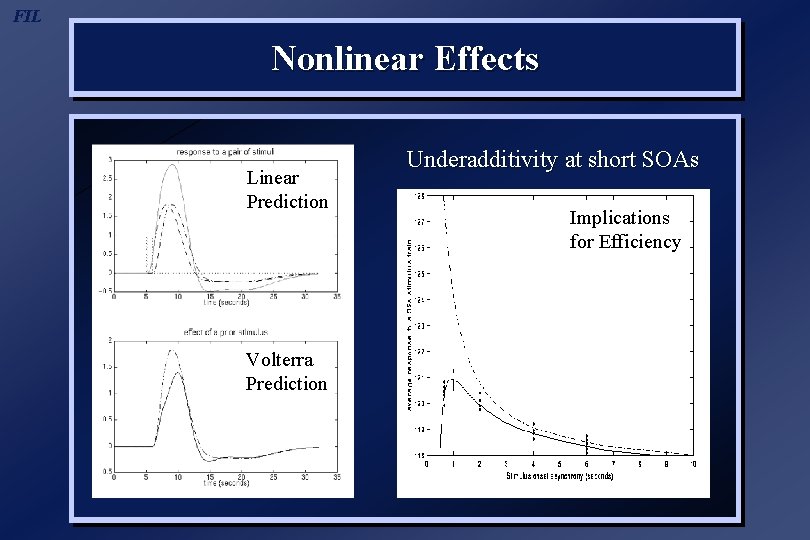

FIL Nonlinear Effects Linear Prediction Volterra Prediction Underadditivity at short SOAs

FIL Nonlinear Effects Linear Prediction Volterra Prediction Underadditivity at short SOAs

FIL Nonlinear Effects Linear Prediction Volterra Prediction Underadditivity at short SOAs Implications for Efficiency

FIL Overview 1. BOLD impulse response 2. General Linear Model 3. Temporal Basis Functions 4. Timing Issues 5. Design Optimisation 6. Nonlinear Models 7. Example Applications

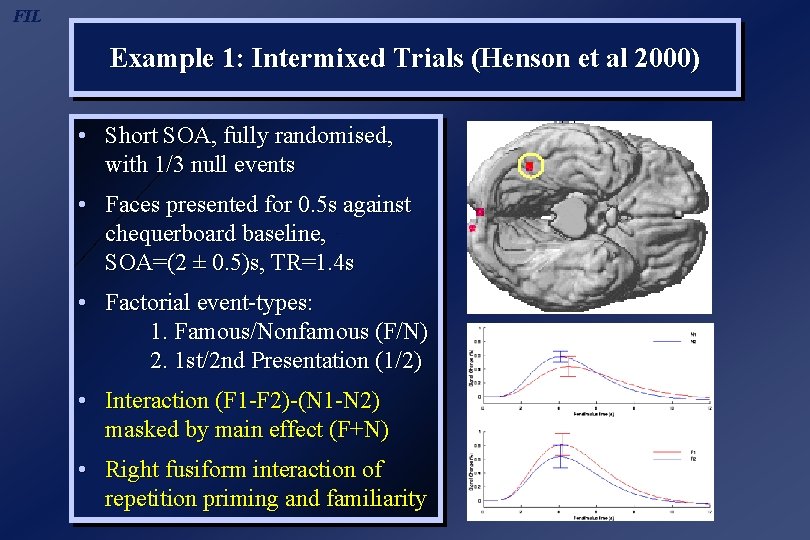

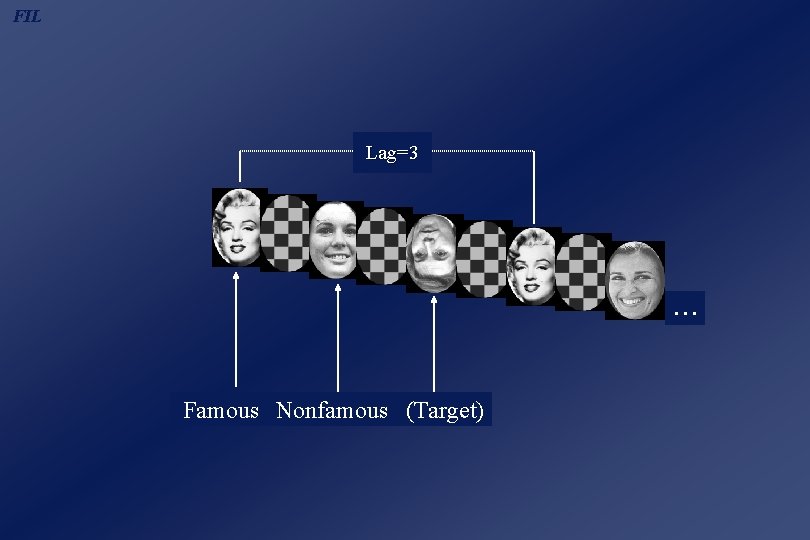

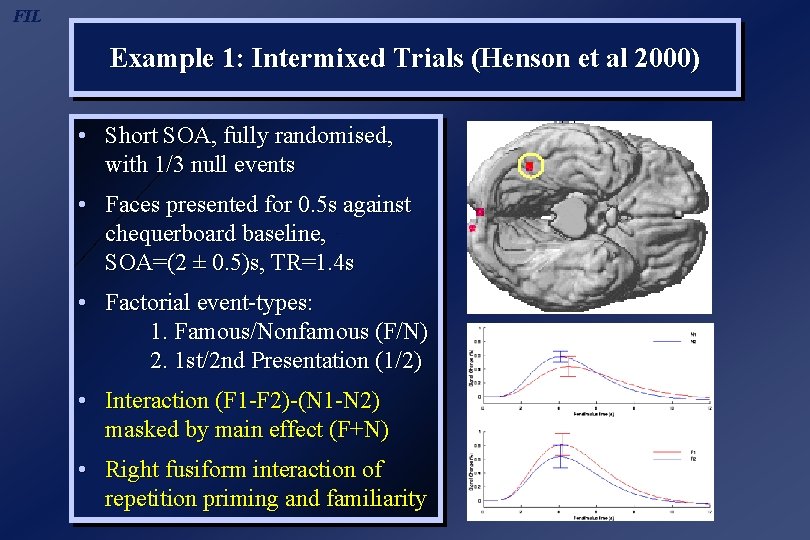

FIL Example 1: Intermixed Trials (Henson et al 2000) • Short SOA, fully randomised, with 1/3 null events • Faces presented for 0. 5 s against chequerboard baseline, SOA=(2 ± 0. 5)s, TR=1. 4 s • Factorial event-types: 1. Famous/Nonfamous (F/N) 2. 1 st/2 nd Presentation (1/2)

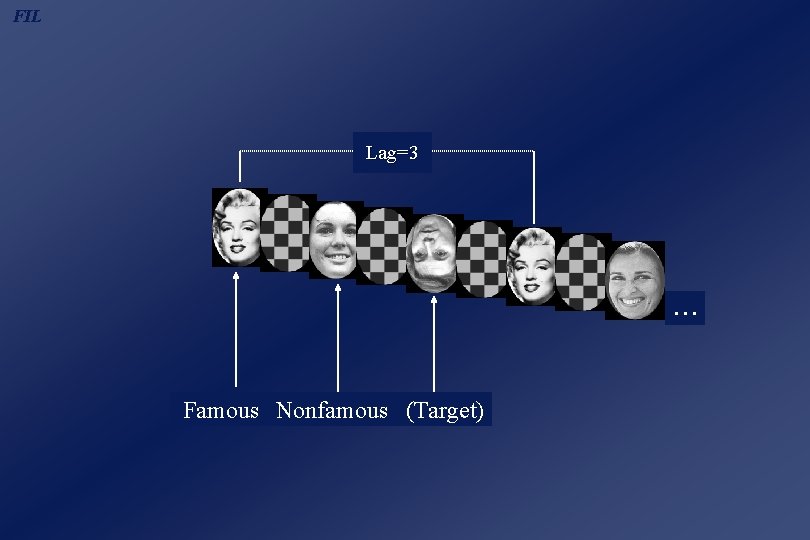

FIL Lag=3 . . . Famous Nonfamous (Target)

FIL Example 1: Intermixed Trials (Henson et al 2000) • Short SOA, fully randomised, with 1/3 null events • Faces presented for 0. 5 s against chequerboard baseline, SOA=(2 ± 0. 5)s, TR=1. 4 s • Factorial event-types: 1. Famous/Nonfamous (F/N) 2. 1 st/2 nd Presentation (1/2) • Interaction (F 1 -F 2)-(N 1 -N 2) masked by main effect (F+N) • Right fusiform interaction of repetition priming and familiarity

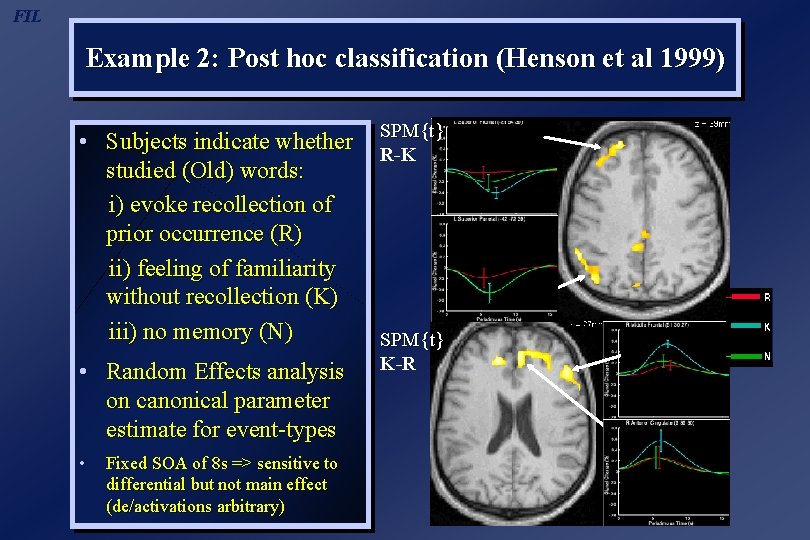

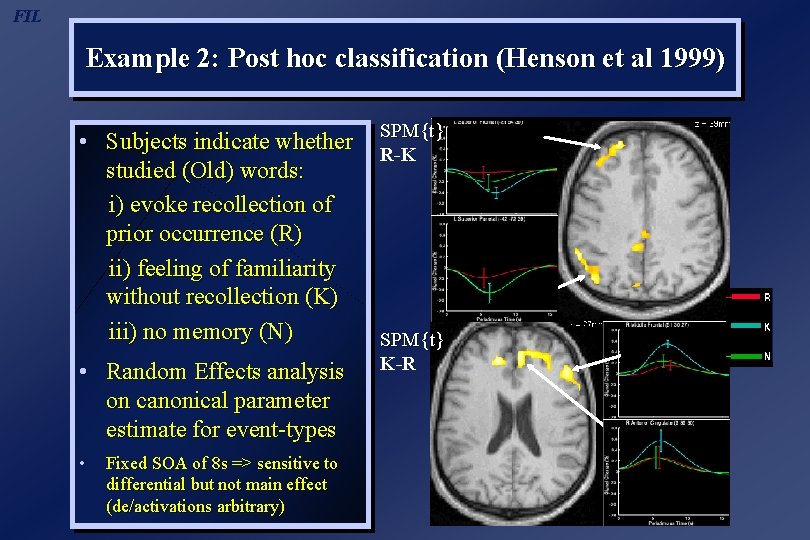

FIL Example 2: Post hoc classification (Henson et al 1999) • Subjects indicate whether studied (Old) words: i) evoke recollection of prior occurrence (R) ii) feeling of familiarity without recollection (K) iii) no memory (N) • Random Effects analysis on canonical parameter estimate for event-types • Fixed SOA of 8 s => sensitive to differential but not main effect (de/activations arbitrary) SPM{t} R-K SPM{t} K-R

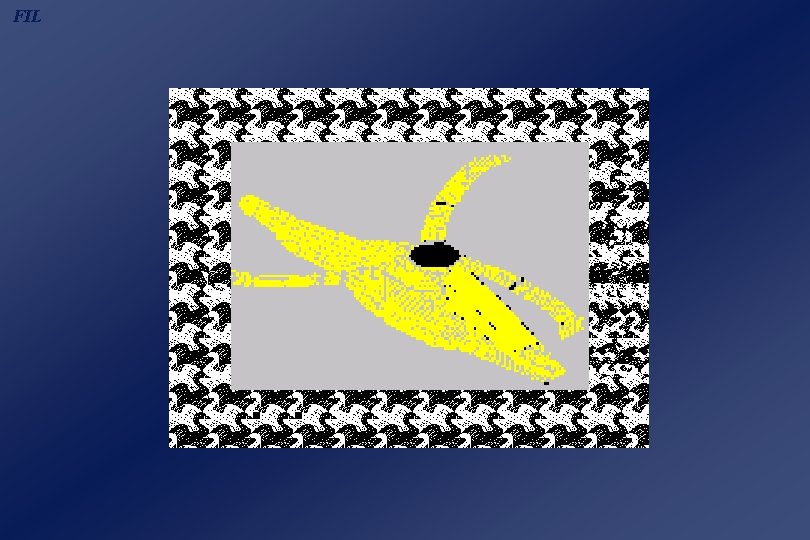

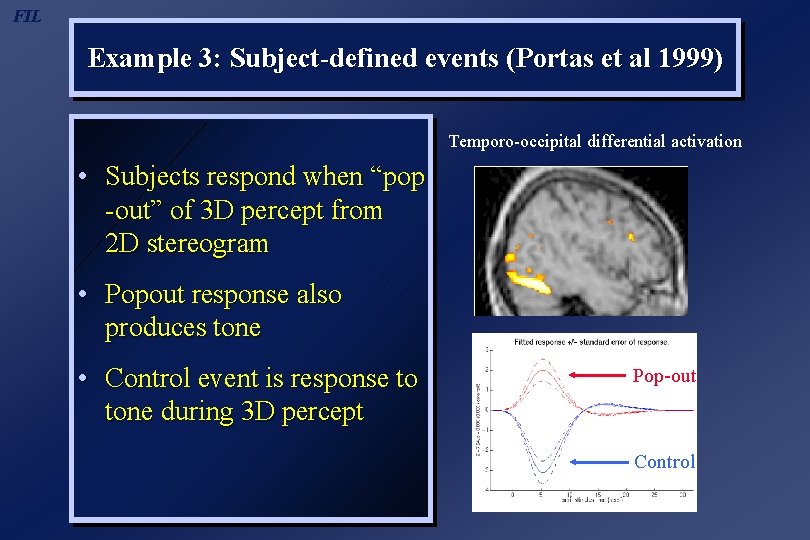

FIL Example 3: Subject-defined events (Portas et al 1999) • Subjects respond when “pop -out” of 3 D percept from 2 D stereogram

FIL

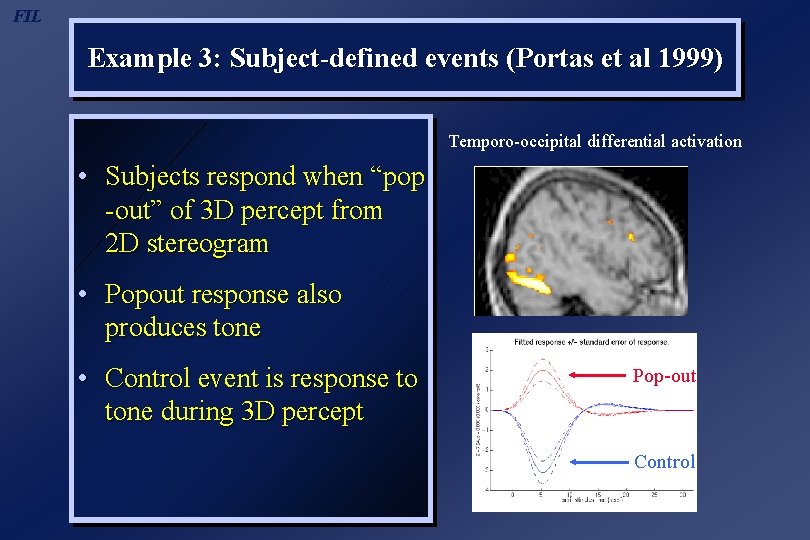

FIL Example 3: Subject-defined events (Portas et al 1999) Temporo-occipital differential activation • Subjects respond when “pop -out” of 3 D percept from 2 D stereogram • Popout response also produces tone • Control event is response to tone during 3 D percept Pop-out Control

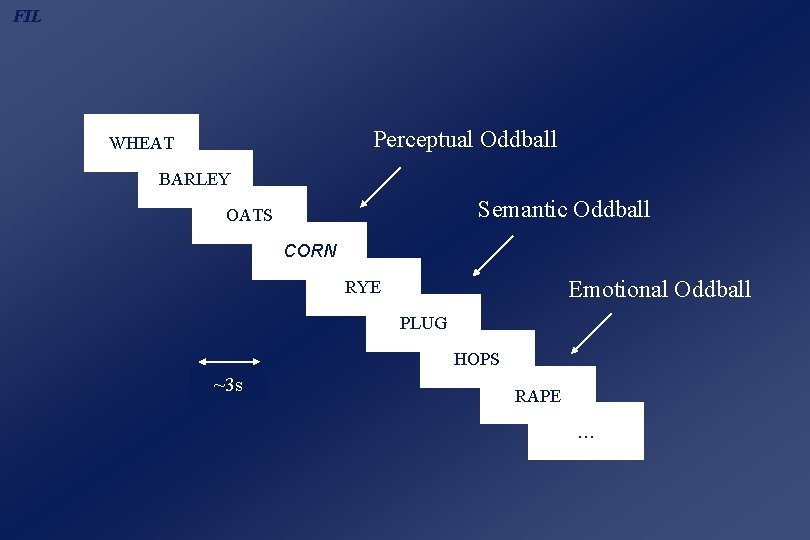

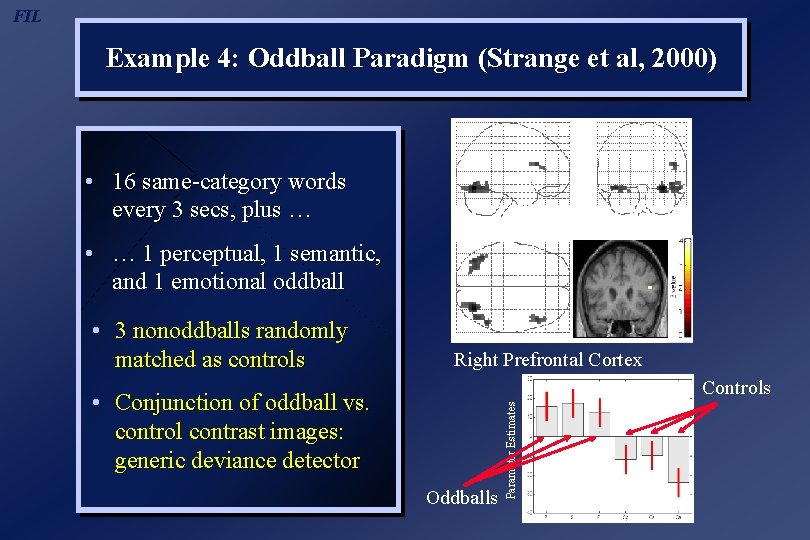

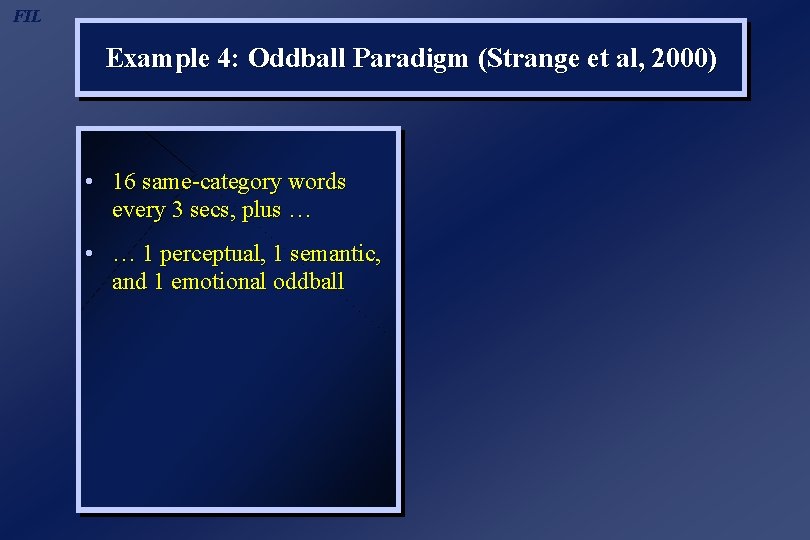

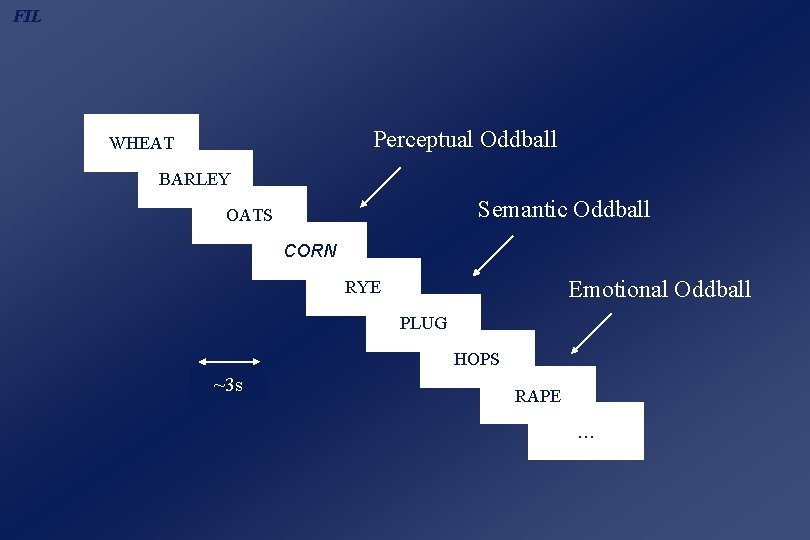

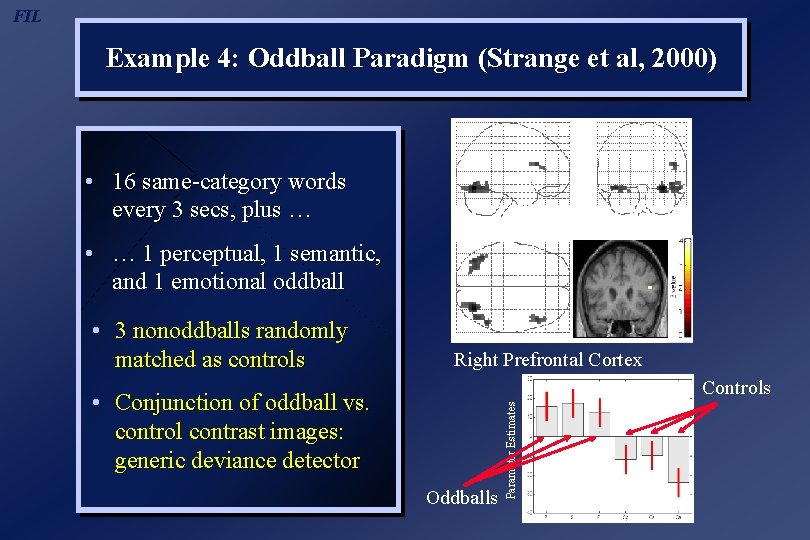

FIL Example 4: Oddball Paradigm (Strange et al, 2000) • 16 same-category words every 3 secs, plus … • … 1 perceptual, 1 semantic, and 1 emotional oddball

FIL Perceptual Oddball WHEAT BARLEY Semantic Oddball OATS CORN Emotional Oddball RYE PLUG HOPS ~3 s RAPE …

FIL Example 4: Oddball Paradigm (Strange et al, 2000) • 16 same-category words every 3 secs, plus … • … 1 perceptual, 1 semantic, and 1 emotional oddball Right Prefrontal Cortex Controls • Conjunction of oddball vs. control contrast images: generic deviance detector Oddballs Parameter Estimates • 3 nonoddballs randomly matched as controls

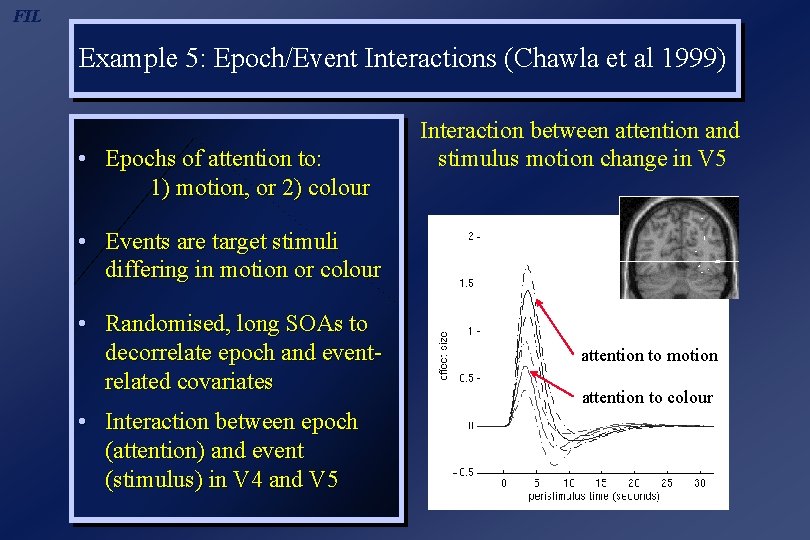

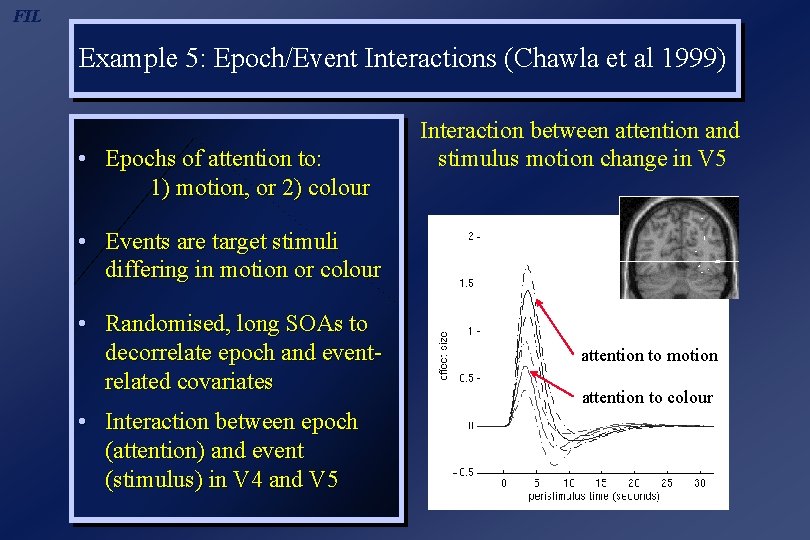

FIL Example 5: Epoch/Event Interactions (Chawla et al 1999) • Epochs of attention to: 1) motion, or 2) colour Interaction between attention and stimulus motion change in V 5 • Events are target stimuli differing in motion or colour • Randomised, long SOAs to decorrelate epoch and eventrelated covariates • Interaction between epoch (attention) and event (stimulus) in V 4 and V 5 attention to motion attention to colour

FIL

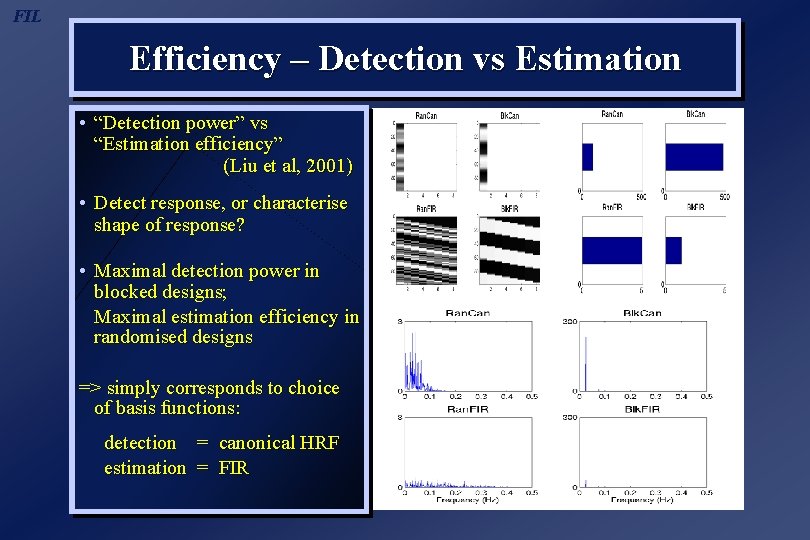

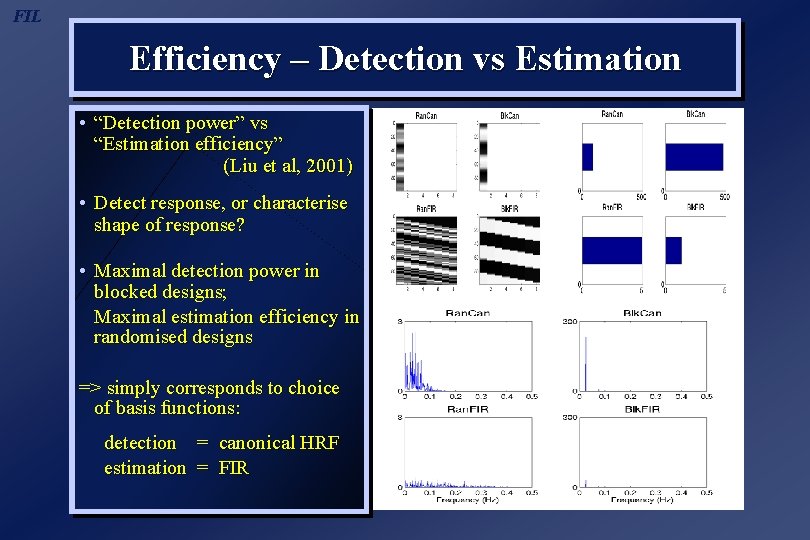

FIL Efficiency – Detection vs Estimation • “Detection power” vs “Estimation efficiency” (Liu et al, 2001) • Detect response, or characterise shape of response? • Maximal detection power in blocked designs; Maximal estimation efficiency in randomised designs => simply corresponds to choice of basis functions: detection = canonical HRF estimation = FIR

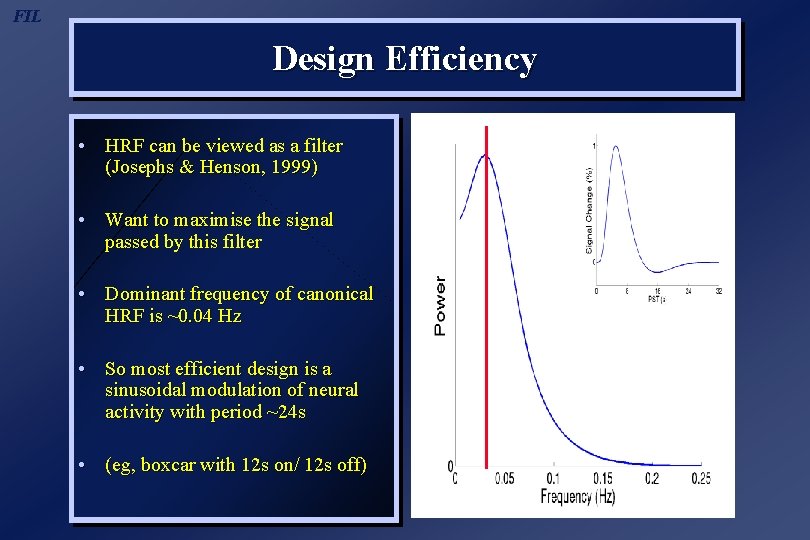

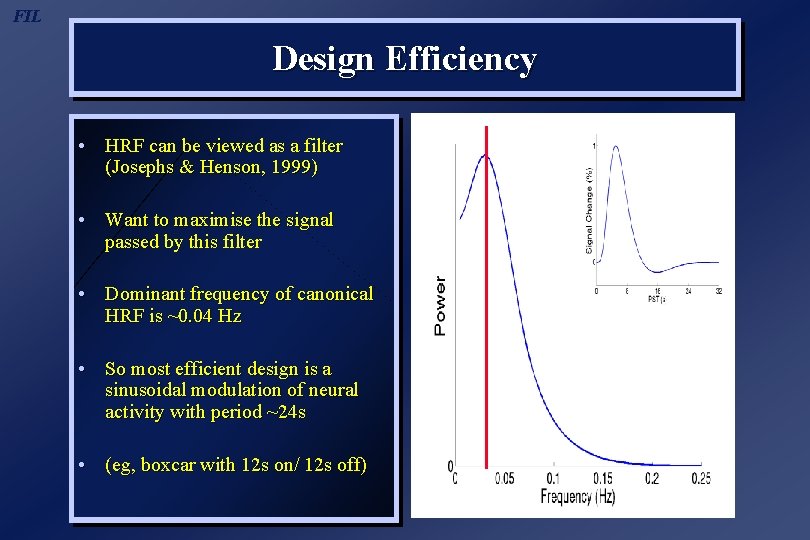

FIL Design Efficiency • HRF can be viewed as a filter (Josephs & Henson, 1999) • Want to maximise the signal passed by this filter • Dominant frequency of canonical HRF is ~0. 04 Hz • So most efficient design is a sinusoidal modulation of neural activity with period ~24 s • (eg, boxcar with 12 s on/ 12 s off)

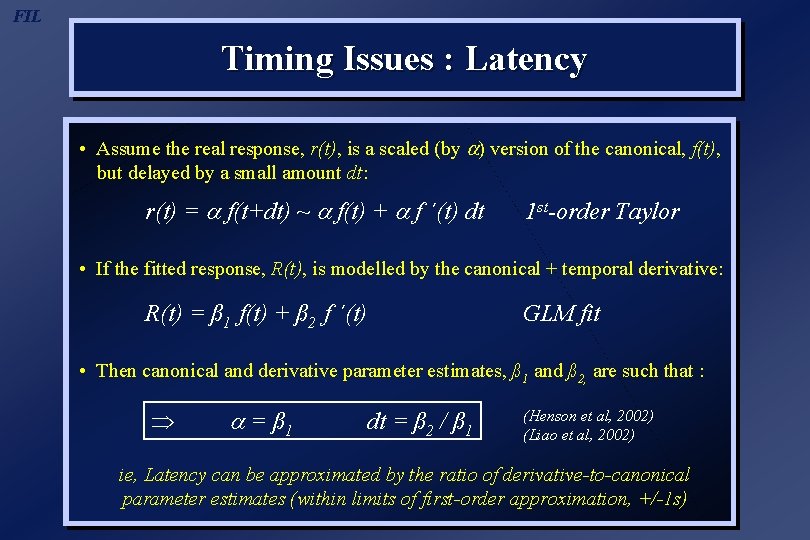

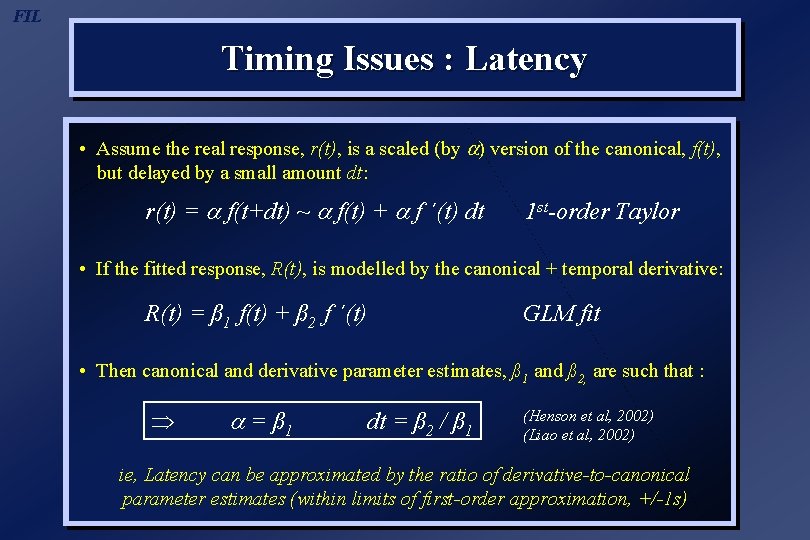

FIL Timing Issues : Latency • Assume the real response, r(t), is a scaled (by ) version of the canonical, f(t), but delayed by a small amount dt: r(t) = f(t+dt) ~ f(t) + f ´(t) dt 1 st-order Taylor • If the fitted response, R(t), is modelled by the canonical + temporal derivative: R(t) = ß 1 f(t) + ß 2 f ´(t) GLM fit • Then canonical and derivative parameter estimates, ß 1 and ß 2, are such that : Þ = ß 1 dt = ß 2 / ß 1 (Henson et al, 2002) (Liao et al, 2002) ie, Latency can be approximated by the ratio of derivative-to-canonical parameter estimates (within limits of first-order approximation, +/-1 s)

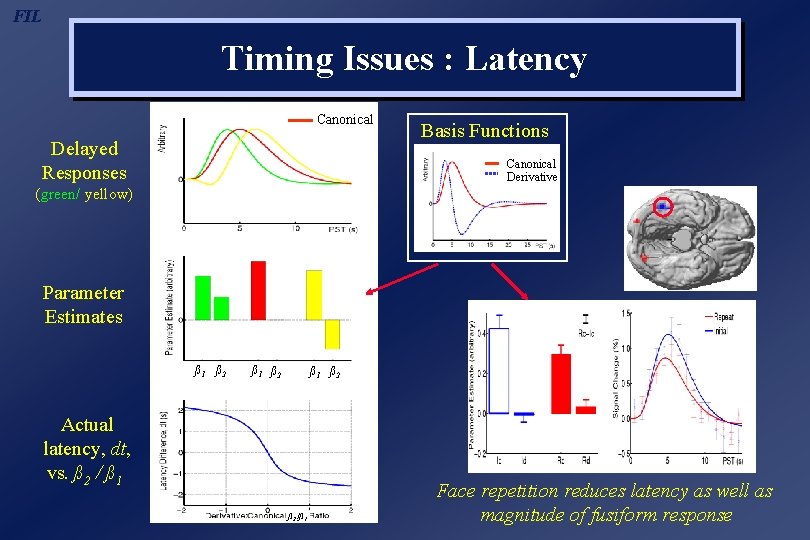

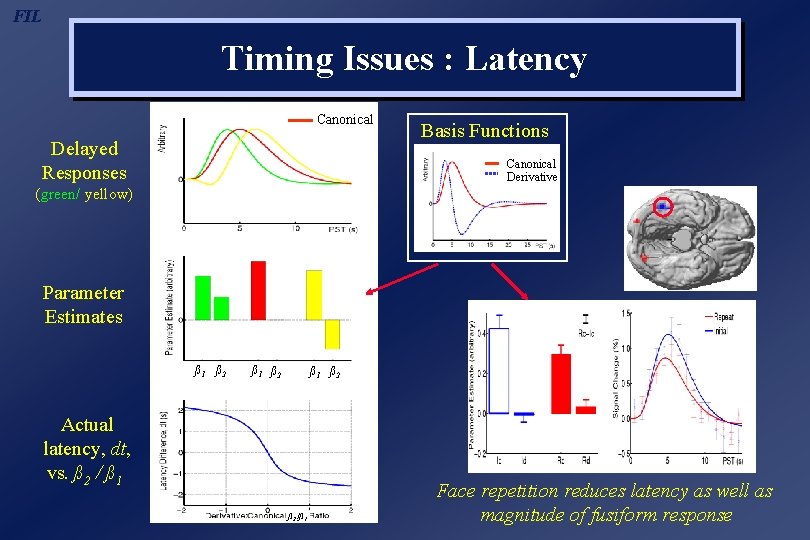

FIL Timing Issues : Latency Canonical Delayed Responses Basis Functions Canonical Derivative (green/ yellow) Parameter Estimates ß 1 ß 2 Actual latency, dt, vs. ß 2 / ß 1 ß 2 /ß 1 Face repetition reduces latency as well as magnitude of fusiform response

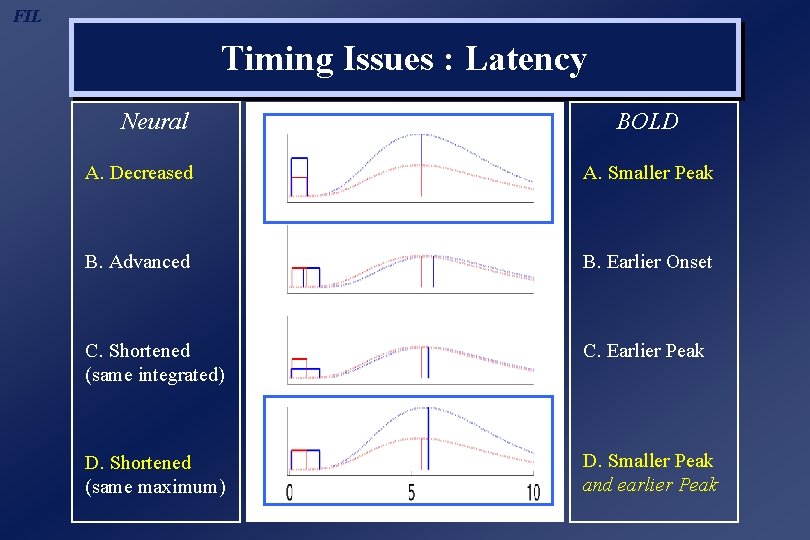

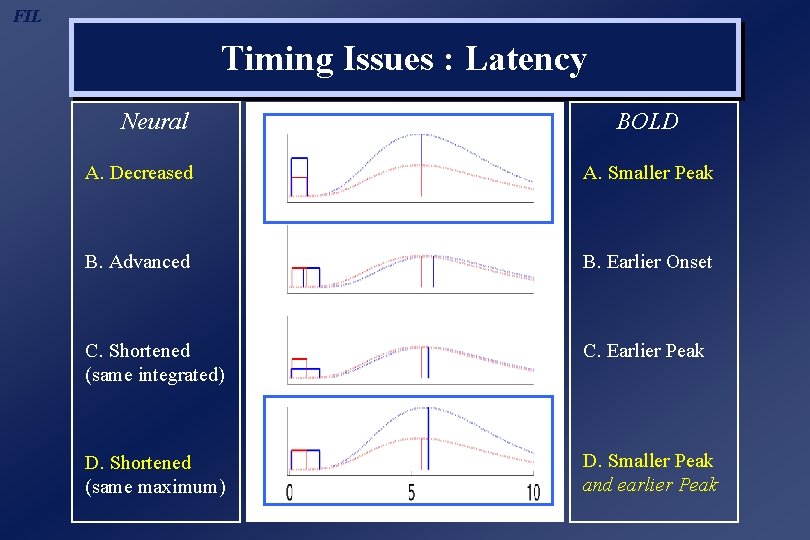

FIL Timing Issues : Latency Neural BOLD A. Decreased A. Smaller Peak B. Advanced B. Earlier Onset C. Shortened (same integrated) C. Earlier Peak D. Shortened (same maximum) D. Smaller Peak and earlier Peak

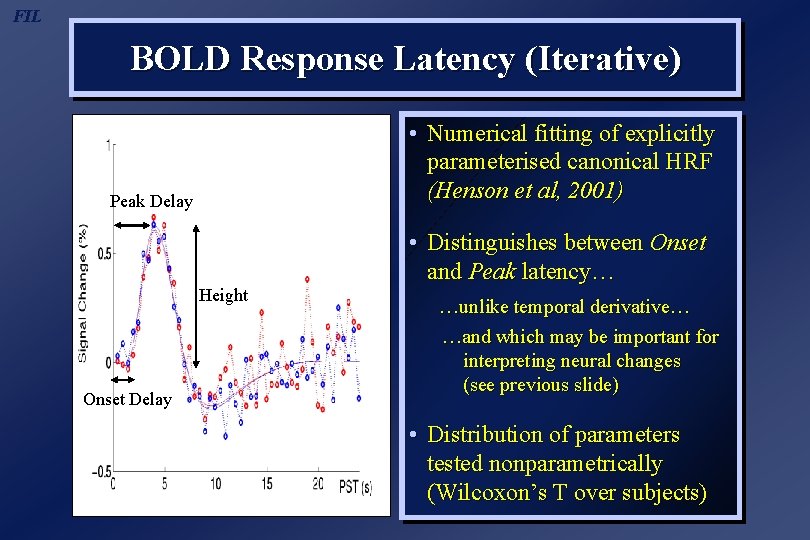

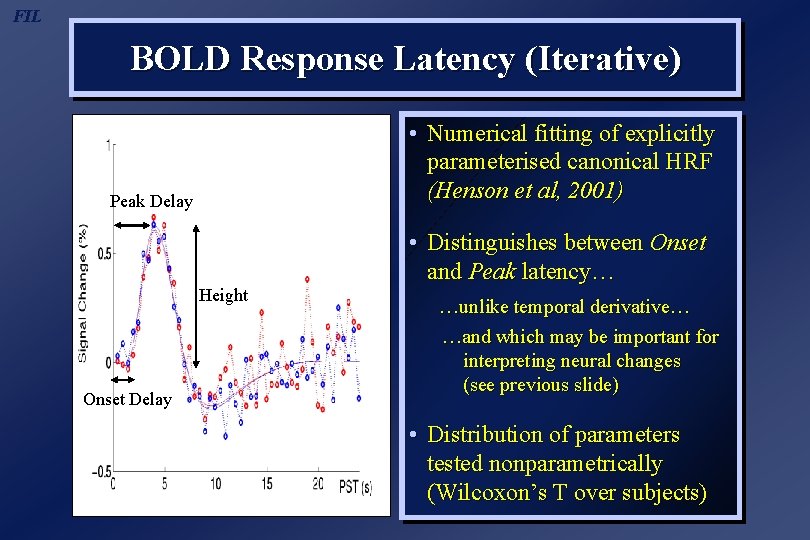

FIL BOLD Response Latency (Iterative) • Numerical fitting of explicitly parameterised canonical HRF (Henson et al, 2001) Peak Delay • Distinguishes between Onset and Peak latency… Height Onset Delay …unlike temporal derivative… …and which may be important for interpreting neural changes (see previous slide) • Distribution of parameters tested nonparametrically (Wilcoxon’s T over subjects)

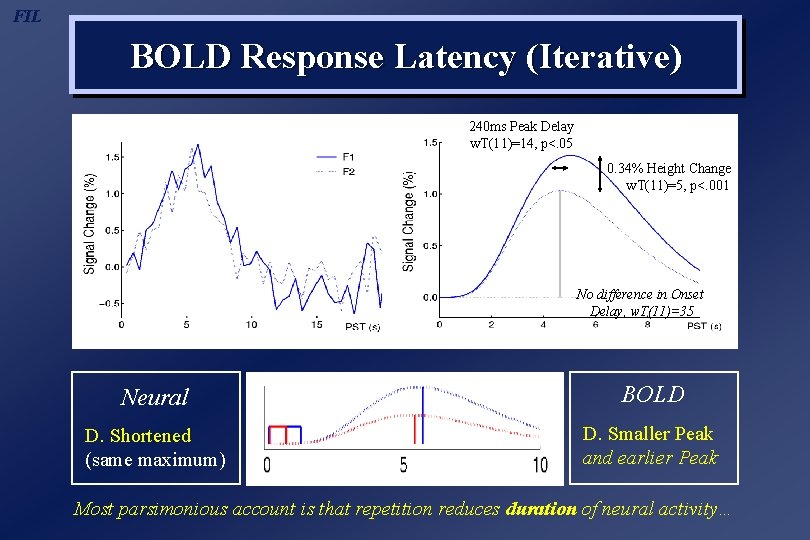

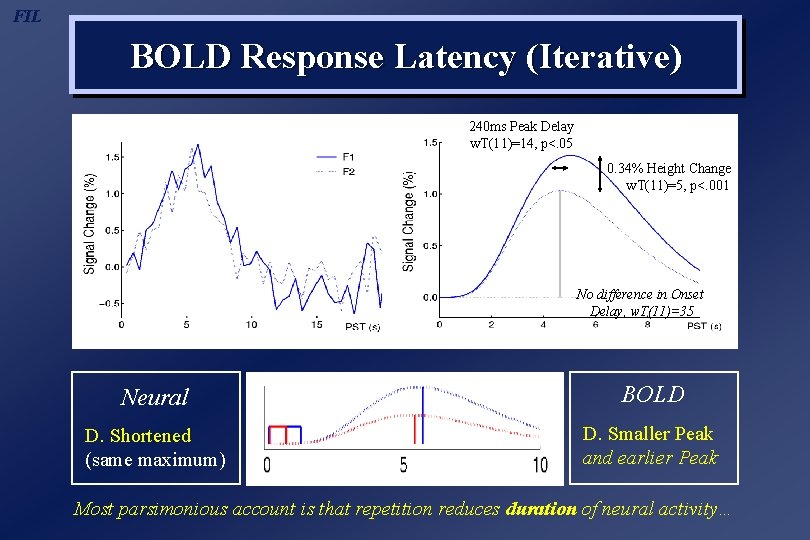

FIL BOLD Response Latency (Iterative) 240 ms Peak Delay w. T(11)=14, p<. 05 0. 34% Height Change w. T(11)=5, p<. 001 No difference in Onset Delay, w. T(11)=35 Neural BOLD D. Shortened (same maximum) D. Smaller Peak and earlier Peak Most parsimonious account is that repetition reduces duration of neural activity…

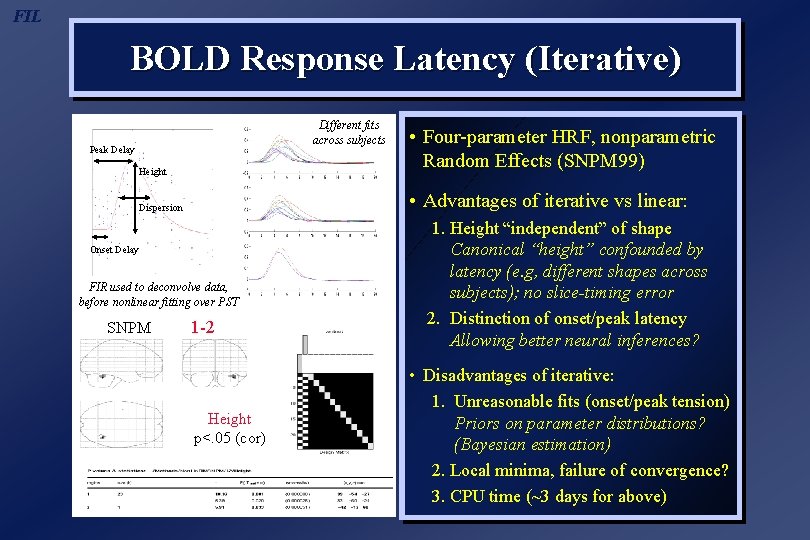

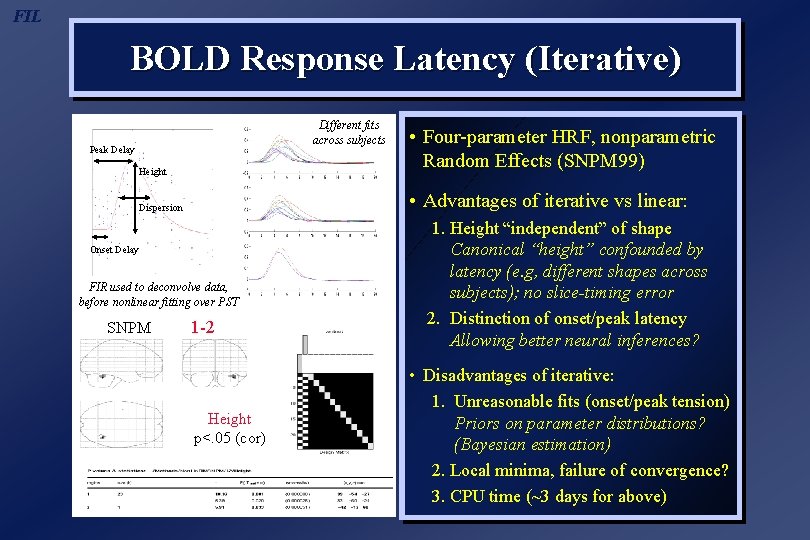

FIL BOLD Response Latency (Iterative) Different fits across subjects Peak Delay Height • Advantages of iterative vs linear: Dispersion Onset Delay FIR used to deconvolve data, before nonlinear fitting over PST SNPM • Four-parameter HRF, nonparametric Random Effects (SNPM 99) 1 -2 Height p<. 05 (cor) 1. Height “independent” of shape Canonical “height” confounded by latency (e. g, different shapes across subjects); no slice-timing error 2. Distinction of onset/peak latency Allowing better neural inferences? • Disadvantages of iterative: 1. Unreasonable fits (onset/peak tension) Priors on parameter distributions? (Bayesian estimation) 2. Local minima, failure of convergence? 3. CPU time (~3 days for above)

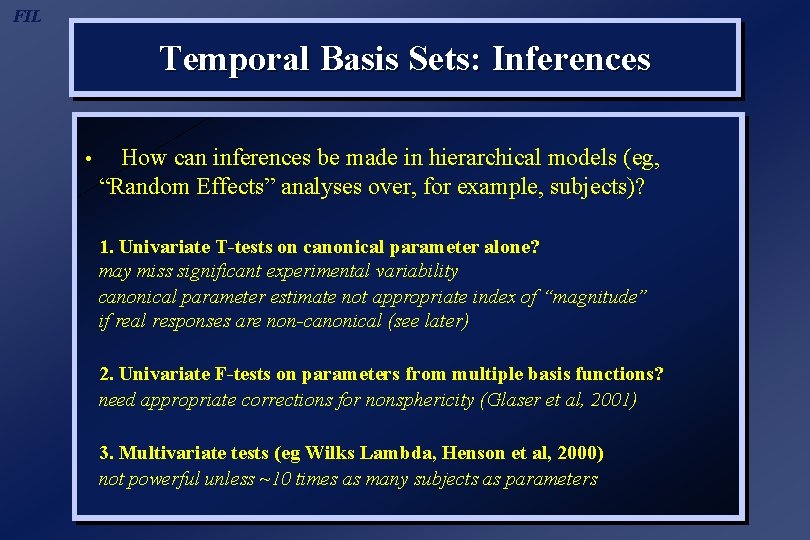

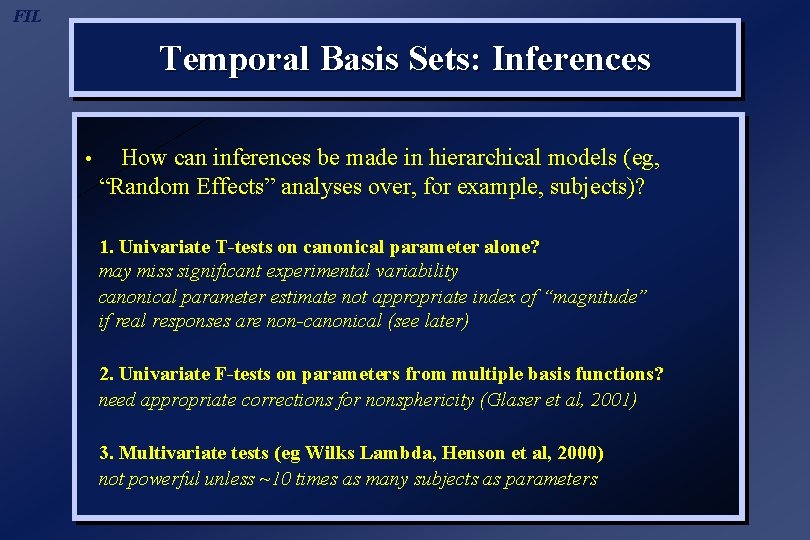

FIL Temporal Basis Sets: Inferences • How can inferences be made in hierarchical models (eg, “Random Effects” analyses over, for example, subjects)? 1. Univariate T-tests on canonical parameter alone? may miss significant experimental variability canonical parameter estimate not appropriate index of “magnitude” if real responses are non-canonical (see later) 2. Univariate F-tests on parameters from multiple basis functions? need appropriate corrections for nonsphericity (Glaser et al, 2001) 3. Multivariate tests (eg Wilks Lambda, Henson et al, 2000) not powerful unless ~10 times as many subjects as parameters

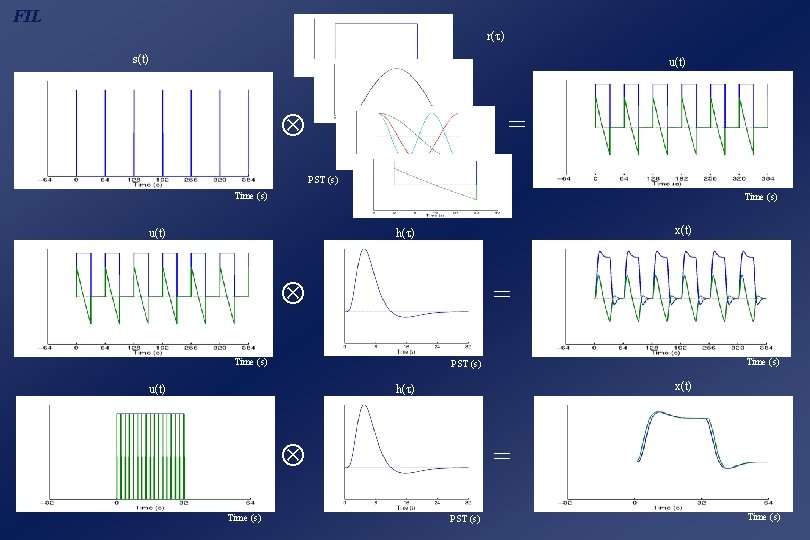

FIL r( ) s(t) u(t) = PST (s) Time (s) u(t) x(t) h( ) = Time (s) PST (s) u(t) x(t) h( ) Time (s) = PST (s) Time (s)

FIL A B Peak Dispersion Undershoot Initial Dip C

Phoebe henson

Phoebe henson Henson trust manitoba

Henson trust manitoba Rik eshuis

Rik eshuis Rik äriregister

Rik äriregister Ingmar vali

Ingmar vali Rik cuypers

Rik cuypers Baldario

Baldario Rik pieters

Rik pieters Richard fil

Richard fil Chick-fil-a supply chain flow chart

Chick-fil-a supply chain flow chart Xtara l-ħut fil-baħar

Xtara l-ħut fil-baħar Martin d. munk

Martin d. munk Wasyawirhum fil amri

Wasyawirhum fil amri Fil 3:13

Fil 3:13 Bourse drain thoracique

Bourse drain thoracique Ucl fil

Ucl fil Marée fraternelle

Marée fraternelle Se nourrir au fil du temps

Se nourrir au fil du temps Fil 3 14

Fil 3 14 Fil d'ariane ppt

Fil d'ariane ppt Inni jailun fil ardhi khalifah

Inni jailun fil ardhi khalifah Fil kollins

Fil kollins Emp alternator

Emp alternator Chick-fil-a organizational structure

Chick-fil-a organizational structure Ode to the artichoke meaning

Ode to the artichoke meaning Communiquer au fil du temps

Communiquer au fil du temps Le rosaire

Le rosaire Papy kilowatt

Papy kilowatt Marko 14

Marko 14 Al ashlu fil muamalah tulisan arab

Al ashlu fil muamalah tulisan arab Indications for knee mri

Indications for knee mri Parox fa

Parox fa Closterphibic

Closterphibic Mri position

Mri position Attain performa

Attain performa Pons mri

Pons mri Haghighat mri center

Haghighat mri center Mri principal

Mri principal Hypothalamus mri coronal

Hypothalamus mri coronal Nmv mri

Nmv mri Mri safety signage

Mri safety signage Tegmentum of midbrain

Tegmentum of midbrain How mri works

How mri works Cnr mri

Cnr mri Absent bow tie sign

Absent bow tie sign Fgatir ge mri

Fgatir ge mri Sagittalt

Sagittalt Block imaging

Block imaging Language diffusion

Language diffusion Mri version x

Mri version x Mri ap psychology

Mri ap psychology Hepatic abscess mri

Hepatic abscess mri Dog mri hancock county

Dog mri hancock county Mri

Mri Mri safety

Mri safety Mri scan mechanism

Mri scan mechanism Lyric hearing aid mri safety

Lyric hearing aid mri safety Prostate mri radiopaedia

Prostate mri radiopaedia Mri pulse sequence

Mri pulse sequence Falks cerebri

Falks cerebri Disadvantage of mri

Disadvantage of mri Pet/mri

Pet/mri Mri position

Mri position Az imelda

Az imelda Entry slice phenomenon mri

Entry slice phenomenon mri Cadasil decorso

Cadasil decorso Valuenomics

Valuenomics Osteopoikilosis mri

Osteopoikilosis mri First mri image 1973

First mri image 1973 Turners unwarping

Turners unwarping Denali ivc filter mri

Denali ivc filter mri Mri hydrogen atoms

Mri hydrogen atoms Cnr mri

Cnr mri How mri works

How mri works Mri question

Mri question Abnormal mri

Abnormal mri Angular momentum mri

Angular momentum mri Scan image to text

Scan image to text