Digital Image Processing Chapter 8 Image Compression 20

- Slides: 90

Digital Image Processing Chapter 8: Image Compression 20 August 2013

Data vs Information = Matter (สาระ) Data = The means by which information is conveyed Image Compression Reducing the amount of data required to represent a digital image while keeping information as much as possible

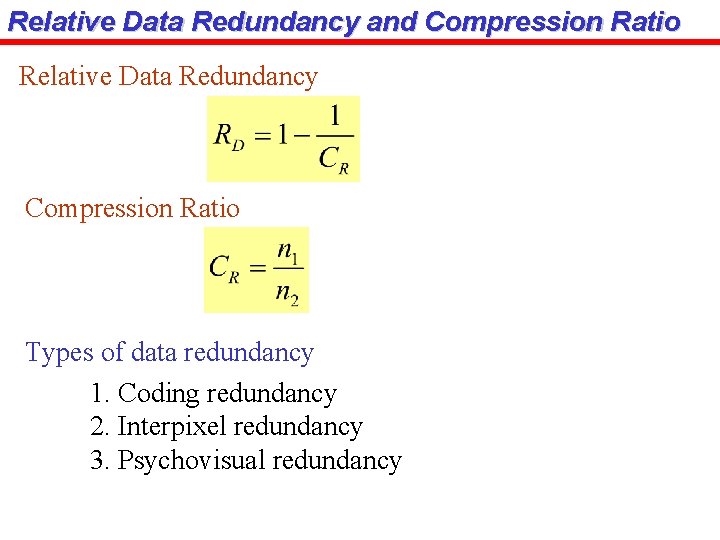

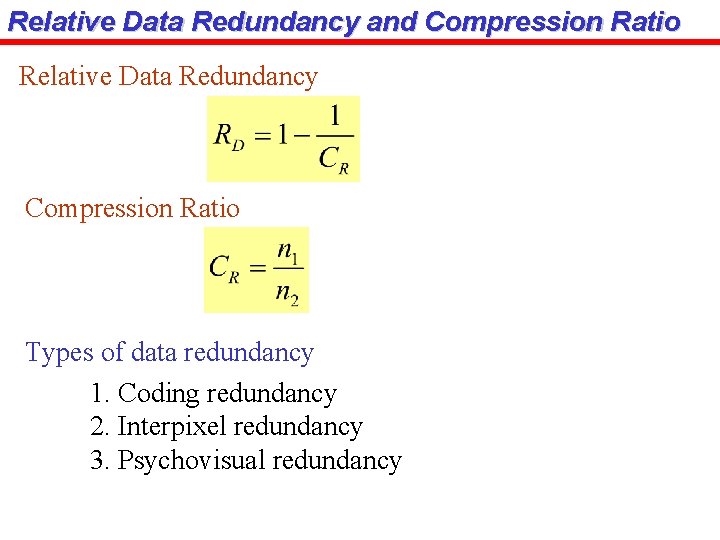

Relative Data Redundancy and Compression Ratio Relative Data Redundancy Compression Ratio Types of data redundancy 1. Coding redundancy 2. Interpixel redundancy 3. Psychovisual redundancy

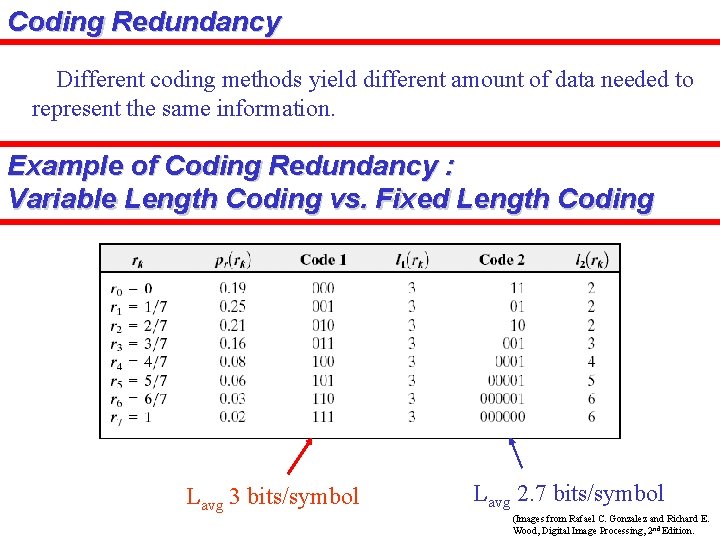

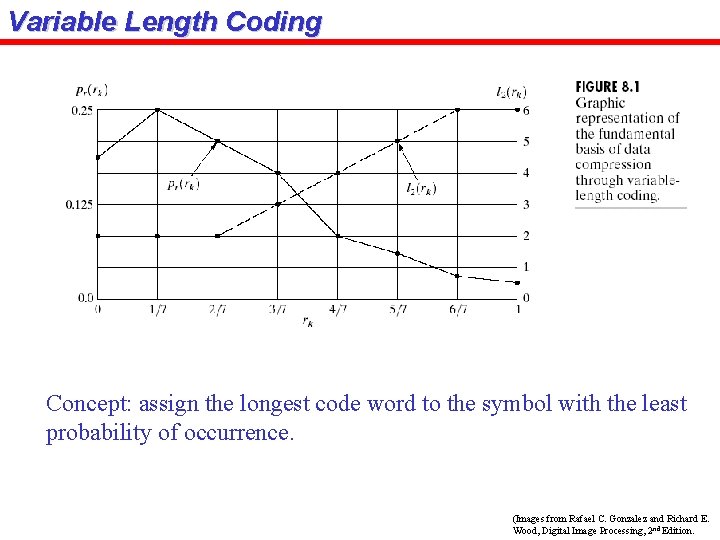

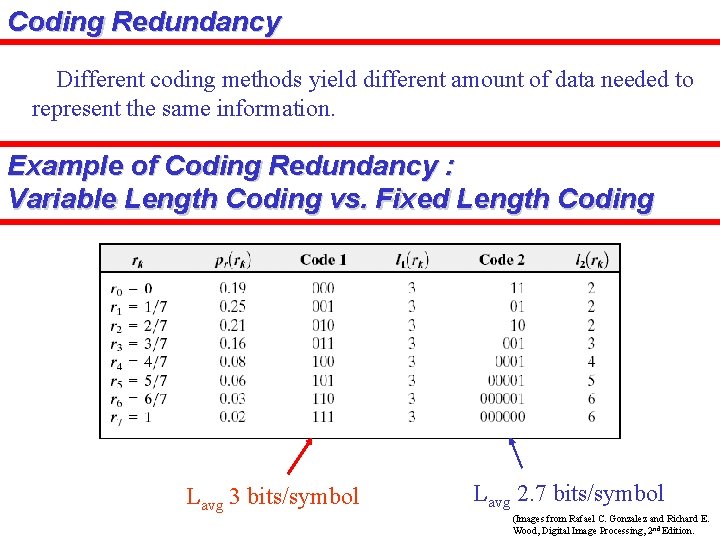

Coding Redundancy Different coding methods yield different amount of data needed to represent the same information. Example of Coding Redundancy : Variable Length Coding vs. Fixed Length Coding Lavg 3 bits/symbol Lavg 2. 7 bits/symbol (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

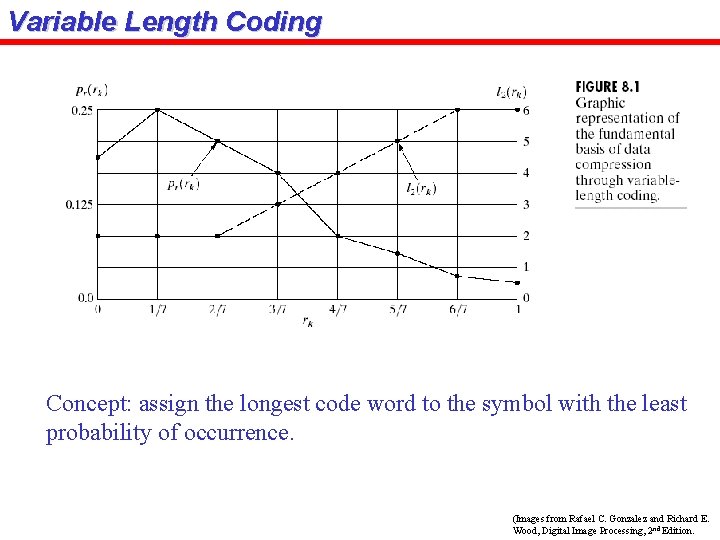

Variable Length Coding Concept: assign the longest code word to the symbol with the least probability of occurrence. (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

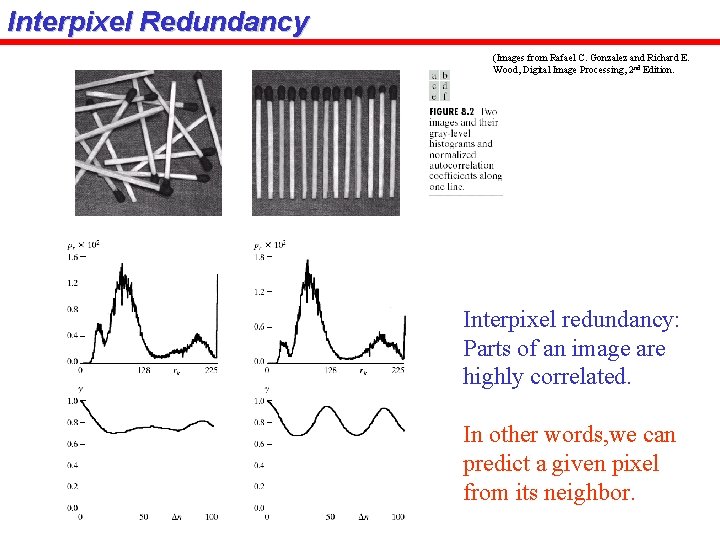

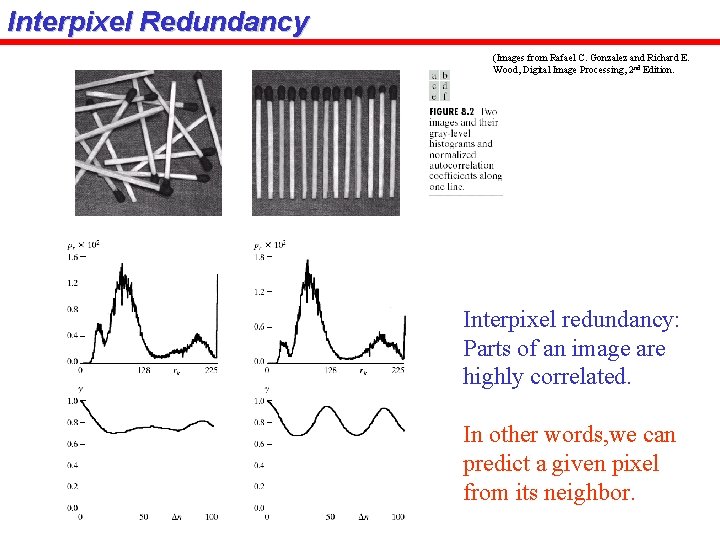

Interpixel Redundancy (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition. Interpixel redundancy: Parts of an image are highly correlated. In other words, we can predict a given pixel from its neighbor.

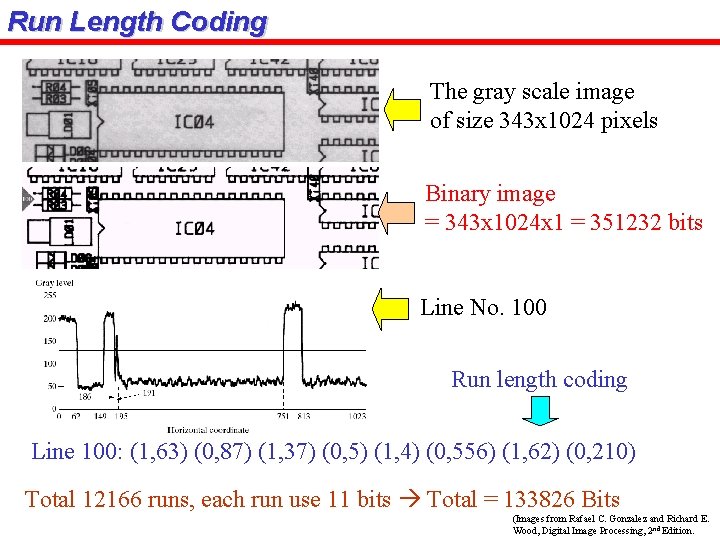

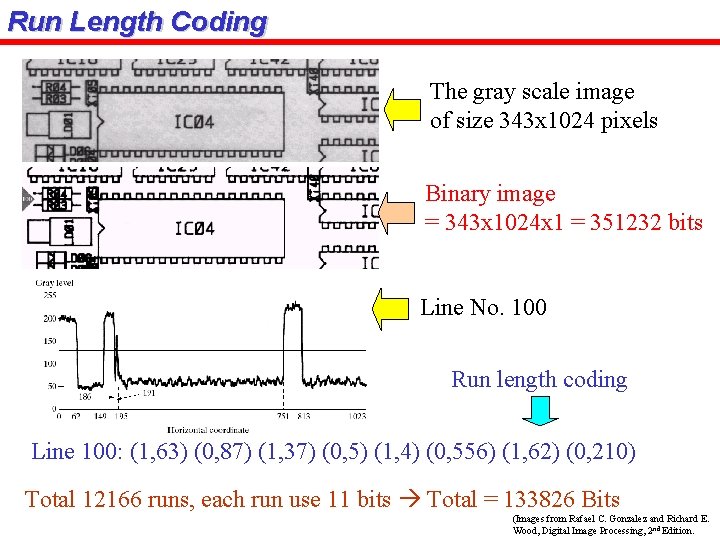

Run Length Coding The gray scale image of size 343 x 1024 pixels Binary image = 343 x 1024 x 1 = 351232 bits Line No. 100 Run length coding Line 100: (1, 63) (0, 87) (1, 37) (0, 5) (1, 4) (0, 556) (1, 62) (0, 210) Total 12166 runs, each run use 11 bits Total = 133826 Bits (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

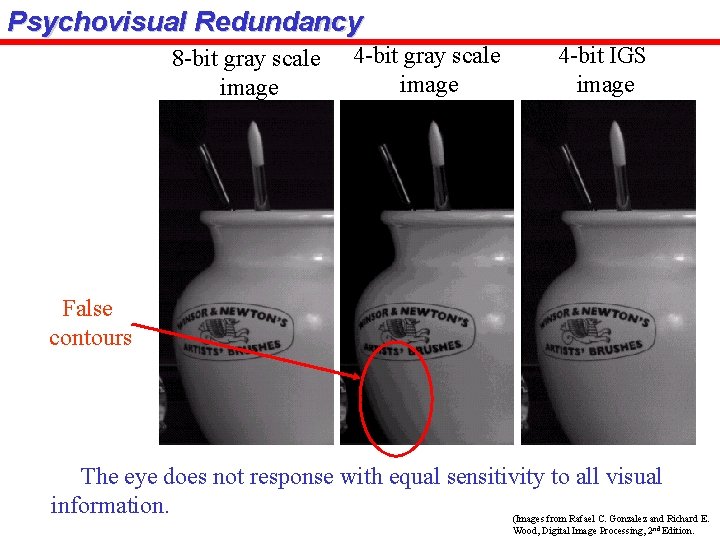

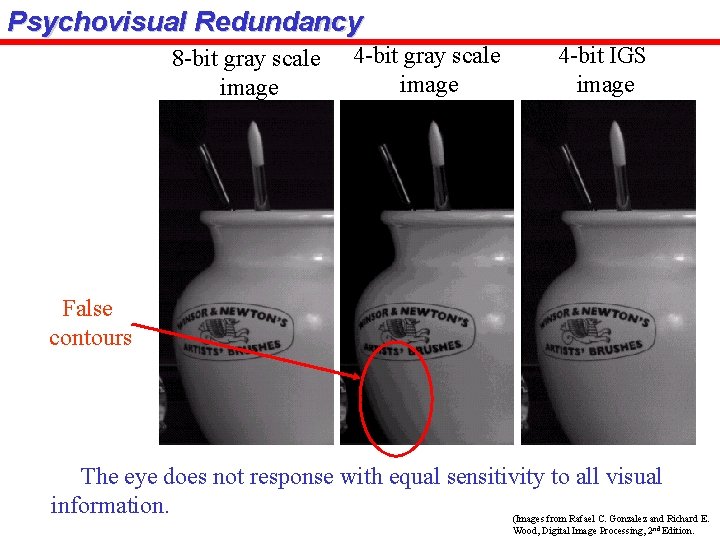

Psychovisual Redundancy 8 -bit gray scale image 4 -bit IGS image False contours The eye does not response with equal sensitivity to all visual information. (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

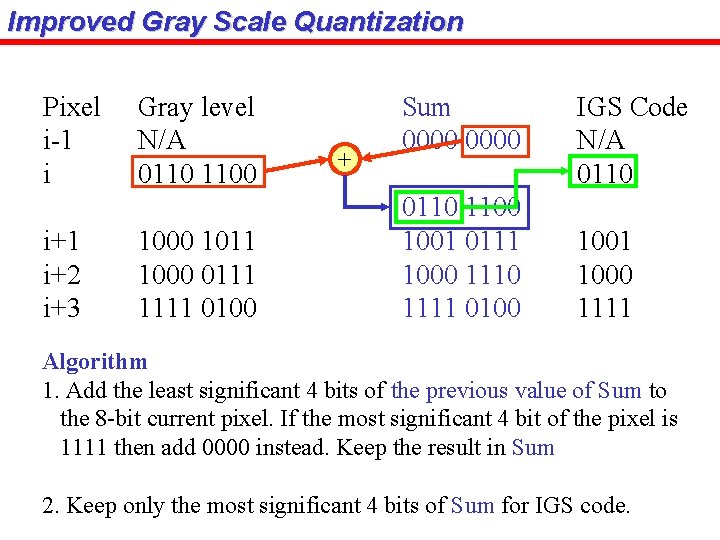

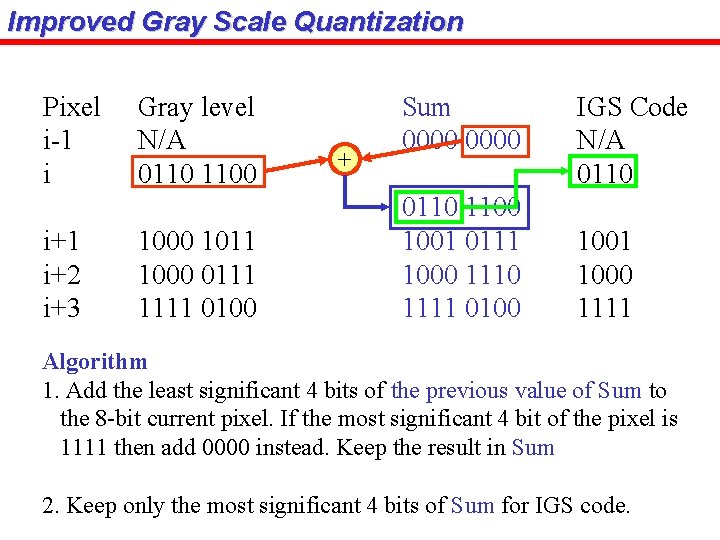

Improved Gray Scale Quantization Pixel i-1 i i+1 i+2 i+3 Gray level N/A 0110 1100 1011 1000 0111 1111 0100 + Sum 0000 0110 1100 1001 0111 1000 1111 0100 IGS Code N/A 0110 1001 1000 1111 Algorithm 1. Add the least significant 4 bits of the previous value of Sum to the 8 -bit current pixel. If the most significant 4 bit of the pixel is 1111 then add 0000 instead. Keep the result in Sum 2. Keep only the most significant 4 bits of Sum for IGS code.

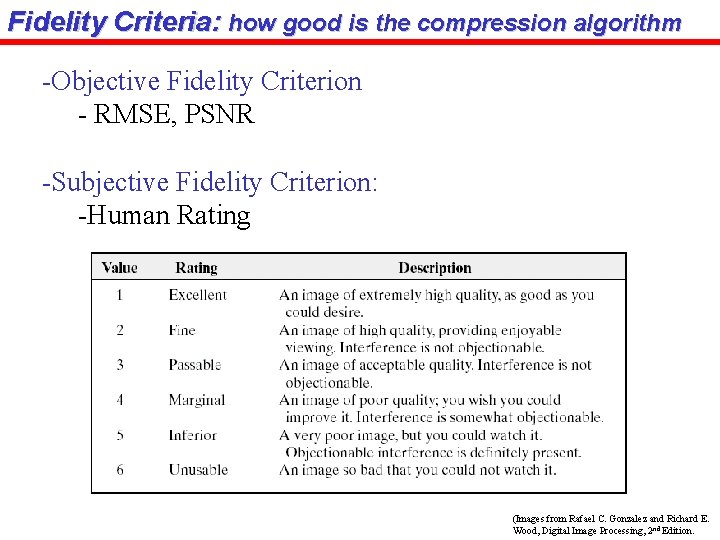

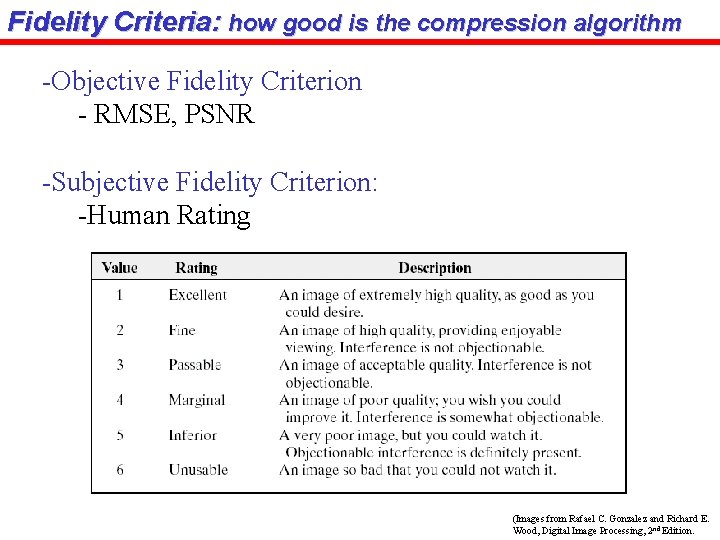

Fidelity Criteria: how good is the compression algorithm -Objective Fidelity Criterion - RMSE, PSNR -Subjective Fidelity Criterion: -Human Rating (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

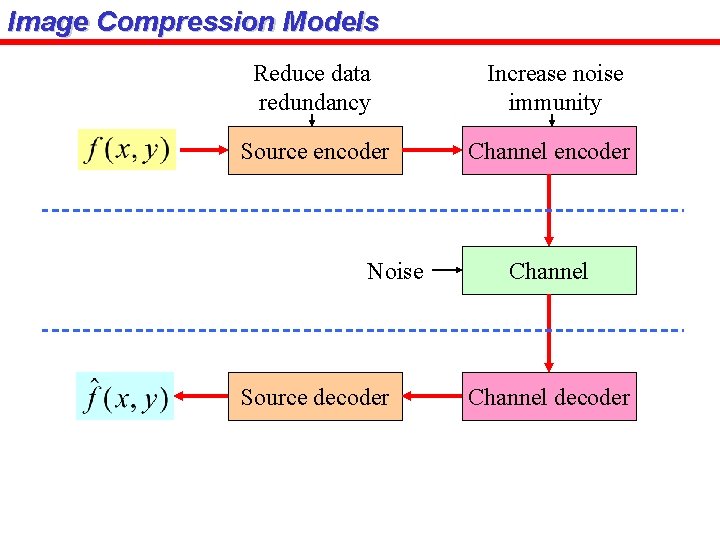

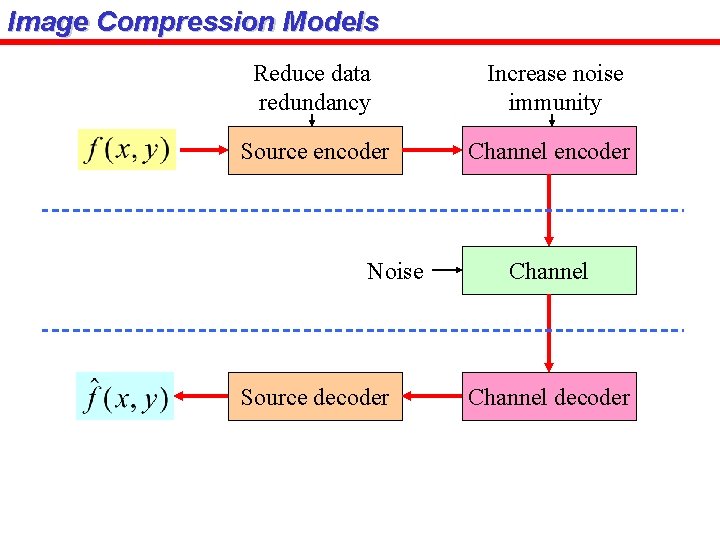

Image Compression Models Reduce data redundancy Increase noise immunity Source encoder Channel encoder Noise Source decoder Channel decoder

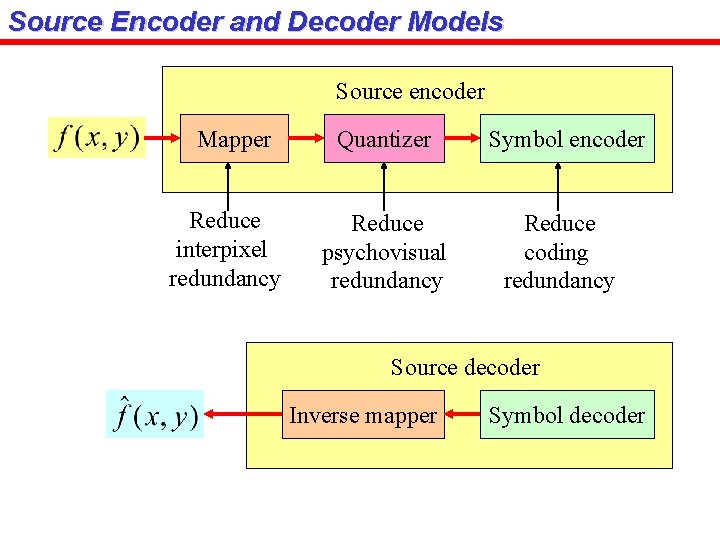

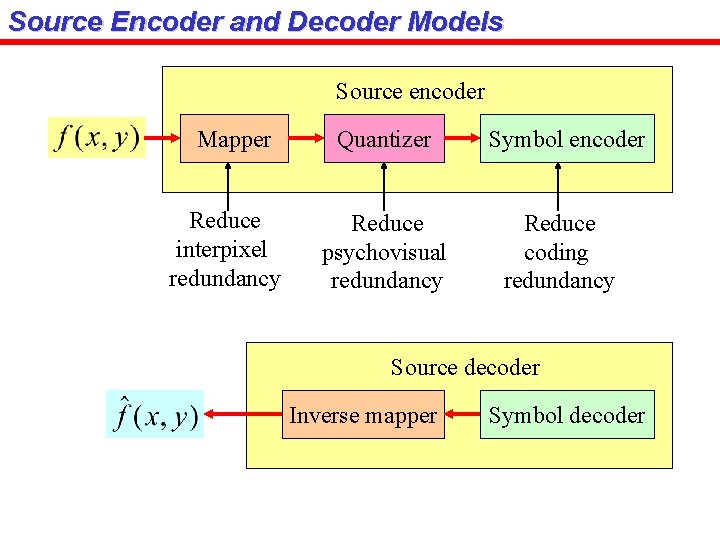

Source Encoder and Decoder Models Source encoder Mapper Reduce interpixel redundancy Quantizer Reduce psychovisual redundancy Symbol encoder Reduce coding redundancy Source decoder Inverse mapper Symbol decoder

Channel Encoder and Decoder - Hamming code, Turbo code, …

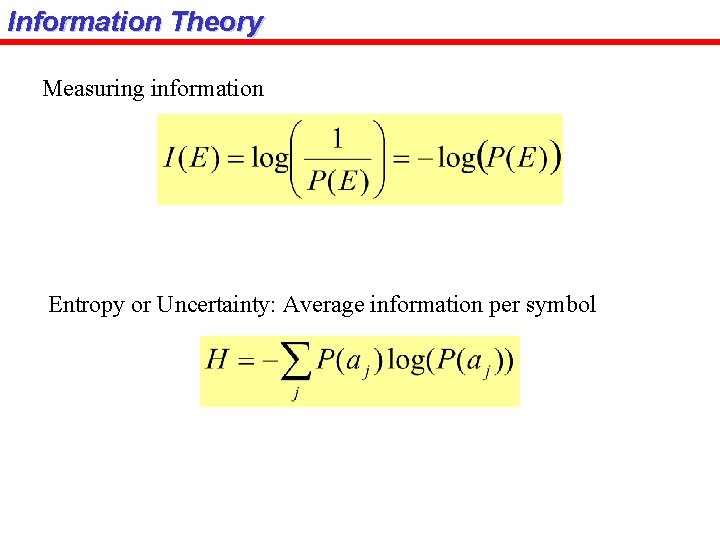

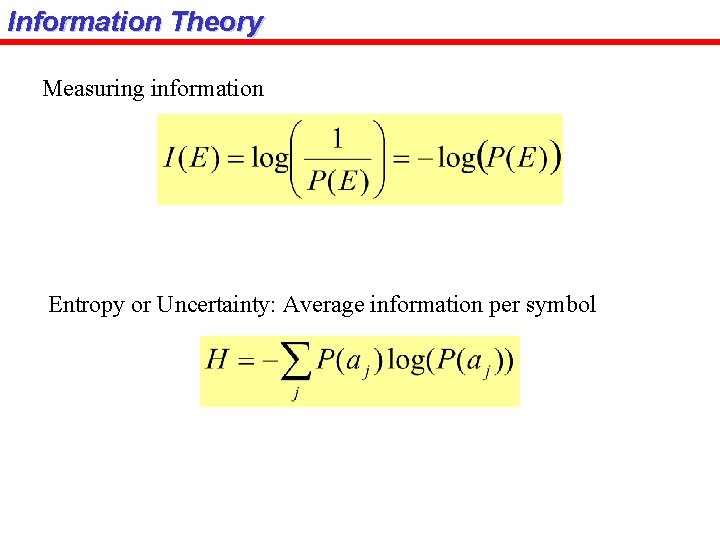

Information Theory Measuring information Entropy or Uncertainty: Average information per symbol

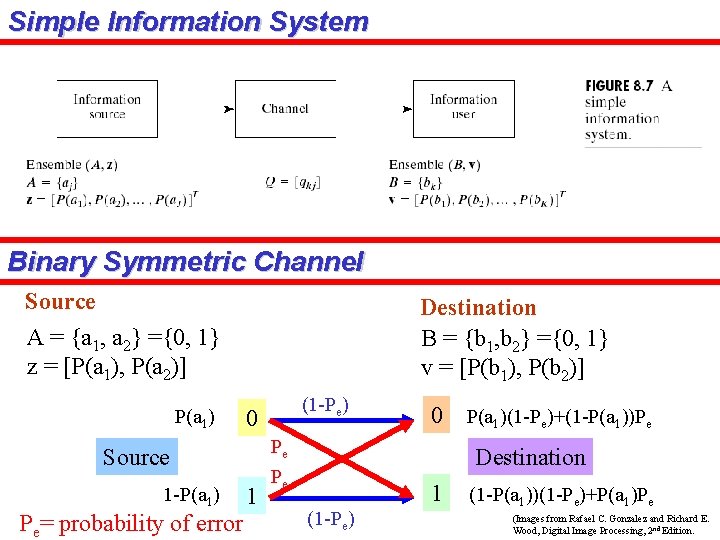

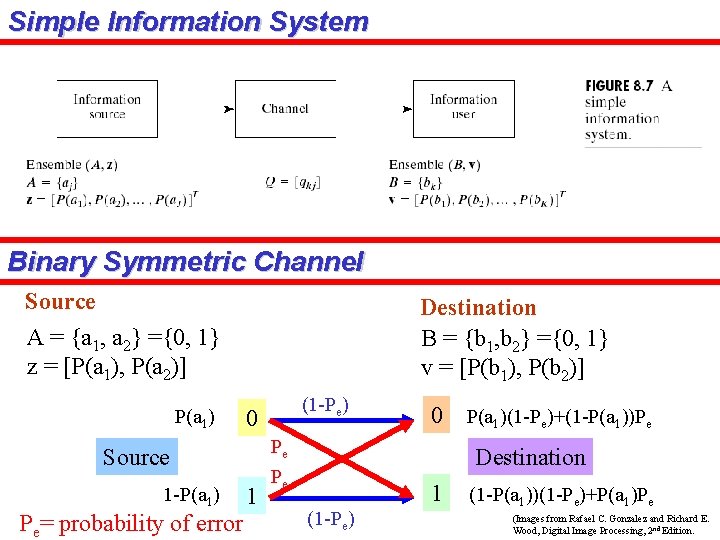

Simple Information System Binary Symmetric Channel Source A = {a 1, a 2} ={0, 1} z = [P(a 1), P(a 2)] P(a 1) Destination B = {b 1, b 2} ={0, 1} v = [P(b 1), P(b 2)] 0 Source 1 -P(a 1) Pe= probability of error (1 -Pe) 1 Pe Pe 0 P(a 1)(1 -Pe)+(1 -P(a 1))Pe Destination 1 (1 -Pe) (1 -P(a 1))(1 -Pe)+P(a 1)Pe (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

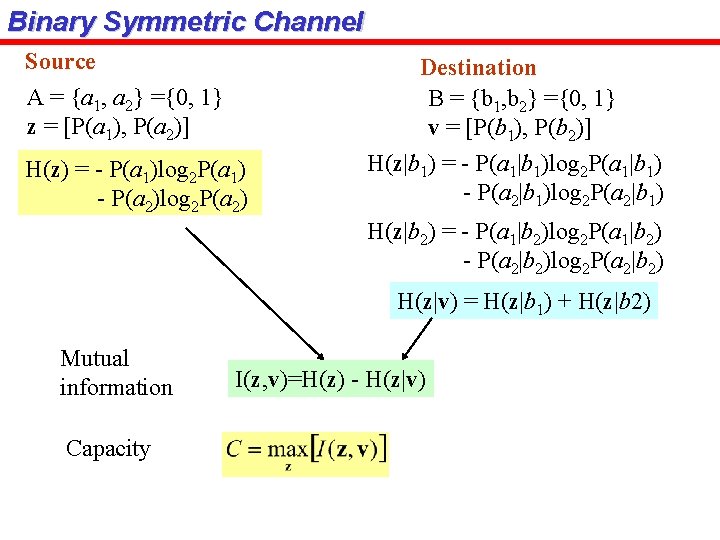

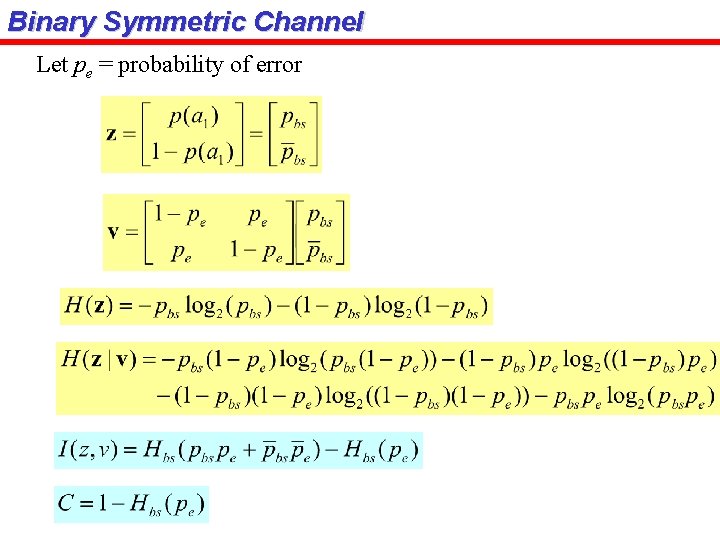

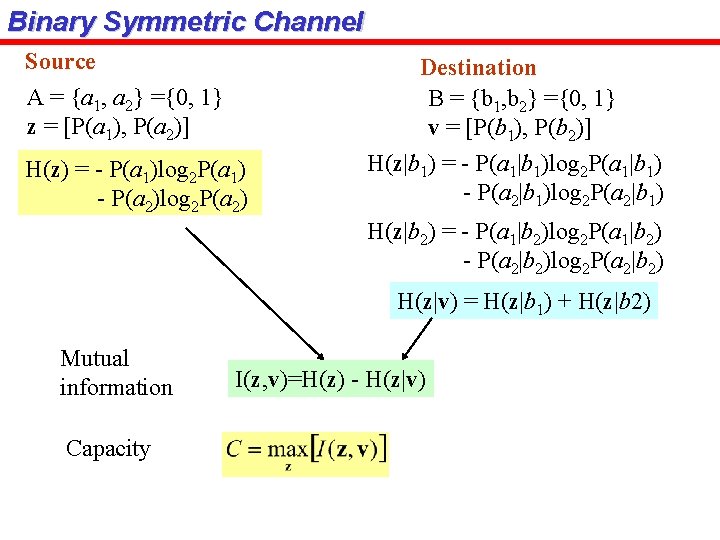

Binary Symmetric Channel Source A = {a 1, a 2} ={0, 1} z = [P(a 1), P(a 2)] H(z) = - P(a 1)log 2 P(a 1) - P(a 2)log 2 P(a 2) Destination B = {b 1, b 2} ={0, 1} v = [P(b 1), P(b 2)] H(z|b 1) = - P(a 1|b 1)log 2 P(a 1|b 1) - P(a 2|b 1)log 2 P(a 2|b 1) H(z|b 2) = - P(a 1|b 2)log 2 P(a 1|b 2) - P(a 2|b 2)log 2 P(a 2|b 2) H(z|v) = H(z|b 1) + H(z|b 2) Mutual information Capacity I(z, v)=H(z) - H(z|v)

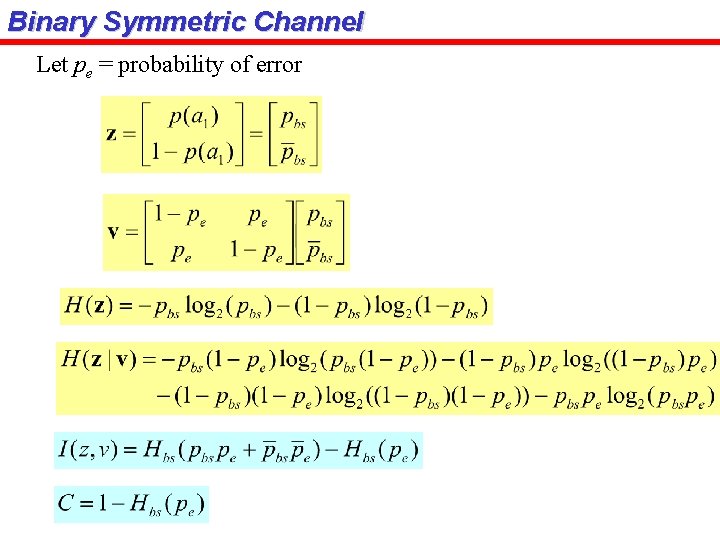

Binary Symmetric Channel Let pe = probability of error

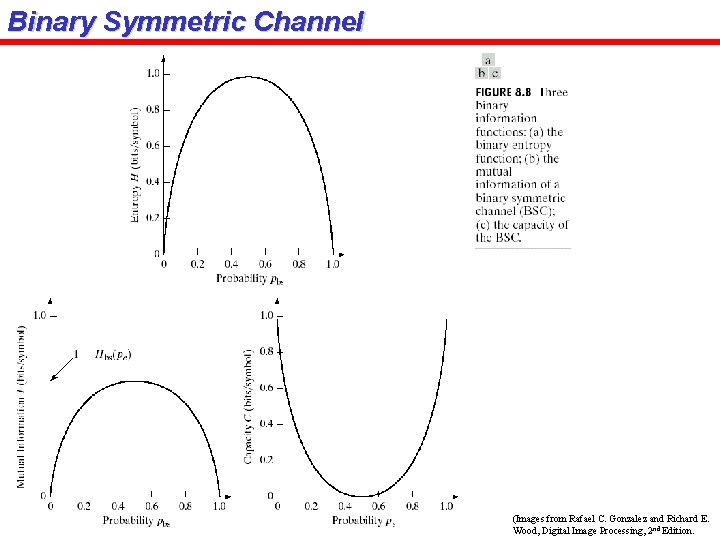

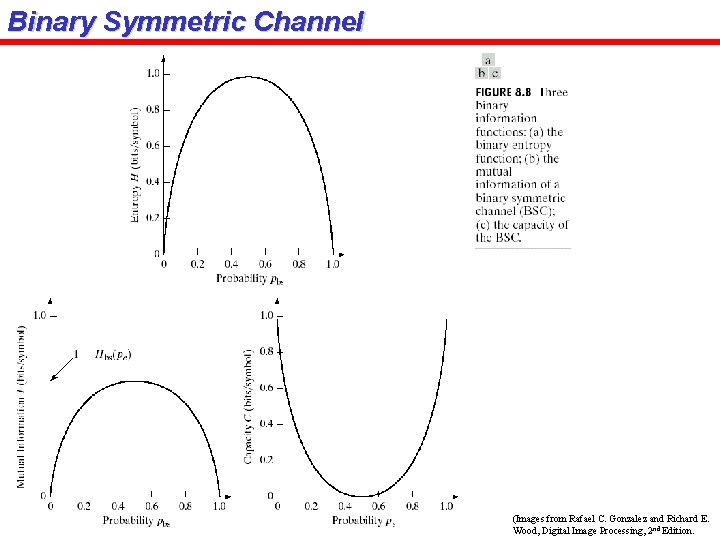

Binary Symmetric Channel (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

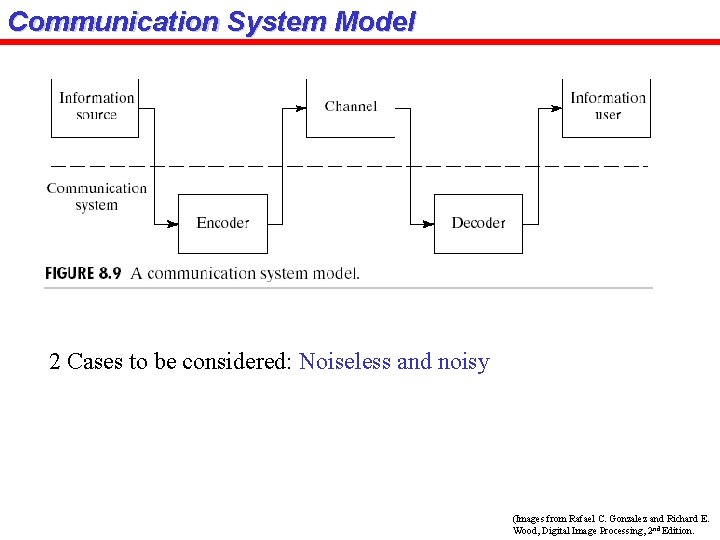

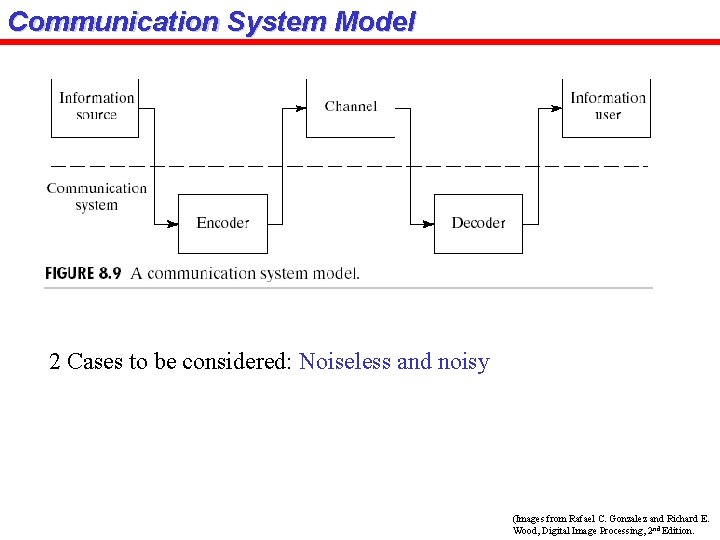

Communication System Model 2 Cases to be considered: Noiseless and noisy (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

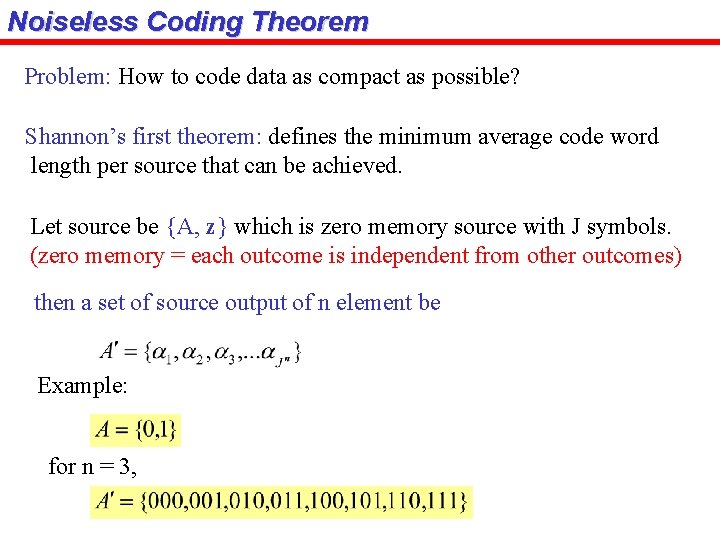

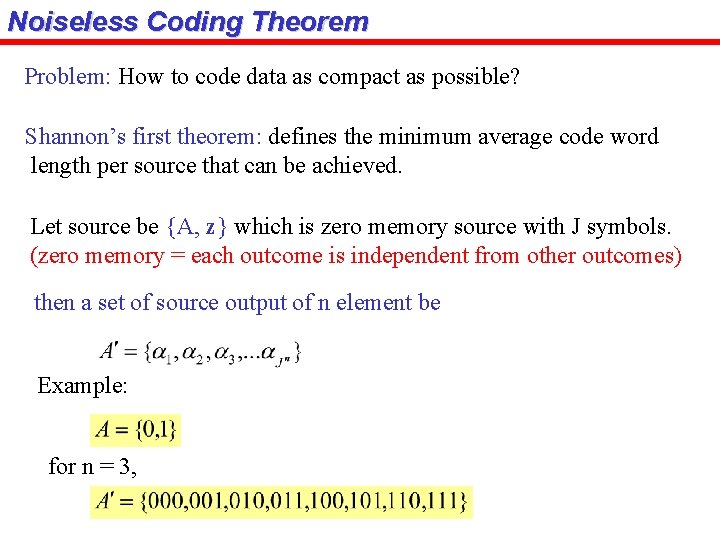

Noiseless Coding Theorem Problem: How to code data as compact as possible? Shannon’s first theorem: defines the minimum average code word length per source that can be achieved. Let source be {A, z} which is zero memory source with J symbols. (zero memory = each outcome is independent from other outcomes) then a set of source output of n element be Example: for n = 3,

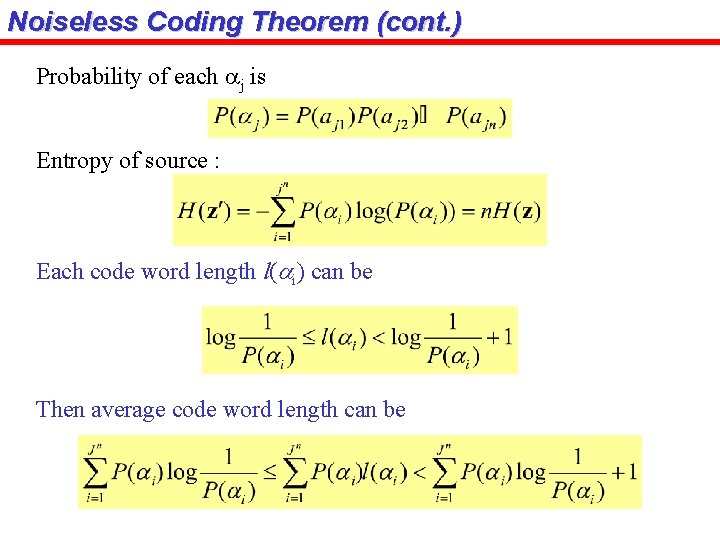

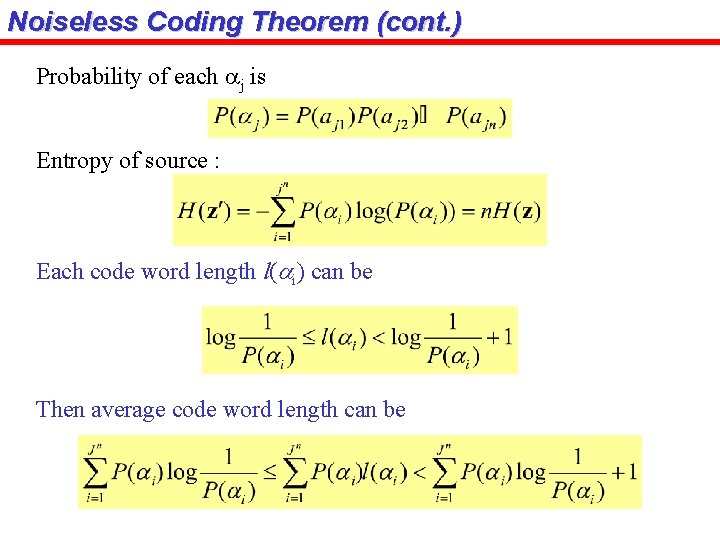

Noiseless Coding Theorem (cont. ) Probability of each aj is Entropy of source : Each code word length l(ai) can be Then average code word length can be

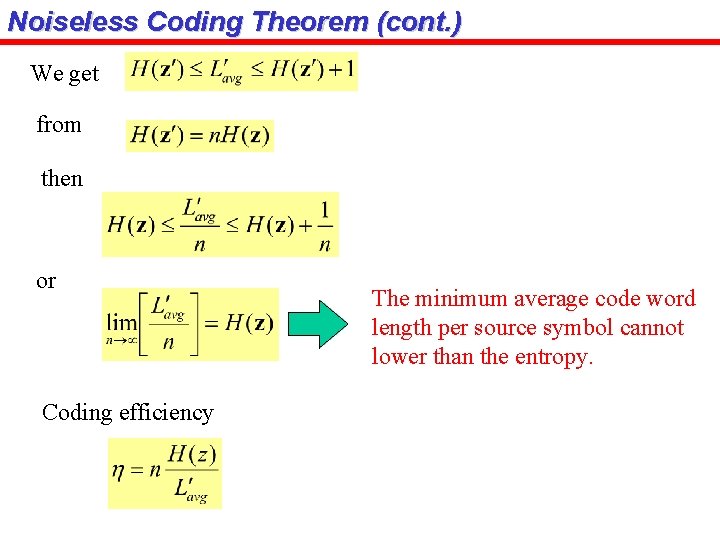

Noiseless Coding Theorem (cont. ) We get from then or Coding efficiency The minimum average code word length per source symbol cannot lower than the entropy.

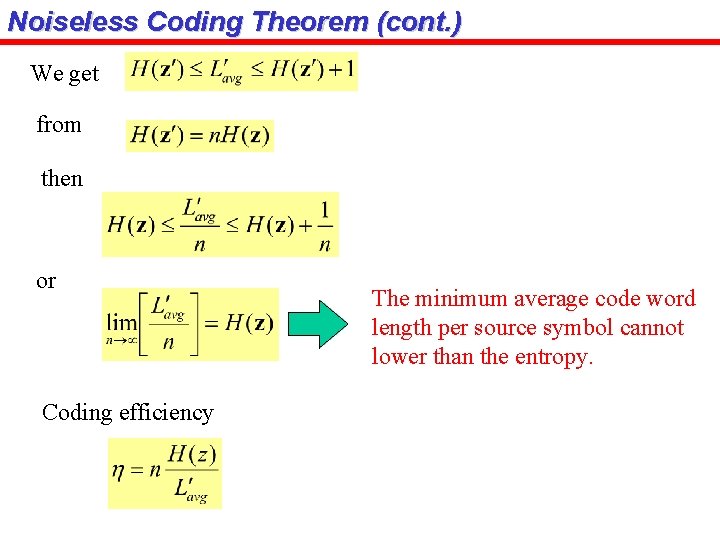

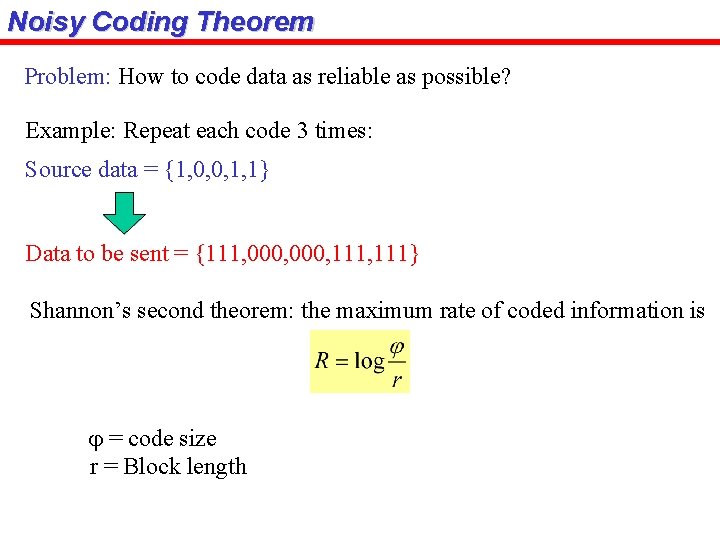

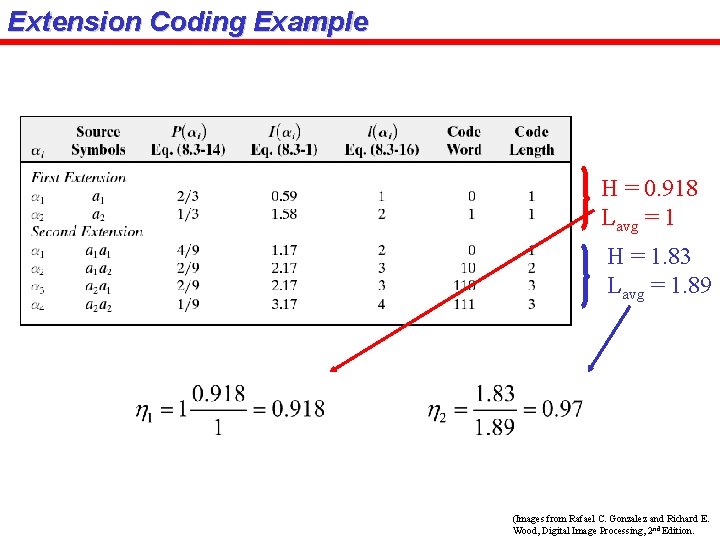

Extension Coding Example H = 0. 918 Lavg = 1 H = 1. 83 Lavg = 1. 89 (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

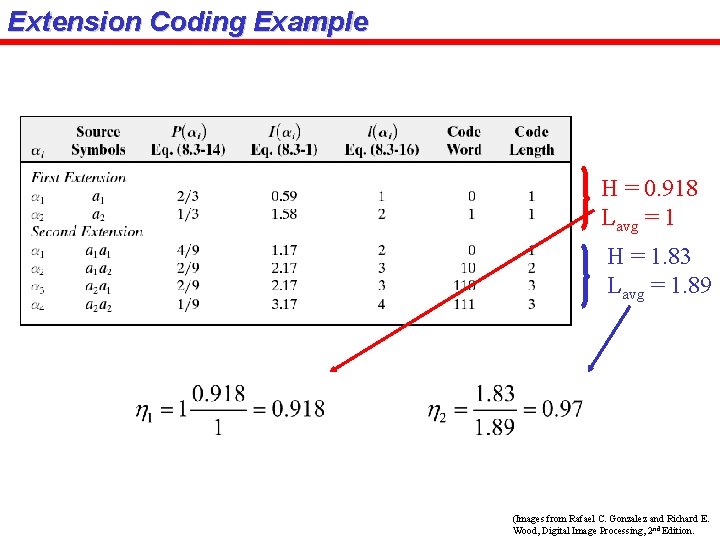

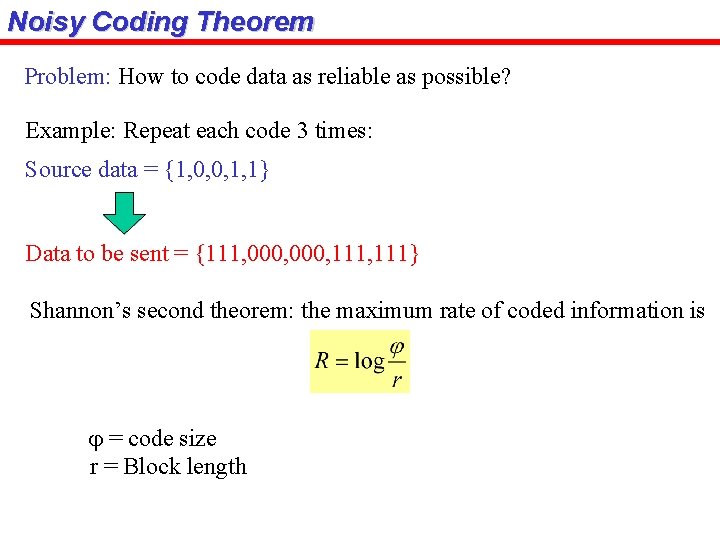

Noisy Coding Theorem Problem: How to code data as reliable as possible? Example: Repeat each code 3 times: Source data = {1, 0, 0, 1, 1} Data to be sent = {111, 000, 111} Shannon’s second theorem: the maximum rate of coded information is j = code size r = Block length

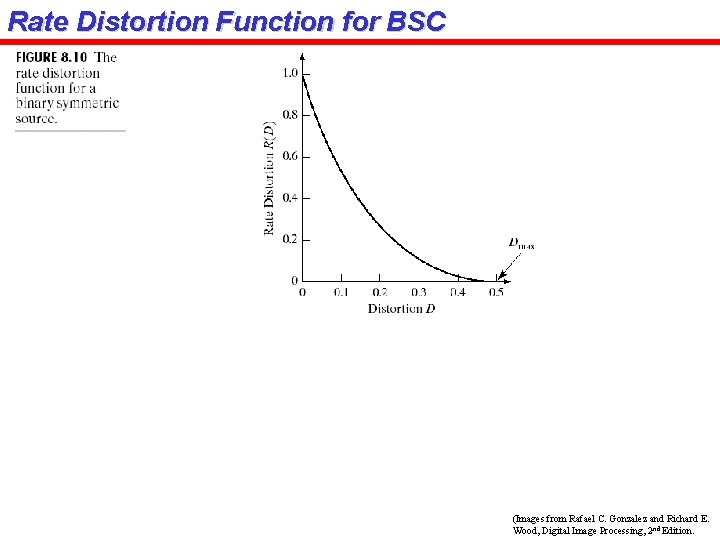

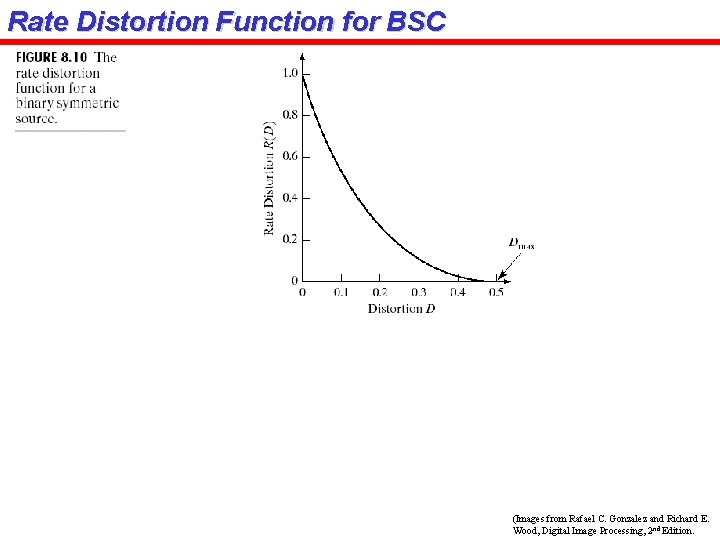

Rate Distortion Function for BSC (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

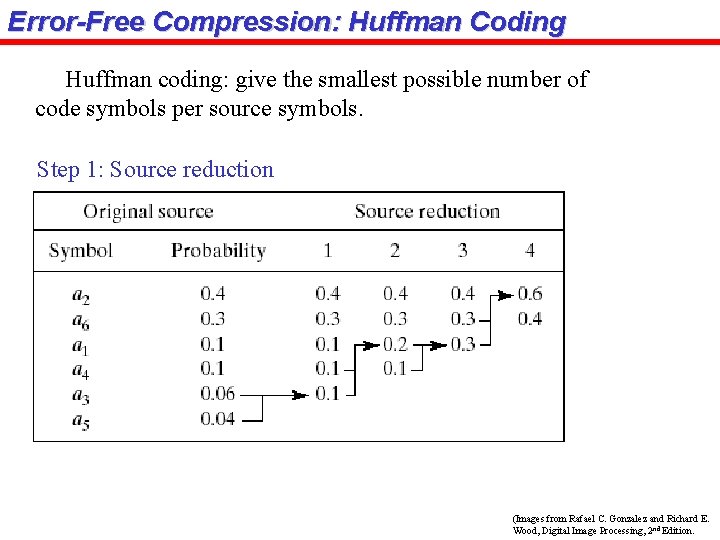

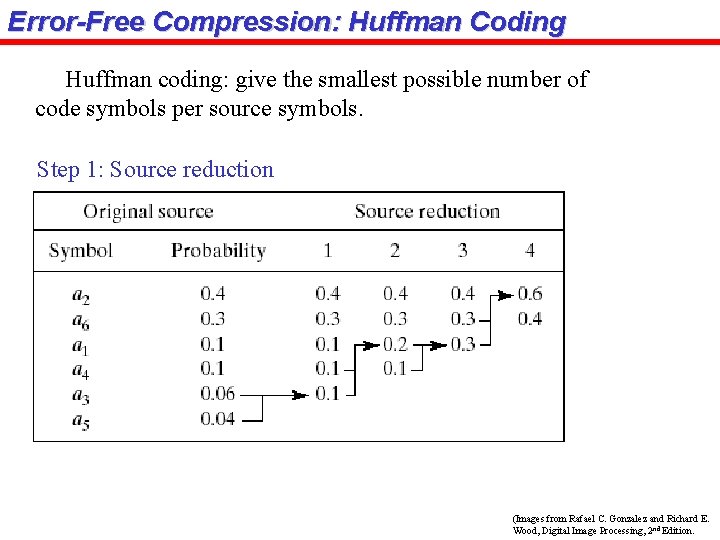

Error-Free Compression: Huffman Coding Huffman coding: give the smallest possible number of code symbols per source symbols. Step 1: Source reduction (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

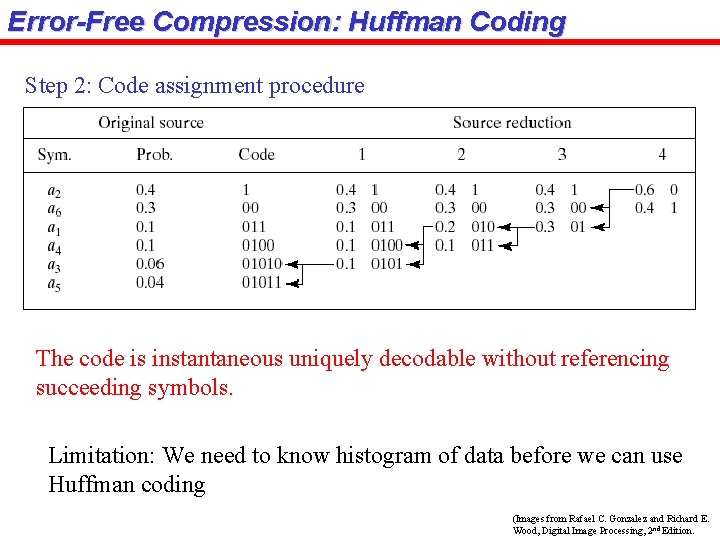

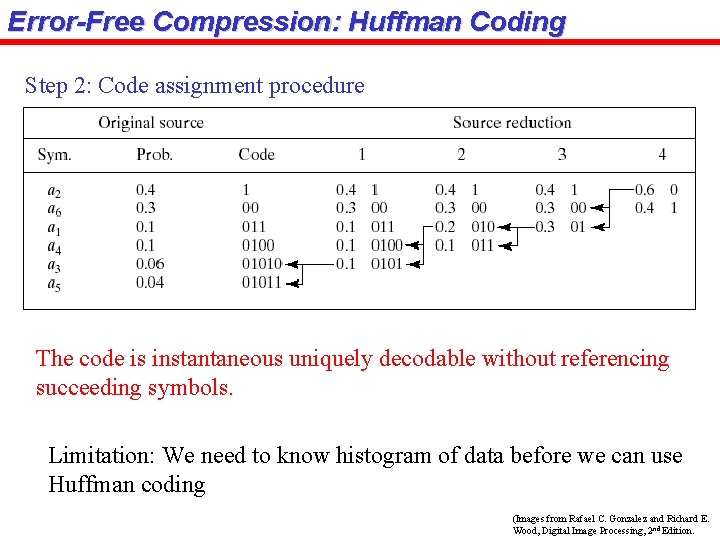

Error-Free Compression: Huffman Coding Step 2: Code assignment procedure The code is instantaneous uniquely decodable without referencing succeeding symbols. Limitation: We need to know histogram of data before we can use Huffman coding (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

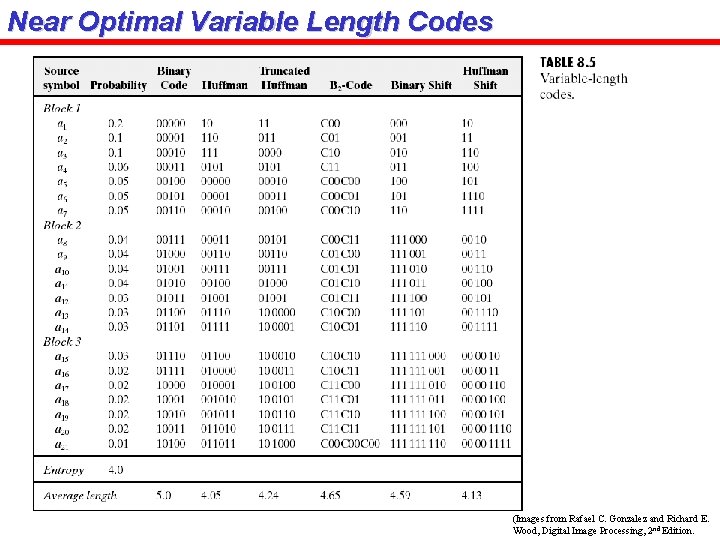

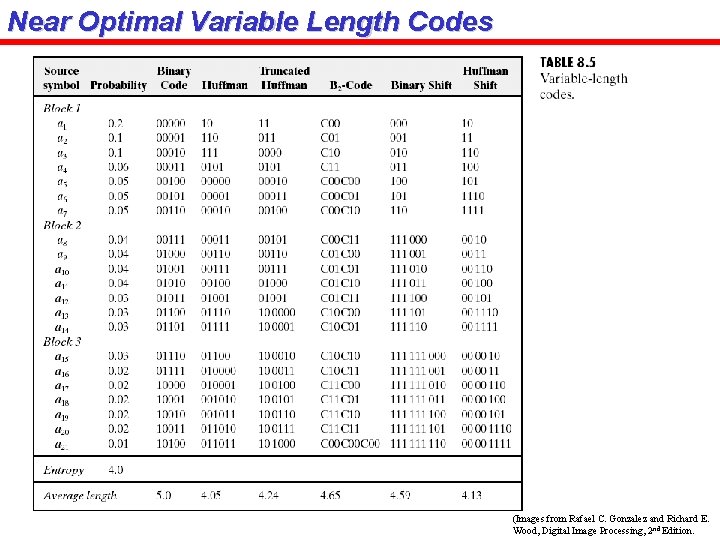

Near Optimal Variable Length Codes (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

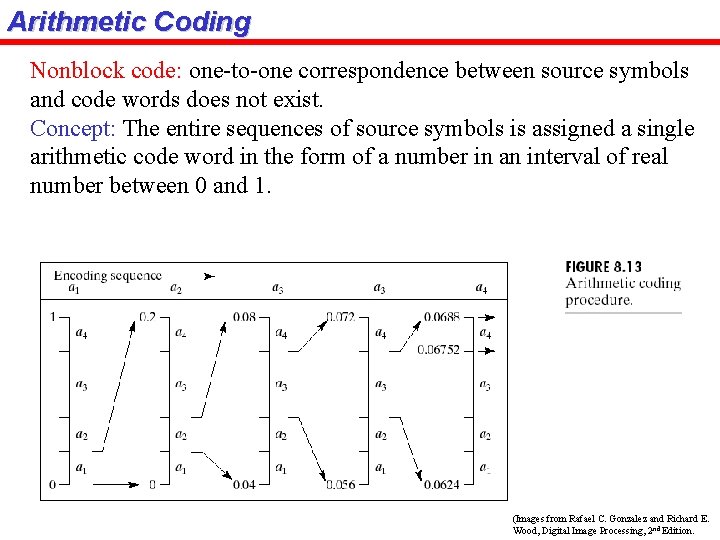

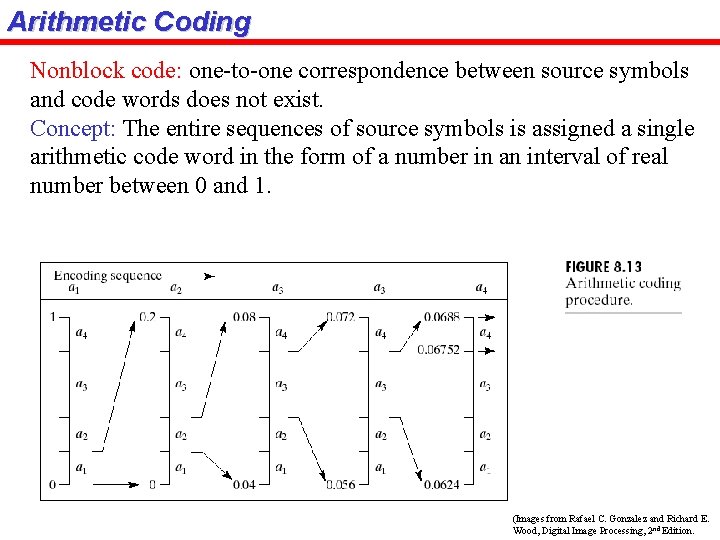

Arithmetic Coding Nonblock code: one-to-one correspondence between source symbols and code words does not exist. Concept: The entire sequences of source symbols is assigned a single arithmetic code word in the form of a number in an interval of real number between 0 and 1. (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

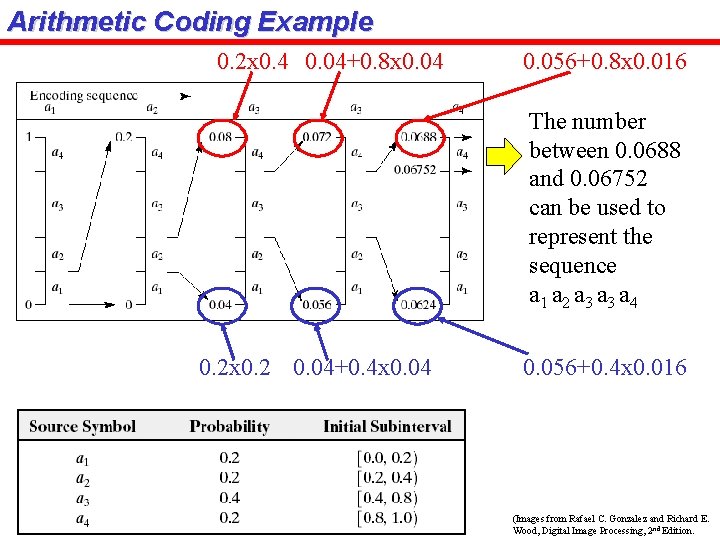

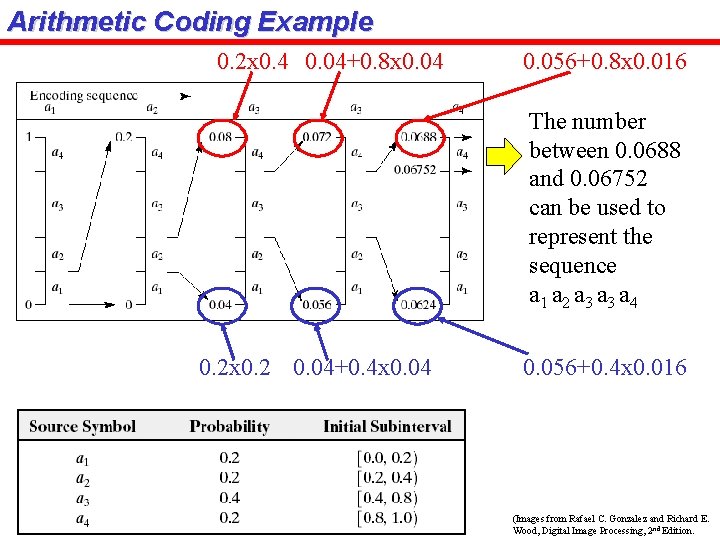

Arithmetic Coding Example 0. 2 x 0. 4 0. 04+0. 8 x 0. 04 0. 056+0. 8 x 0. 016 The number between 0. 0688 and 0. 06752 can be used to represent the sequence a 1 a 2 a 3 a 4 0. 2 x 0. 2 0. 04+0. 4 x 0. 04 0. 056+0. 4 x 0. 016 (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

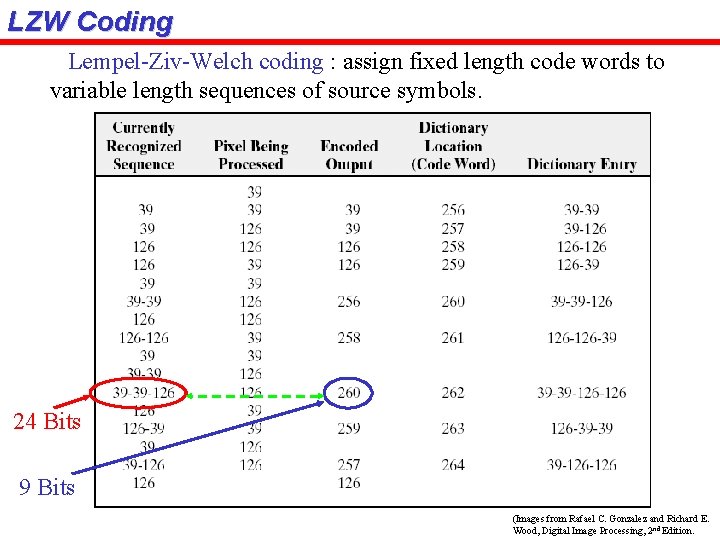

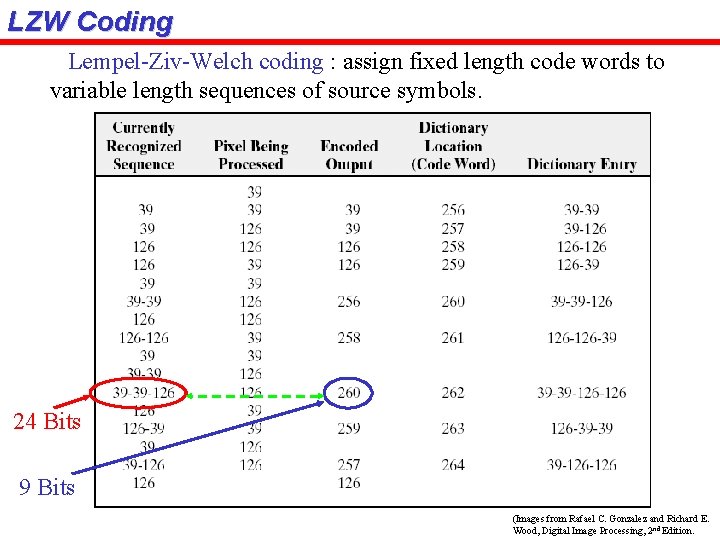

LZW Coding Lempel-Ziv-Welch coding : assign fixed length code words to variable length sequences of source symbols. 24 Bits 9 Bits (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

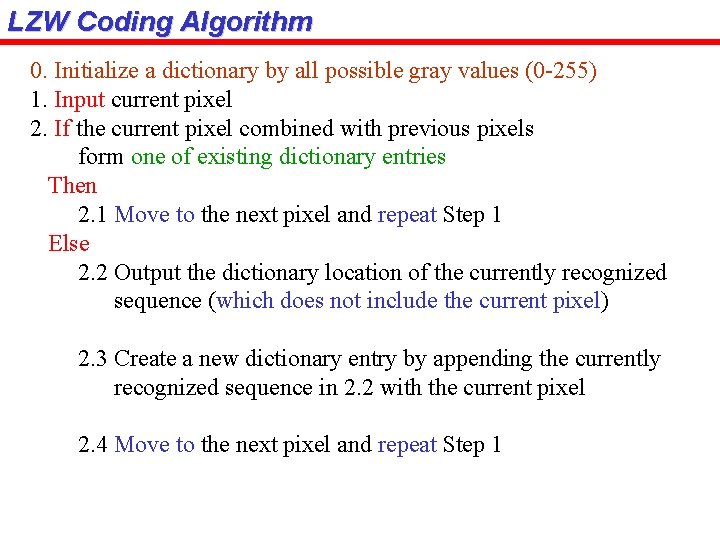

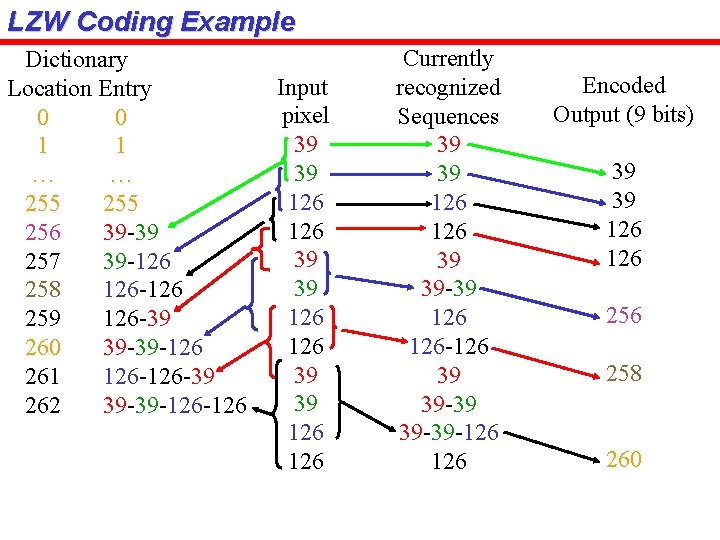

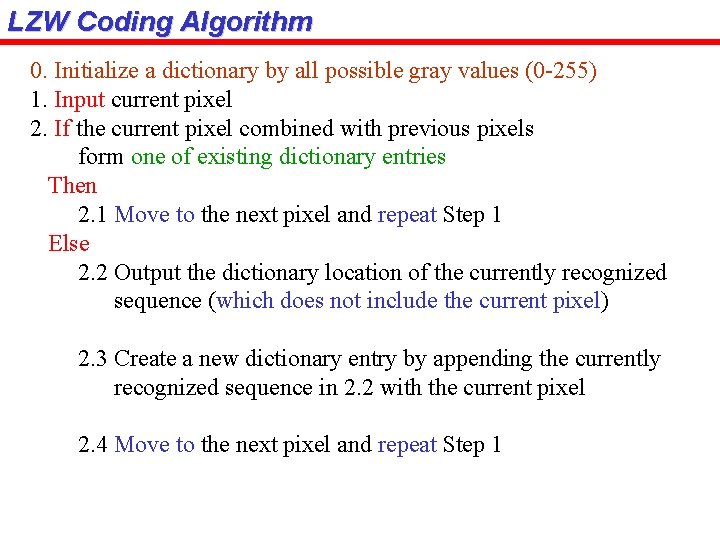

LZW Coding Algorithm 0. Initialize a dictionary by all possible gray values (0 -255) 1. Input current pixel 2. If the current pixel combined with previous pixels form one of existing dictionary entries Then 2. 1 Move to the next pixel and repeat Step 1 Else 2. 2 Output the dictionary location of the currently recognized sequence (which does not include the current pixel) 2. 3 Create a new dictionary entry by appending the currently recognized sequence in 2. 2 with the current pixel 2. 4 Move to the next pixel and repeat Step 1

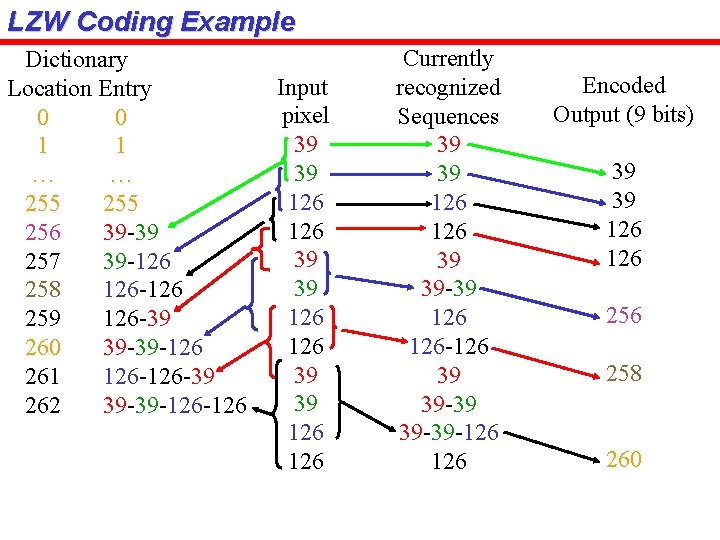

LZW Coding Example Dictionary Location Entry 0 0 1 1 … … 255 256 39 -39 257 39 -126 258 126 -126 259 126 -39 260 39 -39 -126 261 126 -39 262 39 -39 -126 Input pixel 39 39 126 126 39 39 126 Currently recognized Sequences 39 39 126 39 39 -39 126 -126 39 39 -39 -126 Encoded Output (9 bits) 39 39 126 256 258 260

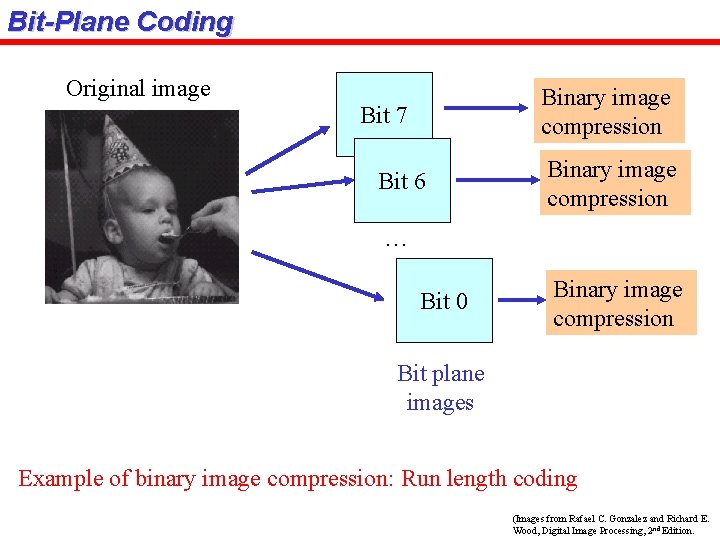

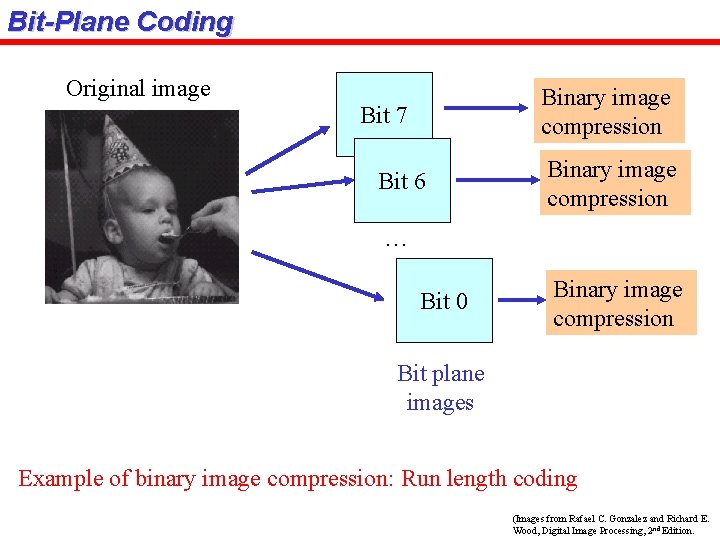

Bit-Plane Coding Original image Binary image compression Bit 7 Bit 6 Binary image compression … Bit 0 Binary image compression Bit plane images Example of binary image compression: Run length coding (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

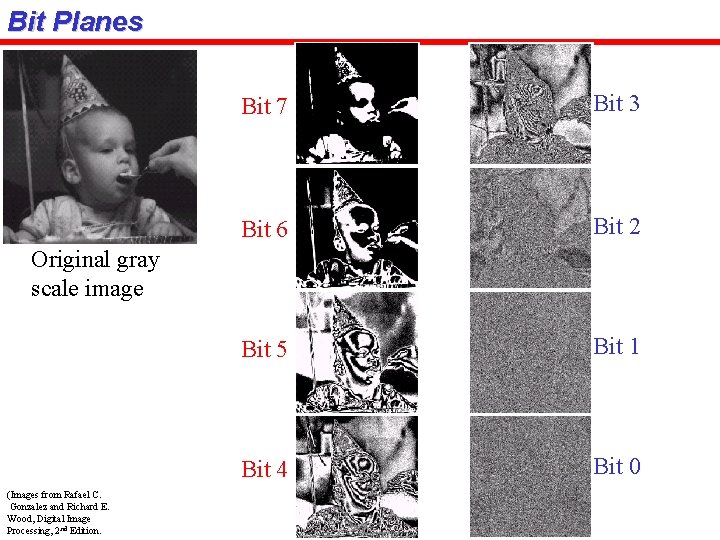

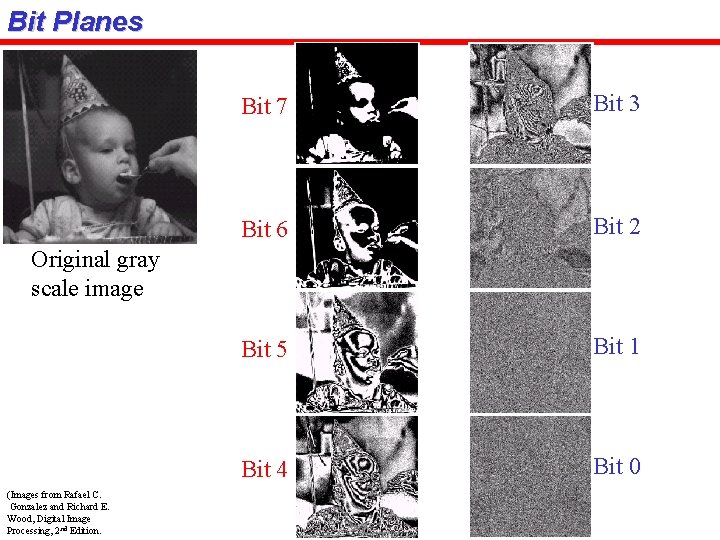

Bit Planes Bit 7 Bit 3 Bit 6 Bit 2 Bit 5 Bit 1 Bit 4 Bit 0 Original gray scale image (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

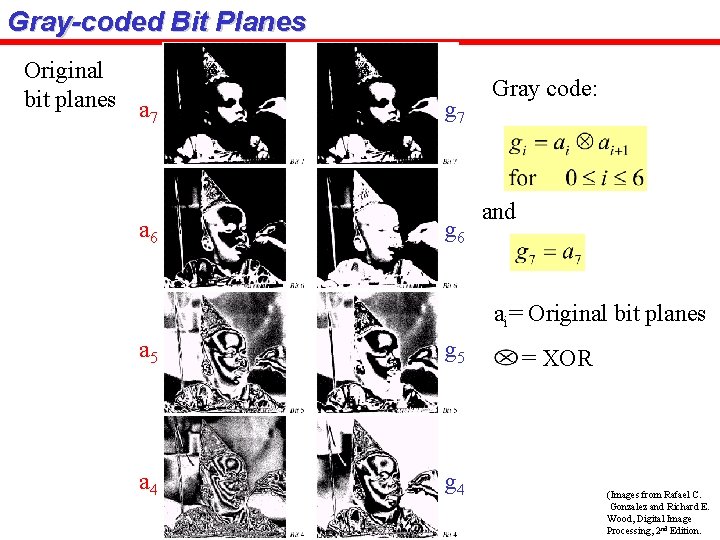

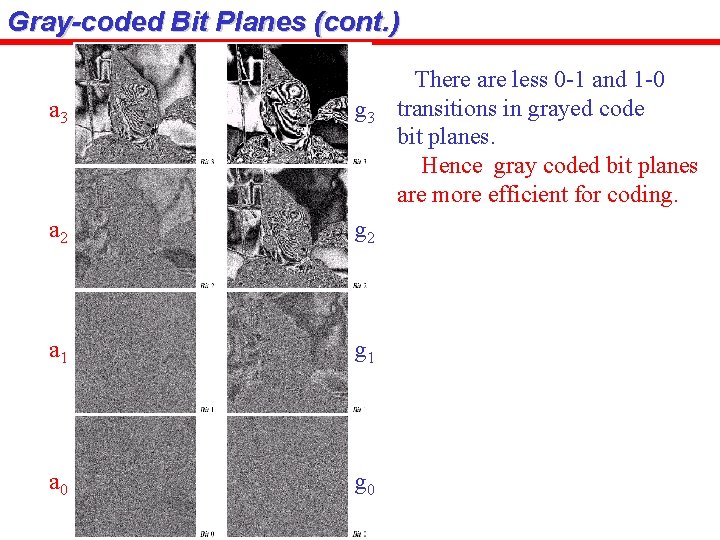

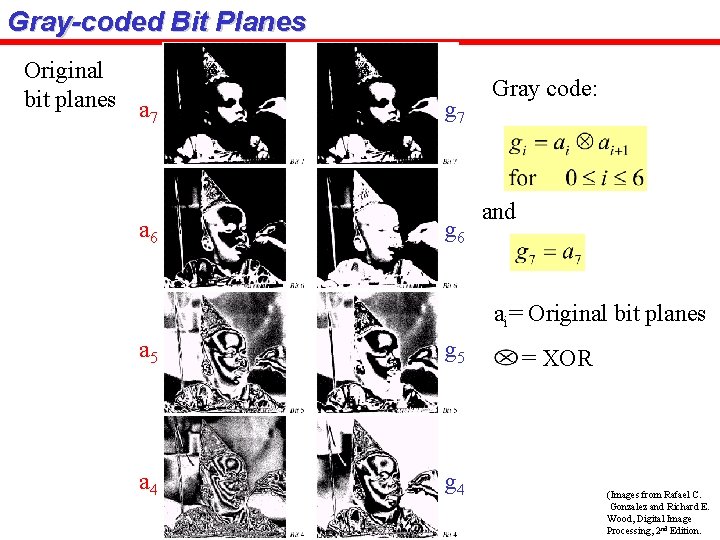

Gray-coded Bit Planes Original bit planes a 7 a 6 g 7 g 6 Gray code: and ai= Original bit planes a 5 g 5 a 4 g 4 = XOR (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

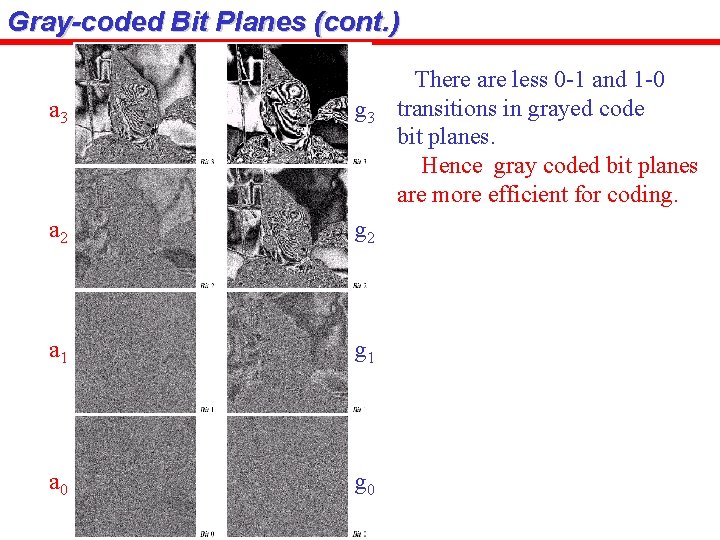

Gray-coded Bit Planes (cont. ) a 3 g 3 a 2 g 2 a 1 g 1 a 0 g 0 There are less 0 -1 and 1 -0 transitions in grayed code bit planes. Hence gray coded bit planes are more efficient for coding.

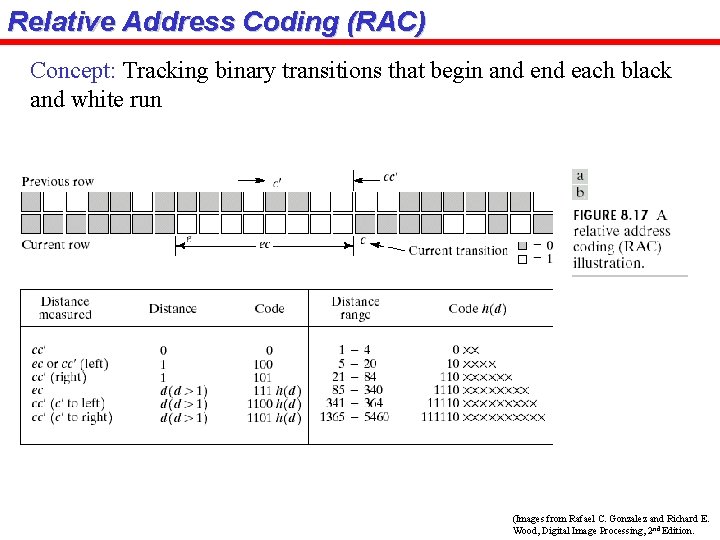

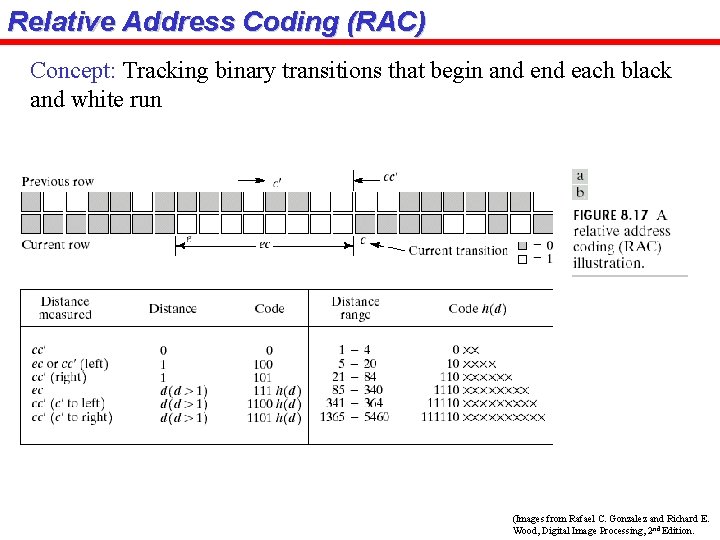

Relative Address Coding (RAC) Concept: Tracking binary transitions that begin and each black and white run (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

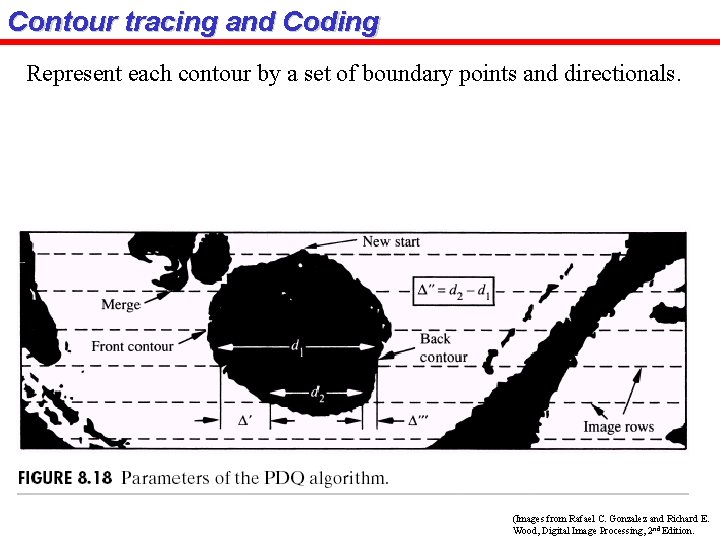

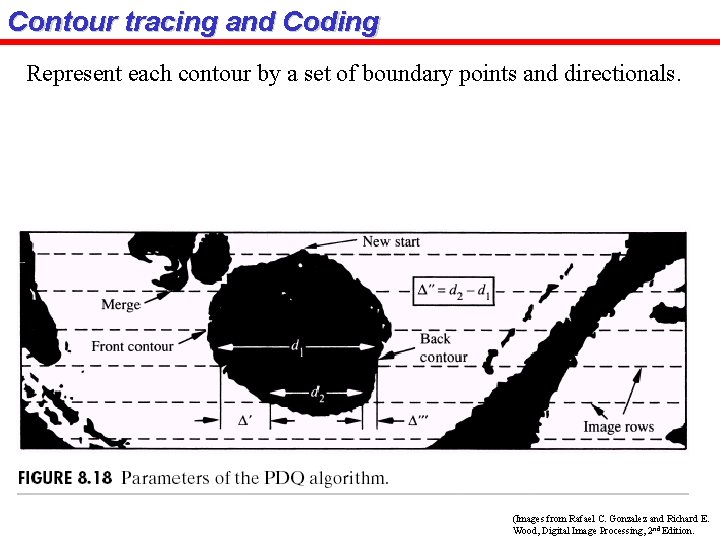

Contour tracing and Coding Represent each contour by a set of boundary points and directionals. (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

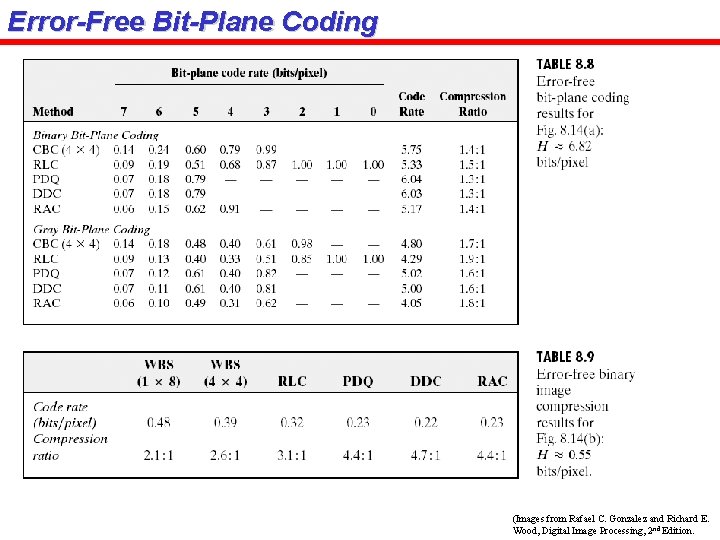

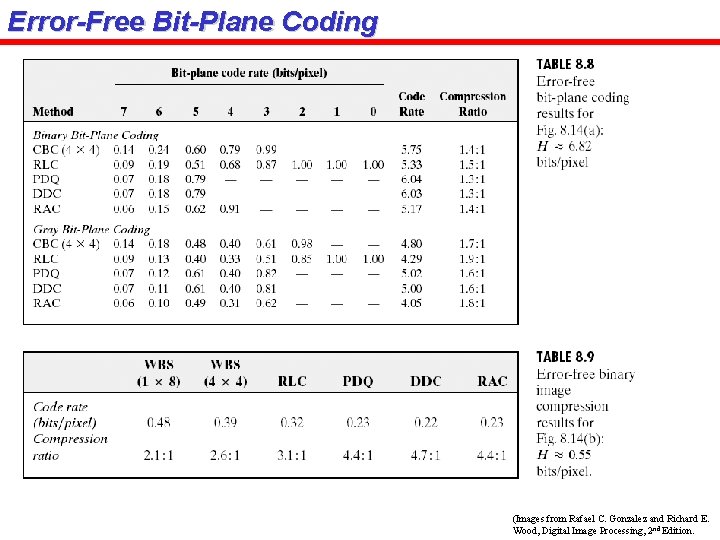

Error-Free Bit-Plane Coding (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

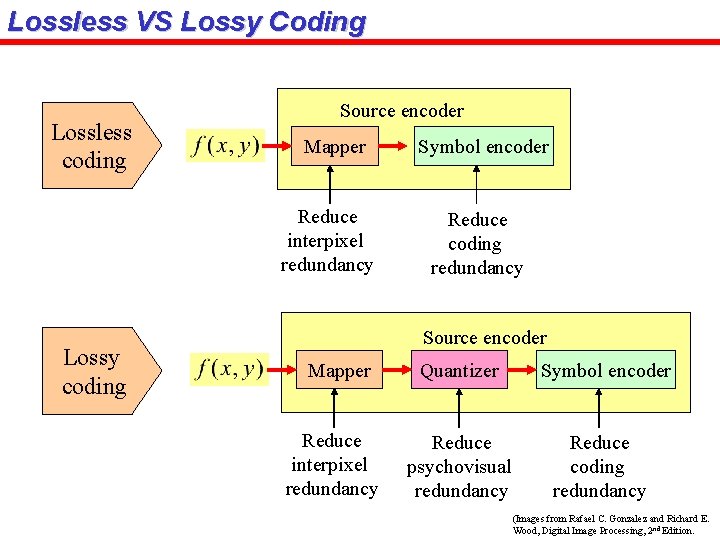

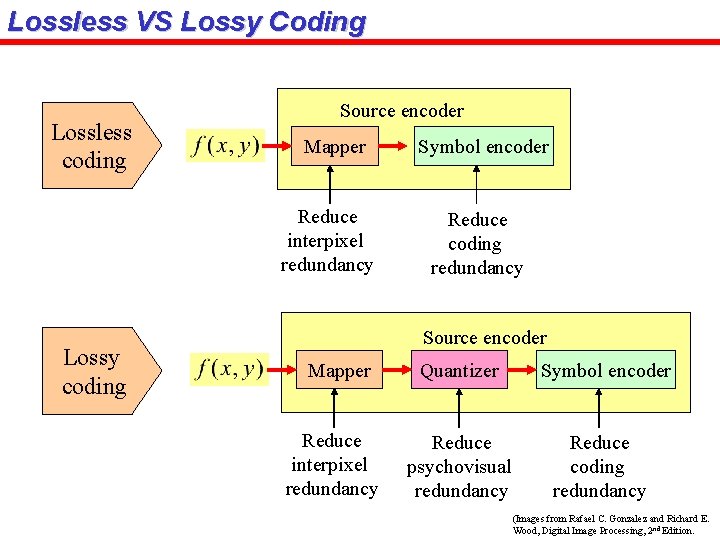

Lossless VS Lossy Coding Lossless coding Source encoder Mapper Reduce interpixel redundancy Lossy coding Symbol encoder Reduce coding redundancy Source encoder Mapper Reduce interpixel redundancy Quantizer Symbol encoder Reduce psychovisual redundancy Reduce coding redundancy (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

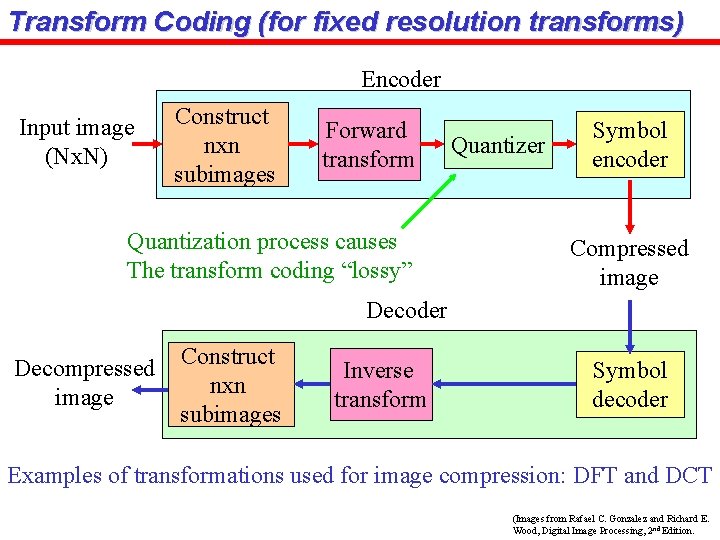

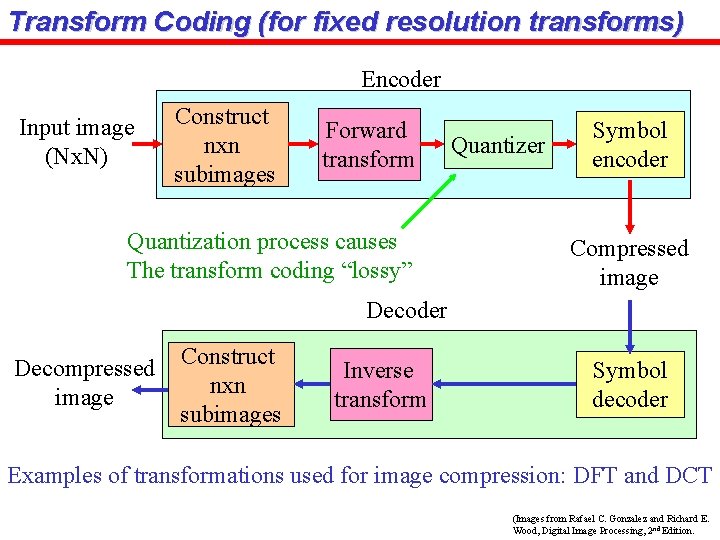

Transform Coding (for fixed resolution transforms) Encoder Input image (Nx. N) Construct nxn subimages Forward transform Quantization process causes The transform coding “lossy” Quantizer Symbol encoder Compressed image Decoder Decompressed image Construct nxn subimages Inverse transform Symbol decoder Examples of transformations used for image compression: DFT and DCT (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

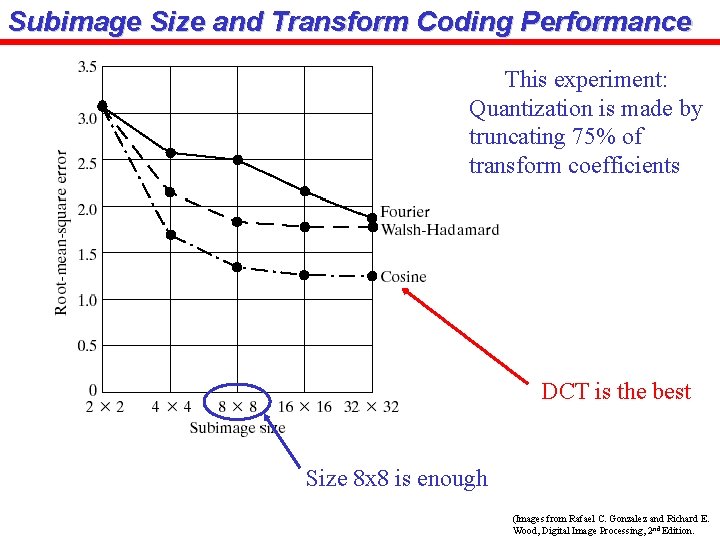

Transform Coding (for fixed resolution transforms) 3 Parameters that effect transform coding performance: 1. Type of transformation 2. Size of subimage 3. Quantization algorithm

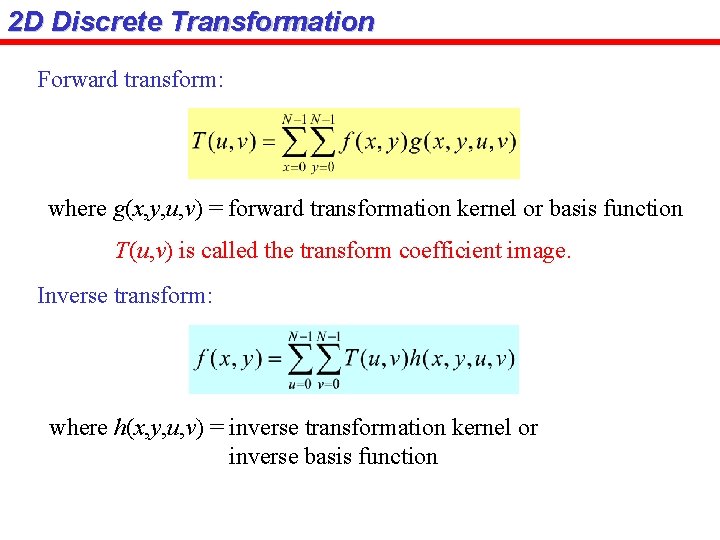

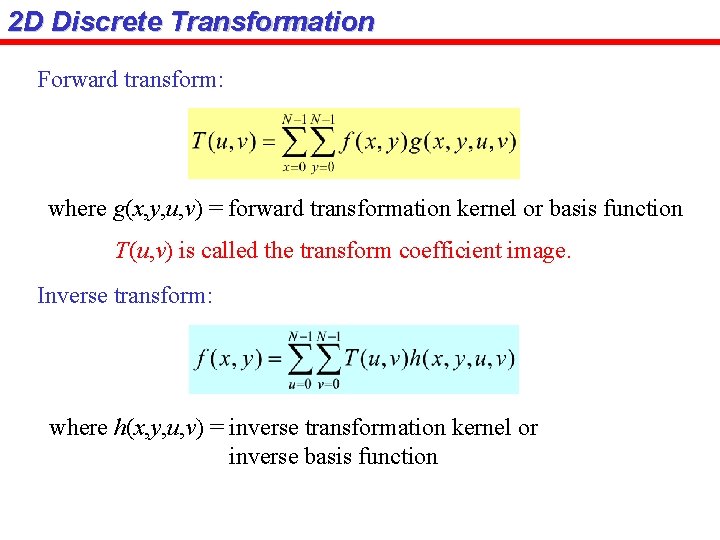

2 D Discrete Transformation Forward transform: where g(x, y, u, v) = forward transformation kernel or basis function T(u, v) is called the transform coefficient image. Inverse transform: where h(x, y, u, v) = inverse transformation kernel or inverse basis function

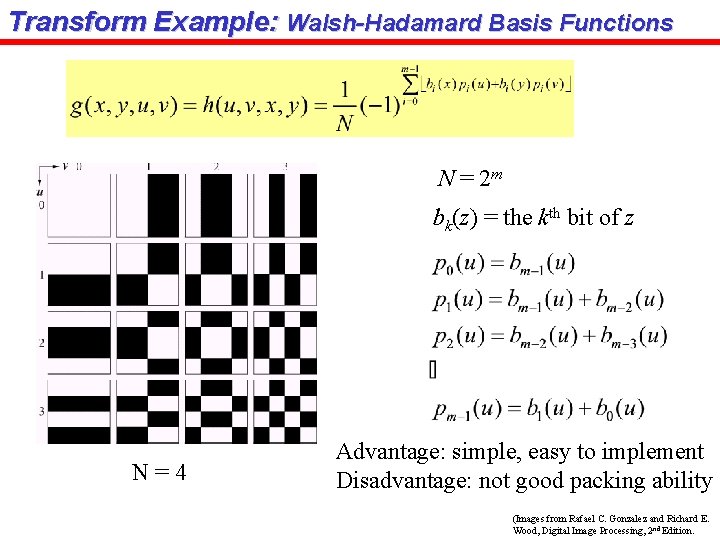

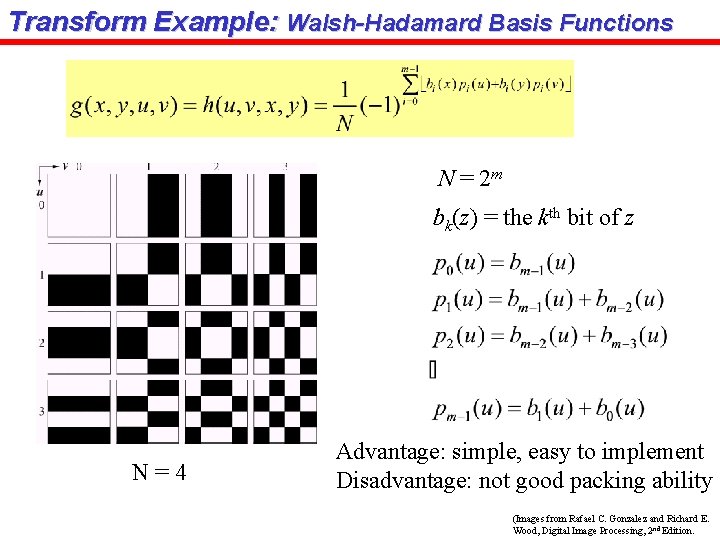

Transform Example: Walsh-Hadamard Basis Functions N = 2 m bk(z) = the kth bit of z N=4 Advantage: simple, easy to implement Disadvantage: not good packing ability (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

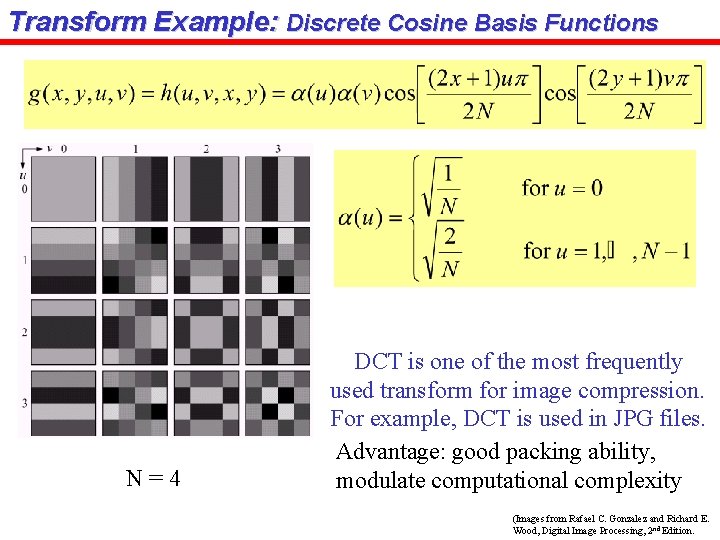

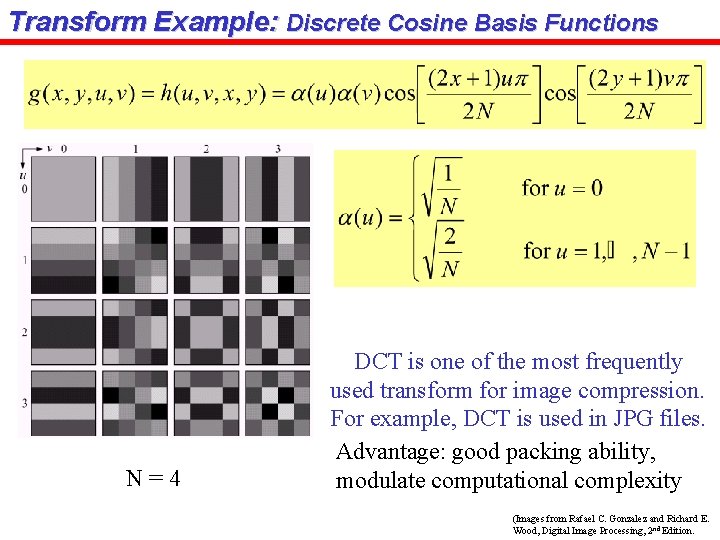

Transform Example: Discrete Cosine Basis Functions N=4 DCT is one of the most frequently used transform for image compression. For example, DCT is used in JPG files. Advantage: good packing ability, modulate computational complexity (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

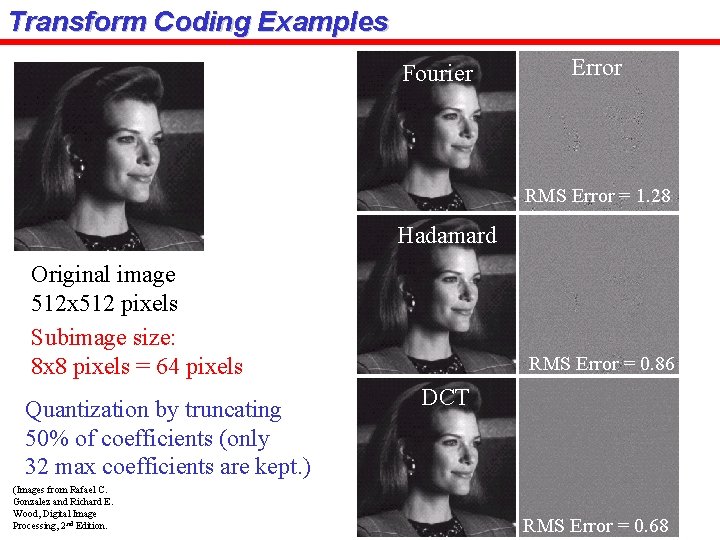

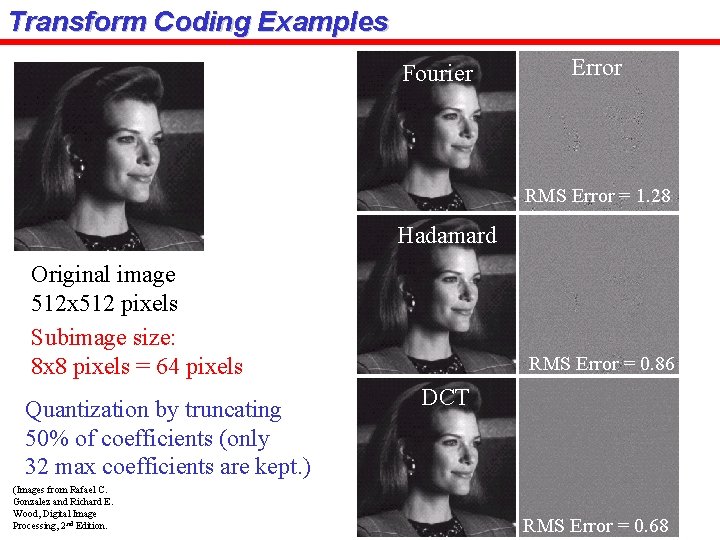

Transform Coding Examples Fourier Error RMS Error = 1. 28 Hadamard Original image 512 x 512 pixels Subimage size: 8 x 8 pixels = 64 pixels Quantization by truncating 50% of coefficients (only 32 max coefficients are kept. ) (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition. RMS Error = 0. 86 DCT RMS Error = 0. 68

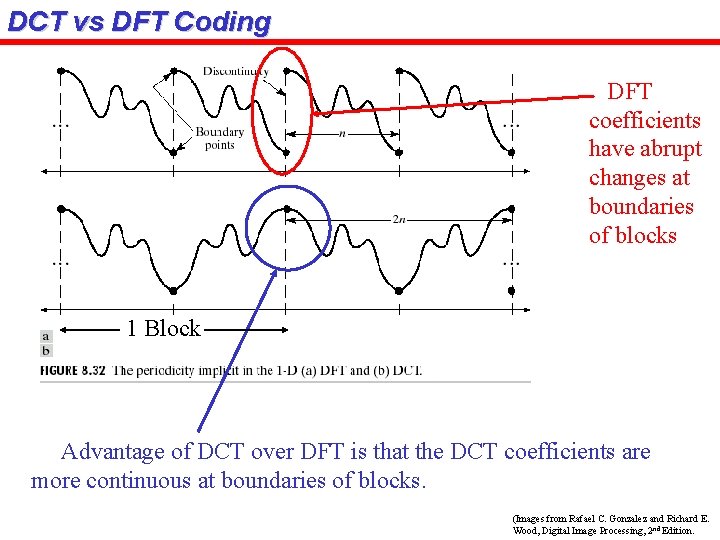

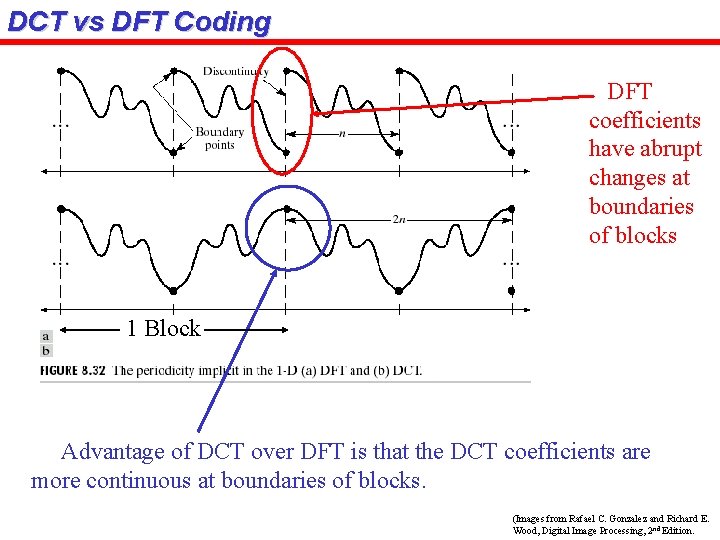

DCT vs DFT Coding DFT coefficients have abrupt changes at boundaries of blocks 1 Block Advantage of DCT over DFT is that the DCT coefficients are more continuous at boundaries of blocks. (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

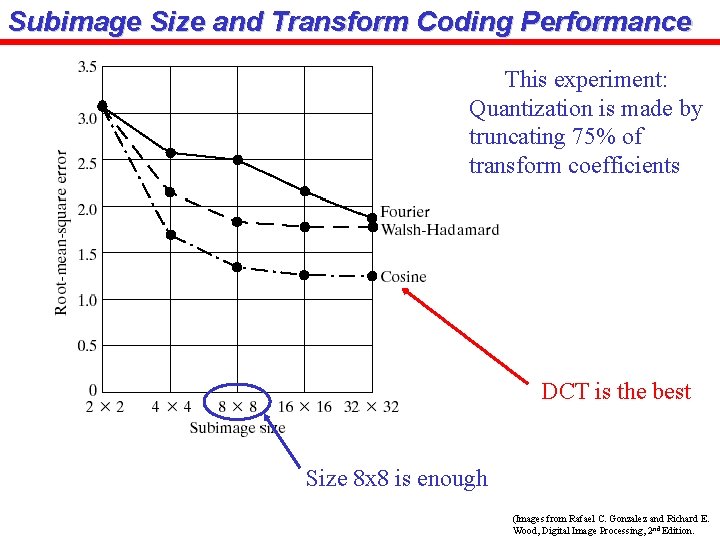

Subimage Size and Transform Coding Performance This experiment: Quantization is made by truncating 75% of transform coefficients DCT is the best Size 8 x 8 is enough (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

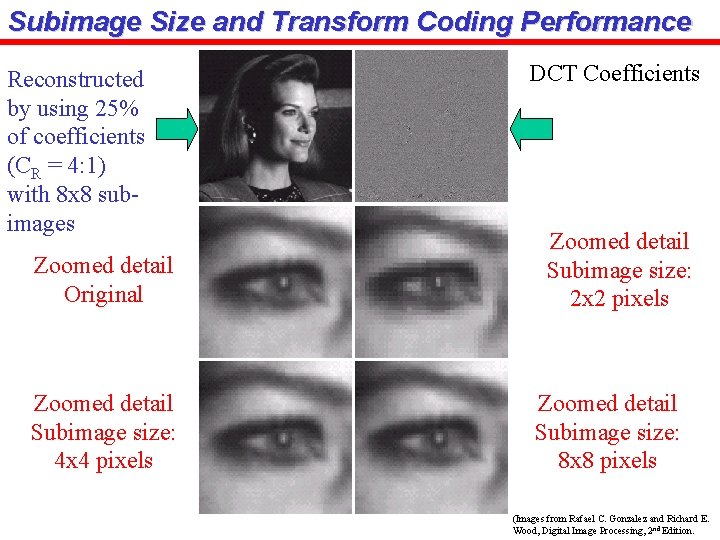

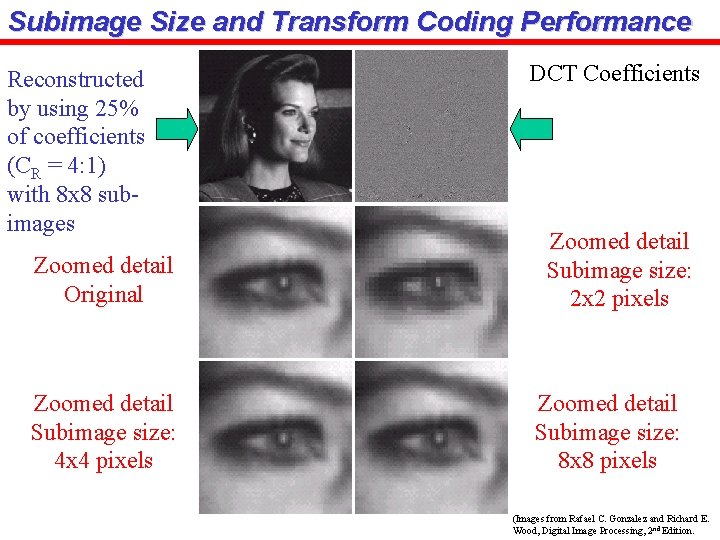

Subimage Size and Transform Coding Performance Reconstructed by using 25% of coefficients (CR = 4: 1) with 8 x 8 subimages Zoomed detail Original Zoomed detail Subimage size: 4 x 4 pixels DCT Coefficients Zoomed detail Subimage size: 2 x 2 pixels Zoomed detail Subimage size: 8 x 8 pixels (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

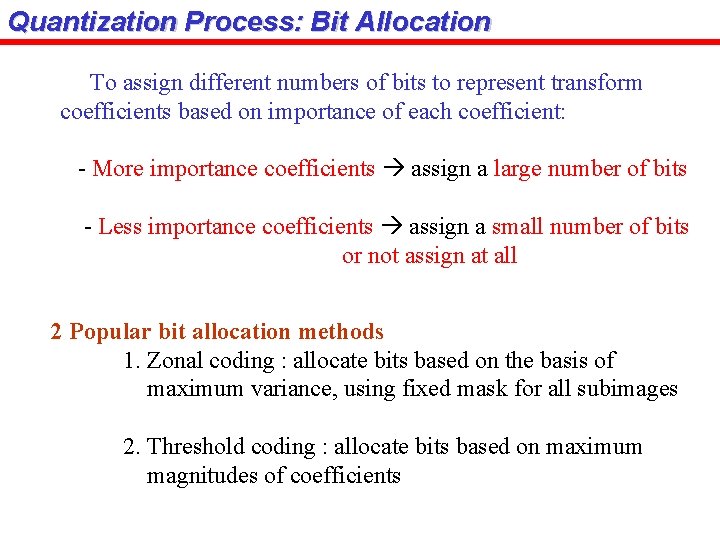

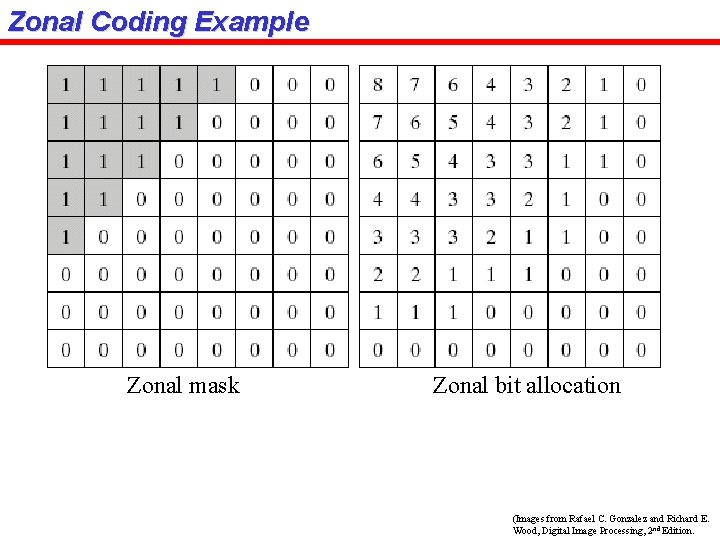

Quantization Process: Bit Allocation To assign different numbers of bits to represent transform coefficients based on importance of each coefficient: - More importance coefficients assign a large number of bits - Less importance coefficients assign a small number of bits or not assign at all 2 Popular bit allocation methods 1. Zonal coding : allocate bits based on the basis of maximum variance, using fixed mask for all subimages 2. Threshold coding : allocate bits based on maximum magnitudes of coefficients

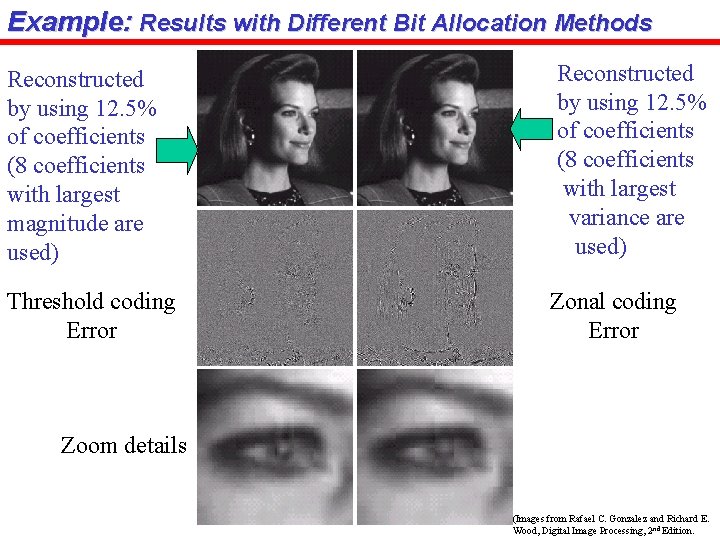

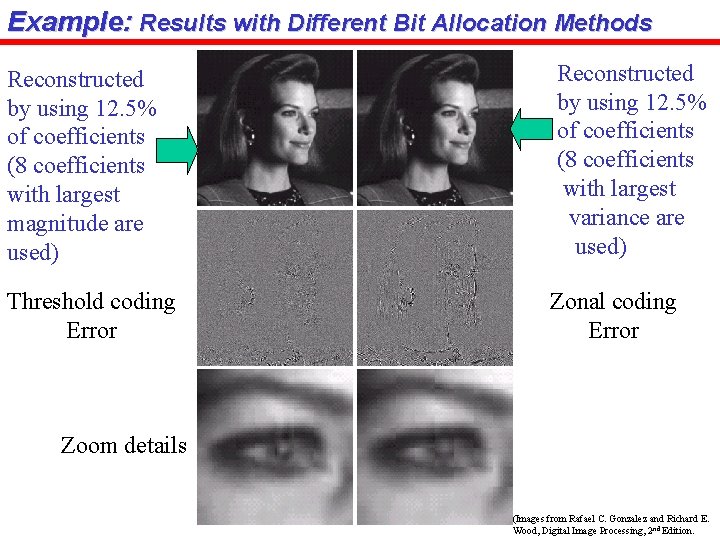

Example: Results with Different Bit Allocation Methods Reconstructed by using 12. 5% of coefficients (8 coefficients with largest magnitude are used) Reconstructed by using 12. 5% of coefficients (8 coefficients with largest variance are used) Threshold coding Error Zonal coding Error Zoom details (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

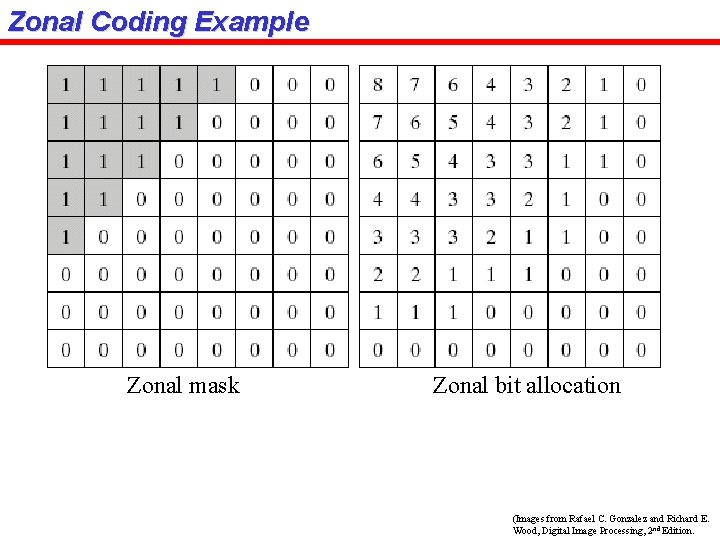

Zonal Coding Example Zonal mask Zonal bit allocation (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

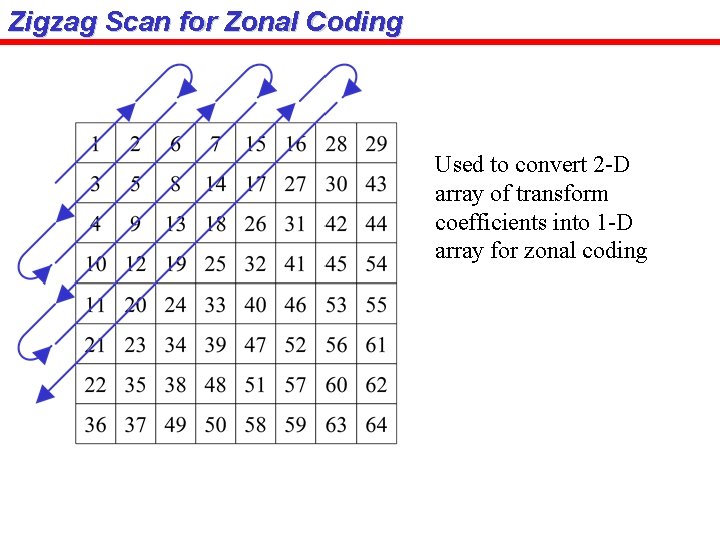

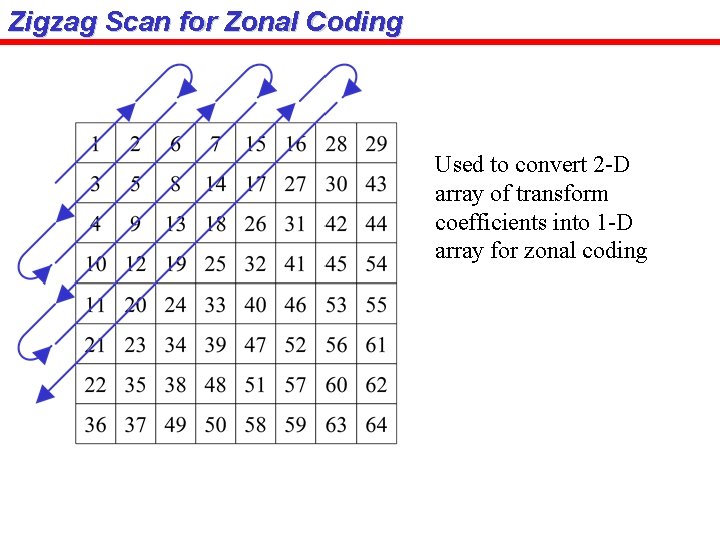

Zigzag Scan for Zonal Coding Used to convert 2 -D array of transform coefficients into 1 -D array for zonal coding

Threshold Coding Example Threshold mask Thresholded coefficient ordering (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

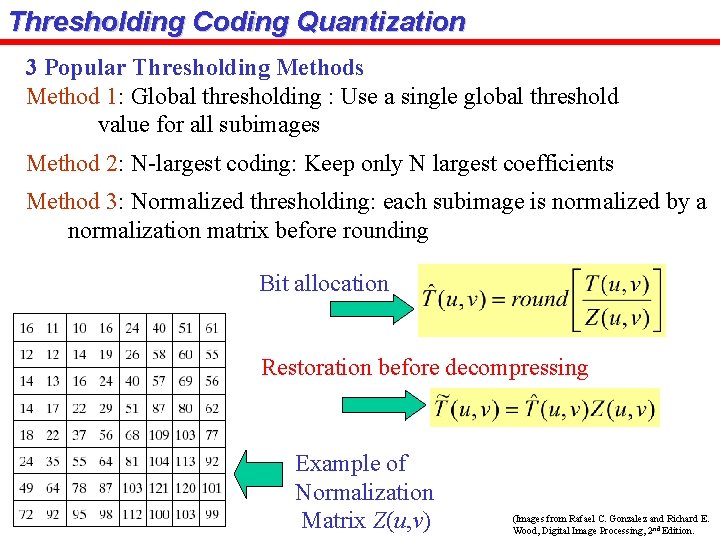

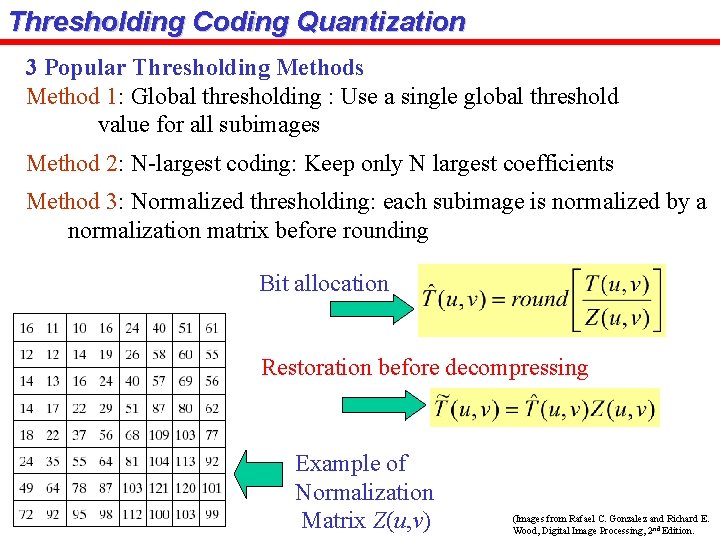

Thresholding Coding Quantization 3 Popular Thresholding Methods Method 1: Global thresholding : Use a single global threshold value for all subimages Method 2: N-largest coding: Keep only N largest coefficients Method 3: Normalized thresholding: each subimage is normalized by a normalization matrix before rounding Bit allocation Restoration before decompressing Example of Normalization Matrix Z(u, v) (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

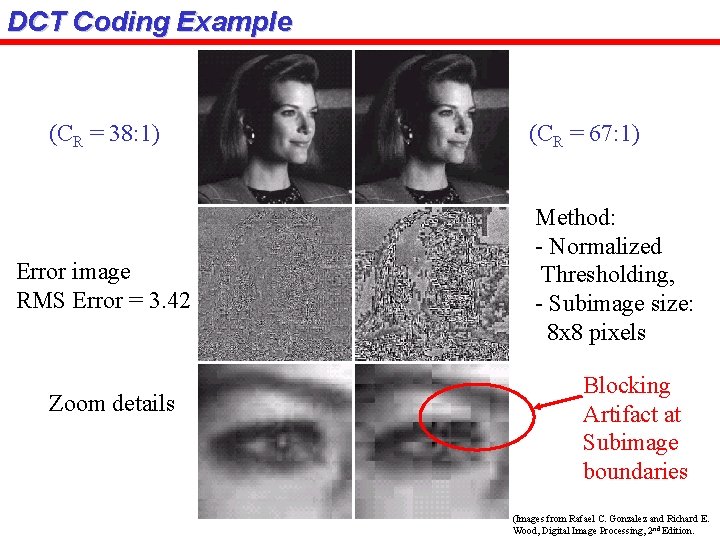

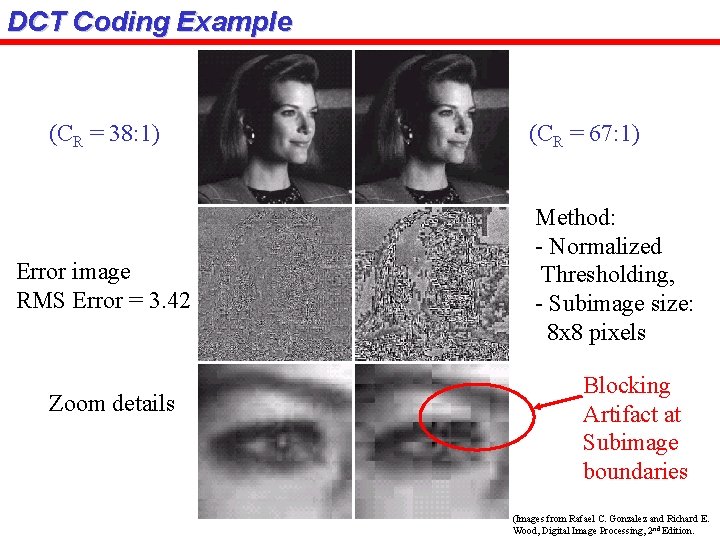

DCT Coding Example (CR = 38: 1) Error image RMS Error = 3. 42 Zoom details (CR = 67: 1) Method: - Normalized Thresholding, - Subimage size: 8 x 8 pixels Blocking Artifact at Subimage boundaries (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

Zonal Coding vs. Threshold Coding v. At the same compression ratio, the threshold coding gives smaller distortion than the zonal coding (the threshold coding keeps the largest coefficients while the zonal coding doesn’t guarantee) v. However, the zonal coding algorithm is simpler than that of the threshold coding

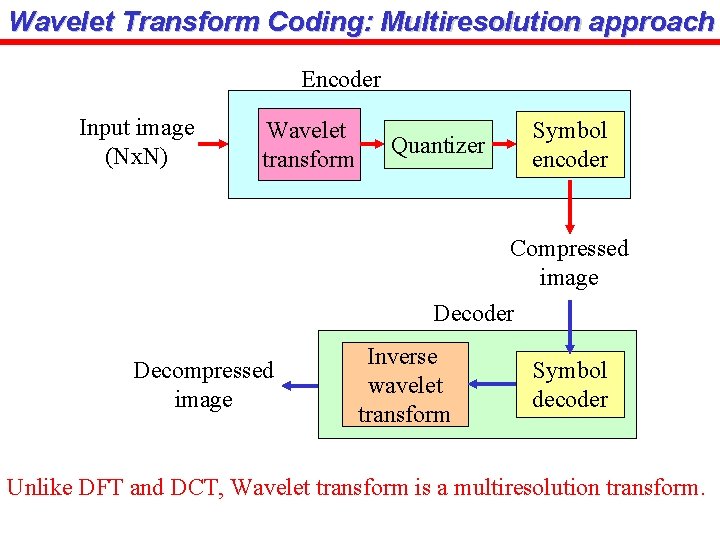

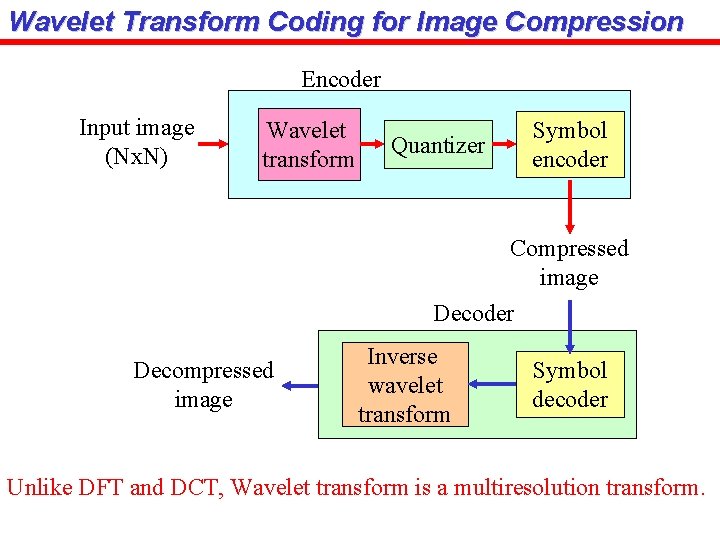

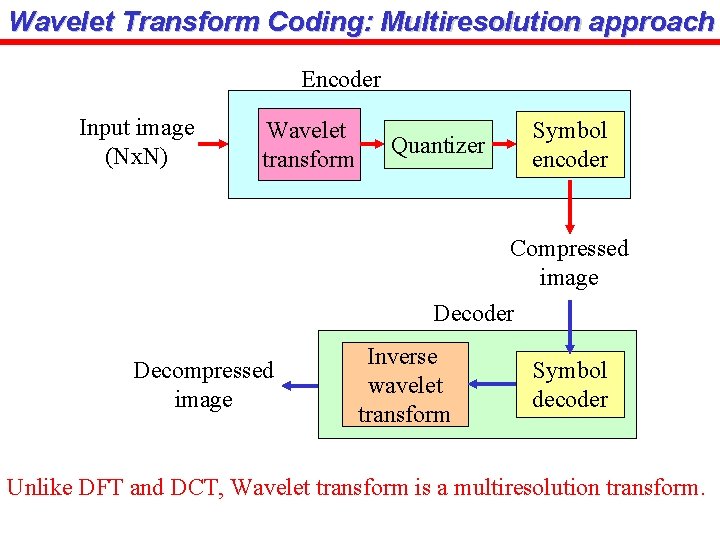

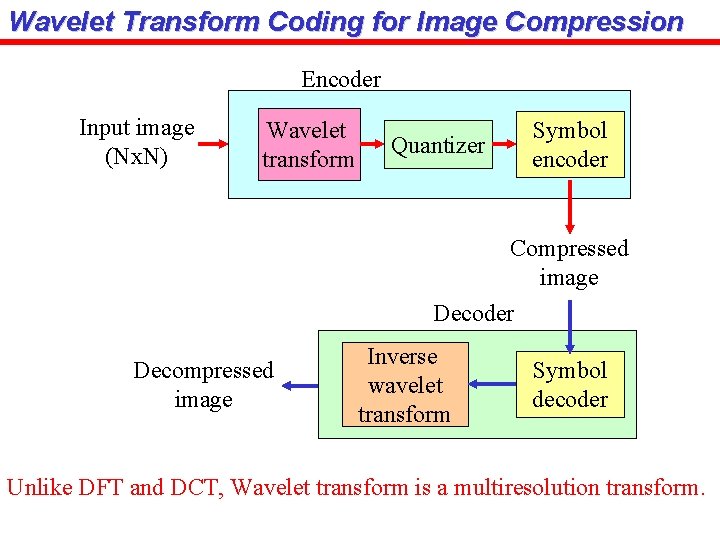

Wavelet Transform Coding: Multiresolution approach Encoder Input image (Nx. N) Wavelet transform Quantizer Symbol encoder Compressed image Decoder Decompressed image Inverse wavelet transform Symbol decoder Unlike DFT and DCT, Wavelet transform is a multiresolution transform.

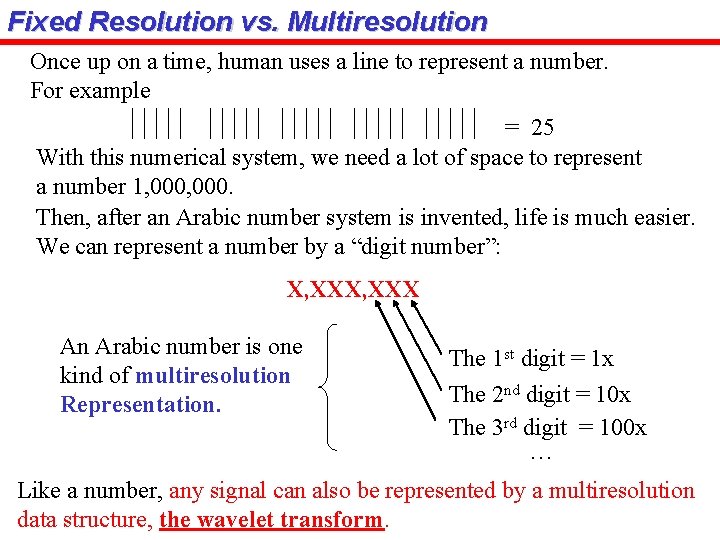

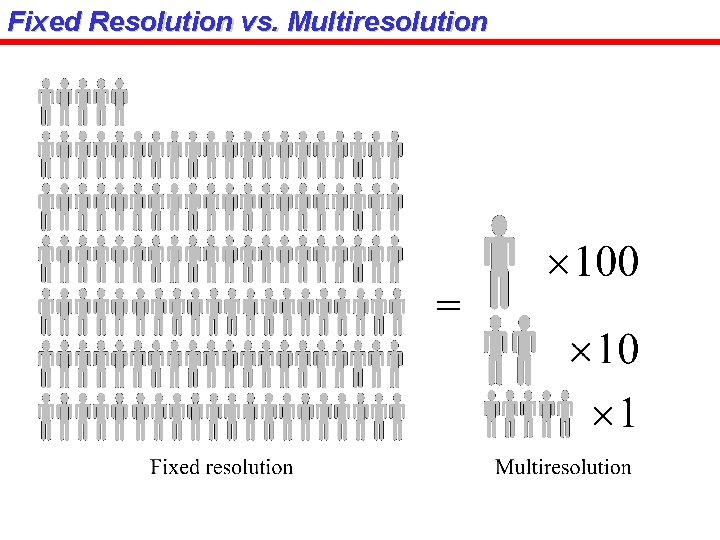

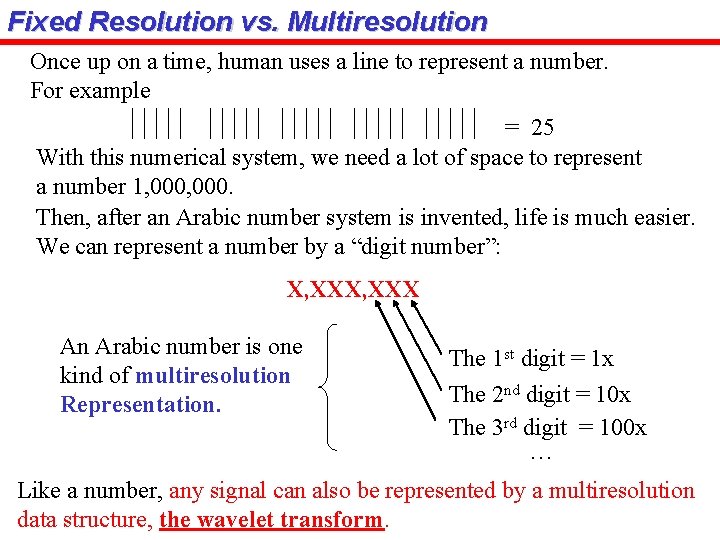

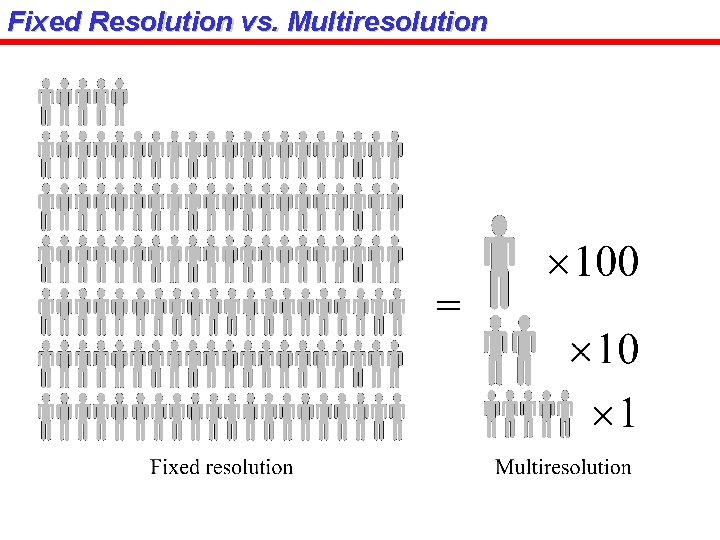

Fixed Resolution vs. Multiresolution Once up on a time, human uses a line to represent a number. For example = 25 With this numerical system, we need a lot of space to represent a number 1, 000. Then, after an Arabic number system is invented, life is much easier. We can represent a number by a “digit number”: X, XXX An Arabic number is one kind of multiresolution Representation. The 1 st digit = 1 x The 2 nd digit = 10 x The 3 rd digit = 100 x … Like a number, any signal can also be represented by a multiresolution data structure, the wavelet transform.

Fixed Resolution vs. Multiresolution

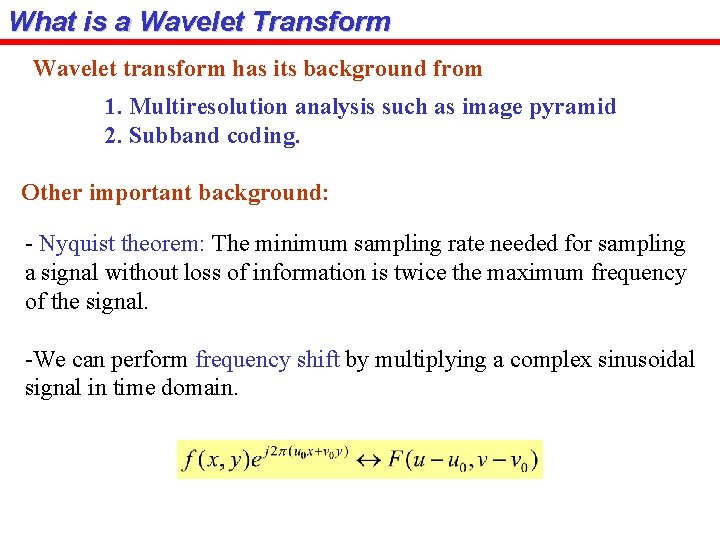

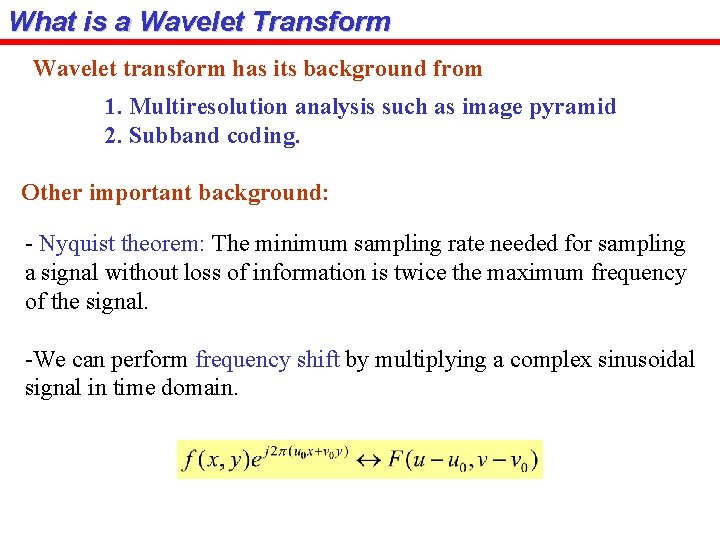

What is a Wavelet Transform Wavelet transform has its background from 1. Multiresolution analysis such as image pyramid 2. Subband coding. Other important background: - Nyquist theorem: The minimum sampling rate needed for sampling a signal without loss of information is twice the maximum frequency of the signal. -We can perform frequency shift by multiplying a complex sinusoidal signal in time domain.

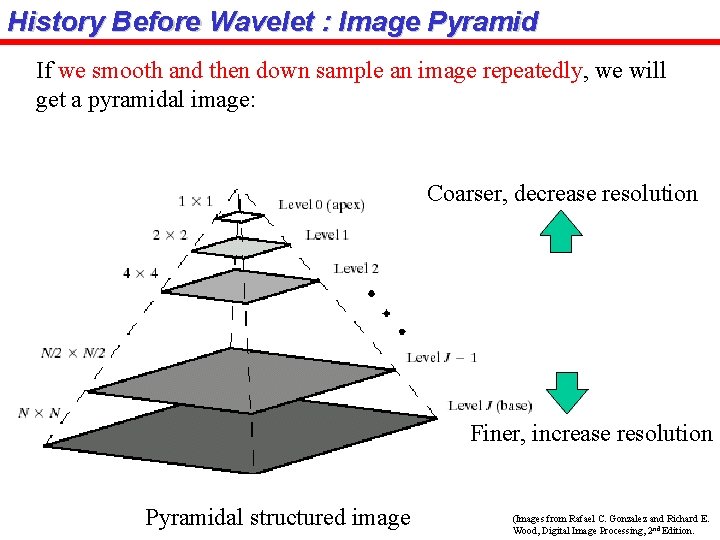

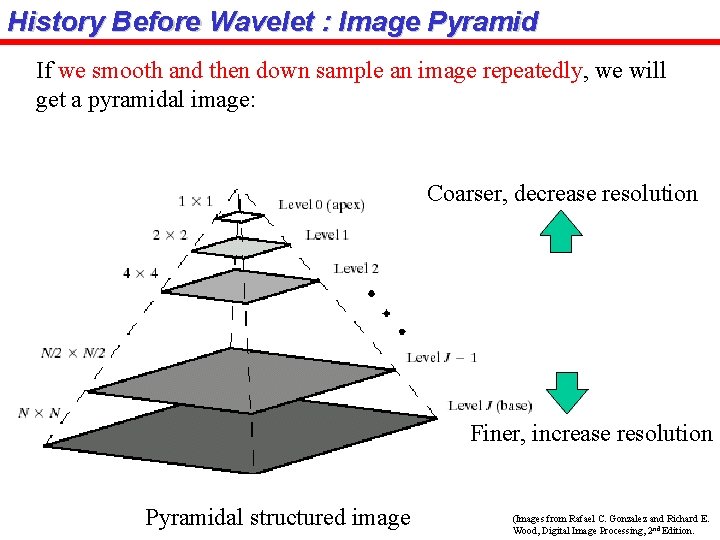

History Before Wavelet : Image Pyramid If we smooth and then down sample an image repeatedly, we will get a pyramidal image: Coarser, decrease resolution Finer, increase resolution Pyramidal structured image (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

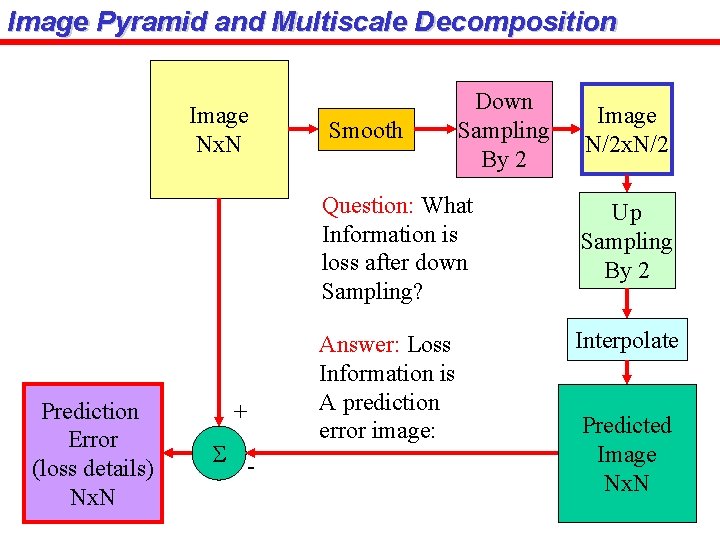

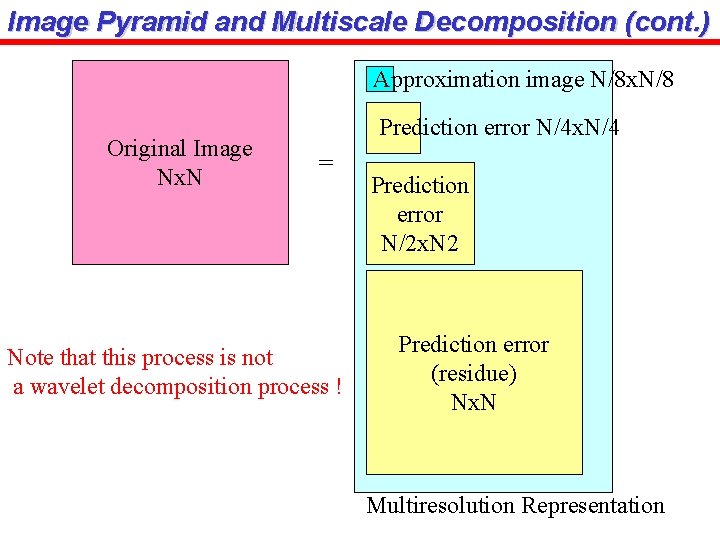

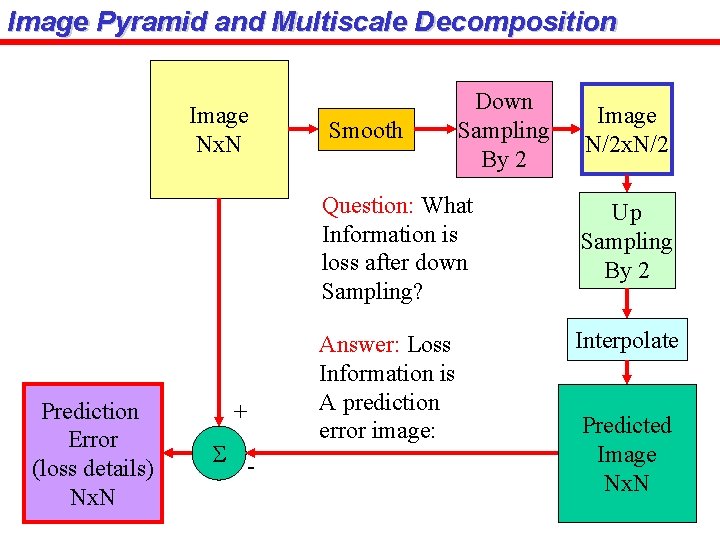

Image Pyramid and Multiscale Decomposition Image Nx. N Prediction Error (loss details) Nx. N + S - Smooth Down Sampling By 2 Image N/2 x. N/2 Question: What Information is loss after down Sampling? Up Sampling By 2 Answer: Loss Information is A prediction error image: Interpolate Predicted Image Nx. N

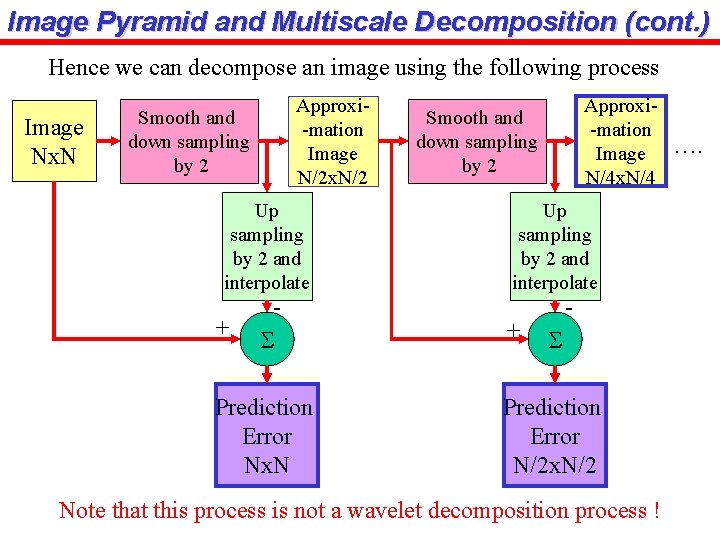

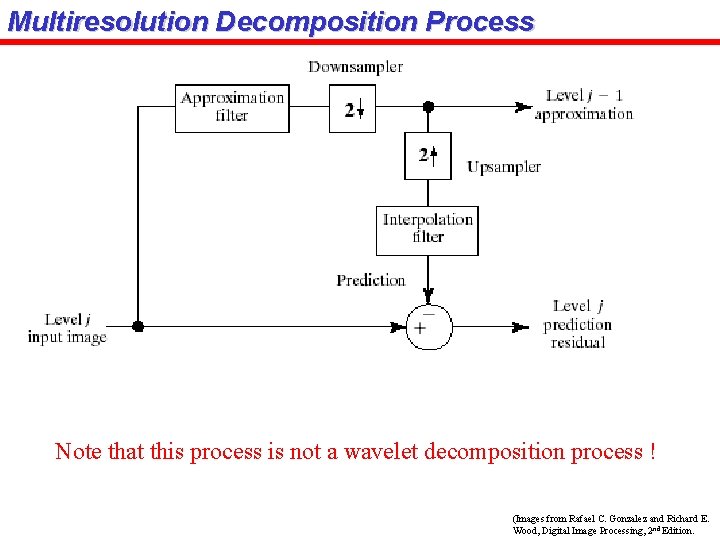

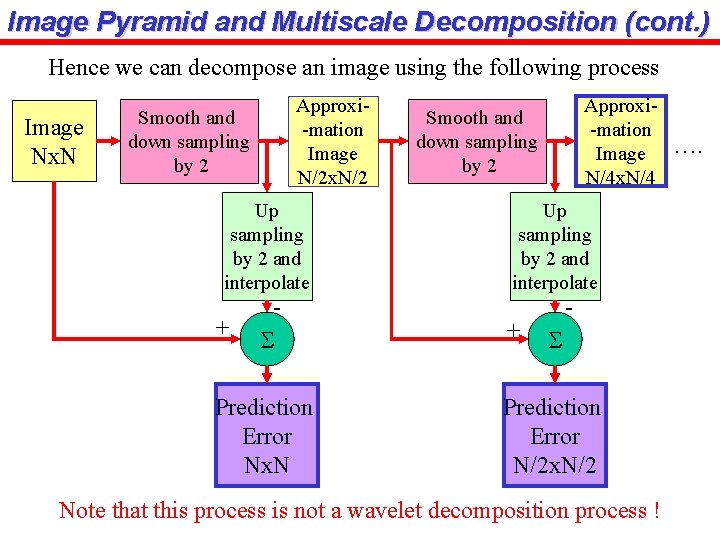

Image Pyramid and Multiscale Decomposition (cont. ) Hence we can decompose an image using the following process Image Nx. N Approxi-mation Image N/2 x. N/2 Smooth and down sampling by 2 Up sampling by 2 and interpolate + S Prediction Error Nx. N Approxi-mation Image N/4 x. N/4 Smooth and down sampling by 2 Up sampling by 2 and interpolate + - S Prediction Error N/2 x. N/2 Note that this process is not a wavelet decomposition process ! ….

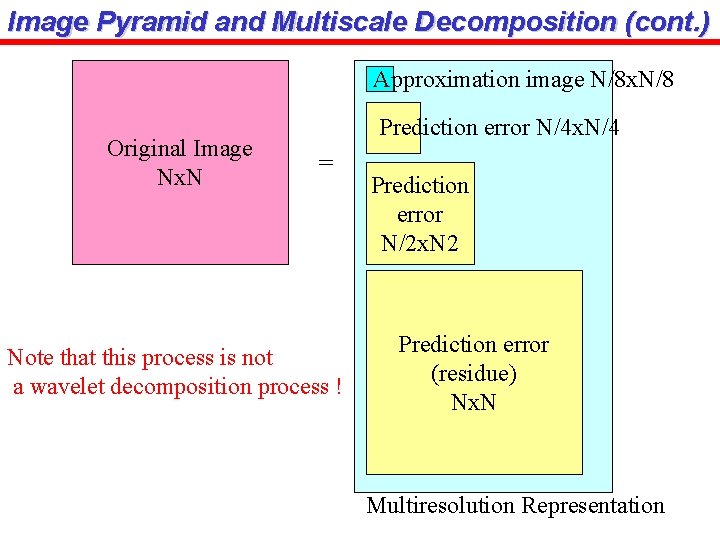

Image Pyramid and Multiscale Decomposition (cont. ) Approximation image N/8 x. N/8 Original Image Nx. N Prediction error N/4 x. N/4 = Note that this process is not a wavelet decomposition process ! Prediction error N/2 x. N 2 Prediction error (residue) Nx. N Multiresolution Representation

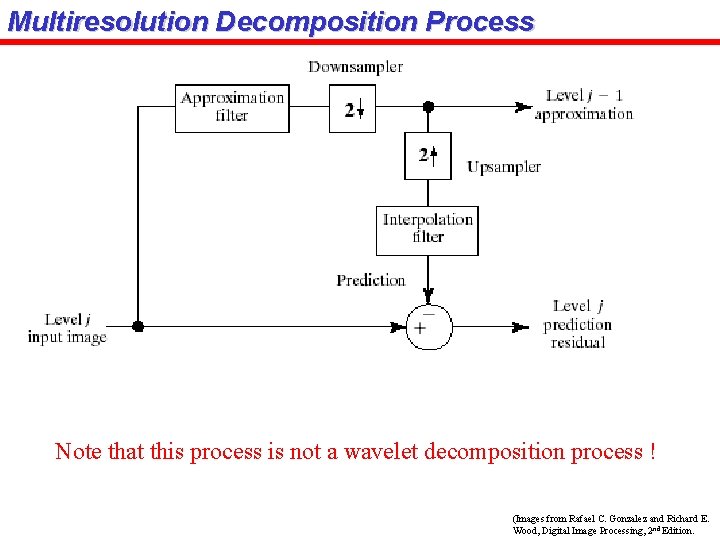

Multiresolution Decomposition Process Note that this process is not a wavelet decomposition process ! (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

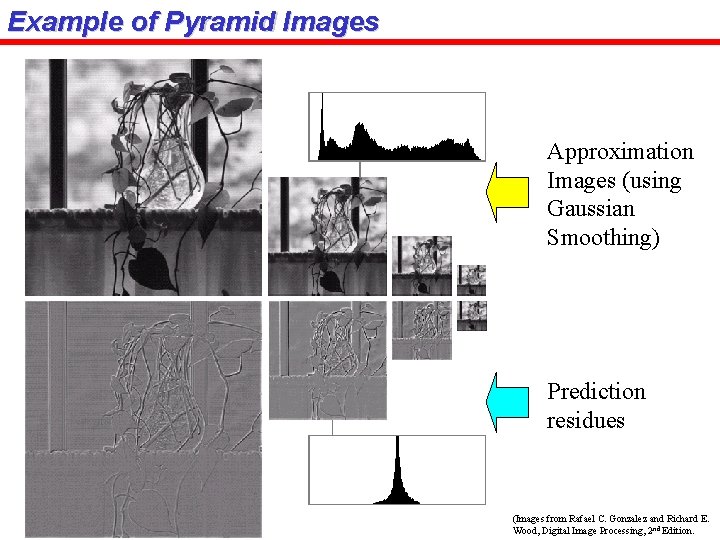

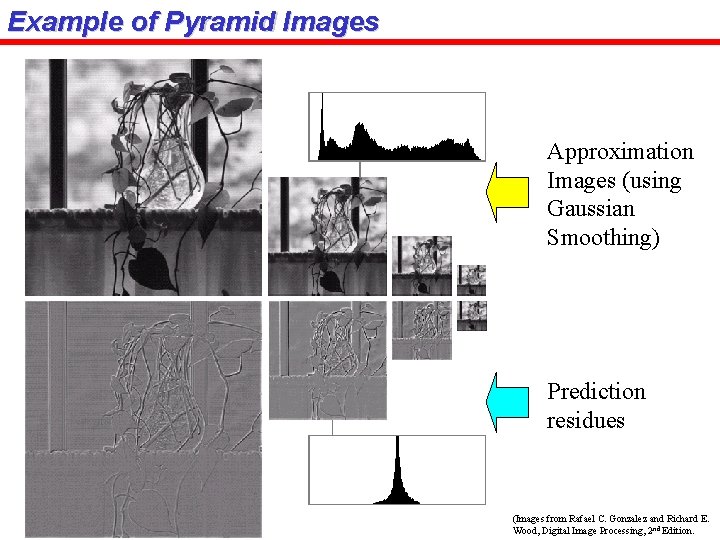

Example of Pyramid Images Approximation Images (using Gaussian Smoothing) Prediction residues (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

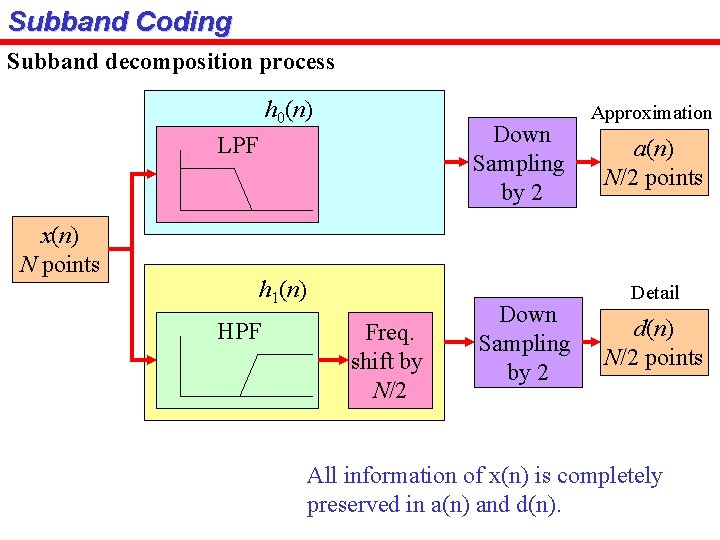

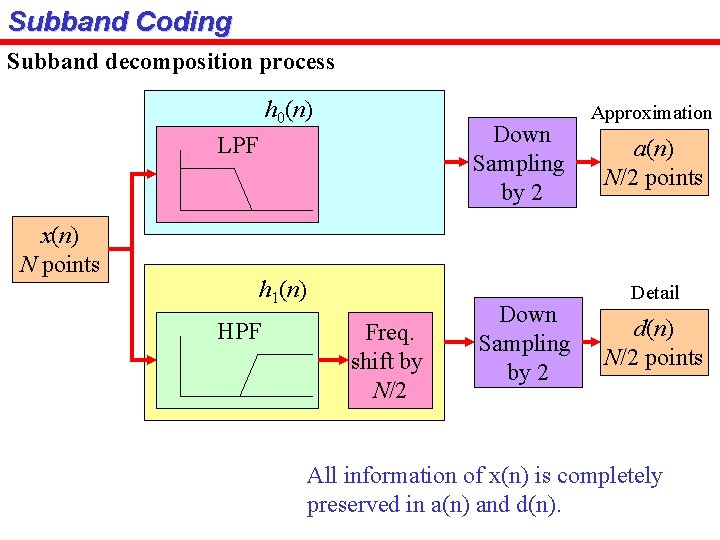

Subband Coding Subband decomposition process h 0(n) Down Sampling by 2 LPF x(n) N points h 1(n) HPF Freq. shift by N/2 Down Sampling by 2 Approximation a(n) N/2 points Detail d(n) N/2 points All information of x(n) is completely preserved in a(n) and d(n).

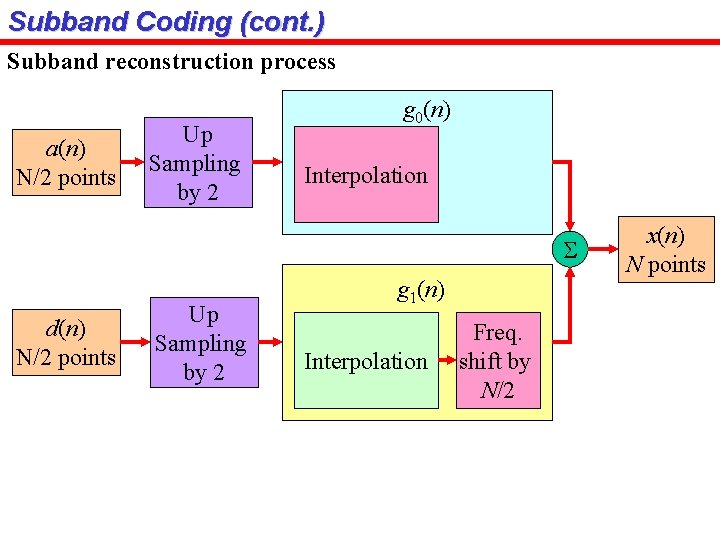

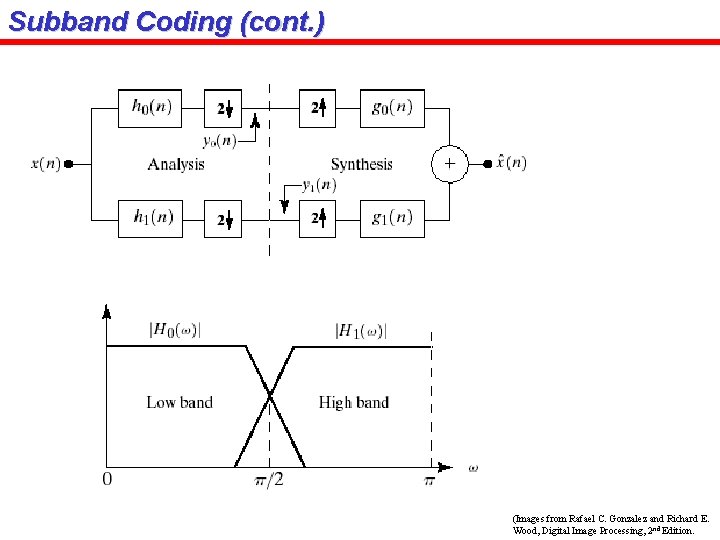

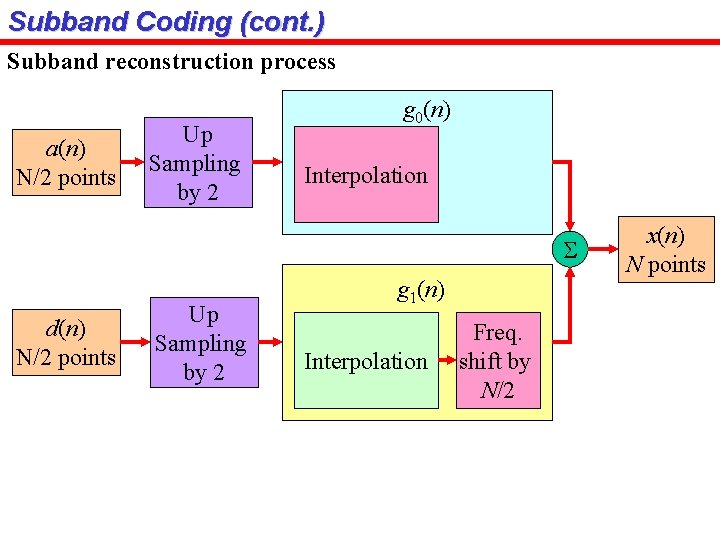

Subband Coding (cont. ) Subband reconstruction process a(n) N/2 points Up Sampling by 2 g 0(n) Interpolation S d(n) N/2 points Up Sampling by 2 g 1(n) Interpolation Freq. shift by N/2 x(n) N points

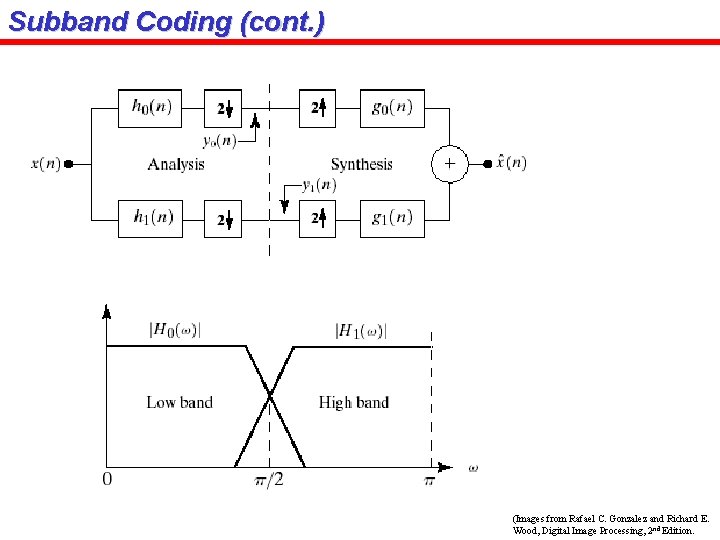

Subband Coding (cont. ) (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

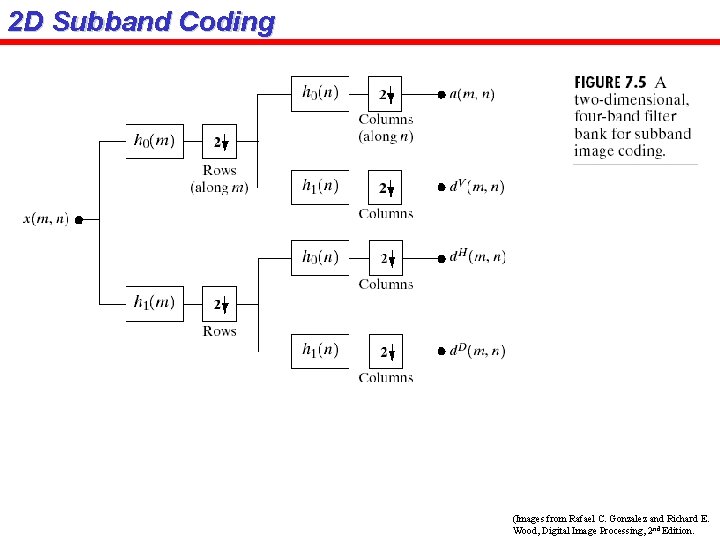

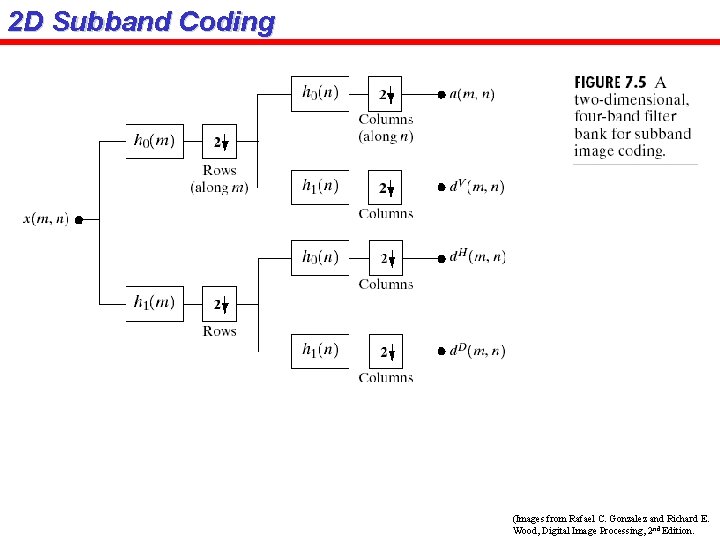

2 D Subband Coding (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

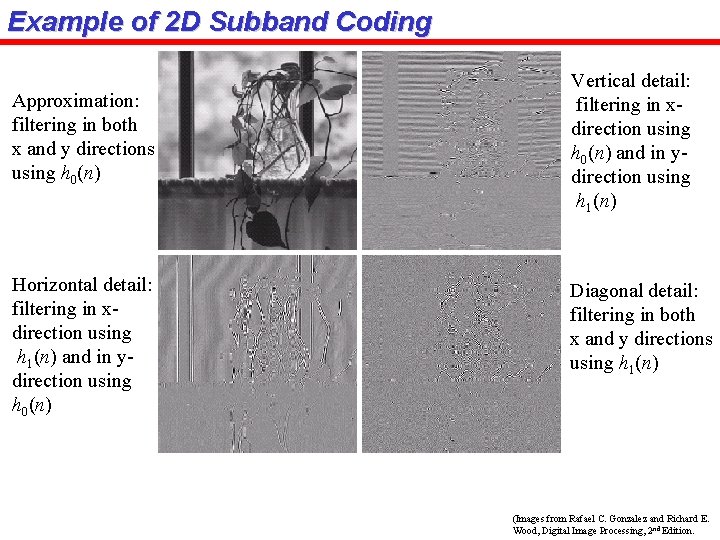

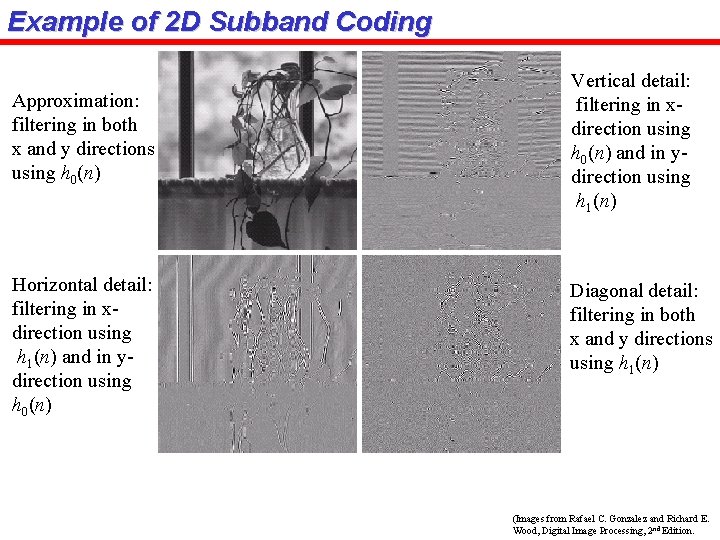

Example of 2 D Subband Coding Approximation: filtering in both x and y directions using h 0(n) Horizontal detail: filtering in xdirection using h 1(n) and in ydirection using h 0(n) Vertical detail: filtering in xdirection using h 0(n) and in ydirection using h 1(n) Diagonal detail: filtering in both x and y directions using h 1(n) (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

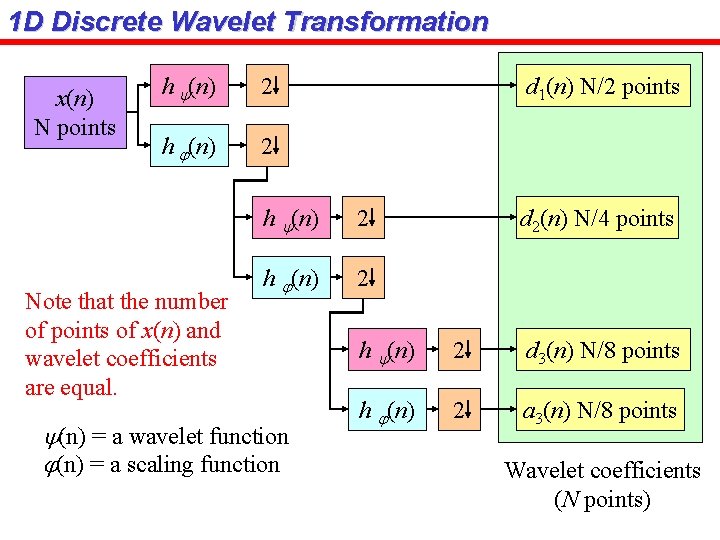

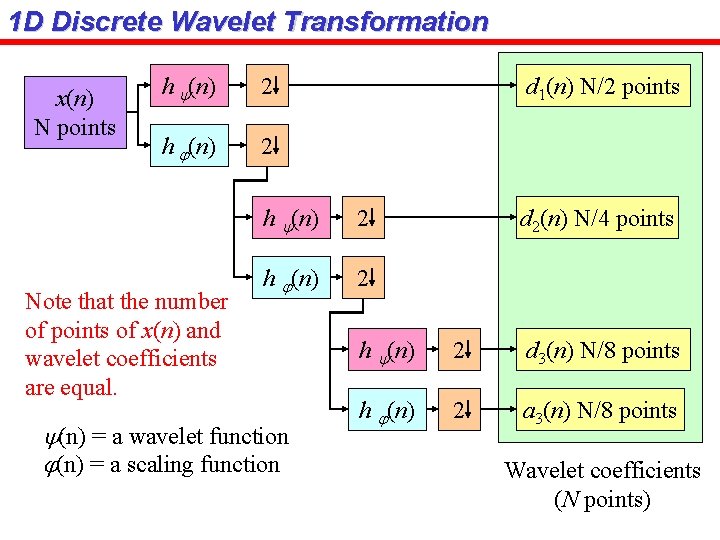

1 D Discrete Wavelet Transformation x(n) N points h y(n) 2 h j(n) 2 Note that the number of points of x(n) and wavelet coefficients are equal. d 1(n) N/2 points h y(n) 2 h j(n) 2 y(n) = a wavelet function j(n) = a scaling function d 2(n) N/4 points h y(n) 2 d 3(n) N/8 points h j(n) 2 a 3(n) N/8 points Wavelet coefficients (N points)

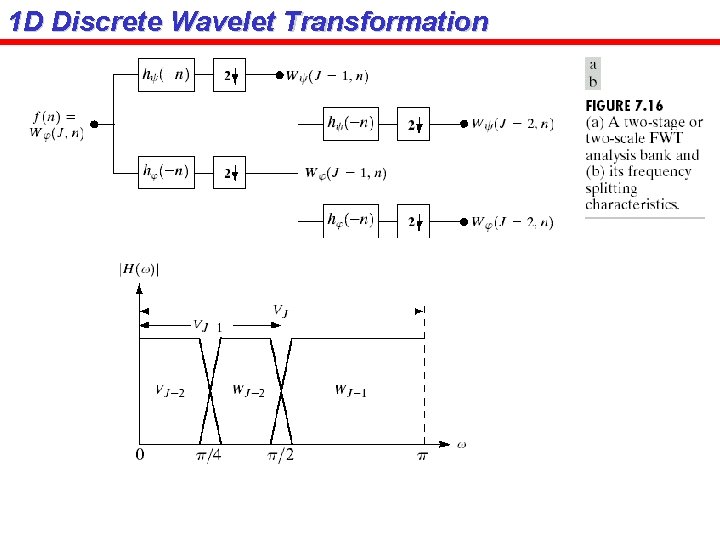

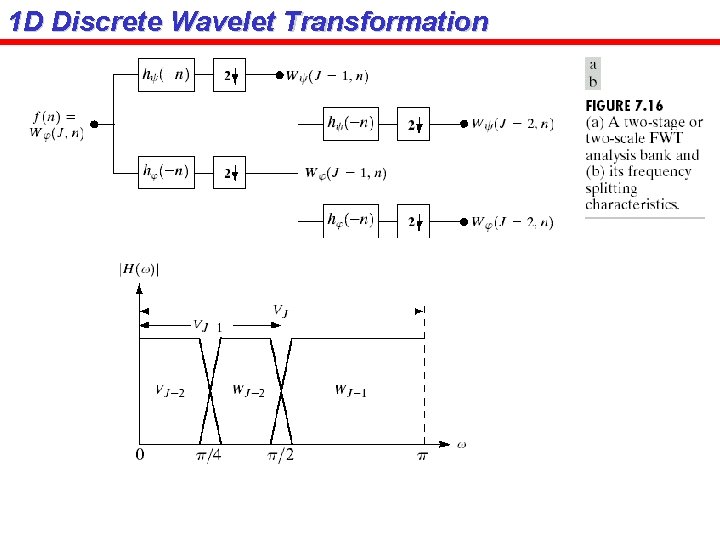

1 D Discrete Wavelet Transformation

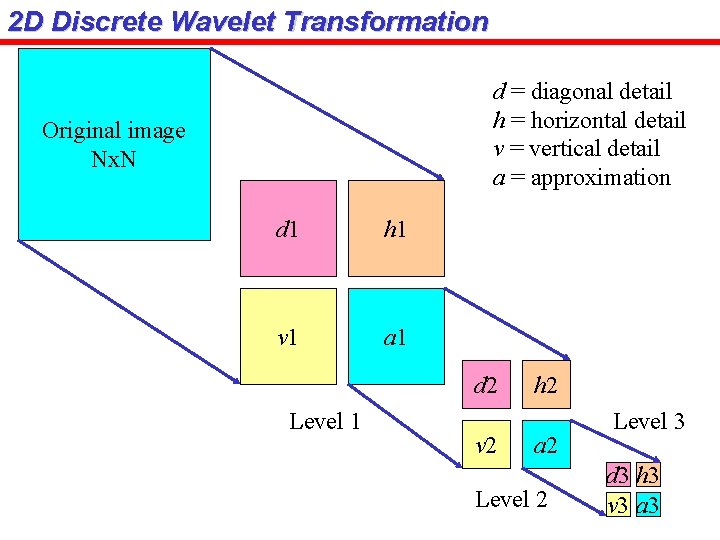

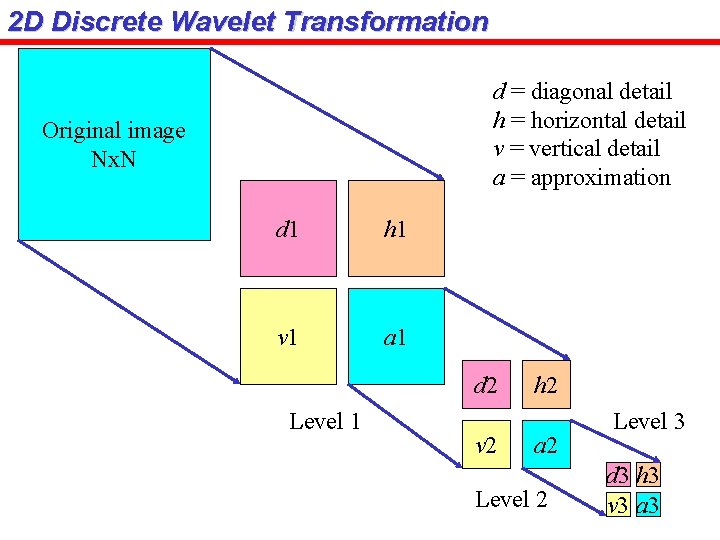

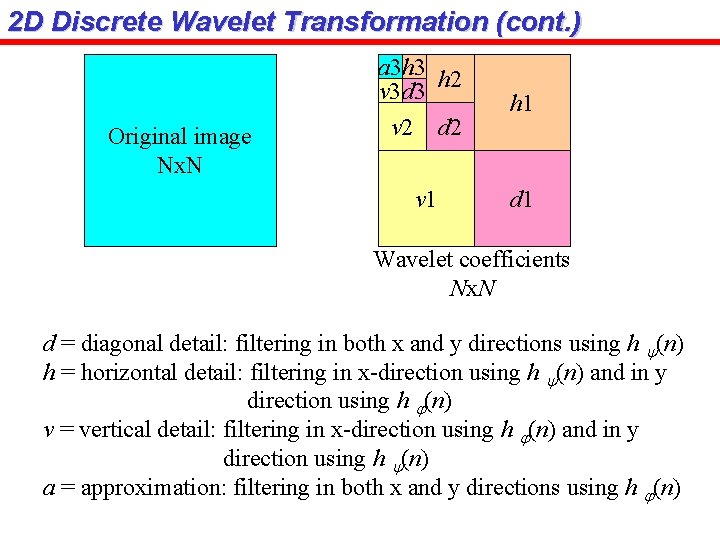

2 D Discrete Wavelet Transformation d = diagonal detail h = horizontal detail v = vertical detail a = approximation Original image Nx. N d 1 h 1 v 1 a 1 d 2 Level 1 v 2 h 2 a 2 Level 3 d 3 h 3 v 3 a 3

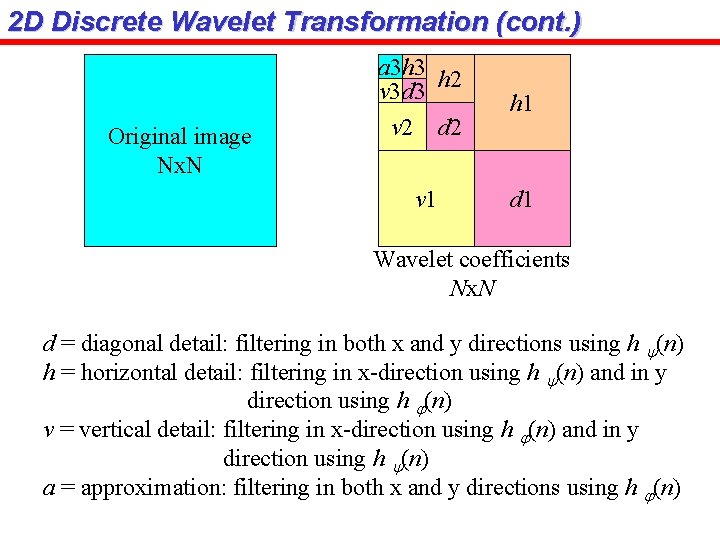

2 D Discrete Wavelet Transformation (cont. ) Original image Nx. N a 3 h 3 h 2 v 3 d 3 v 2 d 2 h 1 v 1 d 1 Wavelet coefficients Nx. N d = diagonal detail: filtering in both x and y directions using h y(n) h = horizontal detail: filtering in x-direction using h y(n) and in y direction using h j(n) v = vertical detail: filtering in x-direction using h j(n) and in y direction using h y(n) a = approximation: filtering in both x and y directions using h j(n)

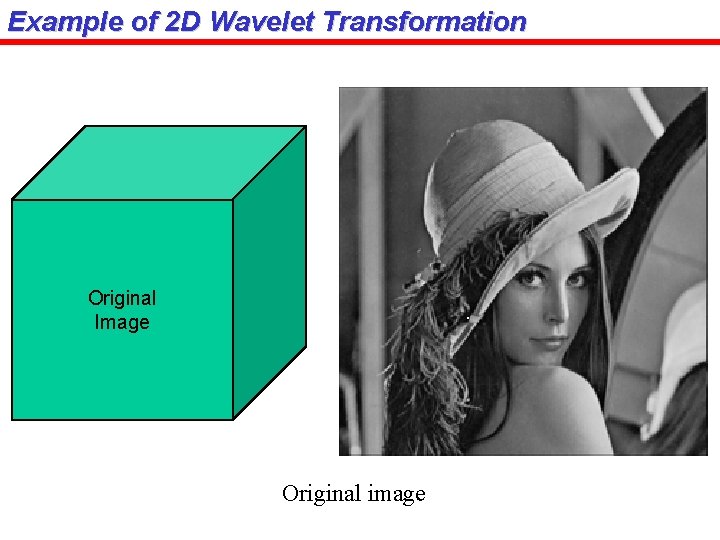

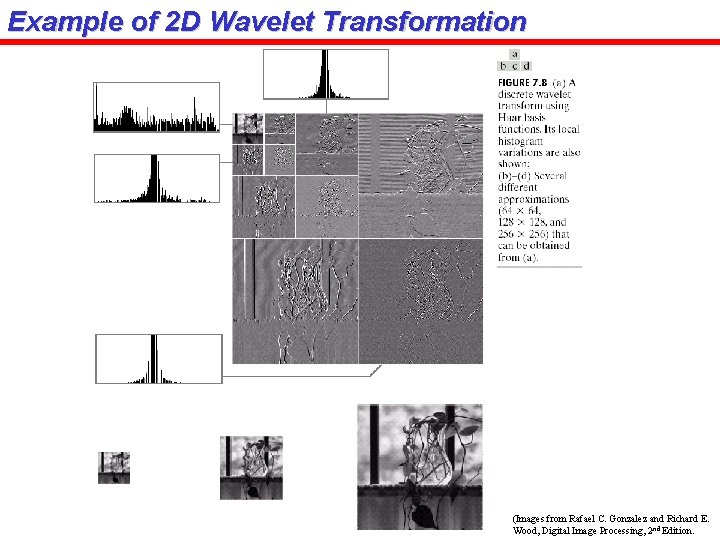

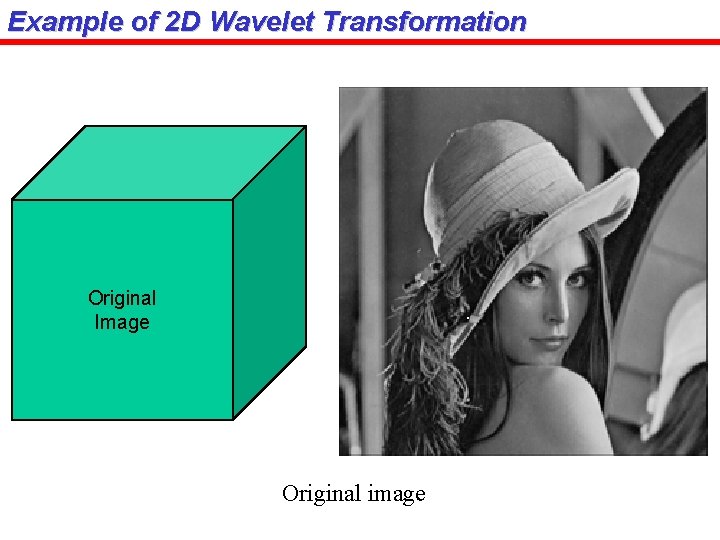

Example of 2 D Wavelet Transformation Original Image Original image

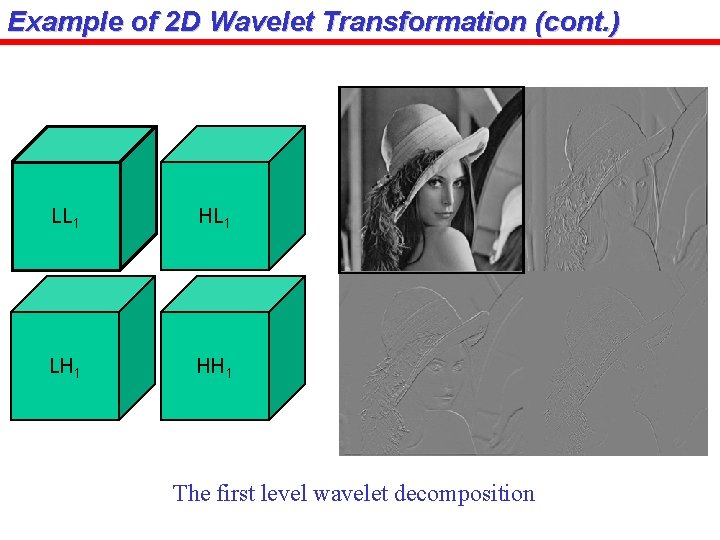

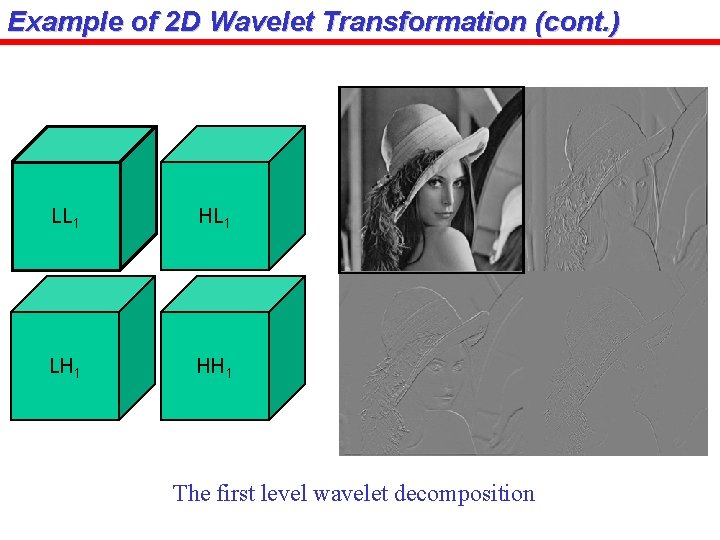

Example of 2 D Wavelet Transformation (cont. ) LL 1 HL 1 LH 1 HH 1 The first level wavelet decomposition

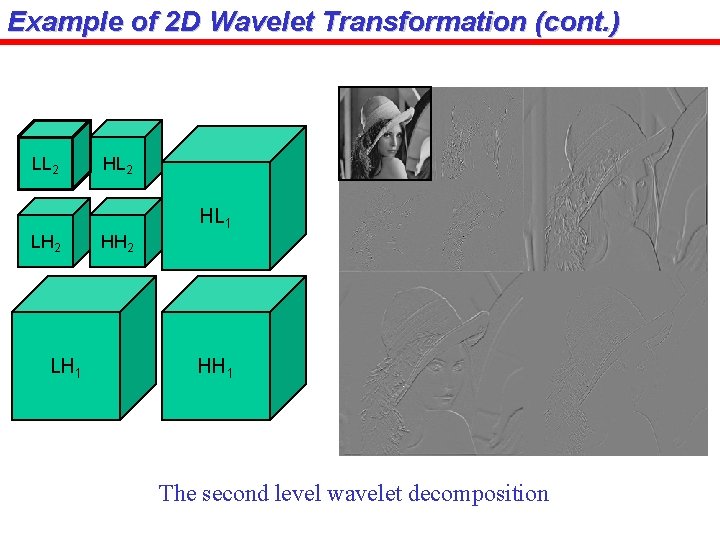

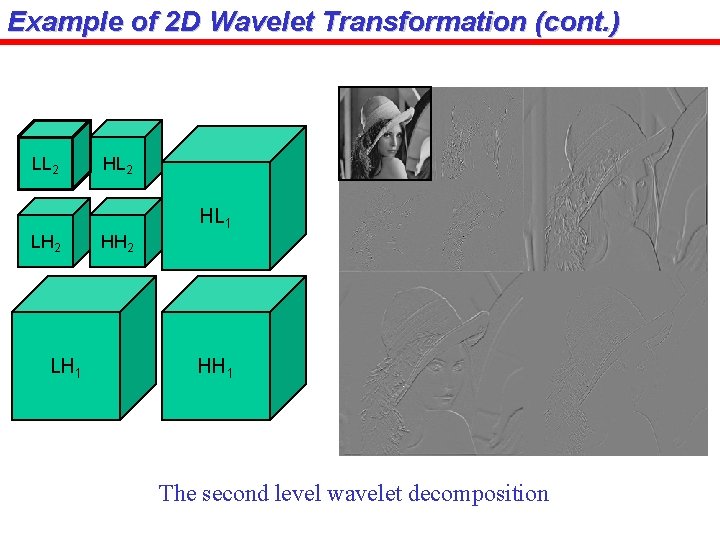

Example of 2 D Wavelet Transformation (cont. ) LL 2 HL 1 LH 2 LH 1 HH 2 HH 1 The second level wavelet decomposition

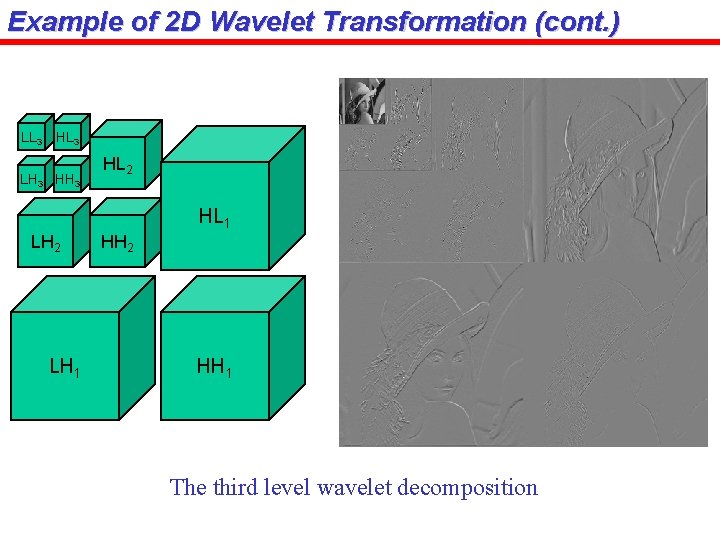

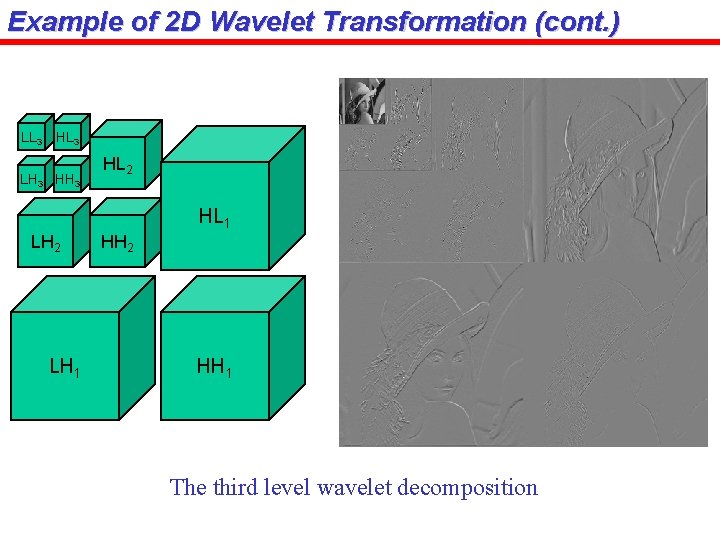

Example of 2 D Wavelet Transformation (cont. ) LL 3 HL 3 LH 3 HL 2 HL 1 LH 2 LH 1 HH 2 HH 1 The third level wavelet decomposition

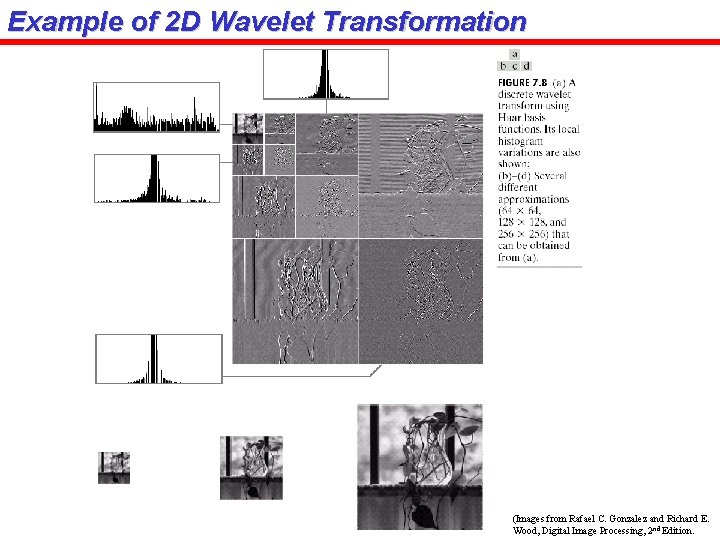

Example of 2 D Wavelet Transformation (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

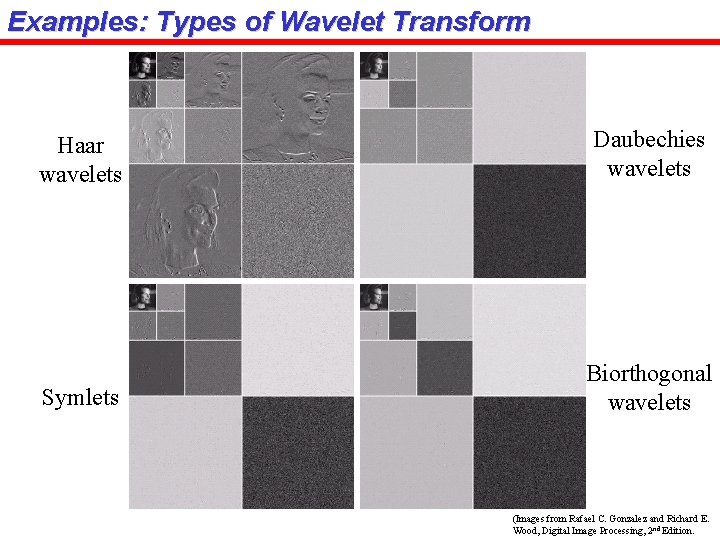

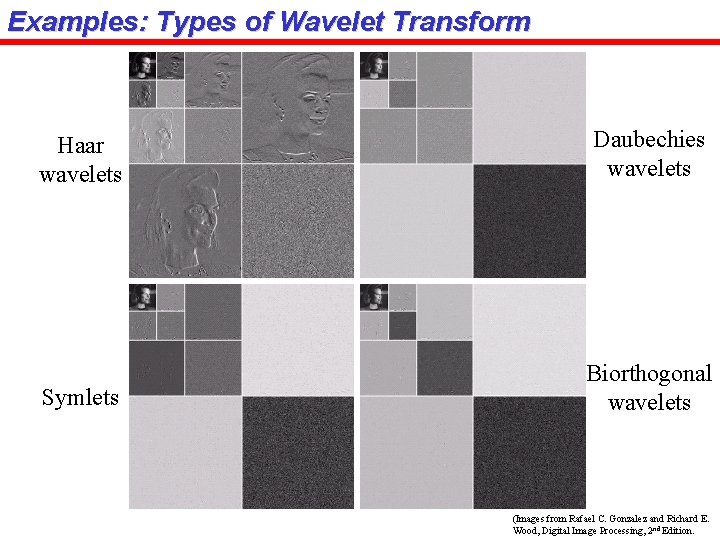

Examples: Types of Wavelet Transform Haar wavelets Daubechies wavelets Symlets Biorthogonal wavelets (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

Wavelet Transform Coding for Image Compression Encoder Input image (Nx. N) Wavelet transform Quantizer Symbol encoder Compressed image Decoder Decompressed image Inverse wavelet transform Symbol decoder Unlike DFT and DCT, Wavelet transform is a multiresolution transform.

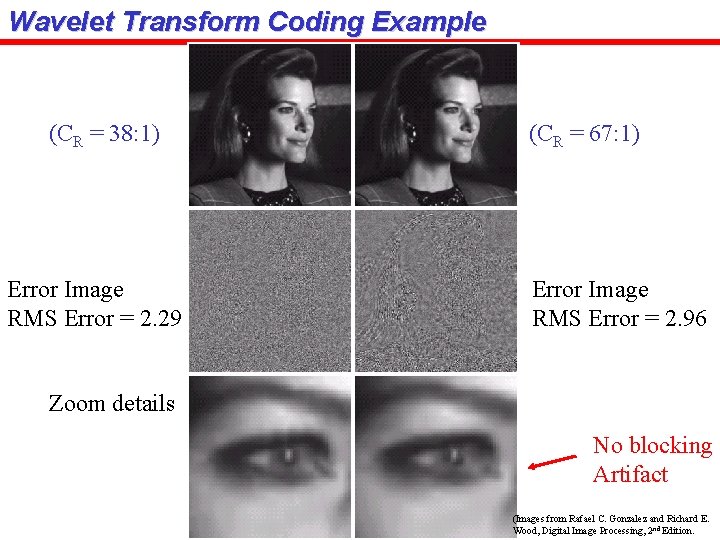

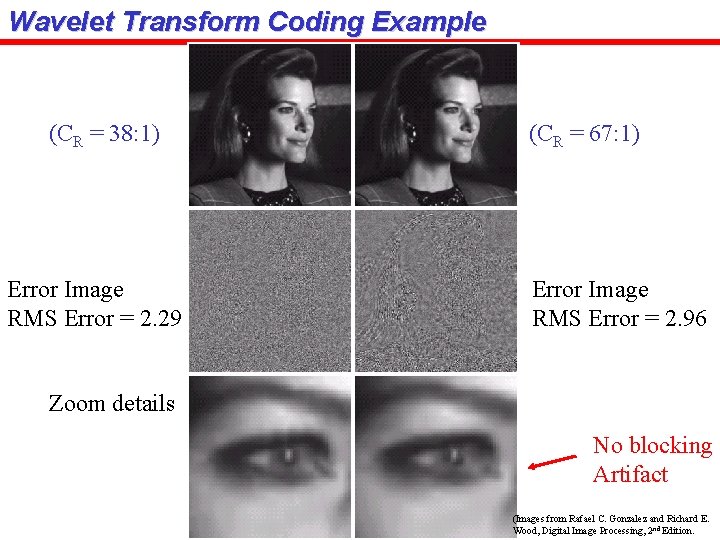

Wavelet Transform Coding Example (CR = 38: 1) Error Image RMS Error = 2. 29 (CR = 67: 1) Error Image RMS Error = 2. 96 Zoom details No blocking Artifact (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

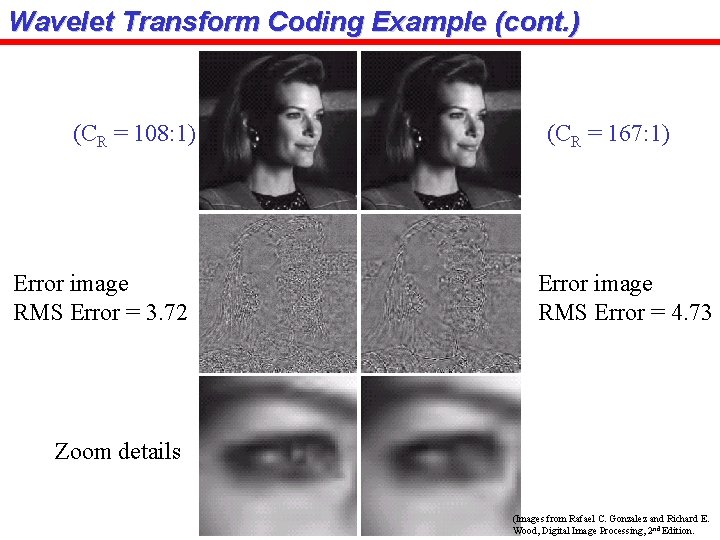

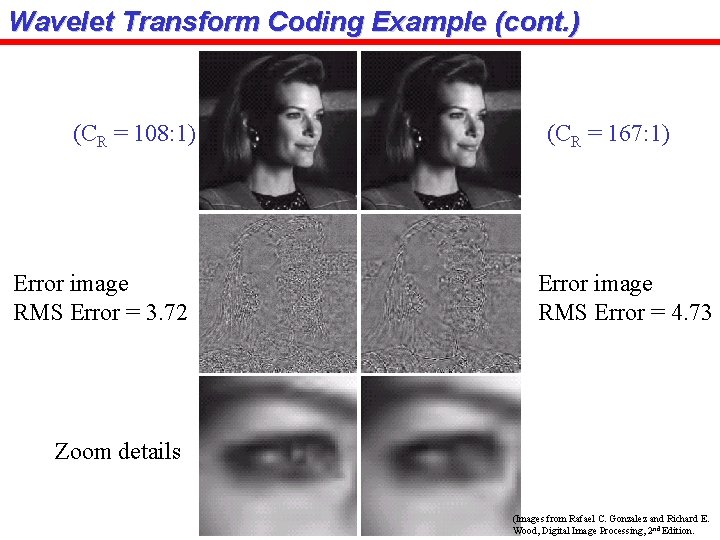

Wavelet Transform Coding Example (cont. ) (CR = 108: 1) Error image RMS Error = 3. 72 (CR = 167: 1) Error image RMS Error = 4. 73 Zoom details (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

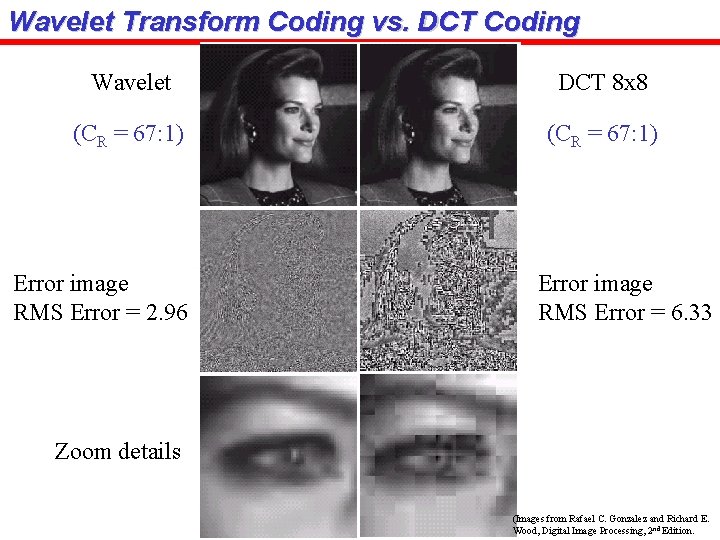

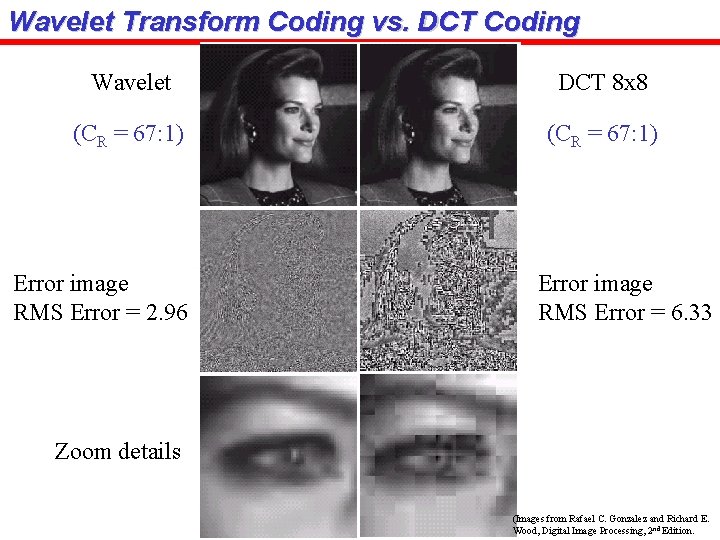

Wavelet Transform Coding vs. DCT Coding Wavelet DCT 8 x 8 (CR = 67: 1) Error image RMS Error = 2. 96 Error image RMS Error = 6. 33 Zoom details (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

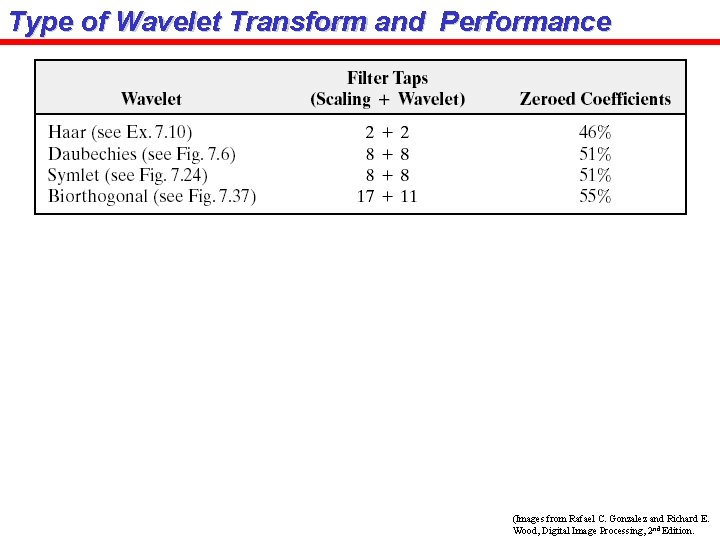

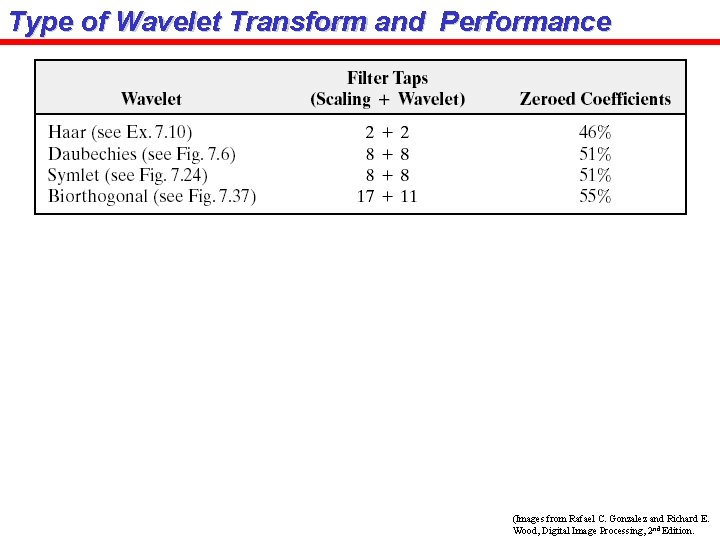

Type of Wavelet Transform and Performance (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.

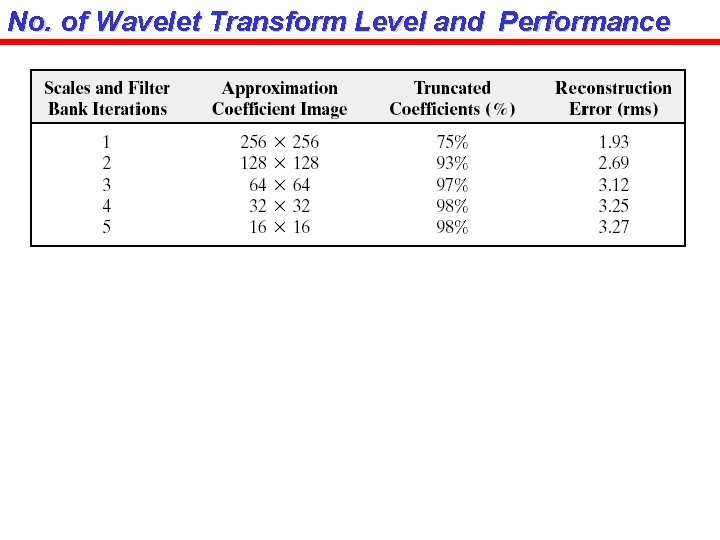

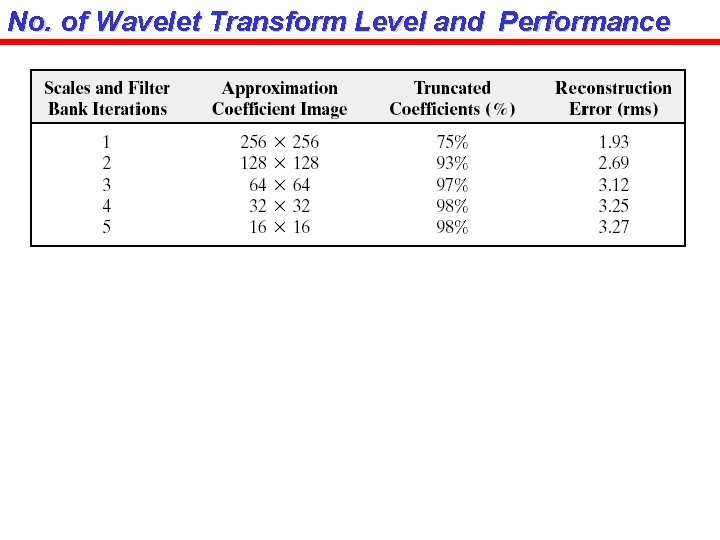

No. of Wavelet Transform Level and Performance

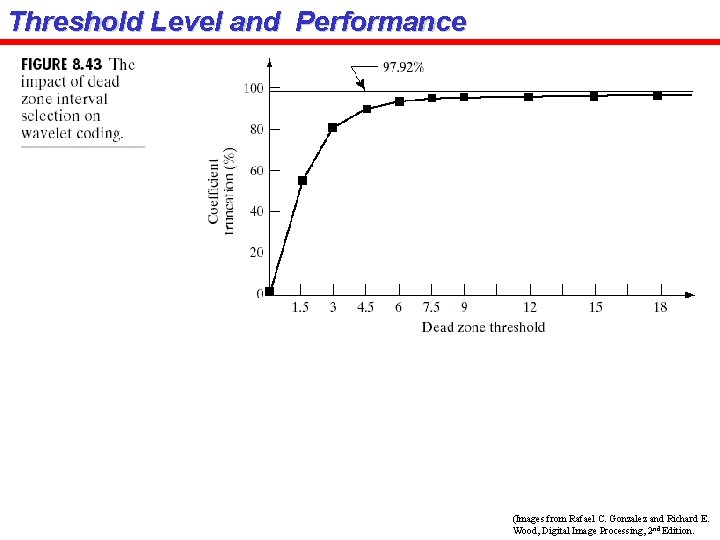

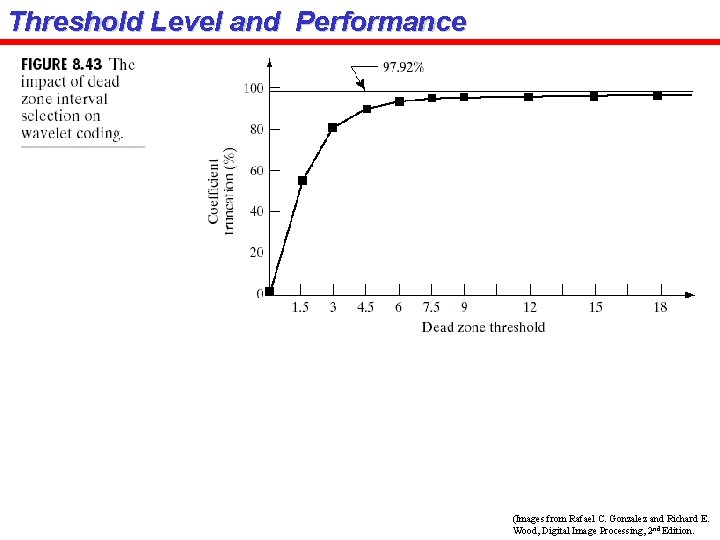

Threshold Level and Performance (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2 nd Edition.