Digital Image Processing Lecture 20 Image Compression May

- Slides: 19

Digital Image Processing Lecture 20: Image Compression May 16, 2005 Prof. Charlene Tsai 1

Before Lecture … n Please return your mid-term exam. 2

Starting with Information Theory n n n Data compression: the process of reducing the amount of data required to represent a given quantity of information. Data information Data convey the information; various amount of data can be used to represent the same amount of information. E. g. story telling (Gonzalez pg 411) Data redundancy Our focus will be coding redundancy 3

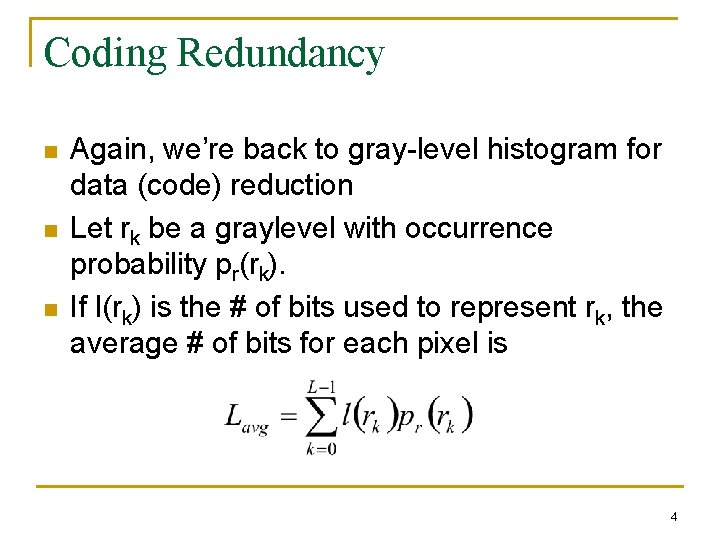

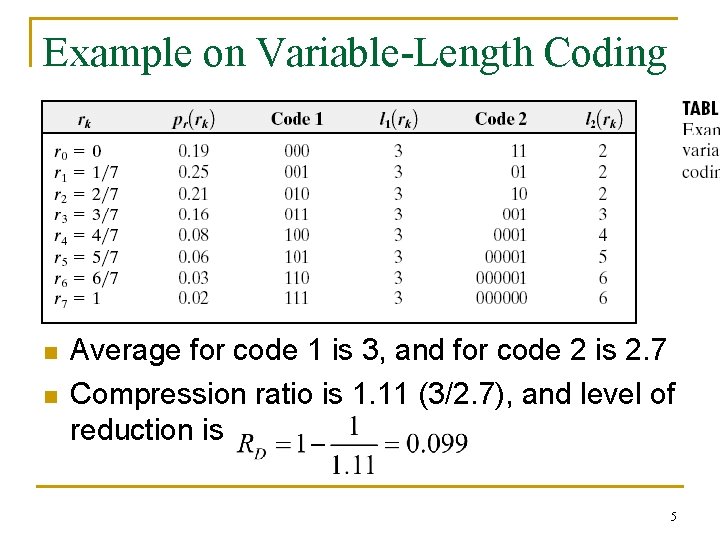

Coding Redundancy n n n Again, we’re back to gray-level histogram for data (code) reduction Let rk be a graylevel with occurrence probability pr(rk). If l(rk) is the # of bits used to represent rk, the average # of bits for each pixel is 4

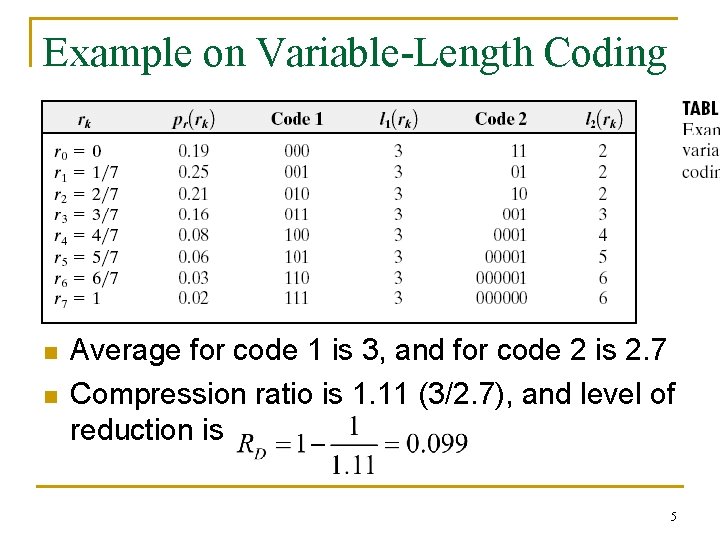

Example on Variable-Length Coding n n Average for code 1 is 3, and for code 2 is 2. 7 Compression ratio is 1. 11 (3/2. 7), and level of reduction is 5

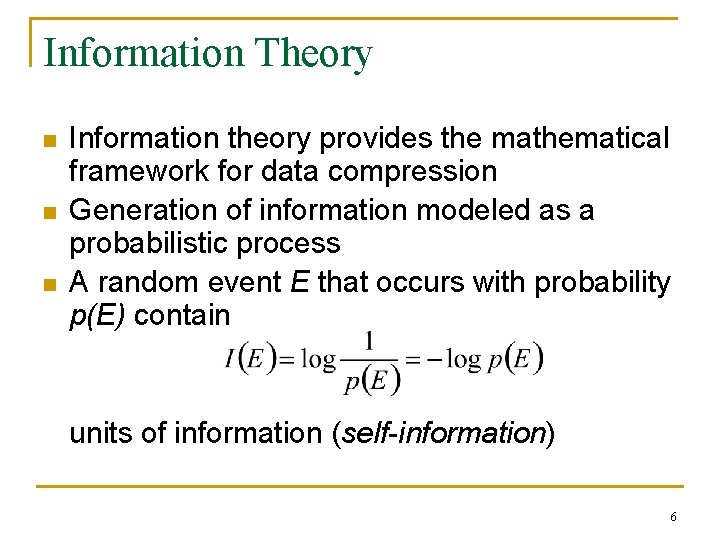

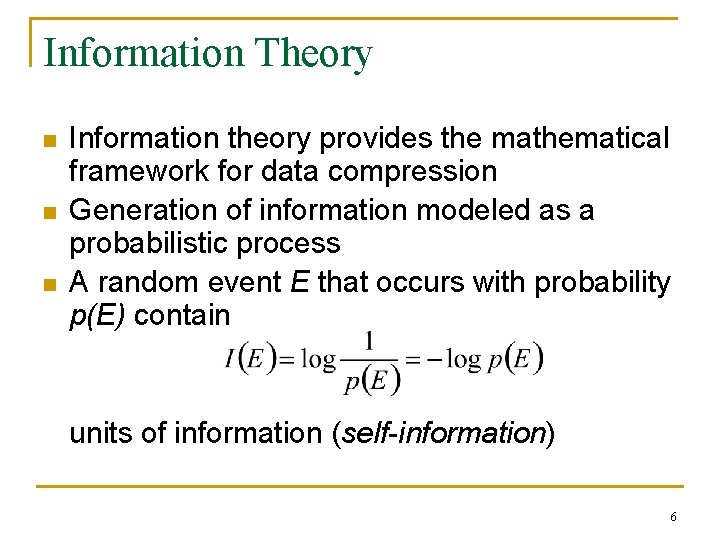

Information Theory n n n Information theory provides the mathematical framework for data compression Generation of information modeled as a probabilistic process A random event E that occurs with probability p(E) contain units of information (self-information) 6

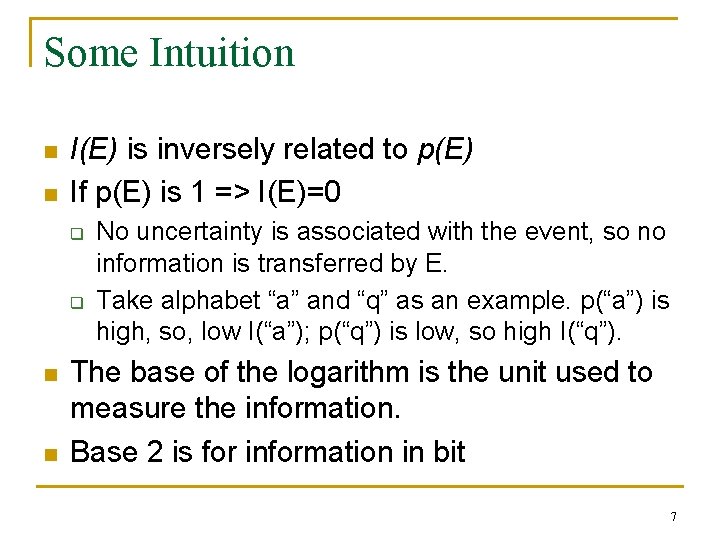

Some Intuition n n I(E) is inversely related to p(E) If p(E) is 1 => I(E)=0 q q n n No uncertainty is associated with the event, so no information is transferred by E. Take alphabet “a” and “q” as an example. p(“a”) is high, so, low I(“a”); p(“q”) is low, so high I(“q”). The base of the logarithm is the unit used to measure the information. Base 2 is for information in bit 7

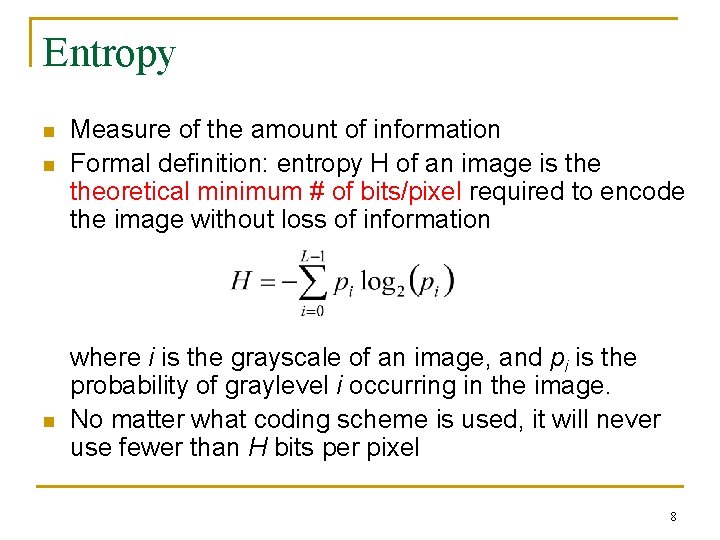

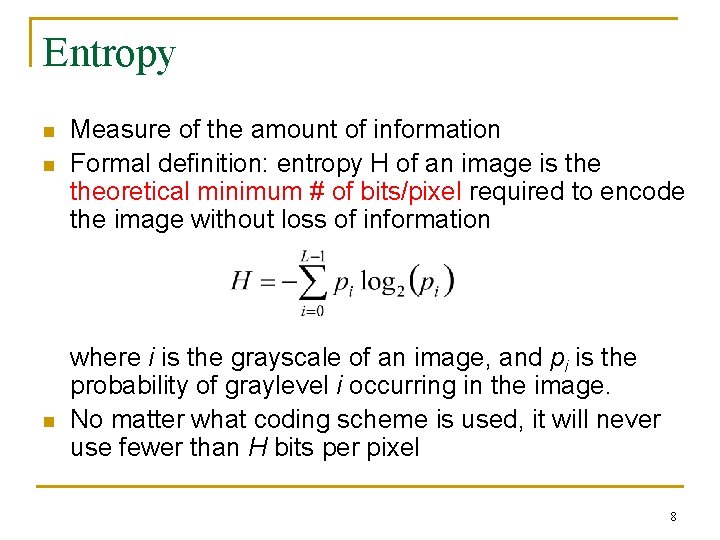

Entropy n n n Measure of the amount of information Formal definition: entropy H of an image is theoretical minimum # of bits/pixel required to encode the image without loss of information where i is the grayscale of an image, and pi is the probability of graylevel i occurring in the image. No matter what coding scheme is used, it will never use fewer than H bits per pixel 8

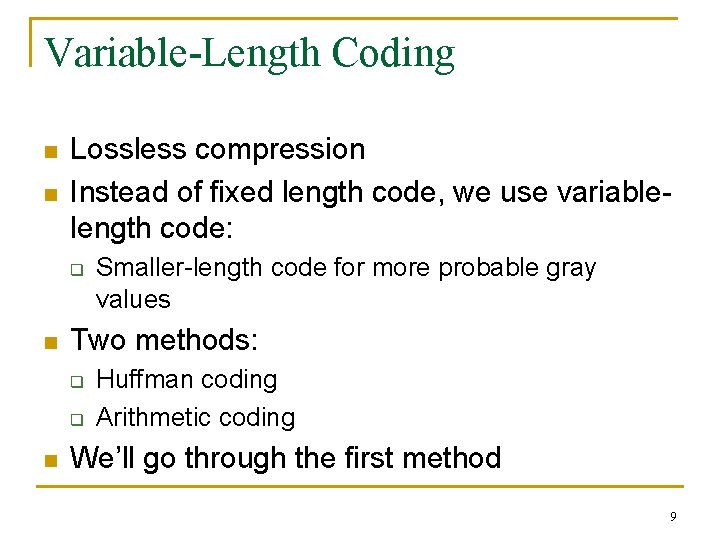

Variable-Length Coding n n Lossless compression Instead of fixed length code, we use variablelength code: q n Two methods: q q n Smaller-length code for more probable gray values Huffman coding Arithmetic coding We’ll go through the first method 9

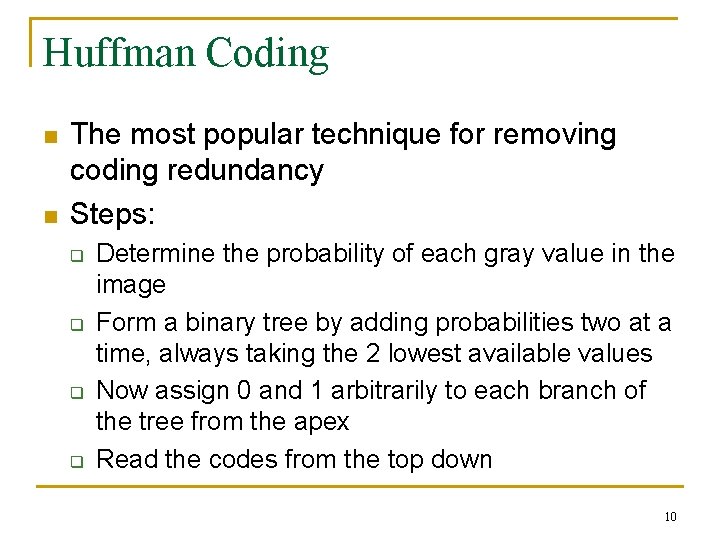

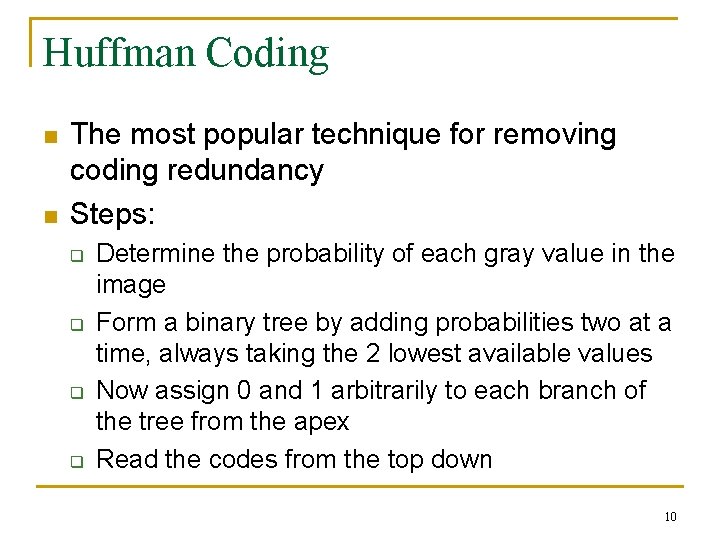

Huffman Coding n n The most popular technique for removing coding redundancy Steps: q q Determine the probability of each gray value in the image Form a binary tree by adding probabilities two at a time, always taking the 2 lowest available values Now assign 0 and 1 arbitrarily to each branch of the tree from the apex Read the codes from the top down 10

Example in pg 397 n The average bit per pixel is 2. 7 q q n n Much better than 3, originally Theoretical minimum (entropy) is 2. 7 Gray value Huffman code 0 00 1 10 2 01 3 110 4 1110 5 11110 6 How to decode the string 7 1101111100111110 Huffman codes are uniquely decodable. 111110 111111 11

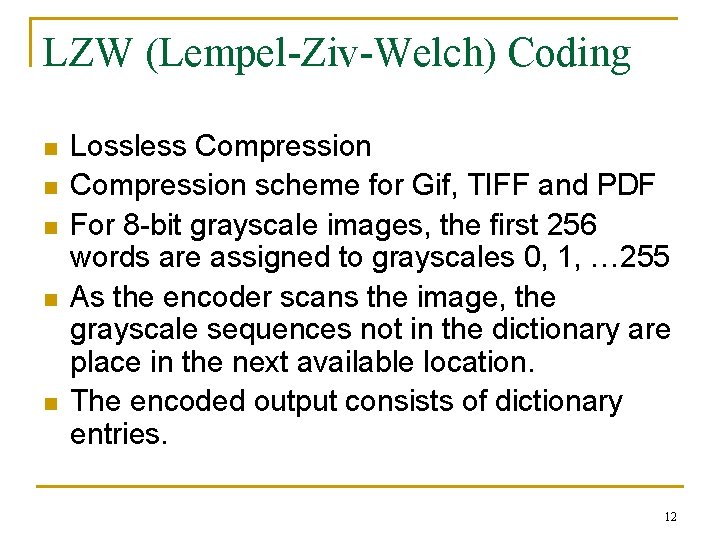

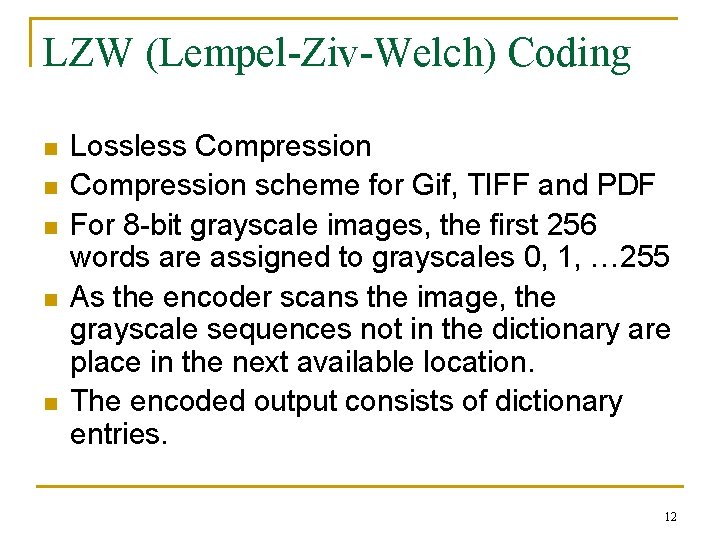

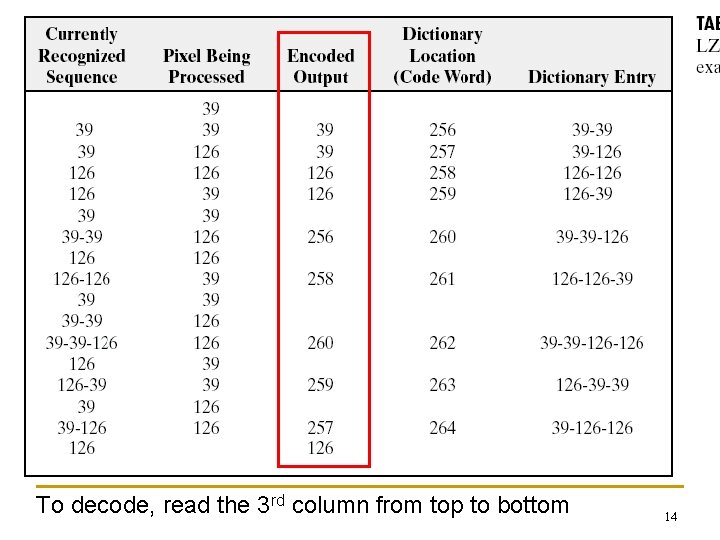

LZW (Lempel-Ziv-Welch) Coding n n n Lossless Compression scheme for Gif, TIFF and PDF For 8 -bit grayscale images, the first 256 words are assigned to grayscales 0, 1, … 255 As the encoder scans the image, the grayscale sequences not in the dictionary are place in the next available location. The encoded output consists of dictionary entries. 12

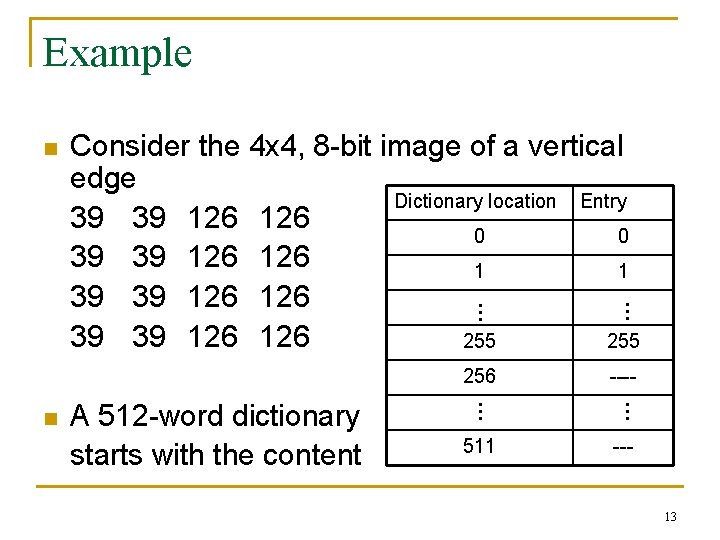

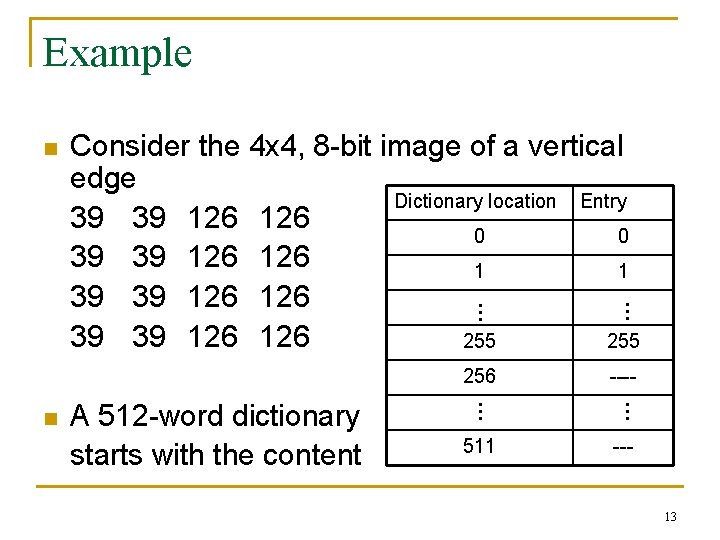

Example n … 256 ---- 511 … … A 512 -word dictionary starts with the content … n Consider the 4 x 4, 8 -bit image of a vertical edge Dictionary location Entry 39 39 126 0 0 39 39 126 1 1 39 39 126 126 255 --- 13

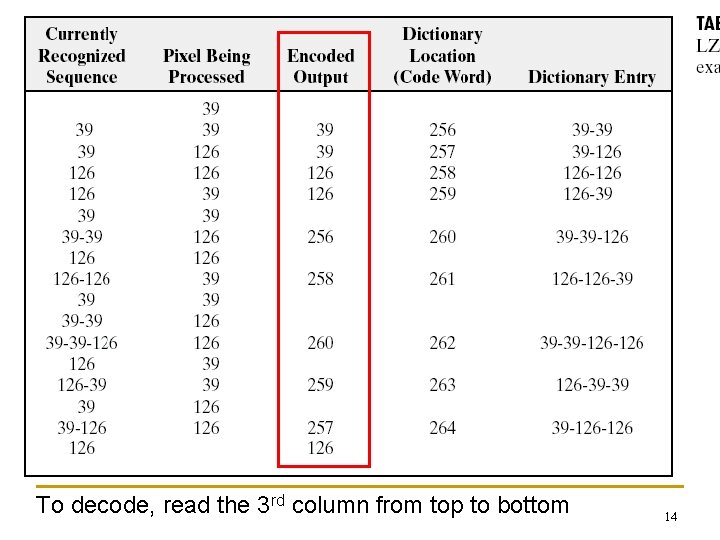

To decode, read the 3 rd column from top to bottom 14

Run-Length Encoding (1 D) n n Lossless compression To encode strings of 0 s and 1 s by the number or repetitions in each string. A standard in fax transmission There are many versions of RLE 15

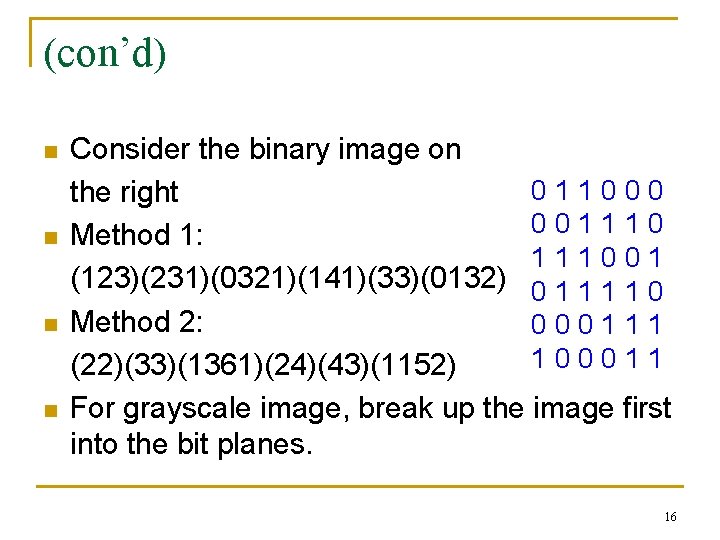

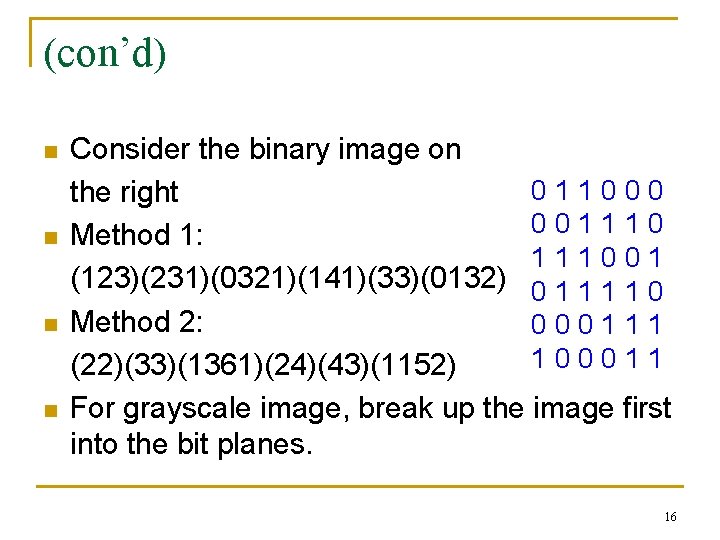

(con’d) n n Consider the binary image on 011000 the right 001110 Method 1: 111001 (123)(231)(0321)(141)(33)(0132) 0 1 1 0 Method 2: 000111 100011 (22)(33)(1361)(24)(43)(1152) For grayscale image, break up the image first into the bit planes. 16

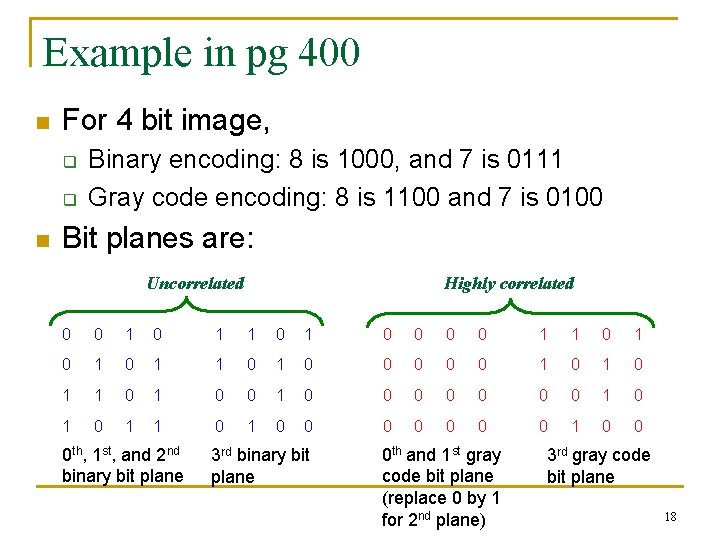

Problem with grayscale RLE n n Long runs of very similar gray values would result in very good compression rate for the code. Not the case for 4 bit image consisting of randomly distributed 7 s and 8 s. One solution is to use gray codes. See page 400 -401 for an example 17

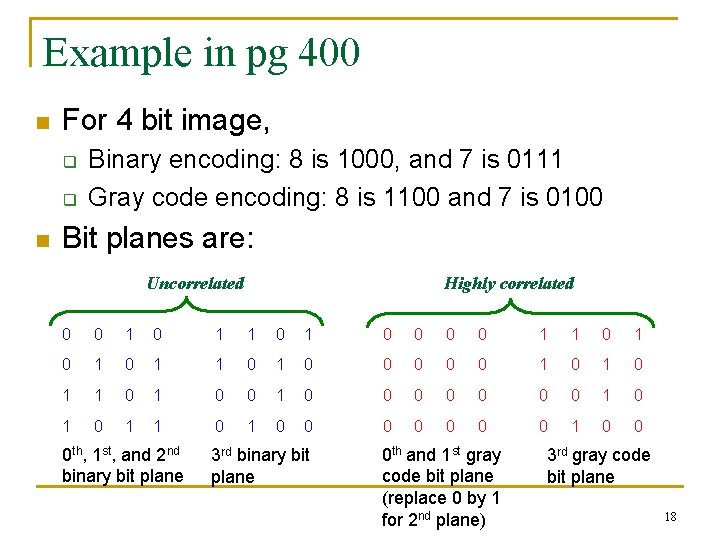

Example in pg 400 n For 4 bit image, q q n Binary encoding: 8 is 1000, and 7 is 0111 Gray code encoding: 8 is 1100 and 7 is 0100 Bit planes are: Uncorrelated Highly correlated 0 0 1 1 0 0 0 0 1 1 0 1 0 1 0 0 0 1 0 1 1 0 0 0 0 1 0 1 1 0 0 0 0 1 0 0 0 th, 1 st, and 2 nd binary bit plane 3 rd binary bit plane 0 th and 1 st gray code bit plane (replace 0 by 1 for 2 nd plane) 3 rd gray code bit plane 18

Summary n Information theory q n Measure of entropy, which is theoretical minimum # of bits per pixel Lossless compression schemes q q q Huffman coding LZW Run-Length encoding 19