Conventional DRAM Organization d x w DRAM n

- Slides: 64

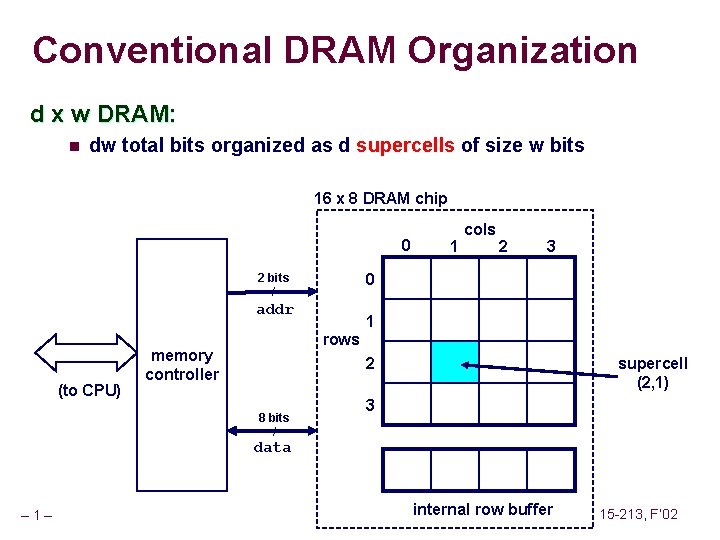

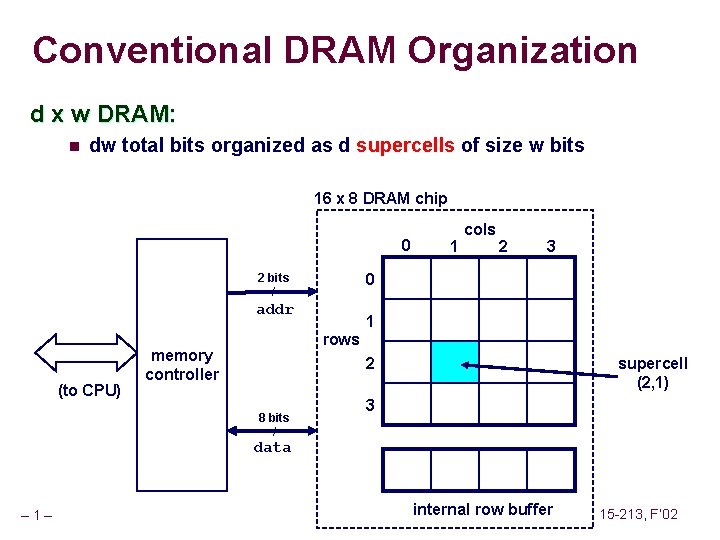

Conventional DRAM Organization d x w DRAM: n dw total bits organized as d supercells of size w bits 16 x 8 DRAM chip 0 2 bits / 2 3 0 addr (to CPU) 1 cols 1 rows memory controller supercell (2, 1) 2 8 bits / 3 data – 1– internal row buffer 15 -213, F’ 02

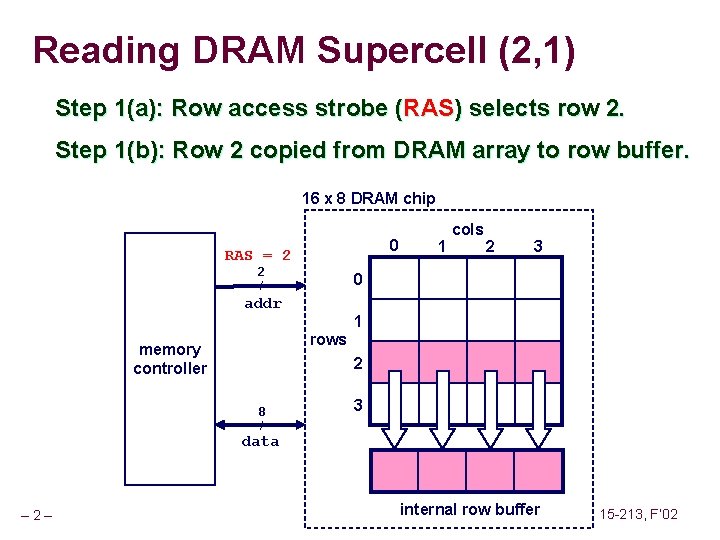

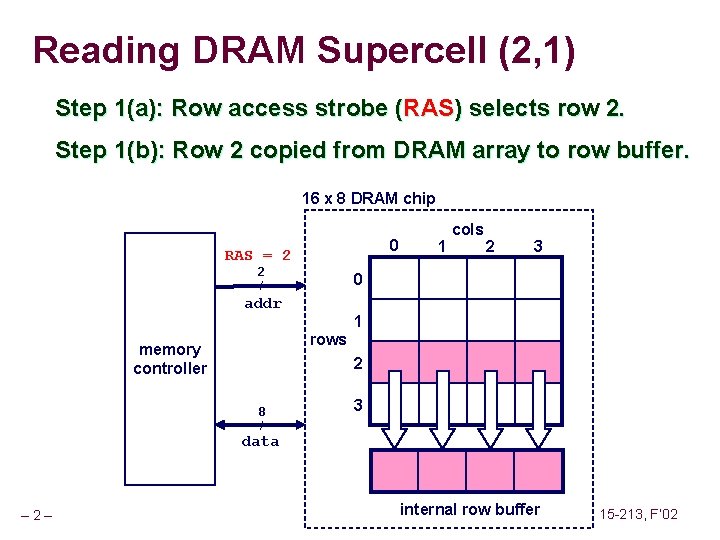

Reading DRAM Supercell (2, 1) Step 1(a): Row access strobe (RAS) selects row 2. Step 1(b): Row 2 copied from DRAM array to row buffer. 16 x 8 DRAM chip 0 RAS = 2 2 / 1 cols 2 3 0 addr 1 rows memory controller 2 8 / 3 data – 2– internal row buffer 15 -213, F’ 02

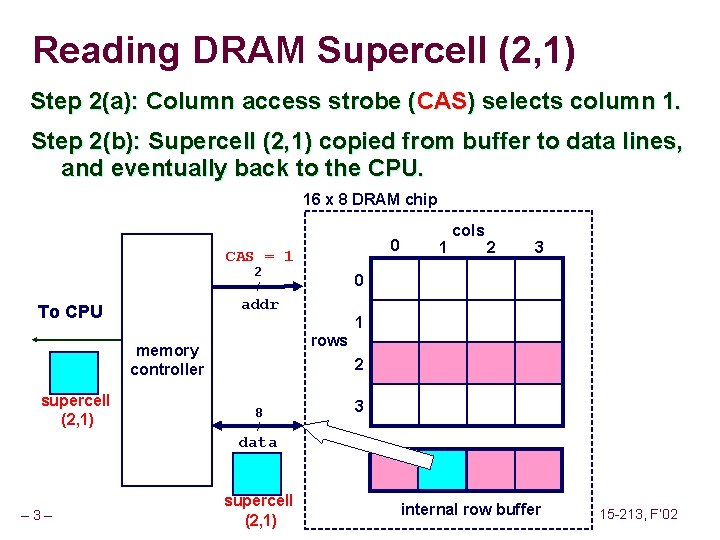

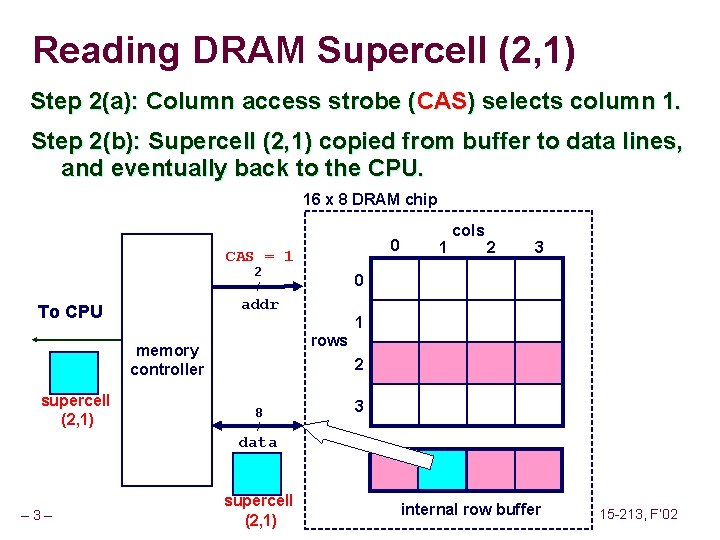

Reading DRAM Supercell (2, 1) Step 2(a): Column access strobe (CAS) selects column 1. Step 2(b): Supercell (2, 1) copied from buffer to data lines, and eventually back to the CPU. 16 x 8 DRAM chip 0 CAS = 1 2 / 2 3 0 addr To CPU 1 rows memory controller supercell (2, 1) 1 cols 2 8 / 3 data – 3– supercell (2, 1) internal row buffer 15 -213, F’ 02

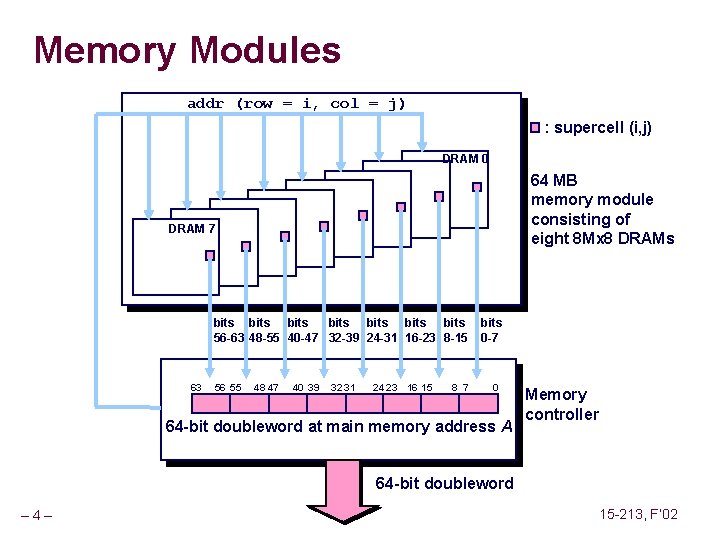

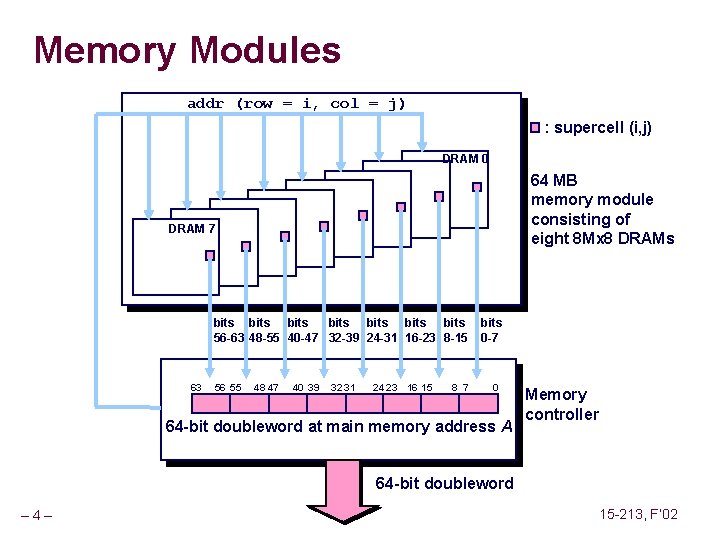

Memory Modules addr (row = i, col = j) : supercell (i, j) DRAM 0 64 MB memory module consisting of eight 8 Mx 8 DRAMs DRAM 7 bits bits 56 -63 48 -55 40 -47 32 -39 24 -31 16 -23 8 -15 63 56 55 48 47 40 39 32 31 24 23 16 15 8 7 bits 0 -7 0 64 -bit doubleword at main memory address A Memory controller 64 -bit doubleword – 4– 15 -213, F’ 02

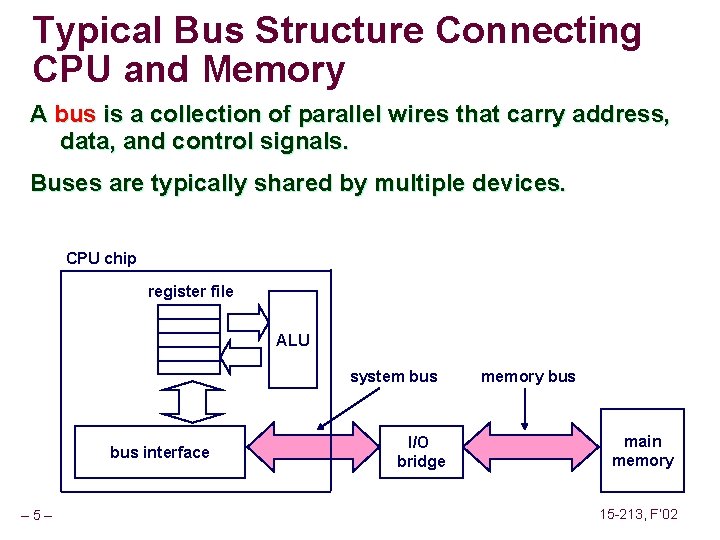

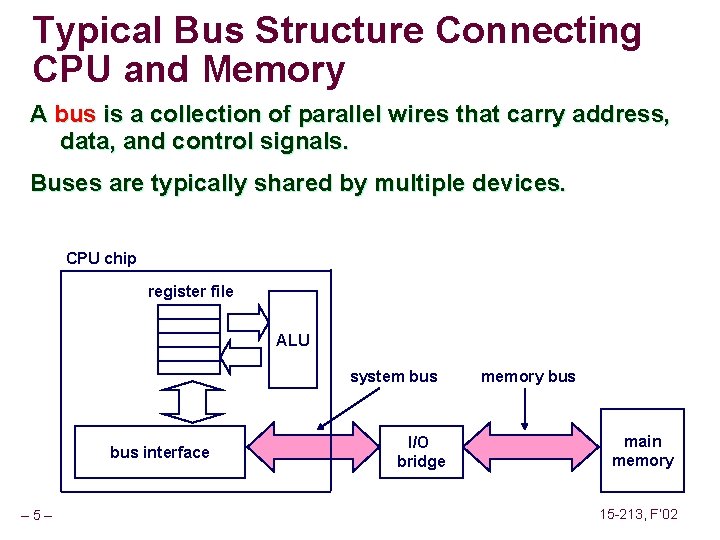

Typical Bus Structure Connecting CPU and Memory A bus is a collection of parallel wires that carry address, data, and control signals. Buses are typically shared by multiple devices. CPU chip register file ALU system bus interface – 5– I/O bridge memory bus main memory 15 -213, F’ 02

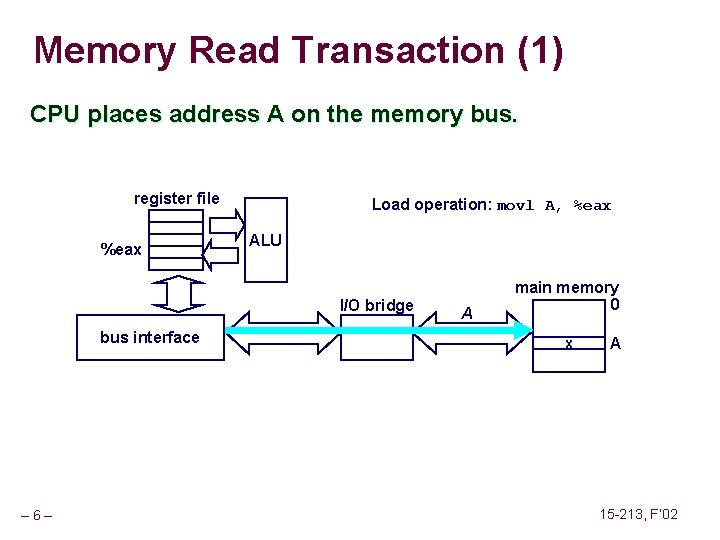

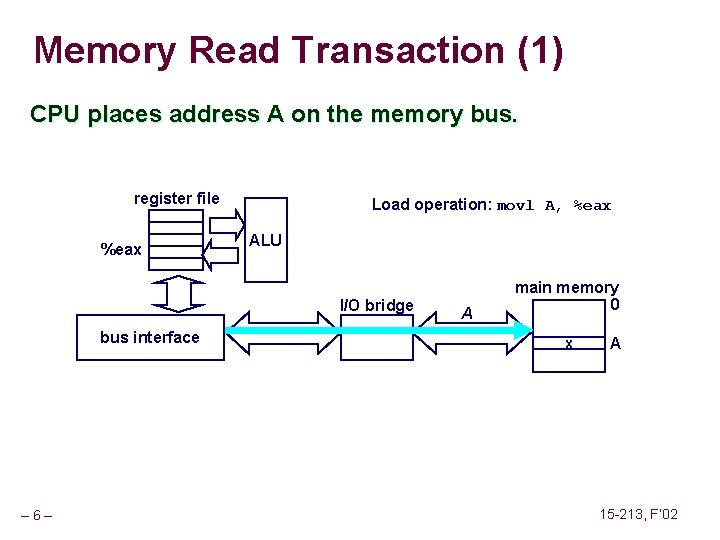

Memory Read Transaction (1) CPU places address A on the memory bus. register file %eax bus interface – 6– Load operation: movl A, %eax ALU I/O bridge A main memory 0 x A 15 -213, F’ 02

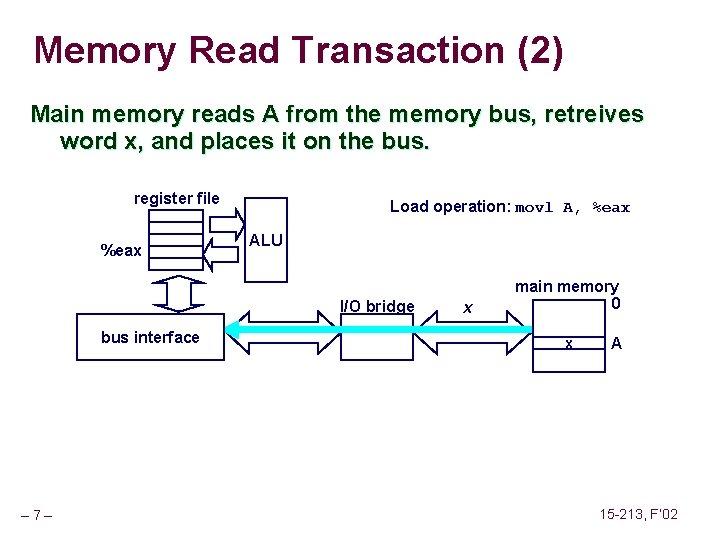

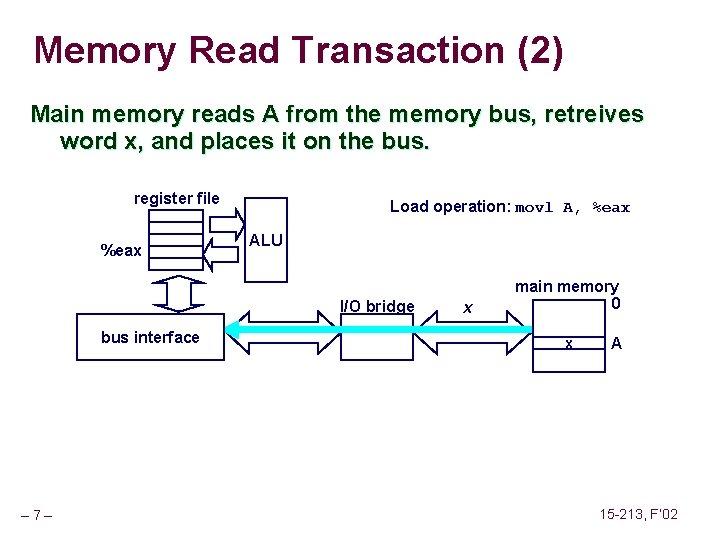

Memory Read Transaction (2) Main memory reads A from the memory bus, retreives word x, and places it on the bus. register file %eax Load operation: movl A, %eax ALU I/O bridge bus interface – 7– x main memory 0 x A 15 -213, F’ 02

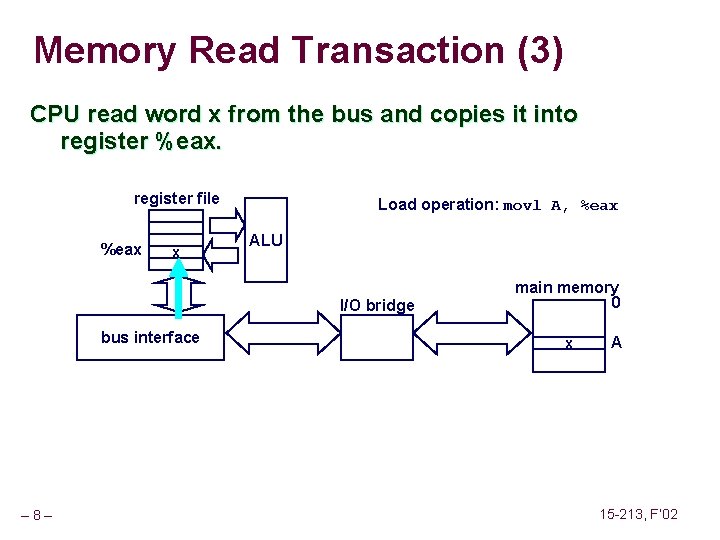

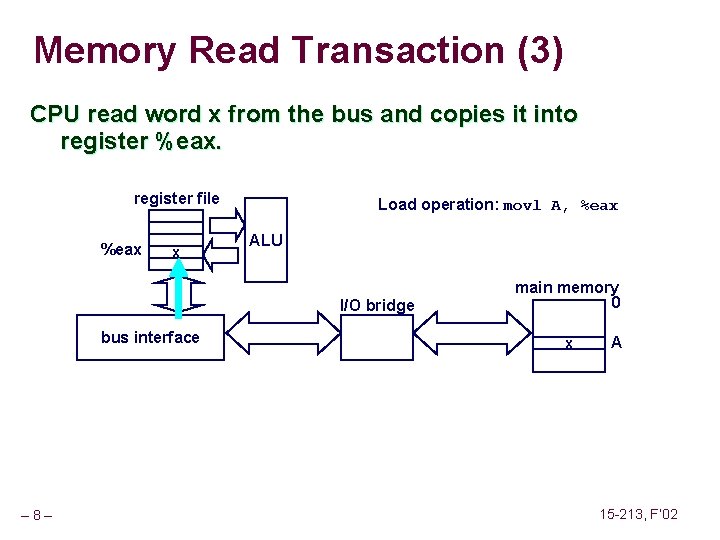

Memory Read Transaction (3) CPU read word x from the bus and copies it into register %eax. register file %eax x Load operation: movl A, %eax ALU I/O bridge bus interface – 8– main memory 0 x A 15 -213, F’ 02

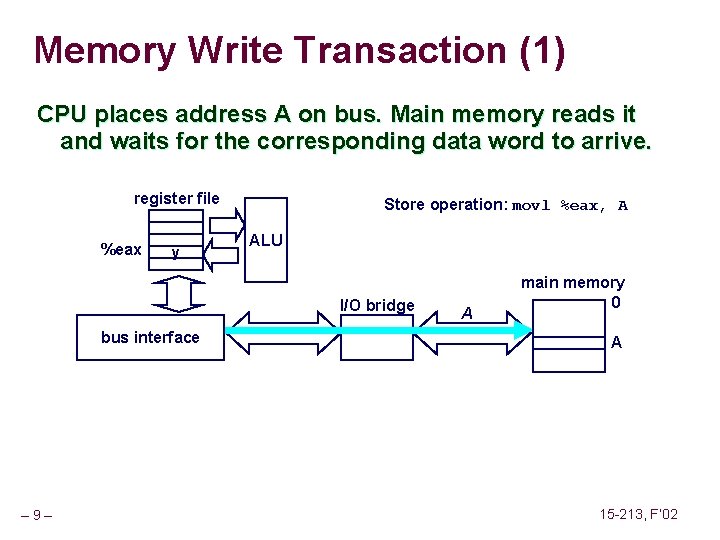

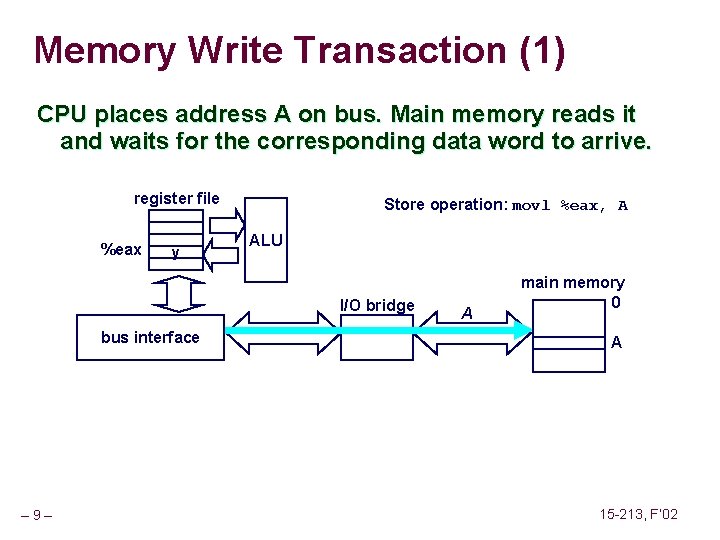

Memory Write Transaction (1) CPU places address A on bus. Main memory reads it and waits for the corresponding data word to arrive. register file %eax y Store operation: movl %eax, A ALU I/O bridge bus interface – 9– A main memory 0 A 15 -213, F’ 02

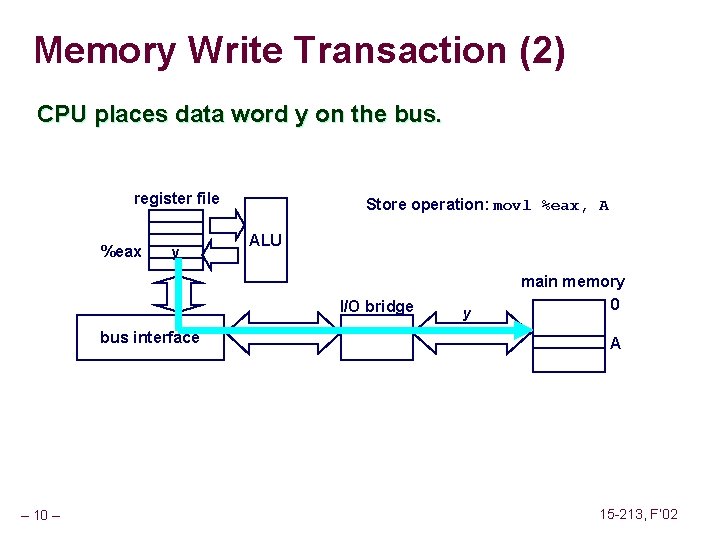

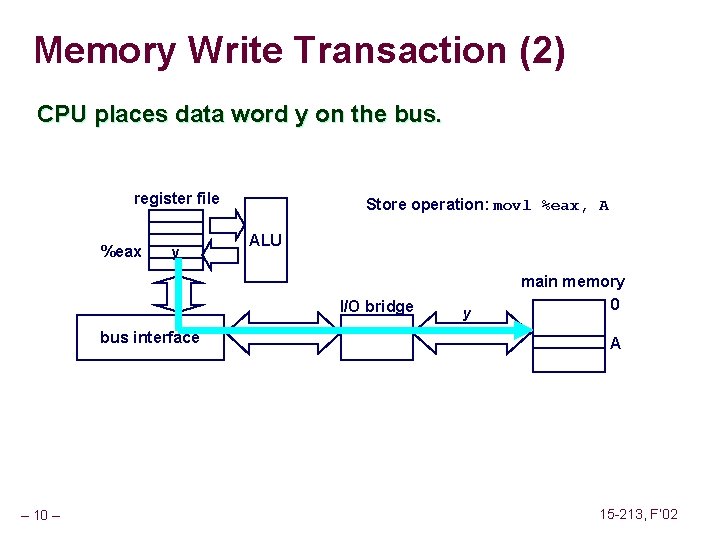

Memory Write Transaction (2) CPU places data word y on the bus. register file %eax y Store operation: movl %eax, A ALU I/O bridge bus interface – 10 – y main memory 0 A 15 -213, F’ 02

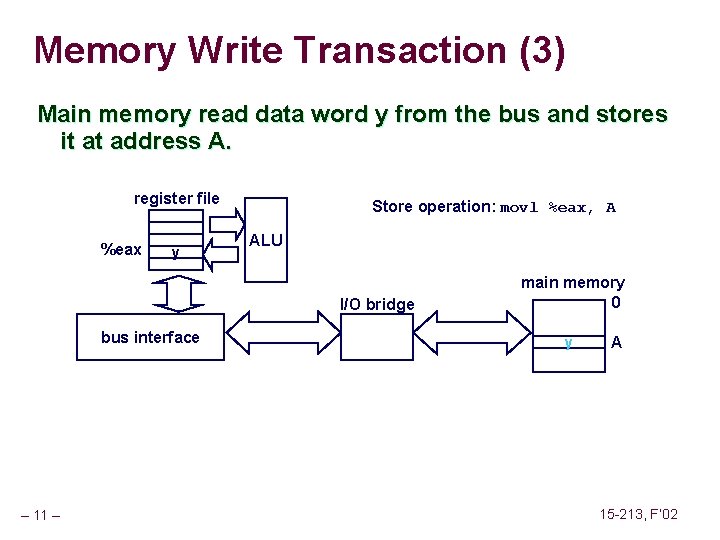

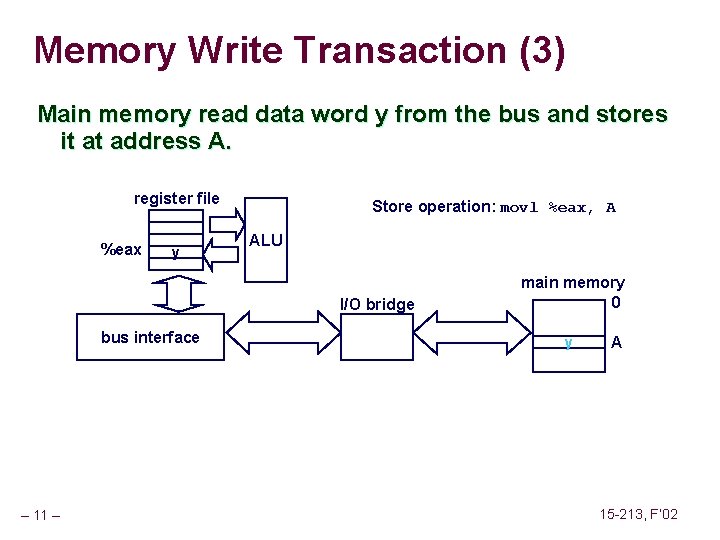

Memory Write Transaction (3) Main memory read data word y from the bus and stores it at address A. register file %eax y Store operation: movl %eax, A ALU I/O bridge bus interface – 11 – main memory 0 y A 15 -213, F’ 02

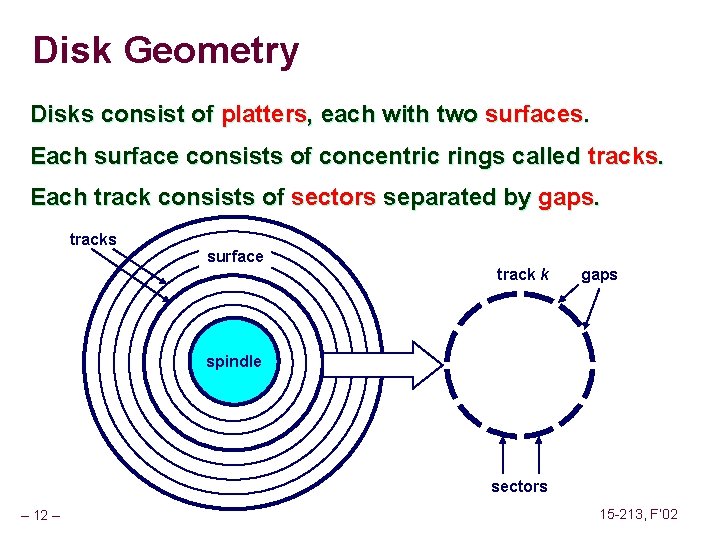

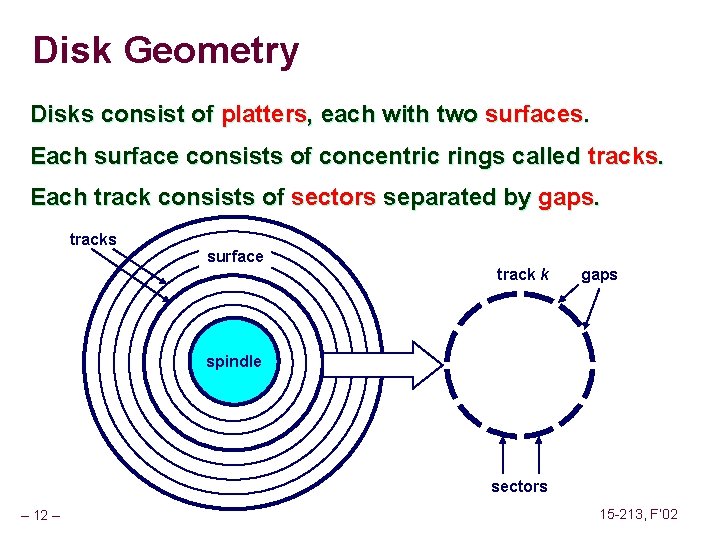

Disk Geometry Disks consist of platters, each with two surfaces. Each surface consists of concentric rings called tracks. Each track consists of sectors separated by gaps. tracks surface track k gaps spindle sectors – 12 – 15 -213, F’ 02

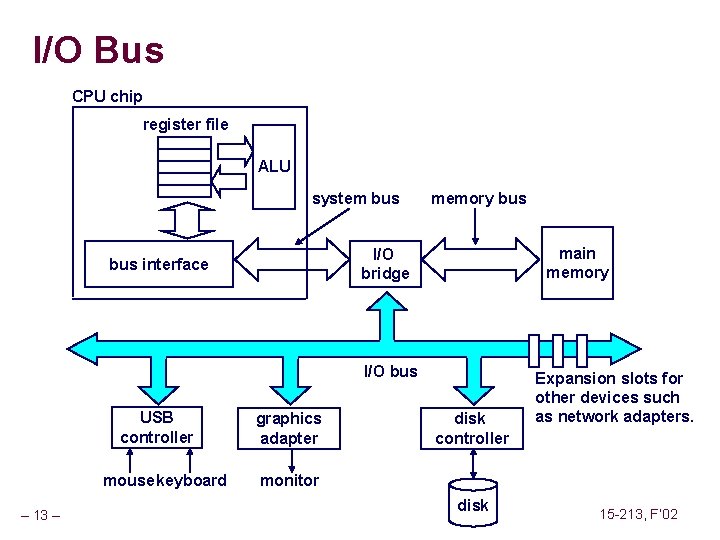

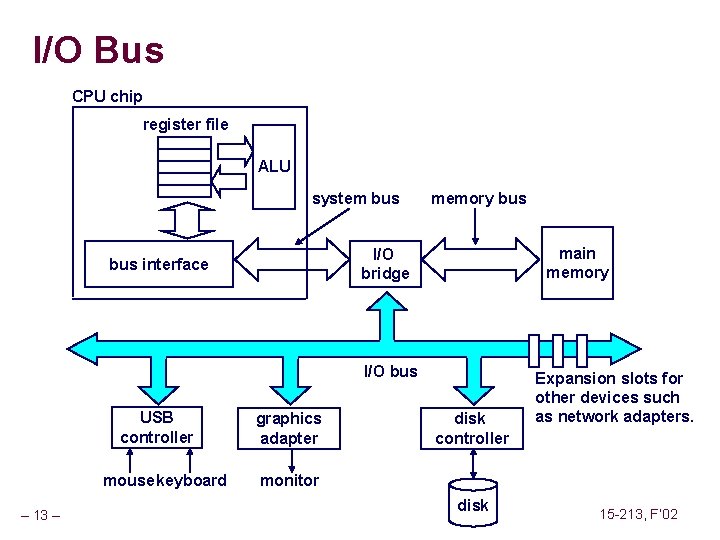

I/O Bus CPU chip register file ALU system bus memory bus main memory I/O bridge bus interface I/O bus USB controller mouse keyboard – 13 – graphics adapter disk controller Expansion slots for other devices such as network adapters. monitor disk 15 -213, F’ 02

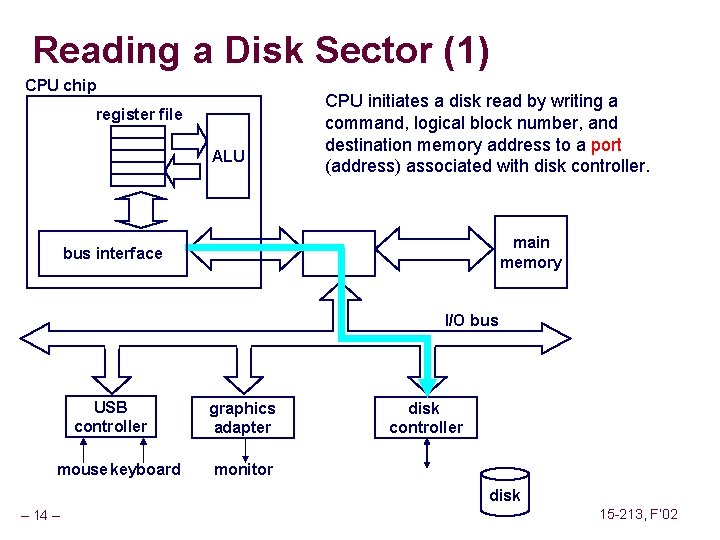

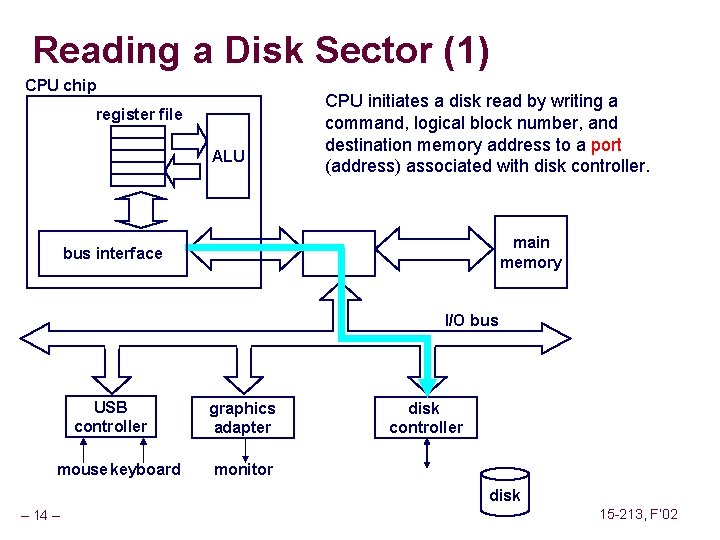

Reading a Disk Sector (1) CPU chip register file ALU CPU initiates a disk read by writing a command, logical block number, and destination memory address to a port (address) associated with disk controller. main memory bus interface I/O bus USB controller mouse keyboard graphics adapter disk controller monitor disk – 14 – 15 -213, F’ 02

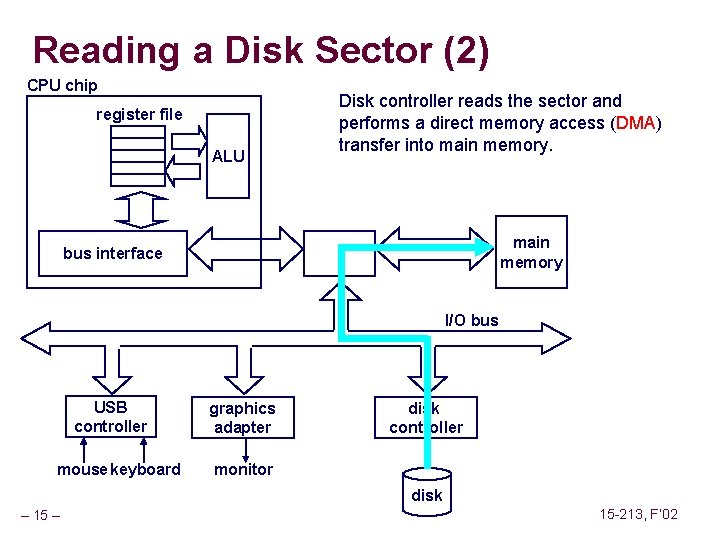

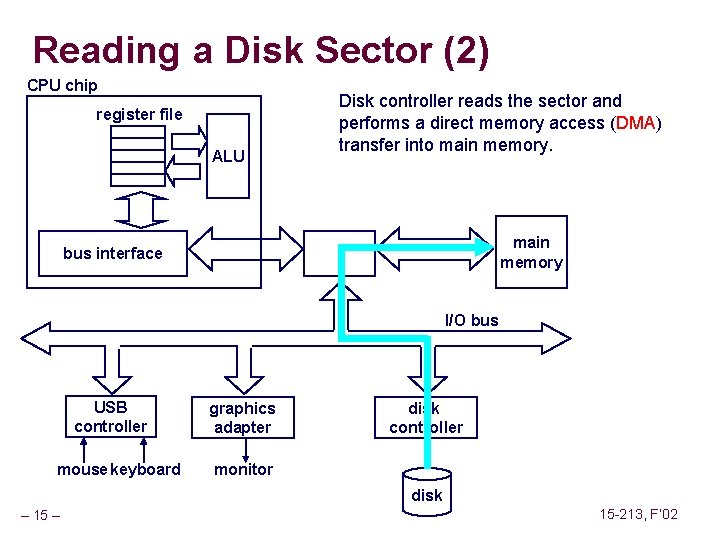

Reading a Disk Sector (2) CPU chip register file ALU Disk controller reads the sector and performs a direct memory access (DMA) transfer into main memory bus interface I/O bus USB controller mouse keyboard graphics adapter disk controller monitor disk – 15 -213, F’ 02

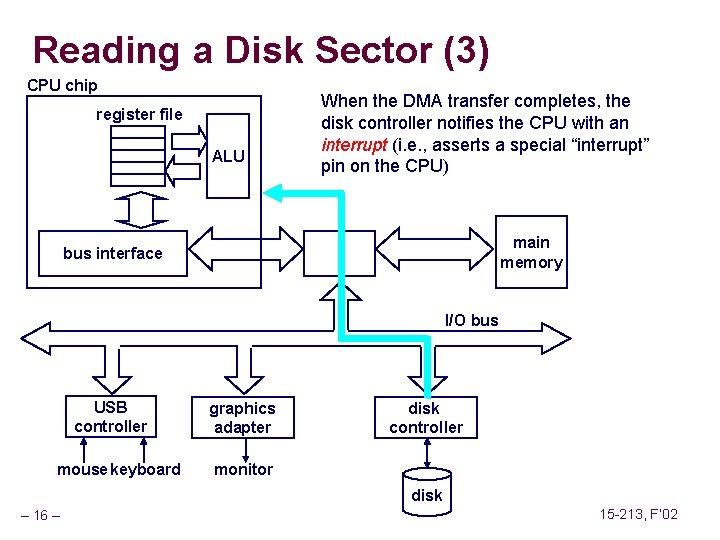

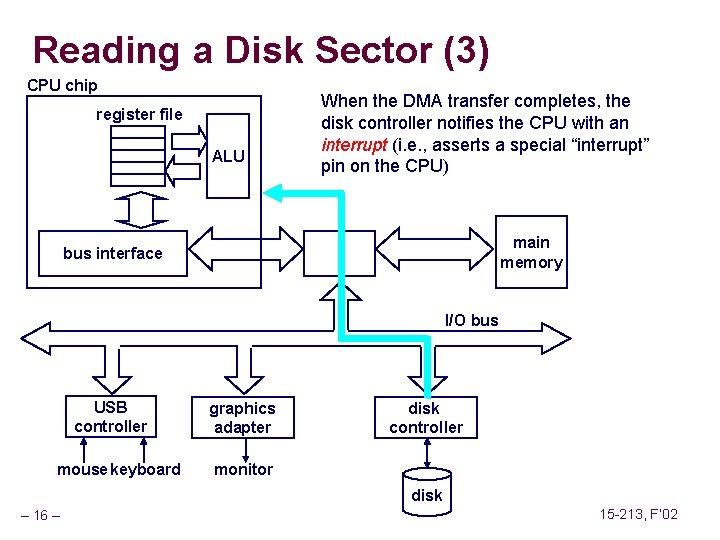

Reading a Disk Sector (3) CPU chip register file ALU When the DMA transfer completes, the disk controller notifies the CPU with an interrupt (i. e. , asserts a special “interrupt” pin on the CPU) main memory bus interface I/O bus USB controller mouse keyboard graphics adapter disk controller monitor disk – 16 – 15 -213, F’ 02

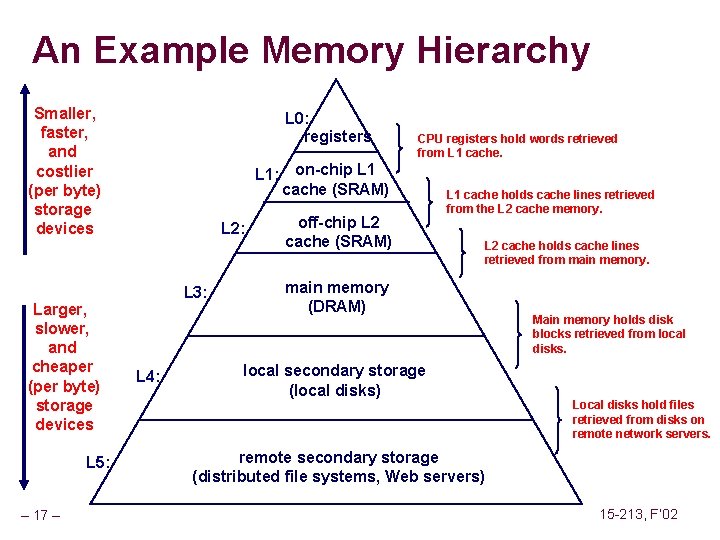

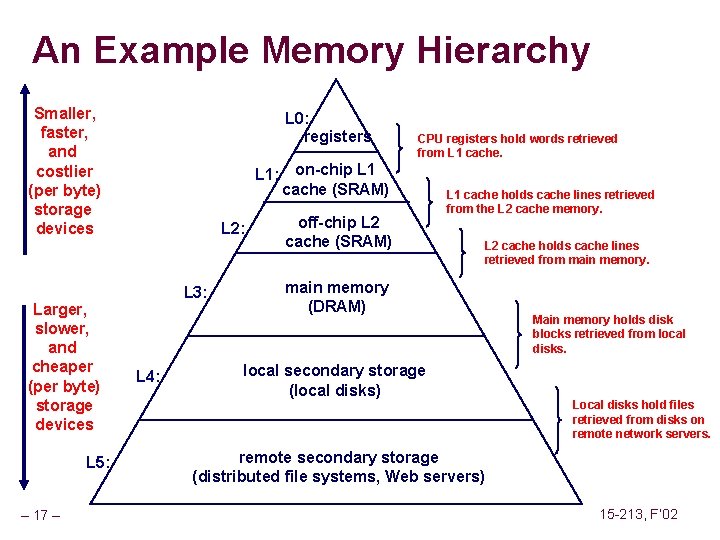

An Example Memory Hierarchy Smaller, faster, and costlier (per byte) storage devices Larger, slower, and cheaper (per byte) storage devices L 5: – 17 – L 0: registers CPU registers hold words retrieved from L 1 cache. L 1: on-chip L 1 cache (SRAM) L 2: L 3: L 4: off-chip L 2 cache (SRAM) L 1 cache holds cache lines retrieved from the L 2 cache memory. L 2 cache holds cache lines retrieved from main memory (DRAM) local secondary storage (local disks) Main memory holds disk blocks retrieved from local disks. Local disks hold files retrieved from disks on remote network servers. remote secondary storage (distributed file systems, Web servers) 15 -213, F’ 02

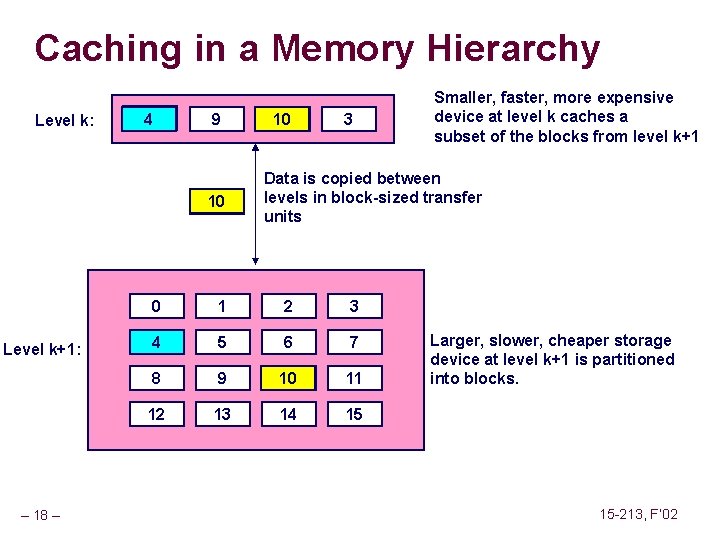

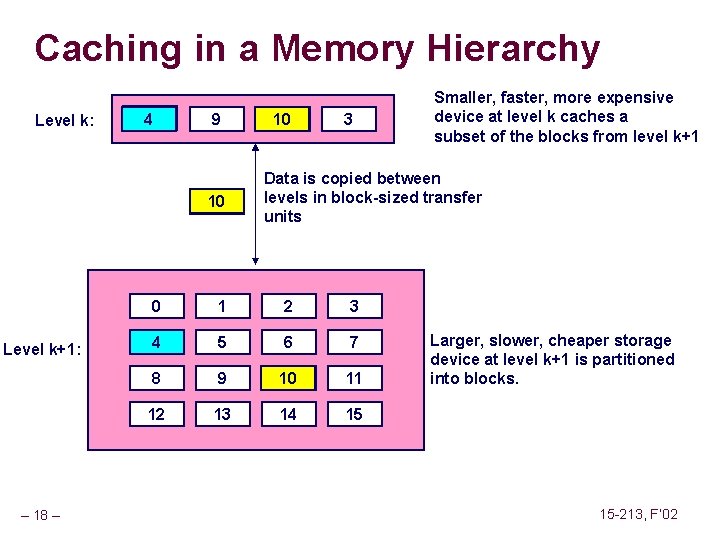

Caching in a Memory Hierarchy Level k: 8 4 9 10 4 Level k+1: – 18 – 14 10 3 Smaller, faster, more expensive device at level k caches a subset of the blocks from level k+1 Data is copied between levels in block-sized transfer units 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Larger, slower, cheaper storage device at level k+1 is partitioned into blocks. 15 -213, F’ 02

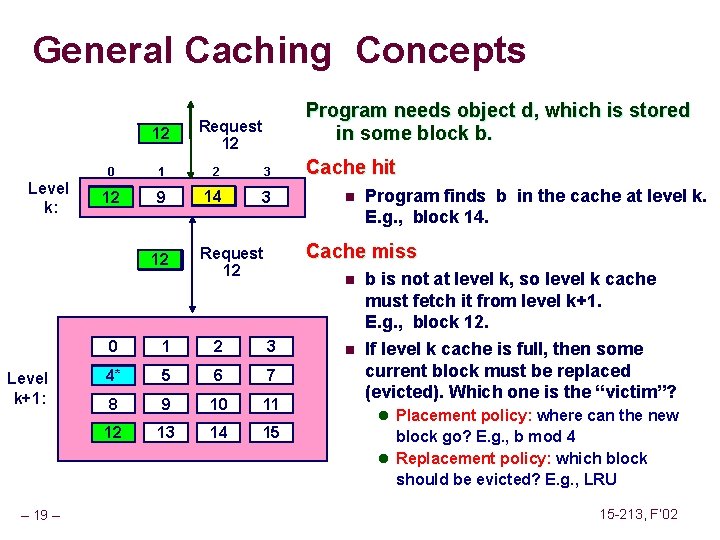

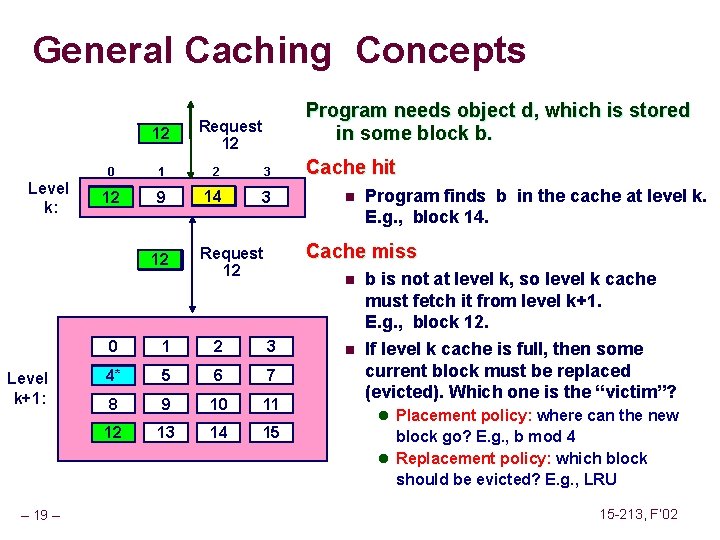

General Caching Concepts 14 12 Level k: 0 1 2 3 Cache hit 4* 12 9 14 3 n 12 4* Level k+1: – 19 – Program needs object d, which is stored in some block b. Request 12 14 Program finds b in the cache at level k. E. g. , block 14. Cache miss Request 12 n 0 1 2 3 4 4* 5 6 7 8 9 10 11 12 13 14 15 n b is not at level k, so level k cache must fetch it from level k+1. E. g. , block 12. If level k cache is full, then some current block must be replaced (evicted). Which one is the “victim”? l Placement policy: where can the new block go? E. g. , b mod 4 l Replacement policy: which block should be evicted? E. g. , LRU 15 -213, F’ 02

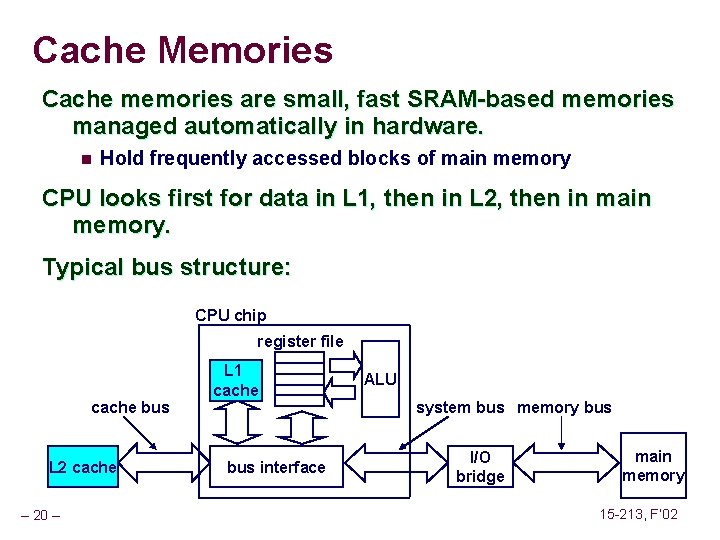

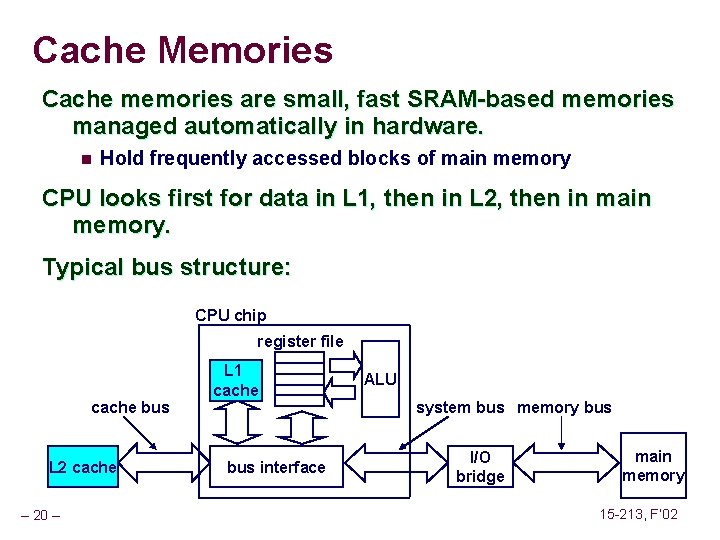

Cache Memories Cache memories are small, fast SRAM-based memories managed automatically in hardware. n Hold frequently accessed blocks of main memory CPU looks first for data in L 1, then in L 2, then in main memory. Typical bus structure: CPU chip register file cache bus L 2 cache – 20 – L 1 cache bus interface ALU system bus memory bus I/O bridge main memory 15 -213, F’ 02

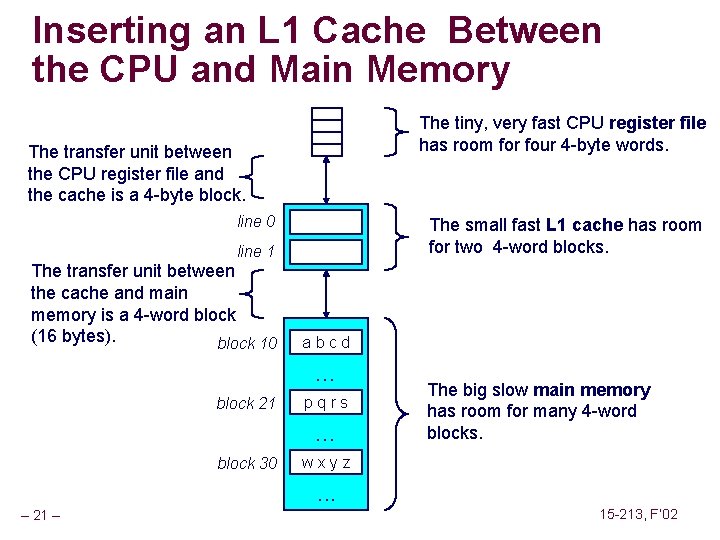

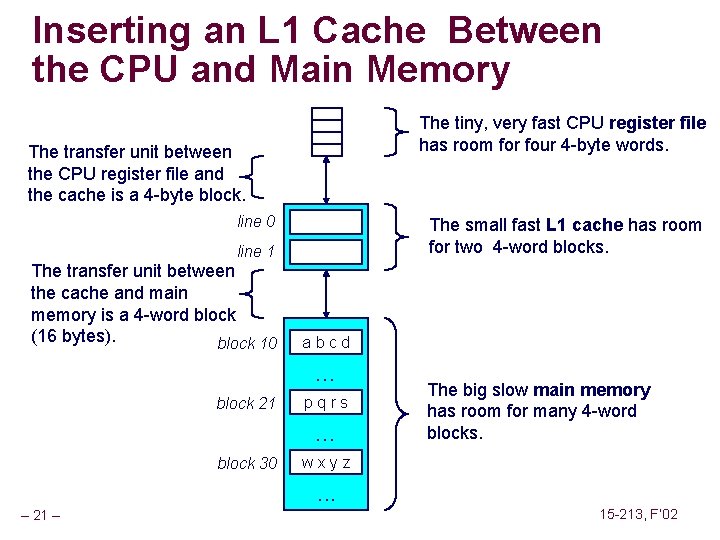

Inserting an L 1 Cache Between the CPU and Main Memory The tiny, very fast CPU register file has room for four 4 -byte words. The transfer unit between the CPU register file and the cache is a 4 -byte block. line 0 The small fast L 1 cache has room for two 4 -word blocks. line 1 The transfer unit between the cache and main memory is a 4 -word block (16 bytes). block 10 abcd . . . block 21 pqrs . . . block 30 The big slow main memory has room for many 4 -word blocks. wxyz . . . – 21 – 15 -213, F’ 02

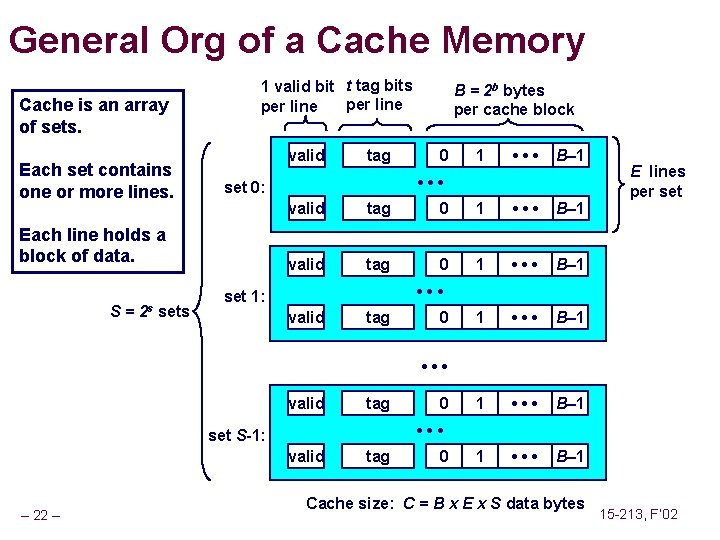

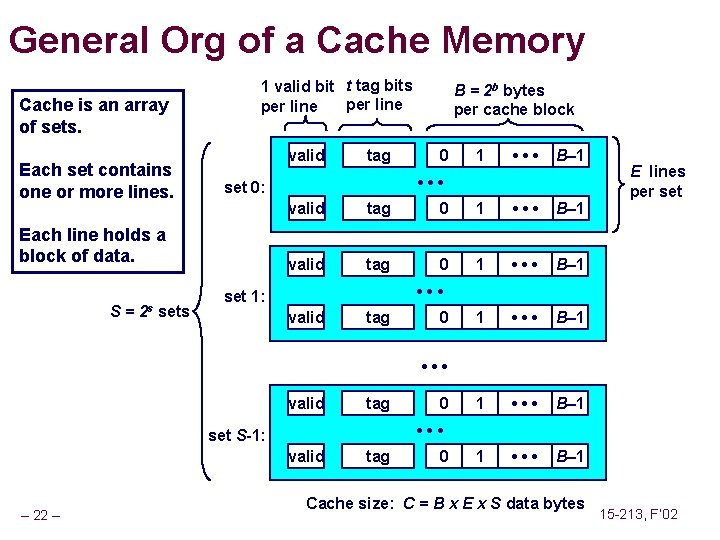

General Org of a Cache Memory Cache is an array of sets. Each set contains one or more lines. 1 valid bit t tag bits per line valid S= 0 1 • • • B– 1 • • • set 0: Each line holds a block of data. 2 s sets tag B = 2 b bytes per cache block valid tag 0 1 • • • B– 1 1 • • • B– 1 E lines per set • • • set 1: valid tag 0 • • • valid tag • • • set S-1: valid – 22 – 0 tag 0 Cache size: C = B x E x S data bytes 15 -213, F’ 02

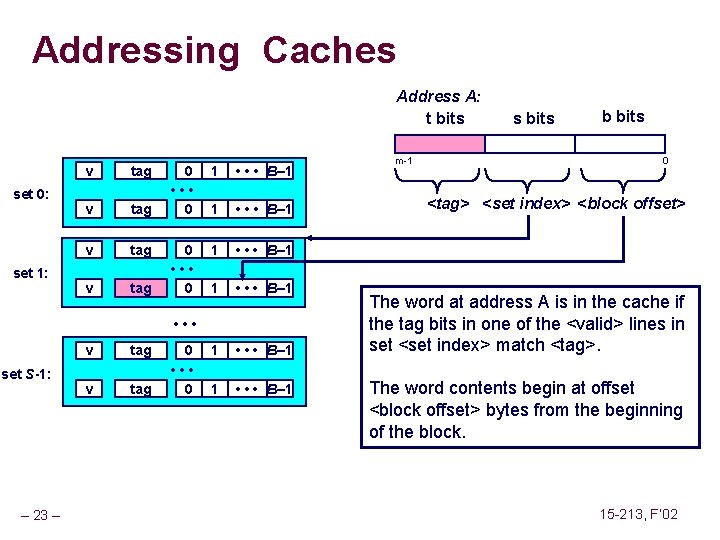

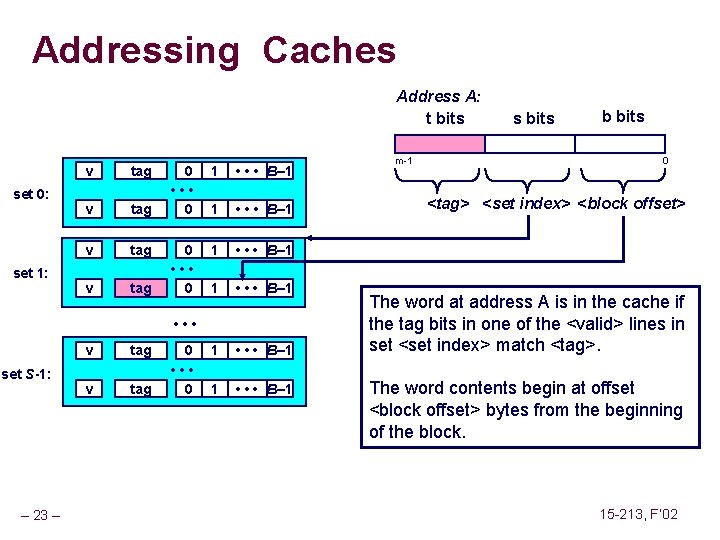

Addressing Caches Address A: t bits set 0: set 1: v tag 0 • • • 0 1 • • • B– 1 1 • • • B– 1 • • • set S-1: – 23 – v tag 0 • • • 0 m-1 s bits b bits 0 <tag> <set index> <block offset> The word at address A is in the cache if the tag bits in one of the <valid> lines in set <set index> match <tag>. The word contents begin at offset <block offset> bytes from the beginning of the block. 15 -213, F’ 02

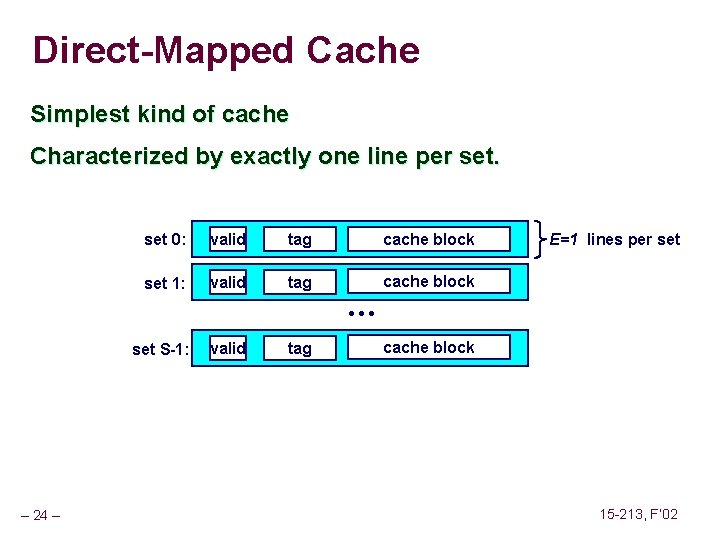

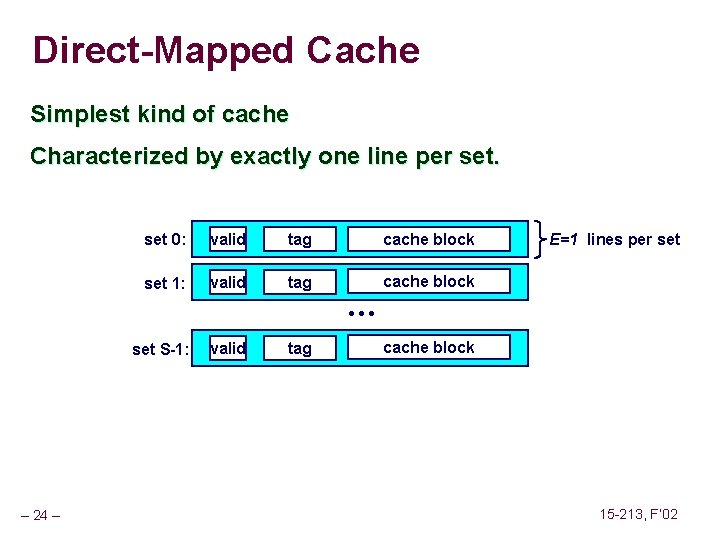

Direct-Mapped Cache Simplest kind of cache Characterized by exactly one line per set 0: valid tag cache block set 1: valid tag cache block E=1 lines per set • • • set S-1: – 24 – valid tag cache block 15 -213, F’ 02

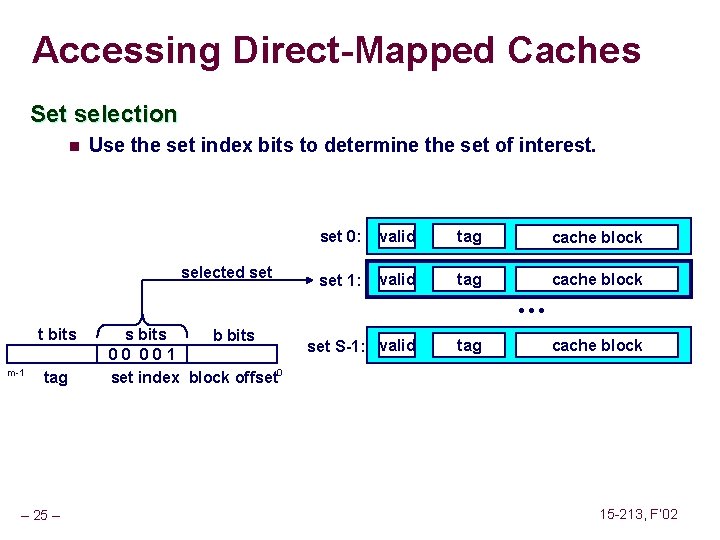

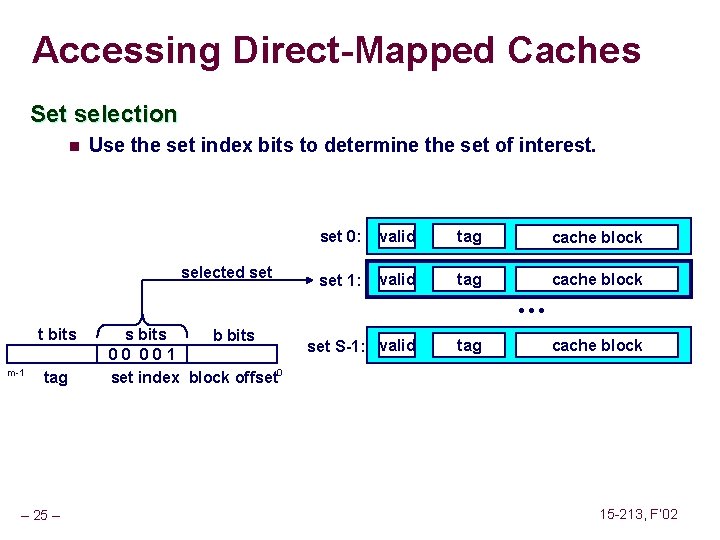

Accessing Direct-Mapped Caches Set selection n Use the set index bits to determine the set of interest. selected set 0: valid tag cache block set 1: valid tag cache block • • • t bits m-1 tag – 25 – s bits b bits 0 0 1 set index block offset 0 set S-1: valid tag cache block 15 -213, F’ 02

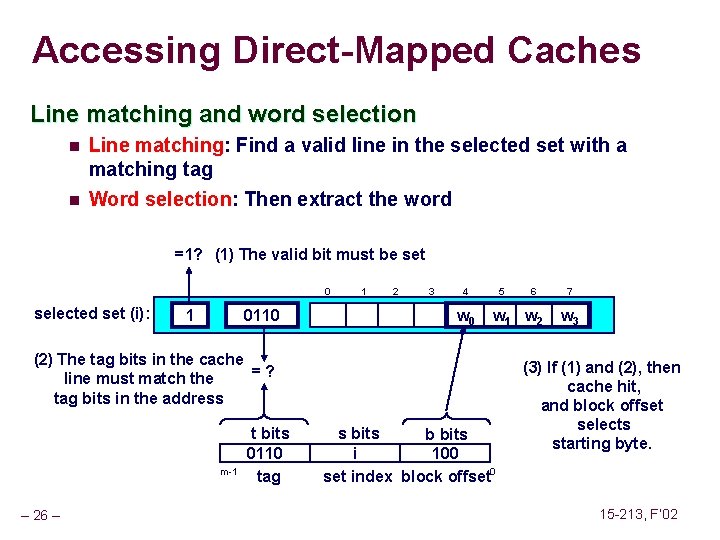

Accessing Direct-Mapped Caches Line matching and word selection n n Line matching: Find a valid line in the selected set with a matching tag Word selection: Then extract the word =1? (1) The valid bit must be set 0 selected set (i): 1 0110 1 2 3 4 w 0 5 w 1 w 2 (2) The tag bits in the cache = ? line must match the tag bits in the address m-1 – 26 – t bits 0110 tag 6 s bits b bits i 100 set index block offset 0 7 w 3 (3) If (1) and (2), then cache hit, and block offset selects starting byte. 15 -213, F’ 02

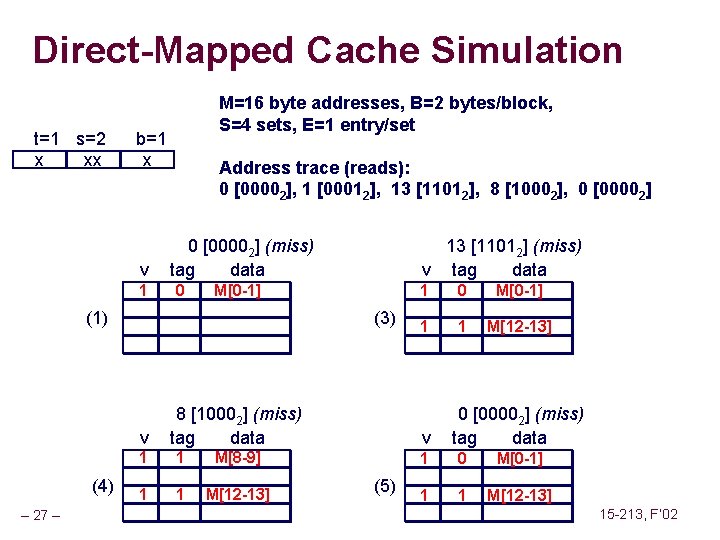

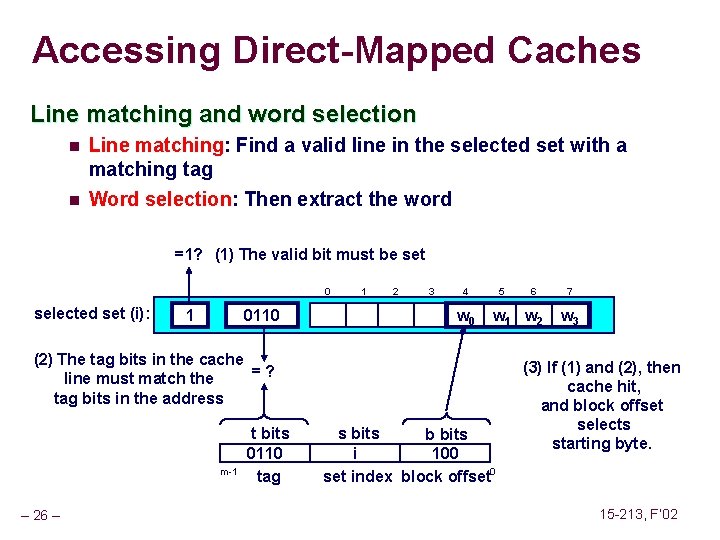

Direct-Mapped Cache Simulation t=1 s=2 x xx M=16 byte addresses, B=2 bytes/block, S=4 sets, E=1 entry/set b=1 x v 11 Address trace (reads): 0 [00002], 1 [00012], 13 [11012], 8 [10002], 0 [00002] (miss) tag data 0 m[1] m[0] M[0 -1] (1) (3) v (4) – 27 – 13 [11012] (miss) v tag data 8 [10002] (miss) tag data 11 1 m[9] m[8] M[8 -9] 1 1 M[12 -13] 1 1 0 m[1] m[0] M[0 -1] 1 1 1 m[13] m[12] M[12 -13] v (5) 0 [00002] (miss) tag data 11 0 m[1] m[0] M[0 -1] 11 1 m[13] m[12] M[12 -13] 15 -213, F’ 02

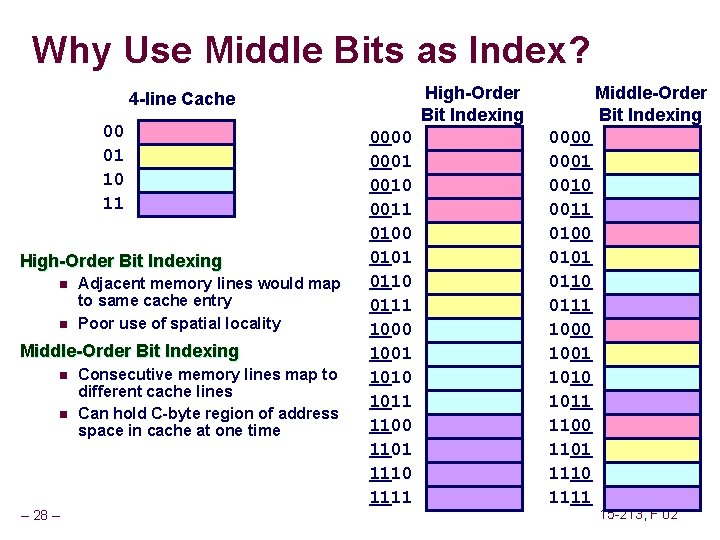

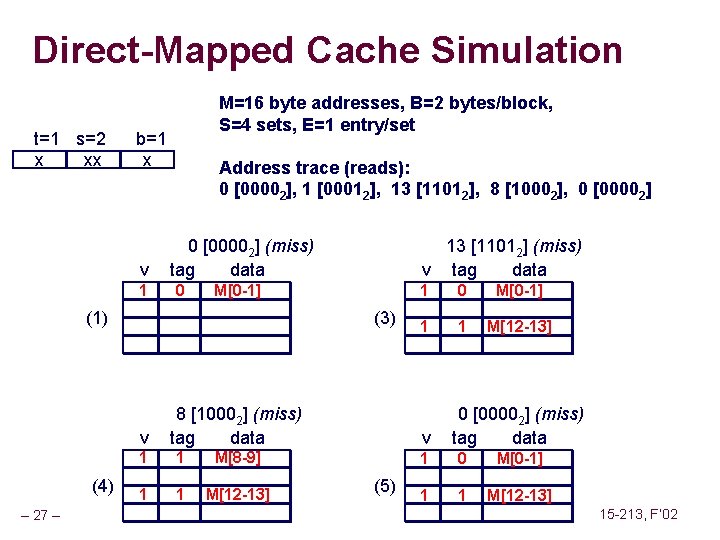

Why Use Middle Bits as Index? High-Order Bit Indexing 4 -line Cache 00 01 10 11 High-Order Bit Indexing n n Adjacent memory lines would map to same cache entry Poor use of spatial locality Middle-Order Bit Indexing n n – 28 – Consecutive memory lines map to different cache lines Can hold C-byte region of address space in cache at one time 0000 0001 0010 0011 0100 0101 0110 0111 1000 1001 1010 1011 1100 1101 1110 1111 Middle-Order Bit Indexing 0000 0001 0010 0011 0100 0101 0110 0111 1000 1001 1010 1011 1100 1101 1110 1111 15 -213, F’ 02

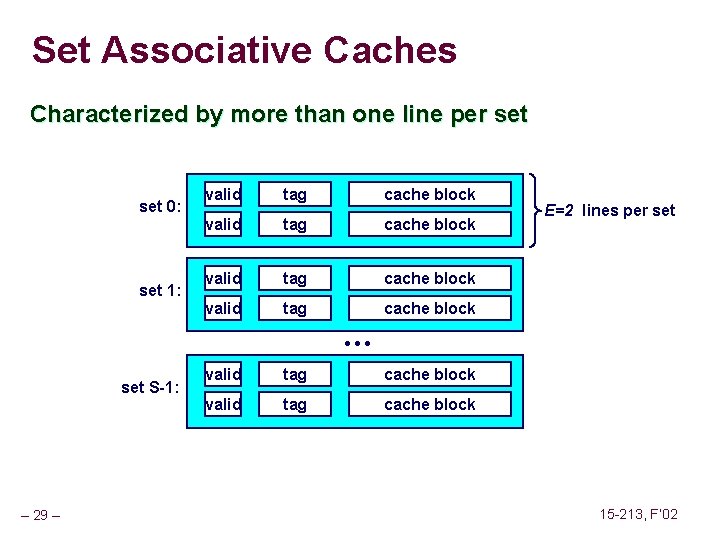

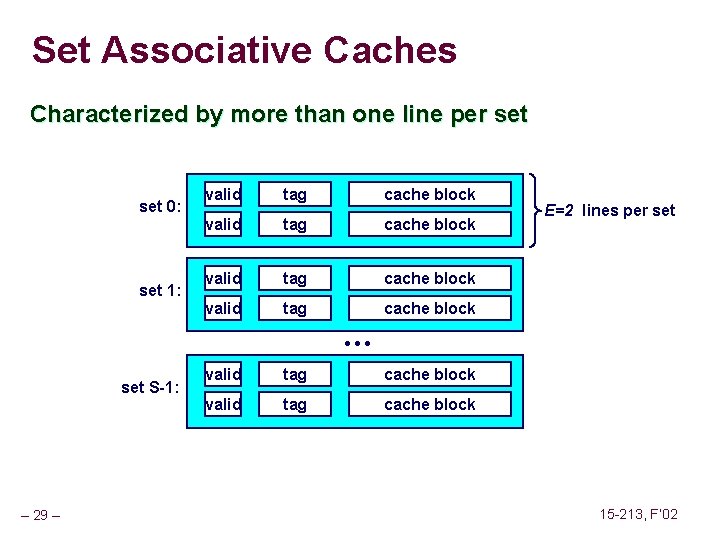

Set Associative Caches Characterized by more than one line per set 0: set 1: valid tag cache block E=2 lines per set • • • set S-1: – 29 – valid tag cache block 15 -213, F’ 02

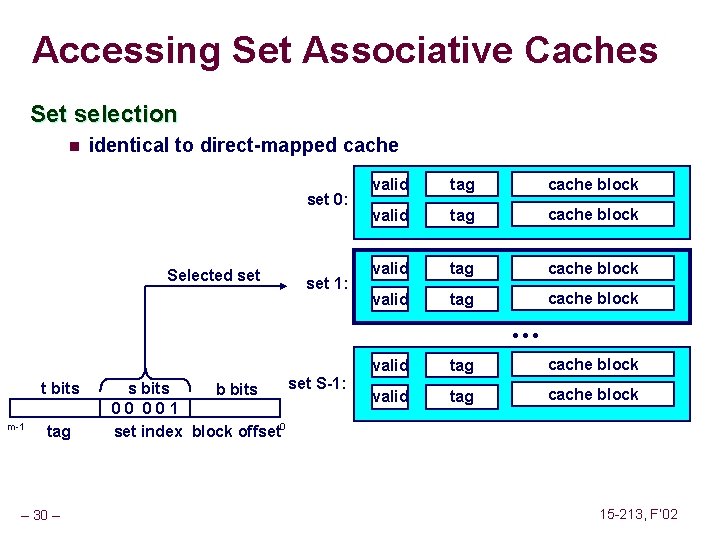

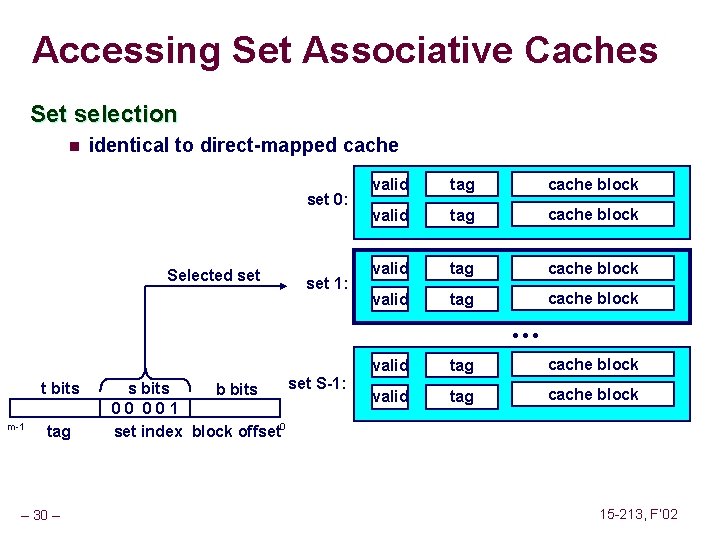

Accessing Set Associative Caches Set selection n identical to direct-mapped cache set 0: Selected set 1: valid tag cache block • • • t bits m-1 tag – 30 – set S-1: s bits b bits 0 0 1 set index block offset 0 valid tag cache block 15 -213, F’ 02

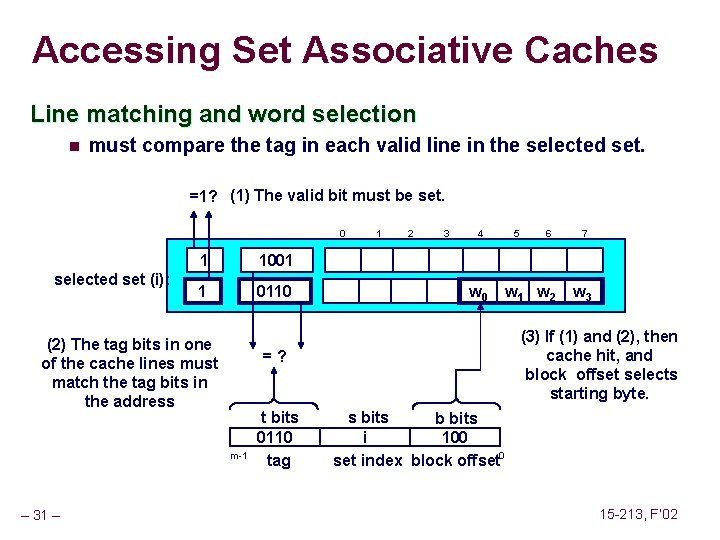

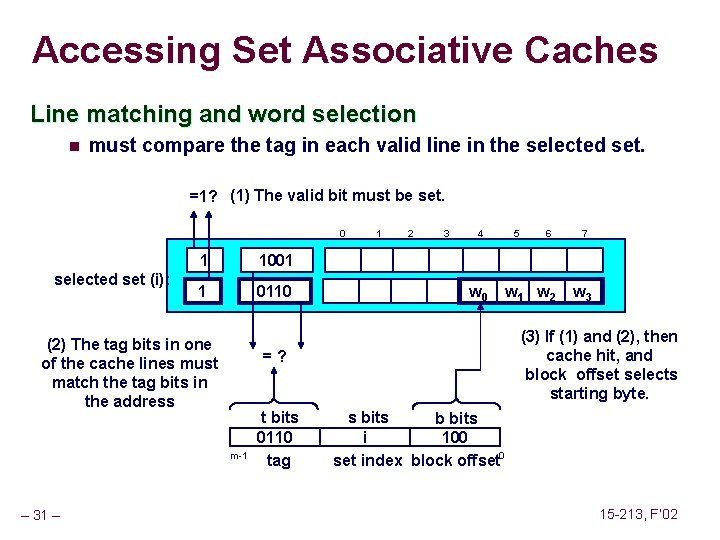

Accessing Set Associative Caches Line matching and word selection n must compare the tag in each valid line in the selected set. =1? (1) The valid bit must be set. 0 selected set (i): 1 1001 1 0110 (2) The tag bits in one of the cache lines must match the tag bits in the address 2 3 4 w 0 t bits 0110 tag 5 6 w 1 w 2 7 w 3 (3) If (1) and (2), then cache hit, and block offset selects starting byte. = ? m-1 – 31 – 1 s bits b bits i 100 set index block offset 0 15 -213, F’ 02

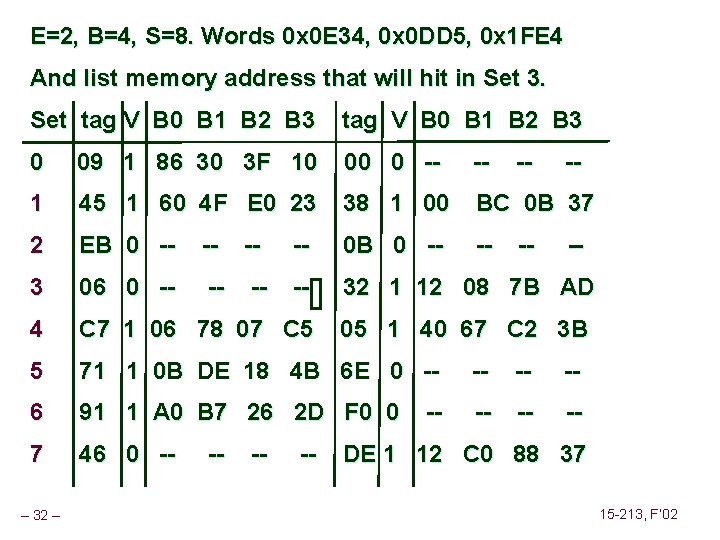

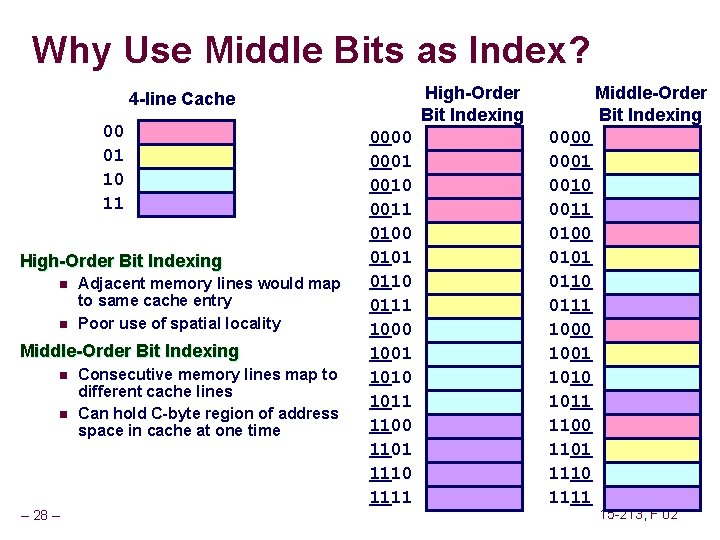

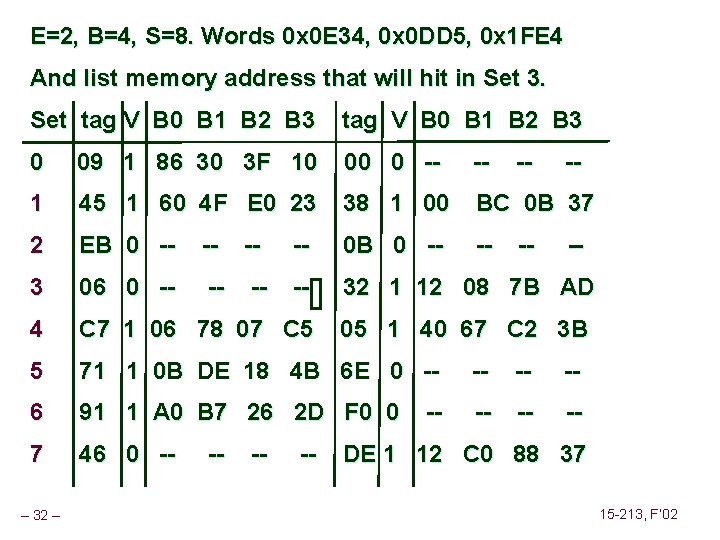

E=2, B=4, S=8. Words 0 x 0 E 34, 0 x 0 DD 5, 0 x 1 FE 4 And list memory address that will hit in Set 3. Set tag V B 0 B 1 B 2 B 3 0 09 1 86 30 3 F 10 0 -- -- 1 45 1 60 4 F E 0 23 38 1 00 BC 0 B 37 2 EB 0 -- -- -- 0 B 0 -- -- -3 06 0 -- -- -- 32 1 12 08 7 B AD 4 C 7 1 06 78 07 C 5 05 1 40 67 C 2 3 B 5 71 1 0 B DE 18 4 B 6 E 0 -- -- -6 91 1 A 0 B 7 26 2 D F 0 0 -- -- -7 46 0 -- -- DE 1 12 C 0 88 37 – 32 – 15 -213, F’ 02

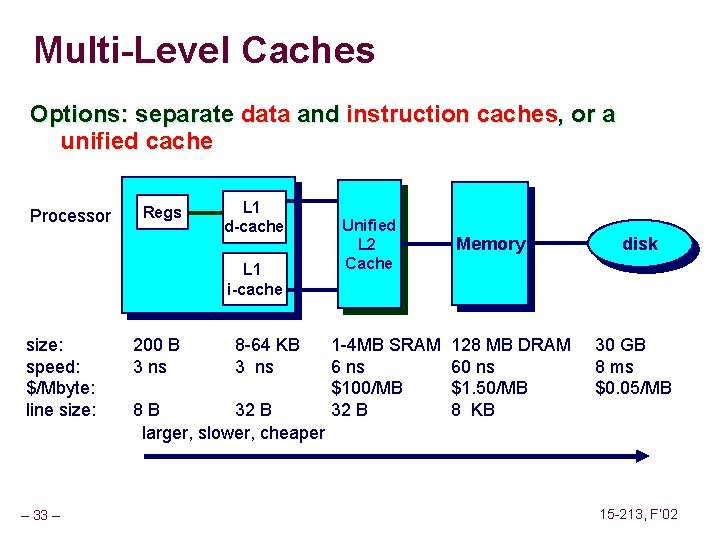

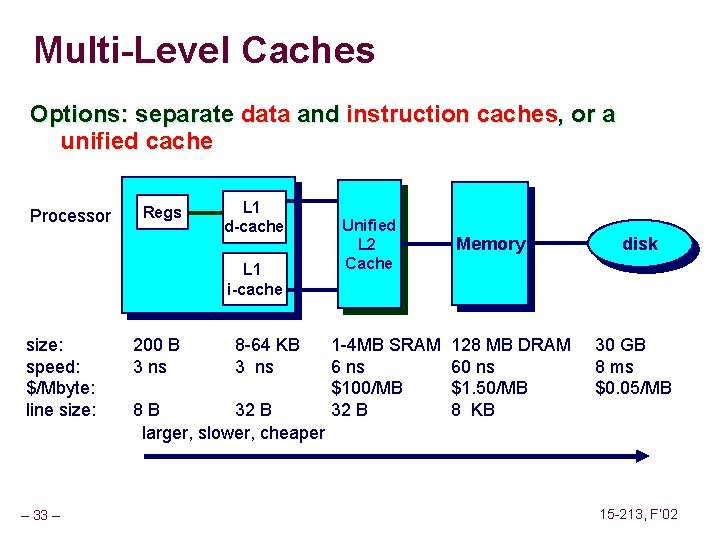

Multi-Level Caches Options: separate data and instruction caches, or a unified cache Processor Regs L 1 d-cache L 1 i-cache size: speed: $/Mbyte: line size: – 33 – 200 B 3 ns 8 -64 KB 3 ns 8 B 32 B larger, slower, cheaper Unified L 2 Cache 1 -4 MB SRAM 6 ns $100/MB 32 B Memory 128 MB DRAM 60 ns $1. 50/MB 8 KB disk 30 GB 8 ms $0. 05/MB 15 -213, F’ 02

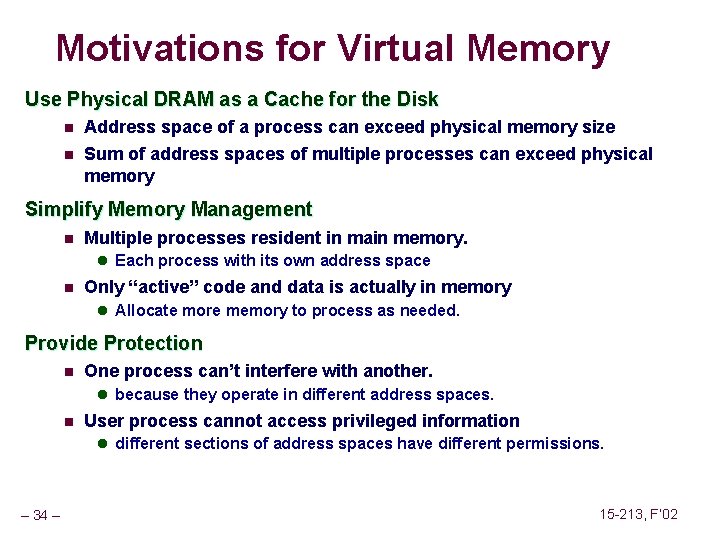

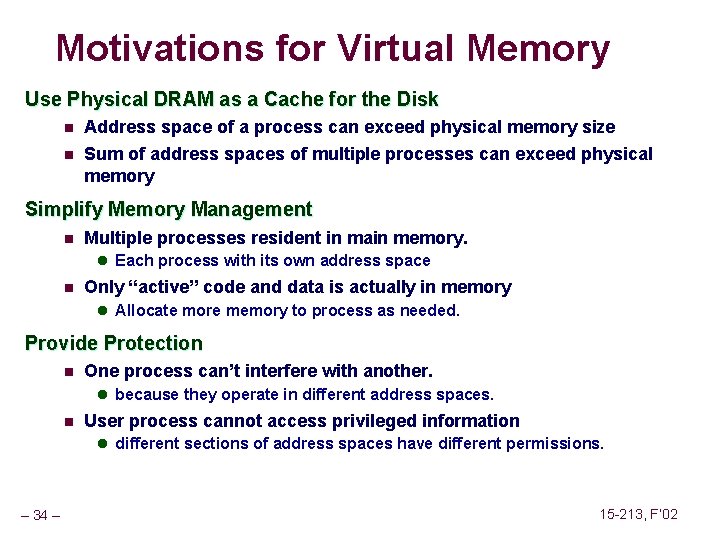

Motivations for Virtual Memory Use Physical DRAM as a Cache for the Disk n n Address space of a process can exceed physical memory size Sum of address spaces of multiple processes can exceed physical memory Simplify Memory Management n Multiple processes resident in main memory. l Each process with its own address space n Only “active” code and data is actually in memory l Allocate more memory to process as needed. Provide Protection n One process can’t interfere with another. l because they operate in different address spaces. n User process cannot access privileged information l different sections of address spaces have different permissions. – 34 – 15 -213, F’ 02

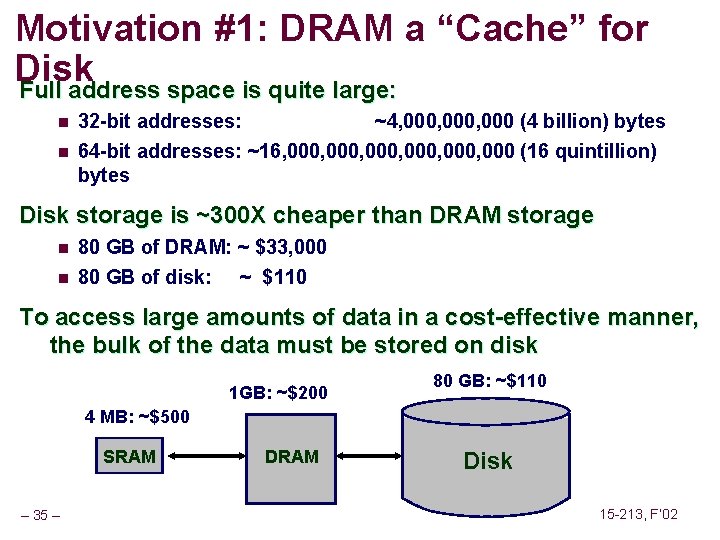

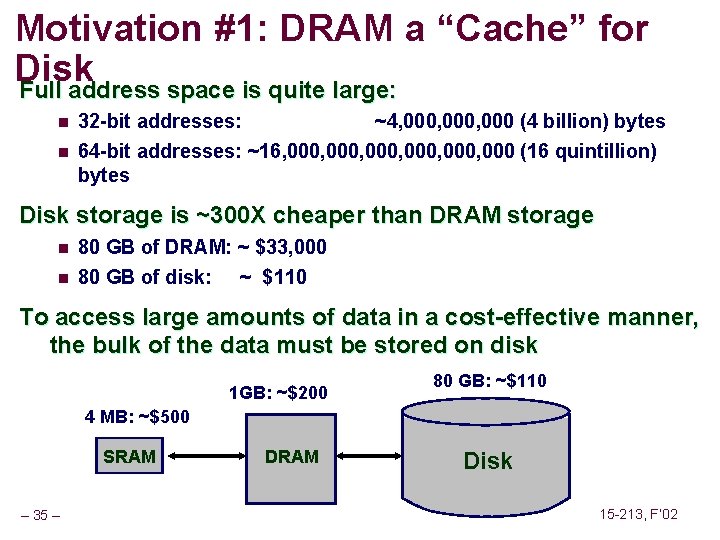

Motivation #1: DRAM a “Cache” for Disk Full address space is quite large: n 32 -bit addresses: ~4, 000, 000 (4 billion) bytes n 64 -bit addresses: ~16, 000, 000 (16 quintillion) bytes Disk storage is ~300 X cheaper than DRAM storage n n 80 GB of DRAM: ~ $33, 000 80 GB of disk: ~ $110 To access large amounts of data in a cost-effective manner, the bulk of the data must be stored on disk 1 GB: ~$200 80 GB: ~$110 4 MB: ~$500 SRAM – 35 – DRAM Disk 15 -213, F’ 02

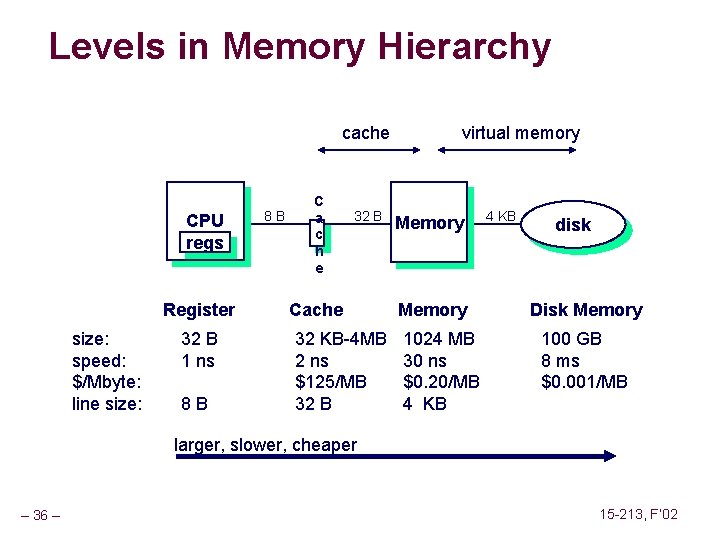

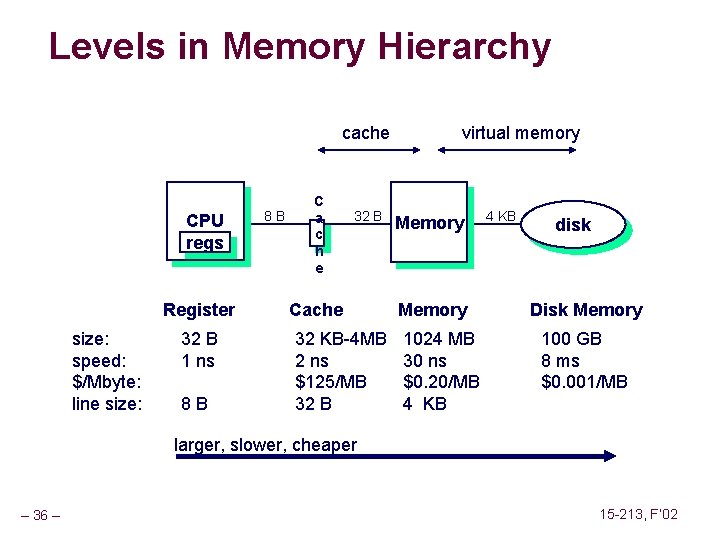

Levels in Memory Hierarchy cache CPU regs Register size: speed: $/Mbyte: line size: 32 B 1 ns 8 B 8 B C a c h e 32 B Cache 32 KB-4 MB 2 ns $125/MB 32 B virtual memory Memory 1024 MB 30 ns $0. 20/MB 4 KB disk Disk Memory 100 GB 8 ms $0. 001/MB larger, slower, cheaper – 36 – 15 -213, F’ 02

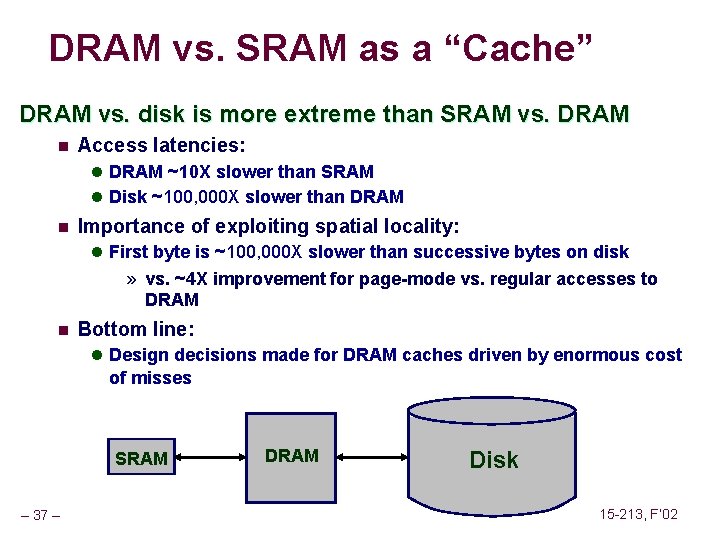

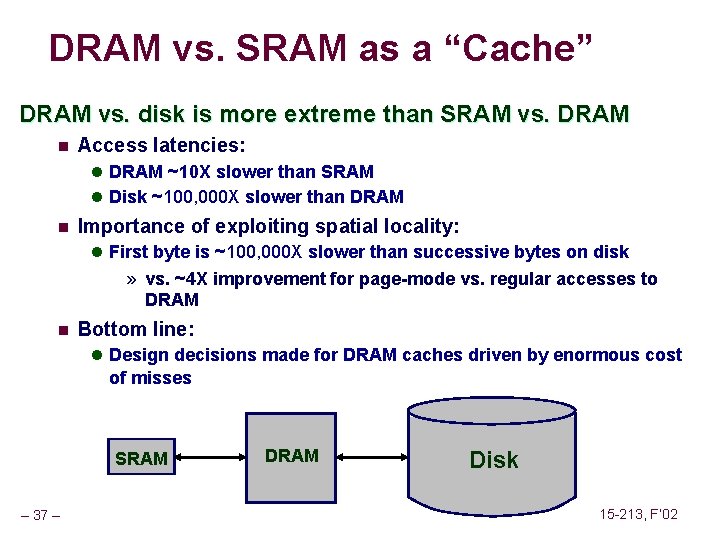

DRAM vs. SRAM as a “Cache” DRAM vs. disk is more extreme than SRAM vs. DRAM n Access latencies: l DRAM ~10 X slower than SRAM l Disk ~100, 000 X slower than DRAM n Importance of exploiting spatial locality: l First byte is ~100, 000 X slower than successive bytes on disk » vs. ~4 X improvement for page-mode vs. regular accesses to DRAM n Bottom line: l Design decisions made for DRAM caches driven by enormous cost of misses SRAM – 37 – DRAM Disk 15 -213, F’ 02

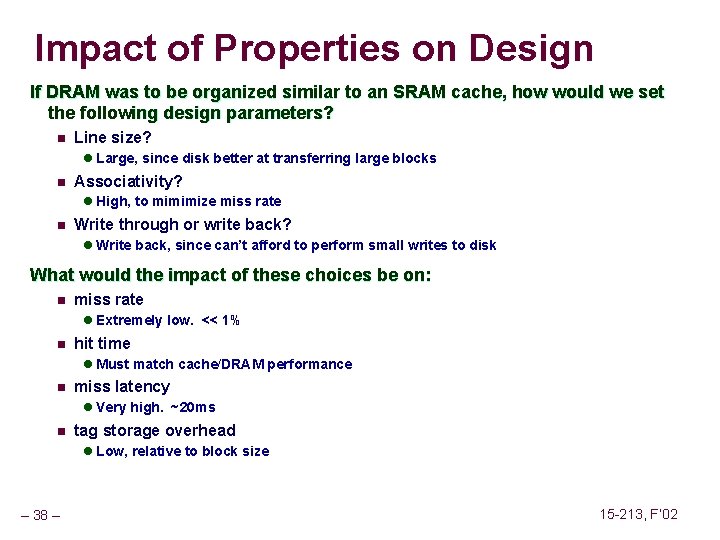

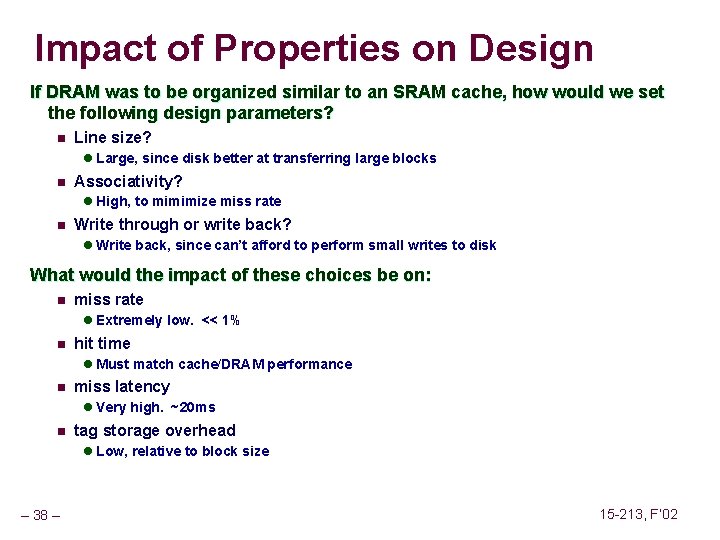

Impact of Properties on Design If DRAM was to be organized similar to an SRAM cache, how would we set the following design parameters? n Line size? l Large, since disk better at transferring large blocks n Associativity? l High, to mimimize miss rate n Write through or write back? l Write back, since can’t afford to perform small writes to disk What would the impact of these choices be on: n miss rate l Extremely low. << 1% n hit time l Must match cache/DRAM performance n miss latency l Very high. ~20 ms n tag storage overhead l Low, relative to block size – 38 – 15 -213, F’ 02

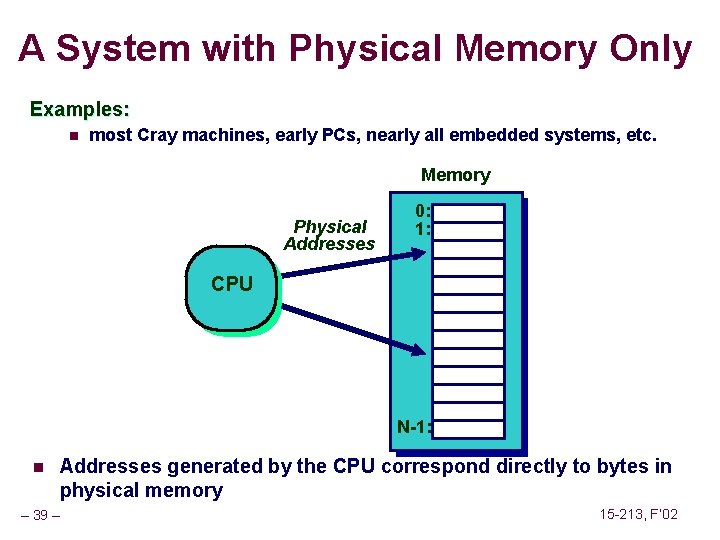

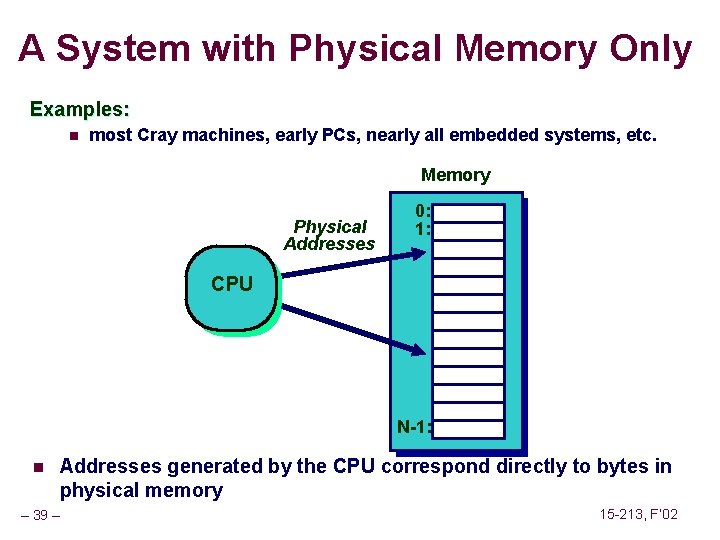

A System with Physical Memory Only Examples: n most Cray machines, early PCs, nearly all embedded systems, etc. Memory Physical Addresses 0: 1: CPU N-1: n Addresses generated by the CPU correspond directly to bytes in physical memory – 39 – 15 -213, F’ 02

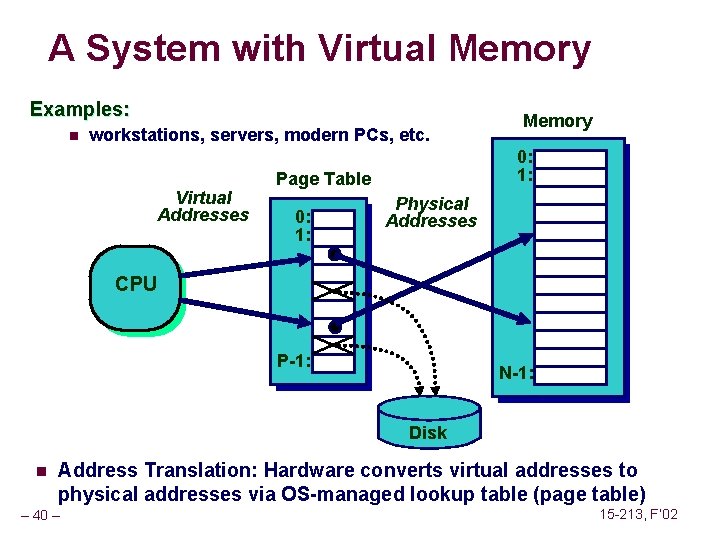

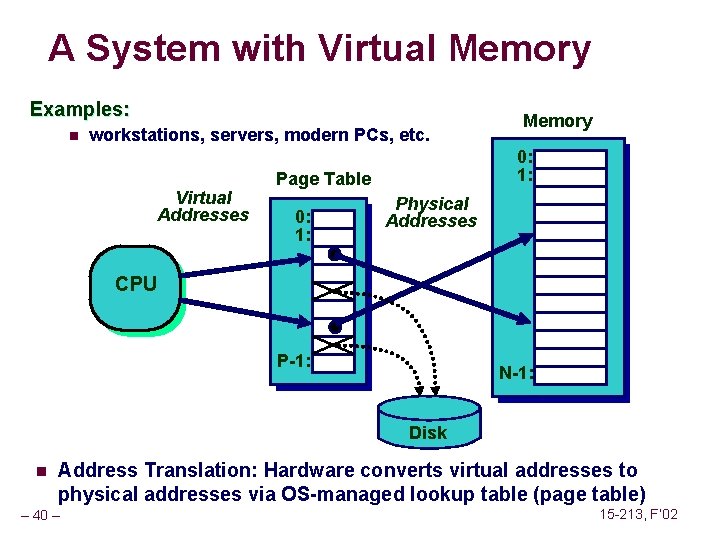

A System with Virtual Memory Examples: n workstations, servers, modern PCs, etc. Virtual Addresses 0: 1: Page Table 0: 1: Memory Physical Addresses CPU P-1: N-1: Disk n Address Translation: Hardware converts virtual addresses to physical addresses via OS-managed lookup table (page table) – 40 – 15 -213, F’ 02

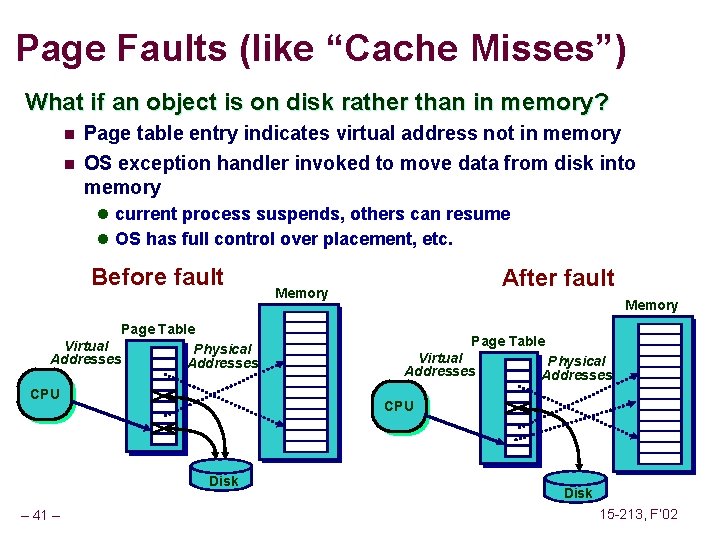

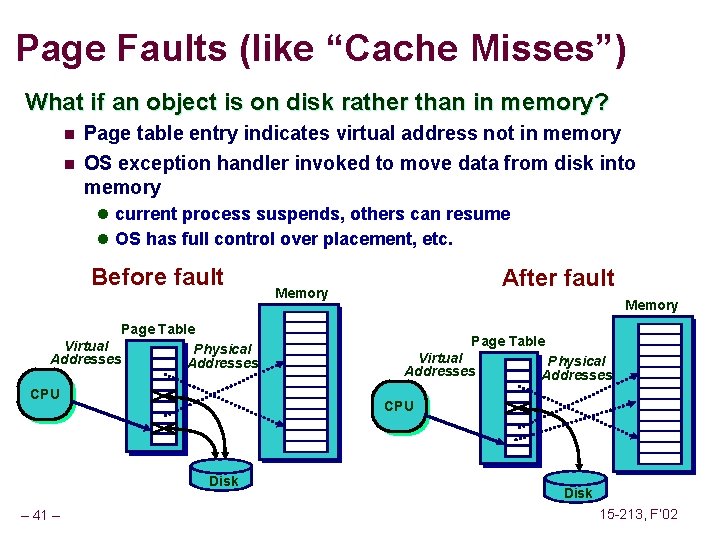

Page Faults (like “Cache Misses”) What if an object is on disk rather than in memory? n Page table entry indicates virtual address not in memory n OS exception handler invoked to move data from disk into memory l current process suspends, others can resume l OS has full control over placement, etc. Before fault Page Table Virtual Physical Addresses CPU Memory Page Table Virtual Addresses Physical Addresses CPU Disk – 41 – After fault Memory Disk 15 -213, F’ 02

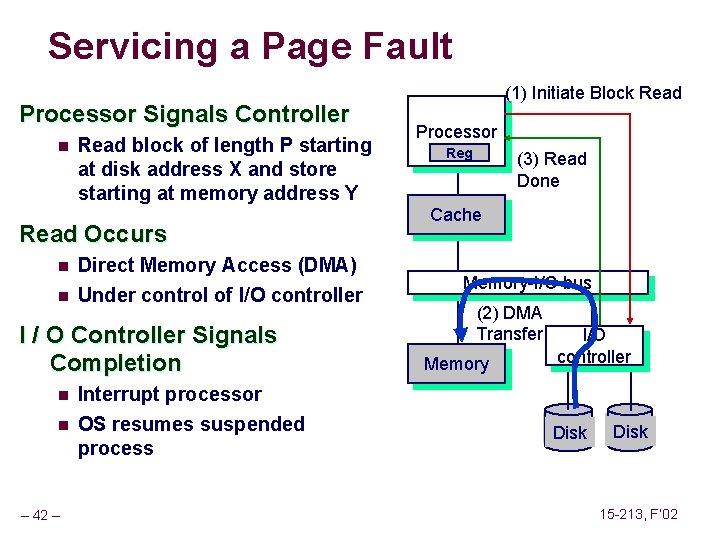

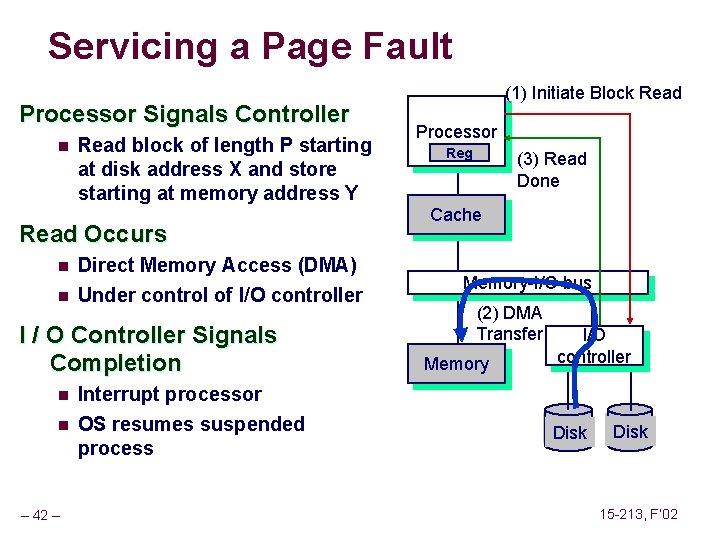

Servicing a Page Fault Processor Signals Controller n Read block of length P starting at disk address X and store starting at memory address Y Read Occurs n n Direct Memory Access (DMA) Under control of I/O controller I / O Controller Signals Completion n n – 42 – Interrupt processor OS resumes suspended process (1) Initiate Block Read Processor Reg (3) Read Done Cache Memory-I/O bus (2) DMA Transfer Memory I/O controller disk Disk 15 -213, F’ 02

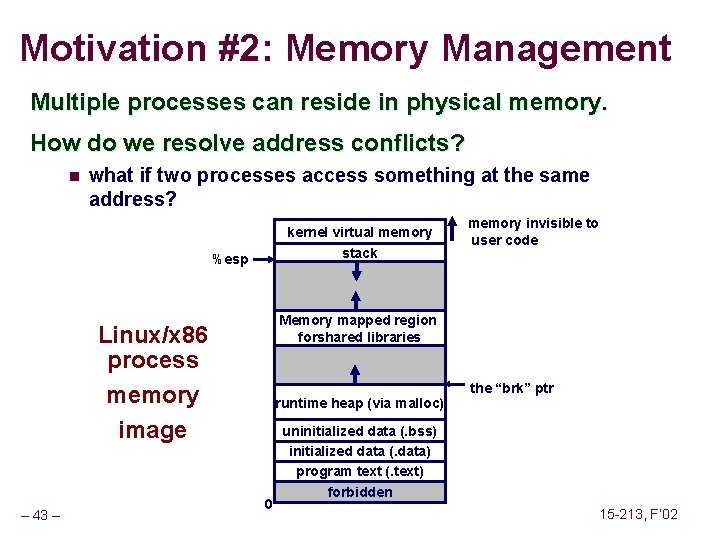

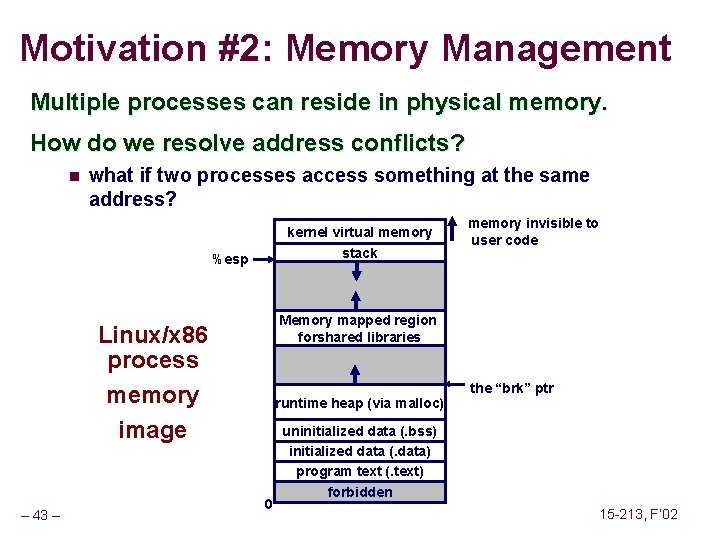

Motivation #2: Memory Management Multiple processes can reside in physical memory. How do we resolve address conflicts? n what if two processes access something at the same address? kernel virtual memory stack %esp Memory mapped region forshared libraries Linux/x 86 process memory image – 43 – memory invisible to user code runtime heap (via malloc) 0 the “brk” ptr uninitialized data (. bss) initialized data (. data) program text (. text) forbidden 15 -213, F’ 02

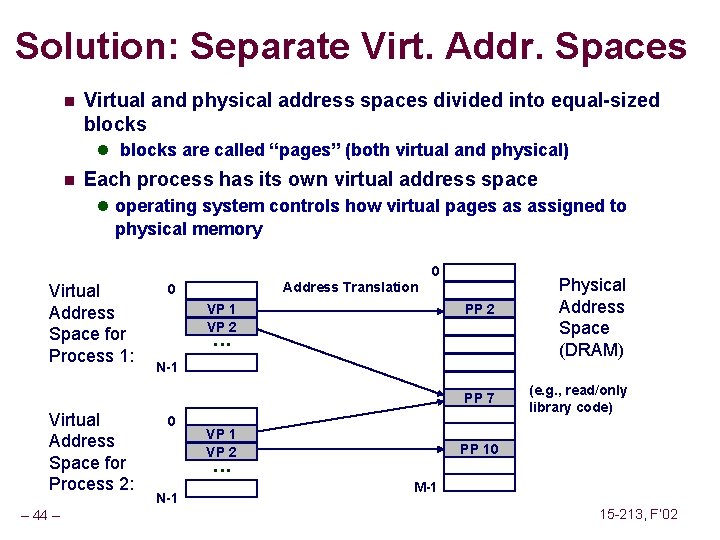

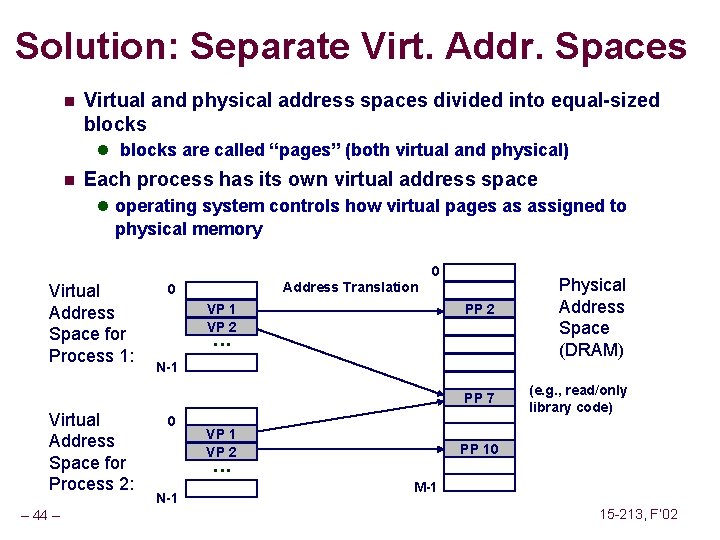

Solution: Separate Virt. Addr. Spaces n Virtual and physical address spaces divided into equal-sized blocks l blocks are called “pages” (both virtual and physical) n Each process has its own virtual address space l operating system controls how virtual pages as assigned to physical memory 0 Virtual Address Space for Process 1: Address Translation 0 VP 1 VP 2 PP 2 . . . N-1 PP 7 Virtual Address Space for Process 2: – 44 – Physical Address Space (DRAM) 0 VP 1 VP 2 PP 10 . . . N-1 (e. g. , read/only library code) M-1 15 -213, F’ 02

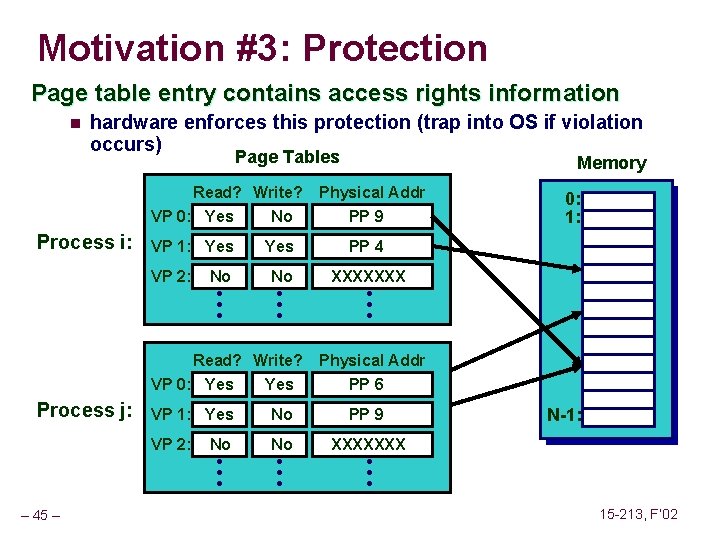

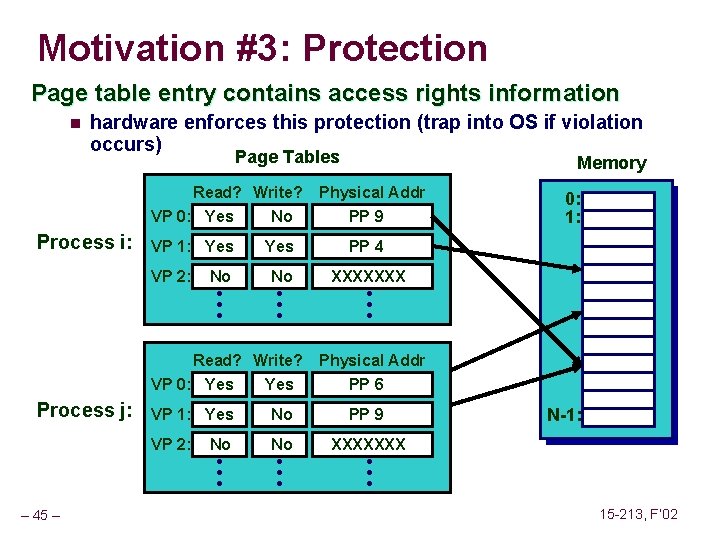

Motivation #3: Protection Page table entry contains access rights information n hardware enforces this protection (trap into OS if violation occurs) Page Tables Read? Write? VP 0: Yes No Process i: VP 1: Yes VP 2: No No • • • Read? Write? VP 0: Yes Process j: Physical Addr PP 9 XXXXXXX • • • Physical Addr PP 6 No PP 9 VP 2: No No XXXXXXX • • • 0: 1: PP 4 VP 1: Yes • • • – 45 – Memory N-1: • • • 15 -213, F’ 02

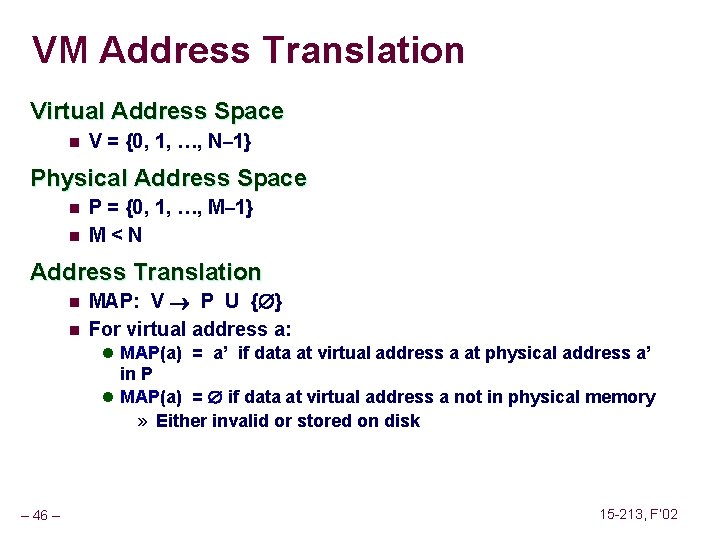

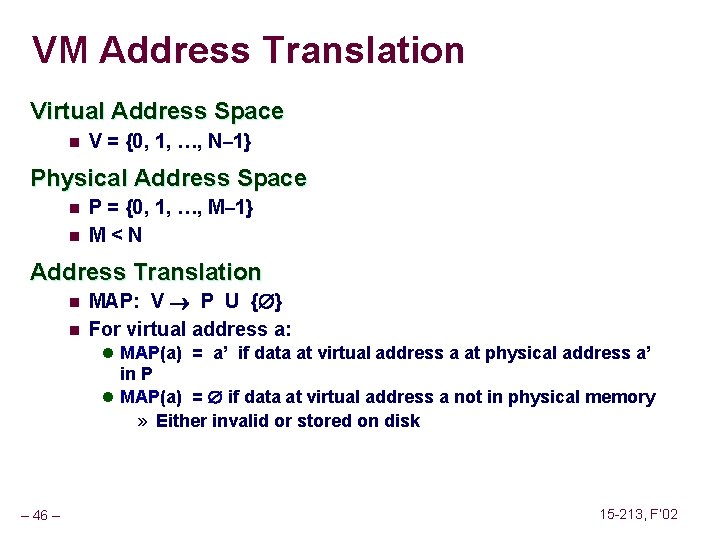

VM Address Translation Virtual Address Space n V = {0, 1, …, N– 1} Physical Address Space n n P = {0, 1, …, M– 1} M < N Address Translation n n MAP: V P U { } For virtual address a: l MAP(a) = a’ if data at virtual address a at physical address a’ in P l MAP(a) = if data at virtual address a not in physical memory » Either invalid or stored on disk – 46 – 15 -213, F’ 02

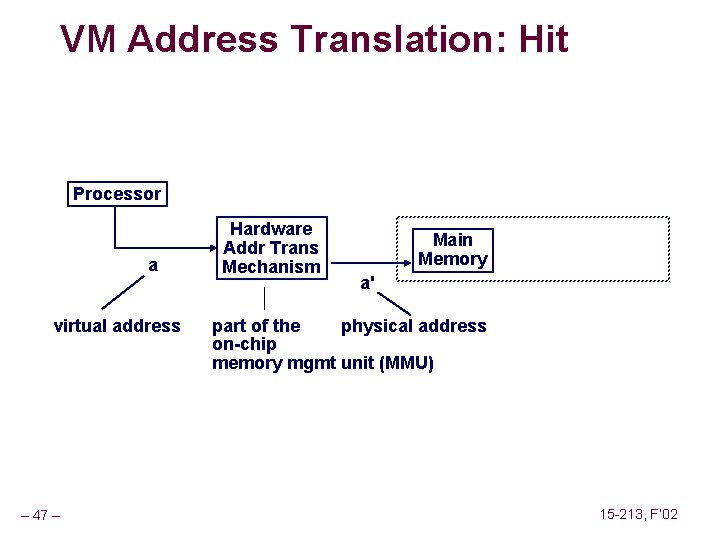

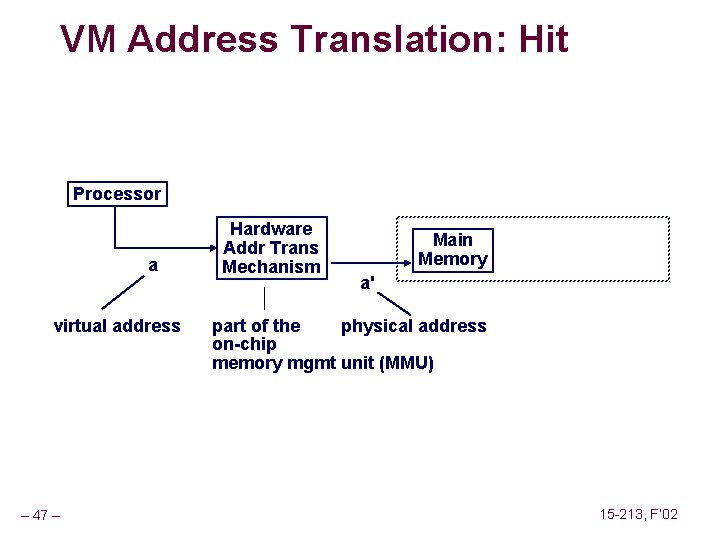

VM Address Translation: Hit Processor a virtual address – 47 – Hardware Addr Trans Mechanism Main Memory a' part of the physical address on-chip memory mgmt unit (MMU) 15 -213, F’ 02

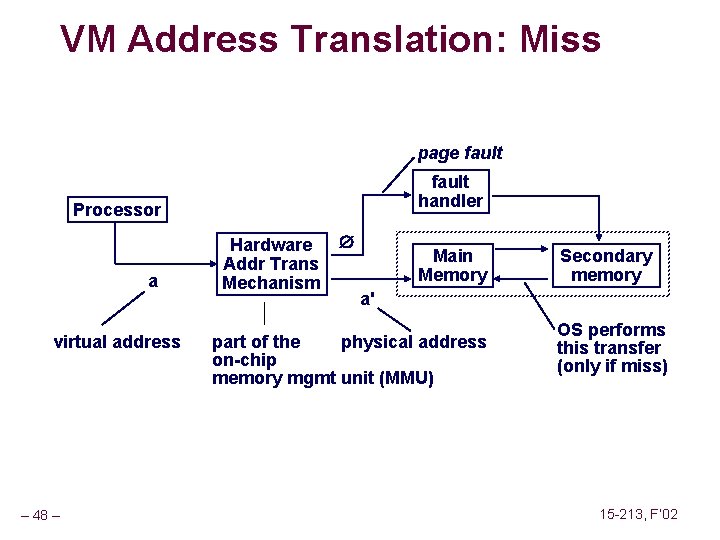

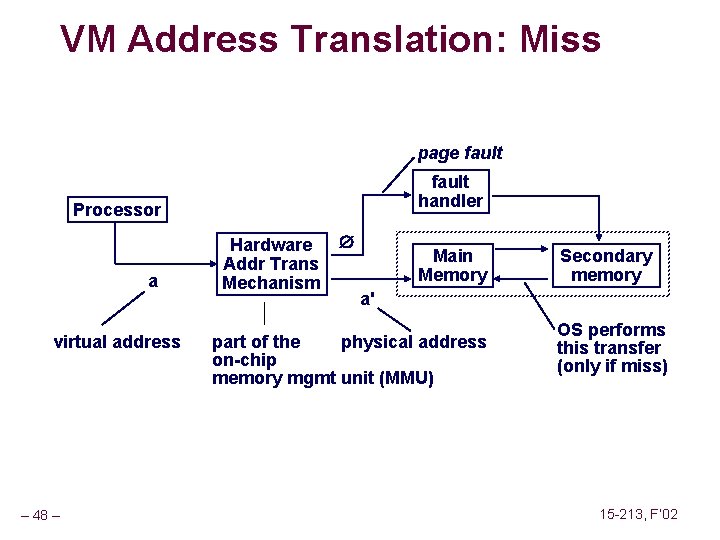

VM Address Translation: Miss page fault handler Processor a virtual address – 48 – Hardware Addr Trans Mechanism Main Memory Secondary memory a' part of the physical address on-chip memory mgmt unit (MMU) OS performs this transfer (only if miss) 15 -213, F’ 02

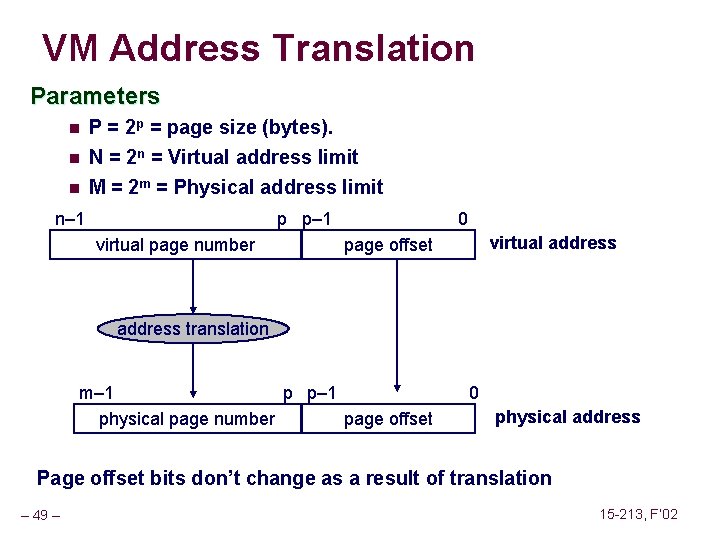

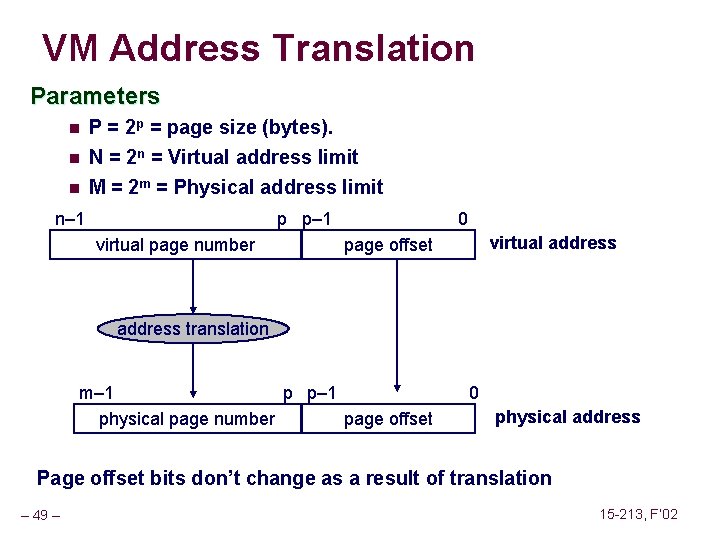

VM Address Translation Parameters n P = 2 p = page size (bytes). n N = 2 n = Virtual address limit M = 2 m = Physical address limit n n– 1 p p– 1 virtual page number 0 virtual address page offset address translation m– 1 p p– 1 physical page number page offset 0 physical address Page offset bits don’t change as a result of translation – 49 – 15 -213, F’ 02

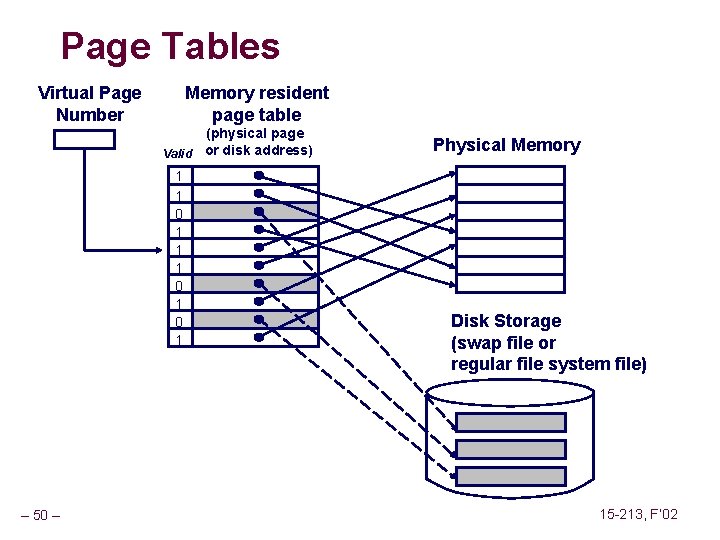

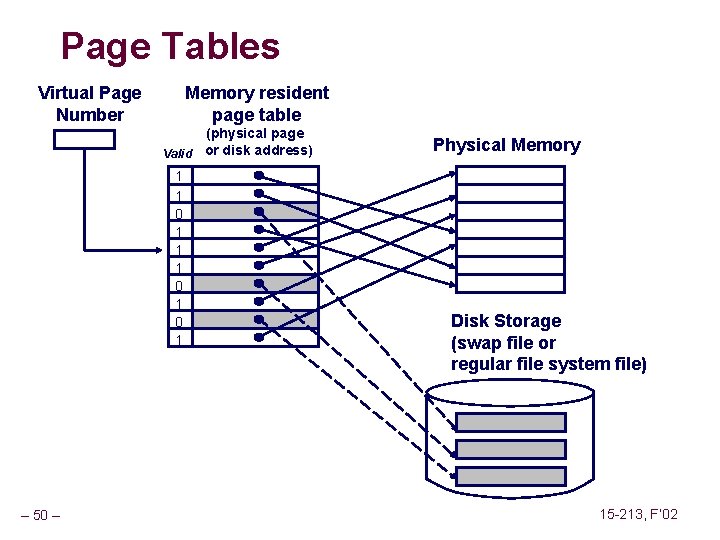

Page Tables Virtual Page Number Memory resident page table (physical page Valid or disk address) 1 1 0 1 0 1 – 50 – Physical Memory Disk Storage (swap file or regular file system file) 15 -213, F’ 02

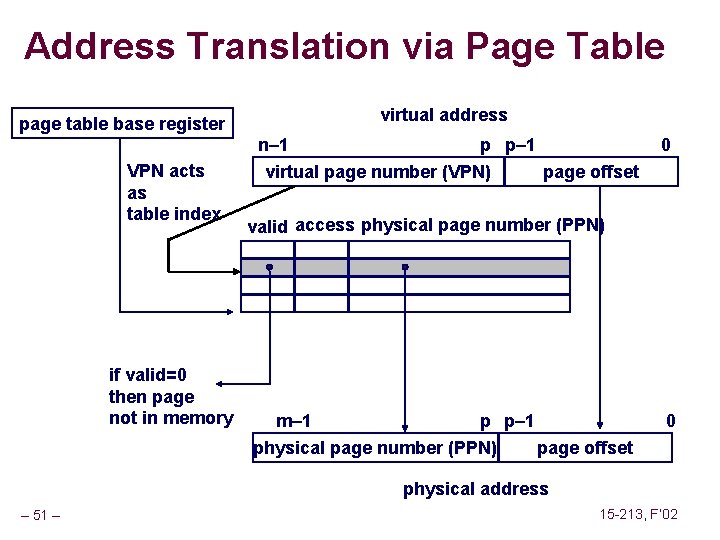

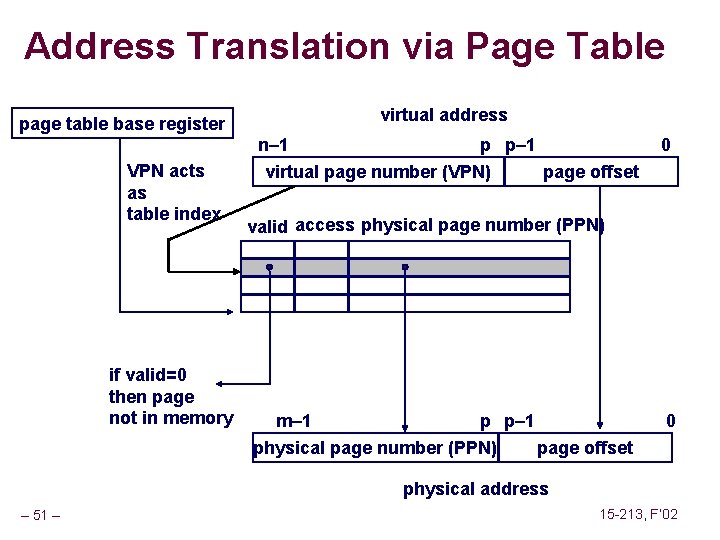

Address Translation via Page Table page table base register VPN acts as table index if valid=0 then page not in memory virtual address n– 1 p p– 1 virtual page number (VPN) page offset 0 valid access physical page number (PPN) m– 1 p p– 1 physical page number (PPN) page offset 0 physical address – 51 – 15 -213, F’ 02

Page Table Operation Translation n Separate (set of) page table(s) per process n VPN forms index into page table (points to a page table entry) – 52 – 15 -213, F’ 02

Page Table Operation Computing Physical Address n Page Table Entry (PTE) provides information about page l if (valid bit = 1) then the page is in memory. » Use physical page number (PPN) to construct address l if (valid bit = 0) then the page is on disk » Page fault – 53 – 15 -213, F’ 02

Page Table Operation Checking Protection n Access rights field indicate allowable access l e. g. , read-only, read-write, execute-only l typically support multiple protection modes (e. g. , kernel vs. user) n – 54 – Protection violation fault if user doesn’t have necessary permission 15 -213, F’ 02

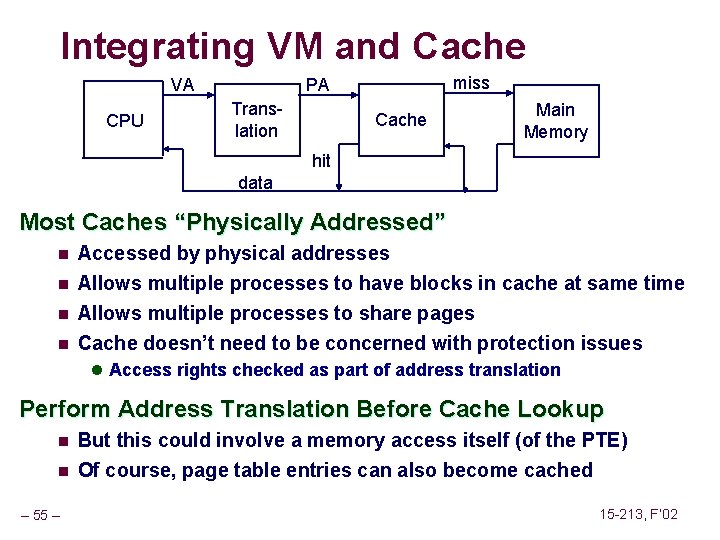

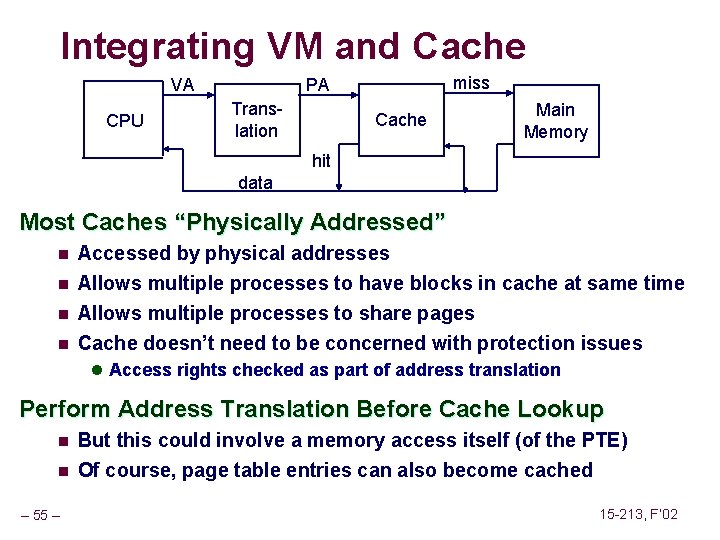

Integrating VM and Cache VA CPU miss PA Translation Cache Main Memory hit data Most Caches “Physically Addressed” n Accessed by physical addresses n Allows multiple processes to have blocks in cache at same time Allows multiple processes to share pages Cache doesn’t need to be concerned with protection issues n n l Access rights checked as part of address translation Perform Address Translation Before Cache Lookup n n – 55 – But this could involve a memory access itself (of the PTE) Of course, page table entries can also become cached 15 -213, F’ 02

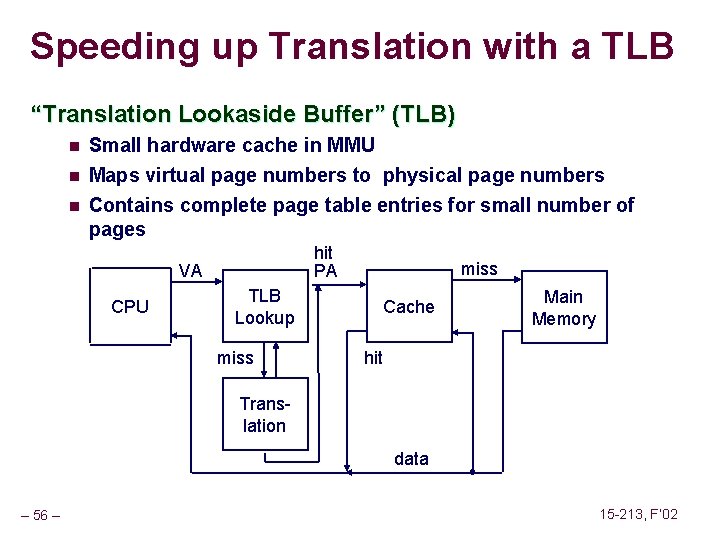

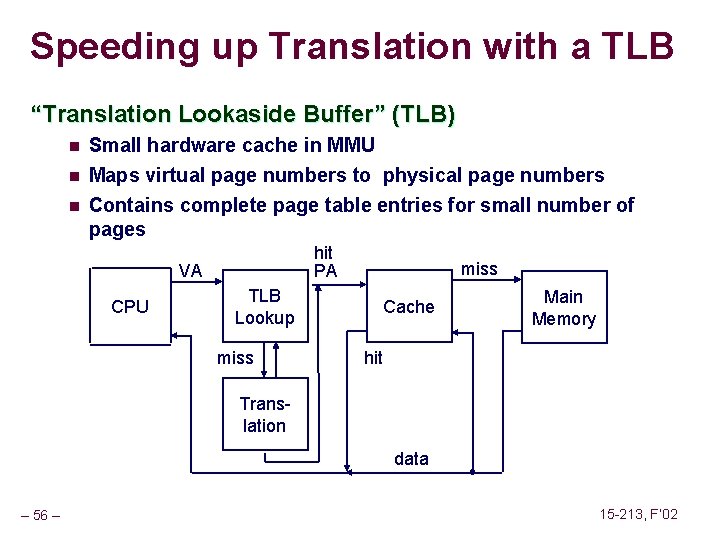

Speeding up Translation with a TLB “Translation Lookaside Buffer” (TLB) n Small hardware cache in MMU n Maps virtual page numbers to physical page numbers Contains complete page table entries for small number of pages n hit PA VA CPU TLB Lookup miss Cache Main Memory hit Translation data – 56 – 15 -213, F’ 02

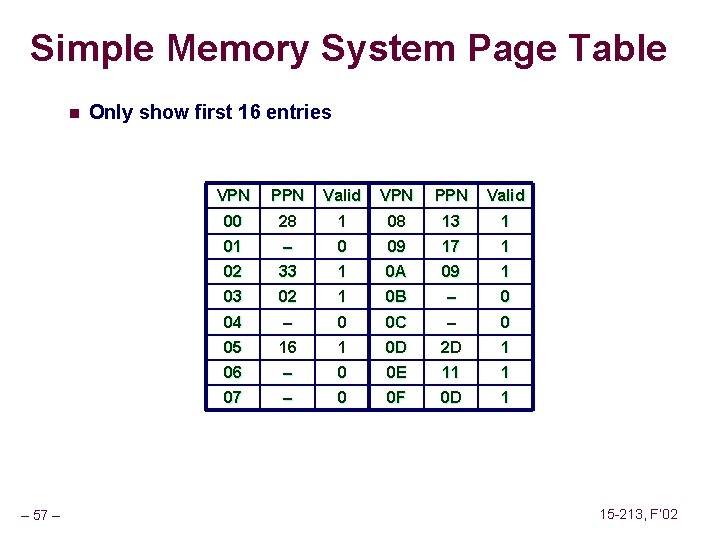

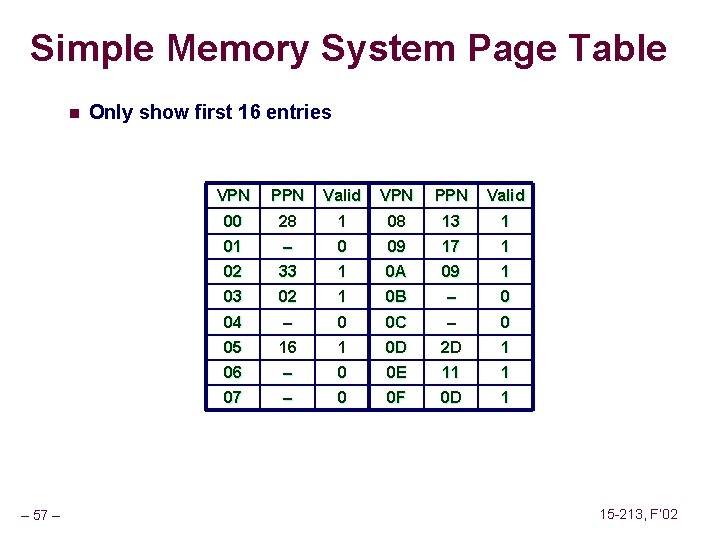

Simple Memory System Page Table n – 57 – Only show first 16 entries VPN PPN Valid 00 28 1 08 13 1 01 – 0 09 17 1 02 33 1 0 A 09 1 03 02 1 0 B – 0 04 – 0 0 C – 0 05 16 1 0 D 2 D 1 06 – 0 0 E 11 1 07 – 0 0 F 0 D 1 15 -213, F’ 02

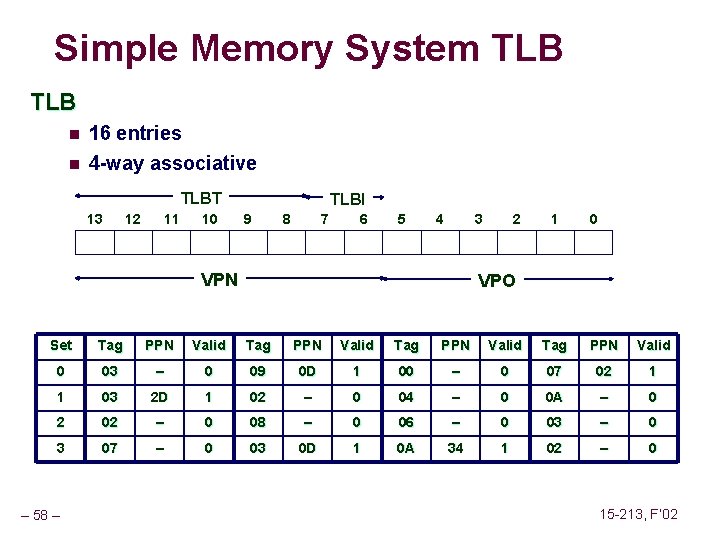

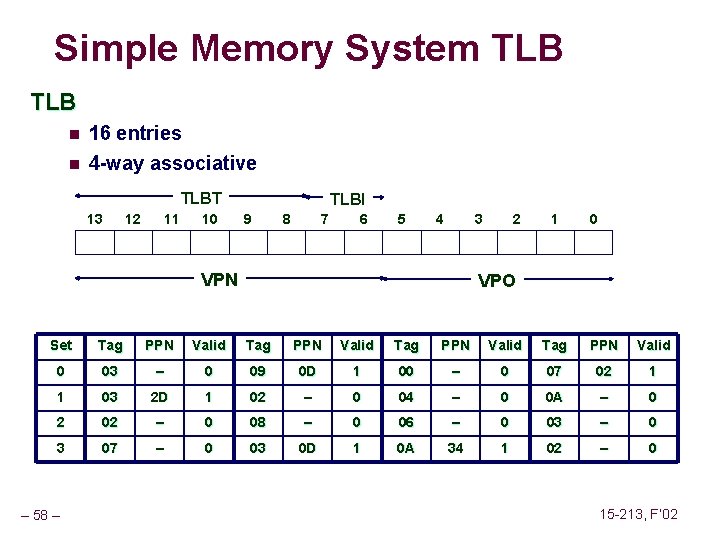

Simple Memory System TLB n 16 entries n 4 -way associative TLBT 13 12 11 10 TLBI 9 8 7 6 5 4 3 VPN 2 1 0 VPO Set Tag PPN Valid 0 03 – 0 09 0 D 1 00 – 0 07 02 1 1 03 2 D 1 02 – 0 04 – 0 0 A – 0 2 02 – 0 08 – 0 06 – 0 03 – 0 3 07 – 0 03 0 D 1 0 A 34 1 02 – 0 – 58 – 15 -213, F’ 02

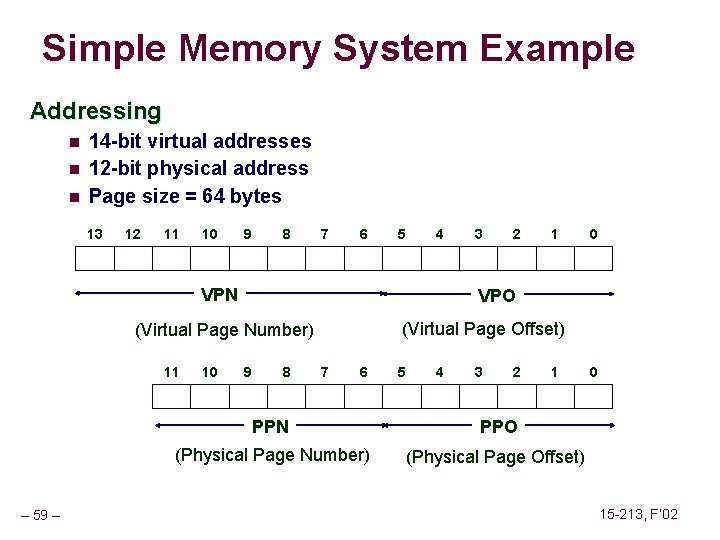

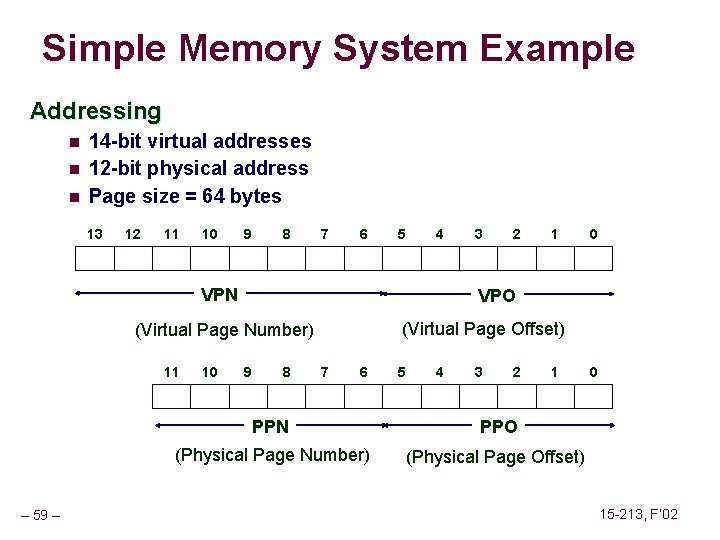

Simple Memory System Example Addressing n n n 14 -bit virtual addresses 12 -bit physical address Page size = 64 bytes 13 12 11 10 9 8 7 6 5 4 VPN – 59 – 10 2 1 0 VPO (Virtual Page Offset) (Virtual Page Number) 11 3 9 8 7 6 5 4 3 2 1 PPN PPO (Physical Page Number) (Physical Page Offset) 0 15 -213, F’ 02

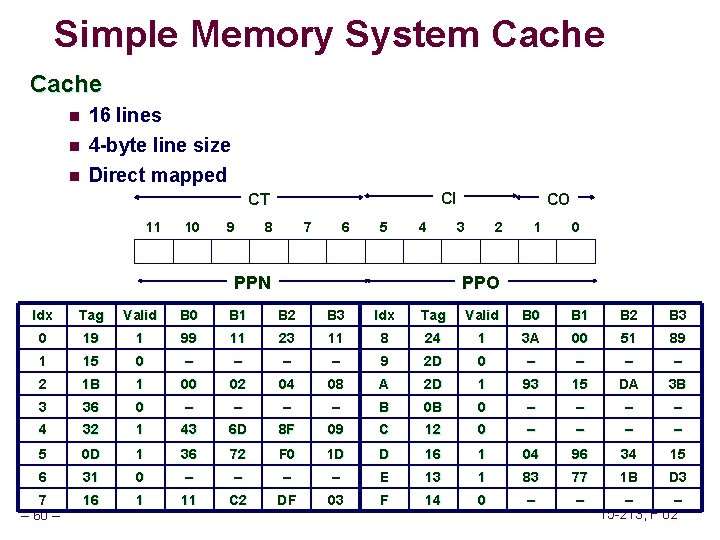

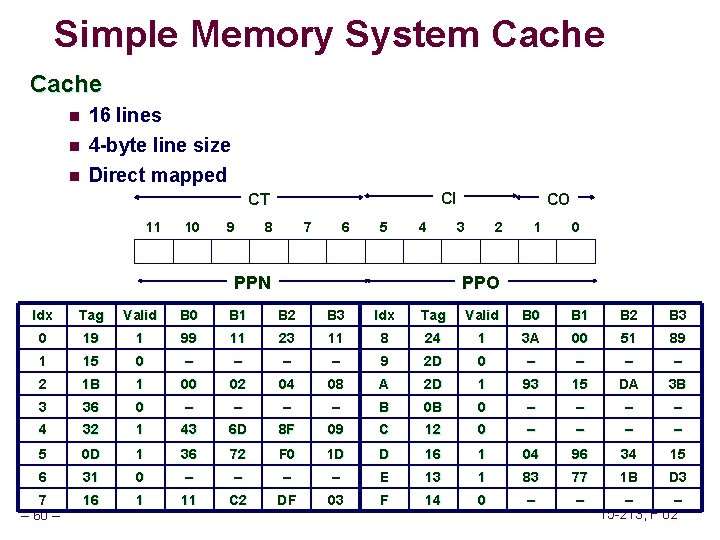

Simple Memory System Cache n 16 lines n 4 -byte line size Direct mapped n CI CT 11 10 9 8 7 6 5 4 PPN CO 3 2 1 0 PPO Idx Tag Valid B 0 B 1 B 2 B 3 0 19 1 99 11 23 11 8 24 1 3 A 00 51 89 1 15 0 – – 9 2 D 0 – – 2 1 B 1 00 02 04 08 A 2 D 1 93 15 DA 3 B 3 36 0 – – B 0 B 0 – – 4 32 1 43 6 D 8 F 09 C 12 0 – – 5 0 D 1 36 72 F 0 1 D D 16 1 04 96 34 15 6 31 0 – – E 13 1 83 77 1 B D 3 7 – 60 – 16 1 11 C 2 DF 03 F 14 0 – – 15 -213, F’ 02

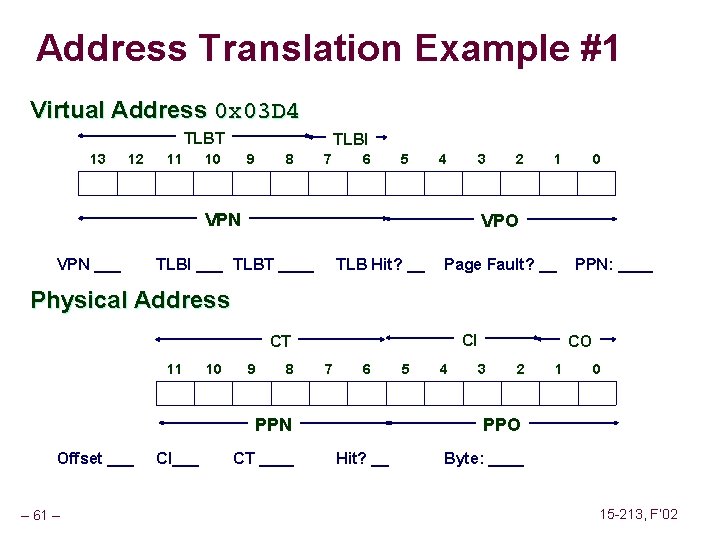

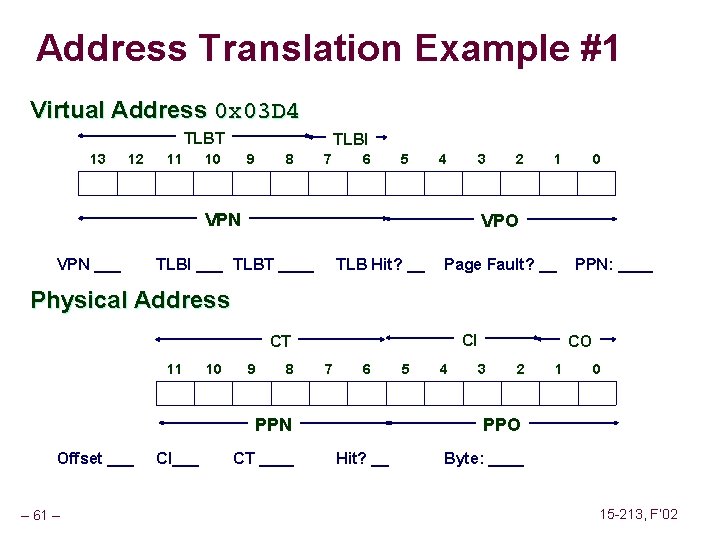

Address Translation Example #1 Virtual Address 0 x 03 D 4 TLBT 13 12 11 TLBI 10 9 8 7 6 5 4 3 VPN ___ 2 1 0 VPO TLBI ___ TLBT ____ TLB Hit? __ Page Fault? __ PPN: ____ Physical Address CI CT 11 10 9 8 7 6 PPN Offset ___ – 61 – CI___ CT ____ 5 4 CO 3 2 1 0 PPO Hit? __ Byte: ____ 15 -213, F’ 02

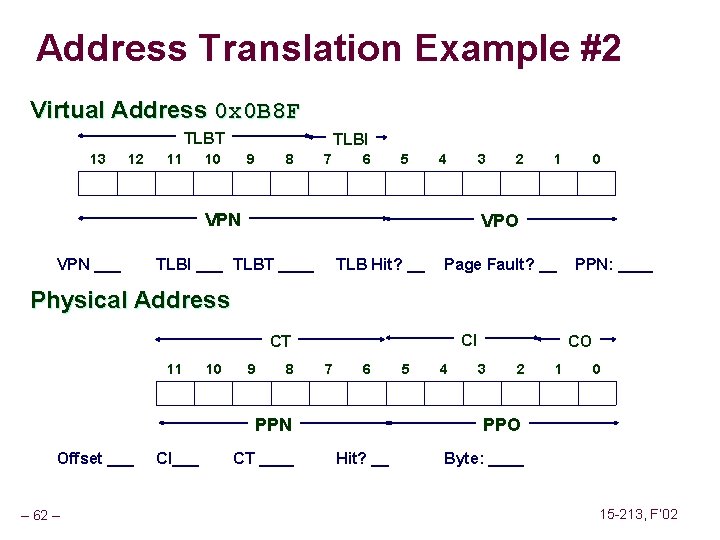

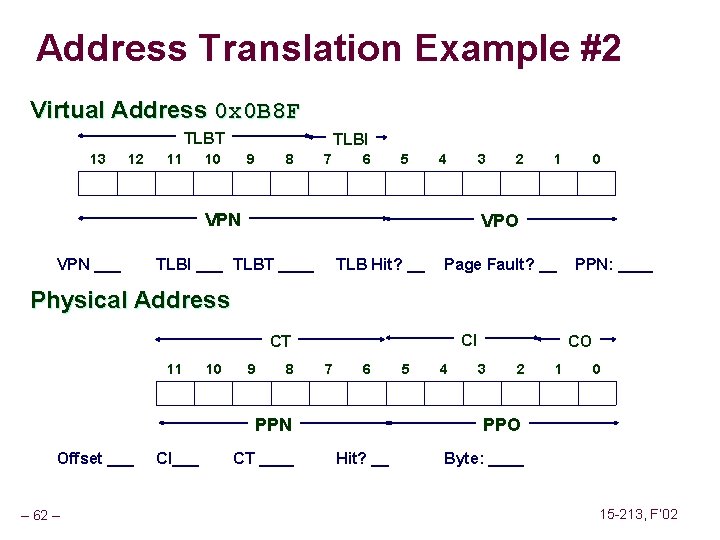

Address Translation Example #2 Virtual Address 0 x 0 B 8 F TLBT 13 12 11 TLBI 10 9 8 7 6 5 4 3 VPN ___ 2 1 0 VPO TLBI ___ TLBT ____ TLB Hit? __ Page Fault? __ PPN: ____ Physical Address CI CT 11 10 9 8 7 6 PPN Offset ___ – 62 – CI___ CT ____ 5 4 CO 3 2 1 0 PPO Hit? __ Byte: ____ 15 -213, F’ 02

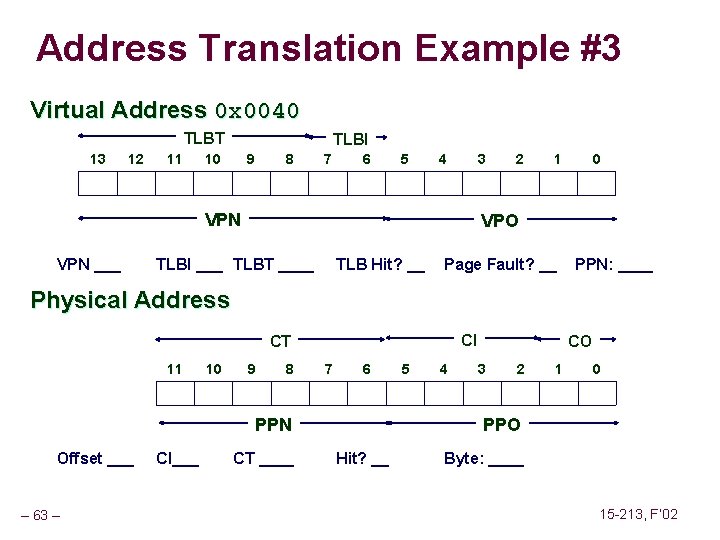

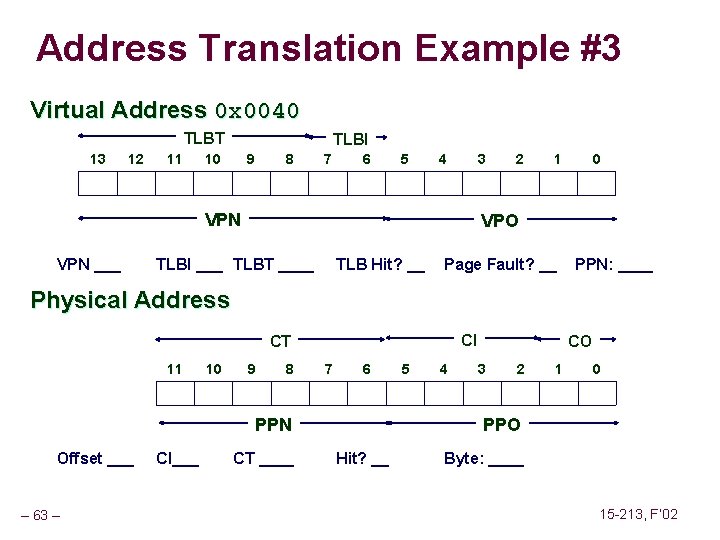

Address Translation Example #3 Virtual Address 0 x 0040 TLBT 13 12 11 TLBI 10 9 8 7 6 5 4 3 VPN ___ 2 1 0 VPO TLBI ___ TLBT ____ TLB Hit? __ Page Fault? __ PPN: ____ Physical Address CI CT 11 10 9 8 7 6 PPN Offset ___ – 63 – CI___ CT ____ 5 4 CO 3 2 1 0 PPO Hit? __ Byte: ____ 15 -213, F’ 02

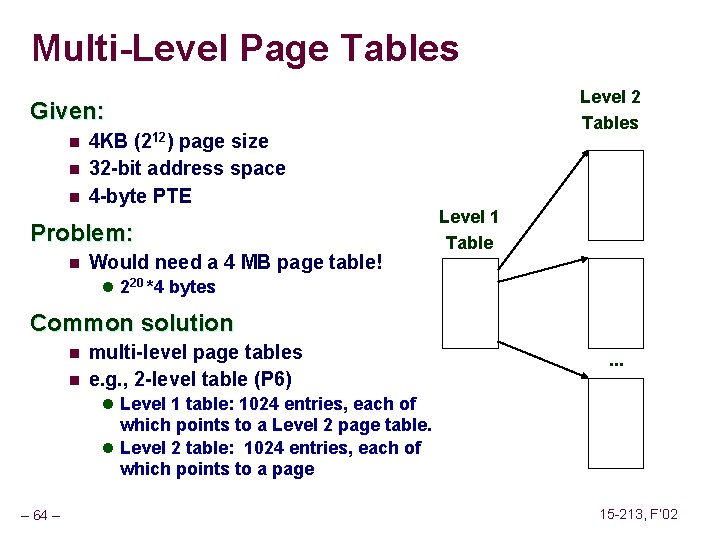

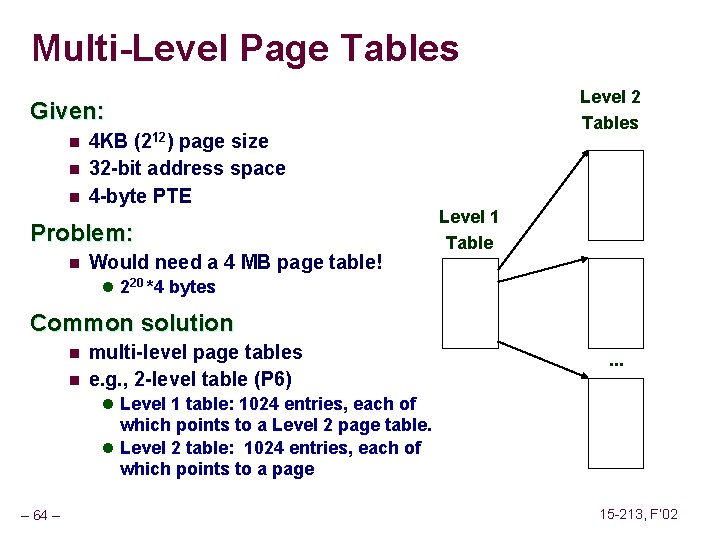

Multi-Level Page Tables Level 2 Tables Given: n n n 4 KB (212) page size 32 -bit address space 4 -byte PTE Problem: n Would need a 4 MB page table! Level 1 Table l 220 *4 bytes Common solution n n multi-level page tables e. g. , 2 -level table (P 6) . . . l Level 1 table: 1024 entries, each of which points to a Level 2 page table. l Level 2 table: 1024 entries, each of which points to a page – 64 – 15 -213, F’ 02