Memory organization Three categories Internal processor memory Main

- Slides: 52

Memory organization

• Three categories: – – Internal processor memory Main memory Secondary memory Cache memory • The choice of a memory device to built a memory system depends on its properties. 6/11/2021 2

Goal of memory organization • To provide high averg performance with low averg cost per bit: – Hierarchy of different memory devices – Automatic storage allocation method – Virtual memory concepts – Efficient memory interface to provide higher data transfer rate. 6/11/2021 3

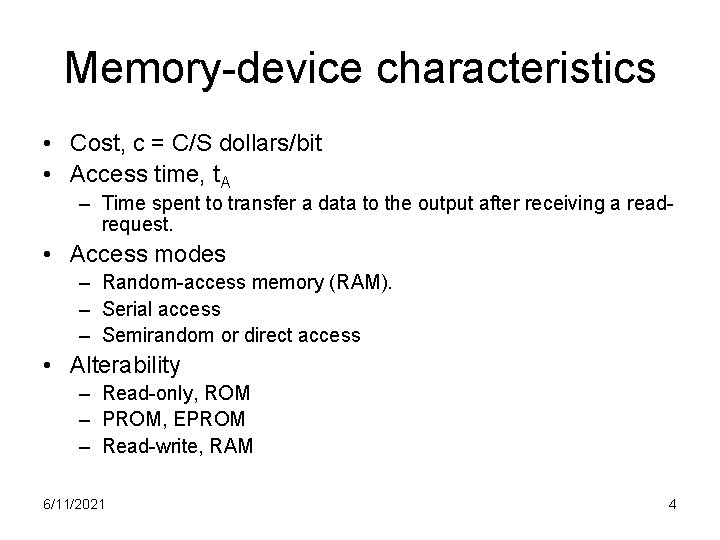

Memory-device characteristics • Cost, c = C/S dollars/bit • Access time, t. A – Time spent to transfer a data to the output after receiving a readrequest. • Access modes – Random-access memory (RAM). – Serial access – Semirandom or direct access • Alterability – Read-only, ROM – PROM, EPROM – Read-write, RAM 6/11/2021 4

• Permanence of storage – – Destructive read-out Non-destructive read-out Dynamic storage, refreshing Volatility. 6/11/2021 5

• Cycle time and data-transfer rate – Bandwidth, b. M =w/t. M – t. A may not be equal to t. M, cycle time. • Physical characteristics – – Physical size Storage density Energy consumption Reliability: mean time to failure (MTTF) 6/11/2021 6

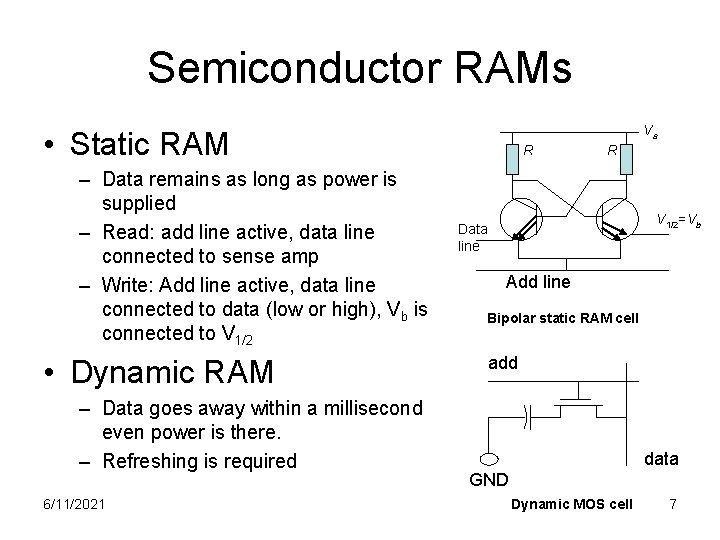

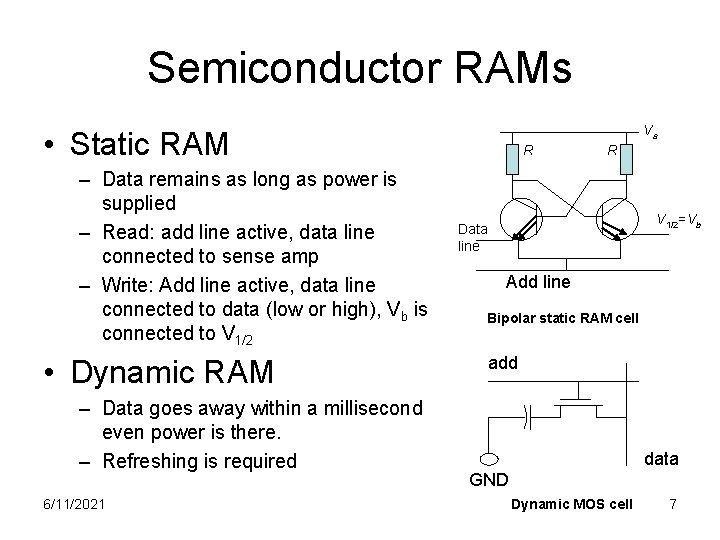

Semiconductor RAMs Va • Static RAM – Data remains as long as power is supplied – Read: add line active, data line connected to sense amp – Write: Add line active, data line connected to data (low or high), Vb is connected to V 1/2 • Dynamic RAM – Data goes away within a millisecond even power is there. – Refreshing is required 6/11/2021 R R V 1/2=Vb Data line Add line Bipolar static RAM cell add data GND Dynamic MOS cell 7

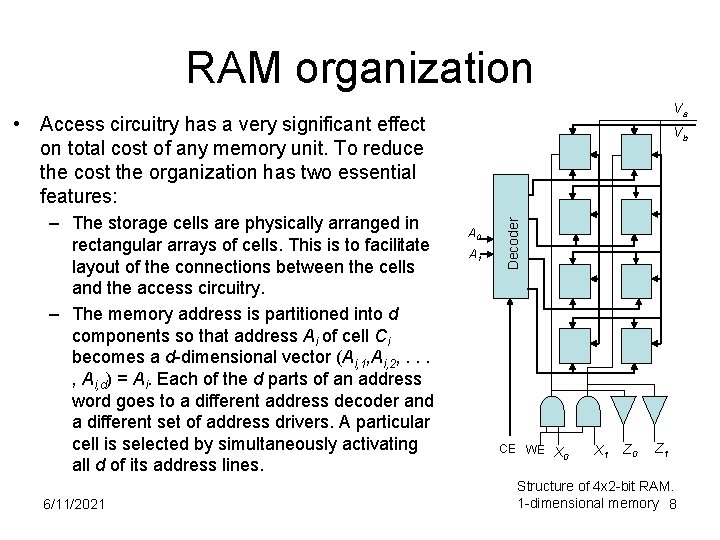

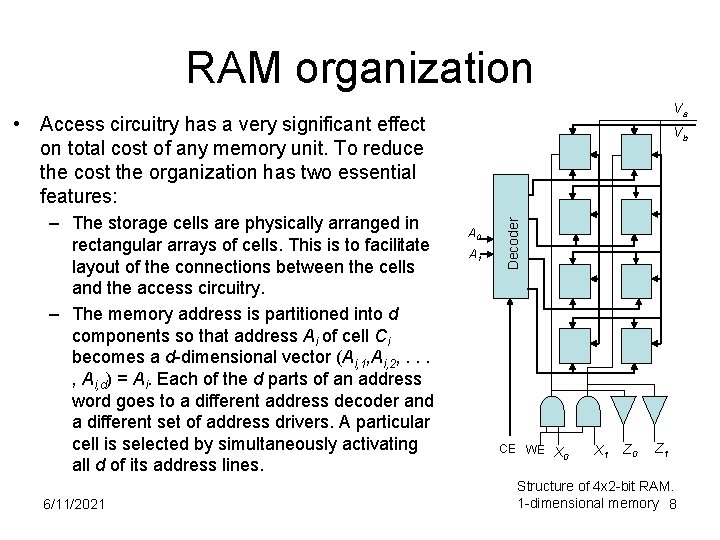

RAM organization Va • Access circuitry has a very significant effect on total cost of any memory unit. To reduce the cost the organization has two essential features: 6/11/2021 A 0 A 1 Decoder – The storage cells are physically arranged in rectangular arrays of cells. This is to facilitate layout of the connections between the cells and the access circuitry. – The memory address is partitioned into d components so that address Ai of cell Ci becomes a d-dimensional vector (Ai, 1, Ai, 2, . . . , Ai, d) = Ai. Each of the d parts of an address word goes to a different address decoder and a different set of address drivers. A particular cell is selected by simultaneously activating all d of its address lines. Vb CE WE X 0 X 1 Z 0 Z 1 Structure of 4 x 2 -bit RAM. 1 -dimensional memory 8

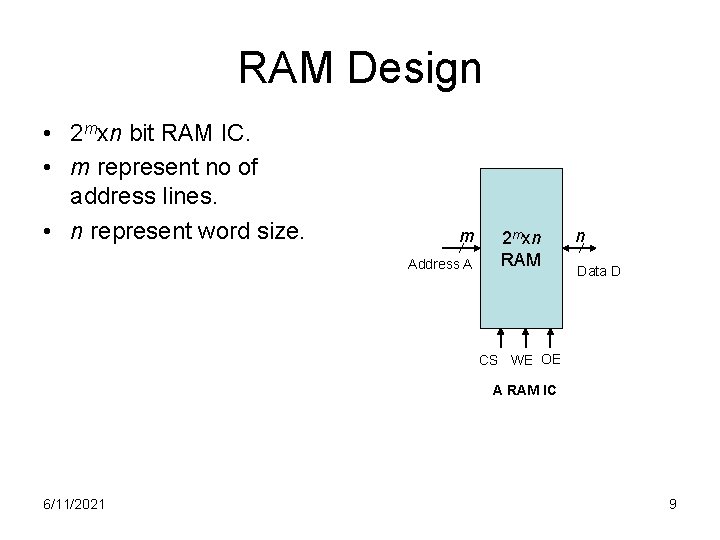

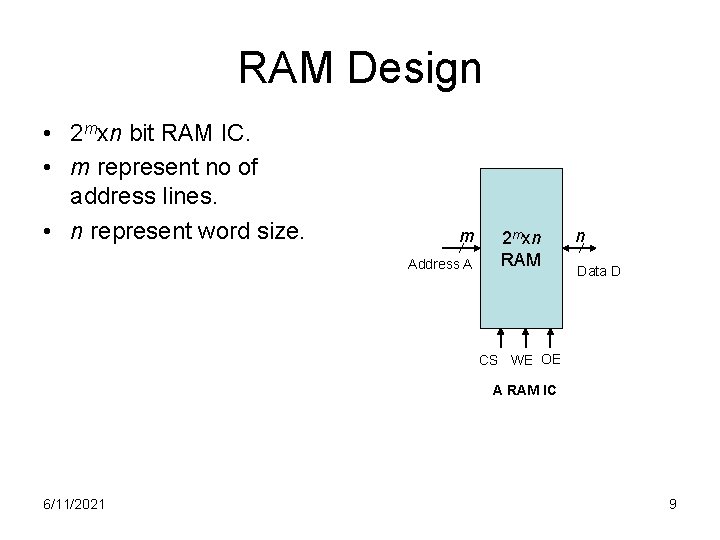

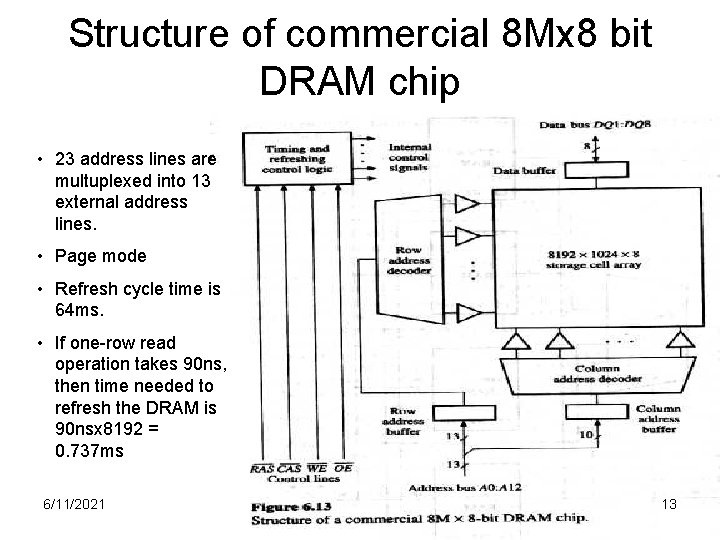

RAM Design • 2 mxn bit RAM IC. • m represent no of address lines. • n represent word size. m Address A 2 mxn RAM n Data D CS WE OE A RAM IC 6/11/2021 9

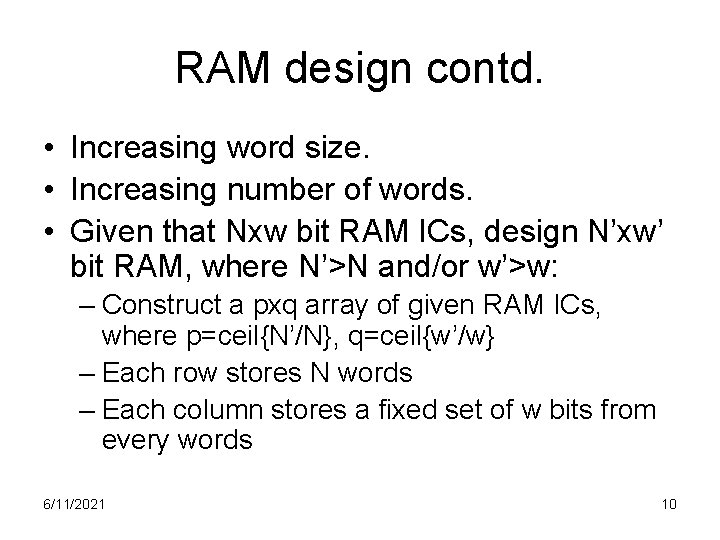

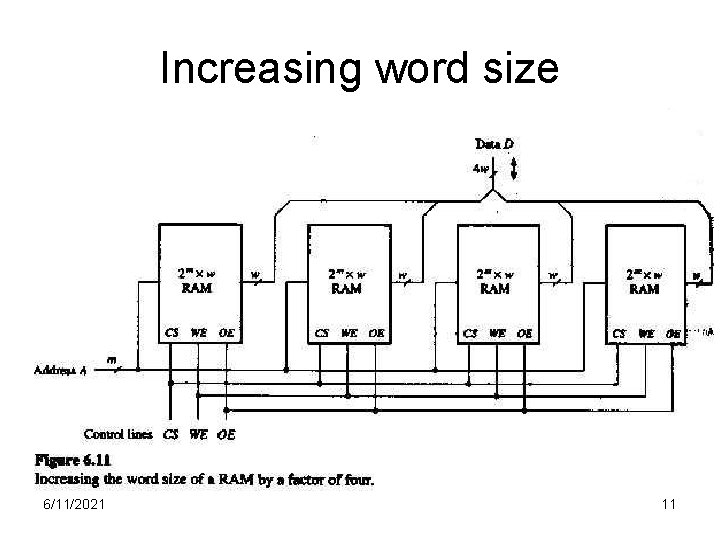

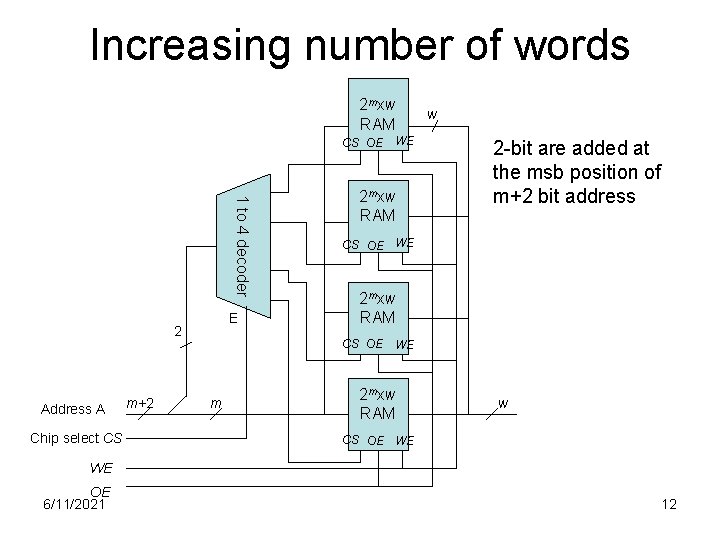

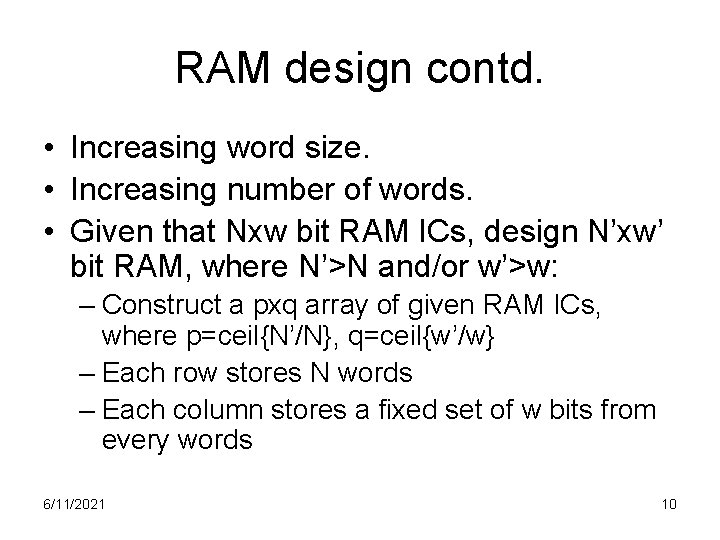

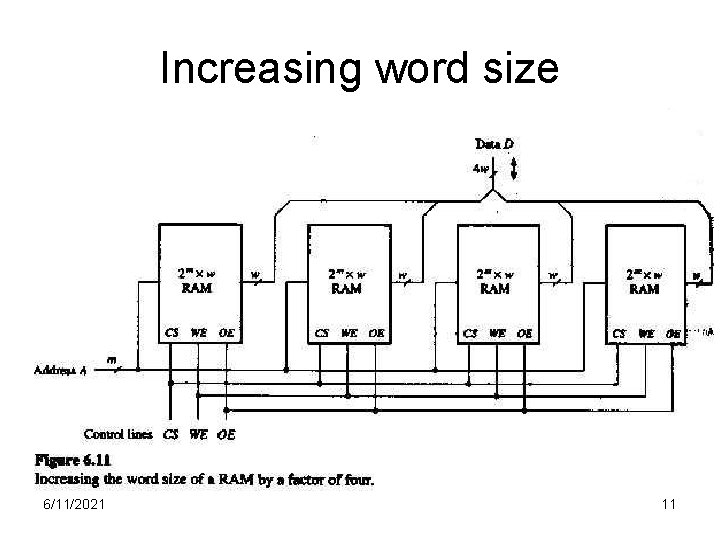

RAM design contd. • Increasing word size. • Increasing number of words. • Given that Nxw bit RAM ICs, design N’xw’ bit RAM, where N’>N and/or w’>w: – Construct a pxq array of given RAM ICs, where p=ceil{N’/N}, q=ceil{w’/w} – Each row stores N words – Each column stores a fixed set of w bits from every words 6/11/2021 10

Increasing word size 6/11/2021 11

Increasing number of words 2 mxw RAM CS OE WE 1 to 4 decoder E 2 Address A Chip select CS m+2 2 mxw RAM w 2 -bit are added at the msb position of m+2 bit address CS OE WE 2 mxw RAM CS OE WE m 2 mxw RAM w CS OE WE WE OE 6/11/2021 12

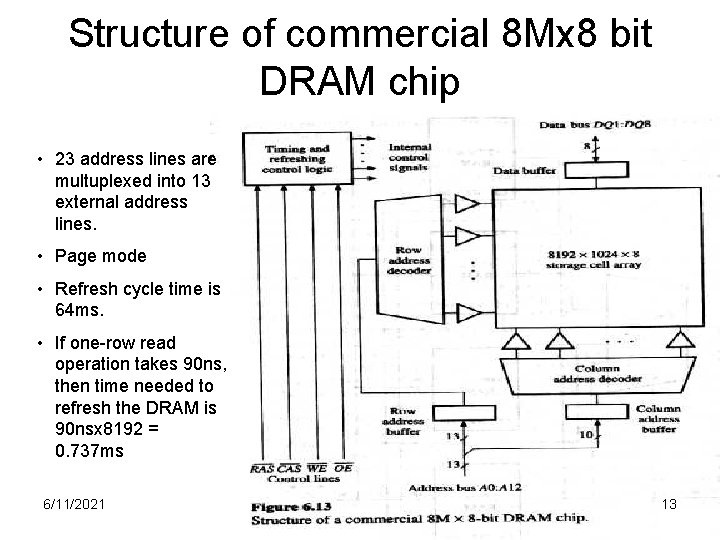

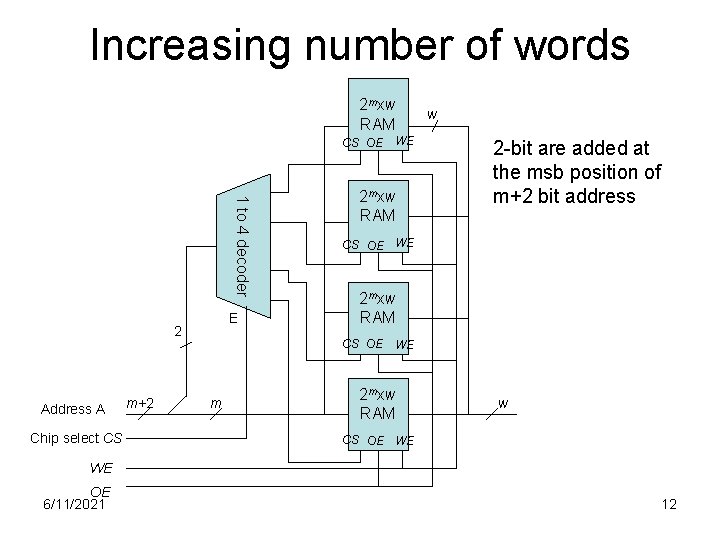

Structure of commercial 8 Mx 8 bit DRAM chip • 23 address lines are multuplexed into 13 external address lines. • Page mode • Refresh cycle time is 64 ms. • If one-row read operation takes 90 ns, then time needed to refresh the DRAM is 90 nsx 8192 = 0. 737 ms 6/11/2021 13

Other semiconductor memories • ROMs – ROM – PROM – EPROM: program randomly erase in bulk offline – EEPROM • Flash memory – Reading is random but writing is in blocks 6/11/2021 14

Fast RAM interface • . If we need to supply a faster external processor with individually accessible n-bit words, then two basic ways to increase data-transfer rate across its external interface by a factor of S – Use a bigger memory words: design the RAM with an internal memory word size of w=Sn bits is used as one unit to be accessed in one memory cycle time TM. – Access more than one words at a time: partition the RAM into S separate banks M 0, M 1, …, MS-1, each covering part of the memory address space and each provided with its own addressing ckt. Need fast ckt inside the RAM to assemble and disassemble the words being accessed. 6/11/2021 15

• Both require fast p-to-s and s-to-p ckts at the memory processor interface. • Normally S words produced or consumed by the processor have consecutive address. Their placement in the physical memory uses Interleaving technique. 6/11/2021 16

Address Interleaving • Let Xh, Xh+1, … be words that expected to be accessed in sequence. They normally be placed in consecutive memory locations Ai, Ai+1, …in the RAM. – Assign Ai to bank Mj if j=i (modulo S). – If S = 2 p, then least significant p bits of a memory add immediately identify the memory bank where it belongs to. 6/11/2021 17

Magnetic surface recording • Surface of magnetic medium: ferric oxide • If each track has a fixed capacity N words, and rotate at r revolutions/s. Let n be the number of words/block, its data can be transferred in n/(r. N) s. The aver latency is 1/(2 r) s. If ts is avrg seek time, then time needs to access a block of data, t. B = ts + 1/(2 r) + n/(r. N) • Magnetic disk drive – – – Platters heads Tracks: Sectors: sector header, inter-sector gap Cylinders 6/11/2021 18

Magnetic tape • Data is stored in longitudinal tracks. Older tapes had 9 parallel tracks. Now about 80 tracks are used. • A single head can read/write all tracks simultaneously. • Along the tracks data are stored in blokcs. Large gaps are inserted between adjucent blocks so that tape can be started and stopped between blocks. 6/11/2021 19

Optical memories • CD-ROMs • Bits are stored in 0. 1 µm wide pits and lands. • Access time is about 100 ms, data transfer rate is 3. 6 MB/s (for 24 x; x = 150 KB/s) 6/11/2021 20

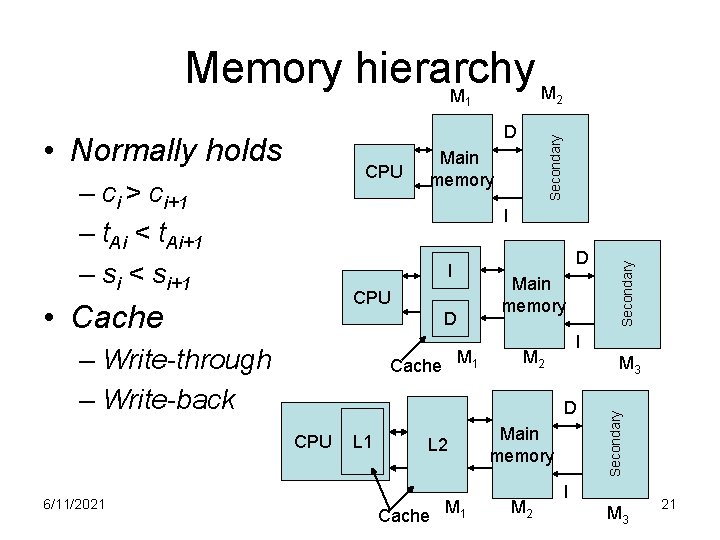

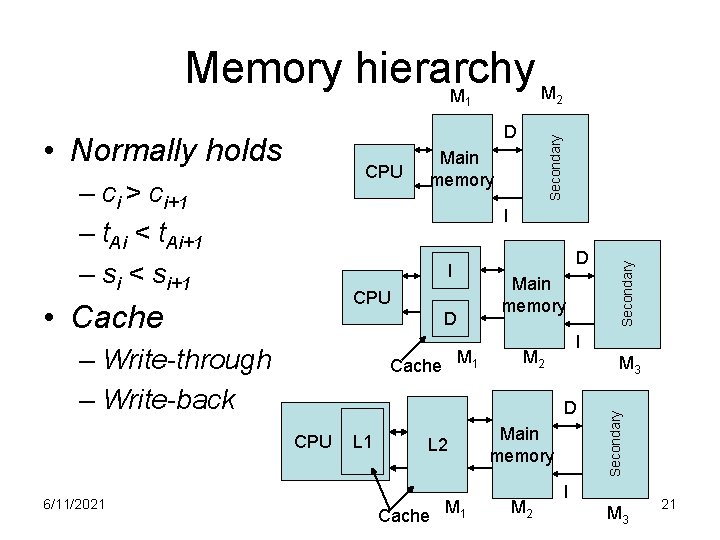

Memory hierarchy M M 1 • Normally holds CPU – ci > ci+1 – t. Ai < t. Ai+1 – si < si+1 Main memory CPU D – Write-through – Write-back Cache M 1 Main memory I M 2 M 3 D CPU L 1 L 2 Cache M 1 Main memory M 2 Secondary I D Secondary I • Cache 6/11/2021 Secondary D 2 I M 3 21

Virtual memory • Memory hierarchy comprising of different memory devices appears to the user program as a single, large, directly addressable memory. – Automatic memory allocation, and efficient sharing – Makes program independent of main memory space – Achieve relatively low cost/bit and low access time. • A memory location is addressed by virtual address V, and it is necessary to map this address to the actual physical address R, f: V R 6/11/2021 22

Locality of references • Over short term, the addresses generated by a program tend to be localized and are therefore predictable. • Page mode: info is transferred between Mi and Mi+1 as a block of consecutive words. Spatial locality. • Temporal locality: loop instru has high frequency of references. 6/11/2021 23

• Let instruction I is stored at address A and assigned to Mi ≠M 1. • Next instru is most likely be at A+1. • Information are paged, Spi-1, Spi-2 etc. • Transfer Spi , containing I to MI from Mi+1. • Transfer Spi-1 containing I to Mi-1 from Mi. • Transfer Spi-2 containing I to Mi-2 from Mi-1. • And so on… • Transfer Sp 1 containing I to M 1 from M 2. 6/11/2021 24

Design objectives • To achieve performance close to that of the fastest device M 1 and a cost per bit close to that of the cheapest device Mn. • Performance depends on the factors: – – – Address reference statistics Access time of each level Mi relative to CPU. Storage capacity of each level Page size of successive levels Algorithm used to determine the page to be transferred. 6/11/2021 25

Cost and performance • Consider a 2 level memory hierarchy (M 1, M 2). Avrg cost per bit of the system is • Hit ratio, H – Probability that a logical address generated by the CPU referers to information stored in M 1. – H = 1 is desirable but difficult to achieve – A set of representative program is executed or simulated. If N 1 and N 2 are the address references satisfied by M 1 and M 2 respectively, then hit ratio, – H is highly program dependent, 1 -H is called miss ratio 6/11/2021 26

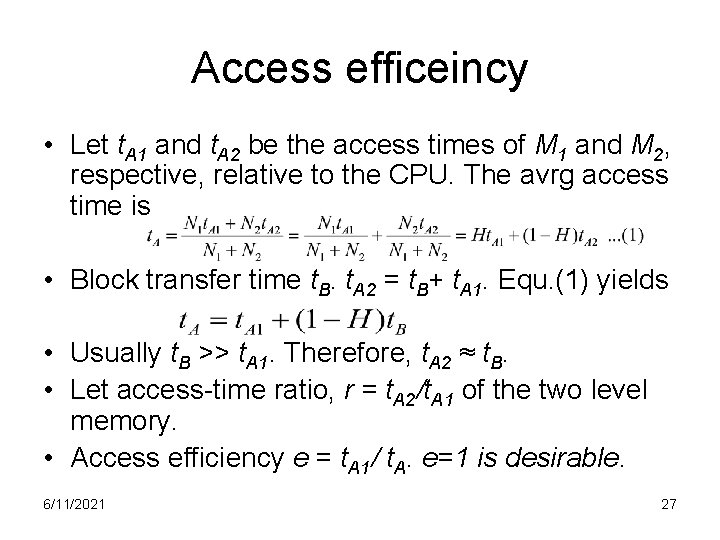

Access efficeincy • Let t. A 1 and t. A 2 be the access times of M 1 and M 2, respective, relative to the CPU. The avrg access time is • Block transfer time t. B. t. A 2 = t. B+ t. A 1. Equ. (1) yields • Usually t. B >> t. A 1. Therefore, t. A 2 ≈ t. B. • Let access-time ratio, r = t. A 2/t. A 1 of the two level memory. • Access efficiency e = t. A 1/ t. A. e=1 is desirable. 6/11/2021 27

Three Q for VM 1. When should the swapping take place? 1. Demand swapping 2. Anticipatory swapping • Requires relative long range prediction, difficult 2. Where should the block being transferred into main memory be placed? 1. allocation policy • • Preemptive Non preemptive 2. Thrashing 3. How many words are transferred during each swap, i. e. the page size? 6/11/2021 28

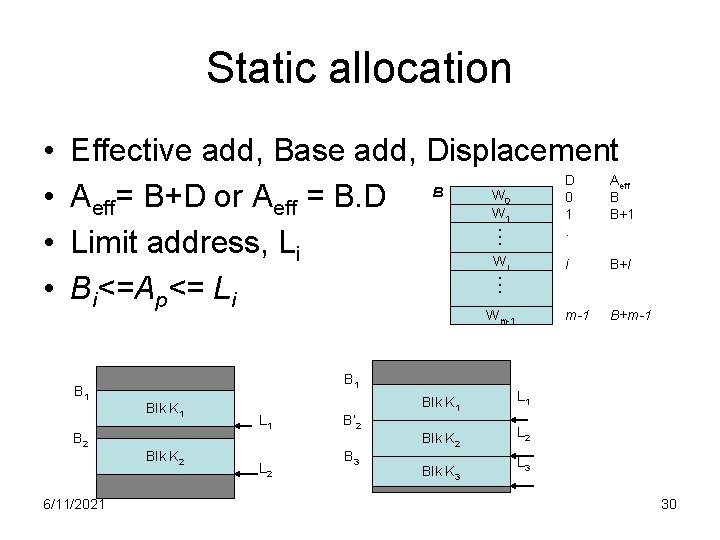

Address mapping • Address mapping and translation is carried out at different stage in life of a prog – By the programmer while writing the prog – By the compiler during compilation – By the loader at initial prog-load time – By run-time memory management HW and/or software • Static allocation, dynamic allocation 6/11/2021 29

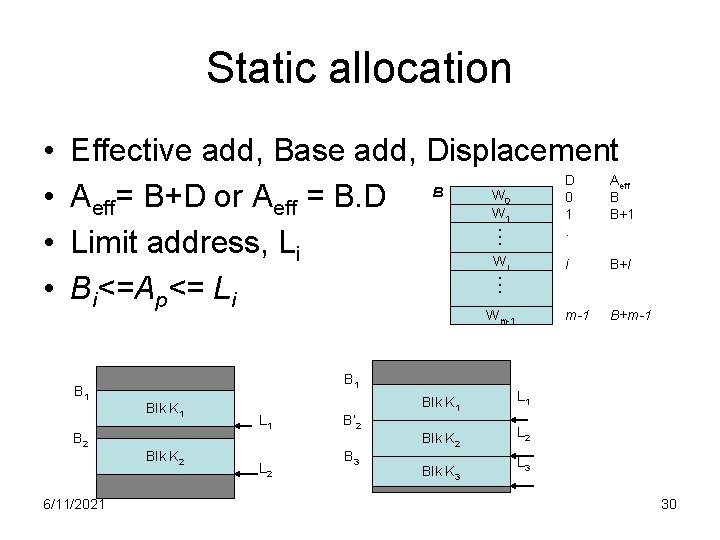

Static allocation Effective add, Base add, Displacement D A B W 0 B Aeff= B+D or Aeff = B. D W 1 B+1. Limit address, Li W i B+I Bi<=Ap<= Li eff 0 1 … i … • • m-1 Wm-1 B 2 6/11/2021 B 1 Blk K 2 L 1 L 2 B’ 2 B 3 Blk K 1 L 1 Blk K 2 L 2 Blk K 3 B+m-1 L 3 30

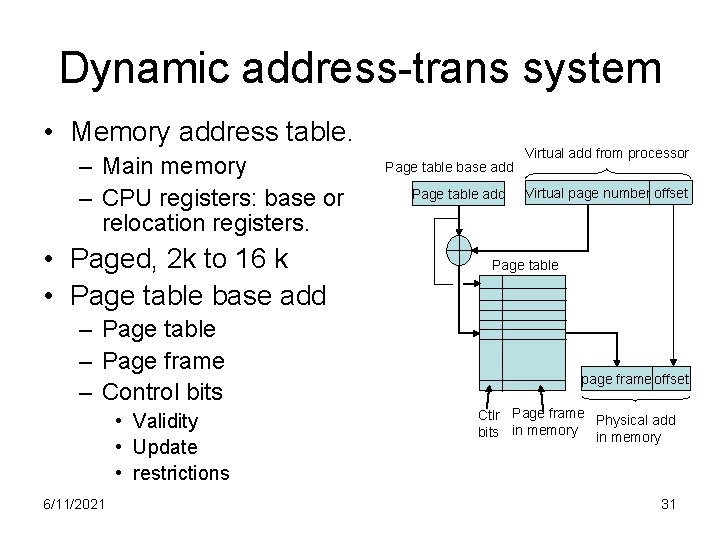

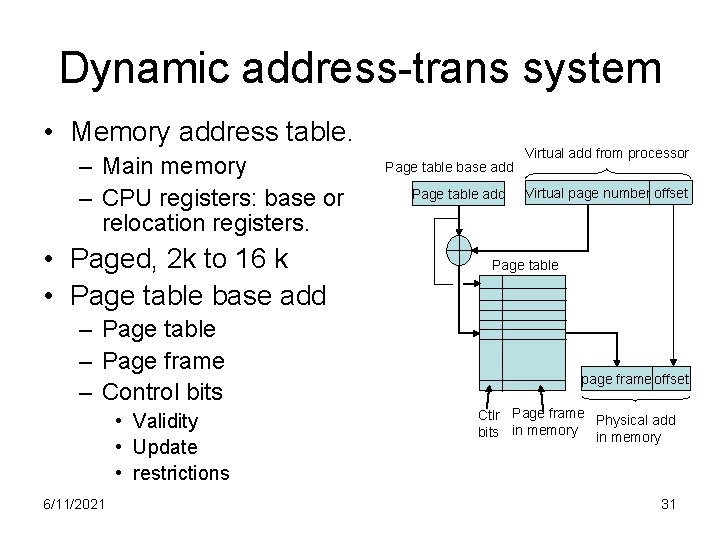

Dynamic address-trans system • Memory address table. – Main memory – CPU registers: base or relocation registers. • Paged, 2 k to 16 k • Page table base add – Page table – Page frame – Control bits • Validity • Update • restrictions 6/11/2021 Page table base add Page table add Virtual add from processor Virtual page number offset Page table page frame offset Ctlr Page frame Physical add bits in memory 31

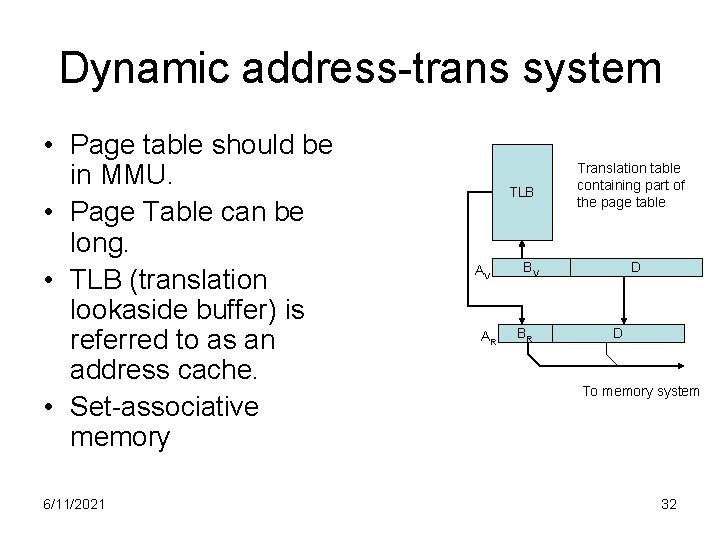

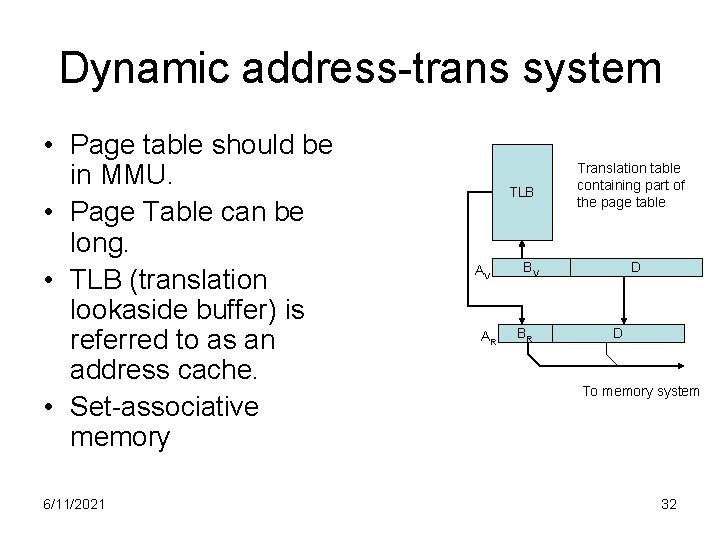

Dynamic address-trans system • Page table should be in MMU. • Page Table can be long. • TLB (translation lookaside buffer) is referred to as an address cache. • Set-associative memory 6/11/2021 TLB AV AR Translation table containing part of the page table BV BR D D To memory system 32

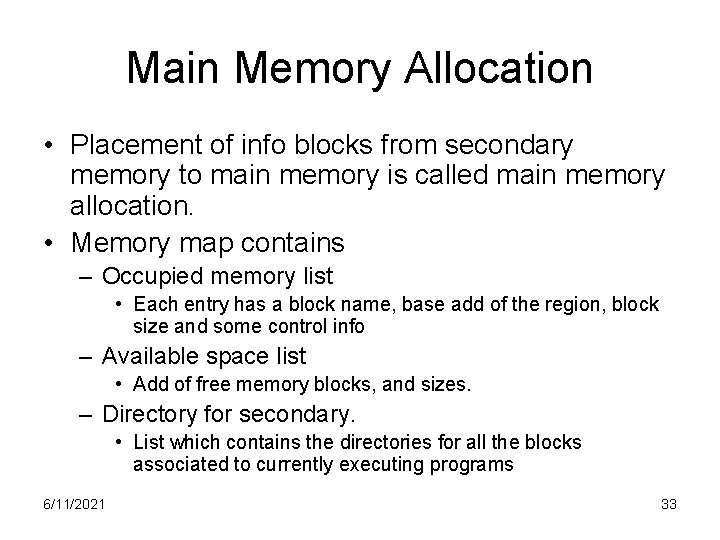

Main Memory Allocation • Placement of info blocks from secondary memory to main memory is called main memory allocation. • Memory map contains – Occupied memory list • Each entry has a block name, base add of the region, block size and some control info – Available space list • Add of free memory blocks, and sizes. – Directory for secondary. • List which contains the directories for all the blocks associated to currently executing programs 6/11/2021 33

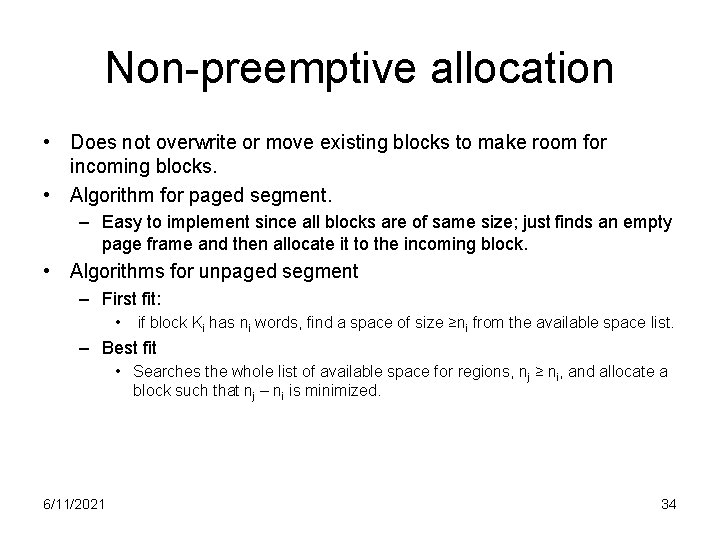

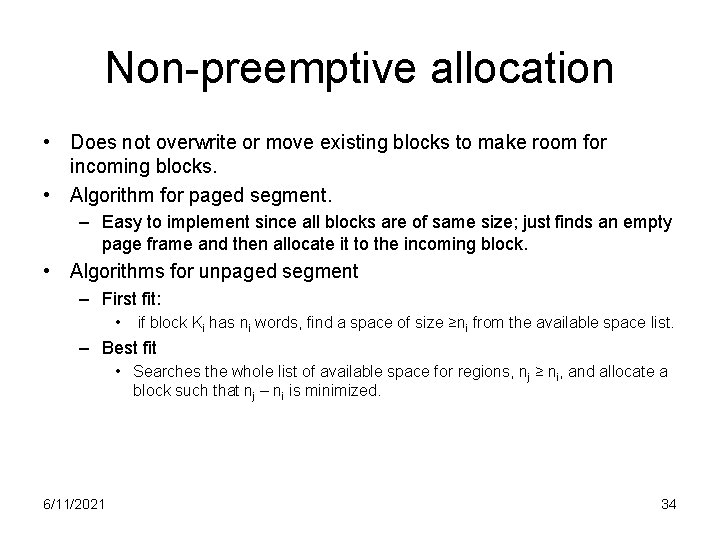

Non-preemptive allocation • Does not overwrite or move existing blocks to make room for incoming blocks. • Algorithm for paged segment. – Easy to implement since all blocks are of same size; just finds an empty page frame and then allocate it to the incoming block. • Algorithms for unpaged segment – First fit: • if block Ki has ni words, find a space of size ≥ni from the available space list. – Best fit • Searches the whole list of available space for regions, nj ≥ ni, and allocate a block such that nj – ni is minimized. 6/11/2021 34

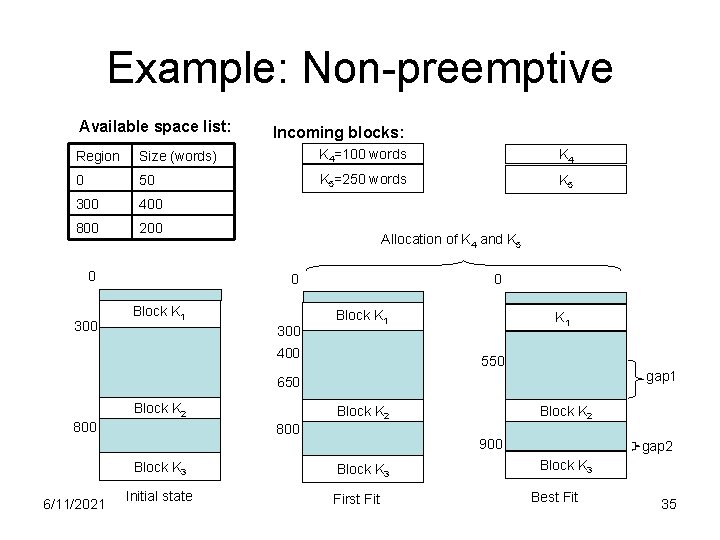

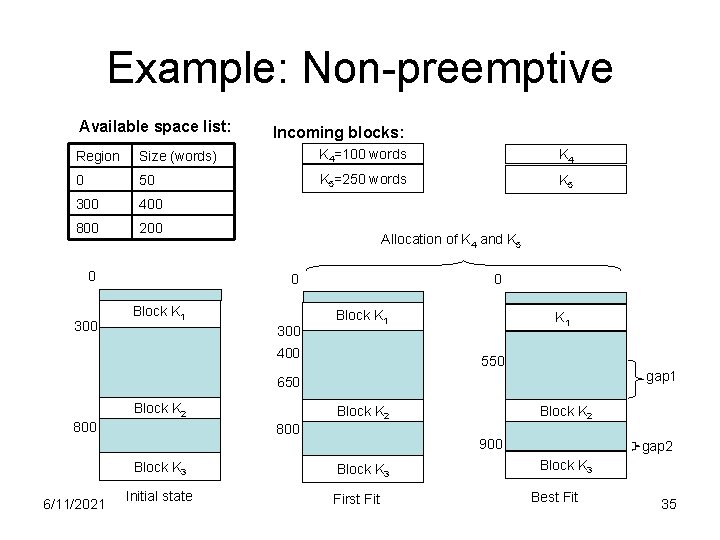

Example: Non-preemptive Available space list: Incoming blocks: Region Size (words) K 4=100 words K 4 0 50 K 5=250 words K 5 300 400 800 200 0 300 Allocation of K 4 and K 5 0 Block K 1 300 0 Block K 1 400 K 1 550 gap 1 650 Block K 2 800 Block K 3 6/11/2021 Block K 2 Initial state Block K 2 900 Block K 3 First Fit gap 2 Block K 3 Best Fit 35

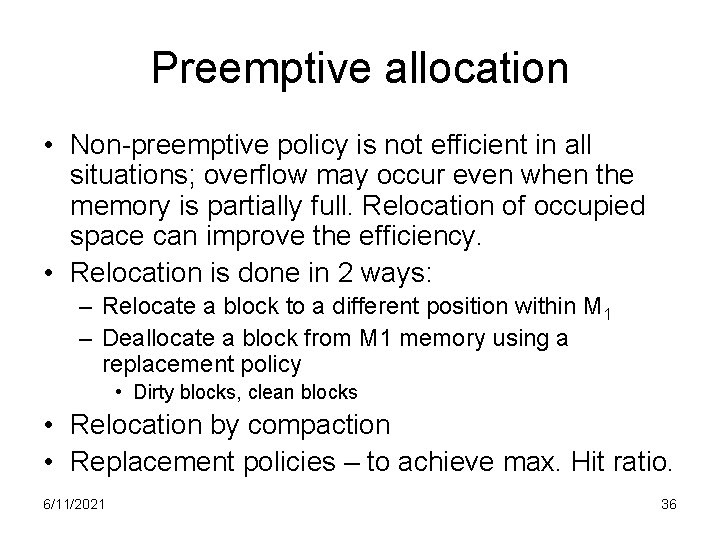

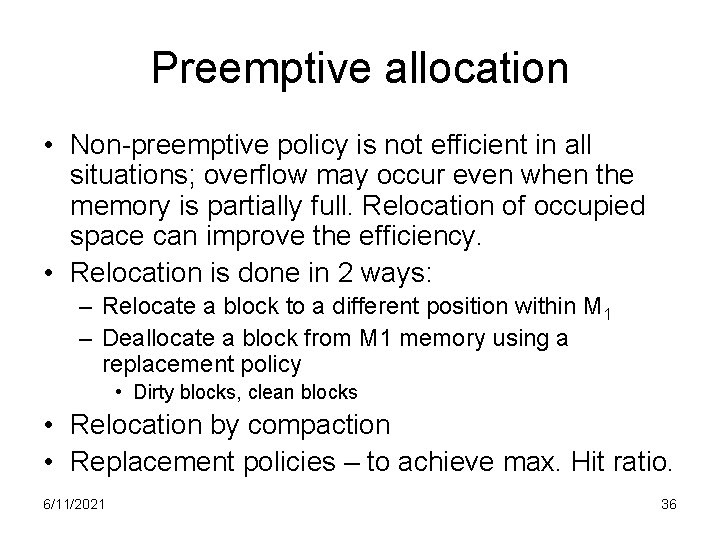

Preemptive allocation • Non-preemptive policy is not efficient in all situations; overflow may occur even when the memory is partially full. Relocation of occupied space can improve the efficiency. • Relocation is done in 2 ways: – Relocate a block to a different position within M 1 – Deallocate a block from M 1 memory using a replacement policy • Dirty blocks, clean blocks • Relocation by compaction • Replacement policies – to achieve max. Hit ratio. 6/11/2021 36

• Optimal replacement policy – find the block for replacement that has minimum chance to be referenced next time. – First a simulation run generates Block Address trace, S. Then find a max(tj – ti) for all locations. Construct optimal sequence SOPT. – Can be extremely time consuming • Two most common replacement policies: – FIFO (first-in first-out) • Simple. But frequently used block (like a loop) may be replaced because it is oldest block. – LRU (Least recently used) • Selects the block least recently accessed by the processor. • Avoids the replacement of freq used blocks. • Slightly difficult to implement 6/11/2021 37

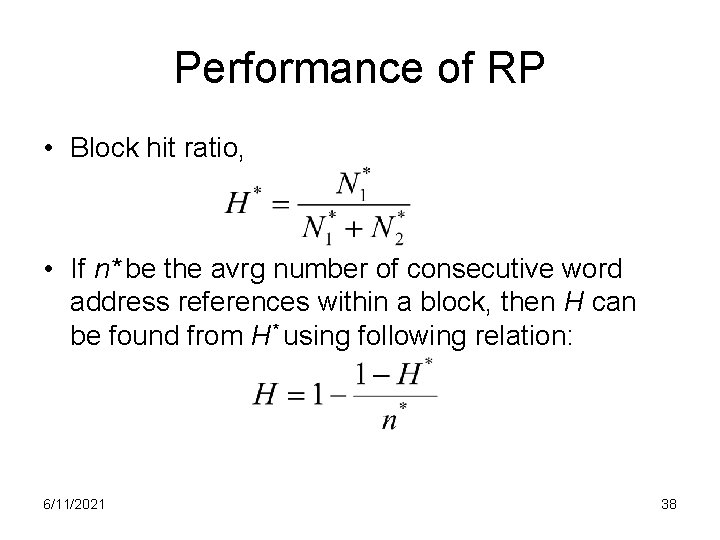

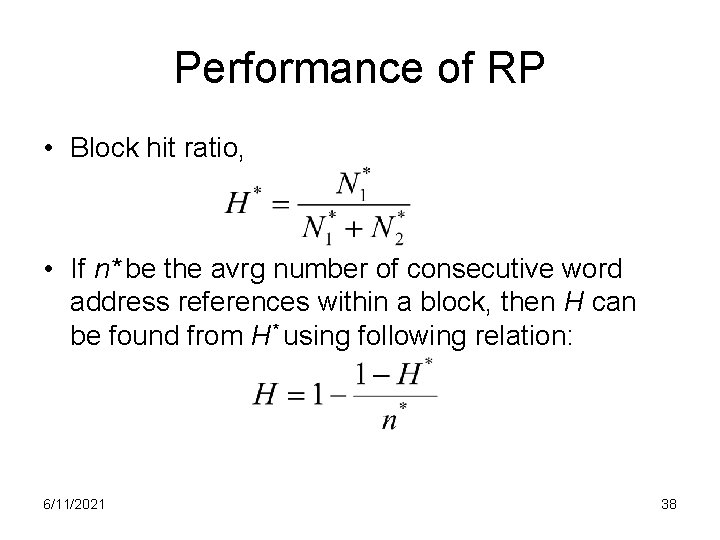

Performance of RP • Block hit ratio, • If n* be the avrg number of consecutive word address references within a block, then H can be found from H* using following relation: 6/11/2021 38

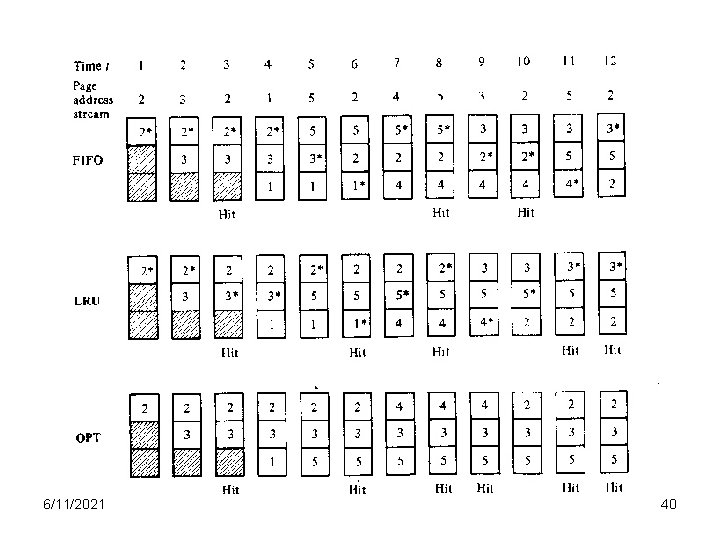

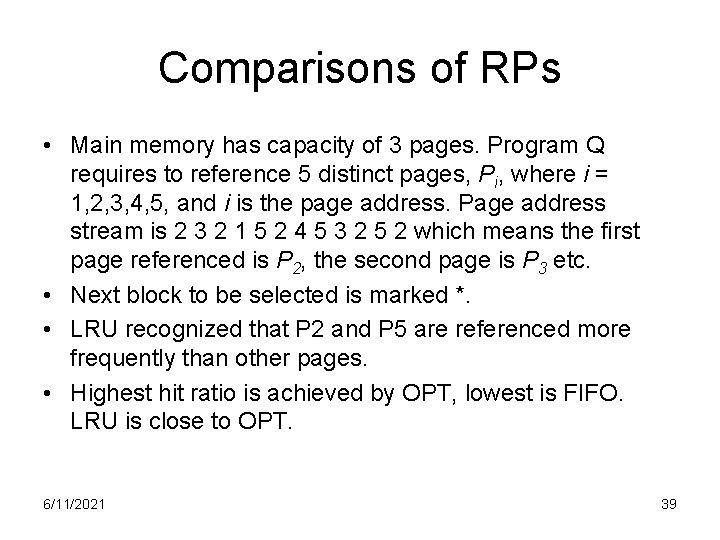

Comparisons of RPs • Main memory has capacity of 3 pages. Program Q requires to reference 5 distinct pages, Pi, where i = 1, 2, 3, 4, 5, and i is the page address. Page address stream is 2 3 2 1 5 2 4 5 3 2 5 2 which means the first page referenced is P 2, the second page is P 3 etc. • Next block to be selected is marked *. • LRU recognized that P 2 and P 5 are referenced more frequently than other pages. • Highest hit ratio is achieved by OPT, lowest is FIFO. LRU is close to OPT. 6/11/2021 39

6/11/2021 40

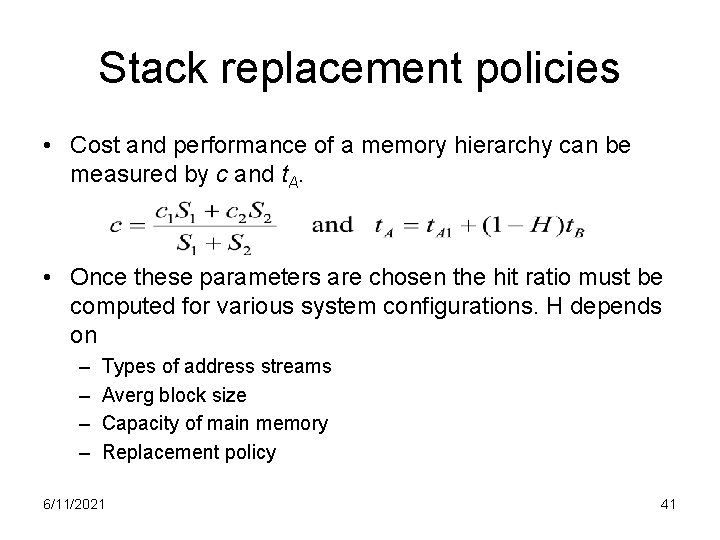

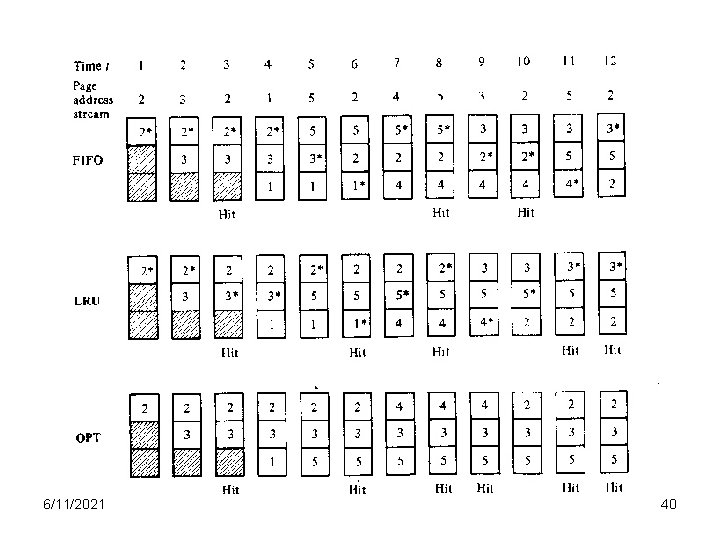

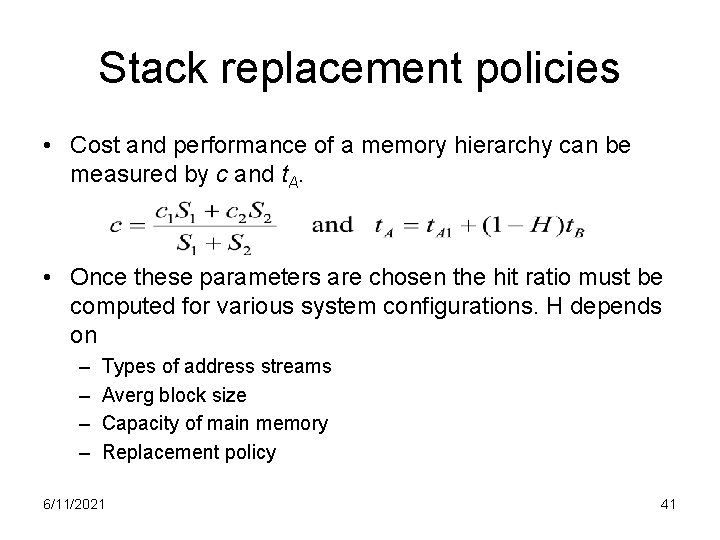

Stack replacement policies • Cost and performance of a memory hierarchy can be measured by c and t. A. • Once these parameters are chosen the hit ratio must be computed for various system configurations. H depends on – – Types of address streams Averg block size Capacity of main memory Replacement policy 6/11/2021 41

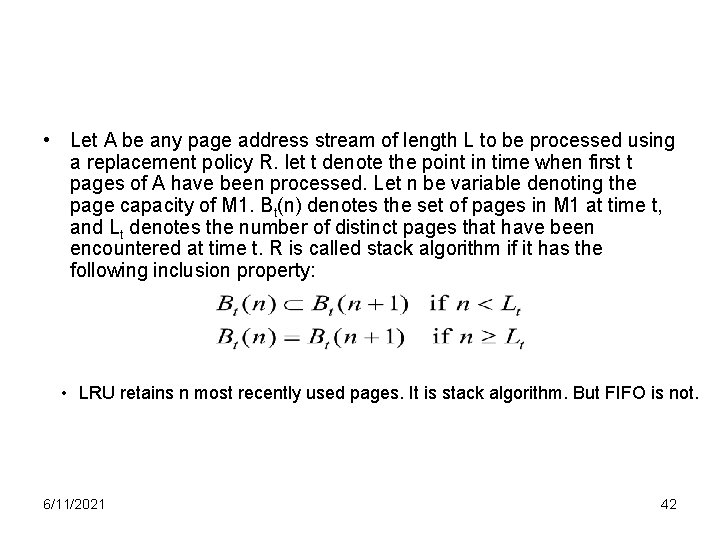

• Let A be any page address stream of length L to be processed using a replacement policy R. let t denote the point in time when first t pages of A have been processed. Let n be variable denoting the page capacity of M 1. Bt(n) denotes the set of pages in M 1 at time t, and Lt denotes the number of distinct pages that have been encountered at time t. R is called stack algorithm if it has the following inclusion property: • LRU retains n most recently used pages. It is stack algorithm. But FIFO is not. 6/11/2021 42

Segments and page • Page - Basic unit of memory info for swapping purpose in a multilevel memory system. • Page-frame • Segments – higher level info blocks corresponding to logical entities e. g program or data sets. A segments can be translated into one or more pages. • Segment add, displacement. when a segment is not currently resident in the M 1 memory, it is entirely transferred from the secondary memory M 2. • Segment table. • Main adva: • Segments are independent, can b recompiled at any time without affecting others. • Efficient memory use, program area protection. • Variable length segment can easily be allocated in the main memory by the help of paging. 6/11/2021 43

6/11/2021 44

Burroughs B 6500 segmentation • Each program has a segment called its program reference table PRT, which serves as it segment table. • Segment descriptor • Intel 80 x 86 and pentium series have four 16 bit segment registers forming a segment table 6/11/2021 45

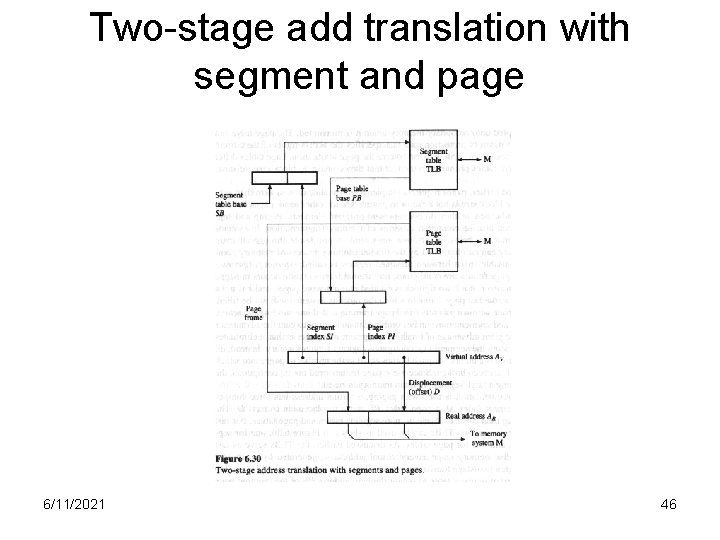

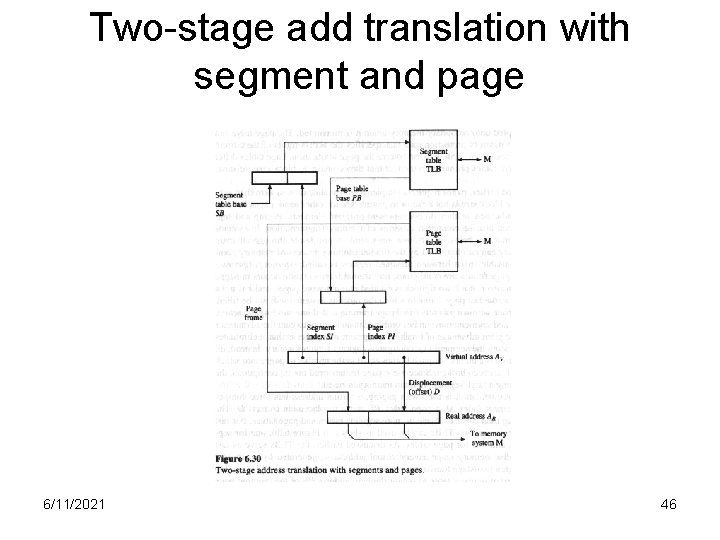

Two-stage add translation with segment and page 6/11/2021 46

Advantages of segmentation • Segment boundaries corresponds to natural program and data boundaries. Because of their logical independence, a program segment can be changed or recompiled at any time without affecting other segments. • Implementation of access rights and scopes of program variables have been easy. • Implementation of Stack and queues have been easy as the segment can be of variable length. 6/11/2021 47

pages • Fixed length block • page table – Page add, displacement • • Page fault. External fragmentation Internal fragmentation Segments can be assigned over a noncontiguous area in the memory by the use of paging. 6/11/2021 48

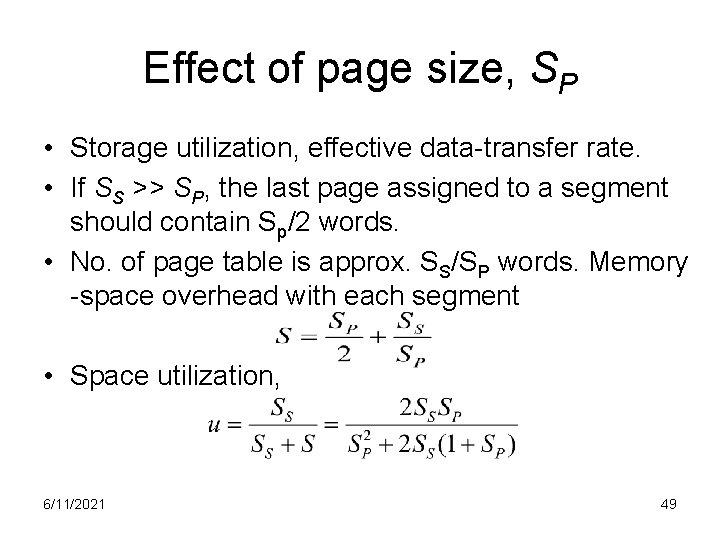

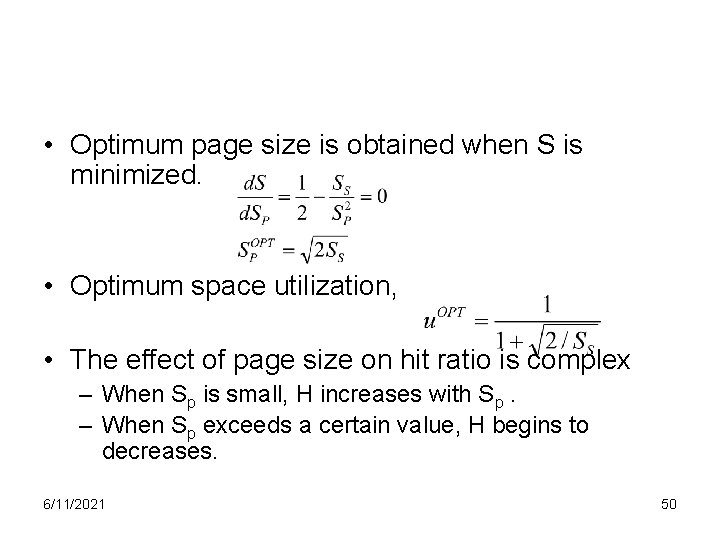

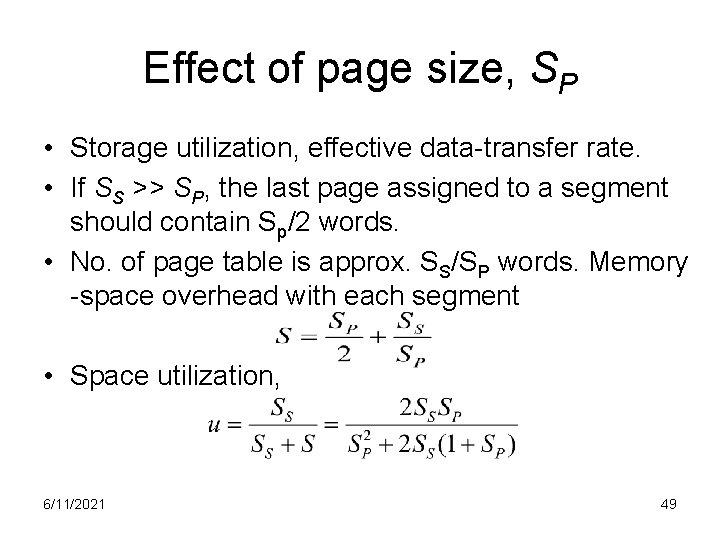

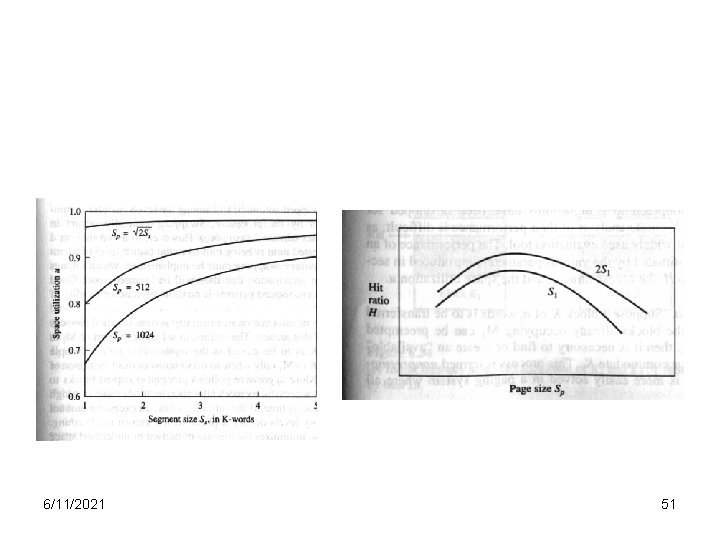

Effect of page size, SP • Storage utilization, effective data-transfer rate. • If SS >> SP, the last page assigned to a segment should contain Sp/2 words. • No. of page table is approx. SS/SP words. Memory -space overhead with each segment • Space utilization, 6/11/2021 49

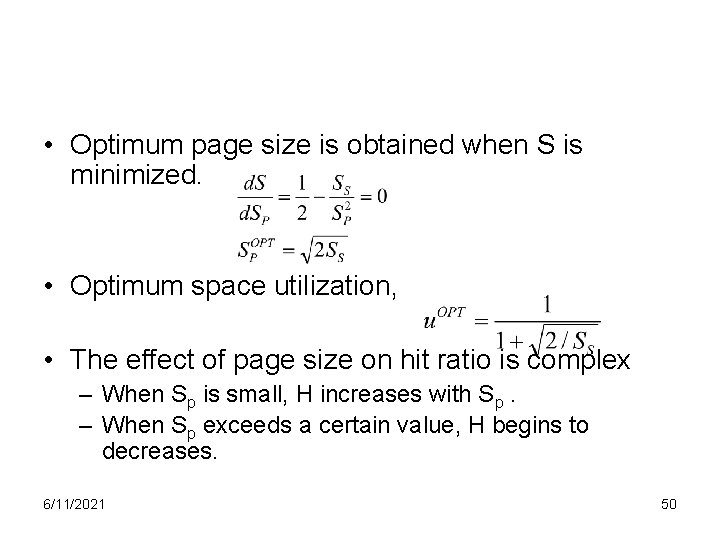

• Optimum page size is obtained when S is minimized. • Optimum space utilization, • The effect of page size on hit ratio is complex – When Sp is small, H increases with Sp. – When Sp exceeds a certain value, H begins to decreases. 6/11/2021 50

6/11/2021 51

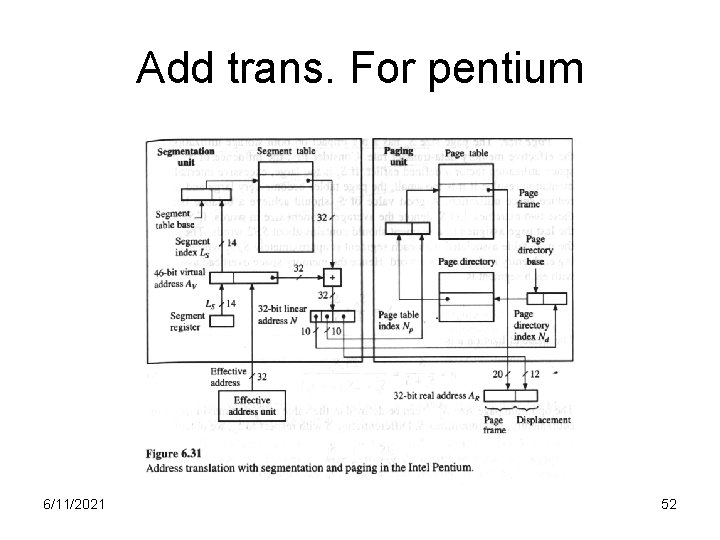

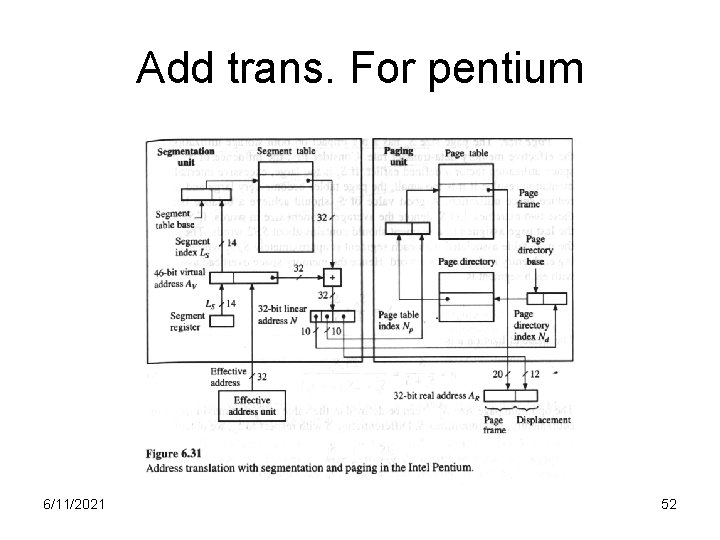

Add trans. For pentium 6/11/2021 52