DRAM Dynamic RAM Store their contents as charge

- Slides: 54

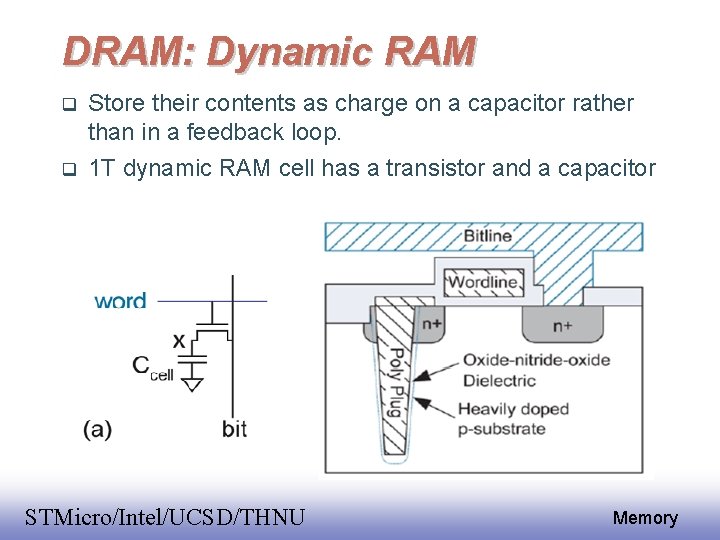

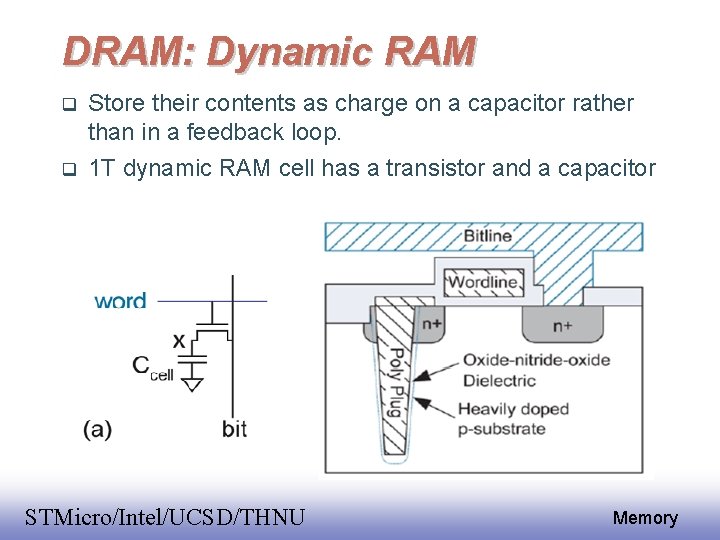

DRAM: Dynamic RAM Store their contents as charge on a capacitor rather than in a feedback loop. 1 T dynamic RAM cell has a transistor and a capacitor EE 141 STMicro/Intel/UCSD/THNU 1 Memory

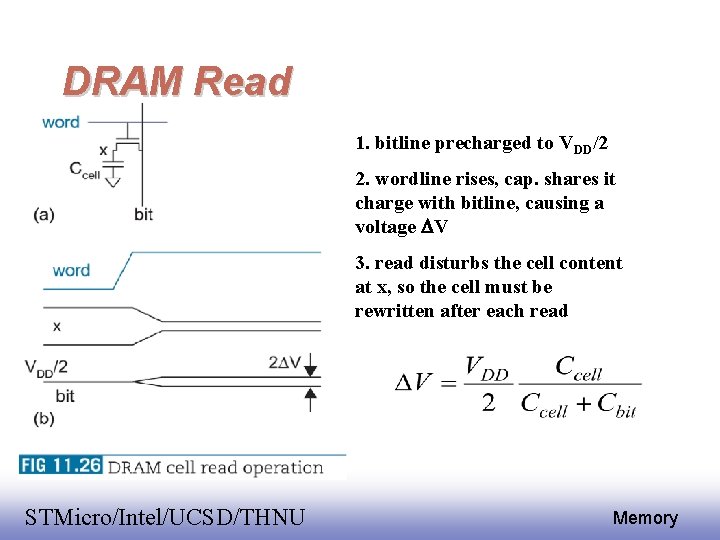

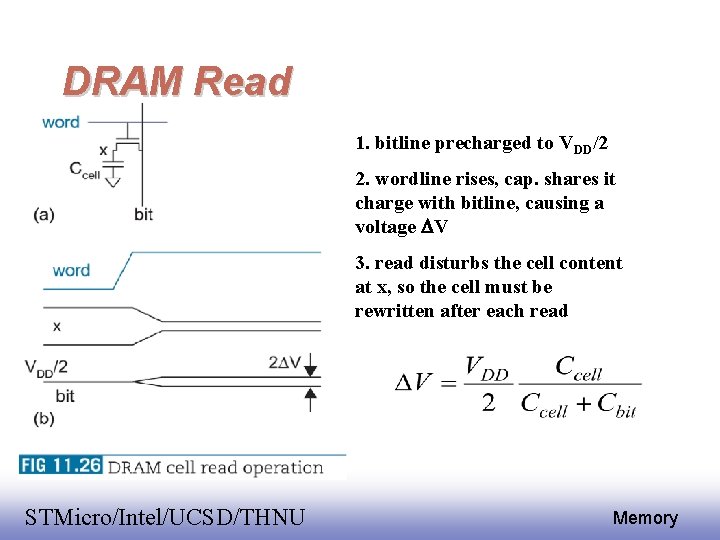

DRAM Read 1. bitline precharged to VDD/2 2. wordline rises, cap. shares it charge with bitline, causing a voltage V 3. read disturbs the cell content at x, so the cell must be rewritten after each read EE 141 STMicro/Intel/UCSD/THNU 2 Memory

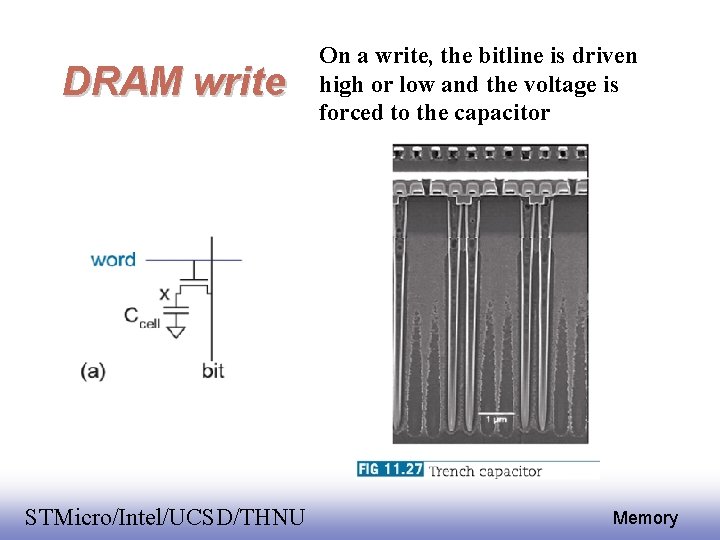

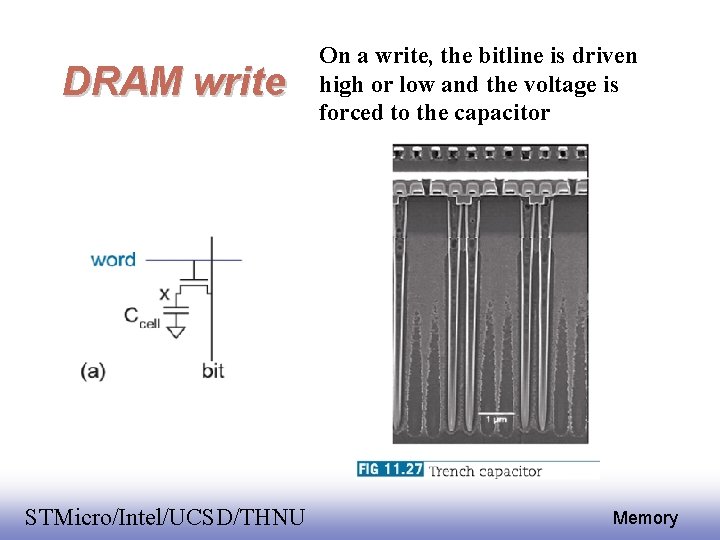

DRAM write EE 141 STMicro/Intel/UCSD/THNU On a write, the bitline is driven high or low and the voltage is forced to the capacitor 3 Memory

DRAM Array EE 141 STMicro/Intel/UCSD/THNU 4 Memory

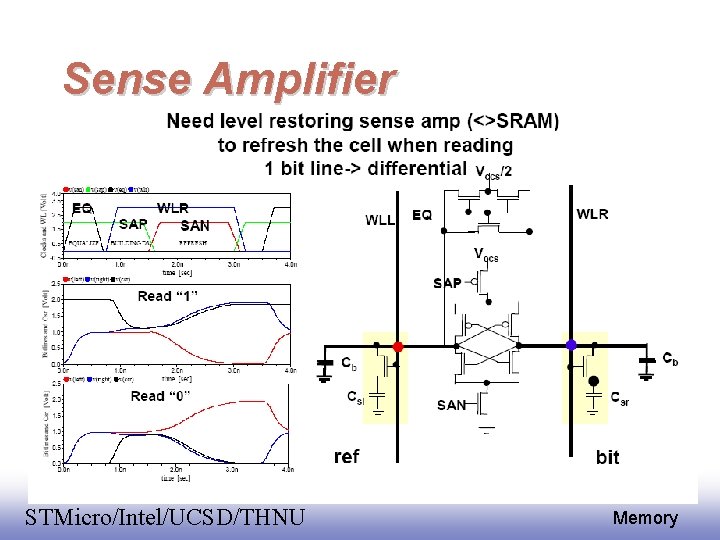

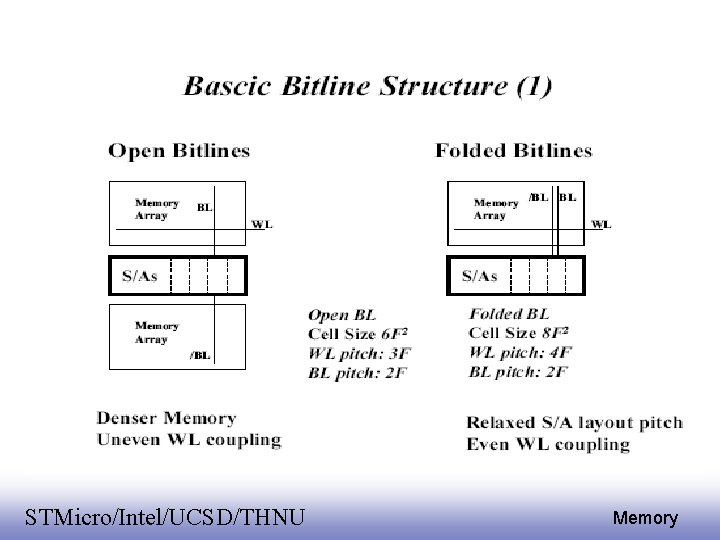

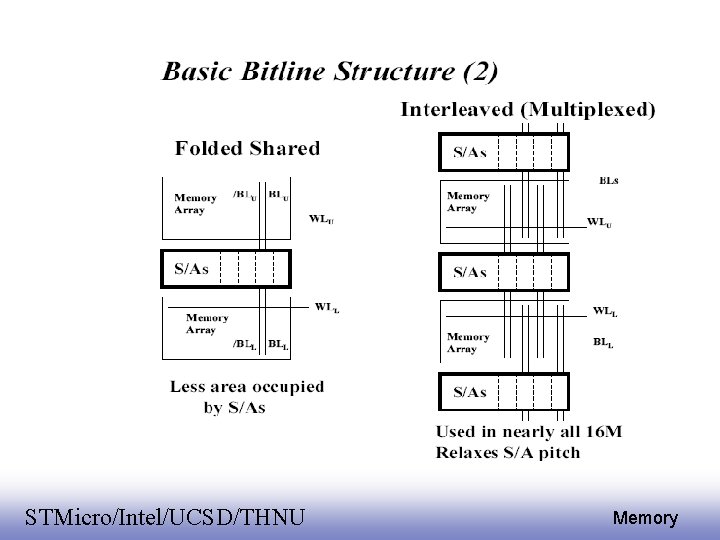

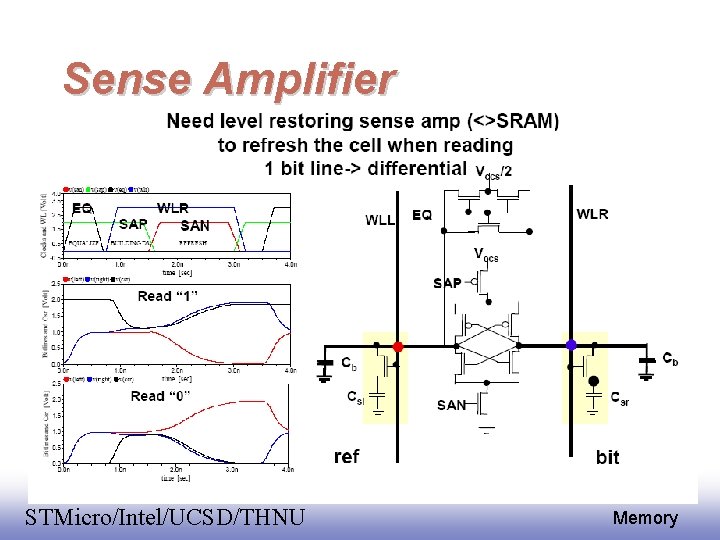

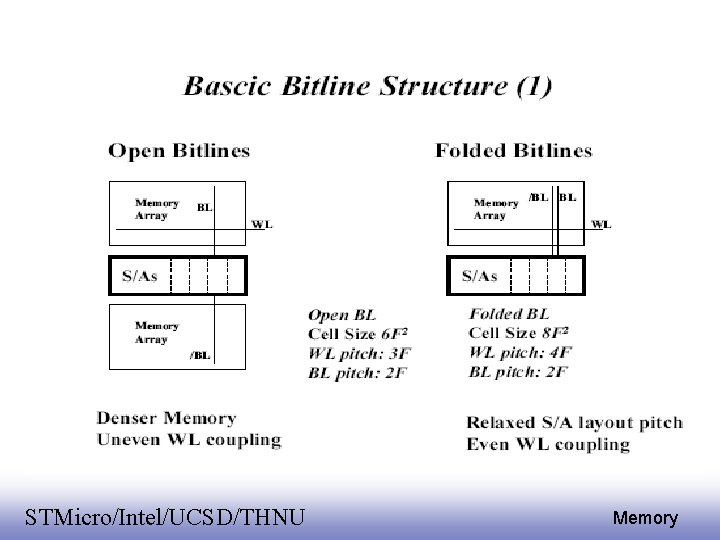

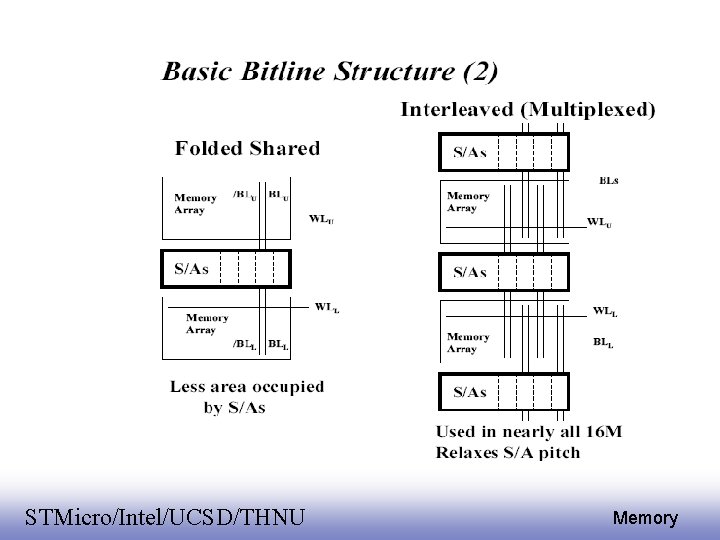

DRAM Bitline cap is an order of magnitude larger than the cell, causing very small voltage swing. A sense amplifier is used. Three different bitline architectures, open, folded, and twisted, offer different compromises between noise and area. EE 141 STMicro/Intel/UCSD/THNU 5 Memory

DRAM in a nutshell Based on capacitive (non-regenerative) storage Highest density (Gb/cm 2) Large external memory (Gb) or embedded DRAM for image, graphics, multimedia… Needs periodic refresh -> overhead, slower EE 141 STMicro/Intel/UCSD/THNU 6 Memory

EE 141 STMicro/Intel/UCSD/THNU 7 Memory

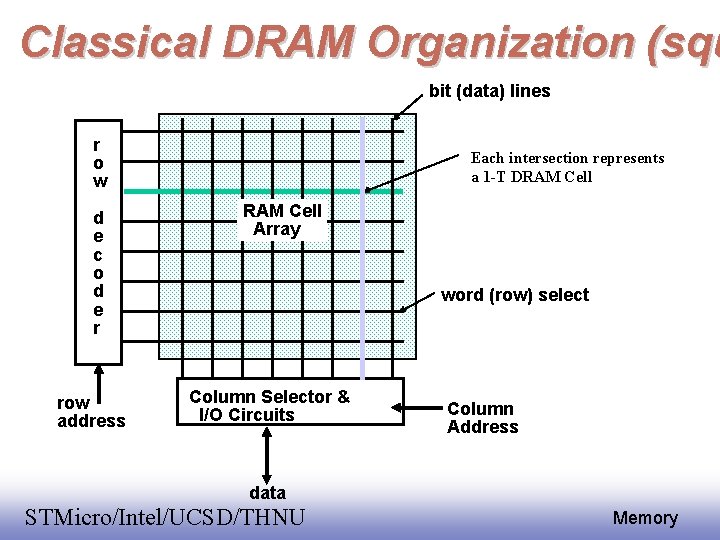

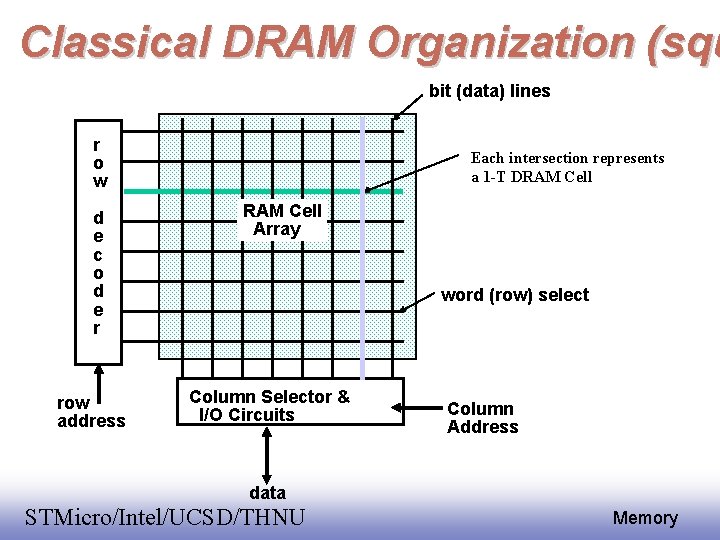

Classical DRAM Organization (squ bit (data) lines r o w d e c o d e r row address Each intersection represents a 1 -T DRAM Cell Array word (row) select Column Selector & I/O Circuits data EE 141 STMicro/Intel/UCSD/THNU Column Address 8 Memory

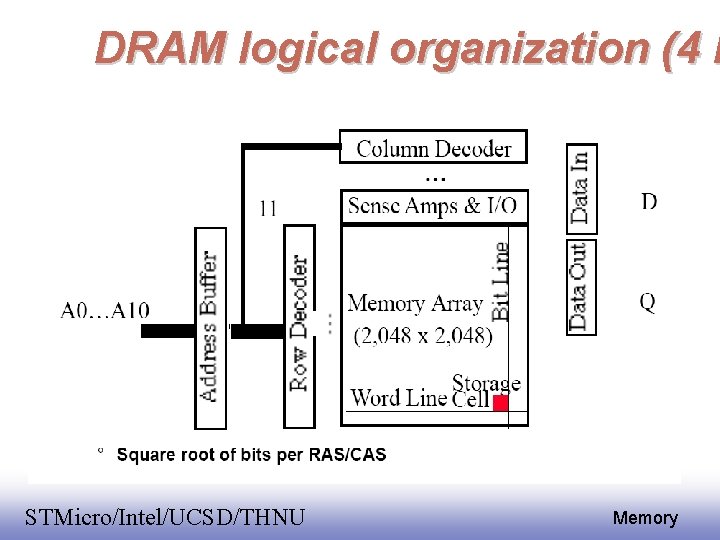

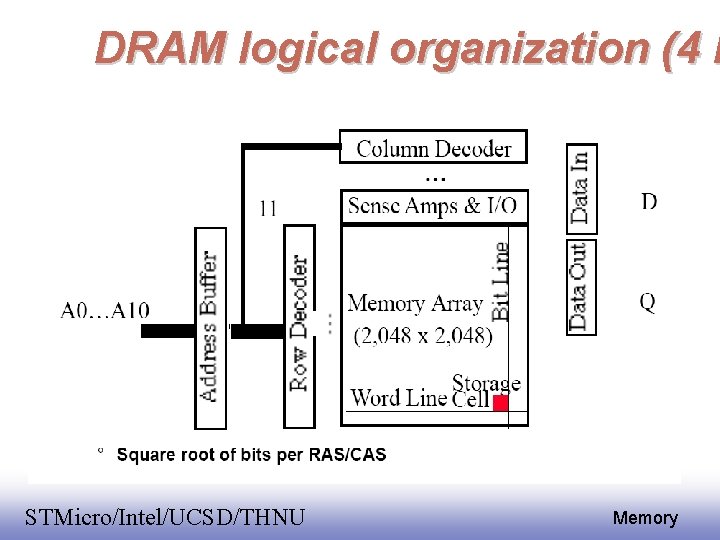

DRAM logical organization (4 M EE 141 STMicro/Intel/UCSD/THNU 9 Memory

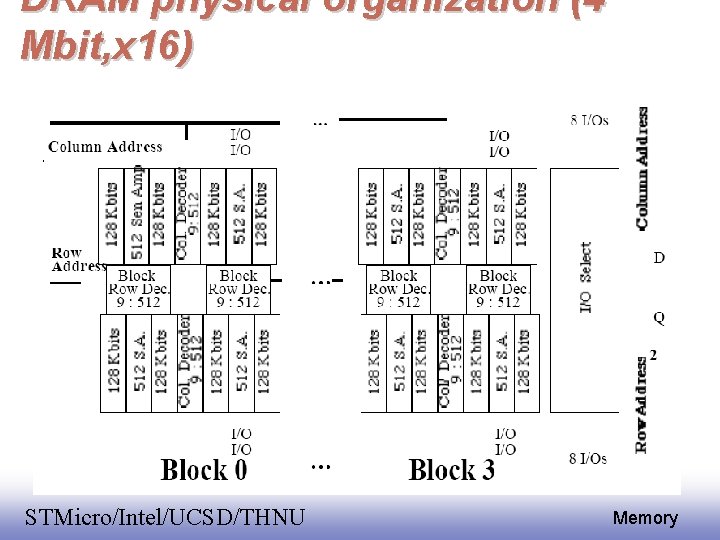

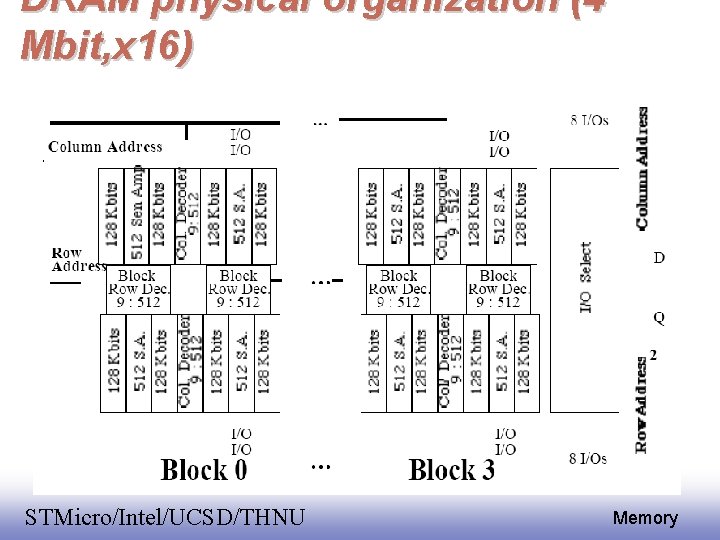

DRAM physical organization (4 Mbit, x 16) EE 141 STMicro/Intel/UCSD/THNU 10 Memory

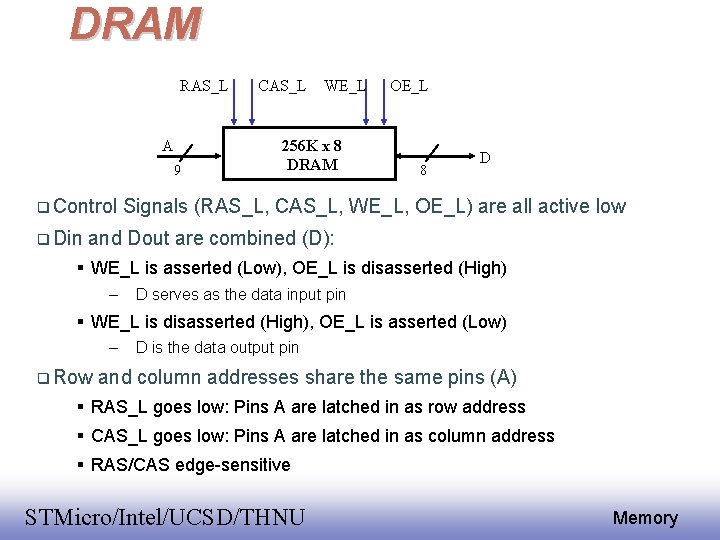

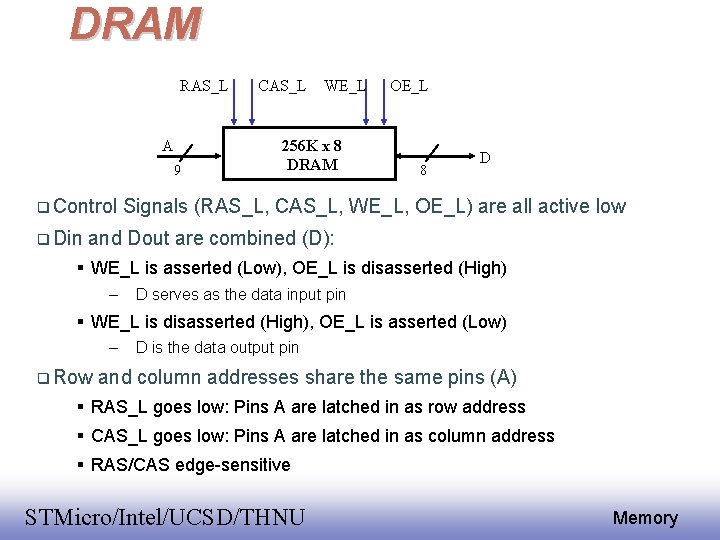

DRAM RAS_L A 9 Control Din CAS_L WE_L 256 K x 8 DRAM OE_L 8 D Signals (RAS_L, CAS_L, WE_L, OE_L) are all active low and Dout are combined (D): WE_L is asserted (Low), OE_L is disasserted (High) – D serves as the data input pin WE_L is disasserted (High), OE_L is asserted (Low) – Row D is the data output pin and column addresses share the same pins (A) RAS_L goes low: Pins A are latched in as row address CAS_L goes low: Pins A are latched in as column address RAS/CAS edge-sensitive EE 141 STMicro/Intel/UCSD/THNU 11 Memory

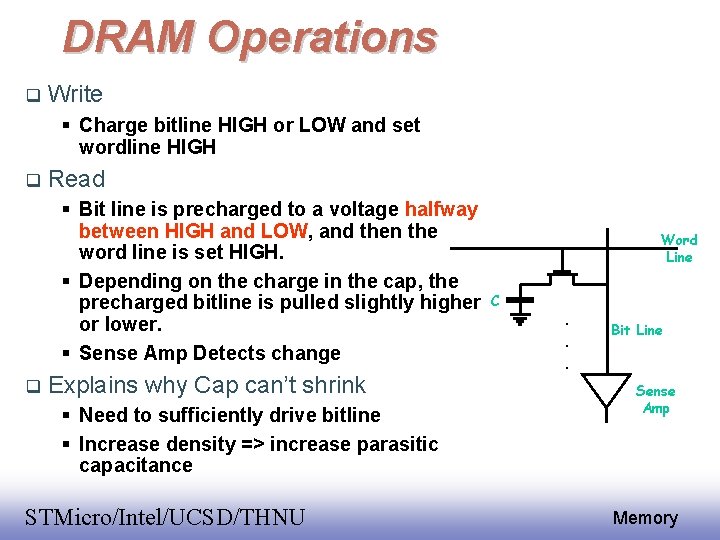

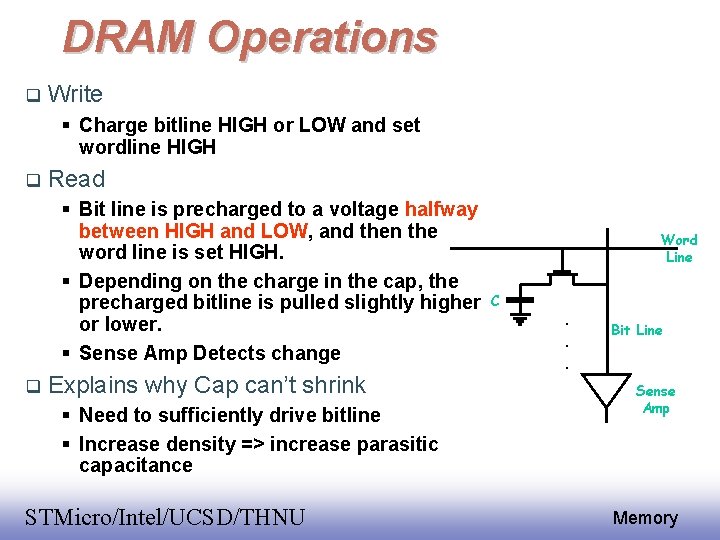

DRAM Operations Write Charge bitline HIGH or LOW and set wordline HIGH Read Bit line is precharged to a voltage halfway between HIGH and LOW, and then the word line is set HIGH. Depending on the charge in the cap, the precharged bitline is pulled slightly higher or lower. Sense Amp Detects change Explains why Cap can’t shrink Need to sufficiently drive bitline Increase density => increase parasitic capacitance EE 141 STMicro/Intel/UCSD/THNU Word Line C . . . Bit Line Sense Amp 12 Memory

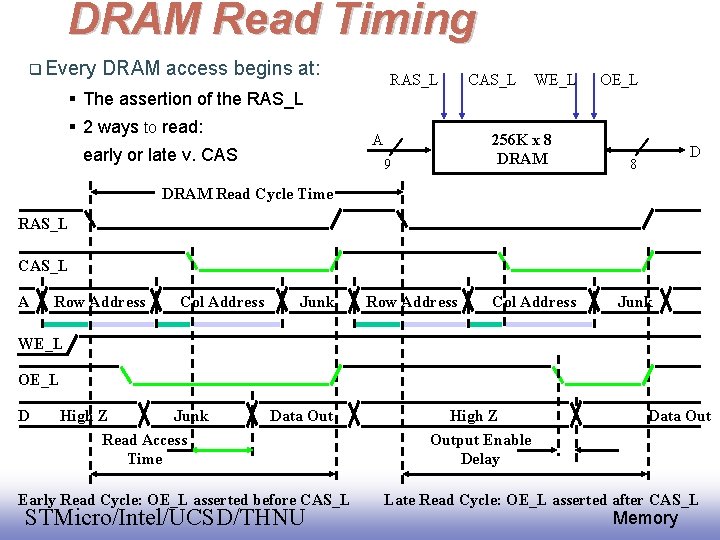

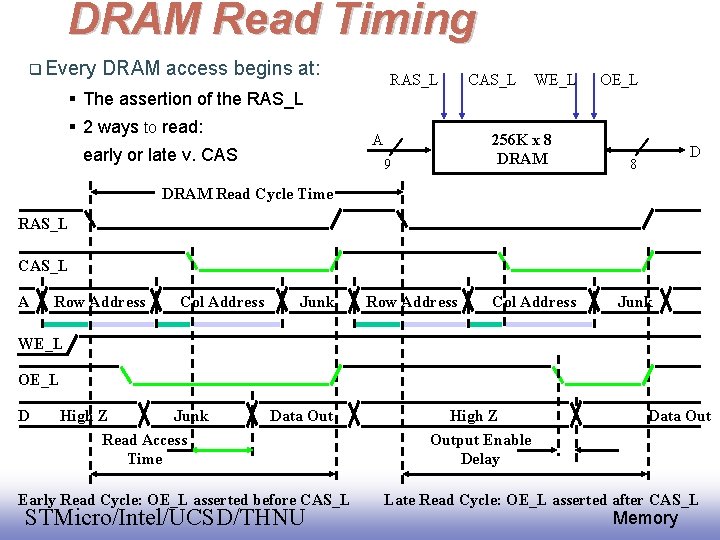

DRAM Read Timing Every DRAM access begins at: RAS_L The assertion of the RAS_L 2 ways to read: early or late v. CAS A CAS_L WE_L 256 K x 8 DRAM 9 OE_L D 8 DRAM Read Cycle Time RAS_L CAS_L A Row Address Col Address Junk WE_L OE_L D High Z Junk Read Access Time Data Out Early Read Cycle: OE_L asserted before CAS_L EE 141 STMicro/Intel/UCSD/THNU High Z Output Enable Delay Data Out Late Read Cycle: OE_L asserted after 13 CAS_L Memory

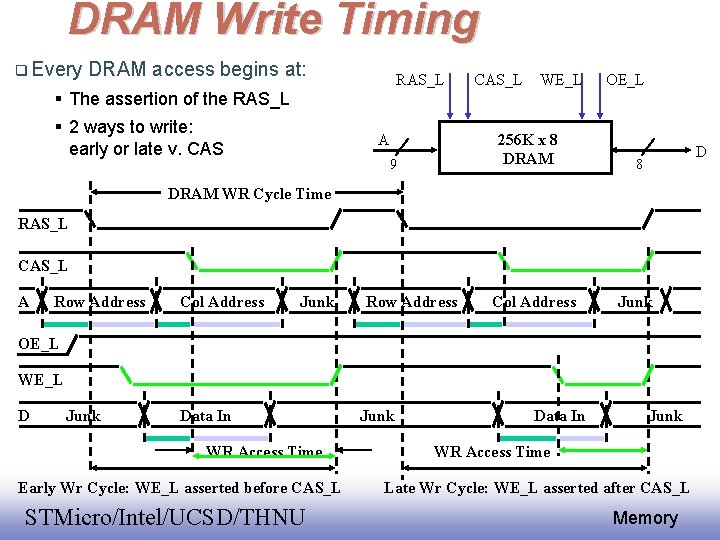

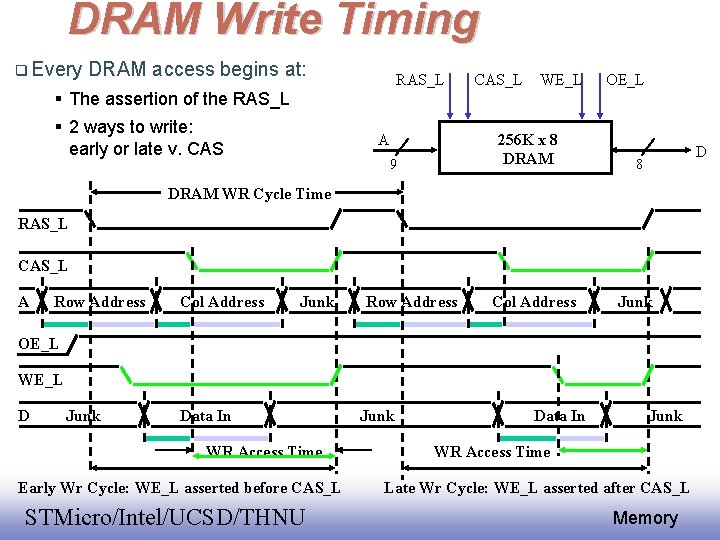

DRAM Write Timing Every DRAM access begins at: RAS_L The assertion of the RAS_L 2 ways to write: early or late v. CAS A CAS_L WE_L 256 K x 8 DRAM 9 OE_L D 8 DRAM WR Cycle Time RAS_L CAS_L A Row Address Col Address Junk OE_L WE_L D Junk Data In WR Access Time Early Wr Cycle: WE_L asserted before CAS_L EE 141 STMicro/Intel/UCSD/THNU Junk Data In Junk WR Access Time Late Wr Cycle: WE_L asserted after CAS_L 14 Memory

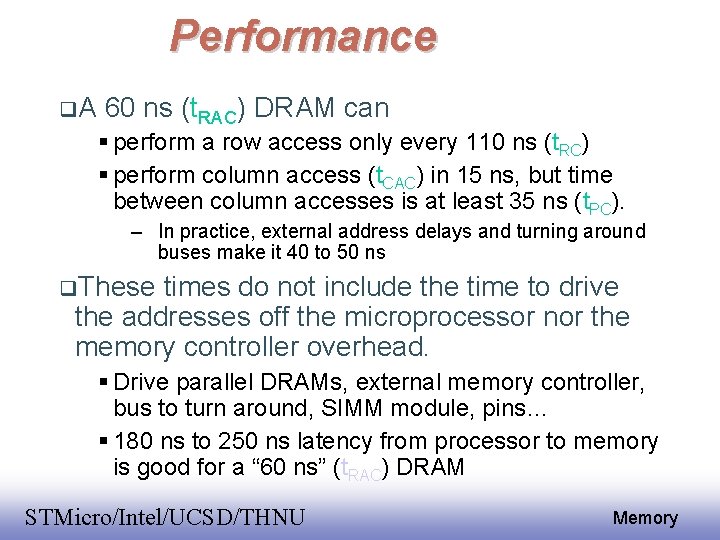

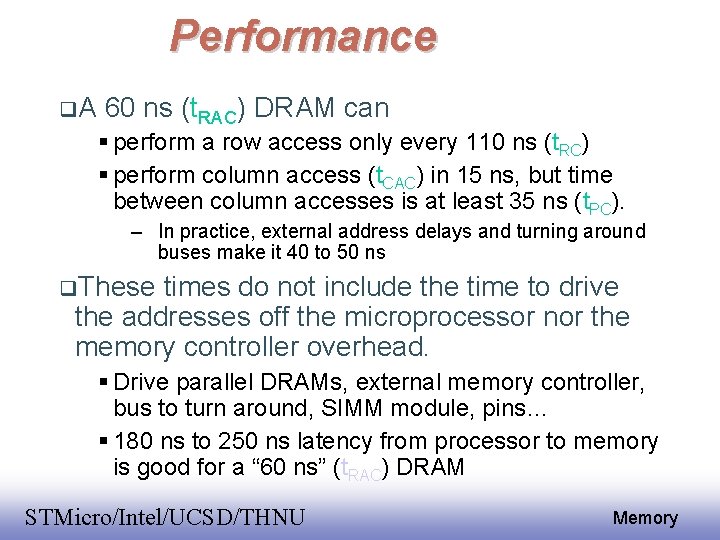

DRAM Performance A 60 ns (t. RAC) DRAM can perform a row access only every 110 ns (t. RC) perform column access (t. CAC) in 15 ns, but time between column accesses is at least 35 ns (t. PC). – In practice, external address delays and turning around buses make it 40 to 50 ns These times do not include the time to drive the addresses off the microprocessor nor the memory controller overhead. Drive parallel DRAMs, external memory controller, bus to turn around, SIMM module, pins… 180 ns to 250 ns latency from processor to memory is good for a “ 60 ns” (t. RAC) DRAM EE 141 STMicro/Intel/UCSD/THNU 15 Memory

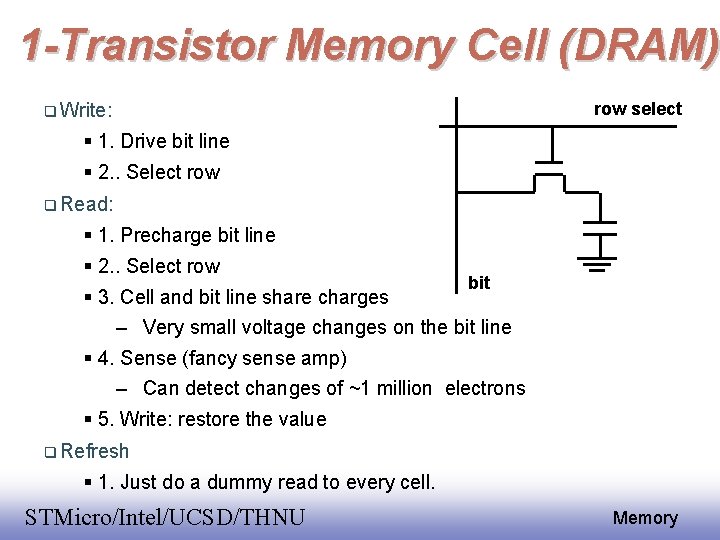

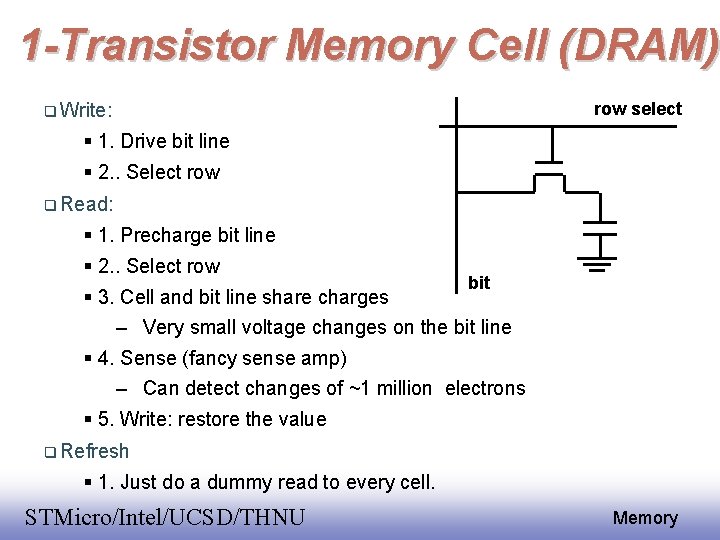

1 -Transistor Memory Cell (DRAM) row select Write: 1. Drive bit line 2. . Select row Read: 1. Precharge bit line 2. . Select row bit 3. Cell and bit line share charges – Very small voltage changes on the bit line 4. Sense (fancy sense amp) – Can detect changes of ~1 million electrons 5. Write: restore the value Refresh 1. Just do a dummy read to every cell. EE 141 STMicro/Intel/UCSD/THNU 16 Memory

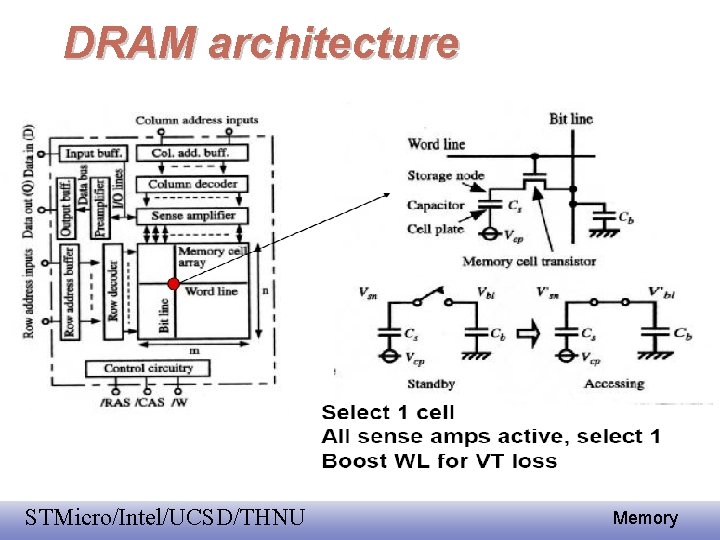

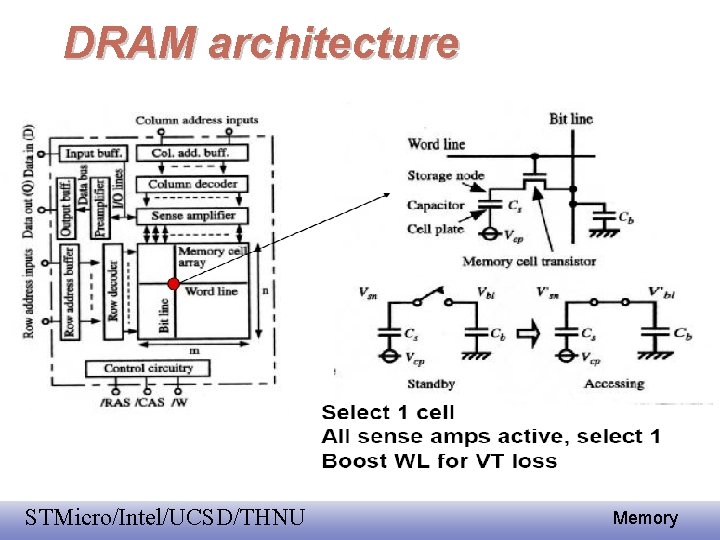

DRAM architecture EE 141 STMicro/Intel/UCSD/THNU 17 Memory

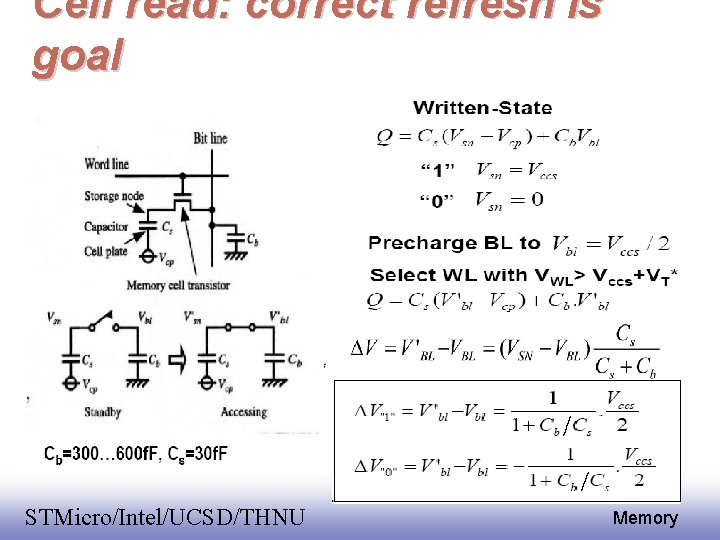

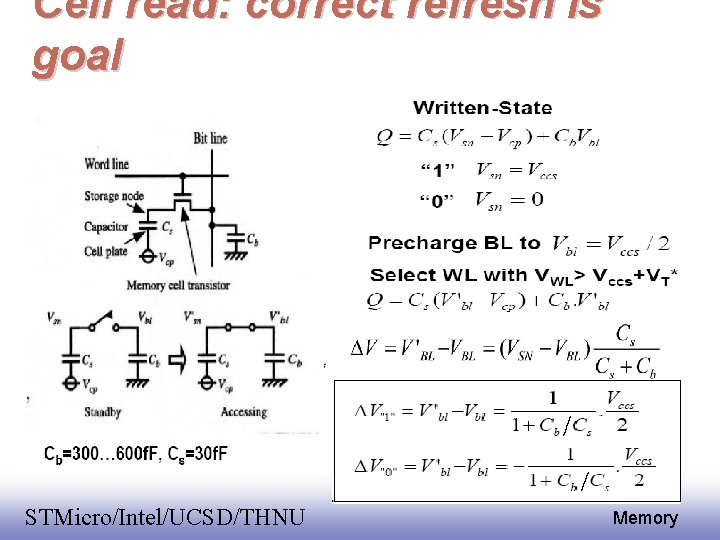

Cell read: correct refresh is goal EE 141 STMicro/Intel/UCSD/THNU 18 Memory

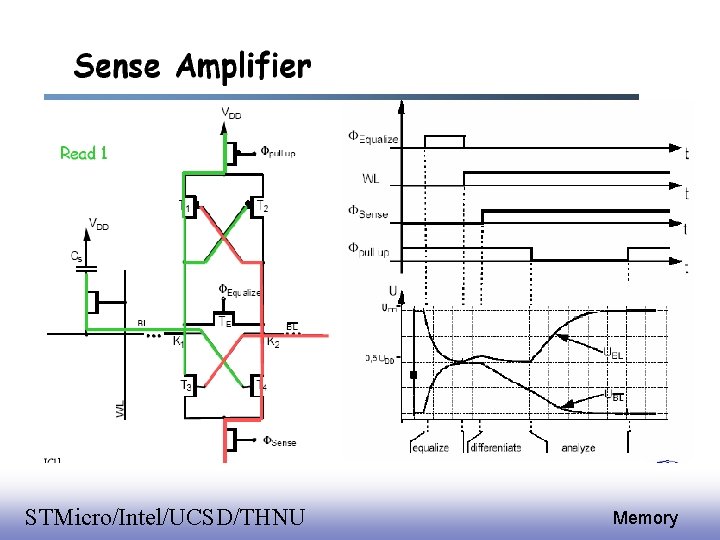

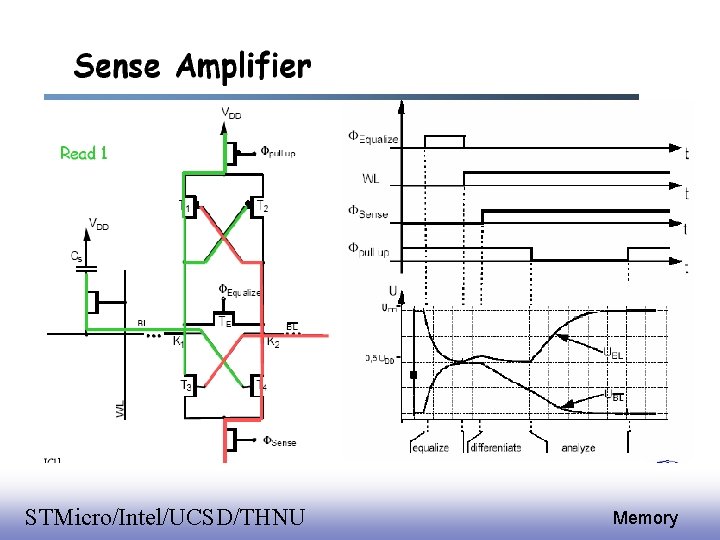

Sense Amplifier EE 141 STMicro/Intel/UCSD/THNU 19 Memory

EE 141 STMicro/Intel/UCSD/THNU 20 Memory

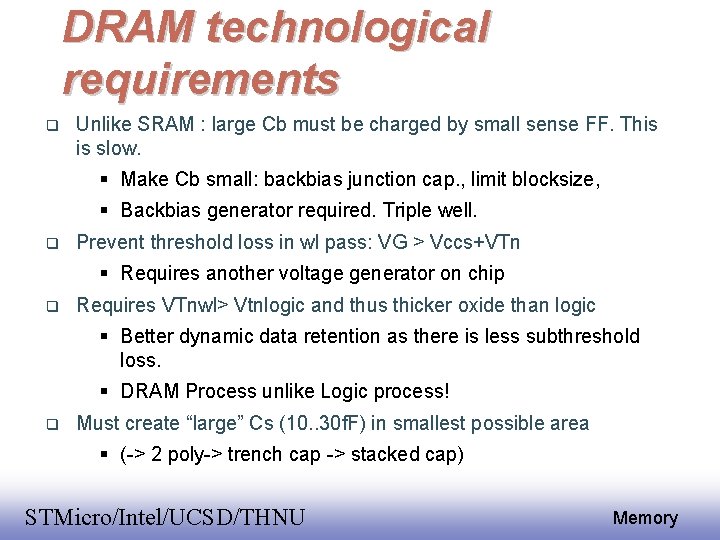

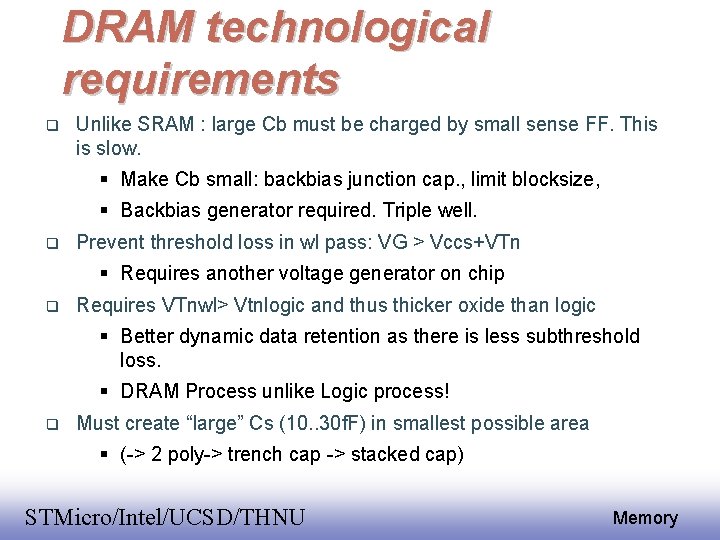

DRAM technological requirements Unlike SRAM : large Cb must be charged by small sense FF. This is slow. Make Cb small: backbias junction cap. , limit blocksize, Backbias generator required. Triple well. Prevent threshold loss in wl pass: VG > Vccs+VTn Requires another voltage generator on chip Requires VTnwl> Vtnlogic and thus thicker oxide than logic Better dynamic data retention as there is less subthreshold loss. DRAM Process unlike Logic process! Must create “large” Cs (10. . 30 f. F) in smallest possible area (-> 2 poly-> trench cap -> stacked cap) EE 141 STMicro/Intel/UCSD/THNU 21 Memory

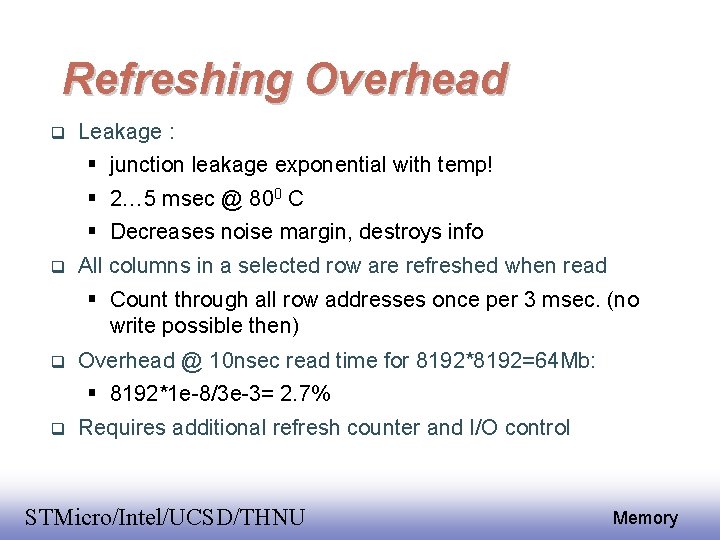

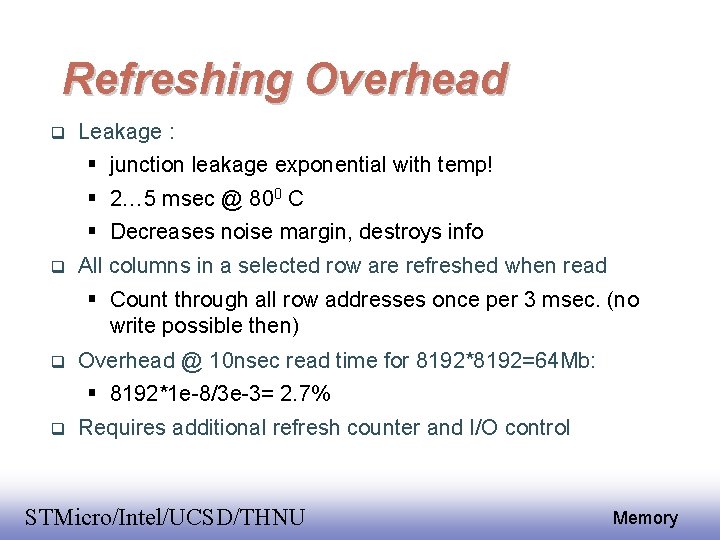

Refreshing Overhead Leakage : junction leakage exponential with temp! 2… 5 msec @ 800 C Decreases noise margin, destroys info All columns in a selected row are refreshed when read Count through all row addresses once per 3 msec. (no write possible then) Overhead @ 10 nsec read time for 8192*8192=64 Mb: 8192*1 e-8/3 e-3= 2. 7% Requires additional refresh counter and I/O control EE 141 STMicro/Intel/UCSD/THNU 22 Memory

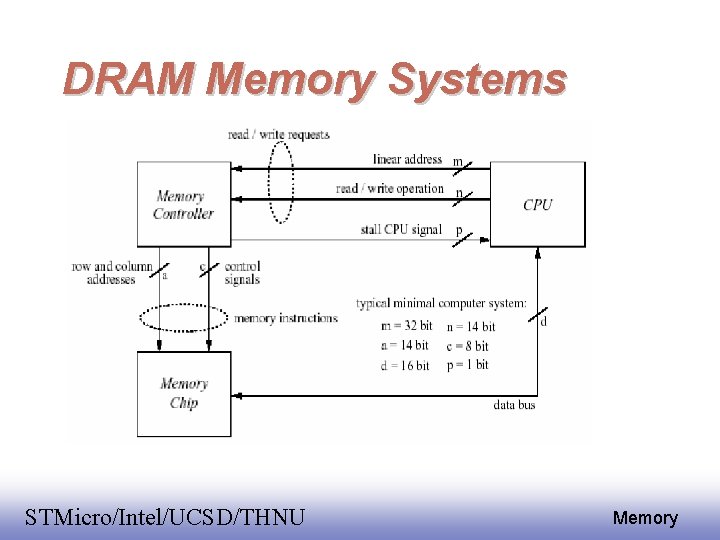

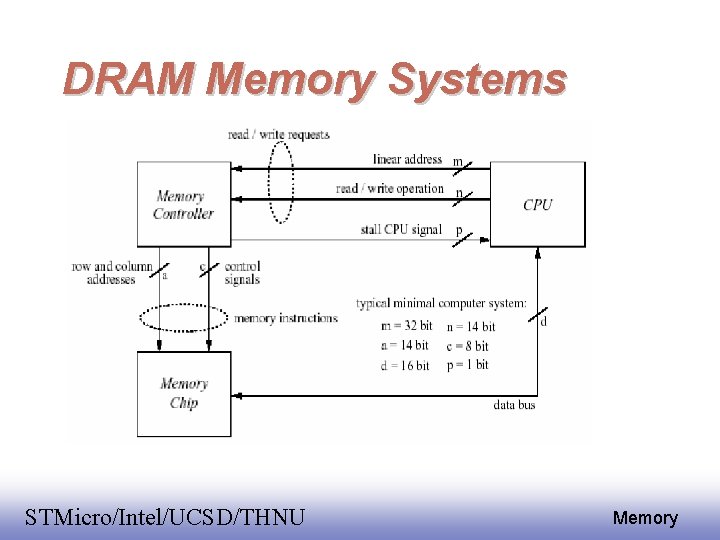

DRAM Memory Systems address n DRAM Controller n/2 Memory Timing Controller DRAM 2^n x 1 chip w Bus Drivers Tc = Tcycle + Tcontroller + Tdriver EE 141 STMicro/Intel/UCSD/THNU 23 Memory

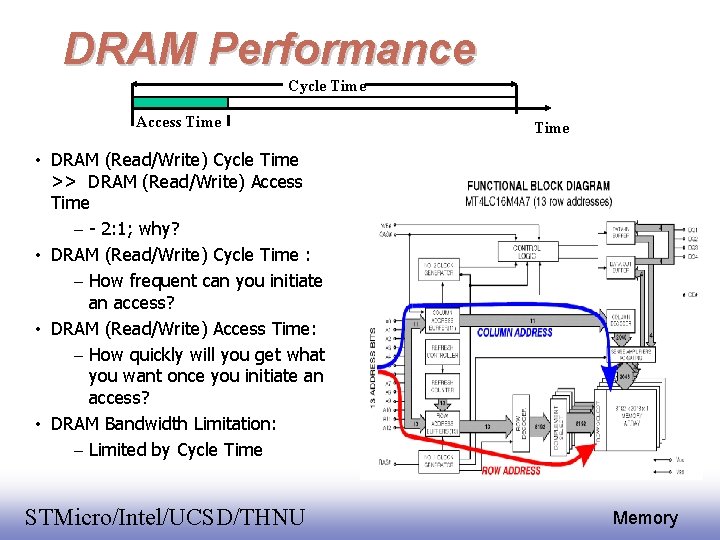

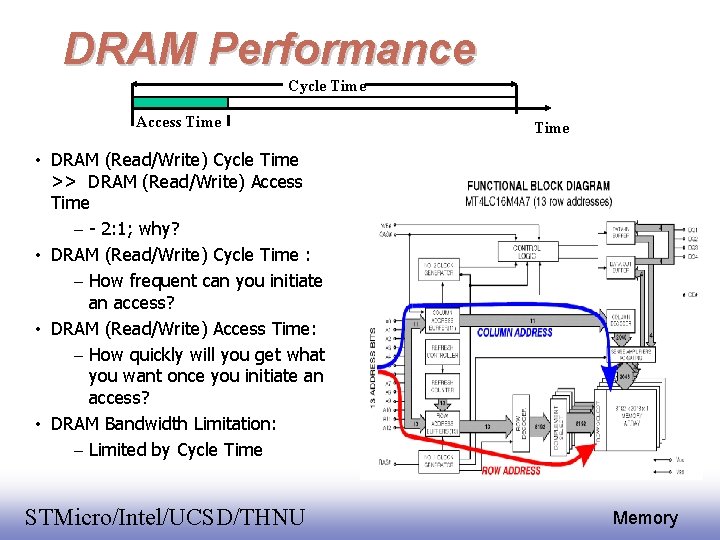

DRAM Performance Cycle Time Access Time • DRAM (Read/Write) Cycle Time >> DRAM (Read/Write) Access Time – 2: 1; why? • DRAM (Read/Write) Cycle Time : – How frequent can you initiate an access? • DRAM (Read/Write) Access Time: – How quickly will you get what you want once you initiate an access? • DRAM Bandwidth Limitation: – Limited by Cycle Time EE 141 STMicro/Intel/UCSD/THNU 24 Memory

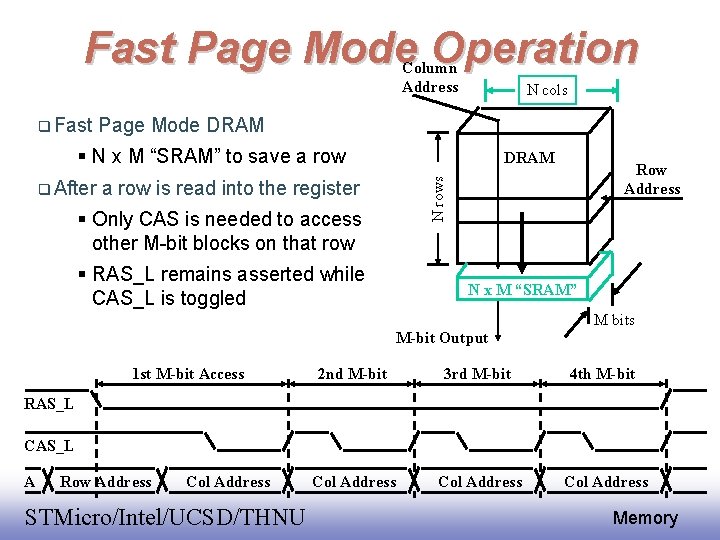

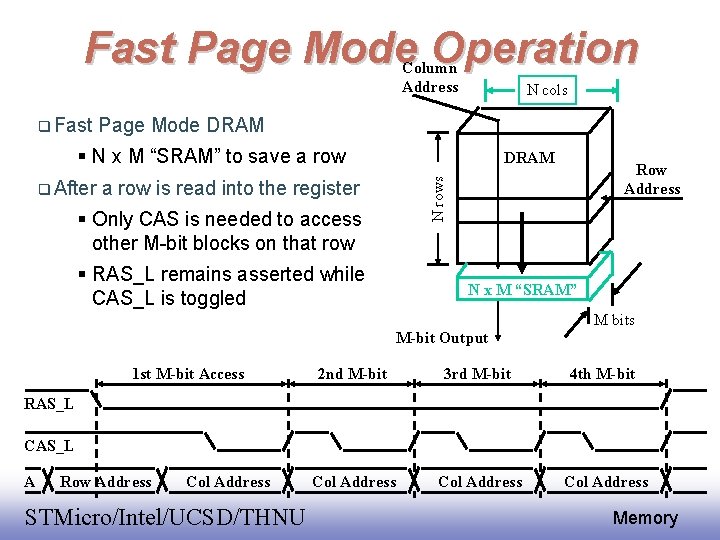

Fast Page Mode Operation Column Address Fast N cols Page Mode DRAM N x M “SRAM” to save a row is read into the register Only CAS is needed to access other M-bit blocks on that row RAS_L remains asserted while CAS_L is toggled Row Address N rows After DRAM N x M “SRAM” M bits M-bit Output 1 st M-bit Access 2 nd M-bit 3 rd M-bit 4 th M-bit Col Address RAS_L CAS_L A Row Address Col Address EE 141 STMicro/Intel/UCSD/THNU 25 Memory

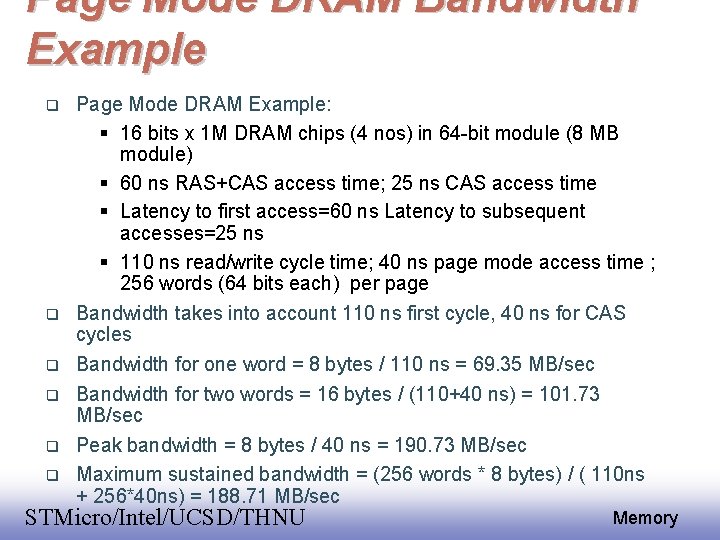

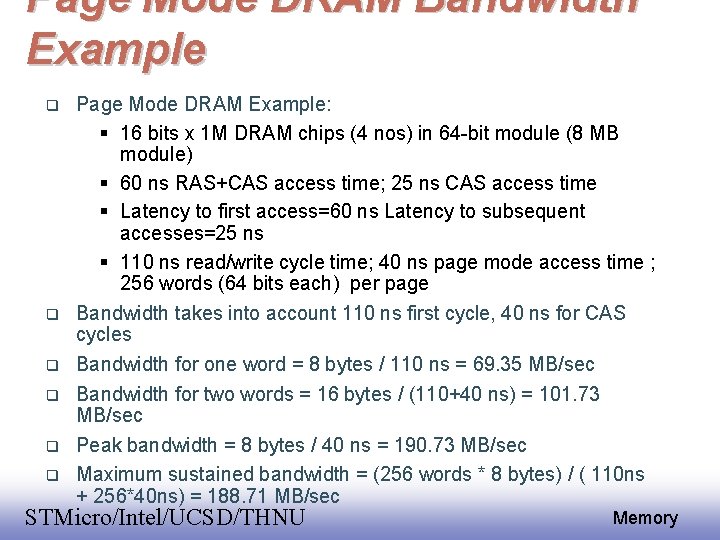

Page Mode DRAM Bandwidth Example Page Mode DRAM Example: 16 bits x 1 M DRAM chips (4 nos) in 64 -bit module (8 MB module) 60 ns RAS+CAS access time; 25 ns CAS access time Latency to first access=60 ns Latency to subsequent accesses=25 ns 110 ns read/write cycle time; 40 ns page mode access time ; 256 words (64 bits each) per page Bandwidth takes into account 110 ns first cycle, 40 ns for CAS cycles Bandwidth for one word = 8 bytes / 110 ns = 69. 35 MB/sec Bandwidth for two words = 16 bytes / (110+40 ns) = 101. 73 MB/sec Peak bandwidth = 8 bytes / 40 ns = 190. 73 MB/sec Maximum sustained bandwidth = (256 words * 8 bytes) / ( 110 ns + 256*40 ns) = 188. 71 MB/sec 26 EE 141 STMicro/Intel/UCSD/THNU Memory

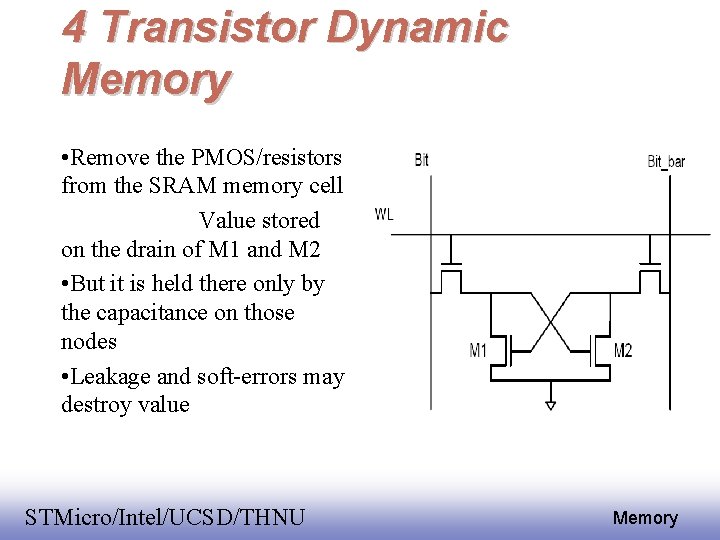

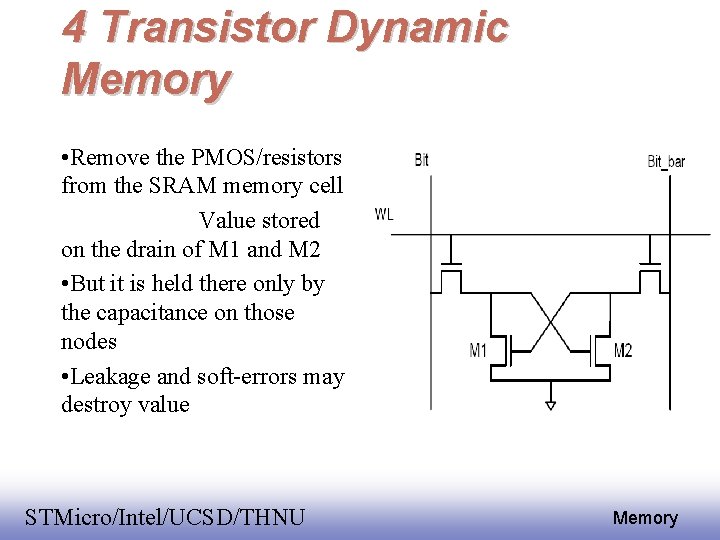

4 Transistor Dynamic Memory • Remove the PMOS/resistors from the SRAM memory cell Value stored on the drain of M 1 and M 2 • But it is held there only by the capacitance on those nodes • Leakage and soft-errors may destroy value EE 141 STMicro/Intel/UCSD/THNU 27 Memory

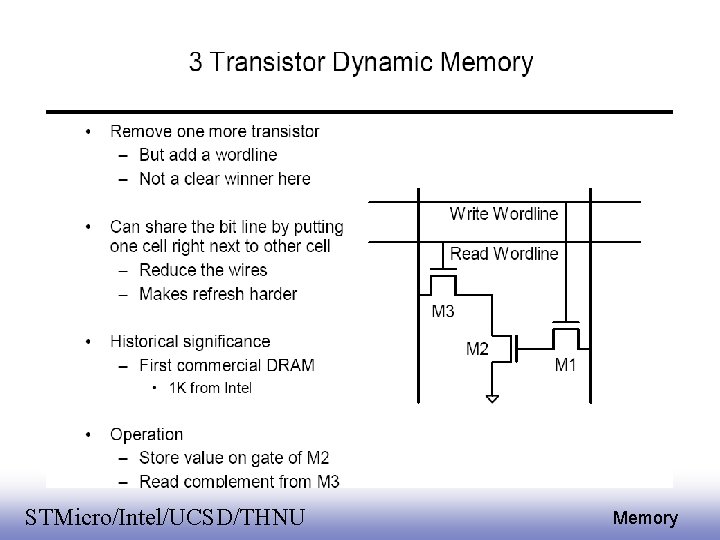

EE 141 STMicro/Intel/UCSD/THNU 28 Memory

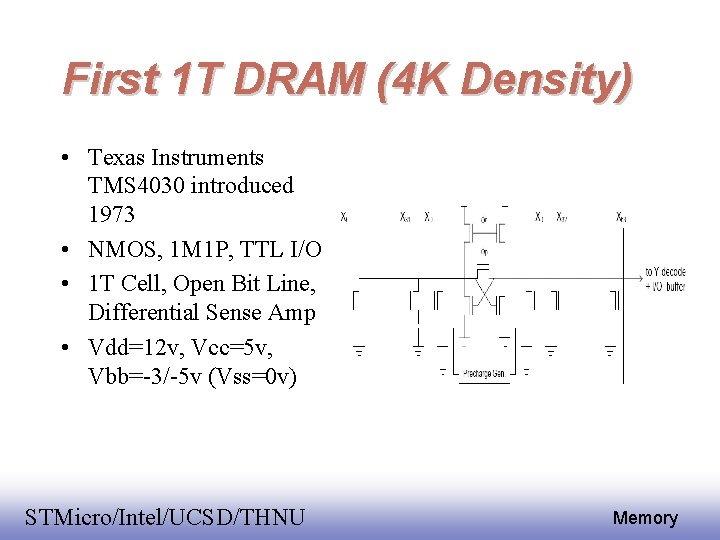

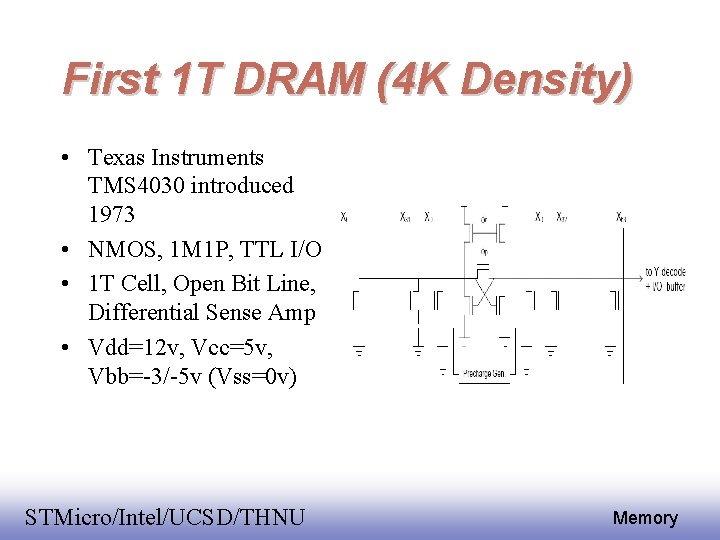

First 1 T DRAM (4 K Density) • Texas Instruments TMS 4030 introduced 1973 • NMOS, 1 M 1 P, TTL I/O • 1 T Cell, Open Bit Line, Differential Sense Amp • Vdd=12 v, Vcc=5 v, Vbb=-3/-5 v (Vss=0 v) EE 141 STMicro/Intel/UCSD/THNU 29 Memory

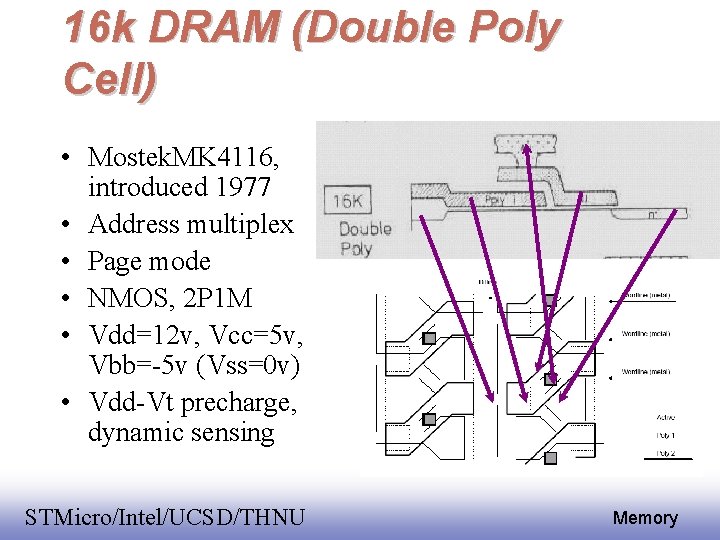

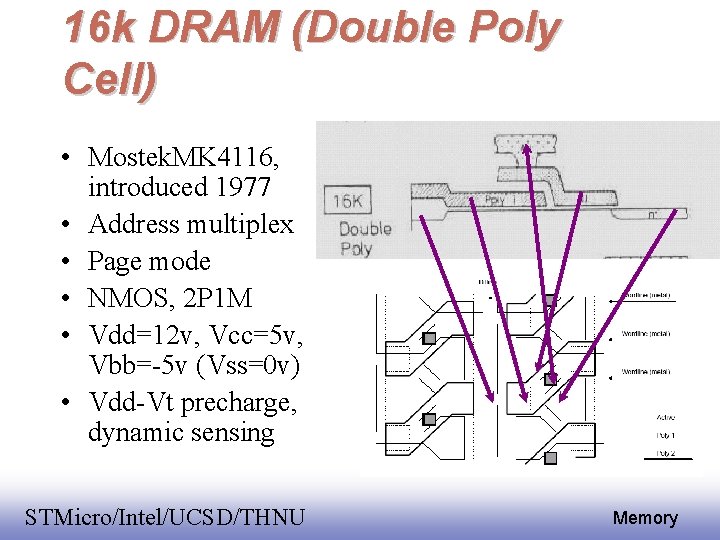

16 k DRAM (Double Poly Cell) • Mostek. MK 4116, introduced 1977 • Address multiplex • Page mode • NMOS, 2 P 1 M • Vdd=12 v, Vcc=5 v, Vbb=-5 v (Vss=0 v) • Vdd-Vt precharge, dynamic sensing EE 141 STMicro/Intel/UCSD/THNU 30 Memory

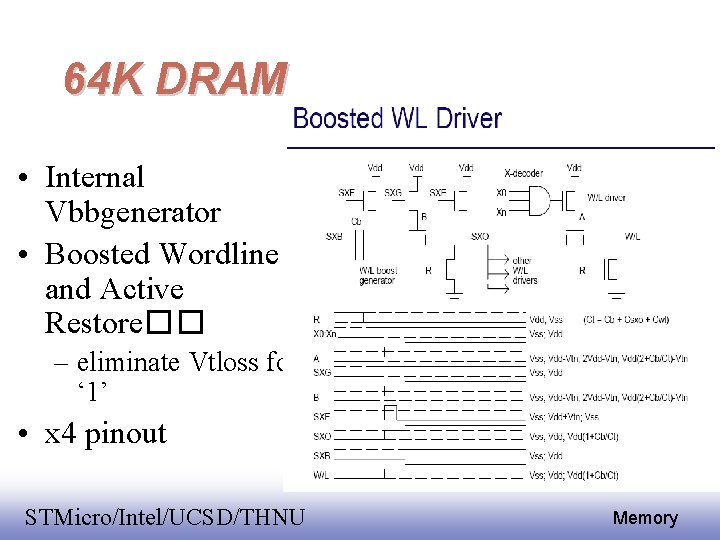

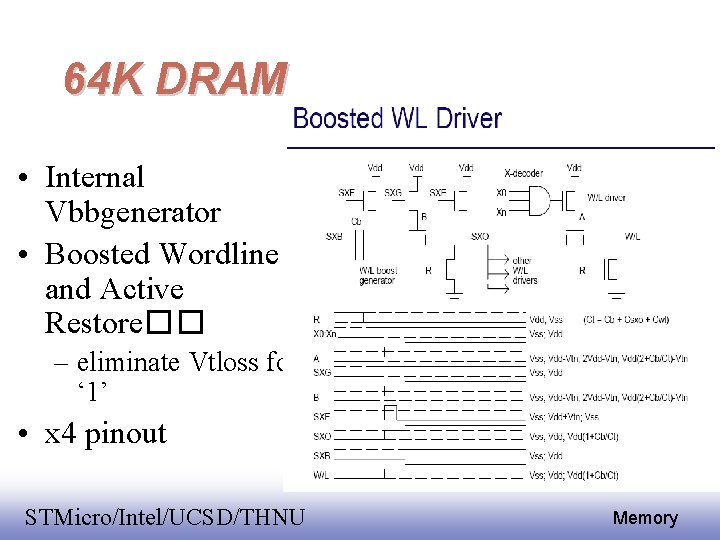

64 K DRAM • Internal Vbbgenerator • Boosted Wordline and Active Restore�� – eliminate Vtloss for ‘ 1’ • x 4 pinout EE 141 STMicro/Intel/UCSD/THNU 31 Memory

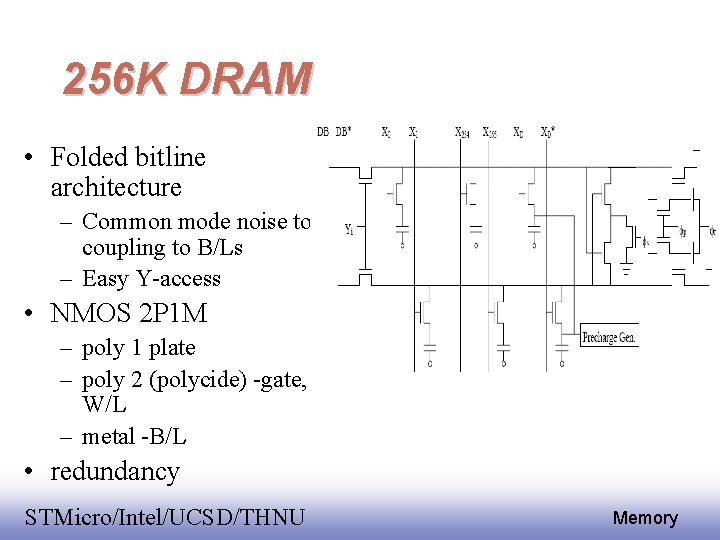

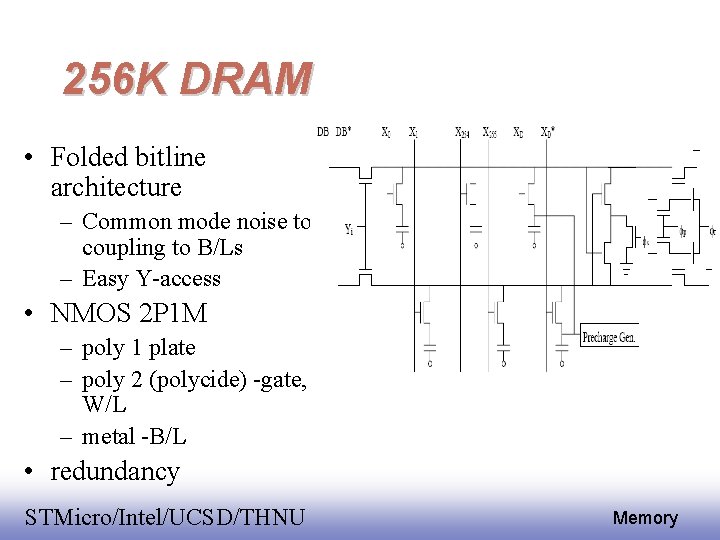

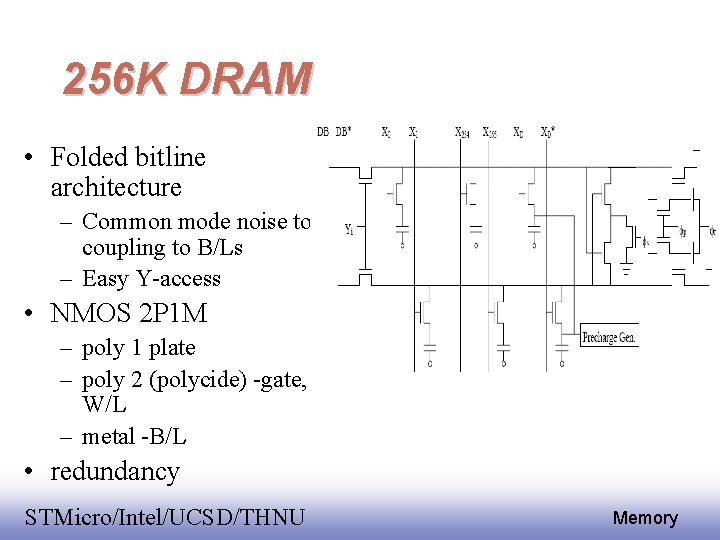

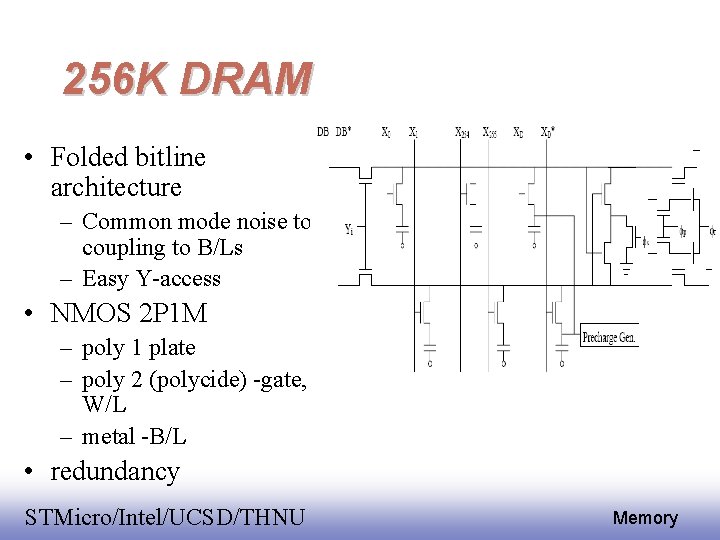

256 K DRAM • Folded bitline architecture – Common mode noise to coupling to B/Ls – Easy Y-access • NMOS 2 P 1 M – poly 1 plate – poly 2 (polycide) -gate, W/L – metal -B/L • redundancy EE 141 STMicro/Intel/UCSD/THNU 32 Memory

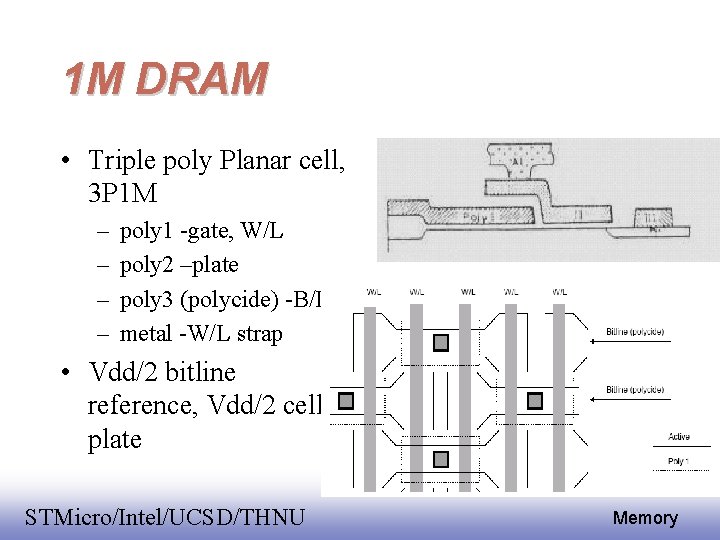

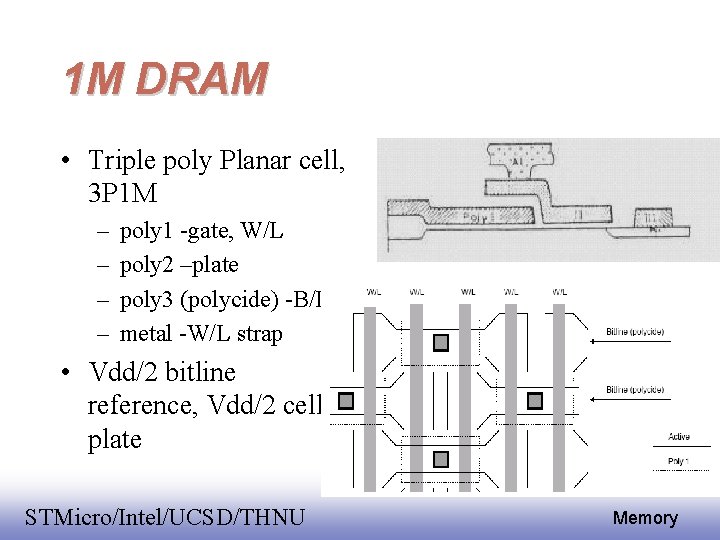

1 M DRAM • Triple poly Planar cell, 3 P 1 M – – poly 1 -gate, W/L poly 2 –plate poly 3 (polycide) -B/L metal -W/L strap • Vdd/2 bitline reference, Vdd/2 cell plate EE 141 STMicro/Intel/UCSD/THNU 33 Memory

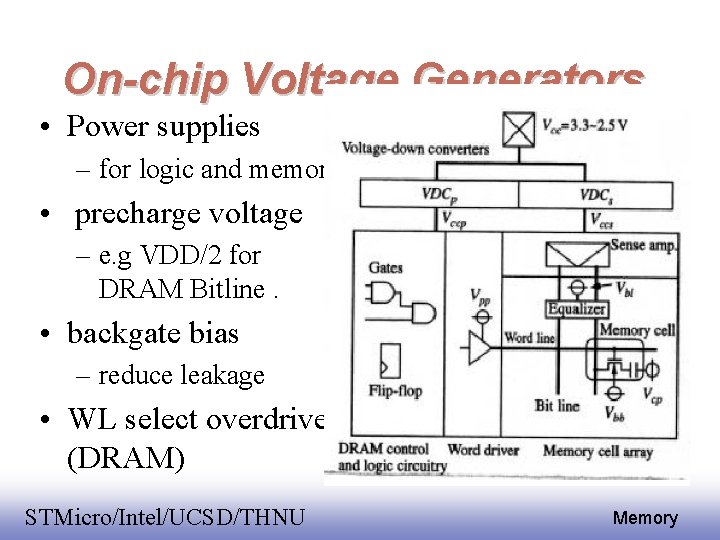

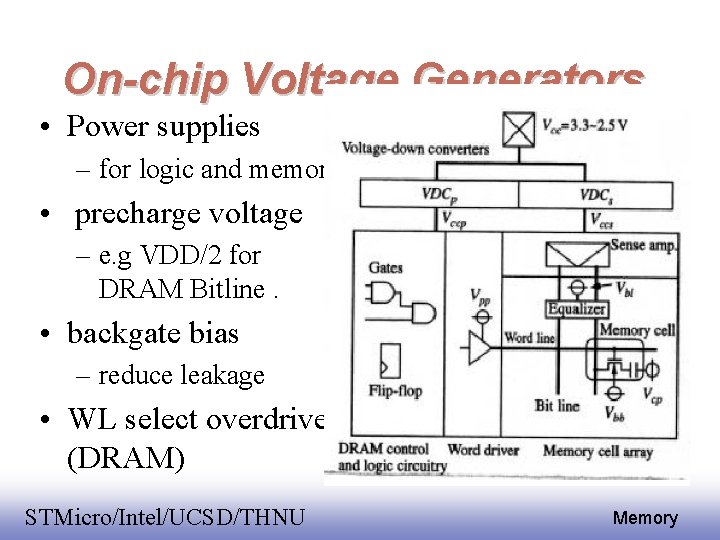

On-chip Voltage Generators • Power supplies – for logic and memory • precharge voltage – e. g VDD/2 for DRAM Bitline. • backgate bias – reduce leakage • WL select overdrive (DRAM) EE 141 STMicro/Intel/UCSD/THNU 34 Memory

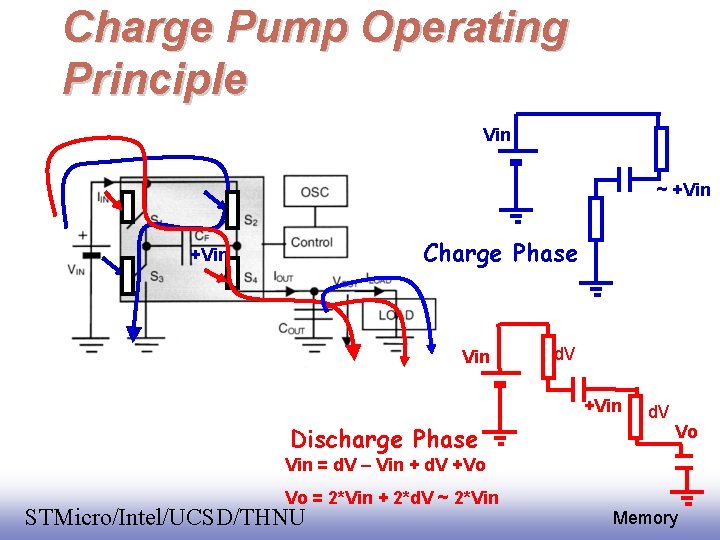

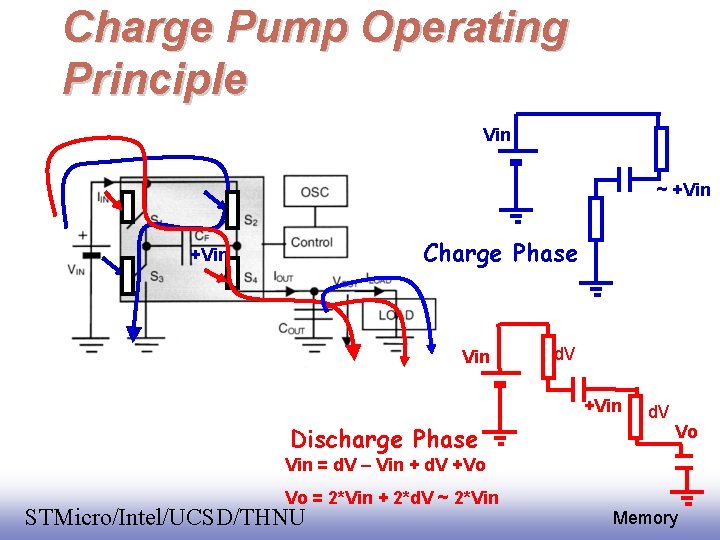

Charge Pump Operating Principle Vin ~ +Vin Charge Phase +Vin d. V +Vin Discharge Phase d. V Vo Vin = d. V – Vin + d. V +Vo Vo = 2*Vin + 2*d. V ~ 2*Vin EE 141 STMicro/Intel/UCSD/THNU 35 Memory

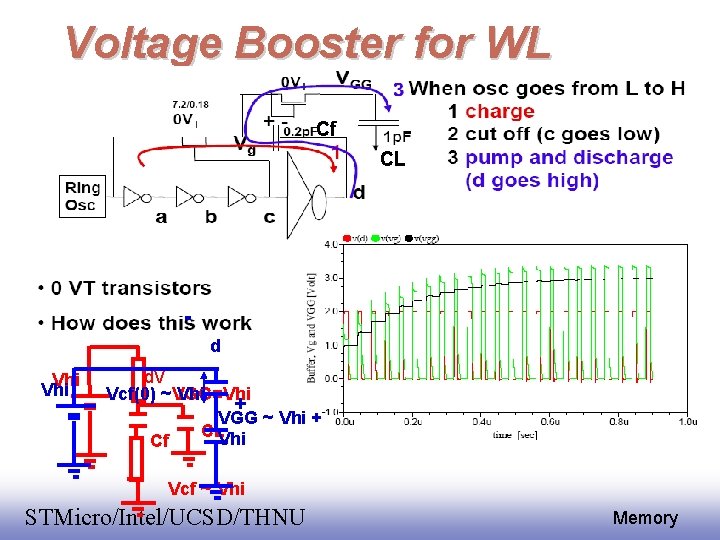

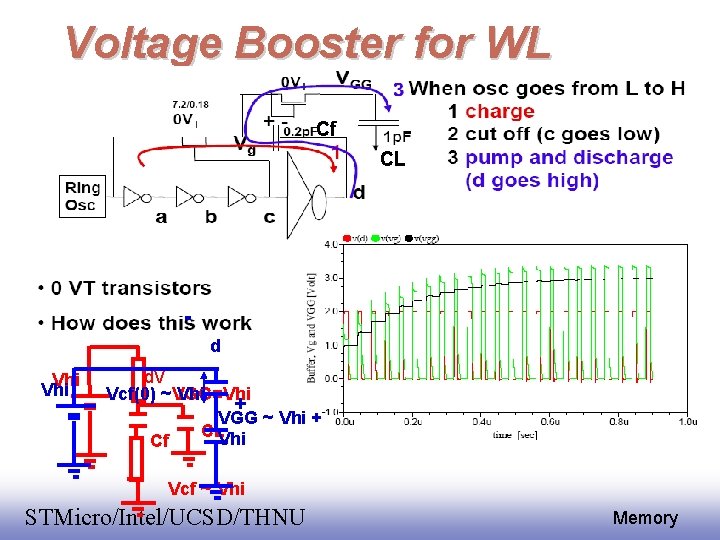

Voltage Booster for WL Cf CL d Vhi d. V Vcf(0) ~VGG=Vhi + VGG ~ Vhi + CLVhi Cf Vcf ~ Vhi EE 141 STMicro/Intel/UCSD/THNU 36 Memory

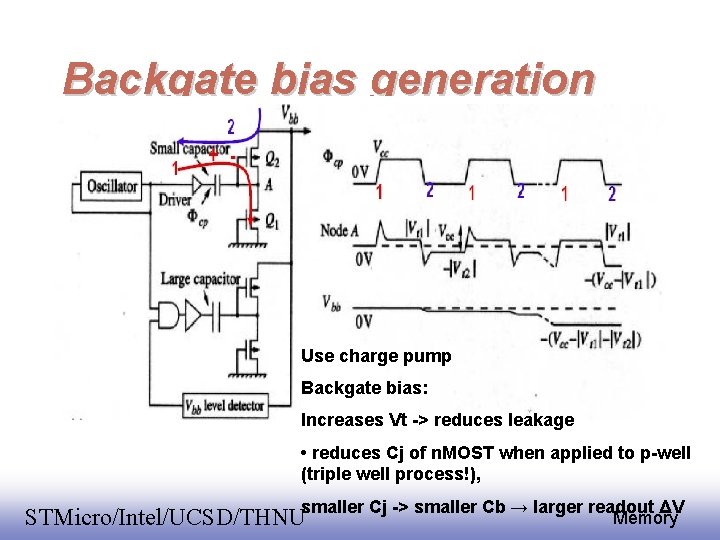

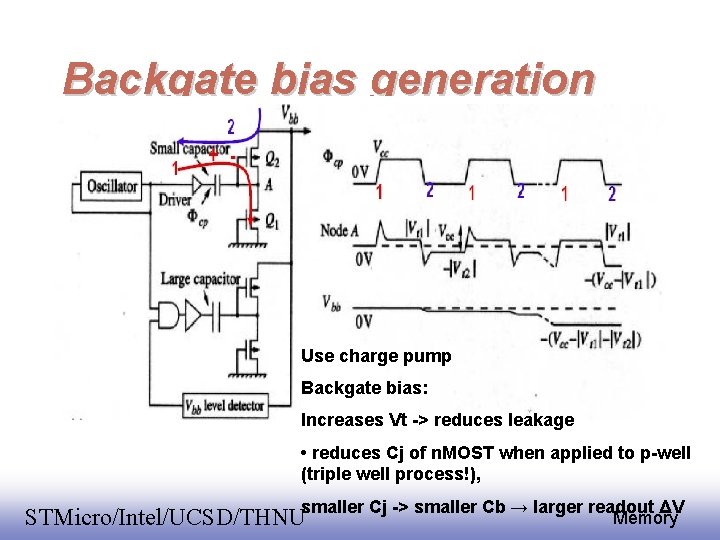

Backgate bias generation Use charge pump Backgate bias: Increases Vt -> reduces leakage • reduces Cj of n. MOST when applied to p-well (triple well process!), 37 ΔV smaller Cj -> smaller Cb → larger readout Memory EE 141 STMicro/Intel/UCSD/THNU

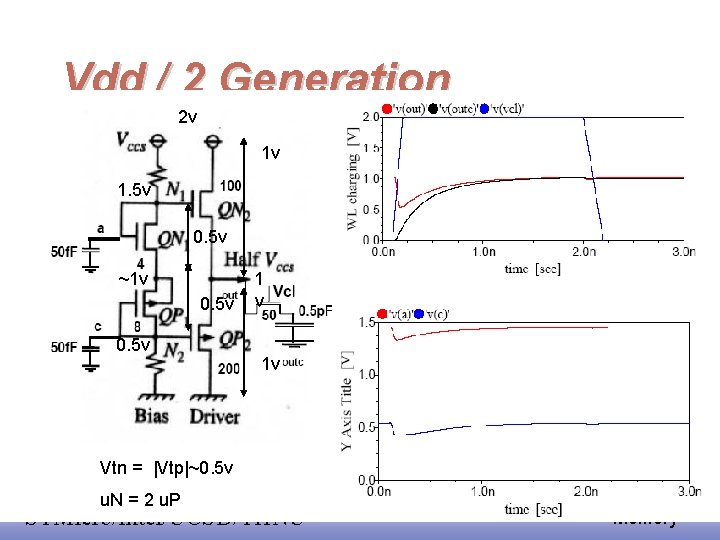

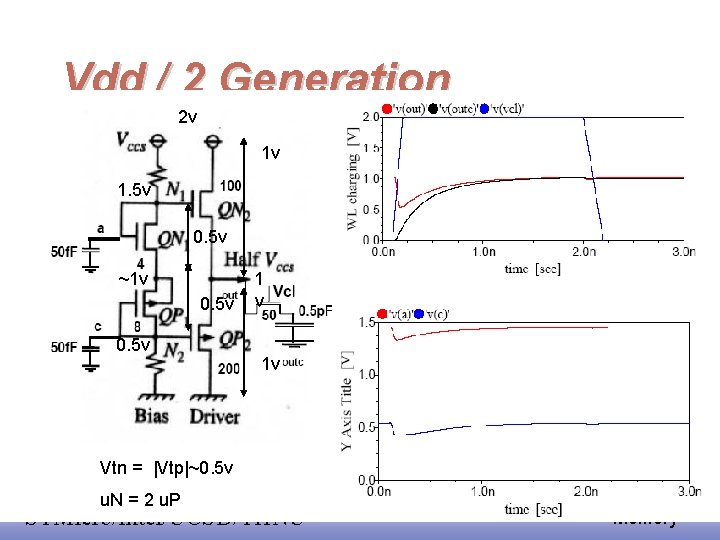

Vdd / 2 Generation 2 v 1 v 1. 5 v 0. 5 v ~1 v 0. 5 v 1 v 1 v Vtn = |Vtp|~0. 5 v u. N = 2 u. P EE 141 STMicro/Intel/UCSD/THNU 38 Memory

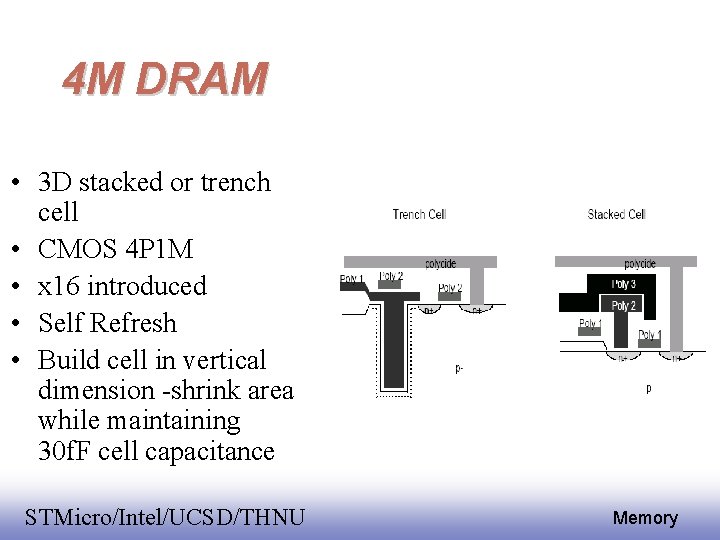

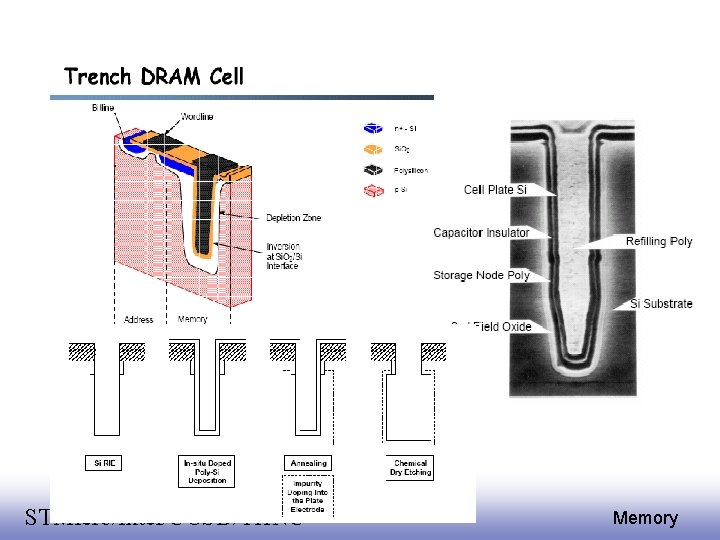

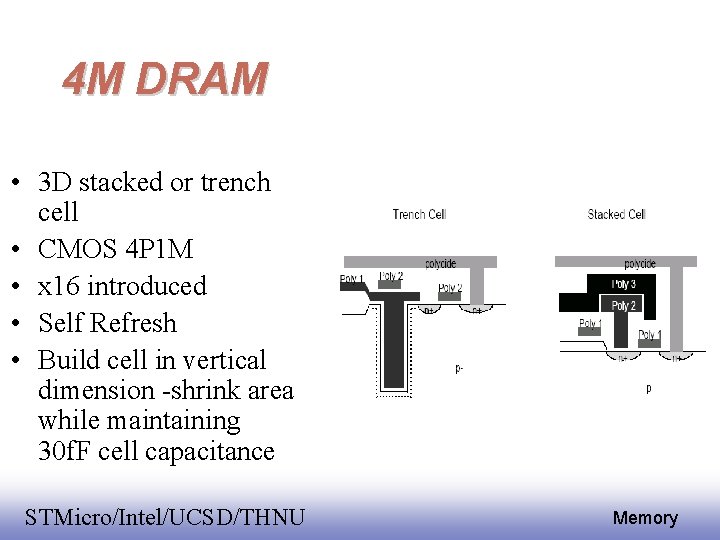

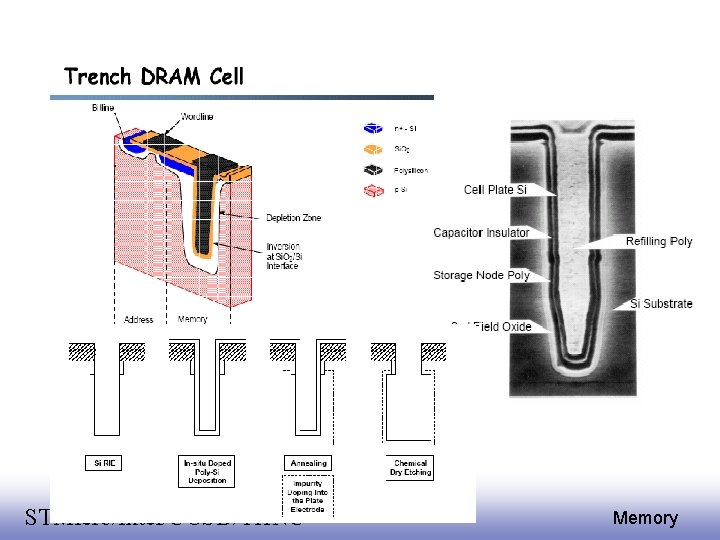

4 M DRAM • 3 D stacked or trench cell • CMOS 4 P 1 M • x 16 introduced • Self Refresh • Build cell in vertical dimension -shrink area while maintaining 30 f. F cell capacitance EE 141 STMicro/Intel/UCSD/THNU 39 Memory

EE 141 STMicro/Intel/UCSD/THNU 40 Memory

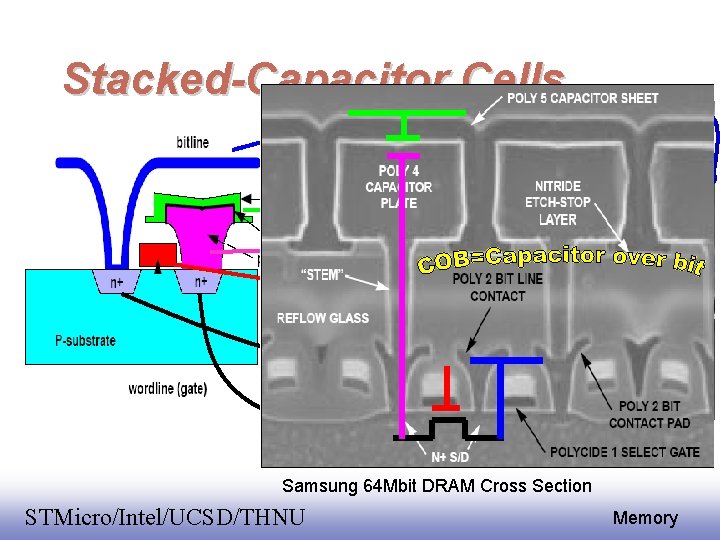

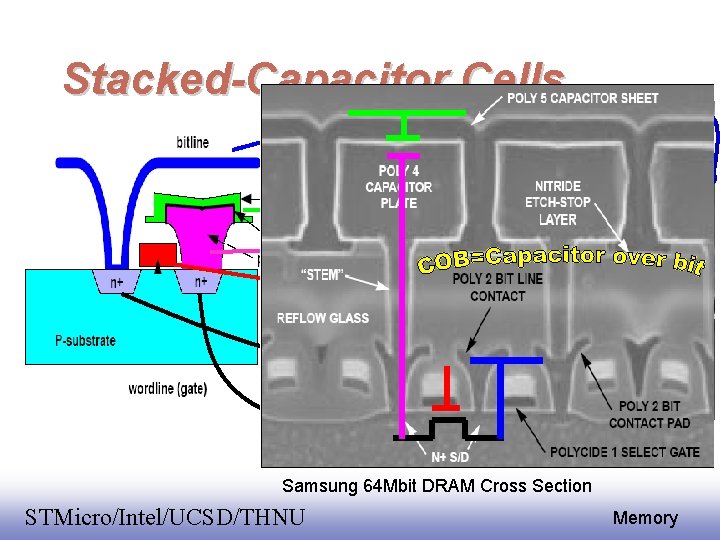

Stacked-Capacitor Cells Poly plate Hitachi 64 Mbit DRAM Cross Section Samsung 64 Mbit DRAM Cross Section EE 141 STMicro/Intel/UCSD/THNU 41 Memory

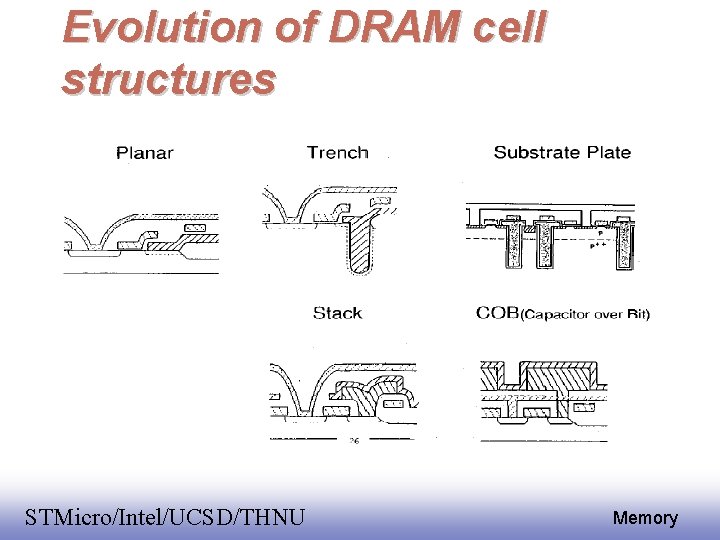

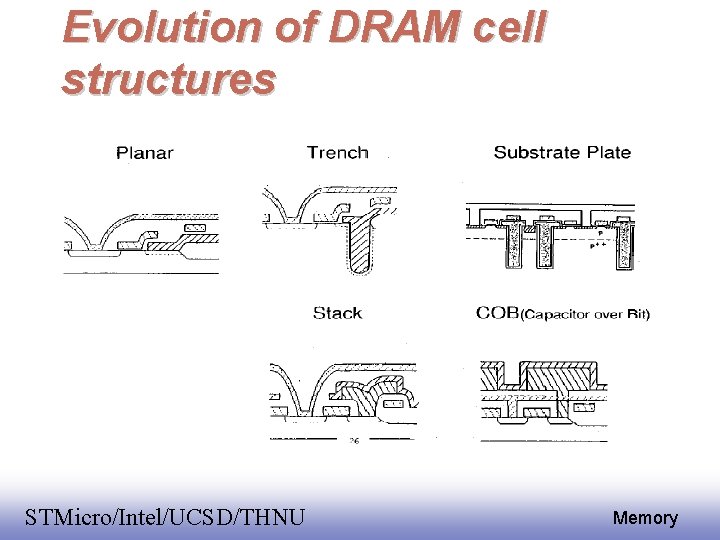

Evolution of DRAM cell structures EE 141 STMicro/Intel/UCSD/THNU 42 Memory

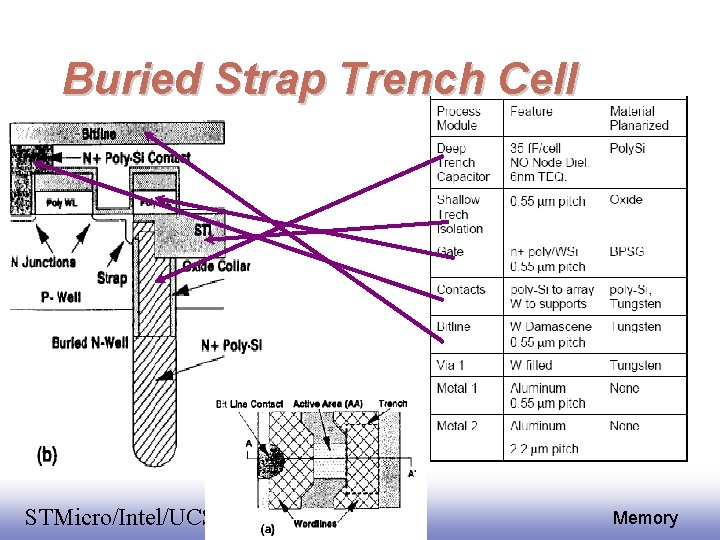

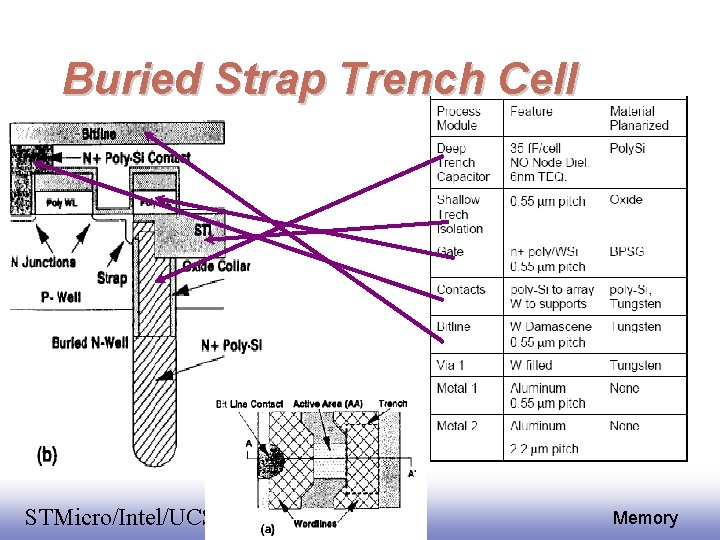

Buried Strap Trench Cell EE 141 STMicro/Intel/UCSD/THNU 43 Memory

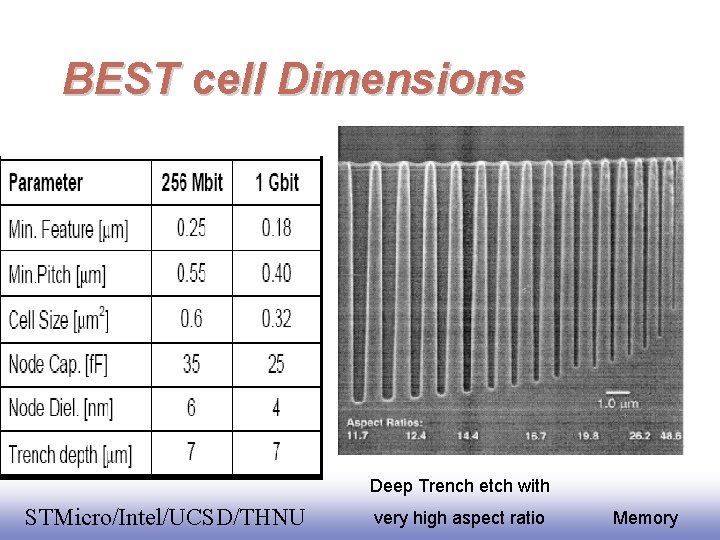

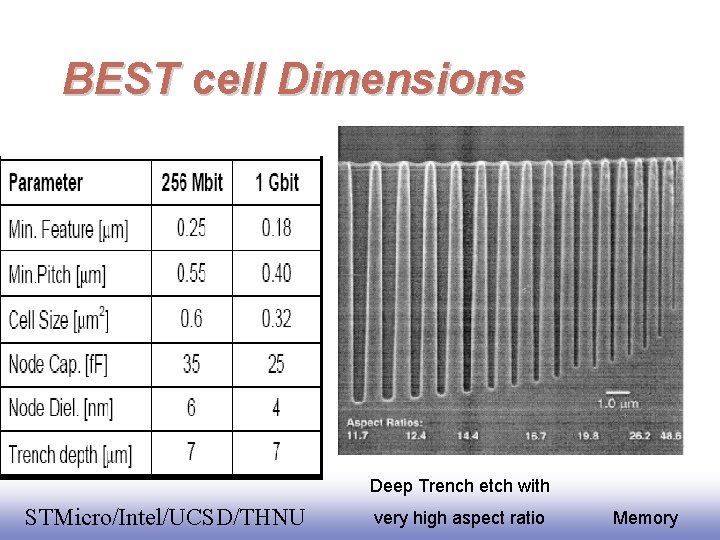

BEST cell Dimensions Deep Trench etch with EE 141 STMicro/Intel/UCSD/THNU very high aspect ratio 44 Memory

256 K DRAM • Folded bitline architecture – Common mode noise to coupling to B/Ls – Easy Y-access • NMOS 2 P 1 M – poly 1 plate – poly 2 (polycide) -gate, W/L – metal -B/L • redundancy EE 141 STMicro/Intel/UCSD/THNU 45 Memory

EE 141 STMicro/Intel/UCSD/THNU 46 Memory

EE 141 STMicro/Intel/UCSD/THNU 47 Memory

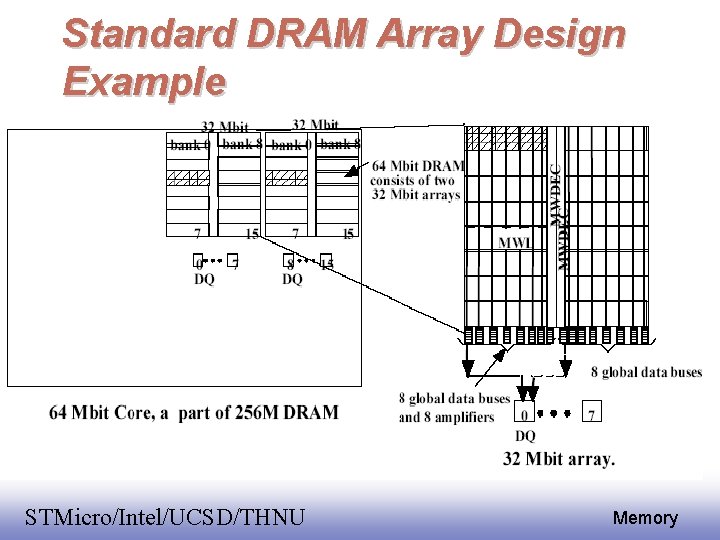

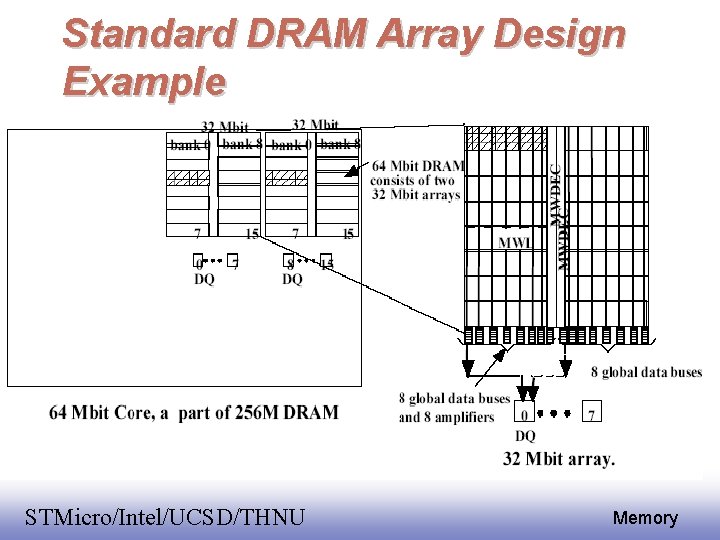

Standard DRAM Array Design Example EE 141 STMicro/Intel/UCSD/THNU 48 Memory

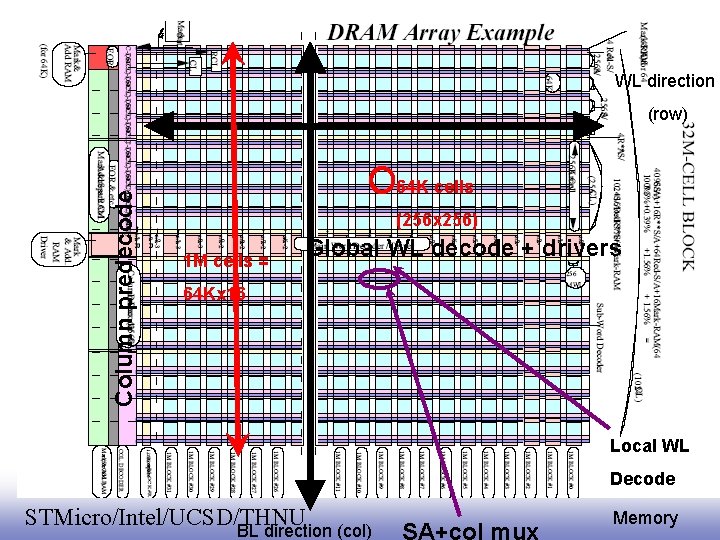

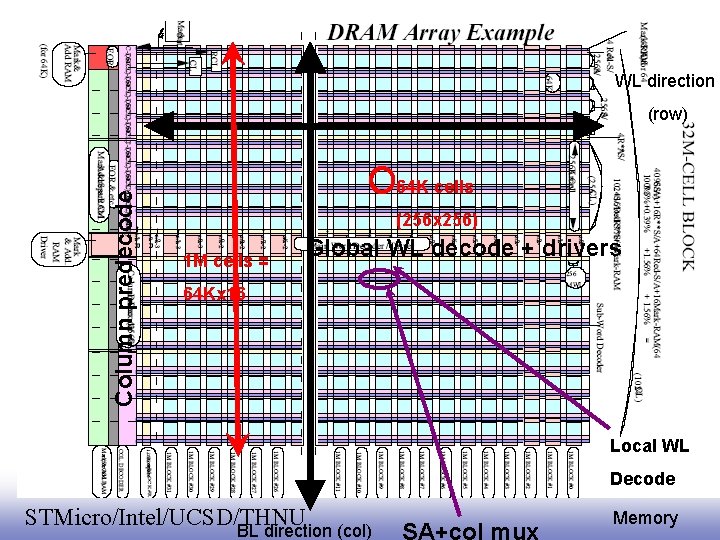

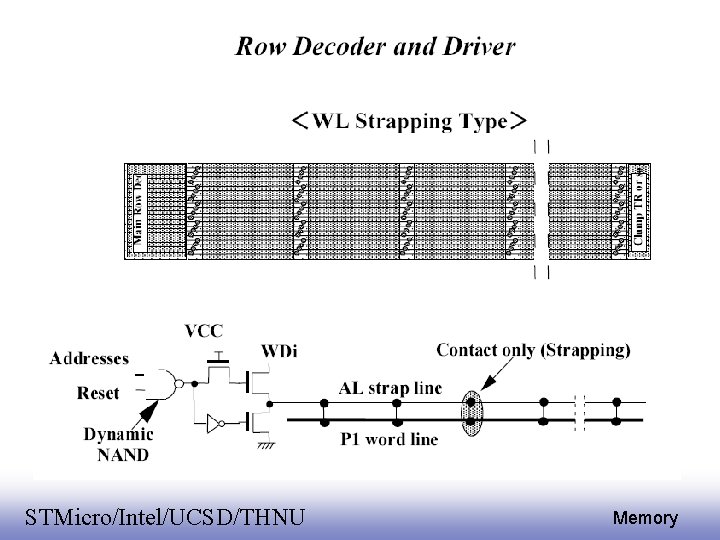

WL direction Column predecode (row) 64 K cells (256 x 256) 1 M cells = Global WL decode + drivers 64 Kx 16 Local WL Decode EE 141 STMicro/Intel/UCSD/THNU BL direction (col) 49 Memory

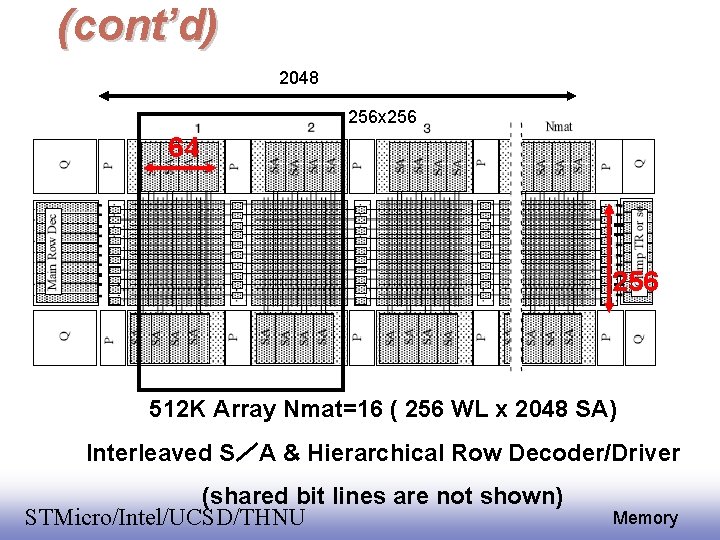

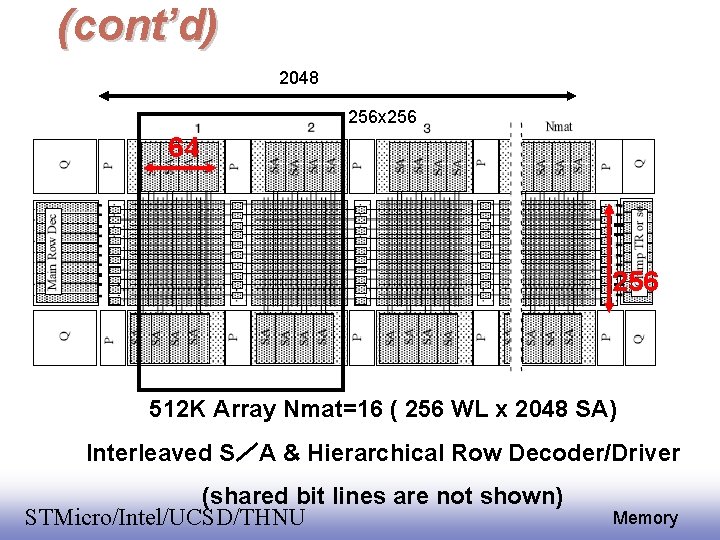

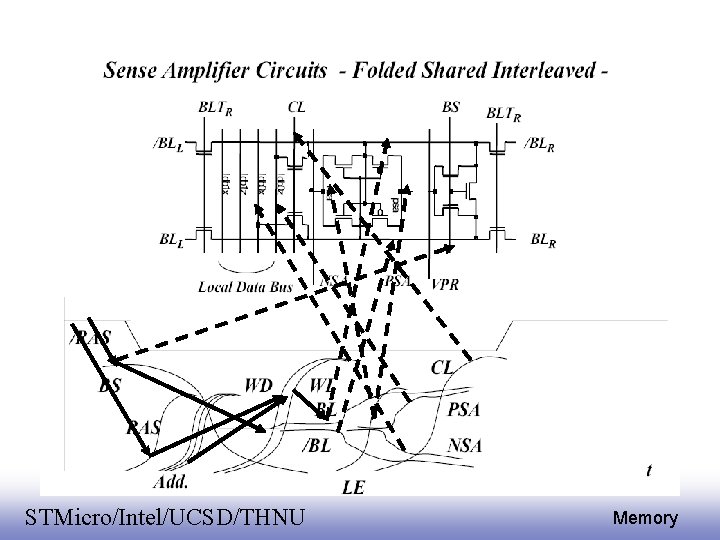

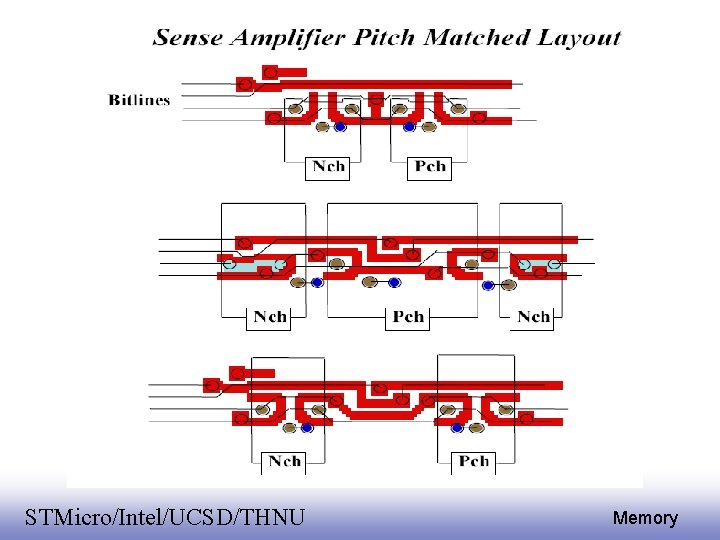

(cont’d) 2048 256 x 256 64 256 512 K Array Nmat=16 ( 256 WL x 2048 SA) Interleaved S/A & Hierarchical Row Decoder/Driver (shared bit lines are not shown) EE 141 STMicro/Intel/UCSD/THNU 50 Memory

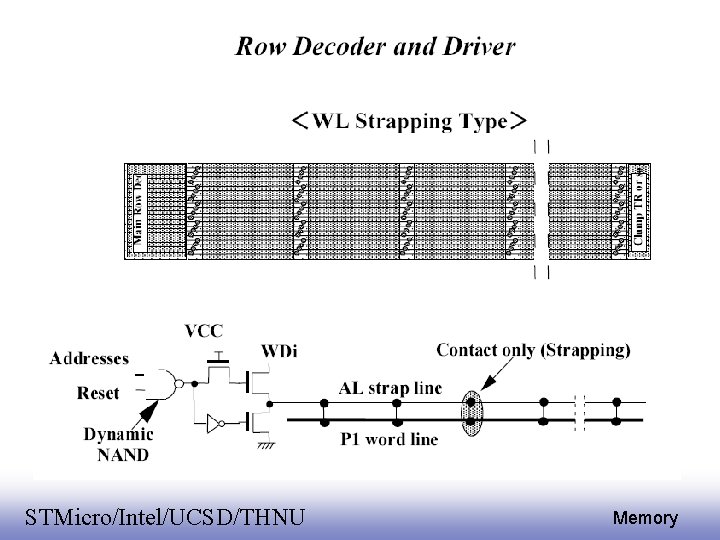

EE 141 STMicro/Intel/UCSD/THNU 51 Memory

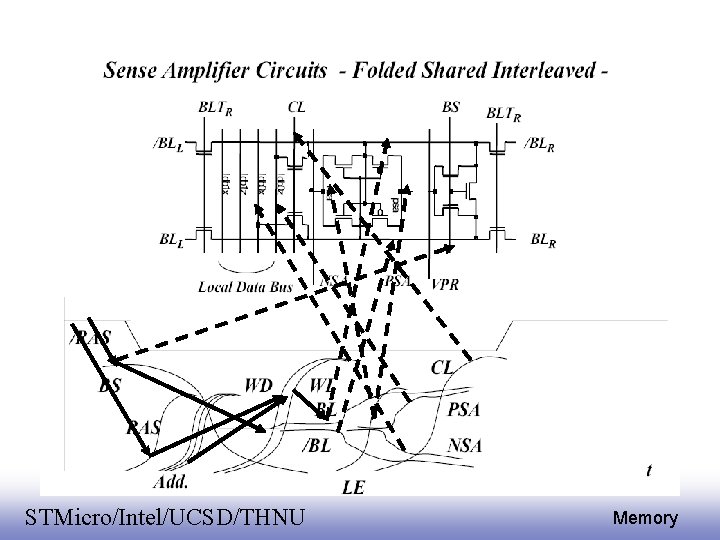

EE 141 STMicro/Intel/UCSD/THNU 52 Memory

EE 141 STMicro/Intel/UCSD/THNU 53 Memory

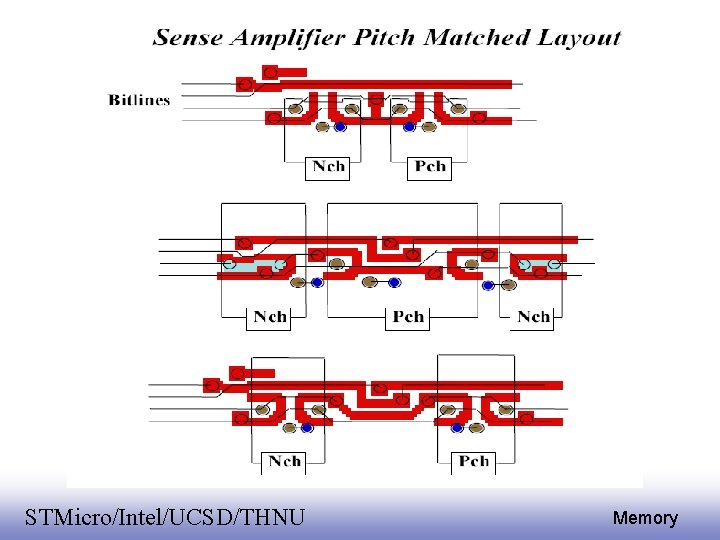

Standard DRAM Design Feature dependence on technology ・The row circuits are fully different from SRAM. ・Almost always analogue circuit design ・CAD: ・Heavy Spice-like circuits simulator Fully handcrafted layout EE 141 STMicro/Intel/UCSD/THNU 54 Memory