AI Alchemy Encoder Generator and put them together

- Slides: 79

AI Alchemy: Encoder, Generator, and put them together 李宏毅 Hung-yi Lee

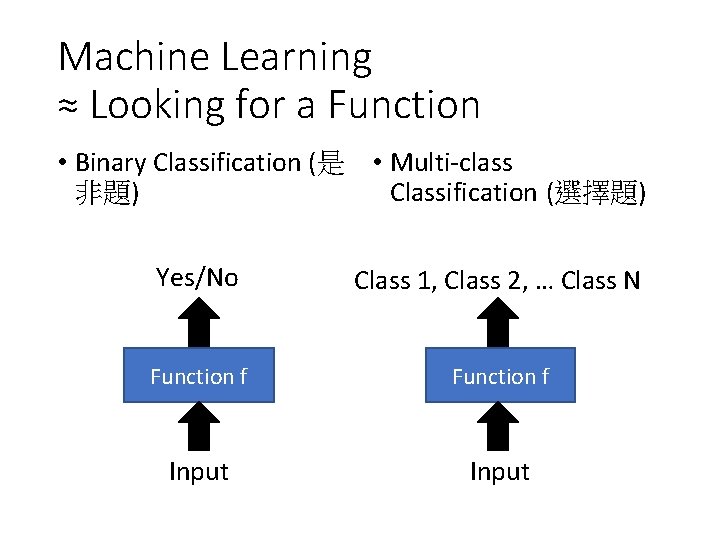

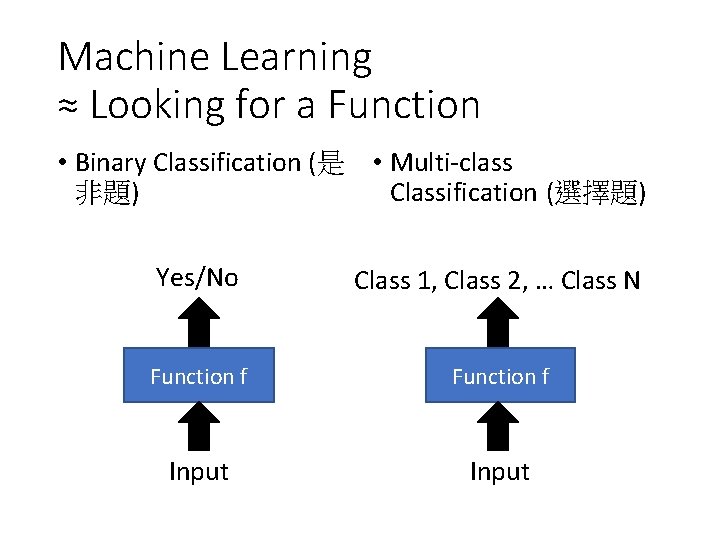

Machine Learning ≈ Looking for a Function • Binary Classification (是 • Multi-class 非題) Classification (選擇題) Yes/No Class 1, Class 2, … Class N Function f Input

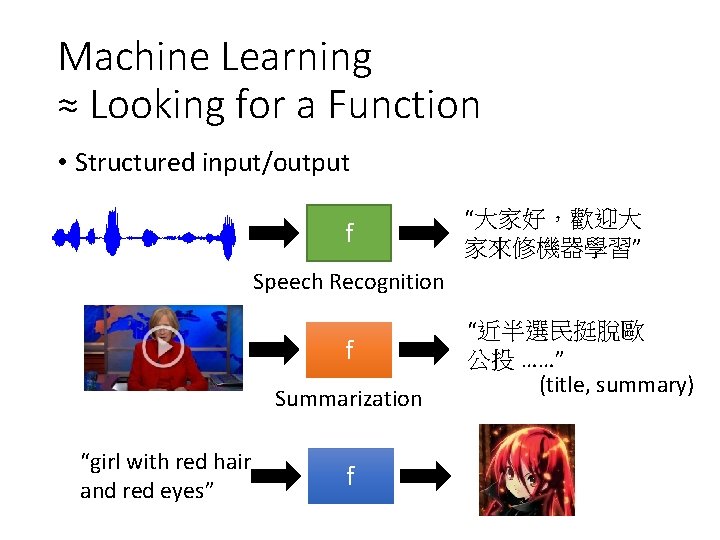

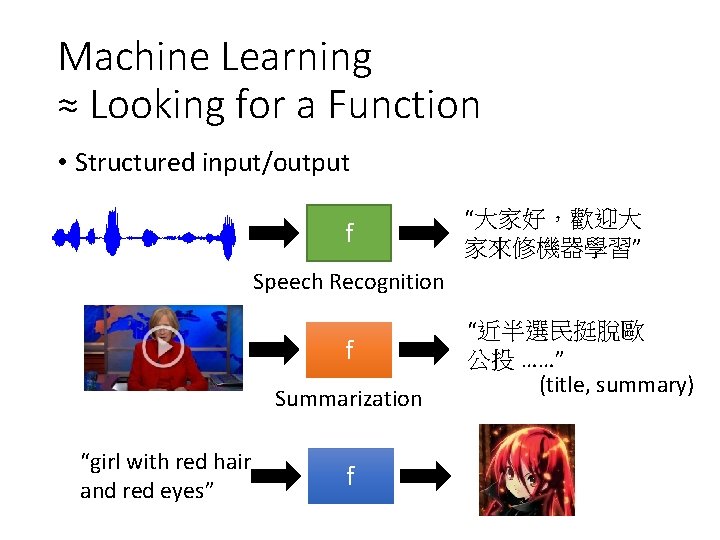

Machine Learning ≈ Looking for a Function • Structured input/output f “大家好,歡迎大 家來修機器學習” Speech Recognition f Summarization “girl with red hair and red eyes” f “近半選民挺脫歐 公投 ……” (title, summary)

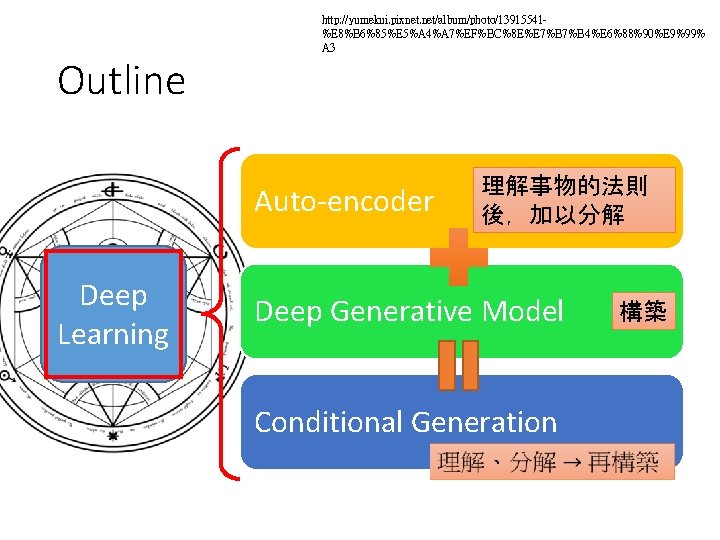

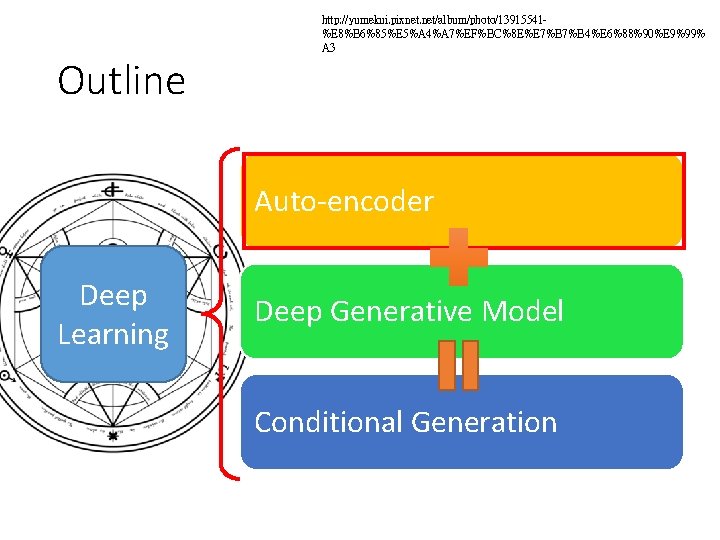

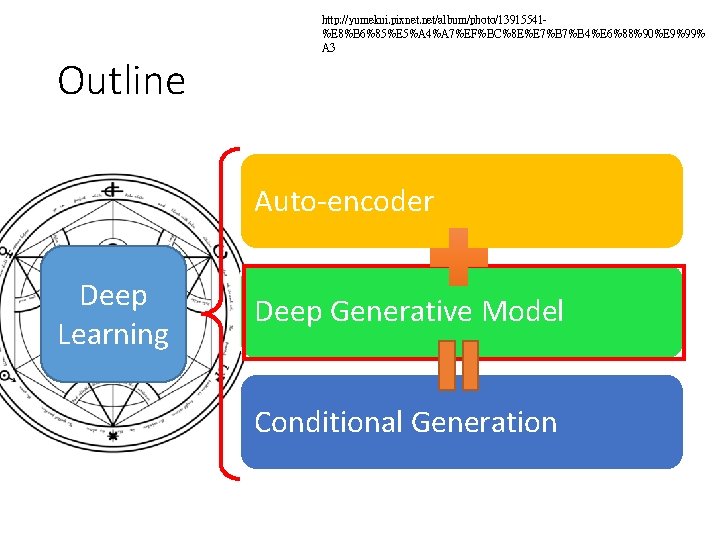

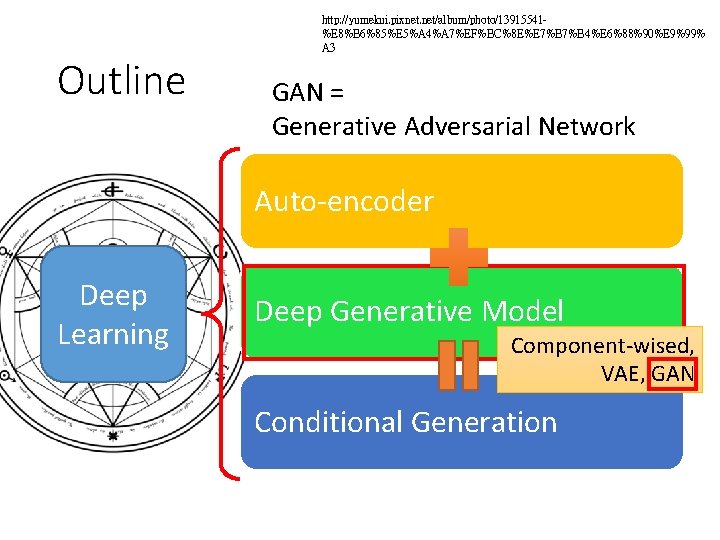

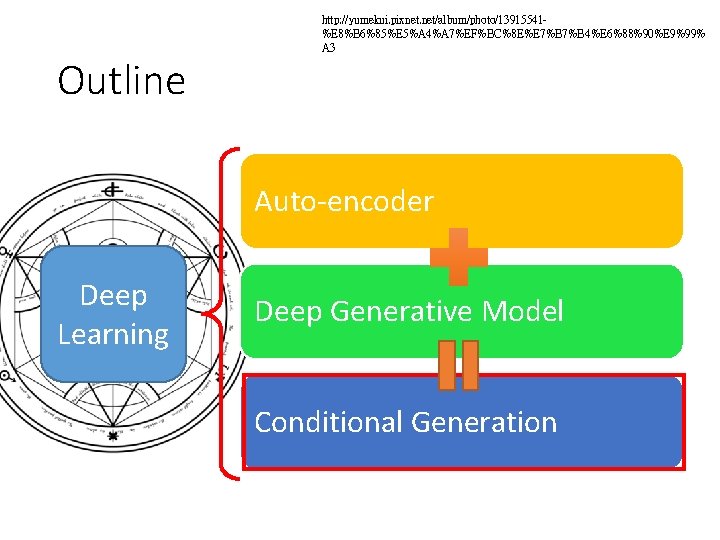

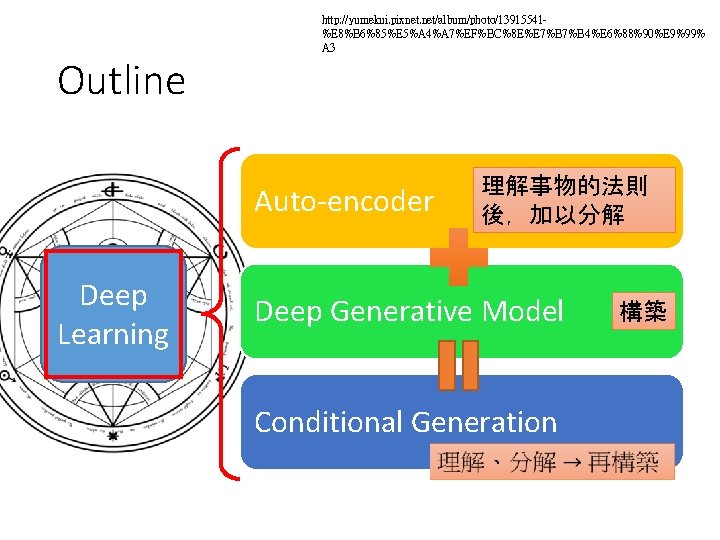

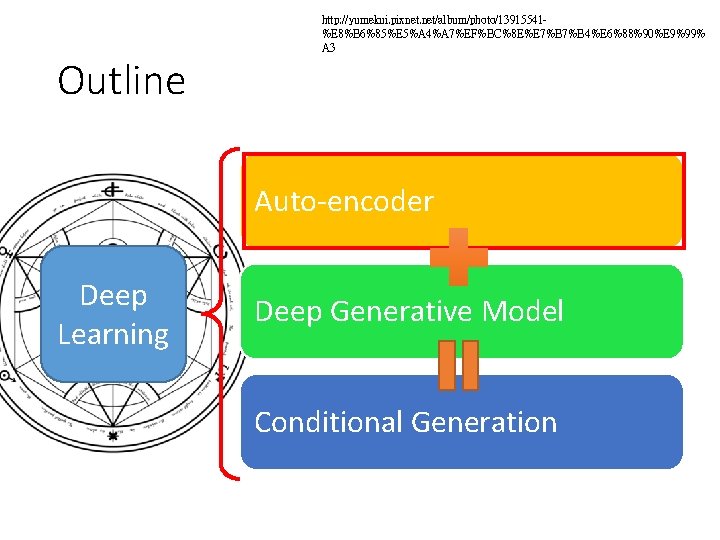

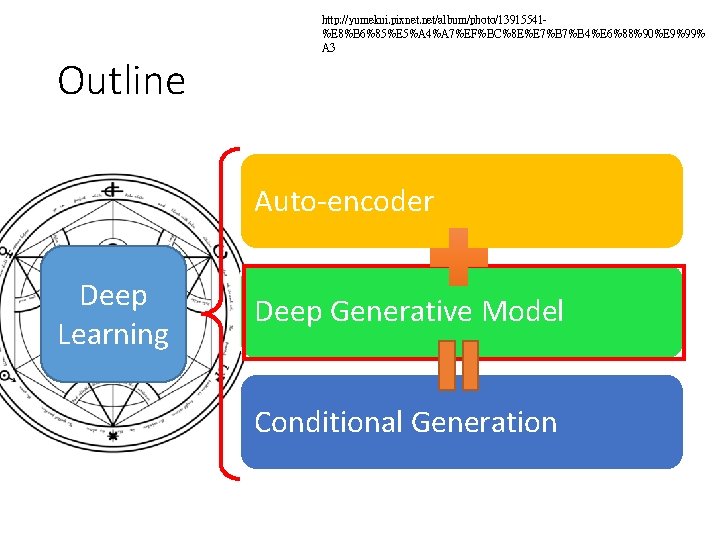

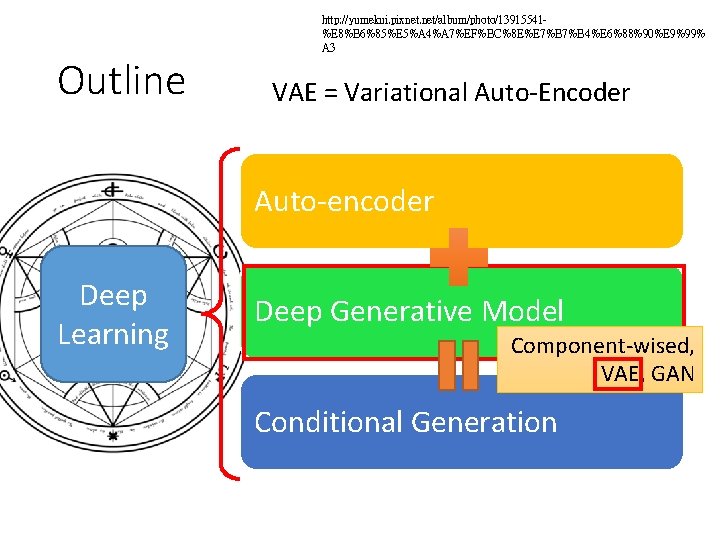

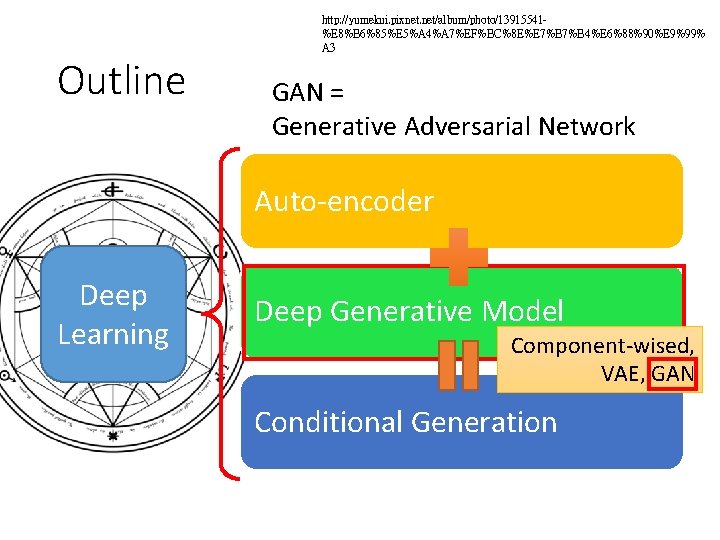

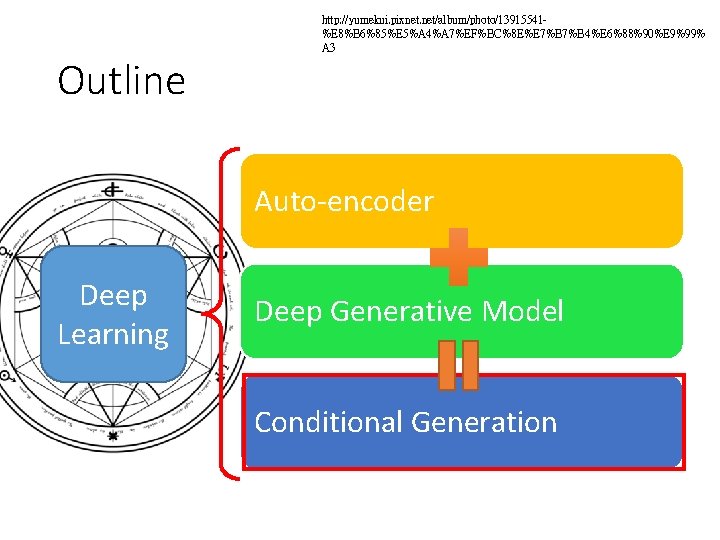

Outline http: //yumekui. pixnet. net/album/photo/13915541%E 8%B 6%85%E 5%A 4%A 7%EF%BC%8 E%E 7%B 4%E 6%88%90%E 9%99% A 3 Auto-encoder Deep Learning 理解事物的法則 後,加以分解 Deep Generative Model Conditional Generation 構築

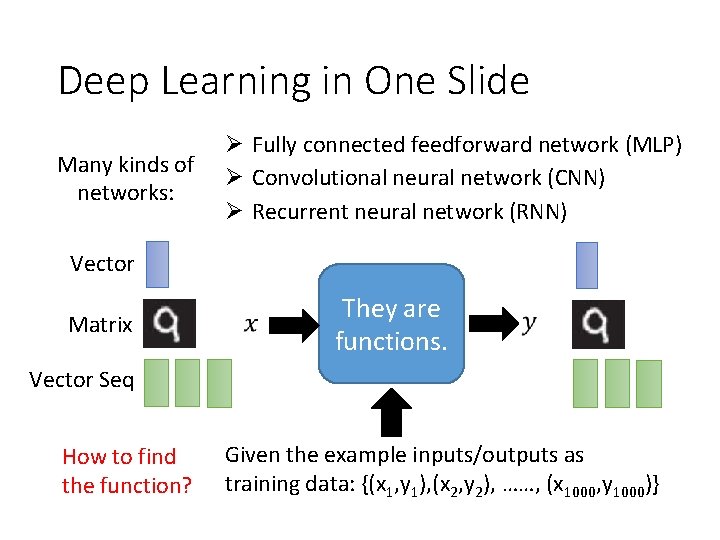

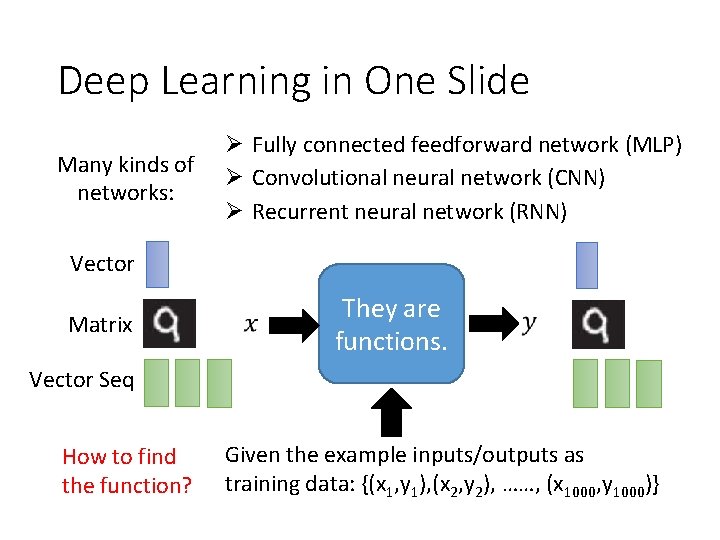

Deep Learning in One Slide Many kinds of networks: Ø Fully connected feedforward network (MLP) Ø Convolutional neural network (CNN) Ø Recurrent neural network (RNN) Vector Matrix They are functions. Vector Seq How to find the function? Given the example inputs/outputs as training data: {(x 1, y 1), (x 2, y 2), ……, (x 1000, y 1000)}

Outline http: //yumekui. pixnet. net/album/photo/13915541%E 8%B 6%85%E 5%A 4%A 7%EF%BC%8 E%E 7%B 4%E 6%88%90%E 9%99% A 3 Auto-encoder Deep Learning Deep Generative Model Conditional Generation

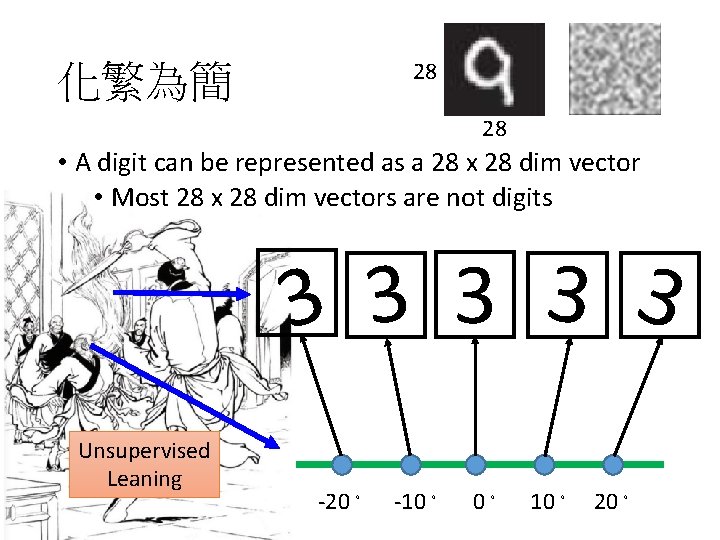

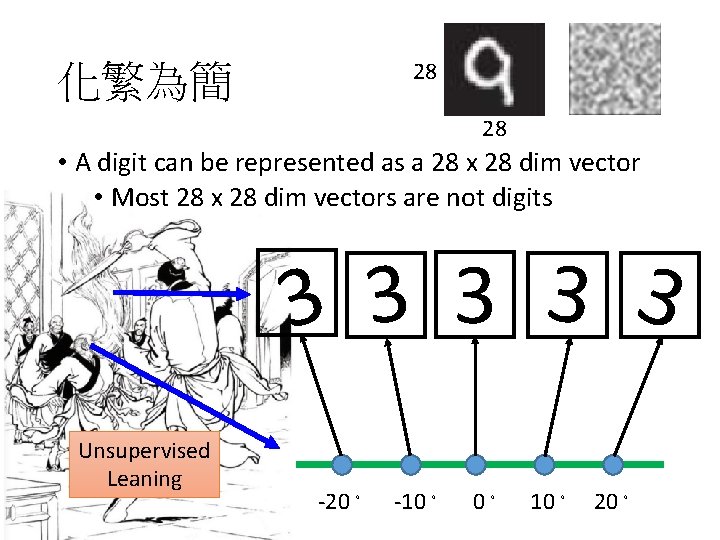

28 化繁為簡 28 • A digit can be represented as a 28 x 28 dim vector • Most 28 x 28 dim vectors are not digits 3 3 3 Unsupervised Leaning -20。 -10。 0。 10。 20。

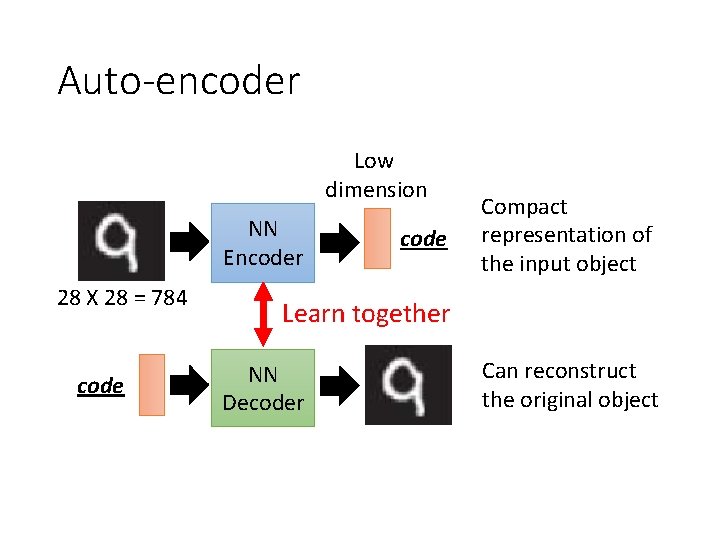

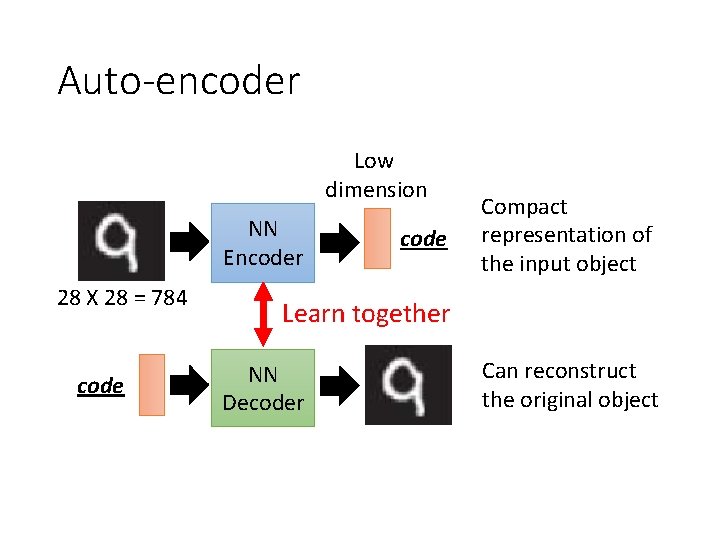

Auto-encoder Low dimension NN Encoder 28 X 28 = 784 code Compact representation of the input object Learn together NN Decoder Can reconstruct the original object

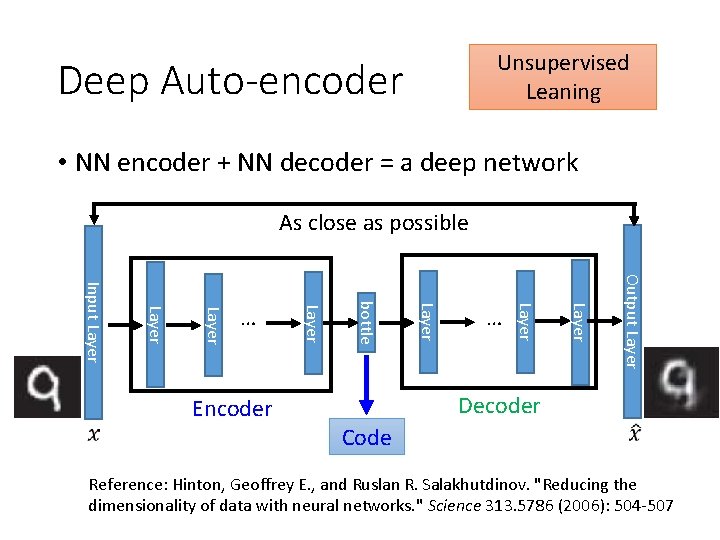

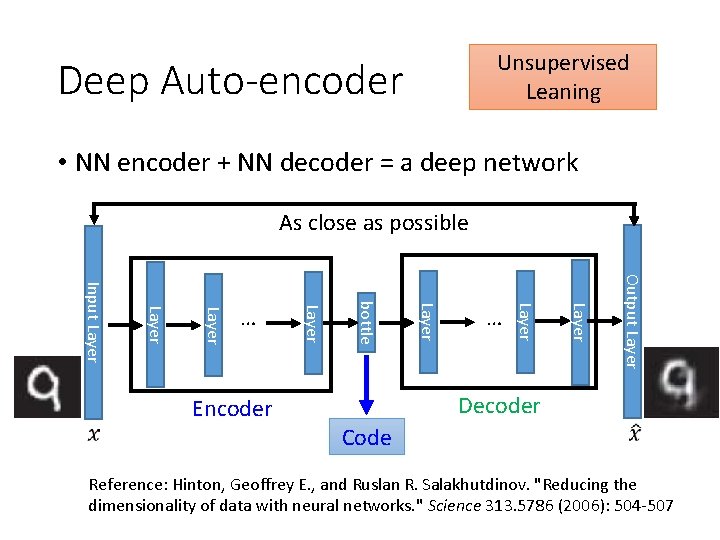

Unsupervised Leaning Deep Auto-encoder • NN encoder + NN decoder = a deep network As close as possible Output Layer … Layer bottle Encoder Layer Input Layer … Decoder Code Reference: Hinton, Geoffrey E. , and Ruslan R. Salakhutdinov. "Reducing the dimensionality of data with neural networks. " Science 313. 5786 (2006): 504 -507

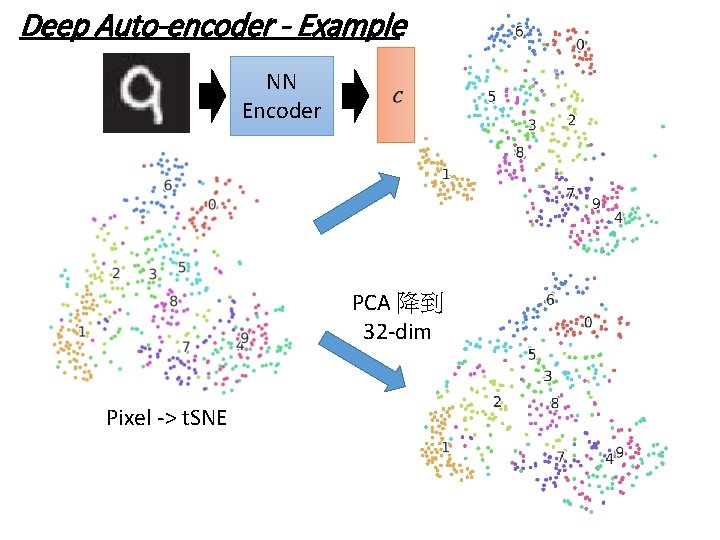

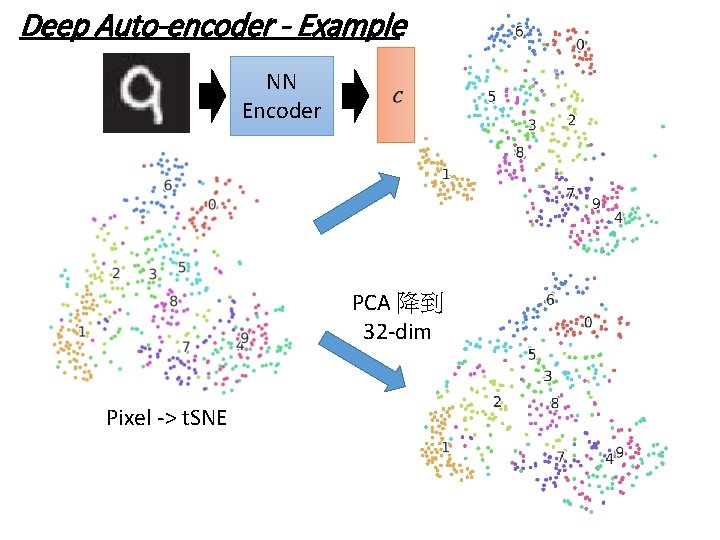

Deep Auto-encoder - Example NN Encoder PCA 降到 32 -dim Pixel -> t. SNE

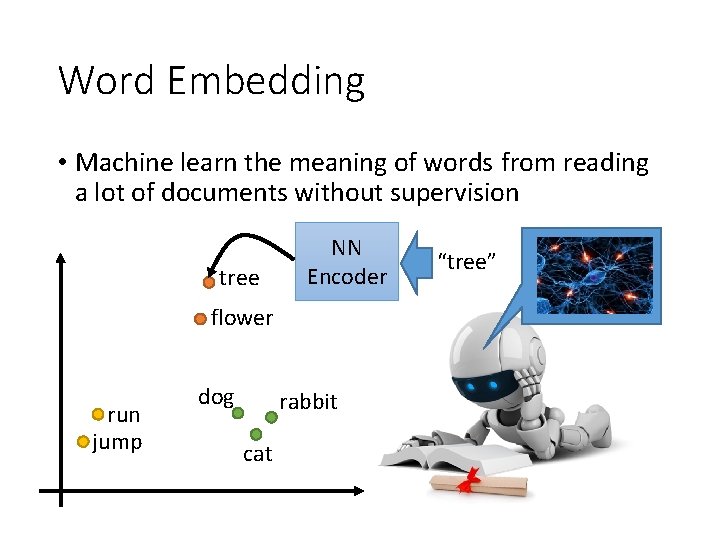

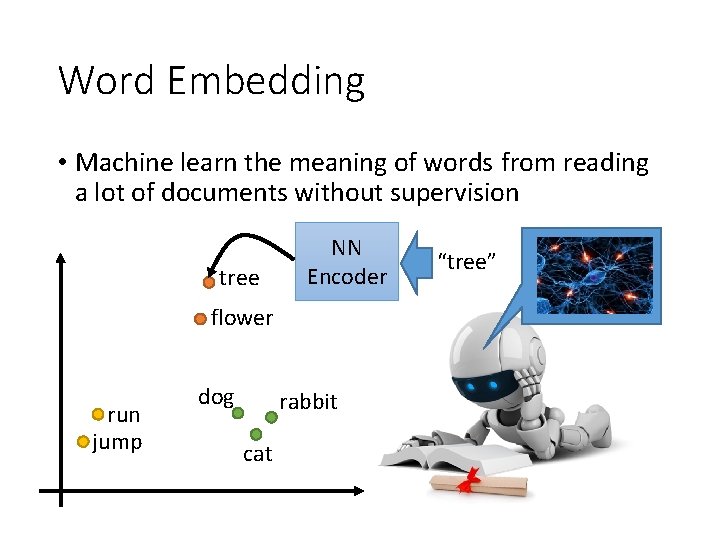

Word Embedding • Machine learn the meaning of words from reading a lot of documents without supervision tree NN Encoder flower run jump dog rabbit cat “tree”

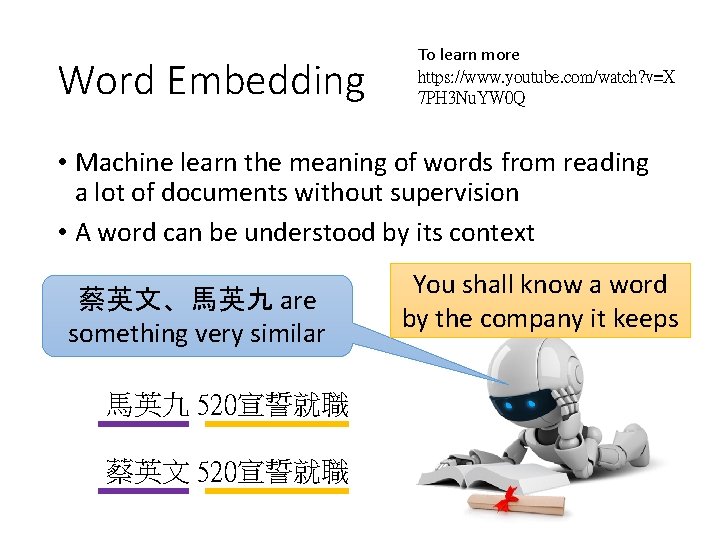

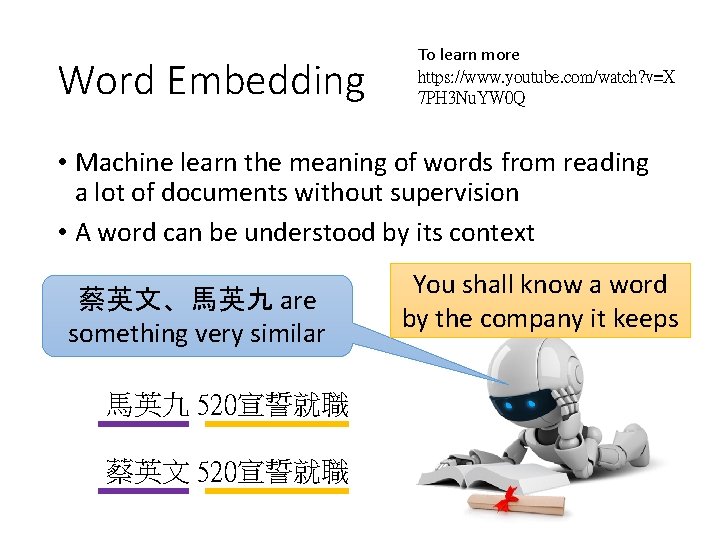

Word Embedding To learn more https: //www. youtube. com/watch? v=X 7 PH 3 Nu. YW 0 Q • Machine learn the meaning of words from reading a lot of documents without supervision • A word can be understood by its context 蔡英文、馬英九 are something very similar 馬英九 520宣誓就職 蔡英文 520宣誓就職 You shall know a word by the company it keeps

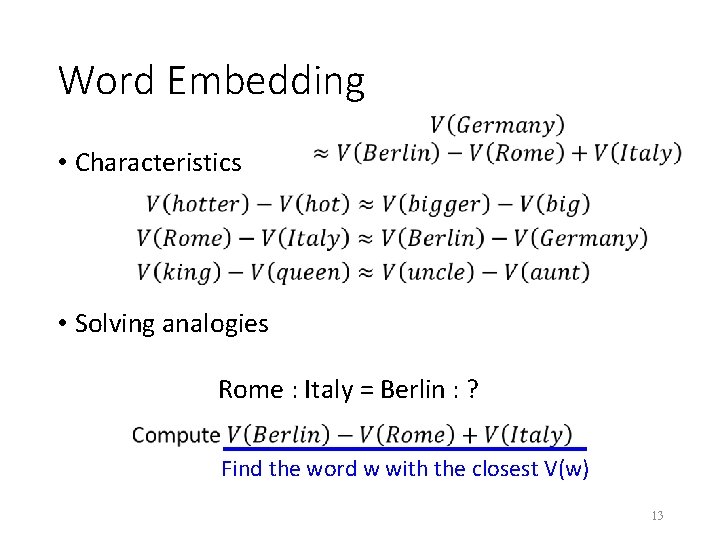

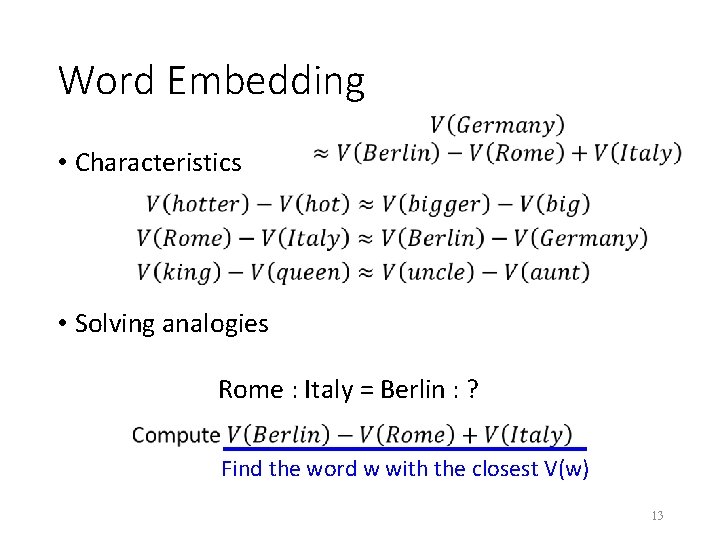

Word Embedding • Characteristics • Solving analogies Rome : Italy = Berlin : ? Find the word w with the closest V(w) 13

Word Embedding - Demo • Machine learn the meaning of words from reading a lot of documents without supervision

Word Embedding - Demo • Model used in demo is provided by 陳仰德 • Part of the project done by 陳仰德、林資偉 • TA: 劉元銘 • Training data is from PTT (collected by 葉青峰) 15

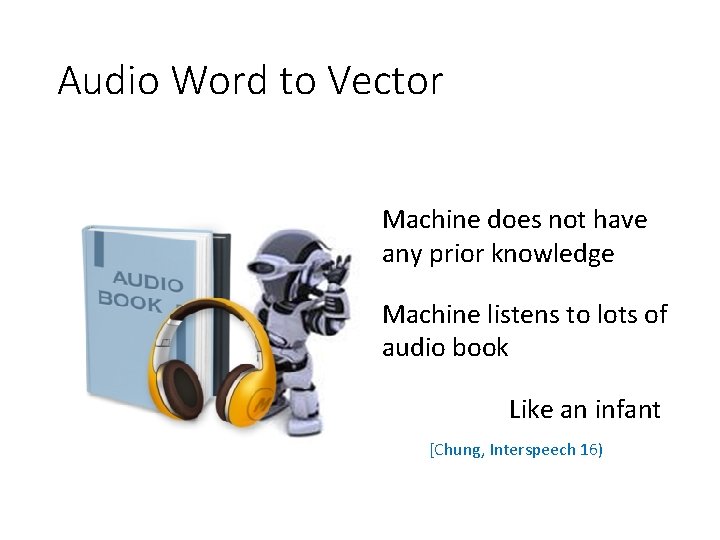

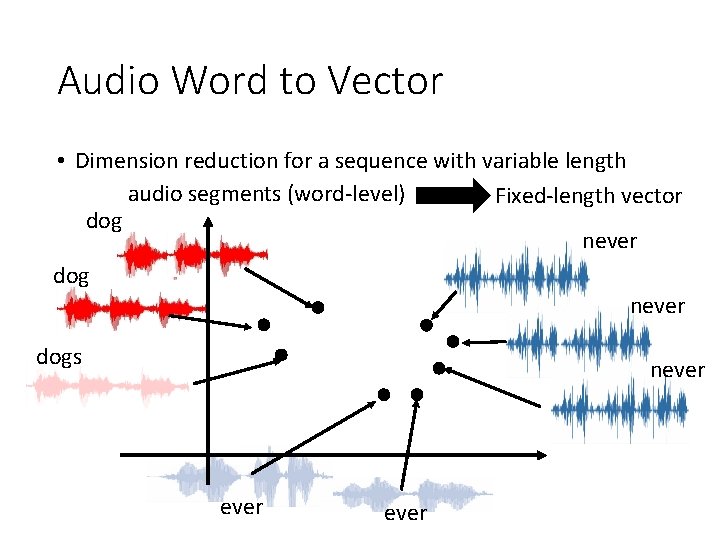

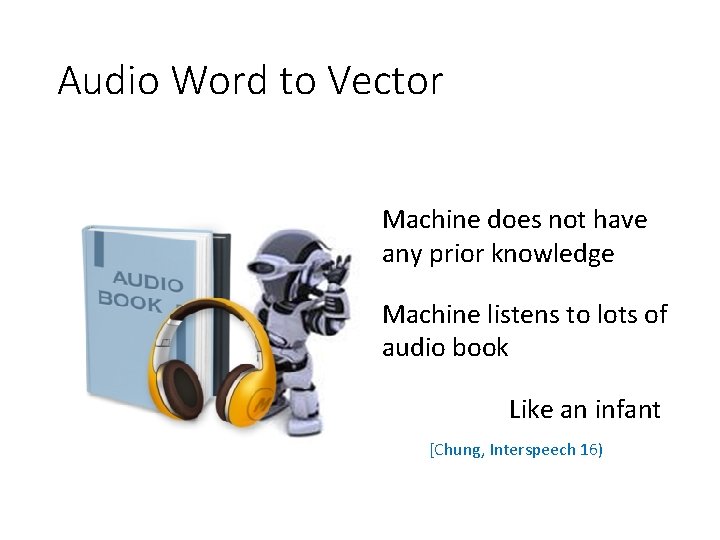

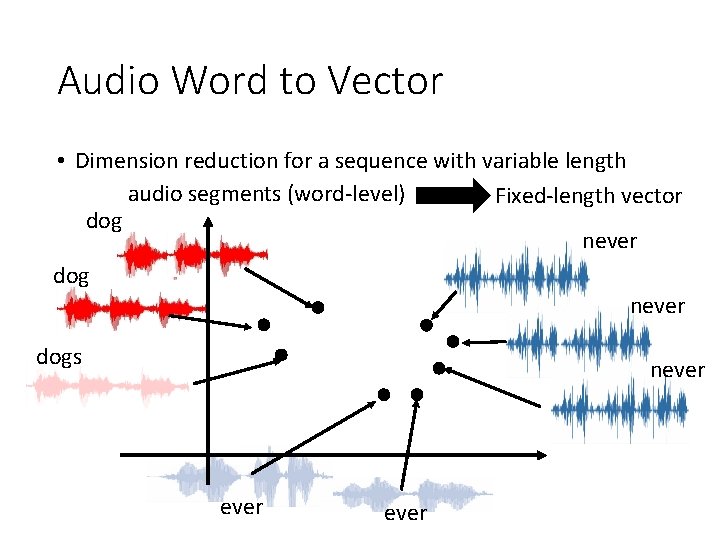

Audio Word to Vector Machine does not have any prior knowledge Machine listens to lots of audio book Like an infant [Chung, Interspeech 16)

Audio Word to Vector • Dimension reduction for a sequence with variable length audio segments (word-level) Fixed-length vector dog never dogs never

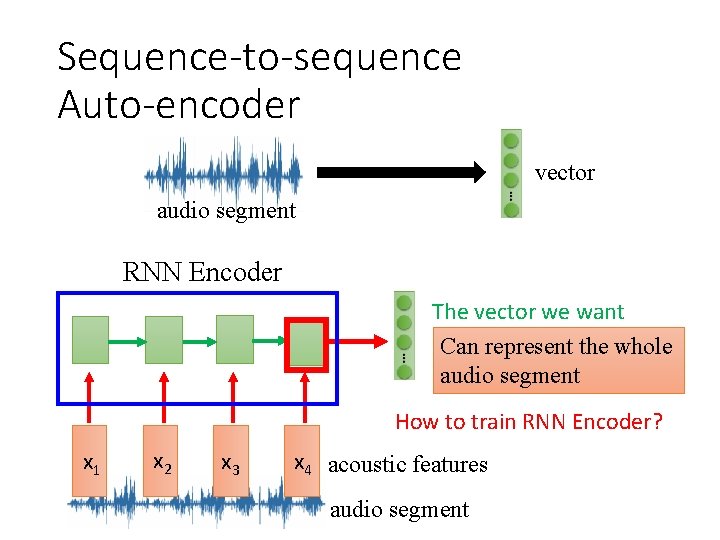

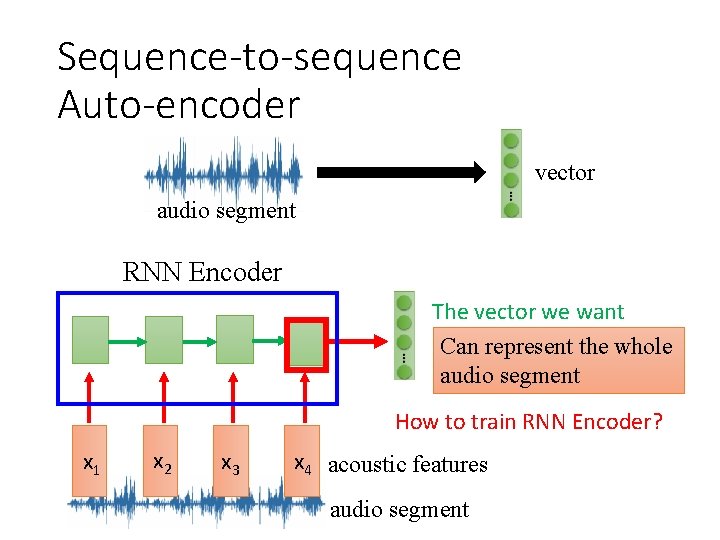

Sequence-to-sequence Auto-encoder vector audio segment RNN Encoder The vector we want Can represent the whole audio segment How to train RNN Encoder? x 1 x 2 x 3 x 4 acoustic features audio segment

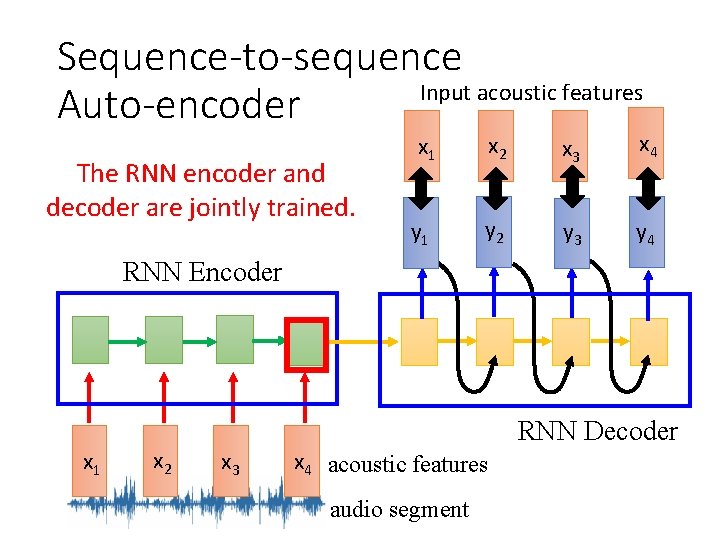

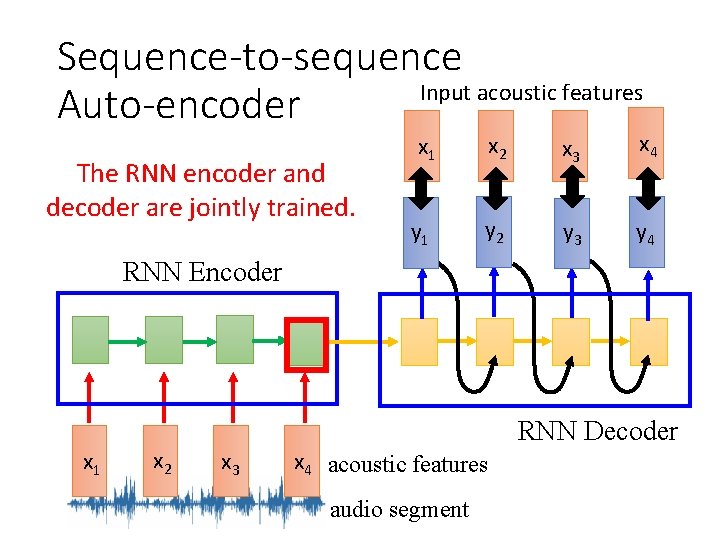

Sequence-to-sequence Input acoustic features Auto-encoder The RNN encoder and decoder are jointly trained. x 1 x 2 x 3 x 4 y 1 y 2 y 3 y 4 RNN Encoder x 1 x 2 x 3 x 4 acoustic features audio segment RNN Decoder

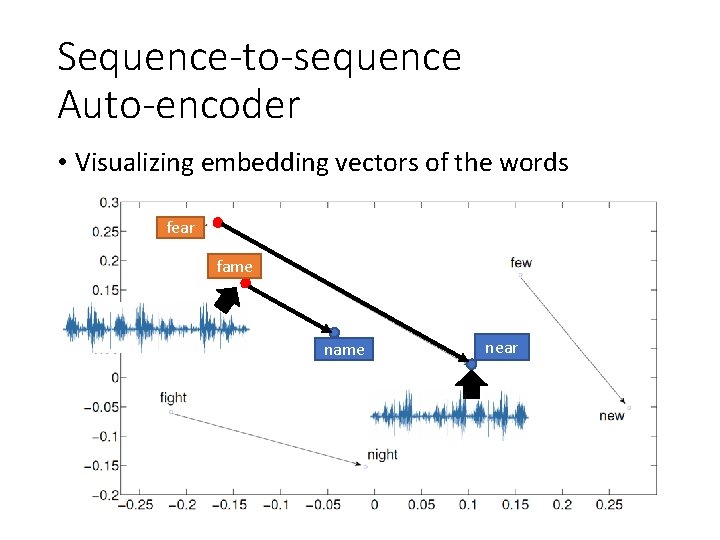

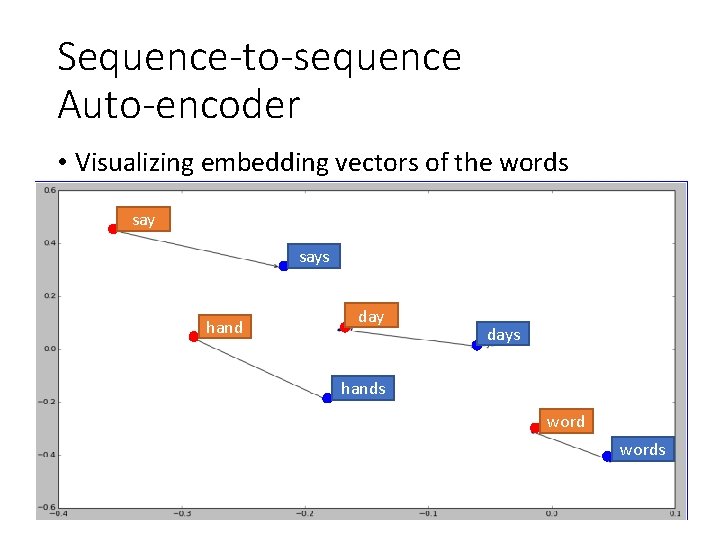

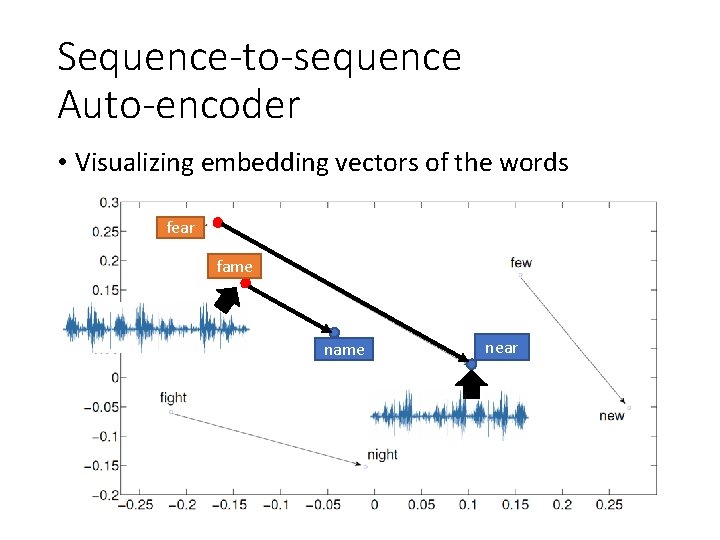

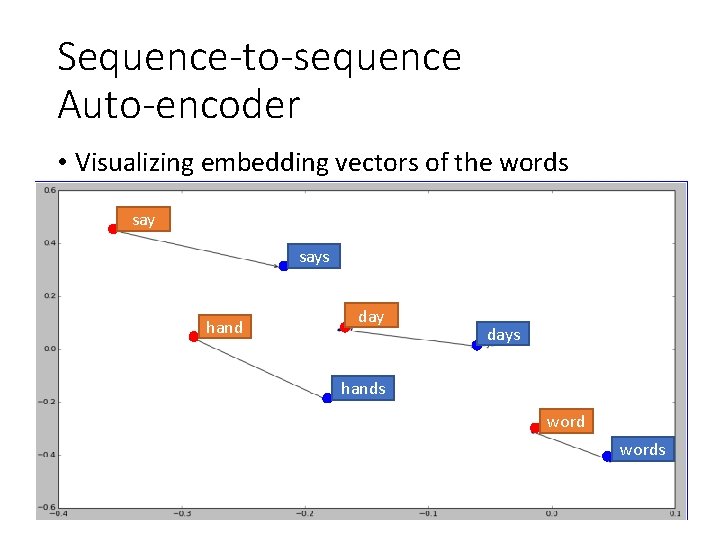

Sequence-to-sequence Auto-encoder • Visualizing embedding vectors of the words fear fame near

Sequence-to-sequence Auto-encoder • Visualizing embedding vectors of the words says hand days hands words

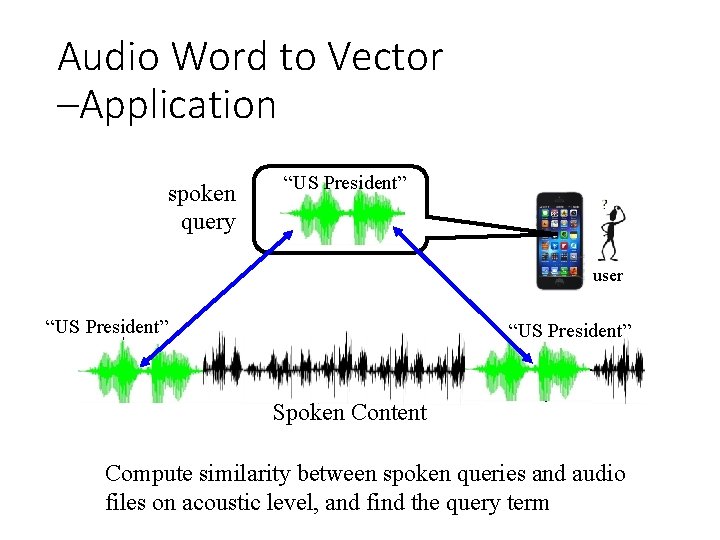

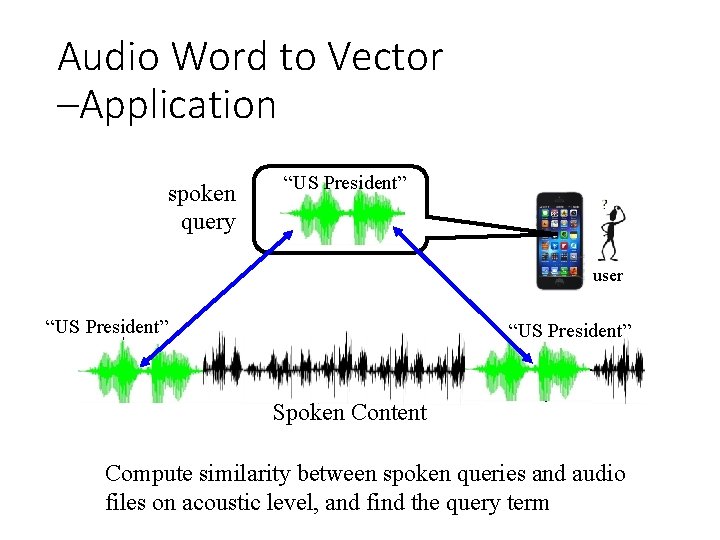

Audio Word to Vector –Application spoken query “US President” user “US President” Spoken Content Compute similarity between spoken queries and audio files on acoustic level, and find the query term

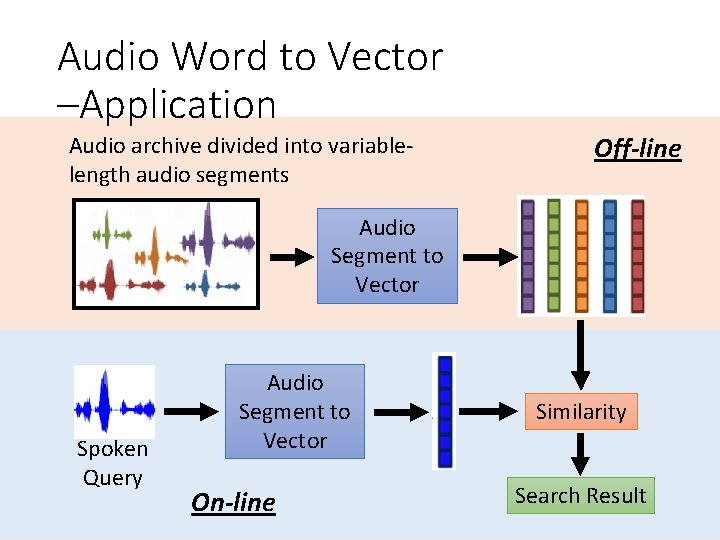

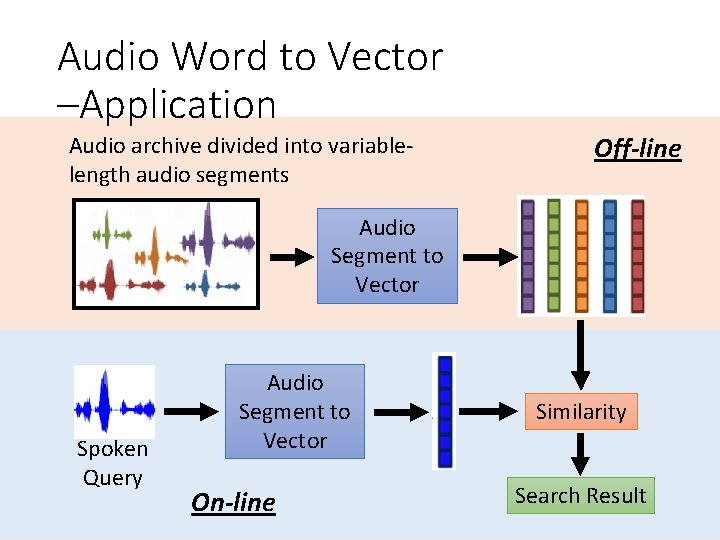

Audio Word to Vector –Application Audio archive divided into variablelength audio segments Off-line Audio Segment to Vector Spoken Query Audio Segment to Vector On-line Similarity Search Result

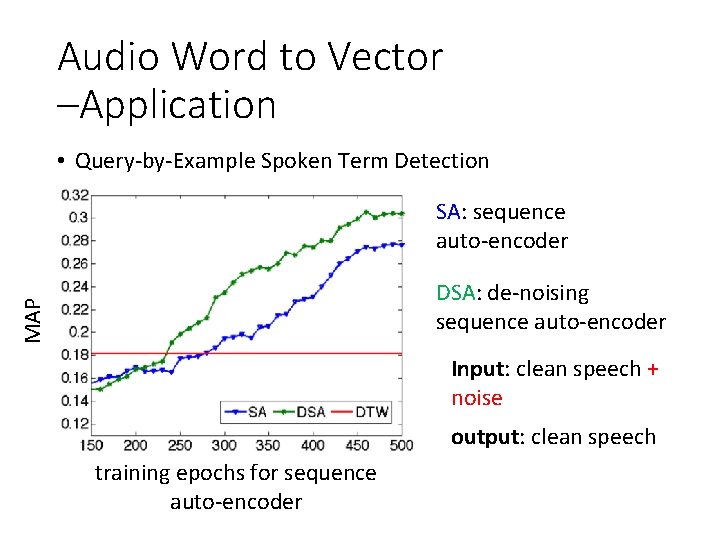

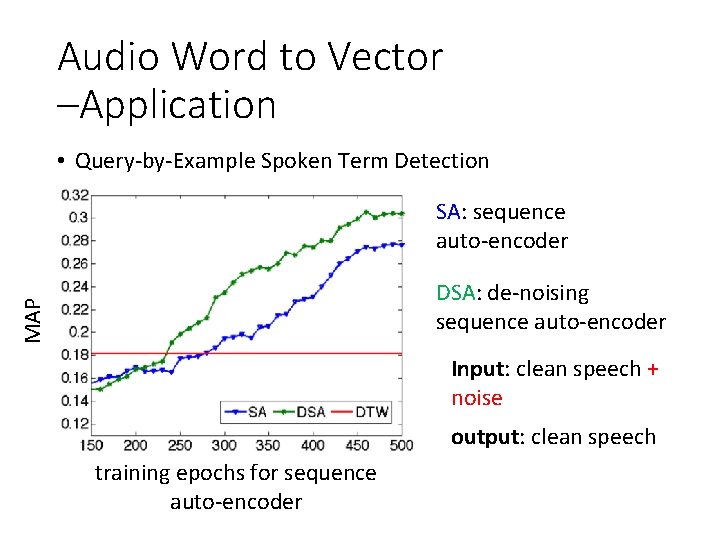

Audio Word to Vector –Application • Query-by-Example Spoken Term Detection SA: sequence auto-encoder MAP DSA: de-noising sequence auto-encoder Input: clean speech + noise output: clean speech training epochs for sequence auto-encoder

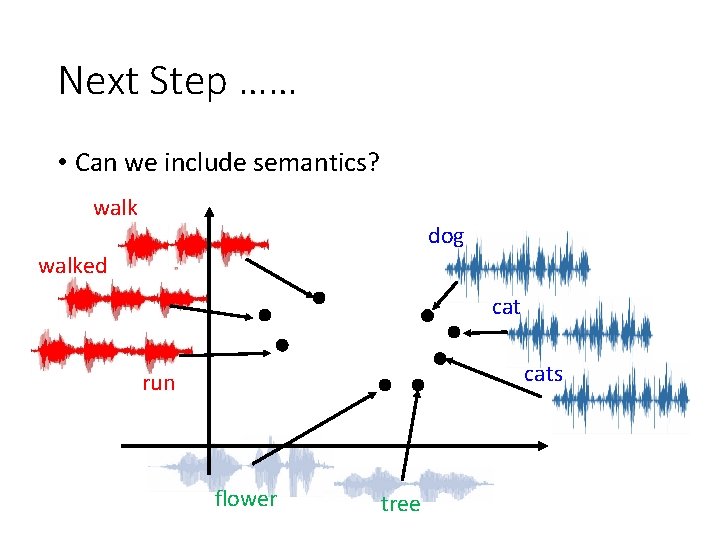

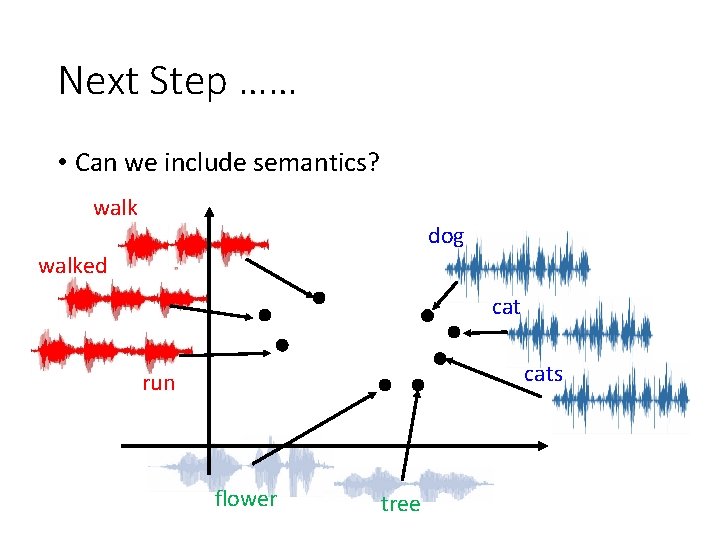

Next Step …… • Can we include semantics? walk dog walked cats run flower tree

Outline http: //yumekui. pixnet. net/album/photo/13915541%E 8%B 6%85%E 5%A 4%A 7%EF%BC%8 E%E 7%B 4%E 6%88%90%E 9%99% A 3 Auto-encoder Deep Learning Deep Generative Model Conditional Generation

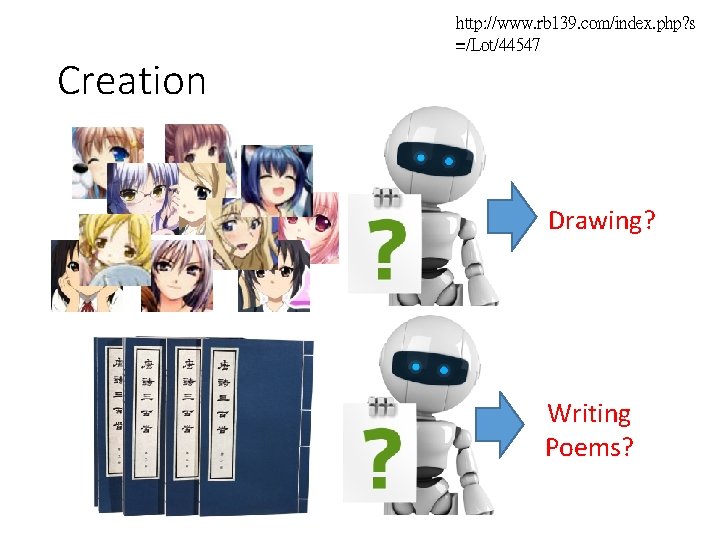

Creation http: //www. rb 139. com/index. php? s =/Lot/44547 Drawing? Writing Poems?

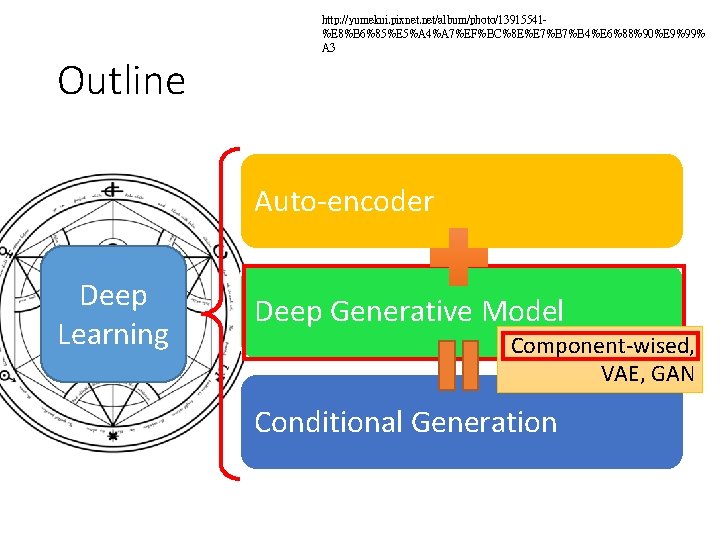

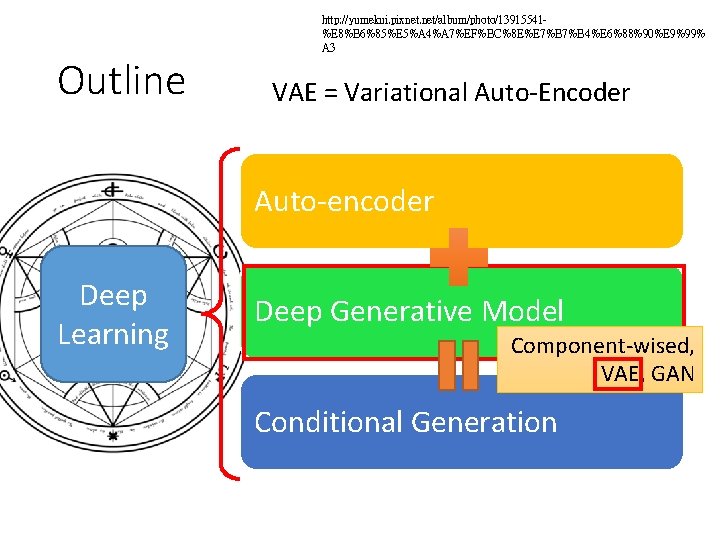

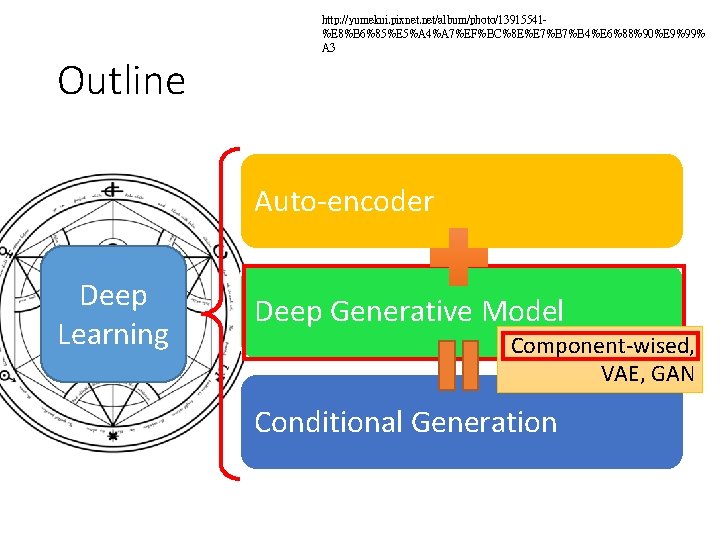

Outline http: //yumekui. pixnet. net/album/photo/13915541%E 8%B 6%85%E 5%A 4%A 7%EF%BC%8 E%E 7%B 4%E 6%88%90%E 9%99% A 3 Auto-encoder Deep Learning Deep Generative Model Component-wised, VAE, GAN Conditional Generation

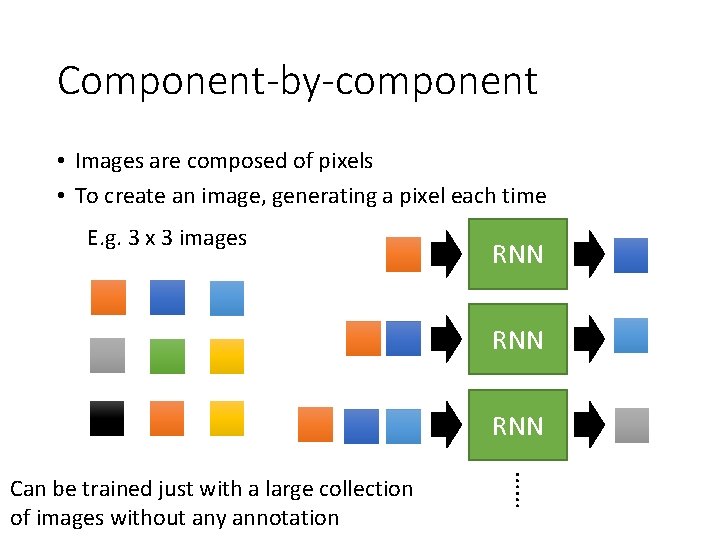

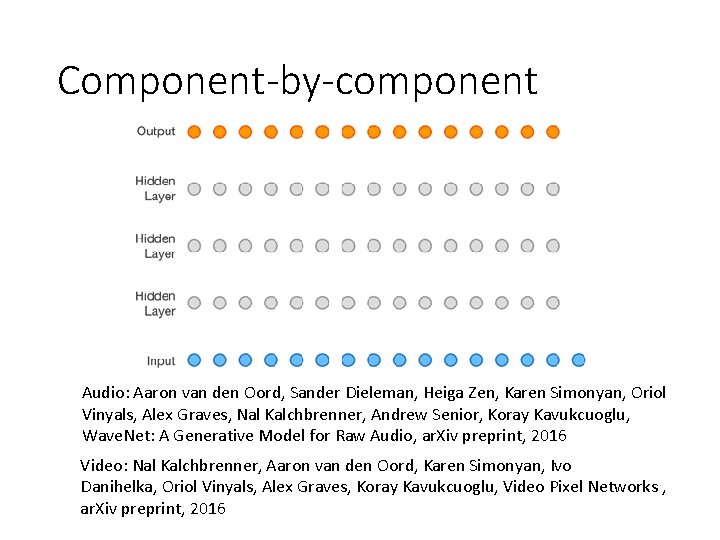

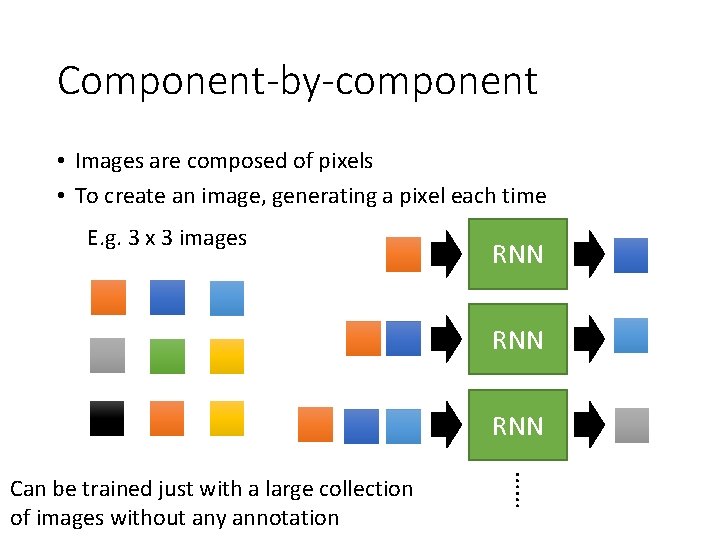

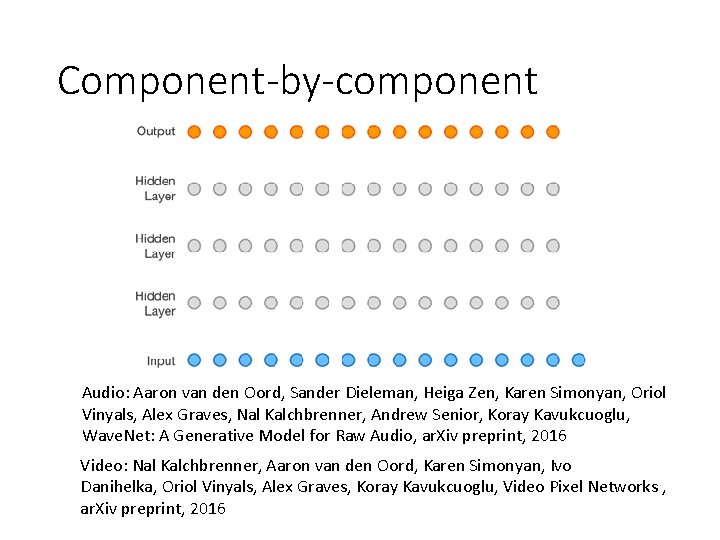

Component-by-component • Images are composed of pixels • To create an image, generating a pixel each time E. g. 3 x 3 images RNN RNN …… Can be trained just with a large collection of images without any annotation

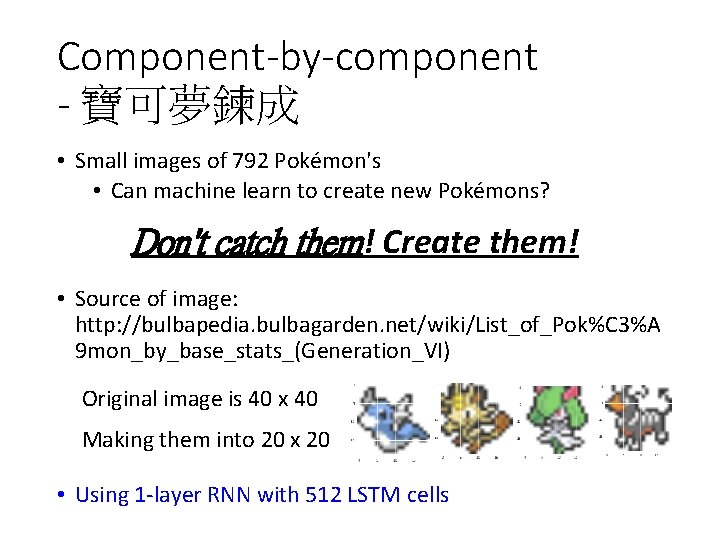

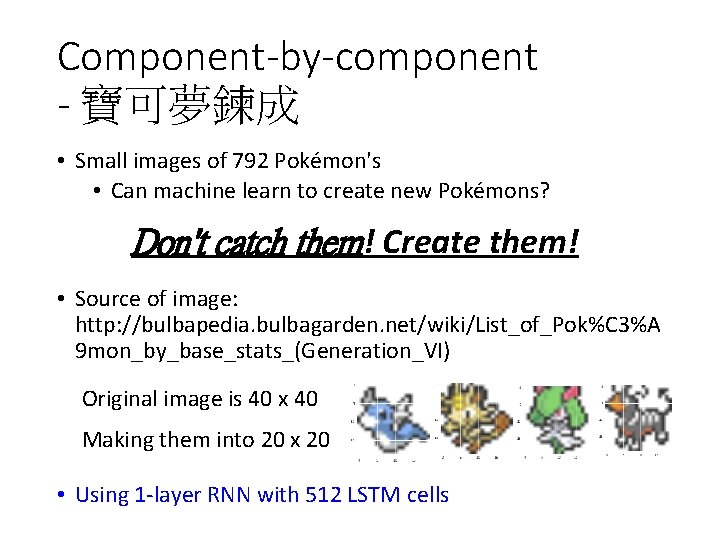

Component-by-component - 寶可夢鍊成 • Small images of 792 Pokémon's • Can machine learn to create new Pokémons? Don't catch them! Create them! • Source of image: http: //bulbapedia. bulbagarden. net/wiki/List_of_Pok%C 3%A 9 mon_by_base_stats_(Generation_VI) Original image is 40 x 40 Making them into 20 x 20 • Using 1 -layer RNN with 512 LSTM cells

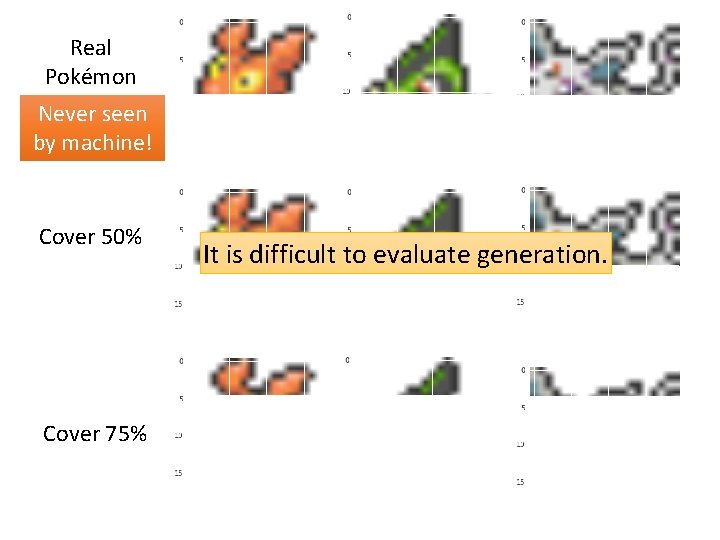

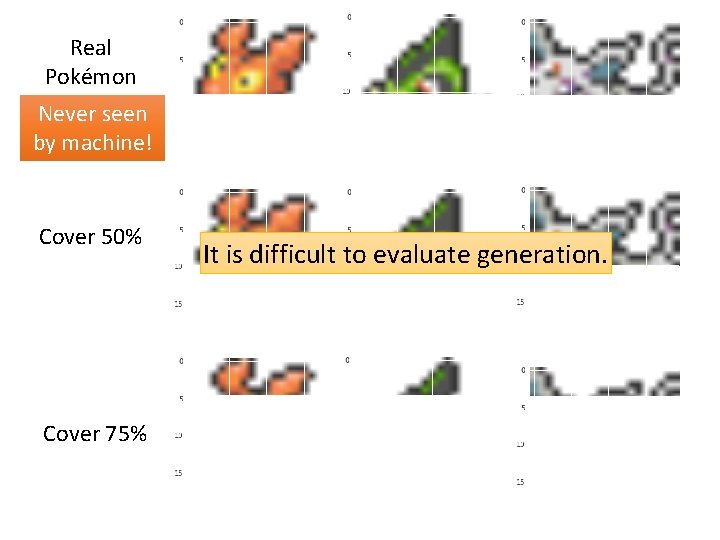

Real Pokémon Never seen by machine! Cover 50% Cover 75% It is difficult to evaluate generation.

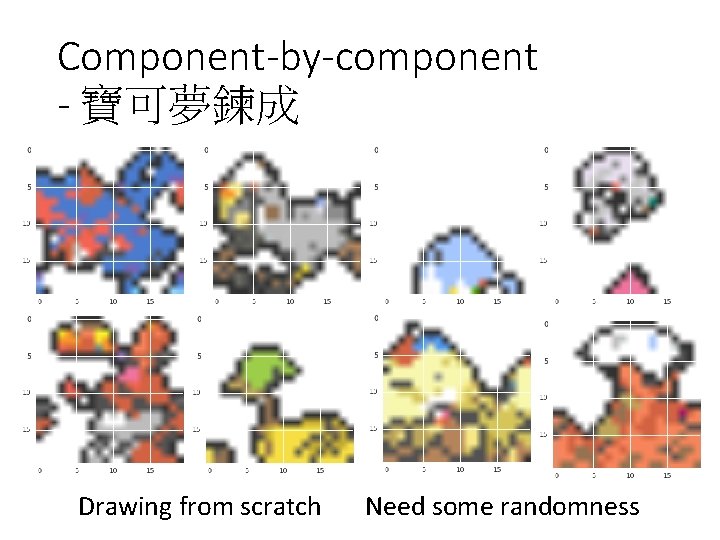

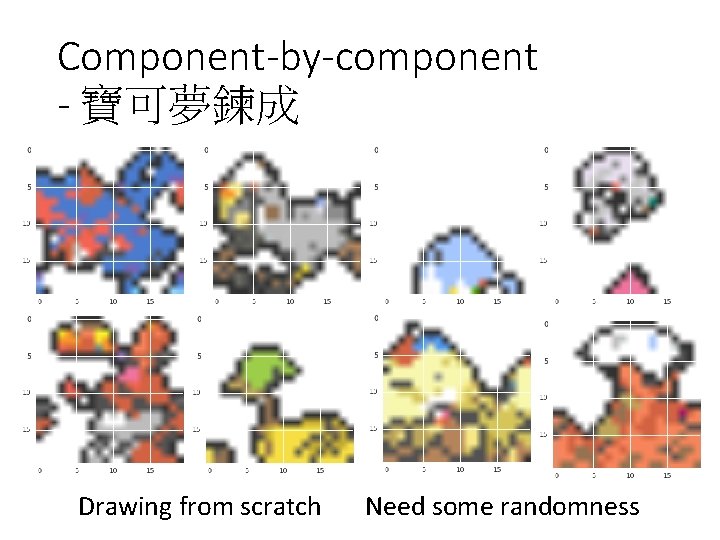

Component-by-component - 寶可夢鍊成 Drawing from scratch Need some randomness

Component-by-component Audio: Aaron van den Oord, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, Koray Kavukcuoglu, Wave. Net: A Generative Model for Raw Audio, ar. Xiv preprint, 2016 Video: Nal Kalchbrenner, Aaron van den Oord, Karen Simonyan, Ivo Danihelka, Oriol Vinyals, Alex Graves, Koray Kavukcuoglu, Video Pixel Networks , ar. Xiv preprint, 2016

Outline http: //yumekui. pixnet. net/album/photo/13915541%E 8%B 6%85%E 5%A 4%A 7%EF%BC%8 E%E 7%B 4%E 6%88%90%E 9%99% A 3 VAE = Variational Auto-Encoder Auto-encoder Deep Learning Deep Generative Model Component-wised, VAE, GAN Conditional Generation

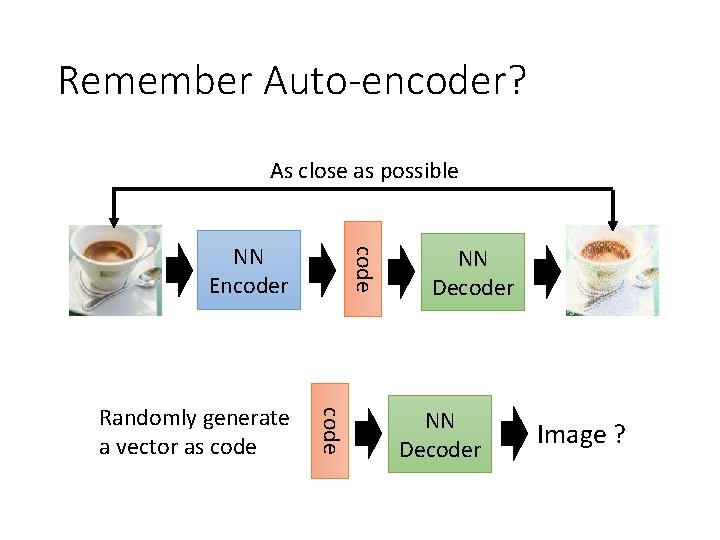

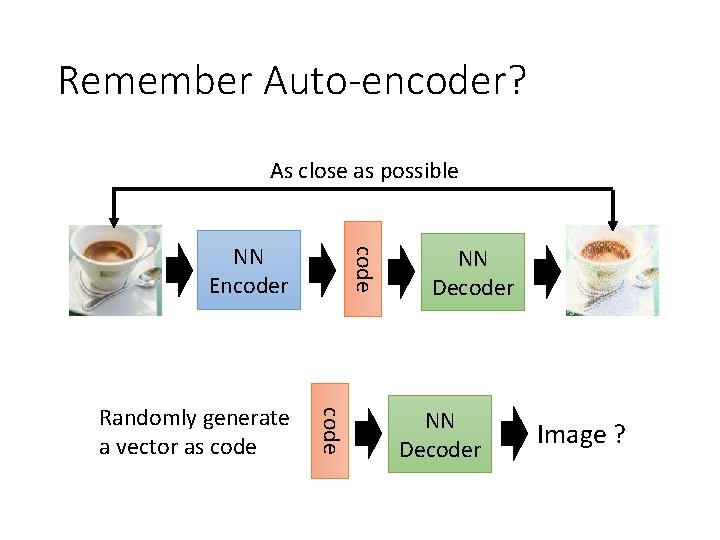

Remember Auto-encoder? As close as possible code Randomly generate a vector as code NN Encoder NN Decoder Image ?

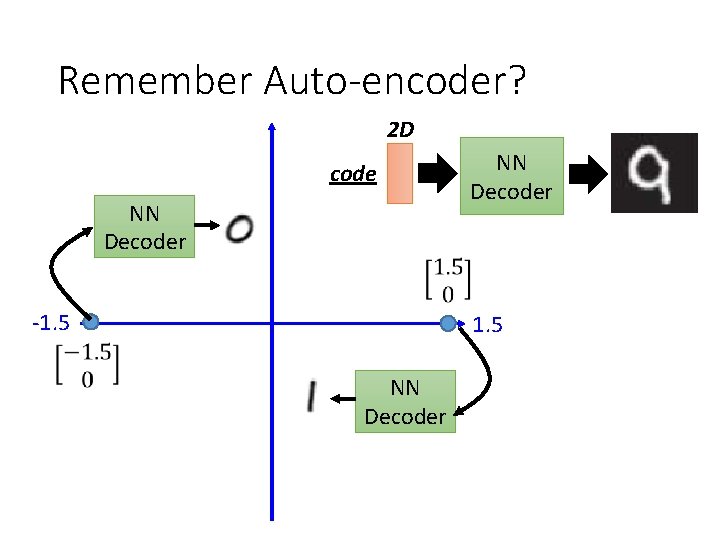

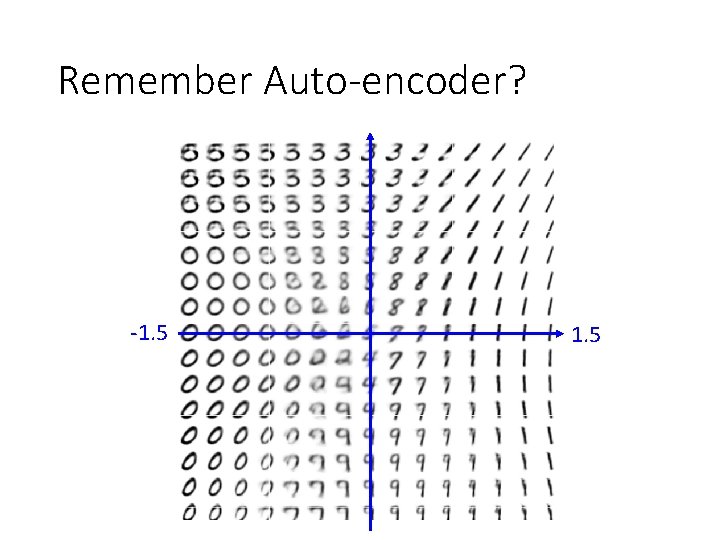

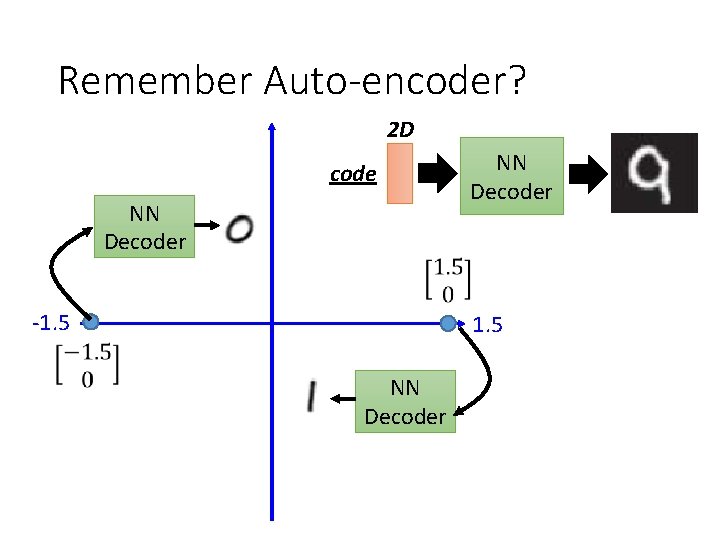

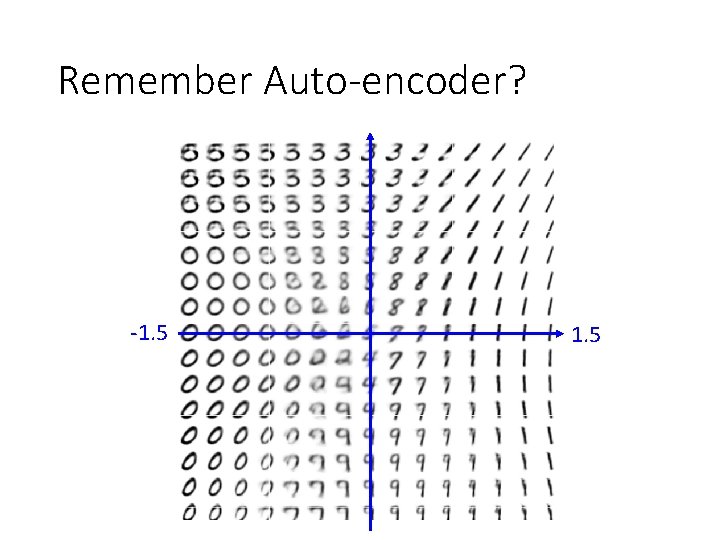

Remember Auto-encoder? 2 D code NN Decoder -1. 5 NN Decoder

Remember Auto-encoder? -1. 5

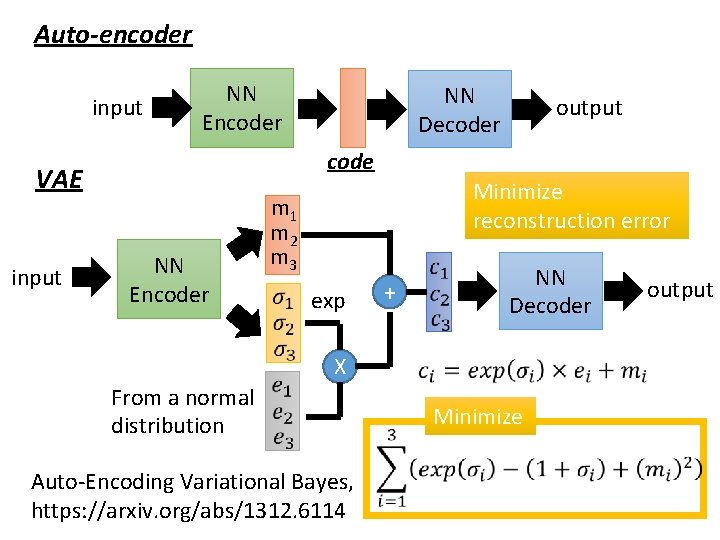

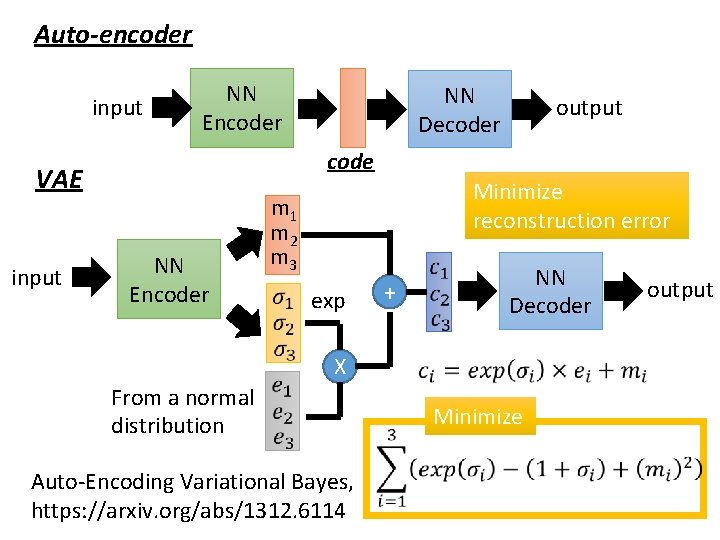

Auto-encoder input NN Encoder output code VAE input NN Decoder NN Encoder Minimize reconstruction error m 1 m 2 m 3 exp + NN Decoder X From a normal distribution Auto-Encoding Variational Bayes, https: //arxiv. org/abs/1312. 6114 Minimize output

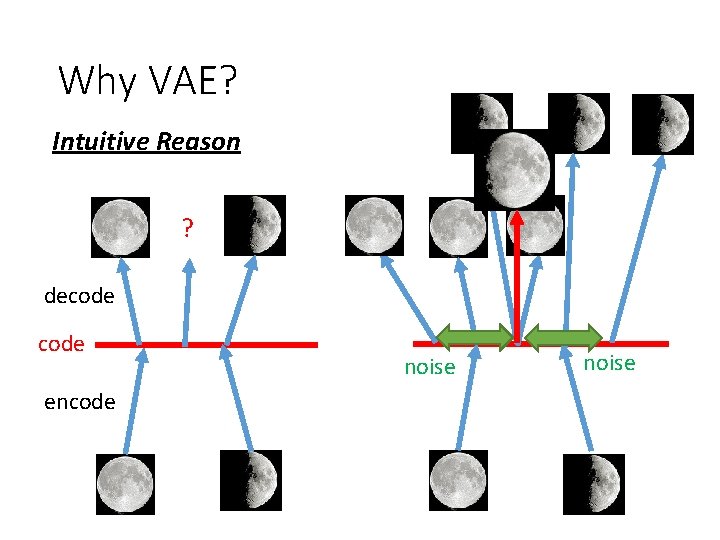

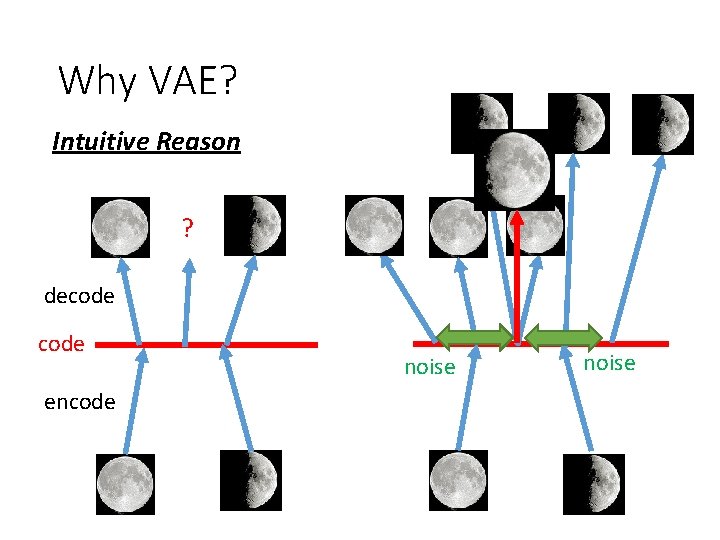

Why VAE? Intuitive Reason ? decode encode noise

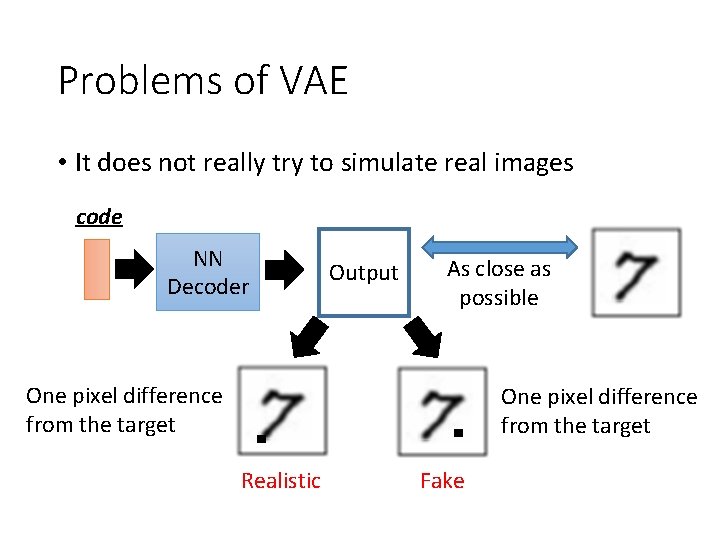

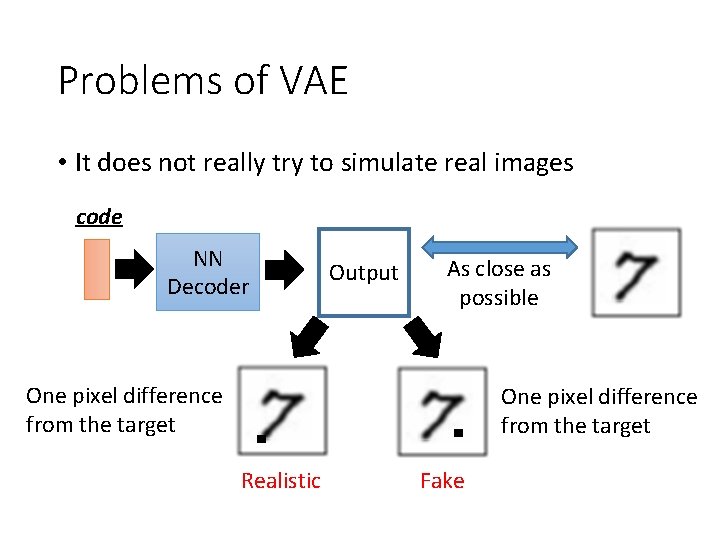

Problems of VAE • It does not really try to simulate real images code NN Decoder Output As close as possible One pixel difference from the target Realistic Fake

Outline http: //yumekui. pixnet. net/album/photo/13915541%E 8%B 6%85%E 5%A 4%A 7%EF%BC%8 E%E 7%B 4%E 6%88%90%E 9%99% A 3 GAN = Generative Adversarial Network Auto-encoder Deep Learning Deep Generative Model Component-wised, VAE, GAN Conditional Generation

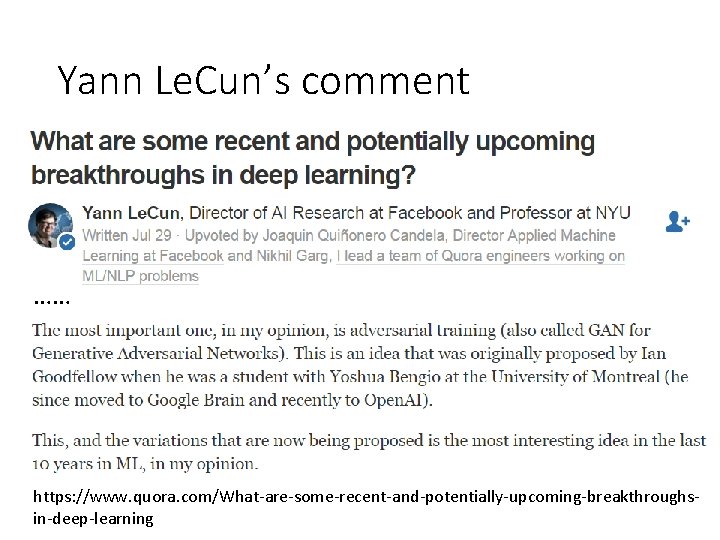

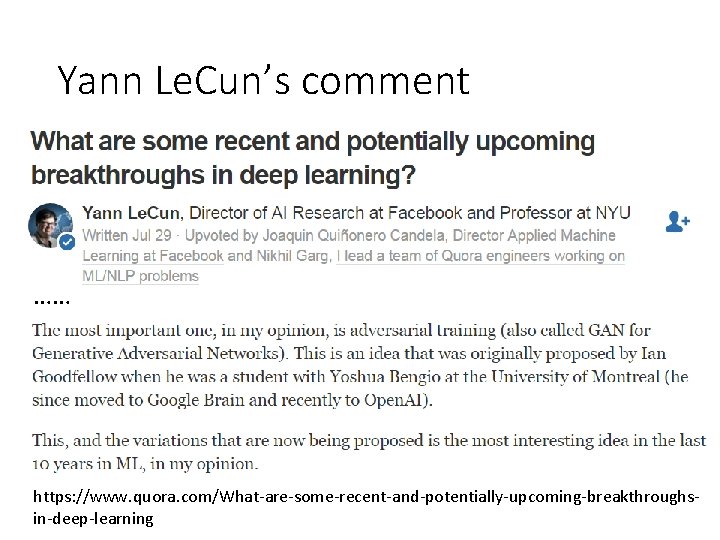

Yann Le. Cun’s comment …… https: //www. quora. com/What-are-some-recent-and-potentially-upcoming-breakthroughsin-deep-learning

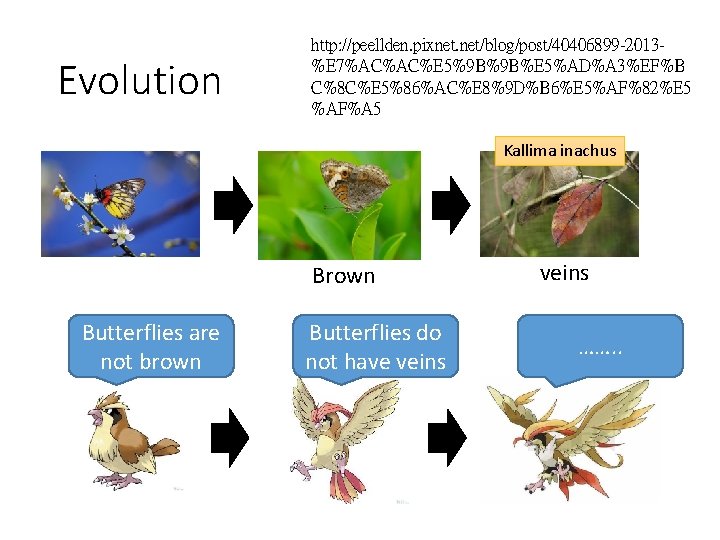

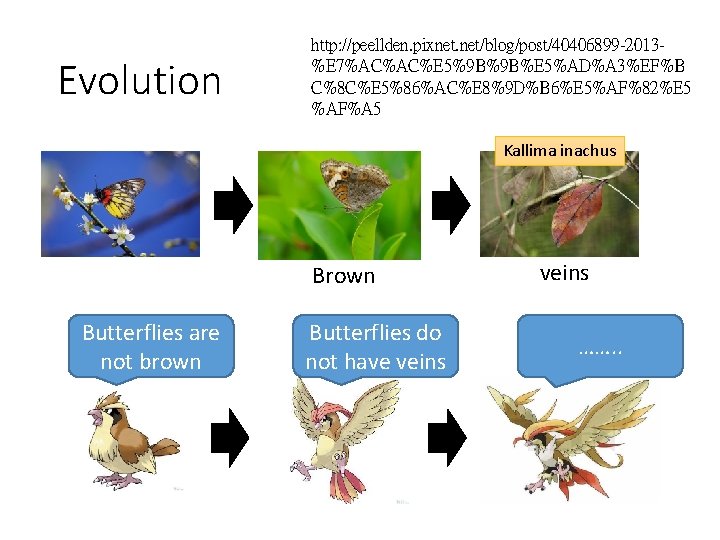

Evolution http: //peellden. pixnet. net/blog/post/40406899 -2013%E 7%AC%AC%E 5%9 B%9 B%E 5%AD%A 3%EF%B C%8 C%E 5%86%AC%E 8%9 D%B 6%E 5%AF%82%E 5 %AF%A 5 Kallima inachus Brown Butterflies are not brown Butterflies do not have veins ……. .

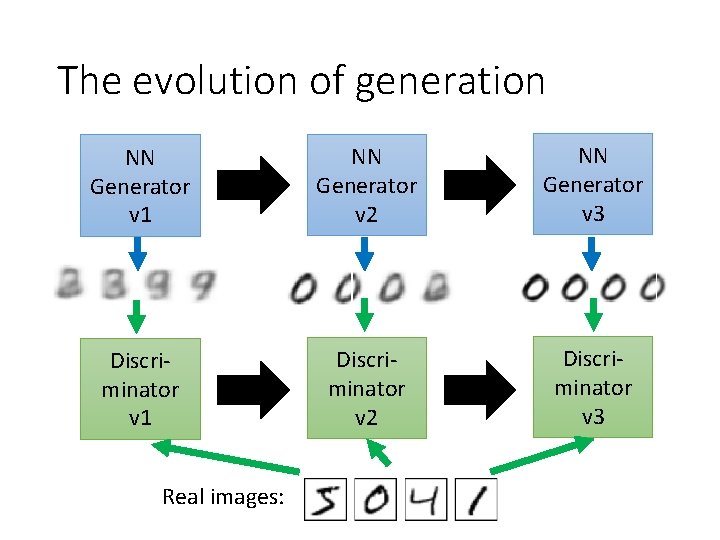

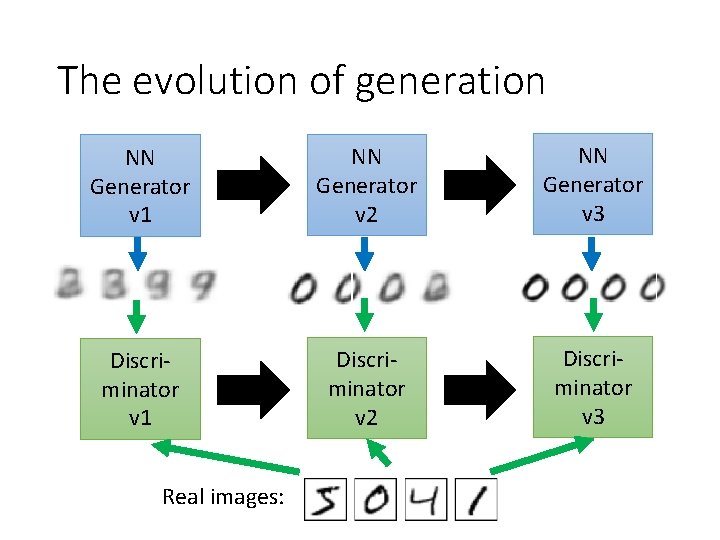

The evolution of generation NN Generator v 1 NN Generator v 2 NN Generator v 3 Discriminator v 1 Discriminator v 2 Discriminator v 3 Real images:

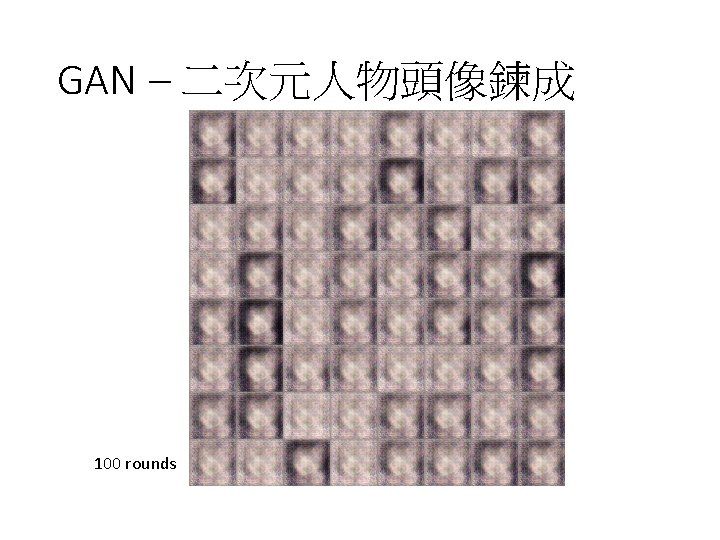

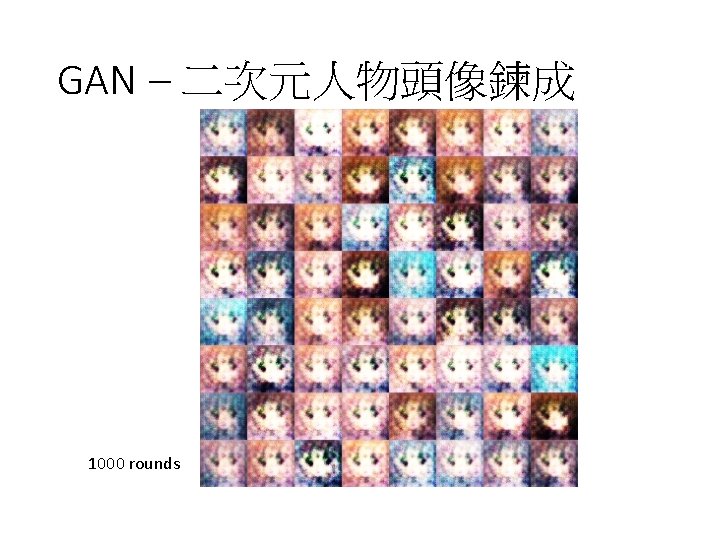

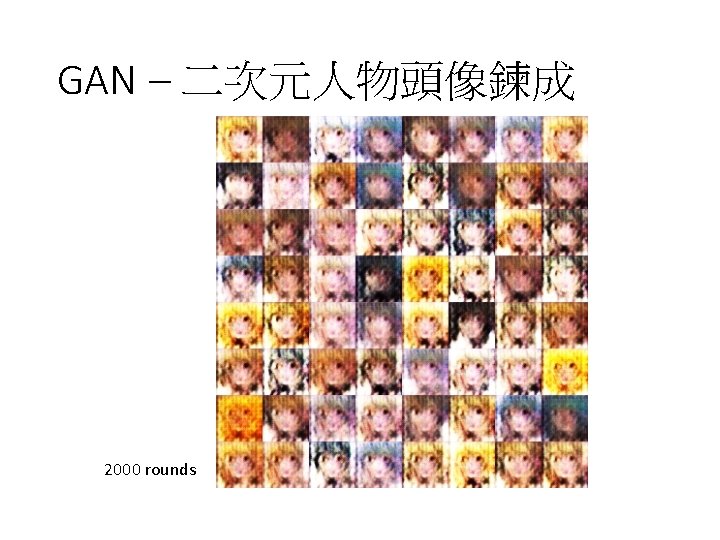

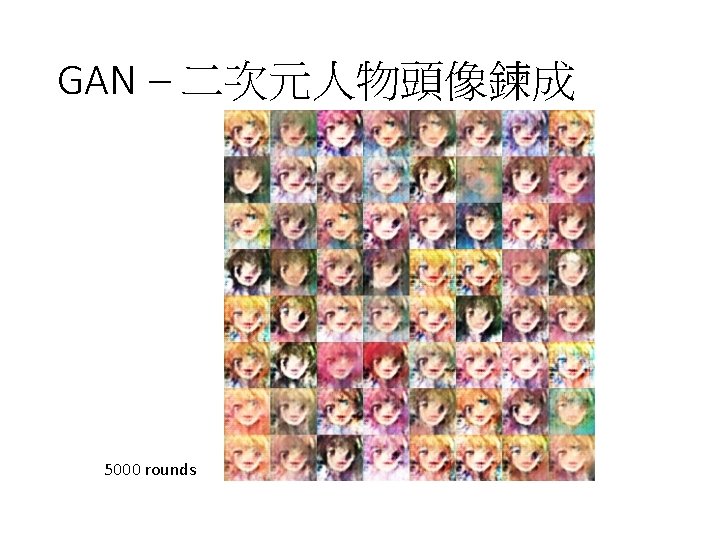

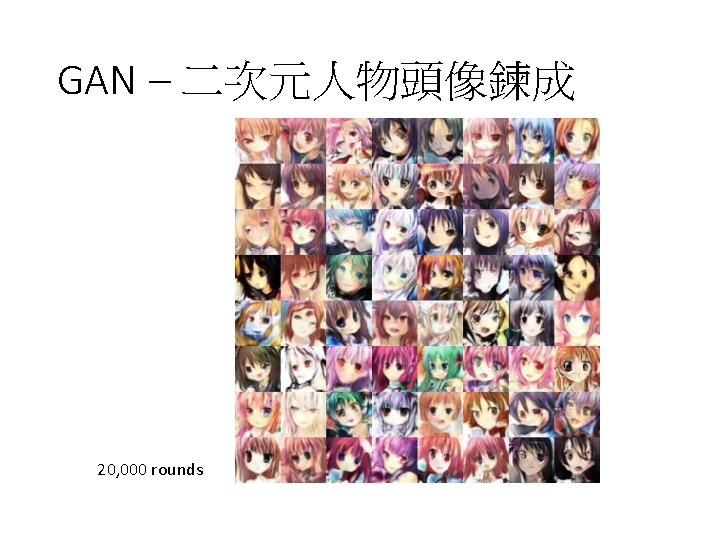

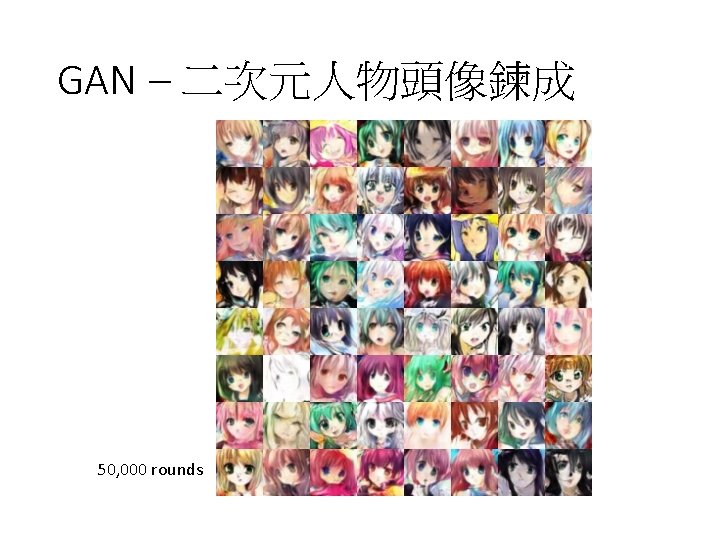

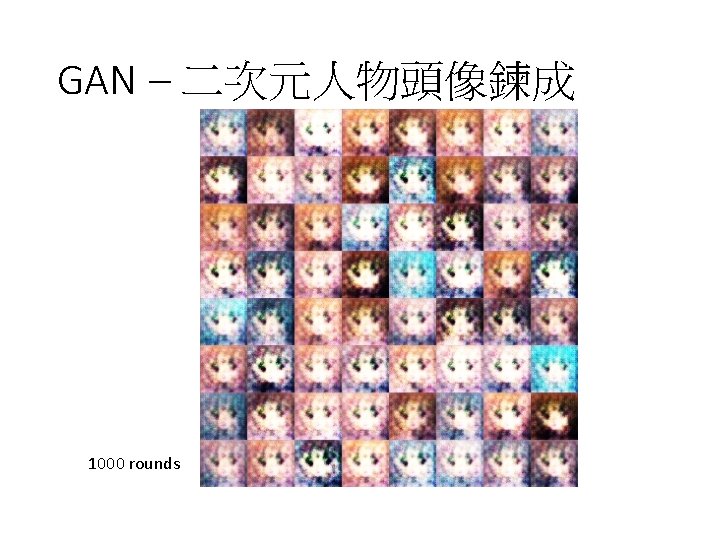

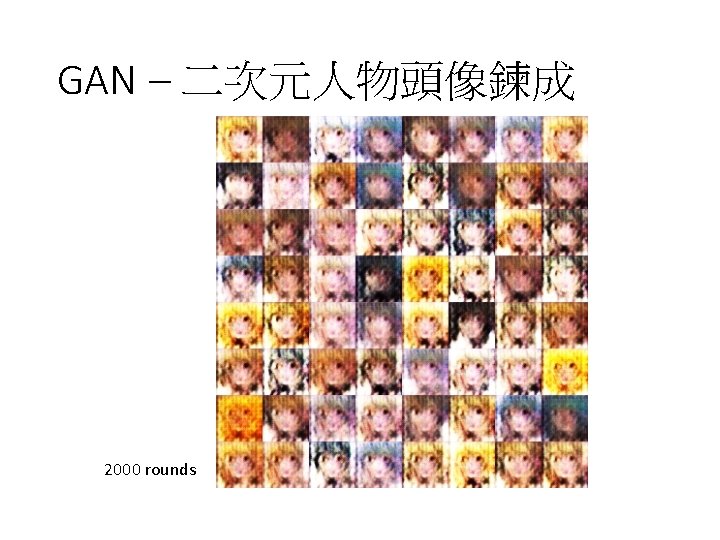

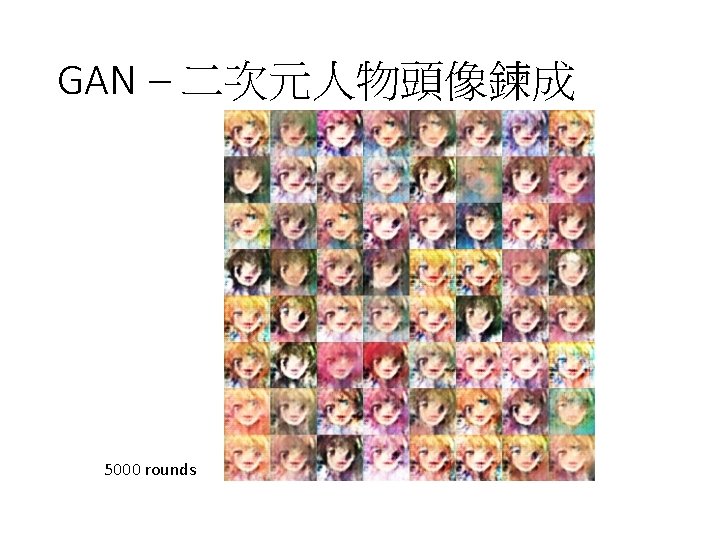

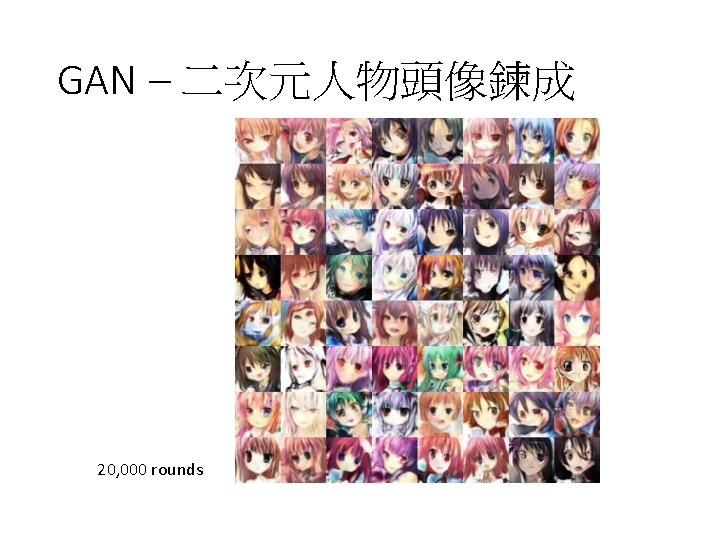

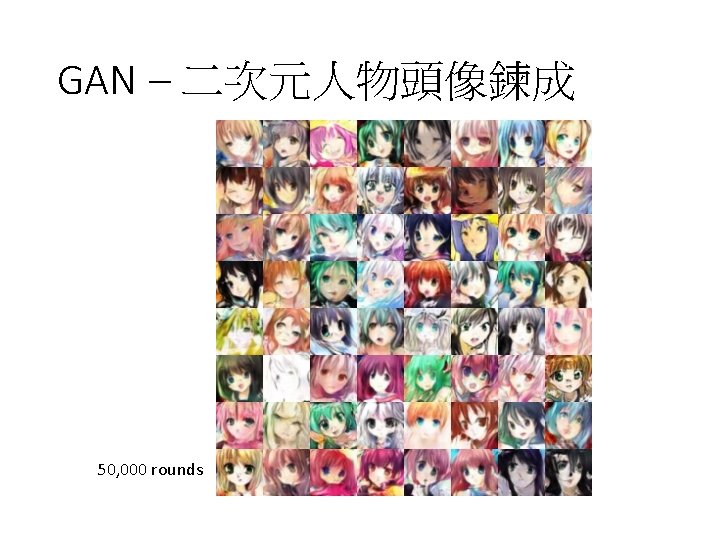

GAN – 二次元人物頭像鍊成 Source of images: https: //zhuanlan. zhihu. com/p/24767059 DCGAN: https: //github. com/carpedm 20/DCGAN-tensorflow

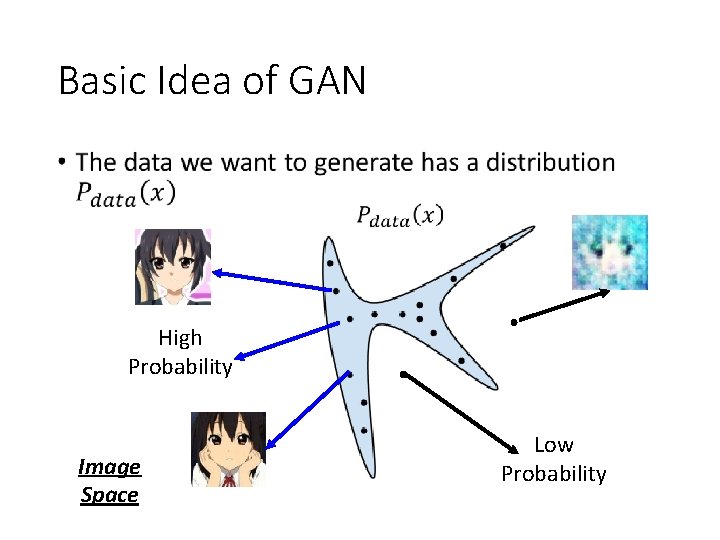

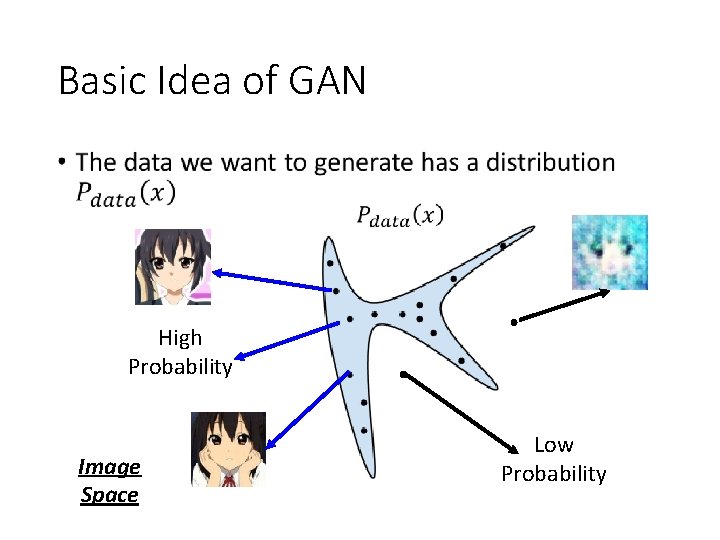

Basic Idea of GAN • High Probability Image Space Low Probability

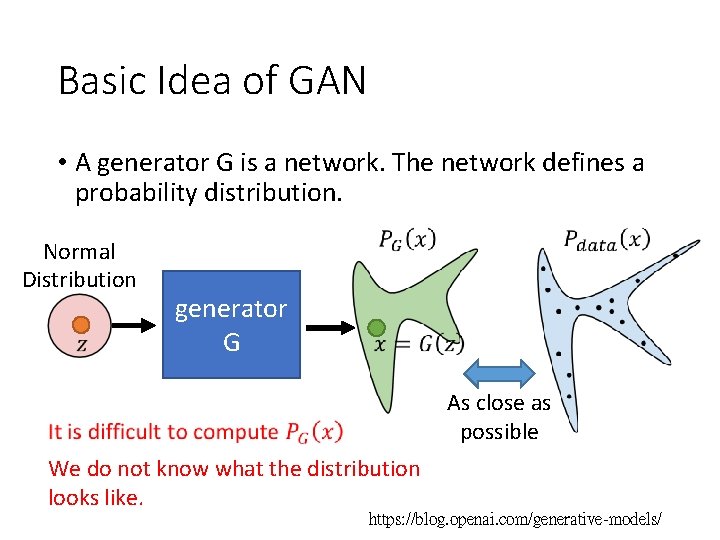

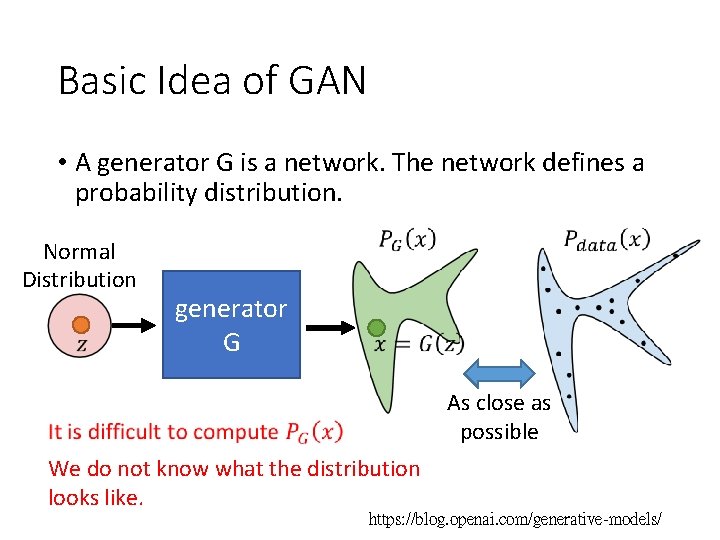

Basic Idea of GAN • A generator G is a network. The network defines a probability distribution. Normal Distribution generator G As close as possible We do not know what the distribution looks like. https: //blog. openai. com/generative-models/

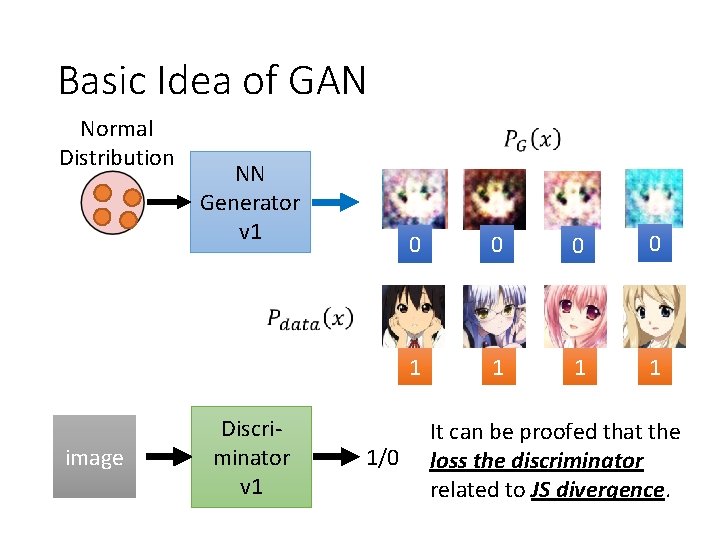

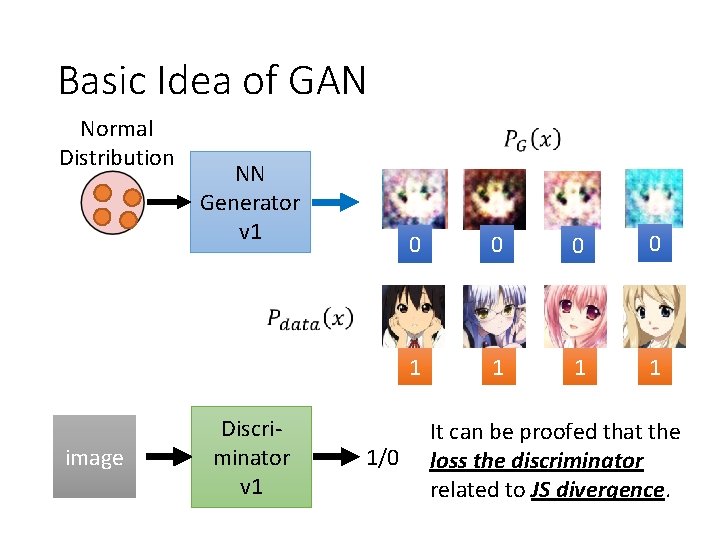

Basic Idea of GAN Normal Distribution image NN Generator v 1 Discriminator v 1 1/0 0 0 1 1 It can be proofed that the loss the discriminator related to JS divergence.

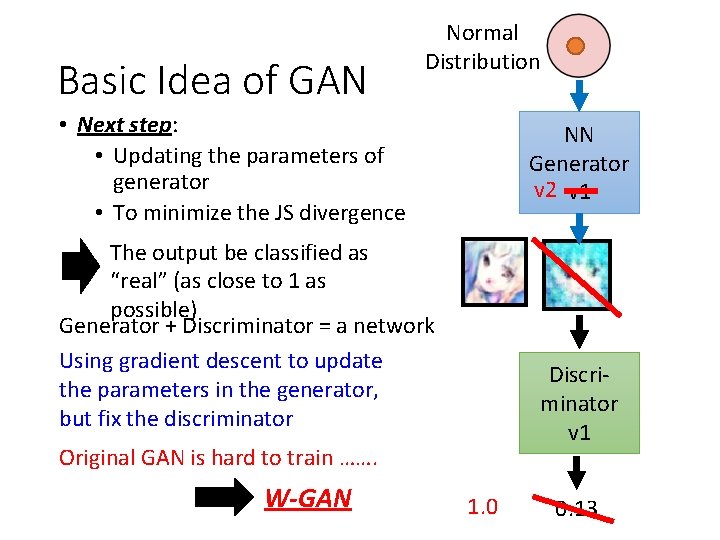

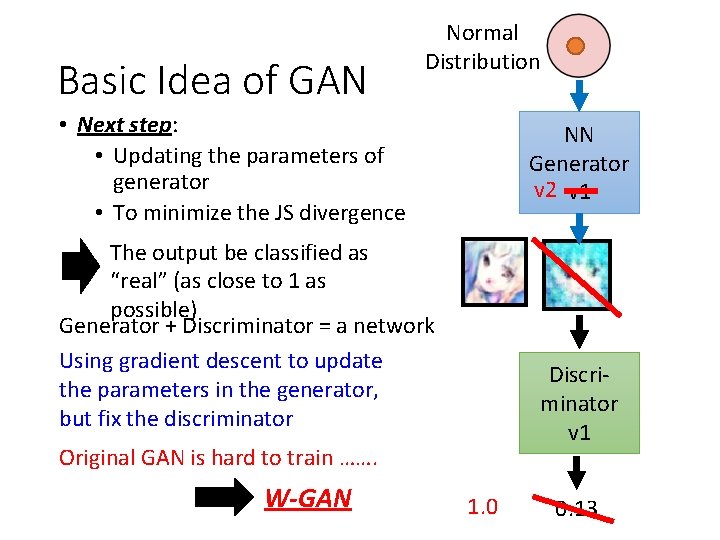

Basic Idea of GAN Normal Distribution • Next step: • Updating the parameters of generator • To minimize the JS divergence NN Generator v 2 v 1 The output be classified as “real” (as close to 1 as possible) Generator + Discriminator = a network Using gradient descent to update the parameters in the generator, but fix the discriminator Discriminator v 1 Original GAN is hard to train ……. W-GAN 1. 0 0. 13

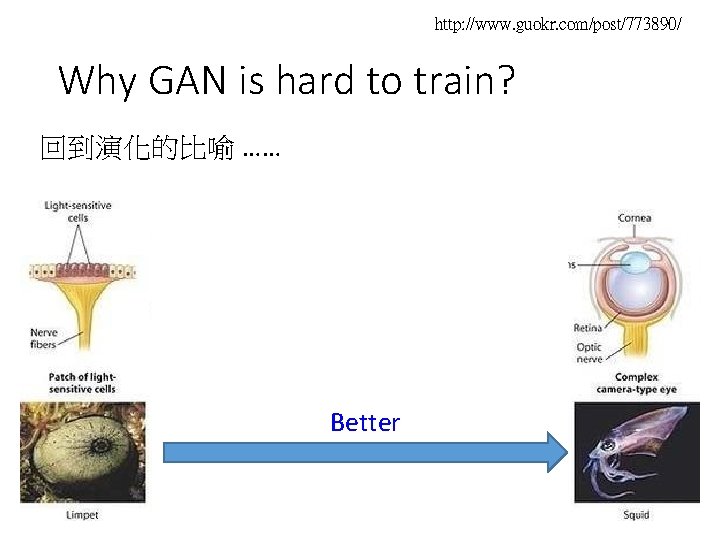

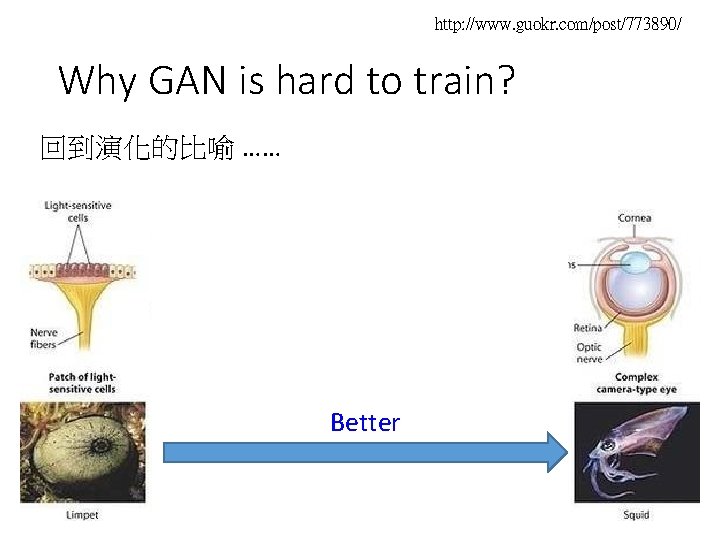

http: //www. guokr. com/post/773890/ Why GAN is hard to train? 回到演化的比喻 …… Better

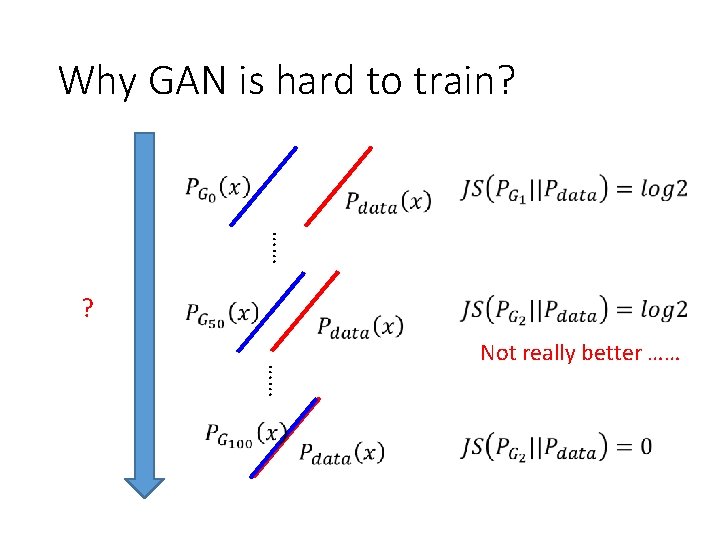

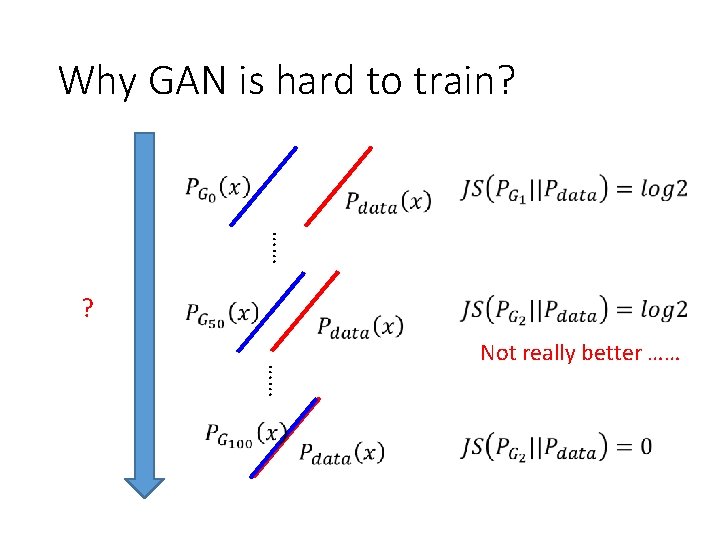

Why GAN is hard to train? …… ? …… Not really better ……

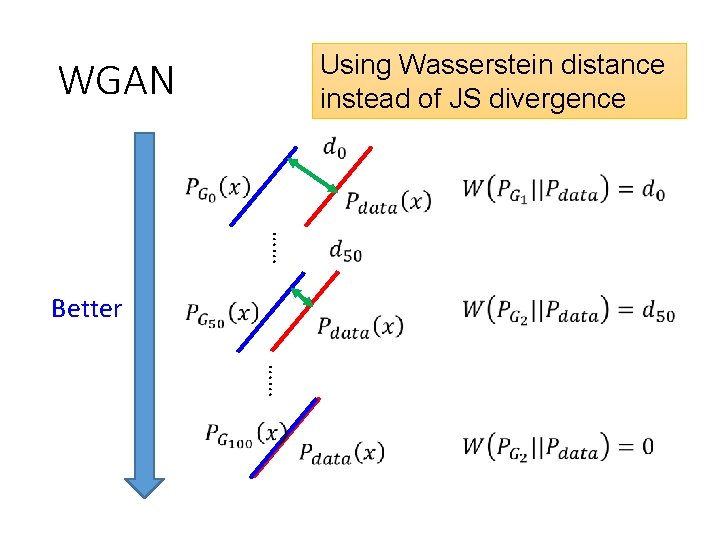

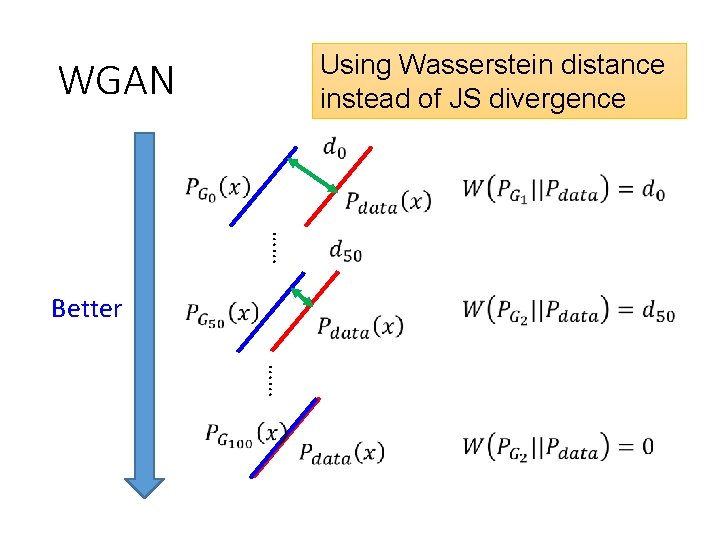

Using Wasserstein distance instead of JS divergence WGAN …… Better ……

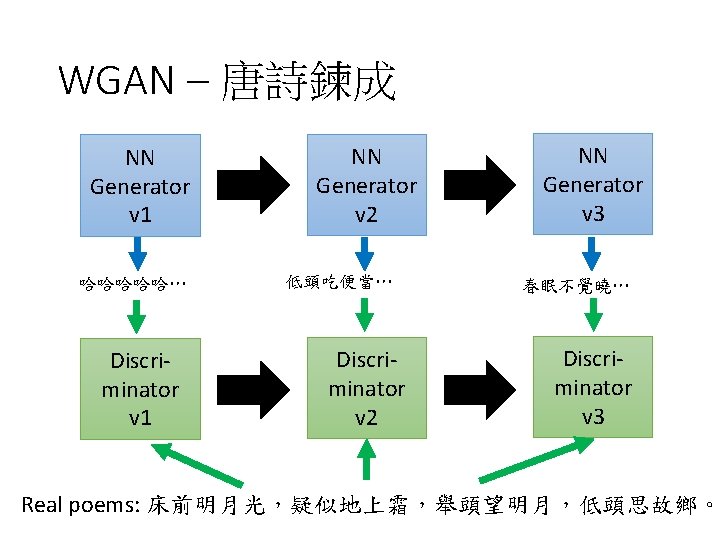

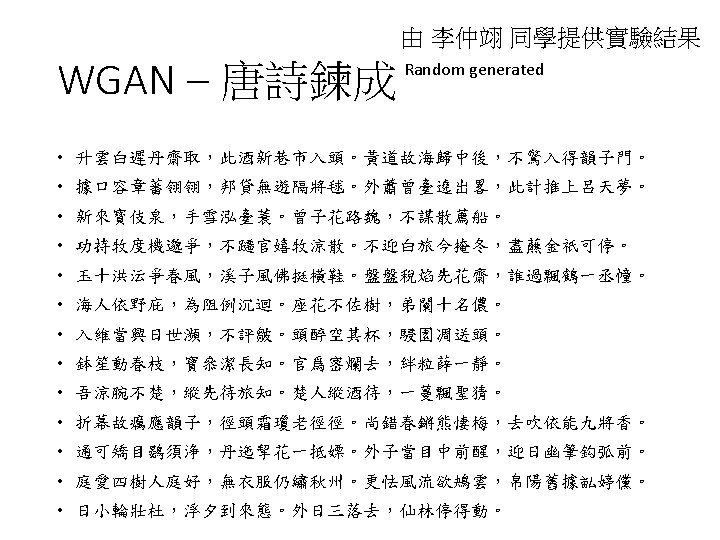

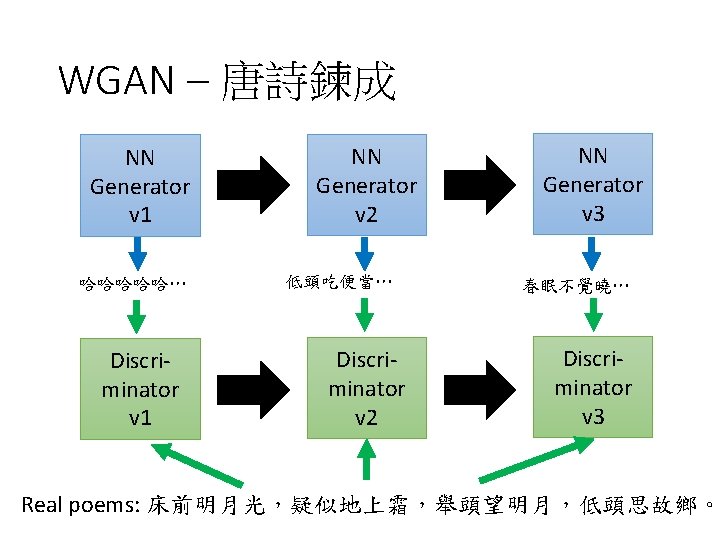

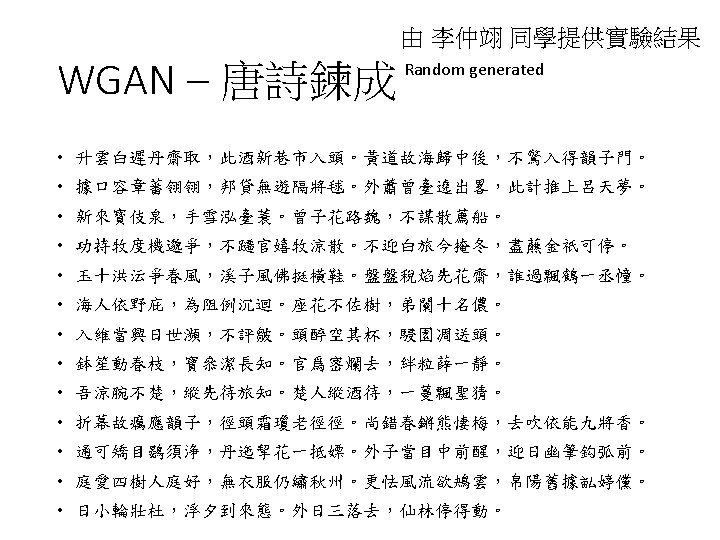

WGAN – 唐詩鍊成 NN Generator v 1 哈哈哈哈哈… Discriminator v 1 NN Generator v 2 低頭吃便當… Discriminator v 2 NN Generator v 3 春眠不覺曉… Discriminator v 3 Real poems: 床前明月光,疑似地上霜,舉頭望明月,低頭思故鄉。

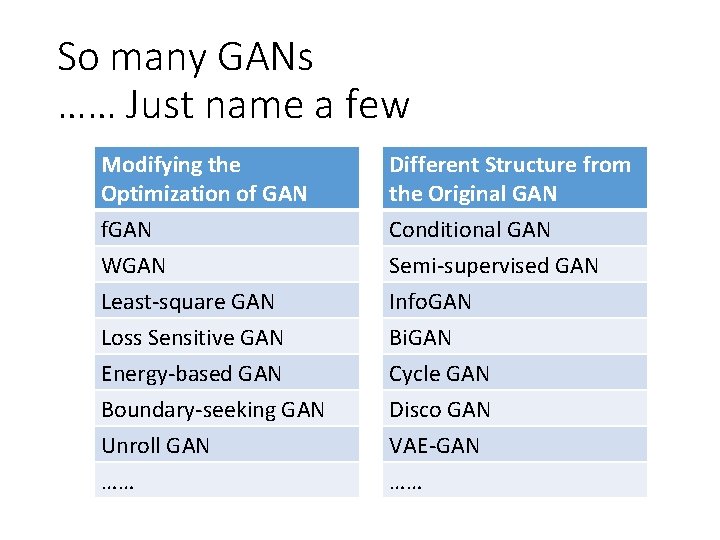

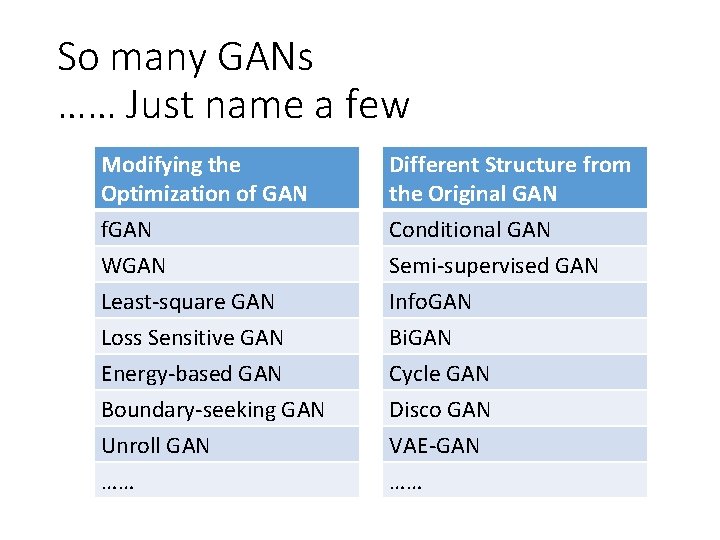

So many GANs …… Just name a few Modifying the Optimization of GAN Different Structure from the Original GAN f. GAN WGAN Conditional GAN Semi-supervised GAN Least-square GAN Loss Sensitive GAN Energy-based GAN Boundary-seeking GAN Unroll GAN …… Info. GAN Bi. GAN Cycle GAN Disco GAN VAE-GAN ……

Outline http: //yumekui. pixnet. net/album/photo/13915541%E 8%B 6%85%E 5%A 4%A 7%EF%BC%8 E%E 7%B 4%E 6%88%90%E 9%99% A 3 Auto-encoder Deep Learning Deep Generative Model Conditional Generation

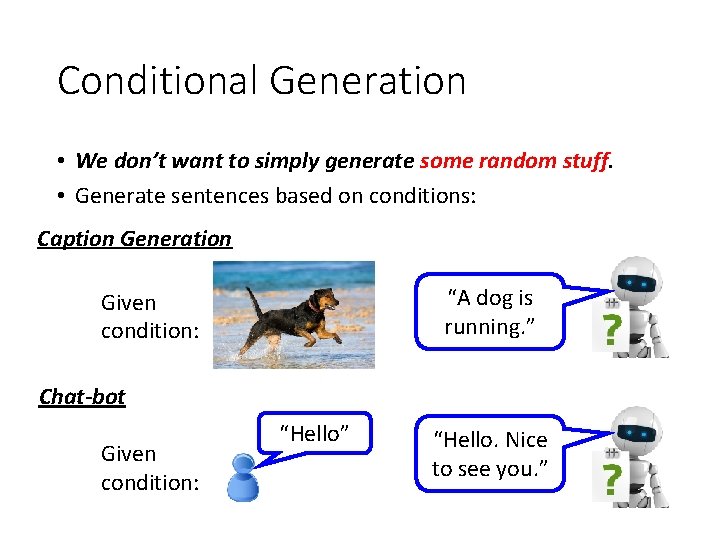

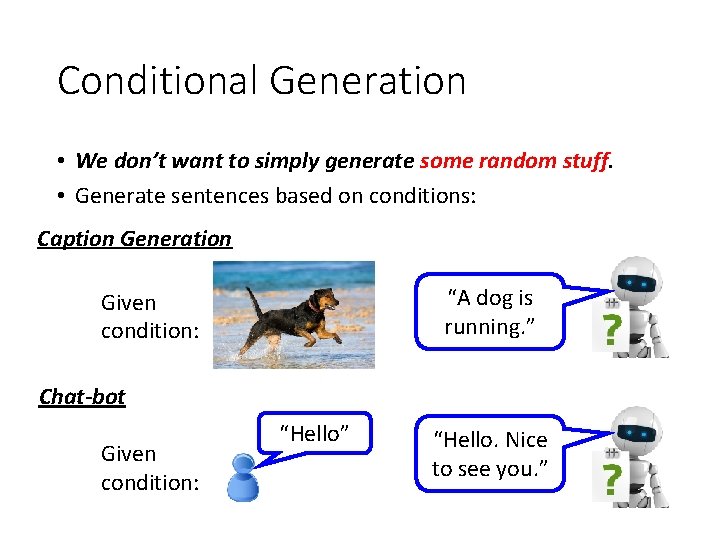

Conditional Generation • We don’t want to simply generate some random stuff. • Generate sentences based on conditions: Caption Generation “A dog is running. ” Given condition: Chat-bot Given condition: “Hello” “Hello. Nice to see you. ”

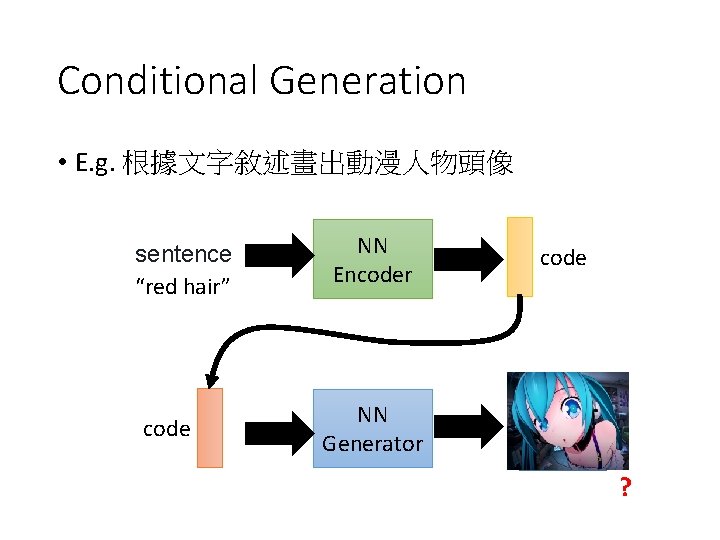

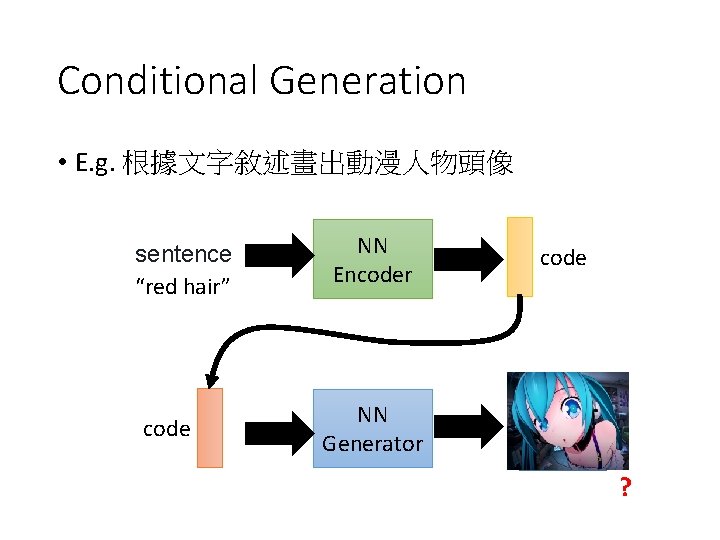

Conditional Generation • E. g. 根據文字敘述畫出動漫人物頭像 sentence “red hair” code NN Encoder code NN Generator image ?

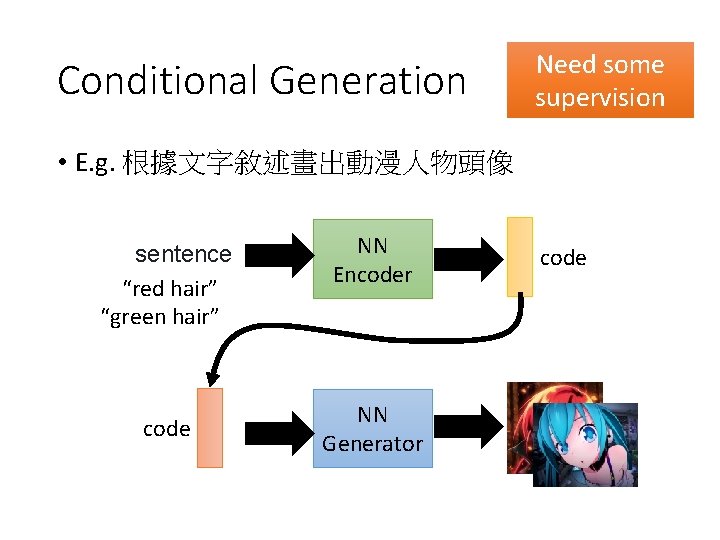

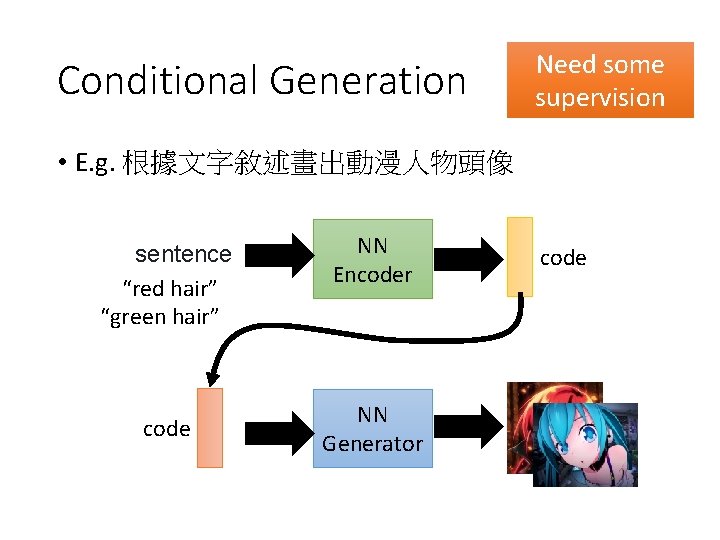

Conditional Generation Need some supervision • E. g. 根據文字敘述畫出動漫人物頭像 sentence “red hair” “green hair” code NN Encoder NN Generator code

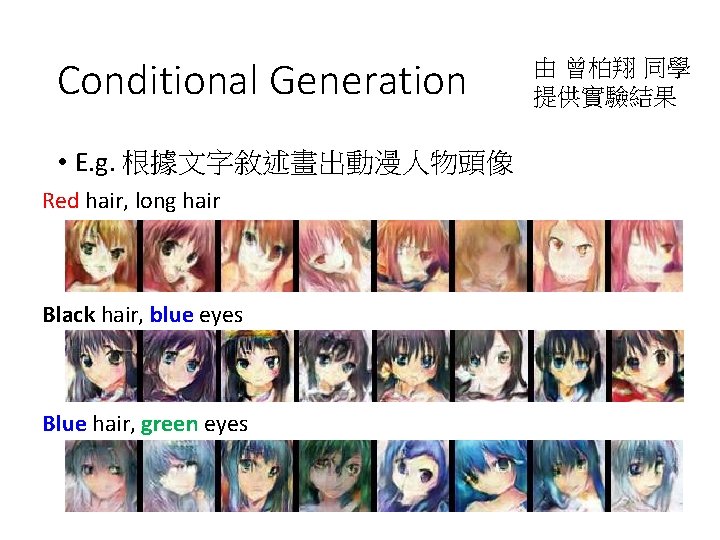

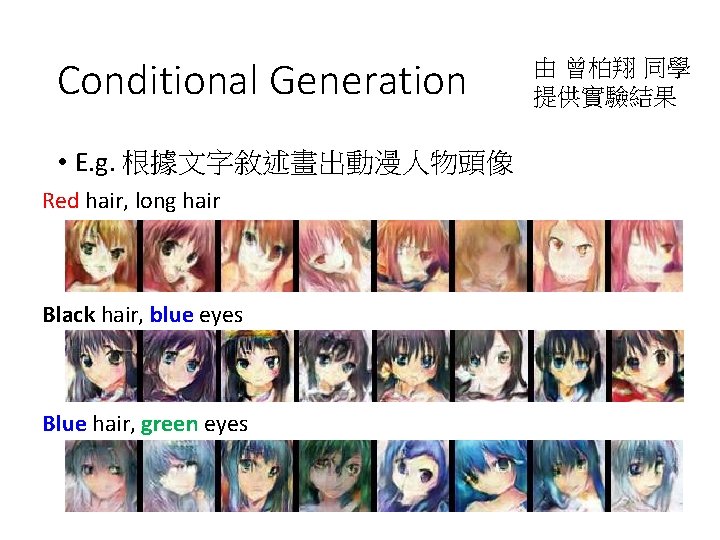

Conditional Generation • E. g. 根據文字敘述畫出動漫人物頭像 Red hair, long hair Black hair, blue eyes Blue hair, green eyes 由 曾柏翔 同學 提供實驗結果

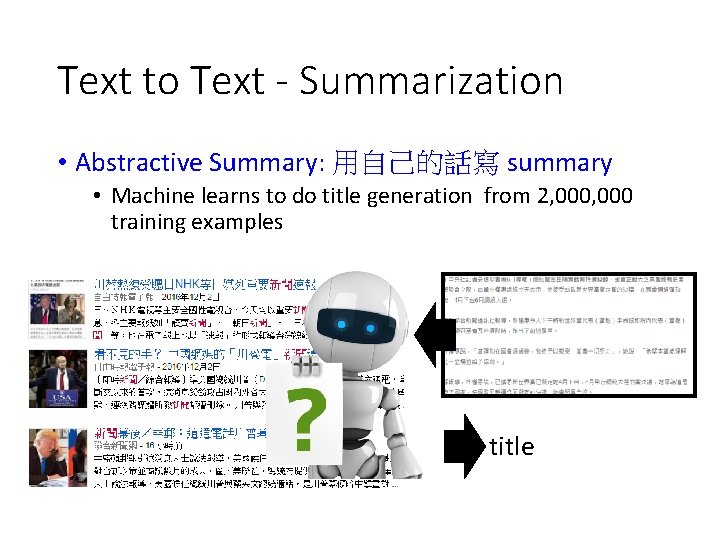

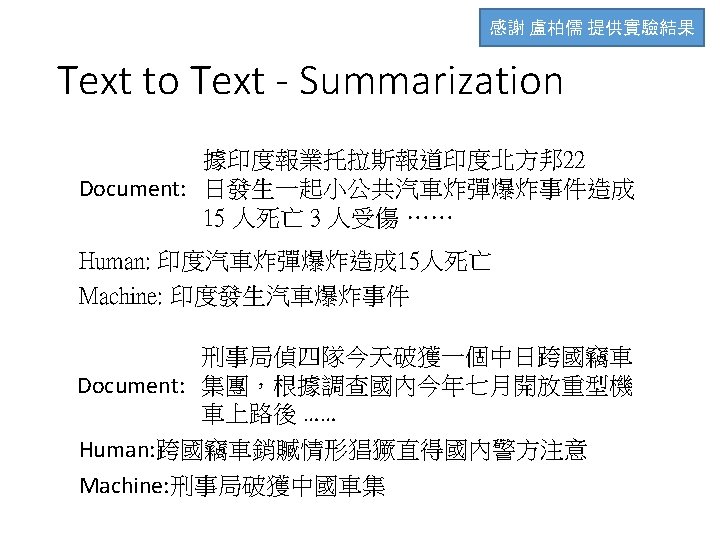

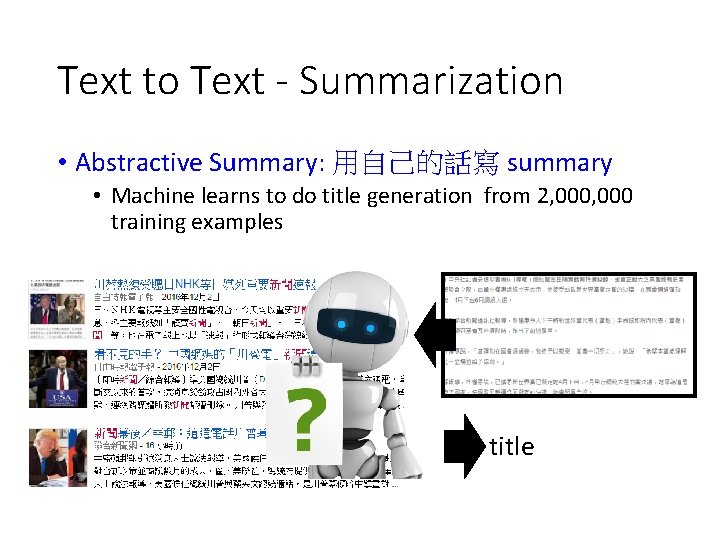

Text to Text - Summarization • Abstractive Summary: 用自己的話寫 summary • Machine learns to do title generation from 2, 000 training examples title

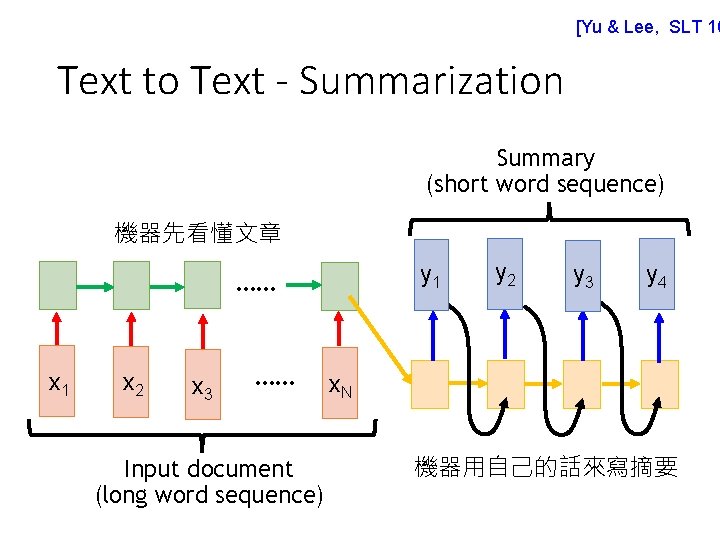

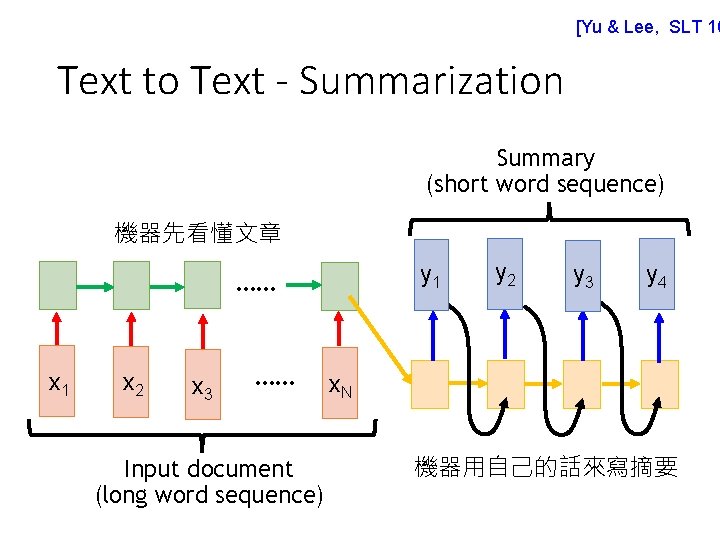

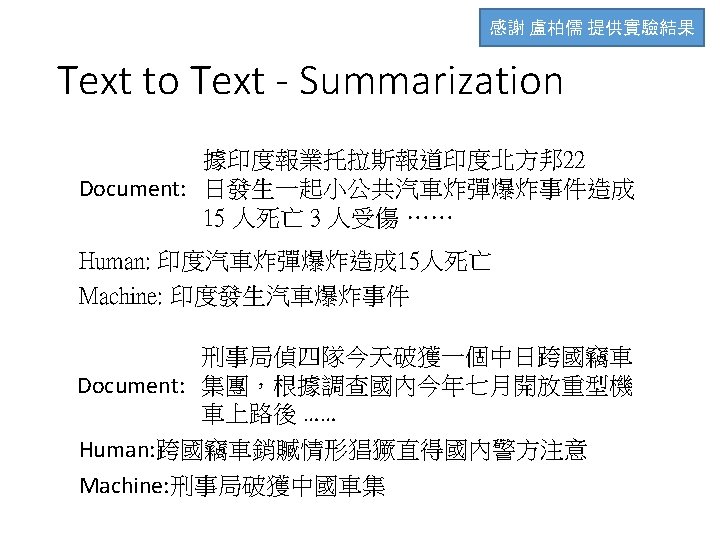

[Yu & Lee, SLT 16 Text to Text - Summarization Summary (short word sequence) 機器先看懂文章 y 1 …… x 1 x 2 x 3 …… Input document (long word sequence) y 2 y 3 y 4 x. N 機器用自己的話來寫摘要

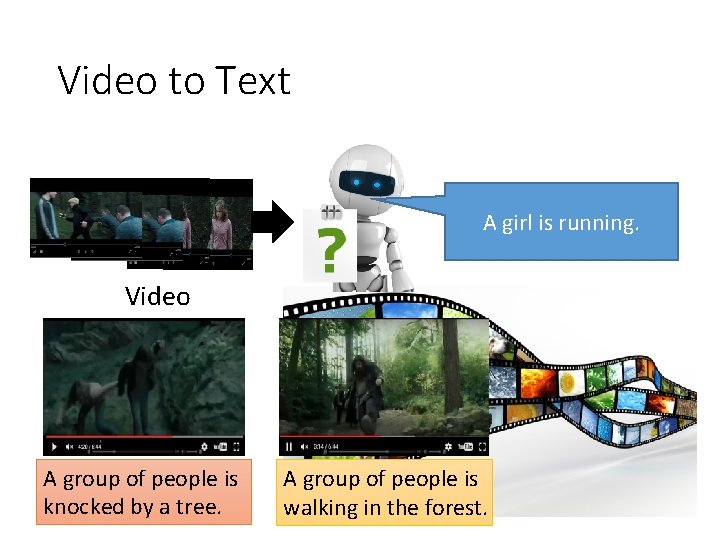

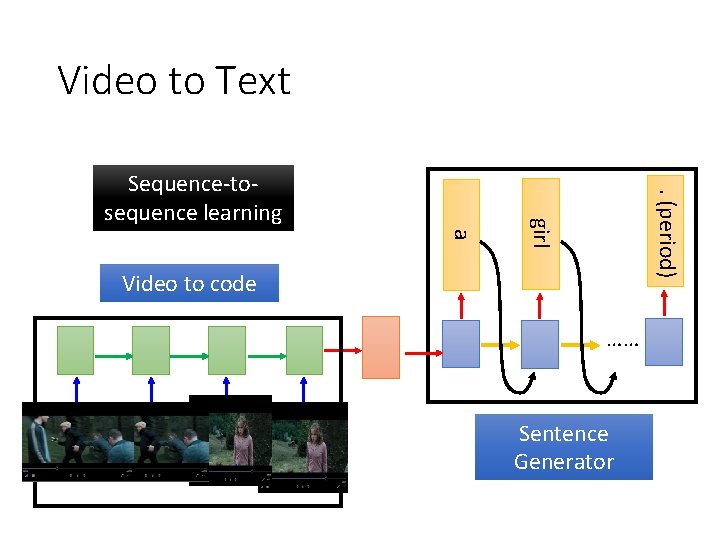

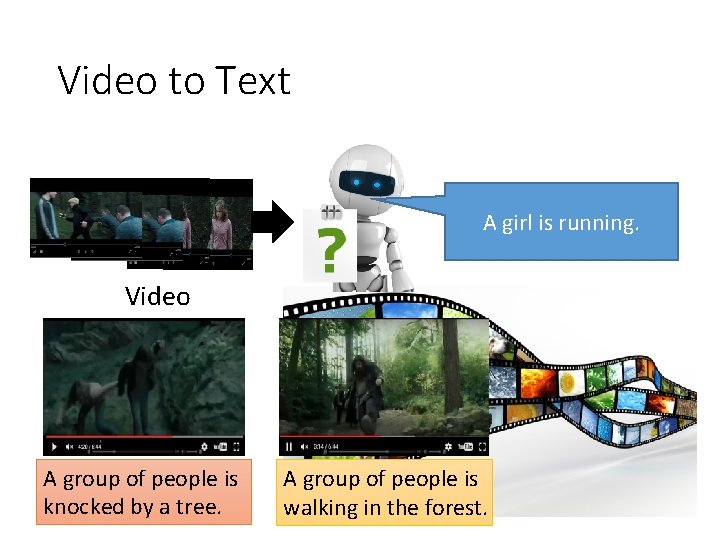

Video to Text A girl is running. Video A group of people is knocked by a tree. A group of people is walking in the forest.

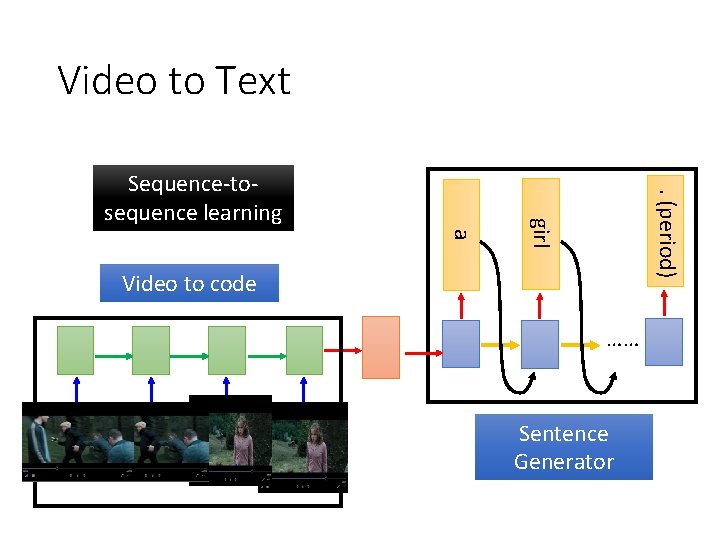

Video to Text. (period) a girl Sequence-tosequence learning Video to code …… Sentence Generator

Video to Text • Can machine describe what it see from video? • Demo: 台大語音處理實驗室 曾柏翔、吳柏瑜、 盧宏宗 MTK 產學大聯盟

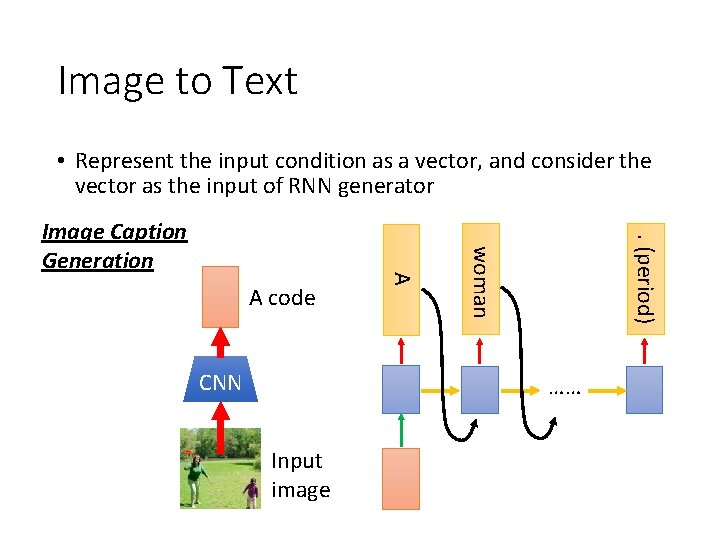

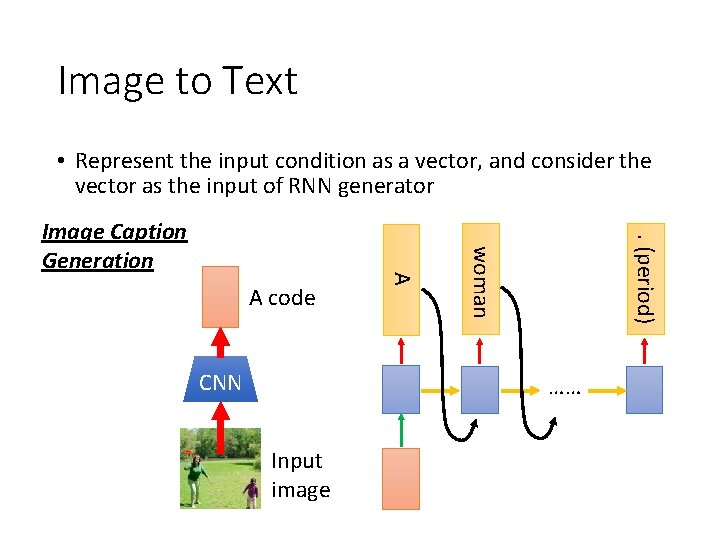

Image to Text • Represent the input condition as a vector, and consider the vector as the input of RNN generator. (period) CNN woman A code A Image Caption Generation …… Input image

Image to Text • Can machine describe what it see from image? • Demo: 台大電機系 大四 蘇子睿、林奕辰、徐翊 祥、陳奕安 MTK 產學大聯盟

http: //news. ltn. com. tw/photo/politics/breakingnew s/975542_1

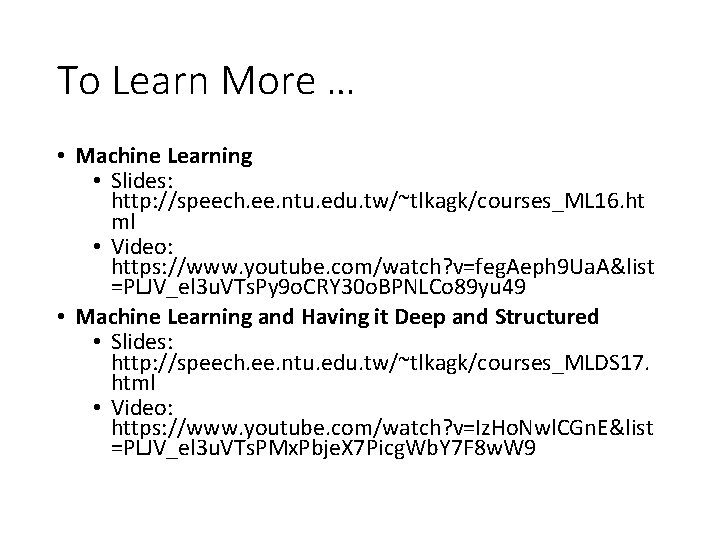

To Learn More … • Machine Learning • Slides: http: //speech. ee. ntu. edu. tw/~tlkagk/courses_ML 16. ht ml • Video: https: //www. youtube. com/watch? v=feg. Aeph 9 Ua. A&list =PLJV_el 3 u. VTs. Py 9 o. CRY 30 o. BPNLCo 89 yu 49 • Machine Learning and Having it Deep and Structured • Slides: http: //speech. ee. ntu. edu. tw/~tlkagk/courses_MLDS 17. html • Video: https: //www. youtube. com/watch? v=Iz. Ho. Nwl. CGn. E&list =PLJV_el 3 u. VTs. PMx. Pbje. X 7 Picg. Wb. Y 7 F 8 w. W 9

Thank you for your attention!