Agent definition Dictionary definition agent ay gent n

- Slides: 73

Agent definition Dictionary definition: agent (ay gent ) n. 1. something that produces or is capable of producing an effect: an active or efficient cause. 2. one who acts for or in the place of another by authority from him. . . 3. a means or instrument by which a guiding intelligence achieves a result.

Agent definition Computer Science definition: An agent is a computer system, situated in some environment, that is capable of flexible autonomous action in order to meet its design objectives. This definition embraces three key concepts: situatedness The agent receives sensory input from its environment and it can perform actions which change the environment in some way. autonomy flexibility

Autonomy Dictionary definitions: Autonomy : self determinedfreedom, especially moral independence. Autonomous: self governing, independent. Agent definition The system should be able to act without the direct intervention of humans (or other agents). The system should have control over its own actions and internal state. Example: Autonomous navigation Sometimes used in a stronger sense to mean systems that are capable of learning from experience.

Examples of existing situated, autonomous computer systems any process control system: monitor real worldenvironment and perform actions to modify it as conditions change (typically in real time), simple thermostats, very complex nuclear reactor control systems. software deamons: monitor software environment and perform actions to modify the environment as conditions change, UNIX xbiff program, monitors a user's incoming mail and displays an icon when new mail is detected. However, these systems are not capable of flexible action in order to meet their design objectives.

Flexibility pro active: agents should not simply act in response to their environment, they should be able to exhibit opportunistic, goal directedbehaviour and take the initiative where appropriate; social: agents should be able to interact, when appropriate, with other artificial agents and humans in order to complete their own problem solving and to help others with their activities. responsive: agents should perceive their environment and respond in a timely fashion to changes that occur in it; Agents may have other characteristics, e. g. mobility, adaptability, but those given here are the distinguishing features of an agent.

The road to intelligent agents Agents have their roots in traditional AI Also builds on contributions from other long established fields: object orientedprogramming concurrent object basedsystems human computerinterface design Historically AI researchers tended to focus on different components of intelligent behaviour, e. g. learning, reasoning, problem solving, vision understanding. The assumption seemed to be that progress was more likely to be made if these aspects of intelligent behaviour were studied in isolation.

AI development stages Phase 1: formal, structured problems with well defined boundaries (block worlds, game playing, symbol manipulation, reasoning, theorem proving) Combination to create integrated AI systems was assumed to be straightforward. Phase 2: expert systems; building on domain-specific knowledge for specialist problems Rule-based systems Phase 3: specialised areas such as vision, speech, natural language processing, robot control, data mining Mainly sensory data Intelligent agents seen currently as the main integrating force

Rational agents The right action is the one that makes the agent the most successful Need measures of success E. g. pick the most points, make the least number of moves, minimise power consumption, etc. Rationality depends on performance measures, prior knowledge, actions, event history For each possible event sequence, the rational agent should select an action that is expected to maximise its performance measure, given the evidence provided by the event sequence and the built-in knowledge the agent has. Important: rationality maximises expected performance, not actual (we cannot tell the future)

Rational agents should Perform information gathering and exploration Learn from past events Be autonomous Requires learning Start with built-in reflexes/knowledge, create new behaviour based on learnt experience

How to design an intelligent agent? An agent perceives its environment via sensors and acts in that environment with its effectors. Hence, an agent gets percepts one at a time, and maps this percept sequence to actions (one action at a time) Properties: Autonomous Interacts with other agents plus the environment Reactive to the environment Pro-active (goal-directed)

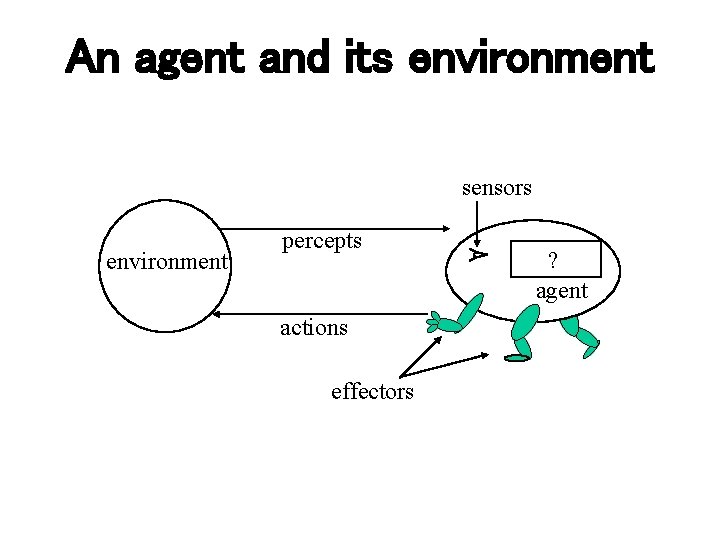

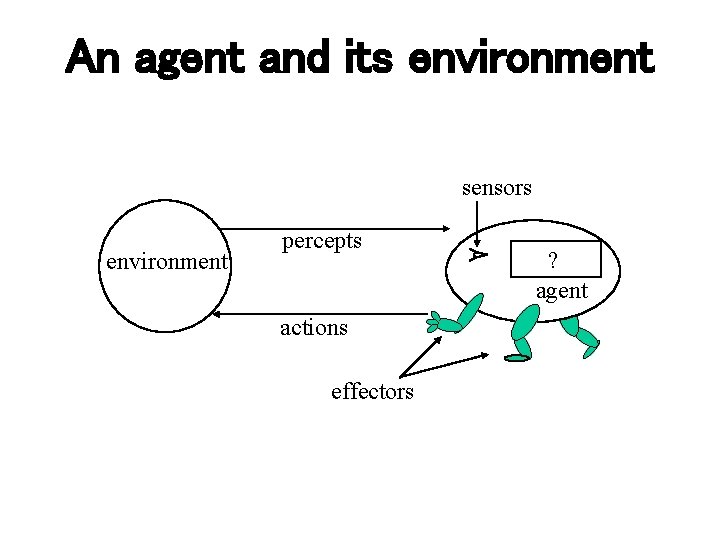

An agent and its environment sensors environment percepts actions effectors ? agent

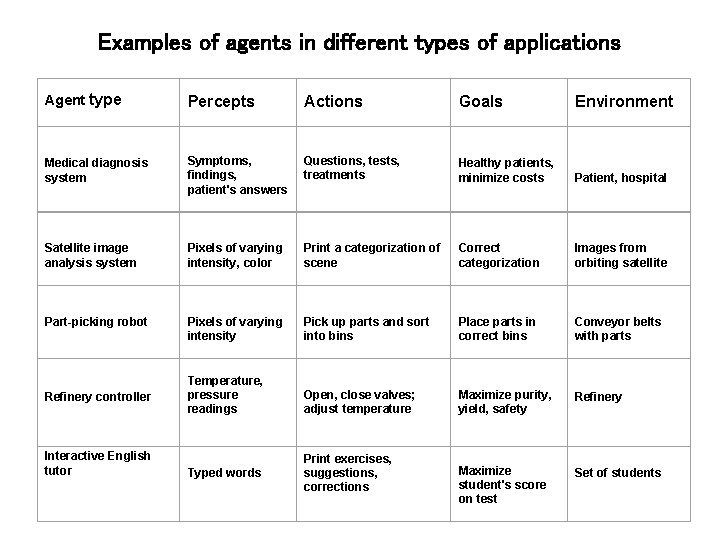

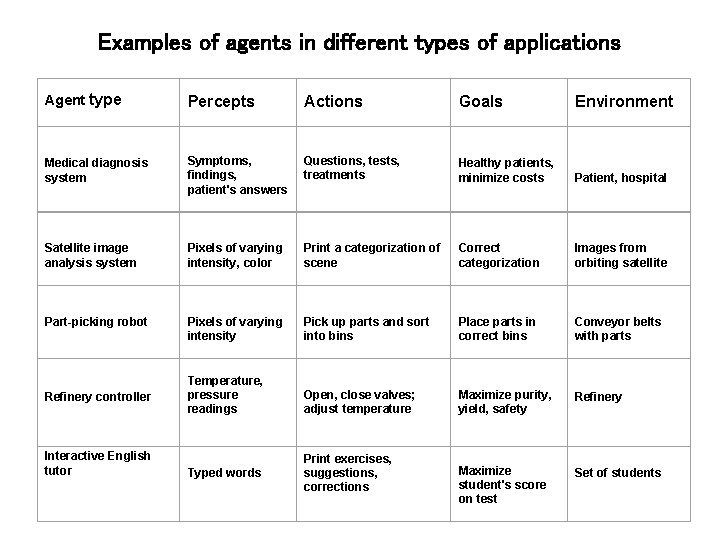

Examples of agents in different types of applications Agent type Percepts Actions Goals Environment Medical diagnosis system Symptoms, findings, patient's answers Questions, tests, treatments Healthy patients, minimize costs Patient, hospital Satellite image analysis system Pixels of varying intensity, color Print a categorization of scene Correct categorization Images from orbiting satellite Part-picking robot Pixels of varying intensity Pick up parts and sort into bins Place parts in correct bins Conveyor belts with parts Refinery controller Temperature, pressure readings Maximize purity, yield, safety Refinery Open, close valves; adjust temperature Interactive English tutor Print exercises, suggestions, corrections Maximize student's score on test Set of students Typed words

Characterizing Agents

Agent’s strategy is a mapping from percept sequence to action How to encode an agent’s strategy? Long list of what should be done for each possible percept sequence vs. shorter specification (e. g. algorithm)

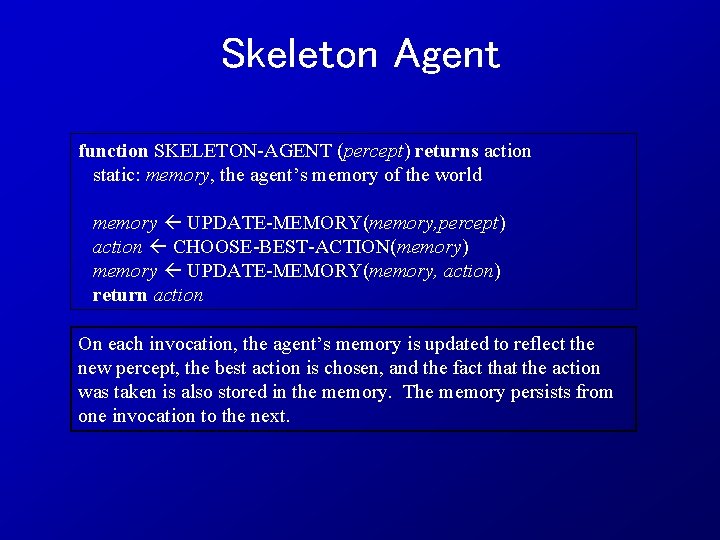

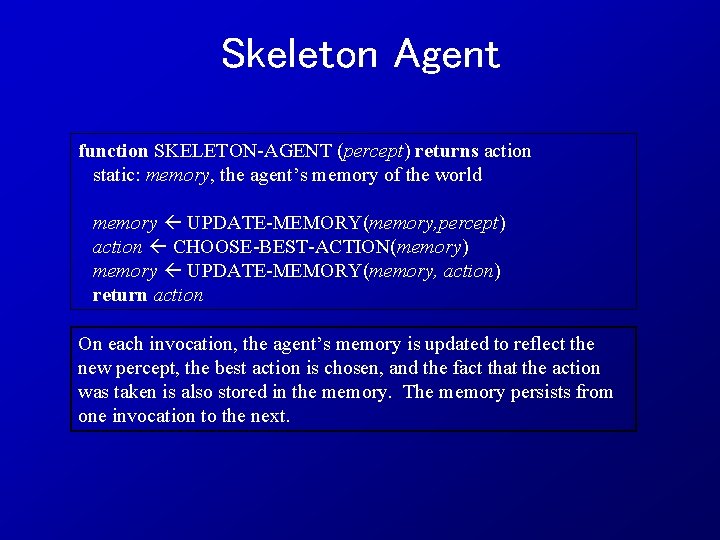

Skeleton Agent function SKELETON-AGENT (percept) returns action static: memory, the agent’s memory of the world memory UPDATE-MEMORY(memory, percept) action CHOOSE-BEST-ACTION(memory) memory UPDATE-MEMORY(memory, action) return action On each invocation, the agent’s memory is updated to reflect the new percept, the best action is chosen, and the fact that the action was taken is also stored in the memory. The memory persists from one invocation to the next.

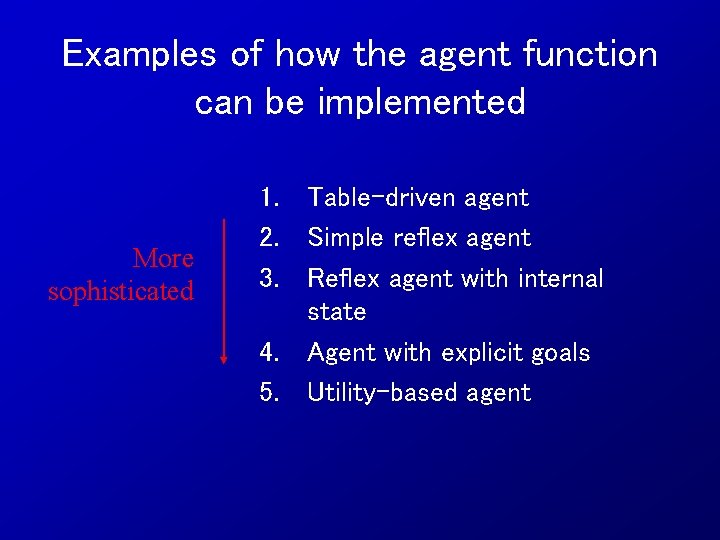

Examples of how the agent function can be implemented More sophisticated 1. Table-driven agent 2. Simple reflex agent 3. Reflex agent with internal state 4. Agent with explicit goals 5. Utility-based agent

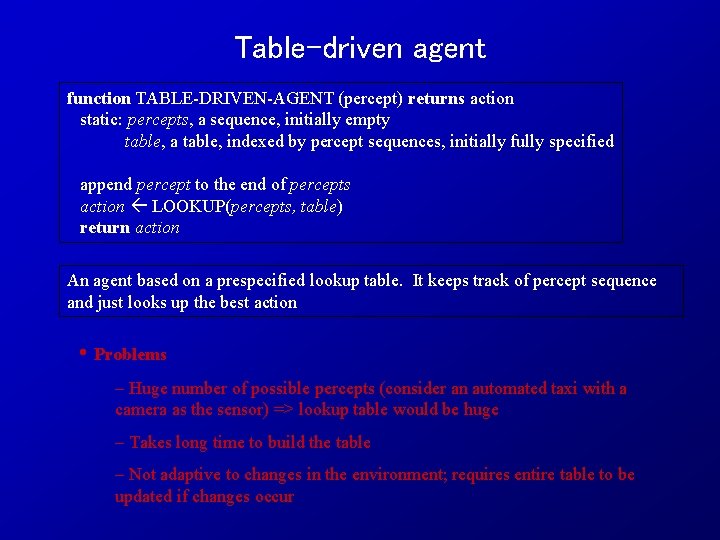

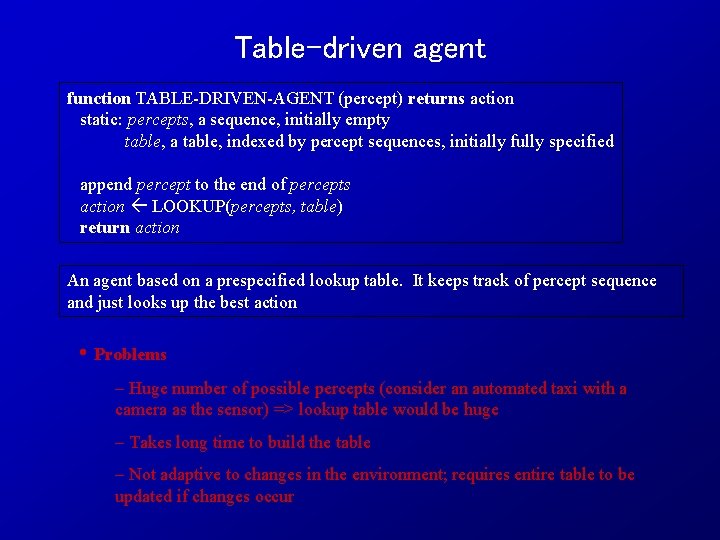

Table-driven agent function TABLE-DRIVEN-AGENT (percept) returns action static: percepts, a sequence, initially empty table, a table, indexed by percept sequences, initially fully specified append percept to the end of percepts action LOOKUP(percepts, table) return action An agent based on a prespecified lookup table. It keeps track of percept sequence and just looks up the best action • Problems – Huge number of possible percepts (consider an automated taxi with a camera as the sensor) => lookup table would be huge – Takes long time to build the table – Not adaptive to changes in the environment; requires entire table to be updated if changes occur

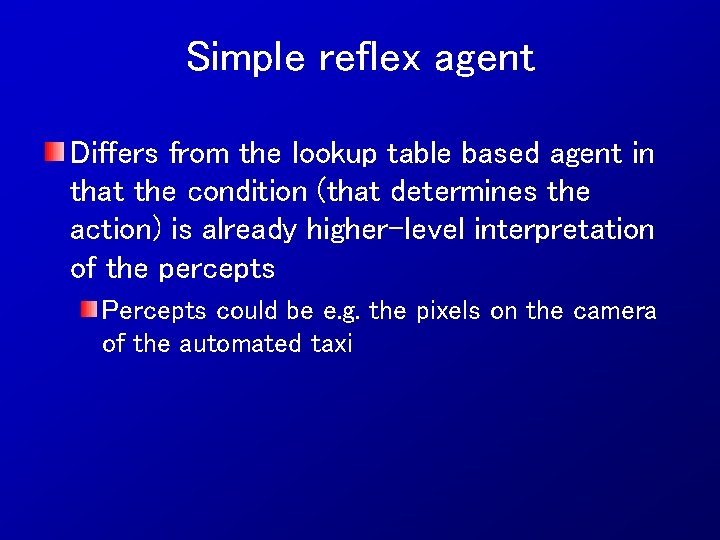

Simple reflex agent Differs from the lookup table based agent in that the condition (that determines the action) is already higher-level interpretation of the percepts Percepts could be e. g. the pixels on the camera of the automated taxi

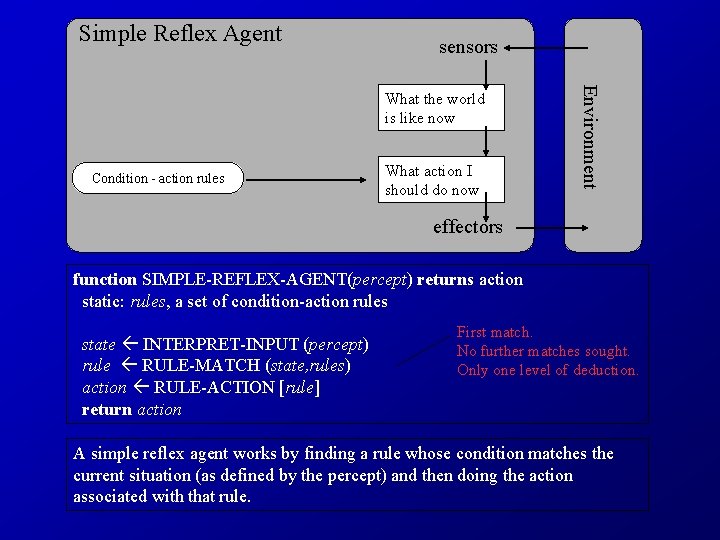

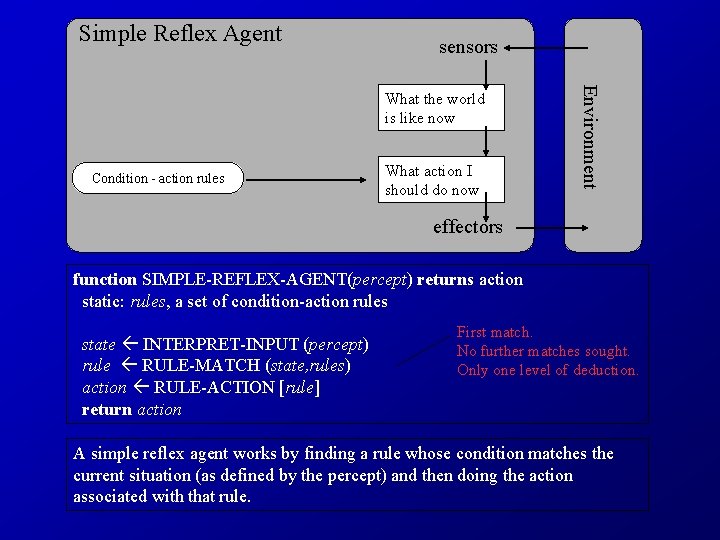

Simple Reflex Agent sensors Condition - action rules What action I should do now Environment What the world is like now effectors function SIMPLE-REFLEX-AGENT(percept) returns action static: rules, a set of condition-action rules state INTERPRET-INPUT (percept) rule RULE-MATCH (state, rules) action RULE-ACTION [rule] return action First match. No further matches sought. Only one level of deduction. A simple reflex agent works by finding a rule whose condition matches the current situation (as defined by the percept) and then doing the action associated with that rule.

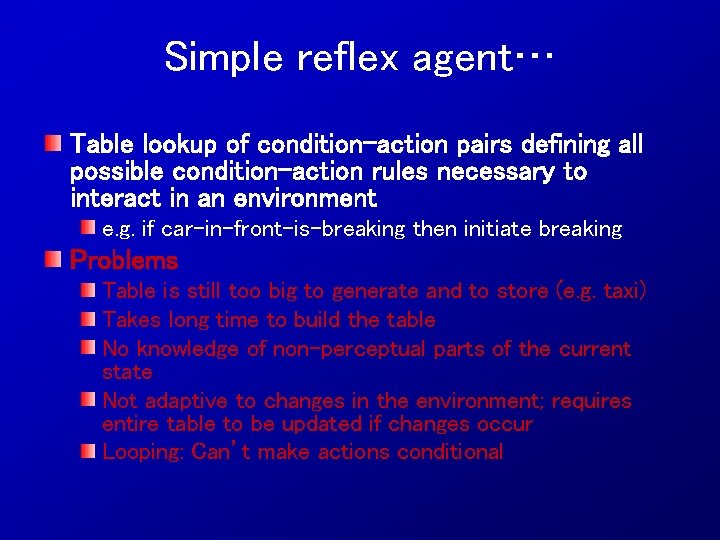

Simple reflex agent… Table lookup of condition-action pairs defining all possible condition-action rules necessary to interact in an environment e. g. if car-in-front-is-breaking then initiate breaking Problems Table is still too big to generate and to store (e. g. taxi) Takes long time to build the table No knowledge of non-perceptual parts of the current state Not adaptive to changes in the environment; requires entire table to be updated if changes occur Looping: Can’t make actions conditional

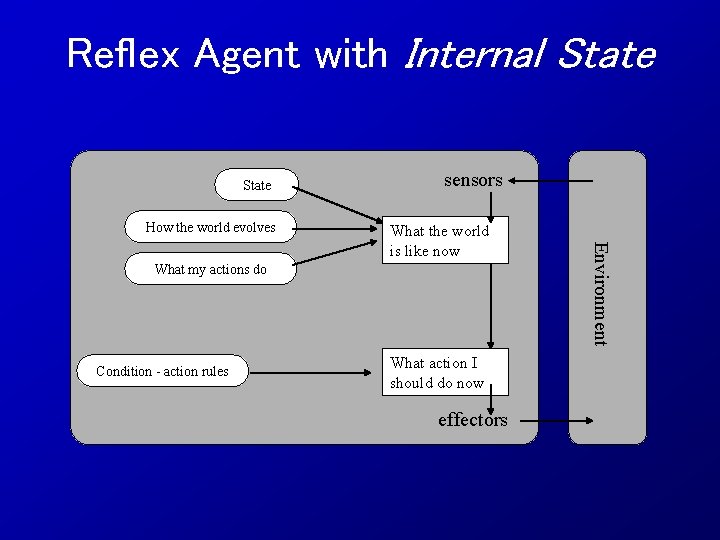

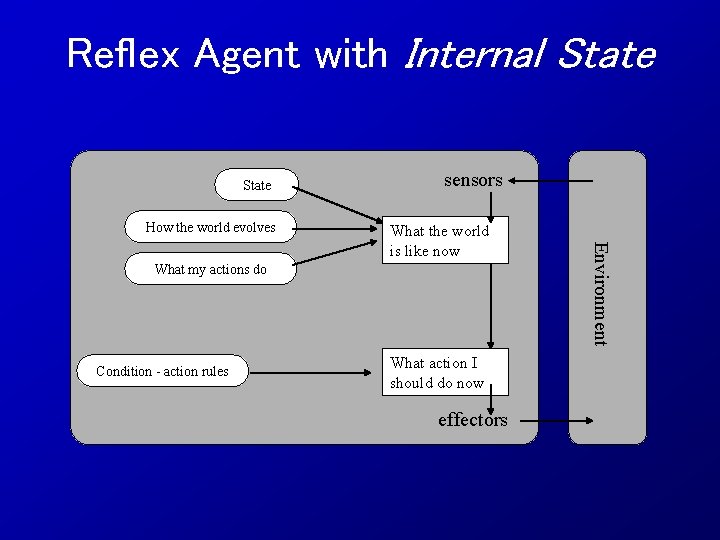

Reflex Agent with Internal State How the world evolves sensors What my actions do Condition - action rules What action I should do now effectors Environment What the world is like now

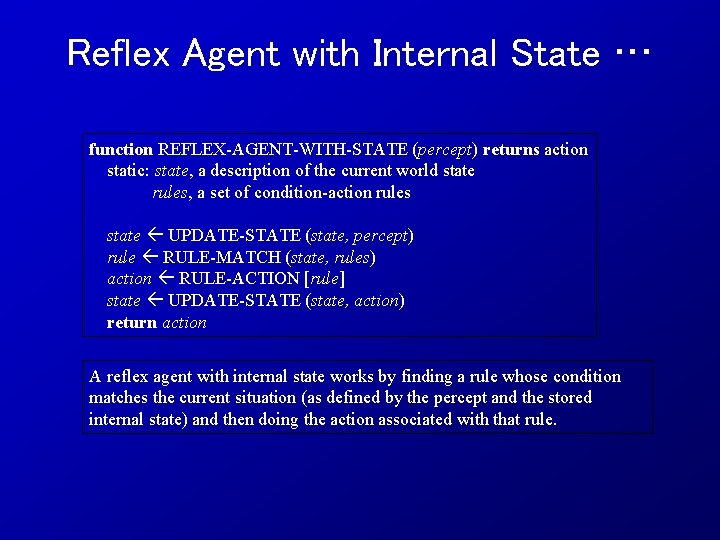

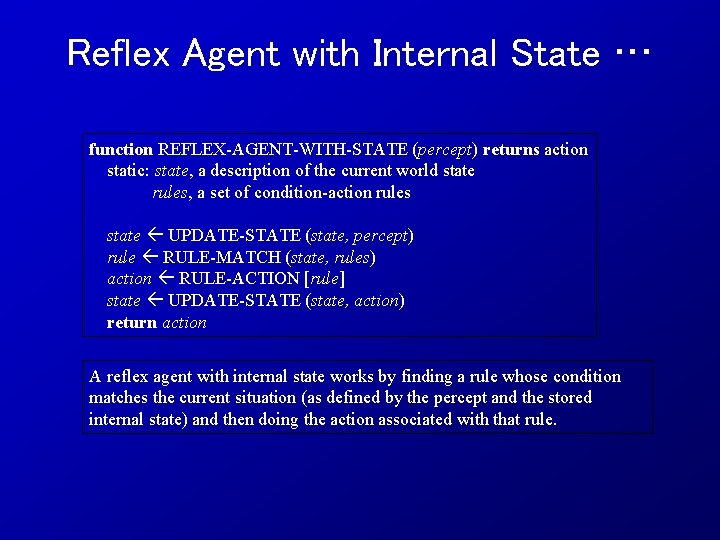

Reflex Agent with Internal State … function REFLEX-AGENT-WITH-STATE (percept) returns action static: state, a description of the current world state rules, a set of condition-action rules state UPDATE-STATE (state, percept) rule RULE-MATCH (state, rules) action RULE-ACTION [rule] state UPDATE-STATE (state, action) return action A reflex agent with internal state works by finding a rule whose condition matches the current situation (as defined by the percept and the stored internal state) and then doing the action associated with that rule.

Reflex Agent with Internal State … Encode “internal state of the world to remember the past as contained in earlier percepts Needed because sensors do not usually give the entire state of the world at each input, so perception of the environment is captured over time. Requires ability to represent change in the world with/without the agent

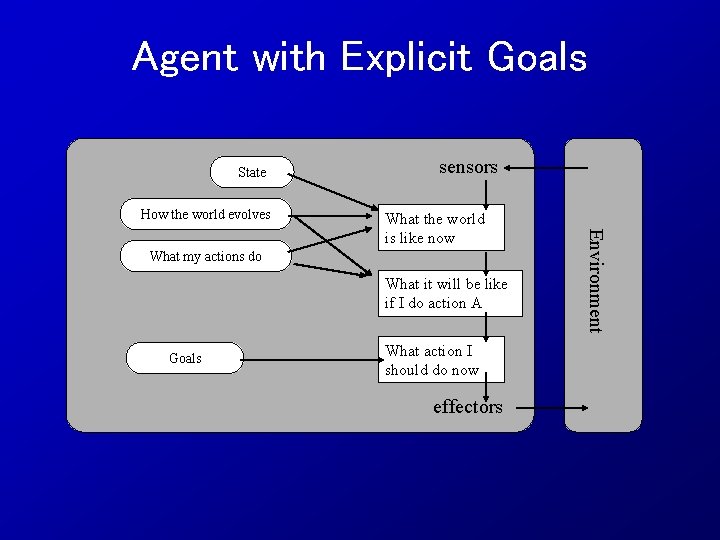

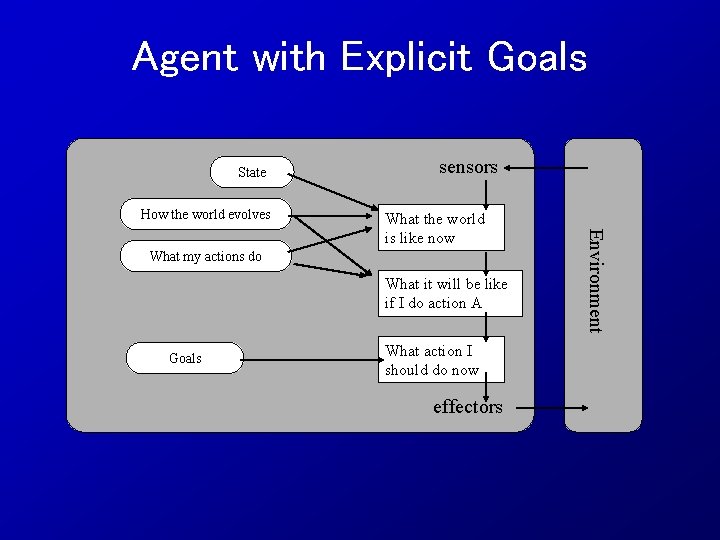

Agent with Explicit Goals State How the world evolves sensors What my actions do What it will be like if I do action A Goals What action I should do now effectors Environment What the world is like now

Agent with Explicit Goals Choose actions so as to achieve a (given or computed) goal = a description of desirable situations. e. g. where the taxi wants to go Keeping track of the current state is often not enough – need to add goals to decide which situations are good Deliberative instead of reactive May have to consider long sequences of possible actions before deciding if goal is achieved – involves considerations of the future, “what will happen if I do…? ” (search and planning) More flexible than reflex agent. (e. g. rain / new destination) In the reflex agent, the entire database of rules would have to be rewritten

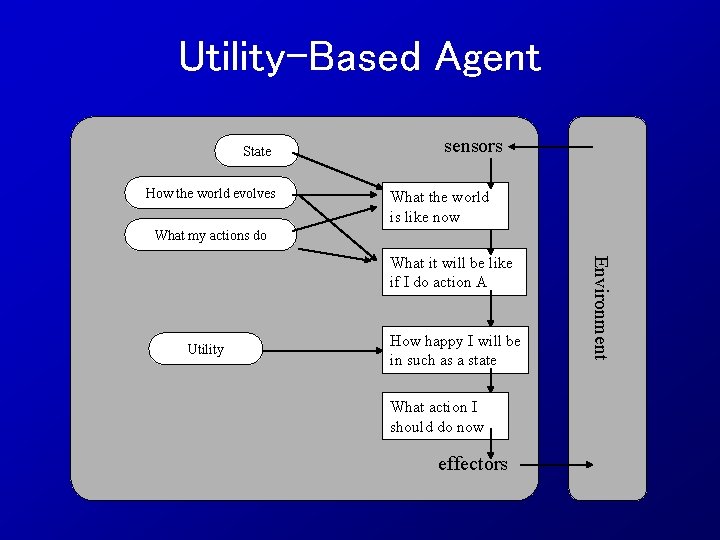

Utility-Based Agent State How the world evolves sensors What the world is like now What my actions do Utility How happy I will be in such as a state What action I should do now effectors Environment What it will be like if I do action A

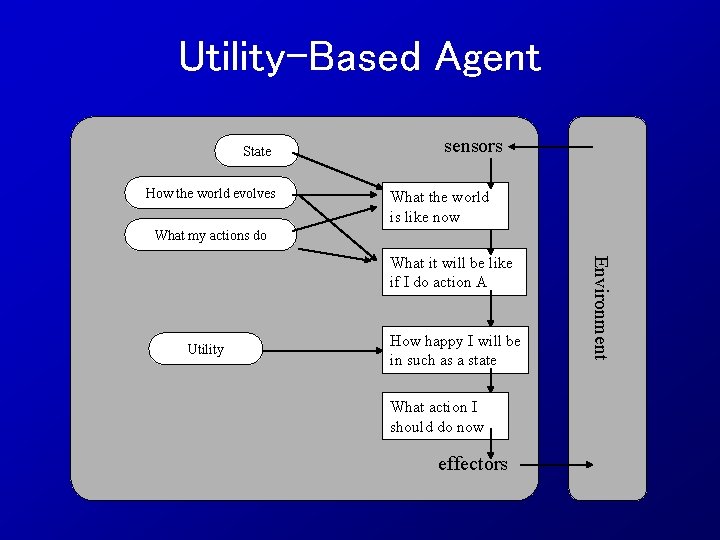

Utility-Based Agent When there are multiple possible alternatives, how to decide which one is best? A goal specifies a crude destination from an unhappy to a happy state, but often need a more general performance measure that describes “degree of happiness” Utility function U: State Reals indicating a measure of success or happiness when at a given state Allows decisions comparing choice between conflicting goals choice between likelihood of success and importance of goal (if achievement is uncertain)

Characterizing Environments

Environments – Accessible vs. Inaccessible An accessible environment is one in which the agent can obtain complete, accurate, up-to-date information about the environment’s state Most moderately complex environments (including, for example, the everyday physical world and the Internet) are inaccessible The more accessible an environment is, the simpler it is to build agents to operate in it

Environments – Deterministic vs. Non-deterministic A deterministic environment is one in which any action has a single guaranteed effect — there is no uncertainty about the state that will result from performing an action Subjective non-determinism Limited memory Too complex environment to model directly (weather, dice) Inaccessibility The physical world can to all intents and purposes be regarded as non-deterministic Non-deterministic environments present greater problems for the agent designer

Environments - Episodic vs. Non- episodic The agent’s experience is divided into independent “episodes, ” each episode consisting of agent perceiving and then acting. Quality of action depends just on the episode itself, because subsequent episodes do not depend on what actions occur in previous episodes. Do not need to think ahead Episodic environments are simpler from the agent developer’s perspective because the agent can decide what action to perform based only on the current episode — it need not reason about the interactions between this and future episodes

Environments - Static vs. Dynamic A static environment is one that can be assumed to remain unchanged except by the performance of actions by the agent A dynamic environment is one that has other processes operating on it, and which therefore changes in ways beyond the agent’s control Other processes can interfere with the agent’s actions (as in concurrent systems theory) The physical world is a highly dynamic environment

Environments – Discrete vs. Continuous An environment is discrete if there a fixed, finite number of actions and percepts in it A chess game is an example of a discrete environment, and taxi driving as an example of a continuous one Continuous environments have a certain level of mismatch with computer systems Discrete environments could in principle be handled by a kind of “lookup table”

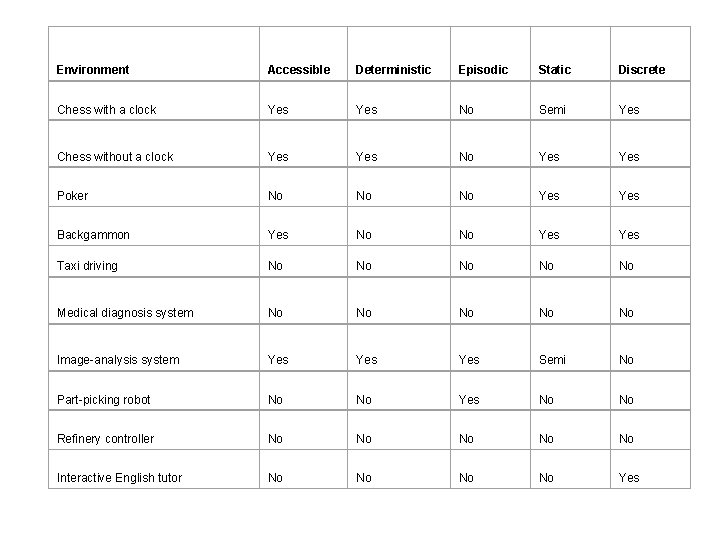

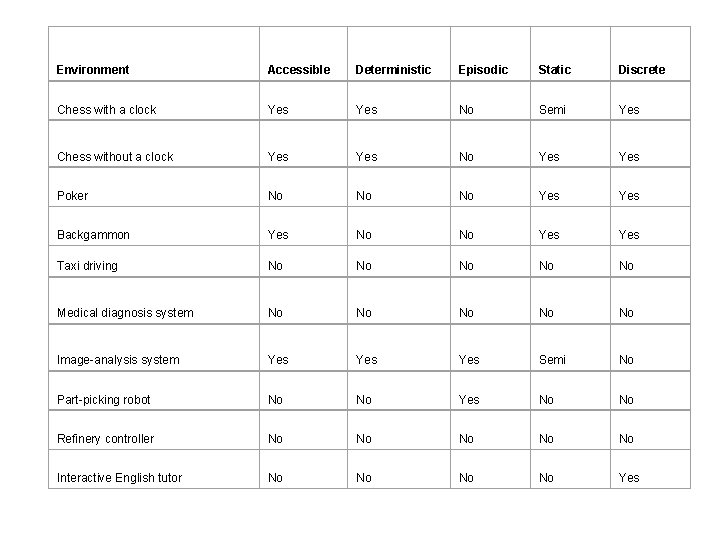

Environment Accessible Deterministic Episodic Static Discrete Chess with a clock Yes No Semi Yes Chess without a clock Yes No Yes Poker No No No Yes Backgammon Yes No No Yes Taxi driving No No No Medical diagnosis system No No No Image-analysis system Yes Yes Semi No Part-picking robot No No Yes No No Refinery controller No No No Interactive English tutor No No Yes

Agents and Objects Are agents just objects by another name? Object: encapsulates some state communicates via message passing has methods, corresponding to operations that may be performed on this state

Agents and Objects Main differences: agents are autonomous: agents embody stronger notion of autonomy than objects, and in particular, they decide for themselves whether or not to perform an action on request from another agents are smart: capable of flexible (reactive, pro-active, social) behavior, and the standard object model has nothing to say about such types of behavior agents are active: a multi-agent system is inherently multi-threaded, in that each agent is assumed to have at least one thread of active control

Agents as Intentional Systems When explaining human activity, it is often useful to make statements such as the following Janine took her umbrella because she believed it was going to rain. Michael worked hard because he wanted to possess a Ph. D. These statements make use of a folk psychology, by which human behavior is predicted and explained through the attribution of attitudes, such as believing and wanting (as in the above examples), hoping, fearing, and so on The attitudes employed in such folk psychological descriptions are called the intentional notions

Agents as Intentional Systems The philosopher Daniel Dennett coined the term intentional system to describe entities ‘whose behavior can be predicted by the method of attributing belief, desires and rational acumen’ Dennett identifies different ‘grades’ of intentional system: ‘A first-order intentional system has beliefs and desires (etc. ) but no beliefs and desires about beliefs and desires. …A second-order intentional system is more sophisticated; it has beliefs and desires (and no doubt other intentional states) about beliefs and desires (and other intentional states) — both those of others and its own’

Agents as Intentional Systems Is it legitimate or useful to attribute beliefs, desires, and so on, to computer systems?

Agents as Intentional Systems Mc. Carthy argued that there are occasions when the intentional stance is appropriate: ‘To ascribe beliefs, free will, intentions, consciousness, abilities, or wants to a machine is legitimate when such an ascription expresses the same information about the machine that it expresses about a person. It is useful when the ascription helps us understand the structure of the machine, its past or future behavior, or how to repair or improve it. It is perhaps never logically required even for humans, but expressing reasonably briefly what is actually known about the state of the machine in a particular situation may require mental qualities or qualities isomorphic to them. Theories of belief, knowledge and wanting can be constructed for machines in a simpler setting than for humans, and later applied to humans. Ascription of mental qualities is most straightforward for machines of known structure such as thermostats and computer operating systems, but is most useful when applied to entities whose structure is incompletely known’.

Agents as Intentional Systems What objects can be described by the intentional stance? As it turns out, more or less anything can. . . consider a light switch: ‘It is perfectly coherent to treat a light switch as a (very cooperative) agent with the capability of transmitting current at will, who invariably transmits current when it believes that we want it transmitted and not otherwise; flicking the switch is simply our way of communicating our desires’. (Yoav Shoham) But most adults would find such a description absurd! Why is this?

Agents as Intentional Systems The answer seems to be that while the intentional stance description is consistent, . . . it does not buy us anything, since we essentially understand the mechanism sufficiently to have a simpler, mechanistic description of its behavior. (Yoav Shoham) Put crudely, the more we know about a system, the less we need to rely on animistic, intentional explanations of its behavior But with very complex systems, a mechanistic, explanation of its behavior may not be practicable As computer systems become ever more complex, we need more powerful abstractions and metaphors to explain their operation — low level explanations become impractical. The intentional stance is such an abstraction

Agents as Intentional Systems The intentional notions are thus abstraction tools, which provide us with a convenient and familiar way of describing, explaining, and predicting the behavior of complex systems Remember: most important developments in computing are based on new abstractions: procedural abstraction abstract data types objects Agents, and agents as intentional systems, represent a further, and increasingly powerful abstraction So agent theorists start from the (strong) view of agents as intentional systems: one whose simplest consistent description requires the intentional stance

Agents as Intentional Systems This intentional stance is an abstraction tool — a convenient way of talking about complex systems, which allows us to predict and explain their behavior without having to understand how the mechanism actually works Much of computer science is concerned with looking for abstraction mechanisms (witness procedural abstraction, ADTs, objects, …) So why not use the intentional stance as an abstraction tool in computing — to explain, understand, crucially, program computer systems? This is an important argument in favor of agents

Agents as Intentional Systems 3 Other points in favor of this idea: Characterizing Agents: It provides us with a familiar, non-technical way of understanding & explaining agents Nested Representations: It gives us the potential to specify systems that include representations of other systems It is widely accepted that such nested representations are essential for agents that must cooperate with other agents

Agents as Intentional Systems Post-Declarative Systems: This view of agents leads to a kind of post-declarative programming: In procedural programming, we say exactly what a system should do In declarative programming, we state something that we want to achieve, give the system general info about the relationships between objects, and let a built-in control mechanism (e. g. , goaldirected theorem proving) figure out what to do With agents, we give a very abstract specification of the system, and let the control mechanism figure out what to do, knowing that it will act in accordance with some built-in theory of agency (e. g. , the well-known Cohen-Levesque model of intention)

Multiagent Systems A multiagent system is one that consists of a number of agents, which interact with one-another In the most general case, agents will be acting on behalf of users with different goals and motivations To successfully interact, they will require the ability to cooperate, coordinate, and negotiate with each other, much as people do

Agent Design vs Society Design There are two basic questions we ask when discussing agents How do we build agents capable of independent, autonomous action, so that they can successfully carry out tasks we delegate to them? How do we build agents that are capable of interacting (cooperating, coordinating, negotiating) with other agents in order to successfully carry out those delegated tasks, especially when the other agents cannot be assumed to share the same interests/goals? The first problem is agent design, the second is society design (micro/macro)

Multiagent Systems In Multiagent Systems, we address questions such as: How can cooperation emerge in societies of selfinterested agents? What kinds of languages can agents use to communicate? How can self-interested agents recognize conflict, and how can they (nevertheless) reach agreement? How can autonomous agents coordinate their activities so as to cooperatively achieve goals?

Multiagent Systems While these questions are all addressed in part by other disciplines (notably economics and social sciences), what makes the multiagent systems field unique is that it emphasizes that the agents in question are computational, information processing entities.

Multiagent Research Issues How do you state your preferences to your agent? How can your agent compare different deals from different vendors? What if there are many different parameters? What algorithms can your agent use to negotiate with other agents (to make sure you get a good deal)? These issues aren’t frivolous – automated procurement could be used massively by (for example) government agencies

Multiagents are Interdisciplinary The field of Multiagent Systems is influenced and inspired by many other fields: Economics Philosophy Game Theory Logic Ecology Social Sciences This can be both a strength (infusing well-founded methodologies into the field) and a weakness (there are many different views as to what the field is about) This has analogies with artificial intelligence itself

”Risky Business” Jeopardy style trivia game Players attempt to be the first to answer trivia questions in order to win virtual money and virtual prizes Played in the Internet Relay Chat (IRC) world Hosted by a computer agent named Rob. Bot Available 24/7.

What is IRC “IRC (Internet Relay Chat) is a virtual meeting place where people from all over the world can meet and talk; you'll find the whole diversity of human interests, ideas, and issues here, and you'll be able to participate in group discussions on one of the many thousands of IRC channels, on hundreds of IRC networks, or just talk in private to family or friends, wherever they are in the world. ” m. IRC Homepage (http: //www. mirc. co. uk/)

Rob. Bot Has knowledge of IRC commands How the game is played Rules and etiquette to maintain the channel Techniques for self-preservation within the IRC environment Human operators are capable of ”correcting” the game Keeps records of individual player statistics Number of games won High scores All knowledge must be input by human operator.

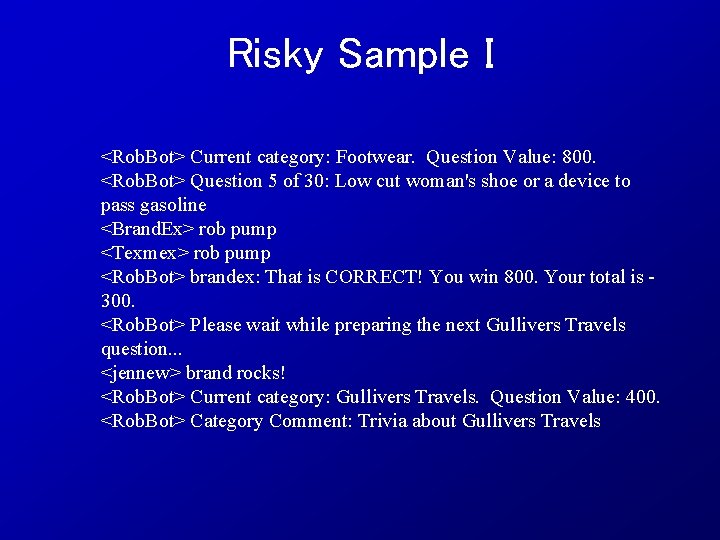

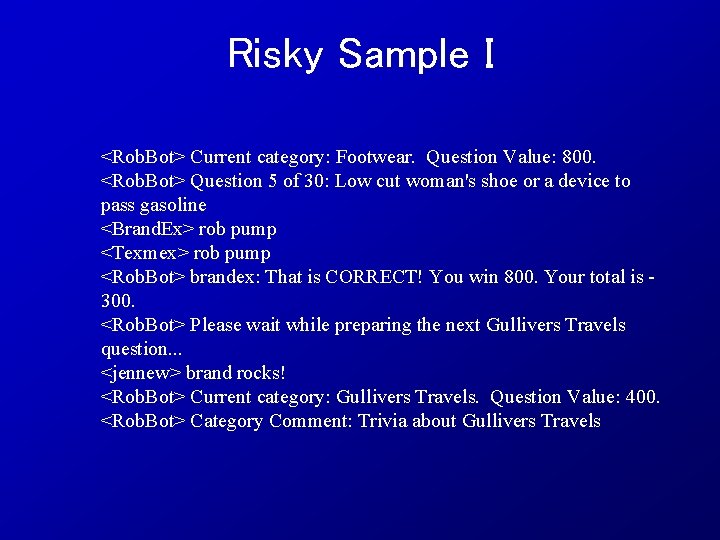

Risky Sample I <Rob. Bot> Current category: Footwear. Question Value: 800. <Rob. Bot> Question 5 of 30: Low cut woman's shoe or a device to pass gasoline <Brand. Ex> rob pump <Texmex> rob pump <Rob. Bot> brandex: That is CORRECT! You win 800. Your total is 300. <Rob. Bot> Please wait while preparing the next Gullivers Travels question. . . <jennew> brand rocks! <Rob. Bot> Current category: Gullivers Travels. Question Value: 400. <Rob. Bot> Category Comment: Trivia about Gullivers Travels

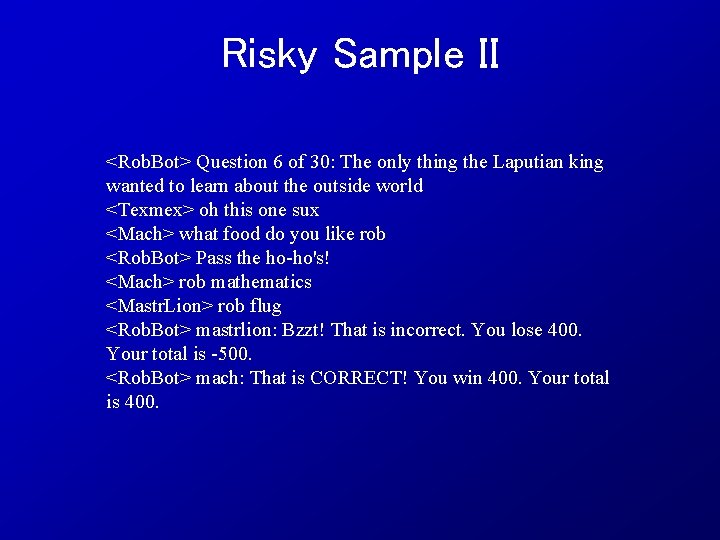

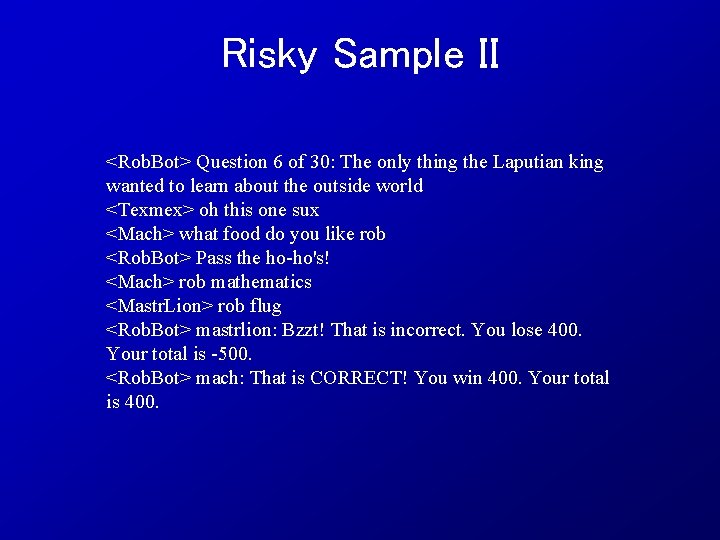

Risky Sample II <Rob. Bot> Question 6 of 30: The only thing the Laputian king wanted to learn about the outside world <Texmex> oh this one sux <Mach> what food do you like rob <Rob. Bot> Pass the ho-ho's! <Mach> rob mathematics <Mastr. Lion> rob flug <Rob. Bot> mastrlion: Bzzt! That is incorrect. You lose 400. Your total is -500. <Rob. Bot> mach: That is CORRECT! You win 400. Your total is 400.

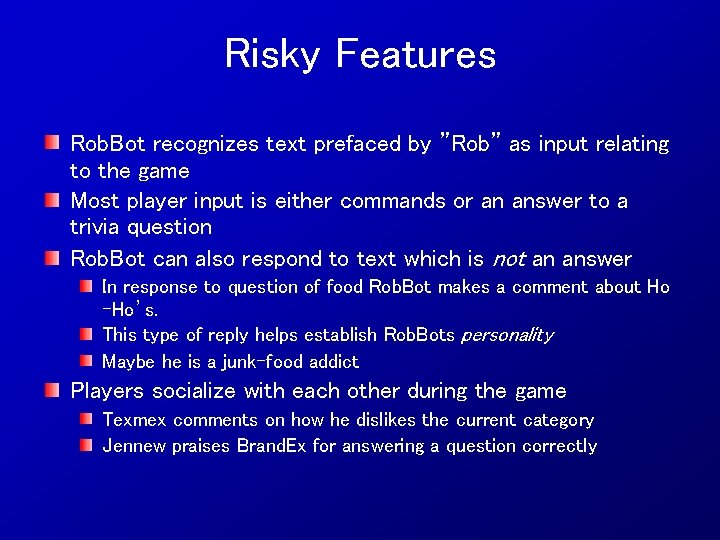

Risky Features Rob. Bot recognizes text prefaced by ”Rob” as input relating to the game Most player input is either commands or an answer to a trivia question Rob. Bot can also respond to text which is not an answer In response to question of food Rob. Bot makes a comment about Ho -Ho’s. This type of reply helps establish Rob. Bots personality Maybe he is a junk-food addict Players socialize with each other during the game Texmex comments on how he dislikes the current category Jennew praises Brand. Ex for answering a question correctly

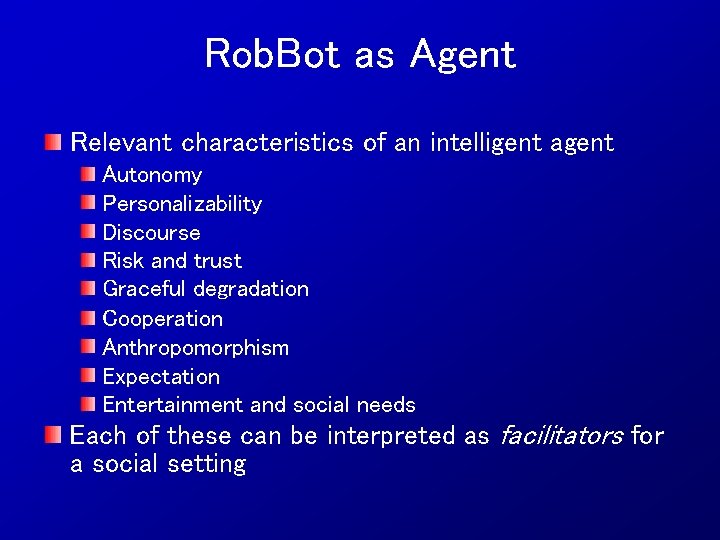

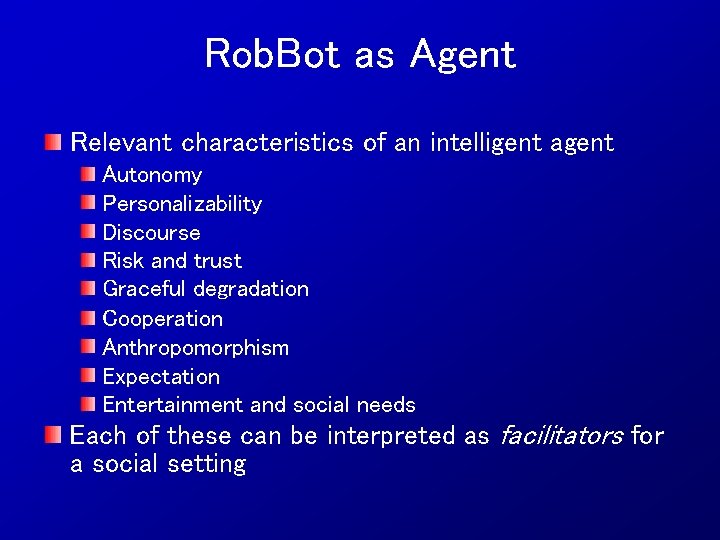

Rob. Bot as Agent Relevant characteristics of an intelligent agent Autonomy Personalizability Discourse Risk and trust Graceful degradation Cooperation Anthropomorphism Expectation Entertainment and social needs Each of these can be interpreted as facilitators for a social setting

Rob. Bot as Agent Autonomy Rob. Bot hosts game without human intervention Despite serious neglect at times by the creators, the game has continued to run and flourish on its own Must be able to run independently if it is to have any value in terms of entertainment or social interaction If human operator must constantly provide direction, Rob. Bot would become a tool of the human rather than a separate entity

Rob. Bot as Agent Dependence Some degree of dependence on humans is important from both technical and sociological viewpoint Dependence invokes a sense of responsibility and power in the human operator

Rob. Bot as Agent Personalizability Rob. Bot maintains his own personality through the responses programmed into his lexicon Life-like qualities help to provide an atmosphere that is inducive to socialization He is capable of recording information about other users Scores that players have obtained Player’s scores and record are important measures of social status on the gaming channels

Rob. Bot as Agent Risk and Trust, Graceful Degradation Rob. Bot does make errors while conducting the game Players may phrase an answer differently than the answer stored in the answer database Spellling errors may be present in the answer database Sometimes human operators are present to correct errors but usually not Sometimes errors are found humorous by players Sometimes players band together to curse Rob. Bot for his errors Both cases encourage socialization among the players

Rob. Bot as Agent Cooperation Player and agent cooperation is crucial to the game Rob. Bot does not question players But, provides feedback to verify that command requests are being processed Even the primitive type of cooperation provided encourages socialization between players and Rob. Bot

Rob. Bot as Agent Anthropomorphism Rob. Bot relies heavily on anthropomorphism to accomplish his tasks as a game show host Main task is to provide entertainment as a game show host Not to pass the Turing Test! Technology based on keyword mappings and canned phrases gets Rob. Bot remarkably far in fulfilling his main task

Rob. Bot as Social Engineer Agent supports the sociological features necessary for an on-going social environment As a core group of players engage in Risky Business, the network becomes meaningfully ritualized in its context A subculture is formed that can be transmitted to new players Components of a subculture include Contextual stability Shared language, history, and purpose These lead to mutually shared norms (behavior patterns) Values (common goals) Agent’s main task is to support and encourage development of the subculture.

Rob. Bot as Social Engineer Autonomy Permits Convenience Stable structure Rules basically stay the same from day to day and month to month Responses that exist to the game environment can be learned and become predictable Stable structure helps facilitate the development of a social history of the game-playing environment

Rob. Bot as Social Engineer Personality and Anthropomorphism Lead to the development of a shared language Bot typically utters certain phrases Used by participants as symbols of events and concepts Bot’s consistency of phrasing leads to players acceptance and standardization of language When Rob. Bot consistently expresses delight about chocolate via *choco* all participants can use *choco* as a keyword for joy

Rob. Bot as Social Engineer Personality and Anthropomorphism Flattery Rob. Bot has a set of canned responses for some players when he sees their names E. g. , in response to “Do you know Cass? ” “Hey! Cass is a real cutie! Woohoo!” Players react strongly to these personalized messages Often input questions that cause Rob. Bot to frequently cycle through a small set of responses Flattery from a computer agent appears to have a similar effect to flattery coming from a real person

Rob. Bot as Social Engineer Anthropomorphism Impact of gender Change name from Rob. Bot to Renee. Bot + make minor changes in the vocabulary Result is significant attitude changes towards the bot Rob. Bot is treated like a man Players joke with him about stereotypical male things Women flirt with him Players can be brusque and treat him rudely Renee. Bot is treated differently Men flirt with her Players treat her more politely

Rob. Bot as Social Engineer Cooperation Helps shape behavior patterns of players Reinforces a definition of social order E. g. , swearing is frowned upon Any player using certain utterances will get “<Player 1> This is a family channel! Be warned or I’ll have to call the bouncers!” Persistence will get the player kicked off the channel E. g. , in another game, Acro, bot provides no guidance on coarse language Result is that it is a common feature of the players There was a morality play between certain players Eventually those who could not deal with occasional vulgarity stopped playing

Bots – The Bigger Picture Many people who cross the boundary from the real world to the Internet world are not prepared to make too great of a leap As the numbers of such people increase, they acquire the power to demand familiar institutions from the real world that cater to the social animal Bars Neighborhoods Dating services Etc.

Bots – The Bigger Picture One role of AI in the cyberspace world is to provide a familiar interface to the participants Rob. Bot’s AI (minimal as it is) creates a persona to which people can relate He is something understood and non-threatening He plays an essential part in acclimatizing people to the world of the internet With more and better AI, the line between user and software artifcat would become more blurred