Transport Layer Adapted from Computer Networking slides Transport

![GBN: sender extended FSM rdt_send(data) L base=1 nextseqnum=1 if (nextseqnum < base+N) { sndpkt[nextseqnum] GBN: sender extended FSM rdt_send(data) L base=1 nextseqnum=1 if (nextseqnum < base+N) { sndpkt[nextseqnum]](https://slidetodoc.com/presentation_image_h/061a9590516264c7e9d9bf691f04afab/image-36.jpg)

![TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival](https://slidetodoc.com/presentation_image_h/061a9590516264c7e9d9bf691f04afab/image-55.jpg)

- Slides: 99

Transport Layer Adapted from Computer Networking slides Transport Layer 1

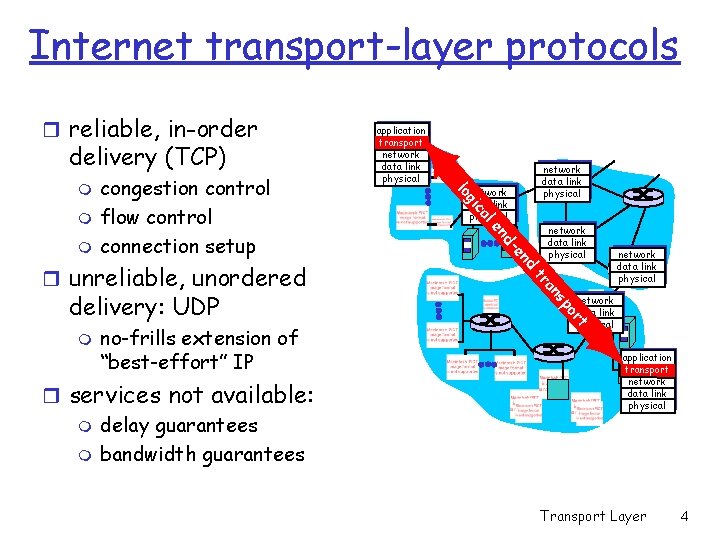

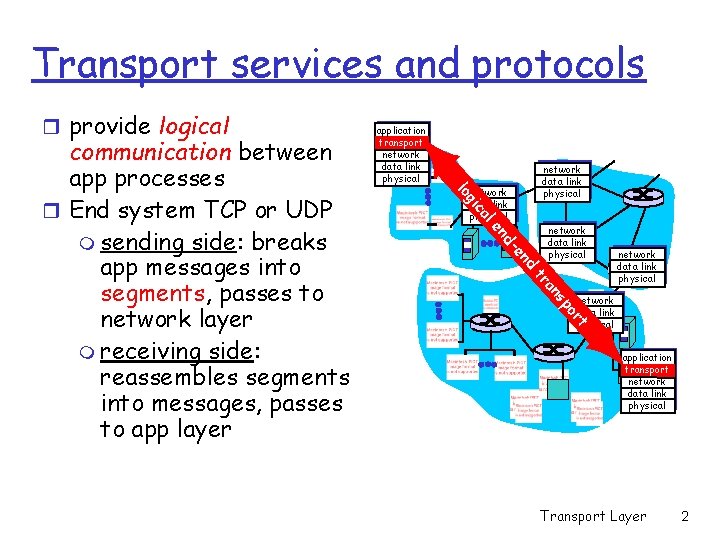

Transport services and protocols r provide logical network data link physical nd -e nd e al c gi lo network data link physical po s an tr rt communication between app processes r End system TCP or UDP m sending side: breaks app messages into segments, passes to network layer m receiving side: reassembles segments into messages, passes to app layer application transport network data link physical Transport Layer 2

Transport vs. network layer r network layer: logical communication between hosts r transport layer: logical communication between processes m relies on and enhances - network layer services Transport Layer 3

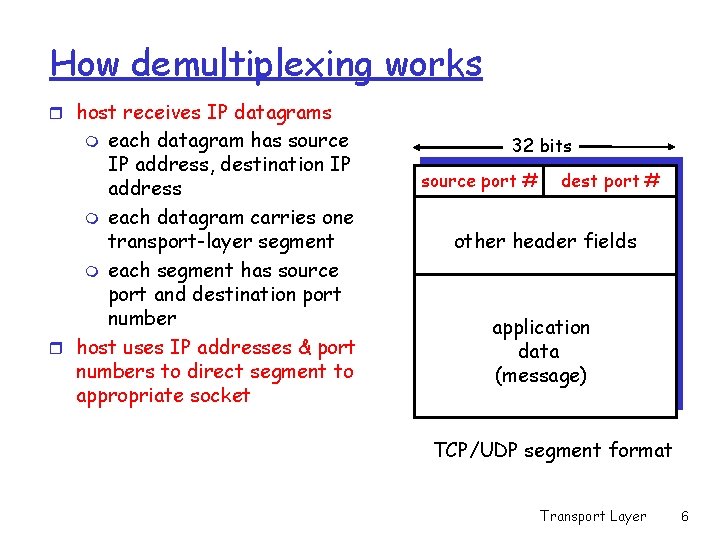

Internet transport-layer protocols r reliable, in-order delivery (TCP) network data link physical po rt r services not available: m delay guarantees m bandwidth guarantees s an no-frills extension of “best-effort” IP network data link physical tr m nd delivery: UDP -e nd r unreliable, unordered e al m network data link physical c gi m congestion control flow control connection setup network data link physical lo m application transport network data link physical Transport Layer 4

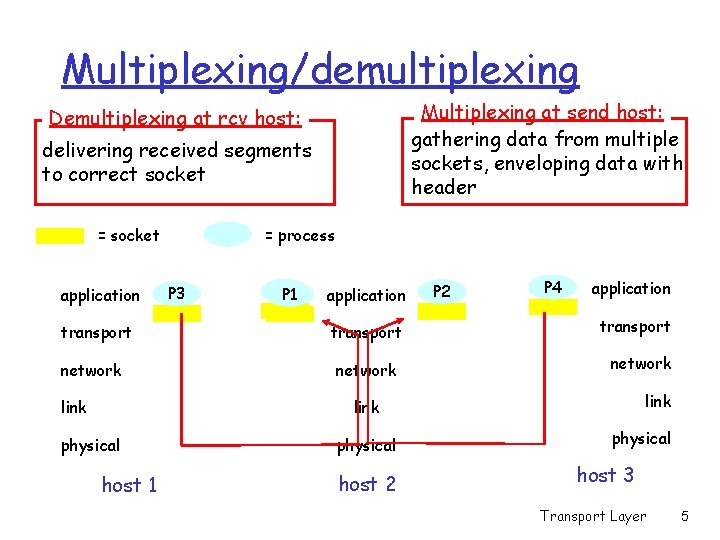

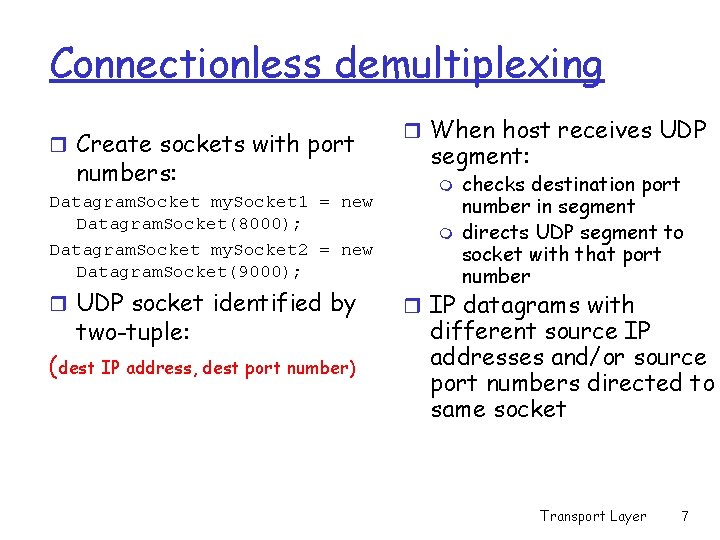

Multiplexing/demultiplexing Multiplexing at send host: gathering data from multiple sockets, enveloping data with header Demultiplexing at rcv host: delivering received segments to correct socket = socket application transport network link = process P 3 P 1 application transport network P 2 P 4 application transport network link physical host 1 physical host 2 physical host 3 Transport Layer 5

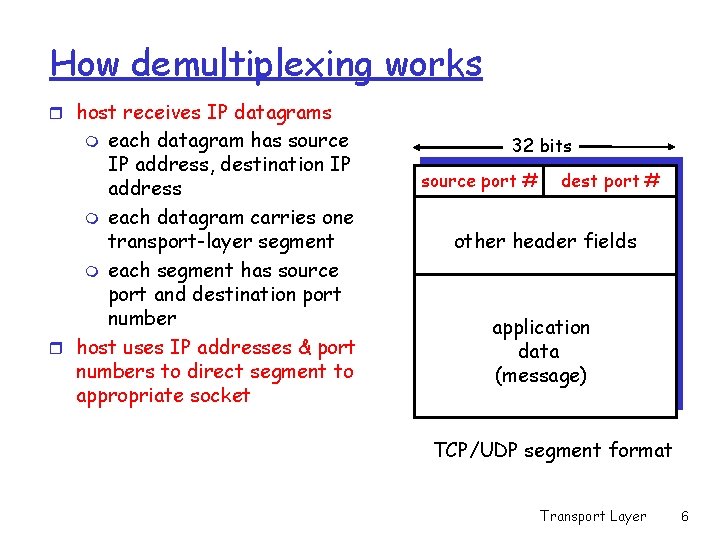

How demultiplexing works r host receives IP datagrams each datagram has source IP address, destination IP address m each datagram carries one transport-layer segment m each segment has source port and destination port number r host uses IP addresses & port numbers to direct segment to appropriate socket m 32 bits source port # dest port # other header fields application data (message) TCP/UDP segment format Transport Layer 6

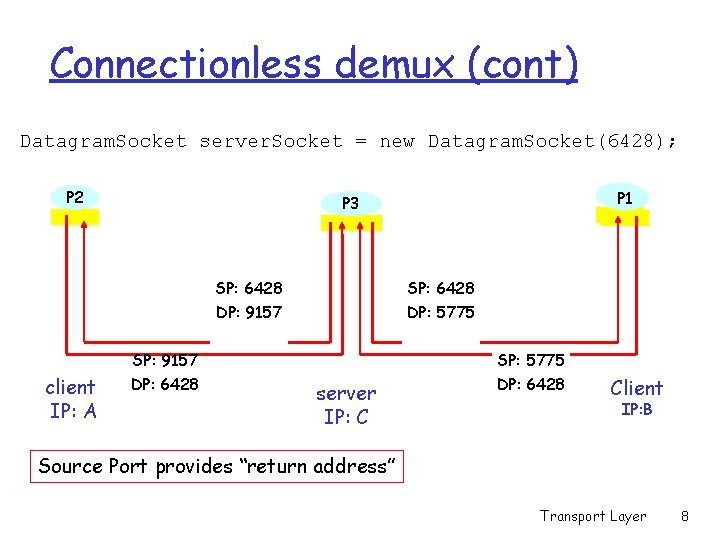

Connectionless demultiplexing r Create sockets with port numbers: Datagram. Socket my. Socket 1 = new Datagram. Socket(8000); Datagram. Socket my. Socket 2 = new Datagram. Socket(9000); r UDP socket identified by two-tuple: (dest IP address, dest port number) r When host receives UDP segment: m m checks destination port number in segment directs UDP segment to socket with that port number r IP datagrams with different source IP addresses and/or source port numbers directed to same socket Transport Layer 7

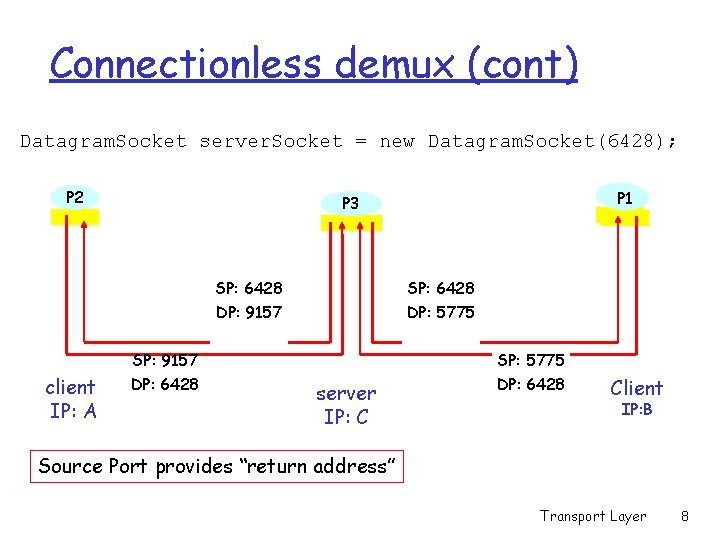

Connectionless demux (cont) Datagram. Socket server. Socket = new Datagram. Socket(6428); P 2 SP: 6428 DP: 9157 SP: 6428 DP: 5775 SP: 9157 client IP: A P 1 P 3 DP: 6428 server IP: C SP: 5775 DP: 6428 Client IP: B Source Port provides “return address” Transport Layer 8

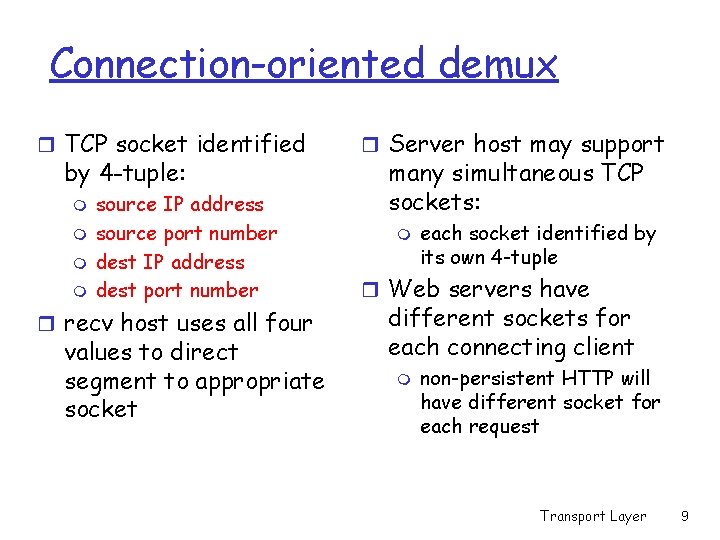

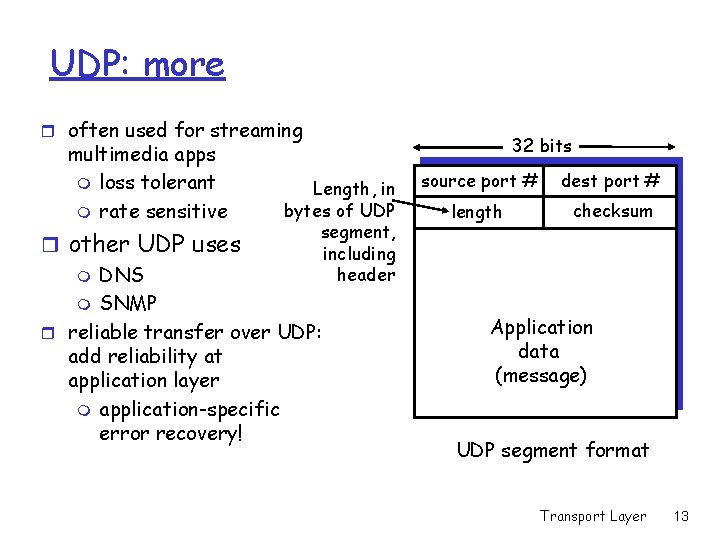

Connection-oriented demux r TCP socket identified by 4 -tuple: m m source IP address source port number dest IP address dest port number r recv host uses all four values to direct segment to appropriate socket r Server host may support many simultaneous TCP sockets: m each socket identified by its own 4 -tuple r Web servers have different sockets for each connecting client m non-persistent HTTP will have different socket for each request Transport Layer 9

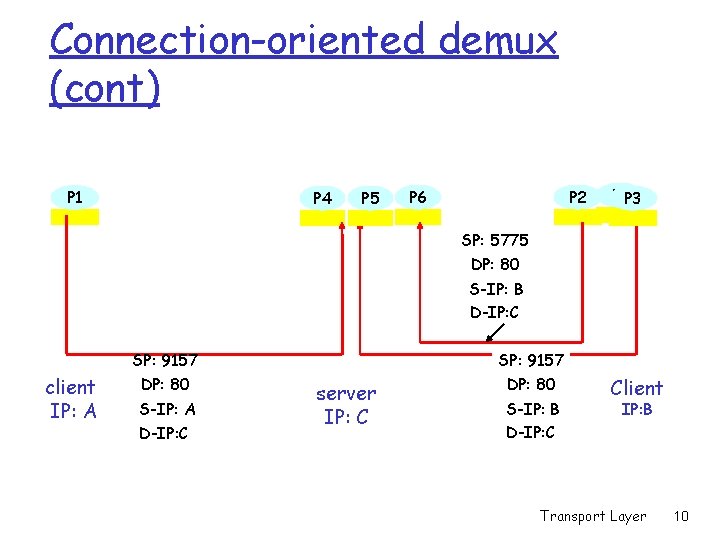

Connection-oriented demux (cont) P 1 P 4 P 5 P 2 P 6 P 1 P 3 SP: 5775 DP: 80 S-IP: B D-IP: C client IP: A SP: 9157 DP: 80 S-IP: A D-IP: C SP: 9157 server IP: C DP: 80 S-IP: B D-IP: C Client IP: B Transport Layer 10

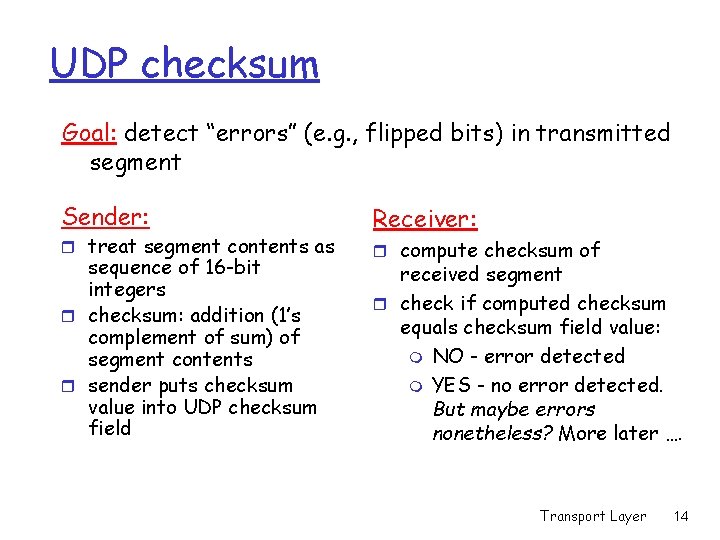

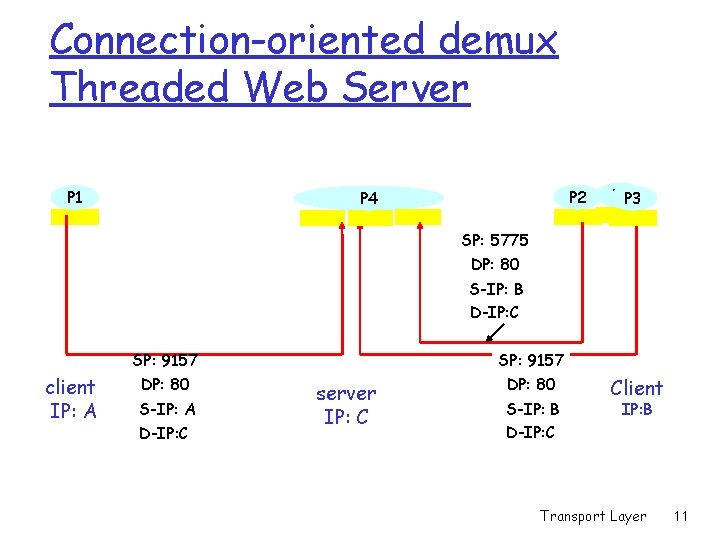

Connection-oriented demux Threaded Web Server P 1 P 2 P 4 P 1 P 3 SP: 5775 DP: 80 S-IP: B D-IP: C client IP: A SP: 9157 DP: 80 S-IP: A D-IP: C SP: 9157 server IP: C DP: 80 S-IP: B D-IP: C Client IP: B Transport Layer 11

UDP: User Datagram Protocol r “no frills, ” “bare bones” Internet transport protocol r “best effort” service, UDP segments may be: m lost m delivered out of order to app r connectionless: m no handshaking between UDP sender, receiver m each UDP segment handled independently of others [RFC 768] Why is there a UDP? r no connection establishment (which can add delay) r simple: no connection state at sender, receiver r small segment header r no congestion control: UDP can blast away as fast as desired Transport Layer 12

UDP: more r often used for streaming multimedia apps m loss tolerant m rate sensitive Length, in bytes of UDP segment, including header r other UDP uses m DNS m SNMP r reliable transfer over UDP: add reliability at application layer m application-specific error recovery! 32 bits source port # dest port # length checksum Application data (message) UDP segment format Transport Layer 13

UDP checksum Goal: detect “errors” (e. g. , flipped bits) in transmitted segment Sender: r treat segment contents as sequence of 16 -bit integers r checksum: addition (1’s complement of sum) of segment contents r sender puts checksum value into UDP checksum field Receiver: r compute checksum of received segment r check if computed checksum equals checksum field value: m NO - error detected m YES - no error detected. But maybe errors nonetheless? More later …. Transport Layer 14

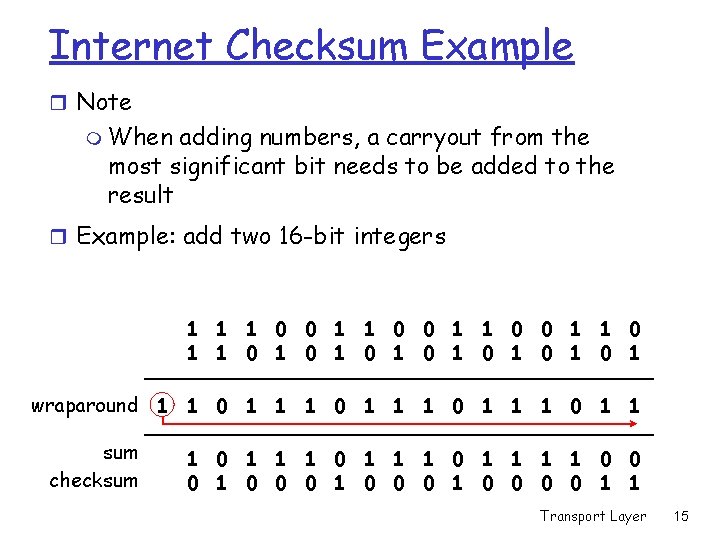

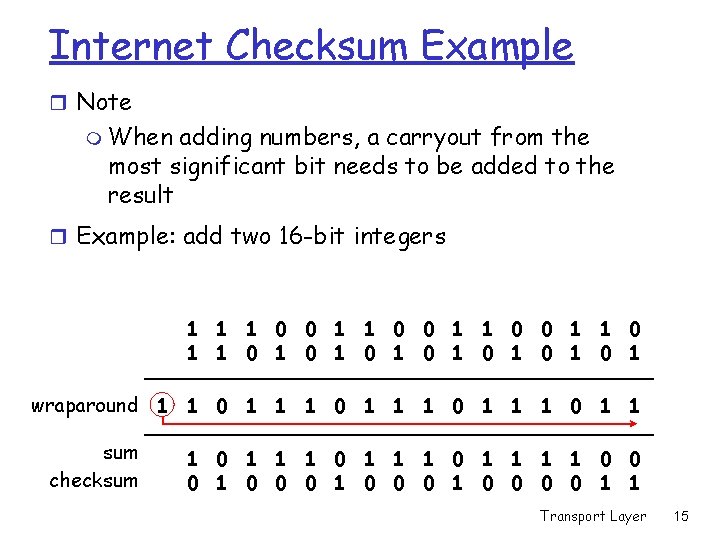

Internet Checksum Example r Note m When adding numbers, a carryout from the most significant bit needs to be added to the result r Example: add two 16 -bit integers 1 1 0 0 1 1 1 0 1 0 1 wraparound 1 1 0 1 1 sum 1 1 0 1 1 0 0 checksum 1 0 0 0 0 1 1 Transport Layer 15

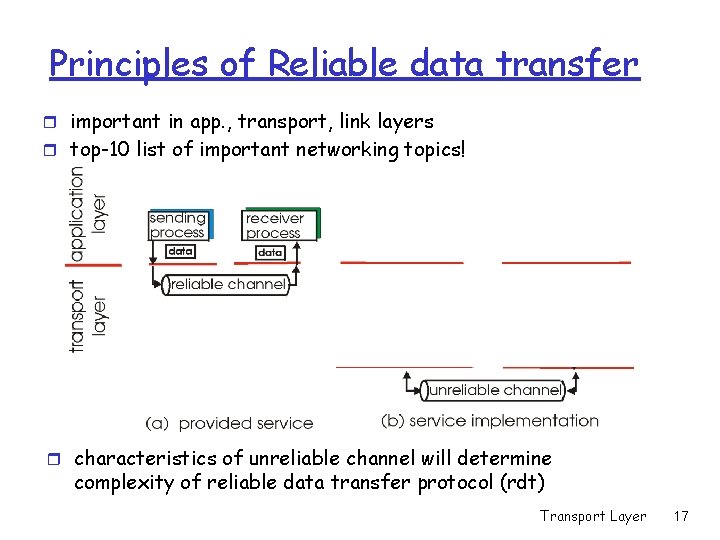

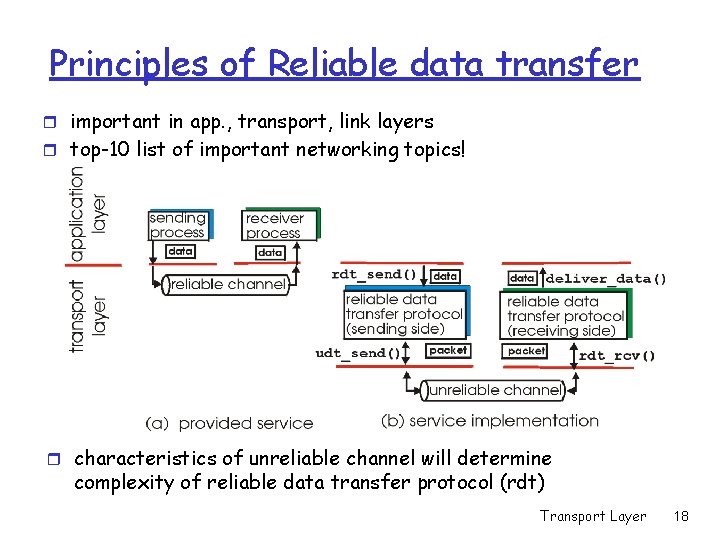

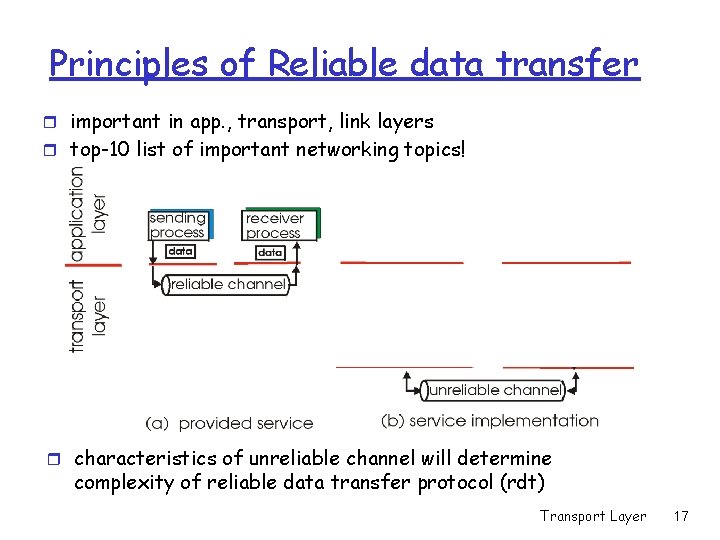

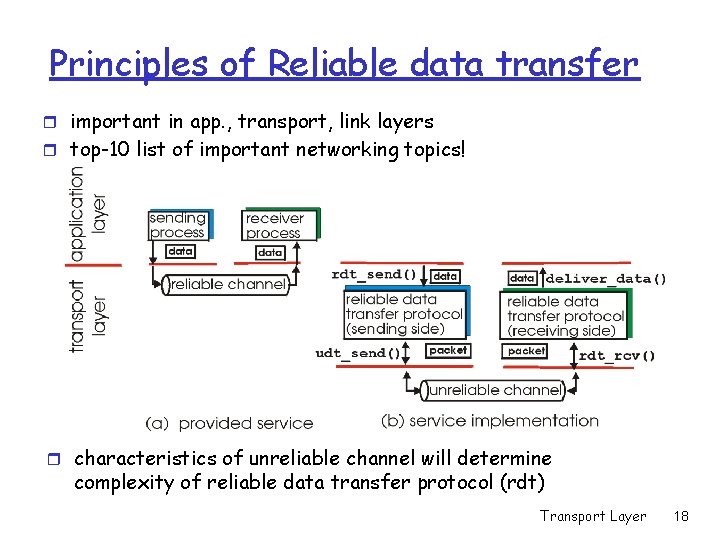

Principles of Reliable data transfer r important in app. , transport, link layers r top-10 list of important networking topics! r characteristics of unreliable channel will determine complexity of reliable data transfer protocol (rdt) Transport Layer 16

Principles of Reliable data transfer r important in app. , transport, link layers r top-10 list of important networking topics! r characteristics of unreliable channel will determine complexity of reliable data transfer protocol (rdt) Transport Layer 17

Principles of Reliable data transfer r important in app. , transport, link layers r top-10 list of important networking topics! r characteristics of unreliable channel will determine complexity of reliable data transfer protocol (rdt) Transport Layer 18

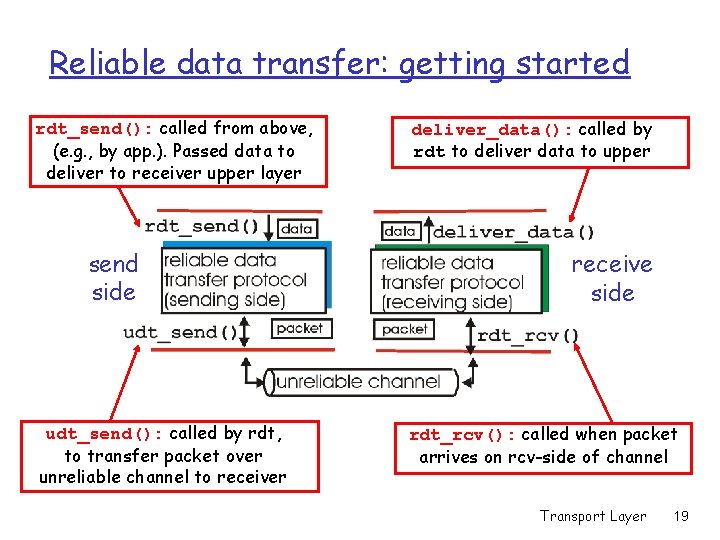

Reliable data transfer: getting started rdt_send(): called from above, (e. g. , by app. ). Passed data to deliver to receiver upper layer send side udt_send(): called by rdt, to transfer packet over unreliable channel to receiver deliver_data(): called by rdt to deliver data to upper receive side rdt_rcv(): called when packet arrives on rcv-side of channel Transport Layer 19

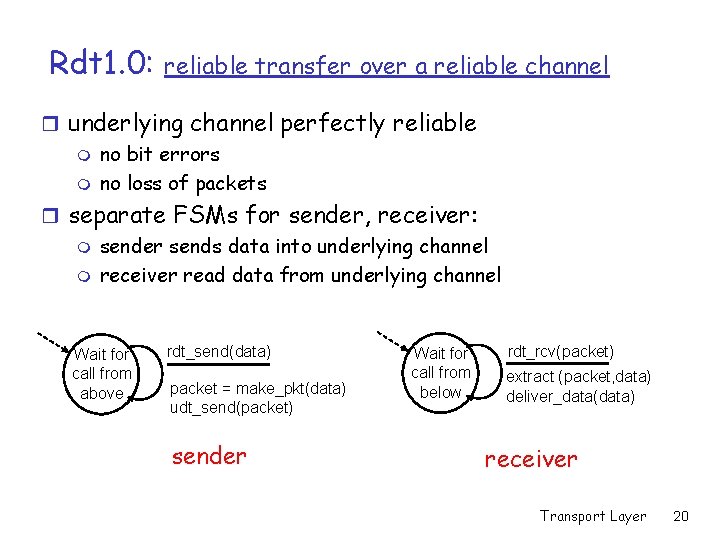

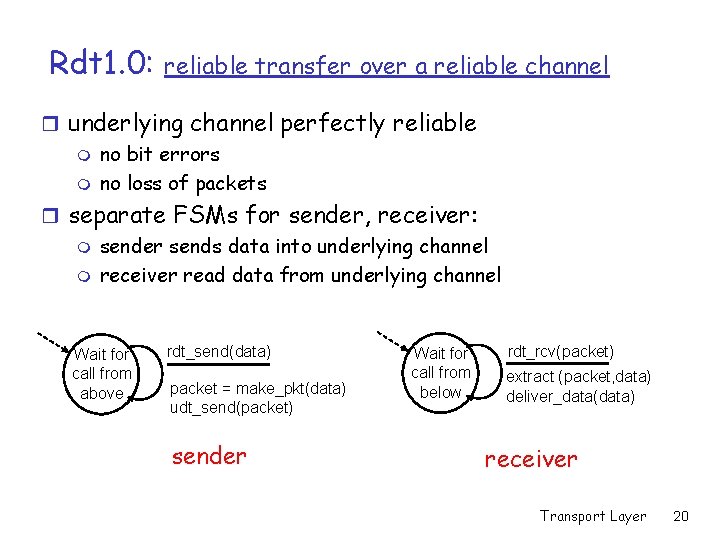

Rdt 1. 0: reliable transfer over a reliable channel r underlying channel perfectly reliable m no bit errors m no loss of packets r separate FSMs for sender, receiver: m sender sends data into underlying channel m receiver read data from underlying channel Wait for call from above rdt_send(data) packet = make_pkt(data) udt_send(packet) sender Wait for call from below rdt_rcv(packet) extract (packet, data) deliver_data(data) receiver Transport Layer 20

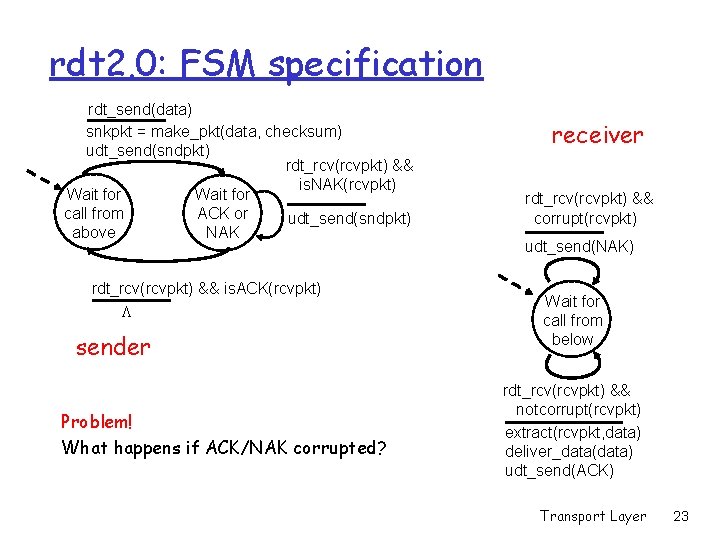

Rdt 2. 0: channel with bit errors r underlying channel may flip bits in packet m checksum to detect bit errors r the question: how to recover from errors: m acknowledgements (ACKs): receiver explicitly tells sender that pkt received OK m negative acknowledgements (NAKs): receiver explicitly tells sender that pkt had errors m sender retransmits pkt on receipt of NAK Transport Layer 21

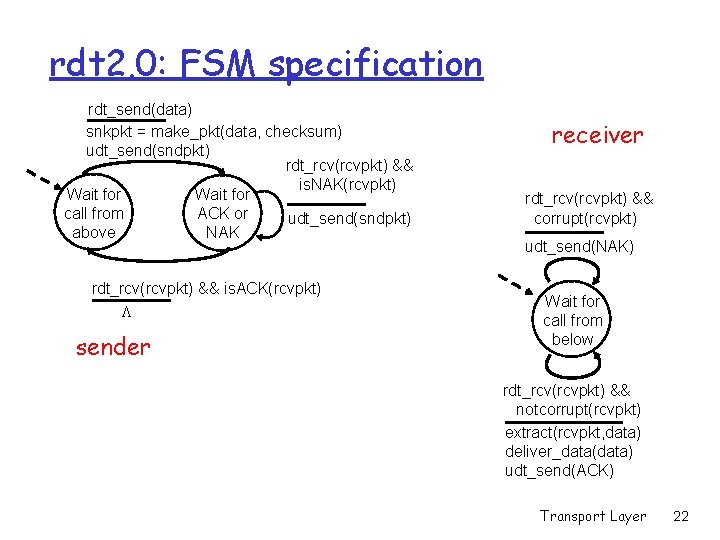

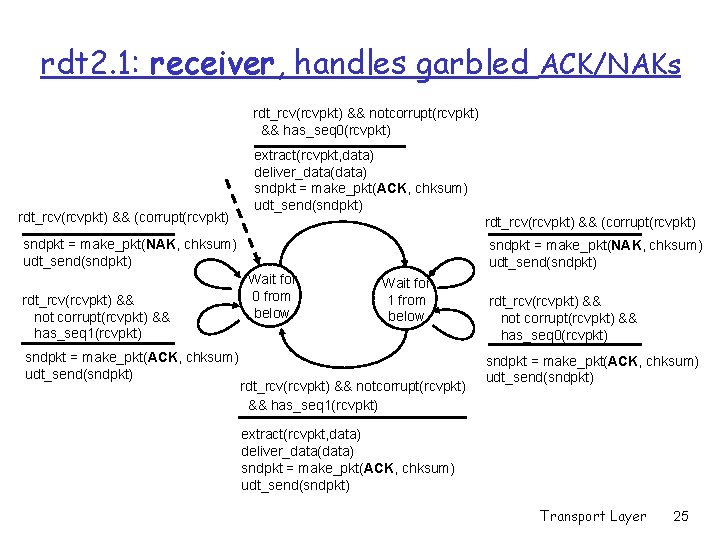

rdt 2. 0: FSM specification rdt_send(data) snkpkt = make_pkt(data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && is. NAK(rcvpkt) Wait for call from ACK or udt_send(sndpkt) above NAK rdt_rcv(rcvpkt) && is. ACK(rcvpkt) L sender receiver rdt_rcv(rcvpkt) && corrupt(rcvpkt) udt_send(NAK) Wait for call from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) extract(rcvpkt, data) deliver_data(data) udt_send(ACK) Transport Layer 22

rdt 2. 0: FSM specification rdt_send(data) snkpkt = make_pkt(data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && is. NAK(rcvpkt) Wait for call from ACK or udt_send(sndpkt) above NAK rdt_rcv(rcvpkt) && is. ACK(rcvpkt) L sender Problem! What happens if ACK/NAK corrupted? receiver rdt_rcv(rcvpkt) && corrupt(rcvpkt) udt_send(NAK) Wait for call from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) extract(rcvpkt, data) deliver_data(data) udt_send(ACK) Transport Layer 23

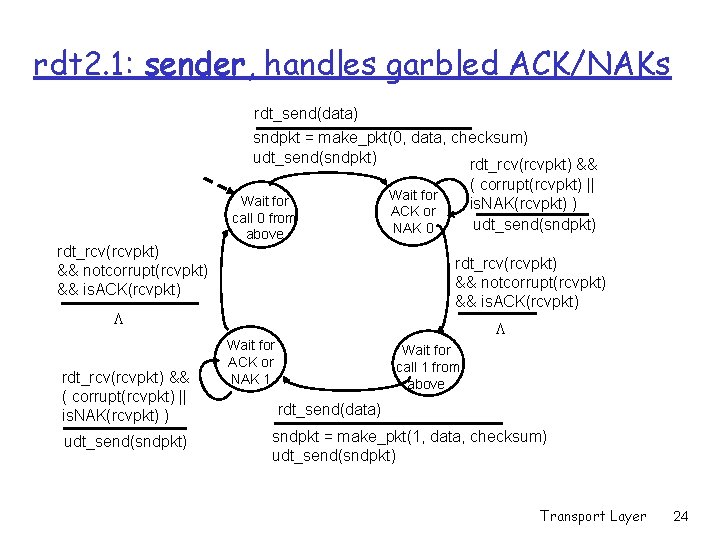

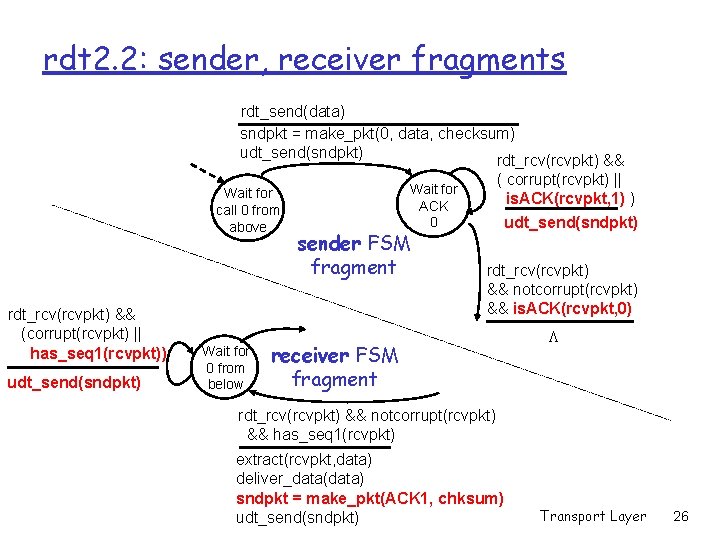

rdt 2. 1: sender, handles garbled ACK/NAKs rdt_send(data) sndpkt = make_pkt(0, data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && is. ACK(rcvpkt) Wait for call 0 from above rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && is. ACK(rcvpkt) L rdt_rcv(rcvpkt) && ( corrupt(rcvpkt) || is. NAK(rcvpkt) ) udt_send(sndpkt) Wait for ACK or NAK 0 L Wait for ACK or NAK 1 Wait for call 1 from above rdt_send(data) sndpkt = make_pkt(1, data, checksum) udt_send(sndpkt) Transport Layer 24

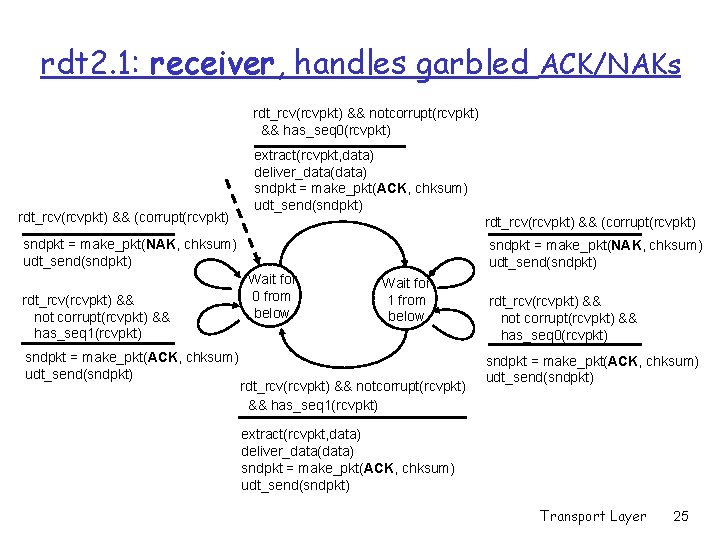

rdt 2. 1: receiver, handles garbled ACK/NAKs rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && has_seq 0(rcvpkt) rdt_rcv(rcvpkt) && (corrupt(rcvpkt) extract(rcvpkt, data) deliver_data(data) sndpkt = make_pkt(ACK, chksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && (corrupt(rcvpkt) sndpkt = make_pkt(NAK, chksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && not corrupt(rcvpkt) && has_seq 1(rcvpkt) sndpkt = make_pkt(ACK, chksum) udt_send(sndpkt) sndpkt = make_pkt(NAK, chksum) udt_send(sndpkt) Wait for 0 from below Wait for 1 from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && has_seq 1(rcvpkt) rdt_rcv(rcvpkt) && not corrupt(rcvpkt) && has_seq 0(rcvpkt) sndpkt = make_pkt(ACK, chksum) udt_send(sndpkt) extract(rcvpkt, data) deliver_data(data) sndpkt = make_pkt(ACK, chksum) udt_send(sndpkt) Transport Layer 25

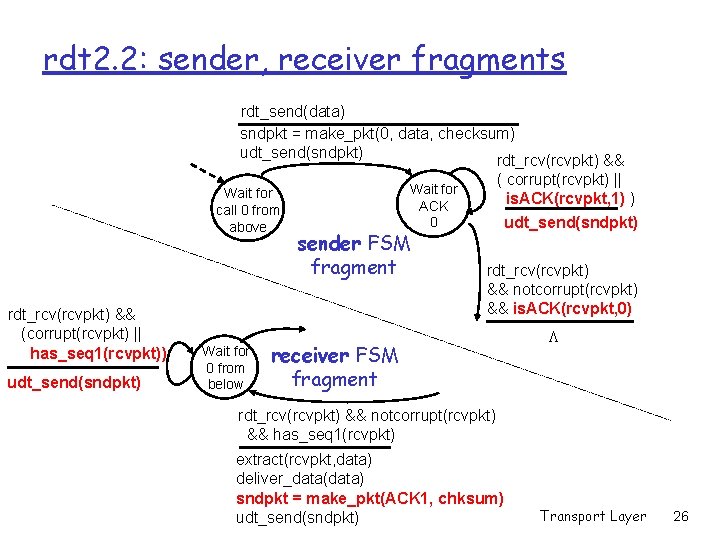

rdt 2. 2: sender, receiver fragments rdt_send(data) sndpkt = make_pkt(0, data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && Wait for call 0 from above rdt_rcv(rcvpkt) && (corrupt(rcvpkt) || has_seq 1(rcvpkt)) udt_send(sndpkt) Wait for 0 from below ( corrupt(rcvpkt) || is. ACK(rcvpkt, 1) ) udt_send(sndpkt) Wait for ACK 0 sender FSM fragment rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && is. ACK(rcvpkt, 0) receiver FSM fragment L rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && has_seq 1(rcvpkt) extract(rcvpkt, data) deliver_data(data) sndpkt = make_pkt(ACK 1, chksum) udt_send(sndpkt) Transport Layer 26

rdt 3. 0: channels with errors and loss New assumption: underlying channel can also lose packets (data or ACKs) Approach: sender waits “reasonable” amount of time for ACK r retransmits if no ACK received in this time m requires countdown timer r if pkt (or ACK) just delayed (not lost): What then? sender recevier Transport Layer 27

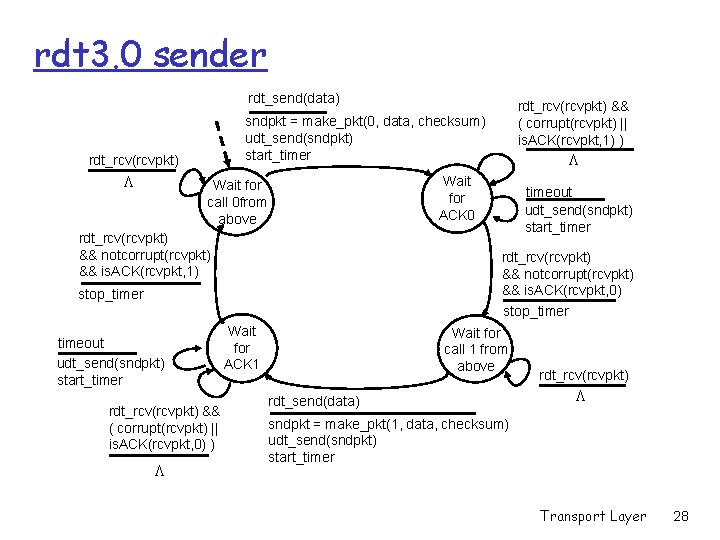

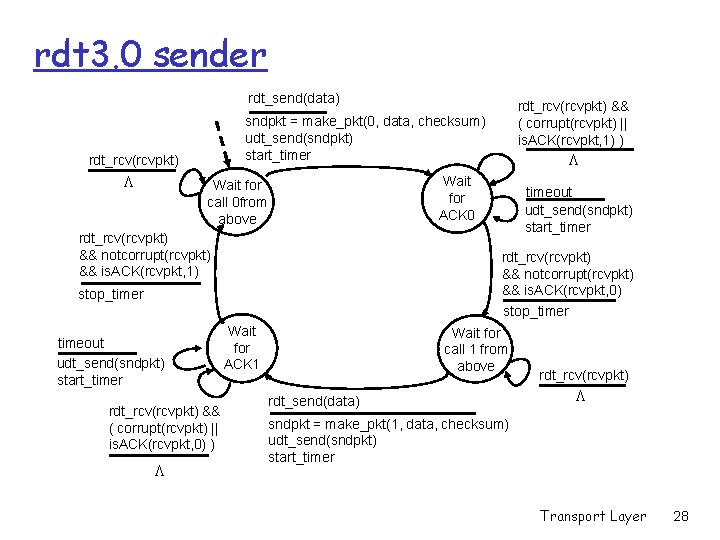

rdt 3. 0 sender rdt_send(data) sndpkt = make_pkt(0, data, checksum) udt_send(sndpkt) start_timer rdt_rcv(rcvpkt) L rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && is. ACK(rcvpkt, 1) rdt_rcv(rcvpkt) && ( corrupt(rcvpkt) || is. ACK(rcvpkt, 0) ) timeout udt_send(sndpkt) start_timer rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && is. ACK(rcvpkt, 0) stop_timer timeout udt_send(sndpkt) start_timer L Wait for ACK 0 Wait for call 0 from above L rdt_rcv(rcvpkt) && ( corrupt(rcvpkt) || is. ACK(rcvpkt, 1) ) Wait for ACK 1 Wait for call 1 from above rdt_send(data) rdt_rcv(rcvpkt) L sndpkt = make_pkt(1, data, checksum) udt_send(sndpkt) start_timer Transport Layer 28

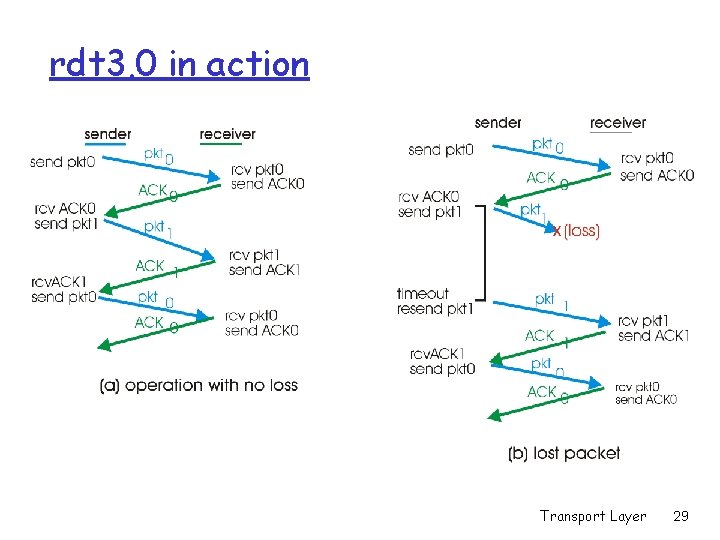

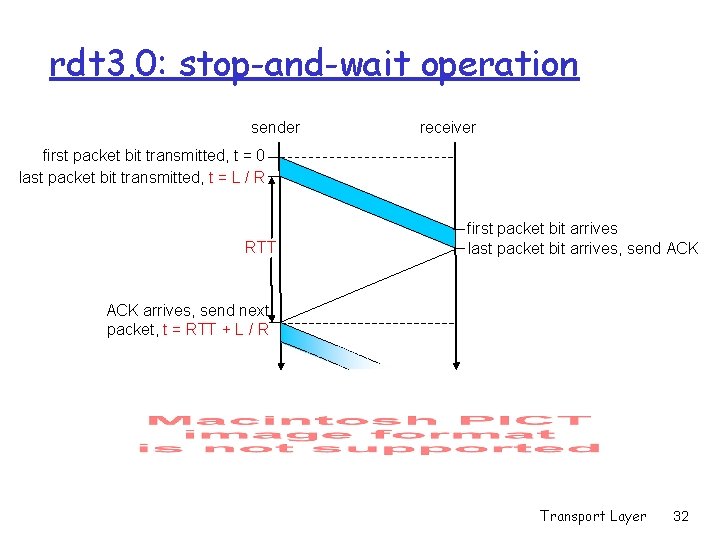

rdt 3. 0 in action Transport Layer 29

rdt 3. 0 in action Transport Layer 30

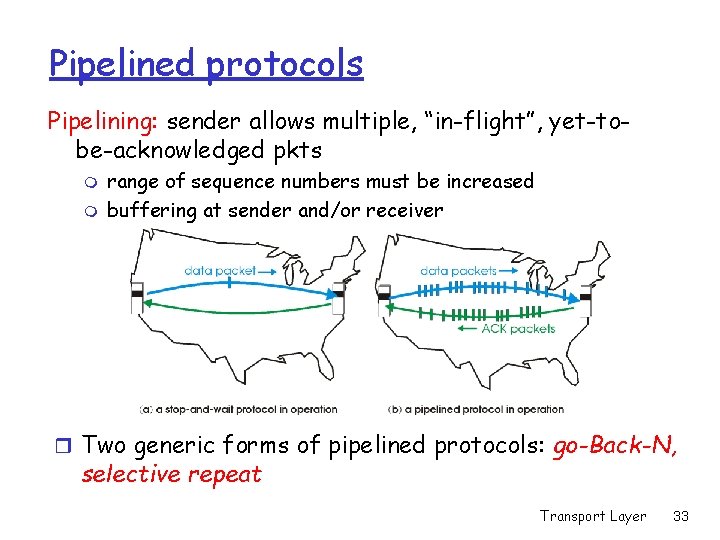

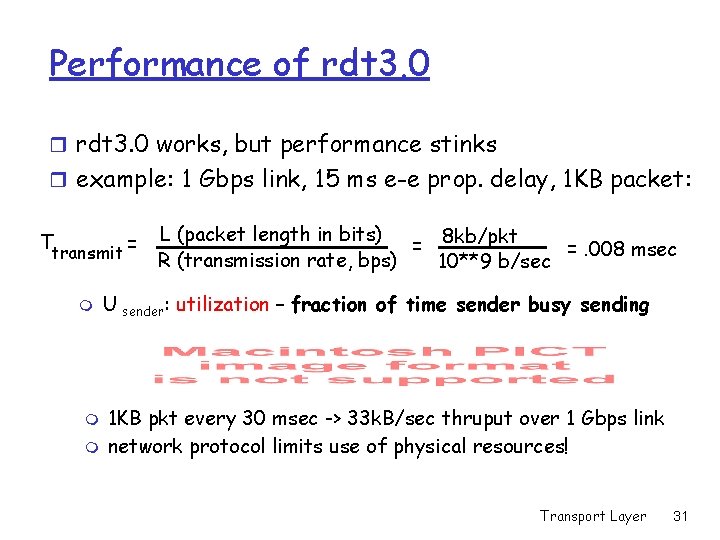

Performance of rdt 3. 0 r rdt 3. 0 works, but performance stinks r example: 1 Gbps link, 15 ms e-e prop. delay, 1 KB packet: Ttransmit = m m m L (packet length in bits) 8 kb/pkt = =. 008 msec R (transmission rate, bps) 10**9 b/sec U sender: utilization – fraction of time sender busy sending 1 KB pkt every 30 msec -> 33 k. B/sec thruput over 1 Gbps link network protocol limits use of physical resources! Transport Layer 31

rdt 3. 0: stop-and-wait operation sender receiver first packet bit transmitted, t = 0 last packet bit transmitted, t = L / R RTT first packet bit arrives last packet bit arrives, send ACK arrives, send next packet, t = RTT + L / R Transport Layer 32

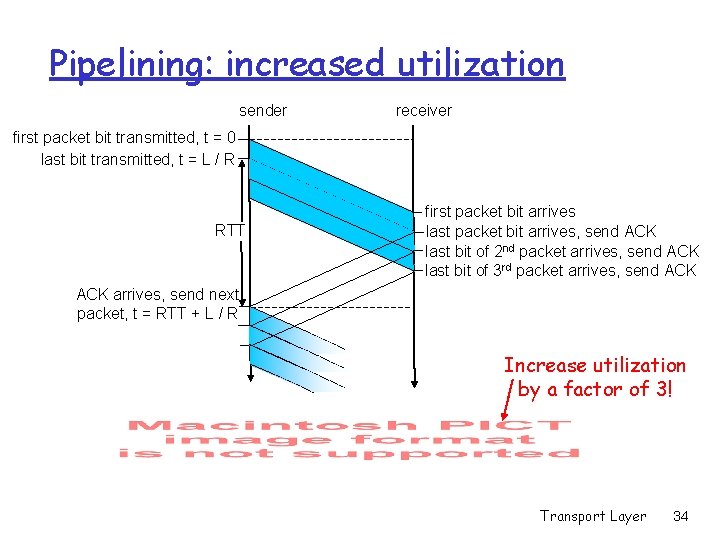

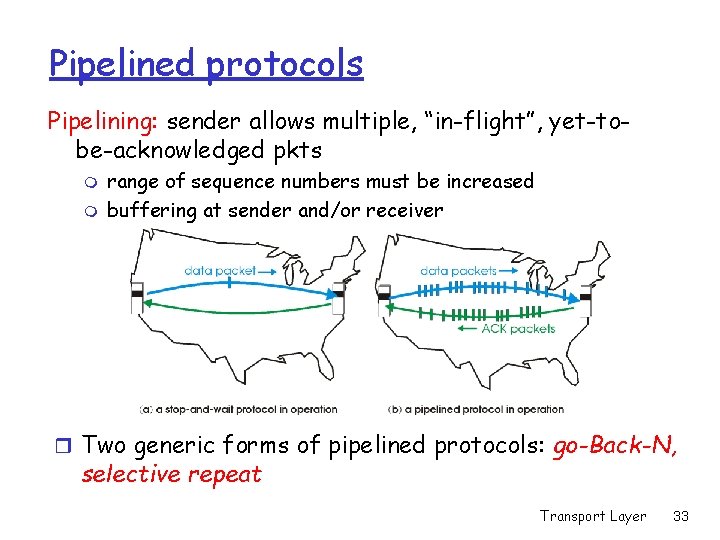

Pipelined protocols Pipelining: sender allows multiple, “in-flight”, yet-tobe-acknowledged pkts m m range of sequence numbers must be increased buffering at sender and/or receiver r Two generic forms of pipelined protocols: go-Back-N, selective repeat Transport Layer 33

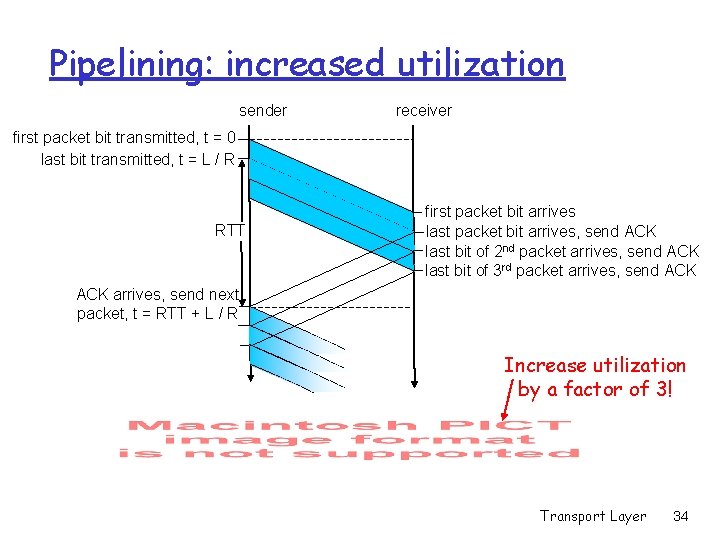

Pipelining: increased utilization sender receiver first packet bit transmitted, t = 0 last bit transmitted, t = L / R RTT first packet bit arrives last packet bit arrives, send ACK last bit of 2 nd packet arrives, send ACK last bit of 3 rd packet arrives, send ACK arrives, send next packet, t = RTT + L / R Increase utilization by a factor of 3! Transport Layer 34

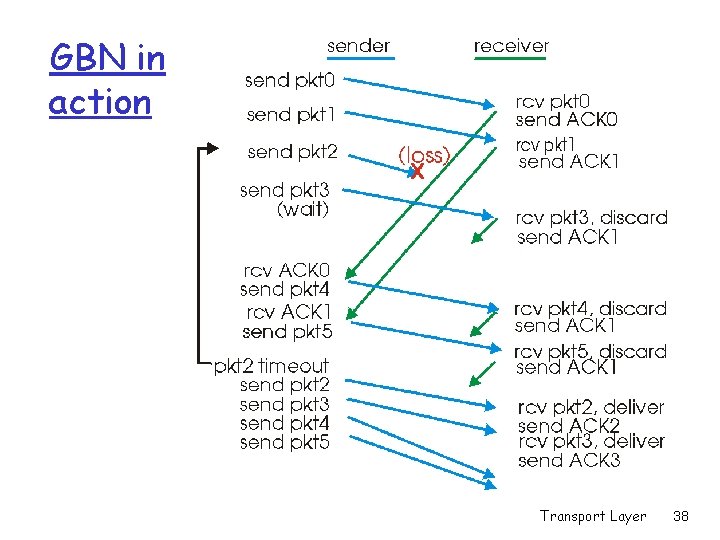

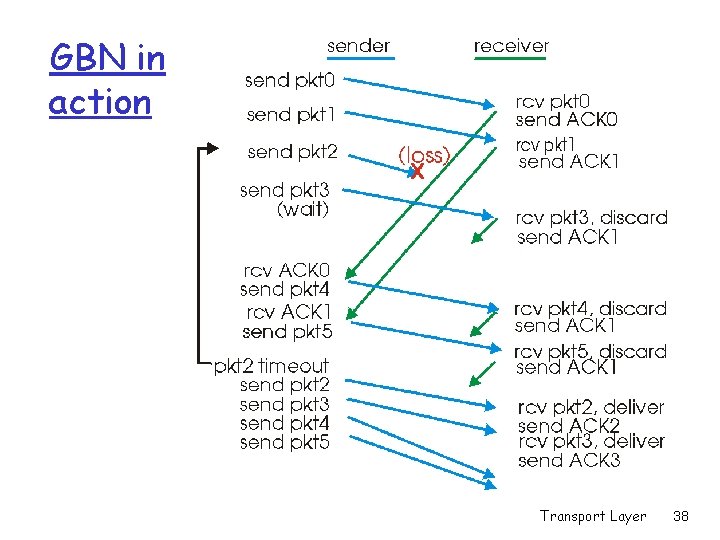

Go-Back-N Sender: r k-bit seq # in pkt header r “window” of up to N, consecutive unack’ed pkts allowed r Cumulative ACK ( Ack(n) ) ACKs all pkts up to, including seq # n may receive duplicate ACKs (see receiver) r timer for each in-flight pkt r timeout(n): retransmit pkt n and all higher seq # pkts in window m Transport Layer 35

![GBN sender extended FSM rdtsenddata L base1 nextseqnum1 if nextseqnum baseN sndpktnextseqnum GBN: sender extended FSM rdt_send(data) L base=1 nextseqnum=1 if (nextseqnum < base+N) { sndpkt[nextseqnum]](https://slidetodoc.com/presentation_image_h/061a9590516264c7e9d9bf691f04afab/image-36.jpg)

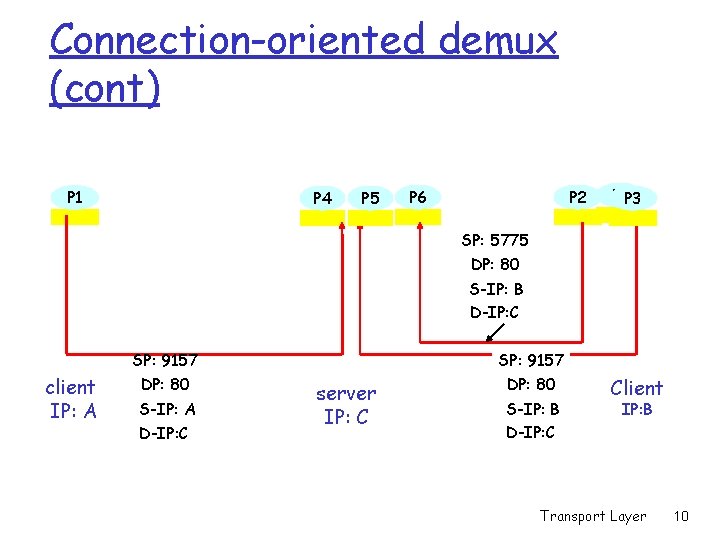

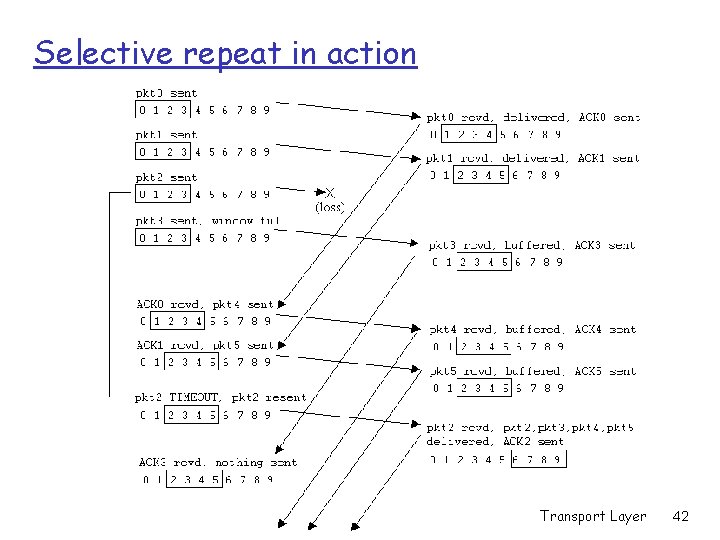

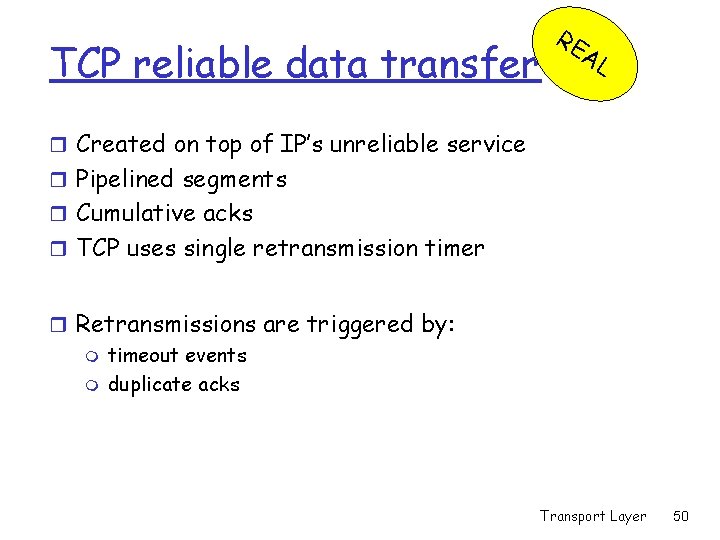

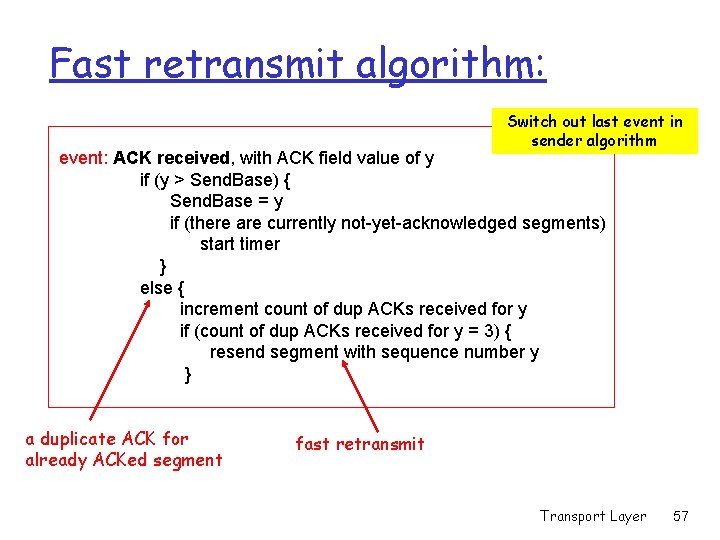

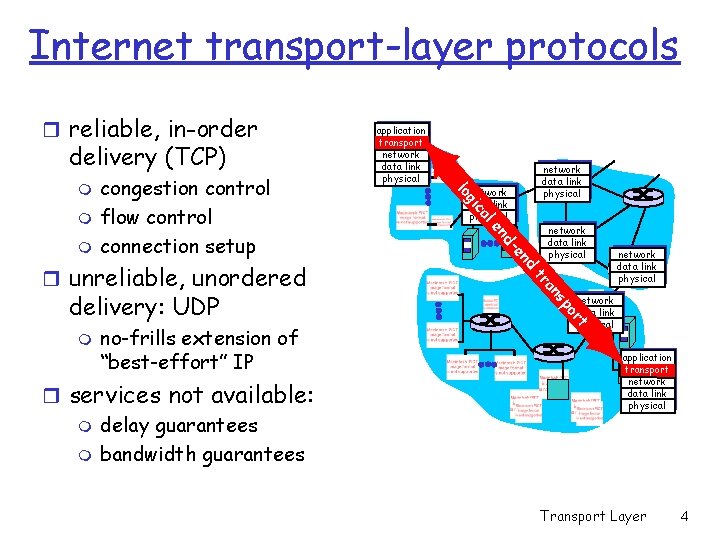

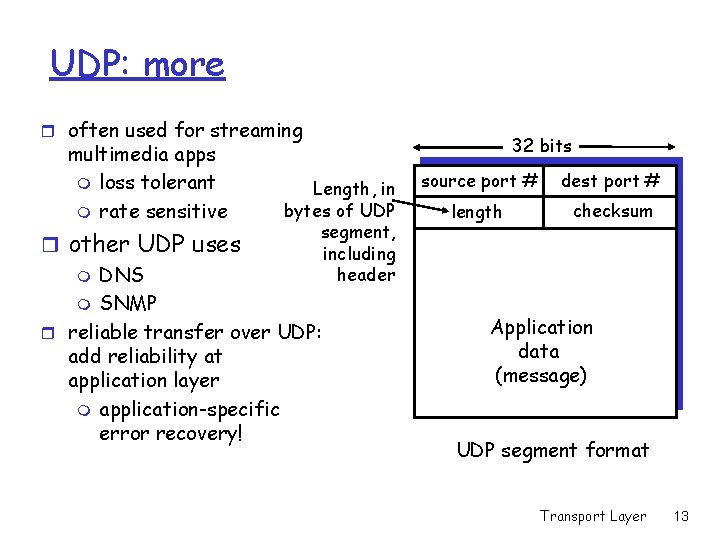

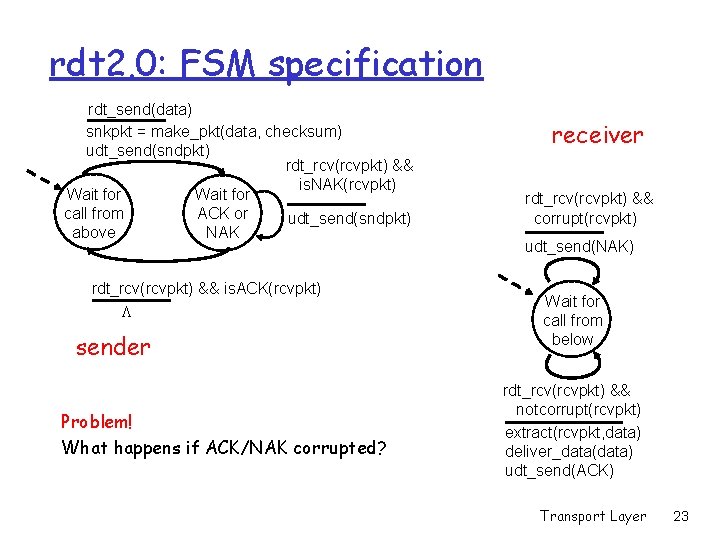

GBN: sender extended FSM rdt_send(data) L base=1 nextseqnum=1 if (nextseqnum < base+N) { sndpkt[nextseqnum] = make_pkt(nextseqnum, data, chksum) udt_send(sndpkt[nextseqnum]) if (base == nextseqnum) start_timer nextseqnum++ } else refuse_data(data) Wait rdt_rcv(rcvpkt) && corrupt(rcvpkt) L timeout start_timer udt_send(sndpkt[base]) udt_send(sndpkt[base+1]) … udt_send(sndpkt[nextseqnum-1]) rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) base = getacknum(rcvpkt)+1 If (base == nextseqnum) stop_timer else start_timer Transport Layer 36

GBN: receiver extended FSM default udt_send(sndpkt) L Wait expectedseqnum=1 sndpkt = make_pkt(expectedseqnum, ACK, chksum) rdt_rcv(rcvpkt) && notcurrupt(rcvpkt) && hasseqnum(rcvpkt, expectedseqnum) extract(rcvpkt, data) deliver_data(data) sndpkt = make_pkt(expectedseqnum, ACK, chksum) udt_send(sndpkt) expectedseqnum++ ACK-only: always send ACK for correctly-received pkt with highest in-order seq # m m may generate duplicate ACKs need only remember expectedseqnum r out-of-order pkt: m discard (don’t buffer) -> no receiver buffering! m Re-ACK pkt with highest in-order seq # Transport Layer 37

GBN in action Transport Layer 38

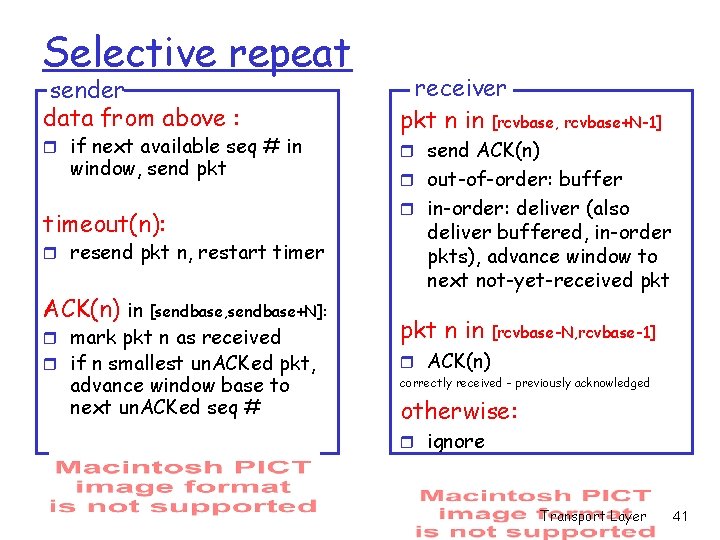

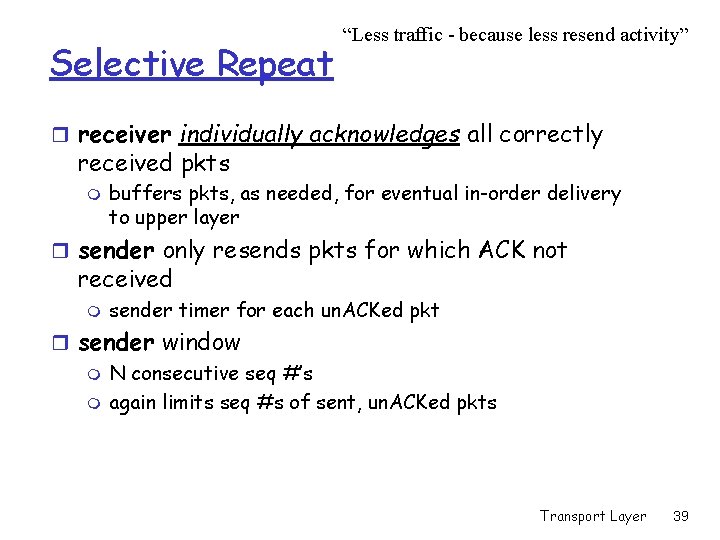

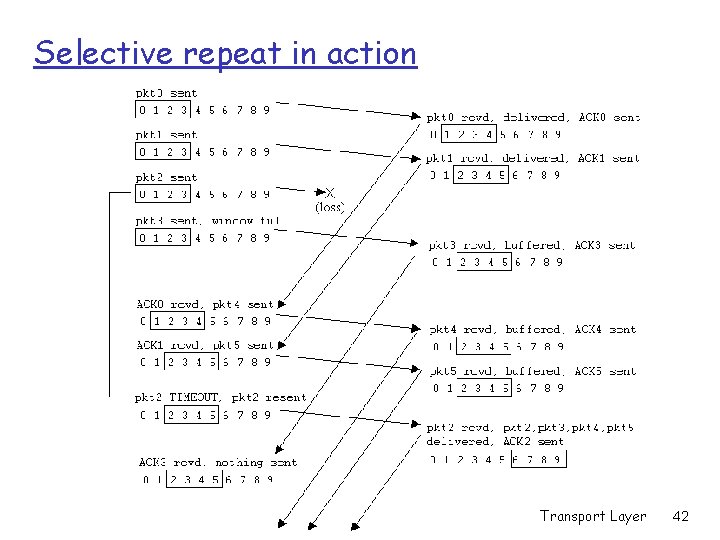

Selective Repeat “Less traffic - because less resend activity” r receiver individually acknowledges all correctly received pkts m buffers pkts, as needed, for eventual in-order delivery to upper layer r sender only resends pkts for which ACK not received m sender timer for each un. ACKed pkt r sender window m N consecutive seq #’s m again limits seq #s of sent, un. ACKed pkts Transport Layer 39

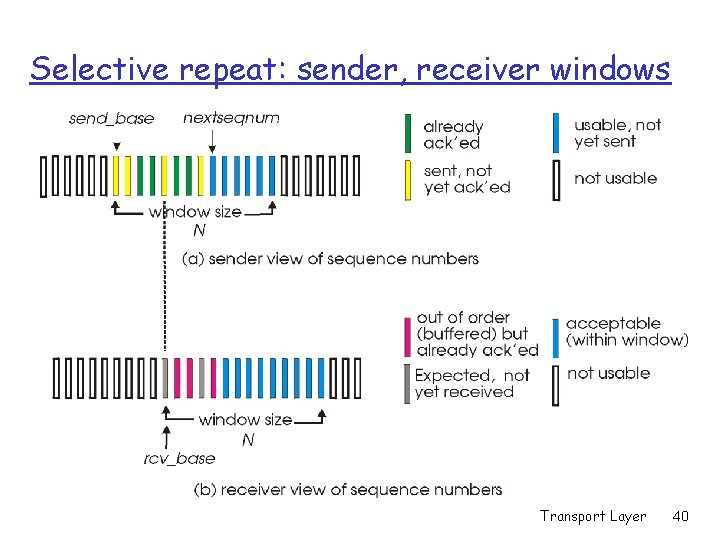

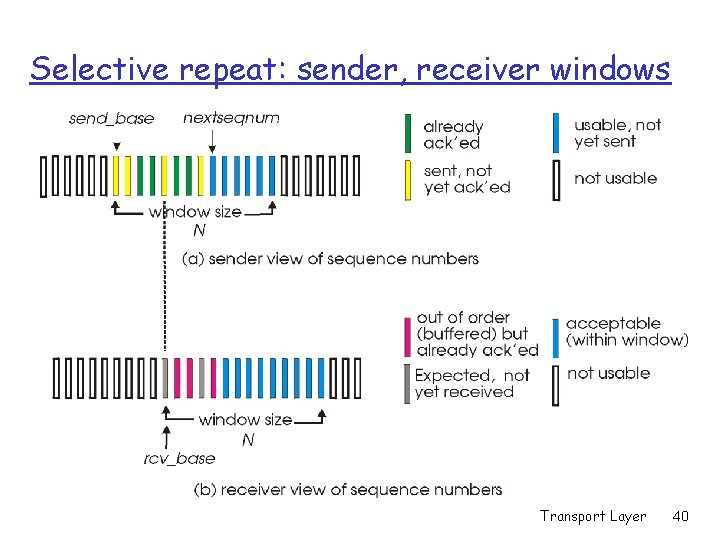

Selective repeat: sender, receiver windows Transport Layer 40

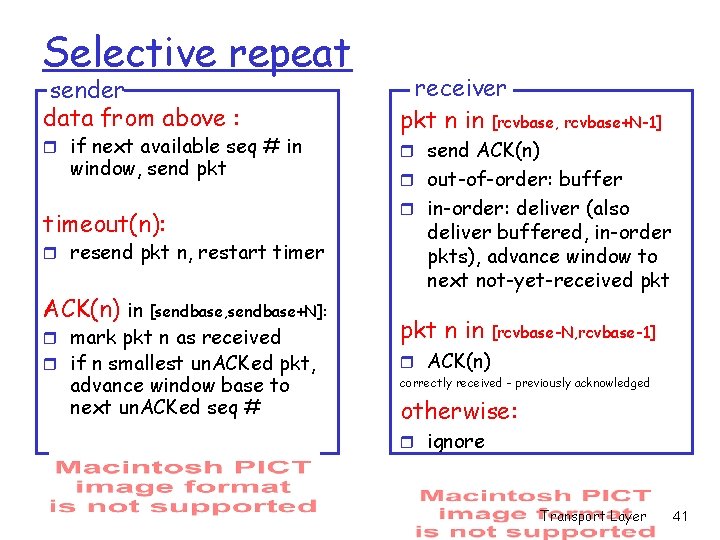

Selective repeat sender data from above : r if next available seq # in window, send pkt timeout(n): r resend pkt n, restart timer ACK(n) in [sendbase, sendbase+N]: r mark pkt n as received r if n smallest un. ACKed pkt, advance window base to next un. ACKed seq # receiver pkt n in [rcvbase, rcvbase+N-1] r send ACK(n) r out-of-order: buffer r in-order: deliver (also deliver buffered, in-order pkts), advance window to next not-yet-received pkt n in [rcvbase-N, rcvbase-1] r ACK(n) correctly received - previously acknowledged otherwise: r ignore Transport Layer 41

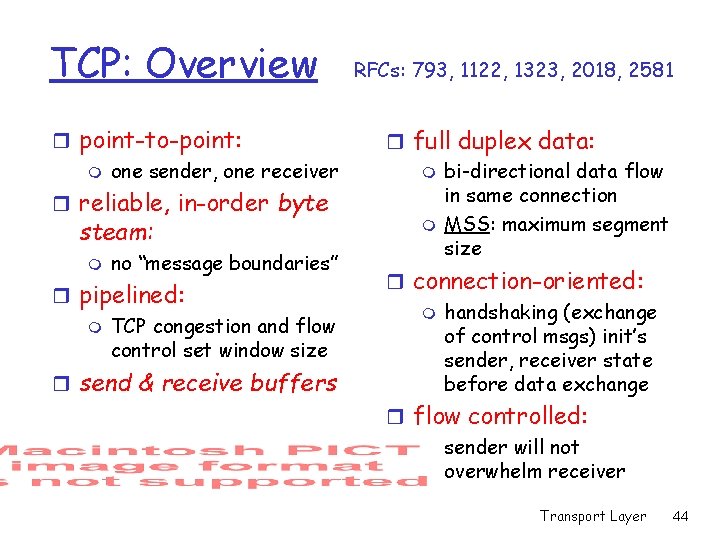

Selective repeat in action Transport Layer 42

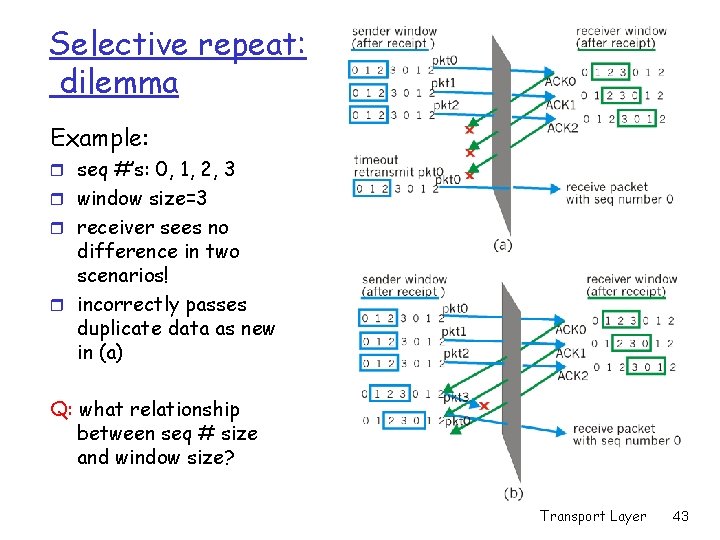

Selective repeat: dilemma Example: r seq #’s: 0, 1, 2, 3 r window size=3 r receiver sees no difference in two scenarios! r incorrectly passes duplicate data as new in (a) Q: what relationship between seq # size and window size? Transport Layer 43

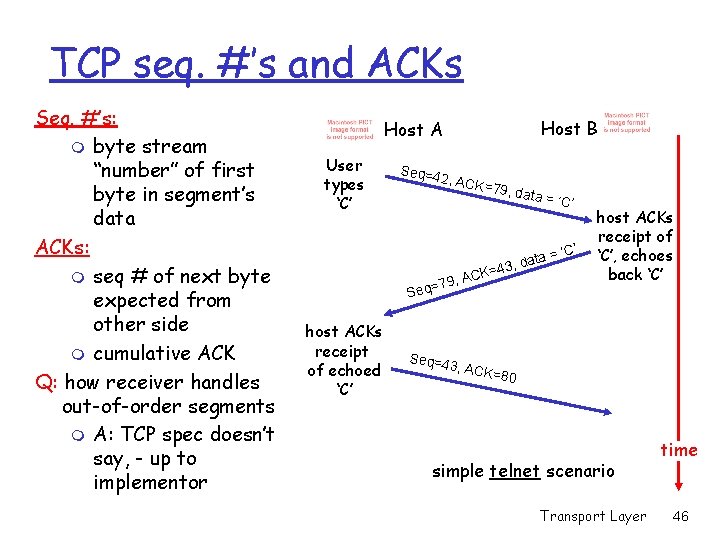

TCP: Overview r point-to-point: m one sender, one receiver r reliable, in-order byte steam: m no “message boundaries” r pipelined: m TCP congestion and flow control set window size r send & receive buffers RFCs: 793, 1122, 1323, 2018, 2581 r full duplex data: m bi-directional data flow in same connection m MSS: maximum segment size r connection-oriented: m handshaking (exchange of control msgs) init’s sender, receiver state before data exchange r flow controlled: m sender will not overwhelm receiver Transport Layer 44

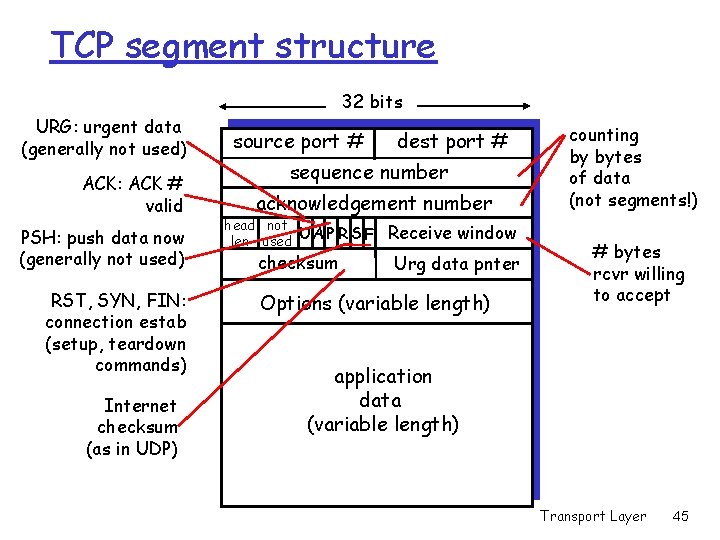

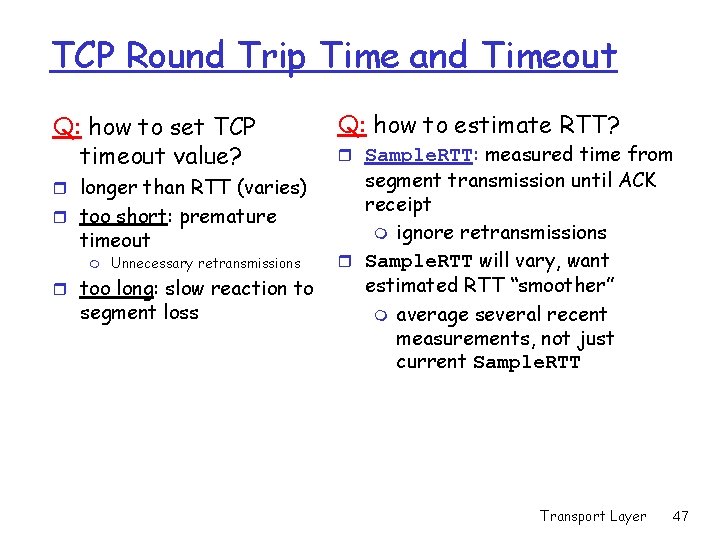

TCP segment structure 32 bits URG: urgent data (generally not used) ACK: ACK # valid PSH: push data now (generally not used) RST, SYN, FIN: connection estab (setup, teardown commands) Internet checksum (as in UDP) source port # dest port # sequence number acknowledgement number head not UA P R S F len used checksum Receive window Urg data pnter Options (variable length) counting by bytes of data (not segments!) # bytes rcvr willing to accept application data (variable length) Transport Layer 45

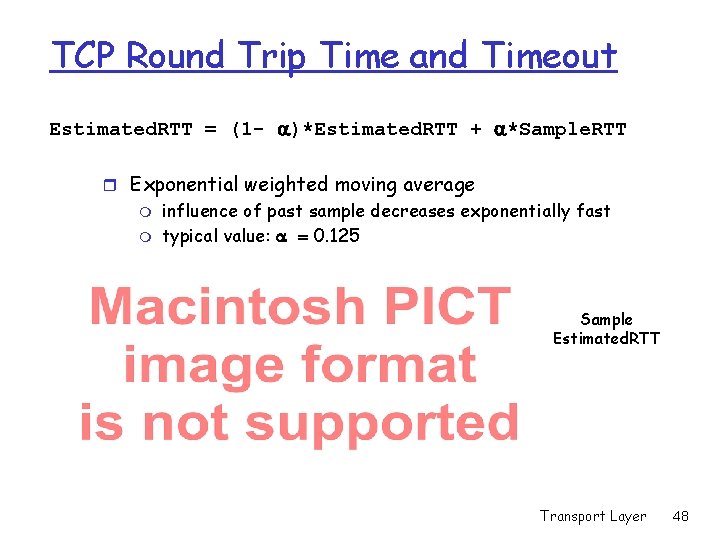

TCP seq. #’s and ACKs Seq. #’s: m byte stream “number” of first byte in segment’s data ACKs: m seq # of next byte expected from other side m cumulative ACK Q: how receiver handles out-of-order segments m A: TCP spec doesn’t say, - up to implementor Host B Host A User types ‘C’ Seq=4 2 , ACK= 79, da ta = ‘C K= , AC q=79 ata = d , 3 4 Se host ACKs receipt of echoed ‘C’ ’ ‘C’ host ACKs receipt of ‘C’, echoes back ‘C’ Seq=4 3, ACK =80 simple telnet scenario Transport Layer time 46

TCP Round Trip Time and Timeout Q: how to set TCP timeout value? r longer than RTT (varies) r too short: premature timeout m Unnecessary retransmissions r too long: slow reaction to segment loss Q: how to estimate RTT? r Sample. RTT: measured time from segment transmission until ACK receipt m ignore retransmissions r Sample. RTT will vary, want estimated RTT “smoother” m average several recent measurements, not just current Sample. RTT Transport Layer 47

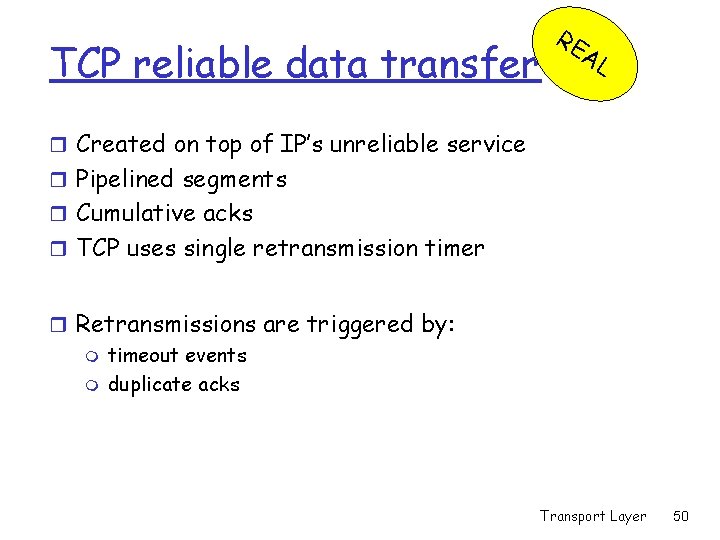

TCP Round Trip Time and Timeout Estimated. RTT = (1 - )*Estimated. RTT + *Sample. RTT r Exponential weighted moving average m m influence of past sample decreases exponentially fast typical value: = 0. 125 Sample Estimated. RTT Transport Layer 48

TCP Round Trip Time and Timeout Setting the timeout r Estimted. RTT plus “safety margin” m large variation in Estimated. RTT -> larger safety margin r first estimate of how much Sample. RTT deviates from Estimated. RTT: Dev. RTT = (1 - )*Dev. RTT + *|Sample. RTT-Estimated. RTT| (typically, = 0. 25) Safety margin Then set timeout interval: Timeout. Interval = Estimated. RTT + 4*Dev. RTT Transport Layer 49

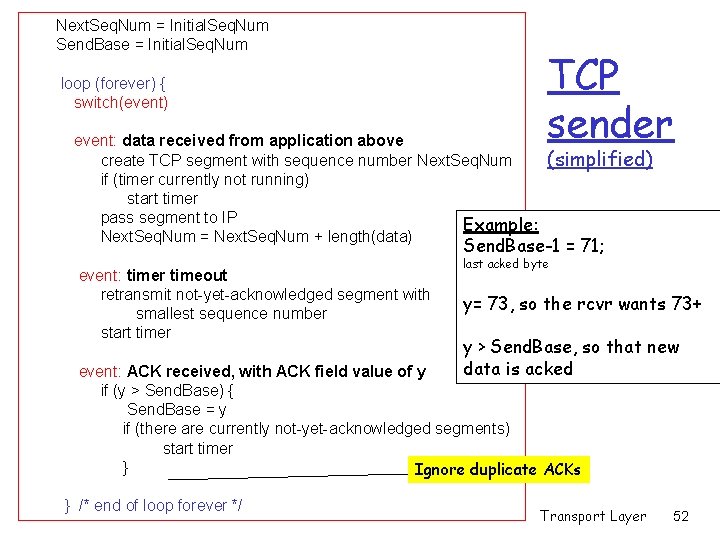

TCP reliable data transfer RE AL r Created on top of IP’s unreliable service r Pipelined segments r Cumulative acks r TCP uses single retransmission timer r Retransmissions are triggered by: m timeout events m duplicate acks Transport Layer 50

TCP sender events: data rcvd from app: r Create segment with seq # r seq # is byte-stream number of first data byte in segment r start timer if not already running (think of timer as for oldest unacked segment) r expiration interval: Time. Out. Interval timeout: r retransmit segment that caused timeout r restart timer Ack rcvd: r If acknowledges previously unacked segments m m update what is known to be acked start timer if there are outstanding segments Transport Layer 51

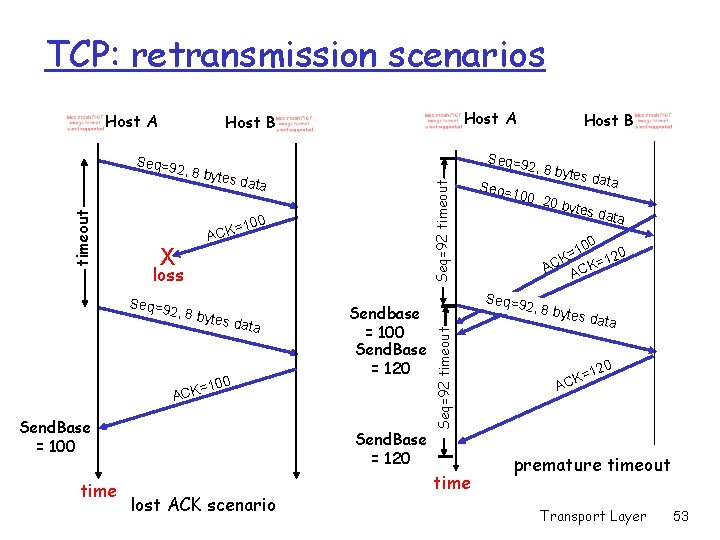

Next. Seq. Num = Initial. Seq. Num Send. Base = Initial. Seq. Num loop (forever) { switch(event) event: data received from application above create TCP segment with sequence number Next. Seq. Num if (timer currently not running) start timer pass segment to IP Example: Next. Seq. Num = Next. Seq. Num + length(data) TCP sender (simplified) Send. Base-1 = 71; event: timer timeout retransmit not-yet-acknowledged segment with smallest sequence number start timer last acked byte y= 73, so the rcvr wants 73+ y > Send. Base, so that new data is acked event: ACK received, with ACK field value of y if (y > Send. Base) { Send. Base = y if (there are currently not-yet-acknowledged segments) start timer } Ignore duplicate ACKs } /* end of loop forever */ Transport Layer 52

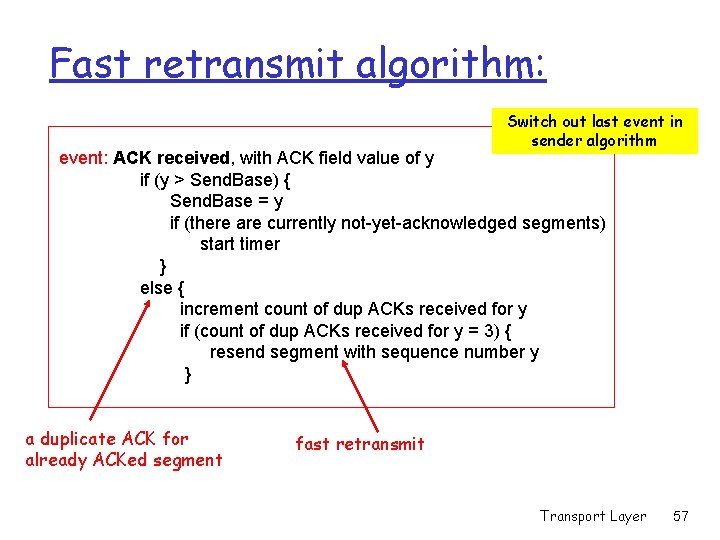

TCP: retransmission scenarios Host A 2, 8 by tes da Seq=92 timeout ta =100 X ACK loss Seq=9 2, 8 by tes da ta 100 Sendbase = 100 Send. Base = 120 = ACK Send. Base = 100 time Host B Seq=9 Send. Base = 120 lost ACK scenario Seq= 2, 8 by 100, tes da t a 20 by tes da time ta 0 10 = K 120 = C K A AC Seq=9 2, 8 by Seq=92 timeout Seq=9 timeout Host A Host B tes da ta 20 K=1 AC premature timeout Transport Layer 53

TCP retransmission scenarios Host A Host B Seq=9 timeout 2, 8 by Send. Base = 120 tes da t a =100 K C A 00, 20 bytes data Seq=1 X loss 120 = ACK time Cumulative ACK scenario Transport Layer 54

![TCP ACK generation RFC 1122 RFC 2581 Event at Receiver TCP Receiver action Arrival TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival](https://slidetodoc.com/presentation_image_h/061a9590516264c7e9d9bf691f04afab/image-55.jpg)

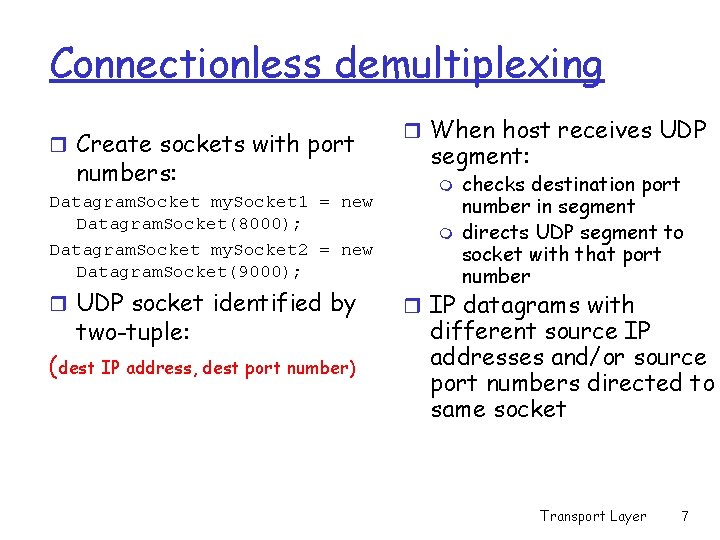

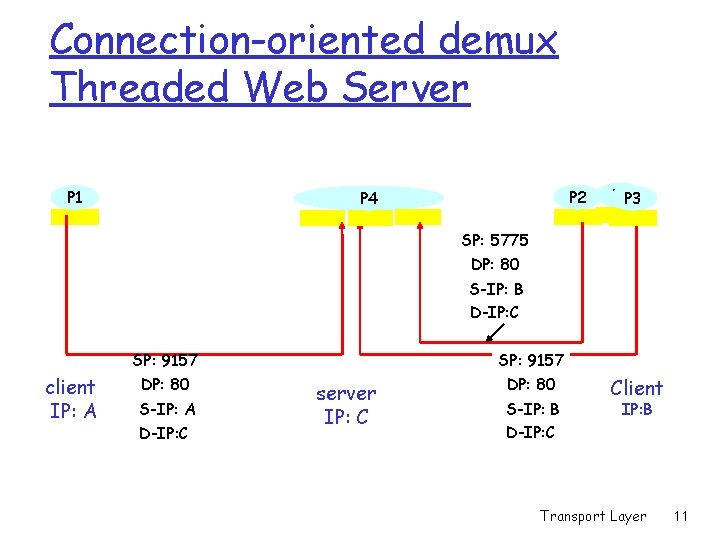

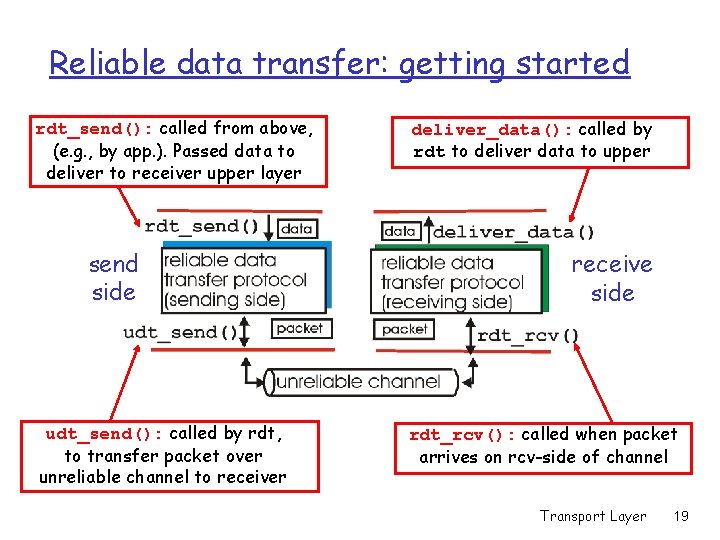

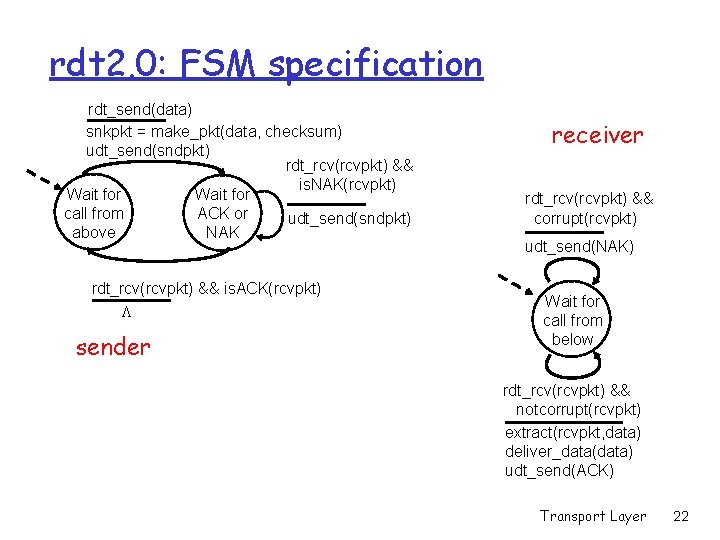

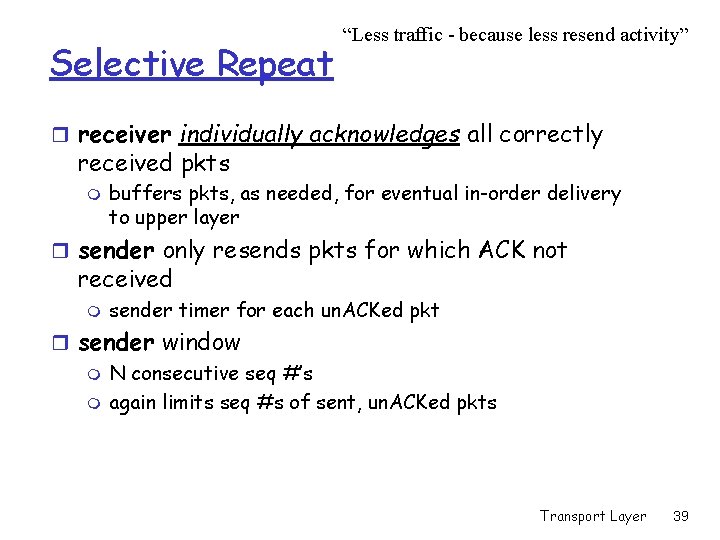

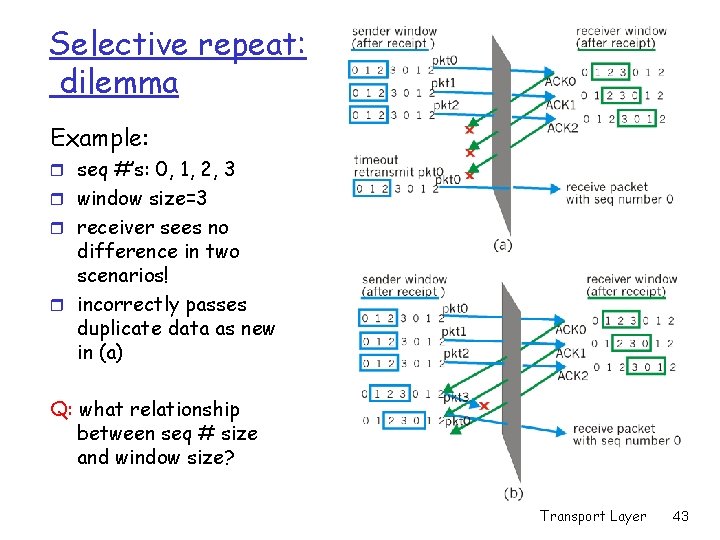

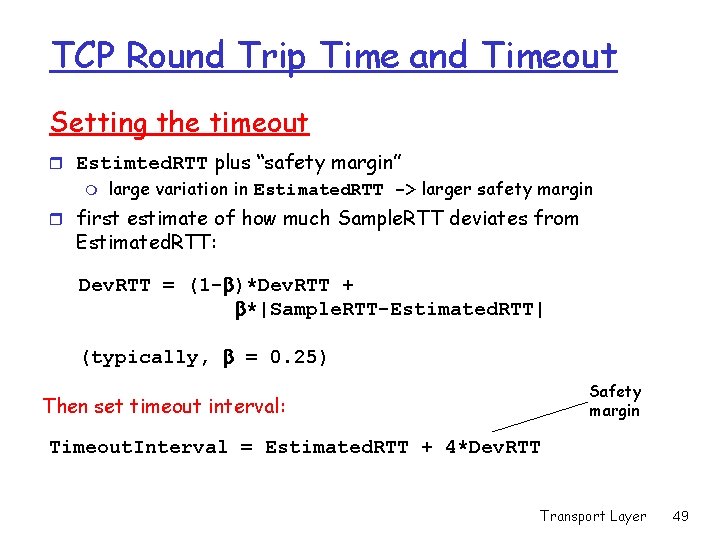

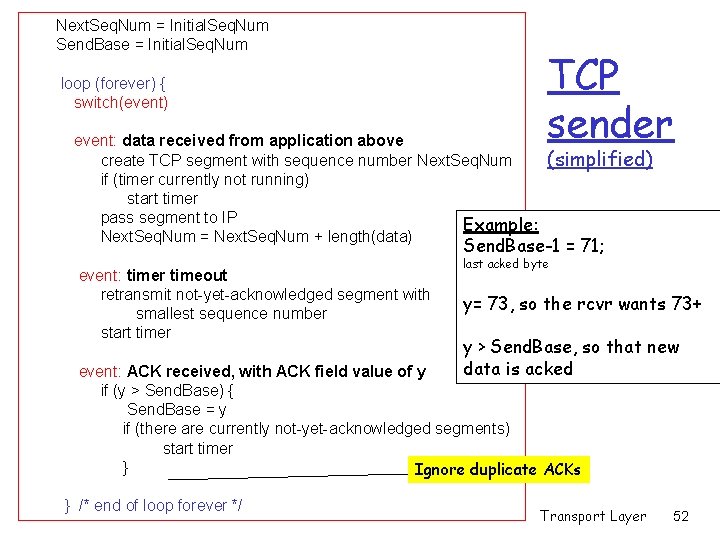

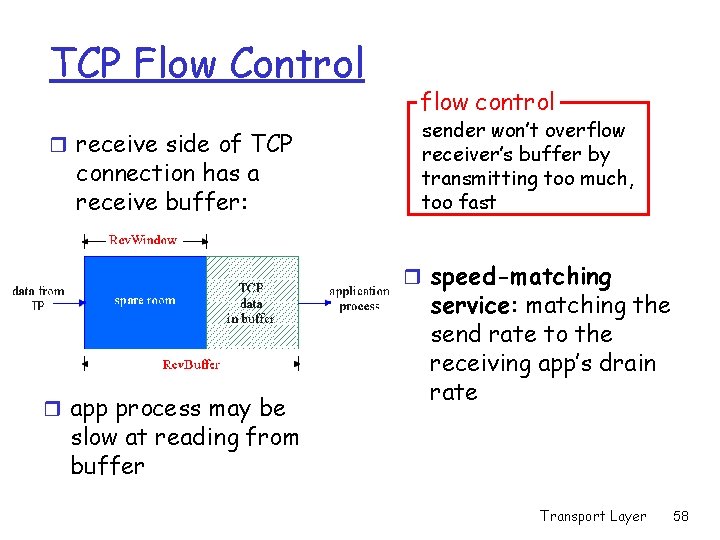

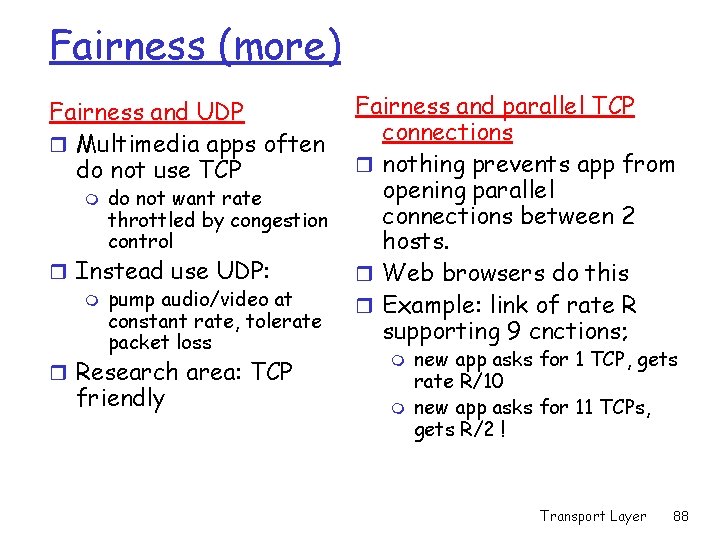

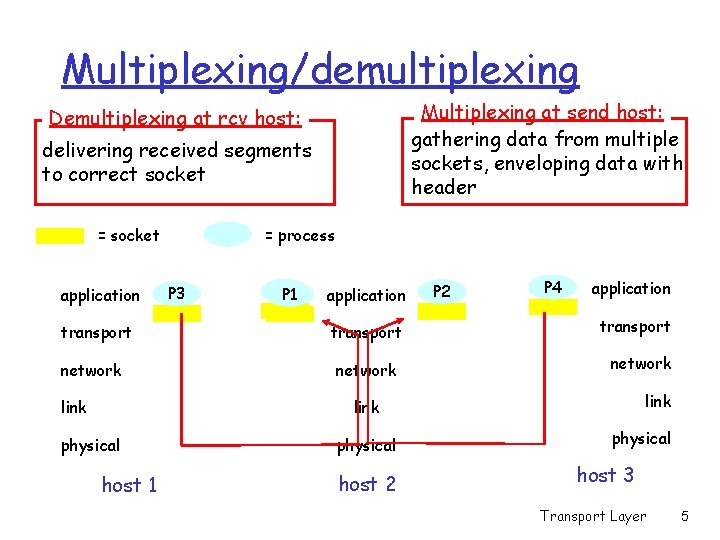

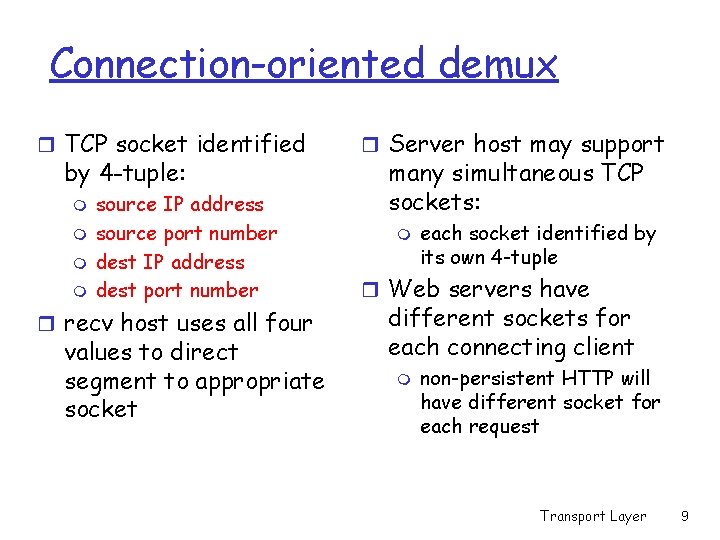

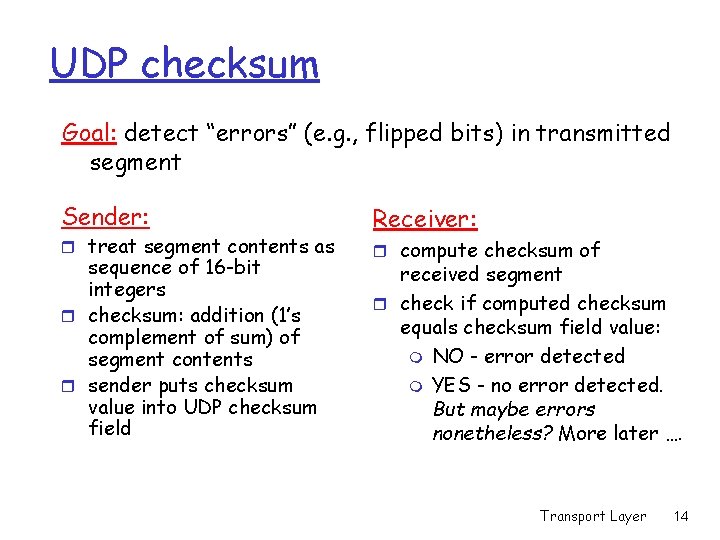

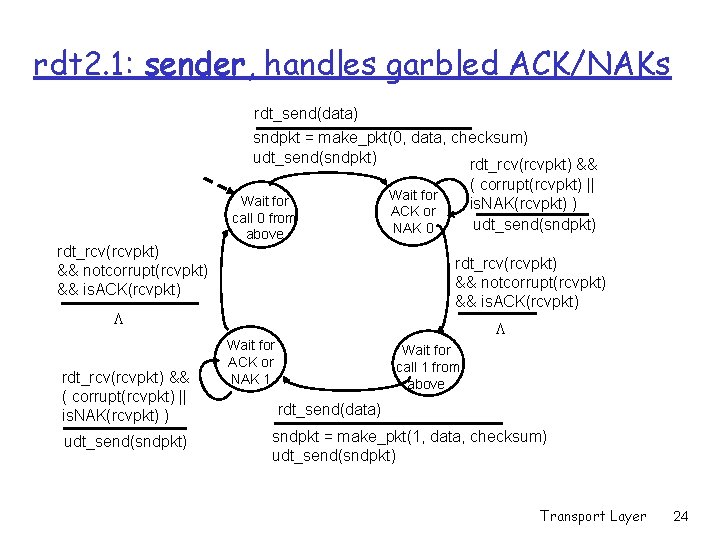

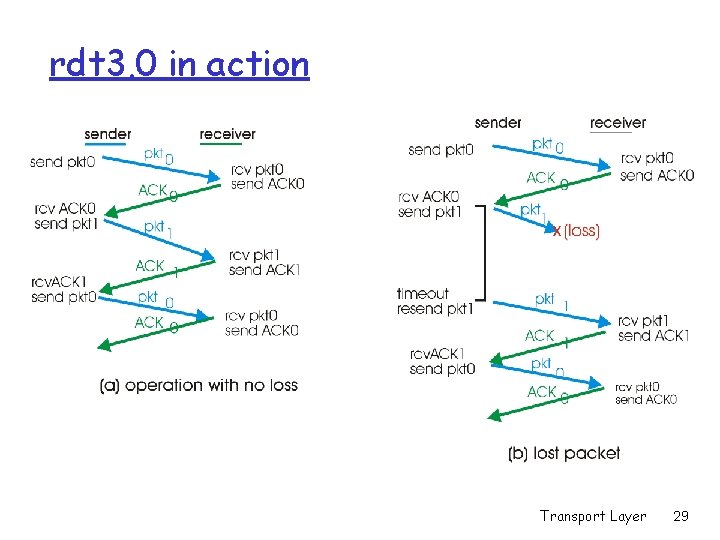

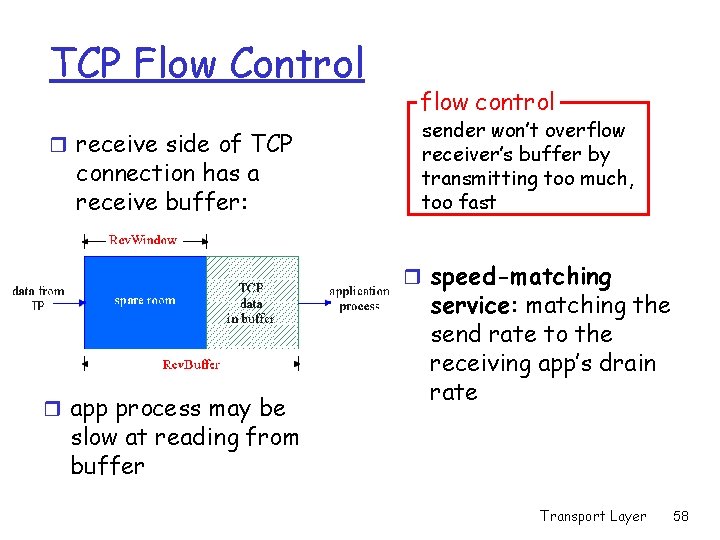

TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival of in-order segment with expected seq #. All data up to expected seq # already ACKed Delayed ACK. Wait up to 500 ms for next segment. If no next segment, send ACK Arrival of in-order segment with expected seq #. One other segment has ACK pending Immediately send single cumulative ACK, ACKing both in-order segments Arrival of out-of-order segment higher-than-expect seq. #. Gap detected Immediately send duplicate ACK, indicating seq. # of next expected byte Arrival of segment that partially or completely fills gap Immediately send ACK, provided that segment starts at lower end of gap Transport Layer 55

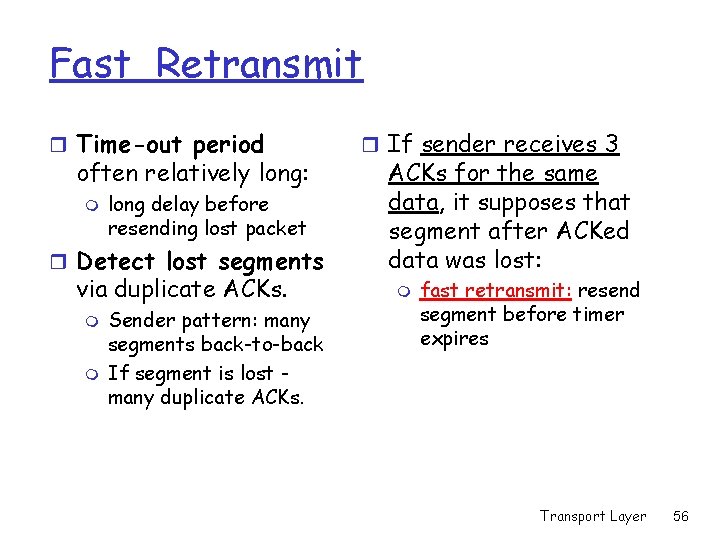

Fast Retransmit r Time-out period often relatively long: m long delay before resending lost packet r Detect lost segments via duplicate ACKs. m m Sender pattern: many segments back-to-back If segment is lost many duplicate ACKs. r If sender receives 3 ACKs for the same data, it supposes that segment after ACKed data was lost: m fast retransmit: resend segment before timer expires Transport Layer 56

Fast retransmit algorithm: Switch out last event in sender algorithm event: ACK received, with ACK field value of y if (y > Send. Base) { Send. Base = y if (there are currently not-yet-acknowledged segments) start timer } else { increment count of dup ACKs received for y if (count of dup ACKs received for y = 3) { resend segment with sequence number y } a duplicate ACK for already ACKed segment fast retransmit Transport Layer 57

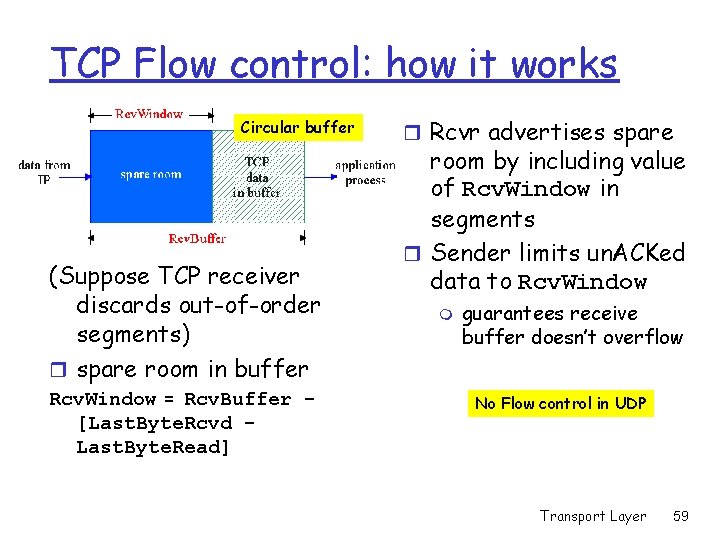

TCP Flow Control r receive side of TCP connection has a receive buffer: flow control sender won’t overflow receiver’s buffer by transmitting too much, too fast r speed-matching r app process may be service: matching the send rate to the receiving app’s drain rate slow at reading from buffer Transport Layer 58

TCP Flow control: how it works Circular buffer (Suppose TCP receiver discards out-of-order segments) r spare room in buffer Rcv. Window = Rcv. Buffer [Last. Byte. Rcvd Last. Byte. Read] r Rcvr advertises spare room by including value of Rcv. Window in segments r Sender limits un. ACKed data to Rcv. Window m guarantees receive buffer doesn’t overflow No Flow control in UDP Transport Layer 59

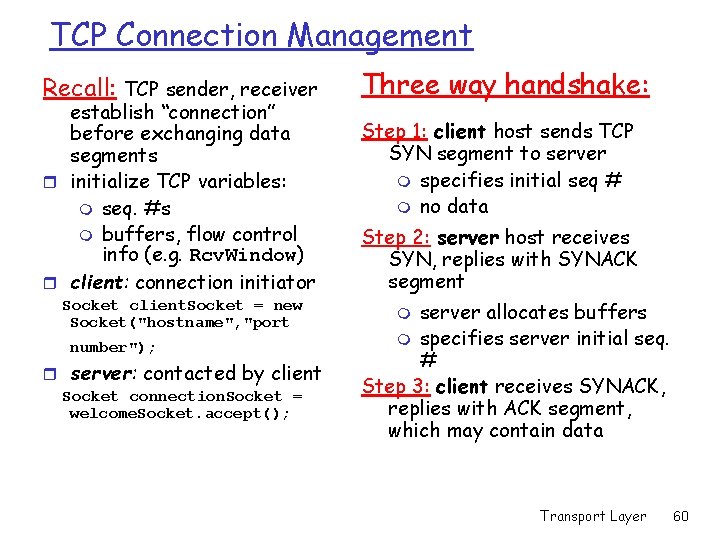

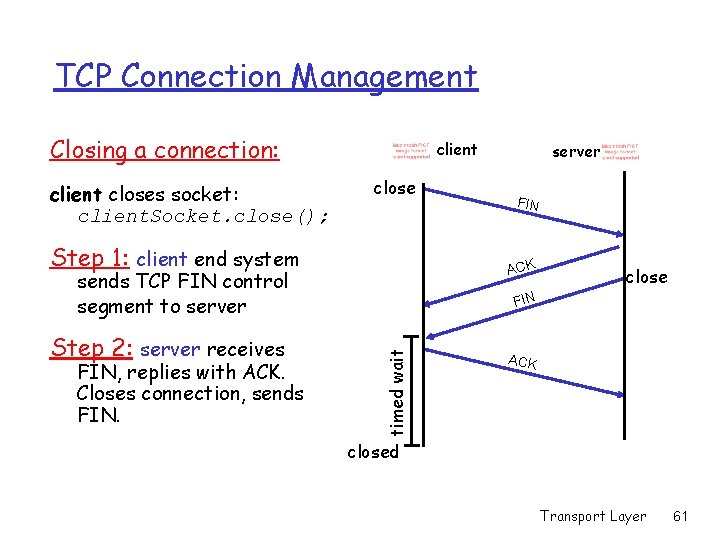

TCP Connection Management Recall: TCP sender, receiver establish “connection” before exchanging data segments r initialize TCP variables: m seq. #s m buffers, flow control info (e. g. Rcv. Window) r client: connection initiator Socket client. Socket = new Socket("hostname", "port number"); r server: contacted by client Socket connection. Socket = welcome. Socket. accept(); Three way handshake: Step 1: client host sends TCP SYN segment to server m specifies initial seq # m no data Step 2: server host receives SYN, replies with SYNACK segment server allocates buffers m specifies server initial seq. # Step 3: client receives SYNACK, replies with ACK segment, which may contain data m Transport Layer 60

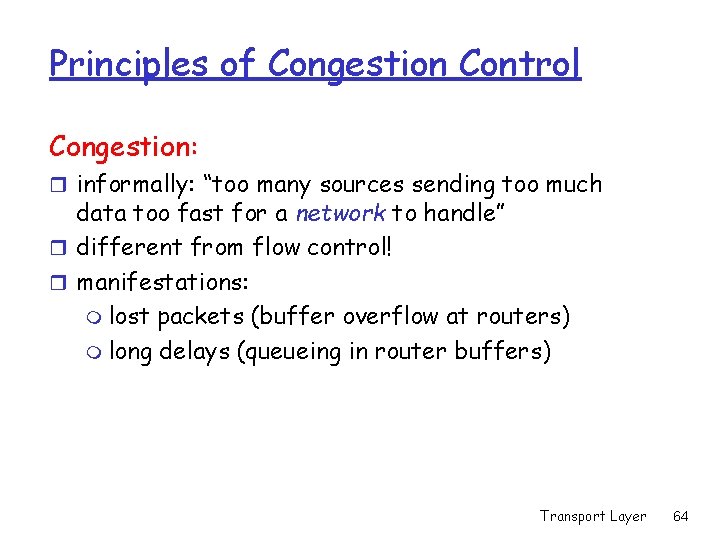

TCP Connection Management Closing a connection: client closes socket: client. Socket. close(); client close Step 1: client end system close FIN timed wait FIN, replies with ACK. Closes connection, sends FIN ACK sends TCP FIN control segment to server Step 2: server receives server ACK closed Transport Layer 61

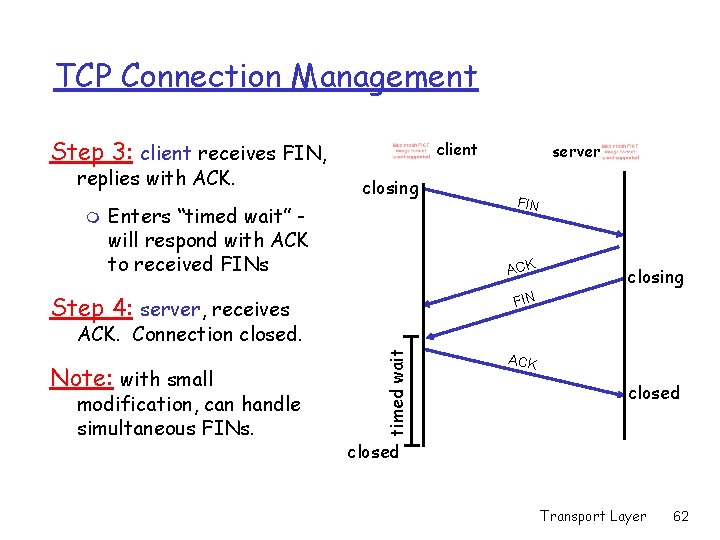

TCP Connection Management Step 3: client receives FIN, replies with ACK. m client closing Enters “timed wait” will respond with ACK to received FINs server FIN ACK Step 4: server, receives closing FIN Note: with small modification, can handle simultaneous FINs. timed wait ACK. Connection closed. ACK closed Transport Layer 62

TCP Connection Management TCP server lifecycle TCP client lifecycle Transport Layer 63

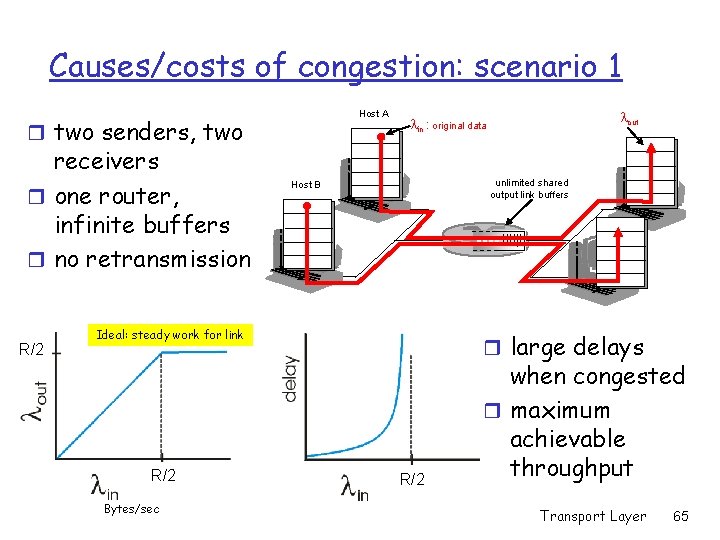

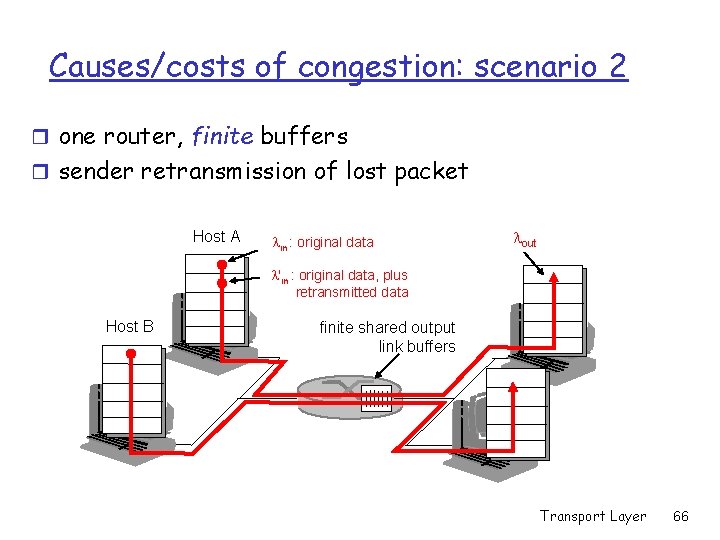

Principles of Congestion Control Congestion: r informally: “too many sources sending too much data too fast for a network to handle” r different from flow control! r manifestations: m lost packets (buffer overflow at routers) m long delays (queueing in router buffers) Transport Layer 64

Causes/costs of congestion: scenario 1 Host A r two senders, two receivers r one router, infinite buffers r no retransmission R/2 unlimited shared output link buffers Host B Ideal: steady work for link R/2 Bytes/sec lout lin : original data r large delays R/2 when congested r maximum achievable throughput Transport Layer 65

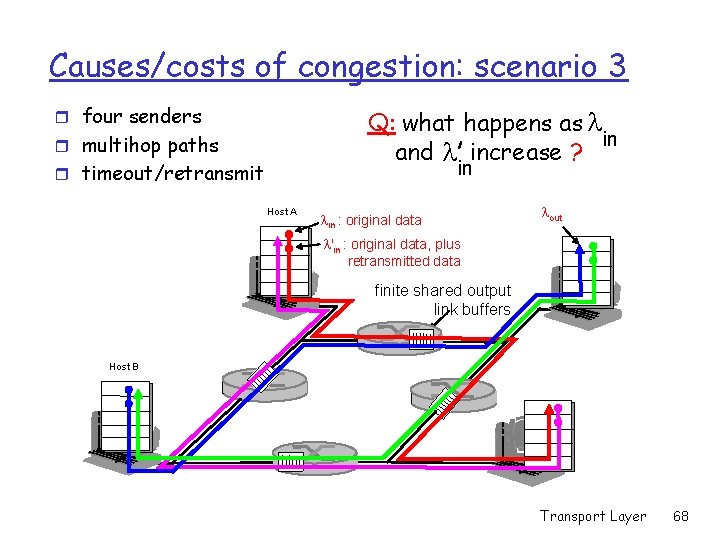

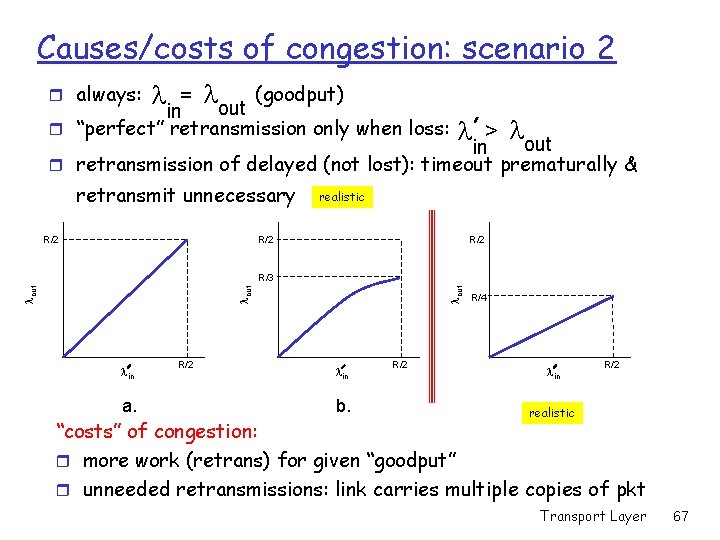

Causes/costs of congestion: scenario 2 r one router, finite buffers r sender retransmission of lost packet Host A lin : original data lout l'in : original data, plus retransmitted data Host B finite shared output link buffers Transport Layer 66

Causes/costs of congestion: scenario 2 (goodput) = l out in r “perfect” retransmission only when loss: r always: l l > lout in r retransmission of delayed (not lost): timeout prematurally & retransmit unnecessary R/2 realistic R/2 lin a. R/2 lout R/3 lin b. R/2 R/4 lin R/2 c. realistic “costs” of congestion: r more work (retrans) for given “goodput” r unneeded retransmissions: link carries multiple copies of pkt Transport Layer 67

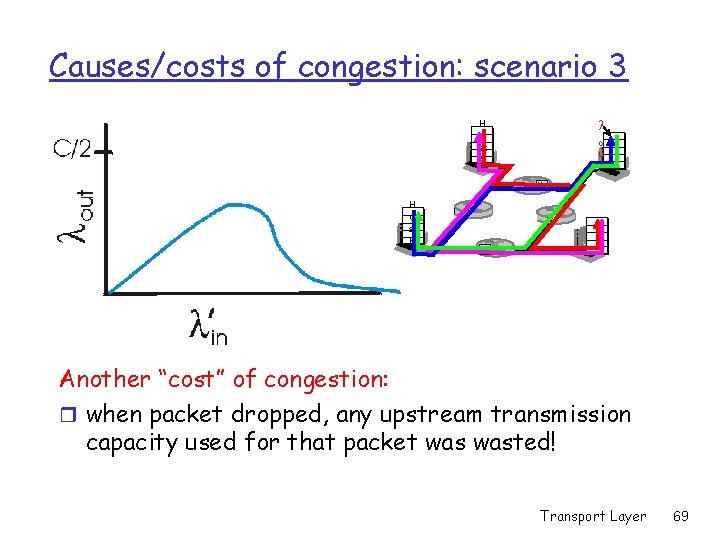

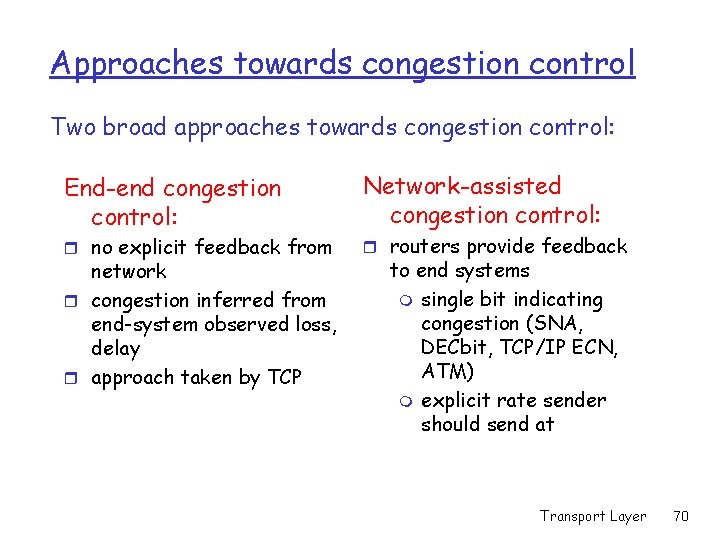

Causes/costs of congestion: scenario 3 r four senders Q: what happens as l in and l increase ? r multihop paths in r timeout/retransmit Host A lin : original data lout l'in : original data, plus retransmitted data finite shared output link buffers Host B Transport Layer 68

Causes/costs of congestion: scenario 3 H o st A l o u t H o st B Another “cost” of congestion: r when packet dropped, any upstream transmission capacity used for that packet wasted! Transport Layer 69

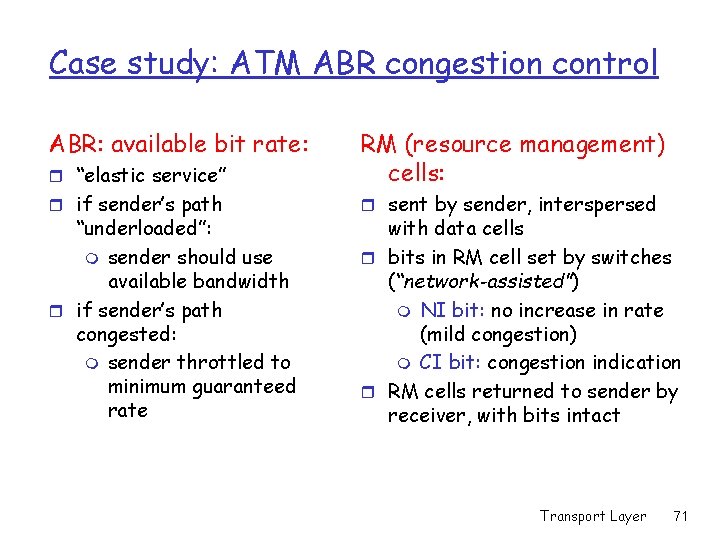

Approaches towards congestion control Two broad approaches towards congestion control: End-end congestion control: r no explicit feedback from network r congestion inferred from end-system observed loss, delay r approach taken by TCP Network-assisted congestion control: r routers provide feedback to end systems m single bit indicating congestion (SNA, DECbit, TCP/IP ECN, ATM) m explicit rate sender should send at Transport Layer 70

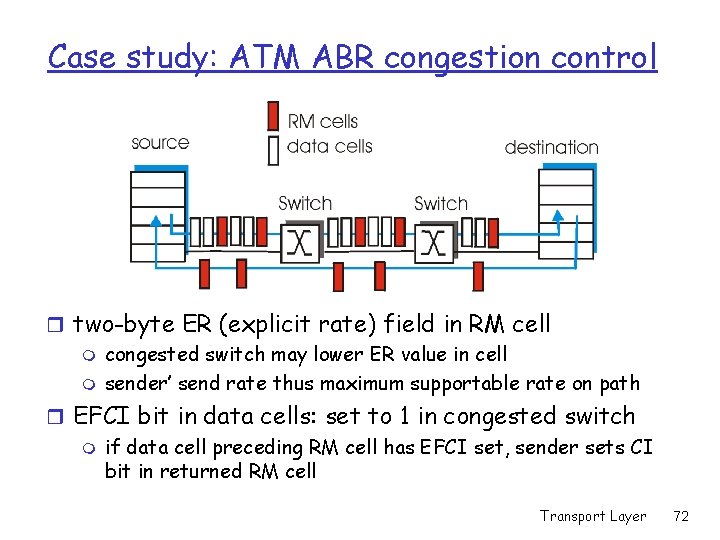

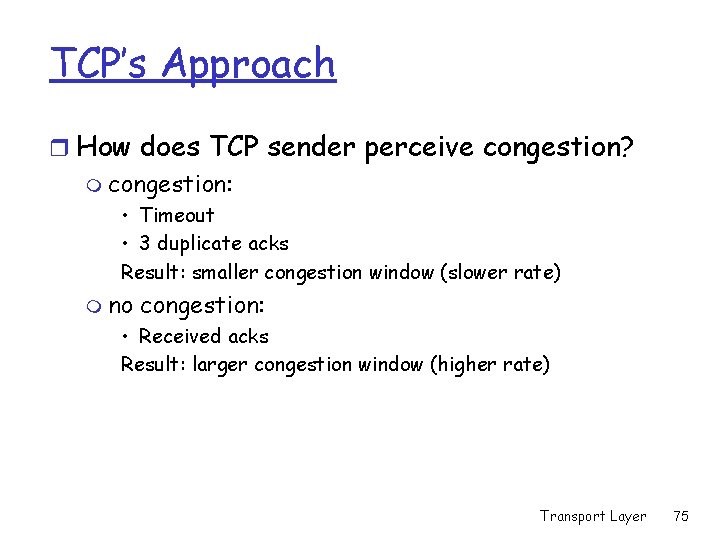

Case study: ATM ABR congestion control ABR: available bit rate: r “elastic service” RM (resource management) cells: r if sender’s path r sent by sender, interspersed “underloaded”: m sender should use available bandwidth r if sender’s path congested: m sender throttled to minimum guaranteed rate with data cells r bits in RM cell set by switches (“network-assisted”) m NI bit: no increase in rate (mild congestion) m CI bit: congestion indication r RM cells returned to sender by receiver, with bits intact Transport Layer 71

Case study: ATM ABR congestion control r two-byte ER (explicit rate) field in RM cell m congested switch may lower ER value in cell m sender’ send rate thus maximum supportable rate on path r EFCI bit in data cells: set to 1 in congested switch m if data cell preceding RM cell has EFCI set, sender sets CI bit in returned RM cell Transport Layer 72

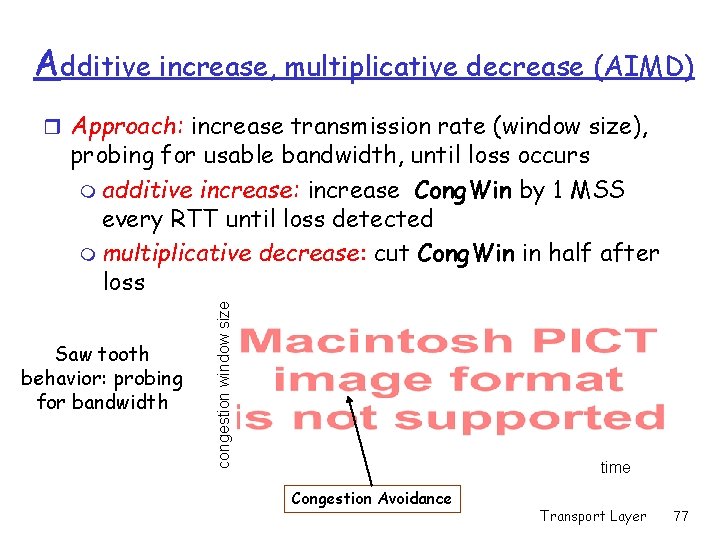

TCP’s Approach r Sender regulates rate of transmission based on “perceived network congestion” r Must consider: m How does TCP sender limit the rate? m How does TCP sender perceive congestion? m What algorithm should control rate based on perceived congestion? Reno congestion control algorithm Transport Layer 73

TCP’s Approach r How does TCP sender limit the rate? • Congestion window – constrains sender rate Last. Byte. Sent-Last. Byte. Acked min{Cong. Win, Rcv. Window} [Unacknowledged data from sender] congwin regulates congestion rate = Cong. Win Bytes/sec RTT Transport Layer 74

TCP’s Approach r How does TCP sender perceive congestion? m congestion: • Timeout • 3 duplicate acks Result: smaller congestion window (slower rate) m no congestion: • Received acks Result: larger congestion window (higher rate) Transport Layer 75

TCP’s Approach r TCP Congestion Control Algorithm Three mechanisms: 1. 2. 3. Additive-increase, multiplicative-decrease Slow start Reaction to timeout events Transport Layer 76

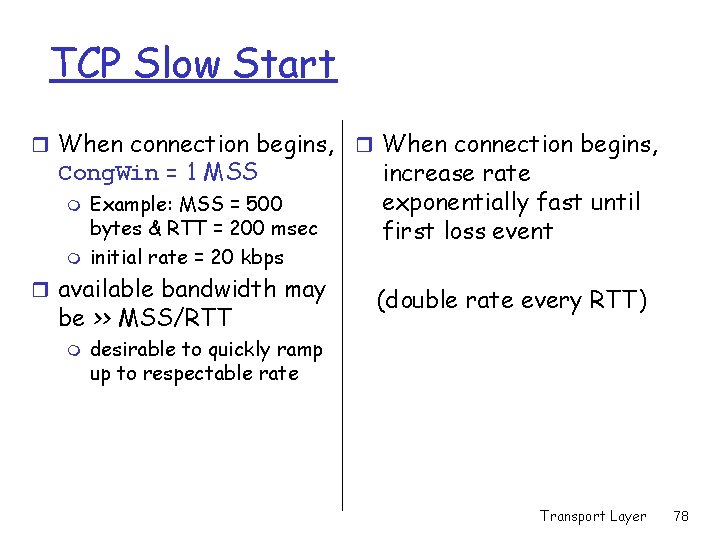

Additive increase, multiplicative decrease (AIMD) r Approach: increase transmission rate (window size), Saw tooth behavior: probing for bandwidth congestion window size probing for usable bandwidth, until loss occurs m additive increase: increase Cong. Win by 1 MSS every RTT until loss detected m multiplicative decrease: cut Cong. Win in half after loss time Congestion Avoidance Transport Layer 77

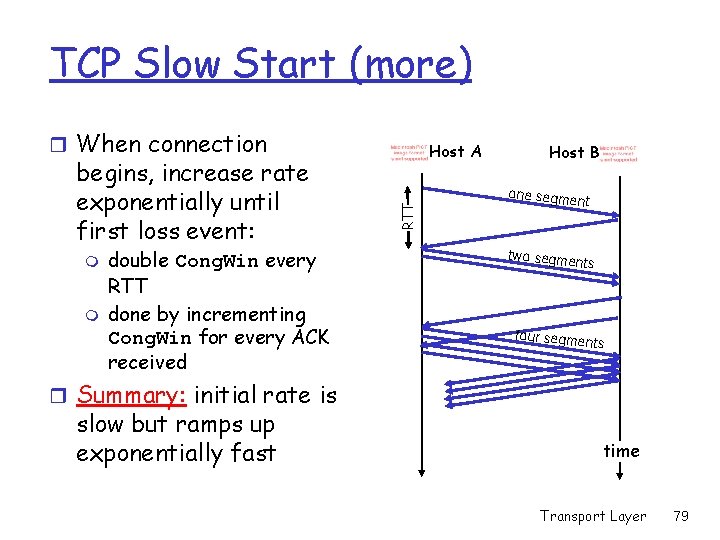

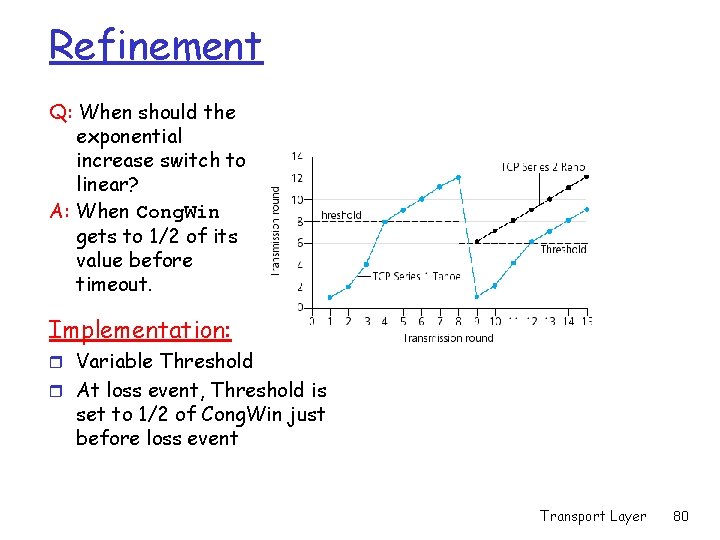

TCP Slow Start r When connection begins, Cong. Win = 1 MSS m m Example: MSS = 500 bytes & RTT = 200 msec initial rate = 20 kbps r available bandwidth may be >> MSS/RTT m r When connection begins, increase rate exponentially fast until first loss event (double rate every RTT) desirable to quickly ramp up to respectable rate Transport Layer 78

TCP Slow Start (more) r When connection m m double Cong. Win every RTT done by incrementing Cong. Win for every ACK received RTT begins, increase rate exponentially until first loss event: Host A Host B one segme nt two segme nts four segme nts r Summary: initial rate is slow but ramps up exponentially fast time Transport Layer 79

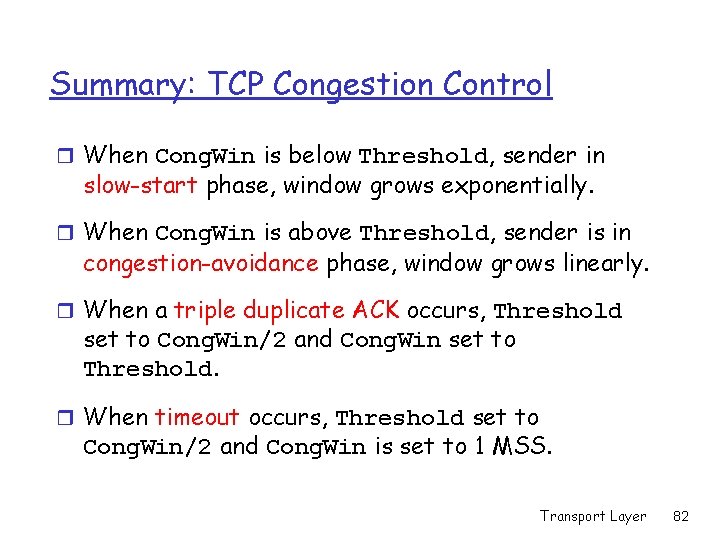

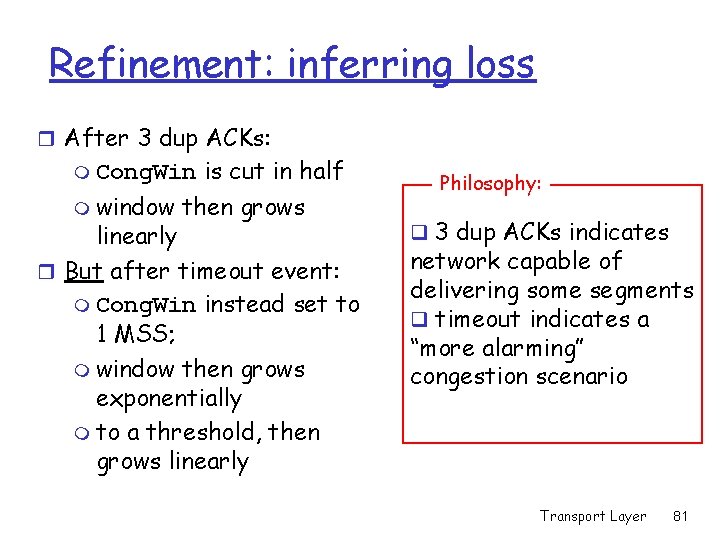

Refinement Q: When should the exponential increase switch to linear? A: When Cong. Win gets to 1/2 of its value before timeout. Implementation: r Variable Threshold r At loss event, Threshold is set to 1/2 of Cong. Win just before loss event Transport Layer 80

Refinement: inferring loss r After 3 dup ACKs: m Cong. Win m window is cut in half then grows linearly r But after timeout event: m Cong. Win instead set to 1 MSS; m window then grows exponentially m to a threshold, then grows linearly Philosophy: q 3 dup ACKs indicates network capable of delivering some segments q timeout indicates a “more alarming” congestion scenario Transport Layer 81

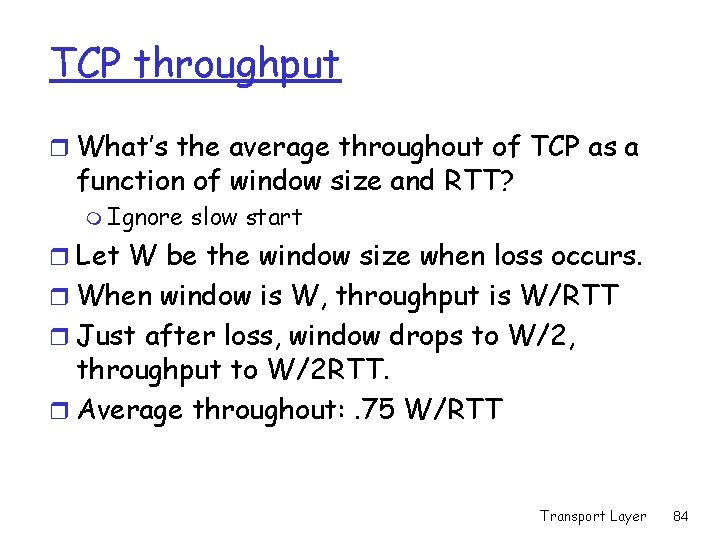

Summary: TCP Congestion Control r When Cong. Win is below Threshold, sender in slow-start phase, window grows exponentially. r When Cong. Win is above Threshold, sender is in congestion-avoidance phase, window grows linearly. r When a triple duplicate ACK occurs, Threshold set to Cong. Win/2 and Cong. Win set to Threshold. r When timeout occurs, Threshold set to Cong. Win/2 and Cong. Win is set to 1 MSS. Transport Layer 82

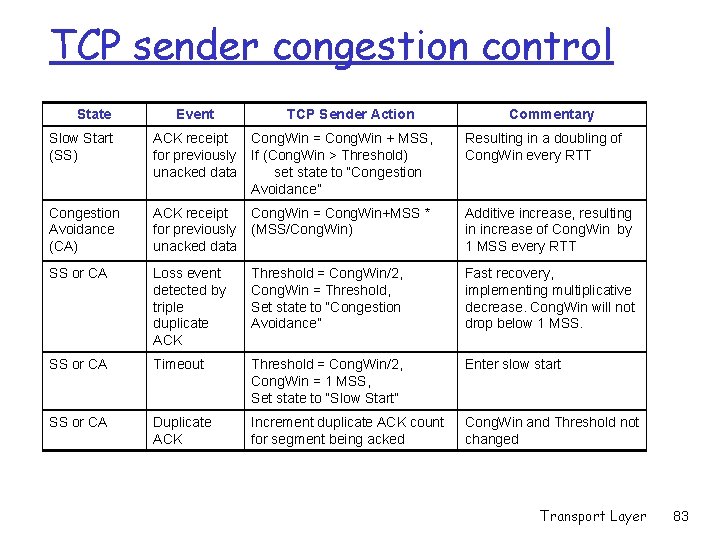

TCP sender congestion control State Event TCP Sender Action Commentary Slow Start (SS) ACK receipt Cong. Win = Cong. Win + MSS, for previously If (Cong. Win > Threshold) unacked data set state to “Congestion Avoidance” Resulting in a doubling of Cong. Win every RTT Congestion Avoidance (CA) ACK receipt Cong. Win = Cong. Win+MSS * for previously (MSS/Cong. Win) unacked data Additive increase, resulting in increase of Cong. Win by 1 MSS every RTT SS or CA Loss event detected by triple duplicate ACK Threshold = Cong. Win/2, Cong. Win = Threshold, Set state to “Congestion Avoidance” Fast recovery, implementing multiplicative decrease. Cong. Win will not drop below 1 MSS. SS or CA Timeout Threshold = Cong. Win/2, Cong. Win = 1 MSS, Set state to “Slow Start” Enter slow start SS or CA Duplicate ACK Increment duplicate ACK count for segment being acked Cong. Win and Threshold not changed Transport Layer 83

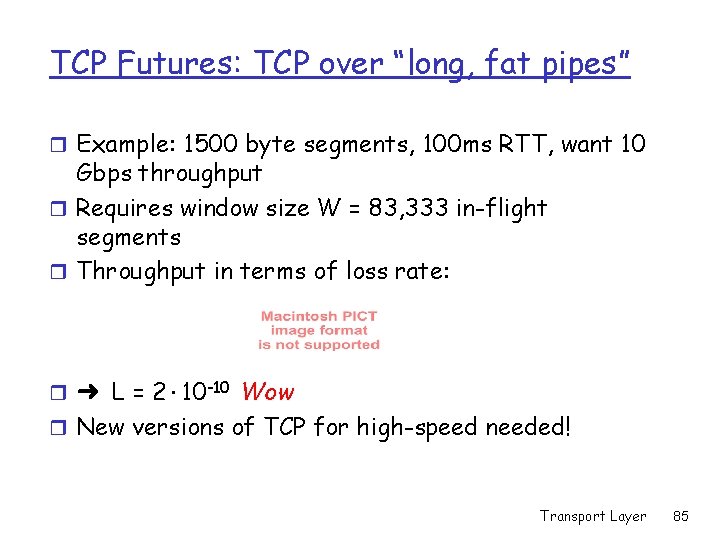

TCP throughput r What’s the average throughout of TCP as a function of window size and RTT? m Ignore slow start r Let W be the window size when loss occurs. r When window is W, throughput is W/RTT r Just after loss, window drops to W/2, throughput to W/2 RTT. r Average throughout: . 75 W/RTT Transport Layer 84

TCP Futures: TCP over “long, fat pipes” r Example: 1500 byte segments, 100 ms RTT, want 10 Gbps throughput r Requires window size W = 83, 333 in-flight segments r Throughput in terms of loss rate: r ➜ L = 2·10 -10 Wow r New versions of TCP for high-speed needed! Transport Layer 85

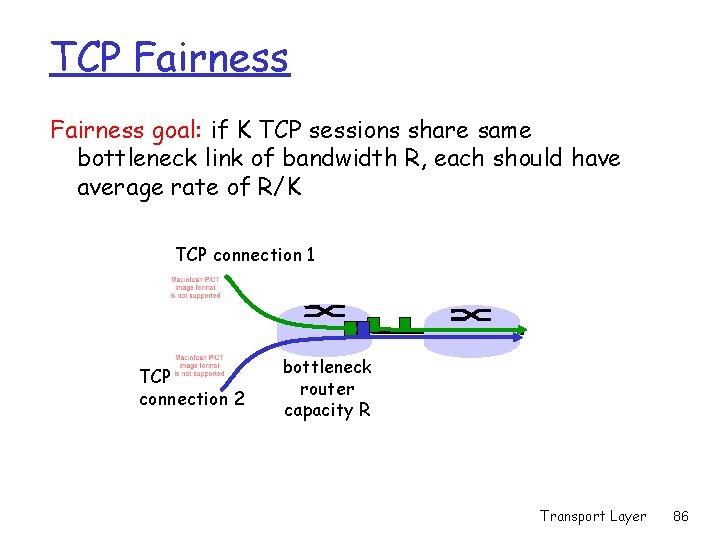

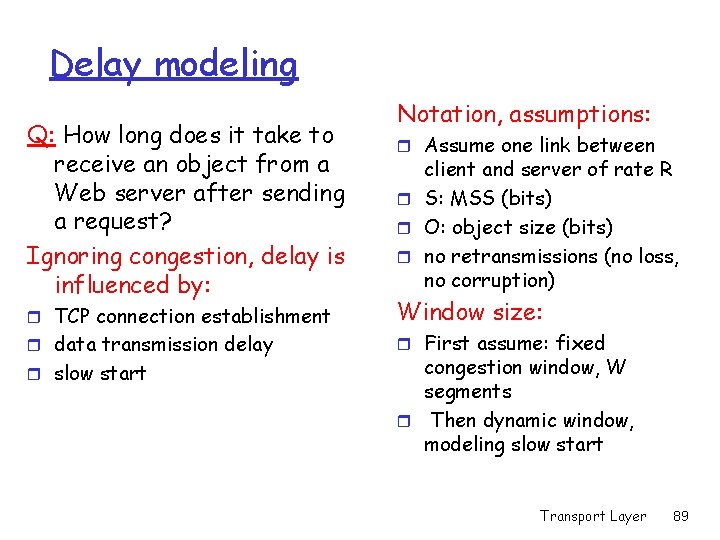

TCP Fairness goal: if K TCP sessions share same bottleneck link of bandwidth R, each should have average rate of R/K TCP connection 1 TCP connection 2 bottleneck router capacity R Transport Layer 86

Why is TCP fair? Two competing sessions: r Additive increase gives slope of 1, as throughout increases r multiplicative decreases throughput proportionally equal bandwidth share Connection 2 throughput R loss: decrease window by factor of 2 congestion avoidance: additive increase Connection 1 throughput R Transport Layer 87

Fairness (more) Fairness and UDP r Multimedia apps often do not use TCP m do not want rate throttled by congestion control r Instead use UDP: m pump audio/video at constant rate, tolerate packet loss r Research area: TCP friendly Fairness and parallel TCP connections r nothing prevents app from opening parallel connections between 2 hosts. r Web browsers do this r Example: link of rate R supporting 9 cnctions; m m new app asks for 1 TCP, gets rate R/10 new app asks for 11 TCPs, gets R/2 ! Transport Layer 88

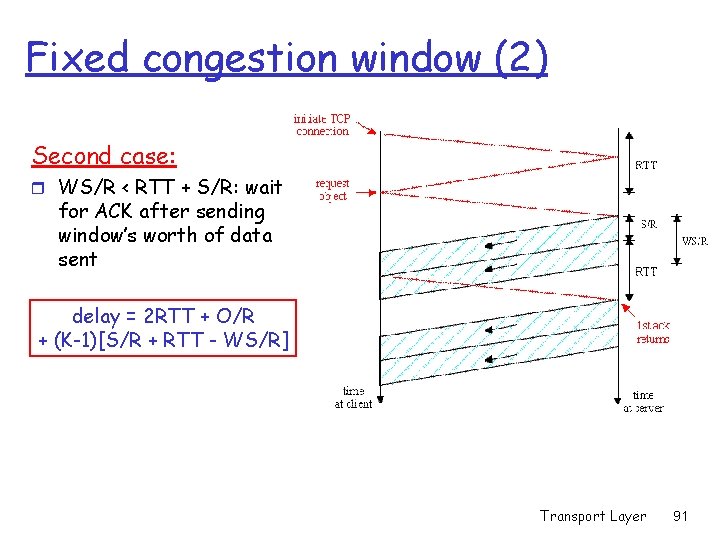

Delay modeling Q: How long does it take to receive an object from a Web server after sending a request? Ignoring congestion, delay is influenced by: r TCP connection establishment r data transmission delay r slow start Notation, assumptions: r Assume one link between client and server of rate R r S: MSS (bits) r O: object size (bits) r no retransmissions (no loss, no corruption) Window size: r First assume: fixed congestion window, W segments r Then dynamic window, modeling slow start Transport Layer 89

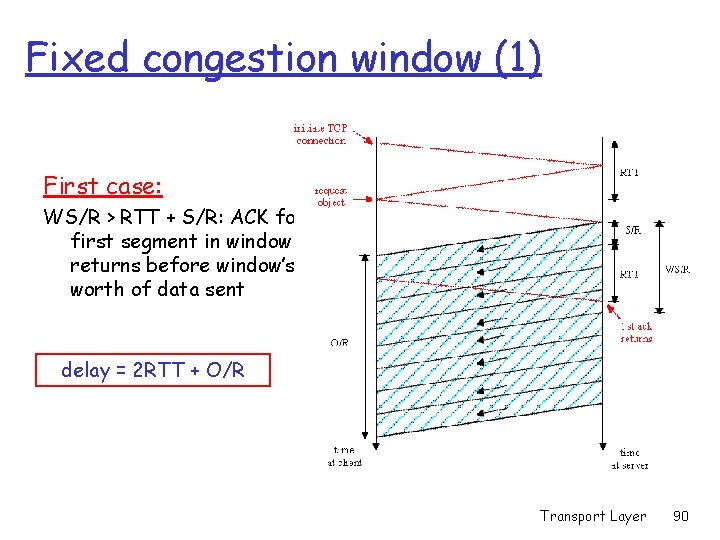

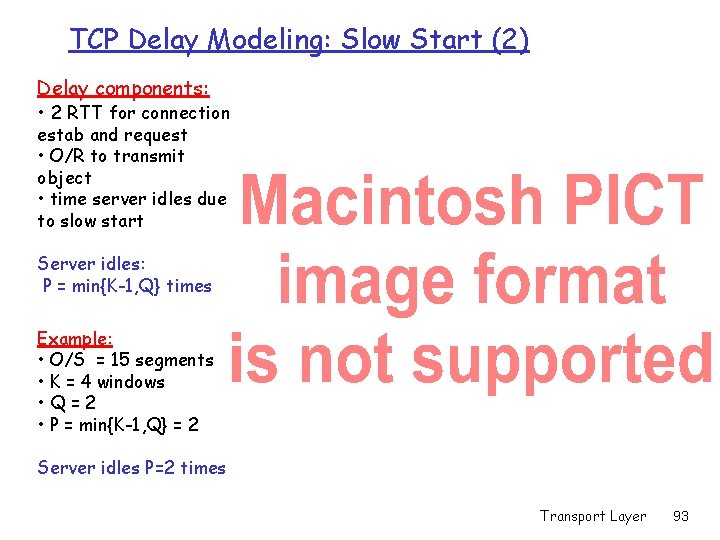

Fixed congestion window (1) First case: WS/R > RTT + S/R: ACK for first segment in window returns before window’s worth of data sent delay = 2 RTT + O/R Transport Layer 90

Fixed congestion window (2) Second case: r WS/R < RTT + S/R: wait for ACK after sending window’s worth of data sent delay = 2 RTT + O/R + (K-1)[S/R + RTT - WS/R] Transport Layer 91

TCP Delay Modeling: Slow Start (1) Now suppose window grows according to slow start Will show that the delay for one object is: where P is the number of times TCP idles at server: - where Q is the number of times the server idles if the object were of infinite size. - and K is the number of windows that cover the object. Transport Layer 92

TCP Delay Modeling: Slow Start (2) Delay components: • 2 RTT for connection estab and request • O/R to transmit object • time server idles due to slow start Server idles: P = min{K-1, Q} times Example: • O/S = 15 segments • K = 4 windows • Q=2 • P = min{K-1, Q} = 2 Server idles P=2 times Transport Layer 93

TCP Delay Modeling (3) Transport Layer 94

TCP Delay Modeling (4) Recall K = number of windows that cover object How do we calculate K ? Calculation of Q, number of idles for infinite-size object, is similar (see HW). Transport Layer 95

HTTP Modeling r Assume Web page consists of: 1 base HTML page (of size O bits) m M images (each of size O bits) r Non-persistent HTTP: m M+1 TCP connections in series m Response time = (M+1)O/R + (M+1)2 RTT + sum of idle times r Persistent HTTP: m 2 RTT to request and receive base HTML file m 1 RTT to request and receive M images m Response time = (M+1)O/R + 3 RTT + sum of idle times r Non-persistent HTTP with X parallel connections m Suppose M/X integer. m 1 TCP connection for base file m M/X sets of parallel connections for images. m Response time = (M+1)O/R + (M/X + 1)2 RTT + sum of idle times m Transport Layer 96

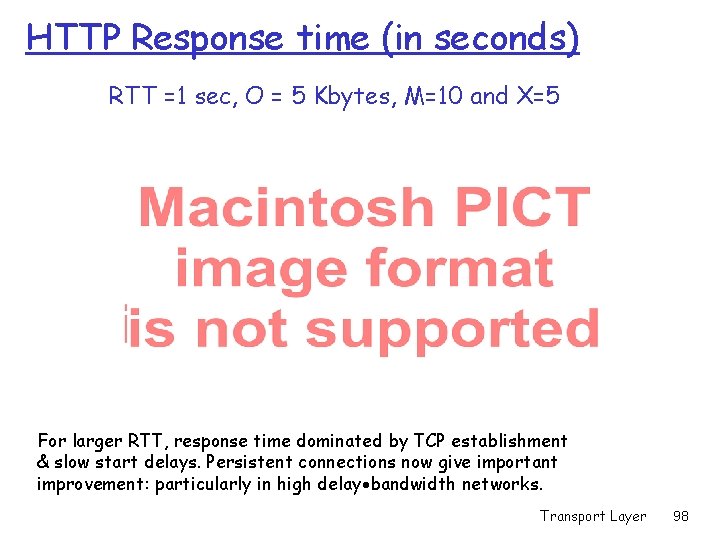

HTTP Response time (in seconds) RTT = 100 msec, O = 5 Kbytes, M=10 and X=5 For low bandwidth, connection & response time dominated by transmission time. Persistent connections only give minor improvement over parallel connections. Transport Layer 97

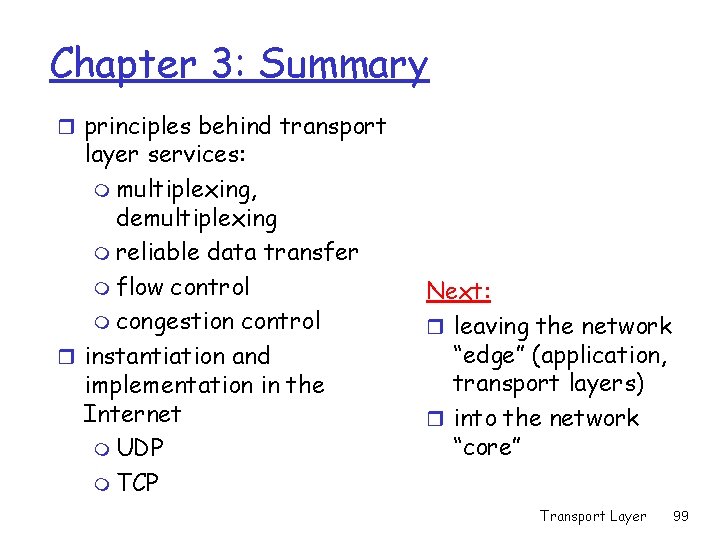

HTTP Response time (in seconds) RTT =1 sec, O = 5 Kbytes, M=10 and X=5 For larger RTT, response time dominated by TCP establishment & slow start delays. Persistent connections now give important improvement: particularly in high delay bandwidth networks. Transport Layer 98

Chapter 3: Summary r principles behind transport layer services: m multiplexing, demultiplexing m reliable data transfer m flow control m congestion control r instantiation and implementation in the Internet m UDP m TCP Next: r leaving the network “edge” (application, transport layers) r into the network “core” Transport Layer 99