Semantic Confidence Measurement for Spoken Dialog Systems Author

![Reference o [1] R. Sarikaya, Y. Gao, M. Picheny and H. Erdogan , “Semantic Reference o [1] R. Sarikaya, Y. Gao, M. Picheny and H. Erdogan , “Semantic](https://slidetodoc.com/presentation_image/4575e5558072323136cd39662db873fa/image-2.jpg)

- Slides: 38

Semantic Confidence Measurement for Spoken Dialog Systems Author : R. Sarikaya, Y. Gao, M. Picheny, H. Erdogan, Reporter : CHEN TZAN HWEI NTNU SPEECH LAB

![Reference o 1 R Sarikaya Y Gao M Picheny and H Erdogan Semantic Reference o [1] R. Sarikaya, Y. Gao, M. Picheny and H. Erdogan , “Semantic](https://slidetodoc.com/presentation_image/4575e5558072323136cd39662db873fa/image-2.jpg)

Reference o [1] R. Sarikaya, Y. Gao, M. Picheny and H. Erdogan , “Semantic Confidence Measurement for Spoken Dialog Systems”, IEEE SAP 2005 11/3/2020 NTNU SPEECH LAB 2

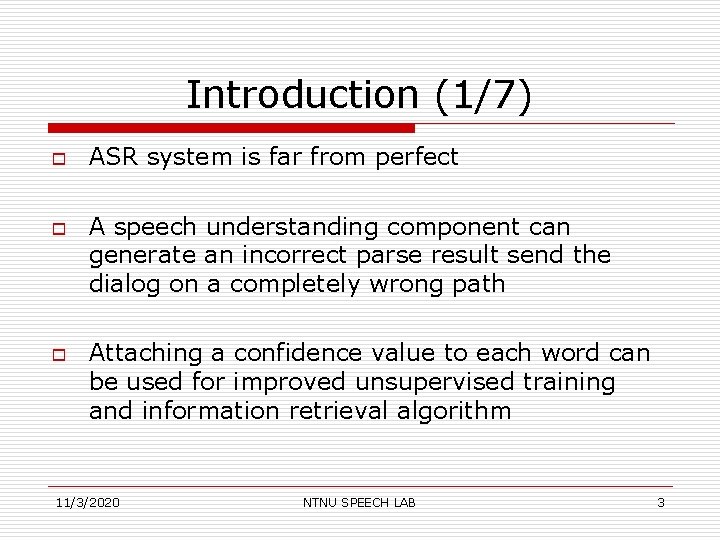

Introduction (1/7) o o o ASR system is far from perfect A speech understanding component can generate an incorrect parse result send the dialog on a completely wrong path Attaching a confidence value to each word can be used for improved unsupervised training and information retrieval algorithm 11/3/2020 NTNU SPEECH LAB 3

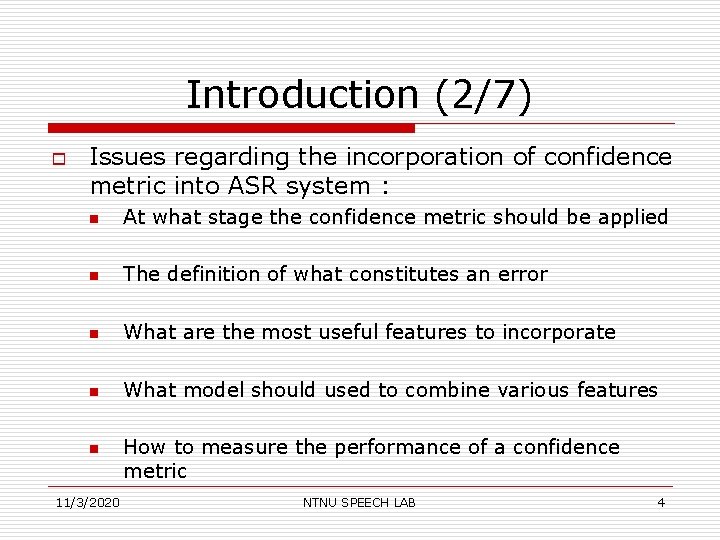

Introduction (2/7) o Issues regarding the incorporation of confidence metric into ASR system : n At what stage the confidence metric should be applied n The definition of what constitutes an error n What are the most useful features to incorporate n What model should used to combine various features n 11/3/2020 How to measure the performance of a confidence metric NTNU SPEECH LAB 4

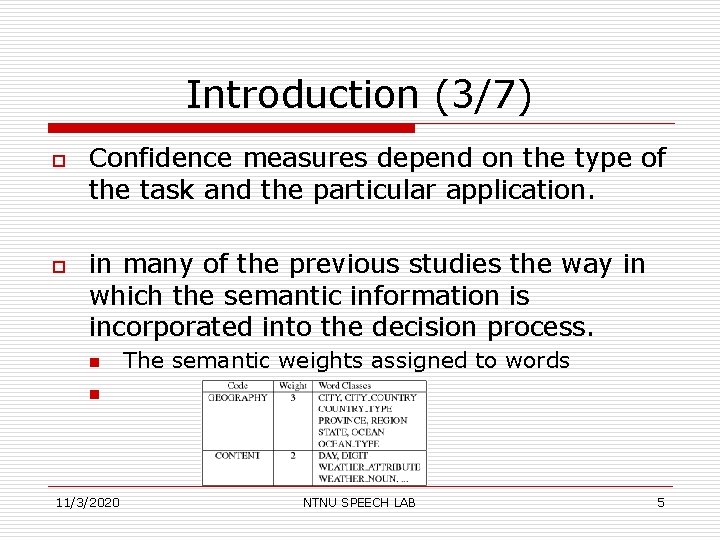

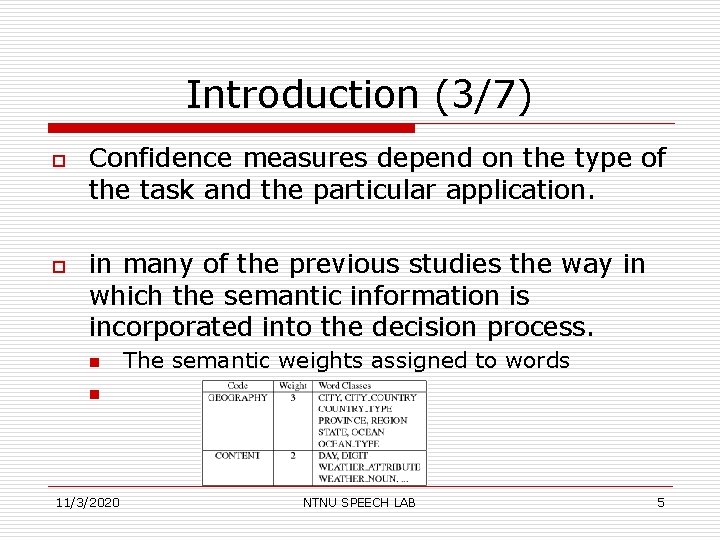

Introduction (3/7) o o Confidence measures depend on the type of the task and the particular application. in many of the previous studies the way in which the semantic information is incorporated into the decision process. n The semantic weights assigned to words n 11/3/2020 NTNU SPEECH LAB 5

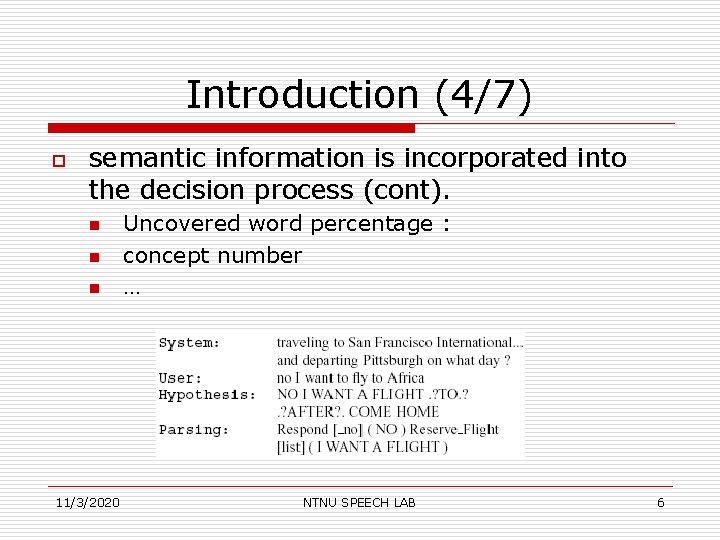

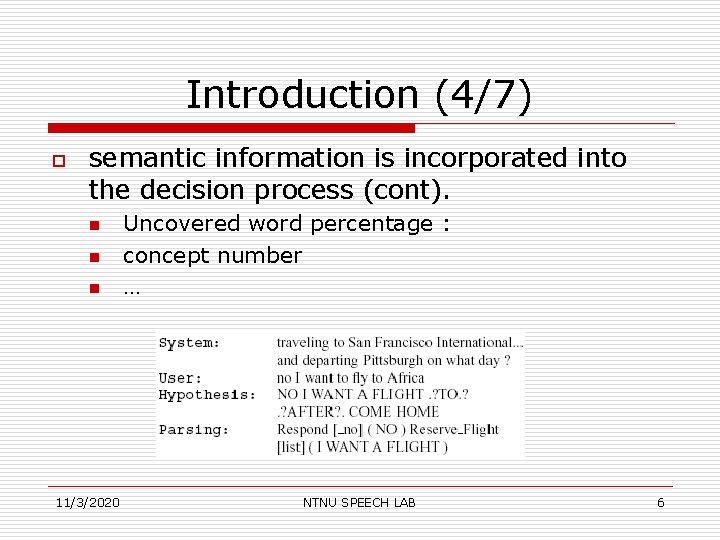

Introduction (4/7) o semantic information is incorporated into the decision process (cont). n n n 11/3/2020 Uncovered word percentage : concept number … NTNU SPEECH LAB 6

Introduction (5/7) o o Recently, there have been new approaches attempting to integrate lexical and semantic content of the sentence tightly Decoupling of LM and AM along with introduction of LSA to find (dis)similar words in a sentence is also shown to provide improvement over traditional feature. 11/3/2020 NTNU SPEECH LAB 7

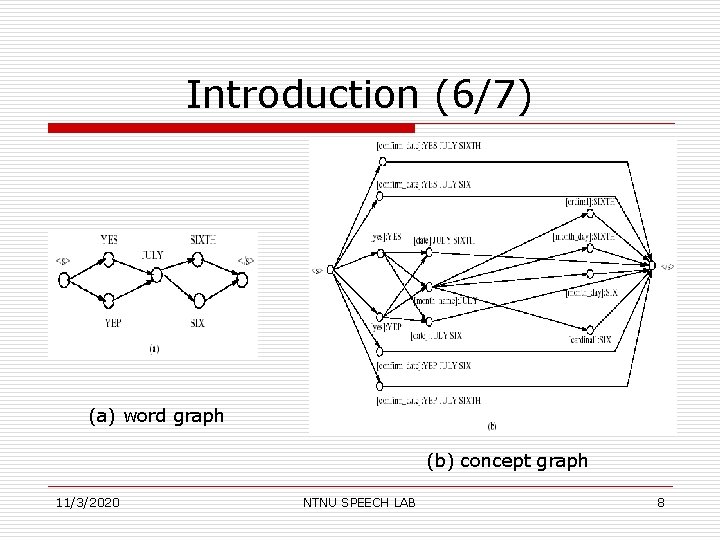

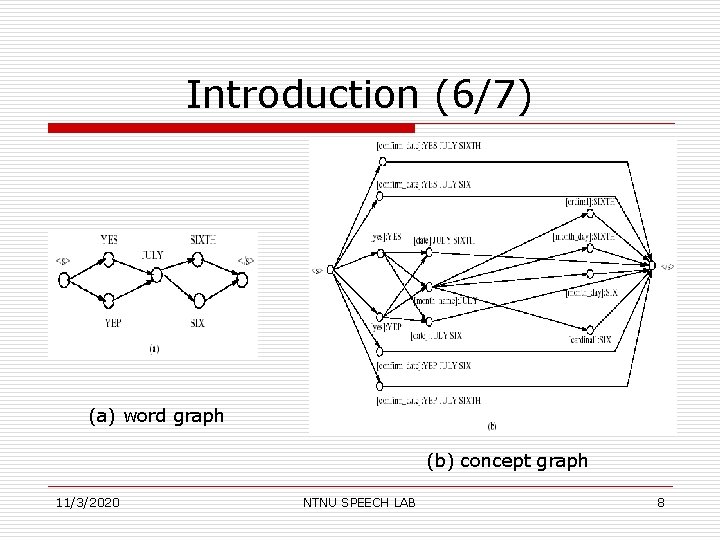

Introduction (6/7) (a) word graph (b) concept graph 11/3/2020 NTNU SPEECH LAB 8

Introduction (7/7) o In this paper, two method is proposed to model semantic information in a sentence. n n 11/3/2020 Relying on the semantic classer/parse tree where node and extension scores corresponding to tags and labels are used. Based on maximum entropy modeling of the word sequence and the semantic parse tree NTNU SPEECH LAB 9

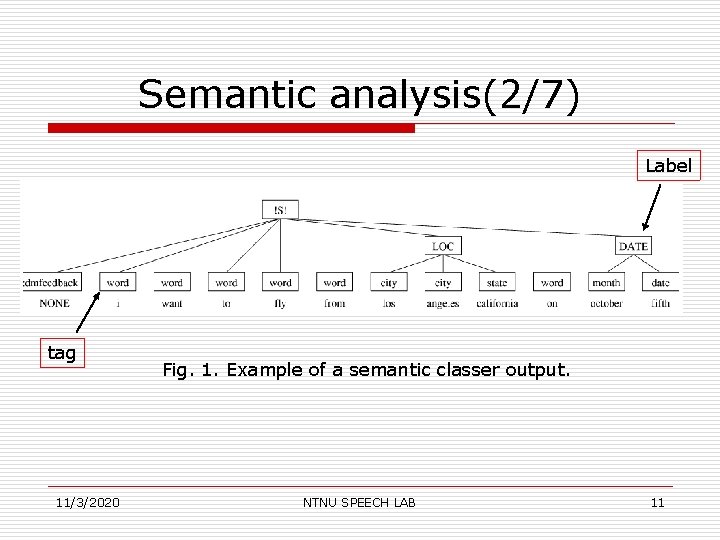

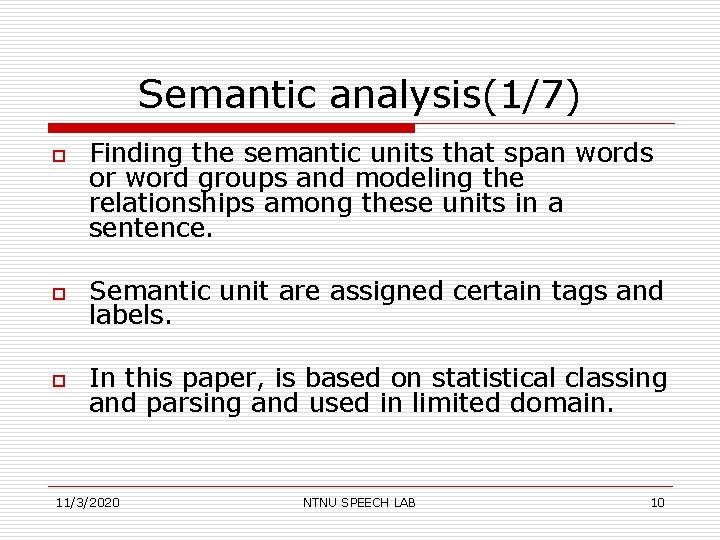

Semantic analysis(1/7) o Finding the semantic units that span words or word groups and modeling the relationships among these units in a sentence. o Semantic unit are assigned certain tags and labels. o In this paper, is based on statistical classing and parsing and used in limited domain. 11/3/2020 NTNU SPEECH LAB 10

Semantic analysis(2/7) Label tag 11/3/2020 Fig. 1. Example of a semantic classer output. NTNU SPEECH LAB 11

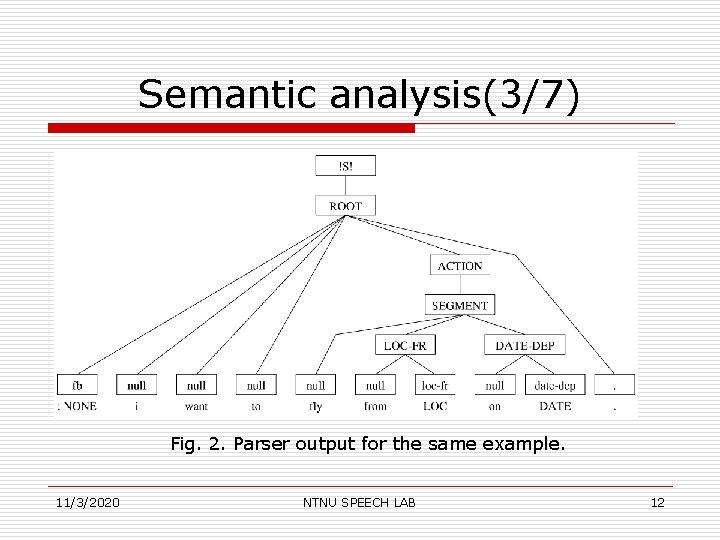

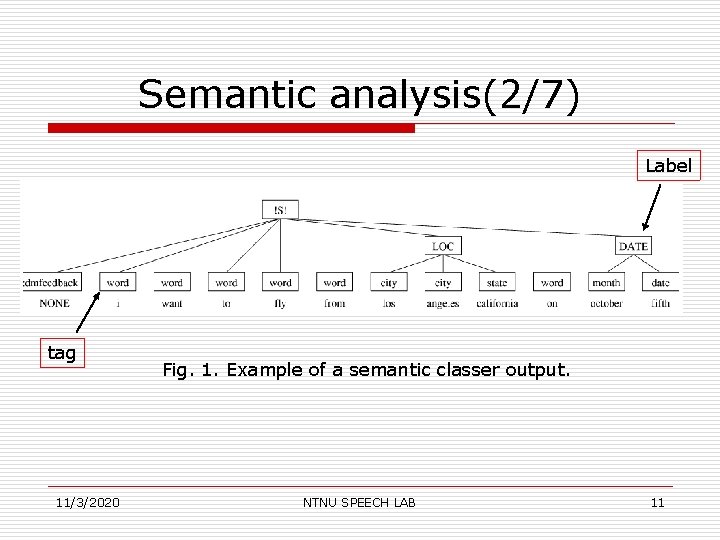

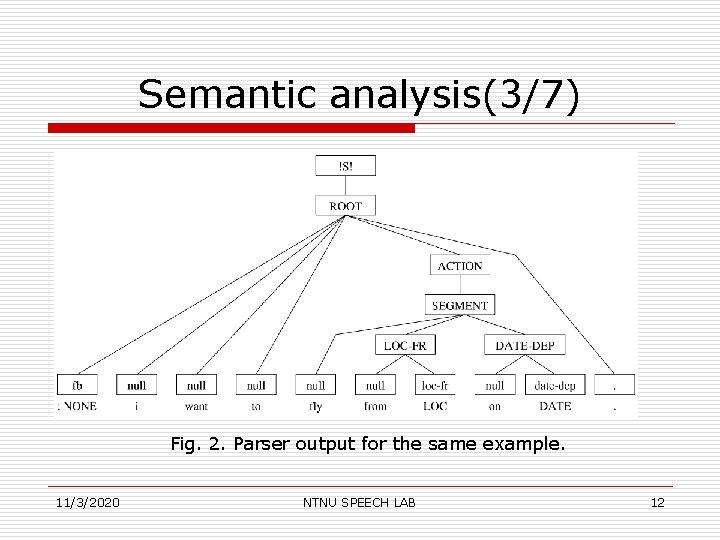

Semantic analysis(3/7) Fig. 2. Parser output for the same example. 11/3/2020 NTNU SPEECH LAB 12

Semantic analysis(4/7) o o o The parser works in a left-to-right and bottom-up fashion. The function of the classer is to group together the words that are part of a concept. The probability of a parse tree given the sentence is given below 11/3/2020 NTNU SPEECH LAB 13

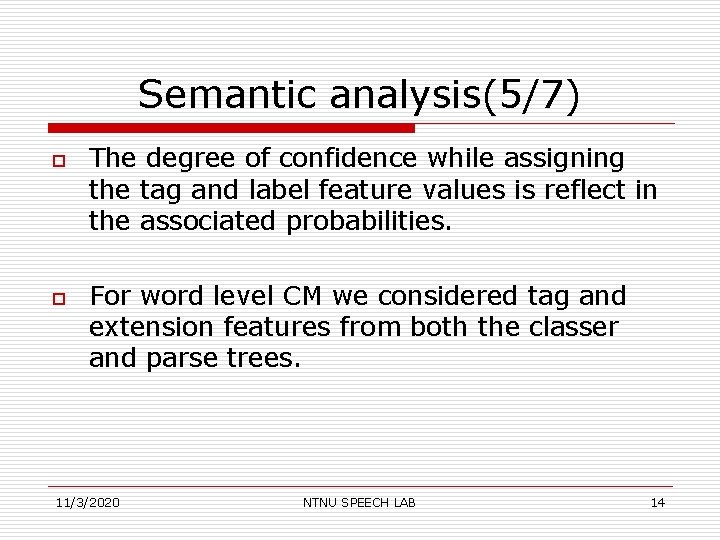

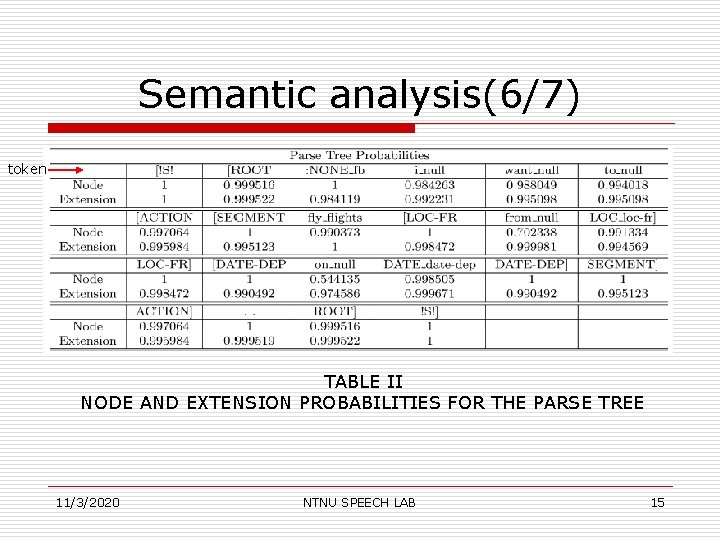

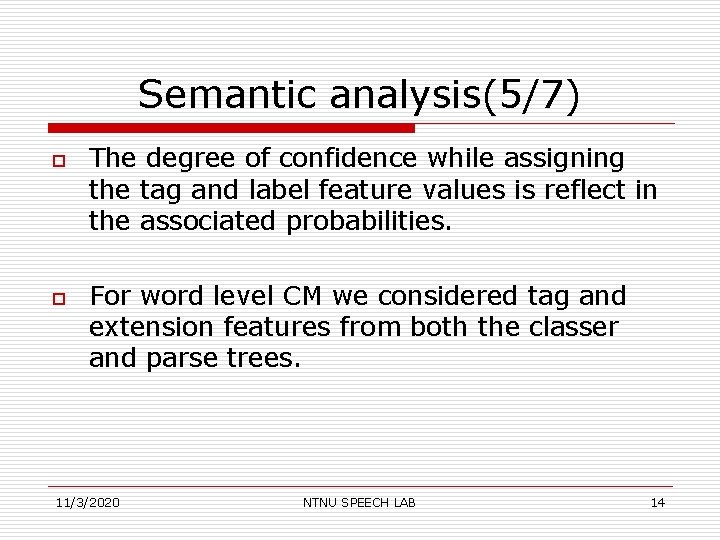

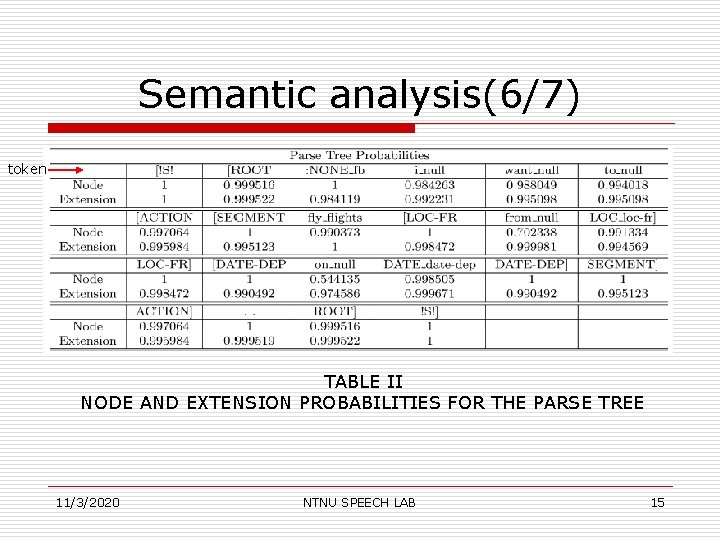

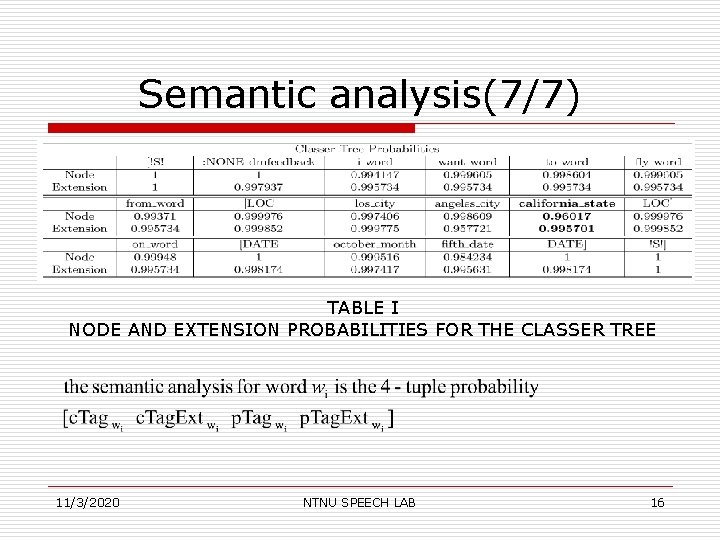

Semantic analysis(5/7) o o The degree of confidence while assigning the tag and label feature values is reflect in the associated probabilities. For word level CM we considered tag and extension features from both the classer and parse trees. 11/3/2020 NTNU SPEECH LAB 14

Semantic analysis(6/7) token TABLE II NODE AND EXTENSION PROBABILITIES FOR THE PARSE TREE 11/3/2020 NTNU SPEECH LAB 15

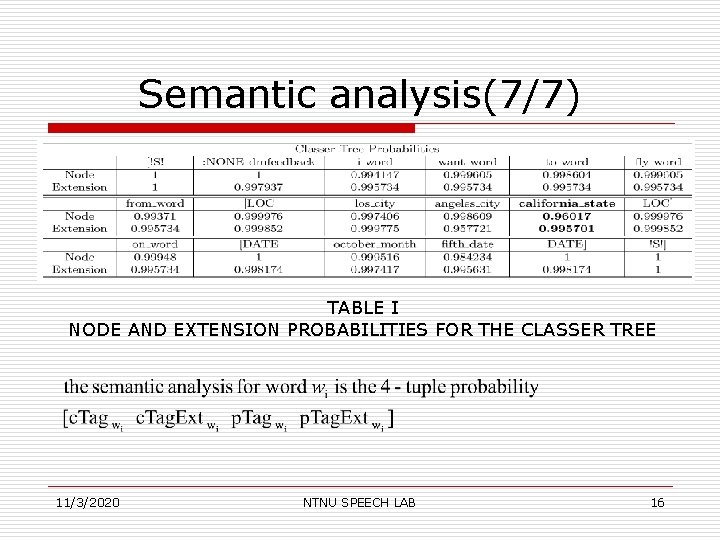

Semantic analysis(7/7) TABLE I NODE AND EXTENSION PROBABILITIES FOR THE CLASSER TREE 11/3/2020 NTNU SPEECH LAB 16

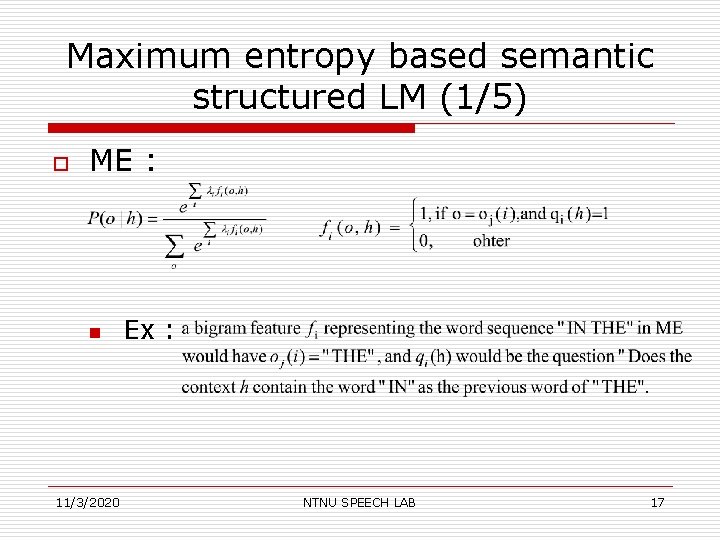

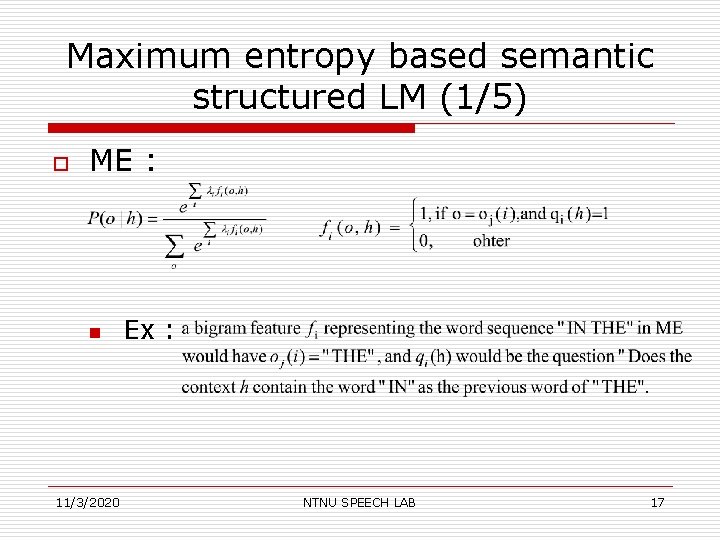

Maximum entropy based semantic structured LM (1/5) o ME : n 11/3/2020 Ex : NTNU SPEECH LAB 17

Maximum entropy based semantic structured LM (2/5) o o o MELM 1 : the ME counterpart of an ordinary n-gram language model. MELM 2 : four more questions are used regarding the semantic structure of the sentence MELM 3 : combines semantic classer and parser and uses a full parse tree. 11/3/2020 NTNU SPEECH LAB 18

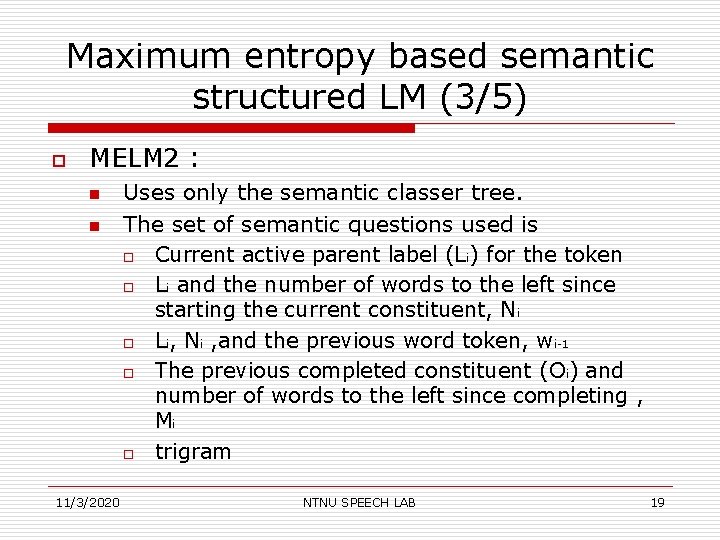

Maximum entropy based semantic structured LM (3/5) o MELM 2 : n n 11/3/2020 Uses only the semantic classer tree. The set of semantic questions used is o Current active parent label (Li) for the token o Li and the number of words to the left since starting the current constituent, Ni o Li, Ni , and the previous word token, w i-1 o The previous completed constituent (Oi) and number of words to the left since completing , Mi o trigram NTNU SPEECH LAB 19

Maximum entropy based semantic structured LM (4/5) o MELM 2 (cont): n n Is defined on the token sequence. In previous study, it is observed that correctness on the current word has a significant effect on the correctness of the next word. o o 11/3/2020 MELM 2 -ctx 3 -> [t-1 wi t+1] MELM 3 -ctx 5 -> [t-2 t-1 wi t+1 t+2] NTNU SPEECH LAB 20

Maximum entropy based semantic structured LM (5/5) o MELE 3 n The set of semantic questions used is o o n 11/3/2020 Li and the number of words to the left since starting the current constituent, Ni Li, and the current grandparent label, Gi The previous completed constituent (Oi) and number of words to the left since completing , Mi Trigram We consider MELE 3 -ctx 3 and MELE 3 -ctx 5 NTNU SPEECH LAB 21

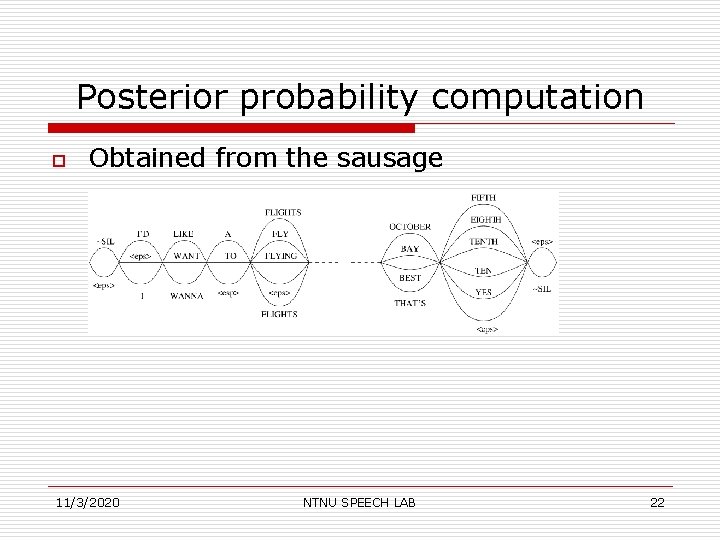

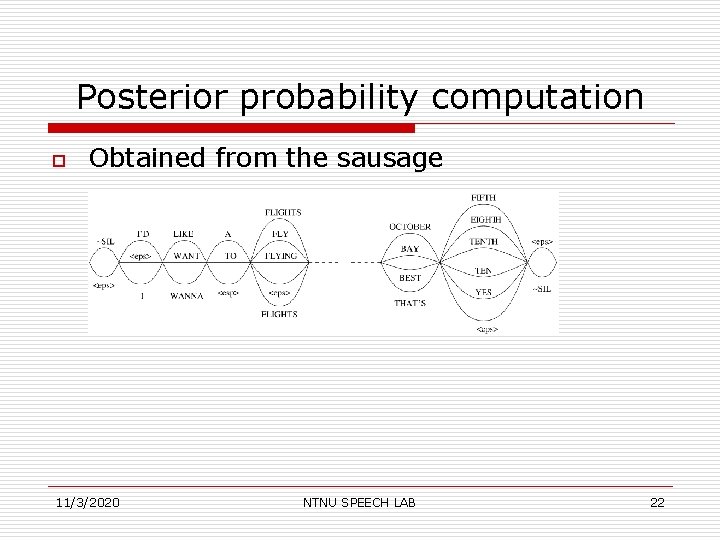

Posterior probability computation o Obtained from the sausage 11/3/2020 NTNU SPEECH LAB 22

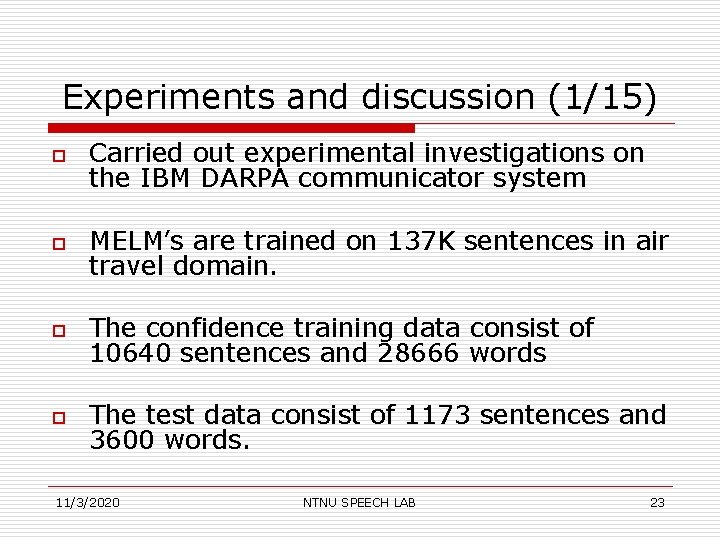

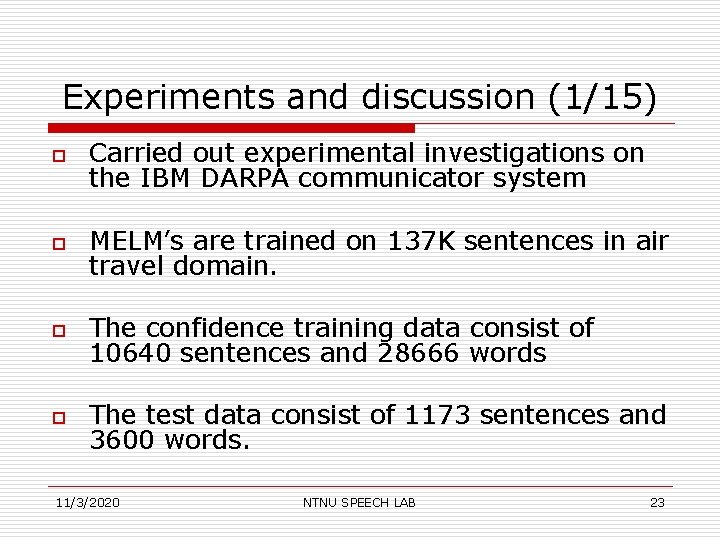

Experiments and discussion (1/15) o Carried out experimental investigations on the IBM DARPA communicator system o MELM’s are trained on 137 K sentences in air travel domain. o The confidence training data consist of 10640 sentences and 28666 words o The test data consist of 1173 sentences and 3600 words. 11/3/2020 NTNU SPEECH LAB 23

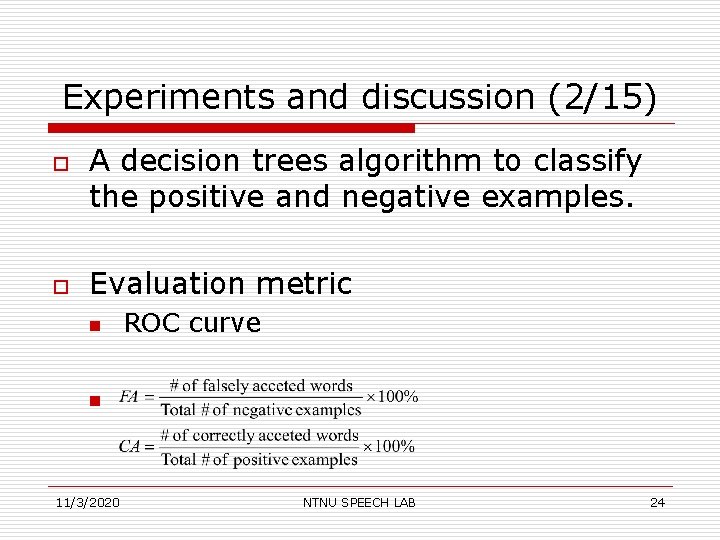

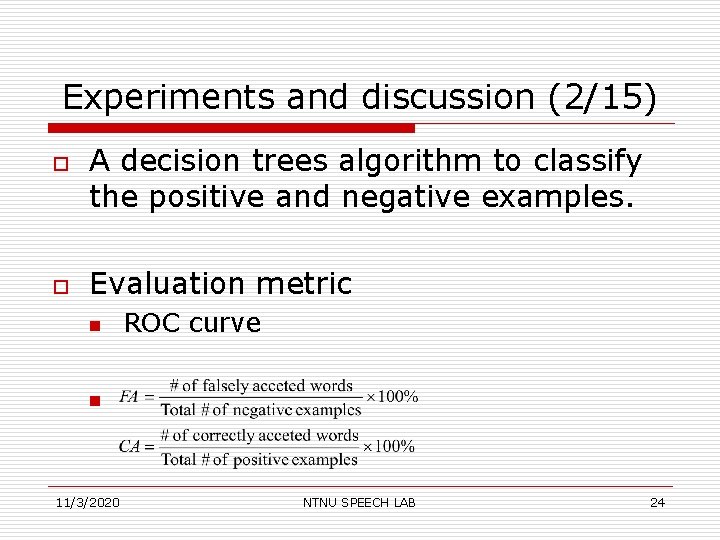

Experiments and discussion (2/15) o o A decision trees algorithm to classify the positive and negative examples. Evaluation metric n ROC curve n 11/3/2020 NTNU SPEECH LAB 24

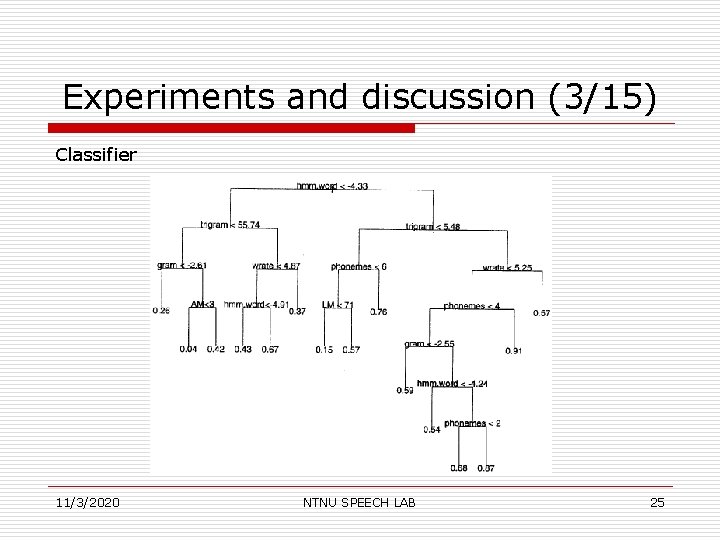

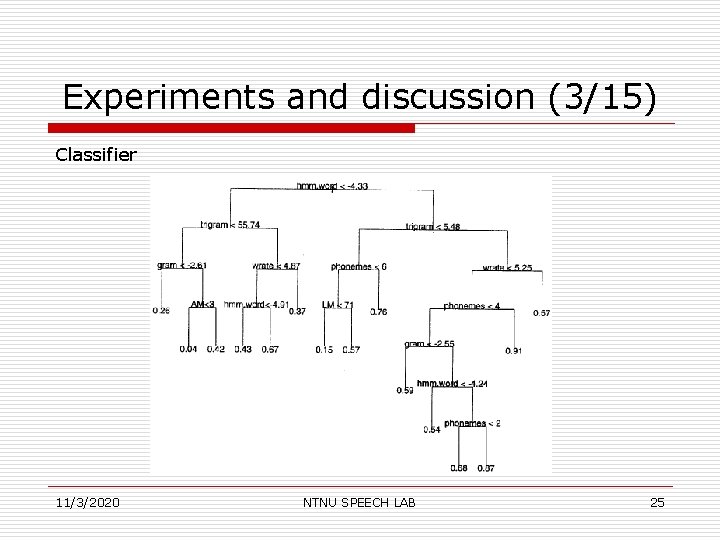

Experiments and discussion (3/15) Classifier 11/3/2020 NTNU SPEECH LAB 25

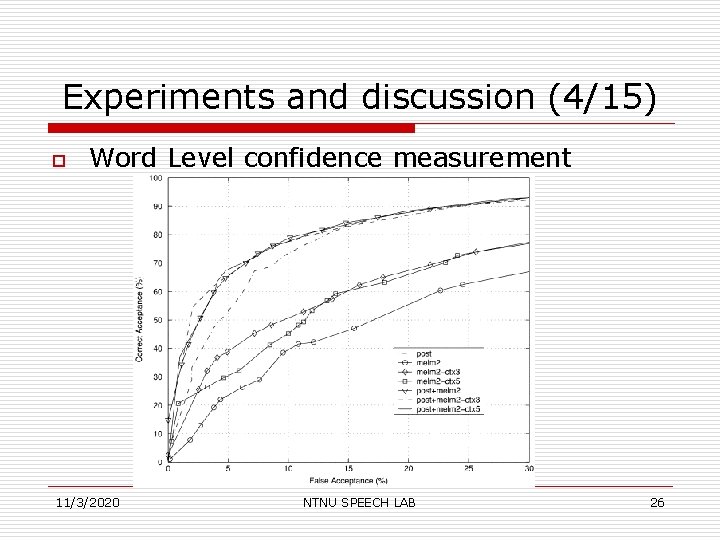

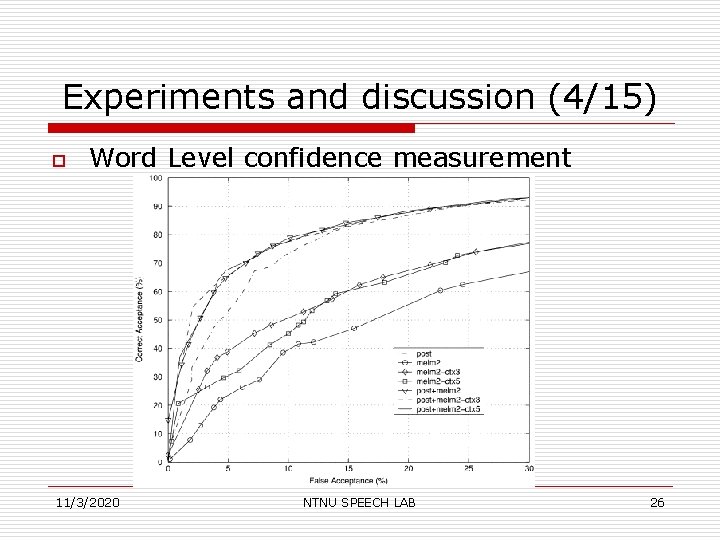

Experiments and discussion (4/15) o Word Level confidence measurement 11/3/2020 NTNU SPEECH LAB 26

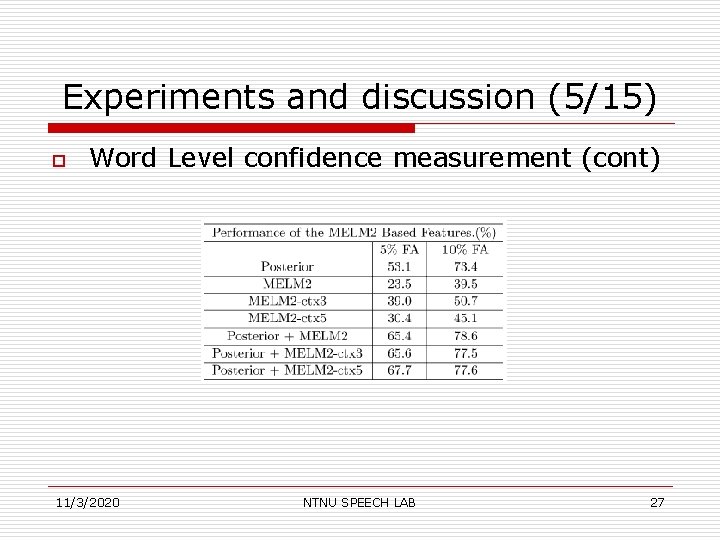

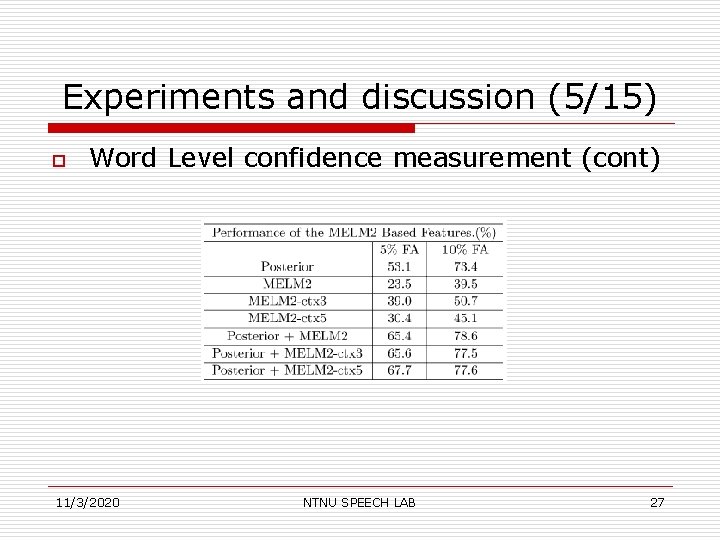

Experiments and discussion (5/15) o Word Level confidence measurement (cont) 11/3/2020 NTNU SPEECH LAB 27

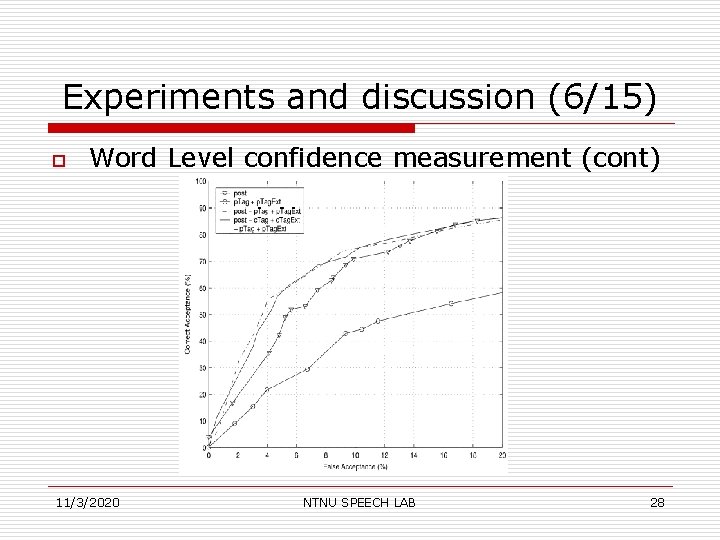

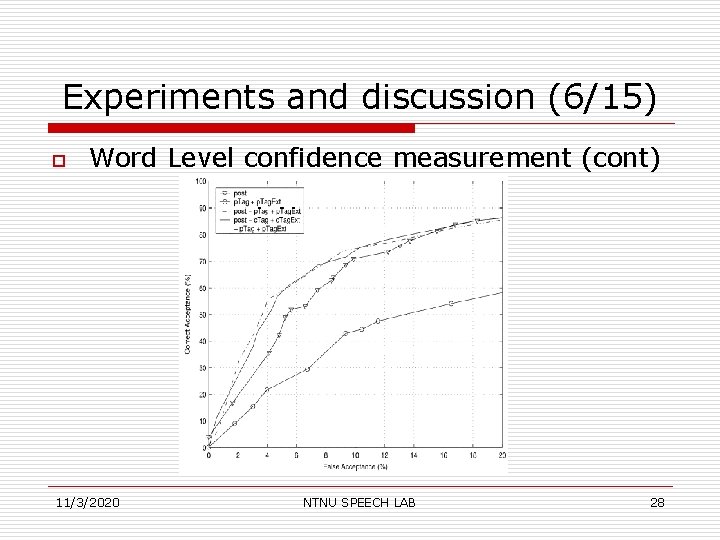

Experiments and discussion (6/15) o Word Level confidence measurement (cont) 11/3/2020 NTNU SPEECH LAB 28

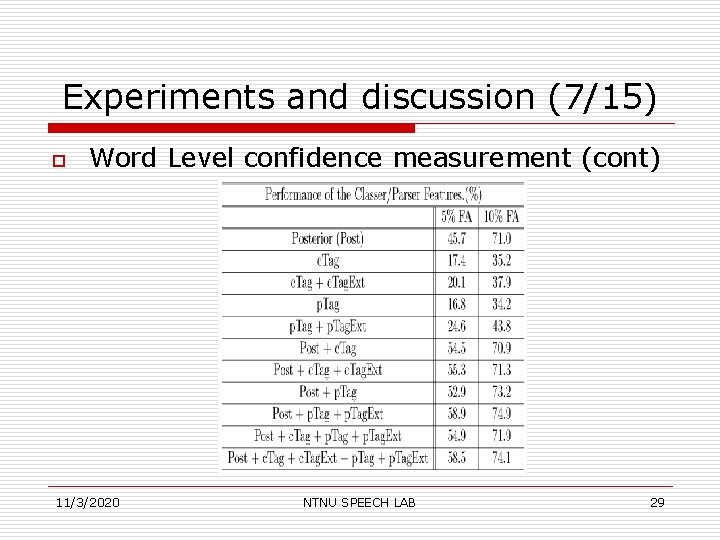

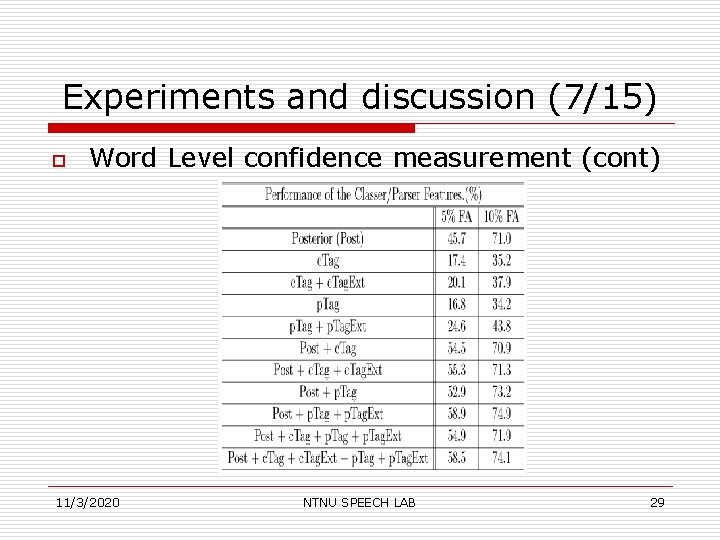

Experiments and discussion (7/15) o Word Level confidence measurement (cont) 11/3/2020 NTNU SPEECH LAB 29

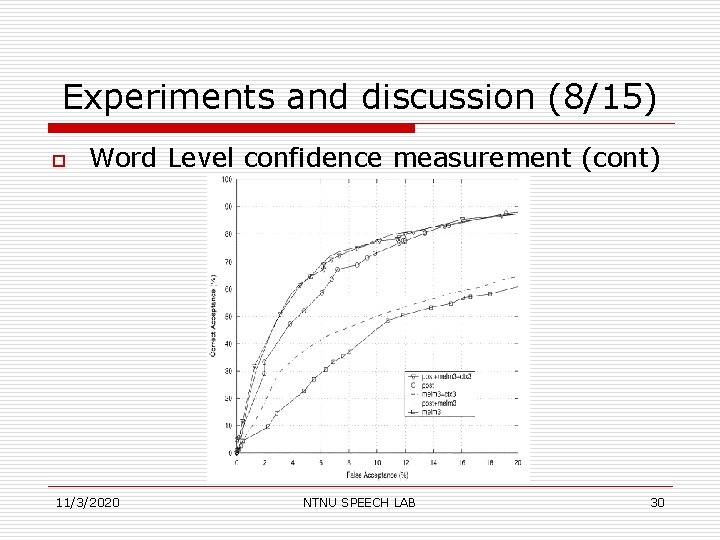

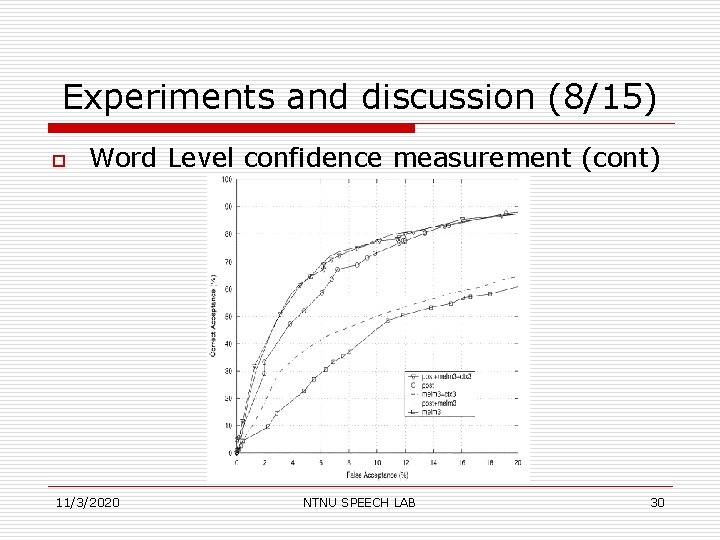

Experiments and discussion (8/15) o Word Level confidence measurement (cont) 11/3/2020 NTNU SPEECH LAB 30

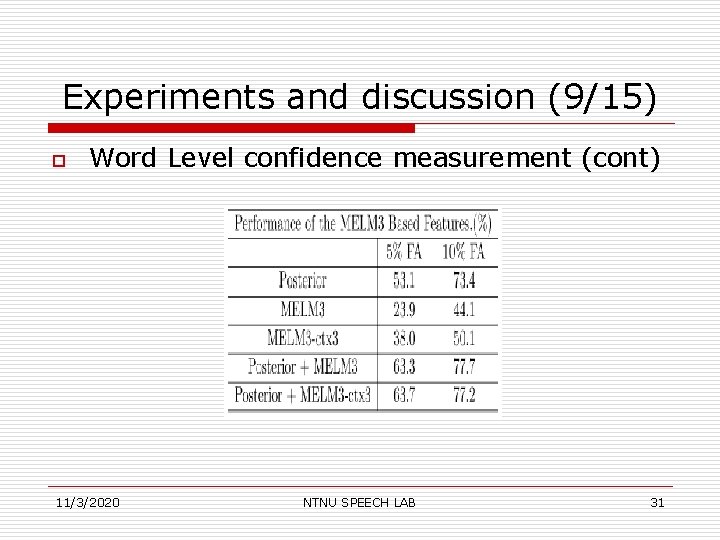

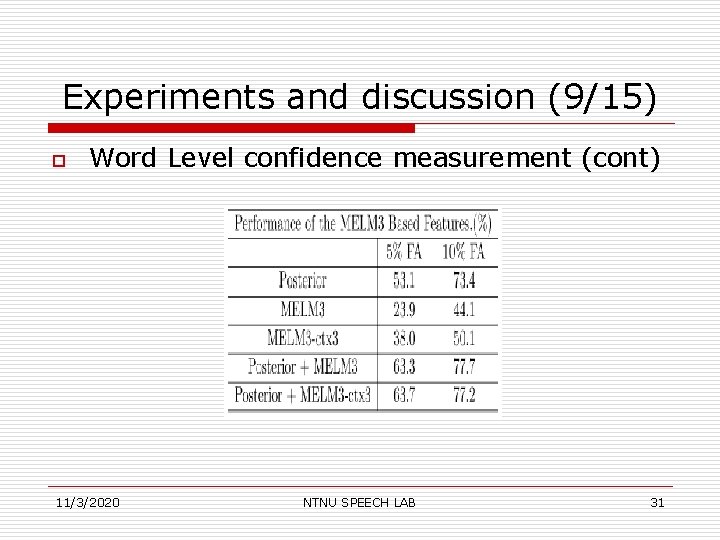

Experiments and discussion (9/15) o Word Level confidence measurement (cont) 11/3/2020 NTNU SPEECH LAB 31

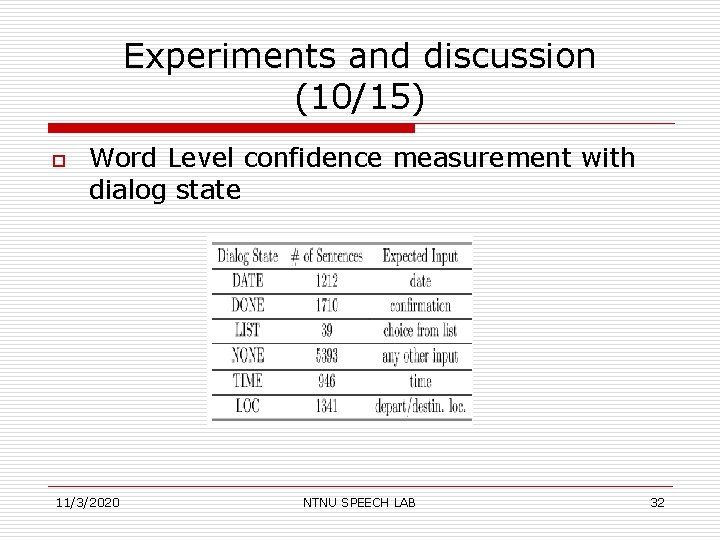

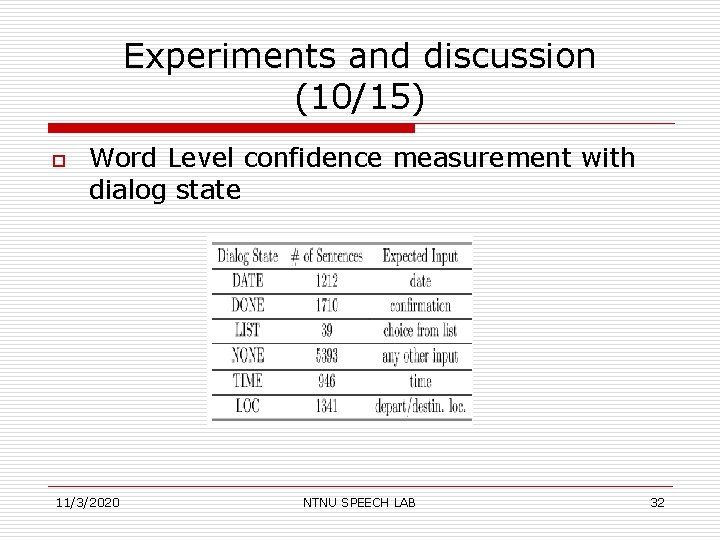

Experiments and discussion (10/15) o Word Level confidence measurement with dialog state 11/3/2020 NTNU SPEECH LAB 32

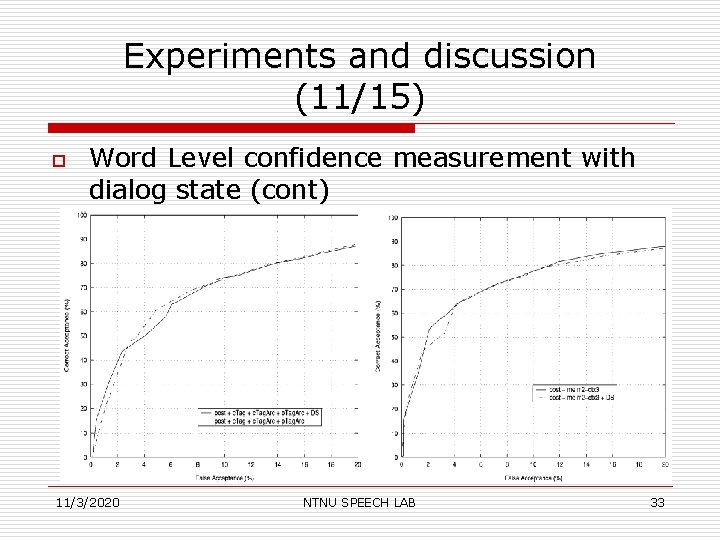

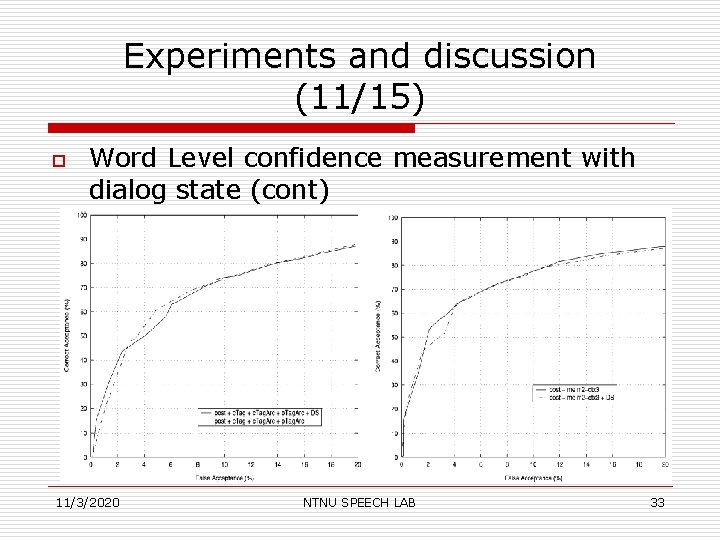

Experiments and discussion (11/15) o Word Level confidence measurement with dialog state (cont) 11/3/2020 NTNU SPEECH LAB 33

Experiments and discussion (12/15) o Concept level confidence measurement n n n 11/3/2020 In spoken dialog system, it is important if the words that are part of a concept are recognized correctly. The concept is defined as the combination of word (s) belonging to a label in a classer tree. The classer covers 19 concepts NTNU SPEECH LAB 34

Experiments and discussion (13/15) o Concept level confidence measurement (cont) n n 11/3/2020 The posterior probability of the concept The concept score from the classer : o Classer : multiply all the probabilities between begin-label and end-label o Norm-classer : normalize the final overall probability with the number of words spanned by the classer concept NTNU SPEECH LAB 35

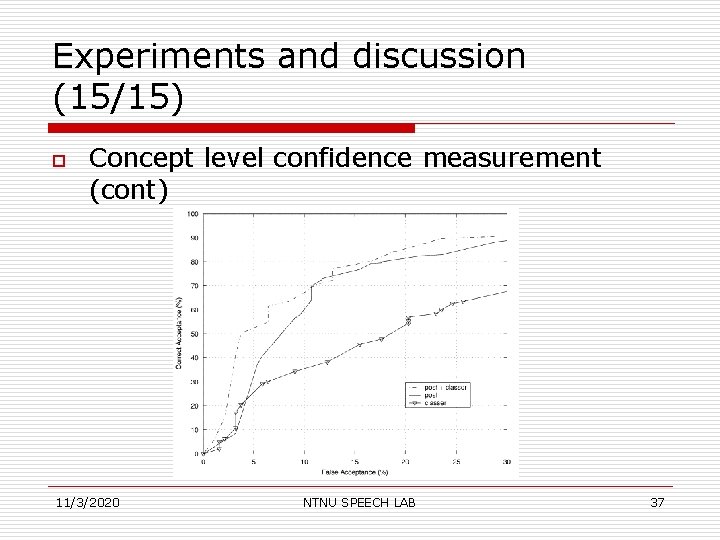

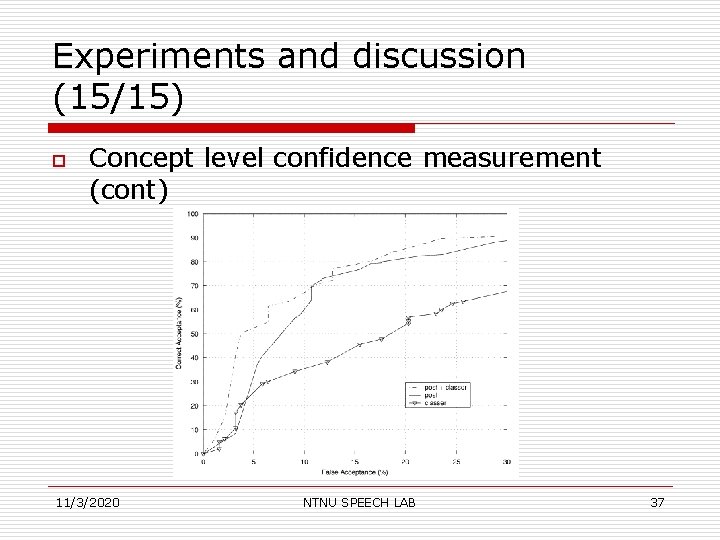

Experiments and discussion (14/15) o Concept level confidence measurement (cont) n 11/3/2020 Extracting the concepts from the training data resulted 6855 concept, and for the test data is 919 NTNU SPEECH LAB 36

Experiments and discussion (15/15) o Concept level confidence measurement (cont) 11/3/2020 NTNU SPEECH LAB 37

Conclusions o o The semantic features brought complementary information to speech recognition summarized by the posterior probability. In the future, we plan to apply the proposed methods to additional domain. 11/3/2020 NTNU SPEECH LAB 38