Stochastic Language Generation for Spoken Dialog Systems Alice

- Slides: 21

Stochastic Language Generation for Spoken Dialog Systems Alice Oh aliceo@cs. cmu. edu Alex I. Rudnicky air@cs. cmu. edu Kevin Lenzo lenzo@cs. cmu. edu School of Computer Science Carnegie Mellon University

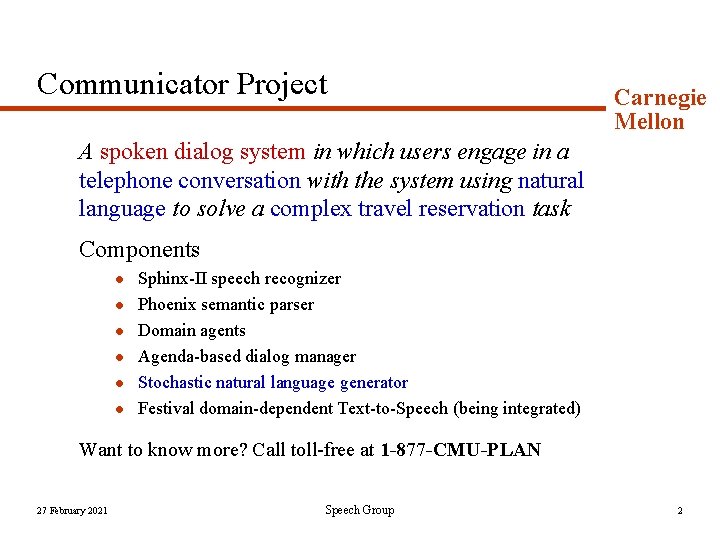

Communicator Project Carnegie Mellon A spoken dialog system in which users engage in a telephone conversation with the system using natural language to solve a complex travel reservation task Components l l l Sphinx-II speech recognizer Phoenix semantic parser Domain agents Agenda-based dialog manager Stochastic natural language generator Festival domain-dependent Text-to-Speech (being integrated) Want to know more? Call toll-free at 1 -877 -CMU-PLAN 27 February 2021 Speech Group 2

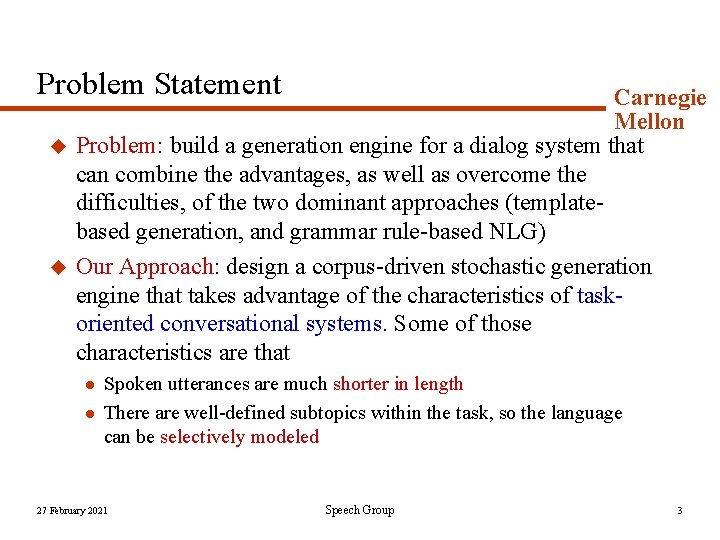

Problem Statement u u Carnegie Mellon Problem: build a generation engine for a dialog system that can combine the advantages, as well as overcome the difficulties, of the two dominant approaches (templatebased generation, and grammar rule-based NLG) Our Approach: design a corpus-driven stochastic generation engine that takes advantage of the characteristics of taskoriented conversational systems. Some of those characteristics are that l l Spoken utterances are much shorter in length There are well-defined subtopics within the task, so the language can be selectively modeled 27 February 2021 Speech Group 3

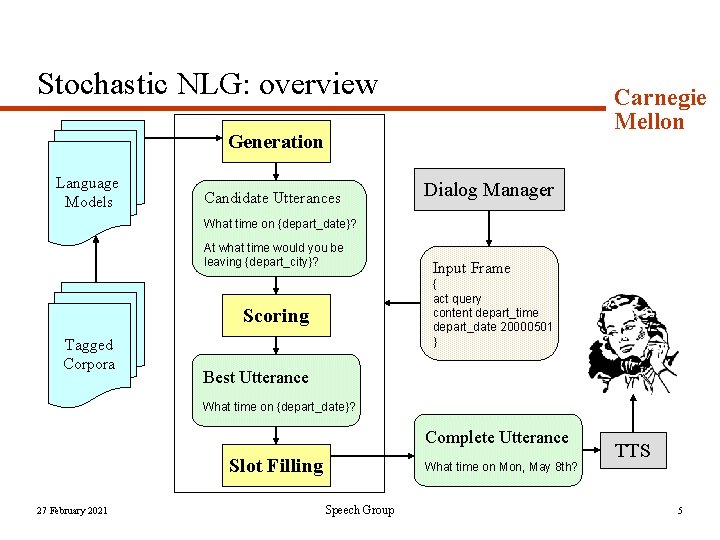

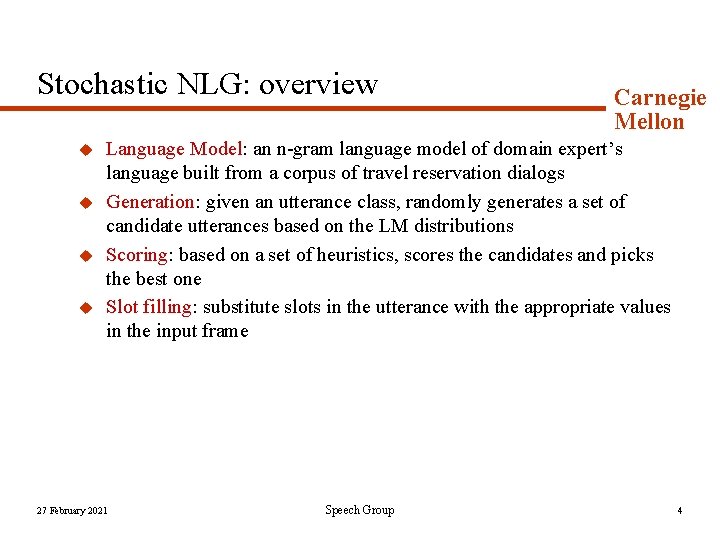

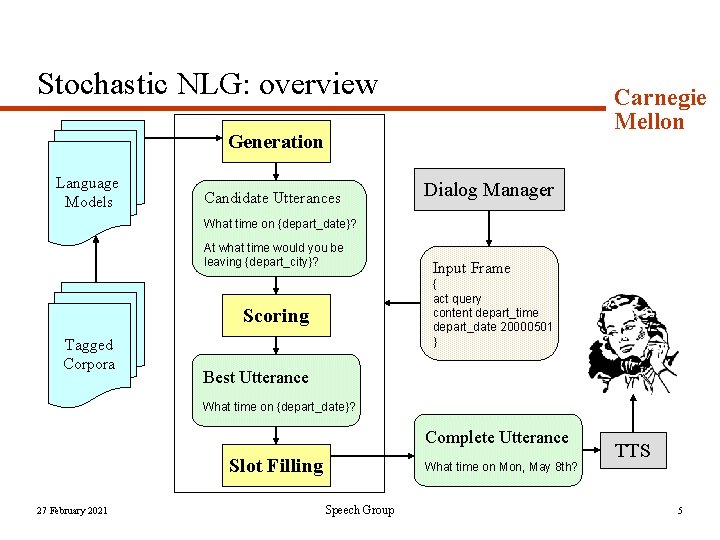

Stochastic NLG: overview u u Carnegie Mellon Language Model: an n-gram language model of domain expert’s language built from a corpus of travel reservation dialogs Generation: given an utterance class, randomly generates a set of candidate utterances based on the LM distributions Scoring: based on a set of heuristics, scores the candidates and picks the best one Slot filling: substitute slots in the utterance with the appropriate values in the input frame 27 February 2021 Speech Group 4

Stochastic NLG: overview Carnegie Mellon Generation Language Models Candidate Utterances Dialog Manager What time on {depart_date}? At what time would you be leaving {depart_city}? { act query content depart_time depart_date 20000501 } Scoring Tagged Corpora Input Frame Best Utterance What time on {depart_date}? Complete Utterance Slot Filling 27 February 2021 What time on Mon, May 8 th? Speech Group TTS 5

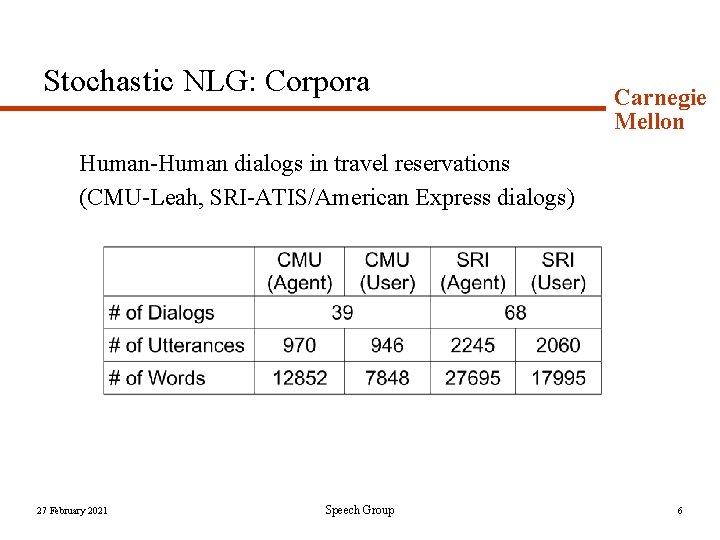

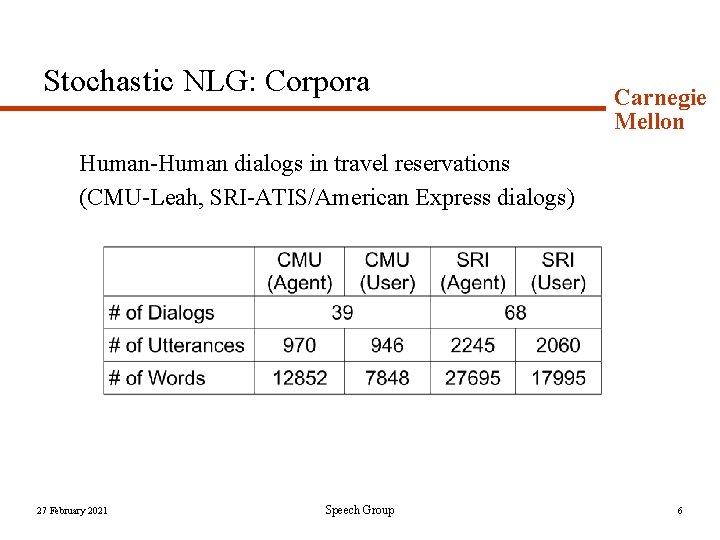

Stochastic NLG: Corpora Carnegie Mellon Human-Human dialogs in travel reservations (CMU-Leah, SRI-ATIS/American Express dialogs) 27 February 2021 Speech Group 6

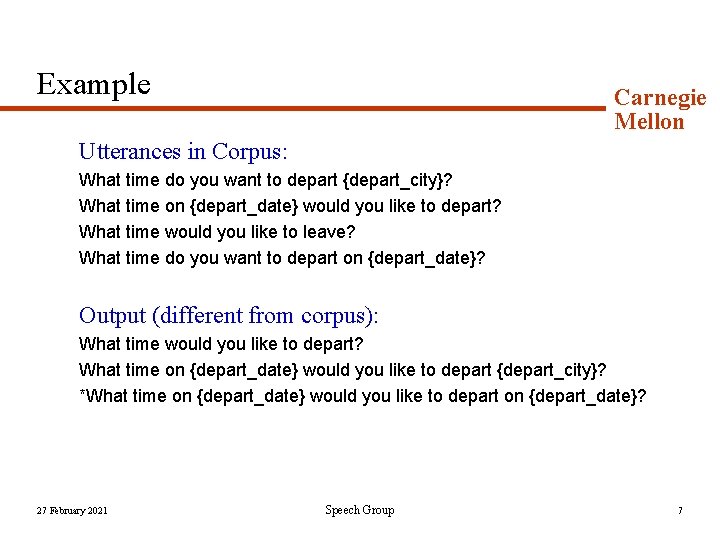

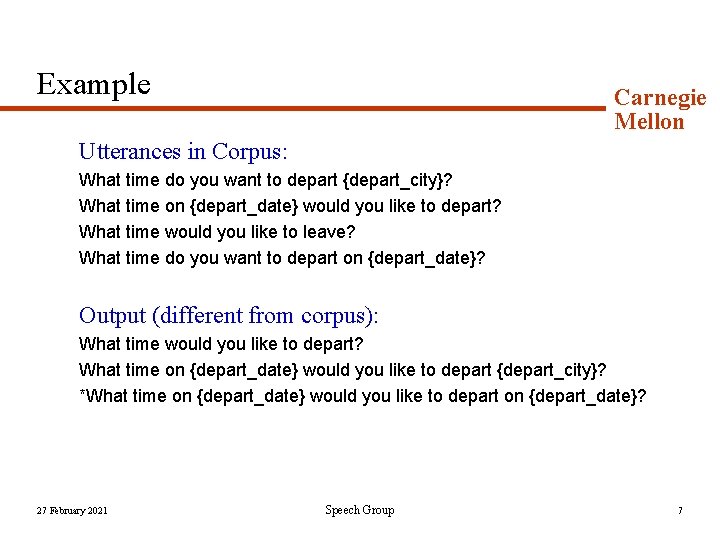

Example Carnegie Mellon Utterances in Corpus: What time do you want to depart {depart_city}? What time on {depart_date} would you like to depart? What time would you like to leave? What time do you want to depart on {depart_date}? Output (different from corpus): What time would you like to depart? What time on {depart_date} would you like to depart {depart_city}? *What time on {depart_date} would you like to depart on {depart_date}? 27 February 2021 Speech Group 7

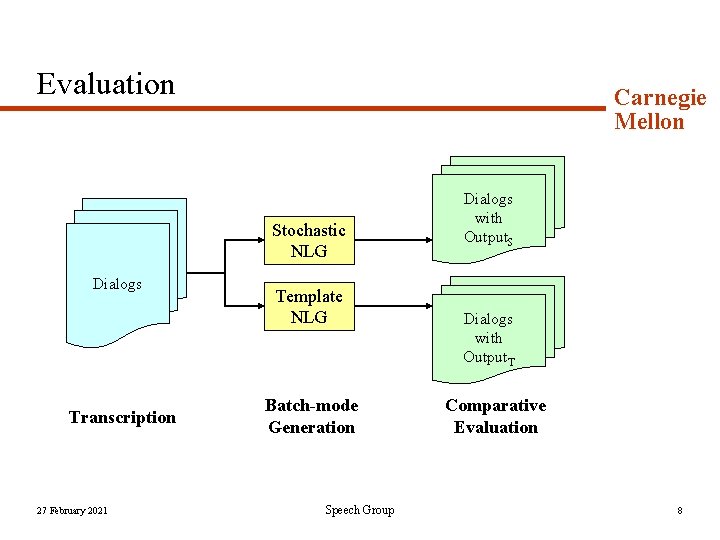

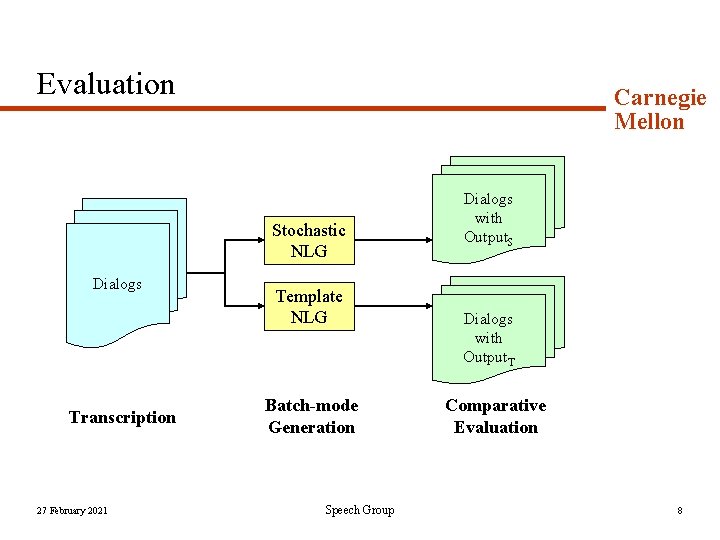

Evaluation Carnegie Mellon Stochastic NLG Dialogs Transcription 27 February 2021 Template NLG Batch-mode Generation Speech Group Dialogs with Output. S Dialogs with Output. T Comparative Evaluation 8

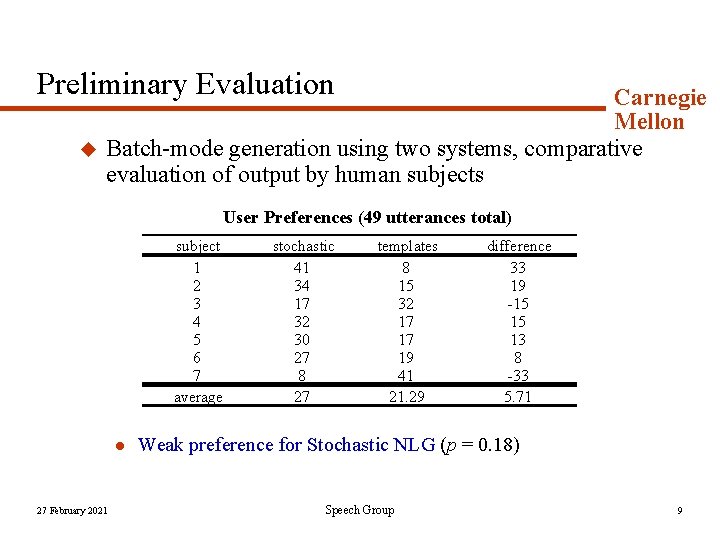

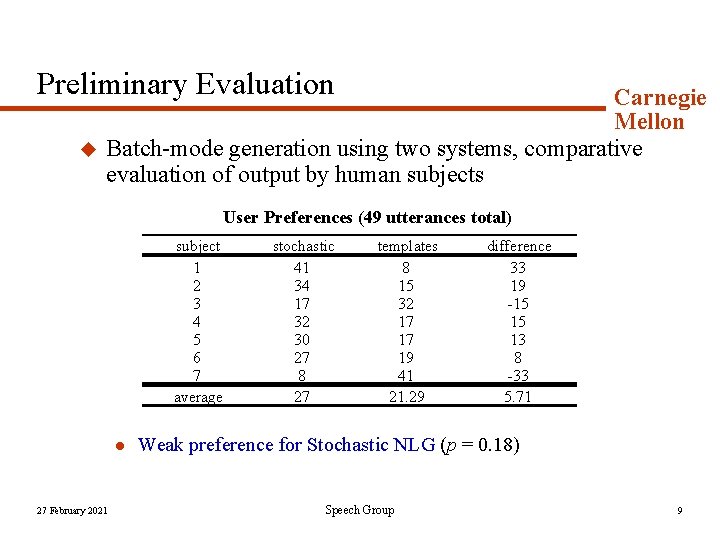

Preliminary Evaluation u Carnegie Mellon Batch-mode generation using two systems, comparative evaluation of output by human subjects User Preferences (49 utterances total) subject 1 2 3 4 5 6 7 average l 27 February 2021 stochastic 41 34 17 32 30 27 8 27 templates 8 15 32 17 17 19 41 21. 29 difference 33 19 -15 15 13 8 -33 5. 71 Weak preference for Stochastic NLG (p = 0. 18) Speech Group 9

Stochastic NLG: Advantages u u u Carnegie Mellon corpus-driven easy to build (minimal knowledge engineering) fast prototyping minimal input (speech act, slot values) natural output leverages data-collecting/tagging effort 27 February 2021 Speech Group 10

Open Issues u u u Carnegie Mellon How big of a corpus do we need? How much of it needs manual tagging? How does the n in n-gram affect the output? What happens to output when two different human speakers are modeled in one model? Can we replace “scoring” with a search algorithm? 27 February 2021 Speech Group 11

Extra Slides

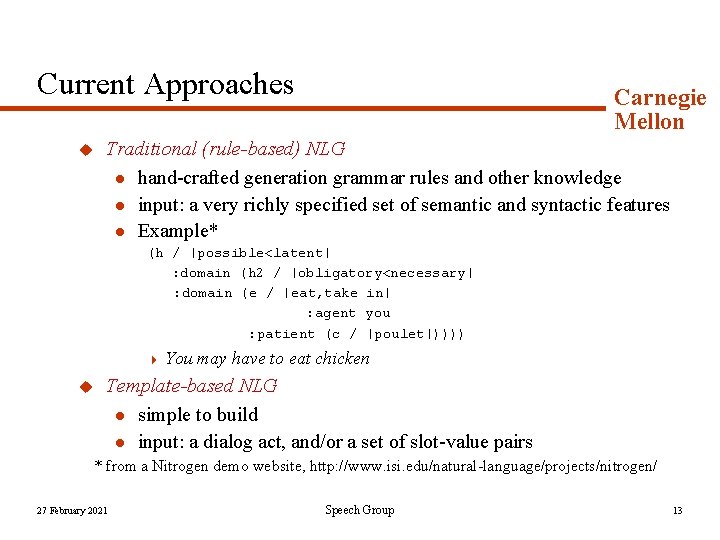

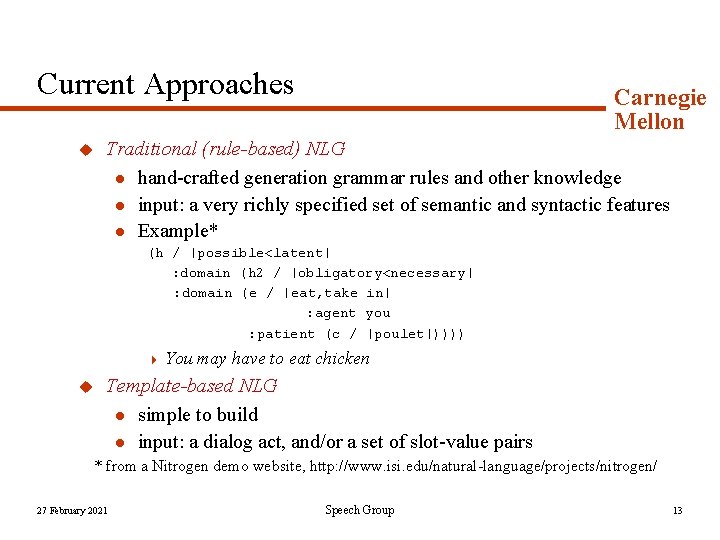

Current Approaches u Carnegie Mellon Traditional (rule-based) NLG l hand-crafted generation grammar rules and other knowledge l input: a very richly specified set of semantic and syntactic features l Example* (h / |possible<latent| : domain (h 2 / |obligatory<necessary| : domain (e / |eat, take in| : agent you : patient (c / |poulet|)))) 4 u You may have to eat chicken Template-based NLG l simple to build l input: a dialog act, and/or a set of slot-value pairs * from a Nitrogen demo website, http: //www. isi. edu/natural-language/projects/nitrogen/ 27 February 2021 Speech Group 13

Carnegie Mellon Stochastic NLG can also be thought of as a way to automatically build templates from a corpus If you set n equal to a large enough number, most utterances generated by LM-NLG will be exact duplicates of the utterances in the corpus. 27 February 2021 Speech Group 14

Tagging u u Carnegie Mellon CMU corpus tagged manually SRI corpus tagged semi-automatically using trigram language models built from CMU corpus 27 February 2021 Speech Group 15

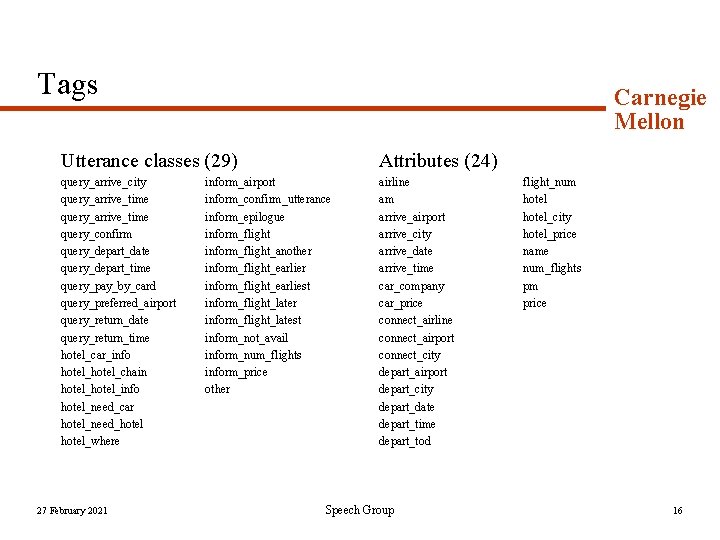

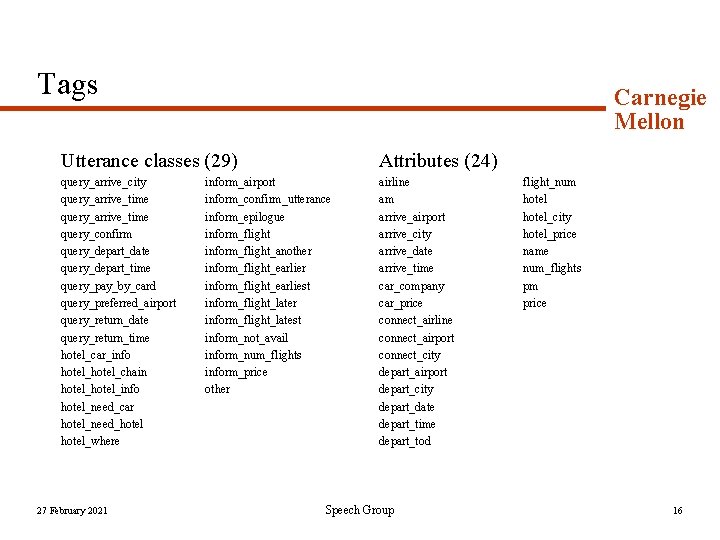

Tags Carnegie Mellon Utterance classes (29) Attributes (24) query_arrive_city query_arrive_time query_confirm query_depart_date query_depart_time query_pay_by_card query_preferred_airport query_return_date query_return_time hotel_car_info hotel_chain hotel_info hotel_need_car hotel_need_hotel_where airline am arrive_airport arrive_city arrive_date arrive_time car_company car_price connect_airline connect_airport connect_city depart_airport depart_city depart_date depart_time depart_tod 27 February 2021 inform_airport inform_confirm_utterance inform_epilogue inform_flight_another inform_flight_earliest inform_flight_later inform_flight_latest inform_not_avail inform_num_flights inform_price other Speech Group flight_num hotel_city hotel_price name num_flights pm price 16

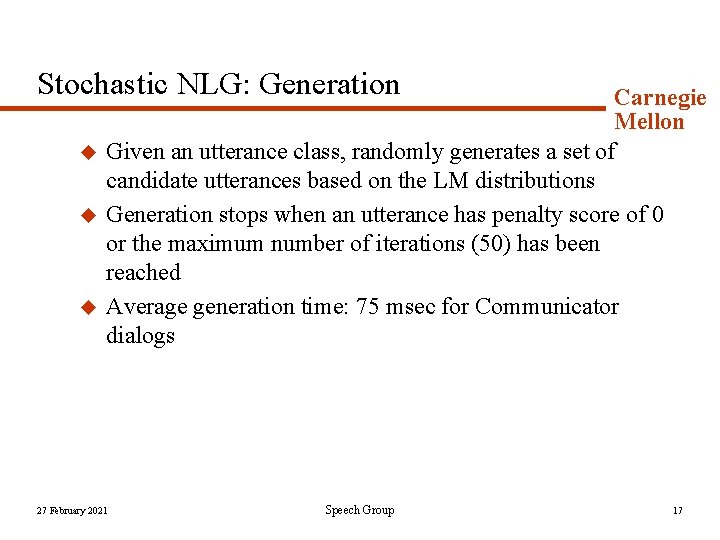

Stochastic NLG: Generation u u u Carnegie Mellon Given an utterance class, randomly generates a set of candidate utterances based on the LM distributions Generation stops when an utterance has penalty score of 0 or the maximum number of iterations (50) has been reached Average generation time: 75 msec for Communicator dialogs 27 February 2021 Speech Group 17

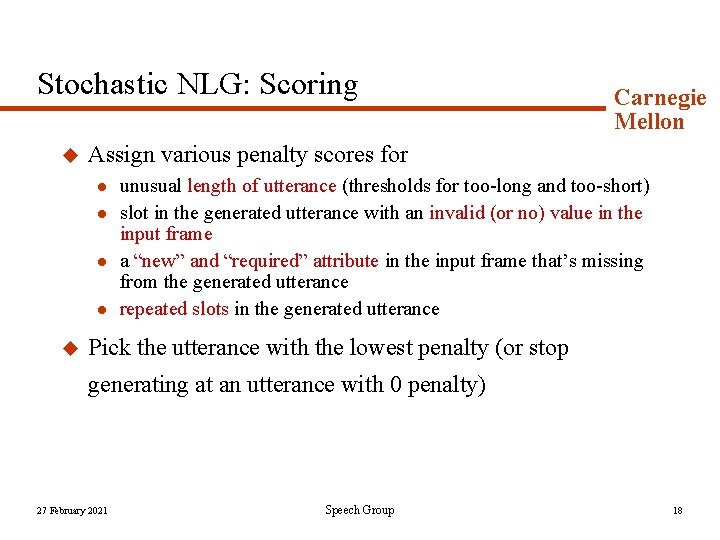

Stochastic NLG: Scoring u Assign various penalty scores for l l u Carnegie Mellon unusual length of utterance (thresholds for too-long and too-short) slot in the generated utterance with an invalid (or no) value in the input frame a “new” and “required” attribute in the input frame that’s missing from the generated utterance repeated slots in the generated utterance Pick the utterance with the lowest penalty (or stop generating at an utterance with 0 penalty) 27 February 2021 Speech Group 18

Stochastic NLG: Slot Filling u Carnegie Mellon Substitute slots in the utterance with the appropriate values in the input frame Example: What time do you need to arrive in {arrive_city}? What time do you need to arrive in New York? 27 February 2021 Speech Group 19

Stochastic NLG: Shortcomings u u Carnegie Mellon What might sound natural (imperfect grammar, intentional omission of words, etc. ) for a human speaker may sound awkward (or wrong) for the system. It is difficult to define utterance boundaries and utterance classes. Some utterances in the corpus may be a conjunction of more than one utterance class. Factors other than the utterance class may affect the words (e. g. , discourse history). Some sophistication built into traditional NLG engines is not available (e. g. , aggregation, anaphorization). 27 February 2021 Speech Group 20

Evaluation u u Carnegie Mellon Must be able to evaluate generation independent of the rest of the dialog system Comparative evaluation using dialog transcripts l l u need more subjects 8 -10 dialogs; system output generated batch-mode by two different engines Evaluation of human travel agent utterances l l 27 February 2021 Do users rate them well? Is it good enough to model human utterances? Speech Group 21