Prosodic Prediction from Text and Prosodic Recognition for

- Slides: 41

Prosodic Prediction from Text and Prosodic Recognition for Speech Corpora Julia Hirschberg CS 4706 12/1/2020 1

Today • Motivation: – Why predict prosody from text? – Why recognize prosody in speech? • Predicting prosodic events from text: prosodic assignment to text input for TTS • Recognizing prosodic events: automatic analysis of TTS and other corpora 12/1/2020 2

Assigning Prosodic Variation in TTS A car bomb attack on a police station in the northern Iraqi city of Kirkuk early Monday killed four civilians and wounded 10 others U. S. military officials said. A leading Shiite member of Iraq's Governing Council on Sunday demanded no more "stalling" on arranging for elections to rule this country once the U. S. -led occupation ends June 30. Abdel Aziz al-Hakim a Shiite cleric and Governing Council member said the U. S. -run coalition should have begun planning for elections months ago. -- Loquendo 12/1/2020 3

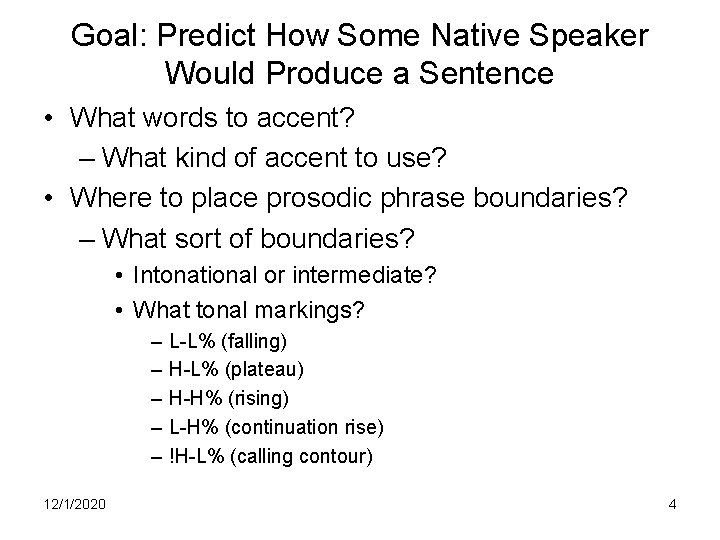

Goal: Predict How Some Native Speaker Would Produce a Sentence • What words to accent? – What kind of accent to use? • Where to place prosodic phrase boundaries? – What sort of boundaries? • Intonational or intermediate? • What tonal markings? – – – 12/1/2020 L-L% (falling) H-L% (plateau) H-H% (rising) L-H% (continuation rise) !H-L% (calling contour) 4

A Simple Approach • Default solution – Accent: • Accent content words • Deaccent function words • Use only simple H* accents unless the input is a yes-no question – then use L* – Phrasing: • Place phrase boundaries at any non-sentence -final punctuation (e. g. , : ; ) using L-H% • Use H-H% for yes-no questions • O. W. use L-L% for sentence final punctuation (!. ) 12/1/2020 5

Problems • Simple accent strategy is only acceptable ~70% of the time • Simple phrasing strategy is right ~80% of the time. • So, in a 20 -word sentence you will probably have about 6 accent errors and 4 phrasing errors: uhoh! 12/1/2020 6

More Interesting Approaches • Learn accent and phrasing assignment from large corpora: Machine Learning • Problems: – There may be many acceptable ways to utter a given sentence – how do we choose which to train on? – Requires the hand labeling of large corpora in some accepted convention – Tobi is accepted, can be labeled in reliably, but labeling takes a long time (about 60 times real time) 12/1/2020 7

Statistical learning methods • Classification and regression trees (CART), Rule induction (Ripper), Support Vector Machines, HMMs, Neural Nets • Input: Vector of independent variables and one dependent (predicted) variable, e. g. ‘theres a phrase boundary here’ or ‘theres not’ Feat 1 Feat 2 …Feat. N Dep. Var • Input from hand labeled dependent variable and automatically extracted independent variables • Result can be integrated into TTS text processor 12/1/2020 8

Where do we get the features: phrasing? • Timing – Pause – Lengthening of final syllable of preceding word • F 0 changes: high and low • Vocal fry/glottalization 12/1/2020 9

What other features may be linked to phrasing? • Syntactic information – Abney ’ 91 chunking major constituents – Steedman ’ 90, Oehrle ’ 91 CCGs … – Which ‘chunks’ tend to stick together? – Which ‘chunks’ tend to be separated intonationally? • Largest constituent dominating w(i) but not w(j) NP[The man in the moon] |? VP[looks down on you] • Smallest constituent dominating w(i), w(j) NP[The man PP[in |? moon]] – Part-of-speech of words around potential boundary site The/DET man/NN |? in/Prep moon/NN • Sentence-level information – Length of sentence – Position in sentence 12/1/2020 10

• • This is a very |? very long sentence ? | which thus might have a lot of phrase boundaries in? | it ? | don’t you think? This |? isn’t. Orthographic information – They live in Butte, ? | Montana, ? | don’t they? Word co-occurrence information Vampire ? | bat …powerful ? | but benign… Are words on each side accented or not? The cat in |? the Where is the most recent previous phrase boundary? He asked for pills | but |? 12/1/2020 11

Integrating More Syntactic Information • Incremental improvements: – Adding higher-accuracy parsing (Koehn et al ‘ 00) • Collins or Charniak parsing in real time • Different syntactic representations: relational grammar? Tree-Adjoining Grammar? • Ranking vs. classification? 12/1/2020 12

Where do we get the features: accent? • • • F 0 excursion Durational lengthening Voice quality Vowel quality Loudness 12/1/2020 13

What phenomena are associated with accent? • Word class: content vs. function words • Information status: – Given/new He likes dogs and dogs like him. – Topic/Focus Dogs he likes. – Contrast He likes dogs but not cats. • Grammatical function – The dog ate his kibble. • Surface position: Today George is hungry. 12/1/2020 14

• Association with focus: – John only introduced Mary to Sue. • Semantic parallelism – John likes beer but Mary prefers wine. • How many of these are easy to compute automatically? 12/1/2020 15

How can we approximate such information? • • • POS window Position of candidate word in sentence Location of prior phrase boundary Pseudo-given/new Location of word in complex nominal and stress prediction for that nominal City hall, parking lot, city hall parking lot • Word co-occurrence Blood vessel, blood orange 12/1/2020 16

How do we evaluate the result of prosodic event assignment? • How to define a Gold Standard? – Natural speech corpus – Multi-speaker/same text – Subjective judgments • No simple mapping from text to prosody – Many variants can be acceptable The car was driven to the border last spring while its owner an elderly man was taking an extended vacation in the south of France. 12/1/2020 17

How well can we do? • Intrinsic evaluation – Phrasing prediction: 95 -96% accuracy – Accent prediction: 80 -85% accuracy – Where are the errors? • Information status • Preposition vs. particle – What about overall contours? • Extrinsic evaluation 12/1/2020 18

How can we automatically label large corpora for Unit Selection Synthesis? • • Train a supervised classifier on labeled data Classify our large unlabeled TTS corpus Evaluate on held out labeled data Hope Interspeech 2011 Tutorial M 1 More Than Words Can Say 19

The Standard Corpus-Based Approach • Identify labeled training data • Decide what to label – syllables or words • Extract aggregate acoustic features based on the labeling region • Train a supervised model • Evaluate using cross-validation or a held-out test set. Interspeech 2011 Tutorial M 1 More Than Words Can Say 20

Available To. BI Labeled Corpora • Boston University Radio News Corpus – Available from LDC – 6 speakers (3 M, 3 F) – Professional News Readers (Broadcast News) – Reading the same news stories • Boston Directions Corpus – Read and Spontaneous Speech – 4 non-professional speakers (3 M, 1 F) – Speakers perform a direction giving task (spontaneous) – ~2 weeks later, speakers read a transcript of their speech with disfluencies removed. • Switchboard Corpus – Available from LDC – Spontaneous phone conversations – 543 speakers – Subset annotated with To. BI labels Interspeech 2011 Tutorial M 1 More Than Words Can Say 21

Reciprosody • Developing shared repository for prosodically annotated material. • Free access for non-commercial research. • Actively seeking contributors. http: //speech. cs. qc. cuny. edu/Reciprosody. html Interspeech 2011 Tutorial M 1 More Than Words Can Say 22

Shape Modeling – Feature Generation • • • Pitch Normalization TILT Tonal Center of Gravity Quantized Contour Modeling Contour smoothing – Piecewise linear fit – Bezier Curve Fitting – Momel Stylization • Fujisaki model extraction Interspeech 2011 Tutorial M 1 More Than Words Can Say 23

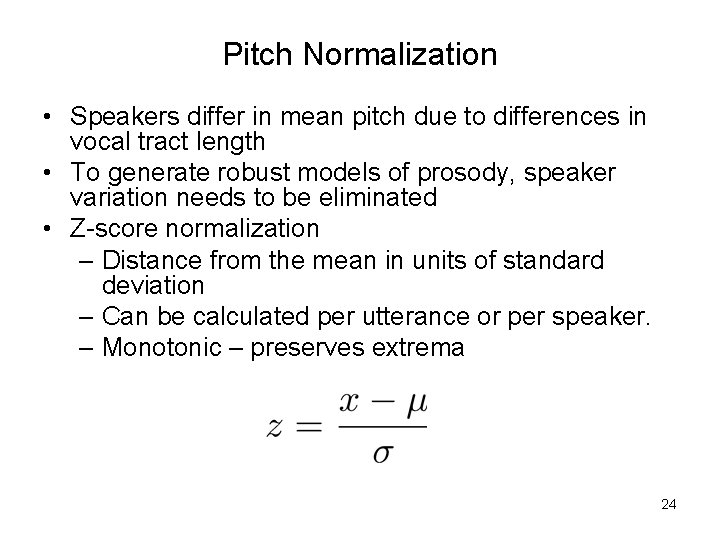

Pitch Normalization • Speakers differ in mean pitch due to differences in vocal tract length • To generate robust models of prosody, speaker variation needs to be eliminated • Z-score normalization – Distance from the mean in units of standard deviation – Can be calculated per utterance or per speaker. – Monotonic – preserves extrema 24

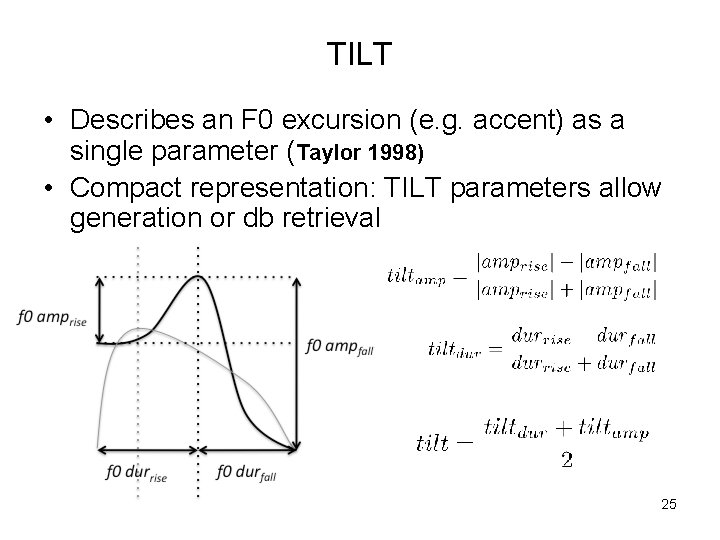

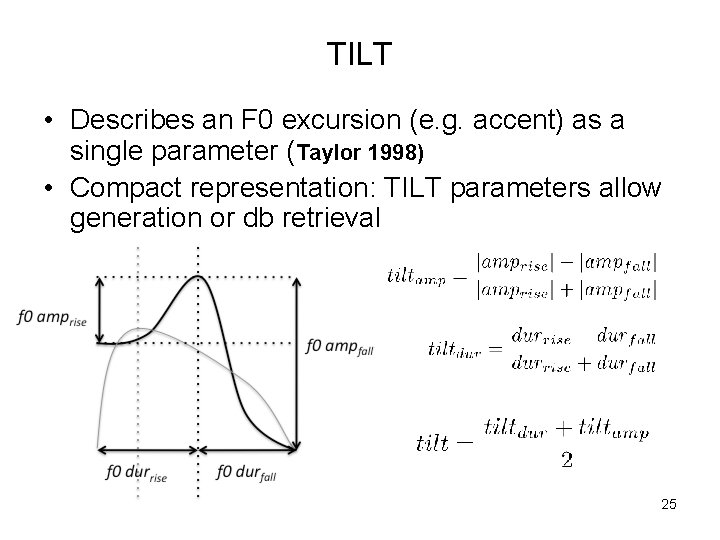

TILT • Describes an F 0 excursion (e. g. accent) as a single parameter (Taylor 1998) • Compact representation: TILT parameters allow generation or db retrieval 25

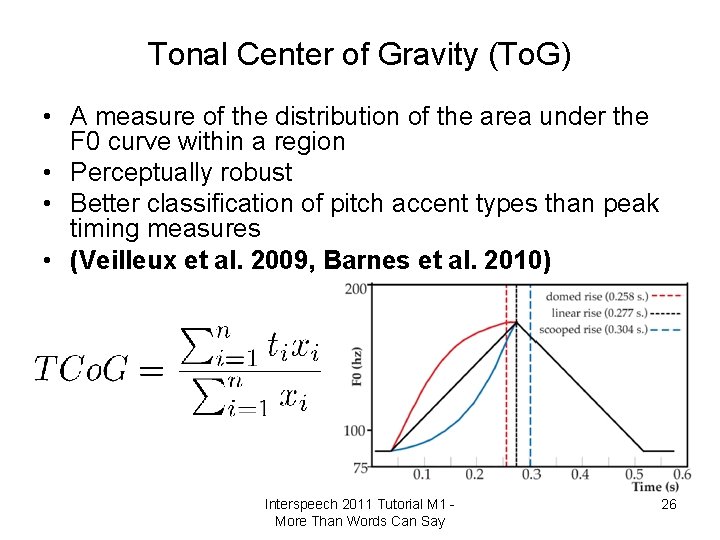

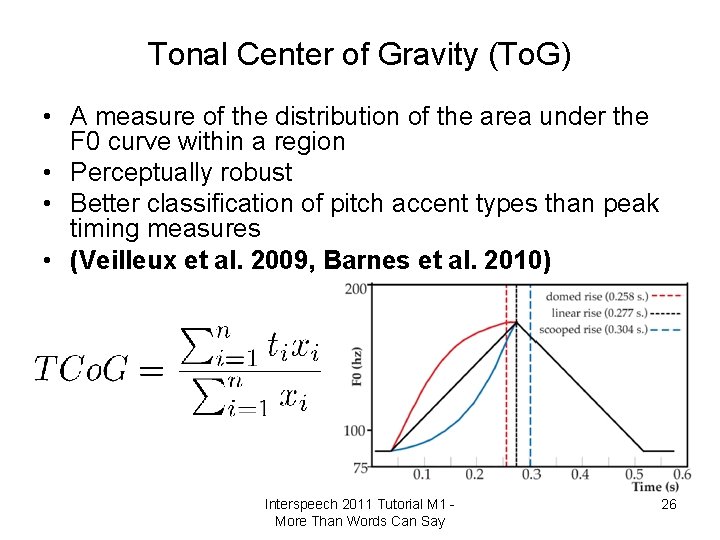

Tonal Center of Gravity (To. G) • A measure of the distribution of the area under the F 0 curve within a region • Perceptually robust • Better classification of pitch accent types than peak timing measures • (Veilleux et al. 2009, Barnes et al. 2010) Interspeech 2011 Tutorial M 1 More Than Words Can Say 26

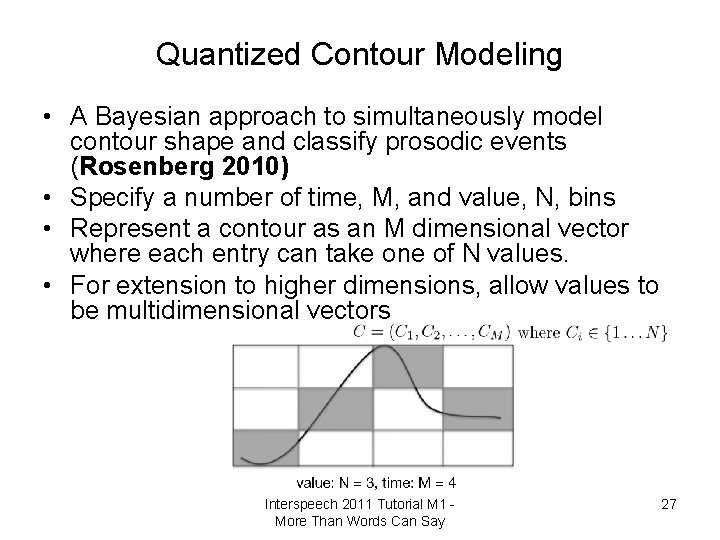

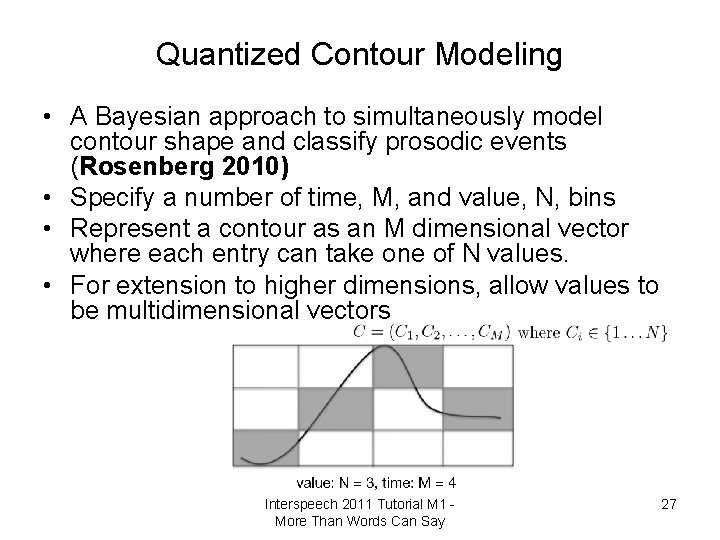

Quantized Contour Modeling • A Bayesian approach to simultaneously model contour shape and classify prosodic events (Rosenberg 2010) • Specify a number of time, M, and value, N, bins • Represent a contour as an M dimensional vector where each entry can take one of N values. • For extension to higher dimensions, allow values to be multidimensional vectors Interspeech 2011 Tutorial M 1 More Than Words Can Say 27

Pitch Contour Smoothing • Pitch estimation can contain errors – Halving, and doubling – Spurious pitch points • Smoothing these outliers allows for more robust and reliable secondary features to be extracted from pitch contours. – Piecewise Linear Fit – Bezier Curve Fitting – Momel stylization 28

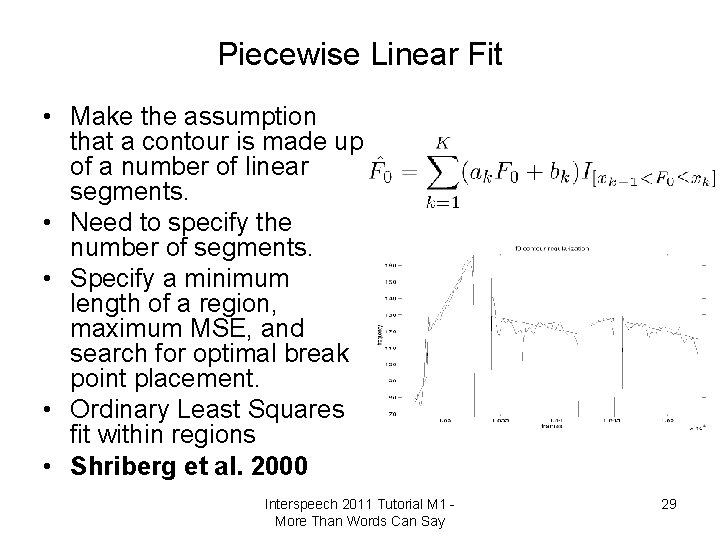

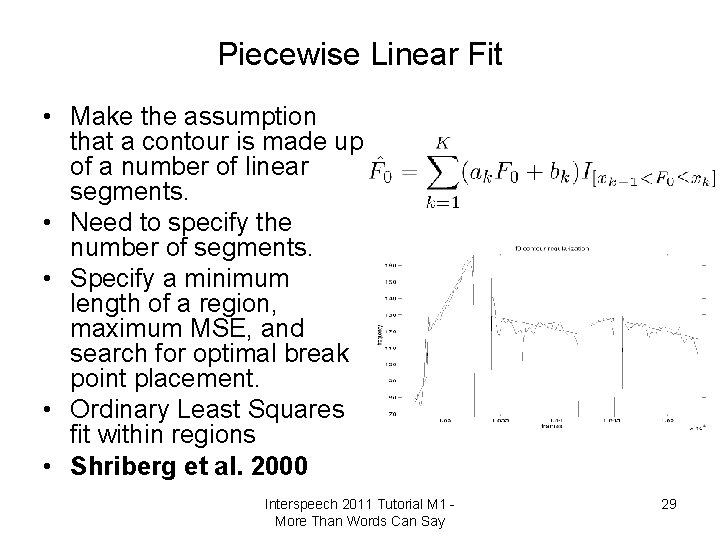

Piecewise Linear Fit • Make the assumption that a contour is made up of a number of linear segments. • Need to specify the number of segments. • Specify a minimum length of a region, maximum MSE, and search for optimal break point placement. • Ordinary Least Squares fit within regions • Shriberg et al. 2000 Interspeech 2011 Tutorial M 1 More Than Words Can Say 29

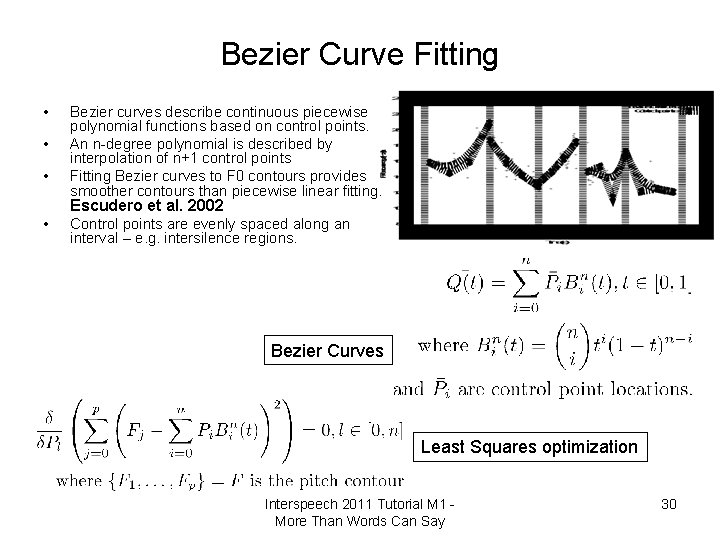

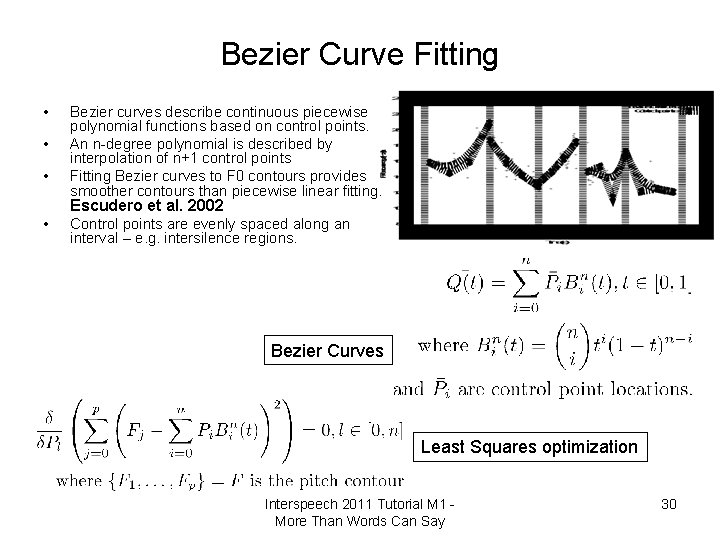

Bezier Curve Fitting • • • Bezier curves describe continuous piecewise polynomial functions based on control points. An n-degree polynomial is described by interpolation of n+1 control points Fitting Bezier curves to F 0 contours provides smoother contours than piecewise linear fitting. Escudero et al. 2002 • Control points are evenly spaced along an interval – e. g. intersilence regions. Bezier Curves Least Squares optimization Interspeech 2011 Tutorial M 1 More Than Words Can Say 30

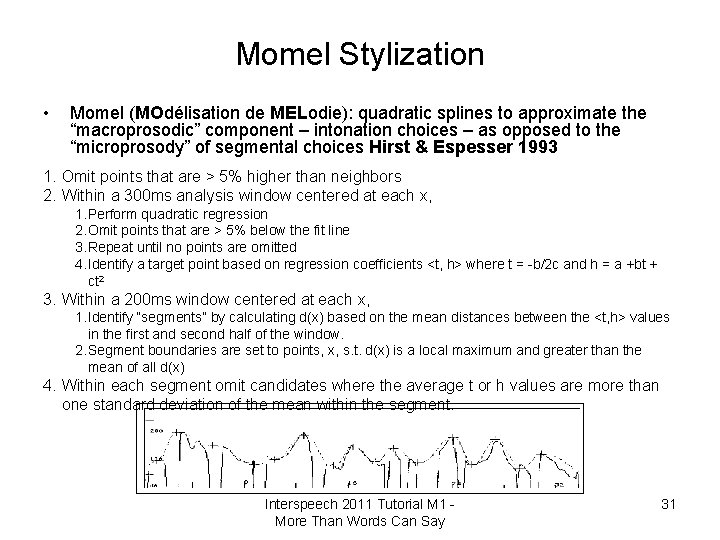

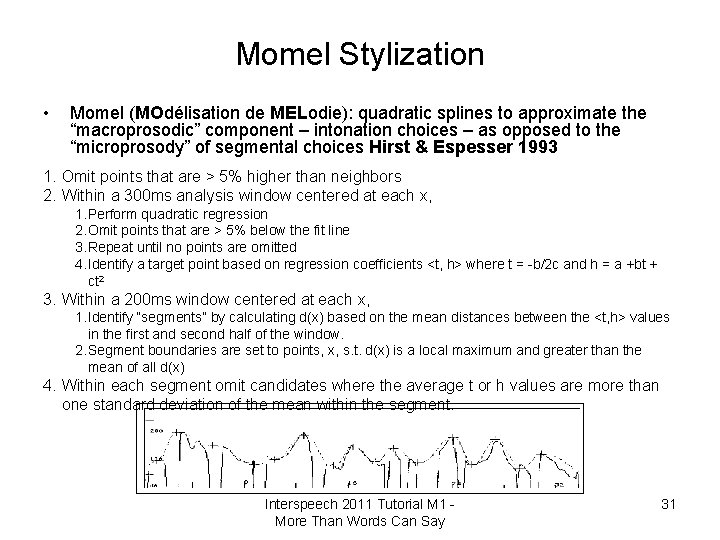

Momel Stylization • Momel (MOdélisation de MELodie): quadratic splines to approximate the “macroprosodic” component – intonation choices – as opposed to the “microprosody” of segmental choices Hirst & Espesser 1993 1. Omit points that are > 5% higher than neighbors 2. Within a 300 ms analysis window centered at each x, 1. Perform quadratic regression 2. Omit points that are > 5% below the fit line 3. Repeat until no points are omitted 4. Identify a target point based on regression coefficients <t, h> where t = -b/2 c and h = a +bt + ct 2 3. Within a 200 ms window centered at each x, 1. Identify “segments” by calculating d(x) based on the mean distances between the <t, h> values in the first and second half of the window. 2. Segment boundaries are set to points, x, s. t. d(x) is a local maximum and greater than the mean of all d(x) 4. Within each segment omit candidates where the average t or h values are more than one standard deviation of the mean within the segment. Interspeech 2011 Tutorial M 1 More Than Words Can Say 31

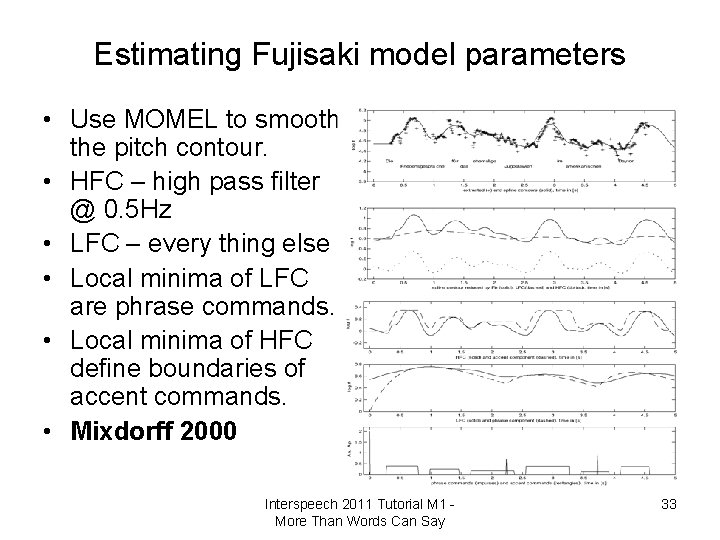

Fujisaki model • Fujisaki model can be viewed as a parametric description of a contour, designed specifically for prosody. • Many techniques for estimating Fujisaki parameters – phrase and accent commands Pfitzinger et al. 2009 Interspeech 2011 Tutorial M 1 More Than Words Can Say 32

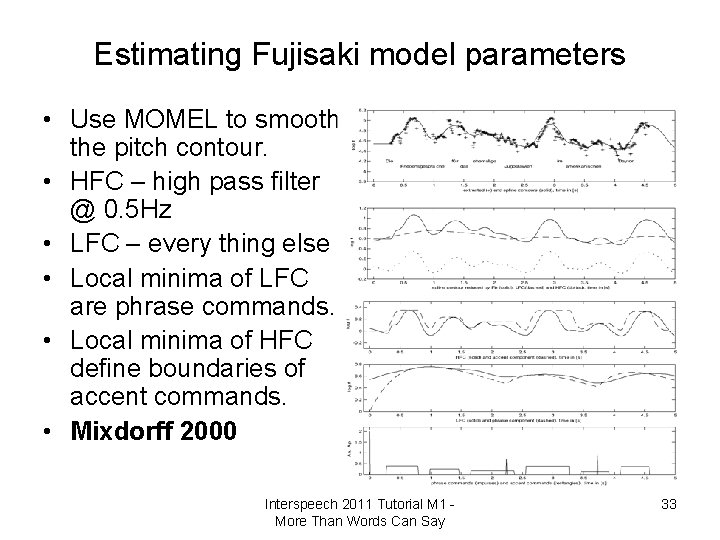

Estimating Fujisaki model parameters • Use MOMEL to smooth the pitch contour. • HFC – high pass filter @ 0. 5 Hz • LFC – every thing else • Local minima of LFC are phrase commands. • Local minima of HFC define boundaries of accent commands. • Mixdorff 2000 Interspeech 2011 Tutorial M 1 More Than Words Can Say 33

Normalization of Phone Durations • Phones in stressed syllables have increased duration as do preboundary phones. Wightman et al. 1991 • Duration is impacted by the inherent duration of a phone (Klatt 1975) as well as speaking rate and speaker idiosyncrasies. • Wightman et al. 1991 use z-score normalization using mean values calculated per speaker phone. • Monkowski et al. 1995 note that phone durations are more log-normal than normally distributed, suggesting that z-score normalization of log durations is more appropriate Interspeech 2011 Tutorial M 1 More Than Words Can Say 34

Analyzing Rhythm • Prosodic rhythm is difficult to analyze. • Measures have been used to classify languages as stress- or syllable-timed including n. PVI, %V, ΔC, but have received little attention in prosodic analysis. Grabe & Low 2002 • • %V – percentage of vowel speech ΔV – distance between vowels ΔC – distance between consonants n. PVI – ratio of difference between consecutive durations to their average duration. using duration of vocalic or intervocalic regions. Interspeech 2011 Tutorial M 1 More Than Words Can Say 35

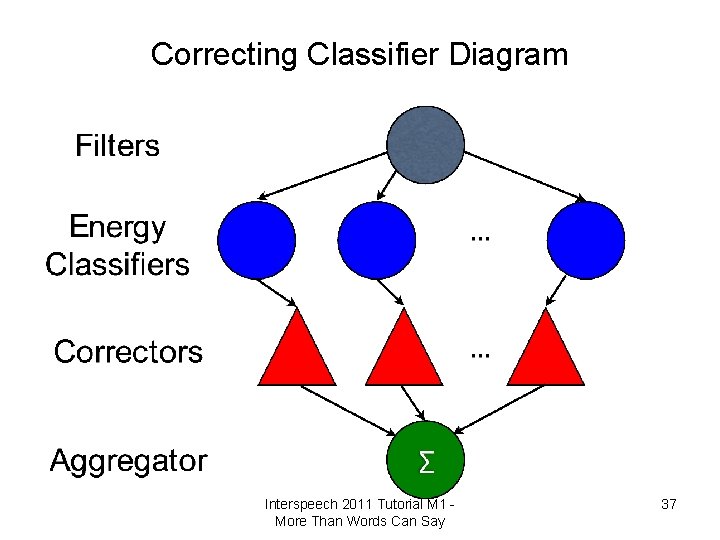

Classification Techniques • Ensemble methods train a set, or ensemble, of independent models or classifiers and combine their results. • Examples – Boosting/Bagging: train weak classifier for each feature and learn weighting of classifiers – Classifier Fusion: train models on different features sets and merge based on confidence scores – Corrected Ensembles of Classifiers: train a classifier B to ‘correct’ results of each classifier A Interspeech 2011 Tutorial M 1 More Than Words Can Say 36

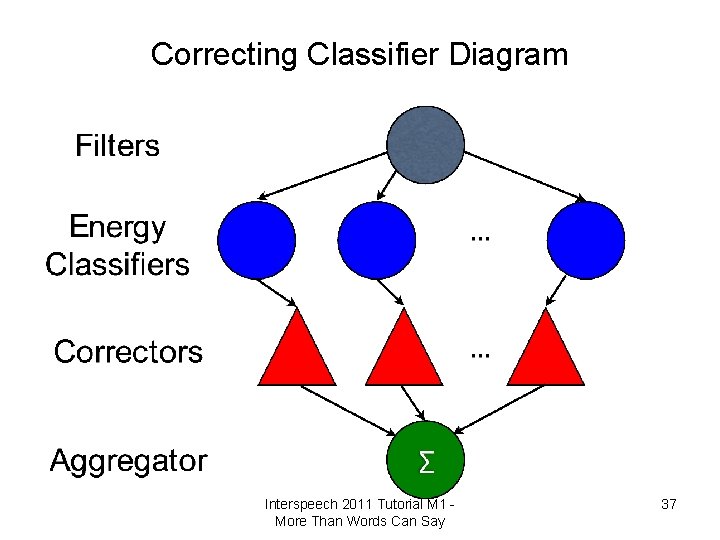

Correcting Classifier Diagram Interspeech 2011 Tutorial M 1 More Than Words Can Say 37

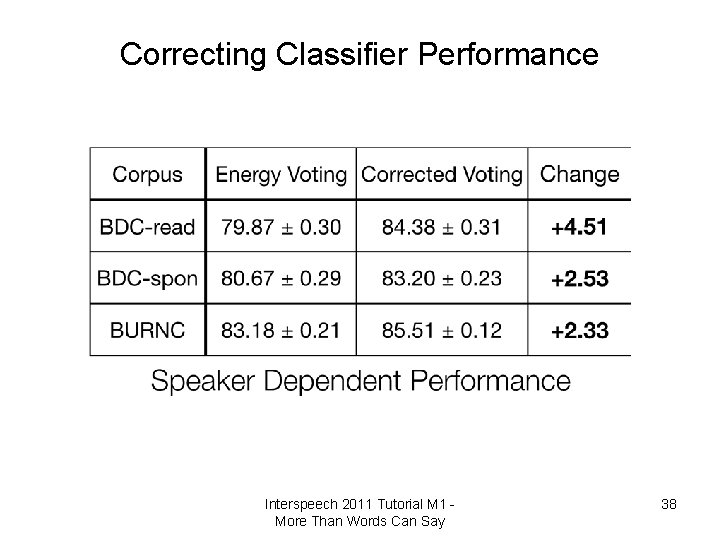

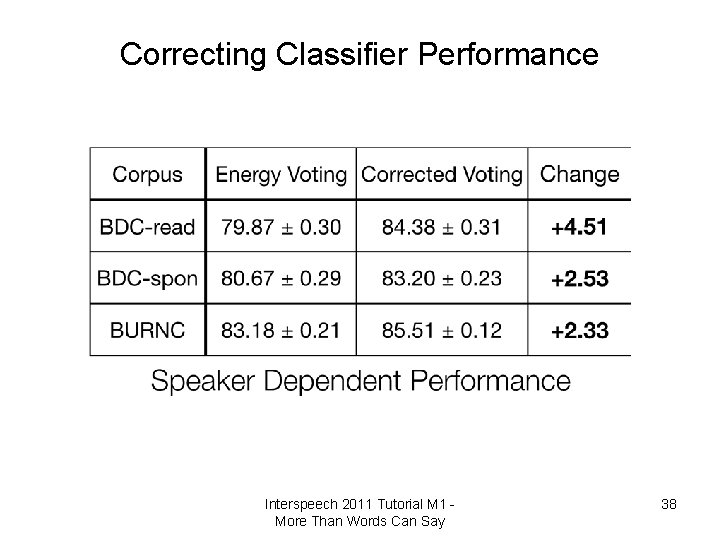

Correcting Classifier Performance Interspeech 2011 Tutorial M 1 More Than Words Can Say 38

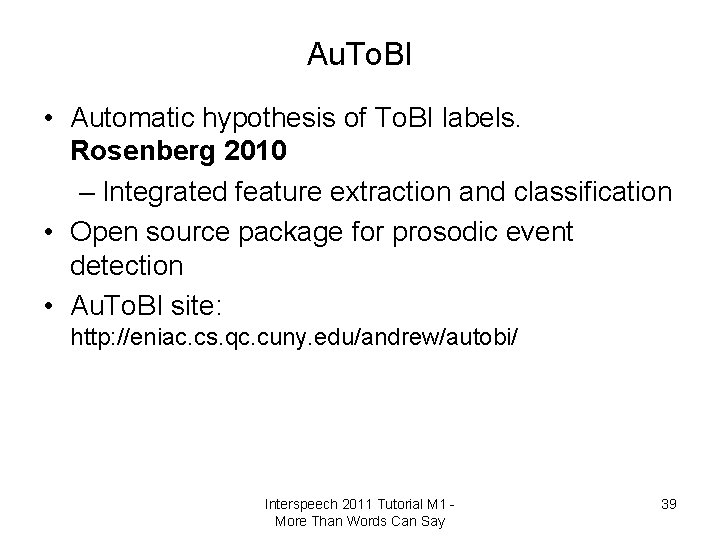

Au. To. BI • Automatic hypothesis of To. BI labels. Rosenberg 2010 – Integrated feature extraction and classification • Open source package for prosodic event detection • Au. To. BI site: http: //eniac. cs. qc. cuny. edu/andrew/autobi/ Interspeech 2011 Tutorial M 1 More Than Words Can Say 39

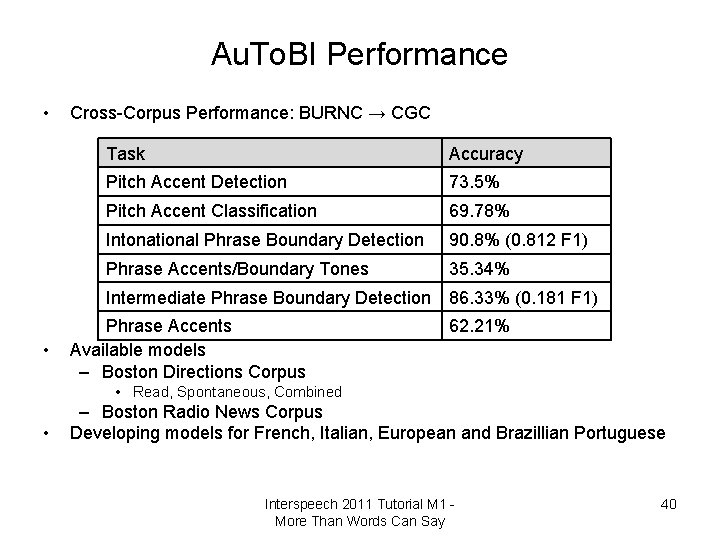

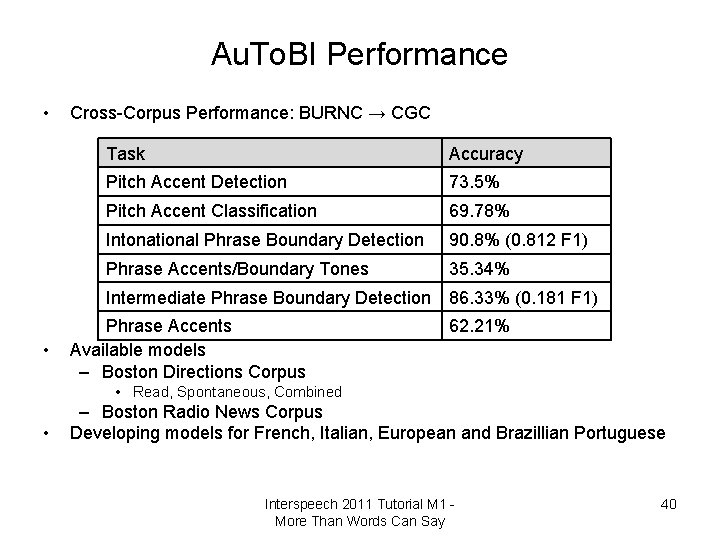

Au. To. BI Performance • • Cross-Corpus Performance: BURNC → CGC Task Accuracy Pitch Accent Detection 73. 5% Pitch Accent Classification 69. 78% Intonational Phrase Boundary Detection 90. 8% (0. 812 F 1) Phrase Accents/Boundary Tones 35. 34% Intermediate Phrase Boundary Detection 86. 33% (0. 181 F 1) Phrase Accents Available models – Boston Directions Corpus 62. 21% • Read, Spontaneous, Combined • – Boston Radio News Corpus Developing models for French, Italian, European and Brazillian Portuguese Interspeech 2011 Tutorial M 1 More Than Words Can Say 40

Next Class • Information status: focus and given/new information 12/1/2020 41