Modeling and Recognition of Phonetic and Prosodic Factors

- Slides: 1

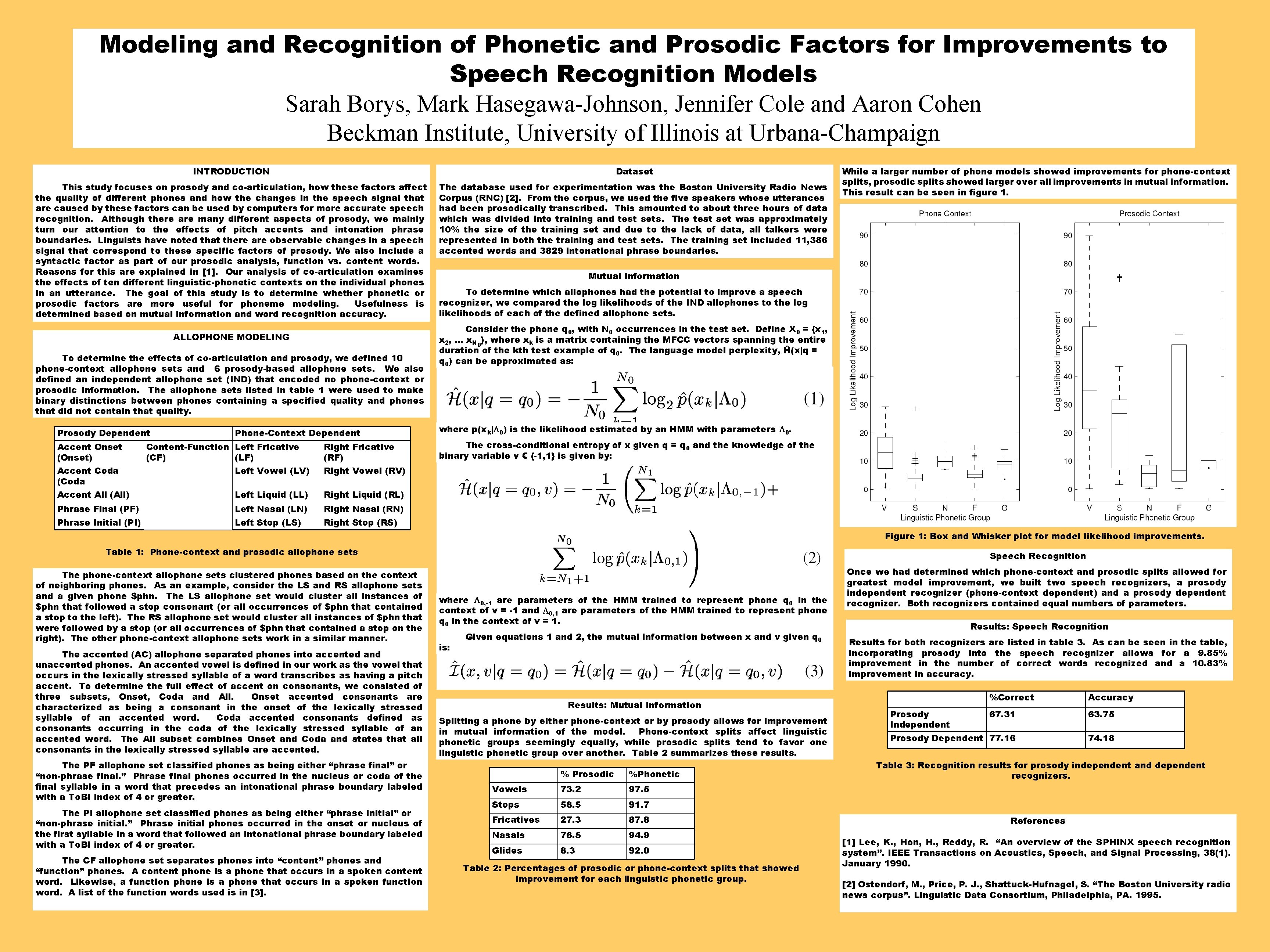

Modeling and Recognition of Phonetic and Prosodic Factors for Improvements to Speech Recognition Models Sarah Borys, Mark Hasegawa-Johnson, Jennifer Cole and Aaron Cohen Beckman Institute, University of Illinois at Urbana-Champaign INTRODUCTION Dataset This study focuses on prosody and co-articulation, how these factors affect the quality of different phones and how the changes in the speech signal that are caused by these factors can be used by computers for more accurate speech recognition. Although there are many different aspects of prosody, we mainly turn our attention to the effects of pitch accents and intonation phrase boundaries. Linguists have noted that there are observable changes in a speech signal that correspond to these specific factors of prosody. We also include a syntactic factor as part of our prosodic analysis, function vs. content words. Reasons for this are explained in [1]. Our analysis of co-articulation examines the effects of ten different linguistic-phonetic contexts on the individual phones in an utterance. The goal of this study is to determine whether phonetic or prosodic factors are more useful for phoneme modeling. Usefulness is determined based on mutual information and word recognition accuracy. The database used for experimentation was the Boston University Radio News Corpus (RNC) [2]. From the corpus, we used the five speakers whose utterances had been prosodically transcribed. This amounted to about three hours of data which was divided into training and test sets. The test set was approximately 10% the size of the training set and due to the lack of data, all talkers were represented in both the training and test sets. The training set included 11, 386 accented words and 3829 intonational phrase boundaries. ALLOPHONE MODELING To determine the effects of co-articulation and prosody, we defined 10 phone-context allophone sets and 6 prosody-based allophone sets. We also defined an independent allophone set (IND) that encoded no phone-context or prosodic information. The allophone sets listed in table 1 were used to make binary distinctions between phones containing a specified quality and phones that did not contain that quality. Prosody Dependent Accent Onset (Onset) Accent Coda (Coda Phone-Context Dependent Content-Function Left Fricative (CF) (LF) Left Vowel (LV) Right Fricative (RF) Right Vowel (RV) Accent All (All) Left Liquid (LL) Right Liquid (RL) Phrase Final (PF) Left Nasal (LN) Right Nasal (RN) Phrase Initial (PI) Left Stop (LS) Right Stop (RS) While a larger number of phone models showed improvements for phone-context splits, prosodic splits showed larger over all improvements in mutual information. This result can be seen in figure 1. Mutual Information To determine which allophones had the potential to improve a speech recognizer, we compared the log likelihoods of the IND allophones to the log likelihoods of each of the defined allophone sets. Consider the phone q 0, with N 0 occurrences in the test set. Define X 0 = {x 1, x 2, … x. N 0}, where xk is a matrix containing the MFCC vectors spanning the entire duration of the kth test example of q 0. The language model perplexity, Ĥ(x|q = q 0) can be approximated as: where p(xk| 0) is the likelihood estimated by an HMM with parameters 0. The cross-conditional entropy of x given q = q 0 and the knowledge of the binary variable v € {-1, 1} is given by: Figure 1: Box and Whisker plot for model likelihood improvements. Table 1: Phone-context and prosodic allophone sets The phone-context allophone sets clustered phones based on the context of neighboring phones. As an example, consider the LS and RS allophone sets and a given phone $phn. The LS allophone set would cluster all instances of $phn that followed a stop consonant (or all occurrences of $phn that contained a stop to the left). The RS allophone set would cluster all instances of $phn that were followed by a stop (or all occurrences of $phn that contained a stop on the right). The other phone-context allophone sets work in a similar manner. The accented (AC) allophone separated phones into accented and unaccented phones. An accented vowel is defined in our work as the vowel that occurs in the lexically stressed syllable of a word transcribes as having a pitch accent. To determine the full effect of accent on consonants, we consisted of three subsets, Onset, Coda and All. Onset accented consonants are characterized as being a consonant in the onset of the lexically stressed syllable of an accented word. Coda accented consonants defined as consonants occurring in the coda of the lexically stressed syllable of an accented word. The All subset combines Onset and Coda and states that all consonants in the lexically stressed syllable are accented. The PF allophone set classified phones as being either “phrase final” or “non-phrase final. ” Phrase final phones occurred in the nucleus or coda of the final syllable in a word that precedes an intonational phrase boundary labeled with a To. BI index of 4 or greater. The PI allophone set classified phones as being either “phrase initial” or “non-phrase initial. ” Phrase initial phones occurred in the onset or nucleus of the first syllable in a word that followed an intonational phrase boundary labeled with a To. BI index of 4 or greater. The CF allophone set separates phones into “content” phones and “function” phones. A content phone is a phone that occurs in a spoken content word. Likewise, a function phone is a phone that occurs in a spoken function word. A list of the function words used is in [3]. Speech Recognition where 0, -1 are parameters of the HMM trained to represent phone q 0 in the context of v = -1 and 0, 1 are parameters of the HMM trained to represent phone q 0 in the context of v = 1. is: Given equations 1 and 2, the mutual information between x and v given q 0 Results: Mutual Information Splitting a phone by either phone-context or by prosody allows for improvement in mutual information of the model. Phone-context splits affect linguistic phonetic groups seemingly equally, while prosodic splits tend to favor one linguistic phonetic group over another. Table 2 summarizes these results. % Prosodic %Phonetic Vowels 73. 2 97. 5 Stops 58. 5 91. 7 Fricatives 27. 3 87. 8 Nasals 76. 5 94. 9 Glides 8. 3 92. 0 Table 2: Percentages of prosodic or phone-context splits that showed improvement for each linguistic phonetic group. Once we had determined which phone-context and prosodic splits allowed for greatest model improvement, we built two speech recognizers, a prosody independent recognizer (phone-context dependent) and a prosody dependent recognizer. Both recognizers contained equal numbers of parameters. Results: Speech Recognition Results for both recognizers are listed in table 3. As can be seen in the table, incorporating prosody into the speech recognizer allows for a 9. 85% improvement in the number of correct words recognized and a 10. 83% improvement in accuracy. %Correct Accuracy 67. 31 63. 75 Prosody Dependent 77. 16 74. 18 Prosody Independent Table 3: Recognition results for prosody independent and dependent recognizers. References [1] Lee, K. , Hon, H. , Reddy, R. “An overview of the SPHINX speech recognition system”. IEEE Transactions on Acoustics, Speech, and Signal Processing, 38(1). January 1990. [2] Ostendorf, M. , Price, P. J. , Shattuck-Hufnagel, S. “The Boston University radio news corpus”. Linguistic Data Consortium, Philadelphia, PA. 1995.