Parameter Learning from Stochastic Teachers and Stochastic Compulsive

![Model of Computation We are searching for a point * [0, 1] We interact Model of Computation We are searching for a point * [0, 1] We interact](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-7.jpg)

![The Learning Algorithm To Conclude the proof compute : E[l( )] as N . The Learning Algorithm To Conclude the proof compute : E[l( )] as N .](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-22.jpg)

![Example Partition interval [0, 1] into eight intervals {0, 1/8 , 2/8 , … Example Partition interval [0, 1] into eight intervals {0, 1/8 , 2/8 , …](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-24.jpg)

![Experimental Results Table I : True value of E[l( )] for various p and Experimental Results Table I : True value of E[l( )] for various p and](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-26.jpg)

![Experimental Results Figure I : Plot of E[l( )] with N. 12/26/2021 27 Experimental Results Figure I : Plot of E[l( )] with N. 12/26/2021 27](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-27.jpg)

![Experimental Results Figure II : Plot of E[l( )] with p. 12/26/2021 28 Experimental Results Figure II : Plot of E[l( )] with p. 12/26/2021 28](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-28.jpg)

![Experimental Results Figure I : Plot of E[l( )] with N. 12/26/2021 50 Experimental Results Figure I : Plot of E[l( )] with N. 12/26/2021 50](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-50.jpg)

- Slides: 56

Parameter Learning from Stochastic Teachers and Stochastic Compulsive Liars B. John Oommen SCS, Carleton University Plenary Talk : PRIS’ 04 (Porto) A Joint Work with G. Raghunath and B. Kuipers 12/26/2021 1

Problem Statement We are learning from: a Stochastic Teacher or a Stochastic Liar Do I go … ? Left LIFE 12/26/2021 Right DEATH 2

Problem Statement Teacher / Liar : Identity Unknown Tells Lies Tells Truth Life Gate Death Gate * We have to ask only ONE question, * Go through the Gate to LIFE. 12/26/2021 3

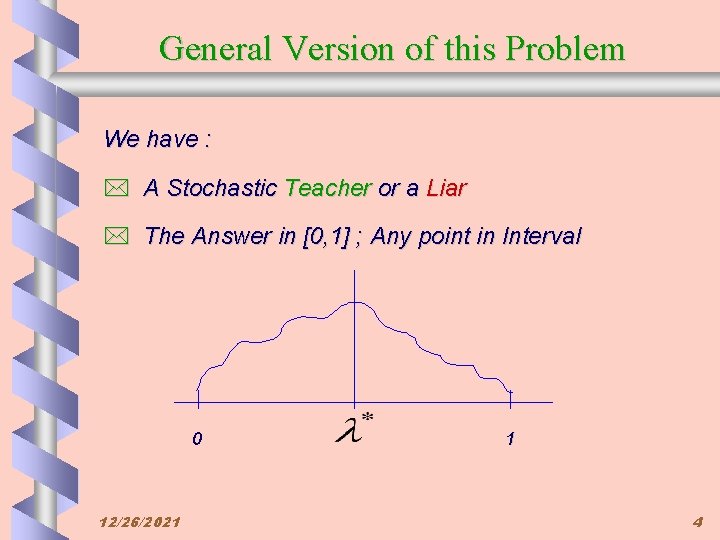

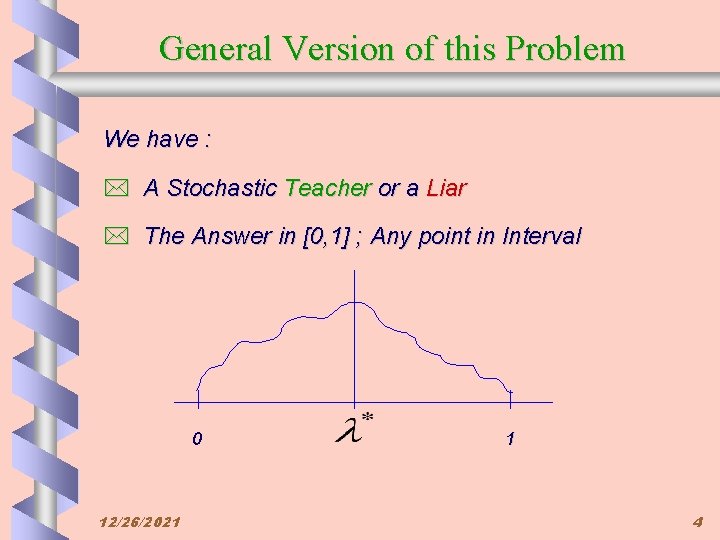

General Version of this Problem We have : * A Stochastic Teacher or a Liar * The Answer in [0, 1] ; Any point in Interval 0 12/26/2021 1 4

General Version of this Problem Question: Shall we go Left or Right ? Teacher Go Right with prob. p Go Left with prob. 1 - p , Liar Do the same 12/26/2021 where p > 0. 5 with p < 0. 5 5

Stochastic Teacher We are on a Line Searching for a Point Don't know how far we are from the point We interact with a Deterministic Teacher Charges us with how far we are from point If Points are Integers : Problem can be solved in O(N) steps Question : What shall we do if the Teacher is Stochastic 12/26/2021 6

![Model of Computation We are searching for a point 0 1 We interact Model of Computation We are searching for a point * [0, 1] We interact](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-7.jpg)

Model of Computation We are searching for a point * [0, 1] We interact with a Stochastic Teacher b(n) 12/26/2021 : Response from the Environment º Move Left / Right º Stochastic -- Erroneous 7

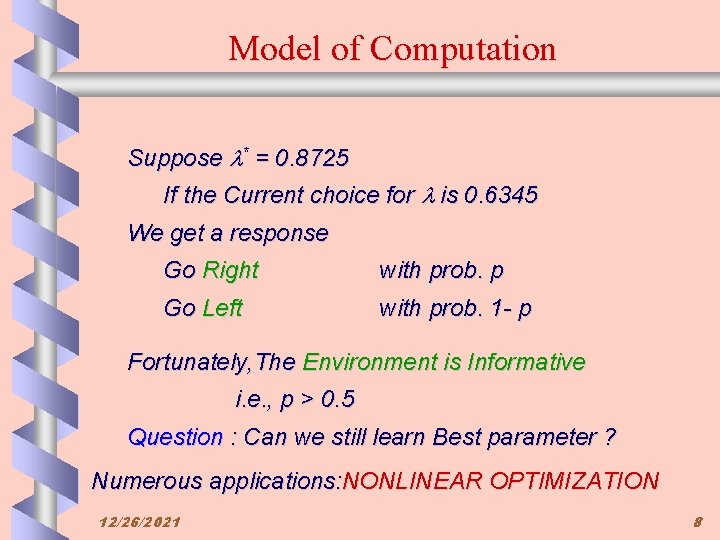

Model of Computation Suppose * = 0. 8725 If the Current choice for is 0. 6345 We get a response Go Right with prob. p Go Left with prob. 1 - p Fortunately, The Environment is Informative i. e. , p > 0. 5 Question : Can we still learn Best parameter ? Numerous applications: NONLINEAR OPTIMIZATION 12/26/2021 8

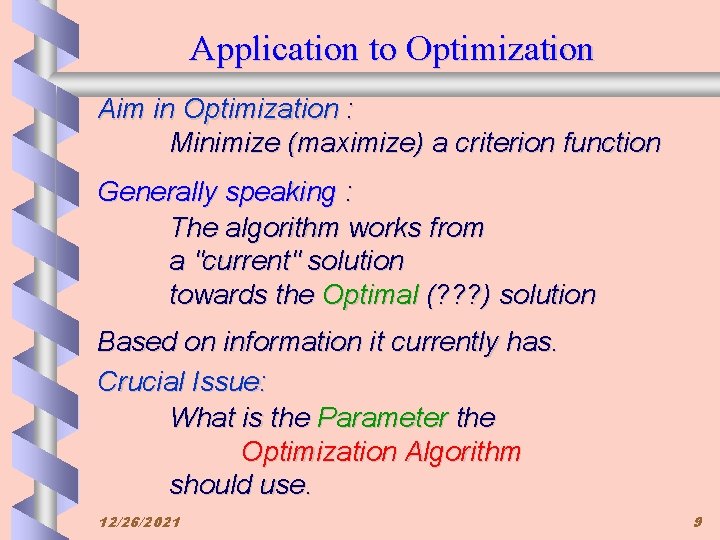

Application to Optimization Aim in Optimization : Minimize (maximize) a criterion function Generally speaking : The algorithm works from a "current" solution towards the Optimal (? ? ? ) solution Based on information it currently has. Crucial Issue: What is the Parameter the Optimization Algorithm should use. 12/26/2021 9

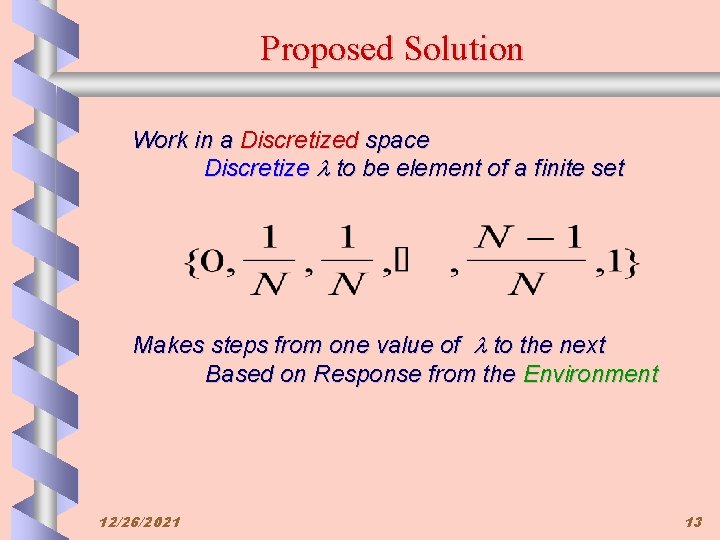

Application to Optimization If the parameter is Too Small the convergence is Sluggish. If it is Too Large Erroneous Convergence or Oscillations. In many cases the parameter related to the second derivative analogous to a "Newton's" method. 12/26/2021 10

Application to Optimization First Relax Assumptions on l Normalize if bounds on parameter known : l = (m - mmin)/(mmax - mmin) Thus * [0, 1] In the case of Neural Networks Functions range of parameter varies from 10 -3 to 103 Use a monotonic one-to-one mapping l : = A. Logbm for some b. b 12/26/2021 11

Application to Optimization Since l Converges Arbitrarily Close to l* m converges Arbitrarily Close to m*. Can Learn the Best Parameter in NN. . . 12/26/2021 12

Proposed Solution Work in a Discretized space Discretize to be element of a finite set Makes steps from one value of to the next Based on Response from the Environment 12/26/2021 13

Advantages of Discretizing in Learning (i) Practical considerations Random Number Generator Typically finite accuracy Action probability not any real number (ii) Probability Changes Jumps and not continuously. Convergence in "finite" time possible (iii) Proofs -optimal different Discrete State Markov Chain 12/26/2021 14

Advantages of Discretizing in Learning (iv) Rate of convergence Faster than continuous schemes Increase probability to unity directly rather than asymptotically. 0 2 4 6 8 (v) Reduces the time per iteration Addition is quicker than Multiplication Don't need floating point numbers Generally : 12/26/2021 Discrete algorithms are superior In terms of both time and space. 15

The Learning Algorithm Assume Current Value for is (n). Then : In Internal State (i. e. , 0 < (n) < 1) : If E suggests Increasing (n+1) : = (n) + 1/N Else 12/26/2021 {If E suggests Decreasing } (n+1) : = (n) - 1/N 16

The Learning Algorithm At End States : If (n) = 1 If E suggests Increasing (n+1) : = (n) Else {If E suggests decreasing } (n+1) : = (n) - 1/N If (n) = 0 If E suggests Decreasing (n+1) : = (n) Else {If E suggests decreasing } (n+1) : = (n) + 1/N 12/26/2021 17

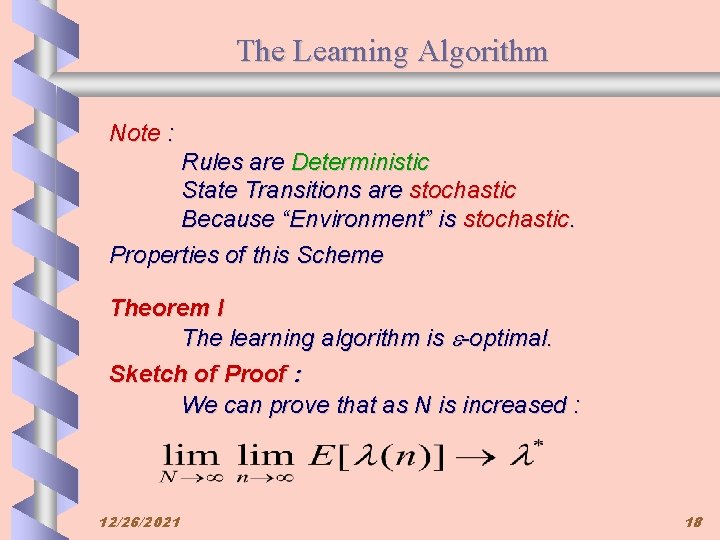

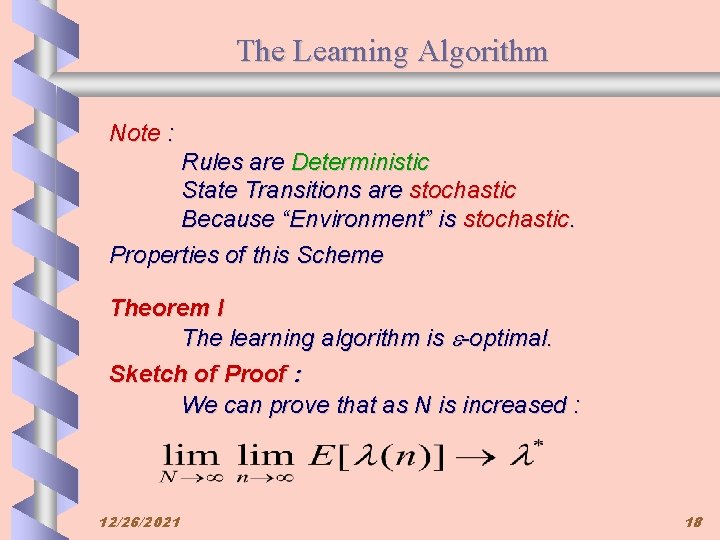

The Learning Algorithm Note : Rules are Deterministic State Transitions are stochastic Because “Environment” is stochastic. Properties of this Scheme Theorem I The learning algorithm is -optimal. Sketch of Proof : We can prove that as N is increased : 12/26/2021 18

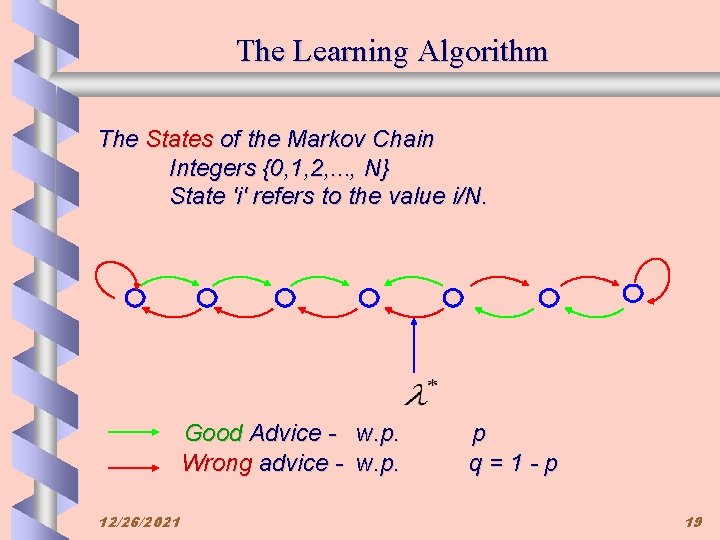

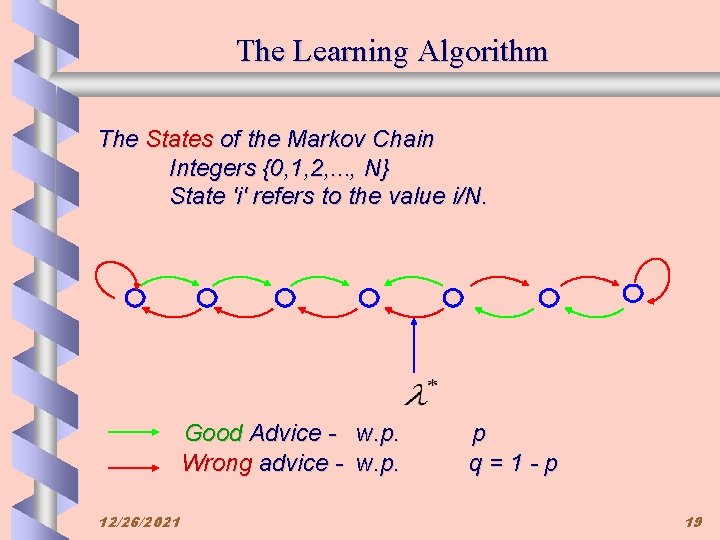

The Learning Algorithm The States of the Markov Chain Integers {0, 1, 2, . . . , N} State 'i' refers to the value i/N. Good Advice - w. p. Wrong advice - w. p. 12/26/2021 p q=1 -p 19

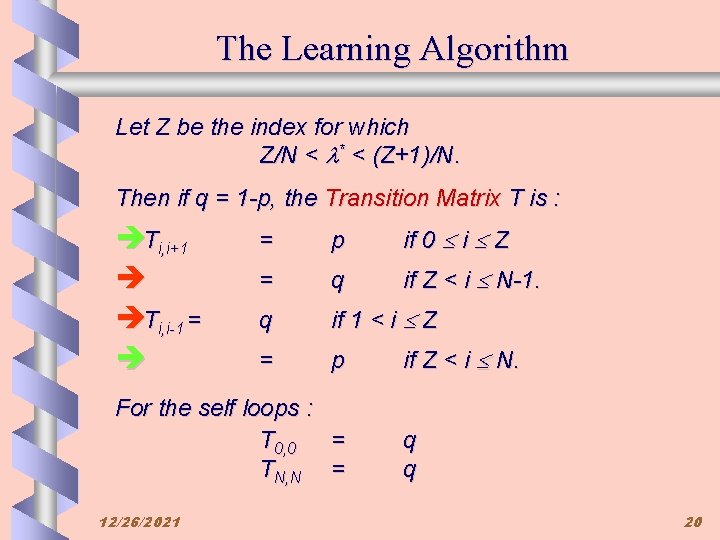

The Learning Algorithm Let Z be the index for which Z/N < * < (Z+1)/N. Then if q = 1 -p, the Transition Matrix T is : èTi, i+1 è èTi, i-1 = è = p if 0 i Z = q if Z < i N-1. q if 1 < i Z = p For the self loops : T 0, 0 = TN, N = 12/26/2021 if Z < i N. q q 20

The Markov Matrix By a lengthy induction it can be proved that : pi = e. pi-1 whenever i Z. pi = pi-1 / e whenever i > Z, and p. Z+1 = p. Z. where e = p/q < 1. 12/26/2021 21

![The Learning Algorithm To Conclude the proof compute El as N The Learning Algorithm To Conclude the proof compute : E[l( )] as N .](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-22.jpg)

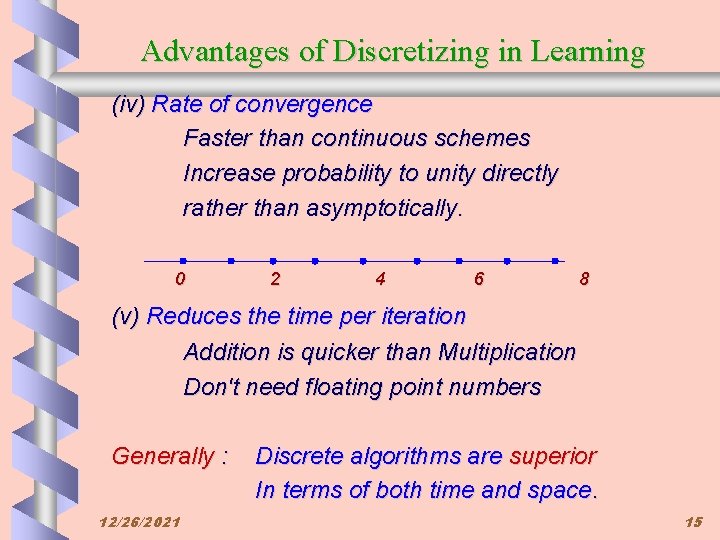

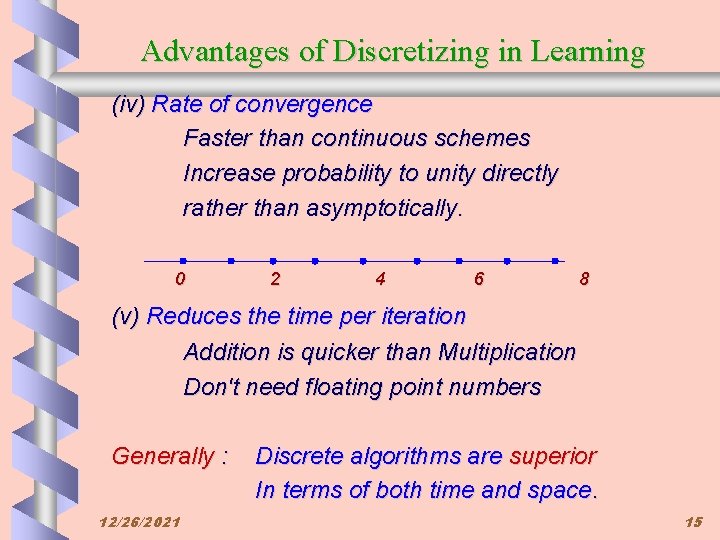

The Learning Algorithm To Conclude the proof compute : E[l( )] as N . INCREASE / DECREASE : GEOMETRIC …. . . 12/26/2021 22

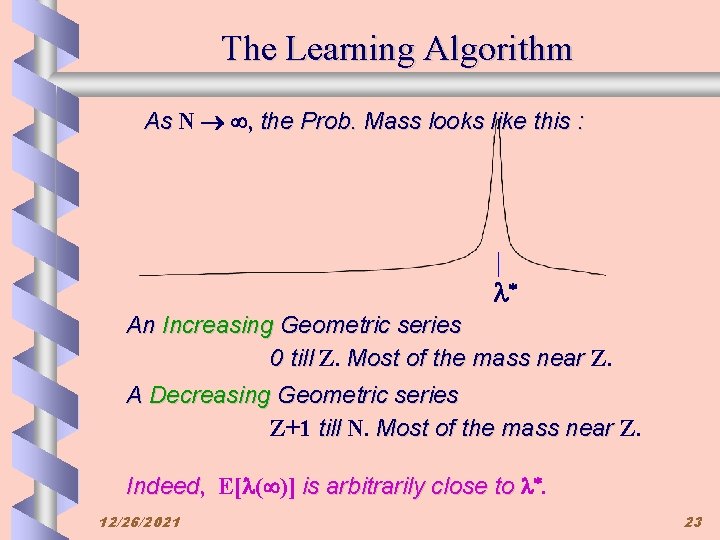

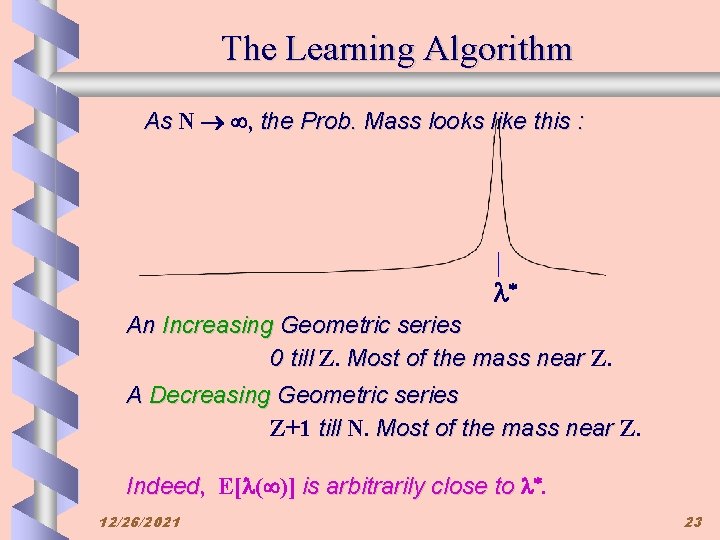

The Learning Algorithm As N , the Prob. Mass looks like this : l* An Increasing Geometric series 0 till Z. Most of the mass near Z. A Decreasing Geometric series Z+1 till N. Most of the mass near Z. Indeed, Indeed E[l( )] is arbitrarily close to l*. 12/26/2021 23

![Example Partition interval 0 1 into eight intervals 0 18 28 Example Partition interval [0, 1] into eight intervals {0, 1/8 , 2/8 , …](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-24.jpg)

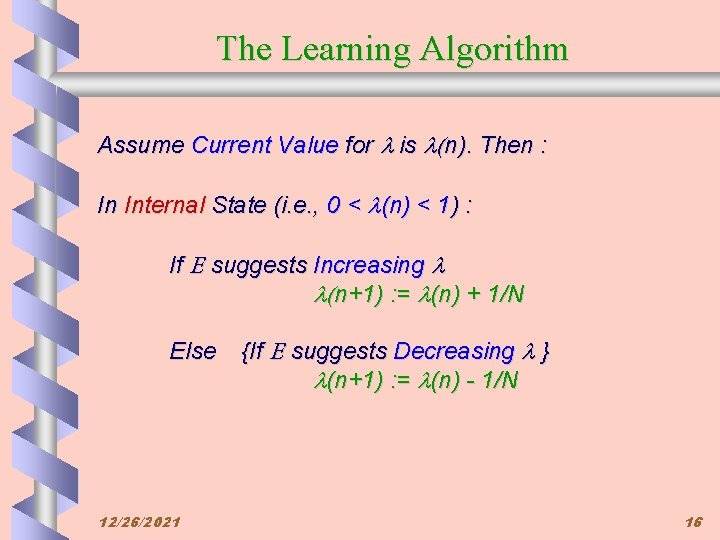

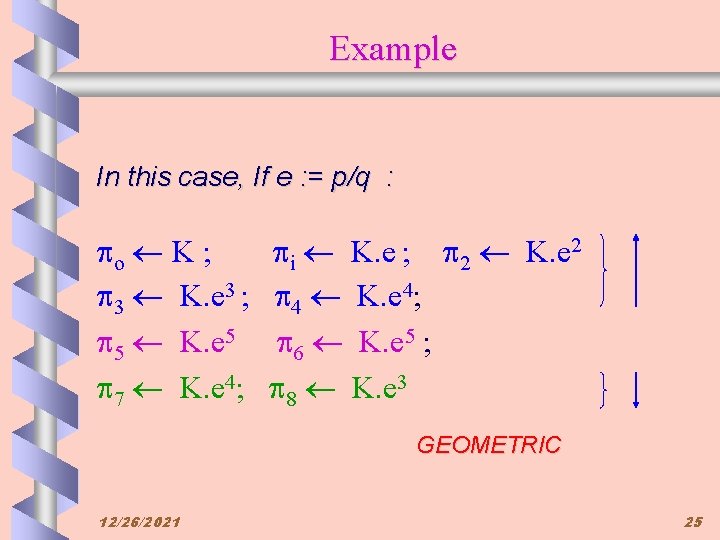

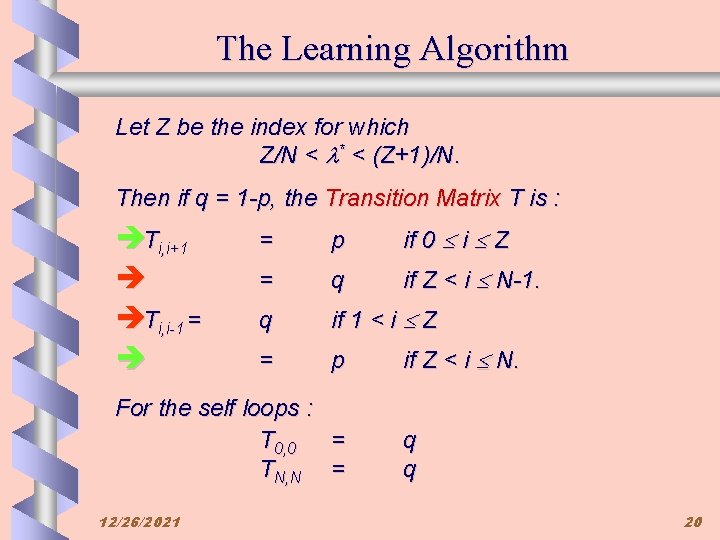

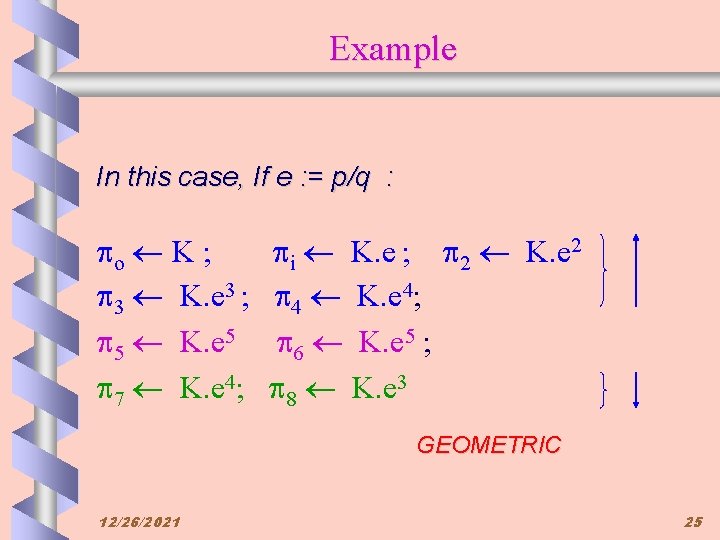

Example Partition interval [0, 1] into eight intervals {0, 1/8 , 2/8 , … , 7/8 , 1) } Suppose l* is 0. 65. (i) All transitions for {0, 1/8, 2/8 , 3/8, 4/8, 5/8 } Increased with prob. p Decreased with prob. q (ii) All transitions for Decreased Increased 12/26/2021 {6/8, 7/8 , 1} are : with prob. p 24

Example In this case, If e : = p/q : po K ; p 3 K. e 3 ; p 5 K. e 5 p 7 K. e 4; pi K. e ; p 2 K. e 2 p 4 K. e 4; p 6 K. e 5 ; p 8 K. e 3 GEOMETRIC 12/26/2021 25

![Experimental Results Table I True value of El for various p and Experimental Results Table I : True value of E[l( )] for various p and](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-26.jpg)

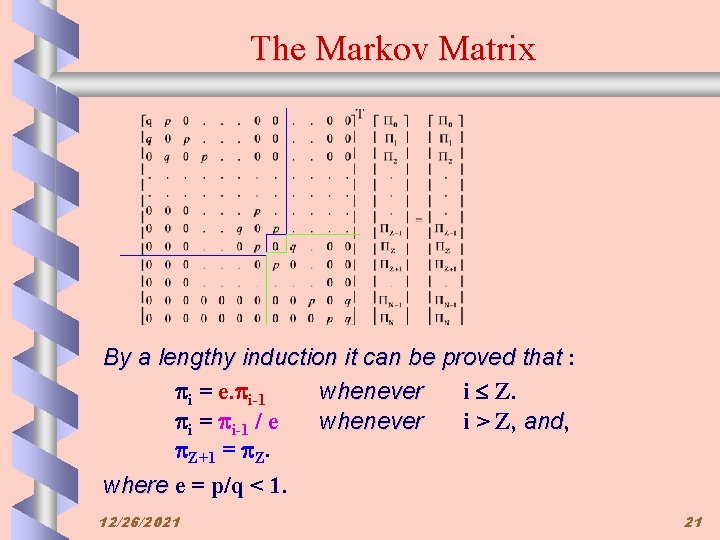

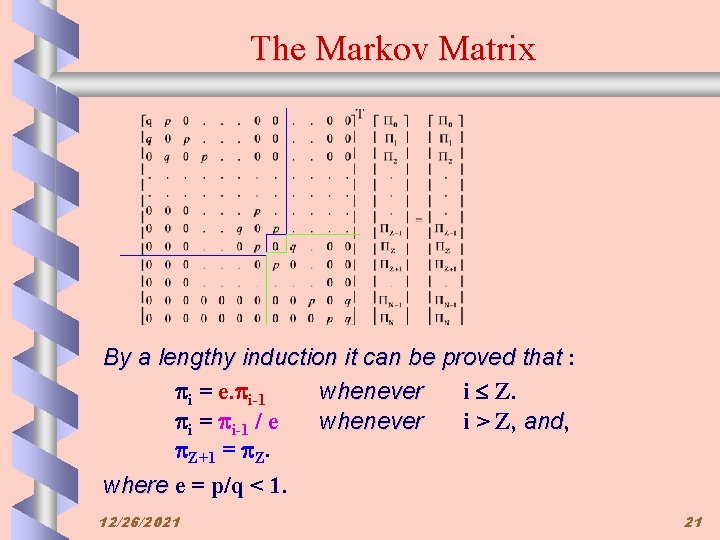

Experimental Results Table I : True value of E[l( )] for various p and Various Resolutions, N. l* is 0. 9123. 12/26/2021 26

![Experimental Results Figure I Plot of El with N 12262021 27 Experimental Results Figure I : Plot of E[l( )] with N. 12/26/2021 27](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-27.jpg)

Experimental Results Figure I : Plot of E[l( )] with N. 12/26/2021 27

![Experimental Results Figure II Plot of El with p 12262021 28 Experimental Results Figure II : Plot of E[l( )] with p. 12/26/2021 28](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-28.jpg)

Experimental Results Figure II : Plot of E[l( )] with p. 12/26/2021 28

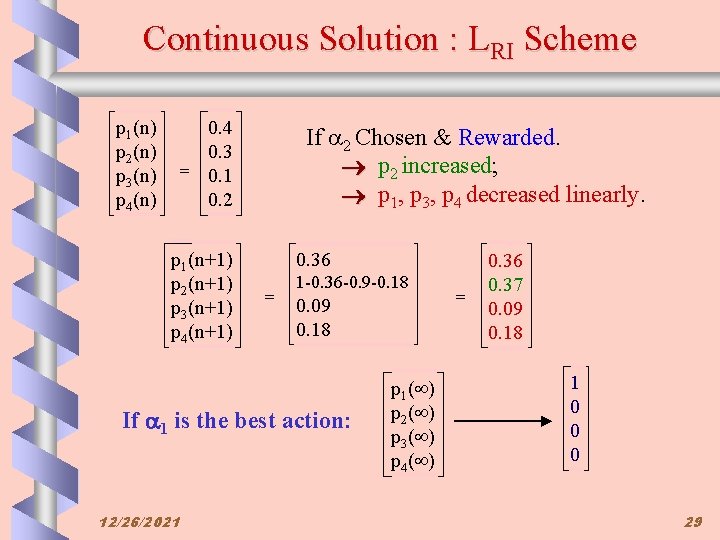

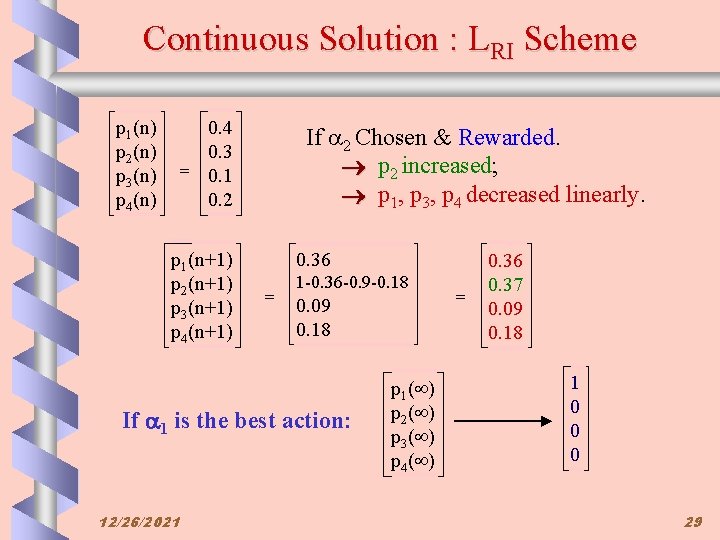

Continuous Solution : LRI Scheme p 1(n) p 2(n) p 3(n) p 4(n) 0. 4 0. 3 = 0. 1 0. 2 p 1(n+1) p 2(n+1) p 3(n+1) p 4(n+1) If 2 Chosen & Rewarded. p 2 increased; p 1, p 3, p 4 decreased linearly. 0. 36 = 1 -0. 36 -0. 9 -0. 18 0. 09 0. 18 If 1 is the best action: 12/26/2021 p 1( ) p 2( ) p 3( ) p 4( ) = 0. 36 0. 37 0. 09 0. 18 1 0 0 0 29

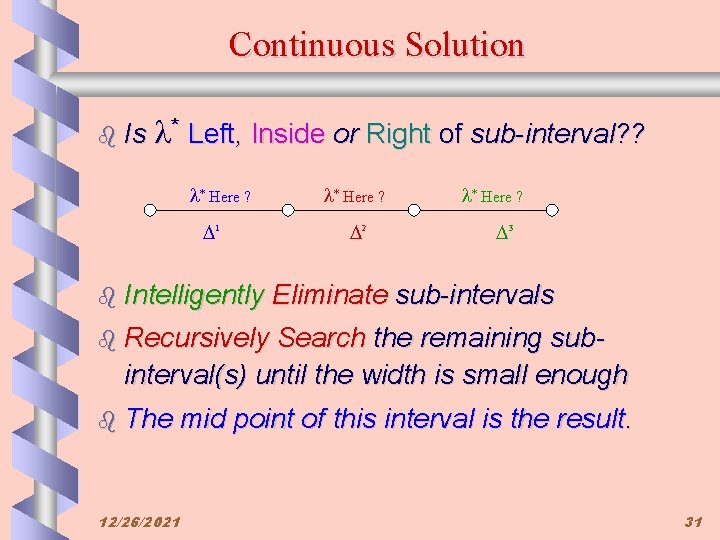

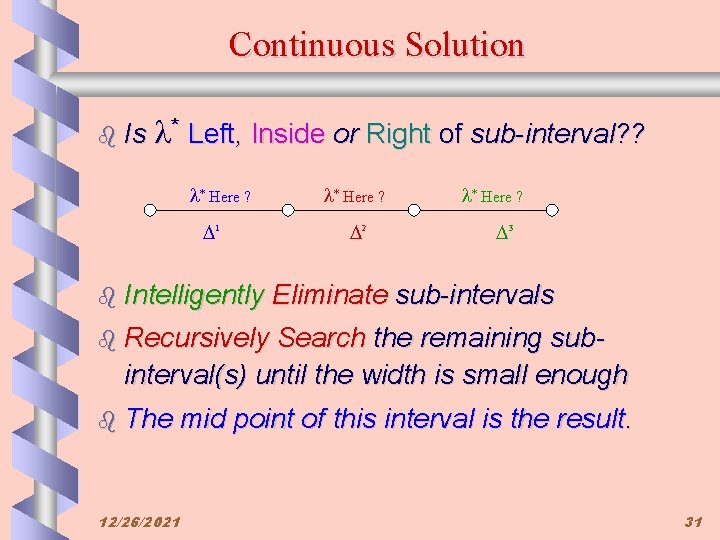

Continuous Solution b Systematically b Use mid-point as the Initial Guess b Partition b Use Explore the Given Interval the interval into 3 sub-intervals -optimal learning in each Sub-interval * Here ? 1 12/26/2021 * Here ? 2 * Here ? 3 30

Continuous Solution b Is * Left, Inside or Right of sub-interval? ? * Here ? 1 b Intelligently * Here ? 2 * Here ? 3 Eliminate sub-intervals b Recursively Search the remaining subinterval(s) until the width is small enough b The 12/26/2021 mid point of this interval is the result. 31

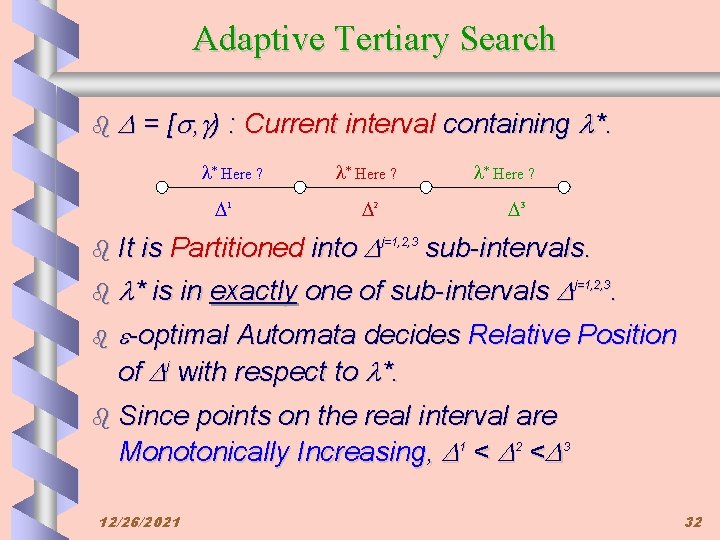

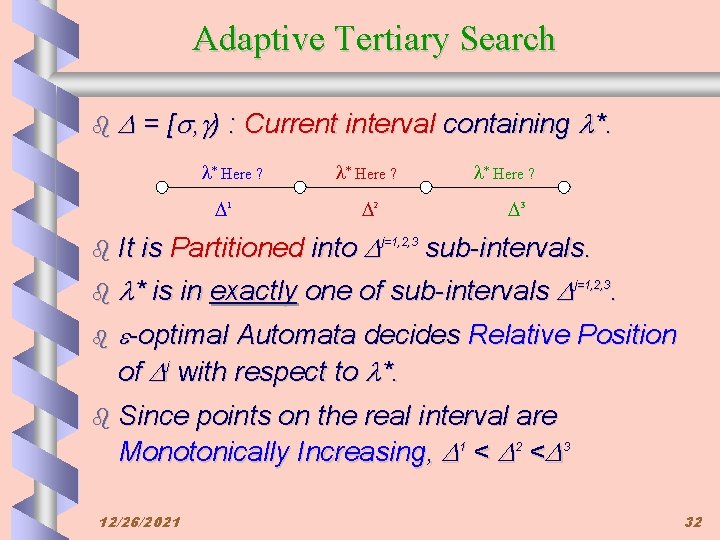

Adaptive Tertiary Search b = [ , ) : Current interval containing *. * Here ? 1 b It 2 * Here ? 3 is Partitioned into j=1, 2, 3 sub-intervals. b * b * Here ? is in exactly one of sub-intervals j=1, 2, 3. -optimal Automata decides Relative Position of j with respect to *. b Since points on the real interval are Monotonically Increasing, 1 < 2 < 3 12/26/2021 32

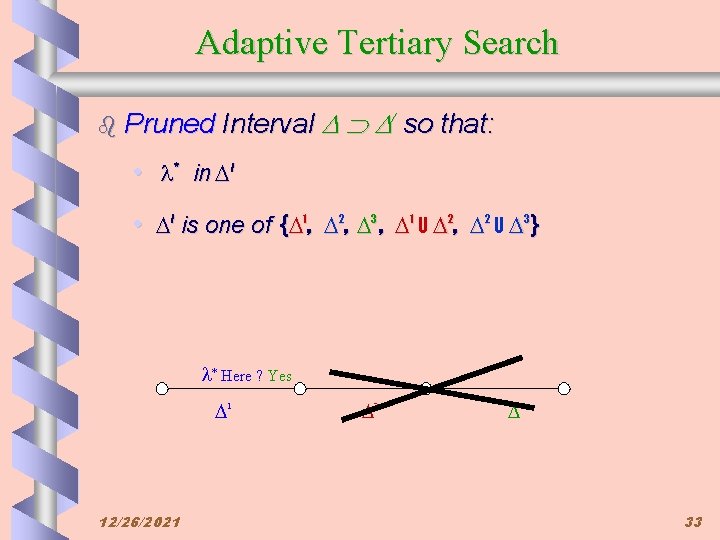

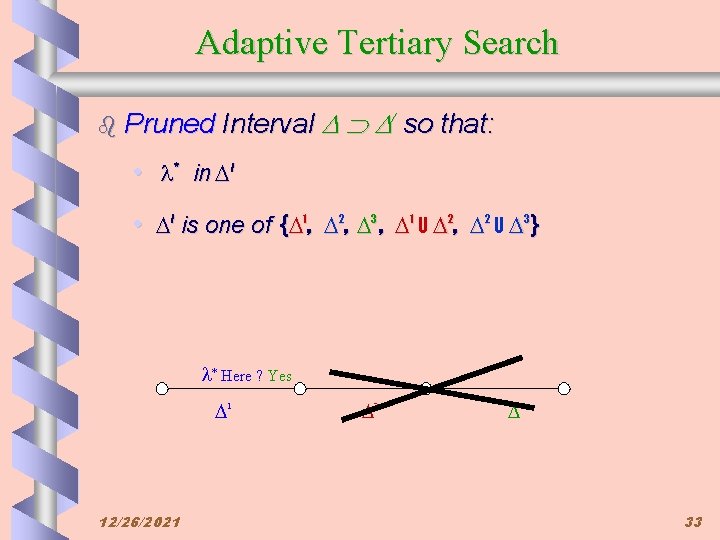

Adaptive Tertiary Search b Pruned Interval / so that: • * in / • / is one of { 1, 2, 3 , 1 U 2, 2 U 3} * Here ? Yes 1 12/26/2021 2 3 33

Adaptive Tertiary Search b Because of monotonicity of intervals b Because of -optimality of the schemes b The above two constraints indicate that • the Search converges • Search converges monotonically 12/26/2021 34

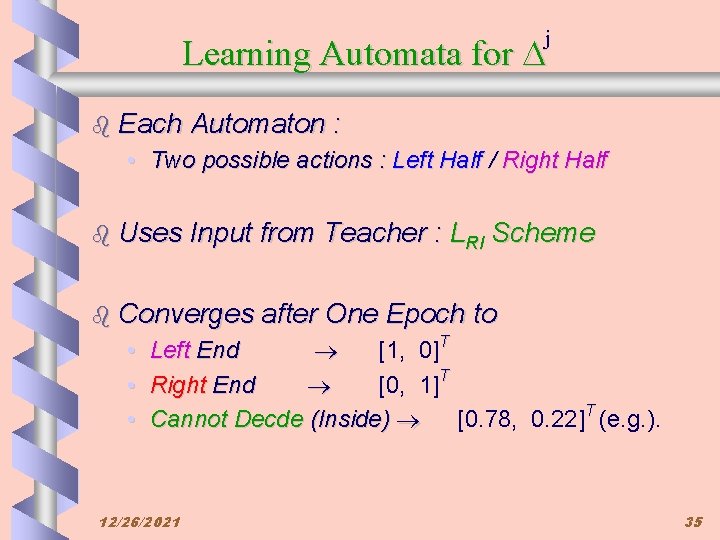

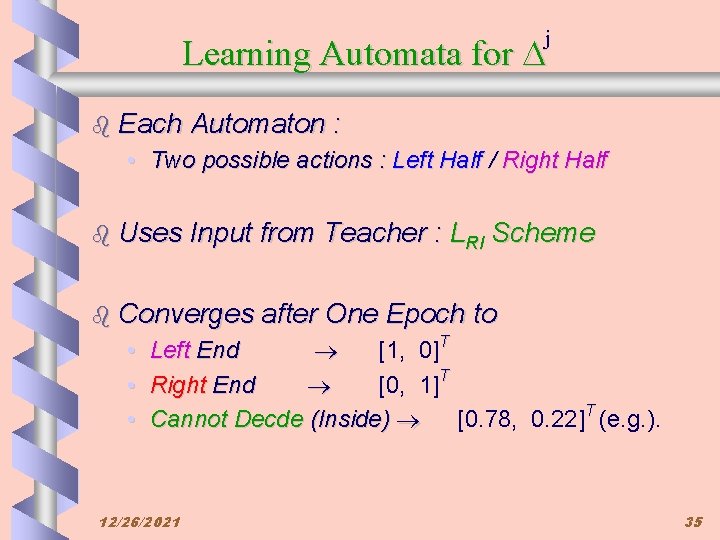

j Learning Automata for b Each Automaton : • Two possible actions : Left Half / Right Half b Uses Input from Teacher : LRI Scheme b Converges • • • after One Epoch to Left End [1, 0]T Right End [0, 1]T Cannot Decde (Inside) [0. 78, 0. 22]T (e. g. ). 12/26/2021 35

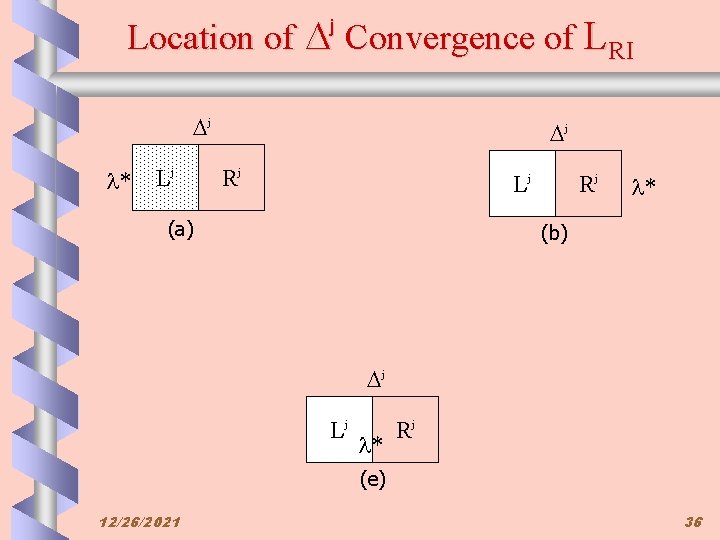

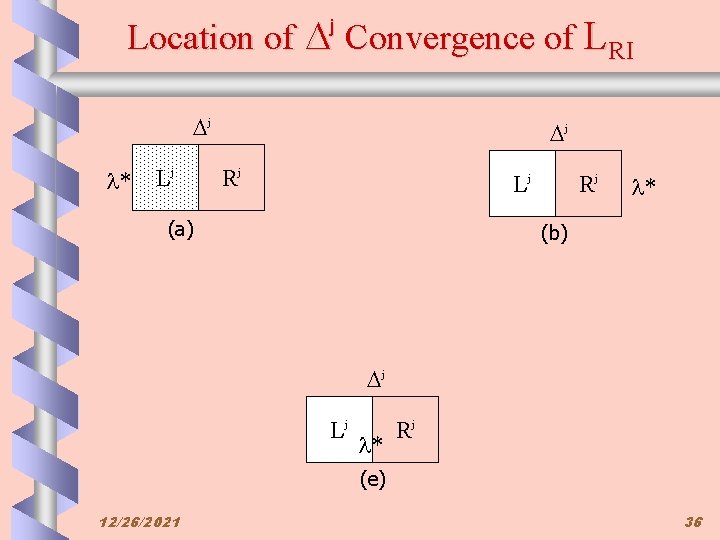

Location of Convergence of LRI j j * Lj j Rj Lj (a) Rj * (b) j Lj * Rj (e) 12/26/2021 36

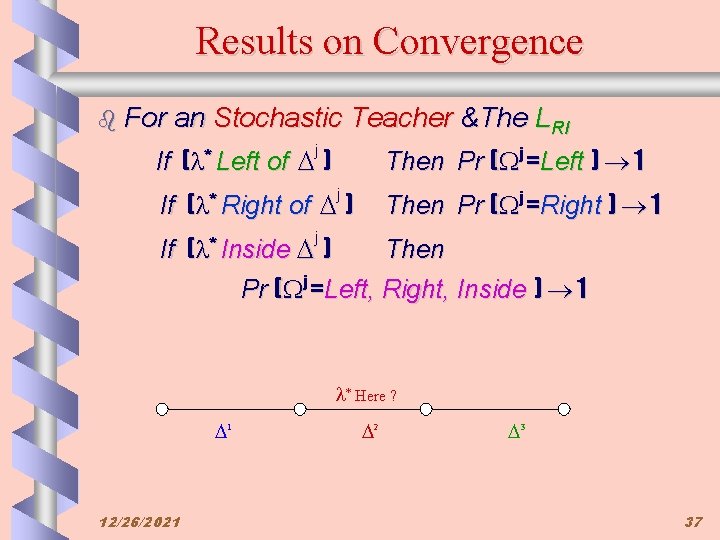

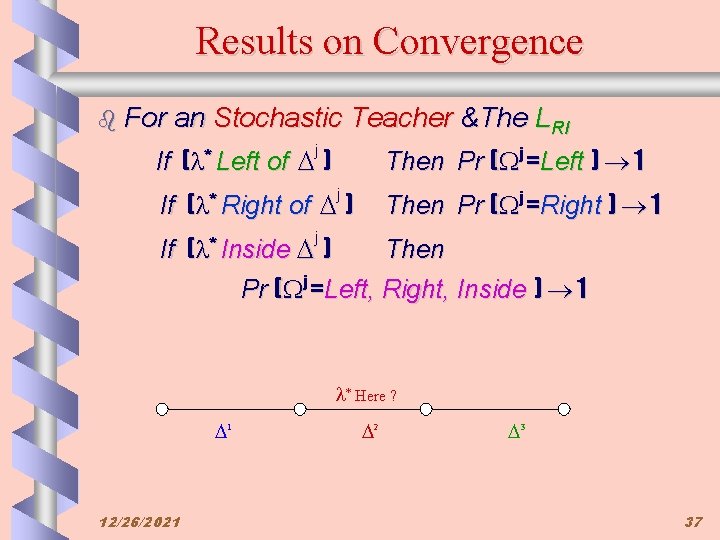

Results on Convergence b For an Stochastic Teacher &The LRI j If ( * Left of ) Then Pr ( j =Left ) 1 j If ( * Right of ) Then Pr ( j =Right ) 1 j If ( * Inside ) Then Pr ( j =Left, Right, Inside ) 1 * Here ? 1 12/26/2021 2 3 37

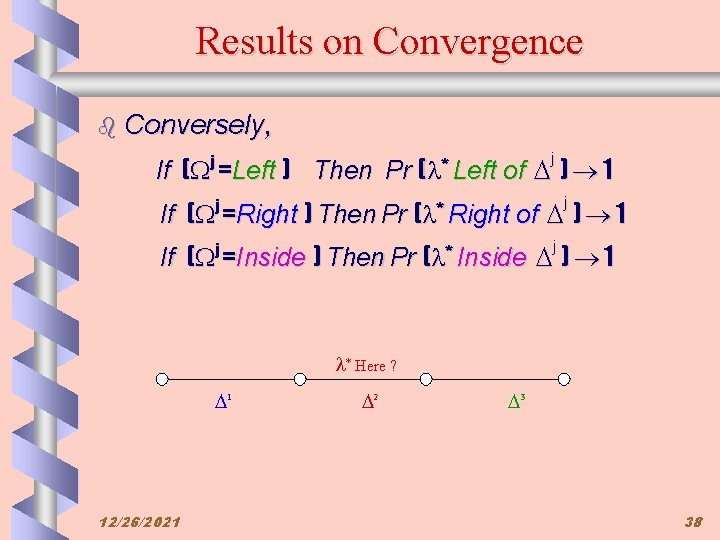

Results on Convergence b Conversely, j If ( j =Left ) Then Pr ( * Left of ) 1 j If ( j =Right ) Then Pr ( * Right of ) 1 j If ( =Inside ) Then Pr ( * Inside ) 1 j * Here ? 1 12/26/2021 2 3 38

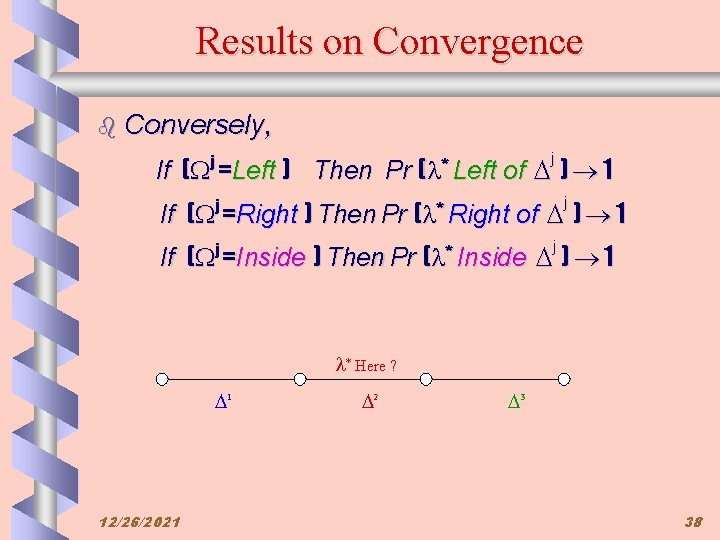

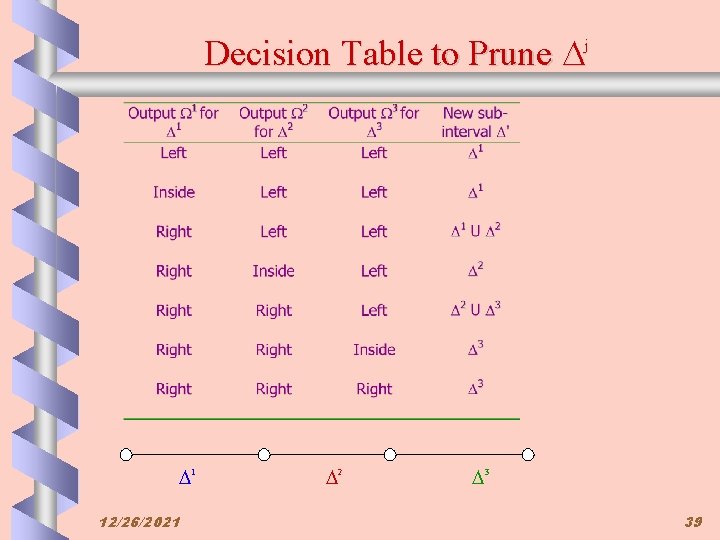

Decision Table to Prune j 1 12/26/2021 2 3 39

Convergence : Stochastic Teachers b In the Previous Decision Table b Only b The Rest Impossible b The b 7 out of 27 combinations are shown. Decision Table to prune is Complete Pr[ * in the Pruned Interval ] 1 12/26/2021 40

Consequences of Convergence b Consequence : Dual Problem • If E : Environment w. p. p then, E’ has p’ = 1 -p • Dual of an Stochastic Teacher (p > 0. 5) is a Stochastic Liar (p’ < 0. 5) 12/26/2021 41

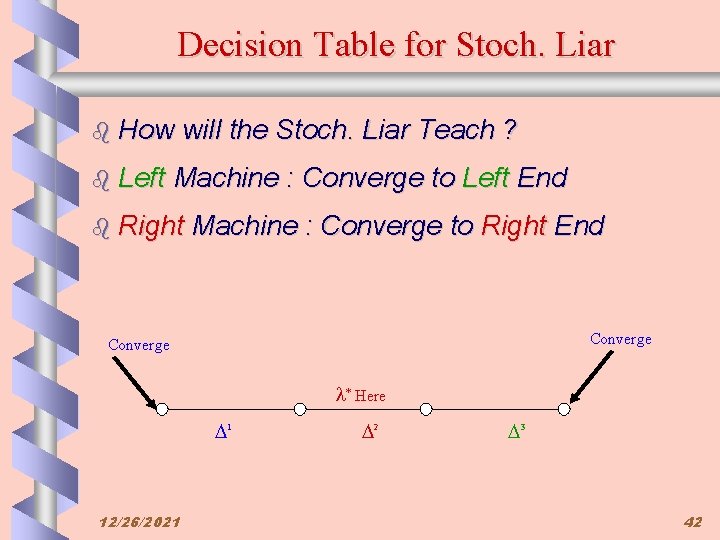

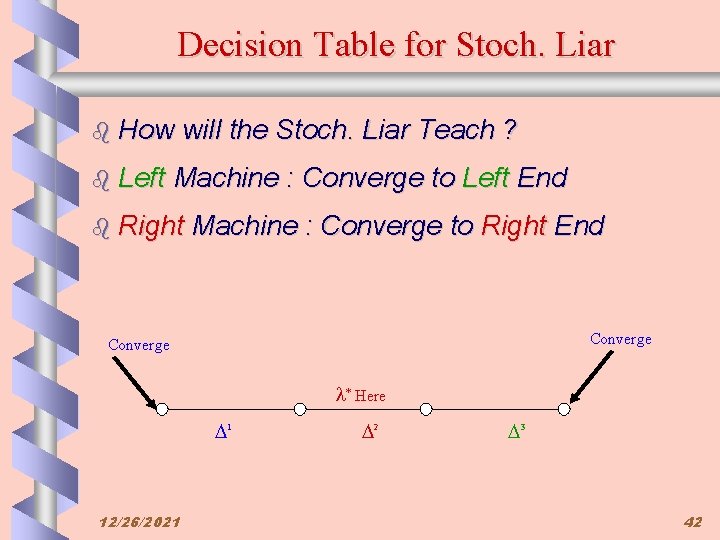

Decision Table for Stoch. Liar b How b Left will the Stoch. Liar Teach ? Machine : Converge to Left End b Right Machine : Converge to Right End Converge * Here 1 12/26/2021 2 3 42

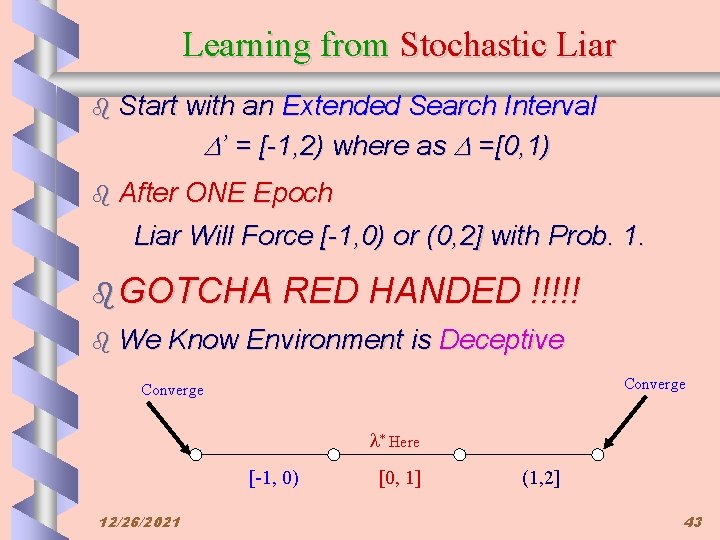

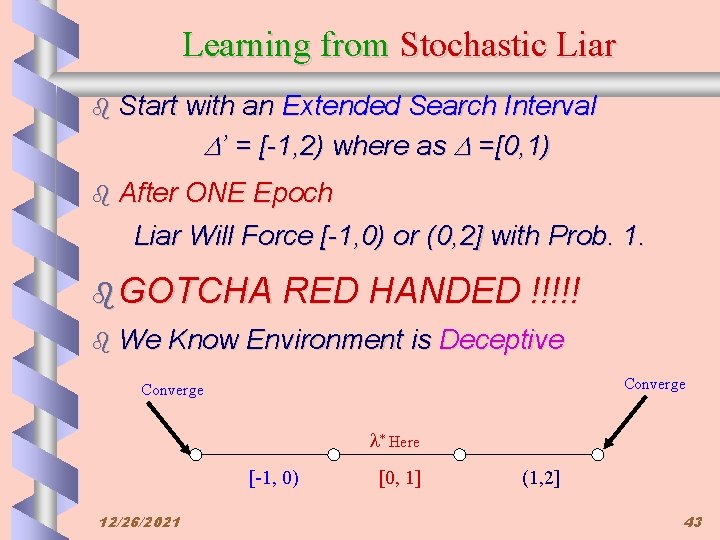

Learning from Stochastic Liar b Start with an Extended Search Interval ’ = [-1, 2) where as =[0, 1) b After ONE Epoch Liar Will Force [-1, 0) or (0, 2] with Prob. 1. b GOTCHA b We RED HANDED !!!!! Know Environment is Deceptive Converge * Here [-1, 0) 12/26/2021 [0, 1] (1, 2] 43

Learning from Stochastic Liar b KNOW b Use Environment is Deceptive the Original interval =[0, 1) Treat 12/26/2021 Go Left as Go Right as Go Left !!!! 44

Experimental Results 12/26/2021 45

Convergence of CPL-ATS 12/26/2021 46

Observations on Results b Convergence : p=0. 1 & p=0. 8 - almost identical The former is highly deceptive environment b Even in the first epoch ONLY 9% error b In two more epochs the error is within 1. 5% b For p nearer 0. 5 the convergence is Sluggish 12/26/2021 47

Conclusions b Can use a combination of LRI and Pruning b Scheme is -optimal. b Can be applied to Stochastic Teachers and Liars b Can detect the nature of Unknown Environment b Can learn the parameter correctly w. p. 1 THANK YOU VERY MUCH 12/26/2021 48

How to Simulate Environment Our idea : analogous to the RPROP network. Dij(t) = -Dij(t-1). h+ = -Dij(t-1). h= Dij(t-1) If *> 0, If *< 0, Otherwise where h+ and h- are parameters of the scheme. Increments are influenced by the sign of two succeeding derivatives 12/26/2021 49

![Experimental Results Figure I Plot of El with N 12262021 50 Experimental Results Figure I : Plot of E[l( )] with N. 12/26/2021 50](https://slidetodoc.com/presentation_image_h2/333a2deb32d8ff7430cf4a1f269c699e/image-50.jpg)

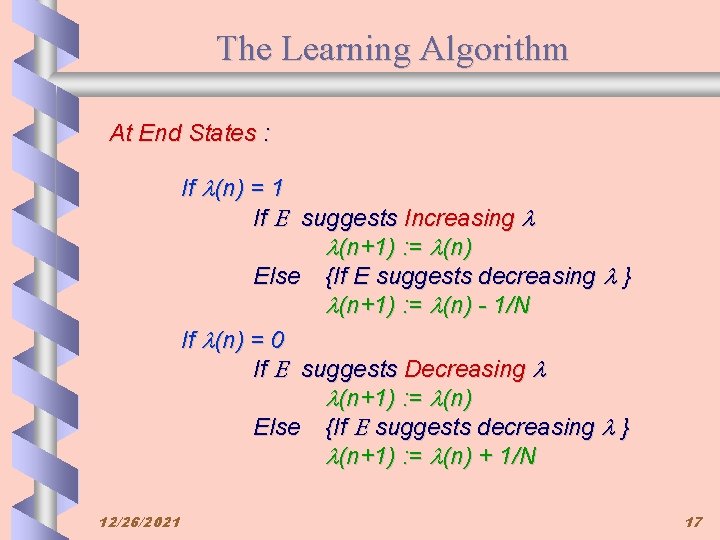

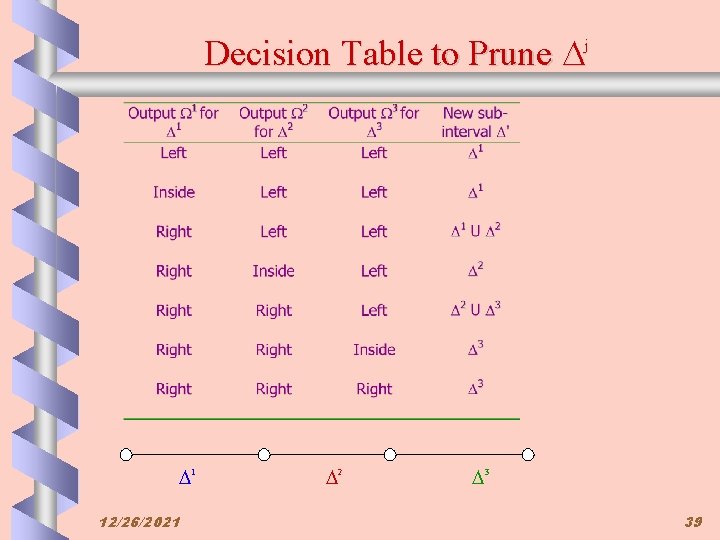

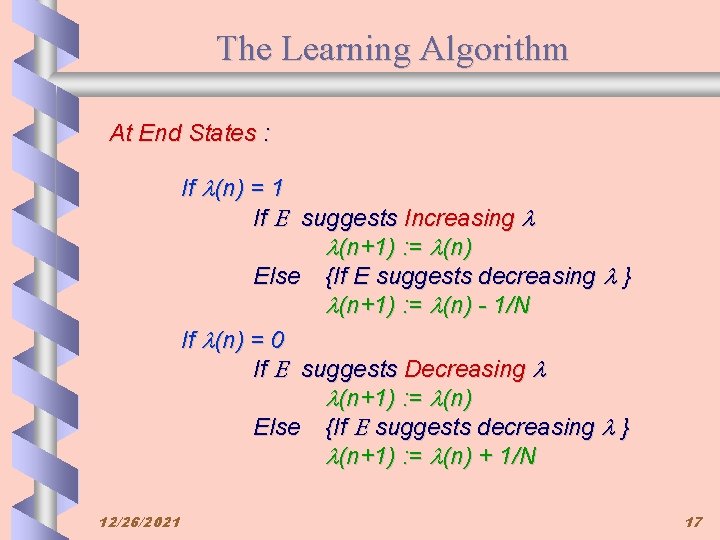

Experimental Results Figure I : Plot of E[l( )] with N. 12/26/2021 50

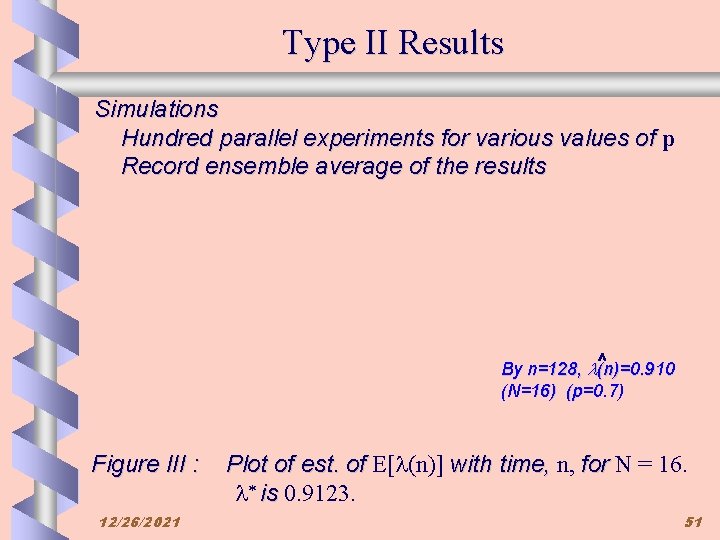

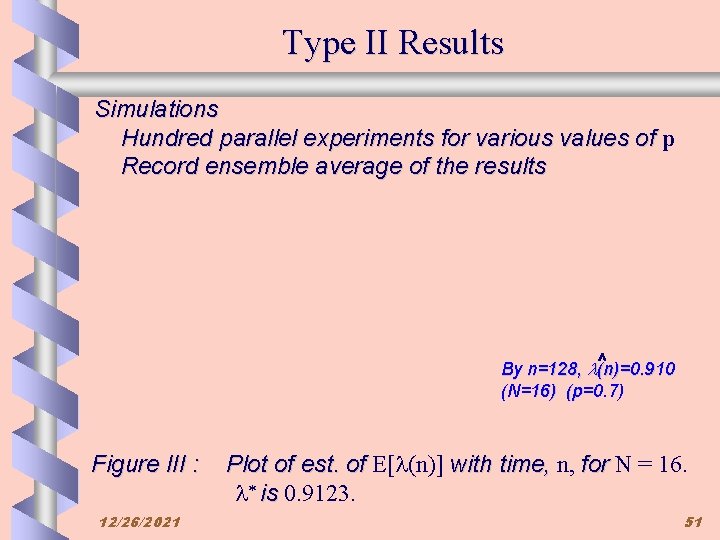

Type II Results Simulations Hundred parallel experiments for various values of p Record ensemble average of the results By n=128, (n)=0. 910 (N=16) (p=0. 7) Figure III : 12/26/2021 Plot of est. of E[ (n)] with time, n, for N = 16. * is 0. 9123. 51

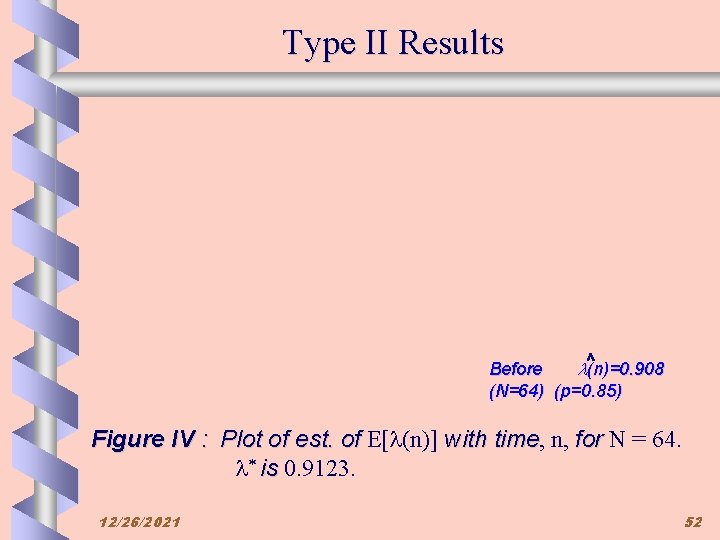

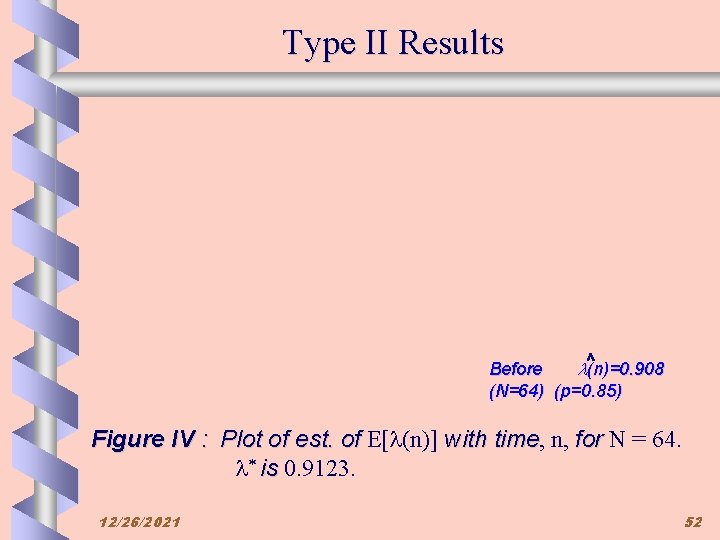

Type II Results Before (n)=0. 908 (N=64) (p=0. 85) Figure IV : Plot of est. of E[ (n)] with time, time n, for N = 64. * is 0. 9123. 12/26/2021 52

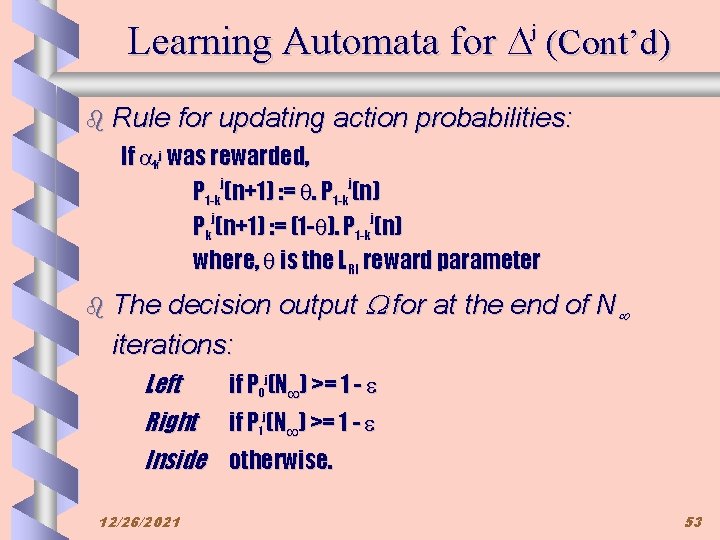

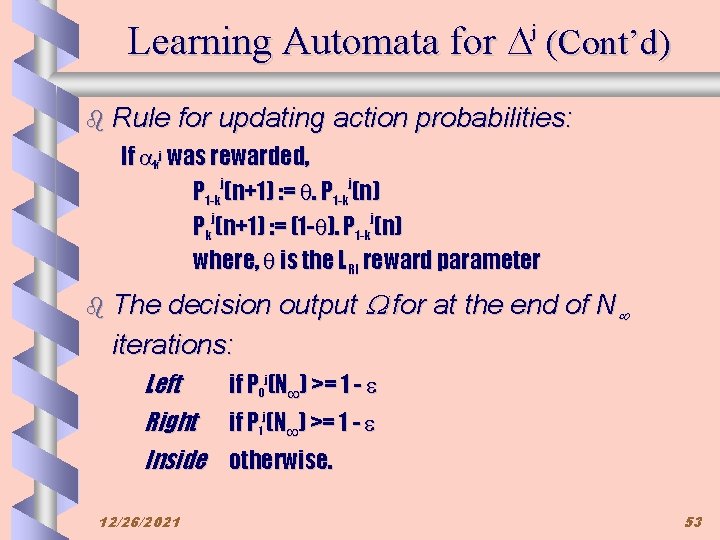

Learning Automata for (Cont’d) j b Rule for updating action probabilities: If kj was rewarded, P 1 -kj(n+1) : = . P 1 -kj(n) Pkj(n+1) : = (1 - ). P 1 -kj(n) where, is the LRI reward parameter decision output j for at the end of N iterations: b The Left if P 0 j(N ) >= 1 - Right if P 1 j(N ) >= 1 - Inside otherwise. 12/26/2021 53

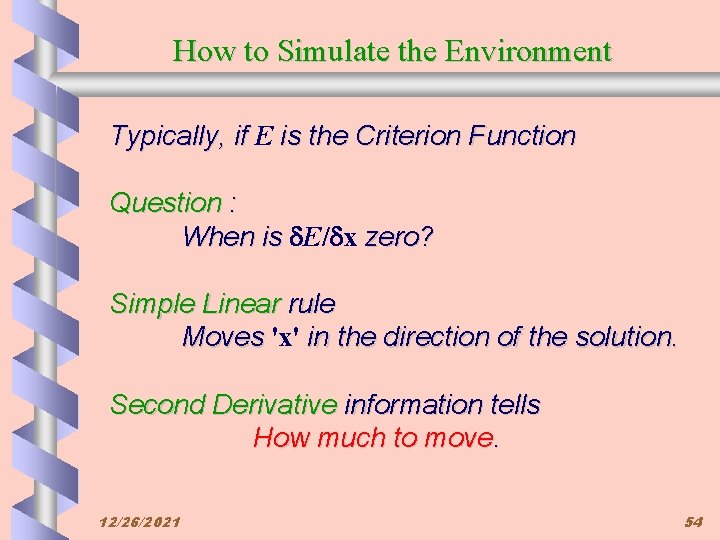

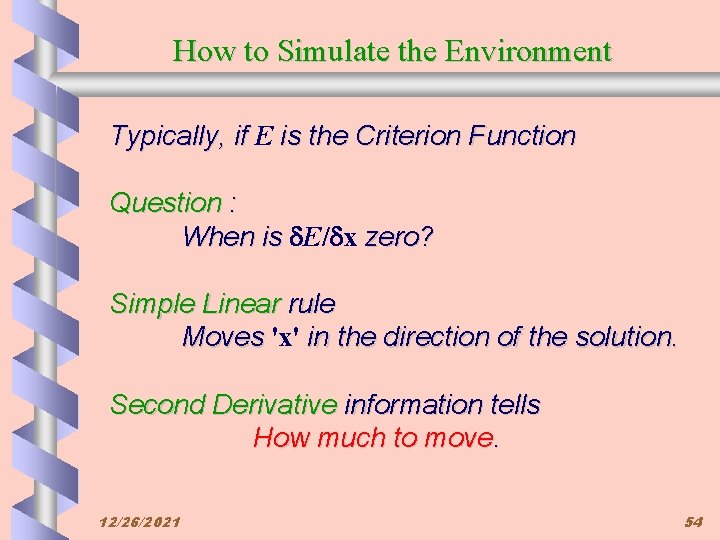

How to Simulate the Environment Typically, if E is the Criterion Function Question : When is d. E/dx zero? Simple Linear rule Moves 'x' in the direction of the solution. Second Derivative information tells How much to move. 12/26/2021 54

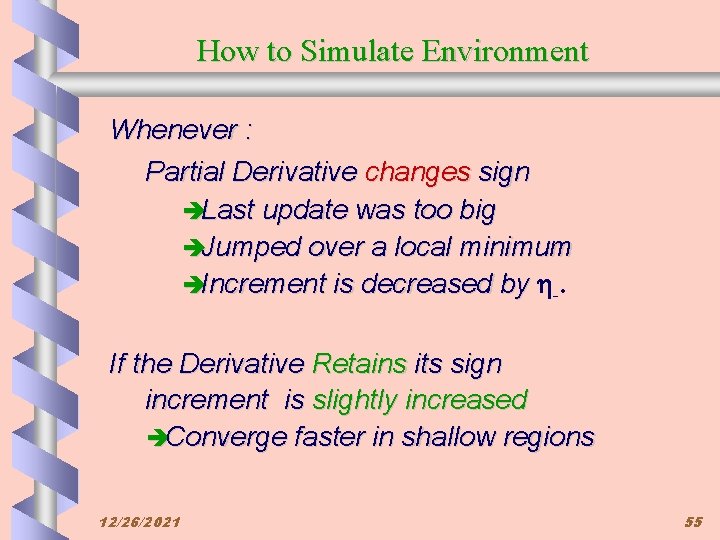

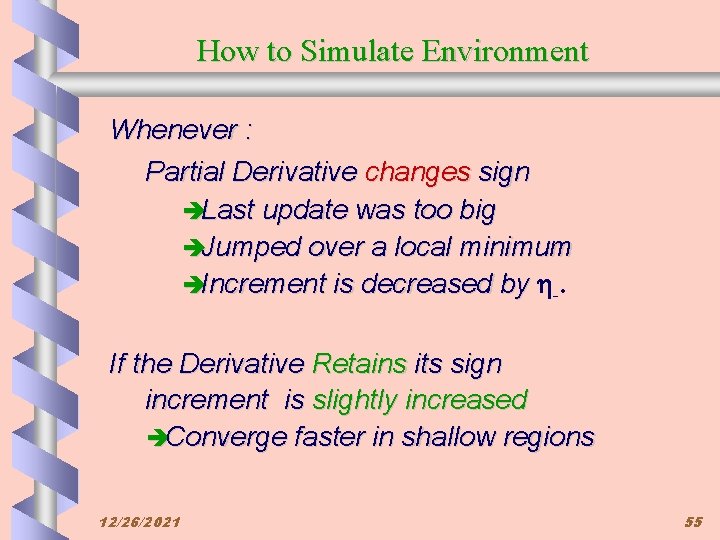

How to Simulate Environment Whenever : Partial Derivative changes sign èLast update was too big èJumped over a local minimum èIncrement is decreased by h-. If the Derivative Retains its sign increment is slightly increased èConverge faster in shallow regions 12/26/2021 55

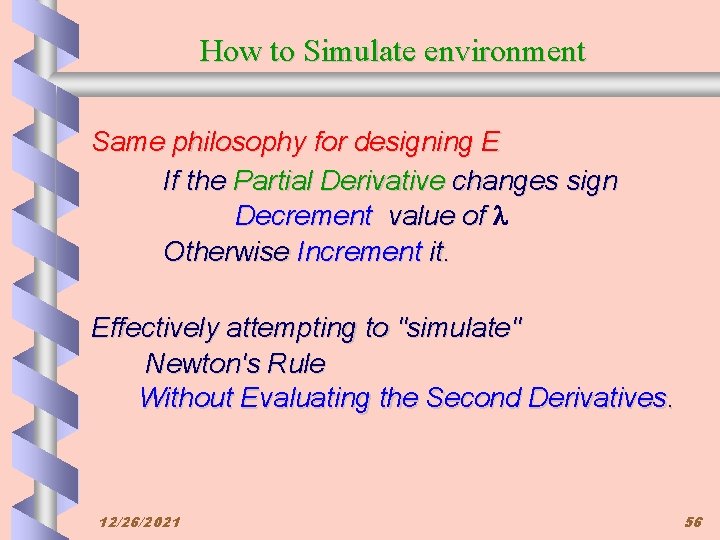

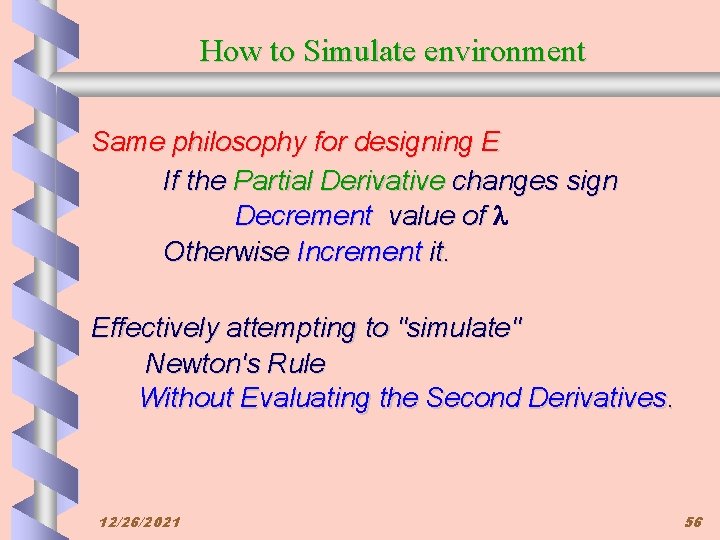

How to Simulate environment Same philosophy for designing E If the Partial Derivative changes sign Decrement value of l Otherwise Increment it. Effectively attempting to "simulate" Newton's Rule Without Evaluating the Second Derivatives. 12/26/2021 56