Efficient Logistic Regression with Stochastic Gradient Descent William

- Slides: 14

Efficient Logistic Regression with Stochastic Gradient Descent William Cohen

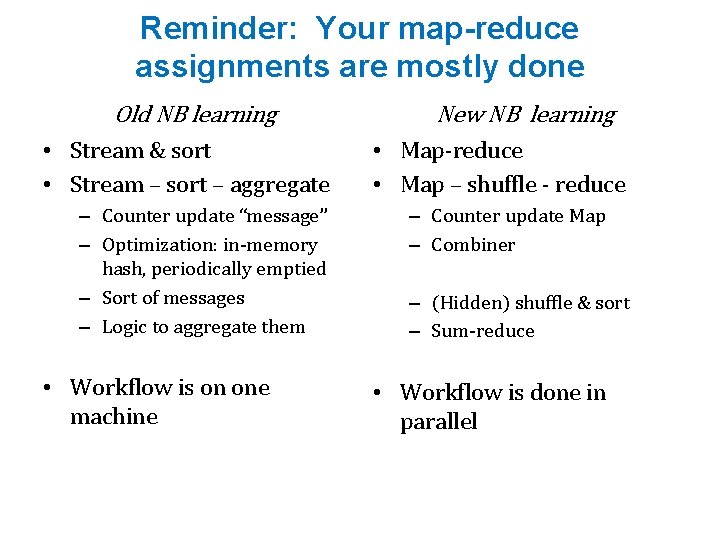

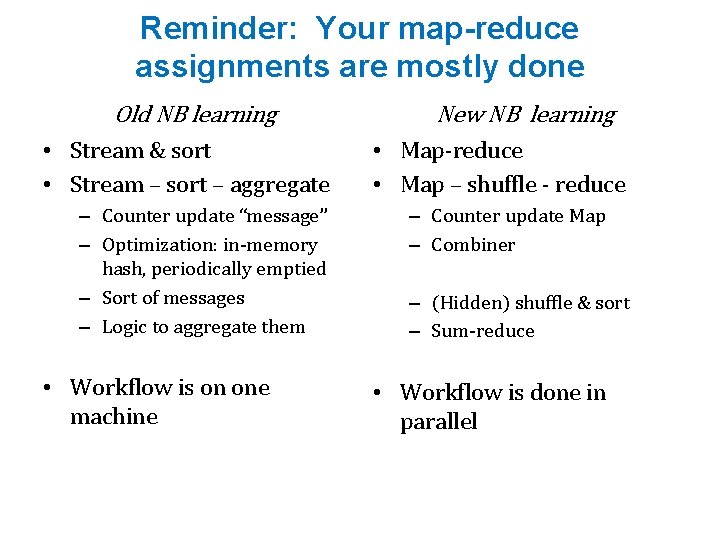

Reminder: Your map-reduce assignments are mostly done Old NB learning • Stream & sort • Stream – sort – aggregate – Counter update “message” – Optimization: in-memory hash, periodically emptied – Sort of messages – Logic to aggregate them • Workflow is on one machine New NB learning • Map-reduce • Map – shuffle - reduce – Counter update Map – Combiner – (Hidden) shuffle & sort – Sum-reduce • Workflow is done in parallel

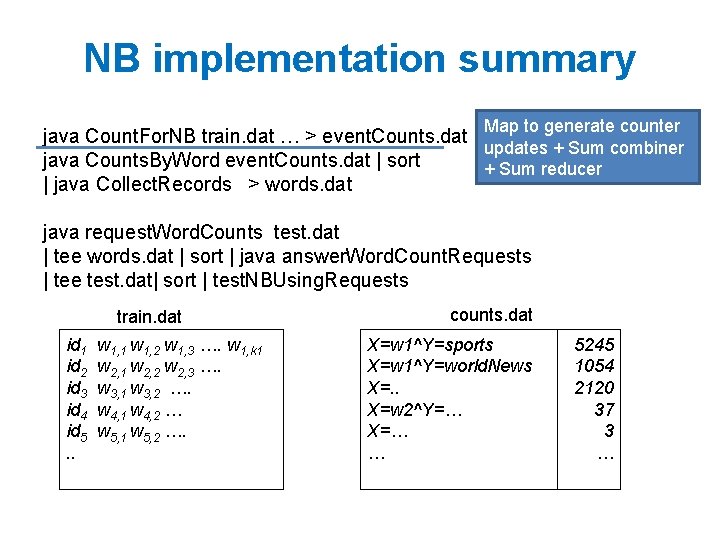

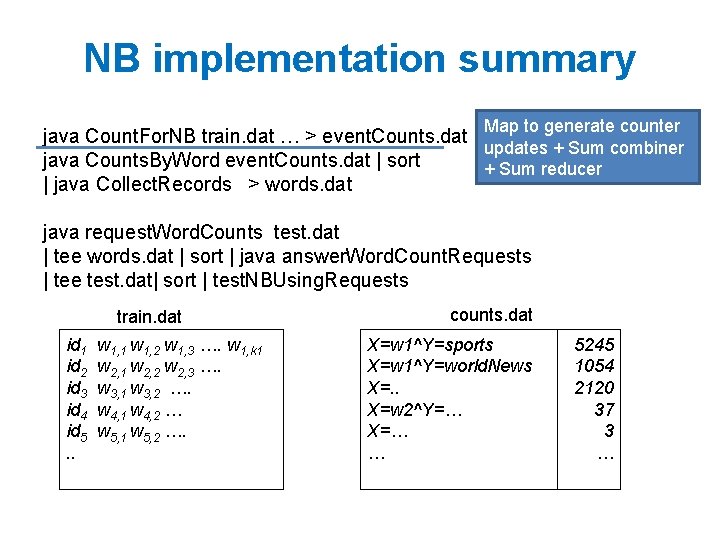

NB implementation summary Map to generate counter java Count. For. NB train. dat … > event. Counts. dat updates + Sum combiner java Counts. By. Word event. Counts. dat | sort + Sum reducer | java Collect. Records > words. dat java request. Word. Counts test. dat | tee words. dat | sort | java answer. Word. Count. Requests | tee test. dat| sort | test. NBUsing. Requests train. dat id 1 id 2 id 3 id 4 id 5. . w 1, 1 w 1, 2 w 1, 3 …. w 1, k 1 w 2, 2 w 2, 3 …. w 3, 1 w 3, 2 …. w 4, 1 w 4, 2 … w 5, 1 w 5, 2 …. counts. dat X=w 1^Y=sports X=w 1^Y=world. News X=. . X=w 2^Y=… X=… … 5245 1054 2120 37 3 …

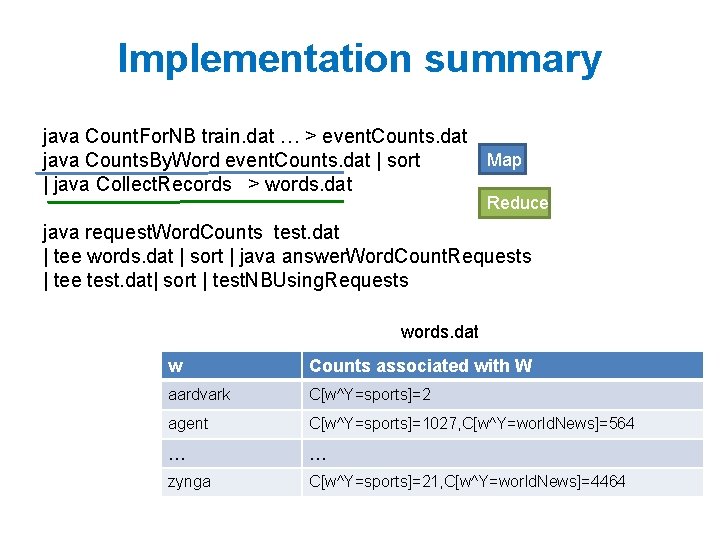

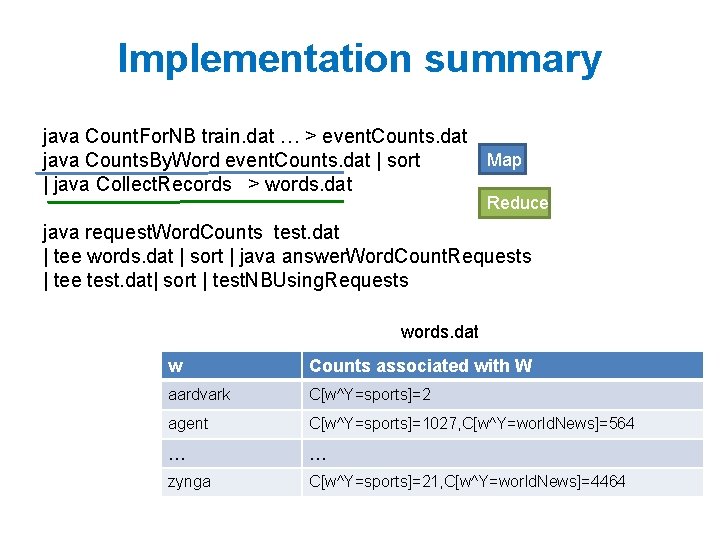

Implementation summary java Count. For. NB train. dat … > event. Counts. dat Map java Counts. By. Word event. Counts. dat | sort | java Collect. Records > words. dat Reduce java request. Word. Counts test. dat | tee words. dat | sort | java answer. Word. Count. Requests | tee test. dat| sort | test. NBUsing. Requests words. dat w Counts associated with W aardvark C[w^Y=sports]=2 agent C[w^Y=sports]=1027, C[w^Y=world. News]=564 … … zynga C[w^Y=sports]=21, C[w^Y=world. News]=4464

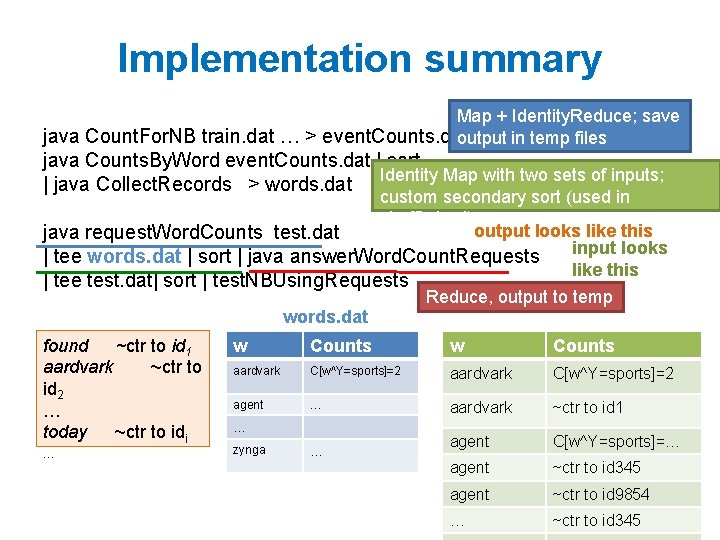

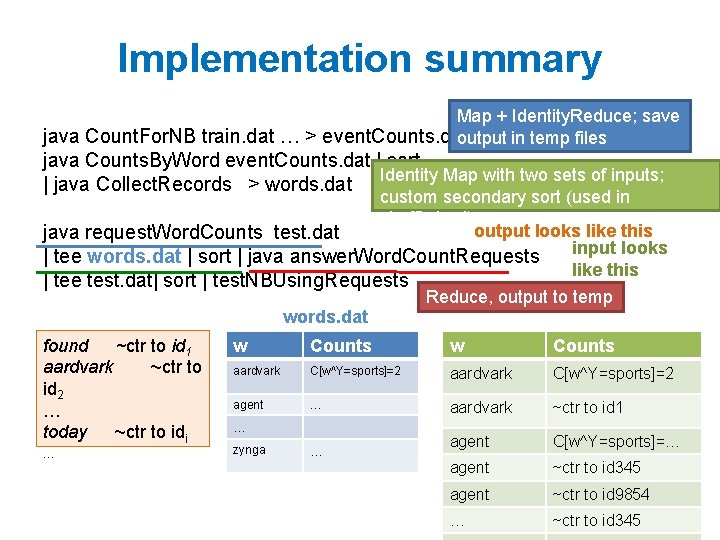

Implementation summary Map + Identity. Reduce; save java Count. For. NB train. dat … > event. Counts. dat output in temp files java Counts. By. Word event. Counts. dat | sort | java Collect. Records > words. dat Identity Map with two sets of inputs; custom secondary sort (used in shuffle/sort) output looks like this java request. Word. Counts test. dat input looks | tee words. dat | sort | java answer. Word. Count. Requests like this | tee test. dat| sort | test. NBUsing. Requests words. dat found ~ctr to id 1 aardvark ~ctr to id 2 … today ~ctr to idi … Reduce, output to temp w Counts aardvark C[w^Y=sports]=2 agent … aardvark ~ctr to id 1 agent C[w^Y=sports]=… agent ~ctr to id 345 agent ~ctr to id 9854 … ~ctr to id 345 … zynga …

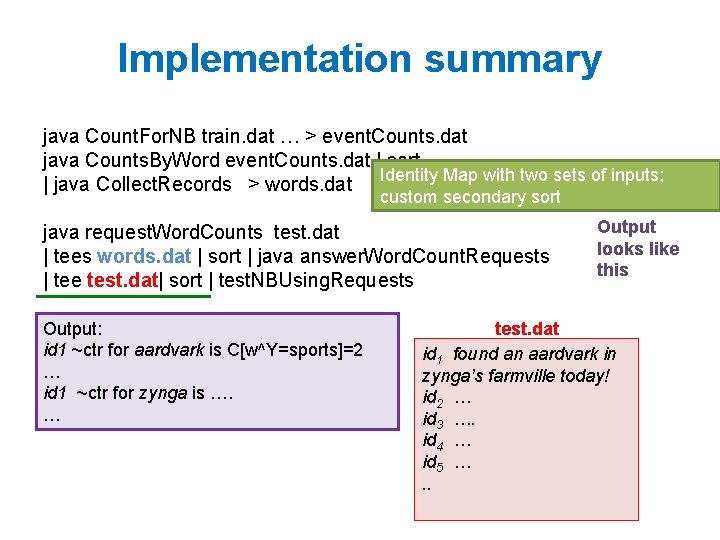

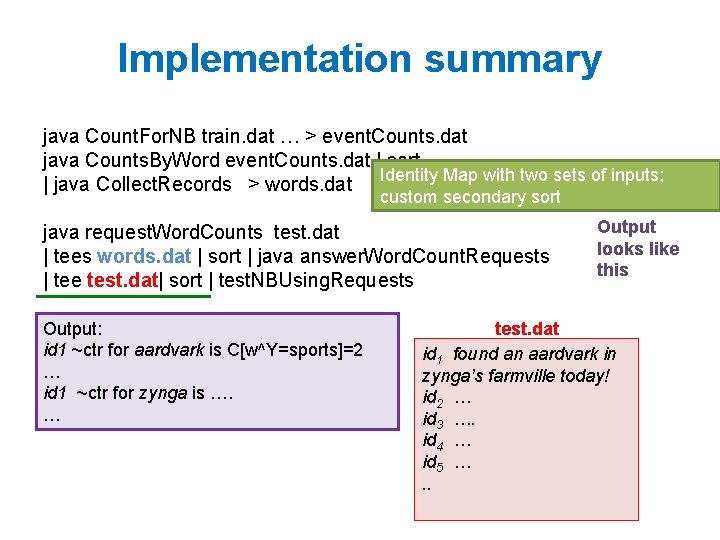

Implementation summary java Count. For. NB train. dat … > event. Counts. dat java Counts. By. Word event. Counts. dat | sort | java Collect. Records > words. dat Identity Map with two sets of inputs; custom secondary sort java request. Word. Counts test. dat | tees words. dat | sort | java answer. Word. Count. Requests | tee test. dat| sort | test. NBUsing. Requests Output: id 1 ~ctr for aardvark is C[w^Y=sports]=2 … id 1 ~ctr for zynga is …. … Output looks like this test. dat id 1 found an aardvark in zynga’s farmville today! id 2 … id 3 …. id 4 … id 5 …. .

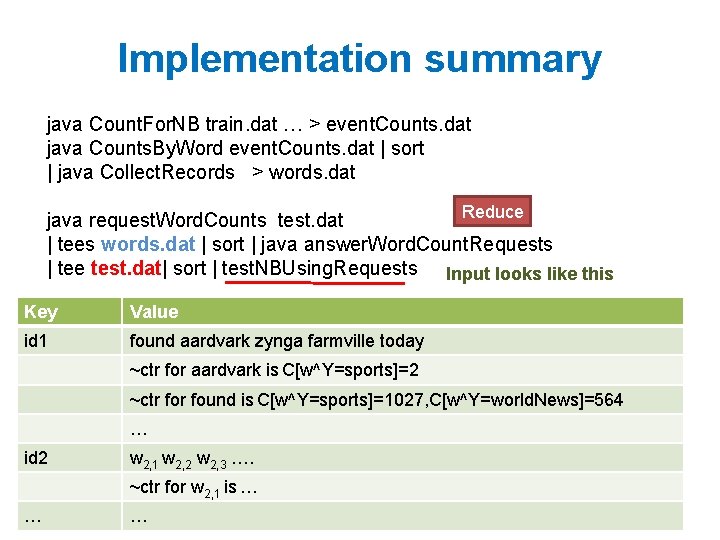

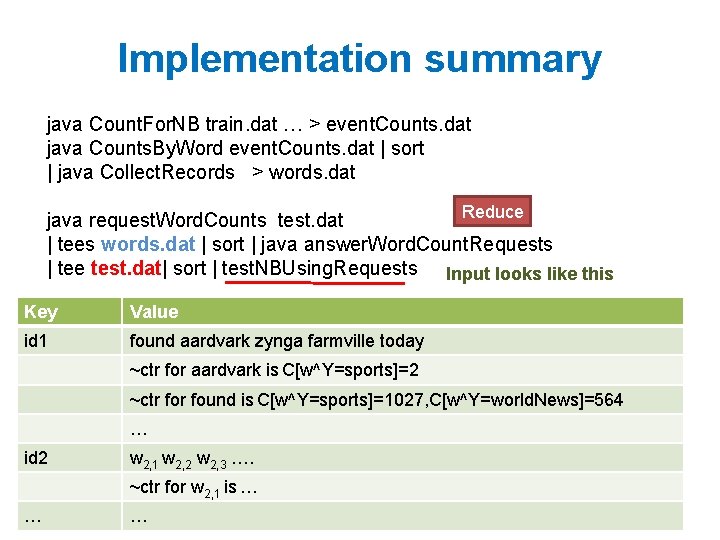

Implementation summary java Count. For. NB train. dat … > event. Counts. dat java Counts. By. Word event. Counts. dat | sort | java Collect. Records > words. dat Reduce java request. Word. Counts test. dat | tees words. dat | sort | java answer. Word. Count. Requests | tee test. dat| sort | test. NBUsing. Requests Input looks like this Key Value id 1 found aardvark zynga farmville today ~ctr for aardvark is C[w^Y=sports]=2 ~ctr found is C[w^Y=sports]=1027, C[w^Y=world. News]=564 … id 2 w 2, 1 w 2, 2 w 2, 3 …. ~ctr for w 2, 1 is … … …

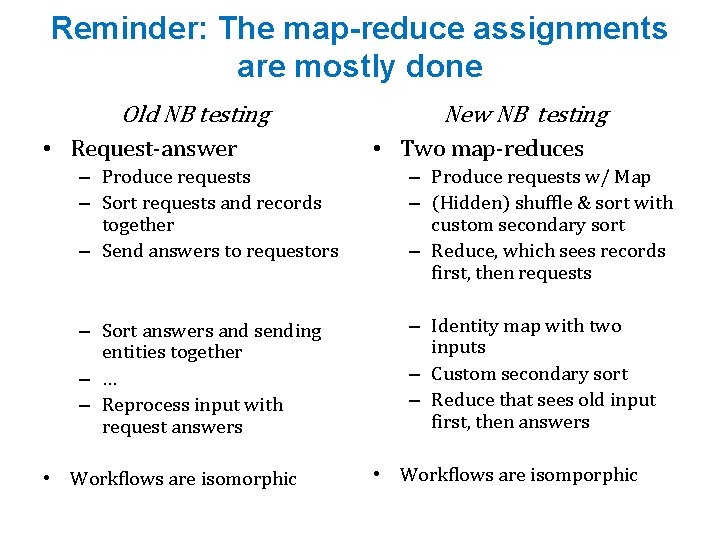

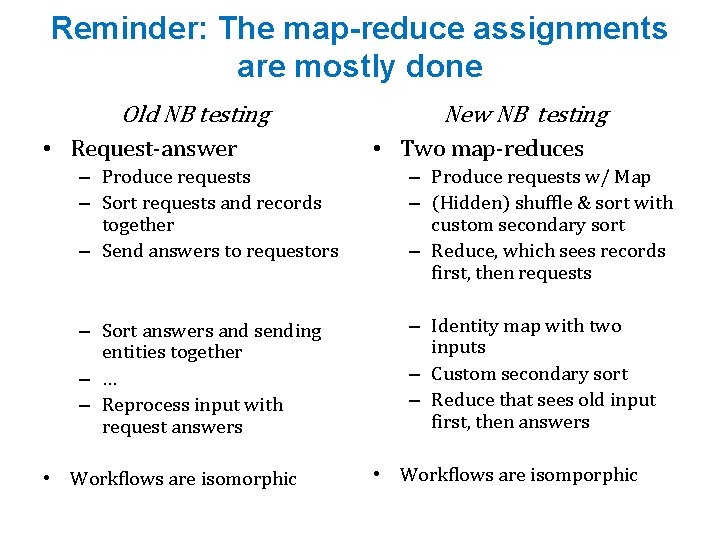

Reminder: The map-reduce assignments are mostly done Old NB testing • Request-answer New NB testing • Two map-reduces – Produce requests – Sort requests and records together – Send answers to requestors – Produce requests w/ Map – (Hidden) shuffle & sort with custom secondary sort – Reduce, which sees records first, then requests – Sort answers and sending entities together – … – Reprocess input with request answers – Identity map with two inputs – Custom secondary sort – Reduce that sees old input first, then answers • Workflows are isomorphic • Workflows are isomporphic

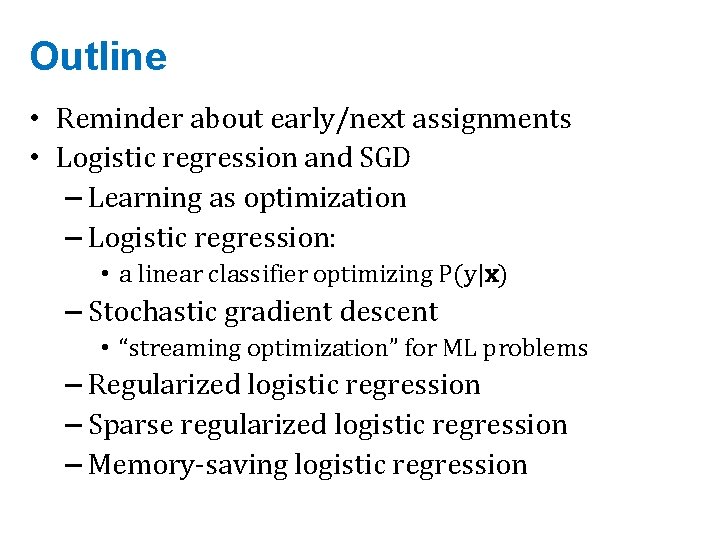

Outline • Reminder about early/next assignments • Logistic regression and SGD – Learning as optimization – Logistic regression: • a linear classifier optimizing P(y|x) – Stochastic gradient descent • “streaming optimization” for ML problems – Regularized logistic regression – Sparse regularized logistic regression – Memory-saving logistic regression

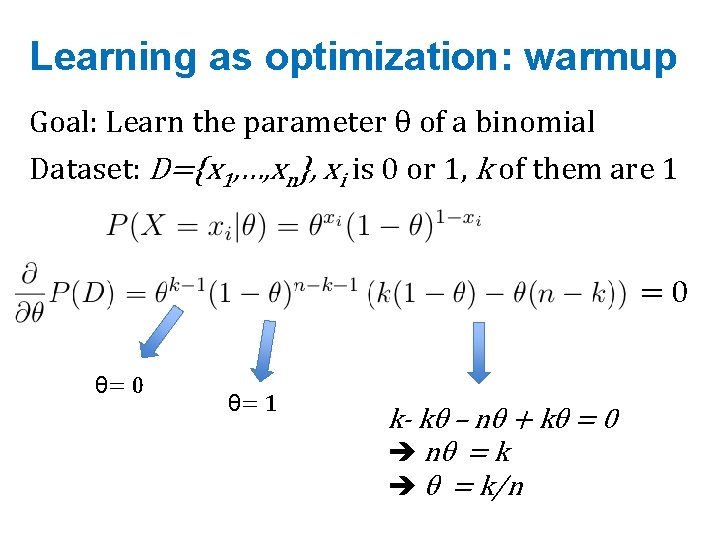

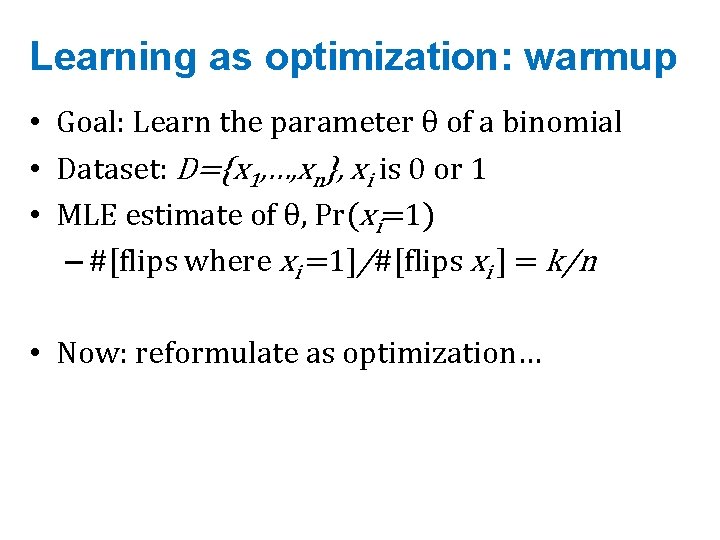

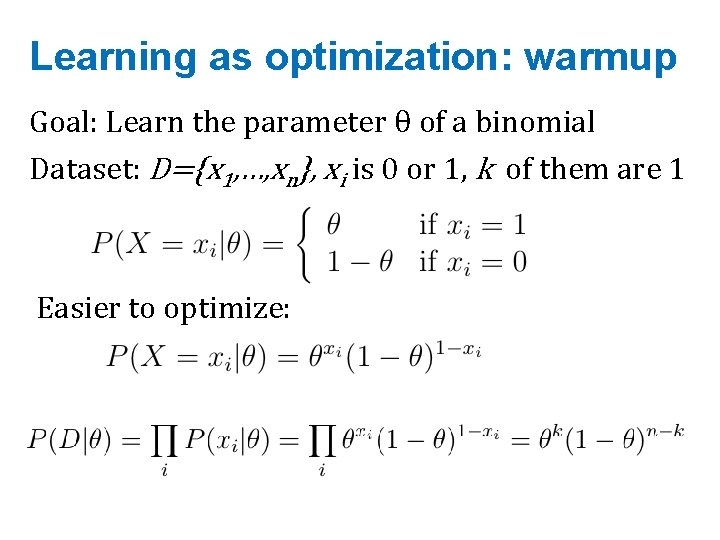

Learning as optimization: warmup • Goal: Learn the parameter θ of a binomial • Dataset: D={x 1, …, xn}, xi is 0 or 1 • MLE estimate of θ, Pr(xi=1) – #[flips where xi =1]/#[flips xi ] = k/n • Now: reformulate as optimization…

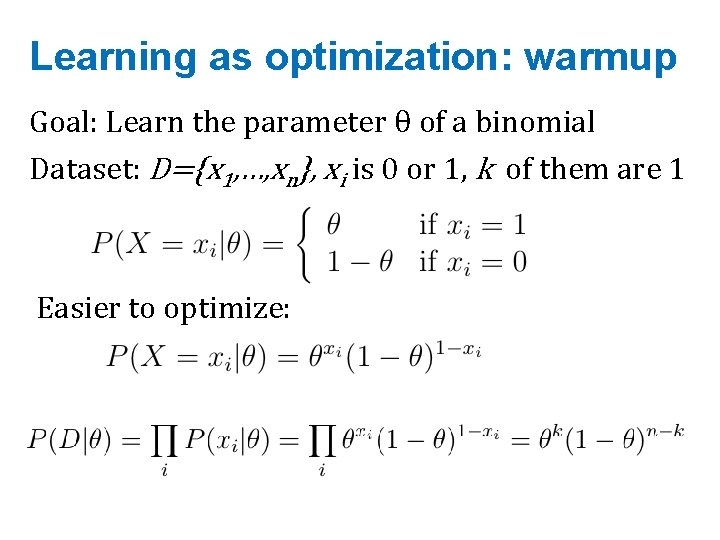

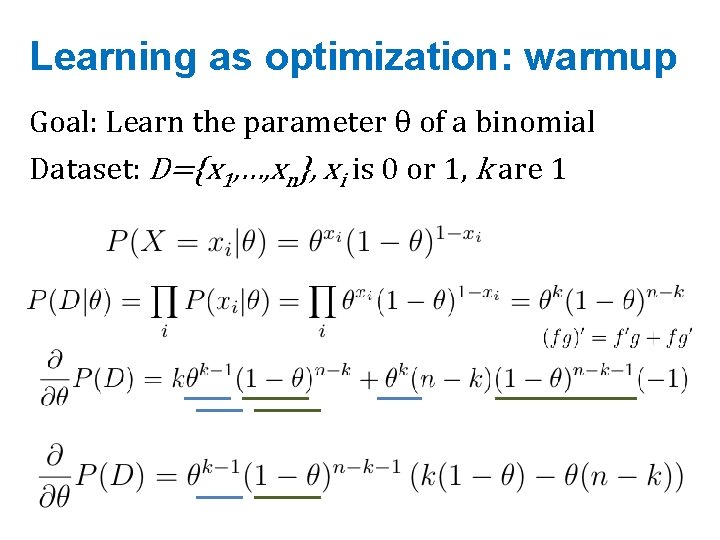

Learning as optimization: warmup Goal: Learn the parameter θ of a binomial Dataset: D={x 1, …, xn}, xi is 0 or 1, k of them are 1 Easier to optimize:

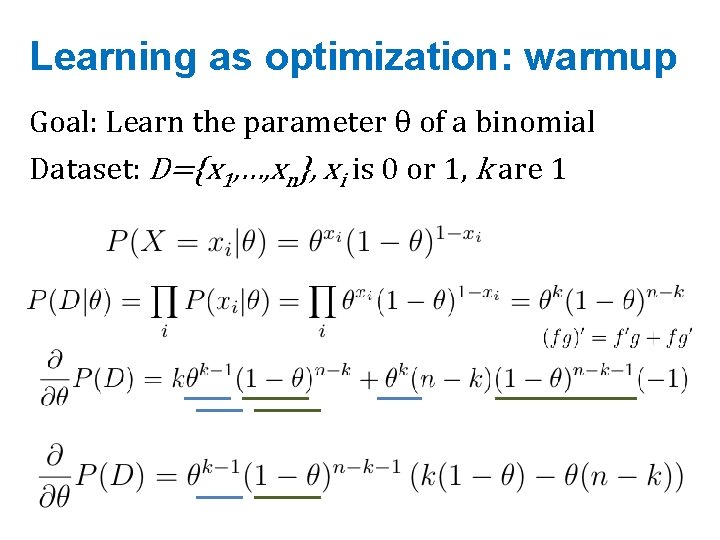

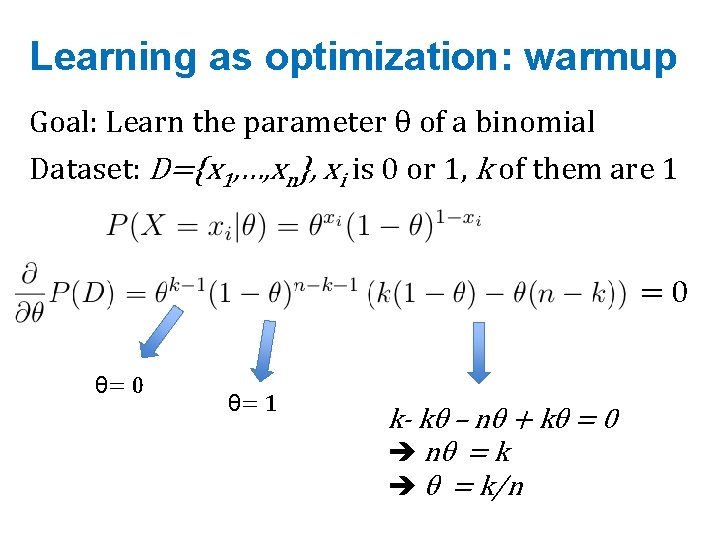

Learning as optimization: warmup Goal: Learn the parameter θ of a binomial Dataset: D={x 1, …, xn}, xi is 0 or 1, k are 1

Learning as optimization: warmup Goal: Learn the parameter θ of a binomial Dataset: D={x 1, …, xn}, xi is 0 or 1, k of them are 1 =0 θ= 1 k- kθ – nθ + kθ = 0 è nθ = k è θ = k/n

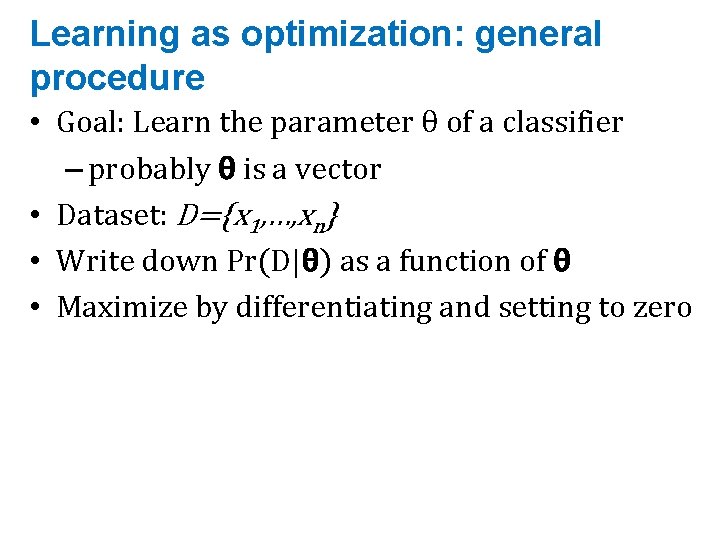

Learning as optimization: general procedure • Goal: Learn the parameter θ of a classifier – probably θ is a vector • Dataset: D={x 1, …, xn} • Write down Pr(D|θ) as a function of θ • Maximize by differentiating and setting to zero