Models of Grammar Learning CS 182 Lecture April

- Slides: 60

Models of Grammar Learning CS 182 Lecture April 26, 2007

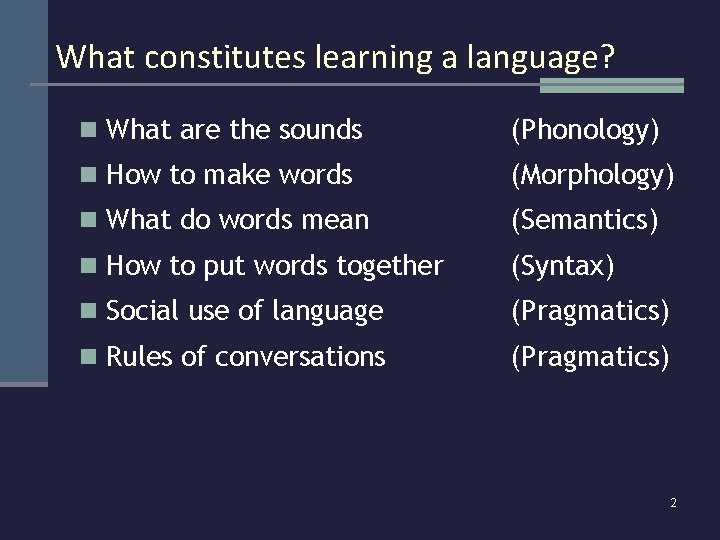

What constitutes learning a language? n What are the sounds (Phonology) n How to make words (Morphology) n What do words mean (Semantics) n How to put words together (Syntax) n Social use of language (Pragmatics) n Rules of conversations (Pragmatics) 2

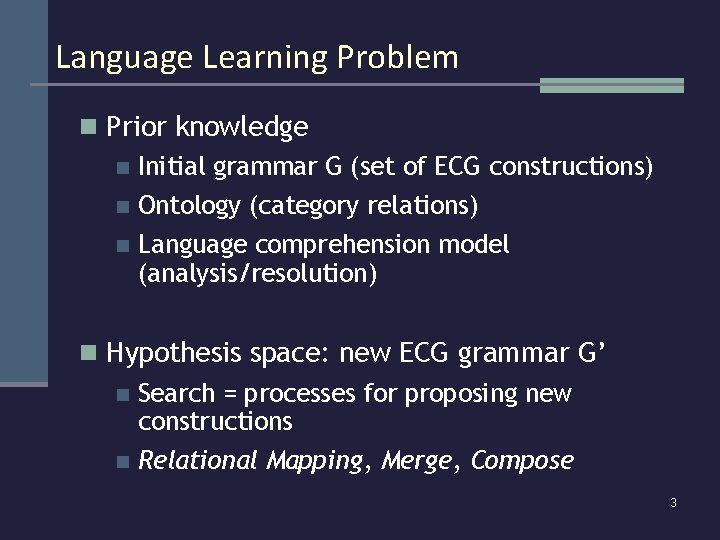

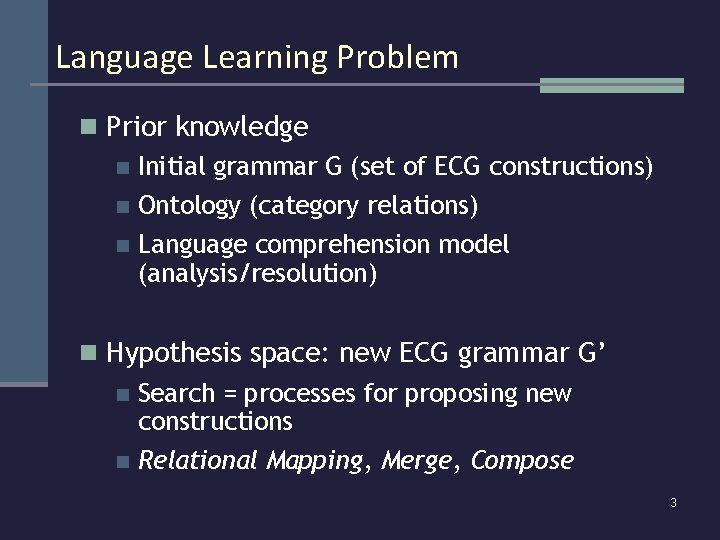

Language Learning Problem n Prior knowledge Initial grammar G (set of ECG constructions) n Ontology (category relations) n n Language comprehension model (analysis/resolution) n Hypothesis space: new ECG grammar G’ Search = processes for proposing new constructions n Relational Mapping, Merge, Compose n 3

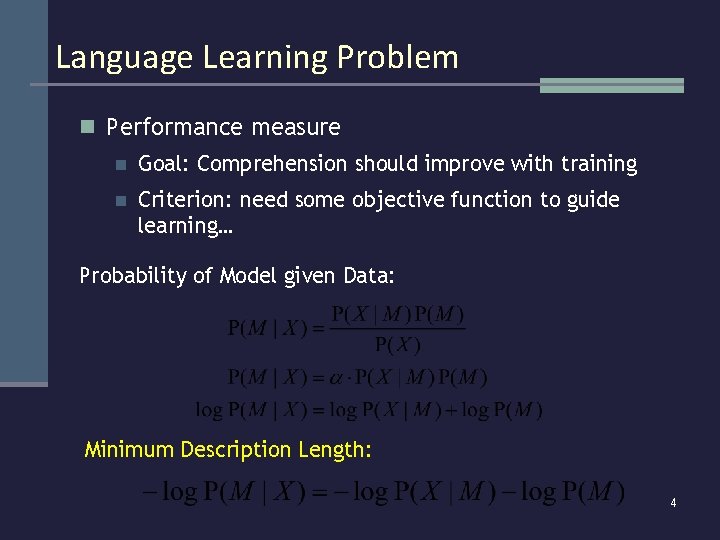

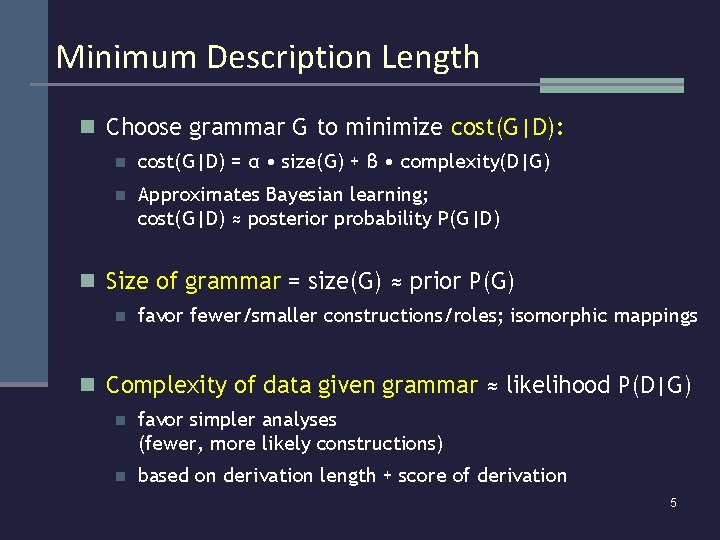

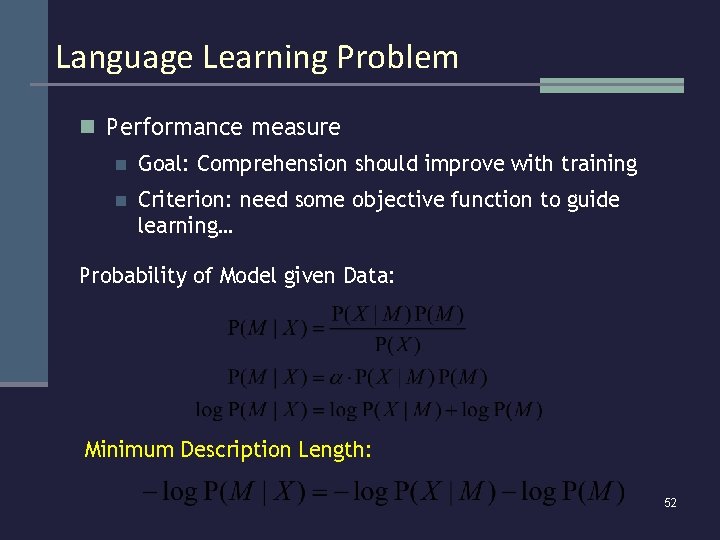

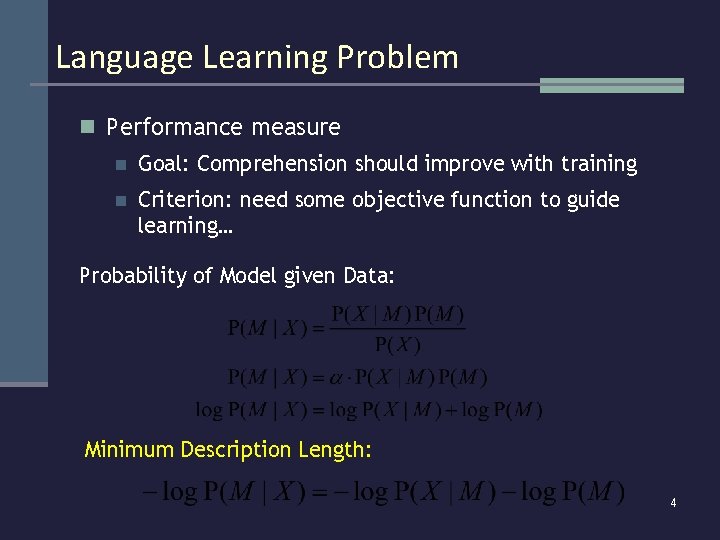

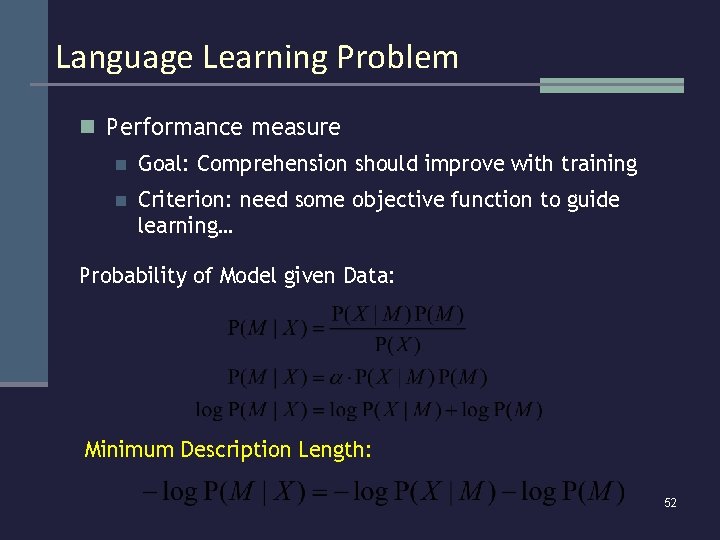

Language Learning Problem n Performance measure n Goal: Comprehension should improve with training n Criterion: need some objective function to guide learning… Probability of Model given Data: Minimum Description Length: 4

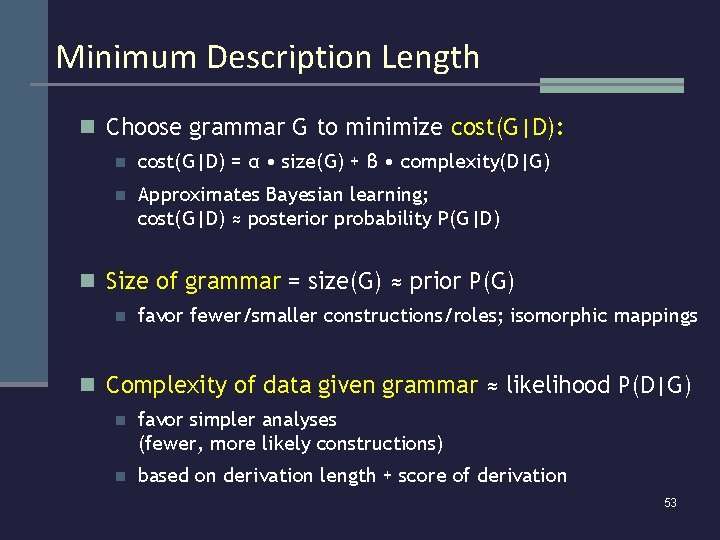

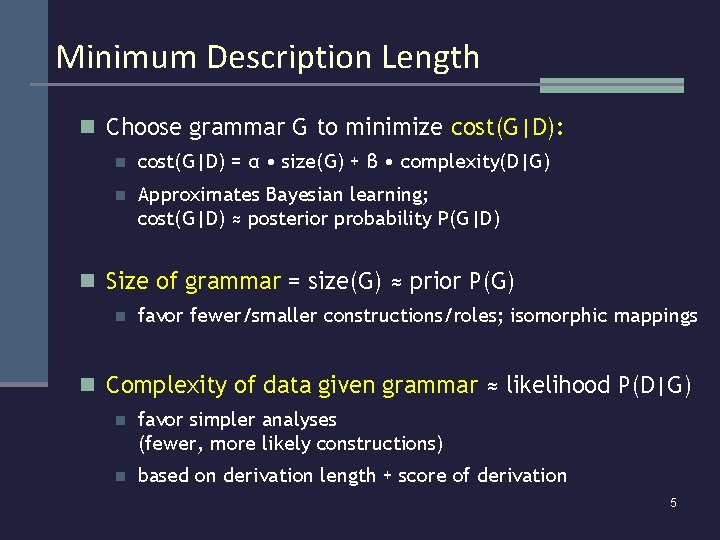

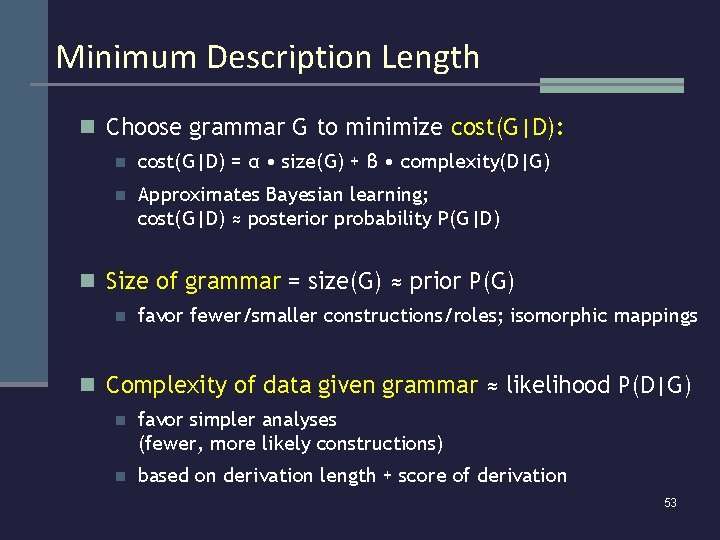

Minimum Description Length n Choose grammar G to minimize cost(G|D): n cost(G|D) = α • size(G) + β • complexity(D|G) n Approximates Bayesian learning; cost(G|D) ≈ posterior probability P(G|D) n Size of grammar = size(G) ≈ prior P(G) n favor fewer/smaller constructions/roles; isomorphic mappings n Complexity of data given grammar ≈ likelihood P(D|G) n favor simpler analyses (fewer, more likely constructions) n based on derivation length + score of derivation 5

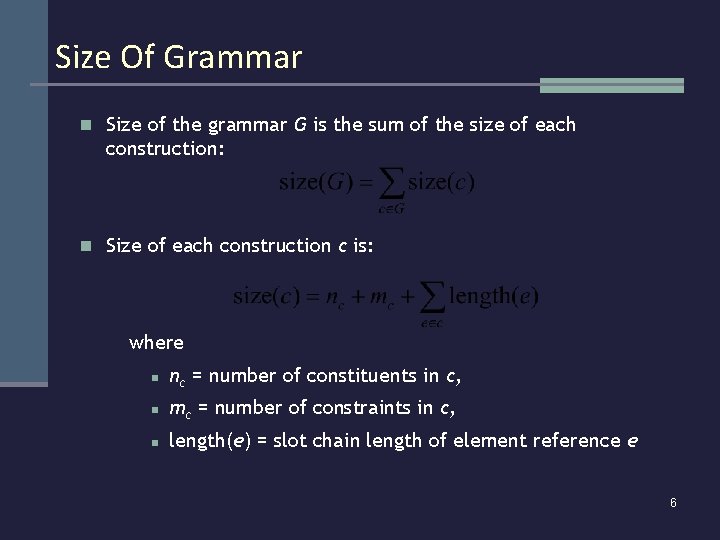

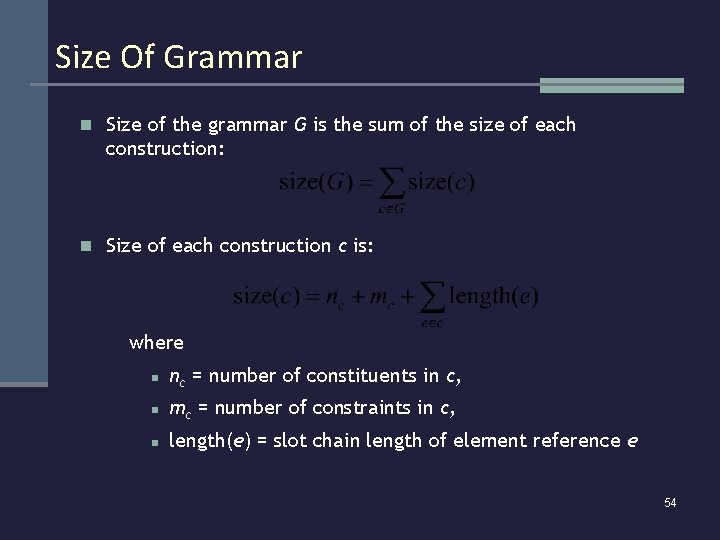

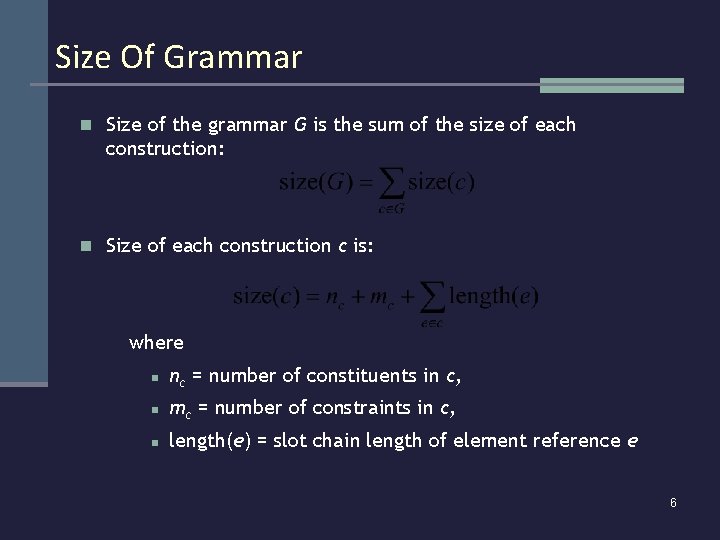

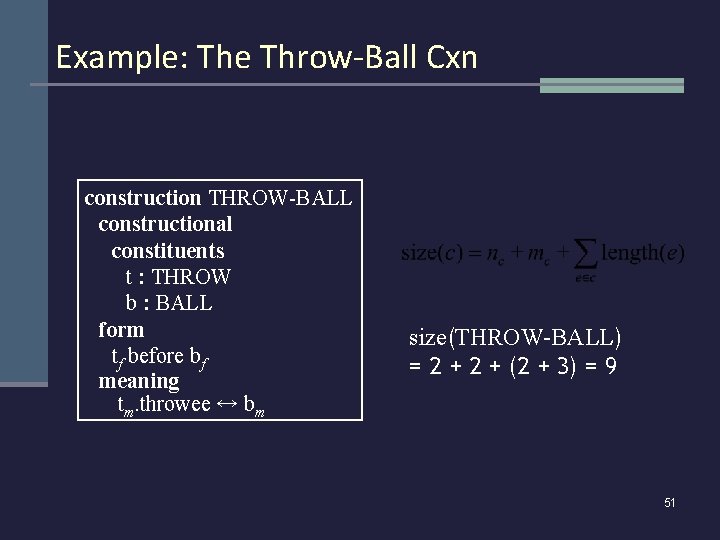

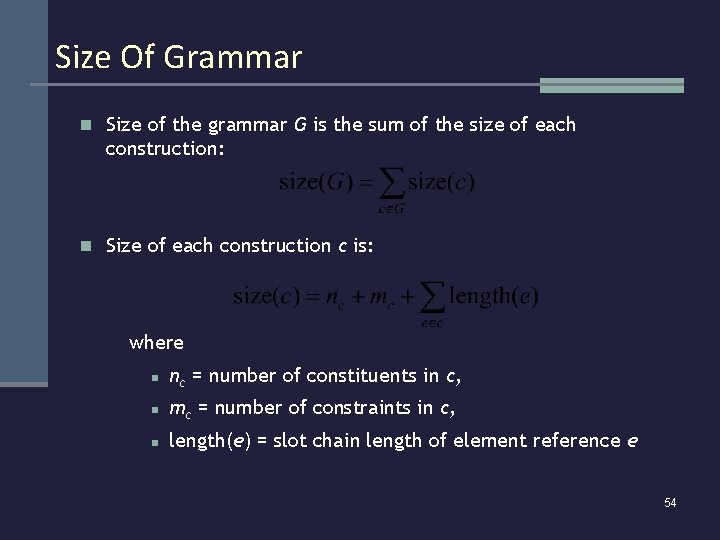

Size Of Grammar n Size of the grammar G is the sum of the size of each construction: n Size of each construction c is: where n nc = number of constituents in c, n mc = number of constraints in c, n length(e) = slot chain length of element reference e 6

What do we know about language development? (focusing mainly on first language acquisition of English-speaking, normal population) 7

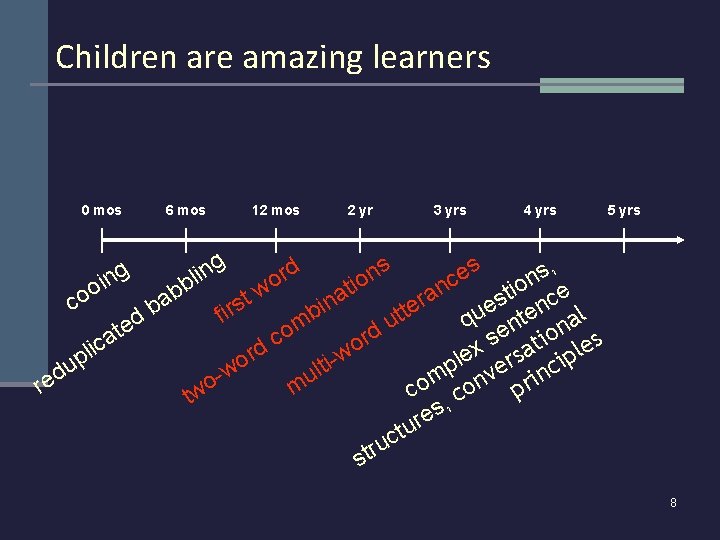

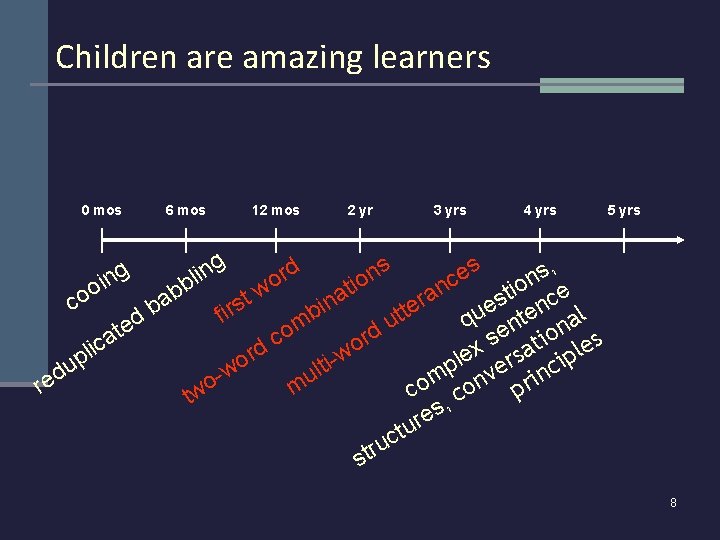

Children are amazing learners 0 mos 6 mos 12 mos 2 yr 3 yrs 4 yrs 5 yrs g , s s d n s r n e i l n c o io b o e t n i o w b t a a o t s c r a n c s i e n e b r t b u nte al t fi d q u m e t o e tion s d r a c s o x rsa ple d lic r w e p l i o i t p u c e l w d v n u m on i o re m p c c tw , s e r tu c u str ing 8

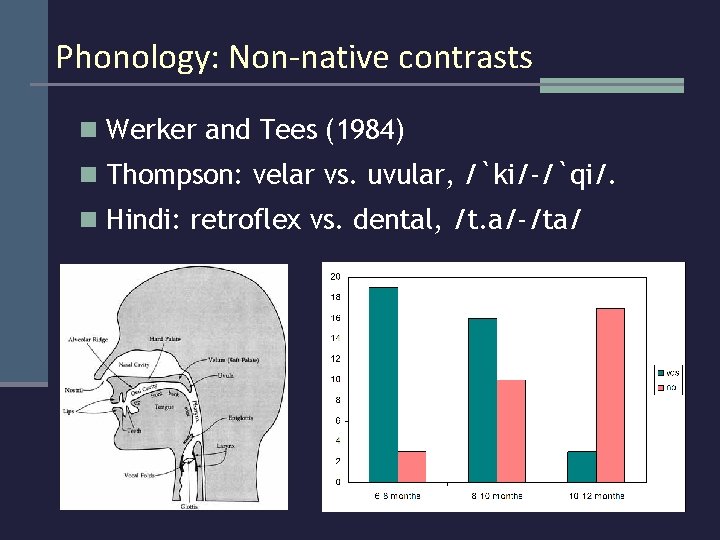

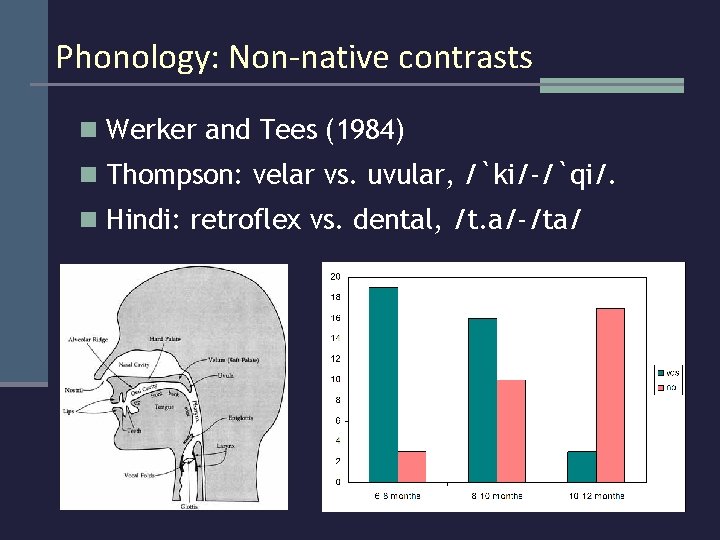

Phonology: Non-native contrasts n Werker and Tees (1984) n Thompson: velar vs. uvular, /`ki/-/`qi/. n Hindi: retroflex vs. dental, /t. a/-/ta/ 9

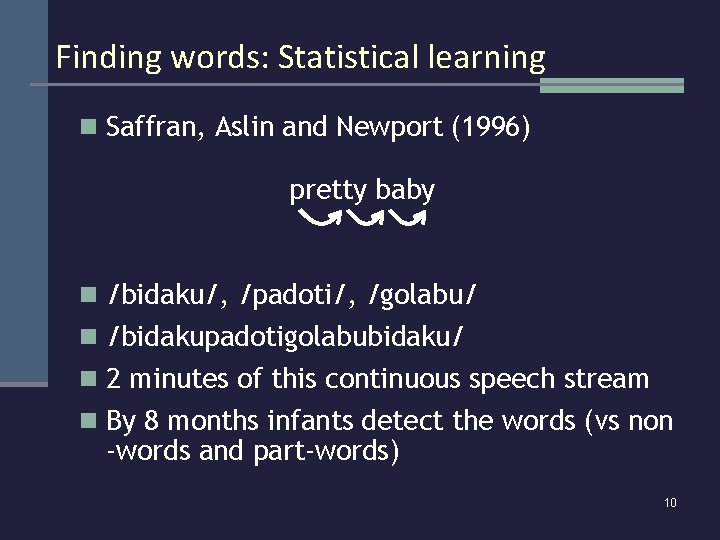

Finding words: Statistical learning n Saffran, Aslin and Newport (1996) pretty baby n /bidaku/, /padoti/, /golabu/ n /bidakupadotigolabubidaku/ n 2 minutes of this continuous speech stream n By 8 months infants detect the words (vs non -words and part-words) 10

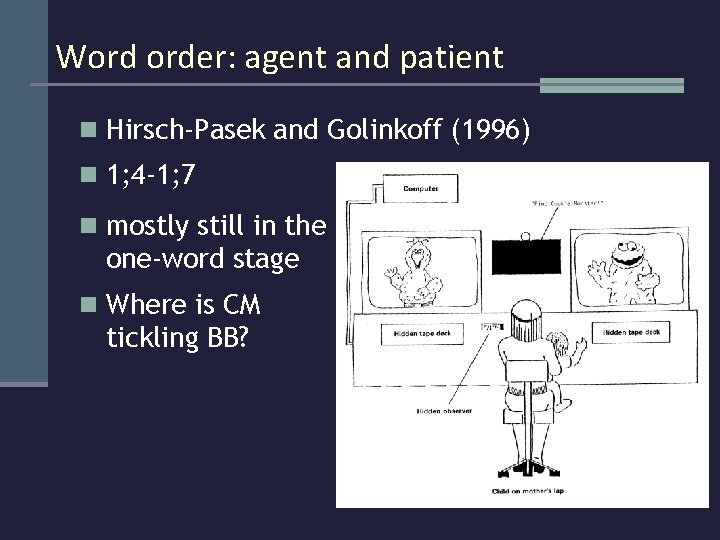

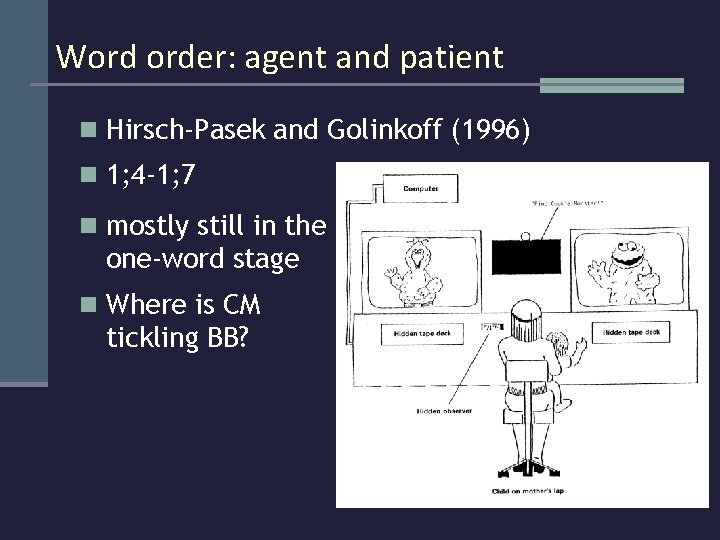

Word order: agent and patient n Hirsch-Pasek and Golinkoff (1996) n 1; 4 -1; 7 n mostly still in the one-word stage n Where is CM tickling BB? 11

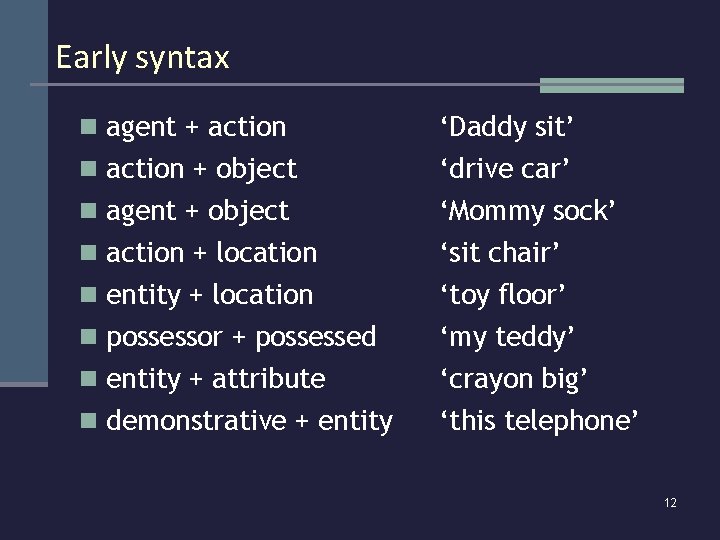

Early syntax n agent + action ‘Daddy sit’ n action + object ‘drive car’ n agent + object ‘Mommy sock’ ‘sit chair’ ‘toy floor’ ‘my teddy’ n action + location n entity + location n possessor + possessed n entity + attribute n demonstrative + entity ‘crayon big’ ‘this telephone’ 12

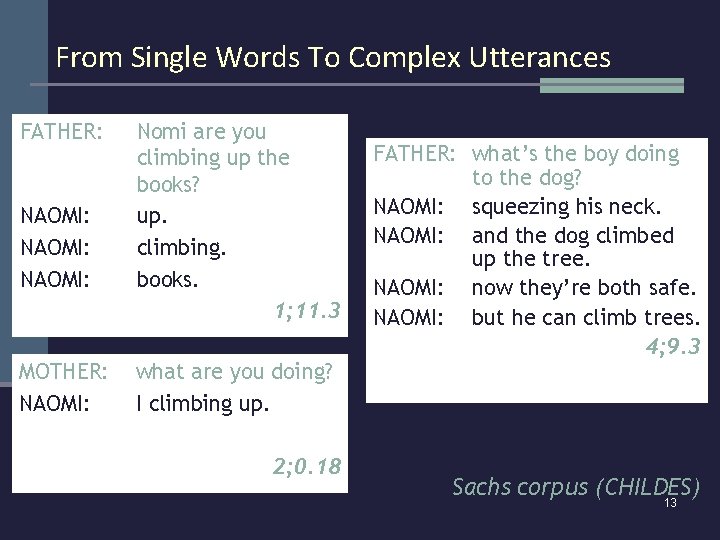

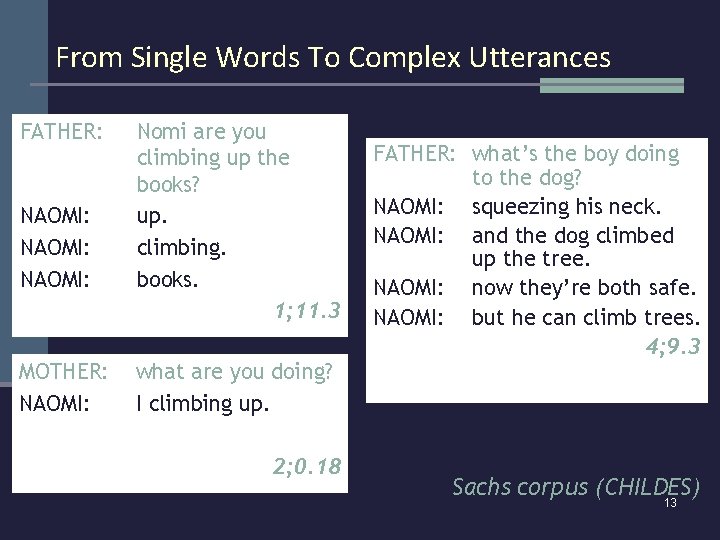

From Single Words To Complex Utterances FATHER: NAOMI: MOTHER: NAOMI: MOTHER: Nomi are you climbing up the books? up. climbing. books. 1; 11. 3 what are you doing? I climbing up. you’re climbing up? 2; 0. 18 FATHER: what’s the boy doing to the dog? NAOMI: squeezing his neck. NAOMI: and the dog climbed up the tree. NAOMI: now they’re both safe. NAOMI: but he can climb trees. 4; 9. 3 Sachs corpus (CHILDES) 13

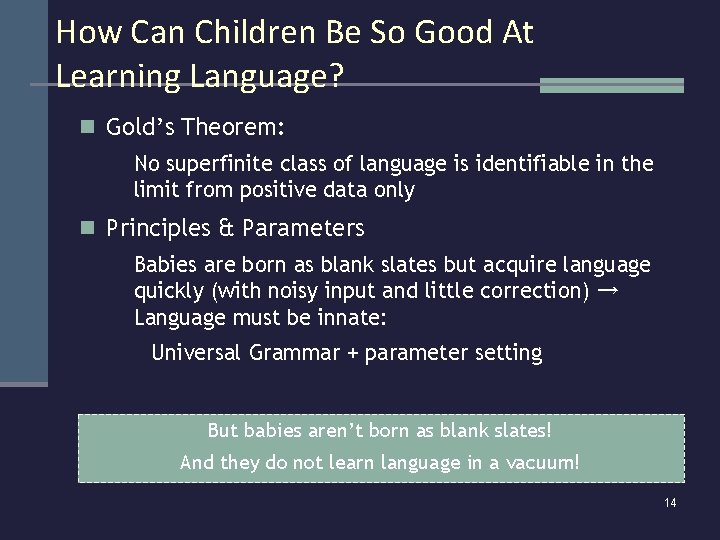

How Can Children Be So Good At Learning Language? n Gold’s Theorem: No superfinite class of language is identifiable in the limit from positive data only n Principles & Parameters Babies are born as blank slates but acquire language quickly (with noisy input and little correction) → Language must be innate: Universal Grammar + parameter setting But babies aren’t born as blank slates! And they do not learn language in a vacuum! 14

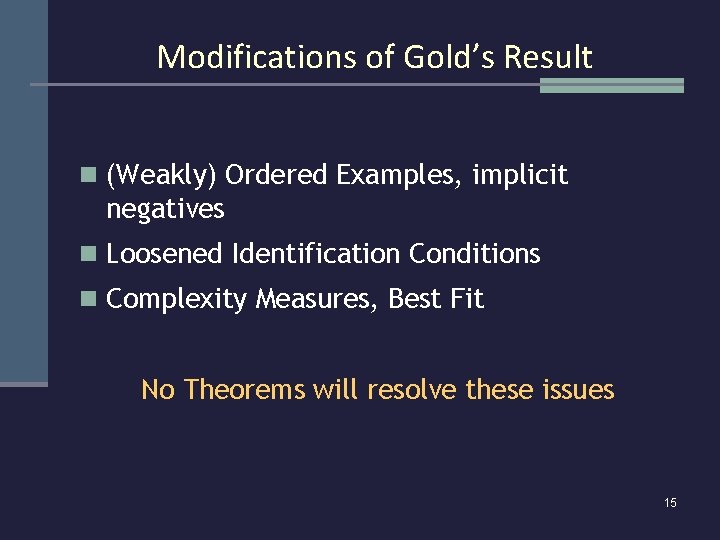

Modifications of Gold’s Result n (Weakly) Ordered Examples, implicit negatives n Loosened Identification Conditions n Complexity Measures, Best Fit No Theorems will resolve these issues 15

Modeling the acquisition of grammar: Theoretical assumptions 16

Language Acquisition n Opulence of the substrate n Prelinguistic children already have rich sensorimotor representations and sophisticated social knowledge intention inference, reference resolution n language-specific event conceptualizations n (Bloom 2000, Tomasello 1995, Bowerman & Choi, Slobin, et al. ) n Children are sensitive to statistical information n n Phonological transitional probabilities Even dependencies between non-adjacent items (Saffran et al. 1996, Gomez 2002) 17

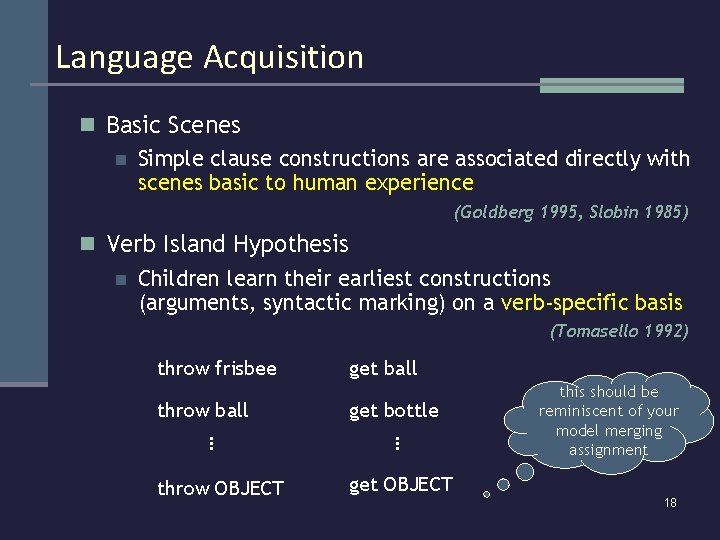

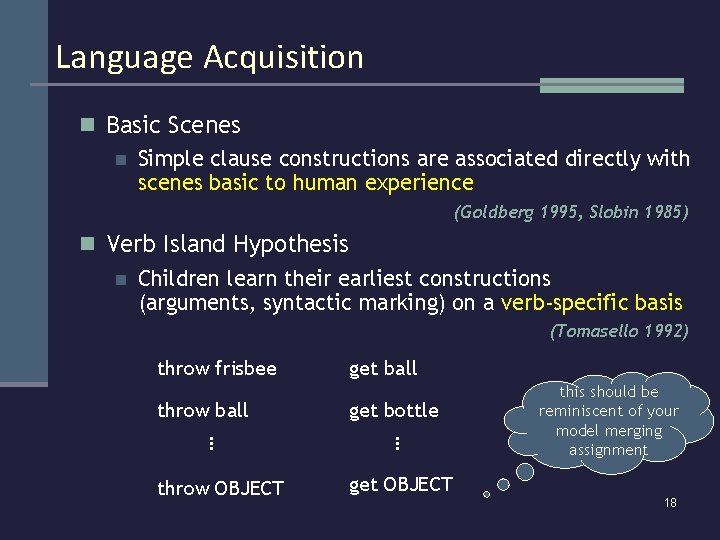

Language Acquisition n Basic Scenes n Simple clause constructions are associated directly with scenes basic to human experience (Goldberg 1995, Slobin 1985) n Verb Island Hypothesis n Children learn their earliest constructions (arguments, syntactic marking) on a verb-specific basis (Tomasello 1992) throw frisbee throw ball get bottle … … throw OBJECT get OBJECT this should be reminiscent of your model merging assignment 18

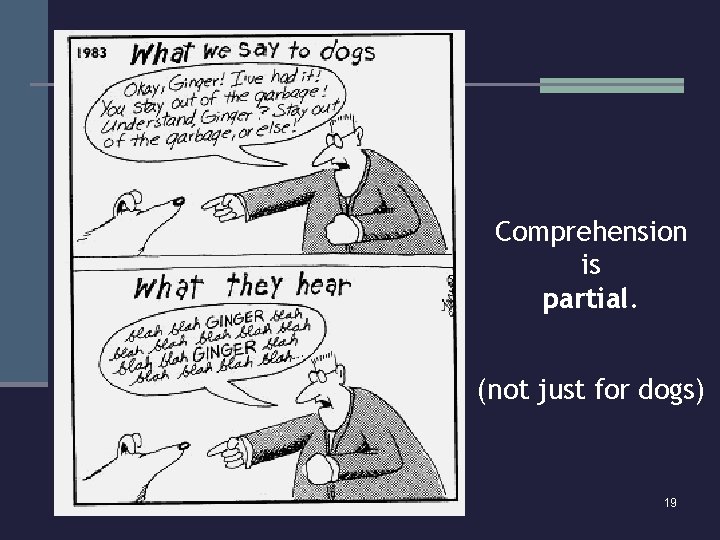

Comprehension is partial. (not just for dogs) 19

What children pick up from what they hear what did you throw it into? they’re throwing this in here. they’re throwing a ball. don’t throw it Nomi. well you really shouldn’t throw things Nomi you know. remember how we told you shouldn’t throw things. n Children use rich situational context / cues to fill in the gaps n They also have at their disposal embodied knowledge and statistical correlations (i. e. experience) 20

Language Learning Hypothesis Children learn constructions that bridge the gap between what they know from language and what they know from the rest of cognition 21

Modeling the acquisition of (early) grammar: Comprehension-driven, usage-based 22

Natural Language Processing at Berkeley Dan Klein EECS Department UC Berkeley

NLP: Motivation n It’d be great if machines could n n n Read text and understand it Translate languages accurately Help us manage, summarize, and aggregate information Use speech as a UI Talk to us / listen to us n But they can’t n n n Language is complex Language is ambiguous Language is highly structured 24

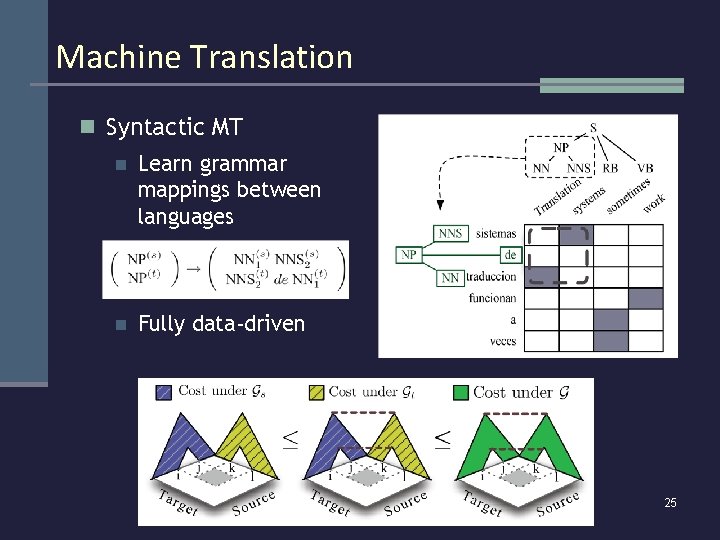

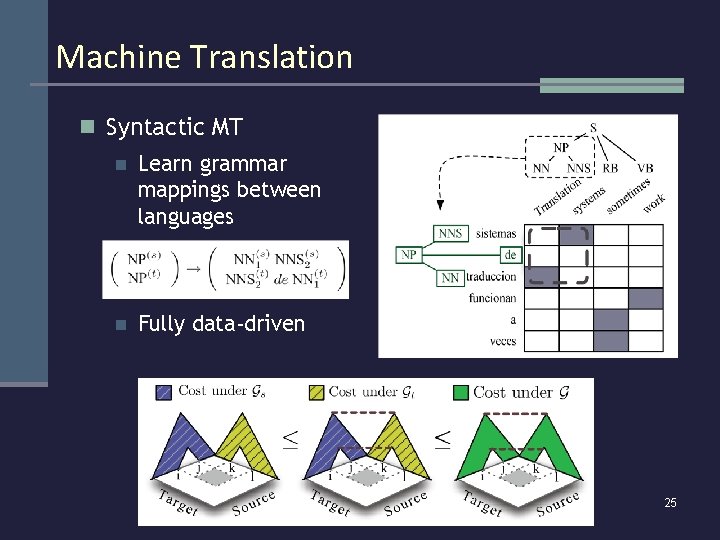

Machine Translation n Syntactic MT n Learn grammar mappings between languages n Fully data-driven 25

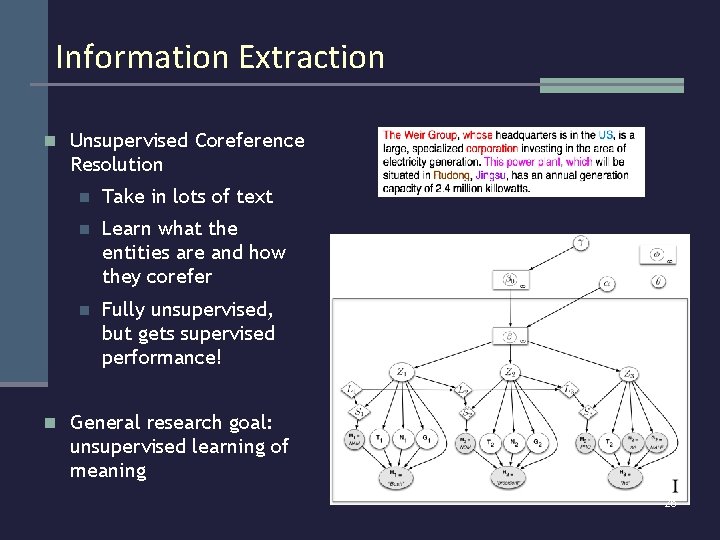

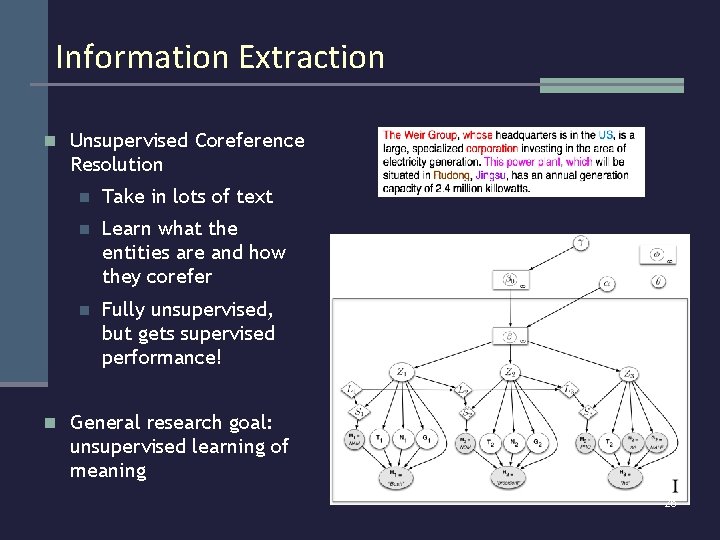

Information Extraction n Unsupervised Coreference Resolution n Take in lots of text n Learn what the entities are and how they corefer n Fully unsupervised, but gets supervised performance! n General research goal: unsupervised learning of meaning 26

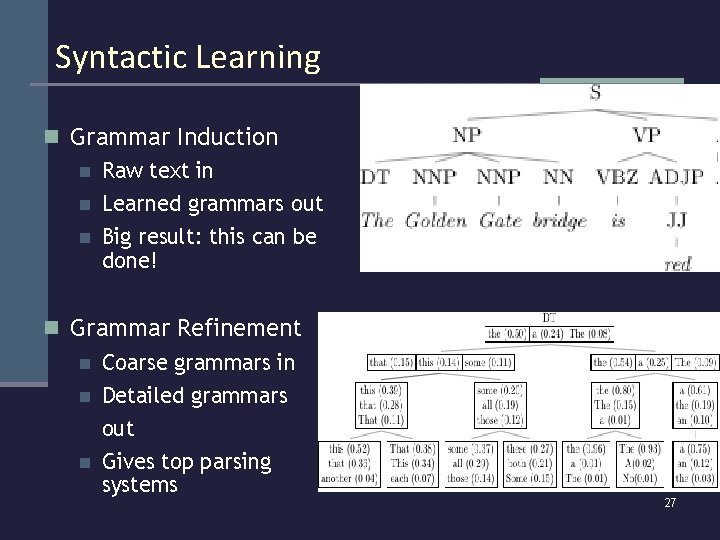

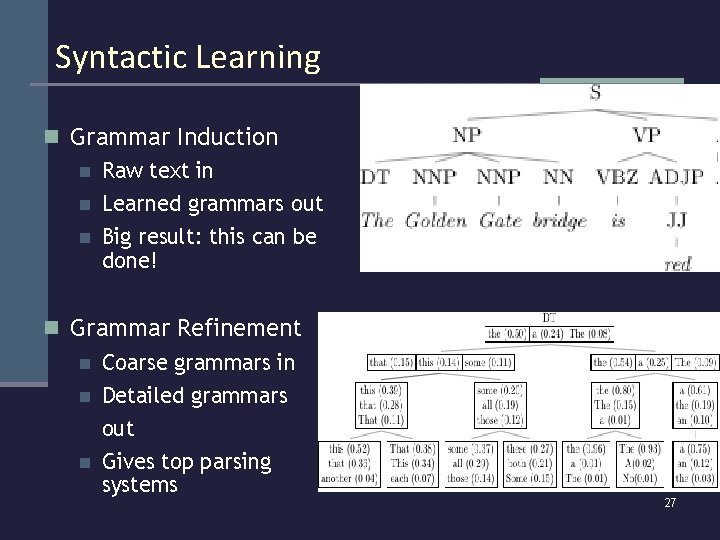

Syntactic Learning n Grammar Induction n Raw text in Learned grammars out Big result: this can be done! n Grammar Refinement n n n Coarse grammars in Detailed grammars out Gives top parsing systems 27

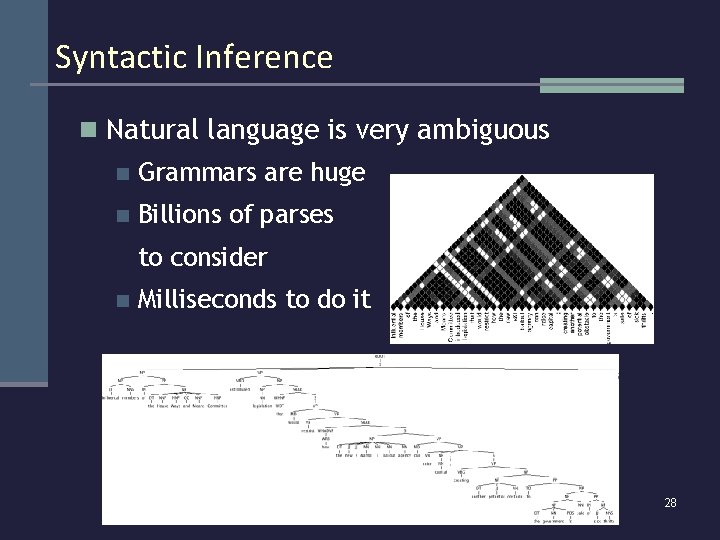

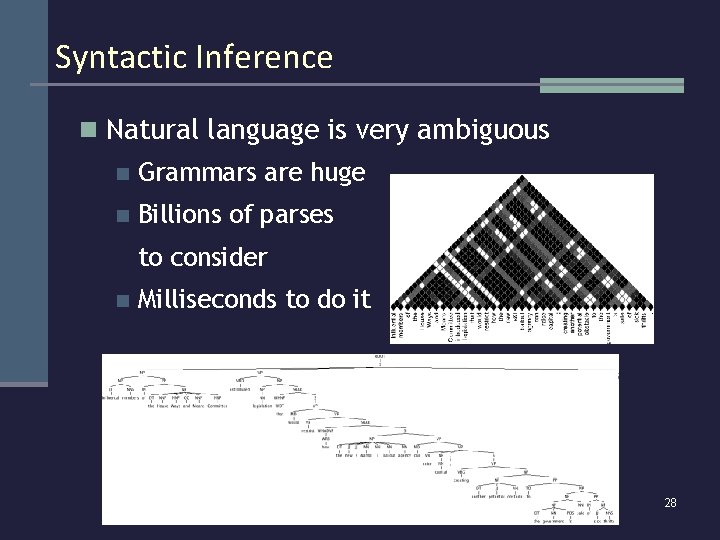

Syntactic Inference n Natural language is very ambiguous n n Grammars are huge Billions of parses to consider n Milliseconds to do it Influental members of the House Ways and Means Committee introduced legislation that would restrict how the new S&L bailout agency can raise capital, creating another potential obstacle to the government's sale of sick thrifts. 28

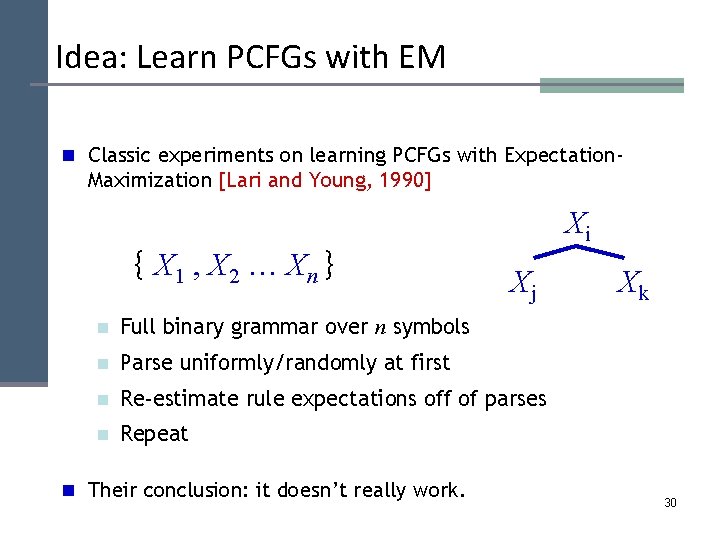

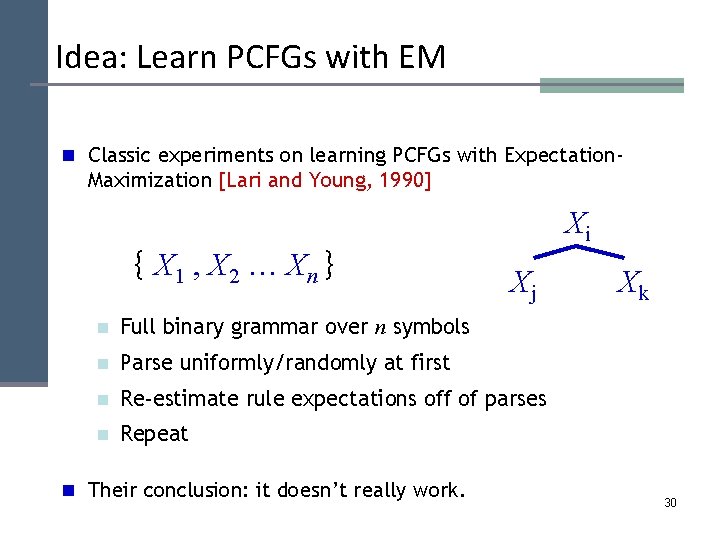

Idea: Learn PCFGs with EM n Classic experiments on learning PCFGs with Expectation- Maximization [Lari and Young, 1990] { X 1 , X 2 … Xn } Xi Xj n Full binary grammar over n symbols n Parse uniformly/randomly at first n Re-estimate rule expectations off of parses n Repeat n Their conclusion: it doesn’t really work. Xk 30

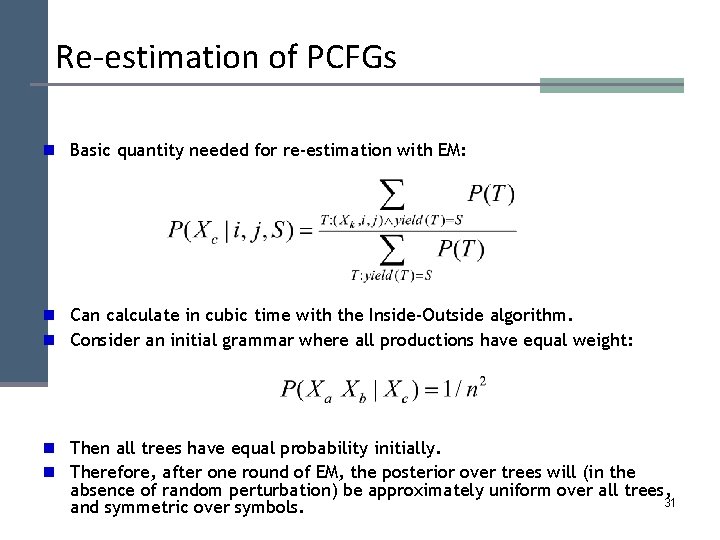

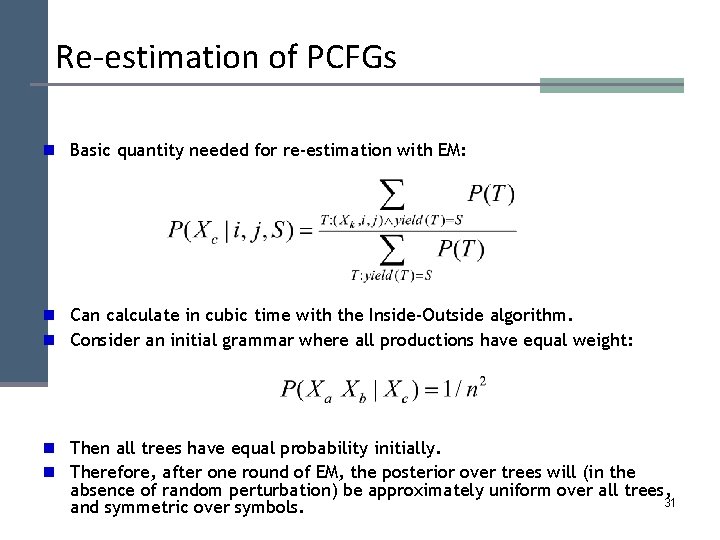

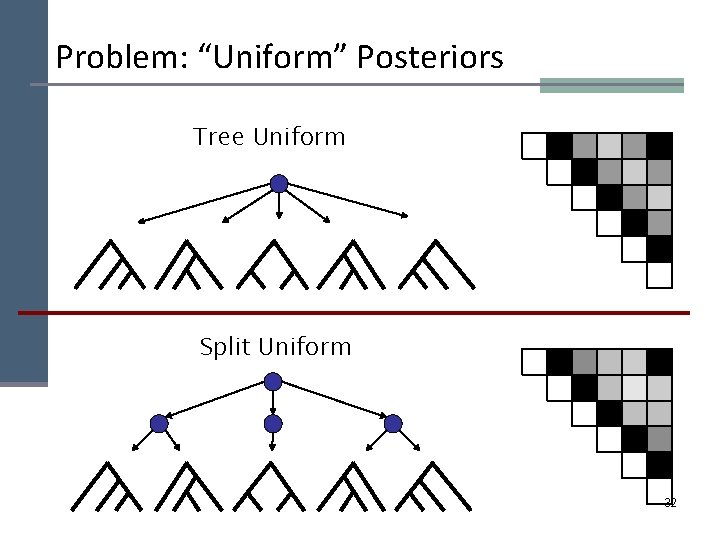

Re-estimation of PCFGs n Basic quantity needed for re-estimation with EM: n Can calculate in cubic time with the Inside-Outside algorithm. n Consider an initial grammar where all productions have equal weight: n Then all trees have equal probability initially. n Therefore, after one round of EM, the posterior over trees will (in the absence of random perturbation) be approximately uniform over all trees, 31 and symmetric over symbols.

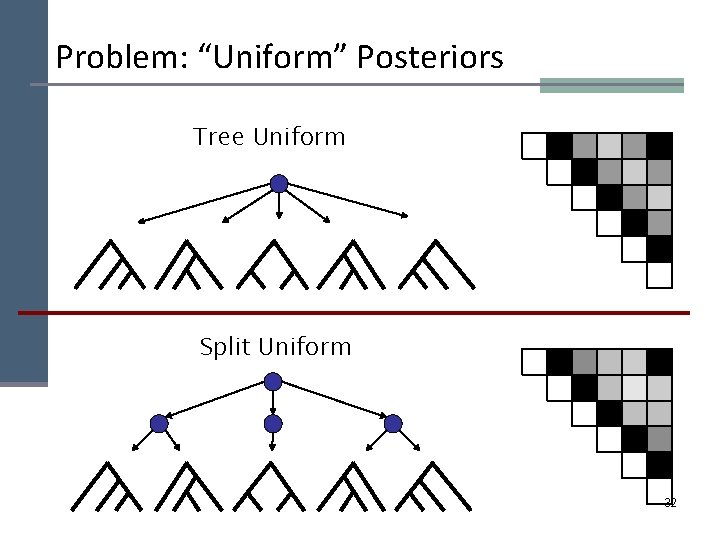

Problem: “Uniform” Posteriors Tree Uniform Split Uniform 32

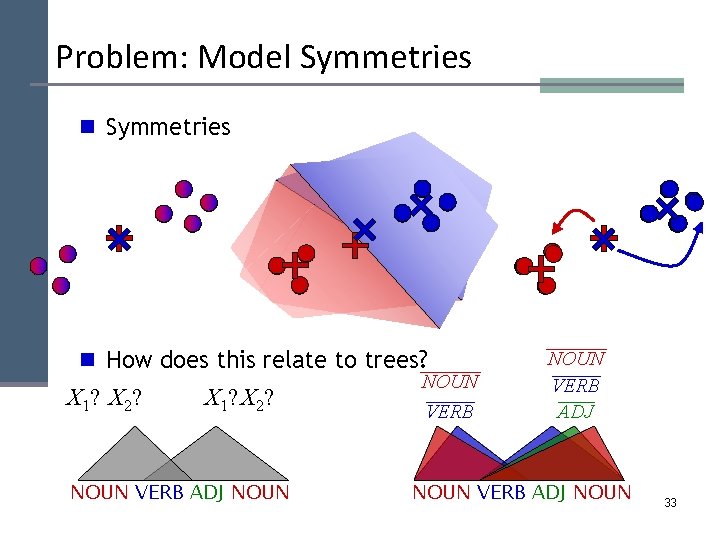

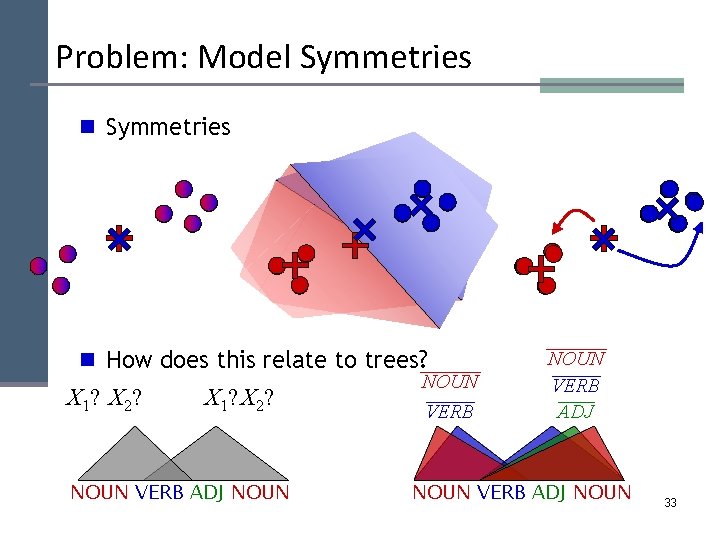

Problem: Model Symmetries n How does this relate to trees? X 1? X 2? NOUN VERB ADJ NOUN VERB ADJ NOUN 33

Overview: NLP at UCB n Lots of research and resources: n Dan Klein: Statistical NLP / ML n Marti Hearst: Stat NLP / HCI n Jerry Feldman: Language and Mind n Michael Jordan: Statistical Methods / ML n Tom Griffiths: Statistical Learning / Psychology n ICSI Speech and AI groups (Morgan, Stolcke, Shriberg, Narayanan…) n Great linguistics and stats departments! n No better place to solve the hard NLP problems! 34

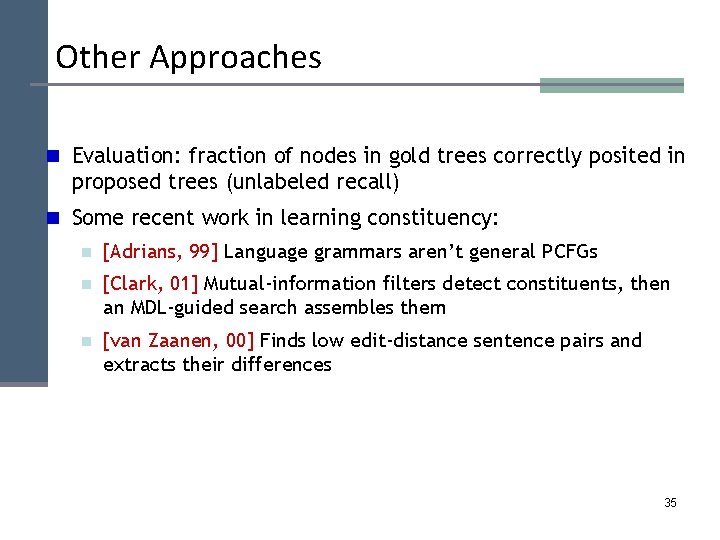

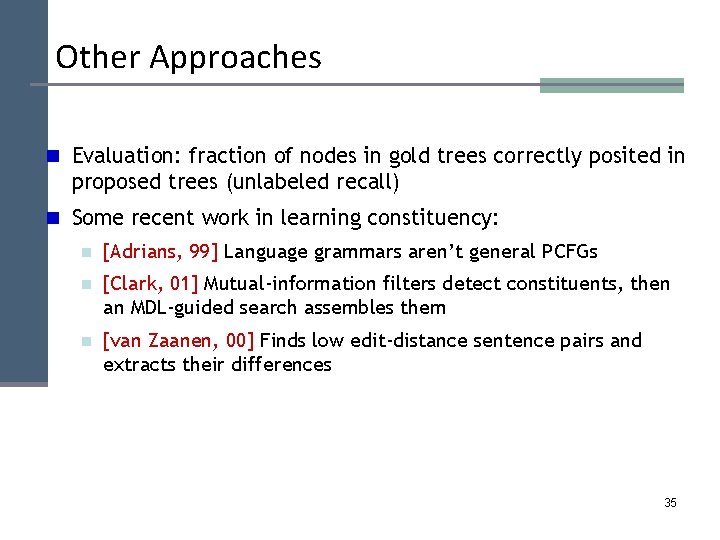

Other Approaches n Evaluation: fraction of nodes in gold trees correctly posited in proposed trees (unlabeled recall) n Some recent work in learning constituency: n [Adrians, 99] Language grammars aren’t general PCFGs n [Clark, 01] Mutual-information filters detect constituents, then an MDL-guided search assembles them n [van Zaanen, 00] Finds low edit-distance sentence pairs and extracts their differences Adriaans, 1999 16. 8 Clark, 2001 34. 6 van Zaanen, 2000 35. 6 35

36

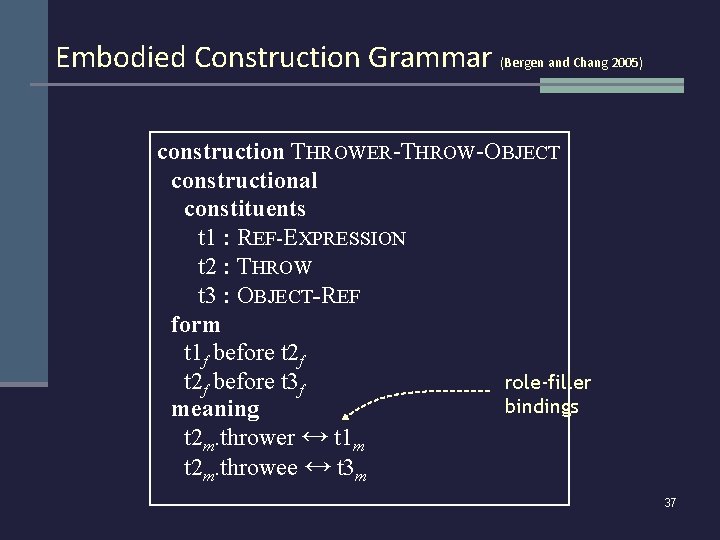

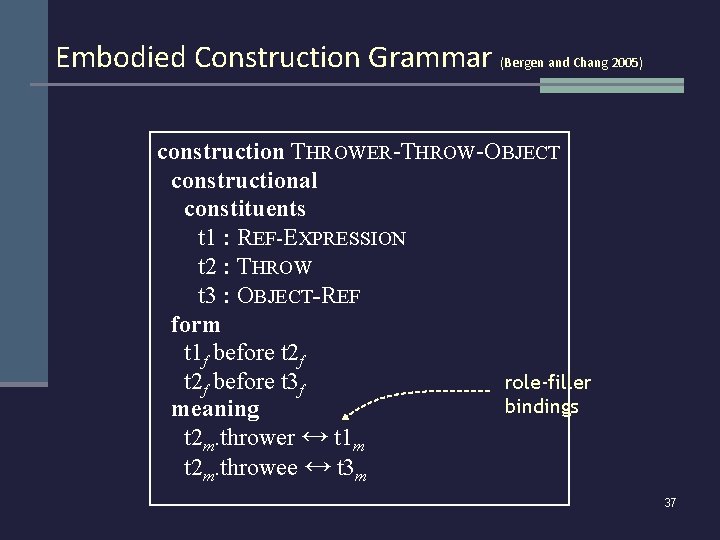

Embodied Construction Grammar (Bergen and Chang 2005) construction THROWER-THROW-OBJECT constructional constituents t 1 : REF-EXPRESSION t 2 : THROW t 3 : OBJECT-REF form t 1 f before t 2 f role-filler t 2 f before t 3 f bindings meaning t 2 m. thrower ↔ t 1 m t 2 m. throwee ↔ t 3 m 37

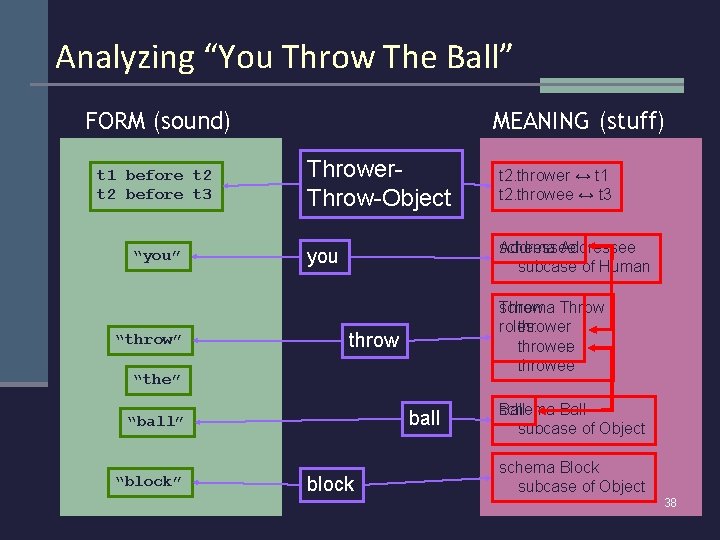

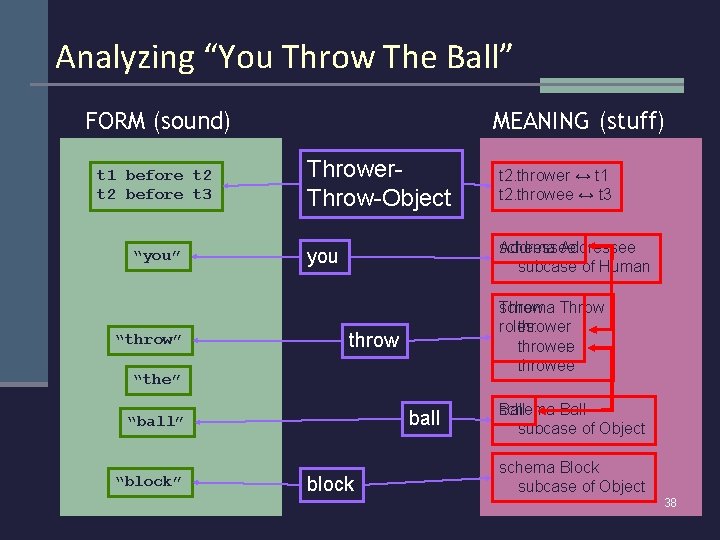

Analyzing “You Throw The Ball” MEANING (stuff) FORM (sound) t 1 before t 2 before t 3 “you” “throw” Thrower. Throw-Object t 2. thrower ↔ t 1 t 2. throwee ↔ t 3 you Addressee schema Addressee subcase of Human Throw schema roles: thrower throwee throw “the” ball “ball” “block” block Ball schema Ball subcase of Object schema Block subcase of Object 38

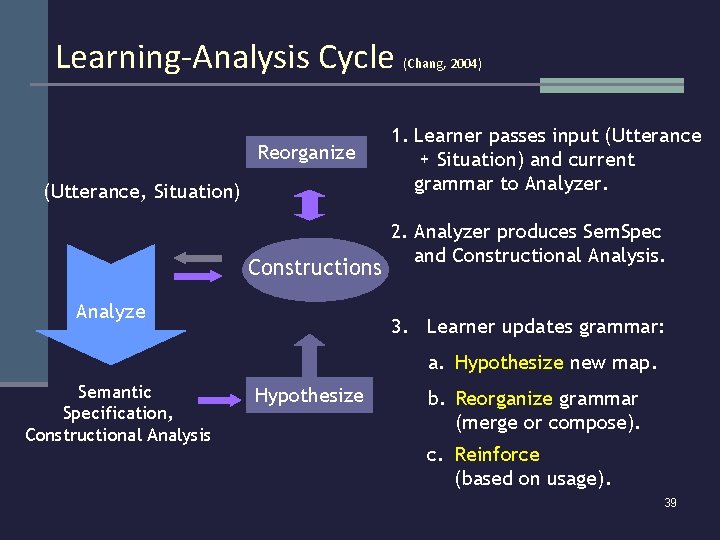

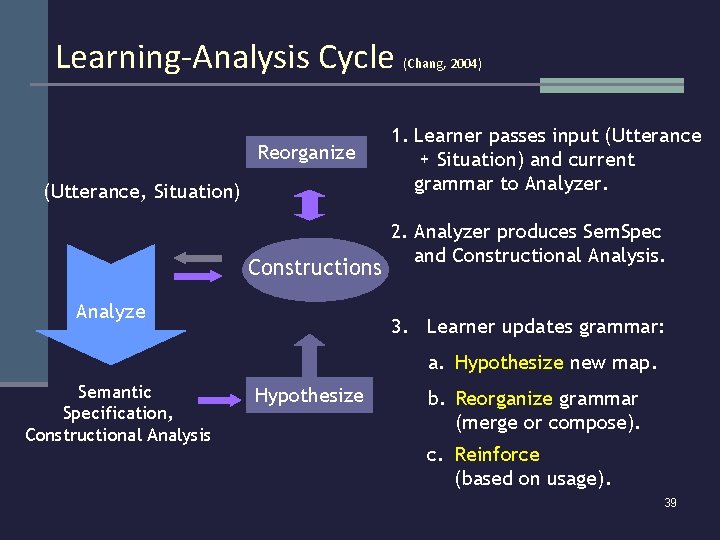

Learning-Analysis Cycle Reorganize (Utterance, Situation) Constructions Analyze (Chang, 2004) 1. Learner passes input (Utterance + Situation) and current grammar to Analyzer. 2. Analyzer produces Sem. Spec and Constructional Analysis. 3. Learner updates grammar: a. Hypothesize new map. Semantic Specification, Constructional Analysis Hypothesize b. Reorganize grammar (merge or compose). c. Reinforce (based on usage). 39

Hypothesizing a new construction through relational mapping 40

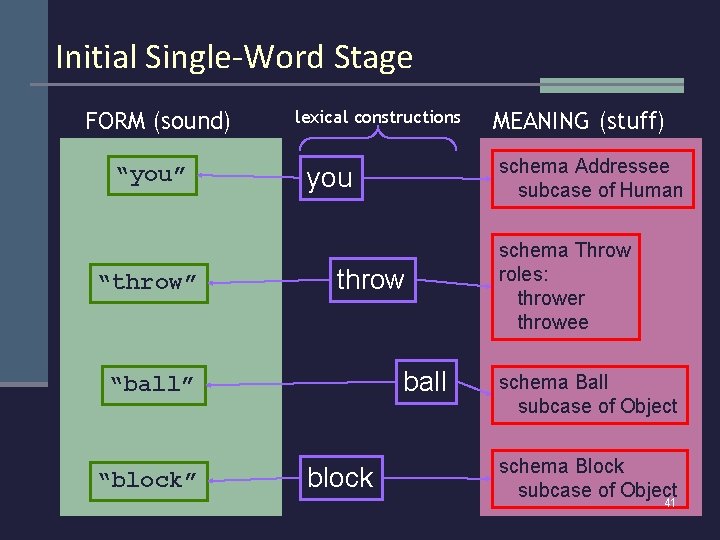

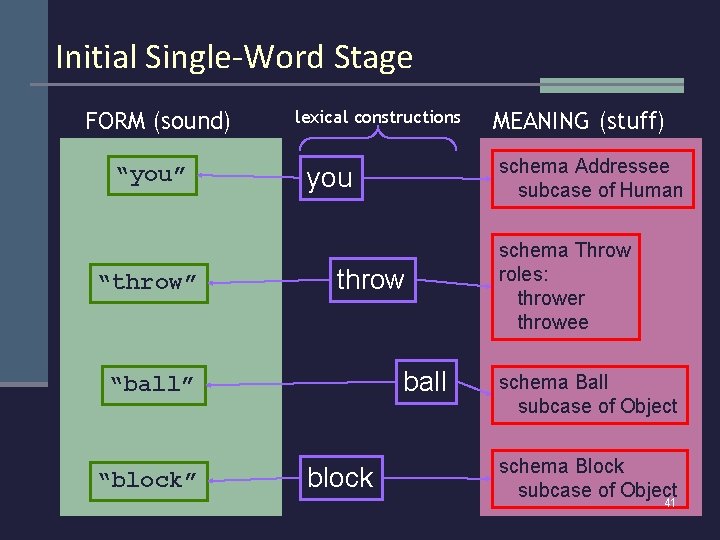

Initial Single-Word Stage FORM (sound) “you” “throw” lexical constructions “block” schema Addressee subcase of Human you throw ball “ball” block MEANING (stuff) schema Throw roles: thrower throwee schema Ball subcase of Object schema Block subcase of Object 41

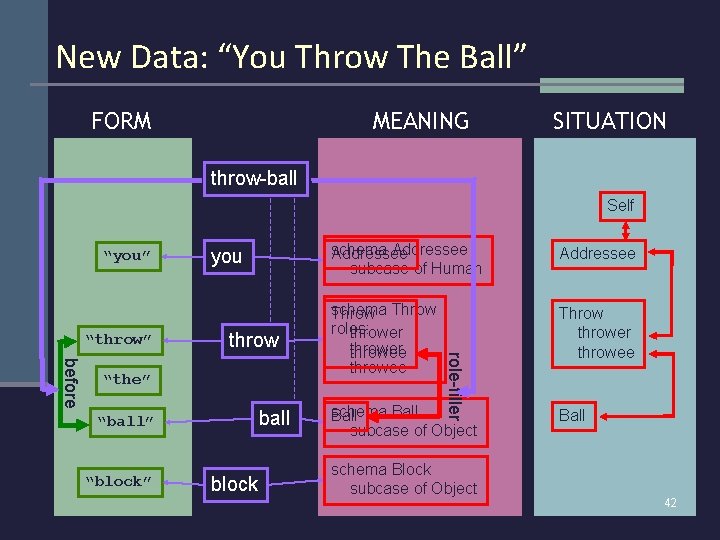

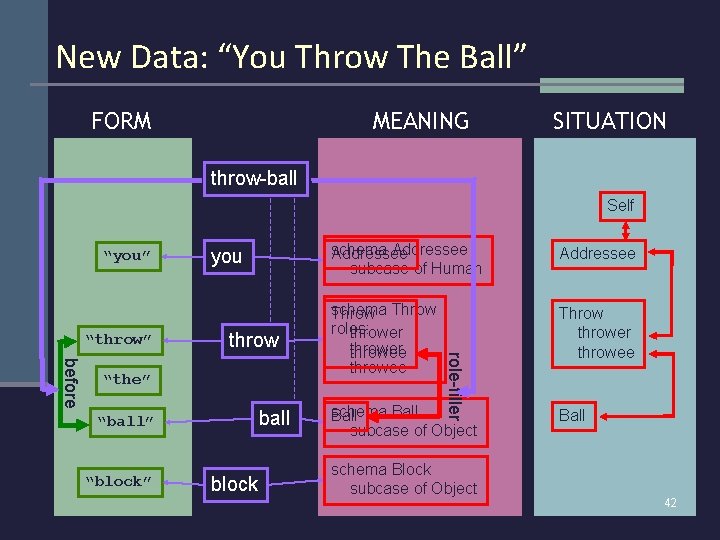

New Data: “You Throw The Ball” FORM MEANING SITUATION throw-ball Self “you” “throw” you throw ball “ball” “block” block Addressee schema Throw roles: thrower throwee Throw thrower throwee role-filler before “the” schema Addressee subcase of Human schema Ball subcase of Object schema Block subcase of Object Ball 42

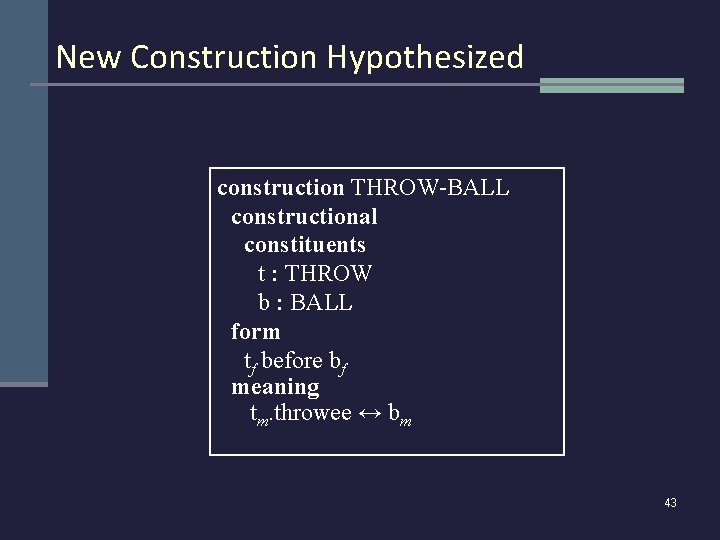

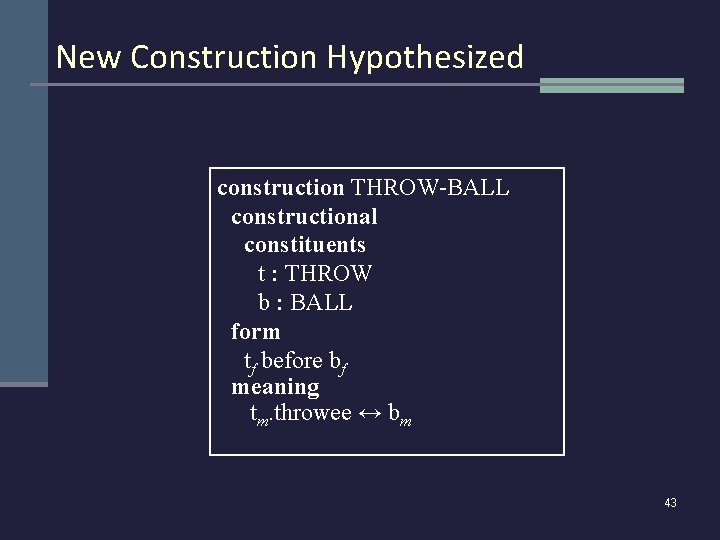

New Construction Hypothesized construction THROW-BALL constructional constituents t : THROW b : BALL form tf before bf meaning tm. throwee ↔ bm 43

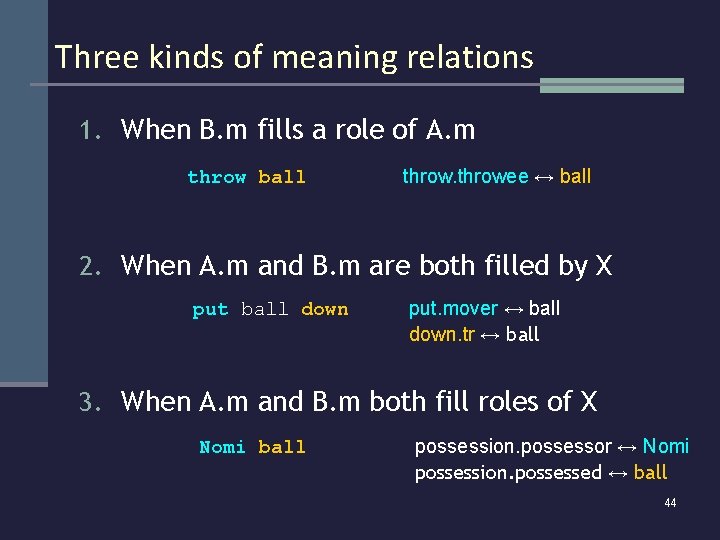

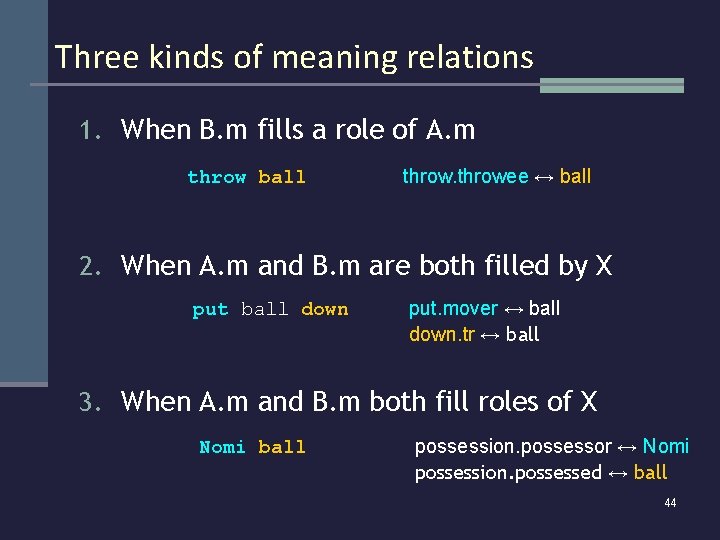

Three kinds of meaning relations 1. When B. m fills a role of A. m throw ball throwee ↔ ball 2. When A. m and B. m are both filled by X put ball down put. mover ↔ ball down. tr ↔ ball 3. When A. m and B. m both fill roles of X Nomi ball possession. possessor ↔ Nomi possession. possessed ↔ ball 44

Reorganizing the current grammar through merge and compose 45

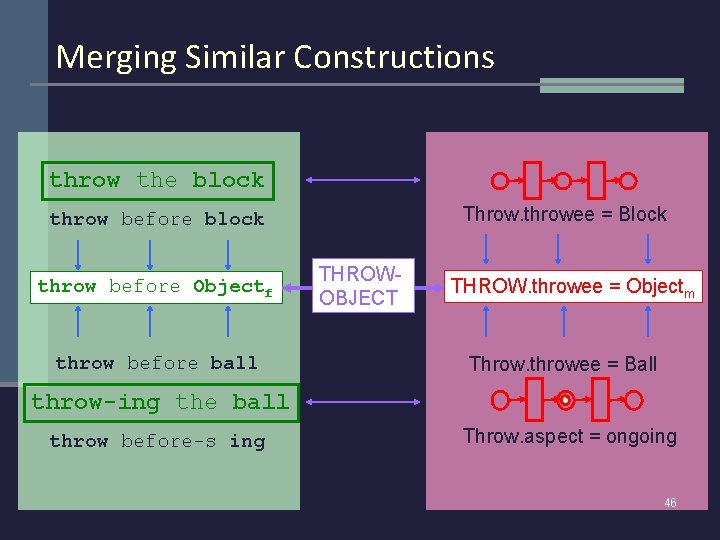

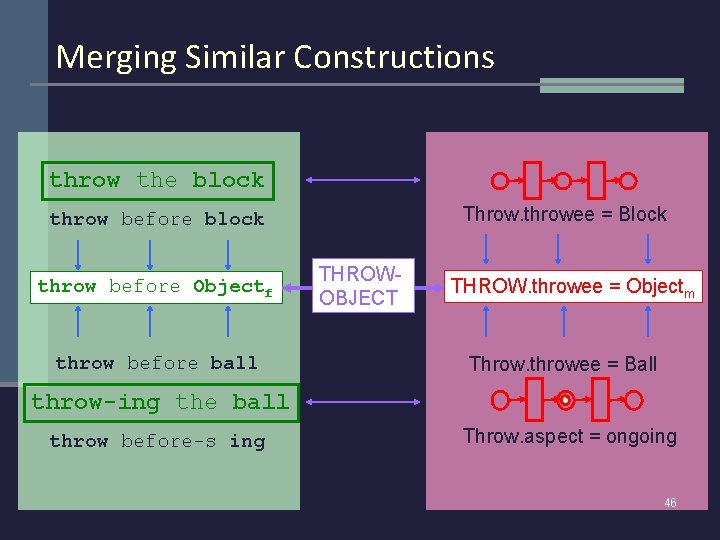

Merging Similar Constructions throw the block Throw. throwee = Block throw before block throw before Objectf throw before ball THROWOBJECT THROW. throwee = Objectm Throw. throwee = Ball throw-ing the ball throw before-s ing Throw. aspect = ongoing 46

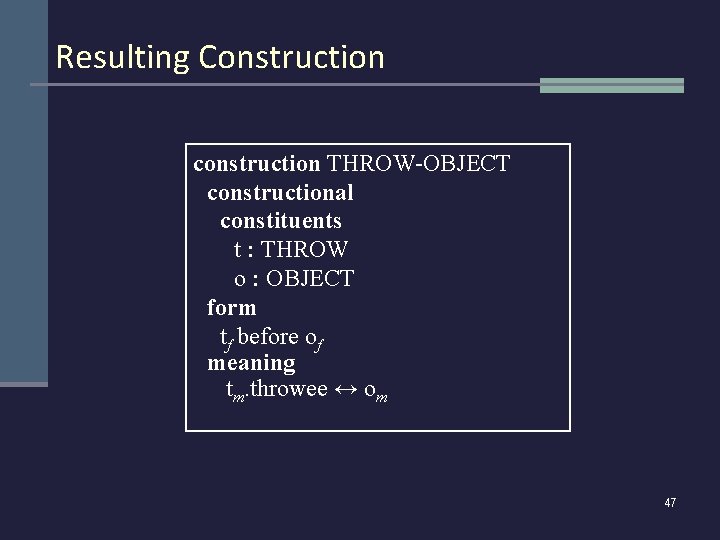

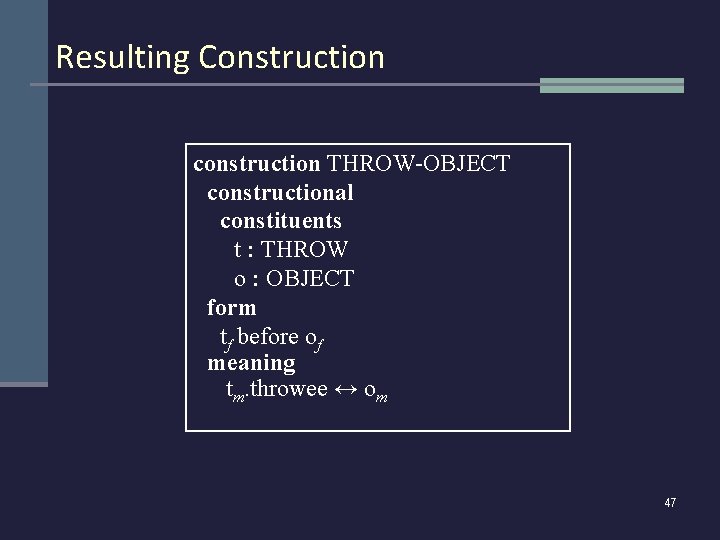

Resulting Construction construction THROW-OBJECT constructional constituents t : THROW o : OBJECT form tf before of meaning tm. throwee ↔ om 47

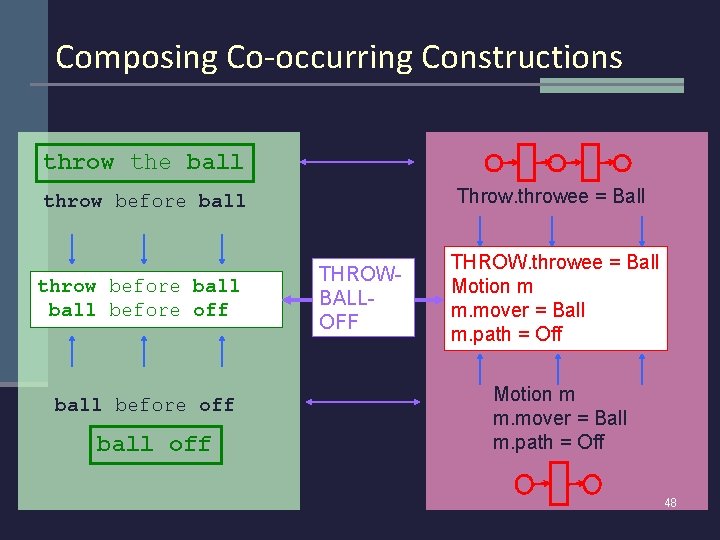

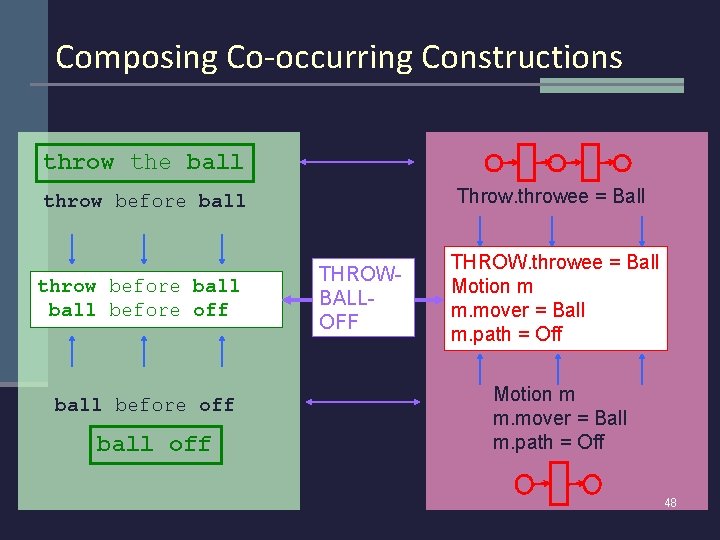

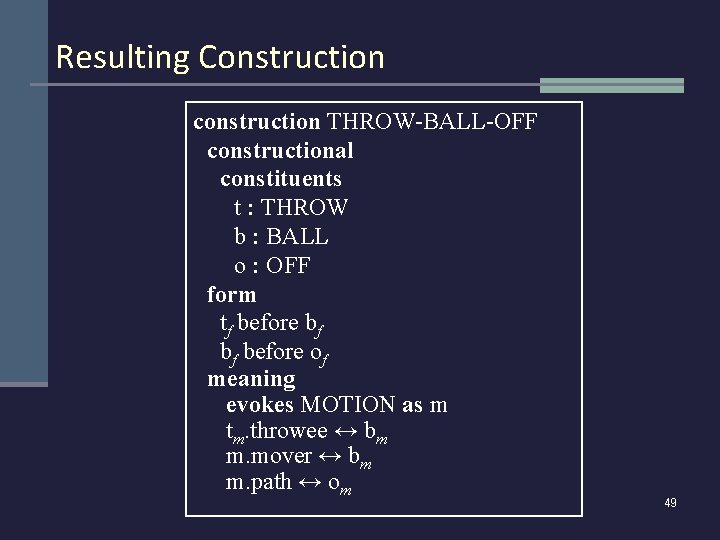

Composing Co-occurring Constructions throw the ball Throw. throwee = Ball throw before ball before off ball off THROWBALLOFF THROW. throwee = Ball Motion m m. mover = Ball m. path = Off 48

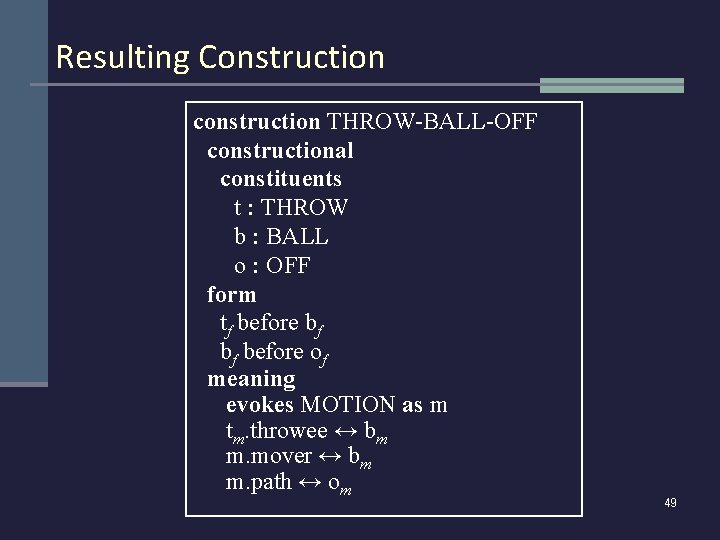

Resulting Construction construction THROW-BALL-OFF constructional constituents t : THROW b : BALL o : OFF form tf before bf bf before of meaning evokes MOTION as m tm. throwee ↔ bm m. mover ↔ bm m. path ↔ om 49

Precisely defining the learning algorithm 50

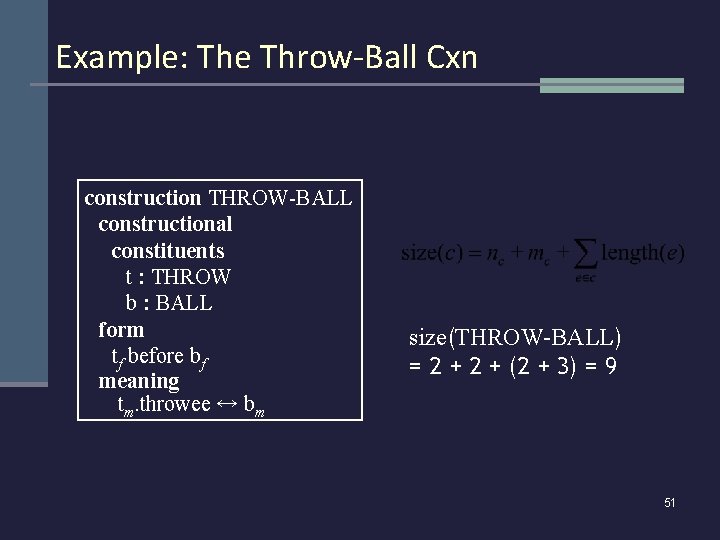

Example: The Throw-Ball Cxn construction THROW-BALL constructional constituents t : THROW b : BALL form tf before bf meaning tm. throwee ↔ bm size(THROW-BALL) = 2 + (2 + 3) = 9 51

Language Learning Problem n Performance measure n Goal: Comprehension should improve with training n Criterion: need some objective function to guide learning… Probability of Model given Data: Minimum Description Length: 52

Minimum Description Length n Choose grammar G to minimize cost(G|D): n cost(G|D) = α • size(G) + β • complexity(D|G) n Approximates Bayesian learning; cost(G|D) ≈ posterior probability P(G|D) n Size of grammar = size(G) ≈ prior P(G) n favor fewer/smaller constructions/roles; isomorphic mappings n Complexity of data given grammar ≈ likelihood P(D|G) n favor simpler analyses (fewer, more likely constructions) n based on derivation length + score of derivation 53

Size Of Grammar n Size of the grammar G is the sum of the size of each construction: n Size of each construction c is: where n nc = number of constituents in c, n mc = number of constraints in c, n length(e) = slot chain length of element reference e 54

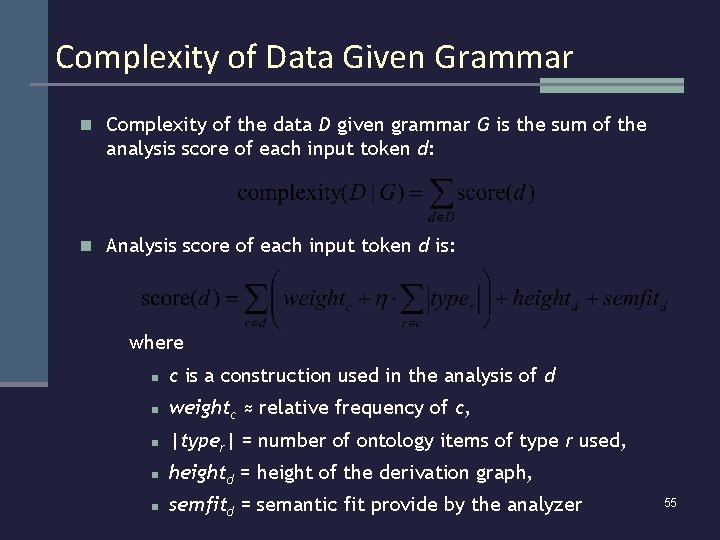

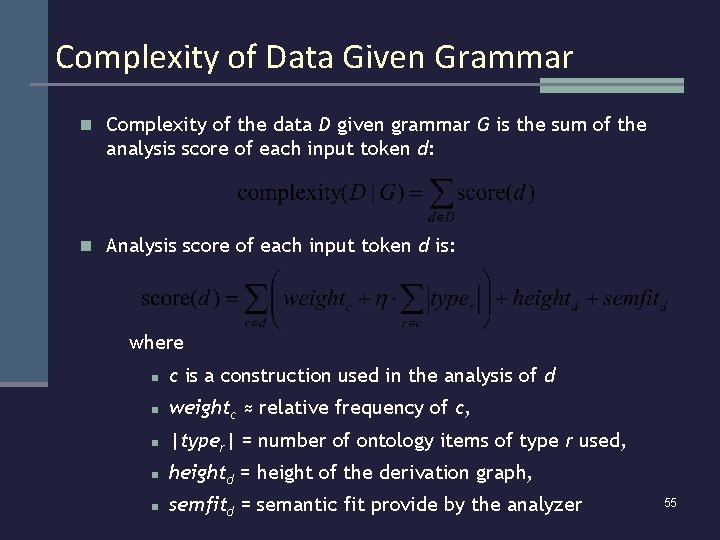

Complexity of Data Given Grammar n Complexity of the data D given grammar G is the sum of the analysis score of each input token d: n Analysis score of each input token d is: where n c is a construction used in the analysis of d n weightc ≈ relative frequency of c, n |typer| = number of ontology items of type r used, n heightd = height of the derivation graph, n semfitd = semantic fit provide by the analyzer 55

Preliminary Results 56

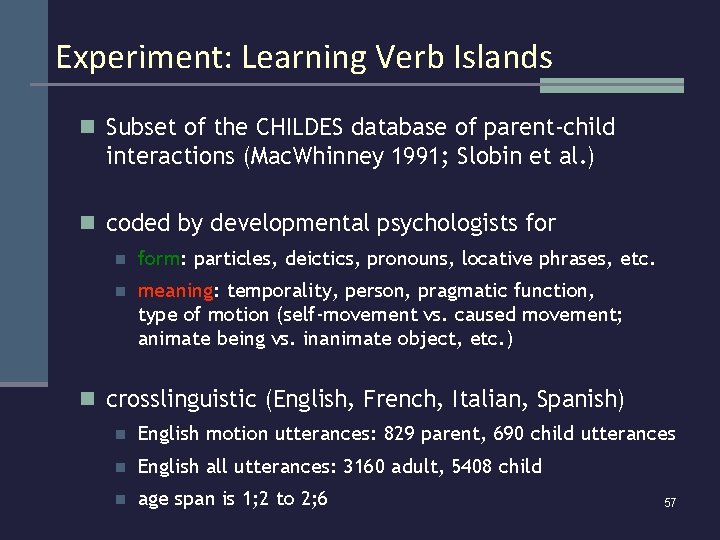

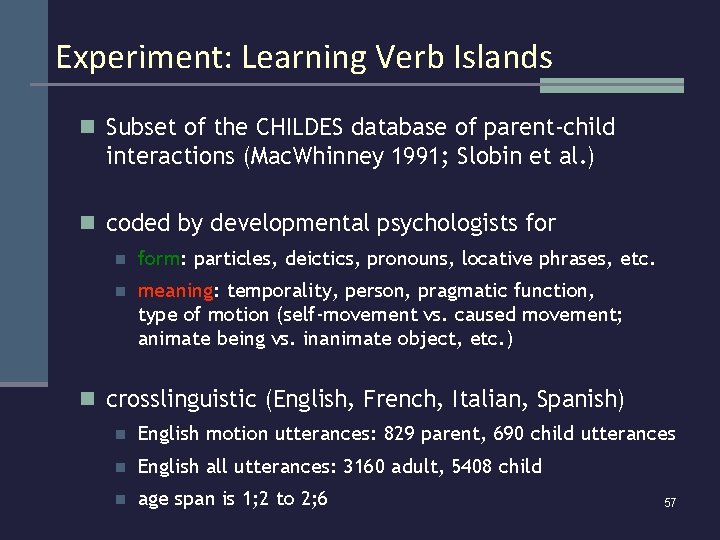

Experiment: Learning Verb Islands n Subset of the CHILDES database of parent-child interactions (Mac. Whinney 1991; Slobin et al. ) n coded by developmental psychologists for n form: particles, deictics, pronouns, locative phrases, etc. n meaning: temporality, person, pragmatic function, type of motion (self-movement vs. caused movement; animate being vs. inanimate object, etc. ) n crosslinguistic (English, French, Italian, Spanish) n English motion utterances: 829 parent, 690 child utterances n English all utterances: 3160 adult, 5408 child n age span is 1; 2 to 2; 6 57

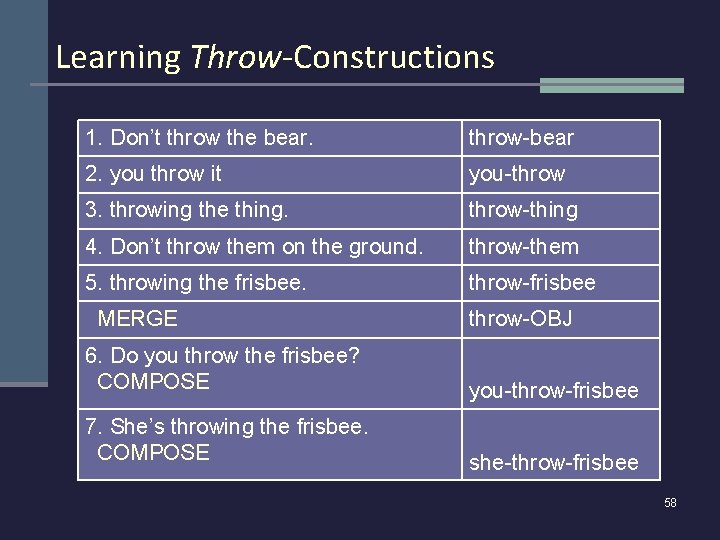

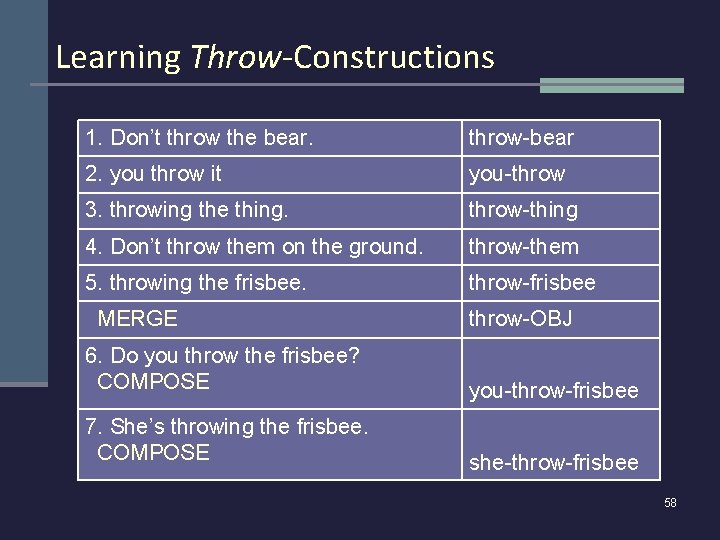

Learning Throw-Constructions 1. Don’t throw the bear. throw-bear 2. you throw it you-throw 3. throwing the thing. throw-thing 4. Don’t throw them on the ground. throw-them 5. throwing the frisbee. throw-frisbee MERGE throw-OBJ 6. Do you throw the frisbee? COMPOSE you-throw-frisbee 7. She’s throwing the frisbee. COMPOSE she-throw-frisbee 58

Learning Results 59

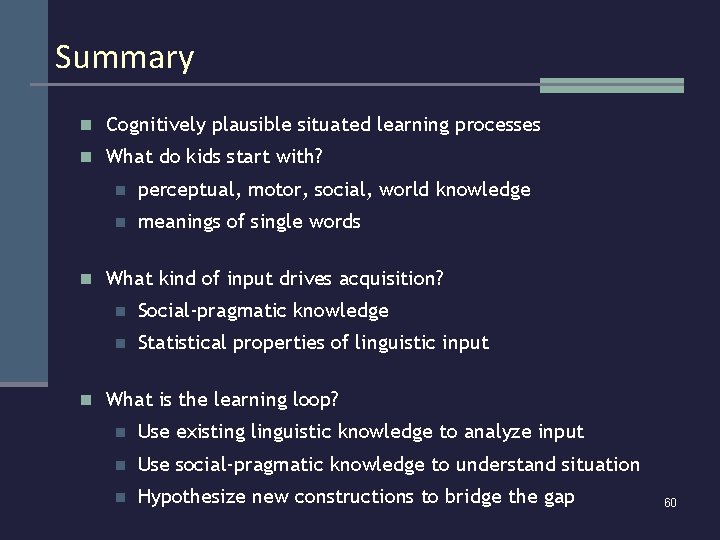

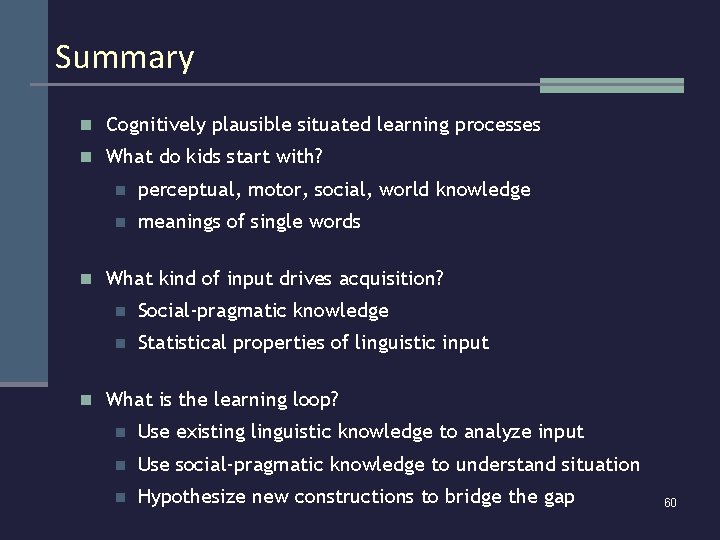

Summary n Cognitively plausible situated learning processes n What do kids start with? n perceptual, motor, social, world knowledge n meanings of single words n What kind of input drives acquisition? n Social-pragmatic knowledge n Statistical properties of linguistic input n What is the learning loop? n Use existing linguistic knowledge to analyze input n Use social-pragmatic knowledge to understand situation n Hypothesize new constructions to bridge the gap 60

2 H 2 O + 2 SO 2 + O 2 → 2 H 2 SO 4 In the gas phase sulfur dioxide is oxidized by reaction with the hydroxyl radical via a termolecular reaction: SO 2 OH· → HOSO 2· which is followed by: HOSO 2· + O 2 → HO 2· + SO 3 In the presence of water sulfur trioxide (SO 3) is converted rapidly to sulfuric acid: SO 3(g) + H 2 O(l) → H 2 SO 4(l) 61