Managing Morphologically Complex Languages in Information Retrieval Kal

![An Example n Consider the Finnish natural language query: lääke sydänvaiva [= medicine heart_problem] An Example n Consider the Finnish natural language query: lääke sydänvaiva [= medicine heart_problem]](https://slidetodoc.com/presentation_image_h2/2943e3ba6dbd29b1458be139017086f9/image-41.jpg)

- Slides: 78

Managing Morphologically Complex Languages in Information Retrieval Kal Järvelin & Many Others University of Tampere

1. Introduction n Morphologically complex languages unlike English, Chinese n rich inflectional and derivational morphology n rich compound formation n n U. Tampere experiences 1998 - 2008 monolingual IR n cross-language IR n focus: Finnish, Germanic languages, English n

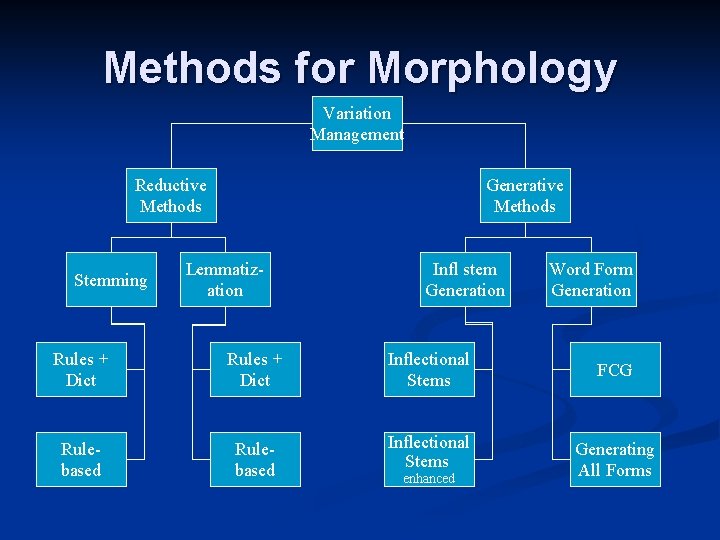

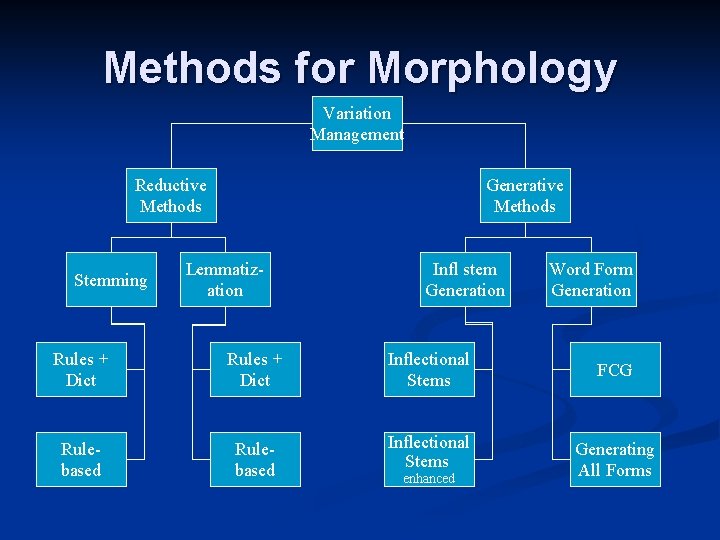

Methods for Morphology Variation Management Reductive Methods Stemming Generative Methods Lemmatization Infl stem Generation Word Form Generation Rules + Dict Inflectional Stems FCG Rulebased Inflectional Stems Generating All Forms enhanced

Agenda 1. Introduction n 2. Reductive Methods n 3. Compounds n 4. Generative Methods n 5. Query Structures n 6. OOV Words n 7. Conclusion n

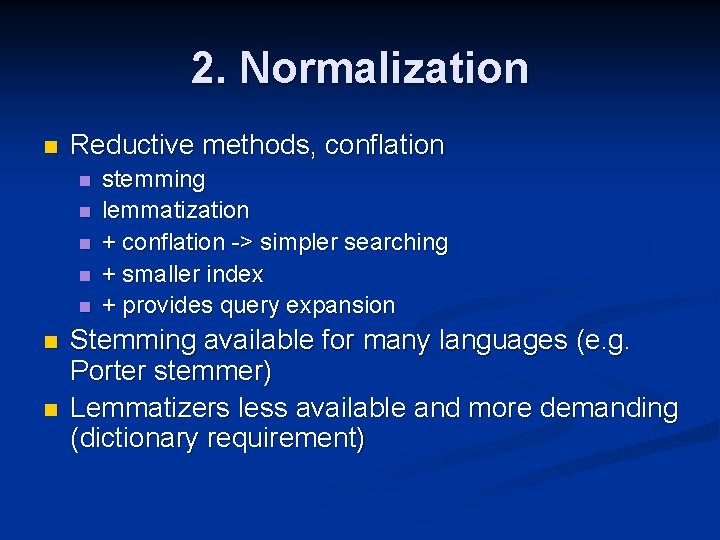

2. Normalization n Reductive methods, conflation n n n stemming lemmatization + conflation -> simpler searching + smaller index + provides query expansion Stemming available for many languages (e. g. Porter stemmer) Lemmatizers less available and more demanding (dictionary requirement)

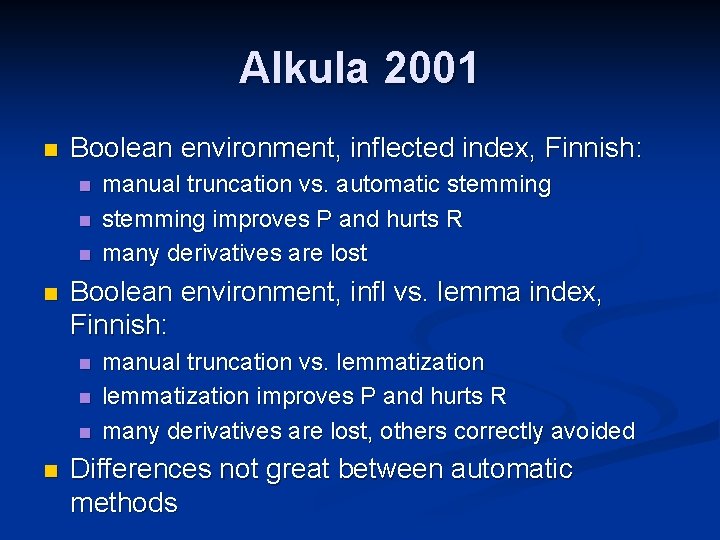

Alkula 2001 n Boolean environment, inflected index, Finnish: n n Boolean environment, infl vs. lemma index, Finnish: n n manual truncation vs. automatic stemming improves P and hurts R many derivatives are lost manual truncation vs. lemmatization improves P and hurts R many derivatives are lost, others correctly avoided Differences not great between automatic methods

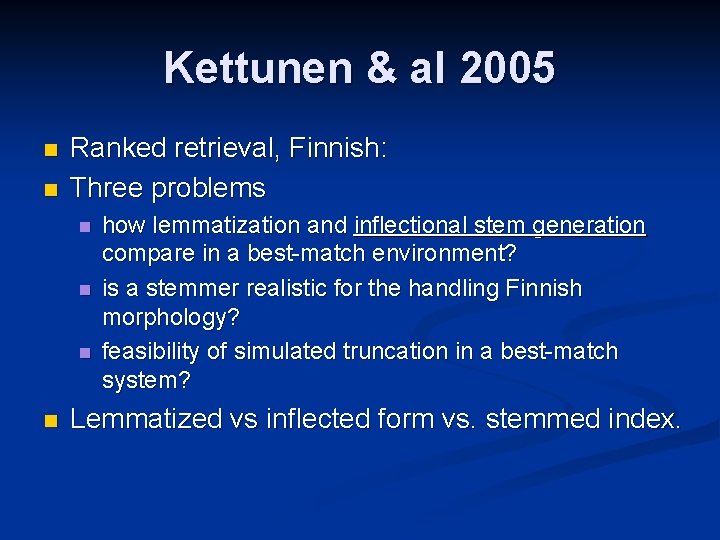

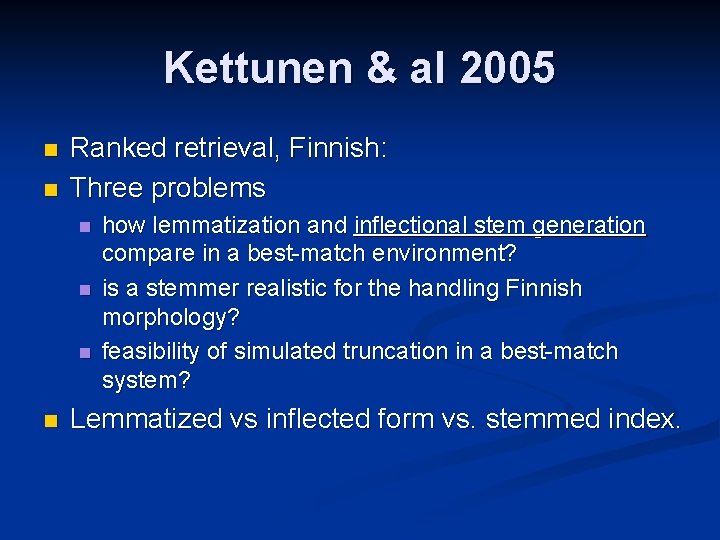

Kettunen & al 2005 n n Ranked retrieval, Finnish: Three problems n n how lemmatization and inflectional stem generation compare in a best-match environment? is a stemmer realistic for the handling Finnish morphology? feasibility of simulated truncation in a best-match system? Lemmatized vs inflected form vs. stemmed index.

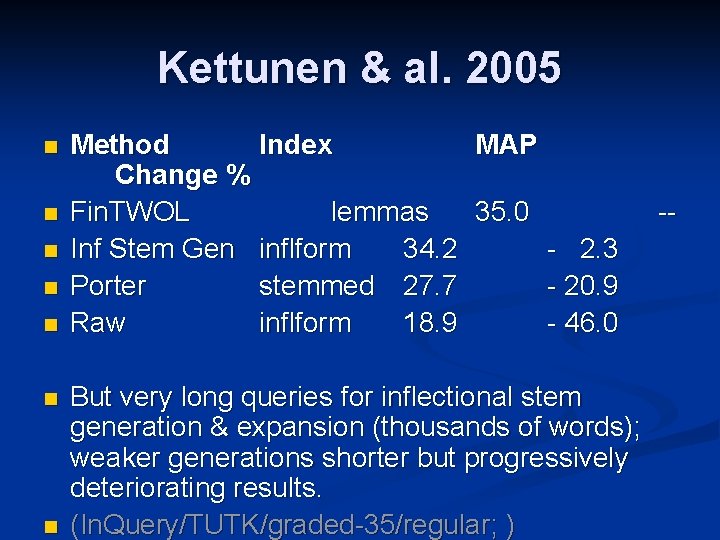

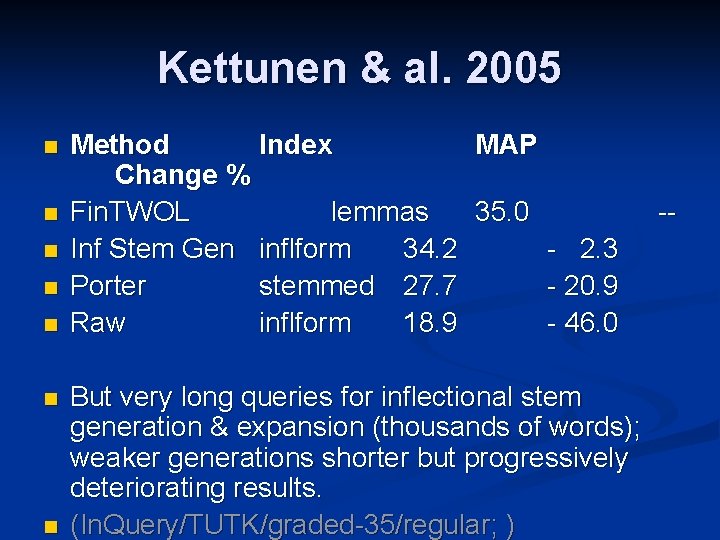

Kettunen & al. 2005 n n n n Method Index MAP Change % Fin. TWOL lemmas 35. 0 Inf Stem Gen inflform 34. 2 - 2. 3 Porter stemmed 27. 7 - 20. 9 Raw inflform 18. 9 - 46. 0 But very long queries for inflectional stem generation & expansion (thousands of words); weaker generations shorter but progressively deteriorating results. (In. Query/TUTK/graded-35/regular; ) --

Kettunen & al. 2005

Mono. IR: Airio 2006 In. Query/CLEF/TD/TWOL&Porter&Raw

CLIR: Inflectional Morphology n n NL queries contain inflected form source keys Dictionary headwords are in basic form (lemmas) Problem significance varies by language Stemming n stem both the dictionary and the query words n n but may cause all too many translations Stemming in dictionary translation best applied after translation.

Lemmatization in CLIR n Lemmatization easy to access dictionaries n but tokens may be ambiguous n dictionary translations not always in basic form n lemmatizer’s dictionary coverage n n insufficient -> non-lemmatized source keys, OOVs n too broad coverage -> too many senses provided

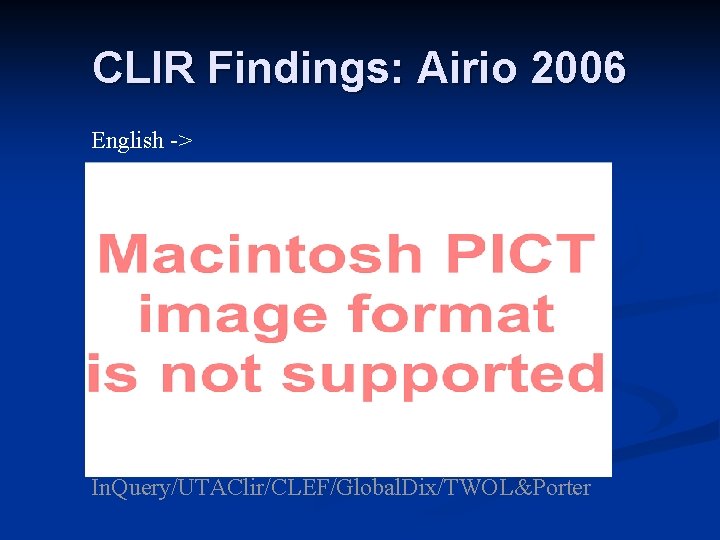

CLIR Findings: Airio 2006 English -> X In. Query/UTAClir/CLEF/Global. Dix/TWOL&Porter

Agenda 1. Introduction n 2. Reductive Methods n 3. Compounds n 4. Generative Methods n 5. Query Structures n 6. OOV Words n 7. Conclusion n

3. Compounds n Compounds, compound word types determinative: Weinkeller, vinkällare, lifejacket n copulative: schwartzweiss, svartvit, black-andwhite n compositional: Stadtverwaltung, stadsförvaltning n non-compositional: Erdbeere, jordgubbe, strawberry n

Compound Word Translation n All compounds are not in dictionary some languages are very productive n small dictionaries: atomic words, old noncompositional compounds n large dictionaries: many compositional compounds added n n Compounds remove phrase identification problems, but cause translation and query formulation problems

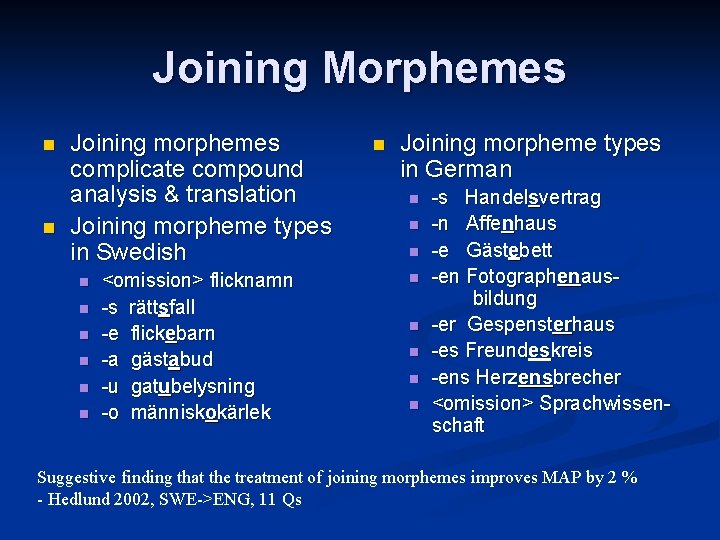

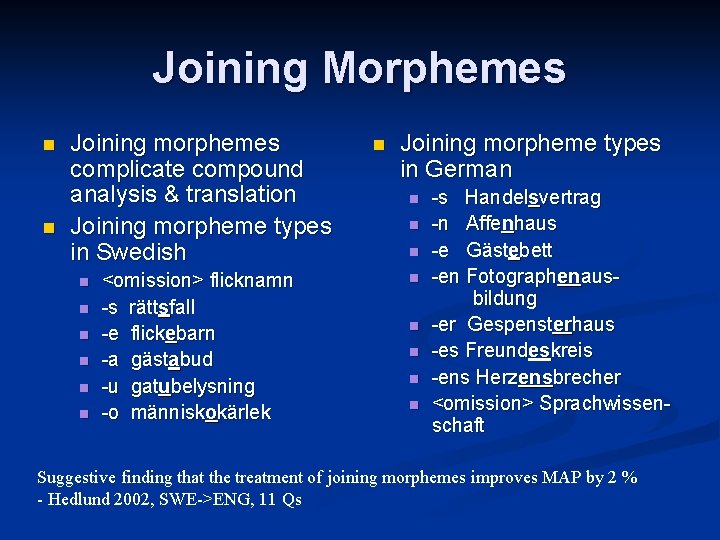

Joining Morphemes n n Joining morphemes complicate compound analysis & translation Joining morpheme types in Swedish n n n <omission> flicknamn -s rättsfall -e flickebarn -a gästabud -u gatubelysning -o människokärlek n Joining morpheme types in German n n n n -s Handelsvertrag -n Affenhaus -e Gästebett -en Fotographenausbildung -er Gespensterhaus -es Freundeskreis -ens Herzensbrecher <omission> Sprachwissenschaft Suggestive finding that the treatment of joining morphemes improves MAP by 2 % - Hedlund 2002, SWE->ENG, 11 Qs

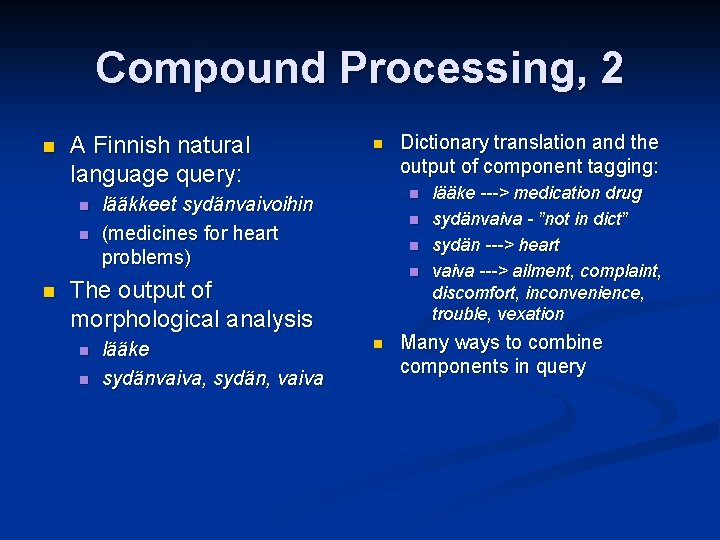

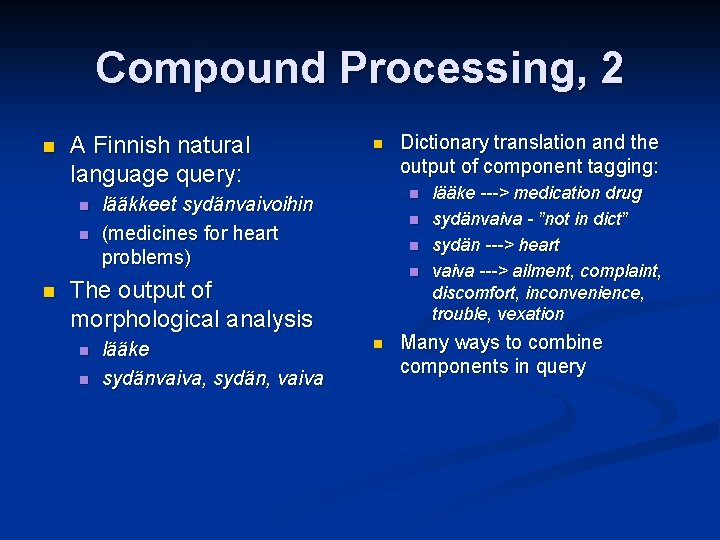

Compound Processing, 2 n A Finnish natural language query: n n n lääkkeet sydänvaivoihin (medicines for heart problems) n n n The output of morphological analysis n n lääke sydänvaiva, sydän, vaiva Dictionary translation and the output of component tagging: n lääke ---> medication drug sydänvaiva - ”not in dict” sydän ---> heart vaiva ---> ailment, complaint, discomfort, inconvenience, trouble, vexation Many ways to combine components in query

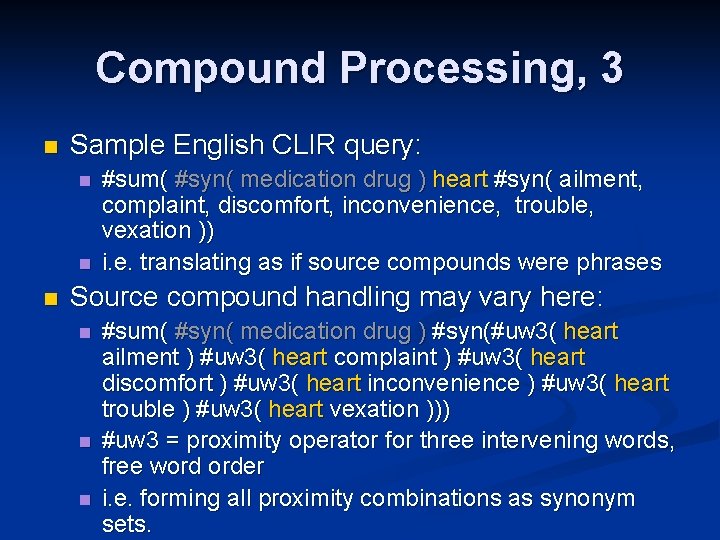

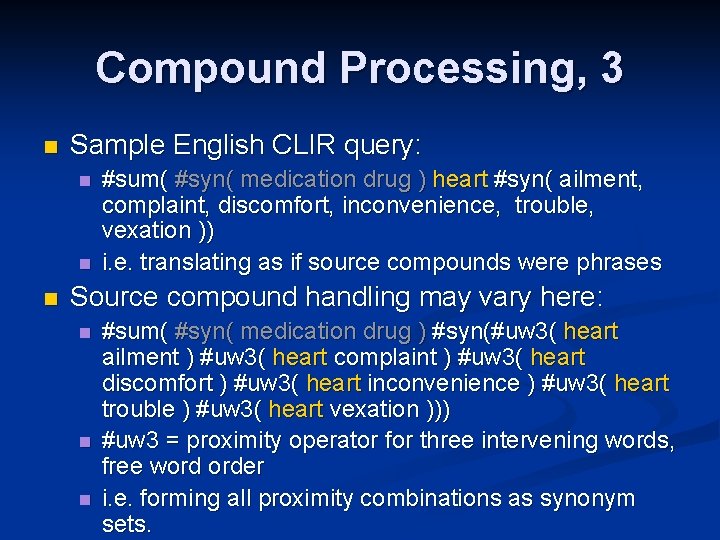

Compound Processing, 3 n Sample English CLIR query: n n n #sum( #syn( medication drug ) heart #syn( ailment, complaint, discomfort, inconvenience, trouble, vexation )) i. e. translating as if source compounds were phrases Source compound handling may vary here: n n n #sum( #syn( medication drug ) #syn(#uw 3( heart ailment ) #uw 3( heart complaint ) #uw 3( heart discomfort ) #uw 3( heart inconvenience ) #uw 3( heart trouble ) #uw 3( heart vexation ))) #uw 3 = proximity operator for three intervening words, free word order i. e. forming all proximity combinations as synonym sets.

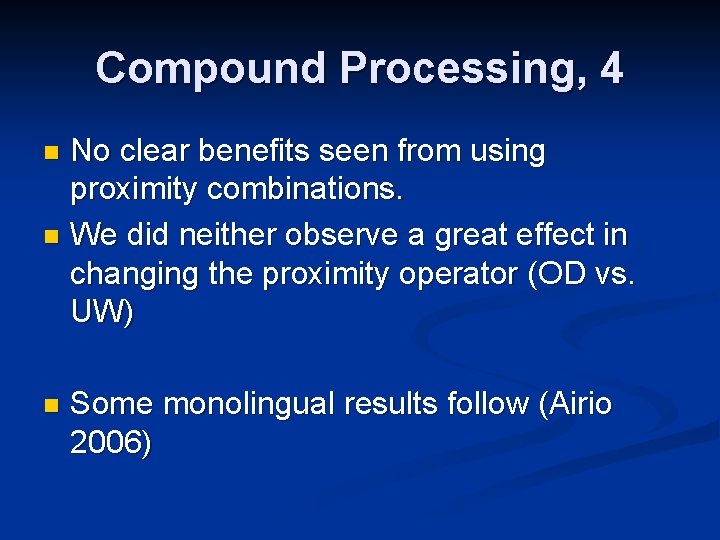

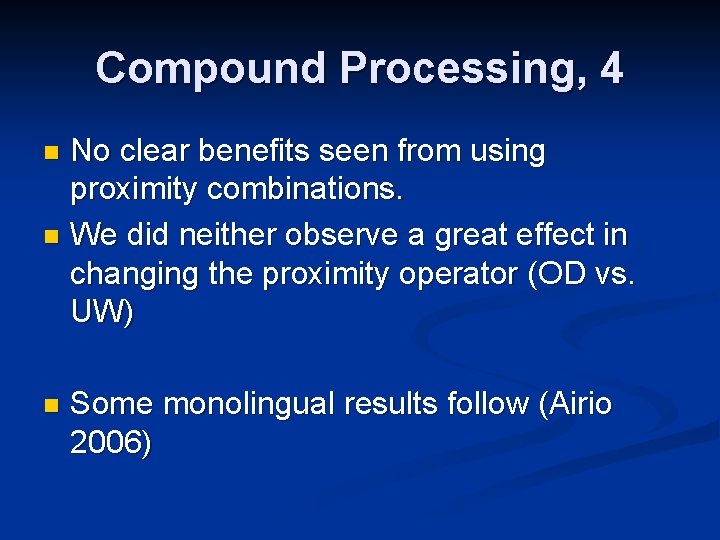

Compound Processing, 4 No clear benefits seen from using proximity combinations. n We did neither observe a great effect in changing the proximity operator (OD vs. UW) n n Some monolingual results follow (Airio 2006)

In. Query/CLEF/Raw&TWOL&Porter

English Swedish Mo rph Finnish olo gic al co mp lex ity inc rea ses

Hedlund 2002 n Compound translation as compounds: 47 German CLEF 2001 topics, English docs collection. n comprehensive dictionary (many compounds) vs. small dict (no compounds) n mean AP 34. 7% vs. 30. 4% n dictionary matters. . . n n Alternative approach: if not translatable, split and translate components

CLEF Ger -> Eng 1. best manually translated 0, 4465 2. large dict, no comp splitting 0, 3520 3. limited dict, no comp splitting 0, 3057 4. large dictionary & comp splitting 0, 3830 5. limited dict & comp splitting 0, 3547 In. Query/UTAClir/CLEF/Duden/TWOL/UW 5+n

CLIR Findings: Airio 2006 English -> In. Query/UTAClir/CLEF/Global. Dix/TWOL&Porter

Eng->Fin Eng->Ger Eng->Swe

Agenda 1. Introduction n 2. Reductive Methods n 3. Compounds n 4. Generative Methods n 5. Query Structures n 6. OOV Words n 7. Conclusion n

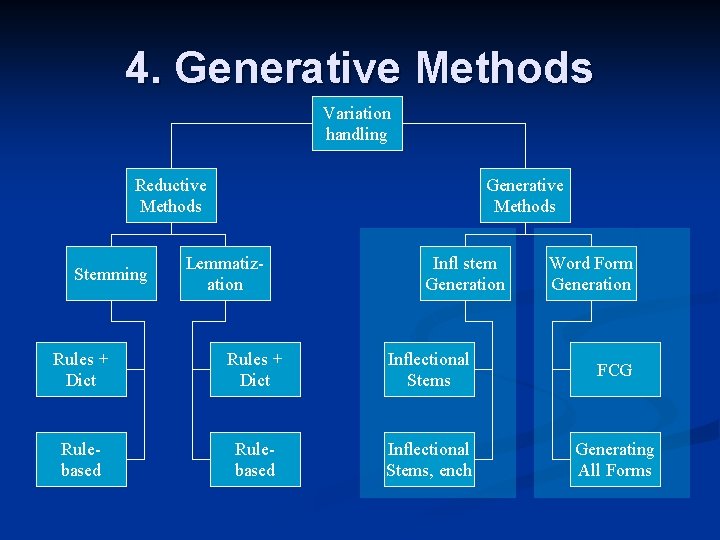

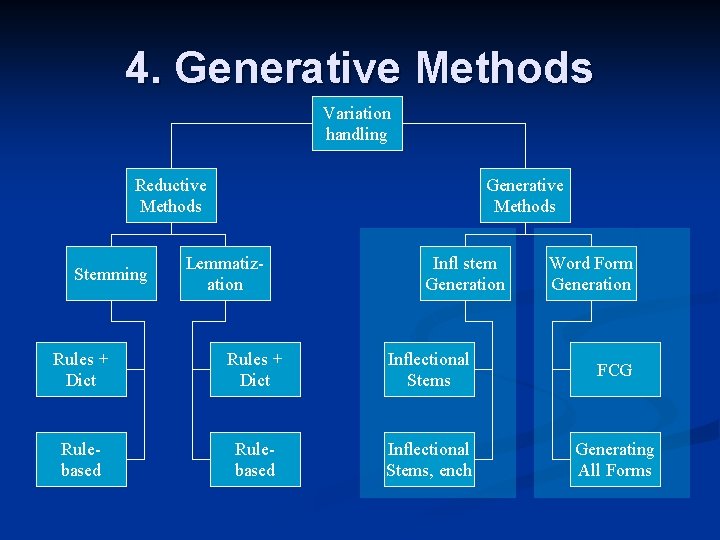

4. Generative Methods Variation handling Reductive Methods Stemming Generative Methods Lemmatization Infl stem Generation Word Form Generation Rules + Dict Inflectional Stems FCG Rulebased Inflectional Stems, ench Generating All Forms

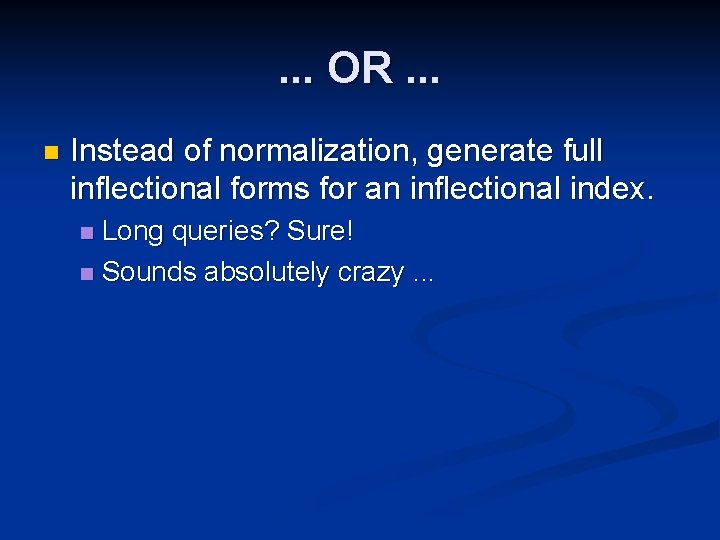

Generative Methods: inf stems n Instead of normalization, generate inflectional stems for an inflectional index. then using stems harvest full forms from the index n long queries. . . n

. . . OR. . . n Instead of normalization, generate full inflectional forms for an inflectional index. Long queries? Sure! n Sounds absolutely crazy. . . n

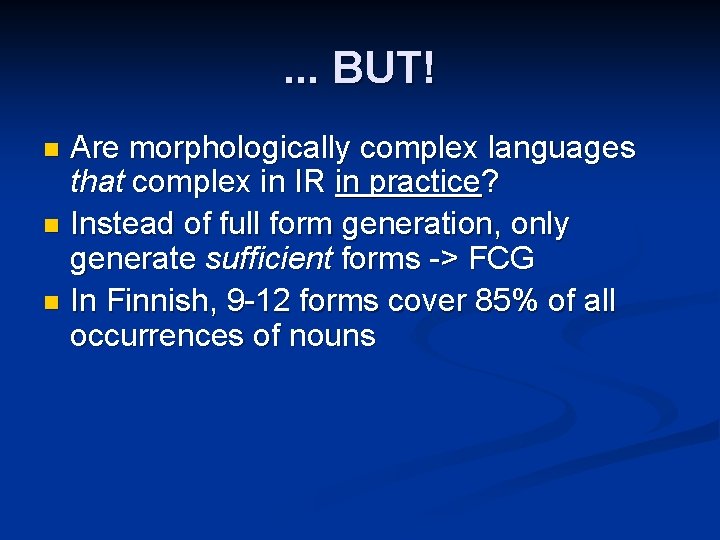

. . . BUT! Are morphologically complex languages that complex in IR in practice? n Instead of full form generation, only generate sufficient forms -> FCG n In Finnish, 9 -12 forms cover 85% of all occurrences of nouns n

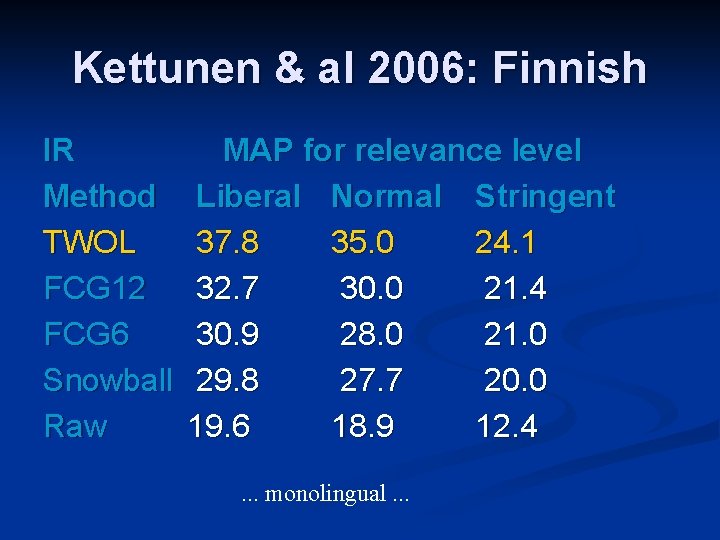

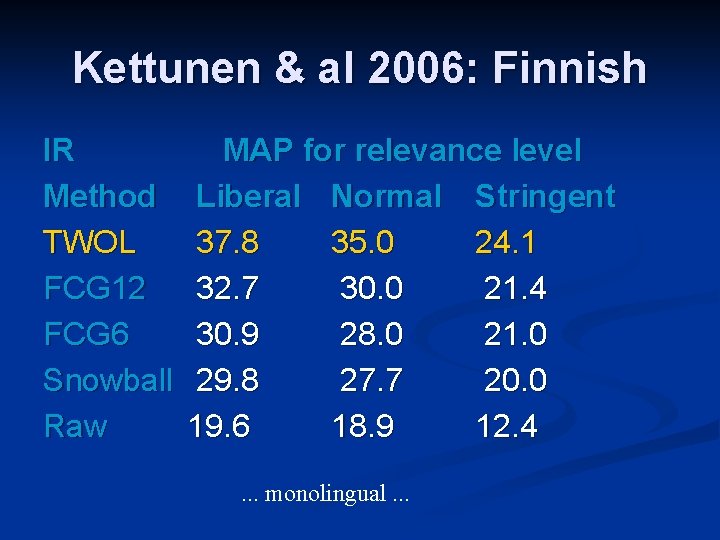

Kettunen & al 2006: Finnish IR Method TWOL FCG 12 FCG 6 Snowball Raw MAP for relevance level Liberal Normal Stringent 37. 8 35. 0 24. 1 32. 7 30. 0 21. 4 30. 9 28. 0 21. 0 29. 8 27. 7 20. 0 19. 6 18. 9 12. 4. . . monolingual. . .

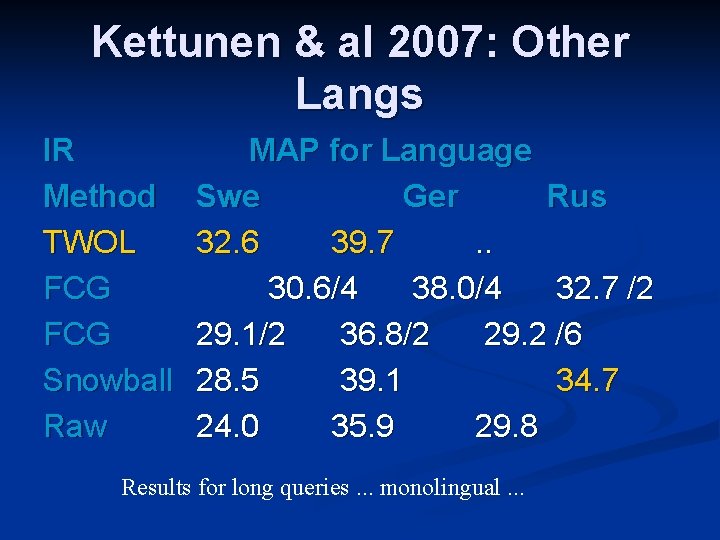

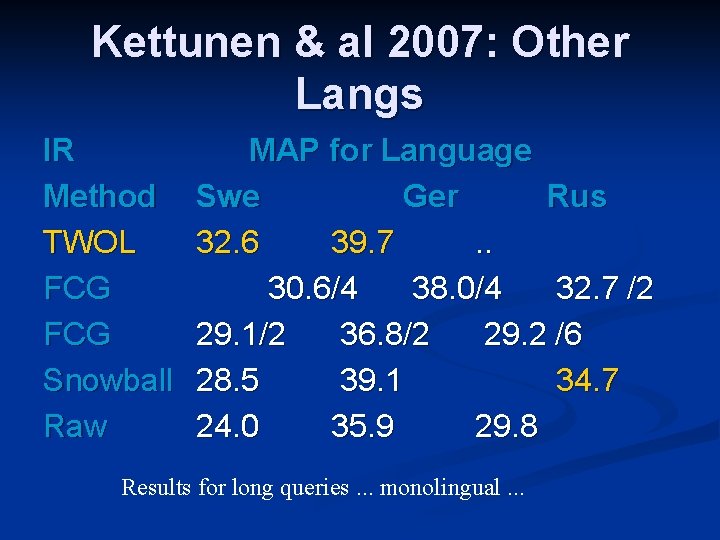

Kettunen & al 2007: Other Langs IR Method TWOL FCG Snowball Raw MAP for Language Swe Ger Rus 32. 6 39. 7. . 30. 6/4 38. 0/4 32. 7 /2 29. 1/2 36. 8/2 29. 2 /6 28. 5 39. 1 34. 7 24. 0 35. 9 29. 8 Results for long queries. . . monolingual. . .

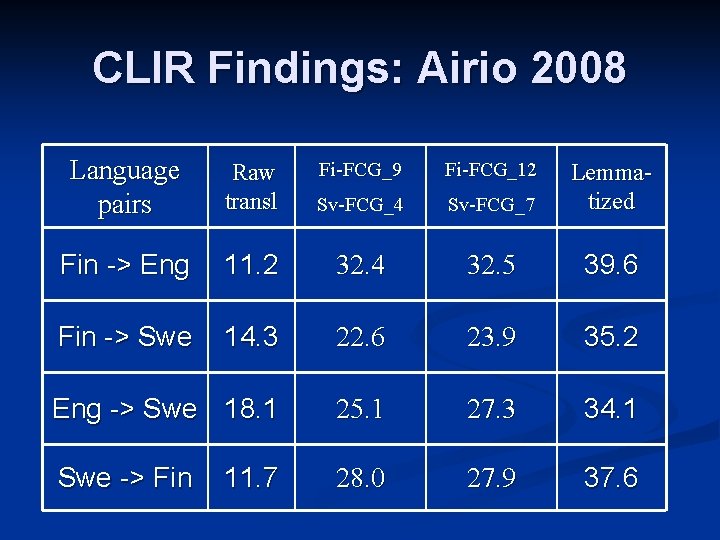

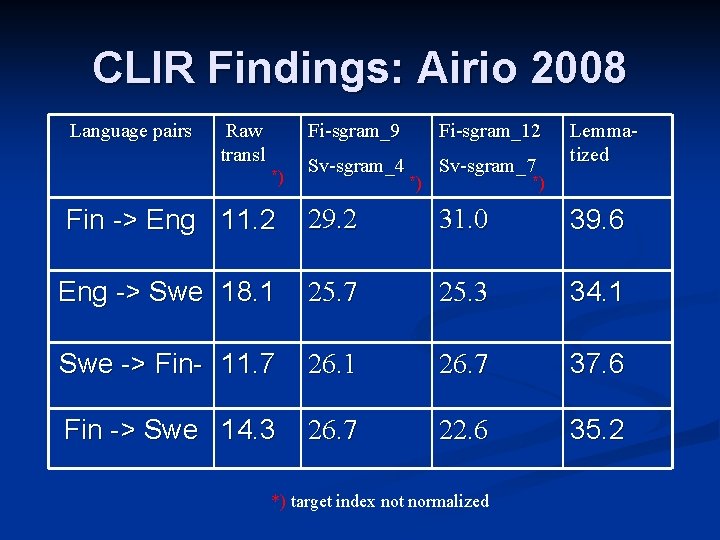

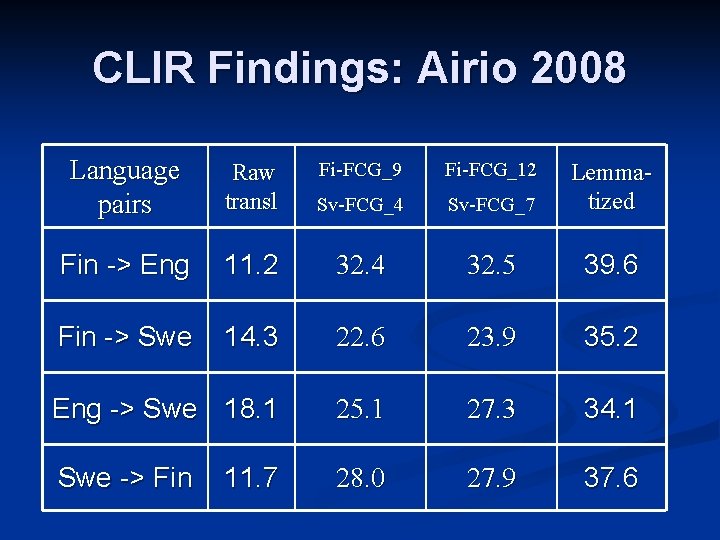

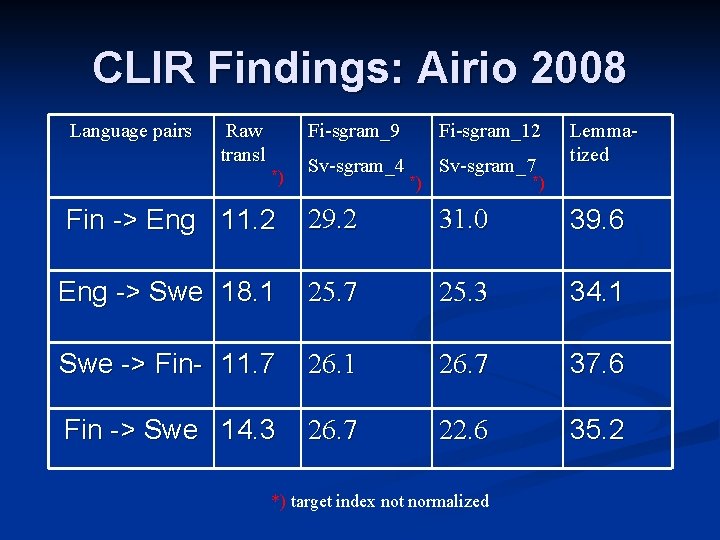

CLIR Findings: Airio 2008 Language pairs Raw transl Fi-FCG_9 Fi-FCG_12 Sv-FCG_4 Sv-FCG_7 Lemmatized Fin -> Eng 11. 2 32. 4 32. 5 39. 6 Fin -> Swe 14. 3 22. 6 23. 9 35. 2 Eng -> Swe 18. 1 25. 1 27. 3 34. 1 Swe -> Fin 28. 0 27. 9 37. 6 11. 7

Agenda 1. Introduction n 2. Reductive Methods n 3. Compounds n 4. Generative Methods n 5. Query Structures n 6. OOV Words n 7. Conclusion n

5. Query Structures n Translation ambiguity such as. . . n Homonymy: homophony, homography n Examples: n platform, bank, book Inflectional homography n Examples: train, trains, training n Examples: book, books, booking n Polysemy n Examples: n back, train . . . a problem in CLIR.

Ambiguity Resolution n Methods Part-of-speech tagging (e. g. Ballesteros & Croft ‘ 98) n Corpus-based methods Ballesteros & Croft ‘ 96; ‘ 97; Chen & al. ‘ 99) n n Query Expansion n Collocations n Query structuring - the Pirkola Method (1998)

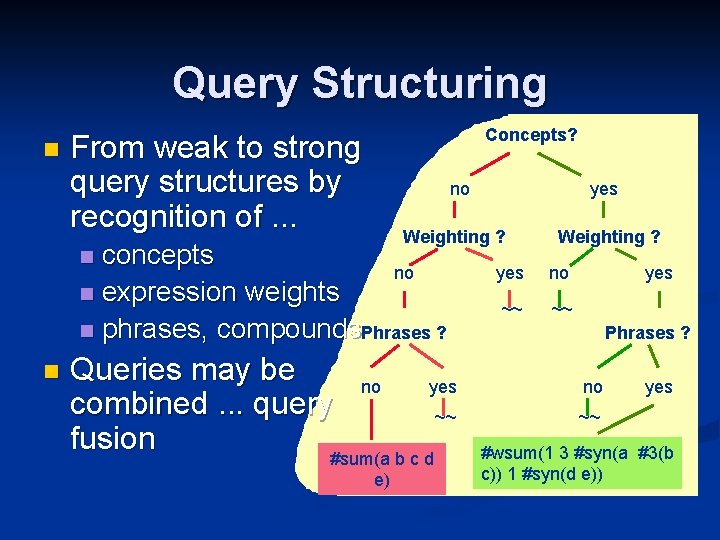

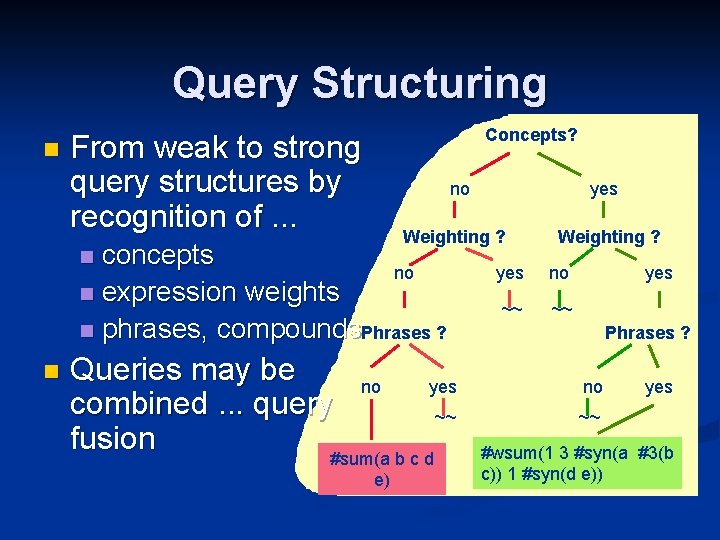

Query Structuring n Concepts? From weak to strong query structures by recognition of. . . no Weighting ? concepts no n expression weights n phrases, compounds. Phrases ? n n yes Queries may be no yes combined. . . query ~~ fusion #sum(a b c d e) Weighting ? yes no ~~ ~~ yes Phrases ? no yes ~~ #wsum(1 3 #syn(a #3(b c)) 1 #syn(d e))

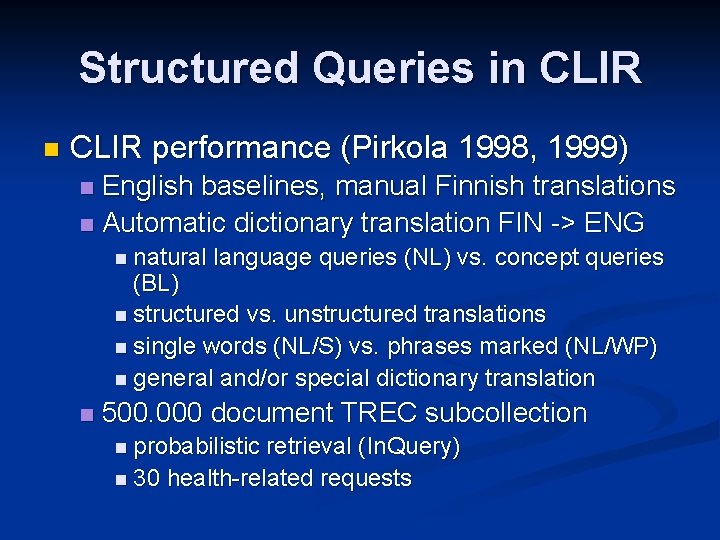

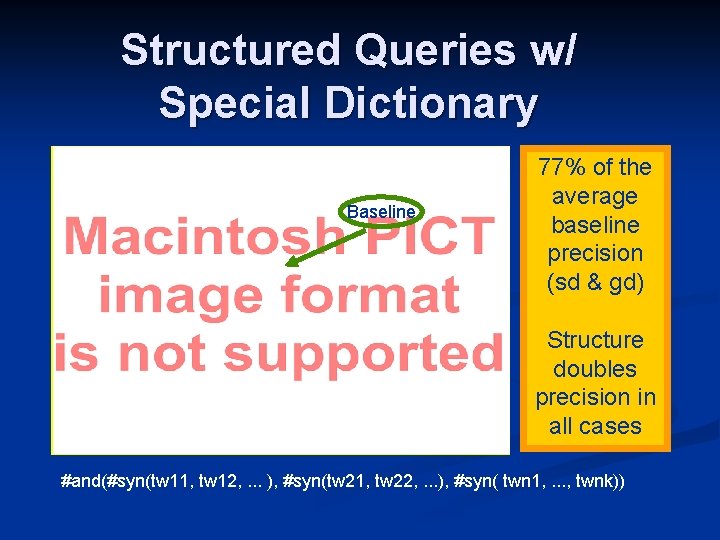

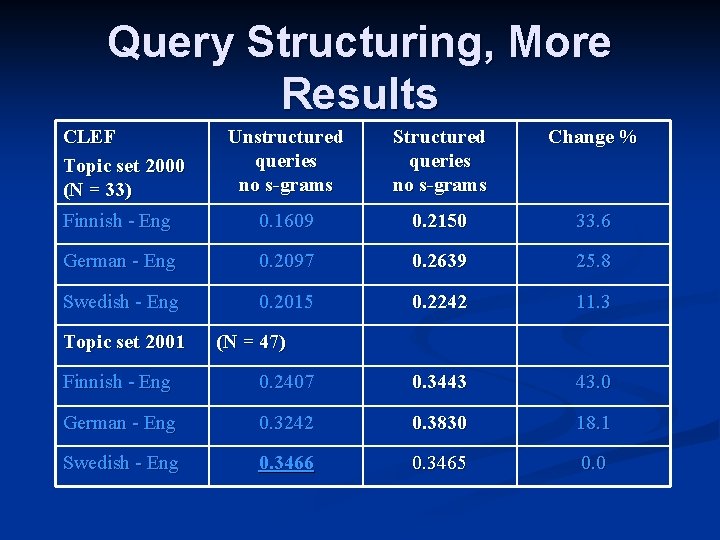

Structured Queries in CLIR performance (Pirkola 1998, 1999) English baselines, manual Finnish translations n Automatic dictionary translation FIN -> ENG n n natural language queries (NL) vs. concept queries (BL) n structured vs. unstructured translations n single words (NL/S) vs. phrases marked (NL/WP) n general and/or special dictionary translation n 500. 000 document TREC subcollection n probabilistic retrieval (In. Query) n 30 health-related requests

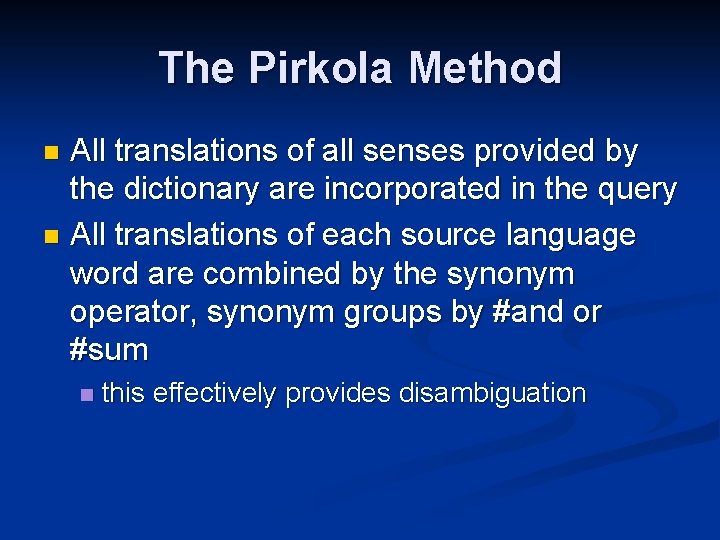

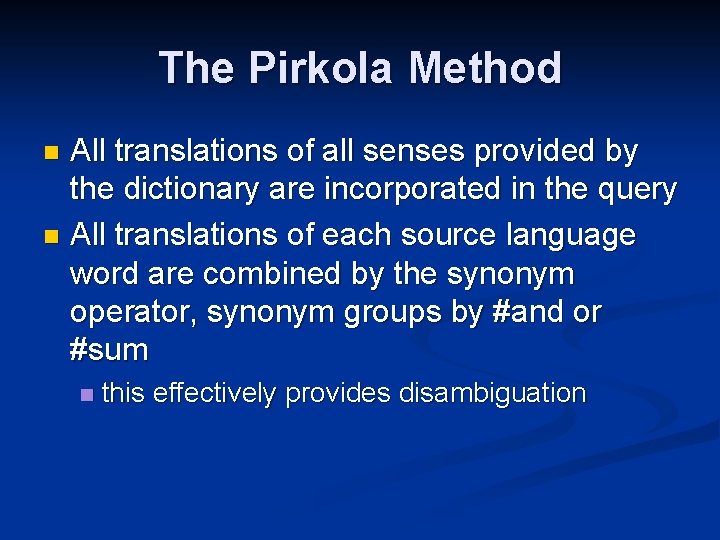

The Pirkola Method All translations of all senses provided by the dictionary are incorporated in the query n All translations of each source language word are combined by the synonym operator, synonym groups by #and or #sum n n this effectively provides disambiguation

![An Example n Consider the Finnish natural language query lääke sydänvaiva medicine heartproblem An Example n Consider the Finnish natural language query: lääke sydänvaiva [= medicine heart_problem]](https://slidetodoc.com/presentation_image_h2/2943e3ba6dbd29b1458be139017086f9/image-41.jpg)

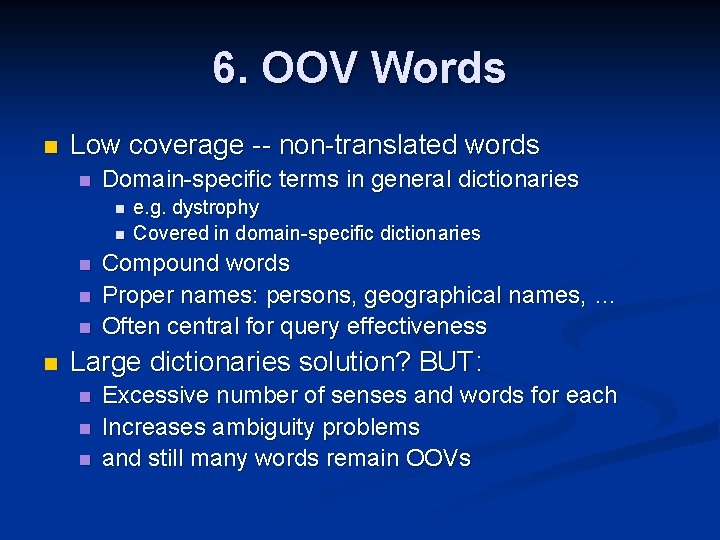

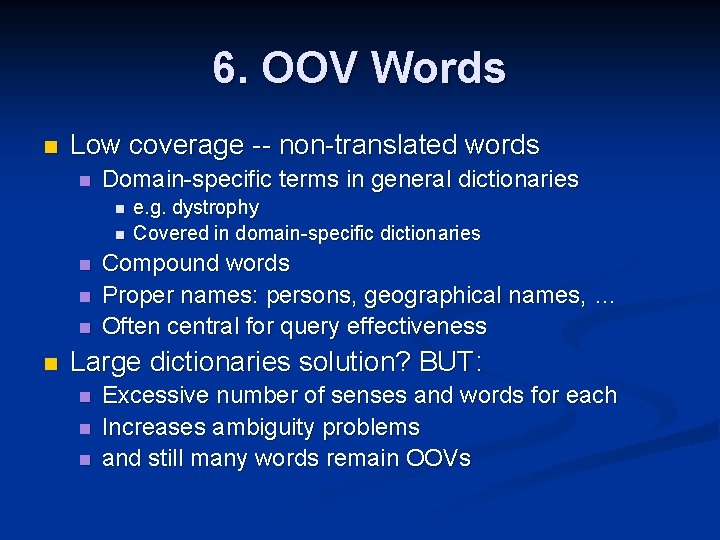

An Example n Consider the Finnish natural language query: lääke sydänvaiva [= medicine heart_problem] Sample English CLIR query: n #sum( #syn( medication drug ) heart #syn( ailment, complaint, discomfort, inconvenience, trouble, vexation ) ) n n n Each source word forming a synonym set

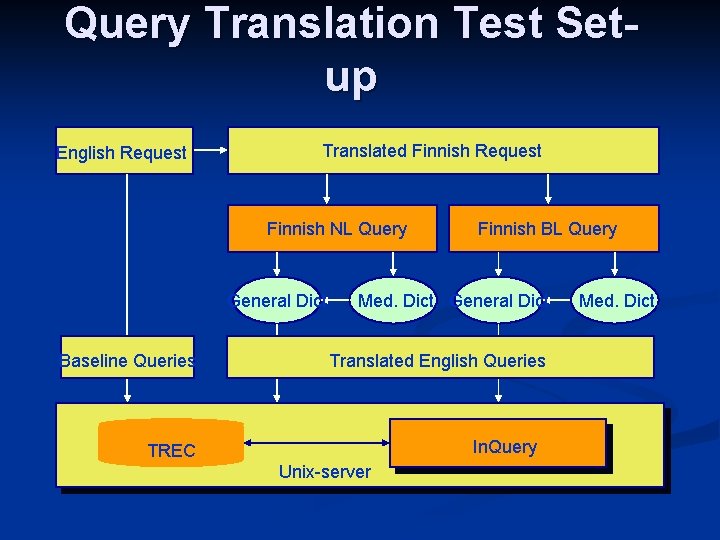

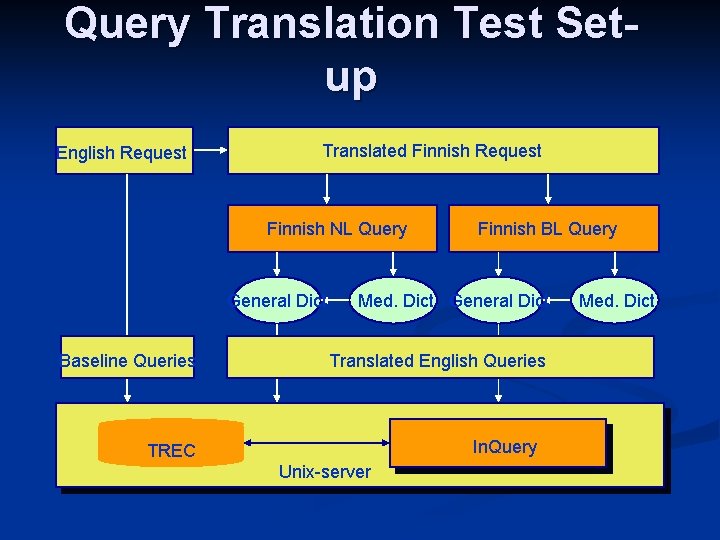

Query Translation Test Setup English Request Translated Finnish Request Finnish NL Query General Dict Baseline Queries Finnish BL Query Med. Dict. General Dict Translated English Queries In. Query TREC Unix-server Med. Dict.

Unstructured NL/S Queries Baseline Only 38% of the average baseline precision (sd&gd) #sum(tw 11, tw 12, . . . , tw 21, tw 22, . . . twn 1, . . . , twnk)

Structured Queries w/ Special Dictionary Baseline 77% of the average baseline precision (sd & gd) Structure doubles precision in all cases #and(#syn(tw 11, tw 12, . . . ), #syn(tw 21, tw 22, . . . ), #syn( twn 1, . . . , twnk))

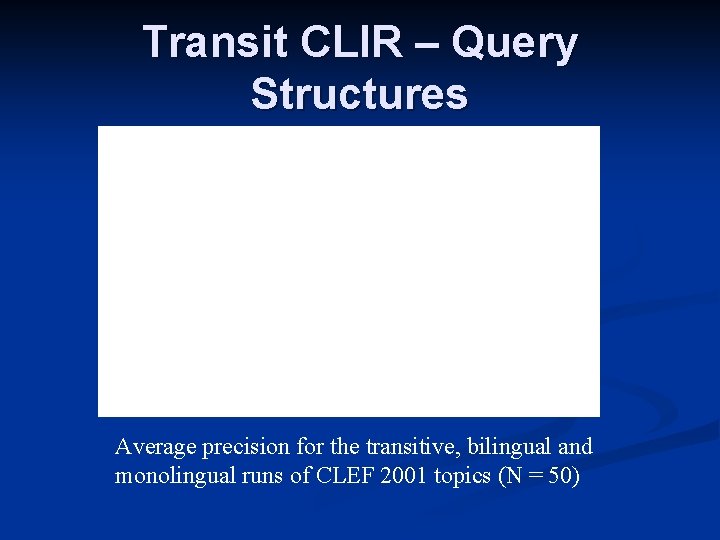

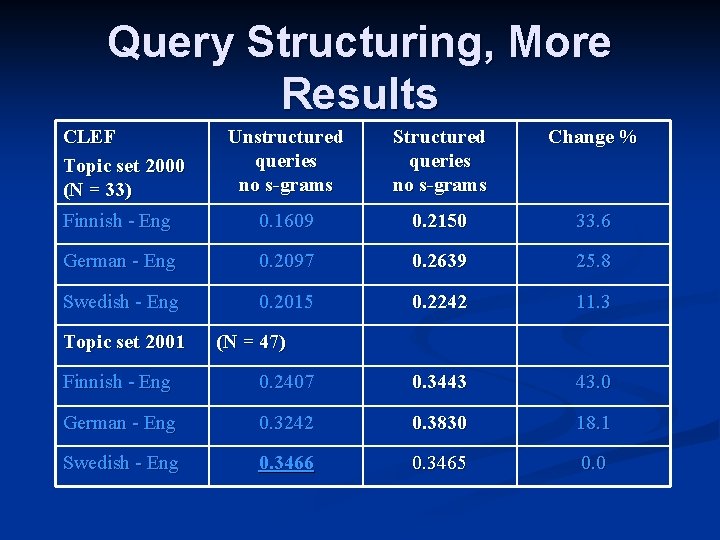

Query Structuring, More Results CLEF Topic set 2000 (N = 33) Unstructured queries no s-grams Structured queries no s-grams Change % Finnish - Eng 0. 1609 0. 2150 33. 6 German - Eng 0. 2097 0. 2639 25. 8 Swedish - Eng 0. 2015 0. 2242 11. 3 Topic set 2001 (N = 47) Finnish - Eng 0. 2407 0. 3443 43. 0 German - Eng 0. 3242 0. 3830 18. 1 Swedish - Eng 0. 3466 0. 3465 0. 0

Transit CLIR – Query Structures Average precision for the transitive, bilingual and monolingual runs of CLEF 2001 topics (N = 50)

Transitive CLIR Results, 2

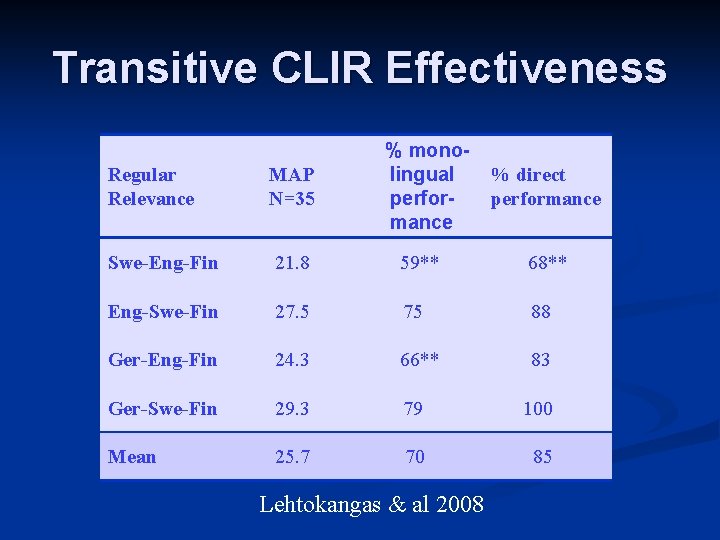

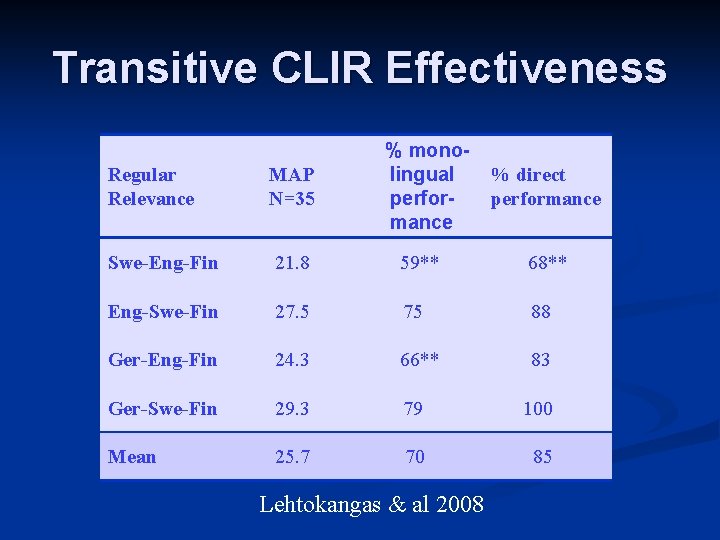

Transitive CLIR Effectiveness % monolingual performance Regular Relevance MAP N=35 Swe-Eng-Fin 21. 8 59** 68** Eng-Swe-Fin 27. 5 75 88 Ger-Eng-Fin 24. 3 66** 83 Ger-Swe-Fin 29. 3 79 100 Mean 25. 7 70 85 Lehtokangas & al 2008 % direct performance

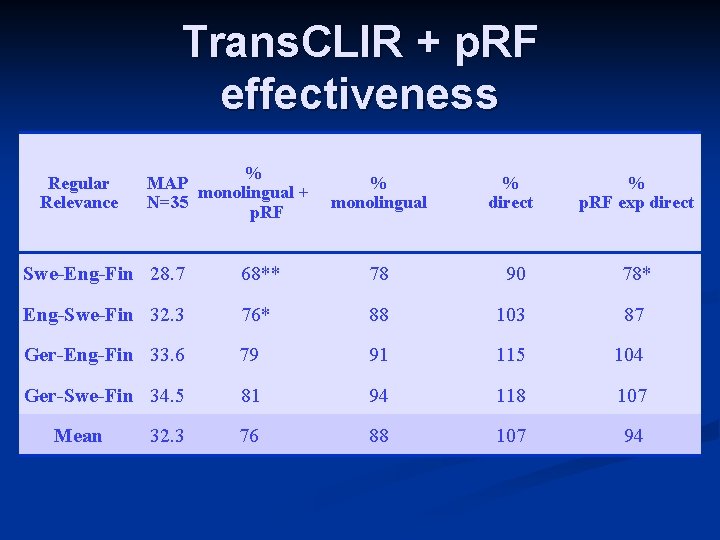

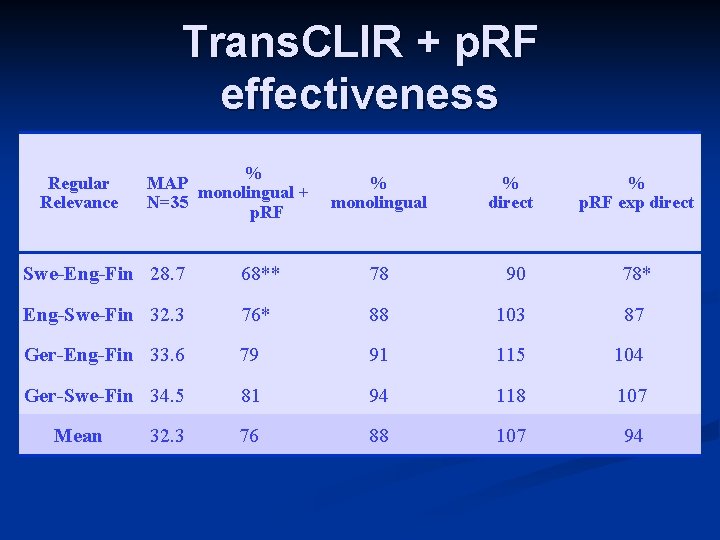

Trans. CLIR + p. RF effectiveness Regular Relevance % MAP monolingual + N=35 p. RF % monolingual % direct % p. RF exp direct Swe-Eng-Fin 28. 7 68** 78 90 78* Eng-Swe-Fin 32. 3 76* 88 103 87 Ger-Eng-Fin 33. 6 79 91 115 104 Ger-Swe-Fin 34. 5 81 94 118 107 76 88 107 94 Mean 32. 3

Agenda 1. Introduction n 2. Reductive Methods n 3. Compounds n 4. Generative Methods n 5. Query Structures n 6. OOV Words n 7. Conclusion n

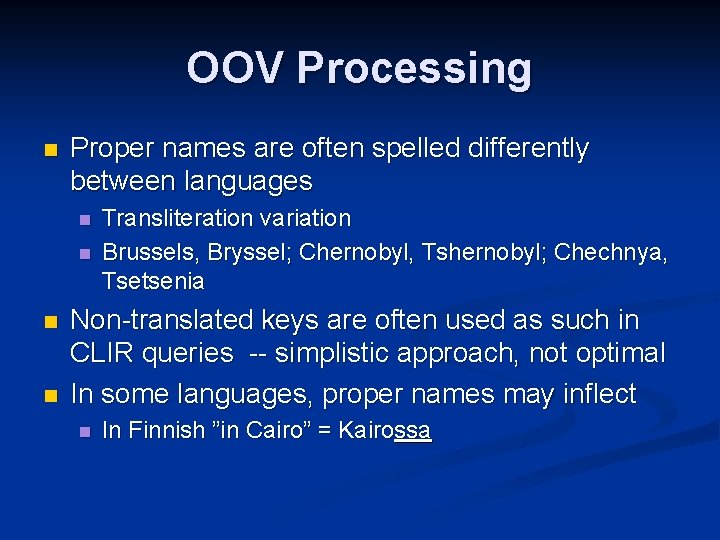

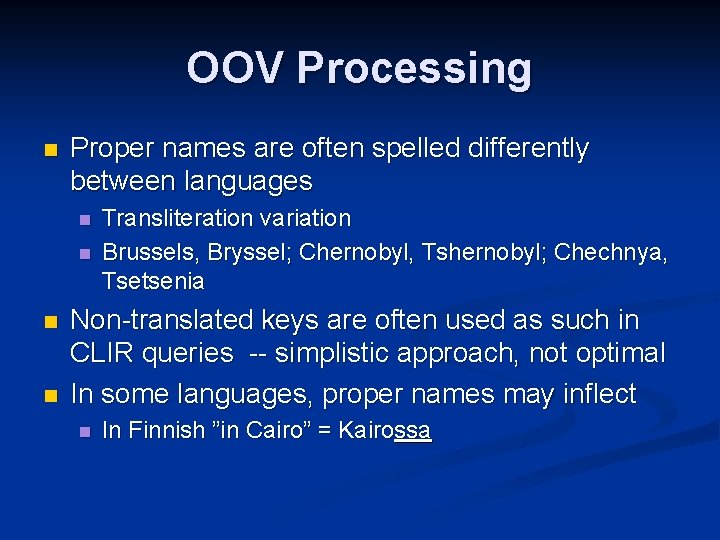

6. OOV Words n Low coverage -- non-translated words n Domain-specific terms in general dictionaries n n n e. g. dystrophy Covered in domain-specific dictionaries Compound words Proper names: persons, geographical names, … Often central for query effectiveness Large dictionaries solution? BUT: n n n Excessive number of senses and words for each Increases ambiguity problems and still many words remain OOVs

OOV Processing n Proper names are often spelled differently between languages n n Transliteration variation Brussels, Bryssel; Chernobyl, Tshernobyl; Chechnya, Tsetsenia Non-translated keys are often used as such in CLIR queries -- simplistic approach, not optimal In some languages, proper names may inflect n In Finnish ”in Cairo” = Kairossa

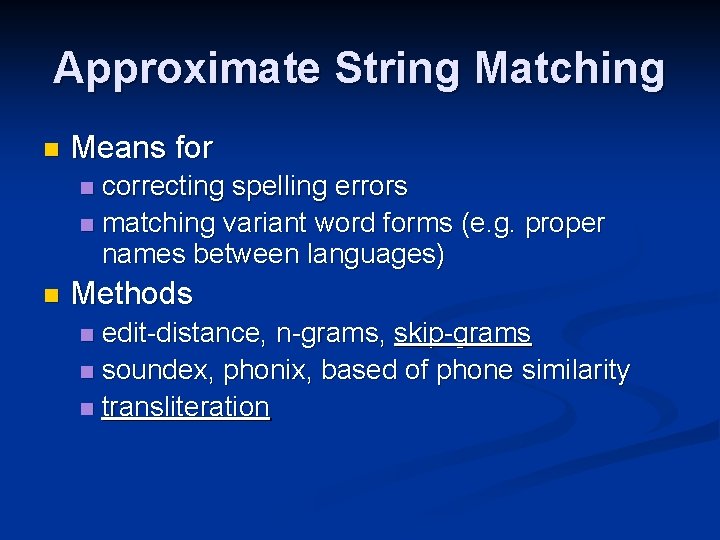

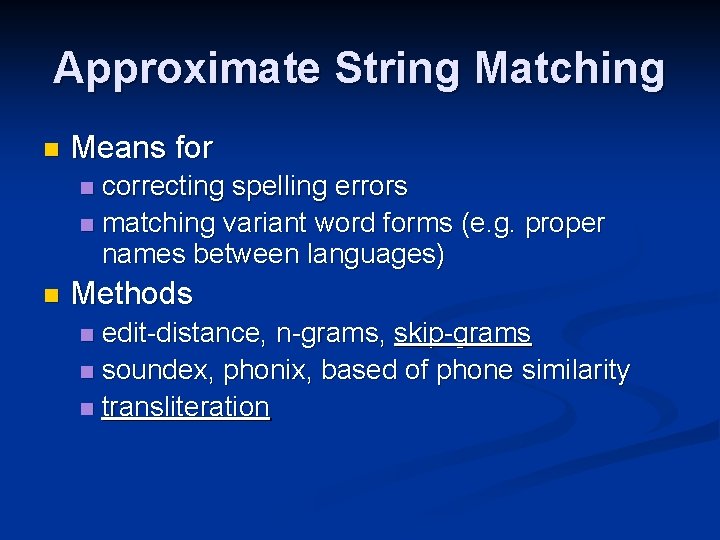

Approximate String Matching n Means for correcting spelling errors n matching variant word forms (e. g. proper names between languages) n n Methods edit-distance, n-grams, skip-grams n soundex, phonix, based of phone similarity n transliteration n

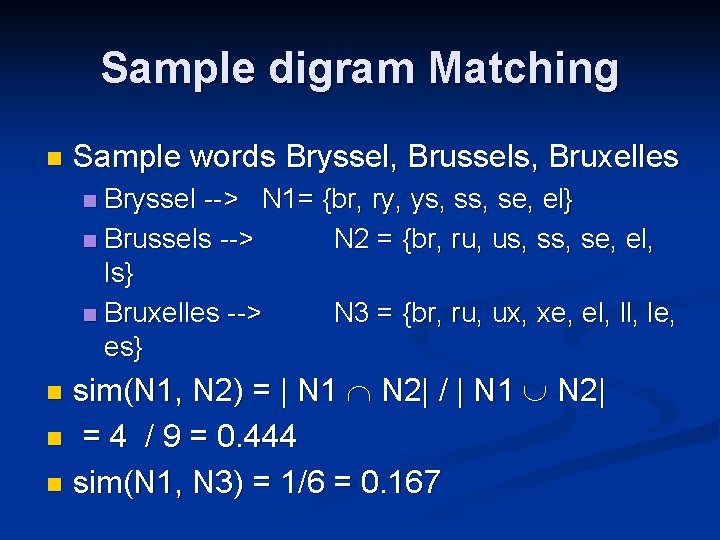

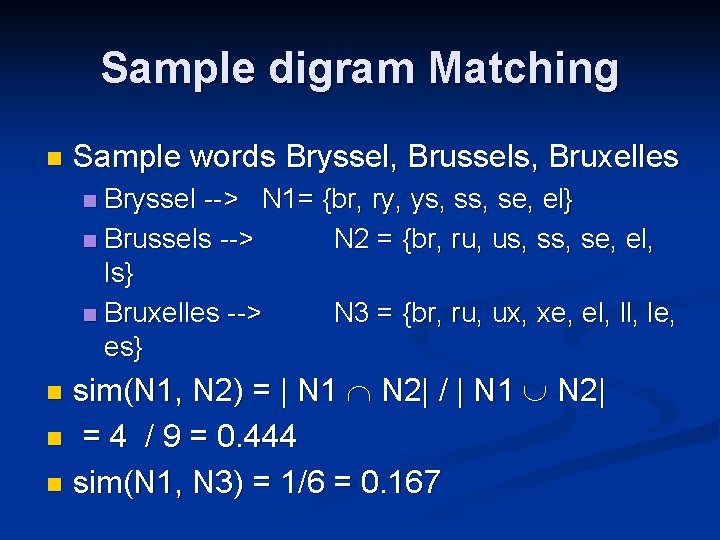

Sample digram Matching n Sample words Bryssel, Brussels, Bruxelles Bryssel --> N 1= {br, ry, ys, se, el} n Brussels --> N 2 = {br, ru, us, se, el, ls} n Bruxelles --> N 3 = {br, ru, ux, xe, el, le, es} n sim(N 1, N 2) = | N 1 N 2| / | N 1 N 2| n = 4 / 9 = 0. 444 n sim(N 1, N 3) = 1/6 = 0. 167 n

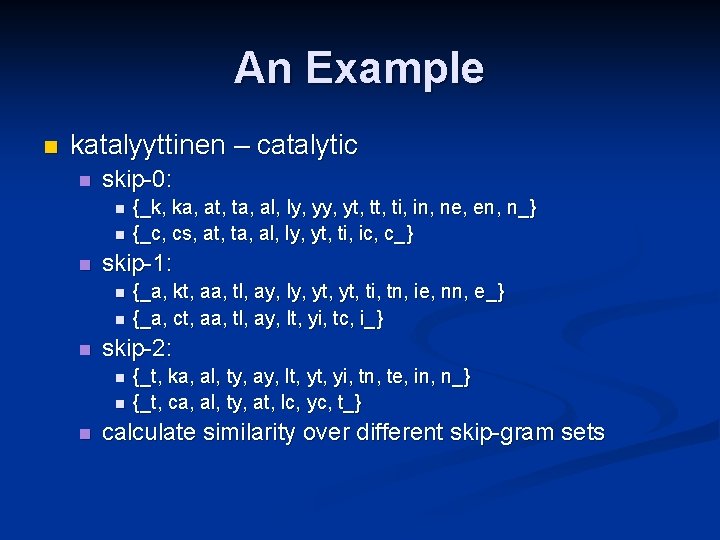

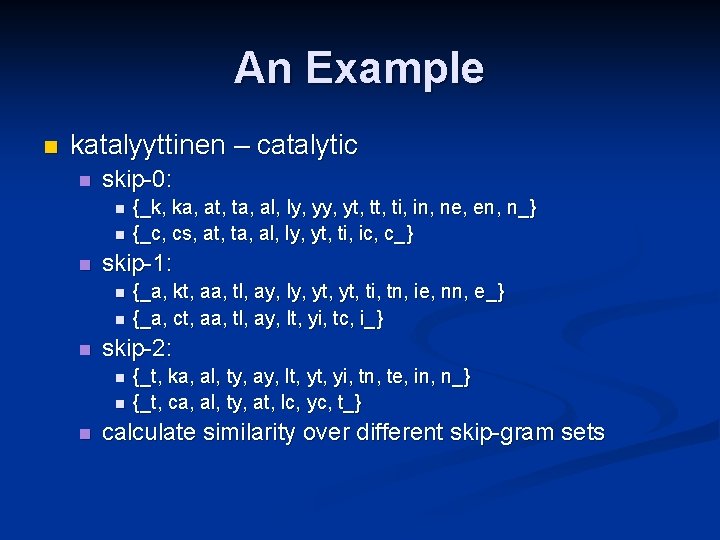

Skip-grams Generalizing n-gram matching n The strings to be compared are split into substrings of length n n Skipping characters is allowed n Substrings produced using various skip lengths n n-grams remain a special case: no skips n (Pirkola & Keskustalo & al 2002) n

An Example n katalyyttinen – catalytic n skip-0: n n n skip-1: n n n {_a, kt, aa, tl, ay, ly, yt, ti, tn, ie, nn, e_} {_a, ct, aa, tl, ay, lt, yi, tc, i_} skip-2: n n n {_k, ka, at, ta, al, ly, yt, ti, in, ne, en, n_} {_c, cs, at, ta, al, ly, yt, ti, ic, c_} {_t, ka, al, ty, ay, lt, yi, tn, te, in, n_} {_t, ca, al, ty, at, lc, yc, t_} calculate similarity over different skip-gram sets

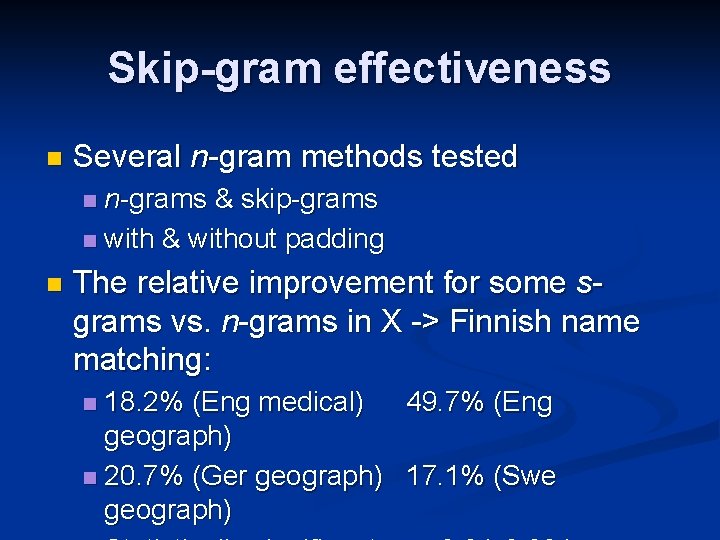

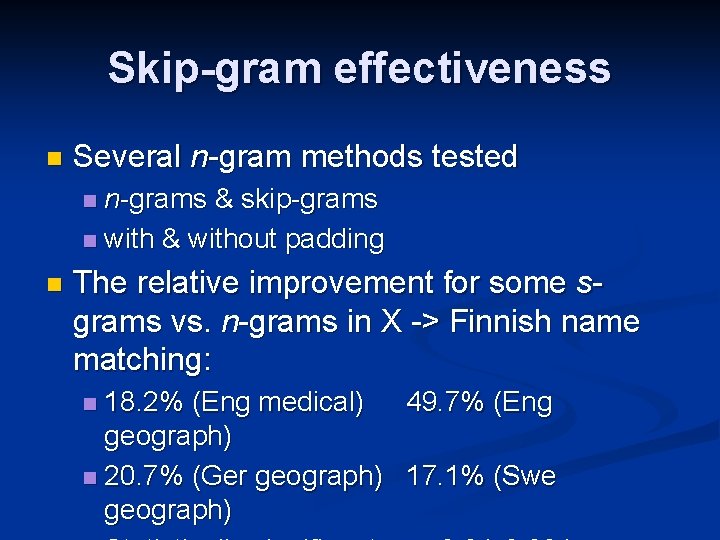

Skip-gram effectiveness n Several n-gram methods tested n-grams & skip-grams n with & without padding n n The relative improvement for some sgrams vs. n-grams in X -> Finnish name matching: 18. 2% (Eng medical) 49. 7% (Eng geograph) n 20. 7% (Ger geograph) 17. 1% (Swe geograph) n

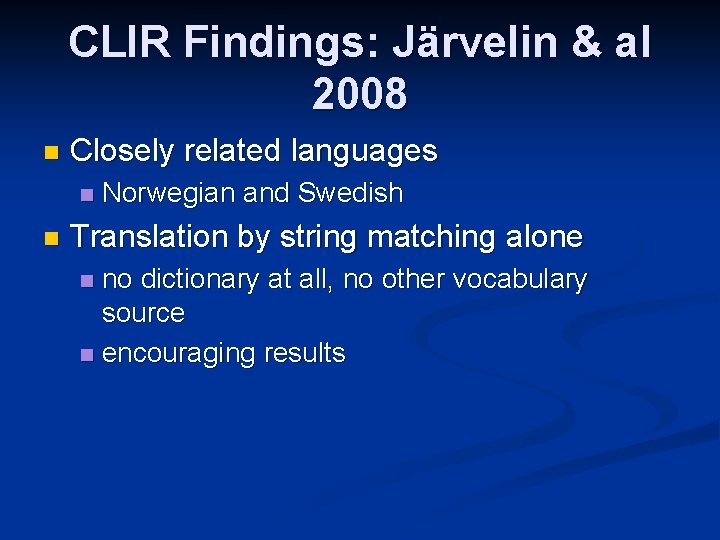

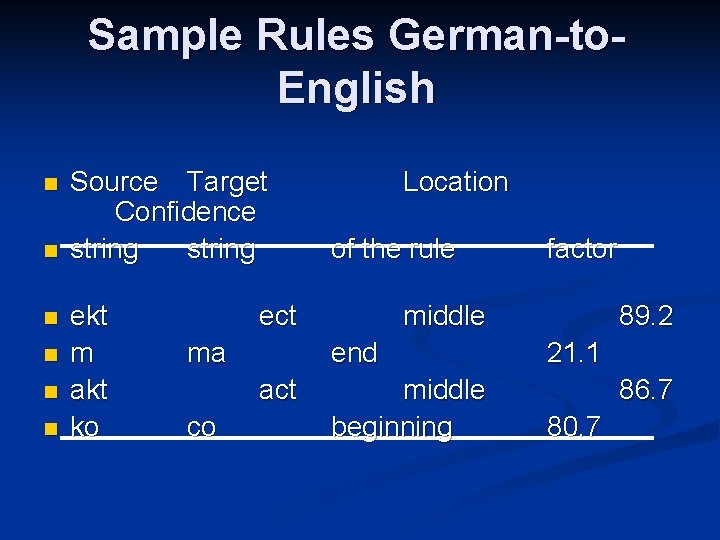

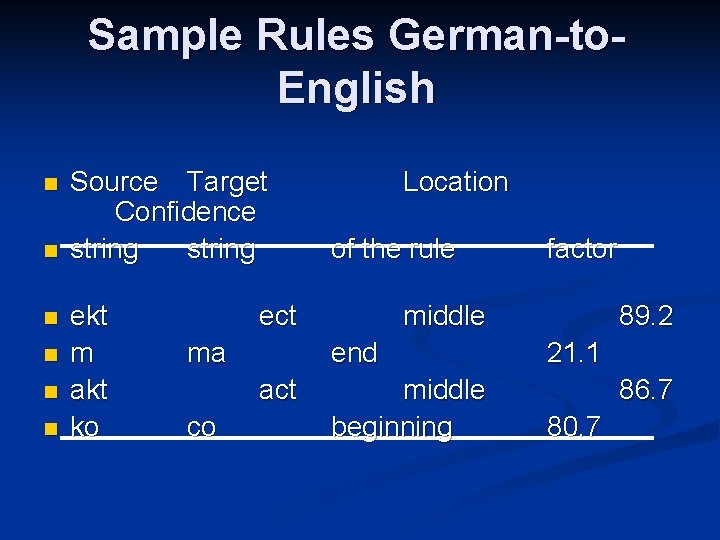

CLIR Findings: Järvelin & al 2008 n Closely related languages n n Norwegian and Swedish Translation by string matching alone no dictionary at all, no other vocabulary source n encouraging results n

CLIR Findings: Järvelin & al 2008

CLIR Findings: Airio 2008 Language pairs Raw transl *) Fi-sgram_9 Fi-sgram_12 Sv-sgram_4 Sv-sgram_7 *) Lemmatized *) Fin -> Eng 11. 2 29. 2 31. 0 39. 6 Eng -> Swe 18. 1 25. 7 25. 3 34. 1 Swe -> Fin- 11. 7 26. 1 26. 7 37. 6 Fin -> Swe 14. 3 26. 7 22. 6 35. 2 *) target index not normalized

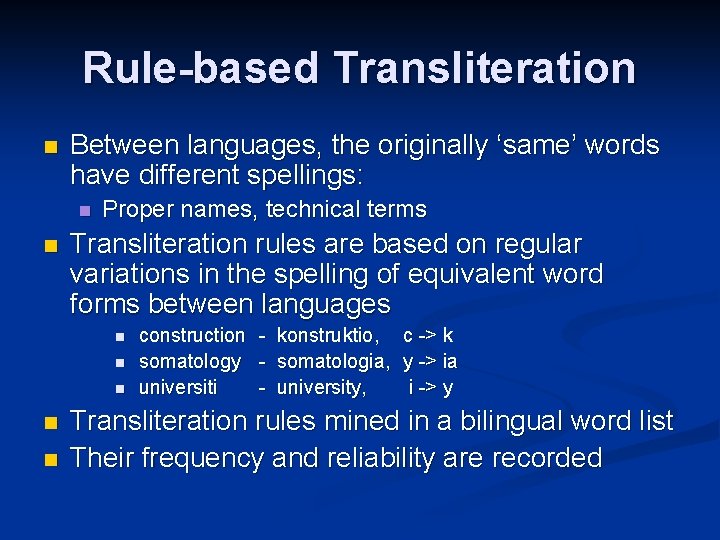

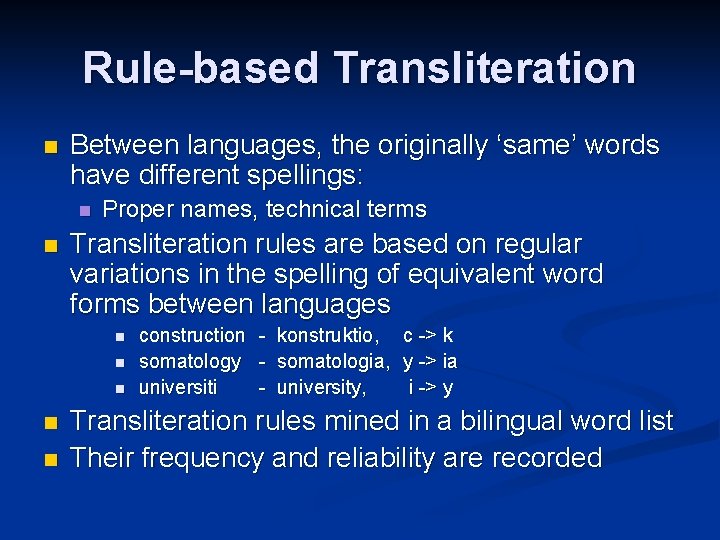

Rule-based Transliteration n Between languages, the originally ‘same’ words have different spellings: n n Proper names, technical terms Transliteration rules are based on regular variations in the spelling of equivalent word forms between languages n n n construction somatology universiti - konstruktio, somatologia, university, c -> k y -> ia i -> y Transliteration rules mined in a bilingual word list Their frequency and reliability are recorded

Sample Rules German-to. English n n n Source Target Confidence string ekt m akt ko Location of the rule ect ma co middle end act factor middle beginning 89. 2 21. 1 86. 7 80. 7

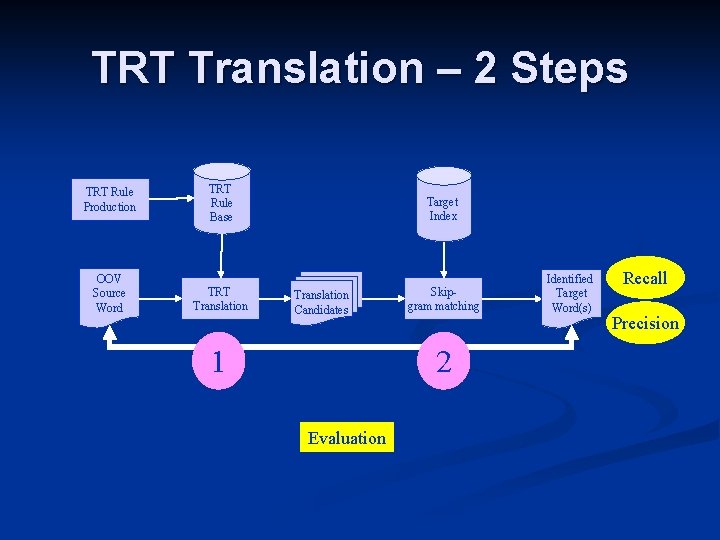

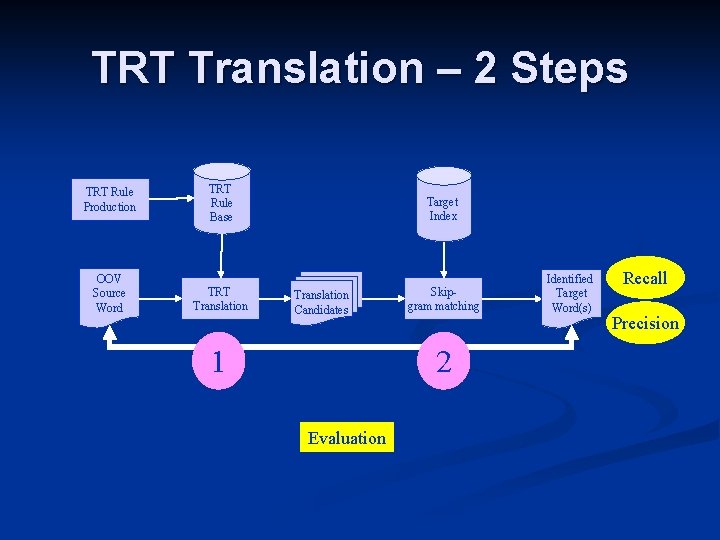

TRT Translation – 2 Steps TRT Rule Production TRT Rule Base Target Index OOV Source Word TRT Translation Skipgram matching Translation Candidates 1 2 Evaluation Identified Target Word(s) Recall Precision

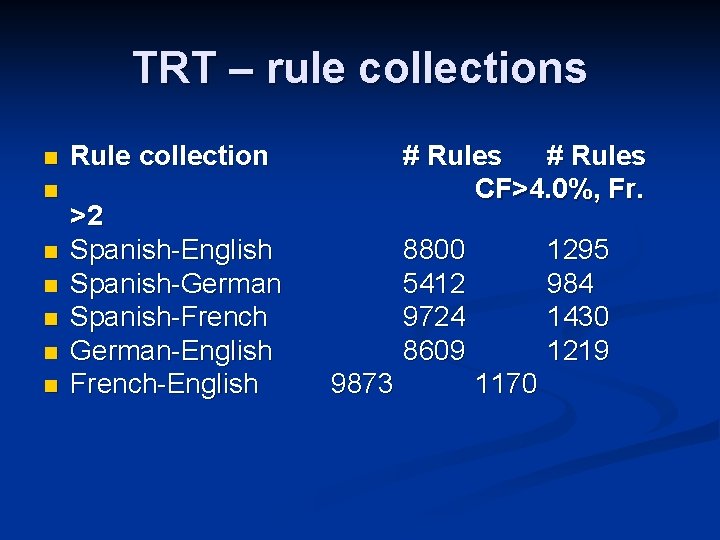

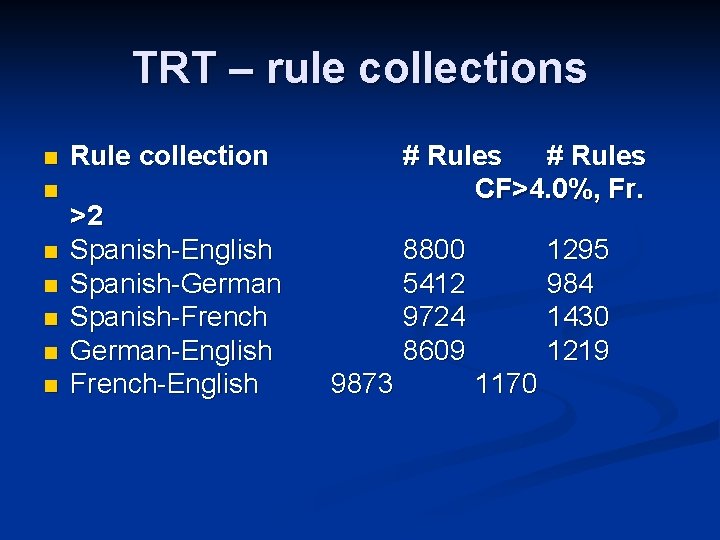

TRT – rule collections n n n n Rule collection >2 Spanish-English Spanish-German Spanish-French German-English French-English # Rules CF>4. 0%, Fr. 8800 5412 9724 8609 9873 1295 984 1430 1219 1170

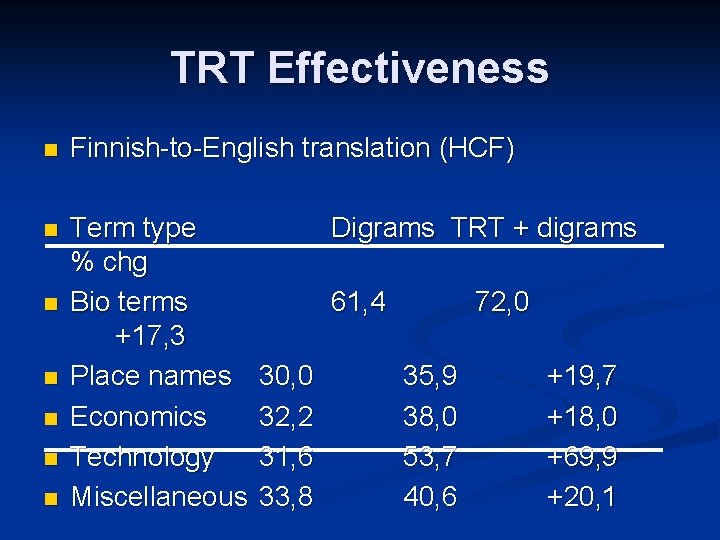

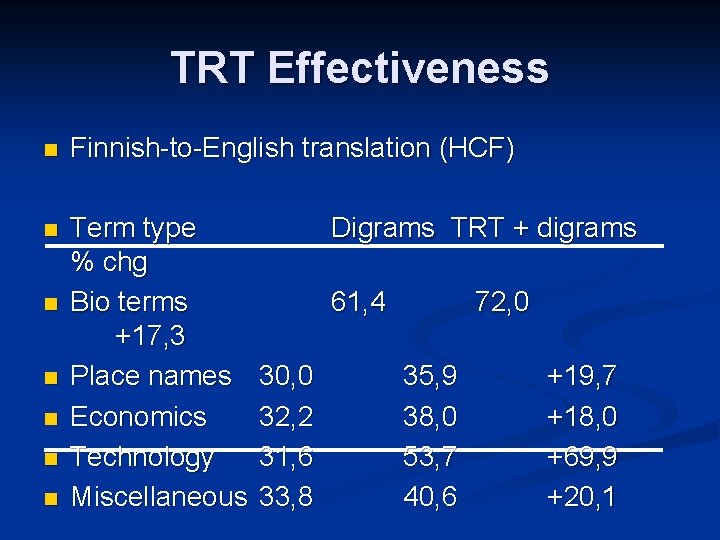

TRT Effectiveness n Finnish-to-English translation (HCF) n Term type % chg Bio terms +17, 3 Place names Economics Technology Miscellaneous n n n Digrams TRT + digrams 61, 4 30, 0 32, 2 31, 6 33, 8 72, 0 35, 9 38, 0 53, 7 40, 6 +19, 7 +18, 0 +69, 9 +20, 1

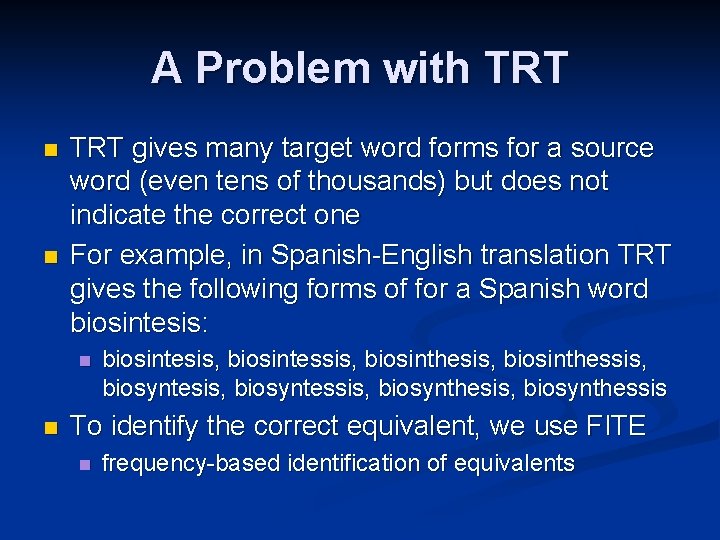

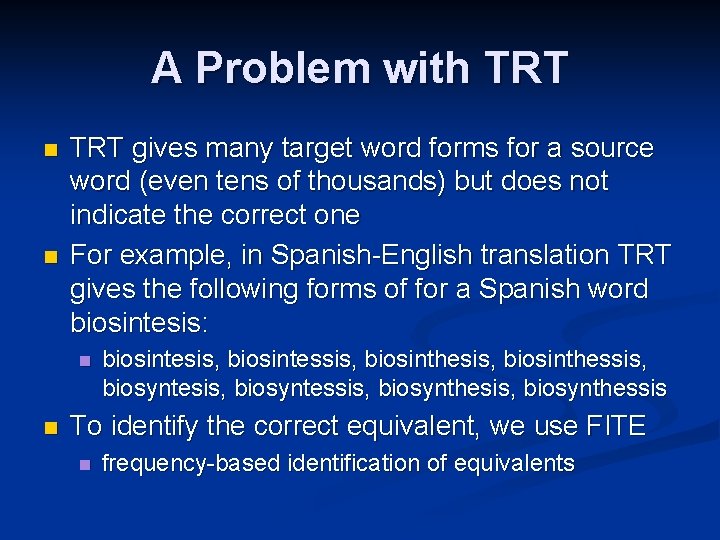

A Problem with TRT n n TRT gives many target word forms for a source word (even tens of thousands) but does not indicate the correct one For example, in Spanish-English translation TRT gives the following forms of for a Spanish word biosintesis: n n biosintesis, biosintessis, biosinthessis, biosyntessis, biosynthessis To identify the correct equivalent, we use FITE n frequency-based identification of equivalents

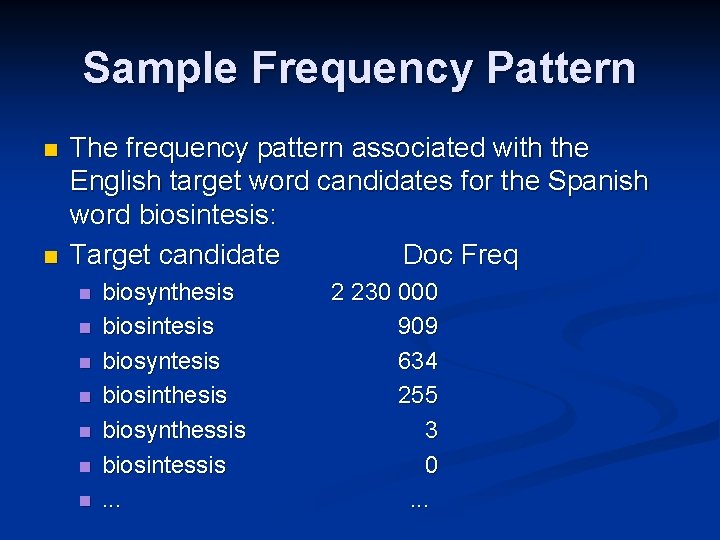

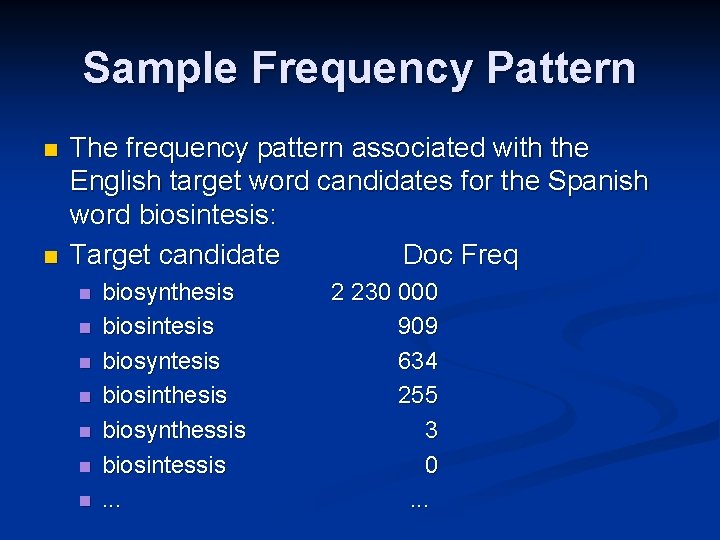

Sample Frequency Pattern n n The frequency pattern associated with the English target word candidates for the Spanish word biosintesis: Target candidate Doc Freq n n n n biosynthesis biosintesis biosyntesis biosinthesis biosynthessis biosintessis. . . 2 230 000 909 634 255 3 0. . .

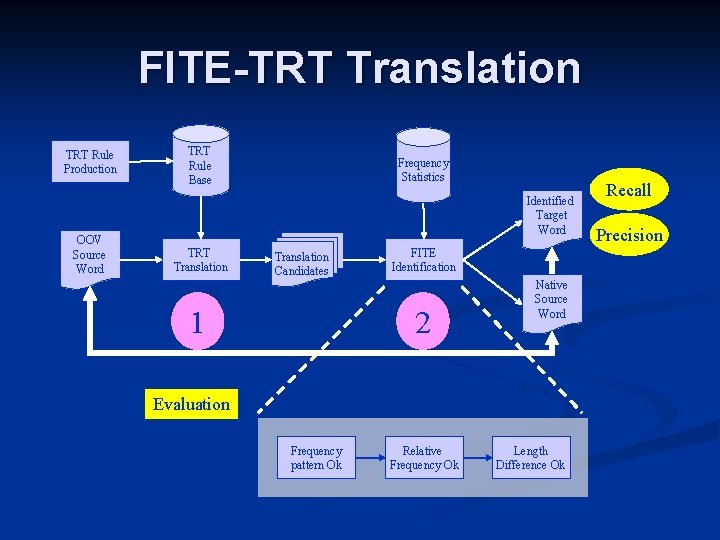

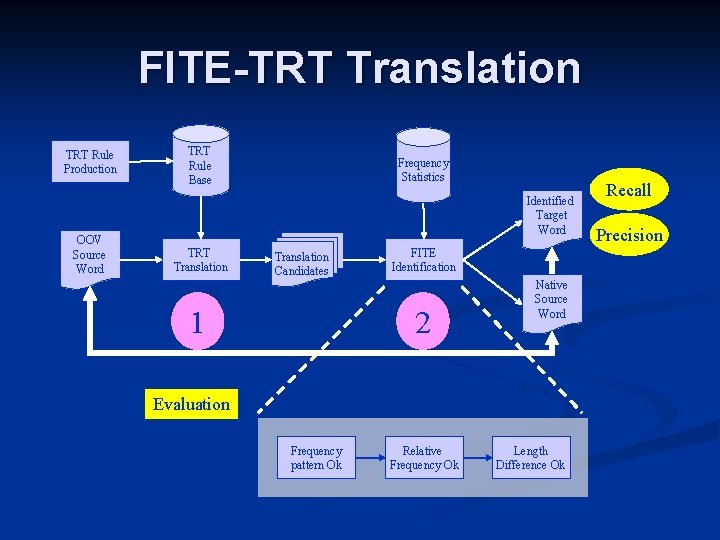

FITE-TRT Translation TRT Rule Production OOV Source Word TRT Rule Base Frequency Statistics Identified Target Word TRT Translation Candidates 1 FITE Identification 2 Native Source Word Evaluation Frequency pattern Ok Relative Frequency Ok Length Difference Ok Recall Precision

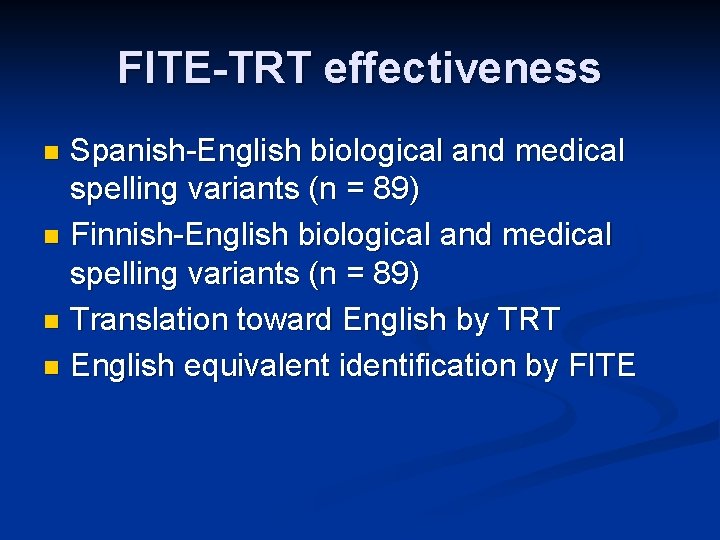

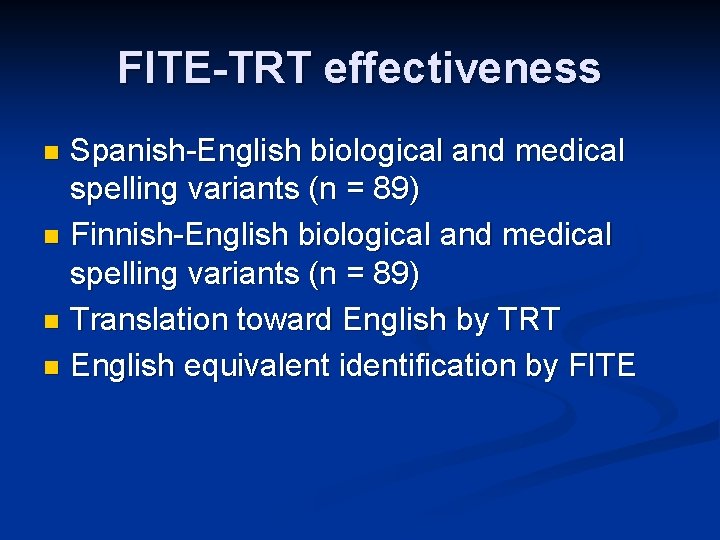

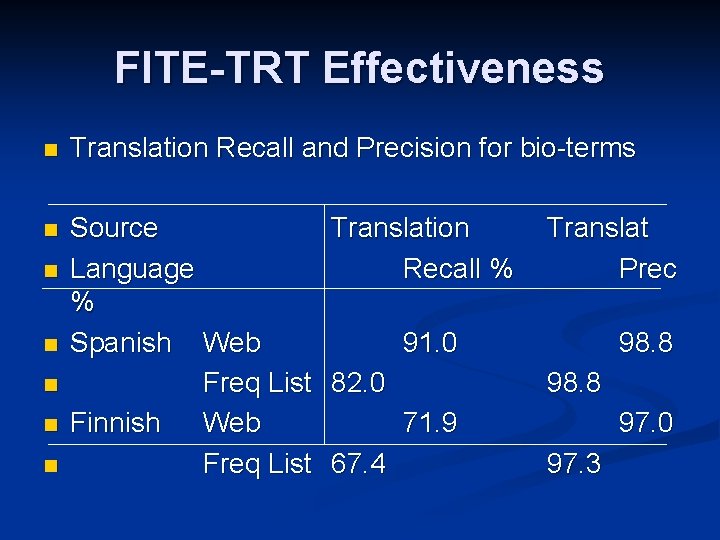

FITE-TRT effectiveness Spanish-English biological and medical spelling variants (n = 89) n Finnish-English biological and medical spelling variants (n = 89) n Translation toward English by TRT n English equivalent identification by FITE n

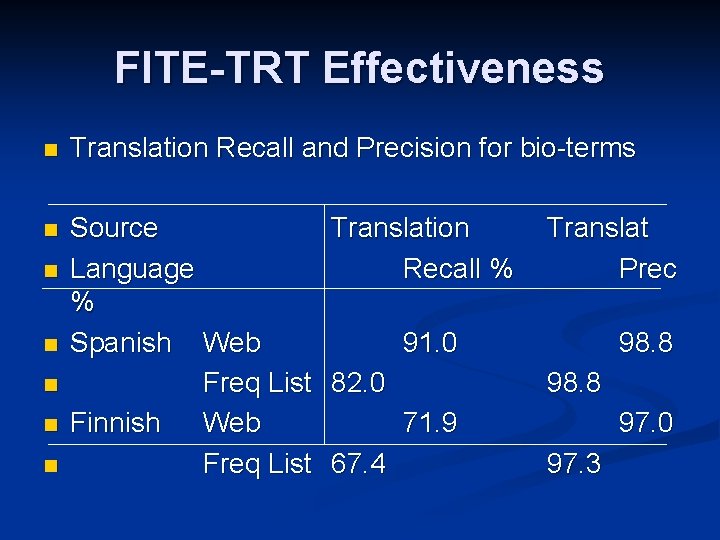

FITE-TRT Effectiveness n Translation Recall and Precision for bio-terms n Source Translation Language Recall % % Spanish Web 91. 0 Freq List 82. 0 Finnish Web 71. 9 Freq List 67. 4 n n n Translat Prec 98. 8 97. 0 97. 3

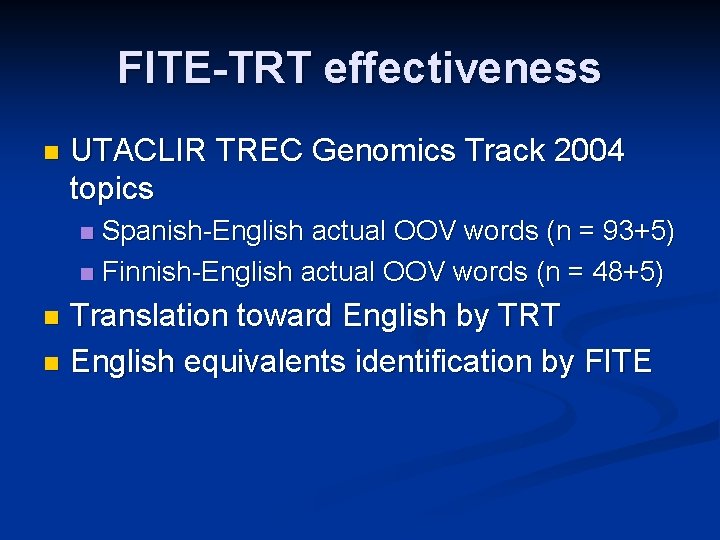

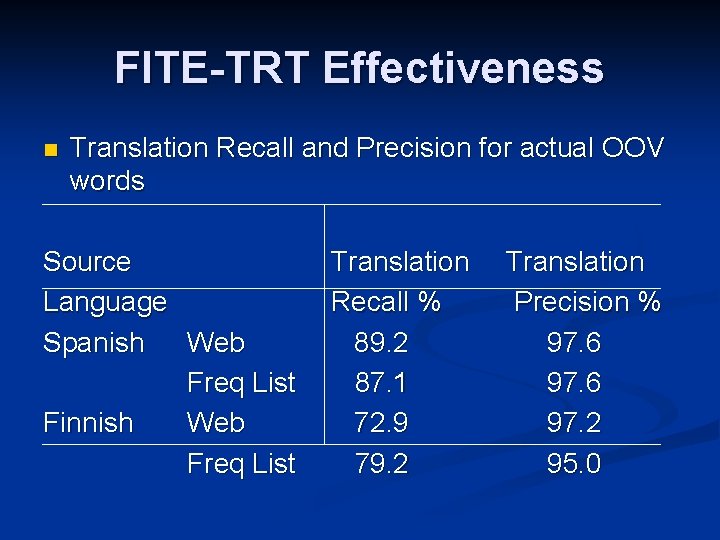

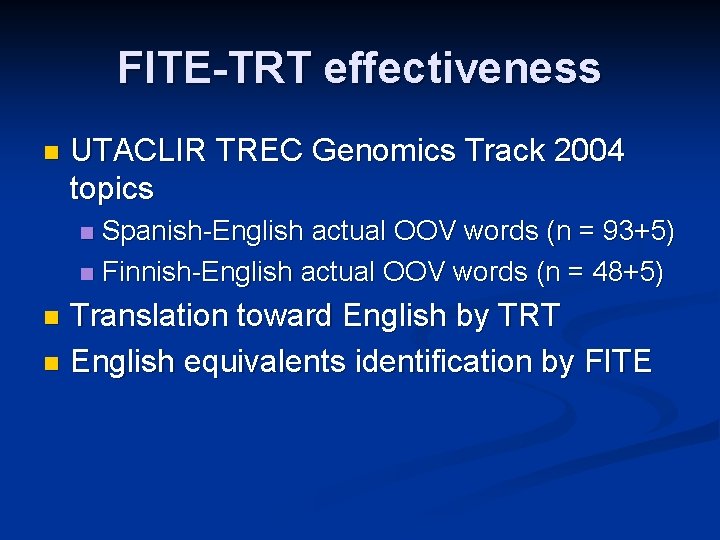

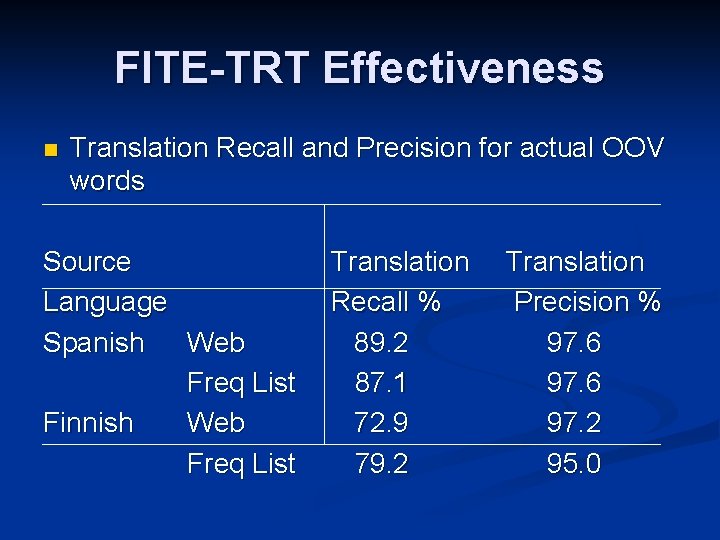

FITE-TRT effectiveness n UTACLIR TREC Genomics Track 2004 topics Spanish-English actual OOV words (n = 93+5) n Finnish-English actual OOV words (n = 48+5) n Translation toward English by TRT n English equivalents identification by FITE n

FITE-TRT Effectiveness n Translation Recall and Precision for actual OOV words Source Language Spanish Web Freq List Finnish Web Freq List Translation Recall % 89. 2 87. 1 72. 9 79. 2 Translation Precision % 97. 6 97. 2 95. 0

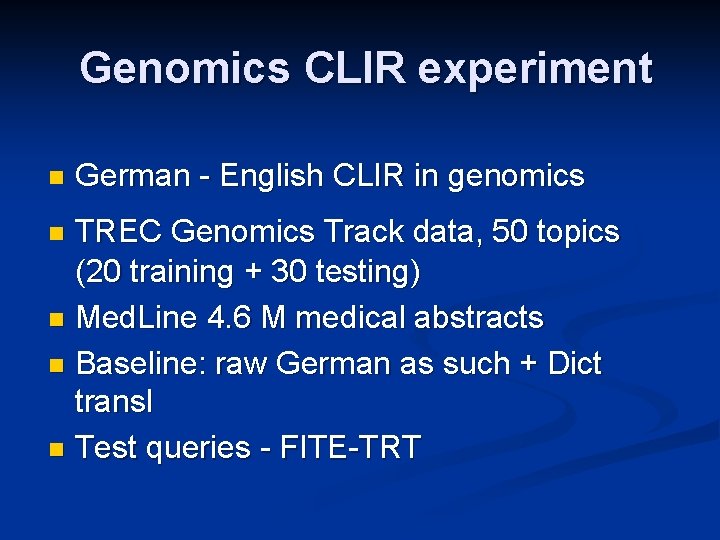

Genomics CLIR experiment n German - English CLIR in genomics TREC Genomics Track data, 50 topics (20 training + 30 testing) n Med. Line 4. 6 M medical abstracts n Baseline: raw German as such + Dict transl n Test queries - FITE-TRT n

Genomics CLIR experiment

Agenda 1. Introduction n 2. Reductive Methods n 3. Compounds n 4. Generative Methods n 5. Query Structures n 6. OOV Words n 7. Conclusion n

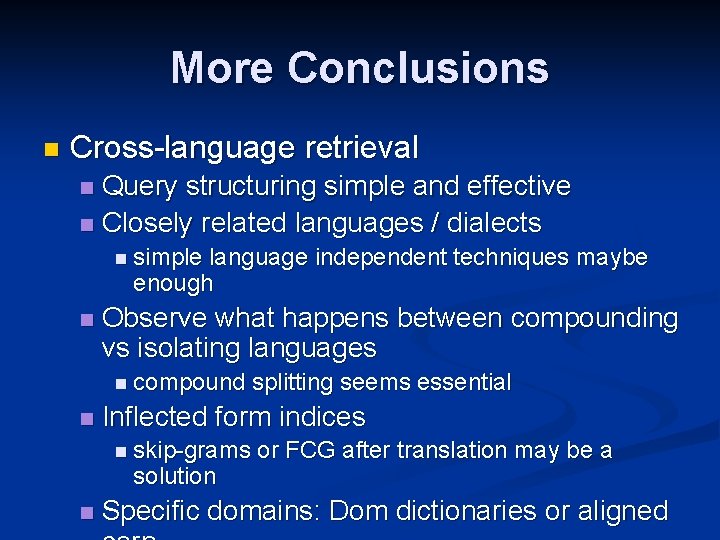

8. Conclusions n Monolingual retrieval n morphological complexity n who owns the index? n reductive and generative approaches n skewed distributions; surprisingly little may be enough n compound handling perhaps not critical in monolingual retrieval

More Conclusions n Cross-language retrieval Query structuring simple and effective n Closely related languages / dialects n n simple language independent techniques maybe enough n Observe what happens between compounding vs isolating languages n compound n Inflected form indices n skip-grams solution n splitting seems essential or FCG after translation may be a Specific domains: Dom dictionaries or aligned

Thanks!