Information Retrieval 2020929 1 Information Retrieval Process Information

- Slides: 47

Information Retrieval 2020/9/29 1

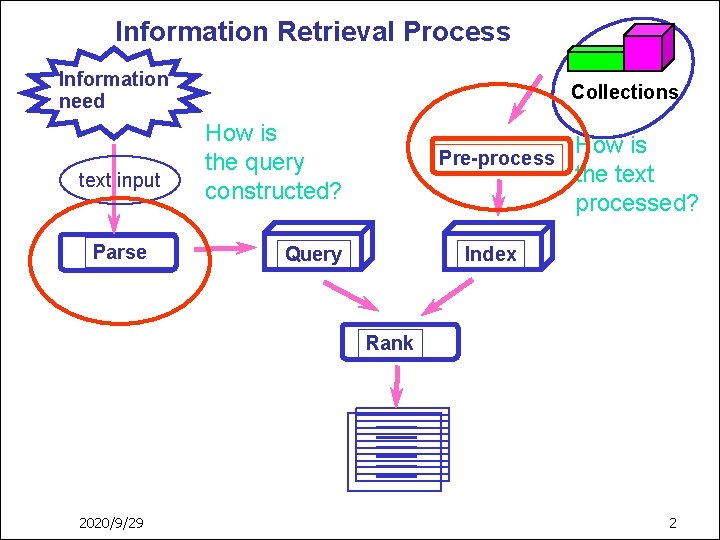

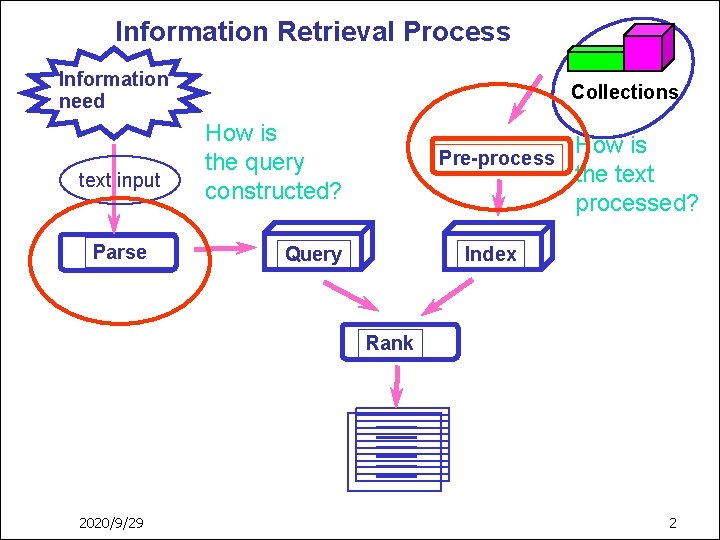

Information Retrieval Process Information need text input Parse Collections How is the query constructed? Pre-process Query How is the text processed? Index Rank 2020/9/29 2

Example: Information Needs n Sometimes very specific n n <title> Falkland petroleum exploration <desc> Description: What information is available on petroleum exploration in the South Atlantic near the Falkland Islands? <narr> Narrative: Any document discussing petroleum exploration in the South Atlantic near the Falkland Islands is considered relevant. Documents discussing petroleum exploration in continental South America are not relevant. Sometimes very vague n 2020/9/29 I am going to Kyoto, Japan for a conference in two months. What should I know? 3

Relevance n In what ways can a document be relevant to a query? n n n Answer precise question precisely. Partially answer question. Suggest a source for more information. Give background information. Remind the user of other knowledge. Others. . . 2020/9/29 4

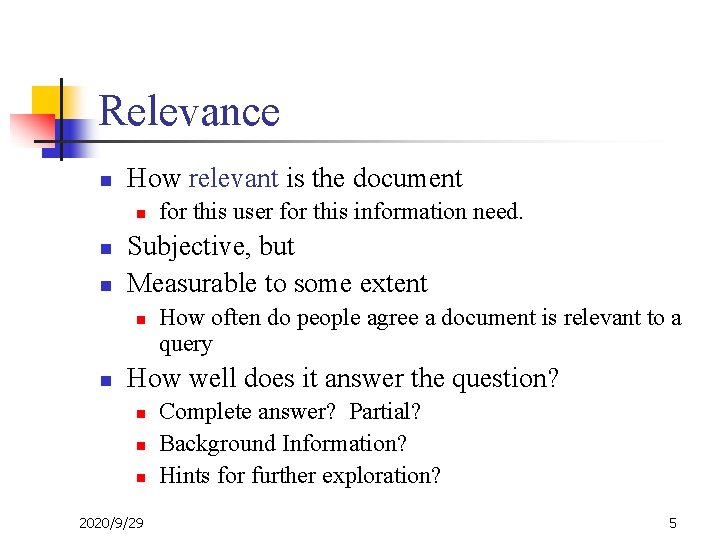

Relevance n How relevant is the document n n n Subjective, but Measurable to some extent n n for this user for this information need. How often do people agree a document is relevant to a query How well does it answer the question? n n n 2020/9/29 Complete answer? Partial? Background Information? Hints for further exploration? 5

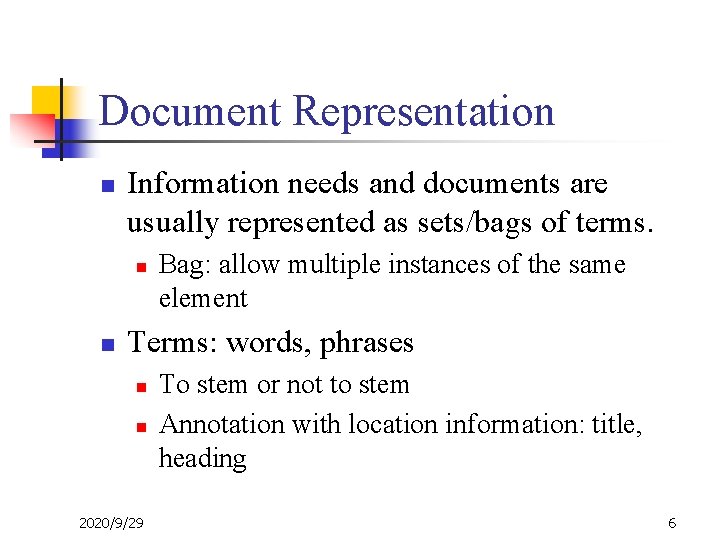

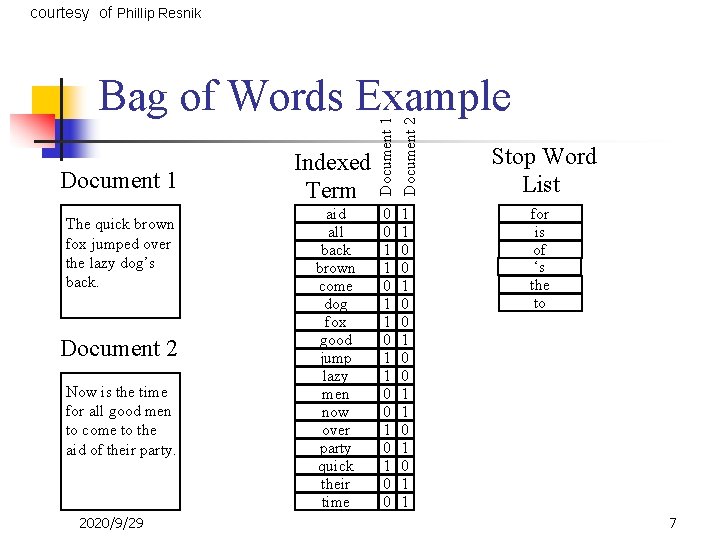

Document Representation n Information needs and documents are usually represented as sets/bags of terms. n n Bag: allow multiple instances of the same element Terms: words, phrases n n 2020/9/29 To stem or not to stem Annotation with location information: title, heading 6

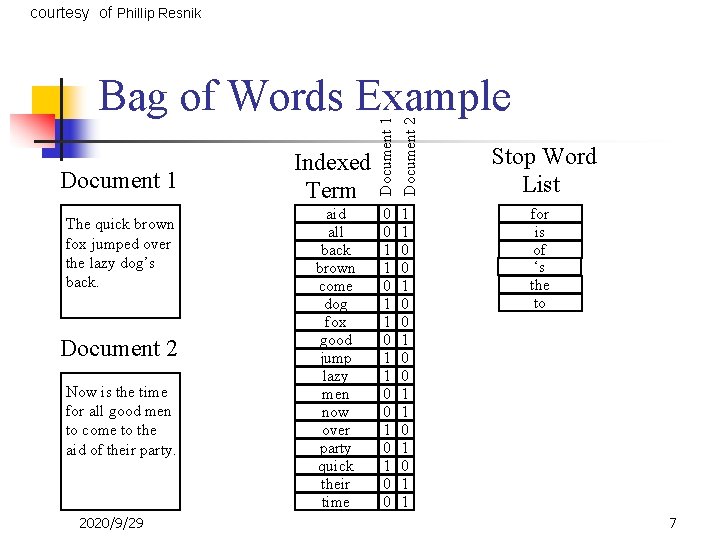

courtesy of Phillip Resnik Document 1 The quick brown fox jumped over the lazy dog’s back. Document 2 Now is the time for all good men to come to the aid of their party. 2020/9/29 Indexed Term aid all back brown come dog fox good jump lazy men now over party quick their time Document 1 Document 2 Bag of Words Example 0 0 1 1 0 0 1 0 0 1 1 0 1 1 Stop Word List for is of ‘s the to 7

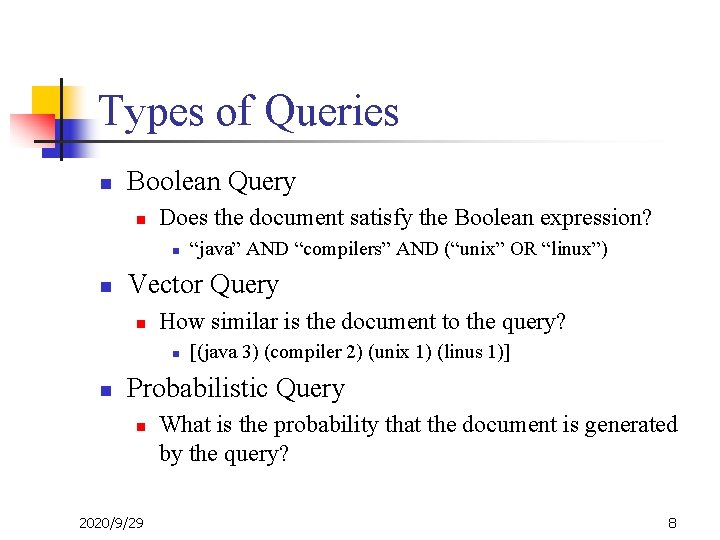

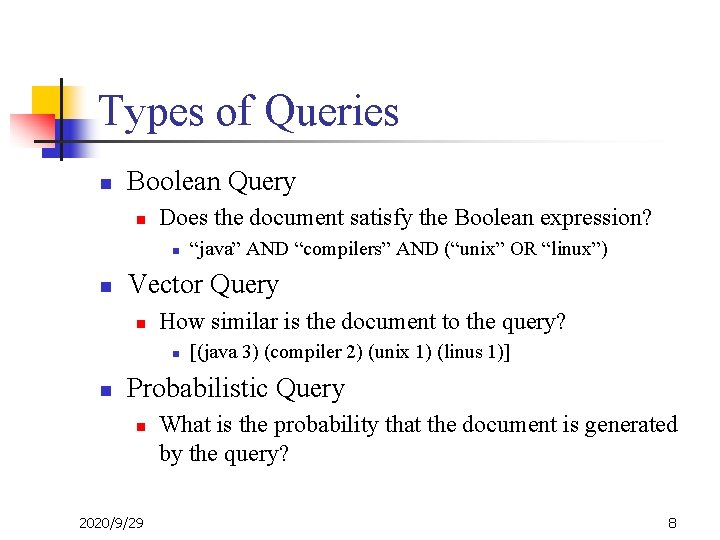

Types of Queries n Boolean Query n Does the document satisfy the Boolean expression? n n Vector Query n How similar is the document to the query? n n “java” AND “compilers” AND (“unix” OR “linux”) [(java 3) (compiler 2) (unix 1) (linus 1)] Probabilistic Query n 2020/9/29 What is the probability that the document is generated by the query? 8

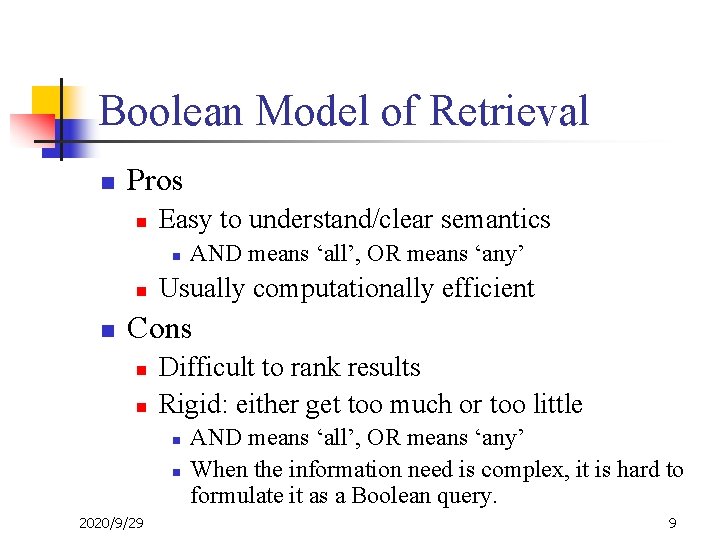

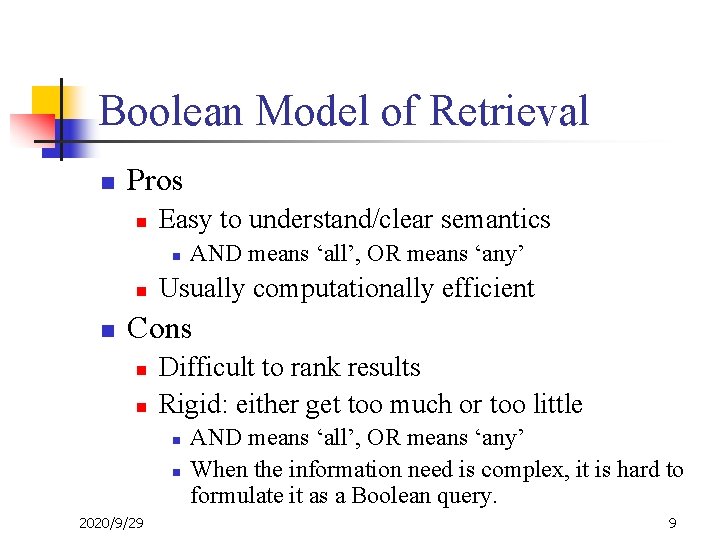

Boolean Model of Retrieval n Pros n Easy to understand/clear semantics n n n AND means ‘all’, OR means ‘any’ Usually computationally efficient Cons n n Difficult to rank results Rigid: either get too much or too little n n 2020/9/29 AND means ‘all’, OR means ‘any’ When the information need is complex, it is hard to formulate it as a Boolean query. 9

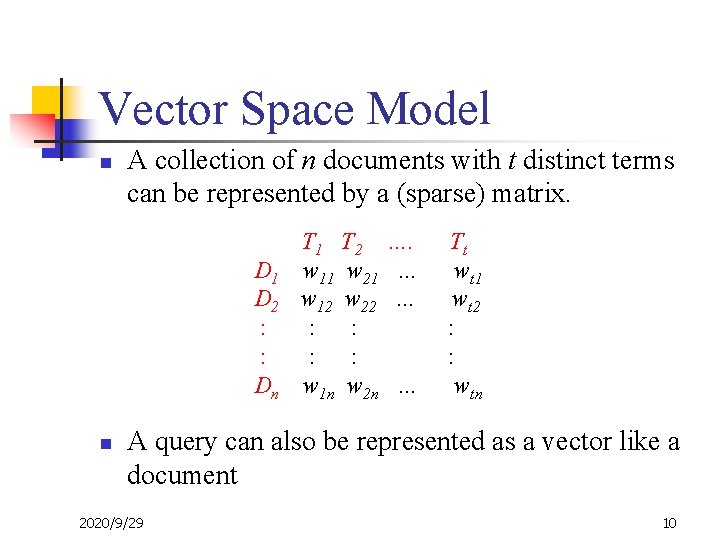

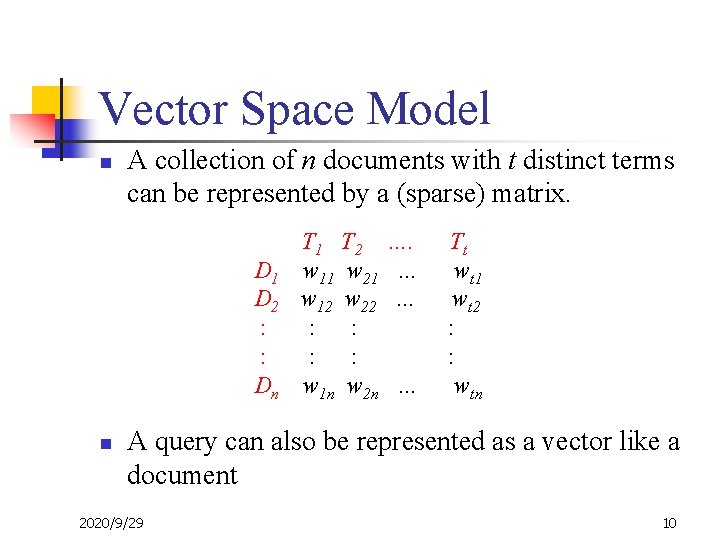

Vector Space Model n A collection of n documents with t distinct terms can be represented by a (sparse) matrix. D 1 D 2 : : Dn n T 1 T 2 w 11 w 21 w 12 w 22 : : w 1 n w 2 n …. … … … Tt wt 1 wt 2 : : wtn A query can also be represented as a vector like a document 2020/9/29 10

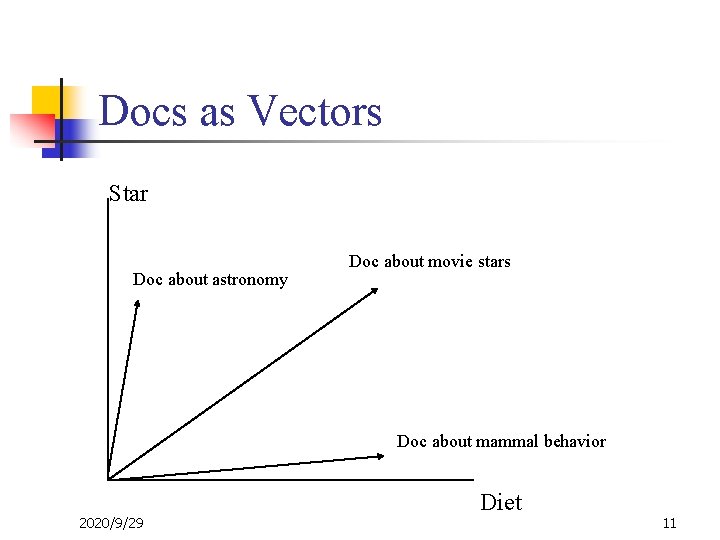

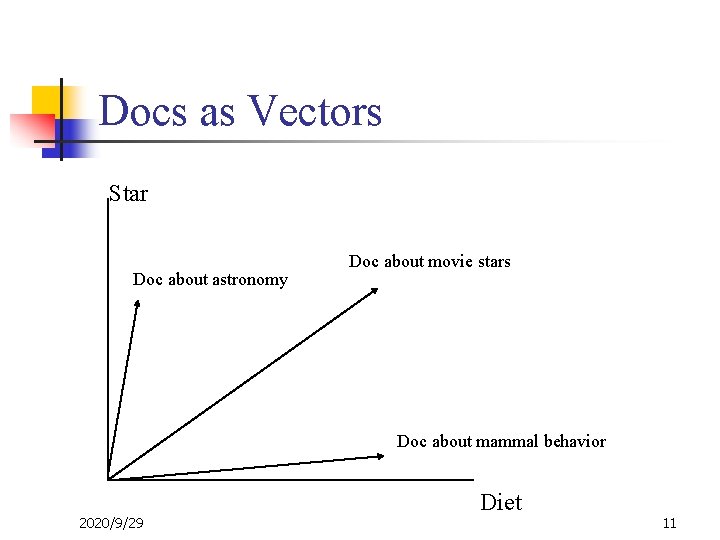

Docs as Vectors Star Doc about astronomy Doc about movie stars Doc about mammal behavior 2020/9/29 Diet 11

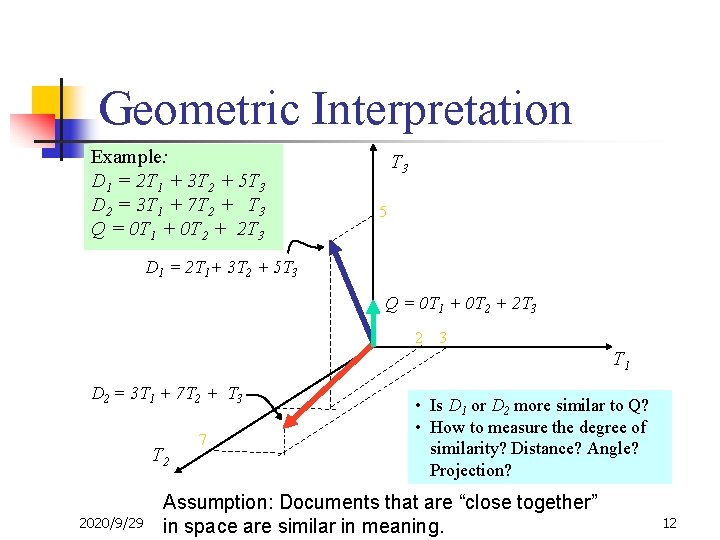

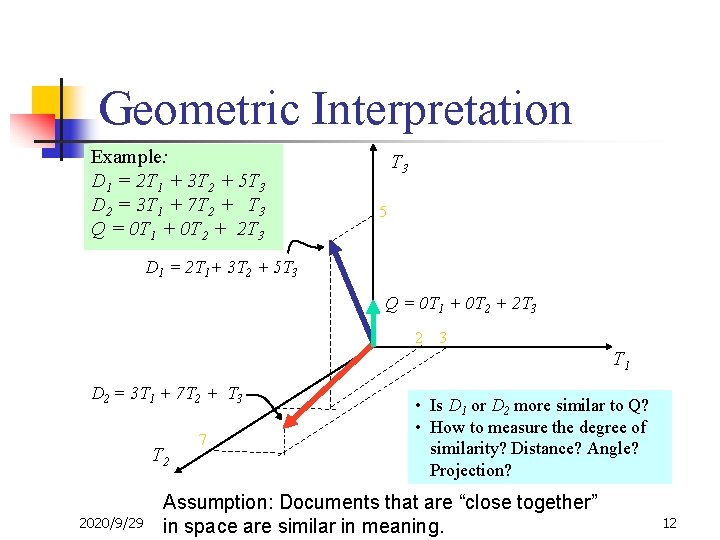

Geometric Interpretation Example: D 1 = 2 T 1 + 3 T 2 + 5 T 3 D 2 = 3 T 1 + 7 T 2 + T 3 Q = 0 T 1 + 0 T 2 + 2 T 3 5 D 1 = 2 T 1+ 3 T 2 + 5 T 3 Q = 0 T 1 + 0 T 2 + 2 T 3 2 3 T 1 D 2 = 3 T 1 + 7 T 2 + T 3 T 2 2020/9/29 7 • Is D 1 or D 2 more similar to Q? • How to measure the degree of similarity? Distance? Angle? Projection? Assumption: Documents that are “close together” in space are similar in meaning. 12

Term Weights n n The weight wij reflects the importance of the term Ti in document Dj. Intuitions: n n 2020/9/29 A term that appears in many documents is not important: e. g. , the, going, come, … If a term is frequent in a document, it is probably important in that document. 13

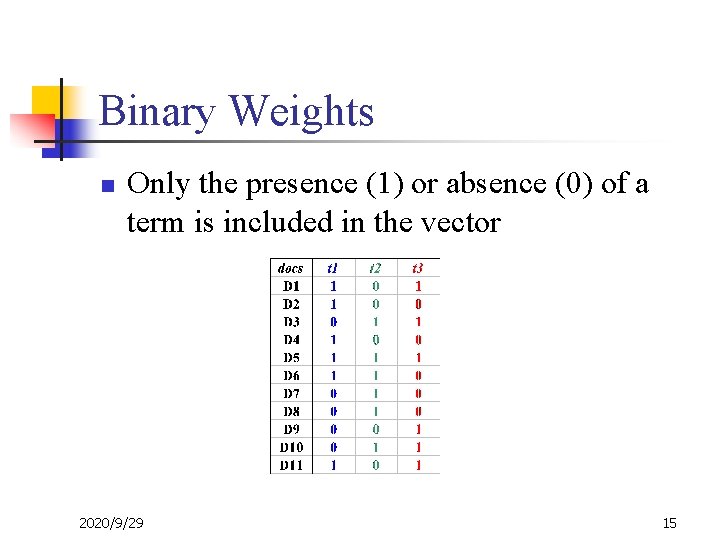

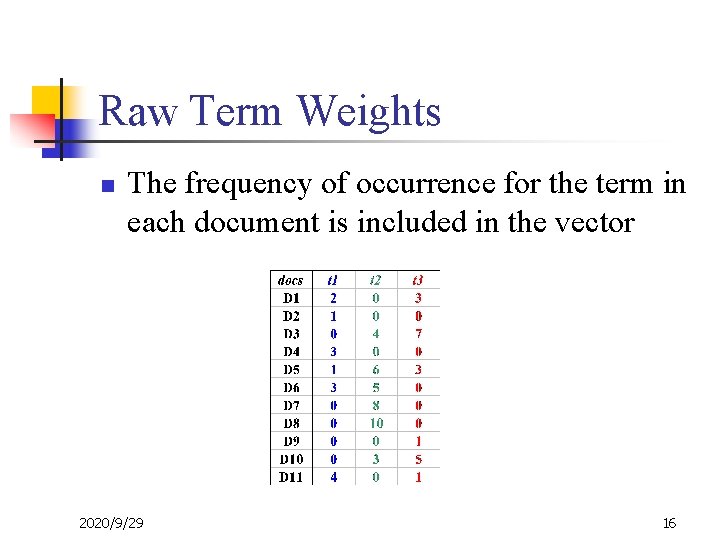

Assigning Weights to Terms n n n Binary Weights Raw term frequency tf x idf n n Recall the Zipf distribution Want to weight terms highly if they are n n n frequent in relevant documents … BUT infrequent in the collection as a whole Pointwise Mutual Information 2020/9/29 14

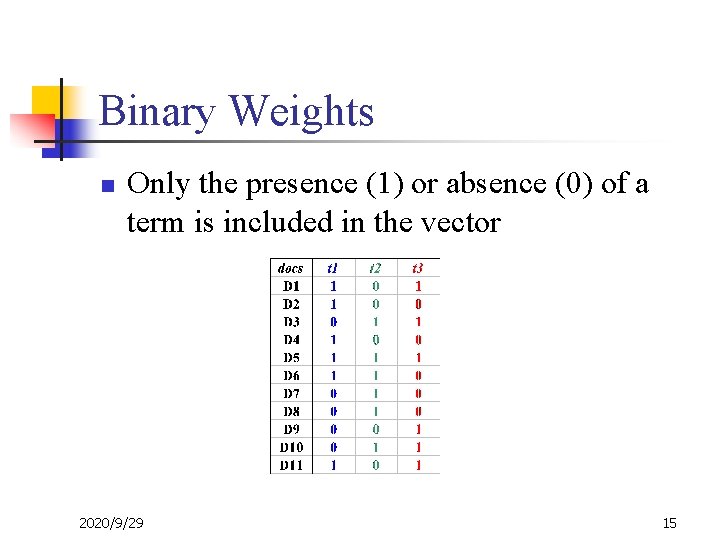

Binary Weights n Only the presence (1) or absence (0) of a term is included in the vector 2020/9/29 15

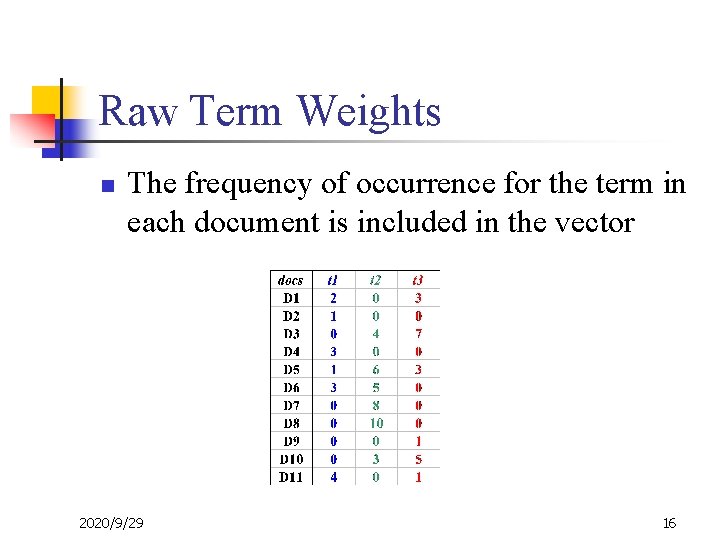

Raw Term Weights n The frequency of occurrence for the term in each document is included in the vector 2020/9/29 16

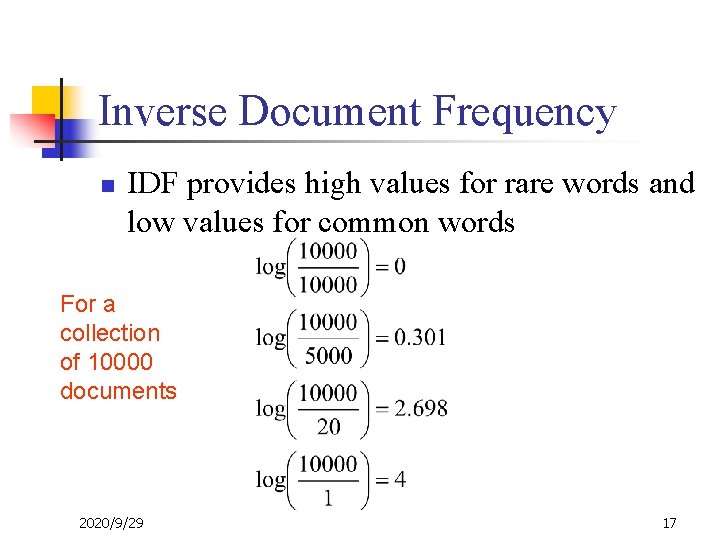

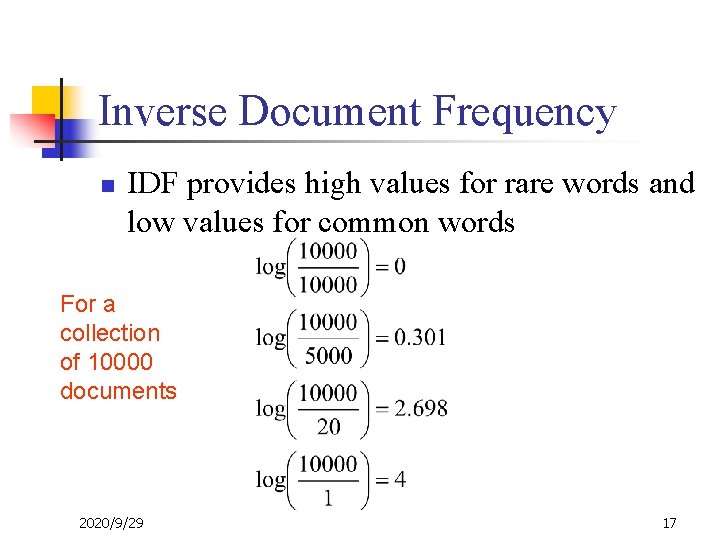

Inverse Document Frequency n IDF provides high values for rare words and low values for common words For a collection of 10000 documents 2020/9/29 17

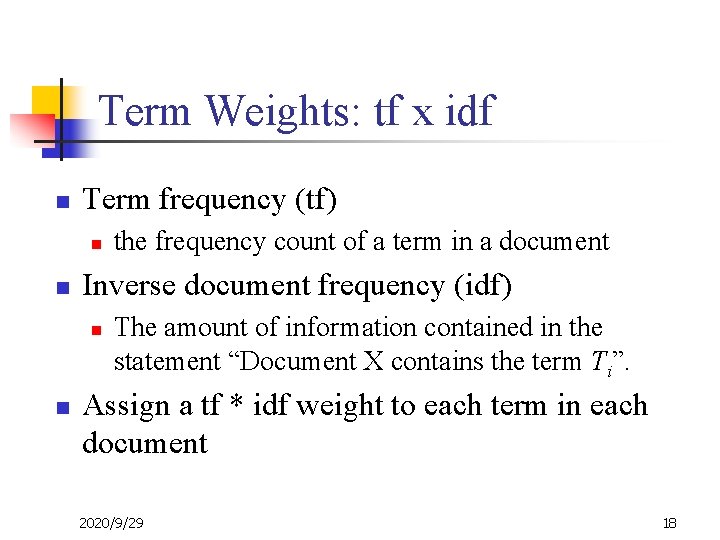

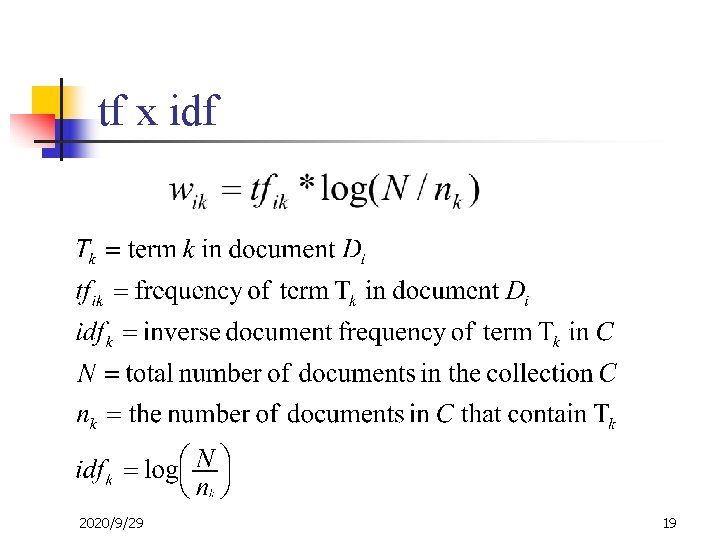

Term Weights: tf x idf n Term frequency (tf) n n Inverse document frequency (idf) n n the frequency count of a term in a document The amount of information contained in the statement “Document X contains the term Ti”. Assign a tf * idf weight to each term in each document 2020/9/29 18

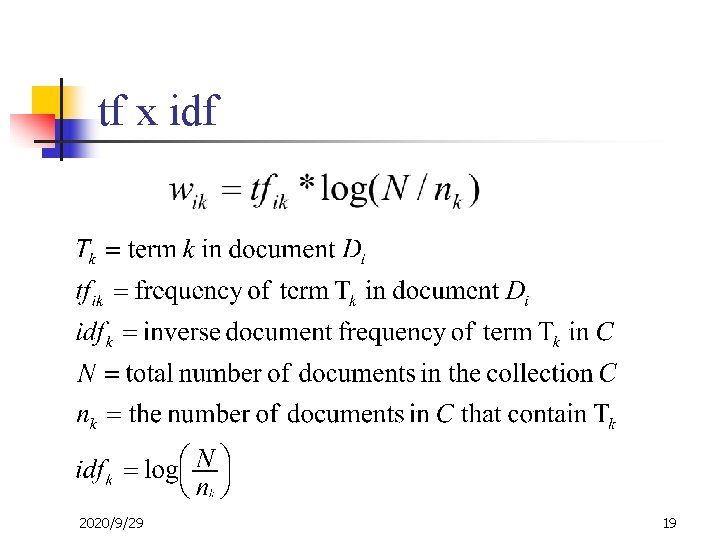

tf x idf 2020/9/29 19

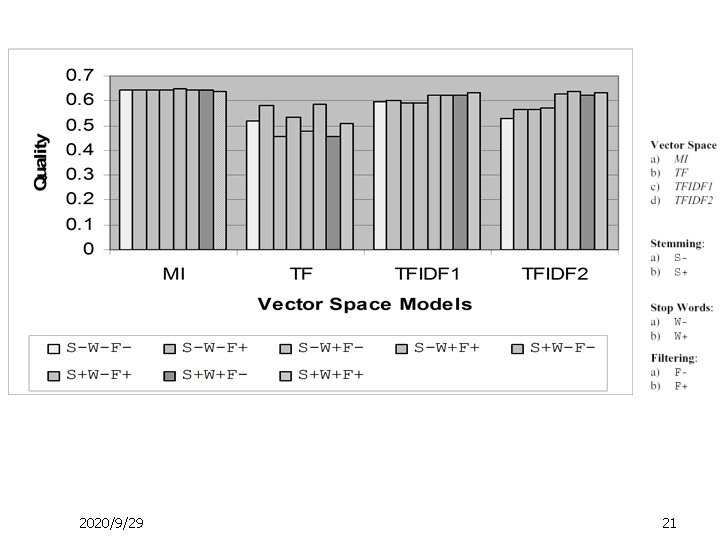

Term Weights: Pointwise Mutual Information n Pointwise Mutual Information measures the strength of association between two elements (a document and a term). n n Observed frequency vs. expected frequency MI weight is insensitive to stemming and the use of stop word list [Pantel and Lin 02] 2020/9/29 20

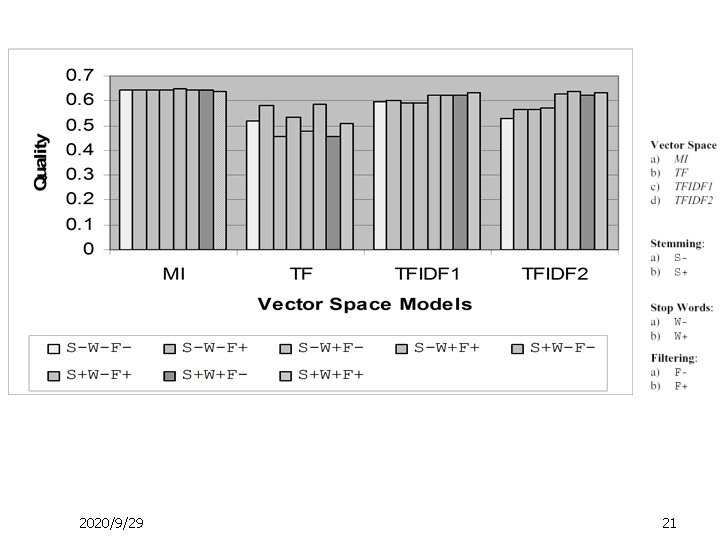

2020/9/29 21

What Else can be Terms? n n Letter n-grams Phrases Relations Semantic categories 2020/9/29 22

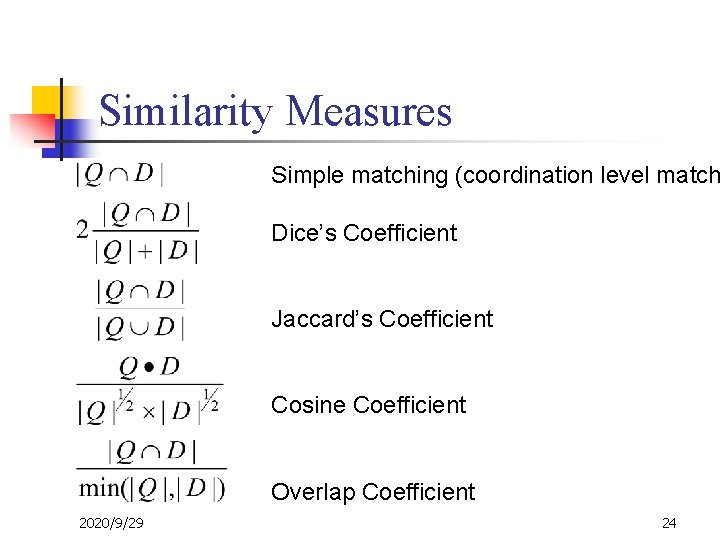

Similarity Measure n Define a similarity measure between a query and a document n n n Cosine Dice Return the documents that are the most similar to the query 2020/9/29 23

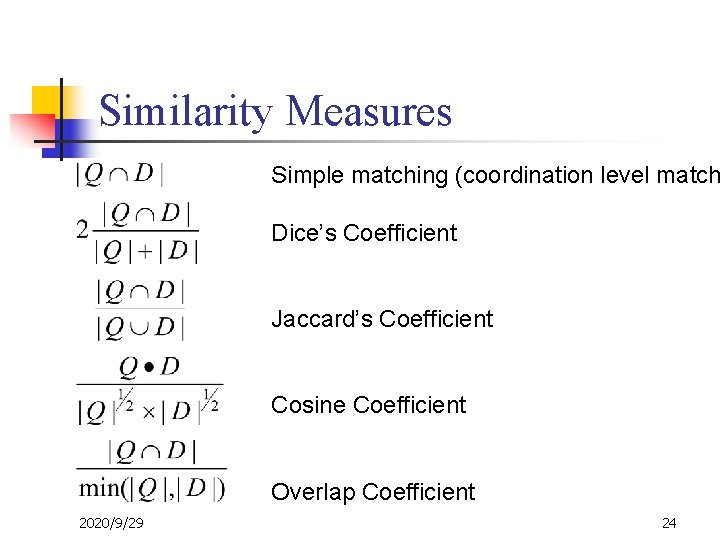

Similarity Measures Simple matching (coordination level match Dice’s Coefficient Jaccard’s Coefficient Cosine Coefficient Overlap Coefficient 2020/9/29 24

Implementation of VS Model n n Does the query vector need to be compared with EVERY document vector? Does the query vector need to be compared with the vectors of documents that contain any of query terms? 2020/9/29 25

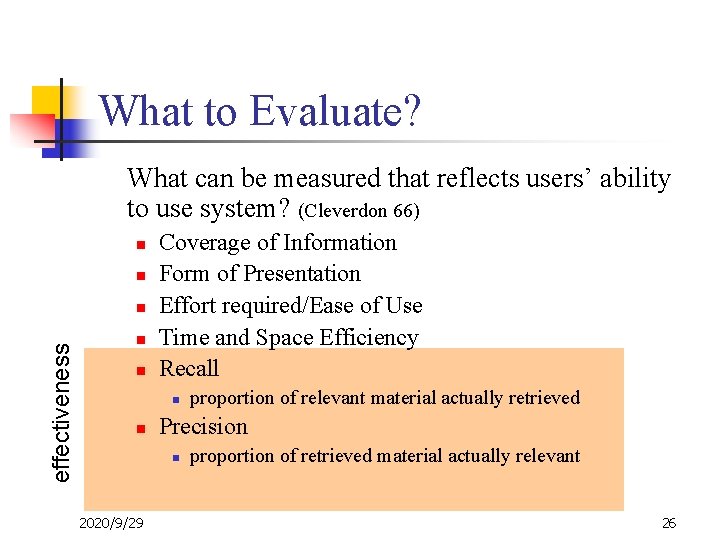

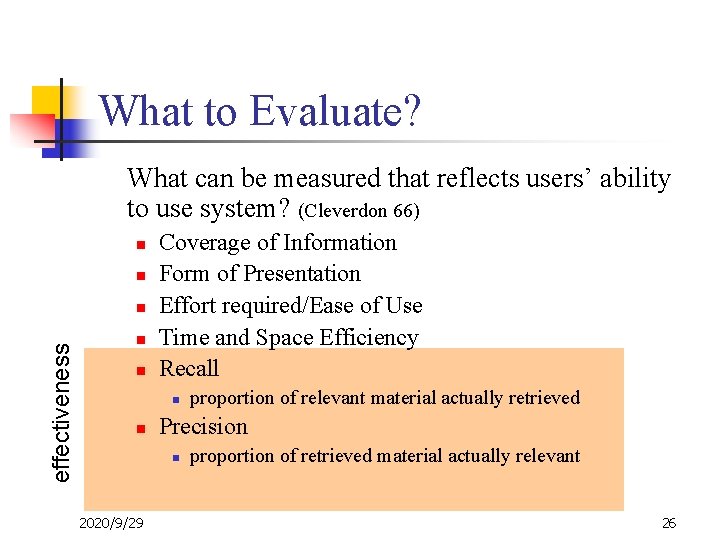

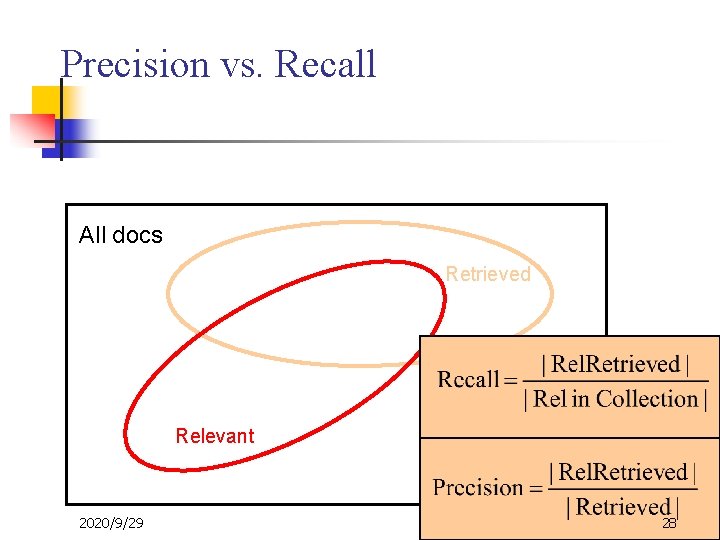

What to Evaluate? What can be measured that reflects users’ ability to use system? (Cleverdon 66) n n effectiveness n n n Coverage of Information Form of Presentation Effort required/Ease of Use Time and Space Efficiency Recall n n Precision n 2020/9/29 proportion of relevant material actually retrieved proportion of retrieved material actually relevant 26

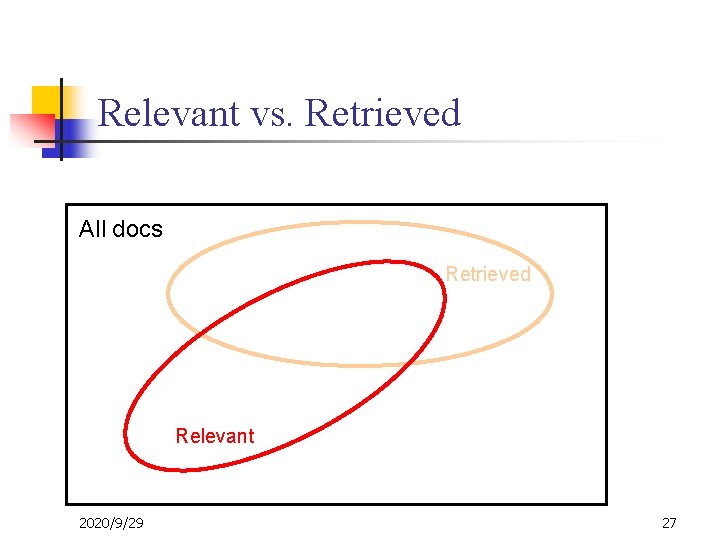

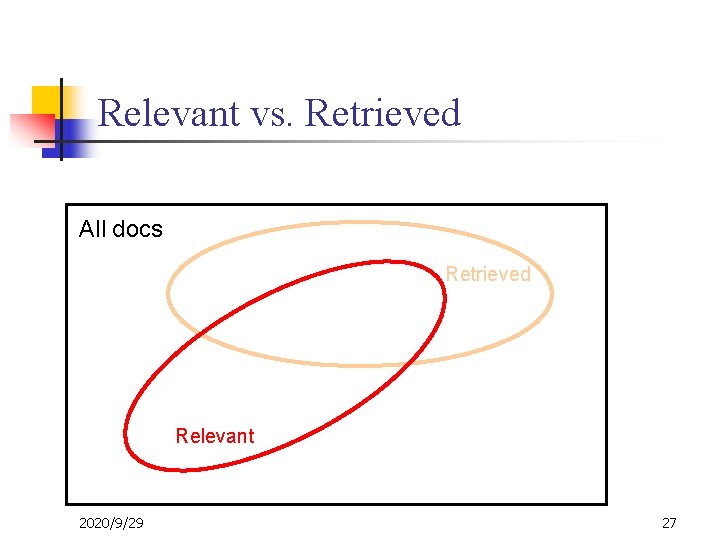

Relevant vs. Retrieved All docs Retrieved Relevant 2020/9/29 27

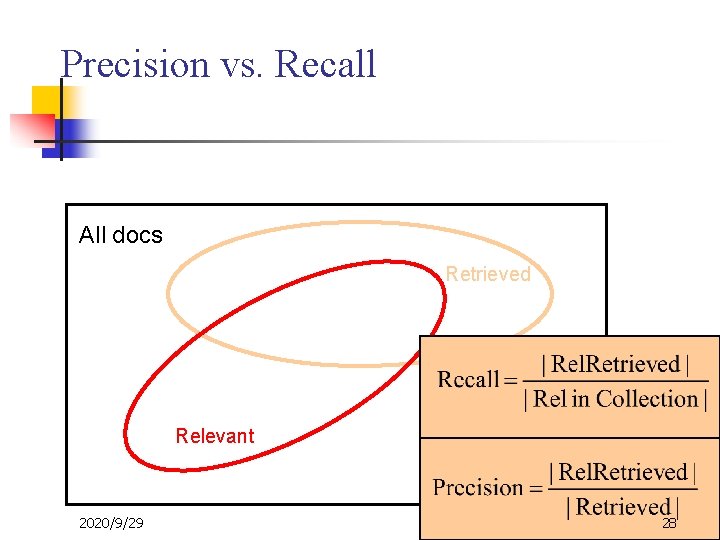

Precision vs. Recall All docs Retrieved Relevant 2020/9/29 28

Why Precision and Recall? Get as much good stuff while at the same time getting as little junk as possible. 2020/9/29 29

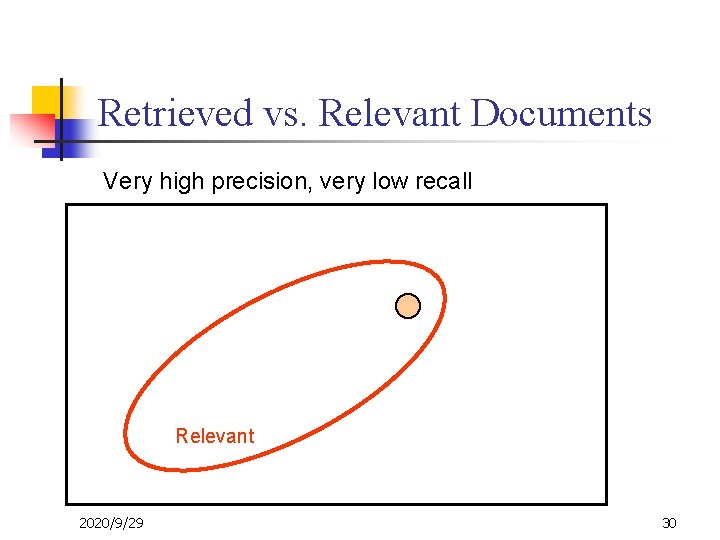

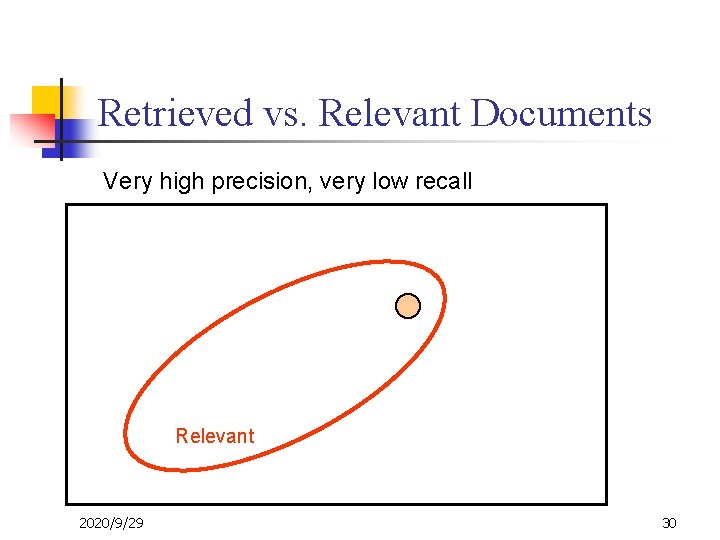

Retrieved vs. Relevant Documents Very high precision, very low recall Relevant 2020/9/29 30

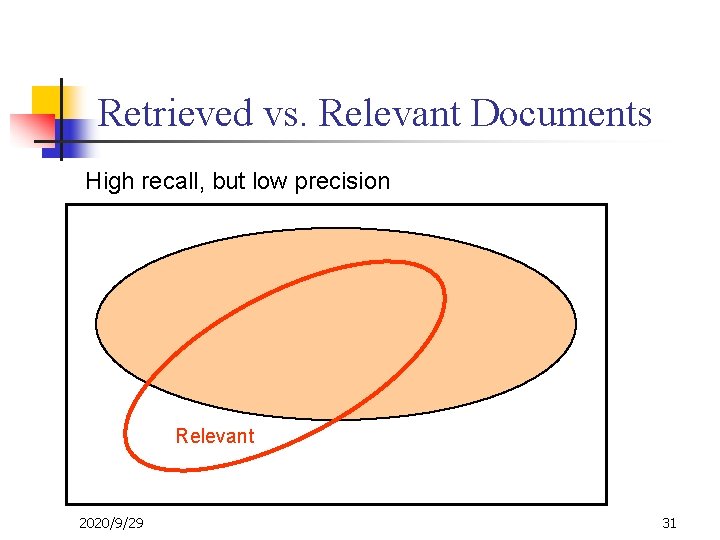

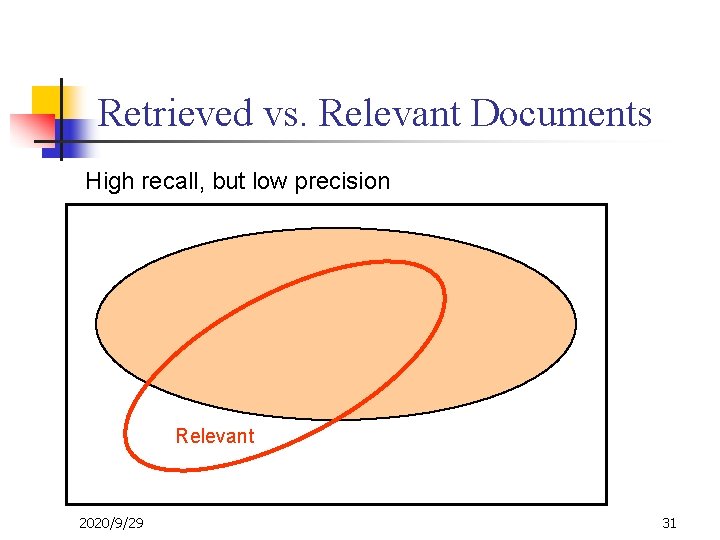

Retrieved vs. Relevant Documents High recall, but low precision Relevant 2020/9/29 31

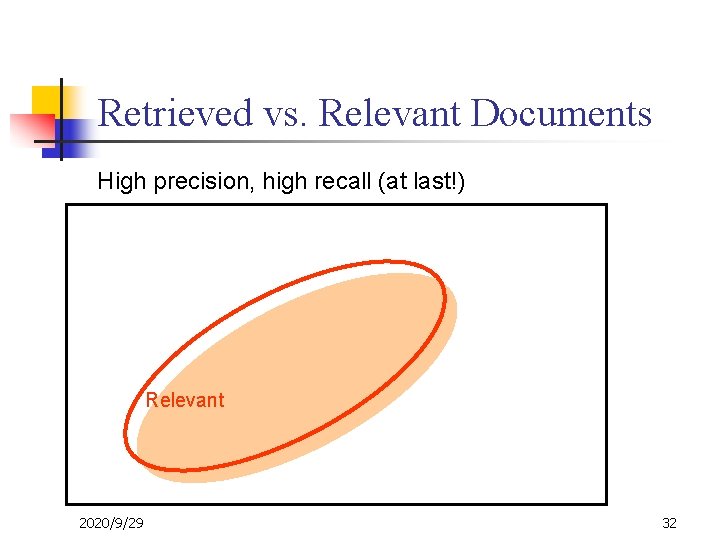

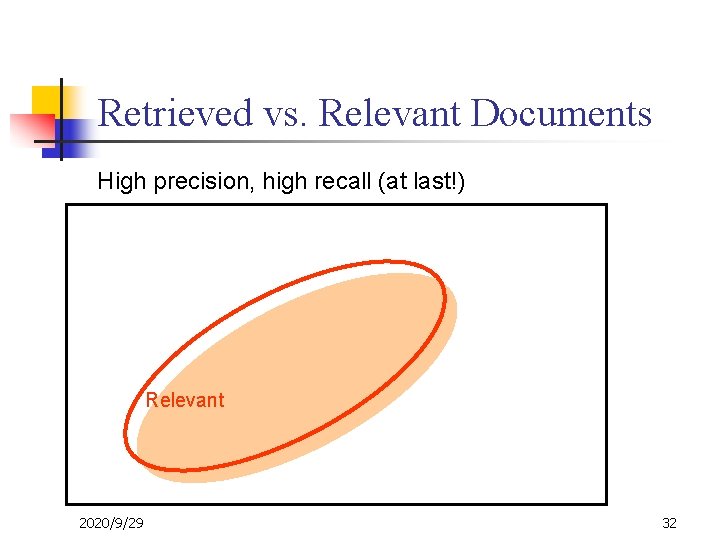

Retrieved vs. Relevant Documents High precision, high recall (at last!) Relevant 2020/9/29 32

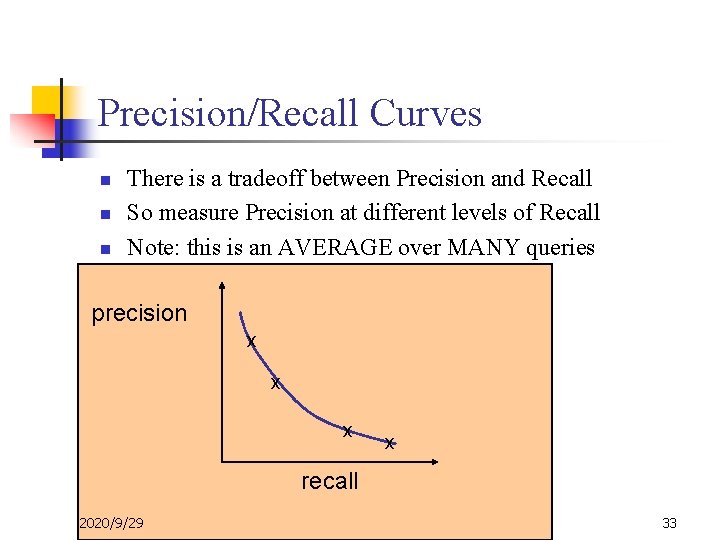

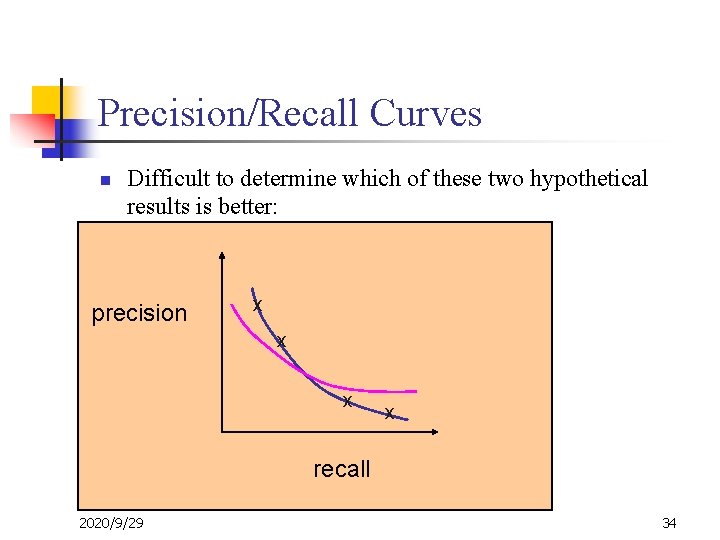

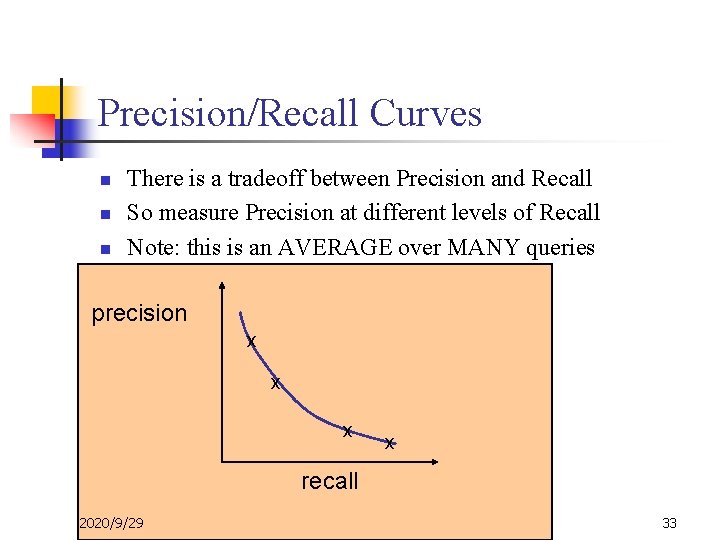

Precision/Recall Curves n n n There is a tradeoff between Precision and Recall So measure Precision at different levels of Recall Note: this is an AVERAGE over MANY queries precision x x recall 2020/9/29 33

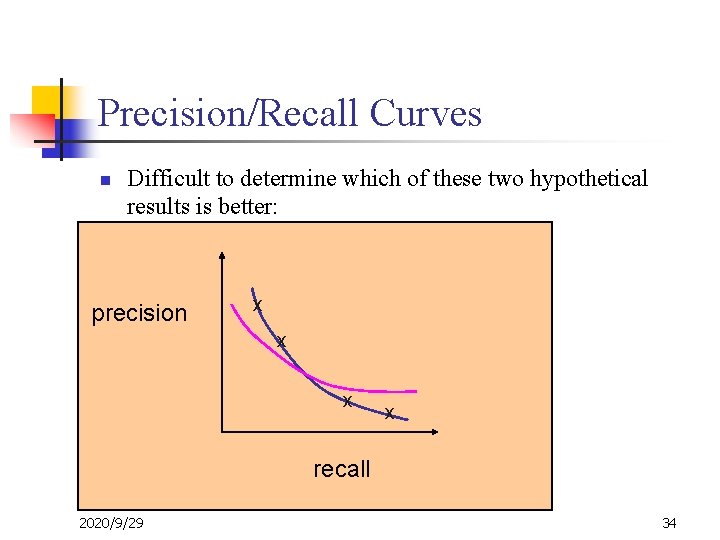

Precision/Recall Curves n Difficult to determine which of these two hypothetical results is better: precision x x recall 2020/9/29 34

Average Precision n IR systems typically output a ranked list of documents For each relevant document, compute the precision up to that point Average over all precision values computed this way. 2020/9/29 35

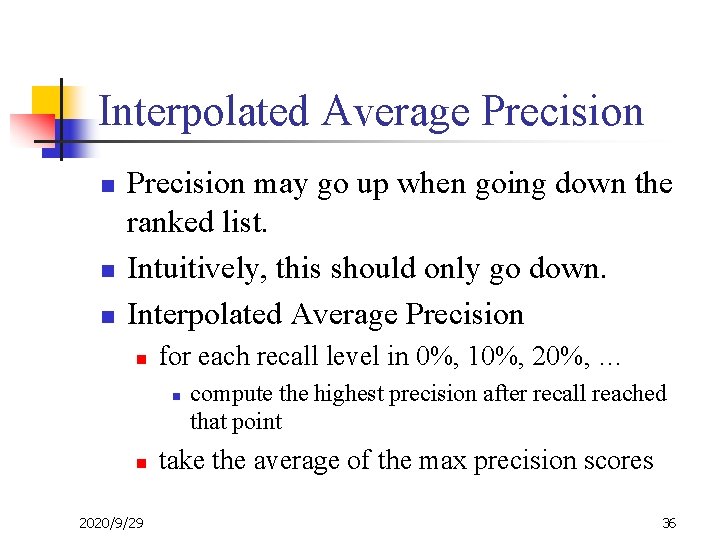

Interpolated Average Precision n Precision may go up when going down the ranked list. Intuitively, this should only go down. Interpolated Average Precision n for each recall level in 0%, 10%, 20%, … n n 2020/9/29 compute the highest precision after recall reached that point take the average of the max precision scores 36

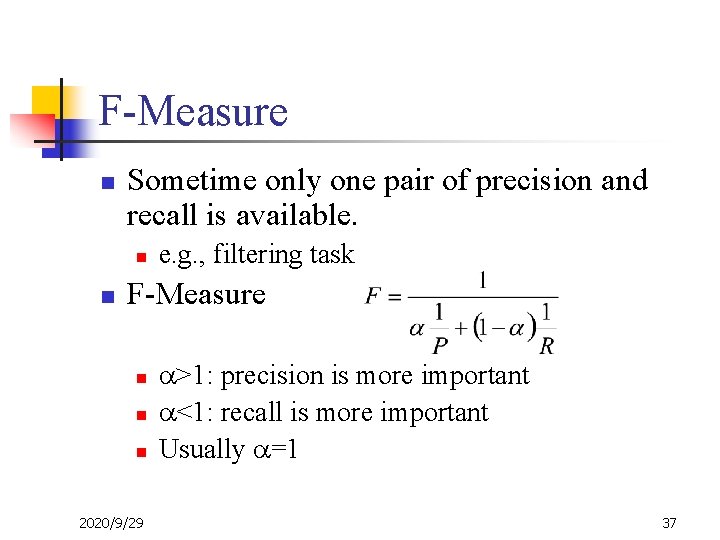

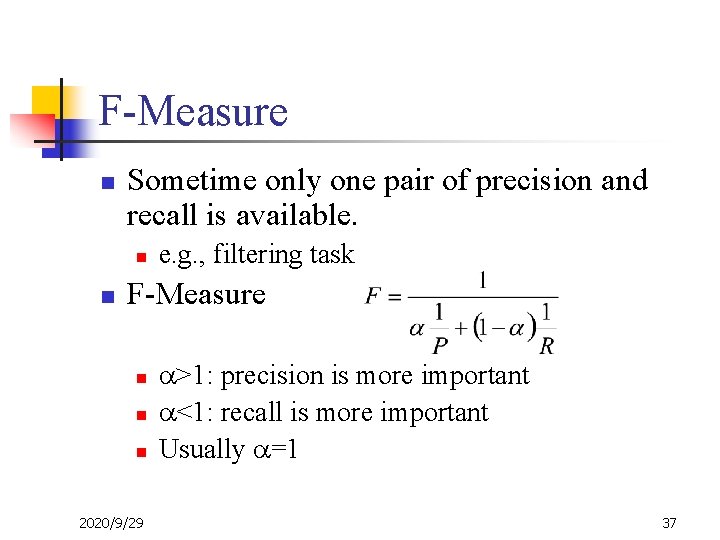

F-Measure n Sometime only one pair of precision and recall is available. n n e. g. , filtering task F-Measure n n n 2020/9/29 >1: precision is more important <1: recall is more important Usually =1 37

Text Categorization n n Goal: classify documents into predefined categories Approaches: n n n 2020/9/29 Naïve Bayes Nearest Neighbor SVM 38

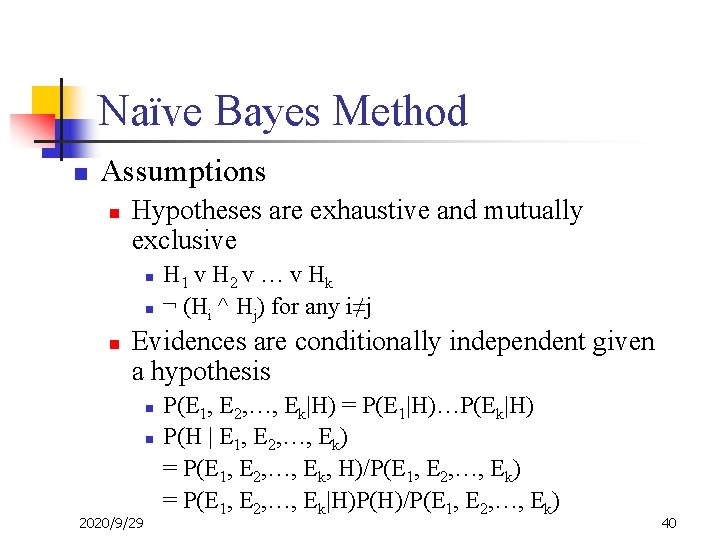

Naïve Bayes Method n Knowledge Base contains n n Given n n A set of hypotheses A set of evidences Probability of an evidence given a hypothesis A sub set of the evidences known to be present in a situation Find n the hypothesis with the highest posterior probability: P(H|E 1, E 2, …, Ek). 2020/9/29 n The probability itself does not matter so much. 39

Naïve Bayes Method n Assumptions n Hypotheses are exhaustive and mutually exclusive n n n H 1 v H 2 v … v Hk ¬ (Hi ^ Hj) for any i≠j Evidences are conditionally independent given a hypothesis n n 2020/9/29 P(E 1, E 2, …, Ek|H) = P(E 1|H)…P(Ek|H) P(H | E 1, E 2, …, Ek) = P(E 1, E 2, …, Ek, H)/P(E 1, E 2, …, Ek) = P(E 1, E 2, …, Ek|H)P(H)/P(E 1, E 2, …, Ek) 40

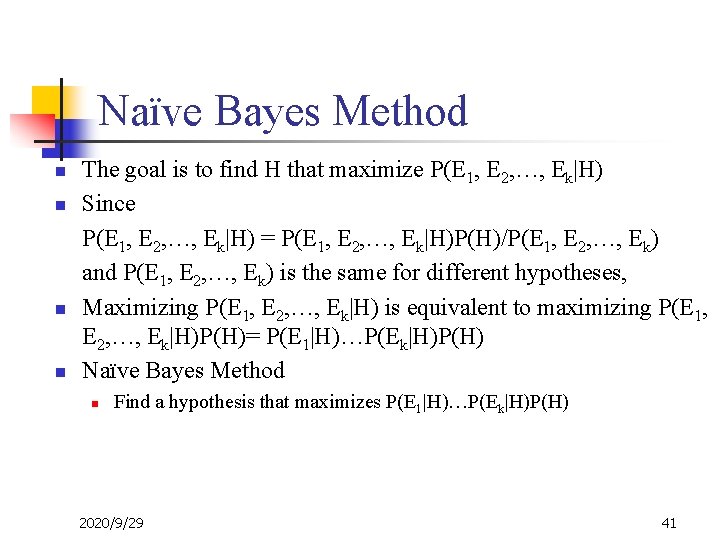

Naïve Bayes Method n n The goal is to find H that maximize P(E 1, E 2, …, Ek|H) Since P(E 1, E 2, …, Ek|H) = P(E 1, E 2, …, Ek|H)P(H)/P(E 1, E 2, …, Ek) and P(E 1, E 2, …, Ek) is the same for different hypotheses, Maximizing P(E 1, E 2, …, Ek|H) is equivalent to maximizing P(E 1, E 2, …, Ek|H)P(H)= P(E 1|H)…P(Ek|H)P(H) Naïve Bayes Method n Find a hypothesis that maximizes P(E 1|H)…P(Ek|H)P(H) 2020/9/29 41

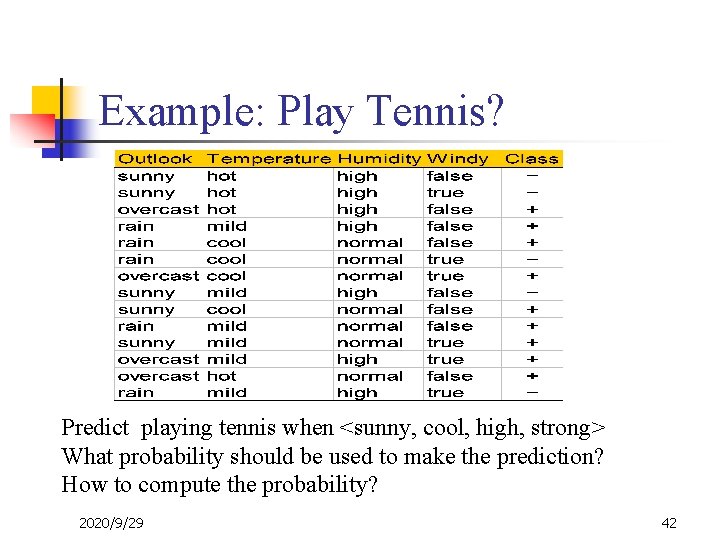

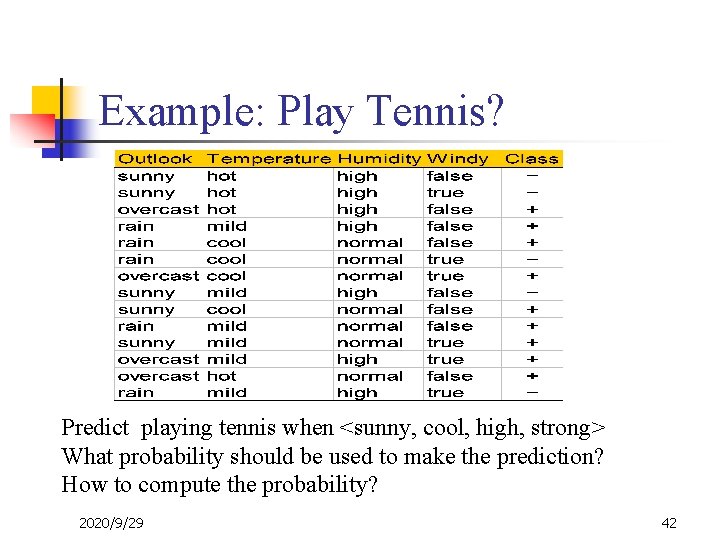

Example: Play Tennis? Predict playing tennis when <sunny, cool, high, strong> What probability should be used to make the prediction? How to compute the probability? 2020/9/29 42

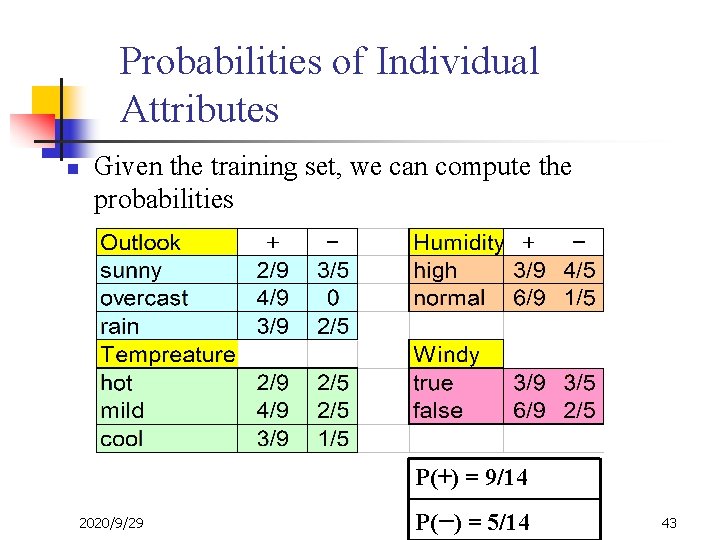

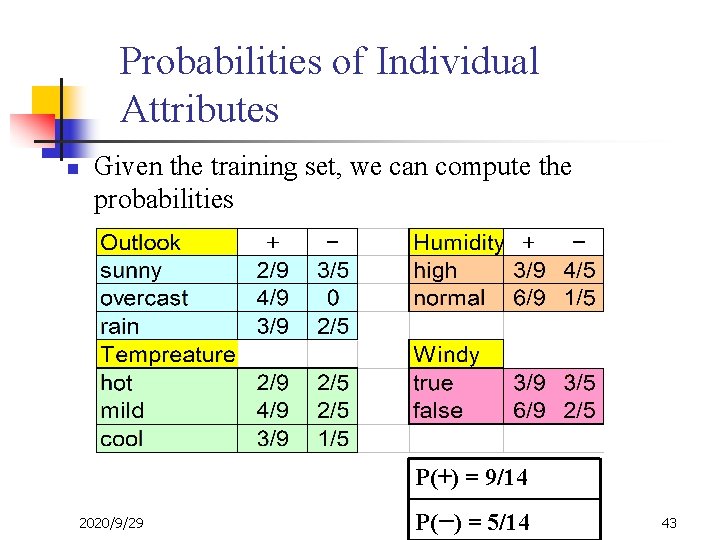

Probabilities of Individual Attributes n Given the training set, we can compute the probabilities P(+) = 9/14 2020/9/29 P(−) = 5/14 43

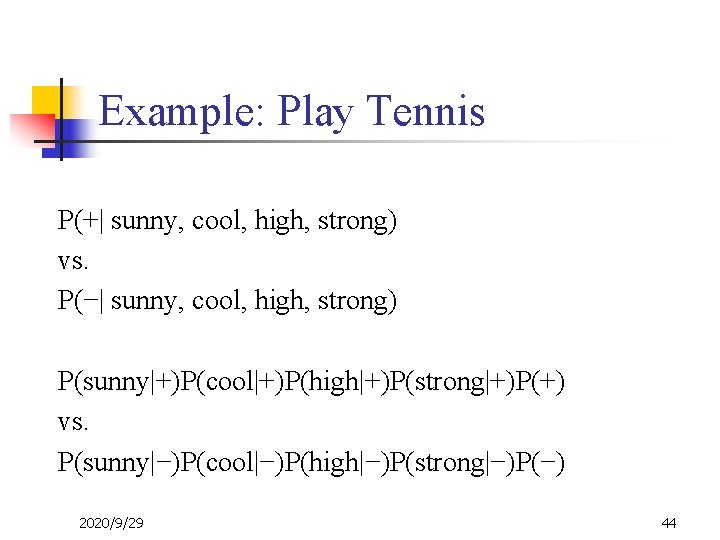

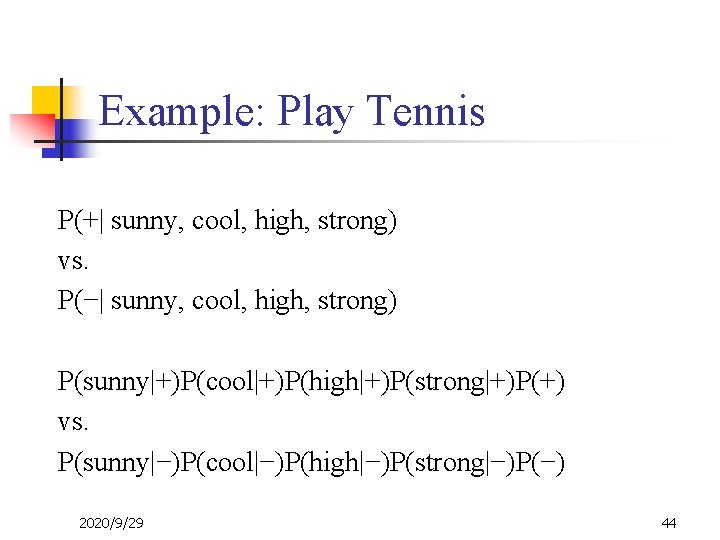

Example: Play Tennis P(+| sunny, cool, high, strong) vs. P(−| sunny, cool, high, strong) P(sunny|+)P(cool|+)P(high|+)P(strong|+)P(+) vs. P(sunny|−)P(cool|−)P(high|−)P(strong|−)P(−) 2020/9/29 44

Application: Spam Detection n Spam n n Dear sir, We want to transfer to overseas ($ 126, 000. 00 USD) One hundred and Twenty six million United States Dollars) from a Bank in Africa, I want to ask you to quietly look for a reliable and honest person who will be capable and fit to provide either an existing …… Legitimate email n 2020/9/29 Ham: for lack of better name. 45

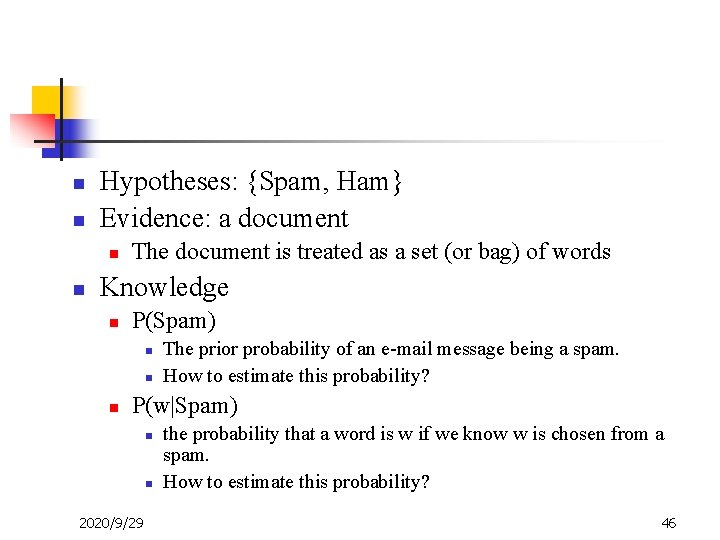

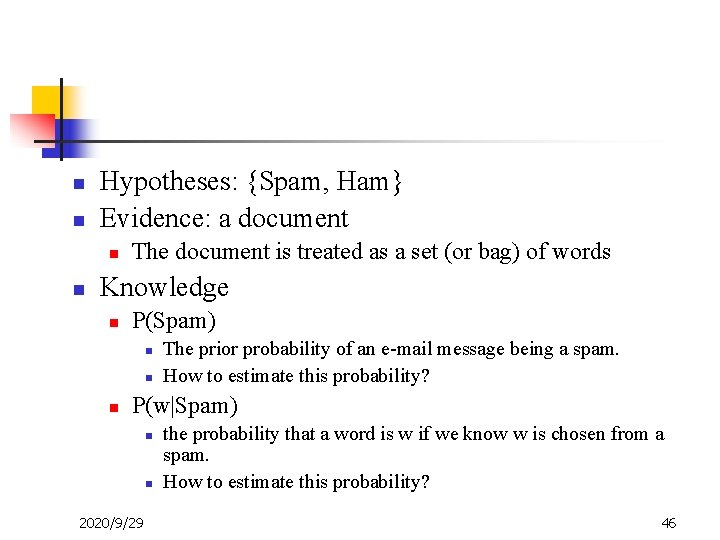

n n Hypotheses: {Spam, Ham} Evidence: a document n n The document is treated as a set (or bag) of words Knowledge n P(Spam) n n n The prior probability of an e-mail message being a spam. How to estimate this probability? P(w|Spam) n n 2020/9/29 the probability that a word is w if we know w is chosen from a spam. How to estimate this probability? 46

Other Text Categorization Algorithms n Support Vector Machine n n often has the best performance. K-Nearest Neighbor 2020/9/29 47