Chapter 3 Retrieval Evaluation Modern Information Retrieval Ricardo

- Slides: 34

Chapter 3 Retrieval Evaluation Modern Information Retrieval Ricardo Baeza-Yates Berthier Ribeiro-Neto Hsu Yi-Chen, NCU MIS 88423043

Outline Introduction Retrieval Performance Evaluation n n Recall and precision Alternative measures Reference Collections n n n TREC Collection CACM&ISI Collection CF Collection Trends and Research Issues

Introduction Type of evaluation n n Functional analysis phase, and Error analysis phase Performance evaluation Performance of the IR system n Performance evaluation w Response time/space required n Retrieval performance evaluation w The evaluation of how precise is the answer set

Retrieval performance evaluation for IR system Goodness of retrieval strategy S = the similarity between Set of retrieval documents by S Set of relevant documents provided by specialists quantified by Evaluation measure

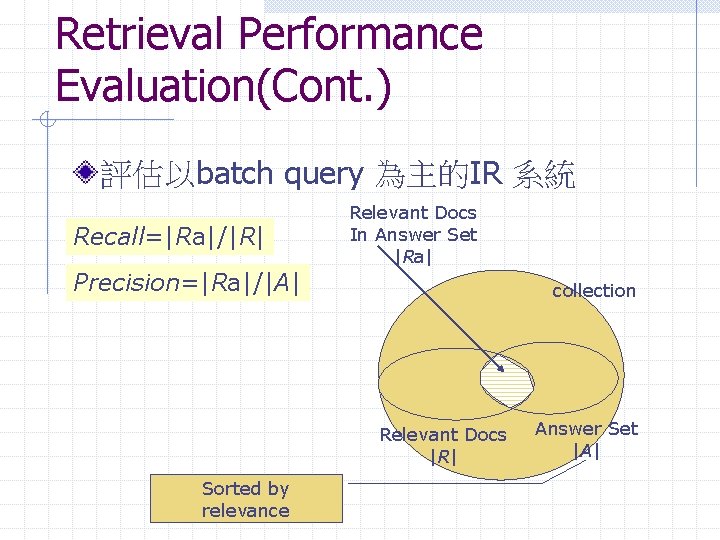

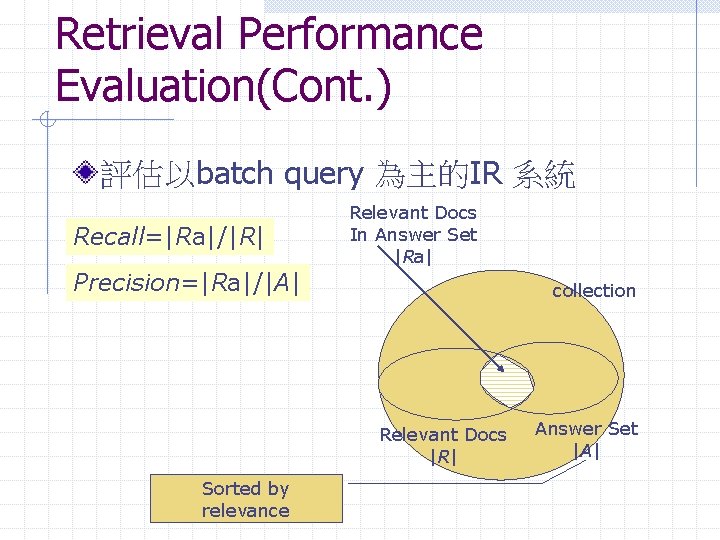

Retrieval Performance Evaluation(Cont. ) 評估以batch query 為主的IR 系統 Recall=|Ra|/|R| Relevant Docs In Answer Set |Ra| Precision=|Ra|/|A| collection Relevant Docs |R| Sorted by relevance Answer Set |A|

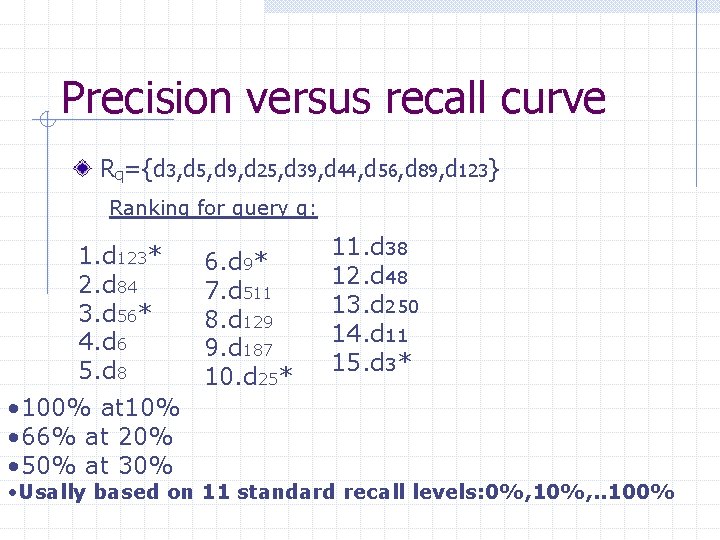

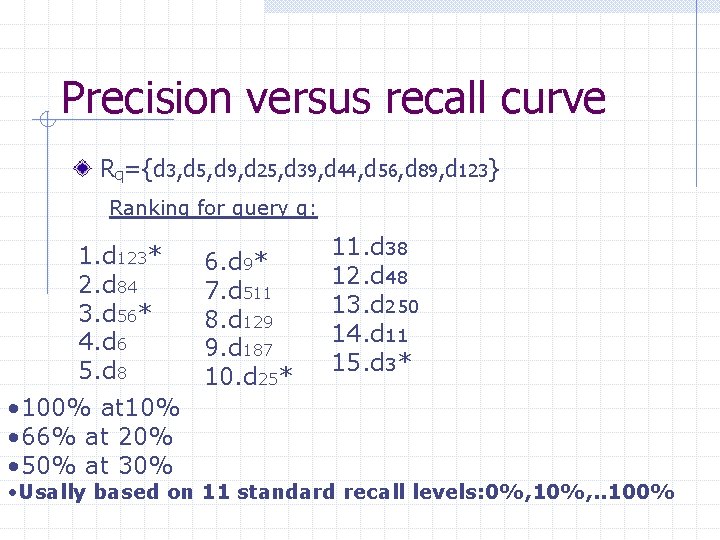

Precision versus recall curve Rq={d 3, d 5, d 9, d 25, d 39, d 44, d 56, d 89, d 123} Ranking for query q: 1. d 123* 2. d 84 3. d 56* 4. d 6 5. d 8 • 100% at 10% • 66% at 20% • 50% at 30% 6. d 9* 7. d 511 8. d 129 9. d 187 10. d 25* 11. d 38 12. d 48 13. d 250 14. d 11 15. d 3* • Usally based on 11 standard recall levels: 0%, 10%, . . 100%

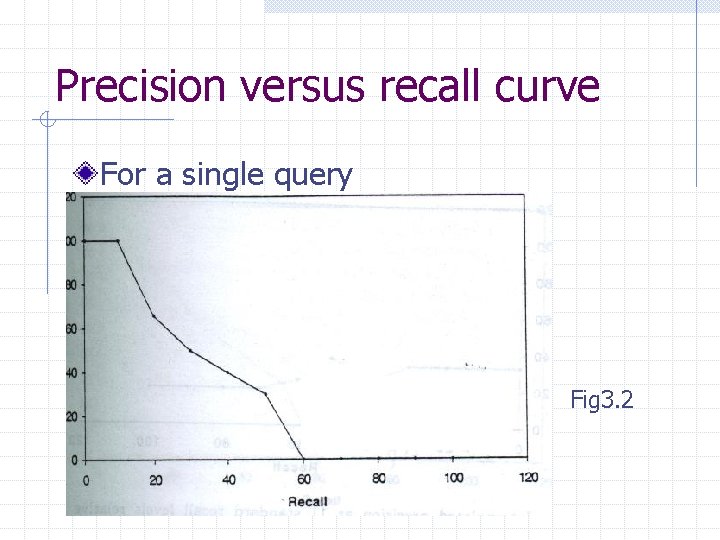

Precision versus recall curve For a single query Fig 3. 2

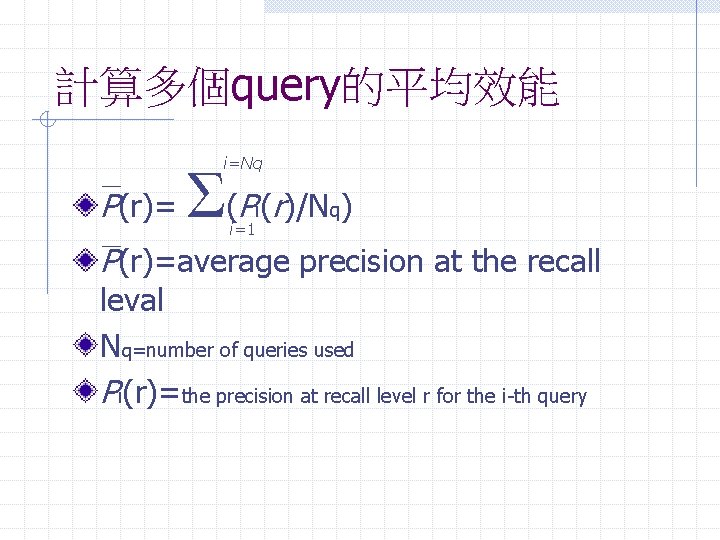

計算多個query的平均效能 i=Nq P(r)= Σ(P (r)/N ) i i=1 q P(r)=average precision at the recall leval Nq=number of queries used Pi(r)=the precision at recall level r for the i-th query

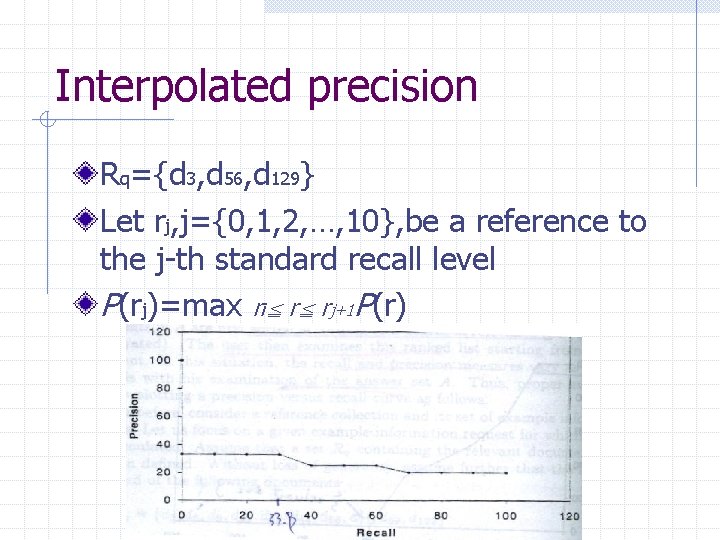

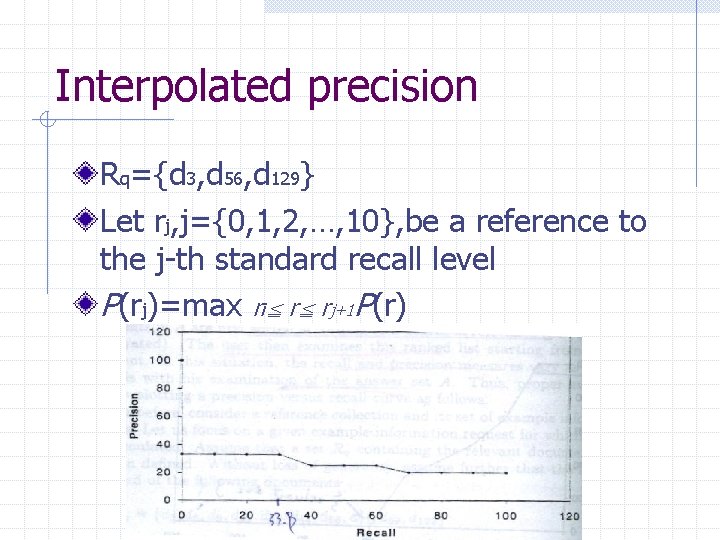

Interpolated precision Rq={d 3, d 56, d 129} Let rj, j={0, 1, 2, …, 10}, be a reference to the j-th standard recall level P(rj)=max ri≦ r≦ rj+1 P(r)

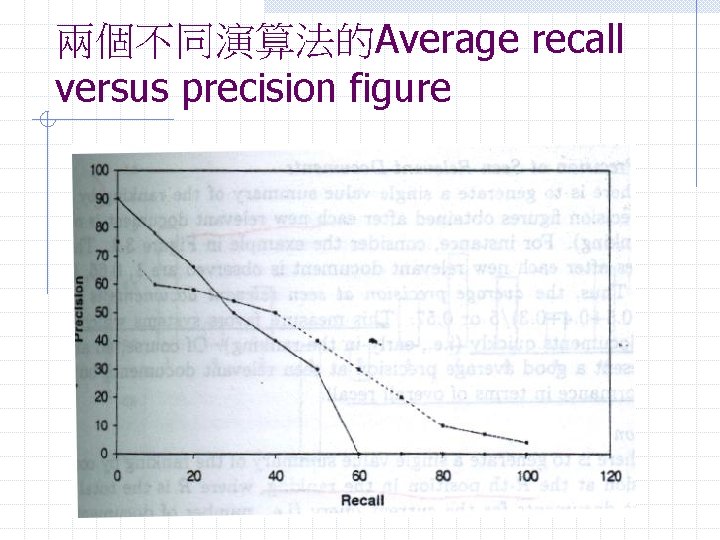

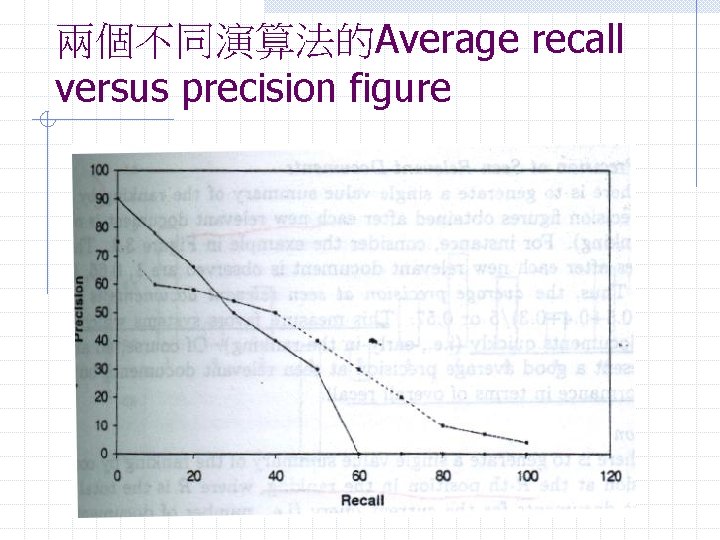

兩個不同演算法的Average recall versus precision figure

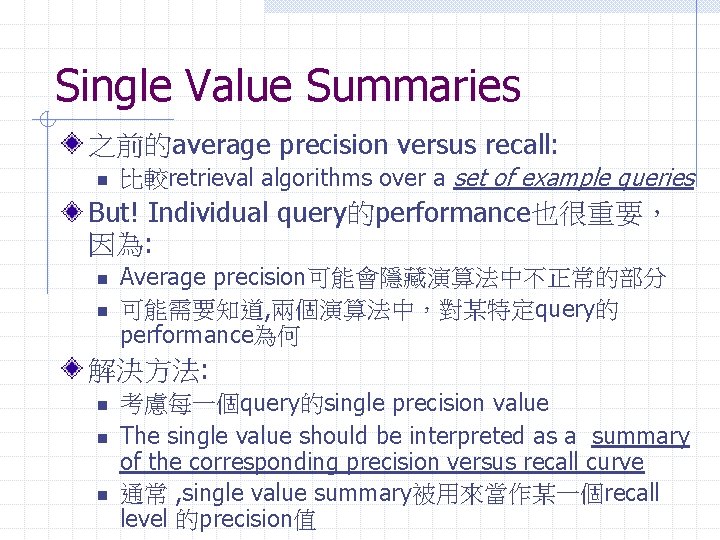

Single Value Summaries 之前的average precision versus recall: n 比較retrieval algorithms over a set of example queries But! Individual query的performance也很重要, 因為: n n Average precision可能會隱藏演算法中不正常的部分 可能需要知道, 兩個演算法中,對某特定query的 performance為何 解決方法: n n n 考慮每一個query的single precision value The single value should be interpreted as a summary of the corresponding precision versus recall curve 通常 , single value summary被用來當作某一個recall level 的precision值

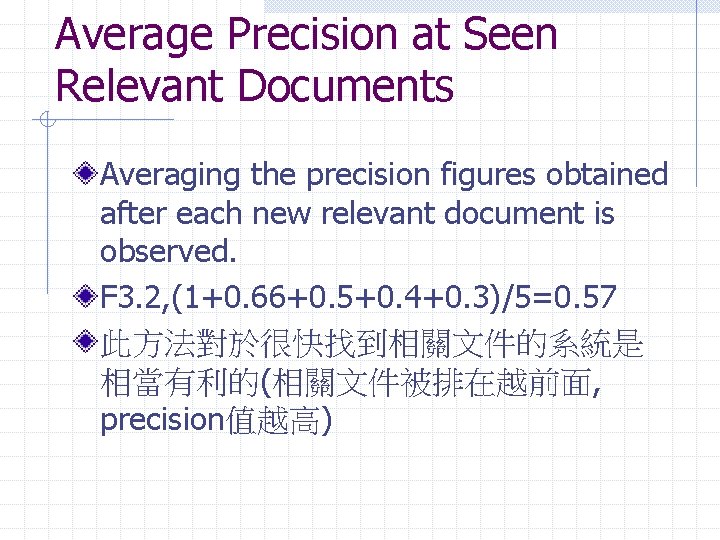

Average Precision at Seen Relevant Documents Averaging the precision figures obtained after each new relevant document is observed. F 3. 2, (1+0. 66+0. 5+0. 4+0. 3)/5=0. 57 此方法對於很快找到相關文件的系統是 相當有利的(相關文件被排在越前面, precision值越高)

R-Precision Computing the precision at the R-th position in the ranking(在R 篇文章中出現相關文章數 目的比例) R: the total number of relevant documents of the current query(total number in Rq) Fig 3. 2: R=10, value=0. 4 Fig 3. 3, R=3, value=0. 33 易於觀察每一個單一query的演算法 performance

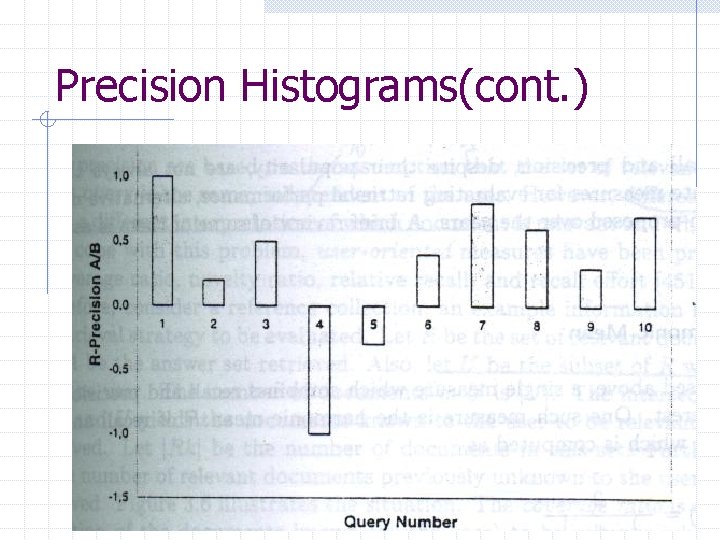

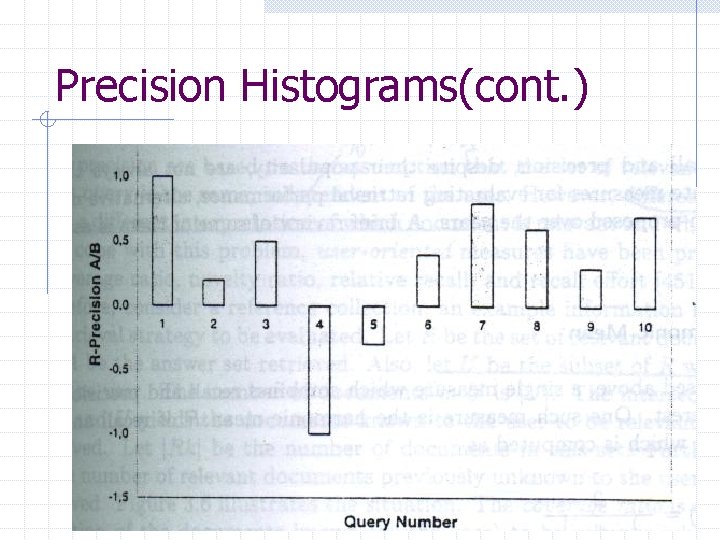

Precision Histograms 利用長條圖比較兩個query的R-precision 值 RPA/B(i )=RPA(i )-RPB(i ) RPA(i), RPB(i): R-precision value of A, B for i-th query Compare the retrieval performance history of two algorithms through visual inspection

Precision Histograms(cont. )

Summary Table Statistics 將所有query相關的single value summary 放在 table中 n n 如the number of queries , total number of documents retrieved by all queries, total number of relevant documents were effectively retrieved when all queries are considered Total number of relevant documents retrieved by all queries…

Precision and Recall 的適用性 Maximum recall值的產生,需要知道所有文件 相關的背景知識 Recall and precision是相對的測量方式,兩者 要合併使用比較適合。 Measures which quantify the informativeness of the retrieval process might now be more appropriate Recall and precision are easy to define when a linear ordering of the retrieved documents is enforced

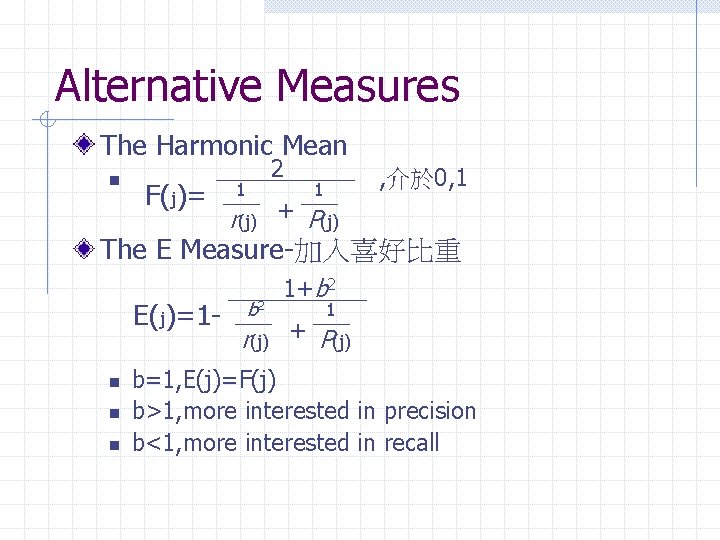

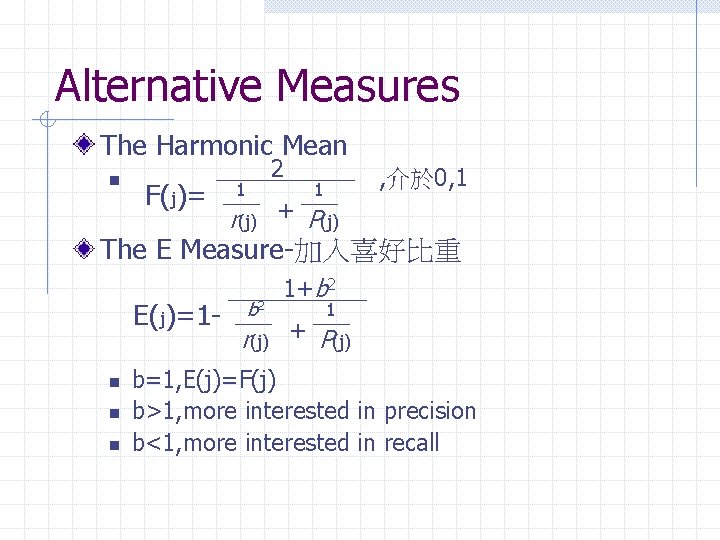

Alternative Measures The Harmonic Mean n F(j)= 2 1 , 介於 0, 1 1 r(j) + P(j) The E Measure-加入喜好比重 E(j)=1 n n n b 2 1+b 2 1 r(j) + P(j) b=1, E(j)=F(j) b>1, more interested in precision b<1, more interested in recall

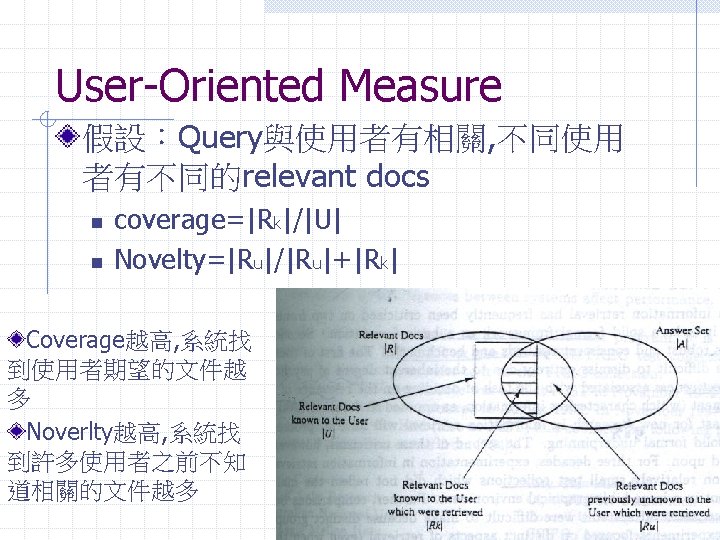

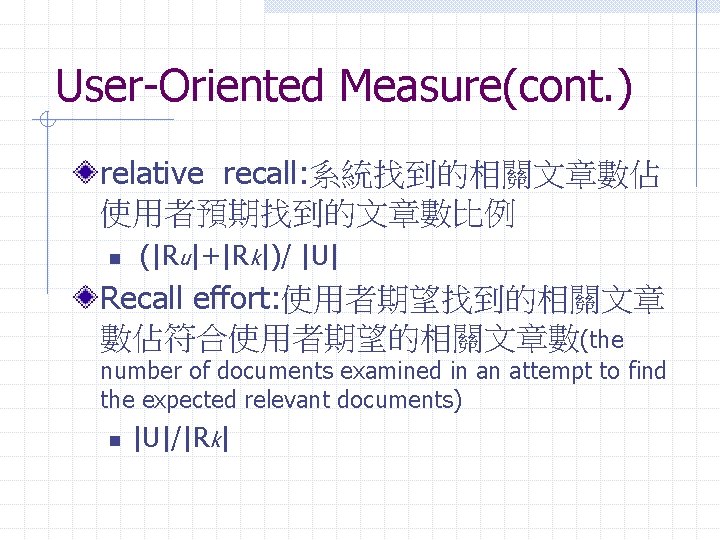

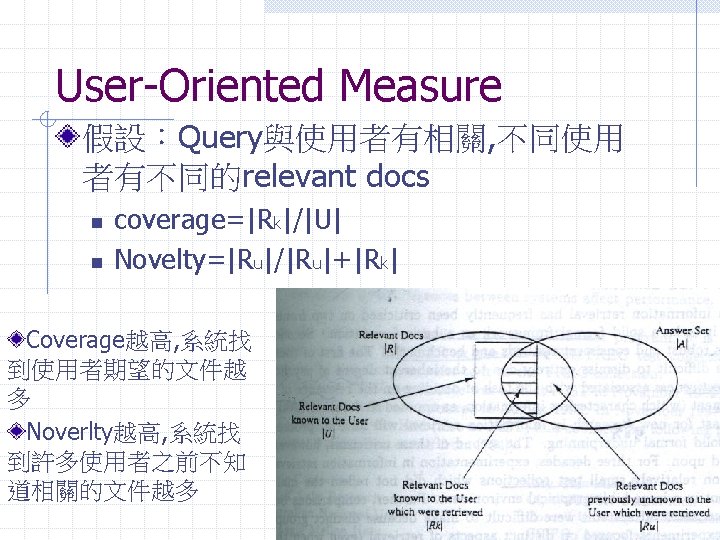

User-Oriented Measure(cont. ) relative recall: 系統找到的相關文章數佔 使用者預期找到的文章數比例 n (|Ru|+|Rk|)/ |U| Recall effort: 使用者期望找到的相關文章 數佔符合使用者期望的相關文章數(the number of documents examined in an attempt to find the expected relevant documents) n |U|/|Rk|

Reference Collection 用來作為評估IR系統reference test collections n n n TIPSTER/TREC: 量大,實驗用 CACM, ISI: 歷史意義 Cystic Fibrosis : small collections, relevant documents由專家研討後產生

IR system遇到的批評 Lacks a solid formal framework as a basic foundation n 無解!一個文件是否與查詢相關,是相當主觀的! Lacks robust and consistent testbeds and benchmarks n n 較早,發展實驗性質的小規模測試資料 1990後,TREC成立,蒐集上萬文件,提供給研究 團體作IR系統評量之用

TREC (Text REtrieval Conference) Initiated under the National Institute of Standards and Technology(NIST) Goals: n n n Providing a large test collection Uniform scoring procedures Forum 7 th TREC conference in 1998: n n Document collection: test collections, example information requests(topics), relevant docs The benchmarks tasks

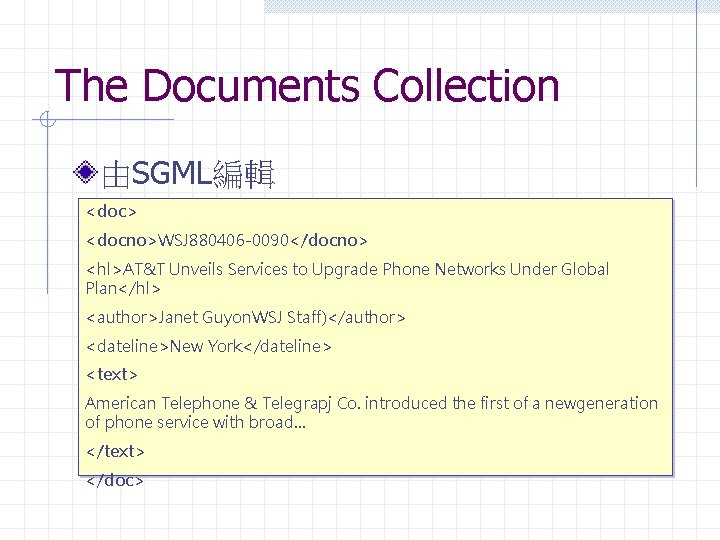

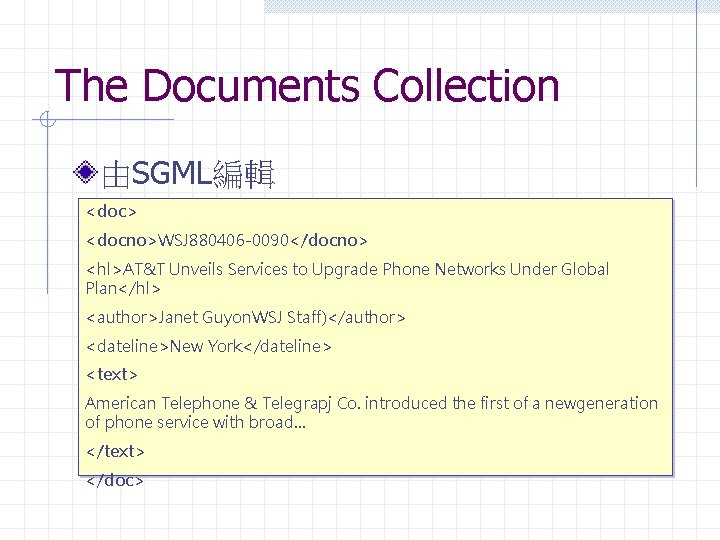

The Documents Collection 由SGML編輯 <doc> <docno>WSJ 880406 -0090</docno> <hl>AT&T Unveils Services to Upgrade Phone Networks Under Global Plan</hl> <author>Janet Guyon. WSJ Staff)</author> <dateline>New York</dateline> <text> American Telephone & Telegrapj Co. introduced the first of a newgeneration of phone service with broad… </text> </doc>

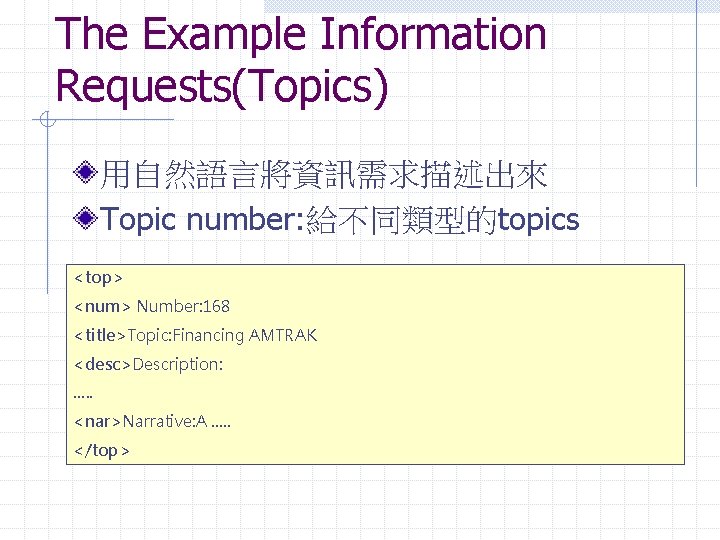

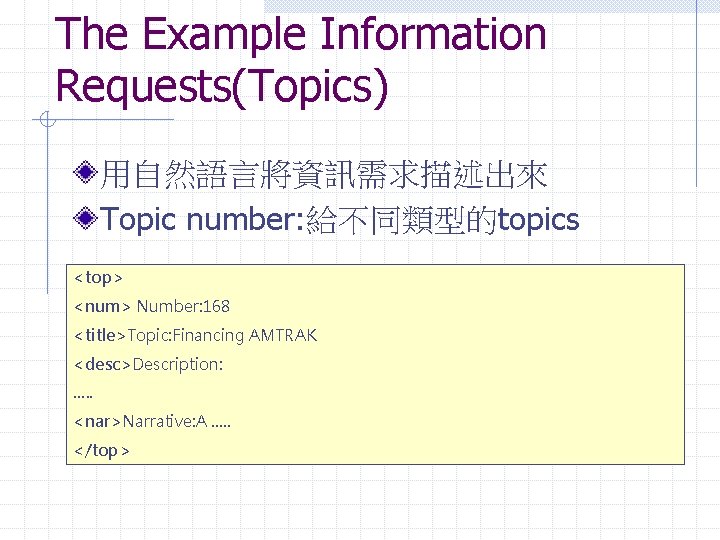

The Example Information Requests(Topics) 用自然語言將資訊需求描述出來 Topic number: 給不同類型的topics <top> <num> Number: 168 <title>Topic: Financing AMTRAK <desc>Description: …. . <nar>Narrative: A …. . </top>

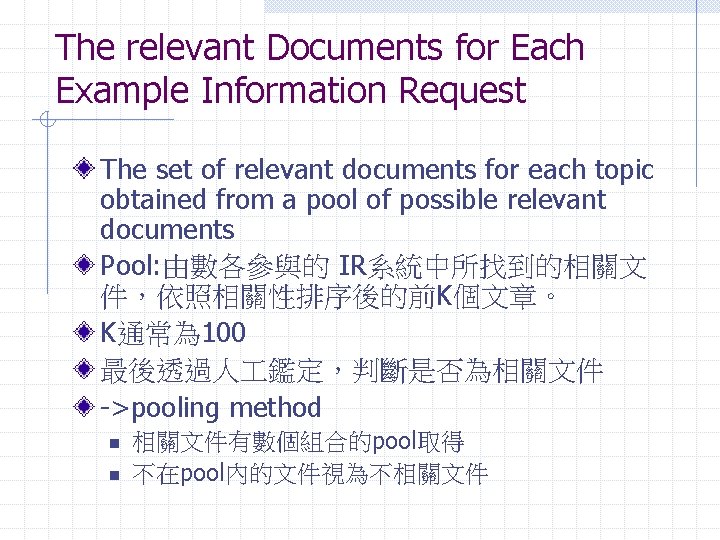

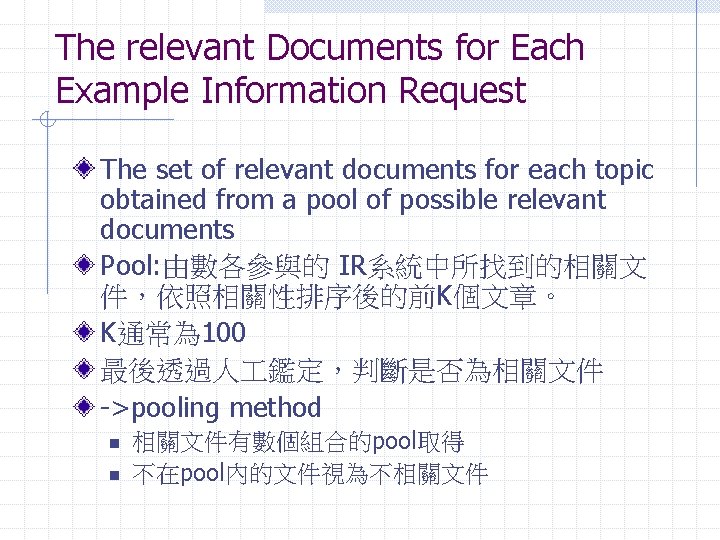

The relevant Documents for Each Example Information Request The set of relevant documents for each topic obtained from a pool of possible relevant documents Pool: 由數各參與的 IR系統中所找到的相關文 件,依照相關性排序後的前K個文章。 K通常為 100 最後透過人 鑑定,判斷是否為相關文件 ->pooling method n n 相關文件有數個組合的pool取得 不在pool內的文件視為不相關文件

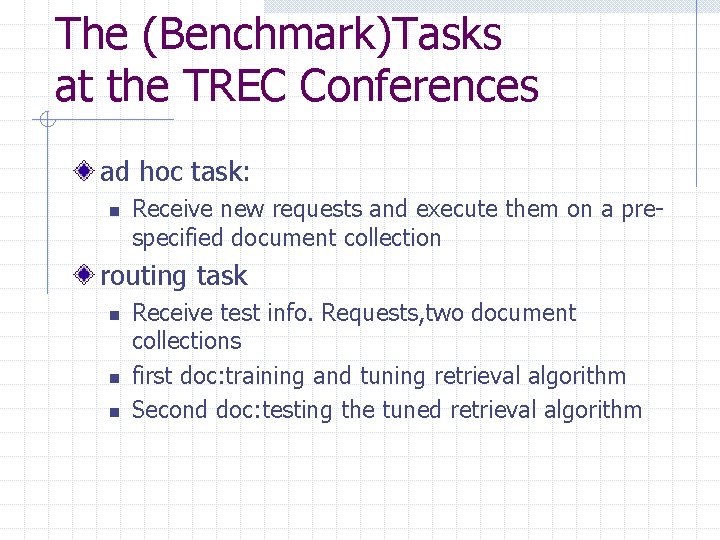

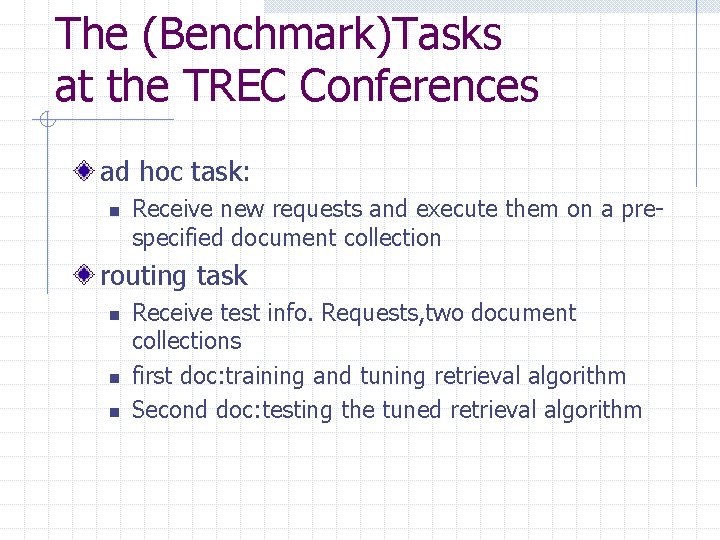

The (Benchmark)Tasks at the TREC Conferences ad hoc task: n Receive new requests and execute them on a prespecified document collection routing task n n n Receive test info. Requests, two document collections first doc: training and tuning retrieval algorithm Second doc: testing the tuned retrieval algorithm

Other tasks: *Chinese Filtering Interactive *NLP(natural language procedure) Cross languages High precision Spoken document retrieval Query Task(TREC-7)

Evaluation Measures at the TREC Conferences Summary table statistics Recall-precision Document level averages* Average precision histogram

The CACM Collection Small collections about computer science literature Text of doc structured subfields n n n word stems from the title and abstract sections Categories direct references between articles: a list of pairs of documents[da, db] Bibliographic coupling connections: a list of triples[ d 1, d 2, ncited] Number of co-citations for each pair of articles[d 1, d 2, nciting] A unique environment for testing retrieval algorithms which are based on information derived from cross-citing patterns

The ISI Collection ISI 的test collection是由之前在 ISI(Institute of Scientific Information) 的Small組合而成 這些文件大部分是由當初Small計畫中有 關cross-citation study中挑選出來 支持有關於terms和cross-citation patterns的相似性研究

The Cystic Fibrosis Collection 有關於“囊胞性纖維症”的文件 Topics和相關文件由具有此方面在臨床或 研究的專家所產生 Relevance scores n n n 0: non-relevance 1: marginal relevance 2: high relevance

Characteristics of CF collection Relevance score均由專家給定 Good number of information requests(relative to the collection size) n n The respective query vectors present overlap among themselves 利用之前的query增加檢索效率

Trends and Research Issues Interactive user interface n n 一般認為feedback的檢索可以改善效率 如何決定此情境下的評估方式(Evaluation measures)? 其它有別於precise, recall的評估方式研究