Information RetrievalIR Information retrieval generally refers to the

- Slides: 19

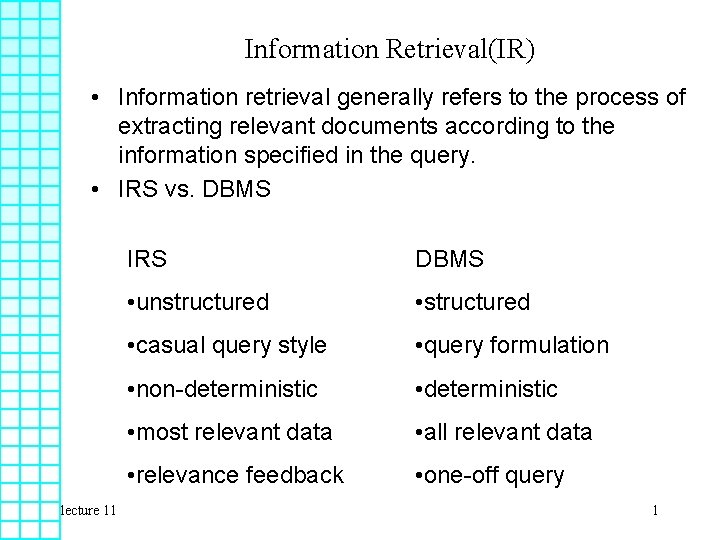

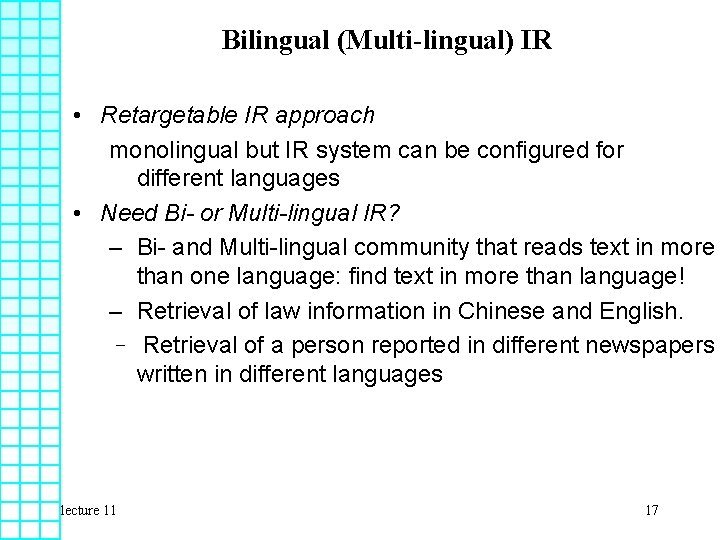

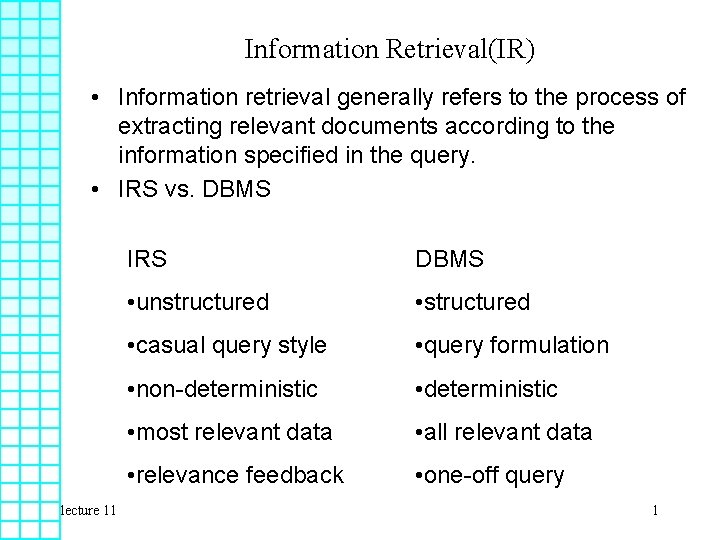

Information Retrieval(IR) • Information retrieval generally refers to the process of extracting relevant documents according to the information specified in the query. • IRS vs. DBMS lecture 11 IRS DBMS • unstructured • casual query style • query formulation • non-deterministic • most relevant data • all relevant data • relevance feedback • one-off query 1

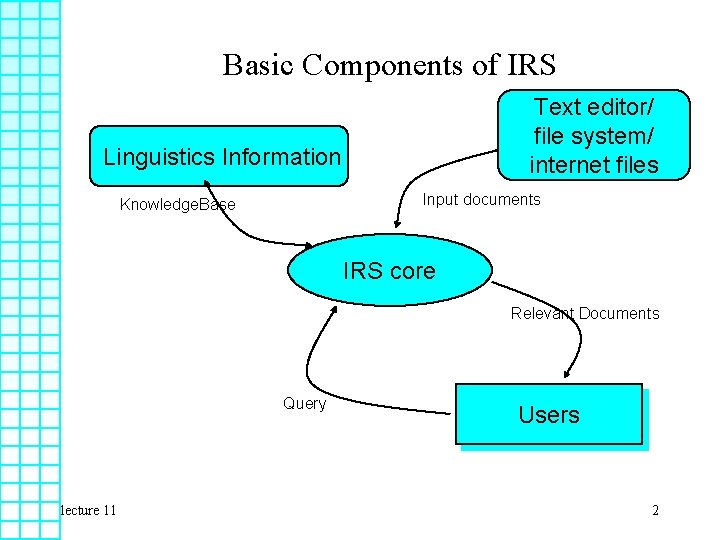

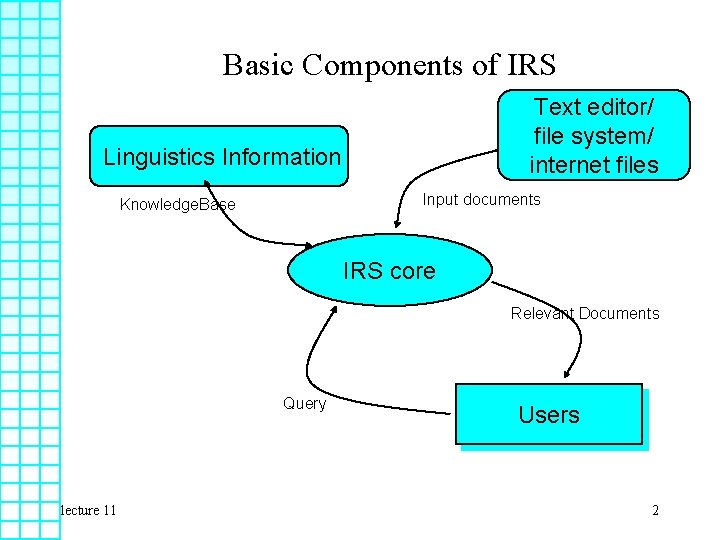

Basic Components of IRS Text editor/ file system/ internet files Linguistics Information Input documents Knowledge. Base IRS core Relevant Documents Query lecture 11 Users 2

Information Retrieval • The IR technology: – Knowledge base: Dictionary and rules – Basic Information representation model – Indexing of documents for retrieval – Relevance calculation • Oriental languages Vs English in IR Main difference is in what is considered useful information in each language – Different NLP knowledge and variants of common methods need to be used lecture 11 3

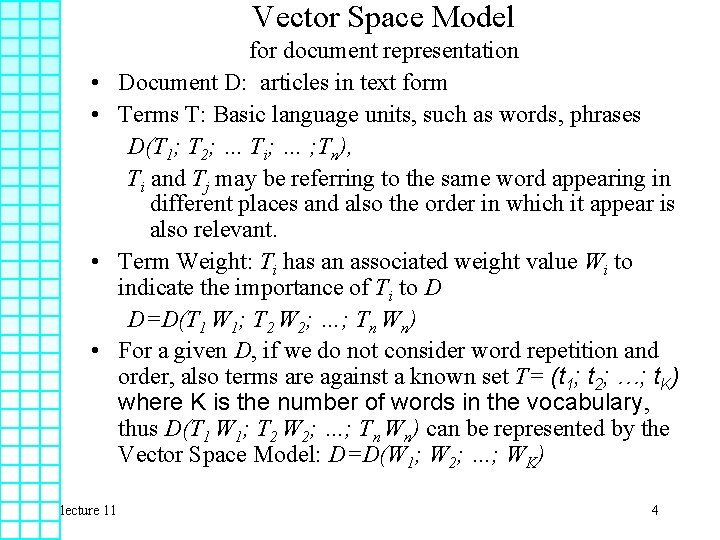

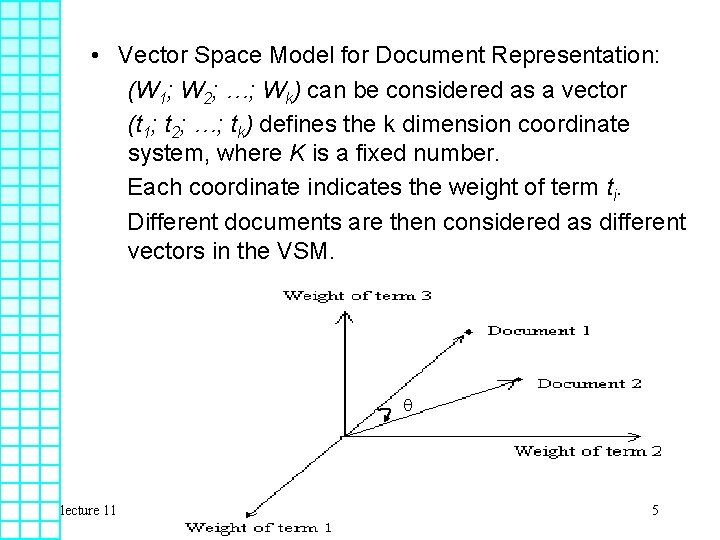

Vector Space Model • • lecture 11 for document representation Document D: articles in text form Terms T: Basic language units, such as words, phrases D(T 1; T 2; … Ti; … ; Tn), Ti and Tj may be referring to the same word appearing in different places and also the order in which it appear is also relevant. Term Weight: Ti has an associated weight value Wi to indicate the importance of Ti to D D=D(T 1 W 1; T 2 W 2; …; Tn Wn) For a given D, if we do not consider word repetition and order, also terms are against a known set T= (t 1; t 2; …; t. K) where K is the number of words in the vocabulary, thus D(T 1 W 1; T 2 W 2; …; Tn Wn) can be represented by the Vector Space Model: D=D(W 1; W 2; …; WK) 4

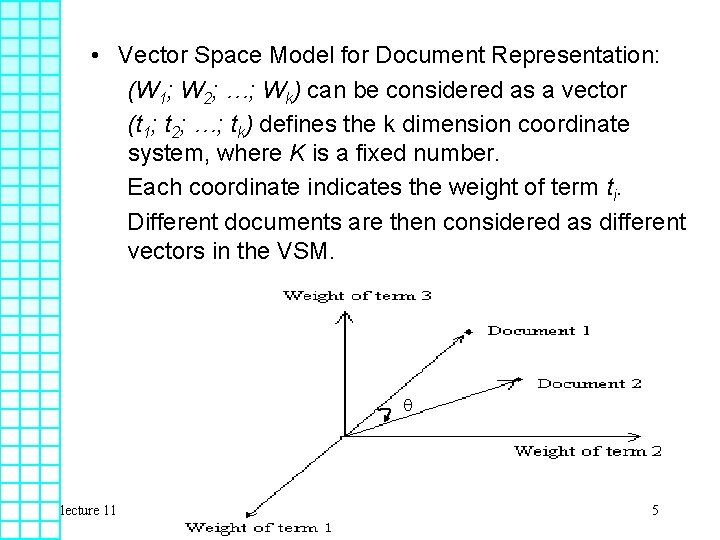

• Vector Space Model for Document Representation: (W 1; W 2; …; Wk) can be considered as a vector (t 1; t 2; …; tk) defines the k dimension coordinate system, where K is a fixed number. Each coordinate indicates the weight of term ti. Different documents are then considered as different vectors in the VSM. lecture 11 5

• Similarity: The degree of relevance of two documents • The degree of relevance of D 1 and D 2 can be described by a so called similarity function, Sim(D 1, D 2 ), which describe their distance. • Many different definitions of Sim(D 1, D 2 ) – One simple definition(inner product): Sim (D 1, D 2 ) = nk=1 w 1 kw 2 k – Example: D 1=(1, 0, 0, 1, 1, 1), D 2=(0, 1, 1, 0) – Sim(D 1, D 2)=1 x 0+0 x 1+1 x 0+1 x 1+1 x 0 = 1 lecture 11 6

– Another definition( Cosine): Sim (D 1, D 2 ) = cos =( nk=1 w 1 kw 2 k)/sqrt(( nk=1 w 1 k 2)( nk=1 w 2 k 2)) • For Information retrieval, D 2 can be a query Q. • Suppose there are I documents: Di , where i =1 to I • Rank Sim(Di, Q), the higher the value, the more relevant Di is to Q lecture 11 7

Terms Selection for Indexing • T can be considered as all the terms that can be indexed: – Approach 1: Simply use a word dictionary – Approach 2: terms in a dictionary + terms segmented dynamically => T is not necessarily static • Every document Di needs to be segmented – Vocabulary for indexing is normally much smaller than vocabulary of documents. • Not every word Tk in Di which is in T will be indexed • Tk in Di which is in T but not indexed is considered to have weight wik = 0 lecture 11 – In other words, all indexed terms for Di are considered to have weight greater than zero 8

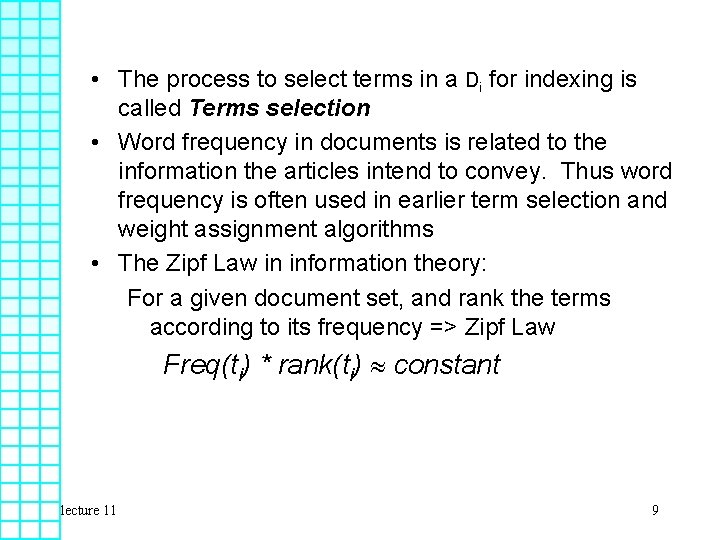

• The process to select terms in a Di for indexing is called Terms selection • Word frequency in documents is related to the information the articles intend to convey. Thus word frequency is often used in earlier term selection and weight assignment algorithms • The Zipf Law in information theory: For a given document set, and rank the terms according to its frequency => Zipf Law Freq(ti) * rank(ti) constant lecture 11 9

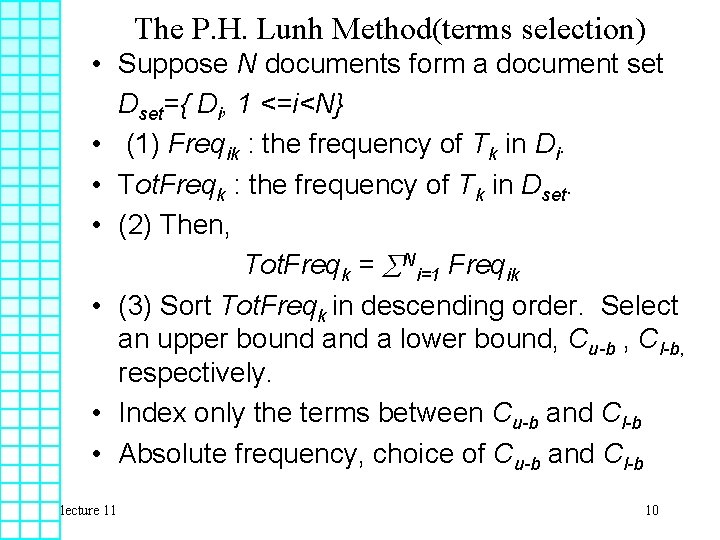

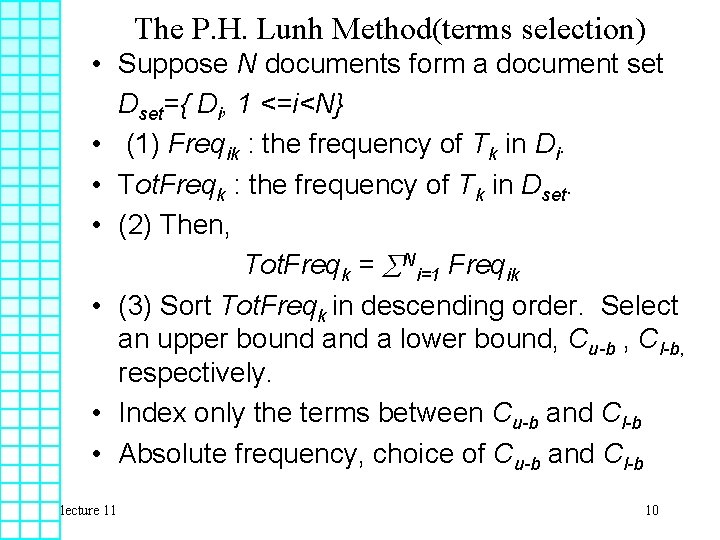

The P. H. Lunh Method(terms selection) • Suppose N documents form a document set Dset={ Di, 1 <=i<N} • (1) Freqik : the frequency of Tk in Di. • Tot. Freqk : the frequency of Tk in Dset. • (2) Then, Tot. Freqk = Ni=1 Freqik • (3) Sort Tot. Freqk in descending order. Select an upper bound a lower bound, Cu-b , Cl-b, respectively. • Index only the terms between Cu-b and Cl-b • Absolute frequency, choice of Cu-b and Cl-b lecture 11 10

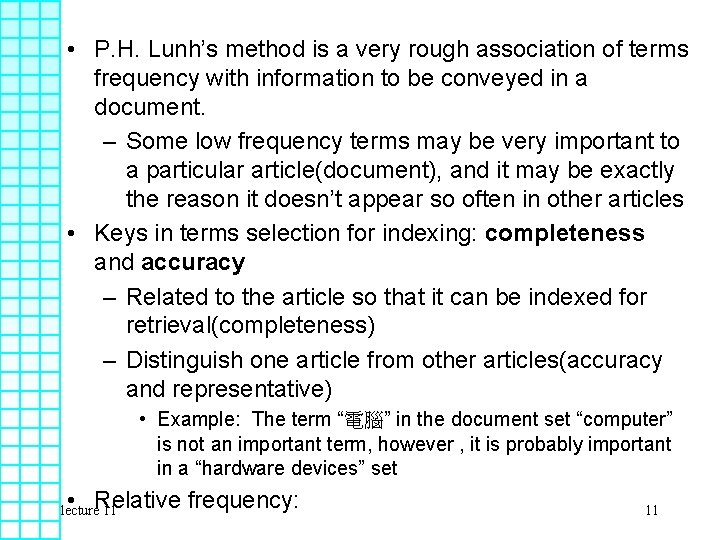

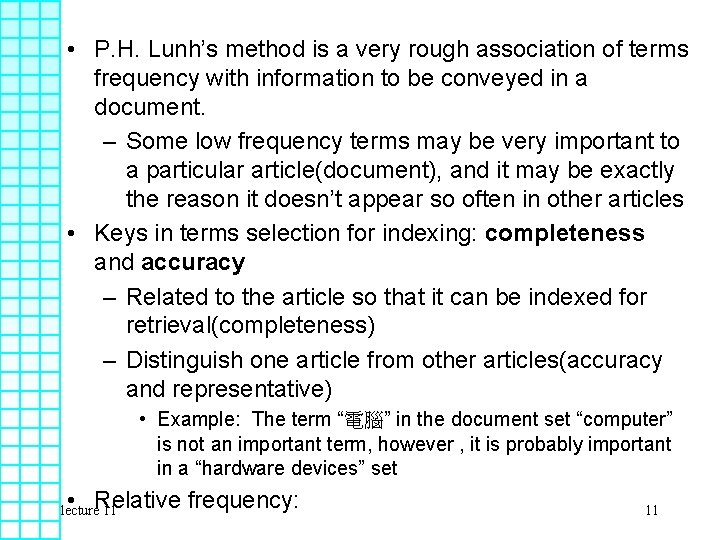

• P. H. Lunh’s method is a very rough association of terms frequency with information to be conveyed in a document. – Some low frequency terms may be very important to a particular article(document), and it may be exactly the reason it doesn’t appear so often in other articles • Keys in terms selection for indexing: completeness and accuracy – Related to the article so that it can be indexed for retrieval(completeness) – Distinguish one article from other articles(accuracy and representative) • Example: The term “電腦” in the document set “computer” is not an important term, however , it is probably important in a “hardware devices” set • Relative frequency: lecture 11 11

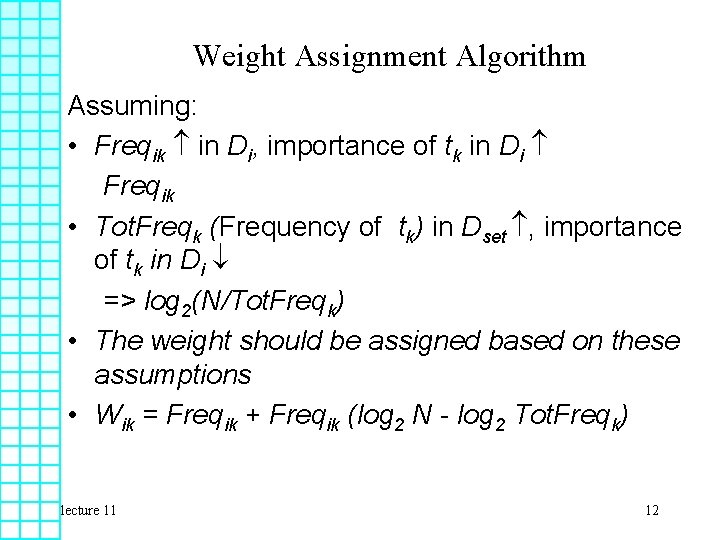

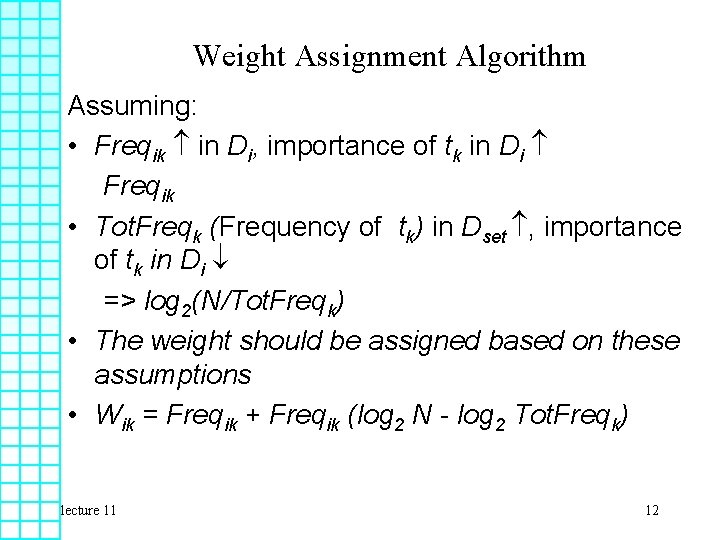

Weight Assignment Algorithm Assuming: • Freqik in Di, importance of tk in Di Freqik • Tot. Freqk (Frequency of tk) in Dset , importance of tk in Di => log 2(N/Tot. Freqk) • The weight should be assigned based on these assumptions • Wik = Freqik + Freqik (log 2 N - log 2 Tot. Freqk) lecture 11 12

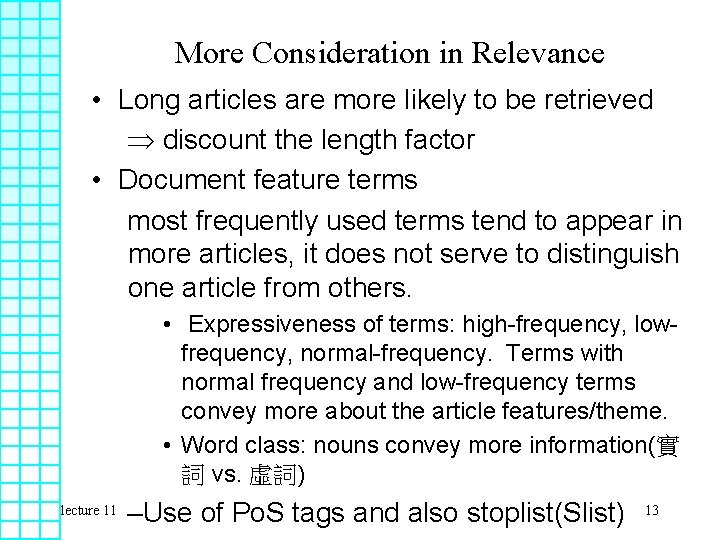

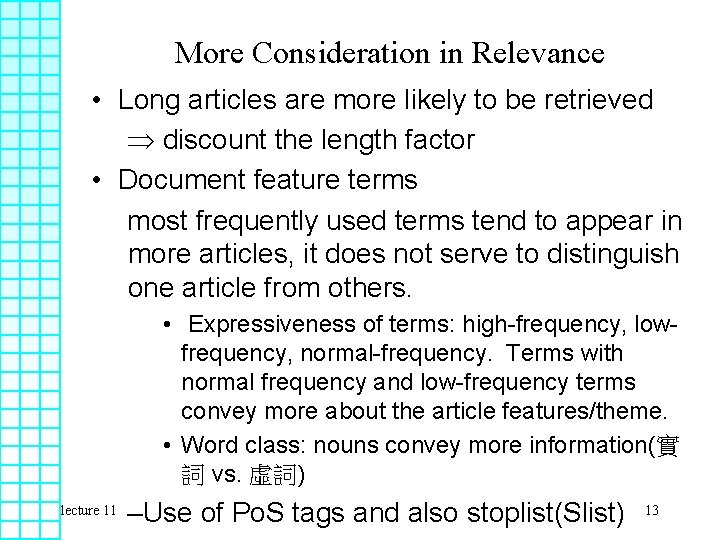

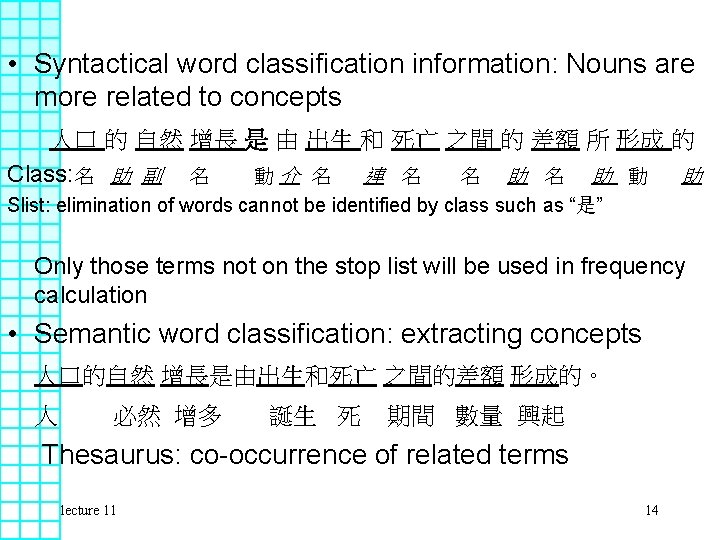

More Consideration in Relevance • Long articles are more likely to be retrieved discount the length factor • Document feature terms most frequently used terms tend to appear in more articles, it does not serve to distinguish one article from others. • Expressiveness of terms: high-frequency, lowfrequency, normal-frequency. Terms with normal frequency and low-frequency terms convey more about the article features/theme. • Word class: nouns convey more information(實 詞 vs. 虛詞) lecture 11 –Use of Po. S tags and also stoplist(Slist) 13

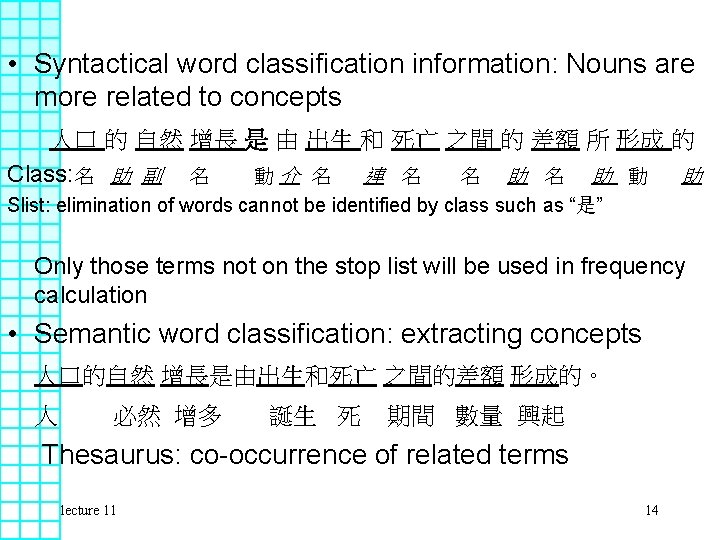

• Syntactical word classification information: Nouns are more related to concepts 人口 的 自然 增長 是 由 出生 和 死亡 之間 的 差額 所 形成 的 Class: 名 助 副 名 動介 名 連 名 名 助 動 助 Slist: elimination of words cannot be identified by class such as “是” Only those terms not on the stop list will be used in frequency calculation • Semantic word classification: extracting concepts 人口的自然 增長是由出生和死亡 之間的差額 形成的。 人 必然 增多 誕生 死 期間 數量 興起 Thesaurus: co-occurrence of related terms lecture 11 14

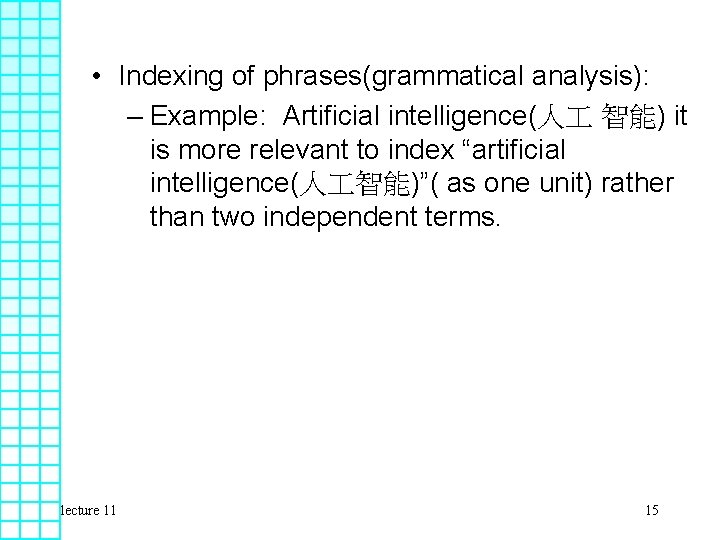

• Indexing of phrases(grammatical analysis): – Example: Artificial intelligence(人 智能) it is more relevant to index “artificial intelligence(人 智能)”( as one unit) rather than two independent terms. lecture 11 15

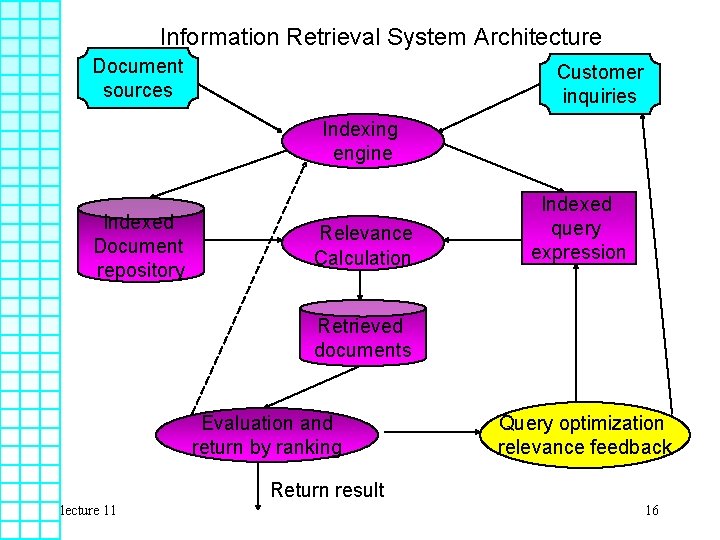

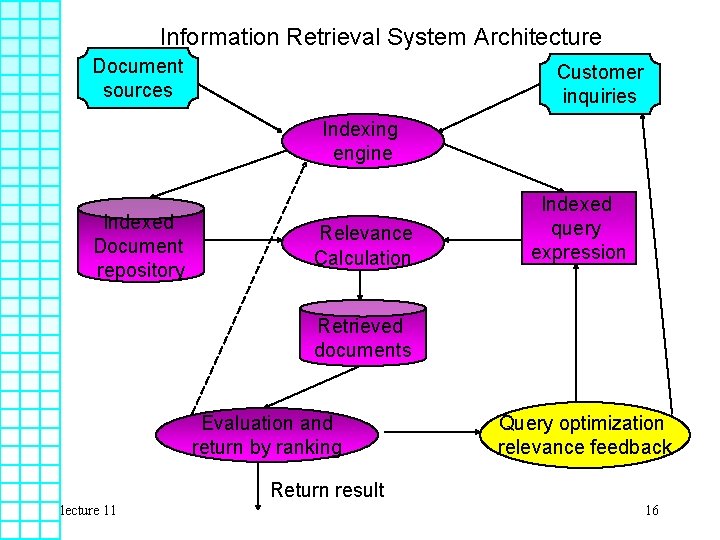

Information Retrieval System Architecture Document sources Customer inquiries Indexing engine Indexed Document repository Relevance Calculation Indexed query expression Retrieved documents Evaluation and return by ranking Query optimization relevance feedback Return result lecture 11 16

Bilingual (Multi-lingual) IR • Retargetable IR approach monolingual but IR system can be configured for different languages • Need Bi- or Multi-lingual IR? – Bi- and Multi-lingual community that reads text in more than one language: find text in more than language! – Retrieval of law information in Chinese and English. – Retrieval of a person reported in different newspapers written in different languages lecture 11 17

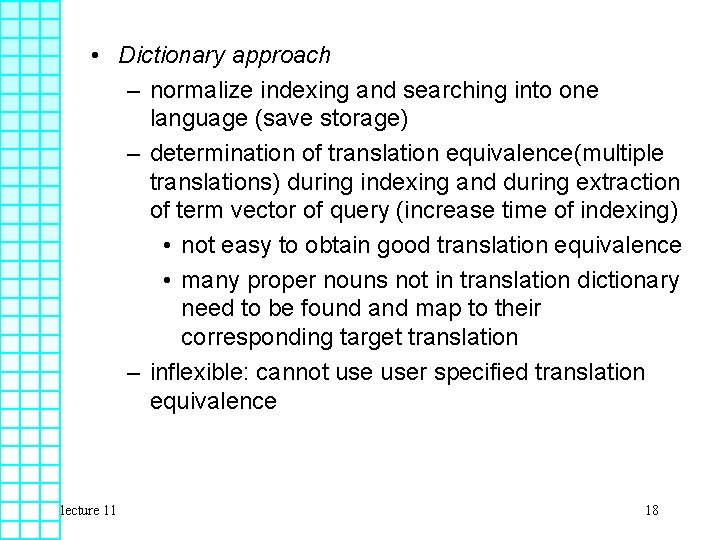

• Dictionary approach – normalize indexing and searching into one language (save storage) – determination of translation equivalence(multiple translations) during indexing and during extraction of term vector of query (increase time of indexing) • not easy to obtain good translation equivalence • many proper nouns not in translation dictionary need to be found and map to their corresponding target translation – inflexible: cannot user specified translation equivalence lecture 11 18

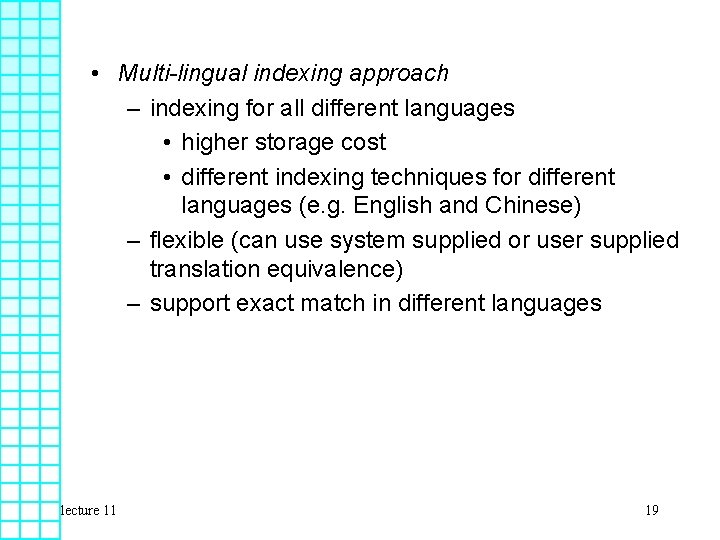

• Multi-lingual indexing approach – indexing for all different languages • higher storage cost • different indexing techniques for different languages (e. g. English and Chinese) – flexible (can use system supplied or user supplied translation equivalence) – support exact match in different languages lecture 11 19