Precision and Recall Information Retrieval Evaluation In the

- Slides: 10

Precision and Recall

Information Retrieval Evaluation • In the information retrieval (search engines) community, system evaluation revolves around the notion of relevant and not relevant documents. – With respect to a given query, a document is given a binary classification as either relevant or not relevant. – An information retrieval system can be thought of as a two classifier which attempts to label documents as such. • It retrieves the subset of documents which it believes to be relevant • To measure information retrieval effectiveness, we need: 1. A test collection of documents 2. A benchmark suite of queries 3. A binary assessment of either relevant or not relevant for each query-document pair.

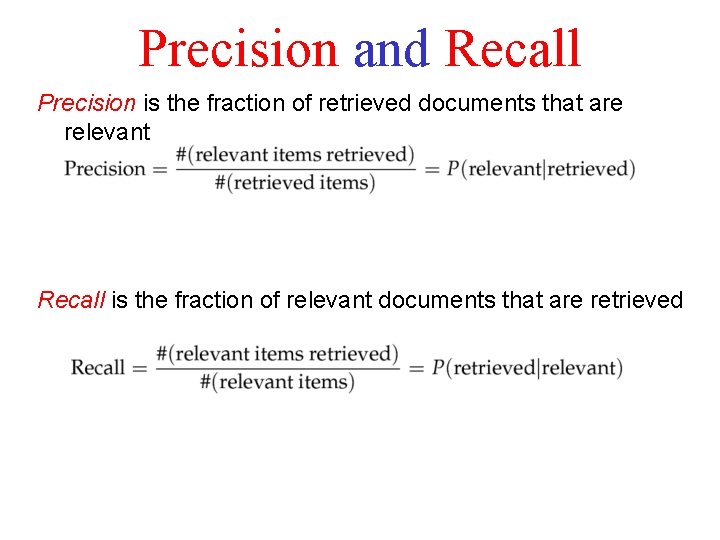

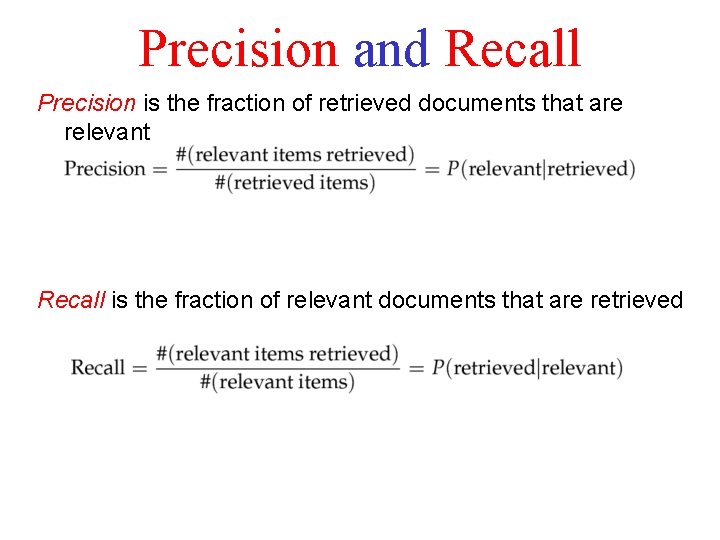

Precision and Recall Precision is the fraction of retrieved documents that are relevant Recall is the fraction of relevant documents that are retrieved

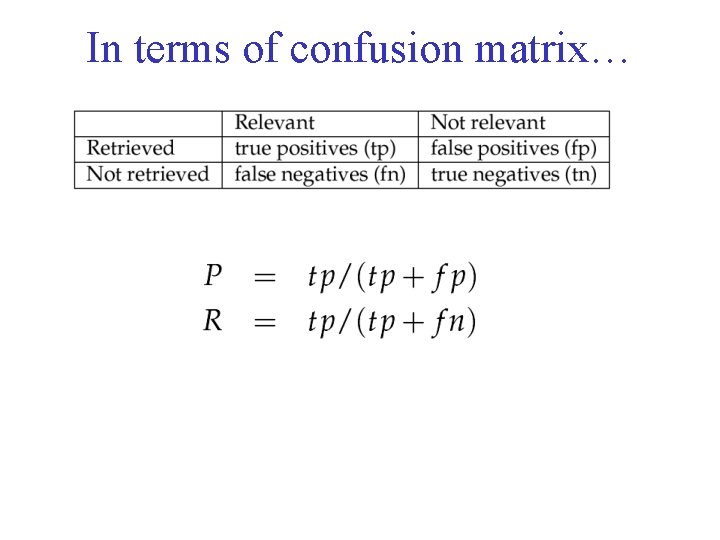

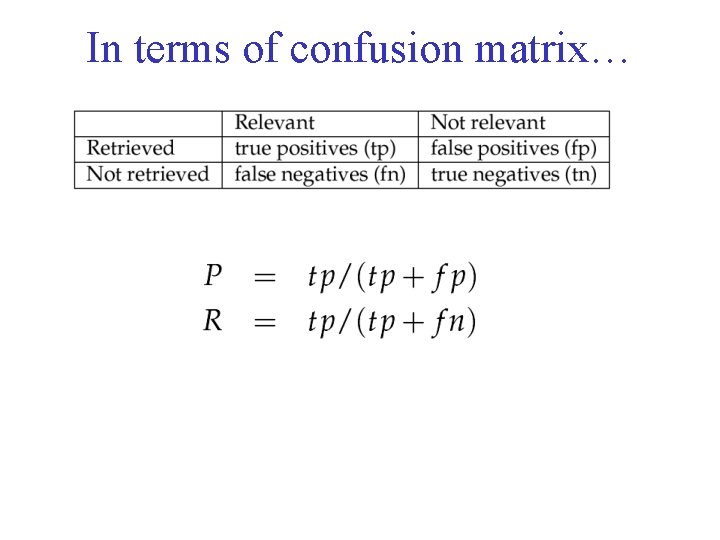

In terms of confusion matrix…

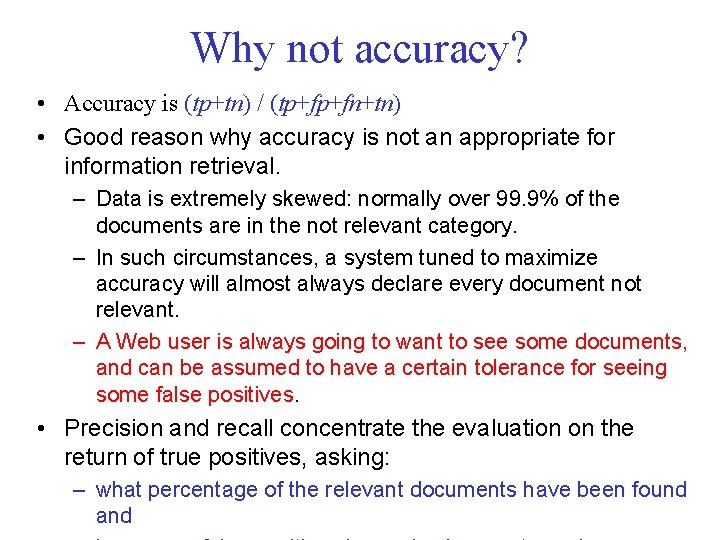

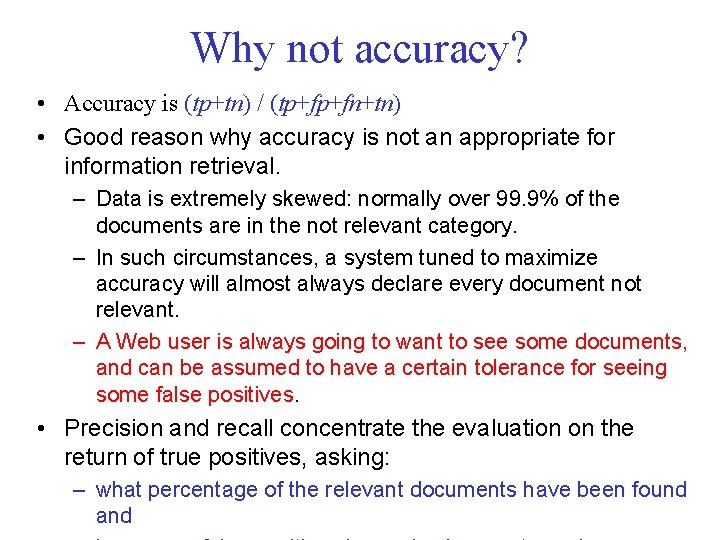

Why not accuracy? • Accuracy is (tp+tn) / (tp+fp+fn+tn) • Good reason why accuracy is not an appropriate for information retrieval. – Data is extremely skewed: normally over 99. 9% of the documents are in the not relevant category. – In such circumstances, a system tuned to maximize accuracy will almost always declare every document not relevant. – A Web user is always going to want to see some documents, and can be assumed to have a certain tolerance for seeing some false positives. • Precision and recall concentrate the evaluation on the return of true positives, asking: – what percentage of the relevant documents have been found and

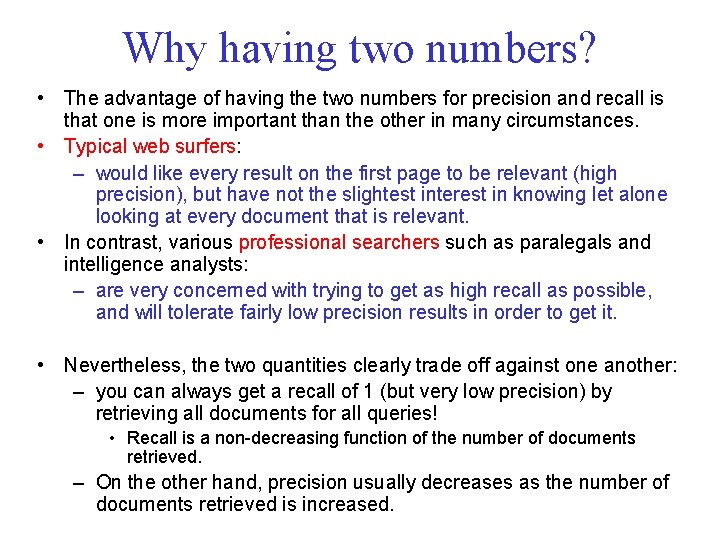

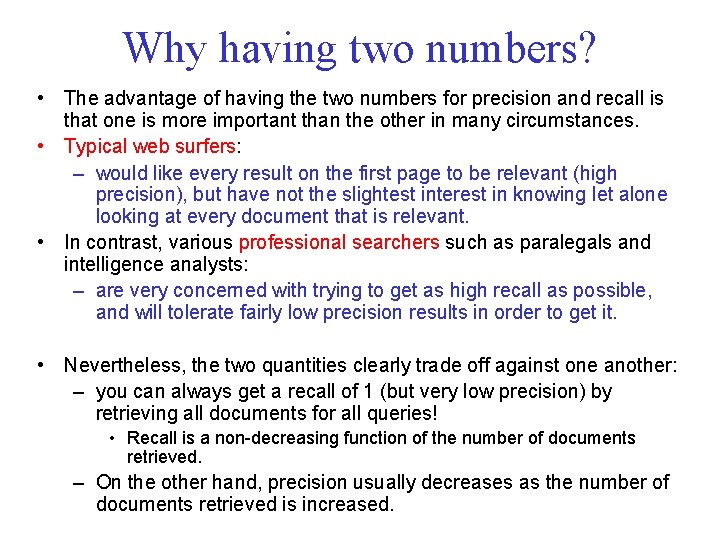

Why having two numbers? • The advantage of having the two numbers for precision and recall is that one is more important than the other in many circumstances. • Typical web surfers: – would like every result on the first page to be relevant (high precision), but have not the slightest interest in knowing let alone looking at every document that is relevant. • In contrast, various professional searchers such as paralegals and intelligence analysts: – are very concerned with trying to get as high recall as possible, and will tolerate fairly low precision results in order to get it. • Nevertheless, the two quantities clearly trade off against one another: – you can always get a recall of 1 (but very low precision) by retrieving all documents for all queries! • Recall is a non-decreasing function of the number of documents retrieved. – On the other hand, precision usually decreases as the number of documents retrieved is increased.

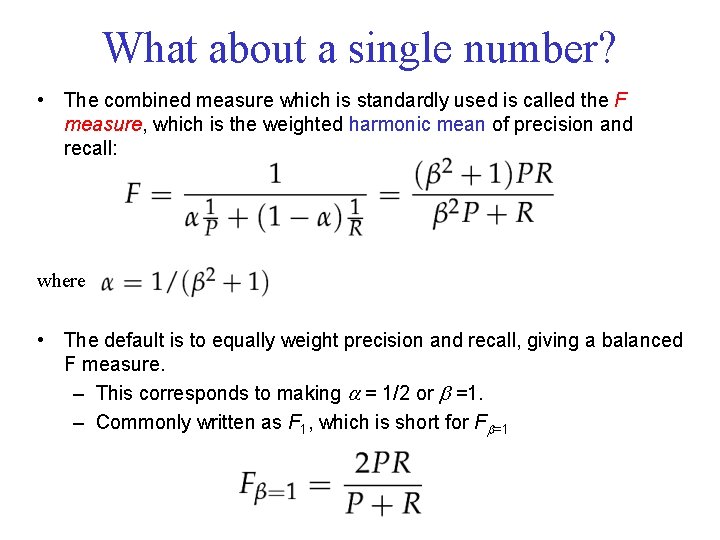

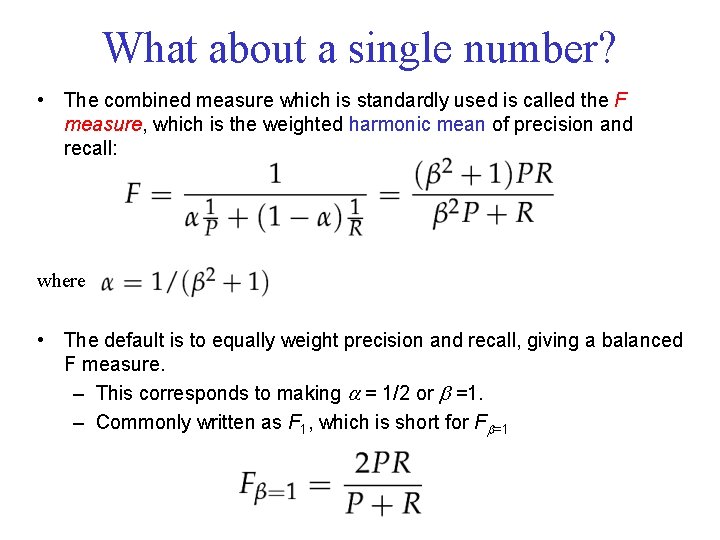

What about a single number? • The combined measure which is standardly used is called the F measure, which is the weighted harmonic mean of precision and recall: where • The default is to equally weight precision and recall, giving a balanced F measure. – This corresponds to making = 1/2 or =1. – Commonly written as F 1, which is short for F =1

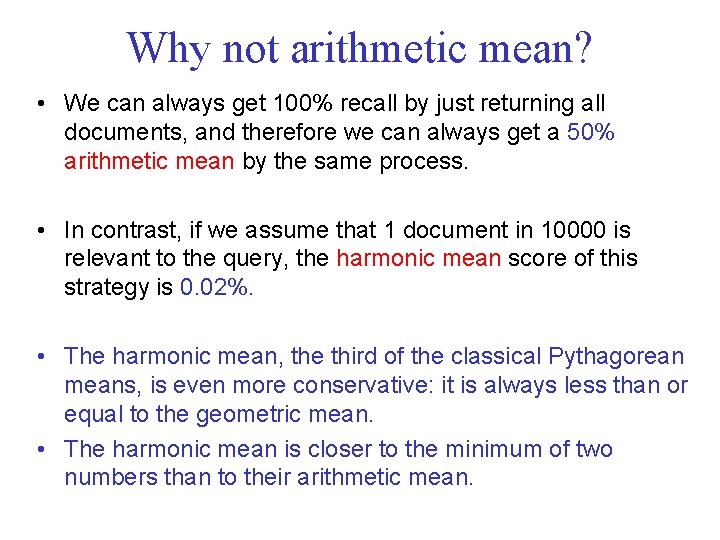

Why not arithmetic mean? • We can always get 100% recall by just returning all documents, and therefore we can always get a 50% arithmetic mean by the same process. • In contrast, if we assume that 1 document in 10000 is relevant to the query, the harmonic mean score of this strategy is 0. 02%. • The harmonic mean, the third of the classical Pythagorean means, is even more conservative: it is always less than or equal to the geometric mean. • The harmonic mean is closer to the minimum of two numbers than to their arithmetic mean.

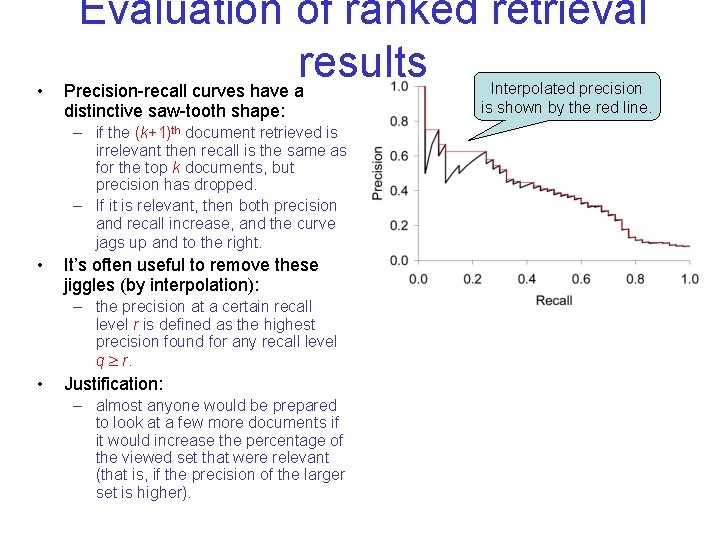

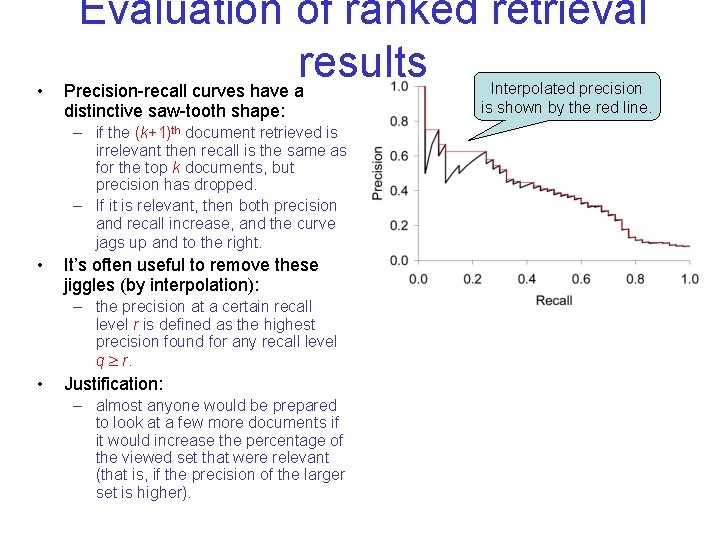

• Evaluation of ranked retrieval results Precision-recall curves have a distinctive saw-tooth shape: – if the (k+1)th document retrieved is irrelevant then recall is the same as for the top k documents, but precision has dropped. – If it is relevant, then both precision and recall increase, and the curve jags up and to the right. • It’s often useful to remove these jiggles (by interpolation): – the precision at a certain recall level r is defined as the highest precision found for any recall level q r. • Justification: – almost anyone would be prepared to look at a few more documents if it would increase the percentage of the viewed set that were relevant (that is, if the precision of the larger set is higher). Interpolated precision is shown by the red line.

Precision at k • The above measures precision at all recall levels. • What matters is rather how many good results there are on the first page or the first three pages. • This leads to measures of precision at fixed low levels of retrieved results, such as 10 or 30 documents. • This is referred to as “Precision at k”, for example “Precision at 10. ”