Statistical Language Models for Information Retrieval Tutorial at

![Two Well-Known Traditional Retrieval Formulas [Singhal 01] Key retrieval heuristics: TF (Term Frequency) IDF Two Well-Known Traditional Retrieval Formulas [Singhal 01] Key retrieval heuristics: TF (Term Frequency) IDF](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-11.jpg)

![Source-Channel Framework (Model of Communication System [Shannon 48] ) Source X Transmitter (encoder) P(X) Source-Channel Framework (Model of Communication System [Shannon 48] ) Source X Transmitter (encoder) P(X)](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-18.jpg)

![Ponte & Croft’s Pioneering Work [Ponte & Croft 98] • Contribution 1: – A Ponte & Croft’s Pioneering Work [Ponte & Croft 98] • Contribution 1: – A](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-27.jpg)

![The Basic LM Approach [Ponte & Croft 98] Document Language Model … Text mining The Basic LM Approach [Ponte & Croft 98] Document Language Model … Text mining](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-31.jpg)

![Other Smoothing Methods • Method 2 Absolute discounting [Ney et al. 94]: Subtract a Other Smoothing Methods • Method 2 Absolute discounting [Ney et al. 94]: Subtract a](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-40.jpg)

![Comparison of Three Methods [Zhai & Lafferty 01 a] Comparison is performed on a Comparison of Three Methods [Zhai & Lafferty 01 a] Comparison is performed on a](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-43.jpg)

![Smoothing & TF-IDF Weighting [Zhai & Lafferty 01 a] • Plug in the general Smoothing & TF-IDF Weighting [Zhai & Lafferty 01 a] • Plug in the general](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-46.jpg)

![The Dual-Role of Smoothing [Zhai & Lafferty 02] long Verbose queries Keyword queries long The Dual-Role of Smoothing [Zhai & Lafferty 02] long Verbose queries Keyword queries long](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-47.jpg)

![Two-stage Smoothing [Zhai & Lafferty 02] Stage-2 Stage-1 -Explain noise in query -Explain unseen Two-stage Smoothing [Zhai & Lafferty 02] Stage-2 Stage-1 -Explain noise in query -Explain unseen](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-49.jpg)

![Estimating using leave-one-out [Zhai & Lafferty 02] w 1 P(w 1|d- w 1) log-likelihood Estimating using leave-one-out [Zhai & Lafferty 02] w 1 P(w 1|d- w 1) log-likelihood](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-50.jpg)

![Estimating using Mixture Model [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1) Estimating using Mixture Model [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1)](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-52.jpg)

![Automatic 2 -stage results Optimal 1 -stage results [Zhai & Lafferty 02] Average precision Automatic 2 -stage results Optimal 1 -stage results [Zhai & Lafferty 02] Average precision](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-53.jpg)

![Justification of Query Likelihood [Lafferty & Zhai 01 a] • The General Probabilistic Retrieval Justification of Query Likelihood [Lafferty & Zhai 01 a] • The General Probabilistic Retrieval](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-55.jpg)

![Query Generation [Lafferty & Zhai 01 a] Query likelihood p(q| d) Document prior Assuming Query Generation [Lafferty & Zhai 01 a] Query likelihood p(q| d) Document prior Assuming](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-56.jpg)

![Relevance Model Estimation [Lavrenko & Croft 01] • • Question: How to estimate P(D|Q, Relevance Model Estimation [Lavrenko & Croft 01] • • Question: How to estimate P(D|Q,](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-67.jpg)

![Kernel-based Allocation [Lavrenko 04] • A general generative model for text An infinite mixture Kernel-based Allocation [Lavrenko 04] • A general generative model for text An infinite mixture](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-68.jpg)

![Query Model Estimation [Lafferty & Zhai 01 b, Zhai & Lafferty 01 b] • Query Model Estimation [Lafferty & Zhai 01 b, Zhai & Lafferty 01 b] •](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-69.jpg)

![Feedback as Model Interpolation [Zhai & Lafferty 01 b] Document D Results Query Q Feedback as Model Interpolation [Zhai & Lafferty 01 b] Document D Results Query Q](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-70.jpg)

![Model-based feedback Improves over Simple LM [Zhai & Lafferty 01 b] Translation models, Relevance Model-based feedback Improves over Simple LM [Zhai & Lafferty 01 b] Translation models, Relevance](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-74.jpg)

![Structured Document Retrieval [Ogilvie & Callan 03] D Title D 1 Abstract D 2 Structured Document Retrieval [Ogilvie & Callan 03] D Title D 1 Abstract D 2](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-78.jpg)

![Personalized/Context-Sensitive Search [Shen et al. 05, Tan et al. 06] • User information and Personalized/Context-Sensitive Search [Shen et al. 05, Tan et al. 06] • User information and](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-79.jpg)

![Predicting Query Difficulty • • [Cronen-Townsend et al. 02] Observations: – Discriminative queries tend Predicting Query Difficulty • • [Cronen-Townsend et al. 02] Observations: – Discriminative queries tend](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-81.jpg)

![Expert Finding [Balog et al. 06, Fang & Zhai 07] • • Task: Given Expert Finding [Balog et al. 06, Fang & Zhai 07] • • Task: Given](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-82.jpg)

![Subtopic Retrieval [Zhai 02, Zhai et al 03] • • Subtopic retrieval: Aim at Subtopic Retrieval [Zhai 02, Zhai et al 03] • • Subtopic retrieval: Aim at](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-83.jpg)

![Generative Model of Document & Query [Lafferty & Zhai 01 b] Us er U Generative Model of Document & Query [Lafferty & Zhai 01 b] Us er U](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-91.jpg)

![Generative Relevance Hypothesis [Lavrenko 04] • • • Generative Relevance Hypothesis: – For a Generative Relevance Hypothesis [Lavrenko 04] • • • Generative Relevance Hypothesis: – For a](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-97.jpg)

![References [Agichtein & Cucerzan 05] E. Agichtein and S. Cucerzan, Predicting accuracy of extracting References [Agichtein & Cucerzan 05] E. Agichtein and S. Cucerzan, Predicting accuracy of extracting](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-106.jpg)

![References (cont. ) [Cronen-Townsend et al. 02] Steve Cronen-Townsend, Yun Zhou, and W. Bruce References (cont. ) [Cronen-Townsend et al. 02] Steve Cronen-Townsend, Yun Zhou, and W. Bruce](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-107.jpg)

![References (cont. ) [Hofmann 99] T. Hofmann. Probabilistic latent semantic indexing. In Proceedings on References (cont. ) [Hofmann 99] T. Hofmann. Probabilistic latent semantic indexing. In Proceedings on](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-108.jpg)

![References (cont. ) [Lavrenko et al. 02] V. Lavrenko, M. Choquette, and W. Croft. References (cont. ) [Lavrenko et al. 02] V. Lavrenko, M. Choquette, and W. Croft.](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-109.jpg)

![References (cont. ) [Nallanati & Allan 02] Ramesh Nallapati and James Allan, Capturing term References (cont. ) [Nallanati & Allan 02] Ramesh Nallapati and James Allan, Capturing term](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-110.jpg)

![References (cont. ) [Shannon 48] Shannon, C. E. (1948). . A mathematical theory of References (cont. ) [Shannon 48] Shannon, C. E. (1948). . A mathematical theory of](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-111.jpg)

![References (cont. ) [Tao et al. 06] Tao, Xuanhui Wang, Qiaozhu Mei, and Cheng. References (cont. ) [Tao et al. 06] Tao, Xuanhui Wang, Qiaozhu Mei, and Cheng.](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-112.jpg)

![References (cont. ) [Zhai et al. 03] C. Zhai, W. Cohen, and J. Lafferty, References (cont. ) [Zhai et al. 03] C. Zhai, W. Cohen, and J. Lafferty,](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-113.jpg)

- Slides: 113

Statistical Language Models for Information Retrieval Tutorial at NAACL HLT 2007 April 22, 2007 Cheng. Xiang Zhai Department of Computer Science University of Illinois, Urbana-Champaign http: //www-faculty. cs. uiuc. edu/~czhai@cs. uiuc. edu © Cheng. Xiang Zhai, 2007

Goal of the Tutorial • Introduce the emerging area of applying statistical language models (SLMs) to information retrieval (IR). • Targeted audience: – IR practitioners who are interested in acquiring advanced modeling techniques – IR researchers who are looking for new research problems in IR models – SLM experts who are interested in applying SLMs to IR • Accessible to anyone with basic knowledge of probability and statistics © Cheng. Xiang Zhai, 2007 2

Scope of the Tutorial • What will be covered – Brief background on IR and SLMs – Review of recent applications of unigram SLMs in IR – Details of some specific methods that are either empirically effective or theoretically important – A framework for systematically exploring SLMs in IR – Outstanding research issues in applying SLMs to IR • What will not be covered – – See any IR textbook e. g. , [Baeza-Yates & Ribeiro-Neto 99, Traditional IR methods Grossman & Frieder 04, Manning et al. 07] Implementation of IR systems See [Witten et al. 99] Discussion of high-order or other complex SLMs Application of SLMs in supervised learning See [Manning & Schutze 99] E. g. , TDT, Text Categorization…. and [Jelinek 98] See publications in Machine Learning, Information Retrieval, and Natural Language Processing © Cheng. Xiang Zhai, 2007 3

Tutorial Outline 1. Introduction 2. The Basic Language Modeling Approach 3. More Advanced Language Models 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 4

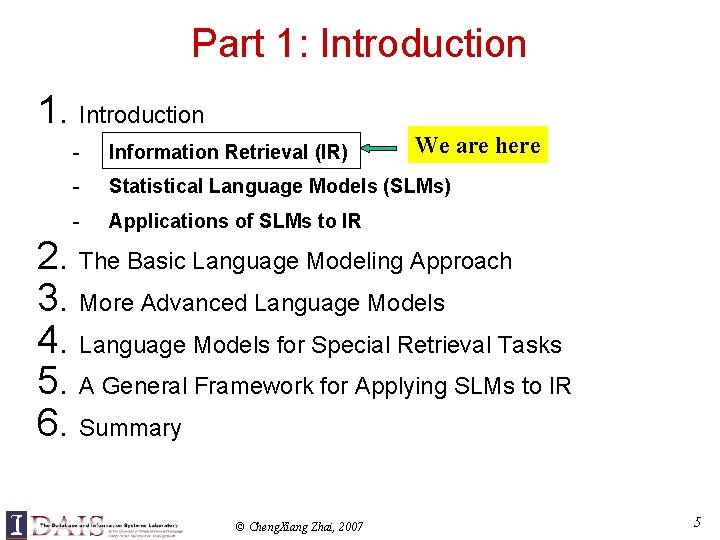

Part 1: Introduction 1. Introduction We are here - Information Retrieval (IR) - Statistical Language Models (SLMs) - Applications of SLMs to IR 2. The Basic Language Modeling Approach 3. More Advanced Language Models 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 5

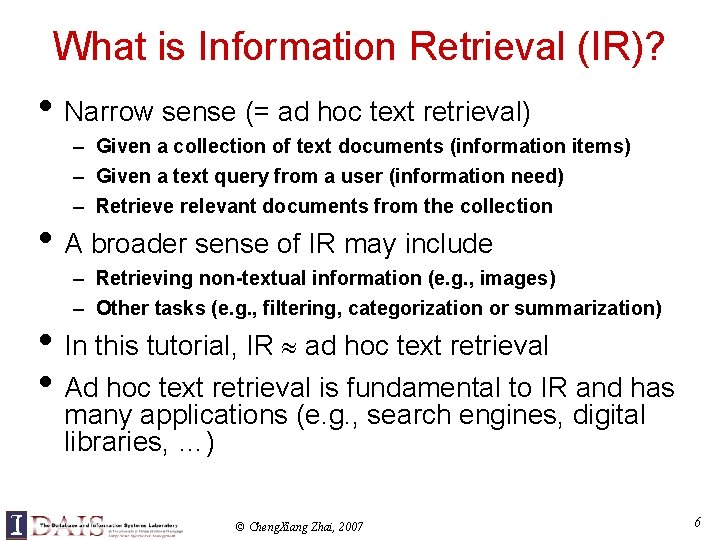

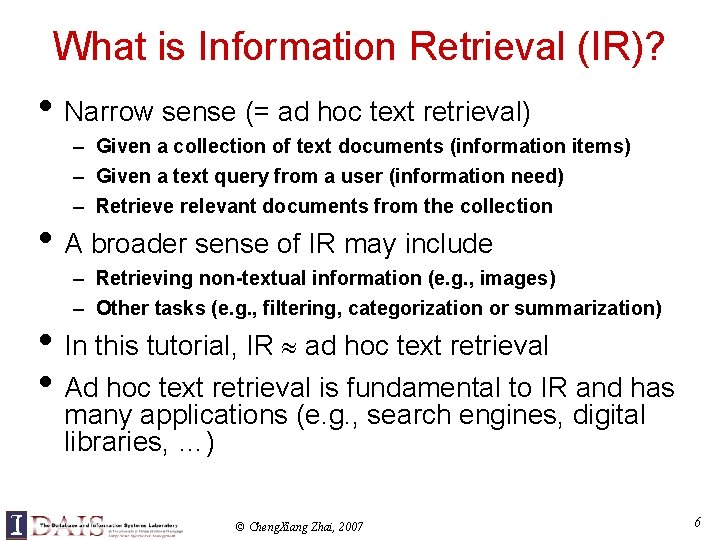

What is Information Retrieval (IR)? • Narrow sense (= ad hoc text retrieval) – Given a collection of text documents (information items) – Given a text query from a user (information need) – Retrieve relevant documents from the collection • A broader sense of IR may include – Retrieving non-textual information (e. g. , images) – Other tasks (e. g. , filtering, categorization or summarization) • In this tutorial, IR ad hoc text retrieval • Ad hoc text retrieval is fundamental to IR and has many applications (e. g. , search engines, digital libraries, …) © Cheng. Xiang Zhai, 2007 6

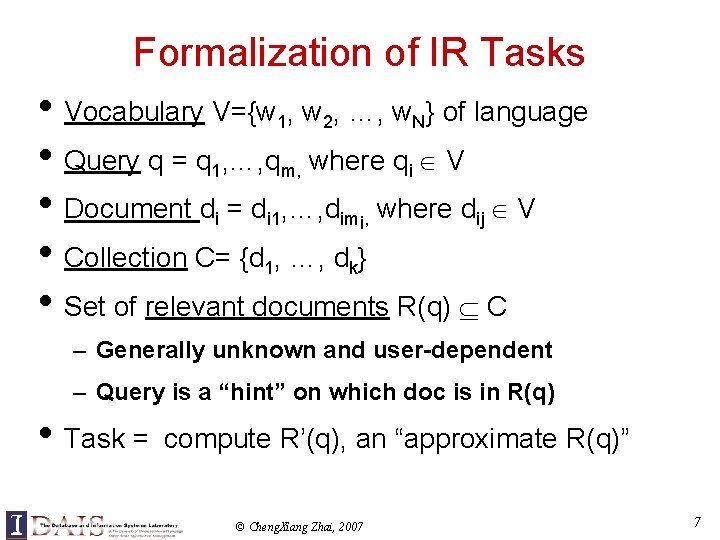

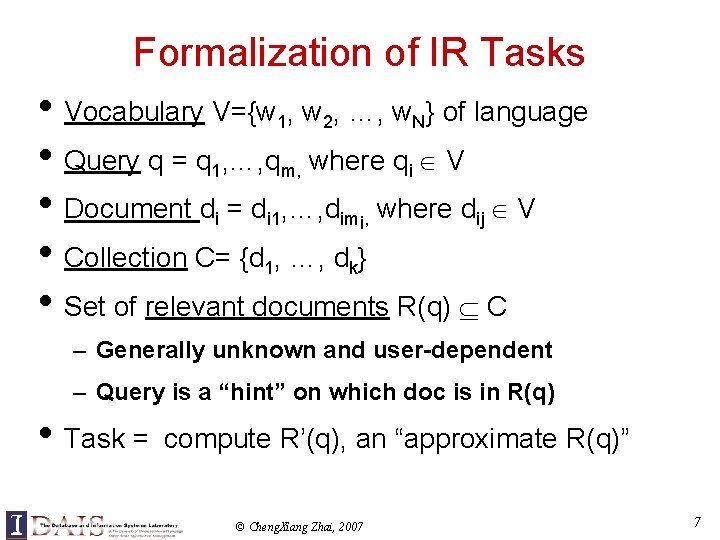

Formalization of IR Tasks • Vocabulary V={w 1, w 2, …, w. N} of language • Query q = q 1, …, qm, where qi V • Document di = di 1, …, dimi, where dij V • Collection C= {d 1, …, dk} • Set of relevant documents R(q) C – Generally unknown and user-dependent – Query is a “hint” on which doc is in R(q) • Task = compute R’(q), an “approximate R(q)” © Cheng. Xiang Zhai, 2007 7

Computing R’(q): Doc Selection vs. Ranking R(q)={d C|f(d, q)=1}, where f(d, q) {0, 1} is an indicator function (classifier) True R(q) + +- - + + --- 1 Doc Selection f(d, q)=? 0 Doc Ranking f(d, q)=? R(q) = {d C|f(d, q)> }, where f(d, q) is a ranking function; is a cutoff implicitly set by the user © Cheng. Xiang Zhai, 2007 + +- + ++ R’(q) - -- - - + - 0. 98 d 1 + 0. 95 d 2 + 0. 83 d 3 0. 80 d 4 + 0. 76 d 5 0. 56 d 6 0. 34 d 7 0. 21 d 8 + 0. 21 d 9 - R’(q) =0. 77 8

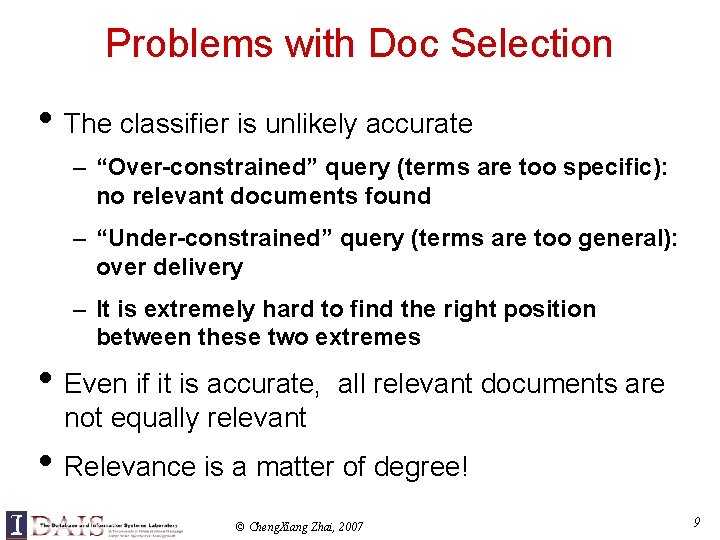

Problems with Doc Selection • The classifier is unlikely accurate – “Over-constrained” query (terms are too specific): no relevant documents found – “Under-constrained” query (terms are too general): over delivery – It is extremely hard to find the right position between these two extremes • Even if it is accurate, all relevant documents are not equally relevant • Relevance is a matter of degree! © Cheng. Xiang Zhai, 2007 9

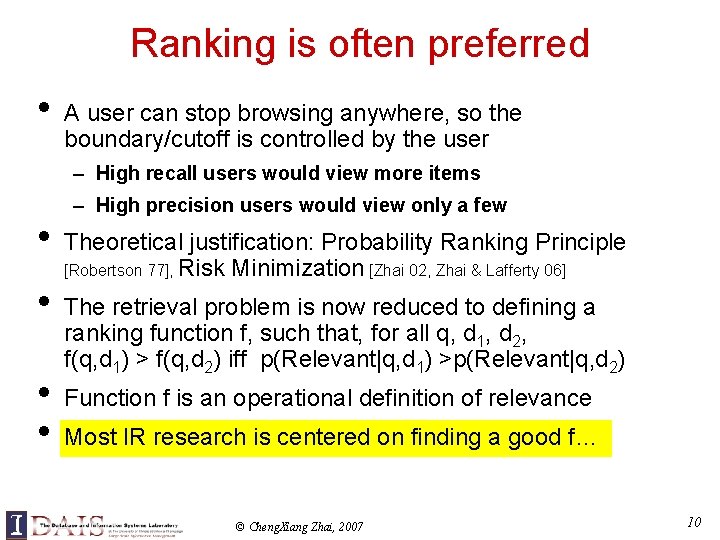

Ranking is often preferred • A user can stop browsing anywhere, so the boundary/cutoff is controlled by the user – High recall users would view more items • • – High precision users would view only a few Theoretical justification: Probability Ranking Principle [Robertson 77], Risk Minimization [Zhai 02, Zhai & Lafferty 06] The retrieval problem is now reduced to defining a ranking function f, such that, for all q, d 1, d 2, f(q, d 1) > f(q, d 2) iff p(Relevant|q, d 1) >p(Relevant|q, d 2) Function f is an operational definition of relevance Most IR research is centered on finding a good f… © Cheng. Xiang Zhai, 2007 10

![Two WellKnown Traditional Retrieval Formulas Singhal 01 Key retrieval heuristics TF Term Frequency IDF Two Well-Known Traditional Retrieval Formulas [Singhal 01] Key retrieval heuristics: TF (Term Frequency) IDF](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-11.jpg)

Two Well-Known Traditional Retrieval Formulas [Singhal 01] Key retrieval heuristics: TF (Term Frequency) IDF (Inverse Doc Freq. ) + Length normalization [Sparck Jones 72, Salton & Buckley 88, Singhal et al. 96, Robertson & Walker 94, Fang et al. 04] [ ] Other heuristics: Stemming Stop word removal Phrases Typo © Cheng. Xiang Zhai, 2007 Similar quantities will occur in the LMs… 11

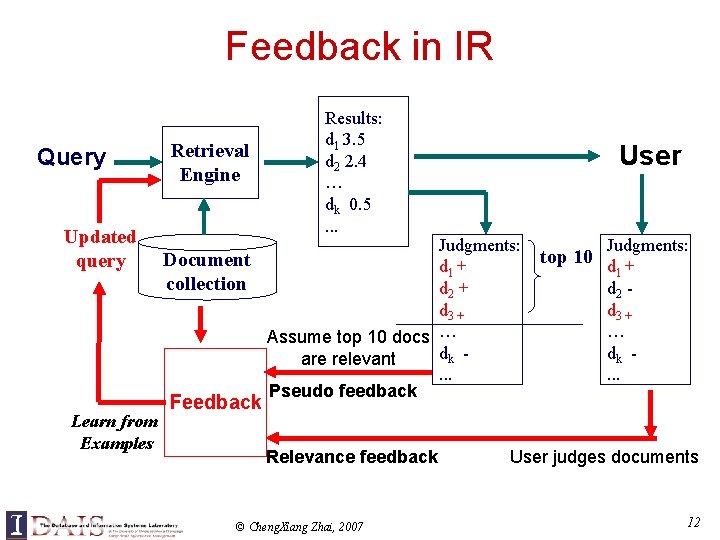

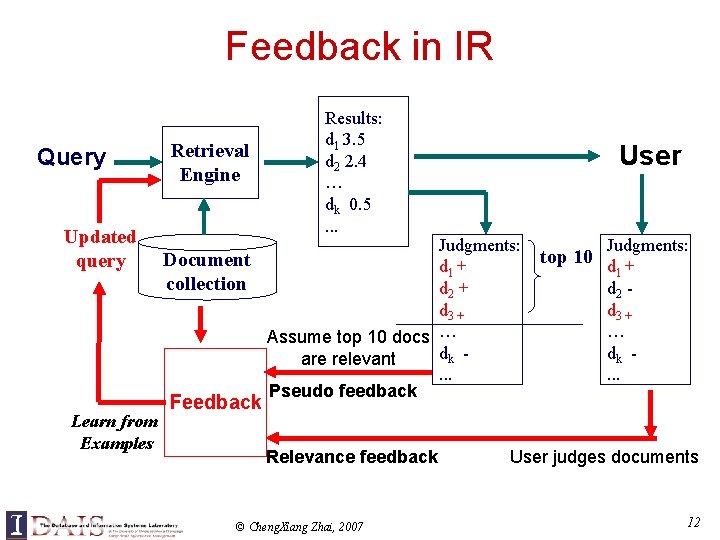

Feedback in IR Query Updated query Learn from Examples Retrieval Engine Results: d 1 3. 5 d 2 2. 4 … dk 0. 5. . . User Judgments: Document d 1 + collection d 2 + d 3 + Assume top 10 docs … dk are relevant. . . Pseudo feedback Judgments: top 10 d + 1 d 2 d 3 + … dk. . . Feedback Relevance feedback © Cheng. Xiang Zhai, 2007 User judges documents 12

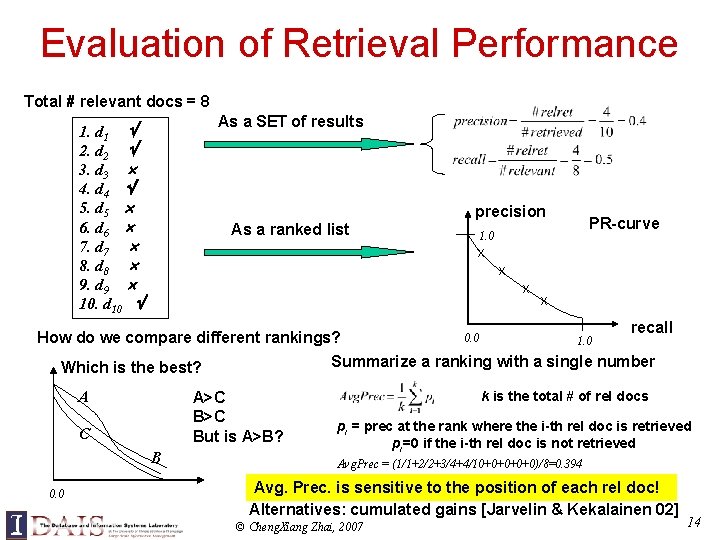

Feedback in IR (cont. ) • An essential component in any IR method • Relevance feedback is always desirable, but a user may not be willing to provide explicit judgments • Pseudo/automatic feedback is always possible, and often improves performance on average through – Exploiting word co-occurrences – Enriching a query with additional related words – Indirectly addressing issues such as ambiguous words and synonyms • Implicit feedback is a good compromise © Cheng. Xiang Zhai, 2007 13

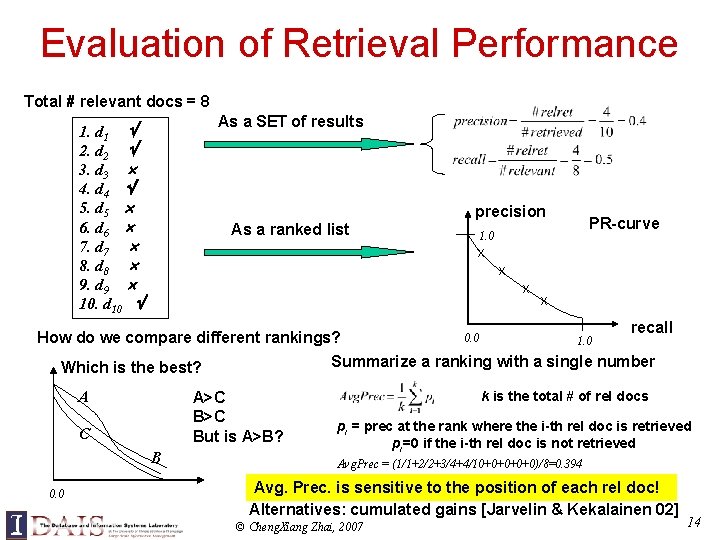

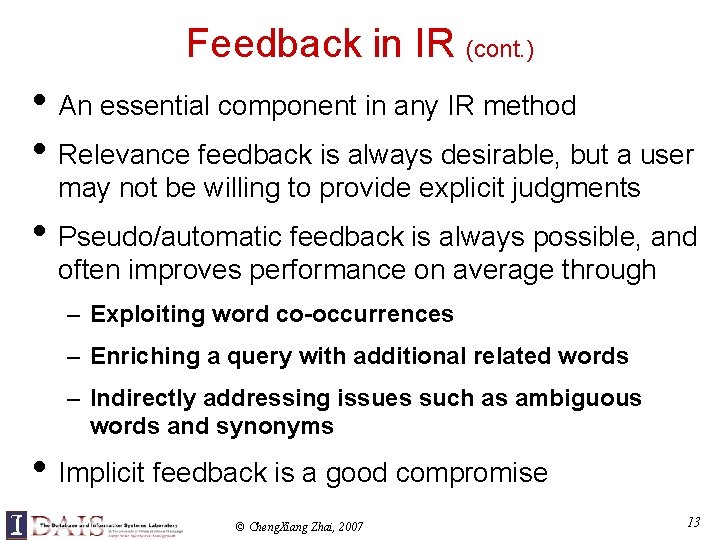

Evaluation of Retrieval Performance Total # relevant docs = 8 As a SET of results 1. d 1 2. d 2 3. d 3 4. d 4 5. d 5 6. d 6 7. d 7 8. d 8 9. d 9 10. d 10 precision As a ranked list x x x How do we compare different rankings? A A>C But is A>B? C B 0. 0 x 1. 0 recall Summarize a ranking with a single number Which is the best? 0. 0 PR-curve 1. 0 k is the total # of rel docs pi = prec at the rank where the i-th rel doc is retrieved pi=0 if the i-th rel doc is not retrieved Avg. Prec = (1/1+2/2+3/4+4/10+0+0)/8=0. 394 Avg. Prec. is sensitive to the position of each rel doc! Alternatives: cumulated gains [Jarvelin & Kekalainen 02] © Cheng. Xiang Zhai, 2007 14

Part 1: Introduction (cont. ) 1. Introduction - Information Retrieval (IR) - Statistical Language Models (SLMs) - Application of SLMs to IR We are here 2. The Basic Language Modeling Approach 3. More Advanced Language Models 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 15

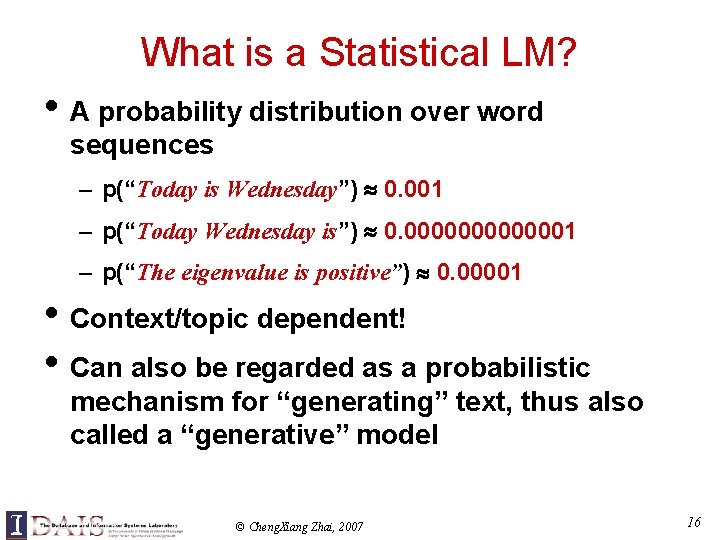

What is a Statistical LM? • A probability distribution over word sequences – p(“Today is Wednesday”) 0. 001 – p(“Today Wednesday is”) 0. 0000001 – p(“The eigenvalue is positive”) 0. 00001 • Context/topic dependent! • Can also be regarded as a probabilistic mechanism for “generating” text, thus also called a “generative” model © Cheng. Xiang Zhai, 2007 16

Why is a LM Useful? • Provides a principled way to quantify the uncertainties associated with natural language • Allows us to answer questions like: – Given that we see “John” and “feels”, how likely will we see “happy” as opposed to “habit” as the next word? (speech recognition) – Given that we observe “baseball” three times and “game” once in a news article, how likely is it about “sports”? (text categorization, information retrieval) – Given that a user is interested in sports news, how likely would the user use “baseball” in a query? (information retrieval) © Cheng. Xiang Zhai, 2007 17

![SourceChannel Framework Model of Communication System Shannon 48 Source X Transmitter encoder PX Source-Channel Framework (Model of Communication System [Shannon 48] ) Source X Transmitter (encoder) P(X)](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-18.jpg)

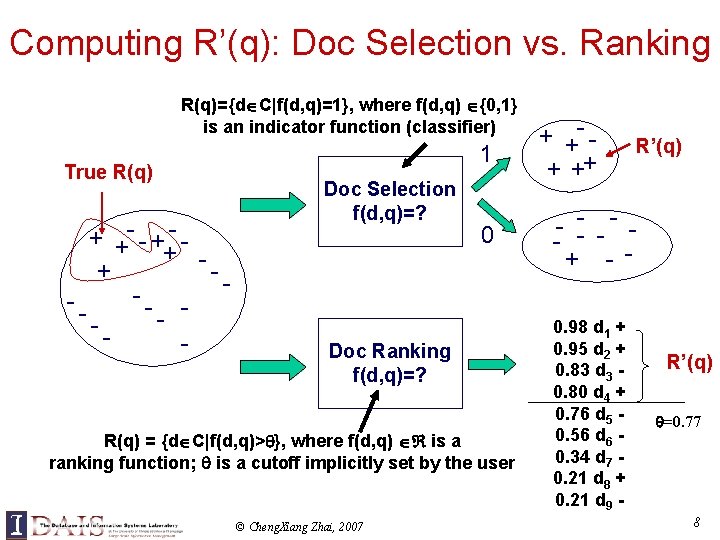

Source-Channel Framework (Model of Communication System [Shannon 48] ) Source X Transmitter (encoder) P(X) Noisy Channel Y Receiver (decoder) Destination X’ P(X|Y)=? P(Y|X) (Bayes Rule) When X is text, p(X) is a language model Many Examples: Speech recognition: Machine translation: OCR Error Correction: Information Retrieval: Summarization: X=Word sequence X=English sentence X=Correct word X=Document X=Summary © Cheng. Xiang Zhai, 2007 Y=Speech signal Y=Chinese sentence Y= Erroneous word Y=Query Y=Document 18

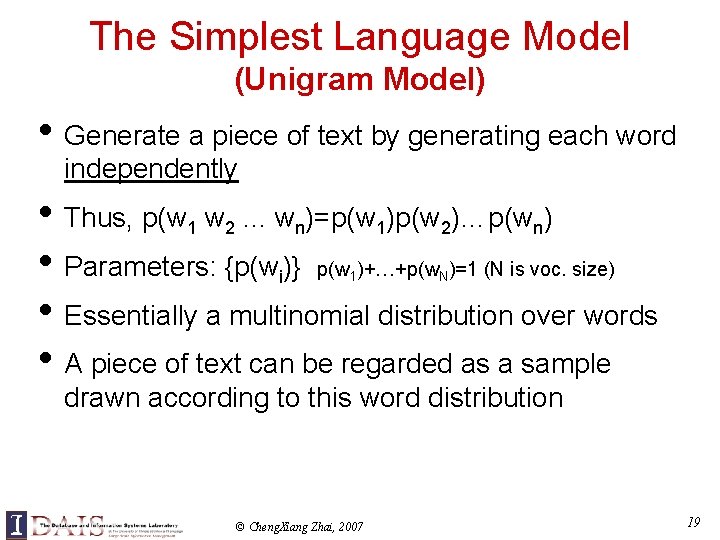

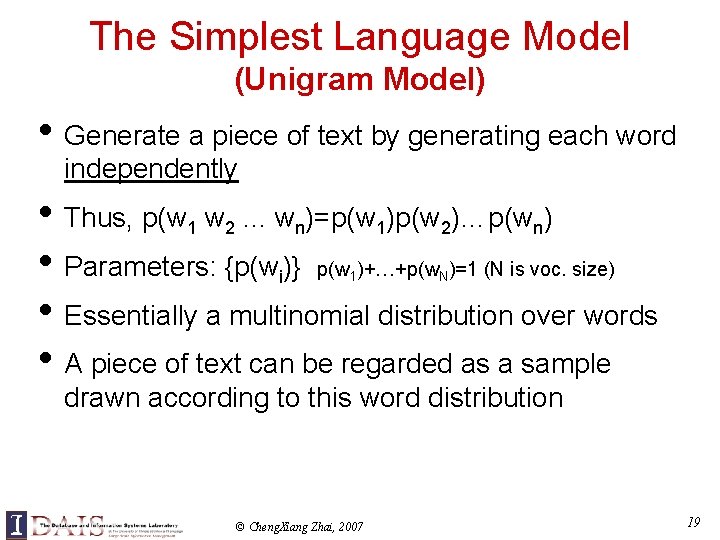

The Simplest Language Model (Unigram Model) • Generate a piece of text by generating each word independently • Thus, p(w 1 w 2. . . wn)=p(w 1)p(w 2)…p(wn) • Parameters: {p(wi)} p(w )+…+p(w )=1 (N is voc. size) • Essentially a multinomial distribution over words • A piece of text can be regarded as a sample 1 N drawn according to this word distribution © Cheng. Xiang Zhai, 2007 19

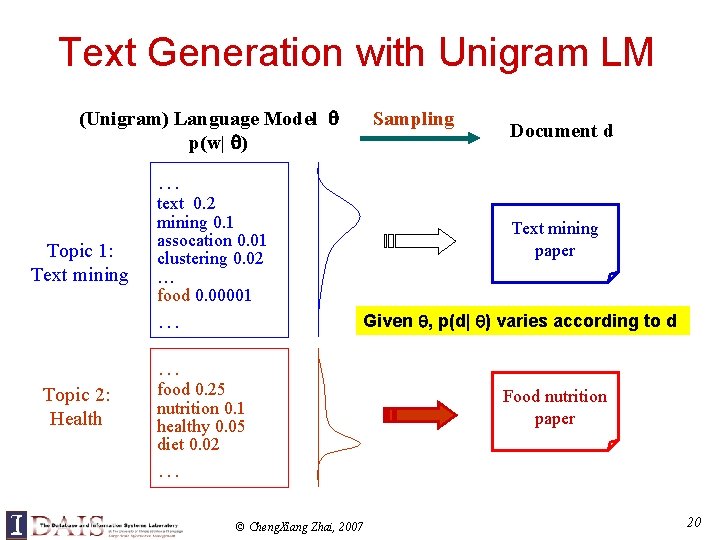

Text Generation with Unigram LM (Unigram) Language Model p(w| ) Sampling Document d … Topic 1: Text mining text 0. 2 mining 0. 1 assocation 0. 01 clustering 0. 02 … food 0. 00001 … Text mining paper Given , p(d| ) varies according to d … Topic 2: Health food 0. 25 nutrition 0. 1 healthy 0. 05 diet 0. 02 Food nutrition paper … © Cheng. Xiang Zhai, 2007 20

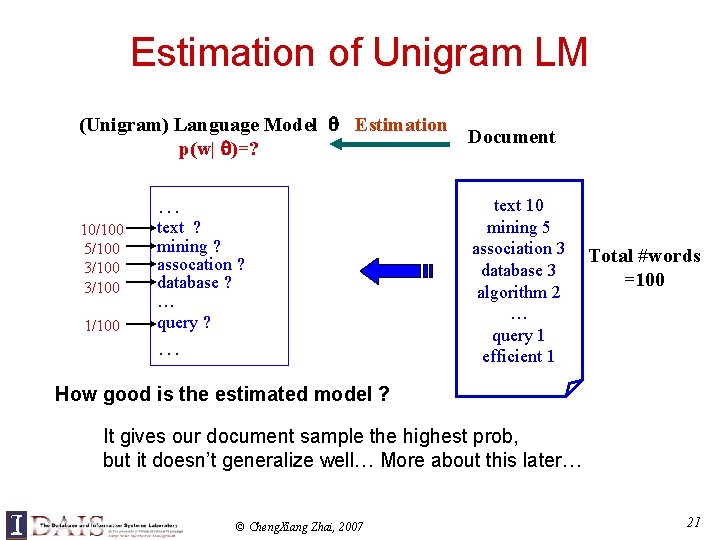

Estimation of Unigram LM (Unigram) Language Model Estimation Document p(w| )=? … 10/100 5/100 3/100 1/100 text ? mining ? assocation ? database ? … query ? … text 10 mining 5 association 3 database 3 algorithm 2 … query 1 efficient 1 Total #words =100 How good is the estimated model ? It gives our document sample the highest prob, but it doesn’t generalize well… More about this later… © Cheng. Xiang Zhai, 2007 21

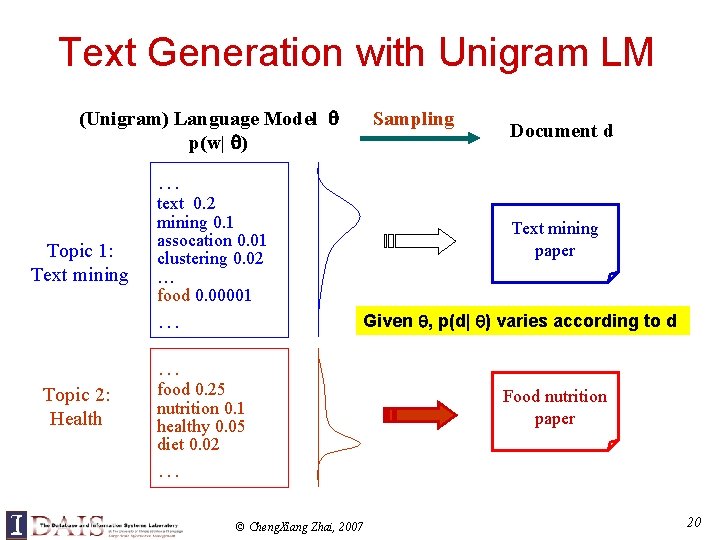

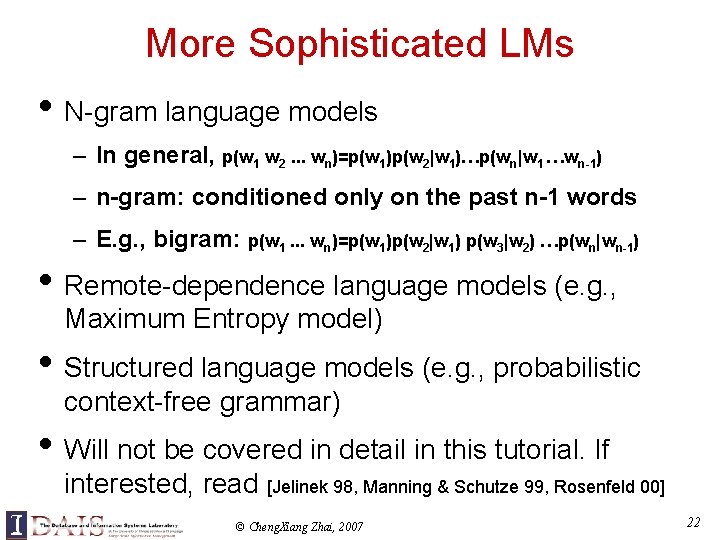

More Sophisticated LMs • N-gram language models – In general, p(w 1 w 2. . . wn)=p(w 1)p(w 2|w 1)…p(wn|w 1 …wn-1) – n-gram: conditioned only on the past n-1 words – E. g. , bigram: p(w 1. . . wn)=p(w 1)p(w 2|w 1) p(w 3|w 2) …p(wn|wn-1) • Remote-dependence language models (e. g. , Maximum Entropy model) • Structured language models (e. g. , probabilistic context-free grammar) • Will not be covered in detail in this tutorial. If interested, read [Jelinek 98, Manning & Schutze 99, Rosenfeld 00] © Cheng. Xiang Zhai, 2007 22

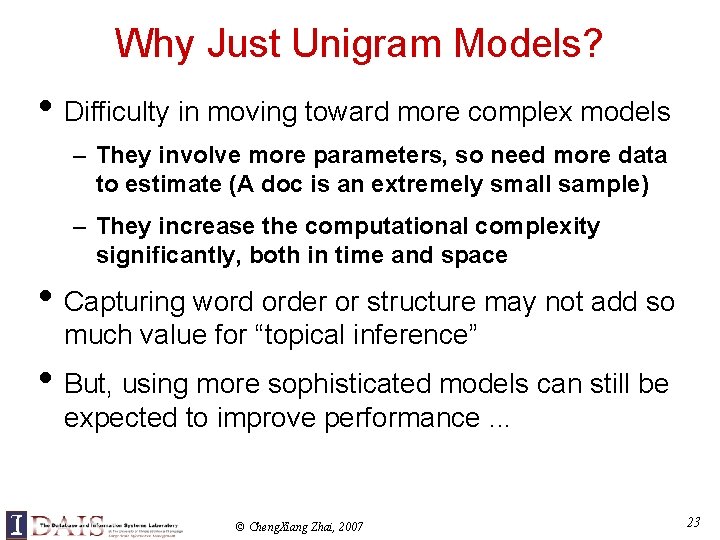

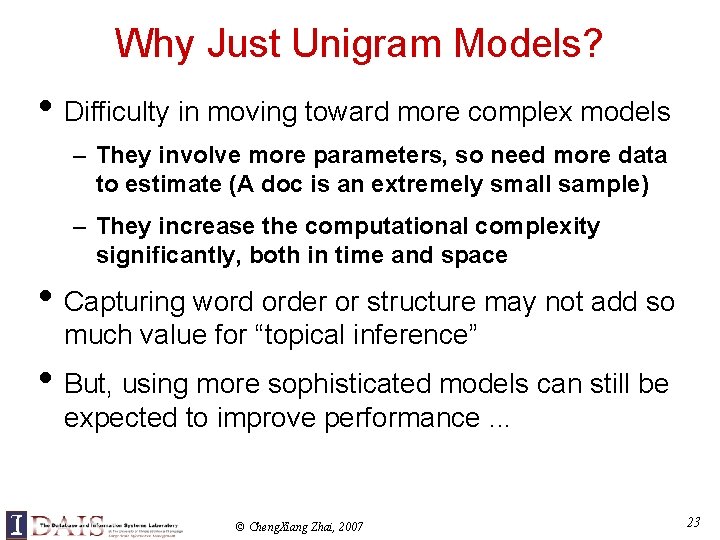

Why Just Unigram Models? • Difficulty in moving toward more complex models – They involve more parameters, so need more data to estimate (A doc is an extremely small sample) – They increase the computational complexity significantly, both in time and space • Capturing word order or structure may not add so much value for “topical inference” • But, using more sophisticated models can still be expected to improve performance. . . © Cheng. Xiang Zhai, 2007 23

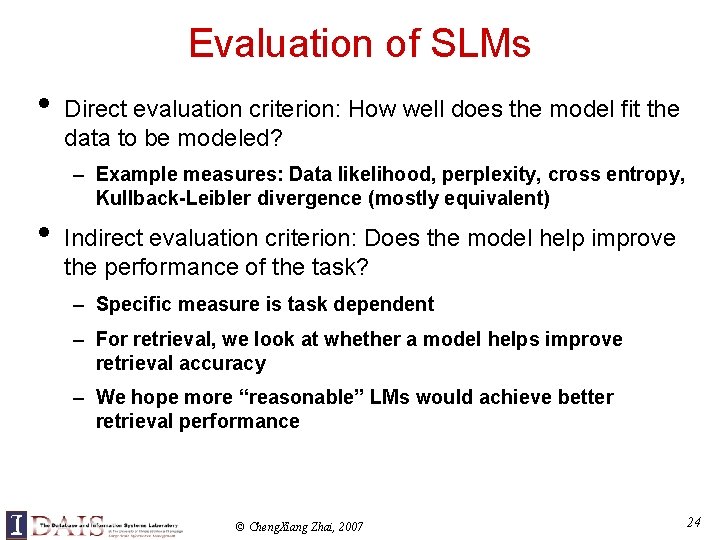

Evaluation of SLMs • Direct evaluation criterion: How well does the model fit the data to be modeled? – Example measures: Data likelihood, perplexity, cross entropy, Kullback-Leibler divergence (mostly equivalent) • Indirect evaluation criterion: Does the model help improve the performance of the task? – Specific measure is task dependent – For retrieval, we look at whether a model helps improve retrieval accuracy – We hope more “reasonable” LMs would achieve better retrieval performance © Cheng. Xiang Zhai, 2007 24

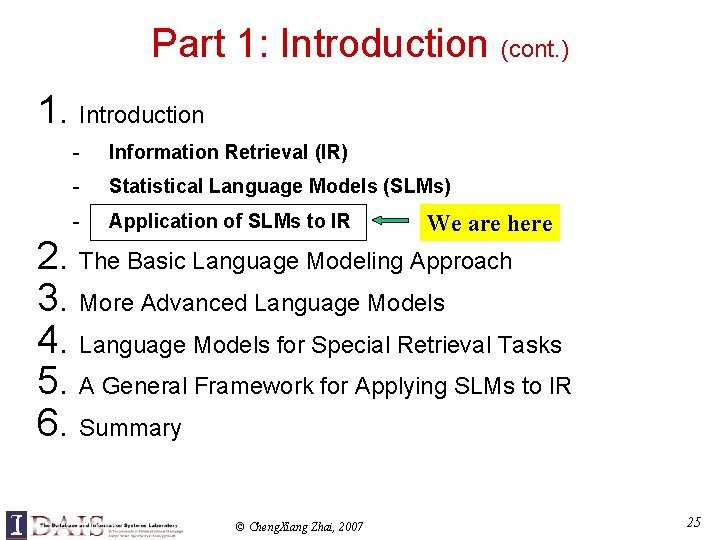

Part 1: Introduction (cont. ) 1. Introduction - Information Retrieval (IR) - Statistical Language Models (SLMs) - Application of SLMs to IR We are here 2. The Basic Language Modeling Approach 3. More Advanced Language Models 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 25

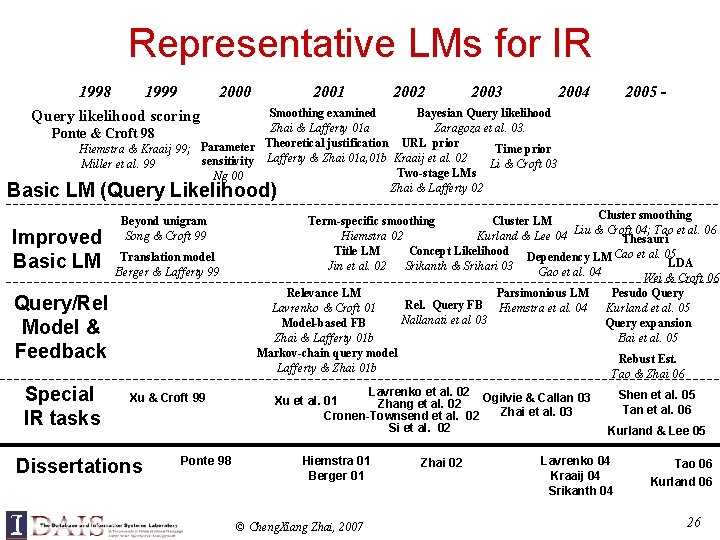

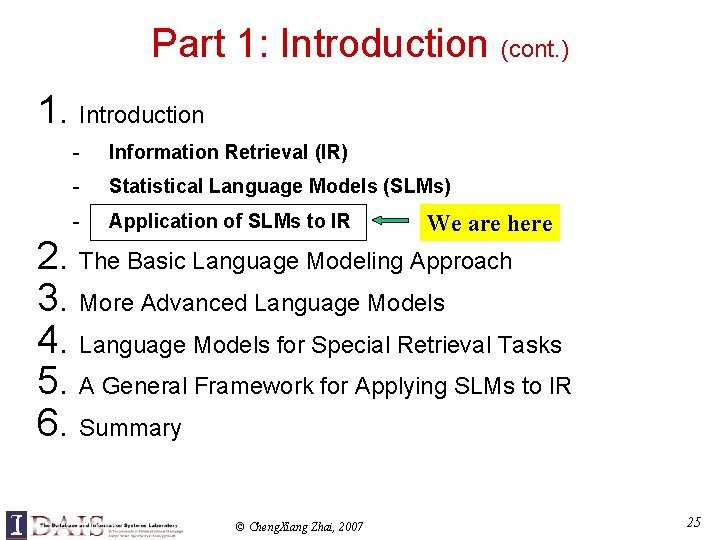

Representative LMs for IR 1998 1999 Query likelihood scoring 2000 2001 2002 2003 2004 2005 - Smoothing examined Bayesian Query likelihood Zhai & Lafferty 01 a Zaragoza et al. 03. Ponte & Croft 98 Hiemstra & Kraaij 99; Parameter Theoretical justification URL prior Time prior sensitivity Lafferty & Zhai 01 a, 01 b Kraaij et al. 02 Miller et al. 99 Li & Croft 03 Two-stage LMs Ng 00 Zhai & Lafferty 02 Basic LM (Query Likelihood) Improved Basic LM Beyond unigram Song & Croft 99 Translation model Berger & Lafferty 99 Query/Rel Model & Feedback Special IR tasks Xu & Croft 99 Dissertations Ponte 98 Cluster smoothing Term-specific smoothing Cluster LM 04; Tao et al. 06 Hiemstra 02 Kurland & Lee 04 Liu & Croft Thesauri Title LM Concept Likelihood Dependency LM Cao et al. 05 LDA Jin et al. 02 Srikanth & Srihari 03 Gao et al. 04 Wei & Croft 06 Relevance LM Parsimonious LM Pesudo Query Rel. Query FB Hiemstra et al. 04 Lavrenko & Croft 01 Kurland et al. 05 Nallanati et al 03 Model-based FB Query expansion Zhai & Lafferty 01 b Bai et al. 05 Markov-chain query model Rebust Est. Lafferty & Zhai 01 b Tao & Zhai 06 Lavrenko et al. 02 Ogilvie & Callan 03 Xu et al. 01 Zhang et al. 02 Zhai et al. 03 Cronen-Townsend et al. 02 Si et al. 02 Hiemstra 01 Berger 01 © Cheng. Xiang Zhai, 2007 Zhai 02 Shen et al. 05 Tan et al. 06 Kurland & Lee 05 Lavrenko 04 Kraaij 04 Srikanth 04 Tao 06 Kurland 06 26

![Ponte Crofts Pioneering Work Ponte Croft 98 Contribution 1 A Ponte & Croft’s Pioneering Work [Ponte & Croft 98] • Contribution 1: – A](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-27.jpg)

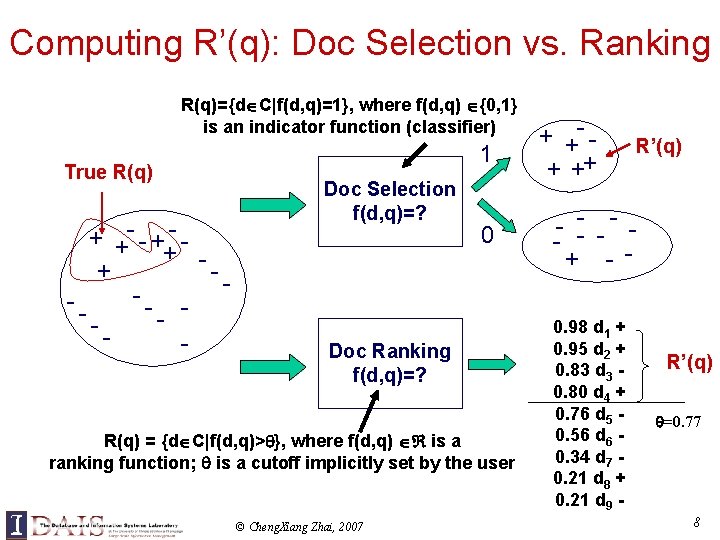

Ponte & Croft’s Pioneering Work [Ponte & Croft 98] • Contribution 1: – A new “query likelihood” scoring method: p(Q|D) – [Maron and Kuhns 60] had the idea of query likelihood, but didn’t • work out how to estimate p(Q|D) Contribution 2: – Connecting LMs with text representation and weighting in IR – [Wong & Yao 89] had the idea of representing text with a • multinomial distribution (relative frequency), but didn’t study the estimation problem Good performance is reported using the simple query likelihood method © Cheng. Xiang Zhai, 2007 27

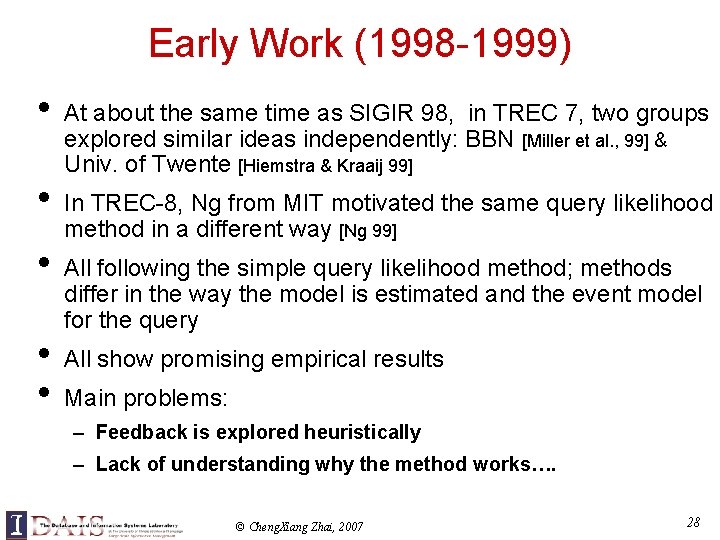

Early Work (1998 -1999) • • • At about the same time as SIGIR 98, in TREC 7, two groups explored similar ideas independently: BBN [Miller et al. , 99] & Univ. of Twente [Hiemstra & Kraaij 99] In TREC-8, Ng from MIT motivated the same query likelihood method in a different way [Ng 99] All following the simple query likelihood method; methods differ in the way the model is estimated and the event model for the query All show promising empirical results Main problems: – Feedback is explored heuristically – Lack of understanding why the method works…. © Cheng. Xiang Zhai, 2007 28

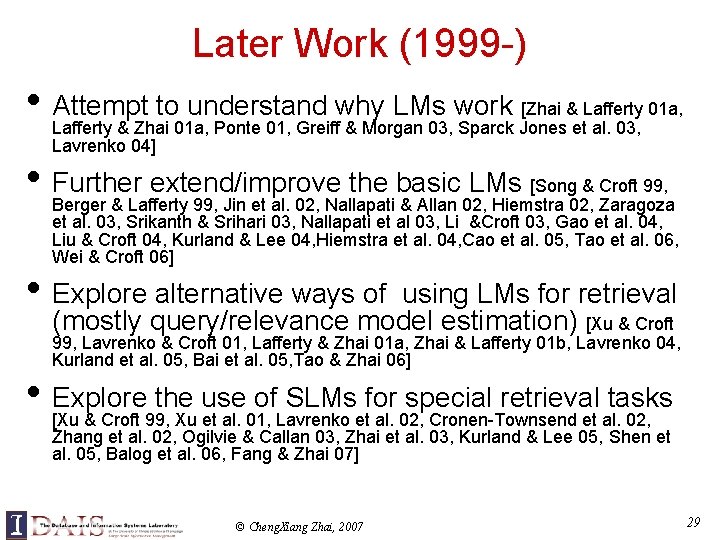

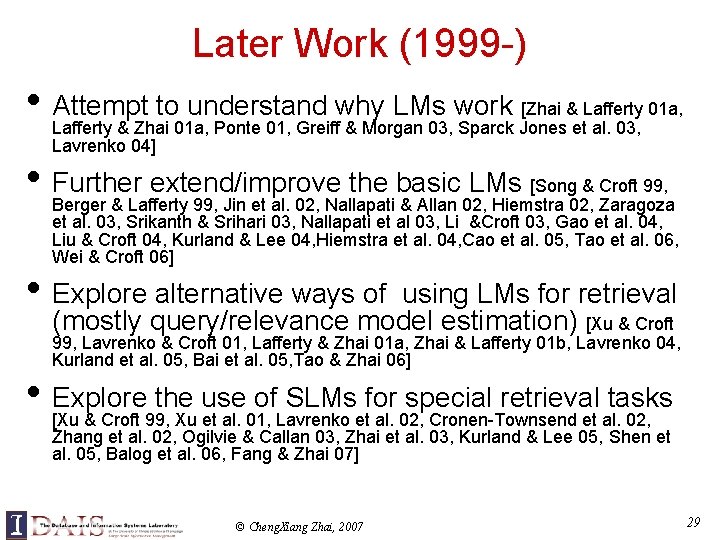

Later Work (1999 -) • Attempt to understand why LMs work [Zhai & Lafferty 01 a, Lafferty & Zhai 01 a, Ponte 01, Greiff & Morgan 03, Sparck Jones et al. 03, Lavrenko 04] • Further extend/improve the basic LMs [Song & Croft 99, Berger & Lafferty 99, Jin et al. 02, Nallapati & Allan 02, Hiemstra 02, Zaragoza et al. 03, Srikanth & Srihari 03, Nallapati et al 03, Li &Croft 03, Gao et al. 04, Liu & Croft 04, Kurland & Lee 04, Hiemstra et al. 04, Cao et al. 05, Tao et al. 06, Wei & Croft 06] • Explore alternative ways of using LMs for retrieval (mostly query/relevance model estimation) [Xu & Croft 99, Lavrenko & Croft 01, Lafferty & Zhai 01 a, Zhai & Lafferty 01 b, Lavrenko 04, Kurland et al. 05, Bai et al. 05, Tao & Zhai 06] • Explore the use of SLMs for special retrieval tasks [Xu & Croft 99, Xu et al. 01, Lavrenko et al. 02, Cronen-Townsend et al. 02, Zhang et al. 02, Ogilvie & Callan 03, Zhai et al. 03, Kurland & Lee 05, Shen et al. 05, Balog et al. 06, Fang & Zhai 07] © Cheng. Xiang Zhai, 2007 29

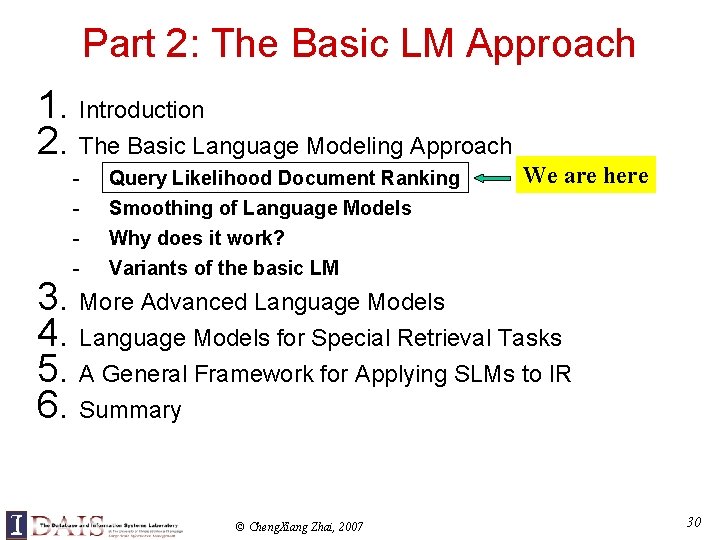

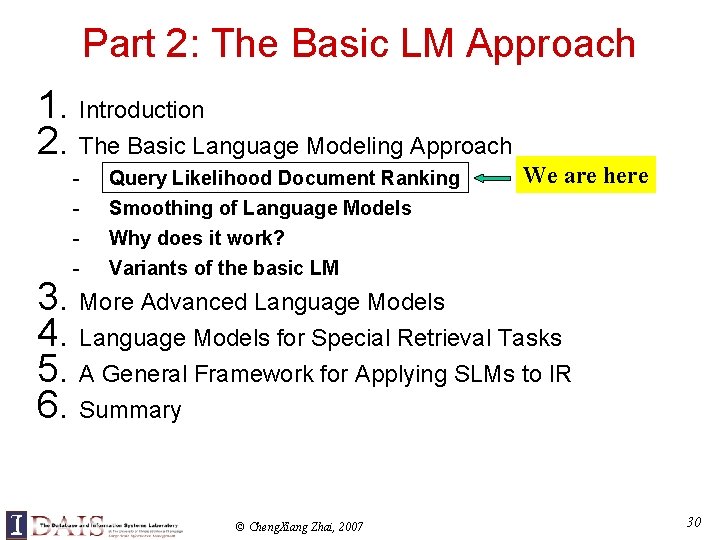

Part 2: The Basic LM Approach 1. Introduction 2. The Basic Language Modeling Approach - Query Likelihood Document Ranking Smoothing of Language Models Why does it work? Variants of the basic LM We are here 3. More Advanced Language Models 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 30

![The Basic LM Approach Ponte Croft 98 Document Language Model Text mining The Basic LM Approach [Ponte & Croft 98] Document Language Model … Text mining](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-31.jpg)

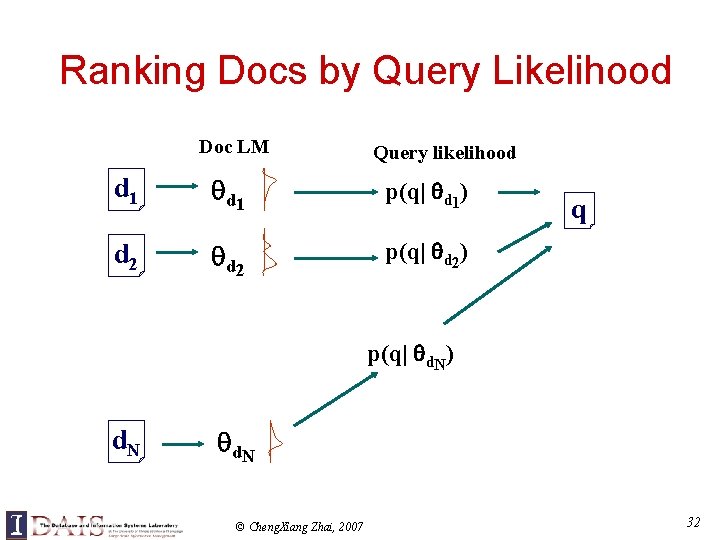

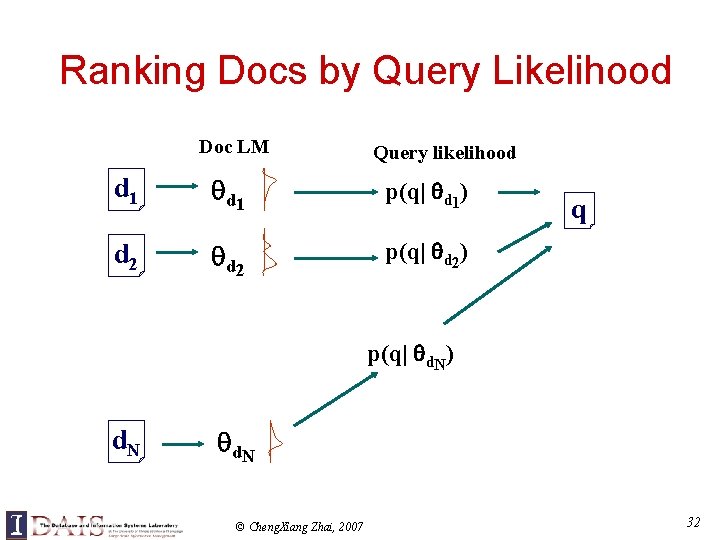

The Basic LM Approach [Ponte & Croft 98] Document Language Model … Text mining paper text ? mining ? assocation ? clustering ? … food ? … Food nutrition paper … Query = “data mining algorithms” ? Which model would most likely have generated this query? food ? nutrition ? healthy ? diet ? … © Cheng. Xiang Zhai, 2007 31

Ranking Docs by Query Likelihood Doc LM Query likelihood d 1 p(q| d 1) d 2 p(q| d 2) q p(q| d. N) d. N © Cheng. Xiang Zhai, 2007 32

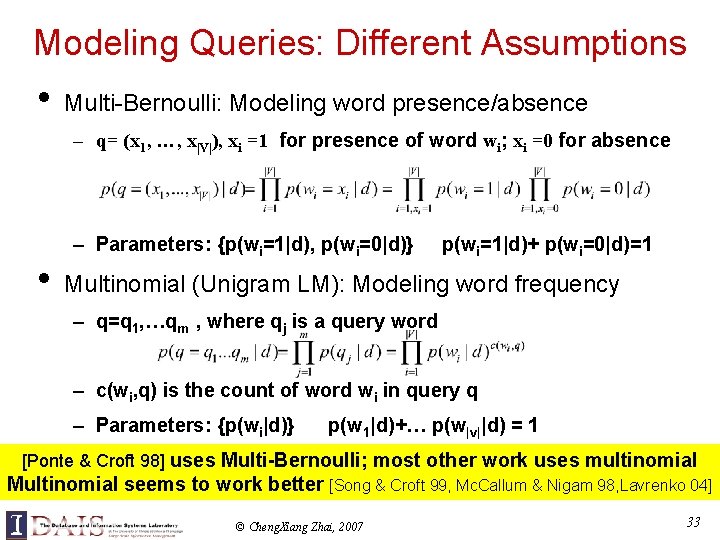

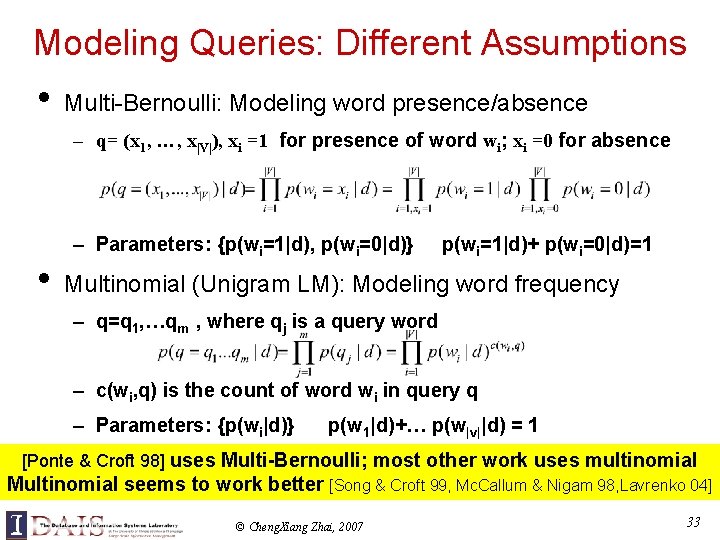

Modeling Queries: Different Assumptions • Multi-Bernoulli: Modeling word presence/absence – q= (x 1, …, x|V|), xi =1 for presence of word wi; xi =0 for absence • – Parameters: {p(wi=1|d), p(wi=0|d)} p(wi=1|d)+ p(wi=0|d)=1 Multinomial (Unigram LM): Modeling word frequency – q=q 1, …qm , where qj is a query word – c(wi, q) is the count of word wi in query q – Parameters: {p(wi|d)} p(w 1|d)+… p(w|v||d) = 1 [Ponte & Croft 98] uses Multi-Bernoulli; most other work uses multinomial Multinomial seems to work better [Song & Croft 99, Mc. Callum & Nigam 98, Lavrenko 04] © Cheng. Xiang Zhai, 2007 33

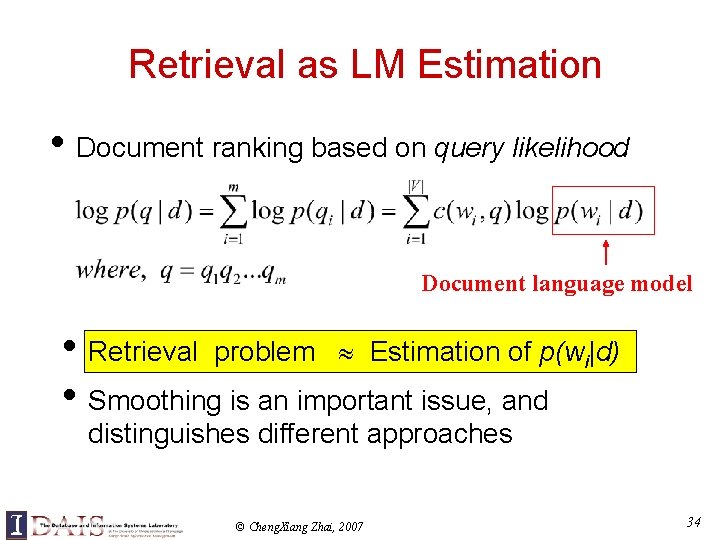

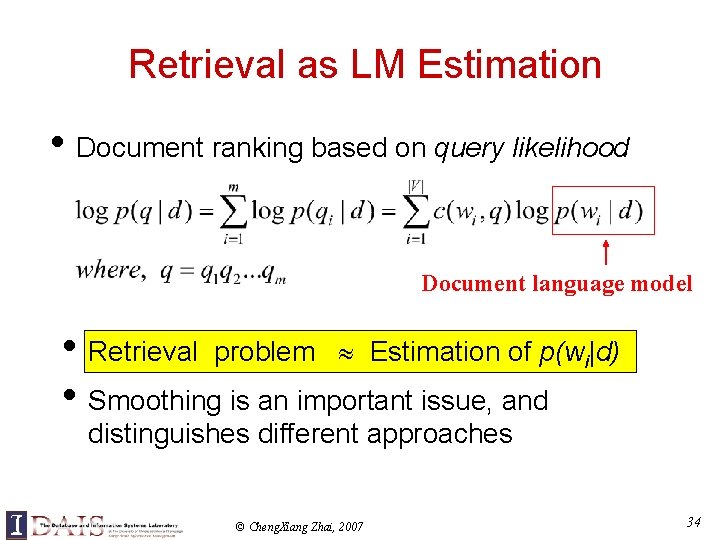

Retrieval as LM Estimation • Document ranking based on query likelihood Document language model • Retrieval problem Estimation of p(wi|d) • Smoothing is an important issue, and distinguishes different approaches © Cheng. Xiang Zhai, 2007 34

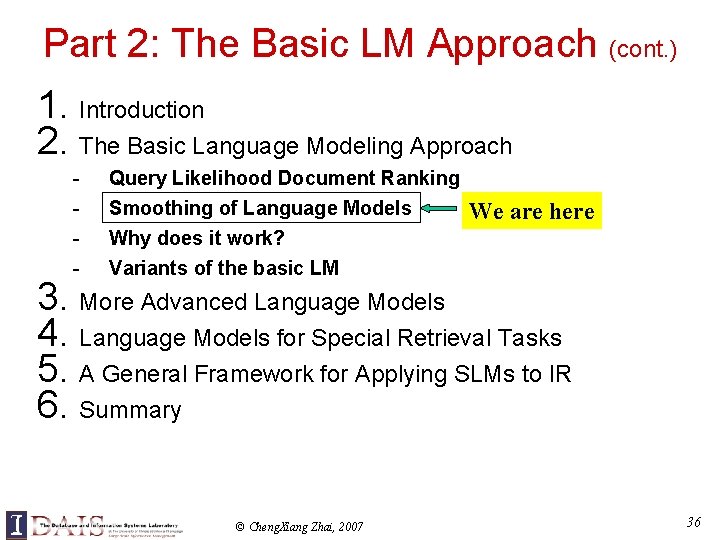

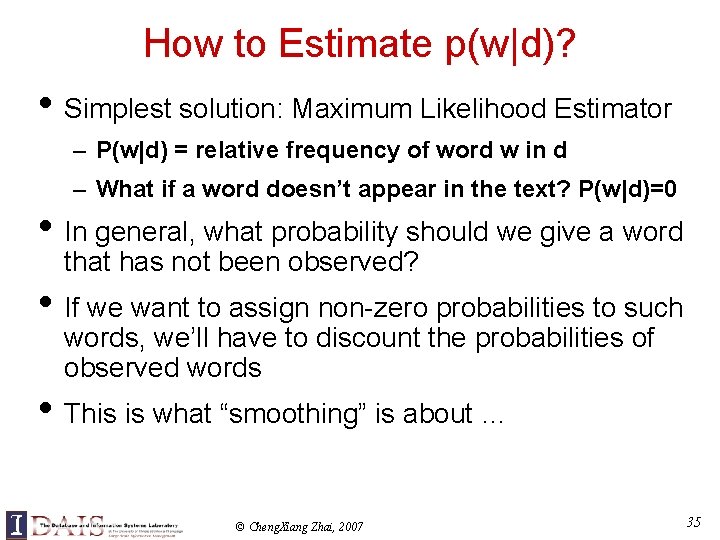

How to Estimate p(w|d)? • Simplest solution: Maximum Likelihood Estimator – P(w|d) = relative frequency of word w in d – What if a word doesn’t appear in the text? P(w|d)=0 • In general, what probability should we give a word that has not been observed? • If we want to assign non-zero probabilities to such words, we’ll have to discount the probabilities of observed words • This is what “smoothing” is about … © Cheng. Xiang Zhai, 2007 35

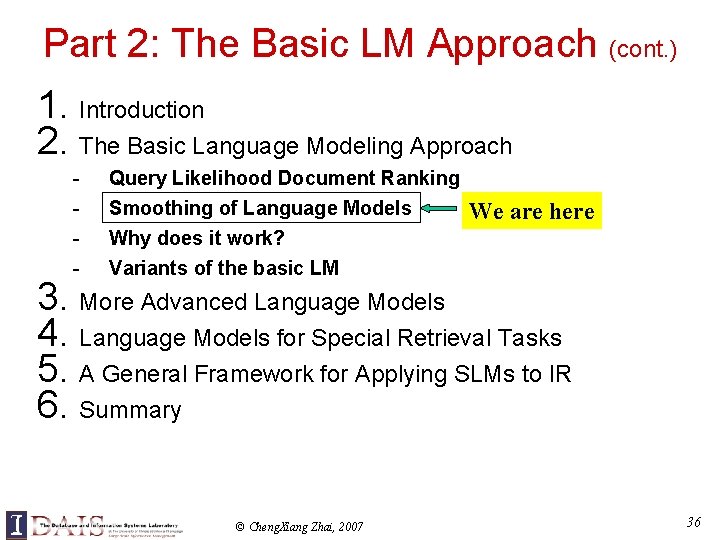

Part 2: The Basic LM Approach (cont. ) 1. Introduction 2. The Basic Language Modeling Approach - Query Likelihood Document Ranking Smoothing of Language Models Why does it work? Variants of the basic LM We are here 3. More Advanced Language Models 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 36

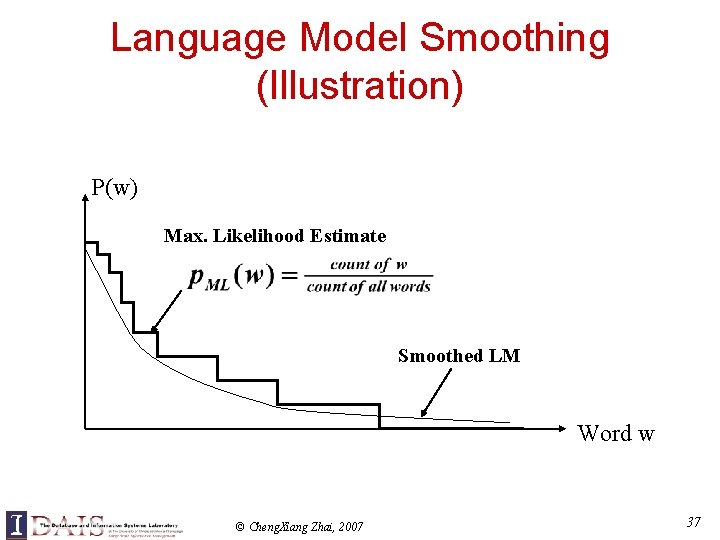

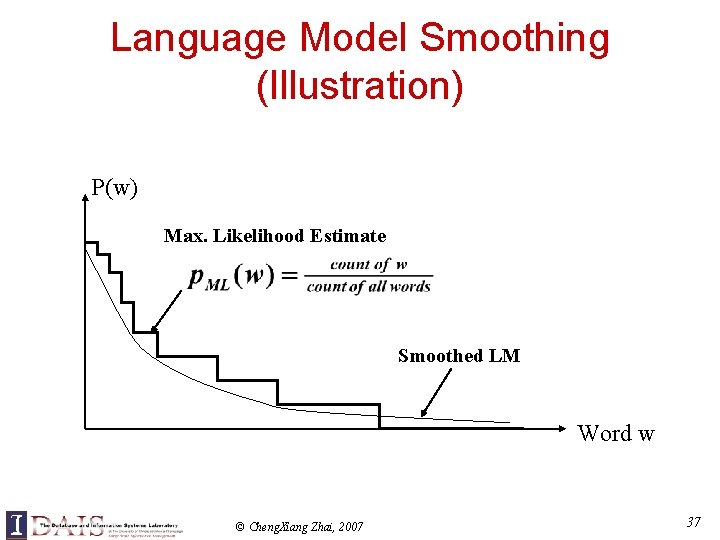

Language Model Smoothing (Illustration) P(w) Max. Likelihood Estimate Smoothed LM Word w © Cheng. Xiang Zhai, 2007 37

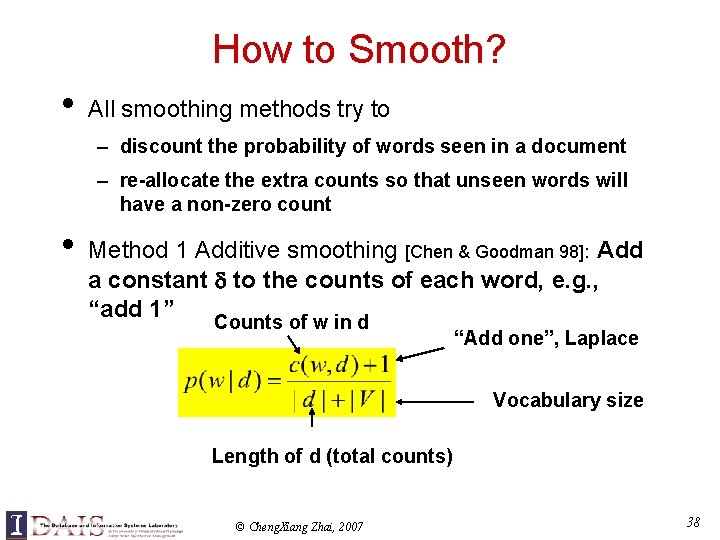

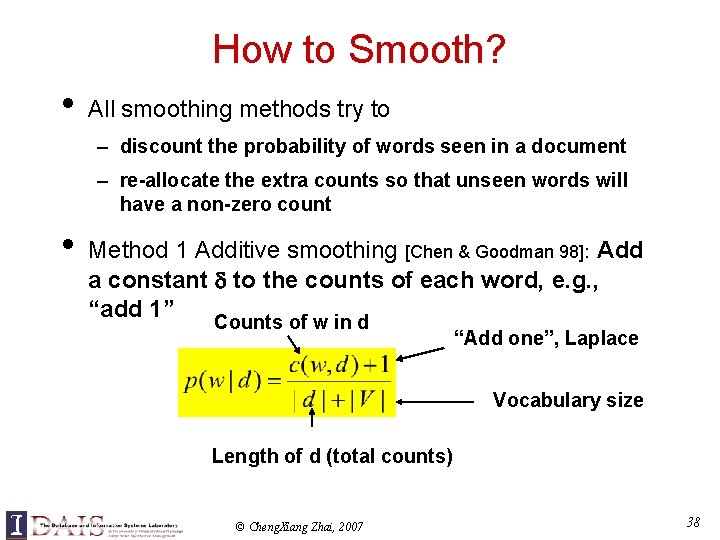

How to Smooth? • All smoothing methods try to – discount the probability of words seen in a document – re-allocate the extra counts so that unseen words will have a non-zero count • Method 1 Additive smoothing [Chen & Goodman 98]: Add a constant to the counts of each word, e. g. , “add 1” Counts of w in d “Add one”, Laplace Vocabulary size Length of d (total counts) © Cheng. Xiang Zhai, 2007 38

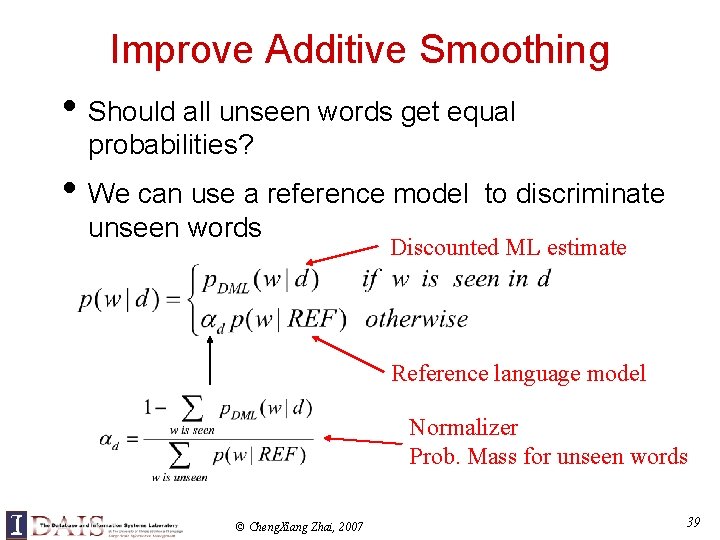

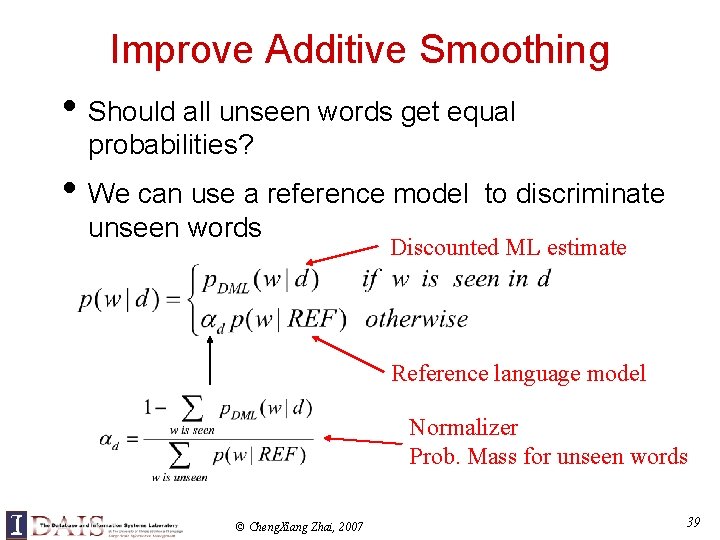

Improve Additive Smoothing • Should all unseen words get equal probabilities? • We can use a reference model to discriminate unseen words Discounted ML estimate Reference language model Normalizer Prob. Mass for unseen words © Cheng. Xiang Zhai, 2007 39

![Other Smoothing Methods Method 2 Absolute discounting Ney et al 94 Subtract a Other Smoothing Methods • Method 2 Absolute discounting [Ney et al. 94]: Subtract a](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-40.jpg)

Other Smoothing Methods • Method 2 Absolute discounting [Ney et al. 94]: Subtract a constant from the counts of each word # unique words • Method 3 Linear interpolation [Jelinek-Mercer 80]: “Shrink” uniformly toward p(w|REF) ML estimate © Cheng. Xiang Zhai, 2007 parameter 40

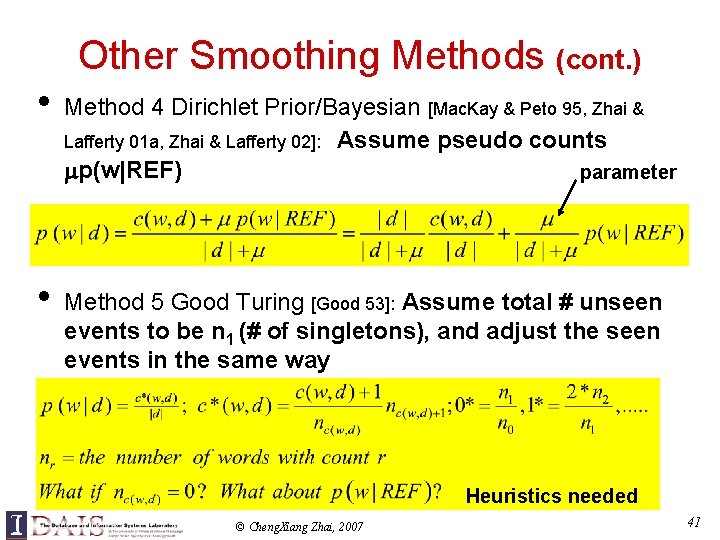

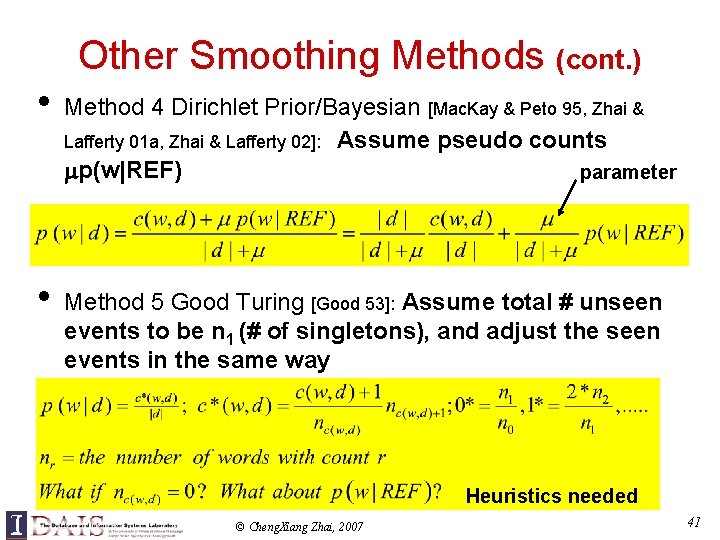

Other Smoothing Methods (cont. ) • • Method 4 Dirichlet Prior/Bayesian [Mac. Kay & Peto 95, Zhai & Lafferty 01 a, Zhai & Lafferty 02]: Assume pseudo counts p(w|REF) parameter Method 5 Good Turing [Good 53]: Assume total # unseen events to be n 1 (# of singletons), and adjust the seen events in the same way Heuristics needed © Cheng. Xiang Zhai, 2007 41

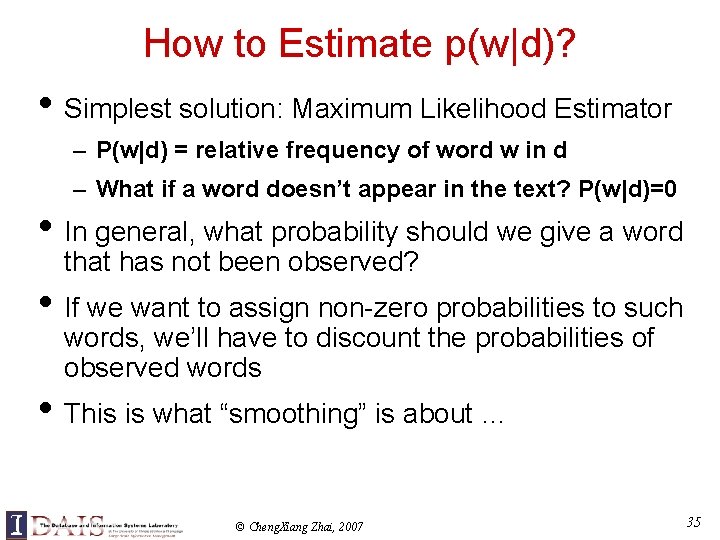

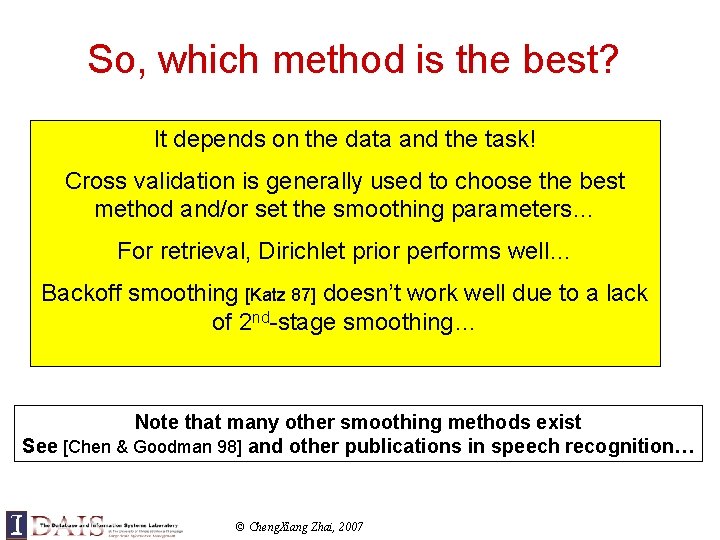

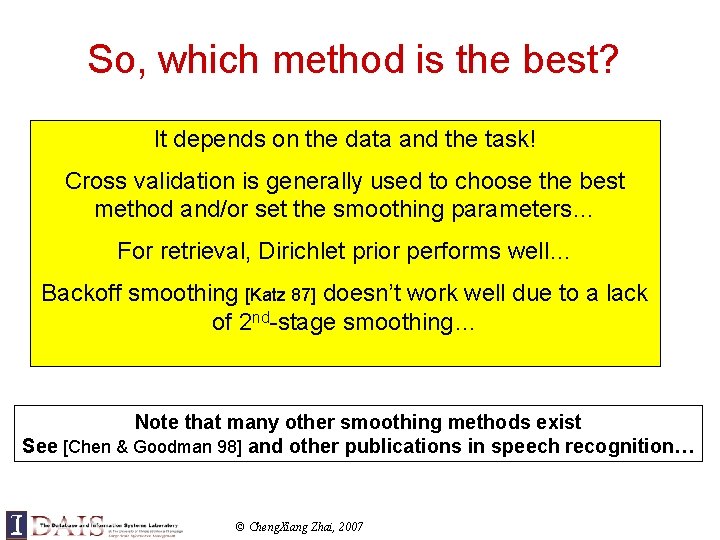

So, which method is the best? It depends on the data and the task! Cross validation is generally used to choose the best method and/or set the smoothing parameters… For retrieval, Dirichlet prior performs well… Backoff smoothing [Katz 87] doesn’t work well due to a lack of 2 nd-stage smoothing… Note that many other smoothing methods exist See [Chen & Goodman 98] and other publications in speech recognition… © Cheng. Xiang Zhai, 2007

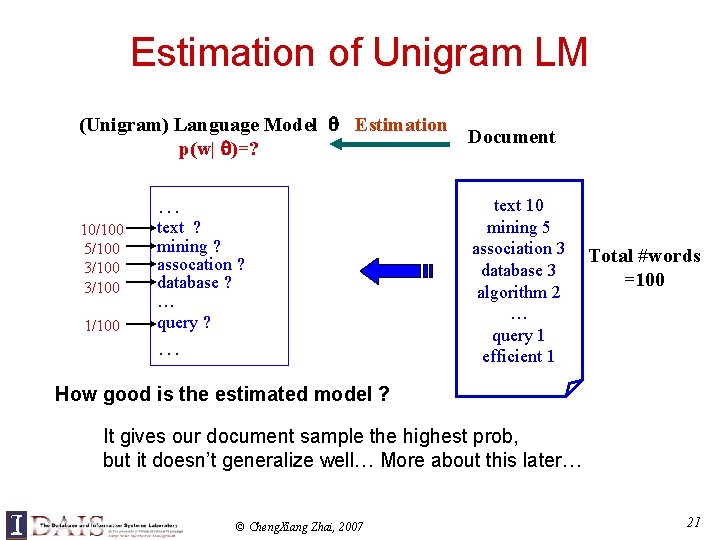

![Comparison of Three Methods Zhai Lafferty 01 a Comparison is performed on a Comparison of Three Methods [Zhai & Lafferty 01 a] Comparison is performed on a](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-43.jpg)

Comparison of Three Methods [Zhai & Lafferty 01 a] Comparison is performed on a variety of test collections © Cheng. Xiang Zhai, 2007 43

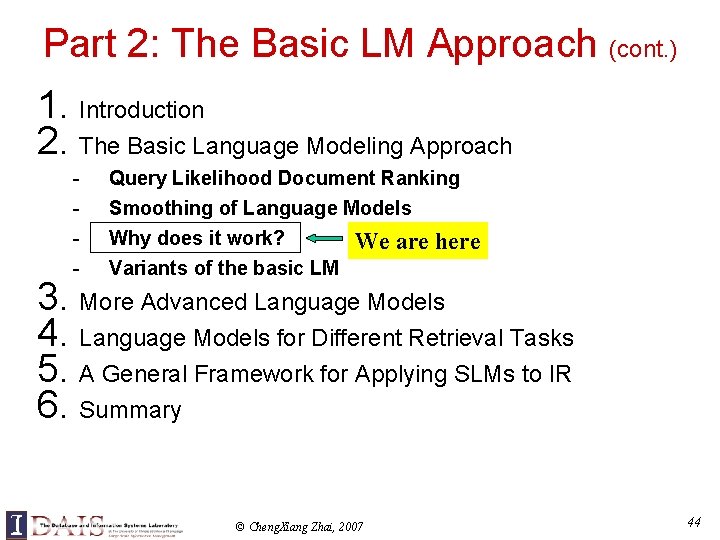

Part 2: The Basic LM Approach (cont. ) 1. Introduction 2. The Basic Language Modeling Approach - Query Likelihood Document Ranking Smoothing of Language Models Why does it work? We are here Variants of the basic LM 3. More Advanced Language Models 4. Language Models for Different Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 44

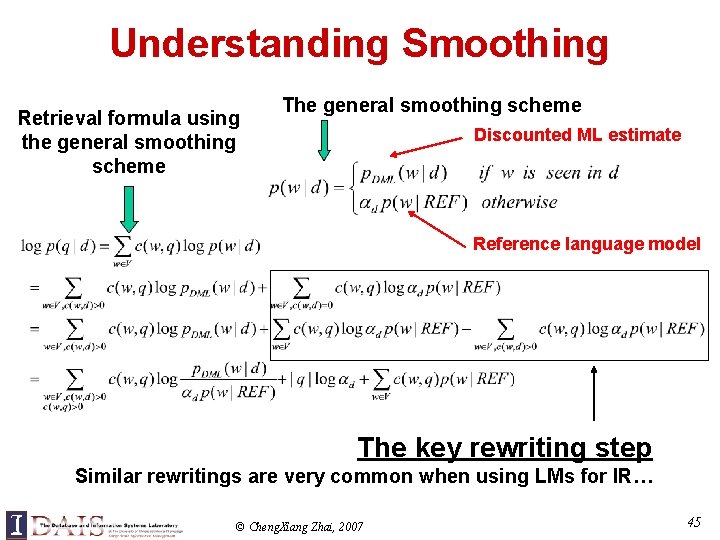

Understanding Smoothing Retrieval formula using the general smoothing scheme The general smoothing scheme Discounted ML estimate Reference language model The key rewriting step Similar rewritings are very common when using LMs for IR… © Cheng. Xiang Zhai, 2007 45

![Smoothing TFIDF Weighting Zhai Lafferty 01 a Plug in the general Smoothing & TF-IDF Weighting [Zhai & Lafferty 01 a] • Plug in the general](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-46.jpg)

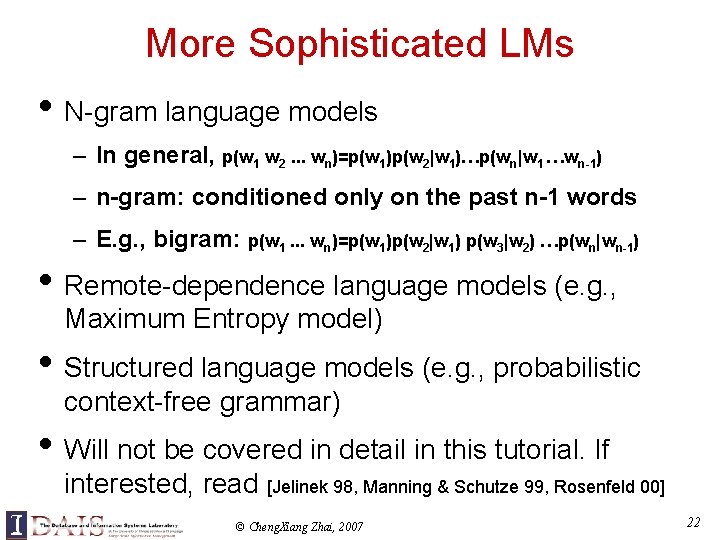

Smoothing & TF-IDF Weighting [Zhai & Lafferty 01 a] • Plug in the general smoothing scheme to the query likelihood retrieval formula, we obtain TF weighting Words in both query and doc • • Doc length normalization (long doc is expected to have a smaller d) IDF-like weighting Ignore for ranking Smoothing with p(w|C) TF-IDF + length norm. Smoothing implements traditional retrieval heuristics LMs with simple smoothing can be computed as efficiently as traditional retrieval models © Cheng. Xiang Zhai, 2007 46

![The DualRole of Smoothing Zhai Lafferty 02 long Verbose queries Keyword queries long The Dual-Role of Smoothing [Zhai & Lafferty 02] long Verbose queries Keyword queries long](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-47.jpg)

The Dual-Role of Smoothing [Zhai & Lafferty 02] long Verbose queries Keyword queries long short Why does query type affect smoothing sensitivity? © Cheng. Xiang Zhai, 2007 47

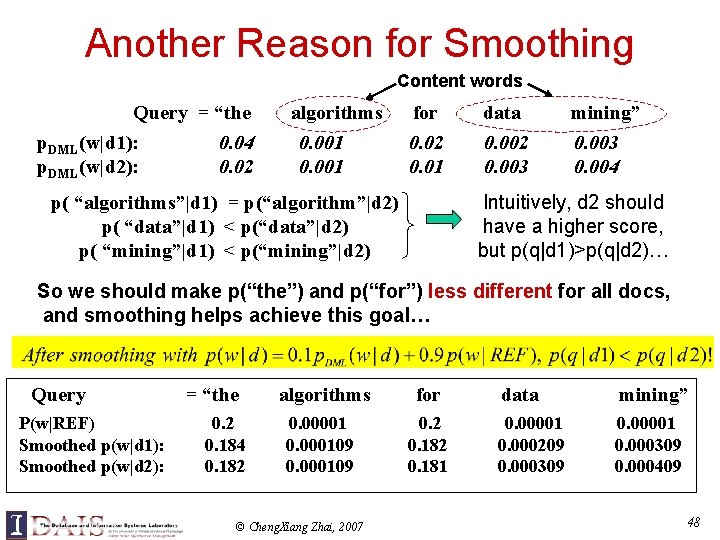

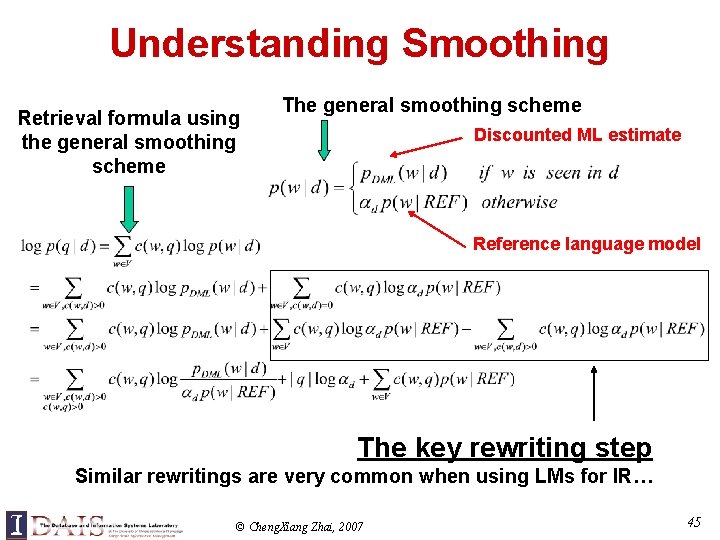

Another Reason for Smoothing Content words Query = “the p. DML(w|d 1): p. DML(w|d 2): 0. 04 0. 02 algorithms 0. 001 for data mining” 0. 02 0. 01 0. 002 0. 003 0. 004 Intuitively, d 2 should have a higher score, but p(q|d 1)>p(q|d 2)… p( “algorithms”|d 1) = p(“algorithm”|d 2) p( “data”|d 1) < p(“data”|d 2) p( “mining”|d 1) < p(“mining”|d 2) So we should make p(“the”) and p(“for”) less different for all docs, and smoothing helps achieve this goal… Query P(w|REF) Smoothed p(w|d 1): Smoothed p(w|d 2): = “the 0. 2 0. 184 0. 182 algorithms for 0. 00001 0. 000109 0. 2 0. 181 © Cheng. Xiang Zhai, 2007 data mining” 0. 00001 0. 000209 0. 000309 0. 00001 0. 000309 0. 000409 48

![Twostage Smoothing Zhai Lafferty 02 Stage2 Stage1 Explain noise in query Explain unseen Two-stage Smoothing [Zhai & Lafferty 02] Stage-2 Stage-1 -Explain noise in query -Explain unseen](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-49.jpg)

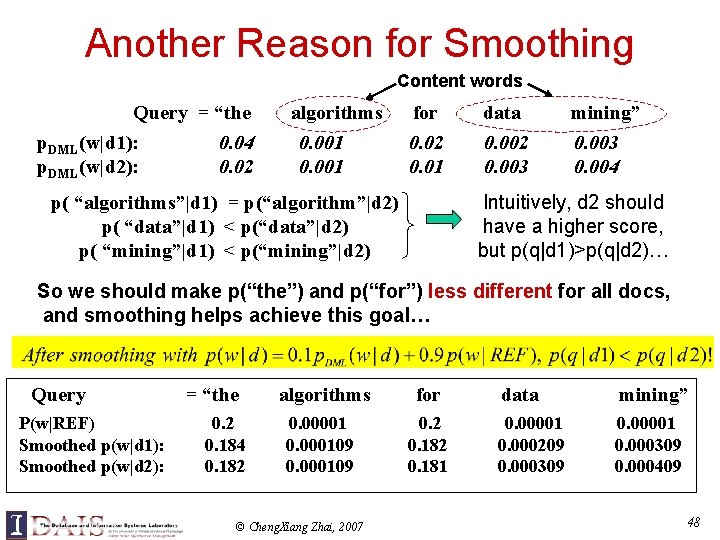

Two-stage Smoothing [Zhai & Lafferty 02] Stage-2 Stage-1 -Explain noise in query -Explain unseen words -Dirichlet prior(Bayesian) -2 -component mixture P(w|d) = (1 - ) c(w, d) + p(w|C) |d| + Collection LM + p(w|U) User background model Can be approximated by p(w|C) © Cheng. Xiang Zhai, 2007 49

![Estimating using leaveoneout Zhai Lafferty 02 w 1 Pw 1d w 1 loglikelihood Estimating using leave-one-out [Zhai & Lafferty 02] w 1 P(w 1|d- w 1) log-likelihood](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-50.jpg)

Estimating using leave-one-out [Zhai & Lafferty 02] w 1 P(w 1|d- w 1) log-likelihood Leave-one-out w 2 P(w 2|d- w 2) Maximum Likelihood Estimator . . . wn Newton’s Method P(wn|d- wn) © Cheng. Xiang Zhai, 2007 50

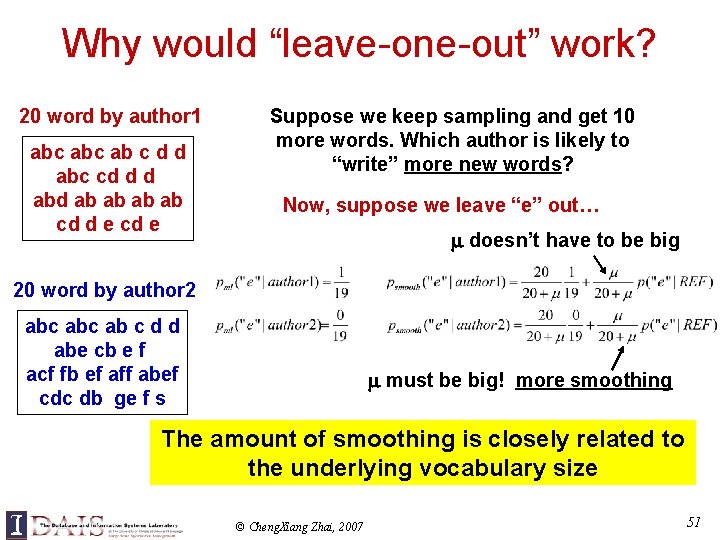

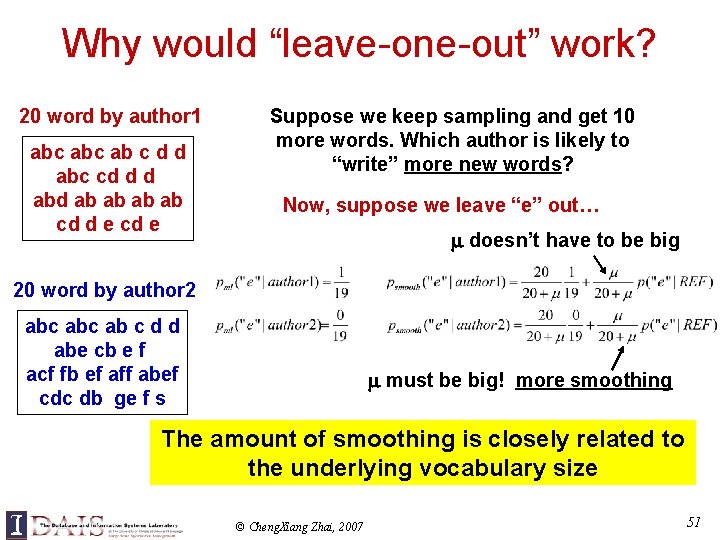

Why would “leave-one-out” work? 20 word by author 1 abc ab c d d abc cd d d ab ab cd d e cd e Suppose we keep sampling and get 10 more words. Which author is likely to “write” more new words? Now, suppose we leave “e” out… doesn’t have to be big 20 word by author 2 abc ab c d d abe cb e f acf fb ef aff abef cdc db ge f s must be big! more smoothing The amount of smoothing is closely related to the underlying vocabulary size © Cheng. Xiang Zhai, 2007 51

![Estimating using Mixture Model Zhai Lafferty 02 Stage2 Stage1 d 1 Pwd 1 Estimating using Mixture Model [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1)](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-52.jpg)

Estimating using Mixture Model [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1) (1 - )p(w|d 1)+ p(w|U) . . . …. . . d. N P(w|d. N) 1 N Query Q=q 1…qm (1 - )p(w|d. N)+ p(w|U) Estimated in stage-1 Maximum Likelihood Estimator Expectation-Maximization (EM) algorithm © Cheng. Xiang Zhai, 2007 52

![Automatic 2 stage results Optimal 1 stage results Zhai Lafferty 02 Average precision Automatic 2 -stage results Optimal 1 -stage results [Zhai & Lafferty 02] Average precision](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-53.jpg)

Automatic 2 -stage results Optimal 1 -stage results [Zhai & Lafferty 02] Average precision (3 DB’s + 4 query types, 150 topics) * Indicates significant difference Completely automatic tuning of parameters IS POSSIBLE! © Cheng. Xiang Zhai, 2007 53

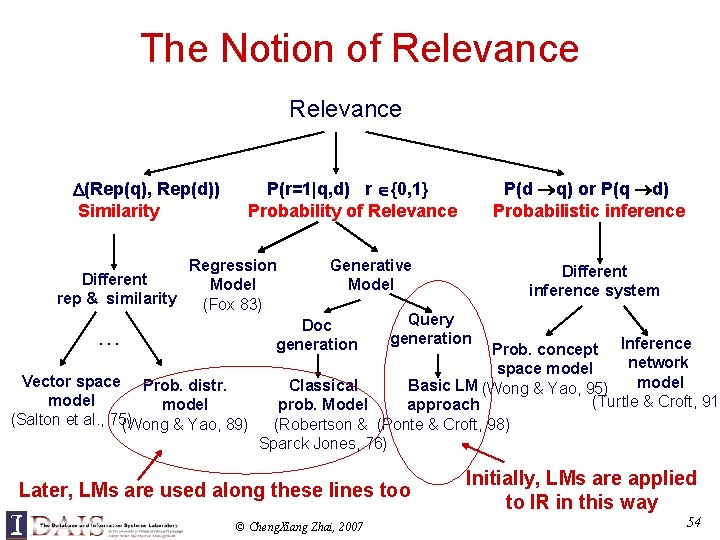

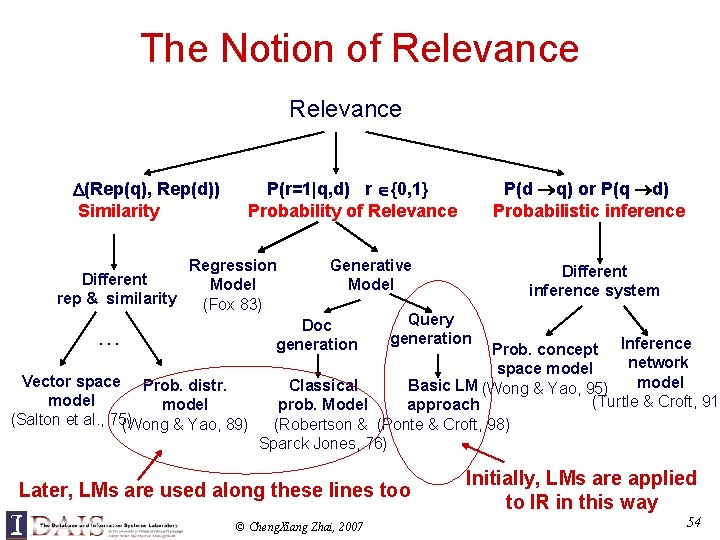

The Notion of Relevance (Rep(q), Rep(d)) Similarity Regression Different Model rep & similarity (Fox 83) … P(d q) or P(q d) Probabilistic inference P(r=1|q, d) r {0, 1} Probability of Relevance Generative Model Doc generation Different inference system Query generation Prob. concept Inference network space model Vector space Prob. distr. model Classical Basic LM (Wong & Yao, 95) model (Turtle & Croft, 91) model prob. Model approach (Salton et al. , 75) (Wong & Yao, 89) (Robertson & (Ponte & Croft, 98) Sparck Jones, 76) Later, LMs are used along these lines too © Cheng. Xiang Zhai, 2007 Initially, LMs are applied to IR in this way 54

![Justification of Query Likelihood Lafferty Zhai 01 a The General Probabilistic Retrieval Justification of Query Likelihood [Lafferty & Zhai 01 a] • The General Probabilistic Retrieval](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-55.jpg)

Justification of Query Likelihood [Lafferty & Zhai 01 a] • The General Probabilistic Retrieval Model – Define P(Q, D|R) – Compute P(R|Q, D) using Bayes’ rule – Rank documents by O(R|Q, D) Ignored for ranking D • Special cases – Document “generation”: P(Q, D|R)=P(D|Q, R)P(Q|R) – Query “generation”: P(Q, D|R)=P(Q|D, R)P(D|R) Doc generation leads to the classic Robertson-Sparck Jones model Query generation leads to the query likelihood LM approach © Cheng. Xiang Zhai, 2007 55

![Query Generation Lafferty Zhai 01 a Query likelihood pq d Document prior Assuming Query Generation [Lafferty & Zhai 01 a] Query likelihood p(q| d) Document prior Assuming](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-56.jpg)

Query Generation [Lafferty & Zhai 01 a] Query likelihood p(q| d) Document prior Assuming uniform prior, we have Computing P(Q|D, R=1) generally involves two steps: (1) estimate a language model based on D (2) compute the query likelihood according to the estimated model P(Q|D)=P(Q|D, R=1)! Prob. that a user who likes D would pose query Q Relevance-based interpretation of the so-called “document language model” © Cheng. Xiang Zhai, 2007 56

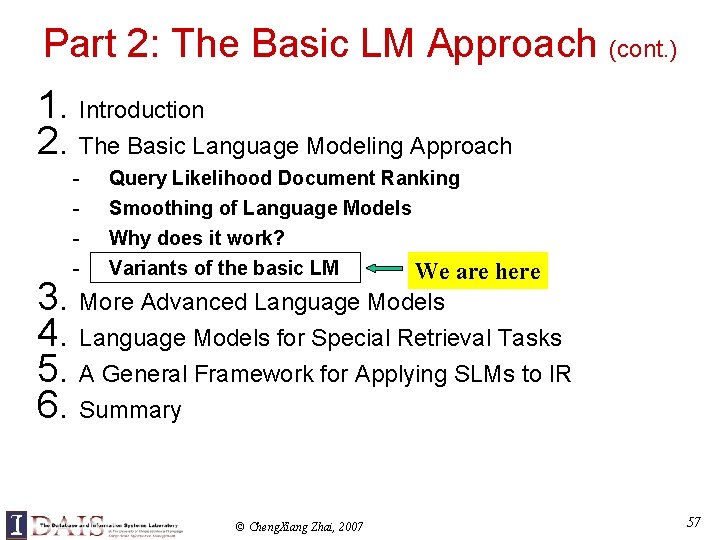

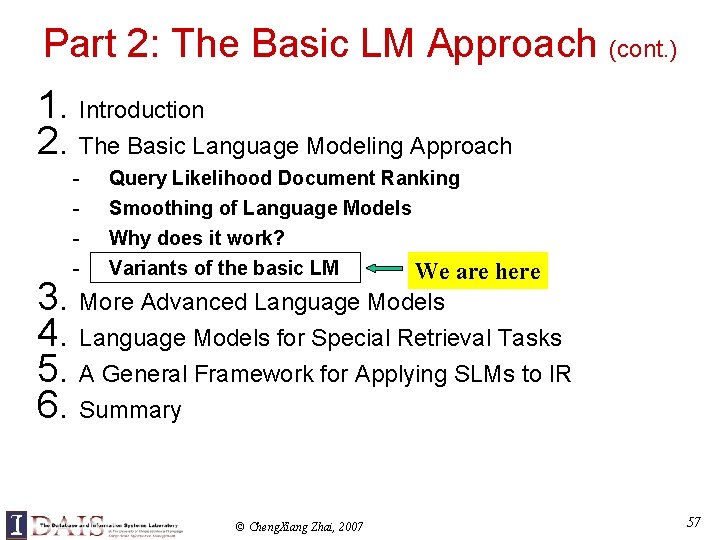

Part 2: The Basic LM Approach (cont. ) 1. Introduction 2. The Basic Language Modeling Approach - Query Likelihood Document Ranking Smoothing of Language Models Why does it work? Variants of the basic LM We are here 3. More Advanced Language Models 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 57

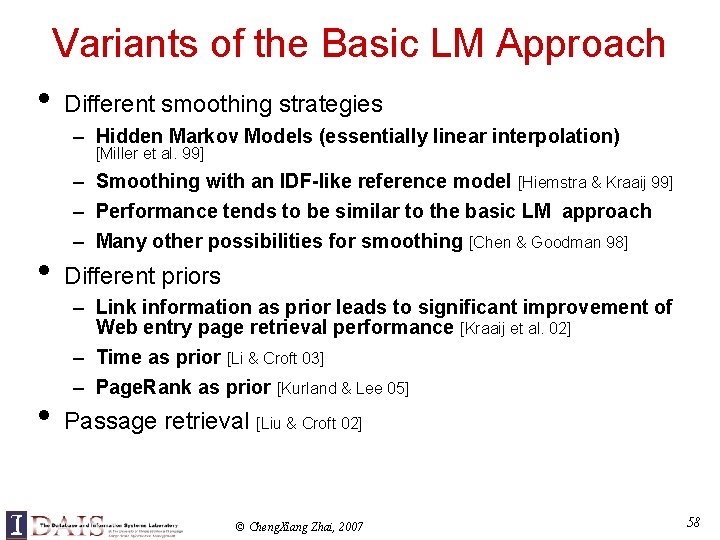

Variants of the Basic LM Approach • Different smoothing strategies – Hidden Markov Models (essentially linear interpolation) [Miller et al. 99] • • – Smoothing with an IDF-like reference model [Hiemstra & Kraaij 99] – Performance tends to be similar to the basic LM approach – Many other possibilities for smoothing [Chen & Goodman 98] Different priors – Link information as prior leads to significant improvement of Web entry page retrieval performance [Kraaij et al. 02] – Time as prior [Li & Croft 03] – Page. Rank as prior [Kurland & Lee 05] Passage retrieval [Liu & Croft 02] © Cheng. Xiang Zhai, 2007 58

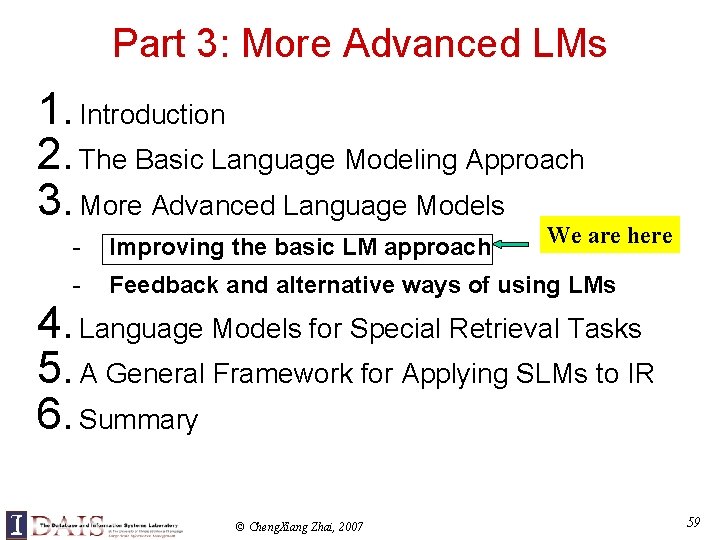

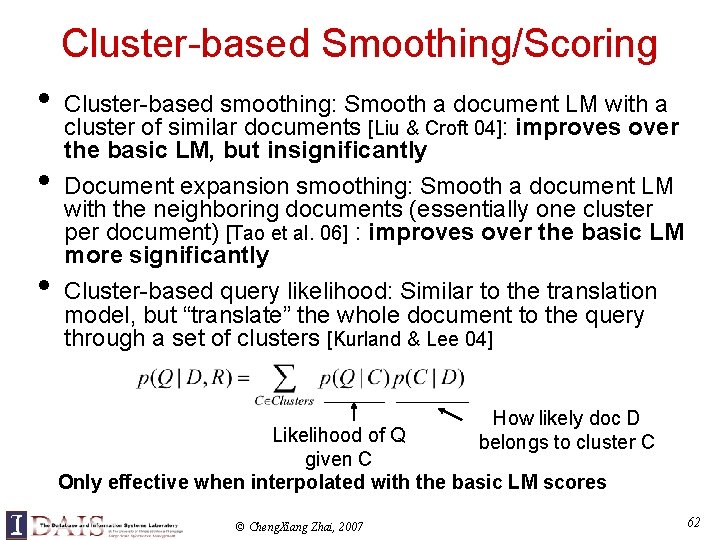

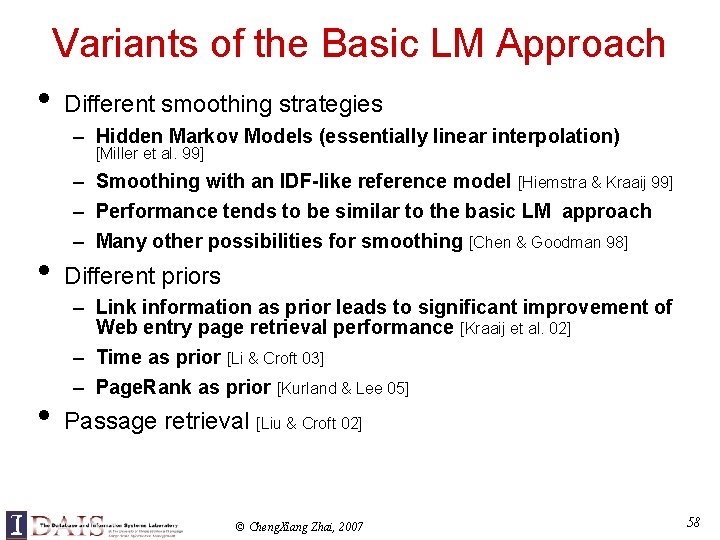

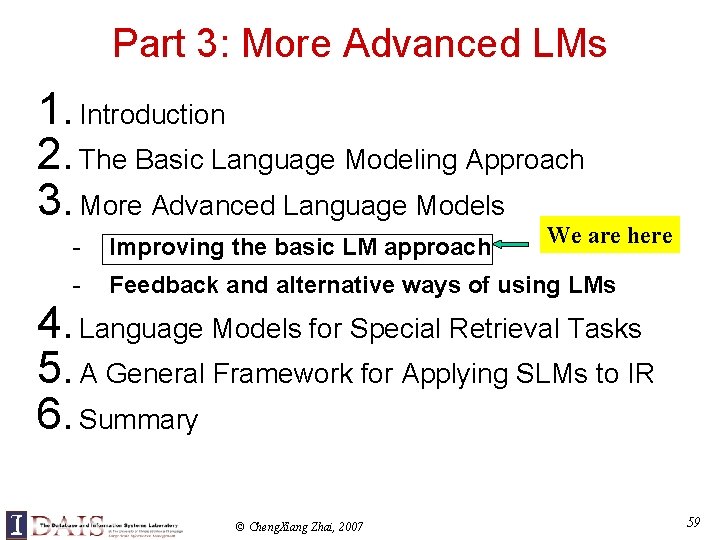

Part 3: More Advanced LMs 1. Introduction 2. The Basic Language Modeling Approach 3. More Advanced Language Models We are here - Improving the basic LM approach - Feedback and alternative ways of using LMs 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 59

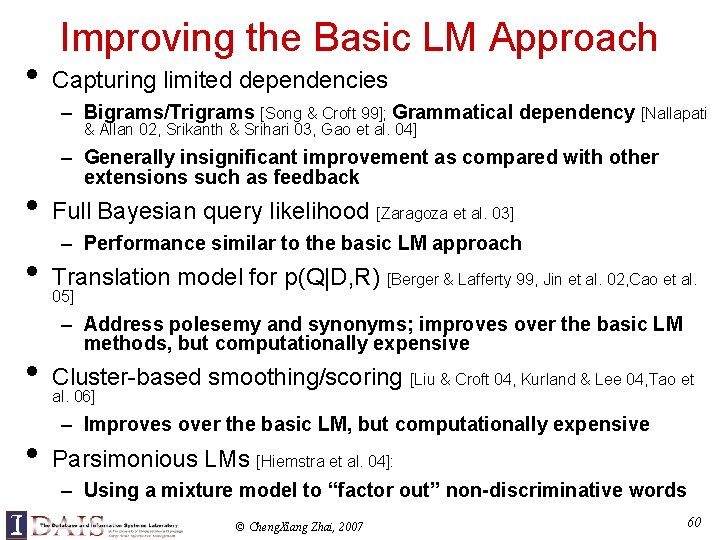

• Improving the Basic LM Approach Capturing limited dependencies – Bigrams/Trigrams [Song & Croft 99]; Grammatical dependency [Nallapati & Allan 02, Srikanth & Srihari 03, Gao et al. 04] • • – Generally insignificant improvement as compared with other extensions such as feedback Full Bayesian query likelihood [Zaragoza et al. 03] – Performance similar to the basic LM approach Translation model for p(Q|D, R) [Berger & Lafferty 99, Jin et al. 02, Cao et al. 05] – Address polesemy and synonyms; improves over the basic LM methods, but computationally expensive Cluster-based smoothing/scoring [Liu & Croft 04, Kurland & Lee 04, Tao et al. 06] – Improves over the basic LM, but computationally expensive Parsimonious LMs [Hiemstra et al. 04]: – Using a mixture model to “factor out” non-discriminative words © Cheng. Xiang Zhai, 2007 60

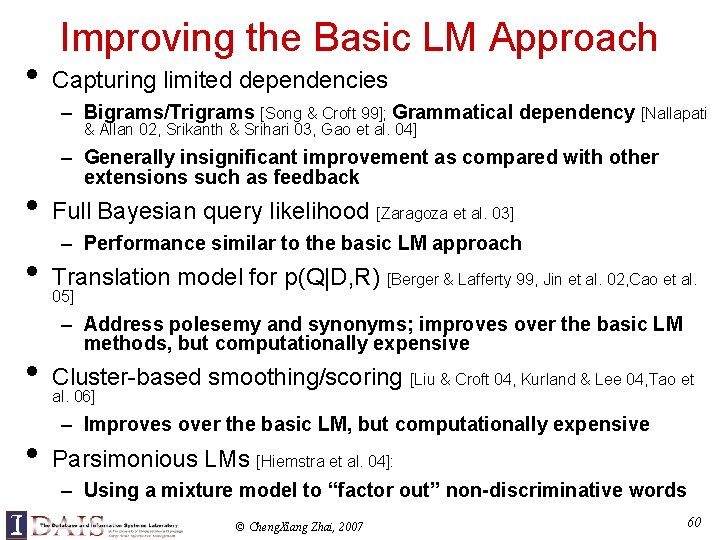

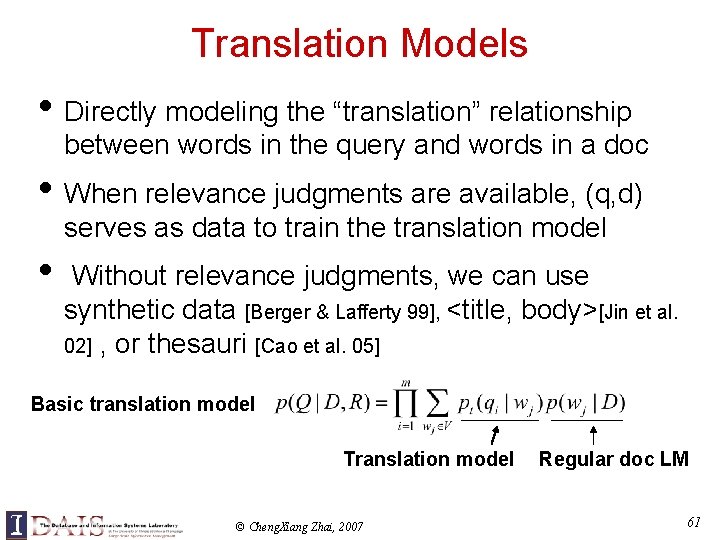

Translation Models • Directly modeling the “translation” relationship between words in the query and words in a doc • When relevance judgments are available, (q, d) serves as data to train the translation model • Without relevance judgments, we can use synthetic data [Berger & Lafferty 99], <title, body>[Jin et al. 02] , or thesauri [Cao et al. 05] Basic translation model Translation model © Cheng. Xiang Zhai, 2007 Regular doc LM 61

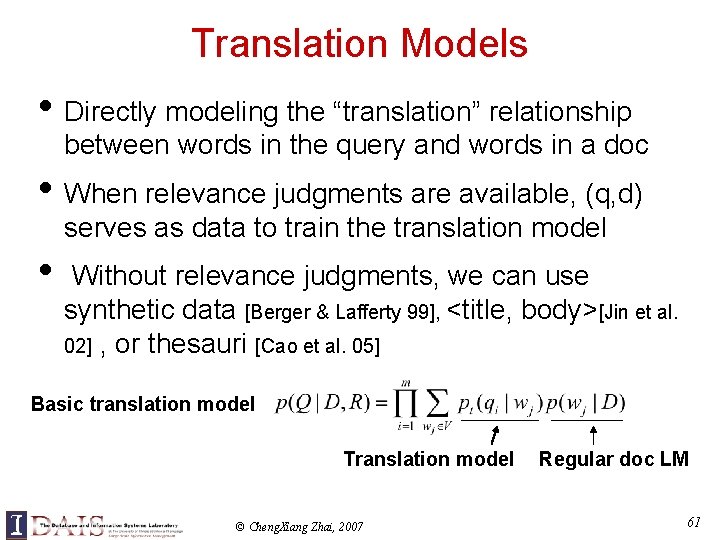

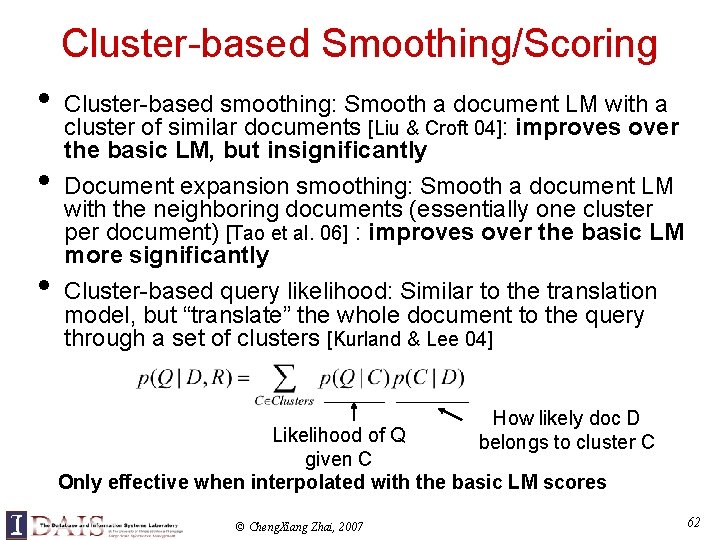

Cluster-based Smoothing/Scoring • Cluster-based smoothing: Smooth a document LM with a cluster of similar documents [Liu & Croft 04]: improves over the basic LM, but insignificantly Document expansion smoothing: Smooth a document LM with the neighboring documents (essentially one cluster per document) [Tao et al. 06] : improves over the basic LM more significantly Cluster-based query likelihood: Similar to the translation model, but “translate” the whole document to the query through a set of clusters [Kurland & Lee 04] • • How likely doc D belongs to cluster C Likelihood of Q given C Only effective when interpolated with the basic LM scores © Cheng. Xiang Zhai, 2007 62

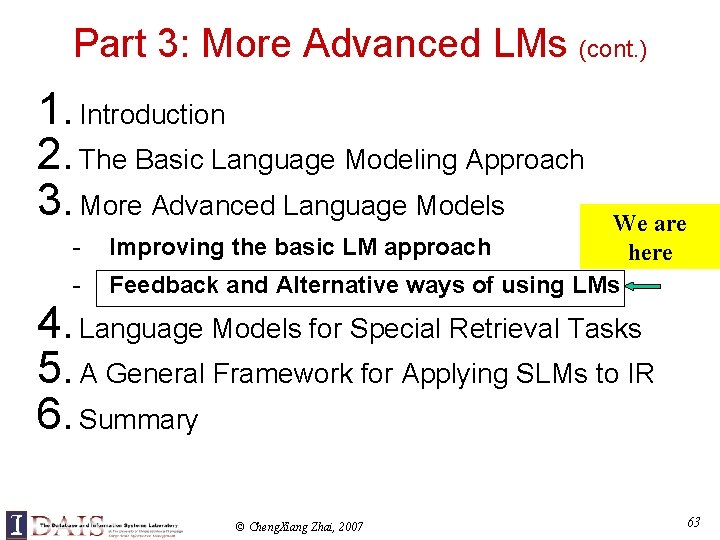

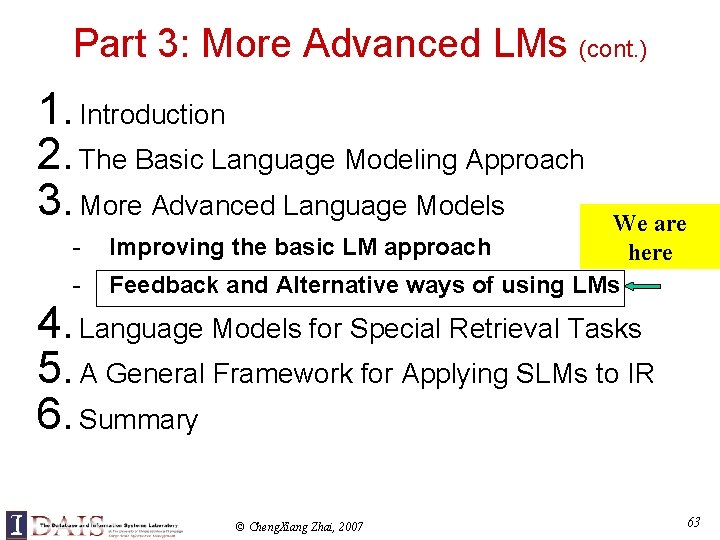

Part 3: More Advanced LMs (cont. ) 1. Introduction 2. The Basic Language Modeling Approach 3. More Advanced Language Models - We are Improving the basic LM approach here Feedback and Alternative ways of using LMs 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR 6. Summary © Cheng. Xiang Zhai, 2007 63

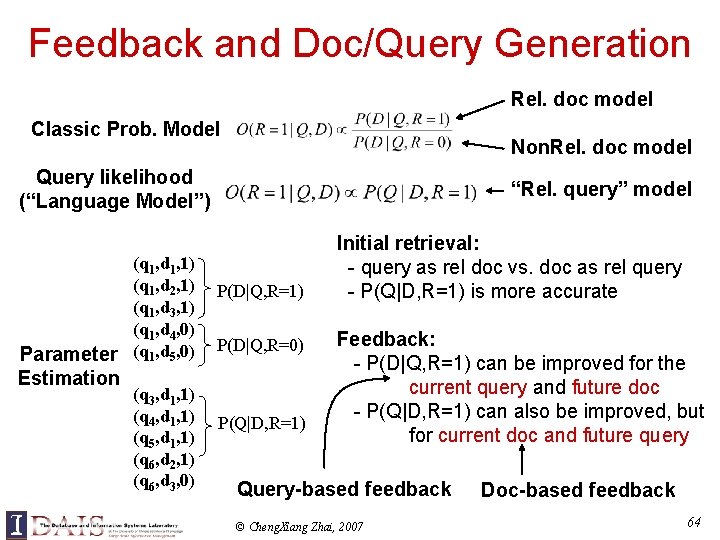

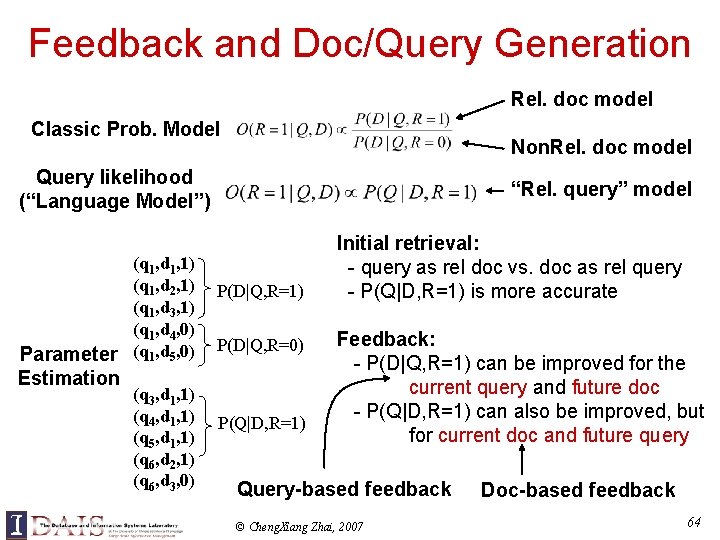

Feedback and Doc/Query Generation Rel. doc model Classic Prob. Model Non. Rel. doc model Query likelihood (“Language Model”) (q 1, d 1, 1) (q 1, d 2, 1) (q 1, d 3, 1) (q 1, d 4, 0) Parameter (q 1, d 5, 0) Estimation (q 3, d 1, 1) (q 4, d 1, 1) (q 5, d 1, 1) (q 6, d 2, 1) (q 6, d 3, 0) “Rel. query” model P(D|Q, R=1) P(D|Q, R=0) P(Q|D, R=1) Initial retrieval: - query as rel doc vs. doc as rel query - P(Q|D, R=1) is more accurate Feedback: - P(D|Q, R=1) can be improved for the current query and future doc - P(Q|D, R=1) can also be improved, but for current doc and future query Query-based feedback © Cheng. Xiang Zhai, 2007 Doc-based feedback 64

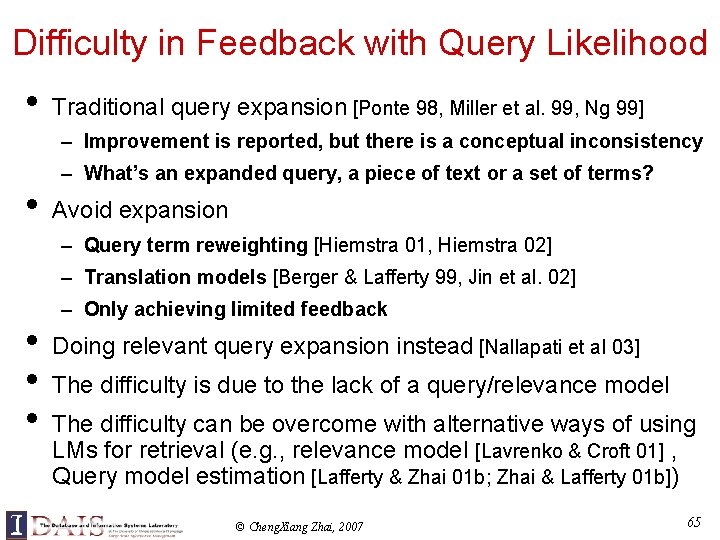

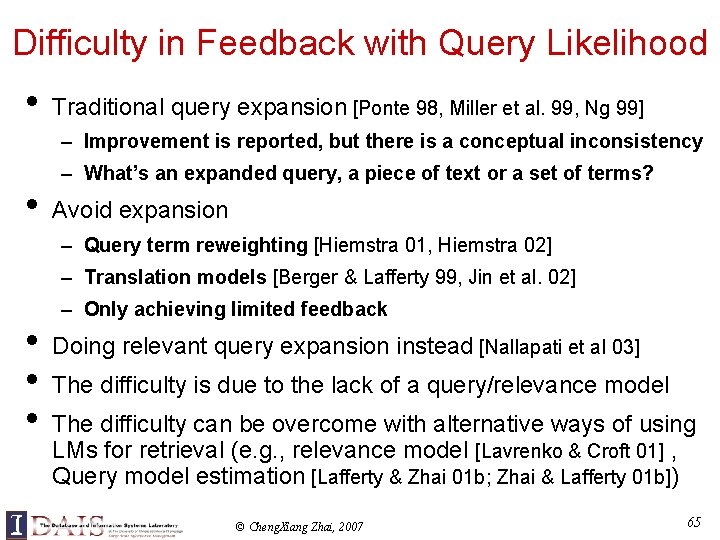

Difficulty in Feedback with Query Likelihood • Traditional query expansion [Ponte 98, Miller et al. 99, Ng 99] – Improvement is reported, but there is a conceptual inconsistency • – What’s an expanded query, a piece of text or a set of terms? Avoid expansion – Query term reweighting [Hiemstra 01, Hiemstra 02] – Translation models [Berger & Lafferty 99, Jin et al. 02] • • • – Only achieving limited feedback Doing relevant query expansion instead [Nallapati et al 03] The difficulty is due to the lack of a query/relevance model The difficulty can be overcome with alternative ways of using LMs for retrieval (e. g. , relevance model [Lavrenko & Croft 01] , Query model estimation [Lafferty & Zhai 01 b; Zhai & Lafferty 01 b]) © Cheng. Xiang Zhai, 2007 65

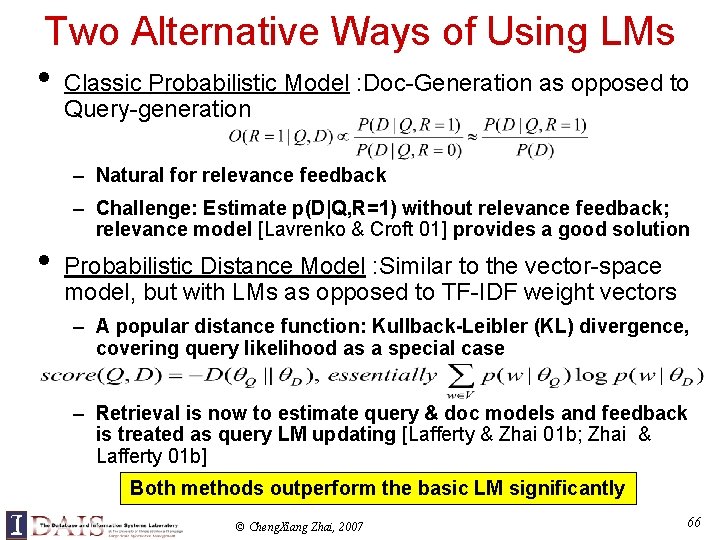

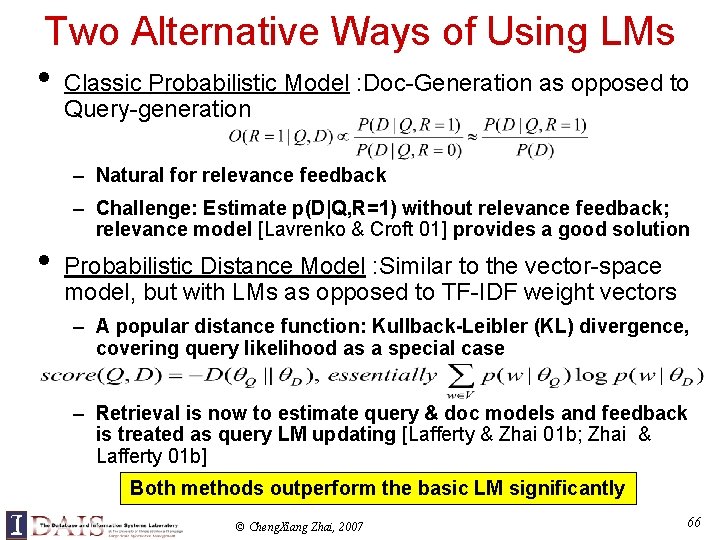

Two Alternative Ways of Using LMs • Classic Probabilistic Model : Doc-Generation as opposed to Query-generation – Natural for relevance feedback • – Challenge: Estimate p(D|Q, R=1) without relevance feedback; relevance model [Lavrenko & Croft 01] provides a good solution Probabilistic Distance Model : Similar to the vector-space model, but with LMs as opposed to TF-IDF weight vectors – A popular distance function: Kullback-Leibler (KL) divergence, covering query likelihood as a special case – Retrieval is now to estimate query & doc models and feedback is treated as query LM updating [Lafferty & Zhai 01 b; Zhai & Lafferty 01 b] Both methods outperform the basic LM significantly © Cheng. Xiang Zhai, 2007 66

![Relevance Model Estimation Lavrenko Croft 01 Question How to estimate PDQ Relevance Model Estimation [Lavrenko & Croft 01] • • Question: How to estimate P(D|Q,](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-67.jpg)

Relevance Model Estimation [Lavrenko & Croft 01] • • Question: How to estimate P(D|Q, R) (or p(w|Q, R)) without relevant documents? Key idea: – Treat query as observations about p(w|Q, R) • – Approximate the model space with document models Two methods for decomposing p(w, Q) – Independent sampling (Bayesian model averaging) – Conditional sampling: p(w, Q)=p(w)p(Q|w) Original formula in [Lavranko &Croft 01] © Cheng. Xiang Zhai, 2007 67

![Kernelbased Allocation Lavrenko 04 A general generative model for text An infinite mixture Kernel-based Allocation [Lavrenko 04] • A general generative model for text An infinite mixture](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-68.jpg)

Kernel-based Allocation [Lavrenko 04] • A general generative model for text An infinite mixture model Kernel-based density function T= training data Kernel function • Choices of the kernel function – Delta kernel: Average probability of w 1…wn over all training points – Dirichlet kernel: allow a training point to “spread” its influence © Cheng. Xiang Zhai, 2007 68

![Query Model Estimation Lafferty Zhai 01 b Zhai Lafferty 01 b Query Model Estimation [Lafferty & Zhai 01 b, Zhai & Lafferty 01 b] •](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-69.jpg)

Query Model Estimation [Lafferty & Zhai 01 b, Zhai & Lafferty 01 b] • • Question: How to estimate a better query model than the ML estimate based on the original query? “Massive feedback”: Improve a query model through cooccurrence pattern learned from – A document-term Markov chain that outputs the query [Lafferty & Zhai 01 b] • – Thesauri, corpus [Bai et al. 05, Collins-Thompson & Callan 05] Model-based feedback: Improve the estimate of query model by exploiting pseudo-relevance feedback – Update the query model by interpolating the original query model with a learned feedback model [ Zhai & Lafferty 01 b] – Estimate a more integrated mixture model using pseudofeedback documents [ Tao & Zhai 06] © Cheng. Xiang Zhai, 2007 69

![Feedback as Model Interpolation Zhai Lafferty 01 b Document D Results Query Q Feedback as Model Interpolation [Zhai & Lafferty 01 b] Document D Results Query Q](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-70.jpg)

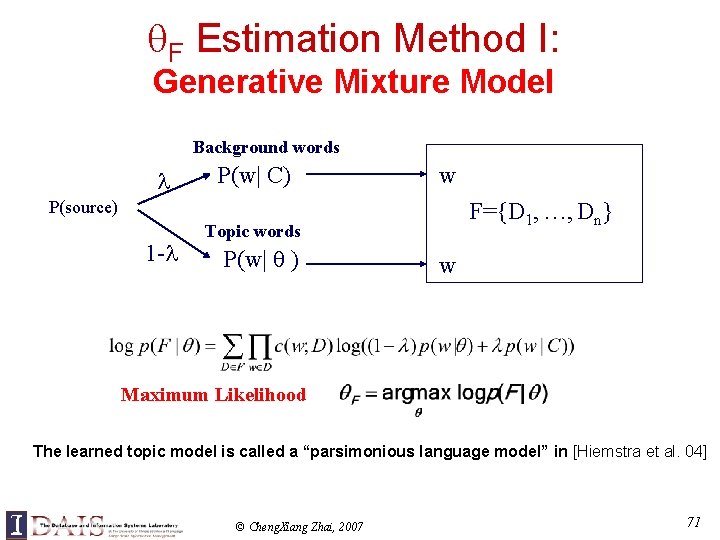

Feedback as Model Interpolation [Zhai & Lafferty 01 b] Document D Results Query Q =0 =1 Feedback Docs F={d 1, d 2 , …, dn} Generative model No feedback Full feedback © Cheng. Xiang Zhai, 2007 Divergence minimization 70

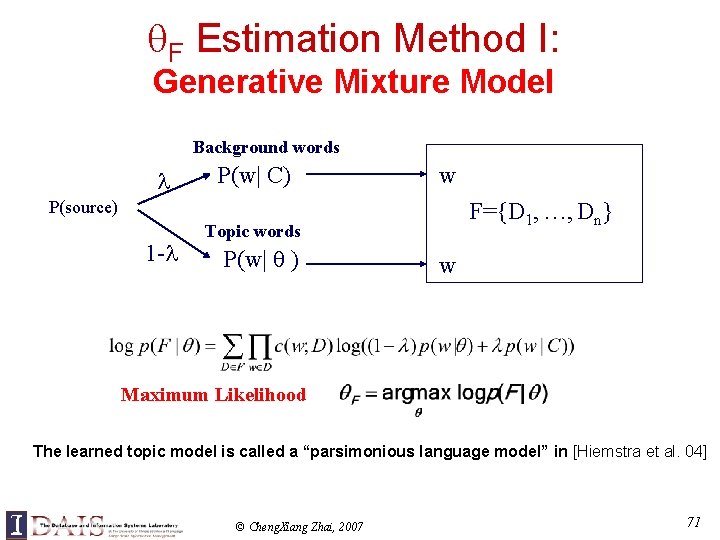

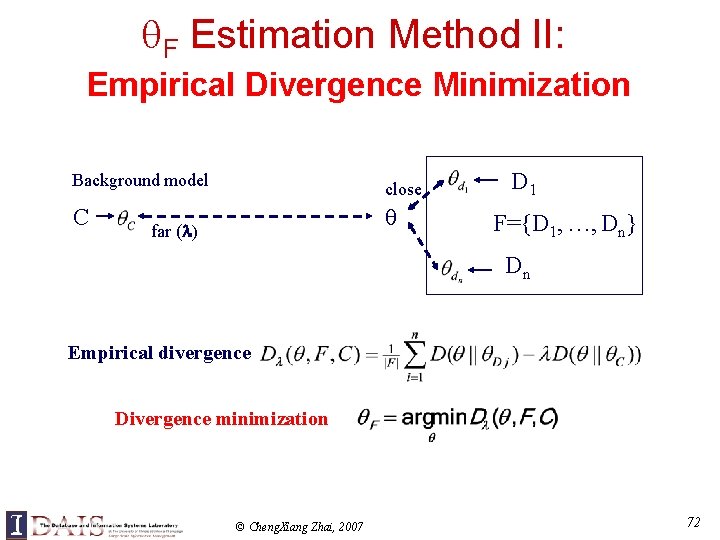

F Estimation Method I: Generative Mixture Model Background words P(w| C) w P(source) 1 - F={D 1, …, Dn} Topic words P(w| ) w Maximum Likelihood The learned topic model is called a “parsimonious language model” in [Hiemstra et al. 04] © Cheng. Xiang Zhai, 2007 71

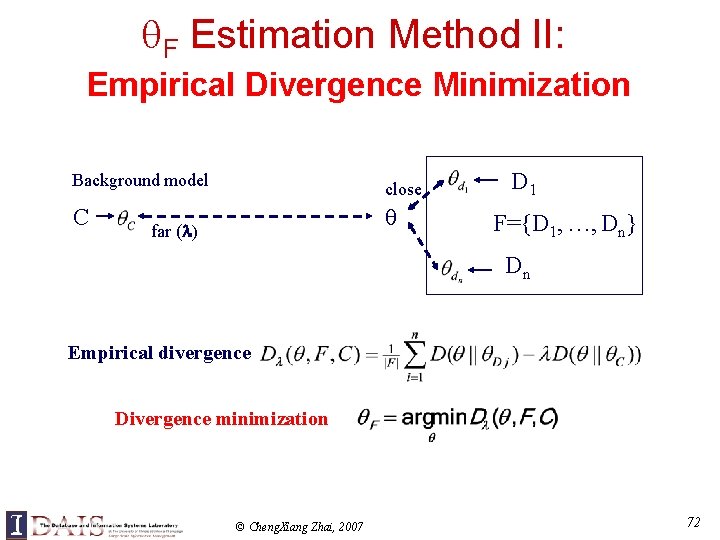

F Estimation Method II: Empirical Divergence Minimization Background model close C far ( ) D 1 F={D 1, …, Dn} Dn Empirical divergence Divergence minimization © Cheng. Xiang Zhai, 2007 72

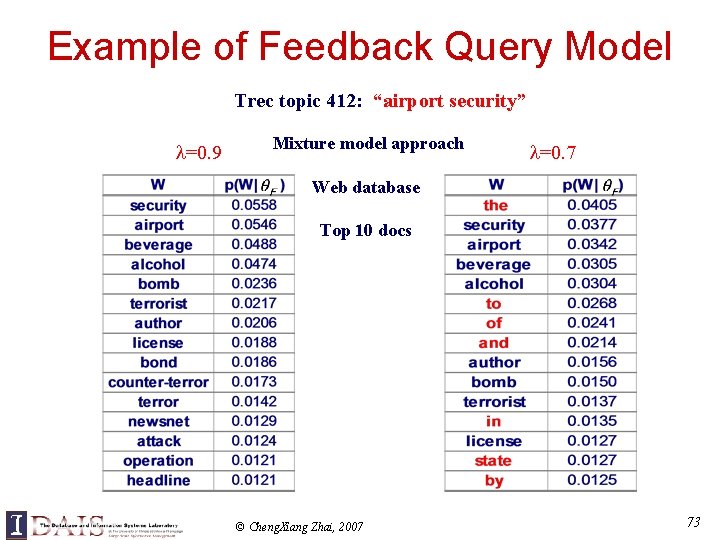

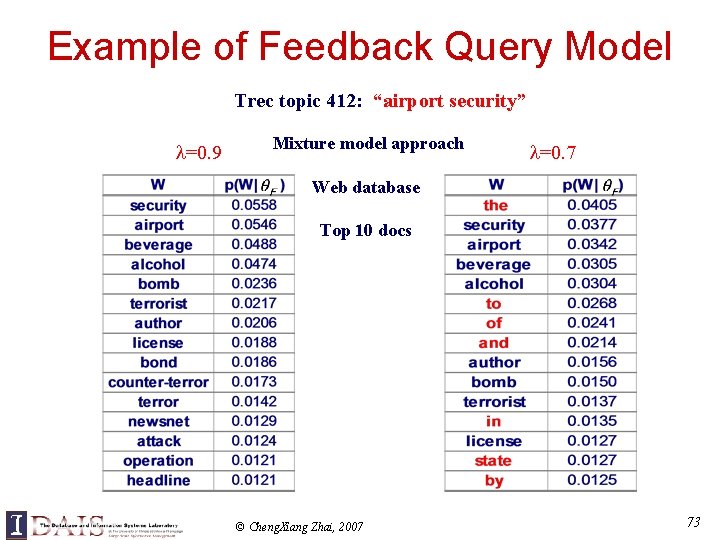

Example of Feedback Query Model Trec topic 412: “airport security” =0. 9 Mixture model approach =0. 7 Web database Top 10 docs © Cheng. Xiang Zhai, 2007 73

![Modelbased feedback Improves over Simple LM Zhai Lafferty 01 b Translation models Relevance Model-based feedback Improves over Simple LM [Zhai & Lafferty 01 b] Translation models, Relevance](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-74.jpg)

Model-based feedback Improves over Simple LM [Zhai & Lafferty 01 b] Translation models, Relevance models, and Feedback-based query models have all been shown to improve performance significantly over the simple LMs (Parameter tuning is necessary in many cases, but see [Tao & Zhai 06] for “parameter-free” pseudo feedback) © Cheng. Xiang Zhai, 2007 74

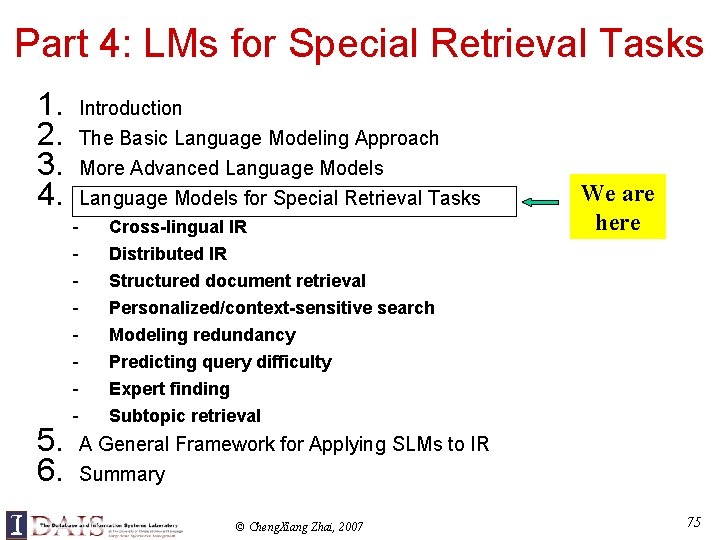

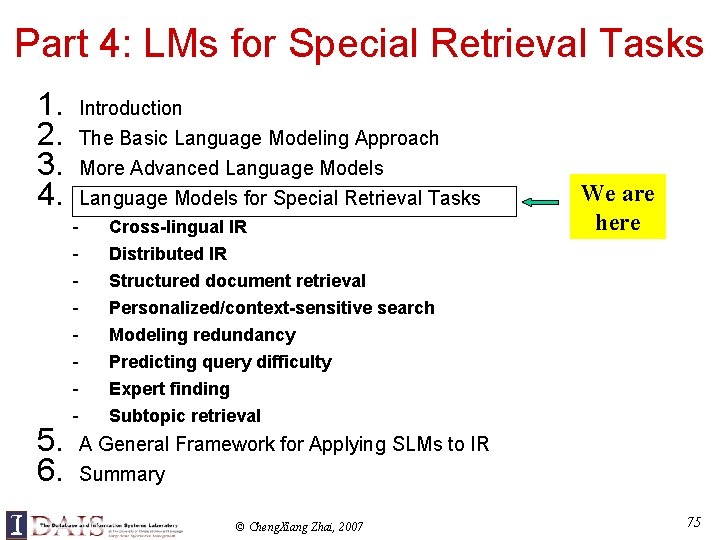

Part 4: LMs for Special Retrieval Tasks 1. 2. 3. 4. 5. 6. Introduction The Basic Language Modeling Approach More Advanced Language Models for Special Retrieval Tasks - Cross-lingual IR Distributed IR Structured document retrieval Personalized/context-sensitive search Modeling redundancy Predicting query difficulty Expert finding Subtopic retrieval We are here A General Framework for Applying SLMs to IR Summary © Cheng. Xiang Zhai, 2007 75

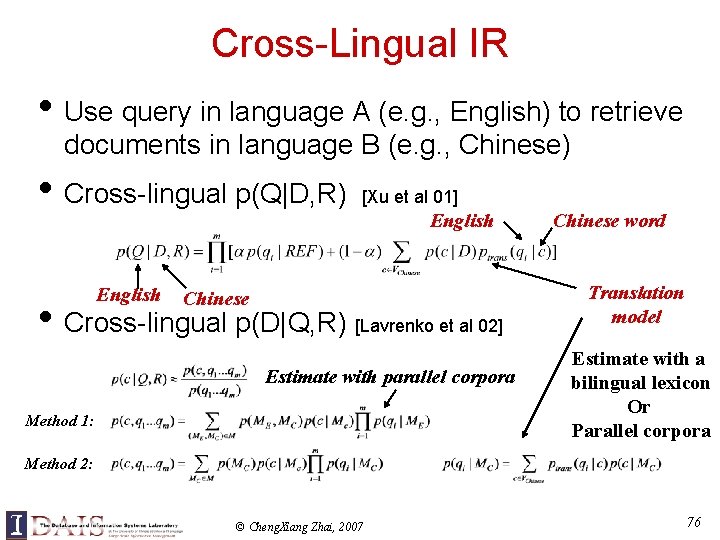

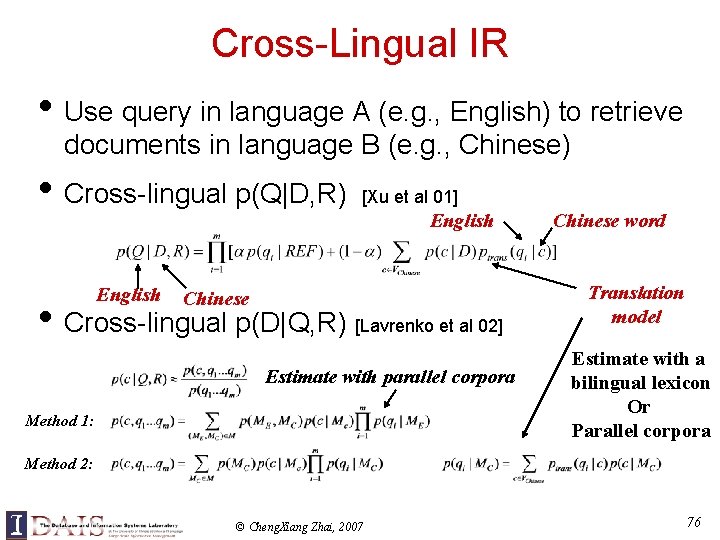

Cross-Lingual IR • Use query in language A (e. g. , English) to retrieve documents in language B (e. g. , Chinese) • Cross-lingual p(Q|D, R) English [Xu et al 01] English Chinese • Cross-lingual p(D|Q, R) [Lavrenko et al 02] Estimate with parallel corpora Method 1: Chinese word Translation model Estimate with a bilingual lexicon Or Parallel corpora Method 2: © Cheng. Xiang Zhai, 2007 76

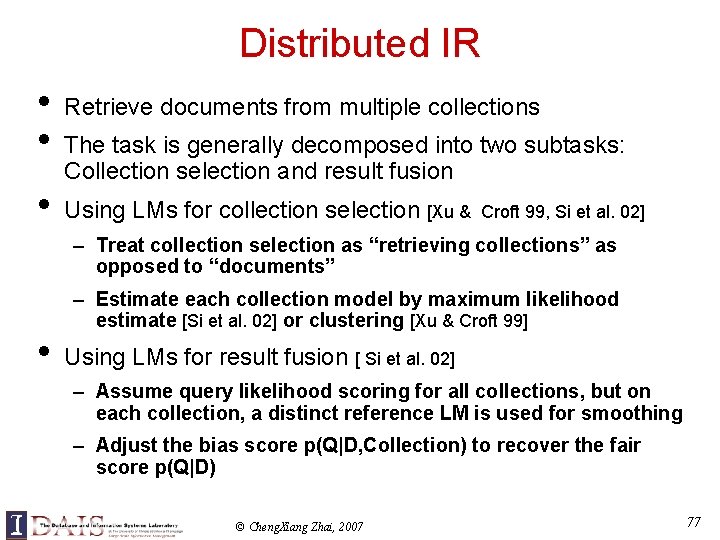

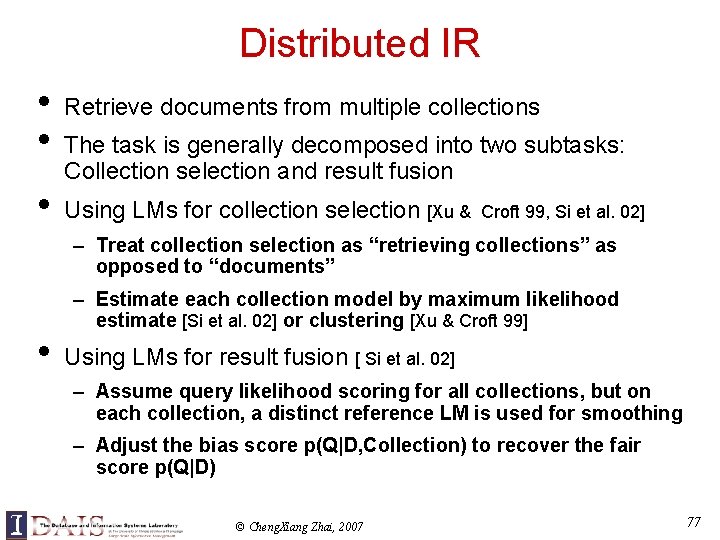

Distributed IR • • • Retrieve documents from multiple collections The task is generally decomposed into two subtasks: Collection selection and result fusion Using LMs for collection selection [Xu & Croft 99, Si et al. 02] – Treat collection selection as “retrieving collections” as opposed to “documents” • – Estimate each collection model by maximum likelihood estimate [Si et al. 02] or clustering [Xu & Croft 99] Using LMs for result fusion [ Si et al. 02] – Assume query likelihood scoring for all collections, but on each collection, a distinct reference LM is used for smoothing – Adjust the bias score p(Q|D, Collection) to recover the fair score p(Q|D) © Cheng. Xiang Zhai, 2007 77

![Structured Document Retrieval Ogilvie Callan 03 D Title D 1 Abstract D 2 Structured Document Retrieval [Ogilvie & Callan 03] D Title D 1 Abstract D 2](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-78.jpg)

Structured Document Retrieval [Ogilvie & Callan 03] D Title D 1 Abstract D 2 Body-Part 1 D 3 Body-Part 2 -Want to combine different parts of a document with appropriate weights -Anchor text can be treated as a “part” of a document - Applicable to XML retrieval Select Dj and generate a query word using Dj … Dk “part selection” prob. Serves as weight for Dj Can be trained using EM © Cheng. Xiang Zhai, 2007 78

![PersonalizedContextSensitive Search Shen et al 05 Tan et al 06 User information and Personalized/Context-Sensitive Search [Shen et al. 05, Tan et al. 06] • User information and](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-79.jpg)

Personalized/Context-Sensitive Search [Shen et al. 05, Tan et al. 06] • User information and search context can be used to estimate a better query model Context-independent Query LM: Context-sensitive Query LM: Refinement of this model leads to specific retrieval formulas Simple models often end up interpolating many unigram language models based on different sources of evidence, e. g. , short-term search history [Shen et al. 05] or long-term search history [Tan et al. 06] © Cheng. Xiang Zhai, 2007 79

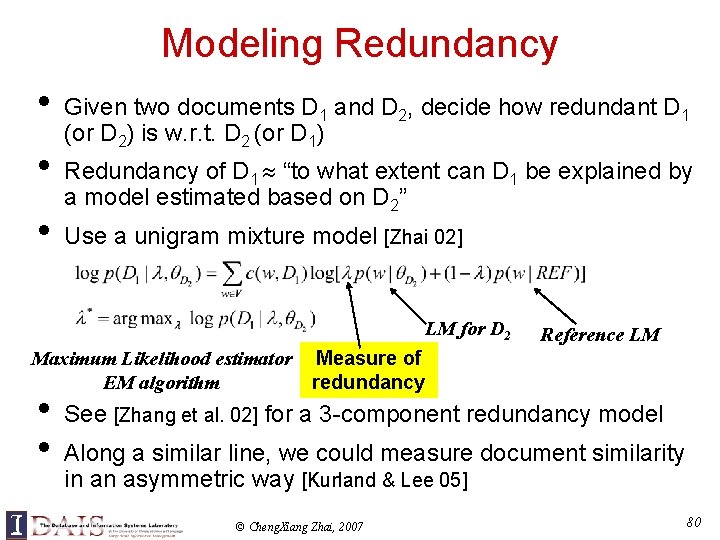

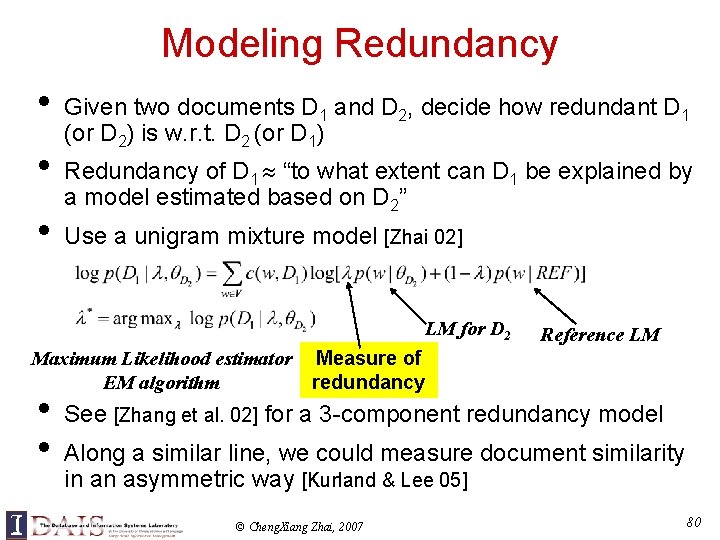

Modeling Redundancy • • • Given two documents D 1 and D 2, decide how redundant D 1 (or D 2) is w. r. t. D 2 (or D 1) Redundancy of D 1 “to what extent can D 1 be explained by a model estimated based on D 2” Use a unigram mixture model [Zhai 02] LM for D 2 Maximum Likelihood estimator EM algorithm • • Reference LM Measure of redundancy See [Zhang et al. 02] for a 3 -component redundancy model Along a similar line, we could measure document similarity in an asymmetric way [Kurland & Lee 05] © Cheng. Xiang Zhai, 2007 80

![Predicting Query Difficulty CronenTownsend et al 02 Observations Discriminative queries tend Predicting Query Difficulty • • [Cronen-Townsend et al. 02] Observations: – Discriminative queries tend](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-81.jpg)

Predicting Query Difficulty • • [Cronen-Townsend et al. 02] Observations: – Discriminative queries tend to be easier – Comparison of the query model and the collection model can indicate how discriminative a query is Method: – Define “query clarity” as the KL-divergence between an estimated query model or relevance model and the collection LM • – An enriched query LM can be estimated by exploiting pseudo feedback (e. g. , relevance model) Correlation between the clarity scores and retrieval performance is found © Cheng. Xiang Zhai, 2007 81

![Expert Finding Balog et al 06 Fang Zhai 07 Task Given Expert Finding [Balog et al. 06, Fang & Zhai 07] • • Task: Given](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-82.jpg)

Expert Finding [Balog et al. 06, Fang & Zhai 07] • • Task: Given a topic T, a list of candidates {Ci} , and a collection of support documents S={Di}, rank the candidates according to the likelihood that a candidate C is an expert on T. Retrieval analogy: – Query = topic T – Document = Candidate C – Rank according to P(R=1|T, C) – Similar derivations to those on slides 55 -56, 64 can be made • Candidate generation model: • Topic generation model: © Cheng. Xiang Zhai, 2007 82

![Subtopic Retrieval Zhai 02 Zhai et al 03 Subtopic retrieval Aim at Subtopic Retrieval [Zhai 02, Zhai et al 03] • • Subtopic retrieval: Aim at](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-83.jpg)

Subtopic Retrieval [Zhai 02, Zhai et al 03] • • Subtopic retrieval: Aim at retrieving as many distinct subtopics of the query topic as possible – E. g. , retrieve “different applications of robotics” – Need to go beyond independent relevance Two methods explored in [Zhai 02] – Maximal Marginal Relevance: • Maximizing subtopic coverage indirectly through redundancy elimination • LMs can be used to model redundancy – Maximal Diverse Relevance: • Maximizing subtopic coverage directly through subtopic modeling • Define a retrieval function based on subtopic representation of query and documents • Mixture LMs can be used to model subtopics (essentially clustering) © Cheng. Xiang Zhai, 2007 83

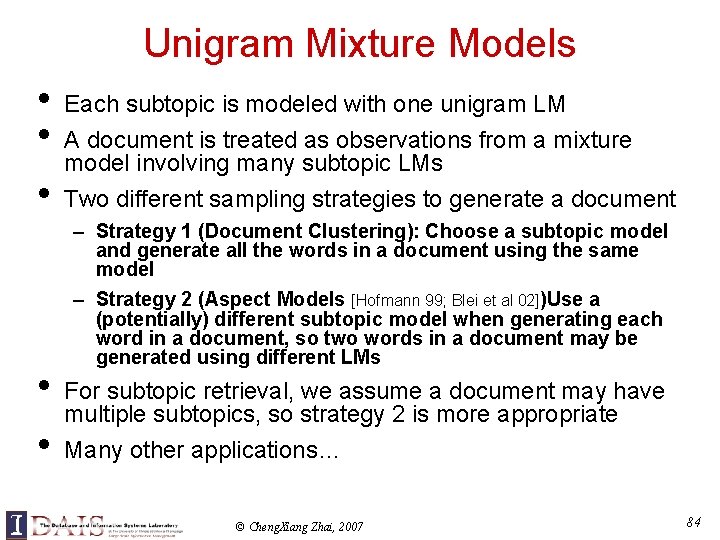

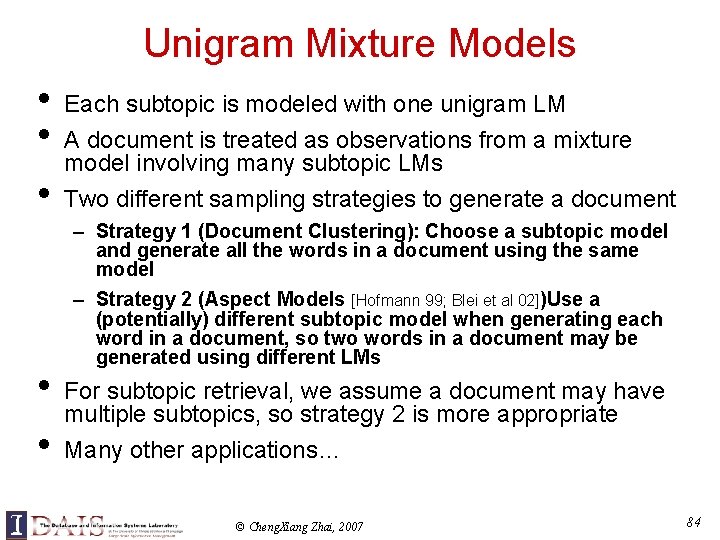

Unigram Mixture Models • • • Each subtopic is modeled with one unigram LM A document is treated as observations from a mixture model involving many subtopic LMs Two different sampling strategies to generate a document – Strategy 1 (Document Clustering): Choose a subtopic model and generate all the words in a document using the same model – Strategy 2 (Aspect Models [Hofmann 99; Blei et al 02])Use a (potentially) different subtopic model when generating each word in a document, so two words in a document may be generated using different LMs For subtopic retrieval, we assume a document may have multiple subtopics, so strategy 2 is more appropriate Many other applications… © Cheng. Xiang Zhai, 2007 84

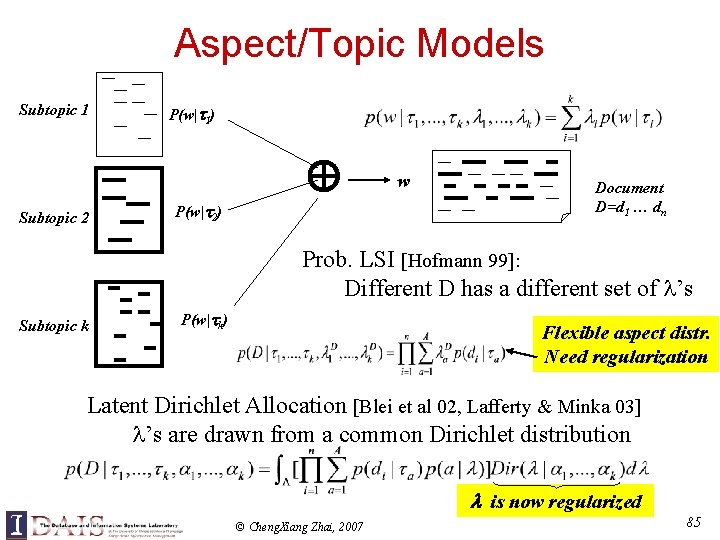

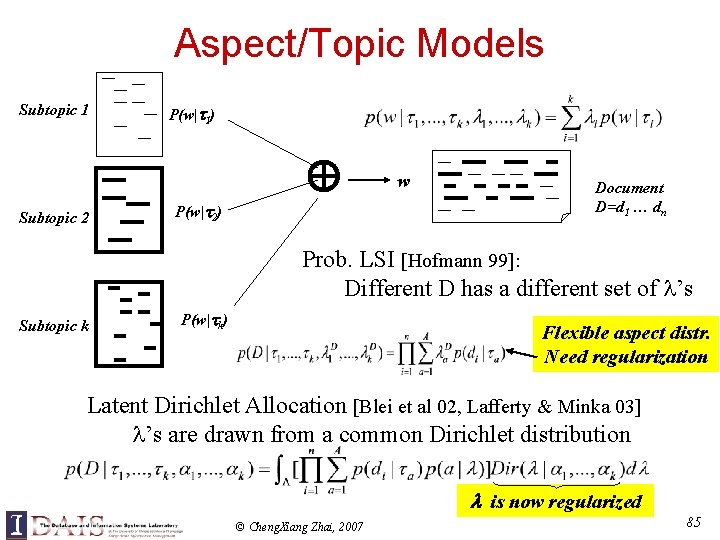

Aspect/Topic Models Subtopic 1 Subtopic 2 P(w| 1) P(w| 2) w Document D=d 1 … dn Prob. LSI [Hofmann 99]: Different D has a different set of ’s Subtopic k P(w| k) Flexible aspect distr. Need regularization Latent Dirichlet Allocation [Blei et al 02, Lafferty & Minka 03] ’s are drawn from a common Dirichlet distribution is now regularized © Cheng. Xiang Zhai, 2007 85

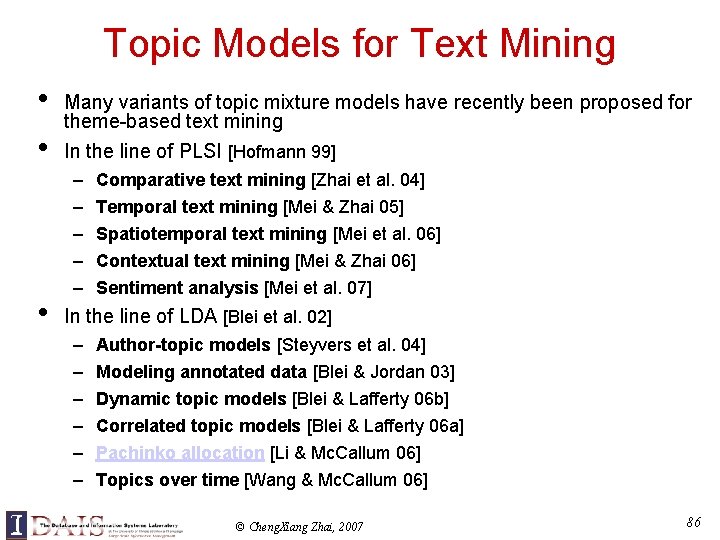

Topic Models for Text Mining • • • Many variants of topic mixture models have recently been proposed for theme-based text mining In the line of PLSI [Hofmann 99] – – – Comparative text mining [Zhai et al. 04] Temporal text mining [Mei & Zhai 05] Spatiotemporal text mining [Mei et al. 06] Contextual text mining [Mei & Zhai 06] Sentiment analysis [Mei et al. 07] In the line of LDA [Blei et al. 02] – – – Author-topic models [Steyvers et al. 04] Modeling annotated data [Blei & Jordan 03] Dynamic topic models [Blei & Lafferty 06 b] Correlated topic models [Blei & Lafferty 06 a] Pachinko allocation [Li & Mc. Callum 06] Topics over time [Wang & Mc. Callum 06] © Cheng. Xiang Zhai, 2007 86

Part 5: A General Framework for Applying SLMs to IR 1. Introduction 2. The Basic Language Modeling Approach 3. More Advanced Language Models 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR - Risk minimization framework - Special cases We are here 6. Summary © Cheng. Xiang Zhai, 2007 87

Risk Minimization: Motivation • Long-standing IR Challenges – Improve IR theory • Develop theoretically sound and empirically effective models • Go beyond the limited traditional notion of relevance (independent, topical relevance) – Improve IR practice • Optimize retrieval parameters automatically • SLMs are very promising tools … – How can we systematically exploit SLMs in IR? – Can SLMs offer anything hard/impossible to achieve in traditional IR? © Cheng. Xiang Zhai, 2007 88

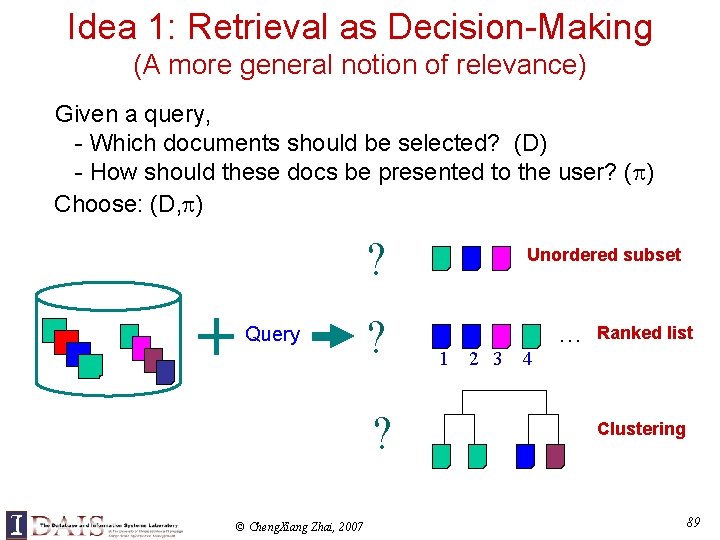

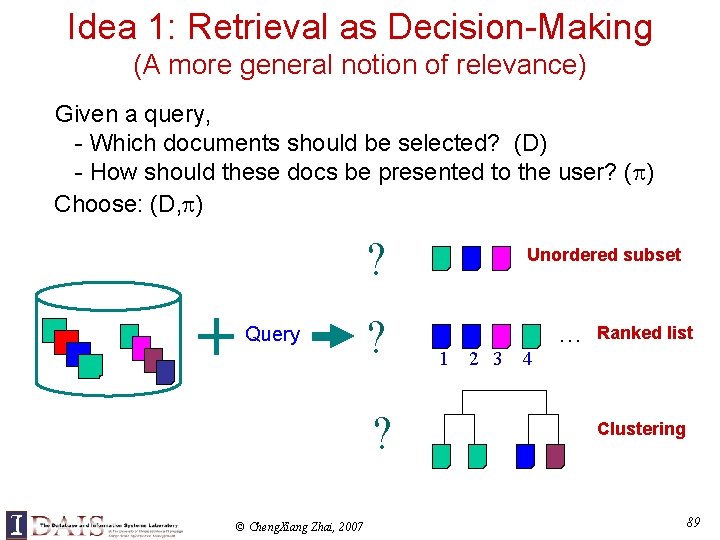

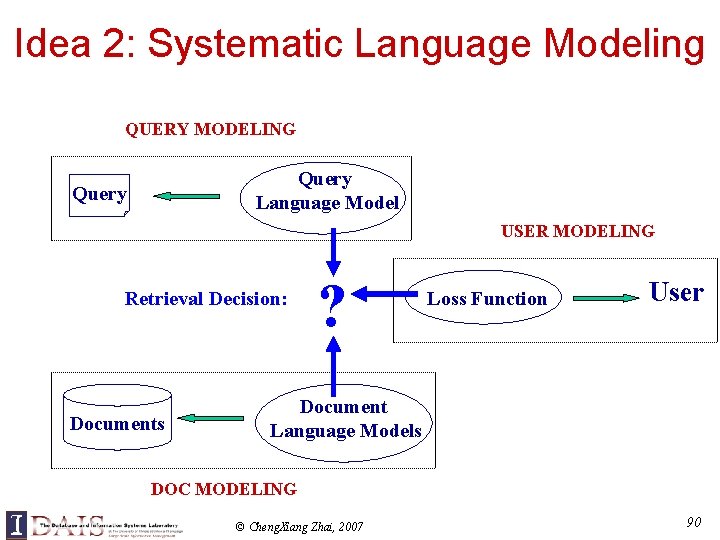

Idea 1: Retrieval as Decision-Making (A more general notion of relevance) Given a query, - Which documents should be selected? (D) - How should these docs be presented to the user? ( ) Choose: (D, ) ? Query ? ? © Cheng. Xiang Zhai, 2007 Unordered subset 1 2 3 4 … Ranked list Clustering 89

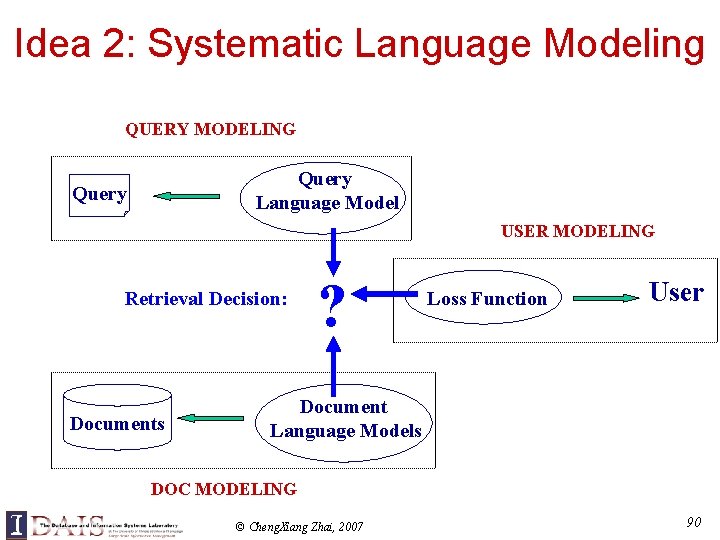

Idea 2: Systematic Language Modeling QUERY MODELING Query Language Model Query USER MODELING Retrieval Decision: Documents ? Loss Function User Document Language Models DOC MODELING © Cheng. Xiang Zhai, 2007 90

![Generative Model of Document Query Lafferty Zhai 01 b Us er U Generative Model of Document & Query [Lafferty & Zhai 01 b] Us er U](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-91.jpg)

Generative Model of Document & Query [Lafferty & Zhai 01 b] Us er U q Partially observed Sourc e observed R d S Query Document inferred © Cheng. Xiang Zhai, 2007 91

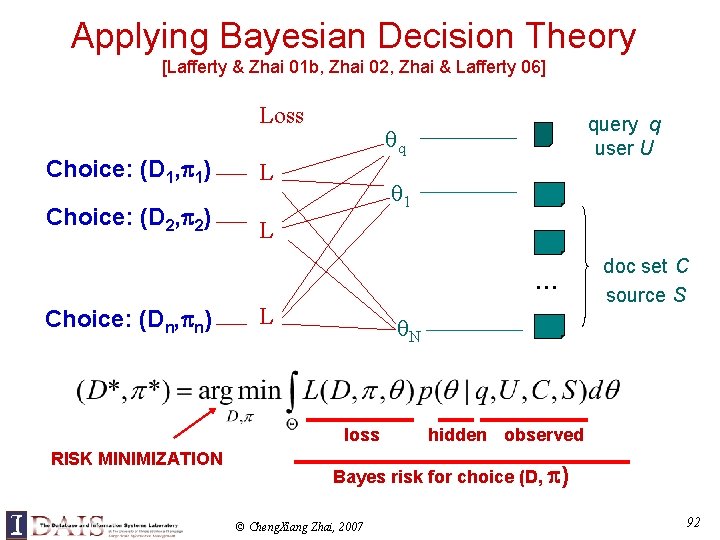

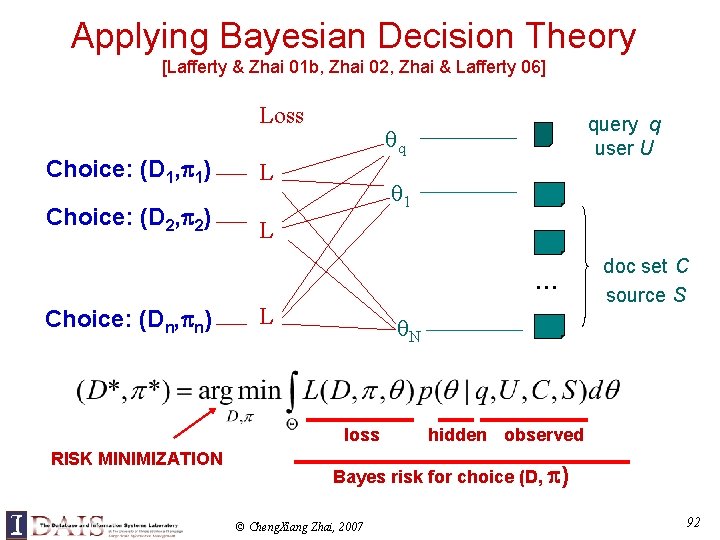

Applying Bayesian Decision Theory [Lafferty & Zhai 01 b, Zhai 02, Zhai & Lafferty 06] Loss Choice: (D 1, 1) L Choice: (D 2, 2) L query q user U q 1 . . . Choice: (Dn, n) L N loss RISK MINIMIZATION doc set C source S hidden observed Bayes risk for choice (D, ) © Cheng. Xiang Zhai, 2007 92

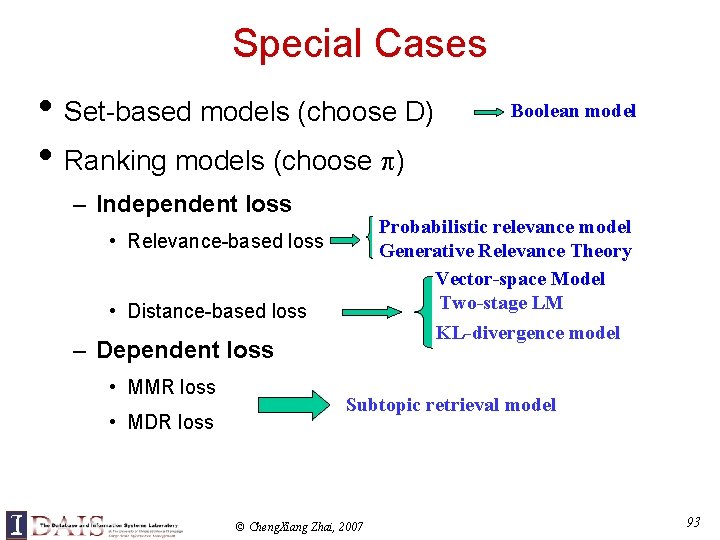

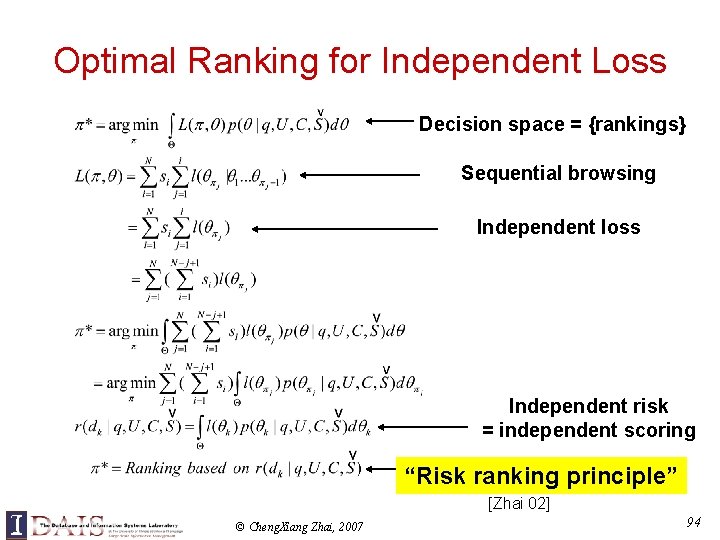

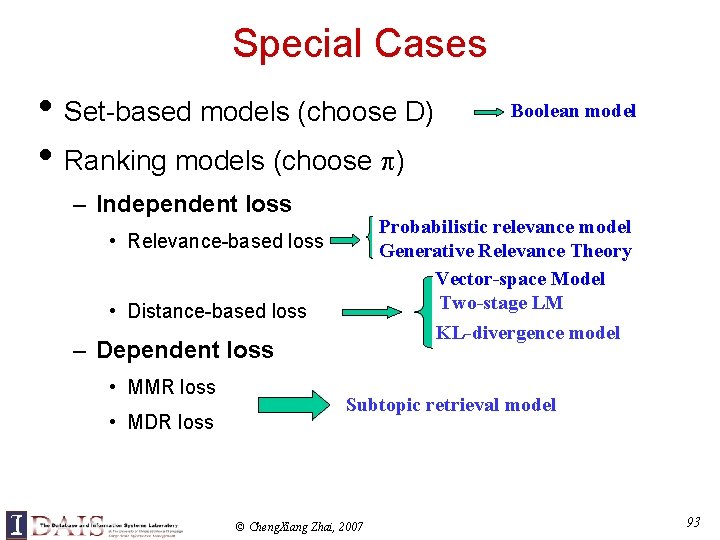

Special Cases • Set-based models (choose D) • Ranking models (choose ) – Independent loss Probabilistic relevance model Generative Relevance Theory Vector-space Model Two-stage LM KL-divergence model • Relevance-based loss • Distance-based loss – Dependent loss • MMR loss • MDR loss Boolean model Subtopic retrieval model © Cheng. Xiang Zhai, 2007 93

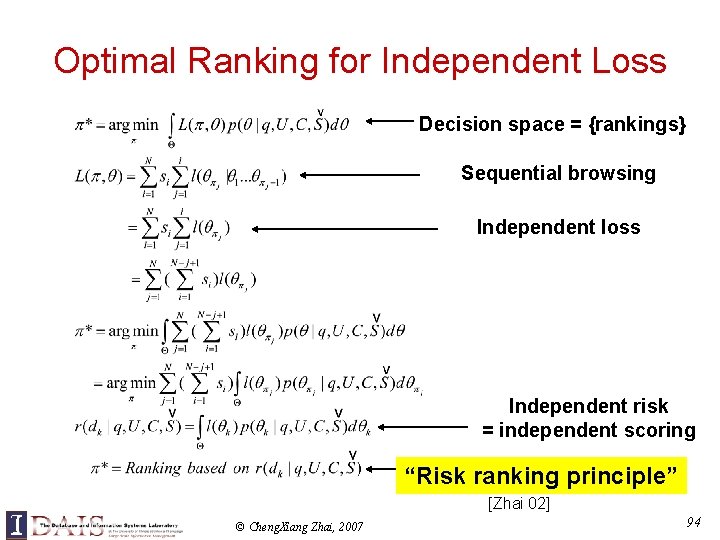

Optimal Ranking for Independent Loss Decision space = {rankings} Sequential browsing Independent loss Independent risk = independent scoring “Risk ranking principle” [Zhai 02] © Cheng. Xiang Zhai, 2007 94

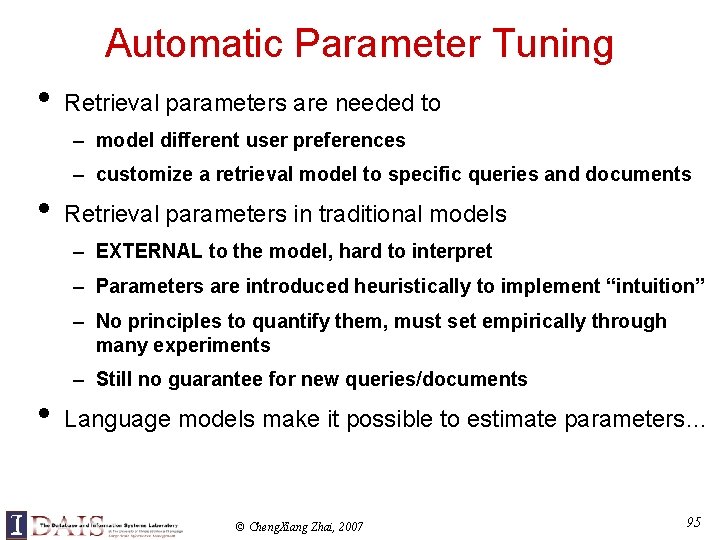

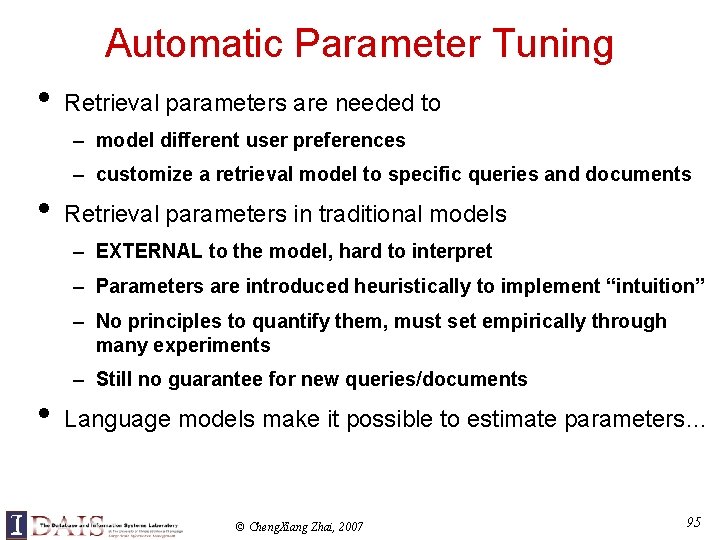

Automatic Parameter Tuning • Retrieval parameters are needed to – model different user preferences – customize a retrieval model to specific queries and documents • Retrieval parameters in traditional models – EXTERNAL to the model, hard to interpret – Parameters are introduced heuristically to implement “intuition” – No principles to quantify them, must set empirically through many experiments – Still no guarantee for new queries/documents • Language models make it possible to estimate parameters… © Cheng. Xiang Zhai, 2007 95

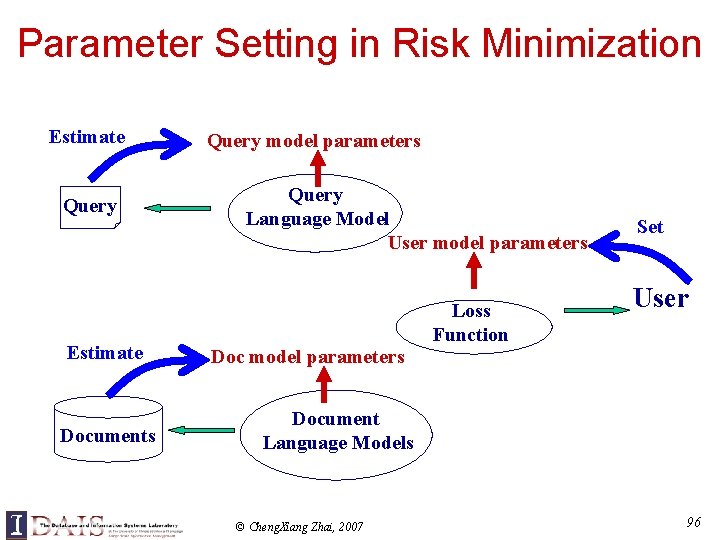

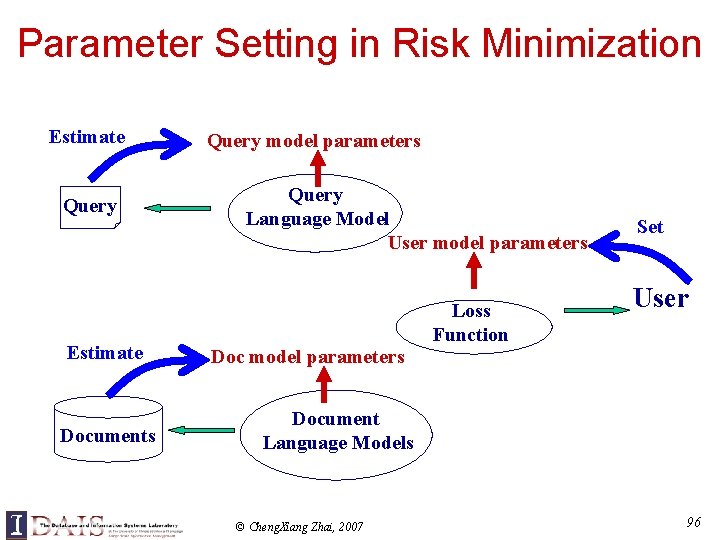

Parameter Setting in Risk Minimization Estimate Query Estimate Documents Query model parameters Query Language Model User model parameters Doc model parameters Loss Function Set User Document Language Models © Cheng. Xiang Zhai, 2007 96

![Generative Relevance Hypothesis Lavrenko 04 Generative Relevance Hypothesis For a Generative Relevance Hypothesis [Lavrenko 04] • • • Generative Relevance Hypothesis: – For a](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-97.jpg)

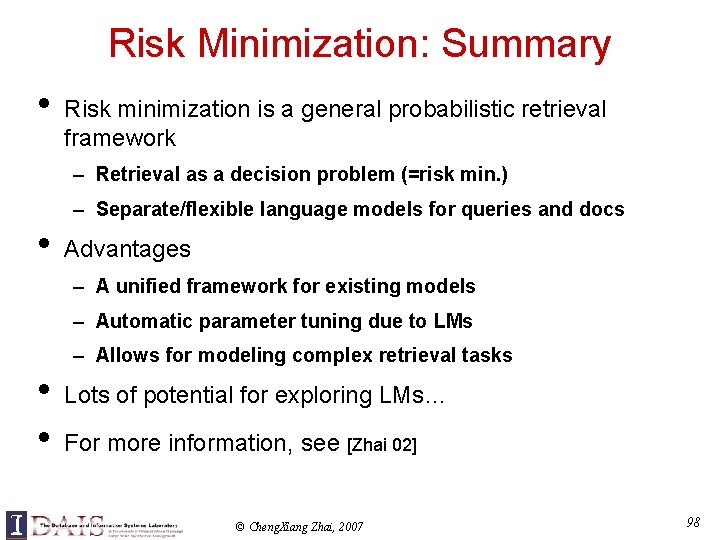

Generative Relevance Hypothesis [Lavrenko 04] • • • Generative Relevance Hypothesis: – For a given information need, queries expressing that need and documents relevant to that need can be viewed as independent random samples from the same underlying generative model A special case of risk minimization when document models and query models are in the same space Implications for retrieval models: “the same underlying generative model” makes it possible to – Match queries and documents even if they are in different languages or media – Estimate/improve a relevant document model based on example queries or vice versa © Cheng. Xiang Zhai, 2007 97

Risk Minimization: Summary • Risk minimization is a general probabilistic retrieval framework – Retrieval as a decision problem (=risk min. ) – Separate/flexible language models for queries and docs • Advantages – A unified framework for existing models – Automatic parameter tuning due to LMs – Allows for modeling complex retrieval tasks • • Lots of potential for exploring LMs… For more information, see [Zhai 02] © Cheng. Xiang Zhai, 2007 98

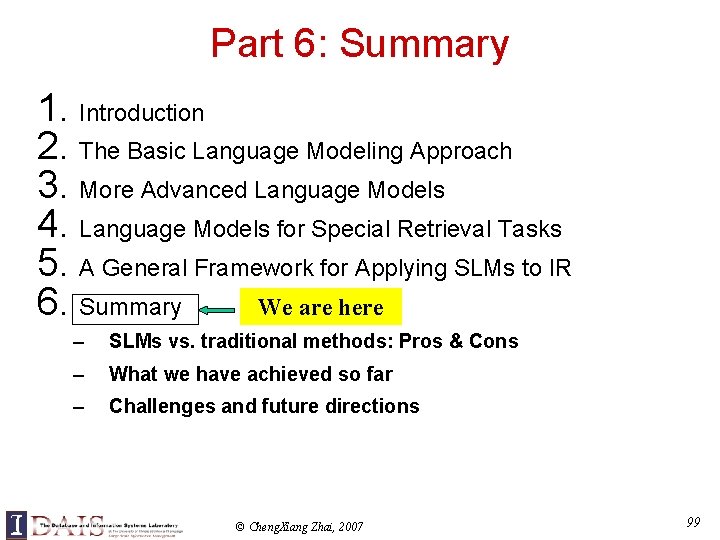

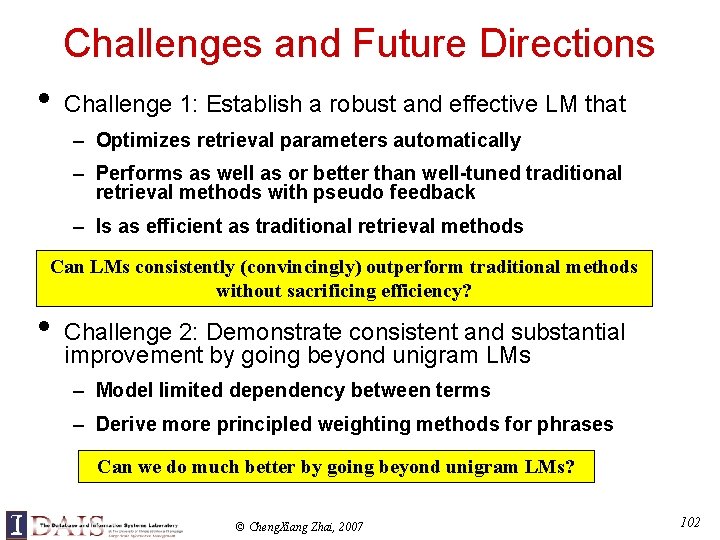

Part 6: Summary 1. Introduction 2. The Basic Language Modeling Approach 3. More Advanced Language Models 4. Language Models for Special Retrieval Tasks 5. A General Framework for Applying SLMs to IR We are here 6. Summary – SLMs vs. traditional methods: Pros & Cons – What we have achieved so far – Challenges and future directions © Cheng. Xiang Zhai, 2007 99

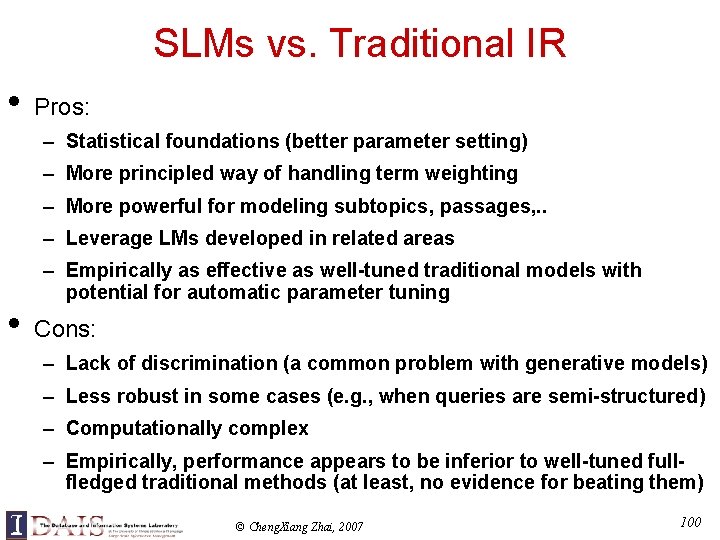

SLMs vs. Traditional IR • Pros: – Statistical foundations (better parameter setting) – More principled way of handling term weighting – More powerful for modeling subtopics, passages, . . – Leverage LMs developed in related areas • – Empirically as effective as well-tuned traditional models with potential for automatic parameter tuning Cons: – Lack of discrimination (a common problem with generative models) – Less robust in some cases (e. g. , when queries are semi-structured) – Computationally complex – Empirically, performance appears to be inferior to well-tuned fullfledged traditional methods (at least, no evidence for beating them) © Cheng. Xiang Zhai, 2007 100

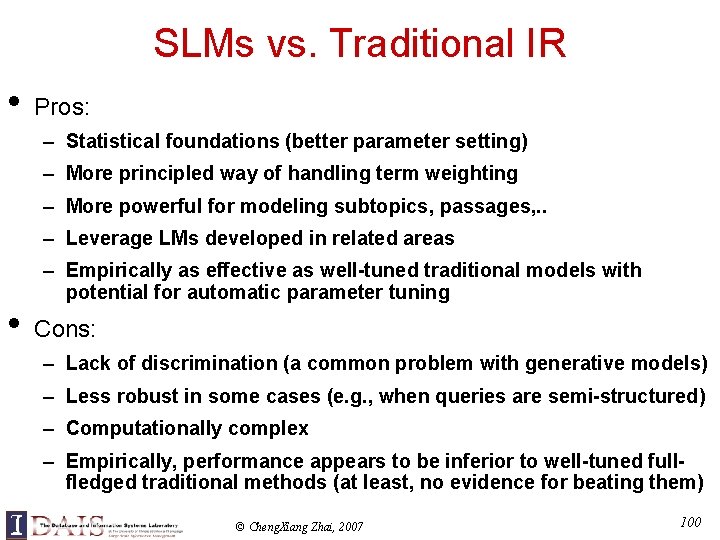

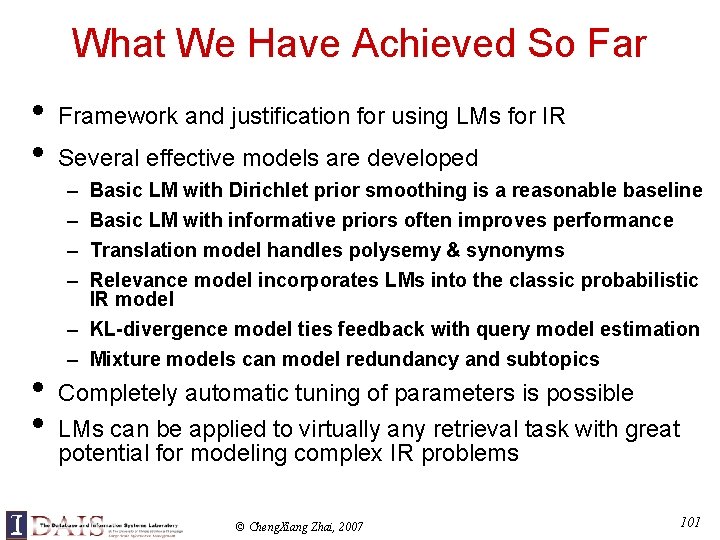

What We Have Achieved So Far • • Framework and justification for using LMs for IR Several effective models are developed – – • • Basic LM with Dirichlet prior smoothing is a reasonable baseline Basic LM with informative priors often improves performance Translation model handles polysemy & synonyms Relevance model incorporates LMs into the classic probabilistic IR model – KL-divergence model ties feedback with query model estimation – Mixture models can model redundancy and subtopics Completely automatic tuning of parameters is possible LMs can be applied to virtually any retrieval task with great potential for modeling complex IR problems © Cheng. Xiang Zhai, 2007 101

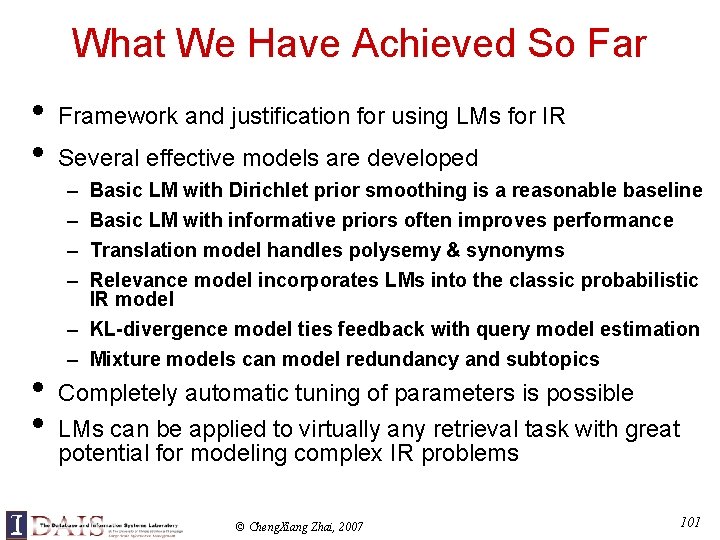

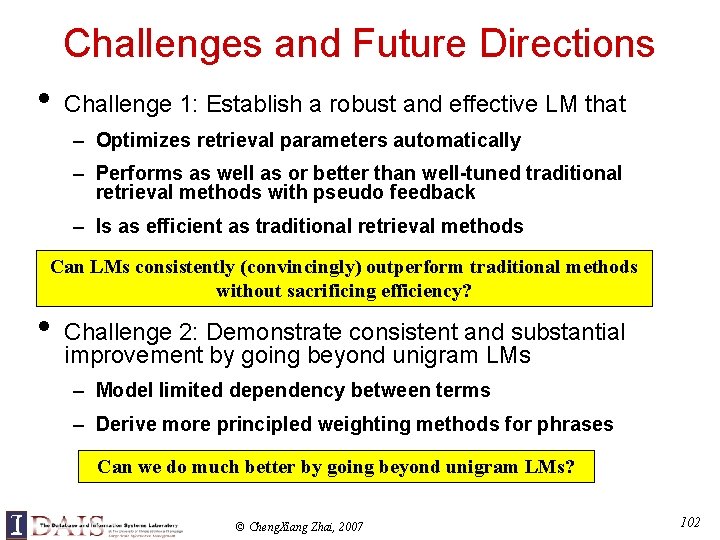

Challenges and Future Directions • Challenge 1: Establish a robust and effective LM that – Optimizes retrieval parameters automatically – Performs as well as or better than well-tuned traditional retrieval methods with pseudo feedback – Is as efficient as traditional retrieval methods Can LMs consistently (convincingly) outperform traditional methods without sacrificing efficiency? • Challenge 2: Demonstrate consistent and substantial improvement by going beyond unigram LMs – Model limited dependency between terms – Derive more principled weighting methods for phrases Can we do much better by going beyond unigram LMs? © Cheng. Xiang Zhai, 2007 102

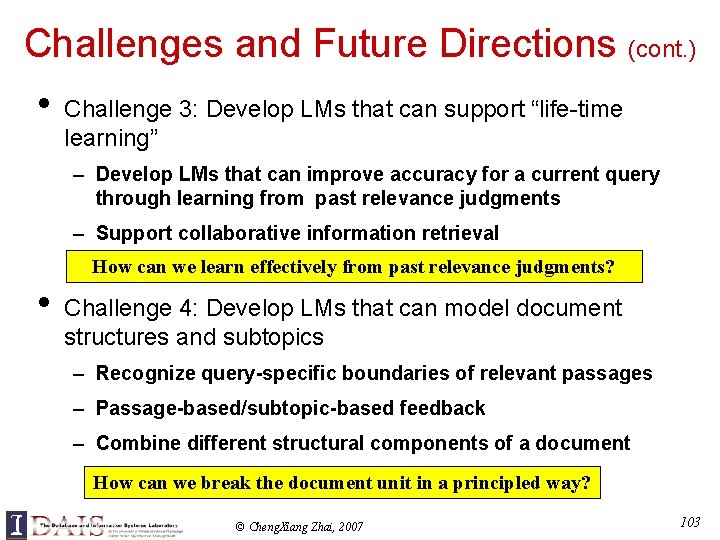

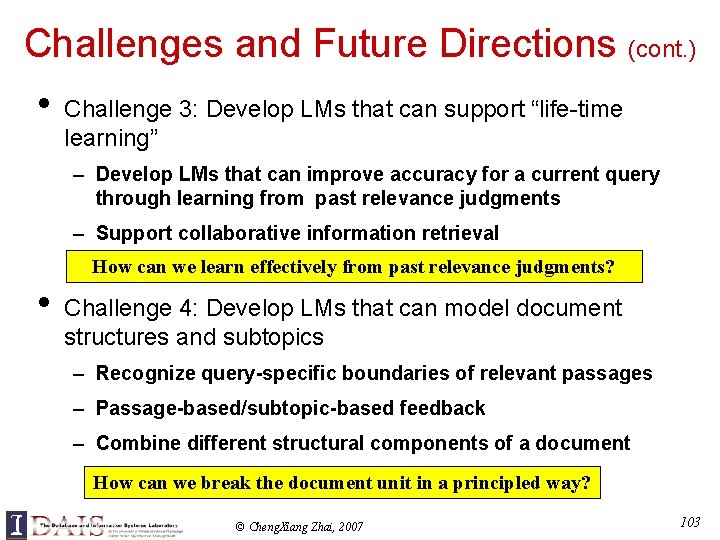

Challenges and Future Directions (cont. ) • Challenge 3: Develop LMs that can support “life-time learning” – Develop LMs that can improve accuracy for a current query through learning from past relevance judgments – Support collaborative information retrieval How can we learn effectively from past relevance judgments? • Challenge 4: Develop LMs that can model document structures and subtopics – Recognize query-specific boundaries of relevant passages – Passage-based/subtopic-based feedback – Combine different structural components of a document How can we break the document unit in a principled way? © Cheng. Xiang Zhai, 2007 103

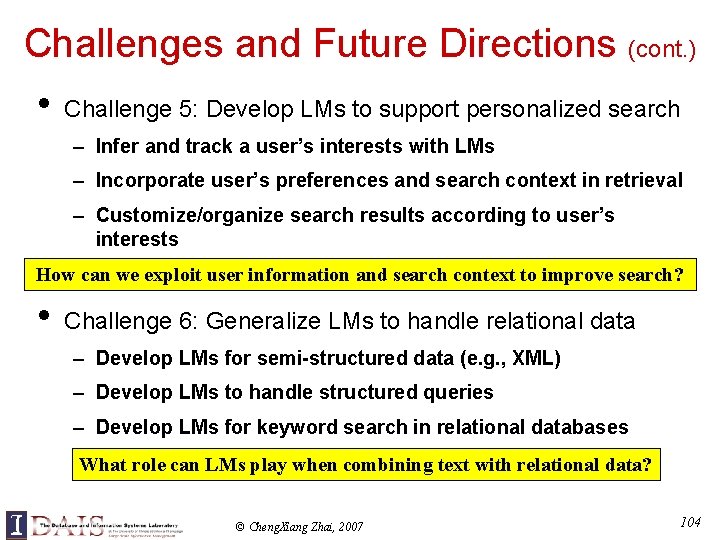

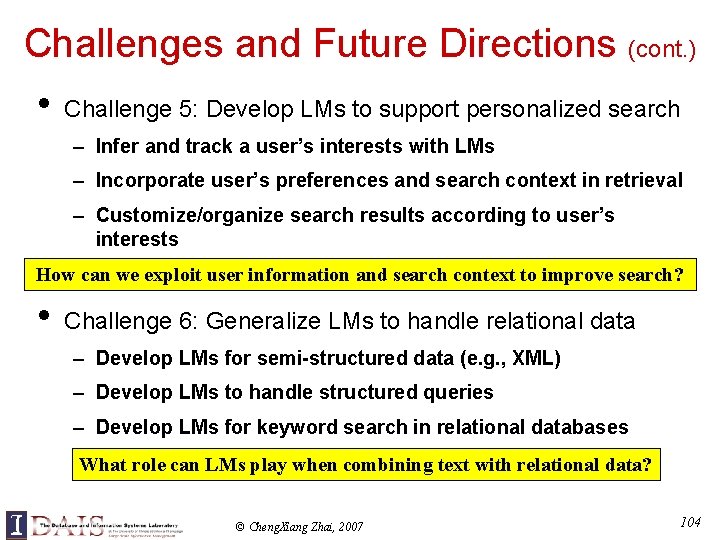

Challenges and Future Directions (cont. ) • Challenge 5: Develop LMs to support personalized search – Infer and track a user’s interests with LMs – Incorporate user’s preferences and search context in retrieval – Customize/organize search results according to user’s interests How can we exploit user information and search context to improve search? • Challenge 6: Generalize LMs to handle relational data – Develop LMs for semi-structured data (e. g. , XML) – Develop LMs to handle structured queries – Develop LMs for keyword search in relational databases What role can LMs play when combining text with relational data? © Cheng. Xiang Zhai, 2007 104

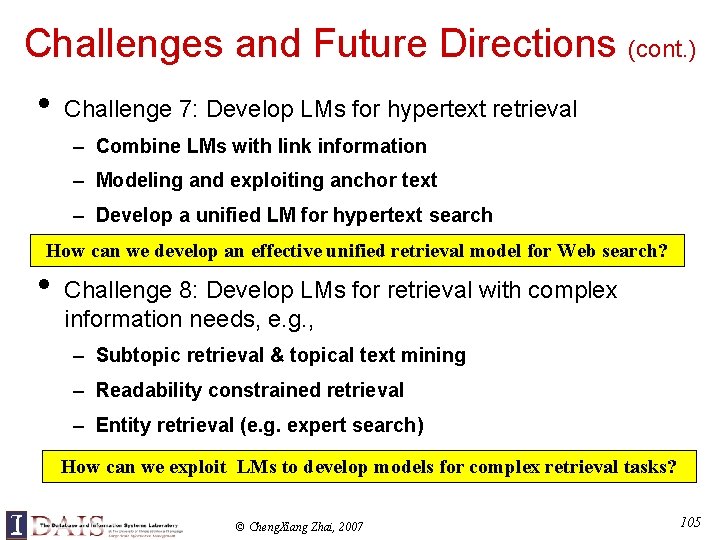

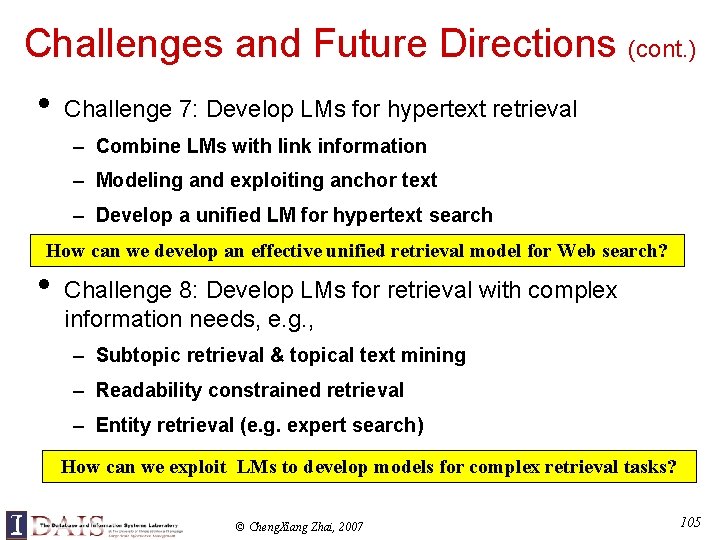

Challenges and Future Directions (cont. ) • Challenge 7: Develop LMs for hypertext retrieval – Combine LMs with link information – Modeling and exploiting anchor text – Develop a unified LM for hypertext search How can we develop an effective unified retrieval model for Web search? • Challenge 8: Develop LMs for retrieval with complex information needs, e. g. , – Subtopic retrieval & topical text mining – Readability constrained retrieval – Entity retrieval (e. g. expert search) How can we exploit LMs to develop models for complex retrieval tasks? © Cheng. Xiang Zhai, 2007 105

![References Agichtein Cucerzan 05 E Agichtein and S Cucerzan Predicting accuracy of extracting References [Agichtein & Cucerzan 05] E. Agichtein and S. Cucerzan, Predicting accuracy of extracting](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-106.jpg)

References [Agichtein & Cucerzan 05] E. Agichtein and S. Cucerzan, Predicting accuracy of extracting information from unstructured text collections, Proceedings of ACM CIKM 2005. pages 413 -420. [Baeza-Yates & Ribeiro-Neto 99] R. Baeza-Yates and B. Ribeiro-Neto, Modern Information Retrieval, Addison-Wesley, 1999. [Bai et al. 05] Jing Bai, Dawei Song, Peter Bruza, Jian-Yun Nie, Guihong Cao, Query expansion using term relationships in language models for information retrieval, Proceedings of ACM CIKM 2005, pages 688 -695. [Balog et al. 06] K. Balog, L. Azzopardi, M. de Rijke, Formal models for expert finding in enterprise corpora, Proceedings of ACM SIGIR 2006, pages 43 -50. [Berger & Lafferty 99] A. Berger and J. Lafferty. Information retrieval as statistical translation. Proceedings of the ACM SIGIR 1999, pages 222 -229. [Berger 01] A. Berger. Statistical machine learning for information retrieval. Ph. D. dissertation, Carnegie Mellon University, 2001. [Blei et al. 02] D. Blei, A. Ng, and M. Jordan. Latent dirichlet allocation. In T G Dietterich, S. Becker, and Z. Ghahramani, editors, Advances in Neural Information Processing Systems 14, Cambridge, MA, 2002. MIT Press. [Blei & Jordan 03] D. Blei and M. Jordan, Modeling annotated data, Proceedings of ACM SIGIR 2003, pages 127 -134. [Blei & Lafferty 06 a] D. Blei and J. Lafferty, Correlated topic models, Advances in Neural Information Processing Systems 18. 2006. [Blei & Lafferty 06 b] D. Blei and J. Lafferty, Dynamic topic models, Proceedings of ICML 2006, pages 113 -120. [Cao et al. 05] Guihong Cao, Jian-Yun Nie, Jing Bai, Integrating word relationships into language models, Proceedings of ACM SIGIR 2005, Pages: 298 - 305. [Carbonell and Goldstein 98]J. Carbonell and J. Goldstein, The use of MMR, diversity-based reranking for reordering documents and producing summaries. In Proceedings of SIGIR'98, pages 335 --336. [Chen & Goodman 98] S. F. Chen and J. T. Goodman. An empirical study of smoothing techniques for language modeling. Technical Report TR-10 -98, Harvard University. [Collins-Thompson & Callan 05] K. Collins-Thompson and J. Callan, Query expansing using random walk models, Proceedings of ACM CIKM 2005, pages 704 -711. © Cheng. Xiang Zhai, 2007 106

![References cont CronenTownsend et al 02 Steve CronenTownsend Yun Zhou and W Bruce References (cont. ) [Cronen-Townsend et al. 02] Steve Cronen-Townsend, Yun Zhou, and W. Bruce](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-107.jpg)

References (cont. ) [Cronen-Townsend et al. 02] Steve Cronen-Townsend, Yun Zhou, and W. Bruce Croft. Predicting query performance. In Proceedings of the ACM Conference on Research in Information Retrieval (SIGIR), 2002. [Croft & Lafferty 03] W. B. Croft and J. Lafferty (ed), Language Modeling and Information Retrieval. Kluwer Academic Publishers. 2003. [Fang et al. 04] H. Fang, T. Tao and C. Zhai, A formal study of information retrieval heuristics, Proceedings of ACM SIGIR 2004. pages 49 -56. [Fang & Zhai 07] H. Fang and C. Zhai, Probabilistic models for expert finding, Proceedings of ECIR 2007. [Fox 83] E. Fox. Expending the Boolean and Vector Space Models of Information Retrieval with P-Norm Queries and Multiple Concept Types. Ph. D thesis, Cornell University. 1983. [Fuhr 01] N. Fuhr. Language models and uncertain inference in information retrieval. In Proceedings of the Language Modeling and IR workshop, pages 6 --11. [Gao et al. 04] J. Gao, J. Nie, G. Wu, and G. Cao, Dependence language model for information retrieval, In Proceedings of ACM SIGIR 2004. [Good 53] I. J. Good. The population frequencies of species and the estimation of population parameters. Biometrika, 40(3 and 4): 237 --264, 1953. [Greiff & Morgan 03] W. Greiff and W. Morgan, Contributions of Language Modeling to the Theory and Practice of IR, In W. B. Croft and J. Lafferty (eds), Language Modeling for Information Retrieval, Kluwer Academic Pub. 2003. [Grossman & Frieder 04] D. Grossman and O. Frieder, Information Retrieval: Algorithms and Heuristics, 2 nd Ed, Springer, 2004. [He & Ounis 05] Ben He and Iadh Ounis, A study of the Dirichlet priors for term frequency normalisation, Proceedings of ACM SIGIR 2005, Pages 465 - 471 [Hiemstra & Kraaij 99] D. Hiemstra and W. Kraaij, Twenty-One at TREC-7: Ad-hoc and Cross-language track, In Proceedings of the Seventh Text REtrieval Conference (TREC-7), 1999. [Hiemstra 01] D. Hiemstra. Using Language Models for Information Retrieval. Ph. D dissertation, University of Twente, Enschede, The Netherlands, January 2001. [Hiemstra 02] D. Hiemstra. Term-specific smoothing for the language modeling approach to information retrieval: the importance of a query term. In Proceedings of ACM SIGIR 2002, 35 -41 [Hiemstra et al. 04] D. Hiemstra, S. Robertson, and H. Zaragoza. Parsimonious language models for information retrieval, In Proceedings of ACM SIGIR 2004. © Cheng. Xiang Zhai, 2007 107

![References cont Hofmann 99 T Hofmann Probabilistic latent semantic indexing In Proceedings on References (cont. ) [Hofmann 99] T. Hofmann. Probabilistic latent semantic indexing. In Proceedings on](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-108.jpg)

References (cont. ) [Hofmann 99] T. Hofmann. Probabilistic latent semantic indexing. In Proceedings on the 22 nd annual international ACMSIGIR 1999, pages 50 -57. [Jarvelin & Kekalainen 02] Cumulated gain-based evaluation of IR techniques, ACM TOIS, Vol. 20, No. 4, 422 -446, 2002. [Jelinek 98] F. Jelinek, Statistical Methods for Speech Recognition, Cambirdge: MIT Press, 1998. [Jelinek & Mercer 80] F. Jelinek and R. L. Mercer. Interpolated estimation of markov source parameters from sparse data. In E. S. Gelsema and L. N. Kanal, editors, Pattern Recognition in Practice. 1980. Amsterdam, North-Holland, . [Jeon et al. 03] J. Jeon, V. Lavrenko and R. Manmatha, Automatic Image Annotation and Retrieval using Cross-media Relevance Models, In Proceedings of ACM SIGIR 2003 [Jin et al. 02] R. Jin, A. Hauptmann, and C. Zhai, Title language models for information retrieval, In Proceedings of ACM SIGIR 2002. [Kalt 96] T. Kalt. A new probabilistic model of text classication and retrieval. University of Massachusetts Technical report TR 98 -18, 1996. [Katz 87] S. M. Katz. Estimation of probabilities from sparse data for the language model component of a speech recognizer. IEEE Transactions on Acoustics, Speech and Signal Processing, volume ASSP-35: 400 --401. [Kraaij et al. 02] W. Kraaij, T. Westerveld, D. Hiemstra: The Importance of Prior Probabilities for Entry Page Search. Proceedings of SIGIR 2002, pp. 27 -34 [Kraaij 04] W. Kraaij. Variations on Language Modeling for Information Retrieval, Ph. D. thesis, University of Twente, 2004, [Kurland & Lee 04] O. Kurland L. Lee. Corpus structure, language models, and ad hoc information retrieval. In Proceedings of ACM SIGIR 2004. [Kurland et al. 05] Oren Kurland, Lillian Lee, Carmel Domshlak, Better than the real thing? : iterative pseudo-query processing using cluster-based language models, Proceedings of ACM SIGIR 2005. pages 19 -26. [Kurland & Lee 05] Oren Kurland Lillian Lee, Page. Rank without hyperlinks: structural re-ranking using links induced by language models, Proceedings of ACM SIGIR 2005. pages 306 -313. [Lafferty and Zhai 01 a] J. Lafferty and C. Zhai, Probabilistic IR models based on query and document generation. In Proceedings of the Language Modeling and IR workshop, pages 1 --5. [Lafferty & Zhai 01 b] J. Lafferty and C. Zhai. Document language models, query models, and risk minimization for information retrieval. In Proceedings of the ACM SIGIR 2001, pages 111 -119. [Lavrenko & Croft 01] V. Lavrenko and W. B. Croft. Relevance-based language models. In Proceedings of the ACM SIGIR 2001, pages 120 -127. © Cheng. Xiang Zhai, 2007 108

![References cont Lavrenko et al 02 V Lavrenko M Choquette and W Croft References (cont. ) [Lavrenko et al. 02] V. Lavrenko, M. Choquette, and W. Croft.](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-109.jpg)

References (cont. ) [Lavrenko et al. 02] V. Lavrenko, M. Choquette, and W. Croft. Cross-lingual relevance models. In Proceedings of SIGIR 2002, pages 175 -182. [Lavrenko 04] V. Lavrenko, A generative theory of relevance. Ph. D. thesis, University of Massachusetts. 2004. [Li & Croft 03] X. Li, and W. B. Croft, Time-Based Language Models, In Proceedings of CIKM'03, 2003 [Li & Mc. Callum 06] W. Li and A. Mc. Callum, Pachinko Allocation: DAG-structured Mixture Models of Topic Correlations, Proceedings of ICML 2006, pages 577 -584. [Liu & Croft 02] X. Liu and W. B. Croft. Passage retrieval based on language models. In Proceedings of CIKM 2002, pages 1519. [Liu & Croft 04] X. Liu and W. B. Croft. Cluster-based retrieval using language models. In Proceedings of ACM SIGIR 2004. [Mac. Kay & Peto 95] D. Mac. Kay and L. Peto. (1995). A hierarchical Dirichlet language model. Natural Language Engineering, 1(3): 289 --307. [Maron & Kuhns 60] M. E. Maron and J. L. Kuhns, On relevance, probabilistic indexing and information retrieval. Journal of the ACM, 7: 216 --244. [Mc. Callum & Nigam 98] A. Mc. Callum and K. Nigam (1998). A comparison of event models for Naïve Bayes text classification. In AAAI-1998 Learning for Text Categorization Workshop, pages 41 --48. [Mei & Zhai 05] Q. Mei and C. Zhai, Discovering evolutionary theme patterns from text – An exploration of temporal text mining, Proceedings of ACM KDD 2005, pages 198 -207. [Mei & Zhai 06] Q. Mei and C. Zhai, A mixture model for contextual text mining, Proceedings of ACM KDD 2006, pages 649 -655. [Mei et al. 06] Q. Mei, C. Liu, H. Su, and C. Zhai, A probabilistic approach to spatiotemporal theme pattern mining on weblogs, Proceedings of WWW 2006, pages 533 -542. [Mei et al. 07] Q. Mei, X. Ling, M. Wondra, H. Su, C. Zhai, Topic Sentiment Mixture: Modeling facets and opinions in Weblogs, Proceedings of WWW 2007. [Miller et al. 99] D. R. H. Miller, T. Leek, and R. M. Schwartz. A hidden Markov model information retrieval system. In Proceedings of ACM-SIGIR 1999, pages 214 -221. [Minka & Lafferty 03] T. Minka and J. Lafferty, Expectation-propagation for the generative aspect model, In Proceedings of the UAI 2002, pages 352 --359. © Cheng. Xiang Zhai, 2007 109

![References cont Nallanati Allan 02 Ramesh Nallapati and James Allan Capturing term References (cont. ) [Nallanati & Allan 02] Ramesh Nallapati and James Allan, Capturing term](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-110.jpg)

References (cont. ) [Nallanati & Allan 02] Ramesh Nallapati and James Allan, Capturing term dependencies using a language model based on sentence trees. In Proceedings of CIKM 2002. 383 -390 [Nallanati et al 03] R. Nallanati, W. B. Croft, and J. Allan, Relevant query feedback in statistical language modeling, In Proceedings of CIKM 2003. [Ney et al. 94] H. Ney, U. Essen, and R. Kneser. On Structuring Probabilistic Dependencies in Stochastic Language Modeling. Comput. Speech and Lang. , 8(1), 1 -28. [Ng 00]K. Ng. A maximum likelihood ratio information retrieval model. In Voorhees, E. and Harman, D. , editors, Proceedings of the Eighth Text REtrieval Conference (TREC-8), pages 483 --492. 2000. [Ogilvie & Callan 03] P. Ogilvie and J. Callan Combining Document Representations for Known Item Search. In Proceedings of the 26 th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2003), pp. 143 -150 [Ponte & Croft 98]] J. M. Ponte and W. B. Croft. A language modeling approach to information retrieval. In Proceedings of ACM-SIGIR 1998, pages 275 -281. [Ponte 98] J. M. Ponte. A language modeling approach to information retrieval. Phd dissertation, University of Massachusets, Amherst, MA, September 1998. [Ponte 01] J. Ponte. Is information retrieval anything more than smoothing? In Proceedings of the Workshop on Language Modeling and Information Retrieval, pages 37 -41, 2001. [Robertson & Sparch-Jones 76] S. Robertson and K. Sparck Jones. (1976). Relevance Weighting of Search Terms. JASIS, 27, 129 -146. [Robertson 77] S. E. Robertson. The probability ranking principle in IR. Journal of Documentation, 33: 294 -304, 1977. [Robertson & Walker 94] S. E. Robertson and S. Walker, Some simple effective approximations to the 2 -Poisson model for probabilistic weighted retrieval. Proceedings of ACM SIGIR 1994. pages 232 -241. 1994. [Rosenfeld 00] R. Rosenfeld, Two decades of statistical language modeling: where do we go from here? In Proceedings of IEEE, volume~88. [Salton et al. 75] G. Salton, A. Wong and C. S. Yang, A vector space model for automatic indexing. Communications of the ACM, 18(11): 613 --620. [Salton & Buckley 88] G. Salton and C. Buckley, Term weighting approaches in automatic text retrieval, Information Processing and Management, 24(5), 513 -523. 1988. © Cheng. Xiang Zhai, 2007 110

![References cont Shannon 48 Shannon C E 1948 A mathematical theory of References (cont. ) [Shannon 48] Shannon, C. E. (1948). . A mathematical theory of](https://slidetodoc.com/presentation_image_h/e553ddddea26dffa7e20497194e23b1b/image-111.jpg)