Modern Information Retrieval Chapter 5 Query Operations 89522022

- Slides: 33

Modern Information Retrieval Chapter 5 Query Operations 報告人:林秉儀 學號: 89522022

Introduction • It is difficult to formulate queries which are well designed for retrieval purposes. • Improving the initial query formulation through query expansion and term reweighting. Approaches based on: – feedback information from the user – information derived from the set of documents initially retrieved (called the local set of documents) – global information derived from the document collection

User Relevance Feedback • User is presented with a list of the retrieved documents and, after examining them, marks those which are relevant. • Two basic operation: – Query expansion : addition of new terms from relevant document – Term reweighting : modification of term weights based on the user relevance judgement

User Relevance Feedback • The usage of user relevance feedback to: – expand queries with the vector model – reweight query terms with the probabilistic model – reweight query terms with a variant of the probabilistic model

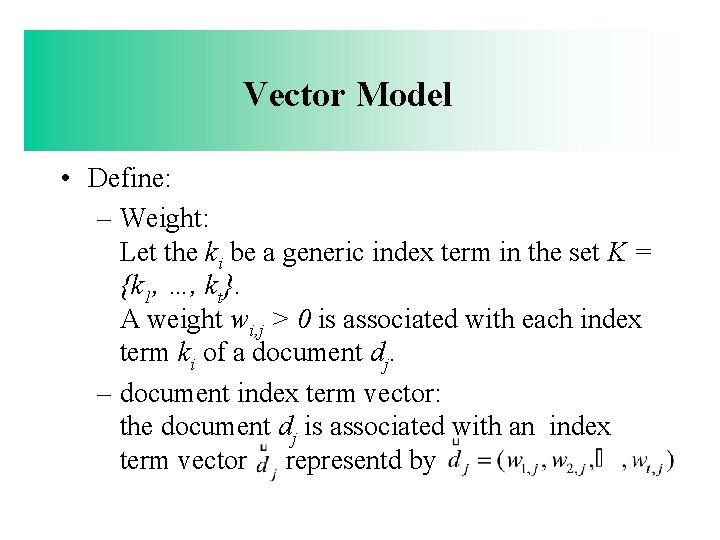

Vector Model • Define: – Weight: Let the ki be a generic index term in the set K = {k 1, …, kt}. A weight wi, j > 0 is associated with each index term ki of a document dj. – document index term vector: the document dj is associated with an index term vector dj representd by

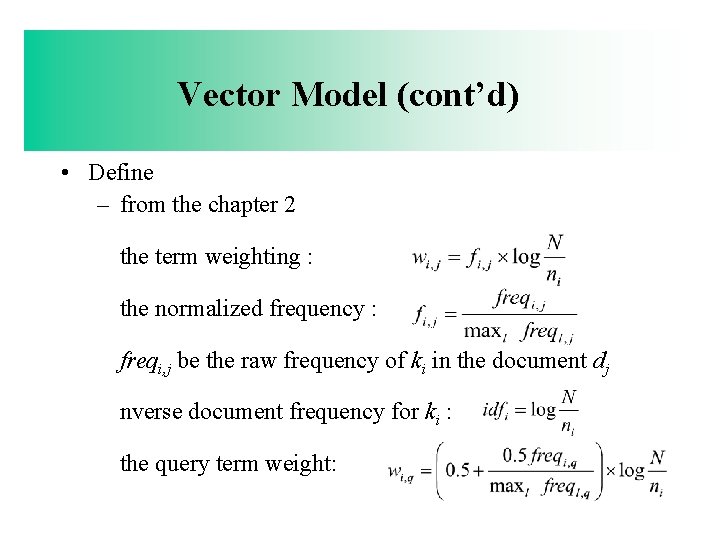

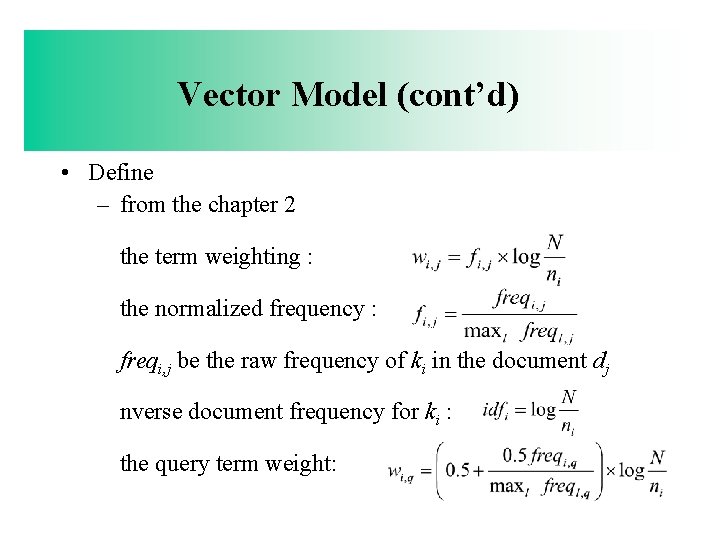

Vector Model (cont’d) • Define – from the chapter 2 the term weighting : the normalized frequency : freqi, j be the raw frequency of ki in the document dj nverse document frequency for ki : the query term weight:

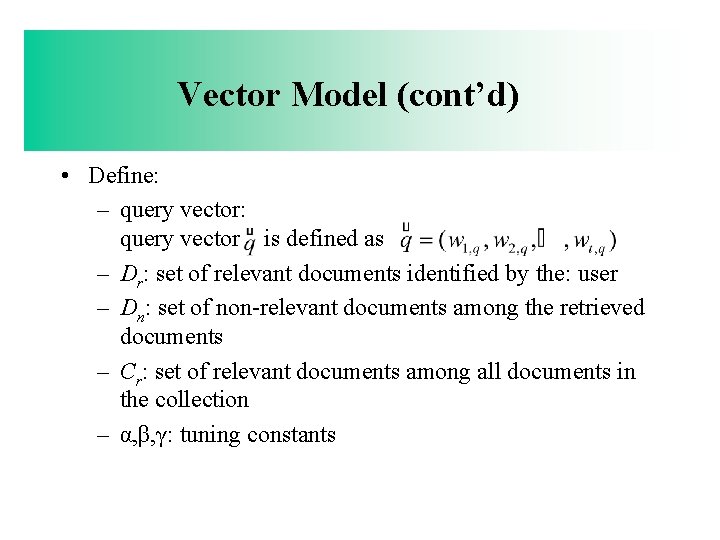

Vector Model (cont’d) • Define: – query vector: query vector q is defined as – Dr: set of relevant documents identified by the: user – Dn: set of non-relevant documents among the retrieved documents – Cr: set of relevant documents among all documents in the collection – α, β, γ: tuning constants

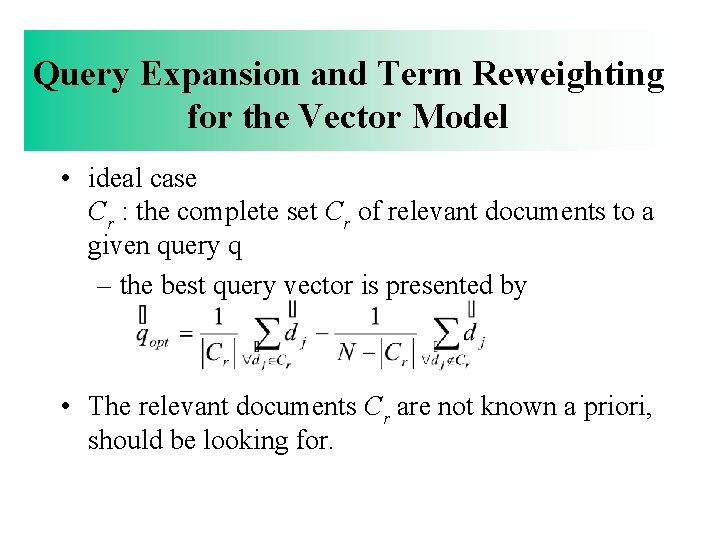

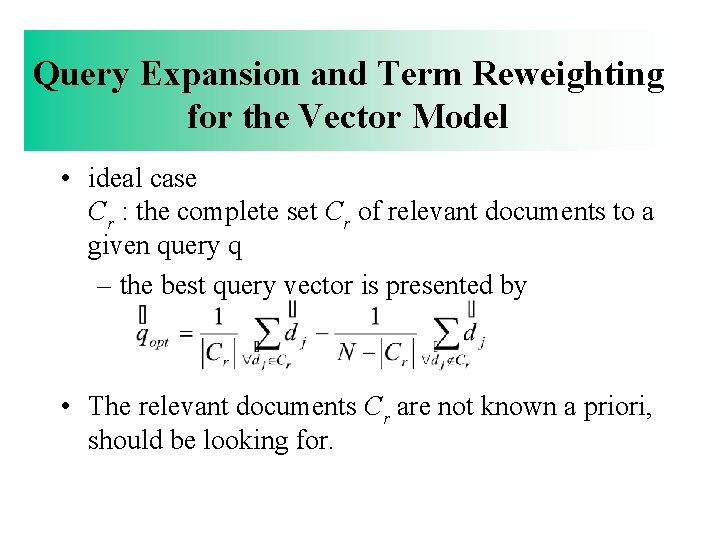

Query Expansion and Term Reweighting for the Vector Model • ideal case Cr : the complete set Cr of relevant documents to a given query q – the best query vector is presented by • The relevant documents Cr are not known a priori, should be looking for.

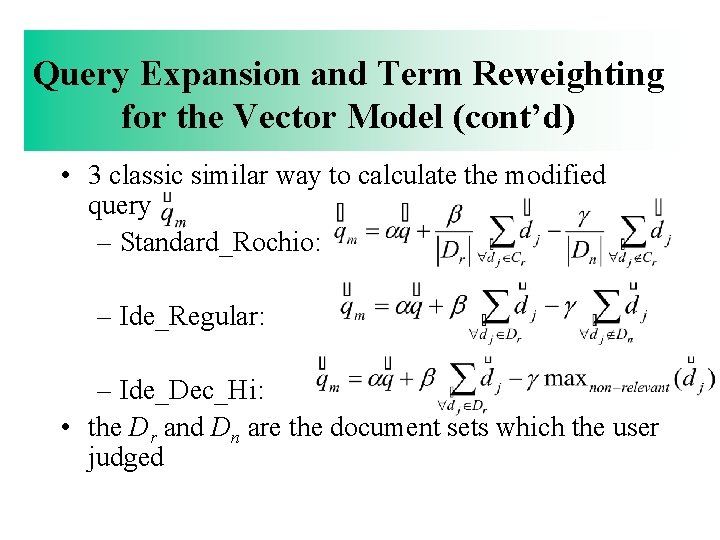

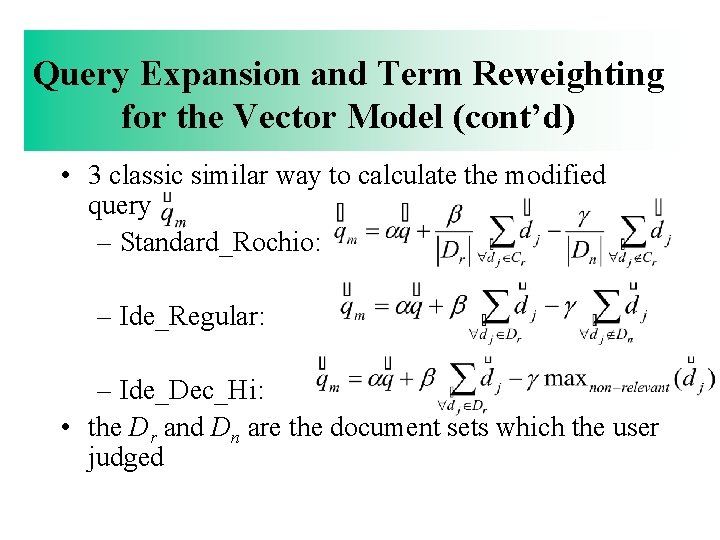

Query Expansion and Term Reweighting for the Vector Model (cont’d) • 3 classic similar way to calculate the modified query – Standard_Rochio: – Ide_Regular: – Ide_Dec_Hi: • the Dr and Dn are the document sets which the user judged

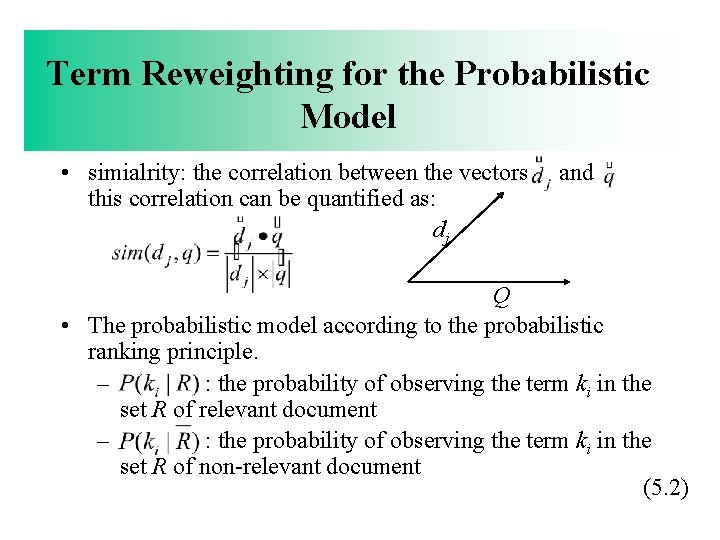

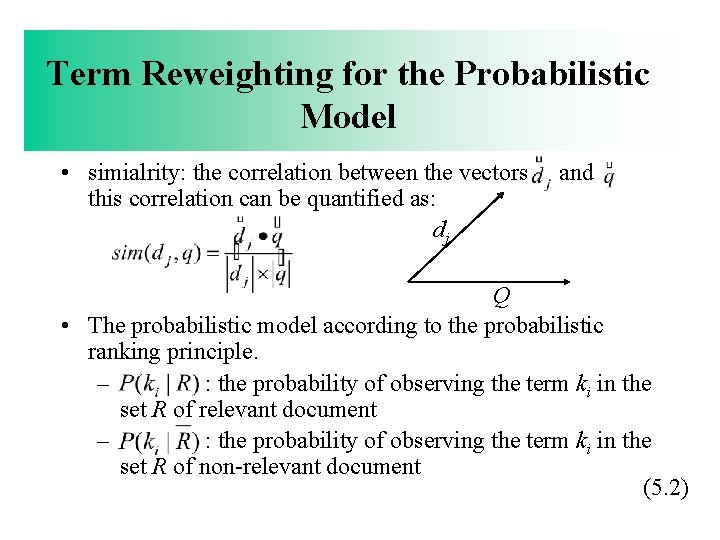

Term Reweighting for the Probabilistic Model • simialrity: the correlation between the vectors dj and this correlation can be quantified as: dj Q • The probabilistic model according to the probabilistic ranking principle. – p(ki|R) : the probability of observing the term ki in the set R of relevant document – p(ki|R) : the probability of observing the term ki in the set R of non-relevant document (5. 2)

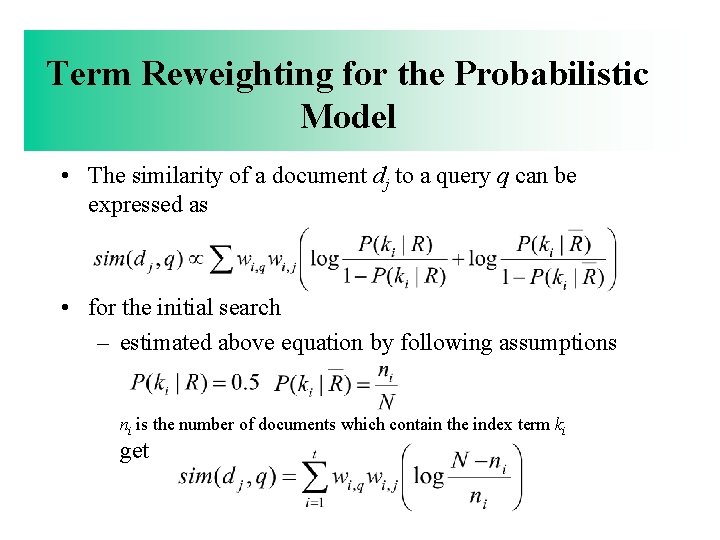

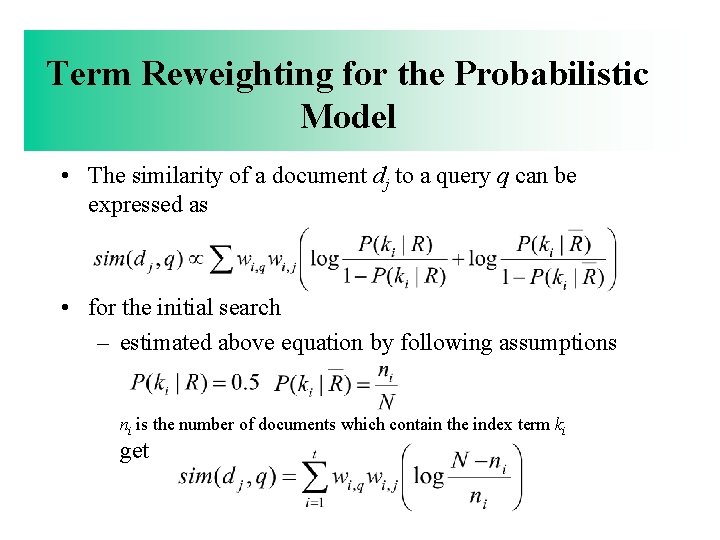

Term Reweighting for the Probabilistic Model • The similarity of a document dj to a query q can be expressed as • for the initial search – estimated above equation by following assumptions ni is the number of documents which contain the index term ki get

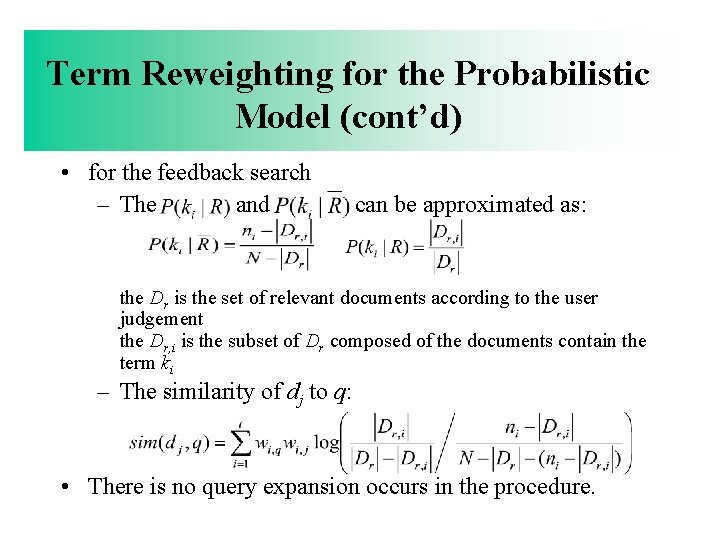

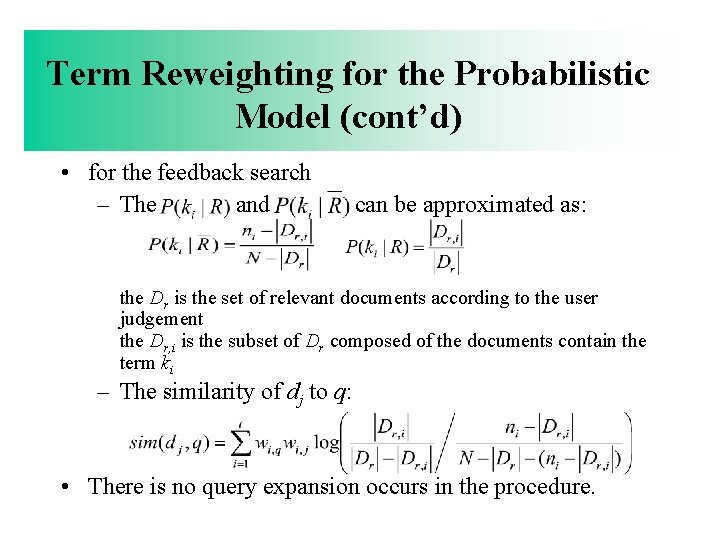

Term Reweighting for the Probabilistic Model (cont’d) • for the feedback search – The P(ki|R) and P(ki|R) can be approximated as: the Dr is the set of relevant documents according to the user judgement the Dr, i is the subset of Dr composed of the documents contain the term ki – The similarity of dj to q: • There is no query expansion occurs in the procedure.

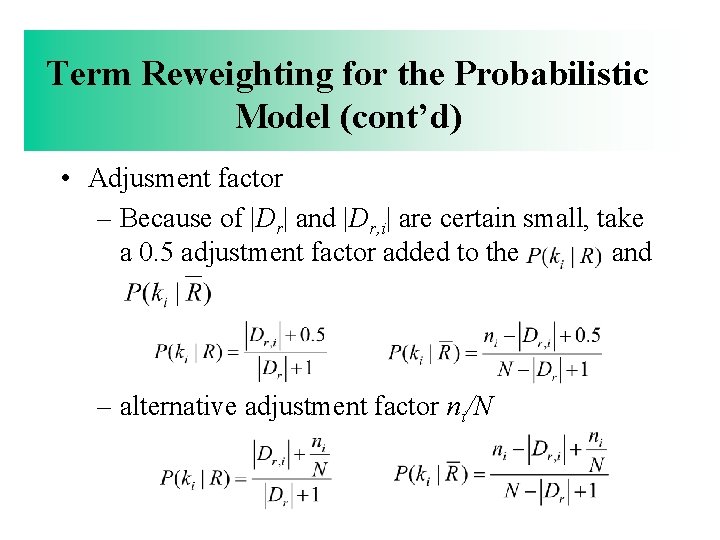

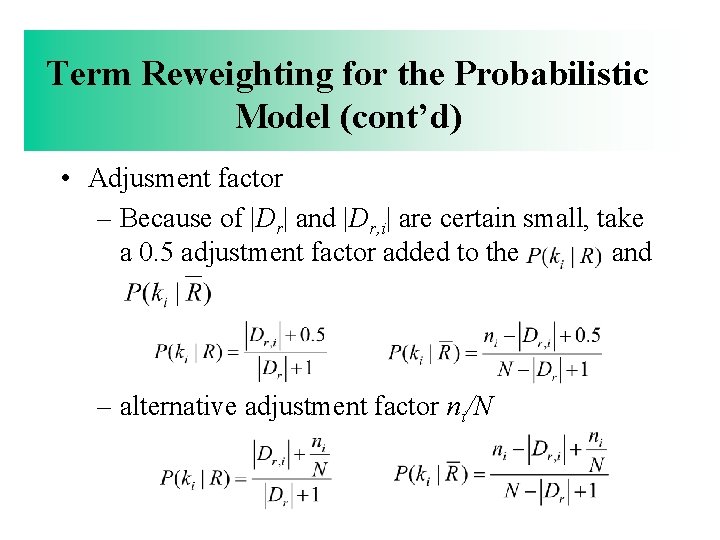

Term Reweighting for the Probabilistic Model (cont’d) • Adjusment factor – Because of |Dr| and |Dr, i| are certain small, take a 0. 5 adjustment factor added to the P(ki|R) and P(ki|R) – alternative adjustment factor ni/N

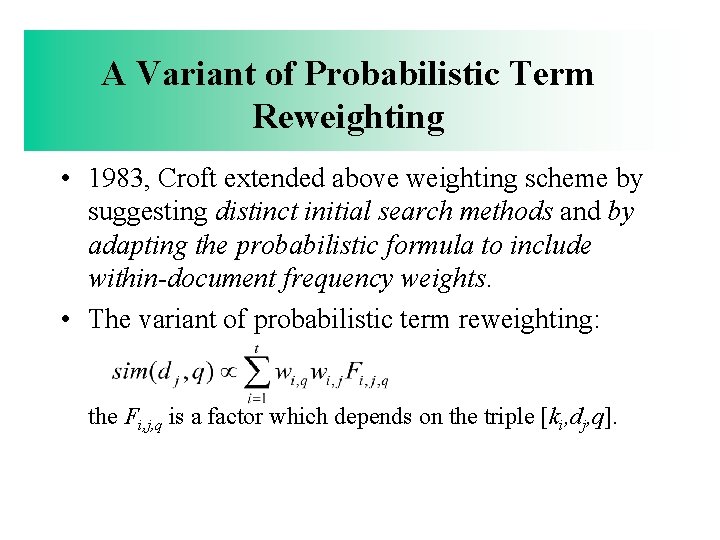

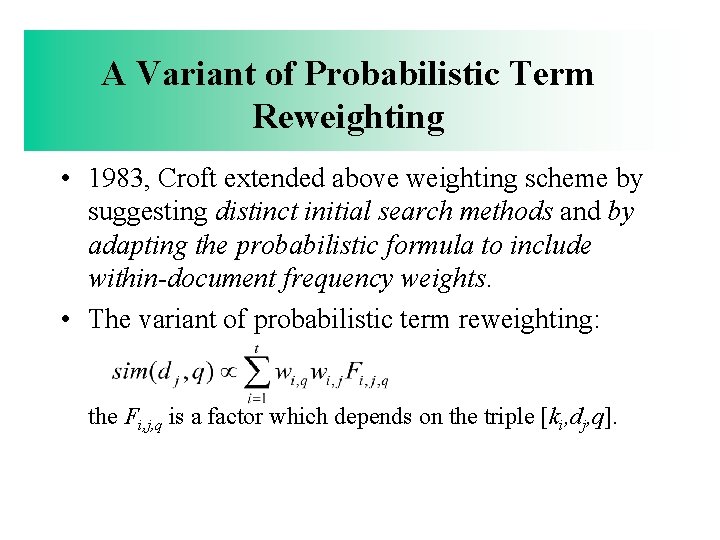

A Variant of Probabilistic Term Reweighting • 1983, Croft extended above weighting scheme by suggesting distinct initial search methods and by adapting the probabilistic formula to include within-document frequency weights. • The variant of probabilistic term reweighting: the Fi, j, q is a factor which depends on the triple [ki, dj, q].

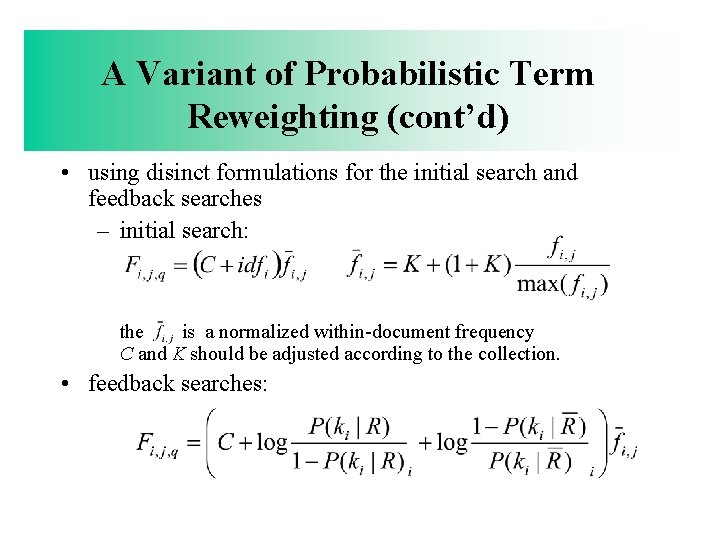

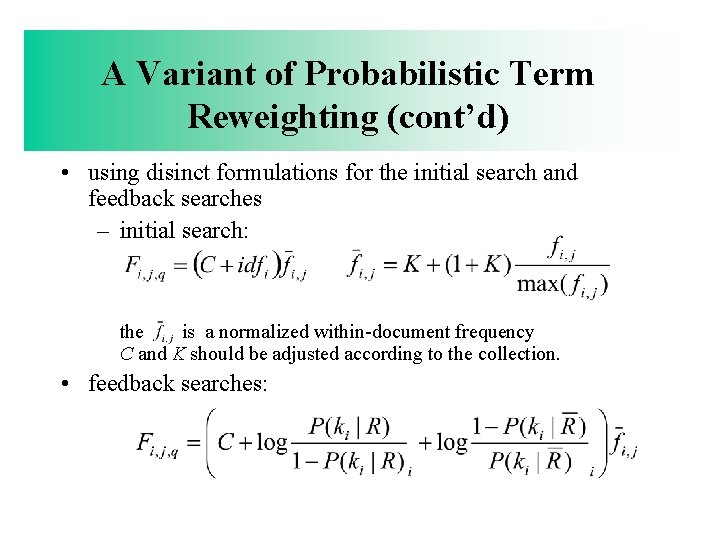

A Variant of Probabilistic Term Reweighting (cont’d) • using disinct formulations for the initial search and feedback searches – initial search: the fi, j is a normalized within-document frequency C and K should be adjusted according to the collection. • feedback searches: • empty text

Automatic Local Analysis • Clustering : the grouping of documents which satisfy a set of common properties. • Attempting to obtain a description for a larger cluster of relevant documents automatically : To identify terms which are related to the query terms such as: – Synonyms – Stemming – Variations – Terms with a distance of at most k words from a query term

Automatic Local Analysis (cont’d) • The local strategy is that the documents retrieved for a given query q are examined at query time to determine terms for query expansion. • Two basic types of local strategy: – Local clustering – Local context analysis • Local strategies suit for environment of intranets, not for web documents.

Query Expansion Through Local Clustering • Local feedback strategies are that expands the query with terms correlated to the query terms. Such correlated terms are those present in local clusters built from the local document set.

Query Expansion Through Local Clustering (cont’d) • Definition: – Stem: A V(s) be a non-empty subset of words which are grammatical variants of each other. A canonical form s of V(s) is called a stem. Example: If V(s) = { polish, polishing, polished} then s=polish – Dl : the local document set, the set of documents retrieved for a given query q • Strategies for building local clusters: – Association clusters – Metric clusters – Scalar clusters

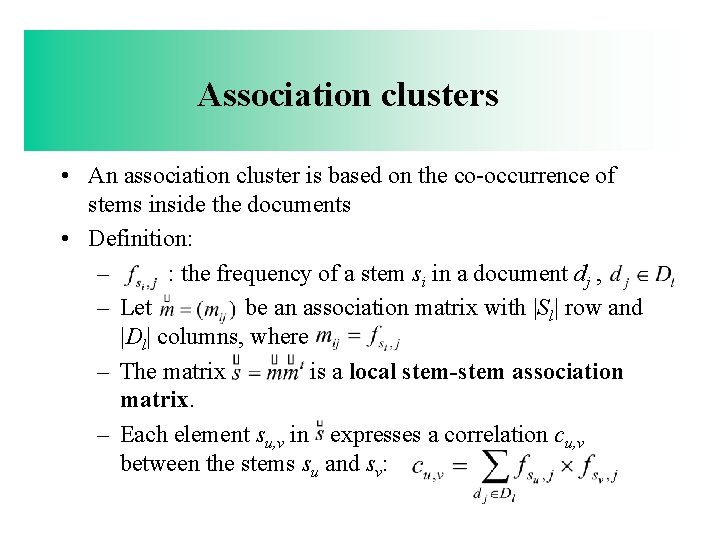

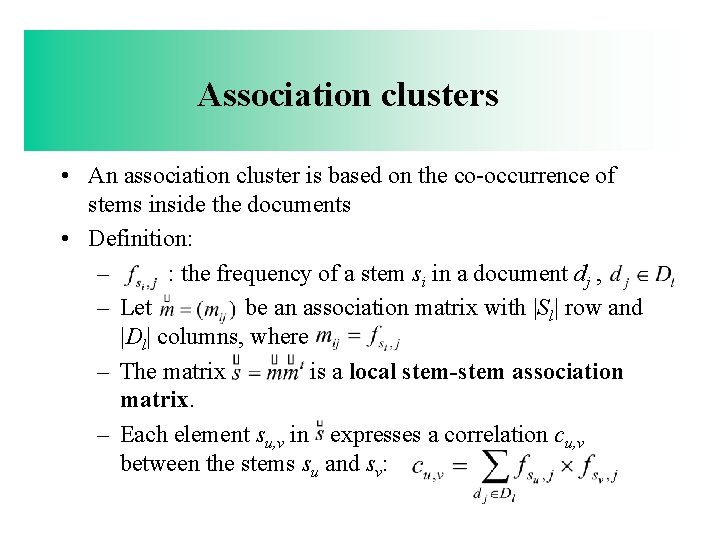

Association clusters • An association cluster is based on the co-occurrence of stems inside the documents • Definition: – fsi, j : the frequency of a stem si in a document dj , – Let m=(mij) be an association matrix with |Sl| row and |Dl| columns, where mij=fsi, j. – The matrix s=mm is a local stem-stem association matrix. – Each element su, v in s expresses a correlation cu, v between the stems su and sv:

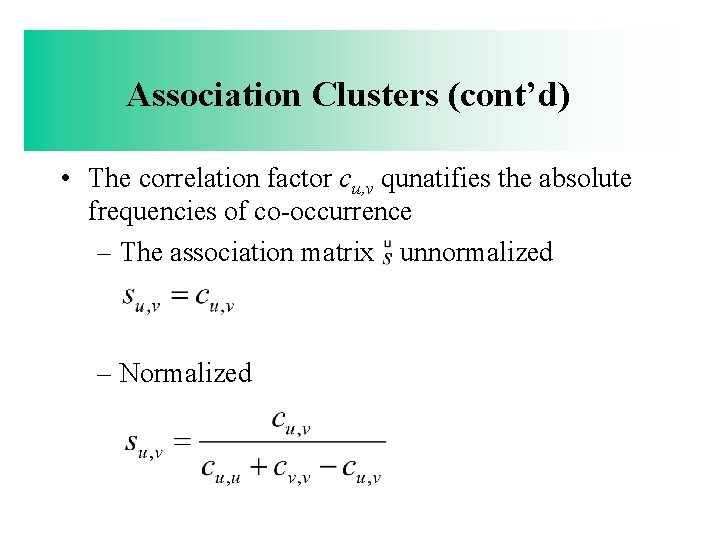

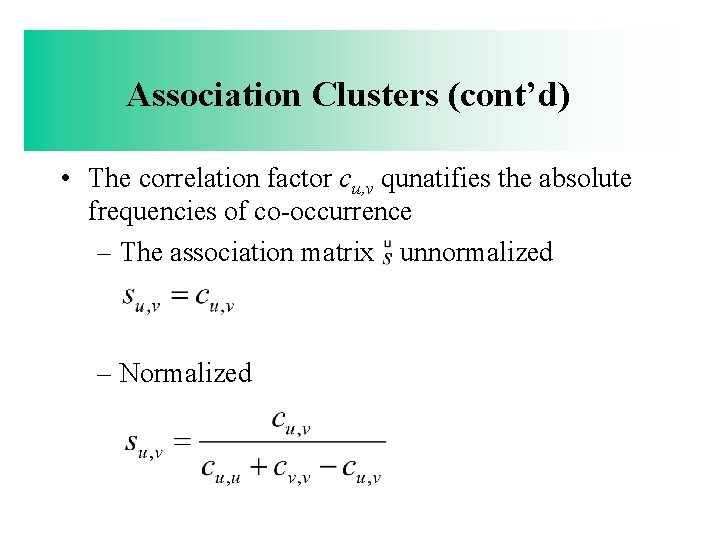

Association Clusters (cont’d) • The correlation factor cu, v qunatifies the absolute frequencies of co-occurrence – The association matrix s unnormalized – Normalized

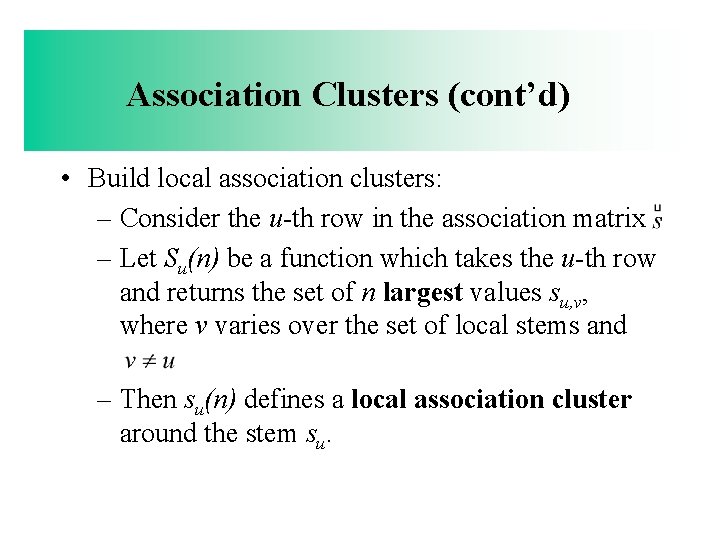

Association Clusters (cont’d) • Build local association clusters: – Consider the u-th row in the association matrix – Let Su(n) be a function which takes the u-th row and returns the set of n largest values su, v, where v varies over the set of local stems and vnotequaltou – Then su(n) defines a local association cluster around the stem su.

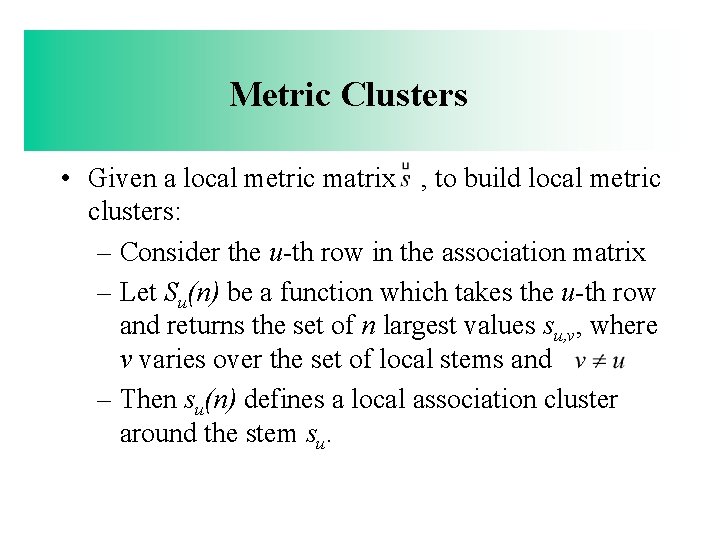

Metric Clusters • Two terms which occur in the same sentence seem more correlated than two terms which occur far apart in a document. • It migh be worthwhile to factor in the distance between two terms in the computation of their correlation factor.

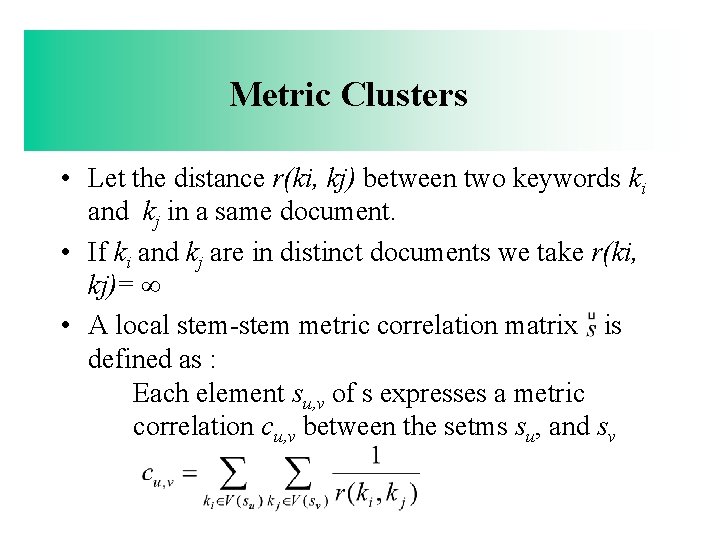

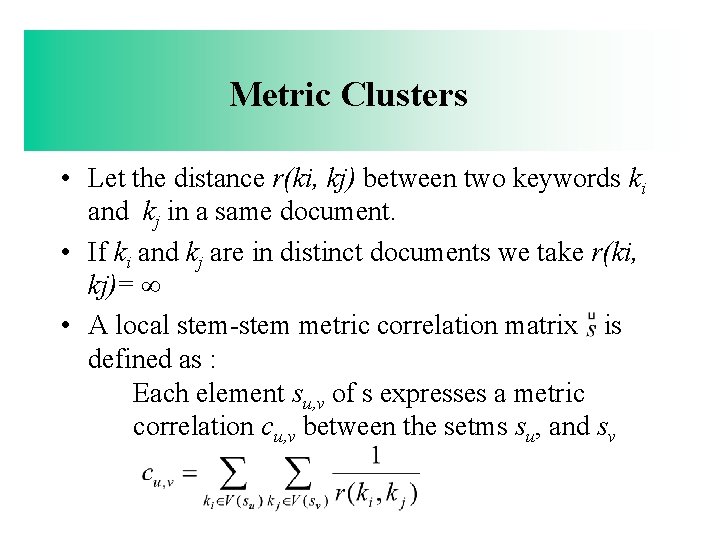

Metric Clusters • Let the distance r(ki, kj) between two keywords ki and kj in a same document. • If ki and kj are in distinct documents we take r(ki, kj)= • A local stem-stem metric correlation matrix s is defined as : Each element su, v of s expresses a metric correlation cu, v between the setms su, and sv

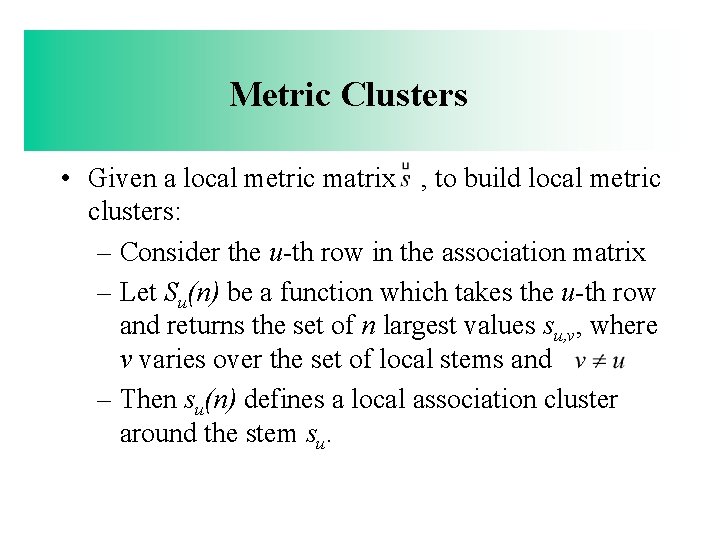

Metric Clusters • Given a local metric matrix s , to build local metric clusters: – Consider the u-th row in the association matrix – Let Su(n) be a function which takes the u-th row and returns the set of n largest values su, v, where v varies over the set of local stems and v – Then su(n) defines a local association cluster around the stem su.

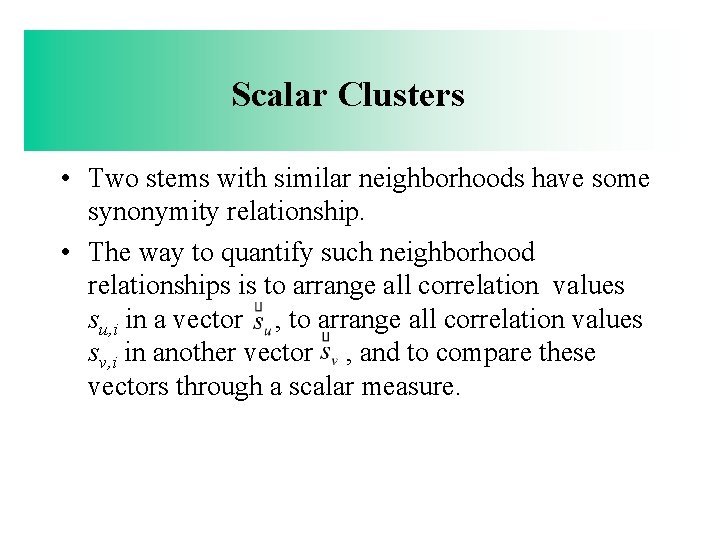

Scalar Clusters • Two stems with similar neighborhoods have some synonymity relationship. • The way to quantify such neighborhood relationships is to arrange all correlation values su, i in a vector su, to arrange all correlation values sv, i in another vector sv, and to compare these vectors through a scalar measure.

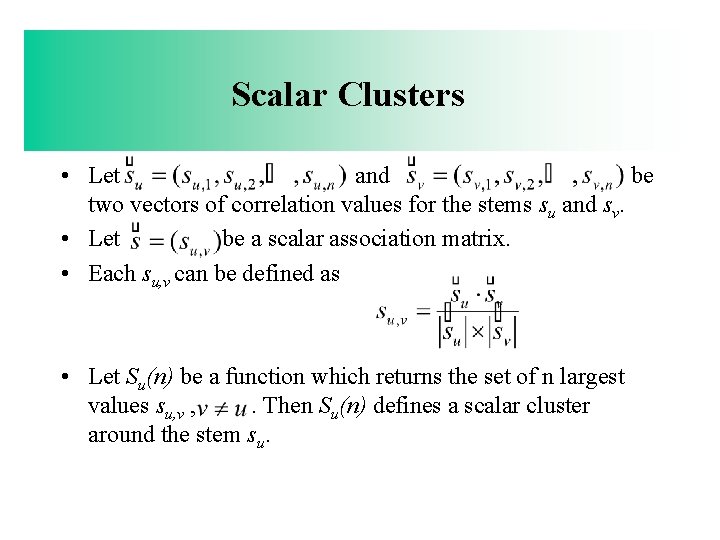

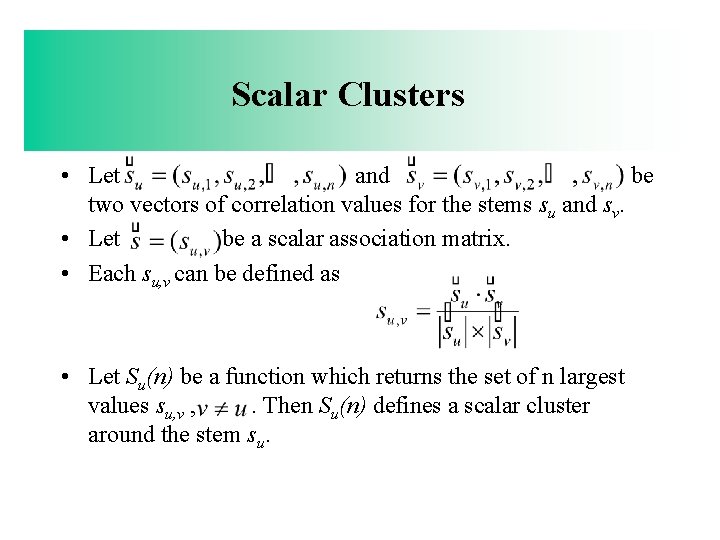

Scalar Clusters • Let su=(su 1, su 2, …, sun ) and sv =(sv 1, sv 2, svn) be two vectors of correlation values for the stems su and sv. • Let s=(su, v ) be a scalar association matrix. • Each su, v can be defined as • Let Su(n) be a function which returns the set of n largest values su, v , v=u. Then Su(n) defines a scalar cluster around the stem su.

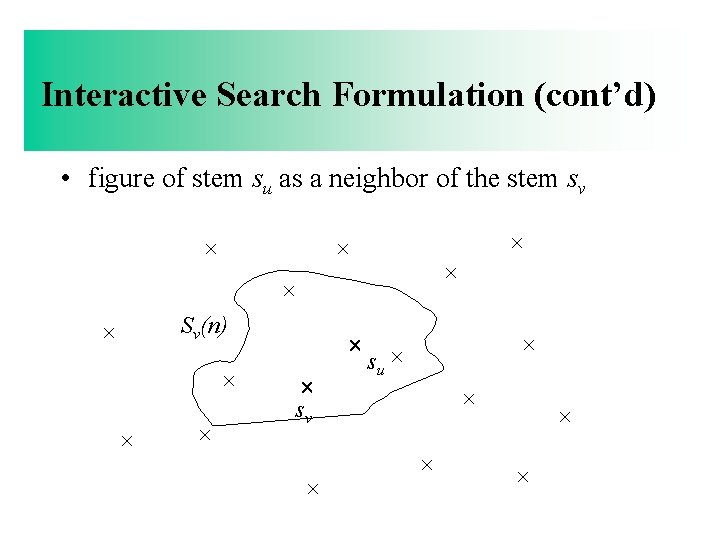

Interactive Search Formulation • Stems(or terms) that belong to clusters associated to the query stems(or terms) can be used to expand the original query. • A stem su which belongs to a cluster (of size n) associated to another stem sv ( i. e. ) is said to be a neighbor of sv.

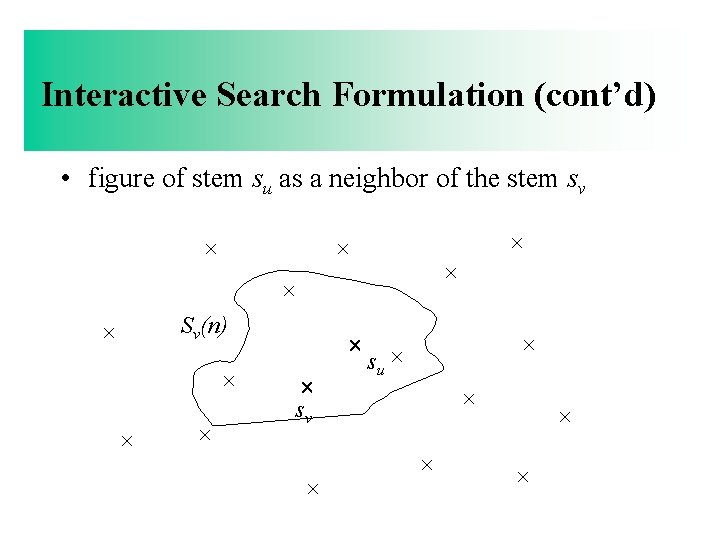

Interactive Search Formulation (cont’d) • figure of stem su as a neighbor of the stem sv Sv(n) sv su

Interactive Search Formulation (cont’d) • For each stem , select m neighbor stems from the cluster Sv(n) (which might be of type association, metric, or scalar) and add them to the query. • Hopefully, the additional neighbor stems will retrieve new relevant documents. 新增的鄰近字根會找出新的relevant documents. • Sv(n) may composed of stems obtained using correlation factors normalized and unnormalized. – normalized cluster tends to group stems which are more rare. – unnormalized cluster tends to group stems due to their large frequencies.

Interactive Search Formulation (cont’d) • Using information about correlated stems to improve the search. – Let two stems su and sv be correlated with a correlation factor cu, v. – If cu, v is larger than a predefined threshold then a neighbor stem of su can also be interpreted as a neighbor stem of sv and vice versa. – This provides greater flexibility, particularly with Boolean queries. – Consider the expression (su + sv) where the + symbol stands for disjunction. – Let su' be an neighbor stem of su. – Then one can try both(su'+sv) and (su+su) as synonym search expressions, because of the correlation given by cu, v.

Query Expansion Through Local Context Analysis • The local context analysis procedure operates in three steps: – 1. retrieve the top n ranked passages using the original query. This is accomplished by breaking up the doucments initially retrieved by the query in fixed length passages (for instance, of size 300 words) and ranking these passages as if they were documents. – 2. for each concept c in the top ranked passages, the similarity sim(q, c) between the whole query q (not individual query terms) and the concept c is computed using a variant of tf-idf ranking.

Query Expansion Through Local Context Analysis – 3. the top m ranked concepts(accroding to sim(q, c) ) are added to the original query q. To each added concept is assigned a weight given by 1 -0. 9 × i/m where i is the position of the concept in the final concept ranking. The terms in the original query q might be stressed by assigning a weight equal to 2 to each of them.