ITEC 502 Chapter 7 Virtual Memory MiJung Choi

- Slides: 52

ITEC 502 컴퓨터 시스템 및 실습 Chapter 7: Virtual Memory Mi-Jung Choi mjchoi@postech. ac. kr DPNM Lab. Dept. of CSE, POSTECH

Contents § Background § Demand Paging – Process Creation § Page Replacement § Allocation of Frames – Thrashing ITEC 502 컴퓨터 시스템 및 실습 2

Background § Virtual memory – separation of user logical memory from physical memory – allows only part of the program to be in memory for execution – allows logical address space to be much larger than physical address space – allows address spaces to be shared by several processes – allows for more efficient process creation § Virtual memory can be implemented via: – Demand paging – Demand segmentation ITEC 502 컴퓨터 시스템 및 실습 3

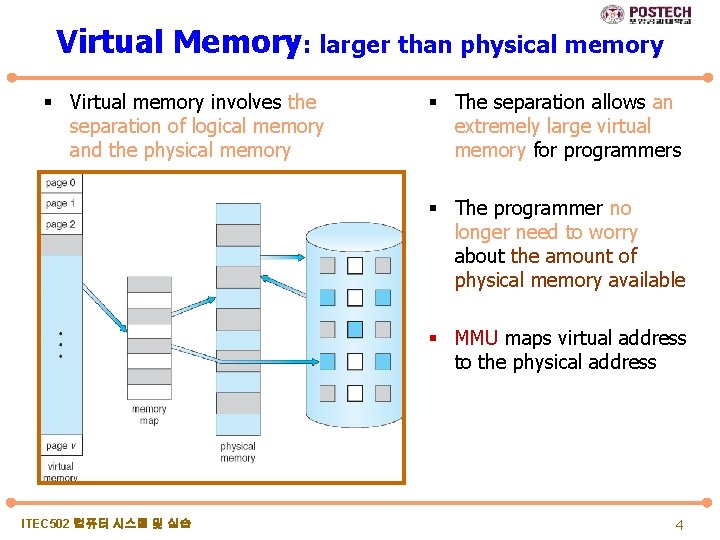

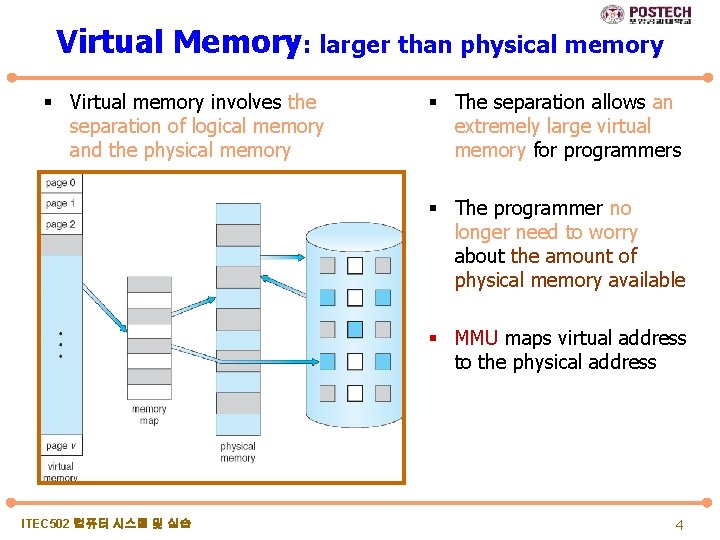

Virtual Memory: larger than physical memory § Virtual memory involves the separation of logical memory and the physical memory § The separation allows an extremely large virtual memory for programmers § The programmer no longer need to worry about the amount of physical memory available § MMU maps virtual address to the physical address ITEC 502 컴퓨터 시스템 및 실습 4

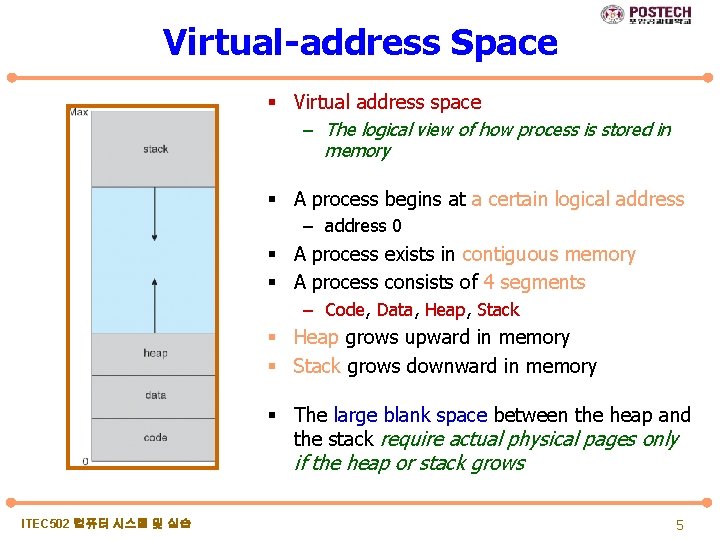

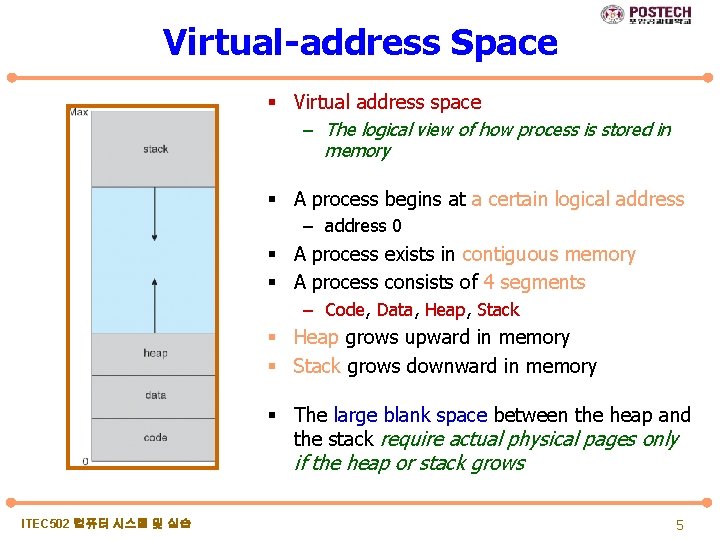

Virtual-address Space § Virtual address space – The logical view of how process is stored in memory § A process begins at a certain logical address – address 0 § A process exists in contiguous memory § A process consists of 4 segments – Code, Data, Heap, Stack § Heap grows upward in memory § Stack grows downward in memory § The large blank space between the heap and the stack require actual physical pages only if the heap or stack grows ITEC 502 컴퓨터 시스템 및 실습 5

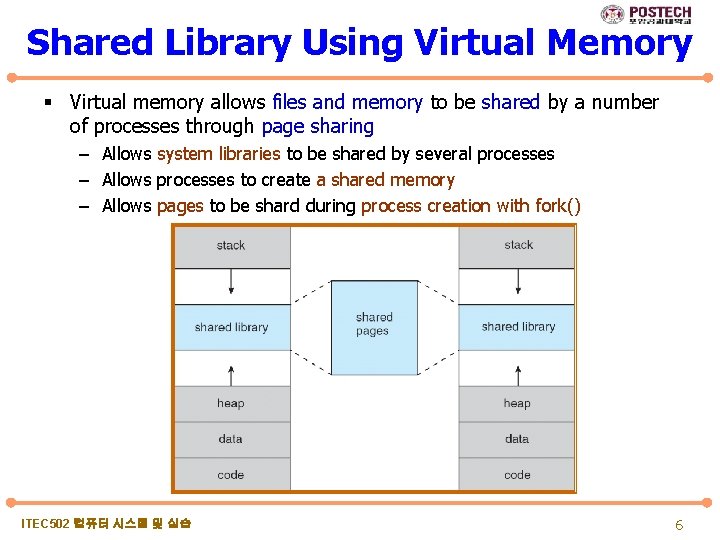

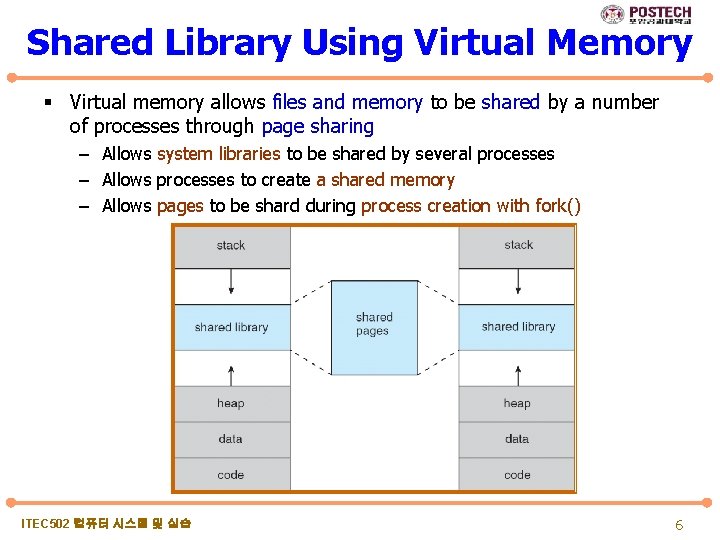

Shared Library Using Virtual Memory § Virtual memory allows files and memory to be shared by a number of processes through page sharing – Allows system libraries to be shared by several processes – Allows processes to create a shared memory – Allows pages to be shard during process creation with fork() ITEC 502 컴퓨터 시스템 및 실습 6

Demand Paging § is a virtual memory management strategy § Pages are loaded into memory only when they are needed during program execution dynamic loading – – Less I/O needed Less memory needed Faster response More users § Page is needed reference to it – invalid reference abort – not-in-memory bring to memory ITEC 502 컴퓨터 시스템 및 실습 7

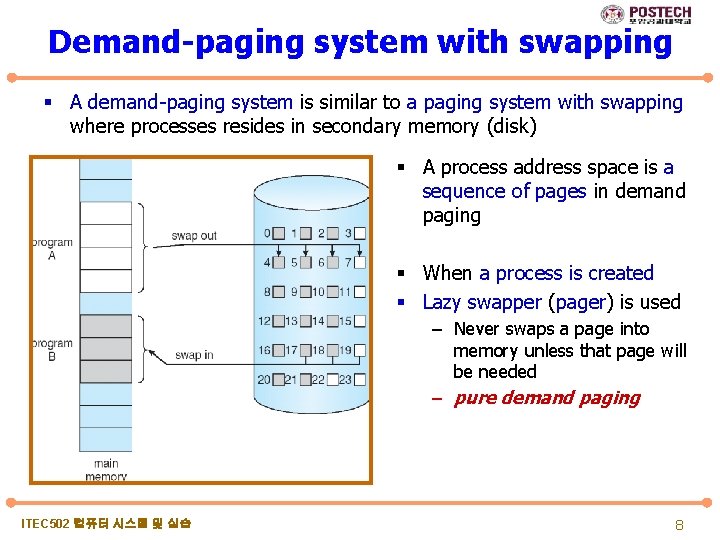

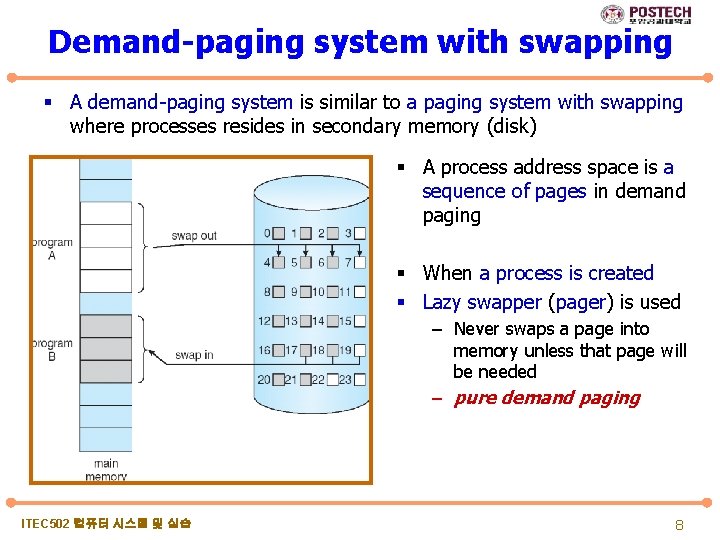

Demand-paging system with swapping § A demand-paging system is similar to a paging system with swapping where processes resides in secondary memory (disk) § A process address space is a sequence of pages in demand paging § When a process is created § Lazy swapper (pager) is used – Never swaps a page into memory unless that page will be needed – pure demand paging ITEC 502 컴퓨터 시스템 및 실습 8

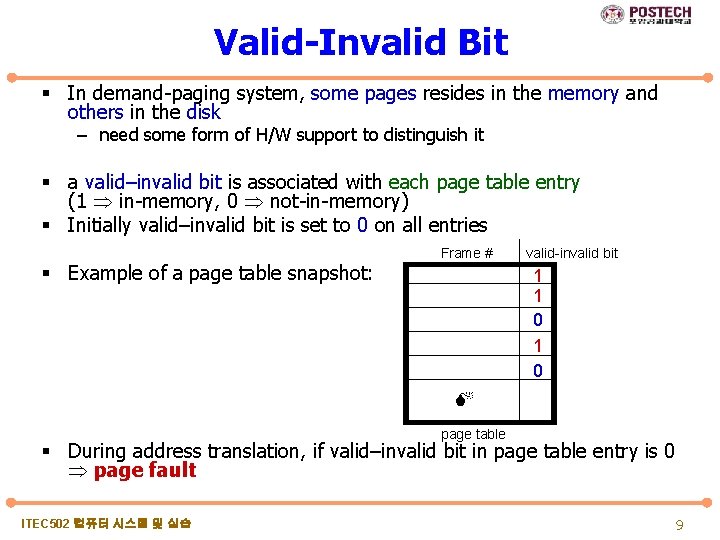

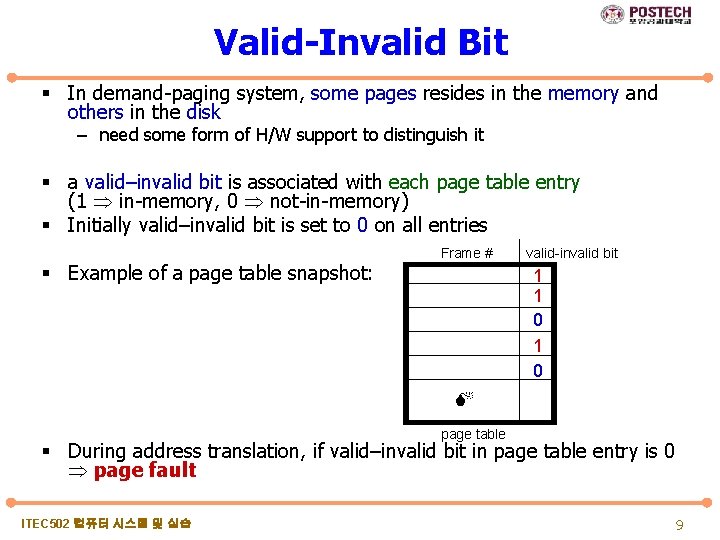

Valid-Invalid Bit § In demand-paging system, some pages resides in the memory and others in the disk – need some form of H/W support to distinguish it § a valid–invalid bit is associated with each page table entry (1 in-memory, 0 not-in-memory) § Initially valid–invalid bit is set to 0 on all entries § Example of a page table snapshot: Frame # valid-invalid bit 1 1 0 page table § During address translation, if valid–invalid bit in page table entry is 0 page fault ITEC 502 컴퓨터 시스템 및 실습 9

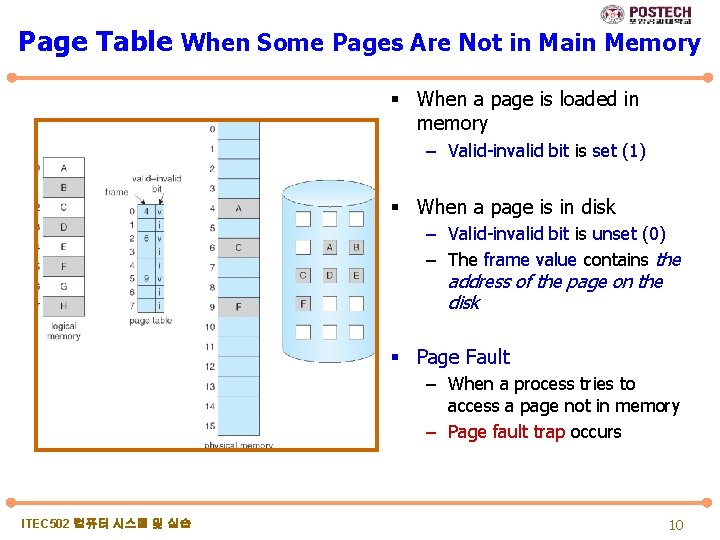

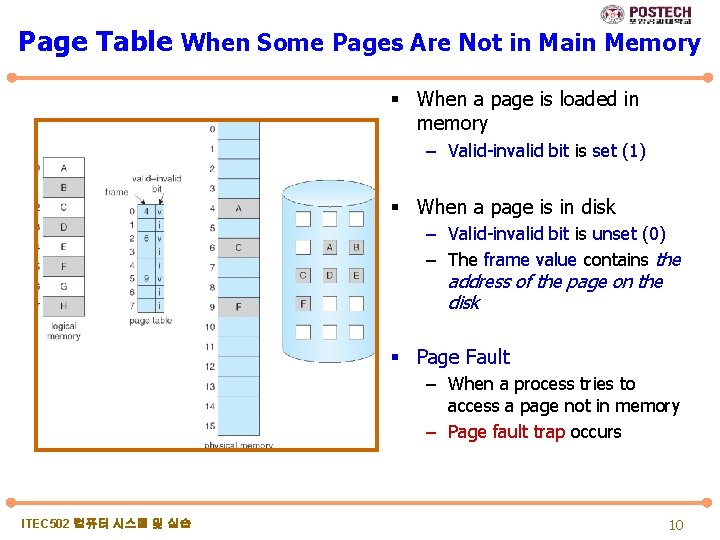

Page Table When Some Pages Are Not in Main Memory § When a page is loaded in memory – Valid-invalid bit is set (1) § When a page is in disk – Valid-invalid bit is unset (0) – The frame value contains the address of the page on the disk § Page Fault – When a process tries to access a page not in memory – Page fault trap occurs ITEC 502 컴퓨터 시스템 및 실습 10

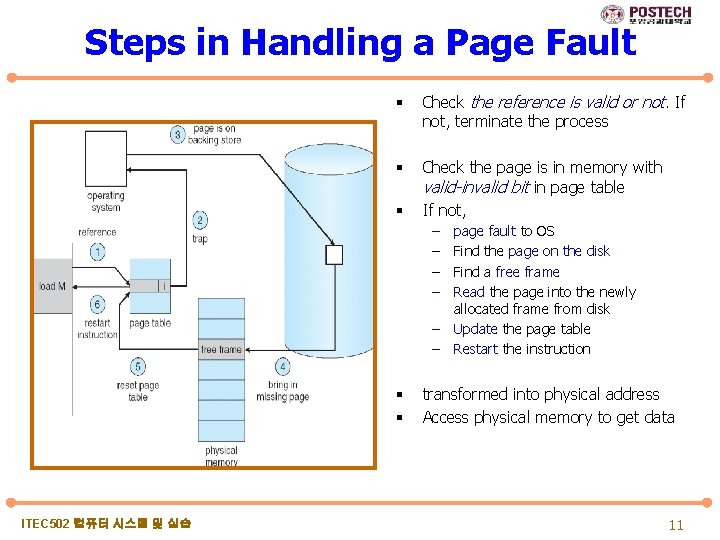

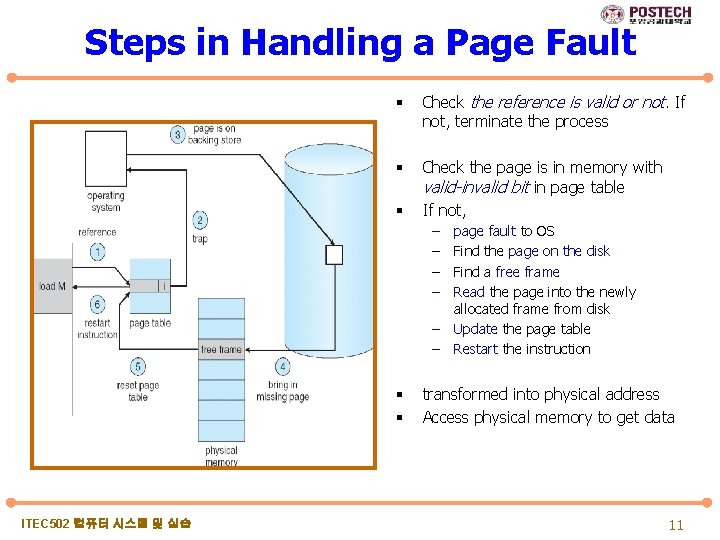

Steps in Handling a Page Fault § Check the reference is valid or not. If not, terminate the process § Check the page is in memory with valid-invalid bit in page table If not, § – – – § § ITEC 502 컴퓨터 시스템 및 실습 page fault to OS Find the page on the disk Find a free frame Read the page into the newly allocated frame from disk Update the page table Restart the instruction transformed into physical address Access physical memory to get data 11

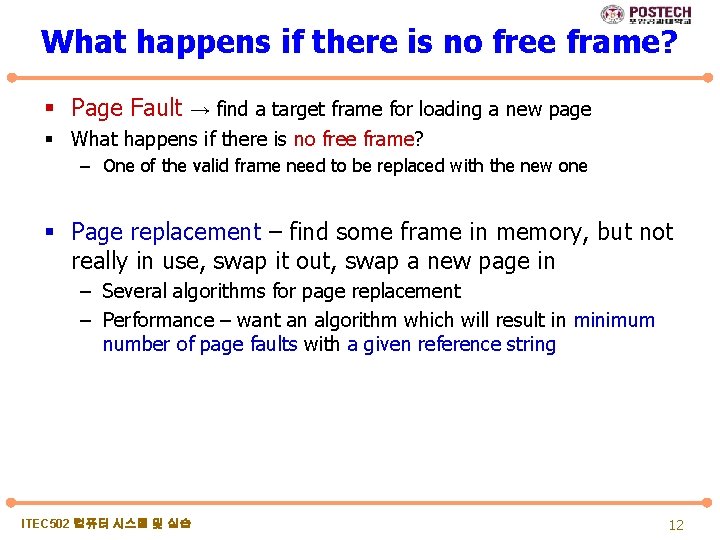

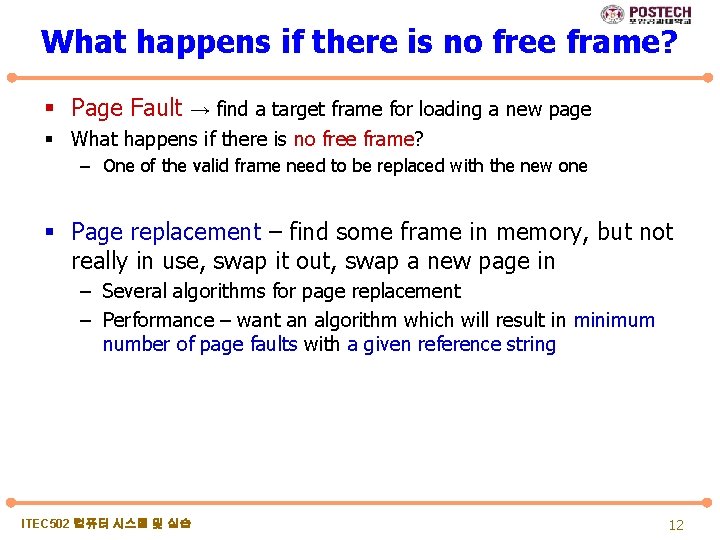

What happens if there is no free frame? § Page Fault → find a target frame for loading a new page § What happens if there is no free frame? – One of the valid frame need to be replaced with the new one § Page replacement – find some frame in memory, but not really in use, swap it out, swap a new page in – Several algorithms for page replacement – Performance – want an algorithm which will result in minimum number of page faults with a given reference string ITEC 502 컴퓨터 시스템 및 실습 12

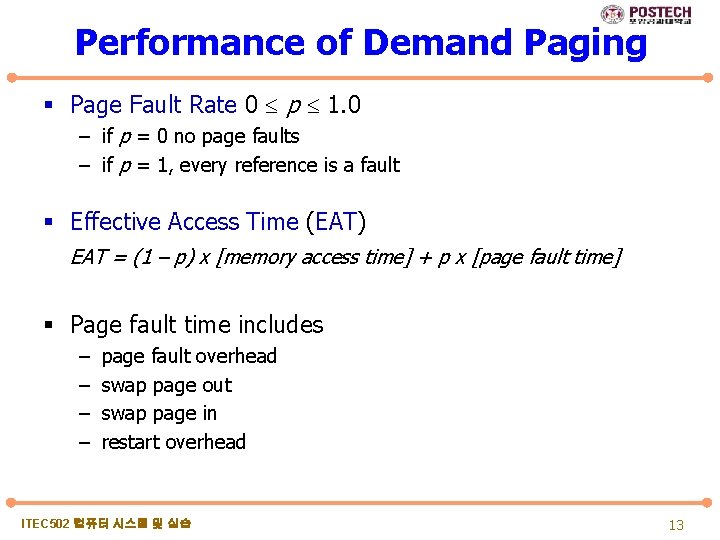

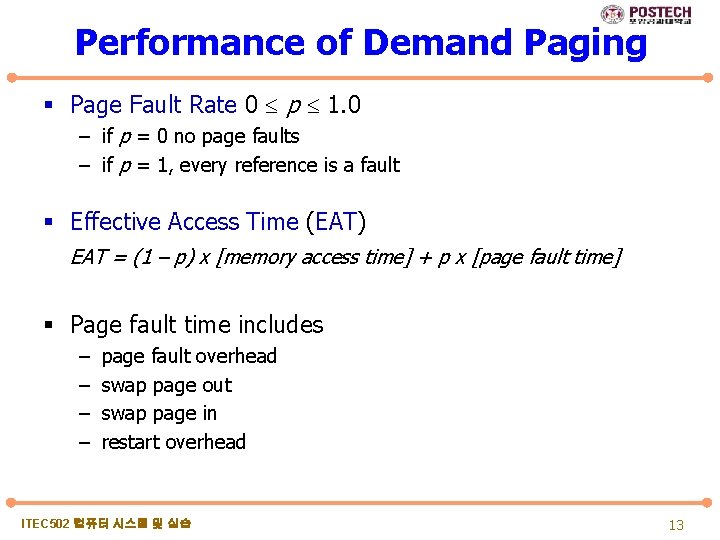

Performance of Demand Paging § Page Fault Rate 0 p 1. 0 – if p = 0 no page faults – if p = 1, every reference is a fault § Effective Access Time (EAT) EAT = (1 – p) x [memory access time] + p x [page fault time] § Page fault time includes – – page fault overhead swap page out swap page in restart overhead ITEC 502 컴퓨터 시스템 및 실습 13

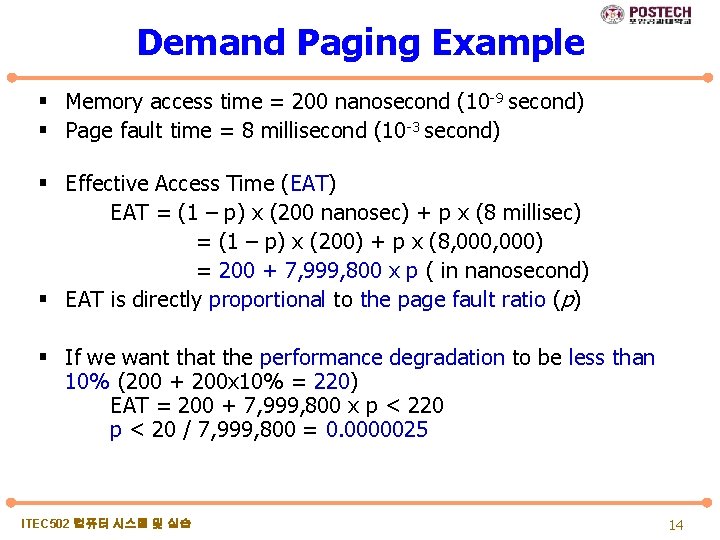

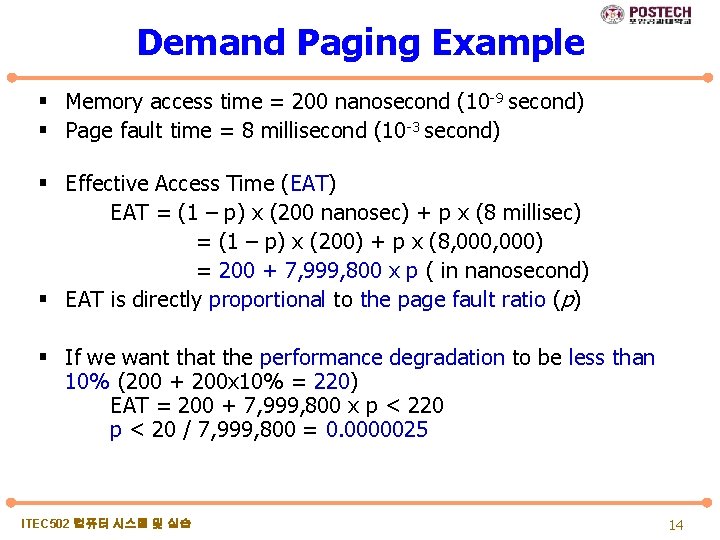

Demand Paging Example § Memory access time = 200 nanosecond (10 -9 second) § Page fault time = 8 millisecond (10 -3 second) § Effective Access Time (EAT) EAT = (1 – p) x (200 nanosec) + p x (8 millisec) = (1 – p) x (200) + p x (8, 000) = 200 + 7, 999, 800 x p ( in nanosecond) § EAT is directly proportional to the page fault ratio (p) § If we want that the performance degradation to be less than 10% (200 + 200 x 10% = 220) EAT = 200 + 7, 999, 800 x p < 220 p < 20 / 7, 999, 800 = 0. 0000025 ITEC 502 컴퓨터 시스템 및 실습 14

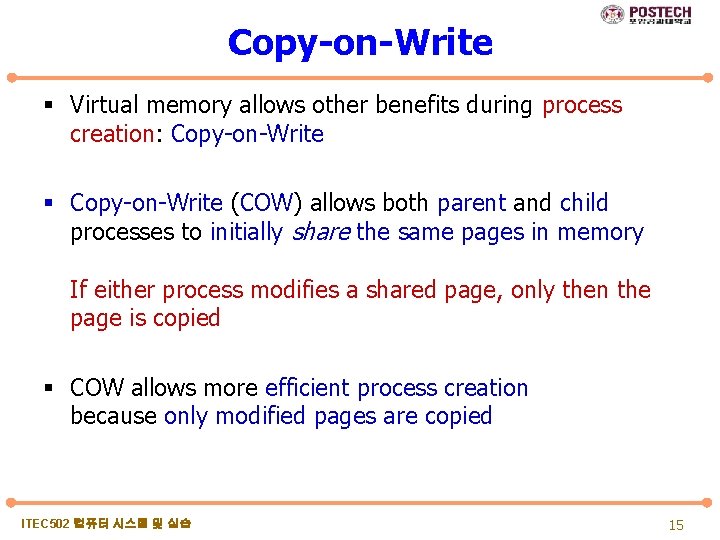

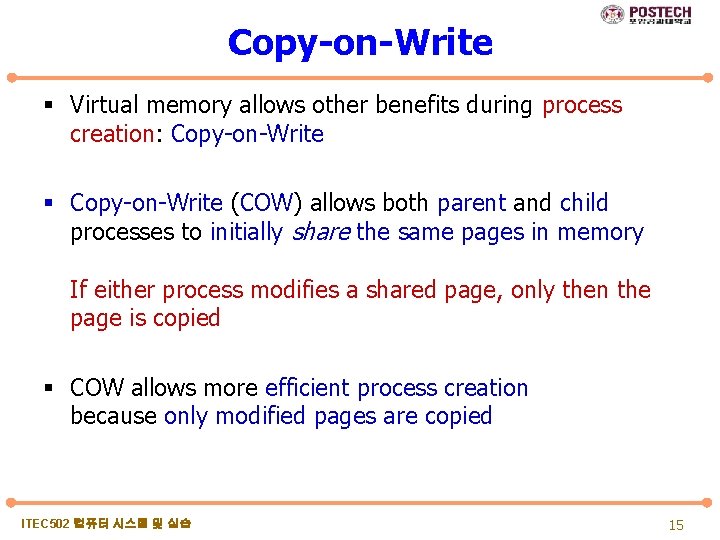

Copy-on-Write § Virtual memory allows other benefits during process creation: Copy-on-Write § Copy-on-Write (COW) allows both parent and child processes to initially share the same pages in memory If either process modifies a shared page, only then the page is copied § COW allows more efficient process creation because only modified pages are copied ITEC 502 컴퓨터 시스템 및 실습 15

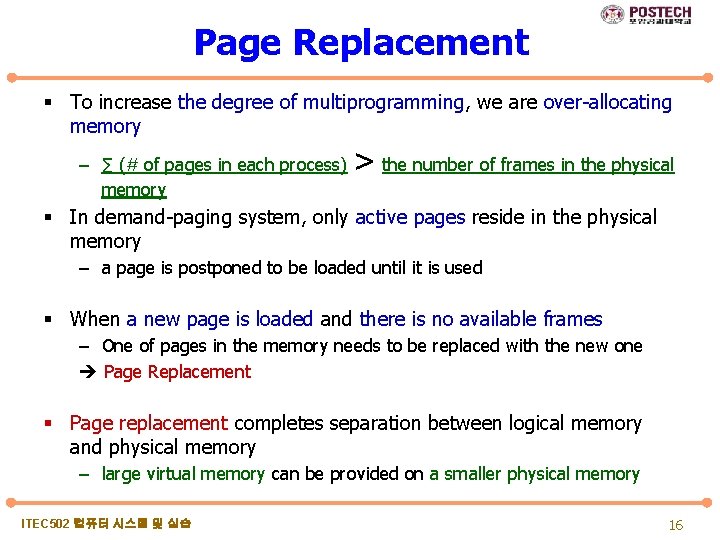

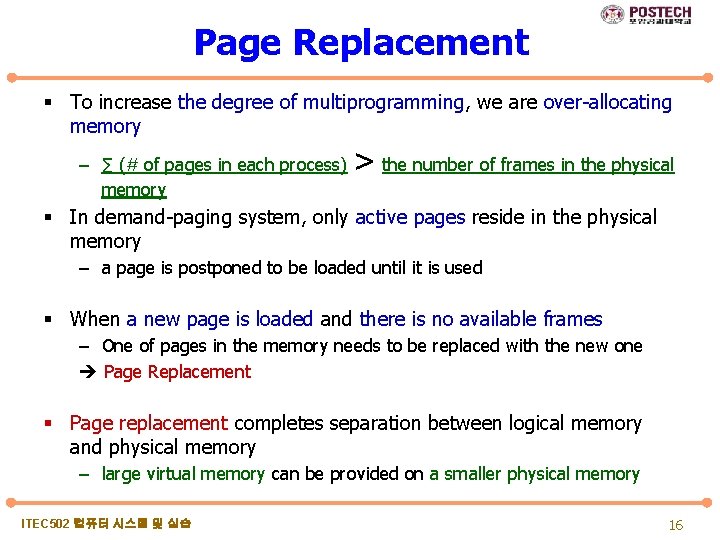

Page Replacement § To increase the degree of multiprogramming, we are over-allocating memory – ∑ (# of pages in each process) memory > the number of frames in the physical § In demand-paging system, only active pages reside in the physical memory – a page is postponed to be loaded until it is used § When a new page is loaded and there is no available frames – One of pages in the memory needs to be replaced with the new one Page Replacement § Page replacement completes separation between logical memory and physical memory – large virtual memory can be provided on a smaller physical memory ITEC 502 컴퓨터 시스템 및 실습 16

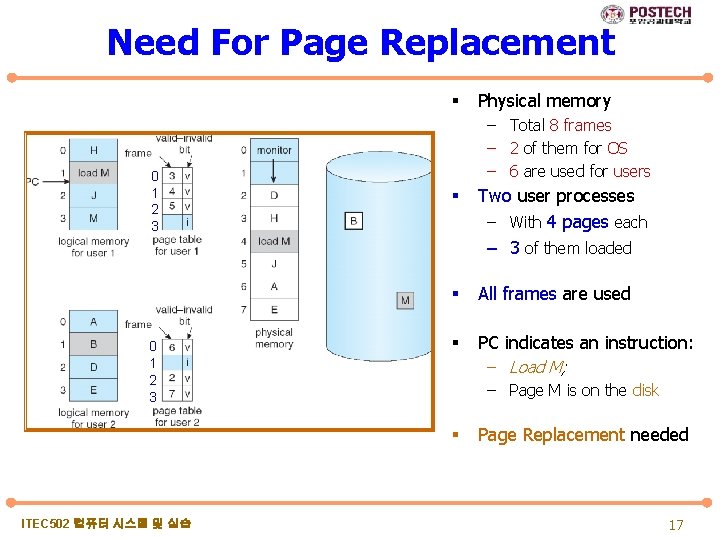

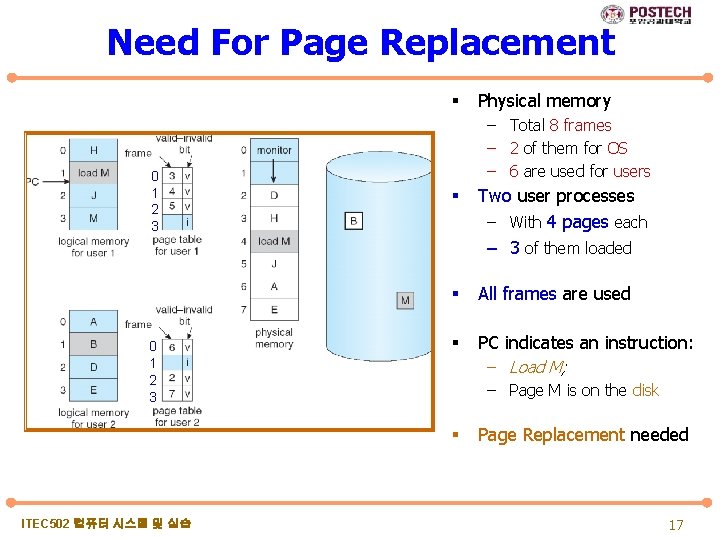

Need For Page Replacement § 0 1 2 3 – Total 8 frames – 2 of them for OS – 6 are used for users § Two user processes – With 4 pages each – 3 of them loaded § All frames are used § PC indicates an instruction: – Load M; – Page M is on the disk § ITEC 502 컴퓨터 시스템 및 실습 Physical memory Page Replacement needed 17

Basic Page Replacement 1. Find the location of the desired page on disk 2. Find a free frame: - If there is a free frame, use it - If there is no free frame, use a page replacement algorithm to select a victim frame 3. Read the desired page into the (newly) free frame Update the page and frame tables 4. Restart the process ITEC 502 컴퓨터 시스템 및 실습 18

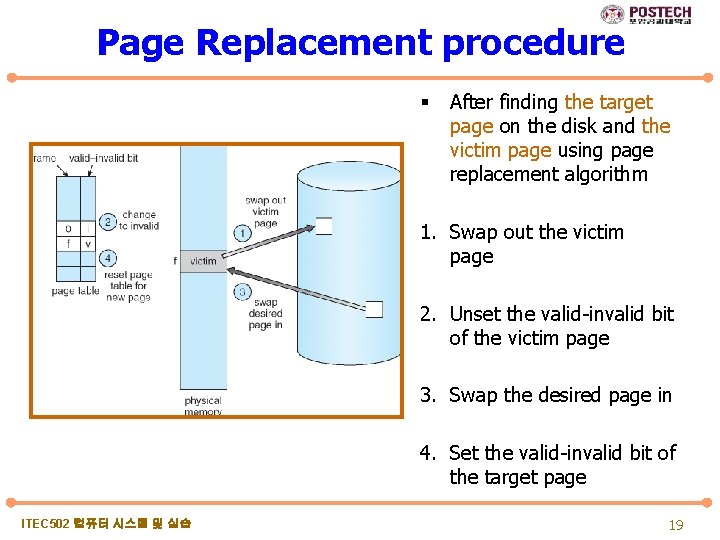

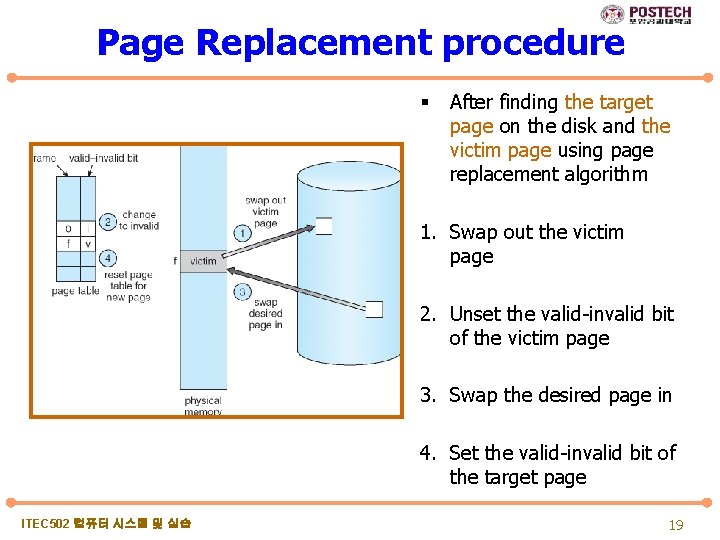

Page Replacement procedure § After finding the target page on the disk and the victim page using page replacement algorithm 1. Swap out the victim page 2. Unset the valid-invalid bit of the victim page 3. Swap the desired page in 4. Set the valid-invalid bit of the target page ITEC 502 컴퓨터 시스템 및 실습 19

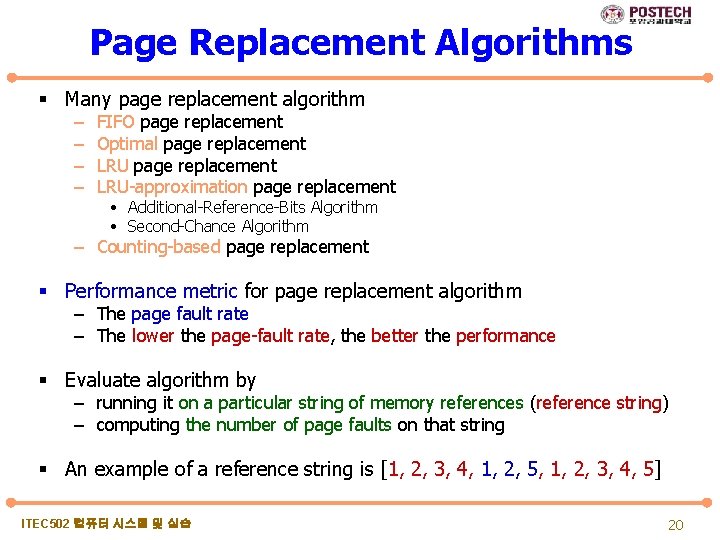

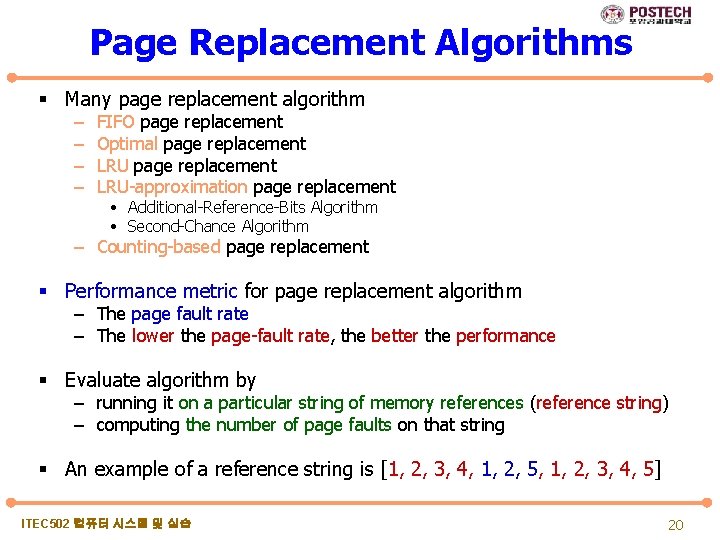

Page Replacement Algorithms § Many page replacement algorithm – – FIFO page replacement Optimal page replacement LRU-approximation page replacement • Additional-Reference-Bits Algorithm • Second-Chance Algorithm – Counting-based page replacement § Performance metric for page replacement algorithm – The page fault rate – The lower the page-fault rate, the better the performance § Evaluate algorithm by – running it on a particular string of memory references (reference string) – computing the number of page faults on that string § An example of a reference string is [1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5] ITEC 502 컴퓨터 시스템 및 실습 20

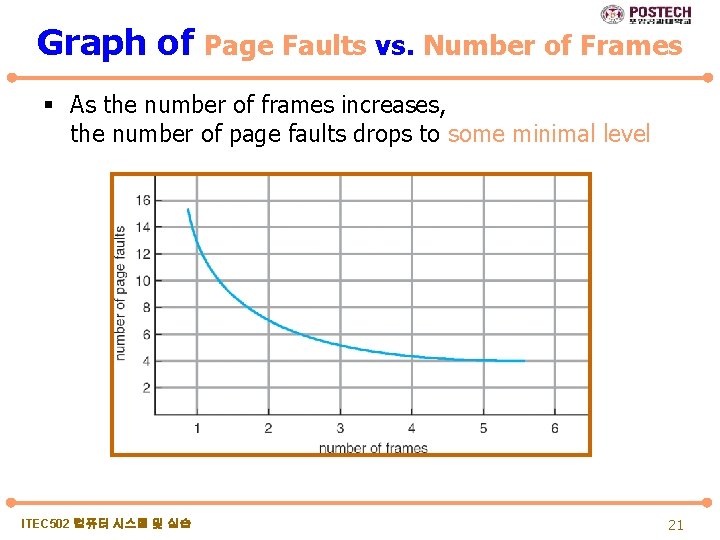

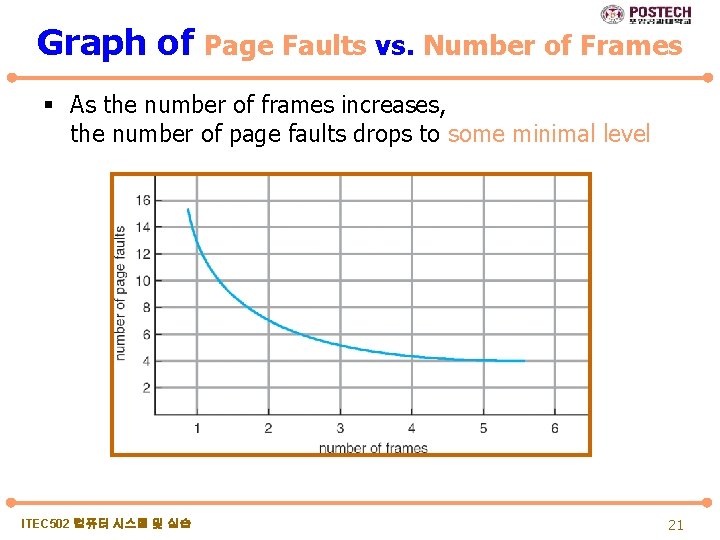

Graph of Page Faults vs. Number of Frames § As the number of frames increases, the number of page faults drops to some minimal level ITEC 502 컴퓨터 시스템 및 실습 21

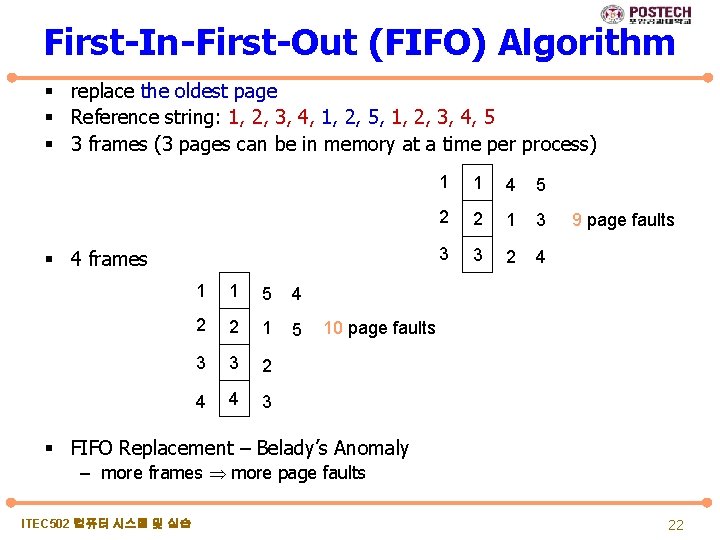

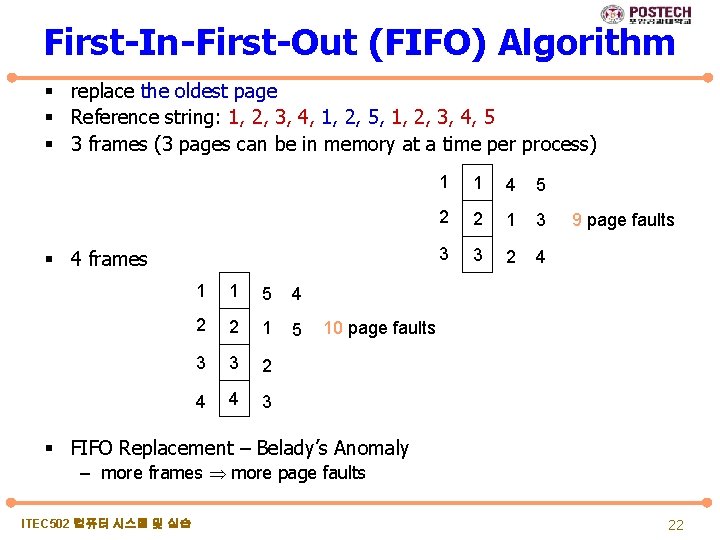

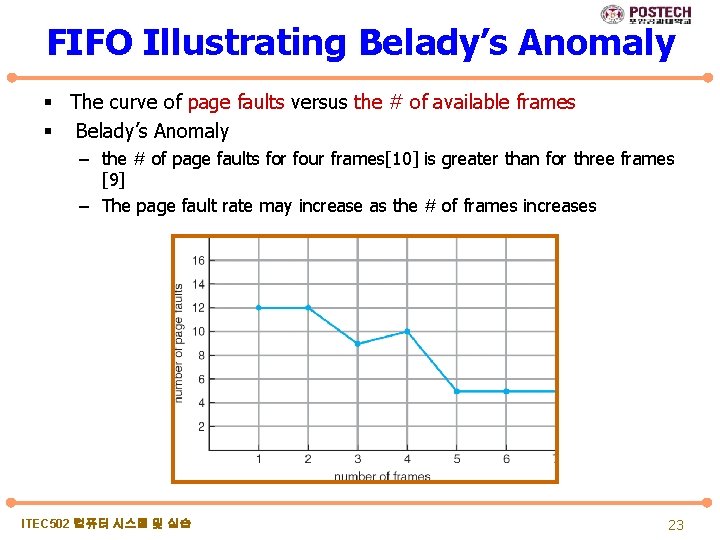

First-In-First-Out (FIFO) Algorithm § replace the oldest page § Reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 § 3 frames (3 pages can be in memory at a time per process) § 4 frames 1 1 5 4 2 2 1 5 3 3 2 4 4 3 1 1 4 5 2 2 1 3 3 3 2 4 9 page faults 10 page faults § FIFO Replacement – Belady’s Anomaly – more frames more page faults ITEC 502 컴퓨터 시스템 및 실습 22

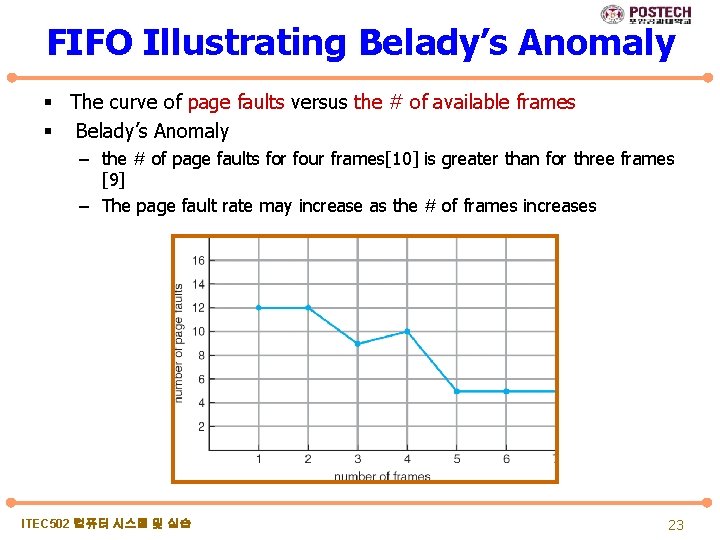

FIFO Illustrating Belady’s Anomaly § The curve of page faults versus the # of available frames § Belady’s Anomaly – the # of page faults for four frames[10] is greater than for three frames [9] – The page fault rate may increase as the # of frames increases ITEC 502 컴퓨터 시스템 및 실습 23

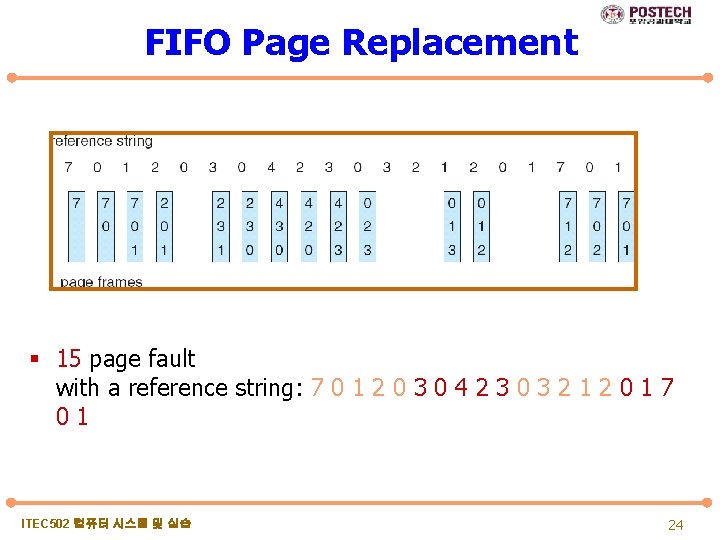

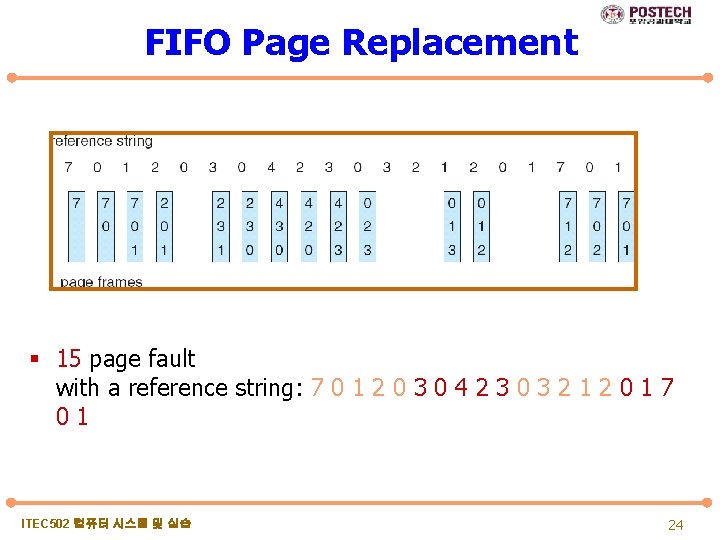

FIFO Page Replacement § 15 page fault with a reference string: 7 0 1 2 0 3 0 4 2 3 0 3 2 1 2 0 1 7 01 ITEC 502 컴퓨터 시스템 및 실습 24

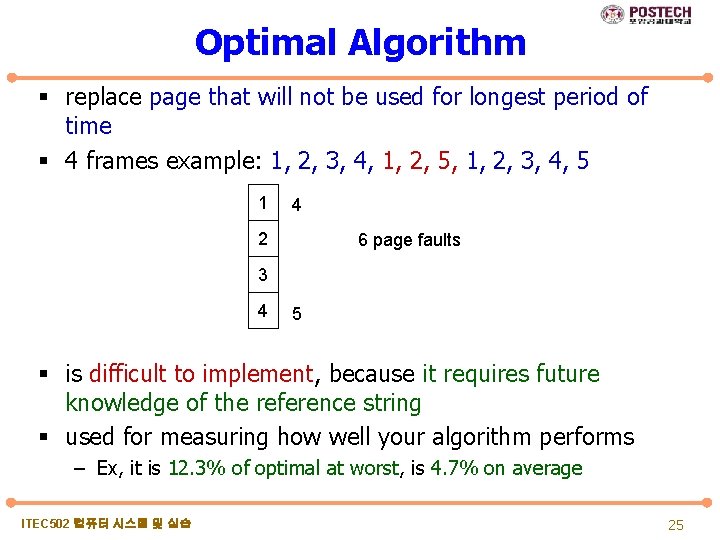

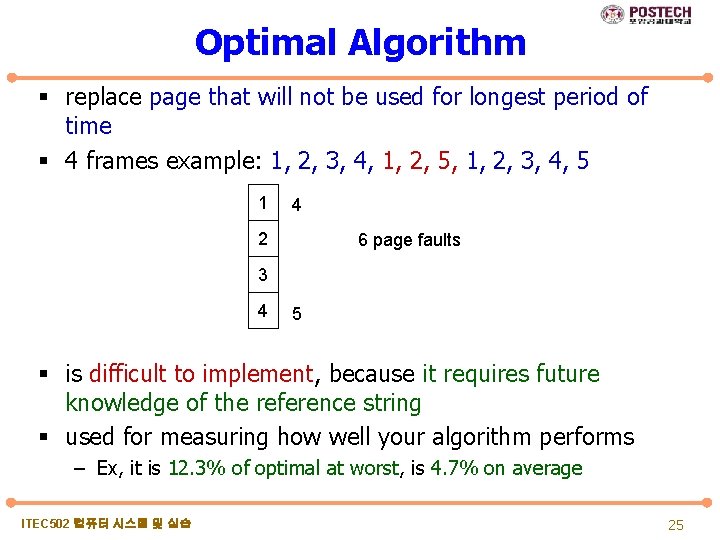

Optimal Algorithm § replace page that will not be used for longest period of time § 4 frames example: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 1 4 2 6 page faults 3 4 5 § is difficult to implement, because it requires future knowledge of the reference string § used for measuring how well your algorithm performs – Ex, it is 12. 3% of optimal at worst, is 4. 7% on average ITEC 502 컴퓨터 시스템 및 실습 25

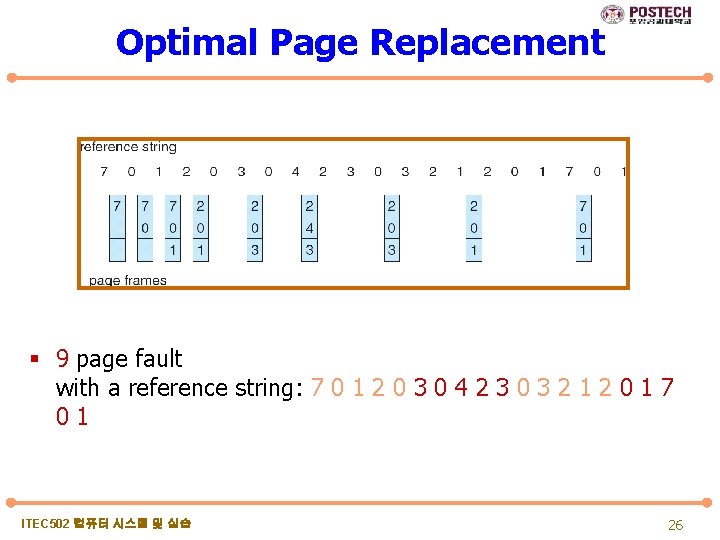

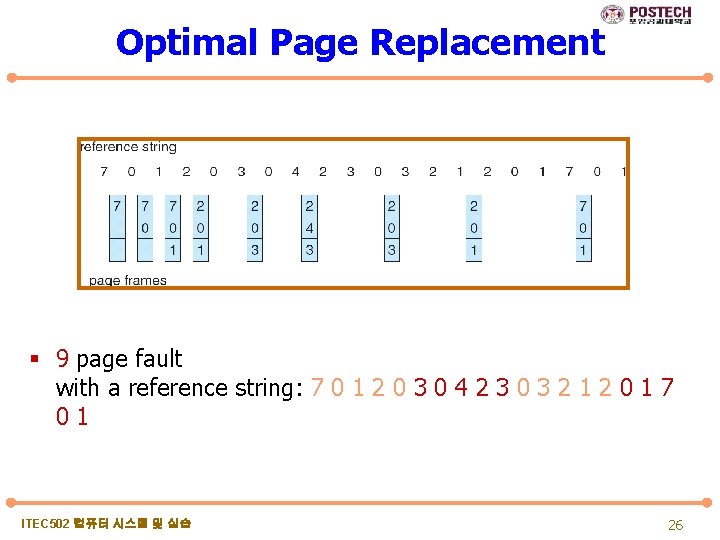

Optimal Page Replacement § 9 page fault with a reference string: 7 0 1 2 0 3 0 4 2 3 0 3 2 1 2 0 1 7 01 ITEC 502 컴퓨터 시스템 및 실습 26

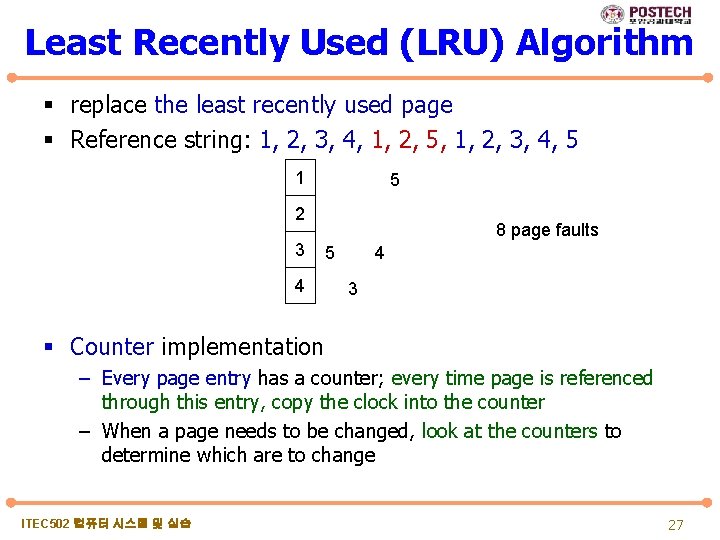

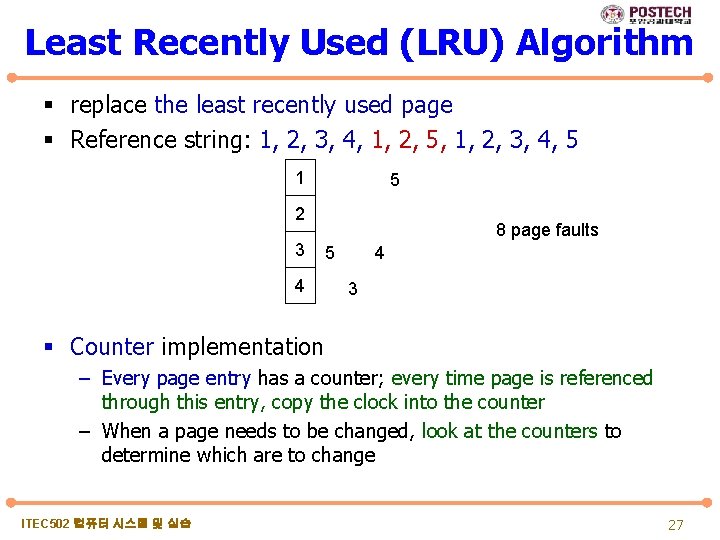

Least Recently Used (LRU) Algorithm § replace the least recently used page § Reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 1 5 2 3 4 8 page faults 5 4 3 § Counter implementation – Every page entry has a counter; every time page is referenced through this entry, copy the clock into the counter – When a page needs to be changed, look at the counters to determine which are to change ITEC 502 컴퓨터 시스템 및 실습 27

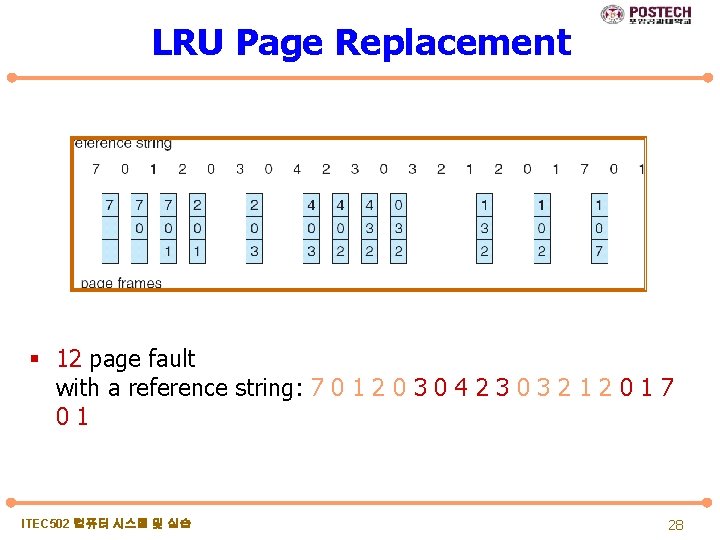

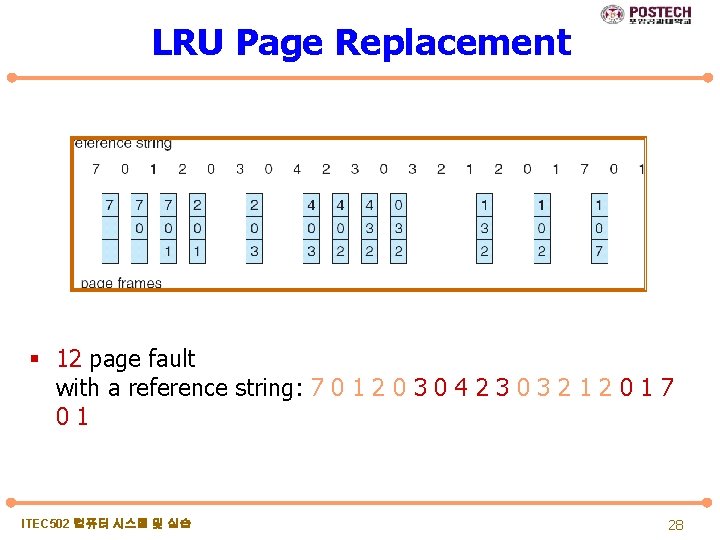

LRU Page Replacement § 12 page fault with a reference string: 7 0 1 2 0 3 0 4 2 3 0 3 2 1 2 0 1 7 01 ITEC 502 컴퓨터 시스템 및 실습 28

LRU Algorithm (Cont. ) § Stack implementation – keep a stack of page numbers in a double link form: – Whenever a page is referenced: • It is moved from the stack and put on the top – No search for replacement ITEC 502 컴퓨터 시스템 및 실습 29

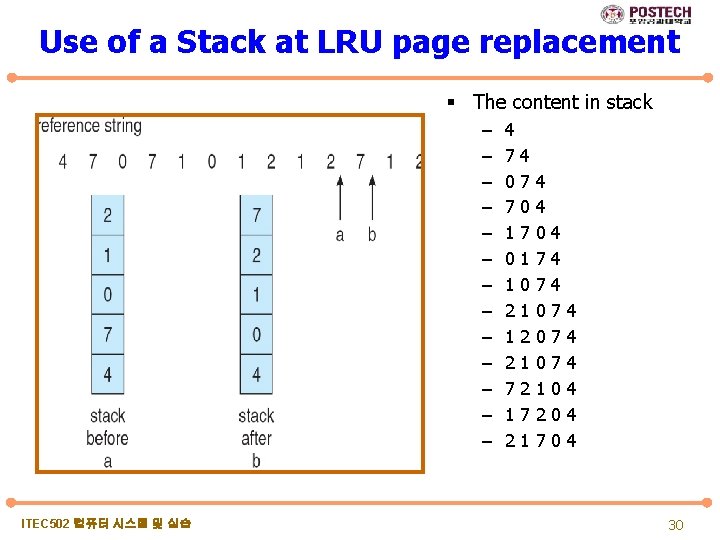

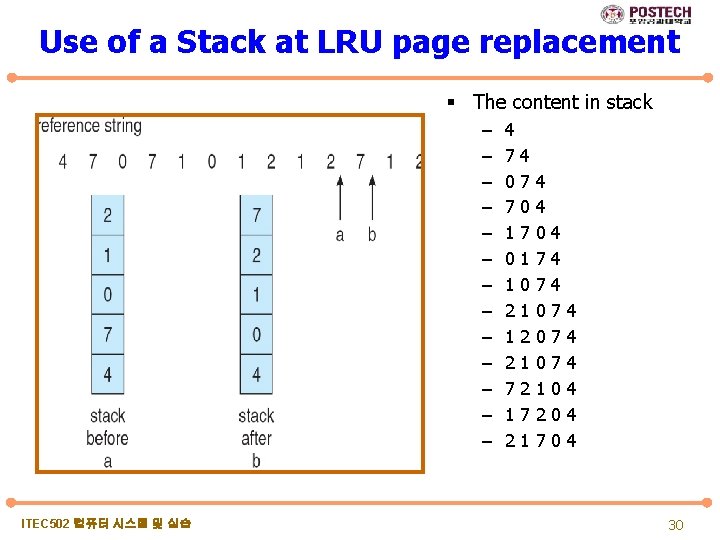

Use of a Stack at LRU page replacement § The content in stack – – – – ITEC 502 컴퓨터 시스템 및 실습 4 7 0 7 1 0 1 2 7 1 2 4 7 0 7 1 0 1 2 7 1 4 4 0 7 7 0 0 0 1 2 7 4 4 4 7 7 7 0 0 0 4 4 4 30

LRU Approximation Algorithms § Reference bit Algorithm – Each page is associate with a bit (reference bit), initially set to 0 – When page is referenced, the corresponding bit is set to 1 – In case of page fault, replace the page whose reference bit is 0 (if one exists). We do not know the order of use, however – Reset all reference bits again to 0 § Second chance Algorithm – – need reference bit If page to be replaced, search for a victim page If reference bit = 0 then proceed to replace this page If reference bit = 1 then • set reference bit to 0 • leave page in memory • Move to next page in clock order, go to previous step ITEC 502 컴퓨터 시스템 및 실습 31

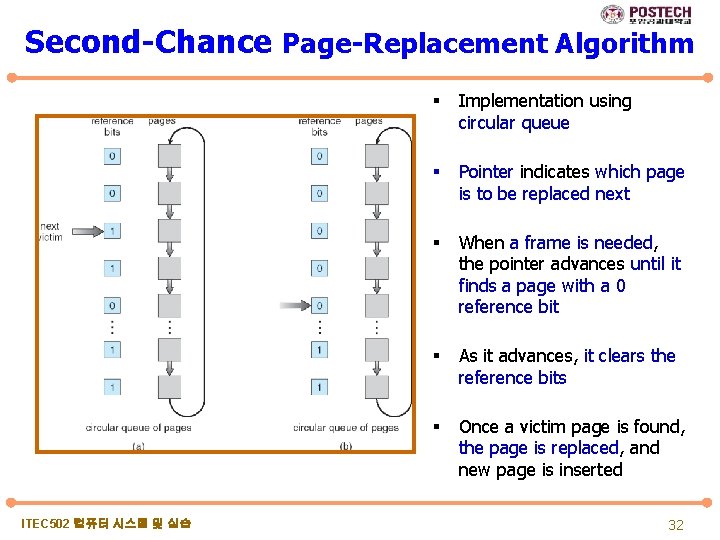

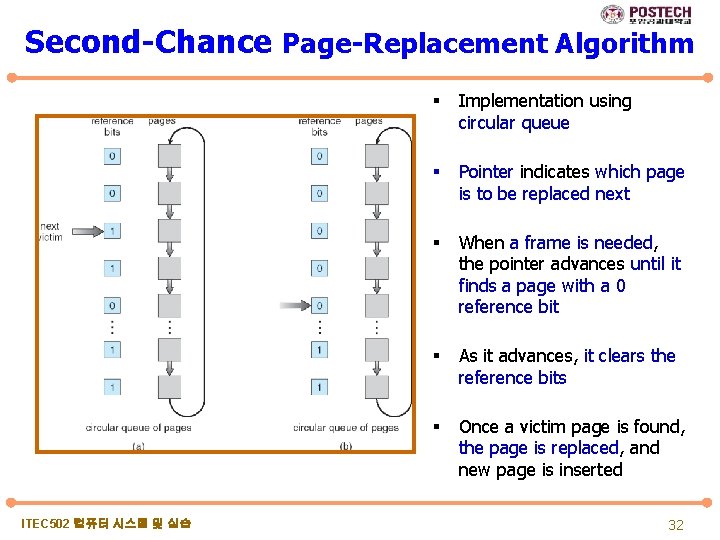

Second-Chance Page-Replacement Algorithm ITEC 502 컴퓨터 시스템 및 실습 § Implementation using circular queue § Pointer indicates which page is to be replaced next § When a frame is needed, the pointer advances until it finds a page with a 0 reference bit § As it advances, it clears the reference bits § Once a victim page is found, the page is replaced, and new page is inserted 32

Counting Algorithms § Keep a counter of the number of references that have been made to each page § LFU Algorithm: replaces page with smallest count § MFU Algorithm: replaces page with largest count – based on the argument that the page with the smallest count was probably just brought in and has yet to be used ITEC 502 컴퓨터 시스템 및 실습 33

Allocation of Frames § How do we allocate the fixed amount of free memory among the various processes? – 93 free frames and two processes, how many frames does each process get? § Constraints – We can’t allocate more than the total number of available frames – Each process needs at least minimum number of pages § Two major allocation schemes – equal allocation – proportional allocation ITEC 502 컴퓨터 시스템 및 실습 34

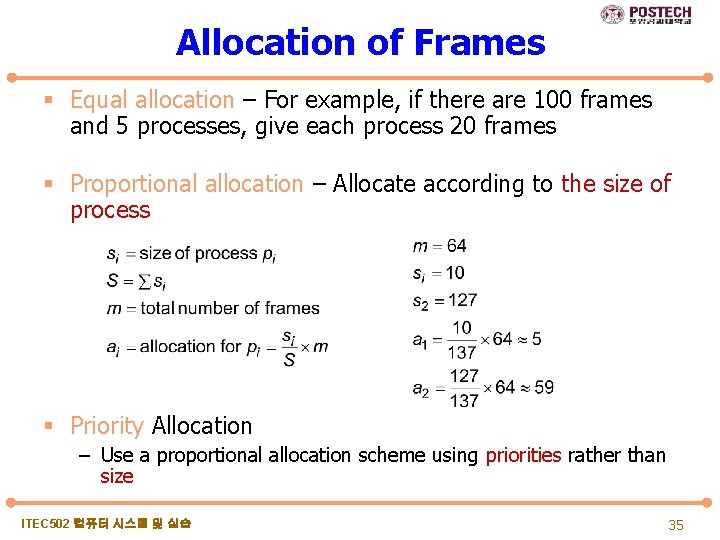

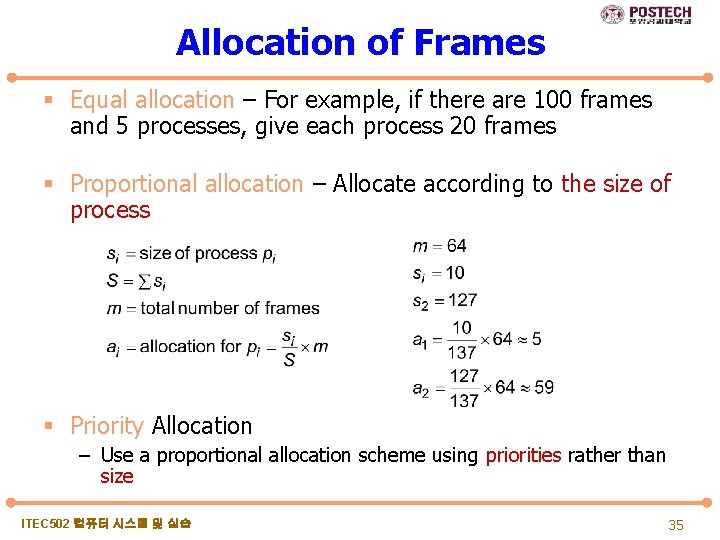

Allocation of Frames § Equal allocation – For example, if there are 100 frames and 5 processes, give each process 20 frames § Proportional allocation – Allocate according to the size of process § Priority Allocation – Use a proportional allocation scheme using priorities rather than size ITEC 502 컴퓨터 시스템 및 실습 35

Global vs. Local Allocation § Global replacement – process selects a replacement frame from the set of all frames; – one process can take a frame from another § Local replacement – each process selects from only its own set of allocated frames ITEC 502 컴퓨터 시스템 및 실습 36

Thrashing § If a process does not have “enough” frames, the pagefault rate is very high. This leads to: – low CPU utilization – operating system thinks that it needs to increase the degree of multiprogramming – another process added to the system § Thrashing a process is busy swapping pages in and out – High paging activity – A process is thrashing if it is spending more time paging than executing ITEC 502 컴퓨터 시스템 및 실습 37

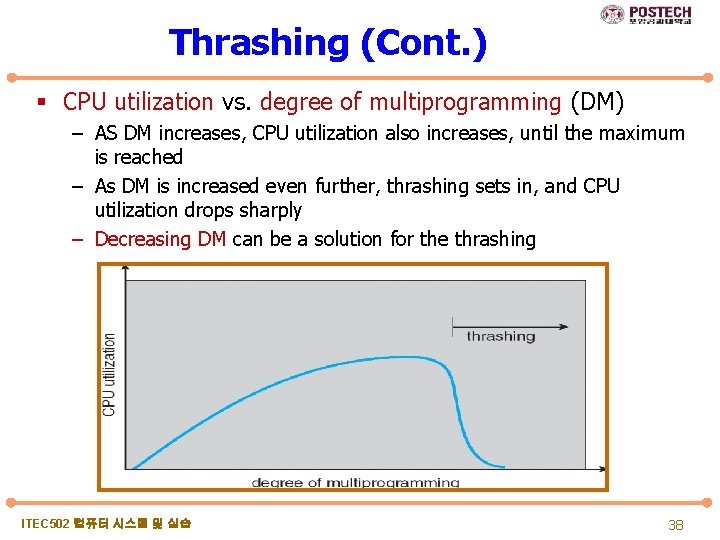

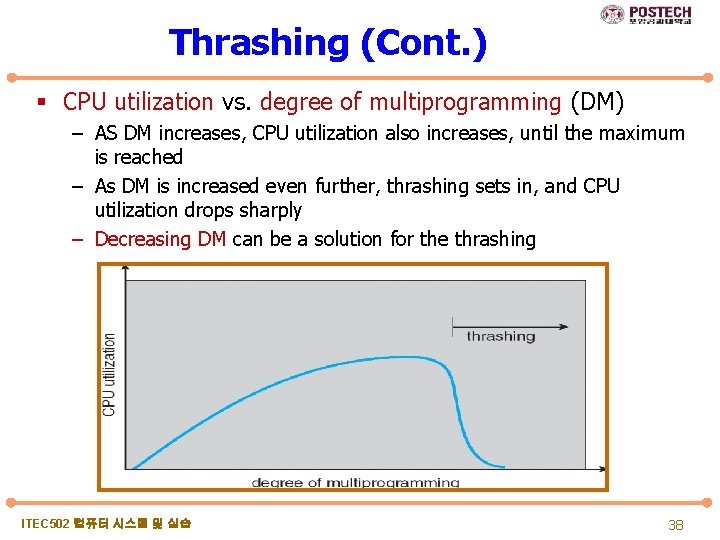

Thrashing (Cont. ) § CPU utilization vs. degree of multiprogramming (DM) – AS DM increases, CPU utilization also increases, until the maximum is reached – As DM is increased even further, thrashing sets in, and CPU utilization drops sharply – Decreasing DM can be a solution for the thrashing ITEC 502 컴퓨터 시스템 및 실습 38

Demand Paging and Thrashing § To prevent thrashing, we must provide a process a proper number of frames in demand paging scheme – How do we know how many frames it need? – Working-Set model with locality is a solution § Locality model – – As a process executes, it moves from locality to locality A locality is a set of pages that are actively used together A program is generally composed of several different localities The localities may overlap § Why does thrashing occur? size of locality > total memory size ITEC 502 컴퓨터 시스템 및 실습 39

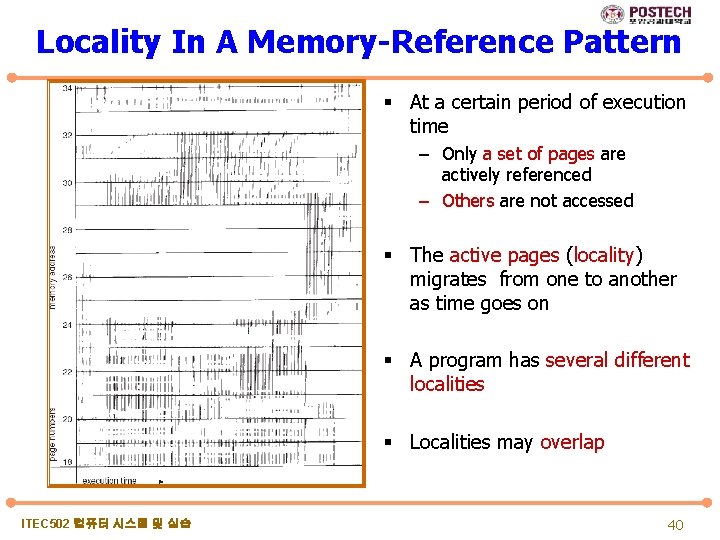

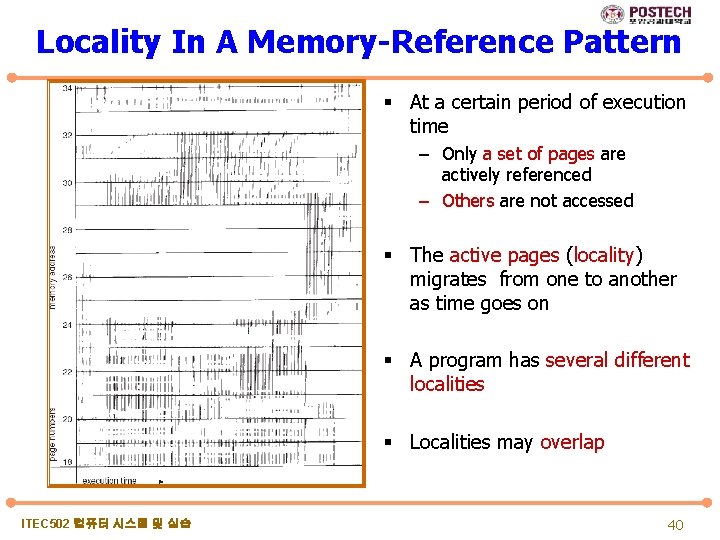

Locality In A Memory-Reference Pattern § At a certain period of execution time – Only a set of pages are actively referenced – Others are not accessed § The active pages (locality) migrates from one to another as time goes on § A program has several different localities § Localities may overlap ITEC 502 컴퓨터 시스템 및 실습 40

Working-Set Model § working-set window a fixed number of page references Example: 10, 000 instruction § WSSi (working set size of process Pi) = total number of pages referenced in the most recent (varies in time) – if too small will not encompass entire locality – if too large will encompass several localities – if = will encompass entire program § D = WSSi total demand frames § if D > m (total number of available frames) Thrashing § Policy: if D > m, then suspend one of the processes ITEC 502 컴퓨터 시스템 및 실습 41

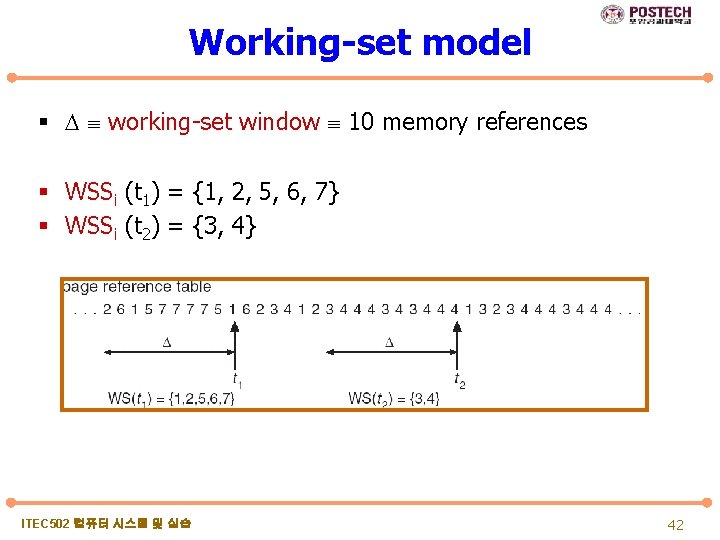

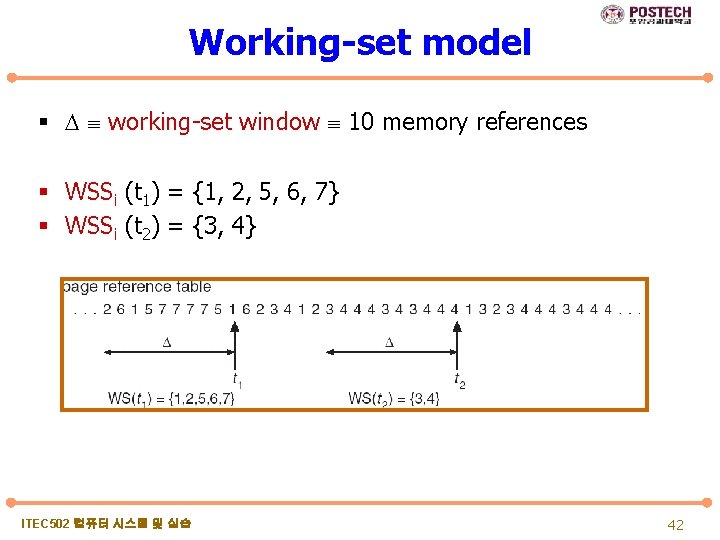

Working-set model § working-set window 10 memory references § WSSi (t 1) = {1, 2, 5, 6, 7} § WSSi (t 2) = {3, 4} ITEC 502 컴퓨터 시스템 및 실습 42

Keeping Track of the Working Set § Approximate with interval timer + a reference bit § Example: = 10, 000 – Timer interrupts after every 5000 time units – Keep in memory 2 bits for each page – Whenever a timer interrupts, copy and sets the values of all reference bits to 0 – If one of the bits in memory = 1 page in working set § Why is this not completely accurate? – We can get WSS only every 5000 time units. § Improvement = 10 bits and interrupt every 1000 time units – WSS every 1000 time units ITEC 502 컴퓨터 시스템 및 실습 43

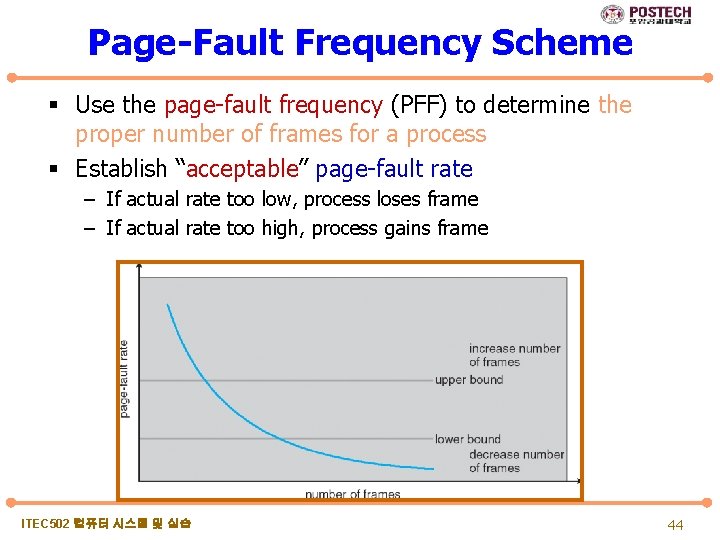

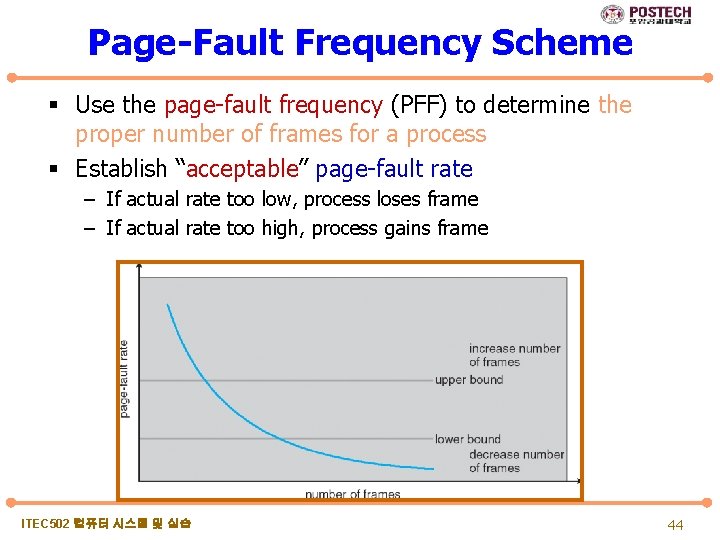

Page-Fault Frequency Scheme § Use the page-fault frequency (PFF) to determine the proper number of frames for a process § Establish “acceptable” page-fault rate – If actual rate too low, process loses frame – If actual rate too high, process gains frame ITEC 502 컴퓨터 시스템 및 실습 44

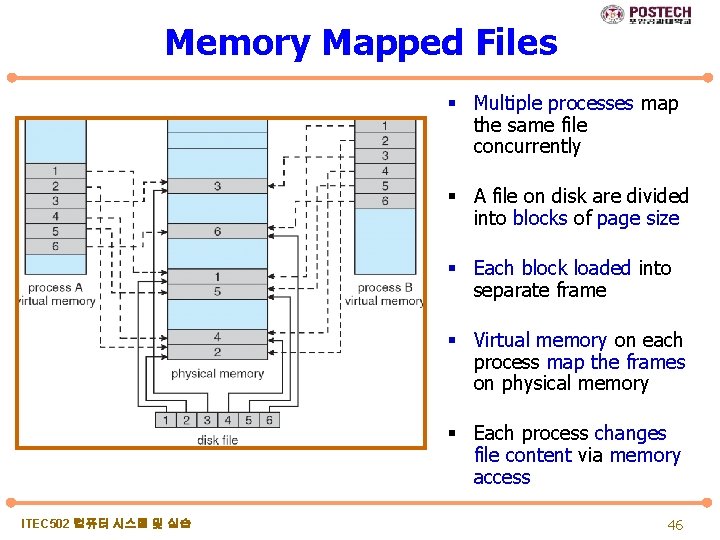

Memory-Mapped Files § Memory-mapped file I/O allows file I/O to be treated as routine of memory access – by mapping a disk block to a page in memory § A file is initially read using demand paging – A page-sized portion of the file is read from the file system into a physical page – Subsequent reads/writes to/from the file are treated as ordinary memory accesses § simplifies file access by treating file I/O through memory – no need of read() write() system calls § also allows several processes to map the same file – by allowing the pages in memory to be shared ITEC 502 컴퓨터 시스템 및 실습 45

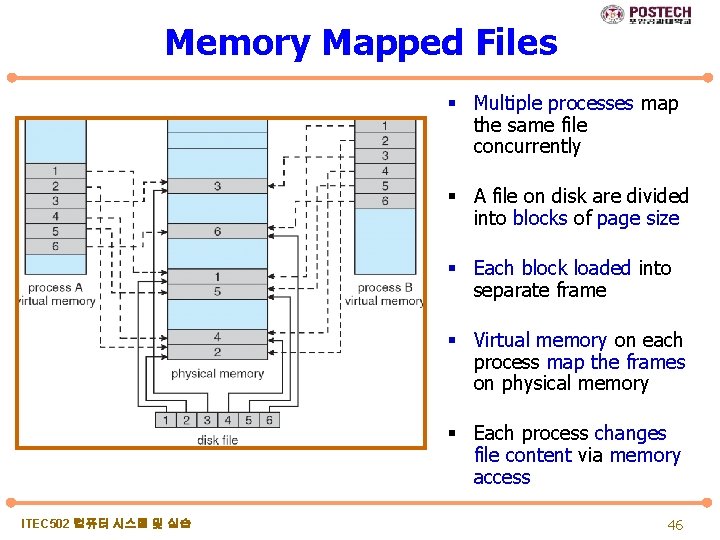

Memory Mapped Files § Multiple processes map the same file concurrently § A file on disk are divided into blocks of page size § Each block loaded into separate frame § Virtual memory on each process map the frames on physical memory § Each process changes file content via memory access ITEC 502 컴퓨터 시스템 및 실습 46

Other Issues -- Prepaging § Prepaging – to reduce the large number of page faults that occurs at process startup – prepage all or some of the pages a process will need, before they are referenced – But if prepaged pages are unused, I/O and memory is wasted – Assume s pages are prepaged and α (0≤α≤ 1) of the pages is used • Is cost of s * α save pages faults > or < than the cost of prepaging s * (1 - α) unnecessary pages? • α close to 0 prepaging loses • α close to 1 prepaging wins ITEC 502 컴퓨터 시스템 및 실습 47

Other Issues – Page Size § Page size selection must take into consideration: – Fragmentation (internal) • Large page size increase internal fragmentation – Page table size • Decreasing the page size increases the number of pages – I/O overhead • Large page size increase I/O transfer time – Locality • Large page size reduces the number of page satisfying locality ITEC 502 컴퓨터 시스템 및 실습 48

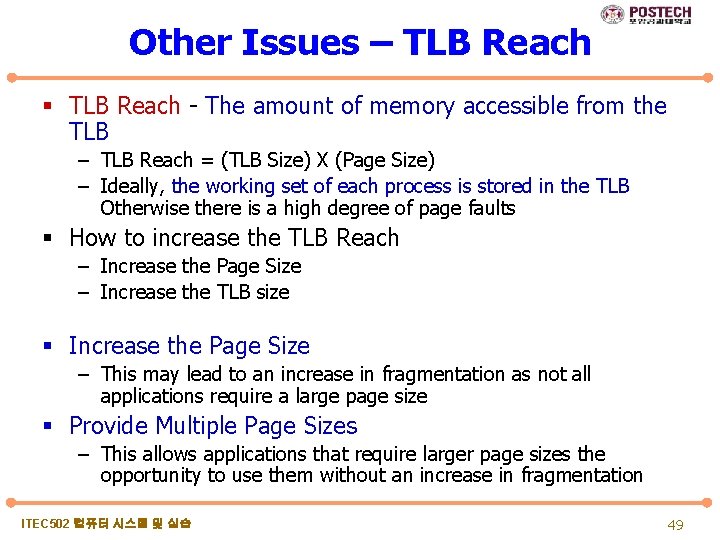

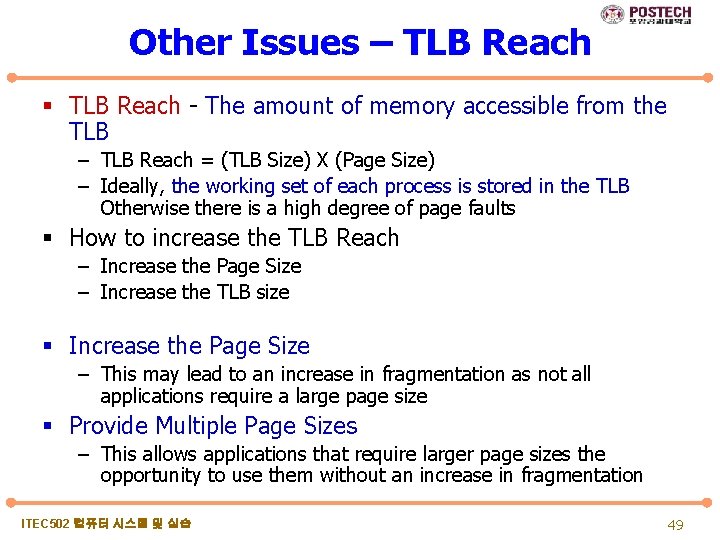

Other Issues – TLB Reach § TLB Reach - The amount of memory accessible from the TLB – TLB Reach = (TLB Size) X (Page Size) – Ideally, the working set of each process is stored in the TLB Otherwise there is a high degree of page faults § How to increase the TLB Reach – Increase the Page Size – Increase the TLB size § Increase the Page Size – This may lead to an increase in fragmentation as not all applications require a large page size § Provide Multiple Page Sizes – This allows applications that require larger page sizes the opportunity to use them without an increase in fragmentation ITEC 502 컴퓨터 시스템 및 실습 49

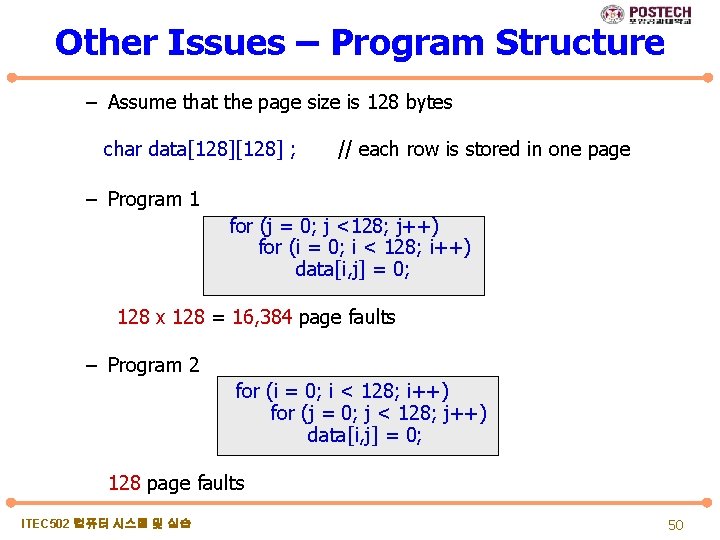

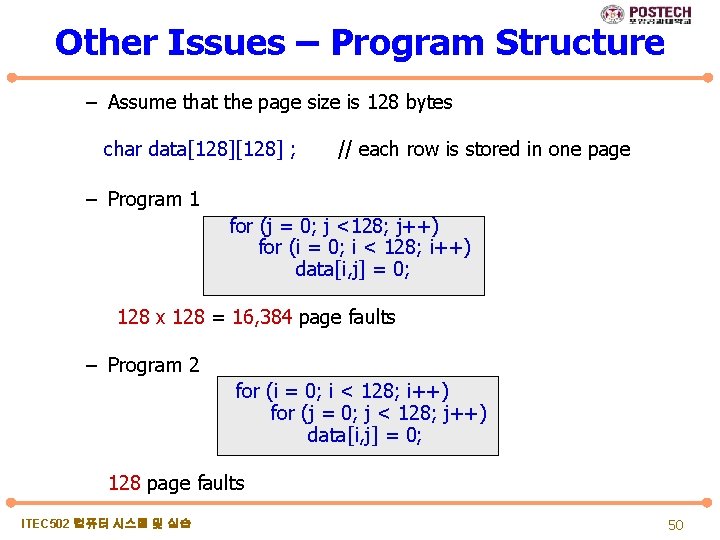

Other Issues – Program Structure – Assume that the page size is 128 bytes char data[128] ; // each row is stored in one page – Program 1 for (j = 0; j <128; j++) for (i = 0; i < 128; i++) data[i, j] = 0; 128 x 128 = 16, 384 page faults – Program 2 for (i = 0; i < 128; i++) for (j = 0; j < 128; j++) data[i, j] = 0; 128 page faults ITEC 502 컴퓨터 시스템 및 실습 50

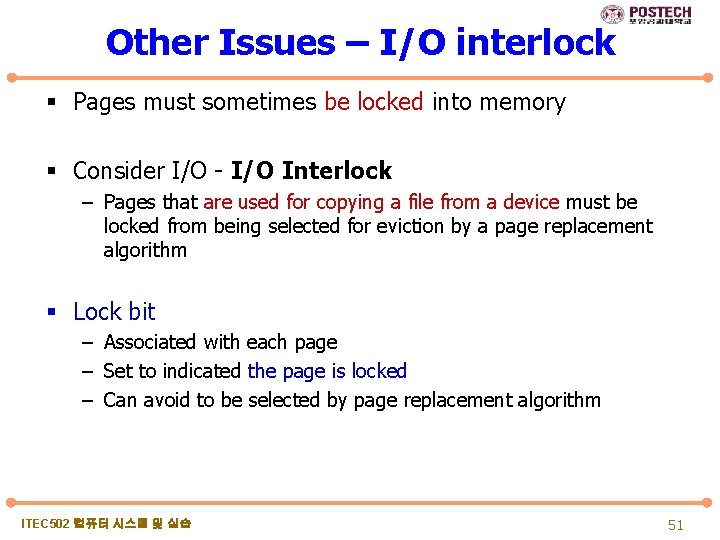

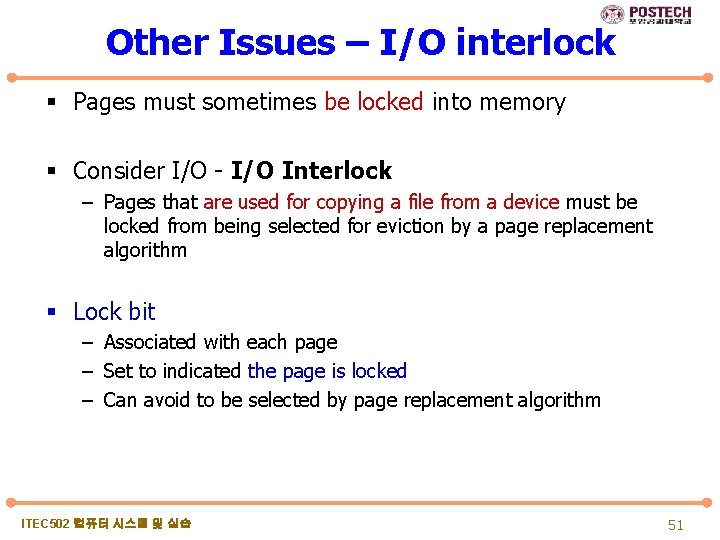

Other Issues – I/O interlock § Pages must sometimes be locked into memory § Consider I/O - I/O Interlock – Pages that are used for copying a file from a device must be locked from being selected for eviction by a page replacement algorithm § Lock bit – Associated with each page – Set to indicated the page is locked – Can avoid to be selected by page replacement algorithm ITEC 502 컴퓨터 시스템 및 실습 51

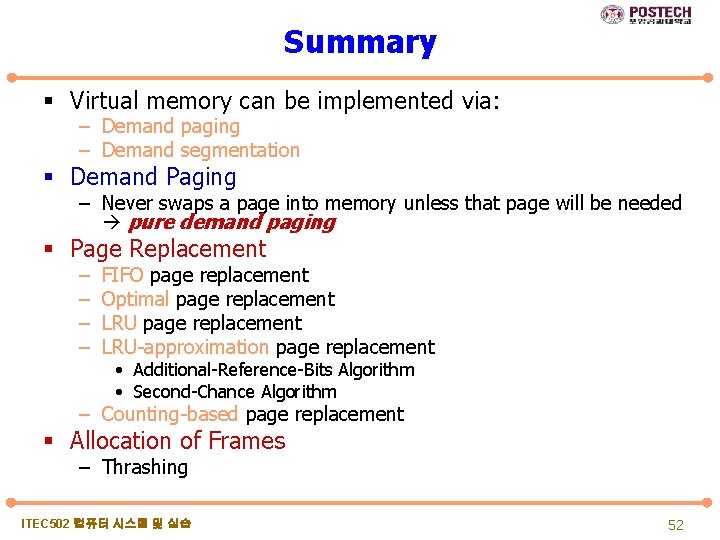

Summary § Virtual memory can be implemented via: – Demand paging – Demand segmentation § Demand Paging – Never swaps a page into memory unless that page will be needed pure demand paging § Page Replacement – – FIFO page replacement Optimal page replacement LRU-approximation page replacement • Additional-Reference-Bits Algorithm • Second-Chance Algorithm – Counting-based page replacement § Allocation of Frames – Thrashing ITEC 502 컴퓨터 시스템 및 실습 52