Hadoop Workshop Immersion 2018 Introduction What is Hadoop

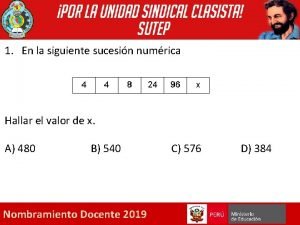

- Slides: 93

Hadoop Workshop Immersion 2018

Introduction

What is Hadoop? • Apache top level project, open-source implementation of frameworks for reliable, scalable, distributed computing and data storage. • It is a flexible and highly-available architecture for large scale computation and data processing on a network of commodity hardware. • Designed to answer the question: “How to process big data with reasonable cost and time? ”

Hadoop Timeline • 2005: Doug Cutting and Michael J. Cafarella developed Hadoop to support distribution for the Nutch search engine project. • The project was funded by Yahoo. • 2006: Yahoo gave the project to Apache • Software Foundation.

Hadoop Milestones • 2008 - Hadoop Wins Terabyte Sort Benchmark (sorted 1 terabyte of data in 209 seconds, compared to previous record of 297 seconds) • 2009 - Avro and Chukwa became new members of Hadoop Framework family • 2010 - Hadoop's Hbase, Hive and Pig subprojects completed, adding more computational power to Hadoop framework • 2011 - Zoo. Keeper Completed • 2013 - Hadoop 1. 1. 2 and Hadoop 2. 0. 3 alpha. - Ambari, Cassandra, Mahout have been added

Wait. . So what is it? • Hadoop: • an open-source software framework that supports data-intensive distributed applications, licensed under the Apache v 2 license. • Goals / Requirements: • Abstract and facilitate the storage and processing of large and/or rapidly growing data sets • Structured and non-structured data • Simple programming models • High scalability and availability • Use commodity (cheap!) hardware with little redundancy • Fault-tolerance • Move computation rather than data

Mathematical Background • Based on functional programming concepts: • map takes a function and a collection of values and then applies that function to every element in the set individually. • map f: X -> Y, {X} => {Y} • Reduce takes a binary function and a collection of values and applies the function pairwise. • reduce g: Y x Y -> Y, {Y} => Y • Example • • (reduce ‘+’ (map length (() (ab) (abc))) (reduce ‘+’ (0 1 2 3)) 0+1+2+3 =6 (reduce ‘+’ (map length (() (ab) (abc))) = 6

Motivation • Many separate attempts to write programs that process large amounts of data. • Data: • Crawled web documents • Web request log • Output of jobs: • • Inverted indices Graph structure of web documents Summaries of pages crawled per host Most frequent query in a given day • Computations are simple! • Data is complex (very large)!

Map Reduce Infrastructure • How to parallelize the computation • Distribute the data • Handle failures • Balance load • Can run on commodity boxes

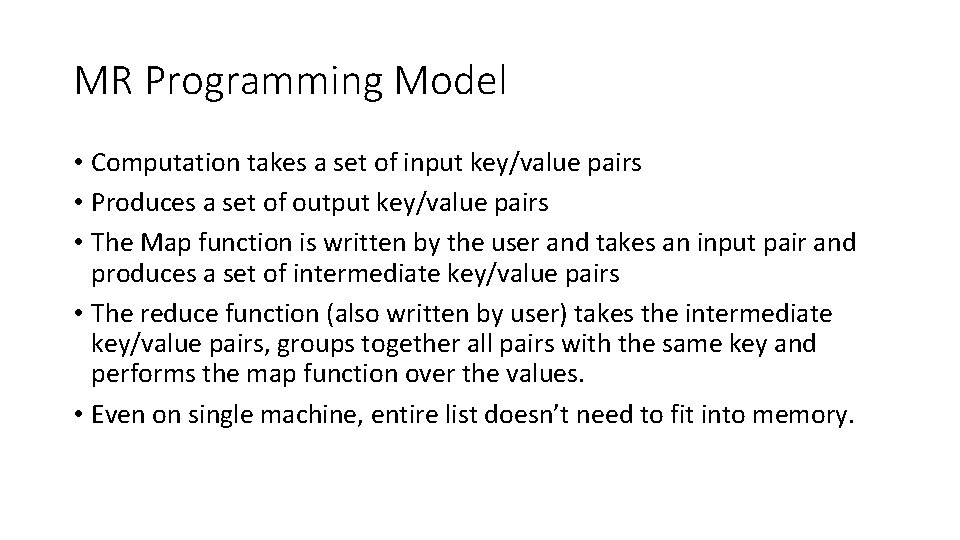

MR Programming Model • Computation takes a set of input key/value pairs • Produces a set of output key/value pairs • The Map function is written by the user and takes an input pair and produces a set of intermediate key/value pairs • The reduce function (also written by user) takes the intermediate key/value pairs, groups together all pairs with the same key and performs the map function over the values. • Even on single machine, entire list doesn’t need to fit into memory.

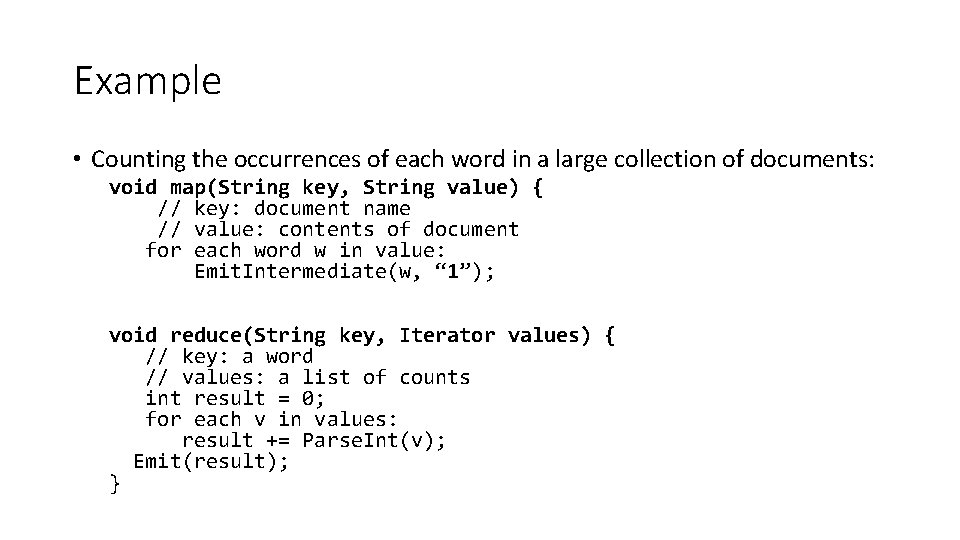

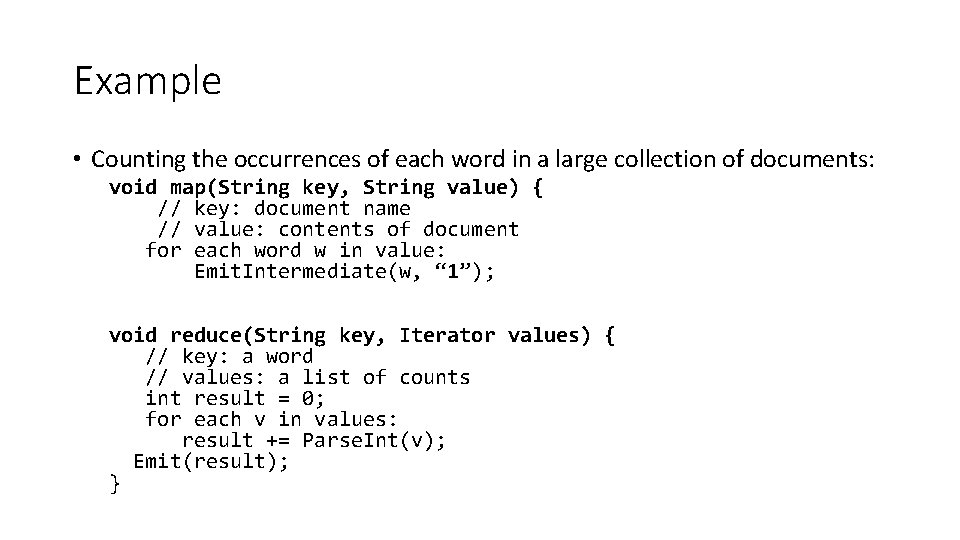

Example • Counting the occurrences of each word in a large collection of documents: void map(String key, String value) { // key: document name // value: contents of document for each word w in value: Emit. Intermediate(w, “ 1”); void reduce(String key, Iterator values) { // key: a word // values: a list of counts int result = 0; for each v in values: result += Parse. Int(v); Emit(result); }

Types • map (k 1, v 1) -> list(k 2, v 2) • reduce (k 2, list(v 2)) -> list (v 2)

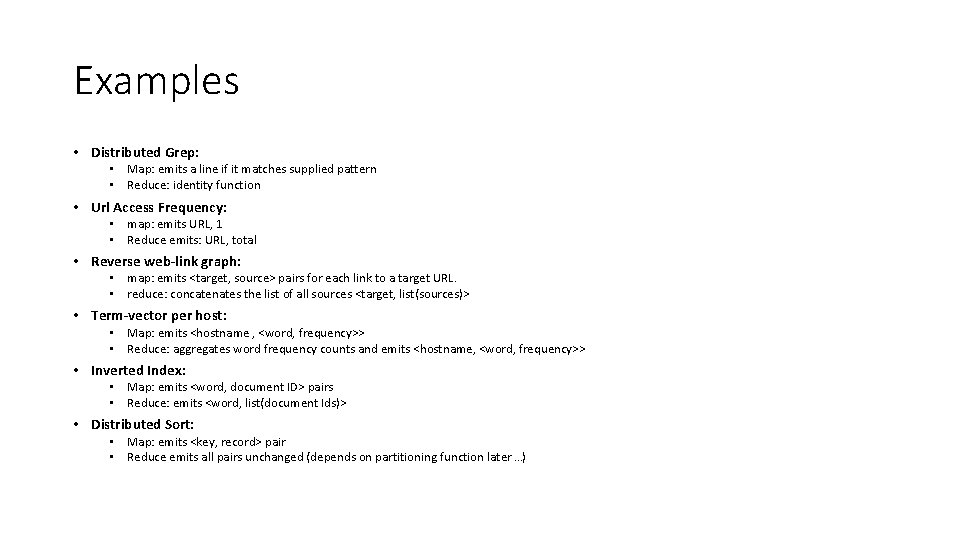

Examples • Distributed Grep: • Map: emits a line if it matches supplied pattern • Reduce: identity function • Url Access Frequency: • map: emits URL, 1 • Reduce emits: URL, total • Reverse web-link graph: • map: emits <target, source> pairs for each link to a target URL. • reduce: concatenates the list of all sources <target, list(sources)> • Term-vector per host: • Map: emits <hostname , <word, frequency>> • Reduce: aggregates word frequency counts and emits <hostname, <word, frequency>> • Inverted Index: • Map: emits <word, document ID> pairs • Reduce: emits <word, list(document Ids)> • Distributed Sort: • Map: emits <key, record> pair • Reduce emits all pairs unchanged (depends on partitioning function later …)

Assumptions for Google • Machines are dual-processor x 86 machines running linux with 2 -4 GB RAM. • Commodity networking hardware is used – either 100 mb/second or 1 gb/second • A cluster consists of hundreds or thousands of machines, failures are common. • Storage is provided by inexpensive IDS disks at individual machines. Distributed file system used to manage data on disks. • Users submit jobs to a scheduling system. Each job consists of a set of tasks and is mapped by the scheduler to available machines.

Distributed File Systems

Trends 1. Component failures are the norm rather than the exception • Some are down, some are broken and will never be fixed. 2. Files are huge by traditional standards • Multiple GBs • 100’s of large files • Support small files but not optimize for this case. 3. Files are mutated by appends rather than overwrites. • Once written, files typically don’t change. • Often just read and then its sequentially • Often being read/written by many entitles at a time. 4. Co-Design of applications & file system APIs • Bandwidth is more important than latency. • Mostly for offline processing

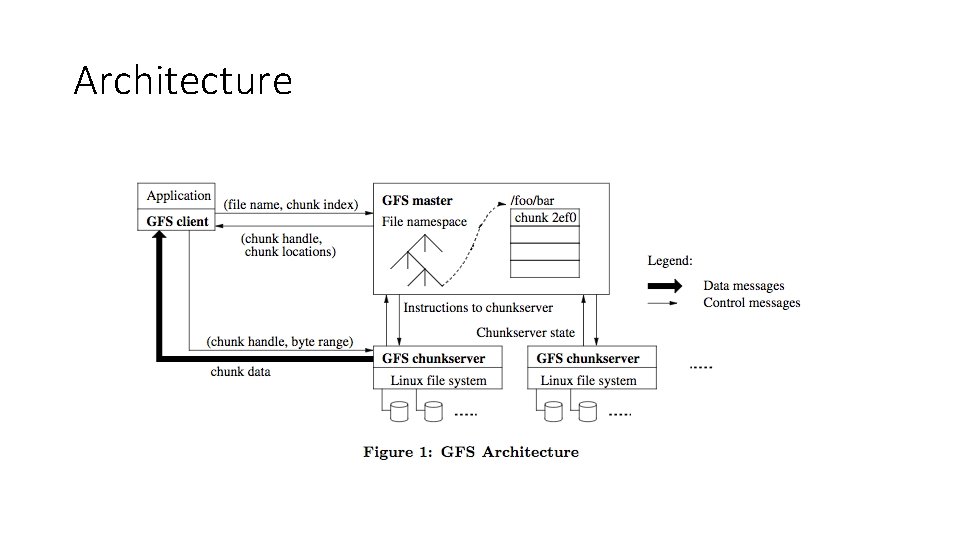

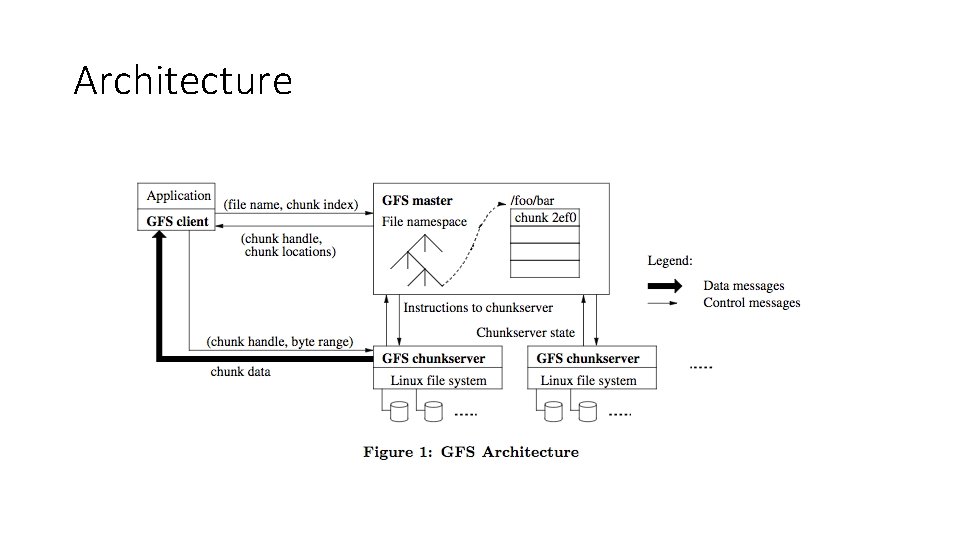

Architecture • A single master and multiple chunkservers accessed by multiple clients. • Clients and chunkservers may be on same machine. • Files are divided into file-size chunks. • Each chunk has a globally unique chunk handle id assigned by master. • Chunkservers store chunks on local disks. • Each chunk is replicated on multiple chunkservers (default replication is 3). • Master maintains all metadata: access control, namespace, mapping from file to chunks and current locations of chunks. • Also controls chunk lease management, garbage collection of orphaned chunks and migration between chunkservers. • Periodically communicates to each chunkserver using heartbeat messages.

Clients • Clients communicate with the master for metadata operations • Data-bearing communications happen with chunkservers. • No POXSIX API support, do not hook into vnodes, inodes, etc. • Neither client nor chunkserver caches file data. • No cache coherence issues

Architecture

Single Master • Having single master simplifies design enables more complex behavior. • Need to ensure it doesn’t become a bottleneck. • Reads/writes of data do not happen at master. • Clients ask where to go for data. • Chunk is read from chunkserver (this information can be cached).

Simple Read Example 1. Client translates file name and byte offset into chunk index within file. (Chunk size is fixed). 2. Sends master a request containing name & chunk index. 3. Master replies with corresponding chunk handle and location of replicas. 4. Client caches this information (filename/chunk index -> data) 5. Client sends request to one of the replicas (closest). • Request specifies chunk handle and byte range within chunk. 6. Further reads of same chunk require no more master work. 7. Client can ask for multiple chunks at once and master can reply with surrounding chunk information as well.

Chunk Size • Chunk size is 64 MB! • Each replica is plain linux file on chunkserver • Lazy space allocation avoids wasting space to internal fragmentation. • Large chunk size: (+) Reduces clients need to go to master. (+) Can keep persistent TCP connection to chunkserver (since larger more operations). (+) Reduces size of meta data (-) Might create hotspots Can be mitigated by higher replication rate for popular blocks.

Metadata • Master Stores three types of meta data 1. File and chunk namespaces 2. Mapping from file to chunks 3. Location of each chunks replicas • All kept in memory. • (1) & (2) are kept persistent by logging mutations to an operation log • Operation log kept on local disk and replicated out to remote mahines. • Chunk locations are not persisted. • At startup master asks each chunk server what it has.

Data Structures • Keeping data structures in memory is fast • Can scan tables for: • Garbage collection • Re-replication (chunkserver failure) • Chunk migration to balance load & disk • But limited by amount of RAM available. • Keeps 64 bytes per 64 MB chunk • Can always add more RAM.

Chunk Locations • Rather than keep persistent information in master, chunkservers have final say. • Simplifies design. • Not rare events: Chunkservers go offline, get renamed, restart, get added, etc.

Operation Log • Contains updates to metadata changes. • Only persistent record of metadata • Serves as logical timeline that defines order of concurrent operations. • Metadata must be updated before change are visible to clients. • Replication happens before respond to client requests (as successful). • Recovery happens by replying log file. • Master can also checkpoint its state to keep log file small. • Recovery: load checkpoint, replay log relative from here. • Master switches to new log file while checkpointing so new updates are written to new log and checkpoint built off of old log.

Consistency Model • File Creation is handled by master. • Locking guarantees correctness. • Operation log defines a global order over these operations • A file is consistent if all clients will see the same data, regardless of which replica they access. • A file region is defined if it is consistent and clients see the mutation in its entirety. • Concurrent successful mutations leave a region undefined but consistent: Data is mingled from many writes. • A failed mutation leave files region inconsistent: clients my see different data. • Mutations may be writes or record appends.

Defined & Consistency • After a sequence of successful mutations, the mutated file region is guaranteed to be defined and contain data written by last mutation. • GFS guarantees this by: • Applying mutations to a chunk in the same order on all mutations. • Using chunk version numbers to detect any replica that has become stale because it has missed some mutations while its chunk-server was down. • Stale replicas are not returned by the master when queried for chunk locations and garbage collected as soon as possible. • Clients cache chunk locations but this time is limited by: • Clients cache timeout. • File open of the same file

Application Use Cases • Writer generates a file from beginning to end using file appends. • Checkpoints may include application-level checksums. • Appending is far more efficient then writes. • Writes can restart incrementally • Many writers concurrently append to a file for merged results. • Readers may deal with duplicates by using checksums. • Or filter them out using unique identifiers within records.

Chunk Leases Master grants a chunk lease to one of the replicas, called the primary. The primary picks a serial order for all mutations to the chunk. All other replicas follow this ordering when applying updates. Mutation order is defined by lease grant order and by serial numbers assigned by primary. • Leases timeout after 60 seconds. • • Primary can request and receive extensions if mutations are ongoing. Requests/grants are piggybacked onto the heartbeat messages. Master may revoke a lease before expiration (for instance on file rename). If Master/Primary communication breaks down, can be re-assigned after it expires.

Control Flow 1. 2. 3. 4. Client asks the master which chunk server holds current lease for chunk and for location of other replicas. (May force assign lease). Master replies with identity of primary and secondary locations • Cached for future mutations • Only needs to contact master when primary lease expires Client pushes data to all replicas, in any order. 1. Once all replicas have acknowledged receiving the data, client sends a write request to the primary. 1. 5. 6. 7. Each chunkserver stores data in an LRU cache until data is used or aged out. Primary assigns consecutive serial numbers to all the utations. The primary forwards the write request to all of the secondary replicas. Each replica must applut mutations in same order. Secondarries all reply indicating operation has been completed. Primary replies to client with indication of any failures, which will trigger retries of steps 3 -7.

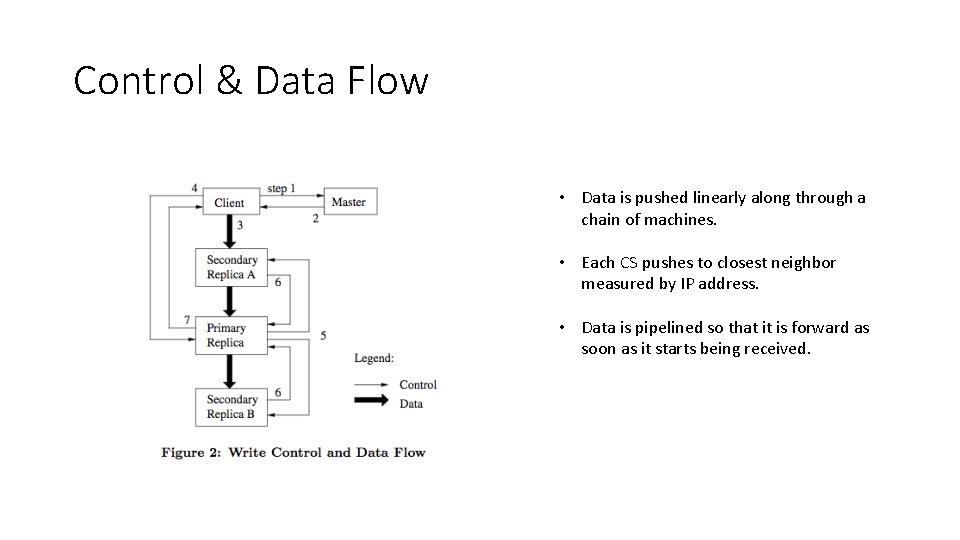

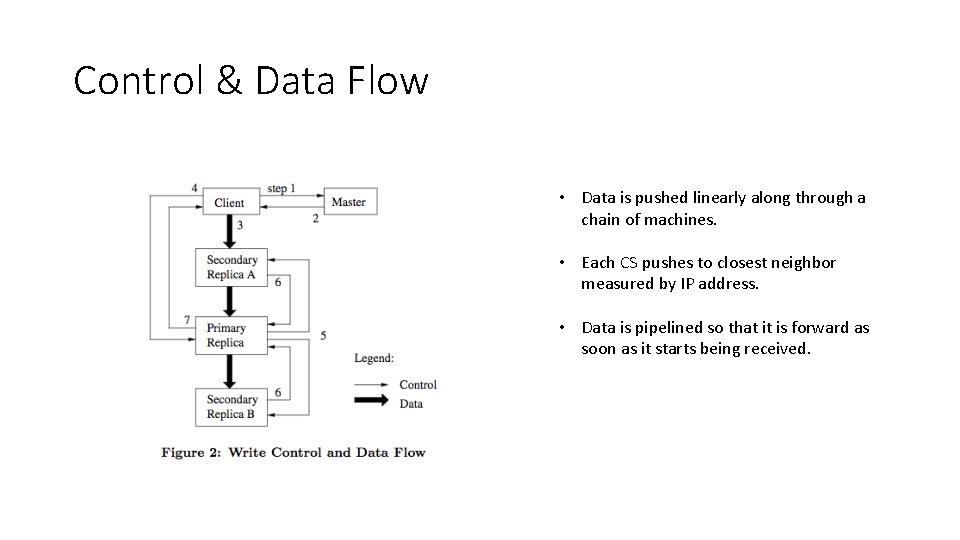

Control & Data Flow • Data is pushed linearly along through a chain of machines. • Each CS pushes to closest neighbor measured by IP address. • Data is pipelined so that it is forward as soon as it starts being received.

Master Operations • Namespace locking • Snapshotting /home/user to /save/user will prevent /home/user/foo from being created. • Acquires read locks on /home and /save and write locks on /home/user and /save/user. • Creation of file /home/user/foo: • acquires read locks on /home and /home/user and a write lock on /home/user/foo.

Chunk Placemen • When a chunk is created, master chooses where to place it: • (1) Place chunk on replicas with below-average disk space utilization. • (2) Limit number of recent creations on each chunkserver: Creation typically indicates heavy write traffic. • (3) Spread replicas across racks (physically dispersed to avoid loss of data if rack/server-farm/etc. goes dark).

Chunk Re-Replication • Master re-replicates a chunk when number of replicas drops below threshold. • Can be prioritized based on: • How far below threshold chunk is. • Live files over recently deleted files. • Client requests for chunk. • Master tells new replicas to copy over data. • Limit number of active copies.

Master Garbage Collection • Deletion is logged immediately • File is renamed to hidden name • Can still be read. • File only removed after k-days (some time). • When hidden file is removed, all metadata is deleted. • Heartbeat is used to coordinate cleanup of data on chunkservers.

GFS Client code • GFS client breaks code into multiple write operations if covers multiple boundaries.

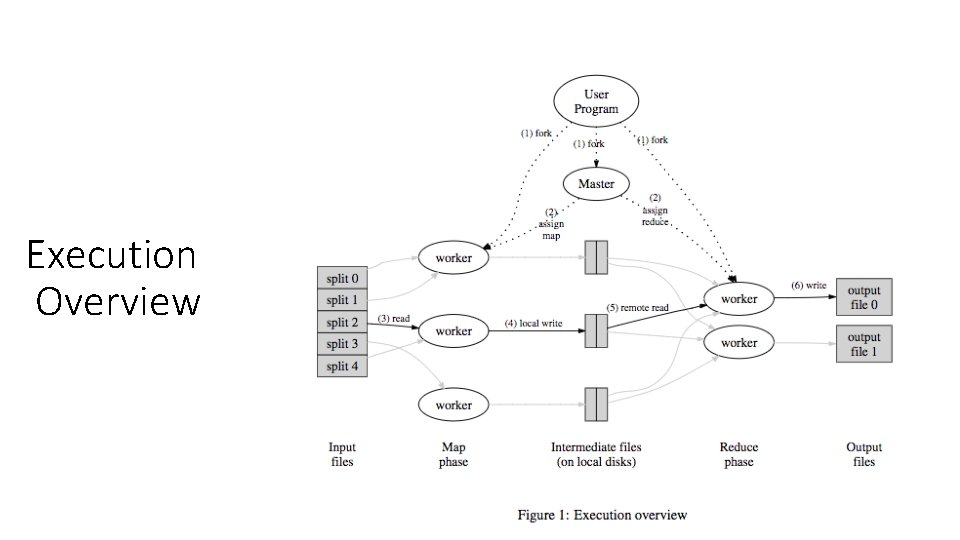

Execution Overview

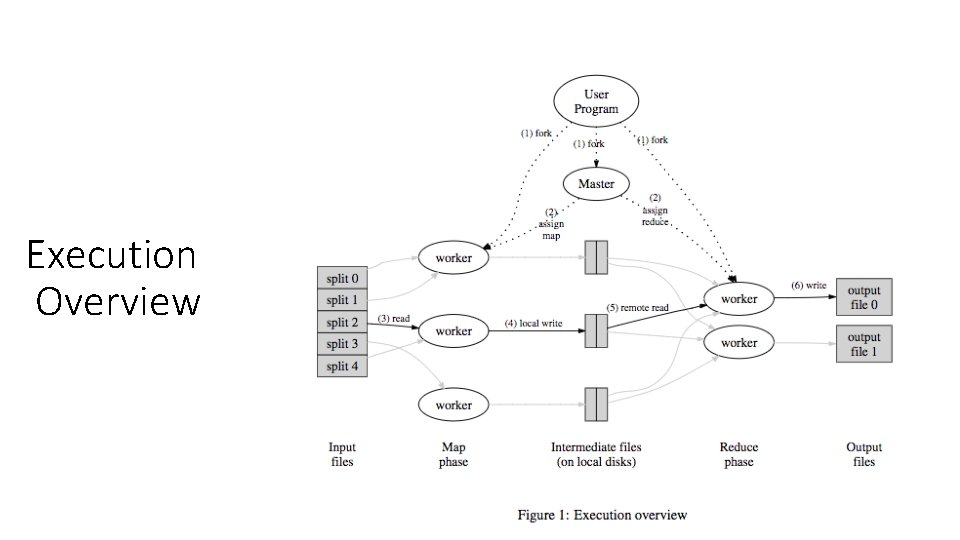

Execution Overview • Map invocations are distributed across multiple machines by automatic partitioning of the input data in to M splits. • Each split can be processed by different machines. • Reduce invocations are distributed by partitioning the intermediate key space into R pieces: hash(key) mod R • Number of partitions (R) and partitioning function are supplied by the user.

Execution Overview

Execution overview steps 1. Split input files into M pieces (16 or 64 MB). Number of mapper jobs is based on M. 2. Master (controller) assigns tasks to idle machines in cluster. 3. Map worker reads its assigned chunk, parses out key/value pairs which are buffered in memory by running users map function. 4. Periodically, buffered pairs written to local disk. Partitioned into R regions by partitioning function. Sends local disk locations back to master. 5. Master notifies reducer of the available data. 6. Reducer copies data over from mapper. 7. Sorts data by intermediate key values and then groups by key. 8. Reducer iterates over sorted key, values and calls the users-reduce function on the set of intermediate keys for a specific key. Written to special output file. 9. In the end there are R resulting files

Master Data Structures • For each map and reduce task master stores the state: • Idle, in-progress or completed. • And identity of the worker machine. • Master is the conduit that propagates the locations from mapper to reducer tasks.

Fault Tolerance • The master pings every worker periodically. • If no response, mapper is marked as failed. • If completed workers are marked as idle and can be scheduled for additional jobs. • Failed jobs can be re-executed even if failure happens after completion (since intermediary output is stored locally on worker). • Reducers can read data from B instead of A.

Master failure • Masters can write periodic check points if the task dies, a new copy can be started from the last checkpointed state. • However implementation aborts the job if master fails and can be restarted by user or by job scheduler.

Semantics & Failure • If user map and reduce are deterministic, same output will be regenerated on a failure (or by multiple runs of the same job). • Mapper writes out R temporary files , reducer writes out 1 such temporary file. Writes are atomic. • Reducer renames file from temporary to final output name using atomic rename operation in underlying file system. • Result is semantically the same as if all mappers & reducers run in 1 job on super computer (sequentially). • If user map and reducer are non-deterministic then multiple runs of the same job can produce different output.

Locality • GFS is used to distribute the data across the cluster. • Master uses this information when assigning mapper jobs to machines. • Assign map job to chunkserver that has a replica of the data. • Or as close as possible. • Avoid having to transfer large files across the network!

Task granularity • Each worker can run multiple mapper & reducer jobs • M, R > > W (# of workers) • Allows for better dynamic load balancing. • Speeds up recovery when worker fails • Many small jobs are safer than fewer larger jobs ! • Master needs to keep O(M * R) state in memory and make O(M + R) scheduling decisions. • Example M = 200, 000 and R = 5, 000, W = 2, 000.

Back up tasks • Stragglers a single job taking a long time to complete when everyone else is complete. • Might be a bad disk • Too many jobs running on host • Bugs: 1 such bug turned off processor caches • When MR operation is close to complete, the master can schedule back-up jobs for remaining in-progress jobs. • Which ever job finishes first is chosen for next stage.

Partitioning Functions • Consider the case where keys are URLs and we want all entries for a single host to end up on the same file. • User can specify hash(Hostname(urlkey)) mod R as the partitioning function causing all URLs from same host to end up in same output file.

Ordering Guarantees • Within a partition, intermediate keys/value pairs are processed in increasing key order • Makes it easy to generate sorted output files per partition • Makes random access lookups by key easier. • Users also prefer this.

Combiner Function • Some times there can be significant repetition of intermediate keys produced by each map task. • We allow the user to specify optional combiner function. • Consider word counts over documents. A single mapper producers the pair <the, “ 1”> hundreds of times. These all need to be transmitted to the reducer. Instead we do local reducing on master before transmitting. • • Combiner function is run on mapper hosts after map is completed. Typically the same code is used for both reducer and combiner. The output of a combiner is still sent to reducer. Reducer function needs to be commutative and associative.

Side-Effects • MR users can produce auxiliary files as additional outputs. • Application writes to temporary file and renames once complete by each mapper. • Application (user-mapper) responsible for atomic writes.

Skipping bad records • Allow mappers to skip bad records. • Could be due to bugs or faulty hardware in data collection, or corrupted data. • Allow jobs to skip % of bad records. • If you have 1 billion records, some will be bad. Period.

Local Execution • Allow map reduce jobs to be run on local machine on small slice of data to check for correctness and debugging purposes.

Status information • Master runs HTTP server so users can track status of the job. • How many tasks have been completed, inprogress, failed, etc.

Counters • MR library provides counter facility to count number of events. • Each worker updates counters locally. • Counts are sent back to master • Master aggregates these counters and displays on the status page • Can avoid double counting by ensuring multiple instances of same job don’t affect the count. • Useful for debugging, sanity checking and business insights.

What can’t MR do (well) • Any N^2 or N^3 operation. • That is if you have a set of records and you want to look at all pairs of records or triples of records, etc. • MR really needs to be done individually across each record.

Introduction to Java APIs

Overview • Hadoop Map. Reduce is a software framework for easily writing applications which process vast amounts of data (multi-terabyte datasets) in-parallel on large clusters (thousands of nodes) of commodity hardware in a reliable, fault-tolerant manner.

Jobs • A Map. Reduce job usually splits the input data-set into independent chunks which are processed by the map tasks in a completely parallel manner. • The framework sorts the outputs of the maps, which are then input to the reduce tasks. • Typically both the input and the output of the job are stored in a filesystem. • The framework takes care of scheduling tasks, monitoring them and re-executes the failed tasks.

Storage • Typically the compute nodes and the storage nodes are the same, that is, the Map. Reduce framework and the Hadoop Distributed File System (see HDFS Architecture Guide) are running on the same set of nodes. • This configuration allows the framework to effectively schedule tasks on the nodes where data is already present, resulting in very high aggregate bandwidth across the cluster.

Framework • The Map. Reduce framework consists of a single master Job. Tracker and one slave Task. Tracker per cluster-node. • The master is responsible for scheduling the jobs' component tasks on the slaves, monitoring them and re-executing the failed tasks. • The slaves execute the tasks as directed by the master.

Job Tracker • Minimally, applications specify the input/output locations and supply map and reduce functions via implementations of appropriate interfaces and/or abstract-classes. • These, and other job parameters, comprise the job configuration. • The Hadoop job client then submits the job (jar/executable etc. ) and configuration to the Job. Tracker which then assumes the responsibility of distributing the software/configuration to the slaves, scheduling tasks and monitoring them, providing status and diagnostic information to the job-client.

Language • Although the Hadoop framework is implemented in Java. TM, Map. Reduce applications need not be written in Java. • Hadoop Streaming is a utility which allows users to create and run jobs with any executables (e. g. shell utilities) as the mapper and/or the reducer. • Hadoop Pipes is a SWIG- compatible C++ API to implement Map. Reduce applications (non JNITM based).

Input & Output • The Map. Reduce framework operates exclusively on <key, value> pairs, that is, the framework views the input to the job as a set of <key, value> pairs and produces a set of <key, value> pairs as the output of the job, conceivably of different types. • The key and value classes have to be serializable by the framework and hence need to implement the Writable interface. • Additionally, the key classes have to implement the. Writable. Comparable interface to facilitate sorting by the framework. • Input and Output types of a Map. Reduce job: • (input) <k 1, v 1> -> map -> <k 2, v 2> -> combine -> <k 2, v 2> -> reduce -> <k 3, v 3> (output)

Mapper

Mapper • Mapper maps input key/value pairs to a set of intermediate key/value pairs. • Maps are the individual tasks that transform input records into intermediate records. The transformed intermediate records do not need to be of the same type as the input records. A given input pair may map to zero or many output pairs. • The Hadoop Map. Reduce framework spawns one map task for each Input. Split generated by the Input. Format for the job.

Mapper Configuration • Overall, Mapper implementations are passed the Job. Conf for the job via the Job. Configurable. configure(Job. Conf) method and override it to initialize themselves. • The framework then calls map(Writable. Comparable, Writable, Output. Collector, Reporter) for each key/value pair in the Input. Split for that task. • Applications can then override the Closeable. close() method to perform any required cleanup.

Mapper Output • Output pairs do not need to be of the same types as input pairs. • A given input pair may map to zero or many output pairs. • Output pairs are collected with calls to Output. Collector. collect(Writable. Comparable, Writable). • Applications can use the Reporter to report progress, set applicationlevel status messages and update Counters, or just indicate that they are alive.

Intermediary Output • All intermediate values associated with a given output key are subsequently grouped by the framework, and passed to the Reducer(s) to determine the final output. • Users can control the grouping by specifying a Comparator via Job. Conf. set. Output. Key. Comparator. Class(Class). • The Mapper outputs are sorted and then partitioned per Reducer. • The total number of partitions is the same as the number of reduce tasks for the job. • Users can control which keys (and hence records) go to which Reducer by implementing a custom Partitioner.

Combiner • Users can optionally specify a combiner, via Job. Conf. set. Combiner. Class(Class), to perform local aggregation of the intermediate outputs, which helps to cut down the amount of data transferred from the Mapper to the Reducer. • The intermediate, sorted outputs are always stored in a simple (keylen, key, value-len, value) format. • Applications can control if, and how, the intermediate outputs are to be compressed and the Compression. Codec to be used via the Job. Conf. •

How many Maps? • The number of maps is usually driven by the total size of the inputs, that is, the total number of blocks of the input files. • The right level of parallelism for maps seems to be around 10 -100 maps per-node, although it has been set up to 300 maps for very cpulight map tasks. • Task setup takes awhile, so it is best if the maps take at least a minute to execute. • Thus, if you expect 10 TB of input data and have a blocksize of 128 MB, you'll end up with 82, 000 maps, unless set. Num. Map. Tasks(int) (which only provides a hint to the framework) is used to set it even higher.

Reducer

Reducer • Reducer reduces a set of intermediate values which share a key to a smaller set of values. • The number of reduces for the job is set by the user via Job. Conf. set. Num. Reduce. Tasks(int). • Overall, Reducer implementations are passed the Job. Conf for the job via the Job. Configurable. configure(Job. Conf) method and can override it to initialize themselves. • The framework then calls reduce(Writable. Comparable, Iterator, Output. Collector, Reporter) method for each <key, (list of values)> pair in the grouped inputs. • Applications can then override the Closeable. close() method to perform any required cleanup.

Reducer Phases • Reducer has 3 primary phases: shuffle, sort and reduce. • Shuffle: Input to the Reducer is the sorted output of the mappers. In this phase the framework fetches the relevant partition of the output of all the mappers, via HTTP. • Sort: The framework groups Reducer inputs by keys (since different mappers may have output the same key) in this stage. The shuffle and sort phases occur simultaneously; while map-outputs are being fetched they are merged. • Reduce: In this phase the reduce(Writable. Comparable, Iterator, Output. Collector, Reporter) method is called for each <key, (list of values)> pair in the grouped inputs.

Reducer Output • The output of the reduce task is typically written to the File. System via Output. Collector. collect(Writable. Comparable, Writable). • Applications can use the Reporter to report progress, set applicationlevel status messages and update Counters, or just indicate that they are alive. • The output of the Reducer is not sorted.

How Many Reducers? • The right number of reduces seems to be 0. 95 or 1. 75 multiplied by (<no. of nodes> * mapred. tasktracker. reduce. tasks. maximum). • Increasing the number of reduces increases the framework overhead, but increases load balancing and lowers the cost of failures. • The scaling factors above are slightly less than whole numbers to reserve a few reduce slots in the framework for speculative-tasks and failed tasks.

Reducer NONE • It is legal to set the number of reduce-tasks to zero if no reduction is desired. • In this case the outputs of the map-tasks go directly to the File. System, into the output path set by set. Output. Path(Path). • The framework does not sort the map-outputs before writing them out to the File. System.

Others

Partitioner • Partitioner partitions the key space. • Partitioner controls the partitioning of the keys of the intermediate map-outputs. • The key (or a subset of the key) is used to derive the partition, typically by a hash function. • The total number of partitions is the same as the number of reduce tasks for the job. • Hence this controls which of the m reduce tasks the intermediate key (and hence the record) is sent to for reduction. • Hash. Partitioner is the default Partitioner.

Reporter • Reporter is a facility for Map. Reduce applications to report progress, set application-level status messages and update Counters. • Mapper and Reducer implementations can use the Reporter to report progress or just indicate that they are alive. • In scenarios where the application takes a significant amount of time to process individual key/value pairs, this is crucial since the framework might assume that the task has timed-out and kill that task. • Another way to avoid this is to set the configuration parameter mapred. task. timeout to a high-enough value (or even set it to zero for no time-outs). • Applications can also update Counters using the Reporter.

Output. Collector • Output. Collector is a generalization of the facility provided by the Map. Reduce framework to collect data output by the Mapper or the Reducer (either the intermediate outputs or the output of the job). • Hadoop Map. Reduce comes bundled with a library of generally useful mappers, reducers, and partitioners.

Job Configuration • Job. Conf represents a Map. Reduce job configuration. • Job. Conf is typically used to specify the Mapper, combiner (if any), Partitioner, Reducer, Input. Format, Output. Format and Output. Co mmitter implementations. • Job. Conf also indicates the set of input files (set. Input. Paths(Job. Conf, Path. . . ) /add. Input. Path(Job. Conf, Path)) and (set. Input. Paths(Job. Conf, String) /add. Input. Paths(Job. Conf, String)) and where the output files should be written (set. Output. Path(Path)).

Job Input

Job Input • Input. Format describes the input-specification for a Map. Reduce job. • The Map. Reduce framework relies on the Input. Format of the job to: • Validate the input-specification of the job. • Split-up the input file(s) into logical Input. Split instances, each of which is then assigned to an individual Mapper. • Provide the Record. Reader implementation used to glean input records from the logical Input. Split for processing by the Mapper.

File. Input. Format • The default behavior of file-based Input. Format implementations, typically sub-classes of File. Input. Format, is to split the input into logical Input. Split instances based on the total size, in bytes, of the input files. • However, the File. System blocksize of the input files is treated as an upper bound for input splits. • A lower bound on the split size can be set via mapred. min. split. size.

Text. Input. Format • Clearly, logical splits based on input-size is insufficient for many applications since record boundaries must be respected. • In such cases, the application should implement a Record. Reader, who is responsible for respecting record-boundaries and presents a record -oriented view of the logical Input. Split to the individual task. • Text. Input. Format is the default Input. Format. • If Text. Input. Format is the Input. Format for a given job, the framework detects input-files with the. gz extensions and automatically decompresses them using the appropriate Compression. Codec.

Input. Spllit • Input. Split represents the data to be processed by an individual Mapper. • Typically Input. Split presents a byte-oriented view of the input, and it is the responsibility of Record. Reader to process and present a recordoriented view. • File. Split is the default Input. Split. It sets map. input. file to the path of the input file for the logical split.

Record. Reader • Record. Reader reads <key, value> pairs from an Input. Split. • Typically the Record. Reader converts the byte-oriented view of the input, provided by the Input. Split, and presents a record-oriented to the Mapper implementations for processing. • Record. Reader thus assumes the responsibility of processing record boundaries and presents the tasks with keys and values.

Job Output • Output. Format describes the output-specification for a Map. Reduce job. • The Map. Reduce framework relies on the Output. Format of the job to: • Validate the output-specification of the job; for example, check that the output directory doesn't already exist. • Provide the Record. Writer implementation used to write the output files of the job. Output files are stored in a File. System. • Text. Output. Format is the default Output. Format. •

Output. Committer • Output. Committer describes the commit of task output for a Map. Reduce job. • File. Output. Committer is the default Output. Committer. • Job setup/cleanup tasks occupy map or reduce slots, whichever is free on the Task. Tracker. • And Job. Cleanup task, Task. Cleanup tasks and Job. Setup task have the highest priority, and in that order.

Record. Writer • Record. Writer writes the output <key, value> pairs to an output file. • Record. Writer implementations write the job outputs to the File. System.

Trivial Example • Word. Count. java

Writable wrappers for java primitives

Writable wrappers for java primitives Que letra continua m v t m j

Que letra continua m v t m j Resume format sample for work immersion

Resume format sample for work immersion Introduction of sheet metal

Introduction of sheet metal Introduction of participants

Introduction of participants Introduction of participants in a workshop

Introduction of participants in a workshop Work immersion attendance

Work immersion attendance Late french immersion halifax

Late french immersion halifax Immersion sword system

Immersion sword system Oil immersion cooling

Oil immersion cooling Ade oelas

Ade oelas What happens during stage 2 of cold water immersion

What happens during stage 2 of cold water immersion Immersion sword system

Immersion sword system Nonpathogenic bacteria are milady

Nonpathogenic bacteria are milady Foy e wallace

Foy e wallace Immersion vs sprinkling baptism

Immersion vs sprinkling baptism Imagination development

Imagination development French immersion schools in halifax

French immersion schools in halifax Pros and cons of dual language programs

Pros and cons of dual language programs Ateneo immersion scandal

Ateneo immersion scandal Work immersion checklist

Work immersion checklist Dip immersion paraffin

Dip immersion paraffin Humble isd spanish immersion

Humble isd spanish immersion Petra burmeister

Petra burmeister Ponding and immersion

Ponding and immersion Immersion testing water path

Immersion testing water path Immersion lithography

Immersion lithography Water immersion test method

Water immersion test method Immersion probe for soup

Immersion probe for soup Immersion marketing

Immersion marketing Hadoop is an open source software framework for

Hadoop is an open source software framework for Hadoop yarn

Hadoop yarn Hadoop 101

Hadoop 101 Hadoop matrix multiplication

Hadoop matrix multiplication Hadoop streaming assignment 1: words rating

Hadoop streaming assignment 1: words rating Supercloud hadoop

Supercloud hadoop Hdfs ls

Hdfs ls Antonino virgillito

Antonino virgillito Install hadoop on ubuntu virtualbox

Install hadoop on ubuntu virtualbox Hadoop hdfs latency

Hadoop hdfs latency Jaql hadoop

Jaql hadoop Hadoop distributed file system

Hadoop distributed file system Inter and trans firewall analytics

Inter and trans firewall analytics Hortonworks gpu

Hortonworks gpu Hadoop streaming python

Hadoop streaming python Scribe hadoop

Scribe hadoop Input formats in hadoop

Input formats in hadoop Fs mkdir

Fs mkdir Google mapreduce

Google mapreduce Evolution of hadoop

Evolution of hadoop Open source hadoop distribution

Open source hadoop distribution Hadoop master slave architecture

Hadoop master slave architecture Big data analytics with r and hadoop

Big data analytics with r and hadoop Partitioner and combiner in hadoop

Partitioner and combiner in hadoop Hadoop fault tolerance

Hadoop fault tolerance Scorecard visio

Scorecard visio Hadoop ruby

Hadoop ruby All the following accurately describe hadoop except

All the following accurately describe hadoop except Scale up and scale out in hadoop

Scale up and scale out in hadoop Zookeeper in hadoop ecosystem

Zookeeper in hadoop ecosystem Hadoop hive architecture

Hadoop hive architecture Distribution hadoop

Distribution hadoop Evolution of hadoop

Evolution of hadoop Ssis hadoop connection manager

Ssis hadoop connection manager Hunk splunk hadoop

Hunk splunk hadoop Strata hadoop

Strata hadoop Weka hadoop

Weka hadoop Hive web ui

Hive web ui Oracle loader for hadoop

Oracle loader for hadoop Disadvantages of hadoop

Disadvantages of hadoop Hadoop file formats

Hadoop file formats Hadoop presentation

Hadoop presentation Ghp hadoop

Ghp hadoop Software

Software Apache hadoop open source

Apache hadoop open source Hadoop

Hadoop Hadoop

Hadoop +hadoop programming

+hadoop programming Hadoop timeline

Hadoop timeline Hadoop reference architecture

Hadoop reference architecture Hadoop netflow

Hadoop netflow Hadoop

Hadoop Apache mahout

Apache mahout Hadoop distributed file system architecture design

Hadoop distributed file system architecture design Mongodb hadoop connector java example

Mongodb hadoop connector java example Hadoop 설치

Hadoop 설치 Hadoop

Hadoop Spotify hadoop

Spotify hadoop Hadoop gfs

Hadoop gfs Ambari hadoop

Ambari hadoop Hadoop gfs

Hadoop gfs Distributed computing hadoop

Distributed computing hadoop Hadoop ubuntu

Hadoop ubuntu Hadoop web services

Hadoop web services