Final Exam Topics CSE 564 Computer Architecture Summer

![8. Reducing Misses by Compiler Optimizations Software-only Approach • Mc. Farling [1989] reduced misses 8. Reducing Misses by Compiler Optimizations Software-only Approach • Mc. Farling [1989] reduced misses](https://slidetodoc.com/presentation_image_h2/d5193769f711ed203da06c8790155bb2/image-11.jpg)

- Slides: 78

Final Exam Topics CSE 564 Computer Architecture Summer 2017 Department of Computer Science and Engineering Yonghong Yan yan@oakland. edu www. secs. oakland. edu/~yan 1

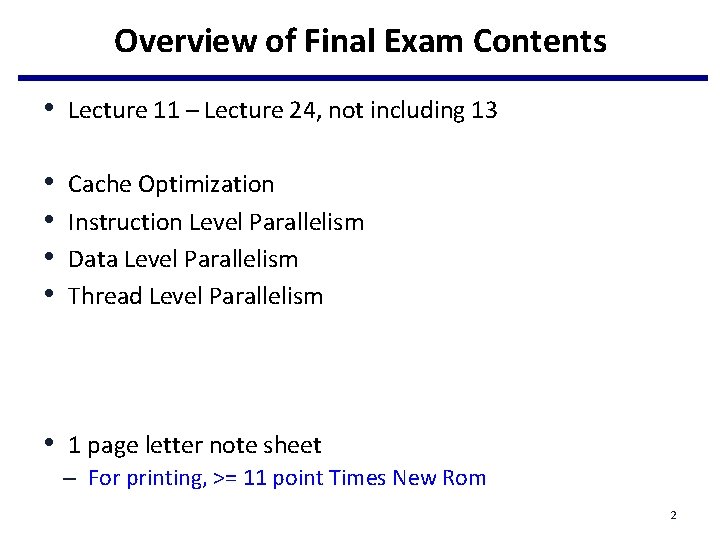

Overview of Final Exam Contents • Lecture 11 – Lecture 24, not including 13 • • Cache Optimization Instruction Level Parallelism Data Level Parallelism Thread Level Parallelism • 1 page letter note sheet – For printing, >= 11 point Times New Rom 2

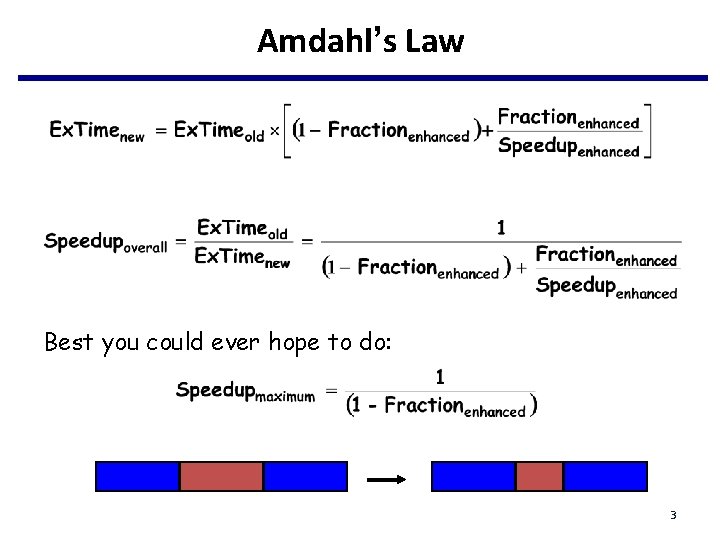

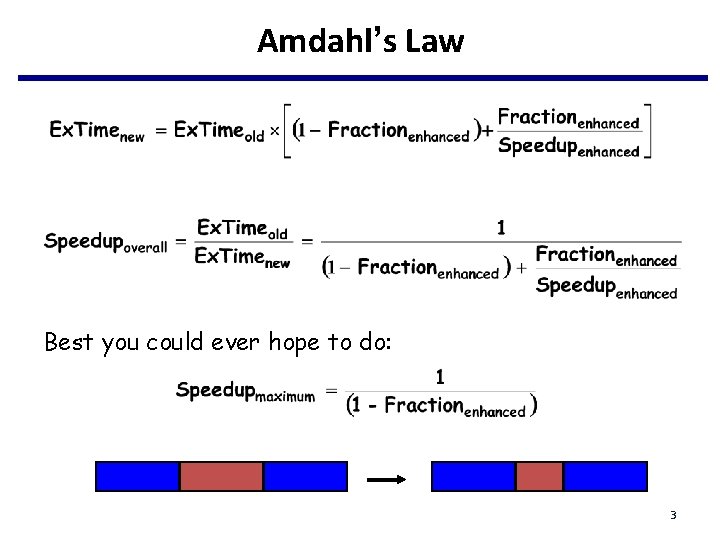

Amdahl’s Law Best you could ever hope to do: 3

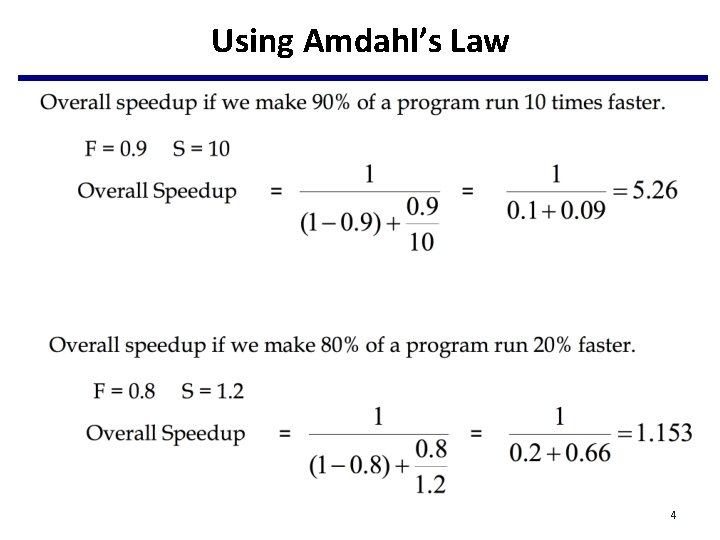

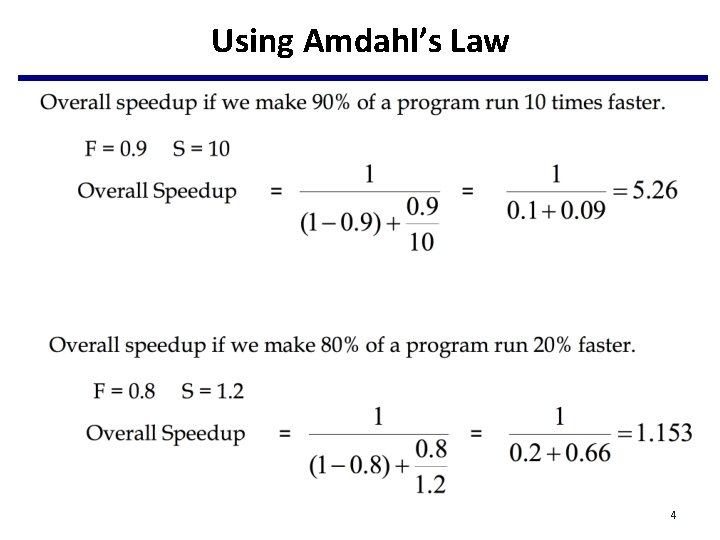

Using Amdahl’s Law 4

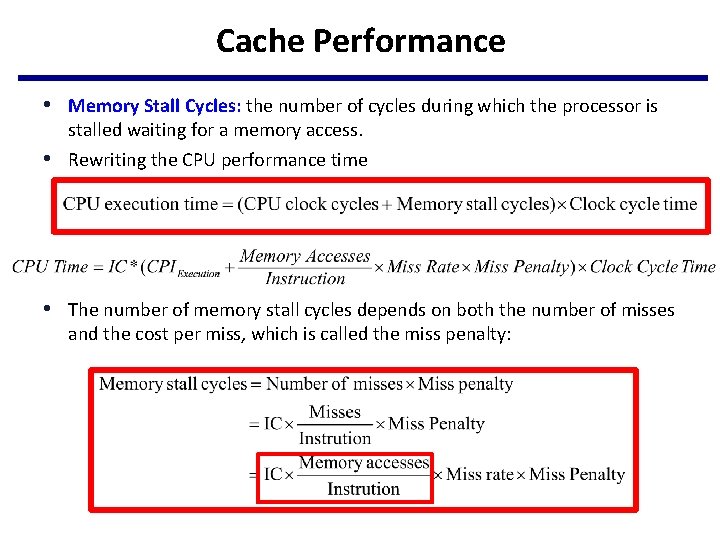

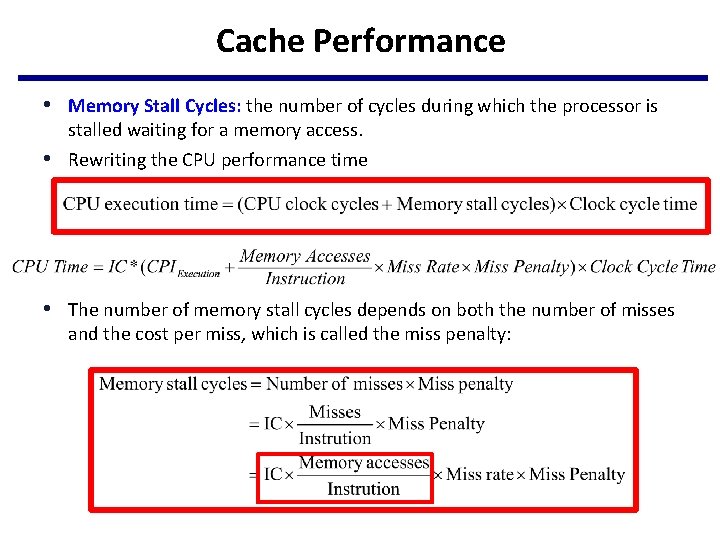

Cache Performance • Memory Stall Cycles: the number of cycles during which the processor is • stalled waiting for a memory access. Rewriting the CPU performance time • The number of memory stall cycles depends on both the number of misses and the cost per miss, which is called the miss penalty:

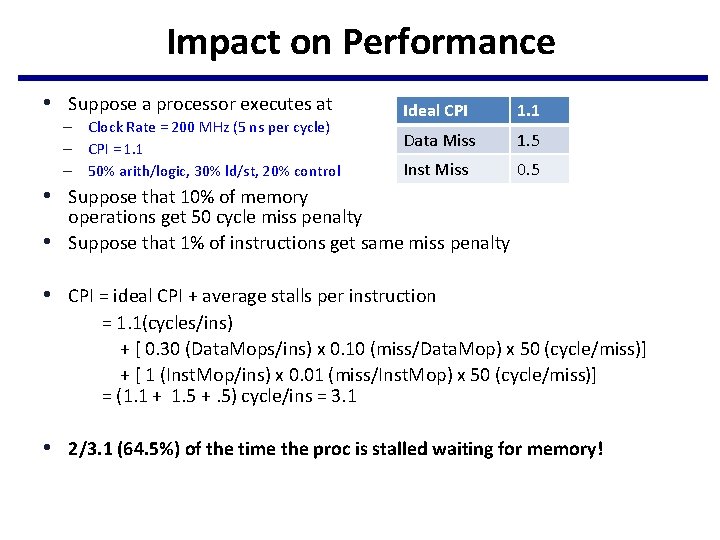

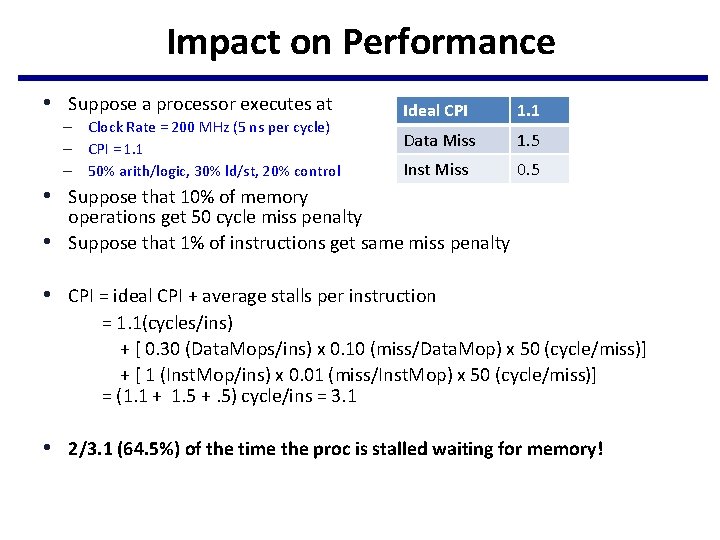

Impact on Performance • Suppose a processor executes at – Clock Rate = 200 MHz (5 ns per cycle) – CPI = 1. 1 – 50% arith/logic, 30% ld/st, 20% control Ideal CPI 1. 1 Data Miss 1. 5 Inst Miss 0. 5 • Suppose that 10% of memory • operations get 50 cycle miss penalty Suppose that 1% of instructions get same miss penalty • CPI = ideal CPI + average stalls per instruction = 1. 1(cycles/ins) + [ 0. 30 (Data. Mops/ins) x 0. 10 (miss/Data. Mop) x 50 (cycle/miss)] + [ 1 (Inst. Mop/ins) x 0. 01 (miss/Inst. Mop) x 50 (cycle/miss)] = (1. 1 + 1. 5 +. 5) cycle/ins = 3. 1 • 2/3. 1 (64. 5%) of the time the proc is stalled waiting for memory!

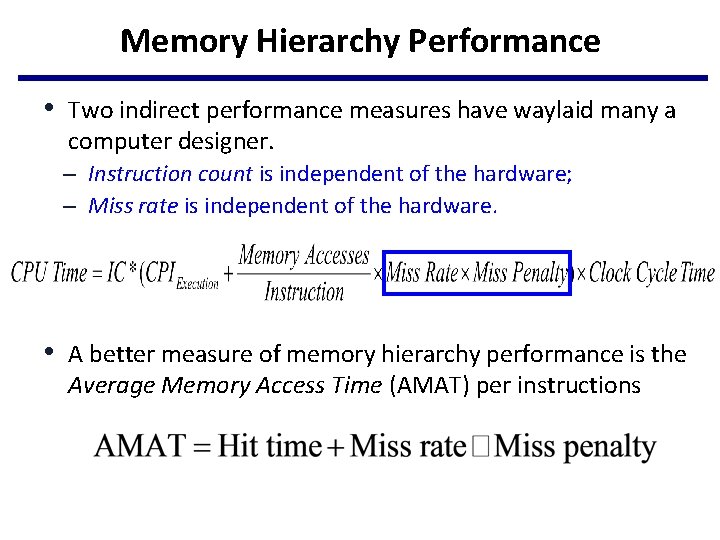

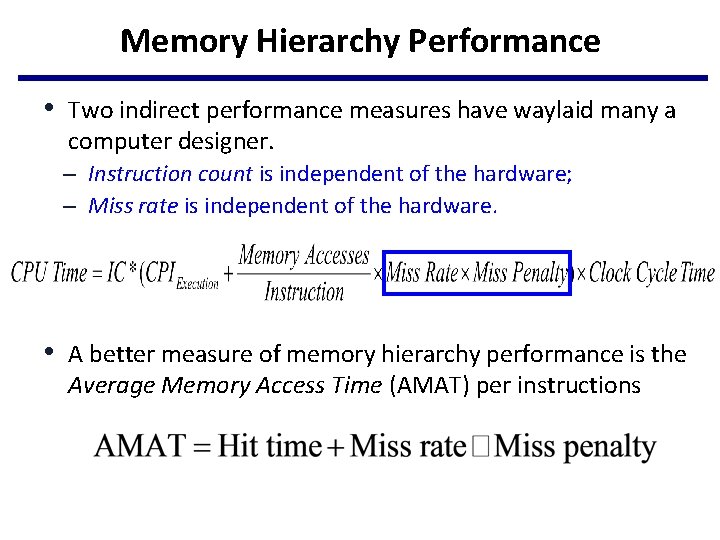

Memory Hierarchy Performance • Two indirect performance measures have waylaid many a computer designer. – Instruction count is independent of the hardware; – Miss rate is independent of the hardware. • A better measure of memory hierarchy performance is the Average Memory Access Time (AMAT) per instructions

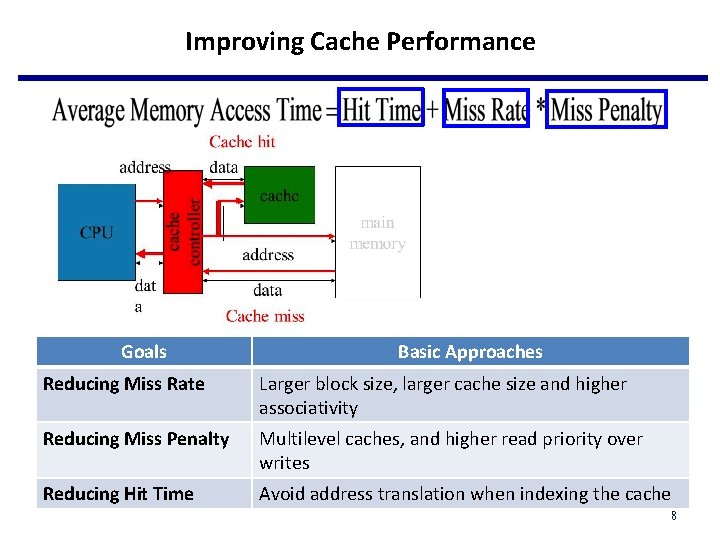

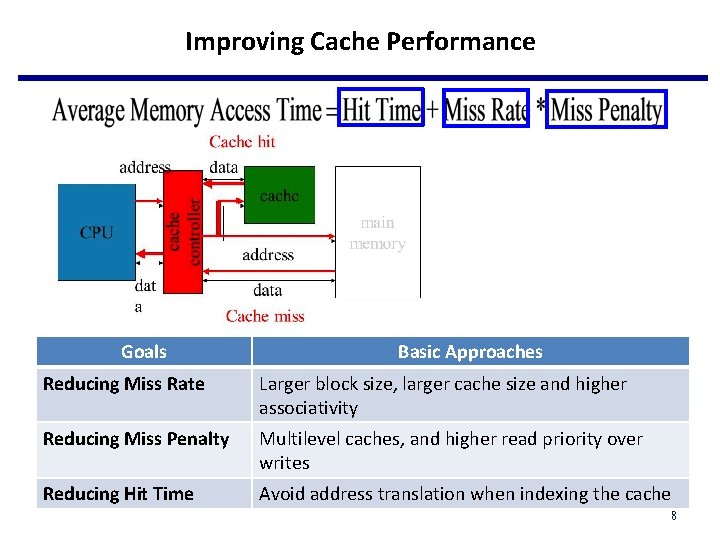

Improving Cache Performance Goals Basic Approaches Reducing Miss Rate Larger block size, larger cache size and higher associativity Reducing Miss Penalty Multilevel caches, and higher read priority over writes Reducing Hit Time Avoid address translation when indexing the cache 8

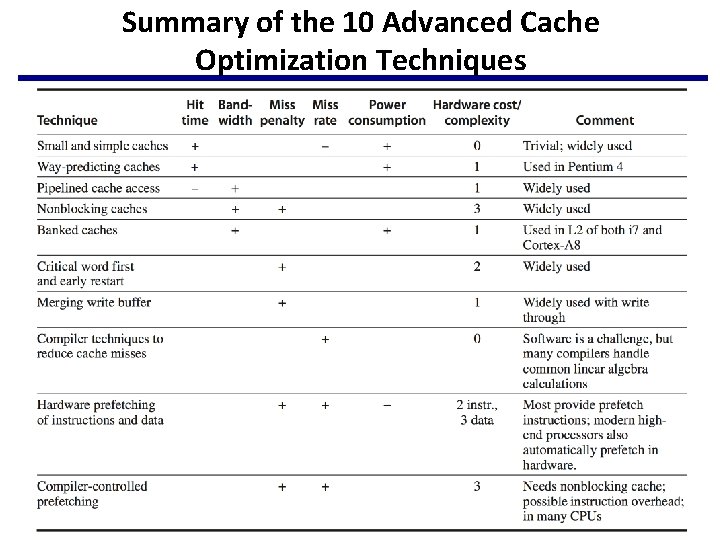

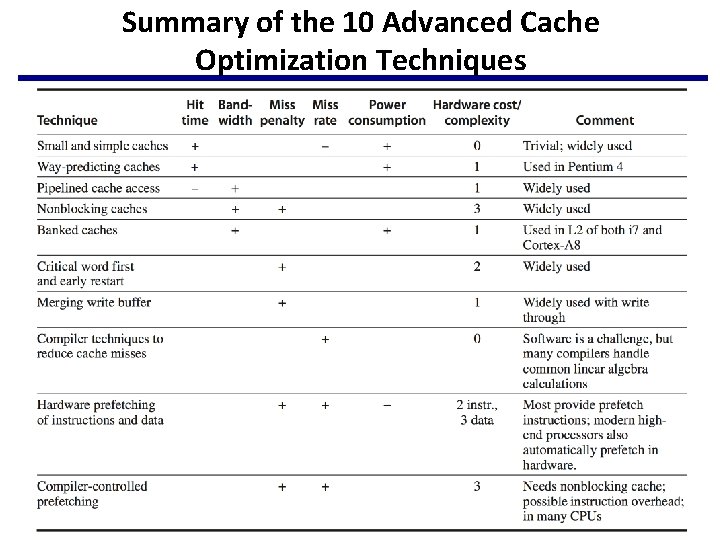

Summary of the 10 Advanced Cache Optimization Techniques 9

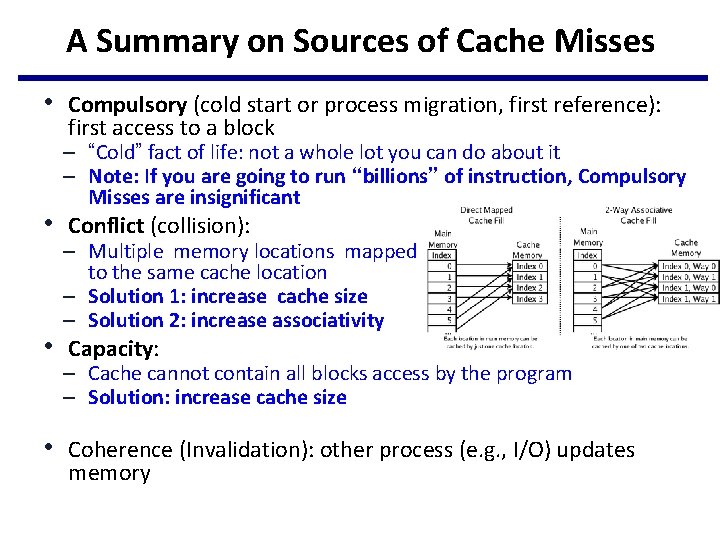

A Summary on Sources of Cache Misses • Compulsory (cold start or process migration, first reference): first access to a block – “Cold” fact of life: not a whole lot you can do about it – Note: If you are going to run “billions” of instruction, Compulsory Misses are insignificant • Conflict (collision): – Multiple memory locations mapped to the same cache location – Solution 1: increase cache size – Solution 2: increase associativity • Capacity: – Cache cannot contain all blocks access by the program – Solution: increase cache size • Coherence (Invalidation): other process (e. g. , I/O) updates memory

![8 Reducing Misses by Compiler Optimizations Softwareonly Approach Mc Farling 1989 reduced misses 8. Reducing Misses by Compiler Optimizations Software-only Approach • Mc. Farling [1989] reduced misses](https://slidetodoc.com/presentation_image_h2/d5193769f711ed203da06c8790155bb2/image-11.jpg)

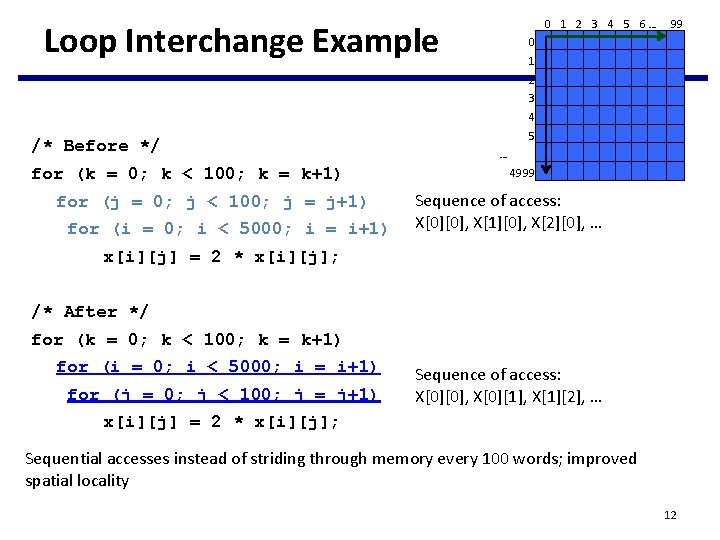

8. Reducing Misses by Compiler Optimizations Software-only Approach • Mc. Farling [1989] reduced misses by 75% in software on 8 KB • direct-mapped cache, 4 byte blocks Instructions – Reorder procedures in memory to reduce conflict misses – Profiling to look at conflicts (using tools they developed) • Data – Loop interchange: Change nesting of loops to access data in memory order – Blocking: Improve temporal locality by accessing blocks of data repeatedly vs. going down whole columns or rows – Merging arrays: Improve spatial locality by single array of compound elements vs. 2 arrays – Loop fusion: Combine 2 independent loops that have same looping and some variable overlap 11

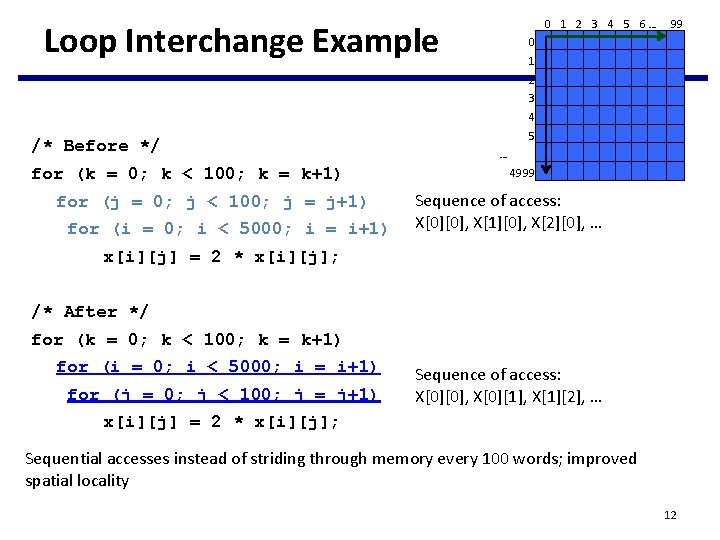

0 1 2 3 4 5 6… Loop Interchange Example 99 0 1 2 3 4 /* Before */ for (k = 0; k < 100; k = k+1) for (j = 0; j < 100; j = j+1) for (i = 0; i < 5000; i = i+1) 5 … 4999 Sequence of access: X[0][0], X[1][0], X[2][0], … x[i][j] = 2 * x[i][j]; /* After */ for (k = 0; k < 100; k = k+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < 100; j = j+1) Sequence of access: X[0][0], X[0][1], X[1][2], … x[i][j] = 2 * x[i][j]; Sequential accesses instead of striding through memory every 100 words; improved spatial locality 12

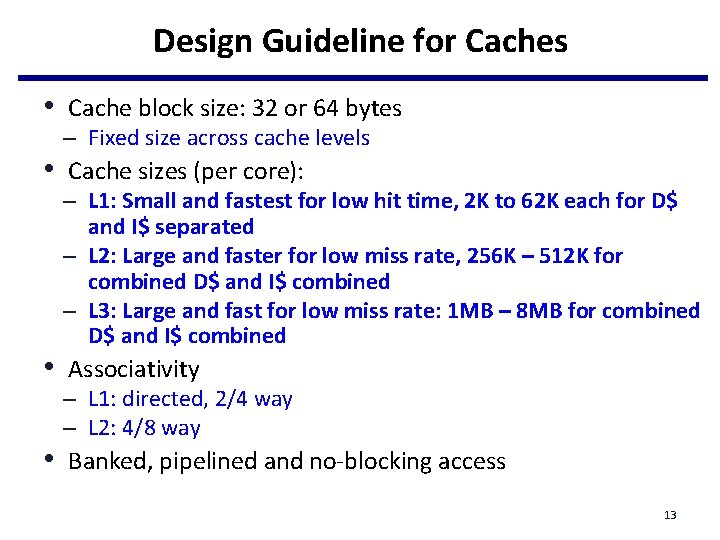

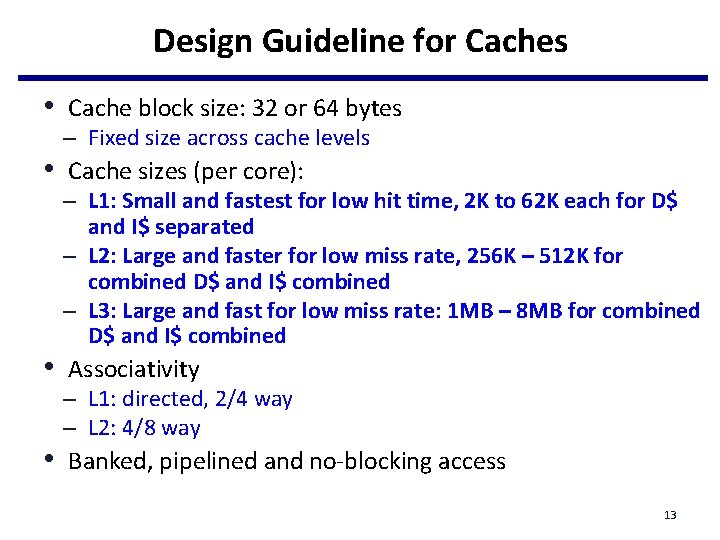

Design Guideline for Caches • Cache block size: 32 or 64 bytes – Fixed size across cache levels • Cache sizes (per core): – L 1: Small and fastest for low hit time, 2 K to 62 K each for D$ and I$ separated – L 2: Large and faster for low miss rate, 256 K – 512 K for combined D$ and I$ combined – L 3: Large and fast for low miss rate: 1 MB – 8 MB for combined D$ and I$ combined • Associativity – L 1: directed, 2/4 way – L 2: 4/8 way • Banked, pipelined and no-blocking access 13

Topics for Instruction Level Parallelism • ILP Introduction, Compiler Techniques and Branch Prediction – 3. 1, 3. 2, 3. 3 • Dynamic Scheduling (OOO) – 3. 4, 3. 5 and C. 5, C. 6 and C. 7 (FP pipeline and scoreboard) • Hardware Speculation and Static Superscalar/VLIW – 3. 6, 3. 7 • Dynamic Scheduling, Multiple Issue and Speculation – 3. 8, 3. 9 • ILP Limitations and SMT – 3. 10, 3. 11, 3. 12

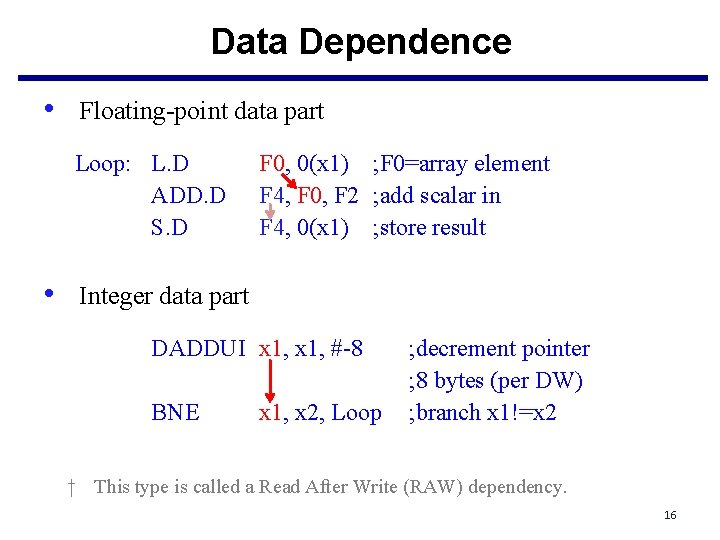

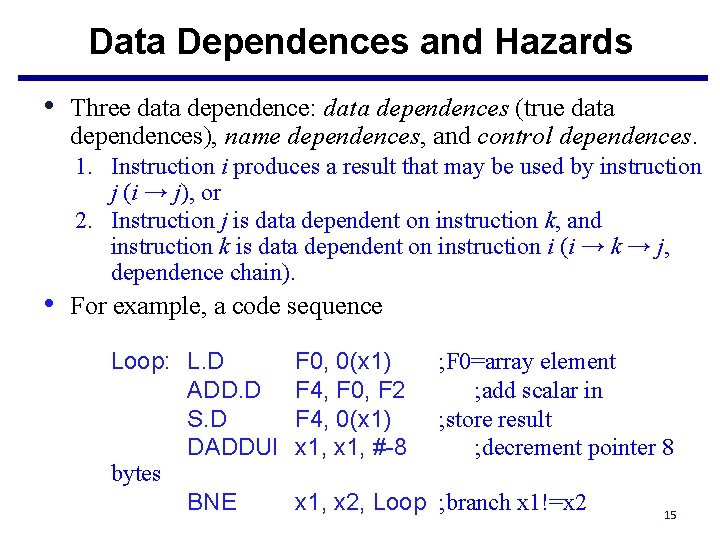

Data Dependences and Hazards • Three data dependence: data dependences (true data dependences), name dependences, and control dependences. 1. Instruction i produces a result that may be used by instruction j (i → j), or 2. Instruction j is data dependent on instruction k, and instruction k is data dependent on instruction i (i → k → j, dependence chain). • For example, a code sequence Loop: L. D ADD. D S. D DADDUI bytes BNE F 0, 0(x 1) F 4, F 0, F 2 F 4, 0(x 1) x 1, #-8 ; F 0=array element ; add scalar in ; store result ; decrement pointer 8 x 1, x 2, Loop ; branch x 1!=x 2 15

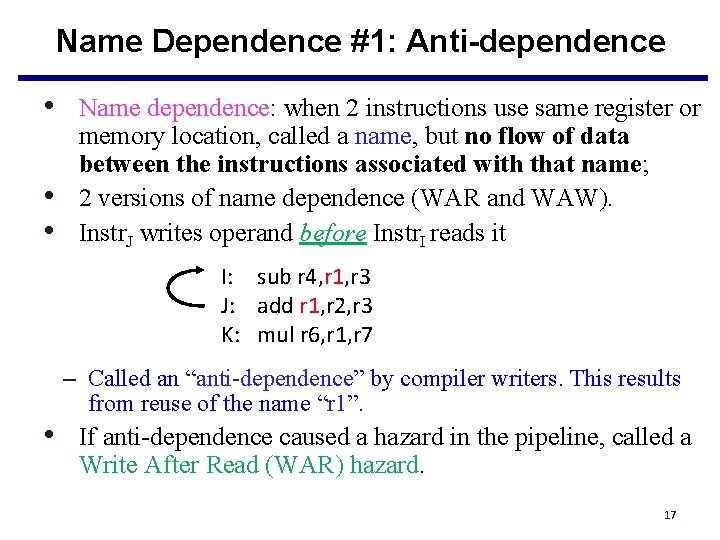

Data Dependence • Floating-point data part Loop: L. D ADD. D S. D F 0, 0(x 1) ; F 0=array element F 4, F 0, F 2 ; add scalar in F 4, 0(x 1) ; store result • Integer data part DADDUI x 1, #-8 BNE x 1, x 2, Loop ; decrement pointer ; 8 bytes (per DW) ; branch x 1!=x 2 † This type is called a Read After Write (RAW) dependency. 16

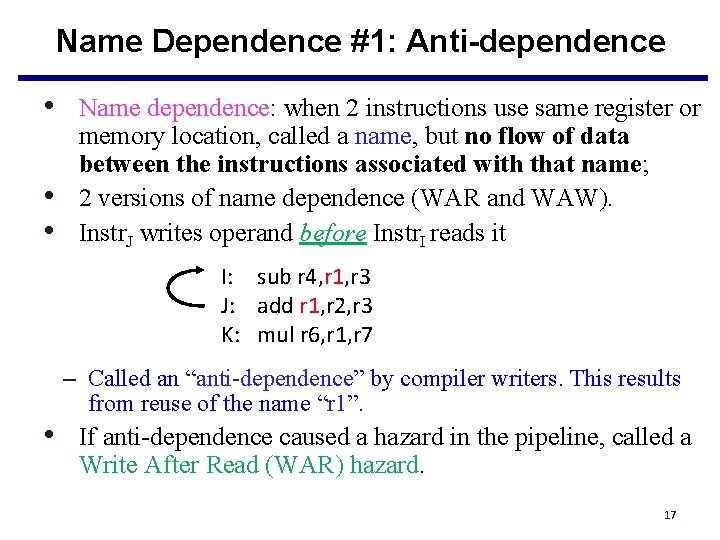

Name Dependence #1: Anti-dependence • Name dependence: when 2 instructions use same register or • • memory location, called a name, but no flow of data between the instructions associated with that name; 2 versions of name dependence (WAR and WAW). Instr. J writes operand before Instr. I reads it I: sub r 4, r 1, r 3 J: add r 1, r 2, r 3 K: mul r 6, r 1, r 7 – Called an “anti-dependence” by compiler writers. This results from reuse of the name “r 1”. • If anti-dependence caused a hazard in the pipeline, called a Write After Read (WAR) hazard. 17

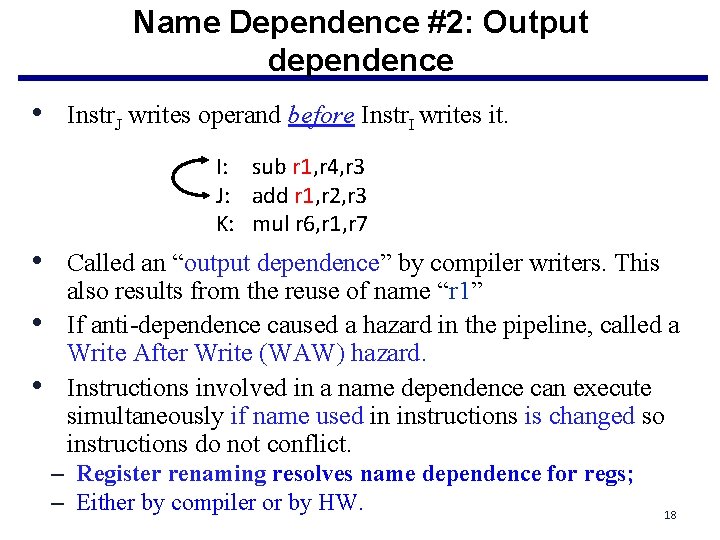

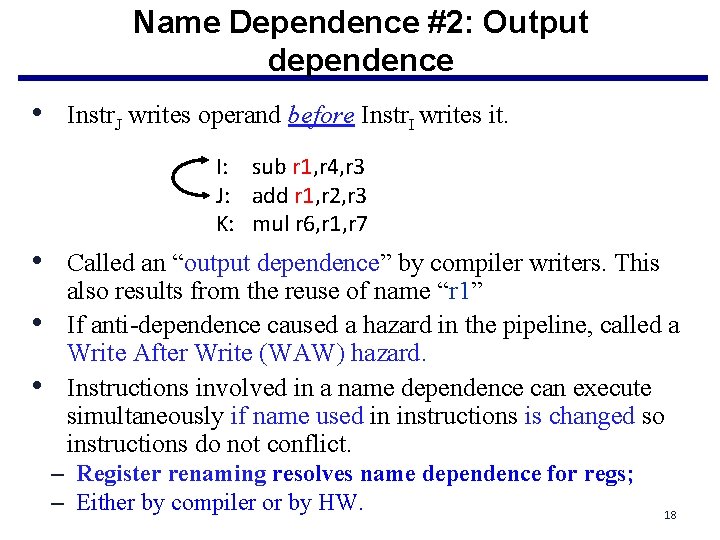

Name Dependence #2: Output dependence • Instr. J writes operand before Instr. I writes it. I: sub r 1, r 4, r 3 J: add r 1, r 2, r 3 K: mul r 6, r 1, r 7 • Called an “output dependence” by compiler writers. This • • also results from the reuse of name “r 1” If anti-dependence caused a hazard in the pipeline, called a Write After Write (WAW) hazard. Instructions involved in a name dependence can execute simultaneously if name used in instructions is changed so instructions do not conflict. – Register renaming resolves name dependence for regs; – Either by compiler or by HW. 18

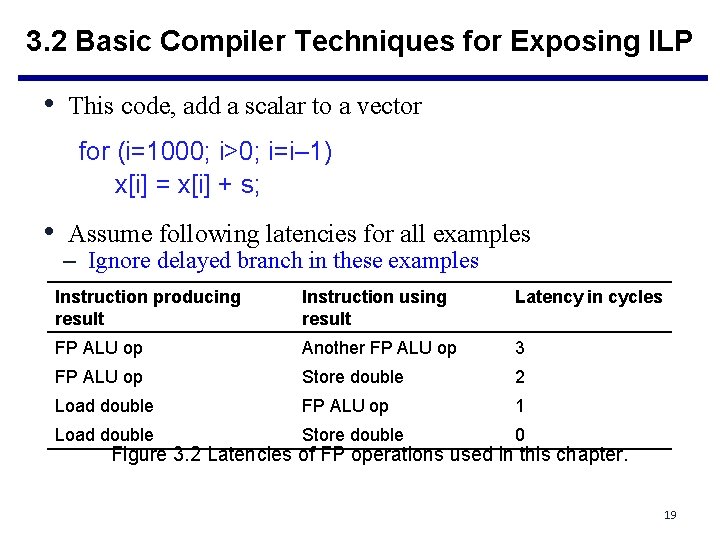

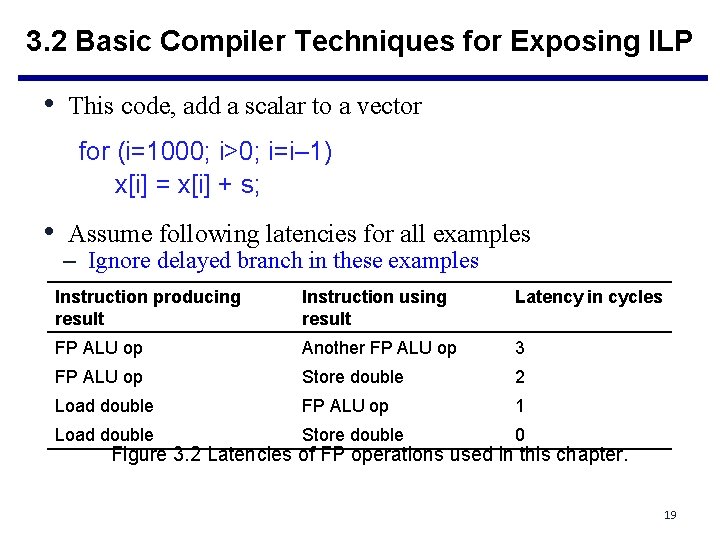

3. 2 Basic Compiler Techniques for Exposing ILP • This code, add a scalar to a vector for (i=1000; i>0; i=i– 1) x[i] = x[i] + s; • Assume following latencies for all examples – Ignore delayed branch in these examples Instruction producing result Instruction using result Latency in cycles FP ALU op Another FP ALU op 3 FP ALU op Store double 2 Load double FP ALU op 1 Load double Store double 0 Figure 3. 2 Latencies of FP operations used in this chapter. 19

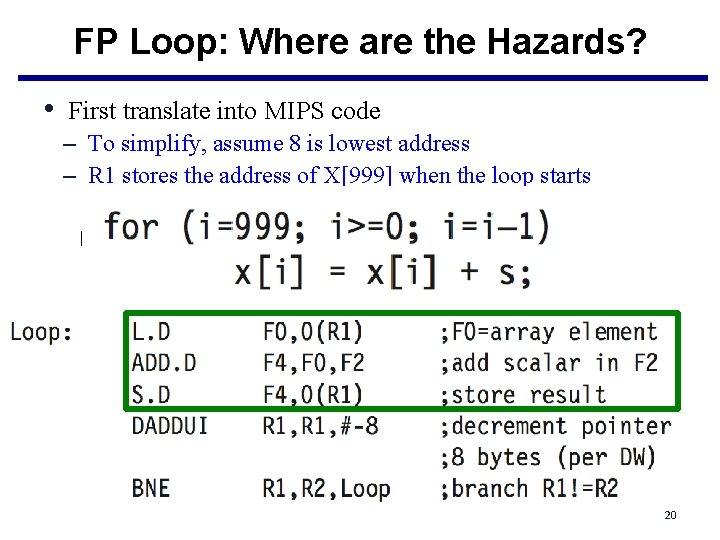

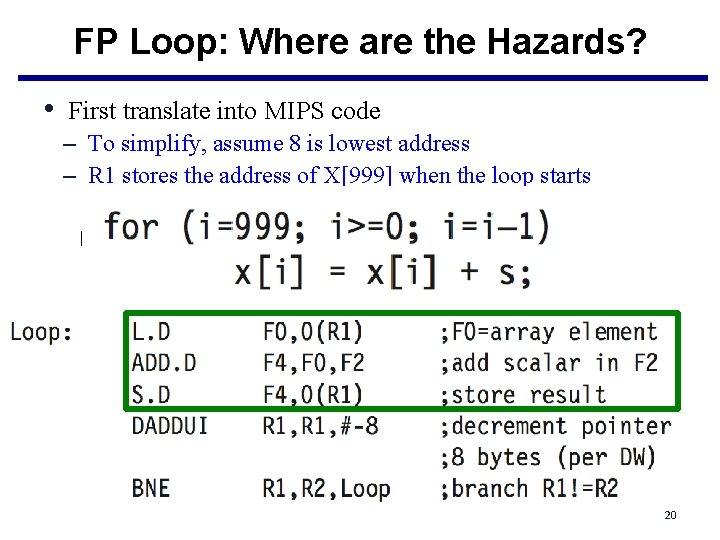

FP Loop: Where are the Hazards? • First translate into MIPS code – To simplify, assume 8 is lowest address – R 1 stores the address of X[999] when the loop starts Loop: L. D F 0, 0(R 1) ; F 0=vector element ADD. D F 4, F 0, F 2 ; add scalar from F 2 S. D 0(R 1), F 4 ; store result DADDUI R 1, -8 ; decrement pointer 8 B (DW) BNEZ R 1, Loop ; branch R 1!=zero 20

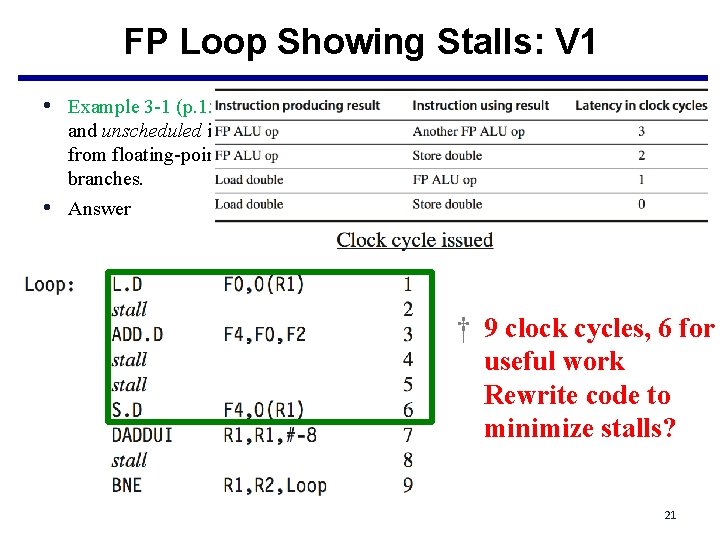

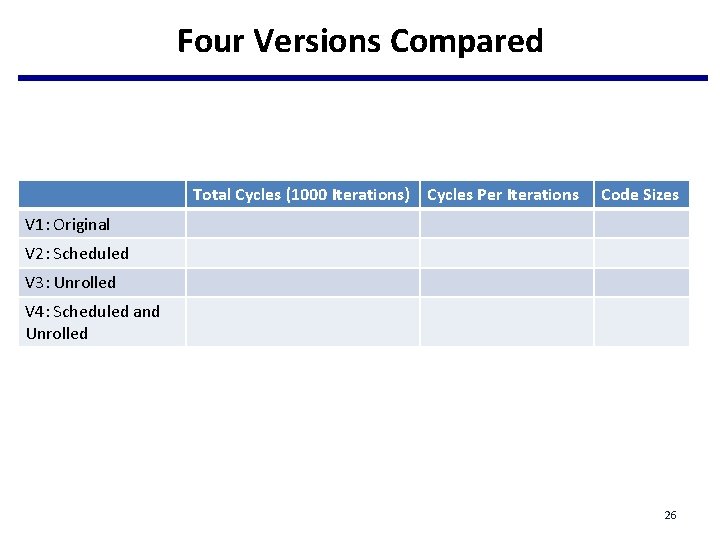

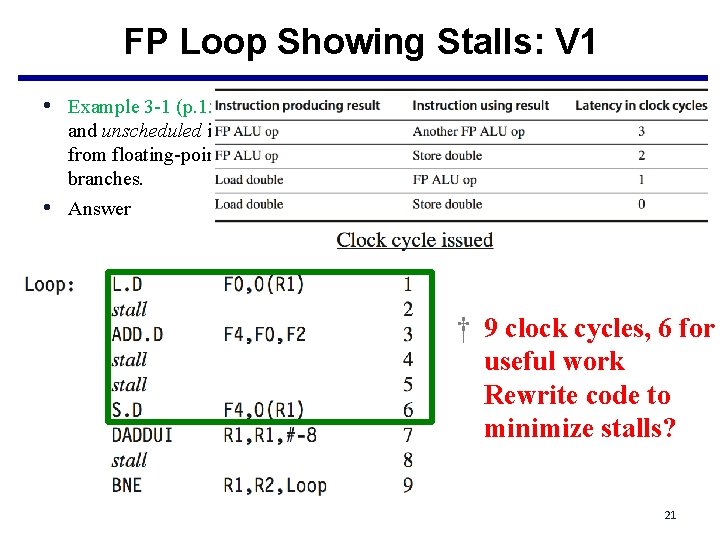

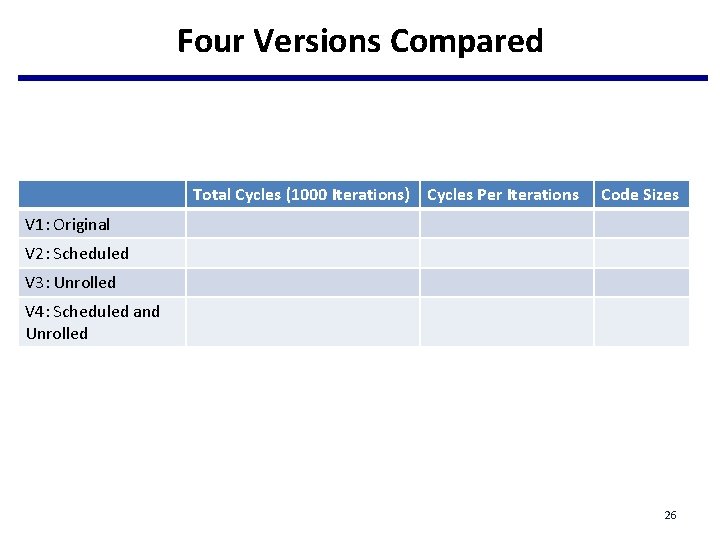

FP Loop Showing Stalls: V 1 • Example 3 -1 (p. 158): Show the loop would look on MIPS, both scheduled • and unscheduled including any stalls or idle clock cycles. Schedule for delays from floating-point operations, but remember that we are ignoring delayed branches. Answer † 9 clock cycles, 6 for useful work Rewrite code to minimize stalls? 21

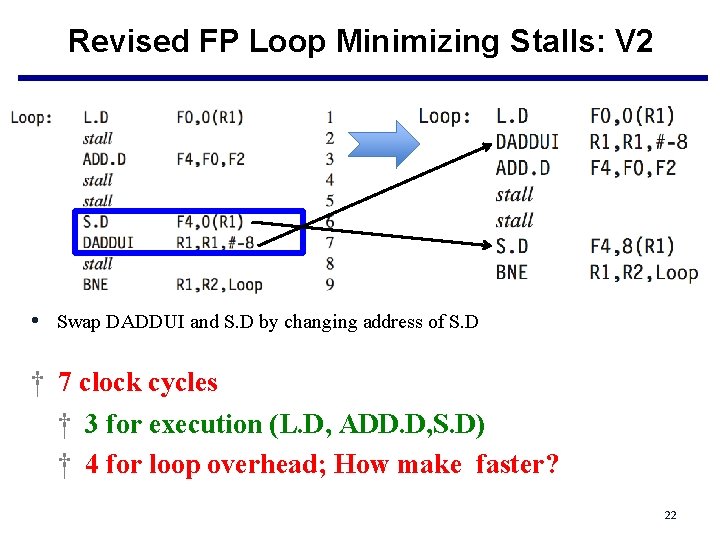

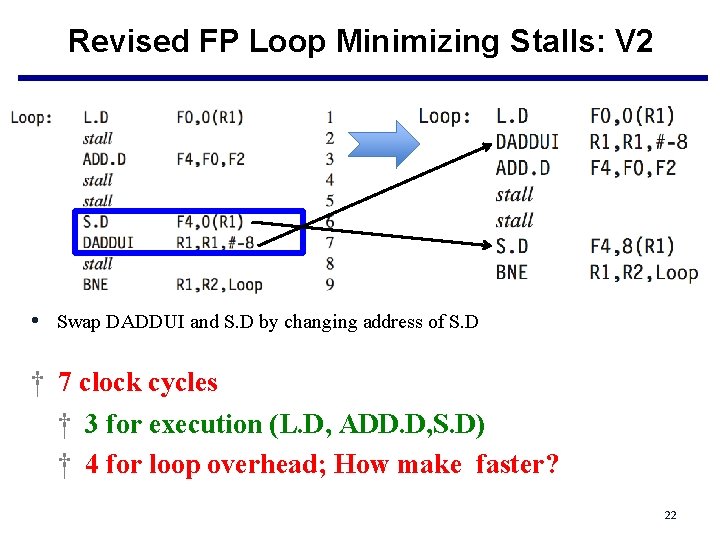

Revised FP Loop Minimizing Stalls: V 2 • Swap DADDUI and S. D by changing address of S. D † 7 clock cycles † 3 for execution (L. D, ADD. D, S. D) † 4 for loop overhead; How make faster? 22

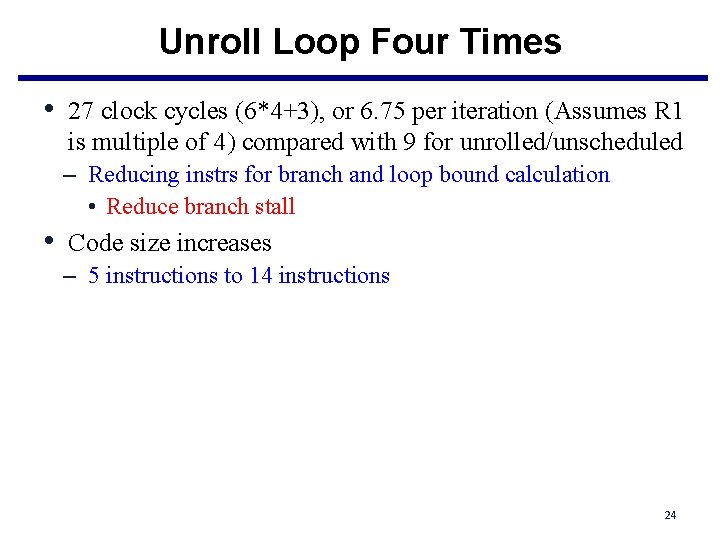

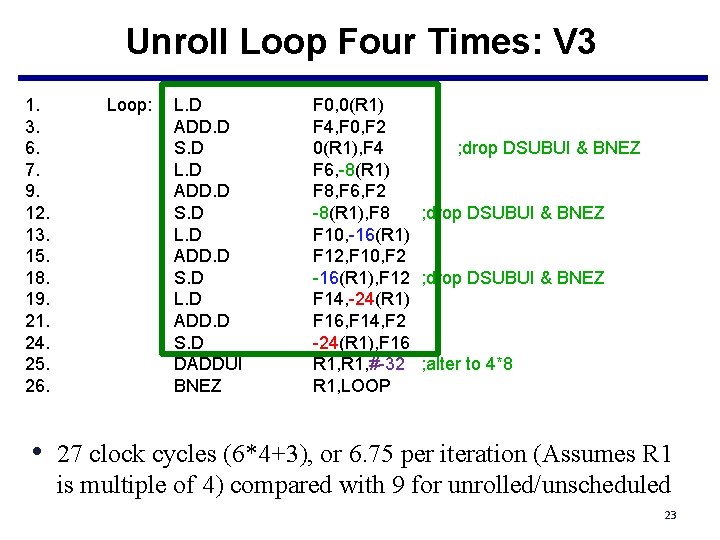

Unroll Loop Four Times: V 3 1. 3. 6. 7. 9. 12. 13. 15. 18. 19. 21. 24. 25. 26. Loop: L. D ADD. D S. D DADDUI BNEZ F 0, 0(R 1) F 4, F 0, F 2 0(R 1), F 4 ; drop DSUBUI & BNEZ F 6, -8(R 1) F 8, F 6, F 2 -8(R 1), F 8 ; drop DSUBUI & BNEZ F 10, -16(R 1) F 12, F 10, F 2 -16(R 1), F 12 ; drop DSUBUI & BNEZ F 14, -24(R 1) F 16, F 14, F 2 -24(R 1), F 16 R 1, #-32 ; alter to 4*8 R 1, LOOP • 27 clock cycles (6*4+3), or 6. 75 per iteration (Assumes R 1 is multiple of 4) compared with 9 for unrolled/unscheduled 23

Unroll Loop Four Times • 27 clock cycles (6*4+3), or 6. 75 per iteration (Assumes R 1 is multiple of 4) compared with 9 for unrolled/unscheduled – Reducing instrs for branch and loop bound calculation • Reduce branch stall • Code size increases – 5 instructions to 14 instructions 24

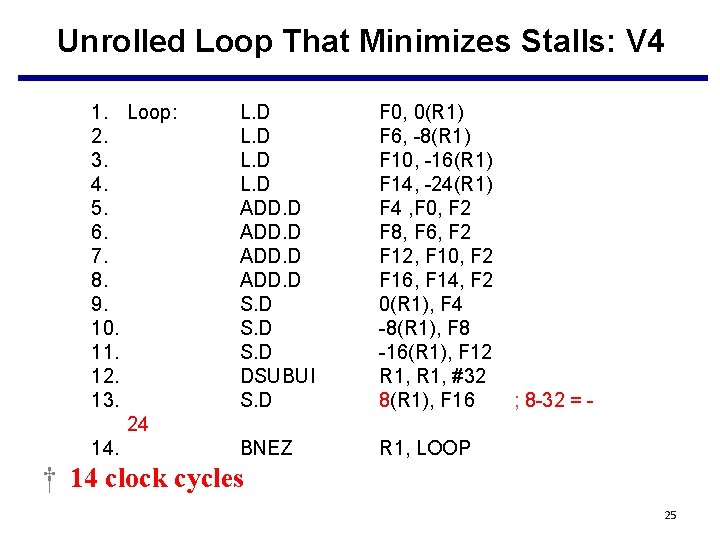

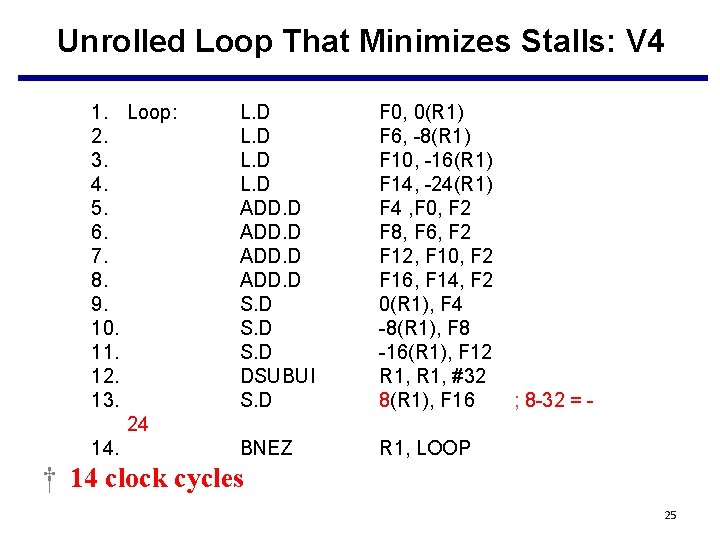

Unrolled Loop That Minimizes Stalls: V 4 1. Loop: 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 24 14. L. D ADD. D S. D DSUBUI S. D F 0, 0(R 1) F 6, -8(R 1) F 10, -16(R 1) F 14, -24(R 1) F 4 , F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 2 0(R 1), F 4 -8(R 1), F 8 -16(R 1), F 12 R 1, #32 8(R 1), F 16 BNEZ R 1, LOOP ; 8 -32 = - † 14 clock cycles 25

Four Versions Compared Total Cycles (1000 Iterations) Cycles Per Iterations Code Sizes V 1: Original V 2: Scheduled V 3: Unrolled V 4: Scheduled and Unrolled 26

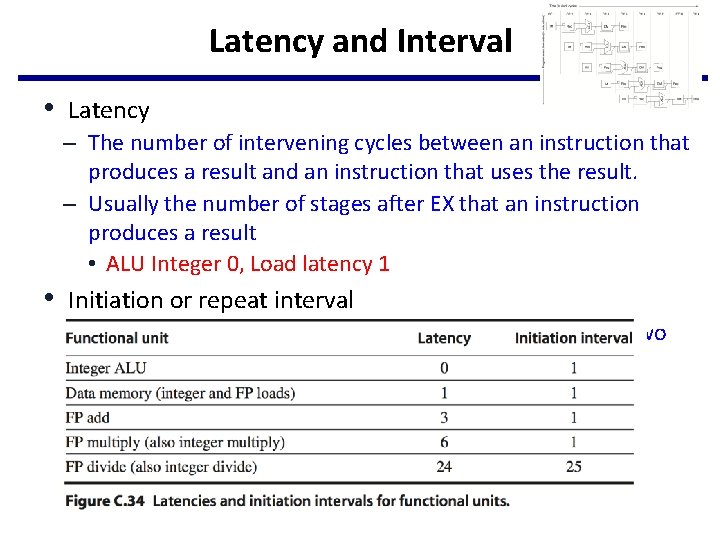

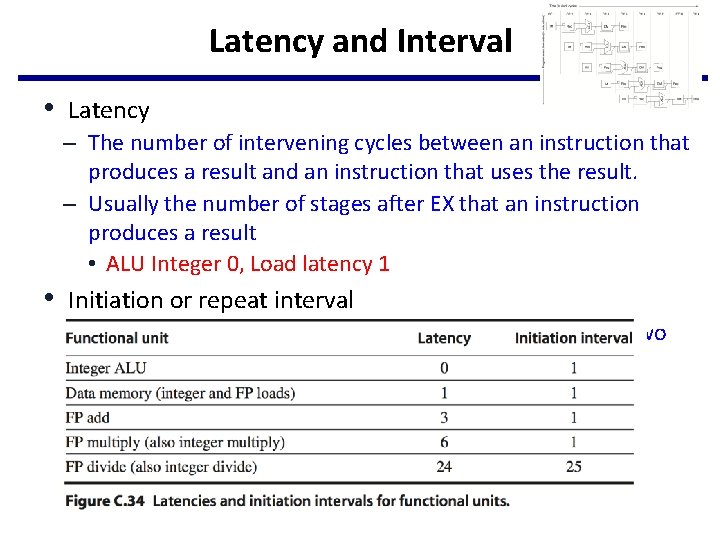

Latency and Interval • Latency – The number of intervening cycles between an instruction that produces a result and an instruction that uses the result. – Usually the number of stages after EX that an instruction produces a result • ALU Integer 0, Load latency 1 • Initiation or repeat interval – the number of cycles that must elapse between issuing two operations of a given type.

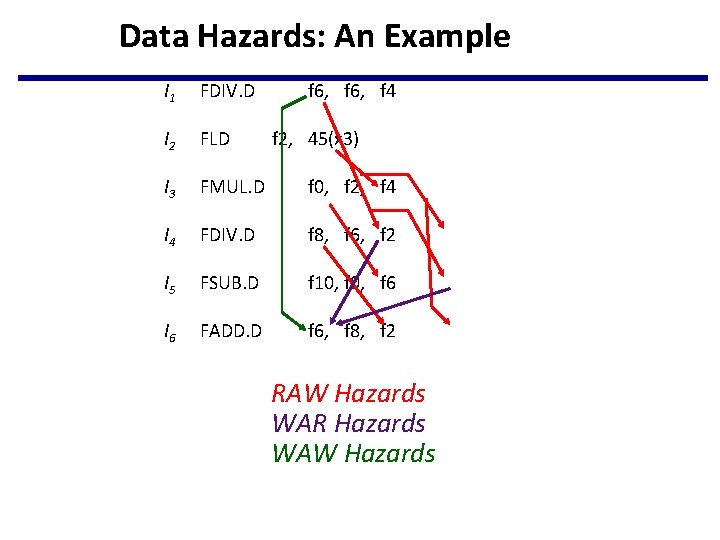

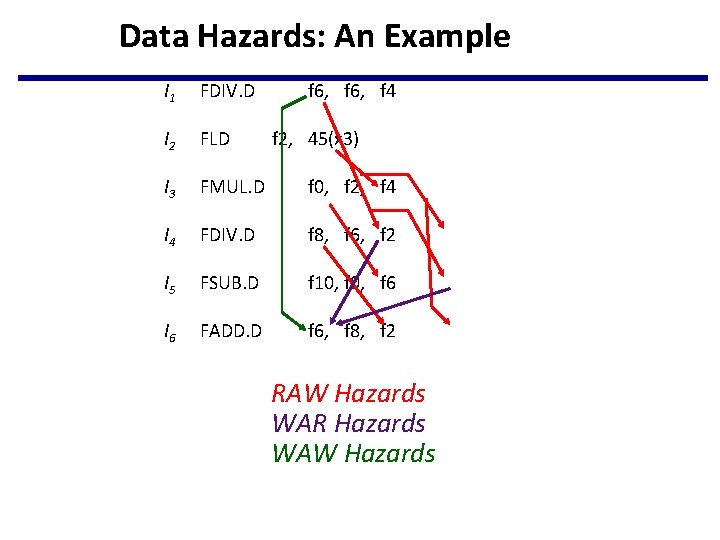

Data Hazards: An Example I 1 FDIV. D f 6, f 4 I 2 FLD I 3 FMUL. D f 0, f 2, f 4 I 4 FDIV. D f 8, f 6, f 2 I 5 FSUB. D f 10, f 6 I 6 FADD. D f 6, f 8, f 2, 45(x 3) RAW Hazards WAR Hazards WAW Hazards

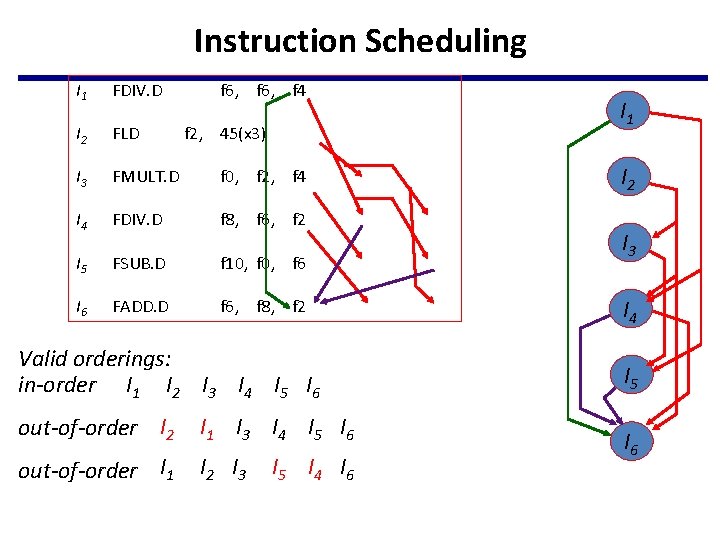

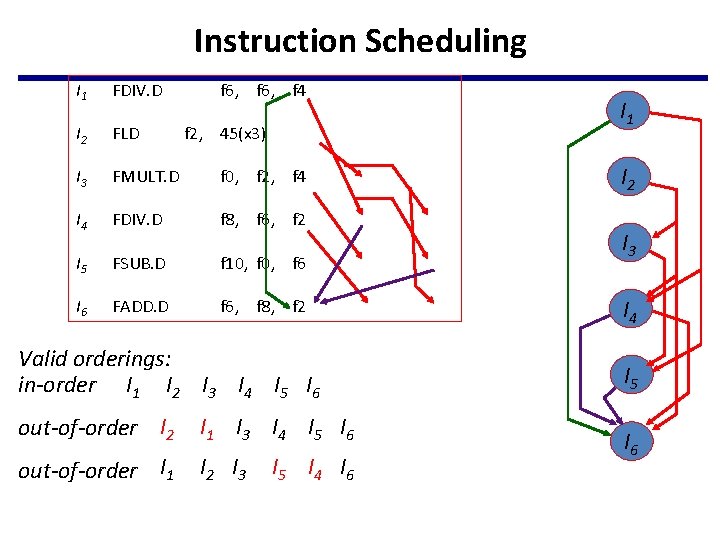

Instruction Scheduling I 1 FDIV. D f 6, f 4 I 2 FLD I 3 FMULT. D f 0, f 2, f 4 I 4 FDIV. D f 8, f 6, f 2 I 5 FSUB. D f 10, f 6 I 6 FADD. D f 6, f 8, f 2, 45(x 3) Valid orderings: in-order I 1 I 2 I 3 I 4 I 5 I 6 out-of-order I 2 I 1 I 3 I 4 I 5 I 6 out-of-order I 1 I 2 I 3 I 5 I 4 I 6 I 1 I 2 I 3 I 4 I 5 I 6

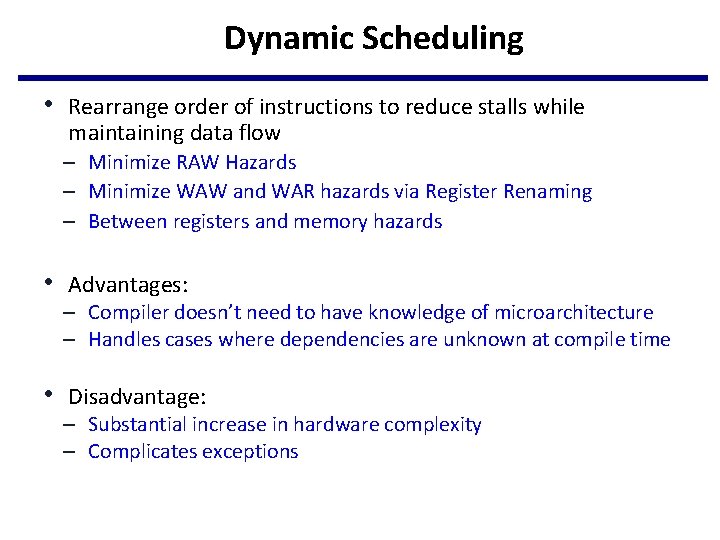

Dynamic Scheduling • Rearrange order of instructions to reduce stalls while maintaining data flow – Minimize RAW Hazards – Minimize WAW and WAR hazards via Register Renaming – Between registers and memory hazards • Advantages: – Compiler doesn’t need to have knowledge of microarchitecture – Handles cases where dependencies are unknown at compile time • Disadvantage: – Substantial increase in hardware complexity – Complicates exceptions

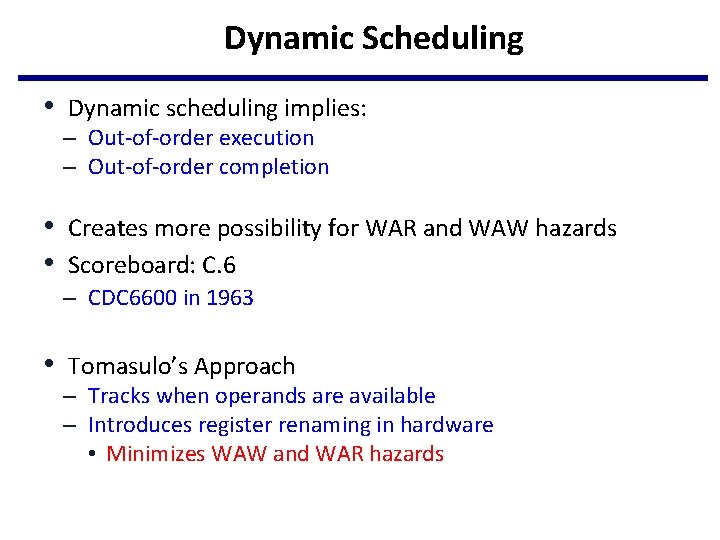

Dynamic Scheduling • Dynamic scheduling implies: – Out-of-order execution – Out-of-order completion • Creates more possibility for WAR and WAW hazards • Scoreboard: C. 6 – CDC 6600 in 1963 • Tomasulo’s Approach – Tracks when operands are available – Introduces register renaming in hardware • Minimizes WAW and WAR hazards

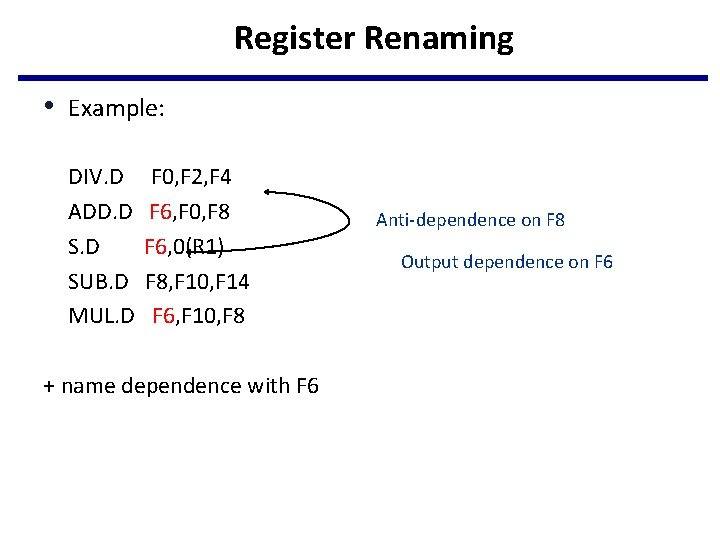

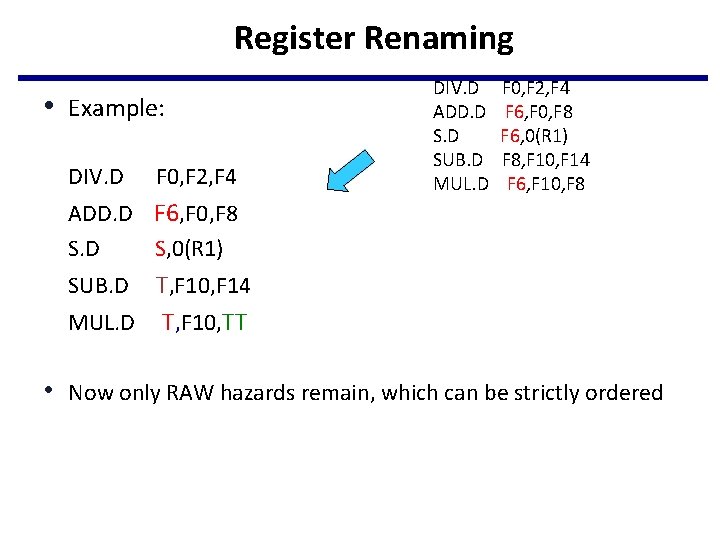

Register Renaming • Example: DIV. D ADD. D SUB. D MUL. D F 0, F 2, F 4 F 6, F 0, F 8 F 6, 0(R 1) F 8, F 10, F 14 F 6, F 10, F 8 + name dependence with F 6 Anti-dependence on F 8 Output dependence on F 6

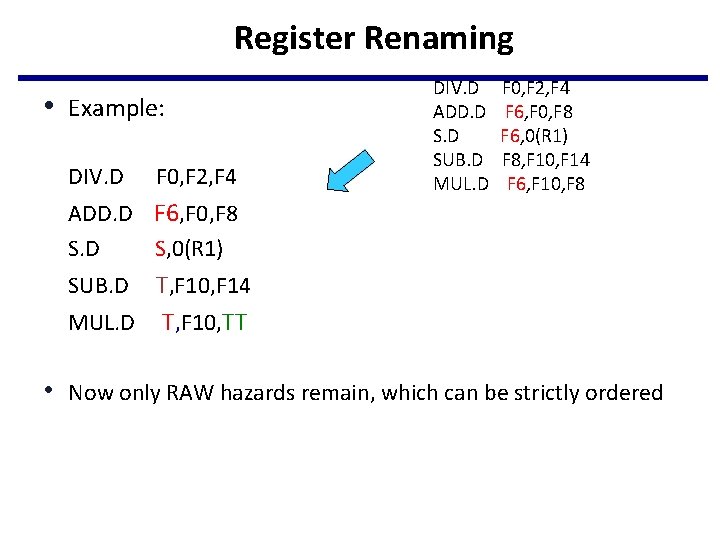

Register Renaming • Example: DIV. D F 0, F 2, F 4 DIV. D ADD. D SUB. D MUL. D F 0, F 2, F 4 F 6, F 0, F 8 F 6, 0(R 1) F 8, F 10, F 14 F 6, F 10, F 8 ADD. D F 6, F 0, F 8 S. D S, 0(R 1) T, F 10, F 14 MUL. D T, F 10, TT SUB. D • Now only RAW hazards remain, which can be strictly ordered

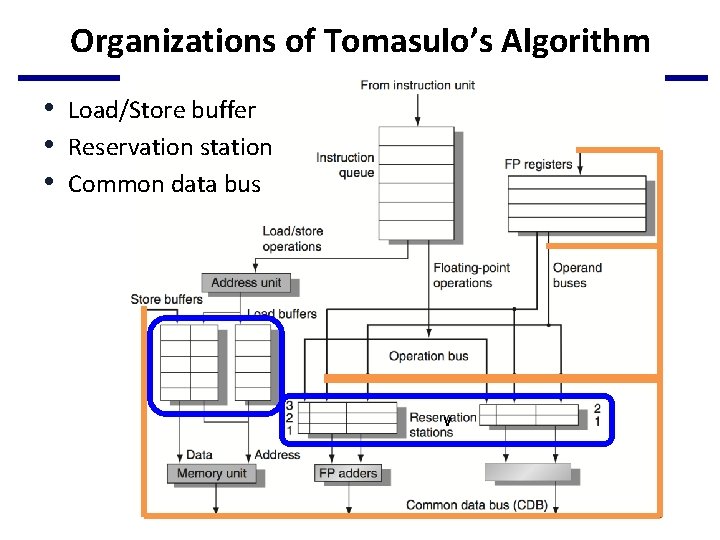

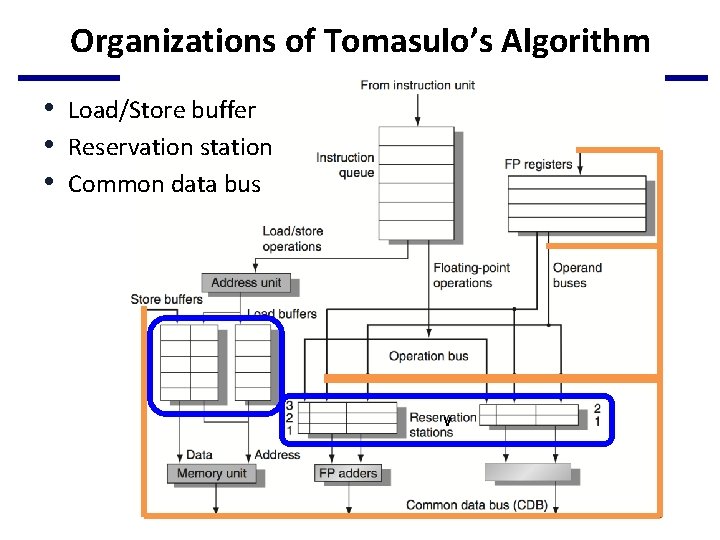

Organizations of Tomasulo’s Algorithm • Load/Store buffer • Reservation station • Common data bus v

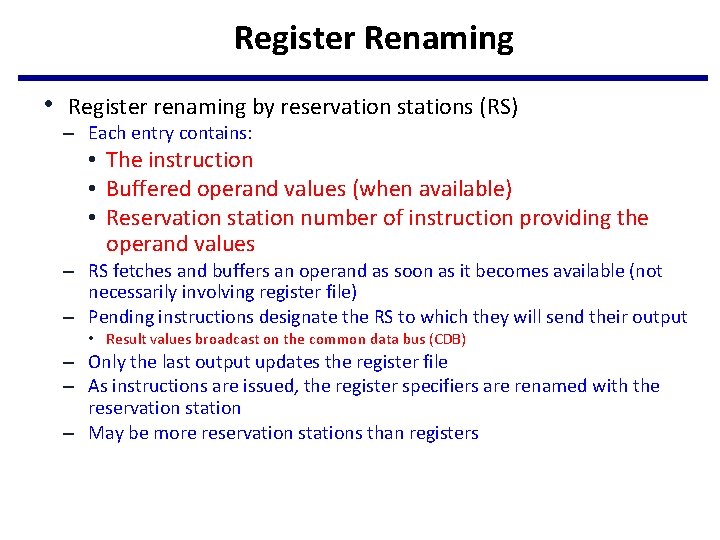

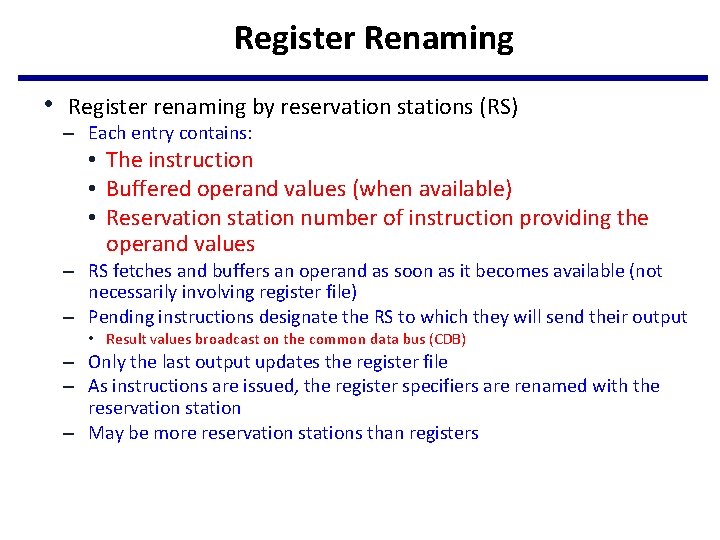

Register Renaming • Register renaming by reservation stations (RS) – Each entry contains: • The instruction • Buffered operand values (when available) • Reservation station number of instruction providing the operand values – RS fetches and buffers an operand as soon as it becomes available (not necessarily involving register file) – Pending instructions designate the RS to which they will send their output • Result values broadcast on the common data bus (CDB) – Only the last output updates the register file – As instructions are issued, the register specifiers are renamed with the reservation station – May be more reservation stations than registers

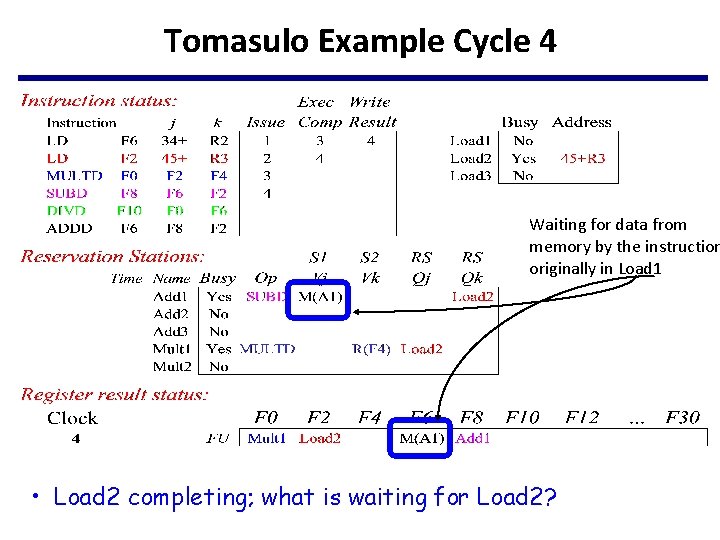

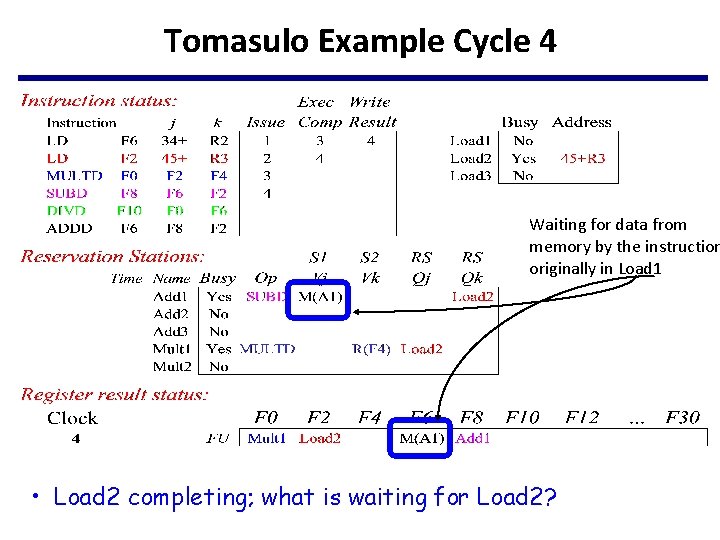

Tomasulo Example Cycle 4 Waiting for data from memory by the instruction originally in Load 1 • Load 2 completing; what is waiting for Load 2?

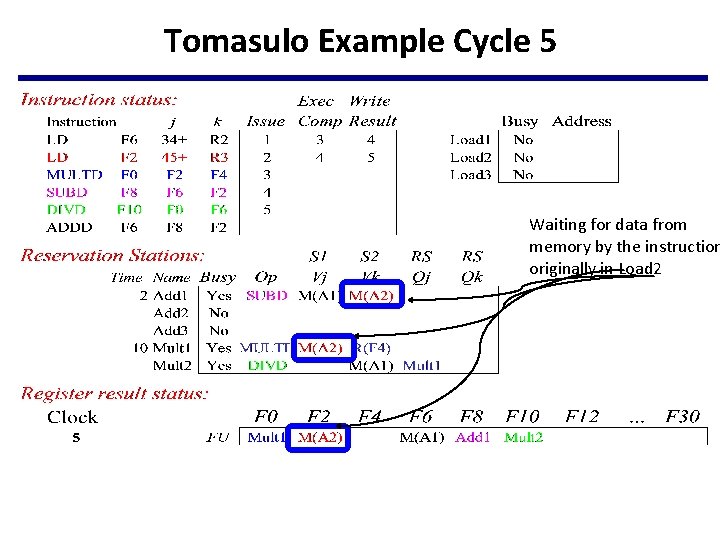

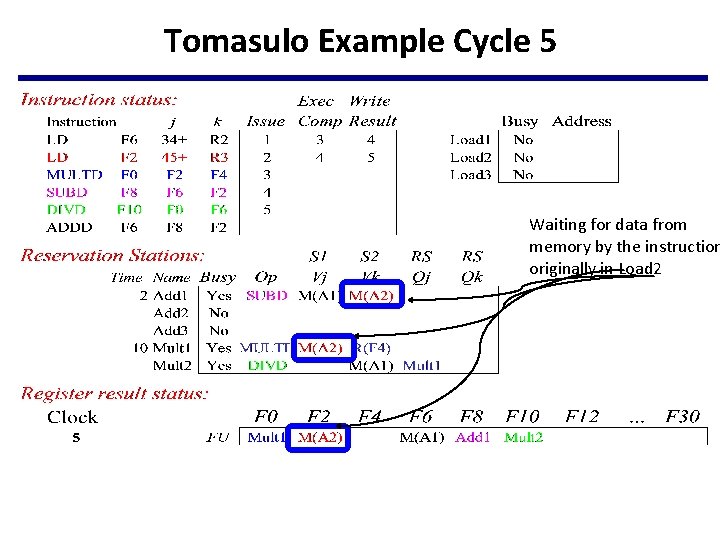

Tomasulo Example Cycle 5 Waiting for data from memory by the instruction originally in Load 2

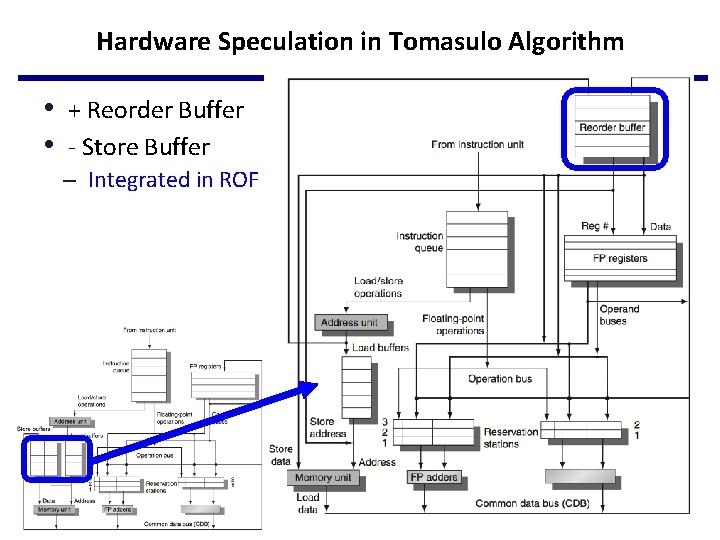

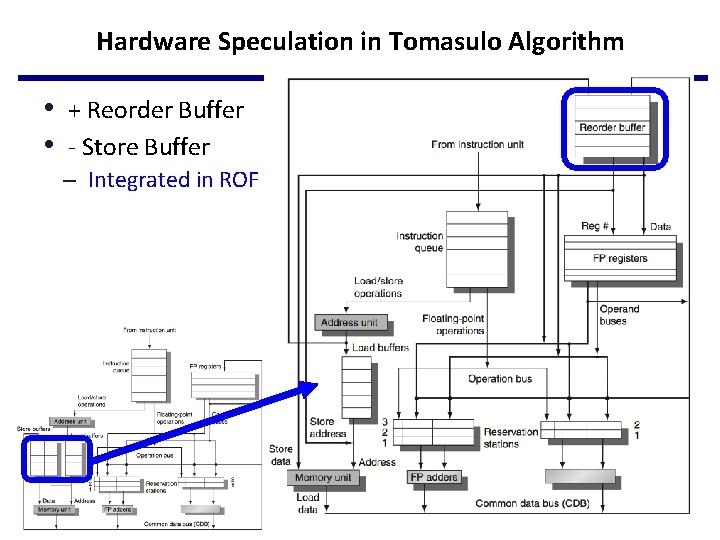

Hardware Speculation in Tomasulo Algorithm • + Reorder Buffer • - Store Buffer – Integrated in ROF

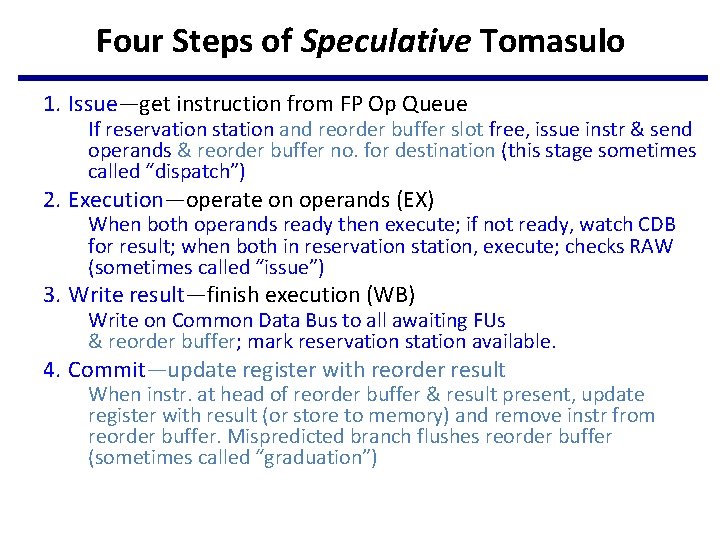

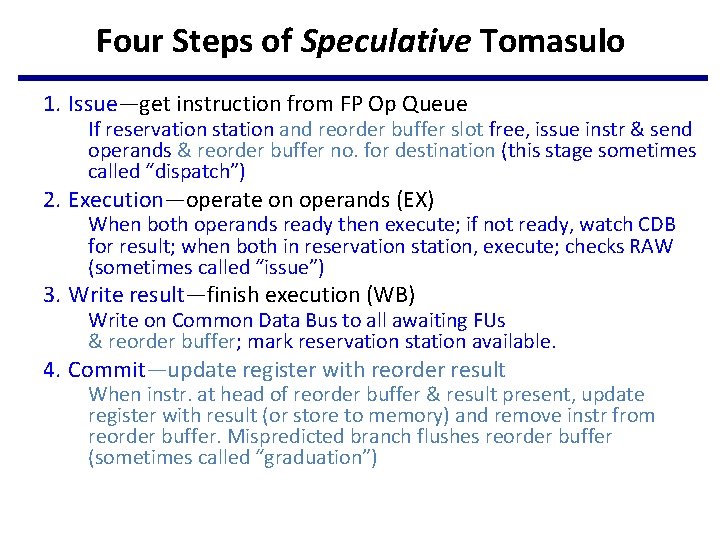

Four Steps of Speculative Tomasulo 1. Issue—get instruction from FP Op Queue If reservation station and reorder buffer slot free, issue instr & send operands & reorder buffer no. for destination (this stage sometimes called “dispatch”) 2. Execution—operate on operands (EX) When both operands ready then execute; if not ready, watch CDB for result; when both in reservation station, execute; checks RAW (sometimes called “issue”) 3. Write result—finish execution (WB) Write on Common Data Bus to all awaiting FUs & reorder buffer; mark reservation station available. 4. Commit—update register with reorder result When instr. at head of reorder buffer & result present, update register with result (or store to memory) and remove instr from reorder buffer. Mispredicted branch flushes reorder buffer (sometimes called “graduation”)

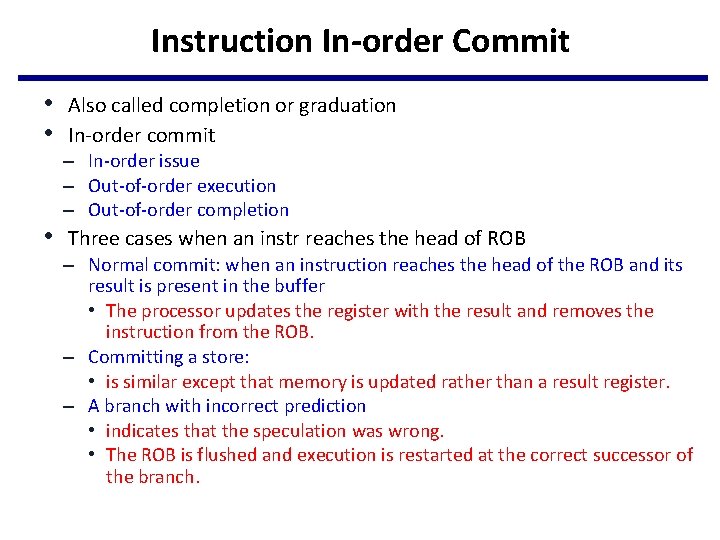

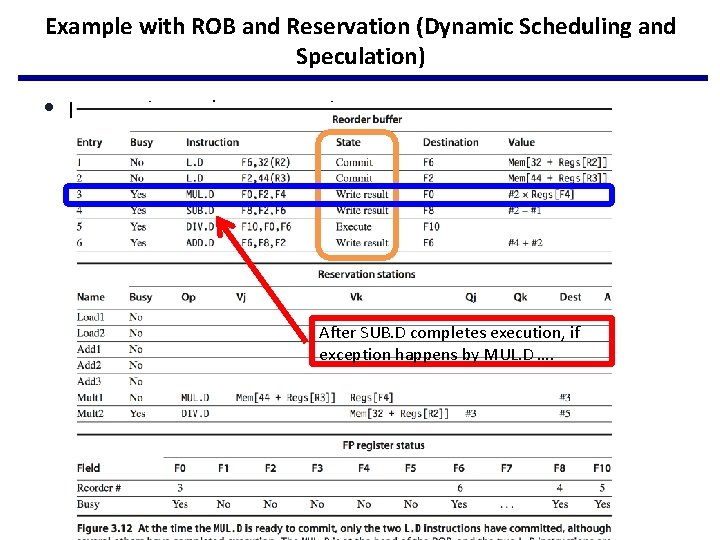

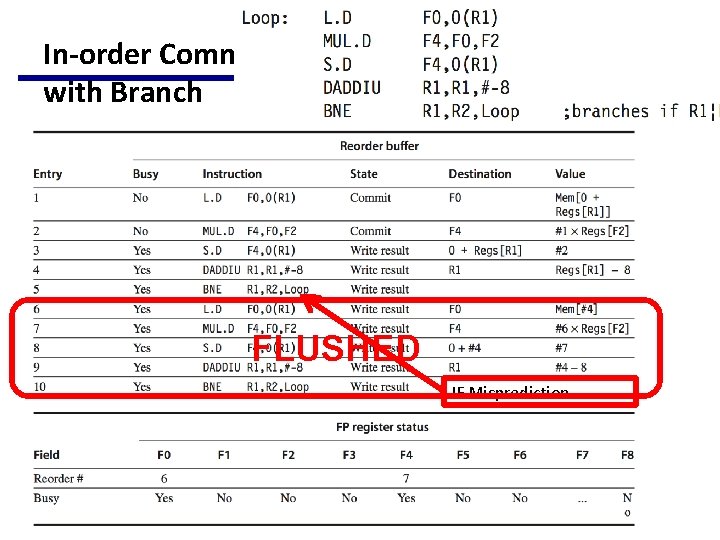

Instruction In-order Commit • Also called completion or graduation • In-order commit – In-order issue – Out-of-order execution – Out-of-order completion • Three cases when an instr reaches the head of ROB – Normal commit: when an instruction reaches the head of the ROB and its result is present in the buffer • The processor updates the register with the result and removes the instruction from the ROB. – Committing a store: • is similar except that memory is updated rather than a result register. – A branch with incorrect prediction • indicates that the speculation was wrong. • The ROB is flushed and execution is restarted at the correct successor of the branch.

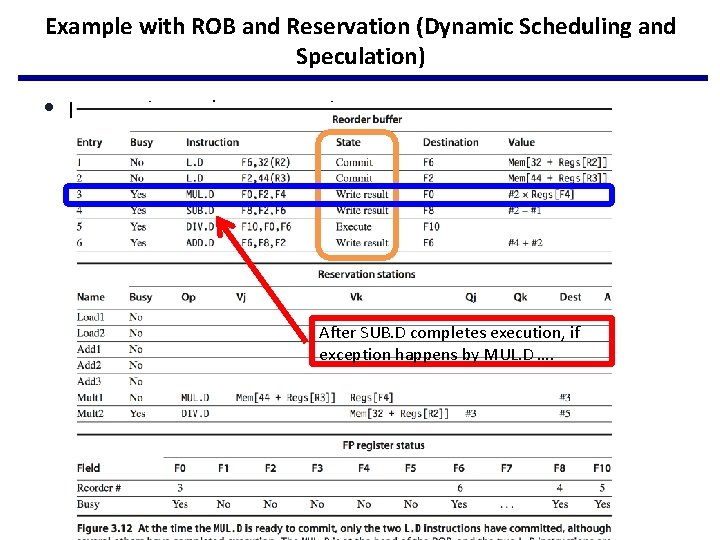

Example with ROB and Reservation (Dynamic Scheduling and Speculation) • MUL. D is ready to commit After SUB. D completes execution, if exception happens by MUL. D ….

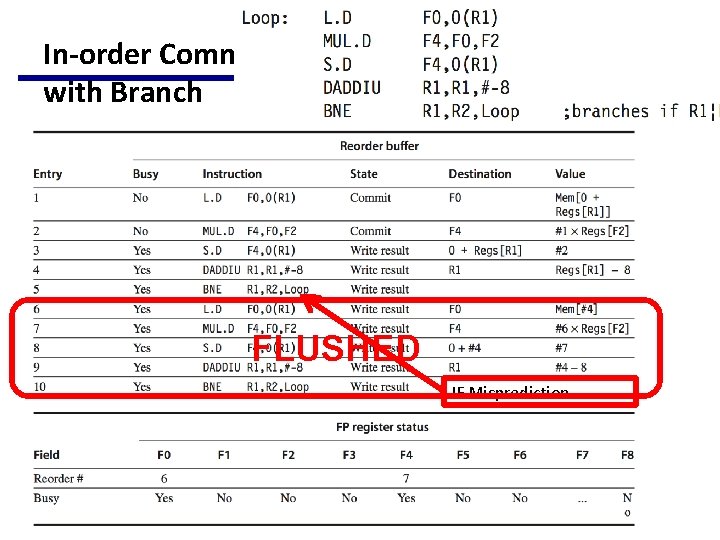

In-order Commit with Branch FLUSHED IF Misprediction

Lecture 17: Instruction Level Parallelism -- Hardware Speculation and VLIW (Static Superscalar) CSE 564 Computer Architecture Fall 2016 Department of Computer Science and Engineering Yonghong Yan yan@oakland. edu www. secs. oakland. edu/~yan

Topics for Instruction Level Parallelism • Static Superscalar/VLIW – 3. 6, 3. 7 • Dynamic Scheduling, Multiple Issue and Speculation – 3. 8, 3. 9 • ILP Limitations and SMT – 3. 10, 3. 11, 3. 12

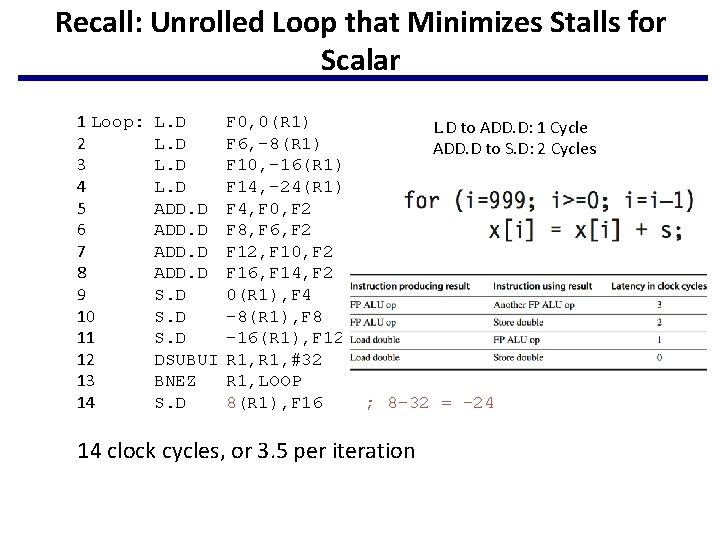

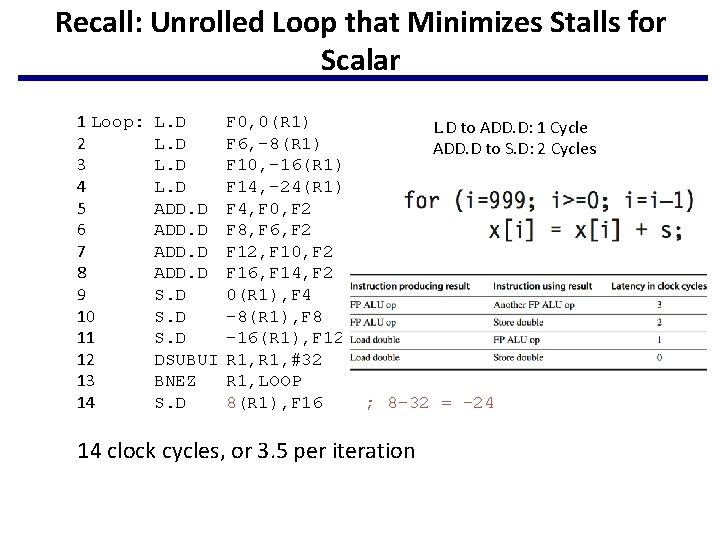

Recall: Unrolled Loop that Minimizes Stalls for Scalar 1 Loop: 2 3 4 5 6 7 8 9 10 11 12 13 14 L. D ADD. D S. D DSUBUI BNEZ S. D F 0, 0(R 1) F 6, -8(R 1) F 10, -16(R 1) F 14, -24(R 1) F 4, F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 2 0(R 1), F 4 -8(R 1), F 8 -16(R 1), F 12 R 1, #32 R 1, LOOP 8(R 1), F 16 L. D to ADD. D: 1 Cycle ADD. D to S. D: 2 Cycles ; 8 -32 = -24 14 clock cycles, or 3. 5 per iteration

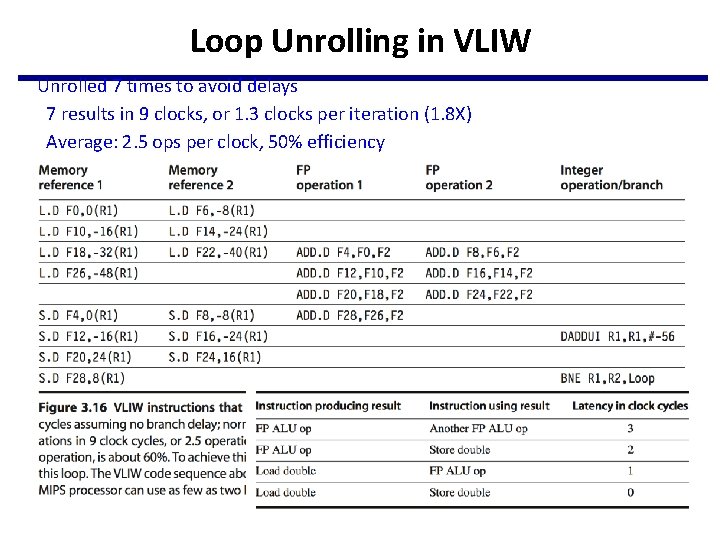

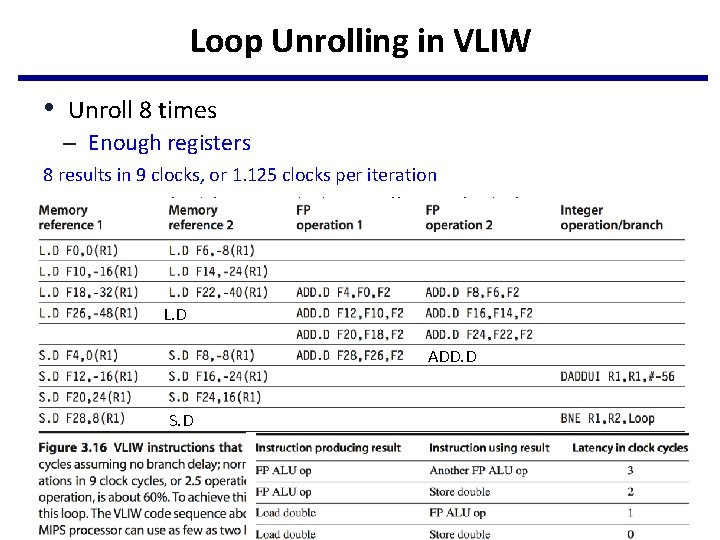

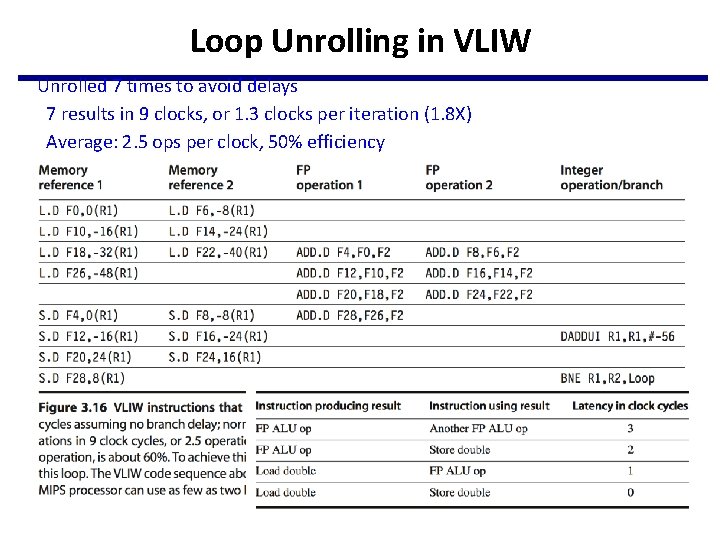

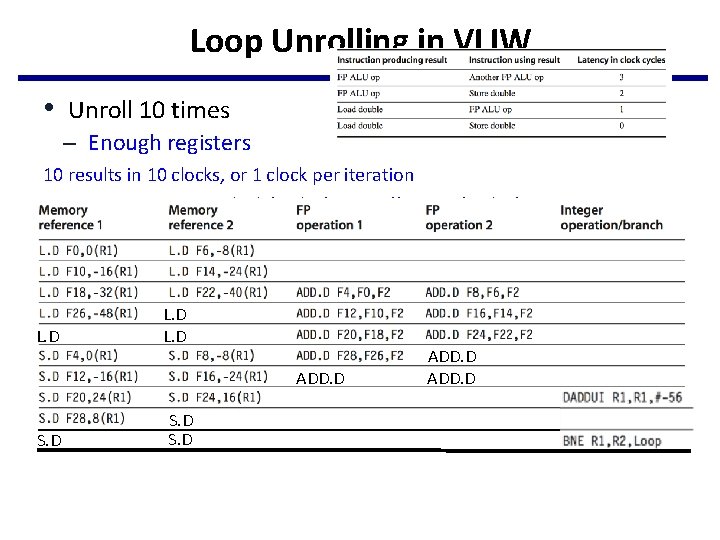

Loop Unrolling in VLIW Unrolled 7 times to avoid delays 7 results in 9 clocks, or 1. 3 clocks per iteration (1. 8 X) Average: 2. 5 ops per clock, 50% efficiency Note: Need more registers in VLIW (15 vs. 6 in SS)

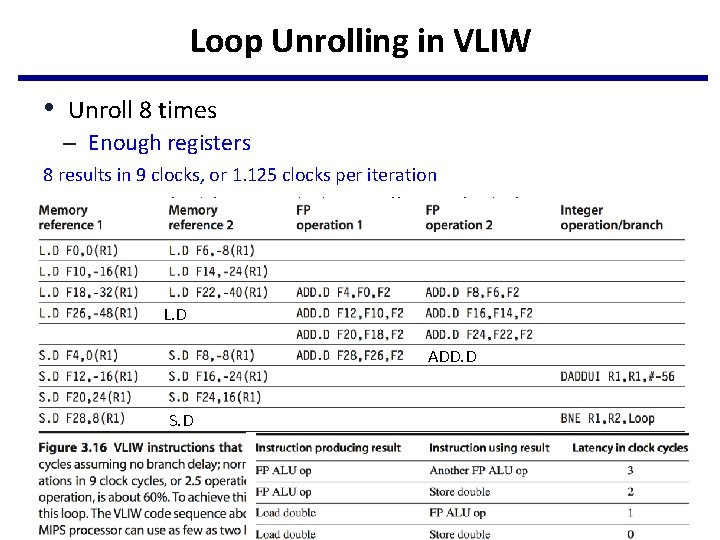

Loop Unrolling in VLIW • Unroll 8 times – Enough registers 8 results in 9 clocks, or 1. 125 clocks per iteration Average: 2. 89 (26/9) ops per clock, 58% efficiency (26/45) L. D ADD. D S. D

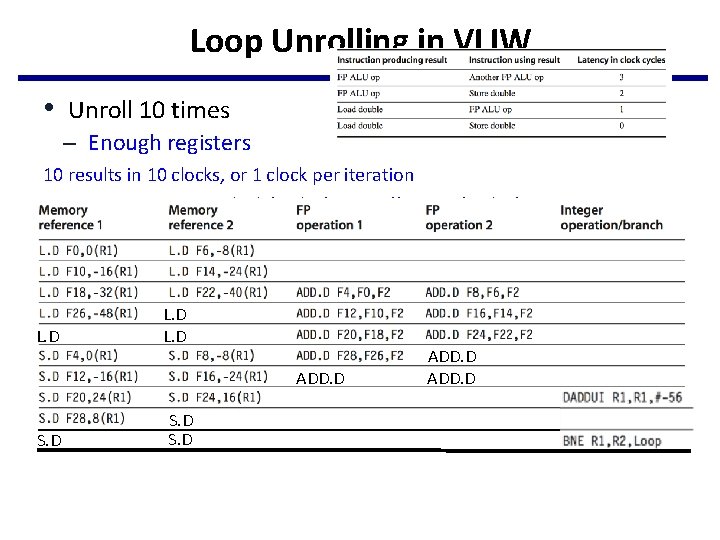

Loop Unrolling in VLIW • Unroll 10 times – Enough registers 10 results in 10 clocks, or 1 clock per iteration Average: 3. 2 ops per clock (32/10), 64% efficiency (32/50) L. D ADD. D S. D ADD. D

Very Important Terms • Dynamic Scheduling Out-of-order Execution • Speculation In-order Commit • Superscalar Multiple Issue Techniques Goals Implementation Addressing Approaches Dynamic Scheduling Out-of-order execution Reservation Stations, Load/Store Buffer and CDB Data hazards (RAW, WAR) Register renaming Speculation In-order commit Branch Prediction (BHT/BTB) and Reorder Buffer Control hazards (branch, func, exception) Prediction and misprediction recovery Software and Hardware To Increase CPI By compiler or hardware Superscalar/V Multiple LIW issue

Lecture 18: Instruction Level Parallelism -- Dynamic Scheduling, Multiple Issue, and Speculation CSE 564 Computer Architecture Fall 2016 Department of Computer Science and Engineering Yonghong Yan yan@oakland. edu www. secs. oakland. edu/~yan

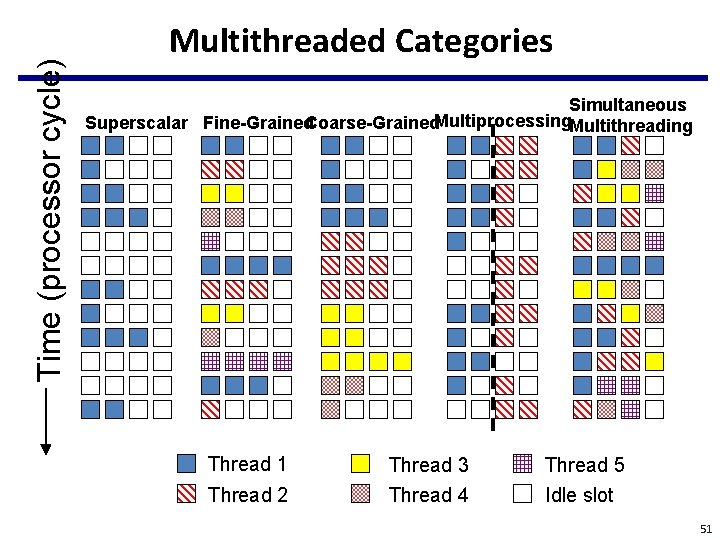

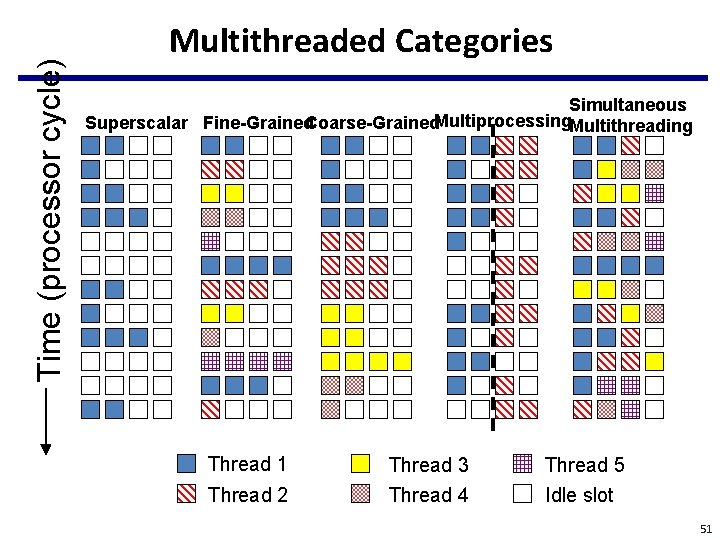

Time (processor cycle) Multithreaded Categories Simultaneous Superscalar Fine-Grained. Coarse-Grained. Multiprocessing. Multithreading Thread 1 Thread 2 Thread 3 Thread 4 Thread 5 Idle slot 51

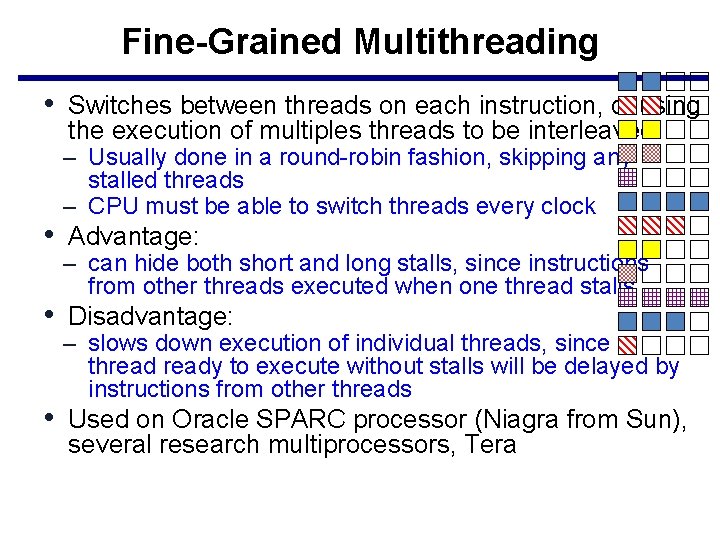

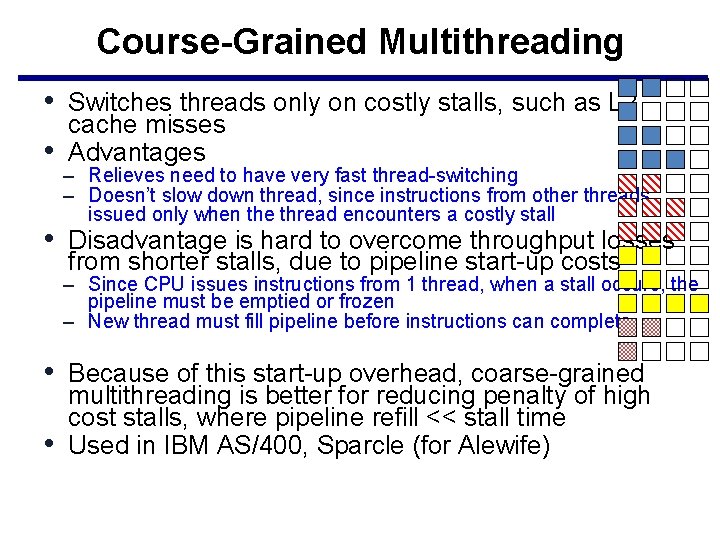

Course-Grained Multithreading • Switches threads only on costly stalls, such as L 2 • cache misses Advantages – Relieves need to have very fast thread-switching – Doesn’t slow down thread, since instructions from other threads issued only when the thread encounters a costly stall • Disadvantage is hard to overcome throughput losses from shorter stalls, due to pipeline start-up costs – Since CPU issues instructions from 1 thread, when a stall occurs, the pipeline must be emptied or frozen – New thread must fill pipeline before instructions can complete • Because of this start-up overhead, coarse-grained • multithreading is better for reducing penalty of high cost stalls, where pipeline refill << stall time Used in IBM AS/400, Sparcle (for Alewife)

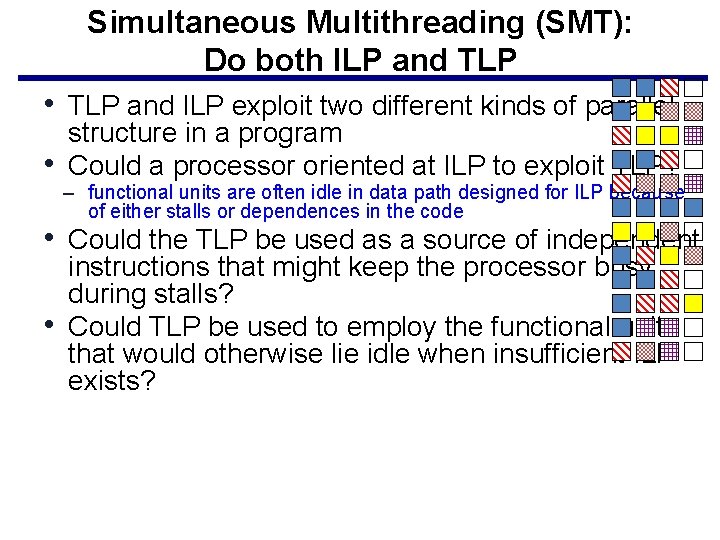

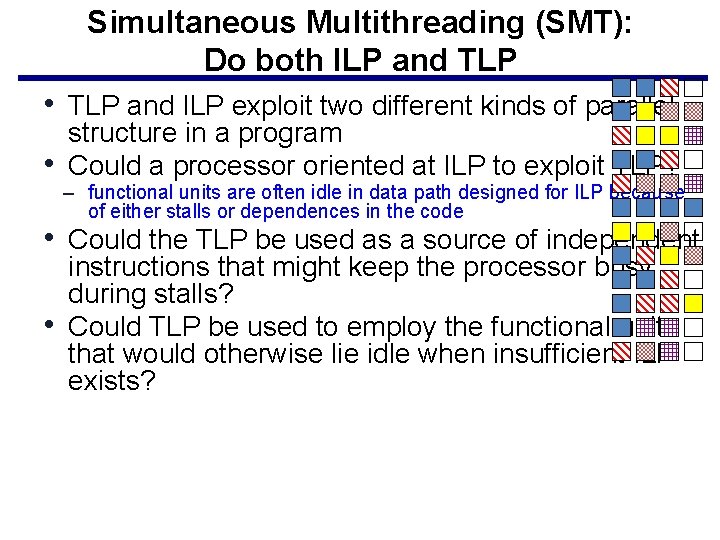

Fine-Grained Multithreading • Switches between threads on each instruction, causing the execution of multiples threads to be interleaved – Usually done in a round-robin fashion, skipping any stalled threads – CPU must be able to switch threads every clock • Advantage: – can hide both short and long stalls, since instructions from other threads executed when one thread stalls • Disadvantage: – slows down execution of individual threads, since a thready to execute without stalls will be delayed by instructions from other threads • Used on Oracle SPARC processor (Niagra from Sun), several research multiprocessors, Tera

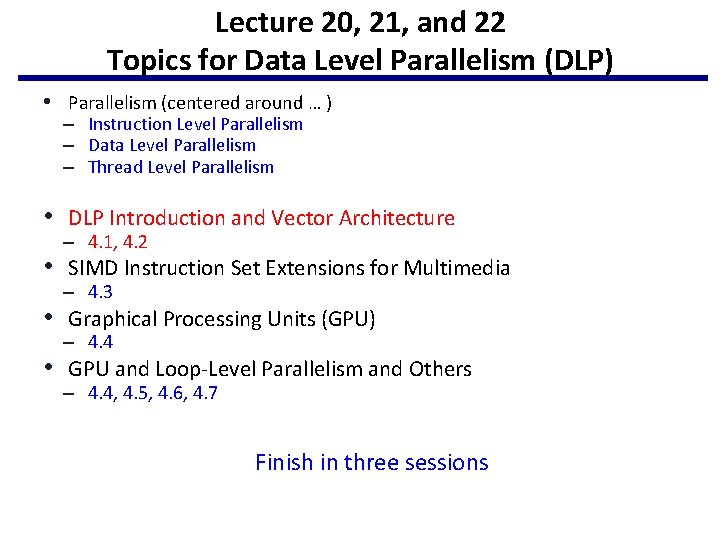

Simultaneous Multithreading (SMT): Do both ILP and TLP • TLP and ILP exploit two different kinds of parallel • structure in a program Could a processor oriented at ILP to exploit TLP? – functional units are often idle in data path designed for ILP because of either stalls or dependences in the code • Could the TLP be used as a source of independent • instructions that might keep the processor busy during stalls? Could TLP be used to employ the functional units that would otherwise lie idle when insufficient ILP exists?

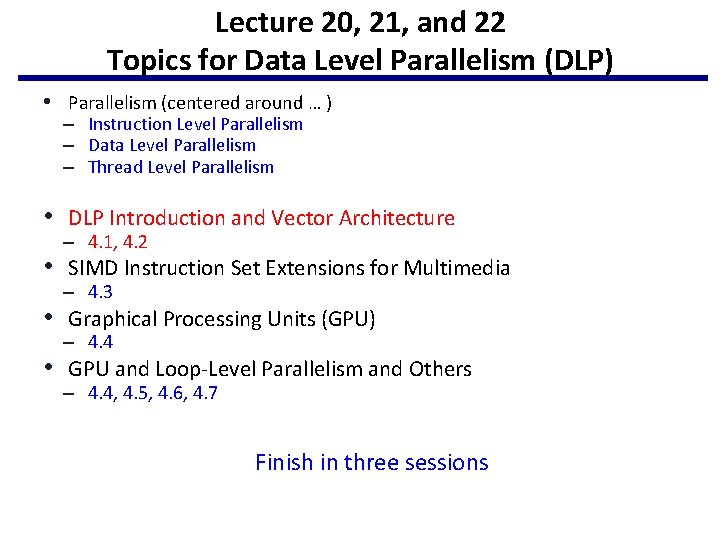

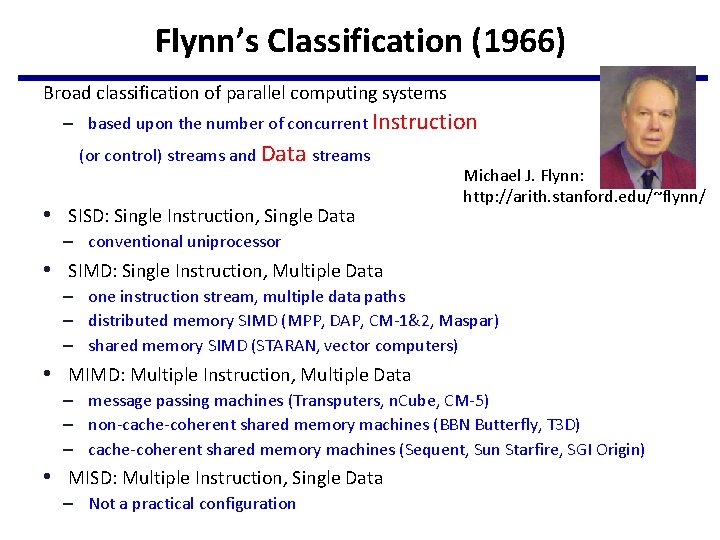

Lecture 20, 21, and 22 Topics for Data Level Parallelism (DLP) • Parallelism (centered around … ) – Instruction Level Parallelism – Data Level Parallelism – Thread Level Parallelism • DLP Introduction and Vector Architecture – 4. 1, 4. 2 • SIMD Instruction Set Extensions for Multimedia – 4. 3 • Graphical Processing Units (GPU) – 4. 4 • GPU and Loop-Level Parallelism and Others – 4. 4, 4. 5, 4. 6, 4. 7 Finish in three sessions

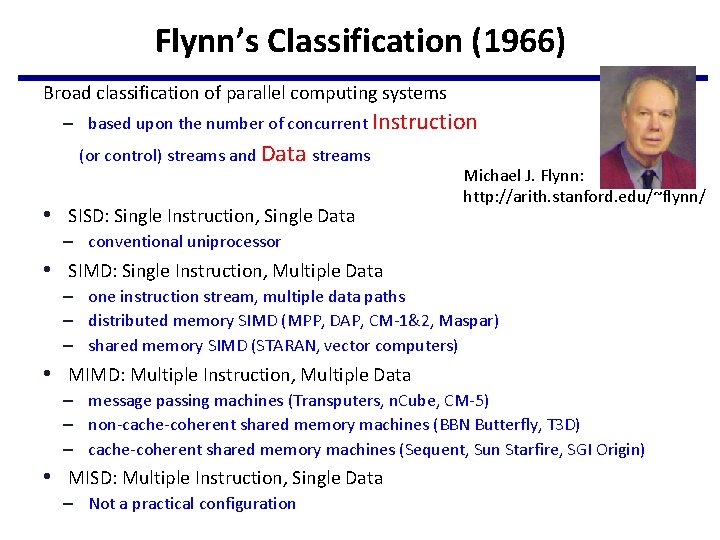

Flynn’s Classification (1966) Broad classification of parallel computing systems – based upon the number of concurrent Instruction (or control) streams and Data streams • SISD: Single Instruction, Single Data Michael J. Flynn: http: //arith. stanford. edu/~flynn/ – conventional uniprocessor • SIMD: Single Instruction, Multiple Data – one instruction stream, multiple data paths – distributed memory SIMD (MPP, DAP, CM-1&2, Maspar) – shared memory SIMD (STARAN, vector computers) • MIMD: Multiple Instruction, Multiple Data – message passing machines (Transputers, n. Cube, CM-5) – non-cache-coherent shared memory machines (BBN Butterfly, T 3 D) – cache-coherent shared memory machines (Sequent, Sun Starfire, SGI Origin) • MISD: Multiple Instruction, Single Data – Not a practical configuration

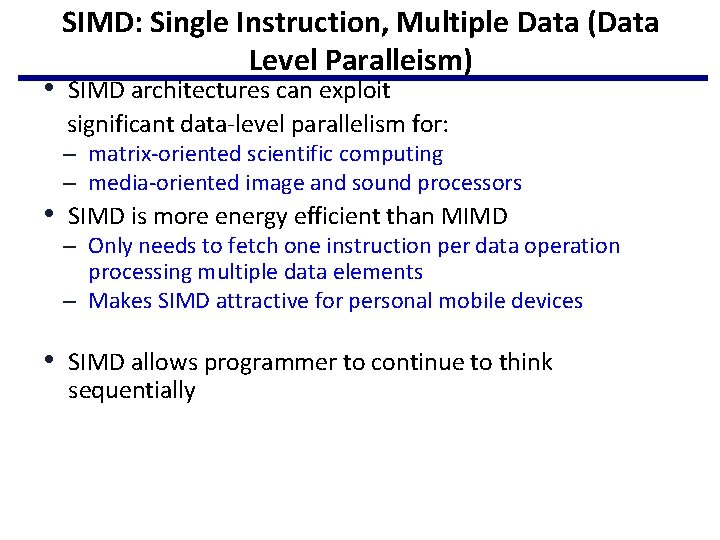

SIMD: Single Instruction, Multiple Data (Data Level Paralleism) • SIMD architectures can exploit significant data-level parallelism for: – matrix-oriented scientific computing – media-oriented image and sound processors • SIMD is more energy efficient than MIMD – Only needs to fetch one instruction per data operation processing multiple data elements – Makes SIMD attractive for personal mobile devices • SIMD allows programmer to continue to think sequentially

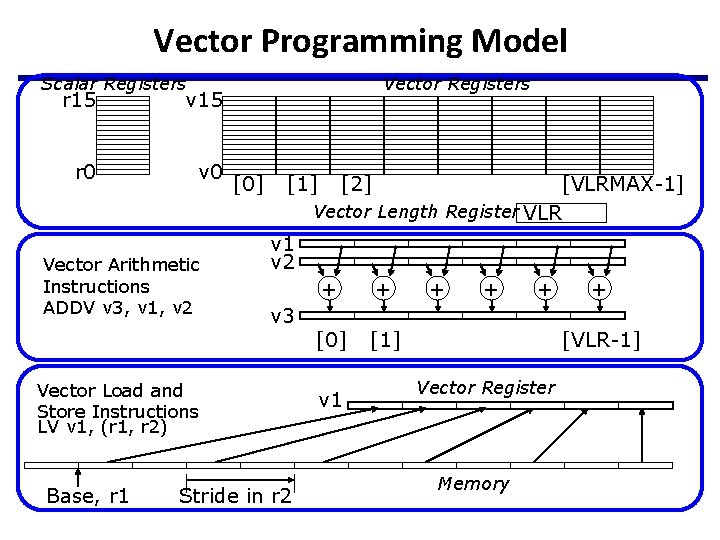

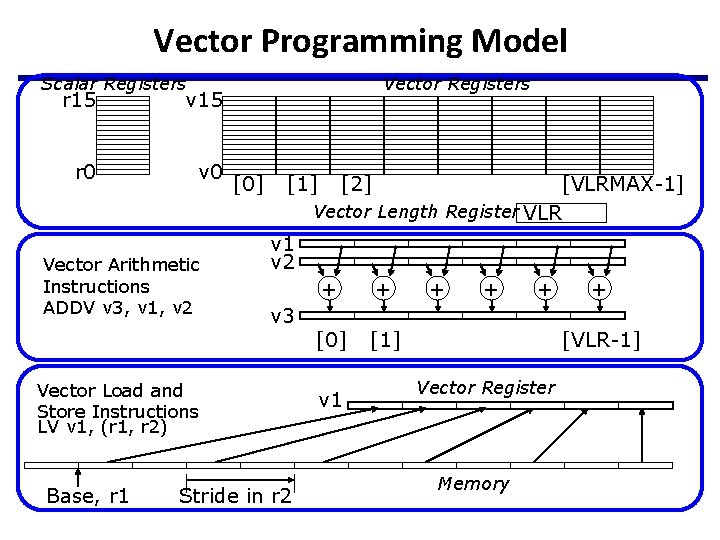

Vector Programming Model Scalar Registers r 15 v 15 r 0 v 0 Vector Arithmetic Instructions ADDV v 3, v 1, v 2 Vector Registers [0] [1] [VLRMAX-1] Vector Length Register VLR v 1 v 2 v 3 Vector Load and Store Instructions LV v 1, (r 1, r 2) Base, r 1 [2] Stride in r 2 + + [0] [1] v 1 + + [VLR-1] Vector Register Memory

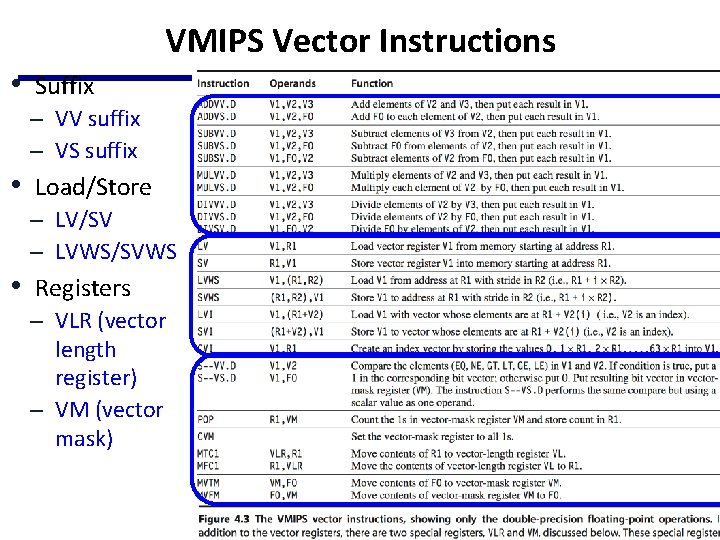

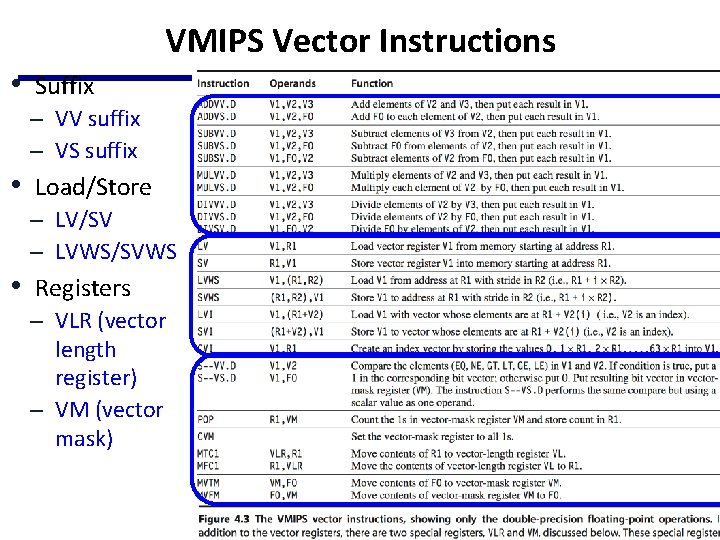

VMIPS Vector Instructions • Suffix – VV suffix – VS suffix • Load/Store – LV/SV – LVWS/SVWS • Registers – VLR (vector length register) – VM (vector mask)

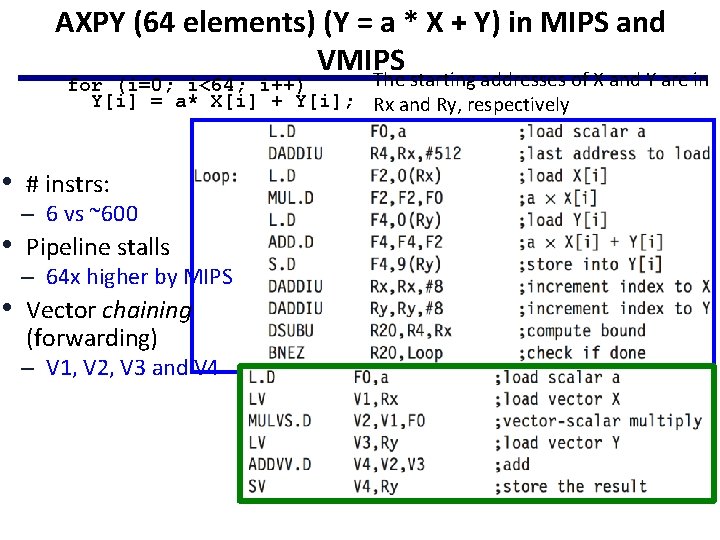

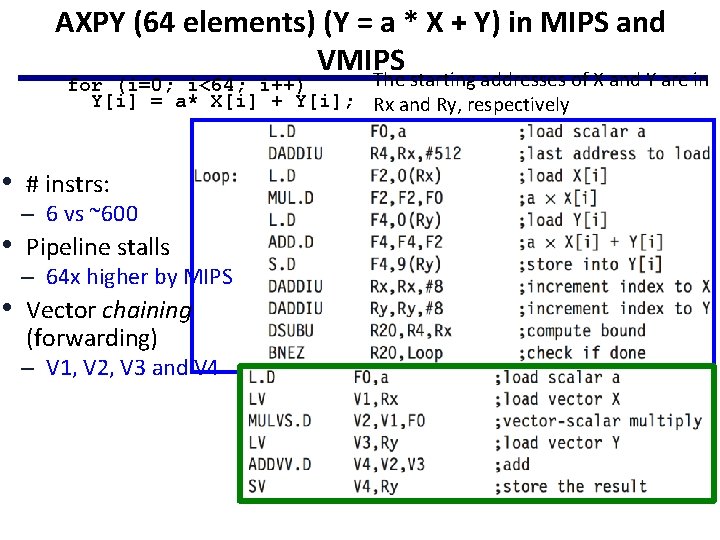

AXPY (64 elements) (Y = a * X + Y) in MIPS and VMIPS The starting addresses of X and Y are in for (i=0; i<64; i++) Y[i] = a* X[i] + Y[i]; Rx and Ry, respectively • # instrs: – 6 vs ~600 • Pipeline stalls – 64 x higher by MIPS • Vector chaining (forwarding) – V 1, V 2, V 3 and V 4

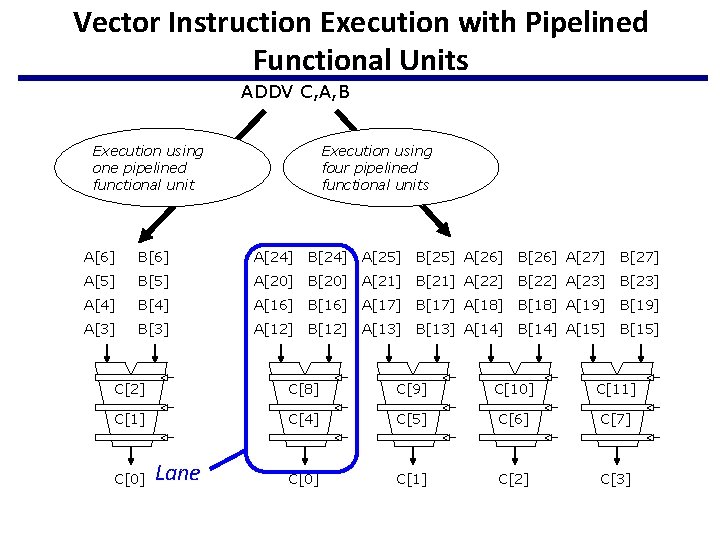

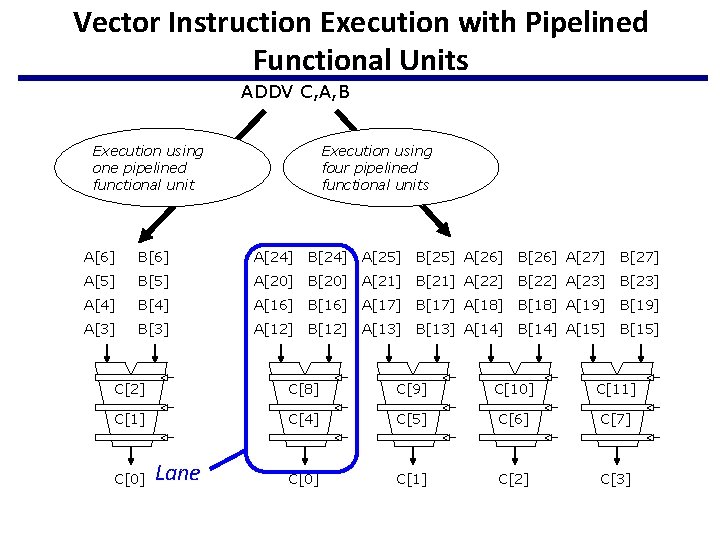

Vector Instruction Execution with Pipelined Functional Units ADDV C, A, B Execution using one pipelined functional unit Execution using four pipelined functional units A[6] B[6] A[24] B[24] A[25] B[25] A[26] B[26] A[27] B[27] A[5] B[5] A[20] B[20] A[21] B[21] A[22] B[22] A[23] B[23] A[4] B[4] A[16] B[16] A[17] B[17] A[18] B[18] A[19] B[19] A[3] B[3] A[12] B[12] A[13] B[13] A[14] B[14] A[15] B[15] C[2] C[8] C[9] C[10] C[11] C[4] C[5] C[6] C[7] C[0] C[1] C[2] C[3] C[0] Lane

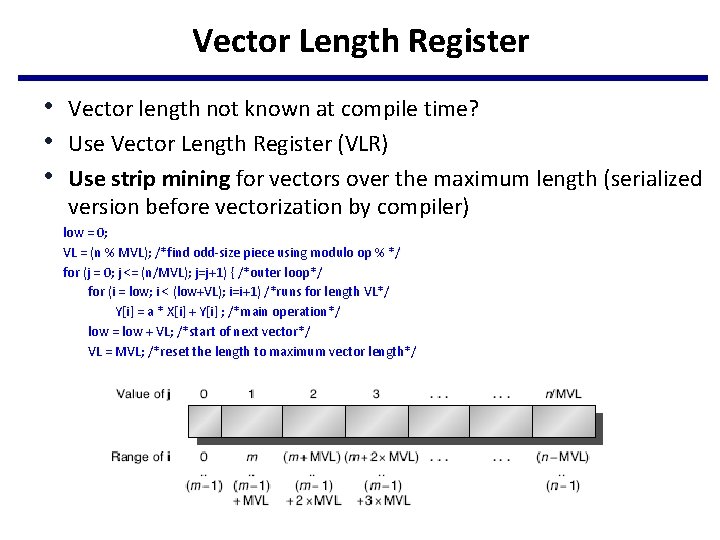

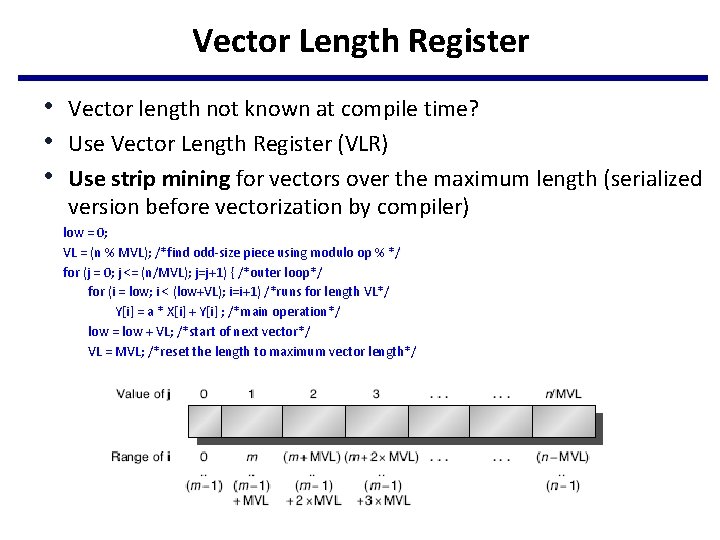

Vector Length Register • Vector length not known at compile time? • Use Vector Length Register (VLR) • Use strip mining for vectors over the maximum length (serialized version before vectorization by compiler) low = 0; VL = (n % MVL); /*find odd-size piece using modulo op % */ for (j = 0; j <= (n/MVL); j=j+1) { /*outer loop*/ for (i = low; i < (low+VL); i=i+1) /*runs for length VL*/ Y[i] = a * X[i] + Y[i] ; /*main operation*/ low = low + VL; /*start of next vector*/ VL = MVL; /*reset the length to maximum vector length*/ }

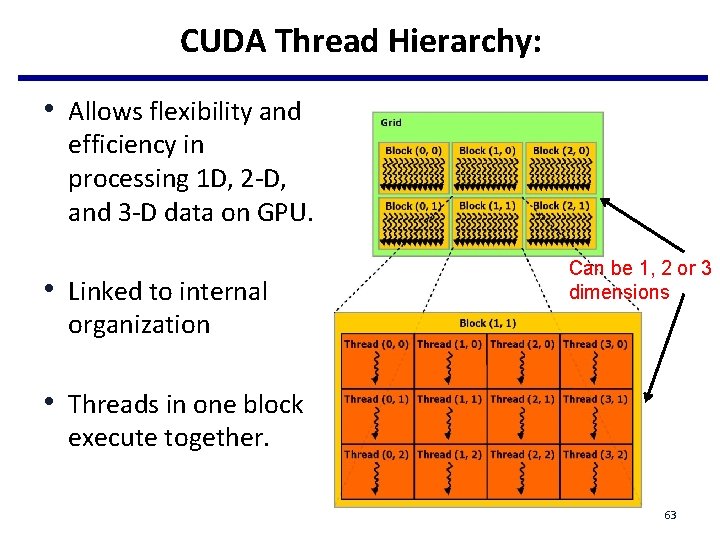

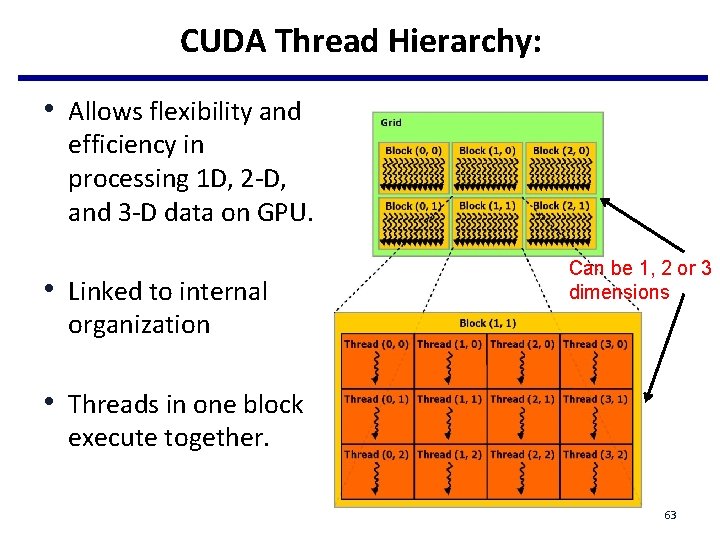

CUDA Thread Hierarchy: • Allows flexibility and efficiency in processing 1 D, 2 -D, and 3 -D data on GPU. • Linked to internal Can be 1, 2 or 3 dimensions organization • Threads in one block execute together. 63

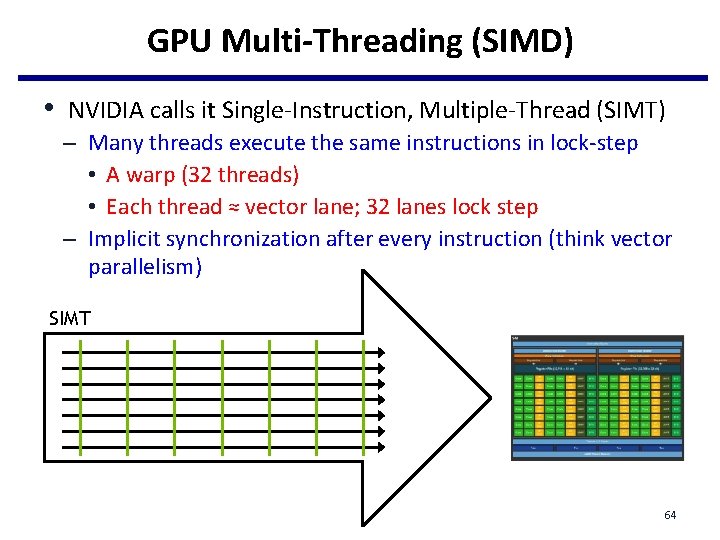

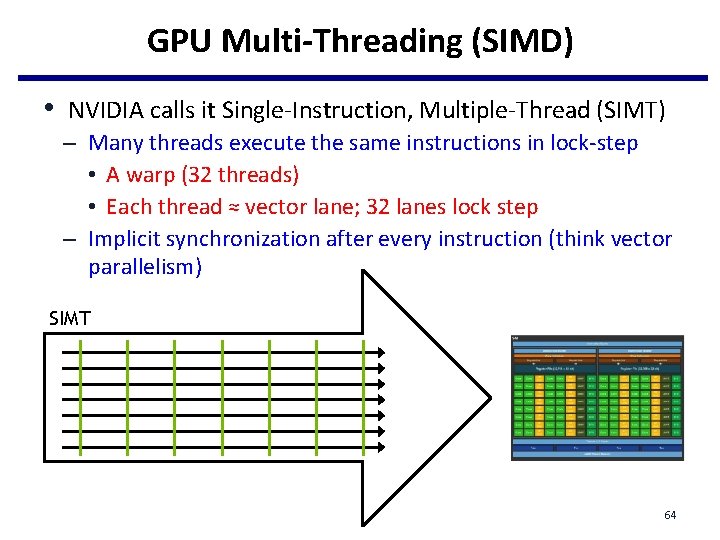

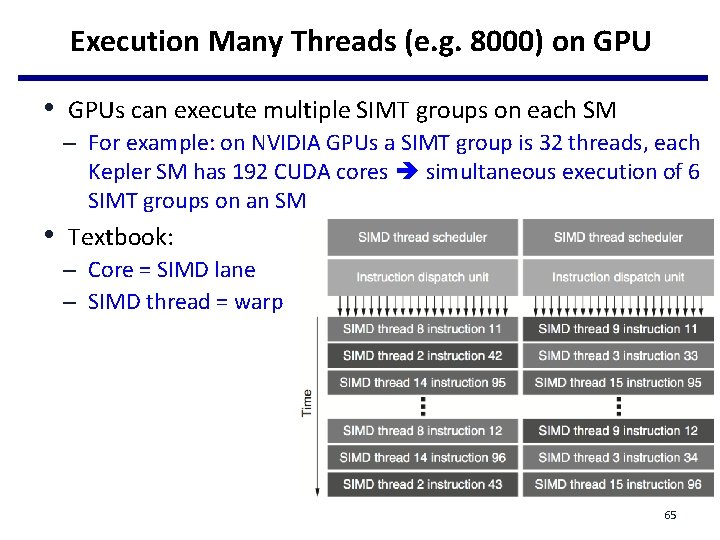

GPU Multi-Threading (SIMD) • NVIDIA calls it Single-Instruction, Multiple-Thread (SIMT) – Many threads execute the same instructions in lock-step • A warp (32 threads) • Each thread ≈ vector lane; 32 lanes lock step – Implicit synchronization after every instruction (think vector parallelism) SIMT 64

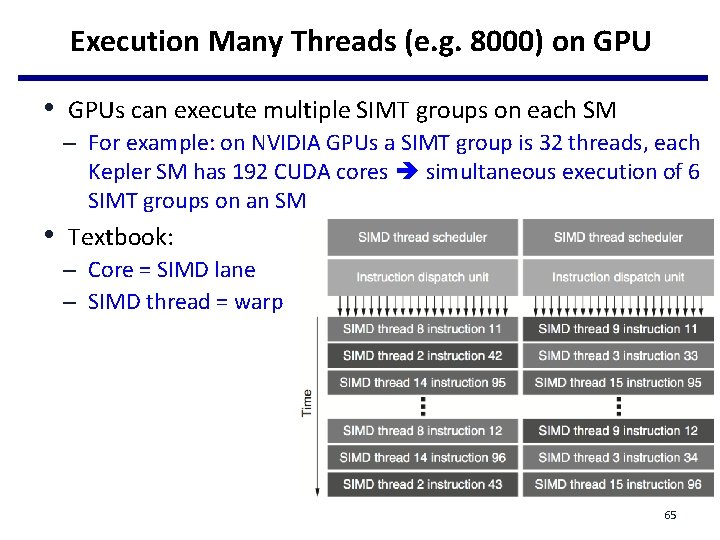

Execution Many Threads (e. g. 8000) on GPU • GPUs can execute multiple SIMT groups on each SM – For example: on NVIDIA GPUs a SIMT group is 32 threads, each Kepler SM has 192 CUDA cores simultaneous execution of 6 SIMT groups on an SM • Textbook: – Core = SIMD lane – SIMD thread = warp 65

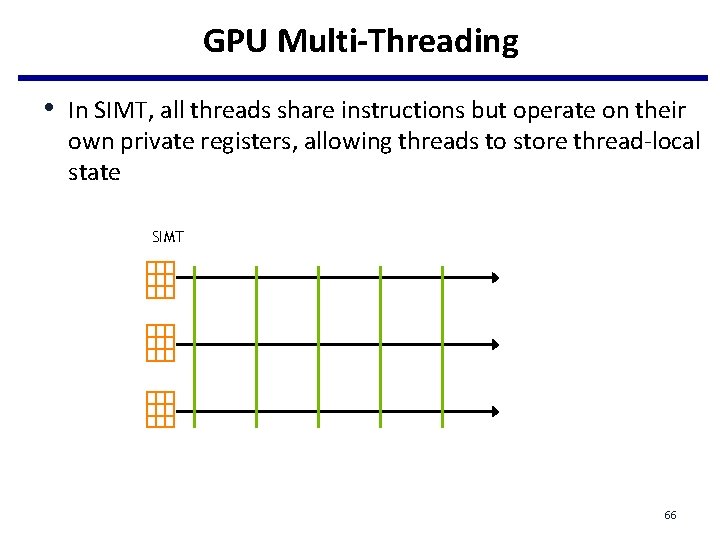

GPU Multi-Threading • In SIMT, all threads share instructions but operate on their own private registers, allowing threads to store thread-local state SIMT 66

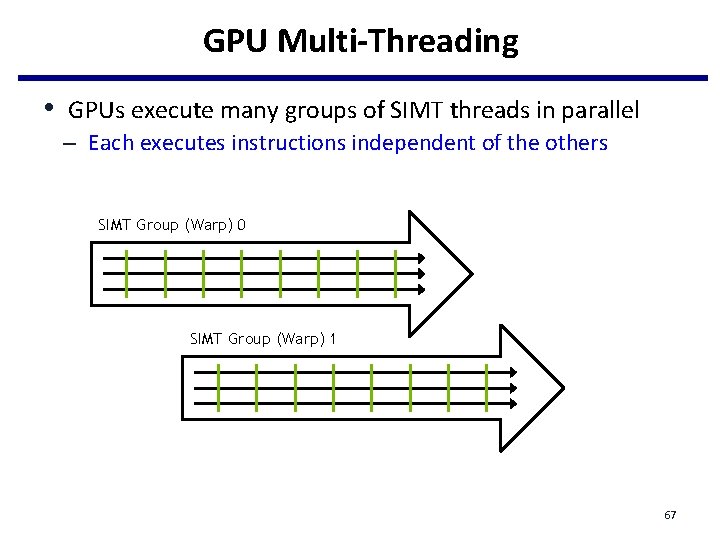

GPU Multi-Threading • GPUs execute many groups of SIMT threads in parallel – Each executes instructions independent of the others SIMT Group (Warp) 0 SIMT Group (Warp) 1 67

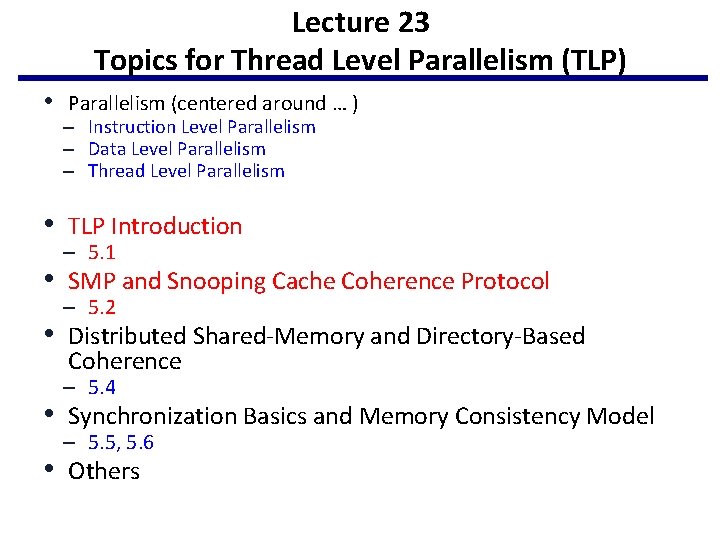

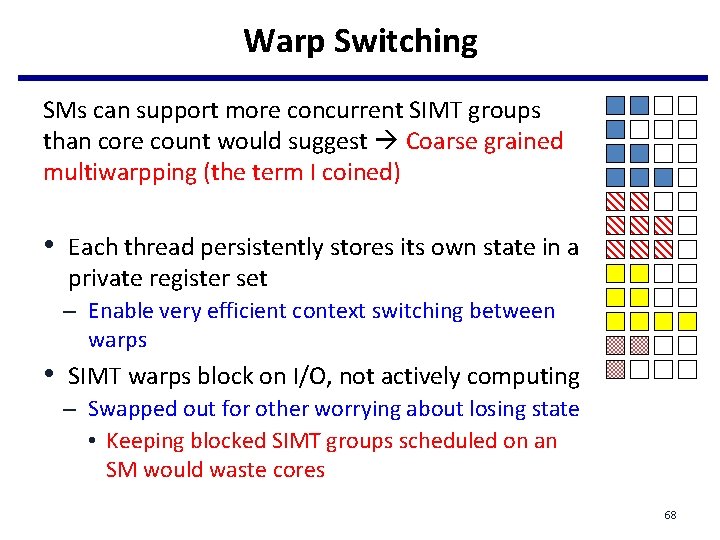

Warp Switching SMs can support more concurrent SIMT groups than core count would suggest Coarse grained multiwarpping (the term I coined) • Each thread persistently stores its own state in a private register set – Enable very efficient context switching between warps • SIMT warps block on I/O, not actively computing – Swapped out for other worrying about losing state • Keeping blocked SIMT groups scheduled on an SM would waste cores 68

Lecture 23 Topics for Thread Level Parallelism (TLP) • Parallelism (centered around … ) – Instruction Level Parallelism – Data Level Parallelism – Thread Level Parallelism • TLP Introduction – 5. 1 • SMP and Snooping Cache Coherence Protocol – 5. 2 • Distributed Shared-Memory and Directory-Based Coherence – 5. 4 • Synchronization Basics and Memory Consistency Model – 5. 5, 5. 6 • Others

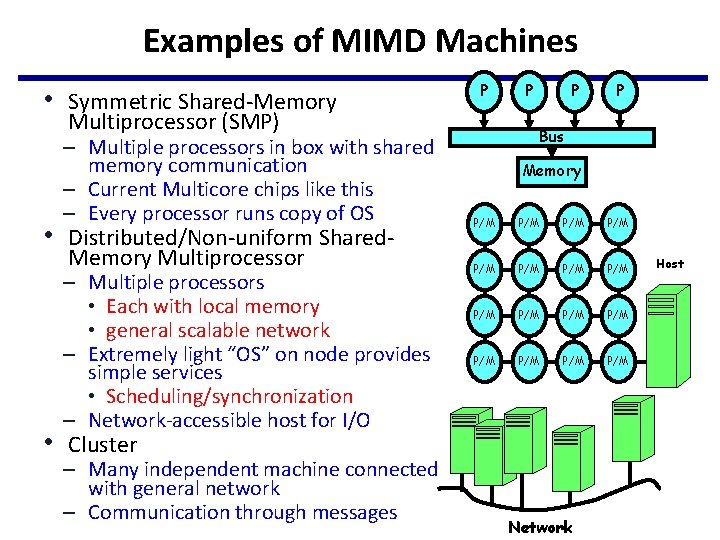

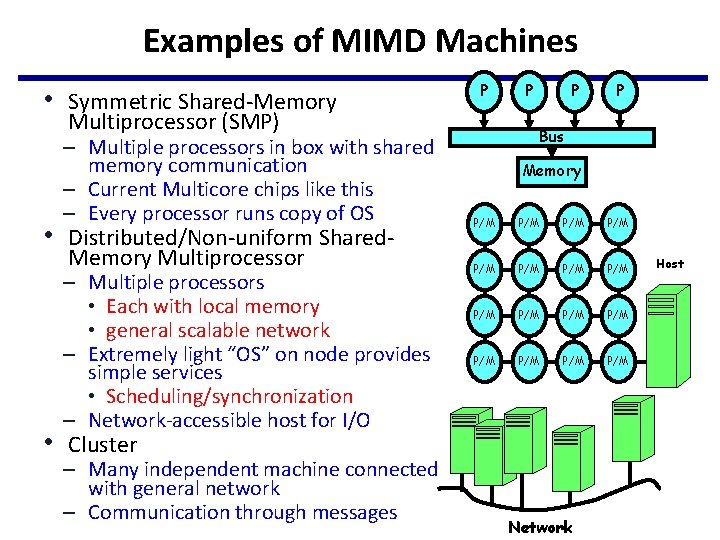

Examples of MIMD Machines • Symmetric Shared-Memory P Multiprocessor (SMP) – Multiple processors in box with shared memory communication – Current Multicore chips like this – Every processor runs copy of OS • Distributed/Non-uniform Shared. Memory Multiprocessor – Multiple processors • Each with local memory • general scalable network – Extremely light “OS” on node provides simple services • Scheduling/synchronization – Network-accessible host for I/O P P Bus Memory P/M P/M P/M P/M • Cluster – Many independent machine connected with general network – Communication through messages P Network Host

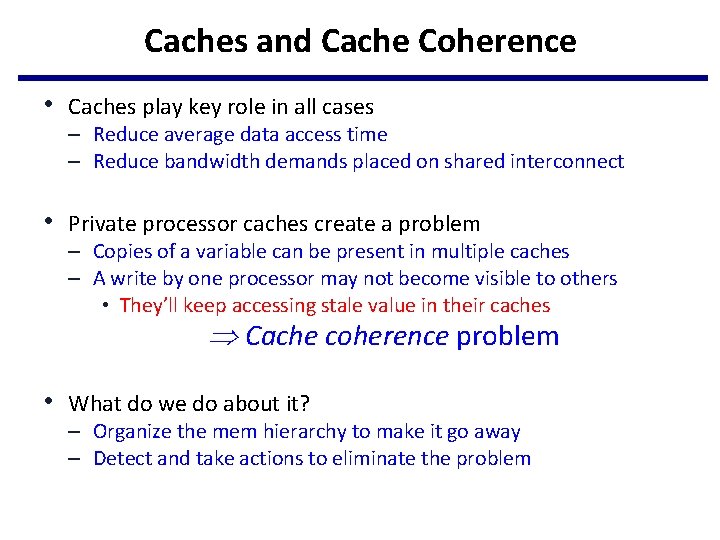

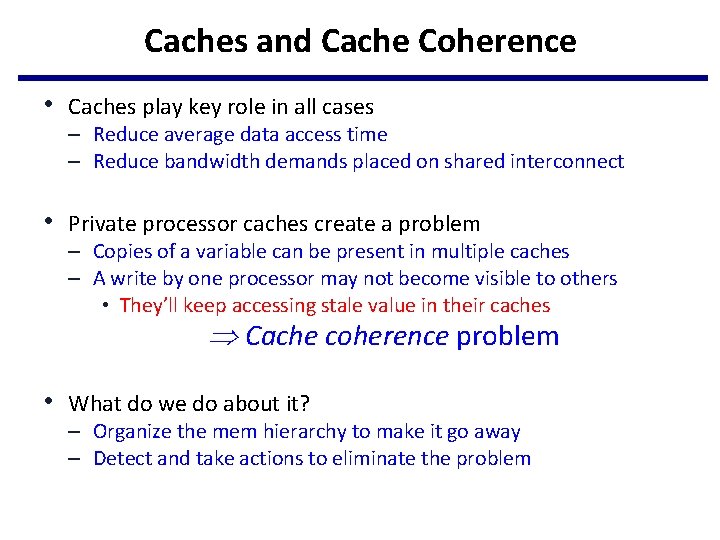

Caches and Cache Coherence • Caches play key role in all cases – Reduce average data access time – Reduce bandwidth demands placed on shared interconnect • Private processor caches create a problem – Copies of a variable can be present in multiple caches – A write by one processor may not become visible to others • They’ll keep accessing stale value in their caches Cache coherence problem • What do we do about it? – Organize the mem hierarchy to make it go away – Detect and take actions to eliminate the problem

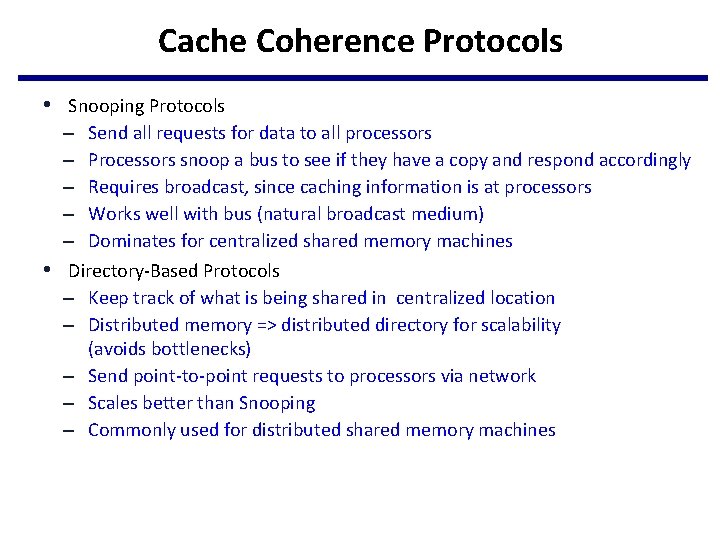

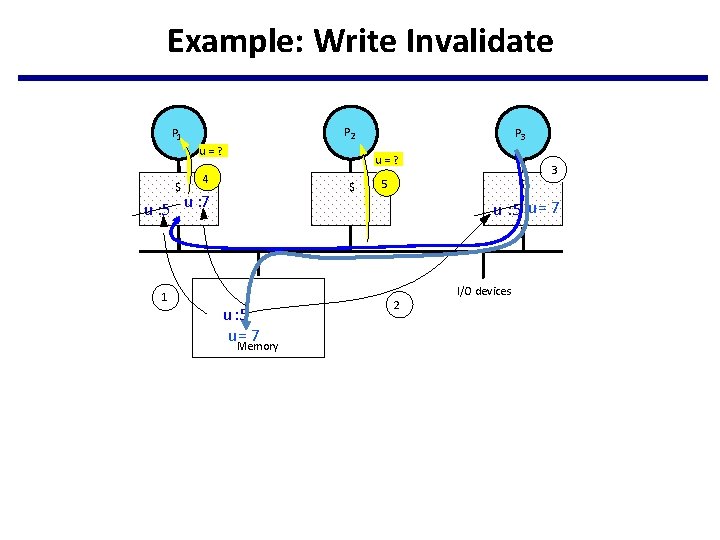

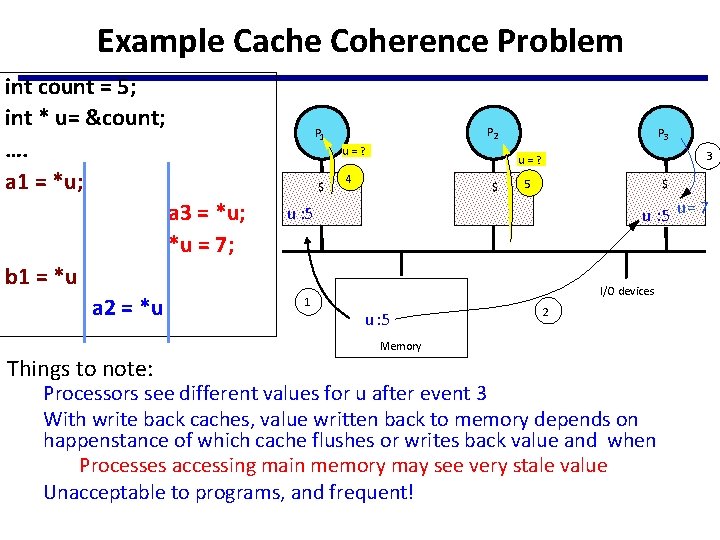

Example Cache Coherence Problem int count = 5; int * u= &count; …. a 1 = *u; P 2 P 1 u=? $ a 3 = *u; *u = 7; P 3 3 u=? 4 $ 5 $ u : 5 u = 7 u : 5 b 1 = *u a 2 = *u Things to note: 1 I/O devices u : 5 2 Memory Processors see different values for u after event 3 With write back caches, value written back to memory depends on happenstance of which cache flushes or writes back value and when Processes accessing main memory may see very stale value Unacceptable to programs, and frequent!

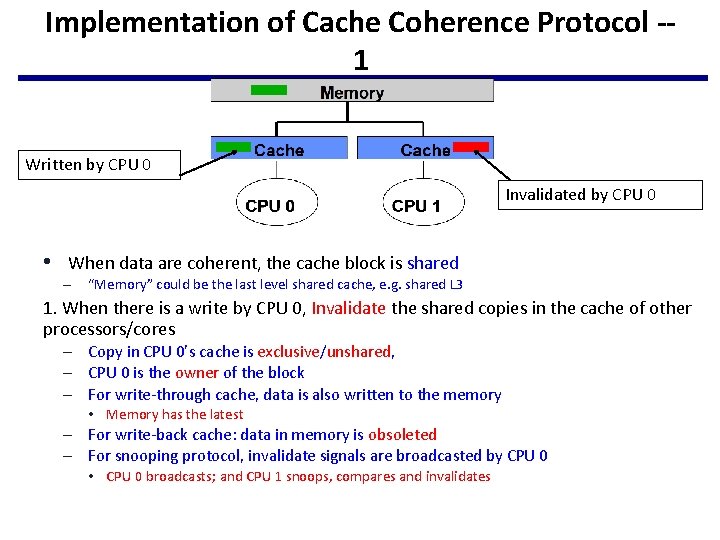

Cache Coherence Protocols • Snooping Protocols – Send all requests for data to all processors – Processors snoop a bus to see if they have a copy and respond accordingly – Requires broadcast, since caching information is at processors – Works well with bus (natural broadcast medium) – Dominates for centralized shared memory machines • Directory-Based Protocols – Keep track of what is being shared in centralized location – Distributed memory => distributed directory for scalability (avoids bottlenecks) – Send point-to-point requests to processors via network – Scales better than Snooping – Commonly used for distributed shared memory machines

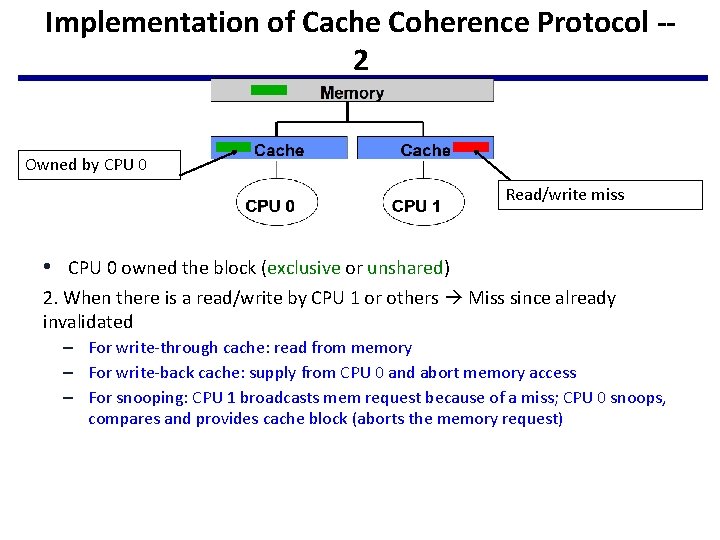

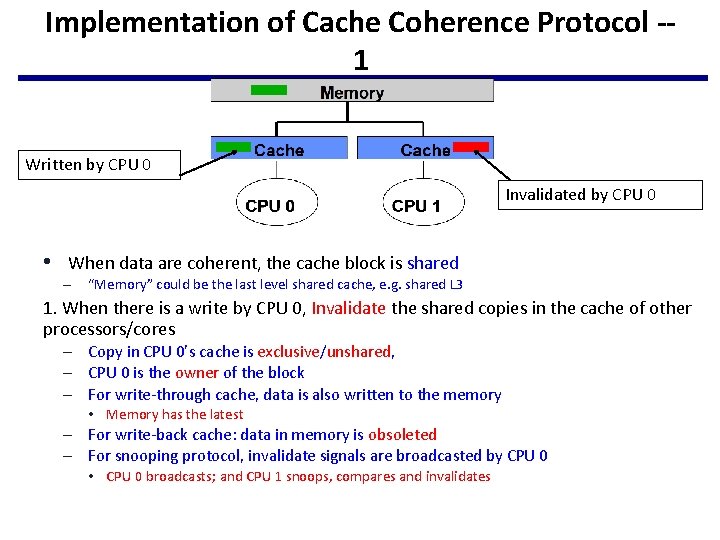

Implementation of Cache Coherence Protocol -1 Written by CPU 0 Invalidated by CPU 0 • When data are coherent, the cache block is shared – “Memory” could be the last level shared cache, e. g. shared L 3 1. When there is a write by CPU 0, Invalidate the shared copies in the cache of other processors/cores – Copy in CPU 0’s cache is exclusive/unshared, – CPU 0 is the owner of the block – For write-through cache, data is also written to the memory • Memory has the latest – For write-back cache: data in memory is obsoleted – For snooping protocol, invalidate signals are broadcasted by CPU 0 • CPU 0 broadcasts; and CPU 1 snoops, compares and invalidates

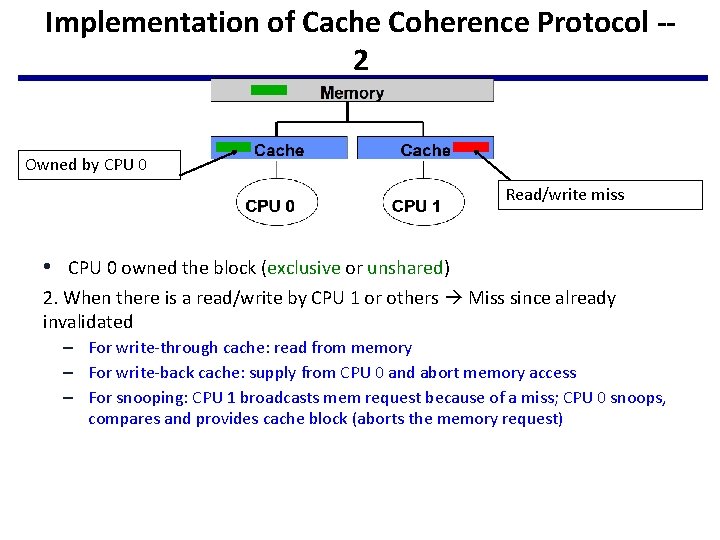

Implementation of Cache Coherence Protocol -2 Owned by CPU 0 Read/write miss • CPU 0 owned the block (exclusive or unshared) 2. When there is a read/write by CPU 1 or others Miss since already invalidated – For write-through cache: read from memory – For write-back cache: supply from CPU 0 and abort memory access – For snooping: CPU 1 broadcasts mem request because of a miss; CPU 0 snoops, compares and provides cache block (aborts the memory request)

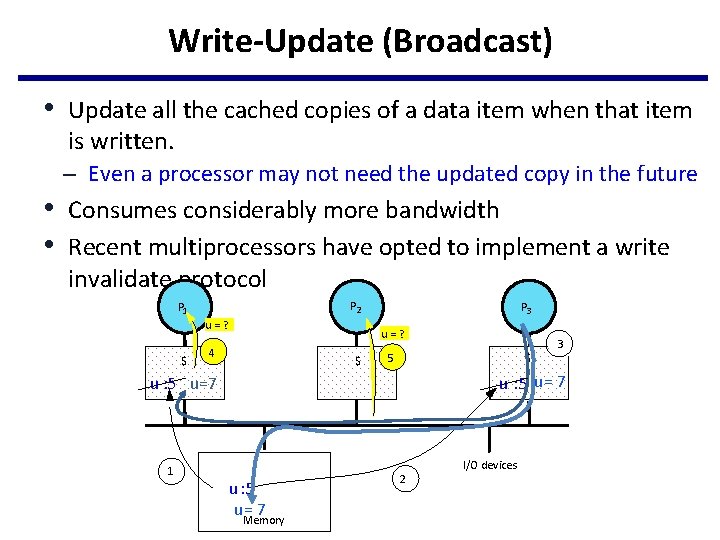

Basic Snoopy Protocols • Write Invalidate Protocol: – Multiple readers, single writer – Write to shared data: an invalidate is sent to all caches which snoop and invalidate any copies – Read Miss: • Write-through: memory is always up-to-date • Write-back: snoop in caches to find most recent copy • Write Update Protocol (typically write through): – Write to shared data: broadcast on bus, processors snoop, and update any copies – Read miss: memory is always up-to-date • Write serialization: bus serializes requests! – Bus is single point of arbitration

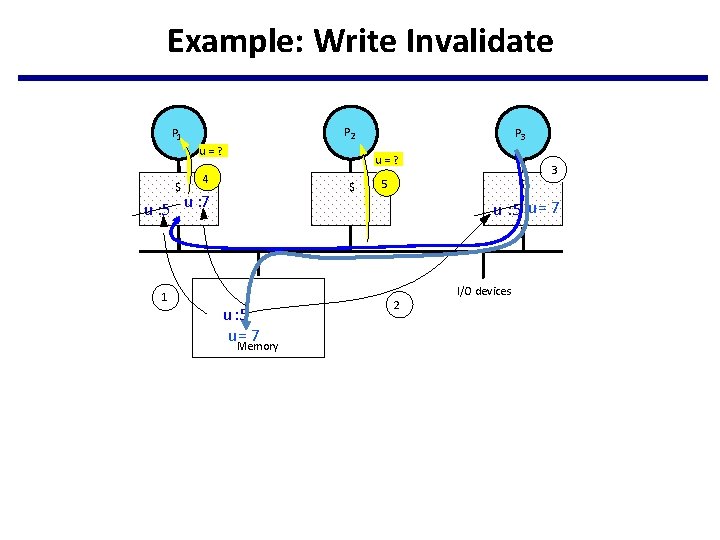

Example: Write Invalidate P 2 P 1 u=? $ u=? 4 $ u : 5 u : 7 1 P 3 3 5 $ u : 5 u = 7 u : 5 u= 7 Memory 2 I/O devices

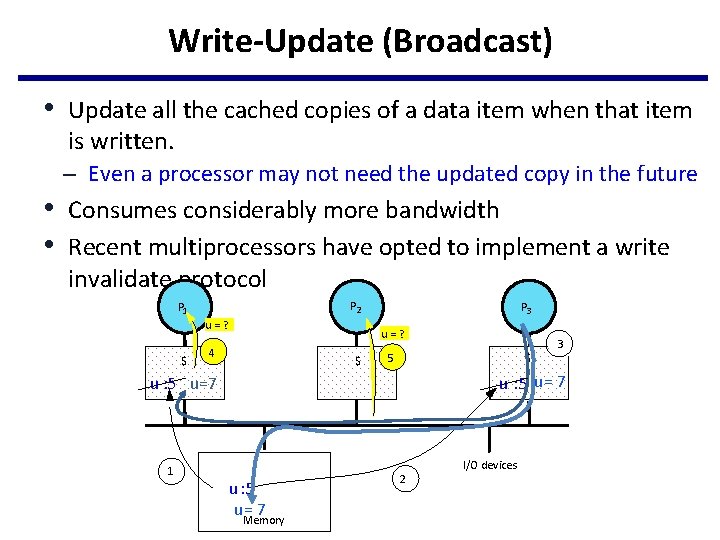

Write-Update (Broadcast) • Update all the cached copies of a data item when that item is written. – Even a processor may not need the updated copy in the future • Consumes considerably more bandwidth • Recent multiprocessors have opted to implement a write invalidate protocol P 2 P 1 u=? $ P 3 u=? 4 $ 5 $ u : 5 u = 7 u : 5 u=7 1 3 u : 5 u= 7 Memory 2 I/O devices