Engineering a Safer World Nancy Leveson MIT Outline

![STPA-Sec Allows us to Address Security “Left of Design” [Bill Young] Attack Response Cost STPA-Sec Allows us to Address Security “Left of Design” [Bill Young] Attack Response Cost](https://slidetodoc.com/presentation_image_h/d59ed37f9f78460e11f7e08650c5b6f1/image-116.jpg)

- Slides: 119

Engineering a Safer World Nancy Leveson MIT

Outline • Accident Causation in Complex Systems: STAMP • New Analysis Methods – Hazard Analysis – Accident Analysis – Security Analysis • Does it Work? Evaluations

Why We Need a New Approach to Safety “Without changing our patterns of thought, we will not be able to solve the problems we created with our current patterns of thought. ” Albert Einstein • Traditional safety engineering approaches developed for relatively simple electro-mechanical systems • Accidents in complex, software-intensive systems are changing their nature • Role of humans in systems is changing • We need new ways to deal with safety in complex systems

Accident Causality Models • Underlie all our efforts to engineer for safety • Explain why accidents occur • Determine the way we prevent and investigate accidents • May not be aware you are using one, but you are • Imposes patterns on accidents “All models are wrong, some models are useful” George Box

Traditional Ways to Cope with Complexity 1. Analytic Reduction 2. Statistics

Analytic Reduction • Divide system into distinct parts for analysis Physical aspects Separate physical components Behavior Events over time • Examine parts separately • Assumes such separation does not distort phenomenon – Each component or subsystem operates independently – Analysis results not distorted when consider components separately – Components act the same when examined singly as when playing their part in the whole – Events not subject to feedback loops and non-linear interactions

Chain-of-Events Accident Causality Model • Explains accidents in terms of multiple events, sequenced as a forward chain over time. – Simple, direct relationship between events in chain • Events almost always involve component failure, human error, or energy-related event • Forms the basis for most safety engineering and reliability engineering analysis: e, g, FTA, PRA, FMECA, Event Trees, etc. and design: e. g. , redundancy, overdesign, safety margins, ….

Domino “Chain of events” Model DC-10: Cargo door fails Causes Floor collapses Causes Hydraulics Causes fail Airplane crashes Event-based © Copyright John Thomas 2013

Chain-of-events example

Accident with No Component Failures • Mars Polar Lander – Have to slow down spacecraft to land safely – Use Martian gravity, parachute, descent engines (controlled by software) – Software knows landed because of sensitive sensors on landing legs. Cut off engines when determine have landed. – But “noise” (false signals) by sensors generated when parachute opens – Software not supposed to be operating at that time but software engineers decided to start early to even out load on processor – Software thought spacecraft had landed and shut down descent engines

Types of Accidents • Component Failure Accidents – Single or multiple component failures – Usually assume random failure • Component Interaction Accidents – Arise in interactions among components – Related to interactive and dynamic complexity – Behavior can no longer be • • Planned Understood Anticipated Guarded against – Exacerbated by introduction of computers and software

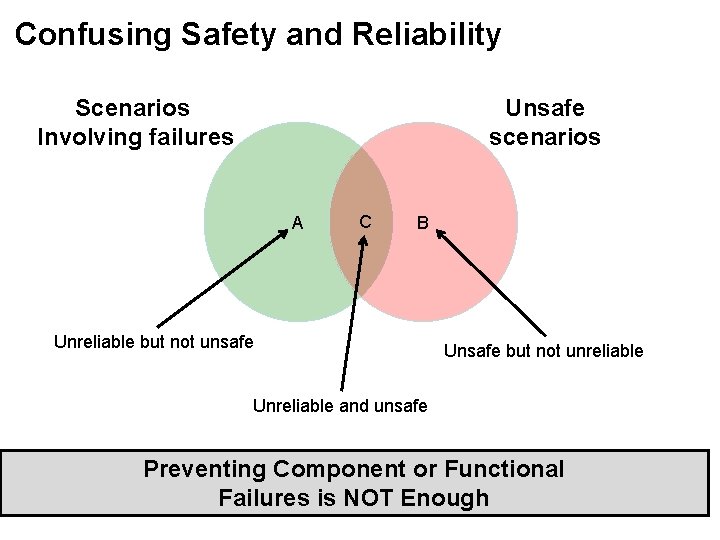

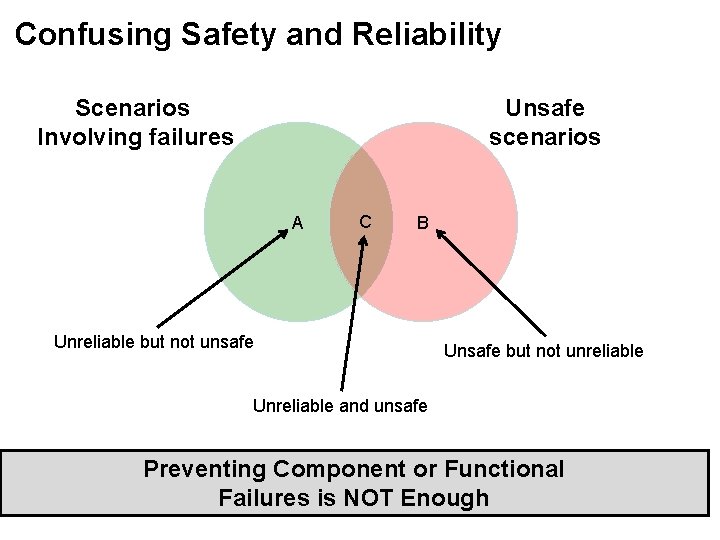

Confusing Safety and Reliability Scenarios Involving failures Unsafe scenarios A C B Unreliable but not unsafe Unsafe but not unreliable Unreliable and unsafe Preventing Component or Functional Failures is NOT Enough

Analytic Reduction does not Handle • Component interaction accidents • Systemic factors (affecting all components and barriers) • Software • Human behavior (in a non-superficial way) • System design errors • Indirect or non-linear interactions and complexity • Migration of systems toward greater risk over time

Summary • The world of engineering is changing. • If safety engineering does not change with it, it will become more and more irrelevant. • Trying to shoehorn new technology and new levels of complexity into old methods does not work 14

Systems Theory • Developed for systems that are – Too complex for complete analysis • Separation into (interacting) subsystems distorts the results • The most important properties are emergent – Too organized for statistics • Too much underlying structure that distorts the statistics • Developed for biology (von Bertalanffy) and engineering (Norbert Weiner) • Basis of system engineering and “System Safety”

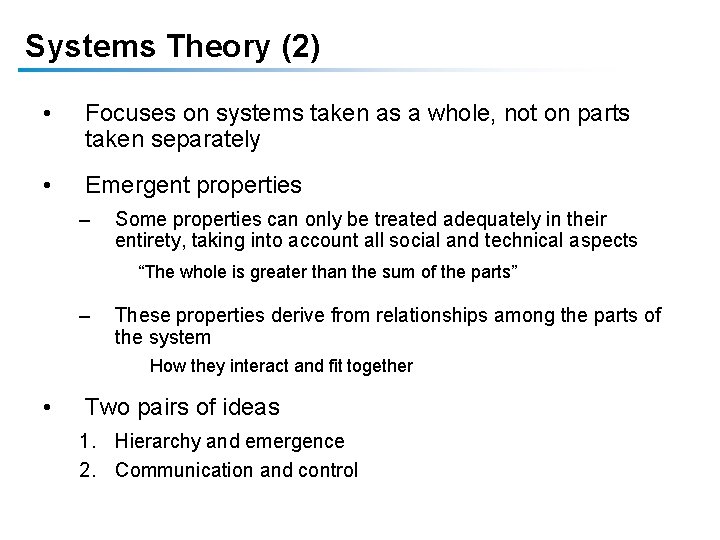

Systems Theory (2) • Focuses on systems taken as a whole, not on parts taken separately • Emergent properties – Some properties can only be treated adequately in their entirety, taking into account all social and technical aspects “The whole is greater than the sum of the parts” – • These properties derive from relationships among the parts of the system How they interact and fit together Two pairs of ideas 1. Hierarchy and emergence 2. Communication and control

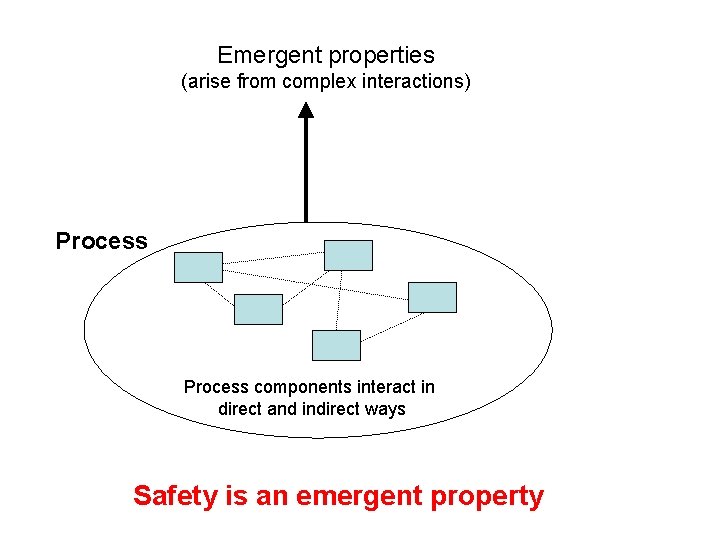

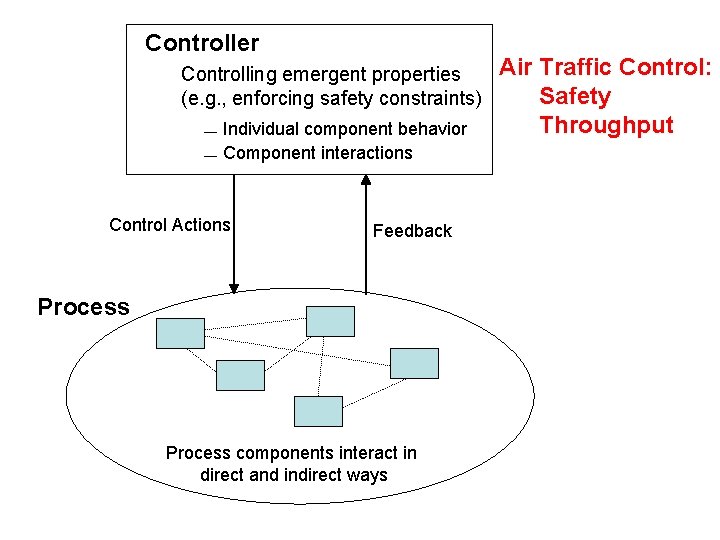

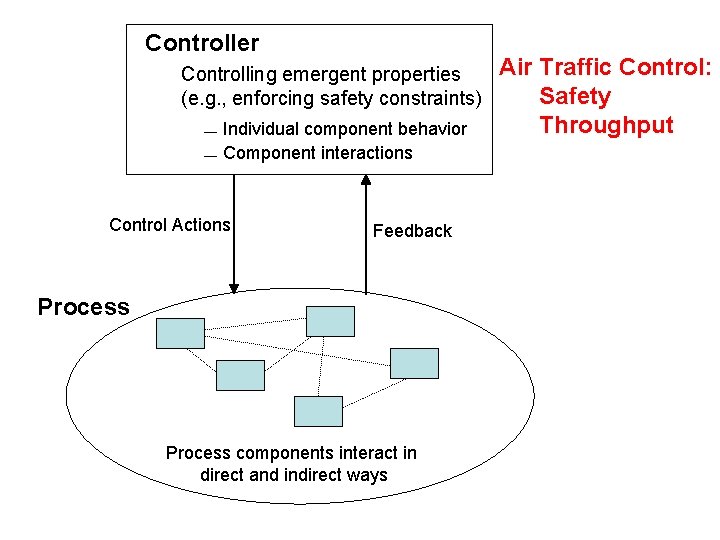

Emergent properties (arise from complex interactions) Process components interact in direct and indirect ways Safety is an emergent property

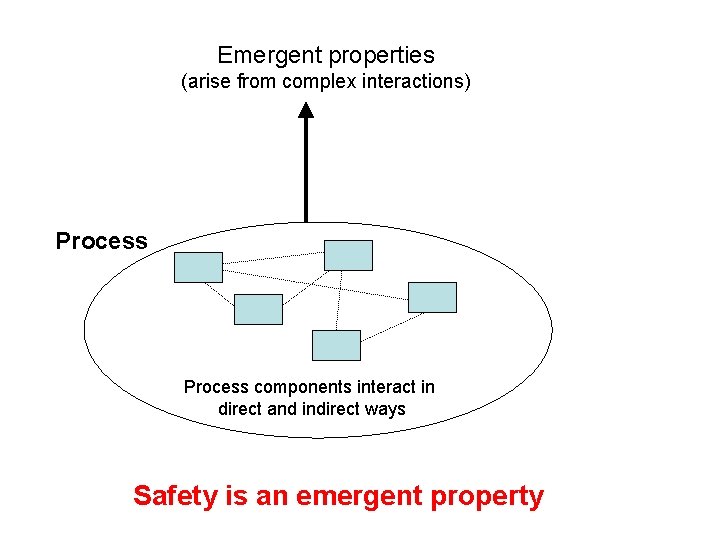

Controller Controlling emergent properties (e. g. , enforcing safety constraints) Individual component behavior Component interactions Control Actions Feedback Process components interact in direct and indirect ways

Controller Controlling emergent properties (e. g. , enforcing safety constraints) Individual component behavior Component interactions Control Actions Feedback Process components interact in direct and indirect ways Air Traffic Control: Safety Throughput

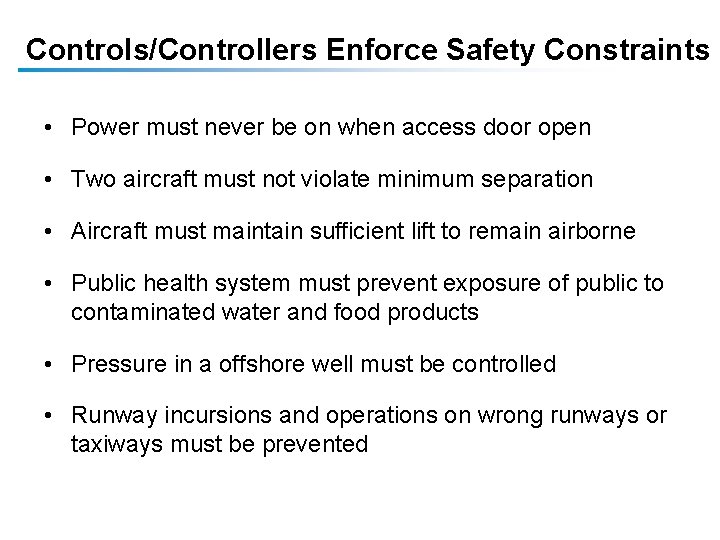

Controls/Controllers Enforce Safety Constraints • Power must never be on when access door open • Two aircraft must not violate minimum separation • Aircraft must maintain sufficient lift to remain airborne • Public health system must prevent exposure of public to contaminated water and food products • Pressure in a offshore well must be controlled • Runway incursions and operations on wrong runways or taxiways must be prevented

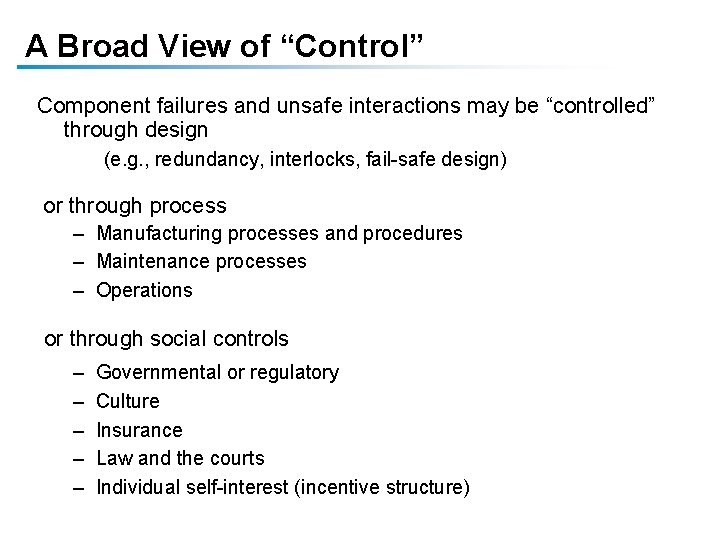

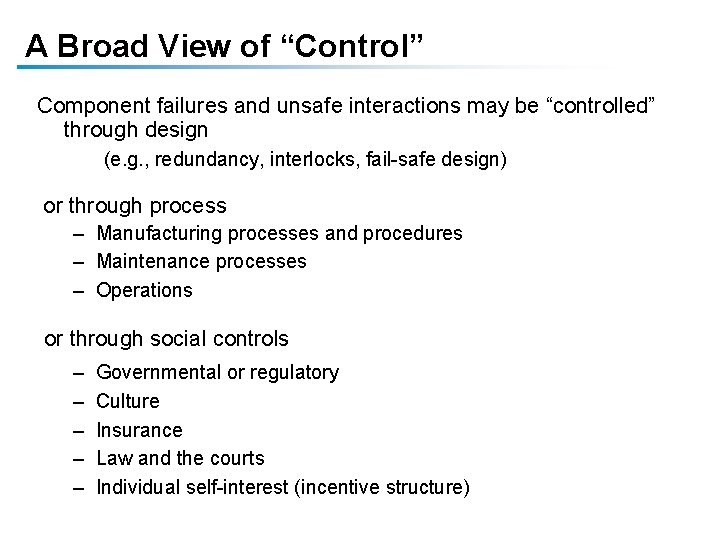

A Broad View of “Control” Component failures and unsafe interactions may be “controlled” through design (e. g. , redundancy, interlocks, fail-safe design) or through process – Manufacturing processes and procedures – Maintenance processes – Operations or through social controls – – – Governmental or regulatory Culture Insurance Law and the courts Individual self-interest (incentive structure)

There may be multiple controllers, processes, and levels of control Controller Physical Process 1 Each controller enforces specific constraints, which together enforce the system level constraints (emergent properties) Controller Physical Process 2 (with various types of communication between them)

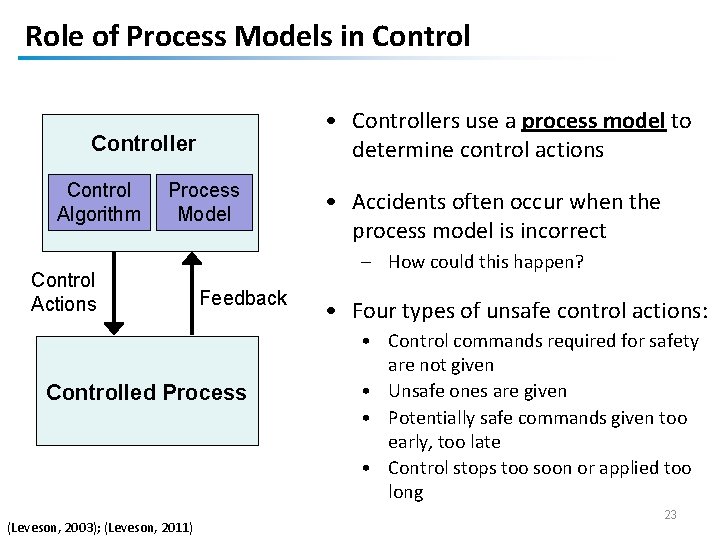

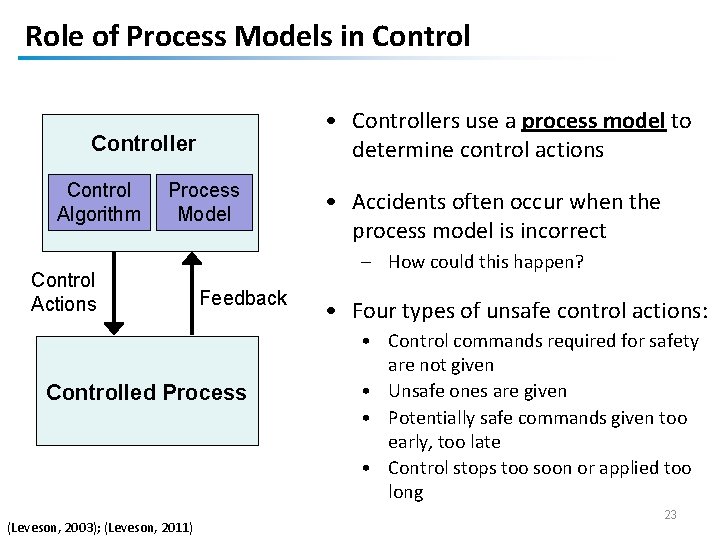

Role of Process Models in Control Controller Control Algorithm Process Model Control Actions • Accidents often occur when the process model is incorrect – How could this happen? Feedback Controlled Process (Leveson, 2003); (Leveson, 2011) • Controllers use a process model to determine control actions • Four types of unsafe control actions: • Control commands required for safety are not given • Unsafe ones are given • Potentially safe commands given too early, too late • Control stops too soon or applied too long 23

Example Safety Control Structure

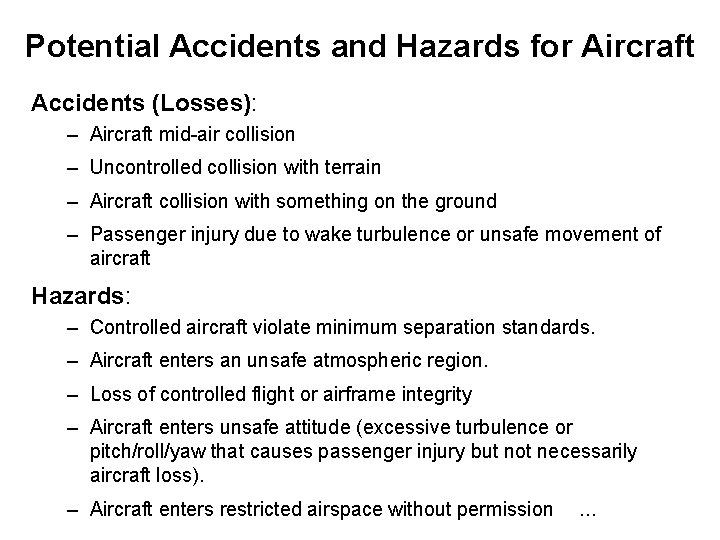

Potential Accidents and Hazards for Aircraft Accidents (Losses): – Aircraft mid-air collision – Uncontrolled collision with terrain – Aircraft collision with something on the ground – Passenger injury due to wake turbulence or unsafe movement of aircraft Hazards: – Controlled aircraft violate minimum separation standards. – Aircraft enters an unsafe atmospheric region. – Loss of controlled flight or airframe integrity – Aircraft enters unsafe attitude (excessive turbulence or pitch/roll/yaw that causes passenger injury but not necessarily aircraft loss). – Aircraft enters restricted airspace without permission …

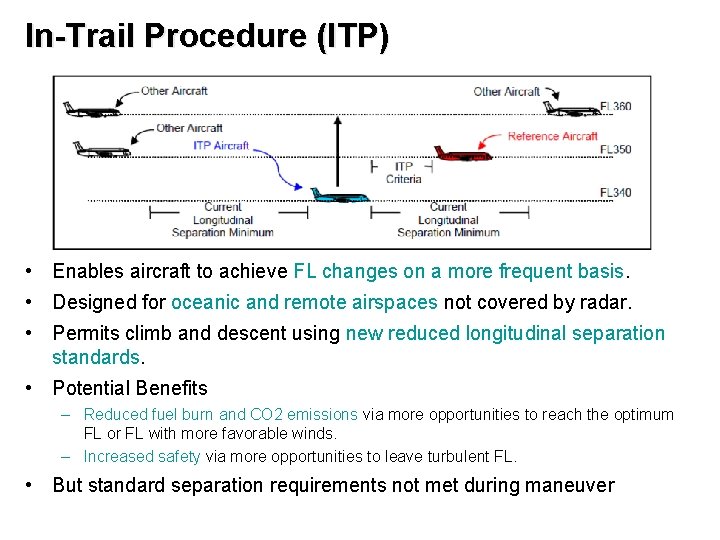

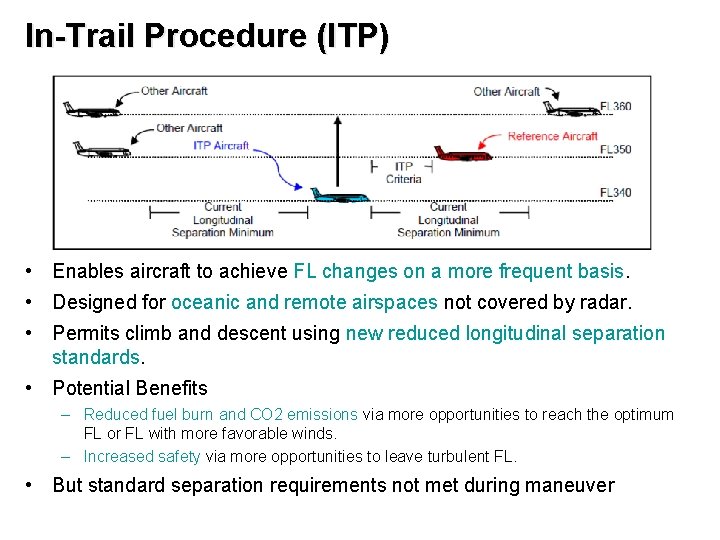

In-Trail Procedure (ITP) • Enables aircraft to achieve FL changes on a more frequent basis. • Designed for oceanic and remote airspaces not covered by radar. • Permits climb and descent using new reduced longitudinal separation standards. • Potential Benefits – Reduced fuel burn and CO 2 emissions via more opportunities to reach the optimum FL or FL with more favorable winds. – Increased safety via more opportunities to leave turbulent FL. • But standard separation requirements not met during maneuver

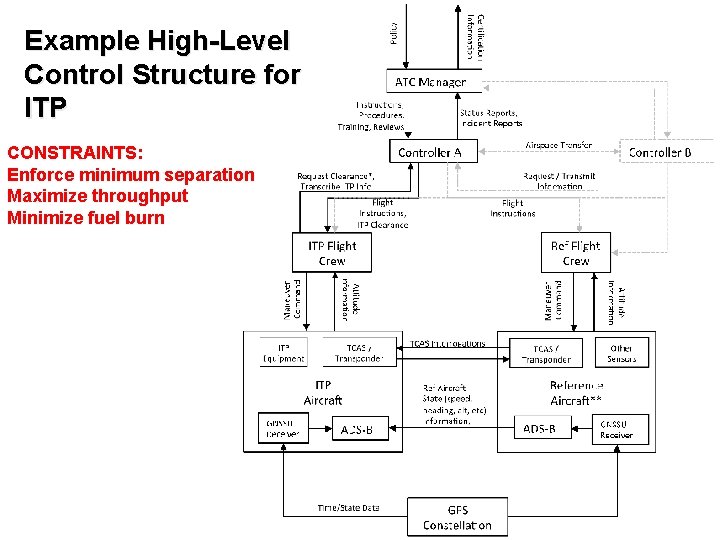

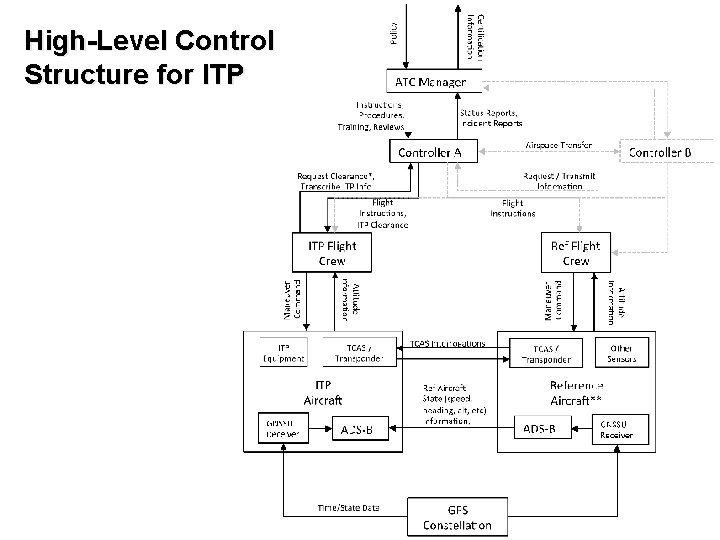

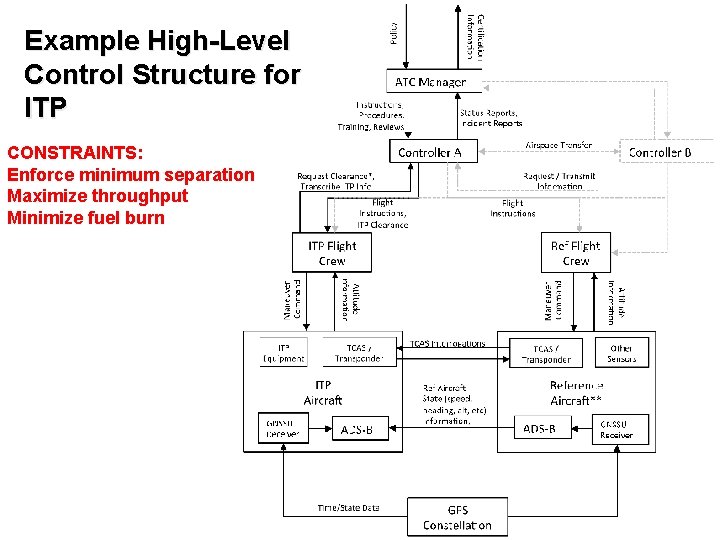

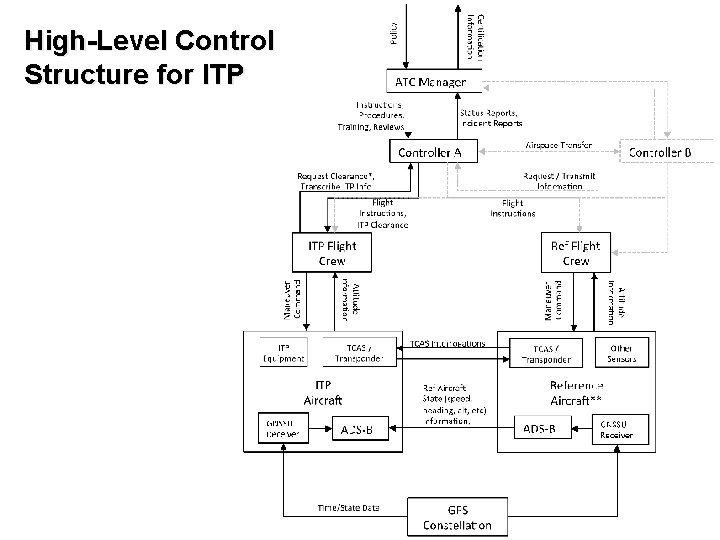

Example High-Level Control Structure for ITP CONSTRAINTS: Enforce minimum separation Maximize throughput Minimize fuel burn

STAMP: System-Theoretic Accident Model and Processes A new accident causality model based on Systems Theory (vs. Reliability Theory)

STAMP: Safety as a Control Problem • Safety is an emergent property that arises when system components interact with each other within a larger environment – A set of constraints related to behavior of system components (physical, human, social) enforces that property – Accidents occur when interactions violate those constraints (a lack of appropriate constraints on the interactions) • Goal is to control the behavior of the components and systems as a whole to ensure safety constraints are enforced in the operating system.

Safety as a Dynamic Control Problem • Examples – O-ring did not control propellant gas release by sealing gap in field joint of Challenger Space Shuttle – Software did not adequately control descent speed of Mars Polar Lander – At Texas City, did not control the level of liquids in the ISOM tower; – In Deep Water Horizon, did not control the pressure in the well; – Financial system did not adequately control the use of financial instruments

Safety as a Dynamic Control Problem • Events are the result of the inadequate control – Result from lack of enforcement of safety constraints in system design and operations • Systems are dynamic processes that are continually changing and adapting to achieve their goals • A change in emphasis: “prevent failures” “enforce safety constraints on system behavior”

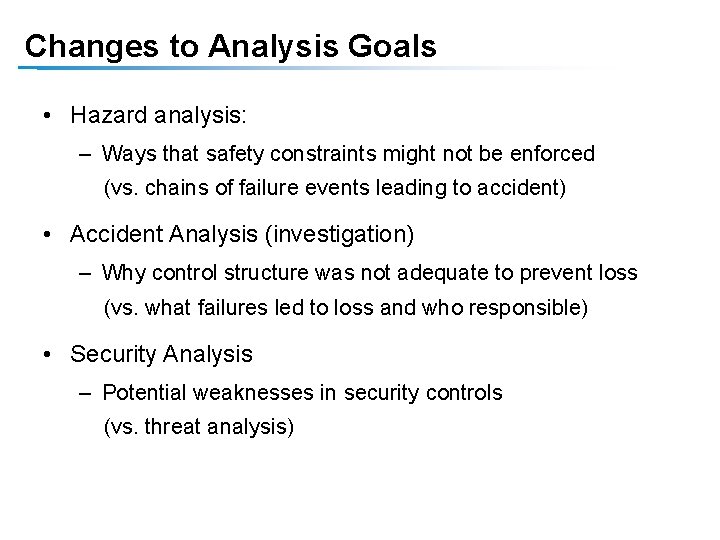

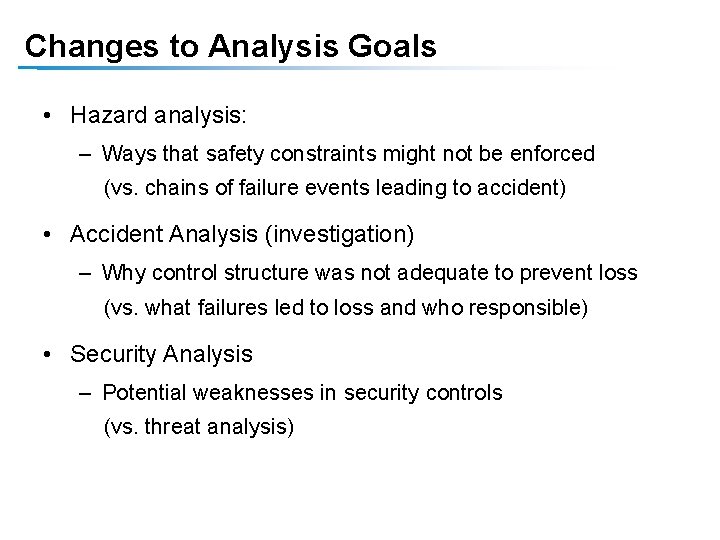

Changes to Analysis Goals • Hazard analysis: – Ways that safety constraints might not be enforced (vs. chains of failure events leading to accident) • Accident Analysis (investigation) – Why control structure was not adequate to prevent loss (vs. what failures led to loss and who responsible) • Security Analysis – Potential weaknesses in security controls (vs. threat analysis)

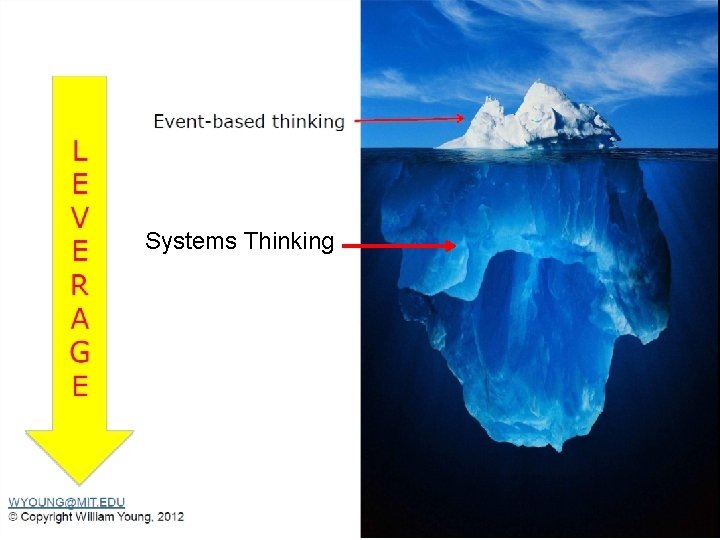

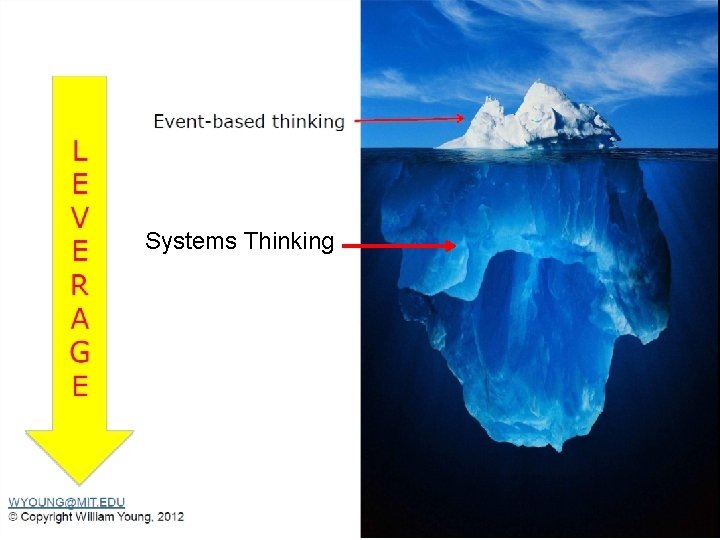

Systems Thinking

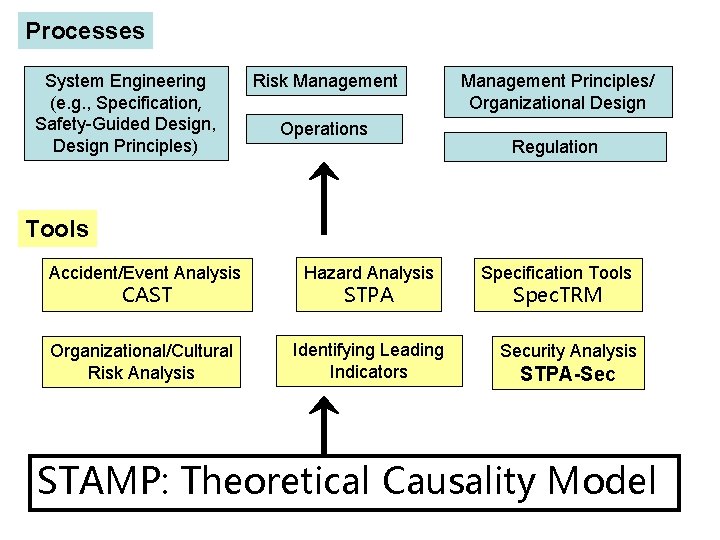

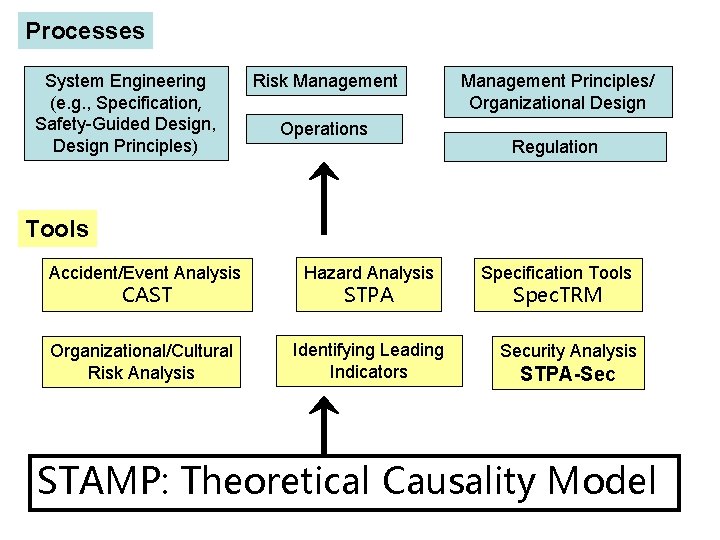

Processes System Engineering (e. g. , Specification, Safety-Guided Design, Design Principles) Risk Management Operations Management Principles/ Organizational Design Regulation Tools Accident/Event Analysis Hazard Analysis Organizational/Cultural Risk Analysis Identifying Leading Indicators CAST STPA Specification Tools Spec. TRM Security Analysis STPA-Sec STAMP: Theoretical Causality Model

Outline • Accident Causation in Complex Systems: STAMP • New Analysis Methods – – – Hazard Analysis Accident Analysis Security Analysis • Does it Work? Evaluations

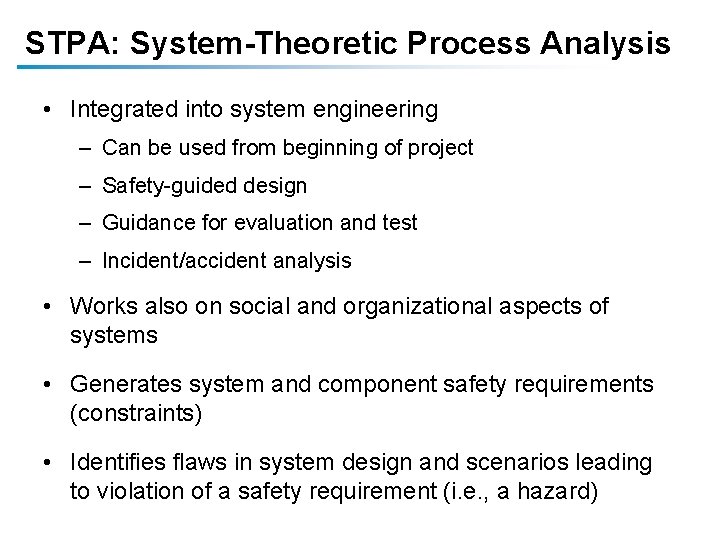

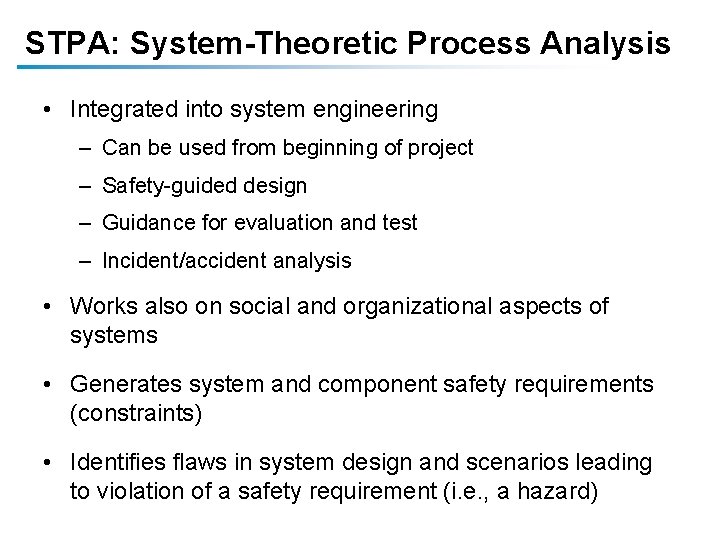

STPA: System-Theoretic Process Analysis • Integrated into system engineering – Can be used from beginning of project – Safety-guided design – Guidance for evaluation and test – Incident/accident analysis • Works also on social and organizational aspects of systems • Generates system and component safety requirements (constraints) • Identifies flaws in system design and scenarios leading to violation of a safety requirement (i. e. , a hazard)

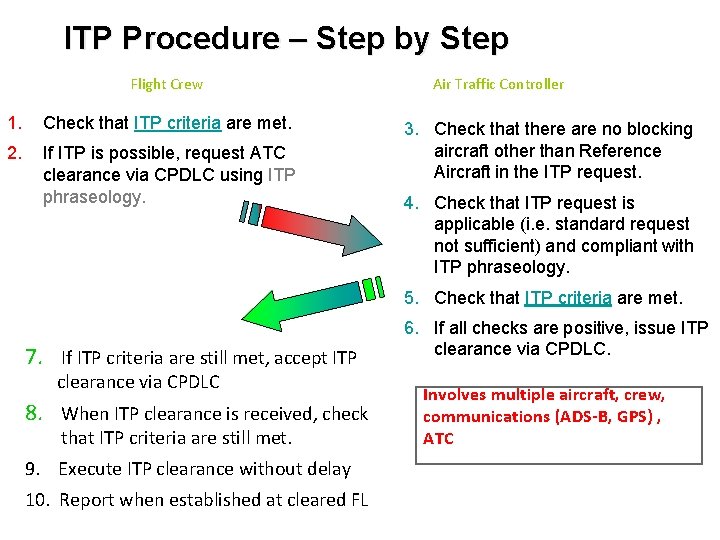

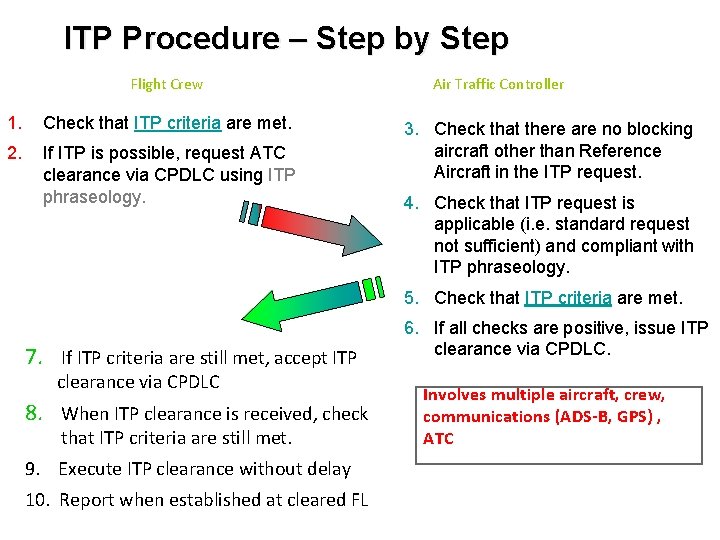

ITP Procedure – Step by Step Flight Crew 1. Check that ITP criteria are met. 2. If ITP is possible, request ATC clearance via CPDLC using ITP phraseology. Air Traffic Controller 3. Check that there are no blocking aircraft other than Reference Aircraft in the ITP request. 4. Check that ITP request is applicable (i. e. standard request not sufficient) and compliant with ITP phraseology. 5. Check that ITP criteria are met. 7. If ITP criteria are still met, accept ITP clearance via CPDLC 8. When ITP clearance is received, check that ITP criteria are still met. 9. Execute ITP clearance without delay 10. Report when established at cleared FL 6. If all checks are positive, issue ITP clearance via CPDLC. Involves multiple aircraft, crew, communications (ADS-B, GPS) , ATC

High-Level Control Structure for ITP

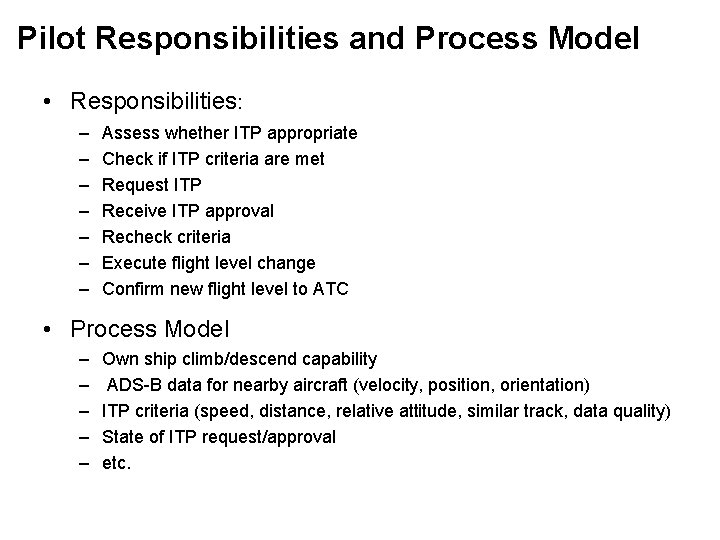

Pilot Responsibilities and Process Model • Responsibilities: – – – – Assess whether ITP appropriate Check if ITP criteria are met Request ITP Receive ITP approval Recheck criteria Execute flight level change Confirm new flight level to ATC • Process Model – – – Own ship climb/descend capability ADS-B data for nearby aircraft (velocity, position, orientation) ITP criteria (speed, distance, relative attitude, similar track, data quality) State of ITP request/approval etc.

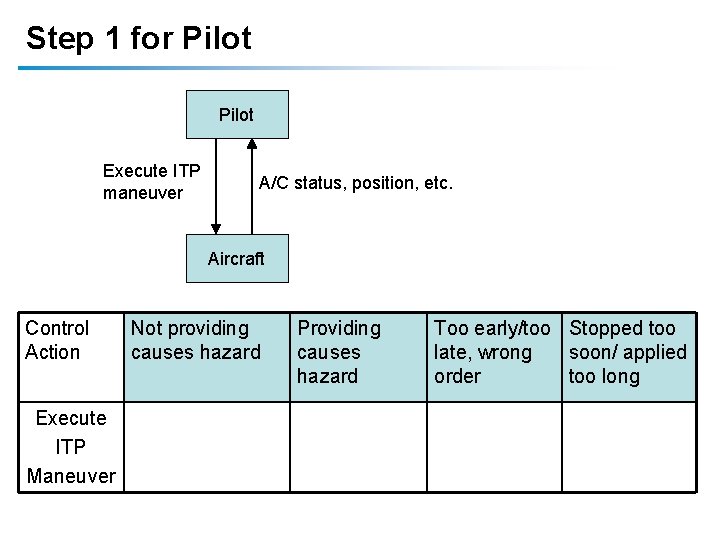

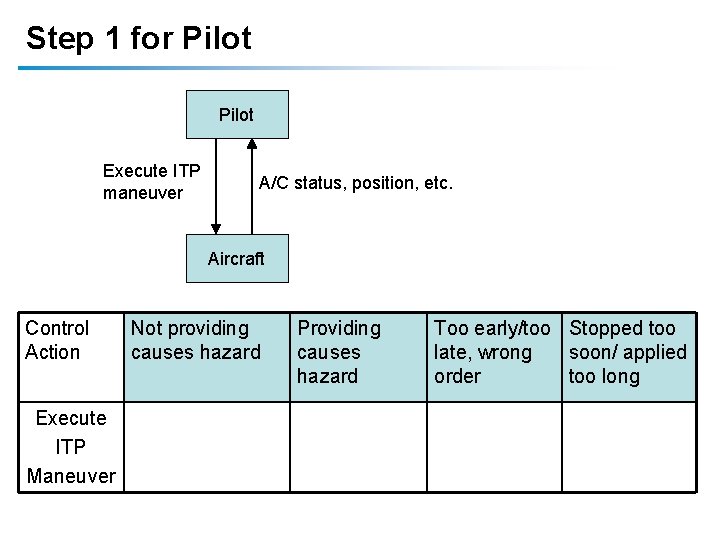

Step 1 for Pilot Execute ITP maneuver A/C status, position, etc. Aircraft Control Action Execute ITP Maneuver Not providing causes hazard Providing causes hazard Too early/too Stopped too late, wrong soon/ applied order too long

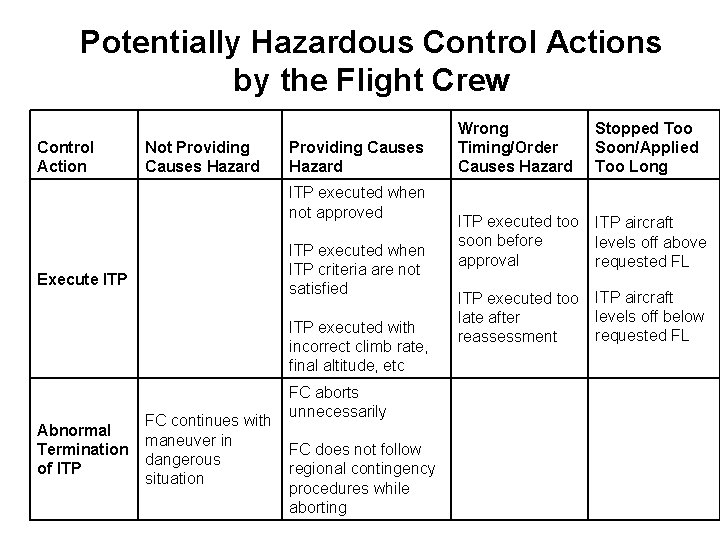

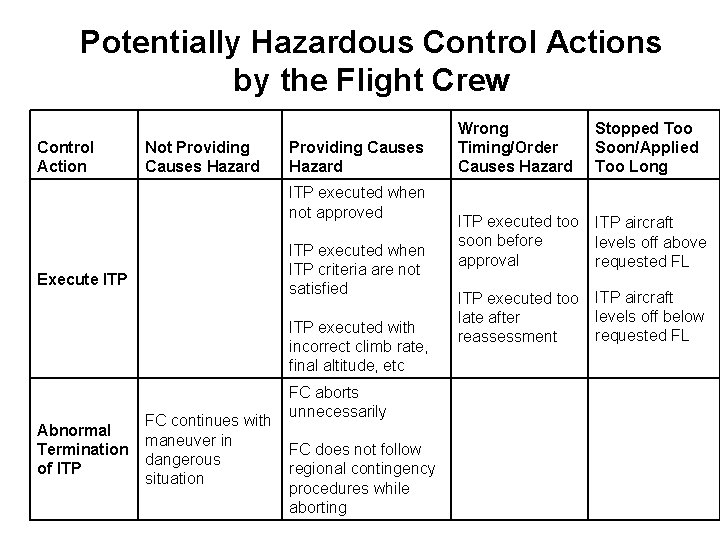

Potentially Hazardous Control Actions by the Flight Crew Control Action Not Providing Causes Hazard ITP executed when not approved Execute ITP executed when ITP criteria are not satisfied ITP executed with incorrect climb rate, final altitude, etc Wrong Timing/Order Causes Hazard Stopped Too Soon/Applied Too Long ITP executed too ITP aircraft soon before levels off above approval requested FL ITP executed too ITP aircraft levels off below late after requested FL reassessment FC aborts unnecessarily Abnormal Termination of ITP FC continues with maneuver in FC does not follow dangerous regional contingency situation procedures while aborting

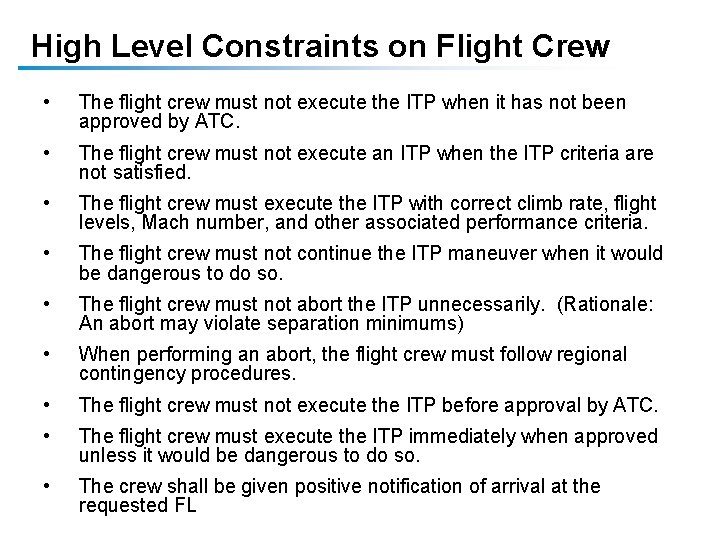

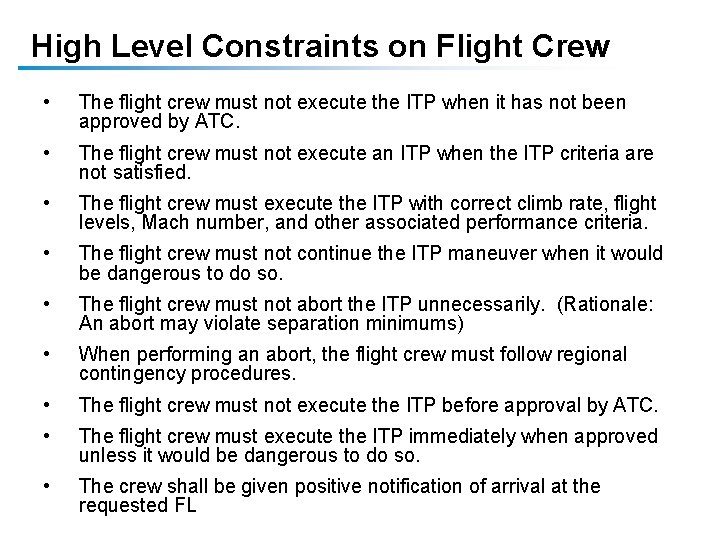

High Level Constraints on Flight Crew • The flight crew must not execute the ITP when it has not been approved by ATC. • The flight crew must not execute an ITP when the ITP criteria are not satisfied. • The flight crew must execute the ITP with correct climb rate, flight levels, Mach number, and other associated performance criteria. • The flight crew must not continue the ITP maneuver when it would be dangerous to do so. • The flight crew must not abort the ITP unnecessarily. (Rationale: An abort may violate separation minimums) • When performing an abort, the flight crew must follow regional contingency procedures. • The flight crew must not execute the ITP before approval by ATC. • The flight crew must execute the ITP immediately when approved unless it would be dangerous to do so. • The crew shall be given positive notification of arrival at the requested FL

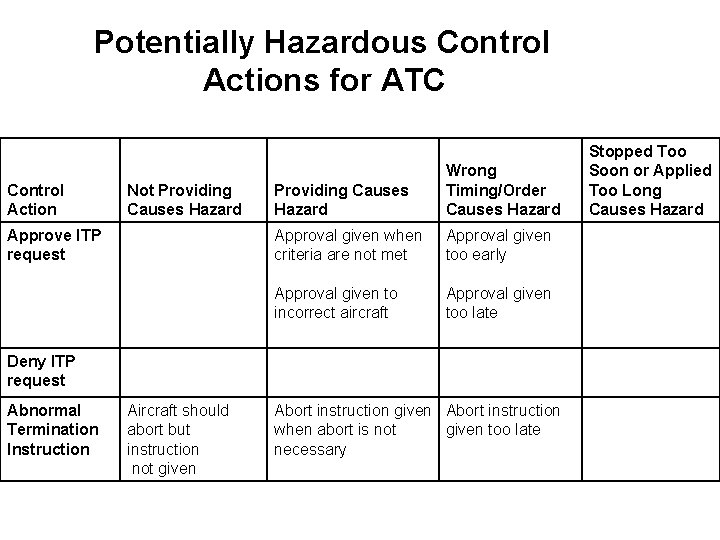

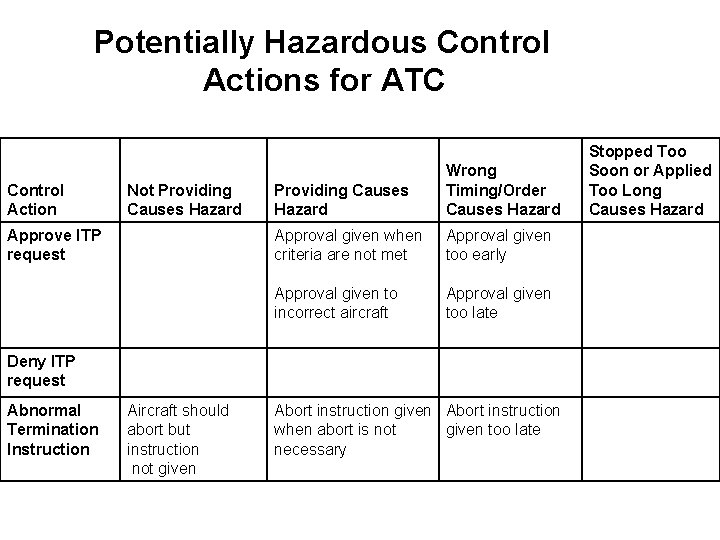

Potentially Hazardous Control Actions for ATC Control Action Not Providing Causes Hazard Approve ITP request Providing Causes Hazard Wrong Timing/Order Causes Hazard Approval given when criteria are not met Approval given too early Approval given to incorrect aircraft Approval given too late Deny ITP request Abnormal Termination Instruction Stopped Too Soon or Applied Too Long Causes Hazard Aircraft should abort but instruction not given Abort instruction when abort is not given too late necessary

High-Level Constraints on ATC • Approval of an ITP request must be given only when the ITP criteria are met. • Approval must be given to the requesting aircraft only. • Approval must not be given too early or too late [needs to be clarified as to the actual time limits] • An abnormal termination instruction must be given when continuing the ITP would be unsafe. • An abnormal termination instruction must not be given when it is not required to maintain safety and would result in a loss of separation. • An abnormal termination instruction must be given immediately if an abort is required.

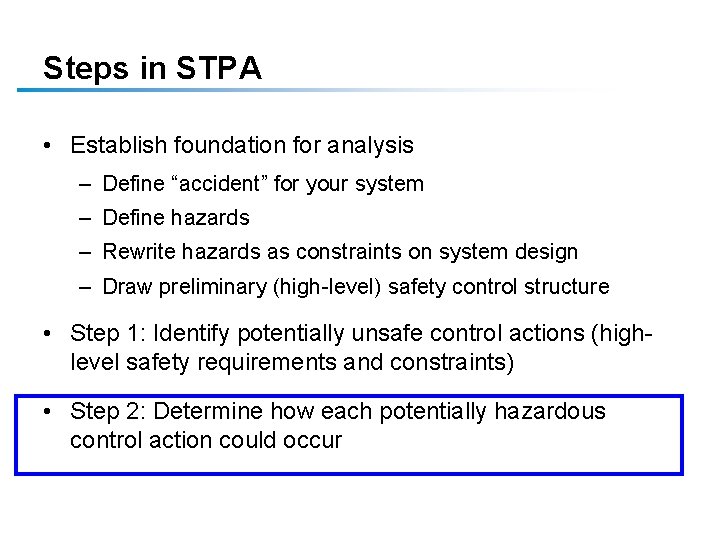

Steps in STPA • Establish foundation for analysis – Define “accident” for your system – Define hazards – Rewrite hazards as constraints on system design – Draw preliminary (high-level) safety control structure • Step 1: Identify potentially unsafe control actions (highlevel safety requirements and constraints) • Step 2: Determine how each potentially hazardous control action could occur

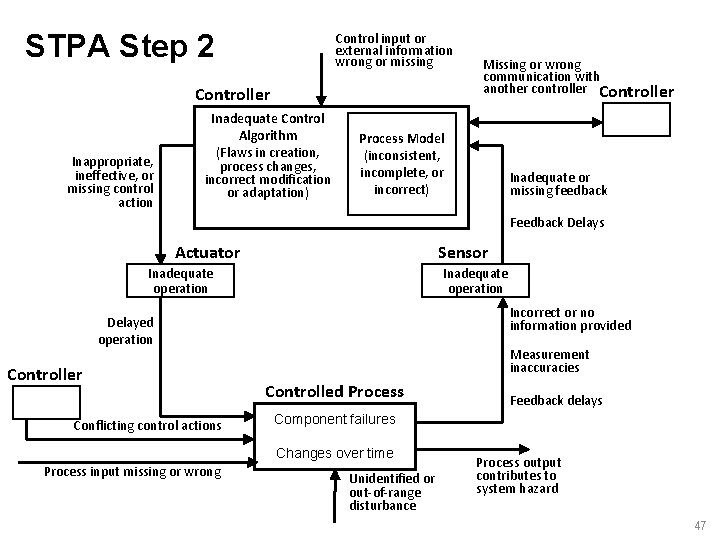

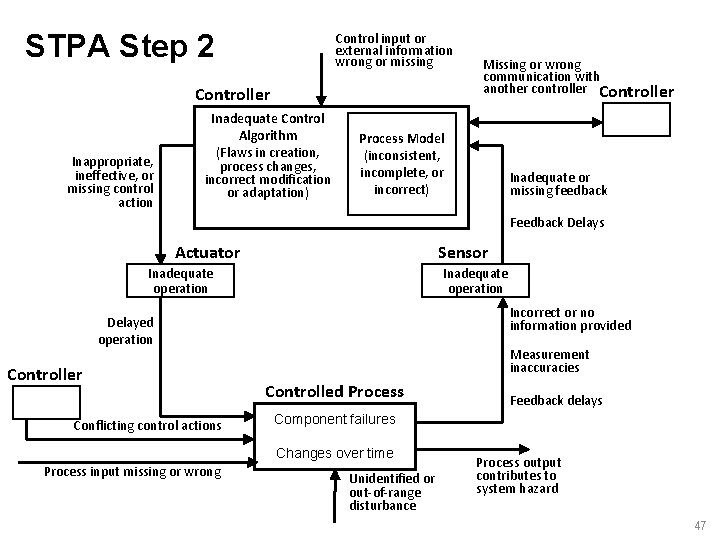

STPA Step 2 Control input or external information wrong or missing Controller Inappropriate, ineffective, or missing control action Inadequate Control Algorithm (Flaws in creation, process changes, incorrect modification or adaptation) Missing or wrong communication with another controller Controller Process Model (inconsistent, incomplete, or incorrect) Inadequate or missing feedback Feedback Delays Actuator Sensor Inadequate operation Incorrect or no information provided Delayed operation Controller Conflicting control actions Measurement inaccuracies Controlled Process Component failures Changes over time Process input missing or wrong Feedback delays Unidentified or out-of-range disturbance Process output contributes to system hazard 47

Example Causal Analysis • Unsafe control action: Pilot executes maneuver when criteria not met • Possible Causes? – Thinks criteria met (incorrect process model) • Inadequate feedback provided by ITP box • Feedback delayed or corrupted • Receives incorrect info from ATC or ADS-B • ATC thinks criteria are met and safe to perform maneuver but it is not –…

Is it Practical? • STPA has been or is being used in a large variety of industries – Spacecraft – Air Traffic Control – UAVs (RPAs) – Defense – Automobiles (GM, Ford, Nissan? ) – Medical Devices and Hospital Safety – Chemical plants – Oil and Gas – Nuclear and Electrical Power – C 02 Capture, Transport, and Storage – Etc.

Is it Practical? (2) Social and Managerial • Analysis of the management structure of the space shuttle program (post-Columbia) • Risk management in the development of NASA’s new manned space program (Constellation) • NASA Mission control ─ re-planning and changing mission control procedures safely • Food safety • Safety in pharmaceutical drug development • Risk analysis of outpatient GI surgery at Beth Israel Deaconess Hospital • Analysis and prevention of corporate fraud

Does it Work? • Most of these systems are very complex (e. g. , the U. S. Missile Defense System) • In all cases where a comparison was made: – STPA found the same hazard causes as the old methods – Plus it found more causes than traditional methods – In some evaluations, found accidents that had occurred that other methods missed – Cost was orders of magnitude less than the traditional hazard analysis methods

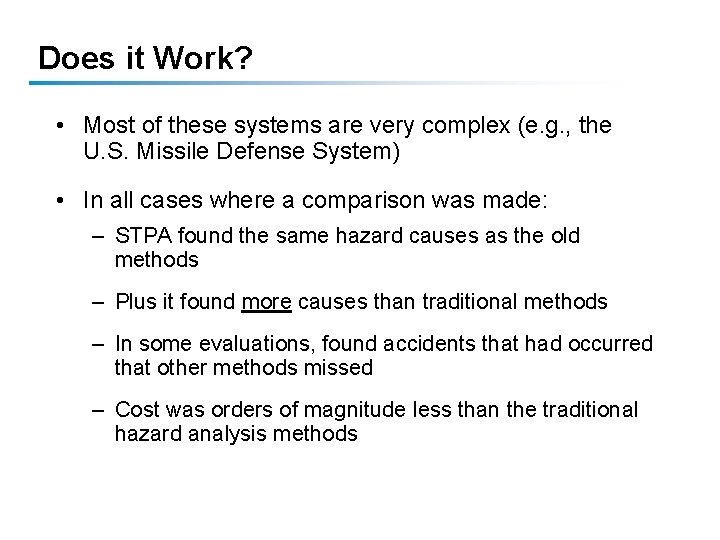

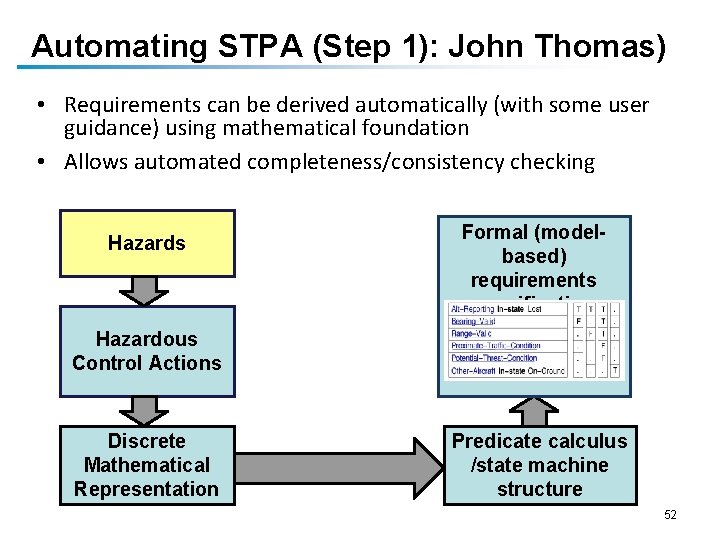

Automating STPA (Step 1): John Thomas) • Requirements can be derived automatically (with some user guidance) using mathematical foundation • Allows automated completeness/consistency checking Hazards Formal (modelbased) requirements specification Hazardous Control Actions Discrete Mathematical Representation Predicate calculus /state machine structure 52

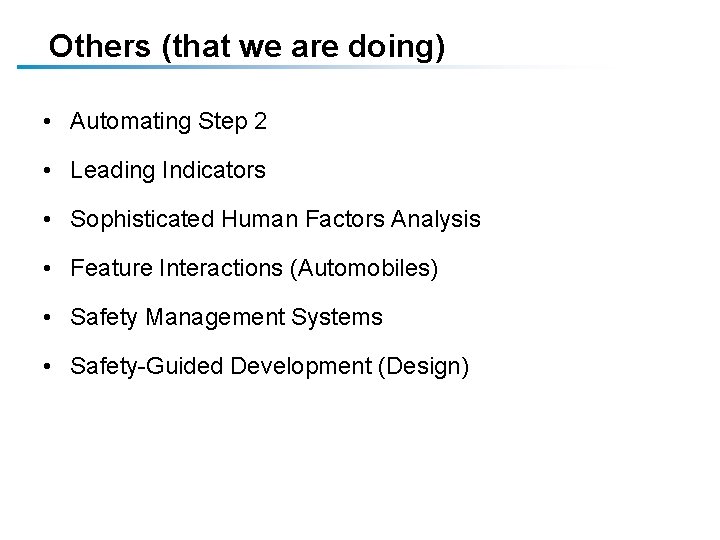

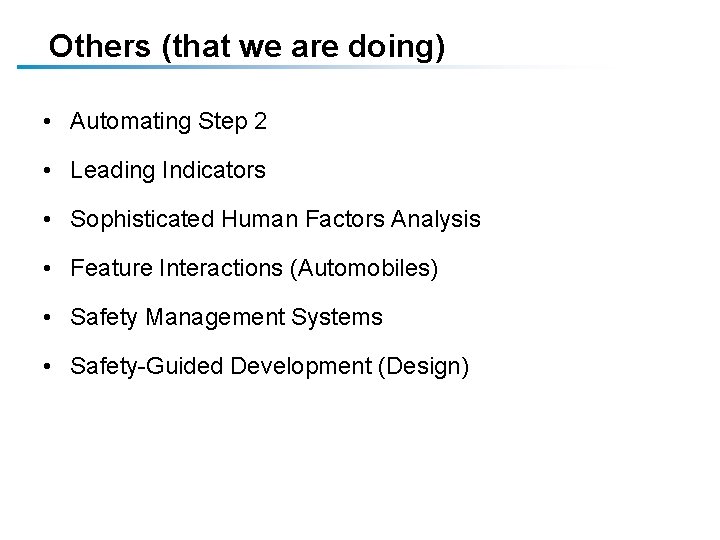

Others (that we are doing) • Automating Step 2 • Leading Indicators • Sophisticated Human Factors Analysis • Feature Interactions (Automobiles) • Safety Management Systems • Safety-Guided Development (Design)

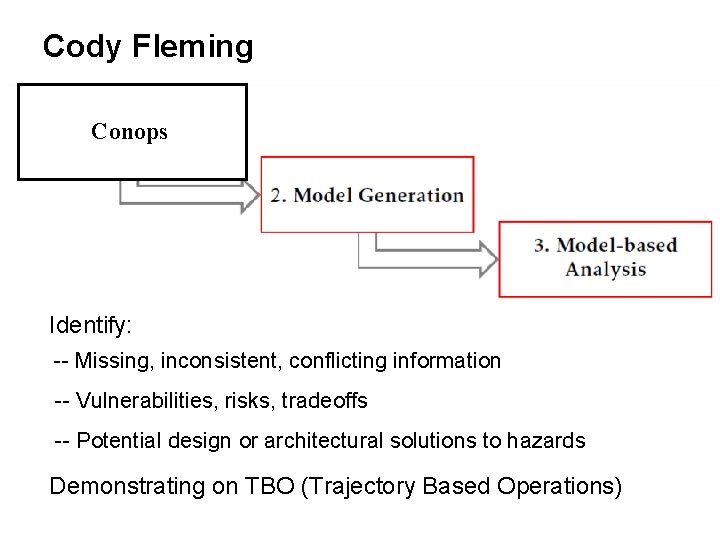

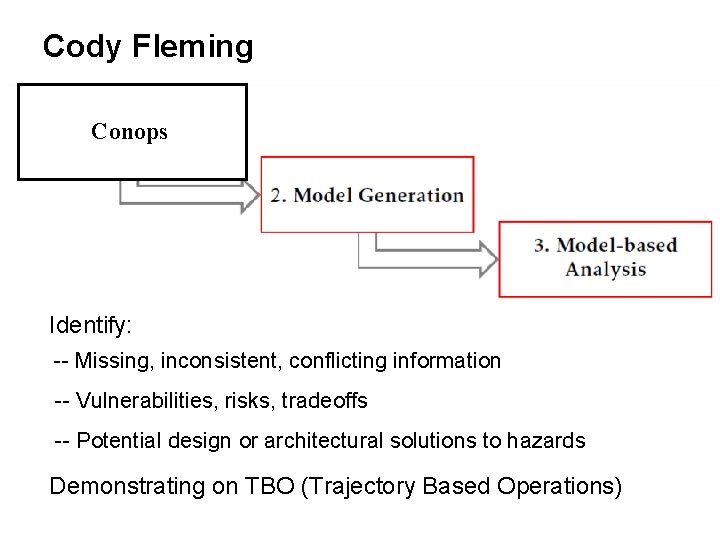

Cody Fleming Conops Identify: -- Missing, inconsistent, conflicting information -- Vulnerabilities, risks, tradeoffs -- Potential design or architectural solutions to hazards Demonstrating on TBO (Trajectory Based Operations)

Others (that we are doing) • Changes in Complex Systems – Air Traffic Control (Next. Gen) • ITP • Interval Management (IMS) – UAS (unmanned aircraft systems) in national airspace • Workplace (Occupational) Safety • Some Current Applications – – Hospital Patient Safety Flight Test (Air Force) Security in aircraft networks Defense systems

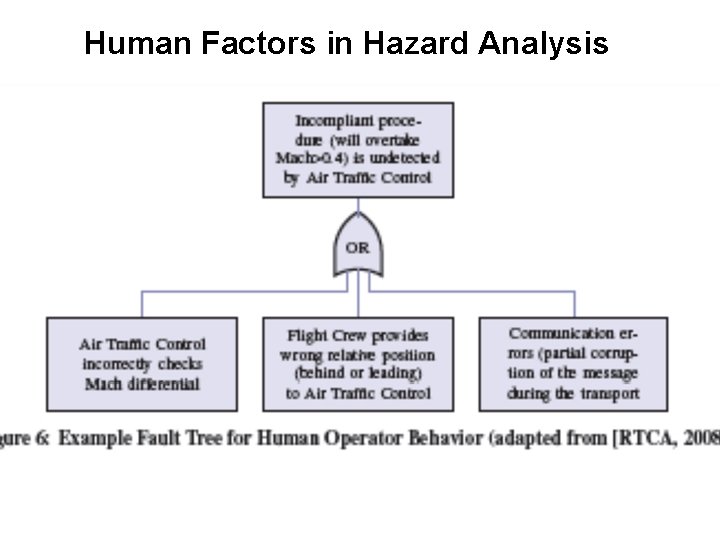

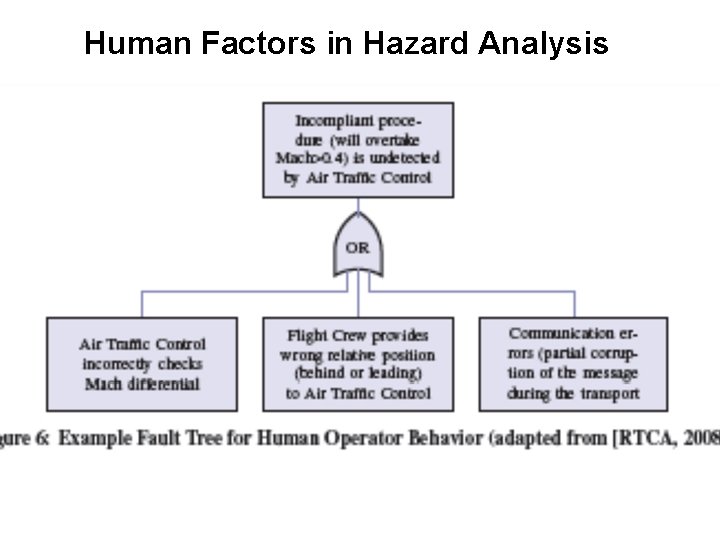

Human Factors in Hazard Analysis

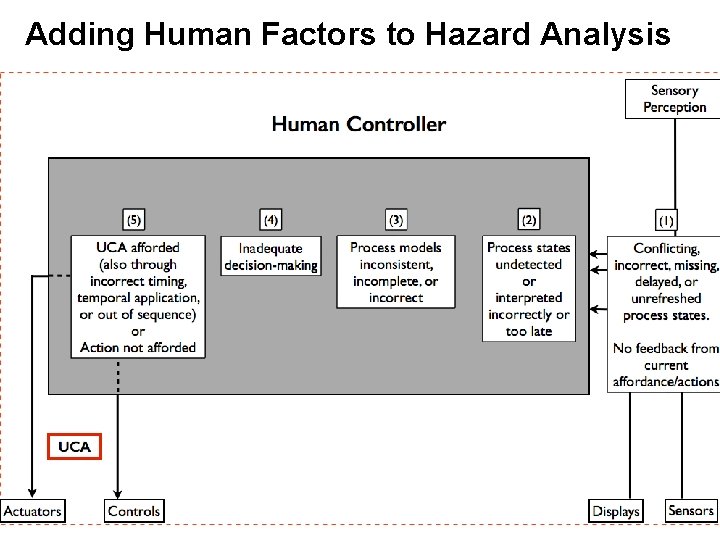

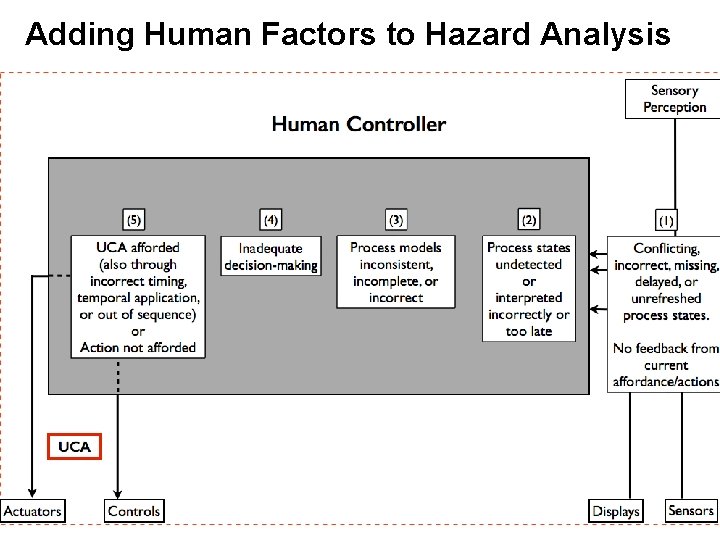

Adding Human Factors to Hazard Analysis

Outline • Accident Causation in Complex Systems: STAMP • New Analysis Methods – – – Hazard Analysis Accident Analysis Security Analysis • Does it Work? Evaluations

Common Traps in Understanding Accident Causes • Root cause seduction and oversimplification • Narrow views of human error • Hindsight bias • Focus finding someone or something to blame

Root Cause Seduction • Assuming there is a root cause gives us an illusion of control. – Usually focus on operator error or technical failures – Ignore systemic and management factors – Leads to a sophisticated “whack a mole” game • Fix symptoms but not process that led to those symptoms • In continual fire-fighting mode • Having the same accident over and over

Oversimplification of Causes • Almost always there is: – Operator “error” – Flawed management decision making – Flaws in the physical design of equipment – Safety culture problems – Regulatory deficiencies – Etc. • Need to determine why safety control structure was ineffective in preventing the loss.

Blame is the Enemy of Safety • Two possible goals for an accident investigation: – Find who to blame – Understand why occurred so can prevent in future • Blame is a legal or moral concept, not an engineering one • Focus on blame can: – Prevent openness during investigation – Lead to finger pointing and cover ups – Lead to people not reporting errors and problems before accidents

Human Error: Traditional View • Operator error is cause of most incidents and accidents • So do something about human involved (fire them, retrain, admonish) • Or do something about humans in general – Marginalize them by putting in more automation – Rigidify their work by creating more rules and procedures

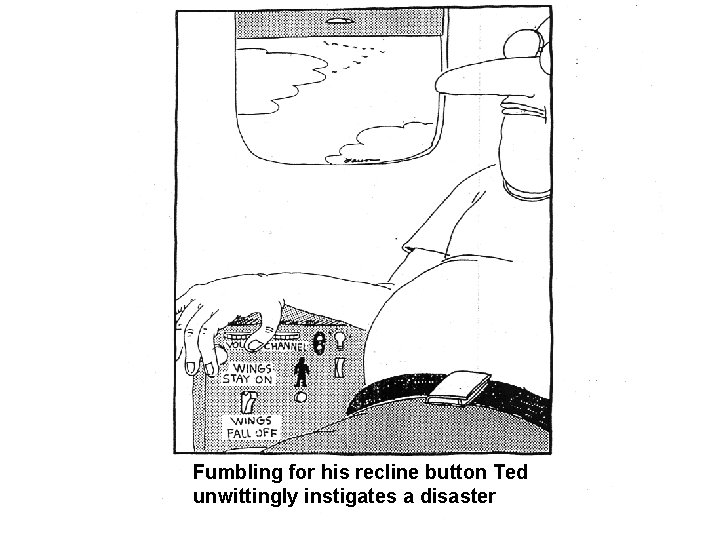

Fumbling for his recline button Ted unwittingly instigates a disaster

Human Error: Systems View (Sydney Dekker, Jens Rasmussen, Leveson) • Human error is a symptom, not a cause • All behavior affected by context (system) in which occurs • To do something about error, must look at system in which people work: – Design of equipment – Usefulness of procedures – Existence of goal conflicts and production pressures • Human error is a sign that a system needs to be redesigned

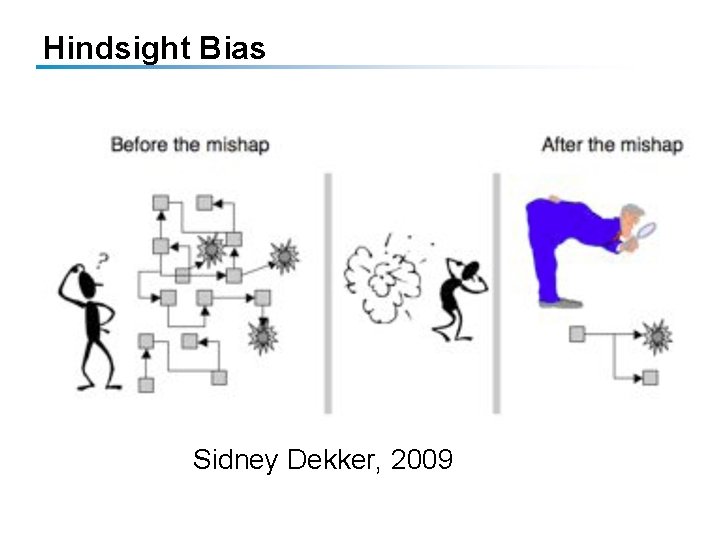

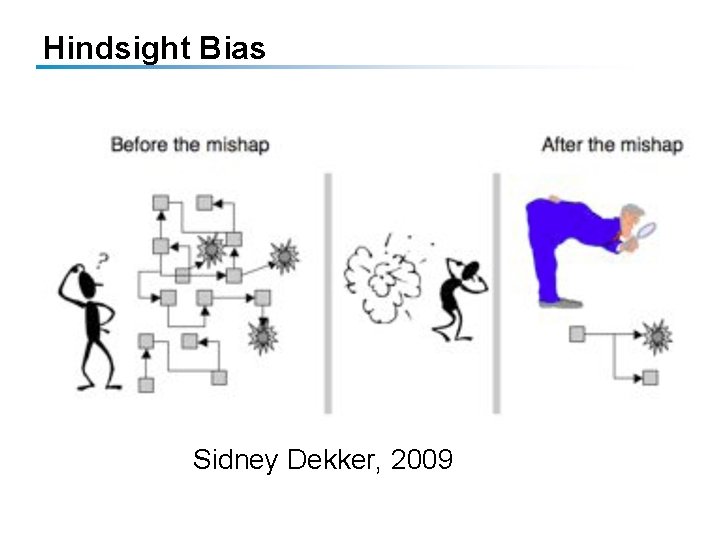

Hindsight Bias Sidney Dekker, 2009

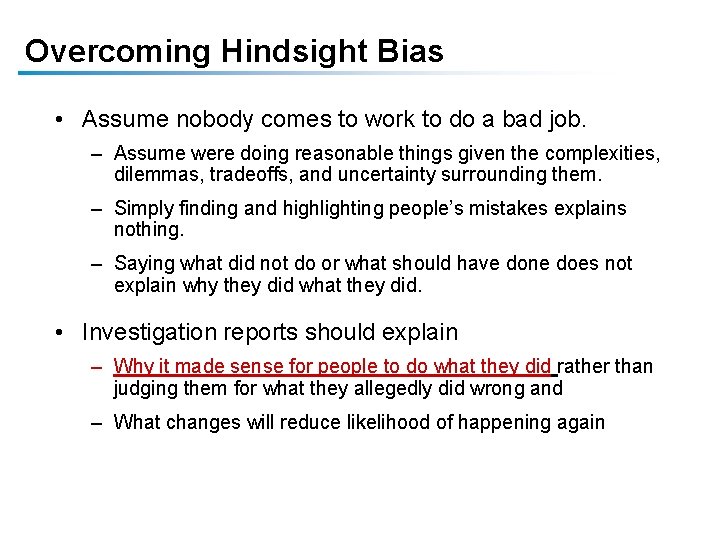

Overcoming Hindsight Bias • Assume nobody comes to work to do a bad job. – Assume were doing reasonable things given the complexities, dilemmas, tradeoffs, and uncertainty surrounding them. – Simply finding and highlighting people’s mistakes explains nothing. – Saying what did not do or what should have done does not explain why they did what they did. • Investigation reports should explain – Why it made sense for people to do what they did rather than judging them for what they allegedly did wrong and – What changes will reduce likelihood of happening again

Com. Air 5191 (Lexington) Sept. 2006 Analysis using CAST by Paul Nelson, Com. Air pilot and human factors expert (for report: http: //sunnyday. mit. edu/papers/nelson-thesis. pdf

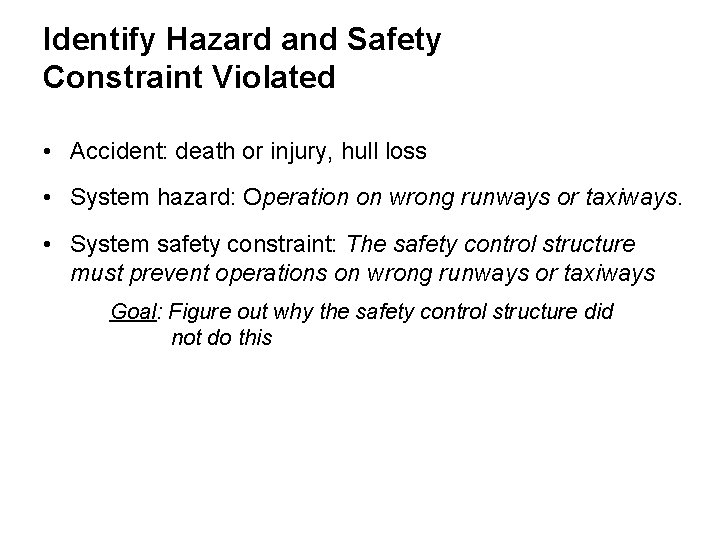

Identify Hazard and Safety Constraint Violated • Accident: death or injury, hull loss • System hazard: Operation on wrong runways or taxiways. • System safety constraint: The safety control structure must prevent operations on wrong runways or taxiways Goal: Figure out why the safety control structure did not do this

Start with Physical System (Aircraft) • Failures: None • Unsafe Interactions – Took off on wrong runway – Runway too short for that aircraft to become safely airborne Then add controller of aircraft to determine why on that runway

Flight Crew Aircraft

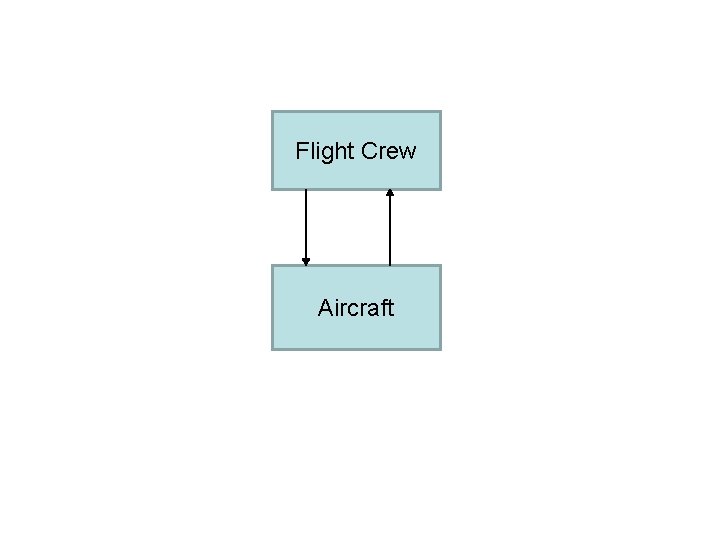

Component Analysis in CAST • Safety responsibilities/constraints • Unsafe control actions Why? • Mental/process model flaws • Contextual/environmental influences

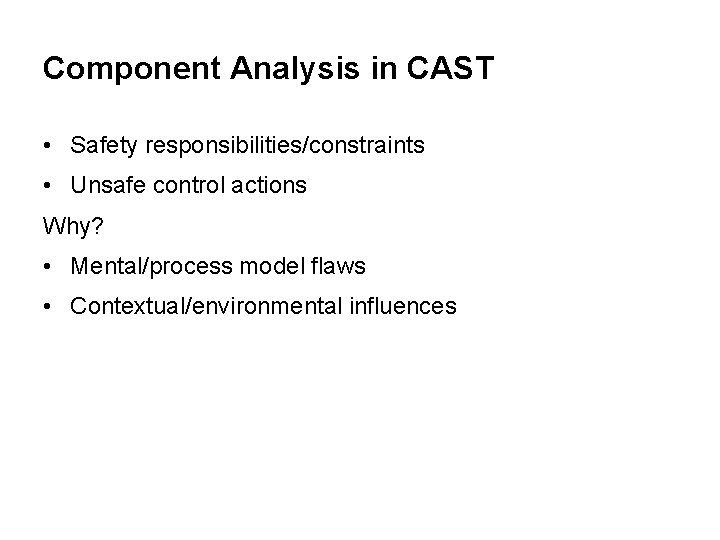

5191 Flight Crew Safety Requirements and Constraints: • Operate the aircraft in accordance with company procedures, ATC clearances and FAA regulations. • Safely taxi the aircraft to the intended departure runway. • Take off safely from the planned runway. Unsafe Control Actions: • Taxied to runway 26 instead of continuing to runway 22. • Did not use the airport signage to confirm their position short of the runway. • Did not confirm runway heading and compass heading matched. • 40 second conversation violation of “sterile cockpit”

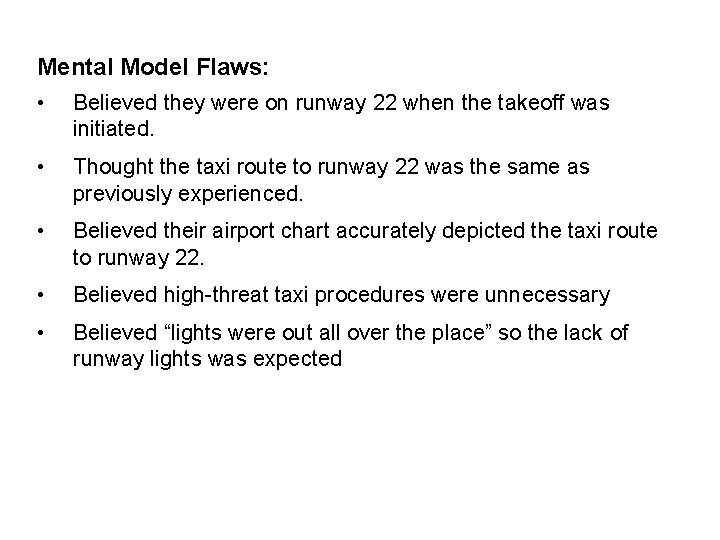

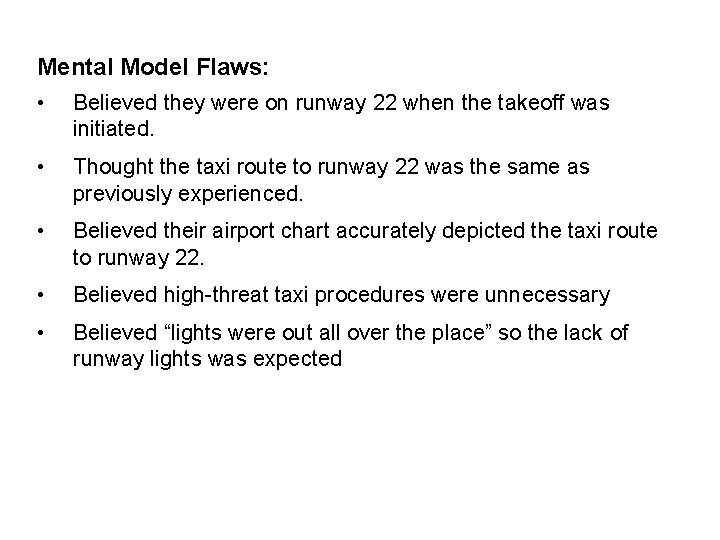

Mental Model Flaws: • Believed they were on runway 22 when the takeoff was initiated. • Thought the taxi route to runway 22 was the same as previously experienced. • Believed their airport chart accurately depicted the taxi route to runway 22. • Believed high-threat taxi procedures were unnecessary • Believed “lights were out all over the place” so the lack of runway lights was expected

Context in Which Decisions Made: • No communication that the taxi route to the departure runway was different than indicated on the airport diagram • No known reason for high-threat taxi procedures • Dark out • Comair had no specified procedures to confirm compass heading with runway • Sleep loss fatigue • Runways 22 and 26 looked very similar from that position • Comair in bankruptcy, tried to maximize efficiency – Demanded large wage concessions from pilots – Economic pressures a stressor and frequent topic of conversation for pilots

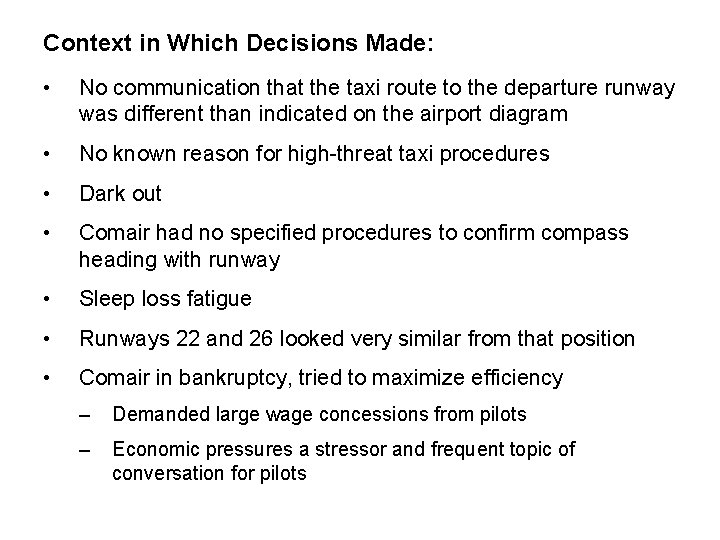

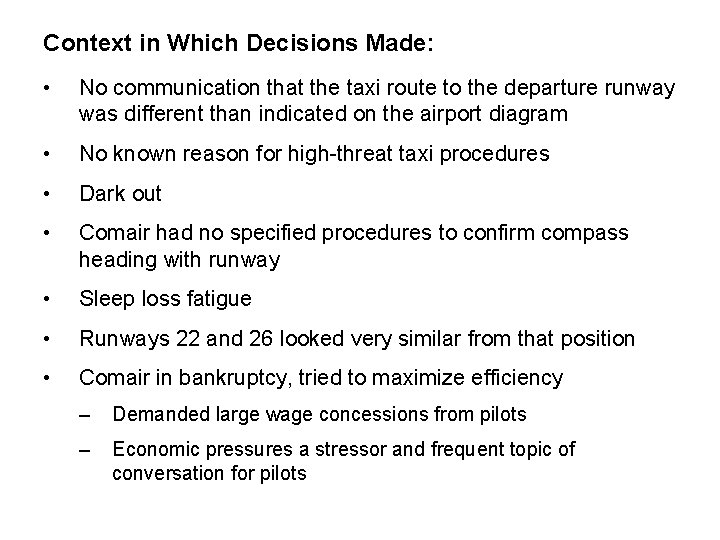

The Airport Diagram What The Crew had What the Crew Needed

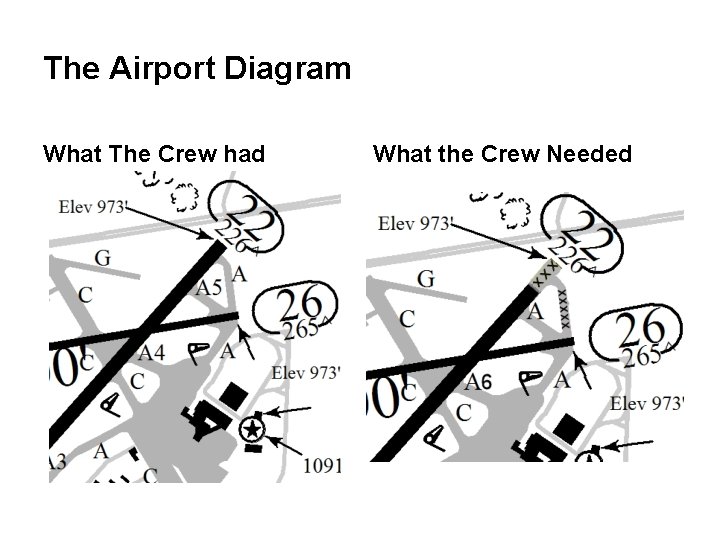

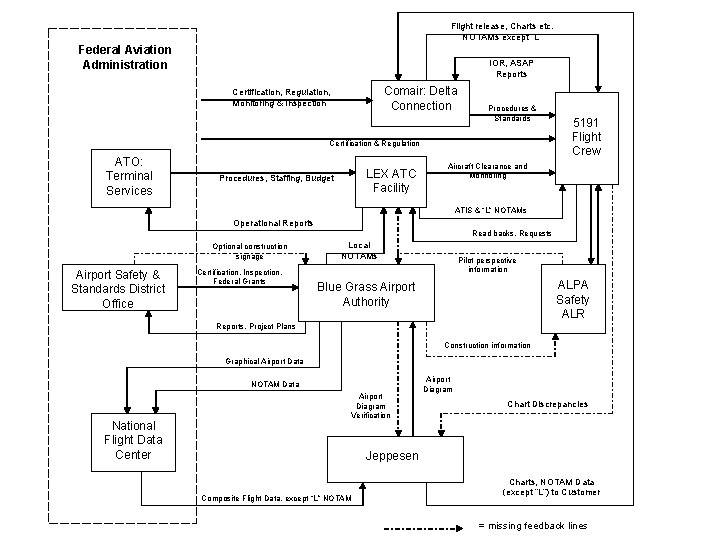

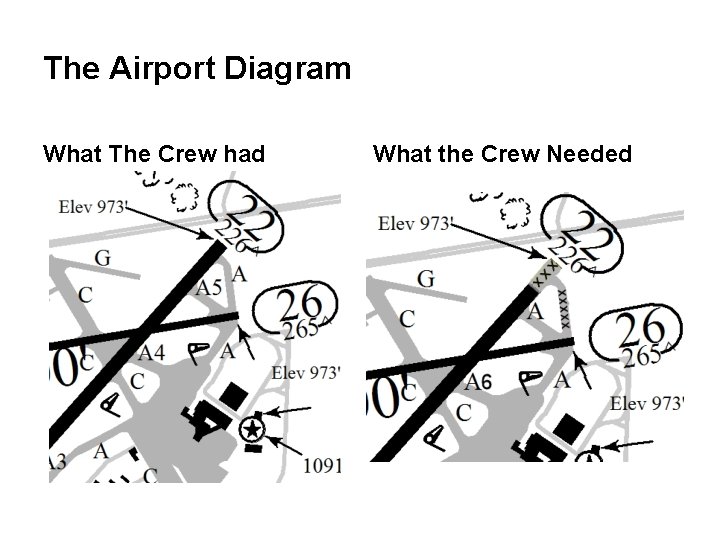

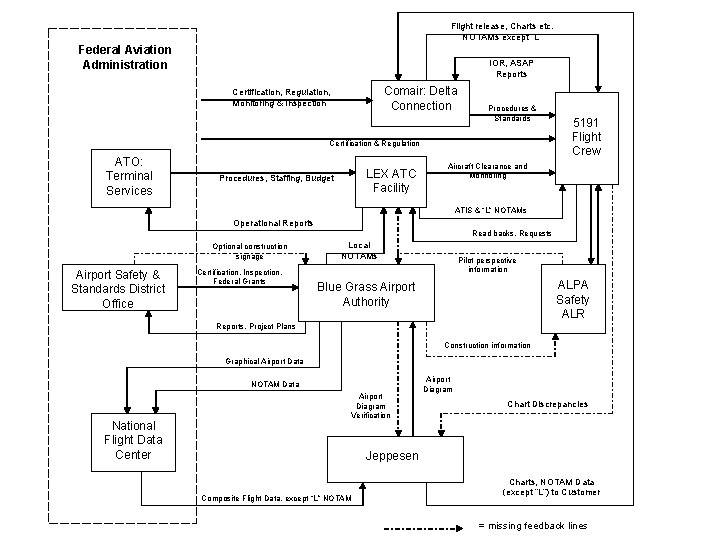

Flight release, Charts etc. NOTAMs except “L” Federal Aviation Administration IOR, ASAP Reports Comair: Delta Connection Certification, Regulation, Monitoring & Inspection Procedures & Standards Certification & Regulation ATO: Terminal Services LEX ATC Facility Procedures, Staffing, Budget 5191 Flight Crew Aircraft Clearance and Monitoring ATIS & “L” NOTAMs Operational Reports Read backs, Requests Optional construction signage Airport Safety & Standards District Office Certification, Inspection, Federal Grants Local NOTAMs Pilot perspective information ALPA Safety ALR Blue Grass Airport Authority Reports, Project Plans Construction information Graphical Airport Data NOTAM Data National Flight Data Center Airport Diagram Verification Airport Diagram Chart Discrepancies Jeppesen Composite Flight Data, except “L” NOTAM Charts, NOTAM Data (except “L”) to Customer = missing feedback lines

Comair (Delta Connection) Airlines Safety Requirements and Constraints • • Responsible for safe, timely tranport of passengers within their established route system Ensure crews have available all necessary information for each flight Facilitate a flight deck environment that enables crew focus on flight safety actions during critical phases of flight Develop procedures to ensure proper taxi route progression and runway confirmation Unsafe Control Actions: • Internal processes did not provide LEX local NOTAM on the flight release, even though it was faxed to Comair from LEX • In order to advance corporate strategies, tactics were used that fostered work environment stress precluding crew focus ability during critical phases of flight. • Did not develop or train procedures for take off runway confirmation.

Comair (2) Process Model Flaws: • Trusted the ATIS broadcast would provide local NOTAMs to crews • Believed tactics promoting corporate strategy had no connection to safety • Believed formal procedures and training emphasis of runway confirmation methods were unnecessary Context in Which Decisions Made: • In bankruptcy.

Blue Grass Airport Authority (LEX) Safety Requirements and Constraints: • Establish and maintain a facility for the safe arrival and departure of aircraft to service the community. • Operate the airport according to FAA certification standards, FAA regulations (FARs) and airport safety bulletin guidelines (ACs). • Ensure taxiway changes are marked in a manner to be clearly understood by aircraft operators.

Airport Authority Unsafe Control Actions: • Relied solely on FAA guidelines for determining adequate signage during construction. • Did not seek FAA acceptable options other than NOTAMs to inform airport users of the known airport chart inaccuracies. • Changed taxiway A 5 to Alpha without communicating the change by other than minimum signage. • Did not establish feedback pathways to obtain operational safety information from airport users.

Airport Authority Process Model Flaws: • Believed compliance with FAA guidelines and inspections would equal adequate safety. • Believed the NOTAM system would provide understandable information about inconsistencies of published documents. • Believed airport users would provide feedback if they were confused. Context in Which Decisions Made: • The last three FAA inspections demonstrated complete compliance with FAA regulations and guidelines. • Last minute change from Safety Plans Construction Document phase III implementation plan.

Airport Safety & Standards Office Safety Requirements and Constraints: • Establish airport design, construction, maintenance, operational and safety standards and issue operational certificates accordingly. • Ensure airport improvement project grant compliance and release of grant money accordingly. • Perform airport inspections and surveillance. Enforce compliance if problems found. • Review and approve Safety Plans Construction Documents in a timely manner, consistent with safety. • Assure all stake holders participate in developing methods to maintain operational safety during construction periods.

Airport Safety & Standards Office Unsafe Control Actions: • The FAA review/acceptance process was inconsistent, accepting the original phase IIIA (Paving and Lighting) Safety Plans Construction Documents and then rejecting them during the transition between phases II and IIIA. • Did not require all stake holders (i. e. a Pilot representative was not present) be part of the meetings where methods of maintaining operational safety during construction were decided. • Focused on inaccurate runway length depiction without consideration of taxiway discrepancies. • Did not require methods in addition to NOTAMs to assure safety during periods of construction when difference between LEX Airport physical environment and LEX Airport charts.

Airport Safety & Standards Office Process Model Flaws • Did not believe pilot input was necessary for development of safe surface movement operations. • No recognition of negative effects of changes on safety. • Belief that the accepted practice of using NOTAMs to advise crews of charting differences was sufficient for safety. Context in Which Decisions Made: • Priority to keep Airport Facility Directory accurate.

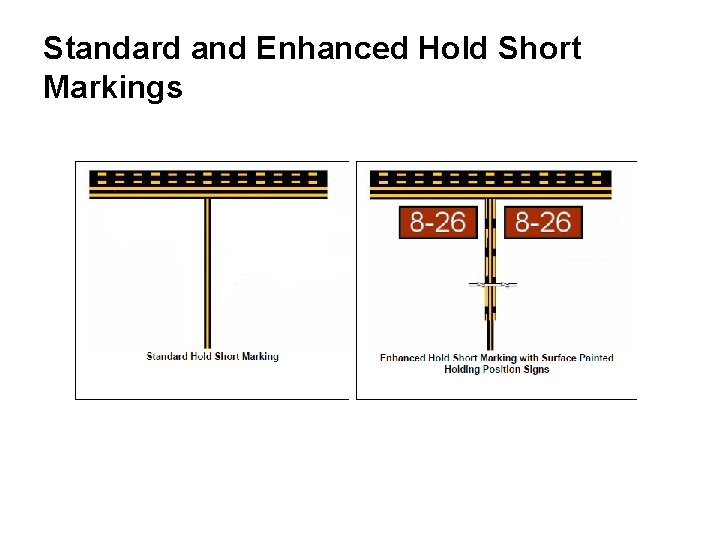

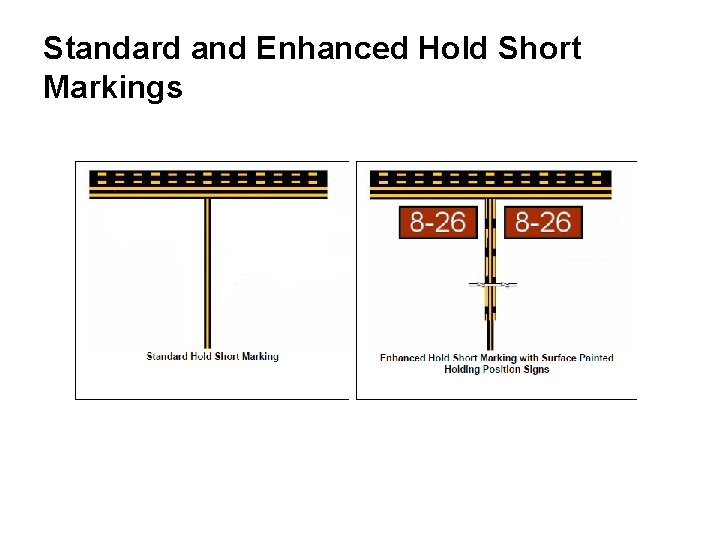

Standard and Enhanced Hold Short Markings

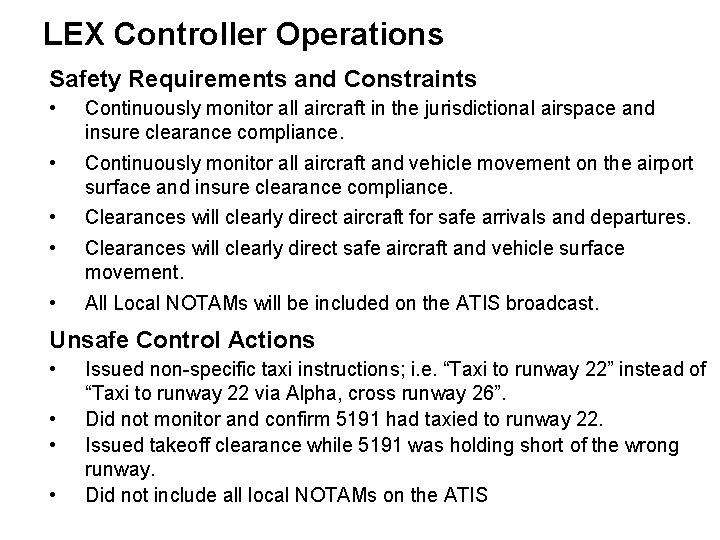

LEX Controller Operations Safety Requirements and Constraints • Continuously monitor all aircraft in the jurisdictional airspace and insure clearance compliance. • Continuously monitor all aircraft and vehicle movement on the airport surface and insure clearance compliance. • Clearances will clearly direct aircraft for safe arrivals and departures. • Clearances will clearly direct safe aircraft and vehicle surface movement. • All Local NOTAMs will be included on the ATIS broadcast. Unsafe Control Actions • • Issued non-specific taxi instructions; i. e. “Taxi to runway 22” instead of “Taxi to runway 22 via Alpha, cross runway 26”. Did not monitor and confirm 5191 had taxied to runway 22. Issued takeoff clearance while 5191 was holding short of the wrong runway. Did not include all local NOTAMs on the ATIS

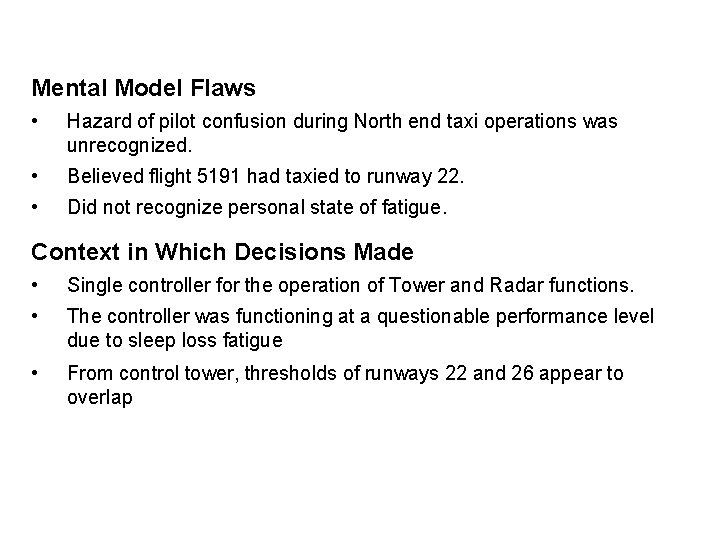

Mental Model Flaws • Hazard of pilot confusion during North end taxi operations was unrecognized. • Believed flight 5191 had taxied to runway 22. • Did not recognize personal state of fatigue. Context in Which Decisions Made • Single controller for the operation of Tower and Radar functions. • The controller was functioning at a questionable performance level due to sleep loss fatigue • From control tower, thresholds of runways 22 and 26 appear to overlap

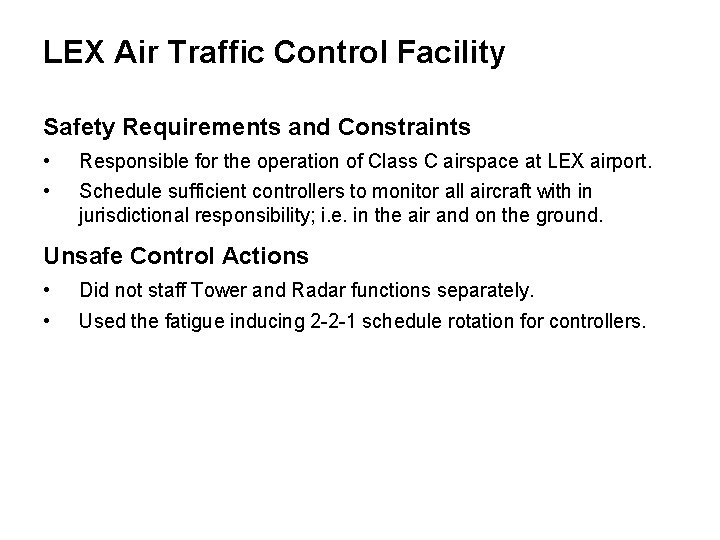

LEX Air Traffic Control Facility Safety Requirements and Constraints • Responsible for the operation of Class C airspace at LEX airport. • Schedule sufficient controllers to monitor all aircraft with in jurisdictional responsibility; i. e. in the air and on the ground. Unsafe Control Actions • Did not staff Tower and Radar functions separately. • Used the fatigue inducing 2 -2 -1 schedule rotation for controllers.

LEX Air Traffic Control Facility (2) Mental Model Flaws • Believed “verbal” guidance requiring 2 controllers was merely a preferred condition. • Controllers would manage fatigue resulting from use of the 2 -2 -1 rotating shift. Context in Which Decisions Made • Requests for increased staffing were ignored. • Overtime budget was insufficient to make up for the reduced staffing.

Air Traffic Organization: Terminal Services Safety Requirements and Constraints • Ensure appropriate ATC Facilities are established to safely and efficiently guide aircraft in and out of airports. • Establish budgets for operation and staffing levels which maintain safety guidelines. • Ensure compliance with minimum facility staffing guidelines. • Provide duty/rest period policies which ensure safe controller performance functioning ability. Unsafe Control Actions • Issued verbal guidance that Tower and Radar functions were to be separately manned, instead of specifying in official staffing policies. • Did not confirm the minimum 2 controller guidance was being followed. • Did not monitor the safety effects of limiting overtime.

Process Model Flaws • Believed “verbal” guidance (minimum staffing of 2 controllers) was clear. • Believed staffing with one controller was rare and if it was unavoidable due to sick calls etc. , that the facility would coordinate the with Air Route Traffic Control Center (ARTCC) to control traffic. • Believed limiting overtime budget was unrelated to safety. • Believed controller fatigue was rare and a personal matter, up to the individual to evaluate and mitigate. Context in Which Decisions Made • Budget constraints. • Air Traffic controller contract negotiations. Feedback • Verbal communication during quarterly meetings. • No feedback pathways for monitoring controller fatigue.

Flight release, Charts etc. NOTAMs except “L” Federal Aviation Administration IOR, ASAP Reports Comair: Delta Connection Certification, Regulation, Monitoring & Inspection Procedures & Standards Certification & Regulation ATO: Terminal Services LEX ATC Facility Procedures, Staffing, Budget 5191 Flight Crew Aircraft Clearance and Monitoring ATIS & “L” NOTAMs Operational Reports Read backs, Requests Optional construction signage Airport Safety & Standards District Office Certification, Inspection, Federal Grants Local NOTAMs Pilot perspective information ALPA Safety ALR Blue Grass Airport Authority Reports, Project Plans Construction information Graphical Airport Data NOTAM Data National Flight Data Center Airport Diagram Verification Airport Diagram Chart Discrepancies Jeppesen Composite Flight Data, except “L” NOTAM Charts, NOTAM Data (except “L”) to Customer = missing feedback lines

Jeppesen Safety Requirements and Constraints • Creation of accurate aviation navigation charts and information data for safe operation of aircraft in the NAS. • Assure Airport Charts reflect the most recent NFDC data Unsafe Control Actions • Insufficient analysis of the software which processed incoming NFDC data to assure the original design assumptions matched those of the application. • Not making available to the NAS Airport structure the type of information necessary to generate the 10 -8 “Yellow Sheet” airport construction chart.

Jeppesen (2) Process Model Flaws • Believed Document Control System software always generated notice of received NFDC data requiring analyst evaluation. • Any extended airport construction included phase and time data as a normal part of FAA submitted paper work. Context in Which Decisions Made • The Document Control System software generated notices of received NFDC data. • Preferred Chart provider to airlines. Feedback • Customer feedback channels are inadequae for providing information about charting inaccuracies.

National Flight Data Center Safety Requirements and Constraints • Collect, collate, validate, store, and disseminateaeronautical information detailing the physical description and operational status of all components of the National Airspace System (NAS). • Operate the US NOTAM system to create, validate, publish and disseminate NOTAMS. • Provide safety critical NAS information in a format which is understandable to pilots. • NOTAM dissemination methods will ensure pilot operators receive all necessary information.

Unsafe Control Actions • Did not use the FAA Human Factors Design Guide principles to update the NOTAM text format. • Limited dissemination of local NOTAMs (NOTAM-L). • Used multiple and various publications to disseminate NOTAMs, none of which individually contained all NOTAM information. Process Model Flaws: • Believed NOTAM system successfully communicated NAS changes. Context in Which Decisions Made • The NOTAM systems over 70 year history of operation. Format based on teletypes Coordination: • No coordination between FAA human factors branch and the NFDC for use of HF design principle for NOTAM format revision.

Federal Aviation Administration Safety Requirements and Constraints • Establish and administer the National Aviation Transportation System. • Coordinate the internal branches of the FAA, to monitor and enforce compliance with safety guidelines and regulations. • Provide budgets which assure the ability of each branch to operate according to safe policies and procedures. • Provide regulations to ensure safety critical operators can function unimpaired. • Provide and require components to prevent runway incursions.

Unsafe Control Actions: • Controller and Crew duty/rest regulations were not updated to be consistent with modern scientific knowledge about fatigue and its causes. • Required enhanced taxiway markings at only 15% of air carrier airports: those with greater than 1. 5 million passenger enplanements per year. Mental Model Flaws • Enhanced taxiway markings unnecessary except for the largest US airports. • Crew/controller duty/rest regulations are safe. Context in Which Decisions Made • FAA funding battles with the US congress. • Industry pressure to leave duty/rest regulations alone.

NTSB “Findings” Probable Cause: • FC’s failure to use available cues and aids to identify the airplane’s location on the airport surface during taxi • FC’s failure to cross-check and verify that the airplane was on the correct runway before takeoff. • Contributing to the accident were the flight crew’s nonpertinent conversation during taxi, which resulted in a loss of positional awareness, • Federal Aviation Administration’s (FAA) failure to require that all runway crossings be authorized only by specific air traffic control (ATC) clearances.

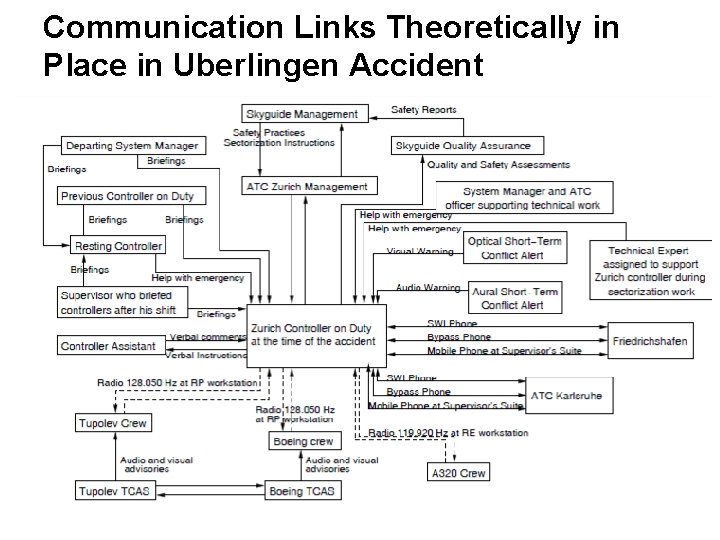

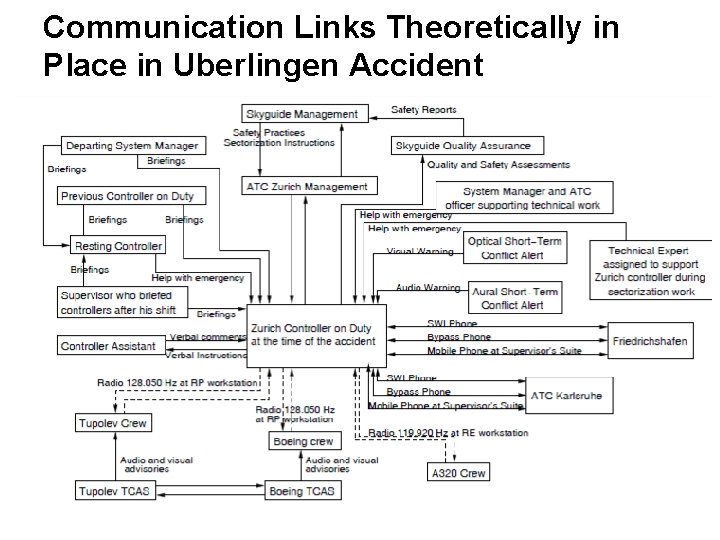

Communication Links Theoretically in Place in Uberlingen Accident

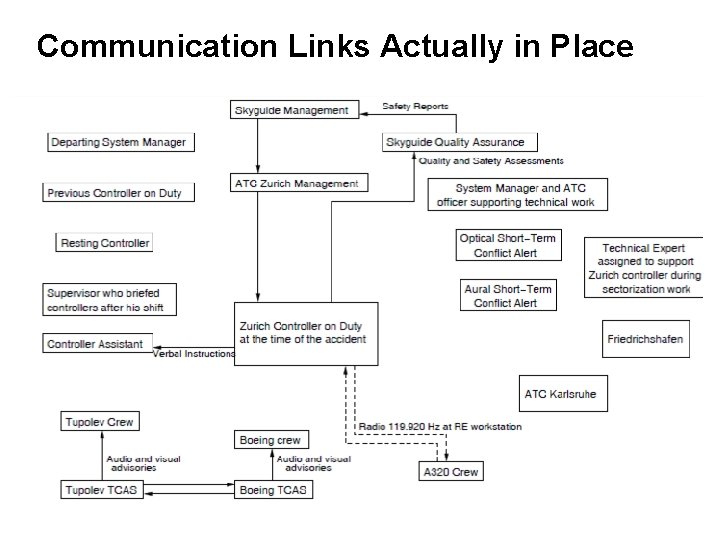

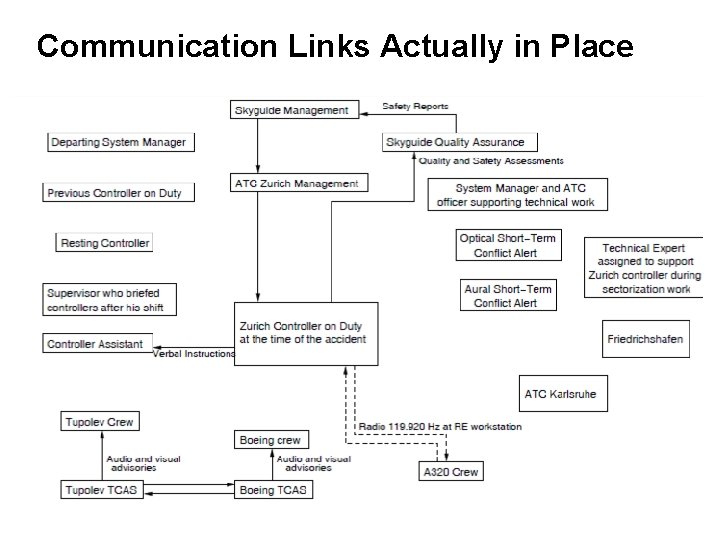

Communication Links Actually in Place

CAST (Causal Analysis using System Theory) • Identify system hazard violated and the system safety design constraints • Construct the safety control structure as it was designed to work – Component responsibilities (requirements) – Control actions and feedback loops • For each component, determine if it fulfilled its responsibilities or provided inadequate control. – If inadequate control, why? (including changes over time) – Context – Process Model Flaws

CAST (2) • For humans, why did it make sense for them to do what they did (to reduce hindsight bias) • Examine coordination and communication • Consider dynamics and migration to higher risk • Determine the changes that could eliminate the inadequate control (lack of enforcement of system safety constraints) in the future. • Generate recommendations

CAST (3) • Continuous Improvement – Assigning responsibility for implementing recommendations – Follow-up to ensure implemented – Feedback channels to determine whether changes effective • If not, why not?

Conclusions • The model used in accident or incident analysis determines what we what look for, how we go about looking for “facts”, and what facts we see as relevant. • A linear chain of events promotes looking for something that broke or went wrong in the proximal sequence of events prior to the accident. • In accidents where nothing physically broke, then currently we look for operator error. • Unless we look further, we limit our learning and almost guarantee future accidents related to the same factors. • Goal is to learn how to improve the safety control structure

Evaluating CAST on Real Accidents • Used on many types of accidents – Aviation – Trains – Chemical plants and off-shore oil drilling – Road Tunnels – Medical devices – Etc. • All CAST analyses so far have identified important causal factors omitted from official accident reports

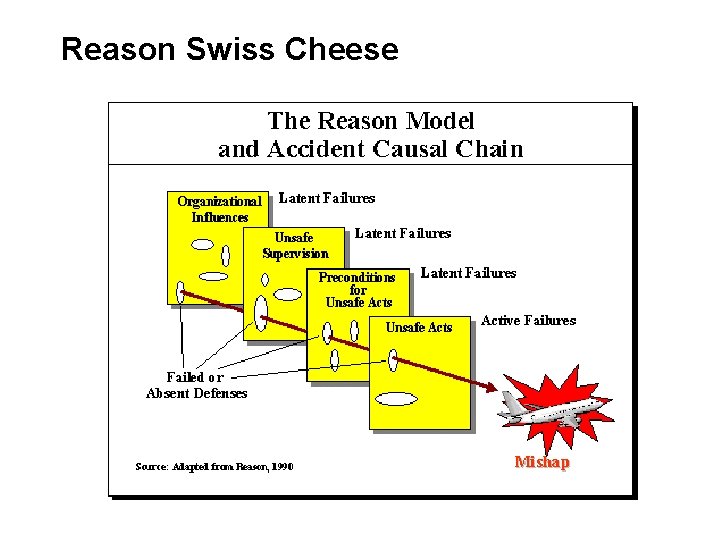

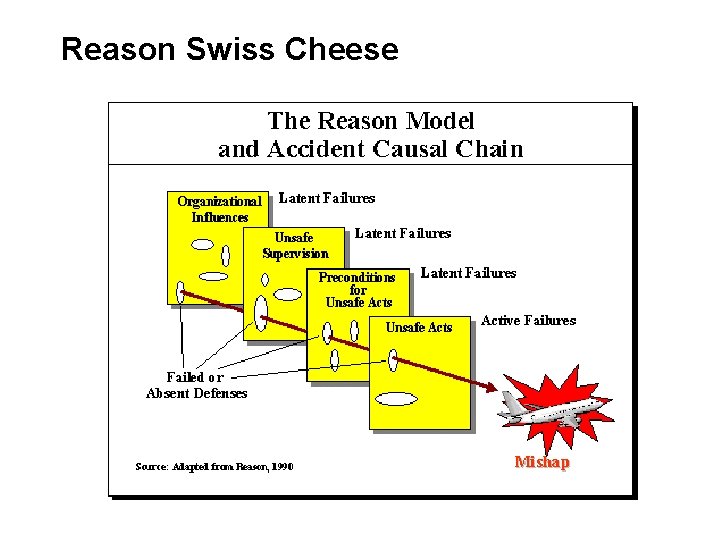

Evaluations (2) • Jon Hickey, US Coast Guard applied to aviation training accidents – US Coast Guard currently uses HFACS (based on Swiss Cheese Model) – Spate of recent accidents but couldn’t find any common factors – Using CAST, found common systemic factors not identified by HFACS – USCG now in process of adopting CAST • Dutch Safety Agency using it on a large variety of accidents (aircraft, railroads, traffic accidents, child abuse, medicine, airport runway incursions, etc. )

Outline • Accident Causation in Complex Systems: STAMP • New Analysis Methods – Hazard Analysis – Accident Analysis – Security Analysis • Does it Work? Evaluations

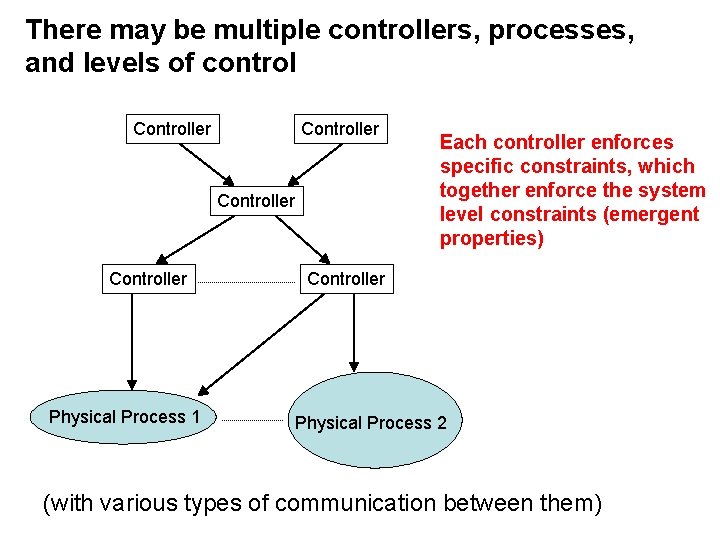

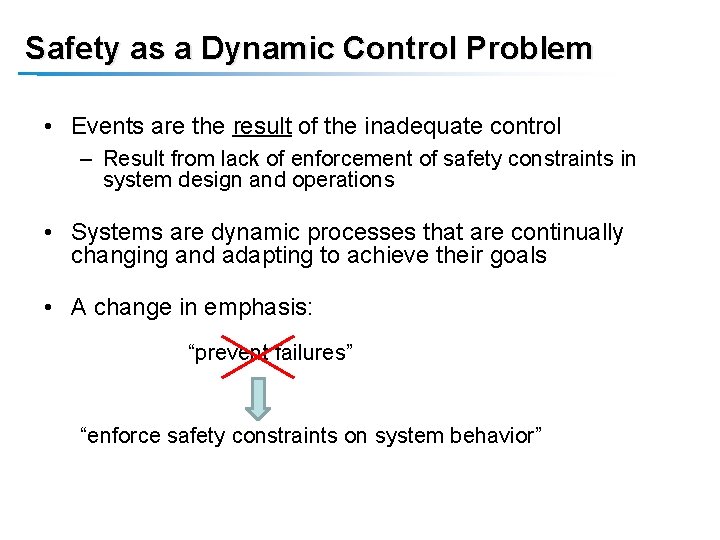

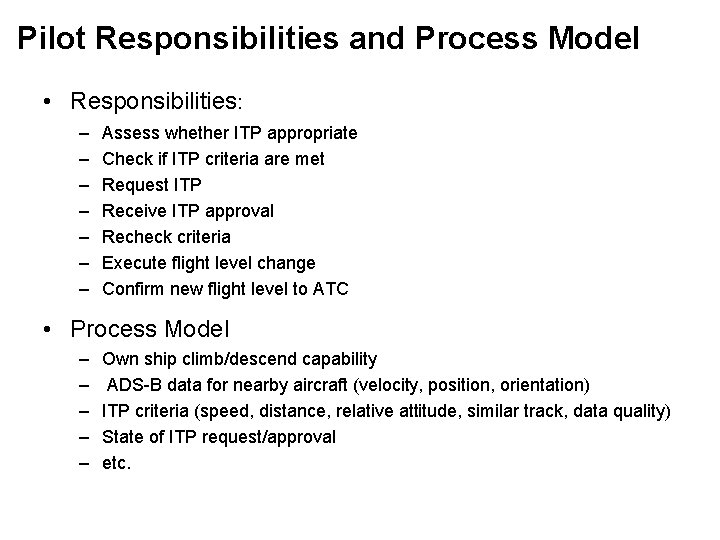

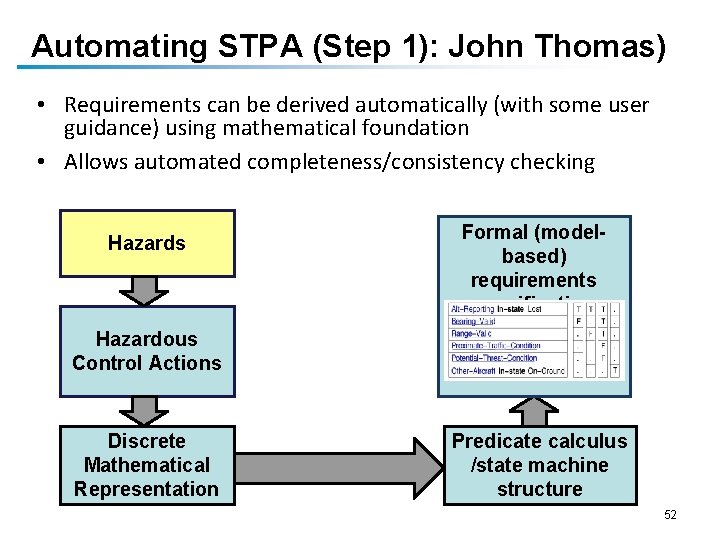

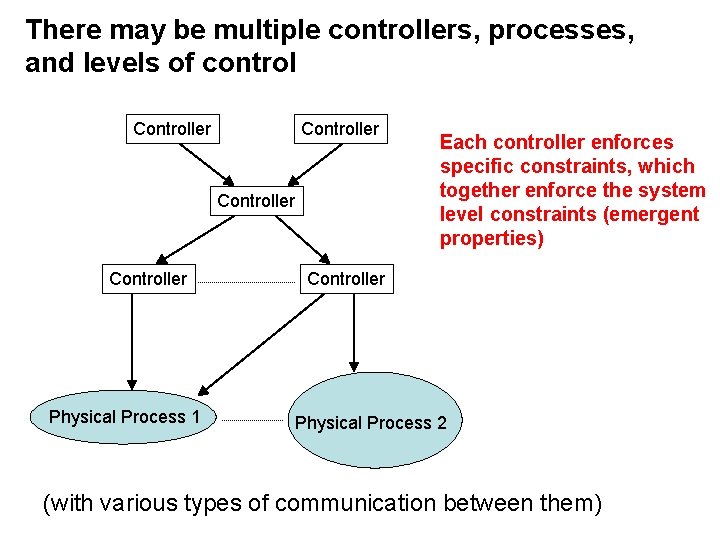

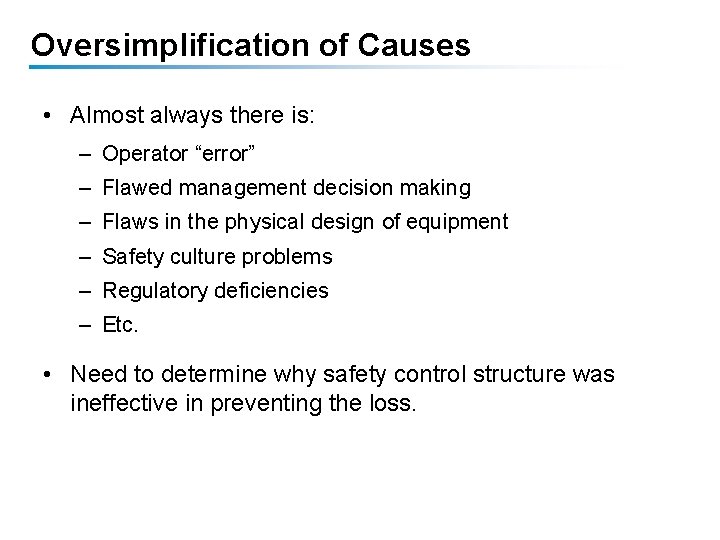

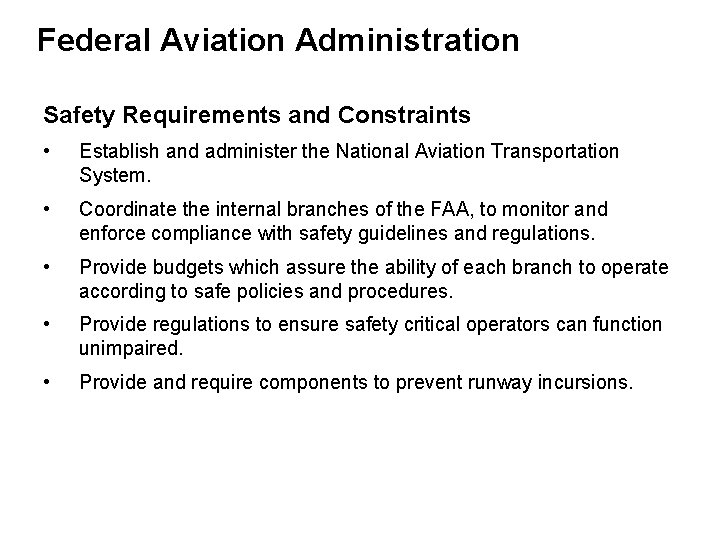

Strategy vs. Tactics • Primarily focus on tactics – Cyber security often framed as battle between adversaries and defenders (tactics) – Requires correctly identifying attackers motives, capabilities, targeting • Can reframe problem in terms of strategy – Identify and control system vulnerabilities (vs. reacting to potential threats) – Top-down vs. bottom-up tactics approach – Tactics tackled later

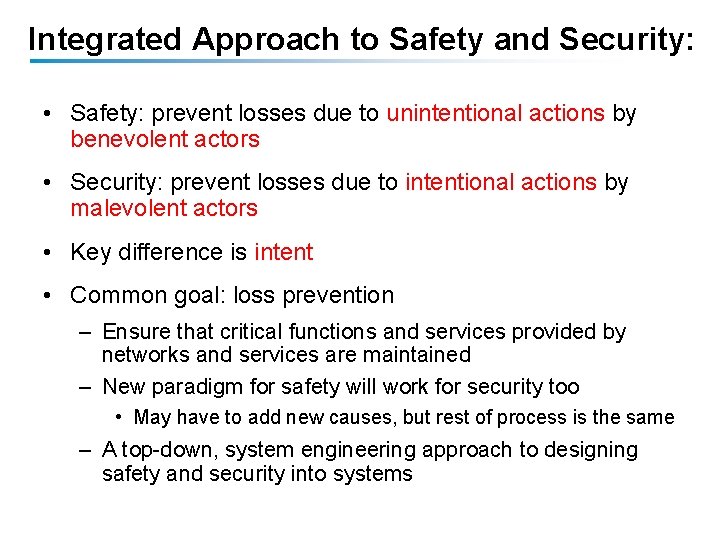

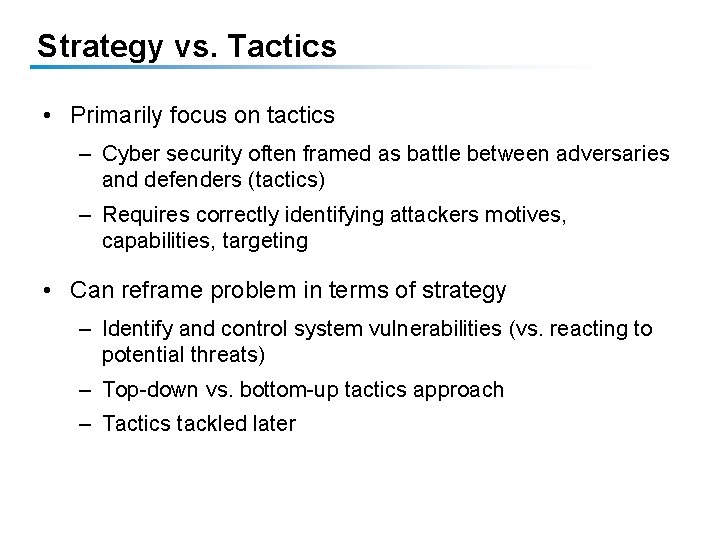

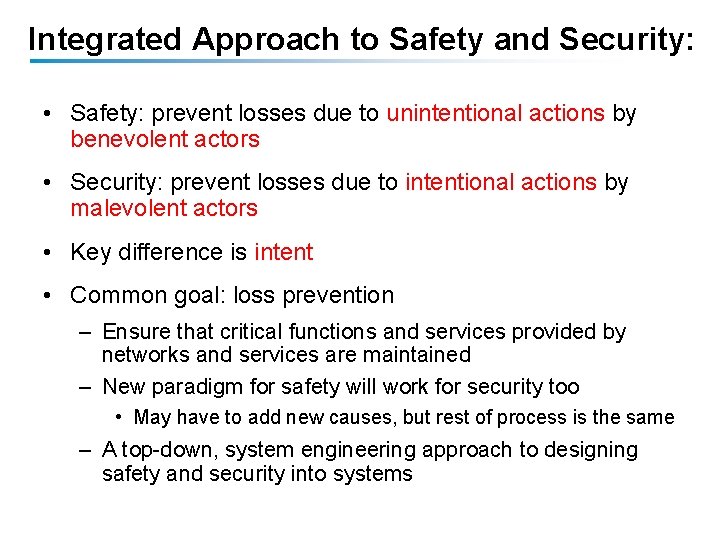

Integrated Approach to Safety and Security: • Safety: prevent losses due to unintentional actions by benevolent actors • Security: prevent losses due to intentional actions by malevolent actors • Key difference is intent • Common goal: loss prevention – Ensure that critical functions and services provided by networks and services are maintained – New paradigm for safety will work for security too • May have to add new causes, but rest of process is the same – A top-down, system engineering approach to designing safety and security into systems

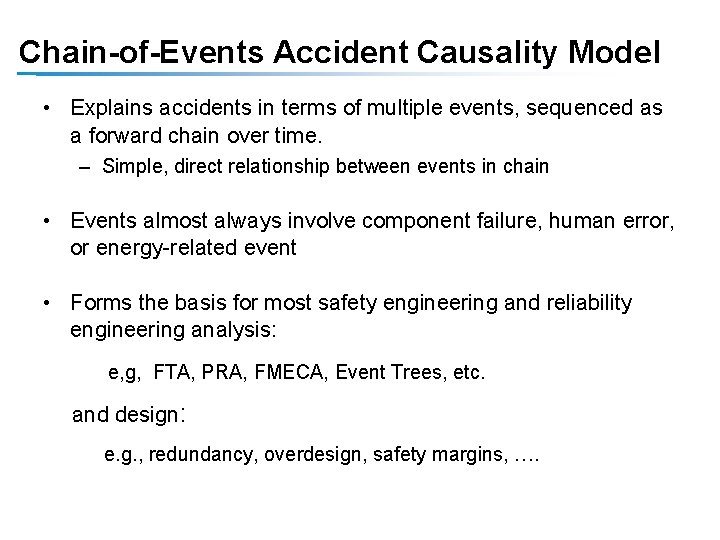

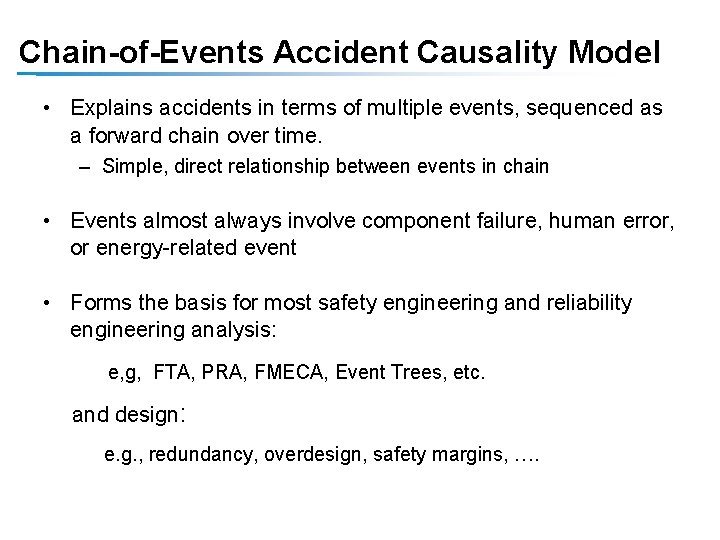

![STPASec Allows us to Address Security Left of Design Bill Young Attack Response Cost STPA-Sec Allows us to Address Security “Left of Design” [Bill Young] Attack Response Cost](https://slidetodoc.com/presentation_image_h/d59ed37f9f78460e11f7e08650c5b6f1/image-116.jpg)

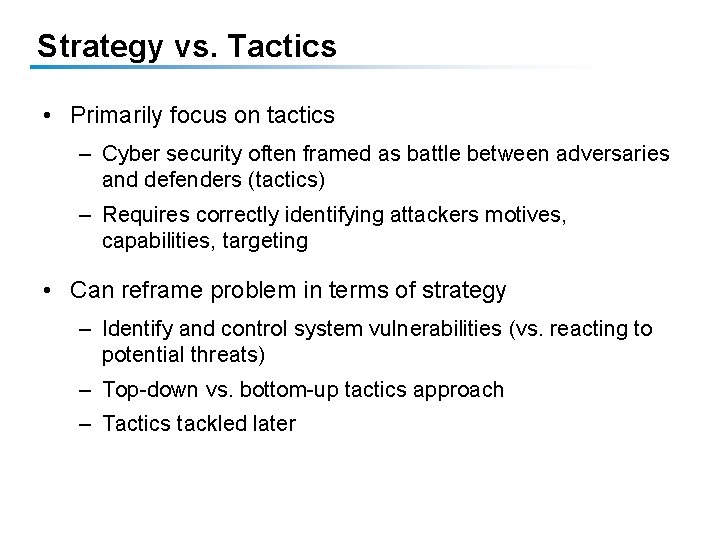

STPA-Sec Allows us to Address Security “Left of Design” [Bill Young] Attack Response Cost of Fix High Secure Systems Thinking System Security Requirements Secure Systems Engineering Cyber Security “Bolt-on” Low Concept Requirements Design Build System Engineering Phases Abstract Systems Operate Physical Systems Build security into system like safety

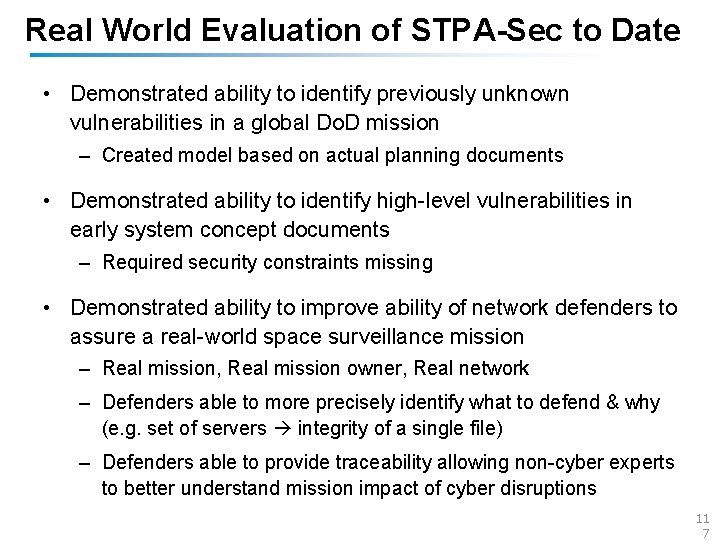

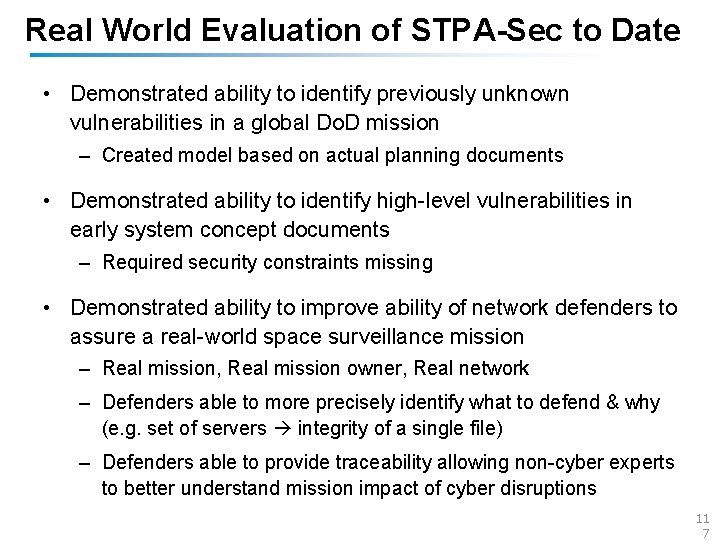

Real World Evaluation of STPA-Sec to Date • Demonstrated ability to identify previously unknown vulnerabilities in a global Do. D mission – Created model based on actual planning documents • Demonstrated ability to identify high-level vulnerabilities in early system concept documents – Required security constraints missing • Demonstrated ability to improve ability of network defenders to assure a real-world space surveillance mission – Real mission, Real mission owner, Real network – Defenders able to more precisely identify what to defend & why (e. g. set of servers integrity of a single file) – Defenders able to provide traceability allowing non-cyber experts to better understand mission impact of cyber disruptions 11 7

It’s still hungry … and I’ve been stuffing worms into it all day.

Reason Swiss Cheese