New Directions for NEST Research Nancy Lynch MIT

![Current Subprojects • Scalable group communication [Khazan, Keidar, Lynch, Shvartsman] • Dynamic Atomic Broadcast Current Subprojects • Scalable group communication [Khazan, Keidar, Lynch, Shvartsman] • Dynamic Atomic Broadcast](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-8.jpg)

![1. Scalable Group Communication [Keidar, Khazan 00, 02] [Khazan 02] [K, K, Lynch, Shvartsman 1. Scalable Group Communication [Keidar, Khazan 00, 02] [Khazan 02] [K, K, Lynch, Shvartsman](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-11.jpg)

![New GC Service for WANs [Khazan] • New specification, including virtual synchrony. GCS • New GC Service for WANs [Khazan] • New specification, including virtual synchrony. GCS •](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-14.jpg)

![New GC Service for WANs • Distributed implementation [Tarashchanskiy] • Safety proofs, using new New GC Service for WANs • Distributed implementation [Tarashchanskiy] • Safety proofs, using new](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-15.jpg)

![2. Early-Delivery Dynamic Atomic Broadcast [Bar-Joseph, Keidar, Lynch, DISC 02] DAB 16 2. Early-Delivery Dynamic Atomic Broadcast [Bar-Joseph, Keidar, Lynch, DISC 02] DAB 16](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-16.jpg)

![3. Reconfigurable Atomic Memory for Basic Objects [Lynch, Shvartsman, DISC 02] RAMBO 27 3. Reconfigurable Atomic Memory for Basic Objects [Lynch, Shvartsman, DISC 02] RAMBO 27](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-27.jpg)

![Static Quorum-Based Atomic Read/Write Memory Implementation [Attiya, Bar-Noy, Dolev] • Read, Write use two Static Quorum-Based Atomic Read/Write Memory Implementation [Attiya, Bar-Noy, Dolev] • Read, Write use two](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-29.jpg)

![Consensus Implementation init(v) decide(v) init(v) Consensus • Use a variant of Paxos algorithm [Lamport] Consensus Implementation init(v) decide(v) init(v) Consensus • Use a variant of Paxos algorithm [Lamport]](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-33.jpg)

![Hybrid I/O Automata (HIOA) [Lynch, Segala, Vaandrager 01, 02] • Mathematical model for hybrid Hybrid I/O Automata (HIOA) [Lynch, Segala, Vaandrager 01, 02] • Mathematical model for hybrid](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-38.jpg)

![Timed I/O Automata, Probabilistic, … • Timed I/O Automata [Lynch, Segala, Vaandrager, Kirli]: – Timed I/O Automata, Probabilistic, … • Timed I/O Automata [Lynch, Segala, Vaandrager, Kirli]: –](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-39.jpg)

- Slides: 40

New Directions for NEST Research Nancy Lynch MIT … NEST Annual P. I. Meeting Bar Harbor, Maine July 12, 2002 1

My Group’s Work and NEST • New building blocks (global services and distributed algorithms) for dynamic, fault-prone, distributed systems. • Interacting state machine semantic models, including timing, hybrid continuous/discrete, probabilistic behavior. Composition, abstraction. • Formal methods/tools to support reasoning about distributed systems: Conditional performance analysis methods, IOA language/tools. • System modeling. 2

A Suggestion for NEST Research: a Middleware Service Catalog • Virtually everyone here is developing middleware services: – Clock synchronization, location services, routing, reliable communication, consensus, group membership, group communication, object management services, publish-subscribe, network surveillance, reconfiguration, authentication, key distribution, … • But it’s not always obvious exactly what these services guarantee: – API, functionality, conditional performance guarantees, faulttolerance guarantees 3

Middleware Service Catalog • Idea: Create and maintain a catalog of specifications for NEST middleware services. – High-level descriptions of requirements • Assumptions and guarantees • API, functionality, conditional performance, fault-tolerance • Formal, informal – Models of the distributed algorithms used in the various implementations. – Claims about the properties satisfied by the algorithms. – Models for the underlying platforms. • Why this would be useful: – – – Another kind of output, complementary to demos. Basis for discussion/clarification/comparison. Will help bring implementations together. Basis formal analysis. Can help in developing algorithmic theory for NEST-like systems. 4

Building Blocks for High -Performance, Fault-Tolerant Distributed Systems Nancy Lynch MIT … NEST Annual P. I. Meeting Bar Harbor, Maine July 12, 2002 5

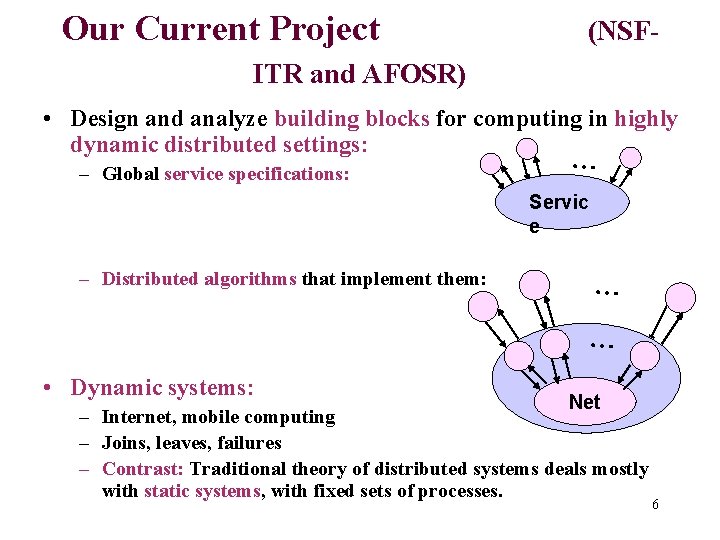

Our Current Project (NSF- ITR and AFOSR) • Design and analyze building blocks for computing in highly dynamic distributed settings: – Global service specifications: … Servic e – Distributed algorithms that implement them: … … • Dynamic systems: Net – Internet, mobile computing – Joins, leaves, failures – Contrast: Traditional theory of distributed systems deals mostly with static systems, with fixed sets of processes. 6

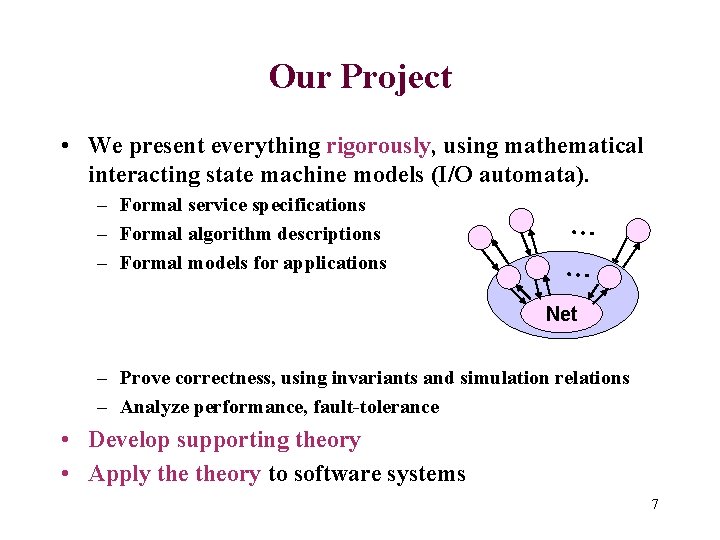

Our Project • We present everything rigorously, using mathematical interacting state machine models (I/O automata). – Formal service specifications – Formal algorithm descriptions – Formal models for applications … … Net – Prove correctness, using invariants and simulation relations – Analyze performance, fault-tolerance • Develop supporting theory • Apply theory to software systems 7

![Current Subprojects Scalable group communication Khazan Keidar Lynch Shvartsman Dynamic Atomic Broadcast Current Subprojects • Scalable group communication [Khazan, Keidar, Lynch, Shvartsman] • Dynamic Atomic Broadcast](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-8.jpg)

Current Subprojects • Scalable group communication [Khazan, Keidar, Lynch, Shvartsman] • Dynamic Atomic Broadcast [Bar-Joseph, Keidar, Lynch] • Reconfigurable Atomic Memory [Lynch, Shvartsman] • Communication protocols [Livadas, Lynch, Keidar, Bakr] • Peer-to-peer computing [Lynch, Malkhi, Ratajczak, Stoica] • Fault-tolerant consensus [Keidar, Rajsbaum] • Foundations: [Lynch, Segala, Vaandrager, Kirli] • Applications: – – Toy helicopter [Mitra, Wang, Feron], Video streaming[Livadas, Nguyen, Zakhor], Unmanned flight control [Ha, Kochocki, Tanzman], Agent programming [Kawabe] 8

People • Project leader: Nancy Lynch • Postdocs: Idit Keidar, Dilsun Kirli • Ph. D students: Roger Khazan, Carl Livadas, Ziv Bar. Joseph, Rui Fan, Sayan Mitra, Seth Gilbert • MEng students: Omar Bakr, Matt Bachmann, Vida Ha • Other collaborators: Alex Shvartsman, Dahlia Malkhi, David Ratajczak, Ion Stoica, Sergio Rajsbaum, Roberto Segala, Frits Vaandrager, Yong Wang, Eric Feron, Thinh Nguyen, Avideh Zakhor, Joe Kochocki, Alan Tanzman, Yoshinobu Kawabe… 9

This talk: 1. Scalable Group Communication 2. Dynamic Atomic Broadcast 3. Reconfigurable Atomic Memory 10

![1 Scalable Group Communication Keidar Khazan 00 02 Khazan 02 K K Lynch Shvartsman 1. Scalable Group Communication [Keidar, Khazan 00, 02] [Khazan 02] [K, K, Lynch, Shvartsman](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-11.jpg)

1. Scalable Group Communication [Keidar, Khazan 00, 02] [Khazan 02] [K, K, Lynch, Shvartsman 02] … GCS 11

Group Communication Services • Cope with changing participants using abstract groups of client processes with changing membership sets. • Processes communicate with group members indirectly, by sending messages to the group as a whole. • GC services support management of groups: – Maintain membership information. • Form new views in response to changes. – Manage communication. • Communication respects views. • Provide guarantees about ordering, reliability of message delivery. • Virtual synchrony GCS • Systems; Isis, Transis, Totem, Ensemble, … 12

Group Communication Services • Advantages: – High-level programming abstraction – Hides complexity of coping with changes • Disadvantages: – Can be costly, especially when forming new views. – May have problems scaling to large networks. • Applications: – Managing replicated data – Distributed multiplayer interactive games – Multi-media conferencing, collaborative work 13

![New GC Service for WANs Khazan New specification including virtual synchrony GCS New GC Service for WANs [Khazan] • New specification, including virtual synchrony. GCS •](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-14.jpg)

New GC Service for WANs [Khazan] • New specification, including virtual synchrony. GCS • New algorithm: – Uses separate scalable membership service, implemented on a small set of membership servers [Keidar, Sussman, Marzullo, Dolev]. – Multicast implemented GCS on all the nodes. Memb Net – View change uses only one round for state exchange, in parallel with membership service’s agreement on views. – Participants can join during view formation. 14

![New GC Service for WANs Distributed implementation Tarashchanskiy Safety proofs using new New GC Service for WANs • Distributed implementation [Tarashchanskiy] • Safety proofs, using new](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-15.jpg)

New GC Service for WANs • Distributed implementation [Tarashchanskiy] • Safety proofs, using new incremental proof methods [Keidar, Khazan, Lynch, Shvartsman 00]. S S’ • Liveness proofs A A’ • Performance analysis – Analyze time from when network stabilizes until GCS announces new views. – Analyze message latency. – Conditional analysis, based on input, failure, and timing assumptions. – Compositional analysis, based on performance of Membership Service and Net. • Also modeled analyzed data-management application running on top of the new GCS. 15

![2 EarlyDelivery Dynamic Atomic Broadcast BarJoseph Keidar Lynch DISC 02 DAB 16 2. Early-Delivery Dynamic Atomic Broadcast [Bar-Joseph, Keidar, Lynch, DISC 02] DAB 16](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-16.jpg)

2. Early-Delivery Dynamic Atomic Broadcast [Bar-Joseph, Keidar, Lynch, DISC 02] DAB 16

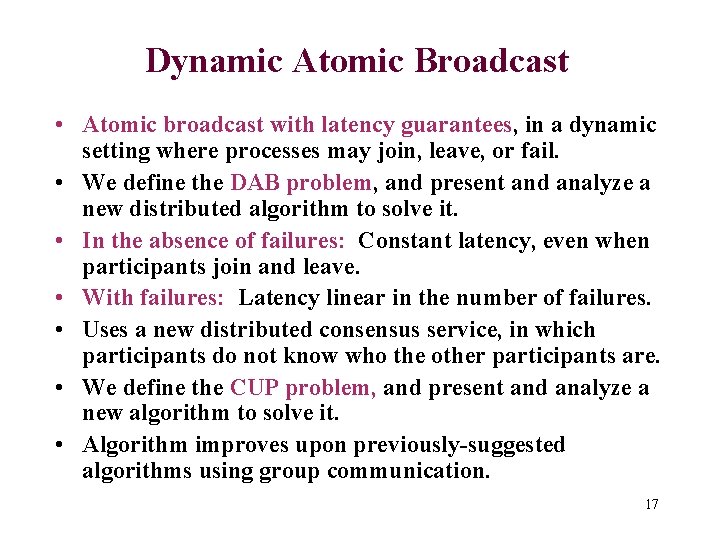

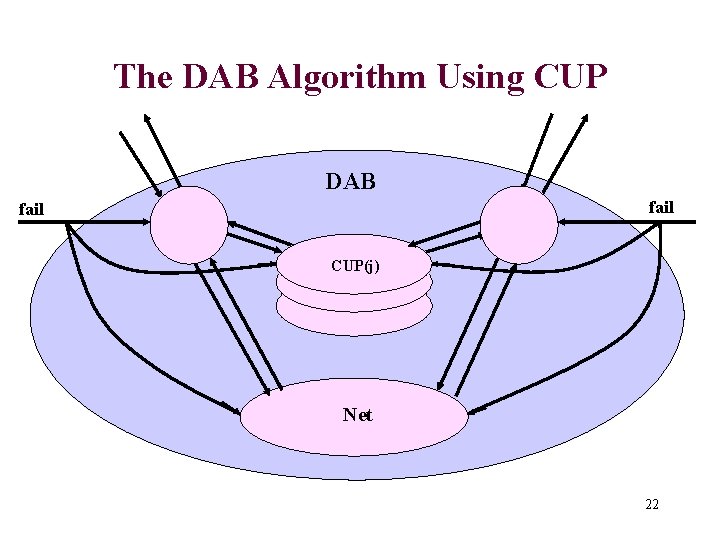

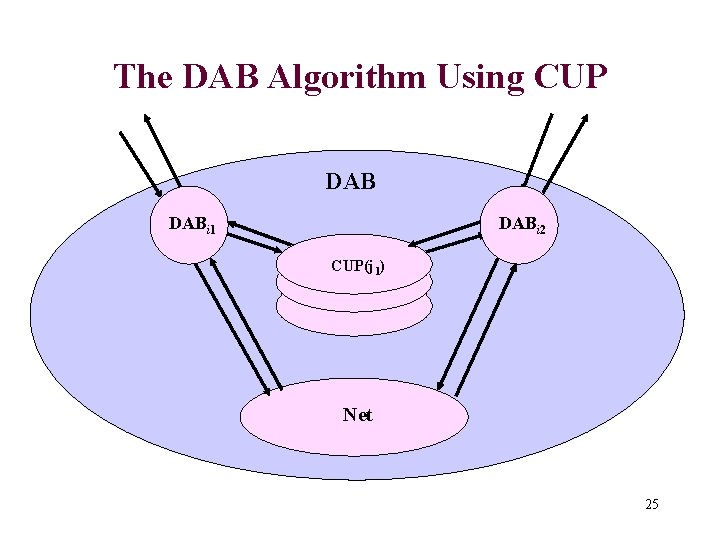

Dynamic Atomic Broadcast • Atomic broadcast with latency guarantees, in a dynamic setting where processes may join, leave, or fail. • We define the DAB problem, and present and analyze a new distributed algorithm to solve it. • In the absence of failures: Constant latency, even when participants join and leave. • With failures: Latency linear in the number of failures. • Uses a new distributed consensus service, in which participants do not know who the other participants are. • We define the CUP problem, and present and analyze a new algorithm to solve it. • Algorithm improves upon previously-suggested algorithms using group communication. 17

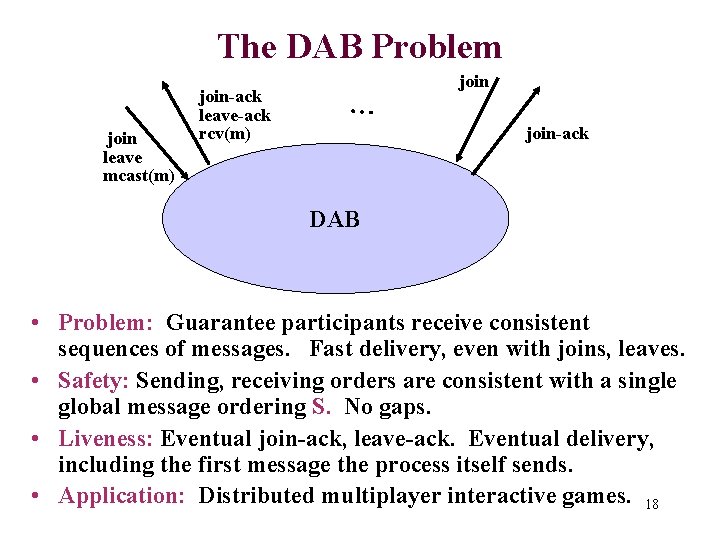

The DAB Problem join leave mcast(m) join-ack leave-ack rcv(m) … join-ack DAB • Problem: Guarantee participants receive consistent sequences of messages. Fast delivery, even with joins, leaves. • Safety: Sending, receiving orders are consistent with a single global message ordering S. No gaps. • Liveness: Eventual join-ack, leave-ack. Eventual delivery, including the first message the process itself sends. • Application: Distributed multiplayer interactive games. 18

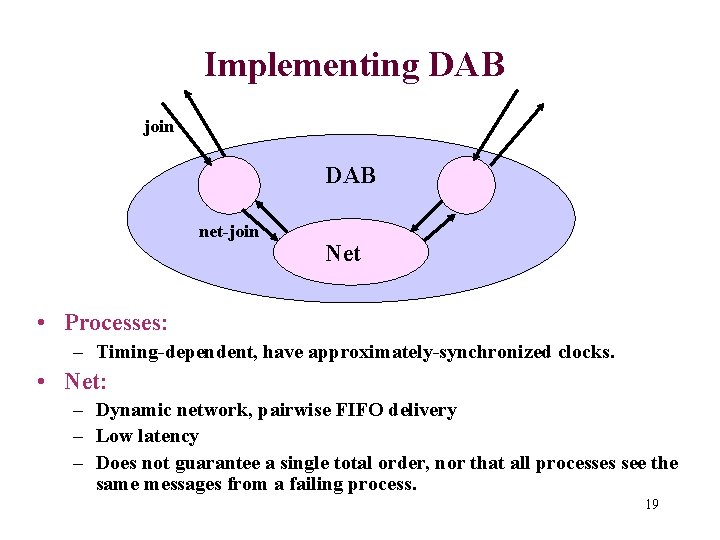

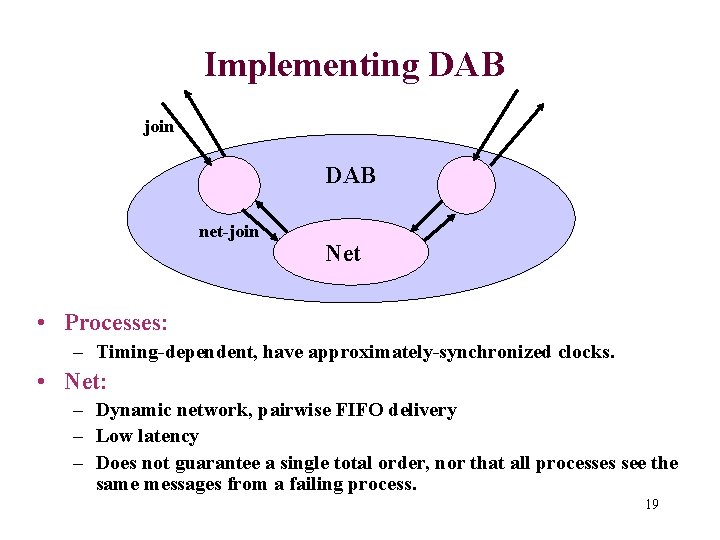

Implementing DAB join DAB net-join Net • Processes: – Timing-dependent, have approximately-synchronized clocks. • Net: – Dynamic network, pairwise FIFO delivery – Low latency – Does not guarantee a single total order, nor that all processes see the same messages from a failing process. 19

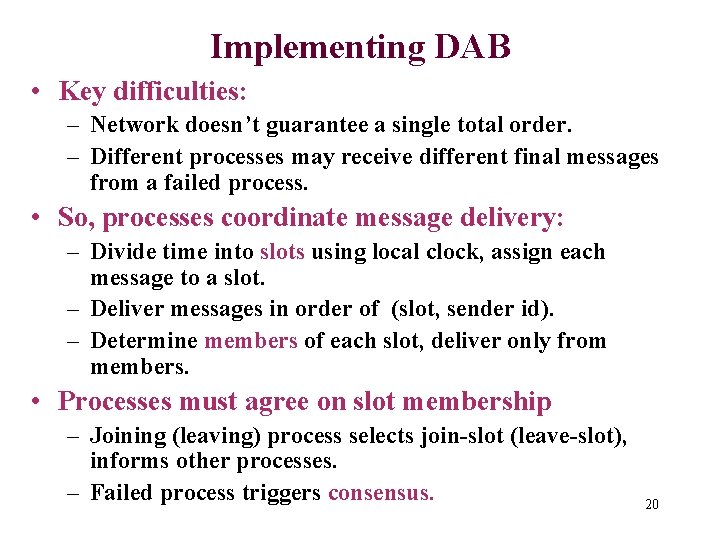

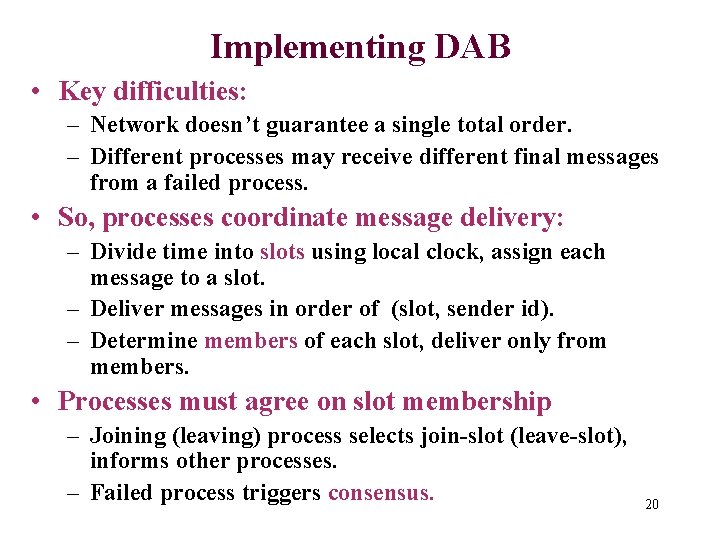

Implementing DAB • Key difficulties: – Network doesn’t guarantee a single total order. – Different processes may receive different final messages from a failed process. • So, processes coordinate message delivery: – Divide time into slots using local clock, assign each message to a slot. – Deliver messages in order of (slot, sender id). – Determine members of each slot, deliver only from members. • Processes must agree on slot membership – Joining (leaving) process selects join-slot (leave-slot), informs other processes. – Failed process triggers consensus. 20

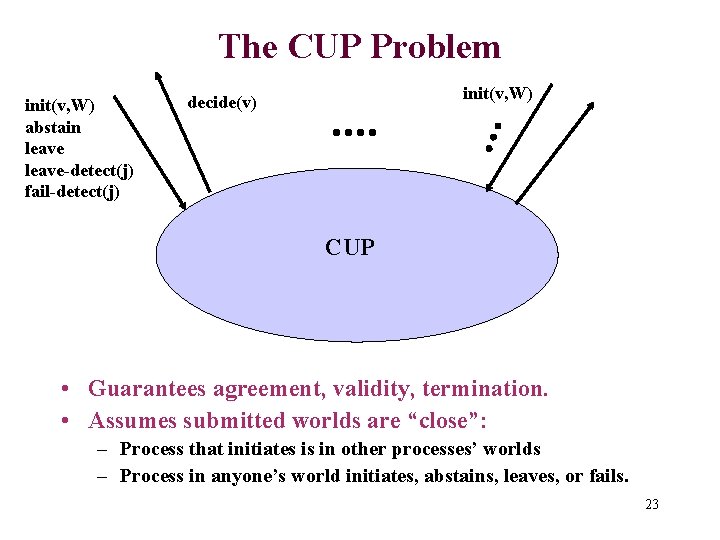

Using Consensus for DAB • When process j fails, a consensus service is used to agree on j’s failure slot. • Requires a new kind of consensus service, which: – Does not assume participants are known a priori; lets each participant say who it thinks the other participants are. – Allows processes to abstain. – Example: i joins around when consensus starts. j 1 thinks i is participating, j 2 thinks not. i cannot participate as usual, because j 2 ignores it, but cannot be silent, because j 1 waits for it. So i abstains. • We define new Consensus with Unknown Participants (CUP) service. • Use separate CUP(j) service to decide on failure slot for j. 21

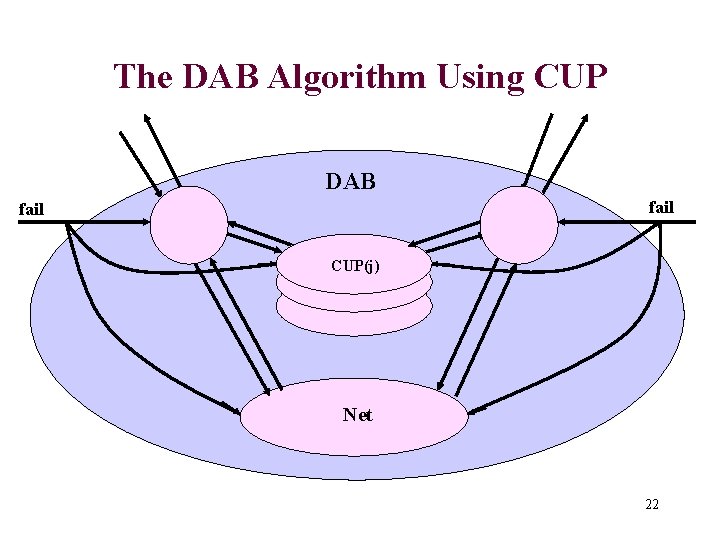

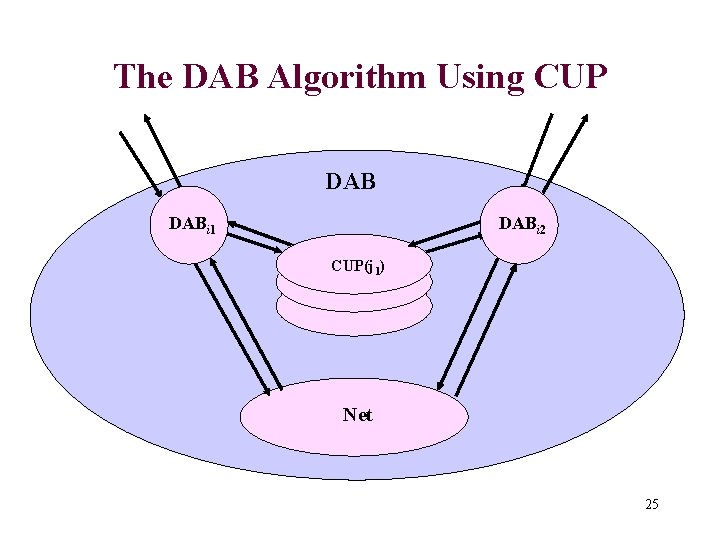

The DAB Algorithm Using CUP DAB fail DABi 1 DABi 2 fail CUP(j) Net 22

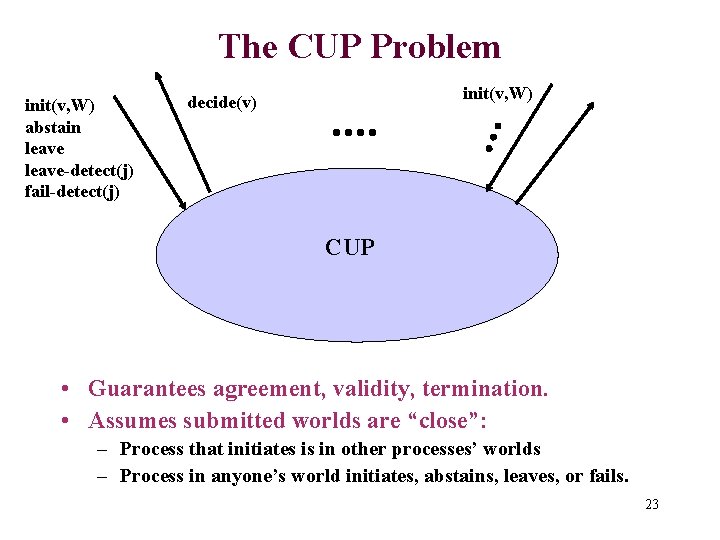

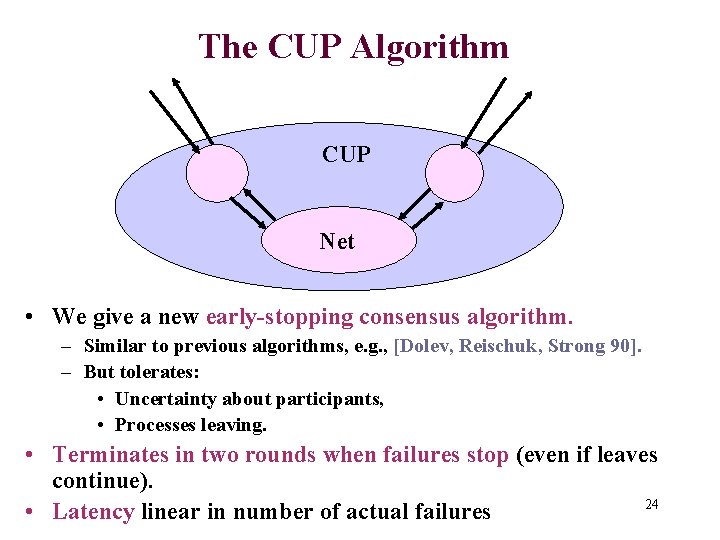

The CUP Problem init(v, W) abstain leave-detect(j) fail-detect(j) init(v, W) decide(v) CUP • Guarantees agreement, validity, termination. • Assumes submitted worlds are “close”: – Process that initiates is in other processes’ worlds – Process in anyone’s world initiates, abstains, leaves, or fails. 23

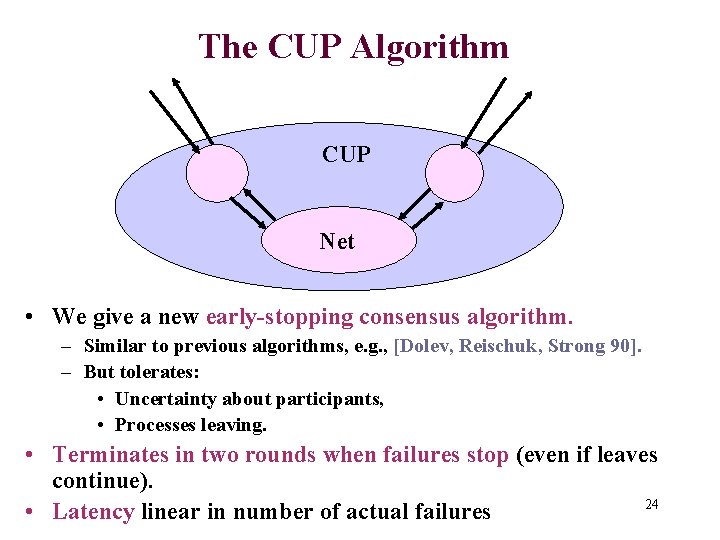

The CUP Algorithm CUP Net • We give a new early-stopping consensus algorithm. – Similar to previous algorithms, e. g. , [Dolev, Reischuk, Strong 90]. – But tolerates: • Uncertainty about participants, • Processes leaving. • Terminates in two rounds when failures stop (even if leaves continue). 24 • Latency linear in number of actual failures

The DAB Algorithm Using CUP DABi 1 DABi 2 CUP(j 1) Net 25

Discussion: DAB • Modular: DAB algorithm, CUP, Network • Modularity needed for keeping the complexity under control. • Initial presentation was intertwined, not modular. • Correctness of CUP (agreement, validity, termination) used to prove correctness of DAB (atomic broadcast safety and liveness guarantees). • Latency bounds for CUP used to prove latency bounds for DAB. 26

![3 Reconfigurable Atomic Memory for Basic Objects Lynch Shvartsman DISC 02 RAMBO 27 3. Reconfigurable Atomic Memory for Basic Objects [Lynch, Shvartsman, DISC 02] RAMBO 27](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-27.jpg)

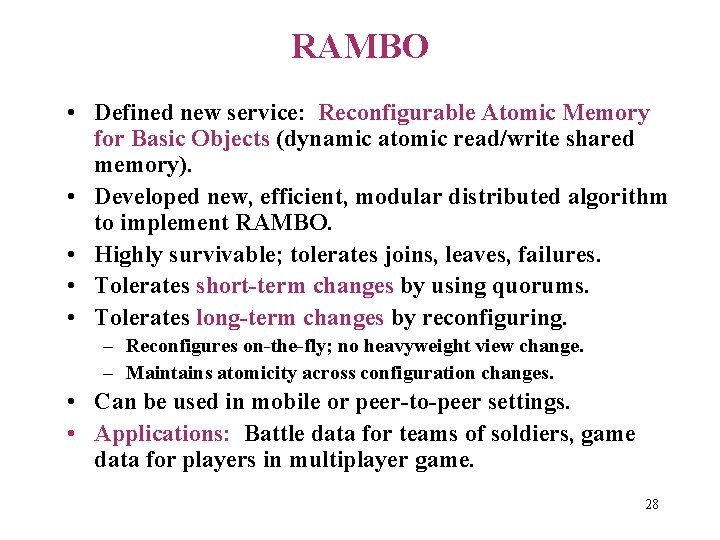

3. Reconfigurable Atomic Memory for Basic Objects [Lynch, Shvartsman, DISC 02] RAMBO 27

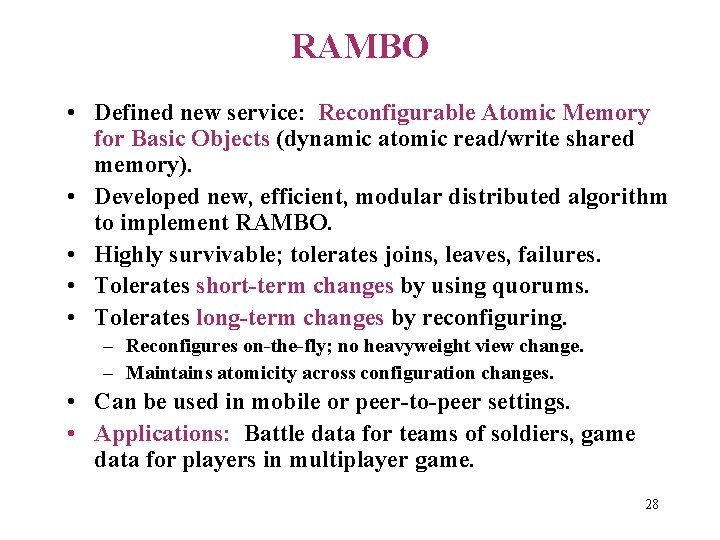

RAMBO • Defined new service: Reconfigurable Atomic Memory for Basic Objects (dynamic atomic read/write shared memory). • Developed new, efficient, modular distributed algorithm to implement RAMBO. • Highly survivable; tolerates joins, leaves, failures. • Tolerates short-term changes by using quorums. • Tolerates long-term changes by reconfiguring. – Reconfigures on-the-fly; no heavyweight view change. – Maintains atomicity across configuration changes. • Can be used in mobile or peer-to-peer settings. • Applications: Battle data for teams of soldiers, game data for players in multiplayer game. 28

![Static QuorumBased Atomic ReadWrite Memory Implementation Attiya BarNoy Dolev Read Write use two Static Quorum-Based Atomic Read/Write Memory Implementation [Attiya, Bar-Noy, Dolev] • Read, Write use two](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-29.jpg)

Static Quorum-Based Atomic Read/Write Memory Implementation [Attiya, Bar-Noy, Dolev] • Read, Write use two phases: – Phase 1: Read (value, tag) from a read-quorum – Phase 2: Write (value, tag) to a write-quorum • Write determines largest tag in phase 1, picks a larger one, writes new (value, tag) in phase 2. • Read determines latest (value, tag) in phase 1, propagates it in phase 2, then returns the value. – Could return unconfirmed value after phase 1. • Highly concurrent. • Quorum intersection property implies atomicity. 29

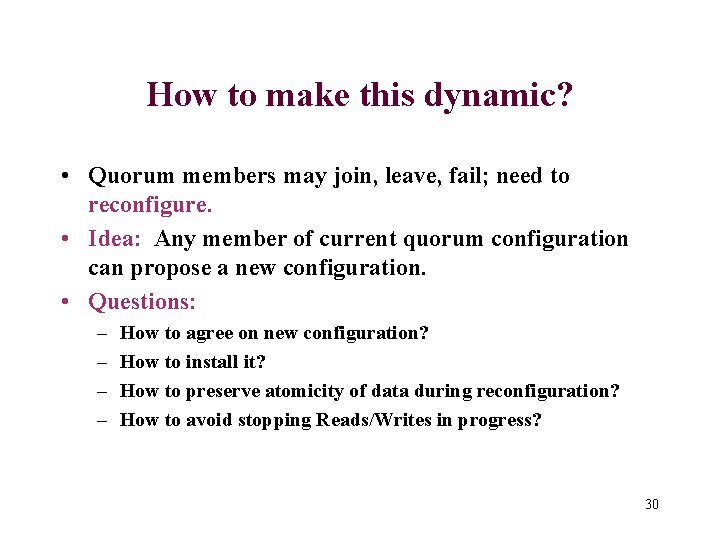

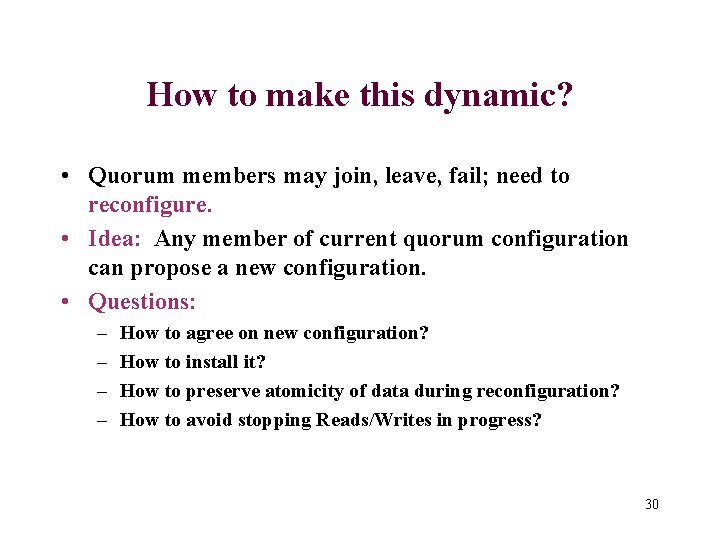

How to make this dynamic? • Quorum members may join, leave, fail; need to reconfigure. • Idea: Any member of current quorum configuration can propose a new configuration. • Questions: – – How to agree on new configuration? How to install it? How to preserve atomicity of data during reconfiguration? How to avoid stopping Reads/Writes in progress? 30

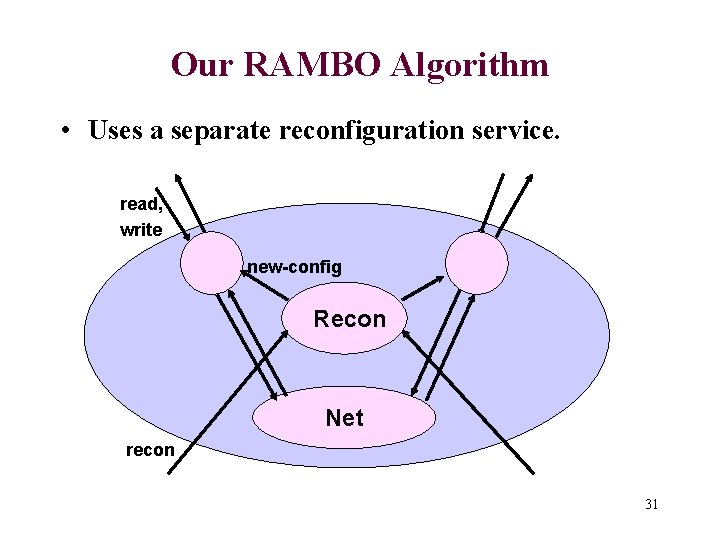

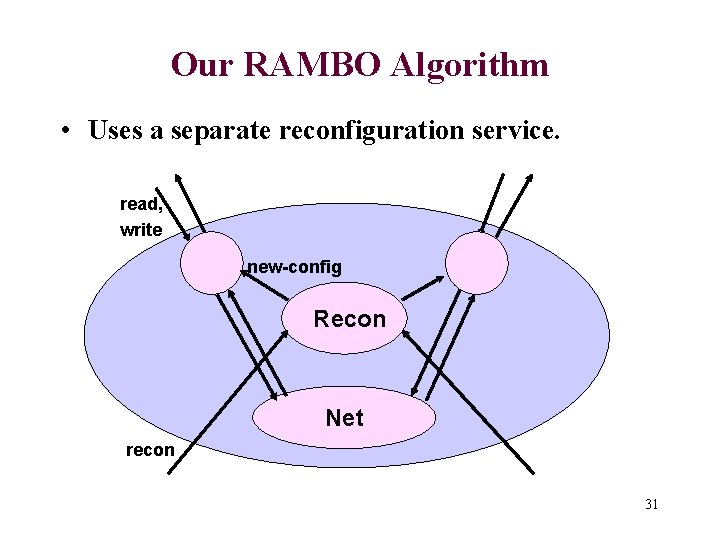

Our RAMBO Algorithm • Uses a separate reconfiguration service. read, write new-config Recon Net recon 31

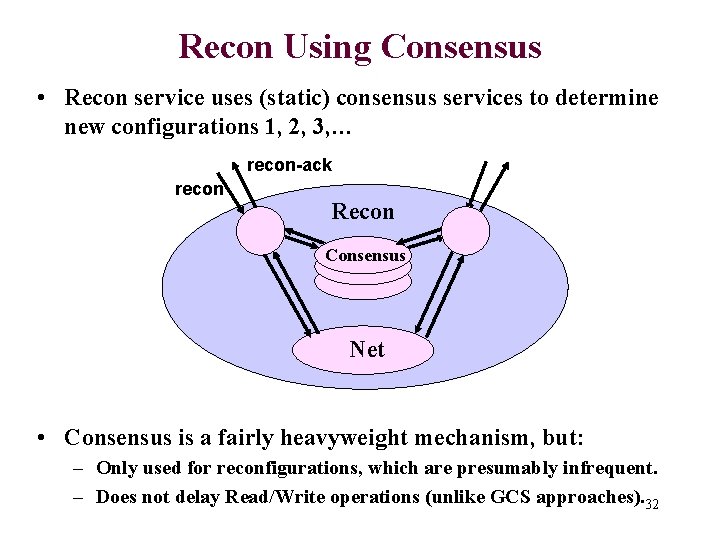

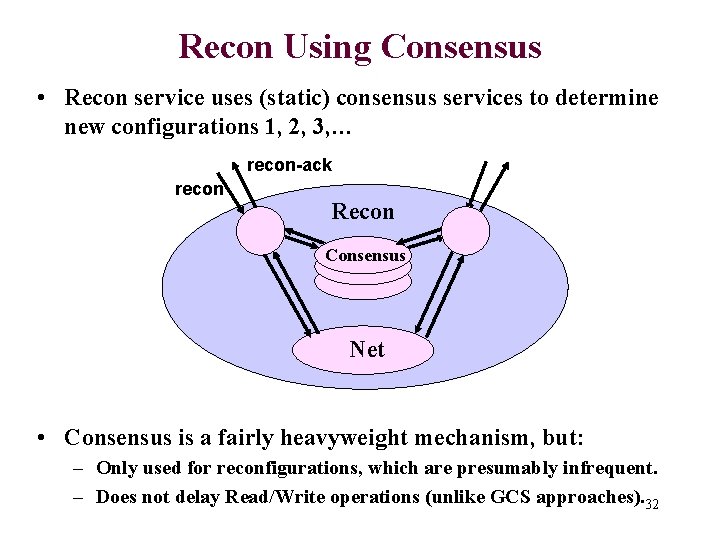

Recon Using Consensus • Recon service uses (static) consensus services to determine new configurations 1, 2, 3, … recon-ack recon Recon Consensus Net • Consensus is a fairly heavyweight mechanism, but: – Only used for reconfigurations, which are presumably infrequent. – Does not delay Read/Write operations (unlike GCS approaches). 32

![Consensus Implementation initv decidev initv Consensus Use a variant of Paxos algorithm Lamport Consensus Implementation init(v) decide(v) init(v) Consensus • Use a variant of Paxos algorithm [Lamport]](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-33.jpg)

Consensus Implementation init(v) decide(v) init(v) Consensus • Use a variant of Paxos algorithm [Lamport] • Agreement, validity guaranteed absolutely. • Termination guaranteed when underlying system stabilizes. • Leader chosen using failure detectors; conducts twophase algorithm with retries. 33

Read/Write Algorithm using Recon read, write new-config Recon Net • Read/write processes run two-phase static quorum-based algorithm, using current configuration. • Use gossiping and fixed point tests rather than highly structured communication. • When Recon provides new configuration, R/W uses both. • Do not abort R/W in progress, but do extra work to access 34 additional processes needed for new quorums.

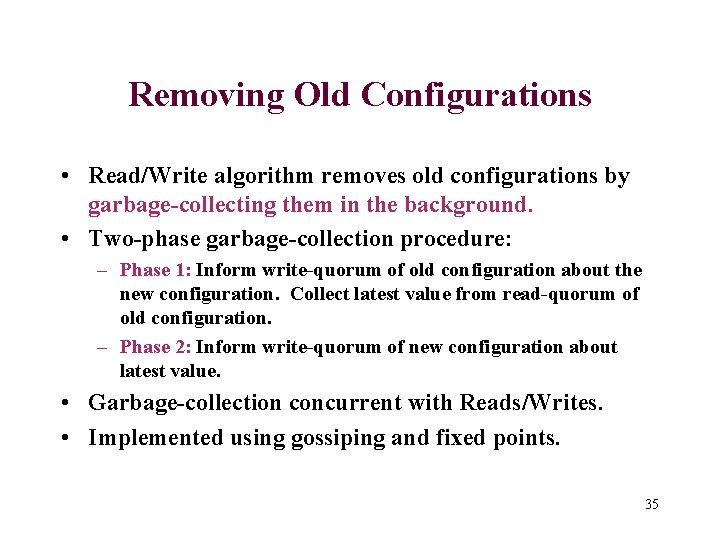

Removing Old Configurations • Read/Write algorithm removes old configurations by garbage-collecting them in the background. • Two-phase garbage-collection procedure: – Phase 1: Inform write-quorum of old configuration about the new configuration. Collect latest value from read-quorum of old configuration. – Phase 2: Inform write-quorum of new configuration about latest value. • Garbage-collection concurrent with Reads/Writes. • Implemented using gossiping and fixed points. 35

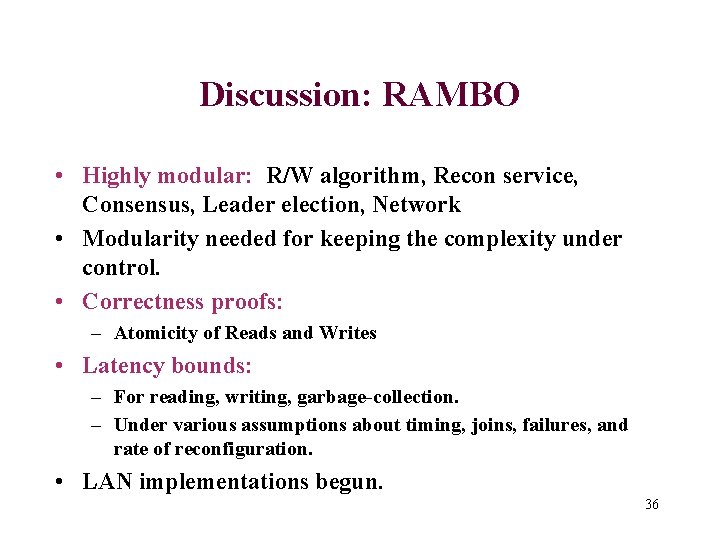

Discussion: RAMBO • Highly modular: R/W algorithm, Recon service, Consensus, Leader election, Network • Modularity needed for keeping the complexity under control. • Correctness proofs: – Atomicity of Reads and Writes • Latency bounds: – For reading, writing, garbage-collection. – Under various assumptions about timing, joins, failures, and rate of reconfiguration. • LAN implementations begun. 36

Foundations: Hybrid, Timed, Probabilistic Models 37

![Hybrid IO Automata HIOA Lynch Segala Vaandrager 01 02 Mathematical model for hybrid Hybrid I/O Automata (HIOA) [Lynch, Segala, Vaandrager 01, 02] • Mathematical model for hybrid](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-38.jpg)

Hybrid I/O Automata (HIOA) [Lynch, Segala, Vaandrager 01, 02] • Mathematical model for hybrid (continuous/discrete) system components. • Discrete actions, continuous trajectories P • Supports composition, levels of abstraction. A • Case studies: S C – Automated transportation systems – Quanser helicopter system [Mitra, Wang, Feron, Lynch] 38

![Timed IO Automata Probabilistic Timed IO Automata Lynch Segala Vaandrager Kirli Timed I/O Automata, Probabilistic, … • Timed I/O Automata [Lynch, Segala, Vaandrager, Kirli]: –](https://slidetodoc.com/presentation_image/60484449f52905a94b163f204eac6382/image-39.jpg)

Timed I/O Automata, Probabilistic, … • Timed I/O Automata [Lynch, Segala, Vaandrager, Kirli]: – For modeling and analyzing timing-based systems, most of the building blocks of our AFOSR project. – Support composition, abstraction. – Collecting ideas from many research papers. e. g. , • Probabilistic I/O automata [Lynch, Segala, Vaandrager]: – For modeling systems with random behavior. – Composition, abstraction aspects still need development. – Need to be combined with timed/hybrid models. 39

Conclusions • Three main building blocks (services and algorithms) for dynamic systems: – Scalable Group Communication – Dynamic Atomic Broadcast – Reconfigurable Atomic Memory • Auxiliary building blocks: group membership, Consensus with Unknown Participants, reconfiguration • Much remains to be done, to produce a “complete” set of useful building blocks for dynamic systems, and a good algorithmic theory for this area. • Connections with NEST? 40