ECE 462562 ISA and Datapath Review Ali Akoglu

![Instructions • • Load and store instructions Example: C code: A[12] = h + Instructions • • Load and store instructions Example: C code: A[12] = h +](https://slidetodoc.com/presentation_image/408d2ac127f68e1cc0020fafe4520556/image-9.jpg)

![Summary A[300]=h+A[300] Lw $t 0, 1200($t 1) Add $t 0, $s 2, $t 0 Summary A[300]=h+A[300] Lw $t 0, 1200($t 1) Add $t 0, $s 2, $t 0](https://slidetodoc.com/presentation_image/408d2ac127f68e1cc0020fafe4520556/image-10.jpg)

![Source: For ( i=1000; i>0; i=i-1 ) x[i] = x[i] + s; Direct translation: Source: For ( i=1000; i>0; i=i-1 ) x[i] = x[i] + s; Direct translation:](https://slidetodoc.com/presentation_image/408d2ac127f68e1cc0020fafe4520556/image-82.jpg)

- Slides: 89

ECE 462/562 ISA and Datapath Review Ali Akoglu 1

Instruction Set Architecture • A very important abstraction – interface between hardware and low-level software – standardizes instructions, machine language bit patterns, etc. – advantage: different implementations of the same architecture • Modern instruction set architectures: – IA-32, Power. PC, MIPS, SPARC, ARM, and others 2

MIPS arithmetic • • All instructions have 3 operands Operand order is fixed (destination first) Example: C code: a = b + c MIPS ‘code’: add a, b, c 3

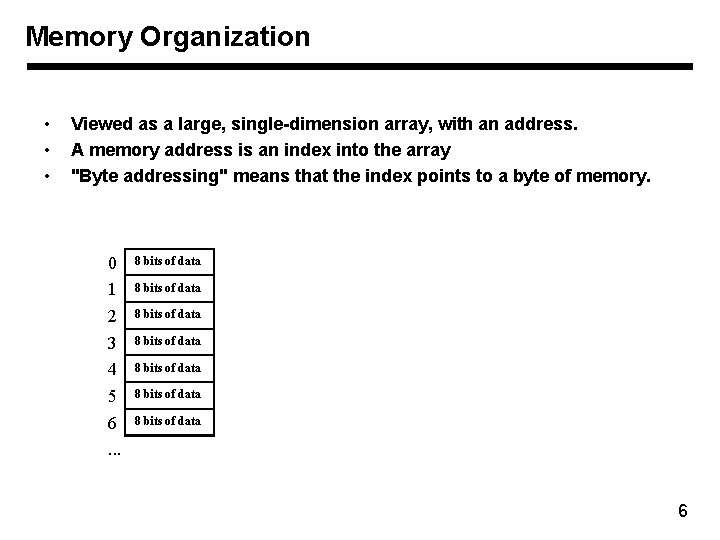

MIPS arithmetic • • Design Principle: simplicity favors regularity. Of course this complicates some things. . . C code: a = b + c + d; MIPS code: add a, b, c add a, a, d Operands must be registers, only 32 registers provided Each register contains 32 bits 4

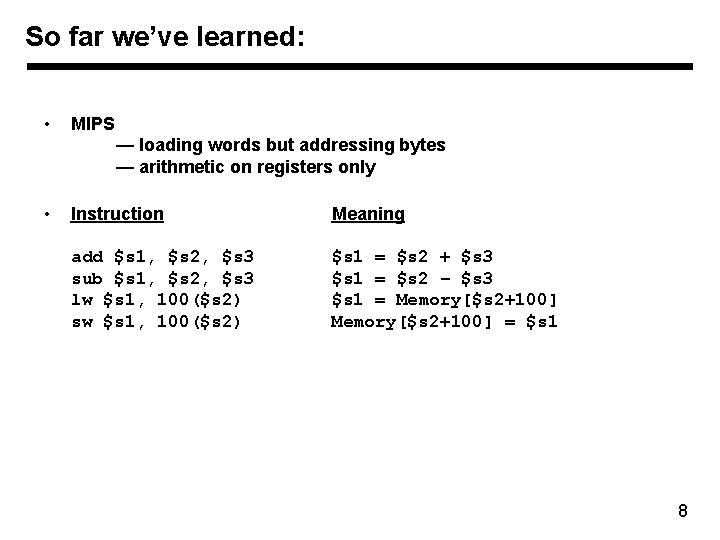

Registers vs. Memory • • • Arithmetic instructions operands must be registers, — only 32 registers provided Compiler associates variables with registers What about programs with lots of variables Control Input Memory Datapath Processor Output I/O 5

Memory Organization • • • Viewed as a large, single-dimension array, with an address. A memory address is an index into the array "Byte addressing" means that the index points to a byte of memory. 0 1 2 3 4 5 6. . . 8 bits of data 8 bits of data 6

Memory Organization • • Bytes are nice, but most data items use larger "words" For MIPS, a word is 32 bits or 4 bytes. 0 4 8 12. . . • • • 32 bits of data Registers hold 32 bits of data 232 bytes with byte addresses from 0 to 232 -1 230 words with byte addresses 0, 4, 8, . . . 232 -4 Words are aligned i. e. , what are the least 2 significant bits of a word address? 7

So far we’ve learned: • MIPS — loading words but addressing bytes — arithmetic on registers only • Instruction Meaning add $s 1, $s 2, $s 3 sub $s 1, $s 2, $s 3 lw $s 1, 100($s 2) sw $s 1, 100($s 2) $s 1 = $s 2 + $s 3 $s 1 = $s 2 – $s 3 $s 1 = Memory[$s 2+100] = $s 1 8

![Instructions Load and store instructions Example C code A12 h Instructions • • Load and store instructions Example: C code: A[12] = h +](https://slidetodoc.com/presentation_image/408d2ac127f68e1cc0020fafe4520556/image-9.jpg)

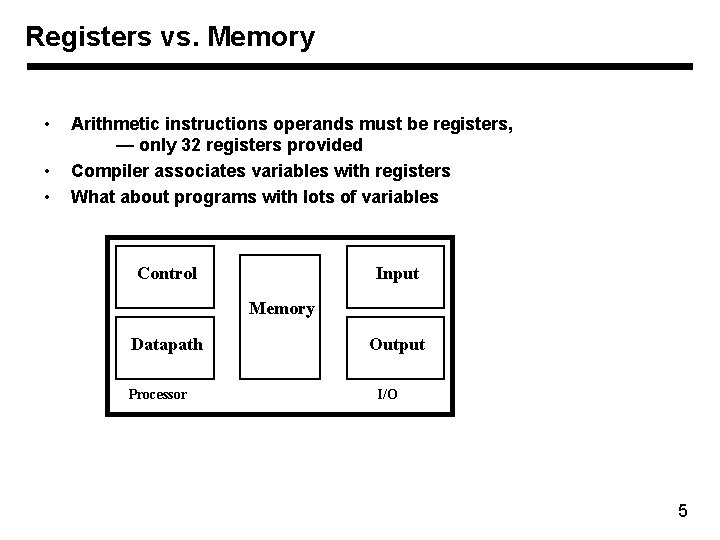

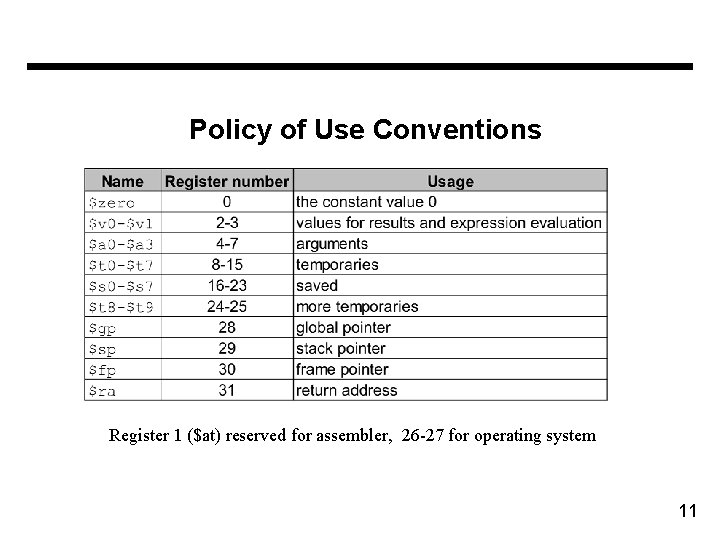

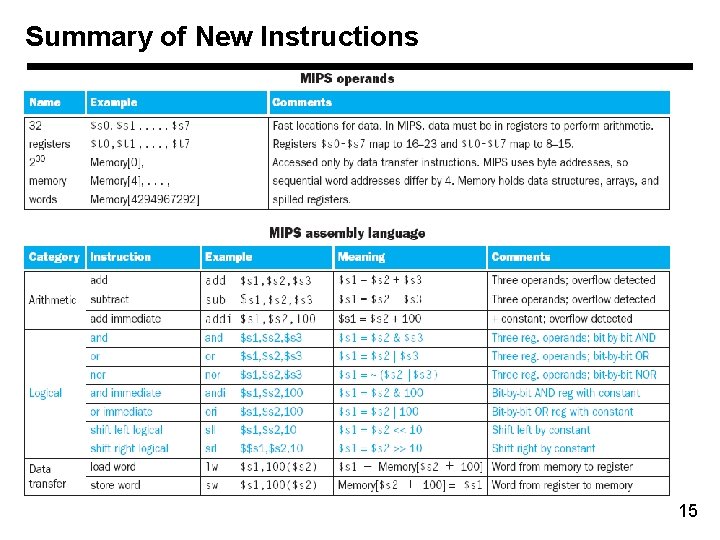

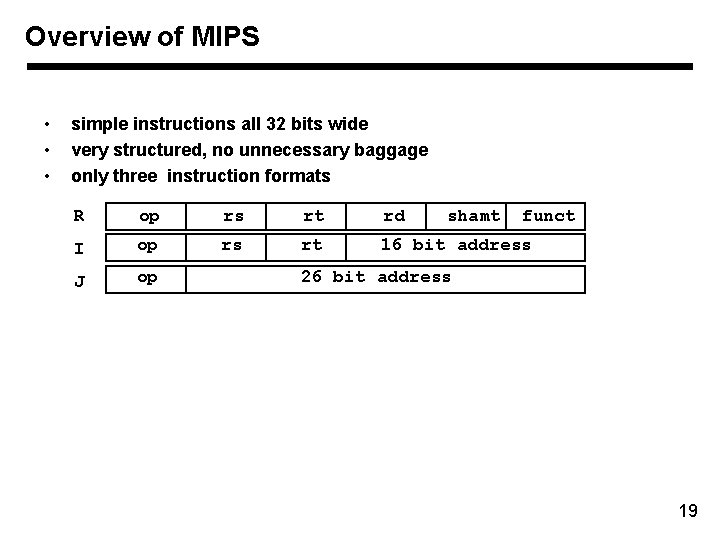

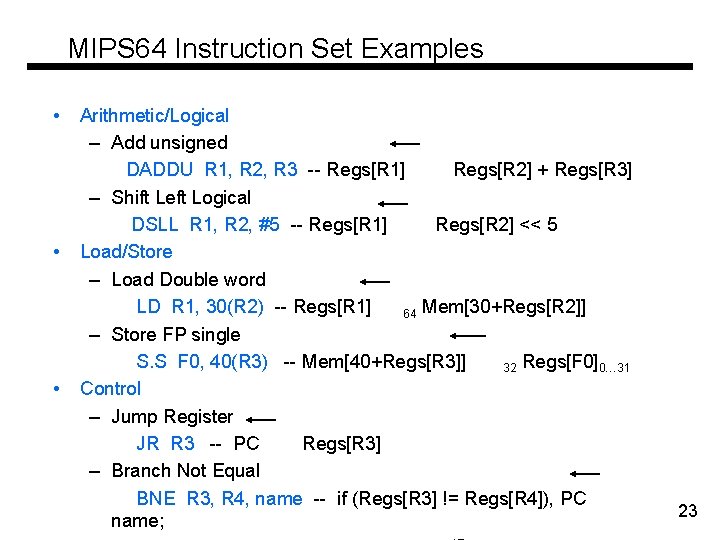

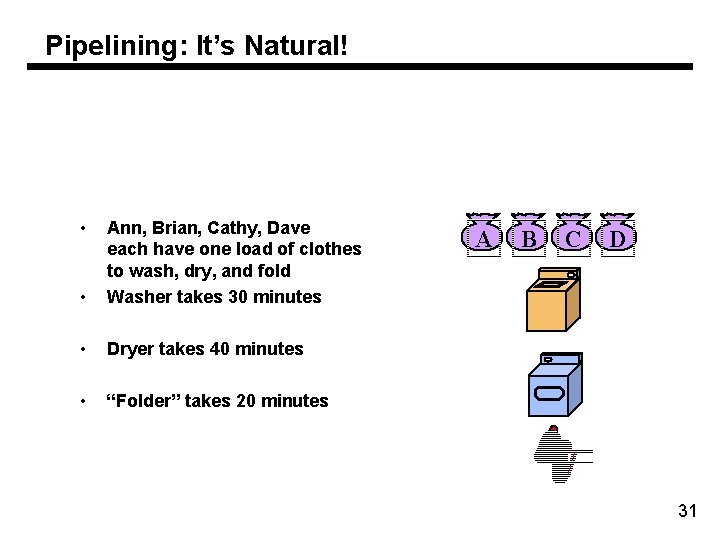

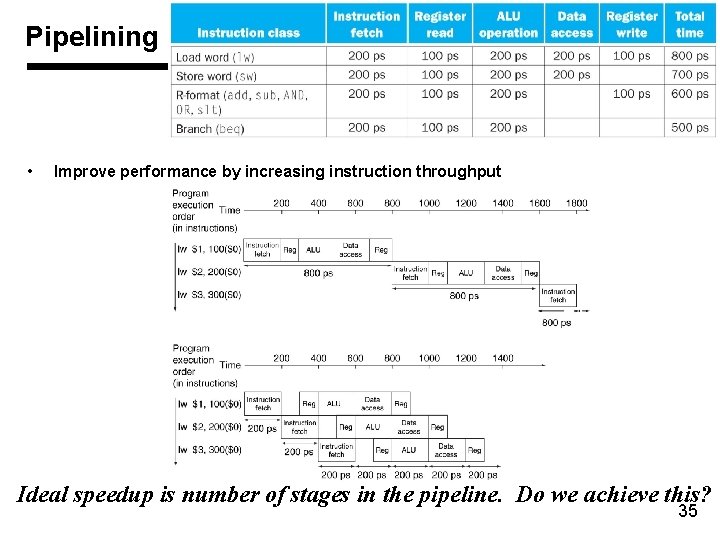

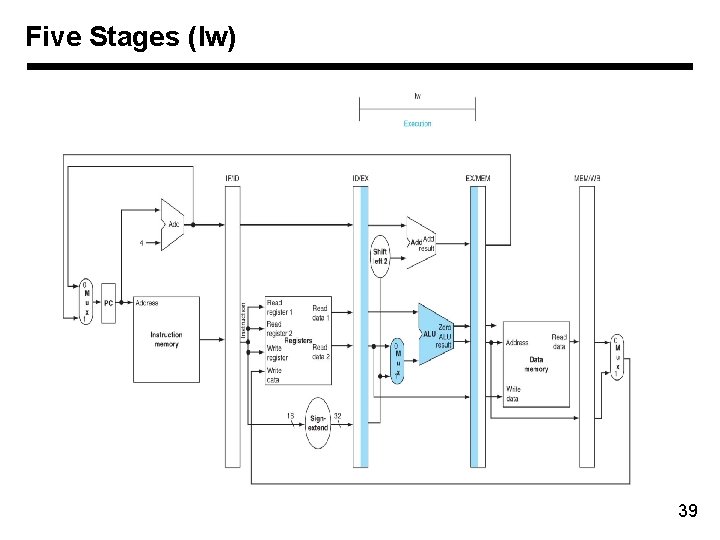

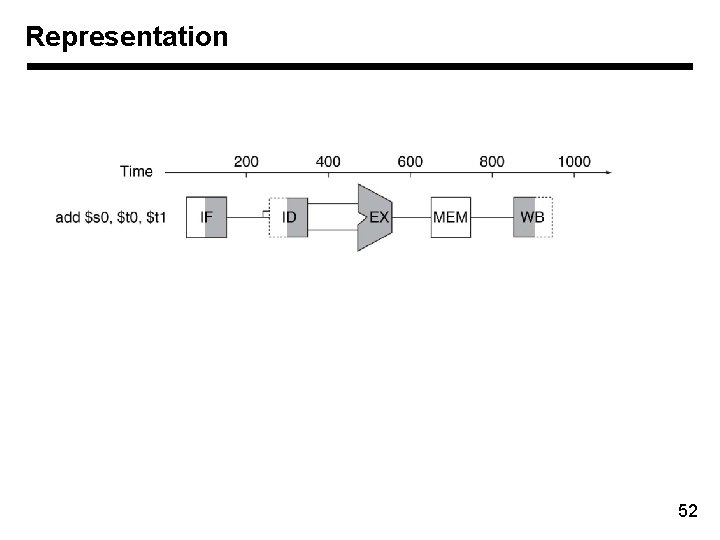

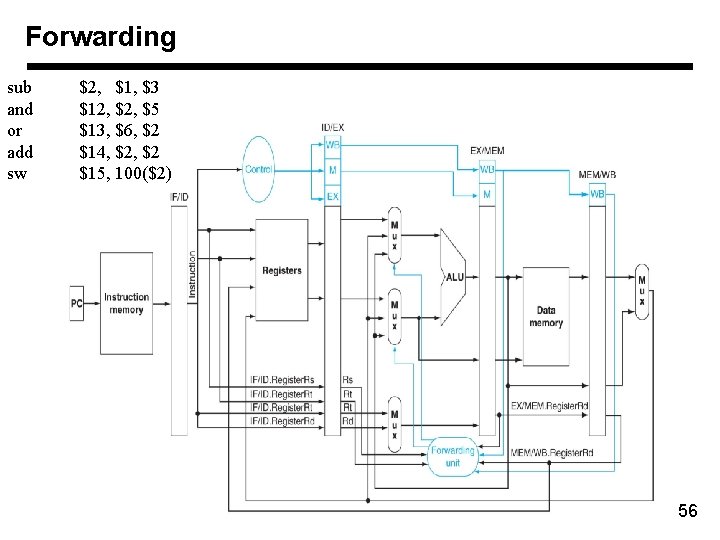

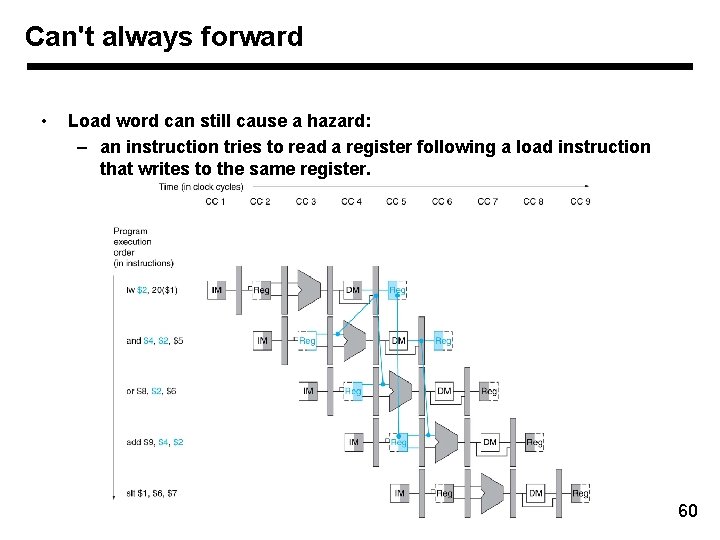

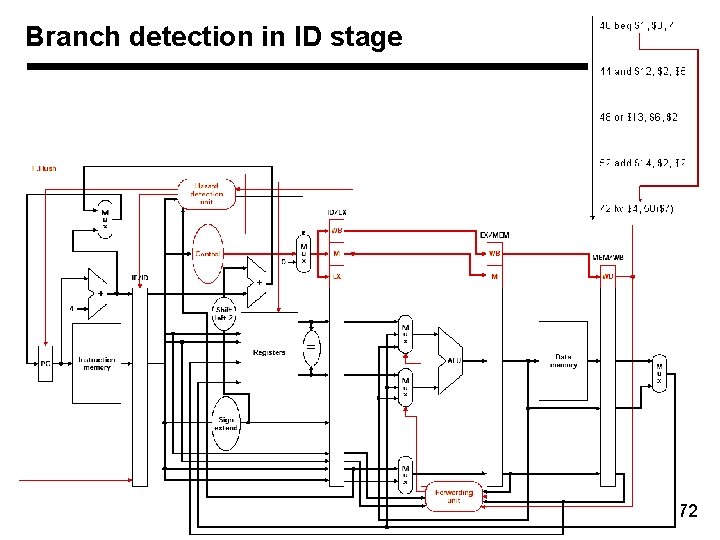

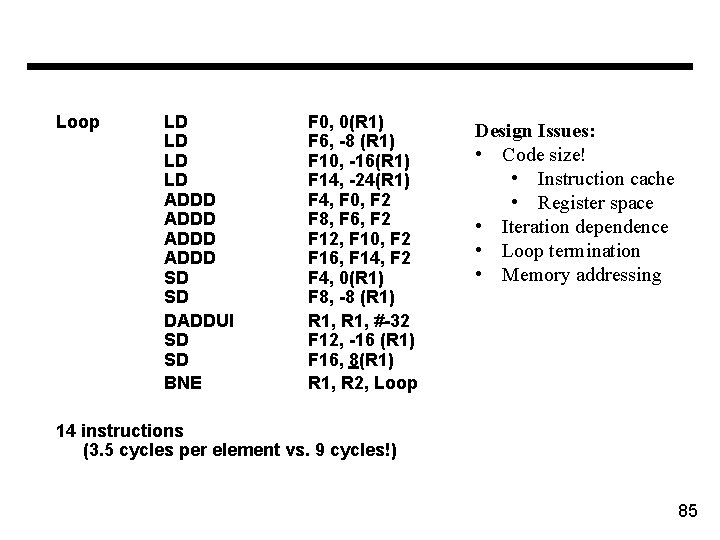

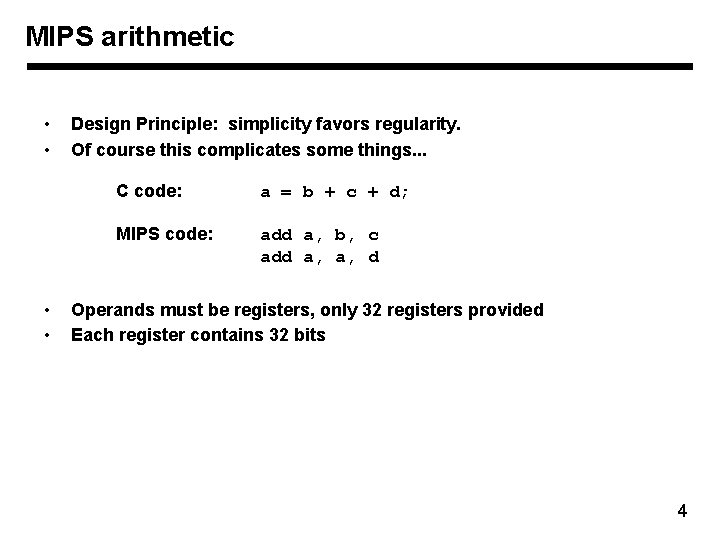

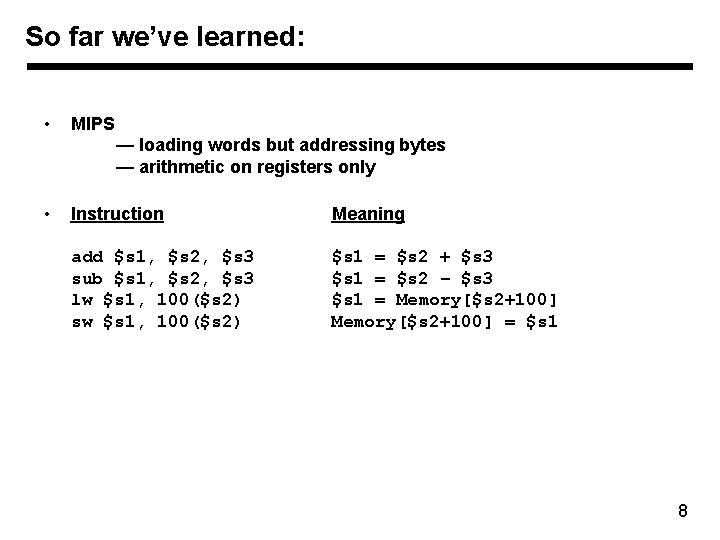

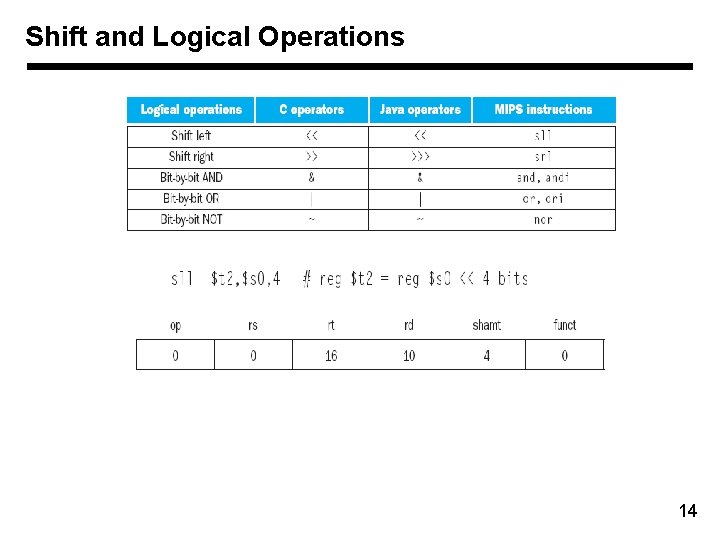

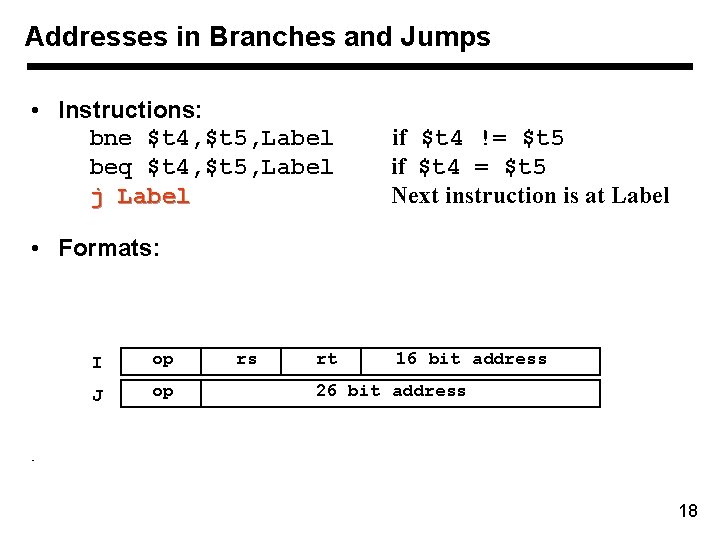

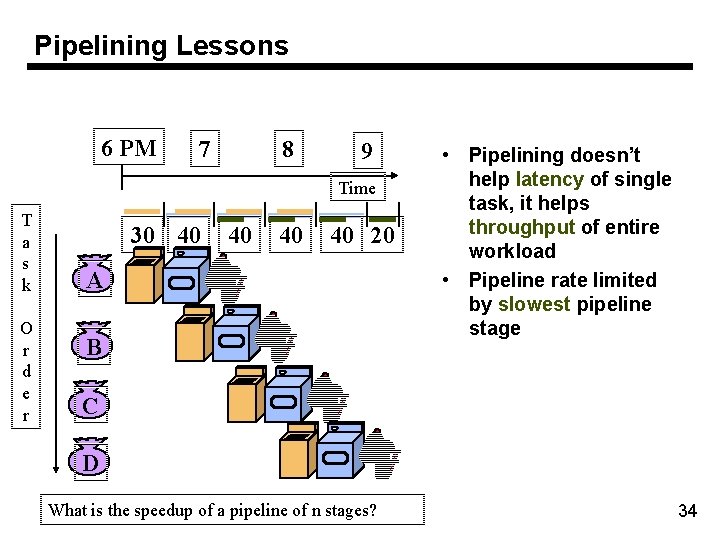

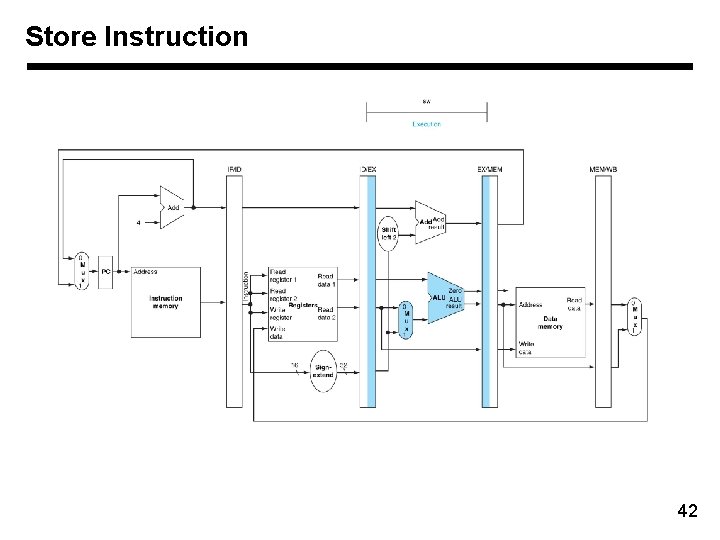

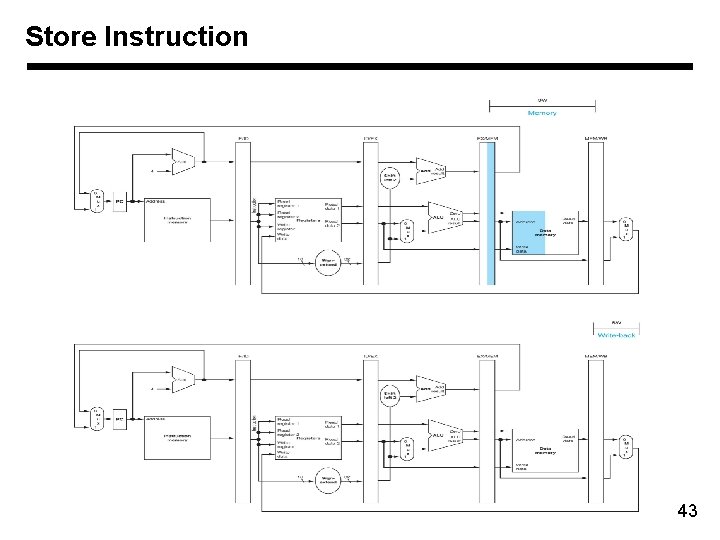

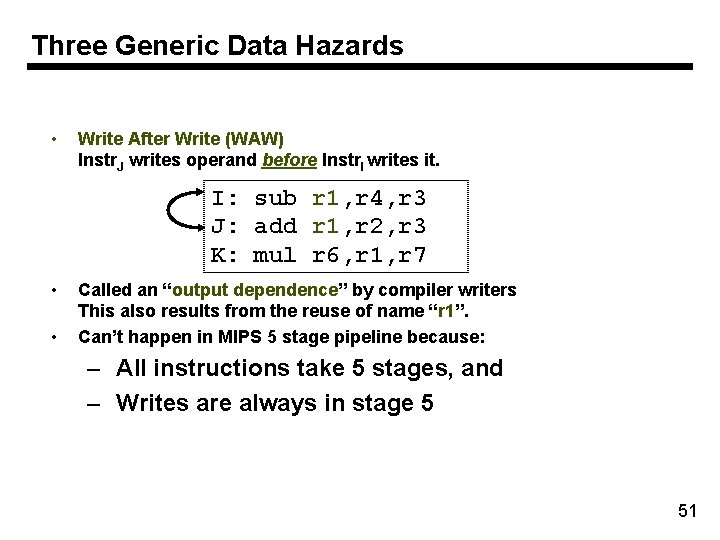

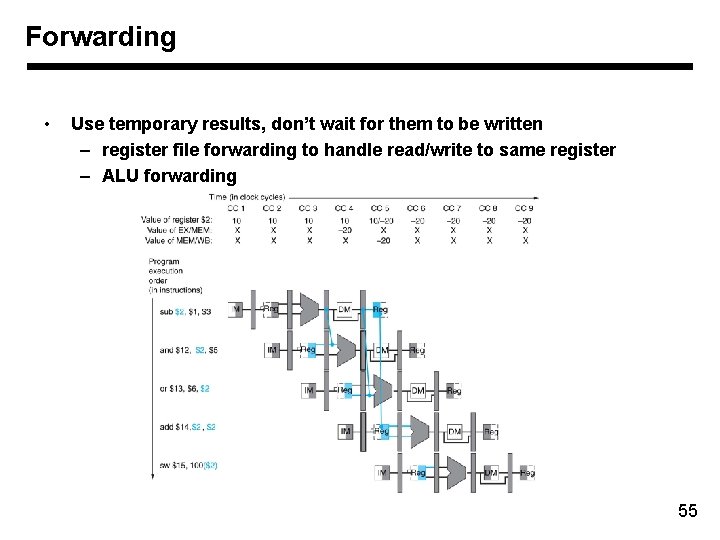

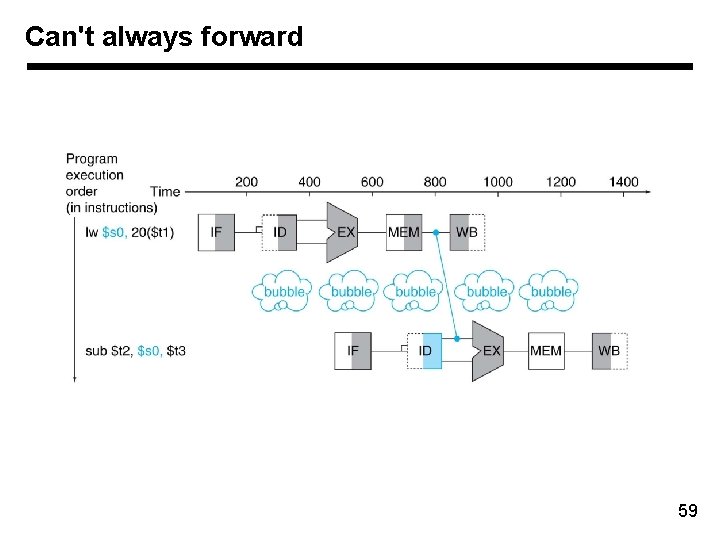

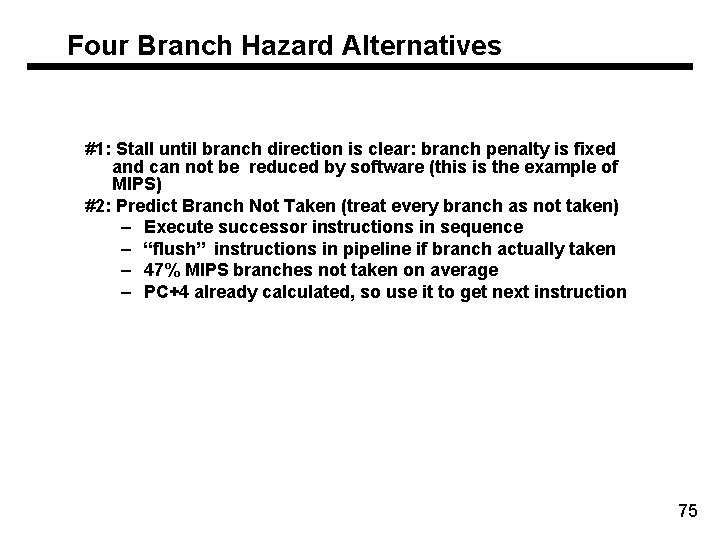

Instructions • • Load and store instructions Example: C code: A[12] = h + A[8]; # $s 3 stores base address of A and $s 2 stores h MIPS code: lw $t 0, 32($s 3) add $t 0, $s 2, $t 0 sw $t 0, 48($s 3) • Remember arithmetic operands are registers, not memory! Can’t write: add 48($s 3), $s 2, 32($s 3) 9

![Summary A300hA300 Lw t 0 1200t 1 Add t 0 s 2 t 0 Summary A[300]=h+A[300] Lw $t 0, 1200($t 1) Add $t 0, $s 2, $t 0](https://slidetodoc.com/presentation_image/408d2ac127f68e1cc0020fafe4520556/image-10.jpg)

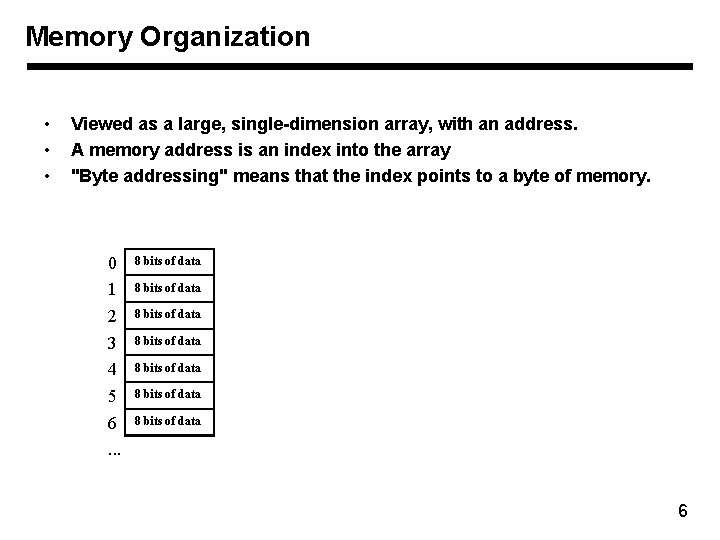

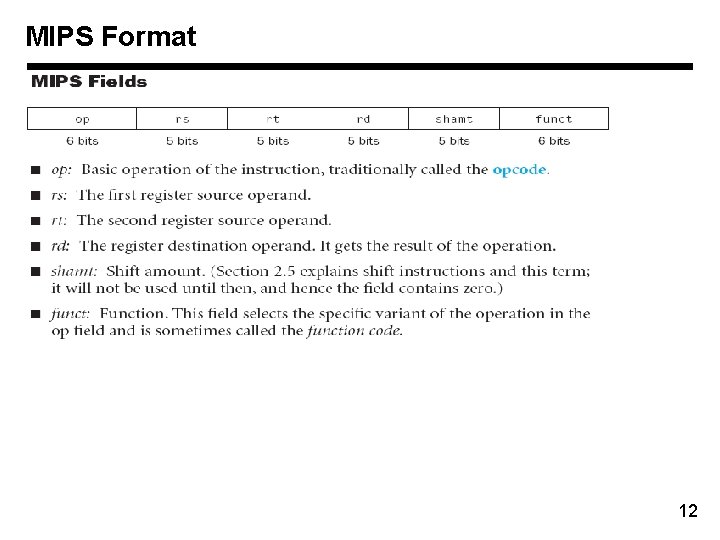

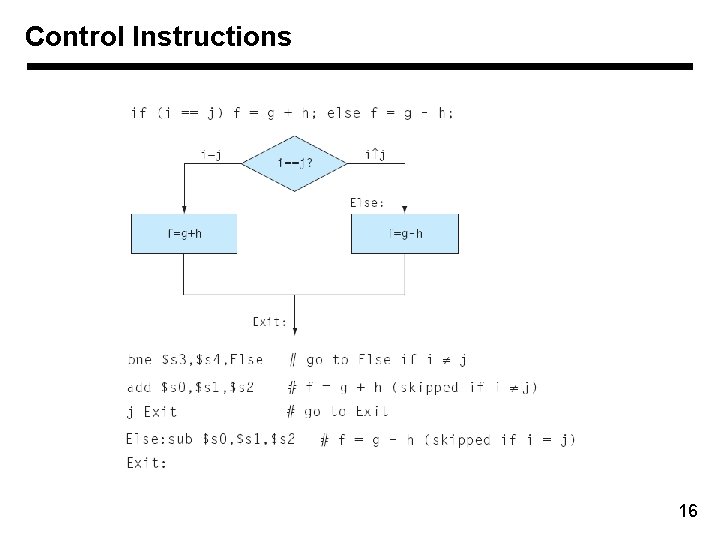

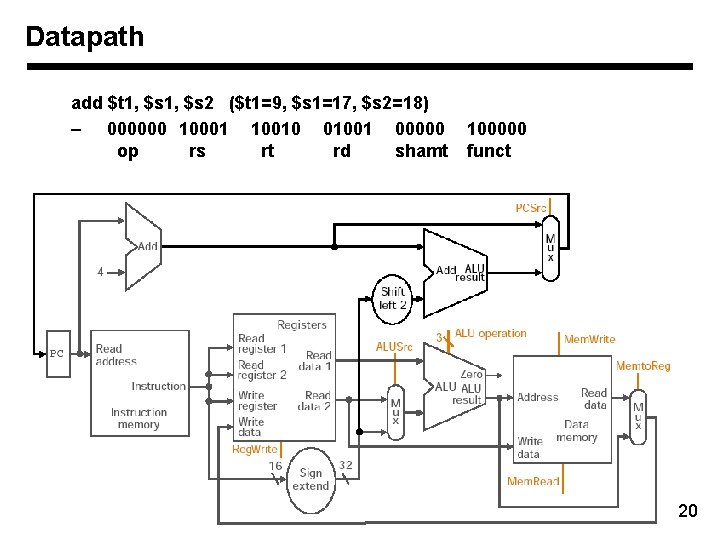

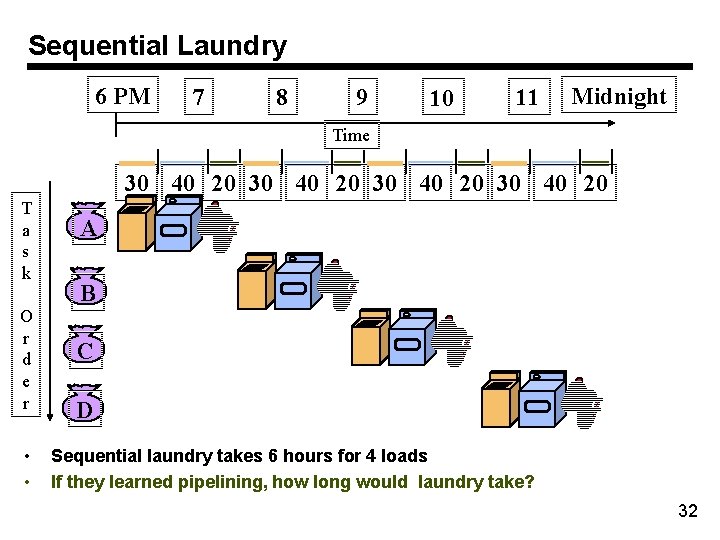

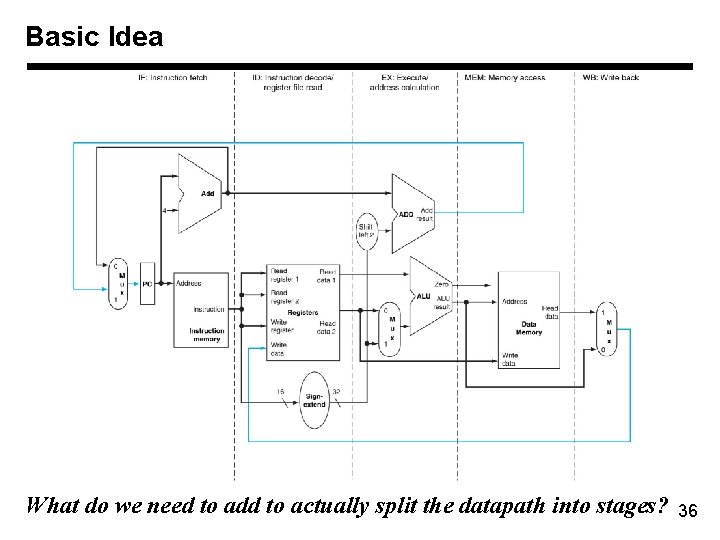

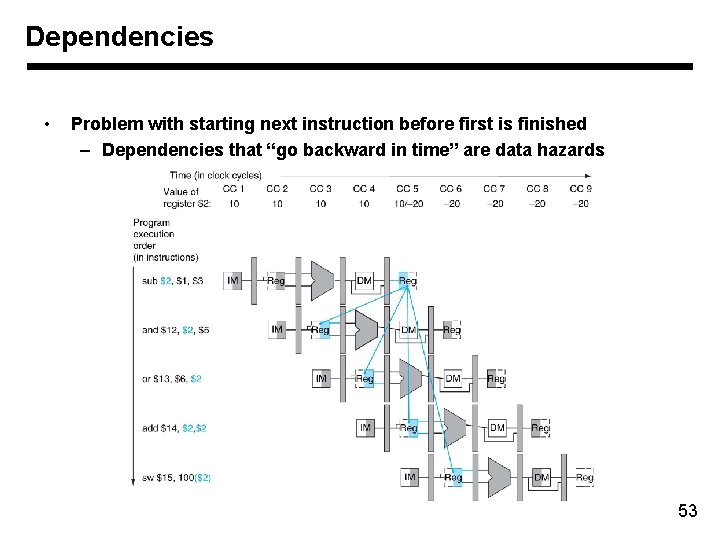

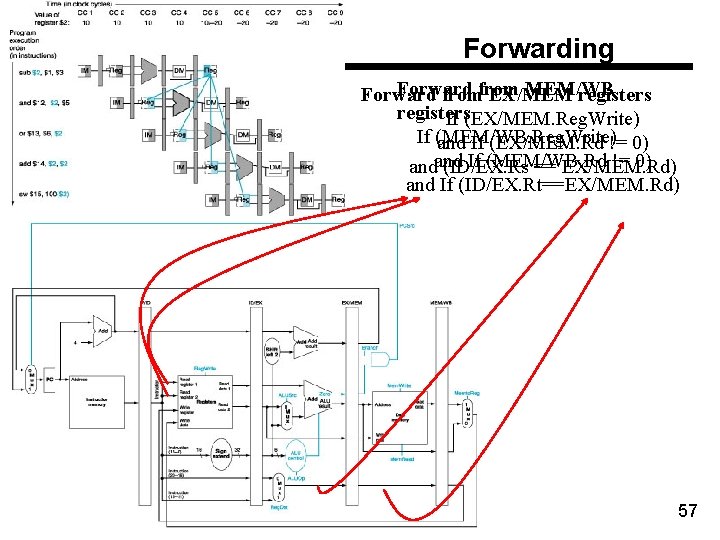

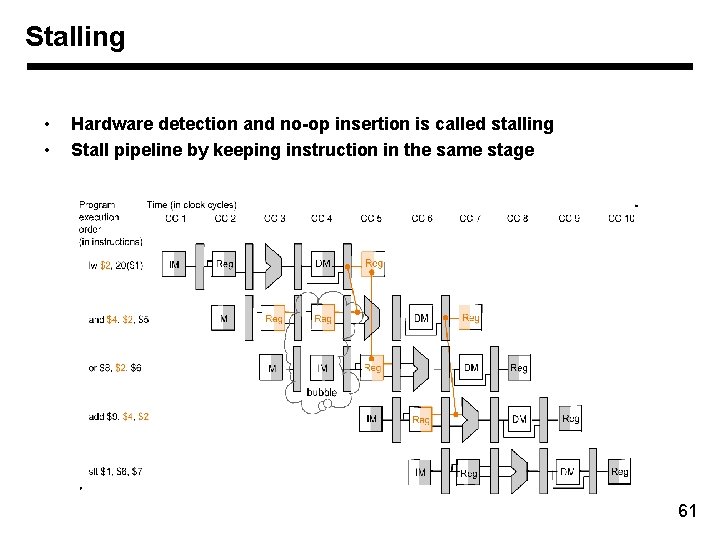

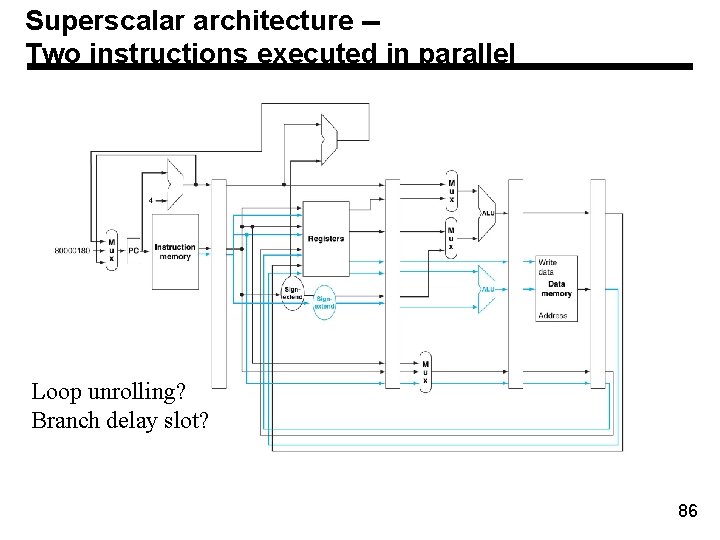

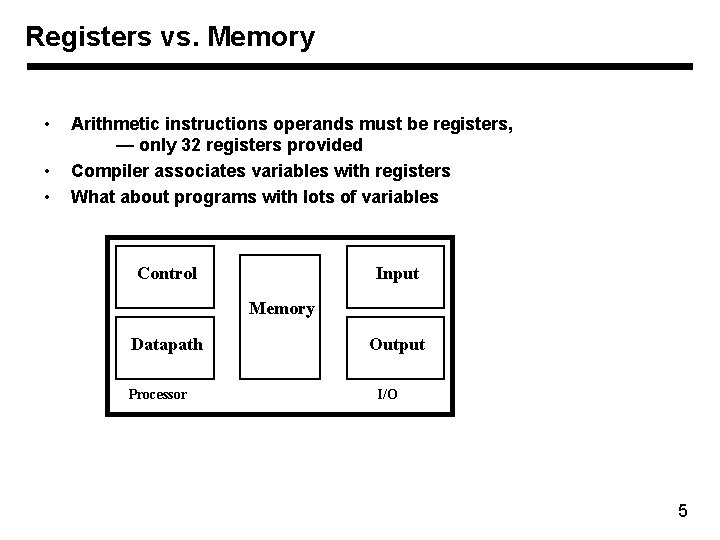

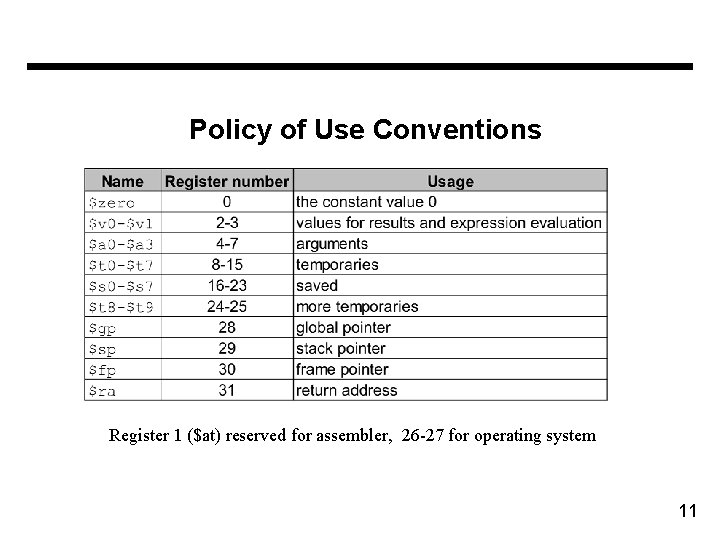

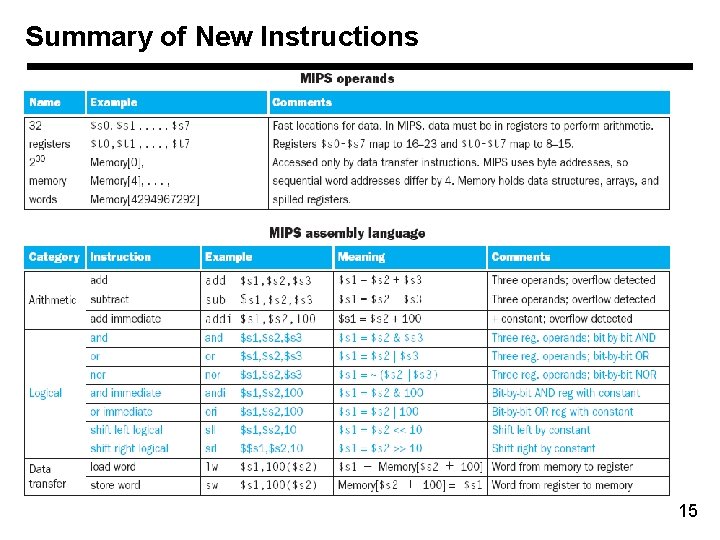

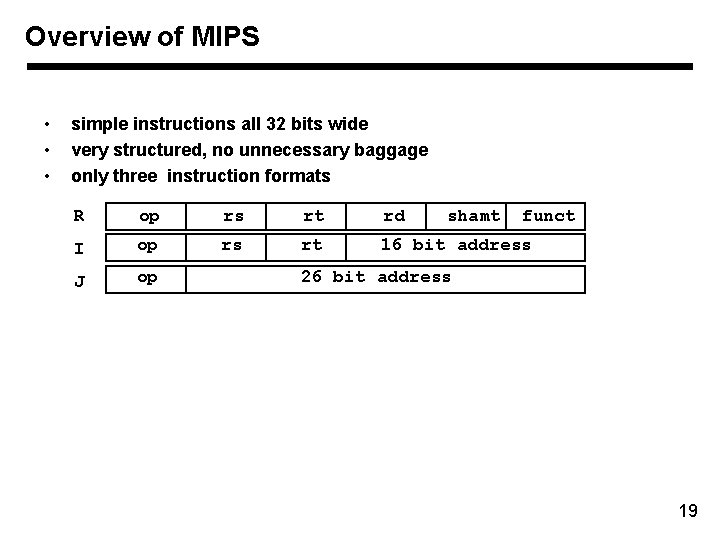

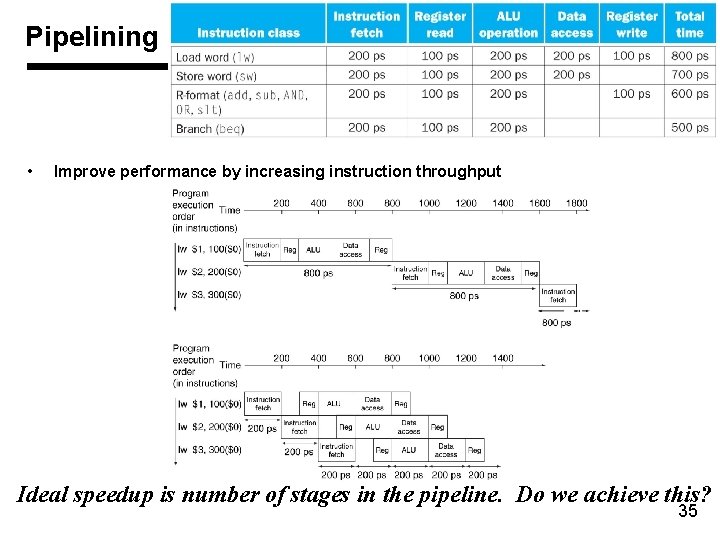

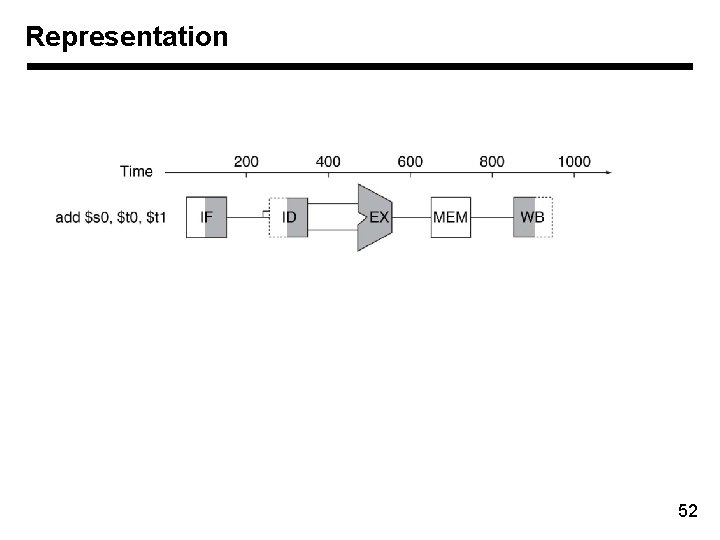

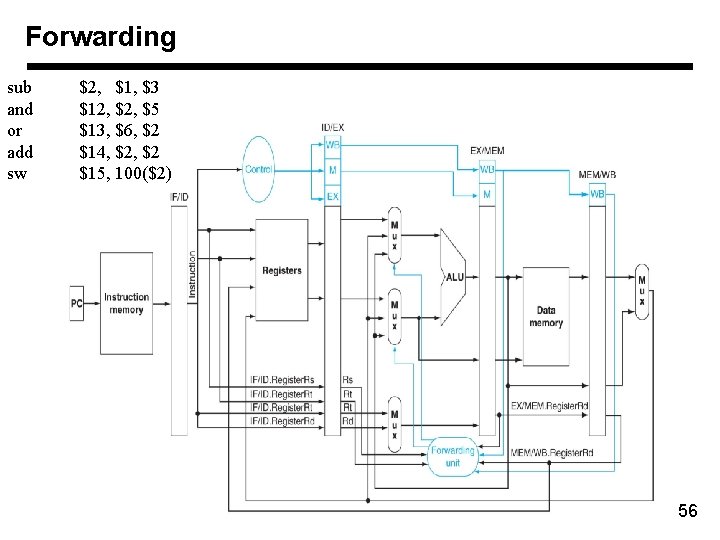

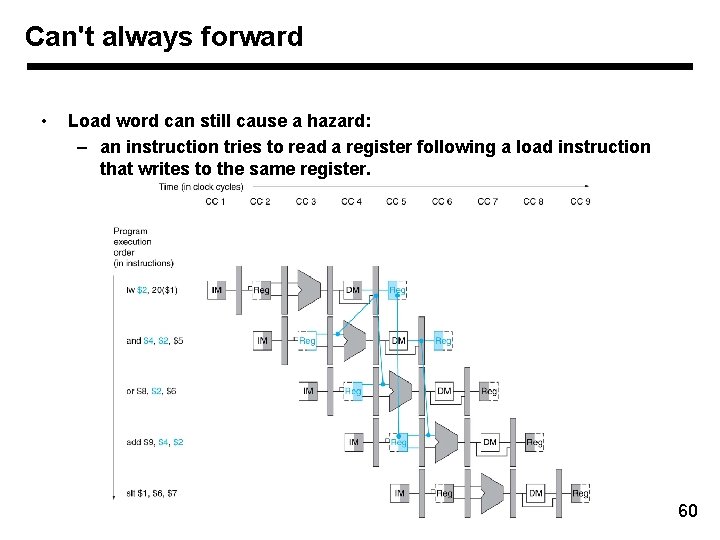

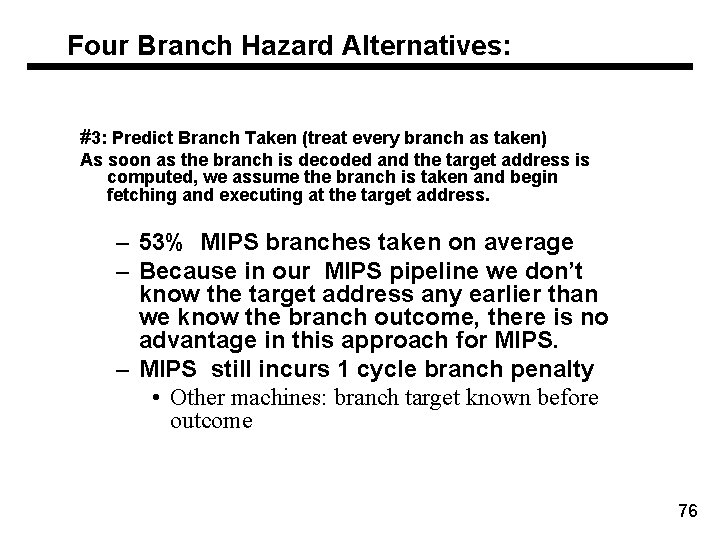

Summary A[300]=h+A[300] Lw $t 0, 1200($t 1) Add $t 0, $s 2, $t 0 Sw $t 0, 1200($t 1) # $t 1 = base address of A, $s 2 stores h # use $t 0 for temporary register Op rs, rt, address Op, rs, rt, rd, shamt, funct Op, rs, rt, address 35, 9, 8, 1200 0, 18, 8, 8, 0, 32 43, 9, 8, 1200 10

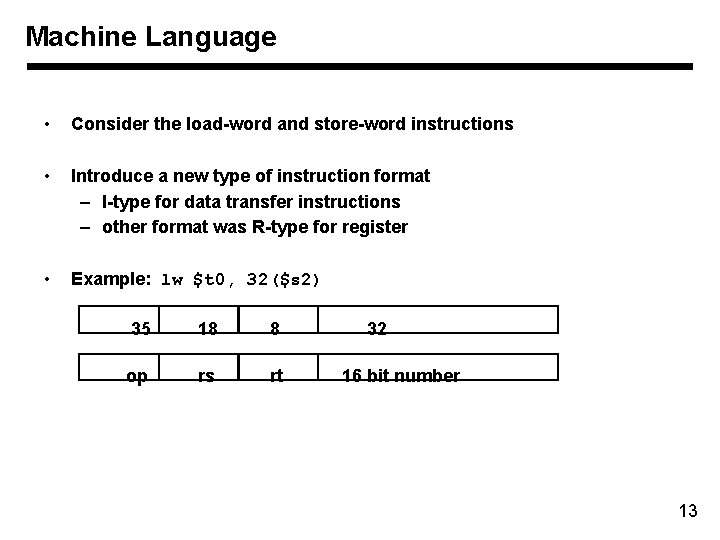

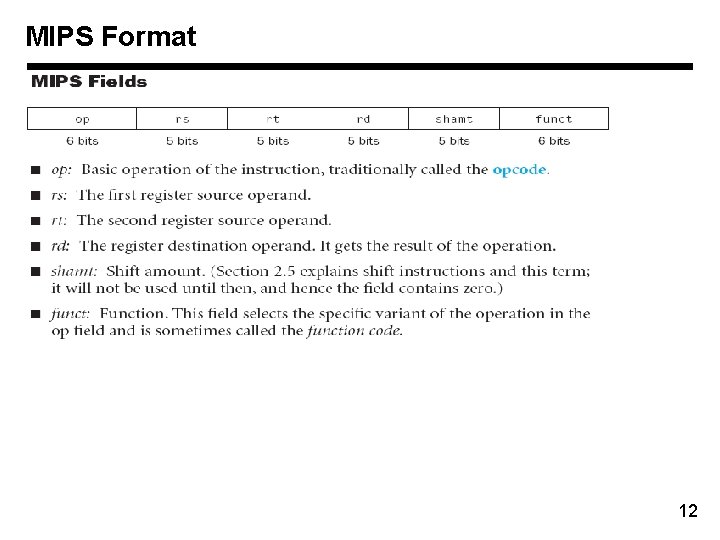

Policy of Use Conventions Register 1 ($at) reserved for assembler, 26 -27 for operating system 11

MIPS Format 12

Machine Language • Consider the load-word and store-word instructions • Introduce a new type of instruction format – I-type for data transfer instructions – other format was R-type for register • Example: lw $t 0, 32($s 2) 35 18 8 op rs rt 32 16 bit number 13

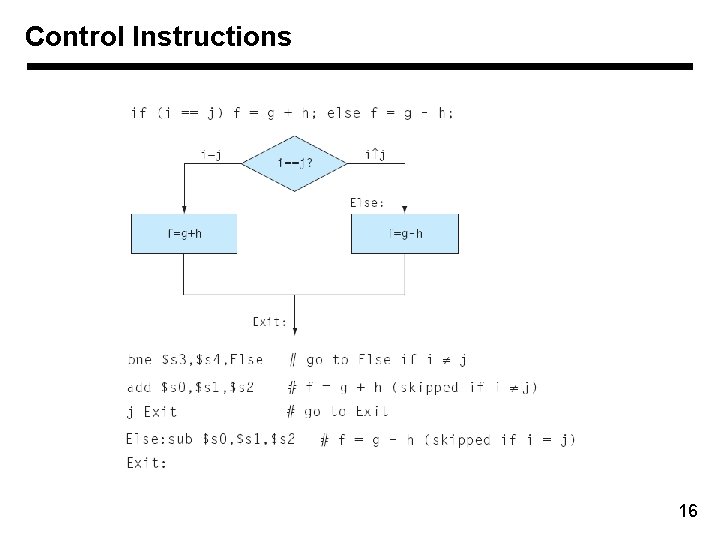

Shift and Logical Operations 14

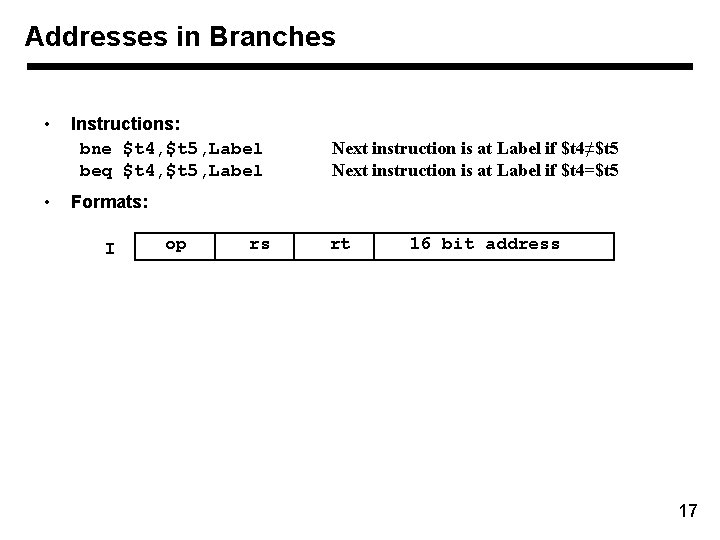

Summary of New Instructions 15

Control Instructions 16

Addresses in Branches • • Instructions: bne $t 4, $t 5, Label beq $t 4, $t 5, Label Next instruction is at Label if $t 4≠$t 5 Next instruction is at Label if $t 4=$t 5 Formats: I op rs rt 16 bit address 17

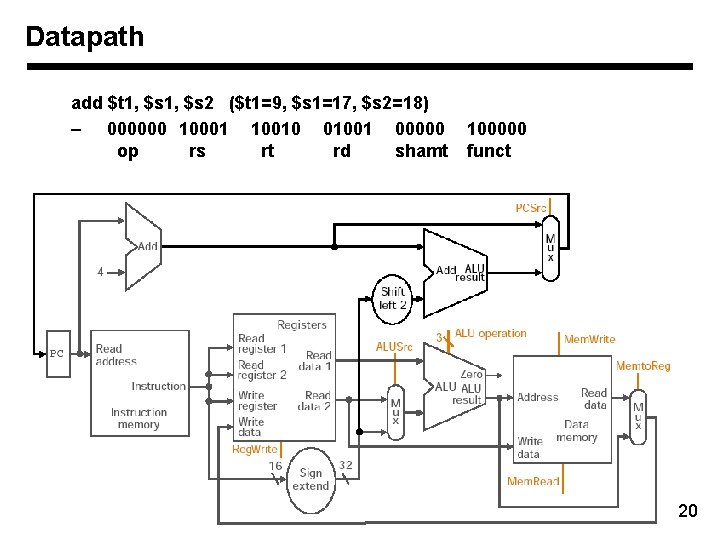

Addresses in Branches and Jumps • Instructions: bne $t 4, $t 5, Label beq $t 4, $t 5, Label j Label if $t 4 != $t 5 if $t 4 = $t 5 Next instruction is at Label • Formats: I op J op rs rt 16 bit address 26 bit address • 18

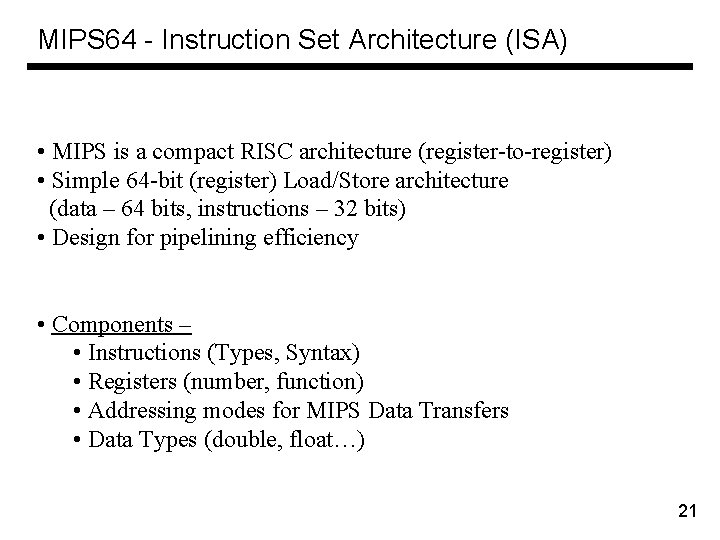

Overview of MIPS • • • simple instructions all 32 bits wide very structured, no unnecessary baggage only three instruction formats R op rs rt rd I op rs rt 16 bit address J op shamt funct 26 bit address 19

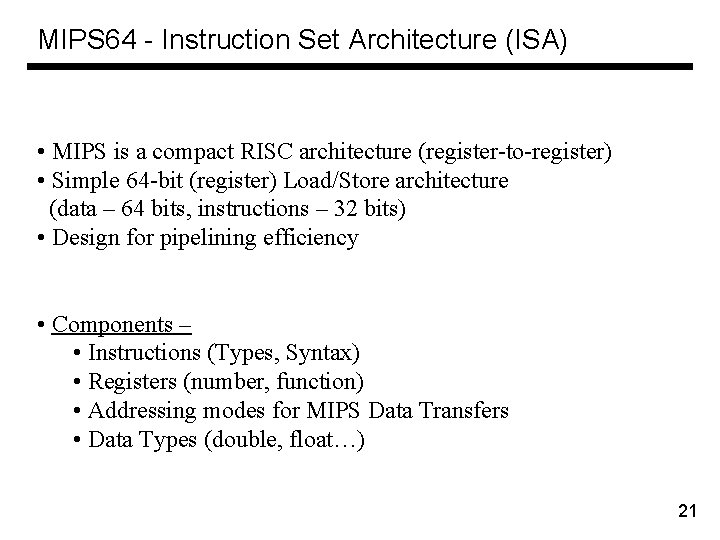

Datapath add $t 1, $s 2 ($t 1=9, $s 1=17, $s 2=18) – 000000 10001 10010 01001 00000 op rs rt rd shamt 100000 funct 20

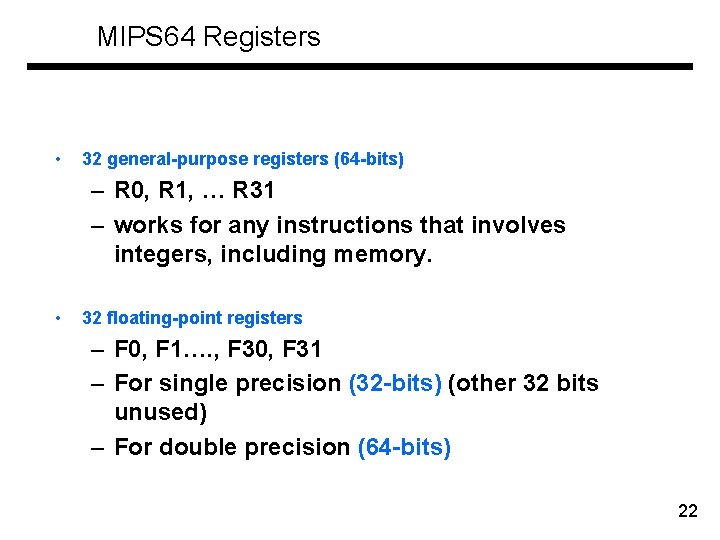

MIPS 64 - Instruction Set Architecture (ISA) • MIPS is a compact RISC architecture (register-to-register) • Simple 64 -bit (register) Load/Store architecture (data – 64 bits, instructions – 32 bits) • Design for pipelining efficiency • Components – • Instructions (Types, Syntax) • Registers (number, function) • Addressing modes for MIPS Data Transfers • Data Types (double, float…) 21

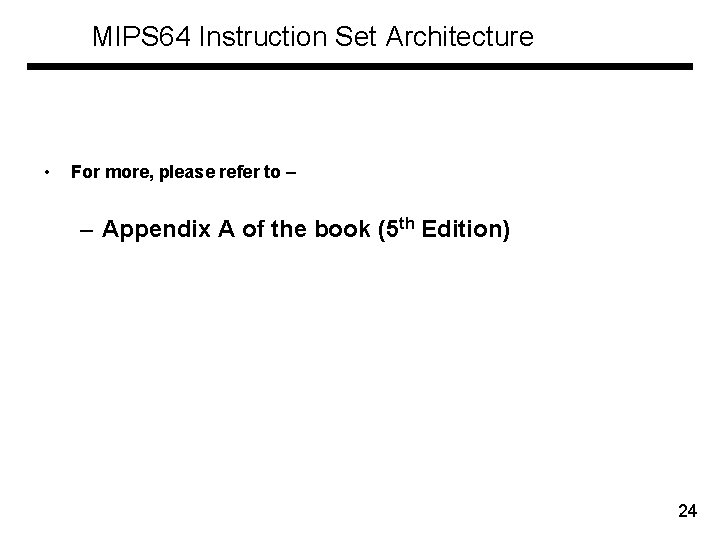

MIPS 64 Registers • 32 general-purpose registers (64 -bits) – R 0, R 1, … R 31 – works for any instructions that involves integers, including memory. • 32 floating-point registers – F 0, F 1…. , F 30, F 31 – For single precision (32 -bits) (other 32 bits unused) – For double precision (64 -bits) 22

MIPS 64 Instruction Set Examples • • • Arithmetic/Logical – Add unsigned DADDU R 1, R 2, R 3 -- Regs[R 1] Regs[R 2] + Regs[R 3] – Shift Left Logical DSLL R 1, R 2, #5 -- Regs[R 1] Regs[R 2] << 5 Load/Store – Load Double word LD R 1, 30(R 2) -- Regs[R 1] 64 Mem[30+Regs[R 2]] – Store FP single S. S F 0, 40(R 3) -- Mem[40+Regs[R 3]] 32 Regs[F 0]0… 31 Control – Jump Register JR R 3 -- PC Regs[R 3] – Branch Not Equal BNE R 3, R 4, name -- if (Regs[R 3] != Regs[R 4]), PC name; 23

MIPS 64 Instruction Set Architecture • For more, please refer to – – Appendix A of the book (5 th Edition) 24

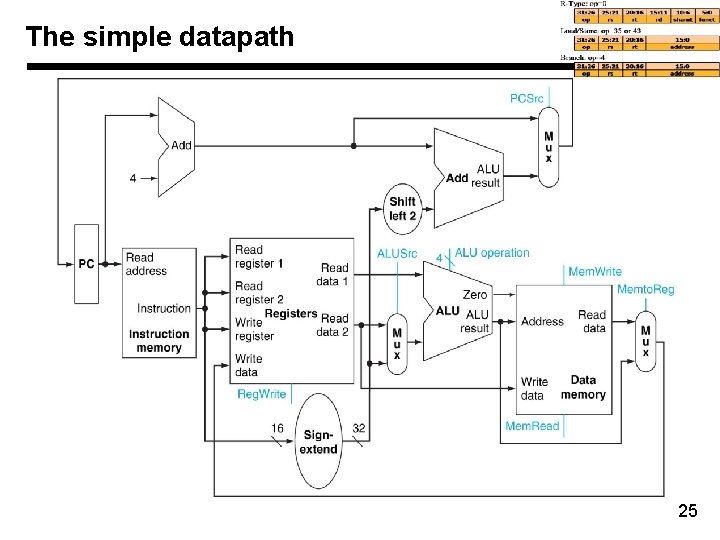

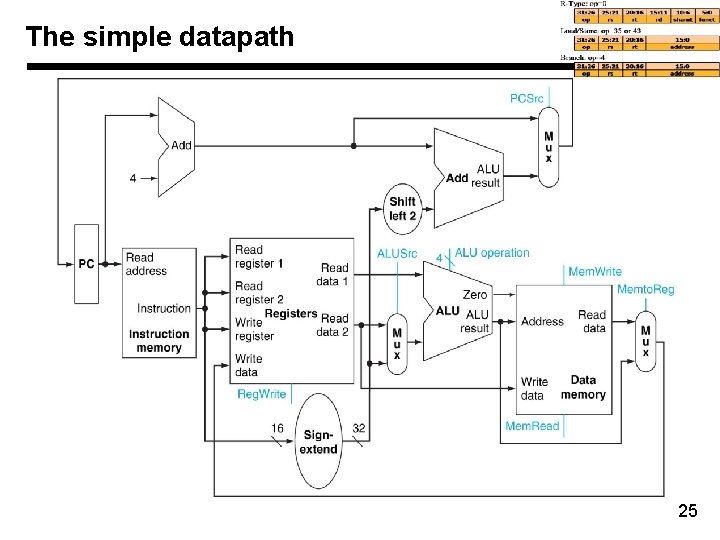

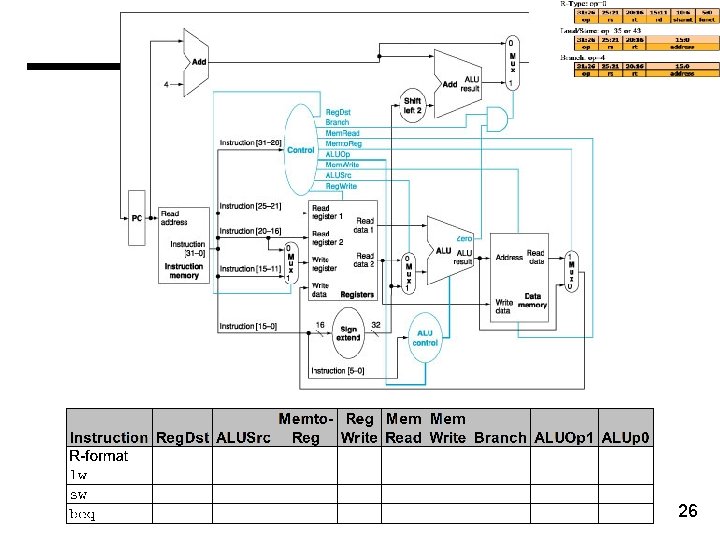

The simple datapath 25

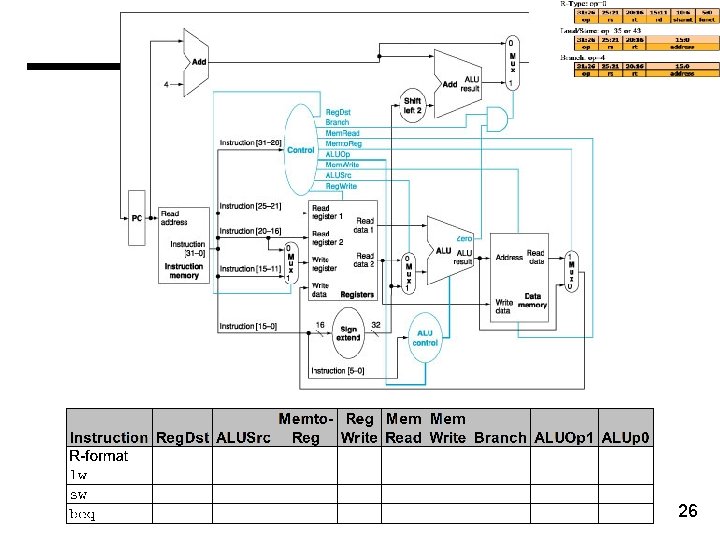

26

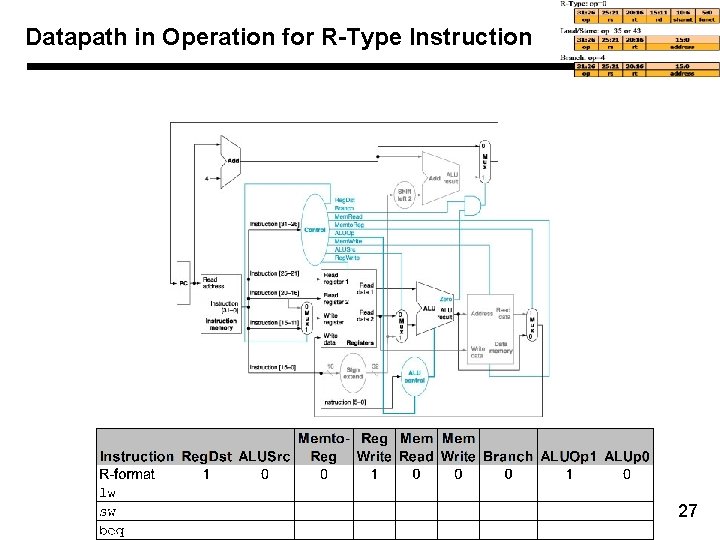

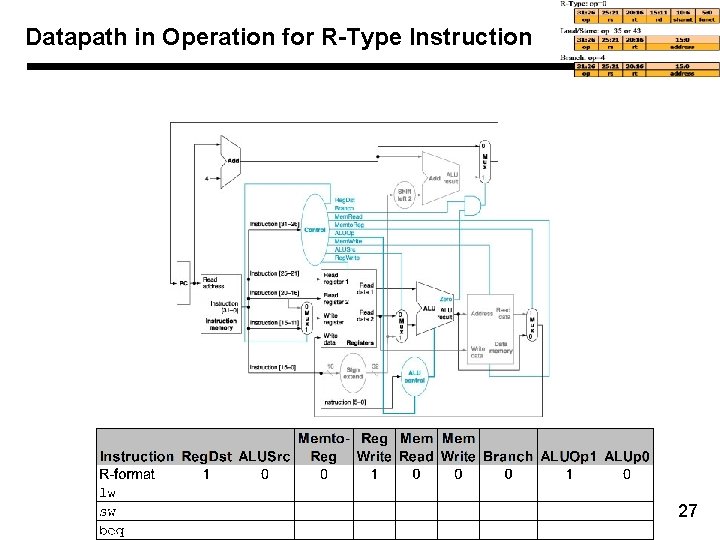

Datapath in Operation for R-Type Instruction 27

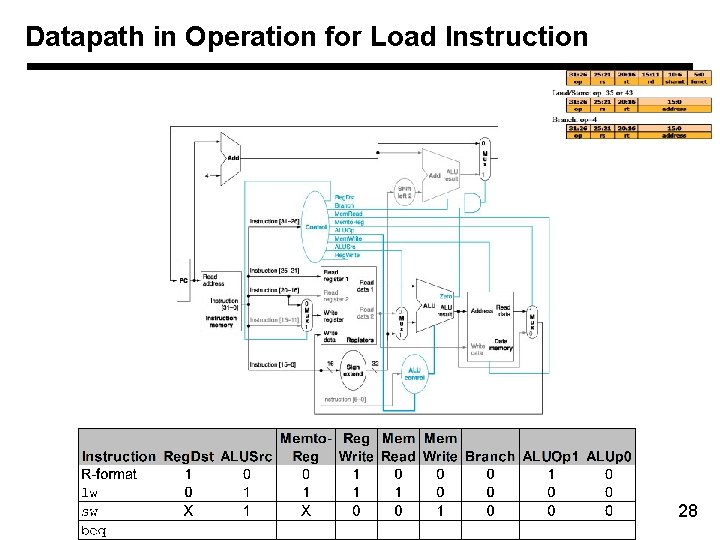

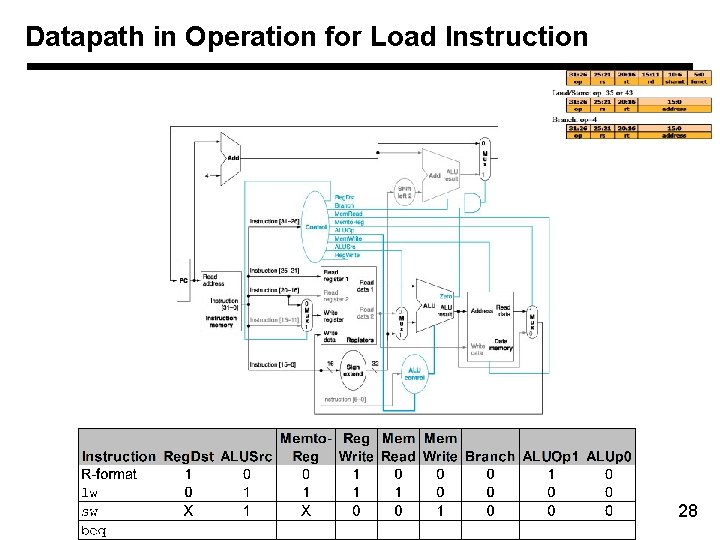

Datapath in Operation for Load Instruction 28

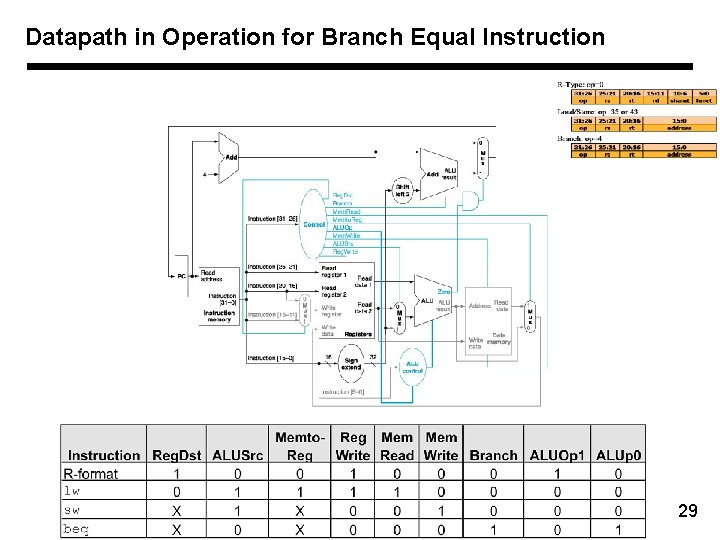

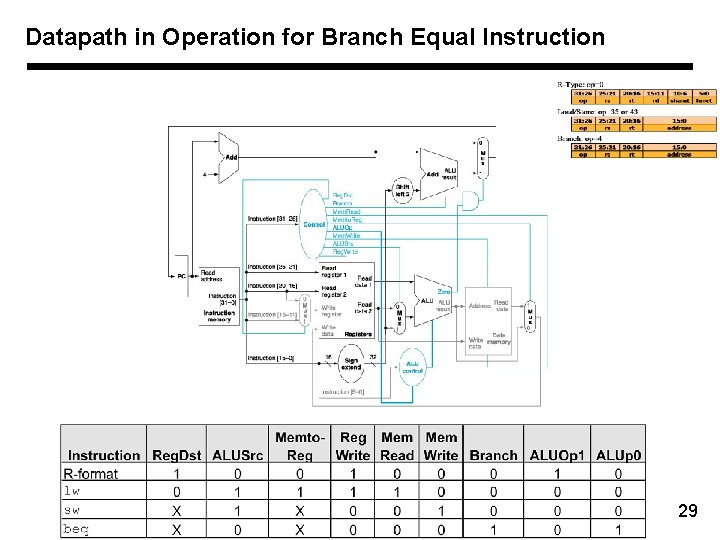

Datapath in Operation for Branch Equal Instruction 29

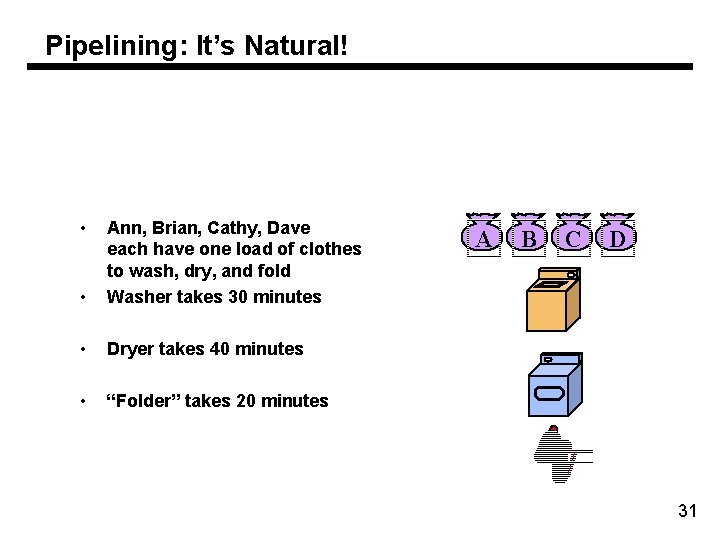

Single Cycle Problems – Wasteful of area • Each unit used once per clock cycle – Clock cycle equal to worst case scenario • Will reducing the delay of common case help? 30

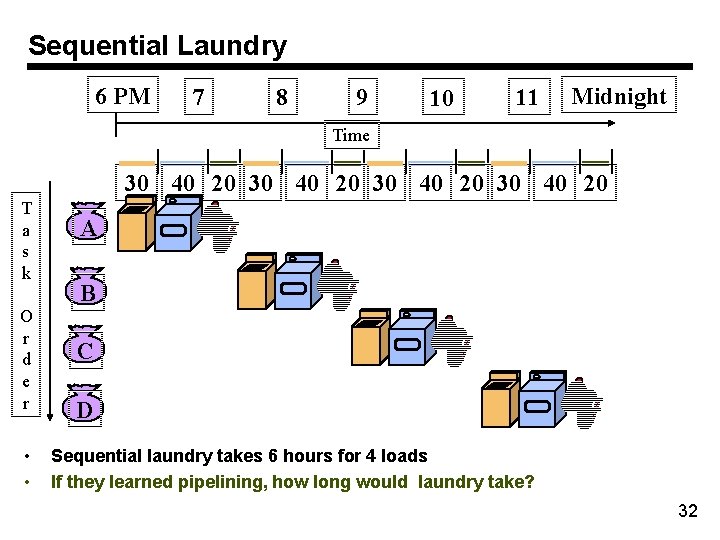

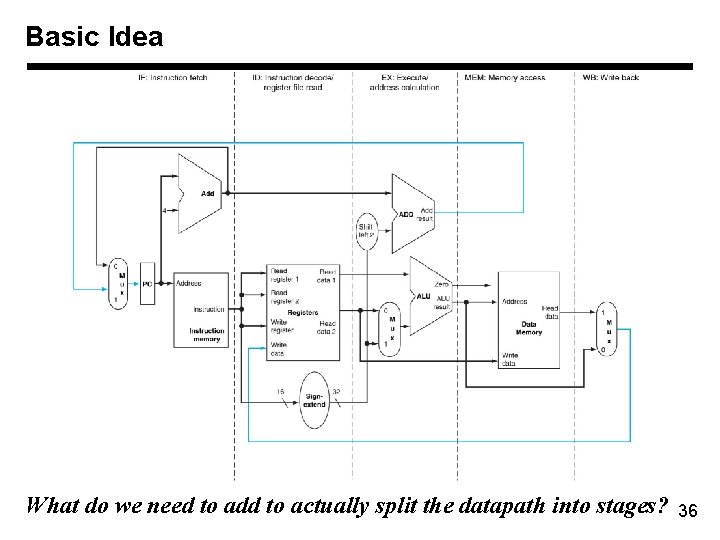

Pipelining: It’s Natural! • • Ann, Brian, Cathy, Dave each have one load of clothes to wash, dry, and fold Washer takes 30 minutes • Dryer takes 40 minutes • “Folder” takes 20 minutes A B C D 31

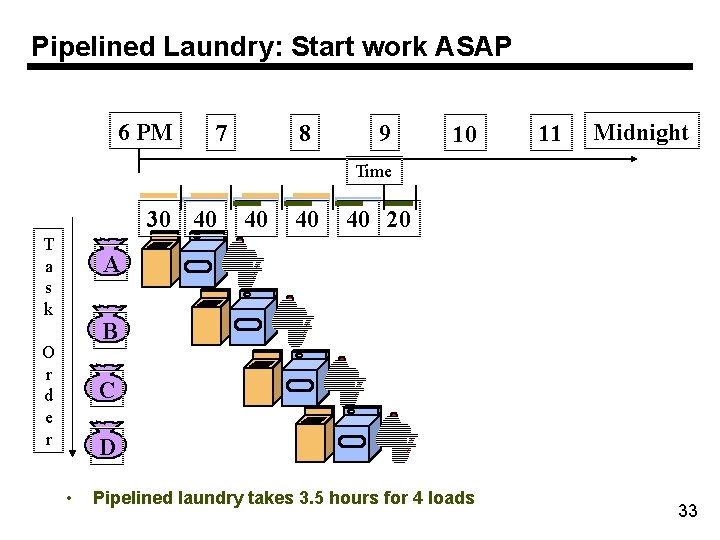

Sequential Laundry 6 PM 7 8 9 10 11 Midnight Time 30 40 20 T a s k O r d e r • • A B C D Sequential laundry takes 6 hours for 4 loads If they learned pipelining, how long would laundry take? 32

Pipelined Laundry: Start work ASAP 6 PM 7 8 9 10 11 Midnight Time 30 40 T a s k 40 40 40 20 A B O r d e r C D • Pipelined laundry takes 3. 5 hours for 4 loads 33

Pipelining Lessons 6 PM 7 8 9 Time T a s k O r d e r 30 40 40 20 A B • Pipelining doesn’t help latency of single task, it helps throughput of entire workload • Pipeline rate limited by slowest pipeline stage C D What is the speedup of a pipeline of n stages? 34

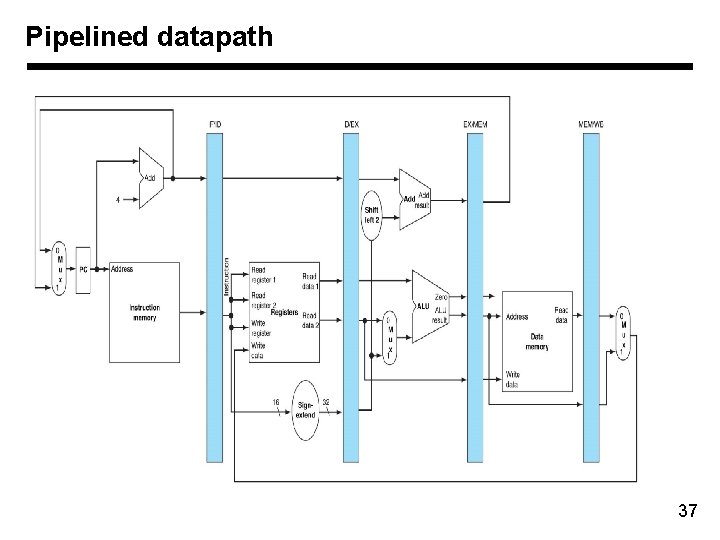

Pipelining • Improve performance by increasing instruction throughput Ideal speedup is number of stages in the pipeline. Do we achieve this? 35

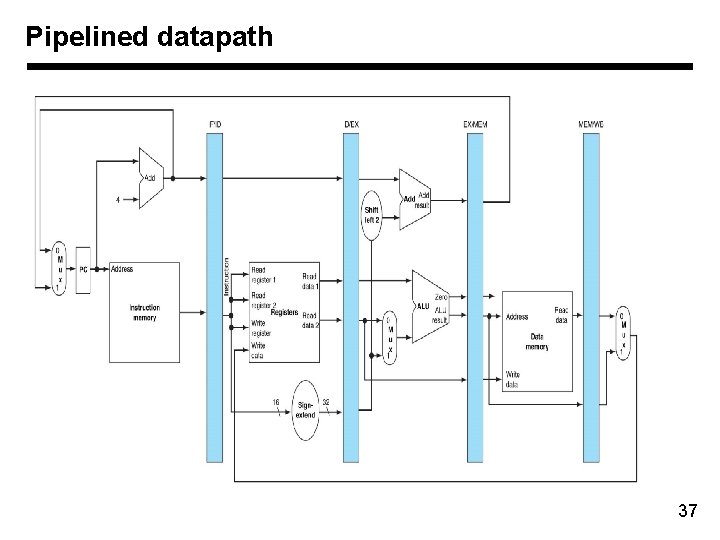

Basic Idea What do we need to add to actually split the datapath into stages? 36

Pipelined datapath 37

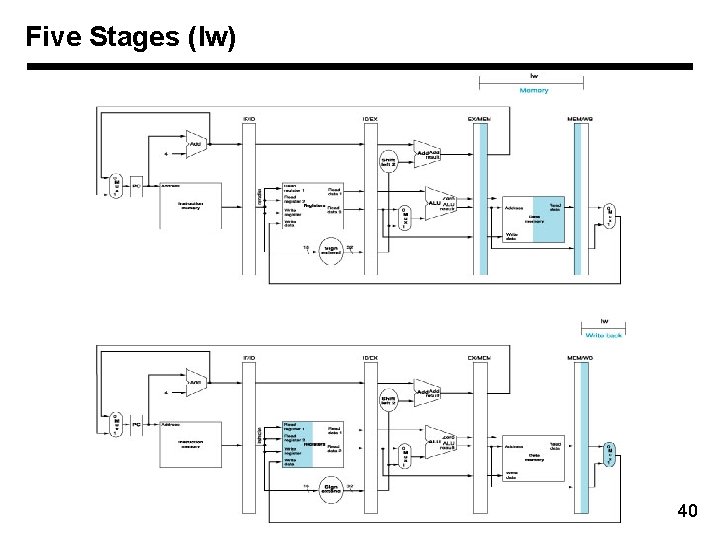

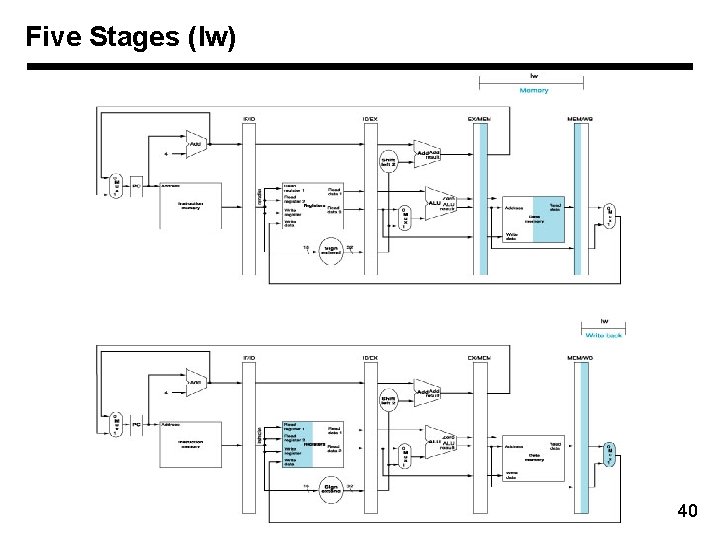

Five Stages (lw) Memory and registers Left half: write Right half: read 38

Five Stages (lw) 39

Five Stages (lw) 40

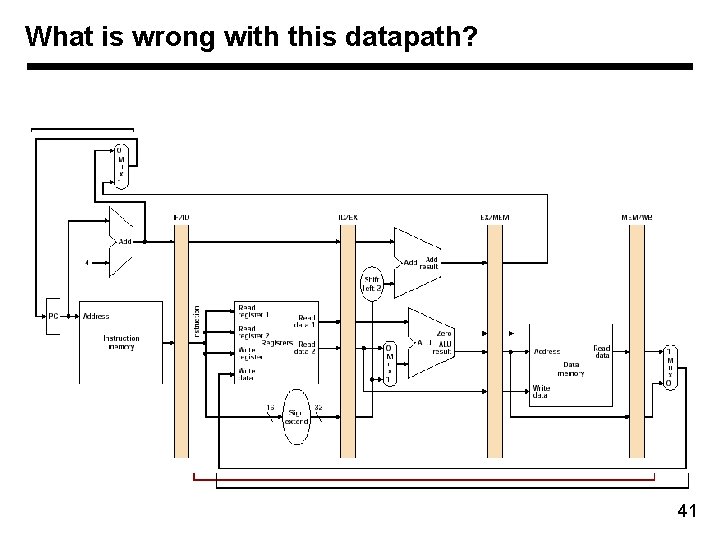

What is wrong with this datapath? 41

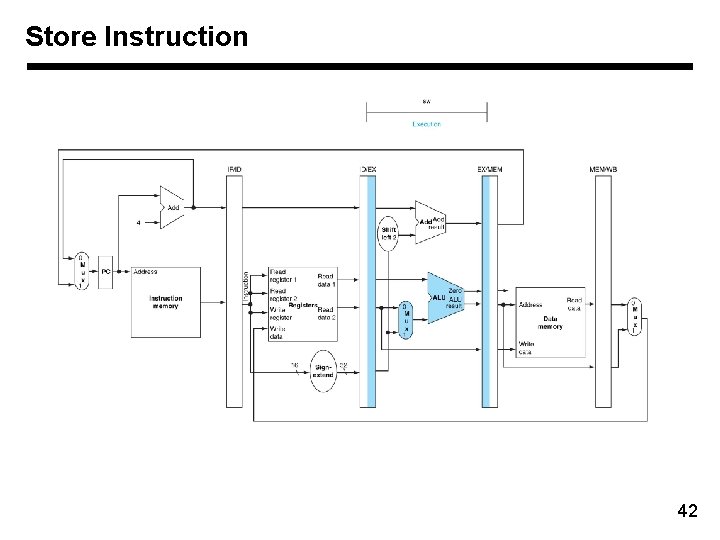

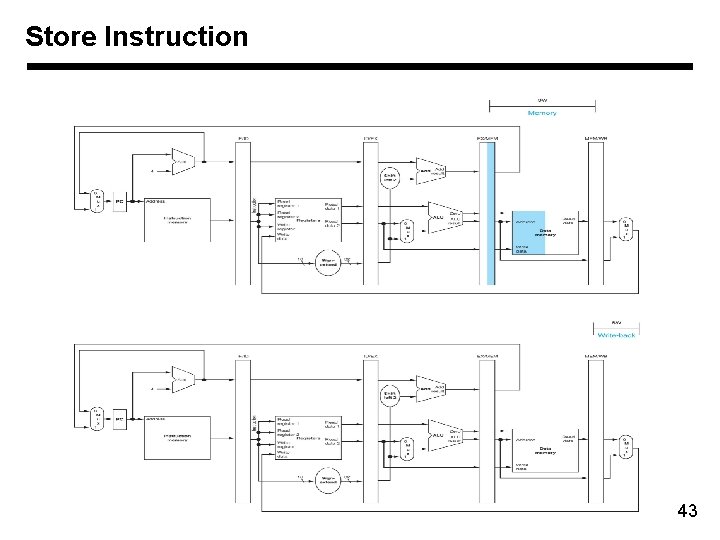

Store Instruction 42

Store Instruction 43

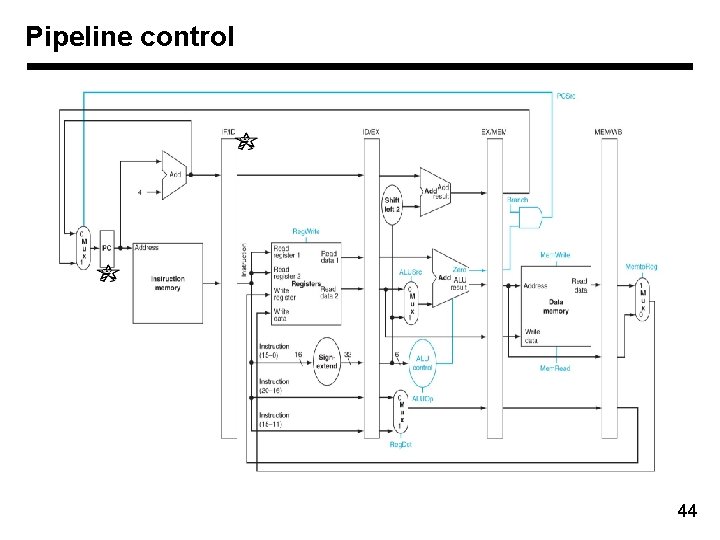

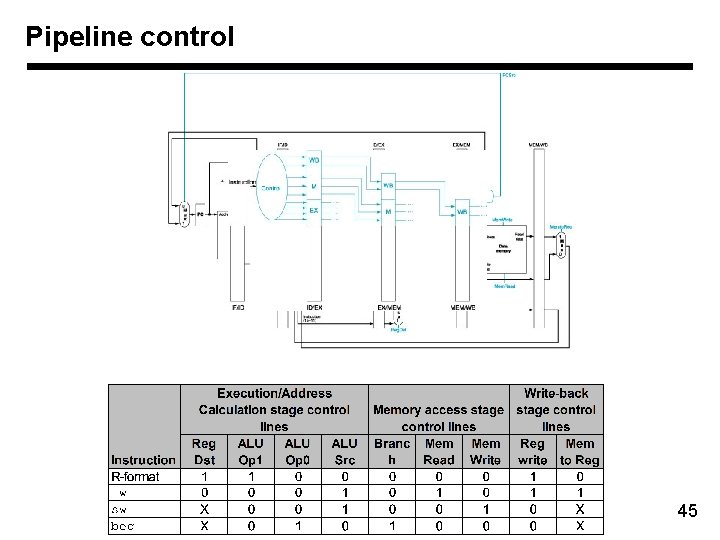

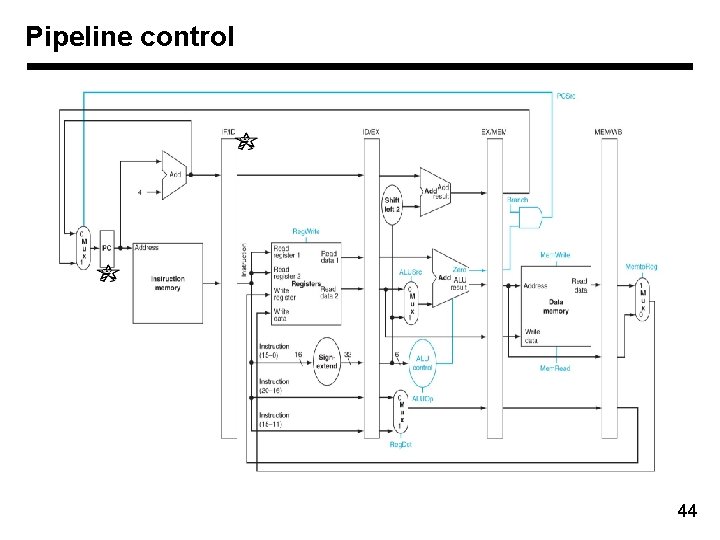

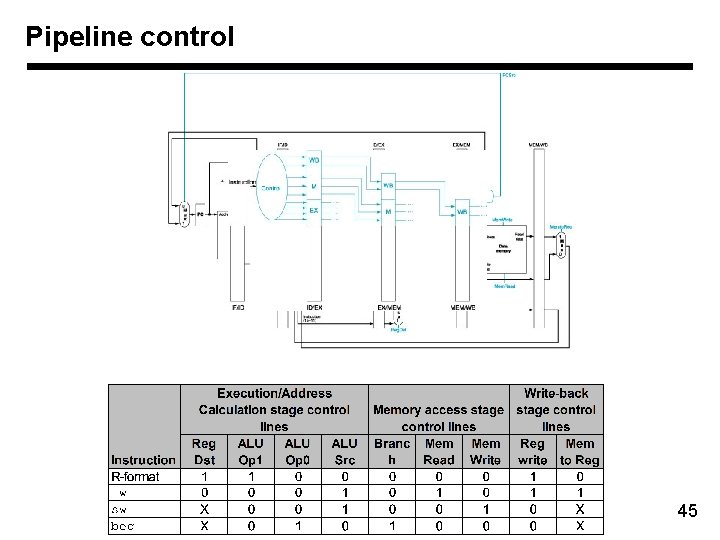

Pipeline control 44

Pipeline control 45

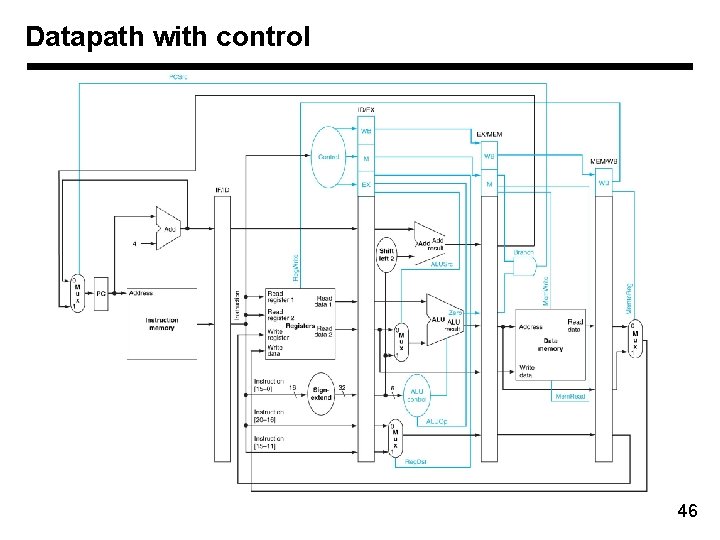

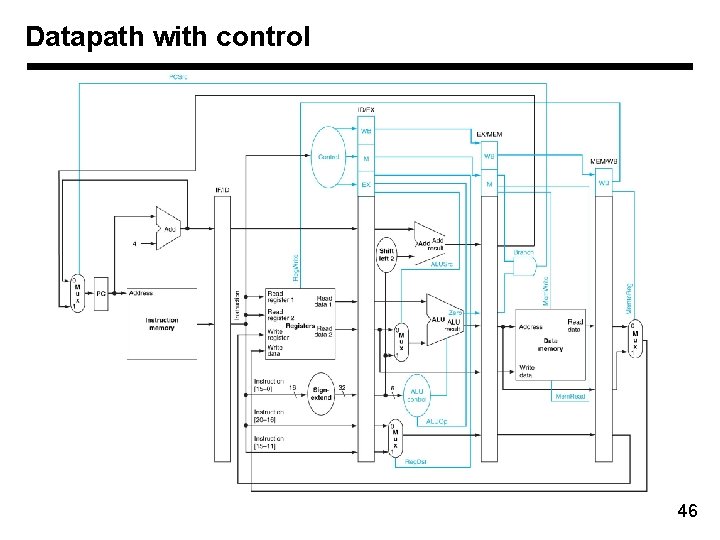

Datapath with control 46

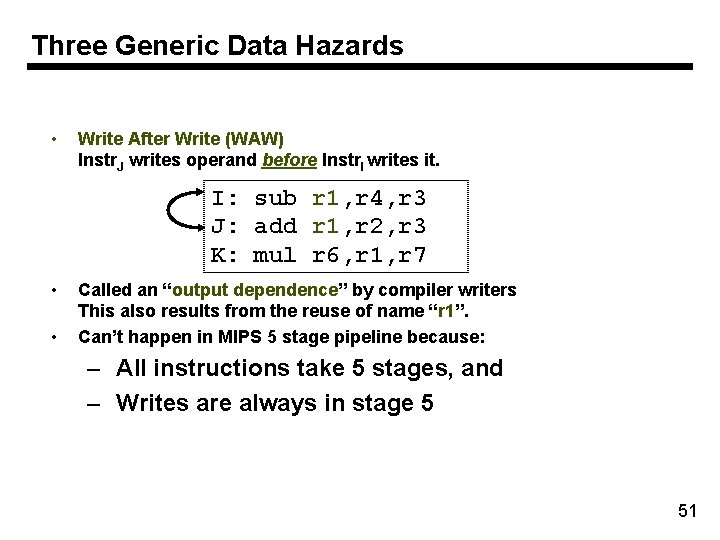

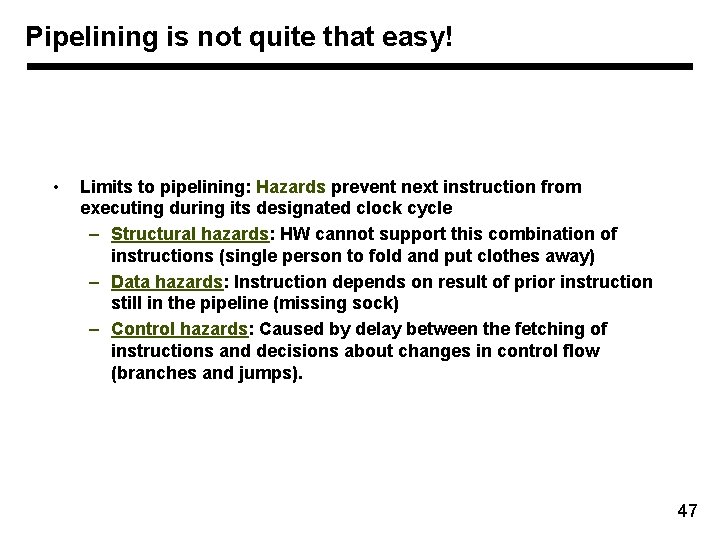

Pipelining is not quite that easy! • Limits to pipelining: Hazards prevent next instruction from executing during its designated clock cycle – Structural hazards: HW cannot support this combination of instructions (single person to fold and put clothes away) – Data hazards: Instruction depends on result of prior instruction still in the pipeline (missing sock) – Control hazards: Caused by delay between the fetching of instructions and decisions about changes in control flow (branches and jumps). 47

One Memory Port/Structural Hazards Figure A. 4, Page A-14 48

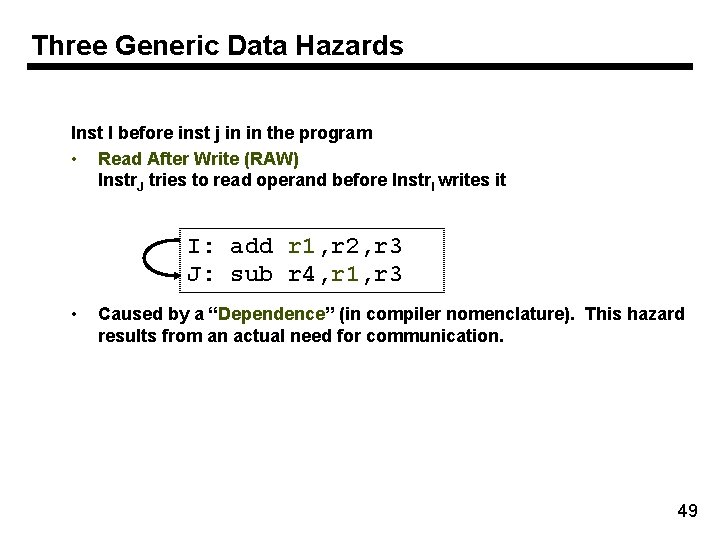

Three Generic Data Hazards Inst I before inst j in in the program • Read After Write (RAW) Instr. J tries to read operand before Instr. I writes it I: add r 1, r 2, r 3 J: sub r 4, r 1, r 3 • Caused by a “Dependence” (in compiler nomenclature). This hazard results from an actual need for communication. 49

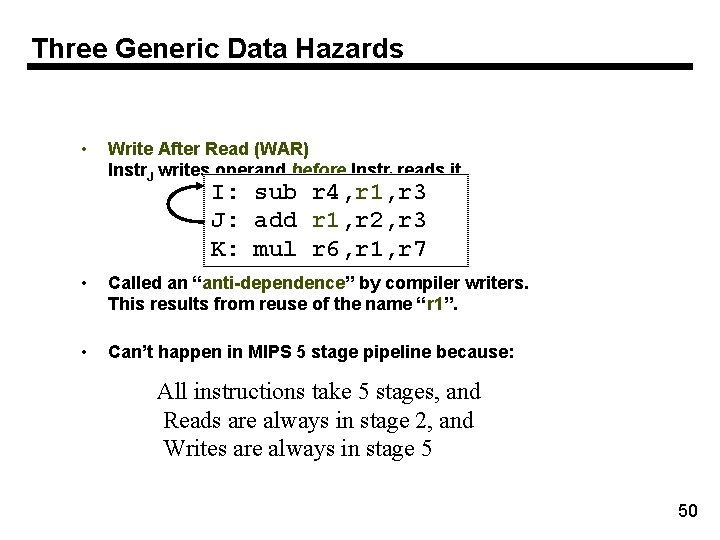

Three Generic Data Hazards • Write After Read (WAR) Instr. J writes operand before Instr. I reads it I: sub r 4, r 1, r 3 J: add r 1, r 2, r 3 K: mul r 6, r 1, r 7 • Called an “anti-dependence” by compiler writers. This results from reuse of the name “r 1”. • Can’t happen in MIPS 5 stage pipeline because: All instructions take 5 stages, and Reads are always in stage 2, and Writes are always in stage 5 50

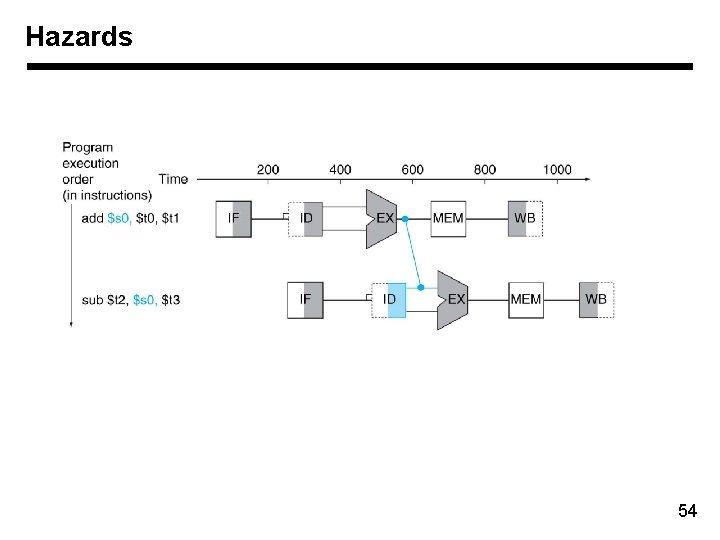

Three Generic Data Hazards • Write After Write (WAW) Instr. J writes operand before Instr. I writes it. I: sub r 1, r 4, r 3 J: add r 1, r 2, r 3 K: mul r 6, r 1, r 7 • • Called an “output dependence” by compiler writers This also results from the reuse of name “r 1”. Can’t happen in MIPS 5 stage pipeline because: – All instructions take 5 stages, and – Writes are always in stage 5 51

Representation 52

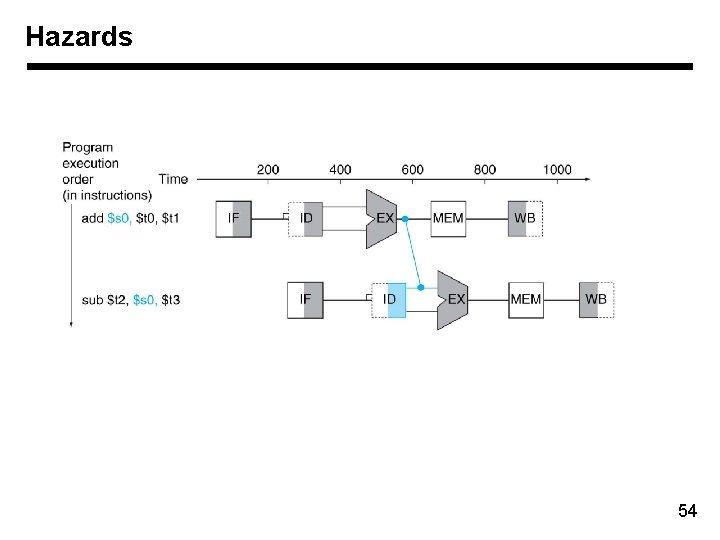

Dependencies • Problem with starting next instruction before first is finished – Dependencies that “go backward in time” are data hazards 53

Hazards 54

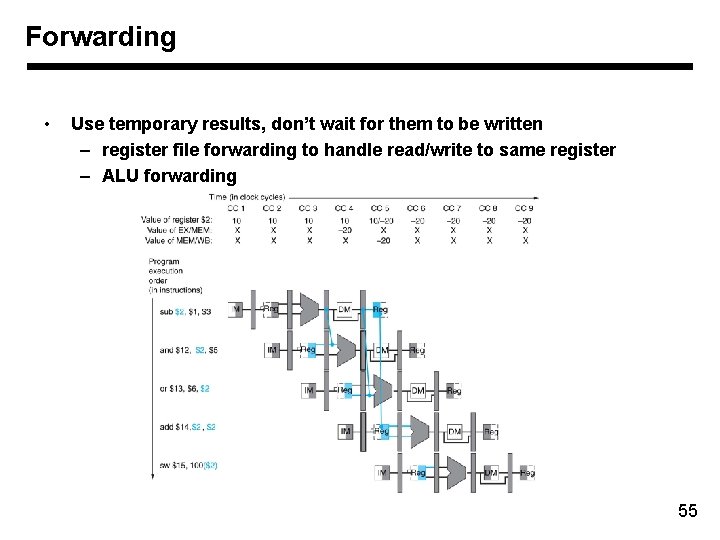

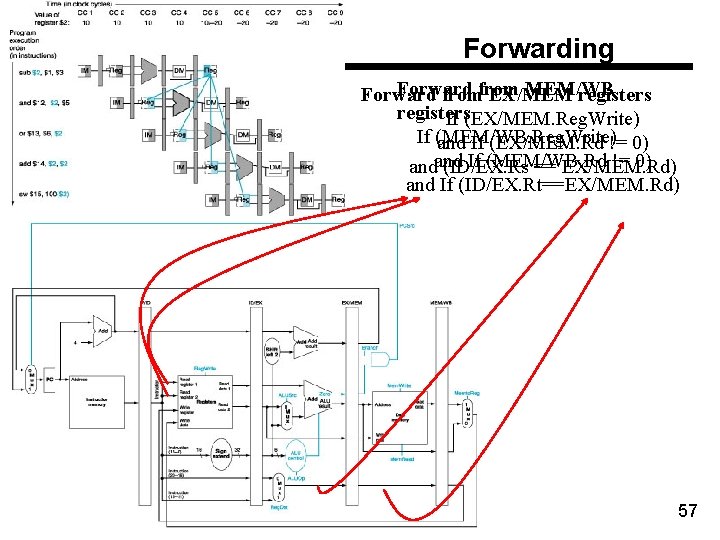

Forwarding • Use temporary results, don’t wait for them to be written – register file forwarding to handle read/write to same register – ALU forwarding 55

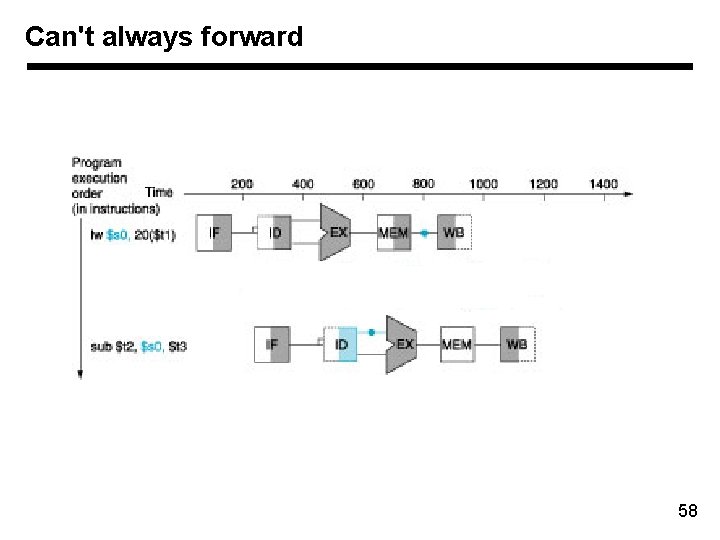

Forwarding sub and or add sw $2, $1, $3 $12, $5 $13, $6, $2 $14, $2 $15, 100($2) 56

Forwarding Forward MEM/WB Forward from EX/MEM registers If (EX/MEM. Reg. Write) If (MEM/WB. Reg. Write) and If (EX/MEM. Rd != 0) If (MEM/WB. Rd != 0) andand (ID/EX. Rs == EX/MEM. Rd) and If (ID/EX. Rt==EX/MEM. Rd) 57

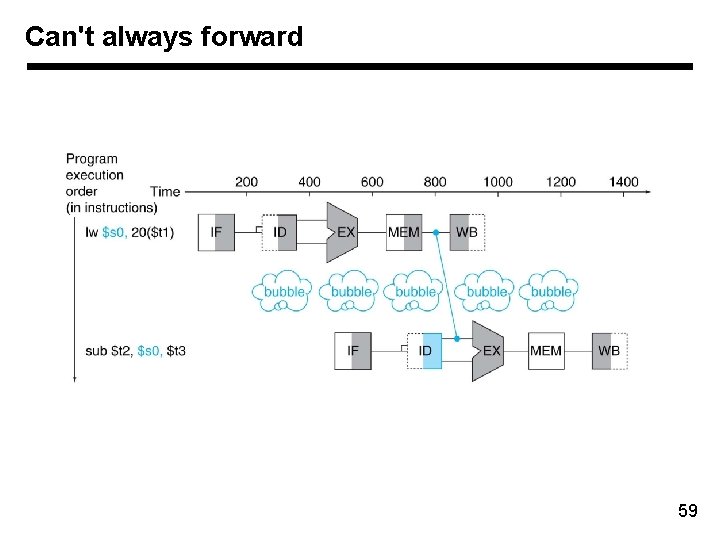

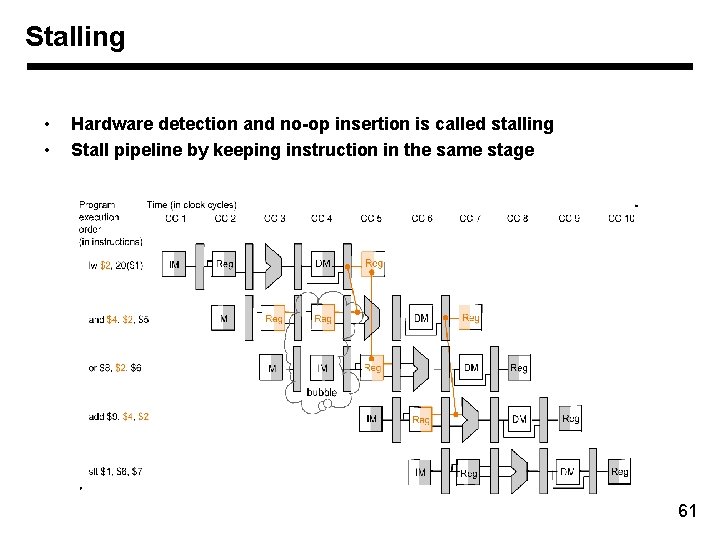

Can't always forward 58

Can't always forward 59

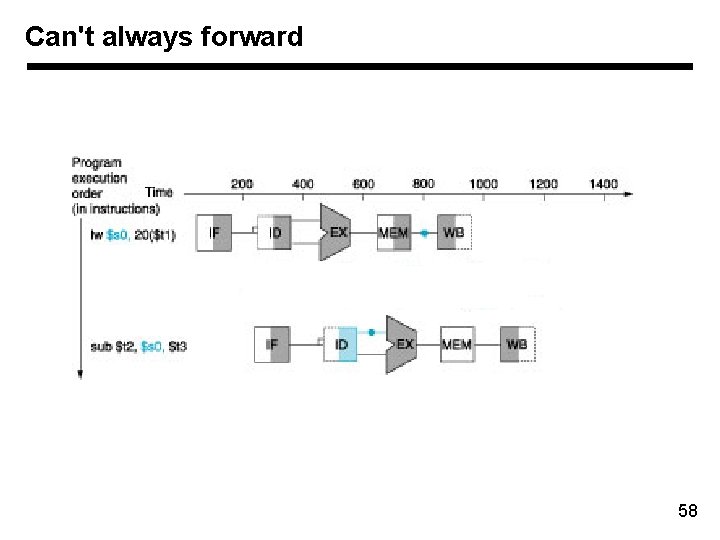

Can't always forward • Load word can still cause a hazard: – an instruction tries to read a register following a load instruction that writes to the same register. 60

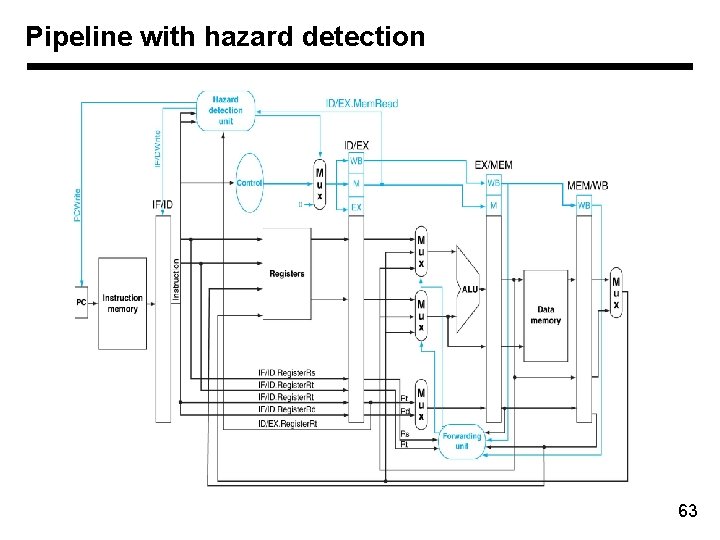

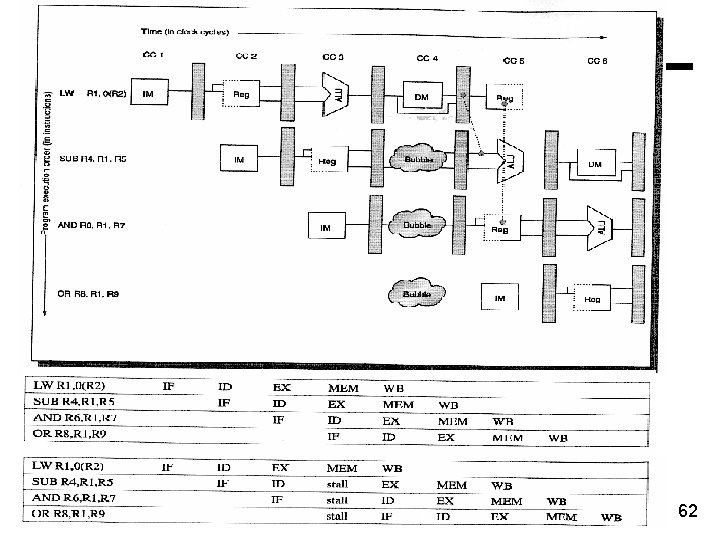

Stalling • • Hardware detection and no-op insertion is called stalling Stall pipeline by keeping instruction in the same stage 61

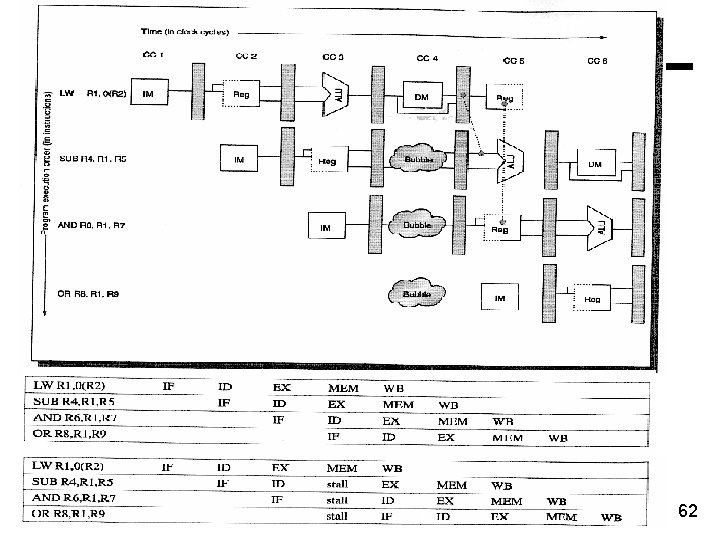

62

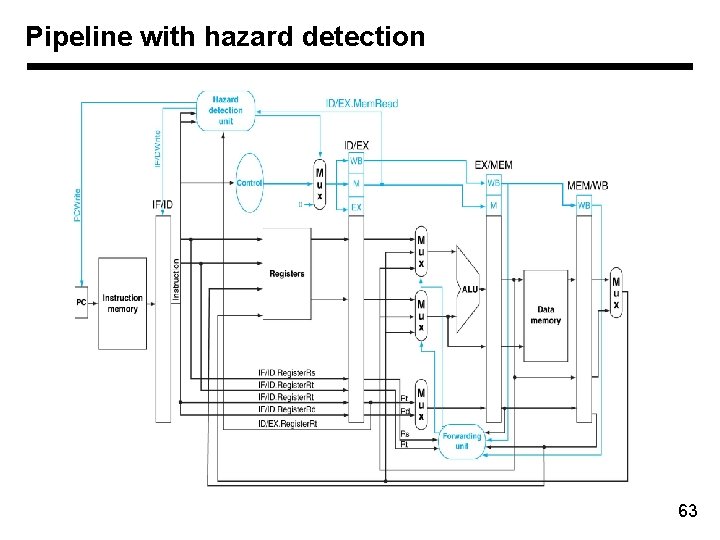

Pipeline with hazard detection 63

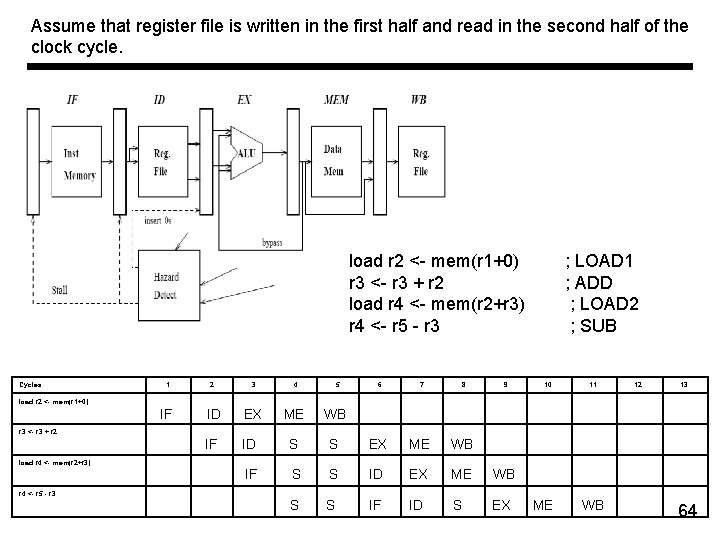

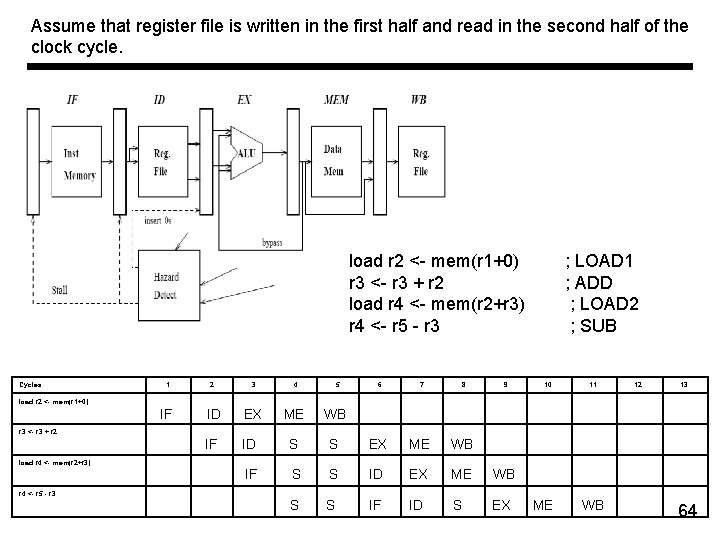

Assume that register file is written in the first half and read in the second half of the clock cycle. load r 2 <- mem(r 1+0) r 3 <- r 3 + r 2 load r 4 <- mem(r 2+r 3) r 4 <- r 5 - r 3 Cycles 1 2 3 4 5 6 7 8 IF ID EX ME WB IF ID S IF 9 S EX ME WB S S ID EX ME WB S S IF ID S EX ; LOAD 1 ; ADD ; LOAD 2 ; SUB 10 11 12 13 load r 2 <- mem(r 1+0) r 3 <- r 3 + r 2 load r 4 <- mem(r 2+r 3) r 4 <- r 5 - r 3 ME WB 64

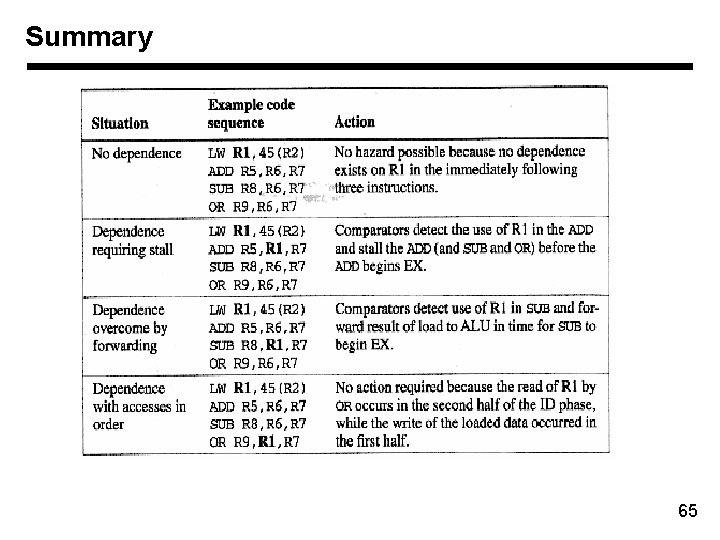

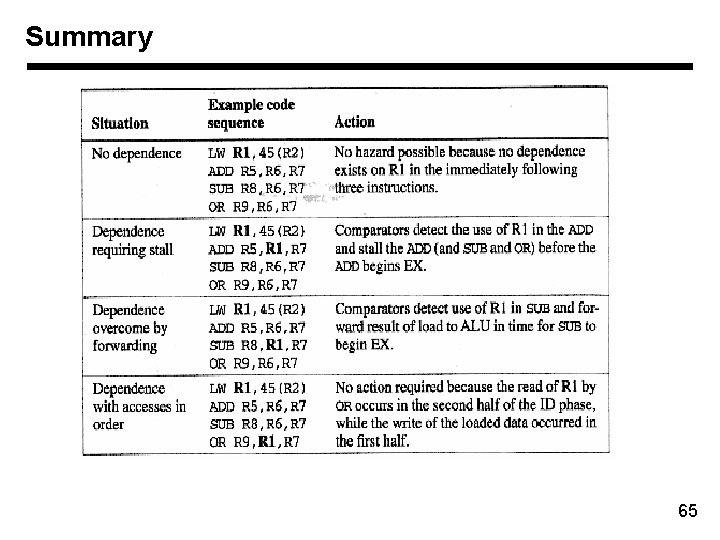

Summary 65

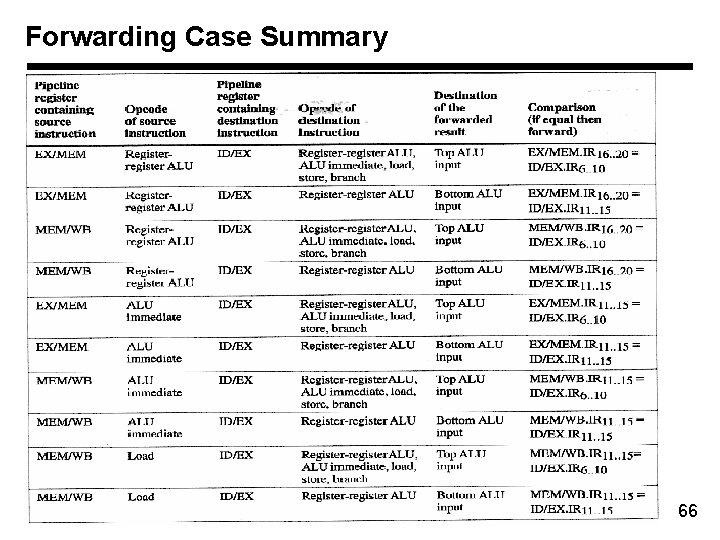

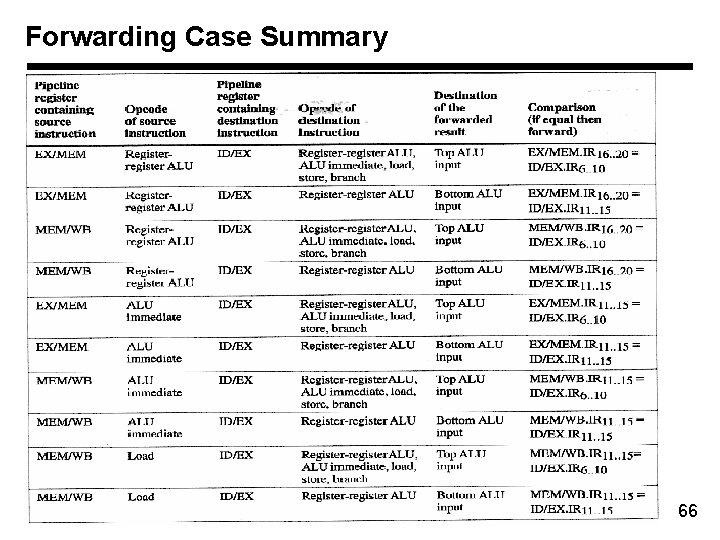

Forwarding Case Summary 66

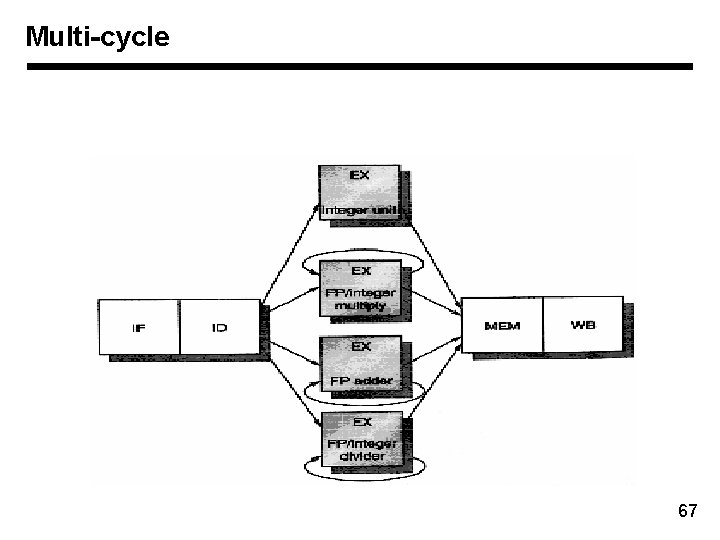

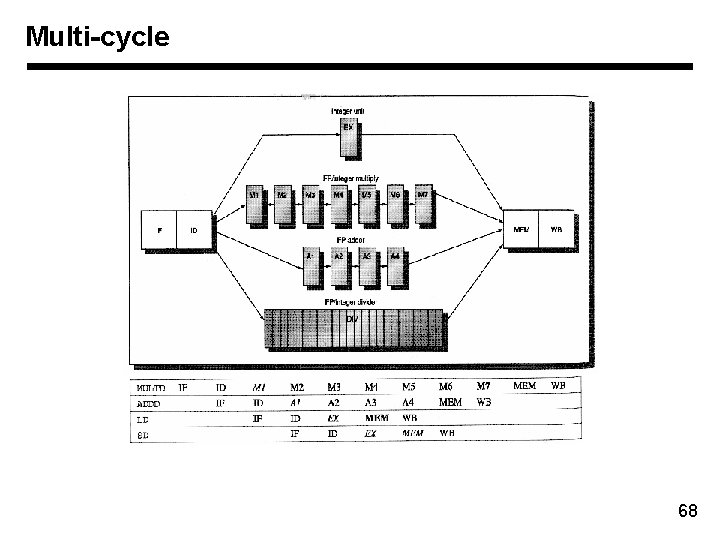

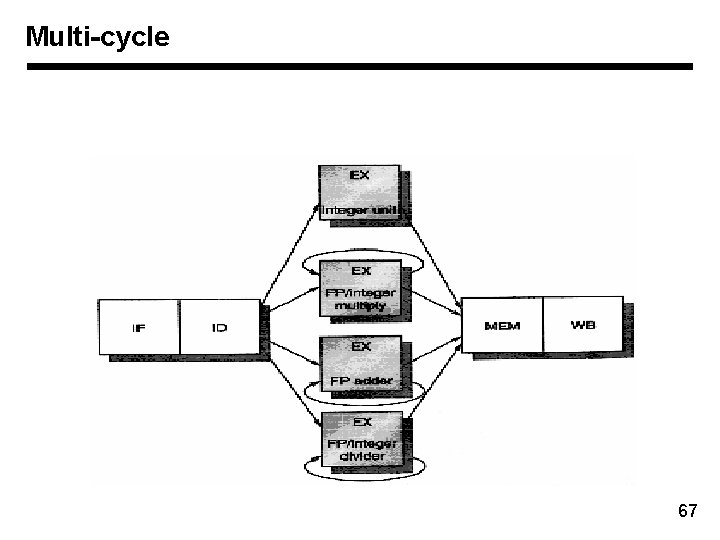

Multi-cycle 67

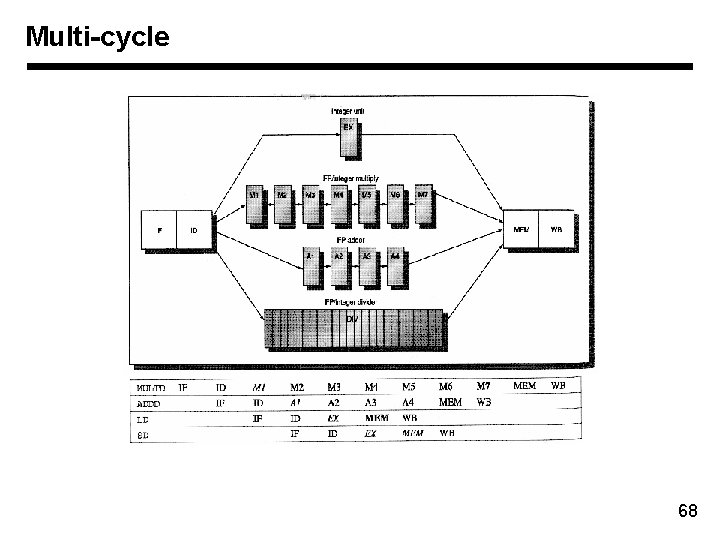

Multi-cycle 68

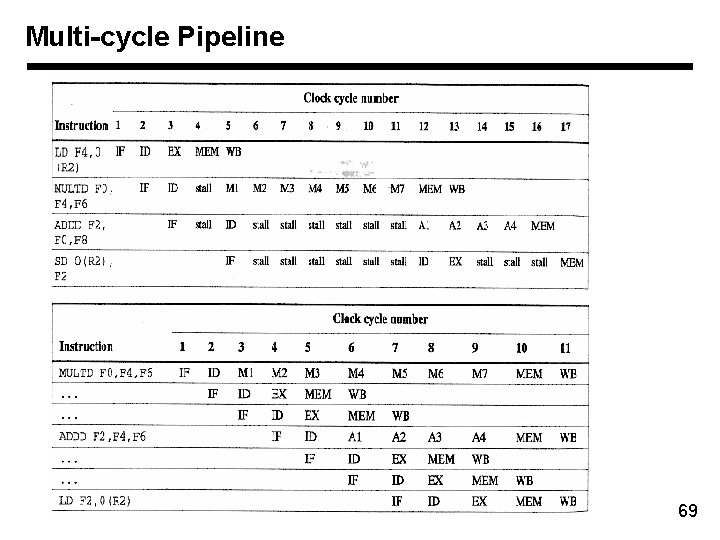

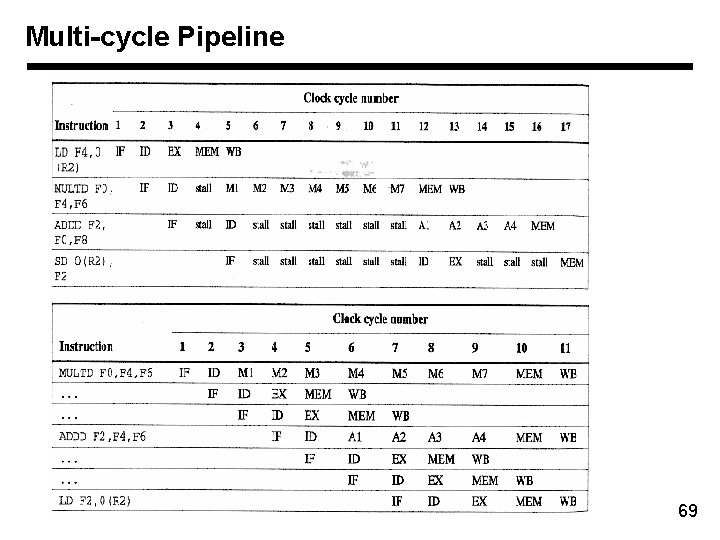

Multi-cycle Pipeline 69

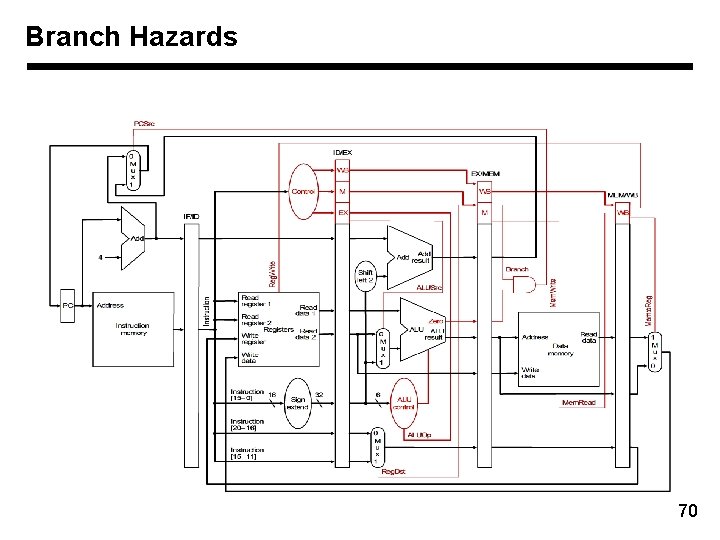

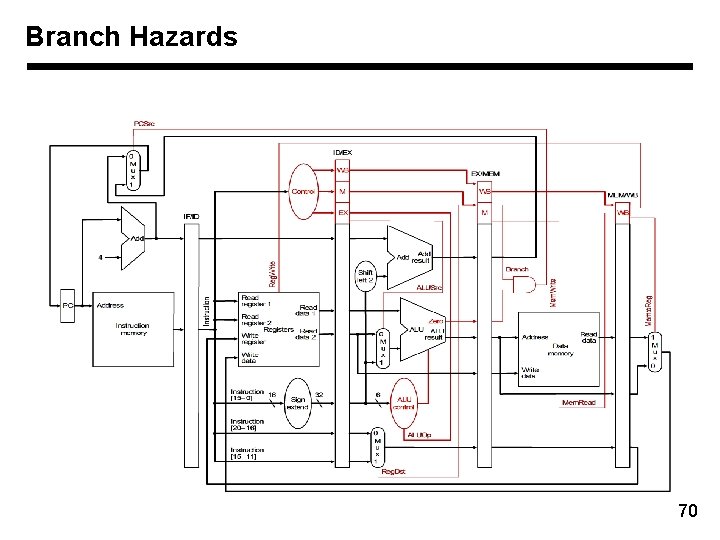

Branch Hazards 70

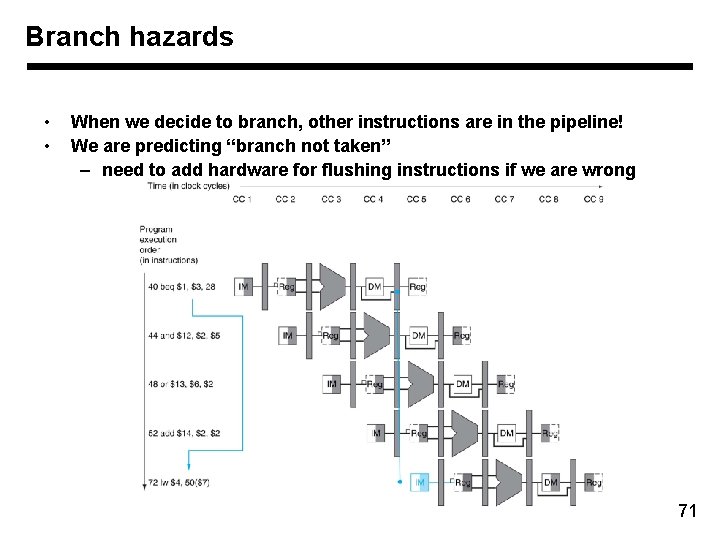

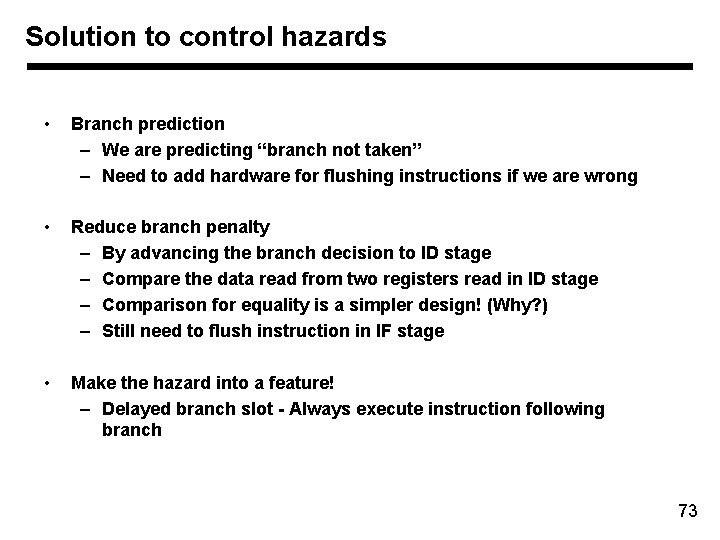

Branch hazards • • When we decide to branch, other instructions are in the pipeline! We are predicting “branch not taken” – need to add hardware for flushing instructions if we are wrong 71

Branch detection in ID stage 72

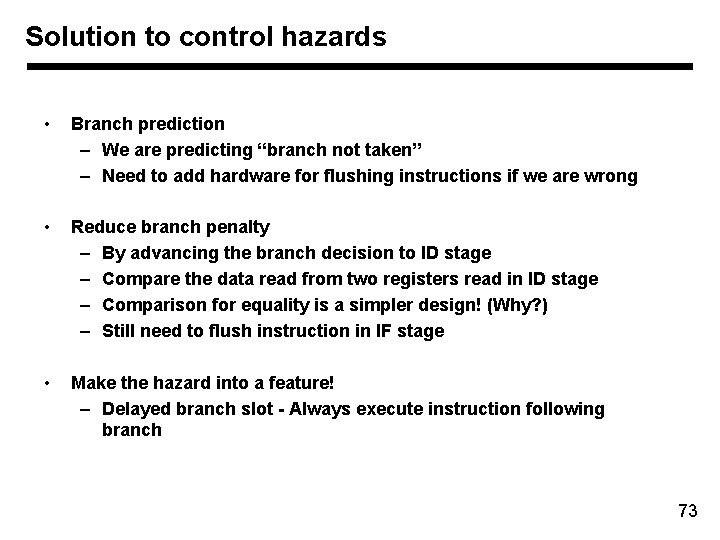

Solution to control hazards • Branch prediction – We are predicting “branch not taken” – Need to add hardware for flushing instructions if we are wrong • Reduce branch penalty – By advancing the branch decision to ID stage – Compare the data read from two registers read in ID stage – Comparison for equality is a simpler design! (Why? ) – Still need to flush instruction in IF stage • Make the hazard into a feature! – Delayed branch slot - Always execute instruction following branch 73

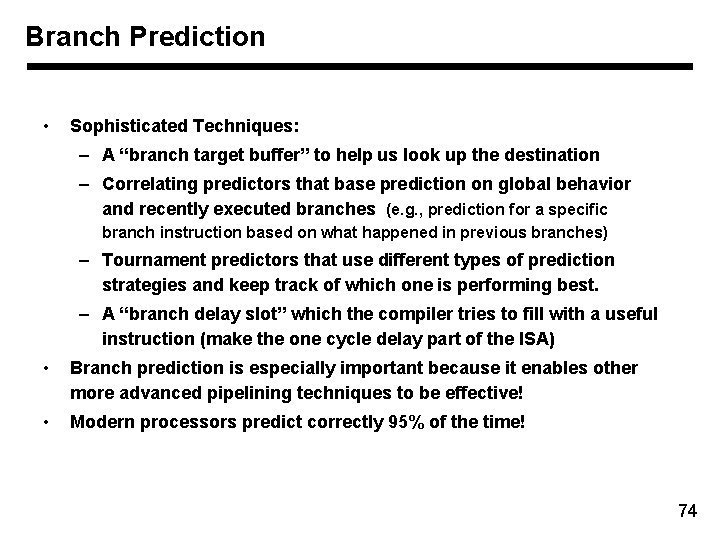

Branch Prediction • Sophisticated Techniques: – A “branch target buffer” to help us look up the destination – Correlating predictors that base prediction on global behavior and recently executed branches (e. g. , prediction for a specific branch instruction based on what happened in previous branches) – Tournament predictors that use different types of prediction strategies and keep track of which one is performing best. – A “branch delay slot” which the compiler tries to fill with a useful instruction (make the one cycle delay part of the ISA) • Branch prediction is especially important because it enables other more advanced pipelining techniques to be effective! • Modern processors predict correctly 95% of the time! 74

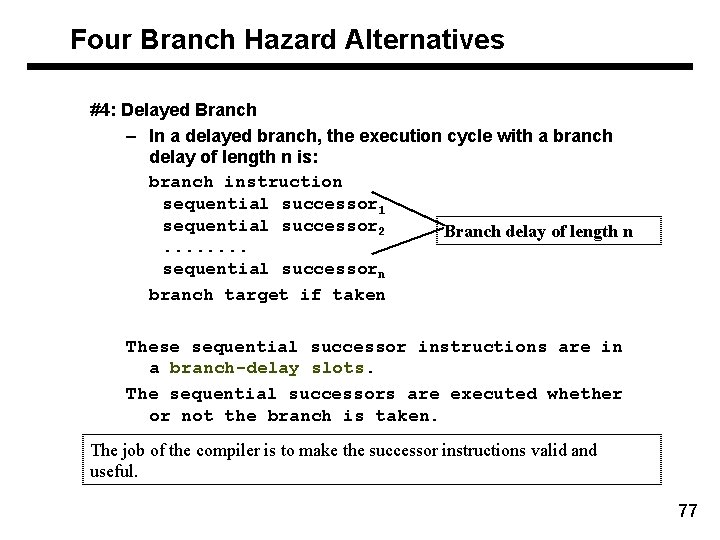

Four Branch Hazard Alternatives #1: Stall until branch direction is clear: branch penalty is fixed and can not be reduced by software (this is the example of MIPS) #2: Predict Branch Not Taken (treat every branch as not taken) – Execute successor instructions in sequence – “flush” instructions in pipeline if branch actually taken – 47% MIPS branches not taken on average – PC+4 already calculated, so use it to get next instruction 75

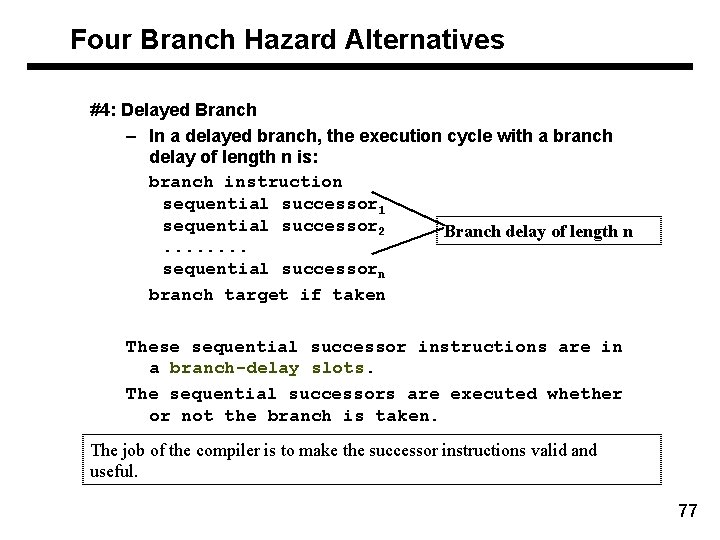

Four Branch Hazard Alternatives: #3: Predict Branch Taken (treat every branch as taken) As soon as the branch is decoded and the target address is computed, we assume the branch is taken and begin fetching and executing at the target address. – 53% MIPS branches taken on average – Because in our MIPS pipeline we don’t know the target address any earlier than we know the branch outcome, there is no advantage in this approach for MIPS. – MIPS still incurs 1 cycle branch penalty • Other machines: branch target known before outcome 76

Four Branch Hazard Alternatives #4: Delayed Branch – In a delayed branch, the execution cycle with a branch delay of length n is: branch instruction sequential successor 1 sequential successor 2 Branch delay of length n. . . . sequential successorn branch target if taken These sequential successor instructions are in a branch-delay slots. The sequential successors are executed whether or not the branch is taken. The job of the compiler is to make the successor instructions valid and useful. 77

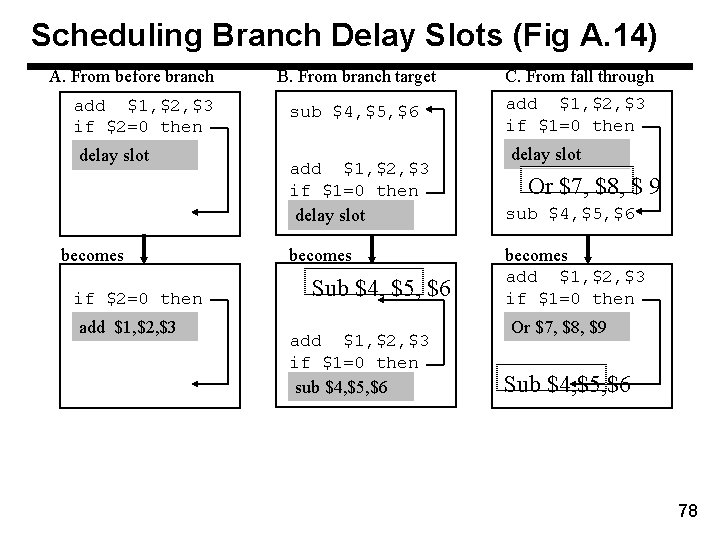

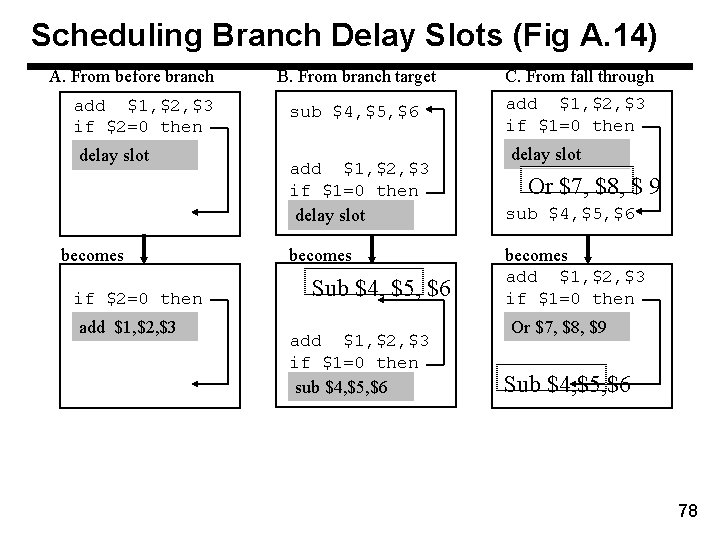

Scheduling Branch Delay Slots (Fig A. 14) A. From before branch add $1, $2, $3 if $2=0 then delay slot becomes if $2=0 then add $1, $2, $3 B. From branch target C. From fall through sub $4, $5, $6 add $1, $2, $3 if $1=0 then delay slot becomes Sub $4, $5, $6 add $1, $2, $3 if $1=0 then sub $4, $5, $6 delay slot Or $7, $8, $ 9 sub $4, $5, $6 becomes add $1, $2, $3 if $1=0 then Or $7, $8, $9 Sub $4, $5, $6 78

Delayed Branch • • • Where to get instructions to fill branch delay slot? – Before branch instruction: this is the best choice if feasible. – From the target address: only valuable when branch taken – From fall through: only valuable when branch not taken Compiler effectiveness for single branch delay slot: – Fills about 60% of branch delay slots – About 80% of instructions executed in branch delay slots useful in computation – About 50% (60% x 80%) of slots usefully filled Delayed Branch downside: As processor go to deeper pipelines and multiple issue, the branch delay grows and need more than one delay slot – Delayed branching has lost popularity compared to more expensive but more flexible dynamic approaches – Growth in available transistors has made dynamic approaches relatively cheaper 79

Improving Performance • Try and avoid stalls! E. g. , reorder these instructions: lw lw sw sw $t 0, $t 2, $t 0, 0($t 1) 4($t 1) • Dynamic Pipeline Scheduling – Hardware chooses which instructions to execute next – Will execute instructions out of order (e. g. , doesn’t wait for a dependency to be resolved, but rather keeps going!) – Speculates on branches and keeps the pipeline full (may need to rollback if prediction incorrect) • Trying to exploit instruction-level parallelism 80

Advanced Pipelining • • Increase the depth of the pipeline Start more than one instruction each cycle (multiple issue) Loop unrolling to expose more ILP (better scheduling) “Superscalar” processors – DEC Alpha 21264: 9 stage pipeline, 6 instruction issue • All modern processors are superscalar and issue multiple instructions usually with some limitations (e. g. , different “pipes”) 81

![Source For i1000 i0 ii1 xi xi s Direct translation Source: For ( i=1000; i>0; i=i-1 ) x[i] = x[i] + s; Direct translation:](https://slidetodoc.com/presentation_image/408d2ac127f68e1cc0020fafe4520556/image-82.jpg)

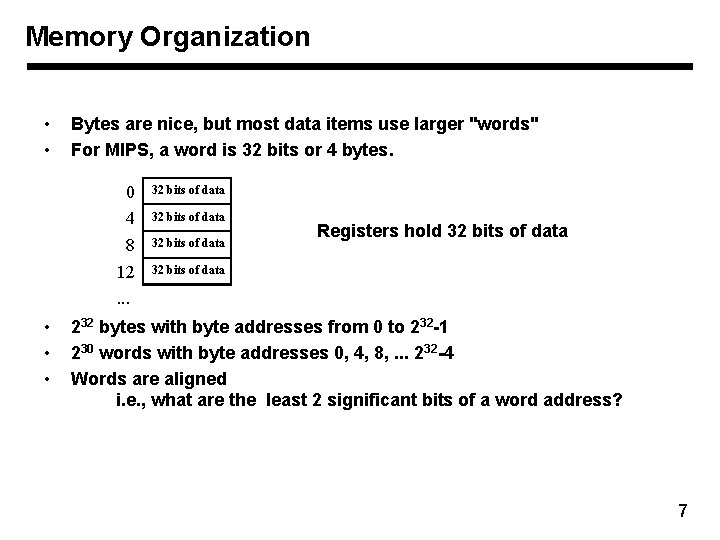

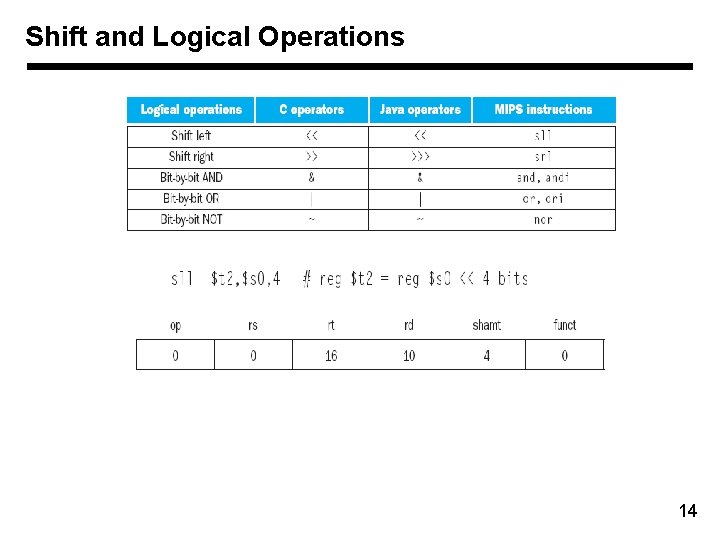

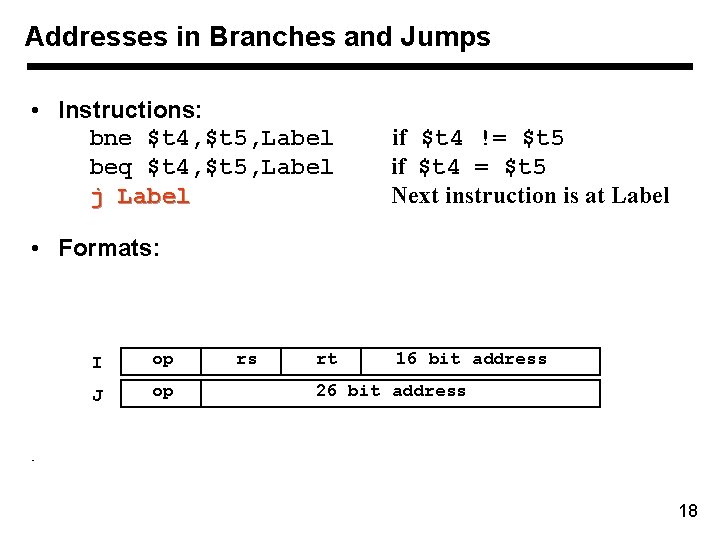

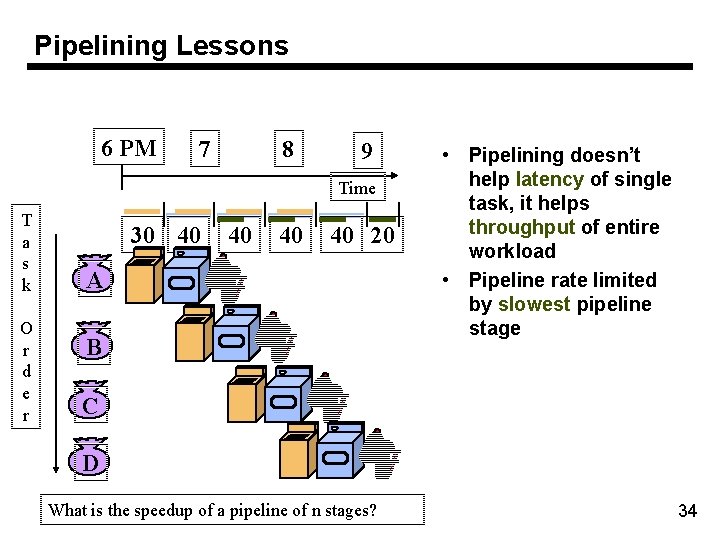

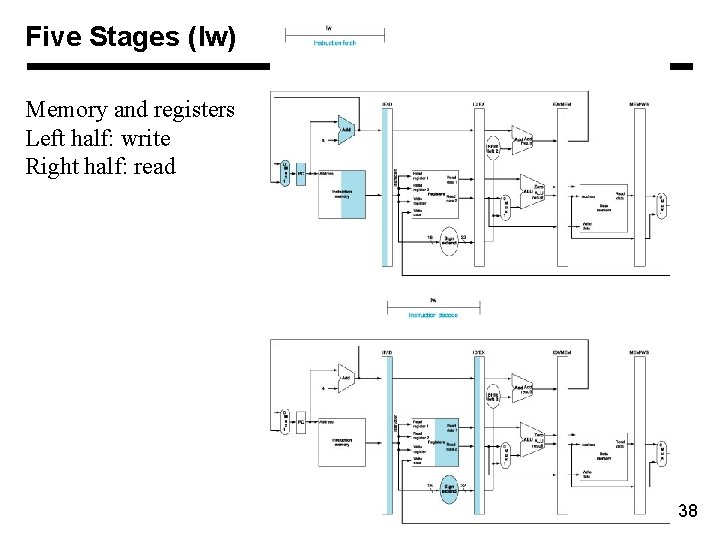

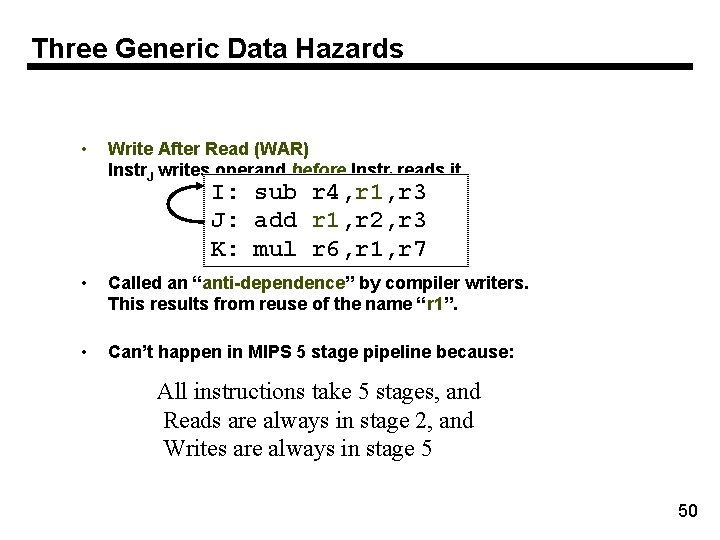

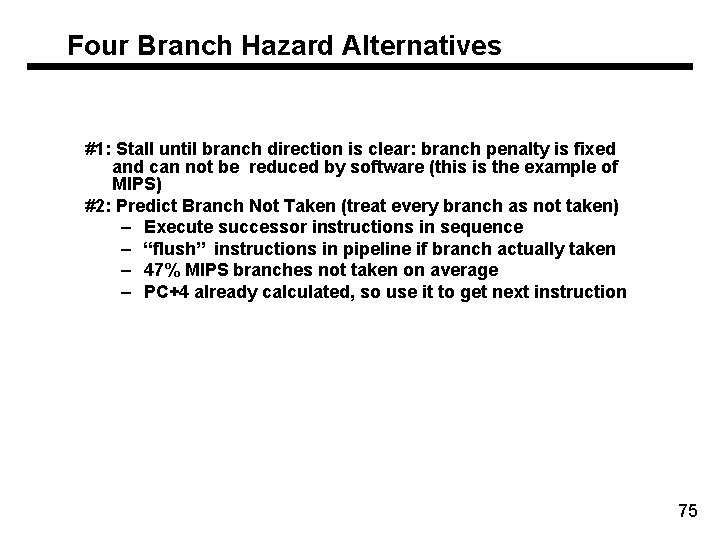

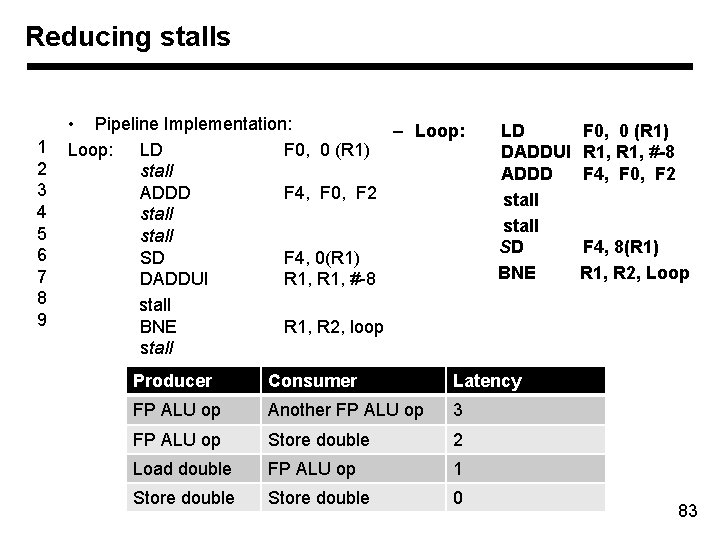

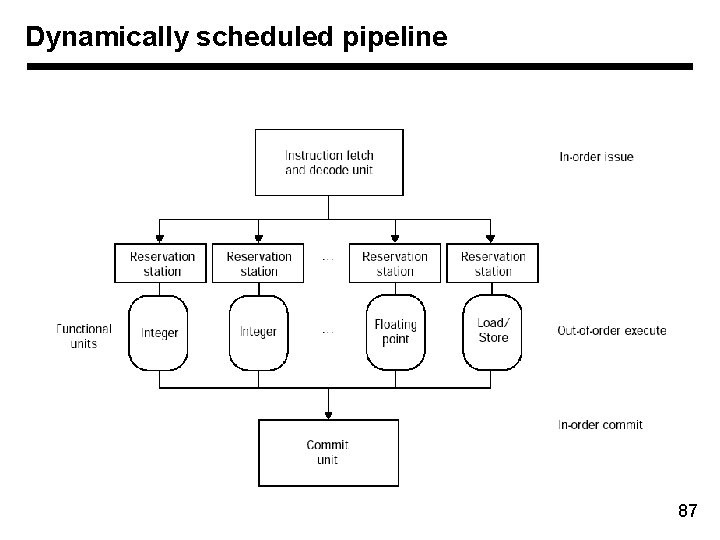

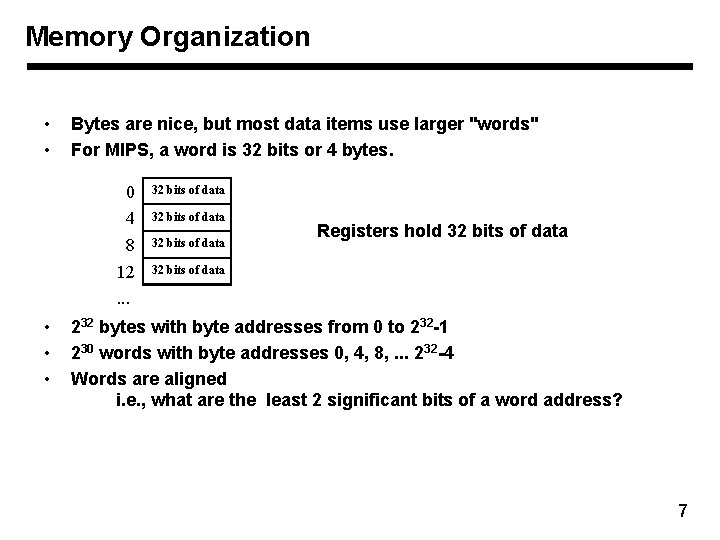

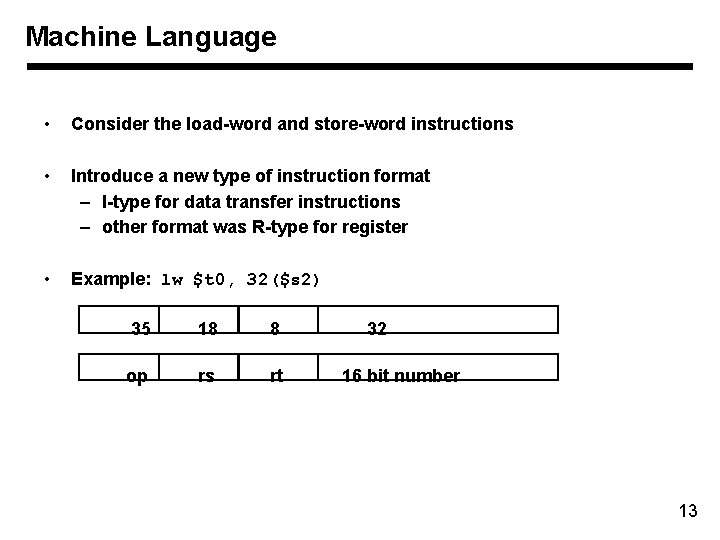

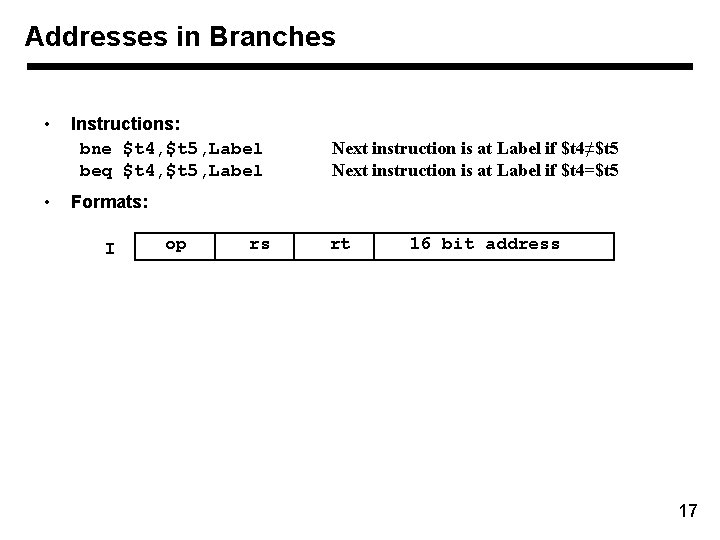

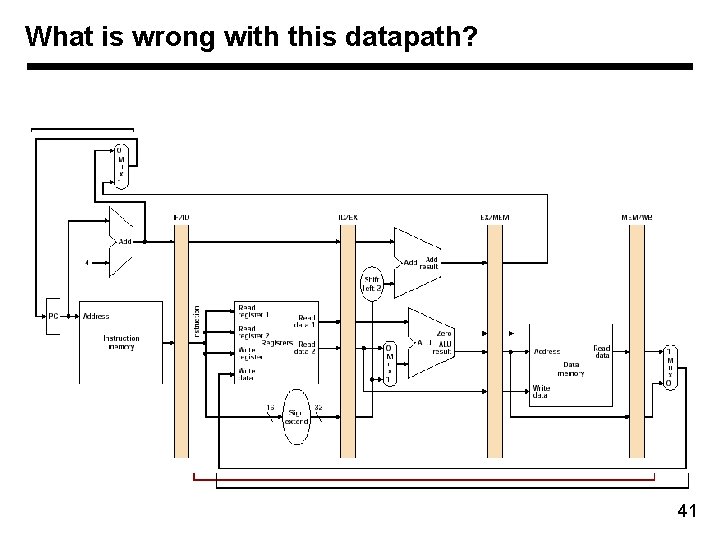

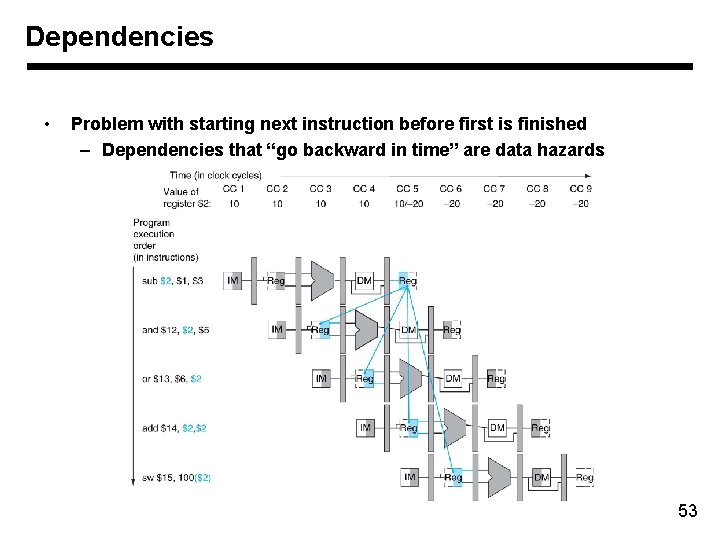

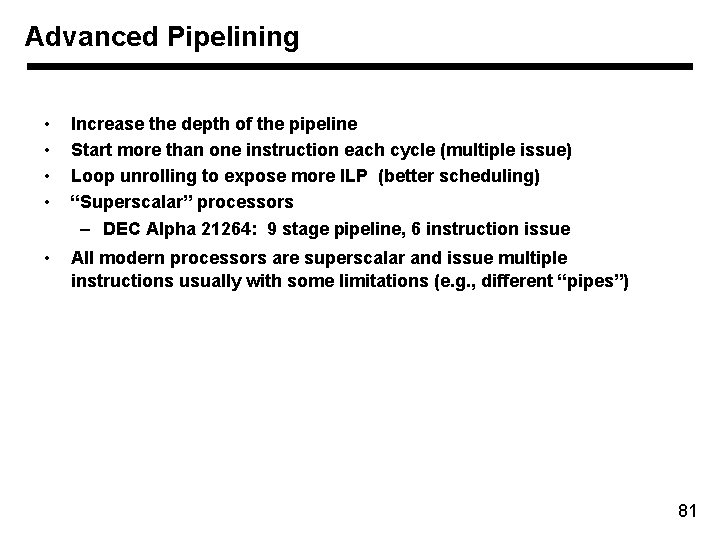

Source: For ( i=1000; i>0; i=i-1 ) x[i] = x[i] + s; Direct translation: – Loop: LD ADDD SD DADDUI BNE F 0, 0 (R 1); F 4, F 0, F 2; F 4, 0(R 1) R 1, #-8 R 1, R 2, loop; R 1 points x[1000] F 2 = scalar value R 2 last element Producer Consumer Latency FP ALU op Another FP ALU op 3 FP ALU op Store double 2 Load double FP ALU op 1 Store double 0 Assume 1 cycle latency from unsigned integer arithmetic to dependent instruction 82

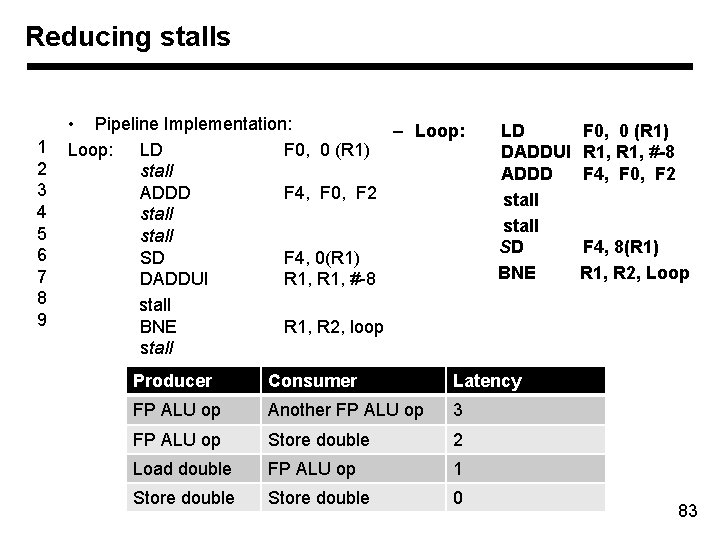

Reducing stalls 1 2 3 4 5 6 7 8 9 • Pipeline Implementation: – Loop: LD F 0, 0 (R 1) stall ADDD F 4, F 0, F 2 stall SD F 4, 0(R 1) DADDUI R 1, #-8 stall BNE R 1, R 2, loop stall LD DADDUI ADDD stall SD BNE Producer Consumer Latency FP ALU op Another FP ALU op 3 FP ALU op Store double 2 Load double FP ALU op 1 Store double 0 F 0, 0 (R 1) R 1, #-8 F 4, F 0, F 2 F 4, 8(R 1) R 1, R 2, Loop 83

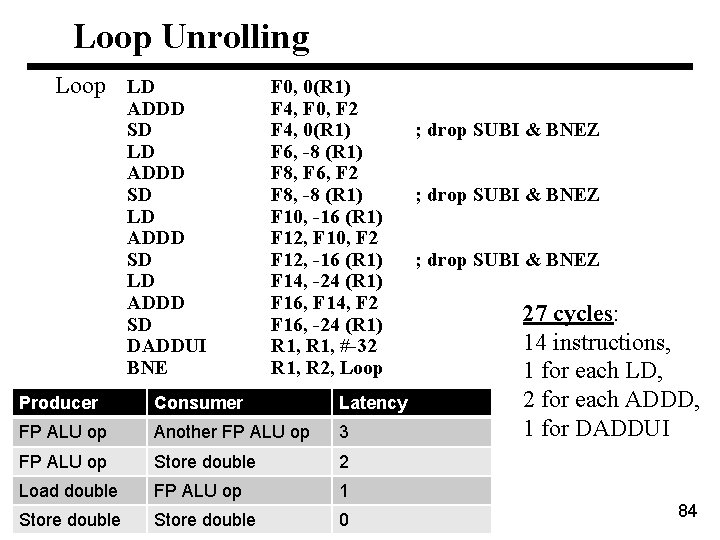

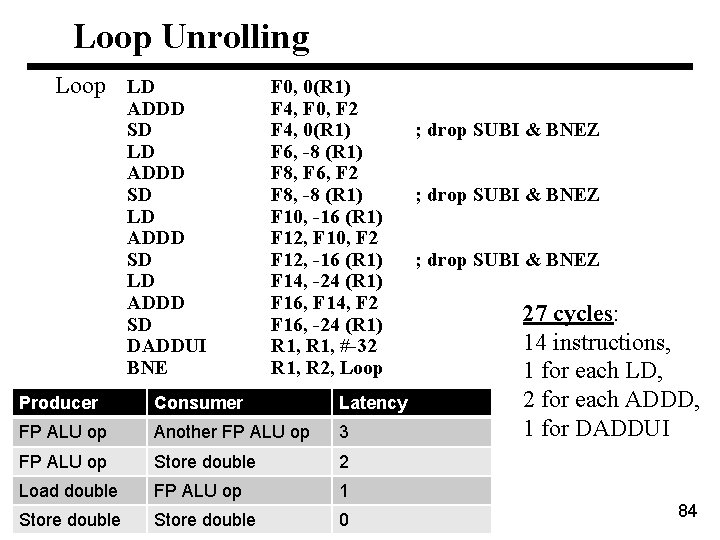

Loop Unrolling Loop LD ADDD SD DADDUI BNE F 0, 0(R 1) F 4, F 0, F 2 F 4, 0(R 1) F 6, -8 (R 1) F 8, F 6, F 2 F 8, -8 (R 1) F 10, -16 (R 1) F 12, F 10, F 2 F 12, -16 (R 1) F 14, -24 (R 1) F 16, F 14, F 2 F 16, -24 (R 1) R 1, #-32 R 1, R 2, Loop Producer Consumer Latency FP ALU op Another FP ALU op 3 FP ALU op Store double 2 Load double FP ALU op 1 Store double 0 ; drop SUBI & BNEZ 27 cycles: 14 instructions, 1 for each LD, 2 for each ADDD, 1 for DADDUI 84

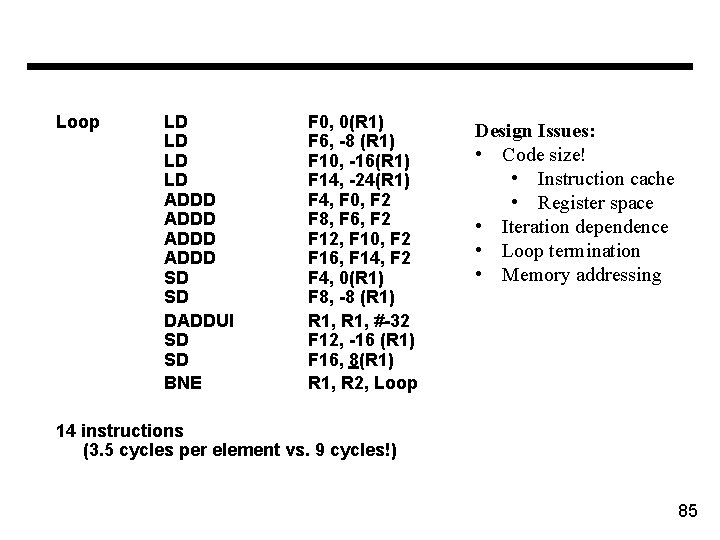

Loop LD LD ADDD SD SD DADDUI SD SD BNE F 0, 0(R 1) F 6, -8 (R 1) F 10, -16(R 1) F 14, -24(R 1) F 4, F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 2 F 4, 0(R 1) F 8, -8 (R 1) R 1, #-32 F 12, -16 (R 1) F 16, 8(R 1) R 1, R 2, Loop Design Issues: • Code size! • Instruction cache • Register space • Iteration dependence • Loop termination • Memory addressing 14 instructions (3. 5 cycles per element vs. 9 cycles!) 85

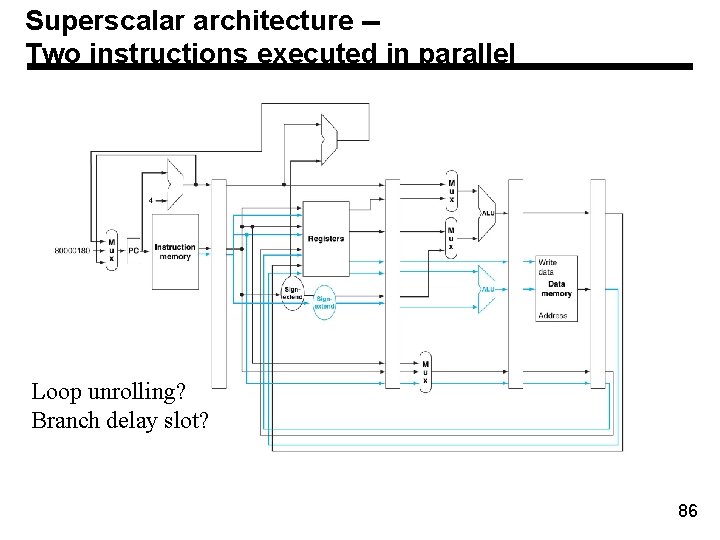

Superscalar architecture -Two instructions executed in parallel Loop unrolling? Branch delay slot? 86

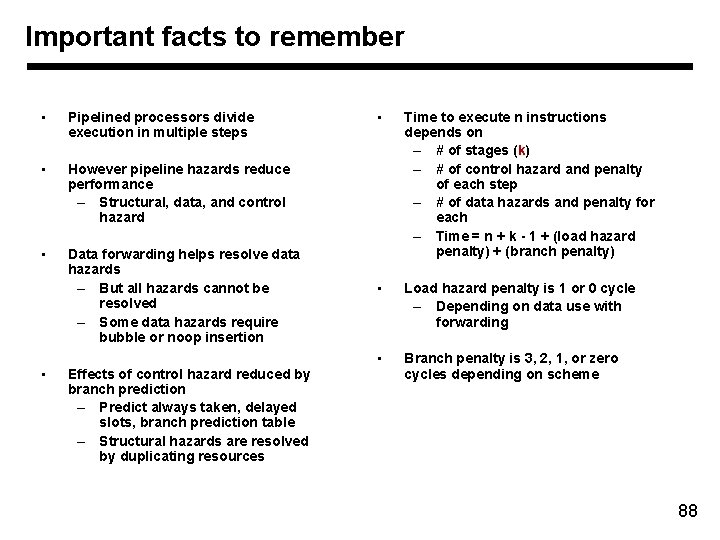

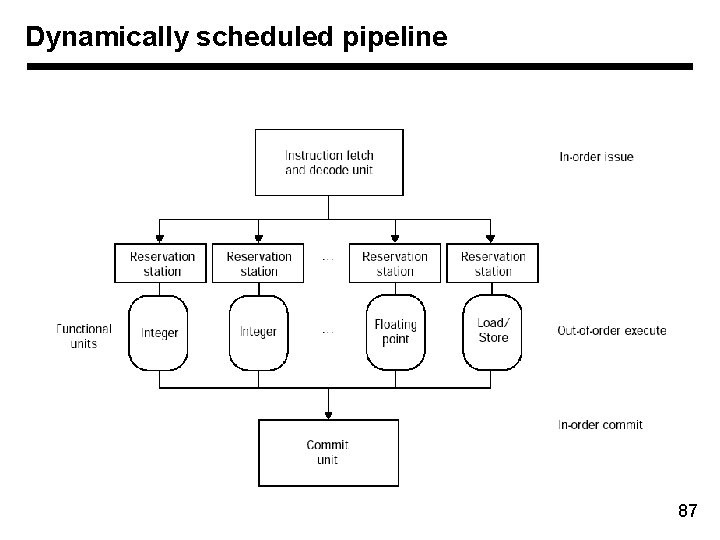

Dynamically scheduled pipeline 87

Important facts to remember • Pipelined processors divide execution in multiple steps • However pipeline hazards reduce performance – Structural, data, and control hazard • Data forwarding helps resolve data hazards – But all hazards cannot be resolved – Some data hazards require bubble or noop insertion • Effects of control hazard reduced by branch prediction – Predict always taken, delayed slots, branch prediction table – Structural hazards are resolved by duplicating resources • Time to execute n instructions depends on – # of stages (k) – # of control hazard and penalty of each step – # of data hazards and penalty for each – Time = n + k - 1 + (load hazard penalty) + (branch penalty) • Load hazard penalty is 1 or 0 cycle – Depending on data use with forwarding • Branch penalty is 3, 2, 1, or zero cycles depending on scheme 88

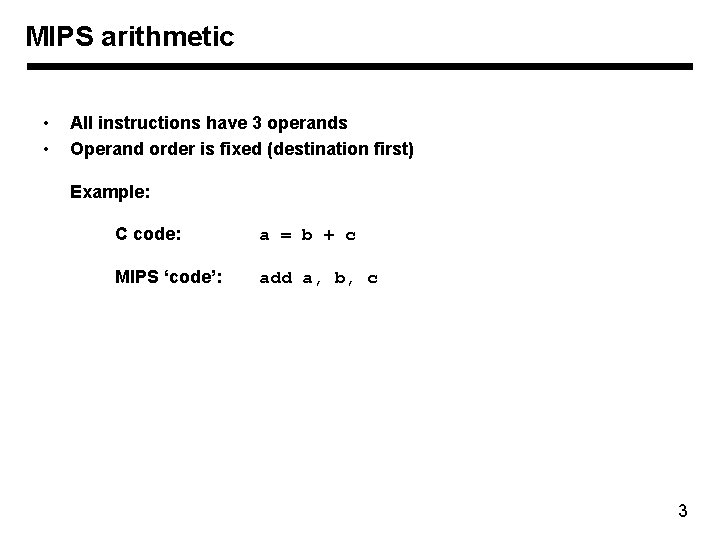

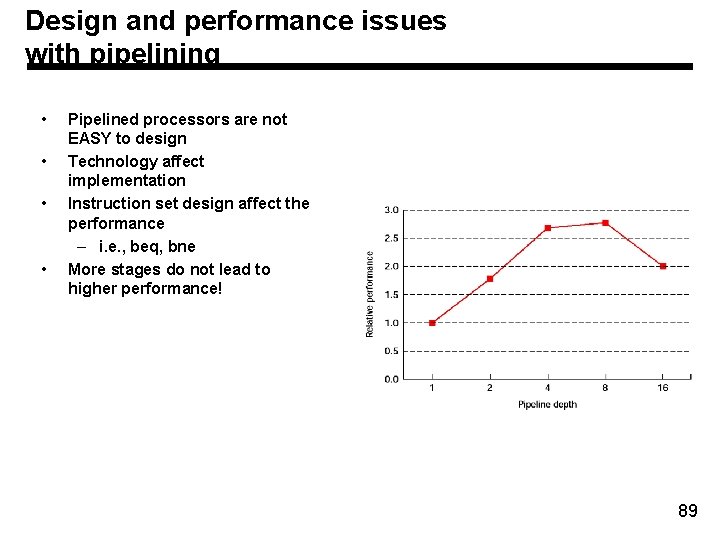

Design and performance issues with pipelining • • Pipelined processors are not EASY to design Technology affect implementation Instruction set design affect the performance – i. e. , beq, bne More stages do not lead to higher performance! 89