Computational Intelligence for Data Mining Wodzisaw Duch Department

![Neurofuzzy system Fuzzy: m(x)=0, 1 (no/yes) replaced by a degree m(x) [0, 1]. Triangular, Neurofuzzy system Fuzzy: m(x)=0, 1 (no/yes) replaced by a degree m(x) [0, 1]. Triangular,](https://slidetodoc.com/presentation_image/79f4e7f8da15c762ce1072a8b82b0f2e/image-39.jpg)

- Slides: 63

Computational Intelligence for Data Mining Włodzisław Duch Department of Informatics Nicholas Copernicus University Torun, Poland With help from R. Adamczak, K. Grąbczewski K. Grudziński, N. Jankowski, A. Naud http: //www. phys. uni. torun. pl/kmk WCCI 2002, Honolulu, HI

Group members

Plan What this tutorial is about ? • • • How to discover knowledge in data; how to create comprehensible models of data; how to evaluate new data. 1. 2. 3. 4. 5. 6. 7. 8. 9. AI, CI & Data Mining Forms of useful knowledge Ghost. Miner philosophy Exploration & Visualization Rule-based data analysis Neurofuzzy models Neural models Similarity-based models Committees of models

AI, CI & DM Artificial Intelligence: symbolic models of knowledge. • Higher-level cognition: reasoning, problem solving, planning, heuristic search for solutions. • Machine learning, inductive, rule-based methods. • Technology: expert systems. Computational Intelligence, Soft Computing: methods inspired by many sources: • biology – evolutionary, immune, neural computing • statistics, patter recognition • probability – Bayesian networks • logic – fuzzy, rough … Perception, object recognition. Data Mining, Knowledge Discovery in Databases. • discovery of interesting patterns, rules, knowledge. • building predictive data models.

Forms of useful knowledge AI/Machine Learning camp: Neural nets are black boxes. Unacceptable! Symbolic rules forever. But. . . knowledge accessible to humans is in: • symbols, • similarity to prototypes, • images, visual representations. What type of explanation is satisfactory? Interesting question for cognitive scientists. Different answers in different fields.

Forms of knowledge • Humans remember examples of each category and refer to such examples – as similaritybased or nearest-neighbors methods do. • Humans create prototypes out of many examples – as Gaussian classifiers, RBF networks, neurofuzzy systems do. • Logical rules are the highest form of summarization of knowledge. Types of explanation: • exemplar-based: prototypes and similarity; • logic-based: symbols and rules; • visualization-based: maps, diagrams, relations. . .

Ghost. Miner Philosophy Ghost. Miner, data mining tools from our lab. • Separate the process of model building and knowledge discovery from model use => Ghost. Miner Developer & Ghost. Miner Analyzer • There is no free lunch – provide different type of tools for knowledge discovery. Decision tree, neural, neurofuzzy, similaritybased, committees. • Provide tools for visualization of data. • Support the process of knowledge discovery/model building and evaluating, organizing it into projects.

Wine data example Chemical analysis of wine from grapes grown in the same region in Italy, but derived from three different cultivars. Task: recognize the source of wine sample. 13 quantities measured, continuous features: • • • alcohol content ash content magnesium content flavanoids content proanthocyanins phenols content • OD 280/D 315 of diluted wines • • malic acid content alkalinity of ash total phenols content nonanthocyanins phenols content • color intensity • hue • proline.

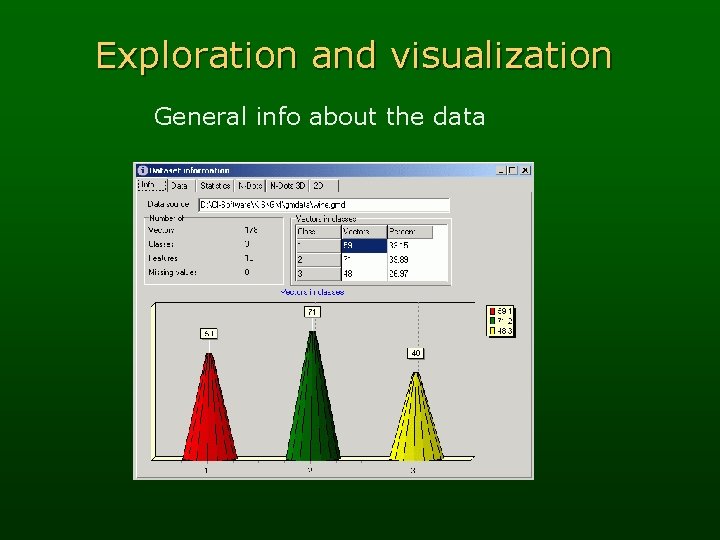

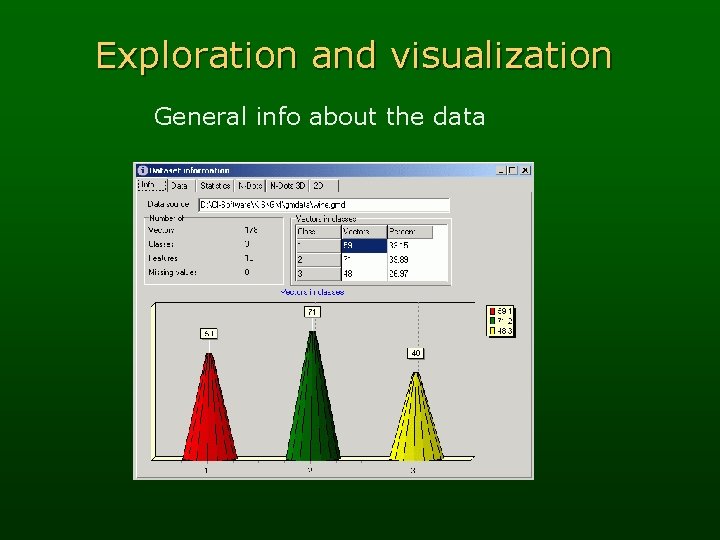

Exploration and visualization General info about the data

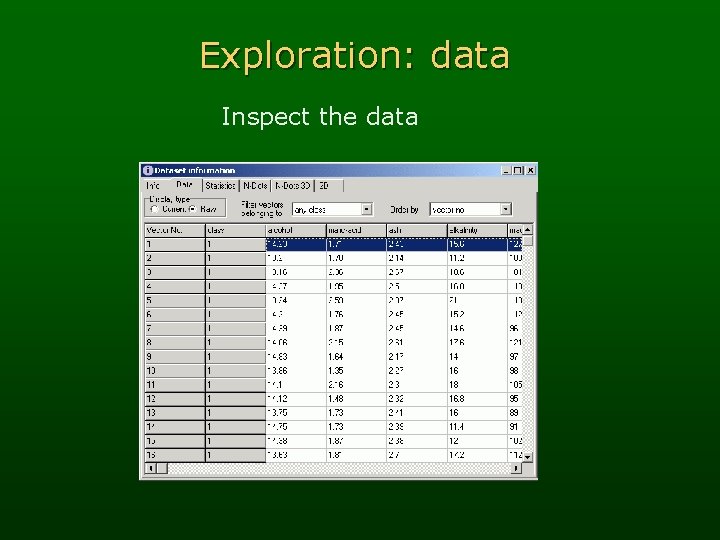

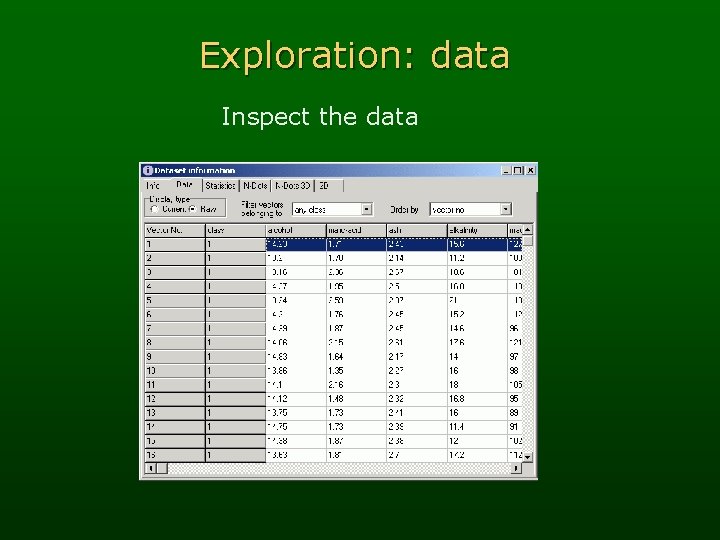

Exploration: data Inspect the data

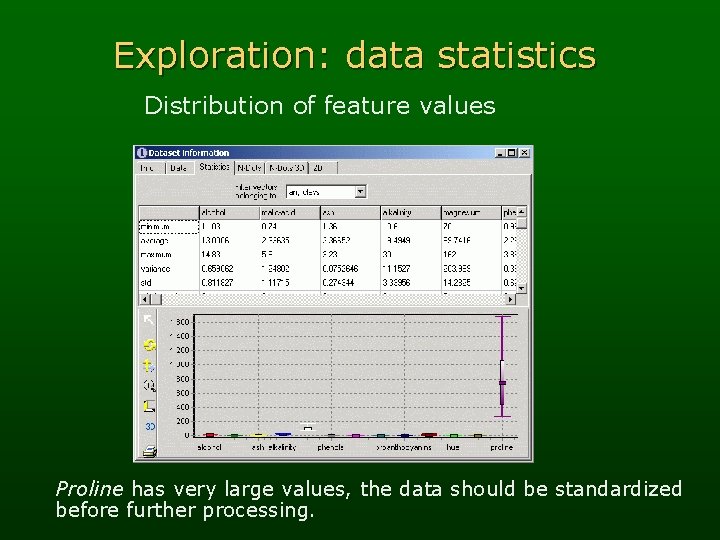

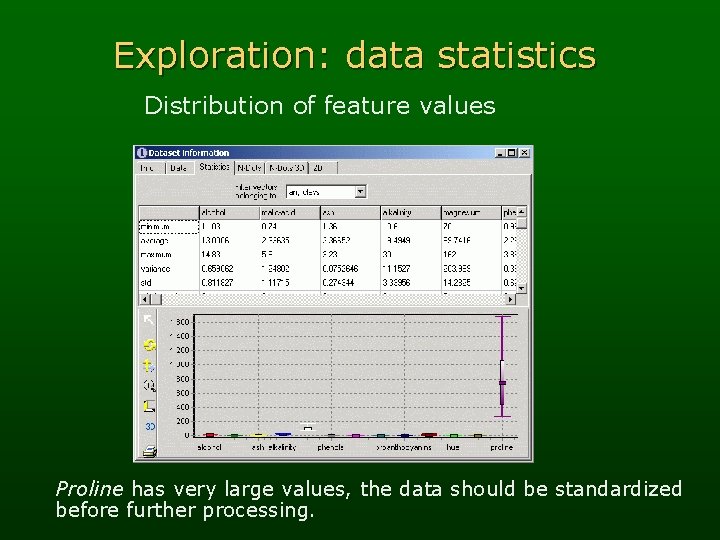

Exploration: data statistics Distribution of feature values Proline has very large values, the data should be standardized before further processing.

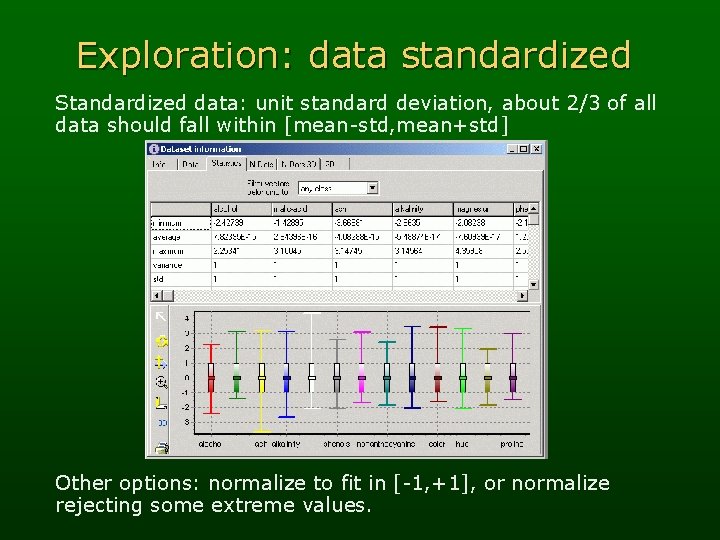

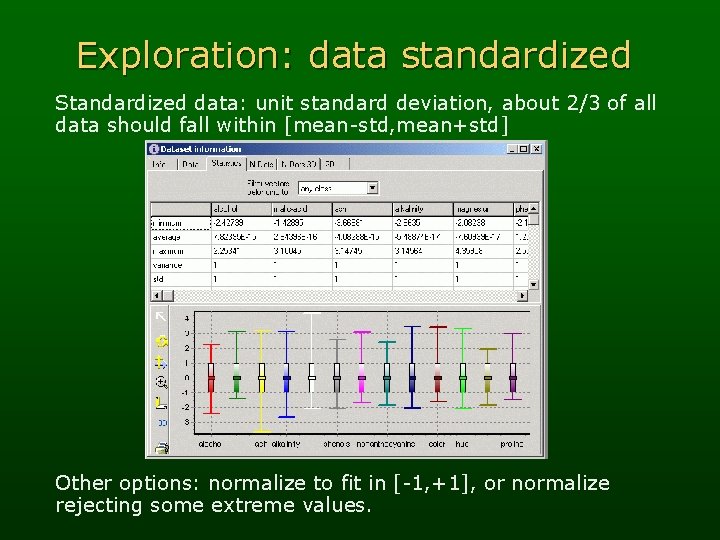

Exploration: data standardized Standardized data: unit standard deviation, about 2/3 of all data should fall within [mean-std, mean+std] Other options: normalize to fit in [-1, +1], or normalize rejecting some extreme values.

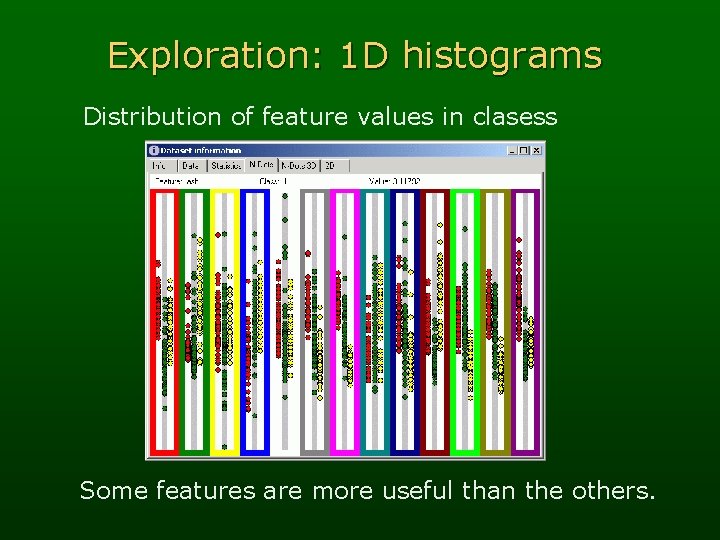

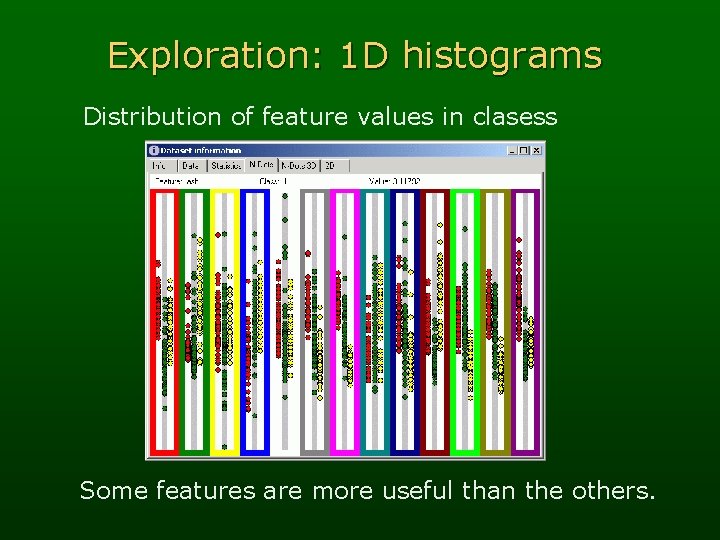

Exploration: 1 D histograms Distribution of feature values in clasess Some features are more useful than the others.

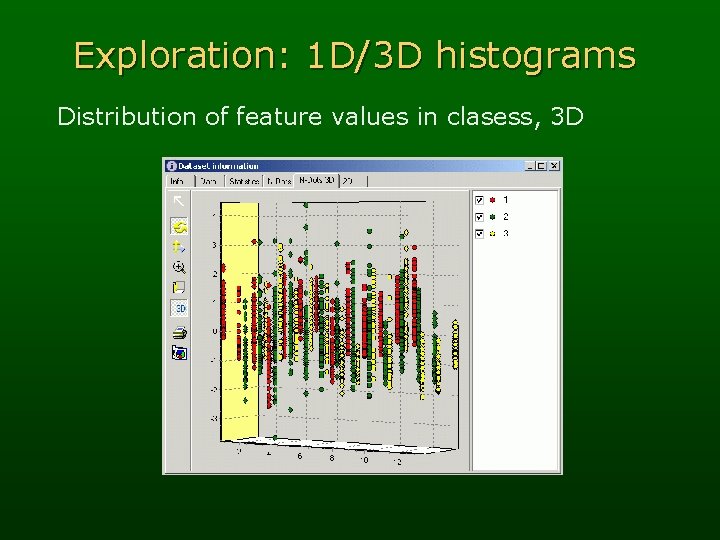

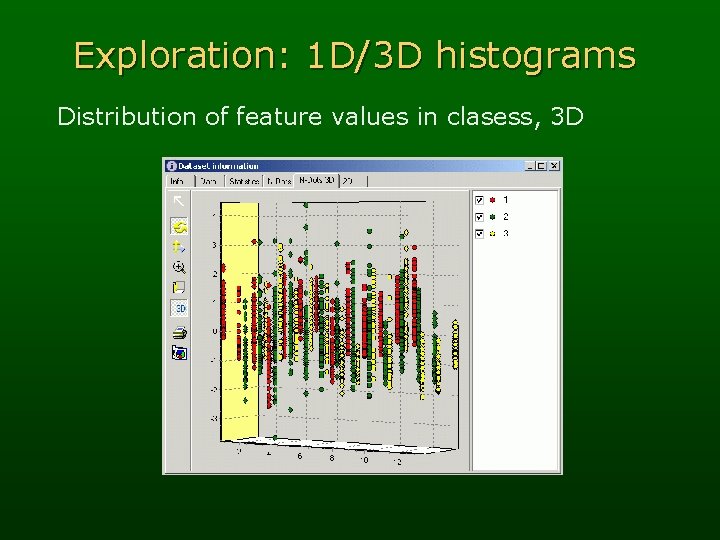

Exploration: 1 D/3 D histograms Distribution of feature values in clasess, 3 D

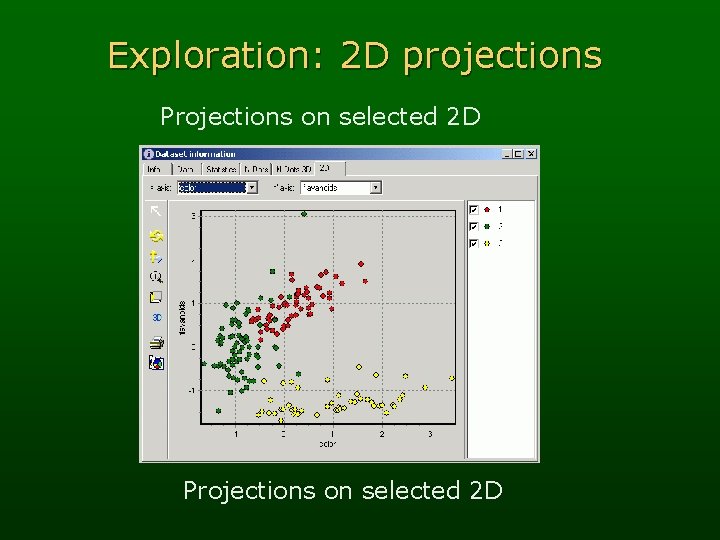

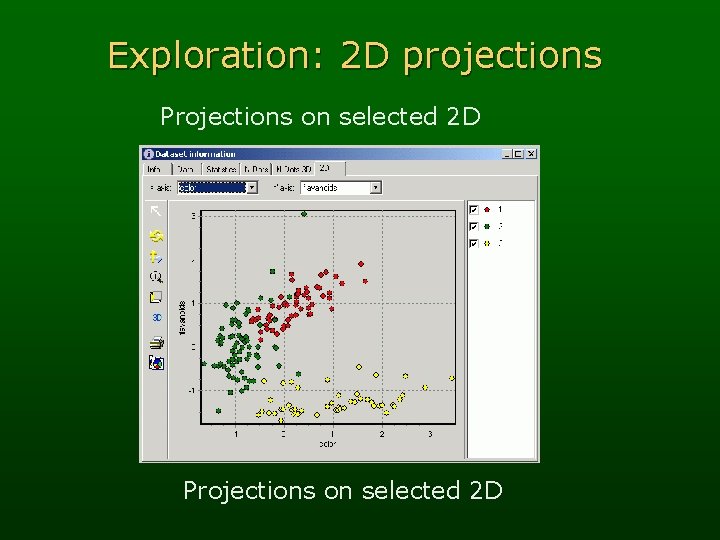

Exploration: 2 D projections Projections on selected 2 D

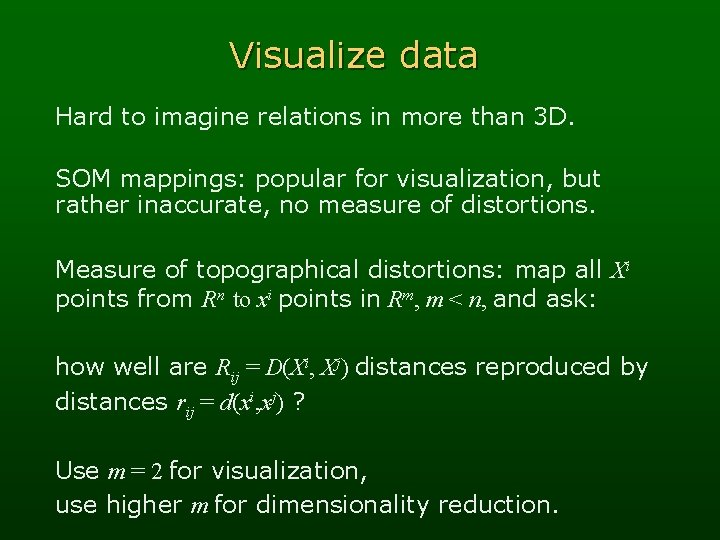

Visualize data Hard to imagine relations in more than 3 D. SOM mappings: popular for visualization, but rather inaccurate, no measure of distortions. Measure of topographical distortions: map all Xi points from Rn to xi points in Rm, m < n, and ask: how well are Rij = D(Xi, Xj) distances reproduced by distances rij = d(xi, xj) ? Use m = 2 for visualization, use higher m for dimensionality reduction.

Visualize data: MDS Multidimensional scaling: invented in psychometry by Torgerson (1952), re-invented by Sammon (1969) and myself (1994) … Minimize measure of topographical distortions moving the x coordinates.

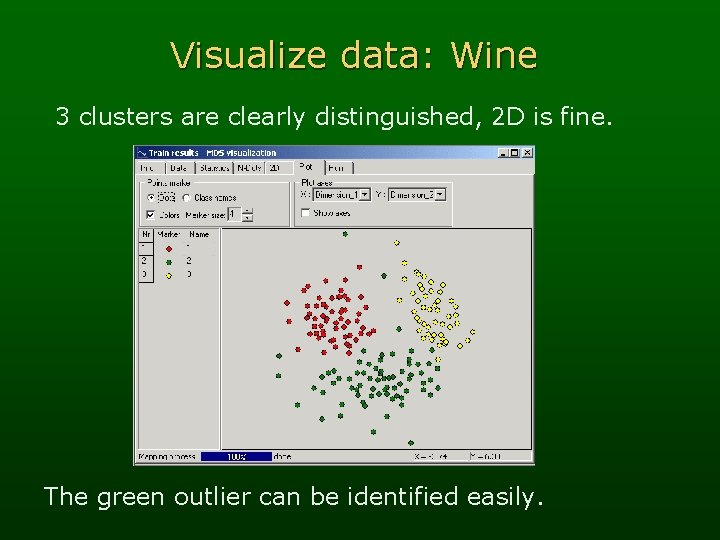

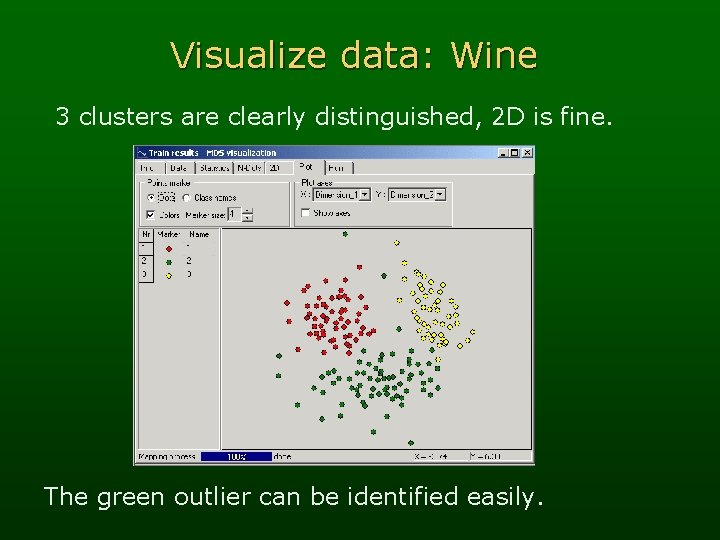

Visualize data: Wine 3 clusters are clearly distinguished, 2 D is fine. The green outlier can be identified easily.

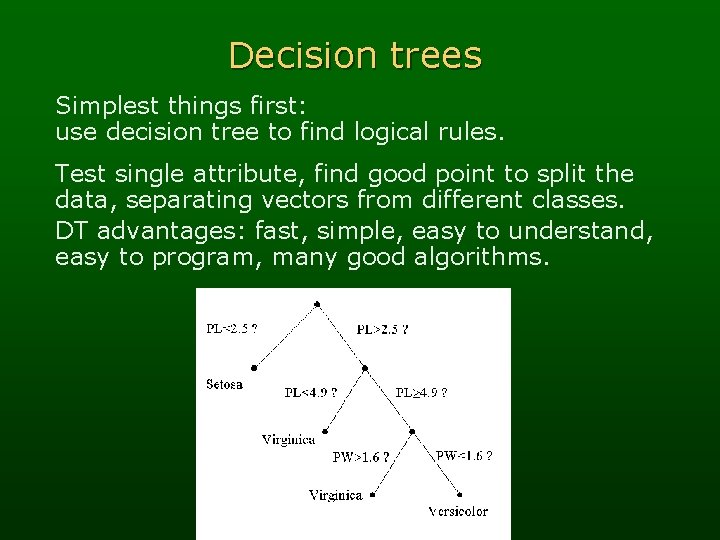

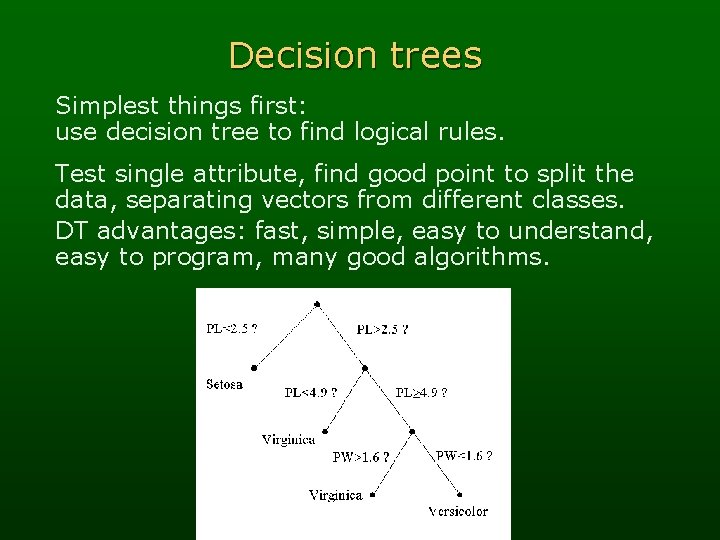

Decision trees Simplest things first: use decision tree to find logical rules. Test single attribute, find good point to split the data, separating vectors from different classes. DT advantages: fast, simple, easy to understand, easy to program, many good algorithms.

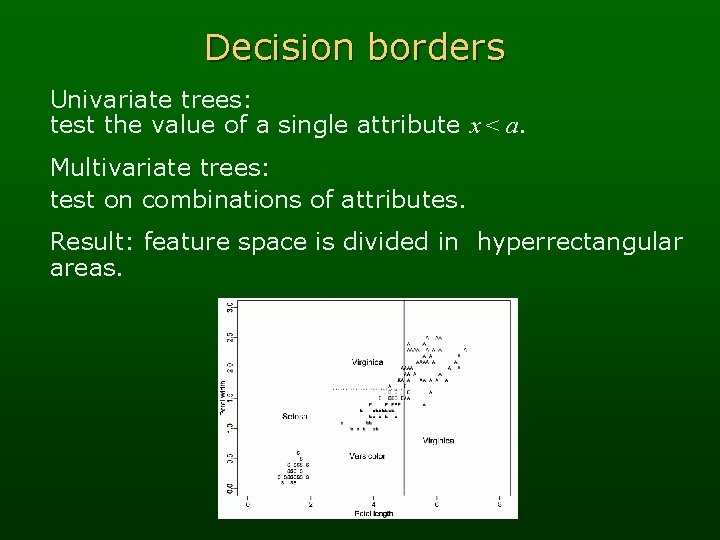

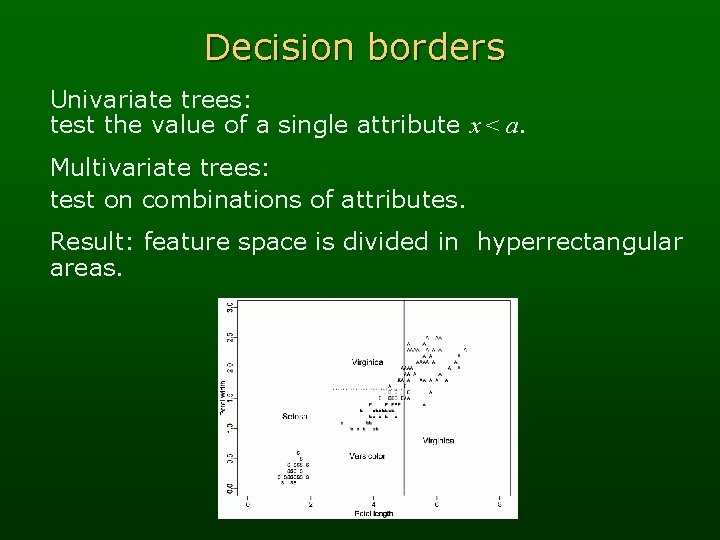

Decision borders Univariate trees: test the value of a single attribute x < a. Multivariate trees: test on combinations of attributes. Result: feature space is divided in hyperrectangular areas.

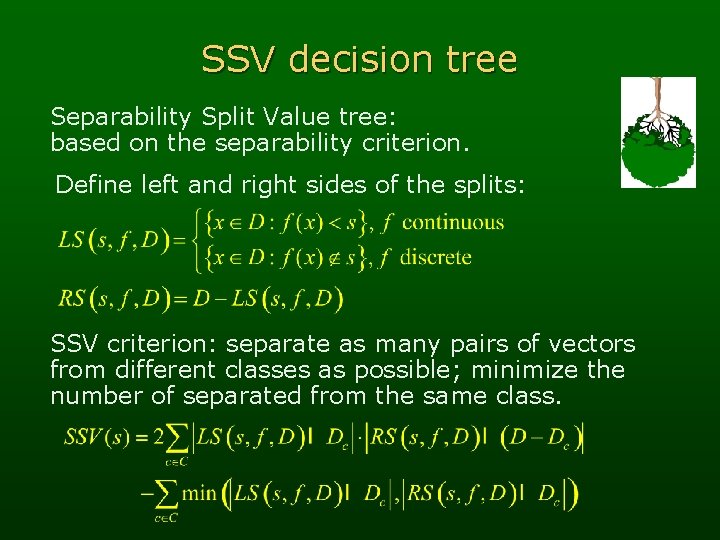

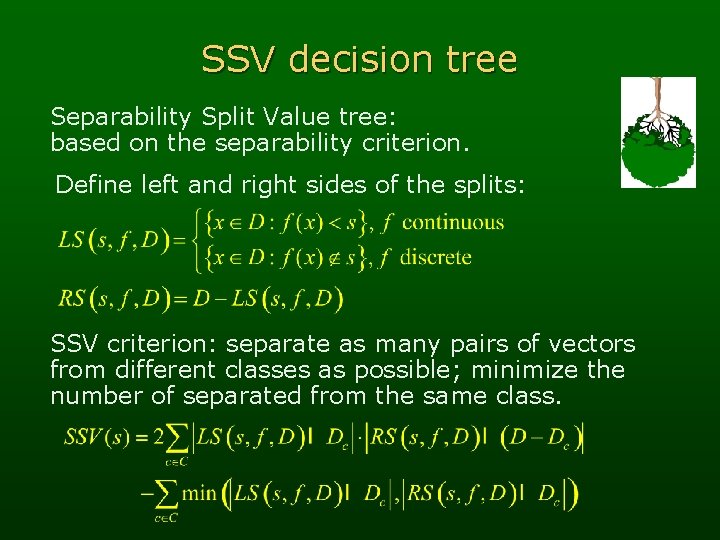

SSV decision tree Separability Split Value tree: based on the separability criterion. Define left and right sides of the splits: SSV criterion: separate as many pairs of vectors from different classes as possible; minimize the number of separated from the same class.

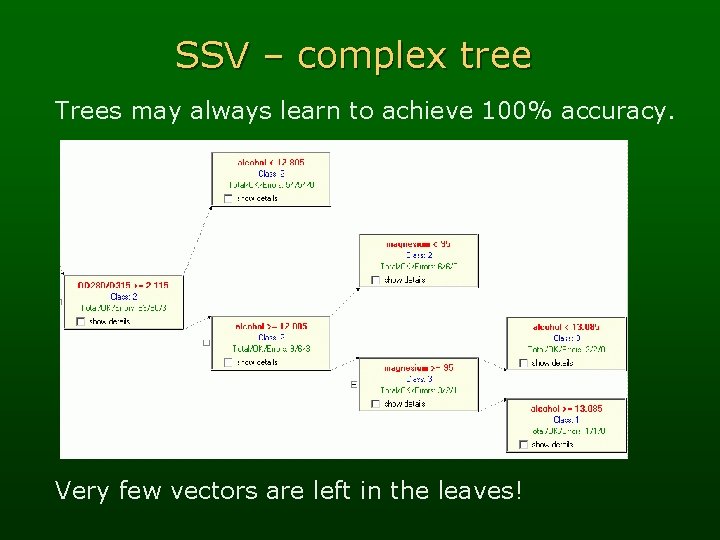

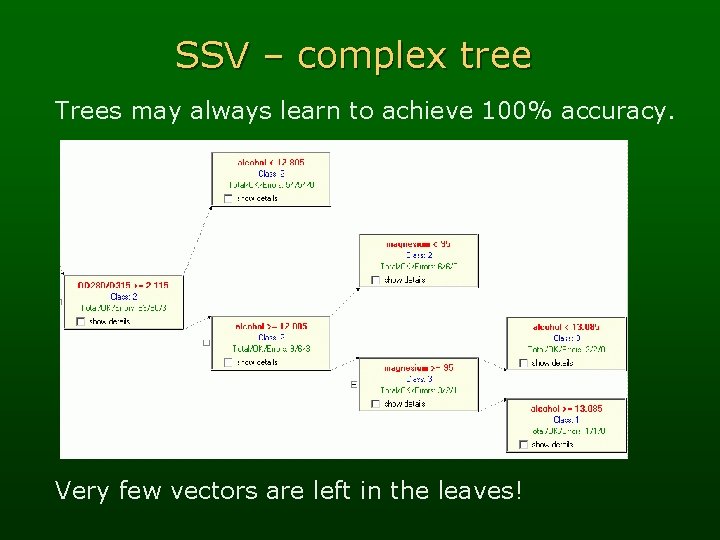

SSV – complex tree Trees may always learn to achieve 100% accuracy. Very few vectors are left in the leaves!

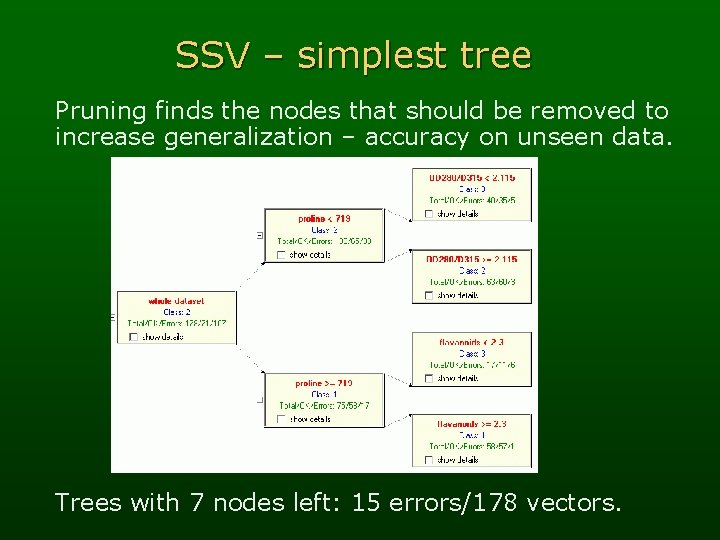

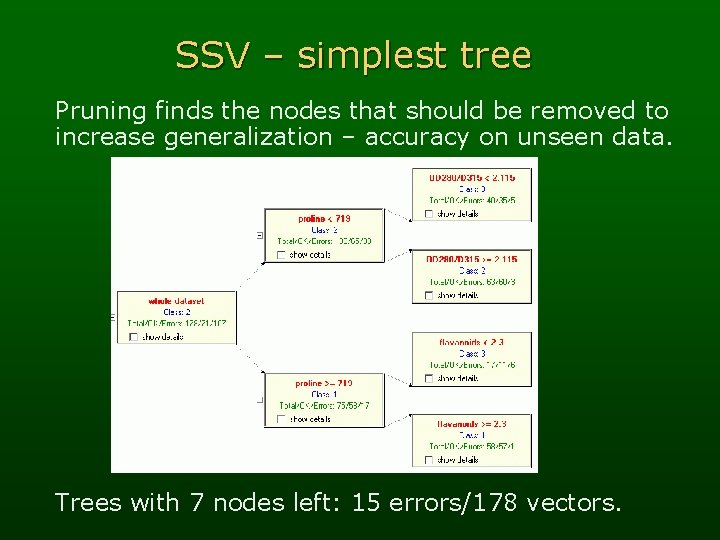

SSV – simplest tree Pruning finds the nodes that should be removed to increase generalization – accuracy on unseen data. Trees with 7 nodes left: 15 errors/178 vectors.

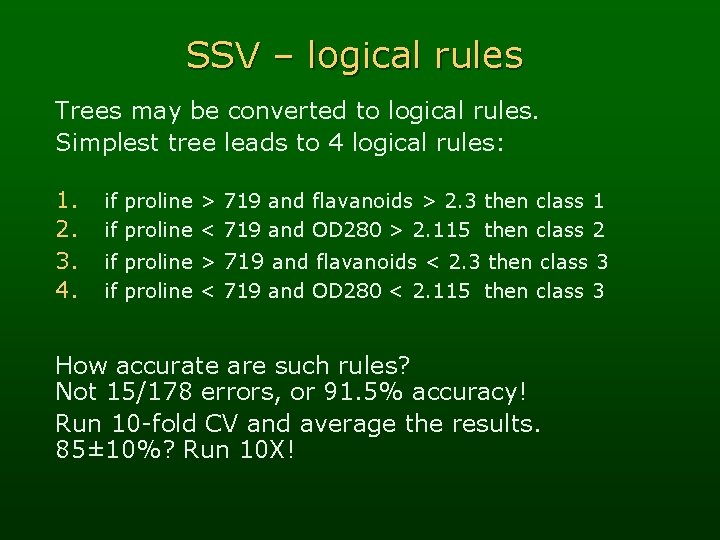

SSV – logical rules Trees may be converted to logical rules. Simplest tree leads to 4 logical rules: 1. 2. 3. 4. if proline > 719 and flavanoids > 2. 3 then class 1 if proline < 719 and OD 280 > 2. 115 then class 2 if proline > 719 and flavanoids < 2. 3 then class 3 if proline < 719 and OD 280 < 2. 115 then class 3 How accurate are such rules? Not 15/178 errors, or 91. 5% accuracy! Run 10 -fold CV and average the results. 85± 10%? Run 10 X!

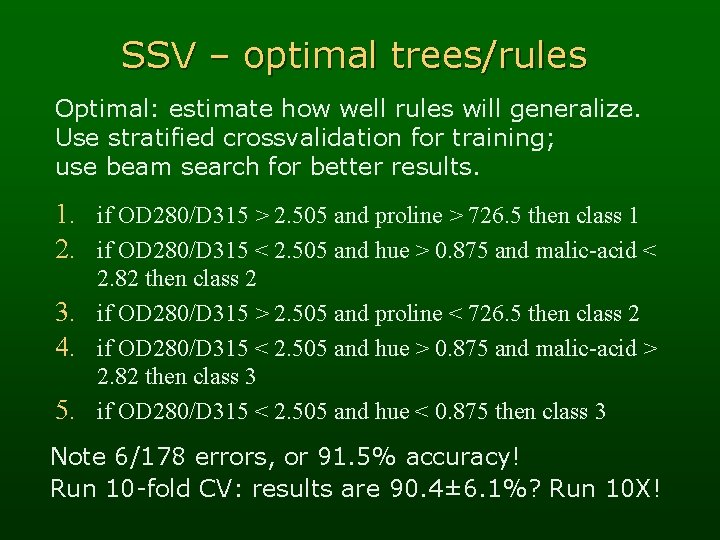

SSV – optimal trees/rules Optimal: estimate how well rules will generalize. Use stratified crossvalidation for training; use beam search for better results. 1. if OD 280/D 315 > 2. 505 and proline > 726. 5 then class 1 2. if OD 280/D 315 < 2. 505 and hue > 0. 875 and malic-acid < 2. 82 then class 2 3. if OD 280/D 315 > 2. 505 and proline < 726. 5 then class 2 4. if OD 280/D 315 < 2. 505 and hue > 0. 875 and malic-acid > 2. 82 then class 3 5. if OD 280/D 315 < 2. 505 and hue < 0. 875 then class 3 Note 6/178 errors, or 91. 5% accuracy! Run 10 -fold CV: results are 90. 4± 6. 1%? Run 10 X!

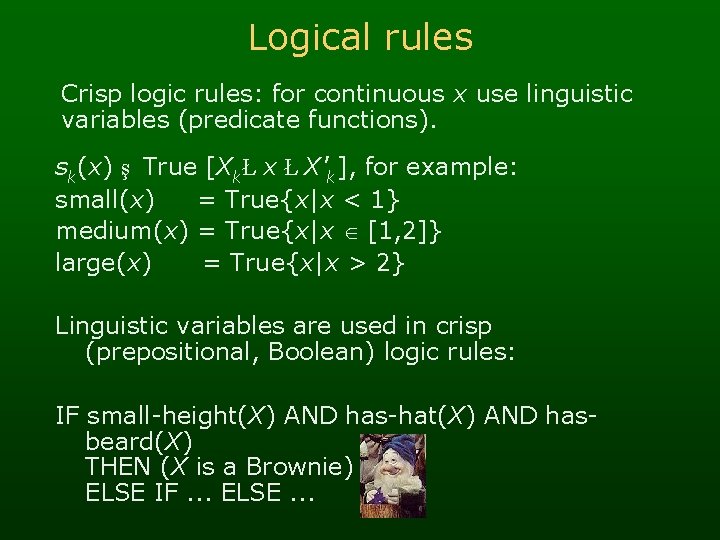

Logical rules Crisp logic rules: for continuous x use linguistic variables (predicate functions). sk(x) ş True [XkŁ x Ł X'k], for example: small(x) = True{x|x < 1} medium(x) = True{x|x [1, 2]} large(x) = True{x|x > 2} Linguistic variables are used in crisp (prepositional, Boolean) logic rules: IF small-height(X) AND has-hat(X) AND hasbeard(X) THEN (X is a Brownie) ELSE IF. . . ELSE. . .

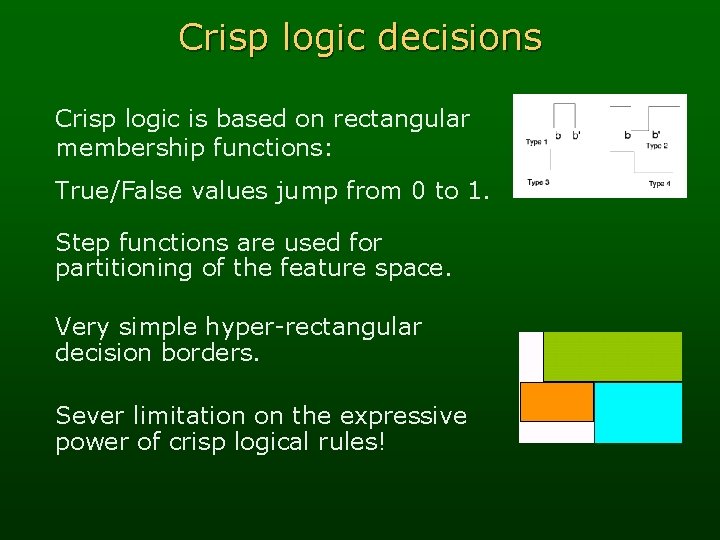

Crisp logic decisions Crisp logic is based on rectangular membership functions: True/False values jump from 0 to 1. Step functions are used for partitioning of the feature space. Very simple hyper-rectangular decision borders. Sever limitation on the expressive power of crisp logical rules!

Logical rules - advantages Logical rules, if simple enough, are preferable. • Rules may expose limitations of black box • • solutions. Only relevant features are used in rules. Rules may sometimes be more accurate than NN and other CI methods. Overfitting is easy to control, rules usually have small number of parameters. Rules forever !? A logical rule about logical rules is: IF the number of rules is relatively small AND the accuracy is sufficiently high. THEN rules may be an optimal choice.

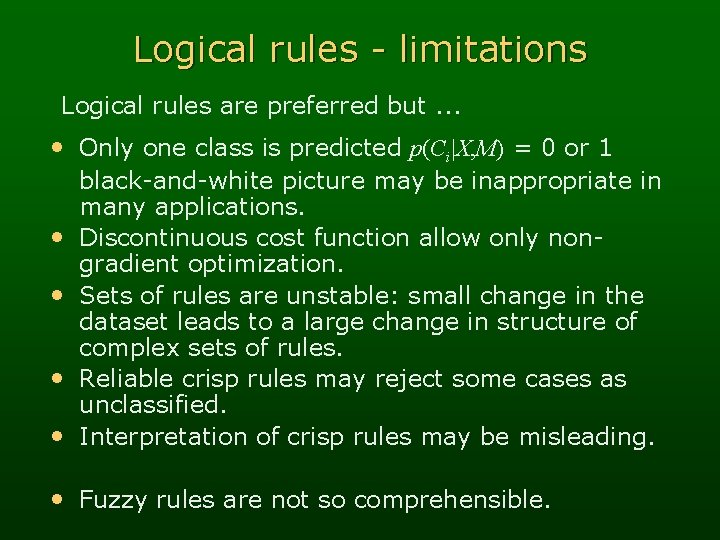

Logical rules - limitations Logical rules are preferred but. . . • Only one class is predicted p(Ci|X, M) = 0 or 1 • • black-and-white picture may be inappropriate in many applications. Discontinuous cost function allow only nongradient optimization. Sets of rules are unstable: small change in the dataset leads to a large change in structure of complex sets of rules. Reliable crisp rules may reject some cases as unclassified. Interpretation of crisp rules may be misleading. • Fuzzy rules are not so comprehensible.

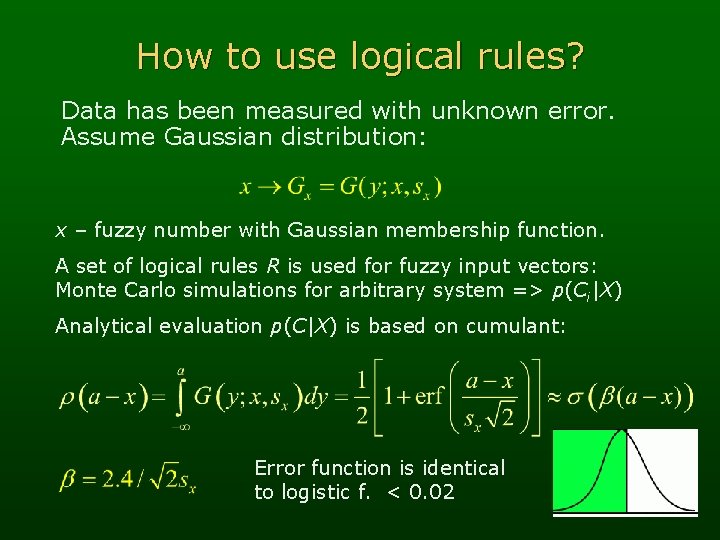

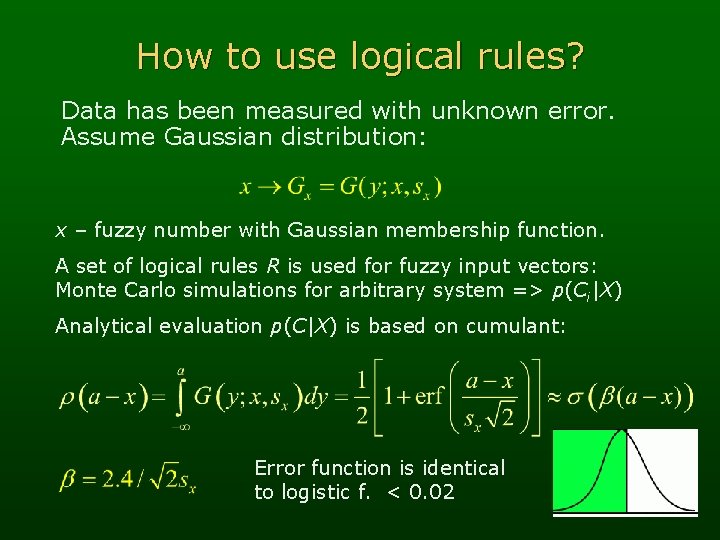

How to use logical rules? Data has been measured with unknown error. Assume Gaussian distribution: x – fuzzy number with Gaussian membership function. A set of logical rules R is used for fuzzy input vectors: Monte Carlo simulations for arbitrary system => p(Ci|X) Analytical evaluation p(C|X) is based on cumulant: Error function is identical to logistic f. < 0. 02

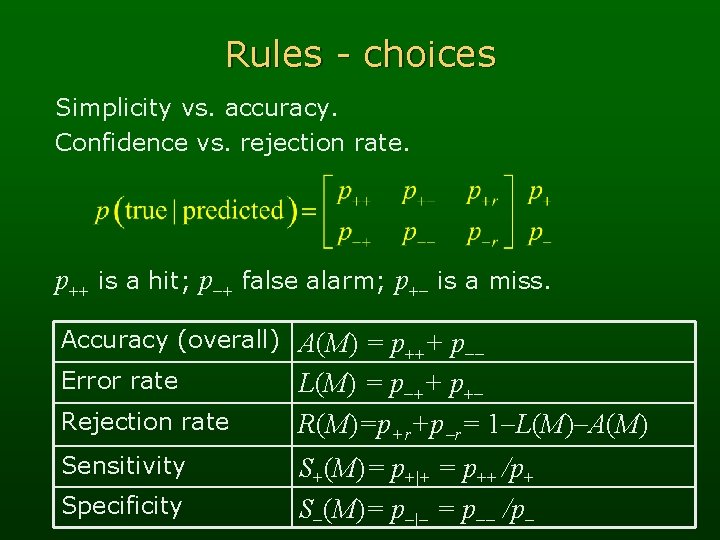

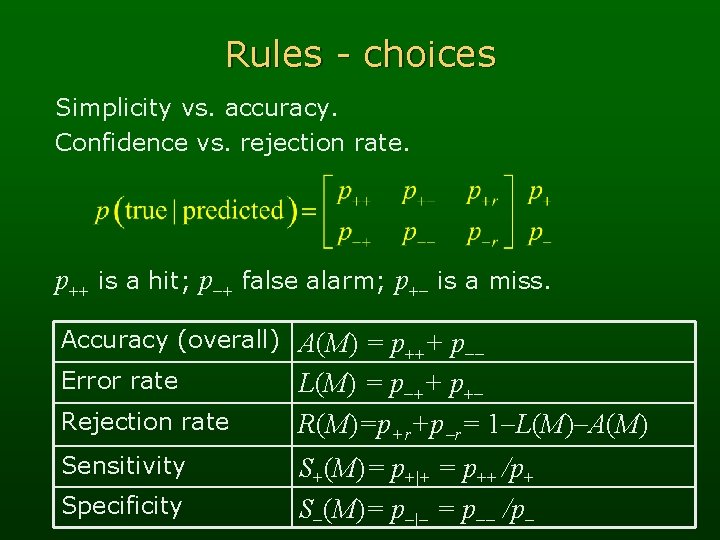

Rules - choices Simplicity vs. accuracy. Confidence vs. rejection rate. p++ is a hit; p-+ false alarm; p+- is a miss. Accuracy (overall) A(M) = p+++ p-Error rate Rejection rate Sensitivity Specificity L(M) = p-++ p+R(M)=p+r+p-r= 1 -L(M)-A(M) S+(M)= p+|+ = p++ /p+ S-(M)= p-|- = p-- /p-

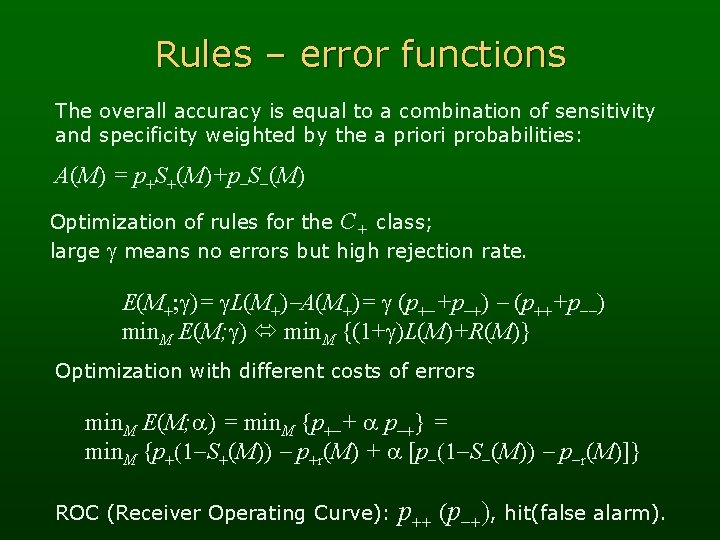

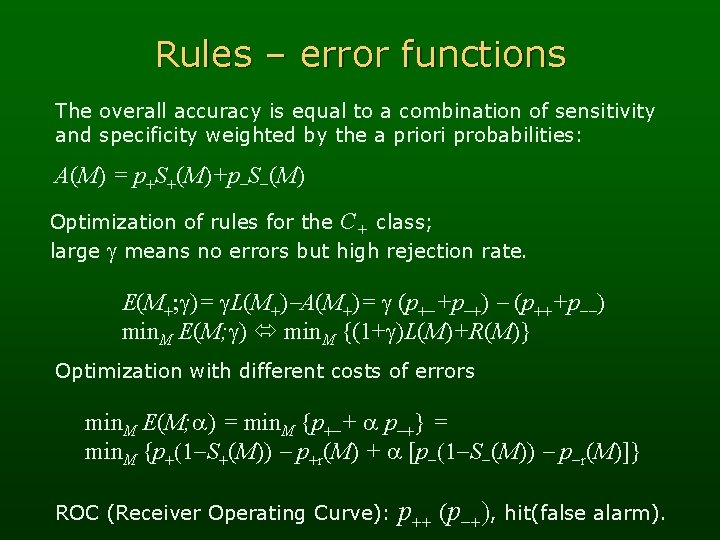

Rules – error functions The overall accuracy is equal to a combination of sensitivity and specificity weighted by the a priori probabilities: A(M) = p+S+(M)+p-S-(M) Optimization of rules for the C+ class; large g means no errors but high rejection rate. E(M+; g)= g. L(M+)-A(M+)= g (p+-+p-+) - (p+++p--) min. M E(M; g) min. M {(1+g)L(M)+R(M)} Optimization with different costs of errors min. M E(M; a) = min. M {p+-+ a p-+} = min. M {p+(1 -S+(M)) - p+r(M) + a [p-(1 -S-(M)) - p-r(M)]} ROC (Receiver Operating Curve): p++ (p-+), hit(false alarm).

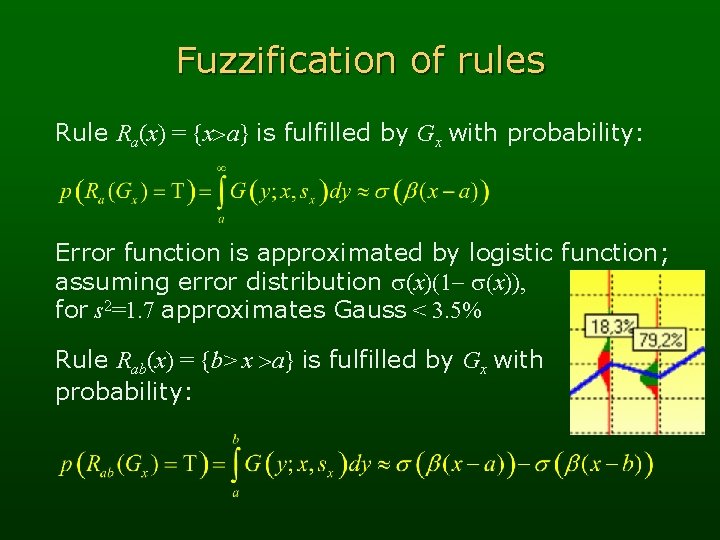

Fuzzification of rules Rule Ra(x) = {x>a} is fulfilled by Gx with probability: Error function is approximated by logistic function; assuming error distribution s(x)(1 - s(x)), for s 2=1. 7 approximates Gauss < 3. 5% Rule Rab(x) = {b> x >a} is fulfilled by Gx with probability:

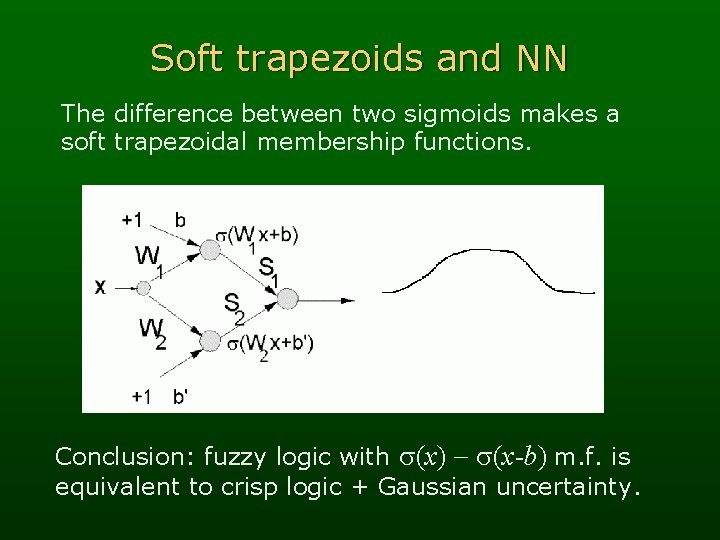

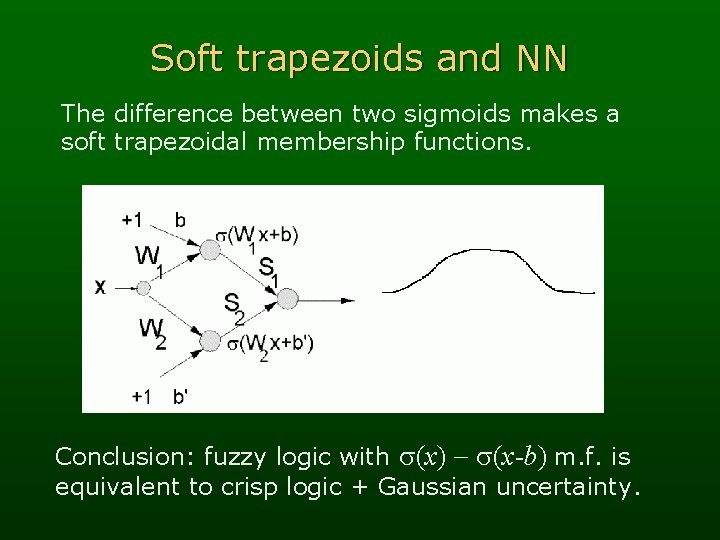

Soft trapezoids and NN The difference between two sigmoids makes a soft trapezoidal membership functions. Conclusion: fuzzy logic with s(x) - s(x-b) m. f. is equivalent to crisp logic + Gaussian uncertainty.

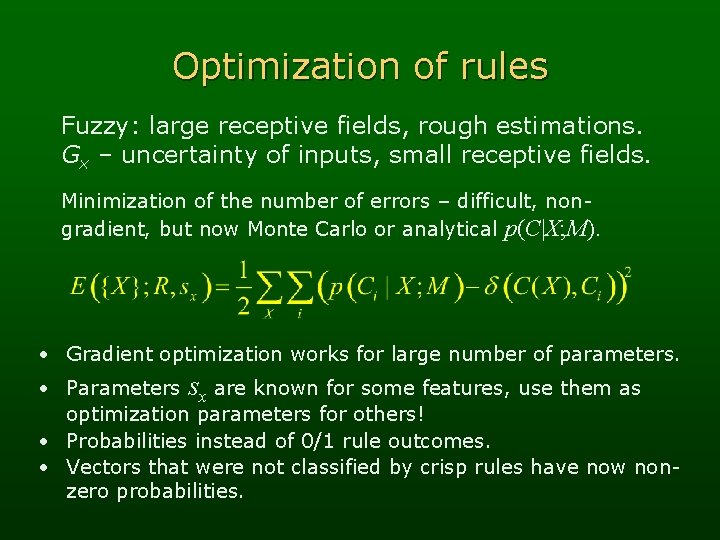

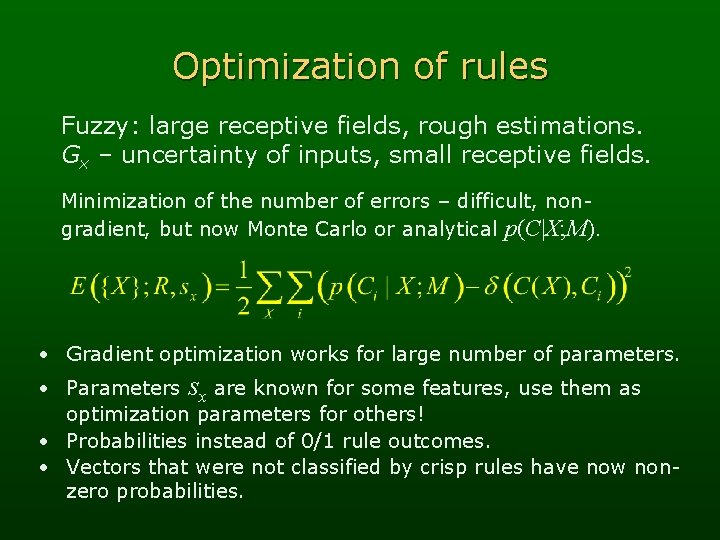

Optimization of rules Fuzzy: large receptive fields, rough estimations. Gx – uncertainty of inputs, small receptive fields. Minimization of the number of errors – difficult, nongradient, but now Monte Carlo or analytical p(C|X; M). • Gradient optimization works for large number of parameters. • Parameters sx are known for some features, use them as optimization parameters for others! • Probabilities instead of 0/1 rule outcomes. • Vectors that were not classified by crisp rules have now nonzero probabilities.

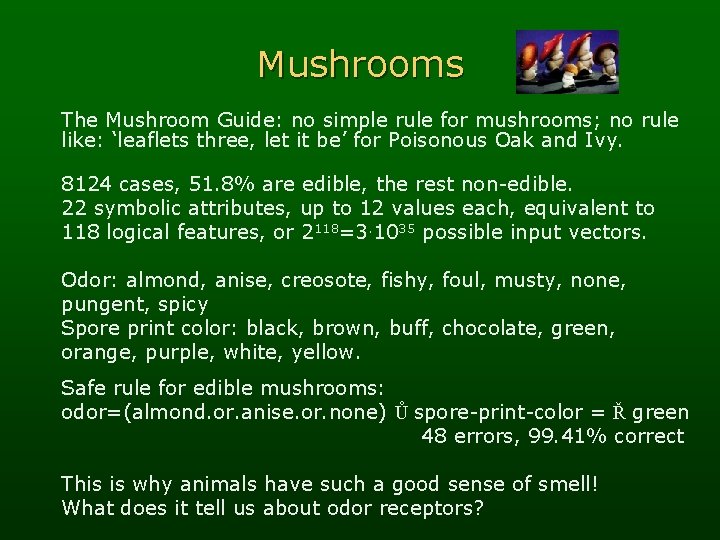

Mushrooms The Mushroom Guide: no simple rule for mushrooms; no rule like: ‘leaflets three, let it be’ for Poisonous Oak and Ivy. 8124 cases, 51. 8% are edible, the rest non-edible. 22 symbolic attributes, up to 12 values each, equivalent to 118 logical features, or 2118=3. 1035 possible input vectors. Odor: almond, anise, creosote, fishy, foul, musty, none, pungent, spicy Spore print color: black, brown, buff, chocolate, green, orange, purple, white, yellow. Safe rule for edible mushrooms: odor=(almond. or. anise. or. none) Ů spore-print-color = Ř green 48 errors, 99. 41% correct This is why animals have such a good sense of smell! What does it tell us about odor receptors?

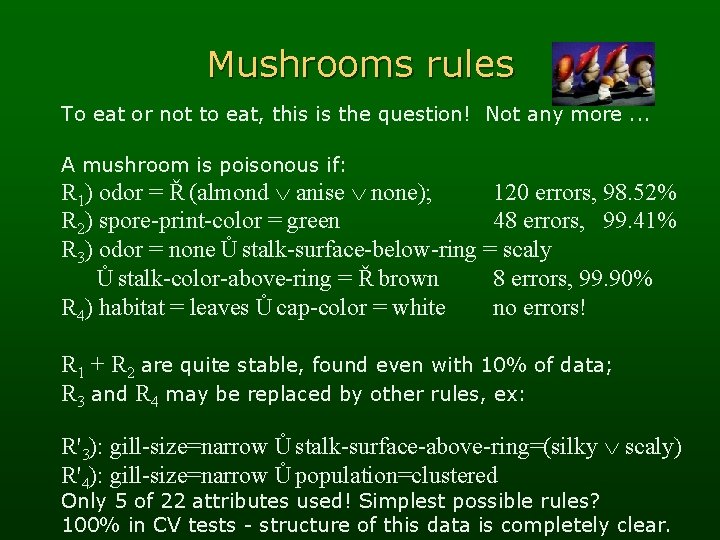

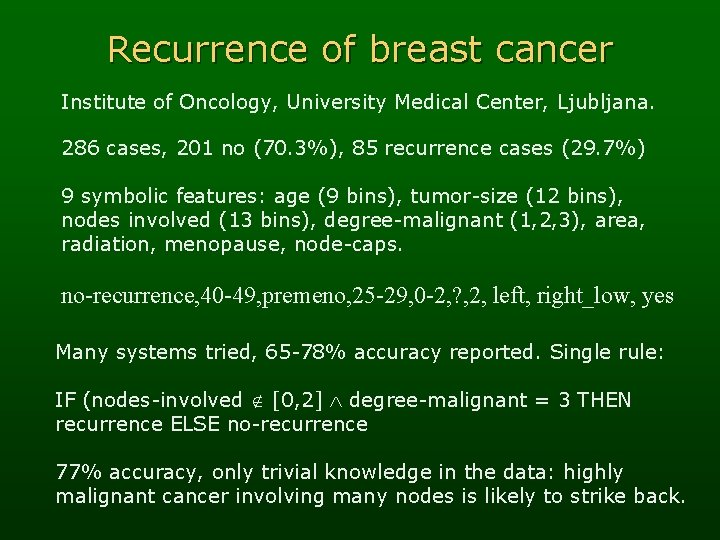

Mushrooms rules To eat or not to eat, this is the question! Not any more. . . A mushroom is poisonous if: R 1) odor = Ř (almond Ú anise Ú none); 120 errors, 98. 52% R 2) spore-print-color = green 48 errors, 99. 41% R 3) odor = none Ů stalk-surface-below-ring = scaly Ů stalk-color-above-ring = Ř brown 8 errors, 99. 90% R 4) habitat = leaves Ů cap-color = white no errors! R 1 + R 2 are quite stable, found even with 10% of data; R 3 and R 4 may be replaced by other rules, ex: R'3): gill-size=narrow Ů stalk-surface-above-ring=(silky Ú scaly) R'4): gill-size=narrow Ů population=clustered Only 5 of 22 attributes used! Simplest possible rules? 100% in CV tests - structure of this data is completely clear.

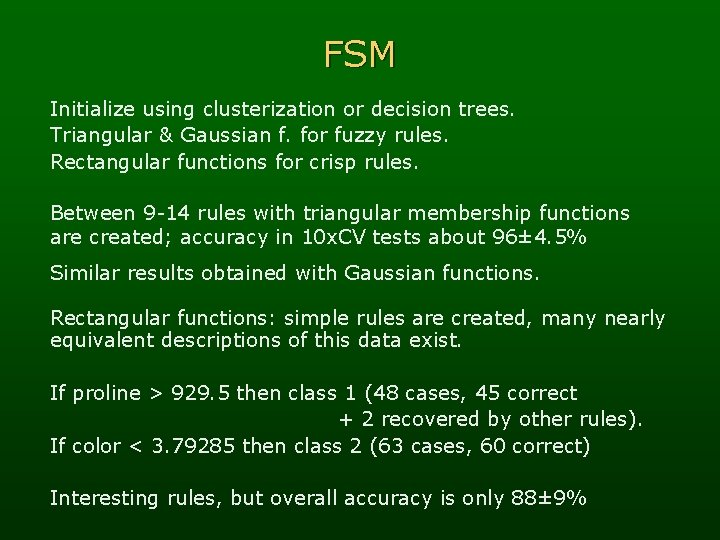

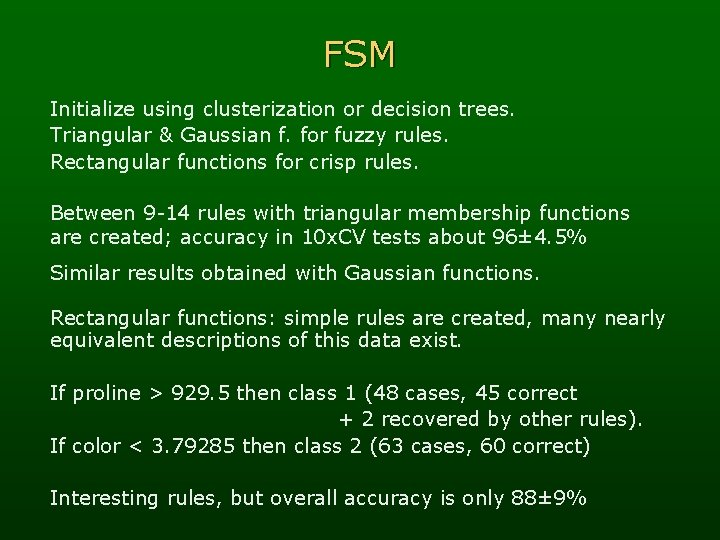

Recurrence of breast cancer Institute of Oncology, University Medical Center, Ljubljana. 286 cases, 201 no (70. 3%), 85 recurrence cases (29. 7%) 9 symbolic features: age (9 bins), tumor-size (12 bins), nodes involved (13 bins), degree-malignant (1, 2, 3), area, radiation, menopause, node-caps. no-recurrence, 40 -49, premeno, 25 -29, 0 -2, ? , 2, left, right_low, yes Many systems tried, 65 -78% accuracy reported. Single rule: IF (nodes-involved [0, 2] degree-malignant = 3 THEN recurrence ELSE no-recurrence 77% accuracy, only trivial knowledge in the data: highly malignant cancer involving many nodes is likely to strike back.

![Neurofuzzy system Fuzzy mx0 1 noyes replaced by a degree mx 0 1 Triangular Neurofuzzy system Fuzzy: m(x)=0, 1 (no/yes) replaced by a degree m(x) [0, 1]. Triangular,](https://slidetodoc.com/presentation_image/79f4e7f8da15c762ce1072a8b82b0f2e/image-39.jpg)

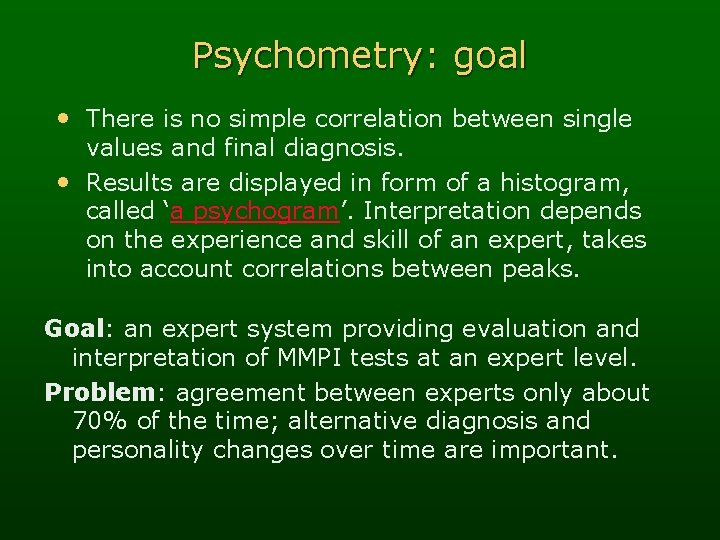

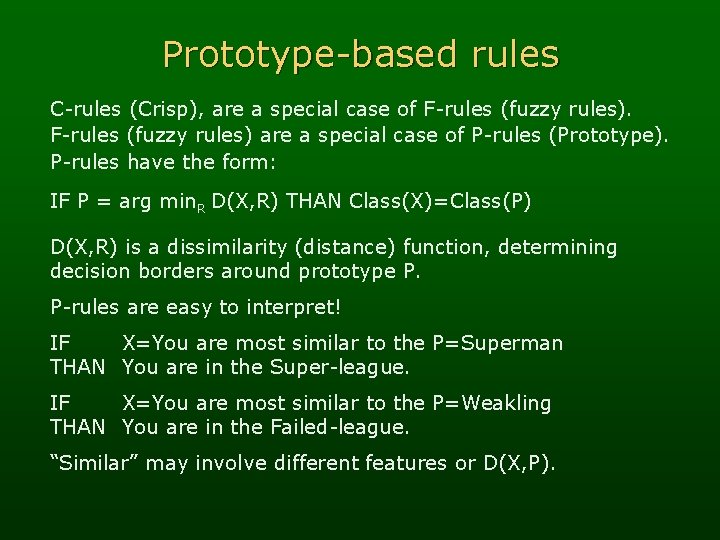

Neurofuzzy system Fuzzy: m(x)=0, 1 (no/yes) replaced by a degree m(x) [0, 1]. Triangular, trapezoidal, Gaussian or other membership f. M. f-s in many dimensions: Feature Space Mapping (FSM) neurofuzzy system. Neural adaptation, estimation of probability density distribution (PDF) usingle hidden layer network (RBF-like) with nodes realizing separable functions:

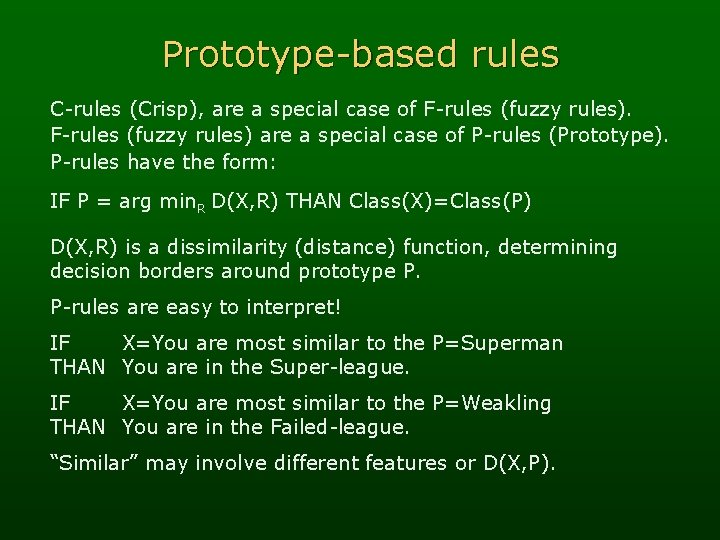

FSM Initialize using clusterization or decision trees. Triangular & Gaussian f. for fuzzy rules. Rectangular functions for crisp rules. Between 9 -14 rules with triangular membership functions are created; accuracy in 10 x. CV tests about 96± 4. 5% Similar results obtained with Gaussian functions. Rectangular functions: simple rules are created, many nearly equivalent descriptions of this data exist. If proline > 929. 5 then class 1 (48 cases, 45 correct + 2 recovered by other rules). If color < 3. 79285 then class 2 (63 cases, 60 correct) Interesting rules, but overall accuracy is only 88± 9%

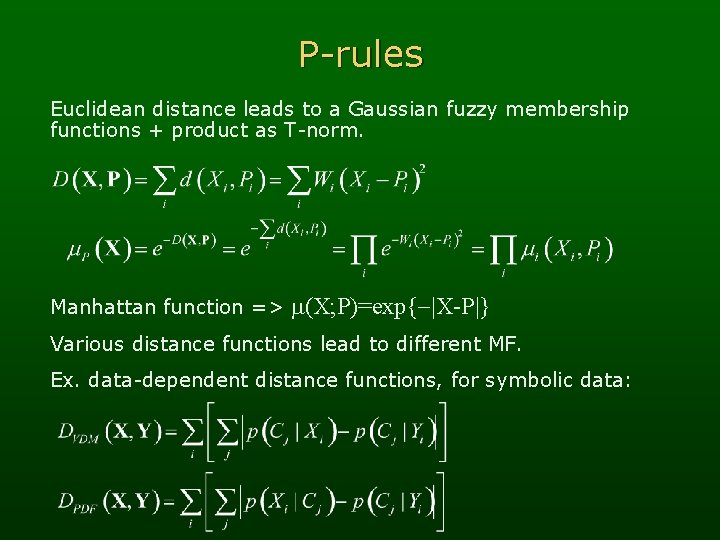

Prototype-based rules C-rules (Crisp), are a special case of F-rules (fuzzy rules) are a special case of P-rules (Prototype). P-rules have the form: IF P = arg min. R D(X, R) THAN Class(X)=Class(P) D(X, R) is a dissimilarity (distance) function, determining decision borders around prototype P. P-rules are easy to interpret! IF X=You are most similar to the P=Superman THAN You are in the Super-league. IF X=You are most similar to the P=Weakling THAN You are in the Failed-league. “Similar” may involve different features or D(X, P).

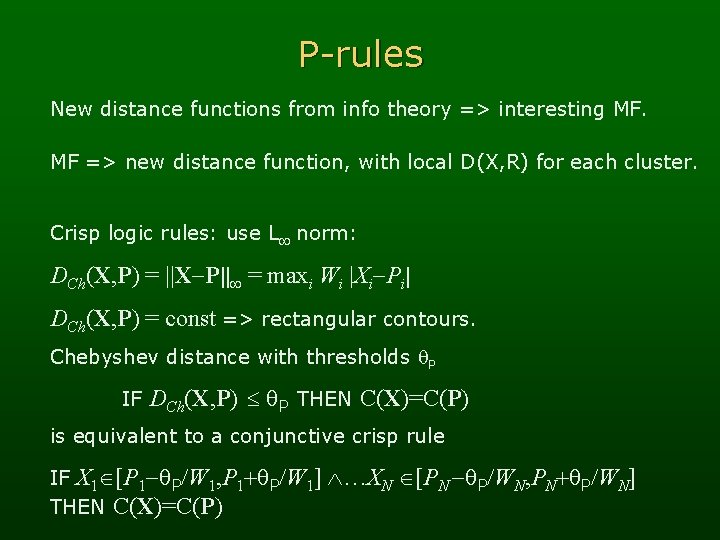

P-rules Euclidean distance leads to a Gaussian fuzzy membership functions + product as T-norm. Manhattan function => m(X; P)=exp{-|X-P|} Various distance functions lead to different MF. Ex. data-dependent distance functions, for symbolic data:

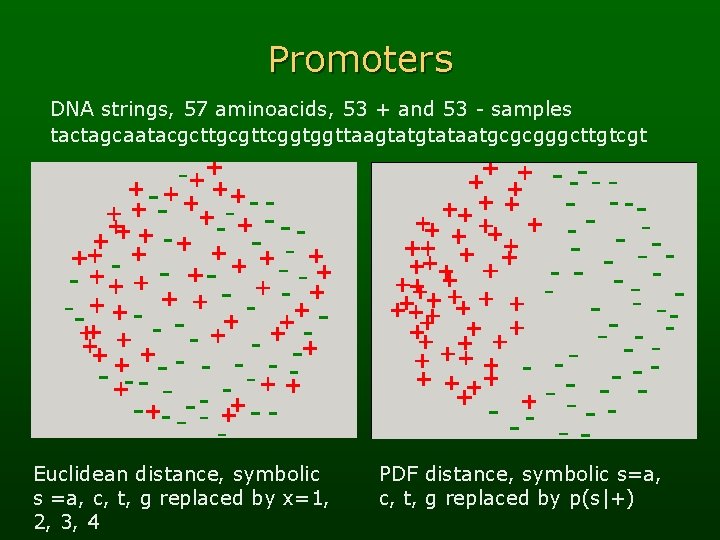

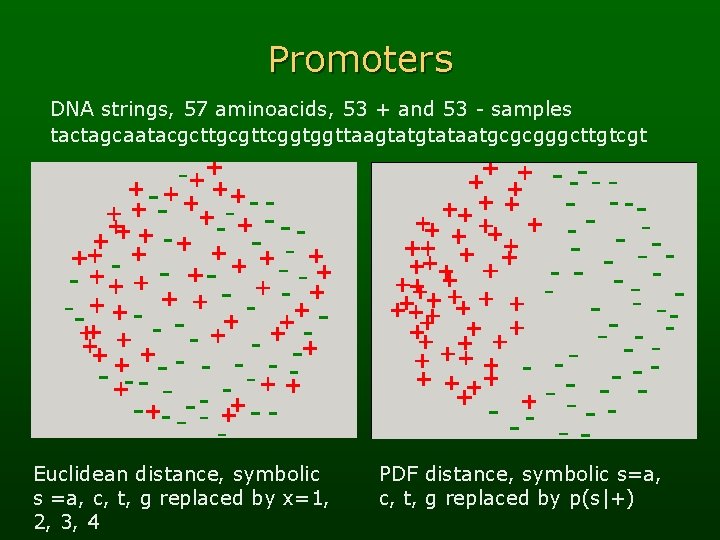

Promoters DNA strings, 57 aminoacids, 53 + and 53 - samples tactagcaatacgcttgcgttcggtggttaagtataatgcgcgggcttgtcgt Euclidean distance, symbolic s =a, c, t, g replaced by x=1, 2, 3, 4 PDF distance, symbolic s=a, c, t, g replaced by p(s|+)

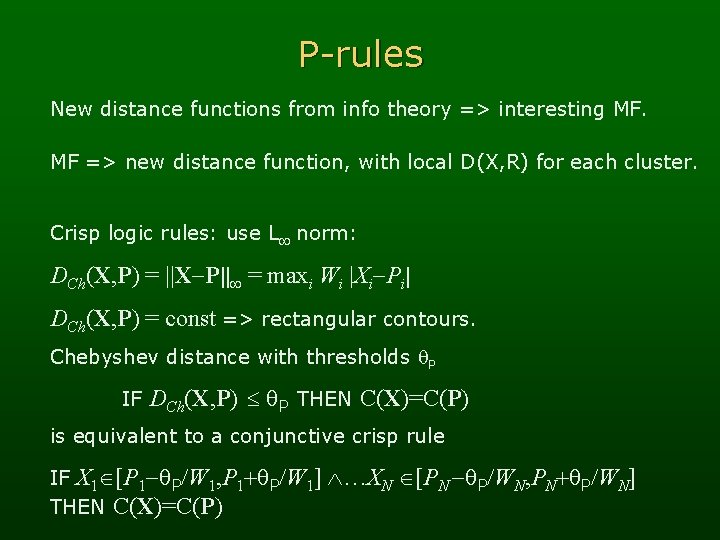

P-rules New distance functions from info theory => interesting MF. MF => new distance function, with local D(X, R) for each cluster. Crisp logic rules: use L norm: DCh(X, P) = ||X-P|| = maxi Wi |Xi-Pi| DCh(X, P) = const => rectangular contours. Chebyshev distance with thresholds P IF DCh(X, P) P THEN C(X)=C(P) is equivalent to a conjunctive crisp rule IF X 1 [P 1 - P/W 1, P 1+ P/W 1] THEN C(X)=C(P) …XN [PN - P/WN, PN+ P/WN]

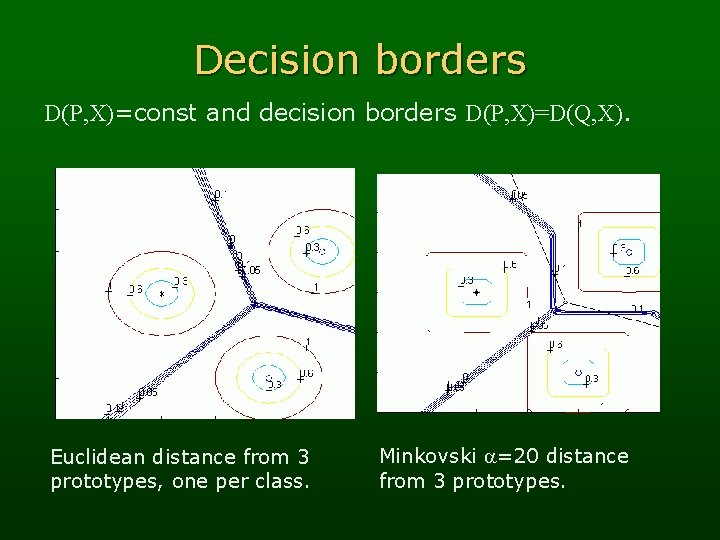

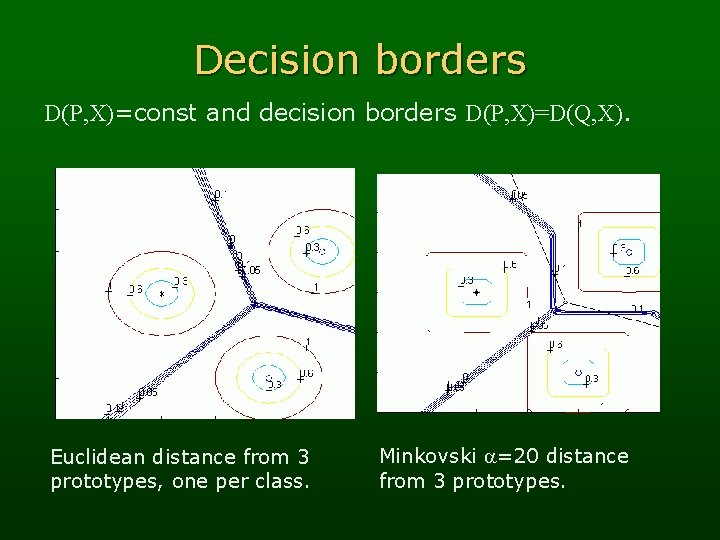

Decision borders D(P, X)=const and decision borders D(P, X)=D(Q, X). Euclidean distance from 3 prototypes, one per class. Minkovski a=20 distance from 3 prototypes.

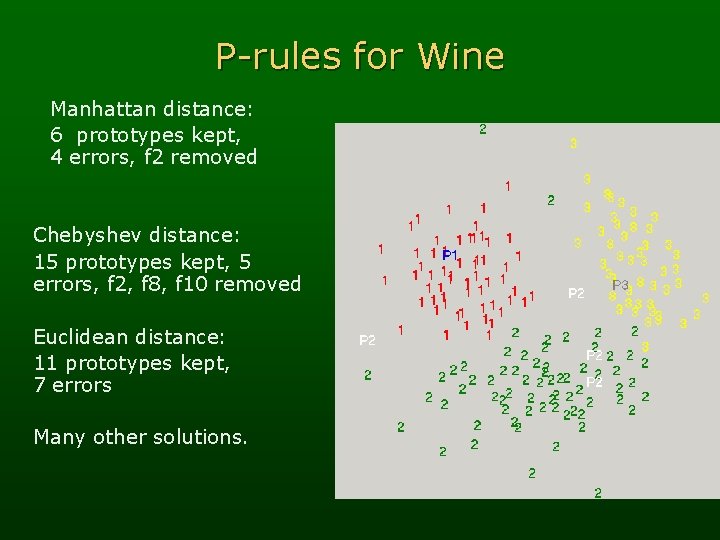

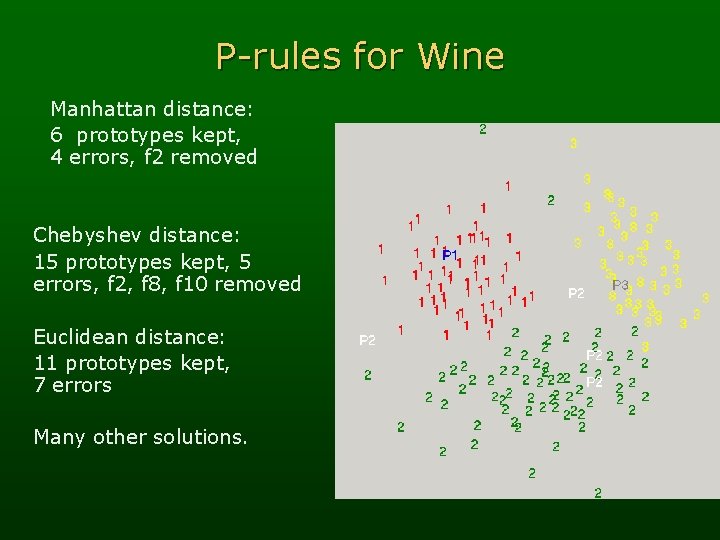

P-rules for Wine Manhattan distance: 6 prototypes kept, 4 errors, f 2 removed Chebyshev distance: 15 prototypes kept, 5 errors, f 2, f 8, f 10 removed Euclidean distance: 11 prototypes kept, 7 errors Many other solutions.

Neural networks • MLP – Multilayer Perceptrons, most popular NN models. Use soft hyperplanes for discrimination. Results are difficult to interpret, complex decision borders. Prediction, approximation: infinite number of classes. • RBF – Radial Basis Functions. RBF with Gaussian functions are equivalent to fuzzy systems with Gaussian membership functions, but … No feature selection => complex rules. Other radial functions => not separable! Use separable functions, not radial => FSM. • Many methods to convert MLP NN to logical rules.

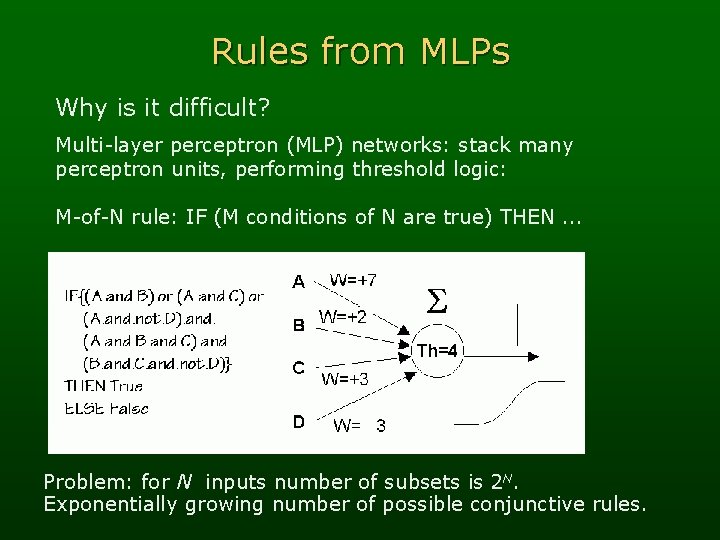

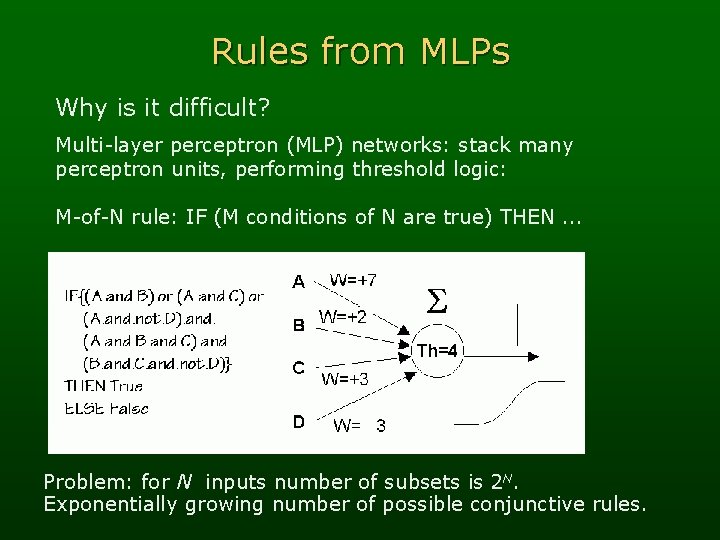

Rules from MLPs Why is it difficult? Multi-layer perceptron (MLP) networks: stack many perceptron units, performing threshold logic: M-of-N rule: IF (M conditions of N are true) THEN. . . Problem: for N inputs number of subsets is 2 N. Exponentially growing number of possible conjunctive rules.

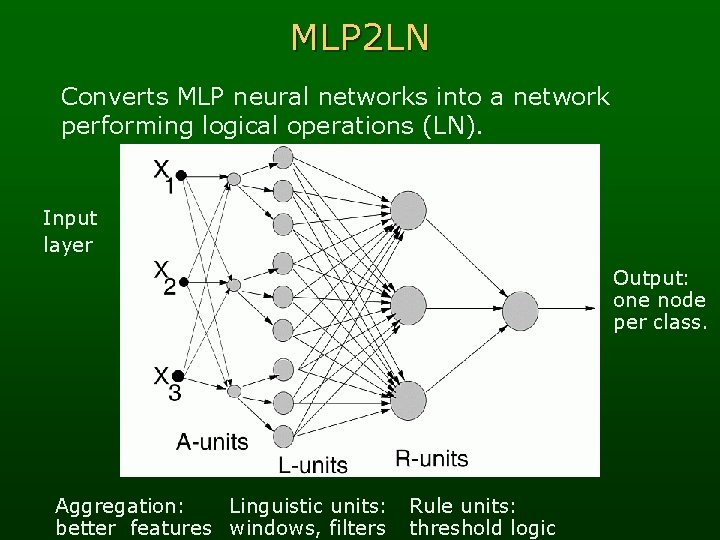

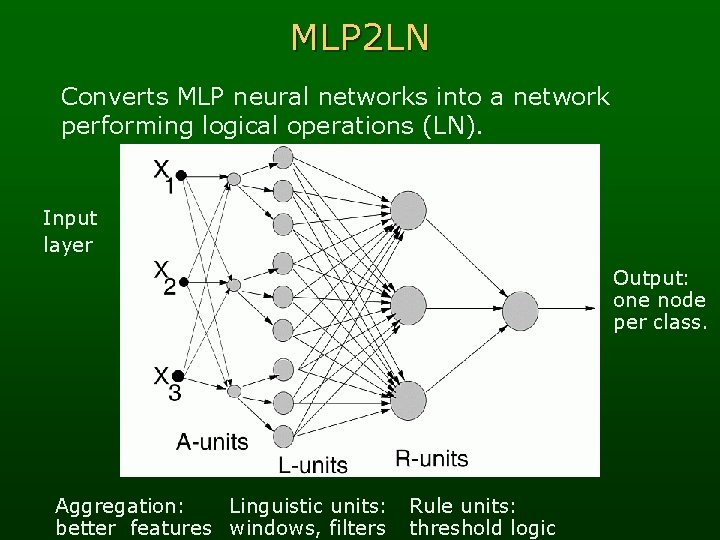

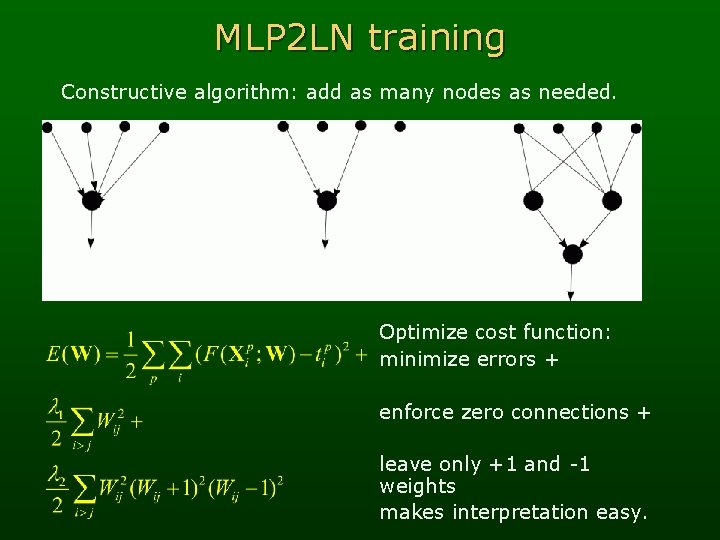

MLP 2 LN Converts MLP neural networks into a network performing logical operations (LN). Input layer Output: one node per class. Aggregation: Linguistic units: better features windows, filters Rule units: threshold logic

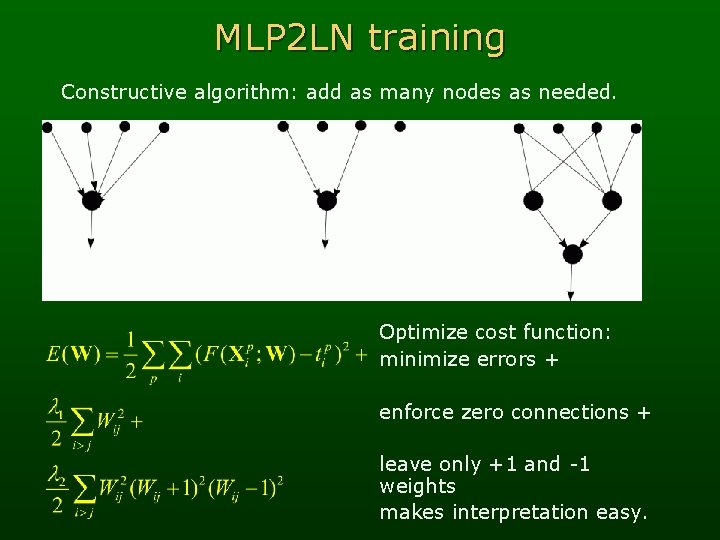

MLP 2 LN training Constructive algorithm: add as many nodes as needed. Optimize cost function: minimize errors + enforce zero connections + leave only +1 and -1 weights makes interpretation easy.

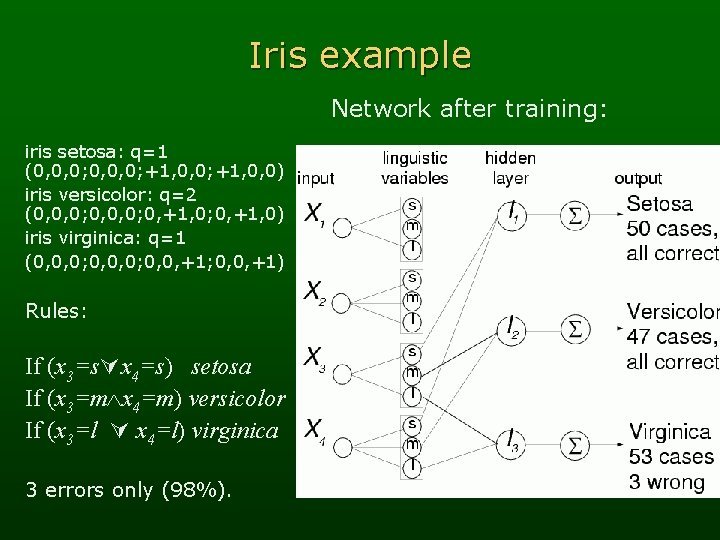

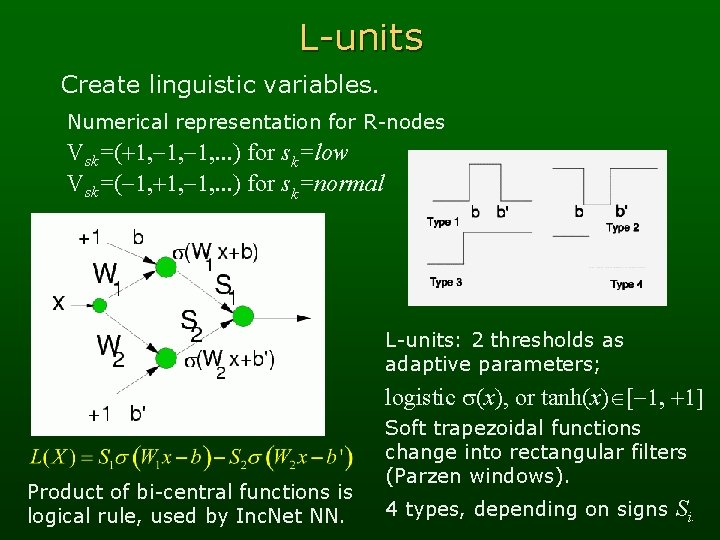

L-units Create linguistic variables. Numerical representation for R-nodes Vsk=(+1, -1, . . . ) for sk=low Vsk=(-1, +1, -1, . . . ) for sk=normal L-units: 2 thresholds as adaptive parameters; logistic s(x), or tanh(x) [-1, +1] Product of bi-central functions is logical rule, used by Inc. Net NN. Soft trapezoidal functions change into rectangular filters (Parzen windows). 4 types, depending on signs Si.

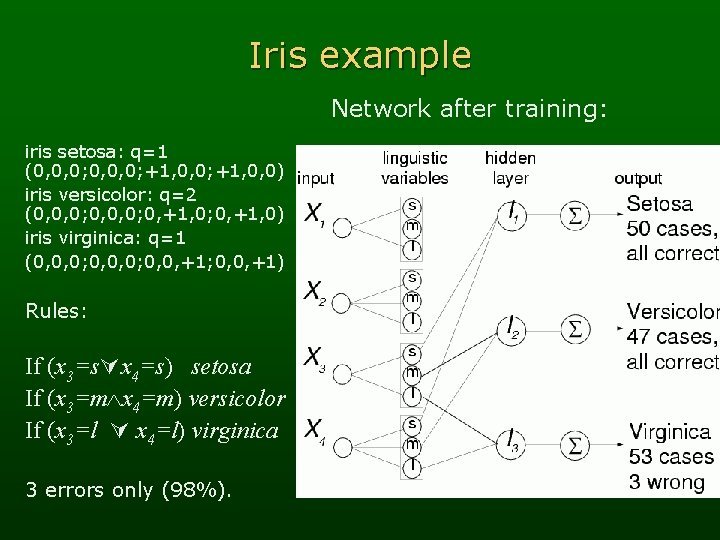

Iris example Network after training: iris setosa: q=1 (0, 0, 0; +1, 0, 0) iris versicolor: q=2 (0, 0, 0; 0, +1, 0) iris virginica: q=1 (0, 0, 0; 0, 0, +1; 0, 0, +1) Rules: If (x 3=s x 4=s) setosa If (x 3=m x 4=m) versicolor If (x 3=l x 4=l) virginica 3 errors only (98%).

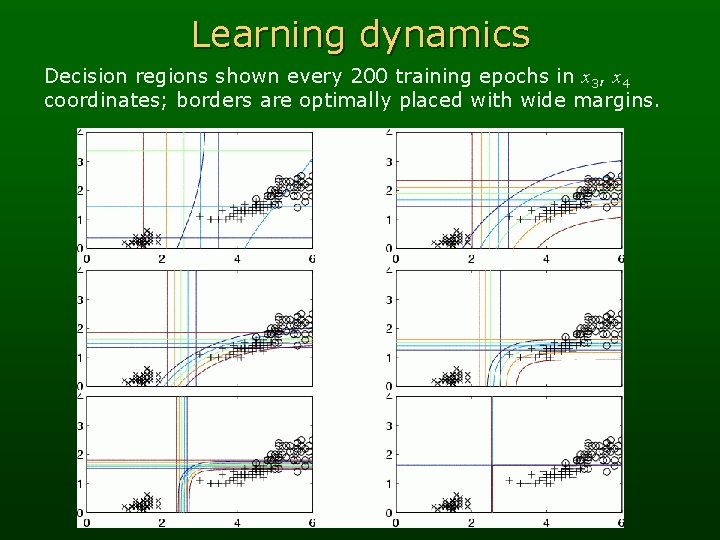

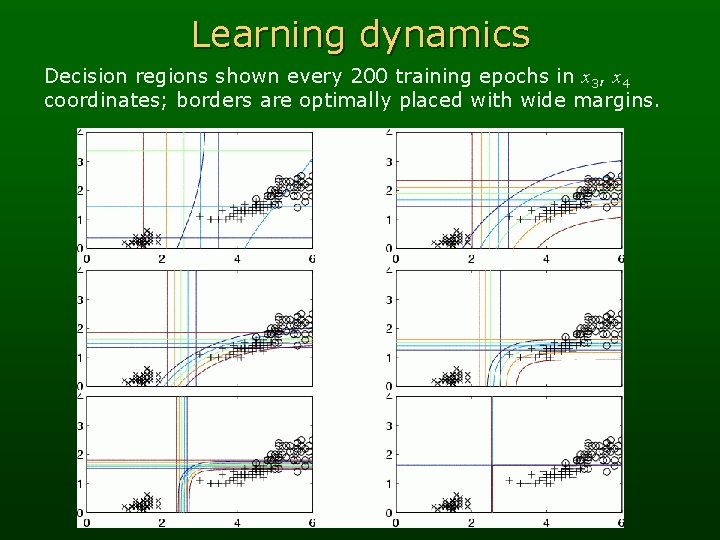

Learning dynamics Decision regions shown every 200 training epochs in x 3, x 4 coordinates; borders are optimally placed with wide margins.

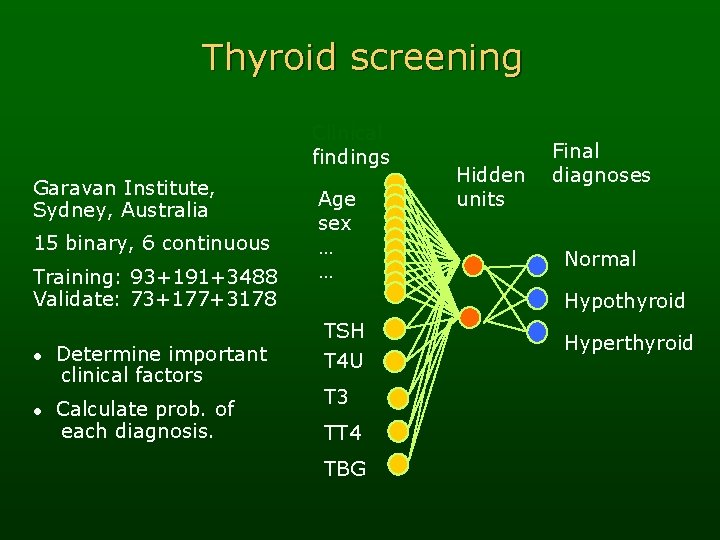

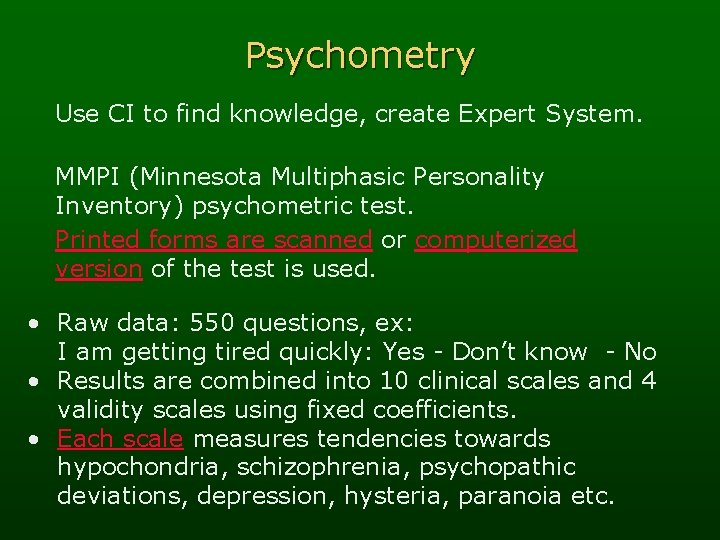

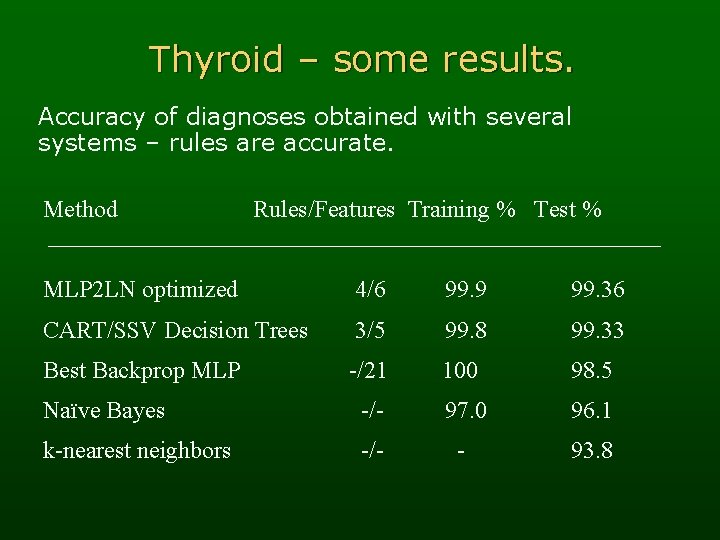

Thyroid screening Clinical findings Garavan Institute, Sydney, Australia 15 binary, 6 continuous Training: 93+191+3488 Validate: 73+177+3178 l l Determine important clinical factors Calculate prob. of each diagnosis. Age sex … … Hidden units Final diagnoses Normal Hypothyroid TSH T 4 U T 3 TT 4 TBG Hyperthyroid

Thyroid – some results. Accuracy of diagnoses obtained with several systems – rules are accurate. Method Rules/Features Training % Test % MLP 2 LN optimized 4/6 99. 9 99. 36 CART/SSV Decision Trees 3/5 99. 8 99. 33 Best Backprop MLP -/21 100 98. 5 Naïve Bayes -/- 97. 0 96. 1 k-nearest neighbors -/- - 93. 8

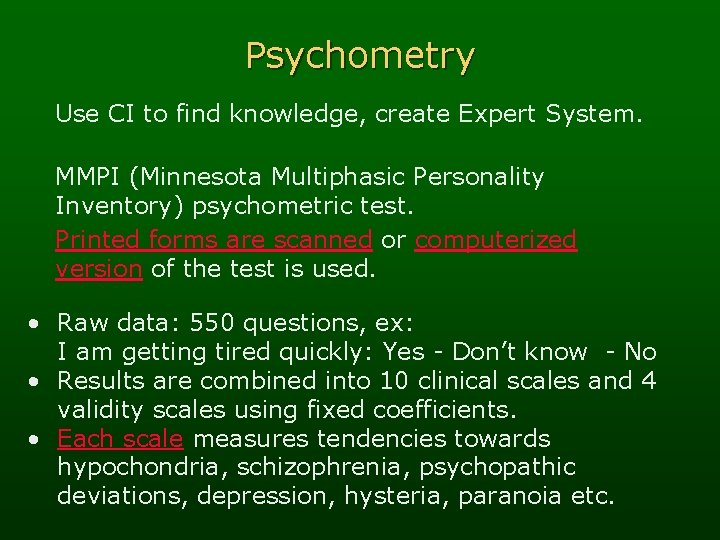

Psychometry Use CI to find knowledge, create Expert System. MMPI (Minnesota Multiphasic Personality Inventory) psychometric test. Printed forms are scanned or computerized version of the test is used. • Raw data: 550 questions, ex: I am getting tired quickly: Yes - Don’t know - No • Results are combined into 10 clinical scales and 4 validity scales using fixed coefficients. • Each scale measures tendencies towards hypochondria, schizophrenia, psychopathic deviations, depression, hysteria, paranoia etc.

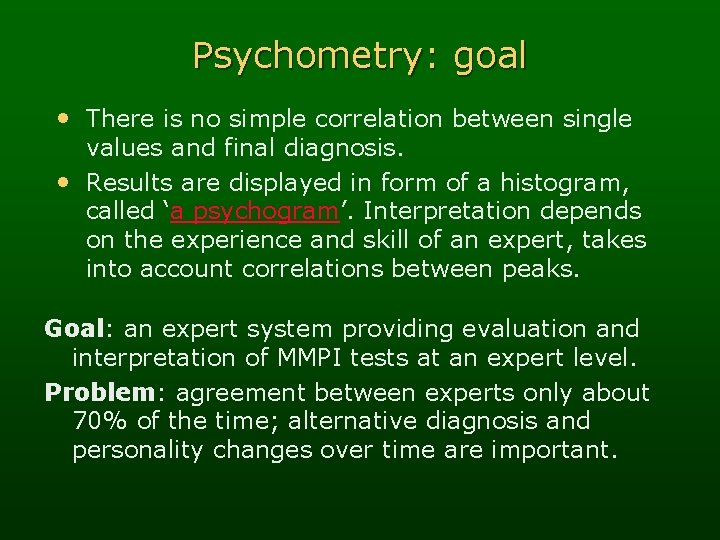

Psychometry: goal • There is no simple correlation between single values and final diagnosis. • Results are displayed in form of a histogram, called ‘a psychogram’. Interpretation depends on the experience and skill of an expert, takes into account correlations between peaks. Goal: an expert system providing evaluation and interpretation of MMPI tests at an expert level. Problem: agreement between experts only about 70% of the time; alternative diagnosis and personality changes over time are important.

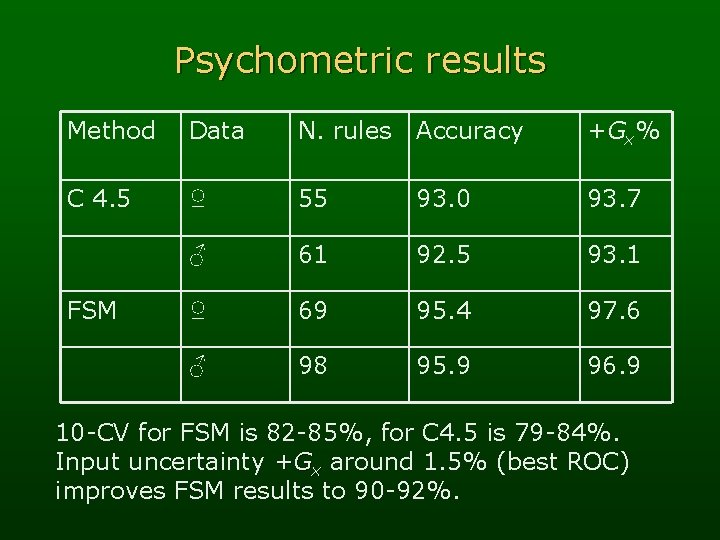

Psychometric data 1600 cases for woman, same number for men. 27 classes: norm, psychopathic, schizophrenia, paranoia, neurosis, mania, simulation, alcoholism, drug addiction, criminal tendencies, abnormal behavior due to. . . Extraction of logical rules: 14 scales = features. Define linguistic variables and use FSM, MLP 2 LN, SSV - giving about 2 -3 rules/class.

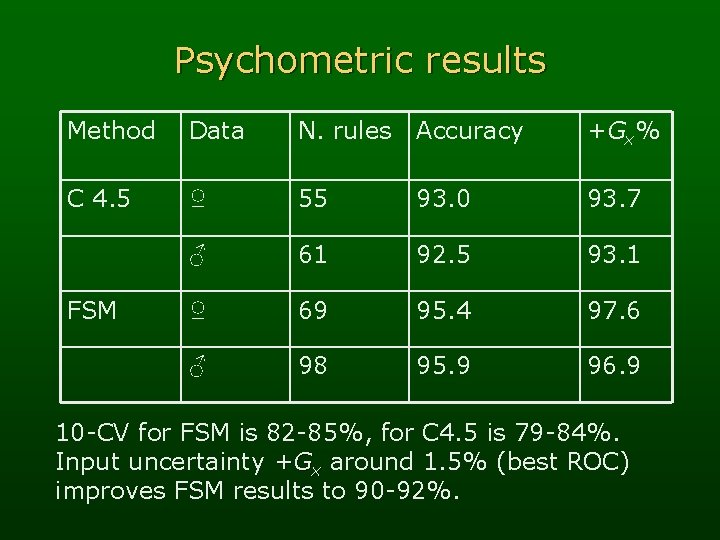

Psychometric results Method Data N. rules Accuracy +Gx% C 4. 5 ♀ 55 93. 0 93. 7 ♂ 61 92. 5 93. 1 ♀ 69 95. 4 97. 6 ♂ 98 95. 9 96. 9 FSM 10 -CV for FSM is 82 -85%, for C 4. 5 is 79 -84%. Input uncertainty +Gx around 1. 5% (best ROC) improves FSM results to 90 -92%.

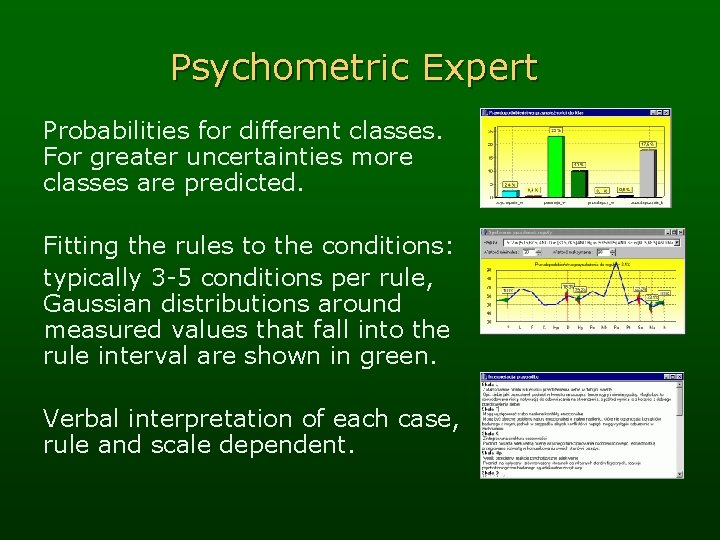

Psychometric Expert Probabilities for different classes. For greater uncertainties more classes are predicted. Fitting the rules to the conditions: typically 3 -5 conditions per rule, Gaussian distributions around measured values that fall into the rule interval are shown in green. Verbal interpretation of each case, rule and scale dependent.

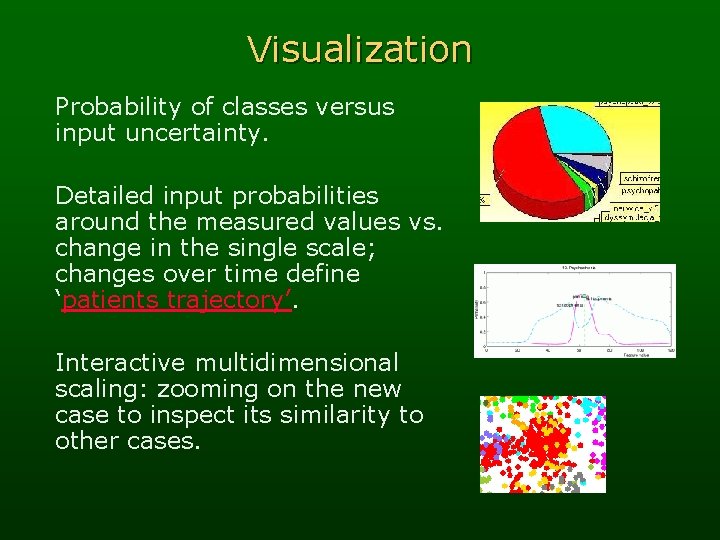

Visualization Probability of classes versus input uncertainty. Detailed input probabilities around the measured values vs. change in the single scale; changes over time define ‘patients trajectory’. Interactive multidimensional scaling: zooming on the new case to inspect its similarity to other cases.

Summary Computational intelligence methods: neural, decision trees, similarity-based & other, help to understand the data. Understanding data: achieved by rules, prototypes, visualization. Small is beautiful => simple is the best! Simplest possible, but not simpler - regularization of models; accurate but not too accurate - handling of uncertainty; high confidence, but not paranoid - rejecting some cases. • Challenges: hierarchical systems, discovery of theories rather than data models, integration with image/signal analysis, reasoning in complex domains/objects, applications in bioinformatics, text analysis. . .

References We are slowly getting there. All this and more is included in the Ghostminer, data mining software (in collaboration with Fujitsu) just released … http: //www. fqspl. com. pl/ghostminer/ Many papers, comparison of results for numerous datasets are kept at: http: //www. phys. uni. torun. pl/kmk See also my homepage at: http: //www. phys. uni. torun. pl/~duch for this and other presentations and some papers.