CISC 4631 Data Mining Lecture 09 Association Rule

![Association Mining? • Examples. – – – Rule form: “Body Head [support, confidence]”. buys(x, Association Mining? • Examples. – – – Rule form: “Body Head [support, confidence]”. buys(x,](https://slidetodoc.com/presentation_image/2f36c4251839dc956cabe172a7b9efc3/image-3.jpg)

- Slides: 51

CISC 4631 Data Mining Lecture 09: Association Rule Mining Theses slides are based on the slides by • Tan, Steinbach and Kumar (textbook authors) • Prof. F. Provost (Stern, NYU) • Prof. B. Liu, UIC 1

What Is Association Mining? • Association rule mining: – Finding frequent patterns, associations, correlations, or causal structures among sets of items or objects in transaction databases, relational databases, and other information repositories. • Applications: – Market Basket analysis, cross-marketing, catalog design, loss-leader analysis, clustering, classification, etc. 2

![Association Mining Examples Rule form Body Head support confidence buysx Association Mining? • Examples. – – – Rule form: “Body Head [support, confidence]”. buys(x,](https://slidetodoc.com/presentation_image/2f36c4251839dc956cabe172a7b9efc3/image-3.jpg)

Association Mining? • Examples. – – – Rule form: “Body Head [support, confidence]”. buys(x, “diapers”) buys(x, “beers”) [0. 5%, 60%] buys(x, "bread") buys(x, "milk") [0. 6%, 65%] major(x, "CS") / takes(x, "DB") grade(x, "A") [1%, 75%] age(X, 30 -45) / income(X, 50 K-75 K) buys(X, SUVcar) age=“ 30 -45”, income=“ 50 K-75 K” car=“SUV”

Market-basket analysis and finding associations • Do items occur together? (more than I might expect) • Proposed by Agrawal et al in 1993. • It is an important data mining model studied extensively by the database and data mining community. • Assume all data are categorical. • No good algorithm for numeric data. • Initially used for Market Basket Analysis to find how items purchased by customers are related. Bread Milk [sup = 5%, conf = 100%]

Association Rule: Basic Concepts • Given: (1) database of transactions, (2) each transaction is a list of items (purchased by a customer in a visit) • Find: all rules that correlate the presence of one set of items with that of another set of items – E. g. , 98% of people who purchase tires and auto accessories also get automotive services done • Applications – * Maintenance Agreement (What the store should do to boost Maintenance Agreement sales) – Home Electronics * (What other products should the store stocks up? ) – Detecting “ping-pong”ing of patients, faulty “collisions” 5

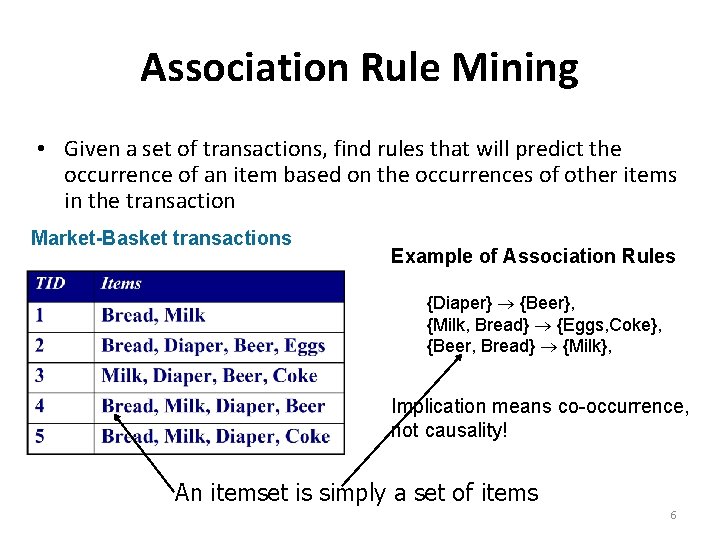

Association Rule Mining • Given a set of transactions, find rules that will predict the occurrence of an item based on the occurrences of other items in the transaction Market-Basket transactions Example of Association Rules {Diaper} {Beer}, {Milk, Bread} {Eggs, Coke}, {Beer, Bread} {Milk}, Implication means co-occurrence, not causality! An itemset is simply a set of items 6

Association Rule Mining – We are interested in rules that are • non-trivial (and possibly unexpected) • actionable • easily explainable 7

Examples from a Supermarket • Can you think of association rules from a supermarket? • Let’s say you identify association rules from a supermarket, how might you exploit them? – That is, if you are the store manager, how might you make money? • Assume you have a rule of the form X Y 8

Supermarket examples • If you have a rule X Y, you could: – Run a sale on X if you want to increase sales of Y – Locate the two items near each other – Locate the two items far from each other to make the shopper walk through the store – Print out a coupon on checkout for Y if shopper bought X but not Y 9

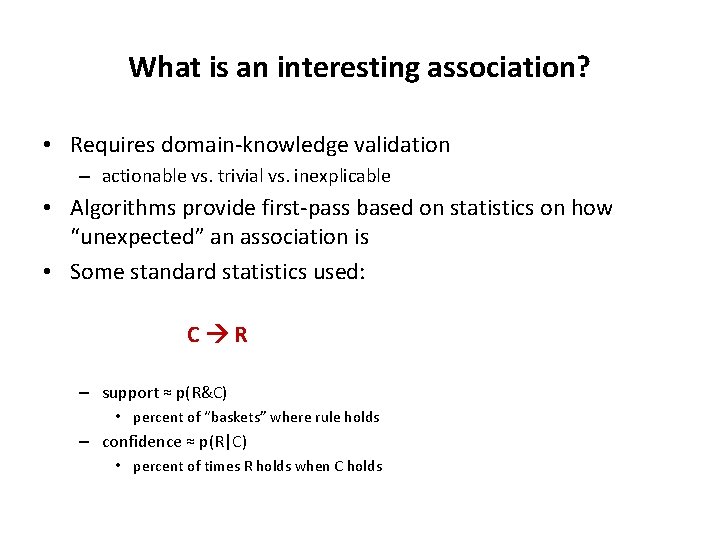

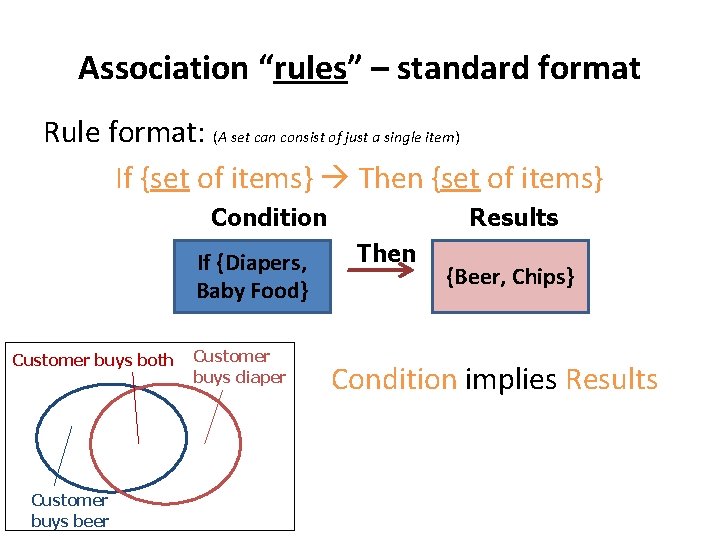

Association “rules” – standard format Rule format: (A set can consist of just a single item) If {set of items} Then {set of items} Condition If {Diapers, Baby Food} Customer buys both Customer buys beer Customer buys diaper Results Then {Beer, Chips} Condition implies Results

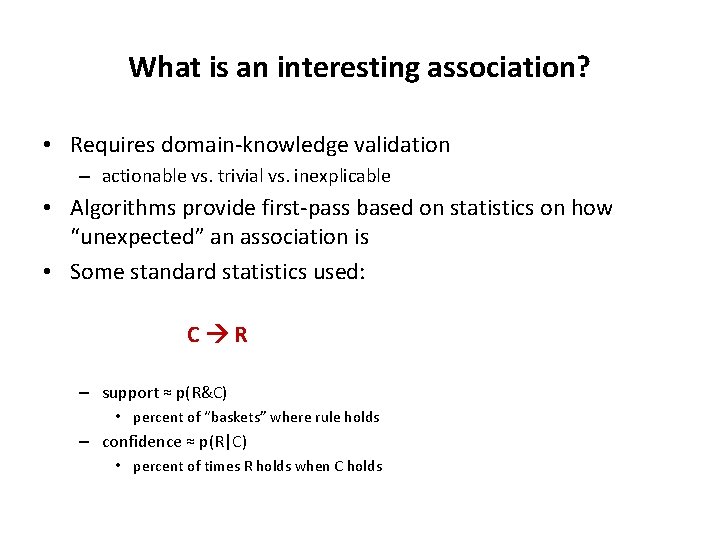

What is an interesting association? • Requires domain-knowledge validation – actionable vs. trivial vs. inexplicable • Algorithms provide first-pass based on statistics on how “unexpected” an association is • Some standard statistics used: C R – support ≈ p(R&C) • percent of “baskets” where rule holds – confidence ≈ p(R|C) • percent of times R holds when C holds

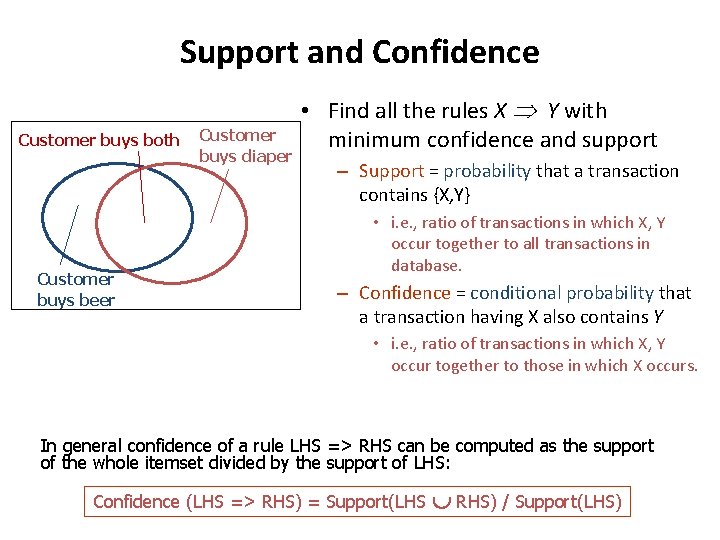

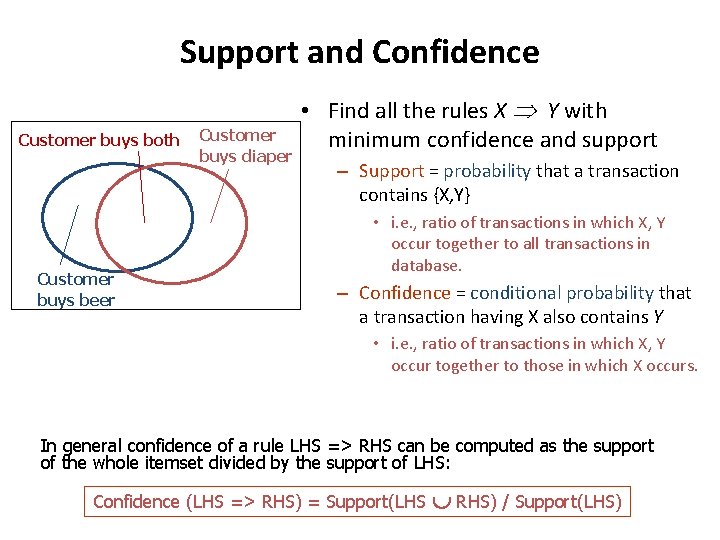

Support and Confidence Customer buys both Customer buys beer Customer buys diaper • Find all the rules X Y with minimum confidence and support – Support = probability that a transaction contains {X, Y} • i. e. , ratio of transactions in which X, Y occur together to all transactions in database. – Confidence = conditional probability that a transaction having X also contains Y • i. e. , ratio of transactions in which X, Y occur together to those in which X occurs. In general confidence of a rule LHS => RHS can be computed as the support of the whole itemset divided by the support of LHS: Confidence (LHS => RHS) = Support(LHS È RHS) / Support(LHS)

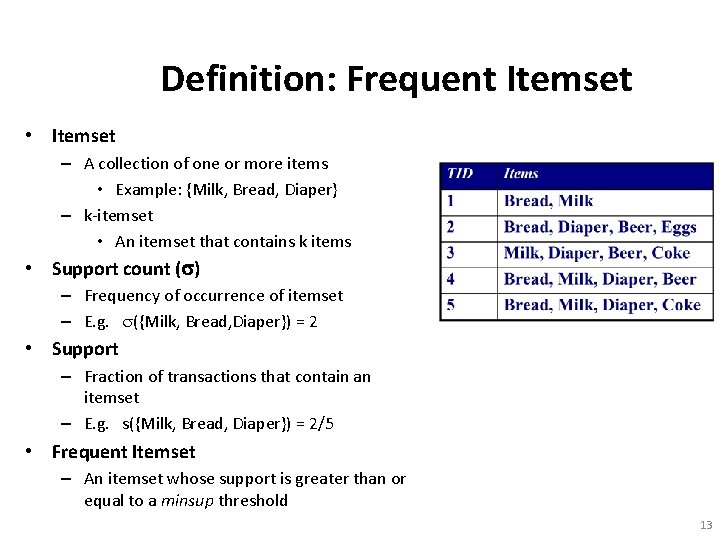

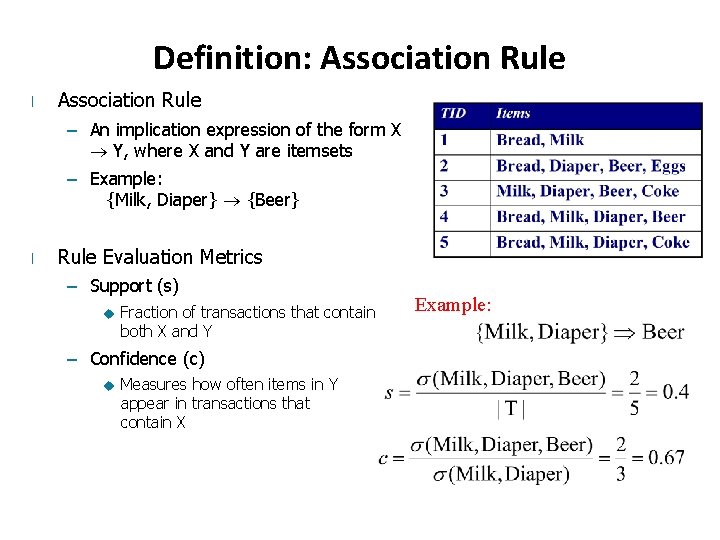

Definition: Frequent Itemset • Itemset – A collection of one or more items • Example: {Milk, Bread, Diaper} – k-itemset • An itemset that contains k items • Support count ( ) – Frequency of occurrence of itemset – E. g. ({Milk, Bread, Diaper}) = 2 • Support – Fraction of transactions that contain an itemset – E. g. s({Milk, Bread, Diaper}) = 2/5 • Frequent Itemset – An itemset whose support is greater than or equal to a minsup threshold 13

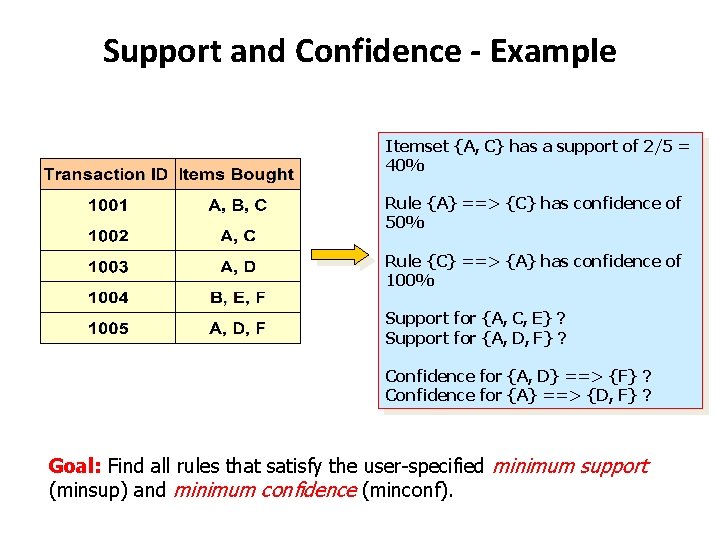

Definition: Association Rule l Association Rule – An implication expression of the form X Y, where X and Y are itemsets – Example: {Milk, Diaper} {Beer} l Rule Evaluation Metrics – Support (s) u Fraction of transactions that contain both X and Y – Confidence (c) u Measures how often items in Y appear in transactions that contain X Example:

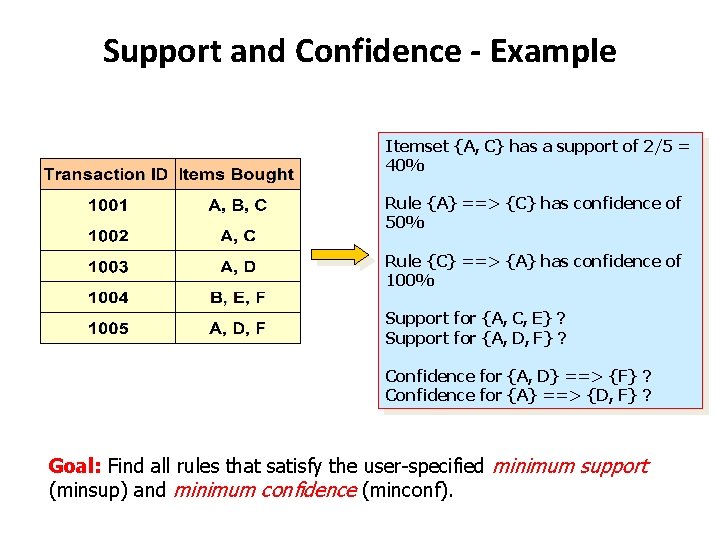

Support and Confidence - Example Itemset {A, C} has a support of 2/5 = 40% Rule {A} ==> {C} has confidence of 50% Rule {C} ==> {A} has confidence of 100% Support for {A, C, E} ? Support for {A, D, F} ? Confidence for {A, D} ==> {F} ? Confidence for {A} ==> {D, F} ? Goal: Find all rules that satisfy the user-specified minimum support (minsup) and minimum confidence (minconf).

Example • Transaction data • Assume: t 1: t 2: t 3: t 4: t 5: t 6: t 7: Beef, Chicken, Milk Beef, Cheese, Boots Beef, Chicken, Cheese Beef, Chicken, Clothes, Cheese, Milk Chicken, Clothes, Milk Chicken, Milk, Clothes minsup = 30% minconf = 80% • An example frequent itemset: {Chicken, Clothes, Milk} [sup = 3/7] • Association rules from the itemset: Clothes Milk, Chicken … Clothes, Chicken Milk, [sup = 3/7, conf = 3/3] … [sup = 3/7, conf = 3/3] 16 CS 583, Bing Liu, UIC

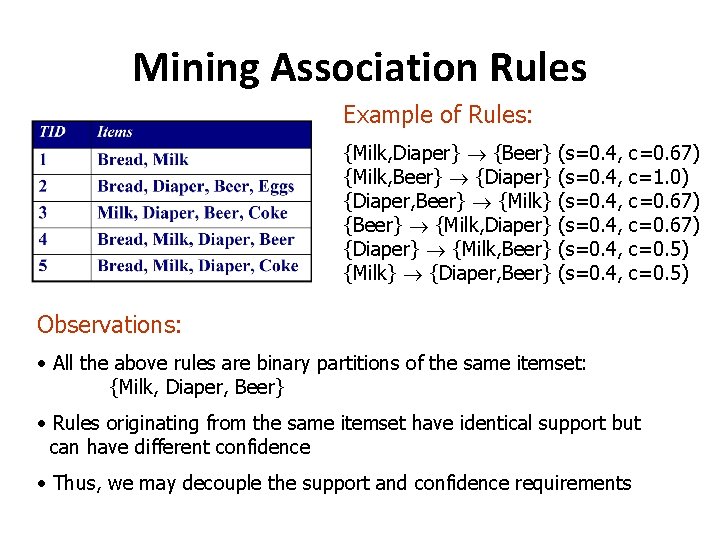

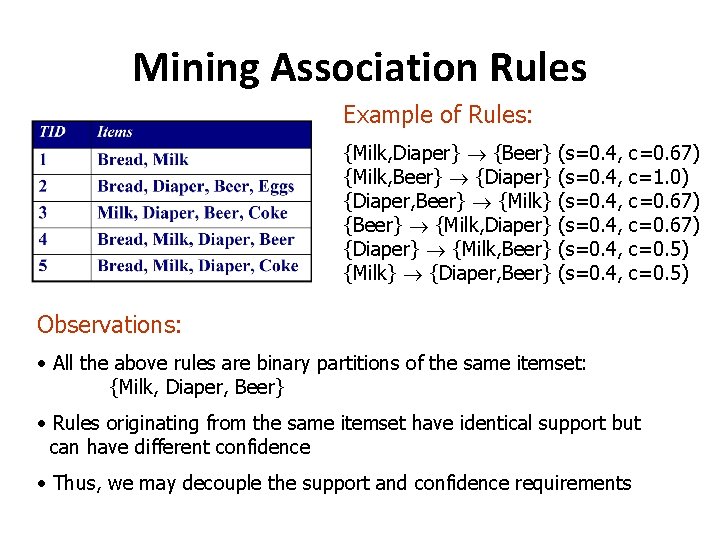

Mining Association Rules Example of Rules: {Milk, Diaper} {Beer} {Milk, Beer} {Diaper} {Diaper, Beer} {Milk} {Beer} {Milk, Diaper} {Diaper} {Milk, Beer} {Milk} {Diaper, Beer} (s=0. 4, c=0. 67) c=1. 0) c=0. 67) c=0. 5) Observations: • All the above rules are binary partitions of the same itemset: {Milk, Diaper, Beer} • Rules originating from the same itemset have identical support but can have different confidence • Thus, we may decouple the support and confidence requirements

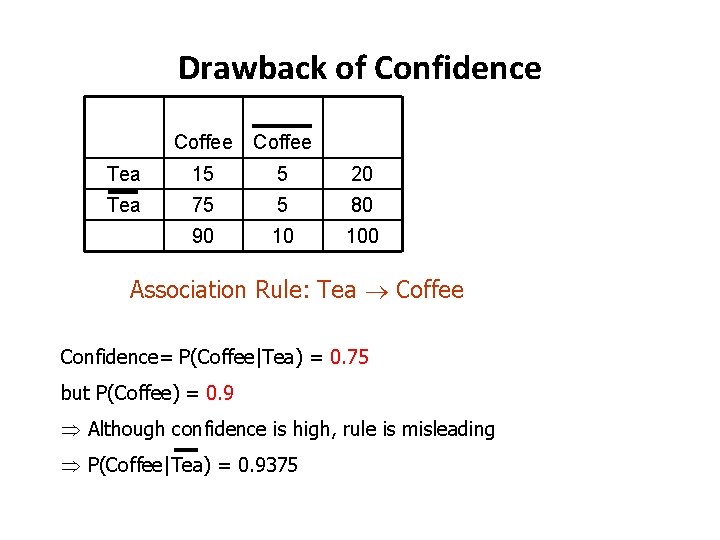

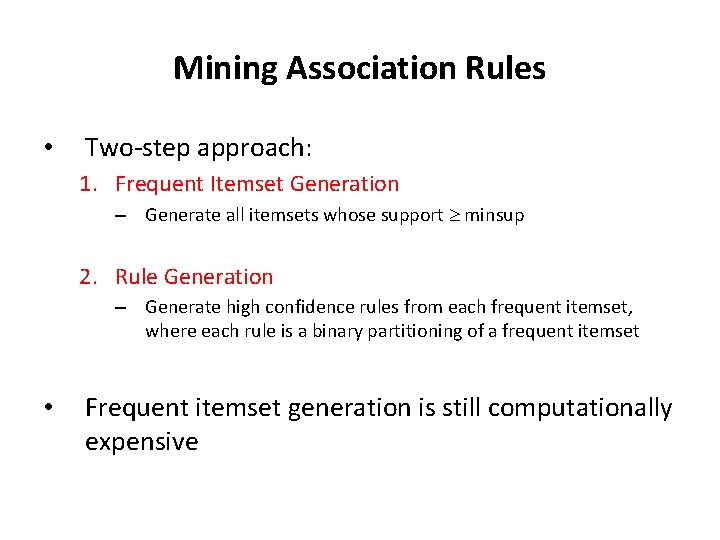

Drawback of Confidence Coffee Tea 15 5 20 Tea 75 5 80 90 10 100 Association Rule: Tea Coffee Confidence= P(Coffee|Tea) = 0. 75 but P(Coffee) = 0. 9 Although confidence is high, rule is misleading P(Coffee|Tea) = 0. 9375

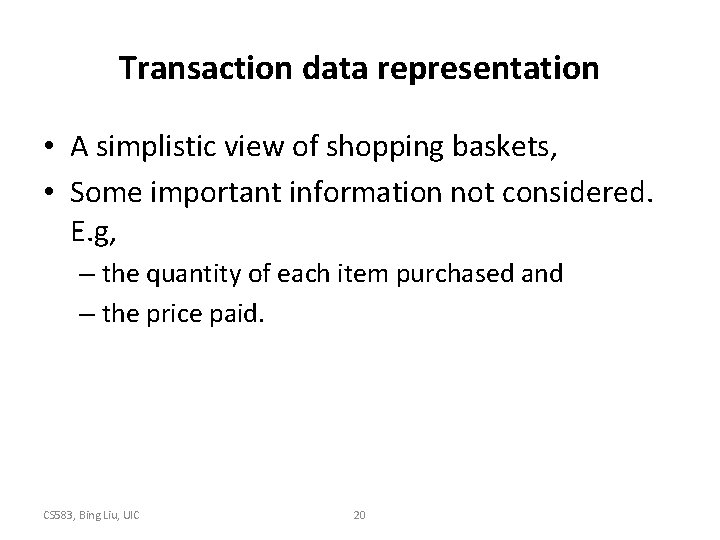

Mining Association Rules • Two-step approach: 1. Frequent Itemset Generation – Generate all itemsets whose support minsup 2. Rule Generation – Generate high confidence rules from each frequent itemset, where each rule is a binary partitioning of a frequent itemset • Frequent itemset generation is still computationally expensive

Transaction data representation • A simplistic view of shopping baskets, • Some important information not considered. E. g, – the quantity of each item purchased and – the price paid. CS 583, Bing Liu, UIC 20

Many mining algorithms • There a large number of them!! • They use different strategies and data structures. • Their resulting sets of rules are all the same. – Given a transaction data set T, and a minimum support and a minimum confident, the set of association rules existing in T is uniquely determined. • Any algorithm should find the same set of rules although their computational efficiencies and memory requirements may be different. • We study only one: the Apriori Algorithm CS 583, Bing Liu, UIC 21

The Apriori algorithm • The best known algorithm • Two steps: – Find all itemsets that have minimum support (frequent itemsets, also called large itemsets). – Use frequent itemsets to generate rules. • E. g. , a frequent itemset {Chicken, Clothes, Milk} [sup = 3/7] and one rule from the frequent itemset Clothes Milk, Chicken CS 583, Bing Liu, UIC 22 [sup = 3/7, conf = 3/3]

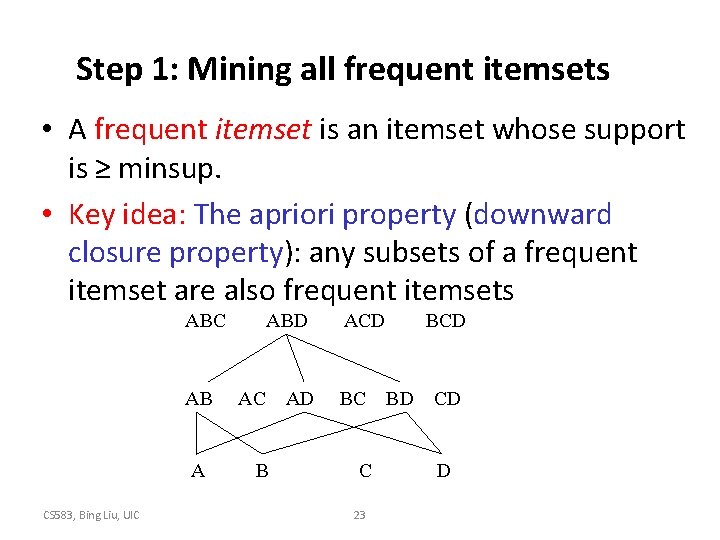

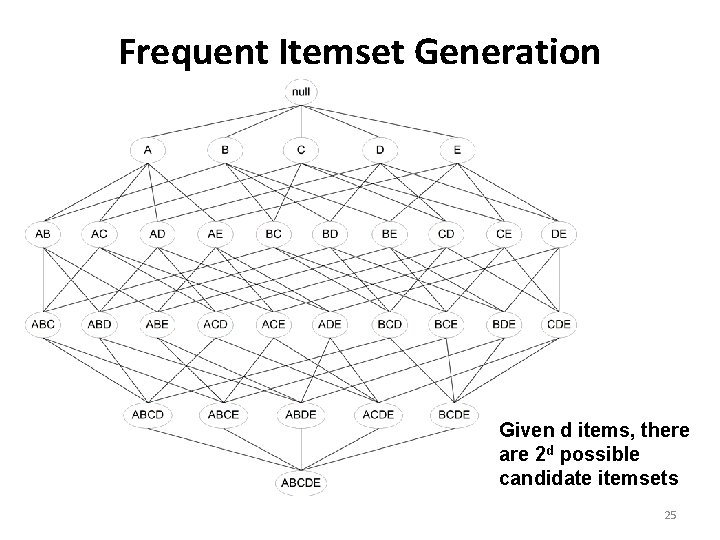

Step 1: Mining all frequent itemsets • A frequent itemset is an itemset whose support is ≥ minsup. • Key idea: The apriori property (downward closure property): any subsets of a frequent itemset are also frequent itemsets ABC AB A CS 583, Bing Liu, UIC ABD AC B AD ACD BC C 23 BCD BD CD D

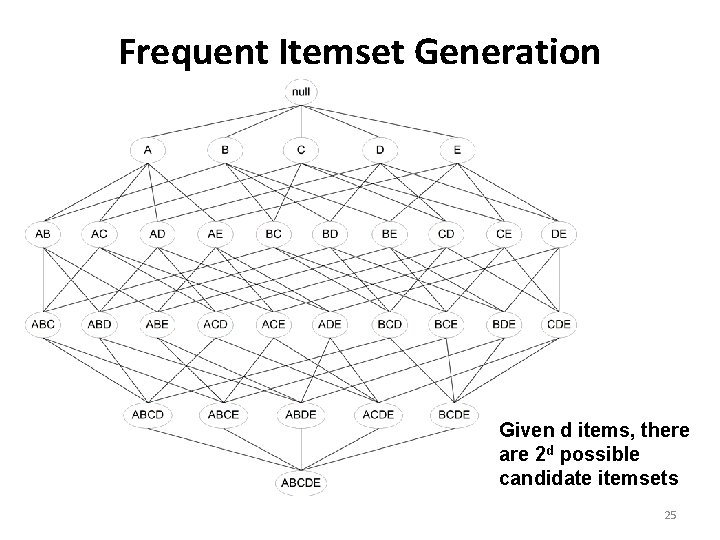

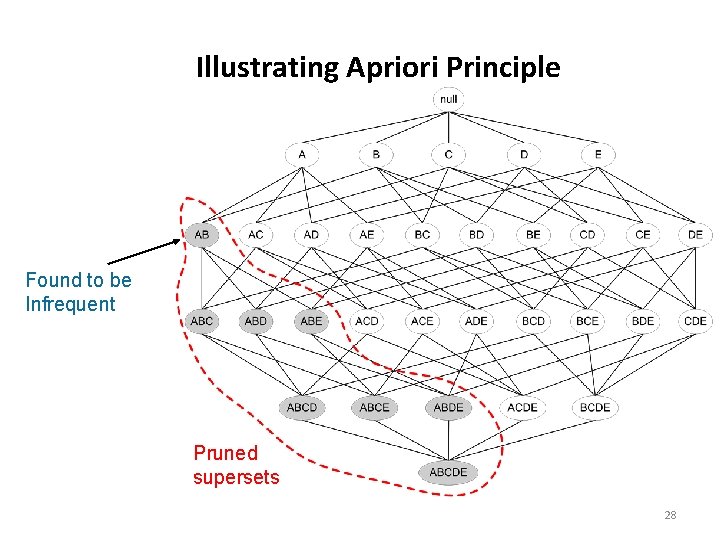

Steps in Association Rule Discovery • Find the frequent itemsets – Frequent item sets are the sets of items that have minimum support – Support is “downward closed”, so, a subset of a frequent itemset must also be a frequent itemset • if {AB} is a frequent itemset, both {A} and {B} are frequent itemsets • this also means that if an itemset that doesn’t satisfy minimum support, none of its supersets will either (this is essential for pruning search space) – Iteratively find frequent itemsets with cardinality from 1 to k (kitemsets) • Use the frequent itemsets to generate association rules

Frequent Itemset Generation Given d items, there are 2 d possible candidate itemsets 25

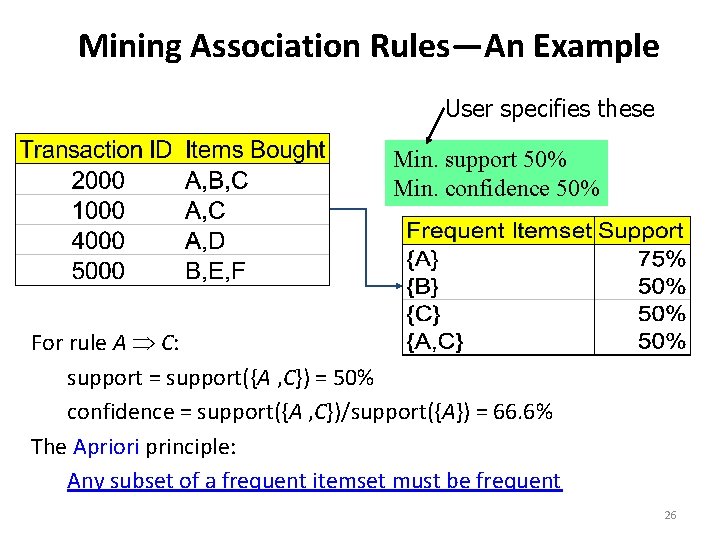

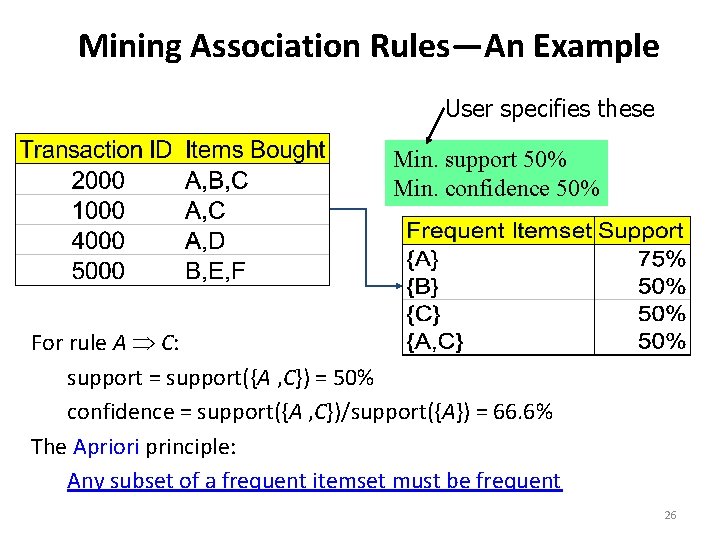

Mining Association Rules—An Example User specifies these Min. support 50% Min. confidence 50% For rule A C: support = support({A , C}) = 50% confidence = support({A , C})/support({A}) = 66. 6% The Apriori principle: Any subset of a frequent itemset must be frequent 26

Mining Frequent Itemsets: the Key Step • Find the frequent itemsets: the sets of items that have minimum support – A subset of a frequent itemset must also be a frequent itemset • i. e. , if {AB} is a frequent itemset, both {A} and {B} should be a frequent itemset. Why? Make sure you can explain this. – Iteratively find frequent itemsets with cardinality from 1 to k (kitemset) • Use the frequent itemsets to generate association rules – This step is more straightforward and requires less computation so we focus on the first step 27

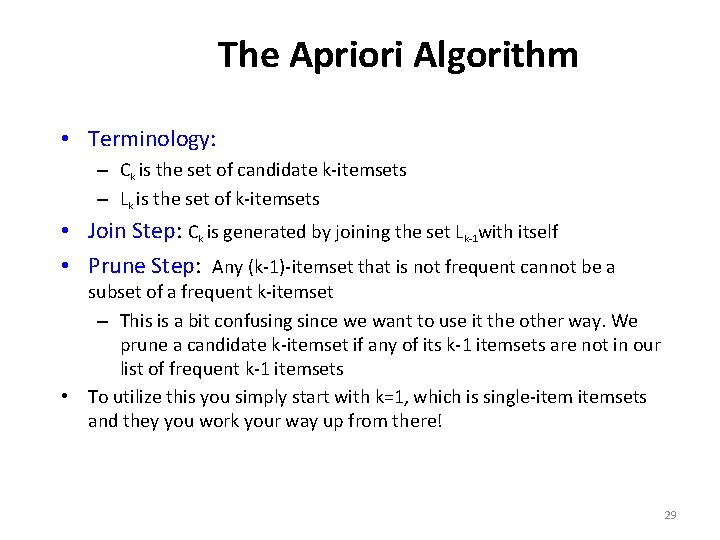

Illustrating Apriori Principle Found to be Infrequent Pruned supersets 28

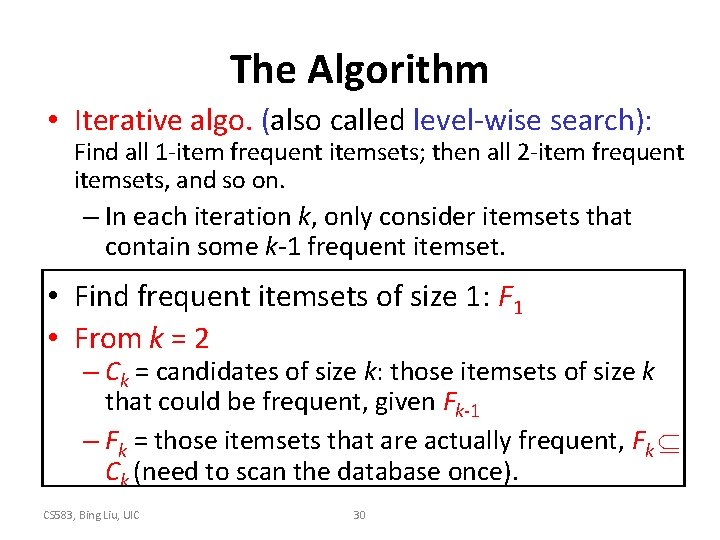

The Apriori Algorithm • Terminology: – Ck is the set of candidate k-itemsets – Lk is the set of k-itemsets • Join Step: Ck is generated by joining the set Lk-1 with itself • Prune Step: Any (k-1)-itemset that is not frequent cannot be a subset of a frequent k-itemset – This is a bit confusing since we want to use it the other way. We prune a candidate k-itemset if any of its k-1 itemsets are not in our list of frequent k-1 itemsets • To utilize this you simply start with k=1, which is single-itemsets and they you work your way up from there! 29

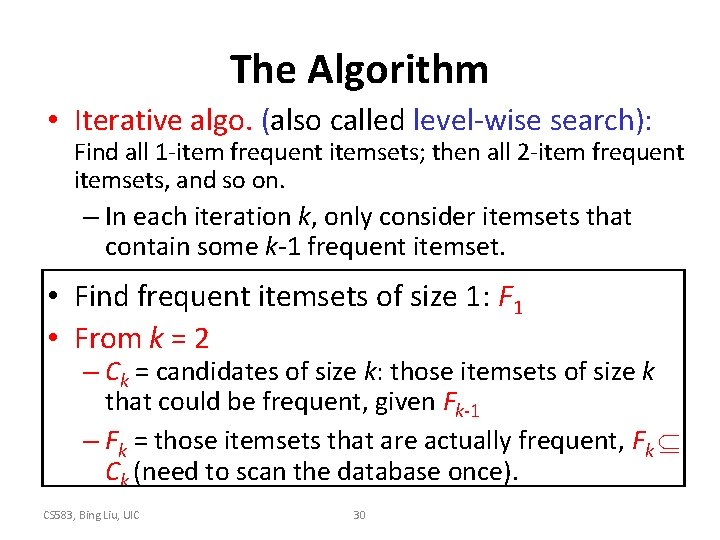

The Algorithm • Iterative algo. (also called level-wise search): Find all 1 -item frequent itemsets; then all 2 -item frequent itemsets, and so on. – In each iteration k, only consider itemsets that contain some k-1 frequent itemset. • Find frequent itemsets of size 1: F 1 • From k = 2 – Ck = candidates of size k: those itemsets of size k that could be frequent, given Fk-1 – Fk = those itemsets that are actually frequent, Fk Ck (need to scan the database once). CS 583, Bing Liu, UIC 30

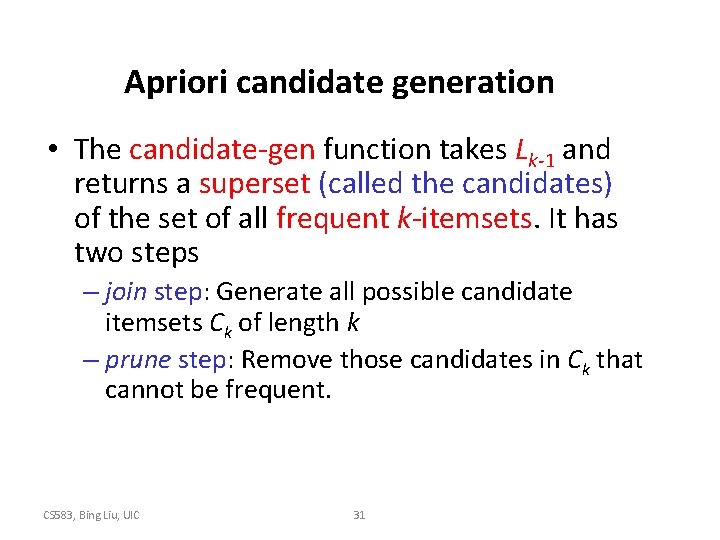

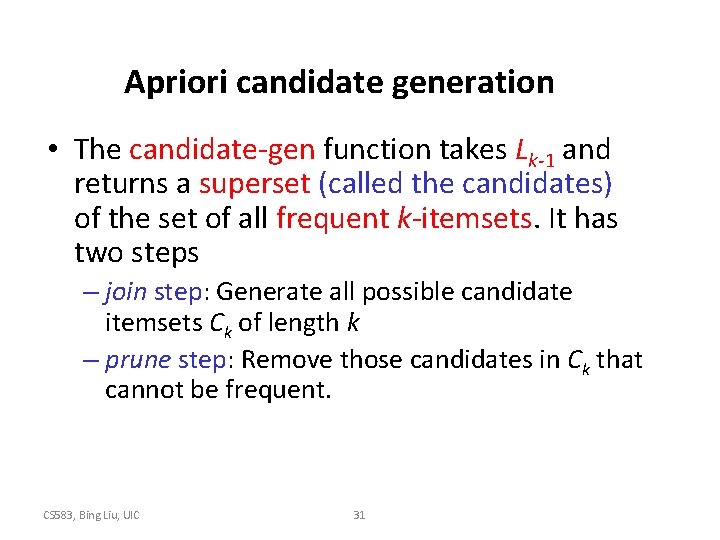

Apriori candidate generation • The candidate-gen function takes Lk-1 and returns a superset (called the candidates) of the set of all frequent k-itemsets. It has two steps – join step: Generate all possible candidate itemsets Ck of length k – prune step: Remove those candidates in Ck that cannot be frequent. CS 583, Bing Liu, UIC 31

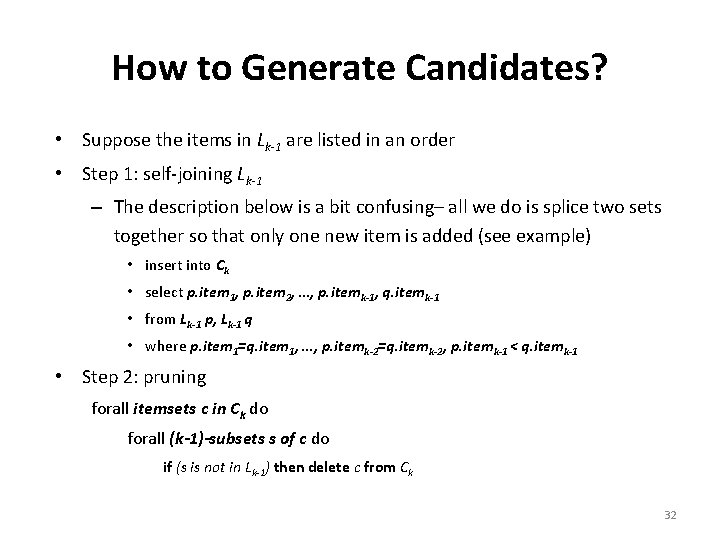

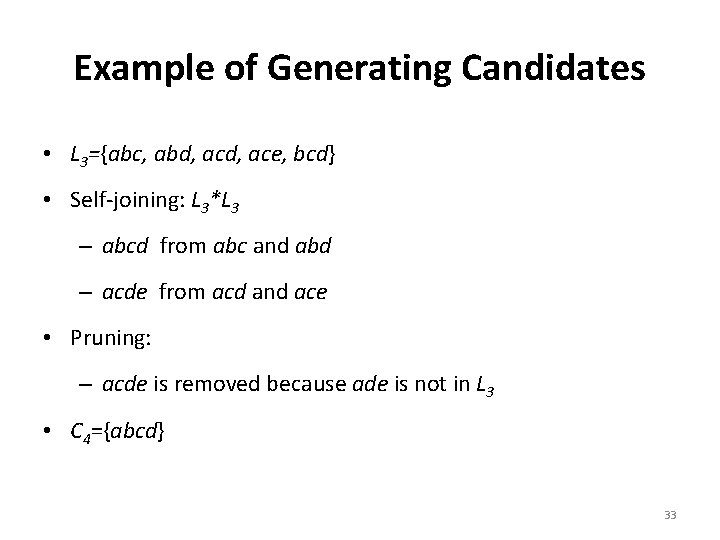

How to Generate Candidates? • Suppose the items in Lk-1 are listed in an order • Step 1: self-joining Lk-1 – The description below is a bit confusing– all we do is splice two sets together so that only one new item is added (see example) • insert into Ck • select p. item 1, p. item 2, …, p. itemk-1, q. itemk-1 • from Lk-1 p, Lk-1 q • where p. item 1=q. item 1, …, p. itemk-2=q. itemk-2, p. itemk-1 < q. itemk-1 • Step 2: pruning forall itemsets c in Ck do forall (k-1)-subsets s of c do if (s is not in Lk-1) then delete c from Ck 32

Example of Generating Candidates • L 3={abc, abd, ace, bcd} • Self-joining: L 3*L 3 – abcd from abc and abd – acde from acd and ace • Pruning: – acde is removed because ade is not in L 3 • C 4={abcd} 33

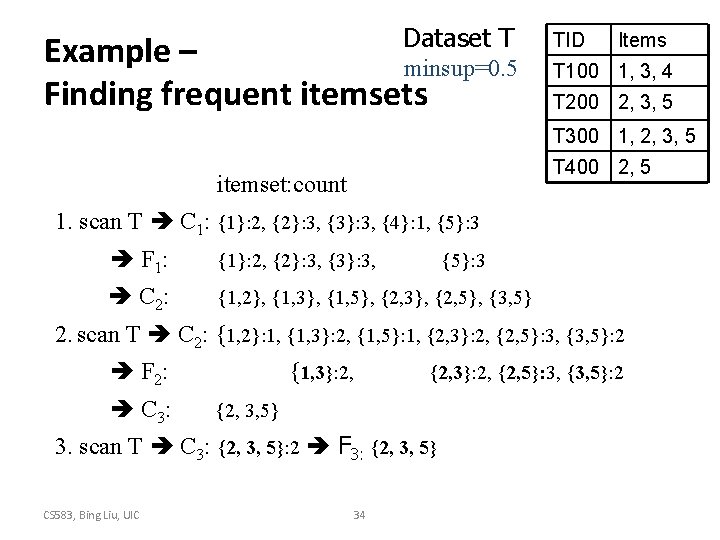

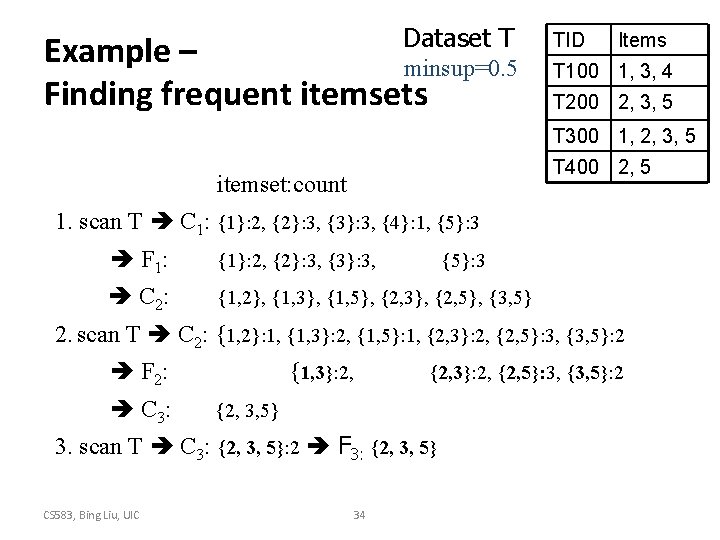

Dataset T Example – minsup=0. 5 Finding frequent itemsets TID Items T 100 1, 3, 4 T 200 2, 3, 5 T 300 1, 2, 3, 5 T 400 2, 5 itemset: count 1. scan T C 1: {1}: 2, {2}: 3, {3}: 3, {4}: 1, {5}: 3 F 1: {1}: 2, {2}: 3, {3}: 3, C 2: {1, 2}, {1, 3}, {1, 5}, {2, 3}, {2, 5}, {3, 5} {5}: 3 2. scan T C 2: {1, 2}: 1, {1, 3}: 2, {1, 5}: 1, {2, 3}: 2, {2, 5}: 3, {3, 5}: 2 F 2: C 3: {1, 3}: 2, {2, 3}: 2, {2, 5}: 3, {3, 5}: 2 {2, 3, 5} 3. scan T C 3: {2, 3, 5}: 2 F 3: {2, 3, 5} CS 583, Bing Liu, UIC 34

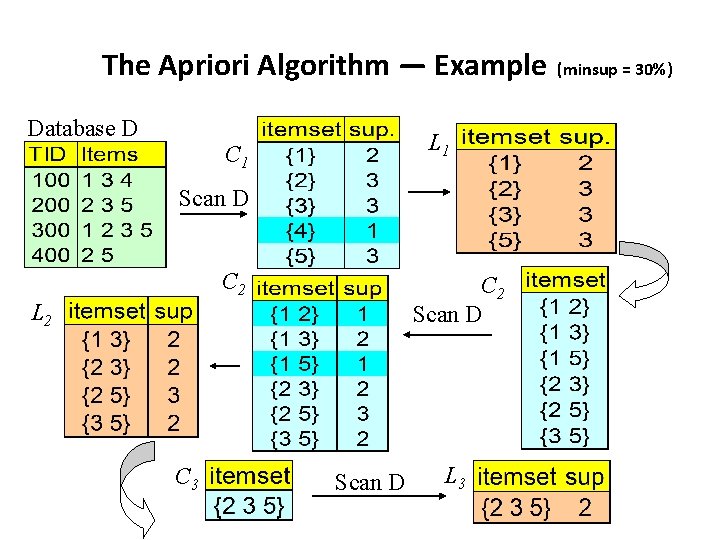

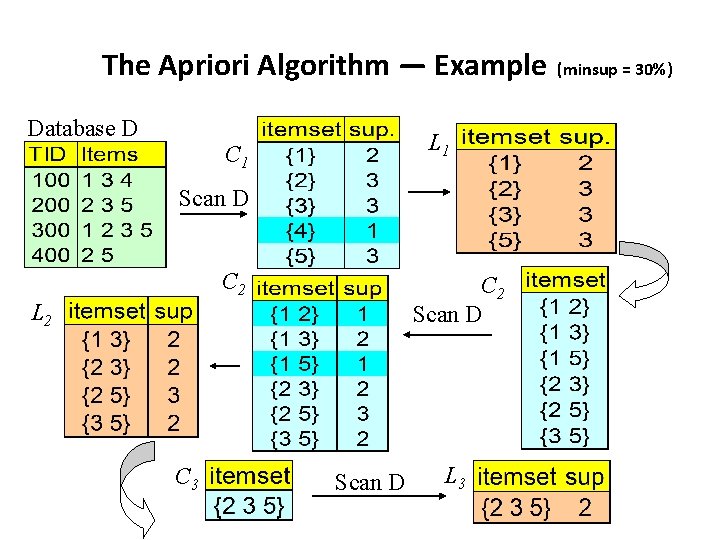

The Apriori Algorithm — Example Database D (minsup = 30%) L 1 C 1 Scan D C 2 Scan D L 2 C 3 Scan D L 3 35

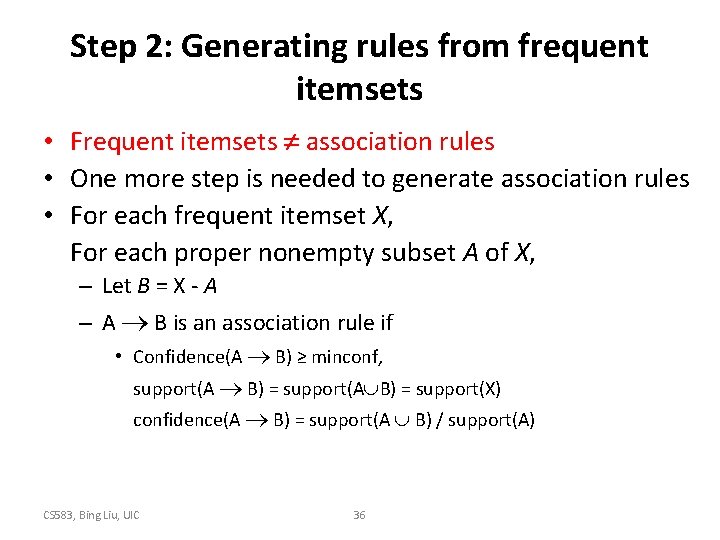

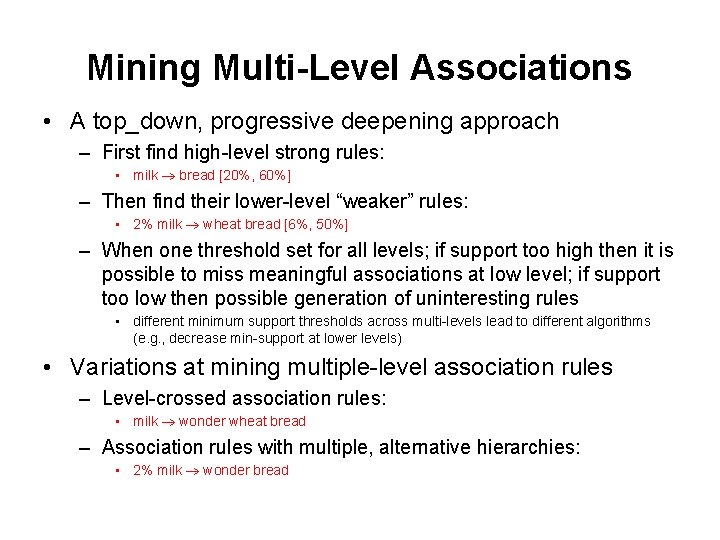

Step 2: Generating rules from frequent itemsets • Frequent itemsets association rules • One more step is needed to generate association rules • For each frequent itemset X, For each proper nonempty subset A of X, – Let B = X - A – A B is an association rule if • Confidence(A B) ≥ minconf, support(A B) = support(A B) = support(X) confidence(A B) = support(A B) / support(A) CS 583, Bing Liu, UIC 36

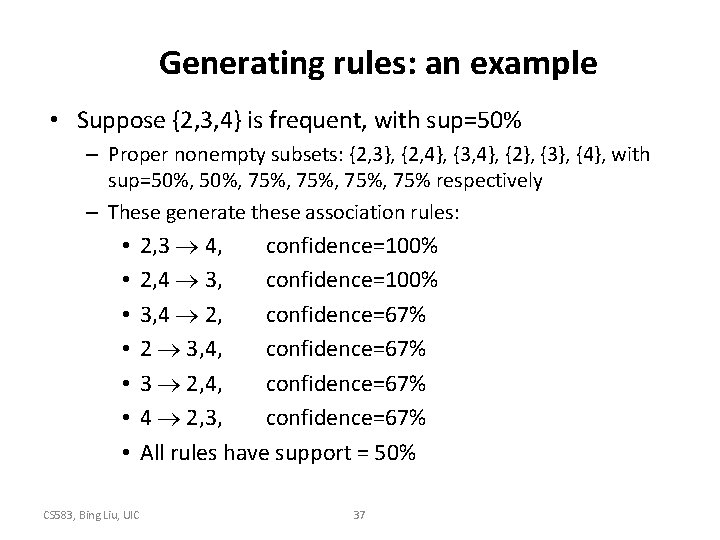

Generating rules: an example • Suppose {2, 3, 4} is frequent, with sup=50% – Proper nonempty subsets: {2, 3}, {2, 4}, {3, 4}, {2}, {3}, {4}, with sup=50%, 75%, 75% respectively – These generate these association rules: • • 2, 3 4, confidence=100% 2, 4 3, confidence=100% 3, 4 2, confidence=67% 2 3, 4, confidence=67% 3 2, 4, confidence=67% 4 2, 3, confidence=67% All rules have support = 50% CS 583, Bing Liu, UIC 37

Generating rules: summary • To recap, in order to obtain A B, we need to have support(A B) and support(A) • All the required information for confidence computation has already been recorded in itemset generation. No need to see the data T any more. • This step is not as time-consuming as frequent itemsets generation. CS 583, Bing Liu, UIC 38

On Apriori Algorithm Seems to be very expensive • Level-wise search • K = the size of the largest itemset • It makes at most K passes over data • In practice, K is bounded (10). • The algorithm is very fast. Under some conditions, all rules can be found in linear time. • Scale up to large data sets CS 583, Bing Liu, UIC 39

Granularity of items • One exception to the “ease” of applying association rules is selecting the granularity of the items. • Should you choose: – – diet coke? coke product? soft drink? beverage? • Should you include more than one level of granularity? Be careful • (Some association finding techniques allow you to represent hierarchies explicitly)

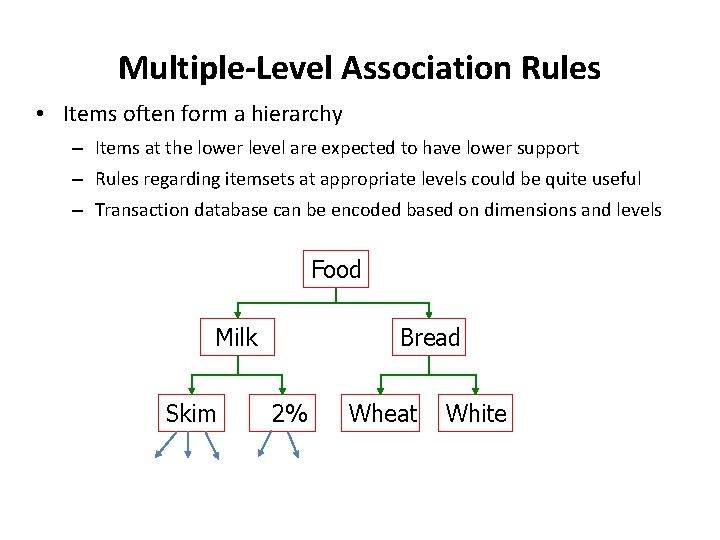

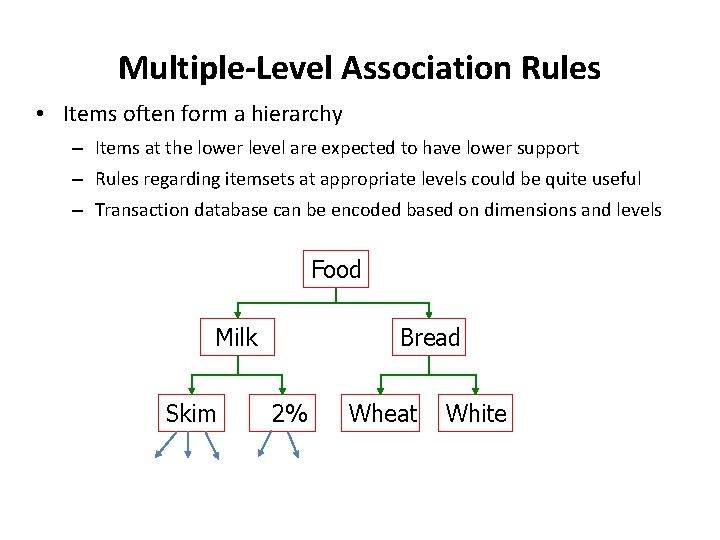

Multiple-Level Association Rules • Items often form a hierarchy – Items at the lower level are expected to have lower support – Rules regarding itemsets at appropriate levels could be quite useful – Transaction database can be encoded based on dimensions and levels Food Milk Skim Bread 2% Wheat White

Mining Multi-Level Associations • A top_down, progressive deepening approach – First find high-level strong rules: • milk bread [20%, 60%] – Then find their lower-level “weaker” rules: • 2% milk wheat bread [6%, 50%] – When one threshold set for all levels; if support too high then it is possible to miss meaningful associations at low level; if support too low then possible generation of uninteresting rules • different minimum support thresholds across multi-levels lead to different algorithms (e. g. , decrease min-support at lower levels) • Variations at mining multiple-level association rules – Level-crossed association rules: • milk wonder wheat bread – Association rules with multiple, alternative hierarchies: • 2% milk wonder bread

Rule Generation • Now that you have the frequent itemsets, you can generate association rules – Split the frequent itemsets in all possible ways and prune if the confidence is below min_confidence threshold • Rules that are left are called strong rules – You may be given a rule template that constrains the rules • Rules with only one item on the right side • Rules with two items on the left and one on the right – Rules of form {X, Y} Z 43

Rules from Previous Example • What are the strong rules of the form {X, Y} Z if the confidence threshold is 75%? – We start with {2, 3, 5} • {2, 3} 5 (confidence = 2/2 = 100%): STRONG • {3, 5} 2 (confidence = 2/2 = 100%): STRONG • {2, 5} 3 (confidence = 2/3 = 66%): PRUNE! • Note that in general you don’t just look at the frequent itemsets of maximum length. If we wanted strong rules of form X Y we would look at C 2 44

Interestingness Measurements • Objective measures – Two popular measurements: • Support • Confidence • Subjective measures (Silberschatz & Tuzhilin, KDD 95) A rule (pattern) is interesting if – it is unexpected (surprising to the user); and/or – actionable (the user can do something with it) 45

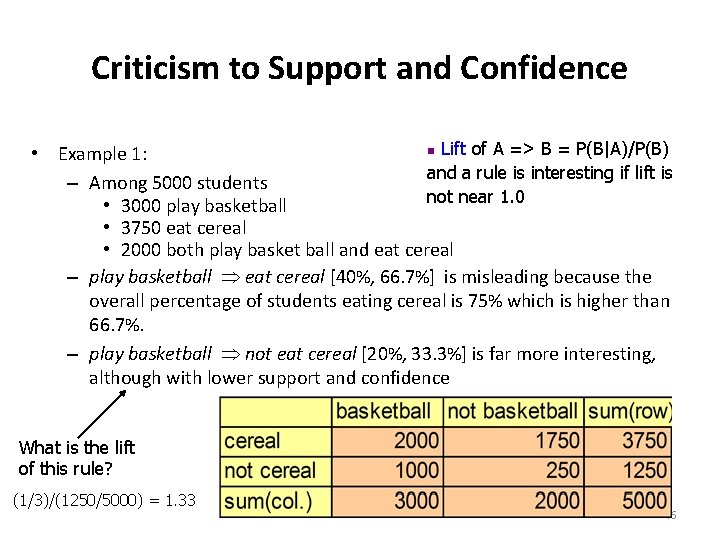

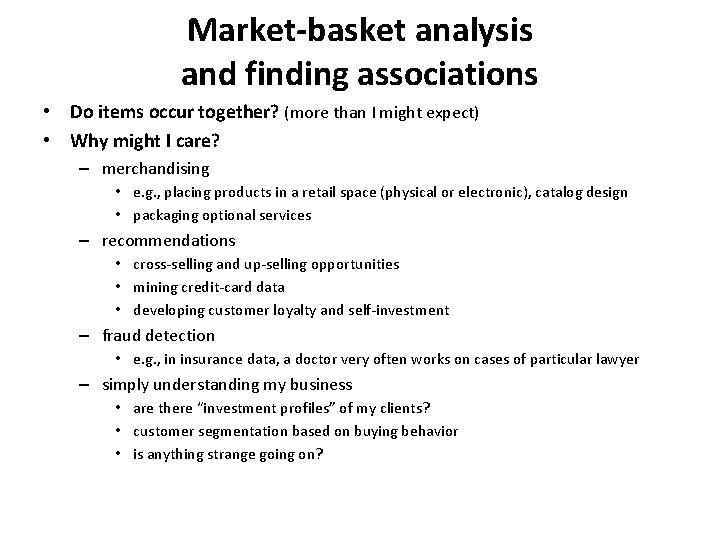

Criticism to Support and Confidence n Lift of A => B = P(B|A)/P(B) • Example 1: and a rule is interesting if lift is – Among 5000 students not near 1. 0 • 3000 play basketball • 3750 eat cereal • 2000 both play basket ball and eat cereal – play basketball eat cereal [40%, 66. 7%] is misleading because the overall percentage of students eating cereal is 75% which is higher than 66. 7%. – play basketball not eat cereal [20%, 33. 3%] is far more interesting, although with lower support and confidence What is the lift of this rule? (1/3)/(1250/5000) = 1. 33 46

Customer Number vs. Transaction ID • In the homework you may have a problem where there is a customer id for each transaction – You can be asked to do association analysis based on the customer id • If this is so, you need to aggregate the transactions to the customer level 47

Market-basket analysis and finding associations • Do items occur together? (more than I might expect) • Why might I care? – merchandising • e. g. , placing products in a retail space (physical or electronic), catalog design • packaging optional services – recommendations • cross-selling and up-selling opportunities • mining credit-card data • developing customer loyalty and self-investment – fraud detection • e. g. , in insurance data, a doctor very often works on cases of particular lawyer – simply understanding my business • are there “investment profiles” of my clients? • customer segmentation based on buying behavior • is anything strange going on?

Virtual items • If you’re interested in including other possible variables, can create “virtual items” • gift-wrap, used-coupon, new-store, winter-holidays, bought-nothing, …

Associations: Pros and Cons • Pros – can quickly mine patterns describing business/customers/etc. without major effort in problem formulation – virtual items allow much flexibility – unparalleled tool for hypothesis generation • Cons – unfocused • not clear exactly how to apply mined “knowledge” • only hypothesis generation – can produce many, many rules! • may only be a few nuggets among them (or none)

Association Rules • Association rule types: – Actionable Rules – contain high-quality, actionable information – Trivial Rules – information already well-known by those familiar with the business – Inexplicable Rules – no explanation and do not suggest action • Trivial and Inexplicable Rules occur most often